DELL EMC ECS WITH F5

Deployment Reference Guide

February 2021

A Dell EMC Whitepaper

Internal Use - Confidential

Date

Description

August 2017

Initial release

October 2017

Corrected initial release date year to 2017 from 2016

June 2018

Clarified S3Ping unauthenticated - no credentials required

Revisions

The information in this publication is provided “as is.” Dell Inc. makes no representations or warranties of any kind with respect to the information in this

publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose.

Use, copying, and distribution of any software described in this publication requires an applicable software license.

Copyright © 2021 Dell Inc. or its subsidiaries. All Rights Reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other

trademarks may be the property of their respective owners. Published in the USA [2/17/2021] [Whitepaper] [H16294.3]

Dell believes the information in this document is accurate as of its publication date. The information is subject to change without notice.

This document may contain language from third party content that is not under Dell's control and is not consistent with Dell's current guidelines for Dell's

own content. When such third-party content is updated by the relevant third parties, this document will be revised accordingly.

2 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Table of contents

Revisions............................................................................................................................................................................. 2

1 Introduction ................................................................................................................................................................... 4

1.1 Audience ............................................................................................................................................................ 4

1.2 Scope ................................................................................................................................................................. 4

2 ECS Overview .............................................................................................................................................................. 5

2.1 ECS Constructs.................................................................................................................................................. 6

3 F5 Overview ................................................................................................................................................................. 8

3.1 F5 Networking Constructs .................................................................................................................................. 9

3.2 F5 Traffic Management Constructs.................................................................................................................. 11

3.3 F5 Device Redundancy .................................................................................................................................... 13

4 ECS Configuration ...................................................................................................................................................... 15

5 F5 Configuration ......................................................................................................................................................... 19

5.1 F5 BIG-IP LTM ................................................................................................................................................. 19

5.1.1 Example: S3 to Single VDC with Active/Standby LTM Pair ............................................................................ 20

5.1.2 Example: LTM-terminated SSL Communication ............................................................................................. 30

5.1.3 Example: NFS via LTM .................................................................................................................................... 35

5.1.4 Example: Geo-affinity via iRule on LTM .......................................................................................................... 43

5.2 F5 BIG-IP DNS................................................................................................................................................. 47

5.2.1 Example: Roaming Client Geo Environment ................................................................................................... 53

5.2.2 Example: Application Traffic Management for XOR Efficiency Gains ............................................................. 54

6 Best Practices............................................................................................................................................................. 55

7 Conclusion .................................................................................................................................................................. 56

8 References ................................................................................................................................................................. 57

A Creating a Custom S3 Monitor ................................................................................................................................... 58

B BIG-IP DNS and LTM CLI Configuration Snippets ..................................................................................................... 61

B.1 BIG-IP DNS ...................................................................................................................................................... 61

B.1.1 bigip.conf .......................................................................................................................................................... 61

B.1.2 bigip_base.conf................................................................................................................................................. 61

B.1.3 bigip_gtm.conf .................................................................................................................................................. 62

B.2 BIG-IP LTM ...................................................................................................................................................... 65

B.2.1 bigip.conf .......................................................................................................................................................... 65

B.2.2 bigip_base.conf................................................................................................................................................. 68

3 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

1 Introduction

The explosive growth of unstructured data and cloud-native applications has created demand for scalable

cloud storage infrastructure in the modern datacenter. ECS

EMC™ designed from the ground up to take advantage of modern cloud storage APIs and distributed data

protection, providing active/active availability spanning multiple datacenters.

Managing application traffic both locally and globally can provide high availability (HA) and efficient use of

ECS storage resources. HA is obtained by directing application traffic to known-to-be-available local or global

storage resources. Optimal efficiency can be gained by balancing application load across local storage

resources.

Organizations often choose F5® BIG-IP® DNS (formerly Global Traffic Manager™) and Local Traffic

Manager™ (LTM®) products to manage client traffic between and within data centers. BIG-IP authoritatively

resolves domain names such as s3.dell.com or nfs.emc.com. BIG-IP DNS systems return IP addresses with

the intent to direct stateless client sessions to an ECS system at a particular data center based on monitoring

the availability and performance of individual ECS data center locations, nodes, and client locations. LTMs

can apply load balancing services based on availability, performance, and persistence, to proxy client traffic to

an ECS node.

TM

is the third generation object store by Dell

1.1 Audience

This document is targeted for customers interested in a reference deployment of ECS with F5.

1.2 Scope

This whitepaper is meant to be a reference guide for deploying F5 with ECS. An external load balancer

(traffic manager) is highly recommended with ECS for applications that do not proactively monitor ECS node

availability or natively manage traffic load to ECS nodes. Directing application traffic to ECS nodes using

local DNS queries, as opposed to a traffic manager, can lead to failed connection attempts to unavailable

nodes and unevenly distributed application load on ECS.

The ECS HDFS client, CAS SDK and ECS S3 API extensions are outside of the scope of this paper. The

ECS HDFS client, which is required for Hadoop connectivity to ECS, handles load balancing natively.

Similarly, the Centera Software Development Kit for CAS access to ECS has a built-in load balancer. The

ECS S3 API also has extensions leveraged by certain ECS S3 client SDKs which allow for balancing load to

ECS at the application level.

Dell EMC takes no responsibility for customer load balancing configurations. All customer networks are

unique, with their own requirements. It’s extremely important for customers to configure their load balancers

according to their own circumstance. We only provide this paper as a guide. F5 or a qualified network

administrator should be consulted before making any changes to your current load balancer configuration.

Related to the BIG-IP DNS and LTM, and outside this paper’s scope, is the BIG-IP Application Acceleration

Manager™ that provides encrypted, accelerated WAN optimization service. F5’s BIG-IP Advanced Firewall

Manager™ which provides network security and DDoS mitigation services, is also outside of the scope of this

paper.

4 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Transport Protocol or

Daemon Service

HTTP

9020

HTTPS

9021

HTTP

9022

HTTPS

9023

HTTP

9024

HTTPS

9025

portmap

111

mountd, nfsd

2049

lockd

10000

2 ECS Overview

ECS provides a complete software-defined strongly-consistent, indexed, cloud storage platform that supports

the storage, manipulation, and analysis of unstructured data on a massive scale. Client access protocols

include S3, with additional Dell EMC extensions to the S3 protocol, Dell EMC Atmos, Swift, Dell EMC CAS

(Centera), NFS, and HDFS. Object access for S3, Atmos, and Swift is achieved via REST APIs. Objects are

written, retrieved, updated and deleted via HTTP or HTTPS calls using REST verbs such as GET, POST,

PUT, DELETE, and HEAD. For file access, ECS provides NFS version 3 natively and a Hadoop Compatible

File System (HCFS).

ECS was built as a completely distributed system following the principle of cloud applications. In this model,

all hardware nodes provide the core storage services. Without dedicated index or metadata nodes the

system has limitless capacity and scalability.

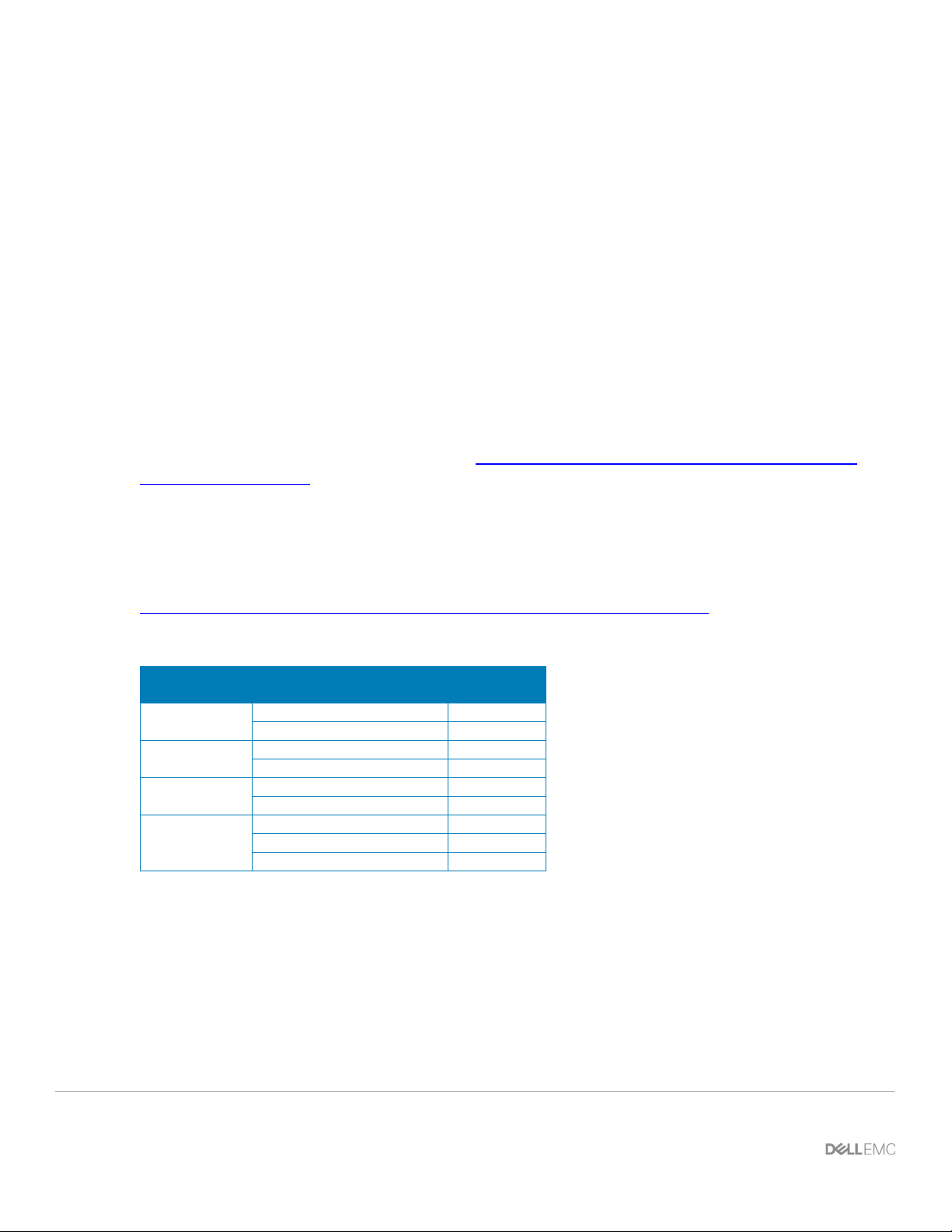

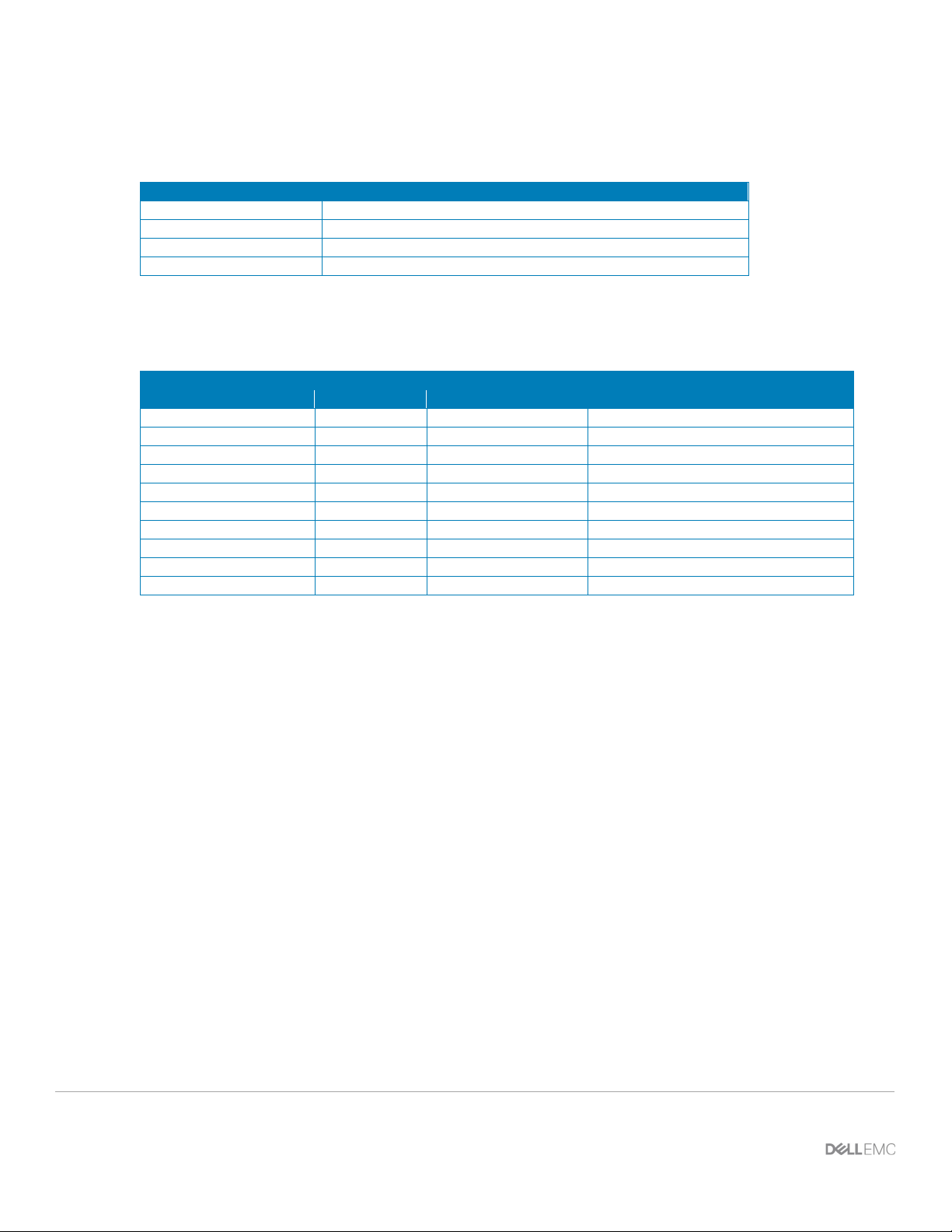

Service communication ports are integral in the F5 LTM configuration. See Table 1 below for a complete list

of protocols used with ECS and their associated ports. In addition to managing traffic flow, port access is a

critical piece to consider when firewalls are in the communication path. For more information on ECS ports

refer to the ECS Security Configuration Guide at

Configuration-Guide-.pdf.

https://support.emc.com/docu88141_ECS-3.2-Security-

For a more thorough ECS overview, please review ECS Overview and Architecture whitepaper at

http://www.emc.com/collateral/white-papers/h14071-ecs-architectural-guide-wp.pdf

Table 1 - ECS protocols and associated ports

Protocol

S3

Atmos

Swift

NFS

Port

.

5 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

2.1 ECS Constructs

Understanding the main ECS constructs is necessary in managing application workflow and load balancing.

This section details each of the upper-level ECS constructs.

Figure 1 - ECS upper-level constructs

• Storage pool - The first step in provisioning a site is creating a storage pool. Storage pools form the

basic building blocks of an ECS cluster. They are logical containers for some or all nodes at a site.

ECS storage pools identify which nodes will be used when storing object fragments for data

protection at a site. Data protection at the storage pool level is rack, node, and drive aware. System

metadata, user data and user metadata all coexist on the same disk infrastructure.

Storage pools provide a means to separate data on a cluster, if required. By using storage pools,

organizations can organize storage resources based on business requirements. For example, if

separation of data is required, storage can be partitioned into multiple different storage pools.

Erasure coding (EC) is configured at the storage pool level. The two EC options on ECS are 12+4 or

10+2 (aka cold storage). EC configuration cannot be changed after storage pool creation.

Only one storage pool is required in a VDC. Generally, at most two storage pools should be created,

one for each EC configuration, and only when necessary. Additional storage pools should only be

implemented when there is a use case to do so, for example, to accommodate physical data

separation requirements. This is because each storage pool has unique indexing requirements. As

such, each storage pool adds overhead to the core ECS index structure.

A storage pool should have a minimum of five nodes and must have at least three or more nodes with

more than 10% free space in order to allow writes.

• Virtual Data Center (VDC) - VDCs are the top-level ECS resources and are also generally referred

to as a site or zone. They are logical constructs that represent the collection of ECS infrastructure

you want to manage as a cohesive unit. A VDC is made up of one or more storage pools.

Between two and eight VDCs can be federated. Federation of VDCs centralizes and thereby

simplifies many management tasks associated with administering ECS storage. In addition,

federation of sites allows for expanded data protection domains that include separate locations.

6 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

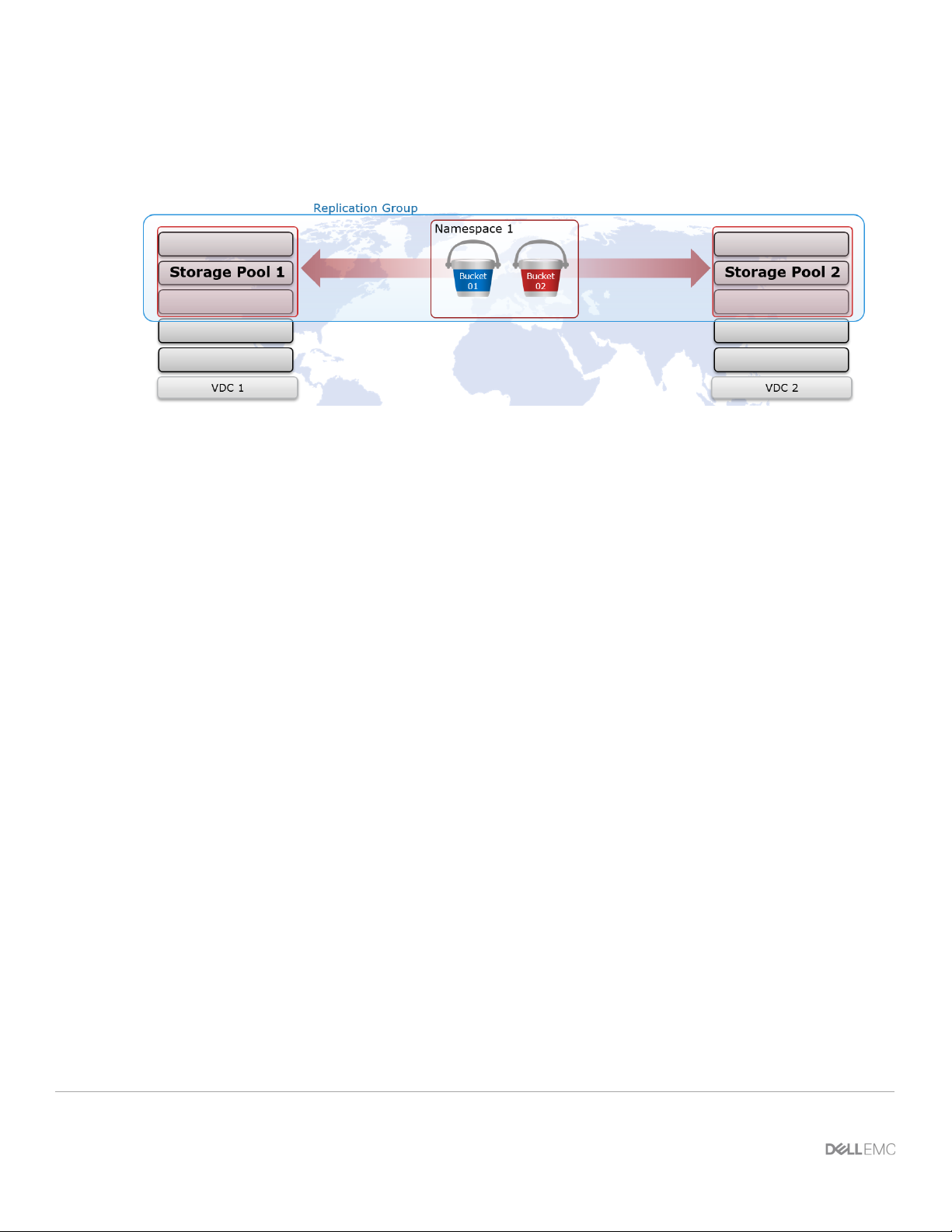

• Replication Group - Replication groups are logical constructs that define where data is protected

and accessed. Replication groups can be local or global. Local replication groups protect objects

within the same VDC against disk or node failures. Global replication groups span two or more

federated VDCs and protect objects against disk, node, and site failures.

The strategy for defining replication groups depends on multiple factors including requirements for

data resiliency, the cost of storage, and physical versus logical separation of data. As with storage

pools, the minimum number of replication groups required should be implemented. At the core ECS

indexing level, each storage pool and replication group pairing is tracked and adds significant

overhead. It is best practice to create the absolute minimum number of replication groups required.

Generally there is one replication group for each local VDC, if necessary, and one replication group

that contains all sites. Deployments with more than two sites may consider additional replication

groups, for example, in scenarios where only a subset of VDCs should participate in data replication,

but, this decision should not be made lightly.

• Namespace - Namespaces enable ECS to handle multi-tenant operations. Each tenant is defined by

a namespace and a set of users who can store and access objects within that namespace.

Namespaces can represent a department within an enterprise, can be created for each unique

enterprise or business unit, or can be created for each user. There is no limit to the number of

namespaces that can be created from a performance perspective. Time to manage an ECS

deployment, on the other hand, or, management overhead, may be a concern in creating and

managing many namespaces.

• Bucket - Buckets are containers for object data. Each bucket is assigned to one replication group.

Namespace users with the appropriate privileges can create buckets and objects within buckets for

each object protocol using its API. Buckets can be configured to support NFS and HDFS. Within a

namespace, it is possible to use buckets as a way of creating subtenants. It is not recommended to

have more than 1000 buckets per namespace. Generally a bucket is created per application,

workflow, or user.

7 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

3 F5 Overview

The F5 BIG-IP platform is a blend of software and hardware that forms the foundation of the current iteration

of F5’s Application Delivery Controller (ADC) technology. On top of the BIG-IP platform are a suite of

products that provide a wide range of application services. Two of the BIG-IP products often used in building

an organization’s foundation for local and global traffic management include:

• F5 BIG-IP Local Traffic Manager (LTM) - Local application traffic load balancing based on a full-

proxy architecture.

• F5 BIG-IP DNS (formerly Global Traffic Manager (GTM)) - DNS services for application requests

F5 offers the products in both physical and virtual form. This paper makes no recommendation between

choosing physical or virtual F5 systems. We do recommend that F5 documentation and product

specifications be consulted to properly size F5 systems. Sizing a BIG-IP system should be based on the

cumulative current and projected workload traffic bandwidth (MB/s), quantity of operations (Ops/sec) or

transactions per second (TPS), and concurrent client sessions. Properly sized BIG-IP systems should not

add any significant transactional overhead or limitation to workflows using ECS. The next few paragraphs

briefly describe considerations when sizing ECS and associated traffic managers.

based on user, network, and cloud performance conditions.

There are two components to each transaction with ECS storage, one, metadata operations, and two, data

movement. Metadata operations include both reading and writing or updating the metadata associated with

an object and we refer to these operations as transactional overhead. Every transaction on the system will

have some form of metadata overhead and the size of an object along with the transaction type determines

the associated level of resource utilization. Data movement is required for each transaction also and refers to

the receipt or delivery of client data. We refer to this component in terms of throughput or bandwidth,

generally in megabytes per second (MB/s).

To put this into an equation, response time, or total time to complete a transaction, is the result of the time to

perform the transaction's required metadata operations, or the transaction's transactional overheard, plus, the

time it takes to move the data between client and through ECS, the transaction's data throughput.

Total response time = transactional overhead time + data movement time

For small objects transactional overhead is similar and is the largest, or limiting, factor to performance. Since

every transaction has a metadata component, and the metadata operations have a minimum amount of time

to complete, at objects with similar sizes that component, the transactional overhead, will be similar. On the

other end of the spectrum, for large objects, the transactional overhead is minimal compared to the amount of

time required to physically move the data between client and the ECS system. For larger objects the major

factor in response time is the throughput which is why at certain large object sizes the throughput potential is

similar.

Object size and thread counts, along with transaction type, are the primary factors which dictate performance

on ECS. Because of this in order to size for workloads, object sizes and their transaction types along with

related application thread counts should be cataloged for all workloads that will utilize the ECS system.

BIG-IP DNS and LTM each provide very different functions and can be used together or independently.

When used together BIG-IP DNS can make decisions based on data received directly from LTMs. BIG-IP

8 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

DNS is recommended if you want to either globally distribute application load, or to ensure seamless failover

during site outage conditions where static failover processes are not feasible or desirable. BIG-IP LTM can

manage client traffic based on results of monitoring both network and application layers and is largely

mandatory where performance and client connectivity is required.

With ECS, monitoring application availability to the data services across all ECS nodes is necessary. This is

done using application level queries to the actual data services that handle transactions as opposed to relying

only on lower network or transport queries which only report IP and port availability.

A major difference between BIG-IP DNS and LTM is that traffic flows through an LTM whereas the BIG-IP

DNS only informs a client which IP to route to and does not handle client application data traffic.

3.1 F5 Networking Constructs

A general understanding of the basic BIG-IP network constructs is critical to a successful architecture and

implementation. Here is a list of the most common of the BIG-IP networking constructs:

• Virtual Local Area Network (VLAN) - A VLAN is required and associated with each non-

management network interface in use on a BIG-IP system.

• Self IP - F5 terminology for an IP address on a BIG-IP system that is associated with a VLAN, to

access hosts in the VLAN. A self IP represents an address space as determined by the associated

netmask.

• Static self IP - A minimum of one IP address is required for each VLAN in use in a BIG-IP system.

Static self IP addresses are unit-specific in that they are unique to the assigned device. For example,

if two BIG-IP devices are paired together for HA, each device’s configuration will have at least one

self IP for each VLAN. The static self IP configuration is not sync’d or shared between devices.

• Floating self IP - Traffic groups, which are logical containers for virtual servers, can float between

BIG-IP LTM devices. Floating self IP addresses are created similarly to static addresses, except that

they are assigned to a floating traffic group. With this, during failover the self IP floats between

devices. The floating self IP addresses are shared between devices. Each traffic group that is part of

an HA system can use a floating self IP address. When in use the device actively serving the virtual

servers on the traffic group hosts the floating self IP.

• MAC Masquerading Address - An optional virtual layer two Media Access Control (MAC) address,

otherwise known as a MAC masquerade, which floats with a traffic group.

MAC masquerade addresses serve to minimize ARP communications or dropped packets during a

failover event. A MAC masquerade address ensures that any traffic destined for the relevant traffic

group reaches an available device after failover has occurred, because the MAC masquerade

address floats to the available device along with the traffic group. Without a MAC masquerade

address, on failover the sending host must relearn the MAC address for the newly-active device,

either by sending an ARP request for the IP address for the traffic or by relying on the gratuitous ARP

from the newly-active device to refresh its stale ARP entry.

9 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

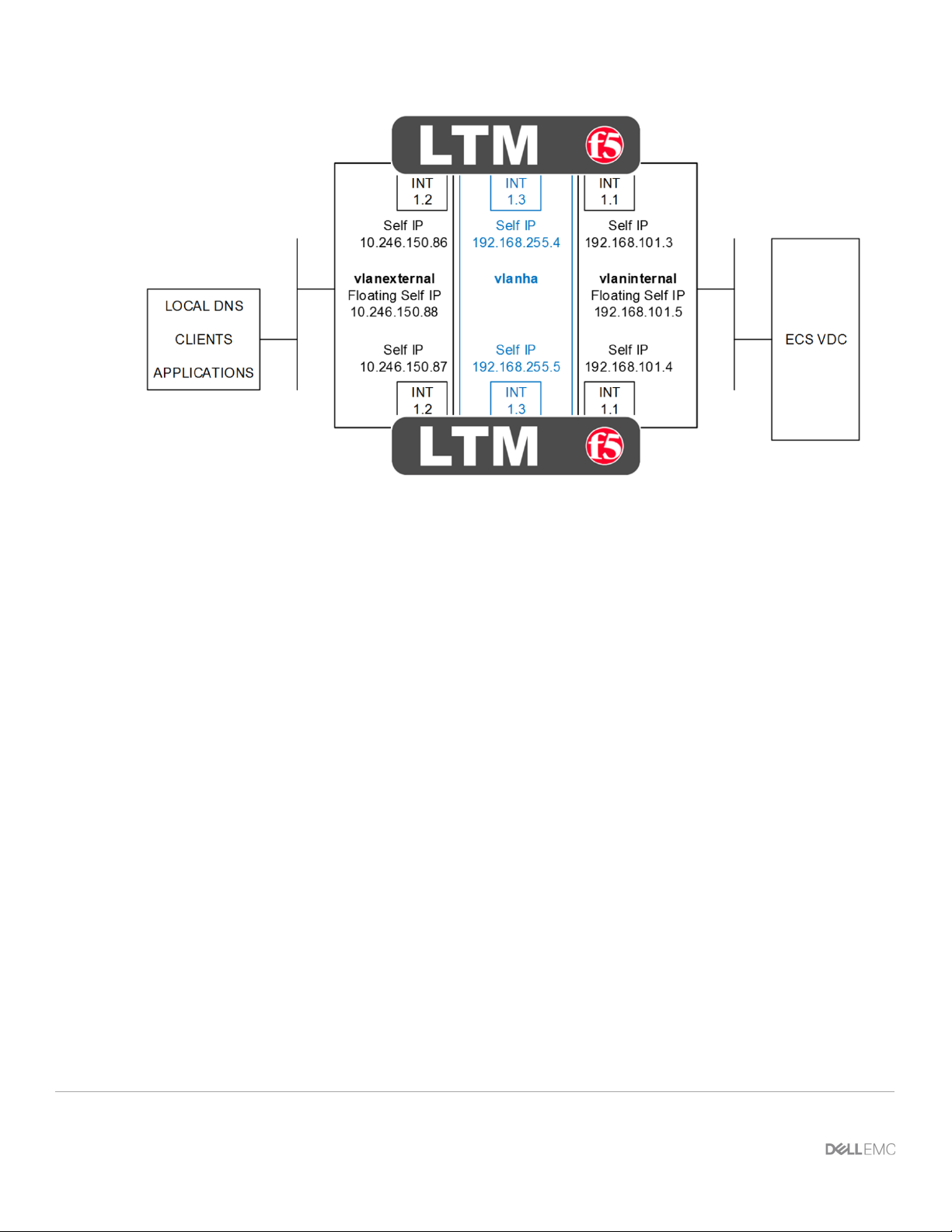

Figure 2 - HA pair of LTM with physical interfaces, VLAN and self IP addresses

Figure 2 above shows a HA pair of LTM. The physical interfaces as shown are 1.1, 1.2, and 1.3. In addition

each device has a management interface which is not shown. Each interface has an associated VLAN and

self IP address. Floating self IP addresses are created in redundant configurations as is a VLAN dedicated

for HA. Not shown are any MAC masquerading addresses. MAC masquerading addresses are associated

with traffic groups. Traffic groups are explained in the next section on F5 device redundancy.

BIG-IP LTM, like with other Application Delivery Controllers, can be deployed in a variety of deployment

architectures. Two commonly used network deployment architectures are:

1. One-arm - A one-arm deployment is where the LTM has only a single VLAN and interface configured

for application traffic. The LTM both receives and forwards traffic using a single network interface.

The LTM sits on the same subnet as the destination servers. In this scenario applications direct their

traffic to virtual servers on the LTM which use virtual IP addresses that are on the same subnet as the

destination nodes. Traffic received by the LTM is forwarded to the target ECS node.

2. Two-arm - The examples we use throughout this paper are two-arm implementations. A two-arm

deployment is where the LTM sits on the same subnet as the destination servers, as above, but also

listens for the application traffic on an entirely different subnet. The LTM uses two interfaces, each on

unique subnets, to receive and forward traffic. In this scenario two VLANs exist on the LTM, one for

external, client-side traffic, and the other one for internal, server-side traffic. Figure 2 above is an

example of a two-arm architecture.

10 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Traffic routing between the client, LTM, and ECS nodes is important. Two primary options for routing exist for

the pool members, in our case, ECS nodes:

1. The default route on the ECS nodes point to a self IP address on the LTM. With this, as the nodes

return traffic to the clients, because the default route points to the LTM, traffic will route back to the

clients the same way it came, through the LTM. In our lab setup we set the default route on the ECS

nodes to point to the self IP address on the LTM. For Site 1, our site with a pair of LTMs configured

for HA, we used the floating self IP address as the default gateway. For Site 2, the ECS nodes point

to the self IP address of the single LTM.

2. The default route on the ECS nodes point to an IP address on a device other than the LTM, such

as a router. This is often referred to as Direct Server Return routing. In this scenario application

traffic is received by the node from the LTM, but due to the default route entry, is returned to the client

bypassing the LTM. The routing is asymmetrical and it is possible that clients will reject the return

traffic. LTM may be configured with address and port rewriting disabled which may allow clients to

accept return traffic. F5 documentation or experts should be referenced for detailed understanding.

3.2 F5 Traffic Management Constructs

The key F5 BIG-IP traffic management logical constructs for the BIG-IP DNS and/or LTM are:

• Pool - Pools are logical sets of devices that are grouped together to receive and process traffic.

Pools are used to efficiently distribute load across grouped server resources.

• Virtual server - Virtual servers are a traffic-management object that is represented in the BIG-IP

system by a destination IP address and service port. Virtual servers require one or more pools.

• Virtual address - A virtual address is an IP address associated with a virtual server, commonly

referred to as a VIP (virtual IP). In the BIG-IP system a virtual addresses are generally created by the

BIG-IP system when a virtual server is created. Many virtual servers can share the same IP address.

• Node - A BIG-IP node is a logical object on the BIG-IP system that identifies the IP address or a fully-

qualified domain name (FQDN) of a server that hosts applications. Nodes can be created explicitly or

automatically when a pool member is added to a load balancing pool.

• Pool member - A pool member is a service on a node and is designated by an IP address and

service (port).

• Monitor - Monitors are pre-configured (system provided) or user created associations with a node,

pool, or pool member to determine availability and performance levels. Health or performance

monitors check status on an ongoing basis, at a set interval. Monitors allow intelligent decision

making by BIG-IP LTM to direct traffic away from unavailable or unresponsive nodes, pools, or pool

members. Multiple monitors can be associated with a node, pool, or pool member.

• Data Center - BIG-IP DNS only. All of the BIG-IP DNS resources are associated with a data center.

BIG-IP DNS consolidates the paths and metrics data collected from the servers, virtual servers, and

links in the data center. BIG-IP DNS uses that data to conduct load balancing and route client

requests to the best-performing resource.

11 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

• Wide IP (WIP) - BIG-IP DNS only. Wide IP addresses (WIP) are one of the main BIG-IP DNS

configuration elements. WIP are identified by a fully qualified domain name (FQDN). Each WIP is

configured with one or more pools (global). The global pool members are LTM-hosted virtual servers.

BIG-IP DNS servers act as an authority for the FQDN WIP and return the IP address of the selected

LTM virtual server to use.

• Listener - BIG-IP DNS only. A listener is a specialized virtual server that passively checks for DNS

packets on port 53 and the IP address assigned to the listener. When a DNS query is sent to the IP

address of the listener, BIG-IP DNS either handles the request locally or forwards the request to the

appropriate resource.

• Probe - BIG-IP DNS only. A probe is an action taken by a BIG-IP system to acquire data from other

network resources.

Figure 3 below shows the relationships between applications, virtual servers, pools, pool members (ECS

node services), and health monitors.

Figure 3 - Relationships between LTM, applications, virtual servers, pools, pool members and health monitors

12 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

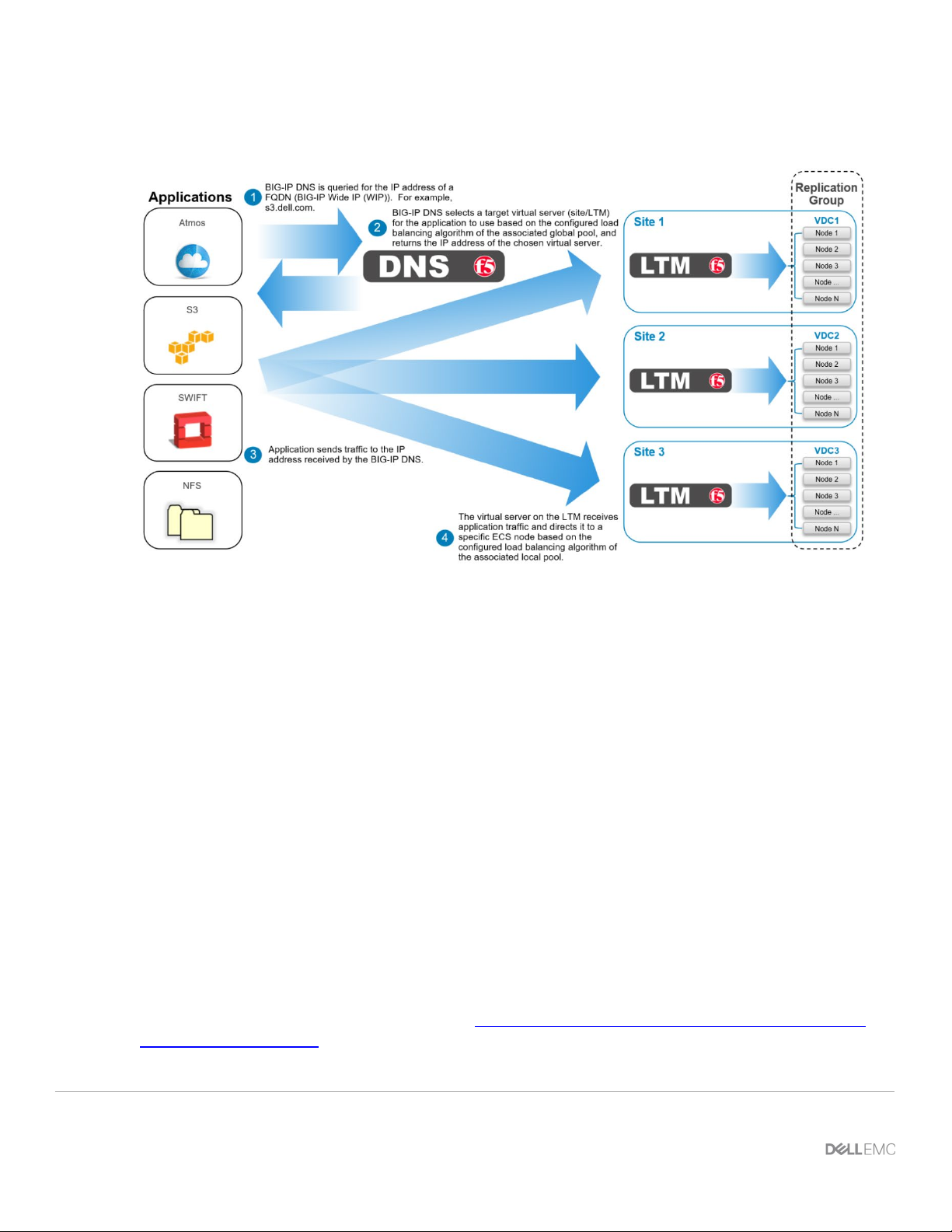

Figure 4 below shows the BIG-IP Wide IP (FQDN) to global pool member (LTM/site/virtual server)

association.

Figure 4 - BIG-IP Wide IP (FQDN) to global pool member (LTM/site/virtual server) association

3.3 F5 Device Redundancy

The BIG-IP DNS and LTM can be deployed as a standalone device or as a group of two or more identical

devices for redundancy. In the single-device, or standalone scenario, one device handles all application

traffic. The obvious downside with this is that if the device fails applications may experience a complete

interruption in service. In a single-site, single-LTM deployment all application traffic will fail if the LTM

becomes unavailable.

In multiple-site ECS deployments if each site contains a single LTM and a site’s LTM fails, BIG-IP DNS can

direct applications to use the LTM at an alternate site that is a member of the same replication group. So as

long as an application can access storage at non-local sites within an acceptable level of performance, if a

BIG-IP DNS is in use, deploying a single LTM at each site may allow for significant cost savings. That is, so

long as an application is tolerant of any increased latency caused by accessing data using a non-local site,

purchasing two or more LTM for each site may not be necessary, providing that a BIG-IP DNS or global load

balancing mechanism is in place. Understanding the tradeoffs between implementing single or multiple

devices, and the related application performance requirements, is important in developing a deployment best

suited for your needs. Also, an understanding of the concept of object owner on ECS, the access during

outage (ADO) configuration and impact to object accessibility during temporary site outage (TSO) are all

critical to consider when planning for multisite multi-access object namespace. Refer to the ECS Architectural

and Overview whitepaper here for more details:

architectural-guide-wp.pdf.

http://www.emc.com/collateral/white-papers/h14071-ecs-

13 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

BIG-IP HA key constructs are:

• Device trust - Device trust establishes trust relationships between BIG-IP devices through mutual

certificate-based authentication. A trust domain is a collection of BIG-IP devices that trust one

another and is a prerequisite for creating a device group for ConfigSync and failover operations. The

trust domain is represented by a special system-generated and system-managed device group

named device_trust_group, which is used to synchronize trust domain information across all devices.

• Device group - A collection of BIG-IP devices that trust each other and can synchronize, and

sometimes fail over, their BIG-IP configuration data. There are two types of device groups:

1. A Sync-Only device group contains devices that synchronize configuration data, such as policy

data, but do not synchronize failover objects.

2. A Sync-Failover device group contains devices that synchronize configuration data and support

traffic groups for failover purposes when a device becomes unavailable.

• Traffic group - A traffic group is a collection of related configuration objects (such as a floating self IP

address and MAC masquerading address) that run on a BIG-IP system and process a particular type

of application traffic. When a BIG-IP system becomes unavailable, a floating traffic group can migrate

to another device in a device group to ensure that the application traffic being handled by the traffic

group continues to be processed with little to no interruption in service.

With two or more local devices, a logical BIG-IP device group can allow application traffic to be processed by

more than one device. In a HA setup, during service interruption of a BIG-IP device, traffic can be routed to

the remaining BIG-IP device(s) which allows applications to experience little if any interruption to service. The

HA failover type discussed in this paper is sync-failover. This type of failover allows traffic to be routed to

working BIG-IP devices during failure.

There are two redundancy modes available when using the sync-failover type of traffic group. They are:

1. Active/Standby - Two or more BIG-IP DNS or LTM devices belong to a device group. For the BIGIP DNS, only one device actively listens and responds to DNS queries. The other device(s) are in

standby mode and available to take over the active role in a failover event. For the LTM, one device

in each floating traffic group actively processes application traffic. The other devices associated with

the floating traffic group are in standby mode and available to take over the active role in a failover

event.

2. Active/Active - Two or more LTM devices belong to a device group. Both devices can actively

process application traffic. This is accomplished by creating two or more BIG-IP floating traffic

groups. Each traffic group can contain and processes traffic for one or more virtual servers.

It is key to understand that a single virtual IP address can be shared by many virtual servers,

however, a virtual IP address may only be associated with one traffic group. If for example a pair of

LTMs are configured with many virtual servers that all share the same virtual IP address, all virtual

servers can only be served by one LTM at a time regardless of the redundancy mode configured.

This means in order to distribute application traffic to more than one LTM device, two or more unique

virtual IP addresses must be in use between two or more virtual servers.

14 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

4 ECS Configuration

There is generally no special configuration required to support load balancing strategies within ECS. ECS is

not aware of any BIG-IP DNS or LTM systems and is strictly concerned, and configured with, ECS node IP

addresses, not, virtual addresses of any kind. Regardless of whether the data flow includes a traffic manager,

each application that utilizes ECS will generally have access to one or more buckets within a namespace.

Each bucket belongs to a replication group and it is the replication group which determines both the local and

potentially global protection domain of its data as well as its accessibility. Local protection involves mirroring

and erasure coding data inside disks, nodes, and racks that are contained in an ECS storage pool. Geoprotection is available in replication groups that are configured within two or more federated VDCs. They

extend protection domains to include redundancy at the site level.

Buckets are generally configured for a single object API. A bucket can be an S3 bucket, an Atmos bucket, or

a Swift bucket, and each bucket is accessed using the appropriate object API. As of ECS version 3.2 objects

can be accessed using S3 and/or Swift in the same bucket. Buckets can also be file enabled. Enabling a

bucket for file access provides additional bucket configuration and allows application access to objects using

NFS and/or HDFS.

Application workflow planning with ECS is generally broken down to the bucket level. The ports associated

with each object access method, along with the node IP addresses for each member of the bucket’s local and

remote ECS storage pools, are the target for client application traffic. This information is what is required

during LTM configuration. In ECS, data access is available via any node in any site that serves the bucket.

In directing the application traffic to an F5 virtual address, instead of directly to an ECS node, load balancing

decisions can be made which support HA and provide the potential for improved utility and performance of the

ECS cluster.

The following tables provide the ECS configuration used for application access via S3 and NFS utilizing the

BIG-IP DNS and LTM devices as described in this document. Note the configuration is sufficient for use by

applications whether they connect directly to ECS nodes, they connect to ECS nodes via LTMs, and/or they

are directed to LTMs via a BIG-IP DNS.

In our reference example, two five node ECS VDCs were deployed and federated using the ECS Community

Edition 3.0 software on top of the CentOS 7.x operating system inside a VMWare ESXi lab environment.

Virtual systems were used to ensure readers could successfully deploy a similar environment for testing and

to gain hands-on experience with the products. A critical difference in using virtual ECS nodes, as opposed to

ECS appliances, is that the primary and recommended method for monitoring an S3 service relies upon the

underlying ECS fabric layer which is not in place in virtual systems. Because of this monitoring using the S3

Ping method is shown against physical ECS hardware in Appendix A, Creating a Custom S3 Monitor.

15 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Site 1 (federated with Site 2)

Storage Pool (SP)

https://192.168.101.11/#/vdc//provisioning/storagePools

Name

s1-ecs1-sp1

ecs-1-1.kraft101.net 192.168.101.11

ecs-1-2.kraft101.net 192.168.101.12

ecs-1-3.kraft101.net 192.168.101.13

ecs-1-4.kraft101.net 192.168.101.14

ecs-1-5.kraft101.net 192.168.101.15

Site 2 (federated with Site 1)

Storage Pool (SP)

https://192.168.102.11/#/vdc//provisioning/storagePools

Name

s2-ecs1-sp1

ecs-2-1.kraft102.net 192.168.102.11

ecs-2-2.kraft102.net 192.168.102.12

ecs-2-3.kraft102.net 192.168.102.13

ecs-2-4.kraft102.net 192.168.102.14

ecs-2-5.kraft102.net 192.168.102.15

Site 1

Virtual Data Center (VDC)

https://192.168.101.11/#/vdc//provisioning/virtualdatacenter

Name

s1-ecs1-vdc1

Replication and Management

Endpoints

192.168.101.11,192.168.101.12,192.168.101.13,192.168.101.14,

192.168.101.15

Site 2

Name

s2-ecs1-vdc1

Replication and Management

Endpoints

192.168.102.11,192.168.102.12,192.168.102.13,192.168.102.14,

192.168.102.15

Replication Group (RG)

https://192.168.101.11/#/vdc//provisioning/replicationGroups//

Name

ecs-rg1-all-sites

s1-ecs1-vdc1: s1-ecs1-sp1

s2-ecs1-vdc1: s2-ecs1-sp1

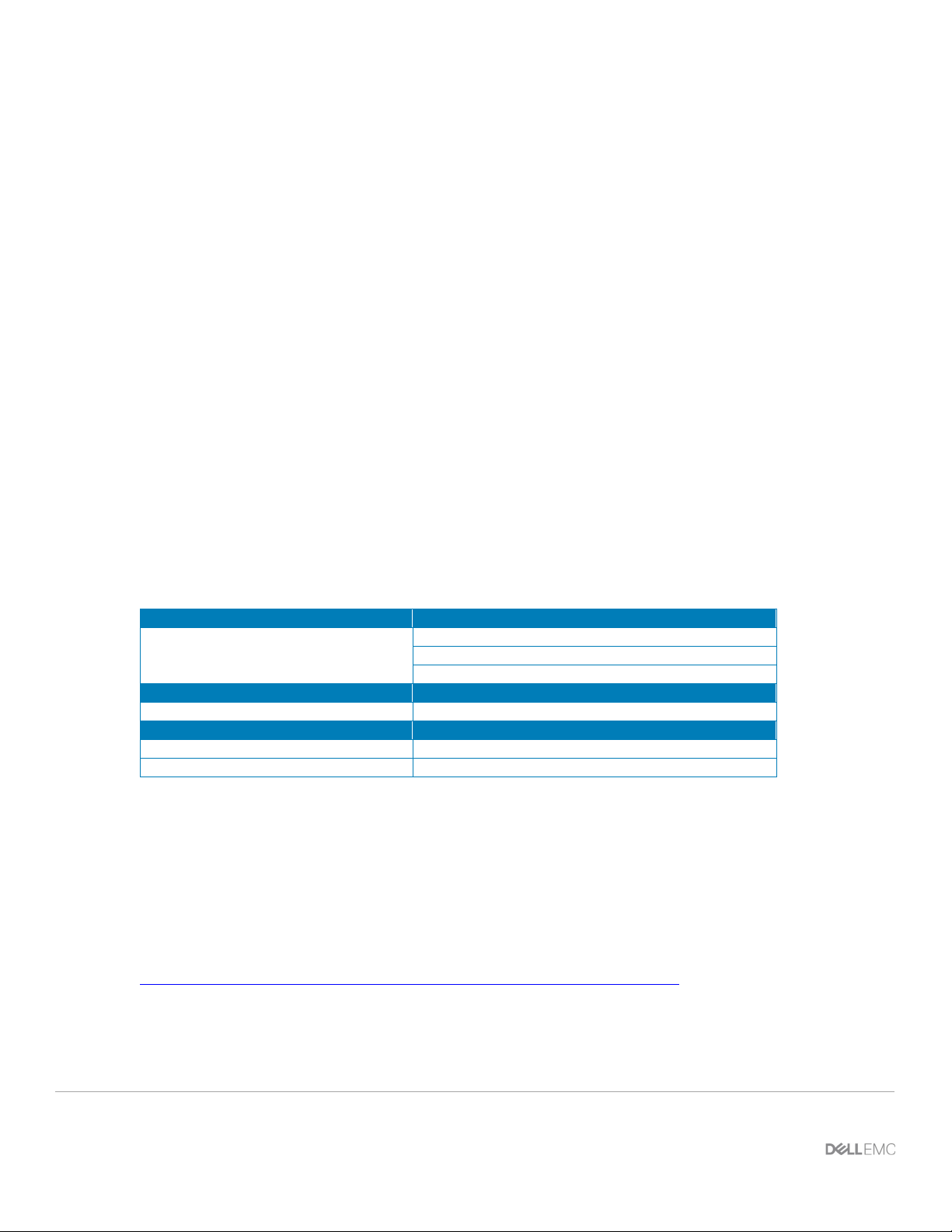

What follows are several tables with the ECS configuration used in our examples. Each table is preceded by

a brief description.

Each site of the two sites has a single storage pool that contains all five of the site’s ECS nodes.

Table 2 - ECS site’s storage pools

Nodes

Nodes

The first VDC is created at Site 1 after the storage pools have been initialized. A VDC access key is copied

from Site 2 and used to create the second VDC at Site 1 as well.

Table 3 - VDC name and endpoints

A replication group is created and populated with the two VDCs and their storage pools. Data stored using

this replication group is protected both locally, at each site, and globally through replication to the second site.

Applications can access all data in the replication group via any of the nodes in either of the VDC’s associated

storage pool.

Table 4 - Replication group

VDC: SP

16 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Namespace (NS)

https://192.168.101.11/#/vdc//provisioning/namespace

Name

webapp1

Replication group

ecs-rg1-all-sites

Access During Outage

Enabled

User

https://192.168.101.11/#/vdc//provisioning/users/object

Name

webapp1_user1

NS

webapp1

Object access

S3

User key

Akc0GMp2W4jZyu/07A+HdRjLtamiRp2p8xp3at7b

File user/Group mapping

https://192.168.101.11/#/vdc//provisioning/file/ns1/userMapping/

User

Name: webapp1_user1, ID: 1000, Type: User, NS: webapp1

Group

Name: webapp1_group1, ID: 1000, Type: Group, NS: webapp1

Bucket

https://192.168.101.11/#/vdc//provisioning/buckets/

Name

s3_webapp1

NS: RG

webapp1: ecs-rg1-all-sites

Bucket owner

webapp1_user1

File system

Enabled

Default bucket group

webapp1_group1

Group file permissions

RWX

Group directory permissions

RWX

Access During Outage

Enable

A namespace is created and associated with the replication group. This namespace will be used for S3 and

NFS traffic.

Table 5 - Namespace

An object user is required for accessing the namespace and created as per Table 6 below.

Table 6 - Object user

An NFS user and group are created as shown in Table 7 below. The NFS user is specifically tied to the

object user and namespace created above in Table 6.

Table 7 - NFS user and group

A file-enabled S3 bucket is created inside the previously created namespace using the object user as bucket

owner. The bucket is associated with the namespace and replication group. Table 8 below shows the bucket

configuration.

Table 8 - S3 bucket configuration

17 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

File export

https://10.10.10.101/#/vdc//provisioning/file//exports

Namespace

webapp1

Bucket

s3_webapp1

Export path

/webapp1/s3_webapp1

Export host options

Host: * Summary: rw,async,authsys

DNS records (corresponding reverse entries not shown but are required)

DNS record entry

Record type

Record data

Comments

ecs-1-1.kraft101.net

A

192.168.101.11

Public interface node 1 ecs1 site1

ecs-1-2.kraft101.net

A

192.168.101.12

Public interface node 2 ecs1 site1

ecs-1-3.kraft101.net

A

192.168.101.13

Public interface node 3 ecs1 site1

ecs-1-4.kraft101.net

A

192.168.101.14

Public interface node 4 ecs1 site1

ecs-1-5.kraft101.net

A

192.168.101.15

Public interface node 5 ecs1 site1

ecs-2-1.kraft102.net

A

192.168.102.11

Public interface node 1 ecs1 site2

ecs-2-2.kraft102.net

A

192.168.102.12

Public interface node 2 ecs1 site2

ecs-2-3.kraft102.net

A

192.168.102.13

Public interface node 3 ecs1 site2

ecs-2-4.kraft102.net

A

192.168.102.14

Public interface node 4 ecs1 site2

ecs-2-5.kraft102.net

A

192.168.102.15

Public interface node 5 ecs1 site2

To allow for access to the bucket by NFS clients, a file export is created as per Table 9 below.

Table 9 - NFS export configuration

Table 10 below lists the DNS records for the ECS nodes. The required reverse lookup entries are not shown.

Table 10 - DNS records for ECS nodes and Site 1 and Site 2

18 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

5 F5 Configuration

Two configuration sections follow, the first for BIG-IP LTM and the second for BIG-IP DNS. Each section

provides a couple examples along with the reference architecture. Context around related deployment

options are also mentioned.

We make the assumption that whether BIG-IP devices are deployed in isolation or as a group, the general

guidelines provided are the same. F5 documentation should be reviewed so that any differences between

deploying single and redundant devices are understood.

All of the examples provided use the ECS VDCs as configured in the tables above. The examples provided

were deployed in a lab environment using the ECS Community Edition, v3.0, and virtual F5 edition, version

13.0.0 (Build 2.0.1671 HF2). Unlike above in the ECS configuration section, steps are provided in the

examples below on how to configure the F5 BIG-IP traffic management constructs. We do not provide BIG-IP

base configuration steps such as licensing, naming, NTP, and DNS.

For each site BIG-IP LTM is deployed and configured to balance the load to ECS nodes. In federated ECS

deployments a BIG-IP DNS can be used to direct application traffic to the most suitable site.

5.1 F5 BIG-IP LTM

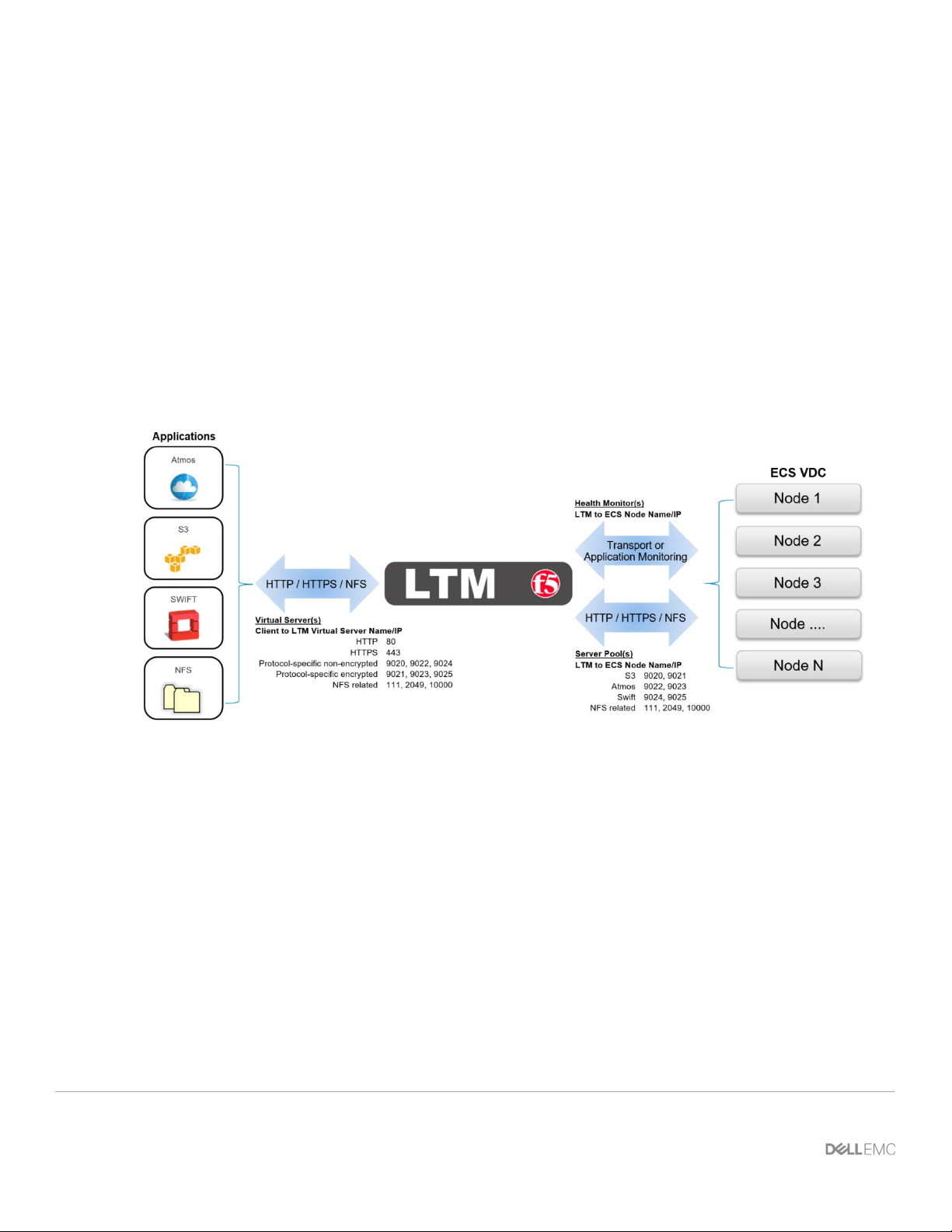

Figure 5 - LTM high-level overview

A local traffic manager, as seen in the middle of Figure 5 above, listens and receives client application traffic

on one or more virtual servers. Virtual servers are generally identified by a FQDN and port, for example,

s3.site1.ecstme.org:9020. Virtual servers process traffic for either one service port, or all service ports.

Virtual servers are backed by one or more pools. The pools have members assigned to them. The pool

members consist of ECS nodes and identified by their hostname and port, for example, ecs-1-1:9020. The

configuration determines whether all traffic is directed to a specific port on pool members or whether the port

of the original request is kept.

An LTM makes a decision for each application message it receives on a virtual server to determine which

specific pool member in the virtual server’s configured pool(s) should receive the message. The LTM uses

the configured load balancing algorithm to make this determination.

The LTM uses health monitors to keep an up-to-date status of all individual pool members and their relevant

services. As a node or node’s required service(s) become unavailable the LTM ceases to send application

19 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

traffic to the pool member and subsequent messages are forwarded to the next available pool member per

the configured load balancing algorithm.

In this document examples are shown with virtual servers and pool members that service single ports, S3 with

Secure Socket Layer (SSL) at port 9021, for example. It is possible for a virtual server to listen traffic on all

service ports. This would allow for all application traffic to point to one virtual server that services all the

related ports. F5 BIG-IP systems have a configuration element called iRule. Using iRules allows

administrators to detail rules, for example, to only process traffic on specific ports and to drop all other traffic.

Using an iRule could allow a virtual server to listen on all ports but only process traffic for ports 111, 2049,

and 10000, the NFS-related ports ECS uses. Readers are encouraged to research iRules to determine if they

are worthwhile for use in their BIG-IP configuration.

Note: Local applications may use the S3-specific application ports, 9020 and 9021. For workflows over the

Internet it is recommended to use ports 80 and 443 on the front end and ports 9020 and 9021 on the

backend. This is because the Internet can handle these ports without problem. Using 9020 or 9021 may

pose issues when used across the Internet.

5.1.1 Example: S3 to Single VDC with Active/Standby LTM Pair

Figure 6 - LTM in active/standby redundancy mode

Figure 6 above shows the basic architecture for this example of an S3 application access to ECS via an

Active/Standby HA pair of LTM at a single site. A client application is shown on the far left. In our case it

resides in the same subnet as the LTM but it could be on any local or global network. Each of our LTM

devices has four network interfaces. The first, not shown above, is used for management access. Interfaces

1.1 are assigned to a VLAN named vlaninternal. In general load balancing terms this is often also called the

back-end or server-side network. Interfaces 1.2 are assigned to VLAN vlanexter nal. Generally this is referred

to as the front-end or client-side network. Interfaces 1.3 are assigned to VLAN vlanha. The HA VLAN is used

for high availability functionality along with synchronization of the device’s configuration and client connection

mirroring. A BIG-IP device group, device-group-a, in our case, logically contains both devices. The device

group’s failover type, sync-failover, is configured with the redundancy mode set to Active/Standby. In

20 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Devices

System ›› Platform ›› Configuration

f5-ltm-s1-1.ecstme.org: 172.16.3.2

f5-ltm-s1-2.ecstme.org: 172.16.3.3

f5-ltm-s2-1.ecstme.org: 172.16.3.5

NTP

System ›› Configuration : Device : NTP

Time servers

10.254.140.21

DNS

System ›› Configuration : Device : DNS

DNS lookup servers list

10.246.150.22

DNS search domain list

localhost, ecstme.org

Active/Standby redundancy mode, one device actively processes all application traffic at any given time. The

other device is idle and in standby mode waiting to take over processing application traffic if necessary. On

the far right is our VDC at Site 1. All of the ECS nodes are each put in to two BIG-IP pools as referenced in

Figure 6 above using an asterisk. One pool is configured with port 9020 and the other pool for 9021.

In between the client and LTM, a BIG-IP traffic group, traffic-group-1, in our example, is shown. It contains

two virtual servers, one for each type of S3 traffic we provide access for in this example. Applications will

point to the FQDN and appropriate port to reach the VDC.

In between the LTM and VDC two pools are shown, one for each service port we required. Each of these

pools contain all VDC nodes. Not shown is the health monitoring. Monitoring will be discussed further down

as the example continues.

Here is a general overview of the steps in this example:

1. Configure the first LTM.

2. Configure the second LTM and pair the devices for HA.

Step 1: Configure the first LTM.

GUI screenshots are used to demonstrate LTM configuration. Not shown are the initial F5 BIG-IP Virtual

Edition basic setup of licensing and core infrastructure components such as NTP and DNS. Table 11 below

shows these details used for each LTM device.

Table 11 - LTM base device details

Hostname: Management IP

Figure 7 below shows three VLANs and associated network interfaces used. At this point in our example, and

during single device configuration, the third interface and associated VLAN is configured but not used until

later in the HA example. VLAN configuration is simple and consists of naming the VLAN and assigning a

physical network interface, either tagged or untagged, as the resource. We use an untagged interface as

each interface in our design only services one VLAN. None of the devices aside from the F5 BIG-IP DNS and

LTM in our test environment use VLAN tagging. If an interface belongs to multiple VLANs it should be

tagged. For more information on tagged versus untagged interfaces, and when to use each, refer to F5

documentation. In addition, refer to Dell EMC ECS Networking and Best Practices whitepaper here:

https://www.emc.com/collateral/white-paper/h15718-ecs-networking-bp-wp.pdf

.

21 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Figure 7 - LTM VLANs with interfaces

Figure 8 below shows the non-management network interfaces on this LTM. As mentioned above, during

single device deployment only two interfaces are required to carry application traffic in a typical two-arm

architecture as described earlier in the F5 Networking Constructs section. The third, in our example, is

configured in advance in preparation for HA.

Figure 8 - LTM non-management network interfaces with MAC addresses

Figure 9 below shows the self IP addresses required for this example. Each of the interfaces used for

application traffic are assigned two self IP addresses. The local addresses are assigned to the interface and

are assigned to the built-in traffic group named traffic-group-local-only. In preparation for HA a floating self IP

address is also created for each interface carrying application traffic. In HA they will move between devices

as needed in service to the active unit. The floating self IP addresses are associated with traffic group named

traffic-group-1. Also shown in the graphic is the self IP address for HA. At this point, in a single device

deployment configuration, only two self IP addresses are required for a two-arm architecture, one for each

VLAN and interface that will handle application traffic. Later when we synchronize configuration between the

LTM pair, the static self IP addresses will not be part of the settings shared between the two devices. That is,

these self IP addresses are local only to the device they’re created on.

22 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Figure 9 - LTM static and floating self IP addresses

Figure 10 below begins to show the creation of one of the pools.

Note: Prior to performing any L3-L7 application configuration, such as the creation of pools, it may be

advisable to create and verify the device trust first. See Step 2 below. This would allow for the verification

of L2/L3 sanity prior to troubleshooting application traffic issues such as failing monitors.

Figure 10 - LTM pool creation

23 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

The pool is named, assigned a health monitor, and configured with “Reject” as the desired “Action on Service

Down” method. Built-in TCP health monitoring is used for the lab. All of the other settings in the upper

‘Configuration’ section for new pool creation dialog are default. When using F5 with a real hardware

appliance the best practice is to use S3 Ping. This is explained further and demonstrated in Appendix A:

Creating a Custom S3 Monitor.

The "Action on Service Down" setting controls LTM's connection management behavior when a monitor

transitions a pool member to a DOWN state after it has been selected as the load balancing target for a

connection. The best option is entirely dependent on that application's client and server implementations.

The possible options are:

• None - Default setting. The LTM will continue to send data on established connections as long as

client is sending and server is responding.

• Reject - Most commonly used option. Used to explicitly close both sides of the connection when the

server goes DOWN. This option often results in the quickest recovery of a failed connection since it

forces the client side of the connection to close.

• Drop - The LTM will silently drop any new client data sent on established connections.

• Reselect - The LTM will choose another pool member if one is available and rebind the client

connection to a new server-side flow.

In the lower section of the new pool creation dialog the load balancing method is selected, and pool members

are assigned. Figure 11 below shows a list of all available load balancing methods. We use the often utilized

least connections option. Consult F5 directly for the specific details and reasons why any of the available

options may be desirable for a given workload.

Figure 11 - LTM load balancing methods available

24 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Figure 12 - LTM pool final configuration

Figure 12 above shows the final configuration selections with all pool members. The S3 with SSL pool is

configured in the same manner using port 9021. The S3 with SSL pool allows for the encryption of traffic

between the client and ECS node using an ECS-generated certificate. In the lab we use a self-signed

certificate. In production environments it is recommended to use a certified signing authority.

It is generally recommended however that SSL connections be terminated at the LTM. Use of SSL encryption

during transport is CPU intensive and the F5 BIG-IP software is specially built to handle this processing

efficiently. F5 hardware also has dedicated Application-Specific Integrated Circuits (ASIC) to further enhance

efficiency in processing this traffic. SSL can be terminated at ECS, the LTM, or both. By terminating SSL at

both places, a client will establish a secure connection to the F5 using an F5 certificate, and the LTM will

establish a secure connection to the ECS node using the ECS certificate. Twice the amount of processing is

required when encrypting each of the two connections individually. Traffic encryption options should be

analyzed for each application workflow. For some workflows, for example when no personally identifiable

information is transmitted, no encryption may be suitable. All traffic will pass over the wire in clear text

fashion and no encryption-related CPU processing is required. For other workflows encryption on the

external connection is appropriate but non-encrypted connectivity on the internal network may be fine. Some

banks and other highly secure facilities may use encryption for each of the two connections.

Figure 13 below shows both pools in the system. Although there are only five ECS nodes, ten members are

shown for each pool. This is because the system lists both ephemeral and non-ephemeral members. Per the

F5 documentation, during pool creation, when the default setting of “Auto Populate on” is used, the system

creates an ephemeral node for each IP address returned as an answer to a DNS query. Also, when a DNS

answer shows that the IP address of an ephemeral node doesn't exist anymore, the system deletes the

ephemeral node.

25 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

These two pools can handle all of our S3 traffic needs. Traffic to pool members listening on port 9020 is not

encrypted. Traffic to pool members listening on port 9021 is encrypted.

Figure 13 - LTM S3-related pools

Next is the creation of a virtual server. Figure 14 below shows the assigned name, destination address, and

service port for an S3 virtual server that serves non-encrypted traffic. A source address or network can

optionally be added as well. The destination IP address shown is the Virtual IP address that will be created

on the system. Client applications will point to this IP address. We optionally assign a name to the address in

DNS to allow clients to use a FQDN.

Figure 14 - LTM new virtual server creation dialog

The type of virtual server we use is Standard. Several other types exist and Figure 15 shows the list of

options provided in the GUI. F5 documentation should be reviewed to understand the available virtual server

types. We choose Standard however using another type of virtual server, such as Performance (HTTP), may

be more suitable for production access.

26 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Figure 15 - LTM virtual server options

The remaining values are not shown. For this example, TCP is selected for protocol. Other protocol options

exist and are UDP, SCTP, or All. We’ll use “All” option when configuring NFS-related virtual servers in the

NFS example. The pool we selected was pool-all-nodes-9020. We also selected a “Default Persistence

Profile” of source_addr. Figure 16 below shows a list of available values for this setting. F5 documentation

should be consulted for details of each to see if they are more appropriate for a given workload. We selected

and recommend source_addr as the persistence profile because it keeps a client’s application traffic pinned to

the same pool member which allows for efficient use of ECS cache. We generally recommend source_addr

as the persistence profile for all virtual servers in use with ECS. Source address affinity persistence directs

session requests to the same server based solely on the source IP address of a packet. In circumstances

where many applications on a few number of clients connect to ECS through an LTM another persistence

profile may be better suited because it could cause an uneven load distribution across ECS nodes. As with

all configuration choices, be sure to understand the options available to make appropriate traffic management

decisions for each workflow and architecture deployed.

Figure 16 - LTM persistence profile options

Figure 17 below shows the vitual server for non-encrypted S3 traffic and some other virtual servers configured

to this point. Three other virtual servers exist on this LTM and are not covered in our example. By default

BIG-IP LTM has a default deny all policy. In order for traffic to traverse through an LTM a rule must explicitly

exist. The topology used in our lab does not have a router in the mix so we created virtual servers to allow

traffic to be forwarded as needed. The additonal rules shown allow, for example, our two ECS VDCs to

communicate with each other and for the underlying CentOS to access the Internet for application binaries

and such.

27 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Figure 17 - Configured virtual servers

Step 2: Configure second LTM, pair the devices for HA.

We add a second LTM to the same network as the first and configure the network interfaces similar to the

first, using unique self IP addresses. To create the pair, in the WebUI, Device Management is selected and

Device Trust then Device Trust Members is chosen. The Add button is selected in the Peer and Subordinate

Devices section to add the peer device. This was done from our first LTM set up, however, it can be

accomplished via either device. The second device’s management IP address, administrator username, and

administrator password are entered. A retrieve Device Information button appears as shown below in Figure

18. Once clicked, some of the remote devices information is displayed. These actions are only required on

one of the devices.

Figure 18 - Retrieve device information button

After the two devices trust each other, a device group can be created. In our environment we called the

device group device-group-a. The group type of Sync-Failover is chosen and both devices are added as

members. The Network Failover box is checked. For now we will sync manually so none of the other boxes

require check marks. A traffic group, traffic-group-1, is created automatically during this process.

Device Management, Devices, Device List, select (self), leads to Properties page along with Device

Connectivity list menu. The list menu contains, ConfigSync, Failover Network and Mirroring. For the

ConfigSync configuration we select our HA local address. For the Failover Network configuration, we choose

28 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Device Group

Device Management ›› Device Groups

Name

device-group-a

Group type

Sync-Failover

Sync type

Manual with incremental sync

Members

f5-ltm-s1-1, f5-ltm-s1-2

Traffic Group

Device Management ›› Traffic Groups

Name

traffic-group-1

Pools

Local Traffic ›› Pools

Name

pool-all-nodes-9020

Health monitors

tcp

Action on service down

Reject

Members

ecs-1-1:9020, ecs-1-2:9020, ecs-1-3:9020, ecs-1-4:9020, ecs-1-5:9020

Virtual Servers

Local Traffic ›› Virtual Servers

Name

virtual-server-80-to-9020

Type

Standard

Destination address

10.246.150.90

Service port

9020

Protocol

TCP

Default pool

pool-all-nodes-9020

Default persistence profile

source_addr

DNS record entry

Record type

Record data

Comments

f5-ltm-s1-1.ecstme.org

A

10.246.150.86

F5 LTM Site 1 (1 of 2) self IP address

f5-ltm-s1-2.ecstme.org

A

10.246.150.87

F5 LTM Site 1 (2 of 2) self IP address

f5-ltm-s1-floater.ecstme.org

A

10.246.150.88

F5 LTM Site 1 floating self IP address

f5-ltm-s1-virtual.ecstme.org

A

10.246.150.90

F5 LTM Site 1 virtual IP address

HA for the unicast address. For Mirroring we chose HA IP address for the Primary, and vlanexternal address

for the secondary. The connection state mirroring feature allows the standby unit to maintain all of the current

connection and persistence information. If the active unit fails and the standby unit takes over, all

connections continue, virtually uninterrupted.

With all of the required configuration we sync the first LTM to the group. This is done via Device

Management, Overview, select self, choose sync device to group, and clicking Sync. Then we can sync the

group to the second device.

Here is a table which provides a summary all configuration elements to this point.

Table 12 - LTM configuration elements

At this point we have two LTM devices configured for HA at Site 1. In this Active/Standby scenario only one

device handles application traffic at any time. Client connections and application sessions are mirrored to the

standby device to allow failover with minimal interruption. Applications point to the single virtual server name

or IP address. With source_addr as the default persistence configuration chosen, connections from each

client are directed by the LTM to a single ECS node. If that ECS node should fail the connections are reset

and subsequent application traffic is pointed to the next indicated ECS node.

Table 13 below shows the LTM-related DNS entries.

Table 13 - LTM-related DNS entries

29 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Organizations may choose to consider a VDC unavailable if less than a required minimum number of nodes

are up. A three node minimum may be chosen as it is the minimum number of nodes required for writes to

complete. ECS customers decide on their own logic and configuration on how best to accomplish this.

One approach may involve the creation of an object whose existence can be queried on each node. A

monitor which queries for the object on each node can be customized to look for a minimum number of

successful responses in order to consider the pool healthy.

When considering this type of rule, it is important to understand the significance of enabling ADO during

bucket creation. If enabled, objects can be accessed RW (or RO if file-enabled) after 15 minutes (by default)

when the site where the object was written is not reachable. If not enabled, object access is unavailable if the

object owner's site is not reachable.

If a rule exists to send requests to an alternate site when a subset of a site's nodes are unavailable, but the

site remains reachable, a TSO wouldn't be triggered but some requests will fail even if they are sent to

alternate site. Losing more than one node is rare so it is not generally recommended to create rules for this.

5.1.2 Example: LTM-terminated SSL Communication

Three primary configuration options are available for encrypting client traffic to ECS. They are:

1. ECS-terminated SSL connectivity. End-to-end traffic encryption between client and ECS.

2. LTM-terminated SSL connectivity. Encrypted traffic between the client and LTM. No encryption

between LTM and ECS.

3. ECS-terminated and LTM-terminated SSL connectivity. Traffic is encrypted twice, first between

client and LTM, and second between LTM and ECS.

As previously mentioned, SSL termination is a CPU intensive task. LTM hardware has dedicated hardware

processors specializing in SSL processing. LTM software also has mechanisms for efficient SSL processing.

It is recommended, when appropriate, to terminate SSL on the LTM and offload encryption processing

overhead off of the ECS storage. Each workflow should be assessed to determine if traffic requires

encryption at any point in the communication path.

Generally, storage administrators use SSL certificates signed by a trusted Certificate Authority (CA). A CAsigned or trusted certificate is highly recommended for production environments. For one, they can generally

be validated by clients without any extra steps. Also, some applications may generate an error message

when encountering a self-signed certificate. In our example we generate and use a self-signed certificate.

Both the LTM and ECS software have mechanisms to produce the required SSL keys and certificates.

Private keys remain on the LTM and/or ECS. Clients must have a means to trust a device’s certificate. This

is one disadvantage to using self-signed certificates. A self-signed certificate is its own root certificate and as

such client systems will not have it in their cache of known (and trusted) root certificates. Self-signed

certificates must be installed in the certificate store of any machines that will access ECS.

The first step in configuring any of the three SSL connectivity scenarios described above is the generation of

the required SSL keys and associated signed certificates. Each SSL key and certificate pair can then be

added to a custom SSL profile on the BIG-IP LTM. Custom SSL profiles are part of the virtual server

configuration.

30 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

SSL connectivity between a client and LTM requires the creation of a key and certificate pair for the LTM.

This pair is then added to an SSL profile and associated with the virtual server which hosts the associated

traffic.

Note: Although heavily CPU intensive, terminating a client's connection twice, both on the LTM and then

again on an ECS node, may be required if complete end-to-end encryption is required, AND, http header

information requires processing by the LTM. For example, when an iRule is used to hash an object's name

to determine the destination pool for which to direct the application's request to.

The simplest and most common use is for the client to LTM traffic to be encrypted and LTM to ECS is not. In

this scenario the LTM offloads the CPU-intensive SSL processing from ECS.

Here is a general overview of the steps we’ll walk through in this example:

1. Create SSL key and self-signed certificate on the LTM.

2. Create a custom SSL profile and configure with the key/certificate pair.

3. Create a virtual server for LTM-terminated SSL connectivity to ECS.

Step 1: Create SSL key and self-signed certificate on the LTM.

A single self-signed certificate is used for all three LTM devices in our lab deployment. Subject Alternate

Names (SAN) are populated during the creation of the certificate to include all possible combinations of

names and addresses that may be used during client connectivity through the LTM to ECS. Using a single

certificate pair for all devices isn’t necessary. A key and certificate can be uniquely created for each BIG-IP

device. Because self-signed certificates are used in our lab, additional client-side steps are required to allow

the LTM to be trusted by the client. In production environments, or, when using widely recognized public

signing authorities such as CA, there shouldn’t be any need for special client-side accommodations to accept

the granting authority.

Creating a self-signed digital certificate on an LTM device can be done at the command prompt using

OpenSSL, or, via the WebUI by clicking on the Create button located by navigating to the System, Certificate

Management, Traffic Certificate Management, SSL Certificate List page.

A new SSL Certificate configuration page is presented and the Common Name (CN) and SAN fields are

populated. The end result is a new self-signed certificate which is then exported and imported in to the other

two LTM devices. Figure 19 shows the fields as used in the lab. The SSL key was also exported and

imported to all three LTM under the same name for consistency, along with the new certificate.

Note: SSL keys can be generated on any device with the proper tools such as OpenSSL.

31 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

Figure 19 - Custom SSL certificate fields

At this point a newly-created self-signed certificate exists on all three LTM in the lab deployment. Clients will

be able to negotiate an encrypted session to any of the lab LTMs via SSL using any of the names or IP

addresses in the SAN field.

Step 2: Create a custom SSL client profile and configure with the key/certificate pair.

There are two types of SSL profiles for use in LTM virtual servers:

1. Client SSL Profile. A client SSL profile is used to enable SSL from the client to the virtual server.

2. Server SSL Profile. A server SSL profile is used to enable SSL from the LTM to the pool member.

32 DELL EMC ECS WITH F5 | H16294.3

Internal Use - Confidential

In this example we create a new custom SSL client profile to allow for encrypted communication between the

client and LTM.

A new profile can be generated by clicking the Create button on the Local Traffic, Profiles: SSL: Client page.

Profile configuration has four sections: General Properties, Configuration (Basic or Advanced), Client

Authentication, and SSL Forward Proxy (Basic or Advanced). In the General Properties section a name is

provided for the new profile along with the parent profile to be used which serves as a template. Figure 20

shows the values used for the example.

Figure 20 - LTM client SSL profile naming

Only two other values are edited for the example, specifying the ssl-key-ecs.key and the ssl-certificate-ecs.crt

files as shown in Figure 21 below.

Figure 21 - CA certificate and key selection

The custom SSL client profile is generated on all three LTM in our reference deployment. ConfigSync could

also be used to deploy the profile. Each LTM has a similar new custom SSL Client profile. This profile can

now be associated with a virtual server.

Step 3: Create a virtual server for LTM-terminated SSL connectivity to ECS.

A second S3 virtual server is created in this example to demonstrate the scenario where an LTM offloads the