Page 1

Dell/EMC AX4-5 Fibre Channel

Storage Arrays With

Microsoft

Hardware Installation

and Troubleshooting

®

Windows Server

Failover Clusters

Guide

®

Page 2

Notes, Cautions, and Warnings

NOTE: A NOTE indicates important information that helps you make better use

of your computer.

CAUTION: A CAUTION indicates potential damage to hardware or loss of data

if instructions are not followed.

WARNING: A WARNING indicates a potential for property damage, personal

injury, or death.

____________________

Information in this document is subject to change without notice.

© 2008-2010 Dell Inc. All rights reserved.

Reproduction of these materials in any manner whatsoever without the written permission of Dell Inc.

is strictly forbidden.

Trademarks used in this text: Dell, the DELL logo, PowerEdge, and PowerVault are trademarks of

Dell Inc.; Active Directory, Microsoft, Windows, Windows Server, and Windows NT are either

trademarks or registered trademarks of Microsoft Corporation in the United States and/or other

countries.; EMC, Navisphere, and PowerPath are registered trademarks and MirrorView, SAN

Copy, and SnapView are trademarks of EMC Corporation.

Other trademarks and trade names may be used in this document to refer to either the entities

claiming the marks and names or their products. Dell Inc. disclaims any proprietary interest in

trademarks and trade names other than its own.

January 2010 Rev A01

Page 3

Contents

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . 7

Cluster Solution . . . . . . . . . . . . . . . . . . . . . . 8

Cluster Hardware Requirements

Cluster Nodes

Cluster Storage

. . . . . . . . . . . . . . . . . . . . . 9

. . . . . . . . . . . . . . . . . . . 10

Supported Cluster Configurations

Direct-Attached Cluster

SAN-Attached Cluster

Other Documents You May Need

. . . . . . . . . . . . . 8

. . . . . . . . . . . . 11

. . . . . . . . . . . . . . 11

. . . . . . . . . . . . . . . 12

. . . . . . . . . . . . 13

2 Cabling Your Cluster Hardware . . . . . . . . 15

Cabling the Mouse, Keyboard, and Monitor . . . . . . 15

Cabling the Power Supplies

Cabling Your Cluster for Public and

Private Networks

. . . . . . . . . . . . . . . . . . . . 17

Cabling the Public Network

Cabling the Private Network

NIC Teaming

. . . . . . . . . . . . . . . . . . . . 20

Cabling the Storage Systems

Cabling Storage for Your

Direct-Attached Cluster

Cabling Storage for Your

SAN-Attached Cluster

Cabling a SAN-Attached Cluster to an

AX4-5F Storage System

. . . . . . . . . . . . . . . 15

. . . . . . . . . . . . 19

. . . . . . . . . . . . 19

. . . . . . . . . . . . . . 20

. . . . . . . . . . . . . . 20

. . . . . . . . . . . . . . . 25

. . . . . . . . . . . . . . . 27

Contents 3

Page 4

3 Preparing Your Systems

for Clustering

Cluster Configuration Overview . . . . . . . . . . . . . 35

. . . . . . . . . . . . . . . . . . . . . 35

Installation Overview

Installing the Fibre Channel HBAs

. . . . . . . . . . . . . . . . . . 37

. . . . . . . . . . . . 38

Installing the Fibre Channel HBA Drivers

Installing EMC PowerPath

. . . . . . . . . . . . . . . . 38

Implementing Zoning on a Fibre Channel

Switched Fabric

Using Worldwide Port Name Zoning

. . . . . . . . . . . . . . . . . . . . . 39

. . . . . . . . 39

Installing and Configuring the Shared

Storage System

. . . . . . . . . . . . . . . . . . . . . . 41

Installing Navisphere Storage System

Initialization Utility

. . . . . . . . . . . . . . . . . 41

Installing the Expansion Pack Using

Navisphere Express

Installing Navisphere Server Utility

. . . . . . . . . . . . . . . . . 42

. . . . . . . . . 43

Registering a Server With a Storage System

Assigning the Virtual Disks to Cluster Nodes

Advanced or Optional Storage Features

Installing and Configuring a Failover Cluster

. . . . . . 38

. . . . 43

. . . . 44

. . . . . . 44

. . . . . . 46

4 Contents

Page 5

A Troubleshooting . . . . . . . . . . . . . . . . . . . . 47

B Cluster Data Form . . . . . . . . . . . . . . . . . . 55

C Zoning Configuration Form

Index

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

. . . . . . . . . . . 57

Contents 5

Page 6

6 Contents

Page 7

Introduction

A failover cluster combines specific hardware and software components to

provide enhanced availability for applications and services that are run on the

cluster. A failover cluster is designed to reduce the possibility of any single

point of failure within the system that can cause the clustered applications or

services to become unavailable. It is recommended that you use redundant

components like server and storage power supplies, connections between the

nodes and the storage array(s), connections to client systems or other servers

in a multi-tier enterprise application architecture in your cluster.

This document provides information and specific configuration tasks that

enable you to configure your Microsoft

with Dell/EMC AX4-5F (2 Fibre Channel ports per Storage Processor) or

Dell/EMC AX4-5FX (4 Fibre Channel ports per Storage Processor) storage

array(s).

NOTE: Throughout this document, Dell/EMC AX4-5 refers to Dell/EMC AX4-5F and

Dell/EMC AX4-5FX storage arrays.

NOTE: Throughout this document, Windows Server 2008 refers to Windows Server

2008 and Windows Server 2008 R2 operating systems.

For more information on deploying your cluster with Windows Server 2003

operating systems, see the Dell™ Failover Clusters with Microsoft Windows

Server 2003 Installation and Troubleshooting Guide on the Dell Support

website at support.dell.com/manuals. For more information on deploying

your cluster with Windows Server 2008 operating systems, see the Dell

Failover Clusters with Microsoft Windows Server 2008 Installation and

Troubleshooting Guide on the Dell Support website at

support.dell.com/manuals.

For a list of recommended operating systems, hardware components, and

driver or firmware versions for your Dell Windows Server Failover Cluster, see

the Dell Cluster Configuration Support Matrices located on the Dell High

Availability Clustering website at dell.com/ha.

®

Windows Server® failover cluster

Introduction 7

Page 8

Cluster Solution

Your cluster implements a minimum of two node to a maximum of either eight

nodes (for Windows Server 2003) or sixteen nodes (for Windows Server 2008)

clustering and provides the following features:

• 8-Gbps and 4-Gbps Fibre Channel technologies

• High availability of resources to network clients

• Redundant paths to the shared storage

• Failure recovery for applications and services

• Flexible maintenance capabilities, allowing you to repair, maintain, or

upgrade a node or storage system without taking the entire cluster offline

Implementing Fibre Channel technology in a cluster provides the following

advantages:

•

Flexibility

switches without degrading the signal.

•

Availability

providing multiple data paths and greater availability for clients.

•

Connectivity

SCSI. Because Fibre Channel devices are hot-swappable, you can add or

remove devices from the nodes without bringing down the cluster.

— Fibre Channel allows a distance of up to 10 km between

— Fibre Channel components use redundant connections,

— Fibre Channel allows more device connections than

Cluster Hardware Requirements

Your cluster requires the following hardware components:

• Cluster nodes

• Cluster storage

8 Introduction

Page 9

Cluster Nodes

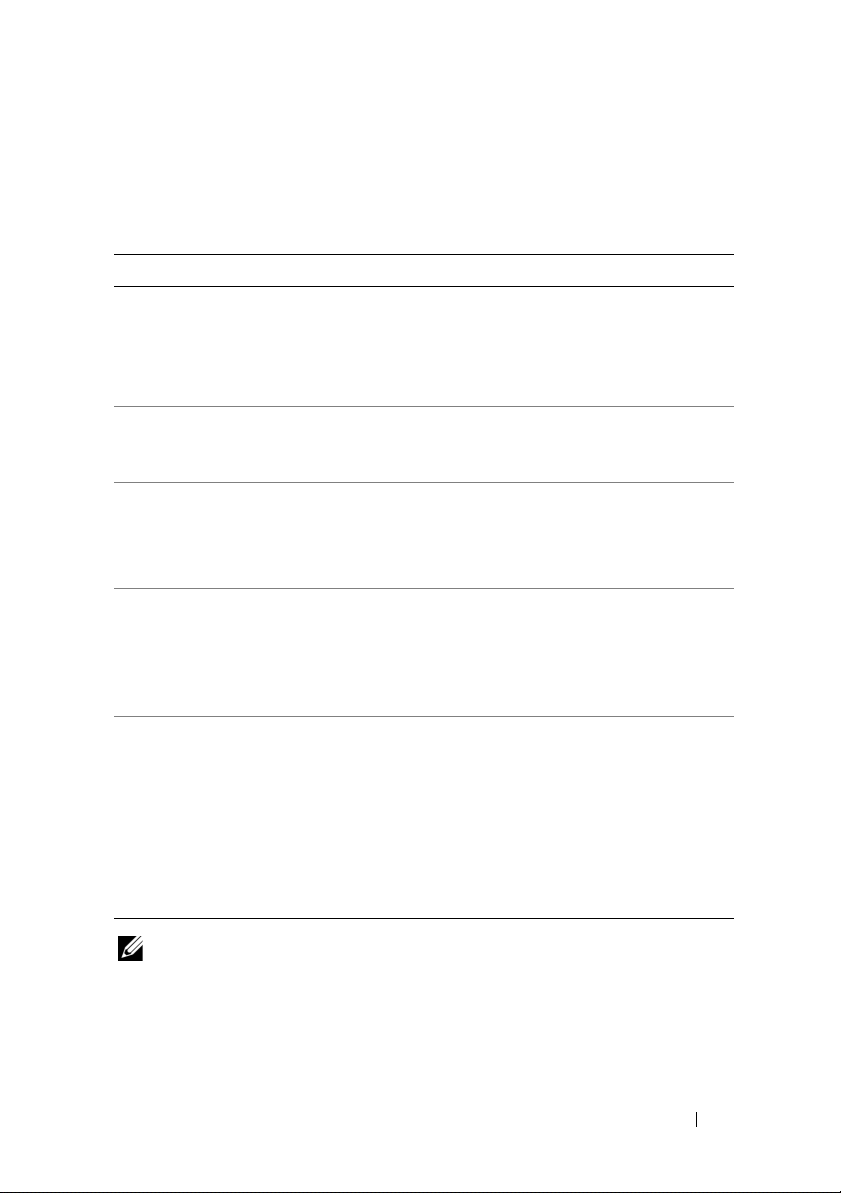

Table 1-1 lists the hardware requirements for the cluster nodes.

Table 1-1. Cluster Node Requirements

Component Minimum Requirement

Cluster nodes A minimum of two identical Dell™ PowerEdge™ servers are

required. The maximum number of nodes that is supported

depends on the variant of the Windows Server operating

system used in your cluster, and on the physical topology in

which the storage system and nodes are interconnected.

RAM The variant of the Windows Server operating system that is

installed on your cluster nodes determines the minimum

required amount of system RAM.

HBA ports Two Fibre Channel HBAs per node, unless the server employs

an integrated or supported dual-port Fibre Channel HBA.

Where possible, place the HBAs on separate PCI buses to

improve availability and performance.

NICs (public and

private networks)

Internal disk

controller

At least two NICs: one NIC for the public network and

another NIC for the private network.

NOTE: It is recommended that the NICs on each public network

are identical, and that the NICs on each private network are

identical.

One controller connected to at least two internal hard drives

for each node. Use any supported RAID controller or disk

controller.

Two hard drives are required for mirroring (RAID 1) and at

least three are required for disk striping with parity (RAID 5).

NOTE: It is strongly recommended that you use hardware-

based RAID or software-based disk-fault tolerance for the

internal drives.

NOTE: For more information about supported systems, HBAs and operating system

variants, see the Dell Cluster Configuration Support Matrices located on the Dell

High Availability Clustering website at dell.com/ha.

Introduction 9

Page 10

Cluster Storage

Cluster nodes can share access to external storage systems. However, only one

of the nodes can own any redundant array of independent disks (RAID)

volume in the external storage system at any time. Microsoft Cluster Services

(MSCS) controls which node has access to each RAID volume in the shared

storage system.

Table 1-2 lists supported storage systems and the configuration requirements

for the cluster nodes and stand-alone systems connected to the storage

systems.

Table 1-2. Cluster Storage Requirements

Hardware Components Requirement

Supported storage

systems

Cluster nodes All nodes must be directly attached to a single storage

Multiple clusters and

stand-alone systems

One to four supported Dell/EMC storage systems. For

specific storage system requirements see Table 1-3.

system or attached to one or more storage systems

through a SAN.

Can share one or more supported storage systems using

optional software that is available for your storage system.

See "Installing and Configuring the Shared Storage

System" on page 41.

The storage systems work together with the following hardware components:

• Disk processor enclosure (DPE)—Configured with storage processors that

control the RAID arrays in the storage system and provide storage

functionalities such as snapshots, LUN masking, and remote mirroring.

• Disk array enclosure (DAE)—Provides additional storage and is attached

to the disk processor enclosure.

• Standby power supply (SPS)—Provides backup power to protect the

integrity of the disk processor write cache. The SPS is connected to the

disk processor enclosure

10 Introduction

Page 11

Table 1-3 lists hardware requirements for the disk processor enclosures DPE

DAE, and SPS.

Table 1-3. Dell/EMC Storage System Requirements

Storage

System

AX4-5 1 DPE with at least 4

NOTE: Ensure that the core software version running on the storage system is

supported by Dell. For specific version requirements, see the Dell Cluster

Configuration Support Matrices located on the Dell High Availability Cluster website

at dell.com/ha.

Minimum Required

Storage

and up to 12 hard

drives

Possible Storage

Expansion

Up to 3 DAE with a

maximum of 12 harddrives each

SPS

First is required,

the second SPS is

optional

Supported Cluster Configurations

Direct-Attached Cluster

In a direct-attached cluster, all nodes of the cluster are directly attached to a

single storage system. In this configuration, the RAID controllers (or storage

processors) on the storage systems are connected by cables directly to the

Fibre Channel HBA ports in the nodes.

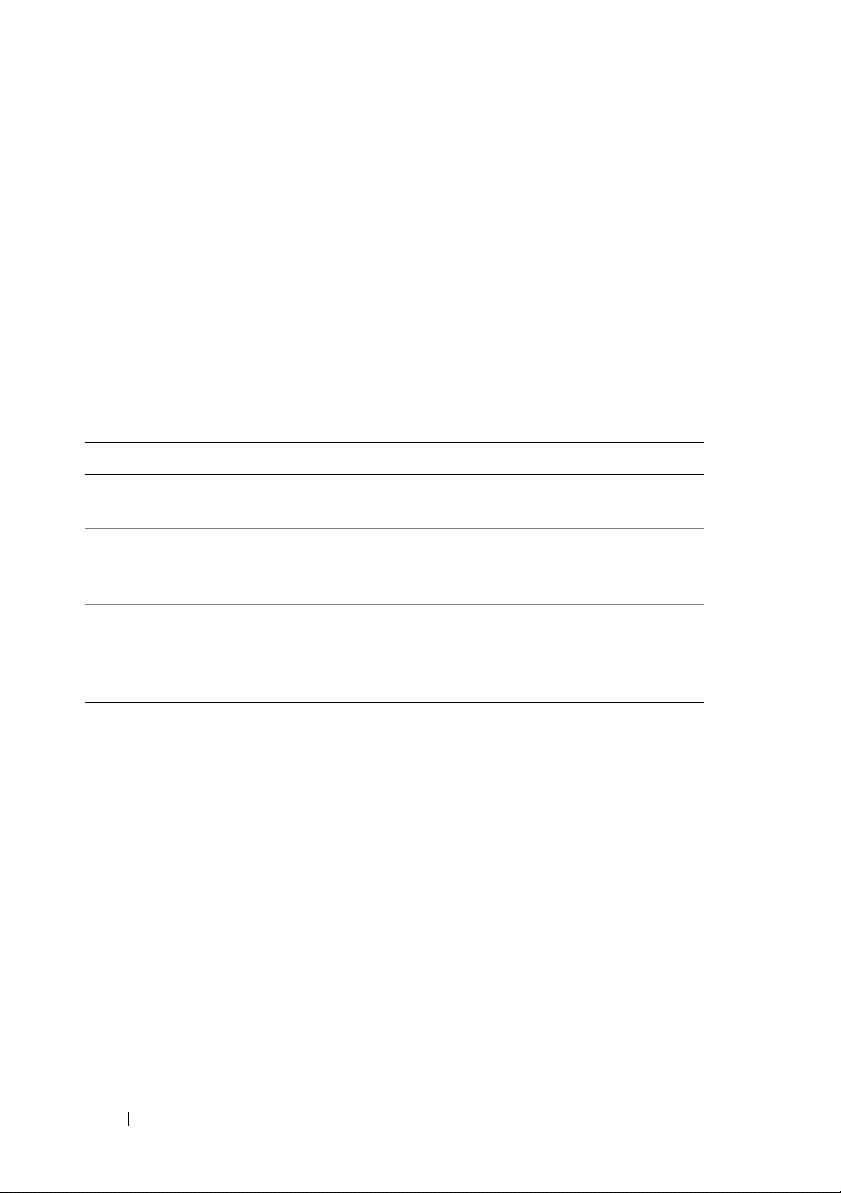

Figure 1-1 shows a basic direct-attached, single-cluster configuration.

Introduction 11

Page 12

Figure 1-1. Direct-Attached, Single-Cluster Configuration

cluster node

private network

public network

cluster node

storage system

Fibre Channel

connections

Fibre Channel

connections

EMC® PowerPath® Limitations in a Direct-Attached Cluster

EMC PowerPath provides failover capabilities and multiple path detection as

well as dynamic load balancing between multiple ports on the same storage

processor. However, direct-attached clusters supported by Dell connect to a

single port on each storage processor in the storage system. Because of the

single port limitation, PowerPath can provide only failover protection, not

load balancing, in a direct-attached configuration.

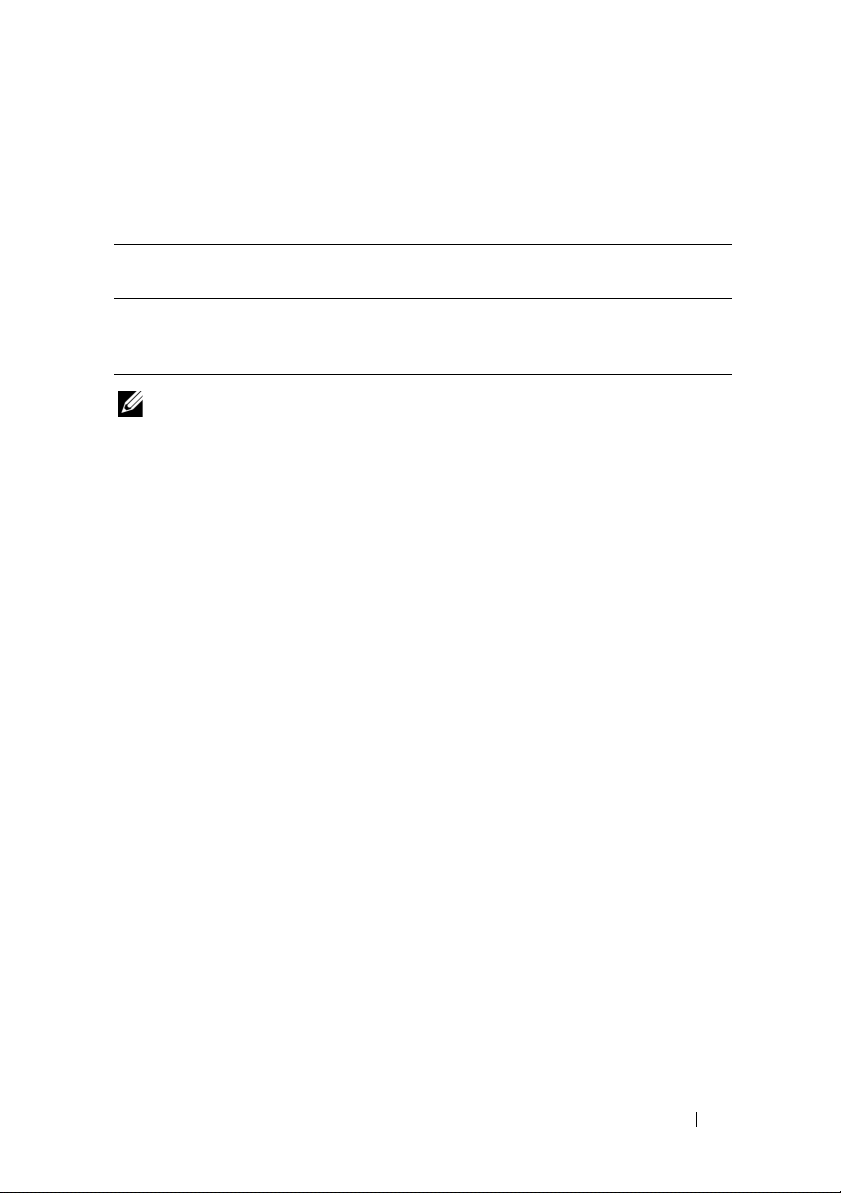

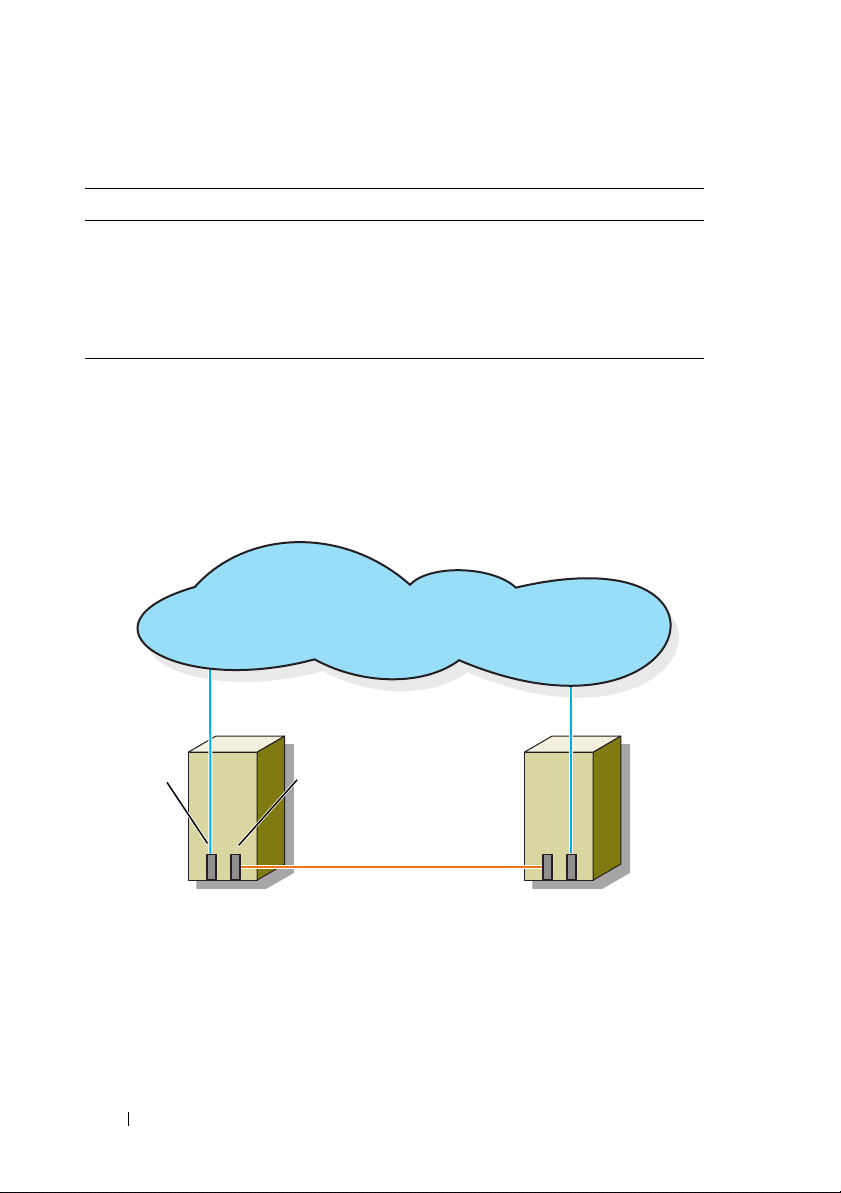

SAN-Attached Cluster

In a SAN-attached cluster, all of the nodes are attached to a single storage

system or to multiple storage systems through a SAN using redundant switch

fabrics. SAN-attached clusters are superior to direct-attached clusters in

configuration flexibility, expandability, and performance.

Figure 1-2 shows a SAN-attached cluster.

12 Introduction

Page 13

Figure 1-2. SAN-Attached Cluster

cluster node

cluster node

private network

Fibre Channel

connections

storage system

Fibre Channel

switch

Fibre Channel

switch

public network

Fibre Channel

connections

Other Documents You May Need

CAUTION: The safety information that is shipped with your system provides

important safety and regulatory information. Warranty information may be

included within this document or as a separate document.

• The

• The

• The

• The

NOTE: To configure Dell blade server modules in a Dell PowerEdge cluster, see the

Using Dell Blade Servers in a Dell PowerEdge High Availability Cluster document

located on the Dell Support website at support.dell.com/manuals.

Rack Installation Guide

how to install your system into a rack.

Getting Started Guide

system.

Dell Failover Clusters with Microsoft Windows Server 2003 Installation

and Troubleshooting Guide

cluster with the Windows Server 2003 operating system.

Dell Failover Clusters with Microsoft Windows Server 2008 Installation

and Troubleshooting Guide

cluster with the Windows Server 2008 operating system.

included with your rack solution describes

provides an overview of initially setting up your

provides more information on deploying your

provides more information on deploying your

Introduction 13

Page 14

•The

Dell Cluster Configuration Support Matrices

provides a list of

recommended operating systems, hardware components, and driver or

firmware versions for your Dell Windows Server Failover Cluster.

• The HBA documentation provides installation instructions for the HBAs.

• Systems management software documentation describes the features,

requirements, installation, and basic operation of the software.

• Operating system documentation describes how to install (if necessary),

configure, and use the operating system software.

• The Dell PowerVault™ tape library documentation provides information

for installing, troubleshooting, and upgrading the tape library.

• The EMC PowerPath documentation that came with your HBA kit(s) and

Dell/EMC Storage Enclosure User’s Guides.

• Updates are sometimes included with the system to describe changes to

the system, software, and/or documentation.

NOTE: Always read the updates first because they often supersede

information in other documents.

• Release notes or readme files may be included to provide last-minute

updates to the system or documentation, or advanced technical reference

material intended for experienced users or technicians.

14 Introduction

Page 15

Cabling Your Cluster Hardware

NOTE: To configure Dell blade server modules in a Dell™ PowerEdge™ cluster, see

the Using Dell Blade Servers in a Dell PowerEdge High Availability Cluster

document located on the Dell Support website at support.dell.com/manuals.

Cabling the Mouse, Keyboard, and Monitor

When installing a cluster configuration in a rack, you must include a switch

box to connect the mouse, keyboard, and monitor to the nodes. See the

documentation included with your rack for instructions on cabling each

node’s connections to the switch box.

Cabling the Power Supplies

Refer to the documentation for each component in your cluster solution to

ensure that the specific power requirements are satisfied.

The following guidelines are recommended to protect your cluster solution

from power-related failures:

• For nodes with multiple power supplies, plug each power supply into a

separate AC circuit.

• Use uninterruptible power supplies (UPS).

• For some environments, consider having backup generators and power

from separate electrical substations.

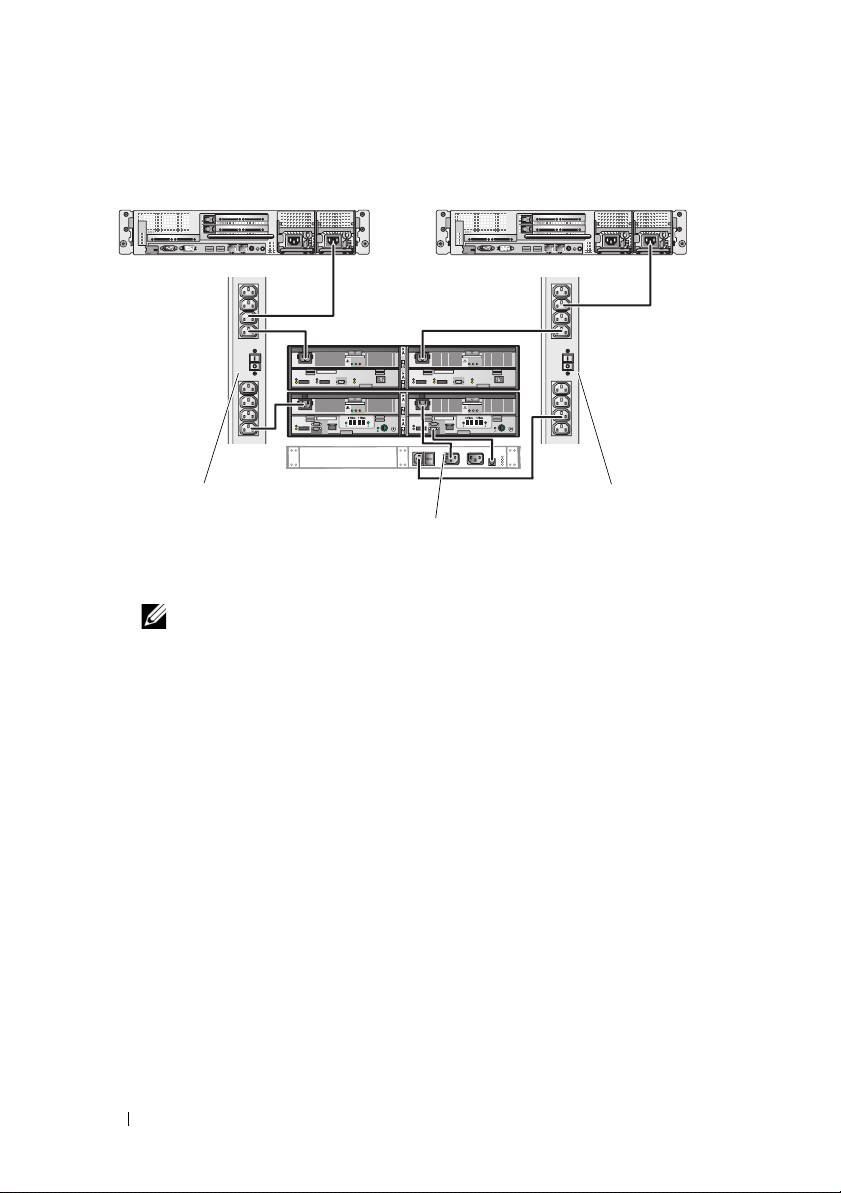

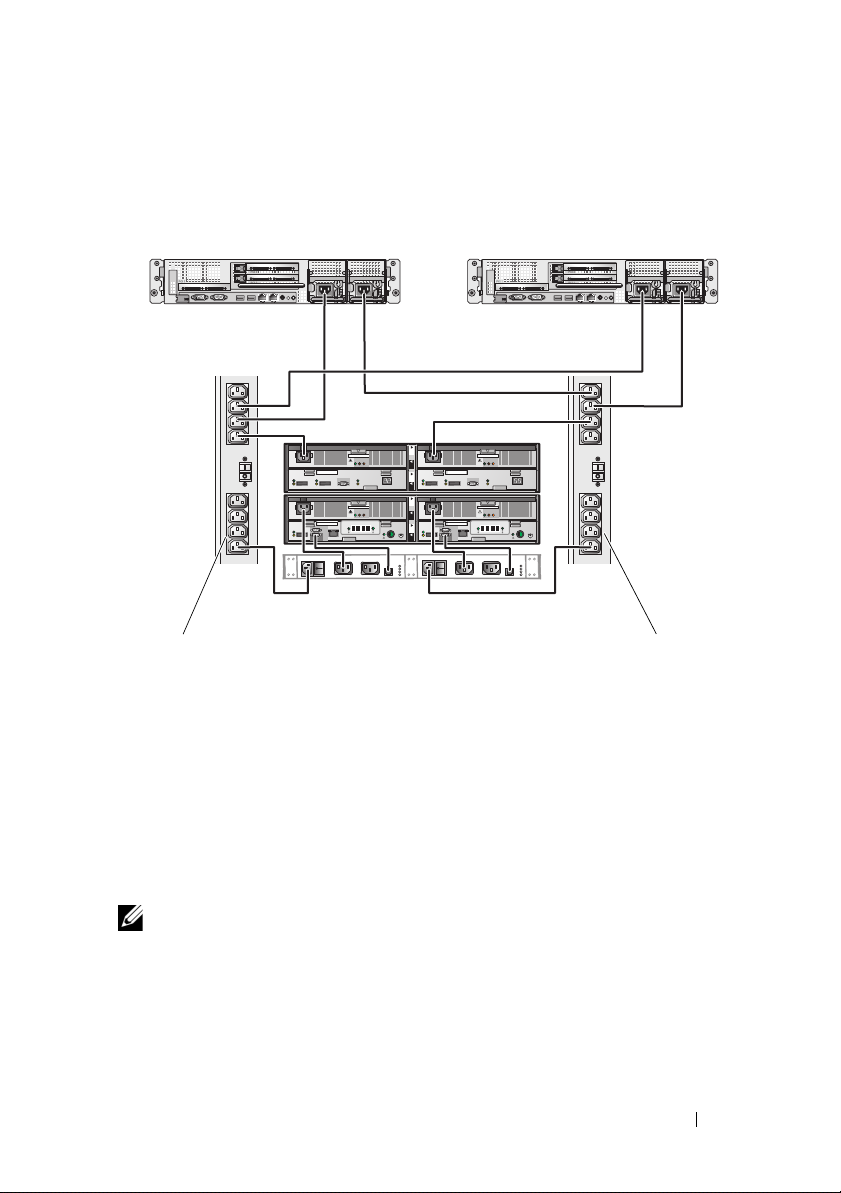

Figure 2-1, and Figure 2-2 illustrate recommended methods for power cabling

for a cluster solution consisting of two PowerEdge systems and two storage

systems. To ensure redundancy, the primary power supplies of all the

components are grouped onto one or two circuits and the redundant power

supplies are grouped onto a different circuit.

Cabling Your Cluster Hardware 15

Page 16

Figure 2-1. Power Cabling Example With One Power Supply in the PowerEdge Systems

redundant power

supplies on one AC

power strip (or on one

AC PDU [not shown])

primary power

supplies on one AC

power strip (or on one

AC PDU [not shown])

NOTE: This illustration is intended only to demonstrate the power

distribution of the components.

SPS

and One Standby Power Supply (SPS) in an AX4-5 Storage System

16 Cabling Your Cluster Hardware

Page 17

Figure 2-2. Power Cabling Example With Two Power Supplies in the PowerEdge

A

B

A

B

0Fibre 1Fibre 0Fibre 1Fibre

A

B

A

B

primary power supplies

on one AC power strip

(or on one AC PDU [not

shown])

redundant power supplies

on one AC power strip (or

on one AC PDU [not

shown])

Systems and Two SPS(s) in an AX4-5 Storage System

Cabling Your Cluster for Public and Private Networks

The network adapters in the cluster nodes provide at least two network

connections for each node, as described in Table 2-1.

NOTE: To configure Dell blade server modules in a Dell PowerEdge cluster, see the

Using Dell Blade Servers in a Dell PowerEdge High Availability Cluster document

located on the Dell Support website at support.dell.com/manuals.

Cabling Your Cluster Hardware 17

Page 18

Table 2-1. Network Connections

cluster node 1

cluster node 2

public network

private network

private

network

adapter

public

network

adapter

Network Connection Description

Public network All connections to the client LAN.

At least one public network must be configured for

Mixed mode for private network failover.

Private network A dedicated connection for sharing cluster health and

status information only.

Figure 2-3 shows an example of cabling in which dedicated network adapters

in each node are connected to each other (for the private network) and the

remaining network adapters are connected to the public network.

Figure 2-3. Example of Network Cabling Connection

18 Cabling Your Cluster Hardware

Page 19

Cabling the Public Network

Any network adapter supported by a system running TCP/IP may be used to

connect to the public network segments. You can install additional network

adapters to support additional public network segments or to provide

redundancy in the event of a faulty primary network adapter or switch port.

Cabling the Private Network

The private network connection to the nodes is provided by a different

network adapter in each node. This network is used for intra-cluster

communications. Table 2-2 describes two possible private network

configurations.

Table 2-2. Private Network Hardware Components and Connections

Method Hardware Components Connection

Network

switch

Point-to-Point

(two-node

clusters only)

Gigabit or 10 Gigabit

Ethernet network adapters

and switches

Gigabit or 10 Gigabit

Ethernet network adapters

with RJ-45 connectors

10 Gigabit Ethernet

network adapters with

SFP+ connectors

Optical Gigabit or 10

Gigabit Ethernet network

adapters with LC

connectors

Depending on the hardware, connect

the CAT5e or CAT6 cables, the

multimode optical cables with LC

(Local Connector) connectors, or the

twin-ax cables from the network

adapters in the nodes to a switch.

Connect a standard CAT5e or CAT6

Ethernet cable between the network

adapters in both nodes.

Connect a twin-ax cable between the

network adapters in both nodes.

Connect a multi-mode optical cable

between the network adapters in both

nodes.

NOTE: For more information on the supported cable types, see your system or NIC

documentation.

Cabling Your Cluster Hardware 19

Page 20

Using Dual-Port Network Adapters

You can configure your cluster to use the public network as a failover for

private network communications. If dual-port network adapters are used, do

not use both ports simultaneously to support both the public and private

networks.

NIC Teaming

NIC teaming combines two or more NICs to provide load balancing and fault

tolerance. Your cluster supports NIC teaming, but only in a public network;

NIC teaming is not supported in a private network.

You should use the same brand of NICs in a team, and you cannot mix brands

of teaming drivers.

Cabling the Storage Systems

This section provides information for connecting your cluster to a storage

system in a direct-attached configuration, or to one or more storage systems

in a SAN-attached configuration.

Cabling Storage for Your Direct-Attached Cluster

NOTE: Ensure that the management port on each storage processor is connected

to the storage server with the management station, using an Ethernet network

cable.

A direct-attached cluster configuration consists of redundant Fibre Channel

host bus adapter (HBA) ports cabled directly to a Dell/EMC storage system.

Direct-attached configurations are self-contained and do not share any

physical resources with other server or storage systems outside of the cluster.

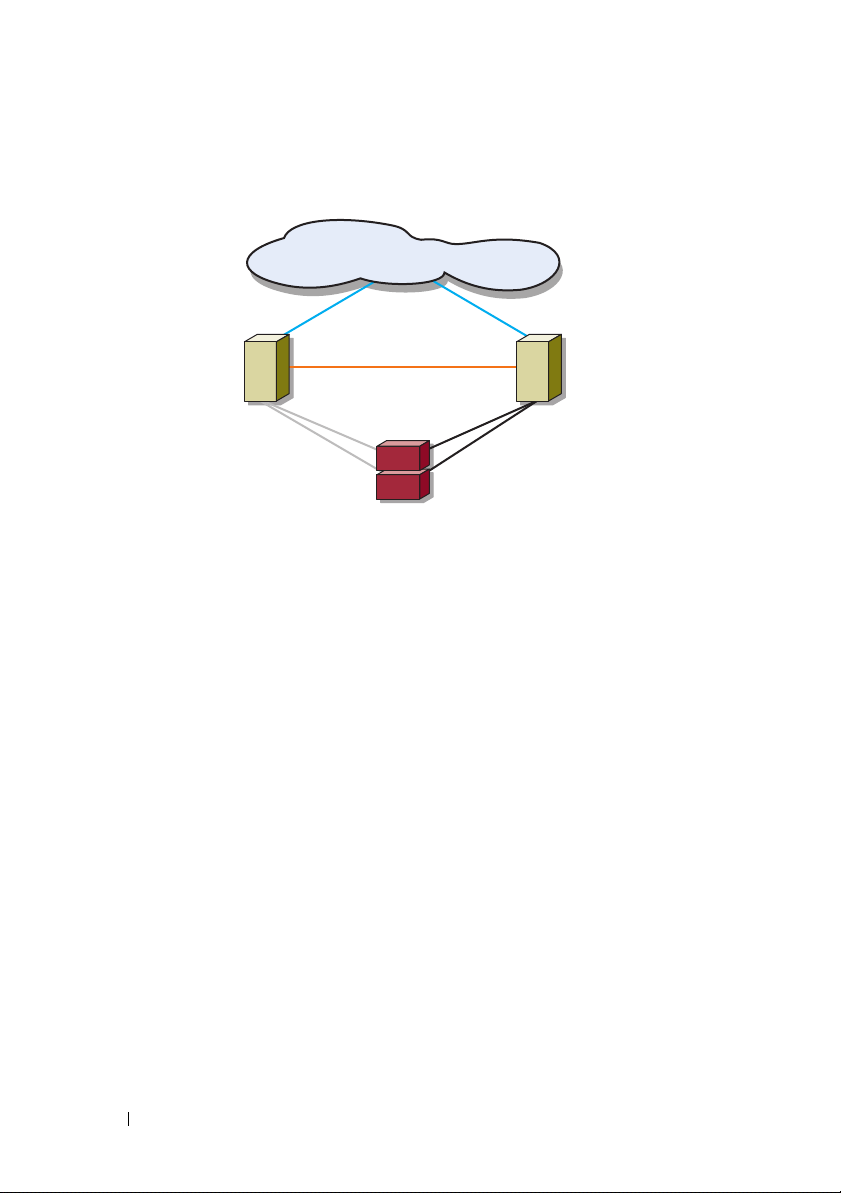

Figure 2-4 shows an example of a direct-attached, single cluster configuration

with redundant HBA ports installed in each cluster node.

20 Cabling Your Cluster Hardware

Page 21

Figure 2-4. Direct-Attached Cluster Configuration

public network

storage system

private network

cluster node

cluster node

Fibre Channel

Connections

Fibre Channel

Connections

Each cluster node attaches to the storage system using two multi-mode

optical cables with LC connectors that attach to the HBA ports in the cluster

nodes and the storage processor (SP) ports in the Dell/EMC storage system.

These connectors consist of two individual Fibre optic connectors with

indexed tabs that must be aligned properly into the HBA ports and SP ports.

WARNING: Do not remove the connector covers until you are ready to insert the

connectors into the HBA port, SP port, or tape library port.

Cabling a Two-Node Cluster to an AX4-5F Storage System

NOTE: The connections listed in this section are representative of one proven

method of ensuring redundancy in the connections between the cluster nodes and

the storage system. Other methods that achieve the same type of redundant

connectivity may be acceptable.

1

Connect cluster node 1 to the storage system.

a

Install a cable from cluster node 1 HBA port 0 to SP-A

(first fibre port)

b

Install a cable from cluster node 1 HBA port 1 to SP-B

(first fibre port)

2

Connect cluster node 2 to the storage system.

.

.

Cabling Your Cluster Hardware 21

Fibre port 0

Fibre port 0

Page 22

a

A

B

A

B

0Fibre 1Fibre 0Fibre 1Fibre

cluster node 1

cluster node 2

SP-B

SP-A

1100

AX4-5F storage system

HBA ports (2)

HBA ports (2)

Install a cable from cluster node 2 HBA port 0 to SP-A

1(second fibre port)

b

Install a cable from cluster node 2 HBA port 1 to SP-B

1(second fibre port)

.

.

Fibre port

Fibre port

Figure 2-5 and Figure 2-6 illustrate the method of cabling a two-node

direct-attached cluster to an AX4-5F and AX4-5FX storage system

respectively.

NOTE: The cables are connected to the storage processor ports in sequential

order for illustrative purposes. While the available ports in your storage system may

vary, HBA port 0 and HBA port 1 must be connected to SP-A and SP-B, respectively.

Figure 2-5. Cabling a Two-Node Cluster to an AX4-5F Storage System

22 Cabling Your Cluster Hardware

Page 23

0

1

HBA ports (2)

SP-B

SP-A

HBA ports (2)

1

0

AX4-5FX storage system

Figure 2-6. Cabling a Two-node Cluster to an AX4-5FX Storage System

Cabling a Four-Node Cluster to a Dell/EMC AX4-5FX Storage System

You can configure a 4-node cluster in a direct-attached configuration using a

Dell/EMC AX4-5FX storage system:

1

Connect cluster node 1 to the storage system:

a

Install a cable from cluster node 1 HBA port 0 to the first front-end

fibre channel port on SP-A.

b

Install a cable from cluster node 1 HBA port 1 to the first front-end

fibre channel port on SP-B.

2

Connect cluster node 2 to the storage system:

a

Install a cable from cluster node 2 HBA port 0 to the second front-end

fibre channel port on SP-A.

b

Install a cable from cluster node 2 HBA port 1 to the second front-end

fibre channel port on SP-B.

3

Connect cluster node 3 to the storage system:

a

Install a cable from cluster node 3 HBA port 0 to the third front-end

fibre channel port on SP-A.

b

Install a cable from cluster node 3 HBA port 1 to the third front-end

fibre channel port on SP-B.

4

Connect cluster node 4 to the storage system:

Cabling Your Cluster Hardware 23

Page 24

a

Install a cable from cluster node 4 HBA port 0 to the fourth front-end

fibre channel port on SP-A.

b

Install a cable from cluster node 4 HBA port 1 to the fourth front-end

fibre channel port on SP-B.

Cabling 2 Two-Node Clusters to a Dell/EMC AX4-5FX Storage System

The following steps are an example of how to cable a 2 two-node cluster to a

Dell/EMC AX4-5FX storage system:

1

In the first cluster, connect cluster node 1 to the storage system:

a

Install a cable from cluster node 1 HBA port 0 to the first front-end

fibre channel port on SP-A.

b

Install a cable from cluster node 1 HBA port 1 to the first front-end

fibre channel port on SP-B.

2

In the first cluster, connect cluster node 2 to the storage system:

a

Install a cable from cluster node 2 HBA port 0 to the second front-end

fibre channel port on SP-A.

b

Install a cable from cluster node 2 HBA port 1 to the second front-end

fibre channel port on SP-B.

3

In the second cluster, connect cluster node 1 to the storage system:

a

Install a cable from cluster node 1 HBA port 0 to the third front-end

fibre channel port on SP-A.

b

Install a cable from cluster node 1 HBA port 1 to the third front-end

fibre channel port on SP-B.

4

In the second cluster, connect cluster node 2 to the storage system:

a

Install a cable from cluster node 2 HBA port 0 to the fourth front-end

fibre channel port on SP-A.

b

Install a cable from cluster node 2 HBA port 1 to the fourth front-end

fibre channel port on SP-B.

24 Cabling Your Cluster Hardware

Page 25

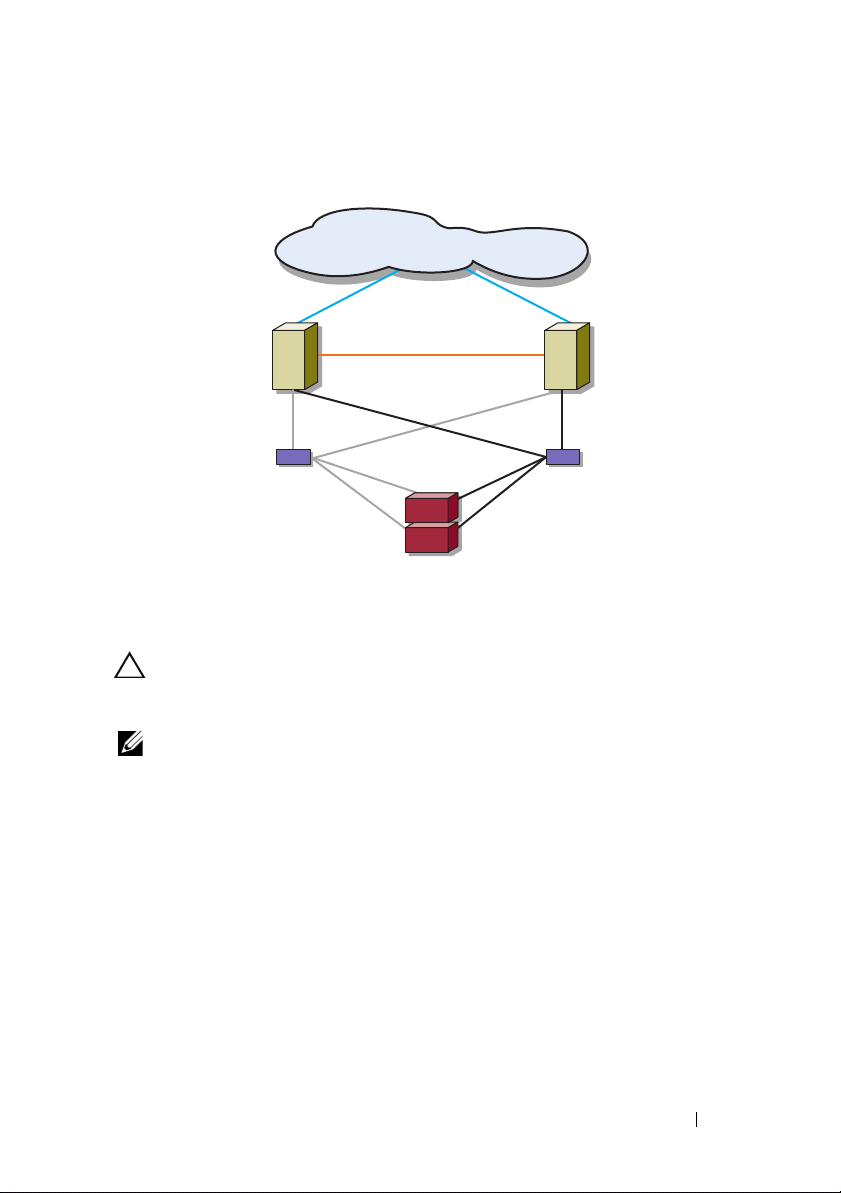

Cabling Storage for Your SAN-Attached Cluster

cluster node

cluster node

private network

Fibre Channel

connections

storage system

Fibre Channel

switch

Fibre Channel

switch

public network

Fibre Channel

connections

A SAN-attached cluster is a cluster configuration where all cluster nodes are

attached to a single storage system or to multiple storage systems through a

SAN using a redundant switch fabric.

SAN-attached cluster configurations provide more flexibility, expandability,

and performance than direct-attached configurations.

Figure 2-7 shows an example of a two-node, SAN-attached cluster.

Figure 2-8 shows an example of an eight-node, SAN-attached cluster.

Similar cabling concepts can be applied to clusters that contain a different

number of nodes.

NOTE: The connections listed in this section are representative of one proven

method of ensuring redundancy in the connections between the cluster nodes and

the storage system. Other methods that achieve the same type of redundant

connectivity may be acceptable.

Figure 2-7. Two-Node SAN-Attached Cluster

Cabling Your Cluster Hardware 25

Page 26

Figure 2-8. Eight-Node SAN-Attached Cluster

public network

storage system

cluster

nodes (8)

Fibre

Channel

switch

Fibre

Channel

switch

private

network

Each HBA port is cabled to a port on a Fibre Channel switch. One or more

cables connect from the outgoing ports on a switch to a storage processor on a

Dell/EMC storage system.

26 Cabling Your Cluster Hardware

Page 27

Cabling a SAN-Attached Cluster to an AX4-5F Storage System

1

Connect cluster node 1 to the SAN.

a

Connect a cable from HBA port 0 to Fibre Channel switch 0 (sw0).

b

Connect a cable from HBA port 1 to Fibre Channel switch 1 (sw1).

2

Repeat step 1 for each cluster node.

3

Connect the storage system to the SAN.

a

Connect a cable from Fibre Channel switch 0 (sw0) to SP-A

port 0(first fibre port

b

Connect a cable from Fibre Channel switch 0 (sw0) to SP-B

port 1(second fibre port

c

Connect a cable from Fibre Channel switch 1 (sw1) to SP-A

port 1(second fibre port

d

Connect a cable from Fibre Channel switch 1 (sw1) to SP-B

port 0(first fibre port

).

).

).

).

Fibre

Fibre

Fibre

Fibre

Cabling Your Cluster Hardware 27

Page 28

Figure 2-9. Cabling a SAN-Attached Cluster to an AX4-5F Storage System

A

B

A

B

0Fibre 1Fibre 0Fibre 1Fibre

sw0

sw1

cluster node 2

cluster node 1

AX4-5F storage system

0

1

0

1

HBA ports (2)

HBA ports (2)

SP-A

SP-B

Cabling a SAN-Attached Cluster to an AX4-5FX Storage System

Connect cluster node 1 to the SAN.

a

Connect a cable from HBA port 0 to Fibre Channel switch 0 (sw0).

b

Connect a cable from HBA port 1 to Fibre Channel switch 1 (sw1).

Repeat step 1 for each cluster node.

Connect the storage system to the SAN.

a

Connect a cable from Fibre Channel switch 0 (sw0) to SP-A Fibre port

0(First fibre port).

b

Connect a cable from Fibre Channel switch 0 (sw0) to SP-A Fibre port

1

2

3

1(Second fibre port).

c

Connect a cable from Fibre Channel switch 0 (sw0) to SP-B Fibre port

2(Third fibre port).

28 Cabling Your Cluster Hardware

Page 29

d

sw0

sw1

cluster node 2

cluster node 1

AX4-5FX storage system

0

1

0

1

HBA ports (2)

HBA ports (2)

SP-A

SP-B

Connect a cable from Fibre Channel switch 0 (sw0) to SP-B Fibre port

3(Fourth fibre port).

e

Connect a cable from Fibre Channel switch 1 (sw1) to SP-A Fibre port

2(Third fibre port).

f

Connect a cable from Fibre Channel switch 1 (sw1) to SP-A Fibre port

3(Fourth fibre port).

g

Connect a cable from Fibre Channel switch 1 (sw1) to SP-B Fibre port

0(first fibre port).

h

Connect a cable from Fibre Channel switch 1 (sw1) to SP-B Fibre port

1(Second fibre port).

Figure 2-10. Cabling a SAN-Attached Cluster to an AX4-5FX Storage System

Cabling Your Cluster Hardware 29

Page 30

Cabling Multiple SAN-Attached Clusters to a Dell/EMC Storage System

To cable multiple clusters to the storage system, connect the cluster nodes to

the appropriate Fibre Channel switches and then connect the Fibre Channel

switches to the appropriate storage processors on the processor enclosure.

See the Dell Cluster Configuration Support Matrices located on the Dell High

Availability Clustering website at dell.com/ha for rules and guidelines for

SAN-attached clusters.

NOTE: The following procedure uses Figure 2-9 as an example for cabling

additional clusters.

Cabling Multiple SAN-Attached Clusters to the AX4-5 Storage System

1

In the first cluster, connect cluster node 1 to the SAN.

a

Connect a cable from HBA port 0 to Fibre Channel switch 0 (sw0).

b

Connect a cable from HBA port 1 to Fibre Channel switch 1 (sw1).

2

In the first cluster, repeat step 1 for each node.

3

For each additional cluster, repeat step 1 and step 2.

4

Connect the storage system to the SAN.

a

Connect a cable from Fibre Channel switch 0 (sw0) to SP-A

port 0(first fibre port

b

Connect a cable from Fibre Channel switch 0 (sw0) to SP-B

port 1(second fibre port

c

Connect a cable from Fibre Channel switch 1 (sw1) to SP-A

port 1(second fibre port

d

Connect a cable from Fibre Channel switch 1 (sw1) to SP-B

port 0(first fibre port

).

).

).

).

Fibre

Fibre

Fibre

Fibre

Connecting a PowerEdge Cluster to Multiple Storage Systems

You can increase your cluster storage capacity by attaching multiple storage

systems to your cluster using a redundant switch fabric. PowerEdge cluster

systems can support configurations with multiple storage units attached to

clustered servers. In this scenario, the MSCS software can fail over disk drives

in any cluster-attached shared storage array between the cluster nodes. When

attaching multiple storage systems with your cluster, the following rules apply:

• There is a maximum of four storage systems per cluster.

30 Cabling Your Cluster Hardware

Page 31

• The shared storage systems and firmware must be identical. Using

private network

cluster node

Fibre Channel

switch

storage systems (4)

Fibre Channel

switch

cluster node

dissimilar storage systems and firmware for your shared storage is not

supported.

• MSCS is limited to 22 drive letters. Because drive letters A through D are

reserved for local disks, a maximum of 22 drive letters (E to Z) can be used

for your storage system disks.

• Windows Server 2003 and 2008 support mount points, allowing greater

than 22 drives per cluster.

For more information, see "Assigning Drive Letters and Mount Points"

section of

Installation and Troubleshooting Guide

Dell Failover Clusters with Microsoft Windows Server 2003

or

Dell Failover Clusters with

Microsoft Windows Server 2008 Installation and Troubleshooting Guide

the Dell Support website at

support.dell.com/manuals

.

Figure 2-11 provides an example of cabling the cluster nodes to four

Dell/EMC storage systems.

Figure 2-11. PowerEdge Cluster Nodes Cabled to Four Storage Systems

on

Cabling Your Cluster Hardware 31

Page 32

Connecting a PowerEdge Cluster to a Tape Library

private network

cluster node

Fibre Channel

switch

storage systems

Fibre Channel

switch

cluster node

tape library

To provide additional backup for your cluster, you can add tape backup

devices to your cluster configuration. The Dell PowerVault™ tape libraries

may contain an integrated Fibre Channel bridge, or Storage Network

Controller (SNC), that connects directly to your Dell/EMC Fibre Channel

switch.

Figure 2-12 shows a supported PowerEdge cluster configuration using

redundant Fibre Channel switches and a tape library. In this configuration,

each of the cluster nodes can access the tape library to provide backup for

your local disk resources, as well as your cluster disk resources. Using this

configuration allows you to add more servers and storage systems in the

future, if needed.

NOTE: While tape libraries can be connected to multiple fabrics, they do not

provide path failover.

Figure 2-12. Cabling a Storage System and a Tape Library

Obtaining More Information

See the storage and tape backup documentation for more information on

configuring these components.

32 Cabling Your Cluster Hardware

Page 33

Configuring Your Cluster With SAN Backup

cluster 2cluster 1

Fibre Channel switch

storage systems

tape library

Fibre Channel switch

You can provide centralized backup for your clusters by sharing your SAN

with multiple clusters, storage systems, and a tape library.

Figure 2-13 provides an example of cabling the cluster nodes to your storage

systems and SAN backup with a tape library.

Figure 2-13. Cluster Configuration Using SAN-Based Backup

Cabling Your Cluster Hardware 33

Page 34

34 Cabling Your Cluster Hardware

Page 35

Preparing Your Systems for Clustering

CAUTION: Only trained service technicians are authorized to remove and access

any of the components inside the system. See the safety information that shipped

with your system for complete information about safety precautions, working

inside the computer, and protecting against electrostatic discharge.

Cluster Configuration Overview

1

Ensure that your site can handle the cluster’s power requirements.

Contact your sales representative for information about your region's

power requirements.

2

Install the systems, the shared storage array(s), and the interconnect

switches (example: in an equipment rack), and ensure that all these

components are powered on.

NOTE: For more information on step 3 to step 7 and step 10 to step 13, see

"Preparing your systems for clustering" section of Dell Failover Clusters with

Microsoft Windows Server 2003 Installation and Troubleshooting Guide or Dell

Failover Clusters with Microsoft Windows Server 2008 Installation and

Troubleshooting Guide located on the Dell Support website at

support.dell.com/manuals.

3

Deploy the operating system (including any relevant service pack and

hotfixes), network adapter drivers, and storage adapter drivers (including

Multipath I/O drivers(MPIO)) on each of the systems that will become

cluster nodes. Depending on the deployment method that is used, it may

be necessary to provide a network connection to successfully complete this

step.

NOTE: To help in planning and deployment of your cluster, record the relevant

cluster configuration information in the "Cluster Data Form" on page 55 and

zoning information in the "Zoning Configuration Form" on page 57.

Preparing Your Systems for Clustering 35

Page 36

4

Establish the physical network topology and the TCP/IP settings for

network adapters on each server node to provide access to the cluster

public and private networks.

5

Configure each cluster node as a member in the same Microsoft®

Windows Active Directory

NOTE: You can configure the cluster nodes as Domain Controllers. For more

information, see “Selecting a Domain Model” section of Dell Failover Clusters

with Microsoft Windows Server 2003 Installation and Troubleshooting Guide

or Dell Failover Clusters with Microsoft Windows Server 2008 Installation and

Troubleshooting Guide located on the Dell Support website at

support.dell.com/manuals.

6

Establish the physical storage topology and any required storage network

®

Domain.

settings to provide connectivity between the storage array and the servers

that will be configured as cluster nodes. Configure the storage system(s) as

described in your storage system documentation.

7

Use storage array management tools to create at least one logical unit

number (LUN). The LUN is used as a cluster Quorum disk for Windows

Server 2003 Failover cluster and as a Witness disk for Windows Server

2008 Failover cluster. Ensure that this LUN is presented to the servers that

will be configured as cluster nodes.

NOTE: For security reasons, it is recommended that you configure the LUN on

a single node as mentioned in step 8 when you are setting up the cluster.

Later, you can configure the LUN as mentioned in step 9 so that other cluster

nodes can access it.

8

Select one of the systems and form a new failover cluster by configuring

the cluster name, cluster management IP, and quorum resource. For more

information, see "Preparing Your Systems for Clustering" on page 35.

NOTE: For Windows Server® 2008 Failover Clusters, run the Cluster

Validation Wizard to ensure that your system is ready to form the cluster.

9

Join the remaining node(s) to the failover cluster. For more information,

see "Preparing Your Systems for Clustering" on page 35.

10

Configure roles for cluster networks. Take any network interfaces that are

used for iSCSI storage (or for other purposes outside of the cluster) out of

the control of the cluster.

11

Test the failover capabilities of your new cluster.

36 Preparing Your Systems for Clustering

Page 37

NOTE: For Windows Server 2008 Failover Clusters, you can also use the

Cluster Validation Wizard.

12

Configure highly-available applications and services on your failover

cluster. Depending on your configuration, this may also require providing

additional LUNs to the cluster or creating new cluster resource groups.

Test the failover capabilities of the new resources.

13

Configure client systems to access the highly available applications and

services that are hosted on your failover cluster.

Installation Overview

Each node in your Dell Windows Server failover cluster must have the same

release, edition, service pack, and processor architecture of the Windows

Server operating system installed. For example, all nodes in your cluster may

be configured with Windows Server 2003 R2, Enterprise x64 Edition. If the

operating system varies among nodes, it is not possible to configure a failover

cluster successfully. It is recommended to establish server roles prior to

configuring a failover cluster, depending on the operating system configured

on your cluster.

For a list of Dell PowerEdge Servers, Fibre Channel HBAs and switches, and

recommended list of operating system variants, specific driver and firmware

revisions, see the Cluster Configuration Support Matrices located on Dell

High Availablity Cluster website at dell.com/ha.

For more information on deploying your cluster with Windows Server 2003

operating systems, see the Dell Failover Clusters with Microsoft Windows

Server 2003 Installation and Troubleshooting Guide. For more information on

deploying your cluster with Windows Server 2008 operating systems, see the

Dell Failover Clusters with Microsoft Windows Server 2008 Installation and

Troubleshooting Guide.

The following sub-sections describe steps that must be taken to enable

communication between the cluster nodes and your shared Dell/EMC AX4-5

Fibre Channel storage array, and to present disks from the storage array to the

cluster. Install and configure the following components on each node:

1

The Fibre Channel HBA(s) and driver on each cluster node

2

EMC PowerPath on each cluster node

3

Zoning, if applicable

Preparing Your Systems for Clustering 37

Page 38

4

The shared storage system

5

A failover cluster

Installing the Fibre Channel HBAs

For dual -HBA configurations, It is recommended that you install the Fibre

Channel HBAs on separate peripheral component interconnect (PCI) buses.

Placing the adapters on separate buses improves availability and performance.

See the Dell Cluster Configuration Support Matrices located on the Dell High

Availability Clustering website at dell.com/ha for more information about

your system's PCI bus configuration and supported HBAs.

Installing the Fibre Channel HBA Drivers

See the EMC documentation that is included with your HBA kit for more

information.

See the Emulex support website located at emulex.com or the Dell Support

website at support.dell.com for information about installing and configuring

Emulex HBAs and EMC-approved drivers.

See the QLogic support website at qlogic.com or the Dell Support website at

support.dell.com for information about installing and configuring QLogic

HBAs and EMC-approved drivers.

See the Dell Cluster Configuration Support Matrices located on the Dell High

Availability Clustering website at dell.com/ha for information about

supported HBA controllers and drivers.

Installing EMC PowerPath

EMC® PowerPath® detects a failed storage path and automatically re-routes

I/Os through an alternate path. PowerPath also provides load balancing of

data from the server to the storage system. To install PowerPath:

Insert the PowerPath installation media in the CD/DVD drive.

1

2

On the

appropriate link for the operating system that is running on the node.

3

Select

4

In the

click

38 Preparing Your Systems for Clustering

Getting Started

Run this program from its current location

Choose Language Setup

OK

.

screen, go to the

screen, select the required language, and

Installation

section, and click the

and click OK.

Page 39

5

In the

Welc ome

6

In the

CLARiiON AX-Series

onscreen instructions to complete the installation.

7

Click

Yes

window of the setup wizard, click

window, select and click

to reboot the system.

Next

.

Next

. Follow the

Implementing Zoning on a Fibre Channel Switched Fabric

A Fibre Channel switched fabric consists of one or more Fibre Channel

switches that provide high-speed connections between servers and storage

devices. The switches in a Fibre Channel fabric provide a connection through

inbound and outbound points from one device (sender) to another device or

switch (receiver) on the network. If the data is sent to another switch, the

process repeats itself until a connection is established between the sender and

the receiver.

Fibre Channel switches provide you with the ability to set up barriers between

different devices and operating environments. These barriers create logical

fabric subsets with minimal software and hardware intervention. Similar to

subnets in the client/server network, logical fabric subsets divide a fabric into

similar groups of components, regardless of their proximity to one another.

The logical subsets that form these barriers are called zones.

Zoning automatically and transparently enforces access of information to the

zone devices. More than one PowerEdge cluster configuration can share

Dell/EMC storage system(s) in a switched fabric using Fibre Channel switch

zoning. By using Fibre Channel switches to implement zoning, you can

segment the SANs to isolate heterogeneous servers and storage systems from

each other.

Using Worldwide Port Name Zoning

PowerEdge cluster configurations support worldwide port name zoning.

A worldwide name (WWN) is a unique numeric identifier assigned to Fibre

Channel interfaces, such as HBA ports, storage processor (SP) ports, and

Fibre Channel to SCSI bridges or storage network controllers (SNCs).

Preparing Your Systems for Clustering 39

Page 40

A WWN consists of an 8-byte hexadecimal number with each byte separated

by a colon. For example, 10:00:00:60:69:00:00:8a is a valid WWN. Using

WWN port name zoning allows you to move cables between switch ports

within the fabric without having to update the zones.

Table 3-1 provides a list of WWN identifiers that you can find in the

Dell/EMC cluster environment.

Table 3-1. Port Worldwide Names in a SAN Environment

Identifier Description

xx:xx:

xx:xx:xx:

50:06:01:6

xx:xx:00:

xx:xx:

xx:xx

xx:xx:xx:

xx:xx:xx:

xx:xx:xx

00:60:69:

00:88:

x:xx:xx:xx:xx

00:C9:

00:E0:8B:

:00:0F:1F:

60:45:

E0:02:

:C0:01:

xx:xx:xx

xx:xx:xx

xx:xx:xx

xx:xx:xx

xx:xx:xx

xx:xx:xx

xx:xx:xx

xx:xx:xx

xx:xx:xx:C0:97:xx:xx:xx

Dell/EMC or Brocade switch

McData switch

Dell/EMC storage processor

Emulex HBA ports

QLogic HBA ports (non-embedded)

Dell 2362M HBA port

PowerVault 132T and 136T tape

libraries

PowerVault 128T tape autoloader

PowerVault 160T tape library and Fibre

Channel tape drives

PowerVault ML6000 Fibre Channel

tape drives

WARNING: When you replace a switch module, a storage controller, or a Fibre

Channel HBA in a PowerEdge server, reconfigure your zones to provide continuous

client data access.

Single Initiator Zoning

Each host HBA port in a SAN must be configured in a separate zone on the

switch with the appropriate storage ports. This zoning configuration, known

as single initiator zoning, prevents different hosts from communicating with

each other, thereby ensuring that Fibre Channel communications between

the HBAs and their target storage systems do not affect each other.

When you create your single-initiator zones, follow these guidelines:

40 Preparing Your Systems for Clustering

Page 41

• Create a zone for each HBA port and its target storage devices.

• Each AX4-5 storage processor port can be connected to a maximum of 64

HBA ports in a SAN-attached environment.

• Each host can be connected to a maximum of four storage systems.

• The

integrated bridge/SNC or fibre-channel interface

can be added to any zone.

on a tape library

Installing and Configuring the Shared Storage System

NOTE: You must configure the network settings and create a user account to

manage the AX4-5 storage system from the network.

To install and configure the Dell/EMC storage system in your cluster:

Install and use

1

node or management station to initialize your AX4-5 storage system.

2

Install the expansion pack using

3

Install the

4

Register the cluster node with the storage system.

5

Assign the virtual disks to the cluster nodes.

Installing Navisphere Storage System Initialization Utility

The Navisphere Storage System Initialization Utility provides a user interface

to initialize your AX4-5 storage system. Using the utility, you can configure

the IP address, subnet mask, default gateway address for the storage system’s

SPs, and assign user names and password for storage system access.

1

To install the software from the support media that is shipped with the

storage system:

a

Insert the installation media in the CD/DVD drive.

b

In the

language and click

c

On

d

In the

Initialization Utility

Navisphere® Storage System Initialization Utility

Navisphere Express

Navisphere Server Utility

Choose Language Setup

OK

.

Server

screen, click

Install Products

Install Products

menu, click

.

on each cluster node.

screen, select the appropriate

.

Navisphere Storage System

from a

(optional).

Preparing Your Systems for Clustering 41

Page 42

e

Follow the on-screen instructions to complete the installation.

2

To initialize the storage system:

a

Select

Start→

Programs→

System Initialization

b

Read the license agreement, click

c

From the

Uninitialized Systems

initialized, and click

d

Follow the on-screen instructions to complete the initialization.

.

Next

EMC→

Navisphere→

I accept

and then click

Navisphere Storage

list, select the storage system to be

.

Next

.

Installing the Expansion Pack Using Navisphere Express

Each storage system in the cluster is centrally managed by one host system

(also called a management station) running EMC Navisphere Express—a

centralized storage management application used to configure Dell/EMC

storage systems.

If you have an expansion pack option for the storage system and it has not

been installed, install it now by following the steps listed below:

1

From the management host, open an Internet browser.

2

Enter the IP address of an SP in the storage system.

3

Log in to

specified during the storage system initialization.

4

In the

5

In the

6

Insert the expansion pack media into the CD/DVD drive on the host from

which you are running Navisphere Express.

7

Browse for the expansion tier enabler software file (.

Upgrade

You can use Navisphere Express to perform tasks such as creating disk pools,

binding the virtual disks, and downloading the firmware. Additionally, you

can use Snapshot Management to capture point-in-time images of a virtual

disk for backups or testing without affecting the contents of the source virtual

disk. You can also use the SAN Copy™ feature in Navisphere Express to move

data from the virtual disks on one storage system to the virtual disks on

another storage system without using the host CPU cycles.

Navisphere Express

Navisphere Express

System Software

screen, click

.

with the username and password that you

navigation pane under System, click

Upgrade Software

.

ena

file), and click

Software

.

42 Preparing Your Systems for Clustering

Page 43

Installing Navisphere Server Utility

The Navisphere Server Utility registers the cluster node HBAs with the

storage systems, allowing the nodes to access the cluster storage data. The

tool is also used for the following cluster node maintenance procedures:

• Updating the cluster node host name and/or IP address on the storage

array

• Updating file system information

• Adding, removing, or replacing an HBA

• Starting and stopping a snapshot

To install Navisphere Server Utility:

1

Log in to the Windows server using an account with administrative

privileges.

2

Insert the installation media in the CD/DVD drive.

3

In the

Choose Language Setup

and click

4

In the main menu, click

5

In the

6

Follow the on-screen instructions to complete the installation.

OK

.

Install Products

menu, click

screen, select the appropriate language

Install Products on Server

Navisphere Server Utility

.

.

Registering a Server With a Storage System

To register the server with the storage system:

Start the Navisphere Server Utility by clicking

1

Navisphere→

2

In the

and click

3

In the

all connected storage systems

connected storage systems and lists them under Connected Storage Systems.

4

Select the

once for every SP port it is connected to.

5

Click

6

Click

Navisphere Server Utility

Choose Language Setup

OK

.

Navisphere Server Utility

WWN

of the HBA you just installed. The HBA should appear

Next

to register the server with the storage system.

Finish

to exit the utility.

screen, select the appropriate language

dialog window, select

. The utility automatically scans for all

Start→

Programs→

.

Register this server to

EMC→

Preparing Your Systems for Clustering 43

Page 44

Assigning the Virtual Disks to Cluster Nodes

NOTE: For best practice, have at least one virtual disk for each application. If

multiple NTFS partitions are created on a single LUN or virtual disk, these partitions

will not be able to fail over individually from node-to-node.

To perform data I/O to the virtual disks, assign the virtual disks to a cluster

node by performing the following steps:

1

Open a Web browser.

2

Type the storage system IP address in the

The

Navisphere Express

3

Login with user name and password that were created during the storage

console is displayed.

initialization.

4

In the

Manage

a

Click

b

Click

menu,

Disk Pools

. You can create multiple disk pools.

Virtual Disks

. You can create multiple virtual disks for each disk

pool.

c

In the

Virtual Disks

screen, select the virtual disks that you want to

assign to the cluster node and click

5

In

Assign Server

the virtual disk and click

6

Repeat step 4 and step 5 for each virtual disk.

7

Close the

8

Verify that the PowerPath on the cluster nodes can access all paths to the

screen, select the cluster nodes that you want to assign to

Apply

.

Navisphere Express

window.

virtual disks.

Address field

Assign Server

.

.

Advanced or Optional Storage Features

Your Dell/EMC AX4-5 storage array may be configured to provide optional

features that can be used in with your cluster. These features include

Snapshot Management, SANCopy, Navisphere Manager and MirrorView™.

44 Preparing Your Systems for Clustering

Page 45

Snapshot Management

Snapshot Management captures images of a virtual disk and retains the image

independently of subsequent changes to the files. The images can be used to

share virtual disks with another system without affecting the contents of the

source virtual disk. Snapshot Management creates copies of either virtual

disks or snapshots. Snapshots are virtual copies that create an image of the

source virtual disk at the time the snapshot was created. This snapshot is

retained independently of subsequent changes to the source virtual disk. You

can use snapshots to facilitate backups or to allow multiple hosts to access

data without affecting the contents of the source virtual disk.

WARNING: To avoid data corruption, do not access a snapshot from the same

node as its source.

SAN Copy

SAN Copy allows you to move data between storage systems without using

host processor cycles or LAN bandwidth. It can be used in conjunction with

SnapView™ or MirrorView and is managed from within Navisphere Manager.

Navishphere Manager

Optionally, you can also upgrade Navisphere Express to EMC Navisphere®

Manager—a centralized storage management application used to configure

Dell/EMC storage systems.

EMC Navisphere Manager adds the support for EMC MirrorView, an

optional software that enables synchronous or asynchronous mirroring

between two storage systems.

MirrorView

MirrorView automatically duplicates primary storage system data from a

cluster or stand-alone system to a secondary storage system. It can be used in

conjunction with SnapView and is managed from within Navisphere Manager.

Preparing Your Systems for Clustering 45

Page 46

Installing and Configuring a Failover Cluster

You can configure the operating system services on your Dell Windows Server

failover cluster, after you have established the private and public networks

and have assigned the shared disks from the storage array to the cluster nodes.

The procedures for configuring the failover cluster are different depending on

the Windows Server operating system you use.

For more information on deploying your cluster with Windows Server 2003

operating systems, see the Dell Failover Clusters with Microsoft Windows

Server 2003 Installation and Troubleshooting Guide. For more information on

deploying your cluster with Windows Server 2008 operating systems, see the

Dell Failover Clusters with Microsoft Windows Server 2008 Installation and

Troubleshooting Guide.

46 Preparing Your Systems for Clustering

Page 47

Troubleshooting

This section provides troubleshooting information for your cluster

configuration.

Table A-1 describes general cluster problems you may encounter and the

probable causes and solutions for each problem.

Table A-1. General Cluster Troubleshooting

Problem Probable Cause Corrective Action

The nodes cannot

access the storage

system, or the cluster

software is not

functioning with the

storage system.

The storage system is

not cabled properly to

the nodes or the

cabling between the

storage components is

incorrect.

The length of the

interface cables

exceeds the maximum

allowable length.

One of the cables is

faulty.

Switching zone is not

configured correctly.

LUNs are not assigned

to the hosts.

The cluster is in a

SAN, and one or more

zones are not

configured correctly.

Ensure that the cables are

connected properly from the

node to the storage system. See

"Cabling Your Cluster for Public

and Private Networks" for

more information.

Ensure that the fibre optic

cables do not exceed 300 m

(multimode) or 10 km (single

mode switch-to-switch

connections only).

Replace the faulty cable.

Verify that all switched zones are

configured correctly.

Verify that all LUNs are assigned

to the hosts.

Verify the following:

• Each zone contains only one

initiator (Fibre Channel

daughter card).

• Each zone contains the

correct initiator and the

correct storage port(s).

Appendix:Troubleshooting 47

Page 48

Table A-1. General Cluster Troubleshooting (continued)

Problem Probable Cause Corrective Action

One of the nodes takes a

long time to join the

cluster.

or

One of the nodes fail to

join the cluster.

The node-to-node

network has failed due

to a cabling or

hardware failure.

Long delays in nodeto-node

communications may

be normal.

Check the network cabling.

Ensure that the node-to-node

interconnection and the public

network are connected to the

correct NICs.

Verify that the nodes can

communicate with each other

by running the ping command

from each node to the other

node. Try both the host name

and IP address when using the

ping command.

One or more nodes

may have the Internet

Connection Firewall

enabled, blocking

Remote Procedure

Call (RPC)

communications

between the nodes.

Configure the Internet

Connection Firewall to allow

communications that are

required by the Microsoft

Cluster Service (MSCS) and the

clustered applications

or services.

See Microsoft Knowledge Base

article KB883398 at the

Microsoft Support website at

support.microsoft.com for more

information.

®

48 Appendix:Troubleshooting

Page 49

Table A-1. General Cluster Troubleshooting (continued)

Problem Probable Cause Corrective Action

Attempts to connect to

a cluster using Cluster

Administrator fail.

The Cluster Service

has not been started.

A cluster has not been

formed on the system.

The system has just

been booted and

services are still

starting.

The cluster network

name is not

responding on the

network because the

Internet Connection

Firewall is enabled on

one or more nodes.

Verify that the Cluster Service is

running and that a cluster has

been formed. Use the Event

Viewer and look for the

following events logged by the

Cluster Service:

Microsoft Cluster

Service successfully

formed a cluster on

this node.

or

Microsoft Cluster

Service successfully

joined the cluster.

If these events do not appear in

Event Viewer, see the Microsoft

Cluster Service Administrator’s

Guide for instructions on

setting up the cluster on your

system and starting the

Cluster Service.

Configure the Internet

Connection Firewall to allow

communications that are

required by MSCS and the

clustered applications

or services.

See Microsoft Knowledge Base

article KB883398 at the

Microsoft Support website at

support.microsoft.com for more

information.

Appendix:Troubleshooting 49

Page 50

Table A-1. General Cluster Troubleshooting (continued)

Problem Probable Cause Corrective Action

You are prompted to

configure one network

instead of two during

MSCS installation.

The TCP/IP

configuration

is incorrect.

The node-to-node network and

public network must be assigned

static IP addresses on different

subnets. For information about

assigning the network IPs with a

specific variant of the Windows

Server operating system (for

example: Windows Server 2003

or Windows Server 2008), see

Dell Failover Clusters with

Microsoft Windows Server

Installation and

Troubleshooting Guide.

The private (point-topoint) network is

disconnected.

Ensure that all systems are

powered on so that the NICs in

the private network

are available.

Using Microsoft

Windows NT

®

4.0 to

remotely administer a

Windows Server 2003

cluster generates error

Normal. Some

resources in

Windows Server 2003

are not supported in

Windows NT 4.0.

messages.

50 Appendix:Troubleshooting

Dell strongly recommends that

you use Windows XP

Professional or Windows Server

2003 for remote administration

of a cluster running Windows

Server 2003.

Page 51

Table A-1. General Cluster Troubleshooting (continued)

Problem Probable Cause Corrective Action

Unable to add a node to

the cluster.

The disks on the shared

cluster storage appear

unreadable or

uninitialized in

Windows Disk

Administration

The new node cannot

access the shared

disks.

The shared disks are

enumerated by the

operating system

differently on the

cluster nodes.

One or more nodes

may have the Internet

Connection Firewall

enabled, blocking

RPC communications

between the nodes.

This situation is

normal if you stopped

the Cluster Service. If

you are running

Windows Server 2003,

this situation is

normal if the cluster

node does not own the

cluster disk.

Ensure that the new cluster

node can enumerate the cluster

disks using Windows Disk

Administration. If the disks do

not appear in Disk

Administration, check the

following:

• Check all cable connections

• Check all zone configurations

• Check the LUN Settings. Use

the "Advanced" with

"Minimum" option

Configure the Internet

Connection Firewall to allow

communications that are

required by the MSCS and the

clustered applications

or services.

See Microsoft Knowledge Base

article KB883398 at the

Microsoft Support website at

support.microsoft.com for more

information.

No action required.

Appendix:Troubleshooting 51

Page 52

Table A-1. General Cluster Troubleshooting (continued)

Problem Probable Cause Corrective Action

Cluster Services does

not operate correctly on

a cluster running

Windows Server 2003

and the Internet

Firewall enabled.

The Windows

Internet Connection

Firewall is enabled,

which may conflict

with Cluster Services.

Perform the following steps:

1

On the Windows desktop,

right-click

click

2

In the

My Computer

Manage

Computer

Management

Services

click

3

In the

Services

double-click

4

In the

Cluster Services

window, click the

tab.

5

Click the

First Failure

down arrow and select

the Service

6

Click the

.

Second Failure

drop-down arrow and select

Restart

the service.

7

Click OK.

For information on how to

configure your cluster with the

Windows Internet Connection

Firewall enabled, see Microsoft

Base (KB) articles 258469 and

883398 at the Microsoft Support

website at

support.microsoft.com and the

Microsoft Windows Server 2003

Technet website at

www.microsoft.com/technet.

and

.

window, double.

window,

Cluster Services

Recovery

drop-

Restart

.

52 Appendix:Troubleshooting

Page 53

Table A-1. General Cluster Troubleshooting (continued)

Problem Probable Cause Corrective Action

Public network clients

cannot access the

applications or services

that are provided by the

cluster.

One or more nodes

may have the Internet

Connection Firewall

enabled, blocking

RPC communications

between the nodes.

Configure the Internet

Connection Firewall to allow

communications that are

required by the MSCS and the

clustered applications

or services.

See Microsoft Knowledge Base

article KB883398 at the

Microsoft Support website at

support.microsoft.com for more

information.

Appendix:Troubleshooting 53

Page 54

54 Appendix:Troubleshooting

Page 55

Cluster Data Form

You can attach the following form in a convenient location near each cluster

node or rack to record information about the cluster. Use the form when you

call for technical support.

Table B-1.

Cluster Information Cluster Solution

Cluster name and IP

address

Server type

Installer

Date installed

Applications

Location

Notes

Table B-2.

Node Name Service Tag

Number

Public IP Address Private IP Address

Appendix: Cluster Data Form 55

Page 56

Additional Networks

Table B-3.

Array Array xPE Type Array Service Tag

Number or World Wide

Name Seed

1

2

3

4

Number of Attached

DAEs

56 Appendix: Cluster Data Form

Page 57

Zoning Configuration Form

Node HBA WWPNs or

Alias Names

Storage

WWPNs or

Alias Names

Zone Name Zone Set for

Configuration

Name

Appendix: Zoning Configuration Form 57

Page 58

58 Appendix: Zoning Configuration Form

Page 59

Index

C

cable configurations

cluster interconnect, 19

for client networks, 19

for mouse, keyboard, and

monitor, 15

for power supplies, 15

cluster configurations

connecting to multiple shared

storage systems, 30

connecting to one shared storage

system, 11

direct-attached, 11, 20

SAN-attached, 12

cluster storage

requirements, 10

clustering

overview, 7

D

about, 20

drivers

installing and configuring

Emulex, 38

E

Emulex HBAs

installing and configuring, 38

installing and configuring

drivers, 38

H

HBA drivers

installing and configuring, 38

host bus adapter

configuring the Fibre Channel

HBA, 38

Dell | EMC CX3-40

Cabling a two-node cluster, 21

Dell|EMC CX3-20

Cabling a two-node cluster, 21

Dell|EMC CX3-80

configuring, 41

installing, 41

direct-attached cluster

K

keyboard

cabling, 15

M

monitor

cabling, 15

Index 59

Page 60

mouse

cabling, 15

MSCS

installing and configuring, 46

N

Navisphere Manager

about, 45

hardware view, 45

storage view, 45

network adapters

cabling the private

network, 18-19

cabling the public network, 19

O

operating system

Windows Server 2003, Enterprise

Edition

installing,37

cabling, 17

S

SAN

configuring SAN backup in your

cluster, 33

SAN-attached cluster

about, 25

configurations, 11

single initiator zoning

about, 40

T

tape library

connecting to a PowerEdge

cluster, 32

troubleshooting

connecting to a cluster, 49

shared storage subsystem, 47

P

power supplies

cabling, 15

private network

cabling, 17, 19

hardware components, 19

hardware components and

connections, 19

public network

60 Index

W

warranty, 13

worldwide port name zoning, 39

Z

zones

implementing on a Fibre Channel

switched fabric, 39

using worldwide port names, 39

Loading...

Loading...