Page 1

ECS

Version 3.4.0.1

Administration Guide

302-999-901

02

February 2020

Page 2

Copyright © 2019-2020 Dell Inc. or its subsidiaries. All rights reserved.

Dell believes the information in this publication is accurate as of its publication date. The information is subject to change without notice.

THE INFORMATION IN THIS PUBLICATION IS PROVIDED “AS-IS.” DELL MAKES NO REPRESENTATIONS OR WARRANTIES OF ANY KIND

WITH RESPECT TO THE INFORMATION IN THIS PUBLICATION, AND SPECIFICALLY DISCLAIMS IMPLIED WARRANTIES OF

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. USE, COPYING, AND DISTRIBUTION OF ANY DELL SOFTWARE DESCRIBED

IN THIS PUBLICATION REQUIRES AN APPLICABLE SOFTWARE LICENSE.

Dell Technologies, Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be the property

of their respective owners. Published in the USA.

Dell EMC

Hopkinton, Massachusetts 01748-9103

1-508-435-1000 In North America 1-866-464-7381

www.DellEMC.com

2 ECS Administration Guide

Page 3

CONTENTS

Figures

Tables

Chapter 1

Chapter 2

7

9

Overview 11

Introduction.......................................................................................................12

ECS platform.....................................................................................................12

ECS data protection.......................................................................................... 14

Configurations for availability, durability, and resilience........................15

ECS network..................................................................................................... 17

Load balancing considerations........................................................................... 17

Getting Started with ECS 19

Initial configuration........................................................................................... 20

Log in to the ECS Portal................................................................................... 20

View the Getting Started Task Checklist........................................................... 21

View the ECS Portal Dashboard........................................................................22

Upper-right menu bar...........................................................................22

View requests...................................................................................... 22

View capacity utilization.......................................................................22

View performance................................................................................ 23

View storage efficiency........................................................................23

View geo monitoring.............................................................................23

View node and disk health.................................................................... 23

View alerts........................................................................................... 23

Chapter 3

Chapter 4

Storage Pools, VDCs, and Replication Groups 25

Introduction to storage pools, VDCs, and replication groups.............................26

Working with storage pools in the ECS Portal...................................................27

Create a storage pool...........................................................................28

Edit a storage pool............................................................................... 29

Working with VDCs in the ECS Portal .............................................................. 29

Create a VDC for a single site............................................................... 31

Add a VDC to a federation.................................................................... 31

Edit a VDC........................................................................................... 33

Remove VDC from a Replication Group................................................35

Fail a VDC (PSO)................................................................................. 36

Guidelines to check failover and bootstrap process............................. 36

Working with replication groups in the ECS Portal............................................37

Create a replication group....................................................................38

Edit a replication group........................................................................ 40

Authentication Providers 41

Introduction to authentication providers........................................................... 42

Working with authentication providers in the ECS Portal..................................42

Considerations when adding Active Directory authentication providers...

42

ECS Administration Guide 3

Page 4

Contents

AD or LDAP authentication provider settings....................................... 43

Add an AD or LDAP authentication provider.........................................46

Add a Keystone authentication provider...............................................46

Chapter 5

Chapter 6

Namespaces 49

Introduction to namespaces..............................................................................50

Namespace tenancy.............................................................................50

Working with namespaces in the ECS Portal..................................................... 51

Namespace settings............................................................................. 51

Create a namespace............................................................................ 55

Edit a namespace................................................................................. 57

Delete a namespace............................................................................. 58

Users and Roles 59

Introduction to users and roles......................................................................... 60

Users in ECS.....................................................................................................60

Management users...............................................................................60

Default management users.................................................................. 60

Object users......................................................................................... 61

Domain and local users.........................................................................62

User scope........................................................................................... 63

User tags............................................................................................. 64

Management roles in ECS.................................................................................64

System Administrator.......................................................................... 64

System Monitor................................................................................... 65

Namespace Administrator....................................................................65

Lock Administrator...............................................................................65

Tasks performed by role...................................................................... 65

Working with users in the ECS Portal............................................................... 68

Add an object user............................................................................... 70

Add a domain user as an object user..................................................... 71

Add domain users into a namespace.....................................................72

Create a local management user or assign a domain user or AD group to

a management role...............................................................................72

Assign the Namespace Administrator role to a user or AD group..........73

Chapter 7

4 ECS Administration Guide

Buckets 75

Introduction to buckets.....................................................................................76

Working with buckets in the ECS Portal........................................................... 76

Bucket settings.................................................................................... 76

Create a bucket....................................................................................79

Edit a bucket........................................................................................ 81

Set ACLs..............................................................................................82

Set bucket policies...............................................................................85

Create a bucket using the S3 API (with s3curl)................................................ 89

Bucket HTTP headers...........................................................................91

Bucket, object, and namespace naming conventions........................................ 92

S3 bucket and object naming in ECS....................................................92

OpenStack Swift container and object naming in ECS......................... 93

Atmos bucket and object naming in ECS..............................................93

CAS pool and object naming in ECS..................................................... 93

Disable unused services.................................................................................... 94

Page 5

Contents

Chapter 8

Chapter 9

File Access 97

Introduction to file access.................................................................................98

ECS multi-protocol access................................................................................98

S3/NFS multi-protocol access to directories and files......................... 98

Multi-protocol access permissions....................................................... 99

Working with NFS exports in the ECS Portal................................................... 101

Working with user/group mappings in the ECS Portal..................................... 101

ECS NFS configuration tasks.......................................................................... 102

Create a bucket for NFS using the ECS Portal................................... 102

Add an NFS export using the ECS Portal............................................104

Add a user or group mapping using the ECS Portal.............................105

Configure ECS NFS with Kerberos security........................................106

Mount an NFS export example......................................................................... 112

Best practices for mounting ECS NFS exports....................................114

NFS access using the ECS Management REST API......................................... 114

NFS WORM (Write Once, Read Many)............................................................115

S3A support..................................................................................................... 118

Configuration at ECS.......................................................................... 118

Configuration at Ambari Node............................................................. 118

Geo-replication status......................................................................................119

Certificates 121

Introduction to certificates..............................................................................122

Generate certificates.......................................................................................122

Create a private key............................................................................123

Generate a SAN configuration............................................................ 123

Create a self-signed certificate...........................................................124

Create a certificate signing request....................................................126

Upload a certificate......................................................................................... 130

Authenticate with the ECS Management REST API........................... 130

Upload a management certificate........................................................131

Upload a data certificate for data access endpoints........................... 132

Add custom LDAP certificate..............................................................133

Verify installed certificates.............................................................................. 136

Verify the management certificate..................................................... 136

Verify the object certificate................................................................ 137

Chapter 10

ECS Settings 139

Introduction to ECS settings........................................................................... 140

Object base URL..............................................................................................140

Bucket and namespace addressing..................................................... 140

DNS configuration.............................................................................. 142

Add a Base URL.................................................................................. 142

Key Management.............................................................................................143

Native Key Management.....................................................................146

External Key Management..................................................................146

External Key Manager Configuration.................................................. 147

Key Rotation.......................................................................................152

EMC Secure Remote Services.........................................................................152

ESRS prerequisites ............................................................................153

Add an ESRS Server...........................................................................154

Verify that ESRS call home works...................................................... 155

Disable call home................................................................................ 155

Alert policy...................................................................................................... 156

ECS Administration Guide 5

Page 6

Contents

New alert policy..................................................................................156

Event notification servers................................................................................157

SNMP servers.................................................................................... 157

Syslog servers.................................................................................... 162

Platform locking.............................................................................................. 165

Lock and unlock nodes using the ECS Portal...................................... 166

Lock and unlock nodes using the ECS Management REST API...........166

Licensing......................................................................................................... 167

Obtain the Dell EMC ECS license file..................................................168

Upload the ECS license file.................................................................168

Security...........................................................................................................168

Password............................................................................................169

Password Rules.................................................................................. 169

Sessions.............................................................................................. 171

User Agreement.................................................................................. 171

About this VDC................................................................................................ 172

Chapter 11

Chapter 12

ECS Outage and Recovery 173

Introduction to ECS site outage and recovery................................................. 174

TSO behavior...................................................................................................174

TSO behavior with the ADO bucket setting turned off........................174

TSO behavior with the ADO bucket setting turned on........................ 176

TSO considerations............................................................................ 182

NFS file system access during a TSO................................................. 182

PSO behavior.................................................................................................. 182

Recovery on disk and node failures..................................................................183

NFS file system access during a node failure...................................... 183

Data rebalancing after adding new nodes........................................................ 184

Advanced Monitoring 185

Advanced Monitoring...................................................................................... 186

View Advanced Monitoring Dashboards..............................................186

Share Advanced Monitoring Dashboards............................................ 192

Flux API........................................................................................................... 192

List of metrics for performance-related data......................................195

Dashboard API's to be deprecated or changed in the next release.................. 198

6 ECS Administration Guide

Page 7

FIGURES

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

ECS component layers...................................................................................................... 13

Guide icon .........................................................................................................................21

Getting Started Task Checklist..........................................................................................21

Upper-right menu bar....................................................................................................... 22

Replication group spanning three sites and replication group spanning two sites.............. 27

Adding a subset of domain users into a namespace using one AD attribute.......................62

Adding a subset of domain users into a namespace using multiple AD attributes.............. 63

Bucket Policy Editor code view.........................................................................................86

Bucket Policy Editor tree view.......................................................................................... 86

Data encryption using system-generated keys................................................................ 145

Encryption of the master key in a geo-replicated environment........................................145

Native key management.................................................................................................. 146

Read/write request fails during TSO when data is accessed from non-owner site and

owner site is unavailable.................................................................................................. 175

Read/write request succeeds during TSO when data is accessed from owner site and non-

owner site is unavailable.................................................................................................. 176

Read/write request succeeds during TSO when ADO-enabled data is accessed from non-

owner site and owner site is unavailable...........................................................................177

Object ownership example for a write during a TSO in a two-site federation...................179

Read request workflow example during a TSO in a three-site federation.........................180

Passive replication in normal state................................................................................... 181

TSO for passive replication.............................................................................................. 181

ECS Administration Guide 7

Page 8

Figures

8 ECS Administration Guide

Page 9

TABLES

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

ECS supported data services.............................................................................................13

Erasure encoding requirements for regular and cold archives ........................................... 14

Storage overhead.............................................................................................................. 15

ECS data protection schemes........................................................................................... 16

Storage pool properties.....................................................................................................27

VDC properties................................................................................................................. 30

Replication Group properties............................................................................................ 38

Authentication provider properties....................................................................................42

AD or LDAP authentication provider settings.................................................................... 43

Keystone authentication provider settings........................................................................ 47

Namespace properties.......................................................................................................51

Namespace settings.......................................................................................................... 51

Default management users................................................................................................ 61

Tasks performed by ECS management user role...............................................................66

Object user properties...................................................................................................... 69

Management user properties............................................................................................ 69

Bucket settings................................................................................................................. 77

Bucket ACLs..................................................................................................................... 83

Bucket headers..................................................................................................................91

NFS export properties......................................................................................................101

ECS Management REST API calls for managing NFS access........................................... 114

Autocommit terms........................................................................................................... 115

Key Management properties............................................................................................148

Create cluster..................................................................................................................148

New external key servers.................................................................................................149

Key Management properties............................................................................................ 151

ESRS properties.............................................................................................................. 152

Syslog facilities used by ECS...........................................................................................164

Syslog severity keywords................................................................................................ 164

ECS Management REST API calls for managing node locking .........................................167

Password rules................................................................................................................ 169

Sessions........................................................................................................................... 171

User agreement................................................................................................................171

Advanced monitoring dashboards....................................................................................186

Advanced monitoring dashboard fields............................................................................ 186

Metrics for performance-related data............................................................................. 195

API - Remove.................................................................................................................. 198

API - Change................................................................................................................... 198

API - No change.............................................................................................................. 199

ECS Administration Guide 9

Page 10

Tables

10 ECS Administration Guide

Page 11

CHAPTER 1

Overview

l

Introduction........................................................................................................................... 12

l

ECS platform......................................................................................................................... 12

l

ECS data protection...............................................................................................................14

l

ECS network..........................................................................................................................17

l

Load balancing considerations................................................................................................17

ECS Administration Guide 11

Page 12

Overview

Introduction

Dell EMC ECS provides a complete software-defined cloud storage platform that supports the

storage, manipulation, and analysis of unstructured data on a massive scale on commodity

hardware. You can deploy ECS as a turnkey storage appliance or as a software product that is

installed on a set of qualified commodity servers and disks. ECS offers the cost advantages of a

commodity infrastructure and the enterprise reliability, availability, and serviceability of traditional

arrays.

ECS uses a scalable architecture that includes multiple nodes and attached storage devices. The

nodes and storage devices are commodity components, similar to devices that are generally

available, and are housed in one or more racks.

A rack and its components that are supplied by Dell EMC and that have preinstalled software, is

referred to as an ECS

referred to as a Dell EMC ECS

A rack, or multiple joined racks, with processing and storage that is handled as a coherent unit by

the ECS infrastructure software is referred to as a

Data Center

Management users can access the ECS UI, which is referred to as the ECS Portal, to perform

administration tasks. Management users include the System Administrator, Namespace

Administrator, and System Monitor roles. Management tasks that can be performed in the ECS

Portal can also be performed by using the ECS Management REST API.

ECS administrators can perform the following tasks in the ECS Portal:

l

l

Object users cannot access the ECS Portal, but can access the object store to read and write

objects and buckets by using clients that support the following data access protocols:

l

l

l

l

For more information about object user tasks, see the

ECS Product Documentation page.

For more information about System Monitor tasks, see the

the ECS Product Documentation page.

appliance

. A rack and commodity nodes that are not supplied by Dell EMC, is

software only solution

. Multiple racks are referred to as a

site

, and at the ECS software level as a

cluster

Virtual

(VDC).

Configure and manage the object store infrastructure (compute and storage resources) for

object users.

Manage users, roles, and buckets within namespaces. Namespaces are equivalent to tenants.

Amazon Simple Storage Service (Amazon S3)

EMC Atmos

OpenStack Swift

ECS CAS (content-addressable storage)

ECS Data Access Guide

ECS Monitoring Guide

, available from the

, available from

.

ECS platform

The ECS platform is composed of the data services, portal, storage engine, fabric, infrastructure,

and hardware component layers.

12 ECS Administration Guide

Page 13

Figure 1 ECS component layers

Data

Services

Portal

Storage Engine

Fabric

Infrastructure

Hardware

ECS

Software

Data services

The data services component layer provides support for access to the ECS object store

through object, HDFS, and NFS v3 protocols. In general, ECS provides multi-protocol access,

data that is ingested through one protocol can be accessed through another. For example,

data that is ingested through S3 can be modified through Swift, NFS v3, or HDFS. This multiprotocol access has some exceptions due to protocol semantics and representations of how

the protocol was designed.

The following table shows the object APIs and the protocols that are supported and that

interoperate.

Overview

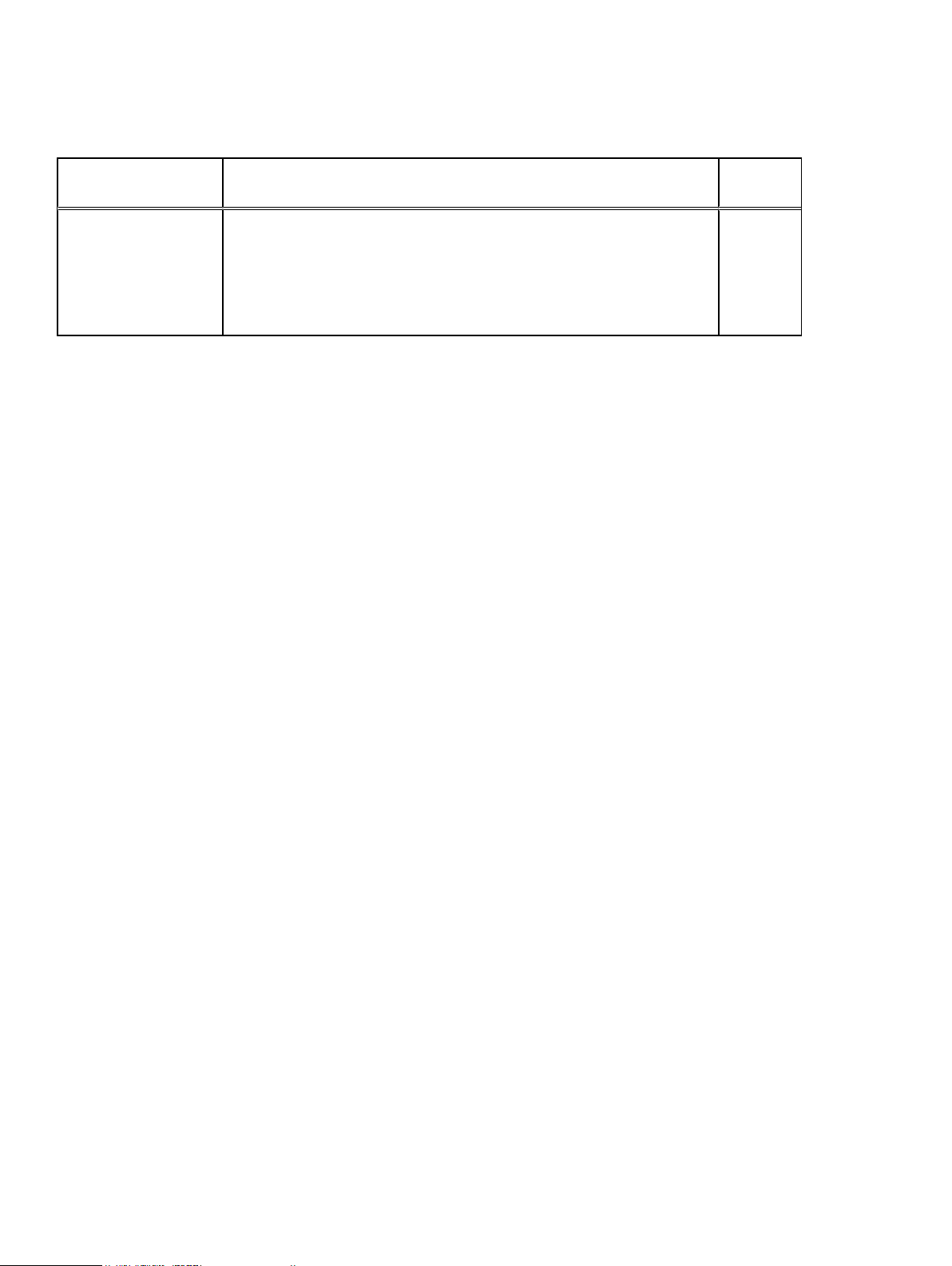

Table 1

ECS supported data services

Protocols Support Interoperability

Object S3 Additional capabilities such as byte range

updates and rich ACLS

Atmos Version 2.0 NFS (only path-based objects, not object ID

Swift V2 APIs, Swift, and Keystone v3

authentication

CAS SDK v3.1.544 and later Not applicable

HDFS Hadoop 2.6.2 compatibility S3, Swift*

NFS NFSv3 S3, Swift, Atmos (only path-based objects)

* When a bucket is enabled for file system access, permissions set using HDFS are in effect when you access the

bucket as an NFS file system, and vice versa.

File systems (HDFS and NFS), Swift

style objects)

File systems (HDFS and NFS), S3

Portal

The ECS Portal component layer provides a Web-based GUI that allows you to manage,

license, and provision ECS nodes. The portal has the following comprehensive reporting

capabilities:

l Capacity utilization for each site, storage pool, node, and disk

l Performance monitoring on latency, throughput, transactions per second, and replication

progress and rate

l Diagnostic information, such as node and disk recovery status and statistics on hardware

and process health for each node, which helps identify performance and system

bottlenecks

ECS Administration Guide 13

Page 14

Overview

Storage engine

The storage engine component layer provides an unstructured storage engine that is

responsible for storing and retrieving data, managing transactions, and protecting and

replicating data. The storage engine provides access to objects ingested using multiple object

storage protocols and the NFS and HDFS file protocols.

Fabric

The fabric component layer provides cluster health management, software management,

configuration management, upgrade capabilities, and alerting. The fabric layer is responsible

for keeping the services running and managing resources such as the disks, containers,

firewall, and network. It tracks and reacts to environment changes such as failure detection

and provides alerts that are related to system health. The 9069 and 9099 ports are public IP

ports protected by Fabric Firewall manager. Port is not available outside of the cluster.

Infrastructure

The infrastructure component layer uses SUSE Linux Enterprise Server 12 as the base

operating system for the ECS appliance, or qualified Linux operating systems for commodity

hardware configurations. Docker is installed on the infrastructure to deploy the other ECS

component layers. The Java Virtual Machine (JVM) is installed as part of the infrastructure

because ECS software is written in Java.

Hardware

The hardware component layer is an ECS appliance or qualified industry standard hardware.

For more information about ECS hardware, see the

from the ECS Product Documentation page.

ECS data protection

ECS protects data within a site by mirroring the data onto multiple nodes, and by using erasure

coding to break down data chunks into multiple fragments and distribute the fragments across

nodes. Erasure coding (EC) reduces the storage overhead and ensures data durability and

resilience against disk and node failures.

By default, the storage engine implements the Reed-Solomon 12 + 4 erasure coding scheme in

which an object is broken into 12 data fragments and 4 coding fragments. The resulting 16

fragments are dispersed across the nodes in the local site. When an object is erasure-coded, ECS

can read the object directly from the 12 data fragments without any decoding or reconstruction.

The code fragments are used only for object reconstruction when a hardware failure occurs. ECS

also supports a 10 + 2 scheme for use with cold storage archives to store objects that do not

change frequently and do not require the more robust default EC scheme.

The following table shows the requirements for the supported erasure coding schemes.

Table 2

Erasure encoding requirements for regular and cold archives

ECS Hardware and Cabling Guide

, available

Use case Minimum

required nodes

Regular archive 4 16 32 1.33 12 + 4

Cold archive 6 12 24 1.2 10 + 2

Minimum

required disks

Recommended

disks

EC efficiency EC scheme

Sites can be federated, so that data is replicated to another site to increase availability and data

durability, and to ensure that ECS is resilient against site failure. For three or more sites, in

addition to the erasure coding of chunks at a site, chunks that are replicated to other sites are

combined using a technique called XOR to provide increased storage efficiency.

14 ECS Administration Guide

Page 15

Overview

The following table shows the storage efficiency that can be achieved by ECS where multiple sites

are used.

Table 3 Storage overhead

Number of sites in

replication group

1 1.33 1.2

2 2.67 2.4

3 2.00 1.8

4 1.77 1.6

5 1.67 1.5

6 1.60 1.44

7 1.55 1.40

8 1.52 1.37

Storage overhead

Default (Erasure Code: 12+4) Cold archive (Erasure Code:

10+2)

If you have one site, with erasure coding the object data chunks use more space (1.33 or 1.2 times

storage overhead) than the raw data bytes require. If you have two sites, the storage overhead is

doubled (2.67 or 2.4 times storage overhead) because both sites store a replica of the data, and

the data is erasure coded at both sites. If you have three or more sites, ECS combines the

replicated chunks so that, counter intuitively, the storage overhead reduces.

When one node is down in a four nodes system, ECS starts to rebuild the EC on priority to avoid

DU. As one node is down, EC segment separates to other three nodes, which results in segment

number being greater than the EC code number. If the down node comes back, things go back to

normal. When another node with the most number of EC segments goes down, the DU window is

as large as the node NA window, when the node does not recover it causes DL.

EC retiring feature converts unsaved EC chunk into three mirror copies chunk for data safety.

However, EC retiring has some limitations:

l

It increases system capacity, and protection overhead from 1.33 to 3.

l

When there is no node down situation, EC retiring introduce unnecessary IO.

l

The feature applies to four nodes system. EC retiring does not automatically trigger, you need

to trigger it on demand using an API through service console.

For a detailed description of the mechanism used by ECS to provide data durability, resilience, and

availability, see the

ECS High Availability Design White Paper

.

Configurations for availability, durability, and resilience

Depending on the number of sites in the ECS system, different data protection schemes can

increase availability and balance the data protection requirements against performance. ECS uses

the replication group to configure the data protection schemes (see Introduction to storage pools,

VDCs, and replication groups on page 26). The following table shows the data protection schemes

that are available.

ECS Administration Guide 15

Page 16

Overview

Table 4 ECS data protection schemes

Number of sites Data protection scheme

Local Protection Full Copy

Protection*

1 Yes Not applicable Not applicable Not applicable

2 Yes Always Not applicable Not applicable

3 or more Yes Optional Normal Optional

* Full Copy Protection can be selected with Active. Full Copy Protection is not available if

Passive is selected.

Active Passive

Local Protection

Data is protected locally by using triple mirroring and erasure coding which provides resilience

against disk and node failures, but not against site failure.

Full Copy Protection

When the Replicate to All Sites setting is turned on for a replication group, the replication

group makes a full readable copy of all objects to all sites within the replication group. Having

full readable copies of objects on all VDCs in the replication group provides data durability and

improves local performance at all sites at the cost of storage efficiency.

Active

Active is the default ECS configuration. When a replication group is configured as Active, data

is replicated to federated sites and can be accessed from all sites with strong consistency. If

you have two sites, full copies of data chunks are copied to the other site. If you have three or

more sites, the replicated chunks are combined (XOR'ed) to provide increased storage

efficiency.

When data is accessed from a site that is not the owner of the data, until that data is cached

at the non-owner site, the access time increases. Similarly, if the owner site that contains the

primary copy of the data fails, and if you have a global load balancer that directs requests to a

non-owner site, the non-owner site must recreate the data from XOR'ed chunks, and the

access time increases.

Passive

The Passive configuration includes two, three, or four active sites with an additional passive

site that is a replication target (backup site). The minimum number of sites for a Passive

configuration is three (two active, one passive) and the maximum number of sites is five (four

active, one passive). Passive configurations have the same storage efficiency as Active

configurations. For example, the Passive three-site configuration has the same storage

efficiency as the Active three-site configuration (2.0 times storage overhead).

In the Passive configuration, all replication data chunks are sent to the passive site and XOR

operations occur only at the passive site. In the Active configuration, the XOR operations

occur at all sites.

If all sites are on-premise, you can designate any of the sites as the replication target.

If there is a backup site hosted off-premise by a third-party data center, ECS automatically

selects it as the replication target when you create a Passive geo replication group (see

Create a replication group on page 38). If you want to change the replication target from a

hosted site to an on-premise site, you can do so using the ECS Management REST API.

16 ECS Administration Guide

Page 17

ECS network

ECS network infrastructure consists of top of rack switches allowing for the following types of

network connections:

l

Public network – connects ECS nodes to your organization's network, providing data.

l

Internal private network – manages nodes and switches within the rack and across racks.

For more information about ECS networking, see the

Paper

.

CAUTION It is required to have connections from the customer's network to both front end

switches (rabbit and hare) in order to maintain the high availability architecture of the ECS

appliance. If the customer chooses not to connect to their network in the required HA manner,

there is no guarantee of high data availability for the use of this product.

Load balancing considerations

It is recommended that a load balancer is used in front of ECS.

In addition to distributing the load across ECS cluster nodes, a load balancer provides High

Availability (HA) for the ECS cluster by routing traffic to healthy nodes. Where network separation

is implemented, and data and management traffic are separated, the load balancer must be

configured so that user requests, using the supported data access protocols, are balanced across

the IP addresses of the data network. ECS Management REST API requests can be made directly

to a node IP on the management network or can be load balanced across the management network

for HA.

The load balancer configuration is dependent on the load balancer type. For information about

tested configurations and best practice, contact your customer support representative.

Overview

ECS Networking and Best Practices White

ECS Administration Guide 17

Page 18

Overview

18 ECS Administration Guide

Page 19

CHAPTER 2

Getting Started with ECS

l

Initial configuration................................................................................................................20

l

Log in to the ECS Portal........................................................................................................20

l

View the Getting Started Task Checklist............................................................................... 21

l

View the ECS Portal Dashboard............................................................................................ 22

ECS Administration Guide 19

Page 20

Getting Started with ECS

Initial configuration

The initial configuration steps that are required to get started with ECS include logging in to the

ECS Portal for the first time, using the ECS Portal Getting Started Task Checklist and Dashboard,

uploading a license, and setting up an ECS virtual data center (VDC).

About this task

To initially configure ECS, the root user or System Administrator must at a minimum:

Procedure

1. Upload an ECS license.

See Licensing on page 167.

2. Select a set of nodes to create at least one storage pool.

See Create a storage pool on page 28.

3. Create a VDC.

See Create a VDC for a single site on page 31.

4. Create at least one replication group.

See Create a replication group on page 38.

a. Optional: Set authentication.

You can add Active Directory (AD), LDAP, or Keystone authentication providers to ECS

to enable users to be authenticated by systems external to ECS. See Introduction to

authentication providers on page 42.

5. Create at least one namespace. A namespace is the equivalent of a tenant.

See Create a namespace on page 55.

a. Optional: Create object and/or management users.

See Working with users in the ECS Portal on page 68.

6. Create at least one bucket.

See Create a bucket on page 79.

After you configure the initial VDC, if you want to create an additional VDC and federate it

with the first VDC, see Add a VDC to a federation on page 31.

Log in to the ECS Portal

Log in to the ECS Portal to set up the initial configuration of a VDC. Log in to the ECS Portal from

the browser by specifying the IP address or fully qualified domain name (FQDN) of any node, or

the load balancer that acts as the front end to ECS. The login procedure is described below.

Before you begin

Logging in to the ECS Portal requires the System Administrator, System Monitor, Lock

Administrator (emcsecurity user), or Namespace Administrator role.

Note:

configure the system, only with the System Administrator role.

20 ECS Administration Guide

You can login to the ECS Portal for the first time with any valid login. However, you can

Page 21

Procedure

1. Type the public IP address of the first node in the system, or the address of the load

balancer that is configured as the front-end, in the address bar of your browser:

https://<node1_public_ip>.

2. Log in with the default root credentials:

l

User Name: root

l

Password: ChangeMe

You are prompted to change the password for the root user immediately.

3. After you change the password at first login, click Save.

You are logged out and the ECS login screen is displayed.

4. Type the User Name and Password.

5. To log out of the ECS Portal, in the upper-right menu bar, click the arrow beside your user

name, and then click logout.

View the Getting Started Task Checklist

Getting Started with ECS

The Getting Started Task Checklist in the ECS Portal guides you through the initial ECS

configuration. The checklist appears when you first log in and when the portal detects that the

initial configuration is not complete. The checklist automatically appears until you dismiss it. On

any ECS Portal page, in the upper-right menu bar, click the Guide icon to open the checklist.

Figure 2

Guide icon

The Getting Started Task Checklist displays in the portal.

Figure 3

Getting Started Task Checklist

1. The current step in the checklist.

2. An optional step. This step does not display a check mark even if you have completed the step.

3. Information about the current step.

4. Available actions.

5. Dismiss the checklist.

A completed step appears in green color.

ECS Administration Guide 21

Page 22

Getting Started with ECS

A completed checklist gives you the option to browse the list again or recheck your configuration.

View the ECS Portal Dashboard

The ECS Portal Dashboard provides critical information about the ECS processes on the VDC you

are currently logged in to.

The Dashboard is the first page you see after you log in. The title of each panel (box) links to the

portal monitoring page that shows more detail for the monitoring area.

Upper-right menu bar

The upper-right menu bar appears on each ECS Portal page.

Figure 4 Upper-right menu bar

Menu items include the following icons and menus:

1. The Alert icon displays a number that indicates how many unacknowledged alerts are pending

for the current VDC. The number displays 99+ if there are more than 99 alerts. You can click

the Alert icon to see the Alert menu, which shows the five most recent alerts for the current

VDC.

2. The Help icon brings up the online documentation for the current portal page.

3. The Guide icon brings up the Getting Started Task Checklist.

4. The VDC menu displays the name of the current VDC. If your AD or LDAP credentials allow you

to access more than one VDC, you can switch the portal view to the other VDCs without

entering your credentials.

5. The User menu displays the current user and allows you to log out. The User menu displays the

last login time for the user.

View requests

The Requests panel displays the total requests, successful requests, and failed requests.

Failed requests are organized by system error and user error. User failures are typically HTTP 400

errors. System failures are typically HTTP 500 errors. Click Requests to see more request

metrics.

Request statistics do not include replication traffic.

View capacity utilization

The Capacity Utilization panel displays the total, used, available, reserved, and percent full

capacity.

Capacity amounts are shown in gibibytes (GiB) and tibibytes (TiB). One GiB is approximately equal

to 1.074 gigabytes (GB). One TiB is approximately equal to 1.1 terabytes (TB).

The Used capacity indicates the amount of capacity that is in use. Click Capacity Utilization to

see more capacity metrics.

The capacity metrics are available in the left menu.

22 ECS Administration Guide

Page 23

View performance

The Performance panel displays how network read and write operations are currently performing,

and the average read/write performance statistics over the last 24 hours for the VDC.

Click Performance to see more comprehensive performance metrics.

View storage efficiency

The Storage Efficiency panel displays the efficiency of the erasure coding (EC) process.

The chart shows the progress of the current EC process, and the other values show the total

amount of data that is subject to EC, the amount of EC data waiting for the EC process, and the

current rate of the EC process. Click Storage Efficiency to see more storage efficiency metrics.

View geo monitoring

The Geo Monitoring panel displays how much data from the local VDC is waiting for georeplication, and the rate of the replication.

Recovery Point Objective (RPO) refers to the point in time in the past to which you can recover.

The value is the oldest data at risk of being lost if a local VDC fails before replication is complete.

Failover Progress shows the progress of any active failover that is occurring in the federation

involving the local VDC. Bootstrap Progress shows the progress of any active process to add a

new VDC to the federation. Click Geo Monitoring to see more geo-replication metrics.

Getting Started with ECS

View node and disk health

The Node & Disks panel displays the health status of disks and nodes.

A green check mark beside the node or disk number indicates the number of nodes or disks in good

health. A red x indicates bad health. Click Node & Disks to see more hardware health metrics. If

the number of bad disks or nodes is a number other than zero, clicking on the count takes you to

the corresponding Hardware Health tab (Offline Disks or Offline Nodes) on the System Health

page.

View alerts

The Alerts panel displays a count of critical alerts and errors.

Click Alerts to see the full list of current alerts. Any Critical or Error alerts are linked to the Alerts

tab on the Events page where only the alerts with a severity of Critical or Error are filtered and

displayed.

ECS Administration Guide 23

Page 24

Getting Started with ECS

24 ECS Administration Guide

Page 25

CHAPTER 3

Storage Pools, VDCs, and Replication Groups

l

Introduction to storage pools, VDCs, and replication groups................................................. 26

l

Working with storage pools in the ECS Portal....................................................................... 27

l

Working with VDCs in the ECS Portal .................................................................................. 29

l

Working with replication groups in the ECS Portal................................................................ 37

ECS Administration Guide 25

Page 26

Storage Pools, VDCs, and Replication Groups

Introduction to storage pools, VDCs, and replication groups

This topic provides conceptual information on storage pools, virtual data centers (VDCs), and

replication groups and the following topics describe the operations required to configure them:

l

Working with storage pools at the ECS Portal

l

Working with VDCs at the ECS Portal

l

Working with replication groups at the ECS Portal

The storage that is associated with a VDC must be assigned to a storage pool and the storage pool

must be assigned to one or more replication groups to allow the creation of buckets and objects.

A storage pool can be associated with more than one replication group. A best practice is to have a

single storage pool for a site. However, you can have as many storage pools as required, with a

minimum of four nodes (and 16 disks) in each pool.

You might need to create more than one storage pool at a site for the following reasons:

l

The storage pool is used for Cold Archive. The erasure coding scheme used for cold archive

uses 10+2 coding rather than the default ECS 12+4 scheme.

l

A tenant requires the data to be stored on separate physical media.

A storage pool must have a minimum of four nodes and must have three or more nodes with more

than 10% free capacity in order to allow writes. This reserved space is required to ensure that ECS

does not run out of space while persisting system metadata. If this criteria is not met, the write will

fail. The ability of a storage pool to accept writes does not affect the ability of other pools to

accept writes. For example, if you have a load balancer that detects a failed write, the load

balancer can redirect the write to another VDC.

The replication group is used by ECS for replicating data to other sites so that the data is

protected and can be accessed from other, active sites. When you create a bucket, you specify

the replication group it is in. ECS ensures that the bucket and the objects in the bucket are

replicated to all the sites in the replication group.

ECS can be configured to use more than one replication scheme, depending on the requirements

to access and protect the data. The following figure shows a replication group (RG 1) that spans all

three sites. RG 1 takes advantage of the XOR storage efficiency provided by ECS when using

three or more sites. In the figure, the replication group that spans two sites (RG 2), contains full

copies of the object data chunks and does not use XOR'ing to improve storage efficiency.

26 ECS Administration Guide

Page 27

VDC C

VDC A

VDC B

SP 1

VDC C

SP 2

Federation

RG 1 (SP 1,2,3)

RG 2 (SP 1,3)

Rack 1

Rack 1

Rack 1

RG 1

RG 1

SP 3

Storage Pools, VDCs, and Replication Groups

Figure 5 Replication group spanning three sites and replication group spanning two sites

The physical storage that the replication group uses at each site is determined by the storage pool

that is included in the replication group. The storage pool aggregates the disk storage of each of

the minimum of four nodes to ensure that it can handle the placement of erasure coding

fragments. A node cannot exist in more than one storage pool. The storage pool can span racks,

but it is always within a site.

Working with storage pools in the ECS Portal

You can use storage pools to organize storage resources based on business requirements. For

example, if you require physical separation of data, you can partition the storage into multiple

storage pools.

You can use the Storage Pool Management page available from Manage > Storage Pools to view

the details of existing storage pools, to create storage pools, and to edit existing storage pools.

You cannot delete storage pools in this release.

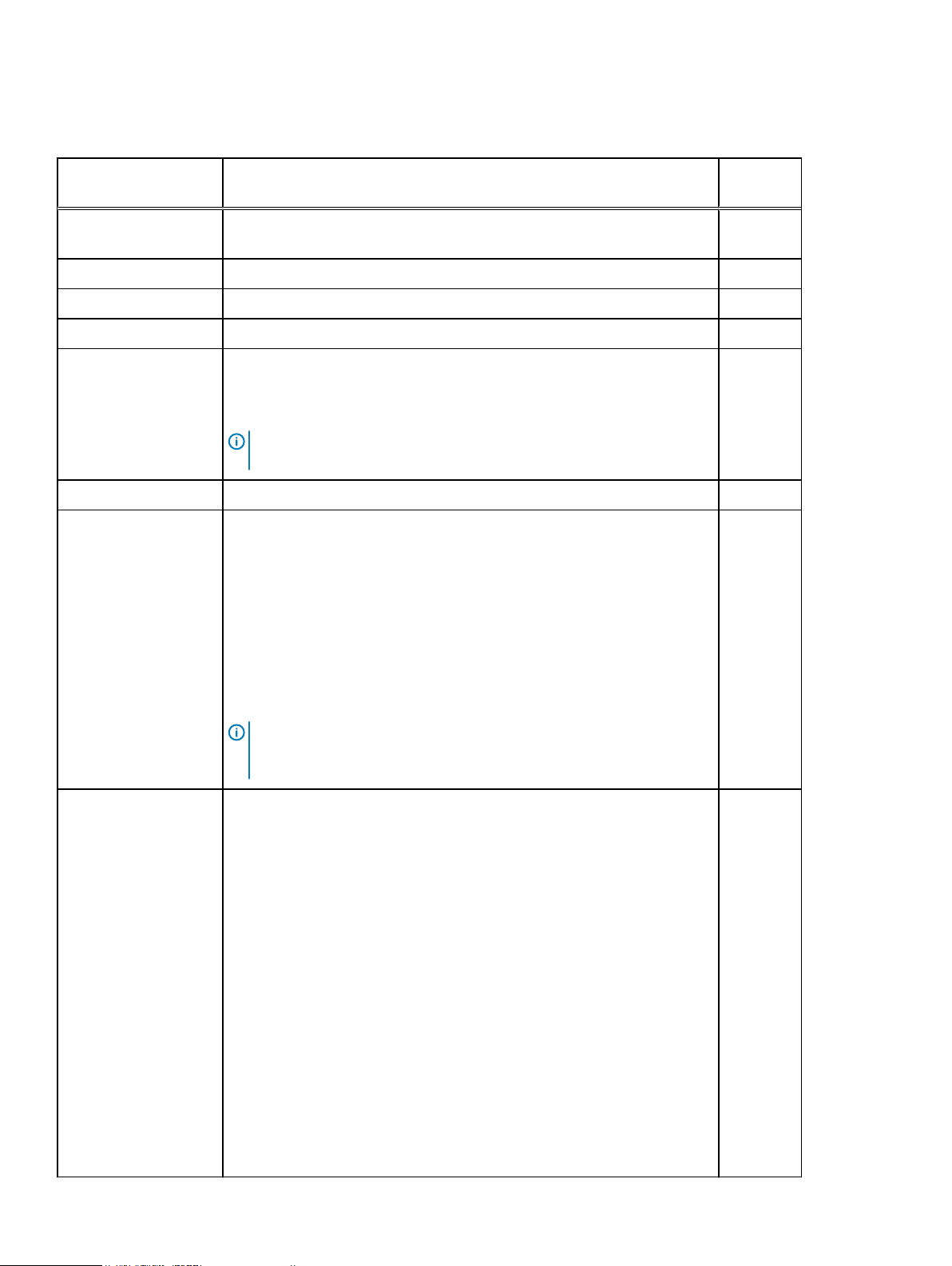

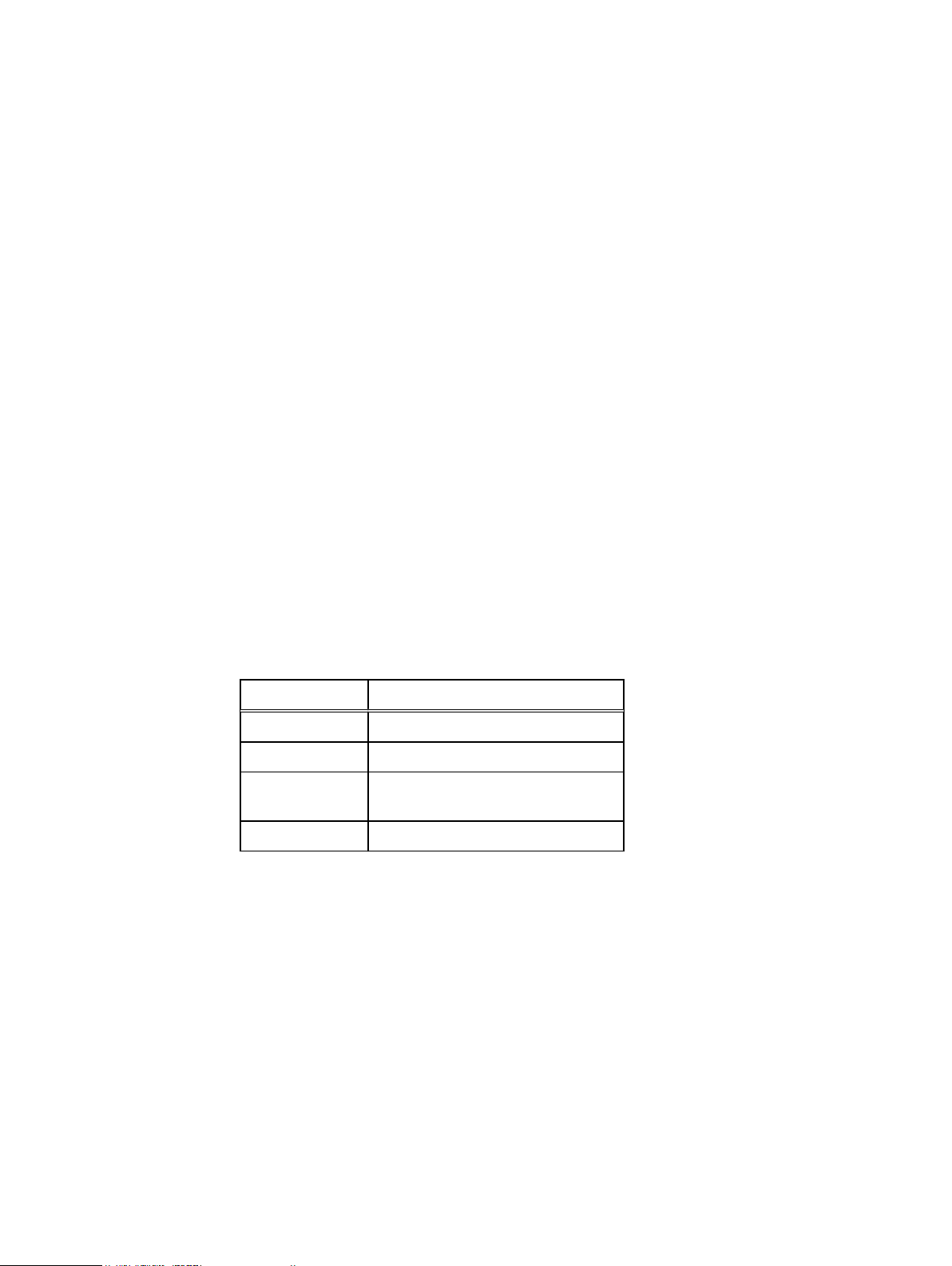

Table 5

Field Description

Name The name of the storage pool.

Nodes The number of nodes that are assigned to the storage pool.

Status The state of the storage pool and of the nodes.

Host The fully qualified host name that is assigned to the node.

Data IP The public IP address that is assigned to the node or the data IP address in network separation

Rack ID The name that is assigned to the rack that contains the nodes.

Actions The actions that can be completed for the storage pool.

Storage pool properties

l

Ready: At least four nodes are installed and all nodes are in the ready to use state.

l

Not Ready: A node in the storage pool is not in the ready to use state.

l

Partially Ready: Less than four nodes, and all nodes are in the ready to use state.

environment.

ECS Administration Guide 27

Page 28

Storage Pools, VDCs, and Replication Groups

Table 5 Storage pool properties (continued)

Field Description

l

Edit: Change the storage pool name or modify the set of nodes that are included in the

storage pool.

l

Delete: Used by Customer Support to delete the storage pool. System Administrators or

root users should not attempt to delete the storage pool. If you attempt this operation in

the ECS Portal, you receive an error message that states this operation is not supported.

If you must delete a storage pool, contact your customer support representative.

Cold Storage A storage pool that is specified as Cold Storage. Cold Storage pools use an erasure coding

(EC) scheme that is more efficient for infrequently accessed objects. Cold Storage is also

known as a Cold Archive. After a storage pool is created, this setting cannot be changed.

Create a storage pool

Storage pools must contain a minimum of four nodes. The first storage pool that is created is

known as the system storage pool because it stores system metadata.

Before you begin

This operation requires the System Administrator role in ECS.

Procedure

1. In the ECS Portal, select Manage > Storage Pools.

2. On the Storage Pool Management page, click New Storage Pool.

3. On the New Storage Pool page, in the Name field, type the storage pool name (for

example, StoragePool1).

4. In the Cold Storage field, specify if this storage pool is Cold Storage. Cold storage contains

infrequently accessed data. The ECS data protection scheme for cold storage is optimized

to increase storage efficiency. After a storage pool is created, this setting cannot be

changed.

Note:

Cold storage requires a minimum hardware configuration of six nodes. For more

information, see ECS data protection on page 14.

5. From the Available Nodes list, select the nodes to add to the storage pool.

a. To select nodes one-by-one, click the + icon beside each node.

b. To select all available nodes, click the + icon at the top of the Available Nodes list.

c. To narrow the list of available nodes, in the search field, type the public IP address for

the node or the host name.

6. In the Available Capacity Alerting fields, select the applicable available capacity thresholds

that will trigger storage pool capacity alerts:

a. In the Critical field, select 10 %, 15 %, or No Alert.

b. In the Error field, select 20 %, 25 %, 30 %, or No Alert.

28 ECS Administration Guide

For example, if you select 10 %, that means a Critical alert will be triggered when the

available storage pool capacity is less than 10 percent.

For example, if you select 25 %, that means an Error alert will be triggered when the

available storage pool capacity is less than 25 percent.

Page 29

7. Click Save.

8. Wait 10 minutes after the storage pool is in the Ready state before you perform other

Edit a storage pool

You can change the name of a storage pool or change the set of nodes included in the storage

pool.

Storage Pools, VDCs, and Replication Groups

c. In the Warning field, select 35 %, 40 %, or No Alert.

For example, if you select 40 %, that means a Warning alert will be triggered when the

available storage pool capacity is less than 40 percent.

When a capacity alert is generated, a call home alert is also generated that alerts ECS

customer support that the ECS system is reaching its capacity limit.

configuration tasks, to allow the storage pool time to initialize.

If you receive the following error, wait a few more minutes before you attempt any further

configuration. Error 7000 (http: 500): An error occurred in the API

Service. An error occurred in the API service.Cause: error

insertVdcInfo. Virtual Data Center creation failure may occur when

Data Services has not completed initialization.

Before you begin

This operation requires the System Administrator role in ECS.

Procedure

1. In the ECS Portal, select Manage > Storage Pools.

2. On the Storage Pool Management page, locate the storage pool you want to edit in the

table. Click Edit in the Actions column beside the storage pool you want to edit.

3. On the Edit Storage Pool page:

l

To modify the storage pool name, in the Name field, type the new name.

l

To modify the nodes included in the storage pool:

n

In the Selected Nodes list, remove an existing node in the storage pool by clicking

the - icon beside the node.

n

In the Available Nodes list, add a node to the storage pool by clicking the + icon

beside the node.

l

To modify the available capacity thresholds that will trigger storage pool capacity alerts,

select the applicable alert thresholds in the Available Capacity Alerting fields.

4. Click Save.

Working with VDCs in the ECS Portal

An ECS virtual data center (VDC) is the top-level resource that represents the collection of ECS

infrastructure components to manage as a unit.

You can use the Virtual Data Center Management page available from Manage > Virtual Data

Center to view VDC details, to create a VDC, to edit an existing VDC, to update endpoints in

multiple VDCs, delete VDCs, and to federate multiple VDCs for a multisite deployment. The

following example shows the Virtual Data Center Management page for a federated deployment.

It is configured with two VDCs named vdc1 and vdc2.

ECS Administration Guide 29

Page 30

Storage Pools, VDCs, and Replication Groups

Table 6 VDC properties

Field Description

Name The name of the VDC.

Type The type of VDC is automatically set and can be either Hosted or On-Premise.

l

A Hosted VDC is hosted off-premise by a third-party data center (a backup site).

Replication Endpoints Endpoints for communication of replication data between VDCs when an ECS federation is

configured.

l

By default, replication traffic runs between VDCs over the public network. By default

the public network IP address for each node is used as the Replication Endpoints.

l

If a separate replication network is configured, the network IP address that is configured

for replication traffic of each node is used as the Replication Endpoints.

l

If a load balancer is configured to distribute the load between the replication IP

addresses of the nodes, the address that is configured on the load balancer is displayed.

Management

Endpoints

Endpoints for communication of management commands between VDCs when an ECS

federation is configured.

l

By default, management traffic runs between VDCs over the public network. By default

the public network IP address for each node is used as the Management Endpoints.

l

If a separate management network is configured, the network IP address that is

configured for management traffic of each node is used for the Management Endpoints.

Status The state of the VDC.

l

Online

l

Permanently Failed: The VDC was deleted.

Actions The actions that can be completed for the VDC.

l

Edit: Change the name of a VDC, the VDC access key, and the VDC replication and

management endpoints.

l

Delete: Delete the VDC. The delete operation triggers permanent failover of the VDC,

You cannot add the VDC again by using the same name. You cannot delete a VDC that is

part of a replication group until you first remove it from the replication group. You

cannot delete a VDC when you are logged in to the VDC you are trying to delete.

l

Fail this VDC:

WARNING Failing a VDC is permanent. The site cannot be added back.

Note: Fail this VDC is available when there is more than one VDC.

30 ECS Administration Guide

n

Ensure that Geo replication is up-to-date. Stop all writes to the VDC.

n

Ensure that all nodes of the VDC are shutdown.

n

Replication to/from the VDC will be disabled for all replication groups.

n

Recovery is initiated only when the VDC is removed from the replication group.

Proceed to do that next.

n

This VDC will display a status of Permanently Failed in any replication group

to which it belongs.

n

To Reconstruct this VDC, it must be added as a new site. Any previous data will

be lost, as that data will have failed over to other sites in the federation.

Page 31

Create a VDC for a single site

You can create a VDC for a single-site deployment, or when you create the first VDC in a multi-site

federation.

Before you begin

This operation requires the System Administrator role in ECS.

Ensure that one or more storage pools are available and in the Ready state.

Procedure

1. In the ECS Portal, select Manage > Virtual Data Center.

2. On the Virtual Data Center Management page, click New Virtual Data Center.

3. On the New Virtual Data Center page, in the Name field, type the VDC name (for example:

VDC1).

4. To create an access key for the VDC, either:

l

Type the VDC access key value in the Key field, or

l

Click Generate to generate a VDC access key.

The VDC Access Key is used as a symmetric key for encrypting replication traffic between

VDCs in a multi-site federation.

Storage Pools, VDCs, and Replication Groups

5. In the Replication Endpoints field, type the replication IP address of each node assigned to

the VDC. Type them as a comma-separated list.

By default replication traffic runs on the public network. Therefore by default, the IP

address configured for the public network on each node is entered here. If the replication

network was separated from the public or management network, each node's replication IP

address is entered here.

If a load balancer is configured to distribute the load between the replication IP addresses of

the nodes, the address configured on the load balancer is displayed.

6. In the Management Endpoints field, type the management IP address of each node

assigned to the VDC. Type them as a comma-separated list.

By default management traffic runs on the public network. Therefore by default, the IP

address configured for the public network on each node is entered here. If the management

network was separated from the public or replication network, each node's management IP

address is entered here.

7. Click Save.

When the VDC is created, ECS automatically sets the VDC's Type to either On-Premise or

Hosted.

A Hosted VDC is hosted off-premise by a third-party data center (a backup site).

Add a VDC to a federation

You can add a VDC to an existing VDC (for example, VDC1) to create a federation. It is important

when you perform this procedure that you DO NOT create a VDC on the rack you want to add.

Retrieve only the VDC Access Key from it, and then proceed from the existing VDC (VDC1).

Before you begin

Obtain the ECS Portal credentials for the root user, or for a user with System Administrator

credentials, to log in to both VDCs.

ECS Administration Guide 31

Page 32

Storage Pools, VDCs, and Replication Groups

In an ECS geo-federated system with multiple VDCs, the IP addresses for the replication and

management networks are used for connectivity of replication and management traffic between

VDC endpoints. If the VDC you are adding to the federation is configured with:

l

Replication or management traffic running on the public network (default), you need the public

network IP address that is used by each node.

l

Separate networks for replication or management traffic, you need the IP addresses of the

separated network for each node.

If a load balancer is configured to distribute the load between the replication IP addresses of the

nodes, you need the IP address that is configured on the load balancer.

Ensure that the VDC you are adding has a valid ECS license that is uploaded and has at least one

storage pool in the Ready state.

Procedure

1. On the VDC you want to add (for example, VDC2):

a. Log in to the ECS Portal.

b. In the ECS Portal, select Manage > Virtual Data Center.

c. On the Virtual Data Center Management page, click Get VDC Access Key.

d. Select the key, and press Ctrl-c to copy it.

Important: You are only obtaining and copying the key of the VDC you want to add, you

are not creating a VDC on the site you are logged in to.

e. Log out of the ECS Portal on the site you are adding.

2. On the existing VDC (for example, VDC1):

a. Log in to the ECS Portal.

b. Select Manage > Virtual Data Center.

c. On the Virtual Data Center Management page, click New Virtual Data Center.

d. On the New Virtual Data Center page, in the Name field, type the name of the new VDC

you are adding.

e. Click in the Key field, and then press Ctrl-v to paste the access key you copied from

the VDC you are adding (from step 1d).

3. In the Replication Endpoints field, enter the replication IP address of each node in the

storage pools that are assigned to the site you are adding (for example, VDC2). Use the:

l

Public IP addresses for the network if the replication network has not been separated.

l

IP address configured for replication traffic, if you have separated the replication

network.

l

If a load balancer is configured to distribute the load between the replication IP

addresses of the nodes, the replication endpoint is the IP address that is configured on

the load balancer.

Use a comma to separate IP addresses within the text box.

4. In the Management Endpoints fields, enter the management IP address of each node in the

storage pools that are assigned to the site you are adding (for example, VDC2). Use the:

l

l

Use a comma to separate IP addresses within the text box.

32 ECS Administration Guide

Public IP addresses for the network if the management network has not been separated.

IP address configured for management traffic, if you have separated the management

network.

Page 33

Storage Pools, VDCs, and Replication Groups

5. Click Save.

Results

The new VDC is added to the existing federation. The ECS system is now a geo-federated system.

When you add the VDC to the federation, ECS automatically sets the type of the VDC to either

On-Premise or Hosted.

After you finish

Note: If External Key Manager (EKM) feature is activated for the federation, and then you

have to add the necessary VDC to EKM mapping for the newly added VDC in the key

management section.

To complete the configuration of the geo-federated system, you must create a replication group

that spans multiple VDCs so that data can be replicated between the VDCs. To do this, you must

ensure that:

l

You have created storage pools in the VDCs that will be in the replication group (see Create a

storage pool on page 28).

l

You create the replication group, selecting the VDCs that provide the storage pools for the

replication group (see Create a replication group on page 38).

l

After you create the replication group, you can monitor the copying of user data and metadata

to the new VDC that you added to the replication group on the Monitor > Geo Replication >

Geo Bootstrap Processing tab. When all the user data and metadata are successfully

replicated to the new VDC, the Bootstrap State is Done and the Bootstrap Progress (%) is

100 on all the VDCs.

Edit a VDC

You can change the name, the access key, or the replication and management endpoints of the

VDC.

Before you begin

This operation requires the System Administrator role in ECS.

If you have an ECS geo-federated system and you want to update VDC endpoints after you:

l

separated the replication or management networks for a VDC, or

l

changed the IP addresses of multiple nodes in a VDC

you must use the update endpoints in multiple VDCs procedure. If you attempt to update the VDC

endpoints by editing the settings for an individual VDC from the Edit Virtual Data Center <

name

> page, you will lose connectivity between VDCs.

VDC

Procedure

1. In the ECS Portal, select Manage > Virtual Data Center.

2. On the Virtual Data Center Management page, locate the VDC you want to edit in the

table. Click Edit in the Actions column beside the VDC you want to edit.

3. On the Edit Virtual Data Center <

l

to modify the VDC name, in the Name field, type the new name.

l

to modify the VDC access key for the node you are logged into, in the Key field, type the

VDC name

> page:

new key value, or click Generate to generate a new VDC access key.

l

To modify the replication and management endpoints:

ECS Administration Guide 33

Page 34

Storage Pools, VDCs, and Replication Groups

Option Description

for a single VDC that is not

part of an ECS federation

for a VDC that is part of an

ECS federation, and for

which you have separated

the replication or

management networks or

changed multiple node IP

addresses

a. In the Replication Endpoints field, enter the IP addresses for the replication endpoints.

Use the:

l

Public IP addresses for the network, if you did not separate the replication network.

l

IP address configured for replication traffic, if you separated the replication network.

l

IP address configured on the load balancer, if you configured a load balancer to

distribute the load between the replication IP addresses of the nodes.

b. In the Management Endpoints field, enter the IP addresses for the management

endpoints. Use the:

l

Public IP addresses for the network, if you did not separate the management

network.

l

IP address configured for management traffic, if you separated the management

network.

Use a comma to separate IP addresses within the text boxes.

Do not modify endpoints on the Edit Virtual Data Center <

modify the endpoints on the Update All VDC Endpoints page as described in Update

replication and management endpoints in multiple VDCs on page 34.

VDC name

> page, you must

4. Click Save.

Update replication and management endpoints in multiple VDCs

In an ECS geo-federated system, you can use the Update All VDC Endpoints page to update

replication and management endpoints in multiple VDCs.

Before you begin

This operation requires the System Administrator role in ECS.

You must update the VDC endpoints from the Update All VDC Endpoints page after you have:

l

Separated the replication or management networks of a VDC in an existing ECS federation.

l

Changed the IP address of multiple nodes in a VDC.