Dell Active System Manager White Paper

Use Case Study: Implementing a

VMware ESXi Cluster with Dell Active

System Manager

Author: Amy Reed

Dell Group: Software Systems

Engineering

Use Case Study: Using Active System For VMware Cluster Environment Configuration

This document is for informational purposes only and may contain typographical errors and

technical inaccuracies. The content is provided as is, without express or implied warranties of any

kind.

© 2012 Dell Inc. All rights reserved. Dell and its affiliates cannot be responsible for errors or omissions

in typography or photography. Dell and the Dell logo are trademarks of Dell Inc. Microsoft is a

registered trademark of Microsoft Corporation in the United States and/or other countries. Vmware,

vSphere, and vMotion are trademarks of Vmware, Inc. Other trademarks and trade names may be used

in this document to refer to either the entities claiming the marks and names or their products. Dell

disclaims proprietary interest in the marks and names of others.

December 2012| Rev 1.0

2

Use Case Study: Using Active System For VMware Cluster Environment Configuration

Contents

Introduction ........................................................................................................... 4

Active Infrastructure ................................................................................................. 4

Active System Manager (ASM) ...................................................................................... 4

Fabric A, iSCSI-Only Configuration (No Data Center Bridging) ................................................ 5

Fabric B, LAN-Only Configuration .................................................................................. 6

Network Partitioning (NPAR) ....................................................................................... 8

Pre-Requisites Introduction ......................................................................................... 8

Infrastructure Setup Using Active System ....................................................................... 10

Create Networks and Identity Pools ........................................................................... 11

Discover Chassis ................................................................................................... 12

Create Management Template ................................................................................. 12

Create Deployment Template .................................................................................. 13

Configure Chassis ................................................................................................. 16

Deploy Servers .................................................................................................... 17

Cluster Setup and Configuration .................................................................................. 18

Configure ESX Management Network .......................................................................... 18

Create Cluster ..................................................................................................... 19

Add Hosts to Cluster ............................................................................................. 19

Configure Management Network, Storage Network, vMotion Network, and Customer Networks .... 20

Using A Host Profile .............................................................................................. 24

Deploying Virtual Machines ..................................................................................... 25

Expanding Cluster Capacity ..................................................................................... 25

Glossary ............................................................................................................... 25

3

Use Case Study: Using Active System For VMware Cluster Environment Configuration

Introduction

This whitepaper describes how to use Active System Manager (ASM) Management and Deployment

templates to configure a blade system infrastructure to host a VMware cluster. The servers in this

cluster will be configured by Active System Manager to boot from an ESXi 5.1 image on their internal

redundant SD cards which have been pre-installed with ESX 5.1 at the factory. Active System Manager

will assist with enabling Converged Network Adapter (CNA) iSCSI connectivity and configuring I/O

Module Network to support the iSCSI data volume connections to the required shared storage for the

cluster. The network containing only the storage traffic is the iSCSI-only network. Active System will

also assist with configuration of the CNA partitioning for the networking required for hypervisor

management, vMotion, and the virtual machine networks. The network containing all other traffic

aside from the storage traffic is the LAN-only network. This white paper introdu ces s everal Active

System concepts for infrastructure management and walks through the real world use case of Active

System iSCSI-Only and LAN-only infrastructure setup, blade environment setup with Active System

Manager, and finally VMware host and cluster configuration.

Active Infrastructure

Dell’s Active Infrastructure is a family of converged infrastructure offering s that combine servers,

storage, networking, and infrastructure management into an integrated system that provides general

purpose virtual resource pools for applications and private clouds. These systems blend intuitive

infrastructure management, an open architecture, flexible delivery models, and a unified support

model to allow IT to rapidly respond to dynamic business needs, maximize efficiency, and strengthen IT

service quality.

In this use case Active Infrastructure is used to provide compute resources and required connectivity to

both storage networks and standard Ethernet networks in order to support a VMware cluster. The

infrastructure to support these connections is provided via two separate fabrics in the blade chassis,

one containing only iSCSI traffic and one containing only standard Ethernet networking traffic. Fabric A

in the blade chassis provides two independent Dell Force10 PowerEdge M I/O Aggregators (IOAs) for

redundant connections to the storage distribution devices. Fabric B in the blade chassis provides two

independent IOAs for redundant connections to the LAN distribution devices. The iSCSI Storage Area

Network (SAN) will allow the VMware ESXi 5.1 hosts to connect to the two or more shared volumes on

the EqualLogic storage array required to create a cluster. The LAN network will carry the standard

Ethernet traffic to support various required networks such as the hypervisor management network, the

vMotion network, and the VM Networks which will provide networking for the virtual machines running

on the various hosts.

Active System Manager (ASM)

Active System Manager simplifies infrastructure configuration, collapses management tools, and drives

automation and consistency. Through capabilities such as template-based provisioning, automated

configuration, and infrastructure lifecycle management, Active System Manager enables IT to respond

rapidly to business needs, maximize data center efficiency, and strengthen quality of IT service

delivery.

4

Use Case Study: Using Active System For VMware Cluster Environment Configuration

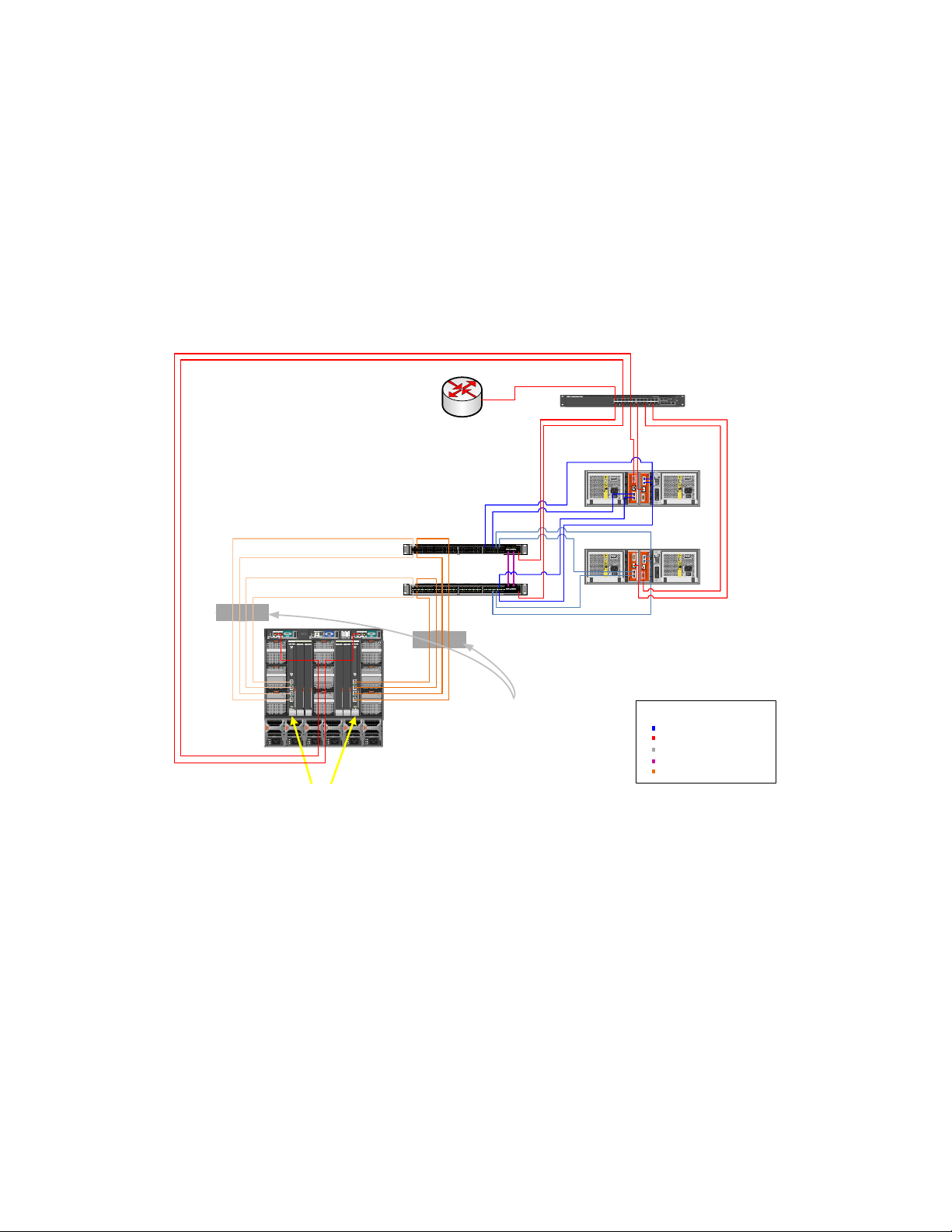

Fabric A, iSCSI-Only Configuration (No Data Center Bridging)

For this use case, Fabric A will be set up by Active System Manager for an iSCSI-only configuration. An

iSCSI-only network contains iSCSI traffic and no other traffic. In the absence of technology such as Data

Center Bridging (DCB), which enable converging LAN and SAN (iSCSI) traffic on the same fabric, an

iSCSI-only network configuration is required to ensure reliability for high-prior ity SAN traffic.

All devices in the storage data path must be enabled for flow control and jumbo frames. It is also

recommended (but not required) that DCB be disabled on all network devices from within Active

System Manager, since the network will carry only one type of traffic. This will ensure that all devices—

including CNAs, iSCSI initiators, I/O aggregators, Top-of-Rack (ToR) switches, and storage arrays—share

the same DCB-disabled configuration. DCB is an all or none configuration, in other words if you choose

to enable DCB, it must be enabled end-to-end from your storage to your CNA. The ASM template

configuration and ToR switch configuration of DCB in this case will drive the configuration of your CNAs

as they should operate in “willing” mode and obtain their DCB configuration from the upstream switch.

EqualLogic storage also operates in “willing” mode and will obtain its DCB or non-DCB configuration

from the ToR distribution switches to which it is connected.

In this configuration, the jumbo frame MTU size is set on the switches to 12000 to accommodate the

largest possible packet size allowed by the S4810 switches. Make sure to set the MTU size in your own

environment based on the packet size your devices support. In this example, specific ESXi hosts being

configured for the cluster will only support an MTU of 9000, as a result the overall MTU for these paths

will adjust down to 9000.

Active System Manager will only configure hardware within the Active Infrastructure blade chassis

environment, thus the distribution layer and above must be configured manually or by other tools.

Active System Manager does not manage storage devices, thus these will also need to be configured by

the system administrator. In this example, two Dell Force10 S4810 switches are used for the

distribution layer devices and two Dell EqualLogic PS6010X are used as the storage arrays. The S4810

switches are configured as a set of VLT peers, and the storage arrays are connected directly to the

distribution layer device. These switches connect the downstream Dell Force10 PowerEdge M I/O

aggregator switches in the chassis with the upstream EqualLogic storage arrays.

In an iSCSI-only configuration, the distribution switches have only four types of ports:

Out-of-band management

VLT peer ports

Downlinks to the I/O aggregator in the M1000e chassis

Connections to the Dell EqualLogic iSCSI storage array

The Virtual Link Trunking (VLT) peer link is configured using 40GbE QSFP ports of the S4810 distribution

layer switches. VLT is a Dell Force10 technology that allows you to create a single link aggregated

(LAG) port channel using ports from two different switch peers while providing load balancing and

redundancy in case of a switch failure. This configuration also provides a loop-f ree environment

without the use of a spanning-tree. The ports are identified in the same manner as two switches not

connected via VLT (port numbers do not change like they would if stacked). You can keep one peer

switch up while updating the firmware on the other peer. In contrast to stacking, these switches

5

Use Case Study: Using Active System For VMware Cluster Environment Configuration

maintain their own identities and act as two independent switches that work together, so they must be

configured and managed separately.

Downlinks to the I/O aggregators must be configured as LACP-enabled LAGs. LACP must also be enabled

on the storage distribution switches to allow auto-configuration of downstream I/O aggregators and to

tag storage VLANs on the LAGs, since they will be used to configure VLANs on the downstream switch.

LAG

Figure 1.

iSCSI-Only Network Diagram

Uplinks fr om IOA T o

iSCSI Only Distribution

VLT Peers

(Orange)

CMC2CMC1KVM

1

2

3

4

5

CMC

GbGb21

PowerConnect M8024-k

20

12

19

18

34

17

CONSOLE

Dell M1000e Blade Enclosure with

two PowerEdge M I/O Aggregators

6

iKVM

7

8

9

GbGb21

123456

B2C2 A2B1 C1A1

PowerConnect M8024-k

20

12

19

18

34

17

CONSOLE

S4810P

01102468 12 2214 16 18 20 24 3426 28 30 32 36 4638 40 42 44 48 56

S4810P

01102468 12 2214 16 18 20 24 3426 28 30 32 36 4638 40 42 44 48 56

CMC

Routed Core

SFP+

SFP+

LAG

X2

STER

U

A

SYS

M

FAN

PS

RS-232

6052

QSFP+

LNK ACT

Ethernet

R

E

ST

S

A

SU

SY

M

FAN

P

RS-232

6052

QSFP+

LNK ACT

Ethernet

X2

Equallogic

Storage

VLT Peer set LAG

connections are seen as

originating from a single

network devi ce . T his a ll ows

connections from switches

with separ ate m anagem ent

planes to be aggregated into

a resilient, LACP enabled

link aggregation.

1357911131517192123

24681012141618202224

CONTROL MODULE

SERIAL PORT 0

PWR

ERR

ACT

ETHERNET 1ETHERNET 0

ETHERNET 2

ETHERNET 2

ETHERNET 1ETHERNET 0

ERR

ACT

PWR

SERIAL PORT 0

CONTROL MODULE

SERIAL PORT 0

PWR

ERR

ACT

ETHERNET 1ETHERNET 0

ETHERNET 2

ETHERNET 2

ETHERNET 1ETHERNET 0

ERR

ACT

PWR

SERIAL PORT 0

LNKACT

Invalid

Address

ID

Hub

Mode

CONTROL MODULE

Invalid

Address

ID

Hub

Mode

CONTROL MODULE

S

P

W

ta

t

R

u

s

MRPSFan

Stack No.

LNKACT

Reset

COMBO PORTS

21 22 23 24

2Gb

X2

2Gb

X2

Legend

iSCSI (blues)

OOB Mgmt Connection

LACP Enabled LAG

40GbE VLT Domain Link

Uplinks from IOA (oranges)

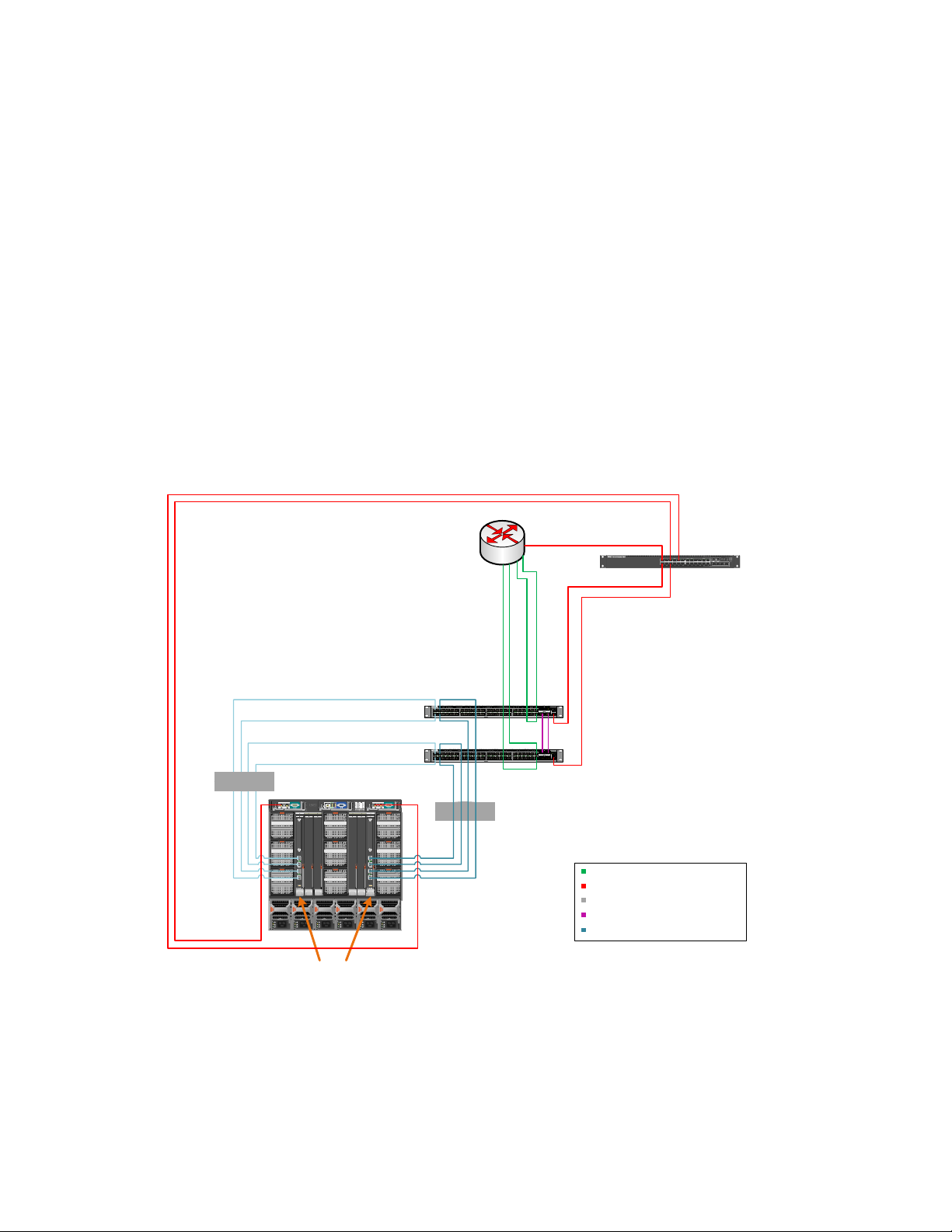

Fabric B, LAN-Only Configuration

For this use case, Fabric B will be set up by Active System Manager for a LAN-only configuration. A LANonly network contains all other Ethernet traffic (but not iSCSI storage traffic). In this example, the LAN

distribution layer switches are a set of Dell Force10 S4810 switches that are configured as a stack.

Stacking allows these switches to be managed as a single, logical switch. Active System Manager will

only configure hardware within the Active Infrastructure blade chassis environment, thus the

distribution layer and above must be configured manually or by other tools. These switches connect

the downstream Dell Force10 PowerEdge M I/O aggregator switches in the chassis with the upstream

routed network.

6

Use Case Study: Using Active System For VMware Cluster Environment Configuration

In a LAN-only configuration, the LAN distribution switches have only four types of ports:

Out-of-band management

Stacking ports

Downlinks to the I/O aggregator in the M1000e chassis

Uplinks to the routed network

Downlinks to the I/O aggregators must be configured as LACP-enabled LAGs. LACP must also be enabled

on the LAN distribution switches to allow auto-configuration of downstream I/O aggregators and to tag

network VLANs on the LAGs, since they will be used to configure VLANs on the downstream switch.

This example shows multiple ports of the Force10 S4810 configured to connect to the I/O Aggregator.

Each port channel is also configured with the appropriate VLANs to carry the environment’s Ethernet

traffic. These ports drive auto-configuration of VLANs on the I/O aggregator LAGs.

LAG

Figure 2.

Uplinks from IOA To

LAN Only Distribution

(teal)

CMC2CMC1KVM

1

2

3

4

5

CMC

GbGb 21

PowerConnect M8024-k

20

12

19

18

34

17

CONSOLE

6

iKVM

7

8

9

123456

B2C2 A2B1 C1A1

LAN-Only Network Diagram

Routed Core

ER

T

S

N

SU

P

FA

MA

S4810P

01102 4 6 8 12 2214 16 18 20 24 3426 28 30 32 36 4638 40 42 44 48 56

S4810P

01102 4 6 8 12 2214 16 18 20 24 3426 28 30 32 36 4638 40 42 44 48 56

CMC

GbGb 21

PowerConnect M8024-k

20

12

19

18

34

17

CONSOLE

SFP+

SFP+

LAG

SYS

RS-232

6052

QSFP+

LNK ACT

Ethernet

ER

T

S

AN

MA

SYS

F

PSU

RS-232

6052

QSFP+

LNK ACT

Ethernet

1357911 131517192123

LNKACT

2 4 6 8 10 12 14 16 18 20 22 24

LNKACT

COMBO PORTS

21 22 23 24

Out of Band

Manag ement

Legend

LAN from Routed Core

OOB Mgmt Connection

LACP Enabled LAG

40GbE Stac king C onnec tions

Uplinks from IOA (teals)

S

P

W

ta

t

R

u

s

MRPSFan

Stack No.

Reset

Dell M1000e Blade Enclosure

with two PowerEdge M I/O

Aggregators

7

Use Case Study: Using Active System For VMware Cluster Environment Configuration

Network Partitioning (NPAR)

Network or NIC partitioning (NPAR) divides a network adapter into multiple independent partitions. In

the case of the Broadcom 57810 CNA, these partitions can each support concurrent network and

storage functions, which appear as independent devices to the host operating system. With iSCSI

offload enabled on the CNA, one partition can appear as a storage controller while another partit ion

with network enabled appears as a standard Ethernet NIC.

NPAR supports the TCP/IP networking protocol and the iSCSI and FCOE storage protocols. It is

important to note that different CNAs support NPAR differently—for example, not all CNAs support all

protocols on all partitions. Refer to the documentation for your device, in this use case a Broadcom

57810, to determine which protocols are available.

Pre-Requisites Introduction

Before you begin configuration of your environment with Active System Manager, you must ensure that

the following items are in place:

Top of Rack Switch Configuration

Out of Band Management Connectivity and Chassis Management Controller Firmware

ESX Images on Managed Server’s SD Card or Hard Disk

VMware vCenter Server

Deploy Active System Manager

Create EqualLogic Storage Volumes

EqualLogic Multipathing Extenstion Module

Top of Rack Switch Configuration

The configuration of the top of rack distribution switches provides the access to the various iSCSI and

standard Ethernet networks, while the configuration of the top of rack switches drives the autoconfiguration of the Dell Force10 PowerEdge M I/O Aggregators (IOA). It is important to ensure that

each IOA has exactly one uplink to the desired top of rack distribution switch and that this uplink is

configured with LACP enabled as well as the VLANs needing to be accessed from that fabric. The uplink

can be configured using combinations of ports on the IOA from a single 10 Gb connection and up to six

40 Gb connections. Despite the number of connections, only a single uplink can be configured on the

IOA.

M1000e Out of Band Management Connectivity and CMC Firmware

The out of band management network is the primary network which the Active System Manager will use

to configure the Chassis, I/O Modules, and Servers in the environment. A simple flat network with no

VLANs is used for access to the management interfaces for these devices. In Active Infrastructure the

Active System Manager will need to be deployed to an ESXi Host with access to this management

network.

8

Loading...

Loading...