Page 1

IP Compiler for PCI Express User Guide

IP Compiler for PCI Express

User Guide

101 Innovation Drive

San Jose, CA 95134

www.altera.com

UG-PCI10605-2014.08.18

Document publication date:

August 2014

Page 2

© 2014 Altera Corporation. All rights reserved. ALTERA, ARRIA, CYCLONE, HARDCOPY, MAX, MEGACORE, NIOS, QUARTUS and STRATIX are Reg. U.S. Pat.

& Tm. Off. and/or trademarks of Altera Corporation in the U.S. and other countries. All other trademarks and service marks are the property of their respective

holders as described at www.altera.com/common/legal.html. Altera warrants performance of its semiconductor products to current specifications in accordance

with Altera’s standard warranty, but reserves the right to make changes to any products and services at any time without notice. Altera assumes no responsibility or

liability arising out of the application or use of any information, product, or service described herein except as expressly agreed to in writing by Altera. Altera

customers are advised to obtain the latest version of device specifications before relying on any published information and before placing orders for products or

services.

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 3

August 2014

<edit Part Number variable in chapter>

This document describes the Altera® IP Compiler for PCI Express IP core. PCI Express

is a high-performance interconnect protocol for use in a variety of applications

including network adapters, storage area networks, embedded controllers, graphic

accelerator boards, and audio-video products. The PCI Express protocol is software

backwards-compatible with the earlier PCI and PCI-X protocols, but is significantly

different from its predecessors. It is a packet-based, serial, point-to-point interconnect

between two devices. The performance is scalable based on the number of lanes and

the generation that is implemented. Altera offers both endpoints and root ports that

are compliant with PCI Express Base Specification 1.0a or 1.1 for Gen1 and PCI Express

Base Specification 2.0 for Gen1 or Gen2. Both endpoints and root ports can be

implemented as a configurable hard IP block rather than programmable logic, saving

significant FPGA resources. The IP Compiler for PCI Express is available in ×1, ×2, ×4,

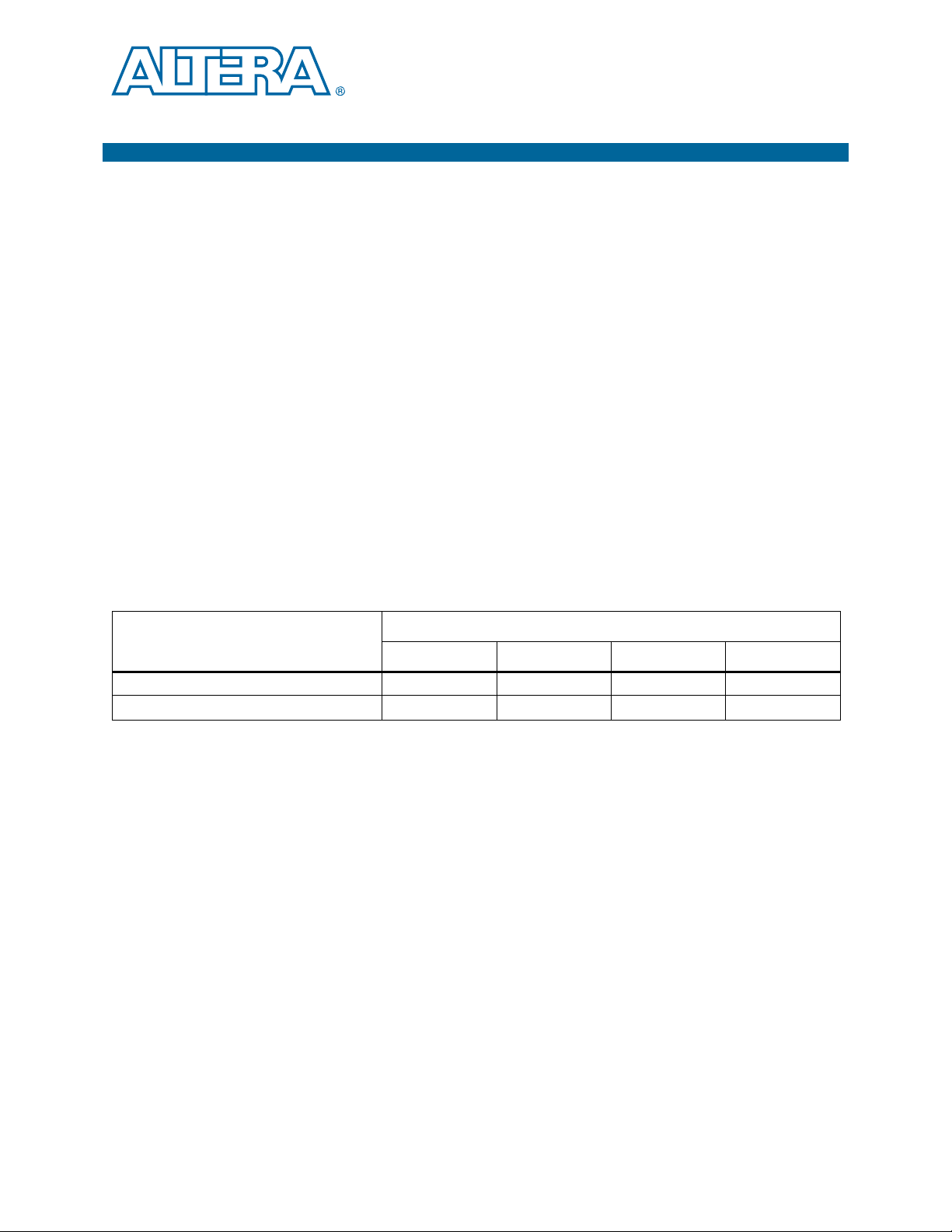

and ×8 configurations. Ta bl e 1– 1 shows the aggregate bandwidth of a PCI Express

link for Gen1 and Gen2 IP Compilers for PCI Express for 1, 2, 4, and 8 lanes. The

protocol specifies 2.5 giga-transfers per second for Gen1 and 5 giga-transfers per

second for Gen2. Because the PCI Express protocol uses 8B/10B encoding, there is a

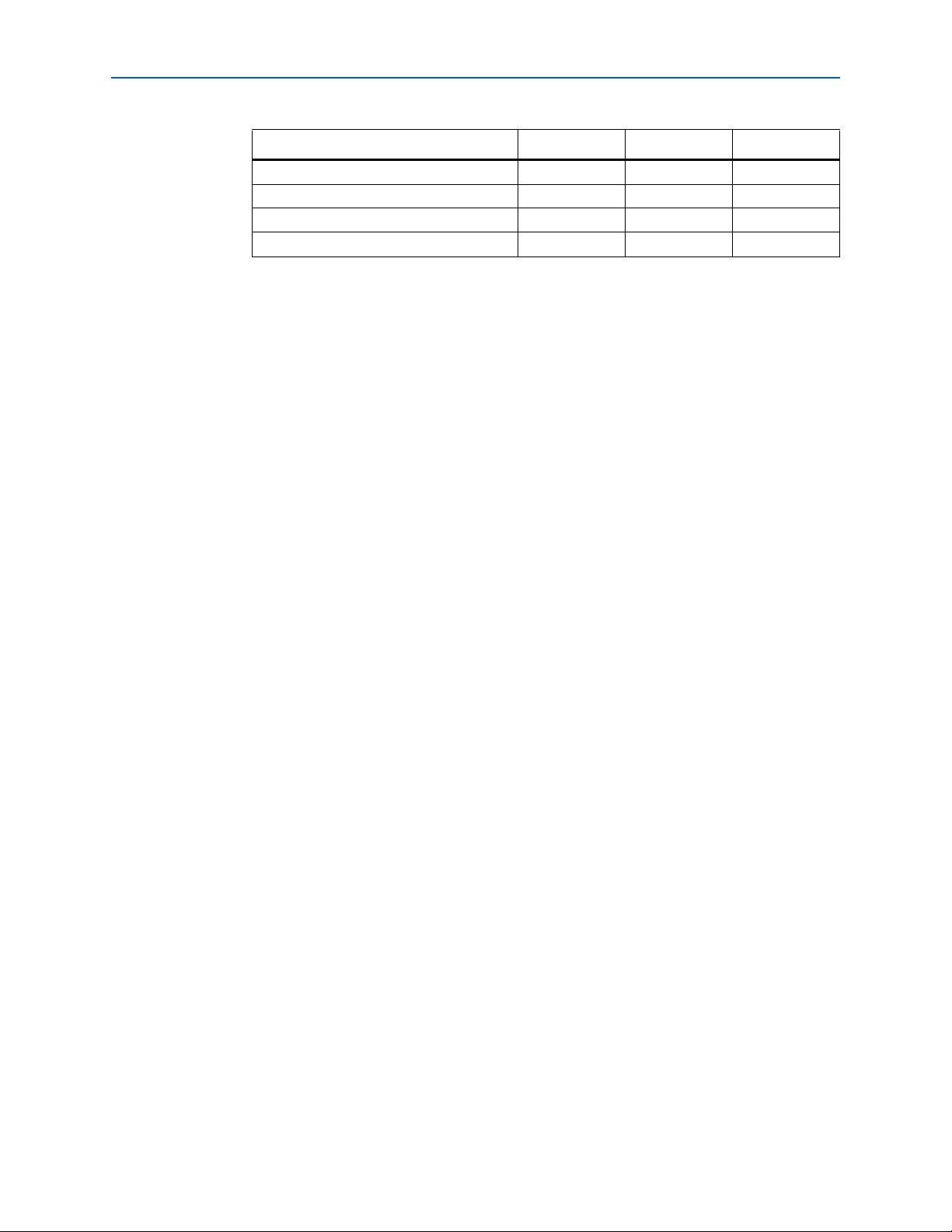

20% overhead which is included in the figures in Tab le 1 –1 . Tab le 1 –1 provides

bandwidths for a single TX or RX channel, so that the numbers in Tab le 1– 1 would be

doubled for duplex operation.

1. Datasheet

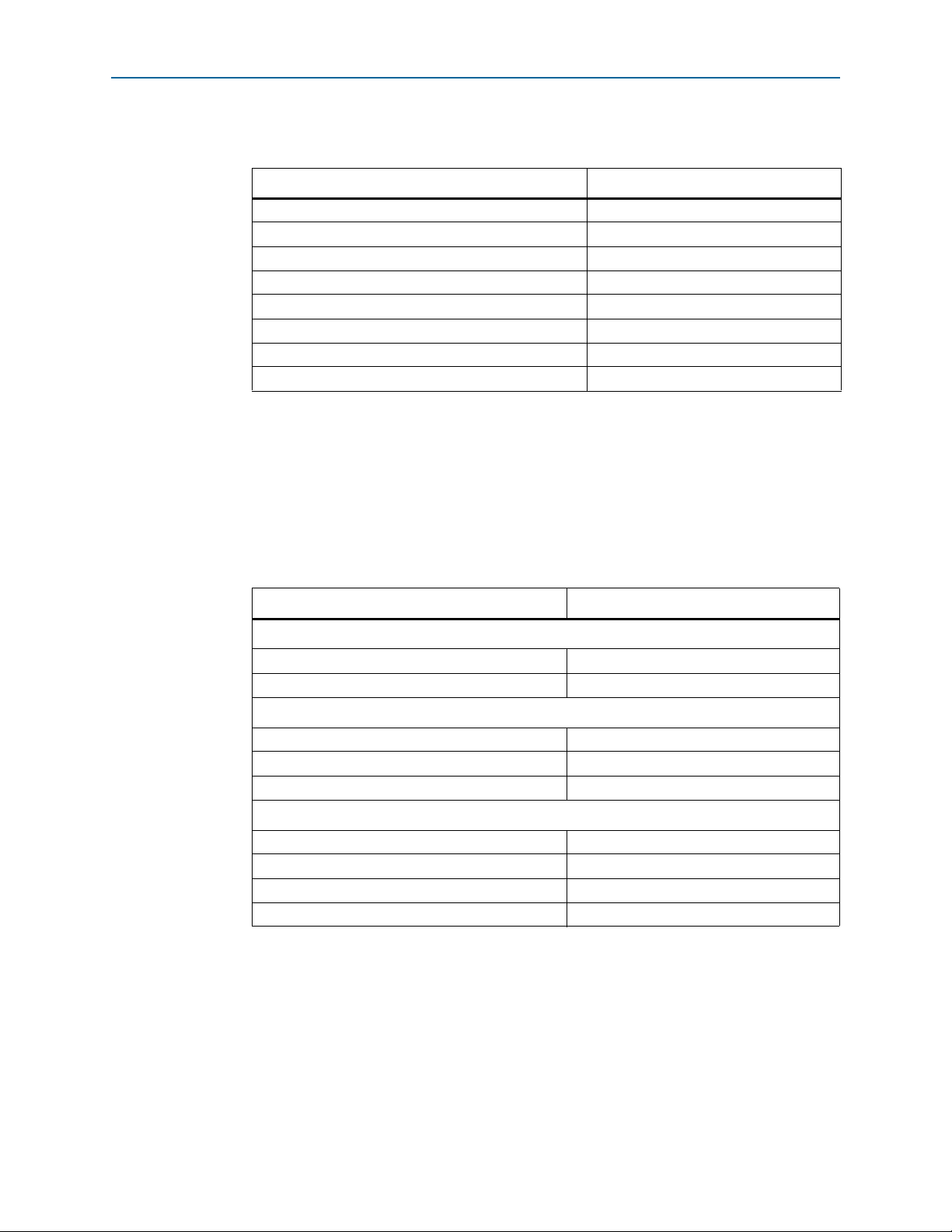

Table 1–1. IP Compiler for PCI Express Throughput

Link Width

×1 ×2 ×4 ×8

PCI Express Gen1 Gbps (1.x compliant) 2 4 8 16

PCI Express Gen2 Gbps (2.0 compliant) 4 8 16 32

f Refer to the PCI Express High Performance Reference Design for bandwidth numbers

for the hard IP implementation in Stratix

®

IV GX and Arria®II GX devices.

Features

Altera’s IP Compiler for PCI Express offers extensive support across multiple device

families. It supports the following key features:

■ Hard IP implementation—PCI Express Base Specification 1.1 or 2.0. The PCI Express

protocol stack including the transaction, data link, and physical layers is hardened

in the device.

■ Soft IP implementation:

■ PCI Express Base Specification 1.0a or 1.1.

■ Many device families supported. Refer to Tab le 1 –4 .

■ The PCI Express protocol stack including transaction, data link, and physical

layer is implemented using FPGA fabric logic elements

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 4

1–2 Chapter 1: Datasheet

■ Feature rich:

Features

■ Support for ×1, ×2, ×4, and ×8 configurations. You can select the ×2 lane

configuration for the Cyclone

®

IV GX without down configuring a ×4

configuration.

■ Optional end-to-end cyclic redundancy code (ECRC) generation and checking

and advanced error reporting (AER) for high reliability applications.

■ Extensive maximum payload size support:

Stratix IV GX hard IP—Up to 2 KBytes (128, 256, 512, 1,024, or 2,048 bytes).

Arria II GX, Arria II GZ, and Cyclone IV GX hard IP—Up to 256 bytes (128 or

256 bytes).

Soft IP Implementations—Up to 2 KBytes (128, 256, 512, 1,024, or 2,048 bytes).

■ Easy to use:

■ Easy parameterization.

■ Substantial on-chip resource savings and guaranteed timing closure using the

IP Compiler for PCI Express hard IP implementation.

■ Easy adoption with no license requirement for the hard IP implementation.

■ Example designs to get started.

■ Qsys support.

■ Stratix V support is provided by the Stratix V Hard IP for PCI Express.

■ Stratix V support is not available with the IP Compiler for PCI Express.

■ The Stratix V Hard IP for PCI Express is documented in the Stratix V Hard

IP for PCI Express User Guide.

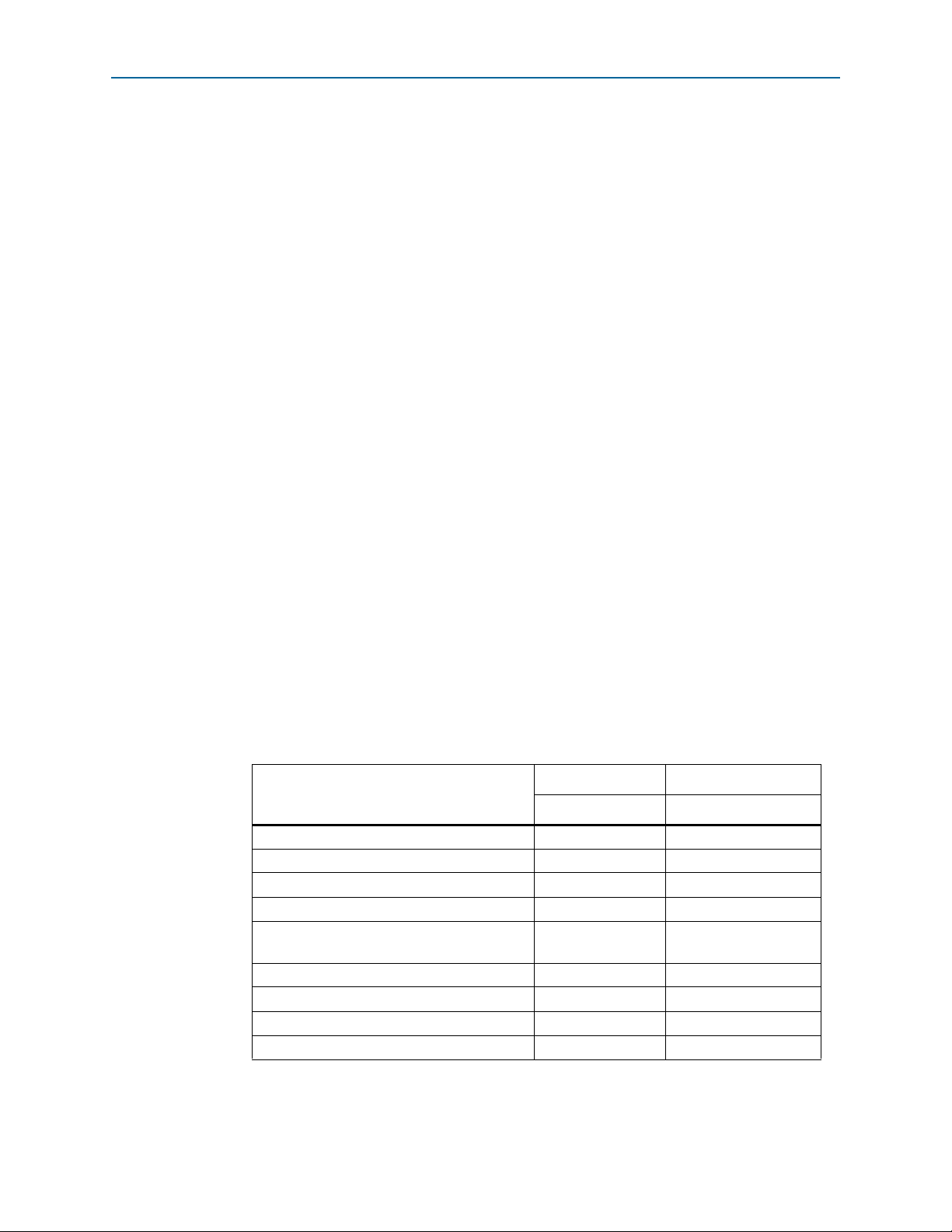

Different features are available for the soft and hard IP implementations and for the

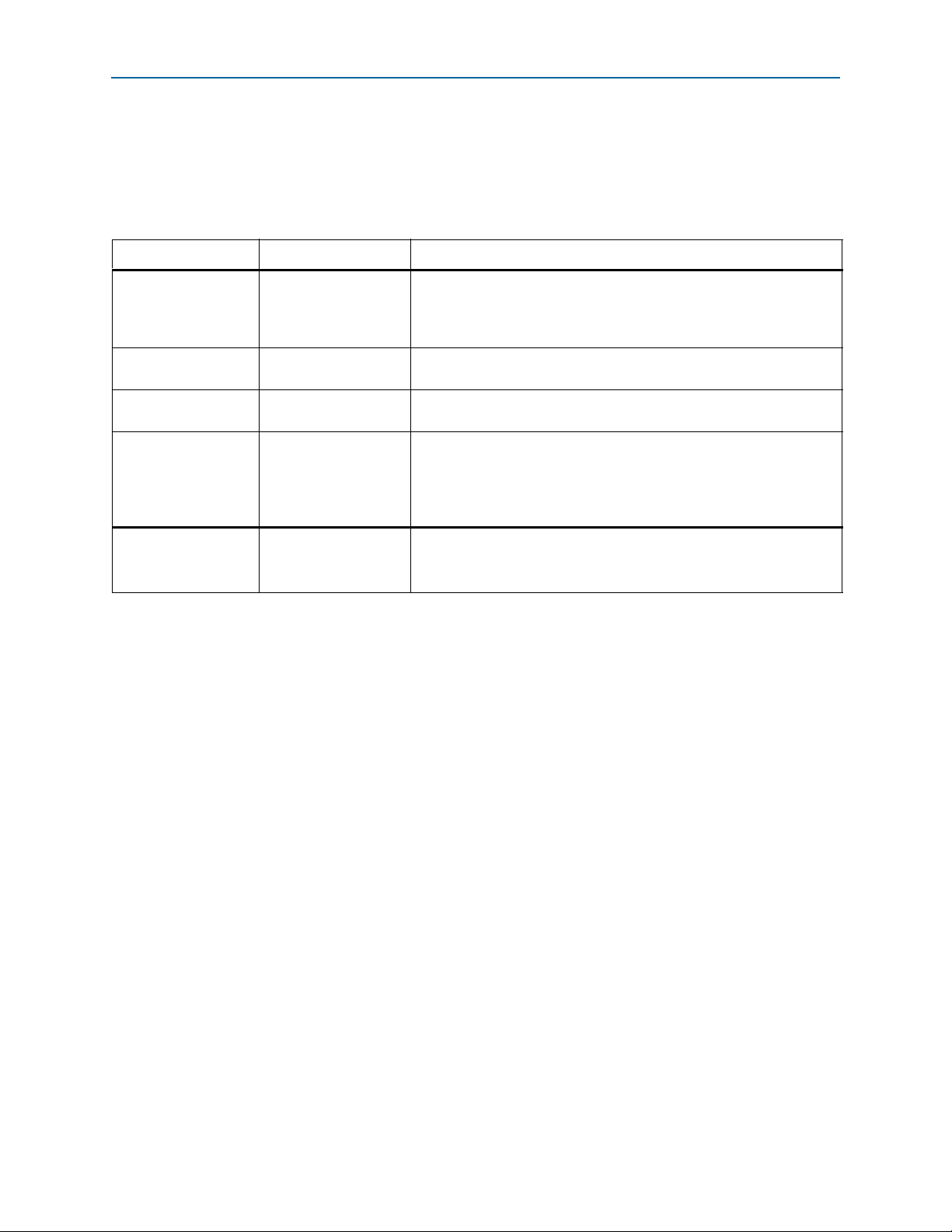

three possible design flows. Table 1–2 outlines these different features.

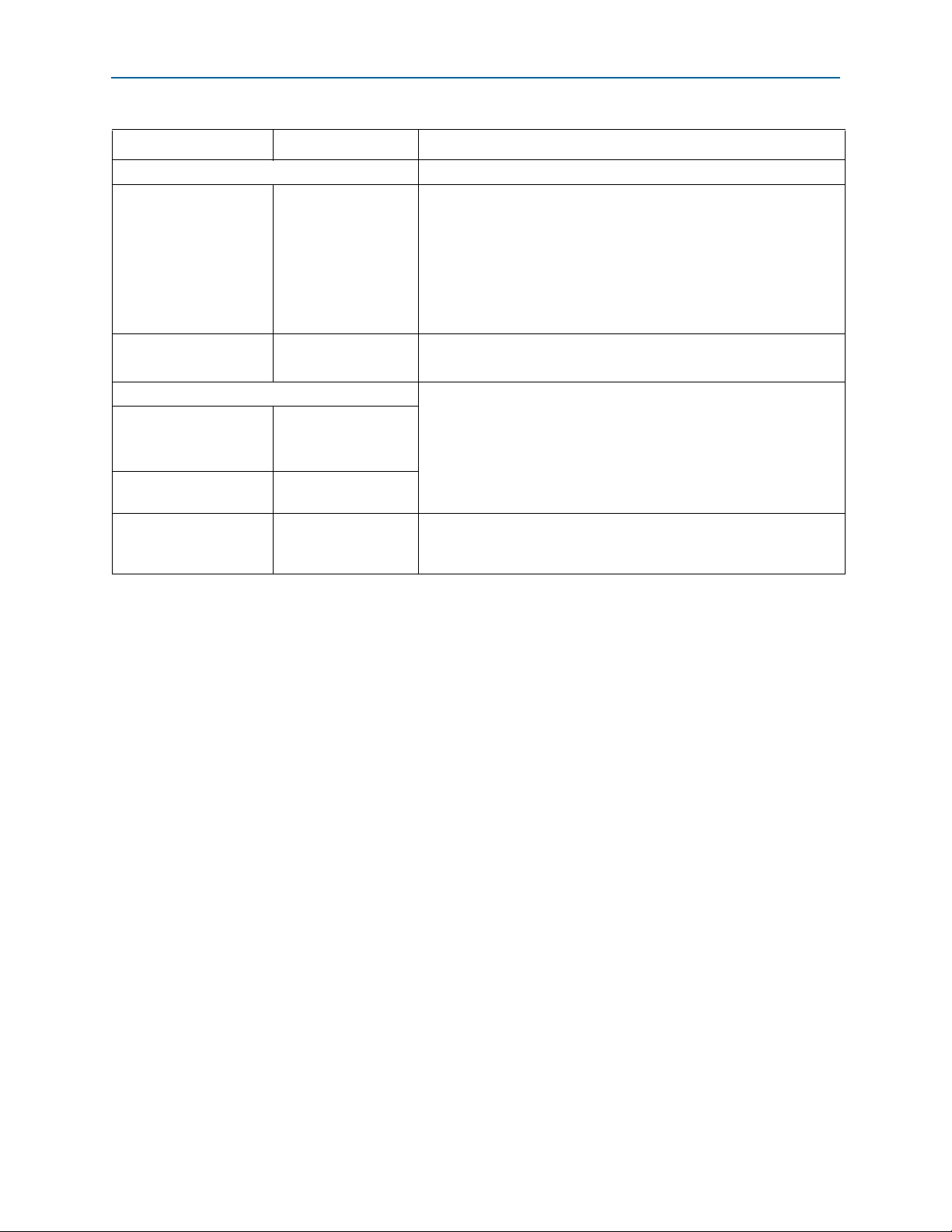

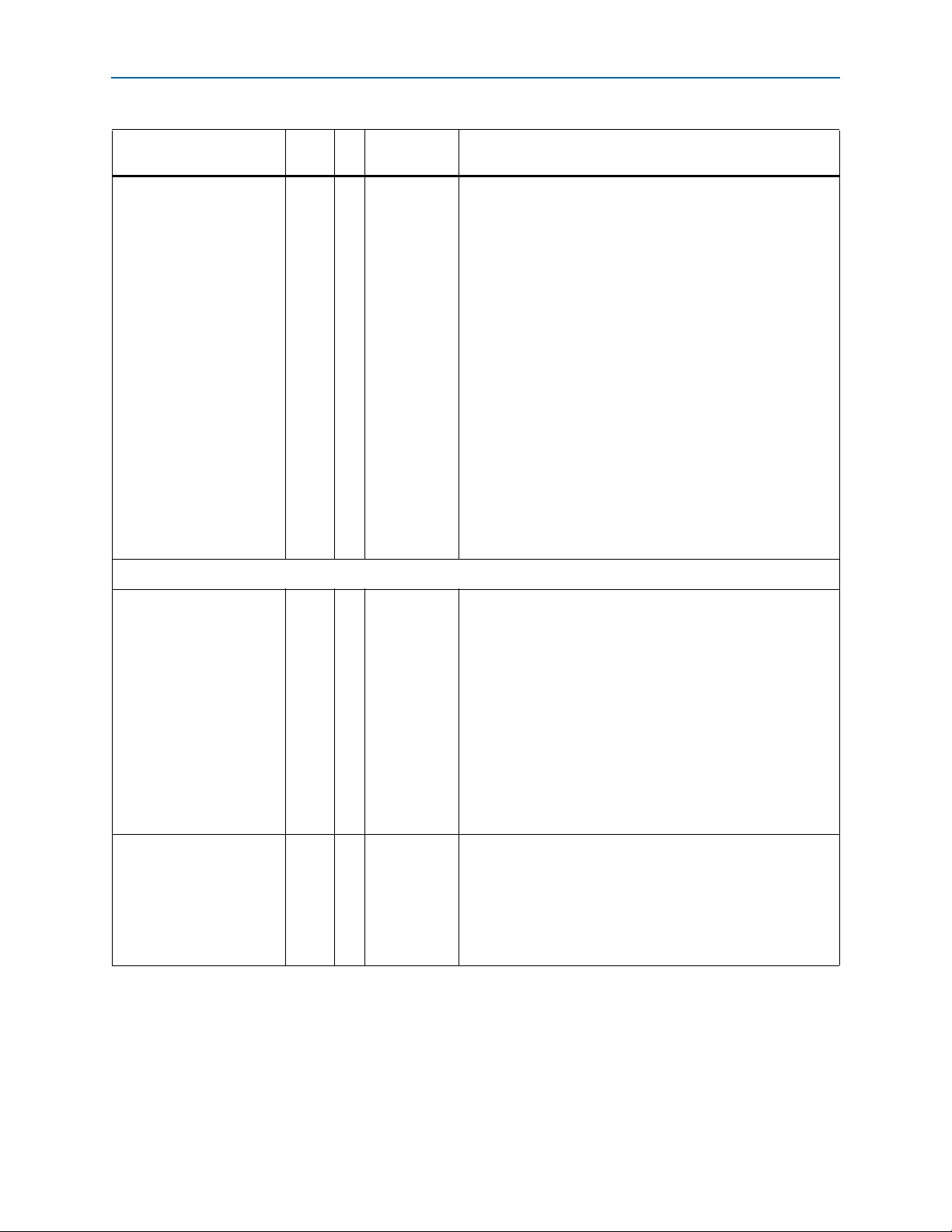

Table 1–2. IP Compiler for PCI Express Features (Part 1 of 2)

Feature

Hard IP Soft IP

MegaCore License Free Required

Root port Not supported Not supported

Gen1 ×1, ×2, ×4, ×8 ×1, ×4

Gen2 ×1, ×4 No

Avalon Memory-Mapped (Avalon-MM)

Interface

Supported Supported

64-bit Avalon Streaming (Avalon-ST) Interface Not supported Not supported

128-bit Avalon-ST Interface Not supported Not supported

Descriptor/Data Interface (1) Not supported Not supported

Legacy Endpoint Not supported Not supported

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 5

Chapter 1: Datasheet 1–3

Release Information

Table 1–2. IP Compiler for PCI Express Features (Part 2 of 2)

Feature

Hard IP Soft IP

Transaction layer packet type (TLP) (2)

■ Memory read

request

■ Memory write

request

■ Completion with

■ Memory read request

■ Memory write

request

■ Completion with or

without data

or without data

Maximum payload size 128–256 bytes 128–256 bytes

Number of virtual channels 1 1

Reordering of out–of–order completions

(transparent to the application layer)

Requests that cross 4 KByte address

boundary (transparent to the application layer)

Number of tags supported for non-posted

requests

Supported Supported

Supported Supported

16 16

ECRC forwarding on RX and TX Not supported Not supported

MSI-X Not supported Not supported

Notes to Table 1–2:

(1) Not recommended for new designs.

(2) Refer to Appendix A, Transaction Layer Packet (TLP) Header Formats for the layout of TLP headers.

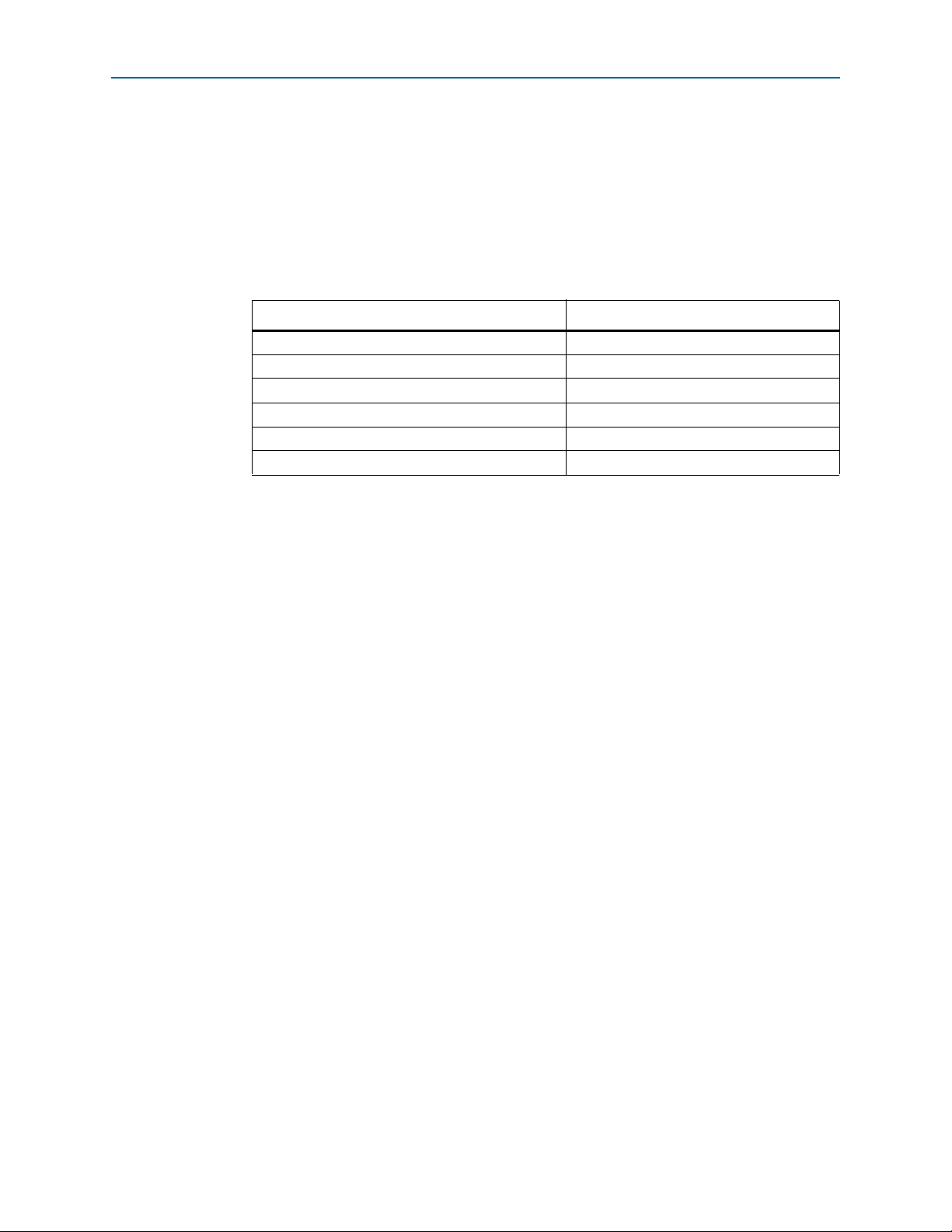

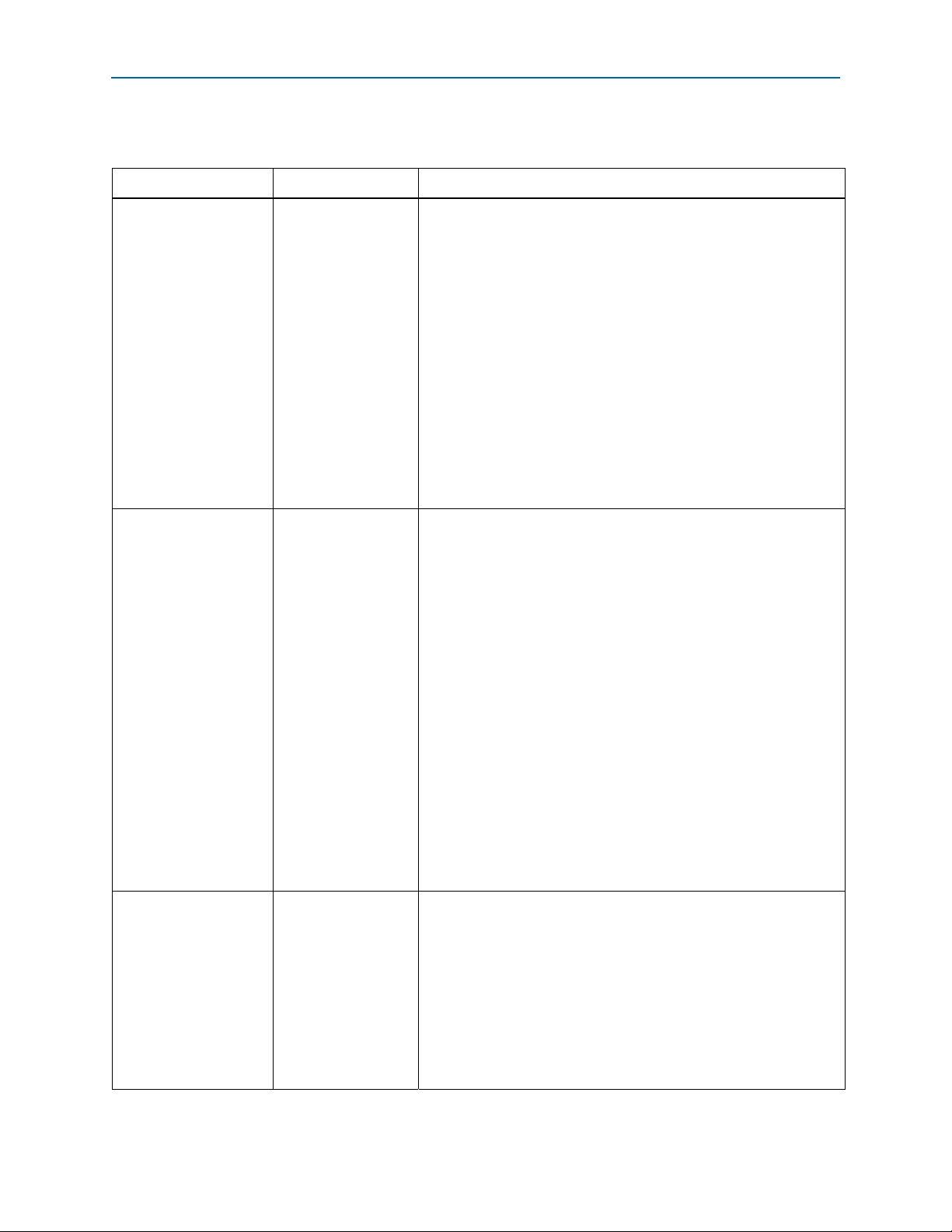

Release Information

Tab le 1– 3 provides information about this release of the IP Compiler for PCI Express.

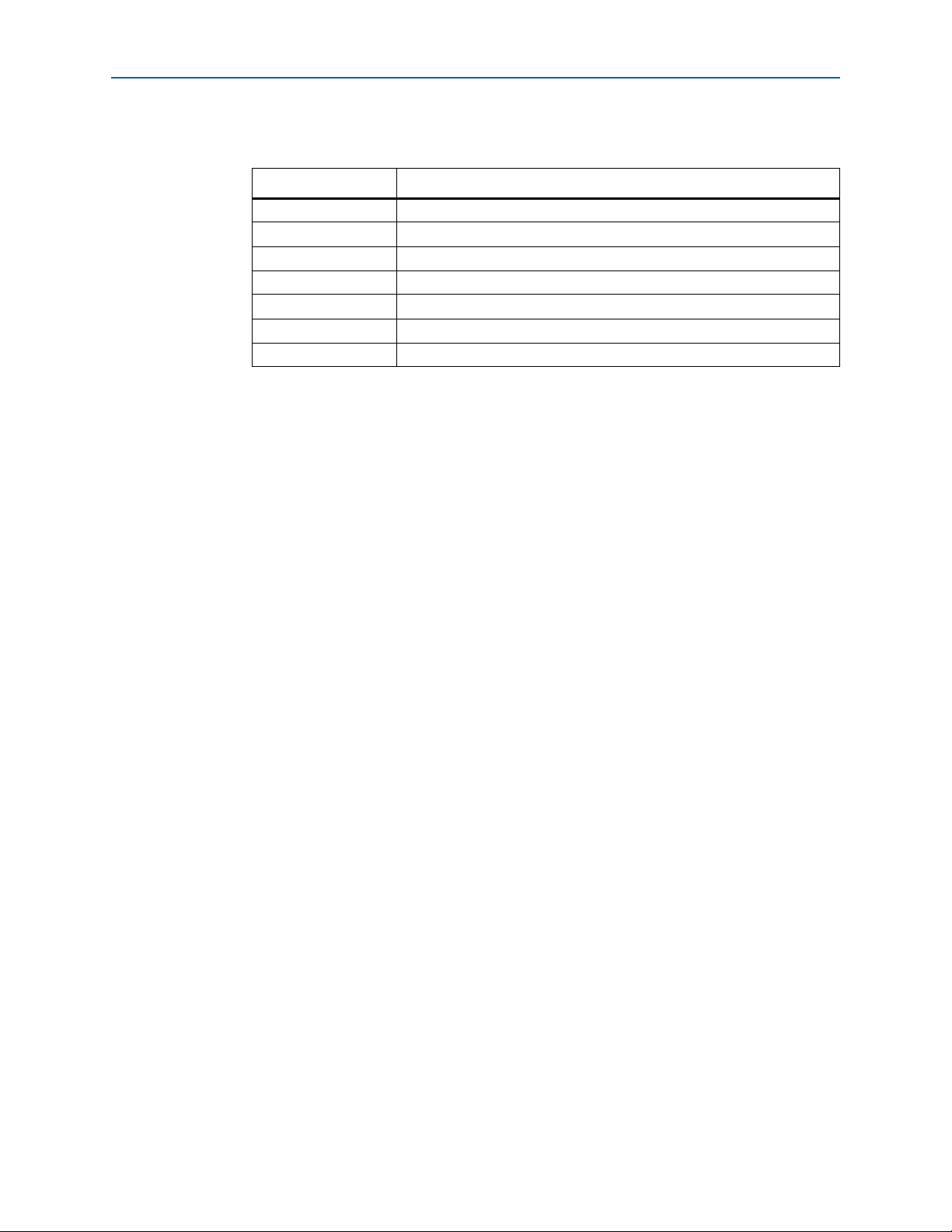

Table 1–3. IP Compiler for PCI Express Release Information

Version 14.0

Release Date June 2014

Ordering Codes

Product IDs

■ Hard IP Implementation

■ Soft IP Implementation

Vendor ID

■ Hard IP Implementation

■ Soft IP Implementation

Item Description

IP-PCIE/1

IP-PCIE/4

IP-PCIE/8

IP-AGX-PCIE/1

IP-AGX-PCIE/4

No ordering code is required for the hard IP implementation.

FFFF

×1–00A9

×4–00AA

×8–00AB

6AF7

6A66

August 2014 Altera Corporation IP Compiler for PCI Express

Page 6

1–4 Chapter 1: Datasheet

Device Family Support

Altera verifies that the current version of the Quartus® II software compiles the

previous version of each IP core. Any exceptions to this verification are reported in the

MegaCore IP Library Release Notes and Errata. Altera does not verify compilation with

IP core versions older than one release.Table 1–4 shows the level of support offered by

the IP Compiler for PCI Express for each Altera device family.

Device Family Support

Table 1–4. Device Family Support

Device Family Support (1)

Arria II GX Final

Arria II GZ Final

Cyclone IV GX Final

Stratix IV E, GX Final

Stratix IV GT Final

Other device families No support

Note to Tab le 1 –4:

(1) Refer to the What's New for IP in Quartus II page for device support level information.

f In the Quartus II 11.0 release, support for Stratix V devices is offered with the Stratix V

Hard IP for PCI Express, and not with the IP Compiler for PCI Express. For more

information, refer to the Stratix V Hard IP for PCI Express User Guide .

General Description

The IP Compiler for PCI Express generates customized variations you use to design

PCI Express root ports or endpoints, including non-transparent bridges, or truly

unique designs combining multiple IP Compiler for PCI Express variations in a single

Altera device. The IP Compiler for PCI Express implements all required and most

optional features of the PCI Express specification for the transaction, data link, and

physical layers.

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 7

Chapter 1: Datasheet 1–5

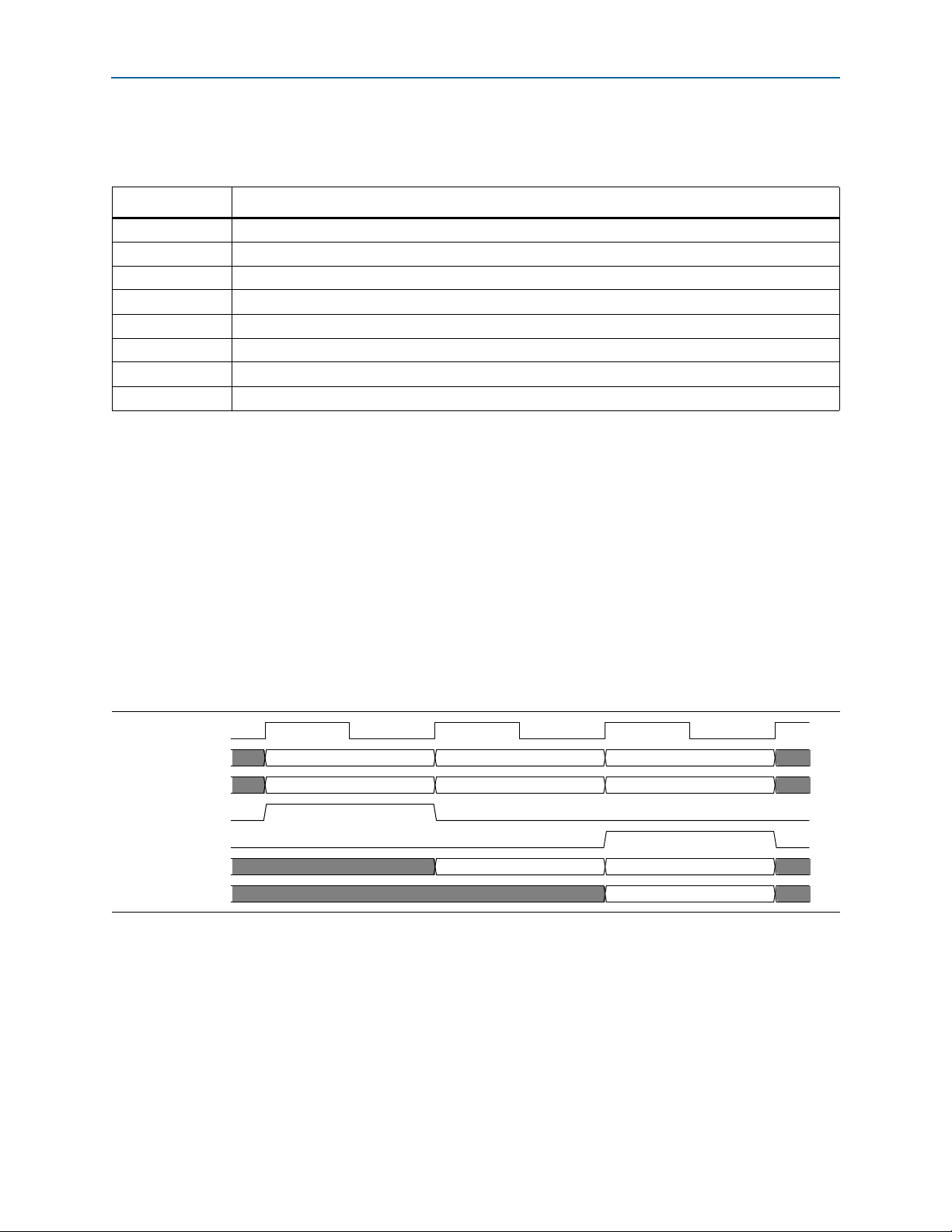

PCI Express

Protocol Stack

Adapter

Clock & Re se t

Se lectio n

PCIe Hard IP Block

TL

Interface

FPGA Fabric Interface

PIPE Interface

LMI

PCIe

Reconfig

Buffer

Virtual

Channel

Buffer

Retry

PCIe Hard IP Block Reconfiguration

RX

FPGA Fabric

Application

Layer

Test, Debug &

Configuration

Logic

PMA

PCS

Transceivers

General Description

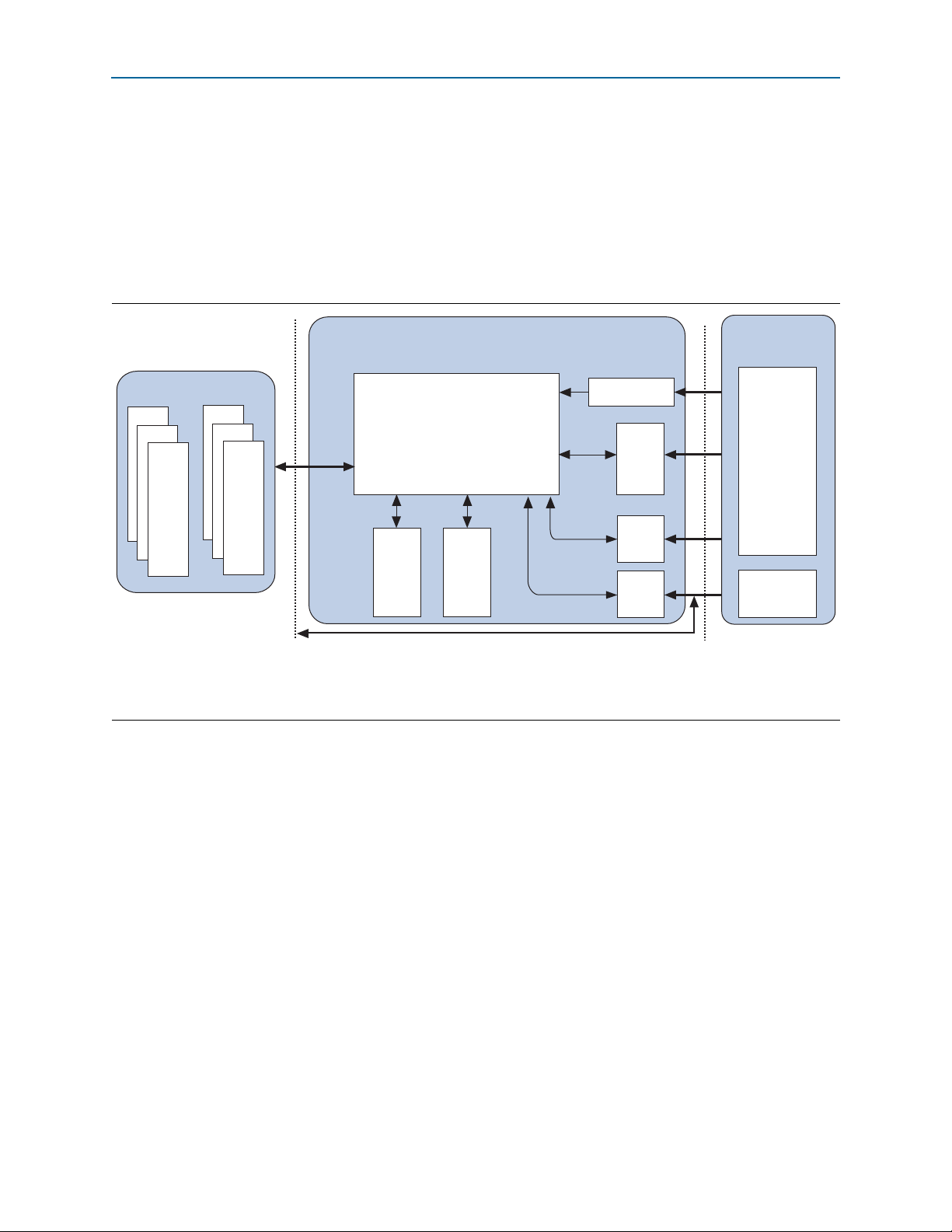

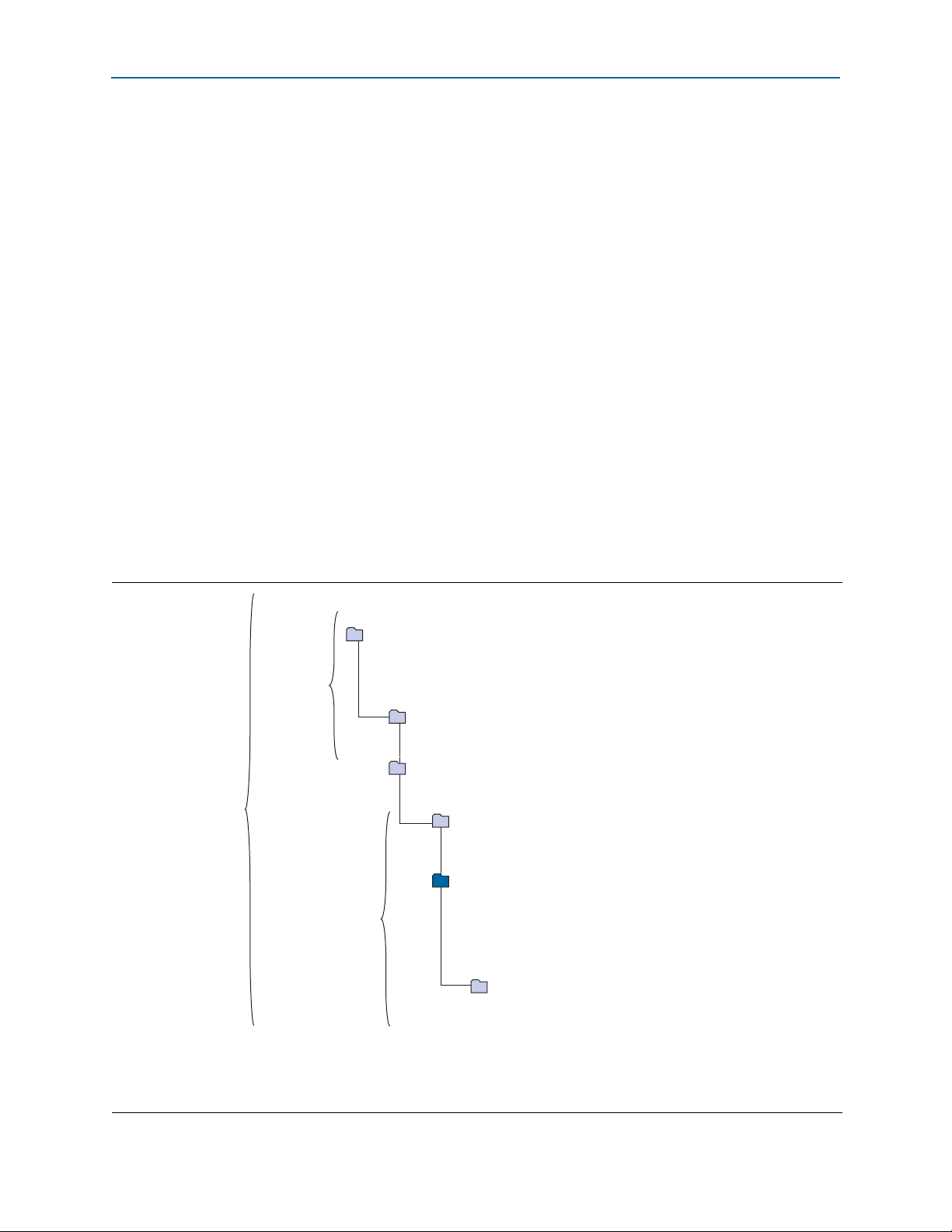

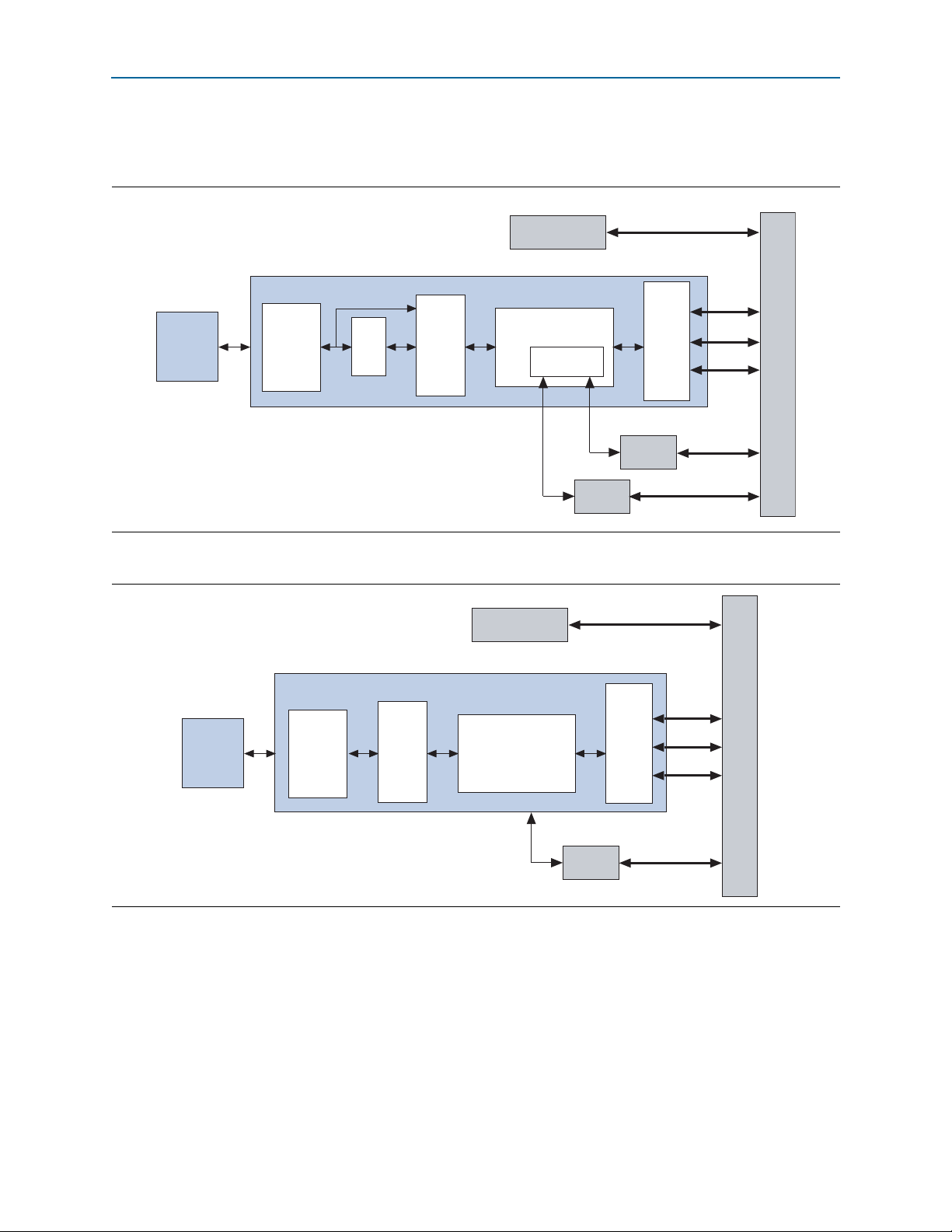

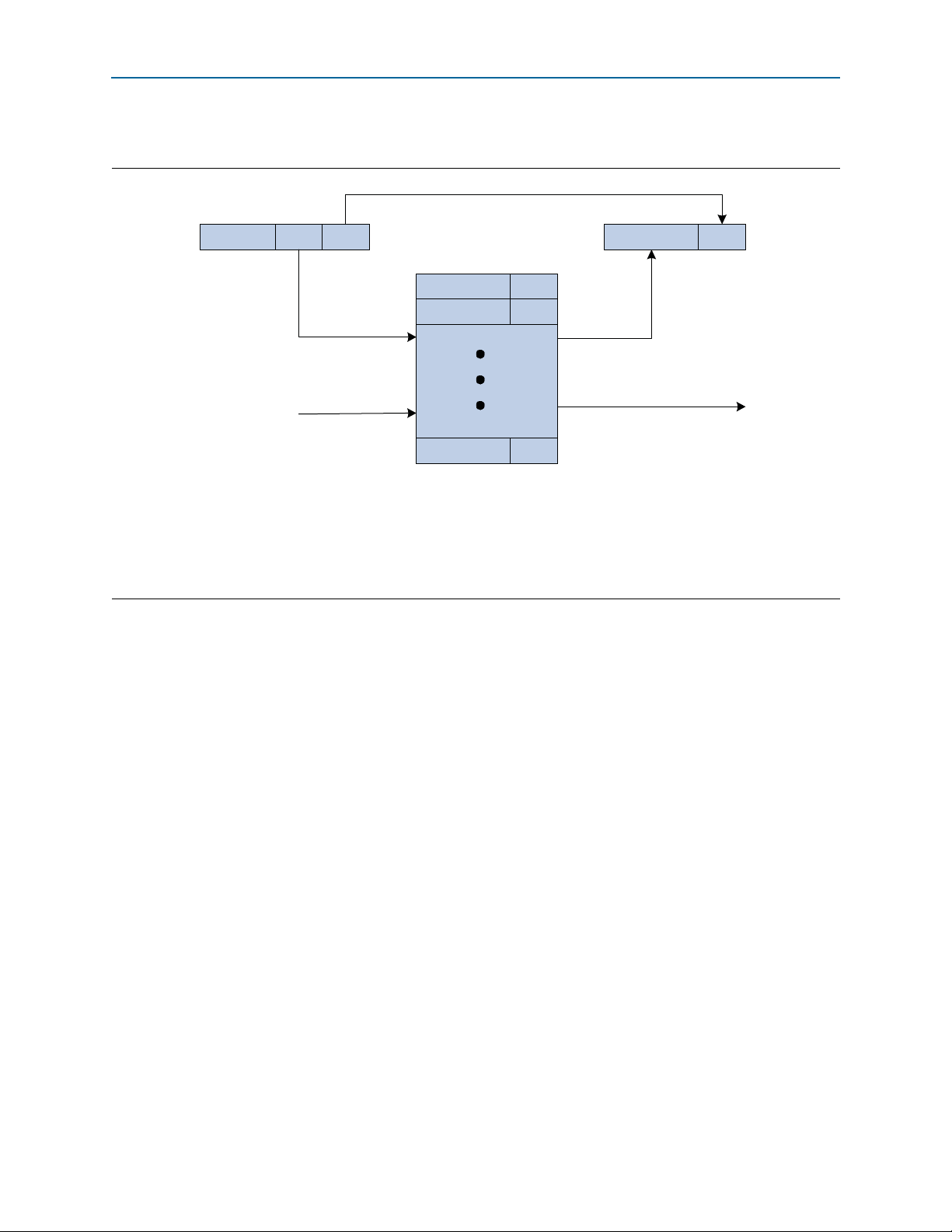

The hard IP implementation includes all of the required and most of the optional

features of the specification for the transaction, data link, and physical layers.

Depending upon the device you choose, one to four instances of the IP Compiler for

PCI Express hard implementation are available. These instances can be configured to

include any combination of root port and endpoint designs to meet your system

requirements. A single device can also use instances of both the soft and hard

implementations of the IP Compiler for PCI Express. Figure 1–1 provides a high-level

block diagram of the hard IP implementation.

Figure 1–1. IP Compiler for PCI Express Hard IP Implementation High-Level Block Diagram (Note 1) (2)

Notes to Figure 1–1:

(1) Stratix IV GX devices have two virtual channels.

(2) LMI stands for Local Management Interface.

This user guide includes a design example and testbench that you can configure as a

root port (RP) or endpoint (EP). You can use these design examples as a starting point

to create and test your own root port and endpoint designs.

f The purpose of the IP Compiler for PCI Express User Guide is to explain how to use the

IP Compiler for PCI Express and not to explain the PCI Express protocol. Although

there is inevitable overlap between the two documents, this document should be used

in conjunction with an understanding of the following PCI Express specifications:

PHY Interface for the PCI Express Architecture PCI Express 3.0 and PCI Express Base

Specification 1.0a, 1.1, or 2.0.

Support for IP Compiler for PCI Express Hard IP

If you target an Arria II GX, Arria II GZ, Cyclone IV GX, or Stratix IV GX device, you

can parameterize the IP core to include a full hard IP implementation of the PCI

Express stack including the following layers:

August 2014 Altera Corporation IP Compiler for PCI Express

■ Physical (PHY)

■ Physical Media Attachment (PMA)

Page 8

1–6 Chapter 1: Datasheet

■ Physical Coding Sublayer (PCS)

■ Media Access Control (MAC)

■ Data link

■ Transaction

General Description

Optimized for Altera devices, the hard IP implementation supports all memory, I/O,

configuration, and message transactions. The IP cores have a highly optimized

application interface to achieve maximum effective throughput. Because the compiler

is parameterizeable, you can customize the IP cores to meet your design

requirements.Table 1–5 lists the configurations that are available for the IP Compiler

for PCI Express hard IP implementation.

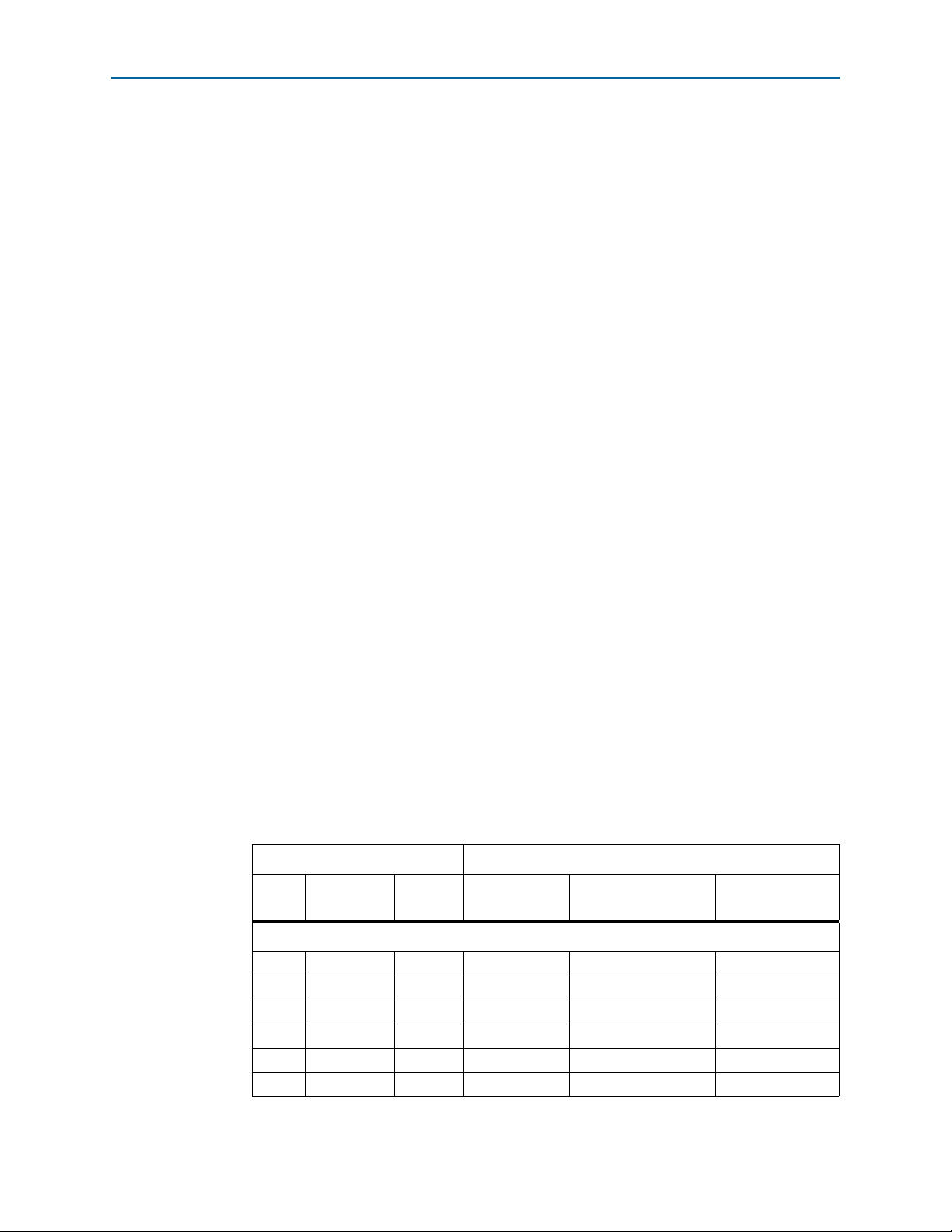

Table 1–5. Hard IP Configurations for the IP Compiler for PCI Express in Quartus II Software Version 11.0

Device Link Rate (Gbps) ×1 ×2 (1) ×4 ×8

Avalon Streaming (Avalon-ST) Interface

Arria II GX

Arria II GZ

Cyclone IV GX

Stratix IV GX

2.5 yes no yes yes (2)

5.0 nononono

2.5 yes no yes yes (2)

5.0 yes no yes (2) no

2.5 yes yes yes no

5.0 nononono

2.5 yes no yes yes

5.0 yes no yes yes

Avalon-MM Interface using Qsys Design Flow (3)

Arria II GX 2.5 yes no yes no

Cyclone IV GX 2.5 yes yes yes no

Stratix IV GX

Notes to Table 1–5:

(1) For devices that do not offer a ×2 initial configuration, you can use a ×4 configuration with the upper two lanes left unconnected at the device

pins. The link will negotiate to ×2 if the attached device is ×2 native or capable of negotiating to ×2.

(2) The ×8 support uses a 128-bit bus at 125 MHz.

(3) The Qsys design flow supports the generation of endpoint variations only.

2.5 yes no yes yes

5.0 yes no yes no

Tab le 1– 6 lists the Total RX buffer space, Retry buffer size, and Maximum Payload

size for device families that include the hard IP implementation. You can find these

parameters on the Buffer Setup page of the parameter editor.

Table 1–6. IP Compiler for PCI Express Buffer and Payload Information (Part 1 of 2)

Devices Family Total RX Buffer Space Retry Buffer Max Payload Size

Arria II GX 4 KBytes 2 KBytes 256 Bytes

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 9

Chapter 1: Datasheet 1–7

Altera FPGA with Embedded

PCIe Hard IP Block

User Application

Logic

PCIe

Hard IP

Block

PCIe

Hard IP

Block

RP EP

User Application

Logic

PCI Express Link

Altera FPGA with Embedded

PCIe Hard IP Block

General Description

Table 1–6. IP Compiler for PCI Express Buffer and Payload Information (Part 2 of 2)

Devices Family Total RX Buffer Space Retry Buffer Max Payload Size

Arria II GZ 16 KBytes 16 KBytes 2 KBytes

Cyclone IV GX 4 KBytes 2 KBytes 256 Bytes

Stratix IV GX 16 KBytes 16 KBytes 2 KBytes

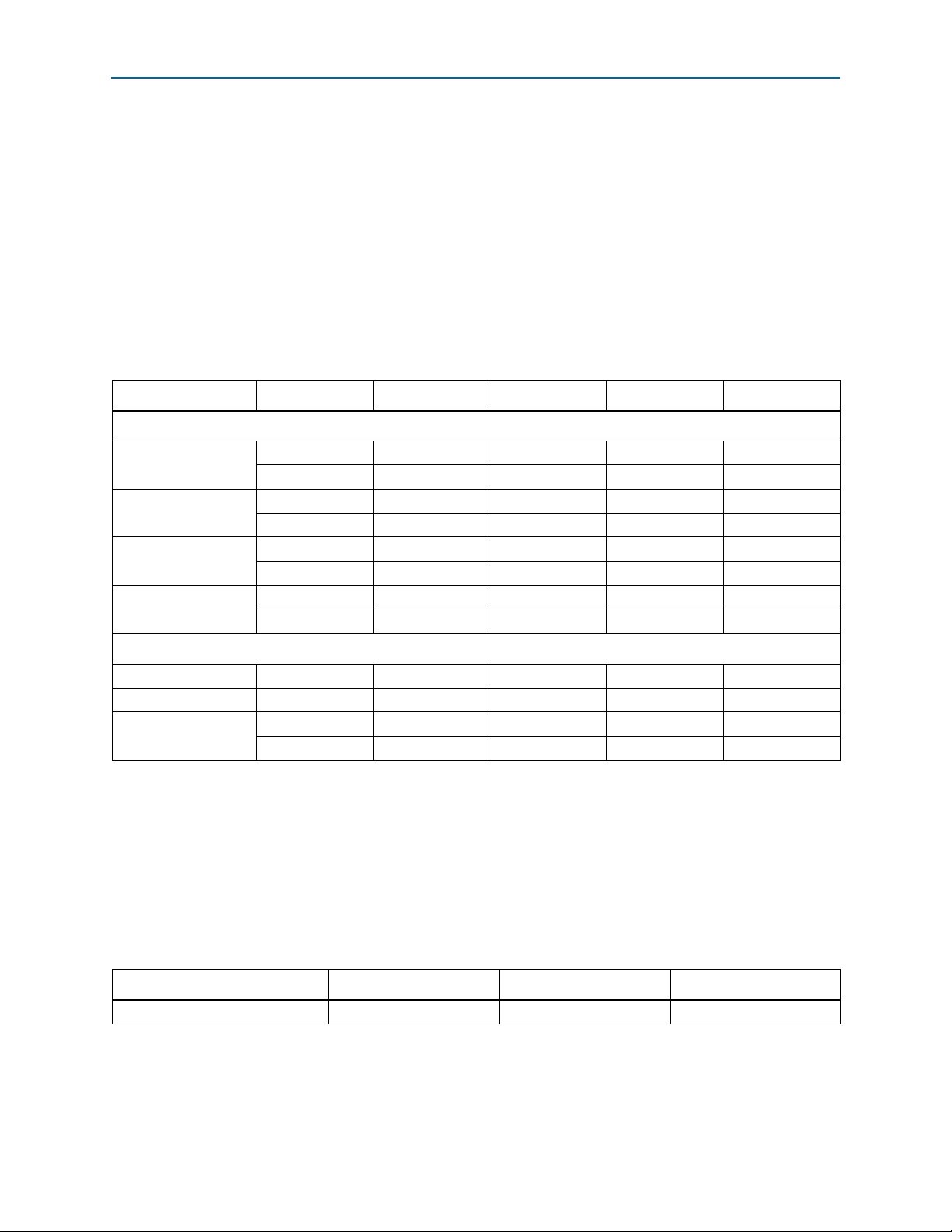

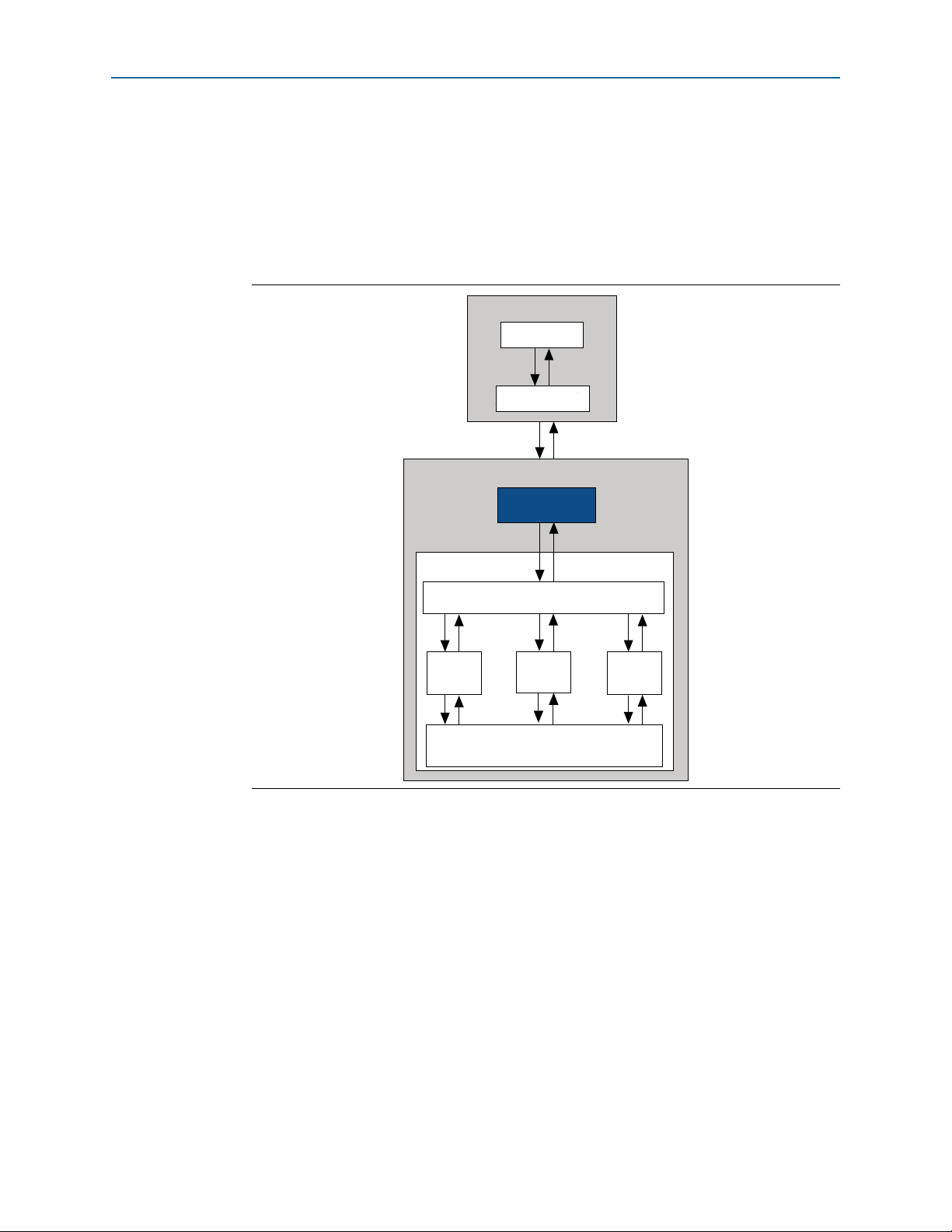

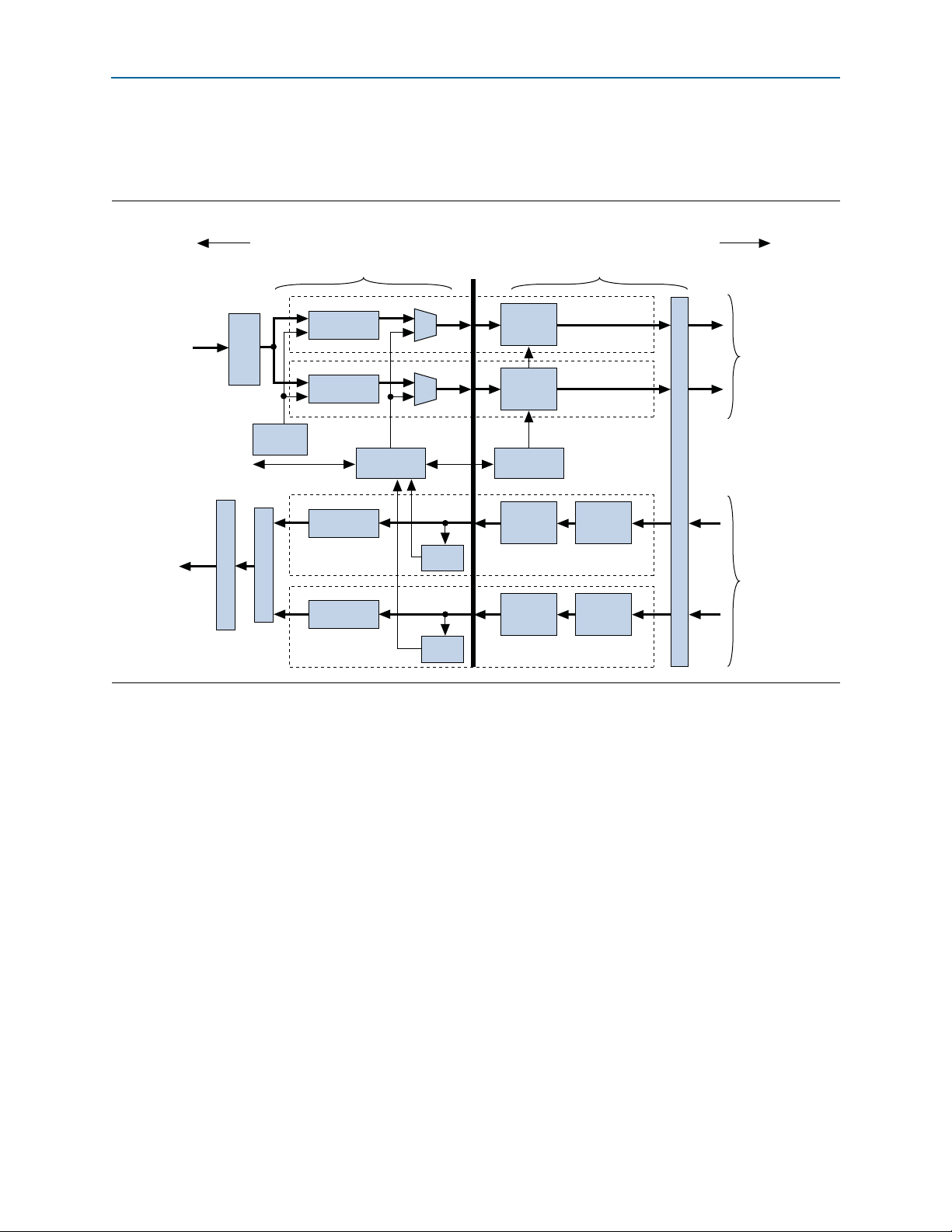

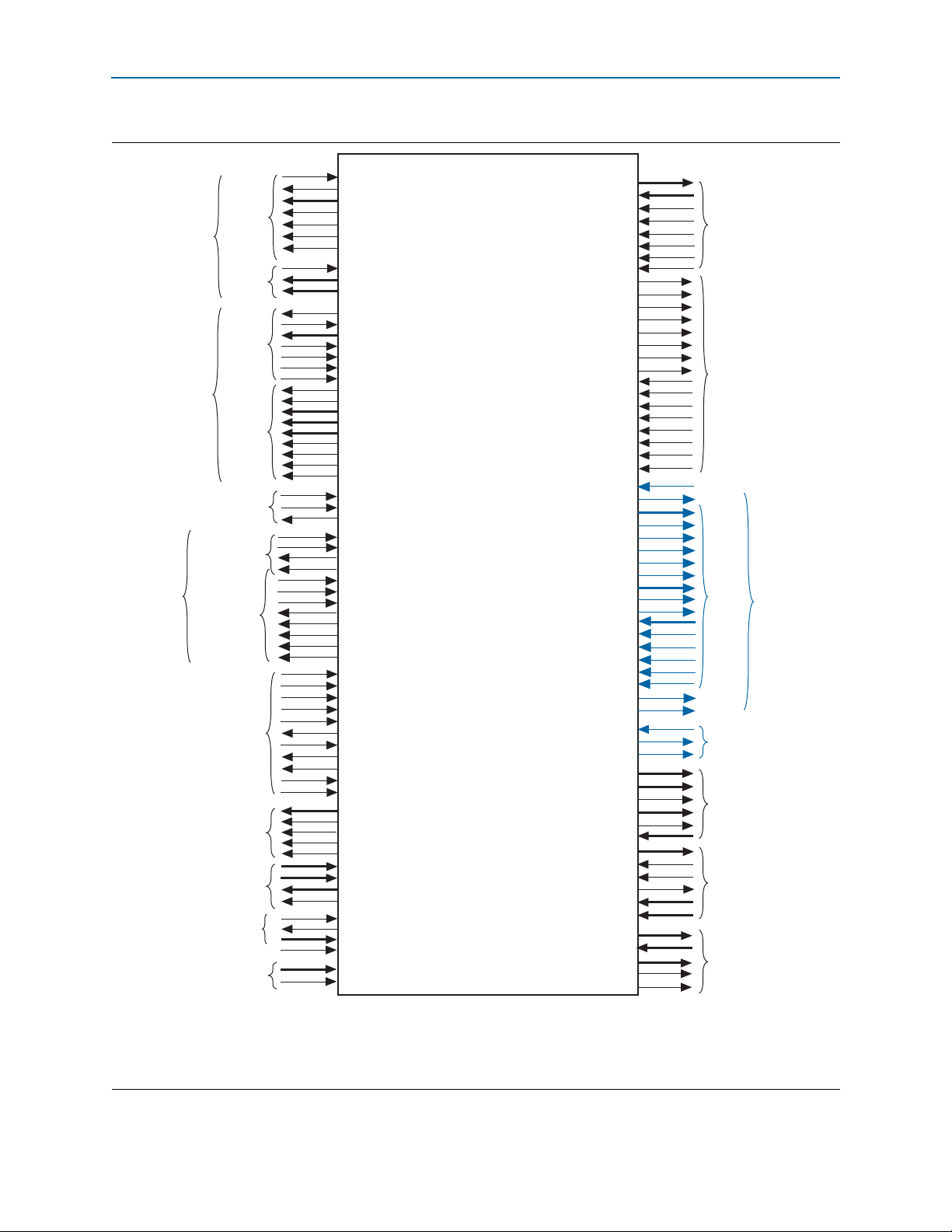

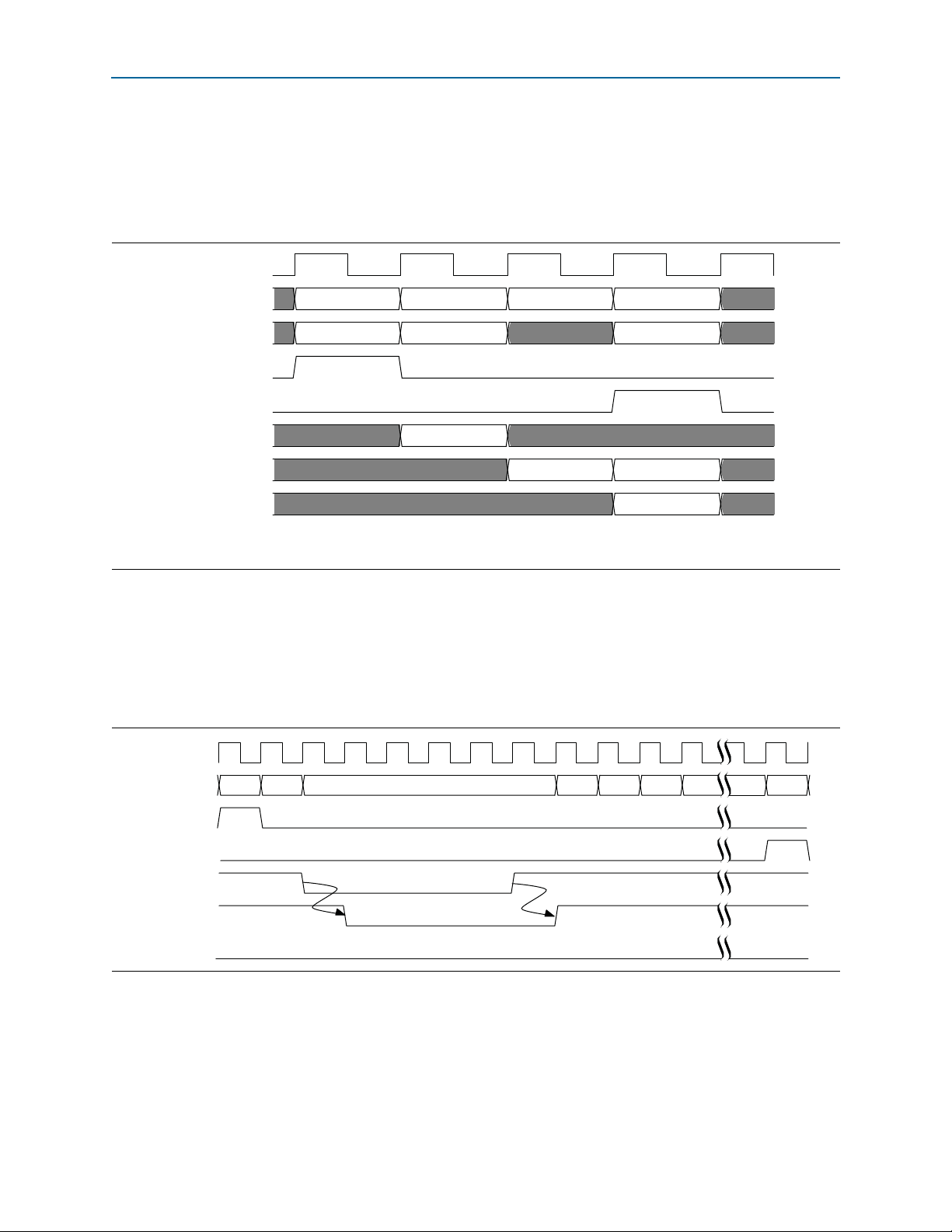

The IP Compiler for PCI Express supports ×1, ×2, ×4, and ×8 variations (Table 1–7 on

page 1–8) that are suitable for either root port or endpoint applications. You can use

the parameter editor to customize the IP core. The Qsys design flows do not support

root port variations. Figure 1–2 shows a relatively simple application that includes

two IP Compilers for PCI Express, one configured as a root port and the other as an

endpoint.

Figure 1–2. PCI Express Application with a Single Root Port and Endpoint

August 2014 Altera Corporation IP Compiler for PCI Express

Page 10

1–8 Chapter 1: Datasheet

PCIe Link

PCIe Hard IP Block

RP

Switch

PCIe

Hard IP

Block

RP

User Application

Logic

PCIe Hard IP Block

EP

PCIe

Hard IP

Block

EP

User Application

Logic

IP Compiler

for

PCI Express

Soft IP

Implementation

EP

User Application

Logic

PHY

PIPE

Interface

User

Application

Logic

PCIe Link

PCIe Link

PCIe Link

PCIe Link

User Application

Logic

Altera FPGA with Embedded PCIe

Hard IP Blocks

Altera FPGA with Embedded PCIe

Hard IP Blocks

Altera FPGA with Embedded PCIe

Hard IP Blocks

Altera FPGA Supporting IP Compiler for

PCI Express Soft IP Implementation

IP Compiler

for

PCI Express

Soft IP

Implementation

General Description

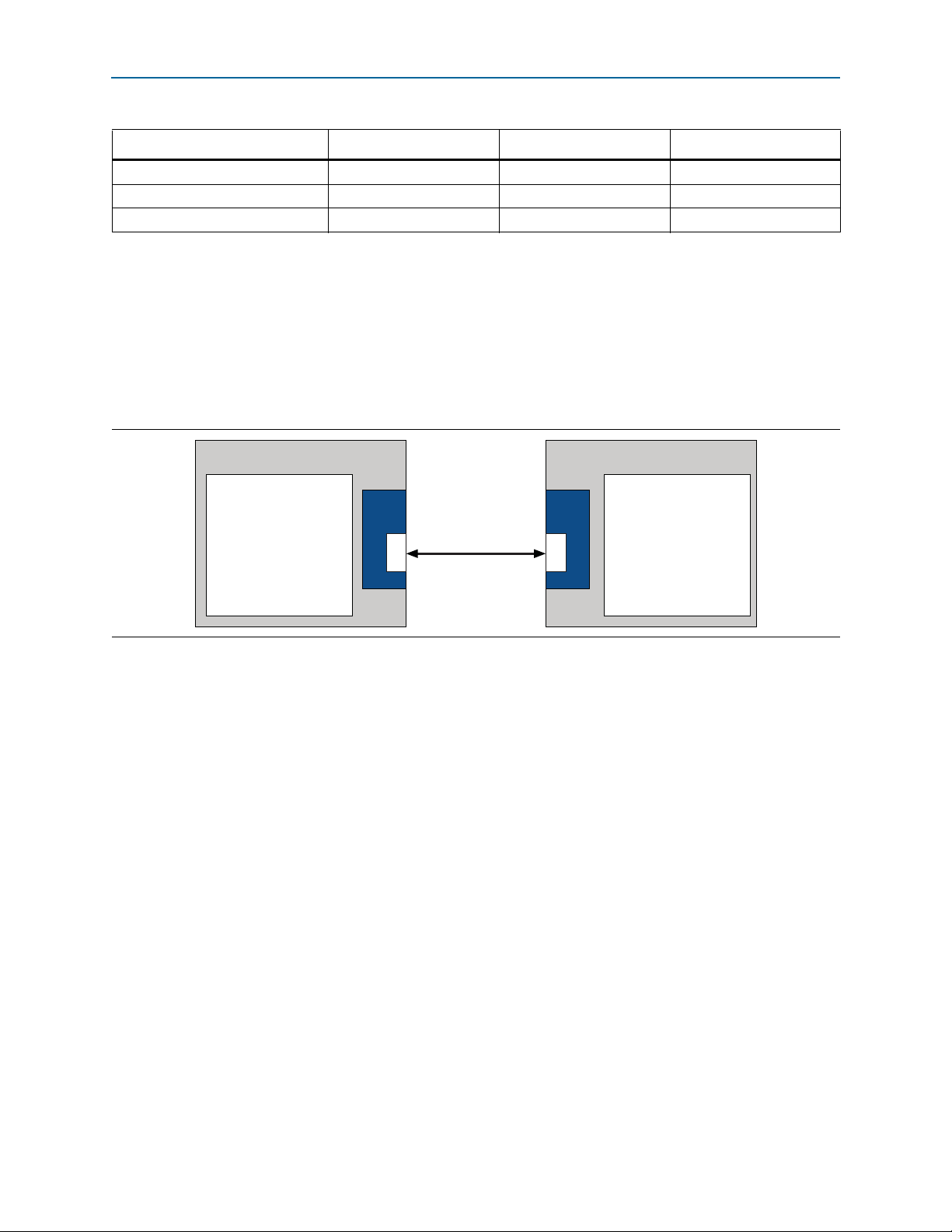

Figure 1–3 illustrates a heterogeneous topology, including an Altera device with two

PCIe hard IP root ports. One root port connects directly to a second FPGA that

includes an endpoint implemented using the hard IP IP core. The second root port

connects to a switch that multiplexes among three PCI Express endpoints.

Figure 1–3. PCI Express Application with Two Root Ports

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

If you target a device that includes an internal transceiver, you can parameterize the

IP Compiler for PCI Express to include a complete PHY layer, including the MAC,

PCS, and PMA layers. If you target other device architectures, the IP Compiler for PCI

Express generates the IP core with the Intel-designed PIPE interface, making the IP

core usable with other PIPE-compliant external PHY devices.

Tab le 1– 7 lists the protocol support for devices that include HSSI transceivers.

Table 1–7. Operation in Devices with HSSI Transceivers (Part 1 of 2) (Note 1)

Device Family ×1 ×4 ×8

Stratix IV GX hard IP–Gen1 Yes Yes Yes

Stratix IV GX hard IP–Gen 2 Yes (2) Yes (2) Yes (3)

Stratix IV soft IP–Gen1 Yes Yes No

Cyclone IV GX hard IP–Gen1 Yes Yes No

Page 11

Chapter 1: Datasheet 1–9

IP Core Verification

Table 1–7. Operation in Devices with HSSI Transceivers (Part 2 of 2) (Note 1)

Device Family ×1 ×4 ×8

Arria II GX–Gen1 Hard IP Implementation Yes Yes Yes

Arria II GX–Gen1 Soft IP Implementation Yes Yes No

Arria II GZ–Gen1 Hard IP Implementation Yes Yes Yes

Arria II GZ–Gen2 Hard IP Implementation Yes Yes No

Notes to Table 1–7:

(1) Refer to Table 1–2 on page 1–2 for a list of features available in the different implementations and design flows.

(2) Not available in -4 speed grade. Requires -2 or -3 speed grade.

(3) Gen2 ×8 is only available in the -2 and I3 speed grades.

1 The device names and part numbers for Altera FPGAs that include internal

transceivers always include the letters GX, GT, or GZ. If you select a device that does

not include an internal transceiver, you can use the PIPE interface to connect to an

external PHY. Table 3–9 on page 3–8 lists the available external PHY types.

You can customize the payload size, buffer sizes, and configuration space (base

address registers support and other registers). Additionally, the IP Compiler for PCI

Express supports end-to-end cyclic redundancy code (ECRC) and advanced error

reporting for ×1, ×2, ×4, and ×8 configurations.

External PHY Support

Altera IP Compiler for PCI Express variations support a wide range of PHYs,

including the TI XIO1100 PHY in 8-bit DDR/SDR mode or 16-bit SDR mode; NXP

PX1011A for 8-bit SDR mode, a serial PHY, and a range of custom PHYs using

8-bit/16-bit SDR with or without source synchronous transmit clock modes and 8-bit

DDR with or without source synchronous transmit clock modes. You can constrain TX

I/Os by turning on the Fast Output Enable Register option in the parameter editor,

or by editing this setting in the Quartus II Settings File (.qsf). This constraint ensures

fastest t

Debug Features

The IP Compiler for PCI Express also includes debug features that allow observation

and control of the IP cores for faster debugging of system-level problems.

f For more information about debugging refer to Chapter 17, Debugging.

IP Core Verification

To ensure compliance with the PCI Express specification, Altera performs extensive

validation of the IP Compiler for PCI Express. Validation includes both simulation

and hardware testing.

timing.

CO

August 2014 Altera Corporation IP Compiler for PCI Express

Page 12

1–10 Chapter 1: Datasheet

Performance and Resource Utilization

Simulation Environment

Altera’s verification simulation environment for the IP Compiler for PCI Express uses

multiple testbenches that consist of industry-standard BFMs driving the PCI Express

link interface. A custom BFM connects to the application-side interface.

Altera performs the following tests in the simulation environment:

■ Directed tests that test all types and sizes of transaction layer packets and all bits of

the configuration space

■ Error injection tests that inject errors in the link, transaction layer packets, and data

link layer packets, and check for the proper response from the IP cores

■ PCI-SIG

■ Random tests that test a wide range of traffic patterns across one or more virtual

®

Compliance Checklist tests that specifically test the items in the checklist

channels

Compatibility Testing Environment

Altera has performed significant hardware testing of the IP Compiler for PCI Express

to ensure a reliable solution. The IP cores have been tested at various PCI-SIG PCI

Express Compliance Workshops in 2005–2009 with Arria GX, Arria II GX,

Cyclone IV GX, Stratix II GX, and Stratix IV GX devices and various external PHYs.

They have passed all PCI-SIG gold tests and interoperability tests with a wide

selection of motherboards and test equipment. In addition, Altera internally tests

every release with motherboards and switch chips from a variety of manufacturers.

All PCI-SIG compliance tests are also run with each IP core release.

Performance and Resource Utilization

The hard IP implementation of the IP Compiler for PCI Express is available in

Arria II GX, Arria II GZ, Cyclone IV GX, and Stratix IV GX devices.

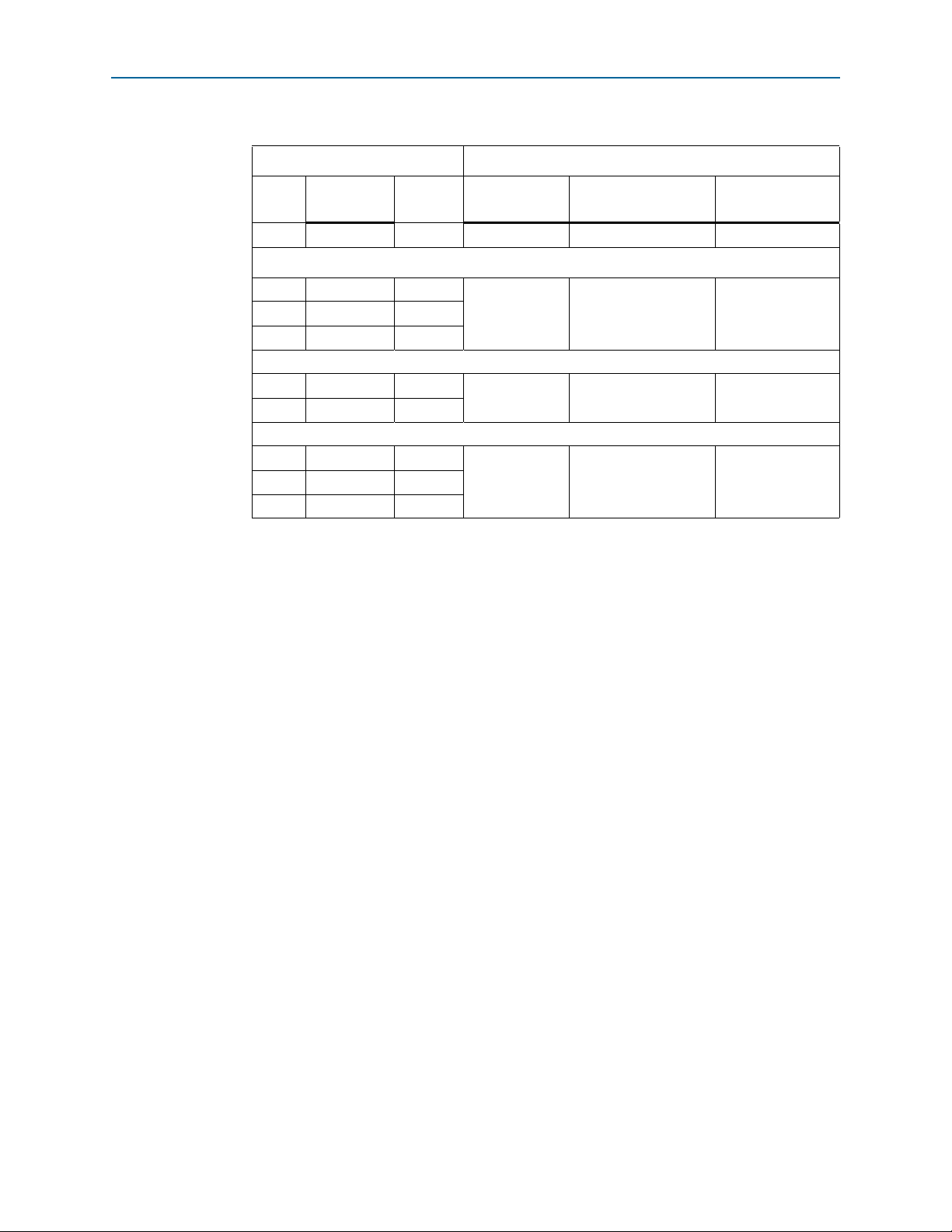

Tab le 1– 8 shows the resource utilization for the hard IP implementation using either

the Avalon-ST or Avalon-MM interface with a maximum payload of 256 bytes and 32

tags for the Avalon-ST interface and 16 tags for the Avalon-MM interface.

Table 1–8. Performance and Resource Utilization in Arria II GX, Arria II GZ, Cyclone IV GX, and

Stratix IV GX Devices (Part 1 of 2)

Parameters Size

Lane

Width

×1 125 1 100 100 0

×1 125 2 100 100 0

×4 125 1 200 200 0

×4 125 2 200 200 0

×8 250 1 200 200 0

×8 250 2 200 200 0

Internal

Clock (MHz)

Virtual

Channel

Combinational

Avalon-ST Interface

ALUTs

Dedicated

Registers

Memory Blocks

M9K

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 13

Chapter 1: Datasheet 1–11

Recommended Speed Grades

Table 1–8. Performance and Resource Utilization in Arria II GX, Arria II GZ, Cyclone IV GX, and

Stratix IV GX Devices (Part 2 of 2)

Parameters Size

Lane

Width

×4 125 1

×1 125 1

×8 250 1

×1 125 1

×4 125 1

×1 125 1

×4 250 1

Note to Tab le 1 –8:

(1) The transaction layer of the Avalon-MM implementation is implemented in programmable logic to improve latency.

Internal

Clock (MHz)

Avalon-MM Interface–Qsys Design Flow - Completer Only Single Dword

Virtual

Channel

Avalon-MM Interface–Qsys Design Flow

Avalon-MM Interface–Qsys Design Flow - Completer Only

Combinational

ALUTs

1600 1600 18 ×4 125 1

1000 1150 10

430 450 0 ×4 125 1

Dedicated

Registers

Memory Blocks

M9K

f Refer to Appendix C, Performance and Resource Utilization Soft IP Implementation

for performance and resource utilization for the soft IP implementation.

Recommended Speed Grades

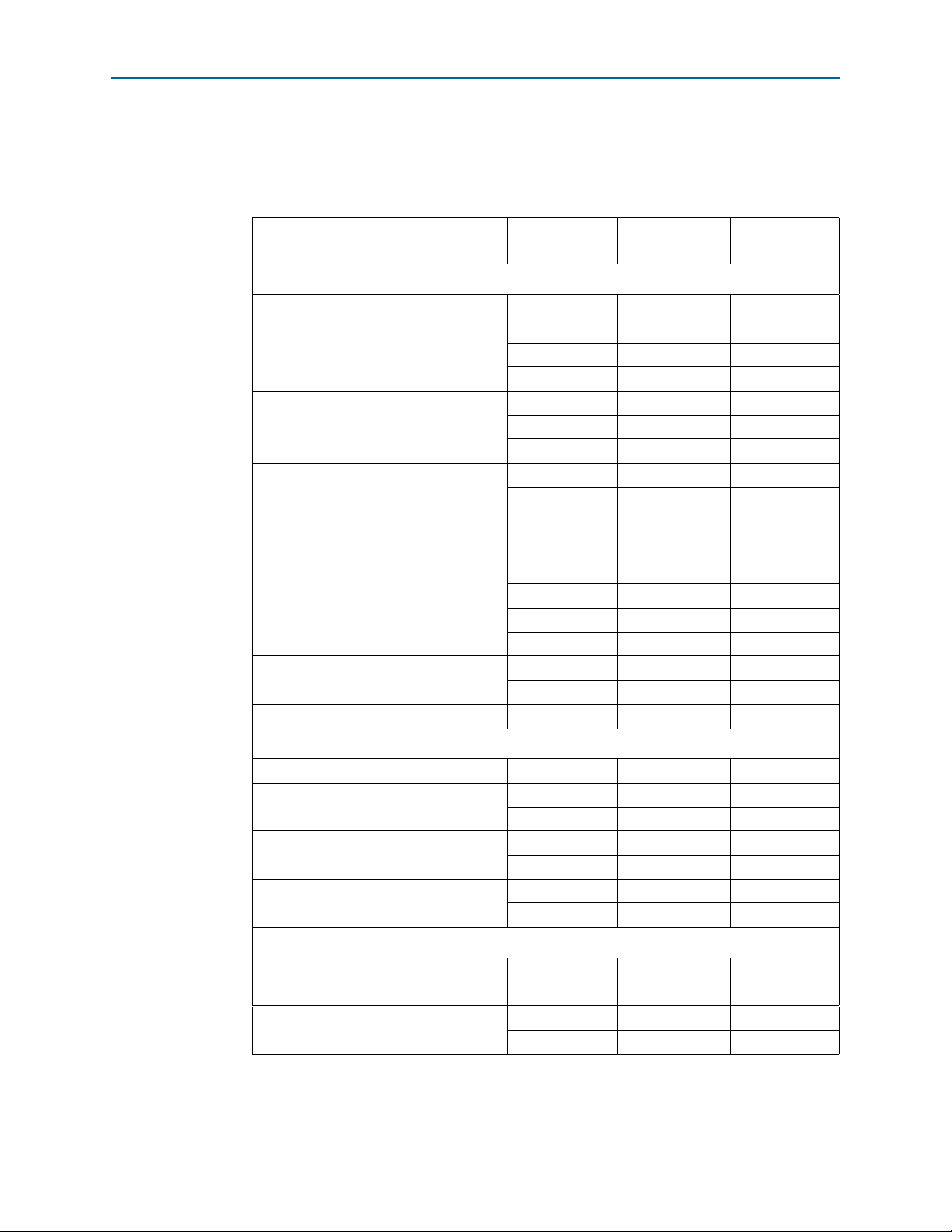

Tab le 1– 9 shows the recommended speed grades for each device family for the

supported link widths and internal clock frequencies. For soft IP implementations of

the IP Compiler for PCI Express, the table lists speed grades that are likely to meet

timing; it may be possible to close timing in a slower speed grade. For the hard IP

implementation, the speed grades listed are the only speed grades that close timing.

When the internal clock frequency is 125 MHz or 250 MHz, Altera recommends

setting the Quartus II Analysis & Synthesis Settings Optimization Technique to

Speed.

August 2014 Altera Corporation IP Compiler for PCI Express

Page 14

1–12 Chapter 1: Datasheet

Recommended Speed Grades

f Refer to “Setting Up and Running Analysis and Synthesis” in Quartus II Help and

Area and Timing Optimization in volume 2 of the Quartus II Handbook for more

information about how to effect this setting.

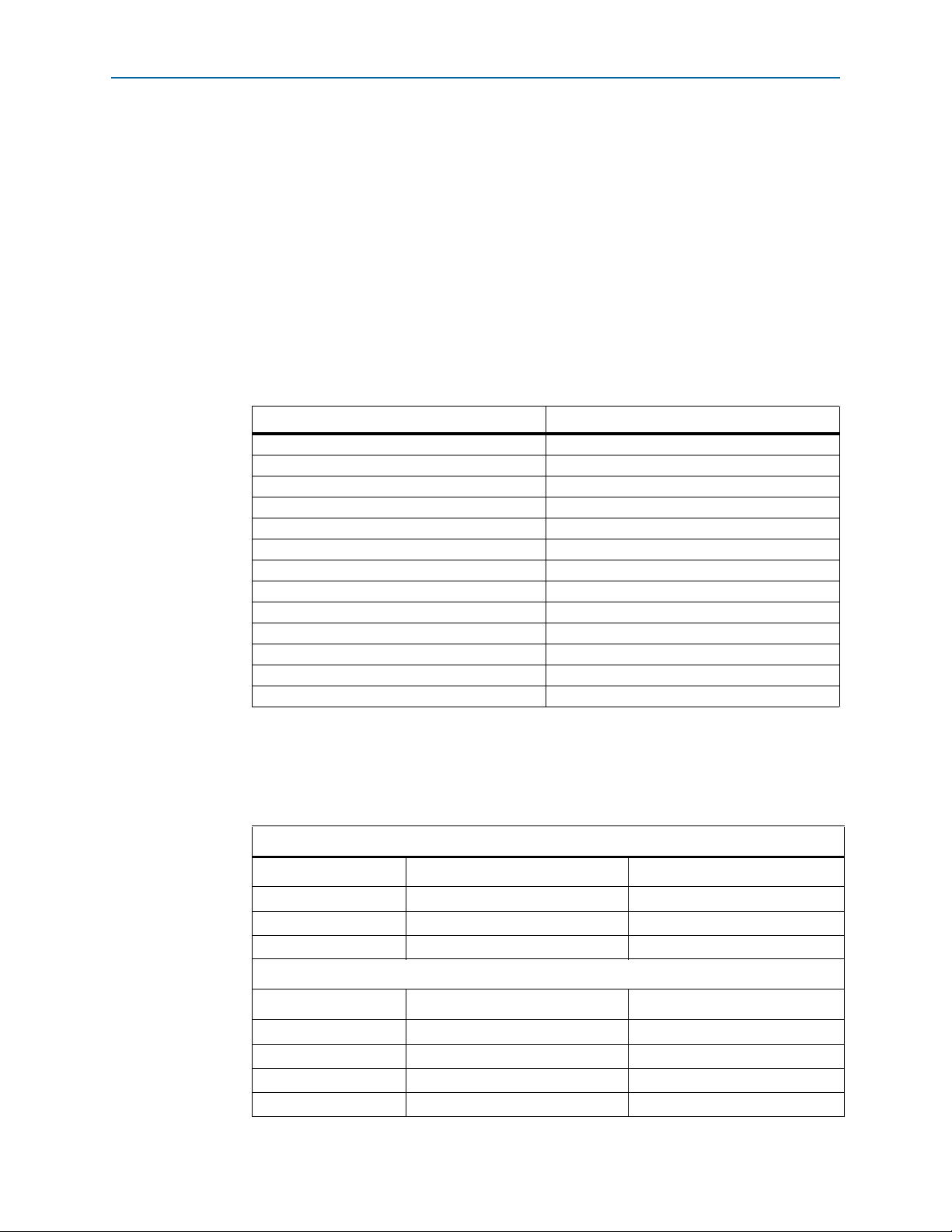

Table 1–9. Recommended Device Family Speed Grades (Part 1 of 2)

Device Family Link Width

Internal Clock

Frequency (MHz)

Recommended

Speed Grades

Avalon-ST Hard IP Implementation

×1 62.5 (2) –4,–5,–6

Arria II GX Gen1 with ECC Support (1)

×1 125 –4,–5,–6

×4 125 –4,–5,–6

×8 125 –4,–5,–6

×1 125 -3, -4

Arria II GZ Gen1 with ECC Support

×4 125 -3, -4

×8 125 -3, -4

Arria II GZ Gen 2 with ECC Support

Cyclone IV GX Gen1 with ECC Support

×1 125 -3

×4 125 -3

×1 62.5 (2) all speed grades

×1, ×2, ×4 125 all speed grades

×1 62.5 (2) –2, –3 (3)

Stratix IV GX Gen1 with ECC Support (1)

×1 125 –2, –3, –4

×4 125 –2, –3, –4

×8 250 –2, –3, –4 (3)

Stratix IV GX Gen2 with ECC Support (1)

×1 125 –2, –3 (3)

×4 250 –2, –3 (3)

Stratix IV GX Gen2 without ECC Support ×8 500 –2, I3 (4)

Avalon–MM Interface–Qsys Flow

Arria II GX ×1, ×4 125 –6

Cyclone IV GX

Stratix IV GX Gen1

Stratix IV GX Gen2

×1, ×2, ×4 125 –6, –7

×1 62.5 –6, –7, –8

×1, ×4 125 –2, –3, –4

×8 250 –2, –3

×1 125 –2, –3

×4 250 –2, –3

Avalon-ST or Descriptor/Data Interface Soft IP Implementation

Arria II GX ×1, ×4 125 –4. –5 (5)

Cyclone IV GX ×1 125 –6, –7 (5)

Stratix IV E Gen1

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

×1 62.5 all speed grades

×1, ×4 125 all speed grades

Page 15

Chapter 1: Datasheet 1–13

Recommended Speed Grades

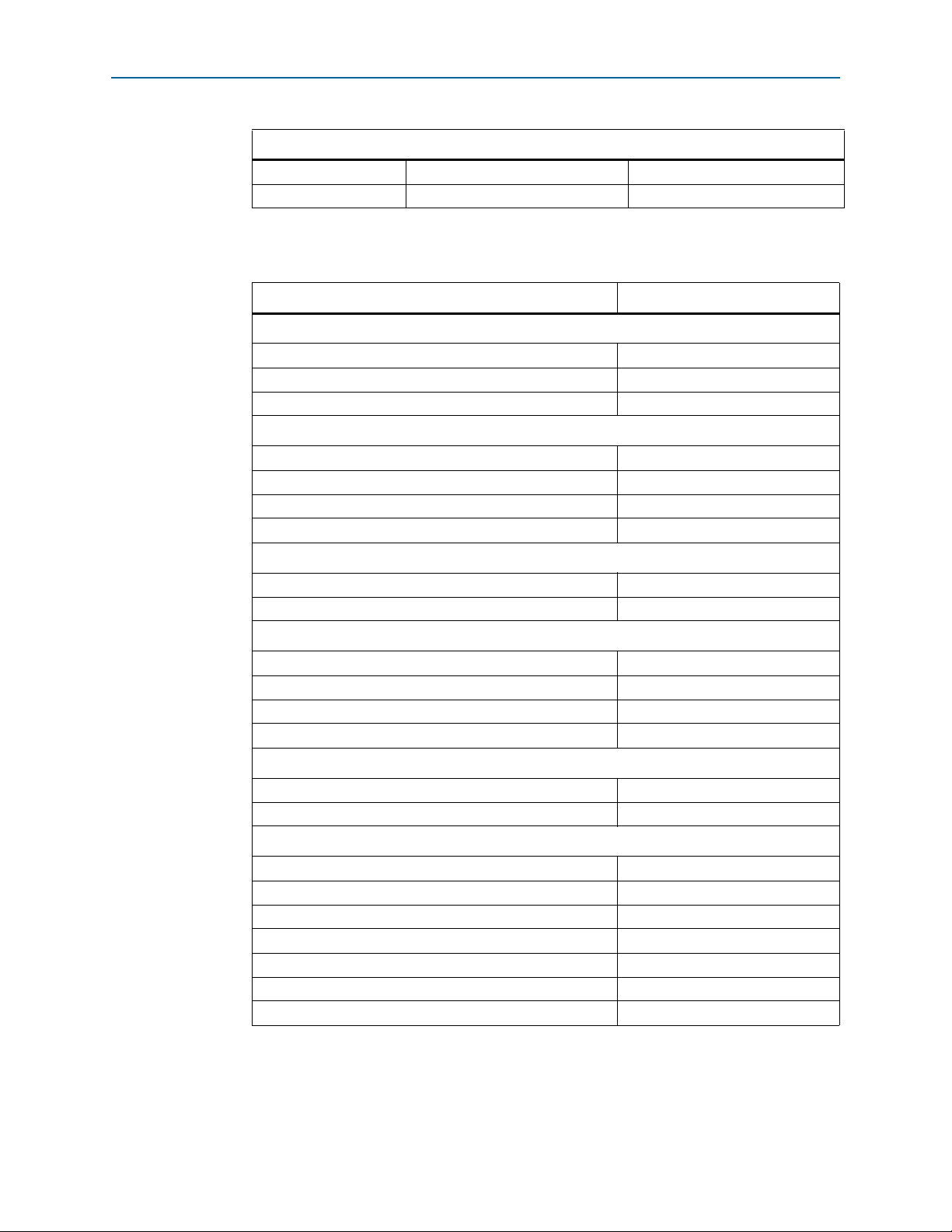

Table 1–9. Recommended Device Family Speed Grades (Part 2 of 2)

Device Family Link Width

Stratix IV GX Gen1

Notes to Table 1–9:

(1) The RX Buffer and Retry Buffer ECC options are only available in the hard IP implementation.

(2) This is a power-saving mode of operation.

(3) Final results pending characterization by Altera for speed grades -2, -3, and -4. Refer to the .fit.rpt file generated

by the Quartus II software.

(4) Closing timing for the –3 speed grades in the provided endpoint example design requires seed sweeping.

(5) You must turn on the following Physical Synthesis settings in the Quartus II Fitter Settings to achieve timing

closure for these speed grades and variations: Perform physical synthesis for combinational logic, Perform

register duplication, and Perform register retiming. In addition, you can use the Quartus II Design Space

Explorer or Quartus II seed sweeping methodology. Refer to the Netlist Optimizations and Physical Synthesis

chapter in volume 2 of the Quartus II Handbook for more information about how to set these options.

(6) Altera recommends disabling the OpenCore Plus feature for the ×8 soft IP implementation because including this

feature makes it more difficult to close timing.

×1 62.5 all speed grades

×4 125 all speed grades

Internal Clock

Frequency (MHz)

Recommended

Speed Grades

August 2014 Altera Corporation IP Compiler for PCI Express

Page 16

1–14 Chapter 1: Datasheet

Recommended Speed Grades

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 17

acds

quartus - Contains the Quartus II software

ip - Contains the Altera IP Library and third-party IP cores

altera - Contains the Altera IP Library source code

<IP core name> - Contains the IP core source files

August 2014

<edit Part Number variable in chapter>

This section provides step-by-step instructions to help you quickly set up and

simulate the IP Compiler for PCI Express testbench. The IP Compiler for PCI Express

provides numerous configuration options. The parameters chosen in this chapter are

the same as those chosen in the PCI Express High-Performance Reference Design

available on the Altera website.

Installing and Licensing IP Cores

The Altera IP Library provides many useful IP core functions for production use

without purchasing an additional license. You can evaluate any Altera IP core in

simulation and compilation in the Quartus II software using the OpenCore evaluation

feature.

Some Altera IP cores, such as MegaCore

separate license for production use. You can use the OpenCore Plus feature to

evaluate IP that requires purchase of an additional license until you are satisfied with

the functionality and performance. After you purchase a license, visit the Self Service

Licensing Center to obtain a license number for any Altera product. For additional

information, refer to Altera Software Installation and Licensing.

Figure 2–1. IP core Installation Path

2. Getting Started

®

functions, require that you purchase a

1 The default installation directory on Windows is <drive>:\altera\<version number>;

on Linux it is <home directory>/altera/<version number>.

OpenCore Plus IP Evaluation

Altera's free OpenCore Plus feature allows you to evaluate licensed MegaCore IP

cores in simulation and hardware before purchase. You need only purchase a license

for MegaCore IP cores if you decide to take your design to production. OpenCore Plus

supports the following evaluations:

■ Simulate the behavior of a licensed IP core in your system.

■ Verify the functionality, size, and speed of the IP core quickly and easily.

■ Generate time-limited device programming files for designs that include IP cores.

■ Program a device with your IP core and verify your design in hardware

OpenCore Plus evaluation supports the following two operation modes:

■ Untethered—run the design containing the licensed IP for a limited time.

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 18

2–2 Chapter 2: Getting Started

■ Tethered—run the design containing the licensed IP for a longer time or

IP Catalog and Parameter Editor

indefinitely. This requires a connection between your board and the host

computer.

All IP cores that use OpenCore Plus time out simultaneously when any IP core in the

design times out.

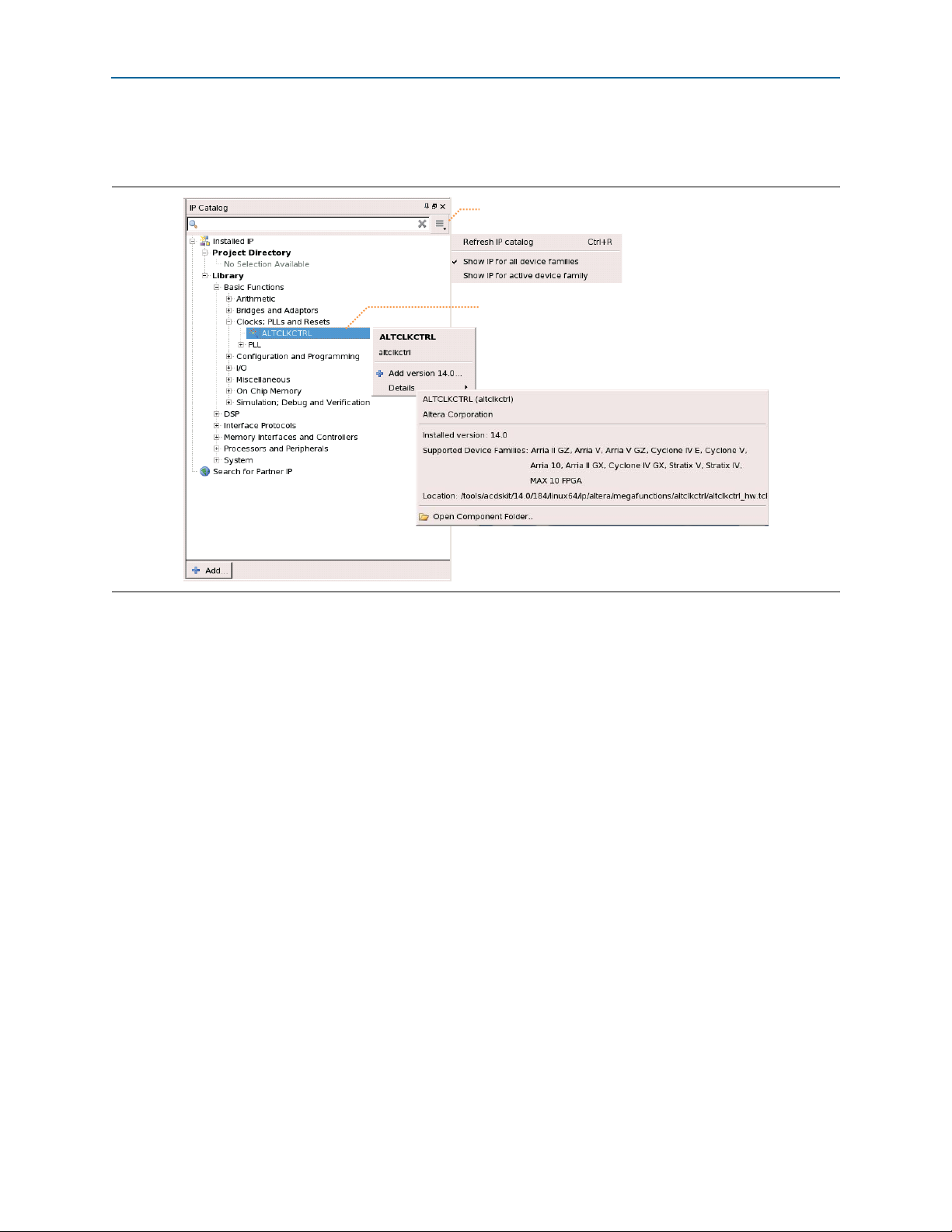

IP Catalog and Parameter Editor

The Quartus II IP Catalog (Too ls > I P C a t a lo g) and parameter editor help you easily

customize and integrate IP cores into your project. You can use the IP Catalog and

parameter editor to select, customize, and generate files representing your custom IP

variation.

1 The IP Catalog (To ol s > IP C a t al og ) and parameter editor replace the MegaWizard™

Plug-In Manager for IP selection and parameterization, beginning in Quartus II

software version 14.0. Use the IP Catalog and parameter editor to locate and

paramaterize Altera IP cores.

The IP Catalog lists IP cores available for your design. Double-click any IP core to

launch the parameter editor and generate files representing your IP variation. The

parameter editor prompts you to specify an IP variation name, optional ports, and

output file generation options. The parameter editor generates a top level Qsys

system file (.qsys) or Quartus II IP file (.qip) representing the IP core in your project.

You can also parameterize an IP variation without an open project.

Use the following features to help you quickly locate and select an IP core:

■ Filter IP Catalog to Show IP for active device family or Show IP for all device

families.

■ Search to locate any full or partial IP core name in IP Catalog. Click Search for

Partner IP, to access partner IP information on the Altera website.

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 19

Chapter 2: Getting Started 2–3

IP Catalog and Parameter Editor

■ Right-click an IP core name in IP Catalog to display details about supported

devices, installation location, and links to documentation.

Figure 2–2. Quartus II IP Catalog

Search and filter IP for your target device

Double-click to customize, right-click for information

1 The IP Catalog is also available in Qsys (View > IP Catalog). The Qsys IP Catalog

includes exclusive system interconnect, video and image processing, and other

system-level IP that are not available in the Quartus II IP Catalog.

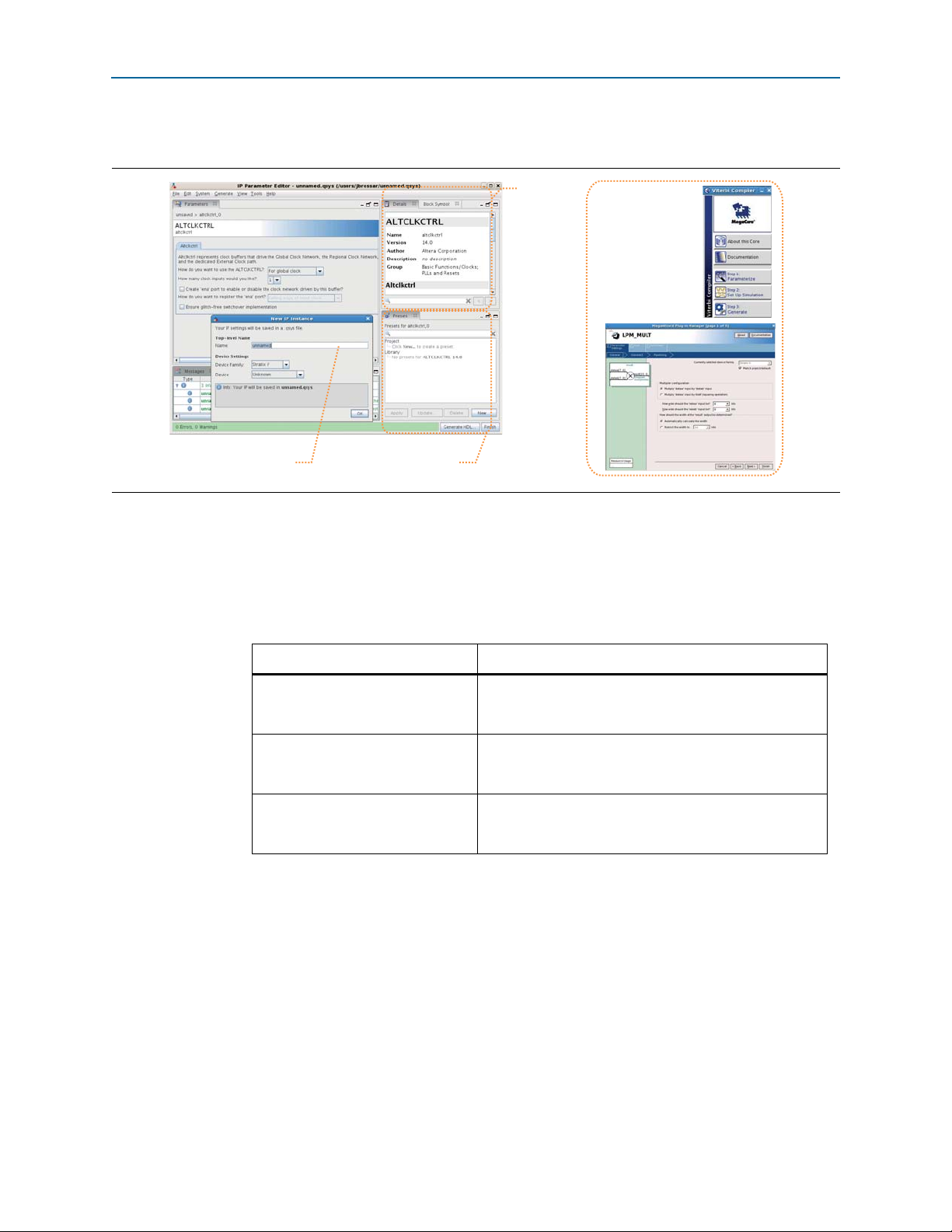

Using the Parameter Editor

The parameter editor helps you to configure your IP variation ports, parameters,

architecture features, and output file generation options:

■ Use preset settings in the parameter editor (where provided) to instantly apply

preset parameter values for specific applications.

■ View port and parameter descriptions and links to detailed documentation.

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 20

2–4 Chapter 2: Getting Started

View IP port

and parameter

details

Apply preset parameters for

specific applications

Specify your IP variation name

and target device

Legacy parameter

editors

■ Generate testbench systems or example designs (where provided).

Upgrading Outdated IP Cores

Figure 2–3. IP Parameter Editors

Modifying an IP Variation

You can easily modify the parameters of any Altera IP core variation in the parameter

editor to match your design requirements. Use any of the following methods to

modify an IP variation in the parameter editor.

Table 2–1. Modifying an IP Variation

Menu Command Action

File > Open

View > Utility Windows >

Project Navigator > IP Components

Project > Upgrade IP Components

Upgrading Outdated IP Cores

IP core variants generated with a previous version of the Quartus II software may

require upgrading before use in the current version of the Quartus II software. Click

Project > Upgrade IP Components to identify and upgrade IP core variants.

The Upgrade IP Components dialog box provides instructions when IP upgrade is

required, optional, or unsupported for specific IP cores in your design. You must

upgrade IP cores that require it before you can compile the IP variation in the current

version of the Quartus II software. Many Altera IP cores support automatic upgrade.

Select the top-levelHDL(.v, or .vhd) IP variation file to

launch the parameter editor and modify the IP variation.

Regenerate the IP variation to implement your changes.

Double-click the IP variation to launch the parameter

editor and modify the IP variation. Regenerate the IP

variation to implement your changes.

Select the IP variation and click Upgrade in Editor to

launch the parameter editor and modify the IP variation.

Regenerate the IP variation to implement your changes.

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 21

Chapter 2: Getting Started 2–5

Upgrading Outdated IP Cores

The upgrade process renames and preserves the existing variation file (.v, .sv, or .vhd)

as <my_ip>_ BAK.v, .sv, .vhd in the project directory.

Table 2–2. IP Core Upgrade Status

IP Core Status Corrective Action

Required Upgrade IP

Components

You must upgrade the IP variation before compiling in the current

version of the Quartus II software.

Upgrade is optional for this IP variation in the current version of the

Optional Upgrade IP

Components

Quartus II software. You can upgrade this IP variation to take

advantage of the latest development of this IP core. Alternatively you

can retain previous IP core characteristics by declining to upgrade.

Upgrade of the IP variation is not supported in the current version of

the Quartus II software due to IP core end of life or incompatibility

Upgrade Unsupported

with the current version of the Quartus II software. You are prompted

to replace the obsolete IP core with a current equivalent IP core from

the IP Catalog.

Before you begin

■ Archive the Quartus II project containing outdated IP cores in the original version

of the Quartus II software: Click Project > Archive Project to save the project in

your previous version of the Quartus II software. This archive preserves your

original design source and project files.

■ Restore the archived project in the latest version of the Quartus II software: Click

Project > Restore Archived Project. Click OK if prompted to change to a

supported device or overwrite the project database. File paths in the archive must

be relative to the project directory. File paths in the archive must reference the IP

variation .v or .vhd file or .qsys file (not the .qip file).

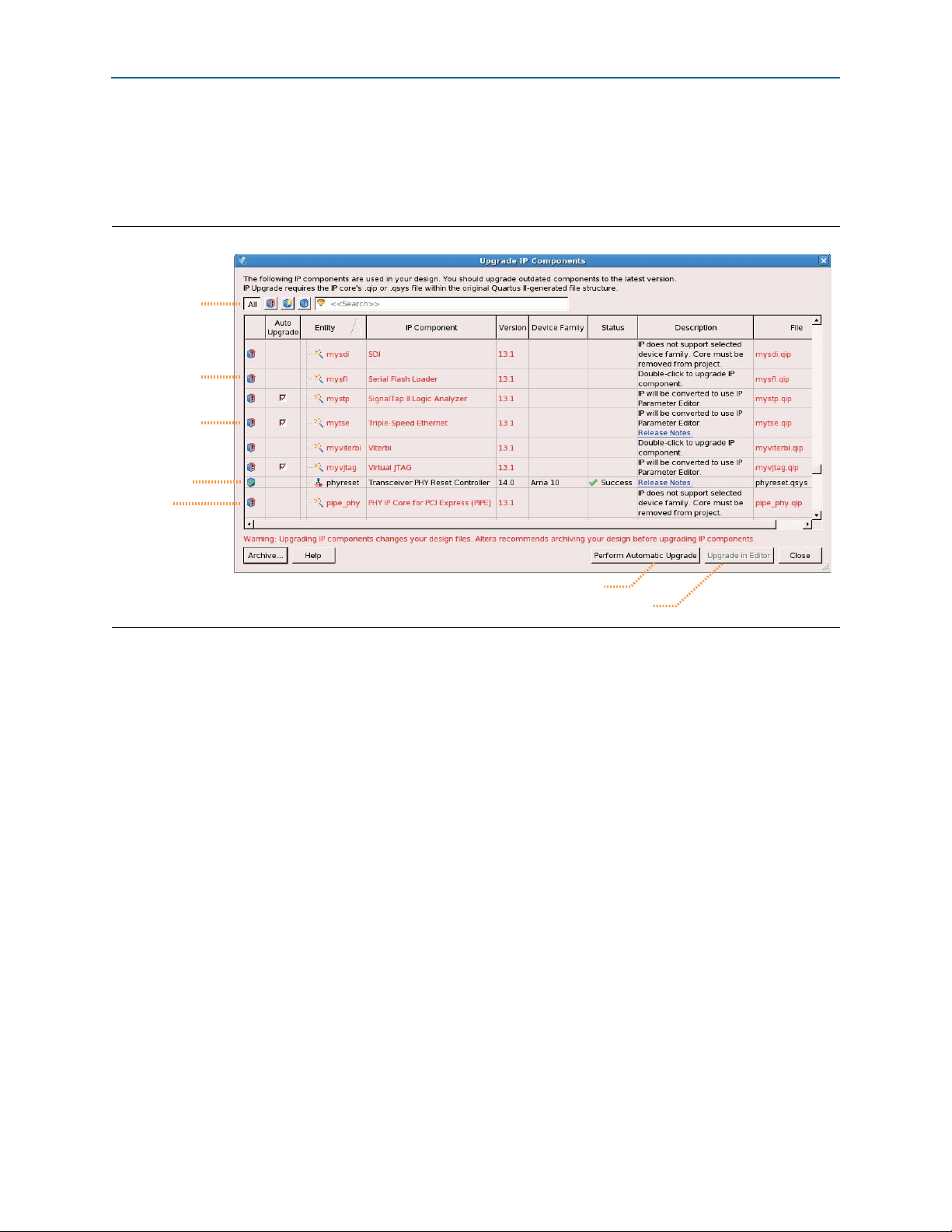

1. In the latest version of the Quartus II software, open the Quartus II project

containing an outdated IP core variation. The Upgrade IP Components dialog

automatically displays the status of IP cores in your project, along with

instructions for upgrading each core. Click Project > Upgrade IP Components to

access this dialog box manually.

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 22

2–6 Chapter 2: Getting Started

Displays upgrade

status for all IP cores

in the Project

Upgrades all IP core that support “Auto Upgrade”

Upgrades individual IP cores unsupported by “Auto Upgrade”

Checked IP cores

support “Auto Upgrade”

Successful

“Auto Upgrade”

Upgrade

unavailable

Double-click to

individually migrate

Upgrading Outdated IP Cores

2. To simultaneously upgrade all IP cores that support automatic upgrade, click

Perform Automatic Upgrade. The Status and Ve rs i o n columns update when

upgrade is complete. Example designs provided with any Altera IP core

regenerate automatically whenever you upgrade the IP core.

Figure 2–4. Upgrading IP Cores

Upgrading IP Cores at the Command Line

You can upgrade IP cores that support auto upgrade at the command line. IP cores

that do not support automatic upgrade do not support command line upgrade.

■ To upgrade a single IP core that supports auto-upgrade, type the following

command:

quartus_sh –ip_upgrade –variation_files

<qii_project>

Example:

■ To simultaneously upgrade multiple IP cores that support auto-upgrade, type the

quartus_sh -ip_upgrade -variation_files mega/pll25.v hps_testx

following command:

quartus_sh –ip_upgrade –variation_files “

<my_ip_filepath/my_ip2>.<hdl>

Example:

quartus_sh -ip_upgrade -variation_files

”

<qii_project>

"mega/pll_tx2.v;mega/pll3.v" hps_testx

f IP cores older than Quartus II software version 12.0 do not support upgrade. Altera

verifies that the current version of the Quartus II software compiles the previous

version of each IP core. The MegaCore IP Library Release Notes reports any verification

exceptions for MegaCore IP. The Quartus II Software and Device Support Release Notes

reports any verification exceptions for other IP cores. Altera does not verify

compilation for IP cores older than the previous two releases.

<my_ip_filepath/my_ip>.<hdl>

<my_ip_filepath/my_ip1>.<hdl>;

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 23

Chapter 2: Getting Started 2–7

Parameterizing the IP Compiler for PCI Express

Parameterizing the IP Compiler for PCI Express

This section guides you through the process of parameterizing the IP Compiler for

PCI Express as an endpoint, using the same options that are chosen in Chapter 15,

Testbench and Design Example. Complete the following steps to specify the

parameters:

1. In the IP Catalog (Tools > IP Catalog), locate and double-click the name of the IP

core to customize. The parameter editor appears.

2. Specify a top-level name for your custom IP variation. This name identifies the IP

core variation files in your project. For this walkthrough, specify top.v for the

name of the IP core file: <working_dir>\top.v.

3. Specify the following values in the parameter editor:

Table 2–3. System Settings Parameters

Parameter Value

PCIe Core Type PCI Express hard IP

PHY type Stratix IV GX

PHY interface serial

Configure transceiver block Use default settings.

Lanes ×8

Xcvr ref_clk 100 MHz

Application interface Avalon-ST 128 -bit

Port type Native Endpoint

PCI Express version 2.0

Application clock 250 MHz

Max rate Gen 2 (5.0 Gbps)

Test out width 64 bits

HIP reconfig Disable

4. To enable all of the tests in the provided testbench and chaining DMA example

design, make the base address register (BAR) assignments. Bar2 or Bar3 is

required.Table 2–4. provides the BAR assignments in tabular format.

Table 2–4. PCI Registers (Part 1 of 2)

PCI Base Registers (Type 0 Configuration Space)

BAR BAR TYPE BAR Size

0 32-Bit Non-Prefetchable Memory 256 MBytes - 28 bits

1 32-Bit Non-Prefetchable Memory 256 KBytes - 18 bits

2 32-bit Non-Prefetchable Memory 256 KBytes -18 bits

PCI Read-Only Registers

Register Name Value

Device ID

Subsystem ID

Revision ID

Vendor ID

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

0xE001

0x2801

0x01

0x1172

Page 24

2–8 Chapter 2: Getting Started

Parameterizing the IP Compiler for PCI Express

Table 2–4. PCI Registers (Part 2 of 2)

PCI Base Registers (Type 0 Configuration Space)

Subsystem vendor ID

Class code

0x5BDE

0xFF0000

5. Specify the following settings for the Capabilities parameters.

Table 2–5. Capabilities Parameters

Parameter Value

Device Capabilities

Tags supported 32

Implement completion timeout disable Turn this option On

Completion timeout range ABCD

Error Reporting

Implement advanced error reporting Off

Implement ECRC check Off

Implement ECRC generation Off

Implement ECRC forwarding Off

MSI Capabilities

MSI messages requested 4

MSI message 64–bit address capable On

Link Capabilities

Link common clock On

Data link layer active reporting Off

Surprise down reporting Off

Link port number 0x01

Slot Capabilities

Enable slot capability Off

Slot capability register 0x0000000

MSI-X Capabilities

Implement MSI-X Off

Table size 0x000

Offset 0x00000000

BAR indicator (BIR) 0

Pending Bit Array (PBA)

Offset 0x00000000

BAR Indicator 0

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 25

Chapter 2: Getting Started 2–9

Parameterizing the IP Compiler for PCI Express

6. Click the Buffer Setup tab to specify settings on the Buffer Setup page.

Table 2–6. Buffer Setup Parameters

Parameter Value

Maximum payload size 512 bytes

Number of virtual channels 1

Number of low-priority VCs None

Auto configure retry buffer size On

Retry buffer size 16 KBytes

Maximum retry packets 64

Desired performance for received requests Maximum

Desired performance for received completions Maximum

1 For the PCI Express hard IP implementation, the RX Buffer Space Allocation is fixed

at Maximum performance. This setting determines the values for a read-only table

that lists the number of posted header credits, posted data credits, non-posted header

credits, completion header credits, completion data credits, total header credits, and

total RX buffer space.

7. Specify the following power management settings.

Table 2–7. Power Management Parameters

Parameter Value

L0s Active State Power Management (ASPM)

Idle threshold for L0s entry 8,192 ns

Endpoint L0s acceptable latency < 64 ns

Number of fast training sequences (N_FTS)

Common clock Gen2: 255

Separate clock Gen2: 255

Electrical idle exit (EIE) before FTS 4

L1s Active State Power Management (ASPM)

Enable L1 ASPM Off

Endpoint L1 acceptable latency < 1 µs

L1 Exit Latency Common clock > 64 µs

L1 Exit Latency Separate clock > 64 µs

8. On the EDA tab, turn on Generate simulation model to generate an IP functional

simulation model for the IP core. An IP functional simulation model is a

cycle-accurate VHDL or Verilog HDL model produced by the Quartus II software.

c Use the simulation models only for simulation and not for synthesis or any

other purposes. Using these models for synthesis creates a non-functional

design.

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 26

2–10 Chapter 2: Getting Started

<working_dir>

<variation>.v = top.v, the parameterized PCI Express IP Core

<variation>.sdc = top.sdc, the timing constraints file

<variation>.tcl = top.tcl, general Quartus II settings

<variation>_examples = top_examples

ip_compiler_for_pci_express-library

contains local copy of the pci express library files needed for

simulation, or compilation, or both

Testbench and

Design Example

Files

IP Compiler for

PCI Express

Files

Includes testbench and incremental compile directories

common

chaining_dma, files to implement the chaining DMA

top_example_chaining_top.qpf, the Quartus II project file

top_example_chaining_top.qsf, the Quartus II settings file

<variation>

_plus.v = top_plus.v,

the parameterized PCI Express IP Core including reset and

calibration circuitry

testbench, scripts to run the testbench

runtb.do, script to run the testbench

<variation>_chaining_testbench = top_chaining_testbench.v

altpcietb_bfm_driver_chaining.v , provides test stimulus

Simulation and

Quartus II

Compilation

(1) (2)

Viewing the Generated Files

9. On the Summary tab, select the files you want to generate. A gray checkmark

indicates a file that is automatically generated. All other files are optional.

10. Click Finish to generate the IP core, testbench, and supporting files.

1 A report file,

<

variation name>.html, in your project directory lists each file

generated and provides a description of its contents.

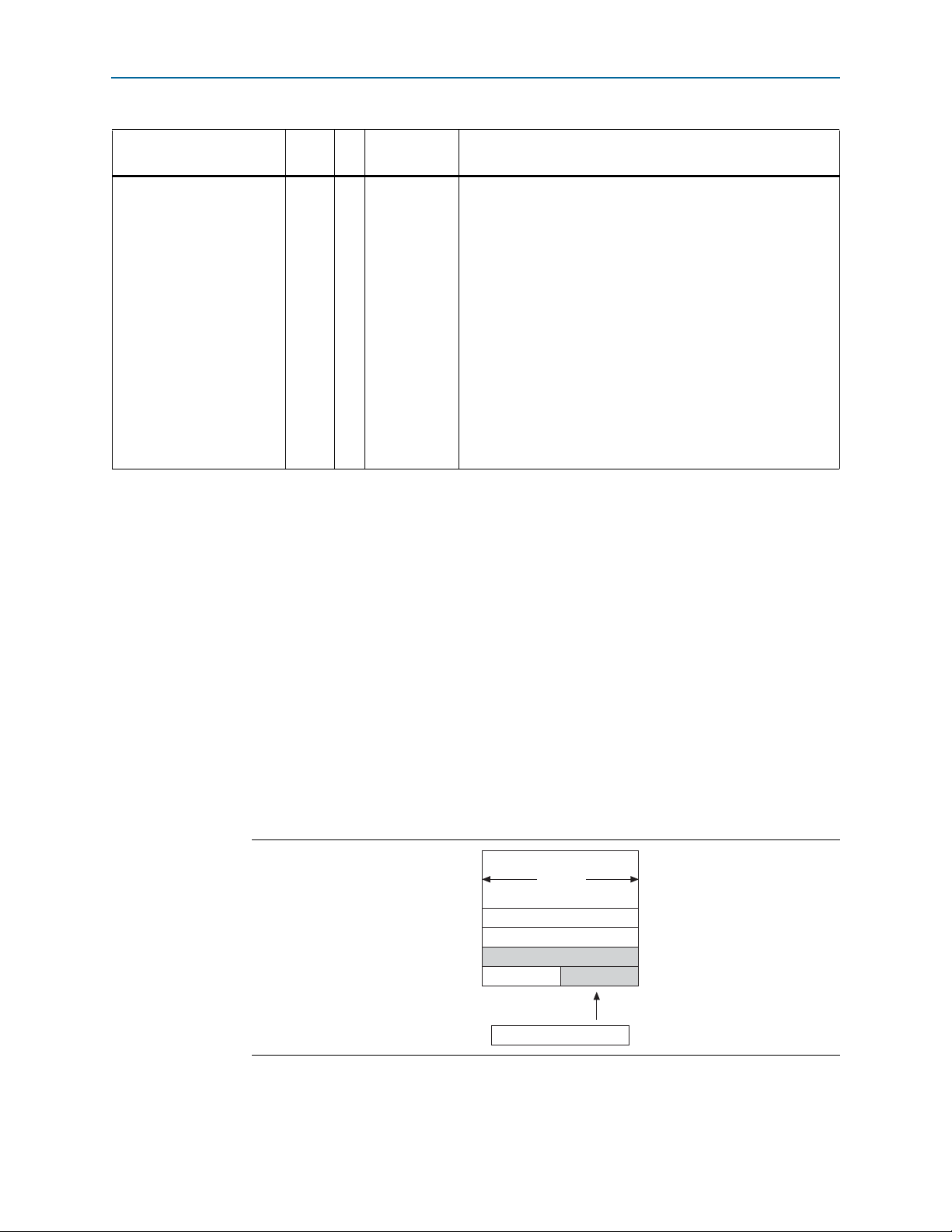

Viewing the Generated Files

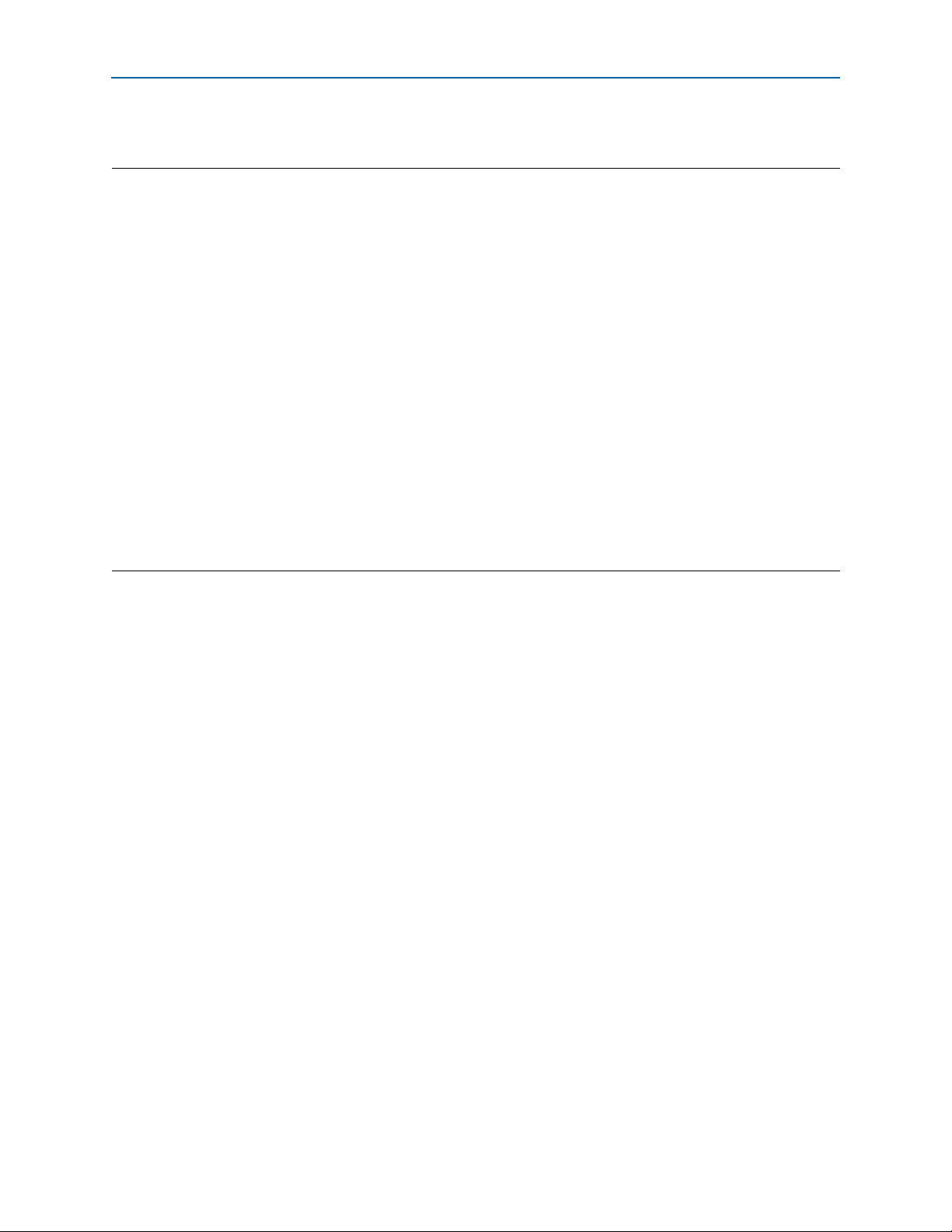

Figure 2–5 illustrates the directory structure created for this design after you generate

the IP Compiler for PCI Express. The directories includes the following files:

■ The IP Compiler for PCI Express design files, stored in <working_dir>.

■ The chaining DMA design example file, stored in the

<working_dir>\top_examples\chaining_dma directory. This design example tests

your generated IP Compiler for PCI Express variation. For detailed information

about this design example, refer to Chapter 15, Testbench and Design Example.

■ The simulation files for the chaining DMA design example, stored in the

<working_dir>\top_examples\chaining_dma\testbench directory. The Quartus II

software generates the testbench files if you turn on Generate simulation model

on the EDA tab while generating the IP Compiler for PCI Express.

0

Figure 2–5. Directory Structure for IP Compiler for PCI Express and Testbench

Notes to Figure 2–5:

(1) The chaining_dma directory contains the Quartus II project and settings files.

(2) <variation>_plus.v is only available for the hard IP implementation.

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 27

Chapter 2: Getting Started 2–11

Viewing the Generated Files

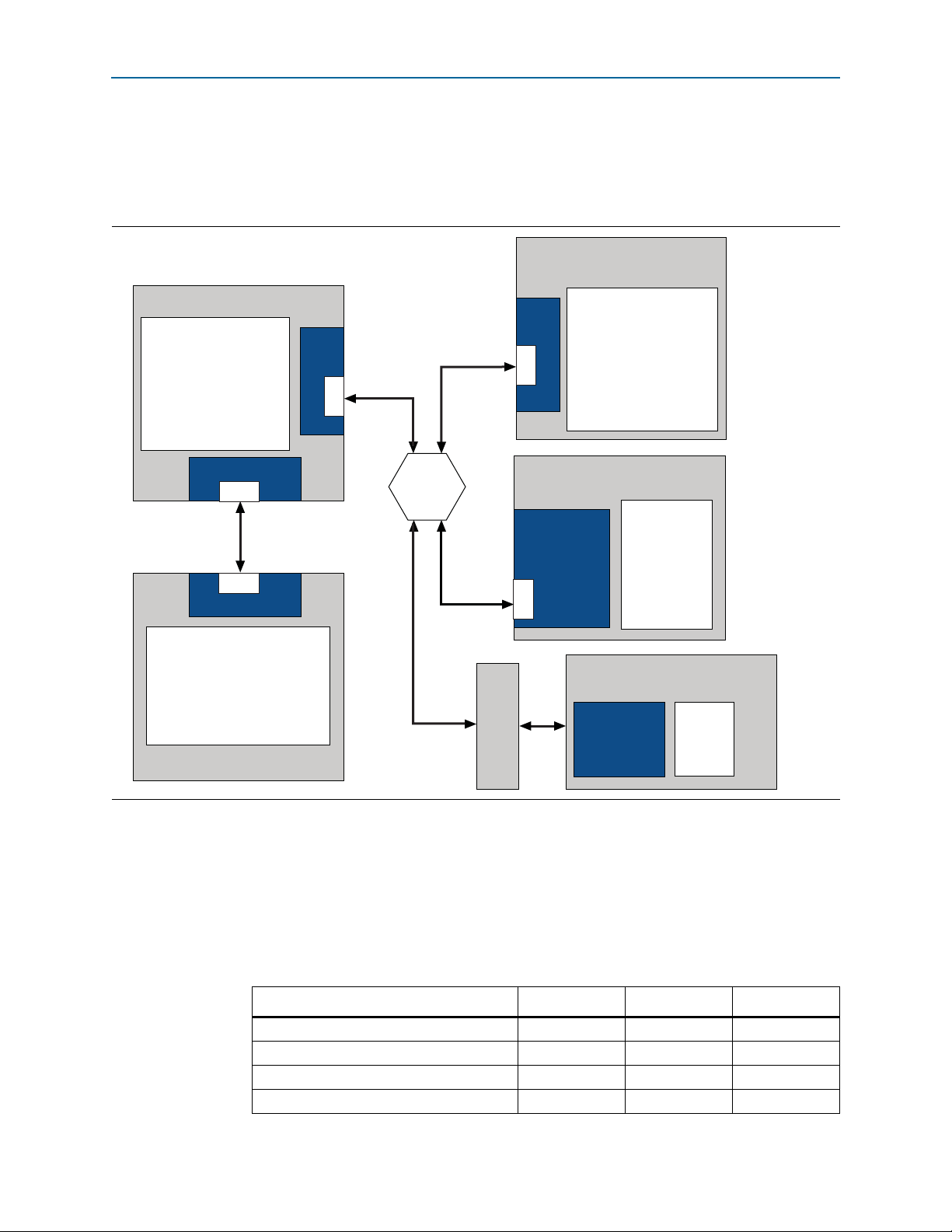

Figure 2–6 illustrates the top-level modules of this design. As this figure illustrates,

the IP Compiler for PCI Express connects to a basic root port bus functional model

(BFM) and an application layer high-performance DMA engine. These two modules,

when combined with the IP Compiler for PCI Express, comprise the complete

example design. The test stimulus is contained in altpcietb_bfm_driver_chaining.v.

The script to run the tests is runtb.do. For a detailed explanation of this example

design, refer to Chapter 15, Testbench and Design Example.

Figure 2–6. Testbench for the Chaining DMA Design Example

Root Port BFM

Root Port Driver

x8 Root Port Model

PCI Express Link

Endpoint Example

IP Compiler

for PCI Express

Endpoint Application

Layer Example

Traffic Control/Virtual Channel Mapping

Request/Completion Routing

RC

Slave

(Optional)

DMA

Write

Endpoint

Memory

(32 KBytes)

DMA

Read

f The design files used in this design example are the same files that are used for the

PCI Express High-Performance Reference Design. You can download the required

files on the PCI Express High-Performance Reference Design product page. This

product page includes design files for various devices. The example in this document

uses the Stratix IV GX files. You can generate, simulate, and compile the design

example with the files and capabilities provided in your Quartus II software and IP

installation. However, to configure the example on a device, you must also download

altpcie_demo.zip, which includes a software driver that the example design uses,

from the PCI Express High-Performance Reference Design.

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 28

2–12 Chapter 2: Getting Started

Simulating the Design

The Stratix IV .zip file includes files for Gen1 and Gen2 ×1, ×4, and ×8 variants. The

example in this document demonstrates the Gen2 ×8 variant. After you download

and unzip this .zip file, you can copy the files for this variant to your project directory,

<working_dir>. The files for the example in this document are included in the

hip_s4gx_gen2x8_128 directory. The Quartus II project file, top.qsf, is contained in

<working_dir>. You can use this project file as a reference for the .qsf file for your own

design.

Simulating the Design

As Figure 2–5 illustrates, the scripts to run the simulation files are located in the

<working_dir>\top_examples\chaining_dma\testbench directory. Follow these

steps to run the chaining DMA testbench.

1. Start your simulation tool. This example uses the ModelSim

1 The endpoint chaining DMA design example DMA controller requires the

use of BAR2 or BAR3.

2. In the testbench directory,

<working_dir>\top_examples\chaining_dma\testbench, type the following

command:

®

software.

do runtb.do

r

This script compiles the testbench for simulation and runs the chaining DMA

tests.

Example 2–1 shows the partial transcript from a successful simulation. As this

transcript illustrates, the simulation includes the following stages:

■ Link training

■ Configuration

■ DMA reads and writes

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 29

Chapter 2: Getting Started 2–13

Simulating the Design

■ Root port to endpoint memory reads and writes

Example 2–1. Excerpts from Transcript of Successful Simulation Run

Time: 56000 Instance: top_chaining_testbench.ep.epmap.pll_250mhz_to_500mhz.

altpll_component.pll0

# INFO: 464 ns Completed initial configuration of Root Port.

# INFO: Core Clk Frequency: 251.00 Mhz

# INFO: 3608 ns EP LTSSM State: DETECT.ACTIVE

# INFO: 3644 ns EP LTSSM State: POLLING.ACTIVE

# INFO: 3660 ns RP LTSSM State: DETECT.ACTIVE

# INFO: 3692 ns RP LTSSM State: POLLING.ACTIVE

# INFO: 6012 ns RP LTSSM State: POLLING.CONFIG

# INFO: 6108 ns EP LTSSM State: POLLING.CONFIG

# INFO: 7388 ns EP LTSSM State: CONFIG.LINKWIDTH.START

# INFO: 7420 ns RP LTSSM State: CONFIG.LINKWIDTH.START

# INFO: 7900 ns EP LTSSM State: CONFIG.LINKWIDTH.ACCEPT

# INFO: 8316 ns RP LTSSM State: CONFIG.LINKWIDTH.ACCEPT

# INFO: 8508 ns RP LTSSM State: CONFIG.LANENUM.WAIT

# INFO: 9004 ns EP LTSSM State: CONFIG.LANENUM.WAIT

# INFO: 9196 ns EP LTSSM State: CONFIG.LANENUM.ACCEPT

# INFO: 9356 ns RP LTSSM State: CONFIG.LANENUM.ACCEPT

# INFO: 9548 ns RP LTSSM State: CONFIG.COMPLETE

# INFO: 9964 ns EP LTSSM State: CONFIG.COMPLETE

# INFO: 11052 ns EP LTSSM State: CONFIG.IDLE

# INFO: 11276 ns RP LTSSM State: CONFIG.IDLE

# INFO: 11356 ns RP LTSSM State: L0

# INFO: 11580 ns EP LTSSM State: L0

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 30

2–14 Chapter 2: Getting Started

Simulating the Design

Example 2-1 continued

## INFO: 12536 ns

# INFO: 15896 ns EP PCI Express Link Status Register (1081):

# INFO: 15896 ns Negotiated Link Width: x8

# INFO: 15896 ns Slot Clock Config: System Reference Clock Used

# INFO: 16504 ns RP LTSSM State: RECOVERY.RCVRLOCK

# INFO: 16840 ns EP LTSSM State: RECOVERY.RCVRLOCK

# INFO: 17496 ns EP LTSSM State: RECOVERY.RCVRCFG

# INFO: 18328 ns RP LTSSM State: RECOVERY.RCVRCFG

# INFO: 20440 ns RP LTSSM State: RECOVERY.SPEED

# INFO: 20712 ns EP LTSSM State: RECOVERY.SPEED

# INFO: 21600 ns EP LTSSM State: RECOVERY.RCVRLOCK

# INFO: 21614 ns RP LTSSM State: RECOVERY.RCVRLOCK

# INFO: 22006 ns RP LTSSM State: RECOVERY.RCVRCFG

# INFO: 22052 ns EP LTSSM State: RECOVERY.RCVRCFG

# INFO: 22724 ns EP LTSSM State: RECOVERY.IDLE

# INFO: 22742 ns RP LTSSM State: RECOVERY.IDLE

# INFO: 22846 ns RP LTSSM State: L0

# INFO: 22900 ns EP LTSSM State: L0

# INFO: 23152 ns Current Link Speed: 5.0GT/s

# INFO: 27936 ns ---------

# INFO: 27936 ns TASK:dma_set_header READ

# INFO: 27936 ns Writing Descriptor header

# INFO: 27976 ns data content of the DT header

# INFO: 27976 ns

# INFO: 27976 ns Shared Memory Data Display:

# INFO: 27976 ns Address Data

# INFO: 27976 ns ------- ----

# INFO: 27976 ns 00000900 00000003 00000000 00000900 CAFEFADE

# INFO: 27976 ns ---------

# INFO: 27976 ns TASK:dma_set_rclast

# INFO: 27976 ns Start READ DMA : RC issues MWr (RCLast=0002)

# INFO: 27992 ns ---------

# INFO: 28000 ns TASK:msi_poll Polling MSI Address:07F0---> Data:FADE......

# INFO: 28092 ns TASK:rcmem_poll Polling RC Address0000090C current data (0000FADE)

expected data (00000002)

# INFO: 29592 ns TASK:rcmem_poll Polling RC Address0000090C current data (00000000)

expected data (00000002)

# INFO: 31392 ns TASK:rcmem_poll Polling RC Address0000090C current data (00000002)

expected data (00000002)

# INFO: 31392 ns TASK:rcmem_poll ---> Received Expected Data (00000002)

# INFO: 31440 ns TASK:msi_poll Received DMA Read MSI(0000) : B0FC

# INFO: 31448 ns Completed DMA Read

# INFO: 31448 ns ---------

# INFO: 31448 ns TASK:chained_dma_test

# INFO: 31448 ns DMA: Write

# INFO: 31448 ns ---------

# INFO: 31448 ns TASK:dma_wr_test

# INFO: 31448 ns DMA: Write

# INFO: 31448 ns ---------

# INFO: 31448 ns TASK:dma_set_wr_desc_data

# INFO: 31448 ns ---------

INFO: 31448 ns TASK:dma_set_msi WRITE

# INFO: 31448 ns Message Signaled Interrupt Configuration

# INFO: 1448 ns msi_address (RC memory)= 0x07F0

# INFO: 31760 ns msi_control_register = 0x00A5

# INFO: 32976 ns msi_expected = 0xB0FD

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 31

Chapter 2: Getting Started 2–15

Simulating the Design

Example 2-1 continued

# INFO: 32976 ns msi_capabilities address = 0x0050

# INFO: 32976 ns multi_message_enable = 0x0002

# INFO: 32976 ns msi_number = 0001

# INFO: 32976 ns msi_traffic_class = 0000

# INFO: 32976 ns ---------

# INFO: 26416 ns TASK:chained_dma_test

# INFO: 26416 ns DMA: Read

# INFO: 26416 ns --------# INFO: 26416 ns TASK:dma_rd_test

# INFO: 26416 ns ---------

# INFO: 26416 ns TASK:dma_set_rd_desc_data

# INFO: 26416 ns ---------

# INFO: 26416 ns TASK:dma_set_msi READ

# INFO: 26416 ns Message Signaled Interrupt Configuration

# INFO: 26416 ns msi_address (RC memory)= 0x07F0

# INFO: 26720 ns msi_control_register = 0x0084

# INFO: 27936 ns msi_expected = 0xB0FC

# INFO: 27936 ns msi_capabilities address = 0x0050

# INFO: 27936 ns multi_message_enable = 0x0002

# INFO: 27936 ns msi_number = 0000

# INFO: 27936 ns msi_traffic_class = 0000

# INFO: 32976 ns TASK:dma_set_header WRITE

# INFO: 32976 ns Writing Descriptor header

# INFO: 33016 ns data content of the DT header

# INFO: 33016 ns

# INFO: 33016 ns Shared Memory Data Display:

# INFO: 33016 ns Address Data

# INFO: 33016 ns ------- ----

# INFO: 33016 ns 00000800 10100003 00000000 00000800 CAFEFADE

# INFO: 33016 ns ---------

# INFO: 33016 ns TASK:dma_set_rclast

# INFO: 33016 ns Start WRITE DMA : RC issues MWr (RCLast=0002)

# INFO: 33032 ns ---------

# INFO: 33038 ns TASK:msi_poll Polling MSI Address:07F0---> Data:FADE......

# INFO: 33130 ns TASK:rcmem_poll Polling RC Address0000080C current data (0000FADE)

expected data (00000002)

# INFO: 34130 ns TASK:rcmem_poll Polling RC Address0000080C current data (00000000)

expected data (00000002)

# INFO: 35910 ns TASK:msi_poll Received DMA Write MSI(0000) : B0FD

# INFO: 35930 ns TASK:rcmem_poll Polling RC Address0000080C current data (00000002)

expected data (00000002)

# INFO: 35930 ns TASK:rcmem_poll ---> Received Expected Data (00000002)

# INFO: 35938 ns ---------

# INFO: 35938 ns Completed DMA Write

# INFO: 35938 ns ---------

# INFO: 35938 ns TASK:check_dma_data

# INFO: 35938 ns Passed : 0644 identical dwords.

# INFO: 35938 ns ---------

# INFO: 35938 ns TASK:downstream_loop

# INFO: 36386 ns Passed: 0004 same bytes in BFM mem addr 0x00000040

and 0x00000840

# INFO: 36826 ns Passed: 0008 same bytes in BFM mem addr 0x00000040 and 0x00000840

# INFO: 37266 ns Passed: 0012 same bytes in BFM mem addr 0x00000040 and 0x00000840

# INFO: 37714 ns Passed: 0016 same bytes in BFM mem addr 0x00000040 and 0x00000840

# INFO: 38162 ns Passed: 0020 same bytes in BFM mem addr 0x00000040 and 0x00000840

# INFO: 38618 ns Passed: 0024 same bytes in BFM mem addr 0x00000040 and 0x00000840

# INFO: 39074 ns Passed: 0028 same bytes in BFM mem addr 0x00000040 and 0x00000840

# INFO: 39538 ns Passed: 0032 same bytes in BFM mem addr 0x00000040 and 0x00000840

# INFO: 40010 ns Passed: 0036 same bytes in BFM mem addr 0x00000040 and 0x00000840

# INFO: 40482 ns Passed: 0040 same bytes in BFM mem addr 0x00000040 and 0x00000840

# SUCCESS: Simulation stopped due to successful completion!

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 32

2–16 Chapter 2: Getting Started

Constraining the Design

Constraining the Design

The Quartus project directory for the chaining DMA design example is in

<working_dir>\top_examples\chaining_dma\. Before compiling the design using

the Quartus II software, you must apply appropriate design constraints, such as

timing constraints. The Quartus II software automatically generates the constraint

files when you generate the IP Compiler for PCI Express.

Tab le 2– 8 describes these constraint files.

Table 2–8. Automatically Generated Constraints Files

Constraint Type Directory Description

This file includes various Quartus II constraints. In

particular, it includes virtual pin assignments. Virtual

General <working_dir>/<variation>.tcl (top.tcl)

Timing <working_dir>/<variation>.sdc (top.sdc)

pin assignments allow you to avoid making specific

pin assignments for top-level signals while you are

simulating and not yet ready to map the design to

hardware.

This file is the Synopsys Design Constraints File (.sdc)

which includes timing constraints.

If you want to perform an initial compilation to check any potential issues without

creating pin assignments for a specific board, you can do so after running the

following two steps that constrain the chaining DMA design example:

1. To apply Quartus II constraint files, type the following commands at the Tcl

console command prompt:

source ../../

top

.tcl

r

1 To display the Quartus II Tcl Console, on the View menu, point to Utility

Windows and click Tc l C o n s o l e .

2. To add the Synopsys timing constraints to your design, follow these steps:

a. On the Assignments menu, click Settings.

b. Click TimeQuest Timing Analyzer.

c. Under SDC files to include in the project, click the Browse button. Browse to

your <working_dir> to add top.sdc.

d. Click Add.

e. Click OK.

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 33

Chapter 2: Getting Started 2–17

Constraining the Design

Example 2–2 illustrates the Synopsys timing constraints.

Example 2–2. Synopsys Timing Constraints

derive_pll_clocks

derive_clock_uncertainty

create_clock -period "100 MHz" -name {refclk} {refclk}

set_clock_groups -exclusive -group [get_clocks { refclk*clkout }] -group [get_clocks {

*div0*coreclkout}]

set_clock_groups -exclusive -group [get_clocks { *central_clk_div0* }] -group

[get_clocks { *_hssi_pcie_hip* }] -group [get_clocks { *central_clk_div1* }]

<The following 4 additional constraints are for Stratix IV ES Silicon only>

set_multicycle_path -from [get_registers *delay_reg*] -to [get_registers *all_one*] hold -start 1

set_multicycle_path -from [get_registers *delay_reg*] -to [get_registers *all_one*] setup -start 2

set_multicycle_path -from [get_registers *align*chk_cnt*] -to [get_registers

*align*chk_cnt*] -hold -start 1

set_multicycle_path -from [get_registers *align*chk_cnt*] -to [get_registers

*align*chk_cnt*] -setup -start 2

Specifying Device and Pin Assignments

If you want to download the design to a board, you must specify the device and pin

assignments for the chaining DMA example design. To make device and pin

assignments, follow these steps:

1. To select the device, on the Assignments menu, click Device.

2. In the Family list, select Stratix IV (GT/GX/E).

3. Scroll through the Available devices to select EP4SGX230KF40C2.

4. To add pin assignments for the EP4SGX230KF40C2 device, copy all the text

included in to the chaining DMA design example .qsf file,

<working_dir>\top_examples\chaining_dma\top_example_chaining_top.qsf to

your project .qsf file.

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 34

2–18 Chapter 2: Getting Started

Constraining the Design

1 The pin assignments provided in the .qsf are valid for the Stratix IV GX

FPGA Development Board and the EP4SGX230KF40C2 device. If you are

using different hardware you must determine the correct pin assignments.

Example 2–3. Pin Assignments for the Stratix IV GX (EP4SGX230KF40C2) FPGA Development Board

set_location_assignment PIN_AK35 -to local_rstn_ext

set_location_assignment PIN_R32 -to pcie_rstn

set_location_assignment PIN_AN38 -to refclk

set_location_assignment PIN_AU38 -to rx_in0

set_location_assignment PIN_AR38 -to rx_in1

set_location_assignment PIN_AJ38 -to rx_in2

set_location_assignment PIN_AG38 -to rx_in3

set_location_assignment PIN_AE38 -to rx_in4

set_location_assignment PIN_AC38 -to rx_in5

set_location_assignment PIN_U38 -to rx_in6

set_location_assignment PIN_R38 -to rx_in7

set_instance_assignment -name INPUT_TERMINATION DIFFERENTIAL -to free_100MHz -disable

set_location_assignment PIN_AT36 -to tx_out0

set_location_assignment PIN_AP36 -to tx_out1

set_location_assignment PIN_AH36 -to tx_out2

set_location_assignment PIN_AF36 -to tx_out3

set_location_assignment PIN_AD36 -to tx_out4

set_location_assignment PIN_AB36 -to tx_out5

set_location_assignment PIN_T36 -to tx_out6

set_location_assignment PIN_P36 -to tx_out7

set_location_assignment PIN_AB28 -to gen2_led

set_location_assignment PIN_F33 -to L0_led

set_location_assignment PIN_AK33 -to alive_led

set_location_assignment PIN_W28 -to comp_led

set_location_assignment PIN_R29 -to lane_active_led[0]

set_location_assignment PIN_AH35 -to lane_active_led[2]

set_location_assignment PIN_AE29 -to lane_active_led[3]

set_location_assignment PIN_AL35 -to usr_sw[0]

set_location_assignment PIN_AC35 -to usr_sw[1]

set_location_assignment PIN_J34 -to usr_sw[2]

set_location_assignment PIN_AN35 -to usr_sw[3]

set_location_assignment PIN_G33 -to usr_sw[4]

set_location_assignment PIN_K35 -to usr_sw[5]

set_location_assignment PIN_AG34 -to usr_sw[6]

set_location_assignment PIN_AG31 -to usr_sw[7]

set_instance_assignment -name IO_STANDARD "2.5 V" -to local_rstn_ext

set_instance_assignment -name IO_STANDARD "2.5 V" -to pcie_rstn

set_instance_assignment -name INPUT_TERMINATION OFF -to refclk

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to rx_in0

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to rx_in1

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to rx_in2

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to rx_in3

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to rx_in4

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to rx_in5

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to rx_in6

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to rx_in7

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to tx_out0

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to tx_out1

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to tx_out2

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to tx_out3

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to tx_out4

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to tx_out5

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to tx_out6

set_instance_assignment -name IO_STANDARD "1.4-V PCML" -to tx_out7

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 35

Chapter 2: Getting Started 2–19

Compiling the Design

Pin Assignments for the Stratix IV (EP4SGX230KF40C2) Development Board (continued)

set_instance_assignment -name IO_STANDARD "2.5 V" -to usr_sw[0]

set_instance_assignment -name IO_STANDARD "2.5 V" -to usr_sw[1]

set_instance_assignment -name IO_STANDARD "2.5 V" -to usr_sw[2]

set_instance_assignment -name IO_STANDARD "2.5 V" -to usr_sw[3]

set_instance_assignment -name IO_STANDARD "2.5 V" -to usr_sw[4]

set_instance_assignment -name IO_STANDARD "2.5 V" -to usr_sw[5]

set_instance_assignment -name IO_STANDARD "2.5 V" -to usr_sw[6]

set_instance_assignment -name IO_STANDARD "2.5 V" -to usr_sw[7]

set_instance_assignment -name IO_STANDARD "2.5 V" -to

lane_active_led[0]

set_instance_assignment -name IO_STANDARD "2.5 V" -to

lane_active_led[2]

set_instance_assignment -name IO_STANDARD "2.5 V" -to

lane_active_led[3]

set_instance_assignment -name IO_STANDARD "2.5 V" -to L0_led

set_instance_assignment -name IO_STANDARD "2.5 V" -to alive_led

set_instance_assignment -name IO_STANDARD "2.5 V" -to comp_led

# Note reclk_free uses 100 MHz input

# On the S4GX Dev kit make sure that

# SW4.5 = ON

# SW4.6 = ON

set_instance_assignment -name IO_STANDARD LVDS -to free_100MHz

set_location_assignment PIN_AV22 -to free_100MHz

Specifying QSF Constraints

This section describes two additional constraints to improve performance in specific

cases.

■ Constraints for Stratix IV GX ES silicon–add the following constraint to your .qsf

file:

set_instance_assignment -name GLOBAL_SIGNAL "GLOBAL CLOCK" -to

*wire_central_clk_div*_coreclkout

This constraint aligns the PIPE clocks (

clock skew in ×8 variants.

■ Constraints for design running at frequencies higher than 250 MHz:

set_global_assignment -name PHYSICAL_SYNTHESIS_ASYNCHRONOUS_SIGNAL_PIPELINING ON

This constraint improves performance for designs in which asynchronous signals

in very fast clock domains cannot be distributed across the FPGA fast enough due

to long global network delays. This optimization performs automatic pipelining of

these signals, while attempting to minimize the total number of registers inserted.

Compiling the Design

To test your IP Compiler for PCI Express in hardware, your initial Quartus II

compilation includes all of the directories shown in Figure 2–5. After you have fully

tested your customized design, you can exclude the testbench directory from the

Quartus II compilation.

core_clk_out

) from each quad to reduce

On the Processing menu, click Start Compilation to compile your design.

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 36

2–20 Chapter 2: Getting Started

Reusing the Example Design

Reusing the Example Design

To use this example design as the basis of your own design, replace the endpoint

application layer example shown in Figure 2–6 with your own application layer

design. Then, modify the BFM driver to generate the transactions needed to test your

application layer.

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 37

August 2014

<edit Part Number variable in chapter>

You customize the IP Compiler for PCI Express by specifying parameters in the IP

Compiler for PCI Express parameter editor, which you access from the IP Catalog.

Some IP Compiler for PCI Express variations are supported in only one or two of the

design flows. Soft IP implementations are supported only in the Quartus II IP Catalog.

For more information about the hard IP implementation variations available in the

different design flows, refer to Table 1–5 on page 1–6.

This chapter describes the parameters and how they affect the behavior of the IP core.

The IP Compiler for PCI Express parameter editor that appears in the Qsys flow is

different from the IP Compiler for PCI Express parameter editor that appears in the

other two design flows. Because the Qsys design flow supports only a subset of the

variations supported in the other two flows, and generates only hard IP

implementations with specific characteristics, the Qsys flow parameter editor

supports only a subset of the parameters described in this chapter.

3. Parameter Settings

Parameters in the Qsys Design Flow

The following sections describe the IP Compiler for PCI Express parameters available

in the Qsys design flow. Separate sections describe the parameters available in

different sections of the IP Compiler for PCI Express parameter editor.

The available parameters reflect the fact that the Qsys design flow supports only the

following functionality:

■ Hard IP implementation

■ Native endpoint, with no support for:

■ I/O space BAR

■ 32-bit prefetchable memory

■ 16 Tags

■ 1 Message Signaled Interrupt (MSI)

■ 1 virtual channel

■ Up to 256 bytes maximum payload

August 2014 Altera Corporation IP Compiler for PCI Express User Guide

Page 38

3–2 Chapter 3: Parameter Settings

Parameters in the Qsys Design Flow

System Settings

The first parameter section of the IP Compiler for PCI Express parameter editor in the

Qsys flow contains the parameters for the overall system settings. Tab le 3 –1 describes

these settings.

Table 3–1. Qsys Flow System Settings Parameters

Parameter Value Description

Specifies the maximum data rate at which the link can operate. Turning

Gen2 Lane Rate Mode Off/On

Number of Lanes ×1, ×2, ×4, ×8

Reference clock

frequency

Use 62.5 MHz

application clock

Test out width None, 9 bits, or 64 bits

100 MHz, 125 MHz

Off/On

on Gen2 Lane Rate Mode sets the Gen2 rate, and turning it off sets the

Gen1 rate. Refer to Table 1–5 on page 1–6 for a complete list of Gen1

and Gen2 support.

Specifies the maximum number of lanes supported. Refer to Table 1–5

on page 1–6 for a complete list of device support for numbers of lanes.

You can select either a 100 MHz or 125 MHz reference clock for Gen1

operation; Gen2 requires a 100 MHz clock.

Specifies whether the application interface clock operates at the slower

62.5 MHz frequency to support power saving. This parameter can only

be turned on for some Gen1 ×1 variations. Refer to Table 4–1 on

page 4–4 for a list of the supported application interface clock

frequencies in different device families.

Indicates the width of the

reserved. Refer to Table 5–33 on page 5–59 for more information.

Altera recommends that you configure the 64-bit width.

test_out

signal. Most of these signals are

PCI Base Address Registers

The ×1 and ×4 IP cores support memory space BARs ranging in size from 128 bytes to

the maximum allowed by a 32-bit or 64-bit BAR. The ×8 IP cores support memory

space BARs from 4 KBytes to the maximum allowed by a 32-bit or 64-bit BAR.

The available BARs reflect the fact that the Qsys design flow supports only native

endpoints, with no support for I/O space BARs or 32-bit prefetchable memory.

The Avalon-MM address is the translated base address corresponding to a BAR hit of

a received request from the PCI Express link.

In the Qsys design flow, the PCI Base Address Registers (Type 0 Configuration

Space) Bar Size and Avalon Base Address information populates from Qsys. You

cannot enter this information in the IP Compiler for PCI Express parameter editor.

After you set the base addresses in Qsys, either automatically or by entering them

manually, the values appear when you reopen the parameter editor.

Altera recommends using the Qsys option—on the System menu, click Assign Base

Addresses—to set the base addresses automatically. If you decide to enter the address

translation entries manually, then you must avoid conflicts in address assignment

when adding other components, making interconnections, and assigning base

addresses.

IP Compiler for PCI Express User Guide August 2014 Altera Corporation

Page 39

Chapter 3: Parameter Settings 3–3

Parameters in the Qsys Design Flow

Tab le 3– 2 describes the PCI register parameters. You can configure a BAR with value

other than Not used only if the preceding BARs are configured. When an

even-numbered BAR is set to 64 bit Prefetchable, the following BAR is labelled

Occupied and forced to value Not used.

Table 3–2. PCI Registers (Note 1), (2)

Parameter Value Description

PCI Base Address Registers (0x10, 0x14, 0x18, 0x1C, 0x20, 0x24)

BAR Table (BAR0)

BAR Type

BAR Table (BAR1)

BAR Type

BAR Table (BAR2)

BAR Type

BAR Table (BAR3)

BAR Type

BAR Table (BAR4)

BAR Type

BAR Table (BAR5)

BAR Type

Notes to Table 3–2:

(1) A prefetchable 64-bit BAR is supported. A non-prefetchable 64-bit BAR is not supported because in a typical system, the root port configuration

register of type 1 sets the maximum non-prefetchable memory window to 32-bits.