Page 1

TMS320 DSP Algorithm Standard

Rules and Guidelines

User's Guide

Literature Number: SPRU352G

June 2005 – Revised February 2007

Page 2

2 SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 3

Contents

Preface ............................................................................................................................... 7

1 Overview ................................................................................................................... 9

1.1 Scope of the Standard ............................................................................................ 10

1.1.1 Rules and Guidelines .................................................................................... 11

1.2 Requirements of the Standard .................................................................................. 11

1.3 Goals of the Standard ............................................................................................ 12

1.4 Intentional Omissions ............................................................................................ 12

1.5 System Architecture ............................................................................................... 13

1.5.1 Frameworks ............................................................................................... 13

1.5.2 Algorithms ................................................................................................. 14

1.5.3 Core Run-Time Support ................................................................................. 14

2 General Programming Guidelines ............................................................................... 15

2.1 Use of C Language ............................................................................................... 16

2.2 Threads and Reentrancy ......................................................................................... 16

2.2.1 Threads .................................................................................................... 16

2.2.2 Preemptive vs. Non-Preemptive Multitasking......................................................... 17

2.2.3 Reentrancy ................................................................................................ 17

2.2.4 Example ................................................................................................... 18

2.3 Data Memory....................................................................................................... 19

2.3.1 Memory Spaces .......................................................................................... 20

2.3.2 Scratch versus Persistent ............................................................................... 20

2.3.3 Algorithm versus Application ............................................................................ 22

2.4 Program Memory ................................................................................................. 23

2.5 ROM-ability ........................................................................................................ 23

2.6 Use of Peripherals ................................................................................................ 24

3 Algorithm Component Model ..................................................................................... 25

3.1 Interfaces and Modules ........................................................................................... 26

3.1.1 External Identifiers ....................................................................................... 27

3.1.2 Naming Conventions ..................................................................................... 28

3.1.3 Module Initialization and Finalization .................................................................. 28

3.1.4 Module Instance Objects ................................................................................ 28

3.1.5 Design-Time Object Creation ........................................................................... 29

3.1.6 Run-Time Object Creation and Deletion .............................................................. 29

3.1.7 Module Configuration .................................................................................... 30

3.1.8 Example Module .......................................................................................... 30

3.1.9 Multiple Interface Support ............................................................................... 31

3.1.10 Interface Inheritance .................................................................................... 32

3.1.11 Summary ................................................................................................. 32

3.2 Algorithms .......................................................................................................... 33

3.3 Packaging .......................................................................................................... 34

3.3.1 Object Code ............................................................................................... 34

3.3.2 Header Files .............................................................................................. 35

3.3.3 Debug Verses Release .................................................................................. 35

SPRU352G – June 2005 – Revised February 2007 Contents 3

Submit Documentation Feedback

Page 4

4 Algorithm Performance Characterization ..................................................................... 37

4.1 Data Memory....................................................................................................... 38

4.1.1 Heap Memory ............................................................................................. 38

4.1.2 Stack Memory ............................................................................................ 39

4.1.3 Static Local and Global Data Memory ................................................................. 39

4.2 Program Memory .................................................................................................. 40

4.3 Interrupt Latency .................................................................................................. 41

4.4 Execution Time .................................................................................................... 41

4.4.1 MIPS Is Not Enough ..................................................................................... 41

4.4.2 Execution Time Model ................................................................................... 42

5 DSP-Specific Guidelines ............................................................................................ 45

5.1 CPU Register Types .............................................................................................. 46

5.2 Use of Floating Point .............................................................................................. 47

5.3 TMS320C6xxx Rules and Guidelines........................................................................... 47

5.3.1 Endian Byte Ordering .................................................................................... 47

5.3.2 Data Models ............................................................................................... 47

5.3.3 Program Model ........................................................................................... 47

5.3.4 Register Conventions .................................................................................... 48

5.3.5 Status Register ........................................................................................... 48

5.3.6 Interrupt Latency ......................................................................................... 49

5.4 TMS320C54xx Rules and Guidelines .......................................................................... 49

5.4.1 Data Models ............................................................................................... 49

5.4.2 Program Models .......................................................................................... 49

5.4.3 Register Conventions .................................................................................... 51

5.4.4 Status Registers .......................................................................................... 51

5.4.5 Interrupt Latency ......................................................................................... 52

5.5 TMS320C55x Rules and Guidelines ............................................................................ 52

5.5.1 Stack Architecture ........................................................................................ 52

5.5.2 Data Models ............................................................................................... 52

5.5.3 Program Models .......................................................................................... 53

5.5.4 Relocatability .............................................................................................. 53

5.5.5 Register Conventions .................................................................................... 54

5.5.6 Status Bits ................................................................................................. 55

5.6 TMS320C24xx Guidelines ....................................................................................... 57

5.6.1 General .................................................................................................... 57

5.6.2 Data Models ............................................................................................... 57

5.6.3 Program Models .......................................................................................... 57

5.6.4 Register Conventions .................................................................................... 57

5.6.5 Status Registers .......................................................................................... 58

5.6.6 Interrupt Latency ......................................................................................... 58

5.7 TMS320C28x Rules and Guidelines ............................................................................ 58

5.7.1 Data Models ............................................................................................... 58

5.7.2 Program Models .......................................................................................... 59

5.7.3 Register Conventions .................................................................................... 59

5.7.4 Status Registers .......................................................................................... 59

5.7.5 Interrupt Latency ......................................................................................... 60

6 Use of the DMA Resource .......................................................................................... 61

6.1 Overview ............................................................................................................ 62

4 Contents SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 5

6.2 Algorithm and Framework ........................................................................................ 62

6.3 Requirements for the Use of the DMA Resource ............................................................. 63

6.4 Logical Channel ................................................................................................... 63

6.5 Data Transfer Properties ......................................................................................... 64

6.6 Data Transfer Synchronization .................................................................................. 64

6.7 Abstract Interface .................................................................................................. 65

6.8 Resource Characterization ....................................................................................... 66

6.9 Runtime APIs ...................................................................................................... 67

6.10 Strong Ordering of DMA Transfer Requests ................................................................... 67

6.11 Submitting DMA Transfer Requests ............................................................................ 68

6.12 Device Independent DMA Optimization Guideline ............................................................ 68

6.13 C6xxx Specific DMA Rules and Guidelines .................................................................... 69

6.13.1 Cache Coherency Issues for Algorithm Producers ................................................. 69

6.14 C55x Specific DMA Rules and Guidelines ..................................................................... 70

6.14.1 Supporting Packed/Burst Mode DMA Transfers .................................................... 70

6.14.2 Minimizing Logical Channel Reconfiguration Overhead ........................................... 71

6.14.3 Addressing Automatic Endianism Conversion Issues ............................................. 71

6.15 Inter-Algorithm Synchronization ................................................................................. 71

6.15.1 Non-Preemptive System ............................................................................... 71

6.15.3 Preemptive System ..................................................................................... 72

A Rules and Guidelines ................................................................................................ 75

A.1 General Rules ..................................................................................................... 76

A.2 Performance Characterization Rules ........................................................................... 77

A.3 DMA Rules ......................................................................................................... 77

A.4 General Guidelines ................................................................................................ 78

A.5 DMA Guidelines ................................................................................................... 79

B Core Run-Time APIs ................................................................................................. 81

B.1 TI C-Language Run-Time Support Library ..................................................................... 82

B.2 DSP/BIOS Run-time Support Library ........................................................................... 82

C Bibliography ............................................................................................................ 83

C.1 Books ............................................................................................................... 83

C.2 URLS ................................................................................................................ 83

D Glossary .................................................................................................................. 85

D.1 Glossary of Terms ................................................................................................. 85

SPRU352G – June 2005 – Revised February 2007 Contents 5

Submit Documentation Feedback

Page 6

List of Figures

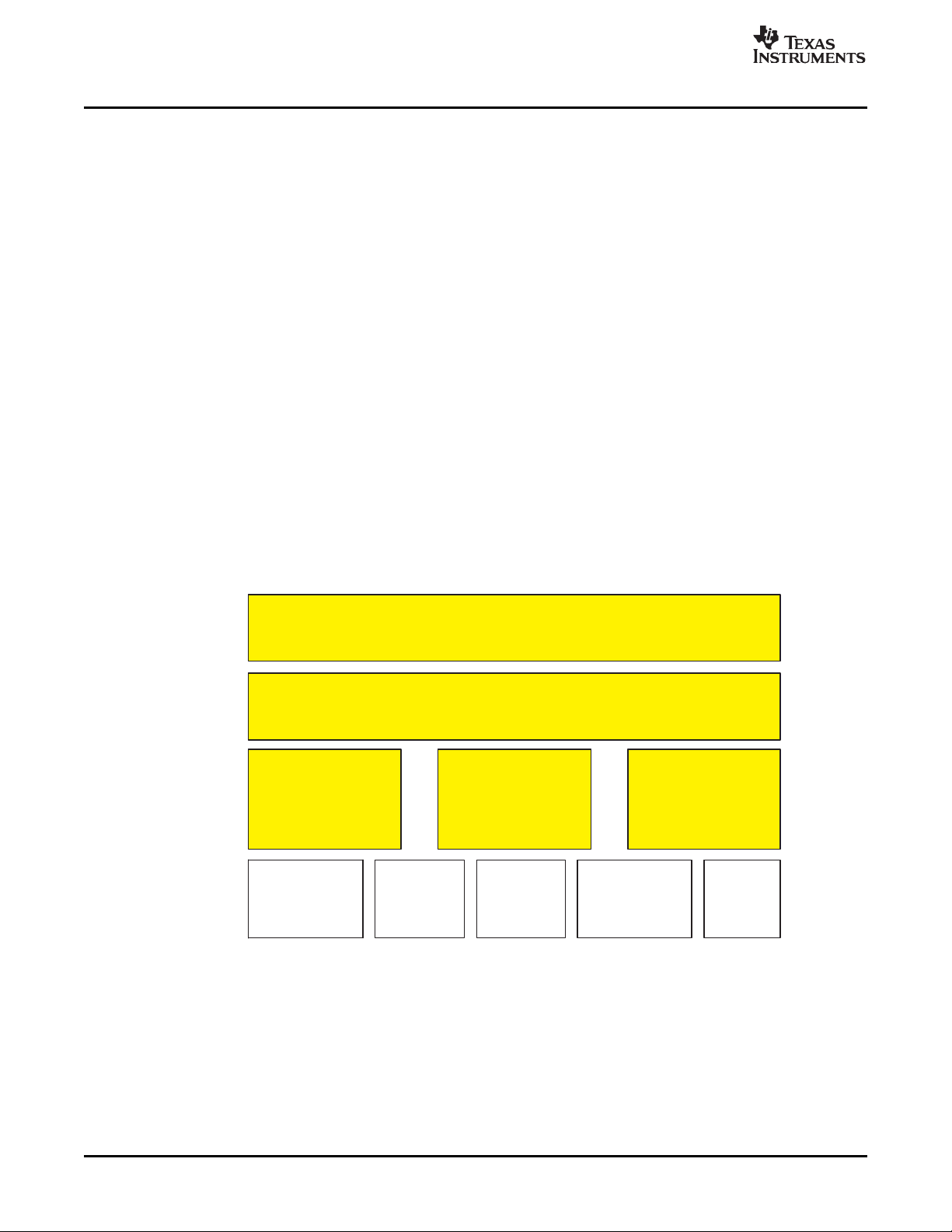

1-1 TMS320 DSP Algorithm Standard Elements ........................................................................... 10

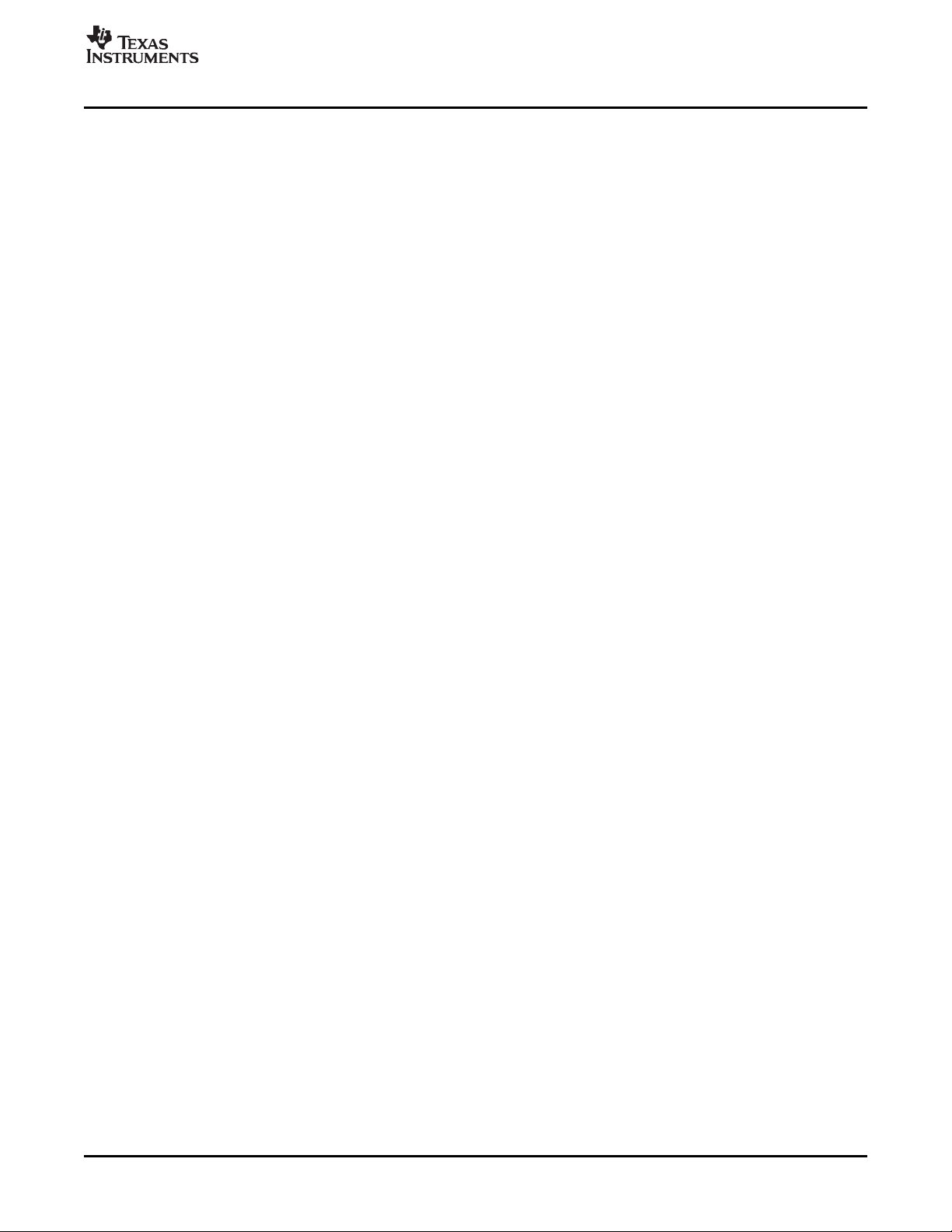

1-2 DSP Software Architecture ................................................................................................ 13

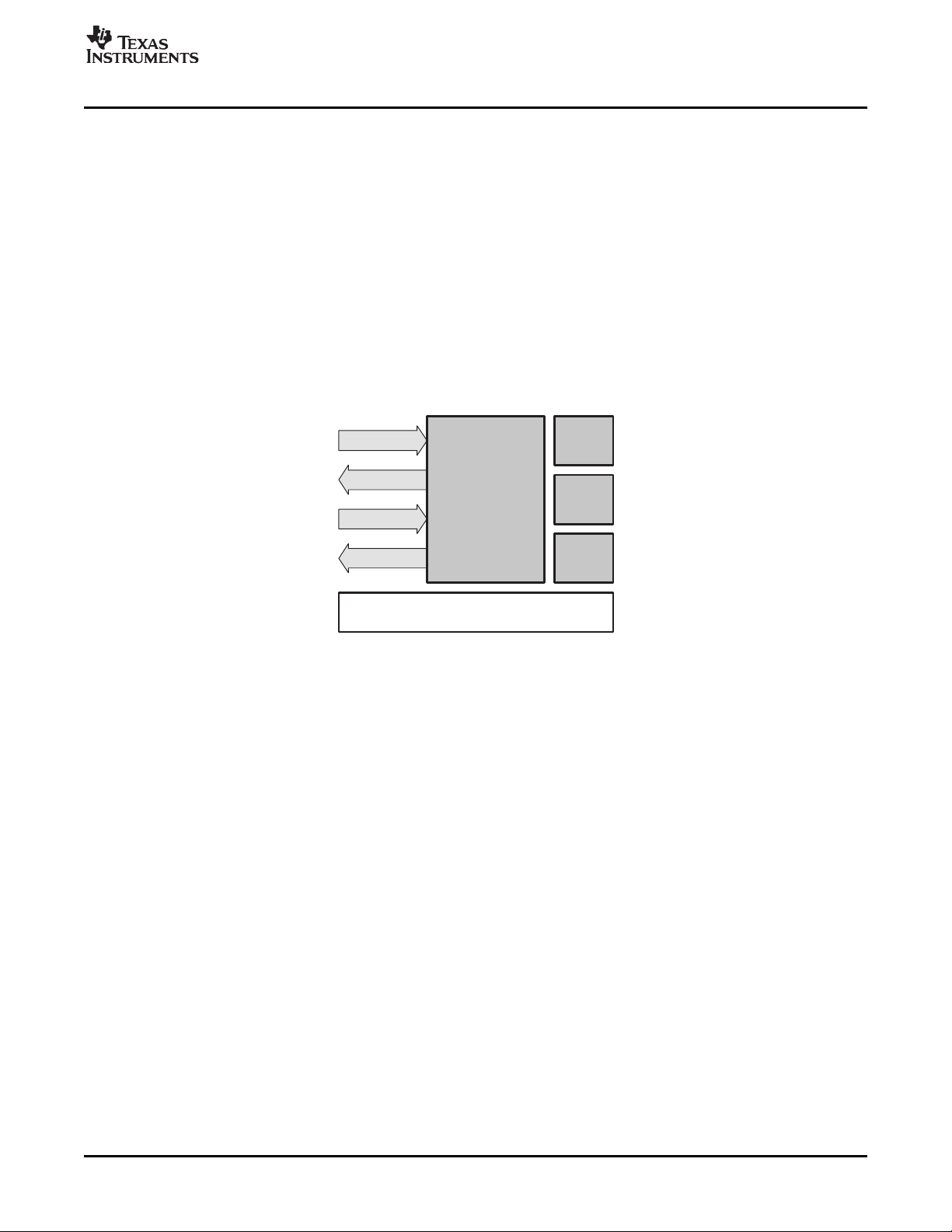

2-1 Scratch vs Persistent Memory Allocation ............................................................................... 21

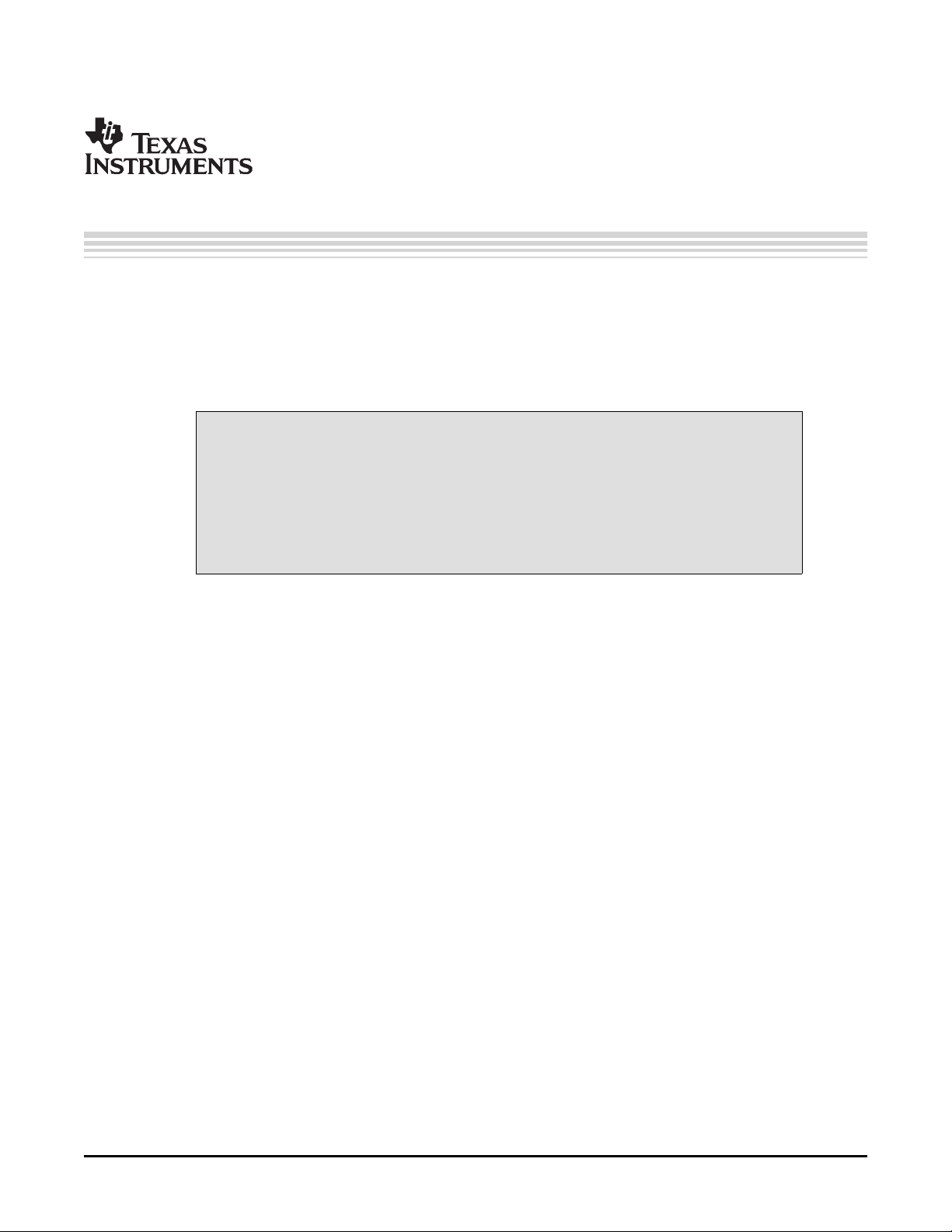

2-2 Data Memory Types ....................................................................................................... 22

3-1 Module Interface and Implementation ................................................................................... 26

3-2 Module Object Creation ................................................................................................... 29

3-3 Example Module Object ................................................................................................... 29

3-4 Example Implementation of IALG Interface ............................................................................. 33

4-1 Execution Timeline for Two Periodic Tasks ............................................................................ 42

5-1 Register Types .............................................................................................................. 46

6-1 Transfer Properties for a 1-D Frame ..................................................................................... 64

6-2 Frame Index and 2-D Transfer of N-1 Frames ......................................................................... 64

6 List of Figures SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 7

Preface

SPRU352G – June 2005 – Revised February 2007

Read This First

This document defines a set of requirements for DSP algorithms that, if followed, allow

system integrators to quickly assemble production-quality systems from one or more

such algorithms. Thus, this standard is intended to enable a rich commercial

off-the-shelf (COTS) marketplace for DSP algorithm technology and to significantly

reduce the time-to-market for new DSP-based products.

The TMS320 DSP Algorithm Standard is part of TI's eXpressDSP technology initiative.

Algorithms that comply with the standard are tested and awarded an

"eXpressDSP-compliant" mark upon successful completion of the test.

In describing these requirements and their purpose, it is often necessary to describe

how applications might be structured to take advantage of eXpressDSP-compliant

algorithms. It is important to keep in mind, however, that the TMS320 DSP Algorithm

Standards make no substantive demands on the clients of these algorithms.

Intended Audience

This document assumes that the reader is fluent in the C programming language, has a good working

knowledge of digital signal processing (DSP) and the requirements of DSP applications, and has some

exposure to the principles and practices of object-oriented programming.

This document describes the rules that must be followed by all eXpressDSP-compliant algorithm software

and interfaces between algorithms and applications that use these algorithms. There are two audiences

for this document:

• Algorithm writers learn how to ensure that an algorithm can coexist with other algorithms in a single

system and how to package an algorithm for deployment into a wide variety of systems.

• System integrators learn how to incorporate multiple algorithms from separate sources into a complete

system.

Document Overview

Throughout this document, the rules and guidelines of the TMS320 DSP Algorithm Standard (referred to

as XDAIS) are highlighted. Rules must be followed to be compliant with the TMS320 DSP Algorithm

Standard Guidelines. Guidelines should be obeyed but may be violated by eXpressDSP-compliant

software. A complete list of all rules and guidelines is provided in Appendix A. Electronic versions of this

document contain hyperlinks from each rule and guideline in Appendix A to the main body of the

document.

This document contains the following chapters:

• Chapter 1 - Overview, provides the motivation for the standard and describes how algorithms (as

defined by the TMS320 DSP Algorithm Standard) are used in DSP systems.

• Chapter 2 - General Programming Guidelines, describes a general programming model for DSP

software and contains rules and guidelines that apply to all eXpressDSP-compliant software.

• Chapter 3 - Algorithm Component Model, describes rules and guidelines that enable

eXpressDSP-compliant algorithms from multiple sources to operate harmoniously in a single system.

• Chapter 4 - Algorithm Performance Characterization, describes how an eXpressDSP-compliant

algorithm's performance must be characterized.

• Chapter 5 - DSP-Specific Guidelines, defines a model for describing the DSP's on-chip registers and

contains rules and guidelines for each specific DSP architecture covered by this specification.

SPRU352G – June 2005 – Revised February 2007 Read This First 7

Submit Documentation Feedback

Page 8

www.ti.com

Related Documentation

• Chapter 6 - Use of the DMA Resource, develops guidelines and rules for creating

eXpressDSP-compliant algorithms that utilize the DMA resource.

• Appendix A - Rules and Guidelines, contains a complete list of all rules and guidelines in this

specification.

• Appendix B - Core Run-time Support APIs, contains a complete description of the APIs that an

eXpressDSP-compliant algorithm may reference.

Related Documentation

The TMS320 DSP Algorithm Standard is documented in the following manuals:

• TMS320 DSP Algorithm Standard Rules and Guidelines (this document). Describes all the rules

and guidelines that make up the TMS320 DSP Algorithm Standard (may be referred to as XDAIS

throughout this document).

• TMS320 DSP Algorithm Standard API Reference (SPRU360). Contains APIs that are required by

the TMS320 DSP Algorithm Standard and full source examples of eXpressDSP-compliant algorithm

components.

• TMS320 DSP Algorithm Standard Developer's Guide (SPRU424). Contains examples that assist the

developer in implementing the XDAIS interface and to create a test application.

• Using DMA with Framework Components for C64x+ Application Report (SPRAAG1). Describes

the standard DMA software abstractions and interfaces for TMS320 DSP Algorithm Standard (XDAIS)

compliant algorithms designed for the C64x+ EDMA3 controller using DMA Framework Components

utilities.

Although these documents are largely self-contained, there are times when it is best not to duplicate

documentation that exists in other documents. The following documents contain supplementary

information necessary to adhere to the TMS320 DSP Algorithm Standards.

• DSP/BIOS User's Guide

• TMS320 C54x/C6x/C2x Optimizing C Compiler User's Guide

Text Conventions

The following typographical conventions are used in this specification:

• Text inside back-quotes (") represents pseudo-code

• Program source code, function and macro names, parameters, and command line commands are

shown in a mono-spaced font.

Rule n

Text is shown like this to indicate a requirement of the TMS320 DSP Algorithm Standard.

Guideline n

Text is shown like this to indicate a recommendation of the TMS320 DSP Algorithm Standard.

Read This First8 SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 9

SPRU352G – June 2005 – Revised February 2007

This chapter provides an overview of the TMS320 DSP Algorithm Standard.

Topic .................................................................................................. Page

1.1 Scope of the Standard ............................................................... 10

1.2 Requirements of the Standard ................................................... 11

1.3 Goals of the Standard ................................................................ 12

1.4 Intentional Omissions ............................................................... 12

1.5 System Architecture .................................................................. 13

Chapter 1

Overview

SPRU352G – June 2005 – Revised February 2007 Overview 9

Submit Documentation Feedback

Page 10

www.ti.com

Rules for TMS320C2x

Level 1

Level 2

Level 3

Level 4

Telecom

Rules for TMS320C5x Rules for TMS320C6x

Imaging Audio Automotive Other

Algorithm Component Model

General Programming Guidelines

S C callable

S No hard coded addresses

S Reentrant

S etc.

S Modules

S Generic interfaces

S Packaging

S etc.

S Interrupt usage

S Memory usage

S Register usage

S etc.

S Interrupt usage

S Memory usage

S Register usage

S etc.

S Interrupt usage

S Memory usage

S Register usage

S etc.

S vocoders

S echo cancel

S etc.

S JPEG

S etc.

S coders

S etc.

S etc.

Scope of the Standard

Digital Signal Processors (DSPs) are often programmed like "traditional" embedded microprocessors. That

is, they are programmed in a mix of C and assembly language, they directly access hardware peripherals,

and, for performance reasons, almost always have little or no standard operating system support. Thus,

like traditional microprocessors, there is very little use of commercial off-the-shelf (COTS) software

components for DSPs.

However, unlike general-purpose embedded microprocessors, DSPs are designed to run sophisticated

signal processing algorithms and heuristics. For example, they may be used to detect DTMF digits in the

presence of noise, to compress toll quality speech by a factor of 20, or for speech recognition in a noisy

automobile traveling at 65 miles per hour.

Such algorithms are often the result of many years of doctoral research. However, because of the lack of

consistent standards, it is not possible to use an algorithm in more than one system without significant

reengineering. Since few companies can afford a team of DSP PhDs, and the reuse of DSP algorithms is

so labor intensive, the time-to-market for a new DSP-based product is measured in years rather than in

months.

This document defines a set of requirements for DSP algorithms that, if followed, allow system integrators

to quickly assemble production-quality systems from one or more such algorithms. Thus, this standard is

intended to enable a rich COTS marketplace for DSP algorithm technology and to significantly reduce the

time-to-market for new DSP-based products.

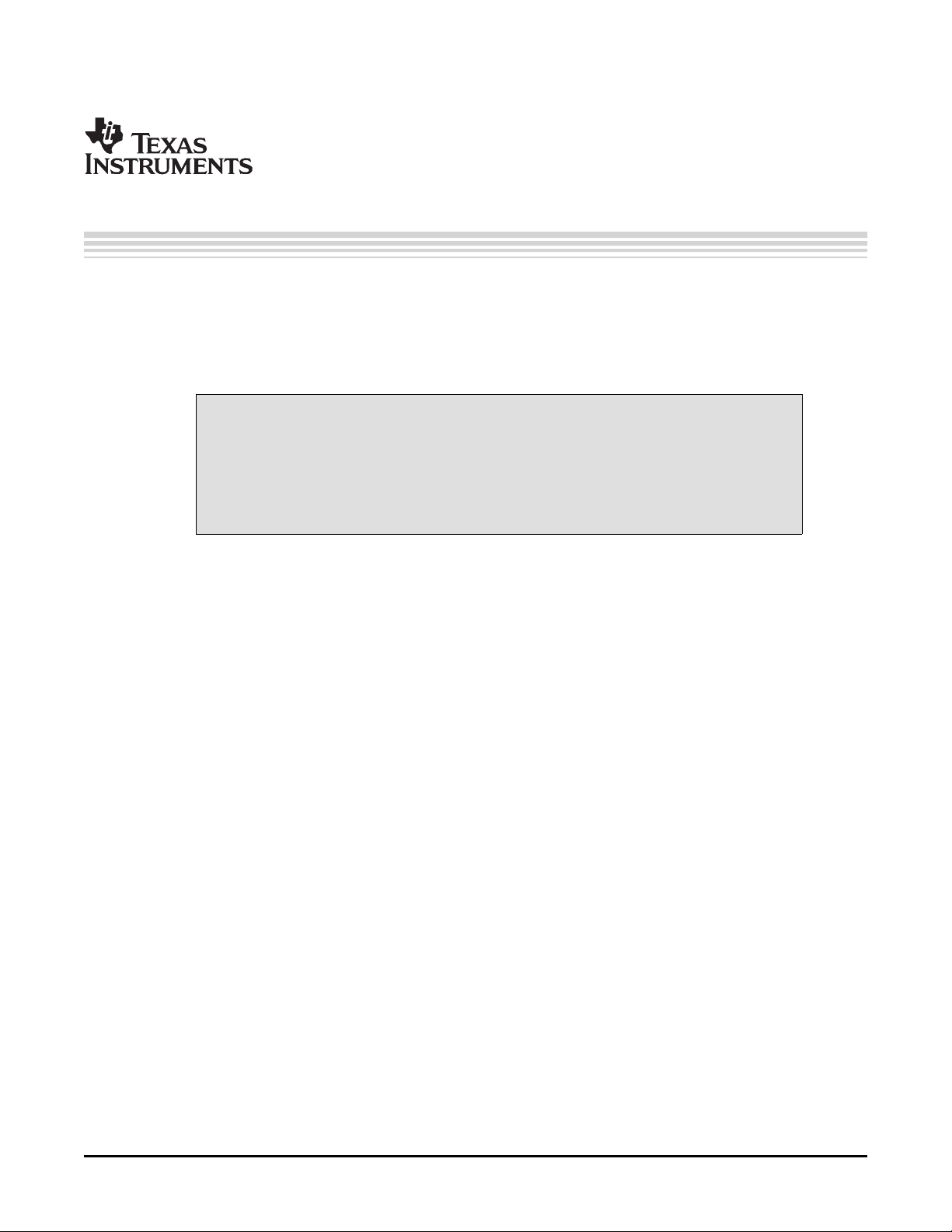

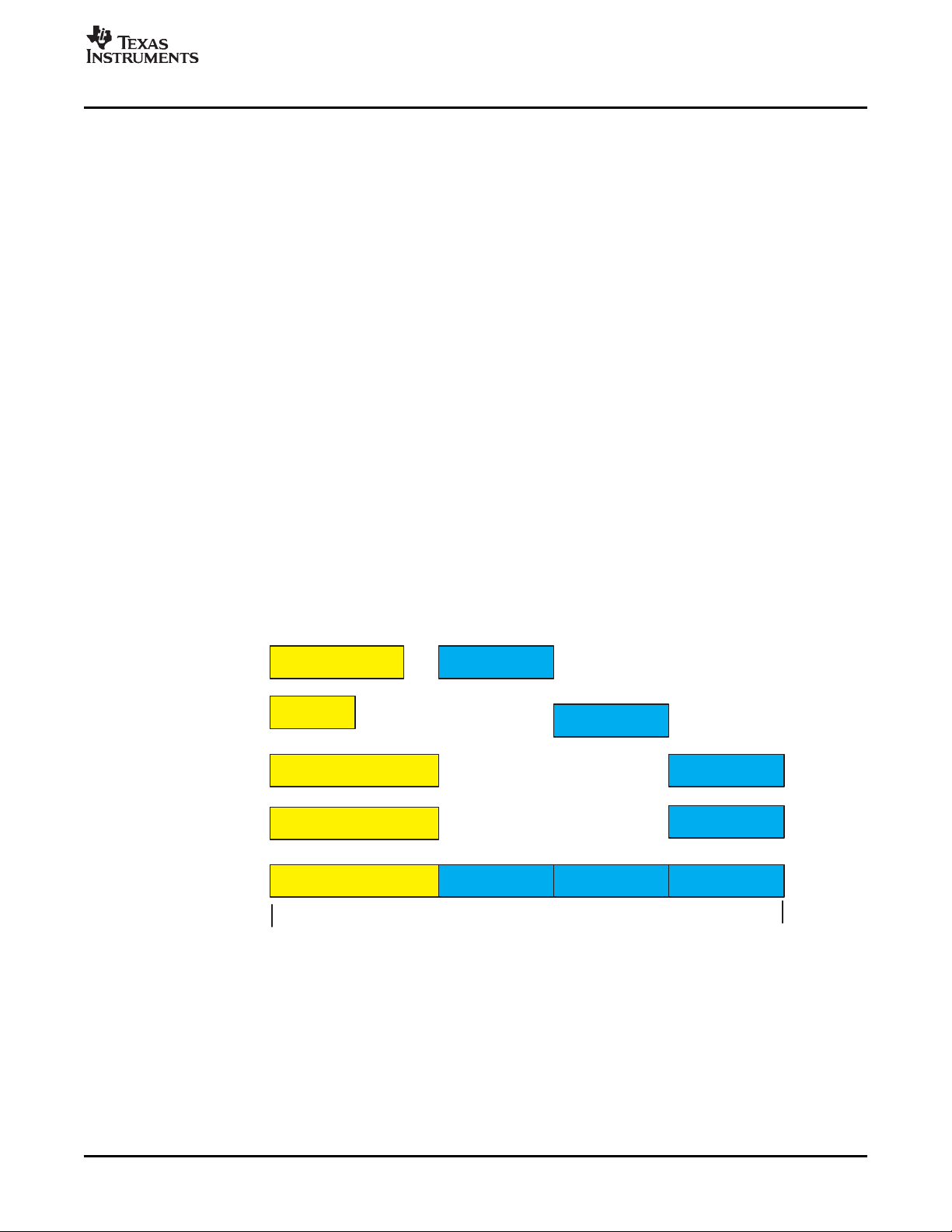

1.1 Scope of the Standard

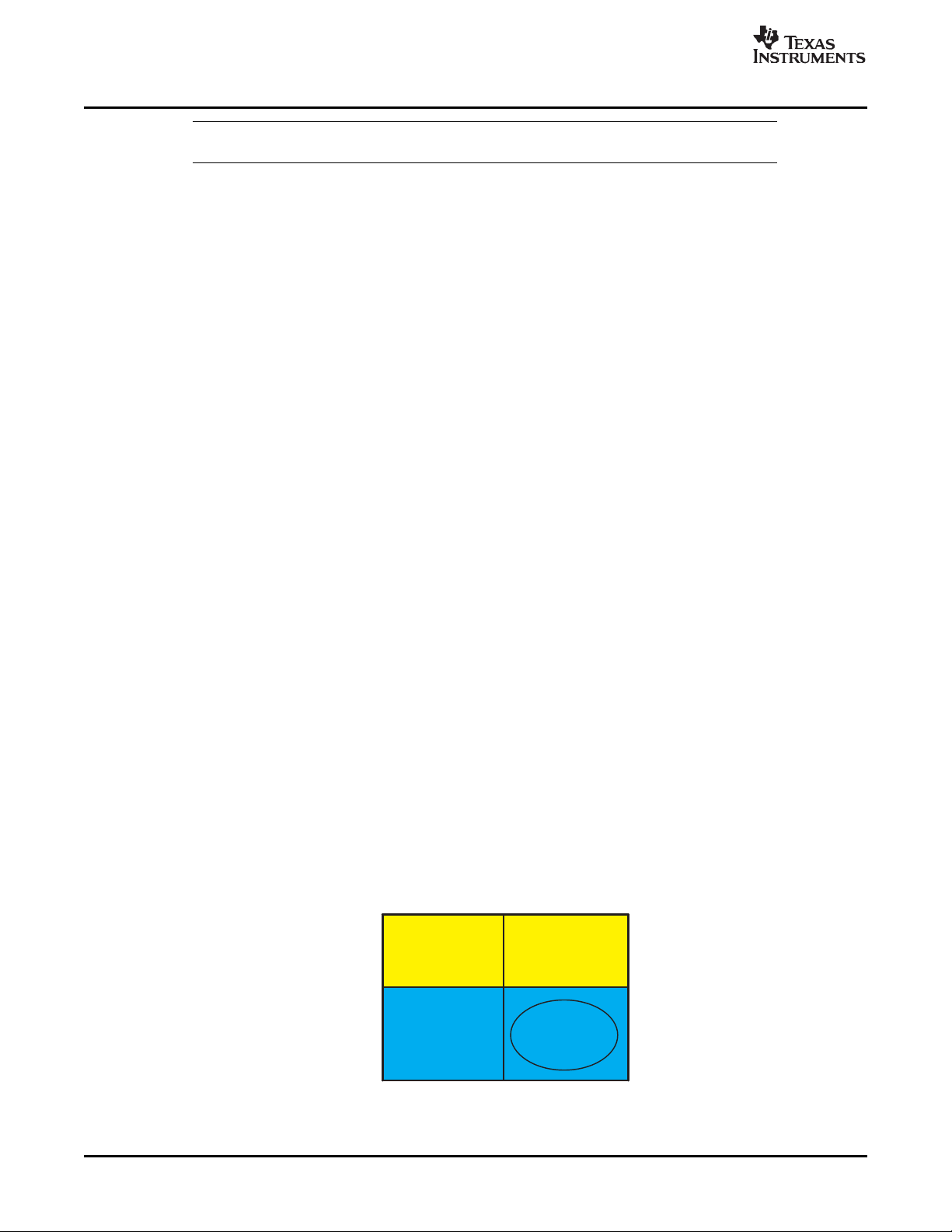

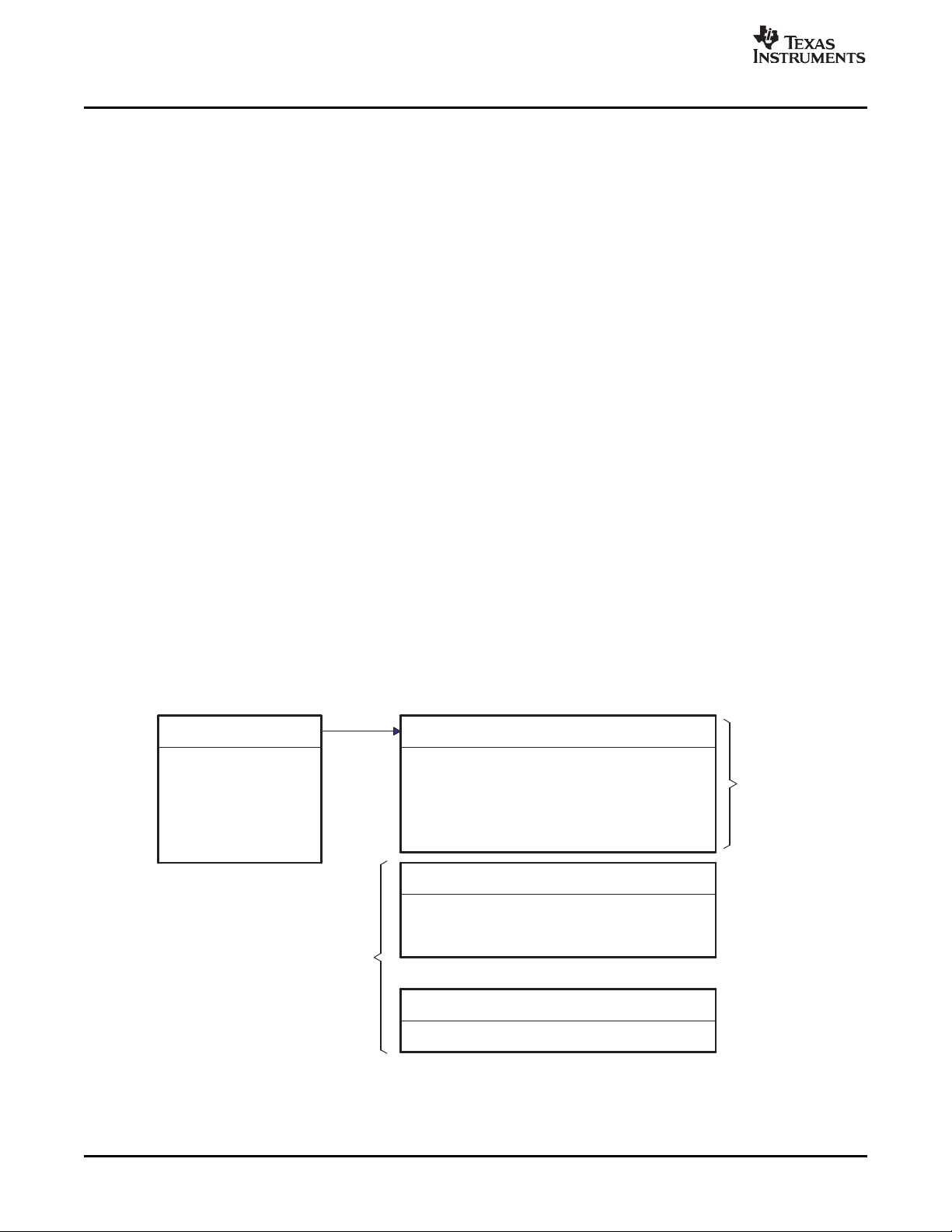

The TMS320 DSP Algorithm Standard defines three levels of guidelines.

Figure 1-1. TMS320 DSP Algorithm Standard Elements

Level 1 contains programming guidelines that apply to all algorithms on all DSP architectures regardless

of application area. Almost all recently developed software modules follow these common sense

guidelines already, so this level just formalizes them.

Level 2 contains rules and guidelines that enable all algorithms to operate harmoniously within a single

system. Conventions are established for the algorithm's use of data memory and names for external

identifiers, for example. In addition, simple rules for how algorithms are packaged are also specified.

10 Overview SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 11

www.ti.com

Level 3 contains the guidelines for specific families of DSPs. Today, there are no agreed-upon guidelines

for algorithms with regard to the use of processor resources. These guidelines will provide guidance on

the dos and don'ts for the various architectures. There is always the possibility that deviations from these

guidelines will occur, but then the algorithm vendor can explicitly draw attention to the deviation in the

relevant documentation or module headers.

The shaded boxes represent the areas that are covered in this version of the specification.

Level 4 contains the various vertical markets. Due to the inherently different nature of each of these

businesses, it seems appropriate for the stakeholders in each of these markets to define the interfaces for

groups of algorithms based on the vertical market. If each unique algorithm were specified with an

interface, the standard would never be able to keep up and thus not be effective. It is important to note

that at this level, any algorithm that conforms to the rules defined in the top three levels is considered

eXpressDSP-compliant.

1.1.1 Rules and Guidelines

The TMS320 DSP Algorithm Standard specifies both rules and guidelines. Rules must be followed in

order for software to be eXpressDSP-compliant. Guidelines, on the other hand, are strongly suggested

recommendations that should be obeyed, but are not required, in order for software to be

eXpressDSP-compliant.

1.2 Requirements of the Standard

This section lists the required elements of the TMS320 DSP Algorithm Standard. These requirements are

used throughout the remainder of the document to motivate design choices. They also help clarify the

intent of many of the stated rules and guidelines.

• Algorithms from multiple vendors can be integrated into a single system.

• Algorithms are framework-agnostic. That is, the same algorithm can be efficiently used in virtually any

application or framework.

• Algorithms can be deployed in purely static as well as dynamic run-time environments.

• Algorithms can be distributed in binary form.

• Integration of algorithms does not require recompilation of the client application, although

reconfiguration and relinking may be required.

A huge number of DSP algorithms are needed in today's marketplace, including modems, vocoders,

speech recognizers, echo cancellation, and text-to-speech. It is not possible for a product developer, who

wants to leverage this rich set of algorithms, to obtain all the necessary algorithms from a single source.

On the other hand, integrating algorithms from multiple vendors is often impossible due to incompatibilities

between the various implementations. In order to break this Catch-22, eXpressDSP-compliant algorithms

from different vendors must all interoperate.

Dozens of distinct third-party DSP frameworks exist in the telephony vertical market alone. Each vendor

has hundreds and sometimes thousands of customers. Yet, no one framework dominates the market. To

achieve the goal of algorithm reuse, the same algorithm must be usable in all frameworks.

Marketplace fragmentation by various frameworks has a legitimate technical basis. Each framework

optimizes performance for an intended class of systems. For example, client systems are designed as

single-channel systems with limited memory, limited power, and lower-cost DSPs. As a result, they are

quite sensitive to performance degradation. Server systems, on the other hand, use a single DSP to

handle multiple channels, thus reducing the cost per channel. As a result, they must support a dynamic

environment. Yet, both client-side and server-side systems may require exactly the same vocoders.

It is important that algorithms be deliverable in binary form. This not only protects the algorithm vendor's

intellectual property; it also improves the reusability of the algorithm. If source code were required, all

clients would require recompilation. In addition to being destabilizing for the clients, version control for the

algorithms would be close to impossible.

Requirements of the Standard

SPRU352G – June 2005 – Revised February 2007 Overview 11

Submit Documentation Feedback

Page 12

www.ti.com

Goals of the Standard

1.3 Goals of the Standard

This section contains the goals of this standard. While it may not be possible to perfectly attain these

goals, they represent the primary concerns that should be addressed after addressing the required

elements described in the previous section.

• Easy to adhere to the standard

• Possible to verify conformance to standard

• Enable system integrators to easily migrate between TI DSPs

• Enable host tools to simplify a system integrator's tasks, including configuration, performance

• Incur little or no "overhead" for static systems

Although TI currently enjoys a leadership role in the DSP marketplace, it cannot directly control the

algorithm software base. This is especially true for relatively mature DSPs, such as the C54xx family,

where significant algorithm technology currently exists. Thus, for any specification to achieve the status of

a standard, it must represent a low hurdle for the legacy code base.

While we can all agree to a guideline that states that every algorithm must be of high quality, this type of

guideline cannot be measured or verified. This non-verification or non-measurement enables system

integrators to claim that all their algorithms are of high quality, and therefore will not place a value on the

guideline in this instance. Thus, it is important that each guideline be measurable or, in some sense,

verifiable.

While this standard does define an algorithm's APIs in a DSP-independent manner, it does not seek to

solve the DSP migration problem. For example, it does not require that algorithms be provided on both a

C54x and a C6x platform. It does not specify a multiple binary object file format to enable a single binary

to be used in both a C5x and a C6x design. Nor does it supply tools to translate code from one

architecture to another or require the use of an architecture independent language (such as C) in the

implementation of algorithms.

Wherever possible, this standard tries to anticipate the needs of the system integrator and provide rules

for the development of algorithms that allow host tools to be created that will assist the integration of these

algorithms. For example, rules related to algorithm naming conventions enable tools that automatically

resolve name conflicts and select alternate implementations as appropriate.

modeling, standard conformance, and debugging.

1.4 Intentional Omissions

Maurice Wilkes once said, "There is no problem in computer programming that cannot be solved by an

added level of indirection." Frameworks are perfect examples of how indirection is used to "solve" DSP

software architecture problems; device independence is achieved by adding a level of indirection between

algorithms and physical peripherals, and algorithm interchangeability is achieved by using function

pointers.

On the other hand, Jim Gray has been quoted as saying, "There is no performance problem that cannot

be solved by eliminating a level of indirection." It is essential that the TMS320 DSP Algorithm Standard

remain true to the spirit of the DSP developer: any overhead incurred by adherence to the standard must

be minimized.

In this section, we describe those aspects of the standard that are intentionally omitted. This is not to say

that these issues are not important, but in the interest of timeliness, this version does not make any

recommendation. Future versions will address these omissions.

• Version control

• Licensing, encryption, and IP protection

• Installation and verification (i.e., digital signatures)

• Documentation and online help

Like all software, algorithms evolve over time, and therefore require version control. Moreover, as the

TMS320 DSP Algorithm Standard evolves, older algorithm components may fail to be compliant with the

latest specification. Ideally, a version numbering scheme would be specified that allowed host-based tools

to automatically detect incompatible versions of algorithm components.

12 Overview SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 13

www.ti.com

ALG

ALG

ALG

Framework

Status

Cmd

Status

Cmd

Core run time support

System Architecture

To support the ability of a system integrator to rapidly evaluate algorithms from various vendors, a

mechanism should be defined that allows a component to be used for evaluation only. This would

encourage algorithm vendors to provide free evaluations of their technology. It is important to provide

mechanisms, such as encryption of the code, that protect the vendor's IP; otherwise, vendors will not

make their components readily available.

Each algorithm component is typically delivered with documentation, on-line help files, and example

programs. Ideally, this set of files would be standardized for each algorithm, and installation into the Code

Composer Studio environment would be standardized. The standardization will greatly simplify the rapid

evaluation and system integration process. In addition, it is important that when a component is obtained,

its origin can be reliably determined, to prevent component theft among algorithm vendors.

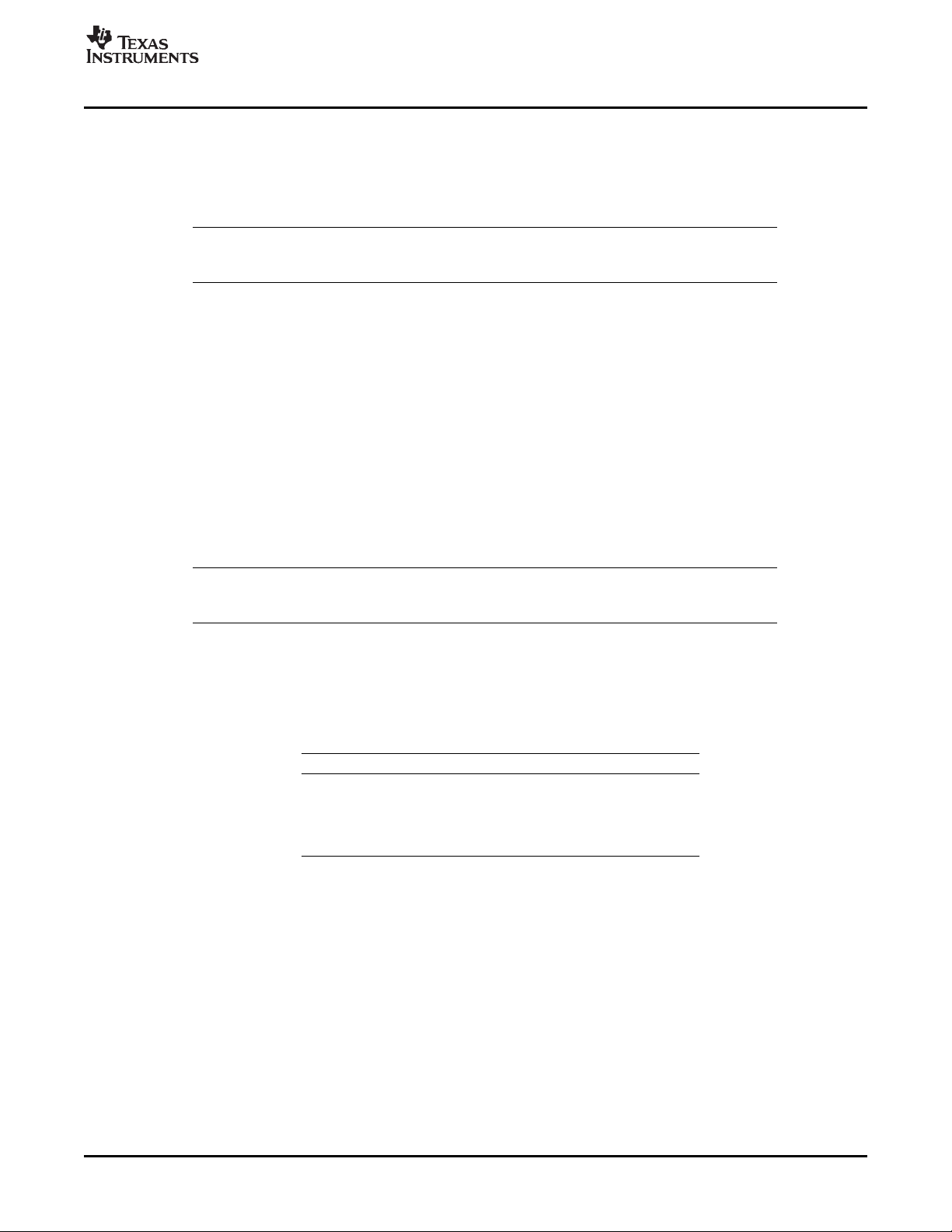

1.5 System Architecture

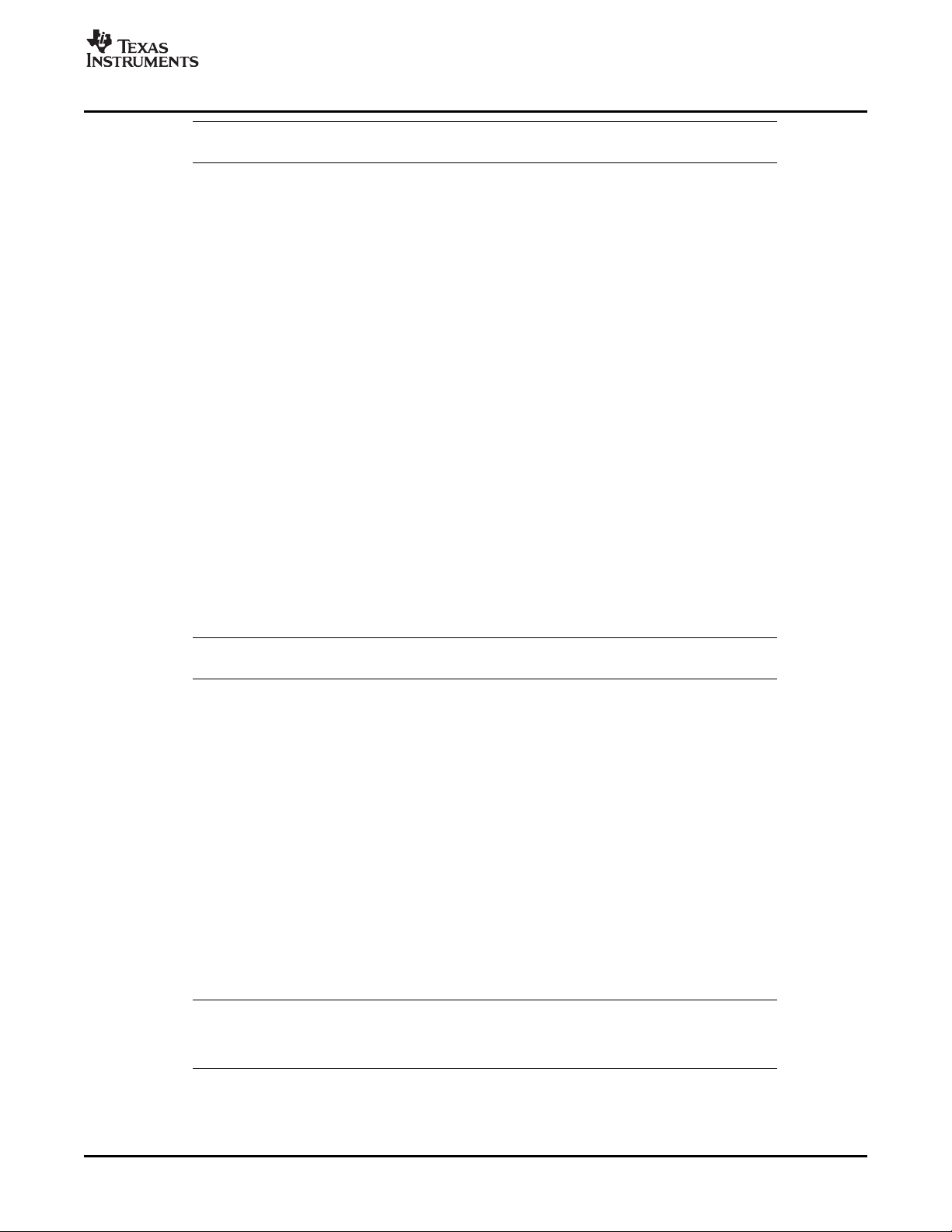

Many modern DSP system architectures can be partitioned along the lines depicted in Figure 1-2 .

Figure 1-2. DSP Software Architecture

1.5.1 Frameworks

Algorithms are "pure" data transducers; i.e., they simply take input data buffers and produce some number

of output data buffers. The core run-time support includes functions that copy memory, and functions to

enable and disable interrupts. The framework is the "glue" that integrates the algorithms with the real-time

data sources and links using the core run time support, to create a complete DSP sub-system.

Frameworks for the DSP often interact with the real-time peripherals (including other processors in the

system) and often define the I/O interfaces for the algorithm components.

Unfortunately, for performance reasons, many DSP systems do not enforce a clear line between algorithm

code and the system-level code (i.e., the framework). Thus, it is not possible to easily reuse an algorithm

in more than one system. The TMS320 DSP Algorithm Standard is intended to clearly define this line in

such a way that performance is not sacrificed and algorithm reusability is significantly enhanced.

Frameworks often define a device independent I/O sub-system and specify how essential algorithms

interact with this sub-system. For example, does the algorithm call functions to request data or does the

framework call the algorithm with data buffers to process? Frameworks also define the degree of

modularity within the application; i.e., which components can be replaced, added, removed, and when can

components be replaced (compile time, link time, or real-time).

Even within the telephony application space, there are a number of different frameworks available and

each is optimized for a particular application segment (e.g., large volume client-side products and low

volume high-density server-side products). Given the large number of incompatibilities between these

various frameworks and the fact that each framework has enjoyed success in the market, this standard

does not make any significant requirements of a framework.

SPRU352G – June 2005 – Revised February 2007 Overview 13

Submit Documentation Feedback

Page 14

www.ti.com

System Architecture

1.5.2 Algorithms

Careful inspection of the various frameworks in use reveals that, at some level, they all have algorithm

components. While there are differences in each of the frameworks, the algorithm components share

many common attributes.

• Algorithms are C callable

• Algorithms are reentrant

• Algorithms are independent of any particular I/O peripheral

• Algorithms are characterized by their memory and MIPS requirements

In approximately half of the frameworks reviewed, algorithms are also required to simply process data

passed to the algorithm. The others assume that the algorithm will actively acquire data by calling

framework-specific, hardware-independent, I/O functions. In all cases, algorithms are designed to be

independent of the I/O peripherals in the system.

In an effort to minimize framework dependencies, this standard requires that algorithms process data that

is passed to them via parameters. It seems likely that conversion of an "active" algorithm to one that

simply accepts data in the form of parameters is straightforward and little or no loss of performance will be

incurred.

Given the similarities between the various frameworks, it seems possible to standardize at the level of the

algorithm. Moreover, there is real benefit to the framework vendors and system integrators to this

standardization: algorithm integration time will be reduced, it will be possible to easily comparison shop for

the "best" algorithm, and more algorithms will be available.

It is important to realize that each particular implementation of, say a speech detector, represents a

complex set of engineering trade-offs between code size, data size, MIPS, and quality. Moreover,

depending on the system designed, the system integrator may prefer an algorithm with lower quality and

smaller footprint to one with higher quality detection and larger footprint (e.g., an electronic toy doll verses

a corporate voice mail system). Thus, multiple implementations of exactly the same algorithm sometimes

make sense; there is no single best implementation of many algorithms.

Unfortunately, the system integrator is often faced with choosing all algorithms from a single vendor to

ensure compatibility between the algorithms and to minimize the overhead of managing disparate APIs.

Moreover, no single algorithm vendor has all the algorithms for all their customers. The system integrator

is, therefore, faced with having to chose a vendor that has "most" of the required algorithms and negotiate

with that vendor to implement the remaining DSP algorithms.

By enabling system integrators to plug or replace one algorithm for another, we reduce the time to market

because the system integrator can chose algorithms from multiple vendors. We effectively create a huge

catalog of interoperable parts from which any system can be built.

1.5.3 Core Run-Time Support

In order to enable algorithms to satisfy the minimum requirements of reentrancy, I/O peripheral

independence, and debuggability, algorithms must rely on a core set of services that are always present.

Since most algorithms are still produced using assembly language, many of the services provided by the

core must be accessible and appropriate for assembly language.

The core run-time support includes a subset of HWI functions of DSP/BIOS to support atomic modification

of control/status registers (to set the overflow mode, for example). It also includes a subset of the standard

C language run-time support libraries; e.g., memcpy, strcpy, etc. The run-time support is described in

detail in Appendix B of this document.

14 Overview SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 15

SPRU352G – June 2005 – Revised February 2007

General Programming Guidelines

In this chapter, we develop programming guidelines that apply to all algorithms on all

DSP architectures, regardless of application area.

Topic .................................................................................................. Page

2.1 Use of C Language .................................................................... 16

2.2 Threads and Reentrancy ............................................................ 16

2.3 Data Memory ............................................................................ 19

2.4 Program Memory ..................................................................... 23

2.5 ROM-ability .............................................................................. 23

2.6 Use of Peripherals .................................................................... 24

Chapter 2

SPRU352G – June 2005 – Revised February 2007 General Programming Guidelines 15

Submit Documentation Feedback

Page 16

www.ti.com

Use of C Language

2.1 Use of C Language

Almost all recently developed software modules follow these common sense guidelines already, so this

chapter just formalizes them. In addition to these guidelines, we also develop a general model of data

memory that enables applications to efficiently manage an algorithm's memory requirements.

All algorithms will follow the run-time conventions imposed by the C programming language. This ensures

that the system integrator is free to use C to "bind" various algorithms together, control the flow of data

between algorithms, and interact with other processors in the system easily.

Rule 1

All algorithms must follow the run-time conventions imposed by TI’s implementation of the C

programming language.

It is very important to note that this does not mean that algorithms must be written in the C language.

Algorithms may be implemented entirely in assembly language. They must, however, be callable from the

C language and respect the C language run-time conventions. Most significant algorithms are not

implemented as a single function; like any sophisticated software, they are composed of many interrelated

internal functions. Again, it is important to note that these internal functions do not need to follow the C

language conventions; only the top-most interfaces must obey the C language conventions. On the other

hand, these internal functions must be careful not to cause the top-most function to violate the C run-time

conventions; e.g., no called function may use a word on the stack with interrupts enabled without first

updating the stack pointer.

2.2 Threads and Reentrancy

Because of the variety of frameworks available for DSP systems, there are many differing types of

threads, and therefore, reentrancy requirements. In this section, we try to precisely define the types of

threads supported by this standard and the reentrancy requirements of algorithms.

2.2.1 Threads

A thread is an encapsulation of the flow of control in a program. Most people are accustomed to writing

single-threaded programs; i.e., programs that only execute one path through their code "at a time."

Multi-threaded programs may have several threads running through different code paths "simultaneously."

Why are some phrases above in quotes? In a typical multi-threaded program, zero or more threads may

actually be running at any one time. This depends on the number of CPUs in the system in which the

process is running, and on how the thread system is implemented. A system with n CPUs can, intuitively

run no more than n threads in parallel, but it may give the appearance of running many more than n

"simultaneously," by sharing the CPUs among threads. The most common case is that of n equal to one;

i.e., a single CPU running all the threads of an application.

Why are threads interesting? An OS or framework can schedule them, relieving the developer of an

individual thread from having to know about all the other threads in the system. In a multi-CPU system,

communicating threads can be moved among the CPUs to maximize system performance without having

to modify the application code. In the more common case of a single CPU, the ability to create

multi-threaded applications allows the CPU to be used more effectively; while one thread is waiting for

data, another can be processing data.

Virtually all DSP systems are multi-threaded; even the simplest systems consist of a main program and

one or more hardware interrupt service routines. Additionally, many DSP systems are designed to manage

multiple "channels" or "ports," i.e., they perform the same processing for two or more independent data

streams.

General Programming Guidelines16 SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 17

www.ti.com

2.2.2 Preemptive vs. Non-Preemptive Multitasking

Non-preemptive multitasking relies on each thread to voluntarily relinquish control to the operating system

before letting another thread execute. This is usually done by requiring threads to periodically call an

operating system function, say yield(), to allow another thread to take control of the CPU or by simply

requiring all threads to complete within a specified short period. In a non-preemptive multi-threading

environment, the amount of time a thread is allowed to run is determined by the thread, whereas in a

preemptive environment, the time is determined by the operating system and the entire set of tasks that

are ready to run.

Note that the difference between those two flavors of multi-threading can be a very big one; for example,

under a non-preemptive system, you can safely assume that no other thread executes while a particular

algorithm processes data using on-chip data memory. Under preemptive execution, this is not true; a

thread may be preempted while it is in the middle of processing. Thus, if your application relies on the

assumption that things do not change in the middle of processing some data, it might break under a

preemptive execution scheme.

Since preemptive systems are designed to preserve the state of a preempted thread and restore it when

its execution continues, threads can safely assume that most registers and all of the thread's data memory

remain unchanged. What would cause an application to fail? Any assumptions related to the maximum

amount of time that can elapse between any two instructions, the state of any global system resource

such as a data cache, or the state of a global variable accessed by multiple threads, can cause an

application to fail in a preemptive environment.

Non-preemptive environments incur less overhead and often result in higher performance systems; for

example, data caches are much more effective in non-preemptive systems since each thread can control

when preemption (and therefore, cache flushing) will occur.

On the other hand, non-preemptive environments require that either each thread complete within a

specified maximum amount of time, or explicitly relinquish control of the CPU to the framework (or

operating system) at some minimum periodic rate. By itself, this is not a problem since most DSP threads

are periodic with real-time deadlines. However, this minimum rate is a function of the other threads in the

system and, consequently, non-preemptive threads are not completely independent of one another; they

must be sensitive to the scheduling requirements of the other threads in the system. Thus, systems that

are by their nature multirate and multichannel often require preemption; otherwise, all of the algorithms

used would have to be rewritten whenever a new algorithm is added to the system.

If we want all algorithms to be framework-independent, we must either define a framework-neutral way for

algorithms to relinquish control, or assume that algorithms used in a non-preemptive environment always

complete in less than the required maximum scheduling latency time. Since we require documentation of

worst-case execution times, it is possible for system integrators to quickly determine if an algorithm will

cause a non-preemptive system to violate its scheduling latency requirements. Thus, the TMS320 DSP

Algorithm Standard does not define a framework-neutral "yield" operation for algorithms.

Since algorithms can be used in both preemptive and non-preemptive environments, it is important that all

algorithms be designed to support both. This means that algorithms should minimize the maximum time

that they can delay other algorithms in a non-preemptive system.

Threads and Reentrancy

2.2.3 Reentrancy

Reentrancy is the attribute of a program or routine that allows the same copy of the program or routine to

be used concurrently by two or more threads.

Reentrancy is an extremely valuable property for functions. In multichannel systems, for example, any

function that can be invoked as part of one channel's processing must be reentrant ; otherwise, that

function would not be usable for other channels. In single channel multirate systems, any function that

must be used at two different rates must be reentrant; for example, a general digital filter function used for

both echo cancellation and pre-emphasis for a vocoder. Unfortunately, it is not always easy to determine if

a function is reentrant.

SPRU352G – June 2005 – Revised February 2007 General Programming Guidelines 17

Submit Documentation Feedback

Page 18

www.ti.com

yn+ xn* x

n*1

)

13

32

x

n*2

Threads and Reentrancy

The definition of reentrant code often implies that the code does not retain "state" information. That is, if

you invoke the code with the same data at different times, by the same or other thread, it will yield the

same results. This is not always true, however. How can a function maintain state and still be reentrant?

Consider the rand() function. Perhaps a better example is a function with state that protects that state by

disabling scheduling around its critical sections. These examples illustrate some of the subtleties of

reentrant programming.

The property of being reentrant is a function of the threading model; after all, before you can determine

whether multiple threads can use a particular function, you must know what types of threads are possible

in a system. For example, if threads are not preemptive, a function may freely use global variables if it

uses them for scratch storage only; i.e., it does not assume these variables have any values upon entry to

the function. In a preemptive environment, however, use of these global variables must be protected by a

critical section or they must be part of the context of every thread that uses them.

Although there are exceptions, reentrancy usually requires that algorithms:

• only modify data on the stack or in an instance "object"

• treat global and static variables as read-only data

• never employ self modifying code

These rules can sometimes be relaxed by disabling all interrupts (and therefore, disabling all thread

scheduling) around the critical sections that violate the rules above. Since algorithms are not permitted to

directly manipulate the interrupt state of the processor, the allowed DSP/BIOS HWI module functions (or

equivalent implementations) must be called to create these critical sections.

Rule 2

All algorithms must be reentrant within a preemptive environment (including time-sliced preemption).

2.2.4 Example

In the remainder of this section we consider several implementations of a simple algorithm, digital filtering

of an input speech data stream, and show how the algorithm can be made reentrant and maintain

acceptable levels of performance. It is important to note that, although these examples are written in C,

the principles and techniques apply equally well to assembly language implementations.

Speech signals are often passed through a pre-emphasis filter to flatten their spectrum prior to additional

processing. Pre-emphasis of a signal can be accomplished by applying the following difference equation

to the input data:

The following implementation is not reentrant because it references and updates the global variables z0

and z1. Even in a non-preemptive environment, this function is not reentrant; it is not possible to use this

function to operate on more than one data stream since it retains state for a particular data stream in two

fixed variables (z0 and z1).

int z0 = 0, z1 = 0; /* previous input values */

void PRE_filter(int input[], int length)

{

int I, tmp;

for (I = 0; I < length; I++) {

tmp = input[i] - z0 + (13 * z1 + 16) / 32;

z1 = z0;

z0 = input[i];

input[i] = tmp;

}

}

We can make this function reentrant by requiring the caller to supply previous values as arguments to the

function. This way, PRE_filter1 no longer references any global data and can be used, therefore, on any

number of distinct input data streams.

18 General Programming Guidelines SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 19

www.ti.com

Data Memory

void PRE_filter1(int input[], int length, int *z)

{

int I, tmp;

for (I = 0; I

< length; I++) {

tmp = input[i] - z[0] + (13 * z[1] + 16) / 32;

z[1] = z[0];

z[0] = input[i];

input[i] = tmp;

}

}

This technique of replacing references to global data with references to parameters illustrates a general

technique that can be used to make virtually any Code reentrant. One simply defines a "state object" as

one that contains all of the state necessary for the algorithm; a pointer to this state is passed to the

algorithm (along with the input and output data).

typedef struct

PRE_Obj { /* state obj for pre-emphasis alg */

int z0;

int z1;

} PRE_Obj;

void

PRE_filter2(PRE_Obj *pre, int input[], int length)

{

int I, tmp;

for (I = 0; I < length; I++)

{

tmp = input[i] - pre->z0 + (13 * pre->z1 + 16) /

32;

pre->z1 = pre->z0;

pre->z0 = input[i];

input[i] = tmp;

}

}

Although the C Code looks more complicated than our original implementation, its performance is

comparable, it is fully reentrant, and its performance can be configured on a "per data object" basis. Since

each state object can be placed in any data memory, it is possible to place some objects in on-chip

memory and others in external memory. The pointer to the state object is, in effect, the function's private

"data page pointer." All of the function's data can be efficiently accessed by a constant offset from this

pointer.

Notice that while performance is comparable to our original implementation, it is slightly larger and slower

because of the state object redirection. Directly referencing global data is often more efficient than

referencing data via an address register. On the other hand, the decrease in efficiency can usually be

factored out of the time-critical loop and into the loop-setup Code. Thus, the incremental performance cost

is minimal and the benefit is that this same Code can be used in virtually any system—independent of

whether the system must support a single channel or multiple channels, or whether it is preemptive or

non-preemptive.

"We should forget about small efficiencies, say about 97% of the time: premature optimization is the root

of all evil." —Donald Knuth "Structured Programming with go to Statements," Computing Surveys, Vol. 6,

No. 4, December, 1974, page 268.

2.3 Data Memory

The large performance difference between on-chip data memory and off-chip memory (even 0 wait-state

SRAM) is so large that every algorithm vendor designs their Code to operate as much as possible within

the on-chip memory. Since the performance gap is expected to increase dramatically in the next 3-5

years, this trend will continue for the foreseeable future. The TMS320C6000 series, for example, incurs a

25 wait state penalty for external SDRAM data memory access. Future processors may see this penalty

increase to 80 or even 100 wait states!

SPRU352G – June 2005 – Revised February 2007 General Programming Guidelines 19

Submit Documentation Feedback

Page 20

www.ti.com

Data Memory

While the amount of on-chip data memory may be adequate for each algorithm in isolation, the increased

number of MIPS available on modern DSPs encourages systems to perform multiple algorithms

concurrently with a single chip. Thus, some mechanism must be provided to efficiently share this precious

resource among algorithm components from one or more third parties.

2.3.1 Memory Spaces

In an ideal DSP, there would be an unlimited amount of on-chip memory and algorithms would simply

always use this memory. In practice, however, the amount of on-chip memory is very limited and there are

even two common types of on-chip memory with very different performance characteristics: dual-access

memory which allows simultaneous read and write operations in a single instruction cycle, and single

access memory that only allows a single access per instruction cycle.

Because of these practical considerations, most DSP algorithms are designed to operate with a

combination of on-chip and external memory. This works well when there is sufficient on-chip memory for

all the algorithms that need to operate concurrently; the system developer simply dedicates portions of

on-chip memory to each algorithm. It is important, however, that no algorithm assume specific region of

on-chip memory or contain any "hard Coded" addresses; otherwise the system developer will not be able

to optimally allocate the on-chip memory among all algorithms.

Note that algorithms can directly access data contained in a static data structure located by the linker. This

rule only requires that all such references be done symbolically; i.e., via a relocatable label rather than a

fixed numerical address.

In systems where the set of algorithms is not known in advance or when there is insufficient on-chip

memory for the worst-case working set of algorithms, more sophisticated management of this precious

resource is required. In particular, we need to describe how the on-chip memory can be shared at run-time

among an arbitrary number of algorithms.

Rule 3

Algorithm data references must be fully relocatable (subject to alignment requirements). That is, there

must be no “hard-coded” data memory locations.

2.3.2 Scratch versus Persistent

In this section, we develop a general model for sharing regions of memory among algorithms. This model

is used to share the on-chip memory of a DSP, for example. This model is essentially a generalization of

the technique commonly used by compilers to share CPU registers among functions. Compilers often

partition the CPU registers into two groups: "scratch" and "preserve." Scratch registers can be freely used

by a function without having to preserve their value upon return from the function. Preserve registers, on

the other hand, must be saved prior to being modified and restored prior to returning from the function. By

partitioning the register set in this way, significant optimizations are possible; functions do not need to

save and restore scratch registers, and callers do not need to save preserve registers prior to calling a

function and restore them after the return.

Consider the program execution trace of an application that calls two distinct functions, say a() and b().

Void main()

{

... /* use scratch registers r1 and r2 */

/* call function

a() */

a() {

... /* use scratch registers r0, r1, and r2 */

}

/* call function b()

*/

b() {

... /* use scratch registers r0 and r1*/

}

}

20 General Programming Guidelines SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 21

www.ti.com

Scratch

Algorithm A

Scratch

Algorithm B

Write-Once C

Scratch

Algorithm C

Scratch

Physical

Memory

Persistent B

Persistent A

Persistent A Persistent B Write-Once C

0000

FFFF

0

1

Algorithm C

Scratch

Scratch

Write-Once C

Data Memory

Notice that both a() and b() freely use some of the same scratch registers and no saving and restoring of

these registers is necessary. This is possible because both functions, a() and b(), agree on the set of

scratch registers and that values in these registers are indeterminate at the beginning of each function.

By analogy, we partition all memory into two groups: scratch and persistent.

• Scratch memory is memory that is freely used by an algorithm without regard to its prior contents, i.e.,

no assumptions about the content can be made by the algorithm and the algorithm is free to leave it in

any state.

• Persistent memory is used to store state information while an algorithm instance is not executing.

Persistent memory is any area of memory that an algorithm can write to assume that the contents are

unchanged between successive invocations of the algorithm within an application. All physical memory

has this behavior, but applications that share memory among multiple algorithms may opt to overwrite

some regions of memory (e.g., on-chip DARAM).

A special variant of persistent memory is the write-once persistent memory. An algorithm's initialization

function ensures that its write-once buffers are initialized during instance creation and that all subsequent

accesses by the algorithm's processing to write-once buffers are strictly read-only. Additionally, the

algorithm can link its own statically allocated write-once buffers and provide the buffer addresses to the

client. The client is free to use provided buffers or allocate its own. Frameworks can optimize memory

allocation by arranging multiple instances of the same algorithm, created with identical creation

parameters, to share write-once buffers.

Note that a simpler alternative to declaring write-once buffers for sharing statically initialized read-only

data is to use global statically linked constant tables and publish their alignment and memory space

requirements in the required standard algorithm documentation. If data has to be computed or relocated at

run-time, the write-once buffers approach can be employed.

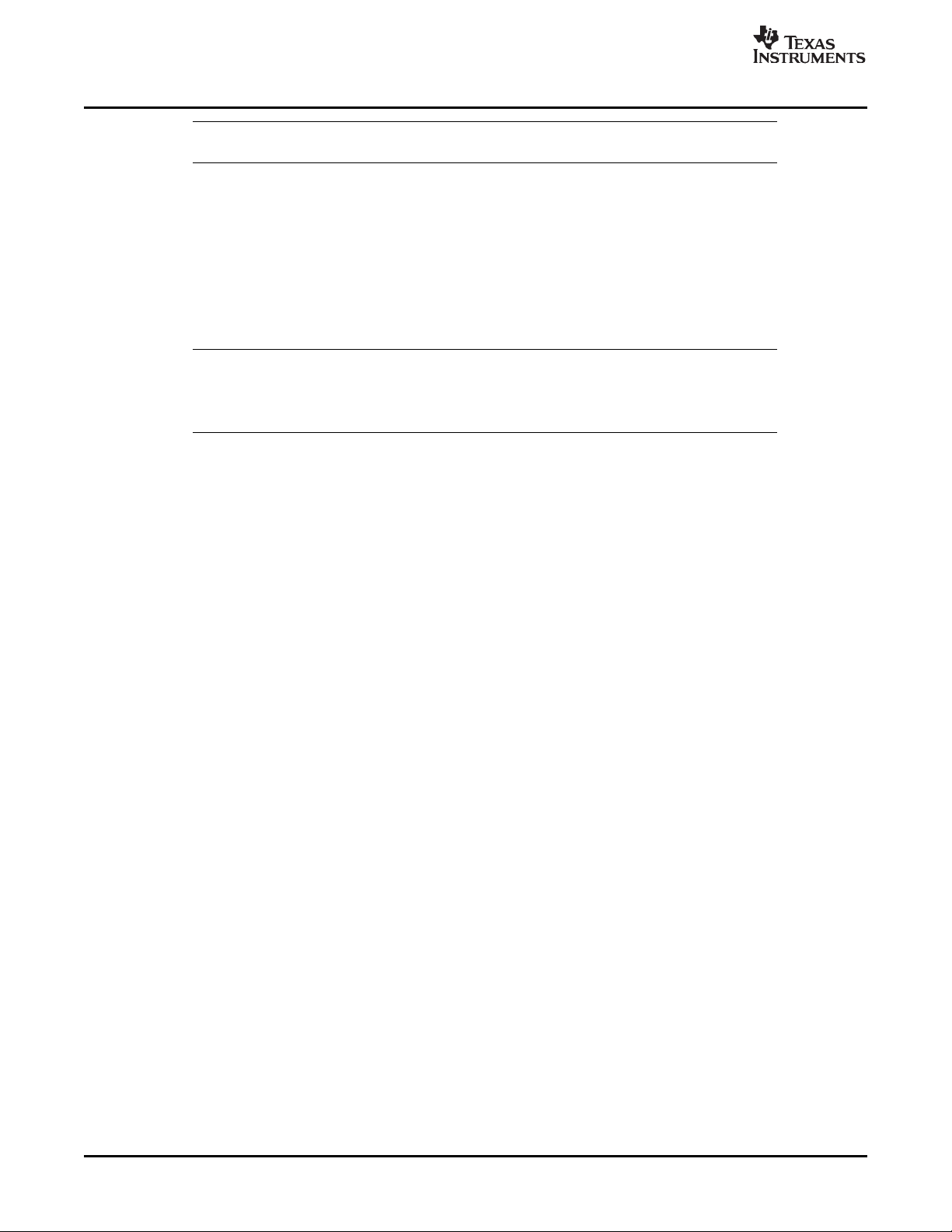

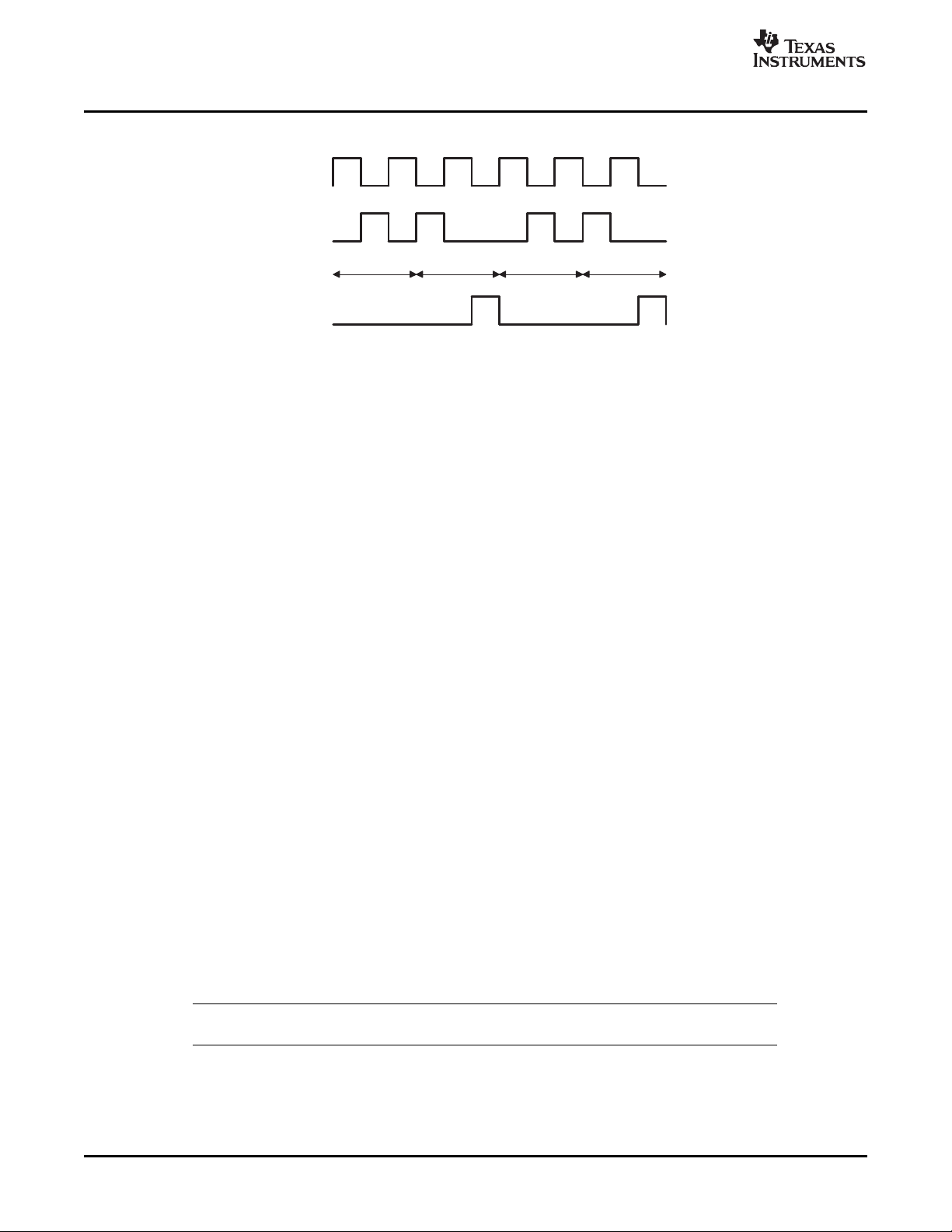

The importance of making a distinction between scratch memory and persistent memory is illustrated in

Figure 2-1 .

Figure 2-1. Scratch vs Persistent Memory Allocation

All algorithm scratch memory can be "overlaid" on the same physical memory. Without the distinction

between scratch and persistent memory, it would be necessary to strictly partition memory among

algorithms, making the total memory requirement the sum of all algorithms' memory requirements. On the

other hand, by making the distinction, the total memory requirement for a collection of algorithms is the

sum of each algorithm's distinct persistent memory, plus any shared write-once persistent memory, plus

the maximum scratch memory requirement of any of these algorithms.

SPRU352G – June 2005 – Revised February 2007 General Programming Guidelines 21

Submit Documentation Feedback

Page 22

www.ti.com

Persistent

Scratch

Shared Private

Shadow

Data Memory

In addition to the types of memory described above, there are often several memory spaces provided by a

DSP to algorithms.

• Dual-access memory (DARAM) is on-chip memory that allows two simultaneous accesses in a single

instruction cycle.

• Single-access memory (SARAM) is on-chip memory that allows only a single access per instruction

cycle.

• External memory is memory that is external to the DSP and may require more than zero wait states

per access.

These memory spaces are often treated very differently by algorithm implementations; in order to optimize

performance, frequently accessed data is placed in on-chip memory, for example. The scratch versus

persistent attribute of a block of memory is independent of the memory space. Thus, there are six distinct

memory classes; scratch and persistent for each of the three memory spaces described above.

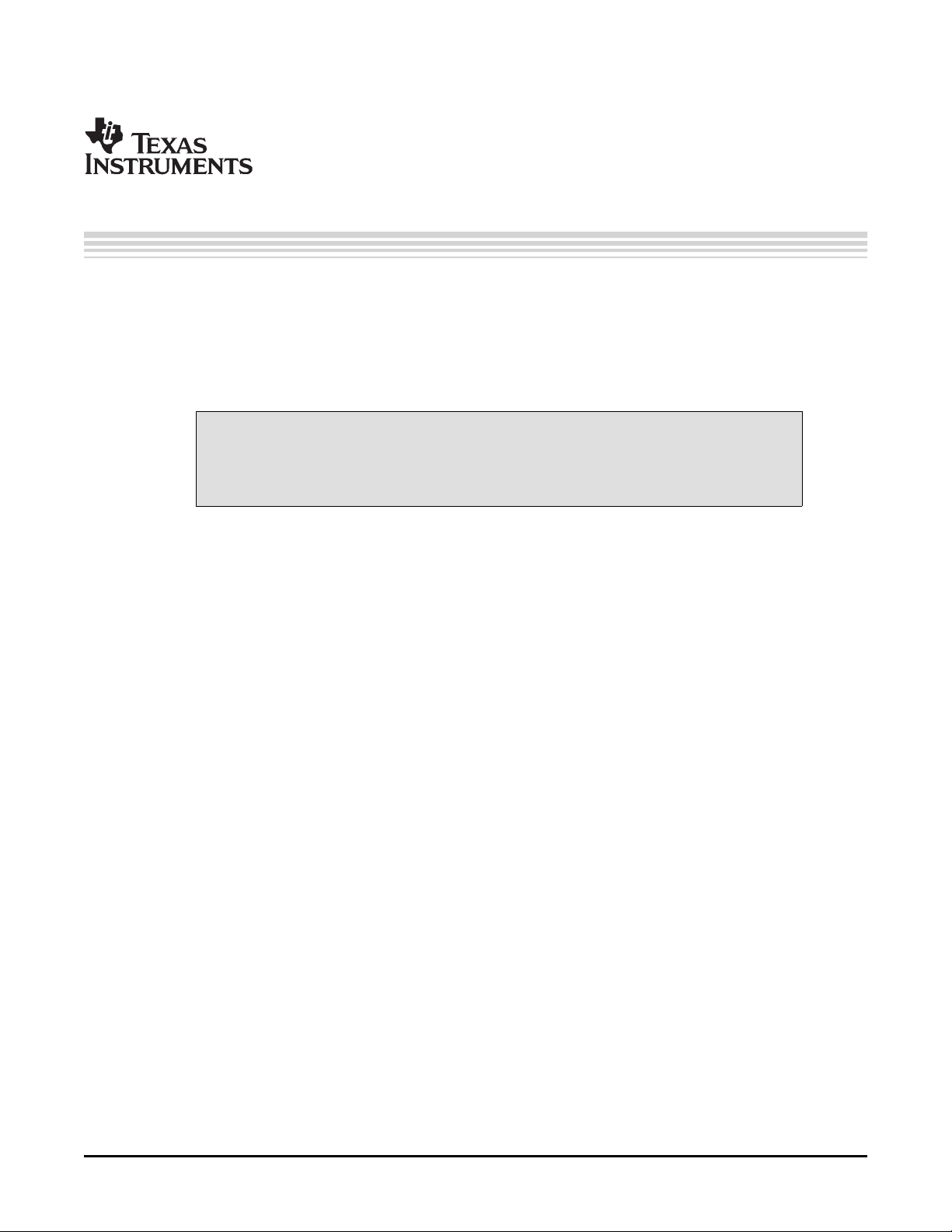

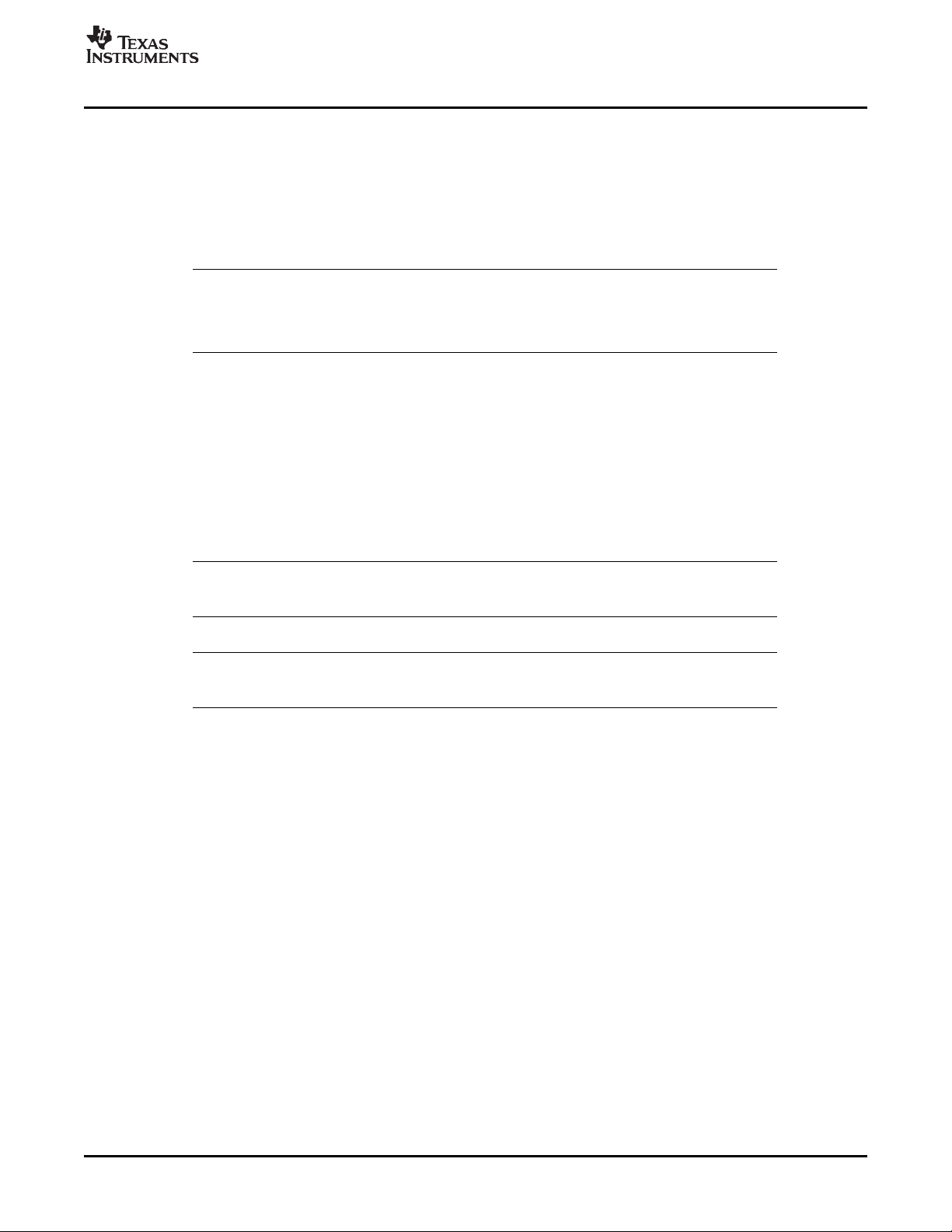

2.3.3 Algorithm versus Application

Other than a memory block's size, alignment, and memory space, three independent questions must be

answered before a client can properly manage a block of an algorithm's data memory.

• Is the block of memory treated as scratch or persistent by the algorithm?

• Is the block of memory shared by more than one algorithm?

• Do the algorithms that share the block preempt one another?

The first question is determined by the implementation of the algorithm; the algorithm must be written with

assumptions about the contents of certain memory buffers. We've argued that there is significant benefit to

distinguish between scratch memory and persistent memory, but it is up to the algorithm implementation

to trade the benefits of increasing scratch, and decreasing persistent memory against the potential

performance overhead incurred by re-computing intermediate results.

The second two questions regarding sharing and preemption, can only be answered by the client of an

eXpressDSP-compliant algorithm. The client decides whether preemption is required for the system and

the client allocates all memory. Thus, only the client knows whether memory is shared among algorithms.

Some frameworks, for example, never share any allocated memory among algorithms whereas others

always share scratch memory.

There is a special type of persistent memory managed by clients of algorithms that is worth distinguishing:

shadow memory is unshared persistent memory that is used to shadow or save the contents of shared

registers and memory in a system. Shadow memory is not used by algorithms; it is used by their clients to

save the memory regions shared by various algorithms.

Figure 2-2 illustrates the relationship between the various types of memory.

Guideline 1

Algorithms should minimize their persistent data memory requirements in favor of scratch memory.

Figure 2-2. Data Memory Types

General Programming Guidelines22 SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 23

www.ti.com

2.4 Program Memory

Program Memory

Like the data memory requirements described in the previous section, it is important that all

eXpressDSP-compliant algorithms are fully relocatable; i.e., there should never be any assumption about

the specific placement of an algorithm at a particular address. Alignment on a specified page size is

permitted, however.

Rule 4

All algorithm code must be fully relocatable. That is, there can be no hard coded program memory

locations.

As with the data memory requirements, this rule only requires that Code be relocated via a linker. For

example, it is not necessary to always use PC-relative branches. This requirement allows the system

developer to optimally allocate program space to the various algorithms in the system.

Algorithm modules sometimes require initialization Code that must be executed prior to any other

algorithm method being used by a client. Often this Code is only run once during the lifetime of an

application. This Code is effectively "dead" once it has been run at startup. The space allocated for this

Code can be reused in many systems by placing the "run-once" Code in data memory and using the data

memory during algorithm operation.

A similar situation occurs in "finalization" Code. Debug versions of algorithms, for example, sometimes

implement functions that, when called when a system exits, can provide valuable debug information; e.g.,

the existence of objects or objects that have not been properly deleted. Since many systems are designed

to never exit (i.e., exit by power-off), finalization Code should be placed in a separate object module. This

allows the system integrator to avoid including Code that can never be executed.

Guideline 2

Each initialization and finalization function should be defined in a separate object module; these

modules must not contain any other code.

In some cases, it is awkward to place each function in a separate file. Doing so may require making some

identifiers globally visible or require significant changes to an existing Code base. The TI C compiler

supports a pragma directive that allows you to place specified functions in distinct COFF output sections.

This pragma directive may be used in lieu of placing functions in separate files. The table below

summarizes recommended section names and their purpose.

Section Name Purpose

.text:init Run-once initialization code

.text:exit Run-once finalization code

.text:create Run-time object creation

.text:delete Run-time object deletion

2.5 ROM-ability

There are several addressing modes used by algorithms to access data memory. Sometimes the data is

referenced by a pointer to a buffer passed to the algorithm, and sometimes an algorithm simply references

global variables directly. When an algorithm references global data directly, the instruction that operates

on the data often contains the address of the data (rather than an offset from a data page register, for

example). Thus, this Code cannot be placed in ROM without also requiring that the referenced data be

placed in a fixed location in a system. If a module has configuration parameters that result in variable

length data structures and these structures are directly referenced, such Code is not considered

ROM-able; the offsets in the Code are fixed and the relative positions of the data references may change.

Alternatively, algorithm Code can be structured to always use offsets from a data page for all fixed length

references and place a pointer in this page to any variable length structures. In this case, it is possible to

configure and locate the data anywhere in the system, provided the data page is appropriately set.

SPRU352G – June 2005 – Revised February 2007 General Programming Guidelines 23

Submit Documentation Feedback

Page 24

www.ti.com

Use of Peripherals

Rule 5

Algorithms must characterize their ROM-ability; i.e., state whether or not they are ROM-able.

Obviously, self-modifying Code is not ROM-able. We do not require that no algorithm employ

self-modifying Code; we only require documentation of the ROM-ability of an algorithm. It is also worth

pointing out that if self-modifying Code is used, it must be done "atomically," i.e., with all interrupts

disabled; otherwise this Code would fail to be reentrant.

2.6 Use of Peripherals

To ensure the interoperability of eXpressDSP-compliant algorithms, it is important that algorithms never

directly access any peripheral device.

Rule 6

Algorithms must never directly access any peripheral device. This includes, but is not limited to on-chip

DMAs, timers, I/O devices, and cache control registers. Note, however, algorithms can utilize the DMA

resource by implementing the IDMA2 interface on C64x and C5000 devices, and the IDMA3 interface

on C64x+ devices using the EDMA3 controller. See Chapter 6 for details.

In order for an algorithm to be framework-independent, it is important that no algorithm directly calls any

device interface to read or write data. All data produced or consumed by an algorithm must be explicitly

passed to the algorithm by the client. For example, no algorithm should call a device-independent I/O

library function to get data; this is the responsibility of the client or framework.

24 General Programming Guidelines SPRU352G – June 2005 – Revised February 2007

Submit Documentation Feedback

Page 25

SPRU352G – June 2005 – Revised February 2007

Algorithm Component Model

In this chapter, we develop additional rules and guidelines that apply to all algorithms

on all DSP architectures regardless of application area.

Topic .................................................................................................. Page

3.1 Interfaces and Modules .............................................................. 26

3.2 Algorithms ............................................................................... 33

3.3 Packaging ................................................................................ 34

Chapter 3

SPRU352G – June 2005 – Revised February 2007 Algorithm Component Model 25

Submit Documentation Feedback

Page 26

www.ti.com

client.c

#include <fir.h>

...

FIR_apply();

}

fir.h

typedef struct FIR_obj *FIR_Handle;

extern void FIR_init();

extern void FIR_exit();

extern FIR_HandleFIR_create();

fir_apply.asm

FIR_apply:

.globalFIR_apply

fir_create.c

FIR_HandleFIR_create() {

Includes

Interface

Implementation

Interfaces and Modules

These rules and guidelines enable many of the benefits normally associated with object-oriented and

component-based programming but with little or no overhead. More importantly, these guidelines are