Page 1

TMS320C55x DSP

Programmer’s Guide

Preliminary Draft

This document contains preliminary data

current as of the publication date and is

subject to change without notice.

SPRU376A

August 2001

Page 2

IMPORTANT NOTICE

Texas Instruments and its subsidiaries (TI) reserve the right to make changes to their products

or to discontinue any product or service without notice, and advise customers to obtain the latest

version of relevant information to verify, before placing orders, that information being relied on

is current and complete. All products are sold subject to the terms and conditions of sale supplied

at the time of order acknowledgment, including those pertaining to warranty, patent infringement,

and limitation of liability.

TI warrants performance of its products to the specifications applicable at the time of sale in

accordance with TI’s standard warranty. Testing and other quality control techniques are utilized

to the extent TI deems necessary to support this warranty . Specific testing of all parameters of

each device is not necessarily performed, except those mandated by government requirements.

Customers are responsible for their applications using TI components.

In order to minimize risks associated with the customer’s applications, adequate design and

operating safeguards must be provided by the customer to minimize inherent or procedural

hazards.

TI assumes no liability for applications assistance or customer product design. TI does not

warrant or represent that any license, either express or implied, is granted under any patent right,

copyright, mask work right, or other intellectual property right of TI covering or relating to any

combination, machine, or process in which such products or services might be or are used. TI’s

publication of information regarding any third party’s products or services does not constitute TI’ s

approval, license, warranty or endorsement thereof.

Reproduction of information in TI data books or data sheets is permissible only if reproduction

is without alteration and is accompanied by all associated warranties, conditions, limitations and

notices. Representation or reproduction of this information with alteration voids all warranties

provided for an associated TI product or service, is an unfair and deceptive business practice,

and TI is not responsible nor liable for any such use.

Resale of T I’ s p roducts o r s ervices w ith statements d ifferent f rom o r b eyond the parameters

by TI for that products or service voids all express and any implied warranties for the associated

TI product or service, is an unfair and deceptive business practice, and TI is not responsible nor

liable for any such use.

Also see: Standard Terms and Conditions of Sale for Semiconductor Products.

www.ti.com/sc/docs/stdterms.htm

Mailing Address:

Texas Instruments

Post Office Box 655303

Dallas, Texas 75265

Copyright 2001, Texas Instruments Incorporated

stated

Page 3

About This Manual

This manual describes ways to optimize C and assembly code for the

TMS320C55x DSPs and recommends ways to write TMS320C55x code for

specific applications.

Notational Conventions

This document uses the following conventions.

- The device number TMS320C55x is often abbreviated as C55x.

- Program listings, program examples, and interactive displays are shown

Preface

in a special typeface similar to a typewriter’s. Examples use a bold

version of the special typeface for emphasis; interactive displays use a

bold version of the special typeface to distinguish commands that you

enter from items that the system displays (such as prompts, command

output, error messages, etc.).

Here is a sample program listing:

0011 0005 0001 .field 1, 2

0012 0005 0003 .field 3, 4

0013 0005 0006 .field 6, 3

0014 0006 .even

Here is an example of a system prompt and a command that you might

enter:

C: csr −a /user/ti/simuboard/utilities

- In syntax descriptions, the instruction, command, or directive is in a bold

typeface font and parameters are in an italic typeface. Portions of a syntax

that are in bold should be entered as shown; portions of a syntax that are

in italics describe the type of information that should be entered. Here is

an example of a directive syntax:

.asect “section name”, address

.asect is the directive. This directive has two parameters, indicated by sec-

tion name and address. When you use .asect, the first parameter must be

an actual section name, enclosed in double quotes; the second parameter

must be an address.

Read This First

iii

Page 4

Notational Conventions

Some directives can have a varying number of parameters. For example,

-

the .byte directive can have up to 100 parameters. The syntax for this directive is:

.byte value

[, ... , valuen]

1

This syntax shows that .byte must have at least one value parameter, but

you have the option of supplying additional value parameters, separated

by commas.

- In most cases, hexadecimal numbers are shown with the suffix h. For ex-

ample, the following number is a hexadecimal 40 (decimal 64):

40h

Similarly, binary numbers usually are shown with the suffix b. For example,

the following number is the decimal number 4 shown in binary form:

0100b

- Bits are sometimes referenced with the following notation:

Notation Description Example

Register(n−m) Bits n through m of Register AC0(15−0) represents the 16

least significant bits of the register AC0.

iv

Page 5

Related Documentation From Texas Instruments

The following books describe the TMS320C55x devices and related support

tools. To obtain a copy of any of these TI documents, call the Texas

Instruments Literature Response Center at (800) 477-8924. When ordering,

please identify the book by its title and literature number.

TMS320C55x T echnical Overview (literature number SPRU393). This over-

view is an introduction to the TMS320C55x digital signal processor

(DSP). The TMS320C55x is the latest generation of fixed-point DSPs in

the TMS320C5000 DSP platform. Like the previous generations, this

processor is optimized for high performance and low-power operation.

This book describes the CPU architecture, low-power enhancements,

and embedded emulation features of the TMS320C55x.

TMS320C55x DSP CPU Reference Guide (literature number SPRU371)

describes the architecture, registers, and operation of the CPU.

TMS320C55x DSP Mnemonic Instruction Set Reference Guide (literature

number SPRU374) describes the mnemonic instructions individually. It

also includes a summary of the instruction set, a list of the instruction

opcodes, and a cross-reference to the algebraic instruction set.

Related Documentation From Texas Instruments

TMS320C55x DSP Algebraic Instruction Set Reference Guide (literature

number SPRU375) describes the algebraic instructions individually. It

also includes a summary of the instruction set, a list of the instruction

opcodes, and a cross-reference to the mnemonic instruction set.

TMS320C55x Optimizing C Compiler User’s Guide (literature number

SPRU281) describes the C55x C compiler. This C compiler accepts

ANSI standard C source code and produces assembly language source

code for TMS320C55x devices.

TMS320C55x Assembly Language Tools User’s Guide (literature number

SPRU280) describes the assembly language tools (assembler, linker,

and other tools used to develop assembly language code), assembler

directives, macros, common object file format, and symbolic debugging

directives for TMS320C55x devices.

TMS320C55x DSP Library Programmer’s Reference (literature number

SPRU422) describes the optimized DSP Function Library for C programmers on the TMS320C55x DSP.

The CPU, the registers, and the instruction sets are also described in online

documentation contained in Code Composer Studio.

Read This First

v

Page 6

Trademarks

Trademarks

Code Composer Studio, TMS320C54x, C54x, TMS320C55x, and C55x are

trademarks of Texas Instruments.

vi

Page 7

Contents

1 Introduction 1-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Lists some key features of the TMS320C55x DSP architecture and recommends a process for

code development.

1.1 TMS320C55x Architecture 1-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 Code Development Flow for Best Performance 1-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2 Tutorial 2-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Uses example code to walk you through the code development flow for the TMS320C55x DSP.

2.1 Introduction 2-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2 Writing Assembly Code 2-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2.1 Allocate Sections for Code, Constants, and Variables 2-5. . . . . . . . . . . . . . . . . . . .

2.2.2 Processor Mode Initialization 2-7. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2.3 Setting up Addressing Modes 2-8. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.3 Understanding the Linking Process 2-10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4 Building Your Program 2-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4.1 Creating a Project 2-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4.2 Adding Files to the Workspace 2-14. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4.3 Modifying Build Options 2-17. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4.4 Building the Program 2-18. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.5 Testing Your Code 2-19. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.6 Benchmarking Your Code 2-21. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3 Optimizing C Code 3-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Describes how you can maximize the performance of your C code by using certain compiler

options, C code transformations, and compiler intrinsics.

3.1 Introduction to Writing C/C++ Code for a C55x DSP 3-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.1.1 Tips on Data Types 3-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.1.2 How to Write Multiplication Expressions Correctly in C Code 3-3. . . . . . . . . . . . . .

3.1.3 Memory Dependences 3-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.1.4 Analyzing C Code Performance 3-6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.2 Compiling the C/C++ Code 3-7. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.2.1 Compiler Options 3-7. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.2.2 Performing Program-Level Optimization (−pm Option) 3-9. . . . . . . . . . . . . . . . . . . .

3.2.3 Using Function Inlining 3-11. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3 Profiling Your Code 3-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.1 Using the clock() Function to Profile 3-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.2 Using CCS 2.0 to Profile 3-14. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

vii

Page 8

Contents

3.4 Refining the C/C++ Code 3-15. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4.1 Generating Efficient Loop Code 3-16. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4.2 Efficient Use of MAC hardware 3-21. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4.3 Using Intrinsics 3-29. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4.4 Using Long Data Accesses for 16-Bit Data 3-34. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4.5 Simulating Circular Addressing in C 3-35. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4.6 Generating Efficient Control Code 3-39. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4.7 Summary of Coding Idioms for C55x 3-40. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5 Memory Management Issues 3-42. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5.1 Avoiding Holes Caused by Data Alignment 3-42. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5.2 Local vs. Global Symbol Declarations 3-43. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5.3 Stack Configuration 3-43. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5.4 Allocating Code and Data in the C55x Memory Map 3-44. . . . . . . . . . . . . . . . . . . . .

3.5.5 Allocating Function Code to Different Sections 3-48. . . . . . . . . . . . . . . . . . . . . . . . .

4 Optimizing Assembly Code 4-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Describes some of the opportunities for optimizing TMS320C55x assembly code and provides

corresponding code examples.

4.1 Efficient Use of the Dual-MAC Hardware 4-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.1.1 Implicit Algorithm Symmetry 4-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.1.2 Loop Unrolling 4-6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.1.3 Multichannel Applications 4-14. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.1.4 Multi-Algorithm Applications 4-15. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2 Using Parallel Execution Features 4-16. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2.1 Built-In Parallelism 4-16. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2.2 User-Defined Parallelism 4-16. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2.3 Architectural Features Supporting Parallelism 4-17. . . . . . . . . . . . . . . . . . . . . . . . . .

4.2.4 User-Defined Parallelism Rules 4-20. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2.5 Process for Implementing User-Defined Parallelism 4-22. . . . . . . . . . . . . . . . . . . . .

4.2.6 Parallelism Tips 4-24. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2.7 Examples of Parallel Optimization Within CPU Functional Units 4-25. . . . . . . . . .

4.2.8 Example of Parallel Optimization Across the A-Unit, P-Unit, and D-Unit 4-35. . . .

4.3 Implementing Efficient Loops 4-42. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3.1 Nesting of Loops 4-42. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3.2 Efficient Use of repeat(CSR) Looping 4-46. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3.3 Avoiding Pipeline Delays When Accessing Loop-Control Registers 4-48. . . . . . . .

4.4 Minimizing Pipeline and IBQ Delays 4-49. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.4.1 Process to Resolve Pipeline and IBQ Conflicts 4-53. . . . . . . . . . . . . . . . . . . . . . . . .

4.4.2 Recommendations for Preventing Pipeline Delays 4-54. . . . . . . . . . . . . . . . . . . . . .

4.4.3 Memory Accesses and the Pipeline 4-72. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.4.4 Recommendations for Preventing IBQ Delays 4-79. . . . . . . . . . . . . . . . . . . . . . . . . .

viii

Page 9

Contents

5 Fixed-Point Arithmetic 5-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Explains important considerations for doing fixed-point arithmetic with the TMS320C55x DSP.

Includes code examples.

5.1 Fixed-Point Arithmetic − a Tutorial 5-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.1.1 2s-Complement Numbers 5-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.1.2 Integers Versus Fractions 5-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.1.3 2s-Complement Arithmetic 5-5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.2 Extended-Precision Addition and Subtraction 5-9. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.2.1 A 64-Bit Addition Example 5-9. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.2.2 A 64-Bit Subtraction Example 5-11. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.3 Extended-Precision Multiplication 5-14. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4 Division 5-17. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4.1 Integer Division 5-17. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4.2 Fractional Division 5-23. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.5 Methods of Handling Overflows 5-24. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.5.1 Hardware Features for Overflow Handling 5-24. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6 Bit-Reversed Addressing 6-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Introduces bit-reverse addressing and its implementation on the TMS320C55x DSP. Includes

code examples.

6.1 Introduction to Bit-Reverse Addressing 6-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.2 Using Bit-Reverse Addressing In FFT Algorithms 6-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.3 In-Place Versus Off-Place Bit-Reversing 6-6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.4 Using the C55x DSPLIB for FFTs and Bit-Reversing 6-7. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7 Application-Specific Instructions 7-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Explains how to implement some common DSP algorithms using specialized TMS320C55x

instructions. Includes code examples.

7.1 Symmetric and Asymmetric FIR Filtering (FIRS, FIRSN) 7-2. . . . . . . . . . . . . . . . . . . . . . . . .

7.1.1 Symmetric FIR Filtering With the firs Instruction 7-3. . . . . . . . . . . . . . . . . . . . . . . . .

7.1.2 Antisymmetric FIR Filtering With the firsn Instruction 7-4. . . . . . . . . . . . . . . . . . . . .

7.1.3 Implementation of a Symmetric FIR Filter on the TMS320C55x DSP 7-4. . . . . . .

7.2 Adaptive Filtering (LMS) 7-6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.2.1 Delayed LMS Algorithm 7-7. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.3 Convolutional Encoding (BFXPA, BFXTR) 7-10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.3.1 Bit-Stream Multiplexing and Demultiplexing 7-12. . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.4 Viterbi Algorithm for Channel Decoding (ADDSUB, SUBADD, MAXDIFF) 7-16. . . . . . . . .

8 TI C55x DSPLIB 8-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Introduces the features and the C functions of the TI TMS320C55x DSP function library.

8.1 Features and Benefits 8-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.2 DSPLIB Data Types 8-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.3 DSPLIB Arguments 8-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.4 Calling a DSPLIB Function from C 8-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.5 Calling a DSPLIB Function from Assembly Language Source Code 8-4. . . . . . . . . . . . . . .

8.6 Where to Find Sample Code 8-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.7 DSPLIB Functions 8-5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Contents

ix

Page 10

Contents

A Special D-Unit Instructions A-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Lists the D-unit instructions where A-unit registers are read (“pre-fetched”) in the Read phase

of the execution pipeline.

B Algebraic Instructions Code Examples B-1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Shows the algebraic instructions code examples that correspond to the mnemonic instructions

code examples shown in Chapters 2 through 7.

x

Page 11

Figures

1−1 Code Development Flow 1-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−1 Section Allocation 2-6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−2 Extended Auxiliary Registers Structure (XARn) 2-9. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−3 Project Creation Dialog Box 2-14. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−4 Add tutor.asm to Project 2-15. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−5 Add tutor.cmd to Project 2-16. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−6 Build Options Dialog Box 2-17. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−7 Rebuild Complete Screen 2-18. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−1 Data Bus Usage During a Dual-MAC Operation 4-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−2 Computation Groupings for a Block FIR (4-Tap Filter Shown) 4-7. . . . . . . . . . . . . . . . . . . . . . .

4−3 Computation Groupings for a Single-Sample FIR With an

4−4 Computation Groupings for a Single-Sample FIR With an

4−5 Matrix to Find Operators That Can Be Used in Parallel 4-18. . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−6 CPU Operators and Buses 4-20. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−7 Process for Applying User-Defined Parallelism 4-23. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−8 First Segment of the Pipeline (Fetch Pipeline) 4-49. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−9 Second Segment of the Pipeline (Execution Pipeline) 4-50. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−1 Dependence Graph for Vector Sum 3-5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−1 4-Bit 2s-Complement Integer Representation 5-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−2 8-Bit 2s-Complement Integer Representation 5-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−3 4-Bit 2s-Complement Fractional Representation 5-5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−4 8-Bit 2s-Complement Fractional Representation 5-5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−5 Effect on CARRY of Addition Operations 5-11. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−6 Effect on CARRY of Subtraction Operations 5-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−7 32-Bit Multiplication 5-14. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6−1 FFT Flow Graph Showing Bit-Reversed Input and In-Order Output 6-4. . . . . . . . . . . . . . . . . .

7−1 Symmetric and Antisymmetric FIR Filters 7-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−2 Adaptive FIR Filter Implemented With the Least-Mean-Squares (LMS) Algorithm 7-6. . . . . .

7−3 Example of a Convolutional Encoder 7-10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−4 Generation of an Output Stream G0 7-11. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−5 Bit Stream Multiplexing Concept 7-12. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−6 Butterfly Structure for K = 5, Rate 1/2 GSM Convolutional Encoder 7-17. . . . . . . . . . . . . . . . .

Even Number of TAPS (4-Tap Filter Shown) 4-12. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Odd Number of TAPS (5-Tap Filter Shown) 4-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Contents

xi

Page 12

Tables

3−1 Compiler Options to Avoid on Performance-Critical Code 3-7. . . . . . . . . . . . . . . . . . . . . . . . . . .

3−2 Compiler Options for Performance 3-8. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−3 Compiler Options That May Degrade Performance and Improve Code Size 3-8. . . . . . . . . . .

3−4 Compiler Options for Information 3-9. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−5 Summary of C/C++ Code Optimization Techniques 3-15. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−6 TMS320C55x C Compiler Intrinsics 3-32. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−7 C Coding Methods for Generating Efficient C55x Assembly Code 3-40. . . . . . . . . . . . . . . . . .

3−8 Section Descriptions 3-45. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−9 Possible Operand Combinations 3-46. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−1 CPU Data Buses and Constant Buses 4-19. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−2 Basic Parallelism Rules 4-21. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−3 Advanced Parallelism Rules 4-22. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−4 Steps in Process for Applying User-Defined Parallelism 4-23. . . . . . . . . . . . . . . . . . . . . . . . . . .

4−5 Pipeline Activity Examples 4-51. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−6 Recommendations for Preventing Pipeline Delays 4-54. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−7 Bit Groups for STx Registers 4-63. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−8 Pipeline Register Groups 4-64. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−9 Memory Accesses 4-72. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−10 C55x Data and Program Buses 4-73. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−11 Half-Cycle Accesses to Dual-Access Memory (DARAM) and the Pipeline 4-73. . . . . . . . . . . .

4−12 Memory Accesses and the Pipeline 4-74. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−13 Cross-Reference Table Documented By Software Developers to Help

6−1 Syntaxes for Bit-Reverse Addressing Modes 6-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6−2 Bit-Reversed Addresses 6-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6−3 Typical Bit-Reverse Initialization Requirements 6-5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−1 Operands to the firs or firsn Instruction 7-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Software Integrators Generate an Optional Application Mapping 4-78. . . . . . . . . . . . . . . . . . .

xii

Page 13

Examples

2−1 Final Assembly Code of tutor.asm 2-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−2 Partial Assembly Code of tutor.asm (Step 1) 2-6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−3 Partial Assembly Code of tutor.asm (Step 2) 2-7. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−4 Partial Assembly Code of tutor.asm (Part3) 2-9. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−5 Linker command file (tutor.cmd) 2-10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−6 Linker map file (test.map) 2-11. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2−7 x Memory Window 2-20. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−1 Generating a 16x16−>32 Multiply 3-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−2 C Code for Vector Sum 3-4. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−3 Main Function File 3-10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−4 Sum Function File 3-10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−5 Assembly Code Generated With −o3 and −pm Options 3-11. . . . . . . . . . . . . . . . . . . . . . . . . . .

3−6 Assembly Generated Using −o3, −pm, and −oi50 3-12. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−7 Using the clock() Function 3-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−8 Simple Loop That Allows Use of localrepeat 3-16. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−9 Assembly Code for localrepeat Generated by the Compiler 3-17. . . . . . . . . . . . . . . . . . . . . . . .

3−10 Inefficient Loop Code for Loop Variable and Constraints (C) 3-18. . . . . . . . . . . . . . . . . . . . . . .

3−11 Inefficient Loop Code for Variable and Constraints (Assembly) 3-19. . . . . . . . . . . . . . . . . . . . .

3−12 Using the MUST_ITERATE Pragma 3-19. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−13 Assembly Code Generated With the MUST_ITERATE Pragma 3-20. . . . . . . . . . . . . . . . . . . . .

3−14 Use Local Rather Than Global Summation Variables 3-22. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−15 Returning Q15 Result for Multiply Accumulate 3-22. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−16 C Code for an FIR Filter 3-24. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−17 FIR C Code After Unroll-and-Jam Transformation 3-25. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−18 FIR Filter With MUST_ITERATE Pragma and restrict Qualifier 3-27. . . . . . . . . . . . . . . . . . . . .

3−19 Generated Assembly for FIR Filter Showing Dual-MAC 3-28. . . . . . . . . . . . . . . . . . . . . . . . . . .

3−20 Implementing Saturated Addition in C 3-29. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−21 Inefficient Assembly Code Generated by C Version of Saturated Addition 3-30. . . . . . . . . . . .

3−22 Single Call to _sadd Intrinsic 3-31. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−23 Assembly Code Generated When Using Compiler Intrinsic for Saturated Add 3-31. . . . . . . .

3−24 Using ETSI Functions to Implement sadd 3-31. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−25 Block Copy Using Long Data Access 3-34. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−26 Simulating Circular Addressing in C 3-35. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−27 Assembly Output for Circular Addressing C Code 3-36. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−28 Circular Addressing Using Modulus Operator 3-37. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−29 Assembly Output for Circular Addressing Using Modulus Operator 3-38. . . . . . . . . . . . . . . . .

Contents

xiii

Page 14

Examples

3−30 Considerations for Long Data Objects in Structures 3-42. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−31 Declaration Using DATA_SECTION Pragma 3-46. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−32 Sample Linker Command File 3-47. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−1 Complex Vector Multiplication Code 4-6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−2 Block FIR Filter Code (Not Optimized) 4-9. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−3 Block FIR Filter Code (Optimized) 4-10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−4 A-Unit Code With No User-Defined Parallelism 4-26. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−5 A-Unit Code in Example 4−4 Modified to Take Advantage of Parallelism 4-28. . . . . . . . . . . . .

4−6 P-Unit Code With No User-Defined Parallelism 4-31. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−7 P-Unit Code in Example 4−6 Modified to Take Advantage of Parallelism 4-32. . . . . . . . . . . . .

4−8 D-Unit Code With No User-Defined Parallelism 4-34. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−9 D-Unit Code in Example 4−8 Modified to Take Advantage of Parallelism 4-35. . . . . . . . . . . . .

4−10 Code That Uses Multiple CPU Units But No User-Defined Parallelism 4-36. . . . . . . . . . . . . . .

4−11 Code in Example 4−10 Modified to Take Advantage of Parallelism 4-39. . . . . . . . . . . . . . . . . .

4−12 Nested Loops 4-43. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−13 Branch-On-Auxiliary-Register-Not-Zero Construct

(Shown in Complex FFT Loop Code) 4-44. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−14 Use of CSR (Shown in Real Block FIR Loop Code) 4-47. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−15 A-Unit Register (Write in X Phase/Read in AD Phase) Sequence 4-56. . . . . . . . . . . . . . . . . . .

4−16 A-Unit Register Read/(Write in AD Phase) Sequence 4-58. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−17 Register (Write in X Phase)/(Read in R Phase) Sequence 4-59. . . . . . . . . . . . . . . . . . . . . . . . .

4−18 Good Use of MAR Instruction (Write/Read Sequence) 4-60. . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−19 Bad Use of MAR Instruction (Read/Write Sequence) 4-61. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−20 Solution for Bad Use of MAR Instruction (Read/Write Sequence) 4-61. . . . . . . . . . . . . . . . . . .

4−21 Stall During Decode Phase 4-62. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−22 Unprotected BRC Write 4-65. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−23 BRC Initialization 4-66. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−24 CSR Initialization 4-67. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−25 Condition Evaluation Preceded by a X-Phase Write to the Register

Affecting the Condition 4-68. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−26 Making an Operation Conditional With XCC 4-69. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−27 Making an Operation Conditional With execute(D_unit) 4-70. . . . . . . . . . . . . . . . . . . . . . . . . . .

4−28 Conditional Parallel Write Operation Followed by an

AD-Phase Write to the Register Affecting the Condition 4-71. . . . . . . . . . . . . . . . . . . . . . . . . . .

4−29 A Write Pending Case 4-76. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4−30 A Memory Bypass Case 4-77. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3−33 Allocation of Functions Using CODE_SECTION Pragma 3-48. . . . . . . . . . . . . . . . . . . . . . . . . .

5−1 Signed 2s-Complement Binary Number Expanded to Decimal Equivalent 5-3. . . . . . . . . . . .

5−2 Computing the Negative of a 2s-Complement Number 5-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−3 Addition With 2s-Complement Binary Numbers 5-6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−4 Subtraction With 2s-Complement Binary Numbers 5-7. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−5 Multiplication With 2s-Complement Binary Numbers 5-8. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−6 64-Bit Addition 5-10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−7 64-Bit Subtraction 5-12. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

xiv

Page 15

Examples

5−8 32-Bit Integer Multiplication 5-15. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−9 32-Bit Fractional Multiplication 5-16. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−10 Unsigned, 16-Bit By 16-Bit Integer Division 5-18. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−11 Unsigned, 32-Bit By 16-Bit Integer Division 5-19. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−12 Signed, 16-Bit By 16-Bit Integer Division 5-21. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5−13 Signed, 32-Bit By 16-Bit Integer Division 5-22. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6−1 Sequence of Auxiliary Registers Modifications in Bit-Reversed Addressing 6-2. . . . . . . . . . .

6−2 Off-Place Bit Reversing of a Vector Array (in Assembly) 6-6. . . . . . . . . . . . . . . . . . . . . . . . . . . .

6−3 Using DSPLIB cbrev() Routine to Bit Reverse a Vector Array (in C) 6-7. . . . . . . . . . . . . . . . . .

7−1 Symmetric FIR Filter 7-5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−2 Delayed LMS Implementation of an Adaptive Filter 7-9. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−3 Generation of Output Streams G0 and G1 7-11. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−4 Multiplexing Two Bit Streams With the Field Expand Instruction 7-13. . . . . . . . . . . . . . . . . . . .

7−5 Demultiplexing a Bit Stream With the Field Extract Instruction 7-15. . . . . . . . . . . . . . . . . . . . . .

7−6 Viterbi Butterflies for Channel Coding 7-19. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7−7 Viterbi Butterflies Using Instruction Parallelism 7-20. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−1 Partial Assembly Code of test.asm (Step 1) B-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−2 Partial Assembly Code of test.asm (Step 2) B-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−3 Partial Assembly Code of test.asm (Part3) B-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−4 Assembly Code Generated With −o3 and −pm Options B-4. . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−5 Assembly Generated Using −o3, −pm, and −oi50 B-5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−6 Assembly Code for localrepeat Generated by the Compiler B-5. . . . . . . . . . . . . . . . . . . . . . . . .

B−7 Inefficient Loop Code for Variable and Constraints (Assembly) B-6. . . . . . . . . . . . . . . . . . . . . .

B−8 Assembly Code Generated With the MUST_ITERATE Pragma B-6. . . . . . . . . . . . . . . . . . . . . .

B−9 Generated Assembly for FIR Filter Showing Dual-MAC B-7. . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−10 Inefficient Assembly Code Generated by C Version of Saturated Addition B-8. . . . . . . . . . . . .

B−11 Assembly Code Generated When Using Compiler Intrinsic for Saturated Add B-9. . . . . . . . .

B−12 Assembly Output for Circular Addressing C Code B-9. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−13 Assembly Output for Circular Addressing Using Modulo B-10. . . . . . . . . . . . . . . . . . . . . . . . . . .

B−14 Complex V ector Multiplication Code B-11. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−15 Block FIR Filter Code (Not Optimized) B-12. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−16 Block FIR Filter Code (Optimized) B-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−17 A-Unit Code With No User-Defined Parallelism B-14. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−18 A-Unit Code in Example B−17 Modified to Take Advantage of Parallelism B-16. . . . . . . . . . .

B−19 P-Unit Code With No User-Defined Parallelism B-18. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−20 P-Unit Code in Example B−19 Modified to Take Advantage of Parallelism B-19. . . . . . . . . . .

B−21 D-Unit Code With No User-Defined Parallelism B-20. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−22 D-Unit Code in Example B−21 Modified to Take Advantage of Parallelism B-21. . . . . . . . . . .

B−23 Code That Uses Multiple CPU Units But No User-Defined Parallelism B-22. . . . . . . . . . . . . . .

B−24 Code in Example B−23 Modified to Take Advantage of Parallelism B-25. . . . . . . . . . . . . . . . .

B−25 Nested Loops B-28. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−26 Branch-On-Auxiliary-Register-Not-Zero Construct

(Shown in Complex FFT Loop Code) B-28. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−27 64-Bit Addition B-30. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Contents

xv

Page 16

Examples

B−28 64-Bit Subtraction B-31. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−29 32-Bit Integer Multiplication B-32. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−30 32-Bit Fractional Multiplication B-33. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−31 Unsigned, 16-Bit By 16-Bit Integer Division B-33. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−32 Unsigned, 32-Bit By 16-Bit Integer Division B-34. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−33 Signed, 16-Bit By 16-Bit Integer Division B-35. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−34 Signed, 32-Bit By 16-Bit Integer Division B-36. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−35 Off-Place Bit Reversing of a Vector Array (in Assembly) B-37. . . . . . . . . . . . . . . . . . . . . . . . . . .

B−36 Delayed LMS Implementation of an Adaptive Filter B-38. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−37 Generation of Output Streams G0 and G1 B-39. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−38 Viterbi Butterflies for Channel Coding B-39. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

B−39 Viterbi Butterflies Using Instruction Parallelism B-40. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

xvi

Page 17

Chapter 1

This chapter lists some of the key features of the TMS320C55x (C55x) DSP

architecture and shows a recommended process for creating code that runs

efficiently.

Topic Page

1.1 TMS320C55x Architecture 1-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.2 Code Development Flow for Best Performance 1-3. . . . . . . . . . . . . . . . . .

1-1

Page 18

TMS320C55x Architecture

1.1 TMS320C55x Architecture

The TMS320C55x device is a fixed-point digital signal processor (DSP). The

main block of the DSP is the central processing unit (CPU), which has the following characteristics:

- A unified program/data memory map. In program space, the map contains

16M bytes that are accessible at 24-bit addresses. In data space, the map

contains 8M words that are accessible at 23-bit addresses.

- An input/output (I/O) space of 64K words for communication with peripher-

als.

- Software stacks that support 16-bit and 32-bit push and pop operations.

You can use these stack for data storage and retreival. The CPU uses

these stacks for automatic context saving (in response to a call or interrupt) and restoring (when returning to the calling or interrupted code sequence).

- A large number of data and address buses, to provide a high level of paral-

lelism. One 32-bit data bus and one 24-bit address bus support instruction

fetching. Three 16-bit data buses and three 24-bit address buses are used

to transport data to the CPU. Two 16-bit data buses and two 24-bit address

buses are used to transport data from the CPU.

1-2

- An instruction buffer and a separate fetch mechanism, so that instruction

fetching is decoupled from other CPU activities.

- The following computation blocks: one 40-bit arithmetic logic unit (ALU),

one 16-bit ALU, one 40-bit shifter, and two multiply-and-accumulate units

(MACs). In a single cycle, each MAC can perform a 17-bit by 17-bit multiplication (fractional or integer) and a 40-bit addition or subtraction with optional 32-/40-bit saturation.

- An instruction pipeline that is protected. The pipeline protection mecha-

nism inserts delay cycles as necessary to prevent read operations and

write operations from happening out of the intended order.

- Data address generation units that support linear, circular, and bit-reverse

addressing.

- Interrupt-control logic that can block (or mask) certain interrupts known as

the maskable interrupts.

- A TMS320C54x-compatible mode to support code originally written for a

TMS320C54x DSP.

Page 19

Code Development Flow for Best Performance

1.2 Code Development Flow for Best Performance

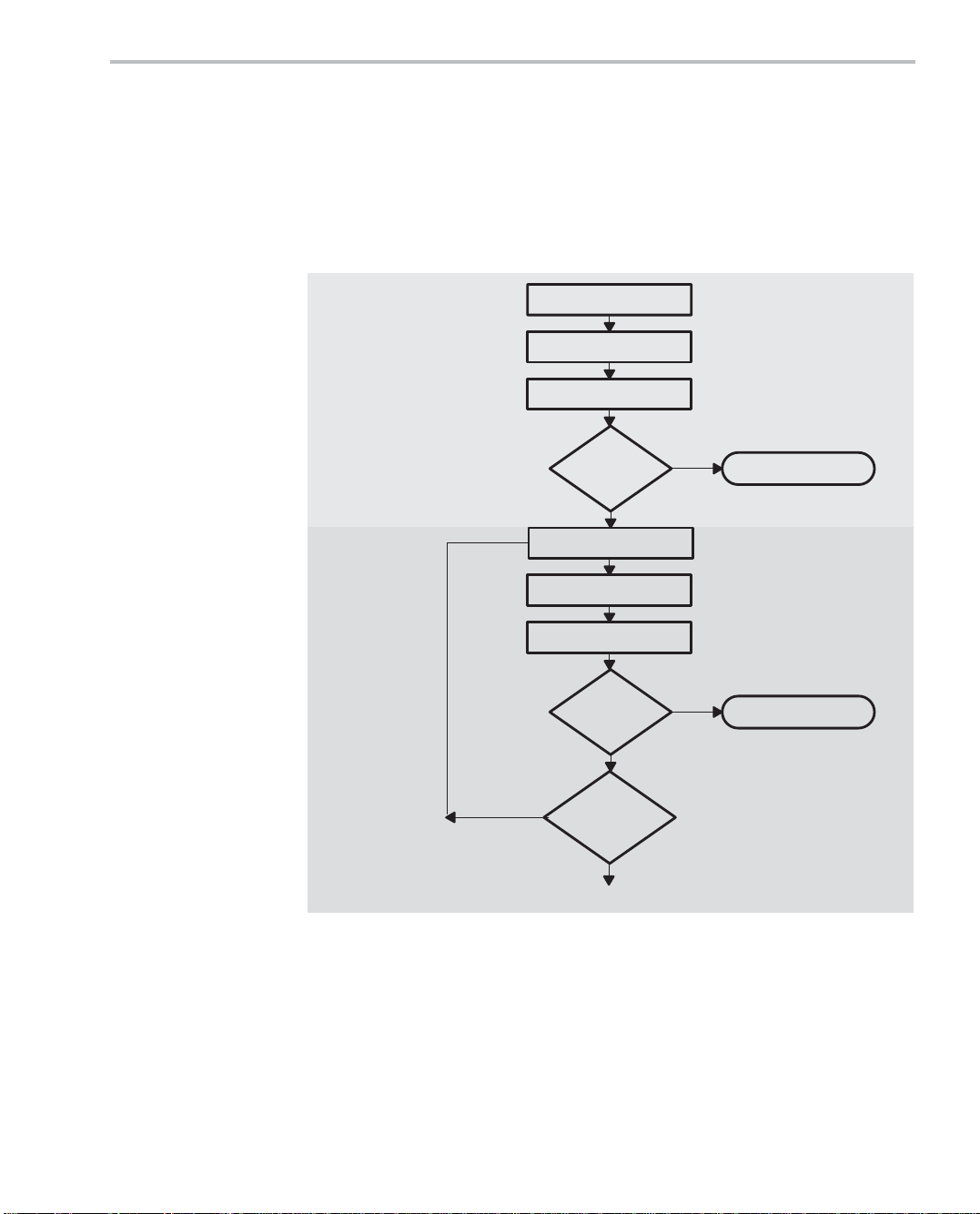

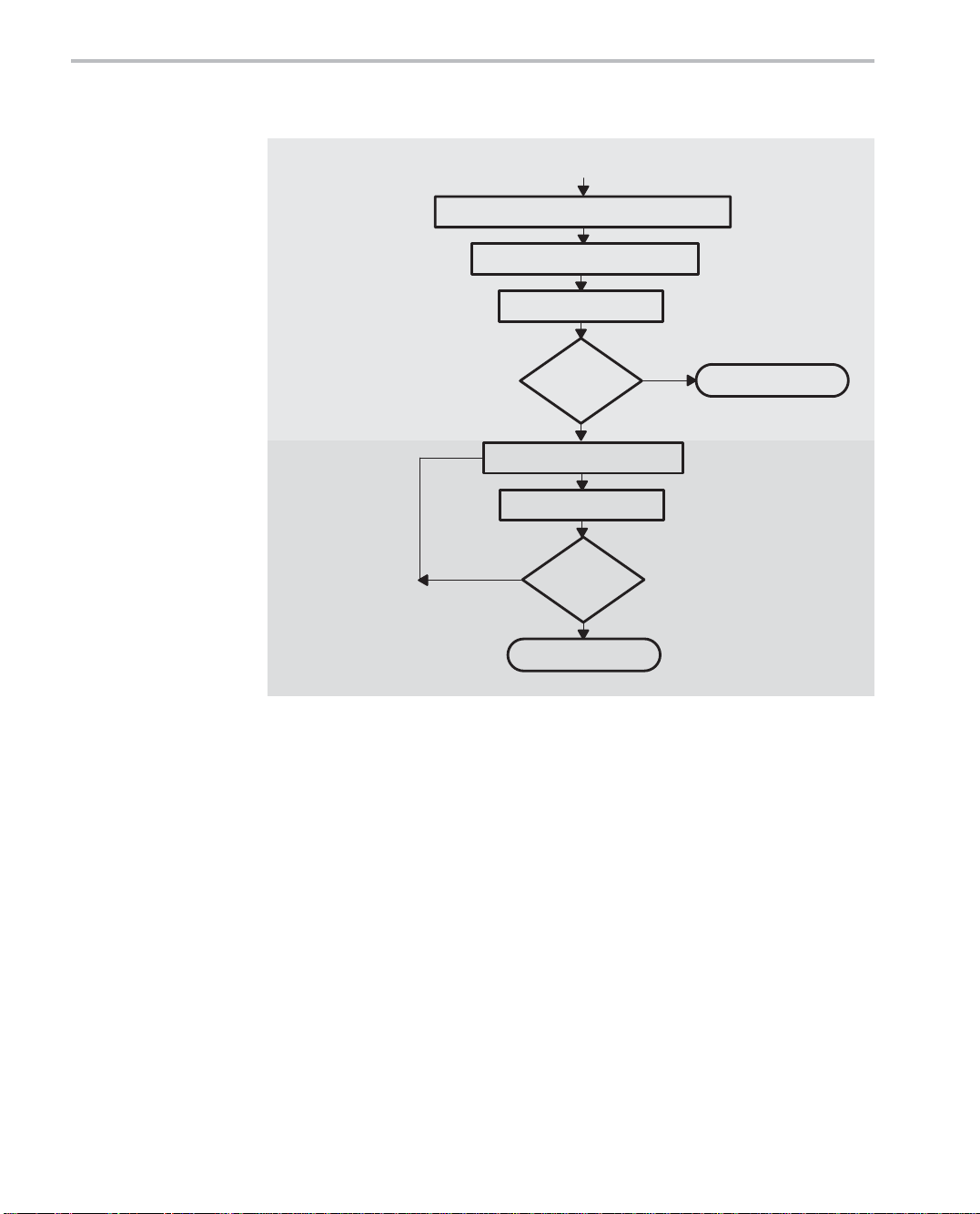

The following flow chart shows how to achieve the best performance and codegeneration efficiency from your code. After the chart, there is a table that describes the phases of the flow.

Figure 1−1. Code Development Flow

Step 1:

Write C Code

Step 2:

Optimize

C Code

Write C code

Optimize C code

Yes

optimization?

Compile

Profile

Efficient

enough?

No

Compile

Profile

Efficient

enough?

No

More C

Yes

Yes

Done

Done

No

To Step 3 (next page)

Introduction

1-3

Page 20

Code Development Flow for Best Performance

Figure 1−1. Code Development Flow (Continued)

From Step 2 (previous page)

Step 3:

Write

Assembly

Code

Step 4:

Optimize

Assembly

Code

Identify time-critical portions of C code

Write them in assembly code

Profile

Efficient

enough?

Yes

No

Optimize assembly code

Profile

No

Efficient

enough?

Yes

Done

Done

1-4

Page 21

Code Development Flow for Best Performance

Step

Goal

1 Write C Code: You can develop your code in C using the ANSI-

compliant C55x C compiler without any knowledge of the C55x DSP.

Use Code Composer Studio to identify any inefficient areas that

you might have in your C code. After making your code functional,

you can improve its performance by selecting higher-level optimization compiler options. If your code i s s ti l l n ot as efficient as you would

like it to be, proceed to step 2.

2 Optimize C Code: Explore potential modifications to your C code

to achieve better performance. Some of the techniques you can apply include (see Chapter 3):

- Use specific types (register, volatile, const).

- Modify the C code to better suit the C55x architecture.

- Use an ETSI intrinsic when applicable.

- Use C55x compiler intrinsics.

After modifying your code, use the C55x profiling tools again, to

check its performance. If your code is still not as efficient as you

would like it to be, proceed to step 3.

3 Write Assembly Code: Identify the time-critical portions of your C

code and rewrite them as C-callable assembly-language functions.

Again, profile your code, and if it is still not as efficient as you would

like it to be, proceed to step 4.

4

Optimize Assembly Code: After making your assembly code functional, try to optimize the assembly-language functions by using

some of the techniques described in Chapter 4, Optimizing Your As-

sembly Code. The techniques include:

- Place instructions in parallel.

- Rewrite or reorganize code to avoid pipeline protection delays.

- Minimize stalls in instruction fetching.

Introduction

1-5

Page 22

1-6

Page 23

Chapter 2

This tutorial walks you through the code development flow introduced in Chapter 1, and introduces you to basic concepts of TMS320C55x (C55x) DSP programming. It uses step-by-step instructions and code examples to show you

how to use the software development tools integrated under Code Composer

Studio (CCS).

Installing CCS before beginning the tutorial allows you to edit, build, and debug

DSP target programs. For more information about CCS features, see the CCS

Tutorial. You can access the CCS Tutorial within CCS by choosing

Help!Tutorial.

The examples in this tutorial use instructions from the mnemonic instruction

set, but the concepts apply equally for the algebraic instruction set.

Topic Page

2.1 Introduction 2-2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2 Writing Assembly Code 2-3. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.3 Understanding the Linking Process 2-10. . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4 Building Your Program 2-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.5 Testing Your Code 2-19. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.6 Benchmarking Your Code 2-21. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2-1

Page 24

Introduction

2.1 Introduction

This tutorial presents a simple assembly code example that adds four numbers together (y = x0 + x3 + x1 + x2). This example helps you become familiar

with the basics of C55x programming.

After completing the tutorial, you should know:

- The four common C55x addressing modes and when to use them.

- The basic C55x tools required to develop and test your software.

This tutorial does not replace the information presented in other C55x documentation and is not intended to cover all the topics required to program the

C55x efficiently.

Refer to the related documentation listed in the preface of this book for more

information about programming the C55x DSP. Much of this information has

been consolidated as part of the C55x Code Composer Studio online help.

For your convenience, all the files required to run this example can be downloaded with the TMS320C55x Programmer’s Guide (SPRU376) from

http://www.ti.com/sc/docs/schome.htm. The examples in this chapter can be

found in the 55xprgug_srccode\tutor directory.

2-2

Page 25

2.2 Writing Assembly Code

Writing your assembly code involves the following steps:

- Allocate sections for code, constants, and variables.

- Initialize the processor mode.

- Set up addressing modes and add the following values: x0 + x1 + x2 + x3.

The following rules should be considered when writing C55x assembly code:

- Labels

The first character of a label must be a letter or an underscore ( _ ) followed by a let t e r, and must begin in the first column of the text file. Labels

can contain up to 32 alphanumeric characters.

- Comments

When preceded by a semicolon ( ; ), a comment may begin in any column.

When preceded by an asterisk ( * ), a comment must begin in the first

column.

Writing Assembly Code

The final assembly code product of this tutorial is displayed in Example 2−1,

Final Assembly Code of tutor.asm. This code performs the addition of the elements in vector x. Sections of this code are highlighted in the three steps used

to create this example.

For more information about assembly syntax, see the TMS320C55x Assembly

Language Tools User’s Guide (SPRU280).

Tutorial

2-3

Page 26

Writing Assembly Code

Example 2−1. Final Assembly Code of tutor.asm

* Step 1: Section allocation

* −−−−−−

x .usect ”vars”,4 ; reserve 4 uninitalized 16-bit locations for x

y .usect ”vars”,1 ; reserve 1 uninitialized 16-bit location for y

.def x,y,init

init .int 1,2,3,4 ; contain initialization values for x

start

* Step 2: Processor mode initialization

* −−−−−−

BCLR C54CM ; set processor to ’55x native mode instead of

BCLR AR0LC ; set AR0 register in linear mode

BCLR AR6LC ; set AR6 register in linear mode

* Step 3a: Copy initialization values to vector x using indirect addressing

* −−−−−−−

copy

AMOV #x, XAR0 ; XAR0 pointing to variable x

AMOV #init, XAR6 ; XAR6 pointing to initialization table

MOV *AR6+, *AR0+ ; copy starts from ”init” to ”x”

MOV *AR6+, *AR0+

MOV *AR6+, *AR0+

MOV *AR6, *AR0

* Step 3b: Add values of vector x elements using direct addressing

* −−−−−−−

add

AMOV #x, XDP ; XDP pointing to variable x

.dp x ; and the assembler is notified

.sect ”table” ; create initialized section ”table” to

.text ; create code section (default is .text)

.def start ; define label to the start of the code

; ’54x compatibility mode (reset value)

MOV @x, AC0

ADD @(x+3), AC0

ADD @(x+1), AC0

ADD @(x+2), AC0

* Step 3c. Write the result to y using absolute addressing

* −−−−−−−

MOV AC0, *(#y)

end

NOP

B end

2-4

Page 27

2.2.1 Allocate Sections for Code, Constants, and Variables

The first step in writing this assembly code is to allocate memory space for the

different sections of your program.

Sections are modules consisting of code, constants, or variables needed to

successfully run your application. These modules are defined in the source file

using assembler directives. The following basic assembler directives are used

to create sections and initialize values in the example code.

- .sect “section_name” creates initialized name section for code/data. Ini-

tialized sections are sections defining their initial values.

- .usect “section_name”, size creates uninitialized named section for data.

Uninitialized sections declare only their size in 16-bit words, but do not define their initial values.

- .int value reserves a 16-bit word in memory and defines the initialization

value

- .def symbol makes a symbol global, known to external files, and indicates

that the symbol is defined in the current file. External files can access the

symbol by using the .ref directive. A symbol can be a label or a variable.

Writing Assembly Code

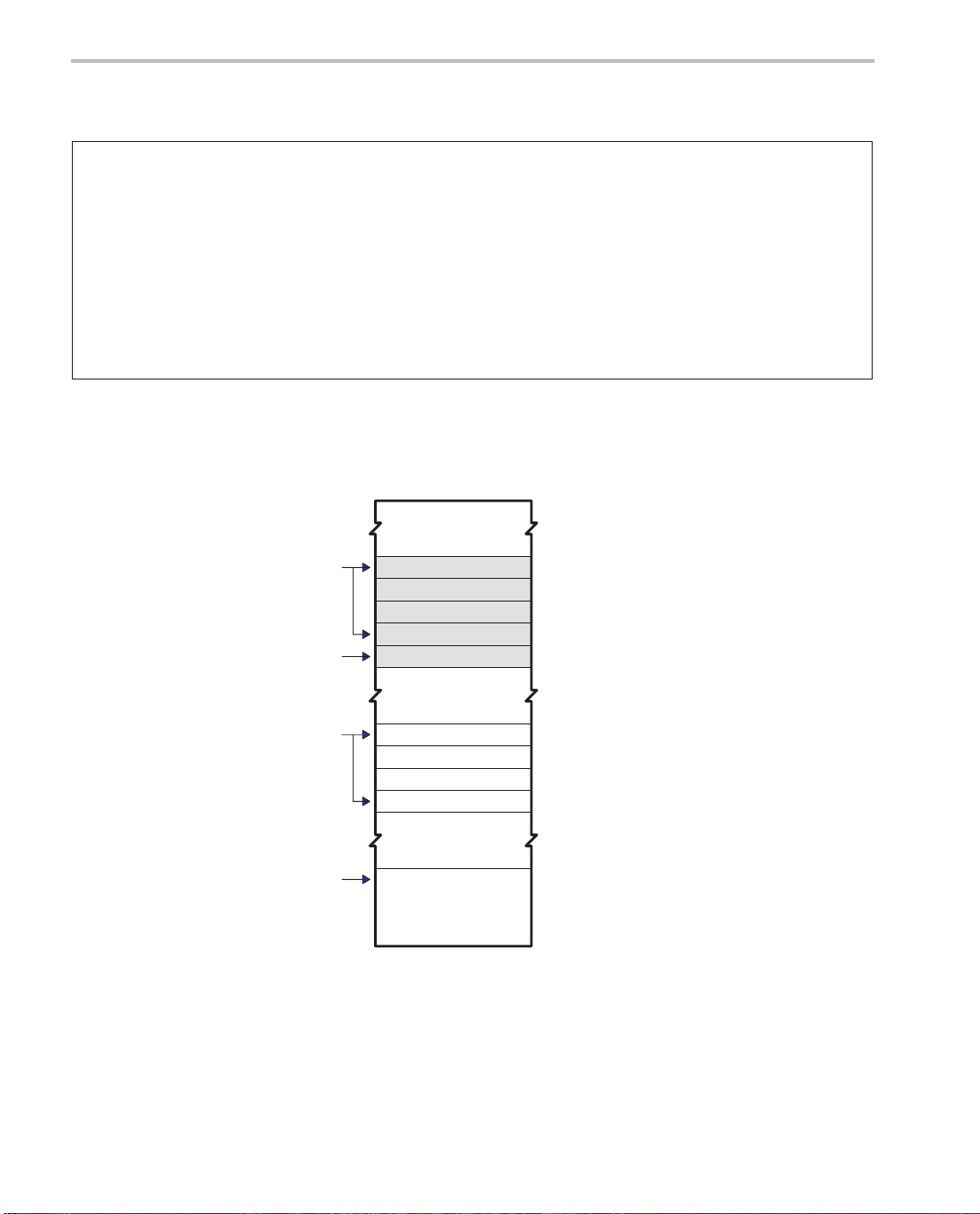

As shown in Example 2−2 and Figure 2−1, the example file tutor.asm contains

three sections:

- vars, containing five uninitialized memory locations

J The first four are reserved for vector x (the input vector to add).

J The last location, y, will be used to store the result of the addition.

- table, to hold the initialization values for x. The init label points to the begin-

ning of section table.

- .text, which contains the assembly code

Example 2−2 shows the partial assembly code used for allocating sections.

Tutorial

2-5

Page 28

Writing Assembly Code

Example 2−2. Partial Assembly Code of tutor.asm (Step 1)

* Step 1: Section allocation

* −−−−−−

.def x, y, init

x .usect “vars”, 4 ; reserve 4 uninitialized 16−bit locations for x

y .usect “vars”, 1 ; reserve 1 uninitialized 16−bit location for y

.sect “table” ; create initialized section “table” to

init .int 1, 2, 3, 4 ; contain initialization values for x

.text ; create code section (default is .text)

.def start ; define label to the start of the code

start

Note: The algebraic instructions code example for Partial Assembly Code of tutor.asm (Step 1) is shown in Example B−1 on

page B-2.

Figure 2−1. Section Allocation

x

y

Init

Start

1

2

3

4

Code

2-6

Page 29

2.2.2 Processor Mode Initialization

The second step is to make sure the status registers (ST0_55, ST1_55,

ST2_55, and ST3_55) are set to configure your processor . You will either need

to set these values or use the default values. Default values are placed in the

registers after processor reset. You can locate the default register values after

reset in the TMS320C55x DSP CPU Reference Guide (SPRU371).

As shown in Example 2−3:

- The AR0 and AR6 registers are set to linear addressing (instead of circular

addressing) using bit addressing mode to modify the status register bits.

- The processor has been set in C55x native mode instead of C54x-compat-

ible mode.

Example 2−3. Partial Assembly Code of tutor.asm (Step 2)

* Step 2: Processor mode initialization

* −−−−−−

BCLR C54CM ; set processor to ’55x native mode instead of

; ’54x compatibility mode (reset value)

BCLR AR0LC ; set AR0 register in linear mode

BCLR AR6LC ; set AR6 register in linear mode

Writing Assembly Code

Note: The algebraic instructions code example for Partial Assembly Code of tutor.asm (Step 2) is shown in Example B−2 on

page B-2.

Tutorial

2-7

Page 30

Writing Assembly Code

2.2.3 Setting up Addressing Modes

Four of the most common C55x addressing modes are used in this code:

- ARn Indirect addressing (identified by *), in which you use auxiliary regis-

ters (ARx) as pointers.

- DP direct addressing (identified by @), which provides a positive offset ad-

dressing from a base address specified by the DP register. The offset is

calculated by the assembler and defined by a 7-bit value embedded in the

instruction.

- k23 absolute addressing (identified by #), which allows you to specify the

entire 23-bit data address with a label.

- Bit addressing (identified by the bit instruction), which allows you to modify

a single bit of a memory location or MMR register.

For further details on these addressing modes, refer to the TMS320C55x DSP

CPU Reference Guide (SPRU371). Example 2−4 demonstrates the use of the

addressing modes discussed in this section.

In Step 3a, initialization values from the table section are copied to vector x (the

vector to perform the addition) using indirect addressing. Figure 2−2 illustrates

the structure of the extended auxiliar registers (XARn). The XARn register is

used only during register initialization. Subsequent operations use ARn because only the lower 16 bits are affected (ARn operations are restricted to a

64k main data page). AR6 is used to hold the address of table, and AR0 is used

to hold the address of x.

In Step 3b, direct addressing is used to add the four values. Notice that the

XDP register was initialized to point to variable x. The .dp assembler directive

is used to define the value of XDP, so the correct offset can be computed by

the assembler at compile time.

Finally , i n Step 3c, the result was stored in the y vector using absolute addressing. Absolute addressing provides an easy way to access a memory location

without having to make XDP changes, but at the expense of an increased code

size.

2-8

Page 31

Writing Assembly Code

n

Example 2−4. Partial Assembly Code of tutor.asm (Part3)

* Step 3a: Copy initialization values to vector x using indirect addressing

* −−−−−−−

copy

AMOV #x, XAR0 ; XAR0 pointing to variable x

AMOV #init, XAR6 ; XAR6 pointing to initialization table

MOV *AR6+, *AR0+ ; copy starts from ”init” to ”x”

MOV *AR6+, *AR0+

MOV *AR6+, *AR0+

MOV *AR6, *AR0

* Step 3b: Add values of vector x elements using direct addressing

* −−−−−−−

add

AMOV #x, XDP ; XDP pointing to variable x

.dp x ; and the assembler is notified

MOV @x, AC0

ADD @(x+3), AC0

ADD @(x+1), AC0

ADD @(x+2), AC0

* Step 3c: Write the result to y using absolute addressing

* −−−−−−−

MOV AC0, *(#y)

end

NOP

B end

Note: The algebraic instructions code example for Partial Assembly Code of tutor.asm (Part3) is shown in Example B−3 on

page B-3.

Figure 2−2. Extended Auxiliary Registers Structure (XARn)

22−16 15−0

XAR

Note: ARnH (upper 7 bits) specifies the 7-bit main data page. ARn (16-bit register) specifies a

ARnH

16-bit offset to the 7-bit main data page to form a 23-bit address.

ARn

Tutorial

2-9

Page 32

Understanding the Linking Process

2.3 Understanding the Linking Process

The linker (lnk55.exe) assigns the final addresses to your code and data sections. This is necessary for your code to execute.

The file that instructs the linker to assign the addresses is called the linker command file (tutor.cmd) and is shown in Example 2−5. The linker command file

syntax is covered in detail in the TMS320C55x Assembly Language Tools

User’s Guide (SPRU280).

- All addresses and lengths given in the linker command file uses byte ad-

dresses and byte lengths. This is in contrast to a TMS320C54x linker command file that uses 16-bit word addresses and word lengths.

- The MEMORY linker directive declares all the physical memory available

in your system (For example, a DARAM memory block at location 0x100

of length 0x8000 bytes). Memory blocks cannot overlap.

- The SECTIONS linker directive lists all the sections contained in your input

files and where you want the linker to allocate them.

When you build your project in Section 2.4, this code produces two files, tutor.out and a tutor.map. Review the test.map file, Example 2−6, to verify the

addresses for x, y, and table. Notice that the linker reports byte addresses for

program labels such as start and .text, and 16-bit word addresses for data labels like x, y , and table. The C55x DSP uses byte addressing to acces variable

length instructions. Instructions can be 1-6 bytes long.

Example 2−5. Linker command file (tutor.cmd)

MEMORY /* byte address, byte len */

{

DARAM: org= 000100h, len = 8000h

SARAM: org= 010000h, len = 8000h

}

SECTIONS /* byte address, byte len */

{

vars :> DARAM

table: > SARAM

.text:> SARAM

}

2-10

Page 33

Understanding the Linking Process

Example 2−6. Linker map file (test.map)

******************************************************************************

TMS320C55xx COFF Linker

******************************************************************************

>> Linked Mon Feb 14 14:52:21 2000

OUTPUT FILE NAME: <tutor.out>

ENTRY POINT SYMBOL: ”start” address: 00010008

MEMORY CONFIGURATION

name org (bytes) len (bytes) used (bytes) attributes fill

−−−− −−−−−−−−−−− −−−−−−−−−−− −−−−−−−−−−−− −−−−−−−−−− −−−−

DARAM 00000100 000008000 0000000a RWIX

SARAM 00010000 000008000 00000040 RWIX

SECTION ALLOCATION MAP

output attributes/

section page orgn(bytes) orgn(words) len(bytes) len(words) input sections

−−−−−−−− −−−− −−−−−−−−−−− −−−−−−−−−−− −−−−−−−−−− −−−−−−−−−− −−−−−−−−−−−−−−

vars 0 00000080 00000005 UNINITIALIZED

00000080 00000005 test.obj (vars)

table 0 00008000 00000004

00008000 00000004 test.obj

(table)

.text 0 00010008 00000038

00010008 00000037 test.obj

(.text)

0001003f 00000001 −−HOLE−− [fill

= 2020]

.data 0 00000000 00000000 UNINITIALIZED

00000000 00000000 test.obj

(.data)

.bss 0 00000000 00000000 UNINITIALIZED

00000000 00000000 test.obj (.bss)

Tutorial

2-11

Page 34

Understanding the Linking Process

Example 2−6. Linker map file (test.map), (Continued)

GLOBAL SYMBOLS: SORTED ALPHABETICALLY BY Name

abs. value/

byte addr word addr name

−−−−−−−−− −−−−−−−−− −−−−

00000000 .bss

00000000 .data

00010008 .text

00000000 ___bss__

00000000 ___data__

00000000 ___edata__

00000000 ___end__

00010040 ___etext__

00010008 ___text__

00000000 edata

00000000 end

00010040 etext

00008000 init

00010008 start

00000080 x

00000084 y

GLOBAL SYMBOLS: SORTED BY Symbol Address

abs. value/

byte addr word addr name

−−−−−−−−− −−−−−−−−− −−−−

00000000 ___end__

00000000 ___edata__

00000000 end

00000000 edata

00000000 ___data__

00000000 .data

00000000 .bss

00000000 ___bss__

00000080 x

00000084 y

00008000 init

00010008 start

00010008 .text

00010008 ___text__

00010040 ___etext__

00010040 etext

[16 symbols]

2-12

Page 35

2.4 Building Your Program

At this point, you should have already successfully installed CCS and selected

the C55x Simulator as the CCS configuration driver to use. Y ou can select the

configuration driver to be used in the CCS setup.

Before building your program, you must set up your work environment and

create a .pjt file. Setting up your work environment involves the following tasks:

- Creating a project

- Adding files to the work space

- Modifying the build options

- Building your program

2.4.1 Creating a Project

Create a new project called tutor.pjt.

1) From the Project menu, choose New and enter the values shown in

Figure 2−3.

Building Your Program

2) Select Finish.

You have now created a project named tutor.pjt and saved it in the new

c:\ti\myprojects\tutor folder.

Tutorial

2-13

Page 36

Building Your Program

Figure 2−3. Project Creation Dialog Box

2.4.2 Adding Files to the Workspace

Copy the tutorial files (tutor .asm and tutor.cmd) to the tutor project directory.

1) Navigate to the directory where the tutorial files are located (the

55xprgug_srccode\tutor directory) and copy them into the c:\ti\myprojects\tutor directory. As an alternative, you can create your own source

files by choosing File!New!Source File and typing the source code from

the examples in this book.

2) Add the two files to the tutor.pjt project. Highlight tutor.pjt, right-click the

mouse, select Add Files, browse for the tutor.asm file, select it, and click

Open, as shown in Figure 2−4. Do the same for tutor.cmd, as shown in

Figure 2−5.

2-14

Page 37

Figure 2−4. Add tutor.asm to Project

Building Your Program

Tutorial

2-15

Page 38

Building Your Program

Figure 2−5. Add tutor.cmd to Project

2-16

Page 39

2.4.3 Modifying Build Options

Modify the Linker options.

1) From the Project menu, choose Build Options.

2) Select the Linker tab and enter fields as shown in Figure 2−6.

3) Click OK when finished.

Figure 2−6. Build Options Dialog Box

Building Your Program

Tutorial

2-17

Page 40

Building Your Program

2.4.4 Building the Program

From the Project menu, choose Rebuild All. After the Rebuild process

completes, the screen shown in Figure 2−7 should display.

When you build your project, CCS compiles, assembles, and links your code

in one step. The assembler reads the assembly source file and converts C55x

instructions to their corresponding binary encoding. The result of the assembly

processes is an object file, tutor.obj, in industry standard COFF binary format.

The object file contains all of your code and variables, but the addresses for

the different sections of code are not assigned. This assignment takes place

during the linking process.

Because there is no C code in your project, no compiler options were used.

Figure 2−7. Rebuild Complete Screen

2-18

Page 41

2.5 Testing Your Code

To test your code, inspect its execution using the C55x Simulator.

Load tutor.out

1) From the File menu, choose Load program.

2) Navigate to and select tutor.out (in the \debug directory), then choose

CCS now displays the tutor.asm source code at the beginning of the start label

because of the entry symbol defined in the linker command file (-e start).

Otherwise, it would have shown the location pointed to by the reset vector.

Display arrays x, y, and init by setting Memory Window options

1) From the View menu, choose Memory.

2) In the Title field, type x.

3) In the Address field, type x.

Testing Your Code

Open.

4) Repeat 1−3 for y.

5) Display the init array by selecting View→ Memory.

6) In the Title field, type Table.

7) In the Address field, type init.

8) Display AC0 by selecting View→CPU Registers→CPU Registers.

The labels x, y, and init are visible to the simulator (using View→ Memory) be-

cause they were exported as symbols (using the .def directive in tutor.asm).

The -g option was used to enable assembly source debugging.

Now, single-step through the code to the end label by selecting Debug→Step

Into. Examine the X Memory window to verify that the table values populate

x and that y gets the value 0xa (1 + 2 + 3 + 4 = 10 = 0xa), as shown in

Example 2−7.

Tutorial

2-19

Page 42

Testing Your Code

Example 2−7. x Memory Window

2-20

Page 43

2.6 Benchmarking Your Code

After verifying the correct functional operation of your code, you can use CCS

to calculate the number of cycles your code takes to execute.

Reload your code

From the File menu, choose Reload Program.

Enable clock for profiling

1) From the Profiler menu, choose Enable Clock.

2) From the Profiler menu, choose View Clock.

Set breakpoints

1) Select the tutor.asm window.

2) Set one breakpoint at the beginning of the code you want to benchmark

(first instruction after start): Right-click on the instruction next to the copy

label and choose Toggle Breakpoint.

Benchmarking Your Code

3) Set one breakpoint marking the end: Right-click on the instruction next to

the end label and choose Toggle Breakpoint.

Benchmark your code

1) Run to the first breakpoint by selecting Debug→ Run.

2) Double-click in the Clock Window to clear the cycle count.

3) Run to the second breakpoint by selecting Debug→ Run.

4) The Clock Window displays the number of cycles the code took to execute

between the breakpoints, which was approximately 17.

Tutorial

2-21

Page 44

2-22

Page 45

Chapter 3

You can maximize the performance of your C code by using certain compiler

options, C code transformations, and compiler intrinsics. This chapter discusses features of the C language relevant to compilation on the

TMS320C55x (C55x) DSP, performance-enhancing options for the compiler,

and C55x-specific code transformations that improve C code performance. All

assembly language examples were generated for the large memory model via

the −ml compiler option.

Topic Page

3.1 Introduction to Writing C/C++ Code for a C55x DSP 3-2. . . . . . . . . . . . . .

3.2 Compiling the C/C++ Code 3-7. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3 Profiling Your Code 3-13. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.4 Refining the C/C++ Code 3-15. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.5 Memory Management Issues 3-42. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3-1

Page 46

Introduction to Writing C/C++ Code for a C55x DSP

3.1 Introduction to Writing C/C++ Code for a C55x DSP