OES Novell Cluster Services 1.8.2 Administration Guide for Linux

Novell

Open Enterprise Server

NOVELL CLUSTER SERVICESTM 1.8.2

April, 2007

ADMINISTRATION GUIDE FOR LINUX*

novdocx (ENU) 29 January 2007

www.novell.com

Legal Notices

Novell, Inc. makes no representations or warranties with respect to the contents or use of this documentation, and

specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose.

Further, Novell, Inc. reserves the right to revise this publication and to make changes to its content, at any time,

without obligation to notify any person or entity of such revisions or changes.

Further, Novell, Inc. makes no representations or warranties with respect to any software, and specifically disclaims

any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc.

reserves the right to make changes to any and all parts of Novell software, at any time, without any obligation to

notify any person or entity of such changes.

Any products or technical information provided under this Agreement may be subject to U.S. export controls and the

trade laws of other countries. You agree to comply with all export control regulations and to obtain any required

licenses or classification to export, re-export, or import deliverables. You agree not to export or re-export to entities

on the current U.S. export exclusion lists or to any embargoed or terrorist countries as specified in the U.S. export

laws. You agree to not use deliverables for prohibited nuclear, missile, or chemical biological weaponry end uses.

Please refer to www.novell.com/info/exports/ for more information on exporting Novell software. Novell assumes no

responsibility for your failure to obtain any necessary export approvals.

Copyright © 2007 Novell, Inc. All rights reserved. No part of this publication may be reproduced, photocopied,

stored on a retrieval system, or transmitted without the express written consent of the publisher.

novdocx (ENU) 29 January 2007

Novell, Inc. has intellectual property rights relating to technology embodied in the product that is described in this

document. In particular, and without limitation, these intellectual property rights may include one or more of the U.S.

patents listed at http://www.novell.com/company/legal/patents/ and one or more additional patents or pending patent

applications in the U.S. and in other countries.

Novell, Inc.

404 Wyman Street, Suite 500

Waltham, MA 02451

U.S.A.

www.novell.com

Online Documentation: To access the online documentation for this and other Novell products, and to get

updates, see www.novell.com/documentation.

Novell Trademarks

ConsoleOne is a registered trademark of Novell, Inc. in the United States and other countries.

eDirectory is a trademark of Novell, Inc.

GroupWise is a registered trademark of Novell, Inc. in the United States and other countries.

NetWare is a registered trademark of Novell, Inc. in the United States and other countries.

NetWare Core Protocol and NCP are trademarks of Novell, Inc.

Novell is a registered trademark of Novell, Inc. in the United States and other countries.

Novell Authorized Reseller is a Service mark of Novell Inc.

Novell Cluster Services is a trademark of Novell, Inc.

Novell Directory Services and NDS are registered trademarks of Novell, Inc. in the United States and other countries.

Novell Storage Services is a trademark of Novell, Inc.

SUSE is a registered trademark of Novell, Inc. in the United States and other countries.

Third-Party Materials

All third-party trademarks are the property of their respective owners.

novdocx (ENU) 29 January 2007

novdocx (ENU) 29 January 2007

Contents

About This Guide 7

1Overview 9

1.1 Product Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.2 Product Benefits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.3 Cluster Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.3.1 Cluster Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2What’s New 15

3 Installation and Setup 17

3.1 Hardware Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.2 Software Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.3 Shared Disk System Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3.4 Rules for Operating a Novell Cluster Services SAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3.5 Installing Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3.5.1 Novell Cluster Services Licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.5.2 Installing Novell Cluster Services during the OES Installation . . . . . . . . . . . . . . . . . 19

3.5.3 Installing Novell Cluster Services after the OES Installation . . . . . . . . . . . . . . . . . . . 20

3.5.4 Starting and Stopping Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.6 Converting a NetWare Cluster to Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.6.1 Changing Existing NetWare Cluster Nodes to Linux (Rolling Cluster Conversion) . . 21

3.6.2 Adding New Linux Nodes to Your NetWare Cluster . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.6.3 Mixed NetWare and Linux Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.6.4 Finalizing the Cluster Conversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.7 Setting Up Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.7.1 Creating NSS Shared Disk Partitions and Pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.7.2 Creating NSS Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.7.3 Cluster Enabling NSS Pools and Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.7.4 Creating Traditional Linux Volumes on Shared Disks . . . . . . . . . . . . . . . . . . . . . . . . 32

3.7.5 Expanding EVMS Volumes on Shared Disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

3.7.6 Cluster Enabling Traditional Linux Volumes on Shared Disks . . . . . . . . . . . . . . . . . 36

3.7.7 Creating Cluster Resource Templates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.7.8 Creating Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.7.9 Configuring Load Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.7.10 Configuring Unload Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.7.11 Setting Start, Failover, and Failback Modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.7.12 Assigning Nodes to a Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.8 Configuration Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.8.1 Editing Quorum Membership and Timeout Properties . . . . . . . . . . . . . . . . . . . . . . . 44

3.8.2 Cluster Protocol Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.8.3 Cluster IP Address and Port Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.8.4 Resource Priority. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

3.8.5 Cluster E-Mail Notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

3.8.6 Cluster Node Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.9 Additional Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

novdocx (ENU) 29 January 2007

Contents 5

4 Managing Novell Cluster Services 49

4.1 Migrating Resources. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.2 Identifying Cluster and Resource States . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.3 Novell Cluster Services Console Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.4 Customizing Cluster Services Management. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

4.5 Novell Cluster Services File Locations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.6 Additional Cluster Operating Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

4.6.1 Connecting to an iSCSI Target . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

4.6.2 Adding a Node That Was Prevously in the Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . 58

4.6.3 Cluster Maintenance Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

4.6.4 Shutting Down Linux Servers When Servicing Shared Storage . . . . . . . . . . . . . . . . 58

4.6.5 Preventing Cluster Node Reboot after Node Shutdown. . . . . . . . . . . . . . . . . . . . . . . 58

4.6.6 Problems Authenticating to Remote Servers during Cluster Configuration . . . . . . . . 59

4.6.7 Reconfiguring a Cluster Node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

4.6.8 Device Name Required to Create a Cluster Partition. . . . . . . . . . . . . . . . . . . . . . . . . 59

4.6.9 Creating a Cluster Partition (SBD Partition) after Installation. . . . . . . . . . . . . . . . . . . 59

4.6.10 Mirroring SBD (Cluster) Partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

A Documentation Updates 61

novdocx (ENU) 29 January 2007

A.1 December 23, 2005 (Open Enterprise Server SP2) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

6 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

About This Guide

This guide describes how to install, upgrade, configure, and manage Novell® Cluster ServicesTM. It

is intended for cluster administrators and is divided into the following sections:

Chapter 1, “Overview,” on page 9

Chapter 2, “What’s New,” on page 15

Chapter 3, “Installation and Setup,” on page 17

Chapter 4, “Managing Novell Cluster Services,” on page 49

Appendix A, “Documentation Updates,” on page 61

Audience

This guide is intended for intended for anyone involved in installing, configuring, and managing

Novell Cluster Services.

novdocx (ENU) 29 January 2007

Feedback

We want to hear your comments and suggestions about this manual and the other documentation

included with this product. Please use the User Comments feature at the bottom of each page of the

online documentation, or go to www.novell.com/documentation/feedback.html and enter your

comments there.

Documentation Updates

The latest version of this Novell Cluster Services for Linux Administration Guide is available on the

OES documentation Web site (http://www.novell.com/documentation/lg/oes).

Documentation Conventions

In Novell documentation, a greater-than symbol (>) is used to separate actions within a step and

items in a cross-reference path.

®

A trademark symbol (

trademark.

, TM, etc.) denotes a Novell trademark. An asterisk (*) denotes a third-party

About This Guide

7

novdocx (ENU) 29 January 2007

8 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

1

Overview

Novell® Cluster ServicesTM is a server clustering system that ensures high availability and

manageability of critical network resources including data, applications, and services. It is a

multinode clustering product for Linux that is enabled for Novell eDirectory

failback, and migration (load balancing) of individually managed cluster resources.

TM

and supports failover,

1.1 Product Features

Novell Cluster Services includes several important features to help you ensure and manage the

availability of your network resources. These include:

Support for shared SCSI, iSCSI or fibre channel storage area networks.

Multinode all-active cluster (up to 32 nodes). Any server in the cluster can restart resources

(applications, services, IP addresses, and file systems) from a failed server in the cluster.

A single point of administration through the browser-based Novell iManager cluster

configuration and monitoring GUI. iManager also lets you remotely manage your cluster.

The ability to tailor a cluster to the specific applications and hardware infrastructure that fit

your organization.

novdocx (ENU) 29 January 2007

1

Dynamic assignment and reassignment of server storage on an as-needed basis.

The ability to automatically notify administrators through e-mail of cluster events and cluster

state changes.

1.2 Product Benefits

Novell Cluster Services allows you to configure up to 32 Linux servers into a high-availability

cluster, where resources can be dynamically switched or moved to any server in the cluster.

Resources can be configured to automatically switch or be moved in the event of a server failure, or

they can be moved manually to troubleshoot hardware or balance the workload.

Novell Cluster Services provides high availability from commodity components. Lower costs are

obtained through the consolidation of applications and operations onto a cluster. The ability to

manage a cluster from a single point of control and to adjust resources to meet changing workload

requirements (thus, manually “load balance” the cluster) are also important benefits of Novell

Cluster Services.

An equally important benefit of implementing Novell Cluster Services is that you can reduce

unplanned service outages as well as planned outages for software and hardware maintenance and

upgrades.

Reasons you would want to implement Novell Cluster Services include the following:

Increased availability

Improved performance

Low cost of operation

Scalability

Disaster recovery

Overview

9

Data protection

y

Server Consolidation

Storage Consolidation

Shared disk fault tolerance can be obtained by implementing RAID on the shared disk subsystem.

An example of the benefits Novell Cluster Services provides can be better understood through the

following scenario.

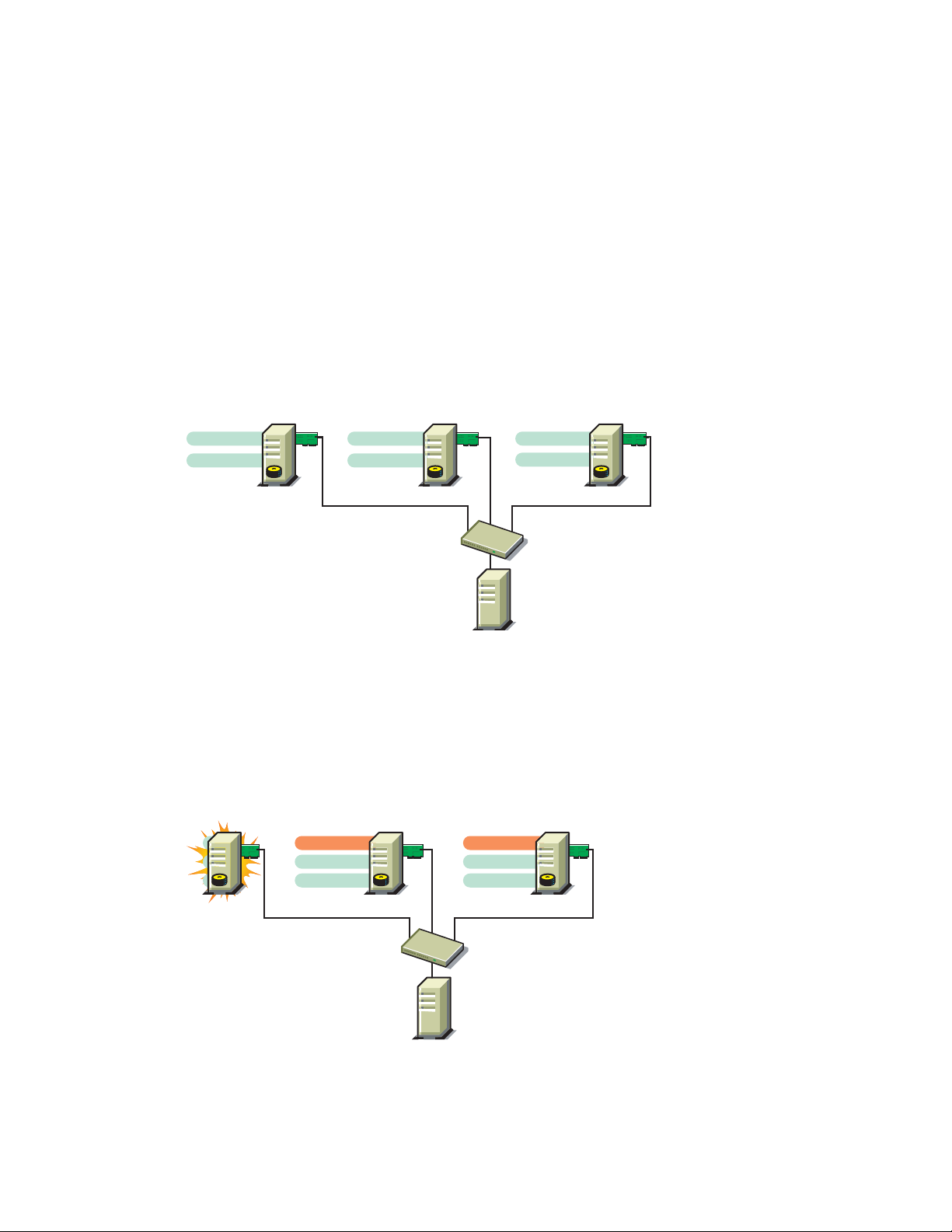

Suppose you have configured a three-server cluster, with a Web server installed on each of the three

servers in the cluster. Each of the servers in the cluster hosts two Web sites. All the data, graphics,

and Web page content for each Web site is stored on a shared disk subsystem connected to each of

the servers in the cluster. The following figure depicts how this setup might look.

Figure 1-1 Three-Server Cluster

novdocx (ENU) 29 January 2007

Web Server 3

Web Site A

Web Site B

Web Server 1

Web Server 2

Web Site C

Web Site D

Fibre Channel Switch

Web Site E

Web Site F

During normal cluster operation, each server is in constant communication with the other servers in

the cluster and performs periodic polling of all registered resources to detect failure.

Suppose Web Server 1 experiences hardware or software problems and the users depending on Web

Server 1 for Internet access, e-mail, and information lose their connections. The following figure

shows how resources are moved when Web Server 1 fails.

Figure 1-2 Three-Server Cluster after One Server Fails

Web Server 1 Web Server 3

Web Site A

Web Site C

Web Site D

Web Server 2

Web Site B

Web Site E

Web Site F

Fibre Channel Switch

Shared Disk

S

stem

10 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

Web Site A moves to Web Server 2 and Web Site B moves to Web Server 3. IP addresses and

certificates also move to Web Server 2 and Web Server 3.

When you configured the cluster, you decided where the Web sites hosted on each Web server would

go should a failure occur. In the previous example, you configured Web Site A to move to Web

Server 2 and Web Site B to move to Web Server 3. This way, the workload once handled by Web

Server 1 is evenly distributed.

When Web Server 1 failed, Novell Cluster Services software

Detected a failure.

Remounted the shared data directories (that were formerly mounted on Web server 1) on Web

Server 2 and Web Server 3 as specified.

Restarted applications (that were running on Web Server 1) on Web Server 2 and Web Server 3

as specified.

Transferred IP addresses to Web Server 2 and Web Server 3 as specified.

In this example, the failover process happened quickly and users regained access to Web site

information within seconds, and in most cases, without having to log in again.

novdocx (ENU) 29 January 2007

Now suppose the problems with Web Server 1 are resolved, and Web Server 1 is returned to a

normal operating state. Web Site A and Web Site B will automatically fail back, or be moved back to

Web Server 1, and Web Server operation will return back to the way it was before Web Server 1

failed.

Novell Cluster Services also provides resource migration capabilities. You can move applications,

Web sites, etc. to other servers in your cluster without waiting for a server to fail.

For example, you could have manually moved Web Site A or Web Site B from Web Server 1 to

either of the other servers in the cluster. You might want to do this to upgrade or perform scheduled

maintenance on Web Server 1, or just to increase performance or accessibility of the Web sites.

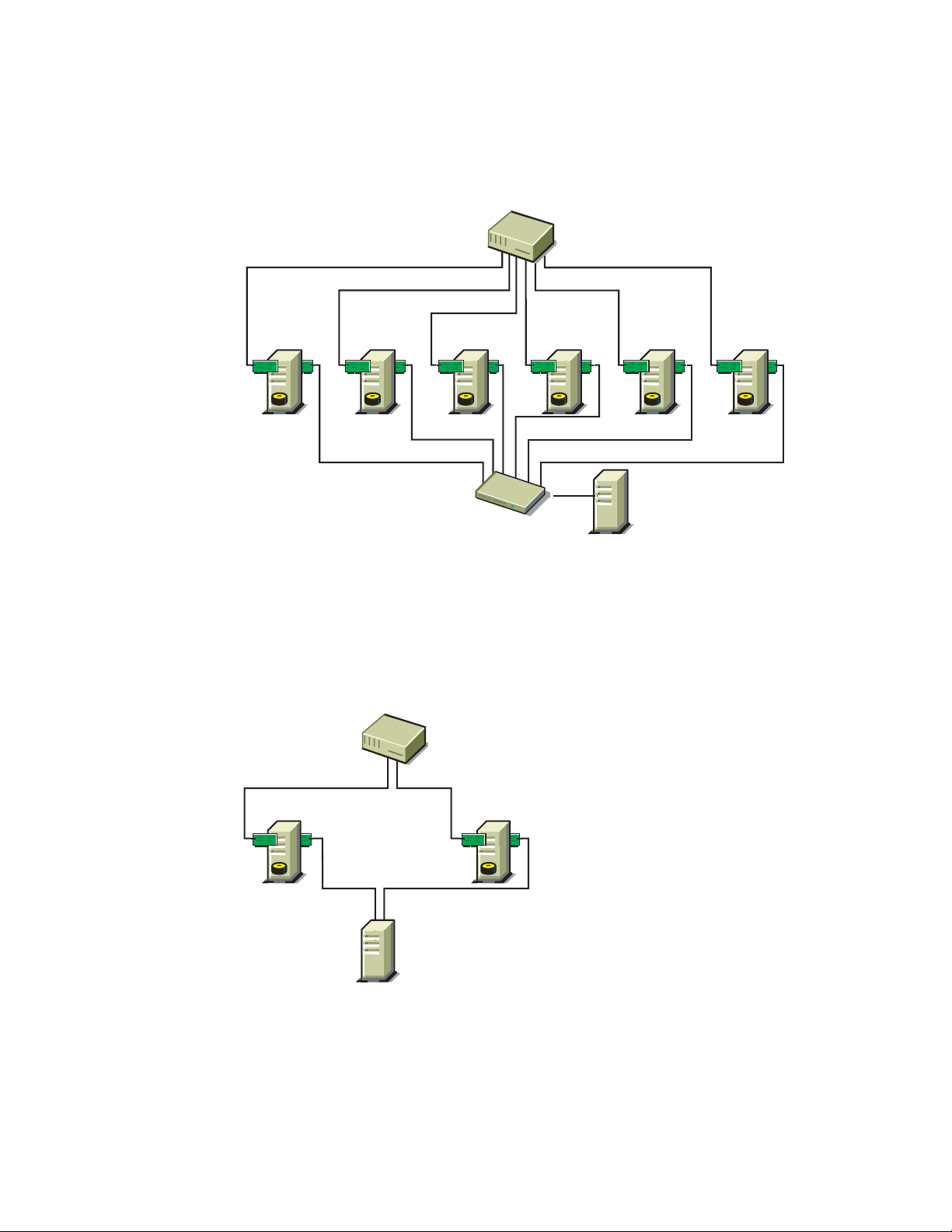

1.3 Cluster Configuration

Typical cluster configurations normally include a shared disk subsystem connected to all servers in

the cluster. The shared disk subsystem can be connected via high-speed fibre channel cards, cables,

and switches, or it can be configured to use shared SCSI or iSCSI. If a server fails, another

designated server in the cluster automatically mounts the shared disk directories previously mounted

on the failed server. This gives network users continuous access to the directories on the shared disk

subsystem.

Overview 11

Typical resources might include data, applications, and services. The following figure shows how a

y

r

typical fibre channel cluster configuration might look.

Figure 1-3 Typical Fibre Channel Cluster Configuration

Network Hub

novdocx (ENU) 29 January 2007

Network

Interface

Card(s)

Server 1 Server 2 Server 3 Server 4 Server 5 Server 6

Fibre Channel Switch

Shared Disk

System

Fibre

Channel

Card(s)

Although fibre channel provides the best performance, you can also configure your cluster to use

shared SCSI or iSCSI. The following figure shows how a typical shared SCSI cluster configuration

might look.

Figure 1-4 Typical Shared SCSI Cluster Configuration

Network Hub

Network

Interface

Card

Server 1 Server 2

SCSI

Adapter

Shared Disk

S

Network

Interface

Card

stem

12 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

SCSI

Adapte

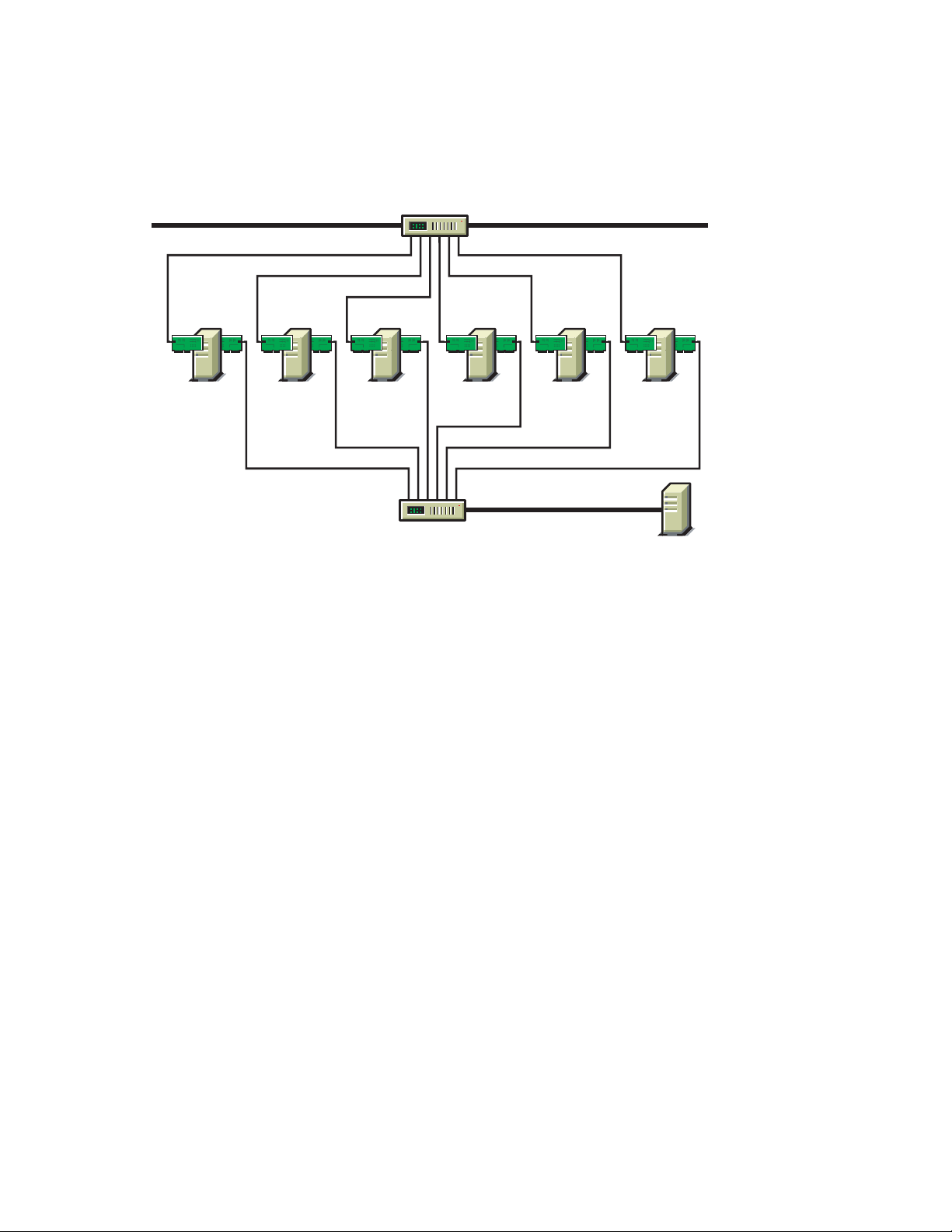

iSCSI is another option and is an alternative to fibre channel that can be used to create a low-cost

SAN. The following figure shows how a typical iSCSI cluster configuration might look.

Figure 1-5 Typical iSCSI Cluster Configuration

novdocx (ENU) 29 January 2007

Ethernet Switch

iSCSI

Initiator

Ethernet Switch

iSCSI

Initiator

iSCSI

Initiator

Ethernet

Ethernet

Card(s)

Network Backbone Network Backbone

Server 1 Server 2 Server 3 Server 4 Server 5 Server 6

iSCSI

Initiator

iSCSI

Initiator

1.3.1 Cluster Components

The following components make up a Novell Cluster Services cluster:

Ethernet

Card(s)

iSCSI

Initiator

Storage

System

From 2 to 32 Linux servers, each containing at least one local disk device.

Novell Cluster Services software running on each Linux server in the cluster.

A shared disk subsystem connected to all servers in the cluster (optional, but recommended for

most configurations).

High-speed fibre channel cards, cables, and switch or SCSI cards and cables used to connect

the servers to the shared disk subsystem.

Overview 13

novdocx (ENU) 29 January 2007

14 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

2

What’s New

The following changes and enhancements were added to Novell® Cluster ServicesTM for Linux for

Novell Open Enterprise Server (OES) Support Pack 2.

It is now possible to choose a device for the SBD partition from a list rather than entering it

manually. See Section 3.5, “Installing Novell Cluster Services,” on page 18.

Some iManager cluster option names and locations have changed to make cluster configuration

and management easier.

It is now possible to upgrade a cluster node directly from NetWare 6.0 to OES Linux without

first upgrading to NetWare 6.5. See Section 3.6, “Converting a NetWare Cluster to Linux,” on

page 21.

novdocx (ENU) 29 January 2007

2

What’s New

15

novdocx (ENU) 29 January 2007

16 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

3

Installation and Setup

novdocx (ENU) 29 January 2007

3

Novell® Cluster ServicesTM can be installed during the Open Enterprise Server (OES) installation or

after. OES is now part of the SUSE

Novell Cluster Services part of the OES installation, you are prompted for configuration information

that is necessary for Novell Cluster Services to function properly. This chapter contains information

to help you install, set up, and configure Novell Cluster Services.

®

Linux Enterprise Server (SLES) 9 installation. During the

3.1 Hardware Requirements

The following list specifies hardware requirements for installing Novell Cluster Services. These

requirements represent the minimum hardware configuration. Additional hardware might be

necessary depending on how you intend to use Novell Cluster Services.

A minimum of two Linux servers

At least 512 MB of memory on each server in the cluster.

NOTE: While identical hardware for each cluster server is not required, having servers with the

same or similar processors and memory can reduce differences in performance between cluster

nodes and make it easier to manage your cluster. There are fewer variables to consider when

designing your cluster and failover rules if each cluster node has the same processor and amount of

memory.

If you have a fibre channel SAN, the host bus adapters (HBAs) for each cluster node should be

identical.

3.2 Software Requirements

Novell Cluster Services is installed as part of the OES installation. OES must be installed and

running on each cluster server. In addition to OES, ensure that the following requirements are met:

All servers in the cluster are configured with a static IP address and are on the same IP subnet

There is an additional IP address for the cluster and for each cluster resource and cluster-

enabled pool

All servers in the cluster are in the same Novell eDirectory

If the servers in the cluster are in separate eDirectory containers, each server must have rights

to the other server's containers and to the containers where any cluster enabled pool objects are

stored. You can do this by adding trustee assignments for all cluster servers to a parent

container of the containers where the cluster server objects reside. See eDirectory Rights (http:/

/www.novell.com/documentation/edir873/edir873/data/fbachifb.html#fbachifb) in the

eDirectory 8.7.3 Administration Guide for more information.

The browser that will be used to manage Novell Cluster Services is set to a supported language.

The iManager plug-in for Novell Cluster Services might not operate properly if the highest

priority Language setting for your Web browser is set to a language other than one of the

supported languages. To avoid problems, in your Web browser, click Too ls > Options >

Languages, and then set the first language preference in the list to a supported language.

TM

tree

Installation and Setup

17

3.3 Shared Disk System Requirements

A shared disk system (Storage Area Network, or SAN) is required for each cluster if you want data

to be highly available. If a shared disk subsystem is used, ensure the following:

At least 20 MB of free disk space on the shared disk system for creating a special cluster

partition

The Novell Cluster Services installation automatically allocates one cylinder on one drive of

the shared disk system for the special cluster partition. Depending on the location of the

cylinder, the actual amount of space used by the cluster partition may be less than 20 MB.

The shared disk system is properly set up and functional according to the manufacturer's

instructions before installing Novell Cluster Services.

We recommend that the disks contained in the shared disk system are configured to use

mirroring or RAID to add fault tolerance to the shared disk system.

If you are using iSCSI for shared disk system access, ensure you have configured iSCSI

intiators and targets prior to installing Novell Cluster Services. See Accessing iSCSI Targets on

NetWare Servers from Linux Initiators (http://www.novell.com/documentation/iscsi1_nak/

iscsi/data/bswmaoa.html#bt8cyhf) for more information.

novdocx (ENU) 29 January 2007

3.4 Rules for Operating a Novell Cluster Services SAN

When you create a Novell Cluster Services system that utilizes shared storage space (a Storage Area

Network, or SAN), it is important to remember that all servers attached to the shared disks, whether

in the cluster or not, have access to all of the data on the shared storage space unless you specifically

prevent such access. Novell Cluster Services arbitrates access to shared data for all cluster nodes,

but cannot protect shared data from being corrupted by noncluster servers.

3.5 Installing Novell Cluster Services

It is necessary to install SLES 9/OES on every server you want to add to a cluster. You can install

Novell Cluster Services and create a new cluster, or add a server to an existing cluster either during

the SLES 9/OES installation or afterwards, using YaST.

If you are creating a new cluster, the YaST setup tool

Creates a new Cluster object and Cluster Node object in eDirectory.

Installs Novell Cluster Services software on the server.

Creates a special cluster partition if you have a shared disk system.

If you are adding a server to an existing cluster, the YaST setup tool

Creates a new Cluster Node object in eDirectory.

Installs Novell Cluster Services software on the server.

You can install up to 32 nodes in each cluster.

18 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

3.5.1 Novell Cluster Services Licensing

You can add up to 32 nodes to a cluster. Novell Cluster Services for Linux includes licenses for two

cluster nodes. You only need additional Cluster Server Licenses if you have a three-node or larger

cluster. A paper license for additional cluster nodes can be obtained from Novell or from your

Novell Authorized Reseller

SM

.

3.5.2 Installing Novell Cluster Services during the OES Installation

1 Start the SUSE Linux Enterprise Server 9 (SLES 9) installation and continue until you get to

the Installation Settings screen, then click Software.

OES is part of the SLES 9 install.

The SLES 9/OES installation includes several steps not described here because they do not

directly relate to Novell Cluster Services. For more detailed instructions on installing OES with

SLES 9, see the OES Linux Installation Guide.

2 On the Software Selection screen, click Detailed Selection.

novdocx (ENU) 29 January 2007

3 In the Selection window, click Novell Cluster Services and any other OES components that you

want to install, then click Accept.

NSS is a required component for Novell Cluster Services and it is automatically selected when

you select Novell Cluster Services. Installing NSS also allows you to create cluster-enabled

NSS pools (virtual servers).

iManager is required to configure and manage Novell Cluster Services, and must be installed

on at least one server.

4 Continue through the installation process until you reach the Installation Settings screen, then

click the Cluster Services link.

5 Choose whether eDirectory is installed locally or remotely, accept or change the Admin name

and enter the Admin password, then click Next.

eDirectory is automatically selected when NSS is selected.

6 Choose to either create a new cluster, configure Novell Cluster Services on a server that you

will add to an existing cluster, or configure Novell Cluster Services later.

7 Enter the fully distinguished name (FDN) of the cluster.

IMPORTANT: Use the dot format illustrated in the example. Do not use commas.

If you are creating a new cluster, this is the name you will give the new cluster and the

eDirectory context where the new Cluster object will reside. You must specify an existing

context. Specifying a new context does not create a new context.

If you are adding a server to an existing cluster, this is the name and eDirectory context of the

cluster that you are adding this server to.

Cluster names must be unique. You cannot create two clusters with the same name in the same

eDirectory tree.

8 (Conditional) If you are creating a new cluster, enter a unique IP address for the cluster.

The cluster IP address is separate from the server IP address, is required to be on the same IP

subnet as the other cluster servers, and is required for certain external network management

Installation and Setup 19

programs to get cluster status alerts. The cluster IP address provides a single point for cluster

access, configuration, and management. A Master IP Address resource that makes this possible

is created automatically during the Cluster Services installation.

The cluster IP address will be bound to the master node and will remain with the master node

regardless of which server is the master node.

9 (Conditional) If you are creating a new cluster, select the device where the SBD partition will

be created.

For example, the device might be something similar to sdc.

If you have a shared disk system or SAN attached to your cluster servers, Novell Cluster

Services will create a small cluster partition on that shared disk system. This small cluster

partition is referred to as the Split Brain Detector (SBD) partition. Specify the drive or device

where you want the small cluster partition created.

If you do not have a shared disk system connected to your cluster servers, accept the default

(none).

IMPORTANT: You must have at least 20 MB of free space on one of the shared disk drives to

create the cluster partition. If no free space is available, the shared disk drives can't be used by

Novell Cluster Services.

novdocx (ENU) 29 January 2007

10 (Conditional) If you want to mirror the SBD partition for greater fault tolerance, select the

device where you want to mirror to, then click Next.

You can also mirror SBD partitions after installing Novell Cluster Services. See Section 4.6.10,

“Mirroring SBD (Cluster) Partitions,” on page 60.

11 Select the IP address Novell Cluster Services will use for this node.

Some servers have multiple IP addresses. This step lets you choose which IP address Novell

Cluster Services will use.

12 Choose whether to start Novell Cluster Services software after configuring it, then click Next.

This option applies only to installing Novell Cluster Services after the OES installation,

because it starts automatically when the server reboots during the OES installation.

If you choose to not start Novell Cluster Services software, you need to either manually start it

after the installation, or reboot the cluster server to automatically start it.

You can manually start Novell Cluster Services by going to the /etc/init.d directory and

entering ./novell-ncs start at the server console of the cluster server.

13 Continue through the rest of the OES installation.

3.5.3 Installing Novell Cluster Services after the OES Installation

If you did not install Novell Cluster Services during the OES installation, you can install it later by

completing the following steps:

1 At the Linux server console, type yast2 ncs.

This installs the Novell Cluster Services software component and takes you to the cluster

configuration screen.

You must be logged in as root to access the cluster configuration screen.

20 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

2 Continue by completing Step 5 on page 19 through Step 12 on page 20.

3.5.4 Starting and Stopping Novell Cluster Services

Novell Cluster Services automatically starts after it is installed. Novell Cluster Services also

automatically starts when you reboot your OES Linux server. If you need to manually start Novell

Cluster Services, go to the /etc/init.d directory and run ./novell-ncs start. You must

be logged in as root to run novell-ncs start.

IMPORTANT: If you are using iSCSI for shared disk system access, ensure you have configured

iSCSI intiators and targets to start prior to starting Novell Cluster Services. You can do this by

entering chkconfig iscsi on at the Linux server console.

To have Novell Cluster Services not start automatically after rebooting your OES Linux server, enter

chkconfig novell-ncs off at the Linux server console before rebooting the server. You can

also enter chkconfig novell-ncs on to again cause Novell Cluster Services to automatically

start.

novdocx (ENU) 29 January 2007

3.6 Converting a NetWare Cluster to Linux

This section covers the following information to help you convert and manage a mixed NetWare 6.5

and Linux cluster.

Section 3.6.1, “Changing Existing NetWare Cluster Nodes to Linux (Rolling Cluster

Conversion),” on page 21

Section 3.6.2, “Adding New Linux Nodes to Your NetWare Cluster,” on page 23

Section 3.6.3, “Mixed NetWare and Linux Clusters,” on page 24

Section 3.6.4, “Finalizing the Cluster Conversion,” on page 26

If you have a NetWare 5.1 cluster, you must upgrade to a NetWare 6.5 cluster before adding new

Linux cluster nodes or converting existing NetWare cluster nodes to Linux cluster nodes.

See “Upgrading Novell Cluster Services” in the Novell Cluster Services Administration Guide for

information on upgrading Novell Cluster Services.

IMPORTANT: You cannot add additional NetWare nodes to your cluster after adding a new Linux

node or changing an existing NetWare cluster node to a Linux cluster node. If you want to add

NetWare cluster nodes after converting part of your cluster to Linux, you must first remove the

Linux nodes from the cluster.

3.6.1 Changing Existing NetWare Cluster Nodes to Linux (Rolling Cluster Conversion)

Performing a rolling cluster conversion from NetWare 6.5 to Linux lets you keep your cluster up and

running and lets your users continue to access cluster resources while the conversion is being

performed.

During a rolling cluster conversion, one server is converted to Linux while the other servers in the

cluster continue running NetWare 6.5. Then, if desired, another server can be converted to Linux,

Installation and Setup 21

and then another, until all servers in the cluster have been converted to Linux. You can also leave the

cluster as a mixed NetWare and Linux cluster.

NOTE: The process for converting NetWare 6.0 cluster nodes to OES Linux cluster nodes is the

same as for converting NetWare 6.5 cluster nodes to OES Linux cluster nodes.

IMPORTANT: Mixed NetWare 6.5 and OES Linux clusters are supported, and mixed NetWare 6.0

and OES Linux clusters are also supported. Mixed clusters consisting of NetWare 6.0 servers,

NetWare 6.5 servers, and OES Linux servers are not supported. All NetWare servers must be either

version 6.5 or 6.0 in order to exist in a mixed NetWare and OES Linux cluster.

When converting NetWare cluster servers to Linux, do not convert the server that has the master

eDirectory replica first. If the server with the master eDirectory replica is a cluster node, convert it at

the end of the rolling cluster conversion.

To perform a rolling cluster conversion from NetWare 6.5 to Linux:

1 On the NetWare server you want to convert to Linux, run NWConfig and remove eDirectory.

novdocx (ENU) 29 January 2007

You can do this by selecting the option in NWConfig to remove eDirectory from the server.

2 Bring down the NetWare server you want to convert to Linux.

Any cluster resources that were running on the server should fail over to another server in the

cluster.

3 In eDirectory, remove (delete) the Cluster Node object, the Server object, and all corresponding

objects relating to the downed NetWare server.

Depending on your configuration, there could be up to 10 or more objects that relate to the

downed NetWare server.

4 Run DSRepair on another server in the eDirectory tree to fix any directory problems.

If DSRepair finds errors or problems, run it multiple times until no errors are returned.

5 Install SLES 9 and OES on the server, but do not install the Cluster Services component of

OES.

You can use the same server name and IP address that were used on the NetWare server. This is

suggested, but not required.

See the OES Linux Installation Guide for more information.

6 Set up and verify SAN connectivity for the Linux node.

Consult your SAN vendor documentation for SAN setup and connectivity instructions.

7 Install Cluster Services and add the node to your existing NetWare 6.5 cluster.

See Section 3.5.3, “Installing Novell Cluster Services after the OES Installation,” on page 20

for more information.

8 Enter sbdutil -f at the Linux server console to verify that the node can see the cluster

(SBD) partition on the SAN.

sbdutil -f also tells you the device on the SAN where the SBD partition is located.

9 Start cluster software by going to the /etc/init.d directory and running ./novell-ncs

start.

You must be logged in as root to run novell-ncs start.

22 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

10 (Conditional) If necessary, manually migrate the resources that were on the former NetWare

server to this Linux server.

The resources will automatically fail back if all of the following apply:

The failback mode for the resources was set to Auto.

You used the same node number for this Linux server as was used for the former NetWare

server.

This only applies if this Linux server is the next server added to the cluster.

This Linux server is the preferred node for the resources.

Simultaneously Changing Multiple NetWare Cluster Nodes to Linux

If you attempt to simultaneously convert multiple NetWare cluster servers to Linux, we strongly

recommend that you use the old NetWare node IP addresses for your Linux cluster servers. You

should record the NetWare node IP addresses before converting them to Linux.

If you must assign new node IP addresses, we recommend that you only convert one node at a time.

Another option if new cluster node IP addresses are required and new server hardware is being used

is to shut down the NetWare nodes that are to be removed and then add the new Linux cluster nodes.

After adding the new Linux cluster nodes, you can remove the NetWare cluster node-related objects

as described in Step 3 on page 22.

novdocx (ENU) 29 January 2007

Failure to follow these recommendations might result in NetWare server abends and Linux server

restarts.

3.6.2 Adding New Linux Nodes to Your NetWare Cluster

You can add new Linux cluster nodes to your existing NetWare 6.5 cluster without bringing down

the cluster. To add new Linux cluster nodes to your NetWare 6.5 cluster:

1 Install SLES 9 and OES on the new node, but do not install the Cluster Services component of

OES.

See the “OES Linux Installation Guide” for more information.

2 Set up and verify SAN connectivity for the new Linux node.

Consult your SAN vendor documentation for SAN setup and connectivity instructions.

3 Install Cluster Services and add the new node to your existing NetWare 6.5 cluster.

See Section 3.5.3, “Installing Novell Cluster Services after the OES Installation,” on page 20

for more information.

4 Enter sbdutil -f at the Linux server console to verify that the node can see the cluster

(SBD) partition on the SAN.

sbdutil -f will also tell you the device on the SAN where the SBD partition is located.

5 Start cluster software by going to the /etc/init.d directory and running novell-ncs

start.

You must be logged in as root to run novell-ncs start.

6 Add and assign cluster resources to the new Linux cluster node.

Installation and Setup 23

3.6.3 Mixed NetWare and Linux Clusters

Novell Cluster Services includes some specialized functionality to help NetWare and Linux servers

coexist in the same cluster. This functionality is also beneficial as you migrate NetWare cluster

servers to Linux. It automates the conversion of the Master IP Address resource and cluster-enabled

NSS pool resource load and unload scripts from NetWare to Linux. The NetWare load and unload

scripts are read from eDirectory, converted, and written into Linux load and unload script files.

Those Linux load and unload script files are then searched for NetWare-specific command strings,

and the command strings are then either deleted or replaced with Linux-specific command strings.

Separate Linux-specific commands are also added, and the order of certain lines in the scripts is also

changed to function with Linux.

Cluster resources that were originally created on Linux cluster nodes cannot be migrated or failed

over to NetWare cluster nodes. Cluster resources that were created on NetWare cluster nodes and

migrated or failed over to Linux cluster nodes can be migrated or failed back to NetWare cluster

nodes. If you want resources that can run on both NetWare and Linux cluster nodes, create them on

a NetWare server.

If you migrate an NSS pool from a NetWare cluster server to a Linux cluster server, it could take

several minutes for volume trustee assignments to synchrozine after the migration. Users might have

limited access to migrated volumes until after the synchronization process is complete.

novdocx (ENU) 29 January 2007

WARNING: Changing existing shared pools or volumes (storage reconfiguration) in a mixed

NetWare/Linux cluster is not possible. If you need to make changes to existing pools or volumes,

you must temporarily bring down either all Linux cluster nodes or all NetWare cluster nodes prior to

making changes. Attempting to reconfigure shared pools or volumes in a mixed cluster can cause

data loss.

The following table identifies some of the NetWare specific cluster load and unload script

commands that are searched for and the Linux commands that they are replaced with (unless

deleted).

Table 3-1 .Cluster Script Command Comparison

Action NetWare Cluster Command Linux Cluster Command

Replace IGNORE_ERROR add secondary ipaddress ignore_error add_secondary_ipaddress

Replace IGNORE_ERROR del secondary ipaddress ignore_error del_secondary_ipaddress

Replace del secondary ipaddress ignore_error del_secondary_ipaddress

Replace add secondary ipaddress exit_on_error add_secondary_ipaddress

Delete IGNORE_ERROR NUDP (deletes entire line)

Delete IGNORE_ERROR HTTP (deletes entire line)

Replace nss /poolactivate= nss /poolact=

Replace nss /pooldeactivate= nss /pooldeact=

Replace mount volume_name VOLID=number exit_on_error ncpcon mount

24 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

volume_name=number

Action NetWare Cluster Command Linux Cluster Command

Replace NUDP ADD clusterservername ipaddress exit_on_error ncpcon bind --

ncpservername=ncpservername -ipaddress=ipaddress

Replace NUDP DEL clusterservername ipaddress ignore_error ncpcon unbind --

ncpservername=ncpservername -ipaddress=ipaddress

Delete CLUSTER CVSBIND (deletes entire line)

Delete CIFS (deletes entire line)

Unlike NetWare cluster load and unload scripts which are stored in eDirectory, the Linux cluster

load and unload scripts are stored in files on Linux cluster servers. The files are automatically

updated each time you make changes to resource load and unload scripts for NetWare cluster

resources. The cluster resource name is used in the load and unload script filenames. The path to the

files is /etc/opt/novell/ncs/.

The following examples provide a sample comparison between NetWare cluster load and unload

scripts, and their corresponding Linux cluster load and load scripts.

novdocx (ENU) 29 January 2007

Master IP Address Resource Load Script

NetWare

IGNORE_ERROR set allow ip address duplicates = on

IGNORE_ERROR CLUSTER CVSBIND ADD BCCP_Cluster 10.1.1.175

IGNORE_ERROR NUDP ADD BCCP_Cluster 10.1.1.175

IGNORE_ERROR add secondary ipaddress 10.1.1.175

IGNORE_ERROR HTTPBIND 10.1.1.175 /KEYFILE:"SSL CertificateIP"

IGNORE_ERROR set allow ip address duplicates = off

Linux

#!/bin/bash

. /opt/novell/ncs/lib/ncsfuncs

ignore_error add_secondary_ipaddress 10.1.1.175 -np

exit 0

Master IP Address Resource Unload Script

NetWare

IGNORE_ERROR HTTPUNBIND 10.1.1.175

IGNORE_ERROR del secondary ipaddress 10.1.1.175

IGNORE_ERROR NUDP DEL BCCP_Cluster 10.1.1.175

IGNORE_ERROR CLUSTER CVSBIND DEL BCCP_Cluster 10.1.1.175

Linux

#!/bin/bash

. /opt/novell/ncs/lib/ncsfuncs

Installation and Setup 25

ignore_error del_secondary_ipaddress 10.1.1.175

exit 0

NSS Pool Resource Load Script

NetWare

nss /poolactivate=HOMES_POOL

mount HOMES VOLID=254

CLUSTER CVSBIND ADD BCC_CLUSTER_HOMES_SERVER 10.1.1.180

NUDP ADD BCC_CLUSTER_HOMES_SERVER 10.1.1.180

add secondary ipaddress 10.1.1.180

CIFS ADD .CN=BCC_CLUSTER_HOMES_SERVER.OU=servers.O=lab.T=TEST_TREE.

Linux

#!/bin/bash

. /opt/novell/ncs/lib/ncsfuncs

exit_on_error nss /poolact=HOMES_POOL

exit_on_error ncpcon mount HOMES=254

exit_on_error add_secondary_ipaddress 10.1.1.180

exit_on_error ncpcon bind --ncpservername=BCC_CLUSTER_HOMES_SERVER -ipaddress=10.1.1.180

exit 0

novdocx (ENU) 29 January 2007

NSS Pool Resource Unload Script

NetWare

del secondary ipaddress 10.1.1.180

CLUSTER CVSBIND DEL BCC_CLUSTER_HOMES_SERVER 10.1.1.180

NUDP DEL BCC_CLUSTER_HOMES_SERVER 10.1.1.180

nss /pooldeactivate=HOMES_POOL /overridetype=question

CIFS DEL .CN=BCC_CLUSTER_HOMES_SERVER.OU=servers.O=lab.T=TEST_TREE.

Linux

#!/bin/bash

. /opt/novell/ncs/lib/ncsfuncs

ignore_error ncpcon unbind --ncpservername=BCC_CLUSTER_HOMES_SERVER -ipaddress=10.1.1.180

ignore_error del_secondary_ipaddress 10.1.1.180

exit_on_error nss /pooldeact=HOMES_POOL

exit 0

3.6.4 Finalizing the Cluster Conversion

If you have converted all nodes in a former NetWare cluster to Linux, you must finalize the

conversion process by issuing the cluster convert command on one Linux cluster node. The

cluster convert command moves cluster resource load and unload scripts from the files

26 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

where they were stored on Linux cluster nodes into eDirectory. This enables a Linux cluster that has

been converted from NetWare to utilize eDirectory like the former NetWare cluster.

To finalize the cluster conversion:

1 Run cluster convert preview resource_name at the server console of one Linux

cluster node.

Replace resource_name with the name of a resource that you want preview.

The preview switch lets you view the resource load and unload script changes that will be made

when the conversion is finalized. You can preview all cluster resources.

2 Run cluster convert commit at the server console of one Linux cluster node to finalize

the conversion.

Generating Linux Cluster Resource Templates

After converting all nodes in a former NetWare cluster to Linux, you might want to generate the

cluster resource templates that are included with Novell Cluster Services for Linux. These templates

are automatically created when you create a new Linux cluster, but are not created when you convert

an existing NetWare cluster to Linux.

novdocx (ENU) 29 January 2007

To generate or regenerate the cluster resource templates that are included with Novell Cluster

Services for Linux, enter the following command on a Linux cluster server:

/opt/novell/ncs/bin/ncs-configd.py -install_templates

In addition to generating Linux cluster resource templates, this command deletes all NetWare cluster

resource templates. Because of this, use this command only after all nodes in the former NetWare

cluster are converted to Linux.

3.7 Setting Up Novell Cluster Services

If you created a new cluster, you now need to create and configure cluster resources. You might also

need to create shared disk partitions and NSS pools if they do not already exist and, if necessary,

configure the shared disk NSS pools to work with Novell Cluster Services. Configuring shared disk

NSS pools to work with Novell Cluster Services can include cluster enabling the pools.

You must use iManager or NSSMU to cluster enable shared NSS disk pools. iManager must be used

to create cluster resources.

3.7.1 Creating NSS Shared Disk Partitions and Pools

Before creating disk partitions and pools on shared storage (storage area network or SAN), Novell

Cluster Services must be installed. You should carefully plan how you want to configure your shared

storage prior to installing Novell Cluster Services. For information on configuring access to a

NetWare server functioning as an iSCSI target, see Accessing iSCSI Targets on NetWare Servers

from Linux Initiators (http://www.novell.com/documentation/iscsi1_nak/iscsi/data/

bswmaoa.html#bt8cyhf).

To create NSS pools on shared storage, use either the server-based NSS Management Utility

(NSSMU) or iManager. These tools can also be used to create NSS volumes on shared storage.

Installation and Setup 27

NSS pools can be cluster enabled at the same time they are created or they can be cluster enabled at

a later time after they are created. To learn more about NSS pools, see “Pools” in the Novell Storage

Services Administration Guide.

Creating Shared NSS Pools Using NSSMU

1 Start NSSMU by entering nssmu at the server console of a cluster server.

2 Select Devices from the NSSMU main menu and mark all shared devices as sharable for

clustering.

On Linux, shared disks are not by default marked sharable for clustering. If a device is marked

as sharable for clustering, all partitions on that device will automatically be sharable.

You can press F6 to individually mark devices as sharable.

3 From the NSSMU main menu, select Pools, press Insert, and then type a name for the new pool

you want to create.

4 Select the device on your shared storage where you want the pool created.

Device names might be labelled something like /dev/sdc.

5 Choose whether you want the pool to be activated and cluster enabled when it is created.

The Activate on Creation feature is enabled by default. This causes the pool to be activated as

soon as it is created. If you choose not to activate the pool, you will have to manually activate it

later before it can be used.

The Cluster Enable on Creation feature is also enabled by default. If you want to cluster enable

the pool at the same time it is created, accept the default entry (Yes) and continue with Step 6.

If you want to cluster enable the pool at a later date, change the default entry from Yes to No,

select Create, and then go to “Creating NSS Volumes” on page 29.

6 Specify the virtual server name, IP address, and advertising protocols.

novdocx (ENU) 29 January 2007

NOTE: The CIFS and AFP check boxes can be checked, but CIFS and AFP functionality does

not apply to Linux. Checking the checkboxes has no effect.

When you cluster enable a pool, a virtual Server object is automatically created and given the

name of the Cluster object plus the cluster-enabled pool. For example, if the cluster name is

cluster1 and the cluster-enabled pool name is pool1, then the default virtual server name will be

cluster1_pool1_server. You can edit the field to change the default virtual server name.

Each cluster-enabled NSS pool requires its own IP address. The IP address is used to provide

access and failover capability to the cluster-enabled pool (virtual server). The IP address you

assign to the pool remains assigned to the pool regardless of which server in the cluster is

accessing the pool.

TM

You can select or deselect NCP. NCP

is selected by default, and is the protocol used by

Novell clients. Selecting NCP will cause commands to be added to the pool resource load and

unload scripts to activate the NCP protocol on the cluster. This lets you ensure that the clusterenabled pool you just created is highly available to Novell clients.

7 Select Create to create and cluster enable the pool.

Repeat the above steps for each additional pool you want to create on shared storage.

Continue with “Creating NSS Volumes” on page 29.

28 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

Creating Shared NSS Pools Using iManager

1 Start your Internet browser and enter the URL for iManager.

The URL is http://server_ip_address/nps/imanager.html. Replace server_ip_address with the

IP address or DNS name of a Linux server in the cluster that has iManager installed or with the

IP address for Apache-based services.

2 Enter your username and password.

3 In the left column, locate Storage, then click the Pools link.

4 Enter a cluster server name or browse and select one, then click the New link.

5 Specify the new pool name, then click Next.

6 Check the box next to the device where you want to create the pool, then specify the size of the

pool.

7 Choose whether you want the pool to be activated and cluster-enabled when it is created, then

click Next.

The Activate On Creation check box is used to determine if the pool you are creating is to be

activated as soon as it is created. The Activate On Creation check box is checked by default. If

you uncheck the check box, you must manually activate the pool later before it can be used.

If you want to cluster enable the pool at the same time it is created, leave the Cluster Enable on

Creation check box checked and continue with Step 8 on page 29.

If you want to cluster enable the pool at a later date, uncheck the check box, click Create, and

continue with “Cluster Enabling NSS Pools and Volumes” on page 31.

novdocx (ENU) 29 January 2007

8 Specify the virtual server name, pool IP address, and advertising protocols, then click Finish.

NOTE: The CIFS and AFP check boxes can be checked, but CIFS and AFP functionality does

not apply to Linux. Checking the check boxes has no effect.

When you cluster-enable a pool, a virtual Server object is automatically created and given the

name of the Cluster object plus the cluster-enabled pool. For example, if the cluster name is

cluster1 and the cluster-enabled pool name is pool1, then the default virtual server name will be

cluster1_pool1_server. You can edit the field to change the default virtual server name.

Each cluster-enabled NSS pool requires its own IP address. The IP address is used to provide

access and failover capability to the cluster-enabled pool (virtual server). The IP address you

assign to the pool remains assigned to the pool regardless of which server in the cluster is

accessing the pool.

You can select or deselect NCP. NCP is selected by default, and is the protocol used by Novell

clients. Selecting NCP causes commands to be added to the pool resource load and unload

scripts to activate the NCP protocol on the cluster. This lets you ensure that the cluster-enabled

pool you just created is highly available to Novell clients.

3.7.2 Creating NSS Volumes

If you plan on using a shared disk system in your cluster and need to create new NSS pools or

volumes after installing Novell Cluster Services, the server used to create the volumes should

already have NSS installed and running.

Installation and Setup 29

Using NSSMU

1 From the NSSMU main menu, select Volumes, then press Insert and type a name for the new

volume you want to create.

Each shared volume in the cluster must have a unique name.

2 Select the pool where you want the volume to reside.

3 Review and change volume attributes as necessary.

You might want to enable the Flush Files Immediately feature. This will help ensure the

integrity of volume data. Enabling the Flush Files Immediately feature improves file system

reliability but hampers performance. You should consider this option only if necessary.

4 Either specify a quota for the volume or accept the default of 0 to allow the volume to grow to

the pool size, then select Create.

The quota is the maximum possible size of the volume. If you have more than one volume per

pool, you should specify a quota for each volume rather than allowing multiple volumes to

grow to the pool size.

5 Repeat the above steps for each cluster volume you want to create.

novdocx (ENU) 29 January 2007

Depending on your configuration, the new volumes will either mount automatically when resources

that require them start or will have to be mounted manually on individual servers after they are up.

Using iManager

1 Start your Internet browser and enter the URL for iManager.

The URL is http://server_ip_address/nps/imanager.html. Replace server_ip_address with the

IP address or DNS name of a Linux server in the cluster that has iManager installed or with the

IP address for Apache-based services.

2 Enter your username and password.

3 In the left column, locate Storage, then click the Vo l um es link.

4 Enter a cluster server name or browse and select one, then click the New link.

5 Specify the new volume name, then click Next.

6 Check the box next to the cluster pool where you want to create the volume and either specify

the size of the volume (Volume Quota) or check the box to allow the volume to grow to the size

of the pool, then click Next.

The volume quota is the maximum possible size of the volume. If you have more than one

volume per pool, you should specify a quota for each volume rather than allowing multiple

volumes to grow to the pool size.

7 Review and change volume attributes as necessary.

The Flush Files Immediately feature helps ensure the integrity of volume data. Enabling the

Flush Files Immediately feature improves file system reliability but hampers performance. You

should consider this option only if necessary.

8 Choose whether you want the volume activated and mounted when it is created, then click

Finish.

30 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

3.7.3 Cluster Enabling NSS Pools and Volumes

If you have a shared disk system that is part of your cluster and you want the pools and volumes on

the shared disk system to be highly available to NCP clients, you will need to cluster enable those

pools and volumes. Cluster enabling a pool or volume allows it to be moved or mounted on different

servers in the cluster in a manner that supports transparent client reconnect.

Cluster-enabled volumes do not appear as cluster resources. NSS pools are resources, and load and

unload scripts apply to pools and are automatically generated for them. Each cluster-enabled NSS

pool requires its own IP address. This means that each cluster-enabled volume does not have an

associated load and unload script or an assigned IP address.

NSS pools can be cluster enabled at the same time they are created. If you did not cluster enable a

pool at creation time, the first volume you cluster enable in the pool automatically cluster enables

the pool where the volume resides. After a pool has been cluster enabled, you need to cluster enable

the other volumes in the pool if you want them to be mounted on another server during a failover.

When a server fails, any cluster-enabled pools being accessed by that server will fail over to other

servers in the cluster. Because the cluster-enabled pool fails over, all volumes in the pool will also

fail over, but only the volumes that have been cluster enabled will be mounted. Any volumes in the

pool that have not been cluster enabled will have to be mounted manually. For this reason, volumes

that aren't cluster enabled should be in separate pools that are not cluster enabled.

novdocx (ENU) 29 January 2007

If you want each cluster-enabled volume to be its own cluster resource, each volume must have its

own pool.

Some server applications don't require NCP client access to NSS volumes, so cluster enabling pools

and volumes might not be necessary. Pools should be deactivated and volumes should be

dismounted before being cluster enabled.

1 Start your Internet browser and enter the URL for iManager.

The URL is http://server_ip_address/nps/imanager.html. Replace server_ip_address with the

IP address or DNS name of a Linux server in the cluster that has iManager installed or with the

IP address for Apache-based services.

2 Enter your username and password.

3 In the left column, locate Clusters, then click the Cluster Options link.

iManager displays four links under Clusters that you can use to configure and manage your

cluster.

4 Enter the cluster name or browse and select it, then click the New link.

5 Specify Pool as the resource type you want to create by clicking the Pool radio button, then

click Next.

6 Enter the name of the pool you want to cluster-enable, or browse and select one.

7 (Optional) Change the default name of the virtual Server object.

When you cluster enable a pool, a Virtual Server object is automatically created and given the

name of the Cluster object plus the cluster-enabled pool. For example, if the cluster name is

cluster1 and the cluster-enabled pool name is pool1, then the default virtual server name will be

cluster1_pool1_server.

If you are cluster-enabling a volume in a pool that has already been cluster-enabled, the virtual

Server object has already been created, and you can't change the virtual Server object name.

Installation and Setup 31

8 Enter an IP address for the pool.

Each cluster-enabled NSS pool requires its own IP address. The IP address is used to provide

access and failover capability to the cluster-enabled pool (virtual server). The IP address

assigned to the pool remains assigned to the pool regardless of which server in the cluster is

accessing the pool.

9 Select an advertising protocol.

NOTE: The CIFS and AFP check boxes can be checked, but CIFS and AFP functionality does

not apply to Linux. Checking the check boxes has no effect.

novdocx (ENU) 29 January 2007

You can select or deselect NCP. NCP

TM

is selected by default, and is the protocol used by

Novell clients. Selecting NCP will cause commands to be added to the pool resource load and

unload scripts to activate the NCP protocol on the cluster. This lets you ensure that the clusterenabled pool you just created is highly available to Novell clients.

10 (Optional) Check the Online Resource after Create check box.

This causes the NSS volume to automatically mount when the resource is created.

11 Ensure that the Define Additional Properties check box is checked, then click Next and

continue with “Setting Start, Failover, and Failback Modes” on page 42.

NOTE: Cluster resource load and unload scripts are automatically generated for pools when they

are cluster-enabled.

When the volume resource is brought online, the pool will automatically be activated. You don't

need to activate the pool at the server console.

If you delete a cluster-enabled volume, Novell Cluster Services automatically removes the volume

mount command from the resource load script. If you delete a cluster-enabled pool, Novell Cluster

Services automatically removes the Pool Resource object and the virtual server object from

eDirectory. If you rename a cluster-enabled pool, Novell Cluster Services automatically updates the

pool resource load and unload scripts to reflect the name change. Also, NSS automatically changes

the Pool Resource object name in eDirectory.

3.7.4 Creating Traditional Linux Volumes on Shared Disks

Although you can use the same Linux tools and procedures used to create partitions on local drives

to create Linux file system partitions on shared storage, EVMS is the recommended tool. Using

EVMS to create partitions, volumes, and file systems will help prevent data corruption caused by

multiple nodes accessing the same data. You can create partitions and volumes using any of the

journaled Linux file systems (EXT3, Reiser, etc.). To cluster enable Linux volumes, see

Section 3.7.6, “Cluster Enabling Traditional Linux Volumes on Shared Disks,” on page 36.

TIP: EVMS virtual volumes are recommended for Novell Cluster Services because they can more

easily be expanded and failed over to different cluster servers than physical devices. You can enter

man evms at the Linux server console to reference the evms man page, which provides additional

instructions and examples for evms.

You can also enter man mount at the Linux server console to reference the mount man page,

which provides additional instructions and examples for the mount command.

32 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

The following sections provide the necessary information for using EVMS to create a tradtional

Linux volume and file system on a shared disk:

“Ensuring That the Shared Disk Is not a Compatibility Volume” on page 33

“Removing Other Segment Managers” on page 33

“Creating a Cluster Segment Manager Container” on page 34

“Adding an Additional Segment Manager” on page 34

“Creating an EVMS Volume” on page 35

“Creating a File System on the EVMS Volume” on page 35

WARNING: EVMS administration utilities (evms, evmsgui, and evmsn) should not be running

when they are not being used. EVMS utilities lock the EVMS engine, which prevents other evmsrelated actions from being performed. This affects both NSS and traditional Linux volume actions.

NSS and traditional Linux volume cluster resources should not be migrated while any of the EVMS

administration utilities are running.

novdocx (ENU) 29 January 2007

Ensuring That the Shared Disk Is not a Compatibility Volume

New EVMS volumes are by default configured as compatibility volumes. If any of the volumes on

your shared disk (that you plan to use in your cluster) are compatibility volumes, you must delete

them.

1 At the Linux server console, enter evmsgui.

2 Click the Vo lu m e s tab, then right-click the volume on the shared disk and select Display details.

3 Click the Page 2 tab and determine from the Status field if the volume is a compatibility

volume.

If the volume is a compatibility volume or has another segment manager on it, continue with

Step 3a below.

3a Click the Vo lu m es tab, right-click the volume, then select Delete.

3b Select the volume, then click Recursive Delete.

3c (Conditional) If a Response Required pop-up appears, click the Write zeros button.

3d (Conditional) If another pop-up appears, click Continue to write 1024 bytes to the end of

the volume.

Removing Other Segment Managers

If any of the shared disks you plan to use with your cluster have other segment managers, you must

delete them as well.

1 In evmsgui, click the Disks tab, then right-click the disk you plan to use for a cluster resource.

2 Select remove segment manager from Object.

This option only appears if there is another segment manager for the selected disk.

3 Select the listed segment manager and click Remove.

Installation and Setup 33

Creating a Cluster Segment Manager Container

To use a traditional Linux volume with EVMS as a cluster resource, you must use the Cluster

Segment Manager (CSM) plug-in for EVMS to create a CSM container.

NOTE: CSM containers require Novell Cluster Services (NCS) to be running on all nodes that

access the CSM container. Do not make to modifications to EVMS objects unless NCS is running.

CSM containers can provide exclusive access to shared storage.

1 In evmsgui, click Actions, select Create, then select Container.

2 Select Cluster Segment Manager, then click Next.

3 Select the disks (storage objects) you want to place in the container, then click Next.

4 On the Configuration Options page, select the node where you are creating the container,

specify Private as the type, then choose a name for the container.

The name must be one word, must consist of standard alphanumeric characters, and must not

be any of the following reserved words:

Container

Disk

EVMS

novdocx (ENU) 29 January 2007

Plugin

Region

Segment

Vo l u m e

5 Click Save to save your changes.

Adding an Additional Segment Manager

After creating a CSM container, you can optionally add an additional non-CSM segment manager

container on top of the CSM container you just created. The benefit of this is that other non-CSM

segment manager containers allow you to create multiple smaller EVMS volumes on your EVMS

disk. You can then add additional EVMS volumes or expand or shrink existing EVMS volumes to

utilize or create additional free space on your EVMS disk. In addition, this means that you can also

have different file system types on your EVMS disk.

A CSM container uses the entire EVMS disk, which means that creating additional volumes or

expanding or shrinking volumes is not possible. And, because only one EVMS volume is possible in

the container, only one file system type is allowed in that container.

1 In evmsgui, click Actions, select Add, then select Segment Manager to Storage Object.

2 Choose the desired segment manager, then click Next.

Most of the segment manager will work. The DOS segment manager is added by default for

some EVMS operations.

3 Choose the storage object (container) you want to add the segment manager to, then click Next.

4 Select the disk type (Linux is the default), click Add, then click OK.

5 Click Save to save your changes.

34 OES Novell Cluster Services 1.8.2 Administration Guide for Linux

Creating an EVMS Volume

1 In evmsgui, click Actions, select Create, and then EVMS Volume.

2 Select the container you just created (either the CSM container or the additional segment

manager container) and specify a volume name.

3 Click Create, then click Save.

Creating a File System on the EVMS Volume

1 In evmsgui, click the Vo l um e s tab and right-click the volume you just created.

2 Select Make File System, choose a traditional Linux file system from the list, then click Next.

3 Specify a volume label, then click Make.

4 Save your changes by clicking Save.

3.7.5 Expanding EVMS Volumes on Shared Disks

As your storage needs increase, it might become necessary to add more disk space or drives to your

shared storage system. EVMS provides features that allow you to expand or move existing volumes.

novdocx (ENU) 29 January 2007

The two supported methods for creating additional space for an existing volume are:

Expanding the volume to a separate disk

Moving the volume to a larger disk

Expanding a Volume to a Separate Disk

1 Unmount the file system for the volume you want to expand.

2 In evmsgui, click the Vo l um e s tab, right-click the volume you want to expand then select Add

Feature.

3 Select Drive Linking Feature, then click Next.

4 Provide a name for the drive link, click Add, then save your changes.

5 Click Actions, select Create, and then click Container.

6 Select the Cluster Segment Manager, click Next, then select the disk you want to expand the

volume to.

The entire disk is used for the expansion, so you must select a disk that does not have other

volumes on it.

7 Provide the same settings information (name, type, etc.) as the existing container for the

volume and save your changes.

8 Click the Vo lu m e s tab, right-click the volume, then click Expand.

9 Select the volume that you are expanding, then click Next.

10 Verify the current volume size and the size of the volume after it is expanded, then click Next.

The expanded volume size should include the size of the disk the volume is being expanded to.

11 Select the storage device the volume is being expanded to, select Expand, and save your

changes.

12 Click Save and exit evmsgui.

Installation and Setup 35

Moving a Volume to a Larger Disk

1 Unmount the file system for the volume you want to move.

2 Add a larger disk to the CSM container.

2a In evmsgui, click Actions, select Create, then click Container.

2b Select the Cluster Segment Manager, then click Next.

2c Select the larger disk you want to move the volume to.

The entire disk is used for the expansion, so you must select a disk that does not have

other volumes on it.

2d Provide the same settings information (name, type, etc.) as the existing container for the

volume, then save your changes.

2e Click Save and exit evmsgui.

3 Restart evmsgui, click the Containers tab, then expand the container so that the objects under

the container appear.

The new disk should appear as part of the container.

4 Right-click the object for the disk where the volume resides and select Replace.

5 Select the object for the disk where the volume will be moved, then click Next.

novdocx (ENU) 29 January 2007

6 Save your changes.

Saving your changes could take a while, depending on volume size and other factors.

7 Click Save, exit evmsgui, then restart evmsgui.