Page 1

Intel® Server Board S5500HV/Intel®

Server System SR1670HV

Technical Product Specification

Intel order number E69391-006

Revision 1.2

May, 2010

Enterprise Platforms and Services Division

Page 2

Revision History Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

Revision History

Date Revision

Number

June 2009 1.0 1st Production Release

July 2009 1.1 Corrected some typo and power connector pin-outs definition

March 2010 1.2 Updated new 5600 processor support information and revise CNCA Certification

Modifications

Disclaimers

Information in this document is provided in connection with Intel® products. No license, express or implied, by

estoppel or otherwise, to any intellectual property rights is granted by this document. Except as provided in Intel's

Terms and Conditions of Sale for such products, Intel assumes no liability whatsoever, and Intel disclaims any

express or implied warranty, relating to sale and/or use of Intel products including liability or warranties relating to

fitness for a particular purpose, merchantability, or infringement of any patent, copyright or other intellectual property

right. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make

changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or

"undefined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or

incompatibilities arising from future changes to them.

The Intel

as errata which may cause the product to deviate from published specifications. Current characterized errata are

available on request.

®

Server Board S5500HV and Intel® Server System SR1670HV may contain design defects or errors known

Intel Corporation server boards contain a number of high-density VLSI and power delivery components that need

adequate airflow to cool. Intel’s own chassis are designed and tested to meet the intended thermal requirements of

these components when the fully integrated system is used together. It is the responsibility of the system integrator

that chooses not to use Intel developed server building blocks to consult vendor datasheets and operating parameters

to determine the amount of airflow required for their specific application and environmental conditions. Intel

Corporation cannot be held responsible if components fail or the server board does not operate correctly when used

outside any of their published operating or non-operating limits.

Intel, Pentium, Itanium, and Xeon are trademarks or registered trademarks of Intel Corporation.

*Other brands and names may be claimed as the property of others.

Copyright © Intel Corporation 2010.

Intel Confidential Revision 1.2

ii

Intel order number E69391-006

Page 3

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Table of Contents

Table of Contents

1. Introduction ..........................................................................................................................1

1.1 Chapter Outline........................................................................................................1

1.2 Server Board Use Disclaimer ..................................................................................1

2. Intel® Server Board S5500HV Overview .............................................................................2

2.1 Intel® Server Board S5500HV Feature Set..............................................................2

2.2 Server Board Layout................................................................................................4

2.2.1 Server Board Connector and Component Layout....................................................5

2.2.2 Server Board Rear I/O Layout .................................................................................6

3. Functional Architecture.......................................................................................................7

3.1 Processor Support...................................................................................................7

3.1.1 Intel® Xeon® Processor 5500 Series and 5600 Series.............................................8

3.1.2 Processor Population Rules ..................................................................................11

3.1.3 Unified Retention System Support.........................................................................11

3.2 Intel® QuickPath Memory Controller and Memory Subsystem ..............................12

3.2.1 Intel® Server Board S5500HV Memory Support....................................................13

3.3 Intel® 5500 Chipset IOH.........................................................................................19

3.4 Intel® 82801Jx I/O Controller Hub (ICH10R)..........................................................19

3.4.2 USB 2.0 Support....................................................................................................21

3.4.3 Keyboard and Mouse Support...............................................................................21

3.5 Network Interface Controller (NIC) ........................................................................21

3.6 ASPEED* AST2050 Graphics and Remote Management Processor....................23

3.6.1 Video Support........................................................................................................23

3.6.2 Baseboard Management Controller & BMC Module..............................................23

4. Platform Management........................................................................................................26

4.1 System Fan Control...............................................................................................27

4.2 On-board/System Sensor Information ...................................................................27

4.3 Error Handling and Messaging..............................................................................31

5. Connector/Header Locations and Pin-outs......................................................................36

5.1 Power Connectors.................................................................................................36

5.2 Serial ATA Connectors..........................................................................................36

5.3 Front Panel Headers..............................................................................................37

Revision 1.2 Intel Confidential iii

Intel order number E69391-006

Page 4

Table of Contents Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

5.4 System Management Headers ..............................................................................39

5.4.1 SGPIO Header.......................................................................................................39

5.4.2 Power Supply SMBus Connectors.........................................................................39

5.5 I/O Connectors.......................................................................................................40

5.5.1 VGA Connector......................................................................................................40

5.5.2 NIC Ports...............................................................................................................40

5.5.3 Serial Port Connector ............................................................................................41

5.5.4 USB Connectors....................................................................................................41

5.6 Fan Headers..........................................................................................................42

6. Configuration Jumpers......................................................................................................43

6.1 Clear RTC RAM (CLRTC1) Jumper.......................................................................43

6.2 VGA Controller Enable/Disable Jumper ................................................................44

6.3 NIC 1 & NIC 2 Enable/Disable Jumper..................................................................44

6.4 Embedded RAID Option Select Jumper ................................................................45

6.5 DDR3 voltage control jumpers (**Future Support Only) ........................................46

6.6 BIOS Recovery Jumper.........................................................................................47

7. Intel® Light-Guided Diagnostics........................................................................................48

7.1 Standby Voltage LED ............................................................................................48

7.2 CPU Fault LED......................................................................................................48

7.3 System Identification LED......................................................................................49

7.4 BMC LED...............................................................................................................49

8. Intel® Server System SR1670HV Overview ......................................................................50

8.1 Front Panel Features.............................................................................................51

8.2 Rear Panel Features..............................................................................................52

8.3 System LED Overview...........................................................................................53

8.3.1 Front Control Panel LEDs......................................................................................53

8.3.2 LAN Port LEDs ......................................................................................................53

8.3.3 Hard Drive Status LEDs.........................................................................................54

8.4 System Storage.....................................................................................................54

8.5 System Cooling......................................................................................................55

8.6 System Power........................................................................................................56

8.6.1 Power Supply Module Specification ......................................................................57

8.6.2 AC Power Cord Specification Requirements.........................................................58

8.7 Add-in Card Support..............................................................................................58

9. Environmental Specifications...........................................................................................59

Intel Confidential Revision 1.2

iv

Intel order number E69391-006

Page 5

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Table of Contents

9.1 Intel® Server Board S5500HV Environmental Specifications.................................59

9.2 Processor Power Support (Server Board Only).....................................................59

9.3 Intel® Server System SR1670HV Environmental Specifications............................60

9.4 Processor Power Support (Integrated System) .....................................................60

10. Regulatory and Certification.............................................................................................61

10.1 Intel® Server Board S5500HV Regulatory and Certification ..................................61

10.1.1 Electromagnetic Compatibility Notices ..................................................................62

10.1.2 FCC Verification Statement...................................................................................63

10.1.3 ICES-003 (Canada)...............................................................................................63

10.1.4 Europe (CE Declaration of Conformity) .................................................................63

10.1.5 Japan EMC Compatibility ......................................................................................63

10.1.6 BSMI (Taiwan).......................................................................................................64

10.1.7 RRL (Korea)...........................................................................................................64

10.2 Intel® Server System SR1670HV Regulatory and Certification .............................64

10.2.1 Product Regulatory Compliance............................................................................64

10.2.2 Use of Specified Regulated Components..............................................................65

10.2.3 Electromagnetic Compatibility Notices ..................................................................67

10.3 Product Ecology Compliance.................................................................................69

10.4 Other Markings......................................................................................................71

10.5 Component Regulatory Requirements to Support System and/or Baseboard Level

Certifications............................................................................................................................72

11. Product Safety Information...............................................................................................74

11.1 Safety Warnings & Cautions..................................................................................74

11.2 Site Selection.........................................................................................................74

11.3 Equipment Handling Practices...............................................................................75

11.4 Power and Electrical Warnings..............................................................................75

11.4.1 Replacing the Back up Battery ..............................................................................75

11.4.2 Power Cord Warnings............................................................................................76

11.5 System Access Warnings......................................................................................77

11.6 Rack Mount Warnings ...........................................................................................77

11.7 Cooling and Airflow................................................................................................78

11.8 Laser Peripherals or Devices.................................................................................78

Appendix A: Product Usage Tips............................................................................................79

Glossary.....................................................................................................................................80

Reference Documents..............................................................................................................83

Revision 1.2 Intel Confidential v

Intel order number E69391-006

Page 6

List of Figures Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

List of Figures

Figure 1. Intel® Server Board S5500HV........................................................................................4

Figure 2. Intel® Server Board S5500HV Layout............................................................................5

Figure 3. Intel® Server Board S5500HV Rear I/O Layout .............................................................6

Figure 4. Intel® Server Board S5500HV Functional Block Diagram..............................................7

Figure 5 Processor Architecture ...................................................................................................9

Figure 6. Unified Retention System and Unified Backplate Assembly........................................12

Figure 7. Conceptual Memory Layout Diagram ..........................................................................13

Figure 8. DIMM Slot Nomenclature.............................................................................................14

Figure 9. Intel Server Board S5500HV Memory Slot Layout.......................................................14

Figure 10. Mirror Channel Mode Memory Population.................................................................18

Figure 11. RAID Option Jumper Block........................................................................................20

Figure 12. Internal USB Port Locations ......................................................................................21

Figure 13. NIC Enable/Disabled Jumper Block...........................................................................22

Figure 14. Video Enable/Disable Jumper Block..........................................................................23

Figure 15. Baseboard Management Module...............................................................................24

Figure 16. Management NIC.......................................................................................................25

Figure 17. System Fan Connector Locations..............................................................................27

Figure 18. System Fan Connector Pin-out Definition..................................................................27

Figure 19. 20-pin ATX Main Power Connector Pin-out and Location.........................................36

Figure 20. 4-pin Peripheral Power Connector Pin-out and Location...........................................36

Figure 21. SATA Connector Location and Pin-out......................................................................37

Figure 22. Front Panel Header Location and Pin-out .................................................................37

Figure 23. AUX Front Panel Header Location and Pinout..........................................................38

Figure 24. SGPIO Header Location and Pin-out.........................................................................39

Figure 25. Power Supply SMBus Connector Location and Pin-out ............................................40

Figure 26. Clear RTC Jumper Block...........................................................................................43

Figure 27. Video Enable/Disable Jumper ...................................................................................44

Figure 28. NIC1 & NIC2 Enable/Disable Jumper.......................................................................45

Figure 29. Embedded RAID Option Select Jumper ....................................................................45

Figure 30. BIOS Recovery Jumper.............................................................................................47

Figure 31. Stand-by Voltage LED...............................................................................................48

Figure 32. CPU Fault LED..........................................................................................................48

Intel Confidential Revision 1.2

vi

Intel order number E69391-006

Page 7

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS List of Figures

Figure 33. System ID LED..........................................................................................................49

Figure 34. BMC LED...................................................................................................................49

Figure 35. Intel® Server System SR1670HV...............................................................................50

Figure 36. Intel® Server System SR1670HV - Front Panel Overview.........................................52

Figure 37. Intel® Server System SR1670HV - Back Panel Overview .........................................52

Figure 38. Intel® Server System SR1670HV - Front Control Panel LEDs...................................53

Figure 39. LAN Port LED Identification.......................................................................................53

Figure 40. Hard Drive LED Identification ....................................................................................54

Figure 41. Hard Drive Assembly.................................................................................................54

Figure 42 Har driver Bay Assembly............................................................................................55

Figure 43. System Fan Assembly...............................................................................................55

Figure 44. System Fan Removal ................................................................................................55

Figure 45. Intel® Server System SR1670HV - Power Sub-system .............................................56

Figure 46. Power Module Removal.............................................................................................57

Figure 47. AC Cord Specification................................................................................................58

Figure 48. Intel® Server System SR1670HV - Add-in card support............................................58

Revision 1.2 Intel Confidential vii

Intel order number E69391-006

Page 8

List of Tables Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

List of Tables

Table 1. Intel® Server Board S5500HV Feature Set.....................................................................2

Table 2. Major Board Components...............................................................................................6

Table 3 Supported RDIMM configurations..................................................................................15

Table 4 Supported UDIMM configurations..................................................................................16

Table 5 Memory Population Table..............................................................................................17

Table 6. Supported Mirrored DIMM Population ..........................................................................19

Table 7. NIC Status LEDs...........................................................................................................22

Table 8. System Sensors............................................................................................................29

Table 9. LED/Power Module Sensors.........................................................................................30

Table 10. Backplane Sensors.....................................................................................................30

Table 11. Power Module Sensors...............................................................................................30

Table 12. System Fan Sensors...................................................................................................30

Table 13. FRU Sensors ..............................................................................................................31

Table 14. BIOS Error Messages and Checkpoints.....................................................................31

Table 15. VGA Connector Pin-out ..............................................................................................40

Table 16. RJ-45 10/100/1000 NIC Connector Pin-out................................................................41

Table 17. Serial Port Pin-out.......................................................................................................41

Table 18. External USB Connector Pin-out ................................................................................42

Table 19. 4-pin System Fan Connector Pin-out..........................................................................42

Table 20. Intel® Server System SR1670HV Feature Set............................................................51

Table 21. Power Supply Module Specification............................................................................57

Table 22. Over Current Protection Limits....................................................................................57

Table 23. Over Voltage Protection Limits ...................................................................................57

Table 24. AC Cord Specification.................................................................................................58

Table 25. Server Board Environmental Limits Summary............................................................59

Table 26. Intel® Xeon® Processor TDP Guidelines.....................................................................59

Table 27. Server System Environmental Limits Summary..........................................................60

Table 28. Server Board Product Safety & Electromagnetic (EMC) Compliance.........................61

Table 29. Server System Product Safety & Electromagnetic (EMC) Compliance ......................66

Intel Confidential Revision 1.2

viii

Intel order number E69391-006

Page 9

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS List of Tables

< This page intentionally left blank. >

Revision 1.2 Intel Confidential ix

Intel order number E69391-006

Page 10

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Introduction

1. Introduction

This Technical Product Specification (TPS) provides information detailing the features,

functionality, and high-level architecture of the Intel

®

Server Board S5500HV and the Intel®

Server System SR1670HV.

Additional product information can be found at the following Intel website:

http://support.intel.com/support/motherboards/server/SR1670HV/

1.1 Chapter Outline

This document is divided into the following chapters:

Chapter 1 – Introduction

Chapter 2 – Intel

Chapter 3 – Functional Architecture

Chapter 4 – Platform Management

Chapter 5 – Connector/Header Locations and Pin-outs

Chapter 6 – Configuration Jumpers

Chapter 7 – Intel

Chapter 8 – Intel

Chapter 9 – Environmental Specifications

Chapter 10 – Regulatory and Certification

Chapter 11 – Product Safety Information

Appendix A – Product Usage Tips

Glossary of Terms

Product Reference Documents

®

Server Board S5500HV Overview

®

Light-Guided Diagnostics

®

Server System SR1670HV Overview

1.2 Server Board Use Disclaimer

Intel Corporation server boards contain a number of high-density VLSI and power delivery

components that need adequate airflow to cool. Intel ensures through its own chassis

development and testing that when Intel server building blocks are used together, the fully

integrated system meets the intended thermal requirements of these components. It is the

responsibility of the system integrator who chooses not to use Intel developed server building

blocks to consult vendor datasheets and operating parameters to determine the amount of

airflow required for their specific application and environmental conditions. Intel Corporation

cannot be held responsible if components fail or the server board does not operate correctly

when used outside any of their published operating or non-operating limits.

1 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 11

Overview Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

2. Intel

®

Server Board S5500HV Overview

The Intel® Server Board S5500HV is designed to support high density rack server markets. Its

unique half width board design (6.3” x 16.7”) allows for possible dual server node configurations

within a single rack mount chassis application.

2.1 Intel

Processors Support for one or two Intel® Xeon® Processors 5500 Series and 5600 Series in FC-

Memory Support for 800/1066/1333 MT/s ECC registered (RDIMM) or unbuffered (UDIMM)

Chipset

I/O Control External connections:

System Fan Support Four 4-pin managed system fan headers

Add-in Adapter Support One riser slot supporting low-profile X16 GEN2 PCI Express* riser cards

Video On-board ASPEED* AST2050 with integrated Video Controller

Hard Drive Support for Four ICH10R SATA II ports with support for the following integrated RAID

LAN Two 10/100/1000 Ethernet LAN ports provided by Intel® 82574L PHYs with Intel® I/O

®

Server Board S5500HV Feature Set

Table 1. Intel® Server Board S5500HV Feature Set

Feature Description

LGA 1366 Socket B package with up to 130 W Thermal Design Power (TDP)

4.8 GT/s, 5.86 GT/s and 6.4 GT/s Intel® QuickPath Interconnect (Intel® QPI)

DDR3 memory.

12 DIMMs total across 6 memory channels (3 channels per processor).

®

5500 Chipset IOH

Intel

®

82801Jx I/O Controller Hub (ICH10R)

Intel

DB-15 Video connector

DB-9 COM1 Serial Port connector

Two RJ-45 Network Interface Connectors (Stacked) for 10/100/1000 Mb

One RJ-45 Management Network Interface Connector

Two USB 2.0 connectors

Internal connections:

One USB 1x5 pin header, supporting one USB 2.0 port

One USB 2.0 Type F Connector

Four SATA II Connectors

20-pin ATX Main Power Connector(s)

4-pin Peripheral Drive Power Connector

Power Supply SMBus Interface Header

Front Panel Headers providing support for control button and LED options

Integrated 2D Video Controller

8 MB Video Memory

solutions:

®

Intel

LSI* SATA Software RAID supporting Software RAID levels 0/1/10 (Windows and

Acceleration Technology

Matrix Storage Manager supporting Software RAID levels 0/1/5/10

(Windows* Only)

Linux)

Revision 1.2 Intel Confidential 2

Intel order number E69391-006

Page 12

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Overview

Feature Description

Server Management On-board ASPEED AST2050 with integrated Baseboard Management Controller

BMC Management Module with IPMI 2.0 support (Included)

10/100 Management NIC port

3 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 13

Overview Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

2.2 Server Board Layout

Revision 1.2 Intel Confidential 4

Intel order number E69391-006

Figure 1. Intel® Server Board S5500HV

Page 14

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Overview

C

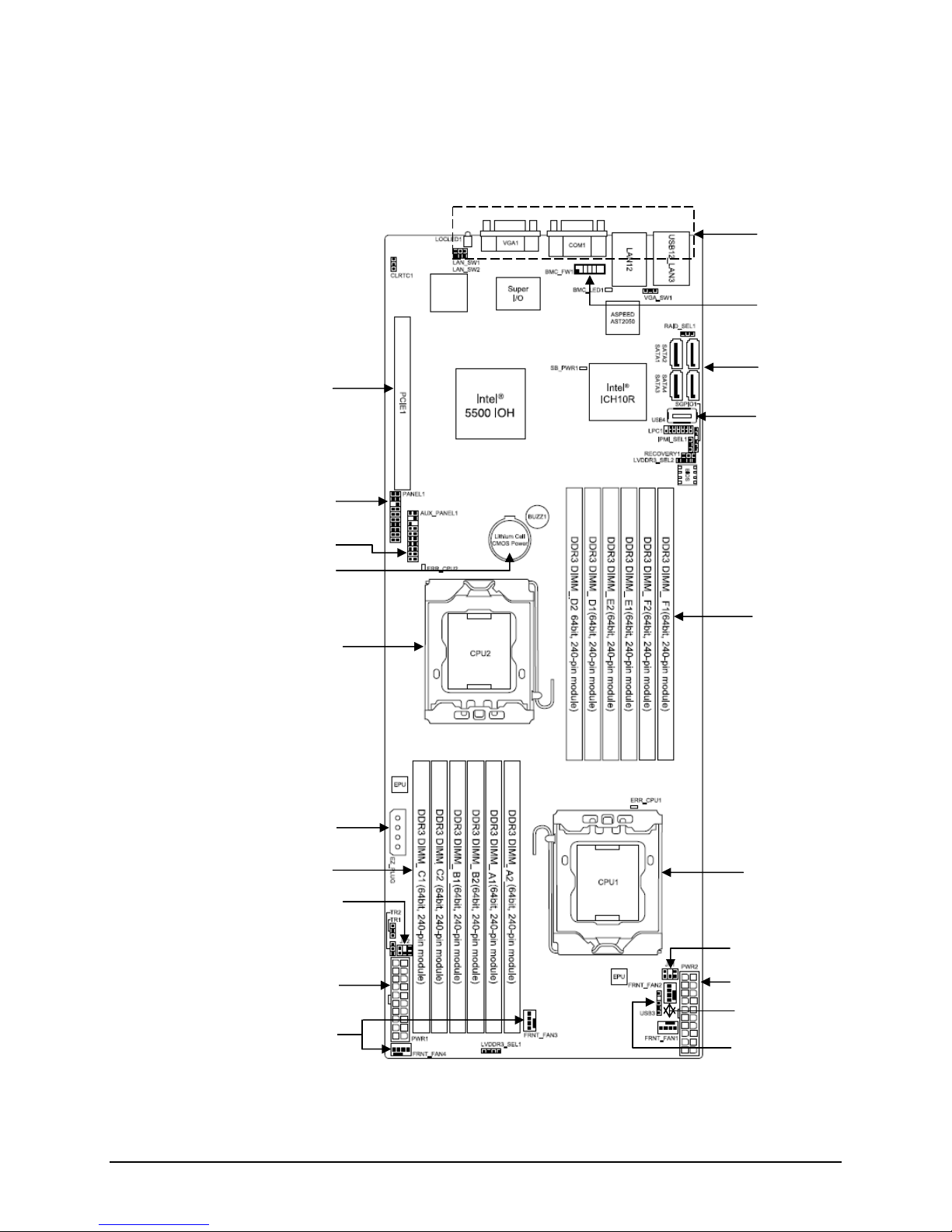

2.2.1 Server Board Connector and Component Layout

The following figure shows the board layout of the server board. Each connector and major

component is identified by letter, with a description of each given in Table 2.

A

B

Q

D

K

P

O

N

E

M

L

F

G

G

Figure 2. Intel® Server Board S5500HV Layout

5 Intel Confidential Revision 1.2

Intel order number E69391-006

H

I

H

I

J

Page 15

Overview Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

Table 2. Major Board Components

A Rear I/O Connectors K CPU 1 DIMM Slots (Slots A1– C2)

B BMC Management Module connector L Peripheral Drive Power Connector – 4 pin

C SATA Ports 1-4 M CPU 2 - LGA 1366 Socket

D Internal USB(4) 2.0 Port N CMOS Battery

E CPU 2 DIMM Slots (Slots D1 – F2) O Auxilary Front Panel Header

F CPU 1 - LGA 1366 Socket P Front Panel Header

G Power Supply SMBus - 2x3 Pin Header Q X16 GEN 2 PCI Express* Riser Card Slot

H Main Power Connector – 20 pin

I System Fan Connectors

J USB(3) 2.0 - 1x5 Pin Header

Description Description

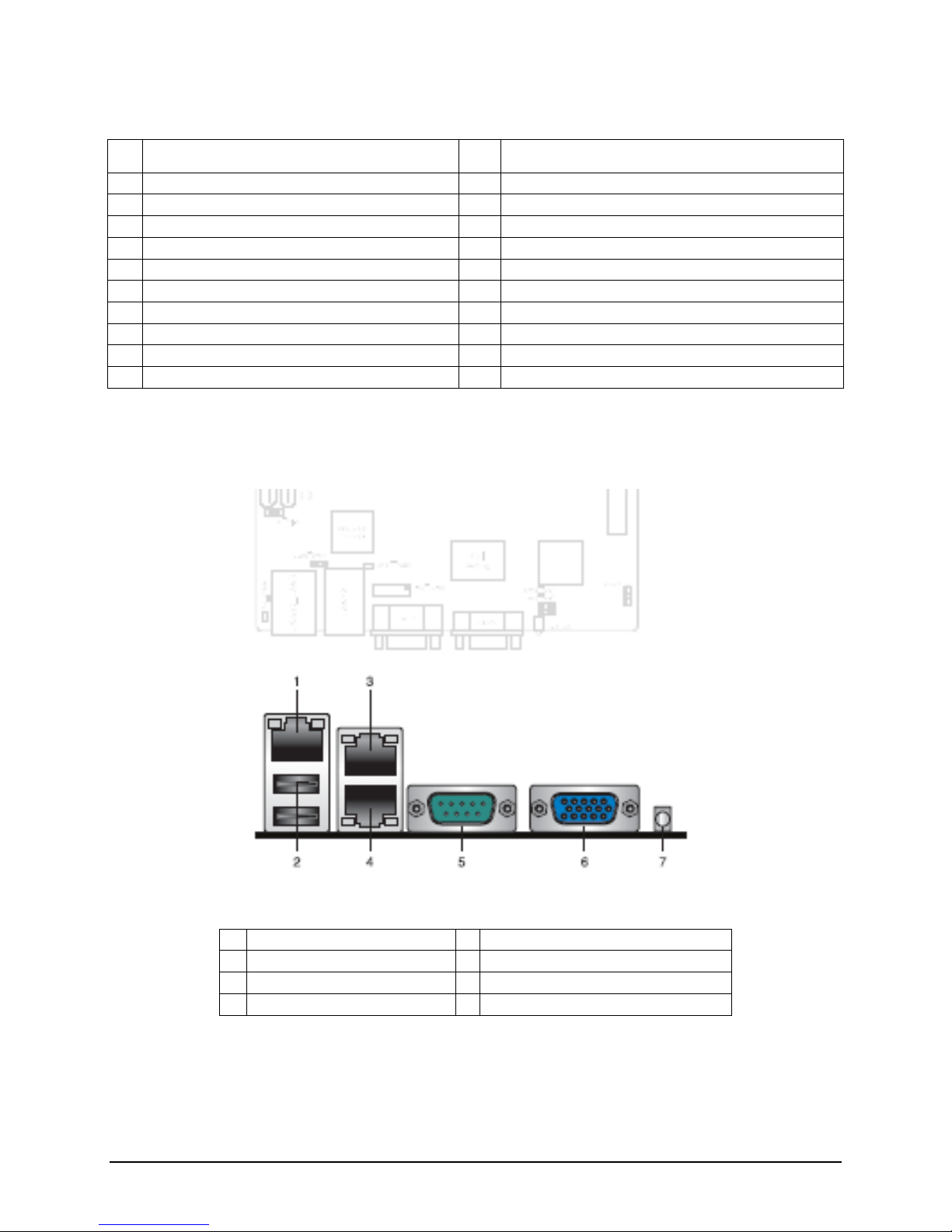

2.2.2 Server Board Rear I/O Layout

The following figure shows the layout of the rear I/O components for the server board.

1 BMC Management NIC Port 5 COM 1 Serial Port

2 Stacked USB 2.0 Ports 6 Video Connector

3 LAN 1 Port 10/100/1000 7 Blue System ID LED

4 LAN 2 Port 10/100/1000

Figure 3. Intel® Server Board S5500HV Rear I/O Layout

Revision 1.2 Intel Confidential 6

Intel order number E69391-006

Page 16

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

®

®

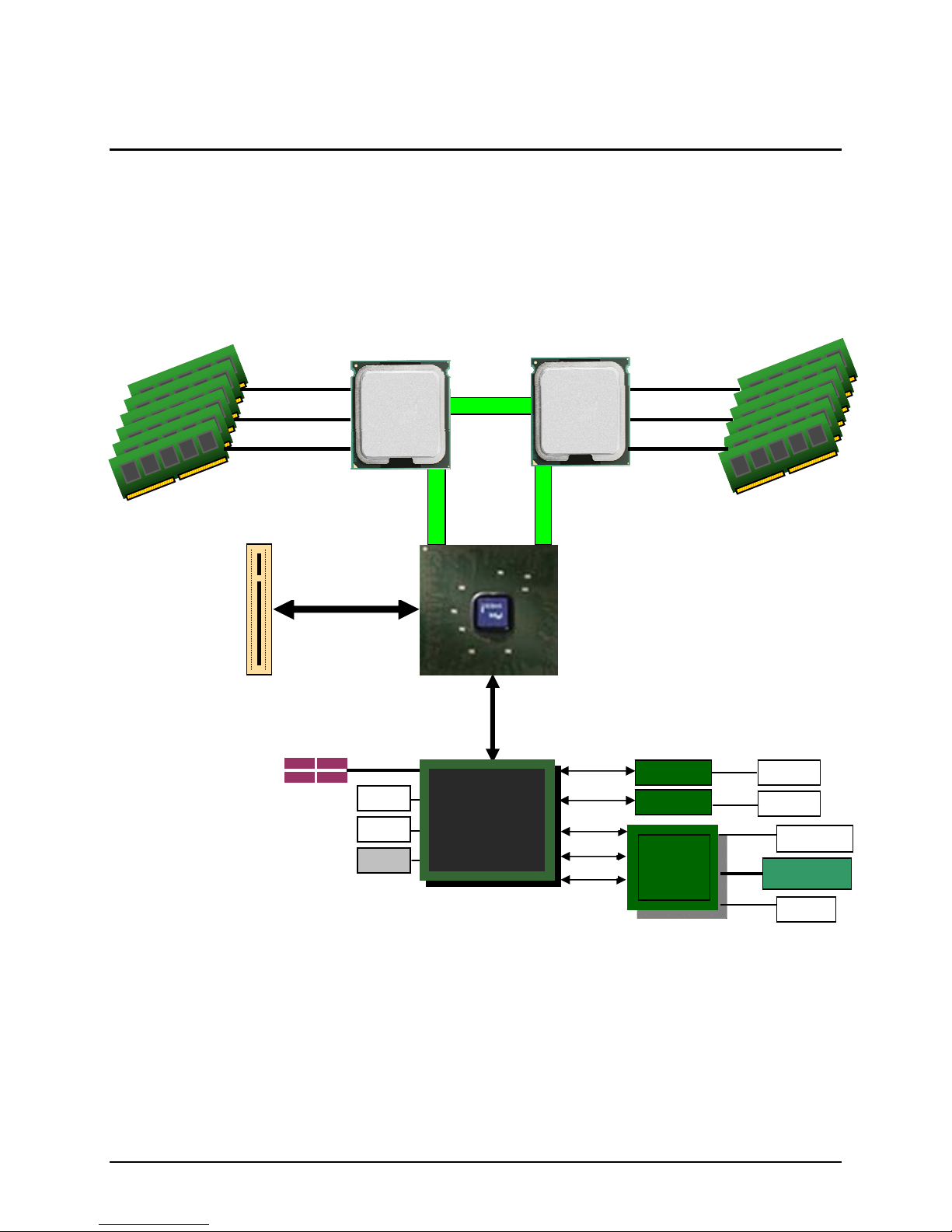

3. Functional Architecture

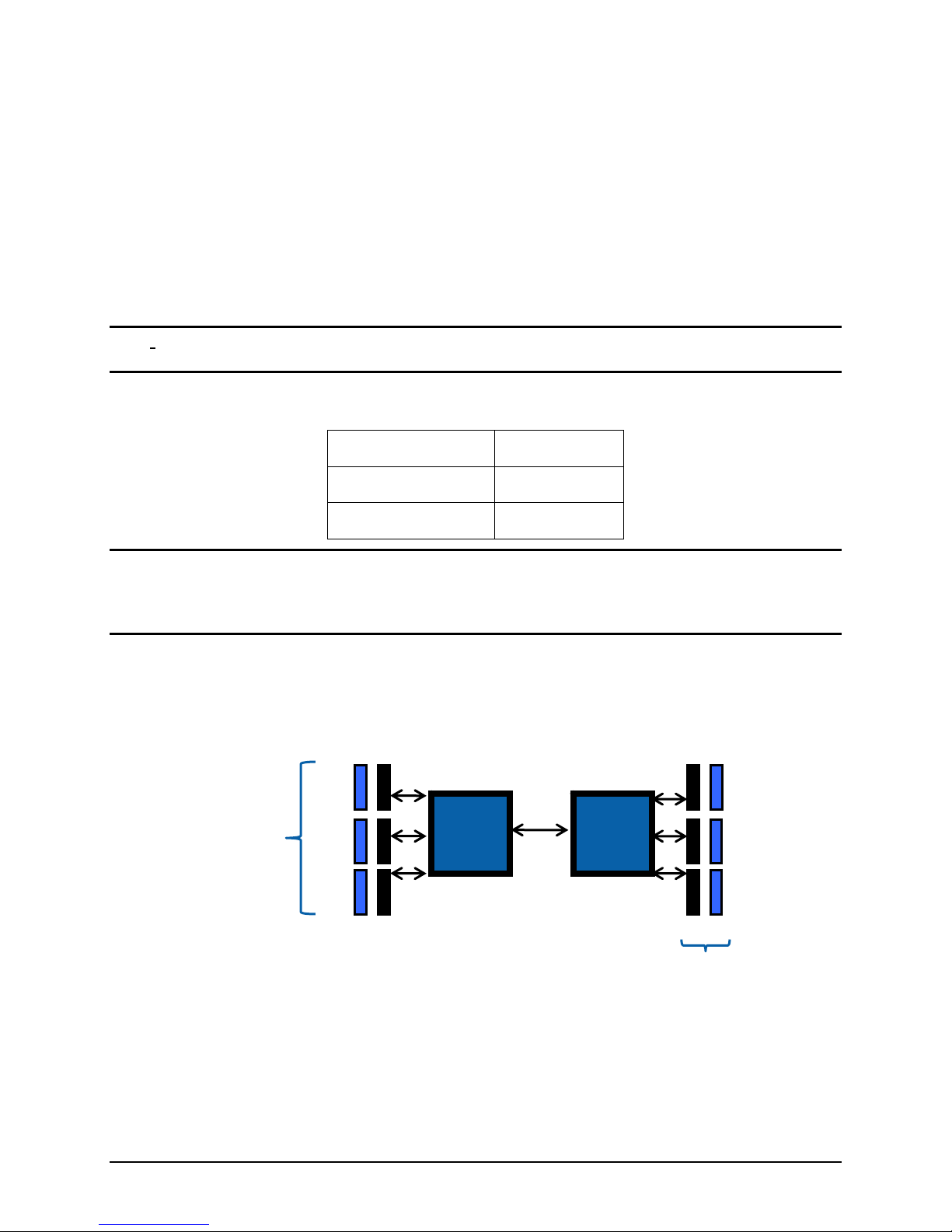

The Intel® Server Board S5500HV is based on Intel architecture with I/O and performance

features provided with the Intel

and the Intel

Interconnect (Intel

®

Xeon® processor 5500 Series and 5600 Series featuring Intel® QuickPath

®

QPI).

®

5500 Chipset I/O Hub (IOH), Intel® ICH10R I/O controller hub,

This chapter provides a high-level description of the functionality associated with each chipset

component and the architectural blocks that make up the server board.

DDR3 – C1, C2

DDR3 – B1, B2

DDR3 – A1, A2 DDR3 – D1, D2

Intel® Xeon®

Processor

5500 Series

EP

QuickPath

Intel® Xeon®

Processor

5500 Series

EP

DDR3 – F1, F2

DDR3 – E1, E2

QuickPath

CPU 2

X16 Riser Card Slot

PCI Express* Gen 2

CPU 1

QuickPath

Intel

X16

5500 Chipset IOH

ESI

4 x SATA

USB 1

USB 2

USB 3

Intel

ICH10R

X1

X1

PCI 33

LPC

USB

®

Figure 4. Intel

Server Board S5500HV Functional Block Diagram

3.1 Processor Support

The server board supports the following:

Two on board FC-LGA 1366 socket B package processor sockets.

AST2050

MGNT NIC

BMC Module

7 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 17

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

Functional support for one or two Intel® Xeon® Processors 5500 Series and 5600 Series

with 4.8 GT/s, 5.86 GT/s or 6.4 GT/s with Intel

®

QuickPath Interconnect.

Up to 130 Watt Thermal Design Power (TDP)

NOTE: Previous generations of the Intel

®

Xeon® processors are not supported on this server

board.

3.1.1 Intel

®

Xeon® Processor 5500 Series and 5600 Series

The Intel® Xeon® Processor 5500 Series and 5600 Series are new micro-architecture based on

Intel 45nm and 32nm process technology. The enhancements of the Intel Xeon Processor 5500

Series and 5600 Series are a result of six major technology developments:

New processor architecture

Intel

®

QuickPath Technology

Hyper-threading

Intelligent power

Turbo boost

Integrated Memory Controller

The processor supports all the existing Streaming SIMD Extensions 2 (SSE2), Streaming SIMD

Extensions 3 (SSE3) and Streaming SIMD Extensions 4 (SSE4); in addition to several

advanced technologies including:

Execute Disable Bit

Intel

Enhanced Intel SpeedStep

Intel

Simultaneous Multithreading.

®

Extended Memory 64 Technology (Intel® EM64T)

®

Technology

®

Virtualization Technology (Intel® VT)

3.1.1.1 New Processor Architecture

®

The Intel

Xeon® Processor 5500 Series and 5600 Series feature several new architectural

innovations, including:

A distinction between the core and “uncore” in terms of chip design

Four distinct cores per processor

New Level 3 shared Smart Cache

More parallelism: 33% more micro-ops over 45nm Intel

Enhanced algorithms and branch prediction capabilities

High bandwidth Intel

An Integrated Memory Controller

Revision 1.2 Intel Confidential 8

Intel order number E69391-006

®

Xeon® Processor 5400 Series

®

QuickPath Memory Controller: up to 25.6GB/s

Page 18

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

Core Core Core Core

8M Shared Cache

Memory

Controller

3x DDR3

Channels

Link

Controller

2x Intel

QuickPath

interconnects

Core

Uncore

Figure 5 Processor Architecture

The Intel Smart Cache architecture features a new three-level hierarchy.

st

1

Level Cache

32 KB instruction cache

32 KB data cache (which supports more L1 misses in parallel than Core 2)

nd

Level Cache

2

New cache introduced in the Intel

®

Xeon® Processor 5500 Series and 5600 Series

Unified (holds code and data)

256KB per core

Performance: Very low latency (new dedicated L2 cache improves performance by

reducing latency to frequently used data)

Scalability: As core count increases, pressure on shared cache is reduced

rd

Level Cache

3

Shared across all cores

Size depends on number of cores

Quad-core: up to 8 MB

Scalability: built to vary size with varied core counts with the ability to easily increase L3

size in future parts

Inclusive cache policy for best performance

The data residing in L1/L2 must be present in 3rd level

Shares memory among all cores

9 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 19

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

3.1.1.2 Intel

®

QPI is a cache-coherent, link-based interconnect specification for processor, chipset, and

Intel

I/O bridge components. Intel

platforms spanning IA-32 and Intel

®

QuickPath Interconnect (Intel® QPI)

®

QPI can be used in a wide variety of desktop, mobile, and server

®

Itanium® architectures. Intel® QPI also provides support for

high-performance I/O transfer between I/O nodes. It allows connection to standard I/O buses

such as PCI Express*, PCI-X, PCI (including peer-to-peer communication support), AGP, etc.,

through appropriate bridges.

Each Intel

and receiver, plus a differential forwarded clock. A full width Intel

signals (20 differential pairs in each direction) plus a forwarded differential clock in each

direction. Each Intel

links, one going to the other processor and the other to the Intel

In the current implementation, Intel

6.4 GT/s. Intel

- 5 lanes) independently in each direction between a pair of devices communicating via Intel

®

QPI link consists of 20 pairs of uni-directional differential lanes for the transmitter

®

Xeon® Processor 5500 Series and 5600 Series supports two Intel® QPI

®

®

QPI ports operate at multiple lane widths (full - 20 lanes, half - 10 lanes, quarter

QPI ports are capable of operating at transfer rates of up to

®

QPI link pair consists of 84

®

5500 Chipset IOH.

®

QPI. The server board supports full width communication only.

3.1.1.3 Intel

®

Hyper-Threading Technology lets you run two threads at the same time per core. This

Intel

®

Hyper-Threading Technology

capability lets the processor take advantage of a 4-wide execution engine, effectively hiding the

latency of a single thread. However, the data residing in L1/L2 must be present in 3rd level

cache.

This is the most power efficient performance feature of the Intel

®

Xeon® Processor 5500 Series

and 5600 Series. It’s very efficient performance boost that comes at a very low die area cost.

Depending on the application, it can provide significant performance benefit and is much more

efficient than adding an entire core. It supports larger caches and provides for massive memory

bandwidth.

3.1.1.4 Intel

®

The Intel

Xeon® Processor 5500 Series and 5600 Series feature two Intel® Intelligent Power

®

Intelligent Power Technology

Technologies:

Integrated Power Gates - this feature lets individual idle cores reduce power to nearly zero

independently. Core power can be controlled automatically or manually.

Automated Low Power States – this feature allows for more and lower CPU power states with

reduced latency during transitions. Also, it now features power management on memory and I/O

chip as well as the processor. This adjusts system power consumption based on real-time loads.

3.1.1.5 Turbo Mode

®

Intel

Turbo Boost Technology opportunistically and automatically allows the processor to run

faster than the marked frequency if the part is operating below power, temperature, and current

limits. This can result in increased performance of both multi-threaded and single threaded

workloads.

Revision 1.2 Intel Confidential 10

Intel order number E69391-006

Page 20

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

Turbo boost operation:

Operates under OS control – only entered when OS requests higher performance state

(P0).

Turbo Boost availability is independent of the number of active cores

Max Turbo boost frequency is dependent on the number of active cores and varies by

processor configuration.

Amount of time the system spends in Turbo Boost will depend on the workload,

operating environment, and platform design.

Turbo Boost can be enabled or disabled by BIOS.

3.1.1.6 Integrated Memory Controller

®

The Intel

as the Intel

5500 Chipset Series employs a new design where the memory controller, referred to

®

QuickPath Memory Controller, is now embedded as part of the processor

architecture.

The Intel

®

QuickPath Memory Controller provides up to three memory channels per processor

supporting up to 32 GB/s bandwidth per memory controller. Supported memory follows the

DDR3 specification and supports the following characteristics:

800MHz, 1066MHz and 1333MHz operating frequencies

Single-rank (SR), dual-rank (DR) and quad-rank (QR)

Registered DIMM (RDIMM) or Unbuffered DIMM (UDIMM)

3.1.2

Processor Population Rules

Note: Although the server board does support dual-processor configurations consisting of

different processors that meet the defined criteria below, Intel does not perform validation

testing of this configuation. For optimal system performance in dual-processor configurations,

Intel recommends that identical processors be installed.

When using a single processor configuration, the processor can be installed into either CPU1 or

CPU2 processor sockets.

When two processors are installed, the following population rules apply:

Both processors must have the same Intel

Both processors must have the same core frequency

Both processors must have the same internal cache sizes

Both processors must have the same Thermal Design Power (TDP - Watts) rating

Processor stepping within a common processor family can be mixed as long as it is

®

QuickPath Interconnect frequency

listed in the processor specification updates published by Intel Corporation.

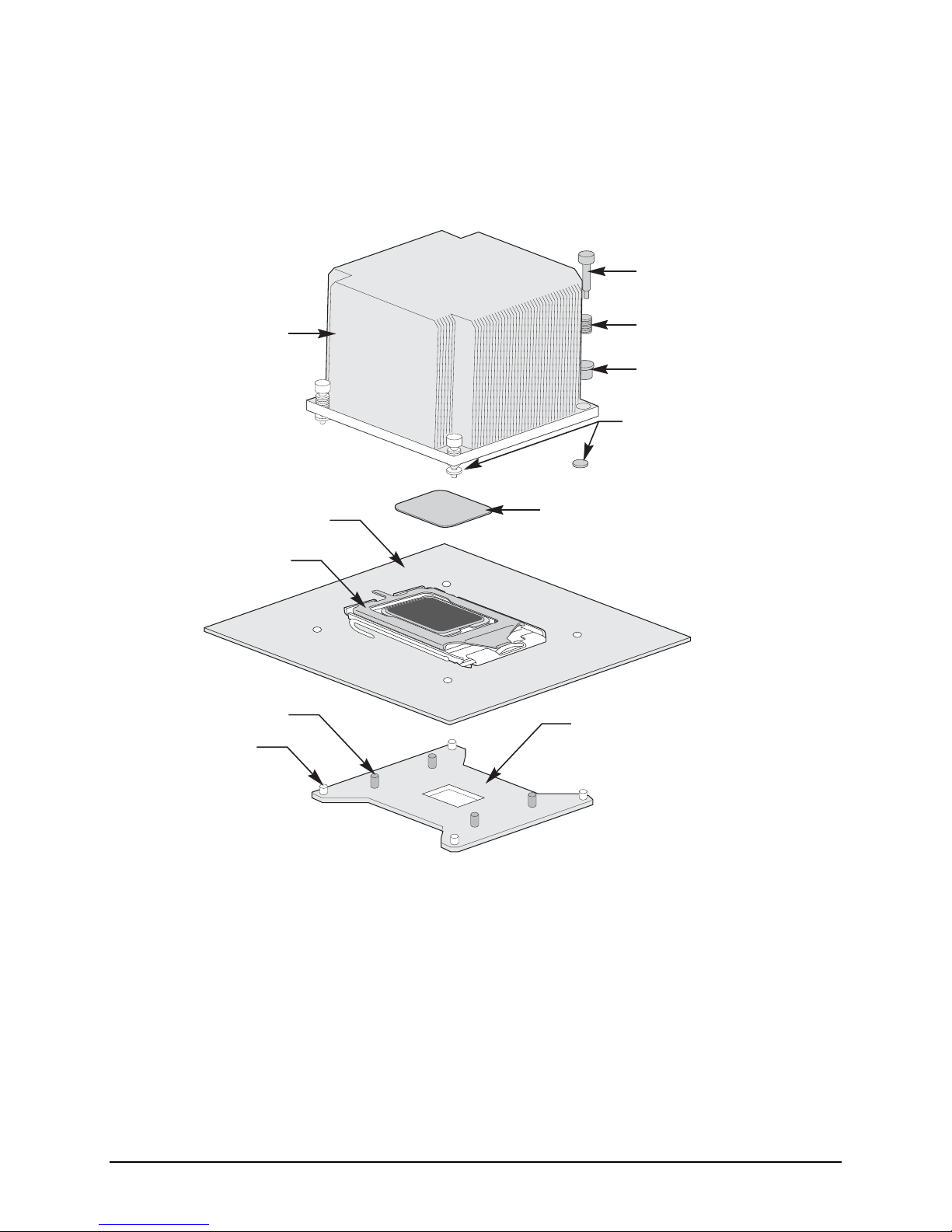

3.1.3

Unified Retention System Support

The server board complies with Intel’s Unified Retention System (URS) and the Unified

Backplate Assembly. The server board ships with a made-up assembly of Independent Loading

Mechanism (ILM) and Unified Backplate for each processor socket.

11 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 21

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

The URS retention transfers load to the server board through the unified backplate assembly.

The URS spring, captive in the heatsink, provides the necessary compressive load for the

thermal interface material. All components of the URS heatsink solution are captive to the

heatsink and only require a Philips* screwdriver to attach to the unified backplate assembly.

See the following figure for the stacking order of the URS components.

Screw

Heatsink

ILM and Socket

ILM Attach Studs

Heatsink

Attach Studs

Server Board

Compression Spring

Retention Cup

Retaining Ring

Thermal Interface Material (TIM)

Unified Backplate

Figure 6. Unified Retention System and Unified Backplate Assembly

3.2 Intel

®

QuickPath Memory Controller and Memory Subsystem

The Intel® QuickPath Memory Controller provides up to three memory channels per processor

supporting up to 32 GB/s bandwidth per memory controller. Supported memory follows the

DDR3 specification and supports the following characteristics:

800MHz, 1066MHz and 1333MHz operating frequencies

Single-rank (SR), dual-rank (DR) and quad-rank (QR)

Revision 1.2 Intel Confidential 12

Intel order number E69391-006

AF002699

Page 22

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

0

Registered DIMM (RDIMM) or Unbuffered DIMM (UDIMM)

RDIMMs must be ECC only

UDIMMs can be ECC or non-ECC and can be mixed within a common configuration

The Channel Independent mode is the only memory RAS mode that supports non-

ECC DIMMs.

The presence of a single non-ECC UDIMM results in the disabling of ECC

functionality.

RDIMMs and UDIMMs cannot be mixed within a common system memory

configuration

Note:

Although non-ECC memory can be used on this server board, Intel does not validate and

strongly discourages their use in a working server environment.

The following table shows the maximum memory amounts possible using RDIMM type memory:

Single Rank RDIMMs

800 MHz and 1066 MHz

Dual Rank RDIMMs

800 MHz and 1066 MHz

Quad Rank RDIMMs (1)

800 MHz only

48 GB

(12x 4GB DIMMs)

96 GB

(12x 8GB DIMMs)

96 GB

(12x 8GB DIMMs)

NOTE: (1) Due to thermal requirements needed to support Quad Rank x4 DDR3 DIMMs, this

memory type is not supported in the Intel® Server System SR1670HV. Support for this memory

type should be verified with other server chassis/system manufacturers planning to support this

server board.

3.2.1 Intel

®

Server Board S5500HV Memory Support

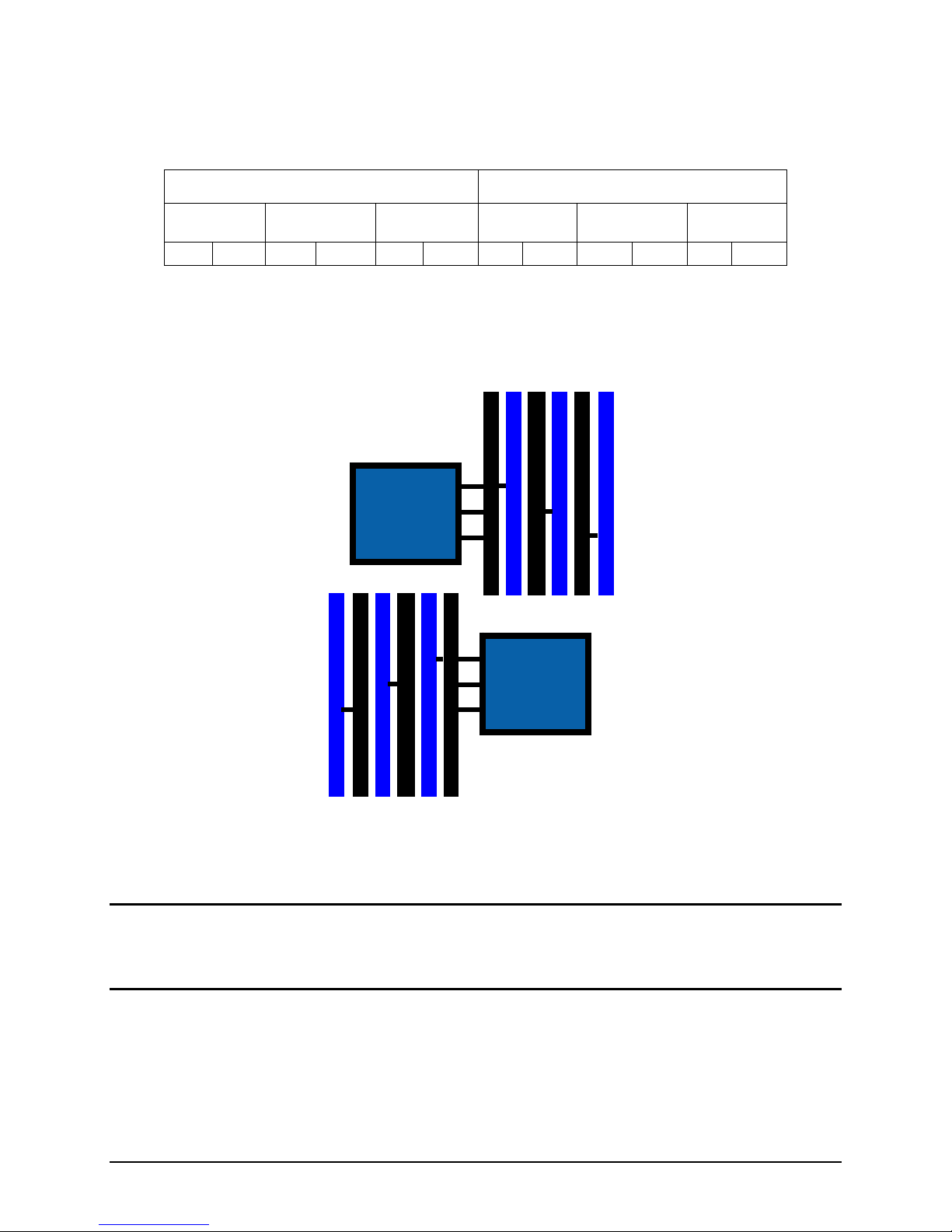

The Intel® Server Board S5500HV provides six DIMM slots across 3 memory channels per

processor, for a total of twelve DIMM slots.

0

1

2

3 channels per

CPU

1

2

Intel®

®

Xeon

Processo

r 5500

CPU 1

QPI

Intel®

Xeon

Processo

r 5500

CPU 2

®

Figure 7. Conceptual Memory Layout Diagram

13 Intel Confidential Revision 1.2

Intel order number E69391-006

1 2

2 1

2 DIMMs per

channel

Page 23

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

A

A

The nomenclature for DIMM slots implemented on the Intel® Server Board S5500HV is detailed

in the following figure.

Processor Socket 1 Processor Socket 2

Channel 0

(A)

A1 A2 B1 B2 C1 C2 D1 D2 E1 E2 F1 F2

Channel 1 (B) Channel 2

(C)

Channel 0

(D)

Channel 1 (E) Channel 2

(F)

Figure 8. DIMM Slot Nomenclature

On the Intel® Server Board S5500HV the DIMM slots are identified as shown below:

D2

D1

E2

E1

F2

F1

CPU 2

Intel® Xeon®

Processor

5500 Series

Intel® Xeon®

Processor

5500 Series

CPU 1

Figure 9. Intel Server Board S5500HV Memory Slot Layout

C2B1B2

C1

2

1

Note: Memory is only populated using DIMM slots associated with a given installed processor.

Ie.) With only one processor installed, only the DIMM slots associated with that processor

should be populated. Memory installed into DIMM slots for a processor that is not installed are

non-functional.

DIMMs are organized into physical slots on DDR3 memory channels that belong to

processor sockets.

The memory channels from processor socket 1 are identified as Channels A, B, and C.

The memory channels from processor socket 2 are identified as Channels D, E, and F.

Revision 1.2 Intel Confidential 14

Intel order number E69391-006

Page 24

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

The memory slots associated with a given processor are unavailable if the given

processor socket is not populated.

A processor may be installed without populating the associated memory slots provided a

second processor is installed with associated memory. In this case, the memory is

shared by the processors. However, this is not a recommended configuration due to the

associated latency which will affect performance.

Processor sockets are self-contained and autonomous. However, all memory subsystem

support (i.e., Memory RAS, Error Management, etc.) in the BIOS setup are applied

commonly across processor sockets.

3.2.1.1 Memory Population Rules

DIMM population requirements are dependent upon the number of slots per channel; the

number of DIMMs installed; and rank type. When installing memory consider the following:

Populate DIMMs by channel starting with the Blue slot farthest from the CPU

All channels in a system will run at the fastest common frequency

RDIMMs and UDIMMs may not be mixed

If two 1333 MHz capable UDIMMs or RDIMMs is detected in the same channel, BIOS

will flag this as a warning and force the speed down to 1066 MHz.

3.2.1.1.1 Supported RDIMM configurations:

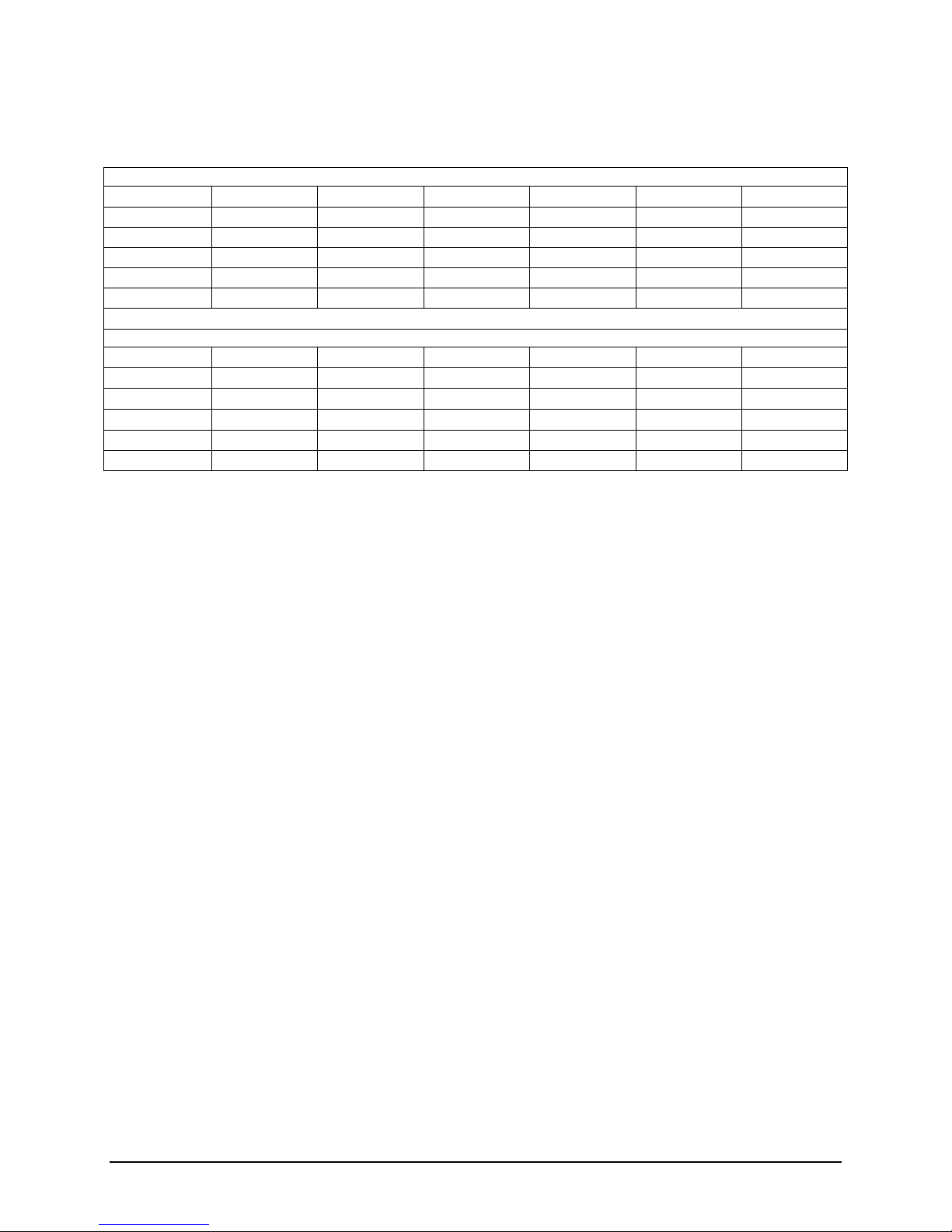

Table 3 Supported RDIMM configurations

DIMM Slots

per Channel

2 1 Registered

2 1 Registered

2 2 Registered

2 2 Registered

DIMMs Populated

per Channel

DIMM Type Speeds Ranks per

DIMM

DDR3 ECC

DDR3 ECC

DDR3 ECC

DDR3 ECC

800,

1066,

1333

800, 1066 QR Only

800, 1066 Mixing SR,

800 Mixing SR,

SR or DR

DR

DR, QR

Population Rules

1. Any combination of x4 and x8

RDIMMs, with 1Gb or 2Gb DRAM

density

256 Mb, 512 Mb and 4 Gb DRAM technologies and x16 DRAM on RDIMM are NOT

supported

If a quad rank RDIMM is mixed with a single rank or dual rank DIMM on given channel,

the quad rank DIMM must be populated in the lowest numbered slot.

15 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 25

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

3.2.1.1.2 Supported UDIMM configurations:

Table 4 Supported UDIMM configurations

DIMM

Slots per

Channel

2 1 Unbuffered DDR3

2 2 Unbuffered DDR3

DIMMs

Populated

per Channel

DIMM Type Speeds Ranks per DIMM Population Rules

SR or DR

Mixing SR, DR

1. Any combination of x8 UDIMMs with

1Gb or 2Gb DRAM Density

(with or without

ECC)

(with or without

ECC)

800,

1066,

1333

800,

1066

256 Mb, 512 Mb and 4 Gb DRAM technologies; x4 DRAM on UDIMM and Quad rank

UDIMM are not supported

Mixing ECC and non-ECC UDIMMs anywhere on the platform will force system to run in

non-ECC mode

No RAS support for non-ECC UDIMMs

No x4 SDDC support with UDIMM w/ECC, however x8 SDDC is supported in lock step

mode with x8 UDIMMs w/ECC

Note:

Although non-ECC memory can be used on this server board, Intel does not plan to

validate and strongly discourages their use in a working server environment.

When installing DIMMs, the following population rules should be followed to deliver best

performance

Maximize number of channels populated first

Balanced DIMM population across channels and sockets.

Revision 1.2 Intel Confidential 16

Intel order number E69391-006

Page 26

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

;

;

;

- -

-

-

;

;

CPU 1 Configuration

1 DIMM

2 DIMMs

3 DIMMs

4 DIMMs

6 DIMMs

Table 5 Memory Population Table

DIMM_A2 DIMM_A1 DIMM_B2 DIMM_B1 DIMM_C2 DIMM_C1

-

-

-

; ;

;

;

;

- - - -

-

-

-

; ; ; ; ; ;

CPU 2 Configuration

1 DIMM

2 DIMMs

3 DIMMs

4 DIMMs

6 DIMMs

DIMM_D2 DIMM_D1 DIMM_E2 DIMM_E1 DIMM_F2 DIMM_F1

-

-

-

; ;

;

;

;

- - - -

-

-

-

;

;

;

- -

-

-

;

;

; ; ; ; ; ;

With two processors installed, the system will operate if only the DIMM slots of one processor

are populated. In this case, memory is shared between the two processors. However, due to the

associated latency of this configuration, this is NOT a recommended operating mode.

3.2.1.2 Memory RAS Modes

The server board supports the following memory RAS Modes:

Independent Channel Mode

Mirrored Channel Mode

Mirrored Channel Mode requires that all installed DIMMs support ECC and that there be

matching DIMM populations between channels. Matching DIMMs must meet the following

criteria: DIMM size, DIMM organization (rank, banks, rows, columns). DIMM timings do not have

to match, however, timings will be set to support all DIMMs populated.

Independent channel mode is the only memory RAS mode that supports either non-ECC or

ECC DIMMs.

Independent Channel Mode

In the Channel Independent mode, channels can be populated in any order (e.g., channels B

and C can be populated while channel A is empty). All three channels may be populated in any

order and have no matching requirements. All channels must run at the same interface

frequency, but individual channels may run at different DIMM timings (RAS latency, CAS

latency, and so on).

The single channel mode is established using the Channel Independent mode by populating

DIMM slots from channel A only.

17 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 27

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

A

A

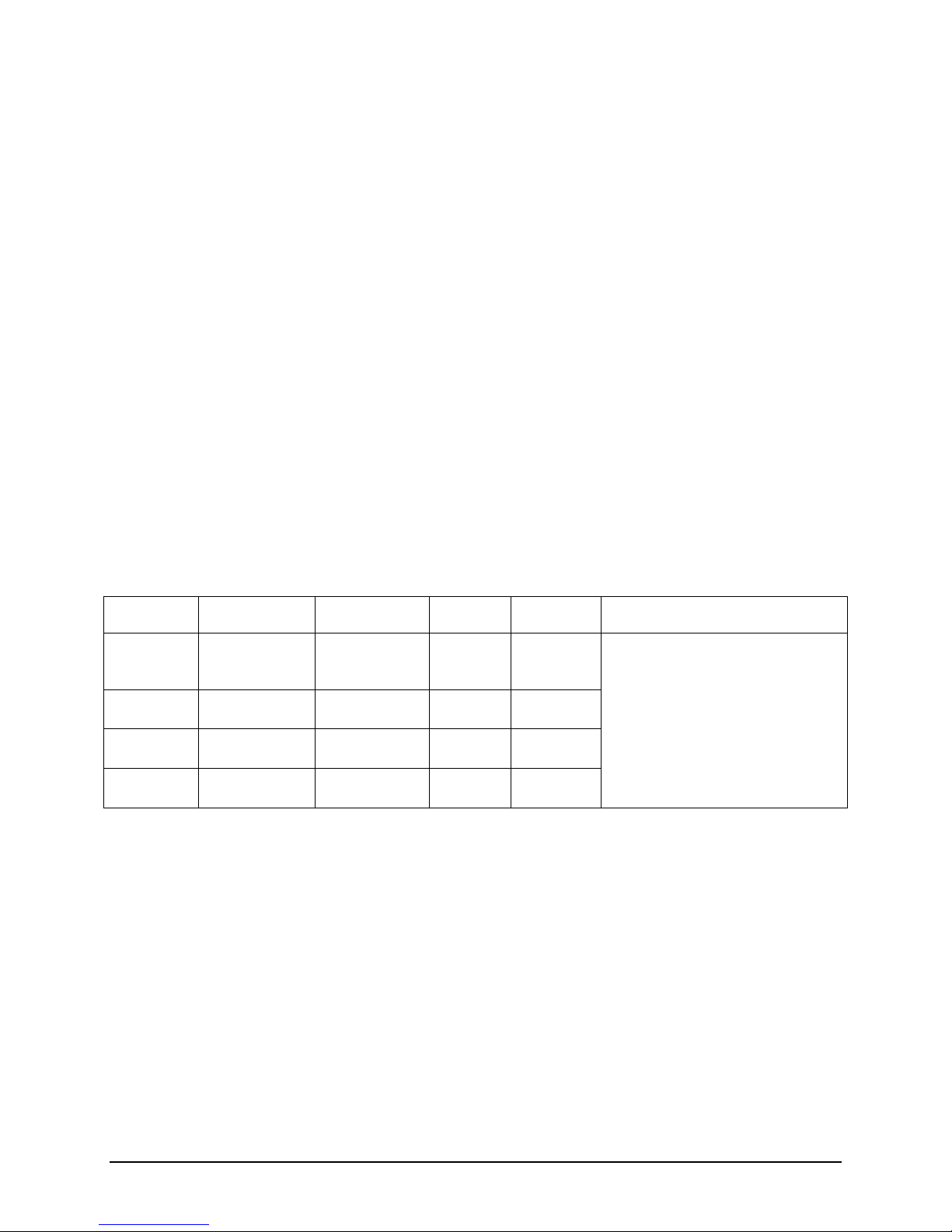

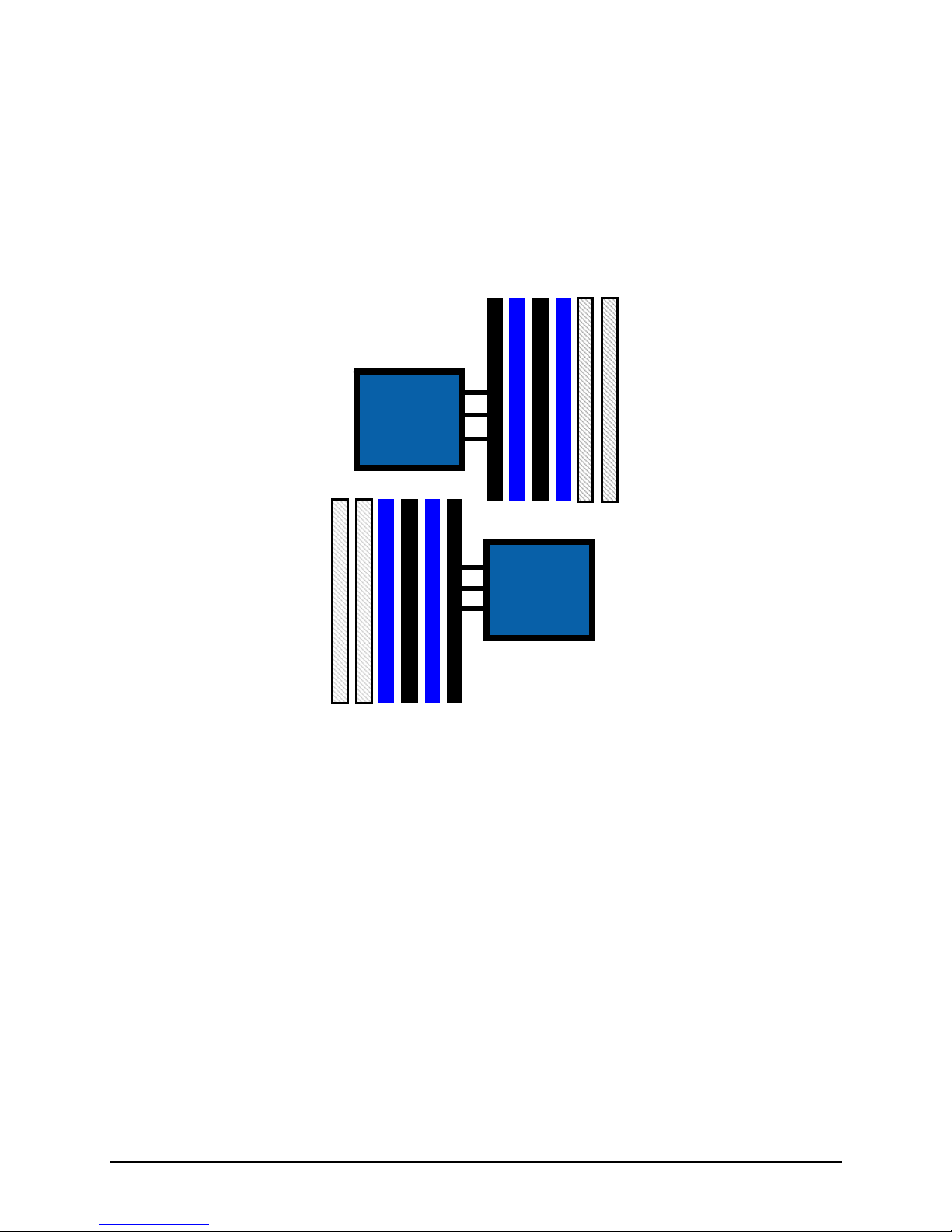

Mirrored Channel Mode

In Mirrored Channel Mode, the memory controller supports mirroring across channels, but not

across CPU sockets. The contents are mirrored between the first 2 channels of a given

processor. For CPU1, mirrored slot pairs include {A1, B1} and {A2, B2}. For CPU2, the mirrored

slot pairs include {D1, E1} and {D2, E2}. The sockets of the 3rd channel of each CPU are not

used in this mode.

D2

D1

E2

E1

F2

F1

Intel® Xeon®

CPU 2

Processor

5500 Series

Intel® Xeon®

Processor

C1

C2

B1

B2

1

5500 Series

2

CPU 1

Figure 10. Mirror Channel Mode Memory Population

Mirrored channel mode requires the following memory population rules:

Channel 0 and Channel 1 of a given processor must be populated identically.

DIMM slot populations within a channel do not have to be identical, but the same DIMM

slot location across Channel 0 and Channel 1 must be populated the same.

For example; DIMM slots A1 and B1 must have identical DIMMs installed to be

mirrored together. DIMM slots A2 and B2 must have identical DIMMs installed to be

mirrored together. However the DIMMs used in mirrored pair {A1, B1} can be

different than those used in mirrored pair {A2, B2}.

With two processors installed, DIMM slots associated with each processor must have a

valid mirroring configuration for memory channels 0 and 1. However, the memory

configuration of each processor can be different from the other.

The exception to this rule is that one processor has no memory installed. Because of

the associated latency, this is NOT a recommended operating mode.

Revision 1.2 Intel Confidential 18

Intel order number E69391-006

Page 28

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

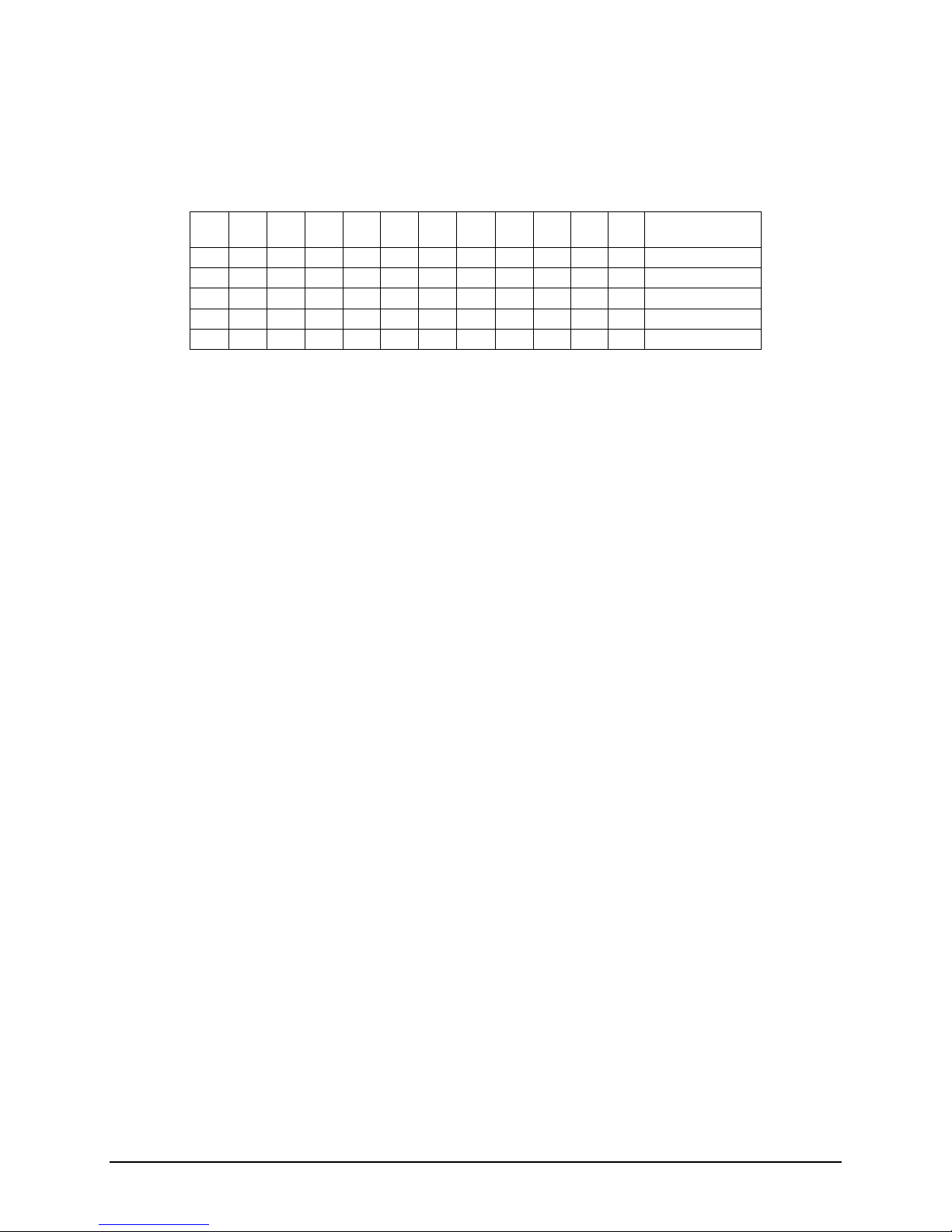

The following table illustrates possible DIMM configurations that can be mirrored, with the

following assumptions: Two processors are installed; all installed DIMMs are identical.

Table 6. Supported Mirrored DIMM Population

A1 A2 B1 B2 C1 C2 D1 D2 E1 E2 F1 F2 Mirroring

Possible?

;

;

;

;

; ; ; ;

; ; ; ;

; ; ; ;

;

;

;

; ; ; ;

;

Yes

Yes

Yes

Yes

Yes

3.3 Intel

The Intel

components and Intel

®

5500 Chipset IOH

®

5500 Chipset I/O Hub (IOH) provides a connection point between various I/O

®

QuickPath Interconnect (Intel® QPI) based processors. It is capable of

interfacing with up to 24 PCI Express* lanes, which can be configured in various combinations

of x4, x8, x16 and limited x2 and x1 devices.

On the Intel Server Board S5500HV the IOH provides the following:

Two Intel

One X16 PCI Express* Gen 2 port supporting a single PCI Express Gen2 compliant X16

®

QuickPath Interconnect (Intel® QPI) interfaces

riser card slot. (Compliant to the PCI Express Base Specification, Revision 2.0)

One X4 ESI link interface to the I/O controller hub Intel

3.4 Intel

®

82801Jx I/O Controller Hub (ICH10R)

®

ICH10R

The server board utilizes features of the Intel® 82801Jx I/O Controller Hub (ICH10R). Supported

features include the following:

PCI Express* Base Specification, Revision 1.1 support

PCI Local Bus Specification, Revision 2.3 support for 33-MHz PCI operations (supports

up to four REQ#/GNT# pairs)

ACPI Power Management Logic Support, Revision 3.0a

Enhanced DMA controller, interrupt controller, and timer functions

Integrated Serial ATA host controllers with independent DMA operation on up to four

ports and AHCI support

USB host interface with support for four USB 2.0 ports; Two external, two internal

System Management Bus (SMBus) Specification, Version 2.0 with additional support for

2

I

C devices

Low Pin Count (LPC) interface support

Firmware Hub (FWH) interface support

Serial Peripheral Interface (SPI) support

19 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 29

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

(

3.4.1.1 Serial ATA Support

The ICH10R has an integrated Serial ATA (SATA) controller that supports independent DMA

operation on four ports with data transfer rates of up to 3.0 Gb/s. The four SATA ports on the

server board are numbered SATA-1 through SATA-4. The SATA ports can be enabled or

disabled and/or configured by accessing the BIOS setup utility during POST.

3.4.1.2 Integrated RAID Support

The server board has embedded support for two RAID options:

Intel

®

Matrix Storage Manager with support for RAID levels 0, 1, 5, and 10 (Windows*

support only)

LSI* MegaRAID (Default) with support for RAID levels 0, 1, and 10 (Windows* and Linux)

By default the server board is configured to support the LSI* MegaRAID option. To change this,

a jumper block on the board needs to be changed. The following diagram shows the location of

the jumper block and its settings.

1

2

3

Default)

LSI

1

2

3

Intel

By default, BIOS does NOT enable RAID support. To enable this feature, on option in BIOS

setup must be set as described in the following procedure:

1. Enter BIOS Setup (F2 Key) during POST

2. Go to the MAIN menu > IDE Configuration, and press <Enter>

3. Set the Configure SATA As option to [RAID]

4. Save changes and then exit BIOS Setup

Note: Refer to Intel

Revision 1.2 Intel Confidential 20

Intel order number E69391-006

®

Server System SR1670HV Service Guide for RAID setup information

Figure 11. RAID Option Jumper Block

Page 30

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

r

3.4.2 USB 2.0 Support

The USB controller functionality integrated into ICH10R provides the server board with an

interface for 4 USB 2.0 ports. All ports are high-speed, full-speed and low-speed capable.

Two external connectors are located on the back edge of the server board.

Two internal connectors (A-Type USB 4, 5x1 header USB 3) allow for optional USB

support

USB 4

Type 4 device

connecto

Figure 12. Internal USB Port Locations

USB 3

Power USB Port A-

USB Port A+

GND

3.4.3 Keyboard and Mouse Support

The server board does not support PS/2 interface keyboards and mice. However, the system

BIOS recognizes USB specification-compliant keyboard and mice.

3.5 Network Interface Controller (NIC)

This server board supports two external Gigabit Ethernet ports in a stacked housing on the back

edge of the server board. The network interface is provided using two on-board Intel

Gigabit Ethernet Controllers supporting 10/100/1000 Mbps operation.

The Intel

1000BASE-T, 100BASE-TX, and 10BASE-T applications (802.3, 802.3u, and 802.3ab).

Each network interface controller (NIC) drives two status LEDs located on each external

network interface connector. The activity/link LED (at the left of the connector) indicates network

connection when on, and transmit/receive activity when blinking. The speed LED (at the right of

21 Intel Confidential Revision 1.2

Intel order number E69391-006

®

82574L device provides MDI (copper) standard IEEE 802.3 Ethernet interface for

®

82574L

Page 31

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

the connector) indicates 1000-Mbps operation when green, 100-Mbps operation when orange,

and 10-Mbps when off. The following table provides an overview of the LEDs.

Act/Link

LED

Speed

LED

Table 7. NIC Status LEDs

Act/Link LED Speed LED

Status Description Status Description

OFF No Link OFF 10 Mbps connection

GREEN Linked ORANGE 100 Mbps connection

BLINKING Data Activity GREEN 1000 Mbps connection

Each LAN port can be enabled (default) or disabled via a jumper block, identified in the

following diagram.

NIC 1

NIC 2

1

2

Enabled

1

2

Disabled

Figure 13. NIC Enable/Disabled Jumper Block

Revision 1.2 Intel Confidential 22

Intel order number E69391-006

Page 32

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

3.6 ASPEED* AST2050 Graphics and Remote Management Processor

This server board utilizes the graphics and baseboard management features of the ASPEED*

AST2050 Graphics and Remote Management Processor. Features utilized include:

Embedded 2D VGA Controller

Baseboard Management Controller

10/100 Mbps MAC (option)

3.6.1

Video Support

Video support on this server board is provided using the video controller features embedded in

the ASPEED* AST2050. Video support includes a 2D VGA controller capable of supporting

video resolutions up to and including 1600x1200@60Hz 16bpp.

The video is accessed using a standard 15-pin VGA connector found on the back edge of the

server board. The on-board video controller can be enabled (default) or disabled via a jumper

on the baseboard, identified in the following diagram:

1

2

Enable

(Default)

1

2

Disable

Figure 14. Video Enable/Disable Jumper Block

3.6.2 Baseboard Management Controller & BMC Module

This server board uses the Baseboard Management Controller feature of the ASPEED

AST2050 along with a Winbond* 83795ADG hardware monitoring chip to monitor various server

board sensors including Processor Temp, Fan Tach, and DC Voltages. Sensor data can be

accessed in-band with or without the included Baseboard Management Module. For out-of-band

access to this sensor data, the included Baseboard Management Module must be installed.

23 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 33

Functional Architecture Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

3.6.2.1 Baseboard Management Module

The server board includes an IPMI 2.0/1.5 compliant Baseboard Management Module which

provides support for out-of-band system access.

Figure 15. Baseboard Management Module

Additional features of the BMC Module include the following:

IPMI 2.0

Advanced Encryption Standard (AES) support

Secure Socket Layer (SSL) support

Dynamic Host Configuration Protocol (DHCP) support

Telnet Access, SSH support

SMASH CLP support

ARC support

Remote Monitor support

Remote Re-Direct (SOL for Text Mode only)

Remote Update Firmware support

Remote CMOS Setting (Text Mode SOL)

Automatic System Recovery (ASR) by watchdog timer

Remote Reboot

Remote Power On/Off

PEF Configuration

SMTP (Email) support

Platform Event Trap (PET) support

Revision 1.2 Intel Confidential 24

Intel order number E69391-006

Page 34

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Functional Architecture

3.6.2.2 Management NIC

The server board provides Serial-Over-LAN support via a dedicated 10/100 Mbps Management

NIC. The management port is located on the back edge of the server board in a stacked

housing over the USB ports as shown in the following diagram.

Management NIC

Figure 16. Management NIC

Note: The Management NIC is only enabled with the BMC Module installed.

25 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 35

Platform Management Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

4. Platform Management

The platform management subsystem consists of several components of the server board

including the embedded BMC features of the ASPEED* 2050, Winbond* 83795ADG hardware

monitoring chip, BMC Module, on-board sensors, BIOS and Firmware. Together these

components provide several platform management features including:

Note: The following feature list is only supported with the BMC Module installed.

In-band/Out-of-Band Sensor monitoring

PMBus support

PSMI support

PET

SNMP Trap

E-Mail Notification

Fan Control

Error Handling and Reporting

IPMI 2.0 support

System Interface (KCS)

LAN Interface (support RMCP+)

System Event Log (SEL)

Sensor Data Records (SDR)

Field Replaceable Unit (FRU)

Remote Power on/off, reboot

Serial Over LAN (SOL)

Authentication Type: RAKP-HMAC_SHA1

Encryption (AES)

Platform Event Filtering (PEF)

Platform Event Trap (PET)

Watchdog Timer

See also the IPMI 2.0 Specification.

Revision 1.2 Intel Confidential 26

Intel order number E69391-006

Page 36

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Platform Management

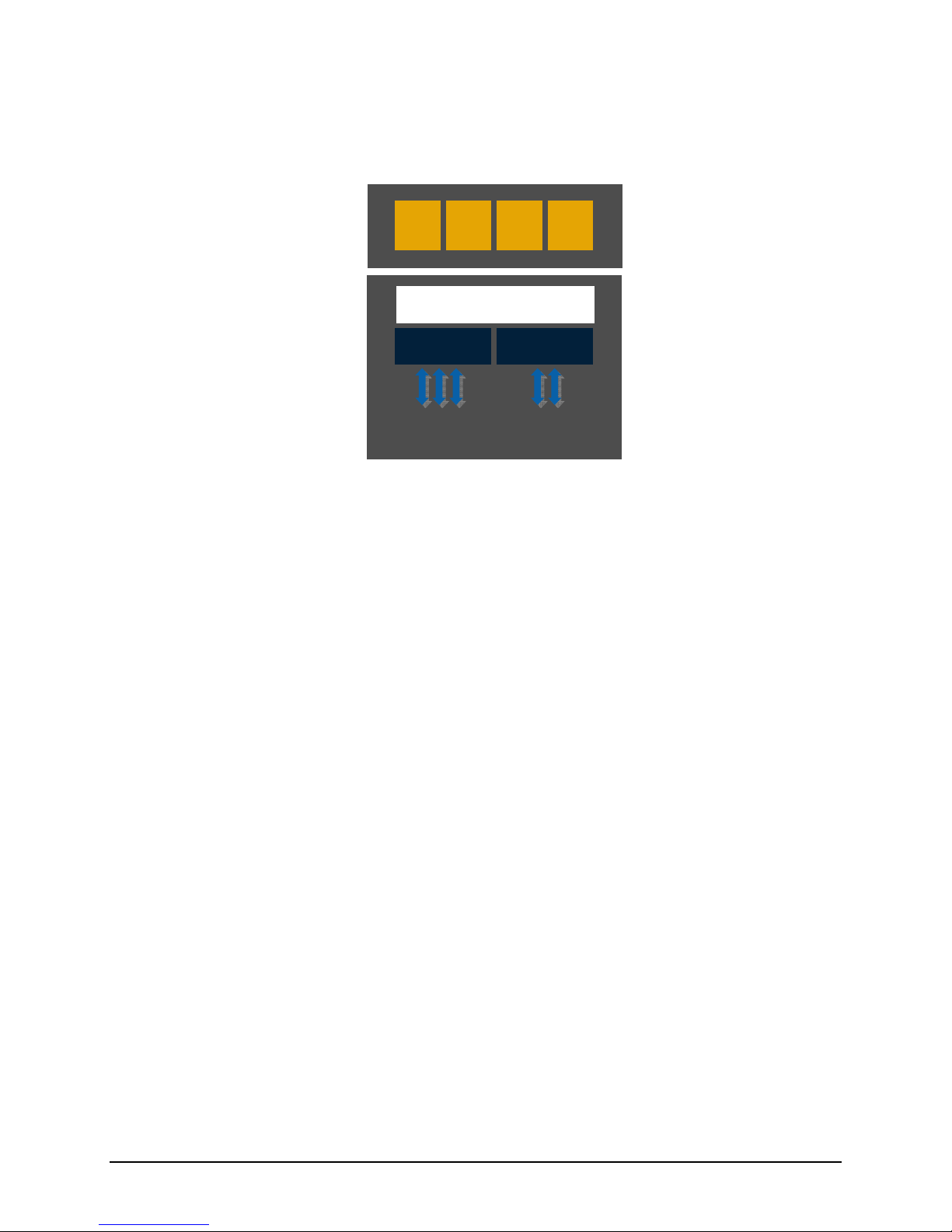

4.1 System Fan Control

The server board provides four 4-pin system fan connectors.

FAN 2 FAN 1

FAN 3

FAN 4

Figure 17. System Fan Connector Locations

Each system fan connector has the following pin-out definition:

Connector

Key

Figure 18. System Fan Connector Pin-out Definition

Pin 1 – GND

Pin 2 – Fan Power

Pin 3 – Fan Speed

Pin 4 – PWM Control

The fan speed for all the system fans is controlled by a single pulse width modulator (PWM) and

monitored by the BMC. BIOS setup provides two fan speed options: Full Speed Mode & High

Density Mode.

In Full Speed Mode, system fans will only operate at highest fan speed, providing maximum air

flow.

In High Density Mode, fan speed is controlled, allowing for a quieter system. At nominal CPU

temperatures, the fan speeds will operate at 50% of maximum. As CPU temperatures rise, the

fan speeds will gradually increase to a maximum 100%. As CPU temperatures fall, fan speeds

will automatically readjust to a lower speed.

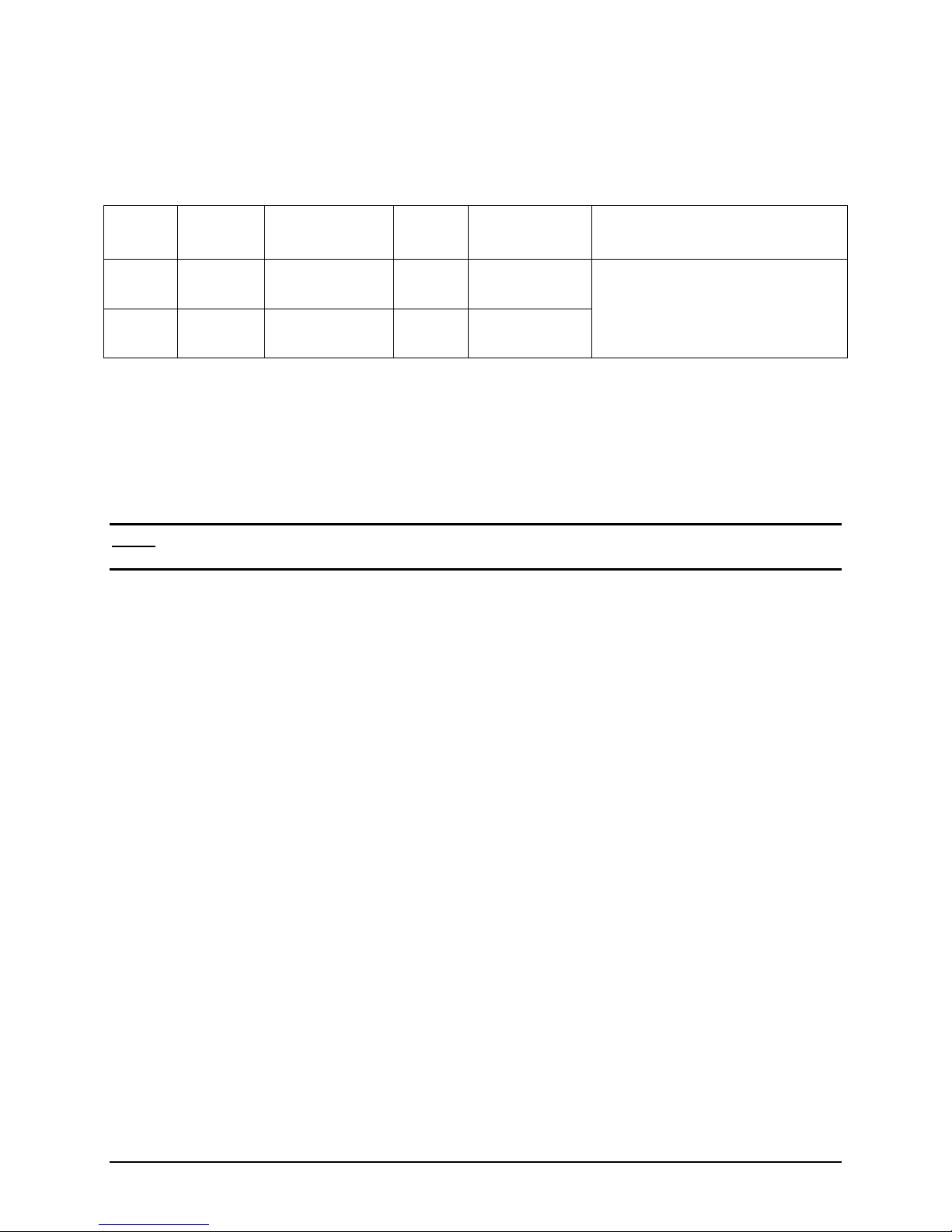

4.2 On-board/System Sensor Information

The server board includes many on-board sensors which are monitored by the BMC for various

server management functions.

27 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 37

Platform Management Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

The following tables list the sensor identification numbers and information about the sensor

type, name, supported thresholds, assertion and de-assertion information. See the Intelligent

Platform Management Interface Specification, Version 2.0, for sensor and event/reading-type

table information.

Sensor Type

The Sensor Type values are the values enumerated in the Sensor Type Codes table in

the IPMI specification. The Sensor Type provides the context in which to interpret the

sensor, such as the physical entity or characteristic that is represented by this sensor.

Event/Reading Type

The Event/Reading Type values are from the Event/Reading Type Code Ranges and

Generic Event/Reading Type Codes tables in the IPMI specification. Digital sensors are

a specific type of discrete sensor, which have only two states.

Event Offset/Triggers

Event Thresholds are event-generating thresholds for threshold types of sensors.

- [u,l][nr,c,nc]: upper non-recoverable, upper critical, upper non-critical, lower non-

recoverable, lower critical, lower non-critical

- uc, lc: upper critical, lower critical

Event Triggers are supported event-generating offsets for discrete type sensors. The

offsets can be found in the Generic Event/Reading Type Codes or Sensor Type Codes

tables in the IPMI specification, depending on whether the sensor event/reading type is

generic or a sensor-specific response.

MLED

The MLED column indicates whether a particular sensor reading will trigger the Message

LED located on the system front panel to illuminate or not.

Revision 1.2 Intel Confidential 28

Intel order number E69391-006

Page 38

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Platform Management

Table 8. System Sensors

System Sensor Table

No Sensor Name Sensor Type Sensor

Type

code

30h

31h

32h CPU2 Temperature Temperature

cch TR1 Temperature Temperature

cdh TR2 Temperature Temperature

34h

35h

36h

37h

38h

39h

3ah

3bh

3ch VBAT Voltage

3dh

3eh

3fh P2VTT Voltage

40h

41h

4Fh

Reserved

CPU1 Temperature

VCORE1 Voltage

VCORE2 Voltage

+3.3V Voltage

+5V Voltage

+12V Voltage

+1.5V_ICH Voltage 02h Threshold (01h)

+1.1V_IOH Voltage 02h Threshold (01h)

+5VSB Voltage

P1VTT Voltage

+1.5V_P1DDR3 Voltage 02h Threshold (01h)

+3.3VSB Voltage

+1.5V_P2DDR3 Voltage 02h Threshold (01h)

Chassis Intrusion Discrete (6Fh)

Temperature

01h

01h

01h

01h

01h

01h

01h

01h

01h

01h

01h

01h

01h

01h

01h

01h

02h Threshold (01h)

02h Threshold (01h)

02h Threshold (01h)

02h Threshold (01h)

02h Threshold (01h)

02h Threshold (01h)

02h Threshold (01h)

02h Threshold (01h)

02h Threshold (01h)

02h Threshold (01h)

Threshold (01h)

00h:Lower Non-critical - going low

01h:Lower Non-critical - going high

02h:Lower Critical - going low

03h:Lower Critical - going high

04h:Lower Non-recoverable - going low

05h:Lower Non-recoverable - going high

06h:Upper Non-critical - going low

07h:Upper Non-critical - going high

08h:Upper Critical - going low

09h:Upper Critical - going high

0Ah:Upper Non-recoverable - going low

0Bh:Upper Non-recoverable - going high

UC, UNC

Threshold (01h)

UC, UNC

Threshold (01h)

UC, UNC

Threshold (01h)

UC, UNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

UC, UNC, LC, LNC

01h: General Chassis Intrusion,

02h: Drive Bay Intrusion

Event Type MLED

UC

UC

UC

UC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

UC,

LC

29 Intel Confidential Revision 1.2

Intel order number E69391-006

Page 39

Platform Management Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS

Table 9. LED/Power Module Sensors

LED/Power Module Sensor Table

No Sensor Name Sensor Type Sensor

Type

code

53h

54h

Message LED OEM Message LED C0h Read sensor 0: off, 1:on

Locate LED OEM Locate LED C0h Read sensor 0: off, 1:on

Event Type MLED

Table 10. Backplane Sensors

Backplane 1 Sensor Table

No Sensor Name Sensor Type Sensor

Type

68h

69h

6Ah

6Bh

Backplane1 HD1 Drive Slot 0Dh Discrete (6Fh)

Backplane1 HD2 Drive Slot 0Dh Discrete (6Fh)

Backplane1 HD3 Drive Slot 0Dh Discrete (6Fh)

Backplane1 HD4 Drive Slot 0Dh Discrete (6Fh)

code

01h: Drive Presence,

02h: Drive Fault

01h: Drive Presence,

02h: Drive Fault

01h: Drive Presence,

02h: Drive Fault.

01h: Drive Presence,

02h: Drive Fault.

Event Type MLED

Table 11. Power Module Sensors

Power Module Sensor Table

No Sensor Name Sensor Type Sensor

Type

code

90h

91h

92h

93h

94h

PSU1 PSON Power Supply 08h Discrete (6Fh)

PSU1 PWRGOOD Power Supply 08h Discrete (6Fh)

PSU1 Over Temp Temperature 01h Discrete (07h)

PSU1 FAN Low FAN 04h Discrete (07h)

PSU1 AC Lost Power Supply

08h

Event Type MLED

01h: Presence detected

02h: Power Supply Failure detected

01h: Presence detected

02h: Power Supply Failure detected

01h: Transition to OK

02h: Transition to Non-Critical from

OK

04h: Transition to Critical from less

severe

01h: Transition to OK

02h: Transition to Non-Critical from

OK

Discrete (6Fh)

01h: Presence detected

08h: Power Supply input lost

(AC/DC)

Revision 1.2 Intel Confidential 30

Intel order number E69391-006

Table 12. System Fan Sensors

Page 40

Intel® Server Board S5500HV/Intel® Server System SR1670HV TPS Platform Management

Fan Sensor Table

No Sensor Name Sensor Type Sensor

Type code

A2h

A3h

A4h

A5h

FRNT_FAN1 Fan 04h LC, LNC

FRNT_FAN2 Fan 04h Threshold (01h)

FRNT_FAN3 Fan 04h LC, LNC

FRNT_FAN4 Fan 04h Threshold (01h)

Event Type MLED

Table 13. FRU Sensors

FRU Sensor Table

No FRU Name FRU Type SDR

MB FRU MB FRU 11h

Type

code

FRU ID=0Ah

FRI ID

4.3 Error Handling and Messaging

BIOS has the ability to generate many possible error messages and BIOS initialization

checkpoints during the POST process. The following table provides a description for each

possible BIOS generated error message and checkpoint:

Note: Some of the listed error messages and checkpoints may not be supported on this server

board.

Table 14. BIOS Error Messages and Checkpoints