Front cover

The IBM TotalStorage Storage

DS8000 Series:s:

Concepts and ArchitectureArchitecture

Advanced features and performance

breakthrough with POWER5 technology

Configuration flexibility with LPAR

and virtualization

Highly scalable solutions for

on demand storage

Cathy Warrick

Olivier Alluis

Werner Bauer

Heinz Blaschek

Andre Fourie

Juan Antonio Garay

Torsten Knobloch

Donald C Laing

Christine O’Sullivan

Stu S Preacher

Torsten Rothenwaldt

Tetsuroh Sano

Jing Nan Tang

Anthony Vandewerdt

Alexander Warmuth

Roland Wolf

ibm.com/redbooks

International Technical Support Organization

The IBM TotalStorage DS8000 Series:

Concepts and Architecture

April 2005

SG24-6452-00

Note: Before using this information and the product it supports, read the information in “Notices” on

page xiii.

First Edition (April 2005)

This edition applies to the DS8000 series per the October 12, 2004 announcement. Please note that

pre-release code was used for the screen captures and command output; some details may vary from the

generally available product.

Note: This book is based on a pre-GA version of a product and may not apply when the product becomes

generally available. We recommend that you consult the product documentation or follow-on versions of

this redbook for more current information.

© Copyright International Business Machines Corporation 2005. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiv

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

The team that wrote this redbook. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xv

Become a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix

Part 1. Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

Chapter 1. Introduction to the DS8000 series. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1 The DS8000, a member of the TotalStorage DS family . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.1 Infrastructure Simplification. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.2 Business Continuity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.3 Information Lifecycle Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2 Overview of the DS8000 series. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2.1 Hardware overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.2.2 Storage capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.2.3 Storage system logical partitions (LPARs) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.2.4 Supported environments. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.2.5 Resiliency Family for Business Continuity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.2.6 Interoperability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.2.7 Service and setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.3 Positioning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.3.1 Common set of functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.3.2 Common management functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.3.3 Scalability and configuration flexibility. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.3.4 Future directions of storage system LPARs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.4 Performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.4.1 Sequential Prefetching in Adaptive Replacement Cache (SARC) . . . . . . . . . . . . 14

1.4.2 IBM TotalStorage Multipath Subsystem Device Driver (SDD) . . . . . . . . . . . . . . . 14

1.4.3 Performance for zSeries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.5 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Part 2. Architecture. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Chapter 2. Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.1 Frames . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.1.1 Base frame . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.1.2 Expansion frame . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.1.3 Rack operator panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.2 Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.2.1 Server-based SMP design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.2 Cache management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3 Processor complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.3.1 RIO-G . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.3.2 I/O enclosures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.4 Disk subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.4.1 Device adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

© Copyright IBM Corp. 2005. All rights reserved. iii

2.4.2 Disk enclosures. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

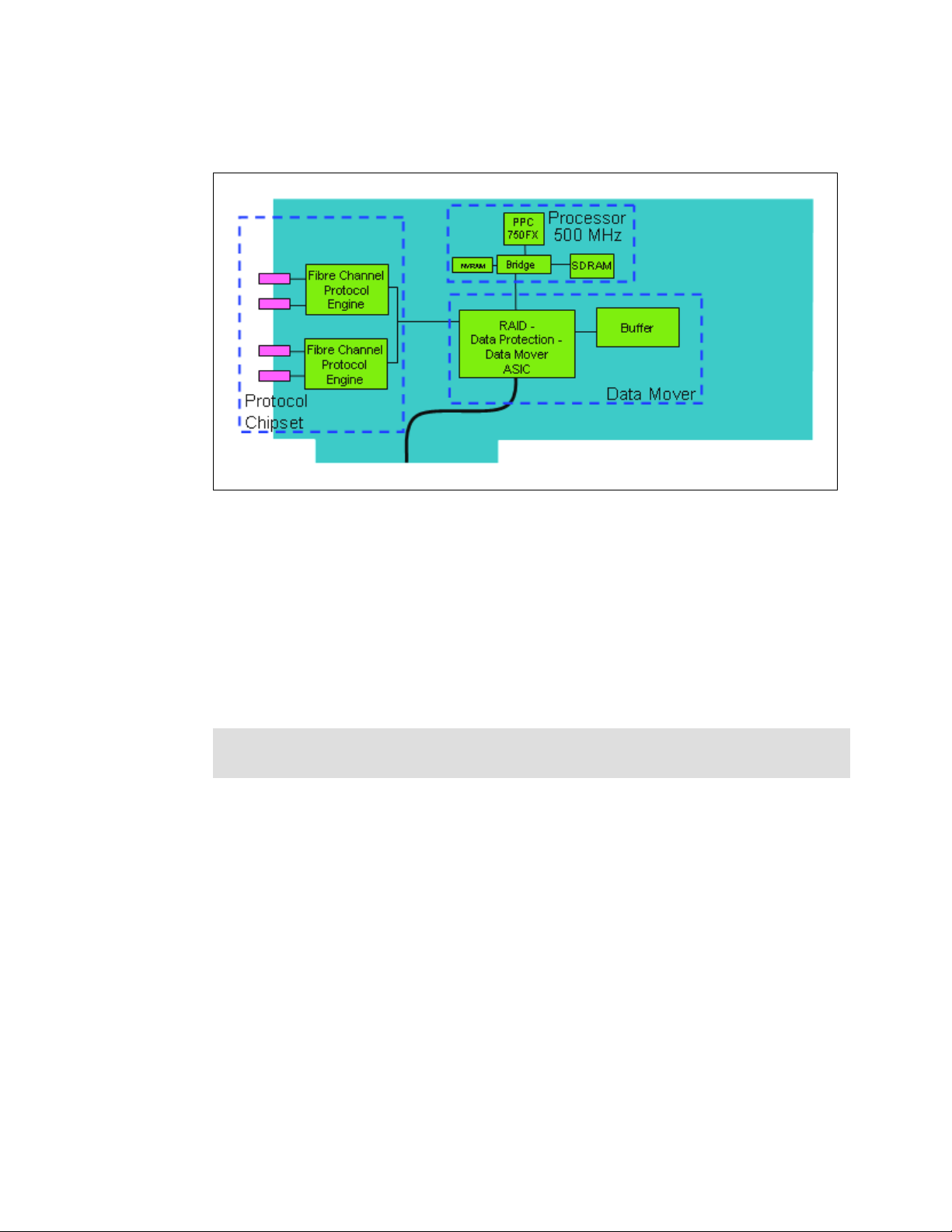

2.5 Host adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

2.5.1 FICON and Fibre Channel protocol host adapters . . . . . . . . . . . . . . . . . . . . . . . . 38

2.6 Power and cooling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

2.7 Management console network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.8 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Chapter 3. Storage system LPARs (Logical partitions). . . . . . . . . . . . . . . . . . . . . . . . . 43

3.1 Introduction to logical partitioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.1.1 Virtualization Engine technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.1.2 Partitioning concepts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.1.3 Why Logically Partition? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.2 DS8000 and LPAR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3.2.1 LPAR and storage facility images . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3.2.2 DS8300 LPAR implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

3.2.3 Storage facility image hardware components . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.2.4 DS8300 Model 9A2 configuration options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3.3 LPAR security through POWER™ Hypervisor (PHYP). . . . . . . . . . . . . . . . . . . . . . . . . 54

3.4 LPAR and Copy Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.5 LPAR benefits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.6 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Chapter 4. RAS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

4.1 Naming . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

4.2 Processor complex RAS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

4.3 Hypervisor: Storage image independence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

4.3.1 RIO-G - a self-healing interconnect. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.3.2 I/O enclosure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.4 Server RAS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.4.1 Metadata checks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.4.2 Server failover and failback. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

4.4.3 NVS recovery after complete power loss . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

4.5 Host connection availability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

4.5.1 Open systems host connection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

4.5.2 zSeries host connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

4.6 Disk subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

4.6.1 Disk path redundancy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

4.6.2 RAID-5 overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

4.6.3 RAID-10 overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

4.6.4 Spare creation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

4.6.5 Predictive Failure Analysis® (PFA) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

4.6.6 Disk scrubbing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

4.7 Power and cooling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

4.7.1 Building power loss . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

4.7.2 Power fluctuation protection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

4.7.3 Power control of the DS8000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

4.7.4 Emergency power off (EPO) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

4.8 Microcode updates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

4.9 Management console . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

4.10 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Chapter 5. Virtualization concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

5.1 Virtualization definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

5.2 Storage system virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

iv DS8000 Series: Concepts and Architecture

5.3 The abstraction layers for disk virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

5.3.1 Array sites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

5.3.2 Arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

5.3.3 Ranks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

5.3.4 Extent pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

5.3.5 Logical volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

5.3.6 Logical subsystems (LSS). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

5.3.7 Volume access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

5.3.8 Summary of the virtualization hierarchy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

5.3.9 Placement of data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

5.4 Benefits of virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Chapter 6. IBM TotalStorage DS8000 model overview and scalability. . . . . . . . . . . . 103

6.1 DS8000 highlights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

6.1.1 Model naming conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

6.1.2 DS8100 Model 921 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

6.1.3 DS8300 Models 922 and 9A2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

6.2 Model comparison . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

6.3 Designed for scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

6.3.1 Scalability for capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

6.3.2 Scalability for performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

6.3.3 Model upgrades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

Chapter 7. Copy Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

7.1 Introduction to Copy Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

7.2 Copy Services functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

7.2.1 Point-in-Time Copy (FlashCopy). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

7.2.2 FlashCopy options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

7.2.3 Remote Mirror and Copy (Peer-to-Peer Remote Copy) . . . . . . . . . . . . . . . . . . . 123

7.2.4 Comparison of the Remote Mirror and Copy functions. . . . . . . . . . . . . . . . . . . . 130

7.2.5 What is a Consistency Group? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

7.3 Interfaces for Copy Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

7.3.1 Storage Hardware Management Console (S-HMC) . . . . . . . . . . . . . . . . . . . . . . 136

7.3.2 DS Storage Manager Web-based interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

7.3.3 DS Command-Line Interface (DS CLI) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

7.3.4 DS Open application programming Interface (API). . . . . . . . . . . . . . . . . . . . . . . 138

7.4 Interoperability with ESS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

7.5 Future Plans . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Part 3. Planning and configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Chapter 8. Installation planning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

8.1 General considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

8.2 Delivery requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

8.3 Installation site preparation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

8.3.1 Floor and space requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

8.3.2 Power requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

8.3.3 Environmental requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

8.4 Host attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

8.4.1 Attaching to open systems hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

8.4.2 ESCON-attached S/390 and zSeries hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

8.4.3 FICON-attached S/390 and zSeries hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

8.4.4 Where to get the updated information for host attachment . . . . . . . . . . . . . . . . . 152

8.5 Network and SAN requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

Contents v

8.5.1 S-HMC network requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

8.5.2 Remote support connection requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

8.5.3 Remote power control requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

8.5.4 SAN requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

Chapter 9. Configuration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

9.1 Configuration planning overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

9.2 Storage Hardware Management Console (S-HMC) . . . . . . . . . . . . . . . . . . . . . . . . . . 158

9.2.1 External S-HMC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

9.2.2 S-HMC software components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

9.2.3 S-HMC network topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

9.2.4 FTP Offload option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

9.3 DS8000 licensed functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

9.3.1 Operating environment license (OEL) - required feature . . . . . . . . . . . . . . . . . . 167

9.3.2 Point-in-Time Copy function (2244 Model PTC) . . . . . . . . . . . . . . . . . . . . . . . . . 168

9.3.3 Remote Mirror and Copy functions (2244 Model RMC) . . . . . . . . . . . . . . . . . . . 169

9.3.4 Remote Mirror for z/OS (2244 Model RMZ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

9.3.5 Parallel Access Volumes (2244 Model PAV) . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

9.3.6 Ordering licensed functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

9.3.7 Disk storage feature activation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

9.3.8 Scenarios for managing licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

9.4 Capacity planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

9.4.1 Logical configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

9.4.2 Sparing rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

9.4.3 Sparing examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

9.4.4 IBM Standby Capacity on Demand (Standby CoD) . . . . . . . . . . . . . . . . . . . . . . 180

9.4.5 Capacity and well-balanced configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

9.5 Data migration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

9.5.1 Operating system mirroring. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

9.5.2 Basic commands. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

9.5.3 Software packages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

9.5.4 Remote copy technologies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

9.5.5 Migration services and appliances . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

9.5.6 z/OS data migration methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

9.6 Planning for performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

9.6.1 Disk Magic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

9.6.2 Size of cache storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

9.6.3 Number of host ports/channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

9.6.4 Remote copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

9.6.5 Parallel Access Volumes (z/OS only) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

9.6.6 I/O priority queuing (z/OS only). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

9.6.7 Monitoring performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

9.6.8 Hot spot avoidance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

Chapter 10. The DS Storage Manager - logical configuration. . . . . . . . . . . . . . . . . . . 189

10.1 Configuration hierarchy, terminology, and concepts . . . . . . . . . . . . . . . . . . . . . . . . . 190

10.1.1 Storage configuration terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

10.1.2 Summary of the DS Storage Manager logical configuration steps . . . . . . . . . . 199

10.2 Introducing the GUI and logical configuration panels . . . . . . . . . . . . . . . . . . . . . . . . 202

10.2.1 Connecting to the DS8000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

10.2.2 The Welcome panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

10.2.3 Navigating the GUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

10.3 The logical configuration process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

vi DS8000 Series: Concepts and Architecture

10.3.1 Configuring a storage complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

10.3.2 Configuring the storage unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

10.3.3 Configuring the logical host systems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

10.3.4 Creating arrays from array sites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

10.3.5 Creating extent pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

10.3.6 Creating FB volumes from extents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

10.3.7 Creating volume groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

10.3.8 Assigning LUNs to the hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

10.3.9 Deleting LUNs and recovering space in the extent pool . . . . . . . . . . . . . . . . . . 226

10.3.10 Creating CKD LCUs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

10.3.11 Creating CKD volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

10.3.12 Displaying the storage unit WWNN. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

10.4 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

Chapter 11. DS CLI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

11.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

11.2 Functionality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

11.3 Supported environments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

11.4 Installation methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

11.5 Command flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

11.6 User security . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

11.7 Usage concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

11.7.1 Command modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

11.7.2 Syntax conventions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

11.7.3 User assistance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

11.7.4 Return codes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

11.8 Usage examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

11.9 Mixed device environments and migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

11.9.1 Migration tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

11.10 DS CLI migration example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

11.10.1 Determining the saved tasks to be migrated. . . . . . . . . . . . . . . . . . . . . . . . . . 245

11.10.2 Collecting the task details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

11.10.3 Converting the saved task to a DS CLI command . . . . . . . . . . . . . . . . . . . . . 247

11.10.4 Using DS CLI commands via a single command or script . . . . . . . . . . . . . . . 249

11.11 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

Chapter 12. Performance considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

12.1 What is the challenge? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

12.1.1 Speed gap between server and disk storage . . . . . . . . . . . . . . . . . . . . . . . . . . 254

12.1.2 New and enhanced functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

12.2 Where do we start? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255

12.2.1 SSA backend interconnection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

12.2.2 Arrays across loops . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

12.2.3 Switch from ESCON to FICON ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

12.2.4 PPRC over Fibre Channel links . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

12.2.5 Fixed LSS to RAID rank affinity and increasing DDM size . . . . . . . . . . . . . . . . 256

12.3 How does the DS8000 address the challenge? . . . . . . . . . . . . . . . . . . . . . . . . . . . . 257

12.3.1 Fibre Channel switched disk interconnection at the back end . . . . . . . . . . . . . 257

12.3.2 Fibre Channel device adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

12.3.3 New four-port host adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

12.3.4 POWER5 - Heart of the DS8000 dual cluster design . . . . . . . . . . . . . . . . . . . . 261

12.3.5 Vertical growth and scalability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

12.4 Performance and sizing considerations for open systems . . . . . . . . . . . . . . . . . . . . 264

Contents vii

12.4.1 Workload characteristics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

12.4.2 Cache size considerations for open systems . . . . . . . . . . . . . . . . . . . . . . . . . . 265

12.4.3 Data placement in the DS8000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

12.4.4 LVM striping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266

12.4.5 Determining the number of connections between the host and DS8000 . . . . . 267

12.4.6 Determining the number of paths to a LUN. . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

12.4.7 Determining where to attach the host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

12.5 Performance and sizing considerations for z/OS . . . . . . . . . . . . . . . . . . . . . . . . . . . 269

12.5.1 Connect to zSeries hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 269

12.5.2 Performance potential in z/OS environments . . . . . . . . . . . . . . . . . . . . . . . . . . 270

12.5.3 Appropriate DS8000 size in z/OS environments. . . . . . . . . . . . . . . . . . . . . . . . 271

12.5.4 Configuration recommendations for z/OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 274

12.6 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 278

Part 4. Implementation and management in the z/OS environment. . . . . . . . . . . . . . . . . . . . . . . . . . . 279

Chapter 13. zSeries software enhancements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 281

13.1 Software enhancements for the DS8000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 282

13.2 z/OS enhancements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 282

13.2.1 Scalability support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 282

13.2.2 Large Volume Support (LVS) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283

13.2.3 Read availability mask support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283

13.2.4 Initial Program Load (IPL) enhancements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

13.2.5 DS8000 definition to host software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

13.2.6 Read control unit and device recognition for DS8000. . . . . . . . . . . . . . . . . . . . 284

13.2.7 New performance statistics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

13.2.8 Resource Management Facility (RMF) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 289

13.2.9 Migration considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

13.2.10 Coexistence considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

13.3 z/VM enhancements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

13.4 z/VSE enhancements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

13.5 TPF enhancements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 291

Chapter 14. Data migration in zSeries environments . . . . . . . . . . . . . . . . . . . . . . . . . 293

14.1 Define migration objectives in z/OS environments . . . . . . . . . . . . . . . . . . . . . . . . . . 294

14.1.1 Consolidate storage subsystems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

14.1.2 Consolidate logical volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295

14.1.3 Keep source and target volume size at the current size . . . . . . . . . . . . . . . . . . 297

14.1.4 Summary of data migration objectives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

14.2 Data migration based on physical migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

14.2.1 Physical migration with DFSMSdss and other storage software. . . . . . . . . . . . 298

14.2.2 Software- and hardware-based data migration. . . . . . . . . . . . . . . . . . . . . . . . . 299

14.2.3 Hardware- or microcode-based migration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

14.3 Data migration based on logical migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307

14.3.1 Data Set Services Utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307

14.3.2 Hierarchical Storage Manager, DFSMShsm . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

14.3.3 System utilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

14.3.4 Data migration within the System-managed storage environment . . . . . . . . . . 308

14.3.5 Summary of logical data migration based on software utilities . . . . . . . . . . . . . 314

14.4 Combine physical and logical data migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 314

14.5 z/VM and VSE/ESA data migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 315

14.6 Summary of data migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 315

Part 5. Implementation and management in the open systems environment. . . . . . . . . . . . . . . . . . . 317

viii DS8000 Series: Concepts and Architecture

Chapter 15. Open systems support and software . . . . . . . . . . . . . . . . . . . . . . . . . . . . 319

15.1 Open systems support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 320

15.1.1 Supported operating systems and servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . 320

15.1.2 Where to look for updated and detailed information . . . . . . . . . . . . . . . . . . . . . 320

15.1.3 Differences to the ESS 2105. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 322

15.1.4 Boot support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 323

15.1.5 Additional supported configurations (RPQ). . . . . . . . . . . . . . . . . . . . . . . . . . . . 323

15.1.6 Differences in interoperability between the DS8000 and DS6000 . . . . . . . . . . 323

15.2 Subsystem Device Driver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 324

15.3 Other multipathing solutions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325

15.4 DS CLI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325

15.5 IBM TotalStorage Productivity Center . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 326

15.5.1 Device Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 328

15.5.2 TPC for Disk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 329

15.5.3 TPC for Replication. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 330

15.6 Global Mirror Utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 330

15.7 Enterprise Remote Copy Management Facility (eRCMF) . . . . . . . . . . . . . . . . . . . . . 331

15.8 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 331

Chapter 16. Data migration in the open systems environment. . . . . . . . . . . . . . . . . . 333

16.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 334

16.2 Comparison of migration methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

16.2.1 Host operating system-based migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

16.2.2 Subsystem-based data migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 339

16.2.3 IBM Piper migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 341

16.2.4 Other migration applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 342

16.3 IBM migration services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 342

16.4 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 342

Appendix A. Open systems operating systems specifics. . . . . . . . . . . . . . . . . . . . . . 343

General considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344

The DS8000 Host Systems Attachment Guide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344

Planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344

UNIX performance monitoring tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 345

IOSTAT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 345

System Activity Report (SAR) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 346

VMSTAT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 347

IBM AIX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 347

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

The AIX host attachment scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

Finding the World Wide Port Names. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

Managing multiple paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

LVM configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 352

AIX access methods for I/O . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 352

Boot device support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 353

AIX on IBM iSeries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 353

Monitoring I/O performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 354

Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 356

Support issues that distinguish Linux from other operating systems . . . . . . . . . . . . . . 356

Existing reference material . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 357

Important Linux issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 358

Linux on IBM iSeries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 363

Troubleshooting and monitoring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 364

Microsoft Windows 2000/2003 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366

Contents ix

HBA and operating system settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366

SDD for Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366

Windows Server 2003 VDS support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 367

HP OpenVMS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 368

FC port configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 368

Volume configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 369

Command Console LUN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 370

OpenVMS volume shadowing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 370

Appendix B. Using DS8000 with iSeries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 373

Supported environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 374

Hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 374

Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 374

Logical volume sizes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 374

Protected versus unprotected volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 375

Changing LUN protection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 375

Adding volumes to iSeries configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 376

Using 5250 interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 376

Adding volumes to an Independent Auxiliary Storage Pool . . . . . . . . . . . . . . . . . . . . . 378

Multipath. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 386

Avoiding single points of failure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 386

Configuring multipath . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 387

Adding multipath volumes to iSeries using 5250 interface . . . . . . . . . . . . . . . . . . . . . . 388

Adding volumes to iSeries using iSeries Navigator. . . . . . . . . . . . . . . . . . . . . . . . . . . . 390

Managing multipath volumes using iSeries Navigator . . . . . . . . . . . . . . . . . . . . . . . . . 392

Multipath rules for multiple iSeries systems or partitions . . . . . . . . . . . . . . . . . . . . . . . 395

Changing from single path to multipath. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 396

Sizing guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 396

Planning for arrays and DDMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 397

Cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 397

Number of iSeries Fibre Channel adapters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 398

Size and number of LUNs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 398

Recommended number of ranks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 399

Sharing ranks between iSeries and other servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . 399

Connecting via SAN switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 400

Migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 400

OS/400 mirroring. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 400

Metro Mirror and Global Copy. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 400

OS/400 data migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 401

Copy Services for iSeries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 403

FlashCopy. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 403

Remote Mirror and Copy. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 403

iSeries toolkit for Copy Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

AIX on IBM iSeries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

Linux on IBM iSeries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 405

Appendix C. Service and support offerings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

IBM Web sites for service offerings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 408

IBM service offerings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 408

IBM Operational Support Services - Support Line . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 410

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 413

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 413

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 413

x DS8000 Series: Concepts and Architecture

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 414

How to get IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 417

Contents xi

xii DS8000 Series: Concepts and Architecture

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area.

Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM

product, program, or service may be used. Any functionally equivalent product, program, or service that does

not infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not give you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such provisions

are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES THIS

PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED,

INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any

manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the

materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without

incurring any obligation to you.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrates programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs. You may copy, modify, and

distribute these sample programs in any form without payment to IBM for the purposes of developing, using,

marketing, or distributing application programs conforming to IBM's application programming interfaces.

© Copyright IBM Corp. 2005. All rights reserved. xiii

Trademarks

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Eserver®

Redbooks (logo) ™

ibm.com®

iSeries™

i5/OS™

pSeries®

xSeries®

z/OS®

z/VM®

zSeries®

AIX 5L™

AIX®

AS/400®

BladeCenter™

Chipkill™

CICS®

DB2®

DFSMS/MVS®

DFSMS/VM®

DFSMSdss™

DFSMShsm™

DFSORT™

Enterprise Storage Server®

Enterprise Systems Connection

Architecture®

ESCON®

FlashCopy®

Footprint®

FICON®

Geographically Dispersed Parallel

Sysplex™

GDPS®

Hypervisor™

HACMP™

IBM®

IMS™

Lotus Notes®

Lotus®

Micro-Partitioning™

Multiprise®

MVS™

Notes®

OS/390®

OS/400®

Parallel Sysplex®

PowerPC®

Predictive Failure Analysis®

POWER™

POWER5™

Redbooks™

RMF™

RS/6000®

S/390®

Seascape®

System/38™

Tivoli®

TotalStorage Proven™

TotalStorage®

Virtualization Engine™

VSE/ESA™

The following terms are trademarks of other companies:

Java and all Java-based trademarks and logos are trademarks or registered trademarks of Sun

Microsystems, Inc. in the United States, other countries, or both.

Microsoft, Windows, Windows NT, and the Windows logo are trademarks of Microsoft Corporation in the

United States, other countries, or both.

Intel, Intel Inside (logos), and Pentium are trademarks of Intel Corporation in the United States, other

countries, or both.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Other company, product, and service names may be trademarks or service marks of others.

xiv DS8000 Series: Concepts and Architecture

Preface

This IBM® Redbook describes the IBM TotalStorage® DS8000 series of storage servers, its

architecture, logical design, hardware design and components, advanced functions,

performance features, and specific characteristics. The information contained in this redbook

is useful for those who need a general understanding of this powerful new series of disk

enterprise storage servers, as well as for those looking for a more detailed understanding of

how the DS8000 series is designed and operates.

The DS8000 series is a follow-on product to the IBM TotalStorage Enterprise Storage

Server® with new functions related to storage virtualization and flexibility. This book

describes the virtualization hierarchy that now includes virtualization of a whole storage

subsystem. This is possible by utilizing IBM’s pSeries® POWER5™-based server technology

and its Virtualization Engine™ LPAR technology. This LPAR technology offers totally new

options to configure and manage storage.

In addition to the logical and physical description of the DS8000 series, the fundamentals of

the configuration process are also described in this redbook. This is useful information for

proper planning and configuration for installing the DS8000 series, as well as for the efficient

management of this powerful storage subsystem.

Characteristics of the DS8000 series described in this redbook also include the DS8000 copy

functions: FlashCopy®, Metro Mirror, Global Copy, Global Mirror and z/OS® Global Mirror.

The performance features, particularly the new switched FC-AL implementation of the

DS8000 series, are also explained, so that the user can better optimize the storage resources

of the computing center.

The team that wrote this redbook

This redbook was produced by a team of specialists from around the world working at the

Washington Systems Center in Gaithersburg, MD.

Cathy Warrick is a project leader and Certified IT Specialist in the IBM International

Technical Support Organization. She has over 25 years of experience in IBM with large

systems, open systems, and storage, including education on products internally and for the

field. Prior to joining the ITSO two years ago, she developed the Technical Leadership

education program for the IBM and IBM Business Partner’s technical field force and was the

program manager for the Storage Top Gun classes.

Olivier Alluis has worked in the IT field for nearly seven years. After starting his career in the

French Atomic Research Industry (CEA - Commissariat à l'Energie Atomique), he joined IBM

in 1998. He has been a Product Engineer for the IBM High End Systems, specializing in the

development of the IBM DWDM solution. Four years ago, he joined the SAN pre-sales

support team in the Product and Solution Support Center in Montpellier working in the

Advanced Technical Support organization for EMEA. He is now responsible for the Early

Shipment Programs for the Storage Disk systems in EMEA. Olivier’s areas of expertise

include: high-end storage solutions (IBM ESS), virtualization (SAN Volume Controller), SAN

and interconnected product solutions (CISCO, McDATA, CNT, Brocade, ADVA, NORTEL,

DWDM technology, CWDM technology). His areas of interest include storage remote copy on

long-distance connectivity for business continuance and disaster recovery solutions.

© Copyright IBM Corp. 2005. All rights reserved. xv

Werner Bauer is a certified IT specialist in Germany. He has 25 years of experience in

storage software and hardware, as well as S/390®. He holds a degree in Economics from the

University of Heidelberg. His areas of expertise include disaster recovery solutions in

enterprises utilizing the unique capabilities and features of the IBM Enterprise Storage

Server, ESS. He has written extensively in various redbooks, including Technical Updates on

DFSMS/MVS® 1.3, 1.4, 1.5. and Transactional VSAM.

Heinz Blaschek is an IT DASD Support Specialist in Germany. He has 11 years of

experience in S/390 customer environments as a HW-CE. Starting in 1997 he was a member

of the DASD EMEA Support Group in Mainz Germany. In 1999, he became a member of the

DASD Backoffice Mainz Germany (support center EMEA for ESS) with the current focus of

supporting the remote copy functions for the ESS. Since 2004 he has been a member of the

VET (Virtual EMEA Team), which is responsible for the EMEA support of DASD systems. His

areas of expertise include all large and medium-system DASD products, particularly the IBM

TotalStorage Enterprise Storage Server.

Andre Fourie is a Senior IT Specialist at IBM Global Services, South Africa. He holds a BSc

(Computer Science) degree from the University of South Africa (UNISA) and has more than

14 years of experience in the IT industry. Before joining IBM he worked as an Application

Programmer and later as a Systems Programmer, where his responsibilities included MVS,

OS/390®, z/OS, and storage implementation and support services. His areas of expertise

include IBM S/390 Advanced Copy Services, as well as high-end disk and tape solutions. He

has co-authored one previous zSeries® Copy Services redbook.

Juan Antonio Garay is a Storage Systems Field Technical Sales Specialist in Germany. He

has five years of experience in supporting and implementing z/OS and Open Systems

storage solutions and providing technical support in IBM. His areas of expertise include the

IBM TotalStorage Enterprise Storage Server, when attached to various server platforms, and

the design and support of Storage Area Networks. He is currently engaged in providing

support for open systems storage across multiple platforms and a wide customer base.

Torsten Knobloch has worked for IBM for six years. Currently he is an IT Specialist on the

Customer Solutions Team at the Mainz TotalStorage Interoperability Center (TIC) in

Germany. There he performs Proof of Concept and System Integration Tests in the Disk

Storage area. Before joining the TIC he worked in Disk Manufacturing in Mainz as a Process

Engineer.

Donald (Chuck) Laing is a Senior Systems Management Integration Professional,

specializing in open systems UNIX® disk administration in the IBM South Delivery Center

(SDC). He has co-authored four previous IBM Redbooks™ on the IBM TotalStorage

Enterprise Storage Server. He holds a degree in Computer Science. Chuck’s responsibilities

include planning and implementation of midrange storage products. His responsibilities also

include department-wide education and cross training on various storage products such as

the ESS and FAStT. He has worked at IBM for six and a half years. Before joining IBM,

Chuck was a hardware CE on UNIX systems for ten years and taught basic UNIX at Midland

College for six and a half years in Midland, Texas.

Christine O’Sullivan is an IT Storage Specialist in the ATS PSSC storage benchmark center

at Montpellier, France. She joined IBM in 1988 and was a System Engineer during her first six

years. She has seven years of experience in the pSeries systems and storage. Her areas of

expertise and main responsibilities are ESS, storage performance, disaster recovery

solutions, AIX® and Oracle databases. She is involved in proof of concept and benchmarks

for tuning and optimizing storage environments. She has written several papers about ESS

Copy Services and disaster recovery solutions in an Oracle/pSeries environment.

Stu Preacher has worked for IBM for over 30 years, starting as a Computer Operator before

becoming a Systems Engineer. Much of his time has been spent in the midrange area,

xvi DS8000 Series: Concepts and Architecture

working on System/34, System/38™, AS/400®, and iSeries™. Most recently, he has focused

on iSeries Storage, and at the beginning of 2004, he transferred into the IBM TotalStorage

division. Over the years, Stu has been a co-author for many Redbooks, including “iSeries in

Storage Area Networks” and “Moving Applications to Independent ASPs.” His work in these

areas has formed a natural base for working with the new TotalStorage DS6000 and DS8000.

Torsten Rothenwaldt is a Storage Architect in Germany. He holds a degree in mathematics

from Friedrich Schiller University at Jena, Germany. His areas of interest are high availability

solutions and databases, primarily for the Windows® operating systems. Before joining IBM

in 1996, he worked in industrial research in electron optics, and as a Software Developer and

System Manager in OpenVMS environments.

Tetsuroh Sano has worked in AP Advanced Technical Support in Japan for the last five

years. His focus areas are open system storage subsystems (especially the IBM

TotalStorage Enterprise Storage Server) and SAN hardware. His responsibilities include

product introduction, skill transfer, technical support for sales opportunities, solution

assurance, and critical situation support.

Jing Nan Tang is an Advisory IT Specialist working in ATS for the TotalStorage team of IBM

China. He has nine years of experience in the IT field. His main job responsibility is providing

technical support and IBM storage solutions to IBM professionals, Business Partners, and

Customers. His areas of expertise include solution design and implementation for IBM

TotalStorage Disk products (Enterprise Storage Server, FAStT, Copy Services, Performance

Tuning), SAN Volume Controller, and Storage Area Networks across open systems.

Anthony Vandewerdt is an Accredited IT Specialist who has worked for IBM Australia for 15

years. He has worked on a wide variety of IBM products and for the last four years has

specialized in storage systems problem determination. He has extensive experience on the

IBM ESS, SAN, 3494 VTS and wave division multiplexors. He is a founding member of the

Australian Storage Central team, responsible for screening and managing all storage-related

service calls for Australia/New Zealand.

Alexander Warmuth is an IT Specialist who joined IBM in 1993. Since 2001 he has worked

in Technical Sales Support for IBM TotalStorage. He holds a degree in Electrical Engineering

from the University of Erlangen, Germany. His areas of expertise include Linux® and IBM

storage as well as business continuity solutions for Linux and other open system

environments.

Roland Wolf has been with IBM for 18 years. He started his work in IBM Germany in second

level support for VM. After five years he shifted to S/390 hardware support for three years.

For the past ten years he has worked as a Systems Engineer in Field Technical Support for

Storage, focusing on the disk products. His areas of expertise include mainly high-end disk

storage systems with PPRC, FlashCopy, and XRC, but he is also experienced in SAN and

midrange storage systems in the Open Storage environment. He holds a Ph.D. in Theoretical

Physics and is an IBM Certified IT Specialist.

Preface xvii

Front row - Cathy, Torsten R, Torsten K, Andre, Toni, Werner, Tetsuroh. Back row - Roland, Olivier,

Anthony, Tang, Christine, Alex, Stu, Heinz, Chuck.

We want to thank all the members of John Amann’s team at the Washington Systems Center

in Gaithersburg, MD for hosting us. Craig Gordon and Rosemary McCutchen were especially

helpful in getting us access to beta code and hardware.

Thanks to the following people for their contributions to this project:

Susan Barrett

IBM Austin

James Cammarata

IBM Chicago

Dave Heggen

IBM Dallas

John Amann, Craig Gordon, Rosemary McCutchen

IBM Gaithersburg

Hartmut Bohnacker, Michael Eggloff, Matthias Gubitz, Ulrich Rendels, Jens Wissenbach,

Dietmar Zeller

IBM Germany

Brian Sherman

IBM Markham

Ray Koehler

IBM Minneapolis

John Staubi

IBM Poughkeepsie

Steve Grillo, Duikaruna Soepangkat, David Vaughn

IBM Raleigh

Amit Dave, Selwyn Dickey, Chuck Grimm, Nick Harris, Andy Kulich, Joe Prisco, Jim Tuckwell,

Joe Writz

IBM Rochester

Charlie Burger, Gene Cullum, Michael Factor, Brian Kraemer, Ling Pong, Jeff Steffan, Pete

Urbisci, Steve Van Gundy, Diane Williams

IBM San Jose

Jana Jamsek

IBM Slovenia

xviii DS8000 Series: Concepts and Architecture

Gerry Cote

IBM Southfield

Dari Durnas

IBM Tampa

Linda Benhase, Jerry Boyle, Helen Burton, John Elliott, Kenneth Hallam, Lloyd Johnson, Carl

Jones, Arik Kol, Rob Kubo, Lee La Frese, Charles Lynn, Dave Mora, Bonnie Pulver, Nicki

Rich, Rick Ripberger, Gail Spear, Jim Springer, Teresa Swingler, Tony Vecchiarelli, John

Walkovich, Steve West, Glenn Wightwick, Allen Wright, Bryan Wright

IBM Tucson

Nick Clayton

IBM United Kingdom

Steve Chase

IBM Waltham

Rob Jackard

IBM Wayne

Many thanks to the graphics editor, Emma Jacobs, and the editor, Alison Chandler.

Become a published author

Join us for a two- to six-week residency program! Help write an IBM Redbook dealing with

specific products or solutions, while getting hands-on experience with leading-edge

technologies. You'll team with IBM technical professionals, Business Partners and/or

customers.

Your efforts will help increase product acceptance and customer satisfaction. As a bonus,

you'll develop a network of contacts in IBM development labs, and increase your productivity

and marketability.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our Redbooks to be as helpful as possible. Send us your comments about this or

other Redbooks in one of the following ways:

Use the online Contact us review redbook form found at:

ibm.com/redbooks

Send your comments in an email to:

redbook@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. QXXE Building 80-E2

650 Harry Road

San Jose, California 95120-6099

Preface xix

xx DS8000 Series: Concepts and Architecture

Part 1 Introduction

In this part we introduce the IBM TotalStorage DS8000 series and its key features. These

include:

Product overview

Part 1

Positioning

Performance

© Copyright IBM Corp. 2005. All rights reserved. 1

2 DS8000 Series: Concepts and Architecture

1

Chapter 1. Introduction to the DS8000 series

This chapter provides an overview of the features, functions, and benefits of the IBM

TotalStorage DS8000 series of storage servers. The topics covered include:

The IBM on demand marketing strategy regarding the DS8000

Overview of the DS8000 components and features

Positioning and benefits of the DS8000

The performance features of the DS8000

© Copyright IBM Corp. 2005. All rights reserved. 3

1.1 The DS8000, a member of the TotalStorage DS family

IBM has a wide range of product offerings that are based on open standards and that share a

common set of tools, interfaces, and innovative features. The IBM TotalStorage DS family

and its new member, the DS8000, gives you the freedom to choose the right combination of

solutions for your current needs and the flexibility to help your infrastructure evolve as your

needs change. The TotalStorage DS family is designed to offer high availability, multiplatform

support, and simplified management tools, all to help you cost effectively adjust to an on

demand world.

1.1.1 Infrastructure Simplification

The DS8000 series is designed to break through to a new dimension of on demand storage,

offering an extraordinary opportunity to consolidate existing heterogeneous storage

environments, helping lower costs, improve management efficiency, and free valuable floor

space. Incorporating IBM’s first implementation of storage system Logical Partitions (LPARs)

means that two independent workloads can be run on completely independent and separate

virtual DS8000 storage systems, with independent operating environments, all within a single

physical DS8000. This unique feature of the DS8000 series, which will be available in the

DS8300 Model 9A2, helps deliver opportunities for new levels of efficiency and cost

effectiveness.

1.1.2 Business Continuity

The DS8000 series is designed for the most demanding, mission-critical environments

requiring extremely high availability, performance, and scalability. The DS8000 series is

designed to avoid single points of failure and provide outstanding availability. With the

additional advantages of IBM FlashCopy, data availability can be enhanced even further; for

instance, production workloads can continue execution concurrent with data backups. Metro

Mirror and Global Mirror business continuity solutions are designed to provide the advanced

functionality and flexibility needed to tailor a business continuity environment for almost any

recovery point or recovery time objective. The addition of IBM solution integration packages

spanning a variety of heterogeneous operating environments offers even more cost-effective

ways to implement business continuity solutions.

1.1.3 Information Lifecycle Management

The DS8000 is designed as the solution for data when it is at its most on demand, highest

priority phase of the data life cycle. One of the advantages IBM offers is the complete set of

disk, tape, and software solutions designed to allow customers to create storage

environments that support optimal life cycle management and cost requirements.

1.2 Overview of the DS8000 series

The IBM TotalStorage DS8000 is a new high-performance, high-capacity series of disk

storage systems. An example is shown in Figure 1-1 on page 5. It offers balanced

performance that is up to 6 times higher than the previous IBM TotalStorage Enterprise

Storage Server (ESS) Model 800. The capacity scales linearly from 1.1 TB up to 192 TB.

With the implementation of the POWER5 Server Technology in the DS8000 it is possible to

create storage system logical partitions (LPARs) that can be used for completely separate

production, test, or other unique storage environments.

4 DS8000 Series: Concepts and Architecture

The DS8000 is a flexible and extendable disk storage subsystem because it is designed to

add and adapt to new technologies as they become available.

In the entirely new packaging there are also new management tools, like the DS Storage

Manager and the DS Command-Line Interface (CLI), which allow for the management and

configuration of the DS8000 series as well as the DS6000 series.

The DS8000 series is designed for 24x7 environments in terms of availability while still

providing the industry leading remote mirror and copy functions to ensure business continuity.

Figure 1-1 DS8000 - Base frame

The IBM TotalStorage DS8000 highlights include that it:

Delivers robust, flexible, and cost-effective disk storage for mission-critical workloads

Helps to ensure exceptionally high system availability for continuous operations

Scales to 192 TB and facilitates unprecedented asset protection with model-to-model field

upgrades

Supports storage sharing and consolidation for a wide variety of operating systems and

mixed server environments

Helps increase storage administration productivity with centralized and simplified

management

Provides the creation of multiple storage system LPARs, that can be used for completely

separate production, test, or other unique storage environments

Occupies 20 percent less floor space than the ESS Model 800's base frame, and holds

even more capacity

Provides the industry’s first four year warranty