Page 1

IBM TotalStorage DS6000

Host Systems Attachment Guid e

GC26-7680-03

Page 2

Page 3

IBM TotalStorage DS6000

Host Systems Attachment Guid e

GC26-7680-03

Page 4

Note:

Before using this information and the product it supports, read the information in the Safety and environmental notices and

Notices sections.

Fourth Edition (May 2005 )

This edition replaces GC26-7680-02 and all previous versions of GC26-7680.

© Copyright International Business Machines Corporation 2004, 2005. All rights reserved.

US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract

with IBM Corp.

Page 5

Contents

Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . .xi

Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

About this guide . . . . . . . . . . . . . . . . . . . . . . . .xv

Safety and environmental notices . . . . . . . . . . . . . . . . xvii

Safety notices . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Environmental notices . . . . . . . . . . . . . . . . . . . . . . xvii

Product recycling . . . . . . . . . . . . . . . . . . . . . . . xvii

Disposing of products . . . . . . . . . . . . . . . . . . . . . xvii

Conventions used in this guide . . . . . . . . . . . . . . . . . . . xvii

Related information . . . . . . . . . . . . . . . . . . . . . . . xviii

DS6000 series library . . . . . . . . . . . . . . . . . . . . . xviii

Other IBM publications . . . . . . . . . . . . . . . . . . . . . xix

Ordering IBM publications . . . . . . . . . . . . . . . . . . . xxiv

Web sites . . . . . . . . . . . . . . . . . . . . . . . . . . xxiv

How to send your comments . . . . . . . . . . . . . . . . . . . xxv

Summary of Changes for GC26-7680-03 IBM TotalStorage DS6000 Host

Systems Attachment Guide . . . . . . . . . . . . . . . . . . xxvii

Chapter 1. Introduction . . . . . . . . . . . . . . . . . . . . . .1

Overview of the DS6000 series models . . . . . . . . . . . . . . . .1

DS6800 (Model 1750-511) . . . . . . . . . . . . . . . . . . . .2

DS6000 expansion enclosure (Model 1750-EX1) . . . . . . . . . . . .3

Performance features . . . . . . . . . . . . . . . . . . . . . . .4

Data availability features . . . . . . . . . . . . . . . . . . . . . .5

RAID implementation . . . . . . . . . . . . . . . . . . . . . .5

Overview of Copy Services . . . . . . . . . . . . . . . . . . . .6

FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . .6

Subsystem Device Driver for open-systems . . . . . . . . . . . . . .8

Multiple allegiance for FICON hosts . . . . . . . . . . . . . . . . .8

DS6000 Interfaces . . . . . . . . . . . . . . . . . . . . . . . .8

IBM TotalStorage DS Storage Manager . . . . . . . . . . . . . . .8

DS Open application programming interface . . . . . . . . . . . . .9

DS command-line interface . . . . . . . . . . . . . . . . . . . .9

Software Requirements . . . . . . . . . . . . . . . . . . . . . .10

Host systems that DS6000 series supports . . . . . . . . . . . . . .10

Fibre channel host attachments . . . . . . . . . . . . . . . . . .10

Attaching a DS6000 series to an open-systems host with fibre channel

adapters . . . . . . . . . . . . . . . . . . . . . . . . .11

FICON-attached S/390 and zSeries hosts that the storage unit supports . . .11

General information about attaching to open-systems host with fibre-channel

adapters . . . . . . . . . . . . . . . . . . . . . . . . . .12

Fibre-channel architecture . . . . . . . . . . . . . . . . . . . .12

Fibre-channel cables and adapter types . . . . . . . . . . . . . . .15

Fibre-channel node-to-node distances . . . . . . . . . . . . . . .15

LUN affinity for fibre-channel attachment . . . . . . . . . . . . . .16

Targets and LUNs for fibre-channel attachment . . . . . . . . . . . .16

LUN access modes for fibre-channel attachment . . . . . . . . . . .16

Fibre-channel storage area networks . . . . . . . . . . . . . . . .17

© Copyright IBM Corp. 2004, 2005 iii

Page 6

Chapter 2. Attaching to a Apple Macintosh server . . . . . . . . . . .19

Supported fibre-channel adapters for the Apple Macintosh server . . . . . .19

Chapter 3. Attaching to a Fujitsu PRIMEPOWER host system . . . . . .21

Supported fibre-channel adapters for PRIMEPOWER . . . . . . . . . . .21

Fibre-channel attachment requirements for PRIMEPOWER . . . . . . . .21

Installing the Emulex adapter card for a PRIMEPOWER host system . . . . .21

Downloading the Emulex adapter driver for a PRIMEPOWER host system . . .22

Installing the Emulex adapter driver for a PRIMEPOWER host system . . . .22

Configuring host device drivers for PRIMEPOWER . . . . . . . . . . .22

Parameter settings for the Emulex LP9002L adapter . . . . . . . . . . .24

Setting parameters for Emulex adapters . . . . . . . . . . . . . . .26

Chapter 4. Attaching to a Hewlett-Packard AlphaServer Tru64 UNIX host 27

Attaching to an HP AlphaServer Tru64 UNIX host system with fibre-channel

adapters . . . . . . . . . . . . . . . . . . . . . . . . . .27

Supported fibre-channel adapters for the HP AlphaServer Tru64 UNIX host

system . . . . . . . . . . . . . . . . . . . . . . . . . .27

Supported operating system levels for fibre-channel attachment to an HP

Tru64 UNIX host . . . . . . . . . . . . . . . . . . . . . .27

Fibre-channel Tru64 UNIX attachment requirements . . . . . . . . . .27

Fibre-channel Tru64 UNIX attachment considerations . . . . . . . . . .28

Supporting the AlphaServer Console for Tru64 UNIX . . . . . . . . . .28

Attaching the HP AlphaServer Tru64 UNIX host to a storage unit using

fibre-channel adapters . . . . . . . . . . . . . . . . . . . .29

Installing the KGPSA-xx adapter card in an Tru64 UNIX host system . . . .30

Setting the mode for the KGPSA-xx host adapter . . . . . . . . . . .31

Setting up the storage unit to attach to an HP AlphaServer Tru64 UNIX host

system with fibre-channel adapters . . . . . . . . . . . . . . . .32

Configuring the storage for fibre-channel Tru64 UNIX hosts . . . . . . .36

Removing persistent reserves for Tru64 UNIX 5.x . . . . . . . . . . . .37

Limitations for Tru64 UNIX . . . . . . . . . . . . . . . . . . . .40

Chapter 5. Attaching to a Hewlett-Packard AlphaServer OpenVMS host 41

Supported fibre-channel adapters for the HP AlphaServer OpenVMS host

system . . . . . . . . . . . . . . . . . . . . . . . . . . .41

Fibre-channel OpenVMS attachment requirements . . . . . . . . . . . .41

Fibre-channel OpenVMS attachment considerations . . . . . . . . . . .41

Supported OpenVMS feature codes . . . . . . . . . . . . . . . . .42

Supported microcode levels for the HP OpenVMS host system . . . . . . .42

Supported switches for the HP OpenVMS host system . . . . . . . . . .42

Attaching the HP AlphaServer OpenVMS host to a storage unit using

fibre-channel adapters . . . . . . . . . . . . . . . . . . . . .42

Supported operating system levels for fibre-channel attachment to an HP

OpenVMS host . . . . . . . . . . . . . . . . . . . . . . .42

Confirming the installation of the OpenVMS operating system . . . . . . .43

Installing the KGPSA-xx adapter card in an OpenVMS host system . . . . .44

Setting the mode for the KGPSA-xx host adapter in an OpenVMS host system 44

Setting up the storage unit to attach to an HP AlphaServer OpenVMS host

system with fibre-channel adapters . . . . . . . . . . . . . . . . .45

Adding or modifying AlphaServer fibre-channel connections for the OpenVMS

host . . . . . . . . . . . . . . . . . . . . . . . . . . .45

Defining OpenVMS fibre-channel adapters to the storage unit . . . . . . .45

Configuring fibre-channel host adapter ports for OpenVMS . . . . . . . .46

OpenVMS fibre-channel considerations . . . . . . . . . . . . . . .46

OpenVMS UDID Support . . . . . . . . . . . . . . . . . . . .46

iv DS6000 Host Systems Attachment Guide

Page 7

OpenVMS LUN 0 - Command Control LUN . . . . . . . . . . . . .48

Confirming fibre-channel switch connectivity for OpenVMS . . . . . . . .48

Confirming fibre-channel storage connectivity for OpenVMS . . . . . . .49

OpenVMS World Wide Node Name hexadecimal representations . . . . .50

Verifying the fibre-channel attachment of the storage unit volumes for

OpenVMS . . . . . . . . . . . . . . . . . . . . . . . . .50

Configuring the storage for fibre-channel OpenVMS hosts . . . . . . . . .51

OpenVMS fibre-channel restrictions . . . . . . . . . . . . . . . . .51

Troubleshooting fibre-channel attached volumes for the OpenVMS host system 52

Chapter 6. Attaching to a Hewlett-Packard Servers (HP-UX) host . . . . .55

Attaching with fibre-channel adapters . . . . . . . . . . . . . . . . .55

Supported fibre-channel adapters for HP-UX hosts . . . . . . . . . . .55

Fibre-channel attachment requirements for HP-UX hosts . . . . . . . .55

Installing the fibre-channel adapter drivers for HP-UX 11.i, and HP-UX 11iv2 56

Setting the queue depth for the HP-UX operating system with fibre-channel

adapters . . . . . . . . . . . . . . . . . . . . . . . . .56

Configuring the storage unit for clustering on the HP-UX 11iv2 operating system 56

Chapter 7. Attaching to an IBM iSeries host . . . . . . . . . . . . .59

Attaching with fibre-channel adapters to the IBM iSeries host system . . . . .59

Supported fibre-channel adapter cards for IBM iSeries hosts . . . . . . .59

Fibre-channel attachment requirements for IBM iSeries hosts . . . . . . .59

Fibre-channel attachment considerations for IBM iSeries hosts . . . . . .59

Host limitations for IBM iSeries hosts . . . . . . . . . . . . . . . .60

IBM iSeries hardware . . . . . . . . . . . . . . . . . . . . .61

IBM iSeries software . . . . . . . . . . . . . . . . . . . . . .61

General information for configurations for IBM iSeries hosts . . . . . . .61

Recommended configurations for IBM iSeries hosts . . . . . . . . . .62

Running the Linux operating system on an IBM i5 server . . . . . . . . .64

Supported fibre-channel adapters for IBM i5 servers running the Linux

operating system . . . . . . . . . . . . . . . . . . . . . .64

Running the Linux operating system in a guest partition on an IBM i5 servers 65

Planning to run Linux in a hosted or nonhosted guest partition . . . . . .65

Creating a guest partition to run Linux . . . . . . . . . . . . . . .66

Managing Linux in a guest partition . . . . . . . . . . . . . . . .67

Ordering a new server or upgrading an existing server to run a guest partition 68

Chapter 8. Attaching to an IBM NAS Gateway 500 host . . . . . . . . .69

Supported adapter cards for IBM NAS Gateway 500 hosts . . . . . . . . .69

Finding the worldwide port name . . . . . . . . . . . . . . . . . .69

Obtaining WWPNs using a Web browser . . . . . . . . . . . . . .69

Obtaining WWPNs through the command-line interface . . . . . . . . .69

Multipathing support for NAS Gateway 500 . . . . . . . . . . . . . .71

Multipath I/O and SDD considerations for NAS Gateway 500 . . . . . . .71

Host attachment multipathing scripts . . . . . . . . . . . . . . . .71

Multipathing with the Subsystem Device Driver . . . . . . . . . . . .73

Chapter 9. Attaching to an IBM RS/6000 or IBM eServer pSeries host . . .75

Installing the 1750 host attachment package on IBM pSeries AIX hosts . . . .75

Preparing for installation of the host attachment package on IBM pSeries AIX

hosts . . . . . . . . . . . . . . . . . . . . . . . . . . .75

Installing the host attachment package on IBM pSeries AIX hosts . . . . .75

Upgrading the host attachment package on IBM pSeries AIX hosts . . . . .76

Attaching with fibre-channel adapters . . . . . . . . . . . . . . . . .76

Supported fibre-channel adapter cards for IBM pSeries hosts . . . . . . .76

Contents v

Page 8

Fibre-channel attachment requirements for IBM pSeries hosts . . . . . .77

Fibre-channel attachment considerations for IBM pSeries hosts . . . . . .77

Verifying the configuration of the storage unit for fibre-channel adapters on

the AIX host system . . . . . . . . . . . . . . . . . . . . .77

Making SAN changes for IBM pSeries hosts . . . . . . . . . . . . .78

Support for fibre-channel boot . . . . . . . . . . . . . . . . . . .79

Prerequisites for setting up the IBM pSeries host as a fibre-channel boot

device . . . . . . . . . . . . . . . . . . . . . . . . . .79

Fibre-channel boot considerations for IBM pSeries hosts . . . . . . . .79

Supported IBM RS/6000 or IBM pSeries hosts for fibre-channel boot . . . .79

Supported levels of firmware for fibre-channel boot on IBM pSeries hosts 80

Supported levels of fibre-channel adapter microcode on IBM pSeries hosts 80

Installation mechanisms that PSSP supports for boot install from

fibre-channel SAN DASD on IBM pSeries hosts . . . . . . . . . . .80

Support for disk configurations for RS/6000 for a fibre-channel boot install 80

Support for fibre-channel boot when a disk subsystem is attached on IBM

pSeries hosts . . . . . . . . . . . . . . . . . . . . . . .80

Attaching to multiple RS/6000 or pSeries hosts without the HACMP host system 81

Considerations for attaching to multiple RS/6000 or pSeries hosts without the

HACMP host system . . . . . . . . . . . . . . . . . . . . .81

Saving data on the storage unit when attaching multiple RS/6000 or pSeries

host systems to the storage unit . . . . . . . . . . . . . . . . .81

Restoring data on the storage unit when attaching multiple RS/6000 or

pSeries host systems to the storage unit . . . . . . . . . . . . .82

Running the Linux operating system on an IBM pSeries host . . . . . . . .82

Attachment considerations for running the Linux operating system on an IBM

pSeries host . . . . . . . . . . . . . . . . . . . . . . . .82

Hardware requirements for the Linux operating system on the pSeries host 83

Software requirement for the Linux operating system on the pSeries host 83

Preparing to install the Subsystem Device Driver for the Linux operating

system on the pSeries host . . . . . . . . . . . . . . . . . .84

Installing the Subsystem Device Driver on the pSeries host running the Linux

operating system . . . . . . . . . . . . . . . . . . . . . .84

Upgrading the Subsystem Device Driver for the Linux operating system on

the pSeries host . . . . . . . . . . . . . . . . . . . . . .85

Verifying the Subsystem Device Driver for the Linux operating system on the

pSeries host . . . . . . . . . . . . . . . . . . . . . . . .85

Configuring the Subsystem Device Driver . . . . . . . . . . . . . .86

Migrating with AIX 5L Version 5.2 . . . . . . . . . . . . . . . . . .86

Storage unit migration issues when you upgrade to AIX 5L Version 5.2

maintenance release 5200-01 . . . . . . . . . . . . . . . . .86

Storage unit migration issues when you remove the AIX 5L Version 5.2 with

the 5200-01 Recommended Maintenance package support . . . . . . .88

Chapter 10. Attaching to an IBM S/390 or IBM eServer zSeries host . . . .89

Migrating from a FICON bridge to a native FICON attachment . . . . . . .89

Migrating from a FICON bridge to a native FICON attachment on zSeries

hosts: FICON bridge overview . . . . . . . . . . . . . . . . .89

Migrating from a FICON bridge to a native FICON attachment on zSeries

hosts: FICON bridge configuration . . . . . . . . . . . . . . . .89

Migrating from a FICON bridge to a native FICON attachment on zSeries

hosts: Mixed configuration . . . . . . . . . . . . . . . . . . .90

Migrating from a FICON bridge to a native FICON attachment on zSeries

hosts: Native FICON configuration . . . . . . . . . . . . . . . .91

Attaching with FICON adapters . . . . . . . . . . . . . . . . . . .92

Configuring the storage unit for FICON attachment on zSeries hosts . . . .92

vi DS6000 Host Systems Attachment Guide

Page 9

Attachment considerations for attaching with FICON adapters on zSeries

hosts . . . . . . . . . . . . . . . . . . . . . . . . . . .92

Attaching to a FICON channel on a S/390 or zSeries host . . . . . . . .94

Registered state-change notifications (RSCNs) on zSeries hosts . . . . . .95

Linux for S/390 and zSeries . . . . . . . . . . . . . . . . . . . .96

Attaching a storage unit to an S/390 or zSeries host running Linux . . . . .96

Running Linux on an S/390 or zSeries host . . . . . . . . . . . . .96

Attaching FCP adapters on zSeries hosts . . . . . . . . . . . . . .97

Chapter 11. Attaching to an Intel host running Linux . . . . . . . . . 103

Supported adapter cards for an Intel host running Linux . . . . . . . . . 103

Attaching with fibre-channel adapters . . . . . . . . . . . . . . . . 103

Attachment requirements for an Intel host running Linux . . . . . . . . 103

Attachment considerations for an Intel host running Linux . . . . . . . . 104

Installing the Emulex adapter card for an Intel host running Linux . . . . . 105

Downloading the Emulex adapter driver for an Intel host running Linux 105

Installing the Emulex adapter driver for an Intel host running Linux . . . . 105

Installing the QLogic adapter card for an Intel host running Linux . . . . . 106

Downloading the current QLogic adapter driver for an Intel host running

Linux . . . . . . . . . . . . . . . . . . . . . . . . . . 107

Installing the QLogic adapter driver for an Intel host running Linux . . . . 107

Defining the number of disk devices on Linux . . . . . . . . . . . . . 108

Configuring the storage unit . . . . . . . . . . . . . . . . . . . . 109

Configuring the storage unit for an Intel host running Linux . . . . . . . 109

Partitioning storage unit disks for an Intel host running Linux . . . . . . 109

Assigning the system ID to the partition for an Intel host running Linux 11 0

Creating and using file systems on the storage unit for an Intel host running

Linux . . . . . . . . . . . . . . . . . . . . . . . . . . 111

SCSI disk considerations for an Intel host running Linux . . . . . . . . .112

Manually adding and removing SCSI disks . . . . . . . . . . . . .112

LUN identification for the Linux host system . . . . . . . . . . . . .112

SCSI disk problem identification and resolution . . . . . . . . . . . .114

Support for fibre-channel boot . . . . . . . . . . . . . . . . . . .114

Creating a modules disk for SUSE LINUX Enterprise Server 9.0 . . . . .114

Installing Linux over the SAN without an IBM Subsystem Device Driver 114

Updating to a more recent module without an IBM Subsystem Device Driver 116

Installing Linux over the SAN with an IBM Subsystem Device Driver . . . .116

Chapter 12. Attaching to an Intel host running VMware ESX server . . . 123

Supported adapter cards for an Intel host running VMware ESX Server . . . 123

Attaching with fibre-channel adapters . . . . . . . . . . . . . . . . 123

Attachment requirements for an Intel host running VMware ESX server 123

Attachment considerations for an Intel host running VMware ESX Server 124

Installing the Emulex adapter card for an Intel host running VMware ESX

Server . . . . . . . . . . . . . . . . . . . . . . . . . . 124

Installing the QLogic adapter card for an Intel host running VMware ESX

Server . . . . . . . . . . . . . . . . . . . . . . . . . . 125

Defining the number of disks devices on VMware ESX Server . . . . . . . 126

SCSI disk considerations for an Intel host running VMware ESX server . . . 126

LUN identification for the VMware ESX console . . . . . . . . . . . 126

Disk device discovery on VMware ESX . . . . . . . . . . . . . . 129

Persistent binding . . . . . . . . . . . . . . . . . . . . . . 129

Configuring the storage unit . . . . . . . . . . . . . . . . . . . . 130

Configuring the storage unit for an Intel host running VMware ESX Server 130

Partitioning storage unit disks for an Intel host running VMware ESX Server 130

Contents vii

Page 10

Creating and using VMFS on the storage unit for an Intel host running

VMware ESX Server . . . . . . . . . . . . . . . . . . . . 132

Copy Services considerations . . . . . . . . . . . . . . . . . . . 132

Chapter 13. Attaching to a Novell NetWare host . . . . . . . . . . . 135

Attaching with fibre-channel adapters . . . . . . . . . . . . . . . . 135

Attaching a Novell NetWare host with fibre-channel adapters . . . . . . 135

Fibre-channel attachment considerations for a Novell NetWare host . . . . 135

Installing the Emulex adapter card for a Novell NetWare host . . . . . . 135

Downloading the current Emulex adapter driver for a Novell NetWare host 136

Installing the Emulex adapter driver for a Novell NetWare host . . . . . . 136

Downloading the current QLogic adapter driver for a Novell NetWare host 136

Installing the QLogic QLA23xx adapter card for a Novell NetWare host 137

Installing the adapter drivers for a Novell NetWare host . . . . . . . . 138

Chapter 14. Attaching to a iSCSI Gateway host . . . . . . . . . . . 141

Attachment considerations . . . . . . . . . . . . . . . . . . . . 141

Attachment overview of the iSCSI Gateway host . . . . . . . . . . . 141

Attachment requirements for the iSCSI Gateway host . . . . . . . . . 141

Ethernet adapter attachment considerations for the iSCSI Gateway host 142

Configuring for storage for the iSCSI Gateway host . . . . . . . . . . . 142

iSCSI Gateway operation through the IP Service Module . . . . . . . . . 143

Chapter 15. Attaching to an IBM SAN File System . . . . . . . . . . 145

Attaching to an IBM SAN File System metadata server with Fibre-channel

adapters . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Configuring a storage unit for attachment to the SAN File System metadata

node . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Chapter 16. Attaching to an IBM SAN Volume Controller host . . . . . . 147

Attaching to an IBM SAN Volume Controller host with fibre-channel adapters 147

Configuring the storage unit for attachment to the SAN Volume Controller host

system . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Chapter 17. Attaching an SGI host system . . . . . . . . . . . . . 149

Attaching an SGI host system with fibre-channel adapters . . . . . . . . 149

Fibre-channel attachment considerations for the SGI host system . . . . . . 149

Fibre-channel attachment requirements for the SGI host system . . . . . . 149

Checking the version of the IRIX operating system for the SGI host system 150

Installing a fibre-channel adapter card for the SGI host system . . . . . . . 150

Verifying the installation of a fibre-channel adapter card for SGI . . . . . . 150

Configuring the fibre-channel adapter drivers for SGI . . . . . . . . . . 151

Installing an optical cable for SGI in a switched-fabric topology . . . . . . . 151

Installing an optical cable for SGI in an arbitrated loop topology . . . . . . 151

Confirming switch connectivity for SGI . . . . . . . . . . . . . . . . 151

Displaying zoning information for the switch . . . . . . . . . . . . . . 152

Confirming storage connectivity . . . . . . . . . . . . . . . . . . 153

Confirming storage connectivity for SGI in a switched-fabric topology . . . 153

Confirming storage connectivity for SGI in a fibre-channel arbitrated loop

topology . . . . . . . . . . . . . . . . . . . . . . . . . 154

Configuring the storage unit for host failover . . . . . . . . . . . . . 154

Configuring the storage unit for host failover . . . . . . . . . . . . 154

Confirming the availability of failover . . . . . . . . . . . . . . . 155

Making a connection through a switched-fabric topology . . . . . . . . 155

Making a connection through an arbitrated-loop topology . . . . . . . . 156

Switching I/O operations between the primary and secondary paths . . . . 156

viii DS6000 Host Systems Attachment Guide

Page 11

Configuring storage . . . . . . . . . . . . . . . . . . . . . . . 156

Configuration considerations . . . . . . . . . . . . . . . . . . 156

Configuring storage in a switched fabric topology . . . . . . . . . . . 157

Configuring storage in an arbitrated loop topology . . . . . . . . . . 159

Chapter 18. Attaching to a Sun host . . . . . . . . . . . . . . . . 163

Attaching with fibre-channel adapters . . . . . . . . . . . . . . . . 163

Supported fibre-channel adapters for Sun . . . . . . . . . . . . . 163

Fibre-channel attachment requirements for Sun . . . . . . . . . . . 163

Installing the Emulex adapter card for a Sun host system . . . . . . . . 164

Downloading the Emulex adapter driver for a Sun host system . . . . . . 165

Installing the Emulex adapter driver for a Sun host system . . . . . . . 165

Installing the AMCC PCI adapter card for Sun . . . . . . . . . . . . 166

Downloading the current AMCC PCI adapter driver for Sun . . . . . . . 166

Installing the AMCC PCI adapter driver for Sun . . . . . . . . . . . 166

Installing the AMCC SBUS adapter card for Sun . . . . . . . . . . . 166

Downloading the current AMCC SBUS adapter driver for Sun . . . . . . 166

Installing the AMCC SBUS adapter driver for Sun . . . . . . . . . . 167

Installing the QLogic adapter card in the Sun host . . . . . . . . . . 167

Downloading the QLogic adapter driver for Sun . . . . . . . . . . . 167

Installing the QLogic adapter driver package for Sun . . . . . . . . . 167

Configuring host device drivers . . . . . . . . . . . . . . . . . . 168

Configuring host device drivers for Sun . . . . . . . . . . . . . . 168

Parameter settings for the Emulex adapters for the Sun host system . . . 170

Parameter settings for the AMCC adapters for the Sun host system . . . . 171

Parameter settings for the QLogic QLA23xxF adapters . . . . . . . . . 174

Parameter settings for the QLogic QLA23xx adapters for San Surf

configuration (4.06+ driver) . . . . . . . . . . . . . . . . . . 175

Setting the Sun host system parameters . . . . . . . . . . . . . . . 177

Setting parameters for AMCC adapters . . . . . . . . . . . . . . 177

Setting parameters for Emulex or QLogic adapters . . . . . . . . . . 178

Installing the Subsystem Device Driver . . . . . . . . . . . . . . . 179

Attaching a storage unit to a Sun host using Storage Traffic Manager System 179

Configuring the Sun STMS host settings . . . . . . . . . . . . . . 179

Attaching a storage unit to a Sun host using Sun Cluster . . . . . . . . . 180

Chapter 19. Attaching to a Windows 2000 host . . . . . . . . . . . 183

Attaching with fibre-channel adapters . . . . . . . . . . . . . . . . 183

Supported fibre-channel adapters for Windows 2000 . . . . . . . . . 183

Fibre-channel attachment requirements for the Windows 2000 host system 183

Fibre-channel attachment considerations for Windows 2000 . . . . . . . 184

Installing and configuring the Emulex adapter card . . . . . . . . . . 184

Installing and configuring the Netfinity adapter card for Windows 2000 187

Updating the Windows 2000 device driver . . . . . . . . . . . . . 189

Installing and configuring the QLogic adapter cards in Windows 2000 . . . 189

Verifying that Windows 2000 is configured for storage . . . . . . . . . 192

Configuring for availability and recoverability . . . . . . . . . . . . . 192

Configuration considerations . . . . . . . . . . . . . . . . . . 192

Setting the TimeOutValue registry for Windows 2000 . . . . . . . . . 192

Installing remote fibre-channel boot support for a Windows 2000 host system 193

Configure zoning and obtain storage . . . . . . . . . . . . . . . 193

Flash QLogic host adapter . . . . . . . . . . . . . . . . . . . 194

Configure QLogic host adapters . . . . . . . . . . . . . . . . . 194

Windows 2000 Installation . . . . . . . . . . . . . . . . . . . 194

Windows 2000 Post Installation . . . . . . . . . . . . . . . . . 195

Contents ix

Page 12

Chapter 20. Attaching to a Windows Server 2003 host . . . . . . . . . 197

Attaching with fibre-channel adapters . . . . . . . . . . . . . . . . 197

Supported fibre-channel adapters for Windows Server 2003 . . . . . . . 197

Fibre-channel attachment requirements for the Windows Server 2003 host

system . . . . . . . . . . . . . . . . . . . . . . . . . 197

Fibre-channel attachment considerations for Windows Server 2003 . . . . 198

Installing and configuring the Emulex adapter card . . . . . . . . . . 198

Installing and configuring the Netfinity adapter card . . . . . . . . . . 201

Updating the Windows Server 2003 device driver . . . . . . . . . . . 203

Installing and configuring the QLogic adapter cards . . . . . . . . . . 203

Verifying that Windows Server 2003 is configured for storage . . . . . . 206

Configuring for availability and recoverability . . . . . . . . . . . . . 206

Configuration considerations . . . . . . . . . . . . . . . . . . 206

Setting the TimeOutValue registry for Windows Server 2003 . . . . . . . 206

Installing remote fibre-channel boot support for a Windows Server 2003 host

system . . . . . . . . . . . . . . . . . . . . . . . . . . 207

Configure zoning and obtain storage . . . . . . . . . . . . . . . 207

Flash QLogic host adapter . . . . . . . . . . . . . . . . . . . 208

Configure QLogic host adapters . . . . . . . . . . . . . . . . . 208

Windows 2003 Installation . . . . . . . . . . . . . . . . . . . 208

Windows 2003 Post Installation . . . . . . . . . . . . . . . . . 209

Appendix. Locating the worldwide port name (WWPN) . . . . . . . .211

Fibre-channel port name identification . . . . . . . . . . . . . . . .211

Locating the WWPN for a Fujitsu PRIMEPOWER host . . . . . . . . . .211

Locating the WWPN for a Hewlett-Packard AlphaServer host . . . . . . . 212

Locating the WWPN for a Hewlett-Packard host . . . . . . . . . . . . 212

Locating the WWPN for an IBM eServer iSeries host . . . . . . . . . . 213

Locating the WWPN for an IBM eServer pSeries or an RS/6000 host . . . . 213

Locating the WWPN for a Linux host . . . . . . . . . . . . . . . . 214

Locating the WWPN for a Novell NetWare host . . . . . . . . . . . . 215

Locating the WWPN for an SGI host . . . . . . . . . . . . . . . . 215

Locating the WWPN for a Sun host . . . . . . . . . . . . . . . . . 216

Locating the WWPN for a Windows 2000 host . . . . . . . . . . . . . 216

Locating the WWPN for a Windows Server 2003 host . . . . . . . . . . 217

Accessibility . . . . . . . . . . . . . . . . . . . . . . . . . 219

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

Terms and conditions for downloading and printing publications . . . . . . 222

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . 223

Electronic emission notices . . . . . . . . . . . . . . . . . . . . 224

Federal Communications Commission (FCC) statement . . . . . . . . 224

Industry Canada compliance statement . . . . . . . . . . . . . . 224

European community compliance statement . . . . . . . . . . . . . 224

Japanese Voluntary Control Council for Interference (VCCI) class A

statement . . . . . . . . . . . . . . . . . . . . . . . . 225

Korean Ministry of Information and Communication (MIC) statement . . . . 225

Taiwan class A compliance statement . . . . . . . . . . . . . . . 226

Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . 227

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255

x DS6000 Host Systems Attachment Guide

Page 13

Figures

1. Point-to-point topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . .13

2. Switched-fabric topology . . . . . . . . . . . . . . . . . . . . . . . . . . . .14

3. Arbitrated loop topology . . . . . . . . . . . . . . . . . . . . . . . . . . . .15

4. Example of sd.conf file entries for fibre-channel . . . . . . . . . . . . . . . . . . .23

5. Example of a start lpfc auto-generated configuration . . . . . . . . . . . . . . . . . .24

6. Confirming the storage unit licensed machine code on an HP AlphaServer through the telnet

command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .29

7. Example of the sizer -v command . . . . . . . . . . . . . . . . . . . . . . . .30

8. Example of the set mode diag command and the wwidmgr -show adapter command . . . . . .31

9. Example results of the wwidmgr command. . . . . . . . . . . . . . . . . . . . . .31

10. Example of the switchshow command . . . . . . . . . . . . . . . . . . . . . . .33

11. Example of storage unit volumes on the AlphaServer console . . . . . . . . . . . . . .33

12. Example of a hex string for a storage unit volume on an AlphaServer Tru64 UNIX console 34

13. Example of a hex string that identifies the decimal volume number for a storage unit volume on

an AlphaServer console or Tru64 UNIX . . . . . . . . . . . . . . . . . . . . . .34

14. Example of hex representation of last 5 characters of a storage unit volume serial number on an

AlphaServer console . . . . . . . . . . . . . . . . . . . . . . . . . . . . .34

15. Example of the hwmgr command to verify attachment . . . . . . . . . . . . . . . . .35

16. Example of a Korn shell script to display a summary of storage unit volumes . . . . . . . .36

17. Example of the Korn shell script output . . . . . . . . . . . . . . . . . . . . . . .36

18. Example of the essvol script . . . . . . . . . . . . . . . . . . . . . . . . . .38

19. Example of the scu command . . . . . . . . . . . . . . . . . . . . . . . . . .38

20. Example of the scu command . . . . . . . . . . . . . . . . . . . . . . . . . .39

21. Example of the scu clear command . . . . . . . . . . . . . . . . . . . . . . . .39

22. Example of the scu command to show persistent reserves . . . . . . . . . . . . . . .40

23. Example of show system command to show system command on the OpenVMS operating system 43

24. Example of the product show history command to check the versions of patches already installed 43

25. Example of the set mode diag command and the wwidmgr -show adapter command . . . . . .44

26. Example results of the wwidmgr command. . . . . . . . . . . . . . . . . . . . . .45

27. Example of the switchshow command . . . . . . . . . . . . . . . . . . . . . . .49

28. Example of storage unit volumes on the AlphaServer console . . . . . . . . . . . . . .49

29. Example of a World Wide Node Name for the storage unit volume on an AlphaServer console 50

30. Example of a volume number for the storage unit volume on an AlphaServer console . . . . .50

31. Example of what is displayed when you use OpenVMS storage configuration utilities . . . . . .51

32. Example of the display for the auxiliary storage hardware resource detail for a 2766 or 2787

adapter card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .60

33. Example of the logical hardware resources associated with an IOP . . . . . . . . . . . .62

34. Example of the display for the auxiliary storage hardware resource detail for the storage unit 63

35. Example output from the lscfg -vpl “fcs*” |grep Network command. . . . . . . . . . . . .70

36. Example output saved to a text file . . . . . . . . . . . . . . . . . . . . . . . .70

37. Example of installed file set . . . . . . . . . . . . . . . . . . . . . . . . . . .72

38. Example environment where all MPIO devices removed . . . . . . . . . . . . . . . .72

39. Example of installed host attachment scripts . . . . . . . . . . . . . . . . . . . . .73

40. Example of extracting the SDD archive . . . . . . . . . . . . . . . . . . . . . . .74

41. Example of a list of devices displayed when you use the lsdev -Cc disk | grep 1750 command for

fibre-channel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .78

42. Example of a list of other devices displayed when you use the lsdisk command for fibre-channel. 78

43. Example of how to configure a FICON bridge from an S/390 or zSeries host system to a storage

unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .90

44. Example of how to add a FICON director and a FICON host adapter . . . . . . . . . . .91

45. Example of the configuration after the FICON bridge is removed . . . . . . . . . . . . .92

46. Example of prerequisite information for FCP Linux on zSeries . . . . . . . . . . . . . .98

47. Example of prerequisite information for FCP Linux on zSeries . . . . . . . . . . . . . .99

© Copyright IBM Corp. 2004, 2005 xi

Page 14

48. Example of prerequisite information for FCP Linux on zSeries . . . . . . . . . . . . . . 100

49. Example of prerequisite information for FCP Linux on zSeries . . . . . . . . . . . . . . 101

50. Example of a script to add more than one device . . . . . . . . . . . . . . . . . . 101

51. Example of how to add SCSI devices through the add_map command . . . . . . . . . . 102

52. Saving the module parameters in the /etc/zfcp.conf file . . . . . . . . . . . . . . . . 102

53. Example of Logical Volume Manager Multipathing . . . . . . . . . . . . . . . . . . 102

54. Example of range of devices for a Linux host . . . . . . . . . . . . . . . . . . . . 109

55. Example of different options for the fdisk utility . . . . . . . . . . . . . . . . . . .110

56. Example of a primary partition on the disk /dev/sdb . . . . . . . . . . . . . . . . . .110

57. Example of assigning a Linux system ID to the partition . . . . . . . . . . . . . . . . 111

58. Example of creating a file with the mke2fs command . . . . . . . . . . . . . . . . . 111

59. Example of creating a file with the mkfs command . . . . . . . . . . . . . . . . . .112

60. Example output for a Linux host that only configures LUN 0 . . . . . . . . . . . . . .113

61. Example output for a Linux host with configured LUNs . . . . . . . . . . . . . . . .113

62. Example of a complete linuxrc file for Red Hat . . . . . . . . . . . . . . . . . . . 121

63. Example of a complete linuxrc file for SUSE . . . . . . . . . . . . . . . . . . . . 122

64. Example of QLogic Output: . . . . . . . . . . . . . . . . . . . . . . . . . . 127

65. Example of Emulex Output: . . . . . . . . . . . . . . . . . . . . . . . . . . 128

66. Example listing of a Vmhba directory . . . . . . . . . . . . . . . . . . . . . . . 128

67. Example of Vmhba entries . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

68. Example of the different options for the fdisk utility: . . . . . . . . . . . . . . . . . . 131

69. Example of primary partition on the disk /dev/vsd71 . . . . . . . . . . . . . . . . . 131

70. Example of PCI bus slots . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

71. Example results for the switchshow command . . . . . . . . . . . . . . . . . . . 152

72. Example results for the cfgShow command . . . . . . . . . . . . . . . . . . . . . 153

73. Example of commands to turn failover on . . . . . . . . . . . . . . . . . . . . . 155

74. Example of an edited /etc/failover.conf file . . . . . . . . . . . . . . . . . . . . . 156

75. Example of an edited /etc/failover.conf file for an arbitrated loop connection . . . . . . . . . 156

76. Example commands for the IRIX switched fabric storage configuration utility . . . . . . . . 158

77. Example commands for the IRIX switched fabric storage configuration utility, part 2 . . . . . . 159

78. Example commands for the IRIX arbitrated loop storage configuration utility . . . . . . . . 160

79. Example commands for the IRIX arbitrated loop storage configuration utility, part 2 . . . . . . 161

80. Example of sd.conf file entries for fibre-channel . . . . . . . . . . . . . . . . . . . 169

81. Example of a start lpfc auto-generated configuration . . . . . . . . . . . . . . . . . 169

82. Example binding inserts for qlaxxxx.conf . . . . . . . . . . . . . . . . . . . . . . 177

83. Example of what is displayed when start the Windows 2000 host . . . . . . . . . . . . 188

84. Example of what the system displays when you start the Windows Server 2003 host . . . . . 202

85. Example of the output from the Hewlett-Packard AlphaServer wwidmgr -show command 212

86. Example of the output from the Hewlett-Packard #fgrep wwn /var/adm/messages command 212

87. Example of the output from the Hewlett-Packard fcmsutil /dev/td1 | grep world command. 213

88. Example of what is displayed in the /var/log/messages file . . . . . . . . . . . . . . . 215

89. Example of the scsiha — bus_number device | command . . . . . . . . . . . . . . . 215

xii DS6000 Host Systems Attachment Guide

Page 15

Tables

1. Recommended configuration file parameters for the Emulex LP9002L adapter . . . . . . . .25

2. Maximum number of adapters you can use for an AlphaServer . . . . . . . . . . . . . .28

3. Maximum number of adapters you can use for an AlphaServer . . . . . . . . . . . . . .41

4. Host system limitations for the IBM iSeries host system . . . . . . . . . . . . . . . .60

5. Capacity and models of disk volumes for IBM iSeries . . . . . . . . . . . . . . . . .63

6. Recommended settings for the QLogic adapter card for an Intel host running Linux . . . . . . 106

7. Recommended settings for the QLogic adapter card for an Intel host running VMware ESX

Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

8. Recommended settings for the QLogic QLA23xx adapter card for a Novell NetWare host 137

9. Solaris 8 minimum revision level patches for fibre-channel . . . . . . . . . . . . . . . 164

10. Recommended configuration file parameters for the Emulex LP9002DC, LP9002L, LP9002S,

LP9402DC and LP9802 adapters . . . . . . . . . . . . . . . . . . . . . . . . 170

11. Recommended configuration file parameters for a AMCC FCX-6562, AMCC FCX2-6562, AMCC

FCE-6460, or a AMCC FCE-1473 adapter . . . . . . . . . . . . . . . . . . . . . 172

12. Recommended configuration file parameters for the QLogic QLA2310F, QLA2340, and QLA2342

adapters with driver level 4.03 . . . . . . . . . . . . . . . . . . . . . . . . . 174

13. Parameter settings for the QLogic QLA23xx host adapters for San Surf Configuration (4.06+) 175

14. Recommended configuration file parameters for the Emulex LP9002L, LP9002DC, LP9402DC,

LP9802, LP10000, and LP10000DC adapters . . . . . . . . . . . . . . . . . . . . 186

15. Recommended settings for the QLogic QLA23xx adapter card for Windows 2000 . . . . . . 189

16. Recommended configuration file parameters for the Emulex LP9002L, LP9002DC, LP9402DC,

LP9802, LP10000, and LP10000DC adapters . . . . . . . . . . . . . . . . . . . . 200

17. Recommended settings for the QLogic QLA23xx adapter card for a Windows Server 2003 host 204

© Copyright IBM Corp. 2004, 2005 xiii

Page 16

xiv DS6000 Host Systems Attachment Guide

Page 17

About this guide

This guide provides information about the following host attachment issues:

v Attaching the storage unit to an open-systems host with fibre-channel adapters

v Connecting IBM fibre-channel connection (FICON) cables to your S/390 and

zSeries host systems

You

can attach the following host systems to a storage unit:

v Apple Macintosh

v Fujitsu PRIMEPOWER

v Hewlett-Packard

v IBM eServer iSeries

v IBM NAS Gateway 500

v IBM RS/6000 and IBM eServer pSeries

v IBM S/390 and IBM eServer zSeries

v IBM SAN File System

v IBM SAN Volume Controller

v Linux

v Microsoft Windows 2000

v Microsoft Windows Server 2003

v Novell NetWare

v Silicon Graphics

v Sun

For

a list of open systems hosts, operating systems, adapters and switches that

IBM supports, see the Interoperability Matrix at

http://www.ibm.com/servers/storage/disk/ds6000/interop.html.

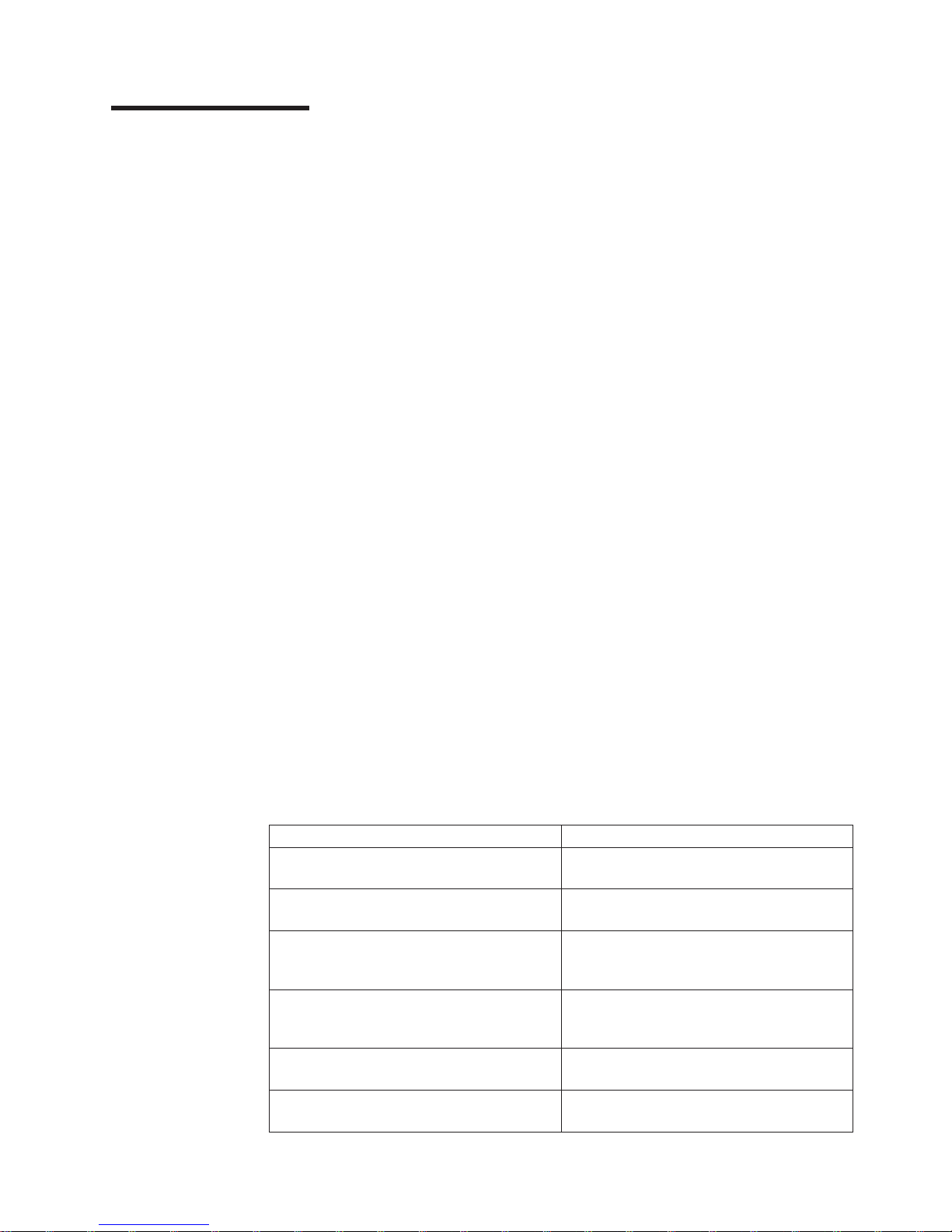

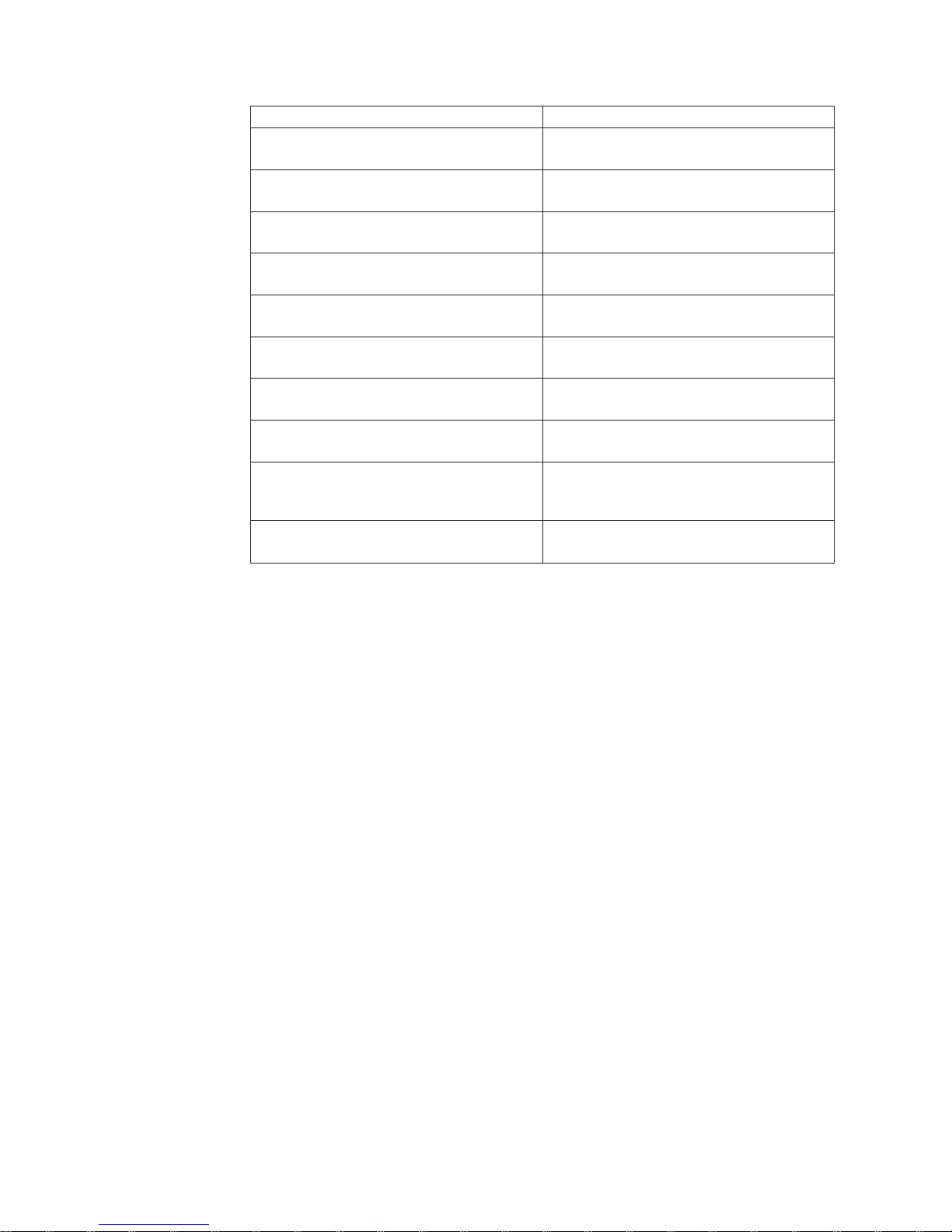

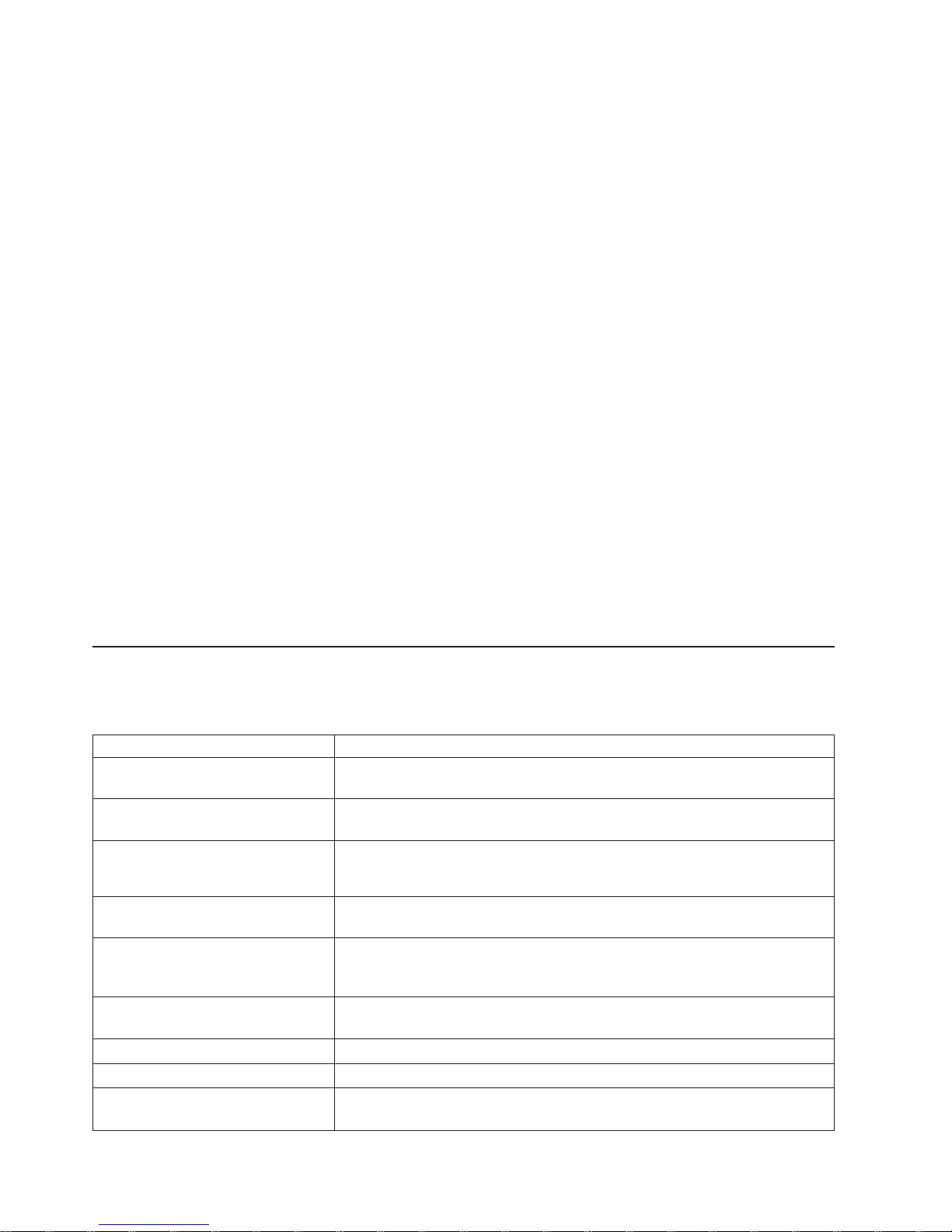

Finding attachment information in the Host Systems Attachment

Guide

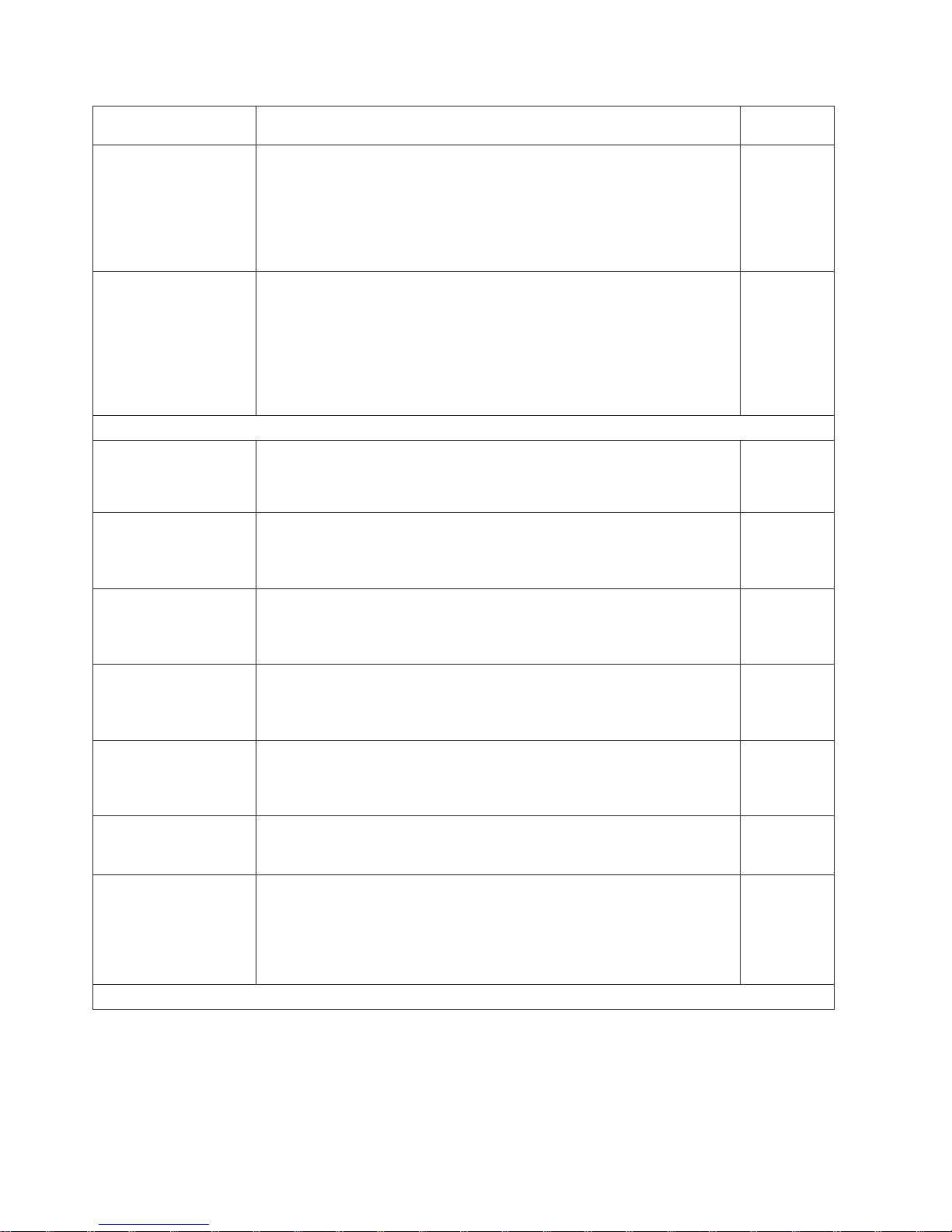

The following table provides information about how to find attachment information

quickly in this guide.

Host Fibre-channel

Apple Macintosh Servers “Supported fibre-channel adapters for the

Apple Macintosh server” on page 19

Fujitsu PRIMEPOWER “Supported fibre-channel adapters for

PRIMEPOWER” on page 21

Hewlett-Packard AlphaServer Tru64 UNIX

®

“Supported fibre-channel adapters for the HP

AlphaServer Tru64 UNIX host system” on

page 27

Hewlett-Packard AlphaServer OpenVMS “Supported fibre-channel adapters for the HP

AlphaServer OpenVMS host system” on

page 41

Hewlett-Packard Servers (HP-UX) “Attaching with fibre-channel adapters” on

page 55

iSCSI Gateway “Attachment overview of the iSCSI Gateway

host” on page 141

© Copyright IBM Corp. 2004, 2005 xv

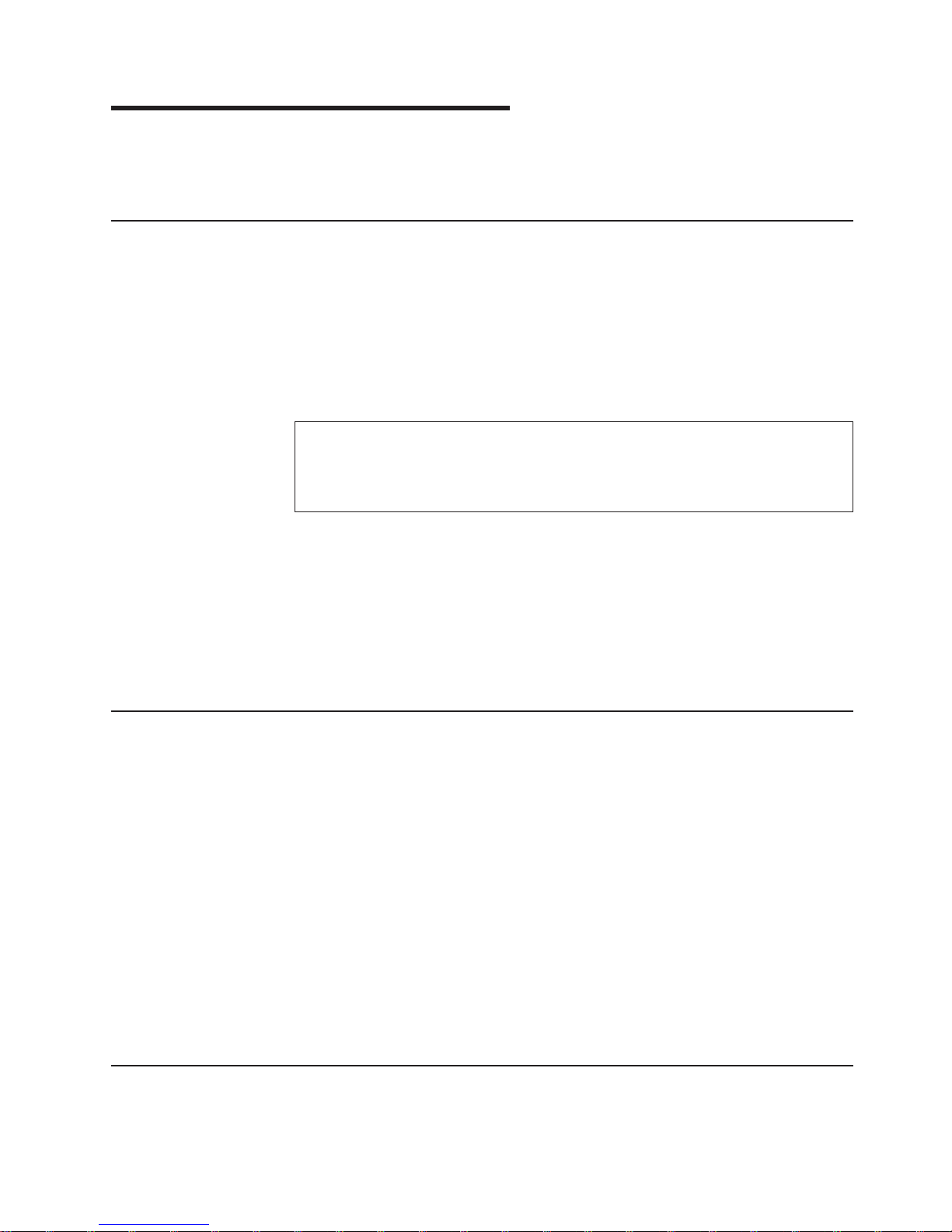

Page 18

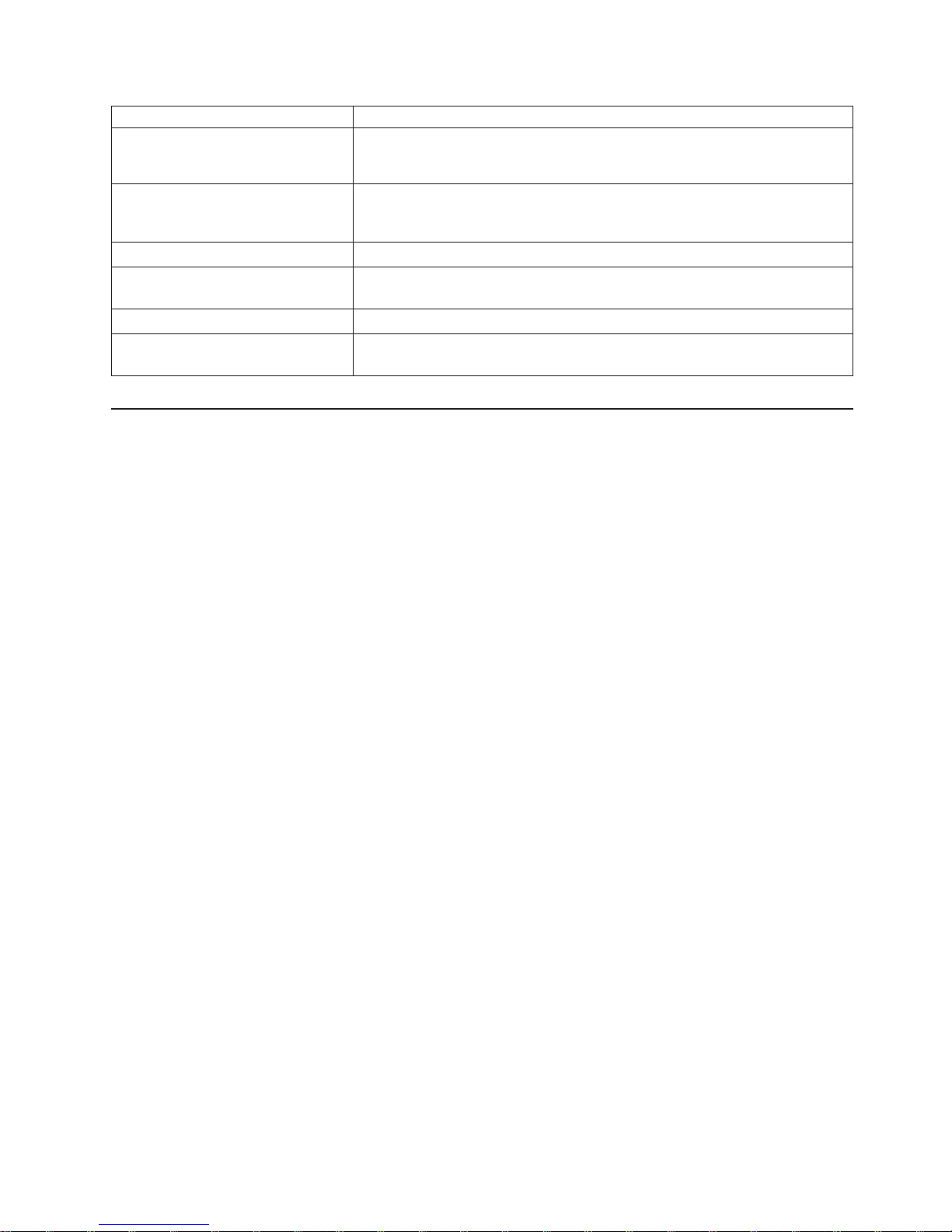

Host Fibre-channel

IBM

®

eServer

™

iSeries

™

“Attaching with fibre-channel adapters to the

IBM iSeries host system” on page 59

IBM NAS Gateway 500 “Supported adapter cards for IBM NAS

Gateway 500 hosts” on page 69

IBM eServer pSeries™or IBM RS/6000

®

“Attaching with fibre-channel adapters” on

page 76

IBM eServer zSeries

®

or S/390

®

“Attaching FCP adapters on zSeries hosts

running Linux” on page 97 (for zSeries only)

Linux “Attaching with fibre-channel adapters” on

page 103

Microsoft

®

Windows

®

2000 “Attaching with fibre-channel adapters” on

page 183

Microsoft Windows Server 2003 “Attaching with fibre-channel adapters” on

page 197

Novell NetWare “Attaching with fibre-channel adapters” on

page 135

IBM SAN Volume Controller “Attaching to an IBM SAN Volume Controller

host with fibre-channel adapters” on page

147

Sun “Supported fibre-channel adapters for Sun”

on page 163

xvi DS6000 Host Systems Attachment Guide

Page 19

Safety and environmental notices

This section contains information about safety notices that are used in this guide

and environmental notices for this product.

Safety notices

Use this process to find information about safety notices.

To find the translated text for a danger or caution notice:

1. Look for the identification number at the end of each danger notice or each

caution notice. In the following examples, the numbers 1000 and 1001 are the

identification numbers.

DANGER

A danger notice indicates the presence of a hazard that has the

potential of causing death or serious personal injury.

1000

CAUTION:

A caution notice indicates the presence of a hazard that has the potential

of causing moderate or minor personal injury.

1001

2. Find the number that matches in the IBM TotalStorage Solutions Safety Notices

for IBM Versatile Storage Server and IBM TotalStorage Enterprise Storage

Server, GC26-7229.

Environmental notices

This section identifies the environmental guidelines that pertain to this product.

Product recycling

This unit contains recyclable materials.

Recycle these materials at your local recycling sites. Recycle the materials

according to local regulations. In some areas, IBM provides a product take-back

program that ensures proper handling of the product. Contact your IBM

representative for more information.

Disposing of products

This topic contains information about how to dispose of products.

This unit might contain batteries. Remove and discard these batteries, or recycle

them, according to local regulations.

Conventions used in this guide

The following typefaces are used to show emphasis:

© Copyright IBM Corp. 2004, 2005 xvii

Page 20

boldface

Text in boldface represents menu items and lowercase or mixed-case

command names.

italics Text in italics is used to emphasize a word. In command syntax, it is used

for variables for which you supply actual values.

monospace

Text in monospace identifies the data or commands that you type, samples of

command output, or examples of program code or messages from the

system.

Related information

The tables in this section list and describe the following publications:

v The publications that make up the IBM

®

TotalStorage

™

DS6000 series library

v Other IBM publications that relate to the DS6000 series

v Non-IBM publications that relate to the DS6000 series

See

“Ordering IBM publications” on page xxiv for information about how to order

publications in the IBM TotalStorage DS6000 series publication library. See “How to

send your comments” on page xxv for information about how to send comments

about the publications.

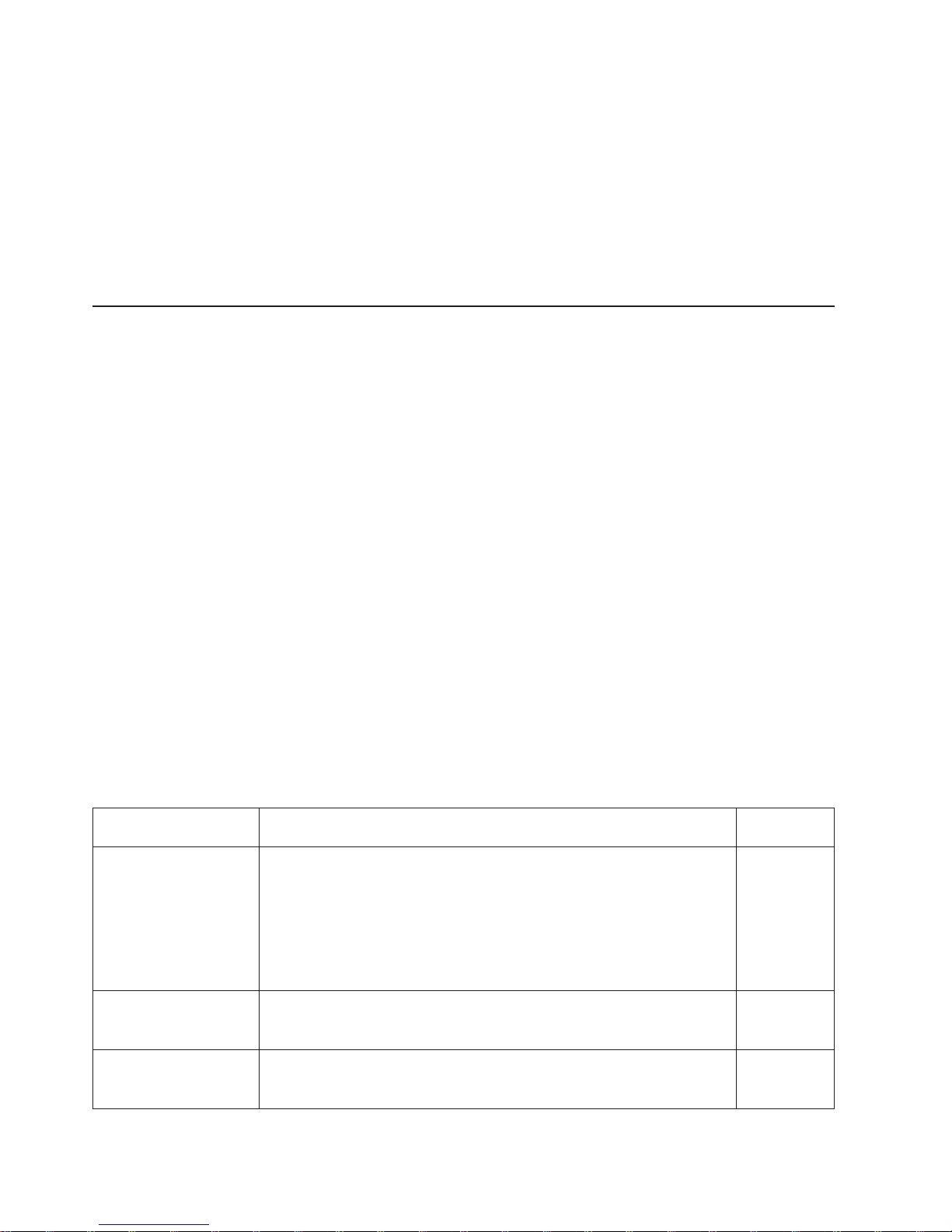

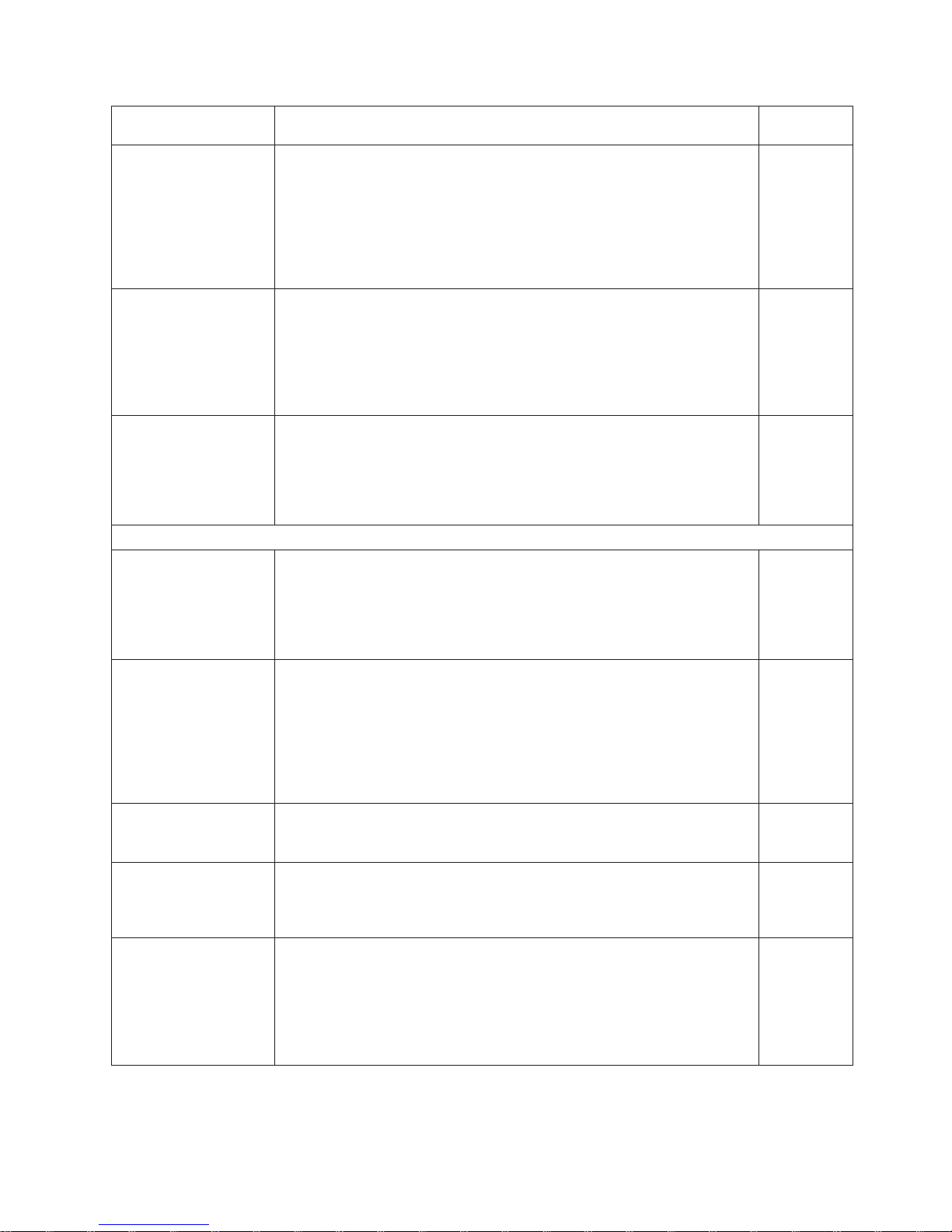

DS6000 series library

These customer publications make up the DS6000 series library.

Unless otherwise noted, these publications are available in Adobe portable

document format (PDF) on a compact disc (CD) that comes with the storage unit. If

you need additional copies of this CD, the order number is SK2T-8803. These

publications are also available as PDF files by clicking on the Documentation link

on the following Web site:

http://www-1.ibm.com/servers/storage/support/disk/ds6800/index.html

See “Ordering IBM publications” on page xxiv for information about ordering these

and other IBM publications.

Title Description

Order

Number

IBM TotalStorage

®

DS:

Command-Line Interface

User’s Guide

This guide describes the commands that you can use from the

command-line interface (CLI) for managing your DS6000 configuration and

Copy Services relationships. The CLI application provides a set of

commands that you can use to write customized scripts for a host system.

The scripts initiate predefined tasks in a Copy Services server application.

You can use the CLI commands to indirectly control Remote Mirror and

Copy and FlashCopy

®

configuration tasks within a Copy Services server

group.

GC26-7681

(See Note.)

IBM TotalStorage

DS6000: Host Systems

Attachment Guide

This guide provides guidelines for attaching the DS6000 to your host

system and for migrating to fibre-channel attachment from a small

computer system interface.

GC26-7680

(See Note.)

IBM TotalStorage

DS6000: Introduction

and Planning Guide

This guide introduces the DS6000 product and lists the features you can

order. It also provides guidelines for planning the installation and

configuration of the storage unit.

GC26-7679

xviii DS6000 Host Systems Attachment Guide

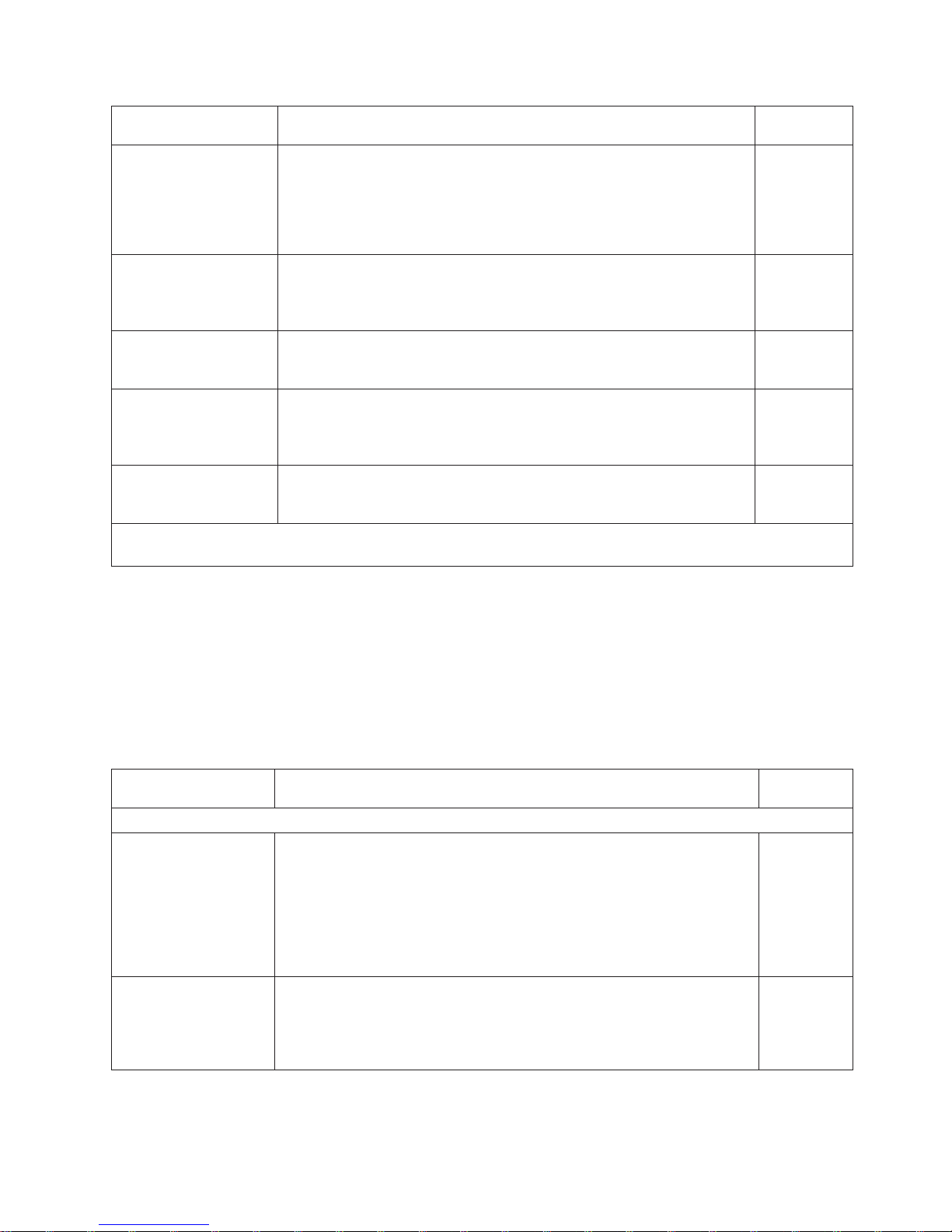

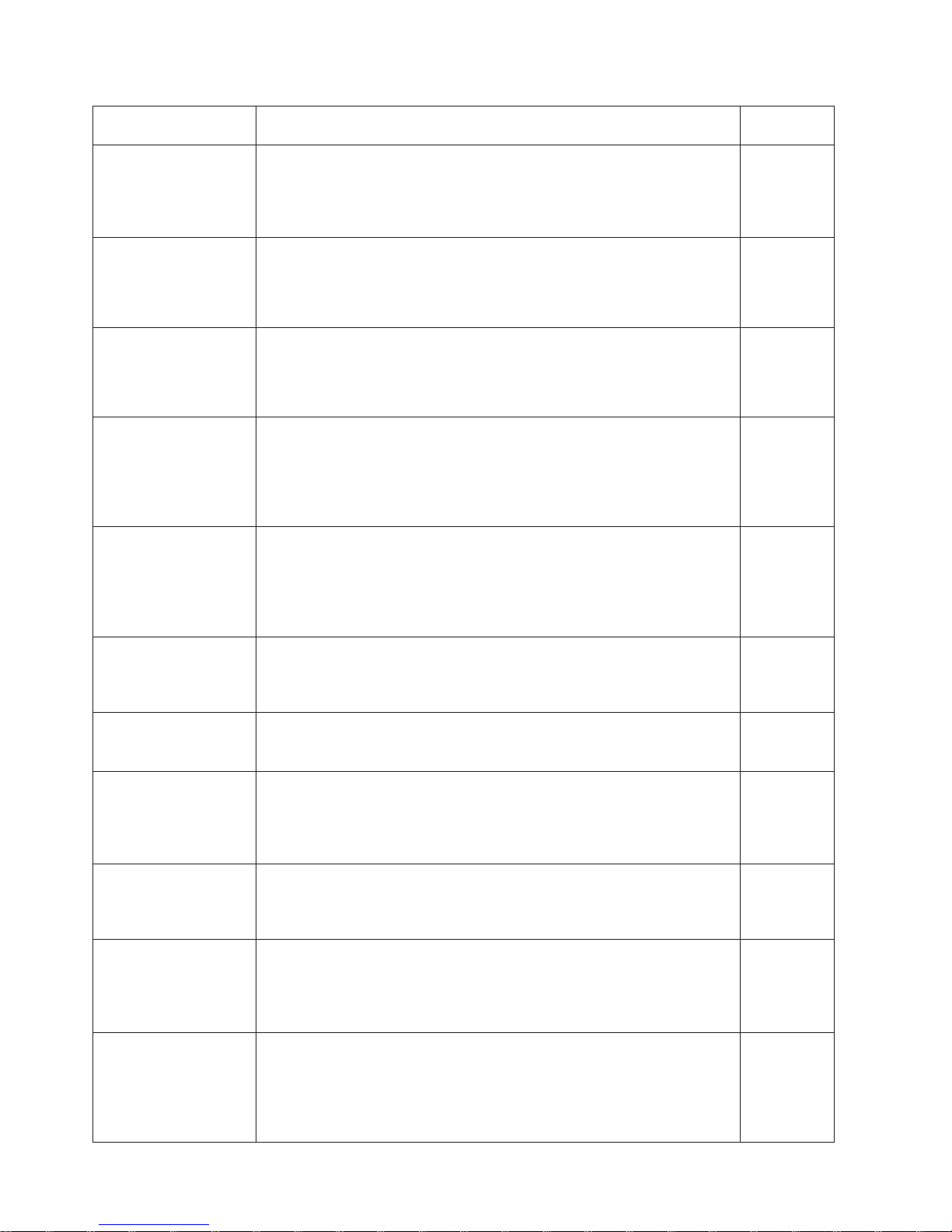

Page 21

Title Description

Order

Number

IBM TotalStorage

Multipath Subsystem

Device Driver User’s

Guide

This publication describes how to use the IBM Subsystem Device Driver

(SDD) on open-systems hosts to enhance performance and availability on

the DS6000. SDD creates redundant paths for shared logical unit

numbers. SDD permits applications to run without interruption when path

errors occur. It balances the workload across paths, and it transparently

integrates with applications.

SC30-4096

IBM TotalStorage DS

Application Programming

Interface Reference

This publication provides reference information for the IBM TotalStorage

DS application programming interface (API) and provides instructions for

installing the Common Information Model Agent, which implements the

API.

GC35-0493

IBM TotalStorage

DS6000 Messages

Reference

This publication provides explanations of error, information, and warning

messages that are issued from the DS6000 user interfaces.

GC26-7682

IBM TotalStorage

DS6000 Installation,

Troubleshooting, and

Recovery Guide

This publication provides reference information for installing and

troubleshooting the DS6000. It also discusses disaster recovery using

Copy Services.

GC26-7678

IBM TotalStorage

DS6000 Quick Start

Card

This is a quick start guide for use in installing and configuring the DS6000

series.

GC26-7685

Note: No hardcopy book is produced for this publication. However, a PDF file is available from the following Web

site: http://www-1.ibm.com/servers/storage/support/disk/ds6800/index.html

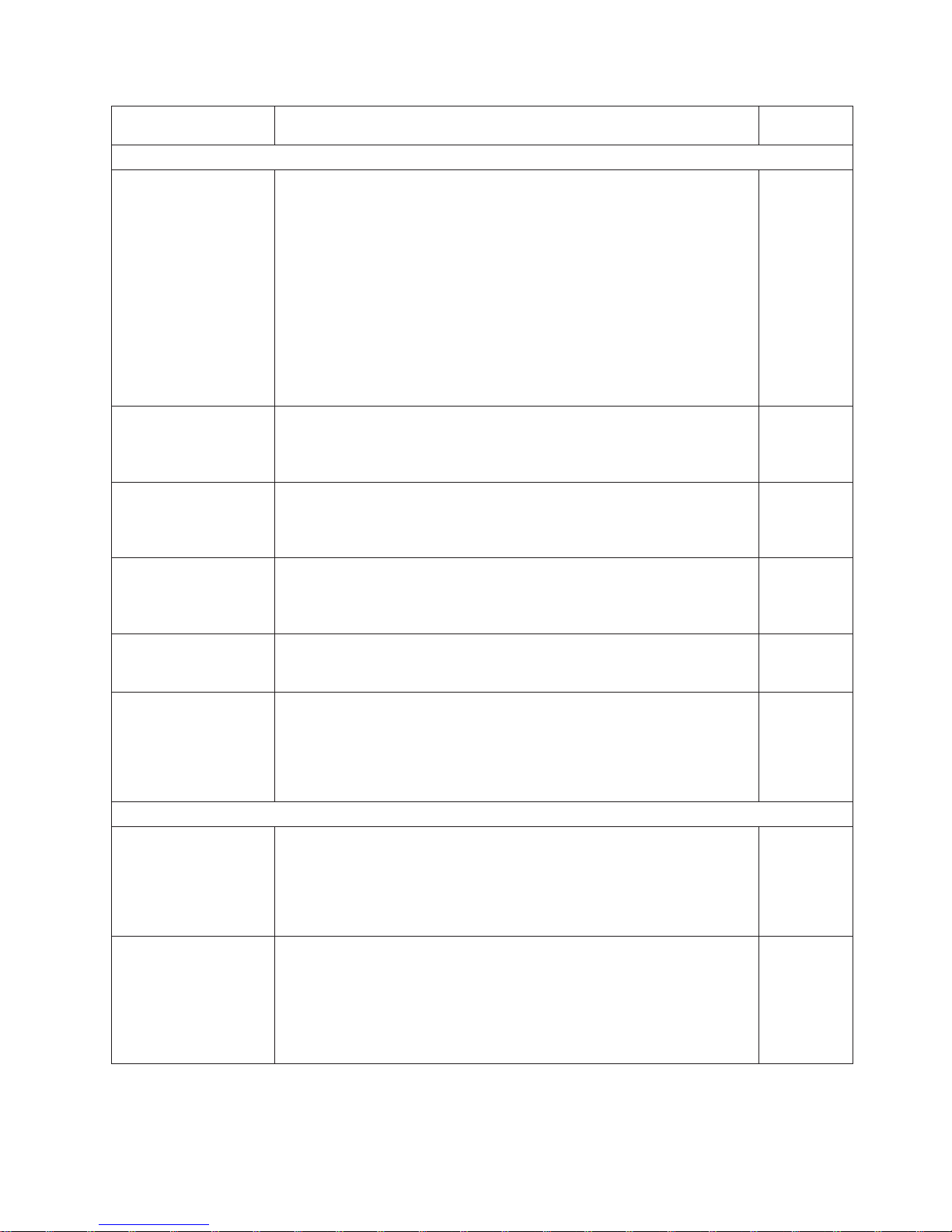

Other IBM publications

Other IBM publications contain additional information that is related to the DS

product library.

The following list is divided into categories to help you find publications that are

related to specific topics. Some of the publications are listed under more than one

category. See “Ordering IBM publications” on page xxiv for information about

ordering these and other IBM publications.

Title Description

Order

Number

Data-copy services

z/OS DFSMS Advanced

Copy Services

This publication helps you understand and use IBM Advanced Copy

Services functions. It describes three dynamic copy functions and several

point-in-time copy functions. These functions provide backup and recovery

of data if a disaster occurs to your data center. The dynamic copy functions

are peer-to-peer remote copy, extended remote copy, and coupled

extended remote copy. Collectively, these functions are known as remote

copy. FlashCopy, SnapShot, and concurrent copy are the point-in-time copy

functions.

SC35-0428

IBM Enterprise Storage

Server

This publication, from the IBM International Technical Support Organization,

introduces the Enterprise Storage Server and provides an understanding of

its benefits. It also describes in detail the architecture, hardware, and

functions, including the advanced copy functions, of the Enterprise Storage

Server.

SG24-5465

Safety and environmental notices xix

Page 22

Title Description

Order

Number

Implementing Copy

Services 0n S/390

This publication, from the IBM International Technical Support Organization,

tells you how to install, customize, and configure Copy Services on an

Enterprise Storage Server that is attached to an S/390 or zSeries host

system. Copy Services functions include peer-to-peer remote copy,

extended remote copy, FlashCopy®, and concurrent copy. This publication

describes the functions, prerequisites, and corequisites and describes how

to implement each function into your environment.

SG24-5680

IBM TotalStorage ESS

Implementing Copy

Services in an Open

Environment

This publication, from the IBM International Technical Support Organization,

tells you how to install, customize, and configure Copy Services on UNIX,

Windows NT®, Windows 2000, Sun Solaris, HP-UX, Tru64, OpenVMS, and

iSeries host systems. The Copy Services functions that are described

include peer-to-peer remote copy and FlashCopy. This publication describes

the functions and shows you how to implement them into your environment.

It also shows you how to implement these functions in a high-availability

cluster multiprocessing environment.

SG24-5757

Fibre channel

Fibre Channel

Connection (FICON) I/O

Interface: Physical Layer

This publication provides information about the fibre-channel I/O interface.

This book is also available as a PDF file from the following Web site:

http://www.ibm.com/servers/resourcelink/

SA24-7172

Fibre Transport Services

(FTS): Physical and

Configuration Planning

Guide

This publication provides information about fibre-optic and ESCON-trunking

systems.

GA22-7234

IBM SAN Fibre Channel

Switch: 2109 Model S08

Installation and Service

Guide

This guide describes how to install and maintain the IBM SAN Fibre

Channel Switch 2109 Model S08.

SC26-7350

IBM SAN Fibre Channel

Switch: 2109 Model S08

User’s Guide

This guide describes the IBM SAN Fibre Channel Switch and the IBM

TotalStorage ESS Specialist. It provides information about the commands

and how to manage the switch with Telnet and the Simple Network

Management Protocol.

SC26-7349

IBM SAN Fibre Channel

Switch: 2109 Model S16

Installation and Service

Guide

This publication describes how to install and maintain the IBM SAN Fibre

Channel Switch 2109 Model S16. It is intended for trained service

representatives and service providers.

SC26-7352

IBM SAN Fibre Channel

Switch: 2109 Model S16

User’s Guide

This guide introduces the IBM SAN Fibre Channel Switch 2109 Model S16

and tells you how to manage and monitor the switch using zoning and how

to manage the switch remotely.

SC26-7351

Implementing Fibre

Channel Attachment on

the ESS

This publication, from the IBM International Technical Support Organization,

helps you install, tailor, and configure fibre-channel attachment of

open-systems hosts to the Enterprise Storage Server. It provides you with a

broad understanding of the procedures that are involved and describes the

prerequisites and requirements. It also shows you how to implement

fibre-channel attachment.

SG24-6113

Open-systems hosts

xx DS6000 Host Systems Attachment Guide

Page 23

Title Description

Order

Number

ESS Solutions for Open

Systems Storage:

Compaq AlphaServer,

HP, and Sun

This publication, from the IBM International Technical Support Organization,

helps you install, tailor, and configure the Enterprise Storage Server when

you attach Compaq AlphaServer (running Tru64 UNIX), HP, and Sun hosts.

This book does not cover Compaq AlphaServer that is running the

OpenVMS operating system. This book also focuses on the settings that

are required to give optimal performance and on the settings for device

driver levels. This book is for the experienced UNIX professional who has a

broad understanding of storage concepts.

SG24-6119

IBM TotalStorage ESS

Implementing Copy

Services in an Open

Environment

This publication, from the IBM International Technical Support Organization,

tells you how to install, customize, and configure Copy Services on UNIX or

Windows 2000 host systems. The Copy Services functions that are

described include peer-to-peer remote copy and FlashCopy. This

publication describes the functions and shows you how to implement them

into your environment. It also shows you how to implement these functions

in a high-availability cluster multiprocessing environment.

SG24-5757

Implementing Fibre

Channel Attachment on

the ESS

This publication, from the IBM International Technical Support Organization,

helps you install, tailor, and configure fibre-channel attachment of

open-systems hosts to the Enterprise Storage Server. It gives you a broad

understanding of the procedures that are involved and describes the

prerequisites and requirements. It also shows you how to implement

fibre-channel attachment.

SG24-6113

S/390 and zSeries hosts

Device Support

Facilities: User’s Guide

and Reference

This publication describes the IBM Device Support Facilities (ICKDSF)

product that are used with IBM direct access storage device (DASD)

subsystems. ICKDSF is a program that you can use to perform functions

that are needed for the installation, the use, and the maintenance of IBM

DASD. Yo u can also use it to perform service functions, error detection, and

media maintenance.

GC35-0033

z/OS Advanced Copy

Services

This publication helps you understand and use IBM Advanced Copy

Services functions. It describes three dynamic copy functions and several

point-in-time copy functions. These functions provide backup and recovery

of data if a disaster occurs to your data center. The dynamic copy functions

are peer-to-peer remote copy, extended remote copy, and coupled

extended remote copy. Collectively, these functions are known as remote

copy. FlashCopy, SnapShot, and concurrent copy are the point-in-time copy

functions.

SC35-0428

DFSMS/MVS V1:

Remote Copy Guide

and Reference

This publication provides guidelines for using remote copy functions with

S/390 and zSeries hosts.

SC35-0169

Fibre Transport Services

(FTS): Physical and

Configuration Planning

Guide

This publication provides information about fibre-optic and ESCON-trunking

systems.

GA22-7234

Implementing ESS Copy

Services on S/390

This publication, from the IBM International Technical Support Organization,

tells you how to install, customize, and configure Copy Services on an

Enterprise Storage Server that is attached to an S/390 or zSeries host

system. Copy Services functions include peer-to-peer remote copy,

extended remote copy, FlashCopy, and concurrent copy. This publication

describes the functions, prerequisites, and corequisites and describes how

to implement each function into your environment.

SG24-5680

Safety and environmental notices xxi

Page 24

Title Description

Order

Number

ES/9000, ES/3090:

IOCP User Guide

Volume A04

This publication describes the Input/Output Configuration Program that

supports the Enterprise Systems Connection (ESCON) architecture. It

describes how to define, install, and configure the channels or channel

paths, control units, and I/O devices on the ES/9000 processors and the

IBM ES/3090 Processor Complex.

GC38-0097

IOCP User’s Guide, IBM

e(logo)server zSeries

800 and 900

This publication describes the Input/Output Configuration Program that

supports the zSeries 800 and 900 servers. This publication is available in

PDF format by accessing ResourceLink at the following Web site:

www.ibm.com/servers/resourcelink/

SB10-7029

IOCP User’s Guide, IBM

e(logo)server zSeries

This publication describes the Input/Output Configuration Program that

supports the zSeries server. This publication is available in PDF format by

accessing ResourceLink at the following Web site:

www.ibm.com/servers/resourcelink/

SB10-7037

S/390: Input/Output

Configuration Program

User’s Guide and

ESCON

Channel-to-Channel

Reference

This publication describes the Input/Output Configuration Program that

supports ESCON architecture and the ESCON multiple image facility.

GC38-0401

IBM z/OS Hardware

Configuration Definition

User’s Guide

This guide provides conceptual and procedural information to help you use

the z/OS Hardware Configuration Definition (HCD) application. It also

explains:

v How to migrate existing IOCP/MVSCP definitions

v How to use HCD to dynamically activate a new configuration

v How to resolve problems in conjunction with MVS/ESA HCD

SC33-7988

OS/390: Hardware

Configuration Definition

User’s Guide

This guide provides detailed information about the input/output definition file

and about how to configure parallel access volumes. This guide discusses

how to use Hardware Configuration Definition for both OS/390

®

and z/OS

V1R1.

SC28-1848

OS/390 V2R10.0: MVS

System Messages

Volume 1 (ABA - ASA)

This publication lists OS/390 MVS

™

system messages ABA to ASA. GC28-1784

Using IBM 3390 Direct

Access Storage in a VM

Environment

This publication provides device-specific information for the various models

of the 3390 and describes methods you can use to manage storage

efficiently using the VM operating system. It provides guidance on

managing system performance, availability, and space through effective use

of the direct access storage subsystem.

GG26-4575

Using IBM 3390 Direct

Access Storage in a

VSE Environment

This publication helps you use the 3390 in a VSE environment. It includes

planning information for adding new 3390 units and instructions for

installing devices, migrating data, and performing ongoing storage

management activities.

GC26-4576

Using IBM 3390 Direct

Access Storage in an

MVS Environment

This publication helps you use the 3390 in an MVS environment. It includes

device-specific information for the various models of the 3390 and

illustrates techniques for more efficient storage management. It also offers

guidance on managing system performance, availability, and space

utilization through effective use of the direct access storage subsystem.

GC26-4574

z/Architecture Principles

of Operation

This publication provides a detailed definition of the z/Architecture™. It is

written as a reference for use primarily by assembler language

programmers and describes each function at the level of detail needed to

prepare an assembler language program that relies on a particular function.

However, anyone concerned with the functional details of z/Architecture will

find this publication useful.

SA22-7832

xxii DS6000 Host Systems Attachment Guide

Page 25

Title Description

Order

Number

SAN

IBM OS/390 Hardware

Configuration Definition

User’s Guide

This guide explains how to use the Hardware Configuration Data

application to perform the following tasks:

v Define new hardware configurations

v View and modify existing hardware configurations

v Activate configurations

v Query supported hardware

v Maintain input/output definition files (IODFs)

v Compare two IODFs or compare an IODF with an actual configuration

v Print reports of configurations

v Create graphical reports of a configuration

v Migrate existing configuration data

SC28-1848

IBM SAN Fibre Channel

Switch: 2109 Model S08

Installation and Service

Guide

This guide describes how to install and maintain the IBM SAN Fibre

Channel Switch 2109 Model S08.

SC26-7350

IBM SAN Fibre Channel

Switch: 2109 Model S08

User’s Guide

This guide describes the IBM SAN Fibre Channel Switch and the IBM

TotalStorage ESS Specialist. It provides information about the commands

and how to manage the switch with Telnet and the Simple Network

Management Protocol (SNMP).

SC26-7349

IBM SAN Fibre Channel

Switch: 2109 Model S16

Installation and Service

Guide

This publication describes how to install and maintain the IBM SAN Fibre

Channel Switch 2109 Model S16. It is intended for trained service

representatives and service providers.

SC26-7352

IBM SAN Fibre Channel

Switch: 2109 Model S16

User’s Guide

This guide introduces the IBM SAN Fibre Channel Switch 2109 Model S16

and tells you how to manage and monitor the switch using zoning and how

to manage the switch remotely.

SC26-7351

Implementing Fibre

Channel Attachment on

the ESS

This publication, from the IBM International Technical Support Organization,

helps you install, tailor, and configure fibre-channel attachment of

open-systems hosts to the Enterprise Storage Server. It provides you with a

broad understanding of the procedures that are involved and describes the

prerequisites and requirements. It also shows you how to implement

fibre-channel attachment.

SG24-6113

Storage management

Device Support

Facilities: User’s Guide

and Reference

This publication describes the IBM Device Support Facilities (ICKDSF)

product used with IBM direct access storage device (DASD) subsystems.

ICKDSF is a program that you can use to perform functions that are

needed for the installation, the use, and the maintenance of IBM DASD.

You can also use it to perform service functions, error detection, and media

maintenance.

GC35-0033

IBM TotalStorage

Solutions Handbook

This handbook, from the IBM International Technical Support Organization,

helps you understand what makes up enterprise storage management. The

concepts include the key technologies that you must know and the IBM

subsystems, software, and solutions that are available today. It also

provides guidelines for implementing various enterprise storage

administration tasks so that you can establish your own enterprise storage

management environment.

SG24-5250

Safety and environmental notices xxiii

Page 26

Ordering IBM publications

This section tells you how to order copies of IBM publications and how to set up a

profile to receive notifications about new or changed publications.

IBM publications center

The publications center is a worldwide central repository for IBM product

publications and marketing material.

The IBM publications center offers customized search functions to help you find the

publications that you need. Some publications are available for you to view or

download free of charge. You can also order publications. The publications center

displays prices in your local currency. Yo u can access the IBM publications center

through the following Web site:

http://www.ibm.com/shop/publications/order

Publications notification system

The IBM publications center Web site offers you a notification system for IBM

publications.

If you register, you can create your own profile of publications that interest you. The

publications notification system sends you a daily e-mail that contains information

about new or revised publications that are based on your profile.

If you want to subscribe, you can access the publications notification system from

the IBM publications center at the following Web site:

http://www.ibm.com/shop/publications/order

Web sites

The following Web sites provide information about the IBM TotalStorage DS6000

series and other IBM storage products.

Type of Storage Information Web Site

Concurrent Copy for S/390 and

zSeries host systems

http://www.storage.ibm.com/software/sms/sdm/

Copy Services command-line

interface (CLI)

http://www-1.ibm.com/servers/storage/support/software/cscli.html

DS6000 series publications http://www-1.ibm.com/servers/storage/support/disk/ds6800/index.html

Click Documentation.

FlashCopy for S/390 and zSeries

host systems

http://www.storage.ibm.com/software/sms/sdm/

Host system models, operating

systems, and adapters that the

storage unit supports

http://www.ibm.com/servers/storage/disk/ds6000/interop.html

Click Interoperability matrix.

IBM Disk Storage Feature Activation

(DSFA)

http://www.ibm.com/storage/dsfa

IBM storage products http://www.storage.ibm.com/

IBM TotalStorage DS6000 series http://www-1.ibm.com/servers/storage/disk/ds6000

IBM version of the Java (JRE) that is

often required for IBM products

http://www-106.ibm.com/developerworks/java/jdk/

xxiv DS6000 Host Systems Attachment Guide

Page 27

Type of Storage Information Web Site

Multiple Device Manager (MDM) http://www.ibm.com/servers/storage/support/

Click Storage Virtualization.

Remote Mirror and Copy (formerly

PPRC) for S/390 and zSeries host

systems

http://www.storage.ibm.com/software/sms/sdm/

SAN fibre channel switches http://www.ibm.com/storage/fcswitch/

Storage Area Network Gateway and

Router

http://www-1.ibm.com/servers/storage/support/san/index.html

Subsystem Device Driver (SDD) http://www-1.ibm.com/servers/storage/support/software/sdd.html

z/OS Global Mirror (formerly XRC)

for S/390 and zSeries host systems

http://www.storage.ibm.com/software/sms/sdm/

How to send your comments

Your feedback is important to help us provide the highest quality information. If you

have any comments about this information or any other DS6000 series

documentation, you can submit them in the following ways:

v e-mail

Submit your comments electronically to the following e-mail address:

starpubs@us.ibm.com

Be sure to include the name and order number of the book and, if applicable, the

specific location of the text you are commenting on, such as a page number or

table number.

v Mail

Fill out the Readers’ Comments form (RCF) at the back of this book. Return it by

mail or give it to an IBM representative. If the RCF has been removed, you can

address your comments to:

International Business Machines Corporation

RCF Processing Department

Department 61C

9032 South Rita Road

TUCSON AZ 85775-4401

Safety and environmental notices xxv

Page 28

xxvi DS6000 Host Systems Attachment Guide

Page 29

Summary of Changes for GC26-7680-03 IBM TotalStorage

DS6000 Host Systems Attachment Guide

This document contains terminology, maintenance, and editorial changes. Technical

changes or additions to the text and illustrations are indicated by a vertical line to

the left of the change. This summary of changes describes new functions that have

been added to this release.

Changed Information

This edition includes the following changed information:

v Support is added for SUSE SLES 9 and Red Hat Enterprise Linux 4.0 for select

hosts.

v The Hewlett-Packard OpenVMS chapter DS CLI command example was

updated.

v The URL to locate the most current information on host attachment firmware and

device driver information, including driver downloads, changed to:

http://knowledge.storage.ibm.com/servers/storage/support/hbasearch/interop/hbaSearch.do

© Copyright IBM Corp. 2004, 2005 xxvii

Page 30

xxviii DS6000 Host Systems Attachment Guide

Page 31

Chapter 1. Introduction

The IBM

®

TotalStorage

®

DS6000 series is a member of the family of DS products

and is built upon 2 Gbps fibre channel technology that provides RAID-protected

storage with advanced functionality, scalability, and increased addressing

capabilities.

The DS6000 series offers a high reliability and performance midrange storage

solution through the use of hot-swappable redundant RAID controllers in a space

efficient modular design. The DS6000 series provides storage sharing and

consolidation for a wide variety of operating systems and mixed server

environments.

The DS6000 series offers high scalability while maintaining excellent performance.

With the DS6800 (Model 1750-511), you can install up to 16 disk drive modules

(DDMs). The minimum storage capability with 8 DDMs is 584 GB. The maximum

storage capability with 16 DDMs for the DS6800 model is 4.8 TB.

If you want to connect more than 16 disks, you use the optional DS6000 expansion

enclosures (Model 1750-EX1) that allow a maximum of 224 DDMs per storage

system and provide a maximum storage capability of 67 TB.

The DS6800 measures 5.25-in. high and is available in a 19-in. rack mountable

package with an optional modular expansion enclosure of the same size to add

capacity to help meet your growing business needs.

The DS6000 series addresses business efficiency needs through its heterogeneous

connectivity, high performance and manageability functions, thereby helping to

reduce total cost of ownership.

The DS6000 series offers the following major features:

v PowerPC 750GX processors

v Dual active controllers provide continuous operations through the use of two

processors that form a pair to back up the other

v A selection of 2 GB fibre channel (FC) disk drives, including 73 GB, 146 GB, and