Page 1

How to Get to DB2 from VSE/VSAM

Using DB2 VSAM Transparency for VSE/ESA

April 1997

SG24-4931-00

Page 2

Page 3

IBML

International Technical Support Organization

How to Get to DB2 from VSE/VSAM

Using DB2 VSAM Transparency for VSE/ESA

April 1997

SG24-4931-00

Page 4

Take Note!

Before using this information and the product it supports, be sure to read the general information in

Appendix C, “Special Notices” on page 113.

First Edition (April 1997)

This edition applies to Version 5 Release 1 of DB2 VSAM Transparency for VSE/ESA, Program Number 5697-B88,

for use with the VSE/ESA operating system.

Comments may be addressed to:

IBM Corporation, International Technical Support Organization

Dept. 3222 Building 71032-02

Postfach 1380

71032 Böblingen, Germany

When you send information to IBM, you grant IBM a non-exclusive right to use or distribute the information in any

way it believes appropriate without incurring any obligation to you.

Copyright International Business Machines Corporation 1997. All rights reserved.

Note to U.S. Government Users — Documentation related to restricted rights — Use, duplication or disclosure is

subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp.

Page 5

Contents

Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Preface

The Team That Wrote This Redbook

Comments Welcome

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

......................... ix

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . x

Part 1. General Conversion Considerations ............................. 1

Chapter 1. Introduction

1.1 Value of DB2 over VSAM

1.2 Management and Personnel

1.3 Conversion Effort

1.3.1 Planning

1.3.2 Data Migration

1.3.3 Application Conversion

1.3.4 Testing

1.4 Problem Area: Coexistence

1.5 Consultants

1.6 Positioning of DB2 VSAM Transparency for VSE/ESA

Chapter 2. Planning for Conversion

2.1 Why Relational

2.1.1 Value

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.1.2 Inhibitors

2.2 Conversion Principles

2.2.1 Conversion Phases

2.2.2 Switchover Strategies

2.2.3 Functional Changes

2.3 Conversion Methods

2.4 Conversion Personnel

2.5 VSAM Application Systems Inventory

2.5.1 Application Inventory

2.5.2 VSAM File Inventory

2.5.3 Program Inventory

2.6 Coexistence Strategies

2.6.1 Coexistence Scenarios

2.7 Conversion Considerations

2.7.1 Tools

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.7.2 Performance

2.7.3 Processor Requirements

2.7.4 DASD Requirements

2.8 Conversion With DB2 VSAM Transparency for VSE/ESA

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

............................. 3

........................... 3

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

............................ 6

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

............ 6

......................... 9

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

...................... 15

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

............................. 17

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

.................................. 22

. . . . . . . . . . . . . . . . . . . . . . . . . . . 22

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

.......... 23

Chapter 3. Database Design

3.1 Types of Database Conversion

3.2 Objectives of Conversion

3.3 Database Design Philosophy

3.3.1 Design Information Sources

3.3.2 Design Sequence

3.3.3 Data Naming Considerations

3.4 VSAM to Relational Considerations

Copyright IBM Corp. 1997 iii

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

.......................... 27

............................. 28

........................... 28

......................... 28

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

........................ 29

....................... 30

Page 6

3.5 Logical Database Design ............................. 31

3.5.1 Designing Tables

3.5.2 Field to Column Mapping

3.6 Data Security

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.7 Designing for Data Related Between VSAM and DB2

3.8 Physical Database Design

3.9 Estimating Performance

3.10 Design Review

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

.......................... 40

............ 43

............................ 44

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Chapter 4. Testing

4.1 Testing Methodology

4.2 Performance

4.3 Data Sampling

4.4 Integration Test Procedures

4.4.1 Compare Application Results

4.4.2 Compare Database Contents

4.4.3 Identical Function Testing

4.5 Preparing for Cutover to Production

4.5.1 Online System Verification

4.5.2 Batch System Verification

4.6 Cutover to Production

4.6.1 Post Cutover

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

........................... 51

........................ 52

........................ 52

.......................... 52

...................... 54

.......................... 55

.......................... 55

............................... 55

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Part 2. Conversion Using DB2 VSAM Transparency for VSE/ESA ............ 57

Chapter 5. Overview of DB2 VSAM Transparency for VSE/ESA

5.1 Introduction

5.2 Components of DB2 VSAM Transparency

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

................... 61

5.3 Steps to Enable DB2 VSAM Transparency for a VSAM File

Chapter 6. Installation of DB2 VSAM Transparency for VSE/ESA

6.1 Prepare Installation

6.2 Install DB2 VSAM Transparency

6.2.1 D efine New Sublibrary

6.2.2 Install from Product Tape

6.3 Acquire Dbspace

6.4 Create Tables

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

......................... 66

............................ 66

.......................... 66

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

6.5 Reload DB2 VSAM Transparency Packages into the Database

6.6 Change the CICS Configuration Tables

6.6.1 CICS Installation Using RDO

6.6.2 Compiling CICS PPT and PCT Tables

6.6.3 Customize CICS Operating Environment

6.7 Customize the Execution JCL Set

6.8 Guest Sharing

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

..................... 69

........................ 69

................... 70

................. 71

........................ 71

......... 59

......... 63

........ 65

...... 69

Chapter 7. Using DB2 VSAM Transparency for VSE/ESA

7.1.1 File Types Supported

7.1.2 Capabilities

7.1.3 Limitations

7.2 Defining VSAM Files

7.2.1 Main Screen

7.2.2 List of Files

7.2.3 File Description

7.2.4 Subset Definition

iv DB2 VSAM Transparency for VSE/ESA

............. 75

............................. 75

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

................................ 77

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

................................... 78

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Page 7

7.2.5 Columns Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

7.2.6 Columns Default Values Description

7.2.7 Columns Format Description

7.2.8 User Exit Definition

7.3 DEFINE

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

7.4 Acquire Dbspace

7.5 MIGRATE

7.6 CREATE

7.7 LOAD

7.8 GENERATE

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

.............................. 85

7.9 Define the List of VSAM Files to Process

7.10 INIT

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

7.11 Implementing Transparency for Batch Applications

7.11.1 Loading Programs into SVA

7.11.2 Start Batch Transparency

......................... 95

7.11.3 Executing a Batch Program Using Transparency

7.11.4 S top Bat ch Transparency

.......................... 96

7.12 Implementing Transparency for CICS Applications

7.12.1 Modify PPT Table

7.12.2 Loading Program into SVA

.............................. 97

......................... 98

7.12.3 Start Transparency for CICS Processing

7.12.4 Execute a Transaction using Transparency

7.12.5 Stopping Transparency for CICS Processing

7.12.6 Disable Transparency for Online Processing

7.13 Summary of DB2 VSAM Transparency Step by Step

.................... 83

........................ 84

.................... 91

............. 93

........................ 93

............ 96

............. 97

................. 98

............... 99

.............. 99

.............. 99

............ 99

Chapter 8. Beyond Transparency

Appendix A. Environment Used

A.1 Installation

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Appendix B. Performance Statistics

Appendix C. Special Notices

Appendix D. Related Publications

. . . . . . . . . . . . . . . . . . . . . . . . . . 103

. . . . . . . . . . . . . . . . . . . . . . . . . . . 107

. . . . . . . . . . . . . . . . . . . . . . . . 109

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

. . . . . . . . . . . . . . . . . . . . . . . . . 115

D.1 International Technical Support Organization Publications

D.2 Redbooks on CD-ROMs

D.3 Other Publications

How to Get ITSO Redbooks

How IBM Employees Can Get ITSO Redbooks

How Customers Can Get ITSO Redbooks

IBM Redbook Order Form

Glossary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

List of Abbreviations

Index

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

............................. 115

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

............................. 117

.................. 117

..................... 118

.............................. 119

................................. 125

........ 115

ITSO Redbook Evaluation

............................... 129

Contents v

Page 8

vi DB2 VSAM Transparency for VSE/ESA

Page 9

Figures

1. The Path through the Mountains . . . . . . . . . . . . . . . . . . . . . . . . 9

2. Positive Thinking

3. Conversion Phases

4. Conversion Methods in Perspective

5. Coexistence Situation

6. Migration with DB2 VSAM Transparency

7. How Transparency Works

8. Options for Periodic Fields

9. Equivalent Testing Flow

10. Data Access with DB2 VSAM Transparency

11. Sample JCL to Define a SUBLIB

12. Install Panel for DB2 VSAM Transparency

13. Sample for DBSPACE DB2 VSAM Transparency

14. Sample ISQL Command Sequence for Acquire Dbspace

15. Sample for CREATE TABLES DB2 VSAM Transparency

16. Sample for Reloading DB2 VSAM Transparency into Database

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

. . . . . . . . . . . . . . . . . . . . . . 14

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

. . . . . . . . . . . . . . . . . . . 23

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

. . . . . . . . . . . . . . . . . . . . . . . . . . . 34

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

................. 60

........................ 66

.................. 66

.............. 67

......... 67

.......... 68

..... 69

17. Sample to Update CICS Tables via RDO DB2 VSAM Transparency into

Database

18. DFHPCT Modifications to Install Transparency

19. DFHPPT Modifications to Install Transparency

20. DFHSIT Modifications to Install Transparency

21. Screen to Customize JCL

22. Main Screen of DB2 VSAM Transparency for VSE/ESA

23. Sample List of Files Screen of DB2 VSAM Transparency

24. Sample File Description Screen for MULT

25. Sample File Description Screen for SUBS with a NEXT File

26. Sample Subset Definition Screen

27. Sample of a Columns Description Screen

28. Sample Columns Default Values Description Screen

29. Sample Columns Format Description Screen

30. Sample XTSTDEF JCL

31. Sample Acquire Dbspace JCL

32. Sample XTSTMIGR JCL

33. Sample XTSTCREA JCL

34. Sample XTSTLOAD JCL

35. Sample XTSTGEN JCL

36. Sample XTSTPRM JCL

37. Sample XTSTINIT JCL

38. Sample XTSTSDL2 JCL for Batch and Online Transparency

39. Sample XTSTSDL JCL for Batch Transparency

40. Sample LIBSDL2 Job Including Transparency LIBDEF for SVA Load

41. Extract from Sample Startup Job JCL02 Including SVA Load

42. Sample XTSTSTRT JCL

43. Sample XTSTSTOP JCL

44. Sample DFHPPT Changes

45. Sample XTSTSDL2 JCL for Online Transparency Only

46. Sample DFHPLTPI Entry

47. Steps for Using DB2 VSAM Transparency

48. Database Layout

49. VSAM Files and DB2 Tables Used

50. Application Performance With Access Modules in COBOL

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

............... 70

................ 71

................ 71

............................ 72

.......... 78

......... 78

.................. 79

........ 80

....................... 81

.................. 82

............ 83

................ 84

.............................. 86

......................... 86

............................. 88

............................. 89

............................. 90

.............................. 91

.............................. 92

.............................. 93

....... 94

............... 94

.. 95

....... 95

............................. 96

............................. 97

............................ 97

........... 98

............................. 98

................. 101

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

...................... 109

....... 110

Copyright IBM Corp. 1997 vii

Page 10

51. Application Performance With Access Modules in Assembler ..... 111

viii DB2 VSAM Transparency for VSE/ESA

Page 11

Preface

Many customers have already recognized the advantages of DB2 Server for

VSE & VM over VSE/VSAM, but could not spend the effort to convert their

business data and applications. With DB2 VSAM Transparency there is a tool

that eases conversion to DB2. After the manual input of the data structures in

VSAM and DB2, it performs the data migration automatically and offers

transparent access to DB2 from the unchanged VSAM applications. DB2 VSAM

Transparency can be used as a first step to exploit DB2; the applications can

later be modified step by step to further optimize the data and application

structure to the individual needs.

This redbook points to the critical areas in a conversion process, and in detail

explains the usage of the DB2 VSAM Transparency. It describes the steps in

using data migration and application Transparency, puts them in the context of

the overall conversion process and gives invaluable hints and tips.

The redbook was written primarily for application programmers and system

programmers, but also for database administrators, managers and all those who

are responsible for planning and performing a database conversion. Basic

knowledge of VSE, DB2, and application programming is assumed.

The Team That Wrote This Redbook

This redbook was produced by a team of specialists from around the world

working at the International Technical Support Organization Böblingen Center.

Gys Brummer from IBM South Africa.

Vijaykumar Singh from ML Sultan Technikon in Durban, South Africa.

Clara Yu from IBM China.

Eberhard Lange from the International Technical Support Organization Böblingen

Center was the project leader.

We want to especially thank Rolf Löben from IBM Germany for his invaluable

advice.

Copyright IBM Corp. 1997 ix

Page 12

Comments Welcome

Your comments are important to us!

We want our redbooks to be as helpful as possible. Please send us your

comments about this or other redbooks in one of the following ways:

•

•

Fax the evaluation form found in “ITSO Redbook Evaluation” on page 129 to

the fax number shown on the form.

Use the electronic evaluation form found on the Redbooks Home Pages at

the following URLs:

For Internet users

http://www.redbooks.ibm.com

For IBM Intranet users http://w3.itso.ibm.com/redbooks

•

Send us a note at the following address:

redbook@vnet.ibm.com

x DB2 VSAM Transparency for VSE/ESA

Page 13

Part 1. General Conversion Considerations

Part 1, “General Conversion Considerations” describes the conversion process

in general. It highlights the problem areas and gives an idea where DB2 VSAM

Transparency for VSE/ESA can help.

•

Chapter 1, “Introduction”

This chapter is an executive overview of a conversion from VSAM to DB2 on

the VSE/ESA platform.

•

Chapter 2, “Planning for Conversion”

This chapter gives an overview of the planning necessary for a successful

conversion. This includes aspects such as project phases, conversion

methods, inventory needs, and coexistence strategies.

•

Chapter 3, “Database Design”

This chapter describes the task of converting VSAM files to a relational

design.

•

Chapter 4, “Testing”

This chapter details testing methodology and some procedures with a

concentration on system integration testing.

Copyright IBM Corp. 1997 1

Page 14

2 DB2 VSAM Transparency for VSE/ESA

Page 15

Chapter 1. Introduction

Converting to a database system is a major decision. It affects business

flexibility, costs, and efficiency. Many customers have already recognized the

advantages that DB2 Server for VSE & VM offers over keeping the enterprise

data in VSE/VSAM files, but have lacked the chance to convert because this

meant a large effort.

This chapter is an executive overview of a conversion from VSAM to DB2 on the

VSE/ESA platform.

In this manual we use the term “conversion” for the whole project of moving

data and applications from VSAM to DB2. The word “migration” names the

process of moving the data from VSAM into DB2 tables.

1.1 Value of DB2 over VSAM

The advantage of a relational database lies in a data structure which is easy to

understand. Therefore, the data and applications can be more quickly and

cheaply adapted to the changing needs of your business. This is achieved with

full integrity and with much higher consistency of your data than you can ever

achieve with VSAM.

Additionally, a DB2 database combined with other IBM software provides you

with the capability to build:

•

Data warehouses

•

Executive information systems

•

Data marts

•

Client/server applications

•

Network computing applications

•

Mobile computing applications.

To summarize:

A DB2 database makes

information

out of your

data

Naturally, you will be looking for a way to get these advantages while leveraging

your existing assets in information and applications. This can be achieved, as

you will see, using the DB2 VSAM Transparency for VSE/ESA.

1.2 Management and Personnel

It is obvious that the project manager is a key person; many conversion projects

failed because the project manager was not suited for the job.

Less obvious is the following fact: For a successful conversion it is crucial that

the management understands the need for the conversion and backs the efforts

and the costs involved. If the upper management does not support the project, a

conversion project cannot be successful. The reason for this lies in the large

effort, the long time and huge costs involved with it, and in the amount of

problems that can arise even in spite of a thorough and careful planning. A ll

Copyright IBM Corp. 1997 3

Page 16

this may lead the management to have high expectations which should be

fulfilled in the short run - but they are not.

It is important that the management understands the purpose of the conversion

to the relational database management system and values its long term

advantages. Since in the short term there are high costs involved and no big

advantages visible (actually none in the first phase), such projects often are

deferred to never, or are begun and fail because of lacking management backup.

1.3 Conversion Effort

Disregarding differences between individual customer installations, generally the

overall effort spreads over 9 months and can be estimated as follows:

30% Planning 12% Data Migration 8% Application Conversion 50% Test

1.3.1 Planning

For a conversion project to be finished successfully, major effort has to be spent

on careful planning. Therefore, after the first phase of a study of typically around

a week, a second phase has to follow making a pilot and a detailed analysis of

the feasibility of the strategy. This phase can take several months. Only then

can the third phase of the actual implementation be started.

Although the purpose of a conversion normally lies in the feasibility of

extensions, changes and new applications, the implementation of any application

change has to wait until the end of the conversion. It is important that no

changes at all are intermixed with the conversion project; they have to be staged

until the conversion project is finished. Otherwise testing of the conversion will

not only become difficult and more expensive, but a complete test with high

quality becomes impossible.

1.3.2 Data Migration

The effort that is involved to migrate the data can be split into the following

areas:

1. Describe the current structure of the VSAM data.

2. Define the future data structure in DB2.

3. Set up the database and implement the structure.

4. Migrate the data from VSAM to DB2.

5. Repair the data.

Define the Current Structure

The first step can be very time consuming. If there is a detailed description of

all the fields of a VSAM data set, including all the special meanings and multiple

different formats the same columns can have depending on various conditions, it

must be made sure that this description is correct and up to date, in

synchronization with the applications accessing the one VSAM file to be

migrated.

4 DB2 VSAM Transparency for VSE/ESA

Page 17

But often, such a description does not exist in a concise form so that the

information must be extracted from all applications reading and writing the

VSAM file. This can be a huge task.

The time spent on this is not at all lost because without this information no

extension to any existing business data or application could be made anyway.

Therefore, from a management point of view, this effort should not be rated as

part of the conversion effort but rather as a necessary step in further developing

the data structure and the applications. The conversion project is just a means

to bring this step forward because it needs this description as a prerequisite.

Repair the Data

Also the last step can be very time consuming. Putting the data into a more

structured, more logical form, for example in a “normal form” (normalization),

very often leads to the detection of data errors. Fixing these can take a lot of

time and is dependent on the quality and consistency of the data.

1.3.3 Application Conversion

To manually change all the data accessing modules at first glance might be

considered the largest effort because it can most easily be imagined as complex.

This can be assisted by tools so that, compared to the other tasks, this is the

smallest effort. This fact shows the importance of good planning and testing.

1.3.4 Testing

Testing must be planned from the beginning. Before the first migration is done,

all test tools must be available. The test tools must be run at least once before

the actual migration is started. The following areas must be covered by the

tests:

•

Function

•

Performance

•

Concurrency

Function Test

The test tools must be able to prove the correct and complete functionality of the

applications after the conversion. Alternatively, it can be tested that the

application before and after the change do the same - if the applications before

were in a good shape and had no problems.

In the most cases this can be considered the easiest part.

Performance Test

Since the relational database management system performs many tasks, it takes

some processing capacity. Therefore, the applications have to be run and both

response time and processor capacity have to be verified. On the other hand,

applications can be relieved of many tasks that are performed by DB2.

Chapter 1. Introduction 5

Page 18

Concurrency Test

More complex than the pure performance and capacity analysis is to test the

behavior of the new applications and the relational database management

system on concurrent access to data. DB2 can lock data access in a more

granular way than VSAM. Therefore, generally concurrency can be improved

when migrating to DB2.

1.4 Problem Area: Coexistence

During the conversion process, there will be data and programs that need to

span both VSAM and DB2 concurrently. In some cases, this may be a short step

towards full conversion, in other cases coexistence may be a way of life for a

longer period of time. The various choices to overcome this include:

•

Extract data from existing VSAM files on a regular basis, for example for

query and decision support systems. No update to the DB2 data from the

applications is allowed. The update from the VSAM copy to DB2 has to

occur regularly.

•

Update of one database management system with read-only of the other.

Such applications can heavily increase the time and costs of a conversion

project and should be avoided where possible.

•

Updates to both database management systems.

1.5 Consultants

IBM and many consultants in many countries are experienced and willing to help

you during a conversion from VSAM to DB2. There are also various tools to

assist you in the one or the other step. For example, a vendor or consultant can

have tools to test the function of an application, guaranteeing that all paths are

run through. This can relieve you with the function test.

It is recommended to involve external help at least in planning for a conversion

project. The savings due to the experience of a consultant having performed

several conversions can by far outweigh the costs.

You can call IBM for consulting, or for closing the contact to another experienced

consultant. Or you can contact a local user group or one of the worldwide user

groups such as GUIDE, SHARE or WAVV to get such contacts. IBM is also

willing to help you to get in touch with user groups in your area.

1.6 Positioning of DB2 VSAM Transparency for VSE/ESA

With DB2 VSAM Transparency for VSE/ESA there is a tool that can assist

dramatically in a conversion. With a comparatively low effort you can migrate all

your data and, leaving your applications unchanged, transparently access DB2.

Because all your data is already in DB2, coexistence problems can be avoided.

The data structure can already be modified to a large extent during the above

migration step. The data structure and the applications can later be modified

step by step to further optimize performance and adapt to the individual

customer′s needs. For example, it is possible to keep some VSAM accesses

while other VSAM accesses are changed to DB2 accesses within the same

application. In this case, the older VSAM accesses continue to be transparently

6 DB2 VSAM Transparency for VSE/ESA

Page 19

intercepted, whereas the changed application statements can directly access

DB2 data using SQL bypassing Transparency.

Using DB2 VSAM Transparency might therefore reduce the effort for an external

consultant.

Chapter 1. Introduction 7

Page 20

8 DB2 VSAM Transparency for VSE/ESA

Page 21

Chapter 2. Planning for Conversion

This chapter gives an overview of the planning necessary for a successful

conversion. This includes aspects such as project phases, conversion methods,

inventory needs, and coexistence strategies. It is strongly recommended to use

the ITSO Redbook ″Planning for Conversion to the DB2 Family:Methodology and

Practice″, GG24-4445 for that purpose. It gives a more detailed description than

this overview. Although it was written for a VSAM to DB2 conversion on MVS,

most of it is valid for a conversion from VSAM to DB2 on VSE/ESA. Some of that

information is repeated here to help customers to:

•

Get an overview of the conversion process.

•

Learn the steps to be performed.

•

Value the DB2 VSAM Transparency for VSE/ESA within that context.

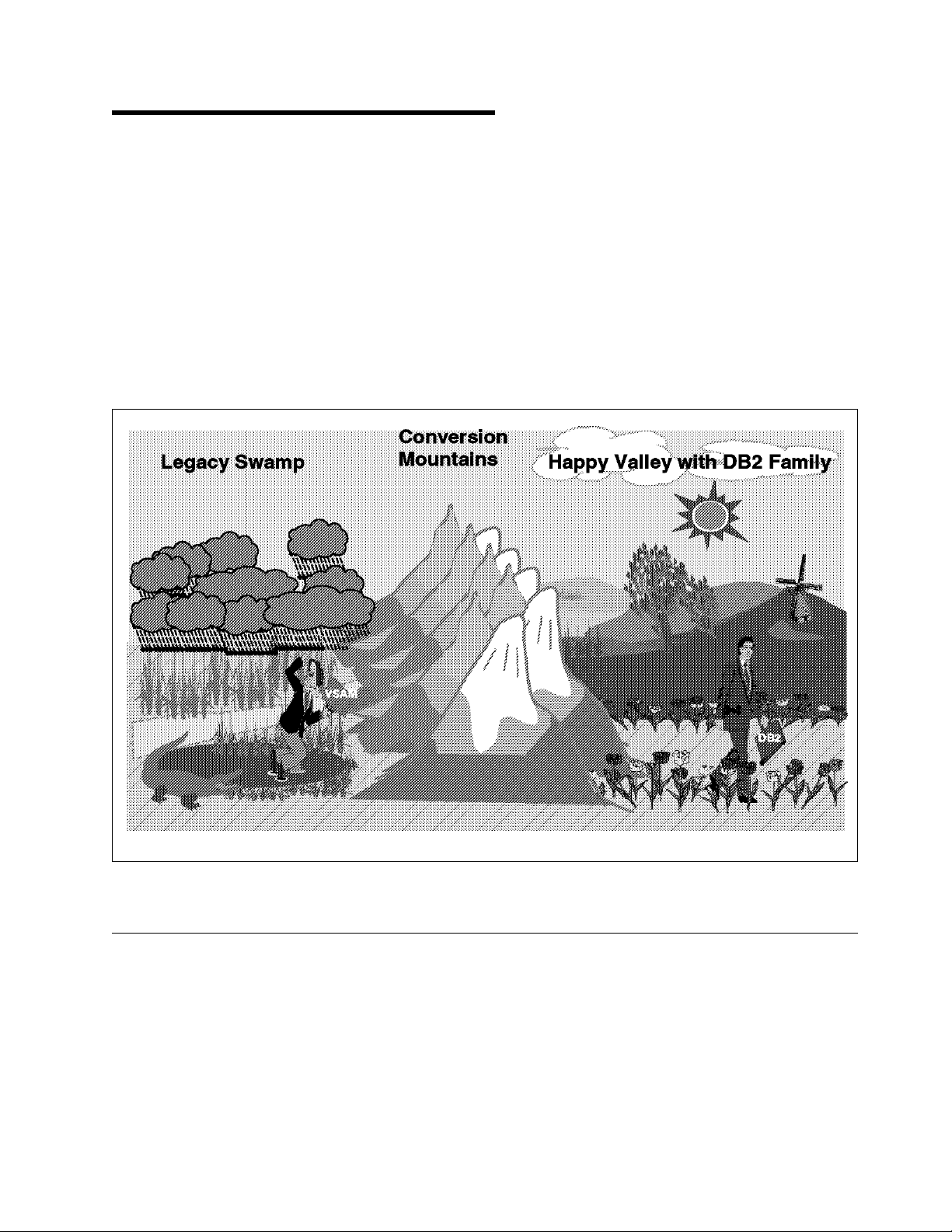

Figure 1. The Path through the Mountains

2.1 Why Relational

Conversion of an application from VSAM, a file management system, to DB2, a

relational database management system, is not necessarily a productive effort.

There are however, a number of relational advantages which justify the

conversion of existing applications with little or no change in function. In the

case of converting to DB2, these reasons are essentially the reasons that cause

the choice of DB2 for new applications. Typically, the choice is to do new

application development and major rewrites with DB2 and permit existing

applications to atrophy. Additional factors come into play: data interchange and

consistency between the two systems can also be costly and complex.

Copyright IBM Corp. 1997 9

Page 22

2.1.1 Value

Problems with VSAM

In many cases, the problems with heritage systems are that they are vital and

form a huge investment, but lack flexibility to implement changes or to add new

applications. Maintenance costs can take up to 80% of the development budget,

but data processing is under pressure to cut costs.

Value of Relational

Generally, the relational model provides significant value for an application

implemented in DB2. It can be shown to add value and reduce cost.

•

Data in a relational database management system such as DB2 is

understandable for end users and programmers.

•

Data is extendable.

•

Data in DB2 is globally accessible, from batch, online transactions,

interactive end users and remote systems.

•

Data replication to many platforms is possible, allowing for better

performance and availability by remote systems.

•

SQL language applications can easily be ported.

•

DB2 offers good recovery management.

•

DB2 offers the ability to protect various levels of data. You can keep all your

data in a centralized table and still have the flexibility of restricting access to

sensitive fields.

2.1.2 Inhibitors

This leads to the following advantages:

•

Reduced maintenance costs for applications.

•

Reduced operational effort.

•

The possibility to prepare for the year 2000.

•

Additional applications are offered, such as decision support tools.

•

The capability to build:

1. Data warehouses

2. Executive information systems

3. Data marts

4. Client/server applications

5. Network computing applications

6. Mobile computing applications.

•

Higher productivity for the end users.

Although DB2 is obviously a much better platform for data, various reasons

might be given for not converting to DB2:

•

Costs of conversion

•

Lack of skill

•

Uncertainty about costs and results

•

Resistance from personnel and executives

10 DB2 VSAM Transparency for VSE/ESA

Page 23

•

Lack of comprehensive help

•

Impact on end users

But IBM and many consultants offer comprehensive help, tools can reduce the

conversion costs dramatically, and the systematic analysis of the value of a

conversion should help to overcome resistance. Good planning can size the

costs, minimize the risks and reduce the impact on end users.

Costs

The additional data processing costs caused by a new database platform with

additional applications can be split into the following areas:

1. One-time conversion costs. For an effort split in percentage, see 1.3,

“Conversion Effort” on page 4. Depending on the individual customer

situation, the one-time conversion costs may be caused by one or more of

the following:

•

Additional people

•

Consultants to assist in planning or execution of parts of the conversion.

•

Additional disk space during the conversion time for tools and duplicate

copies of application and data, see 2.7.4, “DASD Requirements” on

page 22.

•

Additional processor capacity (including follow-on costs mentioned

below) to perform the conversion of data and applications, see 2.7.3,

“Processor Requirements” on page 22.

2. Costs for additional software: DB2, related features, tools and applications.

3. Possibly a larger processor, depending on the current processor size and

load. If this happens, increased software licence costs for existing software

will follow.

4. Additional disk space for the data. A relational database management

system creates control space, redundancy and requires some spare disk

space. Additionally, the new software will take extra disk space.

5. Other. This list is just to give you some ideas what areas should be

considered. It is not necessarily complete and can vary to a great degree

according to the individual customer environment and situation.

2.2 Conversion Principles

The following is a recommended strategy for a conversion:

•

Decide why you want to move.

− Use business needs to drive a strategy rather than technology.

•

Decide where you are now.

•

Decide where you want to be.

•

Evaluate the alternatives.

•

Decide the best route, taking everything into account.

− Use a phased approach rather than a giant leap.

For each of the phases, clearly identify:

•

Objectives

•

Inputs

•

Deliverables

Chapter 2. Planning for Conversion 11

Page 24

•

Checkpoints

This means especially, that the end of the conversion process needs to be

clearly defined.

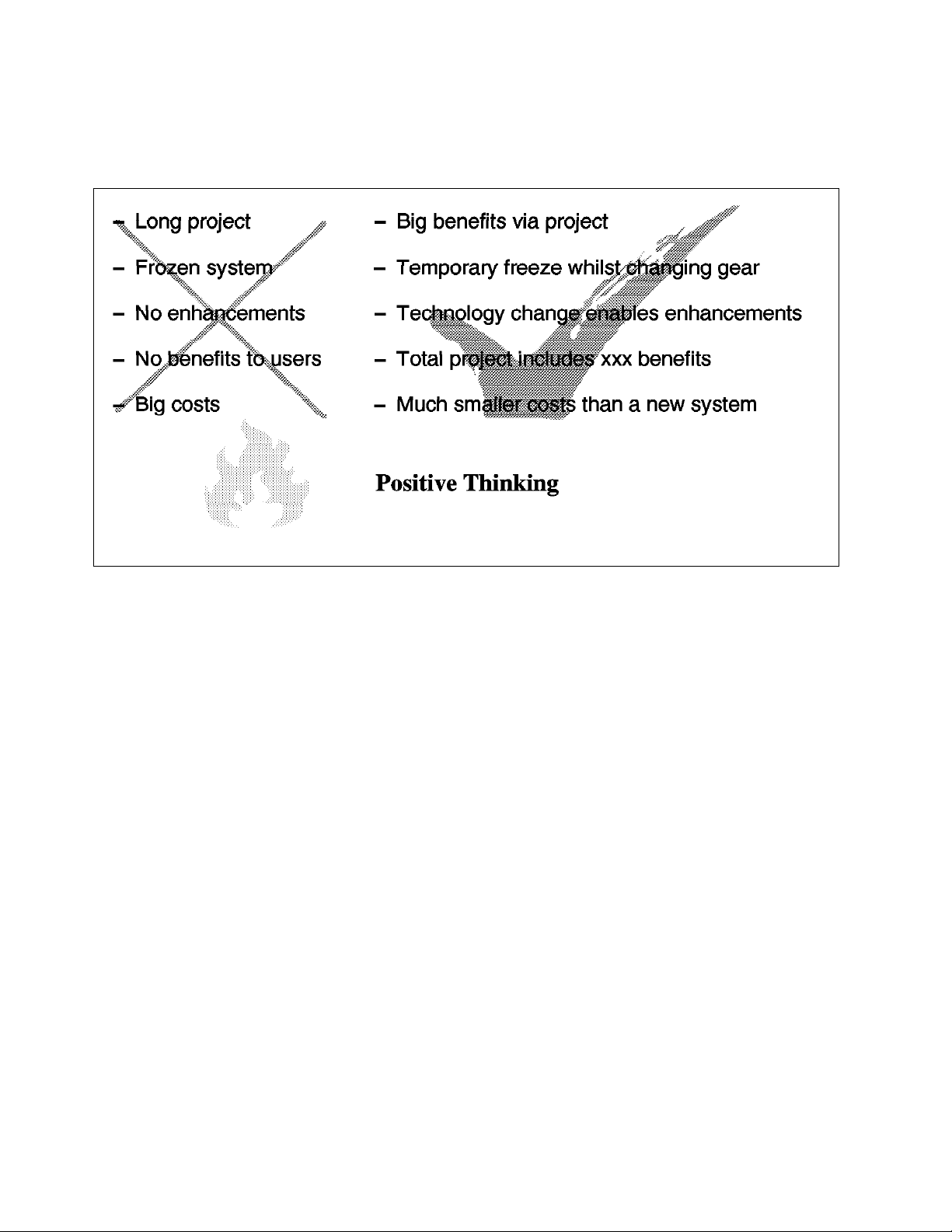

Figure 2. Positive Thinking

2.2.1 Conversion Phases

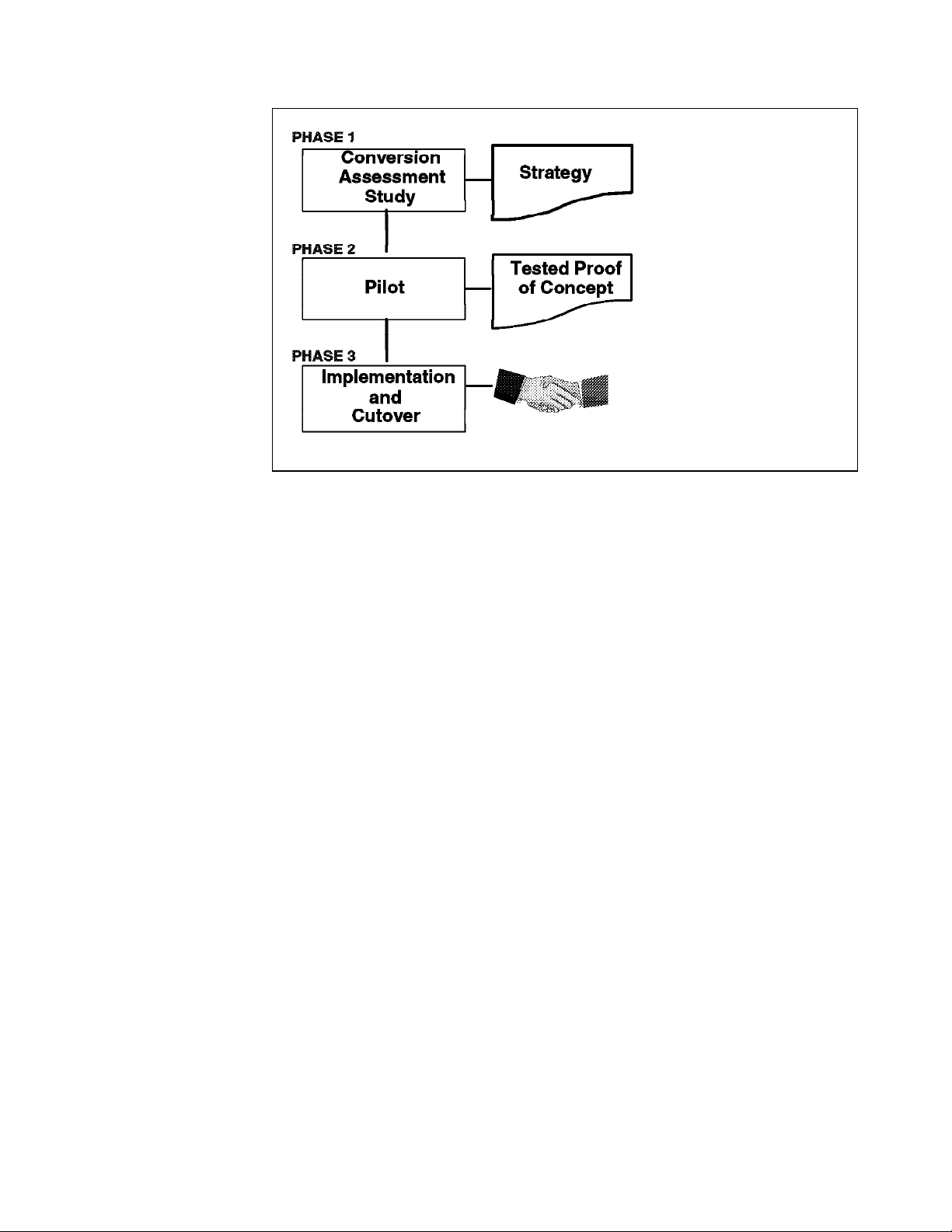

The steps in Figure 3 on page 13 have proven useful in many conversion

projects:

Phase 1 “Conversion Assessment Study” means a portfolio analysis of the

system to ensure that the start point is defined, the scope is understood, the

client′s objectives can be met and the appropriate strategy is set.

The pilot in Phase 2 is to provide proof of the concept by validating the technical

findings of the assessment study. The pilot completes the analysis of Phase 1

and involves setting up a plan.

Phase 3 is the main conversion. If the project is well-managed, it can be a

smooth and efficient process.

12 DB2 VSAM Transparency for VSE/ESA

Page 25

Figure 3. Conversion Phases

2.2.2 Switchover Strategies

During the study, various strategies are examined in order to find the best one

for a particular situation.

•

Management Information System

It may be questioned if report writers are to be converted, or to be replaced

by reporting systems such as QMF.

•

Big-Bang

When you switch over from the old to the new system from Saturday to

Sunday, you take a high risk. Therefore, a fallback plan is required. On the

other hand, coexistence problems do not appear, which can save a lot of

extra work.

•

Piece at a time

One application or group of applications is moved at a time. What if a

program needs access to data under control of both technologies? This kind

of coexistence problem can require a high investment in code which will be

dropped afterwards.

2.2.3 Functional Changes

It is understood that one of the reasons for the conversion is to more readily

accommodate changes to the database.

However, testing the conversion is a significant part of the overall effort. The

effect of the addition of new functions with changes to data, programs, input and

output dramatically complicates the effort of verifying the conversion. The

easiest means of testing the converted system is to use identical input to both

the former VSAM system and the new DB2 system and then compare the data

and output of both systems. It is desirable to automate this as much as possible.

Chapter 2. Planning for Conversion 13

Page 26

We strongly recommend, therefore, that no changes be permitted which will

affect these variables during the conversion. As needs are recognized during

the conversion, they should be logged for later implementation. Failure to follow

this recommendation will extend the period of the conversion, increase the cost

and might eventually cause the conversion project to fail.

2.3 Conversion Methods

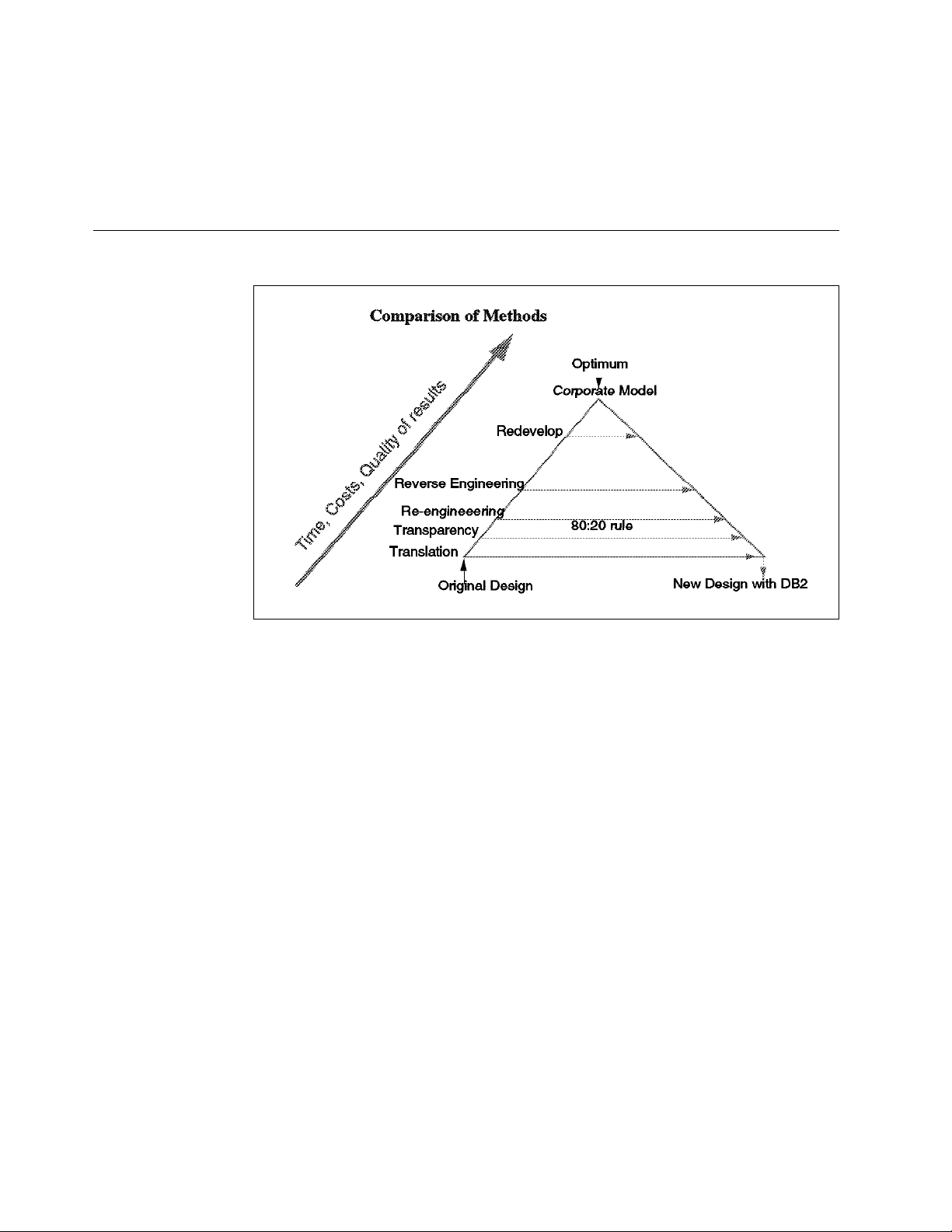

Figure 4. Conversion Methods in Perspective

To decide upon the right conversion method, it is important to understand the

different approaches and their results.

1. Translation is the easiest to understand. It takes the VSAM calls and

translates them to DB2. The data structure is left completely unchanged, this

means, the VSAM records are stored as long character strings into DB2

tables.

This is the easiest method, but does offer few of the advantages of DB2.

2. Transparency intercepts VSAM calls and re-routes them to database calls.

The data can be remodeled to suit a relational structure; using

Transparency, the application can function as before. Performance can be

an issue because applications now contain much used but unneeded code.

Applications can later on be modified step by step without coexistence

issues.

•

Data propagation is a form of Transparency. It keeps two copies of the

data, and updates are propagated (automatically or at regular times

manually) to the new platform.

3. Re-engineering means minimum change consistent with DB2. This means

changing enough to remove dependencies on the old structures and become

compatible with good DB2 design, but not rethinking the whole data design.

It offers the main advantages of DB2 and allows limited redesign to take

advantage of special situations, but takes more time than translation. The

data design still reflects old data structures.

14 DB2 VSAM Transparency for VSE/ESA

Page 27

4. Reverse engineering means to capture the old designs into models, modify

them, and generate new programs and new database designs.

This method requires that really effective, good tools are available. There

are some, but they must be compatible with the programming languages

used. This method needs more development time, but future maintenance

will be easier. Testing for identical results is unlikely to be useful.

5. Redevelopment of the applications can be needed if old code is in a poor

state. Data models are re-thought from scratch.

This means a huge investment with longer development times, but with the

chance to use a 4th generation language and new tools. Testing for identical

results is unlikely to be useful.

6. The Corporate Model means that not only the applications are redeveloped

but, from the modeling of the business and data, a completely new structure

of the data and the applications is created.

Some intermixtures between methods are possible, for example application

re-engineering combined with reverse re-engineering of the data. For more

details, refer to the redbook ″Planning for Conversion to the DB2

Family:Methodology and Practice″, GG24-4445.

The “80:20 rule” should be a guideline to help decide: As in many other areas,

you can achieve 80% of the benefits with 20% of the costs.

2.4 Conversion Personnel

It is obvious that the project manager is a key person; many conversion projects

failed because the project manager was not suited for the job.

Less obvious is the fact that for a successful conversion it is crucial to have an

executive sponsor who wants and needs the project to succeed. The

management must understand the need for the conversion and back the efforts

and the costs involved. If the upper management does not support the project, a

conversion cannot be successful since in the short term, there are high costs

involved and no big advantages visible - in the first phase no advantages at all.

Naturally, technically capable people are needed with various skills; since

special skills are involved with a conversion process which will not be needed

before or afterwards, it might be wise to use external people assisting the

in-house team for the conversion.

2.5 VSAM Application Systems Inventory

In order to prepare accurate estimates of workloads, costs and schedules, it is

necessary to set up a thorough inventory of each application to be converted.

This section provides direction and guidelines for conducting an inventory of the

VSAM applications, analyzing the results of the inventory and ranking each

application in the order of conversion difficulty. The data that will be collected

and the criteria that apply to that data will help make the final ranking and

selection as objective as possible.

If you are a data dictionary user, its reports can be used for some of this

inventory. It should be noted that if the data dictionary is current and complete,

it can be a major tool during the database design and data conversion phases

Chapter 2. Planning for Conversion 15

Page 28

from VSAM to DB2. The conversion team should consider bringing the data

dictionary up-to-date. The two primary advantages of doing so are:

•

The capability to obtain quick answers to ″What uses″ type questions, and

•

The assurance that all data entities are available for inventory.

The best source of information for the application inventory and resulting

analysis may be a VSAM programmer who is knowledgeable in the VSAM data

management system and the applications.

We cannot over emphasize the importance of this inventory.

attempt to do any steps of the conversion without completing this inventory

The specific skills needed by members of the conversion team responsible for

the inventory are:

•

Strong VSAM knowledge, especially of the various data organizations.

•

Ability to use VSAM utilities.

•

Host language (for example, COBOL) programming knowledge.

•

Experience with the VSAM interfaces used in the installation (for example,

CSP).

•

Ability to retrieve data from the data dictionary, VSAM catalog, CSP Member

Specification Library (MSL) and Application Load File (ALF), or other sources

as appropriate.

2.5.1 Application Inventory

Minimum documentation points for each application are:

•

The actual application name that is to be used for migration.

•

A description which is a brief paragraph stating the primary business

requirements and functions of the application.

Other information that should be recorded here is how critical and visible

each application is to the business and users.

•

Document the number of files for each application. You should be alert to

two conditions that could impact the conversion time or complexity. These

are:

You should not

.

1. A very high number of files

2. Inter-relationships between files.

•

File isolation is important when considering an application for conversion.

Isolation refers to the degree of sharing of files between applications.

•

Document the number of programs for the application by language.

If COBOL programs are registered in the data dictionary, they can be listed

from there. This approach assumes the data contained in the dictionary is

current and complete. If not, a physical count against the production library

is needed along with details about which applications use which COBOL

programs.

A list of the CSP programs may be generated through the Application Load

File Utility (ALFUTIL) or the where-used capability of the List Processor

Facility.

•

The application should have few outstanding change requests. Users tend to

expect changes to be made during conversion. Applying changes during the

16 DB2 VSAM Transparency for VSE/ESA

Page 29

conversion increases programming time and can dramatically increase the

complexity and the cost of the testing effort.

A better strategy is first to convert the application to relational and later

make the needed changes, taking advantage of the productivity and flexibility

that the relational database provides.

•

Often business issues dictate the timing of the conversion effort. For

example, conversion of the budget application should be completed before

the fiscal year ends.

It would be convenient if a simple application could be selected for the first

conversion. This gives the staff experience and confidence with minimum

risk. However, business priorities may not permit this.

2.5.2 VSAM File Inventory

Difference Between File and Table

When creating the VSAM file inventory, it is important to recognize the difference

between a VSAM file and a DB2 table. VSAM files often contain several types of

records in the same file. These may be control records, or redefined records

containing different information about the same entity. In the relational model,

all rows in a table must contain the same columns. Because no variation is

permitted, one or more tables are normally defined for each VSAM record type.

Another important difference is the level of data definition. In DB2 each column

is defined in the DB2 catalog. In VSAM, data is defined at the record level, and

field definitions are left to the individual program. To create an inventory of

VSAM fields, each field must have a unique and consistent name. Therefore,

special attention must be given to homonyms (different fields having the same

names) and to synonyms (a field being addressed by different names). If

consistent field (column) names have not been established through a data

dictionary or other means, they will have to be established for the purposes of

the inventory. Since it may be appropriate to use those same names

consistently in the converted programs and as DB2 column names, their

selection should be done with care. See 3.3.3, “Data Naming Considerations” on

page 29.

Possible sources for these names are data dictionaries, COBOL copy library

definitions, other source library definitions, CSP definitions, and COBOL program

definitions.

File Inventory Creation

For each application, the files, record types, fields, and indexes need to be

identified. VSAM catalog listings can provide a complete list of VSAM files and

indexes if more application oriented information is not available or reliable.

They also show the location of prime and alternate key fields, which must also

be identified, but not the names of those fields. Record types may be derived

from COBOL copy library entries, other source libraries, CSP data, or program

listings.

For CSP applications, the Application Load File Utility (ALFUTIL) or the

where-used capability of the List Processor Facility can greatly facilitate the

scanning of applications and the documentation of application to file

relationships, as well as record and field definitions.

Chapter 2. Planning for Conversion 17

Page 30

Caution: In some cases a record in a file may be defined as one long field. In

other cases there may be different record types within one file with a flag or

indicator that the program must read and understand. If either case exists,

investigation of the record layouts in each program accessing this file may be

necessary to achieve accurate record descriptions.

The following information is considered important and should be shown for each

file as a spreadsheet or table form for easy reading and understanding:

•

File name

•

File identifier

•

Catalog name

•

Type of VSAM file

•

Record key and alternate index

•

Number of record types

•

Names of the different record structures

•

Names of the source programs accessing the file

•

Name or number of the library in which the source programs are stored

(name for CSP in VSE library, number of ICCF library)

•

For each record structure, list of fields with start positions and length

•

For each record structure, number of fields

•

Number of records in the file (to calculate dbspace).

2.5.3 Program Inventory

All programs that make up a selected application must be inventoried to ensure

complete conversion. This section describes the data that is needed and how to

collect it.

All CSP programs can be identified with the Application Load File Utility

(ALFUTIL) or the where-used capability of the List File Processor. A complete

inventory of the COBOL programs may or may not be available through a data

dictionary. If the COBOL programs are not registered in the data dictionary, it

may be necessary to list all COBOL programs in the source library, in order to

locate the programs that apply to the selected application and record their

name.

Hints:

Information that should be listed for each application is as follows:

•

A pragmatic way to determine which programs are used, is through

the accounting information. All important programs will be listed

there. Every program that has not been run, for example during the

past two years, is not worth converting.

•

There are also some vendor tools available that will assist in

performing this task.

•

Inventory of all batch and online programs by program name.

•

JCL of all batch programs.

18 DB2 VSAM Transparency for VSE/ESA

Page 31

•

CICS transaction id for all online programs and maps.

•

Library name or number where source programs are stored.

•

For each of the programs within each application, the names of all

VSAM files accessed.

2.6 Coexistence Strategies

During the conversion process, there will be data and programs that need to

span both VSAM and DB2, as shown in Figure 5.

Figure 5. Coexistence Situation

In some instances, coexistence will merely be a stepping stone to full migration

but in other cases, coexistence may be a way of life for a longer period of time.

Therefore, a plan for dealing with data coexistence issues is outlined here.

There are a number of different approaches or strategies that can be defined as

coexistence. The level of complexity and applicability of these strategies varies

depending on the installation′s requirement. It is of lower importance whether

data is replicated, this means actually duplicate data, or just files of which some

have already been migrated and others not.

This section will cover various flavors of coexistence, beginning with the simpler

ones and moving to the more complex ones. Most installations will find that as

they move through the conversion cycle, they will be implementing several

variations of coexistence, depending upon business situations, application types

and use of the data.

Chapter 2. Planning for Conversion 19

Page 32

2.6.1 Coexistence Scenarios

An initial activity for any form of coexistence is the mapping of VSAM file

structures into the DB2 tables. This task is described in Chapter 3, “Database

Design” on page 27. After data mapping has been decided, the remaining tasks

for managing coexistence can be automated to some degree, depending upon

the tools and aids in the installation.

Extract Data for Query and Decision Support Systems

The simplest approach is to extract data from existing VSAM files and load it into

DB2 tables. The tables could then be accessed by a number of tools for query

and decision support applications. This approach might be applied to data that is

maintained in programs that are not to be converted immediately, yet the ability

to get to the data with end user tools is a requirement.

Update of One Data Management System With Read-only of the Other

These applications (often called “Bridge Applications”) contain data access and

logic for both DB2 and VSAM. This scenario may be the most pervasive during

the conversion effort because of the inter-relationship of data and the length of

the conversion effort. However, the conversion effort will be most cost-effective

if the need for these applications can be kept at a minimum because they will

require at least some rework when all data has been moved to DB2.

Consideration should be given to locking algorithms, commit strategies and

recovery methods when mixing the two data management technologies within

the same program.

Updates to Both Data Management Systems

Updates to both copies of data occur when data is replicated in VSAM and DB2.

The approach is dependent upon the timing of the updates. The first

determination that must be made is: must the updates be real time

(synchronous), or can a time lag be tolerated. Both approaches are discussed

below.

Time Delayed Updates

Time delayed updates of DB2 data can be handled in one of three ways. All

methods require a DB2 update program to be written.

1. The first method requires that the VSAM source program be altered and a

transaction file be written which reflects the updates. This transaction file

would then be the input to a DB2 batch or online updating program. Such a

program might be initiated by JCL, time initiated, or be a long running

program waiting for data to appear in a queue.

2. A different approach can be used by having the VSAM program spawn an

online transaction that will immediately schedule a DB2 updating transaction

to cause the corresponding update at transaction commit.

3. If the requirement exists to propagate updates of DB2 data back to VSAM

data, the solution is simpler. Since the DB2 programs will be in the process

of being written, logic to write a transaction file can be included from the

beginning. As the conversion process unfolds and the requirement to update

VSAM is discontinued, the code can then be removed or the output file

dummied.

20 DB2 VSAM Transparency for VSE/ESA

Page 33

Synchronous Updates

Another situation would be transactions that update both VSAM and DB2

synchronously with full integrity. This approach may be a requirement if related

production data resides in both DB2 and VSAM and a single transaction must

update both.

Programs will have to be changed multiple times. If a program updates only

replicated data, once the old VSAM data is no longer required, the logic for

updating that data may be removed. If the program updates related data, it must

be modified to update using SQL once the data is converted to DB2.

In either case, online programs can be written to update both VSAM and DB2

data concurrently. CICS two-phase commit can be used so that integrity

between VSAM and DB2 data can be guaranteed.

Batch programs that update both VSAM and DB2 data must be given special

attention. The two-phase commit and dynamic backout of VSAM data provided

by CICS (which are required to guarantee data integrity) are not available in

batch. If such a batch program abends, the DB2 databases will automatically be

restored to the beginning of the program (or the last COMMIT point), but the

VSAM file will not. Recovery procedures must be developed and executed very

carefully in order to maintain data integrity.

2.7 Conversion Considerations

Many more areas have to be planned for up front to make the conversion

successful. Here we will briefly mention the areas of tools, performance and

hardware requirements. For more details, and for other areas that are not

covered here such as

•

education

•

end point of the conversion

•

recovery concept changes

refer to the redbook ″Planning for Conversion to the DB2 Family:Methodology

and Practice″, GG24-4445.

2.7.1 Tools

A number of tools have emerged over the past few years, each of which can

help to reduce the effort by automating parts of the process. The areas include:

•

Database design tools

•

Transparency tools can speed the data move during the conversion process.

Applications can be changed later.

•

Data movement tools

•

Data propagation tools, synchronous and asynchronous

•

Testing

− Online identical function

Ensure that the new code still provides the same function as the old one.

− Full testing analysis

- Ensure that all parts of a program are tested.

- Ensure that the test data used will test every case possible.

Chapter 2. Planning for Conversion 21

Page 34

2.7.2 Performance

Performance is often considered to be critical. But compared with the overall

question of operating system and database platform, performance is a problem

that can be solved - through faster hardware if not otherwise. DB2 has many

choices to influence and optimize performance, especially in the database

design. Often a small change, even invisible to the end user, can make a large

difference in performance. For more details refer to 3.9, “Estimating

Performance” on page 47 and the redbook ″SQL/DS Version 3 Release 4

Performance Guide″, GG24-4047.

There is a performance estimator tool for MVS DB2. The tool runs on a

workstation. You can fetch it from the download library on the World Wide Web

under the IBM software home page at:

http://www.software.ibm.com

Support comes from an Internet ID of:

estimate@vnet.ibm.com

There is also the IBM VNET id “ESTIMATE at STLVM14.” The DB2 Estimator

Version 5 runs with either OS/2 Warp, Windows 3.1, or Win95 and requires 4MB

of memory and 5MB of hard disk space, 10MB while installing.

− Managing multiple tests

− Batch output comparisons

− Stress testing of systems

•

Real-time performance monitoring

Although performance numbers cannot easily be correlated between MVS and

VSE, the relative performance behavior can be estimated with this tool. You can

have a first run with a primitive design of a one-to-one relationship of VSAM

contents to DB2 table format, for example one large column with characters only,

and then with your proposed database design alternatives. You will then be able

to predict the relative performance of your designs compared to the primitive

design and to one another.

2.7.3 Processor Requirements

In general the processor requirements for conversions are not significantly

different from normal development efforts. Actual requirements depend on the

specific application.

The final testing and production cutover with both VSAM and DB2 running similar

work loads will likely be the peak CPU load. This peak load may be estimated

by summing the time for applications running concurrently on both systems.

2.7.4 DASD Requirements

Both VSAM files and DB2 will exist in the system during the conversion. Some

portion of the data must be replicated for the purpose of development, test and

production cutover. Most development and test may be done with minimal size

test data. During the initial phases the amount of test data may remain small.

At the point of production cutover, the data used by an application is loaded into

DB2 tables. After cutover verification, the VSAM version of the data can be

archived, thus minimizing the time when duplicate data is online. Planning to

convert data and applications in phases rather than all at once may reduce

DASD requirements.

22 DB2 VSAM Transparency for VSE/ESA

Page 35

DB2 VSAM Transparency is independent of DASD device type; any DASD device

can be used that is supported by the VSE/ESA operating system. In addition,

DB2 VSAM Transparency has no specific hardware prerequisites and will

function in any environment that supports DB2.

2.8 Conversion With DB2 VSAM Transparency for VSE/ESA

Conversion with DB2 VSAM Transparency principally is done as a conversion

without it, but with considerably less effort and risk because you migrate the

VSAM files rather than the application programs, as shown in Figure 6.

Figure 6. Migration with DB2 VSAM Transparency

The same project phases apply, and the same aspects have to be considered. It

must be checked whether the principle of Transparency fits into the overall

project situation, purpose and targets. Especially, the limitations of DB2 VSAM

Transparency in database design must be considered, as described in 7.1.3,

“Limitations” on page 76. If the limitations apply, you have four choices:

1. Retreat from the requirements.

This is a choice if the requirement is either not really strong, or it can be

fulfilled later.

2. Consider other methods and tools rather than DB2 VSAM Transparency.

This might be chosen if many applications and data are hit by this limitation

and no circumvention is possible. Avoid the duplicate effort of testing.

3. Use DB2 VSAM Transparency and move the remaining design changes into

a follow-on project.

Chapter 2. Planning for Conversion 23

Page 36

This might be useful if you do not have a real alternative in tools available,

and many applications and data are hit by this limitation. This might lead

you relatively quickly to an intermediate state, but be aware that you

duplicate the huge testing effort.

4. Circumvent and change

Use DB2 VSAM Transparency and include the remaining design changes into

in-between and follow-on steps within the same project, because the large

testing effort should not be duplicated. For examples, see Chapter 8,

“Beyond Transparency” on page 103.

Figure 7. How Transparency Works

The advantages of DB2 VSAM Transparency are:

•

The data may be remodeled to suit relational structures.

•

Tools may be used for database design, refer to 2.7.1, “Tools” on page 21.

•

Once one application suite has been successfully migrated, others can easily

follow.

•

New applications can be written taking advantage of the new data structures

in DB2.

•

After Transparency has gone live, each program can be reworked separately

to access DB2 directly.

Hint: In any single application program you can mix:

− VSAM accesses (to files not enabled for Transparency)

− Intercepted VSAM accesses (to files enabled for Transparency)

− SQL accesses to DB2

These different access modes do not interfere with each other, as

shown in Figure 10 on page 60. This means, after migration using

Transparency you can change an application even partially, step by

step.

24 DB2 VSAM Transparency for VSE/ESA

Page 37

•

Transparency is suited especially for very large databases, with high

availability requirements.

•

Transparency offers a lower-risk path because less is changed in any one

step and fallback is easy.

The disadvantages of Transparency are:

•

Performance is more likely to become an issue because applications now

contain much used but unneeded code.

− But performance issues can be solved, see 2.7.2, “Performance” on

page 22.

•

The conversion might become longer because it is now in two stages:

Taking subsequent steps and achieving good quality and complete

conversion may be hard to ensure.

− But you are on the new platform more quickly and with less risk.

Using DB2 VSAM Transparency lets you take advantage of all DB2 usability

improvements, without any reprogramming, recompiling, or relinking. When

VSAM programs run under DB2 VSAM Transparency, VSAM access requests are

intercepted and redirected to access DB2 data tables. In fact, an application

program can access any mixture of:

•

Unconverted VSAM data

•

Converted VSAM data through DB2 VSAM Transparency

•

DB2 tables through native SQL.

This flexibility removes the need for replicated data and for coexistence efforts.

Where coexistence issues may be a problem, Transparency should seriously be

considered as a migration aid.

Chapter 2. Planning for Conversion 25

Page 38

26 DB2 VSAM Transparency for VSE/ESA

Page 39

Chapter 3. Database Design

This chapter describes the task of converting VSAM files to a relational design.

It covers the translation of both the logical data and the physical structures to

DB2 tables with the same meaning. This document will discuss only those

techniques that are unique to converting an existing VSAM file to DB2 tables. For

general DB2 database design techniques, refer to existing DB2 design

guidelines.

3.1 Types of Database Conversion

Conversions may be done in different degrees.

1. Translation. Conversion may be of a ″translation″ nature where the VSAM

records are mapped directly to DB2 tables.

•

If the data has been designed and maintained as normalized data, this

approach will be appropriate.

•

In those cases where data is not normalized, this is unlikely to provide

optimum performance characteristics and the resulting system loses the

productivity advantages of the relational model. Thus, while the

migration is simplified, it is quite possible that the end result will be

unsatisfactory for either current performance or future additions.

2. Compatibility. As a next level, it is possible to do a simple conversion with

minimal analysis of the data. This provides essentially a relational result

from the VSAM data but may still not optimize performance or application

design.

3. Redesign. The best, but most expensive conversion is an intelligent analysis

of the data, its logical structure, business rules and usages in existing and

planned applications. While such a complete analysis is the most expensive,

it should be understood that the cost of this conversion will still be less than

the cost of a new database design. Most of the required data fields have

been defined and the data exists in a processable form.

4. New design. A new database design according to newly defined business

requirements offers the largest flexibility, but will also have the largest costs.

It should be remembered that in terms of the overall cost of the conversion, the

database conversion is typically a small part.

A major difference exists in the resources required between doing a

″compatibility″ type conversion (2) and a true redesign (3). In the case of a true

database redesign, the availability of one or more persons fully familiar with the

data and its usages is required. This knowledge need not be provided full time

to the conversion team, but must be available on a demand basis.

Documentation rarely provides all the information necessary to do an intelligent

conversion.

Compared to the conversion methods described in 2.3, “Conversion Methods” on

page 14, the “Translation” above maps to “Translation” there, “Compatibility”

here maps to “Re-engineering” there, “Redesign” here maps to “Reverse

Engineering” there, and “New Design” here is used in “Redevelop” and

“Corporate Model” there. “Transparency” can be seen somewhere between

“Compatiblitlity” and “Redesign” above, because it allows limited changes in the

Copyright IBM Corp. 1997 27

Page 40

data structure, as described in 7.1.2, “Capabilities” on page 76, 7.1.3,

“Limitations” on page 76, and Chapter 8 , “Beyond Transparency” on page 103.

3.2 Objectives of Conversion

The primary objective is to optimize both the performance and the application

design for further usage and expandability. The approach is to do a limited

redesign of the database and reprogram the parts of the applications that are

product sensitive. Again, note that no attempt is made to change the application

data requirements. The assumption is that the application will continue to need

and use the data that is currently stored.

application changes or extensions during the conversion except what may be

mandatory to tolerate the changes in the database.

the designer recognizes that better ways exist to store the data or that some

other change should be made to improve the format or structure of the stored

data. Despite the temptation to make the changes that appear minor, the

designer must recognize that making such changes can seriously impact the

application conversion and the testing procedures. Assuming that the objective

is to minimize the time and cost to make the conversion, such changes should

be delayed until the application enters the maintenance cycle. Such

opportunities for improvements should be noted in a journal of future potential

enhancements. This ensures that the knowledge of potential improvements is

not lost and the changes can be incorporated when appropriate.

It is axiomatic that there should be no

There will be cases where

3.3 Database Design Philosophy

At first glance it seems possible to treat each VSAM file as a relational table. In

fact it quickly becomes apparent that this is not completely possible. Conditions

that are not acceptable in a relational table are acceptable in a VSAM file.

Particularly, many VSAM file designs gather as much data into a single record

as possible; the opposite is true of relational normalization philosophy.

Relational capabilities relate minimally redundant tables to associate data. This

major difference in the models means that in many cases it will be necessary to

split VSAM files into several tables in order to get the full benefit of the relational

model. Further, a relational system allows a user to interrogate any column by

name, based on its data value. This prohibits ambiguous data formats which

might be acceptable in VSAM such as COBOL REDEFINES, repeating groups of

fields, or multiple occurring fields.

The VSAM files, then, become a starting point in a relational design. The intent

is to make a table from each file, but each file will be analyzed as to normal

form, redundancy, and conformance with relational standards.

3.3.1 Design Information Sources

Data dictionaries are potentially a primary source of information. If installation

standards have made the use of a data dictionary a requirement, information

stored here can normally be relied upon to reflect the data that is presently in

the VSAM files.

If an inventory of the data has been done, most of the information required will

already have been organized in a format that makes it readily available for the

design process. When it is complete, the inventory will replace, in most cases,

the need for interrogating the dictionary. As the design progresses, decisions

28 DB2 VSAM Transparency for VSE/ESA

Page 41

will be recorded in the inventory tracking tables so that they become the record

of the conversion.

3.3.2 Design Sequence

The following are the basic steps required to convert VSAM data to DB2 data:

1. Verify that each VSAM record type is in third normal form. It should be

normalized if it is not already.

2. For each resulting record type, create a DB2 table.

3. Translate each VSAM field to a DB2 column.

4. Identify a primary key for all tables where it is desirable.

5. If referential integrity is to be implemented, a foreign key must be defined for