Page 1

SG24-5131-00

International Technical Support Organization

http://www.redbooks.ibm.com

IBM Certification Study Guide

AIX HACMP

David Thiessen, Achim Rehor, Reinhard Zettler

Page 2

Page 3

IBM Certification Study Guide

AIX HACMP

May 1999

SG24-5131-00

International Technical Support Organization

Page 4

© Copyright International Business Machines Corpora tion 1999. All rights reserved.

Note to U.S Government Users – Do cum entation r elated to r estric ted righ ts – Us e, duplication or dis closu re is

subject to restrictions set forth in GSA ADP Sc hedule Contra ct with IBM Co rp .

First Edition (May 1 999)

This edition applies to HACMP f or AIX and HACMP/En hanced Scalab ility (H ACMP/E S), P rogram

Number 5765-D28, for use with t he A IX Operat ing System Version 4.3.2 and lat er.

Comments may be addressed to:

IBM Corporation, Internation al Technical Support Organization

Dept. JN9B Building 003 Interna l Zip 2834

11400 Burnet Road

Austin, Texas 78758-3493

When you send information to IBM, you gra nt I BM a non-exclusive right to use or di strib ute t he

information in any way it belie ves appropr iate without incur ring any ob ligati on to you.

Before using this information and t he product it suppor ts, be sure t o re ad th e gen eral infor mation in

Appendix A, “Special Notices” on page 205.

Take Note!

Page 5

© Copyright IBM Corp. 1999 iii

Contents

Figures. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .ix

Tables. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xi

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xiii

The Team That Wrote This Redbook. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xiv

Comments Welcome . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Chapter 1. Certification Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1

1.1 IBM Certified Specialist - AIX HACMP . . . . . . . . . . . . . . . . . . . . . . . . .1

1.2 Certification Exam Objectives. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2

1.3 Certification Education Courses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4

Chapter 2. Cluster Planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7

2.1 Cluster Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7

2.1.1 CPU Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7

2.1.2 Cluster Node Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . .8

2.2 Cluster Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .11

2.2.1 TCP/IP Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .11

2.2.2 Non-TCPIP Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .14

2.3 Cluster Disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .16

2.3.1 SSA Disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .16

2.3.2 SCSI Disks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .26

2.4 Resource Planning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .28

2.4.1 Resource Group Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .28

2.4.2 Shared LVM Components. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .30

2.4.3 IP Address Takeover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .34

2.4.4 NFS Exports and NFS Mounts . . . . . . . . . . . . . . . . . . . . . . . . . .41

2.5 Application Planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .41

2.5.1 Performance Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . .42

2.5.2 Application Startup and Shutdown Routines. . . . . . . . . . . . . . . .42

2.5.3 Licensing Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .43

2.5.4 Coexistence with other Applications . . . . . . . . . . . . . . . . . . . . . .43

2.5.5 Critical/Non-Critical Prioritizations . . . . . . . . . . . . . . . . . . . . . . .43

2.6 Customization Planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .44

2.6.1 Event Customization. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .44

2.6.2 Error Notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .45

2.7 User ID Planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .48

2.7.1 Cluster User and Group IDs . . . . . . . . . . . . . . . . . . . . . . . . . . . .48

2.7.2 Cluster Passwords . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .49

2.7.3 User Home Directory Planning . . . . . . . . . . . . . . . . . . . . . . . . . .49

Page 6

iv IBM Certification Study Guide A IX HAC MP

Chapter 3. Cluster Hardware and Software Preparation . . . . . . . . . . .51

3.1 Cluster Node Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .51

3.1.1 Adapter Slot Placement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .51

3.1.2 Rootvg Mirroring. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .51

3.1.3 AIX Prerequisite LPPs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .55

3.1.4 AIX Parameter Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .56

3.2 Network Connection and Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . .60

3.2.1 TCP/IP Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .60

3.2.2 Non TCP/IP Networks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .63

3.3 Cluster Disk Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .66

3.3.1 SSA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .66

3.3.2 SCSI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .72

3.4 Shared LVM Component Configuration . . . . . . . . . . . . . . . . . . . . . . .81

3.4.1 Creating Shared VGs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .82

3.4.2 Creating Shared LVs and File Systems . . . . . . . . . . . . . . . . . . .84

3.4.3 Mirroring Strategies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .86

3.4.4 Importing to Other Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .86

3.4.5 Quorum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .88

3.4.6 Alternate Method - TaskGuide . . . . . . . . . . . . . . . . . . . . . . . . . .90

Chapter 4. HACMP Installation and Cluster Definition. . . . . . . . . . . . .93

4.1 Installing HACMP. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .93

4.1.1 First Time Installs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .93

4.1.2 Upgrading From a Previous Version. . . . . . . . . . . . . . . . . . . . . .96

4.2 Defining Cluster Topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .100

4.2.1 Defining the Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

4.2.2 Defining Nodes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .101

4.2.3 Defining Adapters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

4.2.4 Configuring Network Modules. . . . . . . . . . . . . . . . . . . . . . . . . .105

4.2.5 Synchronizing the Cluster Definition Across Nodes . . . . . . . . .106

4.3 Defining Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .108

4.3.1 Configuring Resource Groups . . . . . . . . . . . . . . . . . . . . . . . . .108

4.4 Initial Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .111

4.4.1 Clverify. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

4.4.2 Initial Startup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .112

4.4.3 Takeover and Reintegration . . . . . . . . . . . . . . . . . . . . . . . . . . .112

4.5 Cluster Snapshot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .113

4.5.1 Applying a Cluster Snapshot. . . . . . . . . . . . . . . . . . . . . . . . . . .114

Chapter 5. Cluster Customization. . . . . . . . . . . . . . . . . . . . . . . . . . . .117

5.1 Event Customization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .117

5.1.1 Predefined Cluster Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

5.1.2 Pre- and Post-Event Processing. . . . . . . . . . . . . . . . . . . . . . . .122

Page 7

v

5.1.3 Event Notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

5.1.4 Event Recovery and Retry . . . . . . . . . . . . . . . . . . . . . . . . . . . .122

5.1.5 Notes on Customizing Event Processing . . . . . . . . . . . . . . . . . 123

5.1.6 Event Emulator. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .123

5.2 Error Notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

5.3 Network Modules/Topology Services and Group Services . . . . . . . . 124

5.4 NFS considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .125

5.4.1 Creating Shared Volume Groups . . . . . . . . . . . . . . . . . . . . . . .125

5.4.2 Exporting NFS File Systems. . . . . . . . . . . . . . . . . . . . . . . . . . .126

5.4.3 NFS Mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .126

5.4.4 Cascading Takeover with Cross Mounted NFS File Systems . . 126

5.4.5 Cross Mounted NFS File Systems and the Network Lock Manager.

128

Chapter 6. Cluster Testing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .131

6.1 Node Verification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .131

6.1.1 Device State. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .131

6.1.2 System Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .132

6.1.3 Process State. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

6.1.4 Network State. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .132

6.1.5 LVM State . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .133

6.1.6 Cluster State . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .133

6.2 Simulate Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .134

6.2.1 Adapter Failure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .134

6.2.2 Node Failure / Reintegration . . . . . . . . . . . . . . . . . . . . . . . . . . .137

6.2.3 Network Failure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .138

6.2.4 Disk Failure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .139

6.2.5 Application Failure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .141

Chapter 7. Cluster Troubleshootin g . . . . . . . . . . . . . . . . . . . . . . . . . .143

7.1 Cluster Log Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .143

7.2 config_too_long . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .144

7.3 Deadman Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .145

7.3.1 Tuning the System Using I/O Pacing . . . . . . . . . . . . . . . . . . . .146

7.3.2 Extending the syncd Frequency . . . . . . . . . . . . . . . . . . . . . . . .146

7.3.3 Increase Amount of Memory for Communications Subsystem. . 146

7.3.4 Changing the Failure Detection Rate . . . . . . . . . . . . . . . . . . . .147

7.4 Node Isolation and Partitioned Clusters . . . . . . . . . . . . . . . . . . . . . .147

7.5 The DGSP Message. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .148

7.6 User ID Problems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

7.7 Troubleshooting Strategy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .149

Chapter 8. Cluster Management and Administration. . . . . . . . . . . . . 151

8.1 Monitoring the Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Page 8

vi IBM Certification Study Guide A IX HAC MP

8.1.1 The clstat Command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .152

8.1.2 Monitoring Clusters using HAView . . . . . . . . . . . . . . . . . . . . . .152

8.1.3 Cluster Log Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .153

8.2 Starting and Stopping HACMP on a Node or a Client. . . . . . . . . . . .154

8.2.1 HACMP Daemons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .155

8.2.2 Starting Cluster Services on a Node. . . . . . . . . . . . . . . . . . . . . 156

8.2.3 Stopping Cluster Services on a Node . . . . . . . . . . . . . . . . . . . .157

8.2.4 Starting and Stopping Cluster Services on Clients . . . . . . . . . .159

8.3 Replacing Failed Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . .160

8.3.1 Nodes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .160

8.3.2 Adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .160

8.3.3 Disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .161

8.4 Changing Shared LVM Components. . . . . . . . . . . . . . . . . . . . . . . . .163

8.4.1 Manual Update. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .163

8.4.2 Lazy Update. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .164

8.4.3 C-SPOC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

8.4.4 TaskGuide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .167

8.5 Changing Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

8.5.1 Add/Change/Remove Cluster Resources . . . . . . . . . . . . . . . . . 168

8.5.2 Synchronize Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . .168

8.5.3 DARE Resource Migration Utility . . . . . . . . . . . . . . . . . . . . . . .169

8.6 Applying Software Maintenance to an HACMP Cluster. . . . . . . . . . . 174

8.7 Backup Strategies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .176

8.7.1 Split-Mirror Backups. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .176

8.7.2 Using Events to Schedule a Backup. . . . . . . . . . . . . . . . . . . . .178

8.8 User Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .178

8.8.1 Listing Users On All Cluster Nodes. . . . . . . . . . . . . . . . . . . . . .179

8.8.2 Adding User Accounts on all Cluster Nodes . . . . . . . . . . . . . . .179

8.8.3 Changing Attributes of Users in a Cluster. . . . . . . . . . . . . . . . . 180

8.8.4 Removing Users from a Cluster . . . . . . . . . . . . . . . . . . . . . . . . 180

8.8.5 Managing Group Accounts . . . . . . . . . . . . . . . . . . . . . . . . . . . .181

8.8.6 C-SPOC Log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .181

Chapter 9. Special RS/6000 SP Topics . . . . . . . . . . . . . . . . . . . . . . . . 183

9.1 High Availability Control Workstation (HACWS) . . . . . . . . . . . . . . . . 183

9.1.1 Hardware Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .183

9.1.2 Software Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .184

9.1.3 Configuring the Backup CWS . . . . . . . . . . . . . . . . . . . . . . . . . .184

9.1.4 Install High Availability Software. . . . . . . . . . . . . . . . . . . . . . . . 185

9.1.5 HACWS Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

9.1.6 Setup and Test HACWS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .186

9.2 Kerberos Security. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .187

9.2.1 Configuring Kerberos Security with HACMP Version 4.3. . . . . . 189

Page 9

vii

9.3 VSDs - RVSDs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .190

9.3.1 Virtual Shared Disk (VSDs) . . . . . . . . . . . . . . . . . . . . . . . . . . .190

9.3.2 Recoverable Virtual Shared Disk . . . . . . . . . . . . . . . . . . . . . . .193

9.4 SP Switch as an HACMP Network . . . . . . . . . . . . . . . . . . . . . . . . . .195

9.4.1 Switch Basics Within HACMP. . . . . . . . . . . . . . . . . . . . . . . . . . 195

9.4.2 Eprimary Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

9.4.3 Switch Failures. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .196

Chapter 10. HACMP Classic vs. HACMP/ES vs. HANFS. . . . . . . . . . .199

10.1 HACMP for AIX Classic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

10.2 HACMP for AIX / Enhanced Scalability. . . . . . . . . . . . . . . . . . . . . . 199

10.2.1 IBM RISC System Cluster Technology (RSCT). . . . . . . . . . . .200

10.2.2 Enhanced Cluster Security . . . . . . . . . . . . . . . . . . . . . . . . . . .201

10.3 High Availability for Network File System for AIX . . . . . . . . . . . . . .201

10.4 Similarities and Differences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .202

10.5 Decision Criteria . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .202

Appendix A. Special Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

Appendix B. Related Publications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

B.1 International Technical Support Organization Publications . . . . . . . . . . 209

B.2 Redbooks on CD-ROMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

B.3 Other Publications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

How to Get ITSO Redb ook s . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .211

How IBM Employees Can Get ITSO Redbooks. . . . . . . . . . . . . . . . . . . . . . . 211

How Customers Can Get ITSO Redbooks. . . . . . . . . . . . . . . . . . . . . . . . . . . 212

IBM Redbook Order Form . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

List of Abbreviations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .215

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .217

ITSO Redbook Evaluation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .221

Page 10

viii IBM Certification Stud y Gu ide AIX HACMP

Page 11

© Copyright IBM Corp. 1999 ix

Figures

1. Basic SSA Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2. Hot-Standby Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3. Mutual Takeover Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

4. Third-Party Takeover Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

5. Single-Network Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

6. Dual-Network Setup. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

7. A Point-to-Point Connection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

8. Sample Screen for Add a Notification Method. . . . . . . . . . . . . . . . . . . . . . 46

9. Connecting Networks to a Hub . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

10. 7135-110 RAIDiant Arrays Connected on Two Shared 8-Bit SCSI Buses 74

11. 7135-110 RAIDiant Arrays Connected on Two Shared 16-Bit SCSI Buses77

12. Termination on the SCSI-2 Differential Controller . . . . . . . . . . . . . . . . . . . 78

13. Termination on the SCSI-2 Differential Fast/Wide Adapters . . . . . . . . . . . 78

14. NFS Cross Mounts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

15. Applying a PTF to a Cluster Node. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

16. A Simple HACWS Environment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

17. VSD Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

18. VSD State Transitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

19. RVSD Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

20. RVSD Subsystem and HA Infrastructure. . . . . . . . . . . . . . . . . . . . . . . . . 194

Page 12

x IBM Certification Study Guide A IX HA CMP

Page 13

© Copyright IBM Corp. 1999 xi

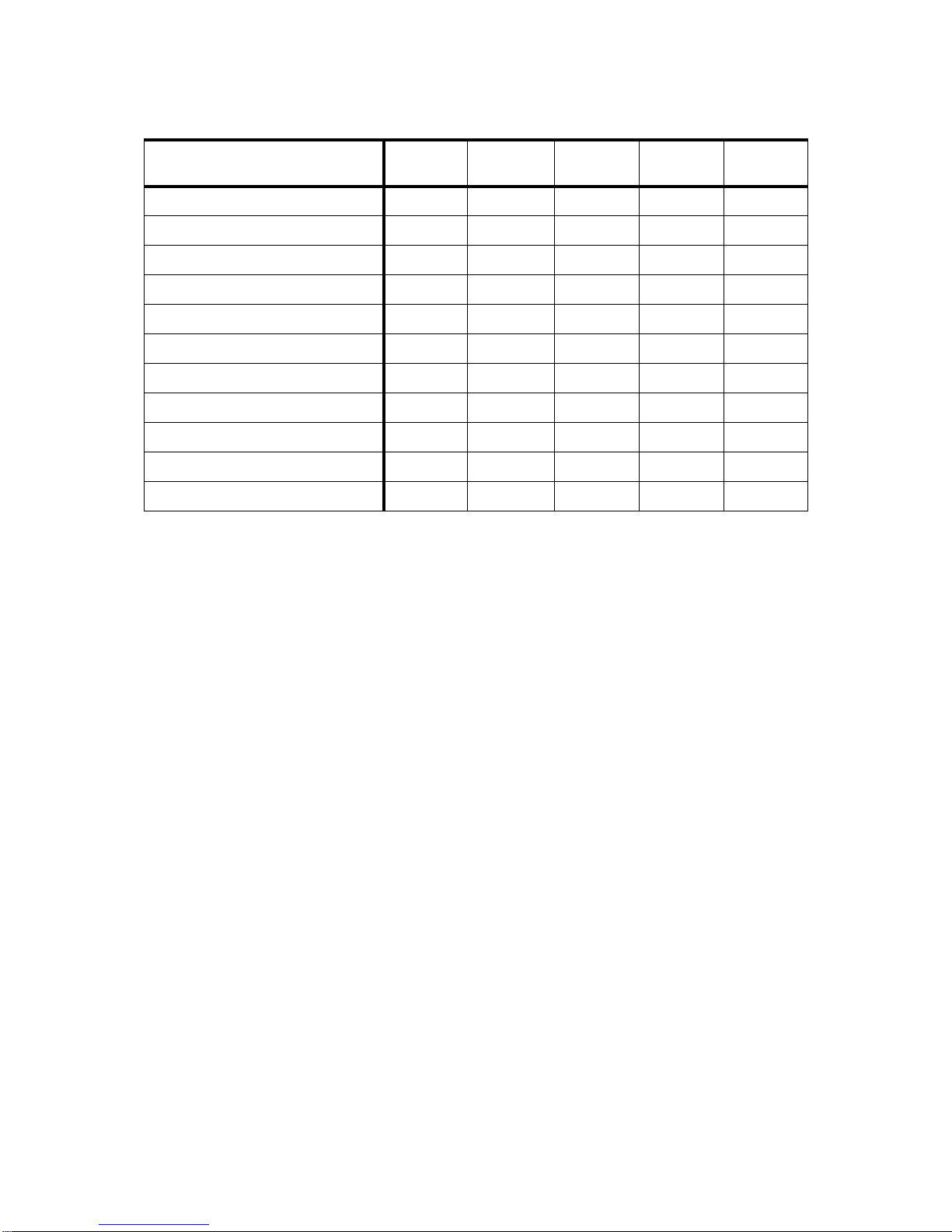

Tables

1. AIX Version 4 HACMP Installation and Implementation . . . . . . . . . . . . . . . 4

2. AIX Version 4 HACMP System Administration . . . . . . . . . . . . . . . . . . . . . . 5

3. Hardware Requirements for the Different HACMP Versions . . . . . . . . . . . . 8

4. Number of Adapter Slots in Each Model . . . . . . . . . . . . . . . . . . . . . . . . . . 10

5. Number of Available Serial Ports in Each Model. . . . . . . . . . . . . . . . . . .15

6. 7131-Model 405 SSA Multi-Storage Tower Specifications . . . . . . . . . . . . 18

7. 7133 Models 010, 020, 500, 600, D40, T40 Specifications. . . . . . . . . . . . 18

8. SSA Disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

9. SSA Adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

10. The Advantages and Disadvantages of the Different RAID Levels . . . . . 24

11. Necessary APAR Fixes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

12. AIX Prerequisite LPPs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

13. smit mkvg Options (Non-Concurrent) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

14. smit mkvg Options (Concurrent, Non-RAID) . . . . . . . . . . . . . . . . . . . . . . . 83

15. smit mkvg Options (Concurrent, RAID) . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

16. smit crjfs Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

17. smit importvg Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

18. smit crjfs Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

19. Options for Synchronization of the Cluster Topology. . . . . . . . . . . . . . . . 107

20. Options Configuring Resources for a Resource Group . . . . . . . . . . . . . . 109

21. HACMP Log Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

Page 14

xii IBM Certification Study Gu ide AIX HACMP

Page 15

xiii

Preface

The AIX and RS/6000 Certifications offered through the Professional

Certification Program from IBM are designed to validate the skills required of

technical professionals who work in the powerful and often complex

environments of AIX and RS/6000. A complete set of professional

certifications is available. It includes:

• IBM Certified AIX User

• IBM Certified Specialist - RS/6000 Solution Sales

• IBM Certified Specialist - AIX V4.3 System Administration

• IBM Certified Specialist - AIX V4.3 System Support

• IBM Certified Specialist - RS/6000 SP

• IBM Certified Specialist - AIX H ACMP

• IBM Certified Specialist - Domino for RS/6000

• IBM Certified Specialist - Web Server for RS/6000

• IBM Certified Specialist - Business Intelligence for RS/6000

• IBM Certified Advanced Technical Expert - RS/6000 AIX

Each certification is developed by following a thorough and rigorous process

to ensure the exam is applicable to the job role and is a meaningful and

appropriate assessment of skill. Subject Matter Experts who successfully

perform the job participate throughout the entire development process. These

job incumbents bring a wealth of experience into the development process,

thus making the exams much more meaningful than the typical test, which

only captures classroom knowledge. These Subject Matter experts ensure

the exams are relevant to the

real world

and that the test content is both

useful and valid. The result is a certification of value that appropriately

measures the skill required to perform the job role.

This redbook is designed as a study guide for professionals wishing to

prepare for the certification exam to achieve IBM Certified Specialist - AIX

HACMP.

The AIX HACMP certification validates the skills required to successfully

plan, install, configure, and support an AIX HACMP cluster installation. The

requirements for this include a working knowledge of the following:

• Hardware options supported for use in a cluster, along with the

considerations that affect the choices made

Page 16

xiv IBM Certification Study Guide AIX HACM P

• AIX parameters that are affected by an HACMP installation, and their

correct settings

• The cluster and resource configuration process, including how to choose

the best resource configuration for a customer requirement

• Customization of the standard HACMP facilities to satisfy special

customer requirements

• Diagnosis and troubleshooting knowledge and skills

This redbook helps AIX professionals seeking a comprehensive and

task-oriented guide for developing the knowledge and skills required for the

certification. It is designed to provide a combination of theory and practical

experience. It also provides sample questions that will help in the evaluation

of personal progress and provide familiarity with the types of questions that

will be encountered in the exam.

This redbook will not replace the practical experience you should have, but,

when combined with educational activities and experience, should prove to

be a very useful preparation guide for the exam. Due to the practical nature of

the certification content, this publication can also be used as a desk-side

reference. So, whether you are planning to take the AIX HACMP certification

exam, or just want to validate your HACMP skills, this book is for you.

For additional information about certification and instructions on How to

Register for an exam, call IBM at 1-800-426-8322 or visit our Web site at:

http://www.ibm.com/certify

The Team That Wrote This Redbook

This redbook was produced by a team of specialists from around the world

working at the International Technical Support Organization Austin Center.

David Thiessen is an Advisory Software Engineer at the International

Techni cal Support Organization, Austin Center. He writes extensively and

teaches IBM classes worldwide on all areas of high availability and clustering.

Before joining the ITSO six years ago, David worked in Vancouver, Canada

as an AIX Systems Engineer.

Achim Rehor is a Software Service Specialist in Mainz/Germany. He is Team

Leader of the HACMP/SP Software Support Group in the European Central

Region (Germany, Austria and Switzerland). Achim started working with AIX

in 1990, just as AIX Version 3 and the RISC System/6000 were first being

introduced. Since 1993, he has specialized in the 9076 RS/6000 Scalable

Page 17

xv

POWERparallel Systems area, known as the SP1 at that time. In 1997 he

began working on HACMP as the Service Groups for HACMP and RS/6000

SP merged into one. He holds a diploma in Computer Science from the

University of Frankfurt in Germany. This is his first redbook.

Reinhard Zettler is an AIX Software Engineer in Munich, Germany. He has

two years of experience working with AIX and HACMP. He has worked at IBM

for two years. He holds a degree in Telecommunication Technology. This is

his first redbook.

Thanks to the following people for their invaluable contributions to this

project:

Marcus Brewer

International Technic al Support Organization, Austin Center

Rebecca Gonzalez

IBM AIX Certification Project Manager, Austin

Milos Radosavljevic

International Technic al Support Organization, Austin Center

Comments Welcome

Your comments are important to us!

We want our redbooks to be as helpful as possible. Please send us your

comments about this or other redbooks in one of the following ways:

• Fax the evaluation form found in “ITSO Redbook Evaluation” on page 221

to the fax number shown on the form.

• Use the electronic evaluation form found on the Redbooks Web sites:

For Internet users

http://www.redbook s.ibm.com

For IBM Intranet users http://w3.itso.ibm .com

• Send us a note at the following address:

redbook@us.ibm.com

Page 18

xvi IBM Certification Study Guide AIX HACM P

Page 19

© Copyright IBM Corp. 1999 1

Chapter 1. Certification Overview

This chapter provides an overview of the skill requirements for obtaining an

IBM Certified Specialist - AIX HACMP certification. The following chapters

are designed to provide a comprehensive review of specific topics that are

essential for obtaining the certification.

1.1 IBM Certified Specialist - AIX HACMP

This certification demonstrates a proficiency in the implementation skills

required to plan, install, and configure AIX High Availability Cluster

Multi-Processing (HACMP) systems, and to perform the diagnostic activities

needed to support Highly Available Clusters.

Certification Requirement (two Tests):

To attain the IBM Certified Specialist - AIX HACMP certification, candidates

must first obtain the AIX System Administration or the AIX System Support

certification. In order to obtain one of these prerequisite certifications, the

candidate must pass one of the following two exams:

Test 181: AIX V4.3 System Administration

or

Test 189: AIX V4.3 System Support.

Following this, the candidate must pass the following exam:

Test 167: HACMP for AIX V 4.2.

Recommended Prerequisites

A minimum of six to twelve months implementation experience installing,

configuring, and testing/supporting HACMP for AIX.

Registration for the Certification Exam

For information about how to register for the certification exam, please visit

the following Web site:

http://www.ibm.com/c ertify

Page 20

2 IBM Certification Study Guide A IX HA CMP

1.2 Certification Exam Objective s

The following objectives were used as a basis for what is required when the

certification exam was developed. Some of these topics have been

regrouped to provide better organization when discussed in this publication.

Section 1 - Preinstallation

The following items should be considered as part of the preinstallation plan:

• Conduct a Planning Session.

• Set customer expectations at the beginning of the planning session.

• Gather customer's availability requirements.

• Articulate trade-offs of different HA configurations.

• Assist customers in identifying HA applications.

• Evaluate the Customer Environment and Tailorable Components.

• Evaluate the configuration and identify Single Points of Failure (SPOF).

• Define and analyze NFS requirements.

• Identify components affecting HACMP.

• Identify HACMP event logic customizations.

• Plan for Installation.

• Develop a disk management modification plan.

• Understand issues regarding single adapter solutions.

• Produce a Test Plan.

Section 2 - HACMP Implementation

The following items should be considered for proper implementation:

• Configure HACMP Solutions.

• Install HACMP Code.

• Configure an IP Address Takeover (IPAT).

• Configure non-IP heartbeat paths.

• Configure a network adapter.

• Customize/tailor AIX.

• Set up a shared disk (SSA).

• Set up a shared disk (SCSI).

• Verify a cluster configuration.

Page 21

Certification Overview 3

• Create an application server.

• Set up Event Notification.

• Set up event notification and pre/post event scripts.

• Set up error notification.

• Post Configuration Activities.

• Configure a client notification and ARP update.

• Implement a test plan.

• Create a snapshot.

• Create a customization document.

• Perform Testing and Troubleshooting.

• Troubleshoot a failed IPAT failover.

• Troubleshoot failed shared volume groups.

• Troubleshoot a failed network configuration.

• Troubleshoot failed shared disk tests.

• Troubleshoot a failed application.

• Troubleshoot failed Pre/Post event scripts.

• Troubleshoot failed error notifications.

• Troubleshoot errors reported by cluster verification.

Section 3 - System Management

The following items should be considered for System Management:

• Communicate with the Customer.

• Conduct a turnover session.

• Provide hands-on customer education.

• Set customer expectations of their HACMP solution's capabilities.

• Perform Systems Maintenance.

• Perform HACMP maintenance tasks (PTFs, adding products, replacing

disks, adapters).

• Perform AIX maintenance tasks.

• Dynamically update the cluster configuration.

• Perform testing and troubleshooting as a result of changes.

Page 22

4 IBM Certification Study Guide A IX HA CMP

1.3 Certification Educ ation Courses

Courses and publications are offered to help you prepare for the certification

tests. These courses are recommended, but not required, before taking a

certification test. At the printing of this guide, the following courses are

available. For a current list, please visit the following Web site:

http://www.ibm.com/certify

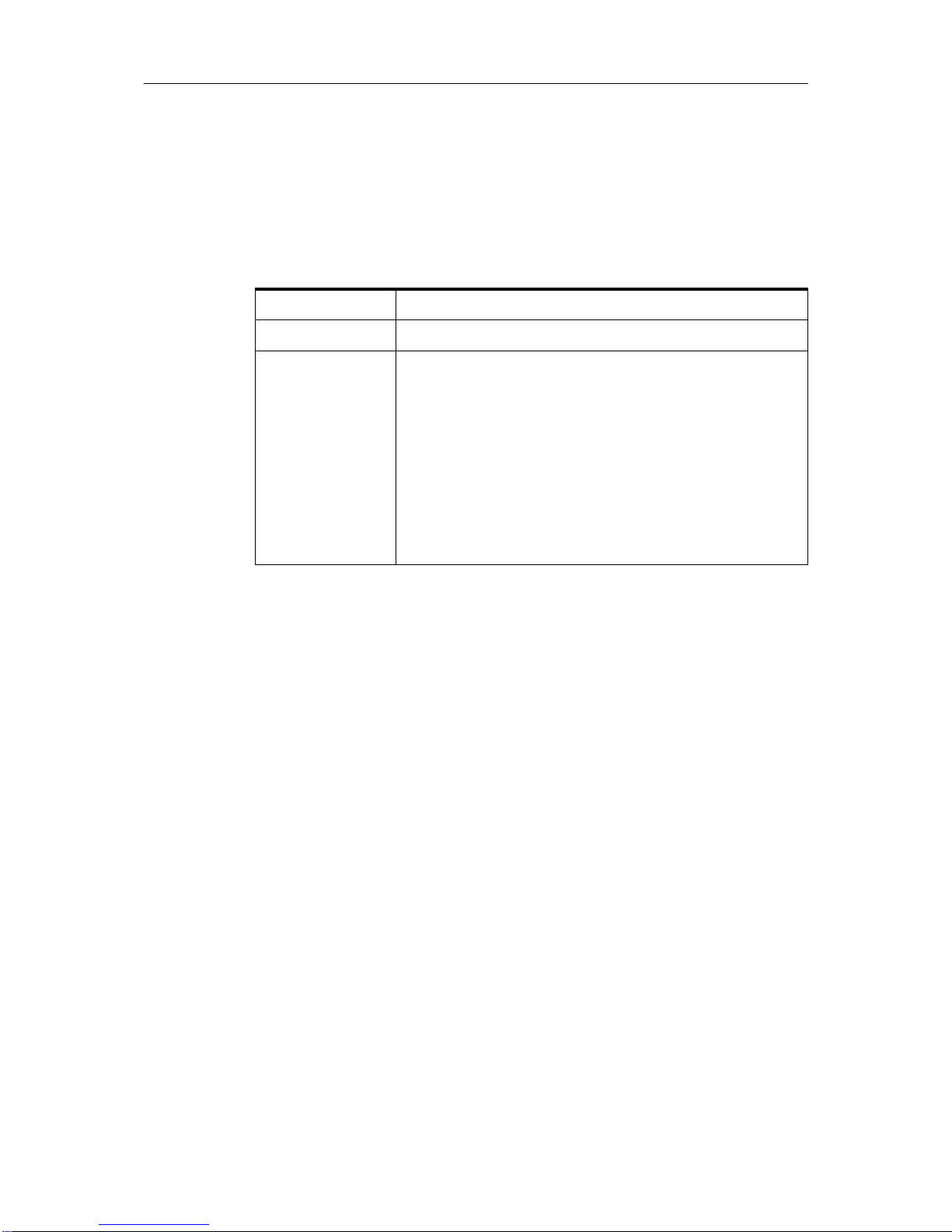

Table 1. AIX Version 4 HACMP Installation and Impl emen tation

Course Number Q1054 (USA) AU54 (Worldwide)

Course Duration Five days

Course Abstract This course provides a detailed understanding of the

High Availability Clustered Multi-Processing for AIX.

The course is supplemented with a series of laboratory

exercises to configure the hardware and software

environments for HACMP. Additionally, the labs provide

the opportunity to:

• Install the product.

• Define networks.

• Create file systems.

• Complete several modes of HACMP installations.

Page 23

Certification Overview 5

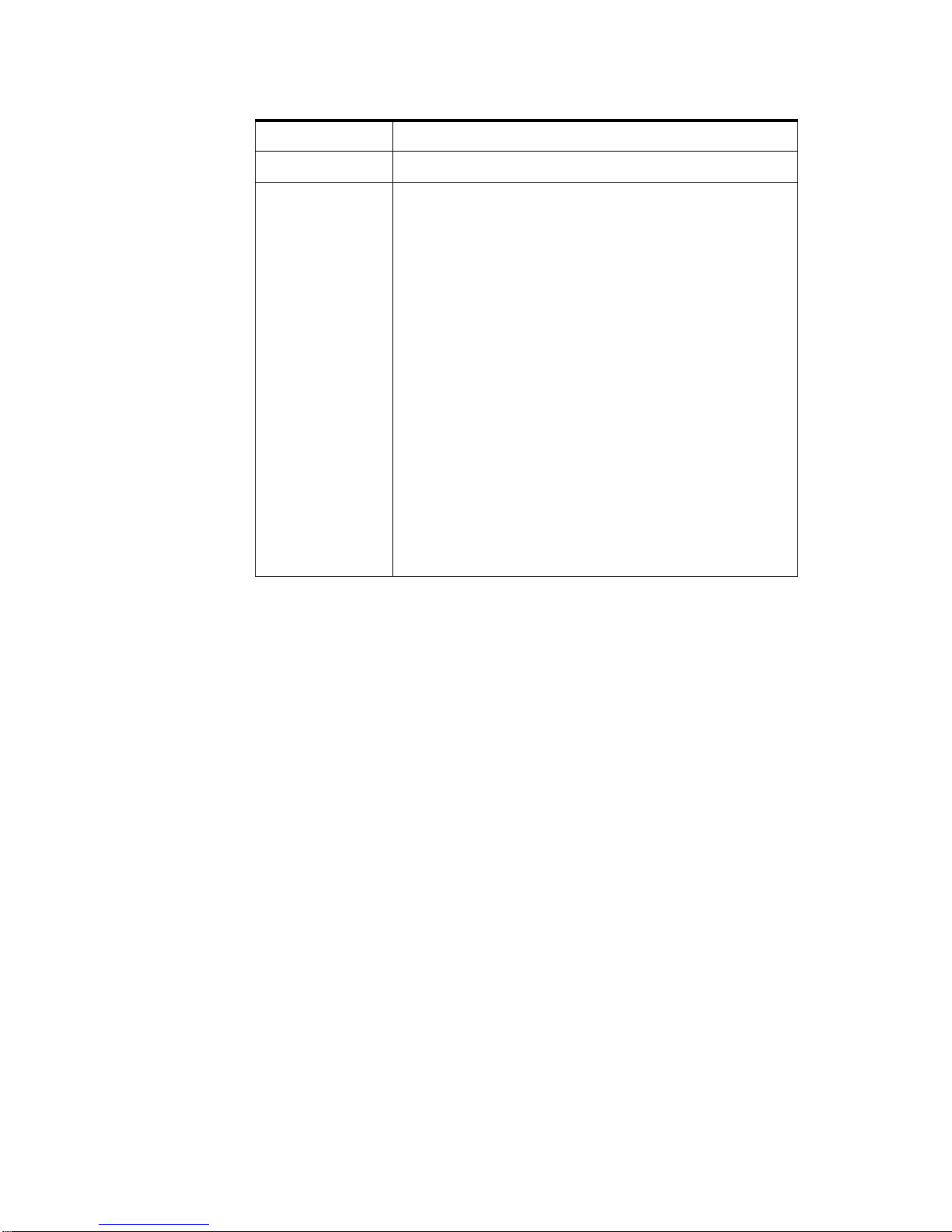

The following table outlines information about the next course.

Table 2. AIX Version 4 HACMP System A dmin istration

Course Number

Q1150 (USA); AU50 (Worldwide)

Course Duration

Five days

Course Abstract

This course teaches the student the skills required to

administer an HACMP cluster on an ongoing basis

after it is installed. The skills that are developed in this

course include:

• Integrating the cluster with existing network

services (DNS, NIS, etc.)

• Monitoring tools for the cluster, including HAView

for Netview

• Maintaining user IDs and passwords across the

cluster

• Recovering from script failures

• Making configuration or resource changes in the

cluster

• Repairing failed hardware

• Maintaining required cluster documentation

The course involves a significant number of hands-on

exercises to reinforce the concepts. Students are

expected to have completed the course AU54

(Q1054) HACMP Installation and Implementation

before attending this course.

Page 24

6 IBM Certification Study Guide A IX HA CMP

Page 25

© Copyright IBM Corp. 1999 7

Chapter 2. Cluster Planning

The area of cluster planning is a large one. Not only does it include planning

for the types of hardware (CPUs, networks, disks) to be used in the cluster,

but it also includes other aspects. These include resource planning, that is,

planning the desired behavior of the cluster in failure situations. Resource

planning must take into account application loads and characteristics, as well

as priorities. This chapter will cover all of these areas, as well as planning for

event customizations and user id planning issues.

2.1 Cluster Nodes

One of HACMP’s key design strengths is its ability to provide support across

the entire range of RISC System/6000 products. Because of this built-in

flexibility and the facility to mix and match RISC System/6000 products, the

effort required to design a highly available cluster is significantly reduced.

In this chapter, we shall outline the various hardware options supported by

HACMP for AIX and HACMP/ES. We realize that the rapid pace of change in

products will almost certainly render any snapshot of the options out of date

by the time it is published. This is true of almost all technical writing, though

to yield to the spoils of obsolescence would probably mean nothing would

ever make it to the printing press.

The following sections will deal with the various:

• CPU Options

• Cluster Node Considerations

available to you when you are planning your HACMP cluster.

2.1.1 CPU Options

HACMP is designed to execute with RISC System/6000 uniprocessors,

Symmetric Multi-Processor (SMP) servers and the RS/6000 Scalable

POWERparallel Systems (RS/6000 SP) in a

no single point of failure

server

configuration. The minimum configuration and sizing of each system CPU is

highly dependent on the user's application and data requirements.

Nevertheless, systems with 32 MB of main storage and 1 GB of disk storage

would be practical, minimum configurations.

Almost any model of the RISC System/6000 POWERserver family can be

included in an HACMP environment and new models continue to be added to

the list. The following table gives you an overview of the currently supported

Page 26

8 IBM Certification Study Guide A IX HA CMP

RISC System/6000 models as nodes in an HACMP 4.1 for AIX, HACMP 4.2

for AIX, or HACMP 4.3 for AIX cluster.

Table 3. Hardware Requ irements for the Differ ent HA CMP Versions

1

AIX 4.3.2 required

For a detailed description of system models supported by HACMP/6000 and

HACMP/ES, you should refer to the current Announcement Letters for

HACMP/6000 and HACMP/ES.

HACMP/ES 4.3 further enhances cluster design flexibility even further by

including support for the RISC System/6000 family of machines and the

Compact Server C20. Since the introduction of HACMP 4.1 for AIX, you have

been able to mix uniprocessor and multiprocessor machines in a single

cluster. Even a mixture of “normal” RS/6000 machines and RS/6000 SP

nodes is possible.

2.1.2 Cluster Node Con siderations

It is important to understand that selecting the system components for a

cluster requires careful consideration of factors and information that may not

be considered in the selection of equipment for a single-system environment.

In this section, we will offer some guidelines to assist you in choosing and

sizing appropriate machine models to build your clusters.

HACMP Version 4.1 4.2 4.3 4.2/ES 4.3/ES

7009 Mod. CXX yes yes yes no yes

1

7011 Mod. 2XX yes yes yes no yes

1

7012 Mod. 3XX and GXX yes yes yes no yes

1

7013 Mod. 5XX and JXX yes yes yes no yes

1

7015 Mod. 9XX and RXX yes yes yes no yes

1

7017 Mod. S7X yes yes yes no yes

1

7024 Mod. EXX yes yes yes no yes

1

7025 Mod. FXX yes yes yes no yes

1

7026 Mod. HXX yes yes yes no yes

1

7043 Mod. 43P, 260 yes yes yes no yes

1

9076 RS/6000 SP yes yes yes yes yes

1

Page 27

Cluster Planning 9

Much of the decision centers around the following areas:

• Processor capacity

• Application requirements

• Anticipated growth requirements

• I/O slot requirements

These paradigms are certainly not new ones, and are also important

considerations when choosing a processor for a single-system environment.

However, when designing a cluster, you must car efully consider the

requirements of the cluster as a total entity. This includes understanding

system capacity requirements of other nodes in the cluster beyond the

requirements of each system's prescribed normal load. You must consider

the required performance of the solution during and after failover, when a

surviving node has to add the workload of a failed node to its own workload.

For example, in a two node cluster, where applications running on both nodes

are critical to the business, each of the two nodes functions as a backup for

the other, in a mutual takeover configuration. If a node is required to provide

failover support for all the applications on the other node, then its capacity

calculation needs to take that into account. Essentially, the choice of a model

depends on the requirements of highly available applications, not only in

terms of CPU cycles, but also of memory and possibly disk space.

Approximately 50 MB of disk storage is required for full installation of the

HACMP software.

A major consideration in the selection of models will be the number of I/O

expansion slots they provide. The model selected must have enough slots to

house the components required to remove single points of failure (SPOFs)

and provide the desired level of availability. A single point of failure is defined

as any single component in a cluster whose failure would cause a service to

become unavailable to end users. The more single points of failure you can

eliminate, the higher your level of availability will be. Typically, you need to

consider the number of slots required to support network adapters and disk

I/O adapters. Your slot configuration must provide for at least two network

adapters to provide adapter redundancy for one service network. If your

system needs to be able to take over an IP address for more than one other

system in the cluster at a time, you will want to configure more standby

network adapters. A node can have up to seven standby adapters for each

network it connects to. Again, if that is your requirement, you will need to

select models as nodes where the number of slots will accomodate the

requirement.

Page 28

10 IBM Certification Study Gu ide AIX HACMP

Your slot configuration must also allow for the disk I/O adapters you need to

support the cluster’s shared disk (volume group) configuration. If you intend

to use disk mirroring for shared volume groups, whic h i s strongly

recommended, then you will need to use slots for additional disk I/O

adapters, providing I/O adapter redundancy across separate buses.

The following table tells you the number of additional adapters you can put

into the different RS/6000 models. Ethernet environments can sometimes

make use of the integrated ethernet port provided by some models. No such

feature is available for token-ring, FDDI or ATM; you must use an I/O slot to

provide token-ring adapter redundancy.

Table 4. Number of Adap ter Slo ts in Eac h Mod el

1

The switch adapter is onboard and does not need an extra slot.

RS/6000 Model Number of Slots Integrated Ethernet Port

7006 4 x MCA yes

7009 C10, C20 4x PCI no

7012 Mod. 3XX and GXX 4 x MCA yes

7013 Mod. 5XX 7 x MCA no

7013 Mod. JXX 6 x MCA, 14 x MCA

with expansion unit J01

no

7015 Mod. R10, R20, R21 8 x MCA no

7015 Mod. R30, R40, R50 16 x MCA no

7017 Mod. S7X 52 x PCI no

7024 EXX 5 x PCI, 1 x PCI/ISA 2 x

ISA

no

7025 F50 6 x PCI, 2 x ISA/PCI yes

7026 Mod. H50 6 x PCI, 2 x ISA/PCI yes

7043Mod. 3 x PCI, 2 x ISA/PCI yes

9076 thin node 4 x MCA yes

9076 wide node 7 x MCA no

9076 high node 15 x MCA no

9076 thin node (silver) 2 x PCI yes

1

9076 wide node (silver) 10 x PCI yes

1

Page 29

Cluster Planning 11

2.2 Cluster Networks

HACMP differentiates between two major types of networks: TCP/IP networks

and non-TCP/IP networks. HACMP utilizes both of them for exchanging

heartbeats. HACMP uses these heartbeats to diagnose failures in the cluster.

Non-TCP/IP networks are used to distinguish an actual hardware failure from

the failure of the TCP/IP software. If there were only TCP/IP networks being

used, and the TCP/IP software failed, causing heartbeats to stop, HACMP

could falsely diagnose a node failure when the node was really still

functioning. Since a non-TCP/IP network would continue working in this

event, the correct diagnosis could be made by HACMP. In general, all

networks are also used for verification, synchronization, communication and

triggering events between nodes. Of course, TCP/IP networks are used for

communication with client machines as well.

At the time of publication, the HACMP/ES Version 4.3 product does not use

non-TCP/IP networks for node-to-node communications in triggering,

synchronizing, and executing event reactions. This can be an issue if you are

configuring a cluster with only one TCP/IP network. This limitation of

HACMP/ES is planned to be removed in a future release. You would be

advised to check on the status of this issue if you are planning a new

installation, and to plan your cluster networks accordingly.

2.2.1 TCP/IP Networks

The following sections describe supported TCP/IP network types and network

considerations.

2.2.1.1 Supported TCP/IP Network Types

Basically every adapter that is capable of running the TCP/IP protocol is a

supported HACMP network type. There are some special considerations for

certain types of adapters however . The following gives a brief overview on the

supported adapters and their special considerations.

Below is a list of TCP/IP network types as you will find them at the

configuration time of an adapter for HACMP. You will find the non-TCP/IP

network types in 2.2.2.1, “Supported Non-TCP/IP Network Types” on page

14.

• Generic IP

•ATM

• Ethernet

•FCS

Page 30

12 IBM Certification Study Gu ide AIX HACMP

• FDDI

• SP Switch

•SLIP

•SOCC

• Token-Ring

As an independent, layered component of AIX, the HACMP for AIX software

works with most TCP/IP-based networks. HACMP for AIX has been tested

with standard Ethernet interfaces (en*) but not with IEEE 802.3 Ethernet

interfaces (et*), where * reflects the interface number. HACMP for AIX also

has been tested with Token-Ring and Fiber Distributed Data Interchange

(FDDI) networks, with IBM Serial Optical Channel Converter (SOCC), Serial

Line Internet Protocol (SLIP), and Asynchronous Transfer Mode (ATM)

point-to-point connections.

The HACMP for AIX software supports a maximum of 32 networks per cluster

and 24 TCP/IP network adapters on each node. These numbers provide a

great deal of flexibility in designing a network configuration. The network

design affects the degree of system availability in that the more

communication paths that connect clustered nodes and clients, the greater

the degree of network availability.

2.2.1.2 Specia l Network Considerat ions

Each type of interface has different characteristics concerning speed, MAC

addresses, ARP, and so on. In case there is a limitation you will have to work

around, you need to be aware of the characteristics of the adapters you plan

to use. In the next paragraphs, we summarize some of the considerations

that are known.

Hardware Address Swapping is one issue. If you enable HACMP to put one

address on another adapter, it would need something like a boot and a

service address for IPAT, but on the hardware layer. So, in addition to the

manufacturers burnt-in address, there has to be an alternate address

configured.

The speed of the network can be another issue. Your application may have

special network throughput requirements that must be taken into account.

ATM and SP Switch networks are special cases of point-to-point, private

networks that can connect clients

Note

Page 31

Cluster Planning 13

Network types also differentiate themselves in the maximum distance they

allow between adapters, and in the maximum number of adapters allowed on

a physical network.

• Ethernet supports 10 and 100 Mbps currently, and supports hardware

address swapping. Alternate hardware addresses should be in the form

xxxxxxxxxxyy , where xx xxxxxxxx is replaced with the first five pairs of digits

of the original burned-in MAC address and

yy can be chosen freely. There

is a limit of 29 adapters on one physical network, unless a network

repeater is used.

• Token-Ring supports 4 or 16 Mbps, but 4 Mbps is very rarely used now. It

also supports hardware address swapping, but here the convention is to

use 42 as the first two characters of the alternate address, since this

indicates that it is a locally set address.

• FDDI is a 100 Mbps optical LAN interface, that supports hardware address

takeover as well. For FDDI adapters you should leave the last six digits of

the burned-in address as they are, and use a 4, 5, 6, or 7 as the first digit

of the rest. FDDI can connect as many as 500 stations with a maximum

link-to-link distance of two kilometers and a total LAN circumference of

100 kilometers.

•ATM is a point-to-point connection network. It currently supports the OC3

and the OC12 standard, which is 155 Mbps or 625 Mbps. You cannot use

hardware address swapping with ATM. ATM doesn’t support broadcasts,

so it must be configured as a private network to HACMP. However, if you

are using LAN Emulation on an existing ATM network, you can use the

emulated ethernet or Token-Ring interfaces just as if they were real ones,

except that you cannot use hardware address swapping.

• FCS is a fiber channel network, currently available as two adapters for

either MCA or PCI technology. The Fibre Channel Adapter /1063-MCA,

runs up to 1063 Mb/second, and the Gigabit Fibre Channel Adapter for

PCI Bus (#6227), announced on October 5th 1998, will run with 100 MBps.

Both of them support TCP/IP, but not hardware address swapping.

•SLIP runs at up to 38400 bps. Since it is a point-to-point connection and

very slow, it is rarely used as an HACMP network. An HACMP cluster is

much more likely to use the serial port as a non-TCP/IP connection. See

below for details.

• SOCC is a fast optical connection, again point-to-point. This is an optical

line with a serial protocol running on it. However, the SOCC Adapter

(Feature 2860) has been withdrawn from marketing for some years now.

Some models, like 7013 5xx, offer SOCC as an option onboard, but these

are rarely used today.

Page 32

14 IBM Certification Study Gu ide AIX HACMP

• SP Switch is a high-speed packet switching network, running on the

RS/6000 SP system only. It runs bidirectionally up to 80 MBps, which adds

up to 160 MBps of capacity per adapter. This is node-to-node

communication and can be done in parallel between every pair of nodes

inside an SP. The SP Switch network has to be defined as a private

Network, and ARP must be enabled. This network is restricted to one

adapter per node, thus, it has to be considered as a Single Point Of

Failure. Therefore, it is strongly recommended to use AIX Error

Notification to propagate a switch failure into a node failure when

appropriate. As there is only one adapter per node, HACMP uses the

ifconfig alias addresses for IPAT on the switch; so, a standby address is

not necessary and, therefore, not used on the switch network. Hardware

address swapping also is not supported on the SP Switch.

For IP Address Takeover (IPAT), in general, there are two adapters per

cluster node and network recommended in order to eliminate single points of

failure. The only exception to this rule is the SP Switch because of hardware

limitations.

2.2.2 Non-TCPIP Ne tworks

Non-TCP/IP networks in HACMP are used as an independent path for

exchanging messages or heartbeats between cluster nodes. In case of an IP

subsystem failure, HACMP can still differentiate between a network failure

and a node failure when an independent path is available and functional.

Below is a short description of the three currently available non-TCP/IP

network types and their characteristics. Even though HACMP works without

one, it is strongly recommended that you use at least one non-TCP/IP

connection between the cluster nodes.

2.2.2.1 Supported Non-TCP/IP Network Types

Currently HACMP supports the following types of networks for non-TCP/IP

heartbeat exchange between cluster nodes:

• Serial (RS232)

• Target-mode SCSI

• Target-mode SSA

All of them must be configured as Network Type: serial in the HACMP

definitions.

Page 33

Cluster Planning 15

2.2.2.2 Specia l Consider ations

As for TCP/IP networks, there are a number of restrictions on non-TCP/IP

networks. These are explained for the three different types in more detail

below.

Serial (RS232)

A serial (RS232) network needs at least one available serial port per cluster

node. In case of a cluster consisting of more than two nodes, a ring of nodes

is established through serial connections, which r equires two seri al ports per

node. Table 3 shows a list of possible cluster nodes and the number of native

serial ports for each:

Table 5. Number of Available Se rial P orts in Each M odel

.

1

serial port can be multiplexed through a dual-port cable, thus offering two

ports

RS/6000 Model Number of Serial Ports Available

7006 1

1

7009 C10, C20 1

1

7012 Mod. 3XX and GXX 2

7013 Mod. 5XX 2

7013 Mod. JXX 3

7015 Mod. R1 0, R20, R21 3

7015 Mod. R3 0, R40, R50 3

7013,7015,7017 Mod. S7X 0

2

7024 EXX 1

1

7025 F50 2

7026 Mod. H50 3

7043Mod. 2

9076 thin node 2

3

9076 wide node 2

3

9076 high node 3

3

9076 thin node (silver) 2

3

9076 wide node (silver) 2

3

Page 34

16 IBM Certification Study Gu ide AIX HACMP

2

a PCI Multiport Async Card is required in an S7X model, no native ports

3

only one serial port available for customer use, i.e. HACMP

In case the number of native serial ports doesn’t match your HACMP cluster

configuration needs, you can extend it by adding an eight-port asynchronous

adapter, thus reducing the number of available MCA slots, or the

corresponding PCI Multiport Async Card for PCI Machines, like the S7X

model.

Target-mode SCSI

Another possibility for a non-TCP/IP network is a target mode SCSI

connection. Whenever you make use of a shared SCSI device, you can also

use the SCSI bus for exchanging heartbeats.

Target Mode SCSI is only supported with SCSI-2 Differential or SCSI-2

Differential Fast/Wide devices. SCSI/SE or SCSI-2/SE are not supported for

HACMP serial networks.

The recommendation is to not use more than 4 target mode SCSI networks in

a cluster.

Target-mode SSA

If you are using shared SSA devices, target mode SSA is the third possibility

for a serial network within HACMP. In order to use target-mode SSA, you

must use the Enhanced RAID-5 Adapter (#6215 or #6219), since these are

the only current adapters that support the Multi-Initiator Feature. The

microcode level of the adapter must be 1801 or higher.

2.3 Cluster Disks

This section describes the various choices you have in selecting the type of

shared disks to use in your cluster.

2.3.1 SSA Disks

The following is a brief description of SSA and the basic rules to follow when

designing SSA networks. For a full description of SSA and its functionality,

please read

Monitoring and Managing IBM SSA Disk Subsystems,

SG24-5251.

SSA is a high-performance, serial interconnect technology used to connect

disk devices and host adapters. SSA is an open standard, and SSA

specifications have been approved by the SSA Industry Association and also

as an ANSI standard through the ANSI X3T10.1 subcommittee.

Page 35

Cluster Planning 17

SSA subsystems are built up from loops of adapters and disks. A simple

example is shown in Figure 1.

Figure 1. Basic SSA Co nfigur ation

Here, a single adapter controls one SSA loop of eight disks. Data can be

transferred around the loop, in either direction, at 20 MBps. Consequently,

the peak transfer rate of the adapter is 80 MBps. The adapter contains two

SSA nodes and can support two SSA loops. Each disk drive also contains a

single SSA node. A node can be either an initiator or a target. An

initiator

issues commands, while a

target

responds with data and status information.

The SSA nodes in the adapter are therefore initiators, while the SSA nodes in

the disk drives are targets.

There are two types of SSA Disk Subsystems for RISC System/6000

available:

• 7131 SSA Multi-Storage Tower Model 405

2

0

M

B

p

s

2

0

M

B

p

s

2

0

M

B

p

s

2

0

M

B

p

s

HOST

SSA Architecture

l

High performance 80 MB/s interface

l

Loop architecture with up to 127 nodes per loop

l

Up to 25 m (82 ft) between SSA devices with copper cables

l

Up to 2.4 km (1.5 mi) between SSA devices with optical extender

l

Spatial reuse (multiple simultaneous transmissions)

Page 36

18 IBM Certification Study Gu ide AIX HACMP

• 7133 Serial Storage Architecture (SSA) Disk Subsystem Models 010, 500,

020, 600, D40 and T40.

The 7133 models 010 and 500 were the first SSA products announced in

1995 with the revolutionary new Serial Storage Architecture. Some IBM

customers still use the Models 010 and 500, but these have been replaced by

7133 Model 020, and 7133 Model 600 respectively. More recently, in

November 1998, the models D40 and T40 were announced.

All 7133 Models have redundant power and cooling, which is hot-swappable.

The following tables give you more configuration information about the

different models:

Table 6. 7131-Model 405 S SA Multi- Storage Tower Specifications

Table 7. 7133 Models 010, 020, 500 , 600, D40, T4 0 Spec ifications

Item Specification

Transfer rate SSA

interface

80 MB

Configuration 2 to 5 disk drives (2.2 GB, 4.5 GB or 9.1 GB) per subsystem

Configuration range 4.4 to 1 1 GB (with 2.2 GB disk drives )

9.0 to 22.5 GB (With 4.5 GB disk drives)

18.2 to 45.5 GB (With 9.1 GB disk drives)

Supported RAID

levels

5

Supported adapters 6214, 6216, 6217, 6218

Hot-swap disks Yes

Item Specification

Transfer rate SSA

interface

80 MB/s

Configuration 4 to 16 disks

- 1.1 GB, 2.2 GB, 4.5 GB, for Models 10, 20, 500, and 600

- 9.1 GB for Models 20, 600, D40 and T40

- With 1.1 GB disk drives you must have 8 to 16 disks)

Configuration range 8.8 to 17.6 GB (with 1.1 GB disks)

8.8 to 35.2 GB (with 2.2 GB disks)

18 to 72 GB (with 4.5 GB disks)

36.4 to 145.6 GB (with 9.1 GB disks)

72.8 to 291.2 GB (with 18.2 GB disks)

Page 37

Cluster Planning 19

2.3.1.1 Disk C apaciti es

Table 8 lists the different SSA disks, and provides an overview of their

characteristics.

Table 8. SSA Disks

2.3.1.2 Supported and Non-Supported Adapters

Table 9 lists the different SSA adapters and presents an overview of their

characteristics.

Table 9. SSA Adapters

Supported RAID level 5

Supported adapters all

Hot-swappable disk Yes (and hot-swappable, redundant power and cooling)

Name Capacities (GB) Buffer size (KB) Maximum Transfer rate

(MBps)

Starfire 1100 1.1 0 20

Starfire 2200 2.2 0 20

Starfire 4320 4.5 512 20

Scorpion 4500 4.5 512 80

Scorpion 9100 9.1 512 160

Sailfin 9100 9.1 1024 160

Thresher 9100 9.1 1024 160

Ultrastar 9.1, 18.2 4096 160

Feature Code Adapter

Label

Bus Adapter Description Number of

Adapters per

Loop

Hardware

Raid Types

6214 4-D MCA Classic 2 n/a

6215 4-N PCI Enhanced RAID-5 8

1

5

6216 4-G MCA Enhanced 8 n/a

6217 4-I MCA RAID-5 1 5

6218 4-J PCI RAID-5 1 5

Item Specification

Page 38

20 IBM Certification Study Gu ide AIX HACMP

1

See 2.3.1.3, “Rules for SSA Loops” on page 20 for more information.

The following rules apply to SSA Adapters:

• You cannot have more than four adapters in a single system.

• The MCA SSA 4-Port RAID Adapter (FC 6217) and PCI SSA 4-Port RAID

Adapter (FC 6218) are not useful for HACMP, because only one can be in

a loop.

• Only the PCI Multi Initiator/RAID Adapter (FC 6215) and the MCA Multi

Initiator/RAID EL Adapter (FC 6219) support target mode SSA (for more

information about target mode SSA see 3.2.2, “Non TCP/IP Networks” on

page 63).

2.3.1.3 Rules for SSA Loops

The following rules must be followed when configuring and connecting SSA

loops:

• Each SSA loop must be connected to a valid pair of connectors on the

SSA adapter (that is, either Connectors A1 and A2, or Connectors B1 and

B2).

• Only one of the two pairs of connectors on an adapter card can be

connected in a single SSA loop.

• A maximum of 48 devices can be connected in a single SSA loop.

• A maximum of two adapters can be connected in a particular loop if one

adapter is an SSA 4-Port adapter, Feature 6214.

• A maximum of eight adapters can be connected in a particular loop if all

the adapters are Enhanced SSA 4-Port Adapters, Feature 6216.

• A maximum of two SSA adapters, both connected in the same SSA loop,

can be installed in the same system.

For SSA loops that include an SSA Four-Port RAID adapter (Feature 6217) or

a PCI SSA Four-Port RAID adapter (Feature 6218), the following rules apply:

• Each SSA loop must be connected to a valid pair of connectors on the

SSA adapter (that is, either Connectors A1 and A2, or Connectors B1 and

B2).

6219 4-M MCA Enhanced RAID-5 8

1

5

Feature Code Adapter

Label

Bus Adapter Description Number of

Adapters per

Loop

Hardware

Raid Types

Page 39

Cluster Planning 21

• A maximum of 48 devices can be connected in a particular SSA loop.

• Only one pair of adapter connectors can be connected in a particular SSA

loop.

• Member disk drives of an array can be on either SSA loop.

For SSA loops that include a Micro Channel Enhanced SSA

Multi-initiator/RAID EL adapter, Feature 6215 or a PCI SSA

Multi-initiator/RAID EL adapter, Feature 6219, the following rules apply:

• Each SSA loop must be connected to a valid pair of connectors on the

SSA adapter (that is, either Connectors A1 and A2, or Connectors B1 and

B2).

• A maximum of eight adapters can be connected in a particular loop if none

of the disk drives in the loops are array disk drives and none of them is

configured for fast-write operations. The adapters can be up to eight

Micro Channel Enhanced SSA Multi-initiator/RAID EL Adapters, up to

eight PCI Multi-initiator/RAID EL Adapters, or a mixture of the two types.

• A maximum of two adapters can be connected in a particular loop if one or

more of the disk drives in the loop are array disk drives that are not

configured for fast-write operations. The adapters can be two Micro

Channel Enhanced SSA Multi-initiator/RAID EL Adapters, two PCI

Multi-initiator/RAID EL Adapters, or one adapter of each type.

• Only one Micro Channel Enhanced SSA Multi-initiator/RAID EL Adapter or

PCI SSA Multi-initiator/RAID EL Adapter can be connected in a particular

loop if any disk drives in the loops are members of a RAID-5 array, and are

configured for fast-write operations.

• All member disk drives of an array must be on the same SSA loop.

• A maximum of 48 devices can be connected in a particular SSA loop.

• Only one pair of adapter connectors can be connected in a particular loop.

• When an SSA adapter is connected to two SSA loops, and each loop is

connected to a second adapter, both adapters must be connected to both

loops.

For the IBM 7190-100 SCSI to SSA converter, the following rules apply:

• There can be up to 48 disk drives per loop.

• There can be up to four IBM 7190-100 attached to any one SSA loop.

Page 40

22 IBM Certification Study Gu ide AIX HACMP

2.3.1.4 RAID vs . Non-RAI D

RAID Technology

RAID is an acronym for Redundant Array of Independent Disks. Disk arrays

are groups of disk drives that work together to achieve higher data-transfer

and I/O rates than those provided by single large drives.

Arrays can also provide data redundancy so that no data is lost if a single

drive (physical disk) in the array should fail. Depending on the RAID level,

data is either mirrored or striped. The following gives you more information

about the different RAID levels.

RAID Level 0

RAID 0 is also known as data striping. Conventionally, a file is written out

sequentially to a single disk. With striping, the information is split into chunks

(fixed amounts of data usually called blocks) and the chunks are written to (or

read from) a series of disks in parallel. There are two main performance

advantages to this:

1. Data transfer rates are higher for sequential operations due to the

overlapping of multiple I/O streams.

2. Random access throughput is higher because access pattern skew is

eliminated due to the distribution of the data. This means that with data

distributed evenly across a number of disks, random accesses will most

likely find the required information spread across multiple disks and thus

benefit from the increased throughput of more than one drive.

RAID 0 is only designed to increase performance. There is no redundancy; so

any disk failures will require reloading from backups.

RAID Level 1

RAID 1 is also known as disk mirroring. In this implementation, identical

copies of each chunk of data are kept on separate disks, or more commonly,

each disk has a twin that contains an exact replica (or mirror image) of the

information. If any disk in the array fails, then the mirrored twin can take over.

Read performance can be enhanced because the disk with its actuator

closest to the required data is always used, thereby minimizing seek times.

The response time for writes can be somewhat slower than for a single disk,

depending on the write policy; the writes can either be executed in parallel for

speed or serially for safety.

RAID Level 1 has data redundancy, but data should be regularly backed up

on the array. This is the only way to recover data in the event that a file or

directory is accidentally deleted.

Page 41

Cluster Planning 23

RAID Levels 2 and 3

RAID 2 and RAID 3 are parallel process array mechanisms, where all drives

in the array operate in unison. Similar to data striping, information to be

written to disk is split into chunks (a fixed amount of data), and each chunk is

written out to the same physical position on separate disks (in parallel). When

a read occurs, simultaneous requests for the data can be sent to each disk.

This architecture requires parity information to be written for each stripe of

data; the difference between RAID 2 and RAID 3 is that RAID 2 can utilize

multiple disk drives for parity, while RAID 3 can use only one. If a drive should

fail, the system can reconstruct the missing data from the parity and

remaining drives.

Performance is very good for large amounts of data but poor for small

requests since every drive is always involved, and there can be no

overlapped or independent operation.

RAID Level 4

RAID 4 addresses some of the disadvantages of RAID 3 by using larger

chunks of data and striping the data across all of the drives except the one

reserved for parity. Using disk striping means that I/O requests need only

reference the drive that the required data is actually on. This means that

simultaneous, as well as independent reads, are possible. Write requests,

however, require a read/modify/update cycle that creates a bottleneck at the

single parity drive. Each stripe must be read, the new data inserted and the

new parity then calculated before writing the stripe back to the disk. The

parity disk is then updated with the new parity, but cannot be used for other

writes until this has completed. This bottleneck means that RAID 4 is not

used as often as RAID 5, which implements the same process but without the

bottleneck. RAID 5 is discussed in the next section.

RAID Level 5

RAID 5, as has been mentioned, is very similar to RAID 4. The difference is

that the parity information is distributed across the same disks used for the

data, thereby eliminating the bottleneck. Parity data is never stored on the

same drive as the chunks that it protects. This means that concurrent read

and write operations can now be performed, and there are performance

increases due to the availability of an extra disk (the disk previously used for

parity). There are other enhancements possible to further increase data

transfer rates, such as caching simultaneous reads from the disks and

transferring that information while reading the next blocks. This can generate

data transfer rates that approach the adapter speed.

Page 42

24 IBM Certification Study Gu ide AIX HACMP

As with RAID 3, in the event of disk failure, the information can be r ebuilt from

the remaining drives. RAID level 5 array also uses parity information, though

it is still important to make regular backups of the data in the array . RAID level

5 stripes data across all of the drives in the array, one segment at a time (a

segment can contain multiple blocks). In an array with n drives, a stripe

consists of data segments written to n-1 of the drives and a parity segment

written to the nth drive. This mechanism also means that not all of the disk

space is available for data. For example, in an array with five 2 GB disks,

although the total storage is 10 GB, only 8 GB are available for data.

The advantages and disadvantages of the various RAID levels are

summarized in the following table:

Table 10. The Advantages and Dis advanta ges of the Different RAID Levels

RAID on the 7133 Disk Subsys tem

The only RAID level supported by the 7133 SSA disk subsystem is RAID 5.

RAID 0 and RAID 1 can be achieved with the striping and mirroring facility of

the Logical Volume Manager (LVM).

RAID 0 does not provide data redundancy, so it is not recommended for use

with HACMP, because the shared disks would be a single point of failure. The

possible configurations to use with the 7133 SSA disk subsystem are RAID 1

(mirroring) or RAID 5. Consider the following points before you make your

decision:

• Mirroring is more expensive than RAID, but it provides higher data

redundancy. Even if more than one disk fails, you may still have access to

all of your data. In a RAID, more than one broken disk means that the data

are lost.

• The SSA loop can include a maximum of two SSA adapters if you use

RAID. So, if you want to connect more than two nodes into the loop,

mirroring is the way to go.

• A RAID array can consist of three to 16 disks.

RAID Level Availability

Mechanism

Capacity Performance Cost

0 none 100% high medium

1 mirroring 50% medium/high high

3 parity 80% medium medium

5 parity 80% medium medium

Page 43

Cluster Planning 25

• Array member drives and spares must be on same loop (cannot span A

and B loops) on the adapter.

• You cannot boot (ipl) from a RAID.

2.3.1.5 Adva ntages

Because SSA allows SCSI-2 mapping, all functions associated with initiators,

targets, and logical units are translatable. Therefore, SSA can use the same

command descriptor blocks, status codes, command queuing, and all other

aspects of current SCSI systems. The effect of this is to make the type of disk

subsystem transparent to the application. No porting of applications is

required to move from traditional SCSI I/O subsystems to high-performance

SSA. SSA and SCSI I/O systems can coexist on the same host running the

same applications.

The advantages of SSA are summarized as follows:

• Dual paths to devices.

• Simplified cabling - cheaper, smaller cables and connectors, no separate

terminators.

• Faster interconnect technology.

• Not an arbitrated system.

• Full duplex, frame multiplexed serial links.

• 40 MBps total per port, resulting in 80 MBps total per node, and 160 MBps

total per adapter.

• Concurrent access to disks.

• Hot-pluggable cables and disks.

• Very high capacity per adapter - up to 127 devices per loop, although most

adapter implementations limit this. For example, current IBM SSA

adapters provide 96 disks per Micro Channel or PCI slot.

• Distance between devices of up to 25 meters with copper cables, 10km

with optical links.

• Auto-configuring - no manual address allocation.

• SSA is an open standard.

• SSA switches can be introduced to produce even greater fan-out and

more complex topologies.

Page 44

26 IBM Certification Study Gu ide AIX HACMP

2.3.2 SCSI Disks

After the announcement of the 7133 SSA Disk Subsystems, the SCSI Disk

subsystems became less common in HACMP clusters. However, the 7135

RAIDiant Array (Model 110 and 210) and other SCSI Subsystems are still in

use at many customer sites. We will not describe other SCSI Subsystems

such as 9334 External SCSI Disk Storage. See the appropriate

documentation if you need information about these SCSI Subsystems.

The 7135 RAIDiant Array is offered with a range of features, with a maximum

capacity of 135 GB (RAID 0) or 108 GB (RAID-5) in a single unit, and uses

the 4.5 GB disk drive modules. The array enclosure can be integrated into a

RISC System/6000 system rack, or into a deskside mini-rack. It can attach to

multiple systems through a SCSI-2 Differential 8-bit or 16-bit bus.

2.3.2.1 Capa cities

Disks