Page 1

HP XC System Software

User's Guide

Version 3.2.1

HP Part Number: A-XCUSR-321

Published: October 2007

Page 2

© Copyright 2003, 2005, 2006, 2007 Hewlett-Packard Development Company, L.P.

Confidential computersoftware. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standardcommercial license.The informationcontained hereinis subject to change without notice. The only warranties forHP products

and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as

constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

AMD and AMD Opteron are trademarks or registered trademarks of Advanced Micro Devices, Inc.

Intel , the Intel logo, Itanium , and Xeon are trademarks or registered trademarks of Intel Corporation in the United States and other countries.

Linux is a U.S. registered trademark of Linus Torvalds.

LSF,Platform Computing,and theLSF andPlatform Computing logos are trademarks or registered trademarks of Platform Computing Corporation.

Lustre is a trademark of Cluster File Systems, Inc.

Myrinet and Myricom are registered trademarks of Myricom, Inc.

Quadrics and QSNet II are registered trademarks of Quadrics, Ltd.

The Portland Group and PGI are trademarks or registered trademarks of The Portland Group Compiler Technology, STMicroelectronics, Inc.

Red Hat is a registered trademark of Red Hat Inc.

syslog-ng is a copyright of BalaBit IT Security.

SystemImager is a registered trademark of Brian Finley.

TotalView is a registered trademark of Etnus, Inc.

UNIX is a registered trademark of The Open Group.

Page 3

Table of Contents

About This Document.......................................................................................................15

Intended Audience................................................................................................................................15

New and Changed Information in This Edition...................................................................................15

Typographic Conventions.....................................................................................................................15

HP XC and Related HP Products Information.....................................................................................16

Related Information..............................................................................................................................17

Manpages..............................................................................................................................................20

HP Encourages Your Comments..........................................................................................................21

1 Overview of the User Environment.............................................................................23

1.1 System Architecture........................................................................................................................23

1.1.1 HP XC System Software..........................................................................................................23

1.1.2 Operating System....................................................................................................................23

1.1.3 Node Platforms.......................................................................................................................23

1.1.4 Node Specialization.................................................................................................................24

1.1.5 Storage and I/O........................................................................................................................25

1.1.6 File System...............................................................................................................................25

1.1.7 System Interconnect Network.................................................................................................26

1.1.8 Network Address Translation (NAT)......................................................................................27

1.2 Determining System Configuration Information............................................................................27

1.3 User Environment...........................................................................................................................27

1.3.1 LVS...........................................................................................................................................27

1.3.2 Modules...................................................................................................................................27

1.3.3 Commands..............................................................................................................................28

1.4 Application Development Environment.........................................................................................28

1.4.1 Parallel Applications...............................................................................................................28

1.4.2 Serial Applications..................................................................................................................29

1.5 Run-Time Environment...................................................................................................................29

1.5.1 SLURM....................................................................................................................................29

1.5.2 Load Sharing Facility (LSF-HPC)............................................................................................29

1.5.3 Standard LSF...........................................................................................................................30

1.5.4 How LSF-HPC and SLURM Interact.......................................................................................30

1.5.5 HP-MPI....................................................................................................................................30

1.6 Components, Tools, Compilers, Libraries, and Debuggers............................................................31

2 Using the System..........................................................................................................33

2.1 Logging In to the System.................................................................................................................33

2.1.1 LVS Login Routing..................................................................................................................33

2.1.2 Using the Secure Shell to Log In.............................................................................................33

2.2 Overview of Launching and Managing Jobs..................................................................................33

2.2.1 Introduction.............................................................................................................................34

2.2.2 Getting Information About Queues........................................................................................34

2.2.3 Getting Information About Resources....................................................................................34

2.2.4 Getting Information About System Partitions........................................................................35

2.2.5 Launching Jobs........................................................................................................................35

2.2.6 Getting Information About Your Jobs.....................................................................................35

2.2.7 Stopping and Suspending Jobs...............................................................................................35

2.2.8 Resuming Suspended Jobs......................................................................................................35

2.3 Performing Other Common User Tasks..........................................................................................35

Table of Contents 3

Page 4

2.3.1 Determining the LSF Cluster Name and the LSF Execution Host..........................................36

2.4 Getting System Help and Information............................................................................................36

3 Configuring Your Environment with Modulefiles.......................................................37

3.1 Overview of Modules......................................................................................................................37

3.2 Supplied Modulefiles......................................................................................................................38

3.3 Modulefiles Automatically Loaded on the System.........................................................................40

3.4 Viewing Available Modulefiles.......................................................................................................40

3.5 Viewing Loaded Modulefiles..........................................................................................................40

3.6 Loading a Modulefile......................................................................................................................40

3.6.1 Loading a Modulefile for the Current Session........................................................................40

3.6.2 Automatically Loading a Modulefile at Login........................................................................40

3.7 Unloading a Modulefile..................................................................................................................41

3.8 Viewing Modulefile-Specific Help..................................................................................................41

3.9 Modulefile Conflicts........................................................................................................................41

3.10 Creating a Modulefile....................................................................................................................42

4 Developing Applications.............................................................................................43

4.1 Application Development Environment Overview........................................................................43

4.2 Compilers........................................................................................................................................44

4.2.1 MPI Compiler..........................................................................................................................44

4.3 Examining Nodes and Partitions Before Running Jobs..................................................................45

4.4 Interrupting a Job............................................................................................................................45

4.5 Setting Debugging Options.............................................................................................................45

4.6 Developing Serial Applications.......................................................................................................45

4.6.1 Serial Application Build Environment....................................................................................46

4.6.2 Building Serial Applications...................................................................................................46

4.6.2.1 Compiling and Linking Serial Applications...................................................................46

4.7 Developing Parallel Applications....................................................................................................46

4.7.1 Parallel Application Build Environment.................................................................................46

4.7.1.1 Modulefiles......................................................................................................................47

4.7.1.2 HP-MPI............................................................................................................................47

4.7.1.3 OpenMP..........................................................................................................................47

4.7.1.4 Pthreads...........................................................................................................................47

4.7.1.5 Quadrics SHMEM...........................................................................................................47

4.7.1.6 MPI Library.....................................................................................................................48

4.7.1.7 Intel Fortran and C/C++Compilers..................................................................................48

4.7.1.8 PGI Fortran and C/C++ Compilers..................................................................................48

4.7.1.9 GNU C and C++ Compilers.............................................................................................48

4.7.1.10 Pathscale Compilers......................................................................................................48

4.7.1.11 GNU Parallel Make.......................................................................................................48

4.7.1.12 MKL Library..................................................................................................................49

4.7.1.13 ACML Library...............................................................................................................49

4.7.1.14 Other Libraries..............................................................................................................49

4.7.2 Building Parallel Applications................................................................................................49

4.7.2.1 Compiling and Linking Non-MPI Applications.............................................................49

4.7.2.2 Compiling and Linking HP-MPI Applications...............................................................49

4.7.2.3 Examples of Compiling and Linking HP-MPI Applications..........................................49

4.8 Developing Libraries.......................................................................................................................50

4.8.1 Designing Libraries for the CP4000 Platform.........................................................................50

5 Submitting Jobs............................................................................................................53

5.1 Overview of Job Submission...........................................................................................................53

4 Table of Contents

Page 5

5.2 Submitting a Serial Job Using LSF-HPC.........................................................................................53

5.2.1 Submitting a Serial Job with the LSF bsub Command............................................................53

5.2.2 Submitting a Serial Job Through SLURM Only......................................................................54

5.3 Submitting a Parallel Job.................................................................................................................55

5.3.1 Submitting a Non-MPI Parallel Job.........................................................................................55

5.3.2 Submitting a Parallel Job That Uses the HP-MPI Message Passing Interface.........................56

5.3.3 Submitting a Parallel Job Using the SLURM External Scheduler...........................................57

5.4 Submitting a Batch Job or Job Script...............................................................................................60

5.5 Submitting Multiple MPI Jobs Across the Same Set of Nodes........................................................62

5.5.1 Using a Script to Submit Multiple Jobs...................................................................................62

5.5.2 Using a Makefile to Submit Multiple Jobs..............................................................................62

5.6 Submitting a Job from a Host Other Than an HP XC Host.............................................................65

5.7 Running Preexecution Programs....................................................................................................65

6 Debugging Applications.............................................................................................67

6.1 Debugging Serial Applications.......................................................................................................67

6.2 Debugging Parallel Applications....................................................................................................67

6.2.1 Debugging with TotalView.....................................................................................................68

6.2.1.1 SSH and TotalView..........................................................................................................68

6.2.1.2 Setting Up TotalView......................................................................................................68

6.2.1.3 Using TotalView with SLURM........................................................................................69

6.2.1.4 Using TotalView with LSF-HPC.....................................................................................69

6.2.1.5 Setting TotalView Preferences.........................................................................................69

6.2.1.6 Debugging an Application..............................................................................................70

6.2.1.7 Debugging Running Applications..................................................................................71

6.2.1.8 Exiting TotalView............................................................................................................71

7 Monitoring Node Activity............................................................................................73

7.1 Installing the Node Activity Monitoring Software.........................................................................73

7.2 Using the xcxclus Utility to Monitor Nodes....................................................................................73

7.3 Plotting the Data from the xcxclus Datafiles...................................................................................76

7.4 Using the xcxperf Utility to Display Node Performance................................................................77

7.5 Plotting the Node Performance Data..............................................................................................79

7.6 Running Performance Health Tests.................................................................................................80

8 Tuning Applications.....................................................................................................85

8.1 Using the Intel Trace Collector and Intel Trace Analyzer...............................................................85

8.1.1 Building a Program — Intel Trace Collector and HP-MPI......................................................85

8.1.2 Running a Program – Intel Trace Collector and HP-MPI.......................................................86

8.2 The Intel Trace Collector and Analyzer with HP-MPI on HP XC...................................................87

8.2.1 Installation Kit.........................................................................................................................87

8.2.2 HP-MPI and the Intel Trace Collector.....................................................................................87

8.3 Visualizing Data – Intel Trace Analyzer and HP-MPI....................................................................89

9 Using SLURM................................................................................................................91

9.1 Introduction to SLURM...................................................................................................................91

9.2 SLURM Utilities...............................................................................................................................91

9.3 Launching Jobs with the srun Command.......................................................................................91

9.3.1 The srun Roles and Modes......................................................................................................92

9.3.1.1 The srun Roles.................................................................................................................92

9.3.1.2 The srun Modes...............................................................................................................92

9.3.2 Using the srun Command with HP-MPI................................................................................92

Table of Contents 5

Page 6

9.3.3 Using the srun Command with LSF-HPC...............................................................................92

9.4 Monitoring Jobs with the squeue Command..................................................................................92

9.5 Terminating Jobs with the scancel Command.................................................................................93

9.6 Getting System Information with the sinfo Command...................................................................93

9.7 Job Accounting................................................................................................................................94

9.8 Fault Tolerance................................................................................................................................94

9.9 Security............................................................................................................................................94

10 Using LSF-HPC............................................................................................................95

10.1 Information for LSF-HPC..............................................................................................................95

10.2 Overview of LSF-HPC Integrated with SLURM...........................................................................96

10.3 Differences Between LSF-HPC and LSF-HPC Integrated with SLURM.......................................98

10.4 Job Terminology............................................................................................................................99

10.5 Using LSF-HPC Integrated with SLURM in the HP XC Environment.......................................101

10.5.1 Useful Commands...............................................................................................................101

10.5.2 Job Startup and Job Control.................................................................................................101

10.5.3 Preemption..........................................................................................................................101

10.6 Submitting Jobs............................................................................................................................101

10.7 LSF-SLURM External Scheduler..................................................................................................102

10.8 How LSF-HPC and SLURM Launch and Manage a Job.............................................................102

10.9 Determining the LSF Execution Host..........................................................................................104

10.10 Determining Available System Resources.................................................................................104

10.10.1 Examining System Core Status..........................................................................................105

10.10.2 Getting Information About the LSF Execution Host Node...............................................105

10.10.3 Getting Host Load Information.........................................................................................106

10.10.4 Examining System Queues................................................................................................106

10.10.5 Getting Information About the lsf Partition...................................................................106

10.11 Getting Information About Jobs................................................................................................107

10.11.1 Getting Job Allocation Information...................................................................................107

10.11.2 Examining the Status of a Job............................................................................................108

10.11.3 Viewing the Historical Information for a Job....................................................................109

10.12 Translating SLURM and LSF-HPC JOBIDs...............................................................................110

10.13 Working Interactively Within an Allocation..............................................................................111

10.14 LSF-HPC Equivalents of SLURM srun Options........................................................................114

11 Advanced Topics......................................................................................................117

11.1 Enabling Remote Execution with OpenSSH................................................................................117

11.2 Running an X Terminal Session from a Remote Node................................................................117

11.3 Using the GNU Parallel Make Capability...................................................................................119

11.3.1 Example Procedure 1...........................................................................................................121

11.3.2 Example Procedure 2...........................................................................................................121

11.3.3 Example Procedure 3...........................................................................................................122

11.4 Local Disks on Compute Nodes..................................................................................................122

11.5 I/O Performance Considerations.................................................................................................123

11.5.1 Shared File View..................................................................................................................123

11.5.2 Private File View..................................................................................................................123

11.6 Communication Between Nodes.................................................................................................123

11.7 Using MPICH on the HP XC System...........................................................................................123

11.7.1 Using MPICH with SLURM Allocation..............................................................................124

11.7.2 Using MPICH with LSF Allocation.....................................................................................124

A Examples....................................................................................................................125

A.1 Building and Running a Serial Application.................................................................................125

6 Table of Contents

Page 7

A.2 Launching a Serial Interactive Shell Through LSF-HPC..............................................................125

A.3 Running LSF-HPC Jobs with a SLURM Allocation Request........................................................126

A.3.1 Example 1. Two Cores on Any Two Nodes..........................................................................126

A.3.2 Example 2. Four Cores on Two Specific Nodes....................................................................127

A.4 Launching a Parallel Interactive Shell Through LSF-HPC...........................................................127

A.5 Submitting a Simple Job Script with LSF-HPC............................................................................129

A.6 Submitting an Interactive Job with LSF-HPC...............................................................................130

A.7 Submitting an HP-MPI Job with LSF-HPC..................................................................................132

A.8 Using a Resource Requirements String in an LSF-HPC Command.............................................133

Glossary.........................................................................................................................135

Index...............................................................................................................................141

Table of Contents 7

Page 8

8

Page 9

List of Figures

4-1 Library Directory Structure...........................................................................................................51

4-2 Recommended Library Directory Structure..................................................................................51

7-1 The xcxclus Utility Display...........................................................................................................74

7-2 The xcxclus Utility Display Icon....................................................................................................74

7-3 Balloon Display in the xcxclus Utility...........................................................................................75

7-4 Plotting the Data from the xcxclus Utility.................................................................................77

7-5 The xcxperf Utility Display...........................................................................................................78

7-6 xcxperf System Information Dialog Box.......................................................................................79

7-7 Plotting Node Data from the xcxperf Utility.................................................................................80

10-1 How LSF-HPC and SLURM Launch and Manage a Job.............................................................103

11-1 MPICH Wrapper Script...............................................................................................................124

9

Page 10

10

Page 11

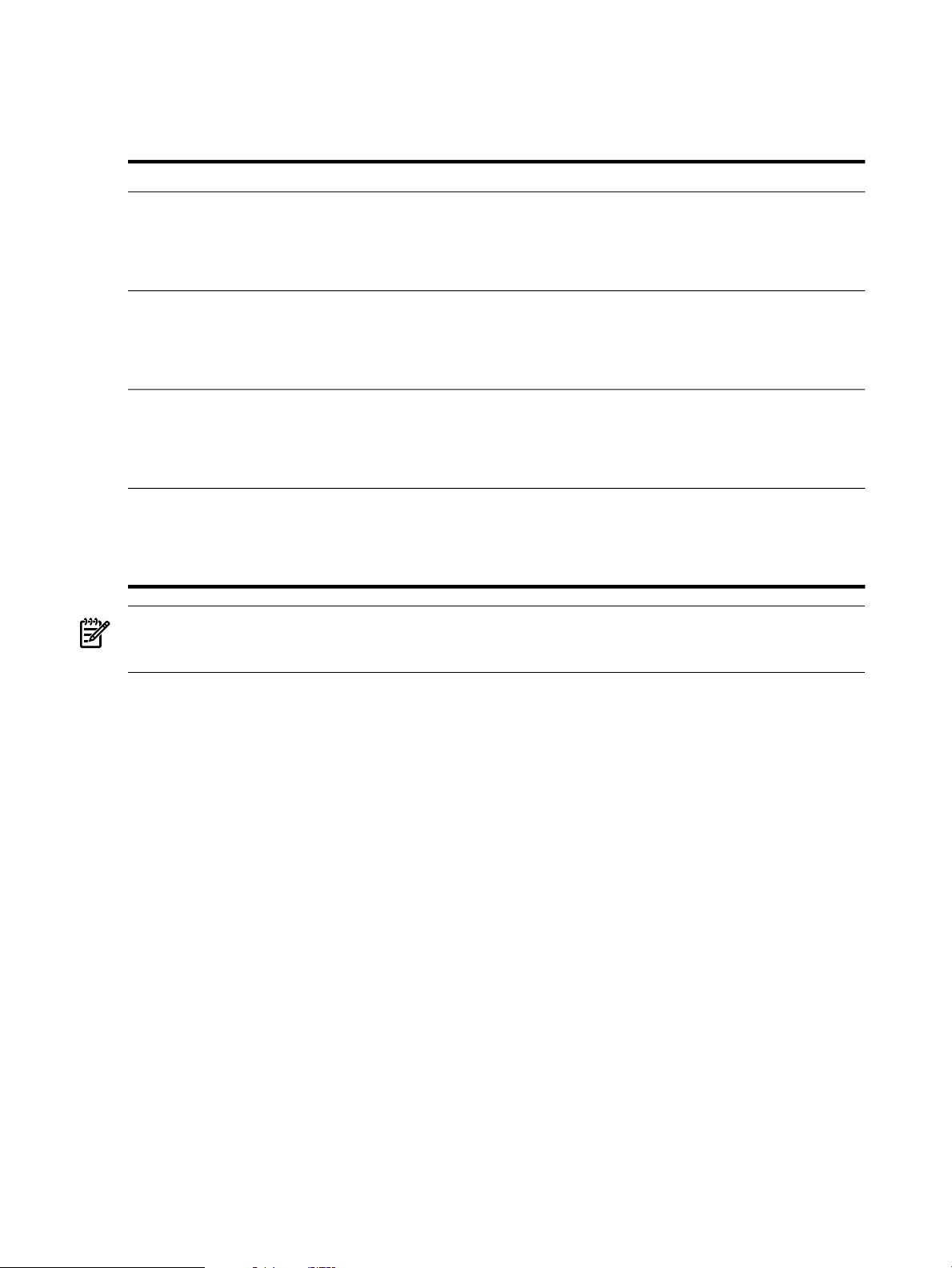

List of Tables

1-1 Determining the Node Platform...................................................................................................24

1-2 HP XC System Interconnects.........................................................................................................26

3-1 Supplied Modulefiles....................................................................................................................38

4-1 Compiler Commands....................................................................................................................44

5-1 Arguments for the SLURM External Scheduler............................................................................58

10-1 LSF-HPC with SLURM Job Launch Exit Codes..........................................................................102

10-2 Output Provided by the bhist Command....................................................................................110

10-3 LSF-HPC Equivalents of SLURM srun Options..........................................................................114

11

Page 12

12

Page 13

List of Examples

5-1 Submitting a Job from the Standard Input....................................................................................54

5-2 Submitting a Serial Job Using LSF-HPC .......................................................................................54

5-3 Submitting an Interactive Serial Job Using LSF-HPC only...........................................................54

5-4 Submitting an Interactive Serial Job Using LSF-HPC and the LSF-SLURM External

Scheduler.......................................................................................................................................54

5-5 Submitting a Non-MPI Parallel Job...............................................................................................56

5-6 Submitting a Non-MPI Parallel Job to Run One Task per Node...................................................56

5-7 Submitting an MPI Job..................................................................................................................57

5-8 Submitting an MPI Job with the LSF-SLURM External Scheduler Option...................................57

5-9 Using the External Scheduler to Submit a Job to Run on Specific Nodes.....................................59

5-10 Using the External Scheduler to Submit a Job to Run One Task per Node..................................59

5-11 Using the External Scheduler to Submit a Job That Excludes One or More Nodes.....................59

5-12 Using the External Scheduler to Launch a Command in Parallel on Ten Nodes.........................59

5-13 Using the External Scheduler to Constrain Launching to Nodes with a Given Feature..............60

5-14 Submitting a Job Script..................................................................................................................60

5-15 Submitting a Batch Script with the LSF-SLURM External Scheduler Option...............................61

5-16 Submitting a Batch Job Script That Uses a Subset of the Allocation.............................................61

5-17 Submitting a Batch job Script That Uses the srun --overcommit Option......................................61

5-18 Environment Variables Available in a Batch Job Script.................................................................62

8-1 The vtjacobic Example Program....................................................................................................86

8-2 C Example – Running the vtjacobic Example Program................................................................86

9-1 Simple Launch of a Serial Program...............................................................................................92

9-2 Displaying Queued Jobs by Their JobIDs.....................................................................................93

9-3 Reporting on Failed Jobs in the Queue.........................................................................................93

9-4 Terminating a Job by Its JobID......................................................................................................93

9-5 Cancelling All Pending Jobs..........................................................................................................93

9-6 Sending a Signal to a Job...............................................................................................................93

9-7 Using the sinfo Command (No Options)......................................................................................93

9-8 Reporting Reasons for Downed, Drained, and Draining Nodes..................................................94

10-1 Examples of LSF-HPC Job Launch................................................................................................97

10-2 Examples of Launching LSF-HPC Jobs Without the srun Command..........................................98

10-3 Job Allocation Information for a Running Job............................................................................108

10-4 Job Allocation Information for a Finished Job.............................................................................108

10-5 Using the bjobs Command (Short Output).................................................................................109

10-6 Using the bjobs Command (Long Output)..................................................................................109

10-7 Using the bhist Command (Short Output)..................................................................................109

10-8 Using the bhist Command (Long Output)..................................................................................110

10-9 Launching an Interactive MPI Job...............................................................................................112

10-10 Launching an Interactive MPI Job on All Cores in the Allocation..............................................113

13

Page 14

14

Page 15

About This Document

This document provides information about using the features and functions of the HP XC System

Software. It describes how the HP XC user and programmingenvironments differ from standard

Linux® system environments. In addition, this manual focuses on building and running

applications in the HP XC environment and is intended to guide an application developer to

take maximum advantage of HP XC features and functions by providing an understanding of

the underlying mechanisms of the HP XC programming environment.

An HP XC system is integratedwith several open source software components. Some open source

software components are being used for underlying technology, and their deployment is

transparent. Some open source software components require user-level documentation specific

to HP XC systems, and that kind of information is included in this document, when required.

HP relies on the documentation providedby the open source developersto supply the information

you need to use their product. For links to open source software documentation for products

that are integrated with the HP XC system, see “Supplementary Software Products” (page 18).

Documentation for third-party hardware and software components that are supported on the

HP XC system is supplied by the third-party vendor. However, information about the operation

of third-party software is included in this document if the functionality of the third-party

component differs from standard behavior when used in the XC environment. In this case, HP

XC documentation supersedes information supplied by the third-party vendor. For links to

related third-party Web sites, see “Supplementary Software Products” (page 18).

Standard Linux® administrative tasks or the functions provided by standard Linux tools and

commands are documented in commercially available Linux reference manuals and on various

Web sites. For more information aboutobtaining documentation for standard Linuxadministrative

tasks and associated topics, see the list of Web sites and additional publications provided in

“Related Software Products and Additional Publications” (page 19).

Intended Audience

This document is intended for experienced Linux users who run applications developed by

others, and for experienced system or application developers who develop, build, and run

application code on an HP XC system.

This document assumes that the user understands, and has experience with, multiprocessor

systems and the Message Passing Interface (MPI), and is familiar with HP XC architecture and

concepts.

New and Changed Information in This Edition

• Chapter 7 contains updated information on xcxclus and xcxperf commands.

• A new section on Unified Parallel C was added.

• There is a description of the ovp utility --opts=--queue option that allows you to specify

the LSF queue for performance health tests.

• A note regarding which ovp utility's performance health tests apply to Standard LSF and

which apply to LSF-HPC incorporated with SLURM was added.

Typographic Conventions

This document uses the following typographical conventions:

%, $, or #

A percent sign represents the C shell system prompt. A dollar

sign represents the system prompt for the Korn, POSIX, and

Bourne shells. A number sign represents the superuser prompt.

Intended Audience 15

Page 16

audit(5) A manpage. The manpage name is audit, and it is located in

Section 5.

Command

Computer output

A command name or qualified command phrase.

Text displayed by the computer.

Ctrl+x A key sequence. A sequence such as Ctrl+x indicates that you

must hold down the key labeled Ctrl while you press another

key or mouse button.

ENVIRONMENT VARIABLE The name of an environment variable, for example, PATH.

[ERROR NAME]

The name of an error, usually returned in the errno variable.

Key The name of a keyboard key. Return and Enter both refer to the

same key.

Term The defined use of an important word or phrase.

User input

Variable

Commands and other text that you type.

The name of a placeholder in a command, function, or other

syntax display that you replace with an actual value.

[ ] The contents are optional in syntax. If the contents are a list

separated by |, you can choose one of the items.

{ } The contents are required in syntax. If the contents are a list

separated by |, you must choose one of the items.

. . . The preceding element can be repeated an arbitrary number of

times.

| Separates items in a list of choices.

WARNING A warning calls attention to important information that if not

understood or followed will result in personal injury or

nonrecoverable system problems.

CAUTION A caution calls attention to important information that if not

understood or followed will result in data loss, data corruption,

or damage to hardware or software.

IMPORTANT This alert provides essential information to explain a concept or

to complete a task

NOTE A note contains additional information to emphasize or

supplement important points of the main text.

HP XC and Related HP Products Information

The HP XC System Software Documentation Set, the Master Firmware List, and HP XC HowTo

documents are available at this HP Technical Documentation Web site:

http://www.docs.hp.com/en/linuxhpc.html

The HP XC System Software Documentation Set includes the following core documents:

HP XC System Software Release Notes

HP XC Hardware Preparation Guide

HP XC System Software Installation Guide

16

Describes important, last-minute information about firmware,

software, or hardware that might affect the system. This

document is not shipped on the HP XC documentation CD. It

is available only on line.

Describes hardware preparation tasks specific to HP XC that

are required to prepare each supported hardware model for

installation and configuration, including required node and

switch connections.

Provides step-by-step instructions for installing the HP XC

System Software on the head node and configuring the system.

Page 17

HP XC System Software Administration Guide

HP XC System Software User's Guide

QuickSpecs for HP XC System Software

Provides an overview of the HP XC system administrative

environment, cluster administration tasks, node maintenance

tasks, LSF® administration tasks, and troubleshooting

procedures.

Provides anoverview ofmanaging theHP XC user environment

with modules, managing jobs with LSF, and describes how to

build, run, debug, and troubleshoot serial and parallel

applications on an HP XC system.

Provides a product overview, hardwarerequirements, software

requirements, software licensing information, ordering

information, and information about commercially available

software that has been qualified to interoperate with the HP XC

System Software. The QuickSpecs are located on line:

http://www.hp.com/go/clusters

See the following sources for information about related HP products.

HP XC Program Development Environment

The Program Development Environment home page provide pointers to tools that have been

tested in the HP XC program development environment (for example, TotalView® and other

debuggers, compilers, and so on).

http://h20311.www2.hp.com/HPC/cache/276321-0-0-0-121.html

HP Message Passing Interface

HP Message Passing Interface (HP-MPI) is an implementation of the MPI standard that has been

integrated in HP XC systems. The home page and documentation is located at the following Web

site:

http://www.hp.com/go/mpi

HP Serviceguard

HP Serviceguard is a service availability tool supported on an HP XC system. HP Serviceguard

enables some system services to continue if a hardware or software failure occurs. The HP

Serviceguard documentation is available at the following Web site:

http://www.docs.hp.com/en/ha.html

HP Scalable Visualization Array

The HP Scalable Visualization Array (SVA) is a scalable visualization solution that is integrated

with the HP XC System Software. The SVA documentation is available at the following Web site:

http://www.docs.hp.com/en/linuxhpc.html

HP Cluster Platform

The cluster platform documentation describes site requirements, shows you how to set up the

servers and additional devices, and provides procedures to operate and manage the hardware.

These documents are available at the following Web site:

http://www.docs.hp.com/en/linuxhpc.html

HP Integrity and HP ProLiant Servers

Documentation for HP Integrity and HP ProLiant servers is available at the following Web site:

http://www.docs.hp.com/en/hw.html

Related Information

This section provides useful links to third-party, open source, and other related software products.

Related Information 17

Page 18

Supplementary Software Products This section provides links to third-party and open source

software products that are integrated into the HP XC System Software core technology. In the

HP XC documentation, except where necessary, references to third-party and open source

software components are generic, and the HP XC adjective is not added to any reference to a

third-party or open source command or product name. For example, the SLURM srun command

is simply referred to as the srun command.

The location of each Web site or link to a particular topic listed in this section is subject to change

without notice by the site provider.

• http://www.platform.com

Home page for Platform Computing Corporation, the developer of the Load Sharing Facility

(LSF). LSF-HPC with SLURM, the batch system resource manager used on an HP XC system,

is tightly integrated with the HP XC and SLURM software. Documentation specific to

LSF-HPC with SLURM is provided in the HP XC documentation set.

Standard LSF is also available as an alternative resource management system (instead of

LSF-HPC with SLURM) for HP XC. This is the version of LSF that is widely discussed on

the Platform Web site.

For your convenience, the following Platform Computing Corporation LSF documents are

shipped on the HP XC documentation CD in PDF format:

— Administering Platform LSF

— Administration Primer

— Platform LSF Reference

— Quick Reference Card

— Running Jobs with Platform LSF

LSF procedures and information supplied in the HP XC documentation, particularly the

documentation relating to the LSF-HPC integration with SLURM, supersedes the information

supplied in the LSF manuals from Platform Computing Corporation.

The Platform Computing Corporation LSF manpages are installed by default. lsf_diff(7)

supplied by HP describes LSF command differences when using LSF-HPC with SLURM on

an HP XC system.

The following documents in the HP XC System Software Documentation Set provide

information about administering and using LSF on an HP XC system:

— HP XC System Software Administration Guide

— HP XC System Software User's Guide

18

• http://www.llnl.gov/LCdocs/slurm/

Documentation for the Simple Linux Utility for Resource Management (SLURM), which is

integrated with LSF to manage job and compute resources on an HP XC system.

• http://www.nagios.org/

Home page for Nagios®, a system and network monitoring application that is integrated

into an HP XC system to provide monitoring capabilities. Nagios watches specified hosts

and services and issues alerts when problems occur and when problems are resolved.

• http://oss.oetiker.ch/rrdtool

Home page of RRDtool, a round-robin database tool and graphing system. In the HP XC

system, RRDtool is used with Nagios to provide a graphical view of system status.

• http://supermon.sourceforge.net/

Home page for Supermon, a high-speed cluster monitoring system that emphasizes low

perturbation, high sampling rates, and an extensible data protocol and programming

interface. Supermonworks in conjunction with Nagios to provide HP XC system monitoring.

Page 19

• http://www.llnl.gov/linux/pdsh/

Home page for the parallel distributed shell (pdsh), which executes commands across HP

XC client nodes in parallel.

• http://www.balabit.com/products/syslog_ng/

Home page for syslog-ng, a logging tool that replaces the traditional syslog functionality.

The syslog-ng tool is a flexible and scalable audit trail processing tool. It provides a

centralized, securely stored log of all devices on the network.

• http://systemimager.org

Home page for SystemImager®, which is the underlying technology that distributes the

golden image to all nodes and distributes configuration changes throughout the system.

• http://linuxvirtualserver.org

Home page for the Linux Virtual Server (LVS), the load balancer running on the Linux

operating system that distributes login requests on the HP XC system.

• http://www.macrovision.com

Home pagefor Macrovision®, developer of the FLEXlm™ license management utility, which

is used for HP XC license management.

• http://sourceforge.net/projects/modules/

Web site for Modules, which provide for easy dynamic modification of a user's environment

through modulefiles, which typically instruct the module command to alter or set shell

environment variables.

• http://dev.mysql.com/

Home page for MySQL AB, developer of the MySQL database. This Web site contains a link

to the MySQL documentation, particularly the MySQL Reference Manual.

Related Software Products and Additional Publications This section provides pointers to Web

sites for related software products and provides references to useful third-party publications.

The location of each Web site or link to a particular topic is subject to change without notice by

the site provider.

Linux Web Sites

• http://www.redhat.com

Home page for Red Hat®, distributors of Red Hat Enterprise Linux Advanced Server, a

Linux distribution with which the HP XC operating environment is compatible.

• http://www.linux.org/docs/index.html

This Web site for the Linux Documentation Project (LDP) contains guides that describe

aspects of working with Linux, from creating your own Linux system from scratch to bash

script writing. This site also includes links to Linux HowTo documents, frequently asked

questions (FAQs), and manpages.

• http://www.linuxheadquarters.com

Web site providing documents and tutorials for the Linux user. Documents contain

instructions on installing and using applications for Linux, configuring hardware, and a

variety of other topics.

• http://www.gnu.org

Home page for the GNU Project. This site provides online software and information for

many programs and utilities that are commonly used on GNU/Linux systems. Online

information include guides for using the bash shell, emacs, make, cc, gdb, and more.

Related Information 19

Page 20

MPI Web Sites

• http://www.mpi-forum.org

Contains the official MPI standards documents, errata, and archives of the MPI Forum. The

MPI Forum is an open group with representatives from many organizations that define and

maintain the MPI standard.

• http://www-unix.mcs.anl.gov/mpi/

A comprehensive site containing general information, such as the specification and FAQs,

and pointers to other resources, including tutorials, implementations, and other MPI-related

sites.

Compiler Web Sites

• http://www.intel.com/software/products/compilers/index.htm

Web site for Intel® compilers.

• http://support.intel.com/support/performancetools/

Web site for general Intel software development information.

• http://www.pgroup.com/

Home page for The Portland Group™, supplier of the PGI® compiler.

Debugger Web Site

http://www.etnus.com

Home page for Etnus, Inc., maker of the TotalView® parallel debugger.

Software RAID Web Sites

• http://www.tldp.org/HOWTO/Software-RAID-HOWTO.html and

http://www.ibiblio.org/pub/Linux/docs/HOWTO/other-formats/pdf/Software-RAID-HOWTO.pdf

A document (in two formats: HTML and PDF) that describes how to use software RAID

under a Linux operating system.

• http://www.linuxdevcenter.com/pub/a/linux/2002/12/05/RAID.html

Provides information about how to use the mdadm RAID management utility.

Additional Publications

For more information about standard Linux system administration or other related software

topics, consider using one of the following publications, which must be purchased separately:

• Linux Administration Unleashed, by Thomas Schenk, et al.

• Linux Administration Handbook, by Evi Nemeth, Garth Snyder, Trent R. Hein, et al.

• Managing NFS and NIS, by Hal Stern, Mike Eisler, and Ricardo Labiaga (O'Reilly)

• MySQL, by Paul Debois

• MySQL Cookbook, by Paul Debois

• High Performance MySQL, by Jeremy Zawodny and Derek J. Balling (O'Reilly)

• Perl Cookbook, Second Edition, by Tom Christiansen and Nathan Torkington

• Perl in A Nutshell: A Desktop Quick Reference , by Ellen Siever, et al.

Manpages

Manpages provide online reference and command information fromthe command line. Manpages

are supplied with the HP XC system for standard HP XC components, Linux user commands,

LSF commands, and other software components that are distributed with the HP XC system.

20

Page 21

Manpages for third-party software components might be provided as a part of the deliverables

for that component.

Using discover(8) as an example, you can use either one of the following commands to display a

manpage:

$ man discover

$ man 8 discover

If you are not sure about a command you need to use, enter the man command with the -k option

to obtain a list of commands that are related to a keyword. For example:

$ man -k keyword

HP Encourages Your Comments

HP encourages comments concerning this document. We are committed to providing

documentation that meets your needs. Send any errors found, suggestions for improvement, or

compliments to:

feedback@fc.hp.com

Include the document title, manufacturing part number, and any comment, error found, or

suggestion for improvement you have concerning this document.

HP Encourages Your Comments 21

Page 22

22

Page 23

1 Overview of the User Environment

The HP XC system is a collection of computer nodes, networks, storage, and software, built into

a cluster, that work together. It is designed to maximize workload and I/O performance, and to

provide the efficient management of large, complex, and dynamic workloads.

This chapter addresses the following topics:

• “System Architecture” (page 23)

• “User Environment” (page 27)

• “Application Development Environment” (page 28)

• “Run-Time Environment” (page 29)

• “Components, Tools, Compilers, Libraries, and Debuggers” (page 31)

1.1 System Architecture

The HP XC architecture is designed as a clustered system with single system traits. From a user

perspective, this architecture achieves a single system view, providing capabilities such as the

following:

• Single user login

• Single file system namespace

• Integrated view of system resources

• Integrated program development environment

• Integrated job submission environment

1.1.1 HP XC System Software

The HP XC System Software enables the nodes in the platform to run cohesively to achieve a

single system view. You can determine the version of the HP XC System Software from the

/etc/hptc-release file.

$ cat /etc/hptc-release

HP XC V#.# RCx PKn date

Where:

#.#

RCx

PKnn

date Is the date (in yyyymmdd format) the software was released.

Is the version of the HP XC System Software

Is the release candidate of the version.

Indicates there was a cumulative patch kit for the HP XC System Software installed.

For example, if PK02 appears in the output, it indicates that both cumulative patch kits

PK01 and PK02 have been installed.

This field is blank if no patch kits are installed.

1.1.2 Operating System

The HP XC system is a high-performance compute cluster that runs HP XC Linux for High

Performance Computing Version 1.0 (HPC Linux) as its software base. Any serial or thread-parallel

applications, or applications built shared with HP-MPI that run correctly on Red Hat Enterprise

Linux Advanced Server Version 3.0 or Version 4.0, also run correctly on HPC Linux.

1.1.3 Node Platforms

The HP XC System Software is available on several platforms. You can determine the platform

by examining the top few fields of the /proc/cpuinfo file, for example, by using the head

command:

1.1 System Architecture 23

Page 24

$ head /proc/cpuinfo

Table 1-1 presents the representative output for each of the platforms. This output may differ

according to changes in models and so on.

Table 1-1 Determining the Node Platform

Platform

CP3000

CP4000

CP6000

CP300BL

(Blade-only

XC systems)

Note:

Partial Output of /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 15

model : 3

model name : Intel(R) Xeon(TM)

processor : 0

vendor_id : AuthenticAMD

cpu family : 15

model : 5

model name : AMD Opteron(tm)

processor : 0

vendor : GenuineIntel

arch : IA-64

family : Itanium 2

model : 1

processor : 0

vendor_id : GenuineIntel

cpu family : 15

model : 6

model name : Intel(R) Xeon(TM) CPU 3.73GHz

The /proc/cpuinfo file is dynamic.

1.1.4 Node Specialization

The HP XC system is implemented as a sea-of-nodes. Each node in the system contains the same

software image on its local disk. There are two types of nodes in the system — a head node and

client nodes.

head node The node is installed with the HP XC system software first — it is used to

generate other HP XC (client) nodes. The head node is generally of interest

only to the administrator of the HP XC system.

client nodes All the other the nodes that make up the system. They are replicated from

the head node and are usually given one or more specialized roles to perform

various system functions, such as logging into the system or running jobs.

The HP XC system allows for the specialization of client nodes to enable efficient and flexible

distribution of the workload. Nodes can be assigned one or more specialized roles that determine

how a particular node is used and what system services it provides. Of the many different roles

that can be assigned to a client node, the following roles contain services that are of special interest

to the general user:

login role The role most visible to users is on nodes that have the login role. Nodes

with the login role are where you log in and interact with the system to

perform various tasks. Forexample, once logged in to anode with login role,

you can execute commands, build applications, or submit jobs to compute

nodes for execution. There can be one or several nodes with the login role

in an HP XC system, depending upon cluster size and requirements. Nodes

with the login role are a part of the Linux Virtual Server ring, which

24 Overview of the User Environment

Page 25

compute role The compute role is assigned to nodes where jobs are to be distributed and

1.1.5 Storage and I/O

The HP XC system supports both shared (global) and private (local) disks and file systems.

Shared file systems can be mounted on all the other nodes by means of Lustre™ or NFS. This

gives users a single view of all the shared data on disks attached to the HP XC system.

SAN Storage

The HP XC system uses the HP StorageWorks Scalable File Share (HP StorageWorks SFS), which

is based on Lustre technology and uses the Lustre File System from Cluster File Systems, Inc.

This is a turnkey Lustre system from HP. It supplies access to Lustre file systems through Lustre

client/server protocols over various system interconnects. The HP XC system is a client to the

HP StorageWorks SFS server.

distributes login requests from users. A node with the login role is referred

to as a login node in this manual.

run. Although all nodes in the HP XC system are capable of carrying out

computations, the nodes with the compute role are the primary nodes used

to run jobs. Nodes with the compute role become a part of the resource pool

used by LSF-HPC and SLURM, which manage and distribute the job

workload. Jobs that are submitted to compute nodes must be launched from

nodes with the login role. Nodes with the compute role are referred to as

compute nodes in this manual.

Local Storage

Local storage for each node holds the operating system, a copy of the HP XC System Software,

and temporary space that can be used by jobs running on the node.

HP XC file systems are described in detail in “File System”.

1.1.6 File System

Each node of the HP XC system has its own local copy of all the HP XC System Software files

including the Linux distribution; it also has its own local user files. Every node can also import

files from NFS or Lustre file servers. HP XC System Software uses NFS 3, including both client

and server functionality. HP XC System Software also enables Lustre client services for

high-performance and high-availability file I/O. These Lustre client services require the separate

installation of Lustre software, provided with the HP Storage Works Scalable File Share (SFS).

NFS files can be shared exclusively among the nodes of the HP XC System or can be shared

between the HP XC and external systems. External NFS files can be shared with any node having

a direct external network connection. It is also possible to set up NFS to import external files to

HP XC nodes without external network connections, by routing through a node with an external

network connection. Your system administrator can choose touse either the HP XC administrative

network or the HP XC system interconnect for NFS operations. The HP XC system interconnect

can potentially offer higher performance, but only at the potential decrease in the performance

of application communications.

For high-performance or high-availability file I/O, the Lustre file system is available on HP XC.

The Lustre file system uses POSIX-compliant syntax and semantics. The HP XC System Software

includes kernel modifications required for Lustre client services which enables the operation of

the separately installable Lustre client software. The Lustre file server product used on HP XC

is the HP StorageWorks Scalable File Share (SFS), which fully supports the HP XC System

Software.

The SFS also includes HP XC Lustre client software. The SFS can be integrated with the HP XC

so that Lustre I/O is performed over the same high-speed system interconnect fabric used by

1.1 System Architecture 25

Page 26

the HP XC. So, for example, if the HP XC system interconnect is based on a Quadrics® QsNet

II® switch, then the SFS will serve files over ports on that switch. The file operations are able to

proceed at the full bandwidth of the HP XC system interconnect because these operations are

implemented directly over the low-level communications libraries. Further optimizations of file

I/O can be achieved at the application level using special file system commands – implemented

as ioctls – which allow a program to interrogate the attributes of the file system, modify the

stripe size and other attributes of new (zero-length) files, and so on. Some of these optimizations

are implicit in the HP-MPI I/O library, which implements the MPI-2 file I/O standard.

File System Layout

In an HP XC system, the basic file system layout is the same as that of the Red Hat Advanced

Server 3.0 Linux file system.

The HP XC file system is structured to separate cluster-specific files, base operating system files,

and user-installed software files. This allows for flexibility and ease of potential upgrades of the

system software and keeps software from conflicting with user installed software. Files are

segregated into the following types and locations:

• Software specific to HP XC is located in /opt/hptc

• HP XC configuration data is located in /opt/hptc/etc

• Clusterwide directory structure (file system) is located in /hptc_cluster

Be aware of the following information about the HP XC file system layout:

• Open source software that by default would be installed under the /usr/local directory

is instead installed in the /opt/hptc directory.

• Software installed in the /opt/hptc directory is not intended to be updated by users.

• Software packages are installed in directories under the /opt/hptc directory under their

own names. The exception to this is third-party software, which usually goes in /opt/r.

• Four directories under the /opt/hptc directory contain symbolic links to files included in

the packages:

— /opt/hptc/bin

— /opt/hptc/sbin

— /opt/hptc/lib

— /opt/hptc/man

Each package directory should have a directory corresponding to each of these directories

in which every file has a symbolic link created in the /opt/hptc/ directory.

1.1.7 System Interconnect Network

The HP XC system interconnect provides high-speed connectivity for parallel applications. The

system interconnect network provides a high-speed communications path used primarily for

user file service and for communications within user applications that are distributed among

nodes of the system. The system interconnect network is a private network within the HP XC.

Typically, every node in the HP XC is connected to the system interconnect.

Table 1-2 indicates the types of system interconnects that are used on HP XC systems.

Table 1-2 HP XC System Interconnects

26 Overview of the User Environment

CP6000CP4000CP3000

XXQuadrics QSNet II®

XXMyrinet®

XXXGigabit Ethernet®

XXXInfiniBand®

Page 27

Additional information on supported system interconnects is provided in the HP XC Hardware

Preparation Guide.

1.1.8 Network Address Translation (NAT)

The HP XC system uses Network Address Translation (NAT) to enable nodes in the HP XC

system that do not have direct external network connections to open outbound network

connections to external network resources.

1.2 Determining System Configuration Information

You can determine various system configuration parameters with a few commands:

Use the following command to display the version of the

HP XC System Software:

Use either of these commands to display the Kernel

version:

Use the following command to display the RPMs:

Use the following command to display the amount of free

and used memory in megabytes:

Use the following command to display the disk partitions

and their sizes:

Use the following command to display the swap usage

summary by device:

Use the following commands to display the cache

information; this is not available on all systems.

1.3 User Environment

This section introduces some general information about logging in, configuring, and using the

HP XC environment.

1.3.1 LVS

The HP XC system uses the Linux Virtual Server (LVS) to present a single host name for user

logins. LVS is a highly scalable virtual server built on a system of real servers. By using LVS, the

architecture of the HP XC system is transparent to end users, and they see only a single virtual

server. This eliminates the need for users to know how the system is configured in order to

successfully log in and use the system. Any changes in the system configuration are transparent

to end users. LVS also provides load balancing across login nodes, which distributes login requests

to different servers.

cat /etc/hptc-release

uname -rcat /proc/version

rpm -qa

free -m

cat /proc/partitions

swapon -s

cat /proc/pal/cpu0/cache_info

cat /proc/pal/cpu1/cache_info

1.3.2 Modules

The HP XC system provides the Modules Package (not to be confused with Linux kernel modules)

to configure and modify the user environment. The Modules Package enables dynamic

modification of auser’s environment by means of modulefiles. Modulefiles provide a convenient

means for users to tailor their working environment as necessary. One of the key features of

modules is to allow multiple versions of the same software to be used in a controlled manner.

A modulefile contains information to configure the shell for an application. Typically, a modulefile

contains instructions that alter or set shell environment variables, such as PATH and MANPATH,

to enable access to various installed software. Many users on a system can share modulefiles,

and users may have their own collection to supplement or replace the shared modulefiles.

1.2 Determining System Configuration Information 27

Page 28

Modulefiles can be loaded into the your environment automatically when you log in to the

system, or any time you need to alter the environment. The HP XC system does not preload

modulefiles.

See Chapter 3 “Configuring Your Environment with Modulefiles” for more information.

1.3.3 Commands

The HP XC user environment includes standard Linux commands, LSF commands, SLURM

commands, HP-MPI commands, and modules commands. This section provides a brief overview

of these command sets.

Linux commands You can use standard Linux user commands and tools on the HP XC

LSF commands HP XC supports LSF-HPC and the use of standard LSF commands,

SLURM commands HP XC uses the Simple Linux Utility for Resource Management

HP-MPI commands You can run standard HP-MPI commands from the command line.

Modules commands The HP XC system uses standard Modules commands to load and

system. StandardLinux commands are not described in this document,

but you can access Linux command descriptions in Linux

documentation and manpages. Run the Linux man command with the

Linux command name to display the corresponding manpage.

some of which operate differently in the HP XC environment from

standard LSF behavior. The use of LSF-HPC commands in the HP XC

environment is described in Chapter 10 “Using LSF-HPC”, and in the

HP XC lsf_diff manpage. Information about standard LSF

commands is available in Platform Computing Corporation LSF

documentation, and in the LSF manpages. For your convenience, the

HP XC Documentation CD contains XC LSF manuals from Platform

Computing. LSF manpages are available on the HP XC system.

(SLURM) for system resource management and job scheduling.

Standard SLURM commands areavailable through thecommand line.

SLURM functionality is described in Chapter 9 “Using SLURM”.

Descriptions of SLURM commands are available in the SLURM

manpages. Invoke the mancommand with the SLURM command name

to access them.

Descriptions of HP-MPI commands are available in the HP-MPI

documentation, which is supplied with the HP XC system software.

unload modulefiles, which are used to configure and modify the user

environment. Modules commands are described in “Overview of

Modules”.

1.4 Application Development Environment

The HP XC system provides an environment that enables developing, building, and running

applications using multiple nodes with multiple cores. These applications can range from parallel

applications using many cores to serial applications using a single core.

1.4.1 Parallel Applications

The HP XCparallel application development environment allows parallelapplication processes

to be started and stopped together on a large number of application processors, along with the

I/O and process control structures to manage these kinds of applications.

Full details and examples of how to build, run, debug, and troubleshoot parallel applications

are provided in “Developing Parallel Applications”.

28 Overview of the User Environment

Page 29

1.4.2 Serial Applications

You can build and run serial applications under the HP XC development environment. A serial

application is a command or application that does not use any form of parallelism.

Full details and examples of how to build, run, debug, and troubleshoot serial applications are

provided in “Building Serial Applications”.

1.5 Run-Time Environment

This section describes LSF-HPC, SLURM, and HP-MPI, and how these components work together

to provide the HP XC run-time environment. LSF-HPC focuses on scheduling (and managing

the workload) and SLURM provides efficient and scalable resource management of the compute

nodes.

Another HP XC environment features standard LSF without the interaction with the SLURM

resource manager.

1.5.1 SLURM

Simple Linux Utility for Resource Management (SLURM) is a resource management system that

is integrated into the HP XC system. SLURM is suitable for use on large and small Linux clusters.

It was developed by Lawrence Livermore National Lab and Linux Networks. As a resource

manager, SLURM allocates exclusive or unrestricted access to resources (application and compute

nodes) for users to perform work, and provides a framework to start, execute and monitor work

(normally a parallel job) on the set of allocated nodes.

A SLURM system consists of two daemons, one configuration file, and a set of commands and

APIs. The central controller daemon, slurmctld, maintains the global state and directs

operations. A slurmd daemon is deployed to each computing node and responds to job-related

requests, such as launching jobs, signalling, and terminating jobs. End users and system software

(such as LSF-HPC) communicate with SLURM by means of commands or APIs — for example,

allocating resources, launching parallel jobs on allocated resources, and terminating running

jobs.

SLURM groups compute nodes (the nodes where jobs are run) together into “partitions”. The

HP XC system can have one or several partitions. When HP XC is installed, a single partition of

compute nodes is created by default for LSF-HPC batch jobs. The system administrator has the

option of creating additional partitions. For example, another partition could be created for

interactive jobs.

1.5.2 Load Sharing Facility (LSF-HPC)

The LoadSharing Facility for High Performance Computing (LSF-HPC) fromPlatform Computing

Corporation is a batch system resource manager that has been integrated with SLURM for use

on the HP XC system. LSF-HPC for SLURM is included with the HP XC System Software, and

is an integral part of the HP XC environment. LSF-HPC interacts with SLURM to obtain and

allocate available resources, andto launch and control all the jobs submitted to LSF-HPC. LSF-HPC

accepts, queues, schedules, dispatches, and controls all the batch jobs that users submit, according

to policies and configurations established by the HP XC site administrator. On an HP XC system,