Page 1

HP XC System Software

Hardware Preparation Guide

Version 3.2.1

HP Part Number: A-XCHWP-321c

Published: October 2008

Page 2

© Copyright 2003, 2004, 2005, 2006, 2007, 2008 Hewlett-Packard Development Company, L.P.

The informationcontained hereinis subjectto changewithout notice.The onlywarranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP

shall not be liable fortechnical or editorial errors oromissions containedherein. Intel, Pentium, Intel Inside, and theIntel Inside logo are trademarks

or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

AMD and AMD Opteron are trademarks or registered trademarks of Advanced Micro Devices, Inc.

FLEXlm and Macrovision are trademarks or registered trademarks of Macrovision Corporation.

InfiniBand is a registered trademark and service mark of the InfiniBand Trade Association.

Intel, Itanium, and Xeon are trademarksor registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

Linux is a U.S. registered trademark of Linus Torvalds.

LSF and Platform Computing are trademarks or registered trademarks of Platform Computing Corporation.

Lustre is a registered trademark of Cluster File Systems, Inc.

Myrinet and Myricom are registered trademarks of Myricom, Inc.

Nagios is a registered trademark of Ethan Galstad.

The Portland Group and PGI are trademarks or registered trademarks of The Portland Group Compiler Technology, STMicroelectronics, Inc.

Quadrics and QsNetIIare registered trademarks of Quadrics, Ltd.

Red Hat and RPM are registered trademarks of Red Hat, Inc.

syslog-ng is a copyright of BalaBit IT Security.

SystemImager is a registered trademark of Brian Finley.

TotalView is a registered trademark of Etnus, Inc.

UNIX is a registered trademark of The Open Group.

Page 3

Table of Contents

About This Document.......................................................................................................11

Intended Audience................................................................................................................................11

New and Changed Information in This Edition...................................................................................11

Typographic Conventions.....................................................................................................................11

HP XC and Related HP Products Information.....................................................................................12

Related Information..............................................................................................................................14

Manpages..............................................................................................................................................17

HP Encourages Your Comments..........................................................................................................17

1 Hardware and Network Overview............................................................................19

1.1 Supported Cluster Platforms...........................................................................................................19

1.1.1 Supported Processor Architectures and Hardware Models...................................................19

1.1.2 Supported Server Blade Combinations...................................................................................21

1.2 Server Blade Enclosure Components..............................................................................................22

1.2.1 HP BladeSystem c7000 Enclosure...........................................................................................22

1.2.2 HP BladeSystem c3000 Enclosure...........................................................................................25

1.2.3 HP BladeSystem c-Class Onboard Administrator .................................................................27

1.2.4 Insight Display........................................................................................................................28

1.3 Server Blade Mezzanine Cards........................................................................................................28

1.4 Server Blade Interconnect Modules.................................................................................................28

1.5 Supported Console Management Devices......................................................................................29

1.6 Administration Network Overview................................................................................................30

1.7 Administration Network: Console Branch......................................................................................31

1.8 Interconnect Network......................................................................................................................31

1.9 Large-Scale Systems........................................................................................................................32

2 Cabling Server Blades.................................................................................................33

2.1 Blade Enclosure Overview..............................................................................................................33

2.2 Network Overview .........................................................................................................................33

2.3 Cabling for the Administration Network........................................................................................37

2.4 Cabling for the Console Network...................................................................................................38

2.5 Cabling for the Interconnect Network............................................................................................39

2.5.1 Configuring a Gigabit Ethernet Interconnect..........................................................................39

2.5.2 Configuring an InfiniBand Interconnect.................................................................................40

2.5.3 Configuring the Interconnect Network Over the Administration Network..........................41

2.6 Cabling for the External Network...................................................................................................41

2.6.1 Configuring the External Network: Option 1.........................................................................41

2.6.2 Configuring the External Network: Option 2.........................................................................42

2.6.3 Configuring the External Network: Option 3 - Non Gigabit Ethernet Interconnect

Clusters............................................................................................................................................43

2.6.4 Creating VLANs......................................................................................................................44

3 Making Node and Switch Connections....................................................................45

3.1 Cabinets...........................................................................................................................................45

3.2 Trunking and Switch Choices..........................................................................................................45

3.3 Switches...........................................................................................................................................46

3.3.1 Specialized Switch Use............................................................................................................46

3.3.2 Administrator Passwords on ProCurve Switches...................................................................47

Table of Contents 3

Page 4

3.3.3 Switch Port Connections.........................................................................................................47

3.3.3.1 Switch Connections and HP Workstations.....................................................................49

3.3.4 Super Root Switch...................................................................................................................49

3.3.5 Root Administration Switch....................................................................................................50

3.3.6 Root Console Switches............................................................................................................51

3.3.6.1 ProCurve 2650 Switch.....................................................................................................52

3.3.6.2 ProCurve 2610-48 Switch................................................................................................52

3.3.6.3 ProCurve 2626 Switch.....................................................................................................53

3.3.6.4 ProCurve 2610-24 Switch................................................................................................54

3.3.7 Branch Administration Switches.............................................................................................54

3.3.8 Branch Console Switches.........................................................................................................55

3.4 Interconnect Connections................................................................................................................56

3.4.1 QsNet Interconnect Connections............................................................................................57

3.4.2 Gigabit Ethernet Interconnect Connections............................................................................57

3.4.3 Administration Network Interconnect Connections..............................................................57

3.4.4 Myrinet Interconnect Connections..........................................................................................58

3.4.5 InfiniBand Interconnect Connections......................................................................................58

4 Preparing Individual Nodes........................................................................................59

4.1 Firmware Requirements and Dependencies...................................................................................59

4.2 Ethernet Port Connections on the Head Node................................................................................61

4.3 General Hardware Preparations for All Cluster Platforms............................................................61

4.4 Setting the Onboard Administrator Password................................................................................62

4.5 Preparing the Hardware for CP3000 (Intel Xeon with EM64T) Systems........................................63

4.5.1 Preparing HP ProLiant DL140 G2 and G3 Nodes...................................................................63

4.5.2 Preparing HP ProLiant DL160 G5 Nodes...............................................................................66

4.5.3 Preparing HP ProLiant DL360 G4 Nodes...............................................................................68

4.5.4 Preparing HP ProLiant DL360 G5 Nodes...............................................................................70

4.5.5 Preparing HP ProLiant DL380 G4 and G5 Nodes...................................................................72

4.5.6 Preparing HP ProLiant DL580 G4 Nodes...............................................................................75

4.5.7 Preparing HP ProLiant DL580 G5 Nodes...............................................................................78

4.5.8 Preparing HP xw8200 and xw8400 Workstations...................................................................80

4.5.9 Preparing HP xw8600 Workstations.......................................................................................82

4.6 Preparing the Hardware for CP3000BL Systems............................................................................84

4.7 Preparing the Hardware for CP4000 (AMD Opteron) Systems......................................................87

4.7.1 Preparing HP ProLiant DL145 Nodes.....................................................................................87

4.7.2 Preparing HP ProLiant DL145 G2 and DL145 G3 Nodes.......................................................89

4.7.3 Preparing HP ProLiant DL165 G5 Nodes...............................................................................93

4.7.4 Preparing HP ProLiant DL365 Nodes.....................................................................................94

4.7.5 Preparing HP ProLiant DL365 G5 Nodes...............................................................................97

4.7.6 Preparing HP ProLiant DL385 and DL385 G2 Nodes...........................................................100

4.7.7 Preparing HP ProLiant DL385 G5 Nodes.............................................................................104

4.7.8 Preparing HP ProLiant DL585 and DL585 G2 Nodes...........................................................106

4.7.9 Preparing HP ProLiant DL585 G5 Nodes.............................................................................110

4.7.10 Preparing HP ProLiant DL785 G5 Nodes............................................................................113

4.7.11 Preparing HP xw9300 and xw9400 Workstations...............................................................116

4.8 Preparing the Hardware for CP4000BL Systems..........................................................................119

4.9 Preparing the Hardware for CP6000 (Intel Itanium) Systems......................................................122

4.9.1 Setting Static IP Addresses on Integrity Servers...................................................................122

4.9.2 Preparing HP Integrity rx1620 and rx2600 Nodes................................................................122

4.9.3 Preparing HP Integrity rx2620 Nodes...................................................................................125

4.9.4 Preparing HP Integrity rx2660 Nodes...................................................................................127

4.9.5 Preparing HP Integrity rx4640 Nodes...................................................................................129

4.9.6 Preparing HP Integrity rx8620 Nodes...................................................................................131

4 Table of Contents

Page 5

4.10 Preparing the Hardware for CP6000BL Systems.........................................................................136

5 Troubleshooting..........................................................................................................139

5.1 iLO2 Devices..................................................................................................................................139

5.1.1 iLO2 Devices Can Become Unresponsive.............................................................................139

A Establishing a Connection Through a Serial Port...................................................141

B Server Blade Configuration Examples.....................................................................143

B.1 Gigabit Ethernet Interconnect With Half-Height Server Blades...................................................143

B.2 InfiniBand Interconnect With Full-Height Server Blades.............................................................143

B.3 InfiniBand Interconnect With Mixed Height Server Blades.........................................................144

Glossary.........................................................................................................................147

Index...............................................................................................................................153

Table of Contents 5

Page 6

List of Figures

1-1 HP BladeSystem c7000 enclosure (Front and Rear Views)...........................................................22

1-2 HP BladeSystem c7000 Enclosure Bay Locations (Front View)....................................................22

1-3 HP BladeSystem c7000 Enclosure Bay Numbering for Half Height and Full Height Server

Blades.............................................................................................................................................23

1-4 HP BladeSystem c7000 Enclosure Bay Locations (Rear View)......................................................24

1-5 HP BladeSystem c3000 Enclosure (Front and Rear Views)...........................................................25

1-6 HP BladeSystem c3000 Enclosure Tower Model...........................................................................25

1-7 HP BladeSystem c3000 Enclosure Bay Locations (Front View)....................................................26

1-8 HP BladeSystem c3000 Enclosure Bay Numbering.......................................................................26

1-9 HP BladeSystem c3000 Enclosure Bay Locations (Rear View)......................................................27

1-10 Server Blade Insight Display.........................................................................................................28

1-11 Administration Network: Console Branch (Without HP Server Blades)......................................31

2-1 Interconnection Diagram for a Small HP XC Cluster of Server Blades........................................35

2-2 Interconnection Diagram for a Medium Sized HP XC Cluster of Server Blades..........................36

2-3 Interconnection Diagram for a Large HP XC Cluster of Server Blades........................................37

2-4 Administration Network Connections..........................................................................................38

2-5 Console Network Connections......................................................................................................39

2-6 Gigabit Ethernet Interconnect Connections..................................................................................40

2-7 InfiniBand Interconnect Connections............................................................................................40

2-8 External Network Connections: Full-Height Server Blades and NIC1 and NIC2 in Use.............42

2-9 External Network Connections: Half-Height Server Blades and NIC1 and NIC2 in Use............43

2-10 External Network Connections: Half and Full-Height Server Blades and NIC1 in Use...............44

3-1 Application and Utility Cabinets..................................................................................................45

3-2 Node and Switch Connections on a Typical System.....................................................................48

3-3 Switch Connections for a Large-Scale System...............................................................................48

3-4 ProCurve 2848 Super Root Switch................................................................................................49

3-5 ProCurve 2848 Root Administration Switch.................................................................................50

3-6 ProCurve 2824 Root Administration Switch.................................................................................51

3-7 ProCurve 2650 Root Console Switch.............................................................................................52

3-8 ProCurve 2610-48 Root Console Switch........................................................................................53

3-9 ProCurve 2626 Root Console Switch.............................................................................................53

3-10 ProCurve 2610-24 Root Console Switch........................................................................................54

3-11 ProCurve 2848 Branch Administration Switch.............................................................................55

3-12 ProCurve 2824 Branch Administration Switch.............................................................................55

3-13 ProCurve 2650 Branch Console Switch.........................................................................................56

3-14 ProCurve 2610-48 Branch Console Switch....................................................................................56

4-1 HP ProLiant DL140 G2 and DL140 G3 Server Rear View.............................................................63

4-2 HP ProLiant DL160 G5 Server Rear View.....................................................................................66

4-3 HP ProLiant DL360 G4 Server Rear View.....................................................................................68

4-4 HP ProLiant DL360 G5 Server Rear View.....................................................................................70

4-5 HP ProLiant DL380 G4 Server Rear View.....................................................................................72

4-6 HP ProLiant DL380 G5 Server Rear View.....................................................................................73

4-7 HP ProLiant DL580 G4 Server Rear View.....................................................................................76

4-8 HP ProLiant DL580 G5 Server Rear View.....................................................................................79

4-9 HP xw8200 and xw8400 Workstation Rear View..........................................................................81

4-10 HP xw8600 Workstation Rear View..............................................................................................82

4-11 HP ProLiant DL145 Server Rear View..........................................................................................87

4-12 HP ProLiant DL145 G2 Server Rear View.....................................................................................89

4-13 HP ProLiant DL145 G3 Server Rear View ....................................................................................90

4-14 HP ProLiant DL165 G5 Server Rear View ....................................................................................93

4-15 HP ProLiant DL365 Server Rear View..........................................................................................95

4-16 HP ProLiant DL365 G5 Server Rear View.....................................................................................97

6 List of Figures

Page 7

4-17 HP ProLiant DL385 Server Rear View.........................................................................................100

4-18 HP ProLiant DL385 G2 Server Rear View...................................................................................101

4-19 HP ProLiant DL385 G5 Server Rear View ..................................................................................105

4-20 HP ProLiant DL585 Server Rear View.........................................................................................106

4-21 HP ProLiant DL585 G2 Server Rear View...................................................................................106

4-22 HP ProLiant DL585 G5 Server Rear View ..................................................................................111

4-23 HP ProLiant DL785 G5 Server Rear View ..................................................................................113

4-24 xw9300 Workstation Rear View...................................................................................................116

4-25 xw9400 Workstation Rear View ..................................................................................................116

4-26 HP Integrity rx1620 Server Rear View.........................................................................................123

4-27 HP Integrity rx2600 Server Rear View.........................................................................................123

4-28 HP Integrity rx2620 Server Rear View.........................................................................................125

4-29 HP Integrity rx2660 Server Rear View ........................................................................................127

4-30 HP Integrity rx4640 Server Rear View ........................................................................................129

4-31 HP Integrity rx8620 Core IO Board Connections........................................................................132

B-1 Gigabit Ethernet Interconnect With Half-Height Server Blades.................................................143

B-2 InfiniBand Interconnect With Full-Height Server Blades...........................................................144

B-3 InfiniBand Interconnect With Mixed Height Server Blades........................................................145

7

Page 8

List of Tables

1-1 Supported Processor Architectures and Hardware Models.........................................................20

1-2 Supported HP ProLiant Server Blade Models...............................................................................21

1-3 Supported Console Management Devices....................................................................................29

1-4 Supported Interconnects...............................................................................................................32

3-1 Supported Switch Models.............................................................................................................47

3-2 Trunking Port Use on Large-Scale Systems with Multiple Regions.............................................49

4-1 Firmware Dependencies................................................................................................................59

4-2 Ethernet Ports on the Head Node.................................................................................................61

4-3 BIOS Settings for HP ProLiant DL140 G2 Nodes..........................................................................64

4-4 BIOS Settings for HP ProLiant DL140 G3 Nodes..........................................................................65

4-5 BIOS Settings for HP ProLiant DL160 G5 Nodes..........................................................................67

4-6 iLO Settings for HP ProLiant DL360 G4 Nodes ...........................................................................69

4-7 BIOS Settings for HP ProLiant DL360 G4 Nodes..........................................................................69

4-8 iLO Settings for HP ProLiant DL360 G5 Nodes ...........................................................................71

4-9 BIOS Settings for HP ProLiant DL360 G5 Nodes .........................................................................71

4-10 iLO Settings for HP ProLiant DL380 G4 and G5 Nodes...............................................................73

4-11 BIOS Settings for HP ProLiant DL380 G4 Nodes..........................................................................74

4-12 BIOS Settings for HP ProLiant DL380 G5 Nodes .........................................................................74

4-13 iLO Settings for HP ProLiant DL580 G4 Nodes............................................................................77

4-14 BIOS Settings for HP ProLiant DL580 G4 Nodes..........................................................................78

4-15 iLO Settings for HP ProLiant DL580 G5 Nodes............................................................................79

4-16 BIOS Settings for HP ProLiant DL580 G5 Nodes..........................................................................80

4-17 BIOS Settings for xw8200 Workstations........................................................................................81

4-18 BIOS Settings for xw8400 Workstations........................................................................................82

4-19 BIOS Settings for xw8600 Workstations........................................................................................83

4-20 Boot Order for HP ProLiant Server Blades...................................................................................84

4-21 BIOS Settings for HP ProLiant DL145 Nodes...............................................................................88

4-22 BIOS Settings for HP ProLiant DL145 G2 Nodes..........................................................................90

4-23 BIOS Settings for HP ProLiant DL145 G3 Nodes..........................................................................92

4-24 BIOS Settings for HP ProLiant DL165 G5 Nodes..........................................................................94

4-25 iLO Settings for HP ProLiant DL365 Nodes.................................................................................95

4-26 RBSU Settings for HP ProLiant DL365 Nodes..............................................................................96

4-27 iLO Settings for HP ProLiant DL365 G5 Nodes............................................................................98

4-28 RBSU Settings for HP ProLiant DL365 G5 Nodes.........................................................................99

4-29 iLO Settings for HP ProLiant DL385 Nodes................................................................................101

4-30 iLO Settings for HP ProLiant DL385 G2 Nodes .........................................................................102

4-31 RBSU Settings for HP ProLiant DL385 Nodes............................................................................103

4-32 RBSU Settings for HP ProLiant DL385 G2 Nodes ......................................................................103

4-33 iLO Settings for HP ProLiant DL385 G2 Nodes .........................................................................105

4-34 iLO Settings for HP ProLiant DL585 Nodes................................................................................107

4-35 iLO Settings for HP ProLiant DL585 G2 Nodes .........................................................................107

4-36 RBSU Settings for HP ProLiant DL585 Nodes............................................................................109

4-37 RBSU Settings for HP ProLiant DL585 G2 Nodes.......................................................................109

4-38 iLO Settings for HP ProLiant DL585 G5 Nodes .........................................................................111

4-39 RBSU Settings for HP ProLiant DL585 G5 Nodes.......................................................................112

4-40 iLO Settings for HP ProLiant DL785 G5 Nodes .........................................................................114

4-41 RBSU Settings for HP ProLiant DL785 G5 Nodes.......................................................................115

4-42 Setup Utility Settings for xw9300 Workstations..........................................................................117

4-43 Setup Utility Settings for xw9400 Workstations..........................................................................117

4-44 Boot Order for HP ProLiant Server Blades..................................................................................119

4-45 Additional BIOS Setting for HP ProLiant BL685c Nodes...........................................................121

4-46 Setting Static IP Addresses for MP Power Management Devices...............................................122

8 List of Tables

Page 9

4-47 Adding a Boot Entry and Setting the Boot Order on HP Integrity Server Blades......................137

9

Page 10

10

Page 11

About This Document

This document describes how to prepare the nodes in your HP cluster platform before installing

HP XC System Software.

An HP XC system is integrated with several open source software components. Some open source

software components are being used for underlying technology, and their deployment is

transparent. Some open source software components require user-level documentation specific

to HP XC systems, and that kind of information is included in this document when required.

HP relies on the documentation provided by the open source developers to supplythe information

you need to use their product. For links to open source software documentation for products

that are integrated with the HP XC system, see “Supplementary Software Products” (page 14).

Documentation for third-party hardware and software components that are supported on the

HP XC system is supplied by the third-party vendor. However, information about the operation

of third-party software is included in this document if the functionality of the third-party

component differs from standard behavior when used in the XC environment. In this case, HP

XC documentation supersedes information supplied by the third-party vendor. For links to

related third-party Web sites, see “Supplementary Software Products” (page 14).

Standard Linux® administrative tasks or the functions provided by standard Linux tools and

commands are documented in commercially available Linux reference manuals and on various

Web sites. For more information about obtaining documentation forstandard Linux administrative

tasks and associated topics, see the list of Web sites and additional publications provided in

“Related Software Products and Additional Publications” (page 15).

Intended Audience

The information in this document is written for technicians or administrators who have the task

of preparing the hardware on which the HP XC System Software will be installed.

Before beginning, you must meet the following requirements:

• You are familiar with accessing BIOS and consoles with either Ethernet or serial port

connections and terminal emulators.

• You have access to and have read the HP Cluster Platform documentation.

• You have access to and have read the HP server blade documentation if the hardware

configuration contains HP server blade models.

• You have previous experience with a Linux operating system.

New and Changed Information in This Edition

• This document was updated to include the following servers:

— CP3000BL platform:

◦ HP ProLiant BL2x220c G5 server blade

Typographic Conventions

This document uses the following typographical conventions:

%, $, or #

audit(5) A manpage. The manpage name is audit, and it is located in

Command

Computer output

A percent sign represents the C shell system prompt. A dollar

sign represents the system prompt for the Korn, POSIX, and

Bourne shells. A number sign represents the superuser prompt.

Section 5.

A command name or qualified command phrase.

Text displayed by the computer.

Intended Audience 11

Page 12

Ctrl+x A key sequence. A sequence such as Ctrl+x indicates that you

must hold down the key labeled Ctrl while you press another

key or mouse button.

ENVIRONMENT VARIABLE The name of an environment variable, for example, PATH.

[ERROR NAME]

The name of an error, usually returned in the errno variable.

Key The name of a keyboard key. Return and Enter both refer to the

same key.

Term The defined use of an important word or phrase.

User input

Variable

Commands and other text that you type.

The name of a placeholder in a command, function, or other

syntax display that you replace with an actual value.

[ ] The contents are optional in syntax. If the contents are a list

separated by |, you can choose one of the items.

{ } The contents are required in syntax. If the contents are a list

separated by |, you must choose one of the items.

. . . The preceding element can be repeated an arbitrary number of

times.

| Separates items in a list of choices.

WARNING A warning calls attention to important information that if not

understood or followed will result in personal injury or

nonrecoverable system problems.

CAUTION A caution calls attention to important information that if not

understood or followed will result in data loss, data corruption,

or damage to hardware or software.

IMPORTANT This alert provides essential information to explain a concept or

to complete a task.

NOTE A note contains additional information to emphasize or

supplement important points of the main text.

HP XC and Related HP Products Information

The HP XC System Software Documentation Set, the Master Firmware List, and HP XC HowTo

documents are available at this HP Technical Documentation Web site:

http://docs.hp.com/en/linuxhpc.html

The HP XC System Software Documentation Set includes the following core documents:

HP XC System Software Release Notes

HP XC Hardware Preparation Guide

HP XC System Software Installation Guide

HP XC System Software Administration Guide

Describes important, last-minute information about firmware,

software, or hardware that might affect the system. This

document is not shipped on the HP XC documentation CD. It

is available only on line.

Describes hardware preparation tasks specific to HP XC that

are required to prepare each supported hardware model for

installation and configuration, including required node and

switch connections.

Provides step-by-step instructions for installing the HP XC

System Software on the head node and configuring the system.

Provides an overview of the HP XC system administrative

environment, cluster administration tasks, node maintenance

tasks, LSF® administration tasks, and troubleshooting

procedures.

12

Page 13

HP XC System Software User's Guide

QuickSpecs for HP XC System Software

Provides anoverview ofmanaging the HP XC user environment

with modules, managing jobs with LSF, and describes how to

build, run, debug, and troubleshoot serial and parallel

applications on an HP XC system.

Provides a product overview, hardware requirements, software

requirements, software licensing information, ordering

information, and information about commercially available

software that has been qualified to interoperate with the HP XC

System Software. The QuickSpecs are located on line:

http://www.hp.com/go/clusters

See the following sources for information about related HP products.

HP XC Program Development Environment

The Program Development Environment home page provide pointers to tools that have been

tested in the HP XC program development environment (for example, TotalView® and other

debuggers, compilers, and so on).

http://h20311.www2.hp.com/HPC/cache/276321-0-0-0-121.html

HP Message Passing Interface

HP Message Passing Interface (HP-MPI) is an implementation of the MPI standard that has been

integrated in HP XC systems. The home page and documentation is located at the following Web

site:

http://www.hp.com/go/mpi

HP Serviceguard

HP Serviceguard is a service availability tool supported on an HP XC system. HP Serviceguard

enables some system services to continue if a hardware or software failure occurs. The HP

Serviceguard documentation is available at the following Web site:

http://docs.hp.com/en/ha.html

HP Scalable Visualization Array

The HP Scalable Visualization Array (SVA) is a scalable visualization solution that is integrated

with the HP XC System Software. The SVA documentation is available at the following Web site:

http://docs.hp.com/en/linuxhpc.html

HP Cluster Platform

The cluster platform documentation describes site requirements, shows you how to set up the

servers and additional devices, and provides procedures to operate and manage the hardware.

These documents are available at the following Web site:

http://www.docs.hp.com/en/highperfcomp.html

HP Integrity and HP ProLiant Servers

Documentation for HP Integrity and HP ProLiant servers is available at the following web

address:

http://docs.hp.com/en/hw.html

For c-Class Server BladeSystems, see also the installation, administration, and user guides for

the following components:

• HP (ProLiant or Integrity) C-Class Server Blades

• HP BladeSystem c-Class Onboard Administrator

HP XC and Related HP Products Information 13

Page 14

• HP Server Blade c7000 Enclosure

• HP BladeSystem c3000 Enclosure

Related Information

This section provides useful links to third-party, open source, and other related software products.

Supplementary Software Products This section provides links to third-party and open source

software products that are integrated into the HP XC System Software core technology. In the

HP XC documentation, except where necessary, references to third-party and open source

software components are generic, and the HP XC adjective is not added to any reference to a

third-party or open source command or product name. For example, the SLURM srun command

is simply referred to as the srun command.

The location of each web address or link to a particular topic listed in this section is subject to

change without notice by the site provider.

• http://www.platform.com

Home page for Platform Computing Corporation, the developer of the Load Sharing Facility

(LSF). LSF-HPC with SLURM, the batch system resourcemanager used on an HP XC system,

is tightly integrated with the HP XC and SLURM software. Documentation specific to

LSF-HPC with SLURM is provided in the HP XC documentation set.

Standard LSF is also available as an alternative resource management system (instead of

LSF-HPC with SLURM) for HP XC. This is the version of LSF that is widely discussed on

the Platform web address.

For your convenience, the following Platform Computing Corporation LSF documents are

shipped on the HP XC documentation CD in PDF format:

— Administering Platform LSF

— Administration Primer

— Platform LSF Reference

— Quick Reference Card

— Running Jobs with Platform LSF

LSF procedures and information supplied in the HP XC documentation, particularly the

documentation relating to the LSF-HPC integration with SLURM, supersedes the information

supplied in the LSF manuals from Platform Computing Corporation.

The Platform Computing Corporation LSF manpages are installed by default. lsf_diff(7)

supplied by HP describes LSF command differences when using LSF-HPC with SLURM on

an HP XC system.

The following documents in the HP XC System Software Documentation Set provide

information about administering and using LSF on an HP XC system:

— HP XC System Software Administration Guide

— HP XC System Software User's Guide

14

• https://computing.llnl.gov/linux/slurm/documentation.html

Documentation for the Simple Linux Utility for Resource Management (SLURM), which is

integrated with LSF to manage job and compute resources on an HP XC system.

• http://www.nagios.org/

Home page for Nagios®, a system and network monitoring application that is integrated

into an HP XC system to provide monitoring capabilities. Nagios watches specified hosts

and services and issues alerts when problems occur and when problems are resolved.

• http://oss.oetiker.ch/rrdtool

Home page of RRDtool, a round-robin database tool and graphing system. In the HP XC

system, RRDtool is used with Nagios to provide a graphical view of system status.

Page 15

• http://supermon.sourceforge.net/

Home page for Supermon, a high-speed cluster monitoring system that emphasizes low

perturbation, high sampling rates, and an extensible data protocol and programming

interface. Supermonworks in conjunction with Nagios to provideHP XC system monitoring.

• http://www.llnl.gov/linux/pdsh/

Home page for the parallel distributed shell (pdsh), which executes commands across HP

XC client nodes in parallel.

• http://www.balabit.com/products/syslog_ng/

Home page for syslog-ng, a logging tool that replaces the traditional syslog functionality.

The syslog-ng tool is a flexible and scalable audit trail processing tool. It provides a

centralized, securely stored log of all devices on the network.

• http://systemimager.org

Home page for SystemImager®, which is the underlying technology that distributes the

golden image to all nodes and distributes configuration changes throughout the system.

• http://linuxvirtualserver.org

Home page for the Linux Virtual Server (LVS), the load balancer running on the Linux

operating system that distributes login requests on the HP XC system.

• http://www.macrovision.com

Home pagefor Macrovision®, developer of the FLEXlm™ license management utility, which

is used for HP XC license management.

• http://sourceforge.net/projects/modules/

Web address for Modules, which provide for easy dynamic modification of a user's

environment through modulefiles, which typically instruct the module command to alter

or set shell environment variables.

• http://dev.mysql.com/

Home page for MySQL AB, developer of the MySQL database. This web address contains

a link to the MySQL documentation, particularly the MySQL Reference Manual.

Related Software Products and Additional Publications This section provides pointers to web

addresses for related software products and provides references to useful third-party publications.

The location of each web address or link to a particular topic is subject to change without notice

by the site provider.

Linux Web Addresses

• http://www.redhat.com

Home page for Red Hat®, distributors of Red Hat Enterprise Linux Advanced Server, a

Linux distribution with which the HP XC operating environment is compatible.

• http://www.linux.org/docs/index.html

This web address for the Linux Documentation Project (LDP) contains guides that describe

aspects of working with Linux, from creating your own Linux system from scratch to bash

script writing. This site also includes links to Linux HowTo documents, frequently asked

questions (FAQs), and manpages.

Related Information 15

Page 16

• http://www.linuxheadquarters.com

Web address providing documents and tutorials for the Linux user. Documents contain

instructions for installing and using applications for Linux, configuring hardware, and a

variety of other topics.

• http://www.gnu.org

Home page for the GNU Project. This site provides online software and information for

many programs and utilities that are commonly used on GNU/Linux systems. Online

information include guides for using the bash shell, emacs, make, cc, gdb, and more.

MPI Web Addresses

• http://www.mpi-forum.org

Contains the official MPI standards documents, errata, and archives of the MPI Forum. The

MPI Forum is an open group with representatives from many organizations that define and

maintain the MPI standard.

• http://www-unix.mcs.anl.gov/mpi/

A comprehensive site containing general information, such as the specification and FAQs,

and pointers to other resources, including tutorials, implementations, and other MPI-related

sites.

Compiler Web Addresses

• http://www.intel.com/software/products/compilers/index.htm

Web address for Intel® compilers.

• http://support.intel.com/support/performancetools/

Web address for general Intel software development information.

• http://www.pgroup.com/

Home page for The Portland Group™, supplier of the PGI® compiler.

Debugger Web Address

http://www.etnus.com

Home page for Etnus, Inc., maker of the TotalView® parallel debugger.

Software RAID Web Addresses

• http://www.tldp.org/HOWTO/Software-RAID-HOWTO.html and http://www.ibiblio.org/

pub/Linux/docs/HOWTO/other-formats/pdf/Software-RAID-HOWTO.pdf

A document (in two formats: HTML and PDF) that describes how to use software RAID

under a Linux operating system.

• http://www.linuxdevcenter.com/pub/a/linux/2002/12/05/RAID.html

Provides information about how to use the mdadm RAID management utility.

16

Additional Publications

For more information about standard Linux system administration or other related software

topics, consider using one of the following publications, which must be purchased separately:

• Linux Administration Unleashed, by Thomas Schenk, et al.

• Linux Administration Handbook, by Evi Nemeth, Garth Snyder, Trent R. Hein, et al.

• Managing NFS and NIS, by Hal Stern, Mike Eisler, and Ricardo Labiaga (O'Reilly)

• MySQL, by Paul Debois

Page 17

• MySQL Cookbook, by Paul Debois

• High Performance MySQL, by Jeremy Zawodny and Derek J. Balling (O'Reilly)

• Perl Cookbook, Second Edition, by Tom Christiansen and Nathan Torkington

• Perl in A Nutshell: A Desktop Quick Reference , by Ellen Siever, et al.

Manpages

Manpages provide online reference and command information from the command line. Manpages

are supplied with the HP XC system for standard HP XC components, Linux user commands,

LSF commands, and other software components that are distributed with the HP XC system.

Manpages for third-party software components might be provided as a part of the deliverables

for that component.

Using discover(8) as an example, you can use either one of the following commands to display a

manpage:

$ man discover

$ man 8 discover

If you arenot sure about a command you need to use, enter the man command with the -k option

to obtain a list of commands that are related to a keyword. For example:

$ man -k keyword

HP Encourages Your Comments

HP encourages comments concerning this document. We are committed to providing

documentation that meets your needs. Send any errors found, suggestions for improvement, or

compliments to:

docsfeedback@hp.com

Include the document title, manufacturing part number, and any comment, error found, or

suggestion for improvement you have concerning this document.

Manpages 17

Page 18

18

Page 19

1 Hardware and Network Overview

This chapter addresses the following topics:

• “Supported Cluster Platforms” (page 19)

• “Server Blade Enclosure Components” (page 22)

• “Server Blade Mezzanine Cards” (page 28)

• “Server Blade Interconnect Modules” (page 28)

• “Supported Console Management Devices” (page 29)

• “Administration Network Overview” (page 30)

• “Administration Network: Console Branch” (page 31)

• “Interconnect Network” (page 31)

• “Large-Scale Systems” (page 32)

1.1 Supported Cluster Platforms

An HP XC system is made up of interconnected servers.

A typical HP XC hardware configuration (on systems other than Server Blade c-Class servers)

contains from 5 to 512 nodes. To allow systems of a greater size, an HP XC system can be arranged

into a large-scale configuration with up to 1,024 compute nodes (HP might consider larger systems

as special cases).

HP Server Blade c-Class servers (hereafter called server blades) are perfectly suited to form HP

XC systems. Physical characteristics make it possible to have many tightly interconnected nodes

while at the same time reducing cabling requirements. Typically, server blades are used as

compute nodes but they can also function as the head node and service nodes. The hardware

and network configuration on an HP XC system with HP server blades differs from that of a

traditional HP XC system, and those differences are described in this document.

You can install and configure HP XC System Software on the following platforms:

• HP Cluster Platform 3000 (CP3000)

• HP Cluster Platform 3000BL (CP3000BL) with HP c-Class server blades

• HP Cluster Platform 4000 (CP4000)

• HP Cluster Platform 4000BL (CP4000BL) with HP c-Class server blades

• HP Cluster Platform 6000 (CP6000).

• HP Cluster Platform 6000BL (CP6000BL) with HP c-Class server blades

For more information about the cluster platforms, see the documentation that was shipped with

the hardware.

1.1.1 Supported Processor Architectures and Hardware Models

Table 1-1 lists the hardware models that are supported for each HP cluster platform.

1.1 Supported Cluster Platforms 19

Page 20

IMPORTANT: A hardware configuration can contain a mixture of Opteron and Xeon nodes,

but not Itanium nodes.

Table 1-1 Supported Processor Architectures and Hardware Models

Hardware ModelProcessor ArchitectureCluster PlatformServer Type

Intel® Xeon™with EM64TCP3000BLBlade

AMD Opteron®CP4000BL

Intel Xeon with EM64TCP3000Non-Blade

• HP ProLiant BL2x220c G5

• HP ProLiant BL260c G5

• HP ProLiant BL460c

• HP ProLiant BL480c

• HP ProLiant BL680c G5

• HP ProLiant BL465c

• HP ProLiant BL465c G5

• HP ProLiant BL685c

• HP ProLiant BL685c G5

• HP Integrity BL860cIntel Itanium®CP6000BL

• HP ProLiant DL140 G2

• HP ProLiant DL140 G3

• HP ProLiant DL160 G5

• HP ProLiant DL360 G4

• HP ProLiant DL360 G4p

• HP ProLiant DL360 G5

• HP ProLiant DL380 G4

• HP ProLiant DL380 G5

• HP ProLiant DL580 G4

• HP ProLiant DL580 G5

• HP xw8200 Workstation

• HP xw8400 Workstation

• HP xw8600 Workstation

AMD OpteronCP4000

Intel ItaniumCP6000

• HP ProLiant DL145

• HP ProLiant DL145 G2

• HP ProLiant DL145 G3

• HP ProLiant DL165 G5

• HP ProLiant DL365

• HP ProLiant DL365 G5

• HP ProLiant DL385

• HP ProLiant DL385 G2

• HP ProLiant DL385 G5

• HP ProLiant DL585

• HP ProLiant DL585 G2

• HP ProLiant DL585 G5

• HP ProLiant DL785 G5

• HP xw9300 Workstation

• HP xw9400 Workstation

• HP Integrity rx1620

• HP Integrity rx2600

• HP Integrity rx2620

• HP Integrity rx2660

• HP Integrity rx4640

• HP Integrity rx8620

20 Hardware and Network Overview

Page 21

HP server blades offer an entirely modular computing system with separate computing and

physical I/O modules that are connected and shared through a common chassis, called an

enclosure; for more information on enclosures, see “Server Blade Enclosure Components”

(page 22). Full-height Opteron server blades can take up to four dual core CPUs and Xeon server

blades can take up to two quad cores.

Table 1-2 lists the HP ProLiant hardware models supported for use in an HP XC hardware

configuration.

Table 1-2 Supported HP ProLiant Server Blade Models

HP Proliant

Blade Model NumberProcessor

Height

Intel XeonhalfBL2x220c G5

Intel XeonhalfBL260c G5

Intel XeonhalfBL460c

AMD OpteronhalfBL465c

AMD OpteronhalfBL465c G5

Intel XeonfullBL480c

Intel XeonfullBL680c G5

Intel Itanium®fullBL860c

Core

up to two quad core or

up to two dual core per

server node

up to two dual core

up to two dual core

up to two dual core

up to two dual core

up to two dual core

quad core

dual core

server node)

Hot Plug

DrivesBuilt-In NICs

02 (1 per

Mezzanine

Slots

2 (1 per

server node)

101up to two quad core or

222up to two quad core or

222up to two single core or

222up to two single core or

344up to two quad core or

324two quad core or four

324up to four dual coreAMD OpteronfullBL685c

324up to four dual coreAMD OpteronfullBL685c G5

324up to two quad core or

For more information on an individual server blade, see the QuickSpec for your model. The

QuickSpecs are located at the following Web address:

http://www.hp.com/go/clusters

1.1.2 Supported Server Blade Combinations

The HP XC System Software supports the following server blade hardware configurations:

• A hardware configuration composed entirely of HP server blades, that is, the head node,

the service nodes, and all compute nodes are server blades.

• A hardware configuration can contain a mixture of Opteron and Xeon server blades, but

not Itanium server blades.

• A mixed hardware configuration of HP server blades and non-blade servers where:

— The head node can be either a server blade or a non-blade server

— Service nodes can be either server blades or non-blade servers

— All compute nodes are server blades

1.1 Supported Cluster Platforms 21

Page 22

1.2 Server Blade Enclosure Components

HP server bladesare contained in an enclosure, which is a chassis that houses and connects blade

hardware components. An enclosure is managed by an Onboard Administrator. The HP

BladeSystem c7000 and c3000 enclosures are supported under HP XC.

This section discusses the following topics:

• “HP BladeSystem c7000 Enclosure” (page 22)

• “HP BladeSystem c3000 Enclosure” (page 25)

• “HP BladeSystem c-Class Onboard Administrator ” (page 27)

• “Insight Display” (page 28)

For more information about enclosures and their related components, see the HP Server Blade

c7000 Enclosure Setup and Installation Guide.

1.2.1 HP BladeSystem c7000 Enclosure

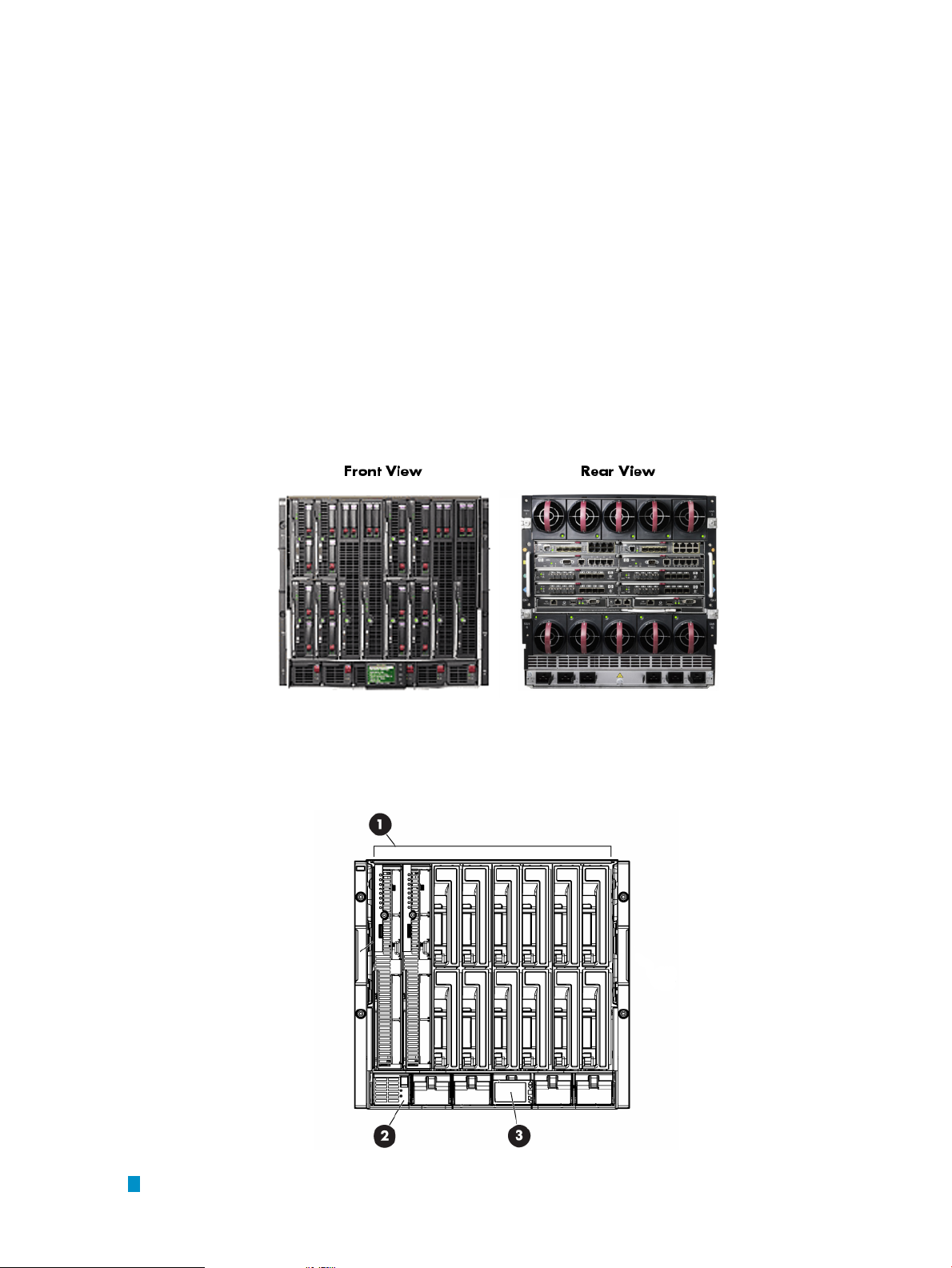

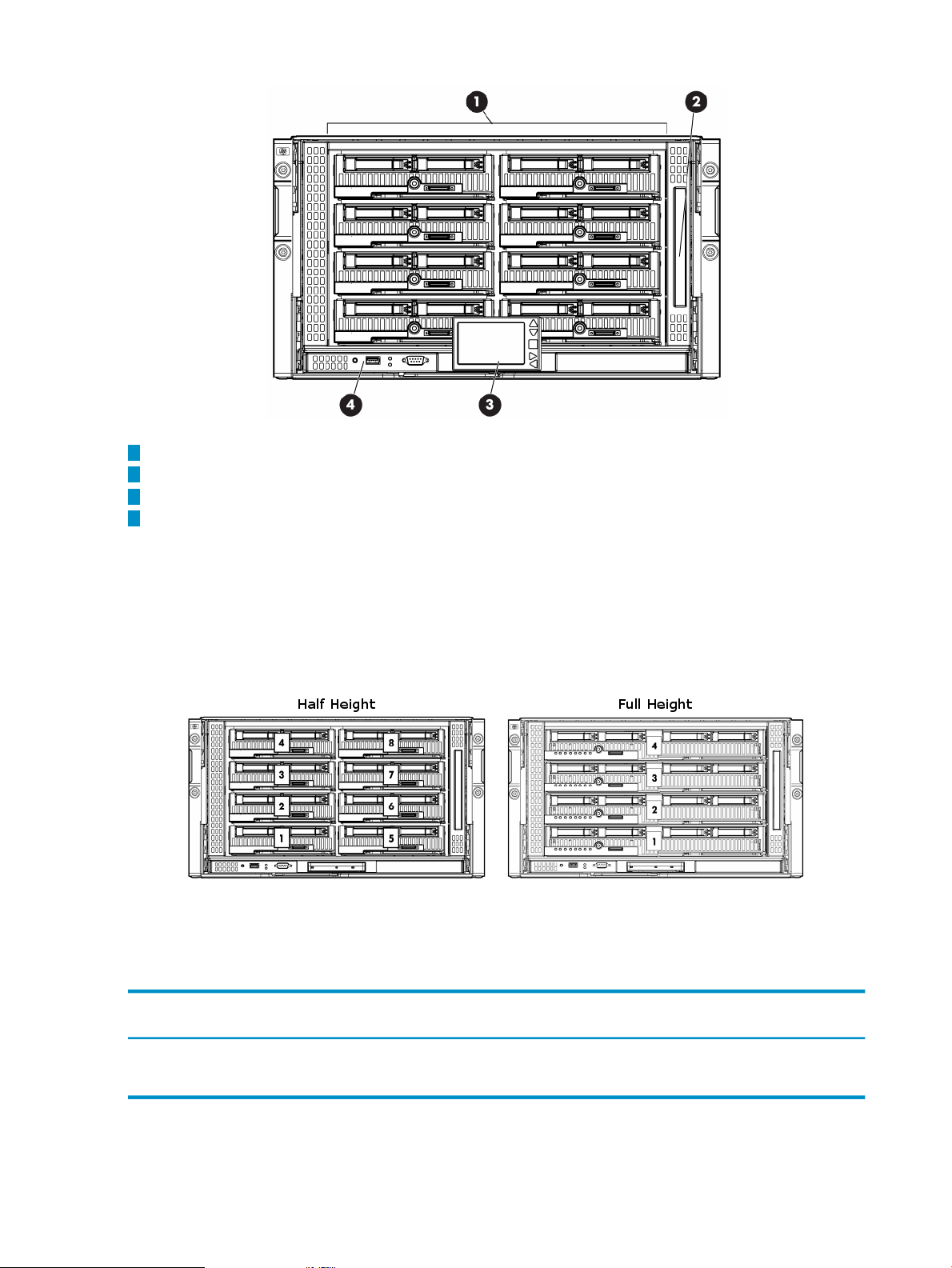

Figure 1-1 shows front and rear views of the HP BladeSystem c7000 enclosure.

Figure 1-1 HP BladeSystem c7000 enclosure (Front and Rear Views)

Figure 1-2 is an illustration showing the location of the device bays, power supply bays, and the

Insight Display at the front of the HP BladeSystem c7000 enclosure.

Figure 1-2 HP BladeSystem c7000 Enclosure Bay Locations (Front View)

1

Device bays

22 Hardware and Network Overview

Page 23

2

Power supply bays

3

Insight Display. For more information, see “Insight Display” (page 28).

As shown in Figure 1-3, the HP BladeSystem c7000 enclosure can house a maximum of 16

half-height or 8 full-height server blades. The c7000 enclosure can contain a maximum of 6 power

supplies and 10 fans. Figure 1-3 also illustrates the numbering scheme for the server bays in

which server blades are inserted. The numbering scheme differs for half height and full height

server blades.

Figure 1-3 HP BladeSystem c7000 Enclosure Bay Numbering for Half Height and Full Height Server

Blades

The number of fans in the enclosure influences the placement of the server blades. Use the

following table and the numbering scheme in Figure 1-3 to determine the placement of the server

blades in the enclosure, based on the number of fans.

Insert Half-Height Server Blades in

These BaysNumber of Fans

4 fans

1 Only two servers are supported in this configuration. They can be inserted in any two of these bays.

1

Insert Full-Height Server Blades in These

Bays

1 or 21, 2, 9, 10

1, 2, 3, 41, 2, 3, 4, 9, 10, 11, 126 fans

all server baysall server bays8 or 10 fans

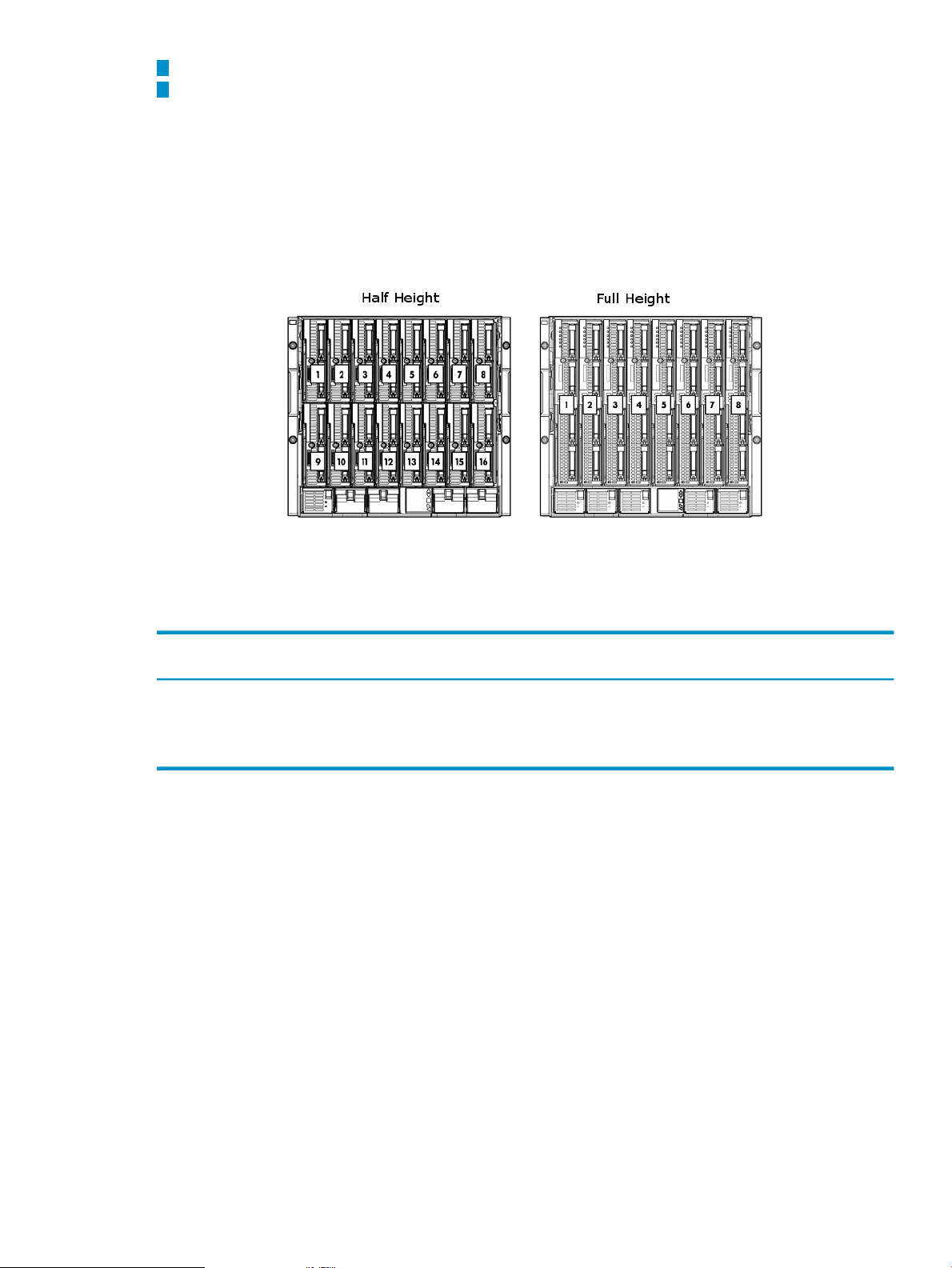

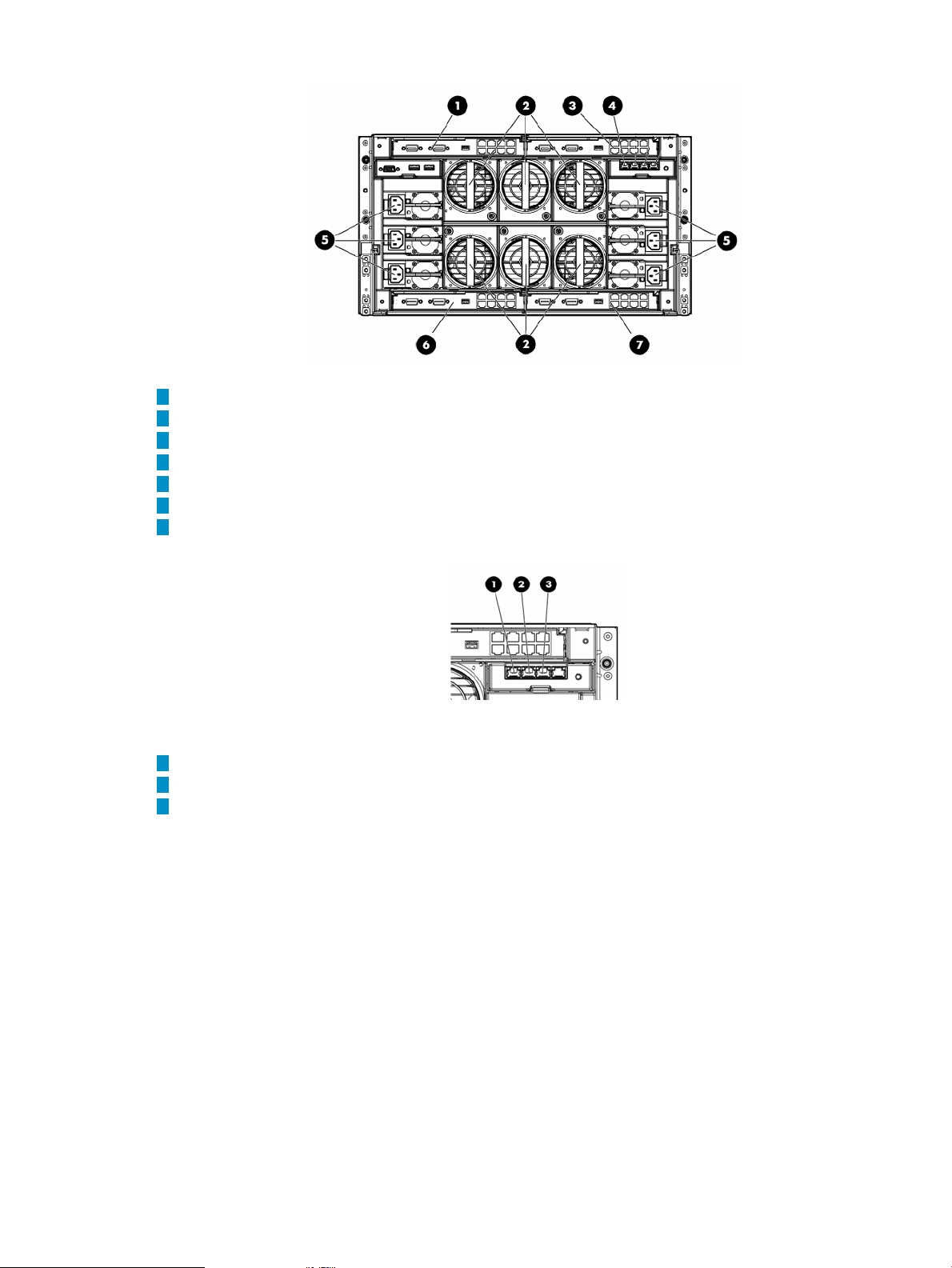

Figure 1-4 is an illustration showing the location of the fan bays, interconnect bays, onboard

administrator bays, the power supply exhaust vent, and the AC power connections at the rear

of the HP BladeSystem c7000 enclosure. This figure includes an inset showing the serial connector,

onboard administrator/iLO port, and the enclosure Uplink and Downlink ports.

1.2 Server Blade Enclosure Components 23

Page 24

Figure 1-4 HP BladeSystem c7000 Enclosure Bay Locations (Rear View)

1

Fan Bays

2

Interconnect Bay #1

3

Interconnect Bay #2

4

Interconnect Bay #3

5

Interconnect Bay #4

6

Interconnect Bay #5

7

Interconnect Bay #6

1

Onboard Administrator/Integrated Lights Out port

2

Serial connector

3

Enclosure Downlink port

4

Enclosure Uplink port

8

Interconnect Bay #7

9

Interconnect Bay #8

10

Onboard Administrator Bay 1

11

Onboard Administrator Bay 2

12

Power Supply Exhaust Vent

13

AC Power Connections

General Configuration Guidelines

The following are general guidelines for configuring HP BladeSystem c7000 enclosures:

• Up to four enclosures can be mounted in an HP 42U Infrastructure Rack.

• If an enclosure is not fully populated with fans and power supplies, see the positioning

guidelines in the HP BladeSystem c7000 enclosure documentation.

• Enclosures are cabled together using their uplink and downlink ports.

• The top uplink port in each rack is used as a service port to attach a laptop or other device

for initial configuration or subsequent debugging.

24 Hardware and Network Overview

Page 25

Specific HP XC Setup Guidelines

The following enclosure setup guidelines are specific to HP XC:

• On every HP BladeSystem c7000 enclosure, an Ethernet interconnect module (either a switch

or pass-through module) is installed in interconnect bay #1 (see callout 2 in Figure 1-4) for

the administration network.

• Hardware configurations that use Gigabit Ethernet as the interconnect require an additional

Ethernet interconnect module (either a switch or pass-through module) to be installed in

interconnect bay #2 (see callout 3 in Figure 1-4) for the interconnect network.

• Systems thatuse InfiniBand as the interconnect require a double-wide InfiniBand interconnect

switch module installed in interconnect bays #5 and #6 (see callouts 6 and 7 in Figure 1-4).

• Some systems might need an additional Ethernet interconnect module to support server

blades that require external connections. For more information about external connections,

see “Cabling for the External Network” (page 41).

1.2.2 HP BladeSystem c3000 Enclosure

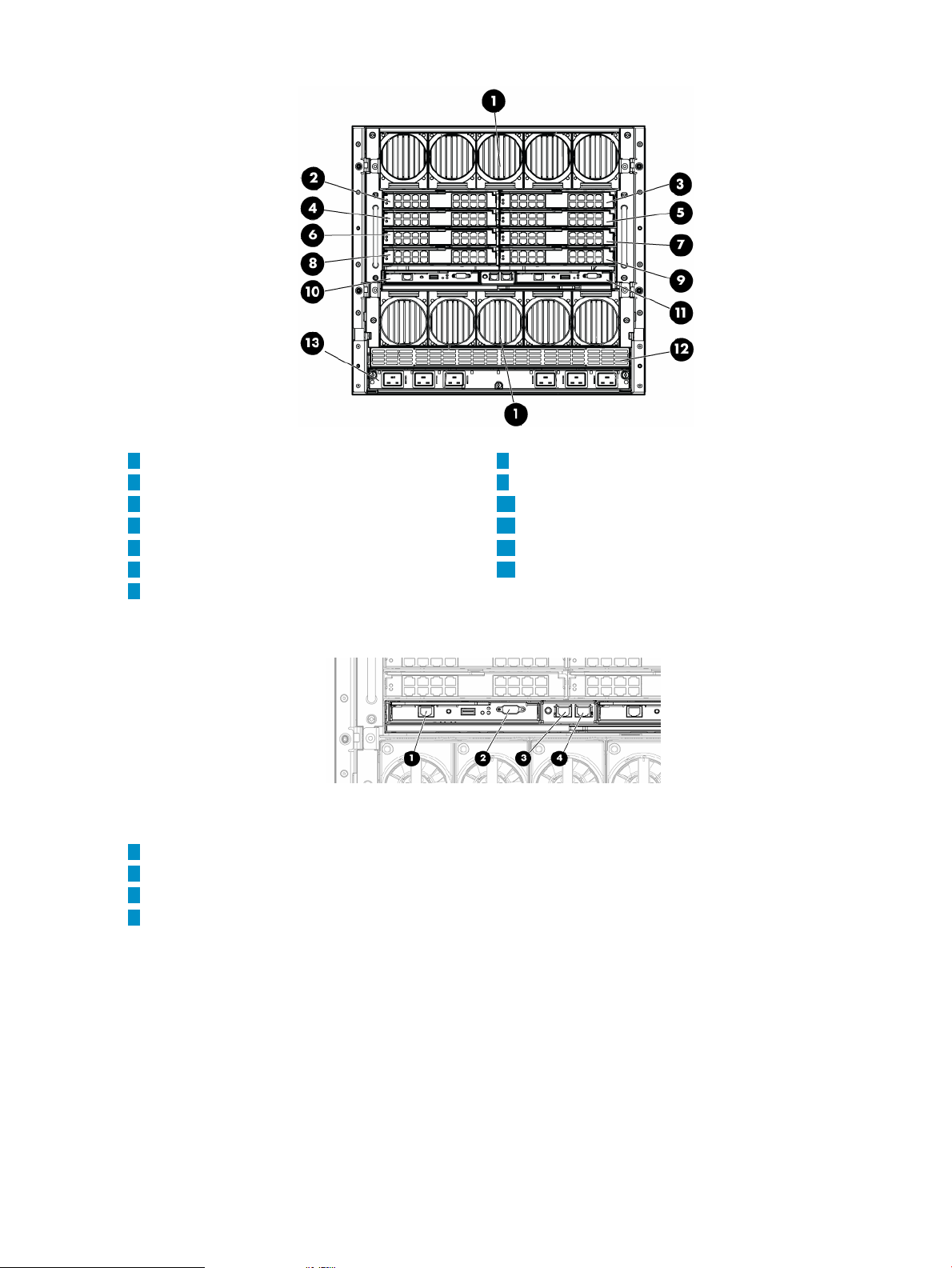

Figure 1-5 shows the front and rear views of the HP BladeSystem c3000 enclosure.

Figure 1-5 HP BladeSystem c3000 Enclosure (Front and Rear Views)

The HP BladeSystem c3000 Enclosure is available as a tower model, as shown in Figure 1-6.

Figure 1-6 HP BladeSystem c3000 Enclosure Tower Model

Figure 1-7 is an illustration showing the location of the device bays, optional DVD drive, Insight

display, and Onboard Administrator at the front of the HP BladeSystem c3000 Enclosure.

1.2 Server Blade Enclosure Components 25

Page 26

Figure 1-7 HP BladeSystem c3000 Enclosure Bay Locations (Front View)

1

Device bays

2

DVD drive (optional)

3

Insight Display. For more information, see “Insight Display” (page 28).

4

Onboard Administrator (OA)

The HP BladeSystem c3000 enclosure can house a maximum of 8 half-height or 4 full-height

server blades. Additionally, the c3000 enclosure contains an integrated DVD drive, which is

useful for installing the HP XC System Software. Figure 1-8 illustrates the numbering of the

server bays of the HP BladeSystem c3000 enclosure for both half height and full height server

blades.

Figure 1-8 HP BladeSystem c3000 Enclosure Bay Numbering

The number of fans in the enclosure influences the placement of the server blades. Use the

following table and the numbering scheme in Figure 1-8 to determine the placement of the server

blades in the enclosure, based on the number of fans.

Insert Half-Height Server Blades in

These BaysNumber of Fans

Insert Full-Height Server Blades in These

Bays

Figure 1-9 is an illustration showing the location of the interconnect bays, fan bays, onboard

administrator bays, the enclosure/onboard administrator link module, and power supplies at

the rear of the HP BladeSystem c3000 enclosure. This figure includes an inset showingthe onboard

administrator/iLO port, and the enclosure Uplink and downlink ports.

26 Hardware and Network Overview

1, 21, 2, 5, 64 fan configuration

anyany6 fan configuration

Page 27

Figure 1-9 HP BladeSystem c3000 Enclosure Bay Locations (Rear View)

1

Interconnect bay #1

2

Fans

3

Interconnect bay #2

4

Enclosure/Onboard Administrator Link Module

5

Power Supplies

6

Interconnect bay #3

7

Interconnect bay #4

1

Enclosure Downlink port

2

Enclosure Uplink port

3

Onboard Administrator/Integrated Lights Out port

Specific HP XC Setup Guidelines

The following enclosure setup guidelines are specific to HP XC:

• On every enclosure, an Ethernet interconnect module (either a switch or pass-through

module) is installed in interconnect bay #1 (see callout 1 in Figure 1-9) for the administration

network.

• Hardware configurations that use Gigabit Ethernet as the interconnect can share with the

administration network in interconnect bay #1.

• Systems thatuse InfiniBand as the interconnect require a double-wide InfiniBand interconnect

switch module installed in interconnect bays #3 and #4 (see callouts 6 and 7 in Figure 1-9).

• Some systems might need an additional Ethernet interconnect module to support server

blades that require external connections. For more information about external connections,

see “Cabling for the External Network” (page 41).

1.2.3 HP BladeSystem c-Class Onboard Administrator

The Onboard Administrator is the management device for an enclosure, and at least one Onboard

Administrator is installed in every enclosure.

1.2 Server Blade Enclosure Components 27

Page 28

You can access the Onboard Administrator through a graphical Web-based user interface, a

command-line interface, or the simple object access protocol (SOAP) to configure and monitor

the enclosure.

You can add a second Onboard Administrator to provide redundancy.

The Onboard Administrator requires a password. For information on setting the Onboard

Administrator Password, see “Setting the Onboard Administrator Password” (page 62).

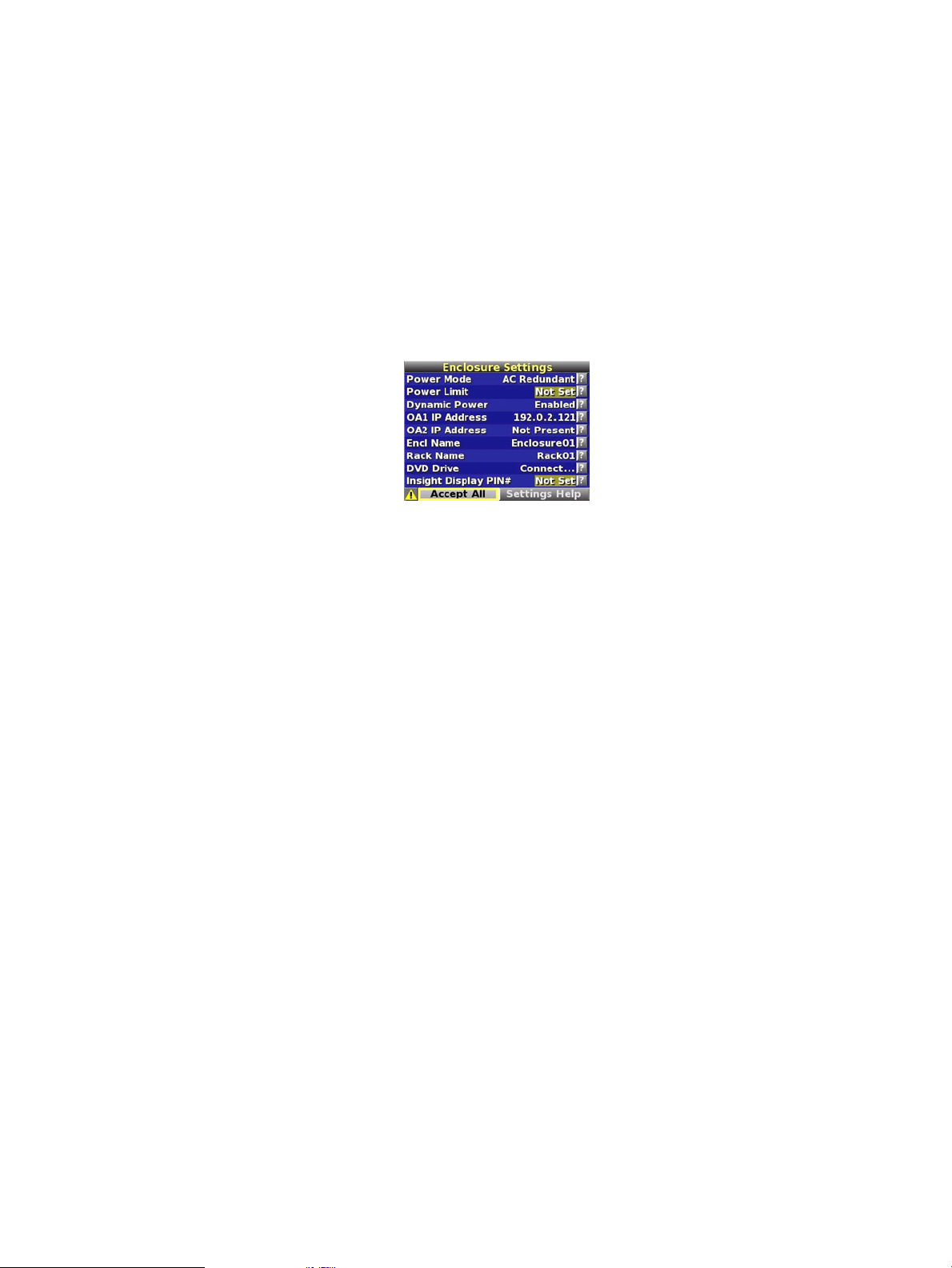

1.2.4 Insight Display

The Insight Display is a small LCD panel on the front of an enclosure that provides instant access

to important information about the enclosure such as the IP address and color-coded status.

Figure 1-10 depicts the Insight Display.

Figure 1-10 Server Blade Insight Display

You can use the Insight Display panel to make some basic enclosure settings.

1.3 Server Blade Mezzanine Cards

The mezzanine slots on each server blade provide additional I/O capability.

Mezzanine cards are PCI-Express cards that attach inside the server blade through a special

connector and have no physical I/O ports on them.

Card types include Ethernet, fibre channel, or 10 Gigabit Ethernet.

1.4 Server Blade Interconnect Modules

An interconnect module provides the physical I/O for the built-in NICs or the supplemental

mezzanine cards on the server blades. An interconnect module can be eithera switch or a pass-thru

module.

A switch provides local switching and minimizes cabling. Switch models that are supported as

interconnect modules include, but are not limited to:

• Nortel GbE2c Gigabit Ethernet switch

• Cisco Catalyst Gigabit Ethernet switch

• HP 4x DDR InfiniBand switch

• Brocade SAN switch

A pass-thru module provides direct connections to the individual ports on each node and does

not provide any local switching.

Bays in the back of each enclosure correspond to specific interfaces on the server blades. Thus,

all I/O devices that correspond to a specific interconnect bay must be the same type.

Interconnect Bay Port Mapping

Connections between the server blades and the interconnect bays are hard wired. Each of the 8

interconnect bays in the back of the enclosure has a connection to each of the 16 server bays in

the front of the enclosure. The built-in NIC or mezzanine card into which the interconnect blade

connects dependson which interconnect bay it is pluggedinto. Because full-height blades consume

two server bays, they have twice as many connections to each of the interconnect bays.

28 Hardware and Network Overview

Page 29

See the HP BladeSystem Onboard Administrator User Guide for illustrations of interconnect bay

port mapping connections on half- and full-height server blades.

1.5 Supported Console Management Devices

Table 1-3 lists the supported console management device for each hardware model within each

cluster platform. The console management device provides remote access to the console of each

node, enablingfunctions such as remote power management, remote console logging, and remote

boot.

HP workstation models do not have console ports.

HP ProLiant servers provide remote management features through a baseboard management

controller (BMC). The BMC enables functions such as remote power management and remote

boot. HP ProLiant BMCs comply with a specified release of the industry-standard Intelligent

Platform Management Interface (IPMI). HP XC supports two IPMI-compliant BMCs: integrated

lights out (iLO and iLO2) and Lights-Out 100i (LO-100i), depending on the server model.

Each HP ProLiant server blade has a built-in Integrated Lights Out (iLO2) device that provides

full remote power control and serial console access. You can access the iLO2 device through the

Onboard Administrator. On server blades, iLO2 advanced features are enabled by default and

include the following:

• Full remote graphics console access including full keyboard, video, mouse (KVM) access

through a Web browser

• Support for remote virtual media which enables you to mount a local CD or diskette and

serve it to the server blade over the network

Each HP Integrity server blade has a built-in management processor (MP) device that provides

full remote power control and serial console access. You can access the MP device by connecting

a serial terminal or laptop serial port to the local IO cable that is connected to the server blade.

Hardware models that use iLO and iLO2 need certain settings that cannot be made until the iLO

has an IP address. The HP XC System Software Installation Guide provides instructions for using

a browser to connect to the iLO and iLO2 to enable telnet access.

Table 1-3 Supported Console Management Devices

Firmware DependencyHardware Component

CP3000

Lights-out 100i management (LO-100i), system BIOSHP ProLiant DL140 G2

LO-100i, system BIOSHP ProLiant DL140 G3

LO-100i, system BIOSHP ProLiant DL160 G5

Integrated lights out (iLO), system BIOSHP ProLiant DL360 G4

iLO, system BIOSHP ProLiant DL360 G4p

iLO2, system BIOSHP ProLiant DL360 G5

iLO, system BIOSHP ProLiant DL380 G4

iLO2, system BIOSHP ProLiant DL380 G5

iLO2, system BIOSHP ProLiant DL580 G4

iLO2, system BIOSHP ProLiant DL580 G5

CP3000BL

iLO2, system BIOS, Onboard Administrator (OA)HP ProLiant BL2x220c G5

iLO2, system BIOS, Onboard Administrator (OA)HP ProLiant BL260c G5

1.5 Supported Console Management Devices 29

Page 30

Table 1-3 Supported Console Management Devices (continued)

Firmware DependencyHardware Component

iLO2, system BIOS, Onboard Administrator (OA)HP ProLiant BL460c

iLO2, system BIOS, OAHP ProLiant BL480c

iLO2, system BIOS, Onboard Administrator (OA)HP ProLiant BL680c G5

CP4000

LO-100iHP ProLiant DL145

LO-100iHP ProLiant DL145 G2

LO-100iHP ProLiant DL145 G3

LO-100iHP ProLiant DL165 G5

iLO2HP ProLiant DL365

iLO2HP ProLiant DL365 G5

iLO2HP ProLiant DL385

iLO2HP ProLiant DL385 G2

iLO2HP ProLiant DL385 G5

iLO2HP ProLiant DL585

CP4000BL

CP6000

CP6000BL

iLO2HP ProLiant DL585 G2

iLO2HP ProLiant DL585 G5

iLO2HP ProLiant DL785 G5

iLO2HP ProLiant BL465c

iLO2HP ProLiant BL465c G5

iLO2HP ProLiant BL685c

iLO2HP ProLiant BL685c G5

Management Processor (MP)HP Integrity rx1620

MPHP Integrity rx2600

MPHP Integrity rx2620

MPHP Integrity rx2660

MPHP Integrity rx4640

MPHP Integrity rx8620

MPHP Integrity BL860c Server Blade (Full-height)

1.6 Administration Network Overview

The administration network is a private network within the HP XC system that is used primarily

for administrative operations. This network is treated as a flat network during run time (that is,

communication between any two points in the network is equal in communication time between

any other two points in the network). However, during the installation and configuration of the

30 Hardware and Network Overview

Page 31

HP XC system, the administrative tools probe and discover the topology of the administration

Compute Nodes

Root Console

Switch

Head

Node

Specialized Role Nodes

Root Administration Switch

Branch Console Switches

network. The administration network requires and uses Gigabit Ethernet.

The administration network has at least one Root Administration Switch and can have multiple

Branch Administration Switches. These switches are discussed in “Switches” (page 46).

1.7 Administration Network: Console Branch

The console branch is part of the private administration network within an HP XC system that

is used primarily for managing and monitoring the consoles of the nodes that comprise the HP

XC system. This branch of the network uses 10/100 Mbps Ethernet.

During the installation and configuration of the HP XC system, the administrative tools probe

and discover the topology of the entire administration network including the console branch.

A (nonblade) HP XC system has at least one Root Console Switch with the potential for multiple

Branch Console Switches. Figure 1-11 shows a graphical representation of the console branch.

Figure 1-11 Administration Network: Console Branch (Without HP Server Blades)

1.8 Interconnect Network

The interconnect network is a private network within the HP XC system. Typically, every node

in the HP XC system is connected to the interconnect.

The interconnect network is dedicated to communication between processors and access to data

in storage areas. It provides a high-speed communications path used primarily for user file

service and for communications within applications that are distributed among nodes of the

cluster.

Table 1-4 lists the supported interconnect types on each cluster platform. The interconnect types

are displayed in the context of an interconnect family, in which InfiniBand products constitute

one family, Quadrics® QsNetII® constitutes another interconnect family, and so on. For more

information about the interconnect types on individual hardware models, see the cluster platform

documentation.

1.7 Administration Network: Console Branch 31

Page 32

Table 1-4 Supported Interconnects

INTERCONNECT FAMILIES

InfiniBand PCI

Cluster

Platform and

Hardware

Model

1 Mellanox ConnectX Infiniband Cards require OFED Version 1.2.5 or later.

2 The HP ProLiant DL385 G2 and DL145 G3 servers require a PCI Express card in order to use this interconnect.

3 This interconnect is supported by CP6000 hardware models with PCI Express.

Gigabit

Ethernet

InfiniBand®

PCI-X

XXCP6000

Express Single

Data Rate and

Double Data

Rate (DDR)

3

X

InfiniBand

ConnectX

Double Data

Rate (DDR)

XXXCP3000BL

XXXCP4000BL

3

Myrinet® (Rev.

1

D, E, and F)

QsNet

XXXXXCP3000

2

XXXXXCP4000

X

XX

Mixing Adapters

Within a given interconnect family, several different adapters can be supported. However,

HP requires that all adapters must be from the same interconnect family; a mix of adapters

from different interconnect families is not supported.

InfiniBand Double Data Rate

All components in a network must be DDR to achieve DDR performance levels.

ConnectX InfiniBand Double Data Rate

Currently ConnectX adapters cannot be mixed with other types of adapters.

Myrinet Adapters

The Myrinet adapters can be either the single-port M3F-PCIXD-2 (Rev. D) or the dual port