Page 1

HP VAN SDN Controller Programming Guide

Document version: 1

Abstract

The HP VAN SDN Controller is a Java-based OpenFlow controller enabling SDN solutions such as network

controllers for the data center, public cloud, private cloud, and campus edge networks. This includes

providing an open platform for developing experimental and special-purpose network control protocols using

a built-in OpenFlow controller. This document provides detailed documentation for writing applications to run

on the HP VAN SDN Controller platform.

Part number: 5998-6079

Software version: 2.3.0

1

Page 2

© Copyright 2013, 2 014 Hewlett-Packard Development Company, L.P.

No part of this documentation may be reproduced or transmitted in any form or by any means without prior

written consent of Hewlett-Packard Development Company, L.P.

The information contained herein is subject to change without notice.

HEWLETT-PACKARD COMPANY MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS

MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND

FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for

incidental or consequential damages in connection with the furnishing, performance, or use of this material.

The only warranties for HP products and services are set forth in the express warranty statements

accompanying such products and services. Nothing herein should be construed as constituting an additional

warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

ii

Page 3

Contents

1 Introduction ··································································································································································· 1

Overview ··········································································································································································· 1

Basic Architecture ····························································································································································· 2

Internal Applications vs. External Applications ············································································································· 5

Acronyms and Abbreviations ·········································································································································· 6

2 Establishing Your Test and Development Environments ··························································································· 7

Test Environment ······························································································································································· 7

Installing HP VAN SDN Controller ························································································································· 7

Authentication Configuration ·································································································································· 7

Development Environment················································································································································ 7

Pre-requisites ····························································································································································· 7

HP VAN SDN Controller SDK ································································································································ 8

3 Developing Applications ··········································································································································· 10

Introduction ······································································································································································ 10

Web Layer ······························································································································································ 12

Business Logic Layer ·············································································································································· 12

Persistence Layer ···················································································································································· 13

Authentication ································································································································································· 13

REST API··········································································································································································· 15

REST API Documentation ······································································································································· 16

Rsdoc ······································································································································································· 16

Rsdoc Extension ······················································································································································ 17

Rsdoc Live Reference ············································································································································· 17

Audit Logging ·································································································································································· 19

Alert Logging ··································································································································································· 20

Configuration ·································································································································································· 21

High Availability ····························································································································································· 23

Role orchestration ·················································································································································· 23

OpenFlow ········································································································································································ 26

Message Library ····················································································································································· 27

Core Controller······················································································································································· 33

Flow Rules ······························································································································································· 43

Metrics Framework ························································································································································· 46

External View·························································································································································· 46

GUI ··················································································································································································· 59

SKI Framework - Overview ··································································································································· 59

SKI Framework - Navigation Tree ························································································································ 60

SKI Framework - Hash Navigation ······················································································································· 61

SKI Framework - View Life-Cycle ·························································································································· 64

SKI Framework - Live Reference Application ······································································································· 64

UI Extension ···························································································································································· 65

Introduction ····························································································································································· 66

Controller Teaming ················································································································································ 67

Distributed Coordination Service ························································································································· 67

Persistence ······································································································································································· 85

iii

Page 4

Distributed Persistence Overview ························································································································· 85

Backup and Restore ····················································································································································· 111

Backup ································································································································································· 111

Restore ·································································································································································· 112

Device Driver Framework············································································································································ 114

Device Driver Framework Overview ················································································································· 114

Facets and Handler Facets ································································································································· 114

Device Type Information ····································································································································· 115

Component Responsibilities ······························································································································· 117

Example Operation ············································································································································ 118

Port-Interface Discovery ······································································································································ 119

Chassis Devices ··················································································································································· 120

Device Objects ···················································································································································· 120

Using the Device Driver Framework·················································································································· 121

4 Application Security ················································································································································ 126

Introduction ··································································································································································· 126

SDN Application Layer ··············································································································································· 126

Application Security ···················································································································································· 126

Assumptions ························································································································································· 127

Distributed Coordination and Uptime ··············································································································· 127

Secure Configuration ·········································································································································· 127

Management Interfaces ······································································································································ 128

System Integrity ··················································································································································· 129

Secure Upgrade ·················································································································································· 129

5 Including Debian Packages with Applications ····································································································· 130

Required Services ························································································································································ 130

AppService ·························································································································································· 130

AdminRest ···························································································································································· 130

Application zip file ······················································································································································ 130

Programming Your Application to Install a Debian Package on the Controller ··················································· 131

Determining when to install the Debian Package ···························································································· 131

AdminRest Interactions ······································································································································· 132

Removing the Debian Package ·································································································································· 134

App Event Listener ··············································································································································· 135

Uploading and Installing the Debian Package ································································································ 135

6 Sample Application ················································································································································ 137

Application Description ··············································································································································· 137

Creating Application Development Workspace······································································································· 137

Creating Application Directory Structure·········································································································· 138

Creating Configuration Files ······························································································································ 139

Creating Module Directory Structure ················································································································ 144

Application Generator (Automatic Workspace Creation)······················································································· 144

Creating Eclipse Projects ············································································································································· 145

Updating Project Dependencies ································································································································· 146

Building the Application ·············································································································································· 146

Installing the Application ············································································································································ 147

Application Code ························································································································································ 149

Defining Model Objects ····································································································································· 150

Controller Teaming ············································································································································· 152

Distributed Coordination Service ······················································································································ 152

iv

Page 5

Creating Domain Service (Business Logic) ······································································································· 156

Creating a REST API ··········································································································································· 169

Creating RSdoc ··················································································································································· 193

Creating a GUI···················································································································································· 197

Using SDN Controller Services ·························································································································· 208

Role orchestration ··············································································································································· 218

7 Testing Applications ················································································································································ 229

Unit Testing ··································································································································································· 229

Remote Debugging with Eclipse ································································································································· 232

8 Built-In Applications ················································································································································· 238

Node Manager ···························································································································································· 238

OpenFlow Node Discovery ········································································································································ 238

Link Manager ······························································································································································· 239

OpenFlow Link Discovery ··········································································································································· 240

Topology Manager ······················································································································································ 240

Path Diagnostics ··························································································································································· 241

Path Daemon ································································································································································ 241

Appendix A ································································································································································· 243

Using the Eclipse Application Environment ··············································································································· 243

Importing Java Projects ······································································································································· 243

Setting M2_REPO Classpath Variable ·············································································································· 246

Installing Eclipse Plug-ins ···································································································································· 246

Eclipse Perspectives ············································································································································ 248

Attaching Source Files when Debugging ········································································································· 248

Appendix B ·································································································································································· 251

Troubleshooting ···························································································································································· 251

Maven Cannot Download Required Libraries·································································································· 251

Path Errors in Eclipse Projects after Importing ·································································································· 252

Bibliography ································································································································································ 254

v

Page 6

1 Introduction

This document describes the process of developing applications to run on the HP VAN SDN

Controller platform.

The base SDN Controller serves as a delivery vehicle for SDN solutions. It provides a platform for

developing various types of network controllers, e.g. data-center, public cloud, private cloud,

campus edge networks, etc. This includes being an open platform for development of experimental

and special-purpose network control protocols using a built-in OpenFlow controller.

The SDN Controller meets certain minimum scalability requirements and it provides the ability to

achieve higher scaling and high-availability requirements via a scale-out teaming model. In this

model, the same set of policies are applied to a region of network infrastructure by a team of such

appliances, which will coordinate and divide their control responsibilities into separate partitions

of the control domain for scaling, load-balancing and fail-over purposes.

Overview

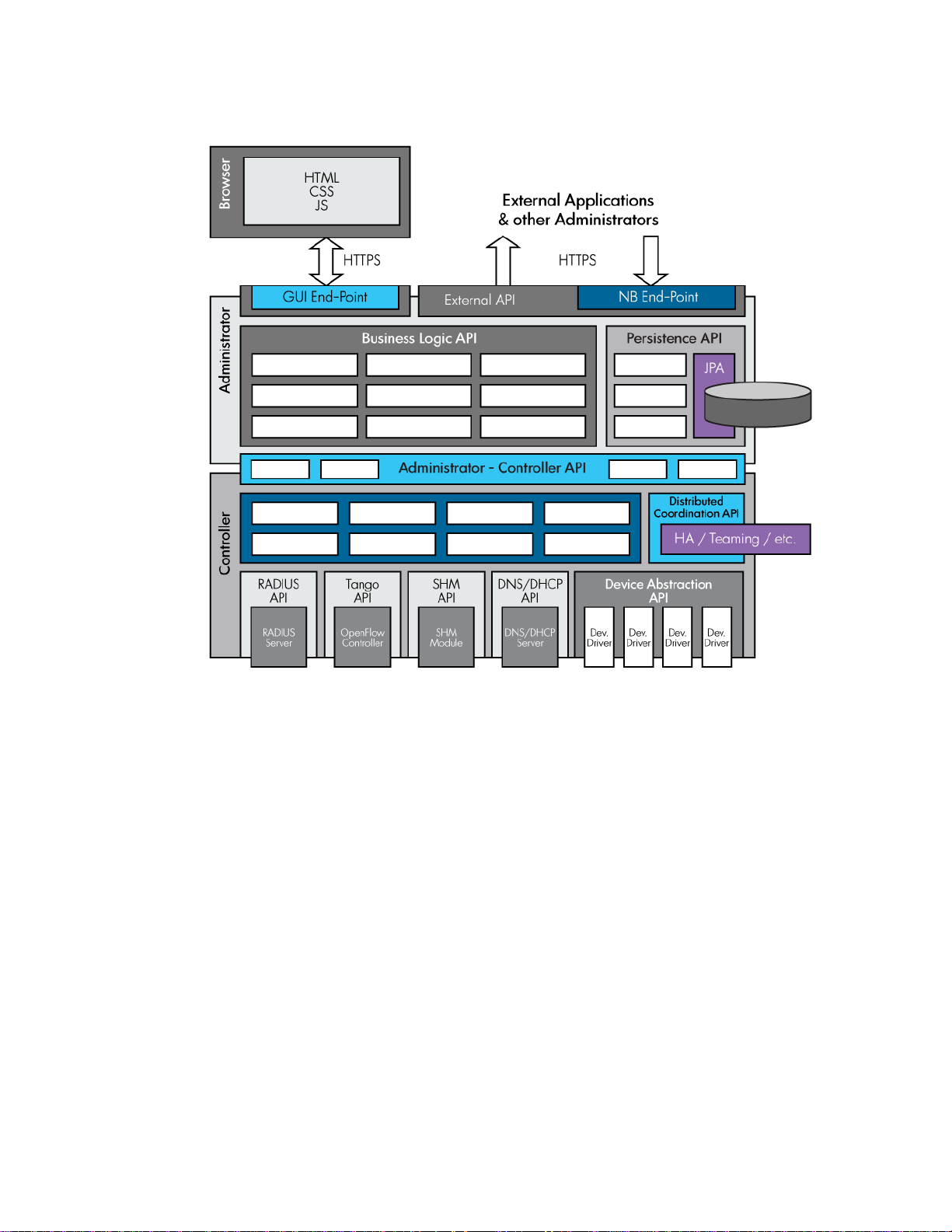

Regardless of the specific personality of the controller, the software stack consists of two major

tiers. The upper Administrator tier hosts functionality related to policy deployment, management,

personae interactions and external application interactions, for example slow-path, deliberating

operations. The lower Controller tier, on the other hand, hosts policy enforcement, sensing, device

interactions, flow interactions, for example fast-path, reflex, muscle-memory like operations. The

interface(s) between the two tiers provide a design firewall and are elastic in that they can change

along with the personality of the overall controller. Also, they are governed by a rule that no

enforcement-related synchronous interaction will cross from the Controller to Administrator tier.

Figure 1 Controller Tiers

1

Page 7

The Administration tier of the controller will host a web-layer through which software modules

installed on the appliance can expose REST APIs [1] [2] (or RESTful web services) to other external

entities. Similarly, modules can extend the available web-based GUI to allow network

administrators and other personae to directly interact with the features of the software running on

the SDN Controller.

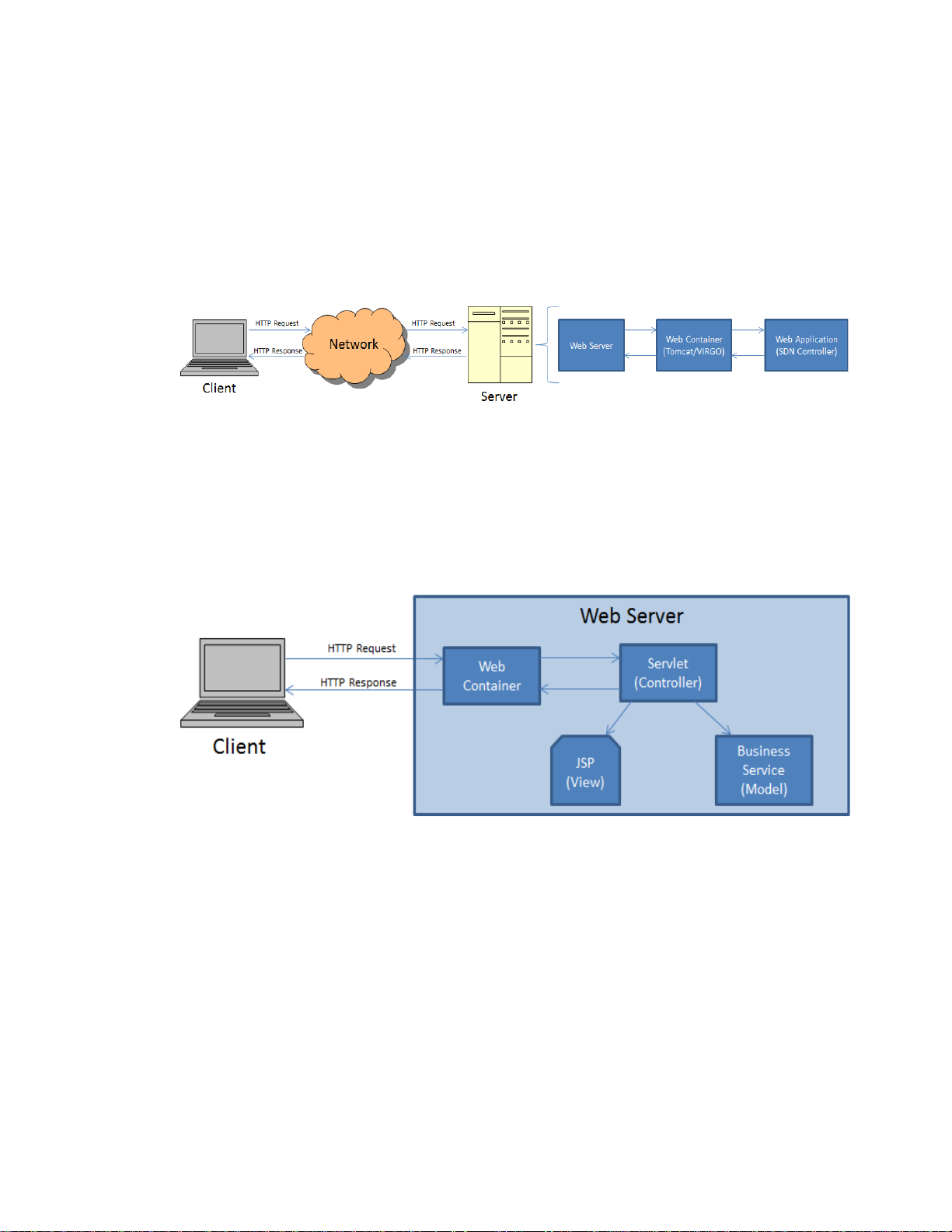

A web application is an application that is accessed by users over a network such as the Internet

or an intranet. The HP VAN SDN Controller runs on a web server as illustrated in Figure 2.

Figure 2 Web Application Architecture

Servlets [3] [4] is the technology used for extending the functionality of the web server and for

accessing business systems. Servlets provide a component-based, platform-independent method for

building Web-based applications.

SDN applications do not implement Servlets directly but instead they implement RESTful web

services [1] [2] which are based on Servlets; however RESTful web services also act as controllers

as described in the pattern from Figure 3.

Figure 3 Web Application Model View Controller Pattern

Basic Architecture

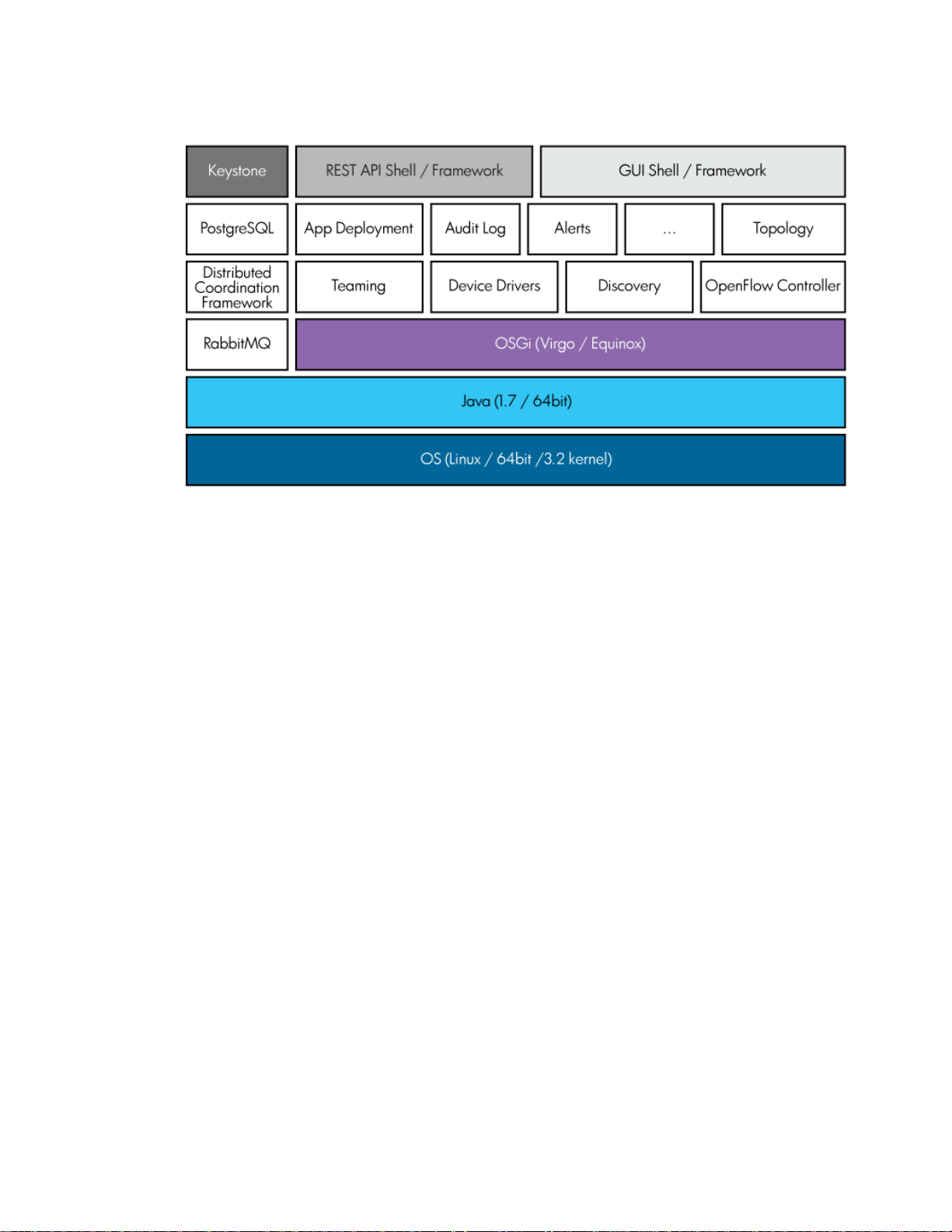

The principal software stack of the appliance uses OSGi framework (Equinox) [5] [6] and a

container (Virgo) [7] as a basis for modular software deployment and to enforce service

provider/consumer separation. The software running in the principal OSGi container can interact

with other components running as other processes on the appliance. Preferably, such IPC

interactions will occur using a standard off-the shelf mechanism, for instance RabbitMQ, but they

can exploit any means of IPC best suited to the external component at hand. Virgo, based on

Tomcat [8], is a module-based Java application server that is designed to run enterprise Java

applications with a high degree of flexibility and reliability. Figure 4 illustrates the HP VAN SDN

Controller software stack.

2

Page 8

Figure 4 HP VAN SDN Controller Software Stack

Jersey [2] is a JAX-RS (JSR 311) reference Implementation for building RESTful Web services. In

Representational State Transfer (REST) architectural style, data and functionality are considered

resources, and these resources are accessed using Uniform Resource Identifiers (URIs), typically

links on the web. REST-style architectures conventionally consist of clients and servers and they are

designed to use a stateless communication protocol, typically HTTP. Clients initiate requests to

servers; servers process requests and return appropriate responses. Requests and responses are

built around the transfer of representations of resources. Clients and servers exchange

representations of resources using a standardized interface and protocol. These principles

encourage RESTful applications to be simple, lightweight, and have high performance.

The HP VAN SDN Controller also offers a framework to develop Web User Interfaces - HP SKI. The

SKI Framework provides a foundation on which developers can create a browser-based web

application.

The HP VAN SDN Controller makes use of external services providing APIs that allow SDN

applications to make use of them.

Keystone [9] is an external service that provides authentication and high level authorization

services. It supports token-based authentication scheme which is used to secure the RESTful web

services (Or REST APIs) and the web user interfaces.

Hazelcast[10] is an in-memory data grid management software that enables: Scale-out

computing, resilience and fast, big data.

Apache Cassandra [10] is a high performance, extremely scalable, fault tolerant (no single point

of failure), distributed post-relational database solution. Cassandra combines all the benefits of

Google Bigtable and Amazon Dynamo to handle the types of database management needs that

traditional RDBMS vendors cannot support.

Figure 5 illustrates with more detail the tiers that compose the HP VAN SDN Controller. It shows

the principal interfaces and their roles in connecting components within each tier, the tiers to each

other and the entire system to the external world.

3

Page 9

The approach aims to achieve connectivity in a controlled manner and without creating undue

dependencies on specifics of component implementations. The separate tiers are expected to

interact over well-defined mutual interfaces, with decreasing coarseness from top to bottom. This

means that on the way down, high-level policy communicated as part of the deployment

interaction over the external APIs is broken down by the upper tier into something similar to a

specific plan, which gets in turn communicated over the inter-tier API to the lower controller tier.

The controller then turns this plan into detailed instructions which are either pre-emptively

disseminated to the network infrastructure or are used to prime the RADIUS or OpenFlow [11] [ 12 ]

controllers so that they are able to answer future switch (other network infrastructure device)

queries.

Similarly, on the way up, the various data sensed by the controller from the network infrastructure,

regarding its state, health and performance, gets aggregated at administrator tier. Only the

administrator tier interfaces with the user or other external applications. Conversely, only the

controller tier interfaces with the network infrastructure devices and other supporting controller

entities, such as RADIUS, OpenFlow [11] [12], MSM controller software, and so on.

4

Page 10

Figure 5 HP VAN SDN Controller Tiers

•

•

•

•

•

•

•

•

Internal Applications vs. External Applications

Internal applications (“Native” Applications / Modules) are ideal to exert relatively fine-grained,

frequent and low-latency control interactions with the environment, for example, handling packet-in

events. Some key points to consider when developing internal applications:

Authored in Java or a byte-code compatible language, e.g. Scala, or Scala DSL.

Deployed on the SDN Controller platform as collections of OSGi bundles.

Built atop services (Java APIs) exported and advertised by the platform and by other

applications.

Export and advertise services (Java APIs) to allow interactions with other applications.

Dynamically extend SDN Controller REST API surface.

Dynamically extend SDN Controller GUI by adding navigation categories, items, views, and

so on.

Integrate with the SDN Controller authentication & authorization framework.

Integrate with the SDN Controller Persistency & Distributed Coordination API.

Internal applications are deployed on the HP VAN SDN Controller and they interact with it by

consuming business services (Java APIs) published by the controller in the SDK.

5

Page 11

External applications are suitable to exert relatively coarse-grained, infrequent, and high-latency

•

•

•

•

Acronym

Description

DTO

Data Transfer Object

HP

Hewlett-Packard

HTTP

Hypertext Transfer Protocol

HTTPS

Hypertext Transfer Protocol Secure

HW

Hardware

LAN

Local Area Network

OF

OpenFlow

OSGi

Open Service Gatway Initiative

OWASP

Open Web Application Security Project

SNMP

Simple Network Management Protocol

VLAN

Virtual LAN

control interactions with the environment, such as path provisioning and flow inspections. External

applications can have these characteristics:

This can be written any language capable of establishing a secure HTTP connection.

Example: Java, C, C++, Python, Ruby, C#, bash, and so on.

They can be deployed on a platform of choice outside of the SDN Controller platform.

They use REST API services exported and advertised by the platform and by other

applications.

They do not extend the Java APIs, REST APIs, or GUI of the controller.

This guide describes writing and deploying internal applications. For information about the REST

APIs you can use for external applications, see the HP VAN SDN Controller REST API Reference

Guide.

Acronyms and Abbreviations

There are many acronyms and abbreviations that are used in this document. Table 1 contains some

of the more commonly used acronyms and abbreviations.

Table 1 Commonly Used Acronyms and Abbreviations

CLI Command Line Interface

6

Page 12

2 Establishing Your Test and Development

•

•

Environments

The suggested development environment contains two separate environments, a Test Environment

and a Development Environment. It is recommended to use a different machine for each of these

environments. The Test Environment is where the HP VAN SDN Controller and all the dependency

systems will be installed; it will be very similar to a real deployment, however virtual machines [13]

are useful during development phase. The Development Environment will be formed by the tools

needed to create, build and package the application. Once the application is ready for

deployment, the test environment will be used to install it.

One reason to keep these environments separated is because distributed applications may need a

team set up to test the application (Cluster of controllers). Another reason is that some unit test

and/or integration tests (RESTful Web Services [1] [2] for example) might open ports that are

reserved for services offered or consumed by the controller.

Test Environment

Installing HP VAN SDN Controller

To install the SDN controller follow the instructions from the HP VAN SDN Controller Installation

Guide [14].

Authentication Configuration

The HP VAN SDN Controller uses Keystone [9] for identity management. When it is installed, two

users are created, "sdn" and "rsdoc", both with a default password of "skyline". This password

can be changed using the keystone command-line interface from a shell on the system where the

controller was installed: Follow the instructions from the HP VAN SDN Controller Installation Guide

[14].

Development Environment

Pre-requisites

The development environment requirements are relatively minimal. They comprise of the following:

Operating System

Supported operating systems include:

Windows 7or later with MKS 9.4p1

Ubuntu 10.10 or later

7

Page 13

•

Java

Maven

Curl

OSX Snow Leopard or later.

The Software Development Language used is Java SE SDK 1.6 or later. To install Java go to [ 15 ]

and follow the download and installation instructions.

Apache Maven is a software project management and comprehension tool. Based on the concept

of a project object model (POM), Maven can manage a project's build, reporting and

documentation from a central piece of information [16].

To install Maven go to [16] and follow the download and installation instructions. Note that if you

are behind a fire-wall, you may need to configure your ~/.m2/settings.xml appropriately to

access the Internet-based Maven repositories via proxy, for more information see Maven Cannot

Download Required Libraries on page 251.

Maven 3.0.4 or newer is needed. To verify the installed version of Maven execute the following

command:

$ mvn –version

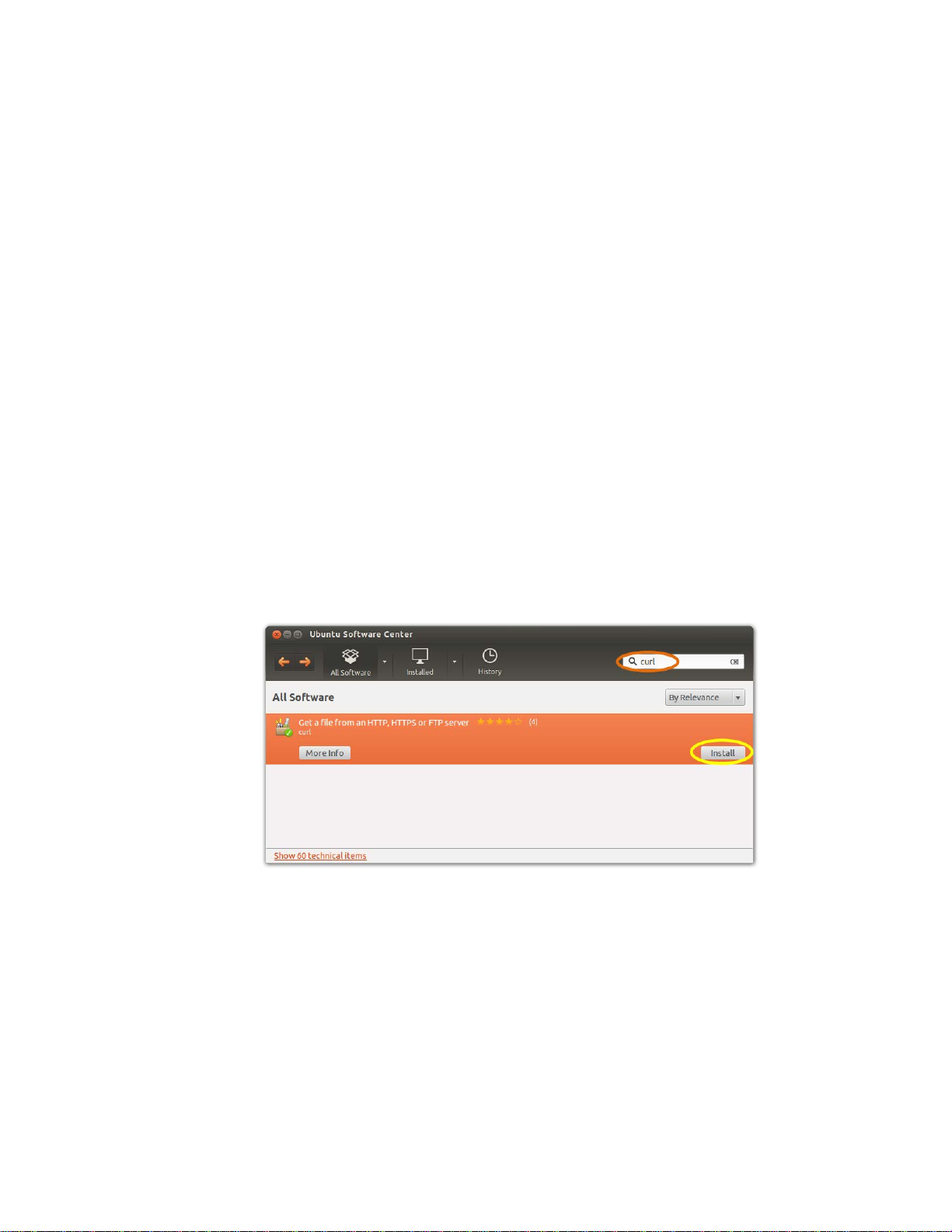

Curl (or cURL) is a command line tool for transferring data with URL syntax. This tool is optional.

Follow the instruction from [17] to install Curl, or if you use Linux Ubuntu as development

environment you may use the Ubuntu Software Center to install it as illustrated in Figure 6.

Figure 6 Installing Curl via Ubuntu Software Center

IDE

An IDE, or an Integrated Development Environment, is a software application that provides a

programmer with many different tools useful for developing. Tools that bundled with an IDE may

include: an editor, a debugger, a compi ler, and more. Eclipse is a popular IDE that can be used to

program in Java and for developing applications. Eclipse might be referenced in this guide.

HP VAN SDN Controller SDK

Download the HP VAN SDN Controller SDK from [18]. The SDK is contained in the hp-sdn-sdk-*.zip

file (for example: hp-sdn-sdk-2.0.0.zip). Unzip its contents in any location. To install the SDN

Controller SDK jar files into the local Maven repository, execute the SDK install tool from the

8

Page 14

Javadoc

directory where the SDK was unzipped, as follows (Note: Java SDK and Maven must already be

installed and properly configured):

$ bin/install-sdk

To verify that the SDK has been properly installed look for the HP SDN libraries installed in the local

Maven repository at:

~/.m2/repository/com/hp.

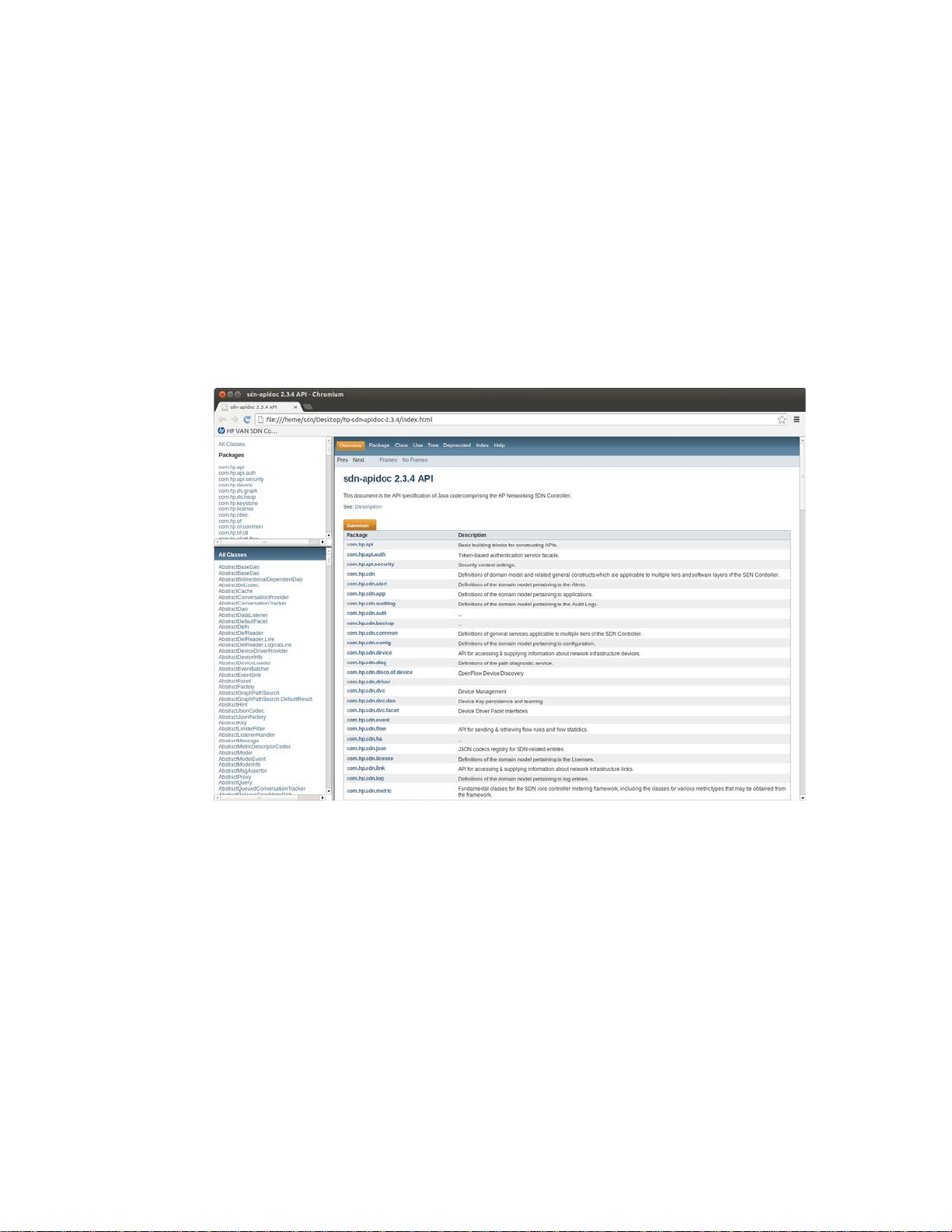

The controller Java APIs are documented in Javadoc format in the hp-sdn-apidoc-*.jar file.

Download the file and unzip its contents. To view the Java API documentation, open the index.html

file. Figure 7 illustrates an example of the HP VAN SDN Controller documentation.

Figure 7 HP VAN SDN Controller Javadoc

9

Page 15

3 Developing Applications

•

•

•

•

•

•

•

•

Internal applications (“Native” Applications / Modules) are ideal to exert relatively fine-grained,

frequent and low-latency control interactions with the environment, for example, handling packet-in

events. Some key points to consider when developing internal applications:

Authored in Java or a byte-code compatible language, e.g. Scala, or Scala DSL.

Deployed on the SDN Controller platform as collections of OSGi bundles.

Built atop services (Java APIs) exported and advertised by the platform and by other

applications.

Export and advertise services (Java APIs) to allow interactions with other applications.

Dynamically extend SDN Controller REST API surface.

Dynamically extend SDN Controller GUI by adding navigation categories, items, views, and

so on.

Integrate with the SDN Controller authentication & authorization framework.

Integrate with the SDN Controller Persistency & Distributed Coordination API.

Internal applications are deployed on the HP VAN SDN Controller and they interact with it by

consuming business services (Java APIs) published by the controller in the SDK.

Introduction

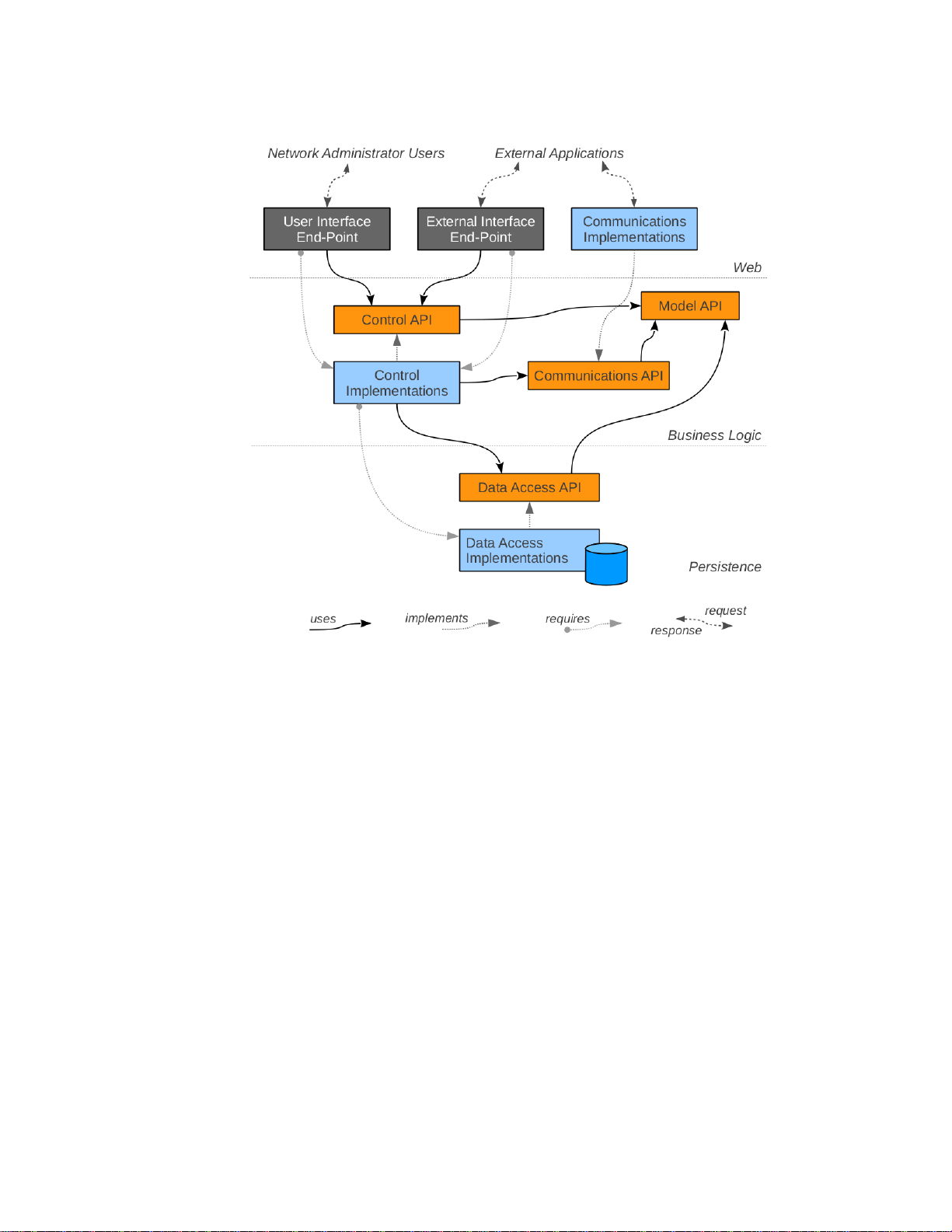

Figure 8 illustrates the various classes of software modules categorized by the nature of their

responsibilities and capabilities and the categories of the software layers to which they belong.

Also shown are the permitted dependencies among the classes of such modules. Note the explicit

separation of the implementations from interfaces (APIs). This separation principle is strictly

enforced in order to maintain modularity and elasticity of the application. Also note that these

represent categories, not necessarily the actual modules or components. This diagram only aims to

highlight the classes of software modules.

10

Page 16

Figure 8 HP Application Modules

11

Page 17

Web Layer

Components in this layer are responsible for receiving and consuming appropriate external

representations (XML, JSON, binary...) suitable for communicating with various external entities

and, if applicable, for utilizing the APIs from the business logic layer to appropriately interact with

the business logic services to achieve the desired tasks and/or to obtain or process the desired

information.

User Interface End-Point (REST API) and end-point resources for handling inbound requests

providing control and data access capabilities to the administrative GUI.

External Interface End-Point (REST API) are end-point resources for handling inbound requests

providing control and data access capabilities to external applications, including other

orchestration and administrative tools (for example IMC, OpenStack , etc.)

Business Logic Layer

Components in this layer fall into two fundamental categories: model control services and

outbound communications services, and each of these are further subdivided into public APIs and

private implementations.

The public APIs are composed of interfaces and passive POJOs [19], which provide the domain

model and services, while the private implementations contain the modules that implement the

various domain model and service interfaces. All interactions between different components must

occur solely using the public API mechanisms.

Model API—Interfaces & objects comprising the domain model. For example: the devices, ports,

network topology and related information about the discovered network environment.

Control API—Interfaces to access the modeled entities, control their life-cycles and in general to

provide the basis for the product features to interact with each other.

Communications API—Interfaces which define the outbound forms of interactions to control,

monitor and discover the network environment.

Control Implementations—Implementations of the control API services and domain model.

Communications Implementations—Implementations of the outbound communications API

services. They are responsible for encoding / transmitting requests and receiving / decoding

responses.

Health Service API—Allows an application to report its health to the controller (via the

HealthMonitorable interface or proactively submitting health information to the HealthService

directly via the updateHealth method) and/or listen to health events from the controller and other

applications (via the HealthListener interface). There are 3 types of health statuses:

• OK – A healthy status to denote that an application is functioning as expected.

• WARN – An unhealthy status to denote that an application is not functioning as expected

and needs attention. This status is usually accompanied by a reason as to why the

application reports this status to provide clues to remedy the situation.

• CRITICAL – An unhealthy status to denote that some catastrophic event has happened to

the application that affects the controller’s functionality. When the controller receives a

CRITICAL event, it will assume that its functionality has been affected, and will proceed to

12

Page 18

shutdown the Openflow port to stop processing Openflow events. If in a teaming

•

•

•

•

•

•

•

•

•

•

environment, the controller will remove itself from the team.

Persistence Layer

Data Access API—Interfaces, which prescribe how to persist and retrieve the domain model

information, such as locations, devices, topology, etc. This can also include any prescribed routing

and flow control policies.

Data Access Implementations—Implementations of the persistence services to store and

retrieve the SDN-related information in a database or other non-volatile form.

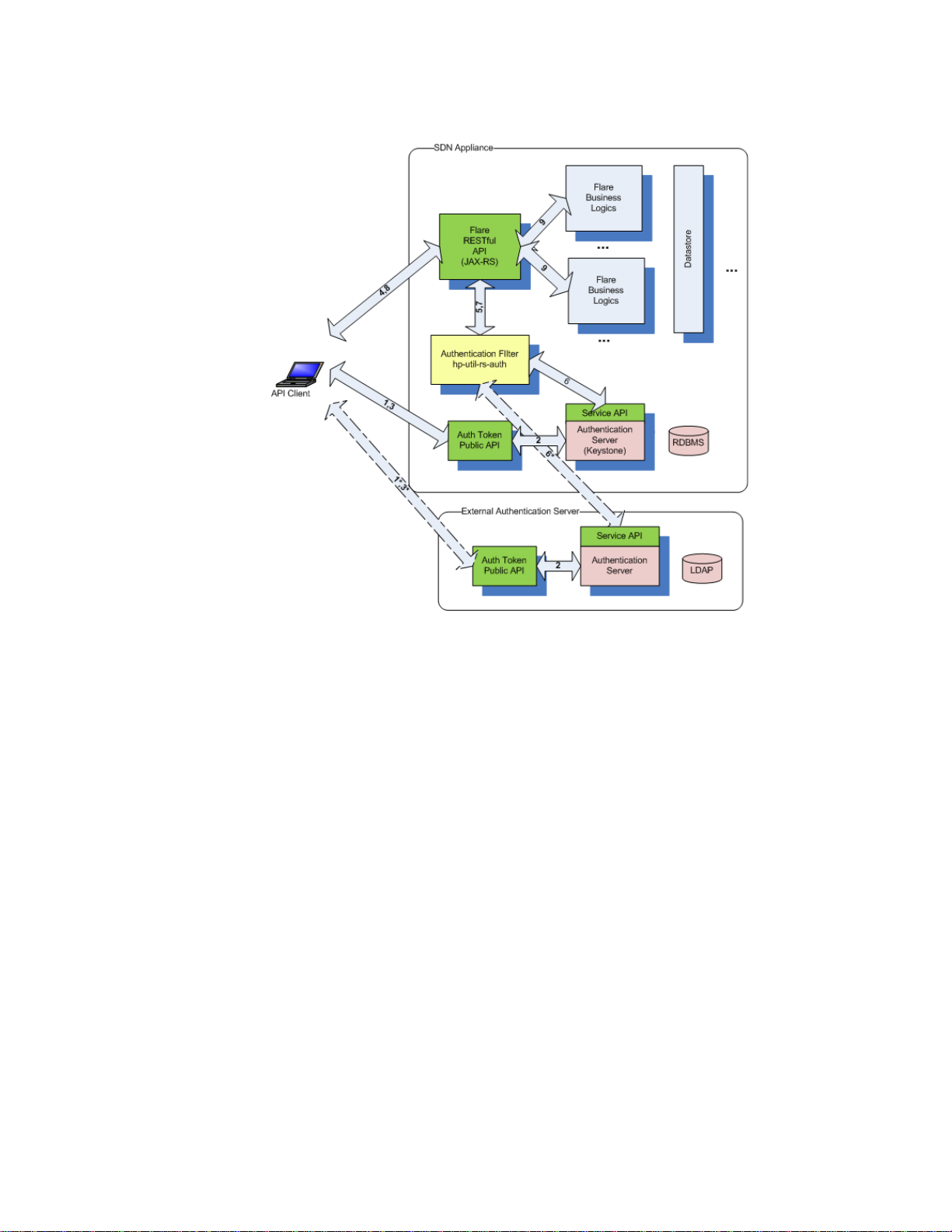

Authentication

Controller REST APIs are secured via a token-based authentication scheme. OpenStack Keystone

[9] is used to provide the token-based authentication.

This security mechanism:

Provides user authentication functionality with RBAC support.

Completely isolates the security mechanism from the underlying REST API.

Works with OpenStack Keystone.

Exposes a REST API to allow any authentication server that implements this REST API to be

hosted elsewhere (outside the SDN appliance).

This security mechanism does not:

Provide authorization. Authorization needs to be provided by the application based on the

authenticated subject's roles.

Support filtering functionality such as black-listing or rate-limiting.

To achieve isolation of security aspects from the API, authentication information is encapsulated by

a token that a user receives by presenting his/her credentials to an Authentication Server. The user

then uses this token (via header X-Auth-Token) in any API call that requires authentication. The

token is validated by an Authentication Filter that fronts the requested API resource. Upon

successful authentication, requests are forwarded to the RESTful APIs with the principal's

information such as:

User ID

User name

User roles

Expiration Date

Upon unsuccessful authentication (either no token or invalid token), it is up to the application to

deny or allow access to its resource. This flexibility allows the application to implement its own

authorization mechanism, such as ACL-based or even allow anonymous operations on certain

resources.

The flow of token-based authentication in the HP VAN SDN Controller can be summarized as

illustrated in Figure 9.

13

Page 19

Figure 9 Token-based Authentication Flow

1) API Client presents credentials (username/password) to the AuthToken REST API.

2) Authentication is performed by the backing Authentication Server. The SDN Appliance

includes a local Keystone-based Authentication Server, but the Authentication Server may also

be hosted elsewhere by the customer (and maybe integrated with an enterprise directory such

as LDAP for example), as long as it implements the AuthToken REST API (described elsewhere).

The external Authentication Server use-case is shown by the dotted-line interactions. If the user

is authenticated, the Authentication Server will return a token.

3) The token is returned back to the API client.

4) The API client includes this token in the X-Auth-Token header when making a request to the HP

VAN SDN Controller’s RESTful API.

5) The token is intercepted by the Authentication Filter (Servlet Filter).

6) The Authentication Filter validates the token with the Authentication Server via another

AuthToken REST API.

7) The validation status is returned back to the REST API.

8) If the validation is unsuccessful (no token or invalid token), the HP VAN SDN Controller will

return a 401 (Unauthorized) status back to the caller.

9) If the validation is successful, the actual the HP VAN SDN Controller REST API will be invoked

and business logics ensue.

In order to isolate services and applications from Keystone specifics, two APIs in charge of

providing authentication services (AuthToken REST API's) are published:

14

Page 20

Public API:

•

•

•

•

•

•

•

•

1) Create token. This accepts username/password credentials and return back a unique token with

some expiration.

Service API:

1) Revoke token. This revokes a given token.

2) Validate token. This validates a given token and returns back the appropriate principal's

information.

Authentication services have been split into these two APIs to limit sensitive services (Service API) to

only authorized clients.

REST API

Internal applications do not make use of the HP VAN SDN Controller’s REST API, they extend it by

defining their own RESTful Web Services. Internal applications make use of the business services

(Java APIs) published by the controller. For external applications consult the RESTful API

documentation (or Rsdoc) as described at Rsdoc Live Reference on page 17.

Representational State Transfer (REST) defines a set of architectural principles by which Web

services are designed focusing on a system's resources, including how resource states are

addressed and transferred over HTTP by a wide range of clients written in different languages

[20].

Concrete implementation of a REST Web service follows four basic design principles:

Use HTTP methods explicitly.

Be stateless.

Expose directory structure-like URIs.

Transfer XML, JavaScript Object Notation (JSON), or both.

One of the key characteristics of a RESTful Web service is the explicit use of HTTP. HTTP GET, for

instance, is defined as a data-producing method that's intended to be used by a client application

to retrieve a resource, to fetch data from a Web server, or to execute a query with the expectation

that the Web server will look for and respond with a set of matching resources [20].

REST asks developers to use HTTP methods explicitly and in a way that's consistent with the

protocol definition. This basic REST design principle establishes a one-to-one mapping between

create, read, update, and delete (CRUD) operations and HTTP methods. According to this

mapping:

To create a resource on the server, use POST.

To retrieve a resource, use GET.

To change the state of a resource or to update it, use PUT.

To remove or delete a resource, use DELETE.

See [1] for guidelines to design REST APIs or RESTful Web Services and Creating a REST API on

page 169 for an example.

15

Page 21

REST API Documentation

In addition to the Rsdoc, the HP VAN SDN Controller REST API provides information for interacting

with the controller’s REST API.

Rsdoc

Rsdoc is a semi-automated interactive RESTful API documentation. It offers a useful way to interact

with REST APIs.

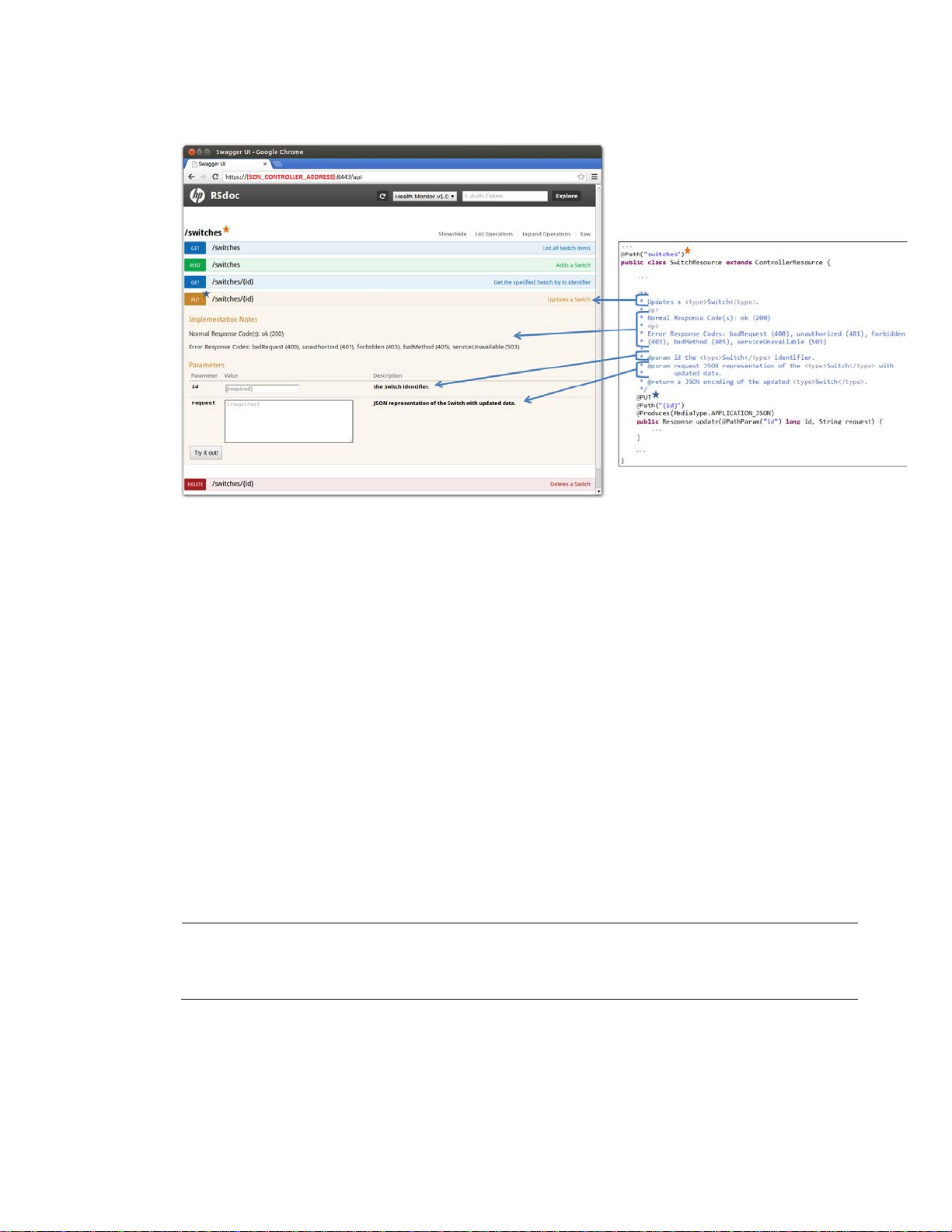

Figure 10 RSdoc

It is called RSdoc because is a combination of JAX-RS annotations [2] and Javadoc [21] (Illustrated

in F i g u r e 11 ).

16

Page 22

Figure 11 RSdoc, JAX-RS and Javadoc

NOTE

Use the correct password if it was changed following instructions from

on

page 7.

JAX-RS annotations and Javadoc are already written when implementing RESTful Web Services, and

they are re-used to generate an interactive API documentation.

Rsdoc Extension

The HP VAN SDN Controller SDK offers a method to extend the Rsdoc to include applications

specific RESTful Web Services (As the example illustrated in F i g u r e 11). Since JAX-RS annotations and

Javadoc are already written when implementing RESTful Web Services, in order to enable an

application to extend the RSdoc is relatively easy and automatic: a few configuration files need to

be updated. See Creating RSdoc on page 193 for an example.

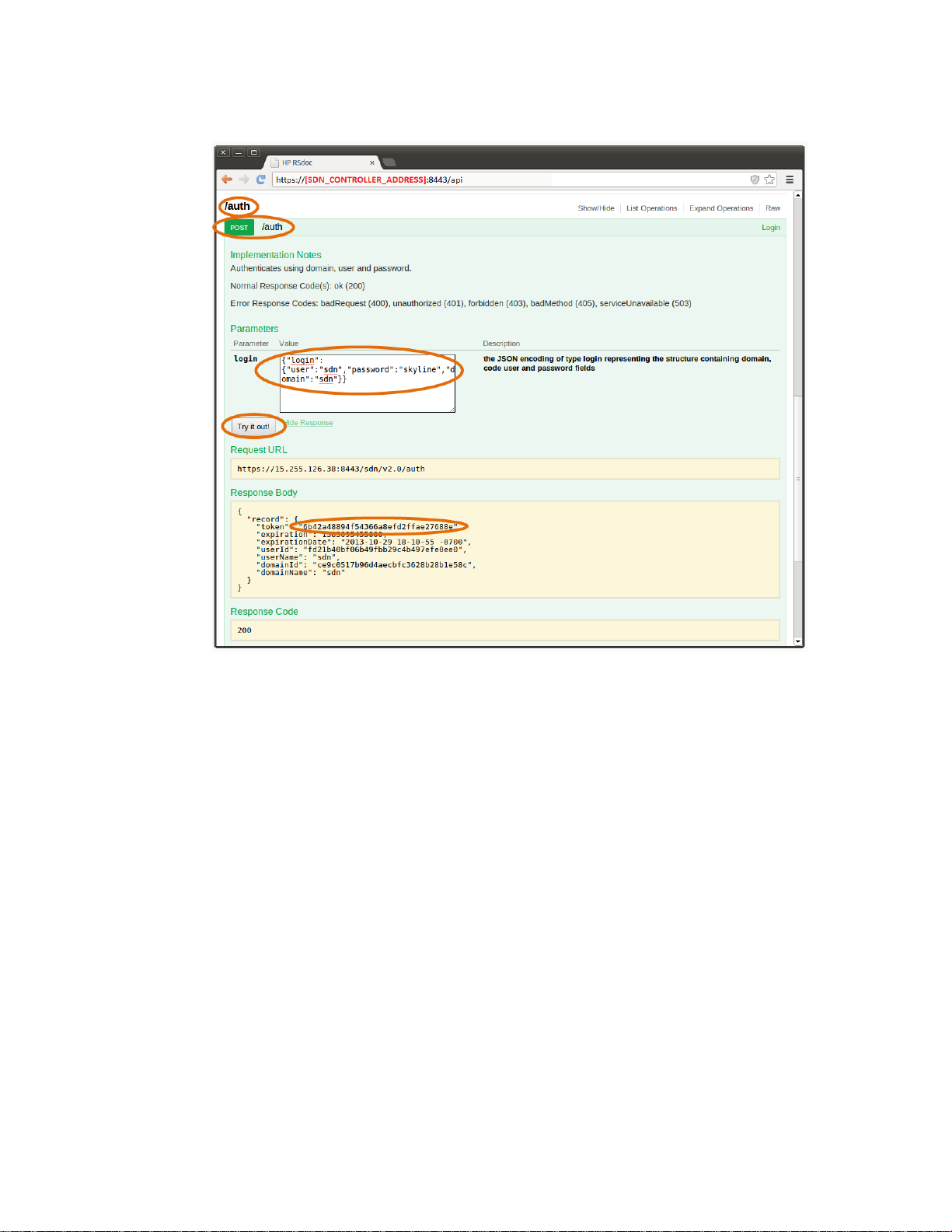

Rsdoc Live Reference

To access the HP VAN SDN Controller’s Rsdoc (including extensions by applications):

1. Open a browser at https

2. Get an authentication token by entering the following authentication JSON document:

{"login":{"user":"sdn","password":"skyline","domain":"sdn"}} (as illustrated in Fi g ur e 12 ).

://SDN_CONTROLLER_ADDRESS:8443/api (As illustrated in F igu re 10 ).

Authentication Configuration

17

Page 23

Figure 12 Authenticating via RSdoc Step 1

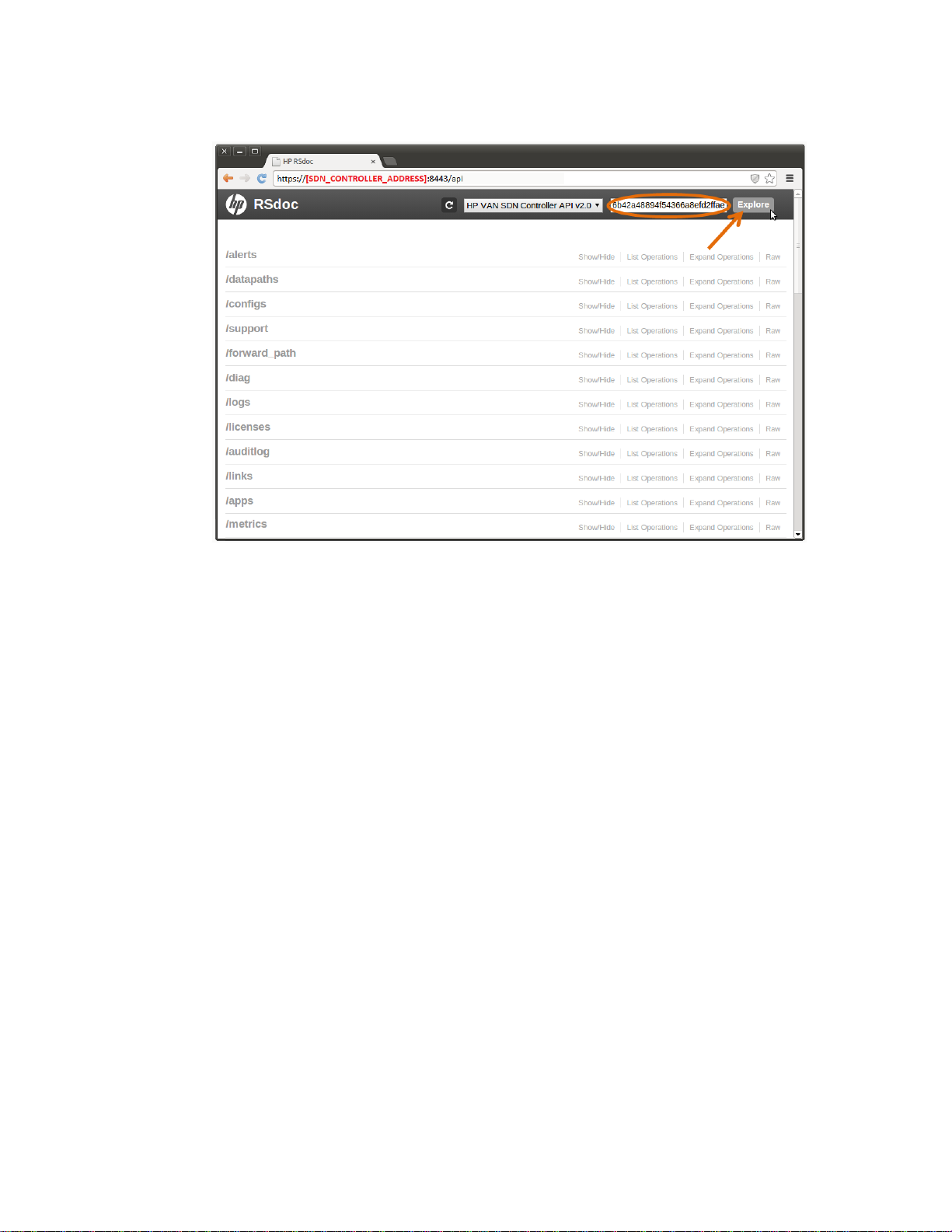

3. Set the authentication token as the X-AUTH-TOKEN in the RSdoc and then click “Explore,” as

illustrated in Fi g u re 13. From this point all requests done via RSdoc will be authenticated as long

as the token is valid.

18

Page 24

Figure 13 Authenticating via RSdoc Step 2

•

•

•

•

Audit Logging

The Audit Log retains information concerning activities, operations and configuration changes that

have been performed by an authorized end user. The purpose of this subsystem is to allow tracking

of significant system changes. This subsystem provides an API which various components can use to

record the fact that some important operation occurred, when and who triggered the operation and

potentially why. The subsystem also provides means to track and retrieve the recorded information

via an internal API as well as via external REST API. An audit log entry, once created, may not be

modified. Audit log entries, once created, may not be selectively deleted. Audit log entries are only

removed based on the age out policy defined by the administrator.

Audit Log data is maintained in persistence storage (default retention period is one year) and is

presented to the end user via both the UI and the REST API layers.

The audit log framework provides a cleanup task that is executed daily (by default) that ages out

audit log entries from persistent storage based on the policy set by the administrator.

An audit log entry consists of the following:

User—a string representation of the user that performed the operation which triggered the

audit log entry.

Time-stamp—the time that the audit log entry was created. The time information is persisted

in an UTC format.

Activity—a string representation of the activity the user was doing that triggered this audit log

entr y.

Data—a string description for the audit log entry. Typically, this contains the data associated

with the operation.

19

Page 25

•

•

•

•

•

•

•

•

Origin—a string representation of the application or component that originated this audit log

entr y.

Controller ID—the unique identification of the controller that originated the audit log entry.

Applications may contribute to the Audit Log via the Audit Log service. When creating an audit log

entry the user, activity, origin and data must be provided. The time-stamp and controller

identification is populated by the audit log framework. To contribute an audit log entry, use the

post(String us er, String origi n, String activi ty, String descr ip tion)

method provided by the AuditLogService API. This method will return the object that was created.

The strings associated with the user, origin and activity are restricted to a maximum of 255

characters, whereas the description string is restricted to a maximum of 4096 characters.

An example of an application consuming the Audit Log service is described at Auditing with Logs on

page 215.

Alert Logging

The purpose of this subsystem is to allow for management of alert data. The subsystem comprises of

an API which various components can use to generate alert data. The subsystem also provides

means to track and retrieve the recorded information via an internal API as well as via external REST

API. Once an alert entry has been created the state of the alert (active or not) is the only

modification that is allowed.

Alert data is maintained in persistent storage (default retention period is 14 days) and is presented

to the end user via both the UI and REST API layers. The alert framework provides a cleanup task

that is executed daily (by default) that ages out alert data from persistent storage based on the

policy set by the administrator.

An alert consists of the following:

Severity—one of Informational, Warning or Critical

Time-stamp—The time the alert was created. The time information is persisted in an UTC

format.

Description—a string description for the alert

Origin—a string representation of the application or component that originated the alert

Topic—the topic related to the alert. Users can register for notification when alerts related to

a given topic or set of topics occur

Controller ID—the unique identification of the controller that originated the alert

Applications may contribute alerts via the Alert service. When creating an alert the severity, topic,

origin and data must be provided. The time-stamp and controller identification is populated by the

alert framework. To contribute an alert, use the

post(Severity severity, Ale rtTopic topic, String origin, Stri ng data)

method provided by the AlertService API. This method returns the Alert DTO object that was created.

The string associated with the origin is restricted to a maximum of 255 characters, as well as the

data string.

An example of an application consuming the Alert service is described at Posting Alerts on page

212 .

20

Page 26

Configuration

The SDN controller presents configurable properties and allows the end user to modify

configurations via both the UI and REST API layers. The HP VAN SDN Controller uses the OSGi

Configuration Admin [22] [23] and MetaType [24] [25] services to present the configuration data.

For an application to provide configuration properties that are automatically presented by the SDN

controller, they must provide the MetaType information for the configurable properties. The metatype

information is contained in a “metatype.xml” file that must be present in the OSGI-INF/metatype

folder of the application bundle.

The necessary metatype.xml can be automatically generated via the use of the Maven SCR

annotations [26] and Maven SCR [27] plugin in a Maven pom.xml file for the application (See Root

POM File on page 139). The SCR annotations must be included as a dependency, and the SCR

plug-in is a build plugin.

Application pom.xml Example:

<?xml versio n="1.0" encoding="UTF-8"?>

<project xmlns=http://ma ven .apache.org/POM/4.0.0

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0

http://maven.apache.org/maven-v4_0_0.xsd">

...

<dependencies>

...

<dependency>

<groupId>org.apache.felix</groupId>

<artifactId>org.apache.felix.scr.annotations</artifactId>

<version>1.9.4</version>

</dependency>

</dependencies>

<build>

<plugins>

...

<plugin>

<groupId>org.apache.felix</groupId>

<artifactId>maven-scr-plugin</artifactId>

<version>1.13.0</version>

<executions>

<execution>

<id>generate-scr-srcdescriptor</id>

<goals>

<goal>scr</goal>

</goals>

</execution>

</executions>

<configuration>

<supportedProjectTypes>

21

Page 27

<supportedProjectType>bundle</supportedProjectType>

<supportedProjectType>war</supportedProjectType>

</supportedProjectTypes>

</configuration>

</plugin>

</plugins>

</build>

</project>

The component can then use Annotations to define the configuration properties as illustrated in the

following listing.

Configurable Property Key Definition Example:

package com.hp.hm.impl;

import org.apache.felix. scr .annotations.*;

...

@Component (metatype=true)

public class SwitchComponen t im plements SwitchService {

@Property(intValue = 100, description="Some Configuration")

protected static final St ring CONFIG_KE Y = " cfg.key";

...

}

The component is provided the configuration data by the OSGi framework as a Java Dictionary

object, which can be referenced as a basic Map of key -> value pairs. The key will always be a

Java String object, and the value will be a Java Object. A component will be provided the

configuration data at component initialization via an annotated “activate” method. Live updates to

a components configuration will be provided via an annotated “modified” method. Both of these

annotated methods should define a Map<String, Object> as an input parameter. The following

listing shows an example.

Configurable Property Example:

...

import com.hp.sdn.misc.C onf igUtils;

@Component (metatype=tru e)

public class SwitchComponen t im plements SwitchService {

@Property(intValue = 100, description="Some Configuration")

protected static final Stri ng CONFIG_KEY = "cfg.key";

private int someCf gVariable;

@Activate

protected void activate(Map<String, Object> config) {

someIntVariable = ConfigUtils.readInt(config, CONF IG_KEY, null, 100);

}

@Modified

protected void modified(Map<String, Object> config) {

22

Page 28

someIntVariable = ConfigUtils.readInt(config, CONF IG_KEY, null, 100);

•

•

•

•

}

...

}

As the configuration property value can one of several different kinds of Java object (Integer, Long,

String, etc.) a utility class is provided to read the appropriate Java object type from the configuration

map. The ConfigUtils.java class provides methods to read integers, longs, strings, Booleans and

ports from the configuration map of key -> value pairs. The caller must provide the following

information:

The configuration map

The key (string) for the desired property in the configuration map

A data Validator object (can be null)

A default value. The default value is returned if the provided key is not found in the

configuration map, if the key does not map to an Object of the desired type, or if a provided

data validator object rejects the value.

A Validator is a typed class which performs custom validation on a given configuration value. For

example, a data validator which only allows integer values between 10 and 20 is illustrated in the

following listing.

Configurable Property Validator Example:

...

import com.hp.sdn.misc.Validator;

public class MyValidator impl em ents Validator<Integer> {

@Override

public boolean isValid( In teger value) {

return ((10 <= value) && (value <= 20));

}

}

To use this validator with the ConfigUtils class to obtain the configuration value from the

configuration map, just include it in the method call:

MyValidato r myValidator = new My Validator();

ConfigUtils.readInt(config, CONFIG_KEY, myValidator, 15);

High Availability

Role orchestration

Role Orchestration Service provides a federated mechanism to define the role of teamed controllers

with respect to the network elements in the controlled domain. The role that a controller assumes in

relation to a network element would determine whether it has abilities to write and modify the

configurations on the network element, or has only read-only access to it.

As a preparation to exercise the Role Orchestration Service (ROS) in the HP VAN SDN Controller,

there are two pre-requisite operations that needs to be carried out beforehand:

23

Page 29

1) Create controller team: Using the teaming interfaces, a team of controllers need to be defined

for leveraging High Availability features.

2) Create Region: the network devices for which the given controller has been identified as a

master are grouped into “regions”. This grouping is defined in the HP VAN SDN Controller

using the Region interface detailed in subsequent sections.

Once the region definition(s) are in place, the ROS would take care of ensuring that a master

controller is always available to the respective network element(s) even when the configured master

experiences a failure or there is effectively a disruption of the communication channel between the

controller and the network device(s).

Failover: ROS would trigger the failover operation in two situations:

1) Controller failure: The ROS detects the failure of a controller in a team via notifications from

the teaming subsystem. If the ROS determines that the failed controller instance was master to

any region, it would immediately elect one of the backup (slave) controllers to assume the

mastership over the affected region.

2) Device disconnect: The ROS instance in a controller would get notified of a communication

failure with network device(s) via the Controller Service notifications. It would instantly federate

with all ROS instances in the team to determine if the network device(s) in question are still

connected to any of the backup (slave) controllers within the team. If that is the case, it would

elect one of the slaves to assume mastership over the affected network device(s).

Failback: When the configured master recovers from a failure and joins the team again, or when

the connection from the disconnected device(s) with the original master is resumed, ROS would

initiate a failback operation i.e. the mastership is restored back to the configured master as defined

in the region definition.

ROS exposes API’s through which interested applications can:

1) Create, delete or update a region definition

2) Determine the current master for a given device identified by a datapathId or IP address

3) Determine the slave(s) for a given device identified by a datapathId or IP address

4) Determine if the local controller is a master to a given device identified by a datapath

5) Determine the set of devices that a given controller is playing the master or slave role.

6) Register for region and role change notifications.

Details of the RegionService and RoleService APIs may be found at the Javadocs provided with the

SDK. See Javadoc on page 9 for details.

Illustrative usages of Role Service API’s

- To determine the controller which is currently playing the role of Master to a given datapath,

applications can use the following API’s depending on the specific need:

import com.hp.sdn.adm.ro le. RoleService;

import com.hp.sdn.adm.sy ste m.SystemInforamationServ ice;

…

public class SampleService {

// Mandatory dependency.

private final Sy stemInformationService sysIn foService;

24

Page 30

// Mandatory dependency.

private final Ro leService roleService;

public void doAct() {

IpAddress masterIp = roleService.getM aster(dpid).ip();

if(masterIp.equals(sysInfoService.

getSystem().getAddress())){

log.debug(“this cont ro ller is the master to {}”,

dpid);

// now that we know this controller has master privil ages

// we could for exam ple initiate wri te operations on the

// datapath – like sending flow-mods

}

}

}

- To determine the role that a controller is playing with respect to a given datapath

import com.hp.of.lib.msg .Co ntrollerRole;

import com.hp.sdn.adm.ro le. RoleService;

import com.hp.sdn.region .Co ntrollerNode;

import com.hp.sdn.region.ControllerNodeModel;

…

public class SampleService {

// Mandatory dependency.

private final Ro leService roleService;

public void doAc t() {

...

ControllerNode co ntroller = new Cont ro llerNodeModel(“10.1.1.1” );

Contro llerRole role = ro leService.ge tC urrentRole(controller,de viceIp);

switch(role){

case MASTER:

// the given controll er has master privilages

// we can trigger write-operations from that co ntroller

...

Break;

Case SLAVE:

// we have only read priv ileges

...

break;

default:

// indicates the cont roller and device are not associated

// to any region.

break;

25

Page 31

}

}

Notification on Region and Role changes

Applications can express interest in region change notifications using the addListener(...) API in

RegionService and providing an implementation of the RegionListener. A sample listener

implementation is illustrated in the following listing:

Region Listener Example:

import com.hp.sdn.adm.re gio n.RegionListener;

import com.hp.sdn.region .Re gion;

...

public class RegionListener Im pl implements Re gionListener {

...

@Override

public void added(Regio n re gion) {

log.debug(“Mast e r of ne w region: {}”, region.master() );

}

@Override

public void removed(Reg io n region) {

log.debug(“Mast e r of re moved region: {}”, region.mast er());

}

}

Similarly applications can express interest in role change notifications using the addListener(...) API

in RoleService and providing an implementation of the RoleListener. A sample listener

implementation is illustrated in the following listing:

Role Listener Example:

import com.hp.sdn.adm.ro le. RoleEvent;

import com.hp.sdn.adm.ro le. RoleListener;

...

public class RoleListenerIm pl implements RoleListener {

...

@Override

public void rolesAsserted(RoleEvent roleEvent) {

log.debug(“Previous master: {}”, roleEvent.oldMaster());

log.debug(“New m ast er: {}”, roleEvent.newMaster ());

log.debug(“Affected datapaths: {}”, roleEvent.data paths());

}

}

OpenFlow

OpenFlow messages are sent and received between the controller and the switches (datapaths) it

manages. These messages are byte streams, the structure of which is documented in the OpenFlow

Protocol Specification documents published by the Open Networking Foundation (ONF) [28].

26

Page 32

The Message Library is a Java implementation of the OpenFlow specification, providing facilities for

•

•

•

•

•

•

encoding and decoding OpenFlow messages from and to Java rich data types.

The controller handles the connections from OpenFlow switches and provides the means for upper

layers of software to interact with those switches via the ControllerService API.

The following figure illustrates this:

Figure 14 OpenFlow Controller

Message Library

The Message Library is a Java implementation of the OpenFlow specification, providing facilities for

encoding and decoding OpenFlow messages from and to Java rich data types.

Design Goals

The following are the overall design goals of the library:

To span all OpenFlow protocol versions

However, actively supporting just 1.0.0 and 1.3.2

To be extensible

Easily accommodating future versions

To provide an elegant, yet simple, API for handling with OpenFlow messages

To reduce the burden on application developers

Insulating developers from differences across protocol versions, as much as possible

To expose the semantics but hide the syntax details

Developers will not be required to encode and decode bitmasks, calculate message

lengths, insert padding, etc.

To be robust and type-safe

Working with Java enumerations and types

27

Page 33

Design Choices

•

•

•

•

•

•

•

Some specific design choices were made to establish the underlying principles of the

implementation, to help meet the goals specified above.

All OpenFlow messages are fully creatable/encodable/decodable, making the library

completely symmetrical in this respect.

The controller (or app) never creates certain messages (such as PortStatus, FlowRemoved,

However, providing a complete solution allows us to emulate OpenFlow switches in Java

Message instances, for the most part, are immutable.

This means a single instance can be shared safely across multiple applications (and

This implies that the structures that make up the message (ports, instructions, actions, etc.)

Where possible, “Data Types” will be used to encourage API type-safety – see the

Where bitmasks are defined in the protocol, Java enumerations are defined with a constant

for each bit.

MultipartReply, etc.) as these are only ever generated by the switch. Technically, we

would only need to decode those messages, never encode them.

code. This facilitates the writing of automated tests to verify switch/controller interactions

in a deterministic manner.

multiple threads) without synchronization.

must also be immutable.

Javadocs for com.hp.util.ip and com.hp.of.lib.dt.

A specific bitmask value is represented by a Set of the appropriate enumeration

constants.

For example: Set<PortConfig>

A message instance is mutable only while the message is under construction (for example, an

application composing a FlowMod message). To be sent through the system it must be

converted to its immutable form first.

To create and send a message, an application will:

Use the Message Factory to create a mutable message of the required type

Set the state (payload) of the message

Make the message immutable

Send the message via the ControllerService API.

The Core Controller will use the Message Factory to encode the message into its byte-stream

form, for transmitting to the switch.

The Core Controller will use the Message Factory to decode incoming messages from their

byte-stream form into their (immutable) rich data type form.

28

Page 34

Figure 15 Message Factory Role

•

•

•

•

•

•

Message Composition and Type Hierarchy

All OpenFlow message instances are subclasses of the OpenflowMessage abstract class. Every

message includes an internal Header instance that encapsulates:

The protocol version

The message type

The message length (in bytes)

The transaction ID (XID)

In addition to the header, specific messages may include:

Data values, such as “port number”, “# bytes processed”, “metadata mask”, “h/w address”,

etc.

These values are represented by Java primitives, enumeration constants, or data types.

Other common structures, such as Ports, Matches, Instructions, Actions, etc.

These structure instances are all subclasses of the OpenflowStructure abstract class.

For each defined OpenFlow message type (see com.hp.of.lib.msg.MessageType) there are

corresponding concrete classes representing the immutable and mutable versions of the message.

For a given message type (denoted below as “Foo”) the following class relationships exist:

29

Page 35

Figure 16 OpenFlow Message Class Diagram

Each mutable subclass includes a private Mutable object that determines whether the instance is still

“writable”. While writable, the “payload” of the mutable message can be set. Once the message

has been made immutable, the mutable instance is marked as “no longer writable”; any attempt to

change its state will result in an InvalidMutableException being thrown.

Note that messages are passive in nature as they are simply data carriers.

Note also that structures (e.g. a Match) have a very similar class relationship.

Factories

Messages and structures are parsed or created by factories. Since the factories are all about

processing, but contain no state, the APIs consist entirely of static methods. Openflow messages are

created, encoded, or parsed by the MessageFactory class. Supporting structures are created,

encoded, or parsed by supporting factories, e.g. MatchFactory, FieldFactory, PortFactory, etc.

The main factory that application developers will deal with is the MessageFactory:

30

Page 36

Figure 17 Message Factory Class Diagram

•

•

•

•

•

•

•

•

•

The other factories that a developer might use are:

MatchFactory—creates matches, used in FlowMods

FieldFactory—creates match fields, used in Matches

InstructionFactory—creates instructions for FlowMods

ActionFactory—creates actions for instructions, (1.0 flowmods), and group buckets

PortFactory—creates port descriptions

Note that there are “reserved” values (special port numbers) defined on the Port class

(MAX, IN_PORT, TABLE, NORMAL, FLOOD, ALL, CONTROLLER, LOCAL, ANY)—see

com.hp.of.lib.msg.Port Javadocs

QueueFactory—creates queue descriptions

MeterBandFactory—creates meter bands, used in MeterMod messages

BucketFactory—creates buckets, used in GroupMod messages

TableFeatureFactory—creates table feature descriptions

Note that application developers should not ever need to invoke “parse” or “encode” methods on

any of the factories; those methods are reserved for use by the Core Controller.

An example: creating a FlowMod message

The following listing shows an example of how to create a flowmod message:

Flowmod Message Example:

public class SampleFlowModM es sageCreation {

private static fin al ProtocolVersi on PV = ProtocolVersion.V_1_3;

private static final long COOKIE = 0x00002468;

private static fin al TableId TABLE_I D = TableId.valueOf(200);

31

Page 37

private static final int FL OW_IDLE_TIME OU T = 300;

private static final int FL OW_HARD_TIME OU T = 600;

private static final int FL OW_PRIORITY = 50 ;

private static fin al Set<FlowModFl ag> FLAGS = EnumSet. of(

FlowModFlag.SEND_FLOW_REM,

FlowModFlag.CHECK_OVERLAP,

FlowModFlag.NO_BYTE_COUNTS

);

private static final MacAddress MAC =

MacAddress.valueOf("00001e:000000");

private static final MacAddress MAC_MASK =

MacAddress.valueOf("ffffff:000000");

private static final PortNumber SMTP_PORT = PortNumb er.valueOf(25);

private static final MacAddress MAC_DEST = MacAddress.BROADCAST;

private static final IpAd dress IP_DEST = Ip A ddress.LOOPBACK_IPv4;

private OfmFlowMod sampleFlowModCreation() {

// Create a 1.3 FlowMod ADD message...