Page 1

HP Scalable File Share User's Guide

G3.1-0

HP Part Number: SFSUGG31-E

Published: June 2009

Edition: 5

Page 2

© Copyright 2009 Hewlett-Packard Development Company, L.P.

Confidential computersoftware. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211and 12.212,Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standardcommercial license.The informationcontained hereinis subject to change without notice. The only warranties forHP products

and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as

constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

Intel, Intel Xeon, and Itanium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the U.S. and other countries.

InfiniBand is a registered trademark and service mark of the InfiniBand Trade Association.

Lustre and the Lustre Logo are trademarks of Sun Microsystems.

Myrinet and Myricom are registered trademarks of Myricom Inc.

Quadrics and QsNetII are trademarks or registered trademarks of Quadrics, Ltd.

UNIX is a registered trademark of The Open Group.

Voltaire, ISR 9024, Voltaire HCA 400, and VoltaireVision are all registered trademarks of Voltaire, Inc.

Red Hat is a registered trademark of Red Hat, Inc.

Fedora is a trademark of Red Hat, Inc.

SUSE is a registered trademark of SUSE AG, a Novell business.

AMD Opteron is a trademark of Advanced Micro Devices, Inc.

Sun and Solaris are trademarks or registered trademarks of Sun Microsystems, Inc. in the United States and other countries.

Page 3

Table of Contents

About This Document.........................................................................................................9

Intended Audience.................................................................................................................................9

New and Changed Information in This Edition.....................................................................................9

Typographic Conventions......................................................................................................................9

Related Information..............................................................................................................................10

Structure of This Document..................................................................................................................11

Documentation Updates.......................................................................................................................11

HP Encourages Your Comments..........................................................................................................11

1 What's In This Version.................................................................................................13

1.1 About This Product.........................................................................................................................13

1.2 Benefits and Features......................................................................................................................13

1.3 Supported Configurations ..............................................................................................................13

1.3.1 Hardware Configuration.........................................................................................................14

1.3.1.1 Fibre Channel Switch Zoning..........................................................................................16

1.4 Server Security Policy......................................................................................................................16

2 Installing and Configuring MSA Arrays.....................................................................19

2.1 Installation.......................................................................................................................................19

2.2 Accessing the MSA2000fc CLI.........................................................................................................19

2.3 Using the CLI to Configure Multiple MSA Arrays.........................................................................19

2.3.1 Configuring New Volumes.....................................................................................................19

2.3.2 Creating New Volumes...........................................................................................................20

3 Installing and Configuring HP SFS Software on Server Nodes..............................23

3.1 Supported Firmware ......................................................................................................................24

3.2 Installation Requirements...............................................................................................................25

3.2.1 Kickstart Template Editing......................................................................................................25

3.3 Installation Phase 1..........................................................................................................................26

3.3.1 DVD/NFS Kickstart Procedure................................................................................................26

3.3.2 DVD/USB Drive Kickstart Procedure.....................................................................................27

3.3.3 Network Installation Procedure..............................................................................................28

3.4 Installation Phase 2..........................................................................................................................28

3.4.1 Patch Download and Installation Procedure..........................................................................29

3.4.2 Run the install2.sh Script.................................................................................................29

3.4.3 10 GigE Installation.................................................................................................................29

3.5 Configuration Instructions..............................................................................................................30

3.5.1 Configuring Ethernet and InfiniBand or 10 GigE Interfaces..................................................30

3.5.2 Creating the /etc/hosts file................................................................................................30

3.5.3 Configuring pdsh...................................................................................................................31

3.5.4 Configuring ntp......................................................................................................................31

3.5.5 Configuring User Credentials.................................................................................................31

3.5.6 Verifying Digital Signatures (optional)...................................................................................32

3.5.6.1 Verifying the HP Public Key (optional)..........................................................................32

3.5.6.2 Verifying the Signed RPMs (optional)............................................................................32

3.6 Upgrade Installation........................................................................................................................32

3.6.1 Rolling Upgrades.....................................................................................................................33

3.6.2 Client Upgrades.......................................................................................................................35

Table of Contents 3

Page 4

4 Installing and Configuring HP SFS Software on Client Nodes...............................37

4.1 Installation Requirements...............................................................................................................37

4.1.1 Client Operating System and Interconnect Software Requirements......................................37

4.1.2 InfiniBand Clients....................................................................................................................37

4.1.3 10 GigE Clients........................................................................................................................37

4.2 Installation Instructions...................................................................................................................38

4.3 Custom Client Build Procedures.....................................................................................................39

4.3.1 CentOS 5.2/RHEL5U2 Custom Client Build Procedure..........................................................39

4.3.2 SLES10 SP2 Custom Client Build Procedure...........................................................................39

5 Using HP SFS Software................................................................................................41

5.1 Creating a Lustre File System..........................................................................................................41

5.1.1 Creating the Lustre Configuration CSV File...........................................................................41

5.1.1.1 Multiple File Systems......................................................................................................43

5.1.2 Creating and Testing the Lustre File System...........................................................................43

5.2 Configuring Heartbeat....................................................................................................................44

5.2.1 Preparing Heartbeat................................................................................................................45

5.2.2 Generating Heartbeat Configuration Files Automatically......................................................45

5.2.3 Configuration Files..................................................................................................................45

5.2.3.1 Generating the cib.xml File..........................................................................................47

5.2.3.2 Editing cib.xml.............................................................................................................47

5.2.4 Copying Files...........................................................................................................................47

5.2.5 Starting Heartbeat...................................................................................................................48

5.2.6 Monitoring Failover Pairs........................................................................................................48

5.2.7 Moving and Starting Lustre Servers Using Heartbeat............................................................48

5.2.8 Things to Double-Check..........................................................................................................49

5.2.9 Things to Note.........................................................................................................................49

5.3 Starting the File System...................................................................................................................49

5.4 Stopping the File System.................................................................................................................50

5.5 Testing Your Configuration.............................................................................................................50

5.5.1 Examining and Troubleshooting.............................................................................................50

5.5.1.1 On the Server...................................................................................................................50

5.5.1.2 The writeconf Procedure.................................................................................................52

5.5.1.3 On the Client...................................................................................................................53

5.6 Lustre Performance Monitoring......................................................................................................54

6 Licensing........................................................................................................................55

6.1 Checking for a Valid License...........................................................................................................55

6.2 Obtaining a New License................................................................................................................55

6.3 Installing a New License.................................................................................................................55

7 Known Issues and Workarounds................................................................................57

7.1 Server Reboot...................................................................................................................................57

7.2 Errors from install2....................................................................................................................57

7.3 Application File Locking.................................................................................................................57

7.4 MDS Is Unresponsive......................................................................................................................57

7.5 Changing group_upcall Value to Disable Group Validation.....................................................57

7.6 Configuring the mlocate Package on Client Nodes......................................................................58

7.7 System Behavior After LBUG..........................................................................................................58

4 Table of Contents

Page 5

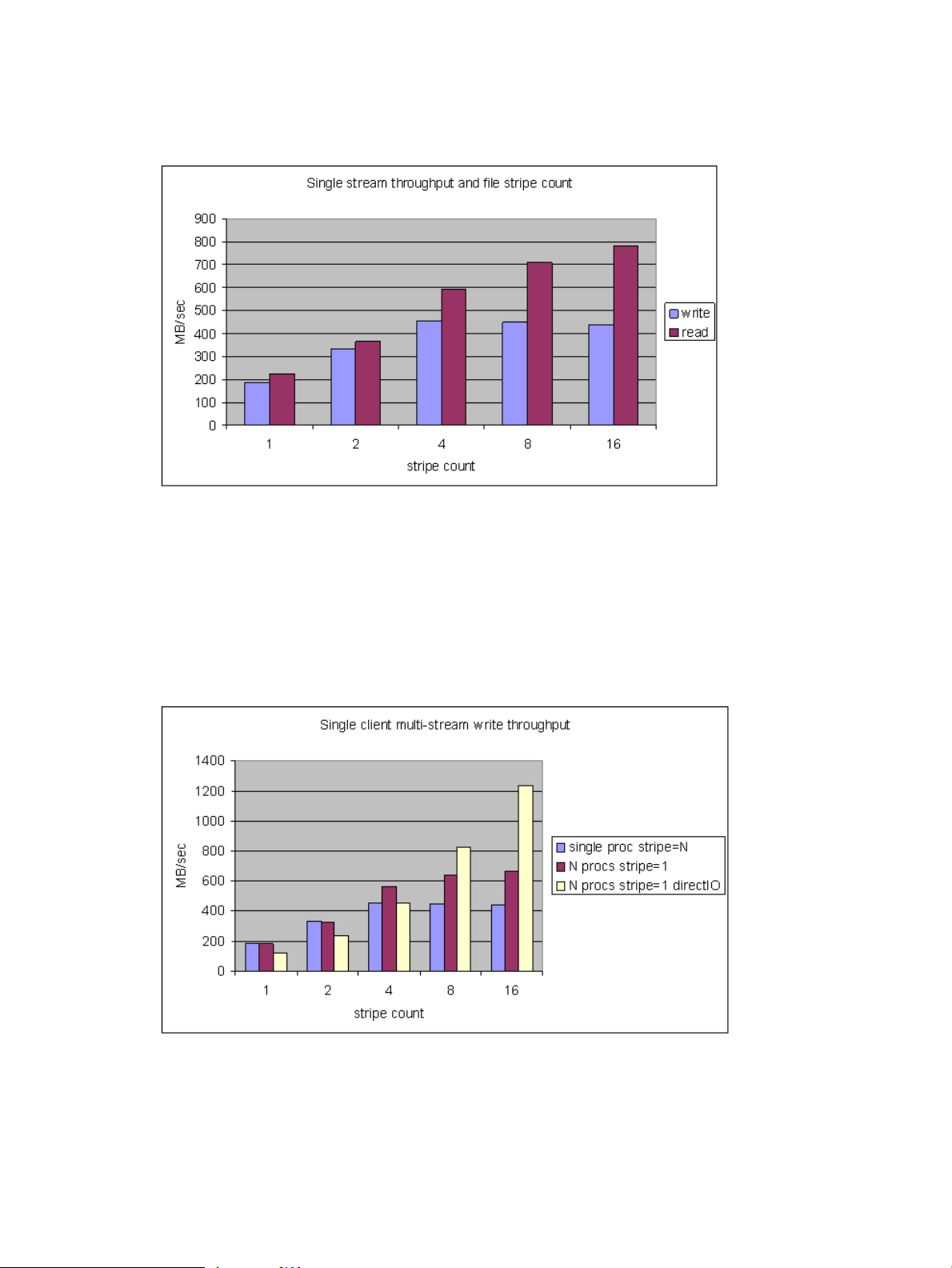

A HP SFS G3 Performance.............................................................................................59

A.1 Benchmark Platform.......................................................................................................................59

A.2 Single Client Performance..............................................................................................................60

A.3 Throughput Scaling........................................................................................................................62

A.4 One Shared File..............................................................................................................................64

A.5 Stragglers and Stonewalling...........................................................................................................64

A.6 Random Reads................................................................................................................................65

Index.................................................................................................................................67

Table of Contents 5

Page 6

List of Figures

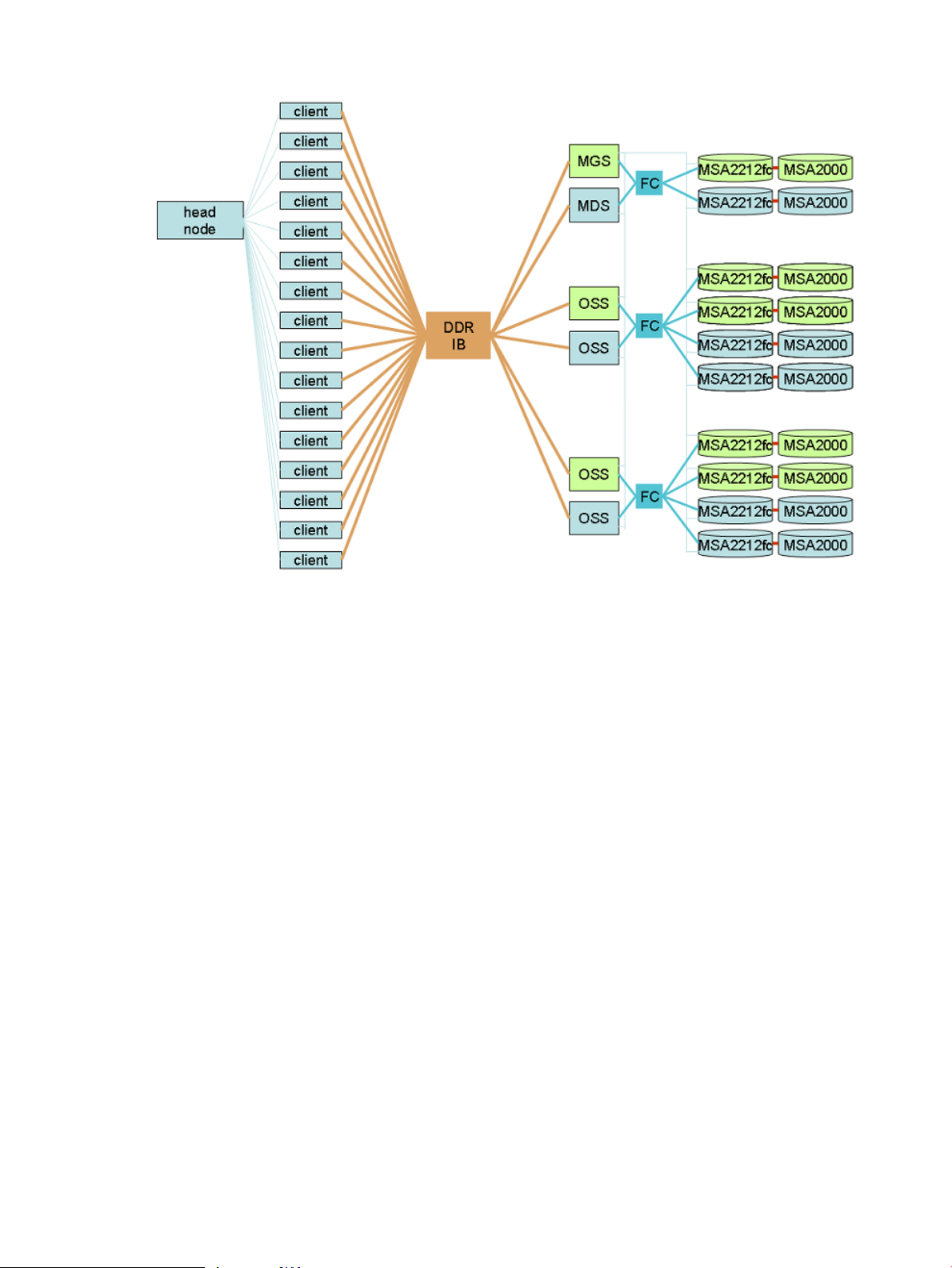

1-1 Platform Overview........................................................................................................................15

1-2 Server Pairs....................................................................................................................................16

A-1 Benchmark Platform......................................................................................................................59

A-2 Storage Configuration...................................................................................................................60

A-3 Single Stream Throughput............................................................................................................61

A-4 Single Client, Multi-Stream Write Throughput.............................................................................61

A-5 Writes Slow When Cache Fills.......................................................................................................62

A-6 Multi-Client Throughput Scaling..................................................................................................63

A-7 Multi-Client Throughput and File Stripe Count...........................................................................63

A-8 Stonewalling..................................................................................................................................64

A-9 Random Read Rate........................................................................................................................65

6 List of Figures

Page 7

List of Tables

1-1 Supported Configurations ............................................................................................................13

3-1 Minimum Firmware Versions.......................................................................................................24

7

Page 8

8

Page 9

About This Document

This document provides installation and configuration information for HP Scalable File Share

(SFS) G3.1-0. Overviews of installing and configuring the Lustre® File System and MSA2000

Storage Arrays are also included in this document.

Pointers to existing documents are provided where possible. Refer to those documents for related

information.

Intended Audience

This document is intended for anyone who installs and uses HP SFS. The information in this

guide assumes that you have experience with the following:

• The Linux operating system and its user commands and tools

• The Lustre File System

• Smart Array storage administration

• HP rack-mounted servers and associated rack hardware

• Basic networking concepts, network switch technology, and network cables

New and Changed Information in This Edition

• CentOS 5.2 support

• Lustre 1.6.7 support

• 10 GigE support

• License checking

• Upgrade path

Typographic Conventions

This document uses the following typographical conventions:

%, $, or #

audit(5) A manpage. The manpage name is audit, and it is located in

Command

Computer output

Ctrl+x A key sequence. A sequence such as Ctrl+x indicates that you

ENVIRONMENT VARIABLE The name of an environment variable, for example, PATH.

[ERROR NAME]

Key The name of a keyboard key. Return and Enter both refer to the

Term The defined use of an important word or phrase.

User input

Variable

[] The contents are optional in syntax. If the contents are a list

A percent sign represents the C shell system prompt. A dollar

sign represents the system prompt for the Bourne, Korn, and

POSIX shells. A number sign represents the superuser prompt.

Section 5.

A command name or qualified command phrase.

Text displayed by the computer.

must hold down the key labeled Ctrl while you press another

key or mouse button.

The name of an error, usually returned in the errno variable.

same key.

Commands and other text that you type.

The name of a placeholder in a command, function, or other

syntax display that you replace with an actual value.

separated by |, you must choose one of the items.

Intended Audience 9

Page 10

{} The contents are required in syntax. If the contents are a list

... The preceding element can be repeated an arbitrary number of

\ Indicates the continuation of a code example.

| Separates items in a list of choices.

WARNING A warning calls attention to important information that if not

CAUTION A caution calls attention to important information that if not

IMPORTANT This alert provides essential information to explain a concept or

NOTE A note contains additional information to emphasize or

Related Information

Pointers to existing documents are provided where possible. Refer to those documents for related

information.

For Sun Lustre documentation, see:

http://manual.lustre.org

separated by |, you must choose one of the items.

times.

understood or followed will result in personal injury or

nonrecoverable system problems.

understood or followed will result in data loss, data corruption,

or damage to hardware or software.

to complete a task.

supplement important points of the main text.

The Lustre 1.6 Operations Manual is installed on the system in /opt/hp/sfs/doc/

LustreManual_v1_15.pdf. Or refer to the Lustre website:

http://manual.lustre.org/images/8/86/820-3681_v15.pdf

For HP XC Software documentation, see:

http://docs.hp.com/en/linuxhpc.html

For MSA2000 products, see:

http://www.hp.com/go/msa2000

For HP servers, see:

http://www.hp.com/go/servers

For InfiniBand information, see:

http://www.hp.com/products1/serverconnectivity/adapters/infiniband/specifications.html

For Fibre Channel networking, see:

http://www.hp.com/go/san

For HP support, see:

http://www.hp.com/support

For product documentation, see:

http://www.hp.com/support/manuals

For collectl documentation, see:

http://collectl.sourceforge.net/Documentation.html

For Heartbeat information, see:

http://www.linux-ha.org/Heartbeat

For HP StorageWorks Smart Array documentation, see:

HP StorageWorks Smart Array Manuals

10

Page 11

For SFS Gen 3 Cabling Tables, see: http://docs.hp.com/en/storage.html and click the Scalable File

Share (SFS) link.

For SFS V2.3 Release Notes, see:

HP StorageWorks Scalable File Share Release Notes Version 2.3

For documentation of previous versions of HP SFS, see:

• HP StorageWorks Scalable File Share Client Installation and User Guide Version 2.2 at:

http://docs.hp.com/en/8957/HP_StorageWorks_SFS_Client_V2_2-0.pdf

Structure of This Document

This document is organized as follows:

Chapter 1 Provides information about what is included in this product.

Chapter 2 Provides information about installing and configuring MSA2000fc arrays.

Chapter 3 Provides information about installing and configuring the HP SFS Software on the

server nodes.

Chapter 4 Provides information about installing and configuring the HP SFS Software on the

client nodes.

Chapter 5 Provides information about using the HP SFS Software.

Chapter 6 Provides information about licensing.

Chapter 7 Provides information about known issues and workarounds.

Appendix A Provides performance data.

Documentation Updates

Documentation updates (if applicable) are provided on docs.hp.com. Use the release date of a

document to determine that you have the latest version.

HP Encourages Your Comments

HP encourages your comments concerning this document. We are committed to providing

documentation that meets your needs. Send any errors found, suggestions for improvement, or

compliments to:

http://docs.hp.com/en/feedback.html

Include the document title, manufacturing part number, and any comment, error found, or

suggestion for improvement you have concerning this document.

Structure of This Document 11

Page 12

12

Page 13

1 What's In This Version

1.1 About This Product

HP SFS G3.1-0 uses the Lustre File System on MSA2000fc hardware to provide a storage system

for standalone servers or compute clusters.

Starting with this release, HP SFS servers can be upgraded. If you are upgrading from one version

of HP SFS G3 to a more recent version, see the instructions in “Upgrade Installation” (page 32).

IMPORTANT: If you are upgrading from HP SFS version 2.3 or older, you must contact your

HP SFS 2.3 support representative to obtain the extra documentation and tools necessary for

completing that upgrade. The upgrade from HP SFS version 2.x to HP SFS G3 cannot be done

successfully with just the HP SFS G3 CD and the user's guide.

HP SFS 2.3 to HP SFS G3 upgrade documentation and tools change regularly and independently

of the HP SFS G3 releases. Verify that you have the latest available versions.

If you are upgrading from one version of HP SFS G3, on a system that was previously upgraded

from HP SFS version 2.3 or older, you must get the latest upgrade documentation and tools from

HP SFS 2.3 support.

1.2 Benefits and Features

HP SFS G3.1-0 consists of a software set required to providehigh performance and highly available

Lustre File System service over InfiniBand or 10 Gigabit Ethernet (GigE) for HP MSA2000fc

storage hardware. The software stack includes:

• Lustre Software 1.6.7

• Open Fabrics Enterprise Distribution (OFED) 1.3.1

• Mellanox 10 GigE driver

• Heartbeat V2.1.3

• HP multipath drivers

• collectl (for system performance monitoring)

• pdsh for running file system server-wide commands

• Other scripts, tests, and utilities

1.3 Supported Configurations

HP SFS G3.1-0 supports the following configurations:

Table 1-1 Supported Configurations

Server Operating System

SupportedComponent

CentOS 5.2, RHEL5U2, SLES10 SP2, XCV4Client Operating Systems

Opteron, XeonClient Platform

V1.6.7Lustre Software

CentOS 5.2

ProLiant DL380 G5Server Nodes

MSA2000fcStorage Array

OFED 1.3.1 InfiniBand or 10 GigEInterconnect

1

1.1 About This Product 13

Page 14

Table 1-1 Supported Configurations (continued)

1 CentOS 5.2 is available for download from the HP Software Depot at:

http://www.hp.com/go/softwaredepot

1.3.1 Hardware Configuration

A typical HP SFS system configuration consists of the base rack only that contains:

• ProLiant DL380 MetaData Servers (MDS), administration servers, and Object Storage Servers

(OSS)

• HP MSA2000fc enclosures

• Management network ProCurve Switch

• SAN switches

• InfiniBand or 10 GigE switches

• Keyboard, video, and mouse (KVM) switch

• TFT console display

All DL380 G5 file system servers must have their eth0 Ethernet interfaces connected to the

ProCurve Switch making up an internal Ethernet network. The iLOs for the DL380 G5 servers

should also be connected to the ProCurve Switch, to enable Heartbeat failover power control

operations. HP recommends at least two nodes with Ethernet interfaces be connected to an

external network.

DL380 G5 file system servers using HP SFS G3.1-0 must be configured with mirrored system

disks to protect against a server disk failure.Use the ROM-based HP ORCA ArrayConfiguration

utility to configure mirrored system disks (RAID 1) for each server by pressing F8 during system

boot. More information is available at:

http://h18004.www1.hp.com/products/servers/proliantstorage/software-management/acumatrix/

index.html

The MDS server, administration server, and each pair of OSS servers have associated HP

MSA2000fc enclosures. Figure 1-1 provides a high-level platform diagram. For detailed diagrams

of the MSA2000 controller and the drive enclosure connections, see the HP StorageWorks 2012fc

Modular Smart Array User Guide at:

http://bizsupport.austin.hp.com/bc/docs/support/SupportManual/c01394283/c01394283.pdf

SupportedComponent

SAS, SATAStorage Array Drives

8.10 and laterProLiant Support Pack (PSP)

14 What's In This Version

Page 15

Figure 1-1 Platform Overview

1.3 Supported Configurations 15

Page 16

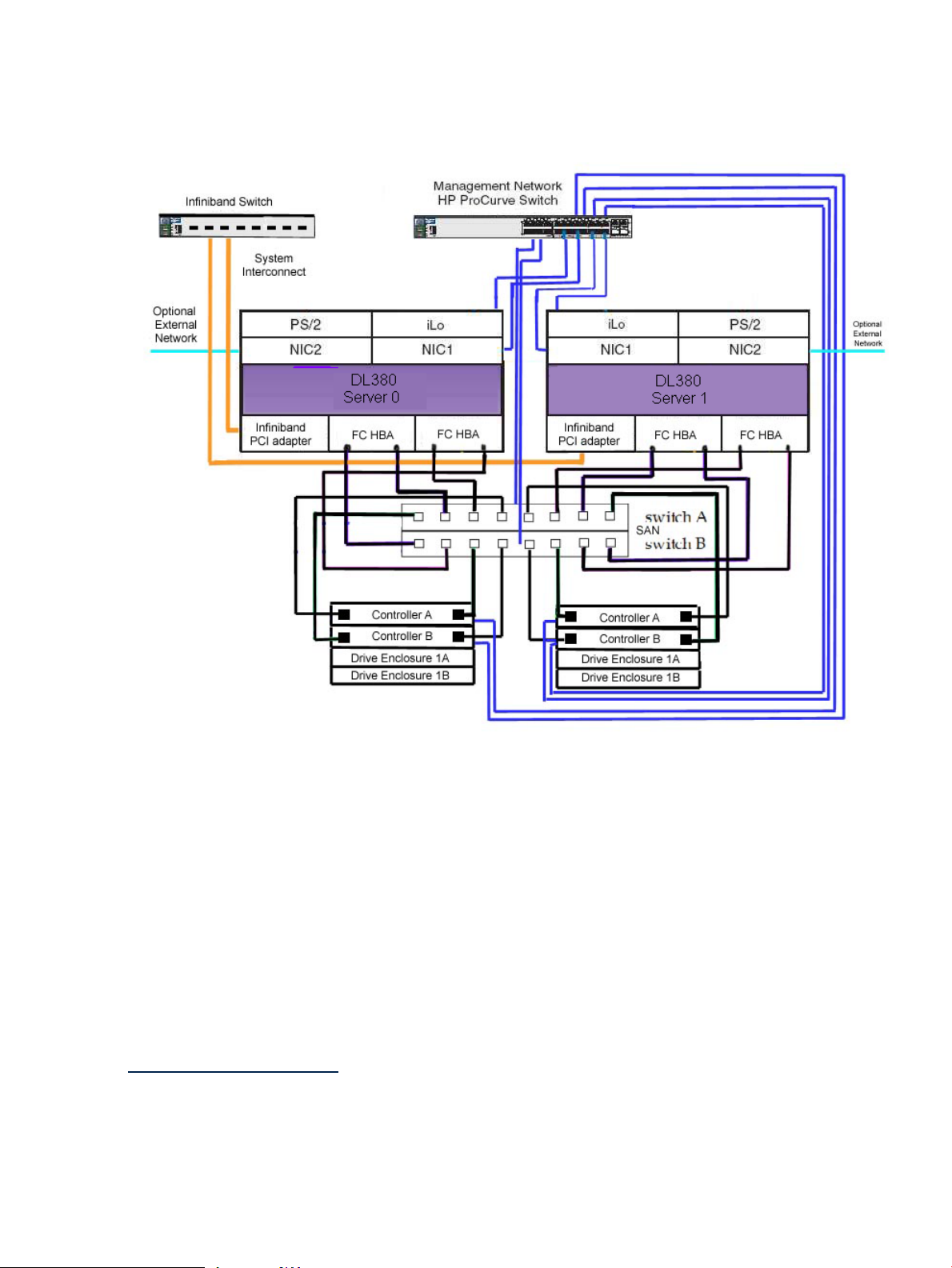

Figure 1-2 Server Pairs

Figure 1-2 shows typical wiring for server pairs.

1.3.1.1 Fibre Channel Switch Zoning

If your Fibre Channel is configured with a single Fibre Channel switch connected to more than

one server node failover pair and its associated MSA2000 storage devices, you must set up zoning

on the Fibre Channel switch. Most configurations are expected to require this zoning. The zoning

should be set up such that each server node failover pair only can see the MSA2000 storage

devices that are defined for it, similar to the logical view shown in Figure 1-1 (page 15). The

Fibre Channel ports for each server node pair, and its associated MSA2000 storage devices should

be put into the same switch zone.

For the commands used to set up Fibre Channel switch zoning, see the documentation for your

specific Fibre Channel B-series switch available from:

http://www.hp.com/go/san

1.4 Server Security Policy

The HP Scalable File Share G3 servers run a generic Linux operating system. Security

considerations associated with the servers are the responsibility of the customer. HP strongly

recommends that access to the SFS G3 servers be restricted to administrative users only. Doing

16 What's In This Version

Page 17

so will limit or eliminate user access to the servers, thereby reducing potential security threats

and the need to apply security updates. For information on how to modify validation of user

credentials, see “Configuring User Credentials” (page 31).

HP provides security updates for all non-operating-system components delivered by HP as part

of the HP SFS G3 product distribution. This includes all rpm's delivered in /opt/hp/

sfs. Additionally, HP SFS G3 servers run a customized kernel which is modified to provide

Lustre support. Generic kernels cannot be used on the HP SFS G3 servers. For this reason, HP

also provides kernel security updates for critical vulnerabilities as defined by CentOS kernel

releases which are based on RedHat errata kernels. These kernel security patches are delivered

via ITRC along with installation instructions.

It is the customer's responsibility to monitor, download, and install user space security updates

for the Linux operating system installed on the SFS G3 servers, as deemed necessary, using

standard methodsavailable for CentOS. CentOS security updatescan be monitored bysubscribing

to the CentOS Announce mailing list.

1.4 Server Security Policy 17

Page 18

18

Page 19

2 Installing and Configuring MSA Arrays

This chapter summarizes the installation and configuration steps for MSA2000fc arrays usee in

HP SFS G3.1-0 systems.

2.1 Installation

For detailed instructions of how to set up and install the MSA2000fc, see Chapter 4 of the HP

StorageWorks 2012fc Modular Smart Array User Guide on the HP website at:

http://bizsupport.austin.hp.com/bc/docs/support/SupportManual/c01394283/c01394283.pdf

2.2 Accessing the MSA2000fc CLI

You can use the CLI software, embedded in the controller modules, to configure, monitor, and

manage a storage system. CLI can be accessed using telnet over Ethernet. Alternatively, you can

use a terminal emulator if the management network is down. For information on setting up the

terminal emulator, see the HP StorageWorks 2000 Family Modular Smart Array CLI Reference Guide

on the HP website at:

http://bizsupport.austin.hp.com/bc/docs/support/SupportManual/c01505833/c01505833.pdf

NOTE: The MSA2000s must be connected to a server with HP SFS G3.1-0 software installed as

described in Chapter 3 (page 23) to use scripts to perform operations on multiple MSA2000

arrays.

2.3 Using the CLI to Configure Multiple MSA Arrays

The CLI is used for managing a number of arrays in a large HP SFS configuration because it

enables scripted automation of tasks that must be performed on each array. CLI commands are

executed on an array by opening a telnet session from the management server to the array. The

provided script, /opt/hp/sfs/msa2000/msa2000cmd.pl, handles the details of opening a

telnet session on an array, executing a command, and closing the session. This operation is quick

enough to be practical in a script that repeats the command on each array. For a detailed

description of CLI commands, see the HP StorageWorks 2000 Family Modular Smart Array CLI

Reference Guide.

2.3.1 Configuring New Volumes

Only a subset of commands is needed to configure the arrays for use with HP SFS. To configure

new volumes on the storage arrays, follow these steps:

1. Power on all the enclosures.

2. Use the rescan command on the array controllers to discover all the attached enclosures

and drives.

3. Use the create vdisk command to create one vdisk from the disks of each storage

enclosure. For MGS and MDS storage, HP SFS uses RAID10 with 10 data drives and 2 spare

drives. For OST storage, HP SFS uses RAID6 with 9 data drives, 2 parity drives, and 1 hot

spare. The command is executed for each enclosure.

4. Use the create volume command to create a single volume occupying the full extent of

each vdisk. In HP SFS, one enclosure contains one vdisk, which contains one volume, which

becomes one Lustre Object Storage Target (OST).

To examine the configuration and status of all the arrays, use the show commands. For more

information about show commands, see the HP StorageWorks 2000 Family Modular Smart Array

CLI Reference Guide.

2.1 Installation 19

Page 20

2.3.2 Creating New Volumes

To create new volumes on a set of MSA2000 arrays, follow these steps:

1. Power on all the MSA2000 shelves.

2. Define an alias.

One way to execute commands on a set of arrays is to define a shell alias that calls

/opt/hp/sfs/msa2000/msa2000cmd.pl for each array. The alias defines a shell for-loop

which is terminated with ; done. For example:

# alias forallmsas='for NN in `seq 101 2 119` ; do \

./msa2000cmd.pl 192.168.16.$NN'

In the above example, controller A of the first array has an IP address of 192.168.16.101,

controller B has the next IP address, and the rest of the arrays have consecutive IP addresses

up through 192.168.16.[119,120] on the last array. This command is only executed on one

controller of the pair.

For the command examples in this section, the MGS and MDS use the MSA2000 A controllers

assigned to IP addresses 192.168.16.101–103. The OSTs use the A controllers assigned to the

IP addresses 192.168.16.105–119. The vdisks and volumes created for MGS and MDS are not

the same as vdisks and volumes created for OSTs. So, for convenience, define an alias for

each set of MDS (MGS and MDS) and OST controllers.

# alias formdsmsas='for NN in `seq 101 2 103` ; do ./msa2000cmd.pl 192.168.16.$NN'

# alias forostmsas='for NN in `seq 105 2 119` ; do ./msa2000cmd.pl 192.168.16.$NN'

NOTE: You may receive the following error if a controller is down:

# alias forallmsas='for NN in `seq 109 2 115` ; do ./msa2000cmd.pl 192.168.16.$NN'

# forallmsas show disk 3 ; done

----------------------------------------------------

On MSA2000 at 192.168.16.109 execute < show disk 3 >

ID Serial# Vendor Rev. State Type Size(GB) Rate(Gb/s) SP

------------------------------------------------------------------------------

3 3LN4CJD700009836M9QQ SEAGATE 0002 AVAIL SAS 146 3.0

------------------------------------------------------------------------------

On MSA2000 at 192.168.16.111 execute < show disk 3 >

ID Serial# Vendor Rev. State Type Size(GB) Rate(Gb/s) SP

------------------------------------------------------------------------------

3 3LN4DX5W00009835TQX9 SEAGATE 0002 AVAIL SAS 146 3.0

------------------------------------------------------------------------------

On MSA2000 at 192.168.16.113 execute < show disk 3 >

problem connecting to "192.168.16.113", port 23: No route to host at ./msa2000cmd.pl line 12

----------------------------------------------------

On MSA2000 at 192.168.16.115 execute < show disk 3 >

problem connecting to "192.168.16.115", port 23: No route to host at ./msa2000cmd.pl line 12

3. Storage arrays consist of a controller enclosure with two controllers and up to three connected

disk drive enclosures. Each enclosure can contain up to 12 disks.

Use the rescan command to find all the enclosures and disks. For example:

# forallmsas rescan ; done

# forallmsas show disks ; done

The CLI syntax for specifying disks in enclosures differs based on the controller type used

in the array. The following vdisk and volume creation steps are organized by controller

types MSA2212fc and MSA2312fc, and provide examples of command-line syntax for

specifying drives. This assumes that all arrays in the system are using the same controller

type.

20 Installing and Configuring MSA Arrays

Page 21

• MSA2212fc Controller

Disks are identified by SCSI ID. The first enclosure has disk IDs 0-11, the second has

16-27, the third has 32-43, and the fourth has 48-59.

• MSA2312fc Controller

Disks are specified by enclosure ID and slot number. Enclosure IDs increment from 1.

Disk IDs increment from 1 in each enclosure. The first enclosure has disk IDs 1.1-12,

the second has 2.1-12, the third has 3.1-12, and the fourth has 4.1-12.

Depending on the order in which the controllers powered on, youmight see different ranges

of disk numbers. If this occurs, run the rescan command again.

4. If you have MSA2212fc controllers in your arrays, use the following commands to create

vdisks and volumes for each enclosure in all of the arrays. When creating volumes, all

volumes attached to a given MSA must be assigned sequential LUN numbers to ensure

correct assignment of multipath priorities.

a. Create vdisks in the MGS and MDS array. The following example assumes the MGS

and MDS do not have attached disk enclosures and creates one vdisk for the controller

enclosure. The disks 0-4 are mirrored by disks 5-9 in this configuration:

# formdsmsas create vdisk level raid10 disks 0-4:5-9 assigned-to a spare 10,11 mode offline vdisk1;

done

Creating vdisks using offline mode is faster, but in offline mode the vdisk must be

created before you can create the volume. Use the show vdisks command to check

the status. When the status changes from OFFL, you can create the volume.

# formdsmsas show vdisks; done

Make a note of the size of the vdisks and use that number <size> to create the volume

in the next step.

b. Create volumes on the MDS and MDS vdisk.

# formdsmsas create volume vdisk vdisk1 size <size> mapping 0-1.11 volume1; done

c. Create vdisks ineach OST array. For OST arrays with one attached disk drive enclosure,

create two vdisks, one for the controller enclosure and one for the attached disk

enclosure. For example:

# forostmsas create vdisk level raid6 disks 0-10 assigned-to a spare 11 mode offline vdisk1; done

# forostmsas create vdisk level raid6 disks 16-26 assigned-to b spare 27 mode offline vdisk2; done

Use the show vdisks command to check the status. When the status changes from

OFFL, you can create the volume.

# forostmsas show vdisks; done

Make a note of the size of the vdisks and use that number <size> to create the volume

in the next step.

d. Create volumes on all OST vdisks. In the following example, LUN numbers are 21 and

22.

# forostmsas create volume vdisk vdisk1 size <size> mapping 0-1.21 volume1; done

# forostmsas create volume vdisk vdisk2 size <size> mapping 0-1.22 volume2; done

5. If you have MSA2312fc controllers in your arrays, use the following commands to create

vdisks and volumes for each enclosure in all of the arrays. When creating volumes, all

volumes attached to a given MSA must be assigned sequential LUN numbers to ensure

correct assignmentof multipath priorities. HP recommends mapping all ports to each volume

to facilitate proper hardware failover.

2.3 Using the CLI to Configure Multiple MSA Arrays 21

Page 22

a. Create vdisks in the MGS and MDS array. The following example assumes the MGS

and MDS do not have attached disk enclosures and creates one vdisk for the controller

enclosure.

# formdsmsas create vdisk level raid10 disks 1.1-4:1.5-9 assigned-to a spare 1.11-12 mode offline

vdisk1; done

Creating vdisks using offline mode is faster, but in offline mode the vdisk must be

created before you can create the volume. Use the show vdisks command to check

the status. When the status changes from OFFL, you can create the volume.

# formdsmsas show vdisks; done

Make a note of the size of the vdisks and use that number <size> to create the volume

in the next step.

b. Create volumes on the MDS and MDS vdisk.

# formdsmsas create volume vdisk vdisk1 size <size> volume1 lun 31 ports a1,a2,b1,b2; done

c. Create vdisks in each OST array. For OST arrays with three attached disk drive

enclosures, create four vdisks, one for the controller enclosure and one for each of the

attached disk enclosures. For example:

# forostmsas create vdisk level raid6 disks 1.1-11 assigned-to a spare 1.12 mode offline vdisk1; done

# forostmsas create vdisk level raid6 disks 2.1-11 assigned-to b spare 2.12 mode offline vdisk2; done

# forostmsas create vdisk level raid6 disks 3.1-11 assigned-to a spare 3.12 mode offline vdisk3; done

# forostmsas create vdisk level raid6 disks 4.1-11 assigned-to b spare 4.12 mode offline vdisk3; done

Use the show vdisks command to check the status. When the status changes from

OFFL, you can create the volume.

# forostmsas show vdisks; done

Make a note of the size of the vdisks and use that number <size> to create the volume

in the next step.

d. Create volumes on all OST vdisks.

# forostmsas create volume vdisk vdisk1 size <size> volume1 lun 41 ports a1,a2,b1,b2; done

# forostmsas create volume vdisk vdisk2 size <size> volume2 lun 42 ports a1,a2,b1,b2; done

# forostmsas create volume vdisk vdisk3 size <size> volume3 lun 43 ports a1,a2,b1,b2; done

# forostmsas create volume vdisk vdisk4 size <size> volume4 lun 44 ports a1,a2,b1,b2; done

6. Use the following command to display the newly created volumes:

# forostmsas show volumes; done

7. Reboot the file system servers to discover the newly created volumes.

22 Installing and Configuring MSA Arrays

Page 23

3 Installing and Configuring HP SFS Software on Server

Nodes

This chapter provides information about installing and configuring HP SFS G3.1-0 software on

the Lustre file system server.

The following list is an overview of the installation and configuration procedure for file system

servers and clients. These steps are explained in detail in the following sections and chapters.

1. Update firmware.

2. Installation Phase 1

a. Choose an installation method.

1) DVD/NFS Kickstart Procedure

2) DVD/USB Drive Kickstart Procedure

3) Network Install

b. Edit the Kickstart template file with local information and copy it to the location specific

to the installation procedure.

c. Power on the server and Kickstart the OS and HP SFS G3.1-0 installation.

d. Run the install1.sh script if not run by Kickstart.

e. Reboot.

3. Installation Phase 2

a. Download patches from the HP IT Resource Center (ITRC) and follow the patch

installation instructions.

b. Run the install2.sh script.

c. Reboot.

4. Perform the following steps on each server node to complete the configuration:

a. Configure the management network interfaces if not configured by Kickstart.

b. Configure the InfiniBand interconnect ib0 interface.

c. Create an etc/hosts file and copy to each server.

d. Configure pdsh.

e. Configure ntp if not configured by Kickstart.

f. Configure user access.

5. When the configuration is complete, perform the following steps to create the Lustre file

system as described in Chapter 5 (page 41):

a. Create the Lustre file system.

b. Configure Heartbeat.

c. Start the Lustre file system.

6. When the file system has been created, install the Lustre software on the clients and mount

the file system as described in Chapter 4 (page 37):

a. Install Lustre software on client nodes.

b. Mount the Lustre file system on client nodes.

The entire file system server installation process must be repeated for additional file system

server nodes. If the configuration consists of a large number of file system server nodes, you

might want to use a cluster installation and monitoring system like HP Insight Control

Environment for Linux (ICE-Linux) or HP Cluster Management Utility (CMU).

23

Page 24

3.1 Supported Firmware

Follow theinstructions in the documentationwhich wasincluded with each hardware component

to ensure that you are running the latest qualified firmware versions. The associated hardware

documentation includes instructions for verifying and upgrading the firmware.

For the minimum firmware versions supported, see Table 3-1.

Upgrade the firmware versions, if necessary. You can download firmware from the HP IT

Resource Center on the HP website at:

http://www.itrc.hp.com

Table 3-1 Minimum Firmware Versions

MSA2212fc Storage Controller F300R22Memory Controller

Minimum Firmware VersionComponent

I.10.43, 08/15/2007HP J4903A ProCurve Switch 2824

J200P30Code Version

15.010Loader Version

MSA2212fc Management Controller

MSA2212fc RAID Controller

Hardware

MSA2312fc Storage Controller F300R22Memory Controller

MSA2312fc Management Controller

MSA2312fc RAID Controller

Hardware

SAN Switch v5.3.0Fabric OS

W420R52Code Version

12.013Loader Version

3022Code VersionMSA2212fc Enclosure Controller

LCA 56Hardware Version

27CPLD Version

3023Enclosure ControllerExpansion Shelf

M110R01Code Version

19.008Loader Version

W441a01Code Version

12.015Loader Version

1036Code VersionMSA2312fc Enclosure Controller

56Hardware Version

8CPLD Version

2.6.14Kernel

DL380 G5 Server

v2.5.0IB ConnectX NIC MT 25418

Mezzanine Card (448262-B21)

v1.0.54X DDR IB Switch Module for HP

c-Class BladeSystem (410398-B21)

IB Switch ISR 9024 D-M DDR

24 Installing and Configuring HP SFS Software on Server Nodes

4.6.4BootProm

P56 1/24/2008BIOS

1.60 7/11/2008iLO

v5.1.0-870 6/12/2008Software

v1.0.0.6Firmware

Page 25

3.2 Installation Requirements

A set of HP SFS G3.1-0 file system server nodes should be installed and connected by HP in

accordance with the HP SFS G3.1-0 hardware configuration requirements.

The file system server nodes use the CentOS 5.2 software as a base. The installation process is

driven by the CentOS 5.2 Kickstart process, which is used to ensure that required RPMs from

CentOS 5.2 are installed on the system.

NOTE: CentOS 5.2 is available for download from the HP Software Depot at:

http://www.hp.com/go/softwaredepot

3.2.1 Kickstart Template Editing

A Kickstart template file called sfsg3DVD.cfg is supplied with HP SFS G3.1-0. You can find

this file in the top-level directory of the HP SFS G3.1-0 DVD, and on an installed system in /opt/

hp/sfs/scripts/sfsg3DVD.cfg. You must copy the sfsg3DVD.cfg file from the DVD,

edit it, and make it available during installation.

This file must be modified by the installer to do the following:

• Set up the time zone.

• Specify the system installation disk device and other disks to be ignored.

• Provide root password information.

IMPORTANT: You must make these edits, or the Kickstart process will halt, prompt for input,

and/or fail.

You can also perform optional edits that make setting up the system easier, such as:

• Setting the system name

• Configuring network devices

• Configuring ntp servers

• Setting the system networking configuration and name

• Setting the name server and ntp configuration

While these are not strictly required, if they are not set up in Kickstart, you must manually set

them up after the system boots.

The areas to edit in the Kickstart file are flagged by the comment:

## Template ADD

Each line contains a variable name of the form %{text}. TYou must replace that variable with

the specific information for your system, and remove the ## Template ADD comment indicator.

For example:

## Template ADD timezone %{answer_timezone}

%{answer_timezone} must be replaced by your time zone, such as America/New_York

For example, the final edited line looks like:

timezone America/New_York

Descriptions of the remaining variables to edit follows:

## Template ADD rootpw %{answer_rootpw}

%{answer_rootpw} must be replaced by your root password, or the encrypted form from the

/etc/shadow file by using the --iscrypted option before the encrypted password.

The following optional, but recommended, line sets up an Ethernet network interface.More than

one Ethernet interface may be set up using additional network lines. The --hostname and

--nameserver specifications are needed only in one network line. For example, (on one line):

3.2 Installation Requirements 25

Page 26

## Template ADD network --bootproto static --device %{prep_ext_nic} \

--ip %{prep _ext_ip} --netmask %{prep_ext_net} --gateway %{prep_ext_gw} \

--hostname %{host_name}.%{prep_ext_search} --nameserver %{prep_ext_dns}

%{prep_ext_nic} must be replaced by the Ethernet interface name. eth1 is recommended for

the external interface and eth0 for the internal interface.

%{prep_ext_ip} must be replaced by the interface IP address.

%{prep_ext_net} must be replaced by the interface netmask.

%{prep_ext_gw} must be replaced by the interface gateway IP address.

%{host_name} must be replaced by the desired host name.

%{prep_ext_search} must be replaced by the domain name.

%{prep_ext_dns} must be replaced by the DNS name server IP address or Fully Qualified

Domain Name (FQDN).

IMPORTANT: The InfiniBand IPoIB interface ib0 cannot be set up using this method, and must

be manually set up using the procedures “Configuration Instructions” (page 30).

In all the following lines, %{ks_harddrive} must be replaced by the installation device, usually

cciss/c0d0 for a DL380 G5 server. The %{ks_ignoredisk} should list all other disk devices on

the system so they will be ignored during Kickstart. For a DL380 G5 server, this variable should

identify all other disk devices detected such as

cciss/c0d1,cciss/c0d2,sda,sdb,sdc,sdd,sde,sdf,sdg,sdh,... For example:

## Template ADD bootloader --location=mbr --driveorder=%{ks_harddrive}

## Template ADD ignoredisk --drives=%{ks_ignoredisk}

## Template ADD clearpart --drives=%{ks_harddrive} --initlabel

## Template ADD part /boot --fstype ext3 --size=150 --ondisk=%{ks_harddrive}

## Template ADD part / --fstype ext3 --size=27991 --ondisk=%{ks_harddrive}

## Template ADD part pv.100000 --size=0 --grow --ondisk=%{ks_harddrive}

These Kickstart files are set up for a mirrored system disk. If your system does not support this,

you must adjust the disk partitioning accordingly.

The following optional, but recommended lines set up the name server and ntp server.

## Template ADD echo "search %{domain_name}" >/etc/resolv.conf

## Template ADD echo "nameserver %{nameserver_path}" >>/etc/resolv.conf

## Template ADD ntpdate %{ntp_server}

## Template ADD echo "server %{ntp_server}" >>/etc/ntp.conf

%{domain_name} should be replaced with the system domain name.

%{nameserver_path} should be replaced with the DNS nameserver address or FQDN.

%{ntp_server} should be replaced with the ntp server address or FQDN.

3.3 Installation Phase 1

3.3.1 DVD/NFS Kickstart Procedure

The recommended software installation method is to install CentOS 5.2 and the HP SFS G3.1-0

software using the DVD copies of both. The installation process begins by inserting the CentOS

5.2 DVD into the DVD drive of the DL380 G5 server and powering on the server. At the boot

prompt, you must type the following on one command line, inserting your own specific

networking information for the node to be installed and the NFS location ofthe modified Kickstart

file:

boot: linux ks=nfs:install_server_network_address:/install_server_nfs_path/sfsg3DVD.cfg

ksdevice=eth1 ip=filesystem_server_network_address netmask=local_netmask gateway=local_gateway

Where the network addresses, netmask, and paths are specific to your configuration.

During the Kickstart post-installation phase, you are prompted to install the HP SFS G3.1-0 DVD

into the DVD drive:

26 Installing and Configuring HP SFS Software on Server Nodes

Page 27

Please insert the HP SFS G3.1-0 DVD and enter any key to continue:

After you insert the HP SFS G3.1-0 DVD and press enter, the Kickstart installs the HP SFS G3.1-0

software onto the system in the directory /opt/hp/sfs. Kickstart then runs the /opt/hp/sfs/

scripts/install1.sh script to perform the first part of the software installation.

NOTE: The output from Installation Phase 1 is contained in /var/log/postinstall.log.

After the Kickstart completes, the system reboots.

If for some reason, the Kickstart process does not install the HP SFS G3.1-0 software and run the

/opt/hp/sfs/scripts/install1.sh script automatically, you can manually load the

software onto the installed system, unpack it in /opt/hp/sfs, and then manually run the script.

For example, after inserting the HP SFS G3.1-0 DVD into the DVD drive:

# mount /dev/cdrom /mnt/cdrom

# mkdir -p /opt/hp/sfs

# cd /opt/hp/sfs

# tar zxvf /mnt/cdrom/hpsfs/SFSgen3.tgz

# ./scripts/install1.sh

Proceed to “Installation Phase 2” (page 28).

3.3.2 DVD/USB Drive Kickstart Procedure

You can also install without any network connection by putting the modified Kickstart file on a

USB drive.

On another system, if it has not already been done, you must create and mount a Linux file

system on the USB drive. After you insert the USB drive into the USB port, examine the dmesg

output to determine the USB drive device name. The USB drive name is the first unused

alphabetical device nameof the form /dev/sd[a-z]1. There might be some /dev/sd* devices

on your system already, some of which may map to MSA2000 drives. In the examples below,

the device name is /dev/sda1, but on many systems it can be /dev/sdi1 or it might use some

other letter. Also, the device name cannotbe the same on the system you use tocopy the Kickstart

file to and the target system to be installed.

# mke2fs /dev/sda1

# mkdir /media/usbdisk

# mount /dev/sda1 /media/usbdisk

Next, copy the modified Kickstart file to the USB drive and unmount it. For example:

# cp sfsg3DVD.cfg /media/usbdisk

# umount /media/usbdisk

The installation is started with the CentOS 5.2 DVD and USB drive inserted into the target system.

In that case, the initial boot command is similar to:

boot: linux ks=hd:sda1:/sfsg3DVD.cfg

NOTE: USB drives are not scanned before the installer reads the Kickstart file, so you are

prompted with a message indicating that the Kickstart file cannot be found. If you are sure that

the device you provided is correct, press Enter, and the installation proceeds. If you are not sure

which device the drive is mounted on, press Ctrl+Alt+F4 to display USB mount information.

Press Ctrl+Alt+F1 to return to the Kickstart file name prompt. Enter the correct device name, and

press Enter to continue the installation.

Proceed as directed in “DVD/NFS Kickstart Procedure” (page 26), inserting the HP SFS G3.1-0

DVD at the prompt and removing the USB drive before the system reboots.

3.3 Installation Phase 1 27

Page 28

3.3.3 Network Installation Procedure

As an alternative to the DVD installation described above, some experienced users may choose

to install the software over a network connection. A complete description of this method is not

provided here, and should only be attempted by those familiar with the procedure. See your

specific Linux system documentation to complete the process.

NOTE: The DL380 G5 servers must be set up to network boot for this installation option.

However, all subsequent reboots of the servers, including the reboot after the install1.sh

script has completed (“Installation Phase 2” (page 28)) must be from the local disk.

In this case, you must obtain ISO image files for CentOS 5.2 and the HP SFS G3.1-0 software

DVD and install them on an NFS server in their network. You must also edit the Kickstart template

file as described in “Kickstart Template Editing” (page 25), using the network installation

Kickstart templatefile called sfsg3.cfg instead. This file has additional configuration parameters

to specify the network address of the installation server, the NFS directories, and paths containing

the CentOS 5.2 and HP SFS G3.1-0 DVD ISO image files. This sfsg3.cfg file can be found in

the top-level directory of the HP SFS G3.1-0 DVD image, and also in /opt/hp/sfs/scripts/

sfsg3.cfg on an installed system.

The following edits are required in addition to the edits described in “Kickstart Template Editing”

(page 25):

## Template ADD nfs --server=%{nfs_server} --dir=%{nfs_iso_path}

## Template ADD mount %{nfs_server}:%{post_image_dir} /mnt/nfs

## Template ADD cp /mnt/nfs/%{post_image} /mnt/sysimage/tmp

## Template ADD losetup /dev/loop2 /mnt/sysimage/tmp/%{post_image}

%{nfs_server} must be replaced by the installation NFS server address or FQDN.

%{nfs_iso_path} must be replaced by the NFS path to the CentOS 5.2 ISO directory.

%{post_image_dir} must be replaced by the NFS path to the HP SFS G3.1-0 ISO directory.

%{post_image} must be replaced by the name of the HP SFS G3.1-0 ISO file.

Each server node installed must be accessible over a network from an installation server that

contains the Kickstart file, the CentOS 5.2 ISO image, and the HP SFS G3.1-0 software ISO image.

This installation server must be configured as a DHCP server to network boot the file system

server nodes to be installed. For this to work, the MAC addresses of the DL380 G5 server eth1

Ethernet interface must be obtained during the BIOS setup. These addresses must be put into

the /etc/dhcpd.conf file on the installation server to assign Ethernet addresses and network

boot the file system servers. See the standard Linux documentation for the proper procedure to

set up your installation server for DHCP and network booting.

The file system server installation starts with a CentOS 5.2 Kickstart install. If the installation

server has been set up to network boot the file system servers, the process starts by powering

on the file system server to be installed. When properly configured, the network boot first installs

Linux using the Kickstart parameters. The HP SFS G3.1-0 software, which must also be available

over the network, installs in the Kickstart post-installation phase, and the /opt/hp/sfs/

scripts/install1.sh script is run.

NOTE: The output from Installation Phase 1 is contained in /var/log/postinstall.log.

Proceed to “Installation Phase 2”.

3.4 Installation Phase 2

After the Kickstart and install1.sh have been run, the system reboots and you must log in

and complete the second phase of the HP SFS G3.1-0 software installation.

28 Installing and Configuring HP SFS Software on Server Nodes

Page 29

3.4.1 Patch Download and Installation Procedure

To download and install HP SFS patches from the ITRC website, follow this procedure:

1. Create a temporary directory for the patch download.

# mkdir /home/patches

2. Go to the ITRC website.

http://www.itrc.hp.com/

3. If you have not previously registered for the ITRC, choose Register from the menu on the

left. You will be assigned an ITRC User ID upon completion of the registration process. You

supply your own password. Remember this User ID and password because you must use

it every time you download a patch from the ITRC.

4. From the registration confirmation window, select the option to go directly to the ITRC

home page.

5. From the ITRC home page, select Patch database from the menu on the left.

6. Under find individual patches, select Linux.

7. In step 1: Select vendor and version, select hpsfsg3 as the vendor and select the

appropriate version.

8. In step 2: How would you like to search?, select Browse Patch List.

9. In step 4 Results per page?, select all.

10. Click search>> to begin the search.

11. Select all the available patches and click add to selected patch list.

12. Click download selected.

13. Choose the format and click download>>. Download all available patches into the temporary

directory you created.

14. Follow the patch installation instructions in the README file for each patch. See the Patch

Support Bulletin for more details, if available.

3.4.2 Run the install2.sh Script

Continue the installation by running the /opt/hp/sfs/scripts/install2.sh script

provided. The system must be rebooted again, and you can proceed with system configuration

tasks as described in “Configuration Instructions” (page 30).

NOTE: You might receive errors when running install2. They can be ignored. See “Errors

from install2” (page 57) for more information.

3.4.3 10 GigE Installation

If your system uses 10 GigE instead of InfiniBand, you must install the Mellanox 10 GigE drivers.

IMPORTANT: This step must be performed for 10 GigE systems only. Do not use this process

on InfiniBand systems.

If your system uses Mellanox ConnectX HCAs in 10 GigE mode, HP recommends that you

upgrade the HCA board firmware before installing the Mellanox 10 GigE driver. If the existing

board firmware revision is outdated, you might encounter errors if you upgrade the firmware

after the Mellanox 10 GigE drivers are installed. Use the mstflint tool to check the firmware

version and upgrade to the minimum recommended version 2.6 as follows:

# mstflint -d 08:00.0 q

Image type: ConnectX

FW Version: 2.6.0

Device ID: 25418

Chip Revision: A0

3.4 Installation Phase 2 29

Page 30

Description: Node Port1 Port2 Sys image

GUIDs: 001a4bffff0cd124 001a4bffff0cd125 001a4bffff0cd126 001a4bffff0

MACs: 001a4b0cd125 001a4b0cd126

Board ID: (HP_09D0000001)

VSD:

PSID: HP_09D0000001

# mstflint -d 08:00.0 -i fw-25408-2_6_000-448397-B21_matt.bin -nofs burn

To ensure the correct firmware version and files for your boards, obtain firmware files from your

HP representative.

Run the following script:

# /opt/hp/sfs/scripts/install10GbE.sh

This script removes the OFED InfiniBand drivers and installs the Mellanox 10 GigE drivers. After

the script completes, the system must be rebooted for the 10 GigE drivers to be operational.

3.5 Configuration Instructions

After the HP SFS G3.1-0 software has been installed, some additional configuration steps are

needed. These steps include the following:

IMPORTANT: HP SFS G3.1-0 requires a valid license. For license installation instructions, see

Chapter 6 (page 55).

• Configuring network interfaces for Ethernet and InfiniBand or 10 GigE

• Creating the /etc/hosts file and propagating it to each node

• Configuring the pdsh command for file system cluster-wide operations

• Configuring user credentials

• Verifying digital signatures (optional)

3.5.1 Configuring Ethernet and InfiniBand or 10 GigE Interfaces

Ethernet and InfiniBand IPoIB ib0 interface addresses must be configured, if not already

configured with network statements in the Kickstart file. Use the CentOS GUI, enter the

system-config-network command, or edit /etc/sysconfig/network-scripts/

ifcfg-xxx files.

The IP addresses and netmasks for the InfiniBand interfaces should be chosen carefully to allow

the file system server nodes to communicate with the client nodes.

The system name, if not already set by the Kickstart procedure, must be set by editing the /etc/

sysconfig/network file as follows:

HOSTNAME=mynode1

3.5.2 Creating the /etc/hosts file

Create an /etc/hosts file with the names and IP addresses of all the Ethernet interfaces on

each system in the file system cluster, including the following:

• Internal interfaces

• External interface

• iLO interfaces

• InfiniBand or 10 GigE interfaces

• Interfaces to the Fibre Channel switches

• MSA2000 controllers

• InfiniBand switches

• Client nodes (optional)

This file should be propagated to all nodes in the file system cluster.

30 Installing and Configuring HP SFS Software on Server Nodes

Page 31

3.5.3 Configuring pdsh

The pdsh command enables parallel shell commands to be run across the file system cluster.

The pdsh RPMs are installed by the HP SFS G3.1-0 software installation process, but some

additional steps are needed to enable passwordless pdsh and ssh access across the file system

cluster.

1. Put all host names in /opt/hptc/pdsh/nodes.

2. Verify the host names are also defined with their IP addresses in/etc/hosts.

3. Append /root/.ssh/id_rsa.pub from the node where pdsh is run to /root/.ssh/

authorized_keys on each node.

4. Enter the following command:

# echo "StrictHostKeyChecking no" >> /root/.ssh/config

This completes the process to run pdsh from one node. Repeat the procedure for each additional

node you want to use for pdsh.

3.5.4 Configuring ntp

The Network Time Protocol (ntp) should be configured to synchronize the time among all the

Lustre file system servers and the client nodes. This is primarily to facilitate the coordination of

time stamps in system log files to easily trace problems. This should have been performed with

appropriate editing to the initial Kickstart configuration file. But if it is incorrect, manually edit

the /etc/ntp.conf file and restart the ntpd service.

3.5.5 Configuring User Credentials

For proper operation, the Lustre file system requires the same User IDs (UIDs) and Group IDs

(GIDs) on all file system clients. The simplest way to accomplish this is with identical /etc/

passwd and /etc/group files across all the client nodes, but there are other user authentication

methods like Network Information Services (NIS) or LDAP.

By default, Lustre file systems are created with the capability to support Linux file system group

access semantics for secondary user groups. This behavior requires that UIDs and GIDs are

known to the file system server node providing the MDS service, and also the backup MDS node

in a failover configuration. When using standard Linux user authorization, you can do this by

adding the lines with UID information from the client /etc/passwd file and lines with GID

information from the client /etc/group file to the /etc/passwd and /etc/group files on

the MDS and backup MDS nodes. This allows the MDS to access the GID and UID information,

but does not provide direct user login access to the file system server nodes. If other user

authentication methods like NIS or LDAP are used, follow the procedures specific to those

methods to provide the user and group information to the MDS and backup MDS nodes without

enabling direct user login access to the file system server nodes. In particular, the shadow

password information should not be provided through NIS or LDAP.

IMPORTANT: HP requires that users do not have direct login access to the file system servers.

If support for secondary user groups is not desired, or to avoid the server configuration

requirements above, the Lustre file system can be created so that it does not require user credential

information. The Lustre method for validating user credentials can be modified in two ways,

depending on whetherthe file system has already been created. The preferredand easier method

is to do this before the file system is created, using step 1 below.

1. Before the file system is created,specify "mdt.group_upcall=NONE" in the file system's CSV

file, as shown in the example in “Generating Heartbeat Configuration Files Automatically”

(page 45).

2. After the file system is created, use the procedure outlined in “Changing group_upcall

Value to Disable Group Validation” (page 57).

3.5 Configuration Instructions 31

Page 32

3.5.6 Verifying Digital Signatures (optional)

Verifying digital signatures is an optional procedure for customers to verify that the contents of

the ISO image are supplied by HP. This procedure is not required.

Two keys can be imported on the system. One key is the HP Public Key, which is used to verify

the complete contents of the HP SFS image. The other key is imported into the rpm database to

verify the digital key signatures of the signed rpms.

3.5.6.1 Verifying the HP Public Key (optional)

To verify the digital signature of the contents of the ISO image, the HP Public Key must be

imported to the user's gpg key ring. Use the following commands to import the HP Public Key:

# cd <root-of-SFS-image>/signatures

# gpg --import *.pub

Use the following commands to verify the digital contents of the ISO image:

# cd <root-of-SFS-image>/

# gpg --verify Manifest.md5.sig Manifest.md5

The following is a sample output of importing the Public key:

# mkdir -p /mnt/loop

# mount -o loop "HPSFSG3-ISO_FILENAME".iso /mnt/loop/

# cd /mnt/loop/

# gpg --import /mnt/loop/signatures/*.pub

gpg: key 2689B887: public key "Hewlett-Packard Company (HP Codesigning Service)" imported

gpg: Total number processed: 1

gpg: imported: 1

And the verification of the digital signature:

# gpg --verify Manifest.md5.sig Manifest.md5

gpg: Signature made Tue 10 Feb 2009 08:51:56 AM EST using DSA key ID 2689B887

gpg: Good signature from "Hewlett-Packard Company (HP Codesigning Service)"

gpg: WARNING: This key is not certified with a trusted signature!

gpg: There is no indication that the signature belongs to the owner.

Primary key fingerprint: FB41 0E68 CEDF 95D0 6681 1E95 527B C53A 2689 B887

3.5.6.2 Verifying the Signed RPMs (optional)

HP recommends importingthe HP Public Key to the RPM database. Use the following command

as root to import this public key to the RPM database:

# rpm --import <root-of-SFS-image>/signatures/*.pub

This import command should be performed by root on each system that installs signed RPM

packages.

3.6 Upgrade Installation

In some situations you may upgrade an HP SFS system running an older version of HP SFS

software to the most recent version of HP SFS software.

If you are upgrading from version 2.3, contact your HP representative for details about upgrade

support for both servers and clients.

If you are upgrading from one version of HP SFS G3 to a more recent version, follow the general

guidelines that follow.

32 Installing and Configuring HP SFS Software on Server Nodes

Page 33

IMPORTANT: All existing file system data must be backed up before attempting an upgrade.

HP is not responsible for the loss of any file system data during an upgrade.

The safest and recommended method for performing an upgrade is to first unmount all clients,

then stop all file system servers before updating any software. Depending on the specific upgrade

instructions, you may need to save certain system configuration files for later restoration. After

the file system server software is upgraded and the configuration is restored, bring the file system

back up. At this point, the client system software can be upgraded if applicable, and the file

system can be remounted on the clients.

3.6.1 Rolling Upgrades

If you must keep the file system online for clients during an upgrade, a "rolling" upgrade

procedure is possible on an HP SFS G3 system with properly configured failover. As file system

servers are upgraded, the file system remains available to clients. However, client recovery delays

(typically around 5 minutes long) occur after each server configuration change or failover

operation. Additional risk is present with higher levels of client activity during the upgrade

procedure, and the procedure is not recommended when there is critical long running client

application activity underway.

Also, please note any rolling upgrade restrictions. Major system configuration changes, such as

changing system interconnect type, or changing system topology are not allowed during rolling

upgrades. For general rolling upgrade guidelines, see the Lustre 1.6 Operations Manual (http://

manual.lustre.org/images/8/86/820-3681_v15.pdf) section 13.2.2. For upgrade instructions

pertaining to the specific releases you are upgrading between, see the “Upgrading Lustre”

chapter.

IMPORTANT: HP SFS G3.1-0 requires a valid license. For license installation instructions, see

Chapter 6 (page 55).

Follow any additional instructions you may have received from HP SFS G3 support concerning

the upgrade you are performing.

In general, a rolling upgrade procedure is performed based on failover pairs of server nodes. A

rolling upgrade must start with the MGS/MDS failover pairs, followed by successive OST pairs.

For each failover pair, the procedure is:

1. For the first member of the failover pair, stop the Heartbeat service to migrate the Lustre

file system components from this node to its failover pair node.

# chkconfig heartbeat off

# service heartbeat stop

At this point, the node is no longer serving the Lustre file system and can be upgraded. The

specific procedures will vary depending on the type of upgrade to be performed. Upgrades

can be as simple as updating a few RPMs, or as complex as a complete reinstallation of the

server node. The upgrade from HP SFS G3.0-0 to HP SFS G3.1-0 requires a complete

reinstallation of the server node.

2. In the case of a complete server reinstallation, save any server specific configuration files

that will need to be restored or referenced later. Those files include, but are not limited to:

• /etc/fstab

• /etc/hosts

• /root/.ssh

• /etc/ha.d/ha.cf

• /etc/ha.d/haresources

• /etc/ha.d/authkeys

3.6 Upgrade Installation 33

Page 34

• /etc/modprobe.conf

• /etc/ntp.conf

• /etc/resolv.conf

• /etc/sysconfig/network

• /etc/sysconfig/network-scripts/ifcfg-ib0

• /etc/sysconfig/network-scripts/ifcfg-eth*

• /opt/hptc/pdsh/nodes

• /root/anaconda-ks.cfg

• /var/lib/heartbeat/crm/cib.xml

• /var/lib/multipath/bindings

• The CSVfile containing the definition of your file system as used by the lustre_config

and gen_hb_config_files.pl programs.

• The CSV file containing the definition of the ILOs on your file system as used by the

gen_hb_config_files.pl program.

• The Kickstart file used to install this node.

• The /mnt mount-points for the Lustre file system.

Many of these files are available from other server nodes in the cluster, or from the failover

pair node in the case of the Heartbeat configuration files. Other files may be re-created

automatically by Kickstart.

3. Upgrade the server according to the general installation instructions in Chapter 3 (page 23),

with specific instructions for this upgrade.

4. Reboot as necessary.

5. If applicable, restore the files saved in step 2.

Please note that some files should not be restored in their entirety. Only the HP SFS specific

parts of the older files should be restored. For example:

• /etc/fstab — Only the HP SFS mount lines

• /etc/modprobe.conf — Only the SFS added lines, for example:

# start lustre config

# Lustre module options added automatically by lc_modprobe

options lnet networks=o2ib0

# end lustre config

6. For the upgrade from SFSG3.0-0 to SFS G3.1-0, you must re-create the Heartbeat configuration

files to account for licensing. For the details, see Chapter 6 (page 55).