Page 1

HP StorageWorks

MPX200 Multifunction Router

User Guide

This user guide describes the MPX200 Multifunction Router, which supports the EVA family of Fibre Channel

storage systems for integrated iSCSI connectivity, FCIP, and data migration. The MPX200 hardware is integrated

with up to four EVA storage systems and HP Command View EVA to deliver multi-protocol capabilities, including

Fibre Channel, iSCSI, and FCIP. This document is intended for system administrators with knowledge of HP

StorageWorks EVA4x00/6x00/8x00 storage systems, configuring LUNs using HP Command View EVA, HP

Fibre Channel SANs, Ethernet, TCP/IP networking, iSCSI, and FCIP.

Part number: 5697-0202

Fifth edition: February 2010

Page 2

Legal and notice information

© Copyright 2009-2010 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211

and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items

are licensed to the U.S. Government under vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set

forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as

constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

Microsoft and Windows are U.S. registered trademarks of Microsoft Corporation.

Page 3

Contents

1 MPX200 Multifunction Router overview ............................................... 21

MPX200 features ...................................................................................................................... 21

MPX200 configuration options ................................................................................................... 23

Planning the MPX200 installation ................................................................................................ 27

Total number of initiators ..................................................................................................... 28

Number of paths required per initiator .................................................................................. 28

Use of iSNS ...................................................................................................................... 28

Use of iSCSI ports .............................................................................................................. 28

Balancing the load ............................................................................................................. 28

10-GbE initiators ................................................................................................................ 29

CHAP security .......................................................................................................................... 29

2 Installing the MPX200 ...................................................................... 31

Rack mounting the MPX200 ...................................................................................................... 31

3 Managing the MPX200 using HP Command View EVA ......................... 37

Setting up the MPX200 management port .................................................................................... 37

Fibre Channel zoning ................................................................................................................ 37

Open zoning ..................................................................................................................... 37

Fibre Channel switch-based zoning ....................................................................................... 38

HP Command View EVA discovery .............................................................................................. 39

Setting up the iSCSI IP ports ....................................................................................................... 41

Setting the date and time on the MPX200 .................................................................................... 42

Code load ........................................................................................................................ 43

Shutting down and restarting the MPX200 ............................................................................ 44

Saving or restoring the MPX200 configuration ....................................................................... 44

Locating the MPX200 ......................................................................................................... 45

Removing an iSCSI controller ............................................................................................... 46

4 MPX200 iSCSI configuration rules and guidelines ................................ 49

iSCSI rules and supported maximums ......................................................................................... 49

EVA storage system rules and guidelines ...................................................................................... 50

HP Command View EVA management rules and guidelines ............................................................ 50

EVA storage system software ...................................................................................................... 51

Features supported for iSCSI hosts ........................................................................................ 51

Features not supported for iSCSI hosts ................................................................................... 51

Fibre Channel switch and fabric support ...................................................................................... 52

Operating system and multipath software support ......................................................................... 52

iSCSI initiator rules and guidelines .............................................................................................. 52

Apple Mac OS X iSCSI initiator rules and guidelines .............................................................. 53

Microsoft Windows iSCSI initiator rules and guidelines ........................................................... 53

Linux iSCSI initiator rules and guidelines ............................................................................... 53

Sun Solaris iSCSI initiator rules and guidelines ...................................................................... 54

VMware iSCSI initiator rules and guidelines ........................................................................... 54

MPX200 Multifunction Router 3

Page 4

Supported IP network adapters .................................................................................................. 55

IP network requirements ............................................................................................................ 55

Setting up the iSCSI initiator ....................................................................................................... 55

Windows Server iSCSI initiator ........................................................................................... 55

Multipathing ...................................................................................................................... 61

Installing the MPIO feature for Windows Server 2008 ............................................................. 62

Installing the MPIO feature for Windows Server 2003 ............................................................. 64

Microsoft Windows Server 2003 Scalable Networking Pack .................................................... 64

Setting up SNP for the HP NC3xxx Multifunction Gigabit server adapter ............................ 65

iSCSI initiator setup for Apple Mac OS X (single-path) ............................................................ 65

Setting up the iSCSI initiator for Apple Mac OS X ............................................................ 65

Setting up storage for Apple Mac OS X .......................................................................... 70

iSCSI initiator setup for Linux ................................................................................................ 70

Installing and configuring the SUSE Linux Enterprise 10 iSCSI driver ................................... 70

Assigning device names ............................................................................................... 75

Target bindings ........................................................................................................... 76

Mounting file systems ................................................................................................... 76

Unmounting file systems ................................................................................................ 76

Presenting EVA storage for Linux .................................................................................... 77

iSCSI Initiator setup for VMware ........................................................................................... 77

Configuring multipath with the Solaris 10 iSCSI initiator .......................................................... 82

MPxIO overview .......................................................................................................... 83

Configuring Microsoft MPIO iSCSI devices ............................................................................. 91

Microsoft MPIO for iSCSI load-balancing policies ................................................................... 92

Microsoft MPIO with QLogic iSCSI HBA ................................................................................ 93

Installing the QLogic iSCSI HBA ..................................................................................... 93

Installing the Microsoft iSCSI initiator services and MPIO .................................................. 93

Configuring the QLogic iSCSI HBA ................................................................................. 94

Adding targets to the QLogic iSCSI initiator ..................................................................... 96

Presenting LUNs to the QLogic iSCSI initiator ................................................................... 97

Installing the HP MPIO Full Featured DSM for EVA ........................................................... 97

Microsoft Windows Cluster support ....................................................................................... 98

Microsoft Cluster Server for Windows 2003 .................................................................... 98

Microsoft Cluster Server for Windows 2008 .................................................................... 99

Setting up authentication .................................................................................................. 100

CHAP restrictions ............................................................................................................ 100

Microsoft initiator CHAP secret restrictions .......................................................................... 101

Linux CHAP restrictions ..................................................................................................... 101

ATTO Macintosh CHAP restrictions .................................................................................... 101

Recommended CHAP policies ........................................................................................... 101

iSCSI session types .......................................................................................................... 101

MPX200 CHAP modes .................................................................................................... 101

Enabling single-direction CHAP during discovery session and normal session ........................... 102

Enabling CHAP for the MPX200-discovered iSCSI initiator entry ............................................ 103

Enabling CHAP for the Microsoft iSCSI initiator .................................................................... 103

Enabling CHAP for the open-iscsi iSCSI initiator .................................................................. 104

Enabling single-direction CHAP during discovery session and bi-directional CHAP during normal

session ........................................................................................................................... 106

Enabling bi-directional CHAP during discovery session and single-direction CHAP during normal

session ............................................................................................................................ 107

Enabling bi-directional CHAP during discovery session and bi-directional CHAP during normal

session ............................................................................................................................ 110

5 MPX200 FCIP ................................................................................ 113

4

Page 5

MPX200 FCIP product description ............................................................................................. 113

Redundant FCIP network structure example ................................................................................. 114

Using FCIP to encapsulate FC packets ....................................................................................... 114

FCIP Impact on existing iSCSI configurations .............................................................................. 114

FCIP and iSCSI performance .................................................................................................... 116

MPX200 iSCSI/FCIP configurations ........................................................................................... 116

HP Continuous Access EVA 3–site configurations .................................................................. 121

3-site configuration with four MPX200 routers ................................................................ 122

3-site configuration with six MPX200 routers .................................................................. 122

3-site configuration with eight MPX200 routers .............................................................. 123

3-site configuration with six MPX200 routers and full inter-site connectivity ......................... 124

Configuration rules and guidelines ..................................................................................... 125

General configuration rules ......................................................................................... 125

Operating system and multipath support ....................................................................... 126

EVA storage system rules and guidelines ....................................................................... 126

Fibre Channel switch and firmware support ................................................................... 126

IP performance tuning ............................................................................................................. 128

Distance ......................................................................................................................... 128

Bandwidth ....................................................................................................................... 128

Latency ........................................................................................................................... 128

MTU/Jumbo frames .......................................................................................................... 128

Compression ................................................................................................................... 129

TCP window size/scaling performance tuning ...................................................................... 129

Modifying the window size and scaling factor ..................................................................... 129

TCP window size recommendations .................................................................................... 130

Configuring an FCIP route ........................................................................................................ 133

Step 1. Verify your system requirements ............................................................................... 133

Step 2. Pre-installation checklist .......................................................................................... 133

Step 3. Rack-mount the MPX200 ........................................................................................ 134

Step 4. Install the SFPs ...................................................................................................... 134

Step 5. Set the MPX200 management port parameters ......................................................... 134

Step 6. Configure the MPX200 FCIP Route parameters .......................................................... 136

Install FCIP license ..................................................................................................... 136

Critical FCIP Performance settings ................................................................................ 136

Configure the FCIP routes using the CLI ......................................................................... 137

Step 7. Configure FC switch settings for the MPX200 ............................................................. 137

Step 8. Cable the MPX200 FC, GE and management ports ................................................... 138

Step 9. Verify FCIP links and firmware version ...................................................................... 138

6 Offline Data Migration ................................................................... 139

Features overview ................................................................................................................... 139

Feature details ........................................................................................................................ 140

Job scheduling ................................................................................................................. 140

Immediate schedule ................................................................................................... 140

Delayed schedule ...................................................................................................... 140

Serial schedule .......................................................................................................... 140

Data migration job groups ................................................................................................. 141

Array properties ............................................................................................................... 141

Protection ........................................................................................................................ 142

Data migration jobs .......................................................................................................... 142

Data migration job attributes ....................................................................................... 142

Data migration job states ............................................................................................ 143

Data migration job commands .................................................................................... 143

Logs ............................................................................................................................... 143

MPX200 Multifunction Router 5

Page 6

Users .............................................................................................................................. 144

Getting started ....................................................................................................................... 144

Supported configurations .................................................................................................. 144

Fabric configuration ................................................................................................... 144

Supported Fibre Channel fabrics .................................................................................. 145

Supported storage arrays ............................................................................................ 145

Best practices .................................................................................................................. 146

Migration considerations ............................................................................................ 146

High availability and redundant configurations .............................................................. 146

Choosing the right DMS options .................................................................................. 147

General precautions ................................................................................................... 147

Installing the MPX200 mpx Manager for Data Migration Services .......................................... 148

Download the application ........................................................................................... 148

HP StorageWorks mpx Manager for Data Migration Services for Windows .............................. 149

HP StorageWorks mpx Manager for Data Migration Services for Linux .................................... 149

HP StorageWorks mpx Manager for Data Migration Services for Mac OS X ............................ 149

Hardware and software setup ............................................................................................ 149

Hardware setup ......................................................................................................... 150

Software setup .......................................................................................................... 150

Using DMS ............................................................................................................................ 150

General steps for data migration ........................................................................................ 150

Setting array properties ..................................................................................................... 151

Setting array properties in the GUI ............................................................................... 151

Setting array properties in the CLI ................................................................................ 153

Creating a Data migration job group .................................................................................. 154

Setting array properties in the GUI ............................................................................... 154

Creating a Data migration job group in the CLI ............................................................. 155

Using the Data Migration Wizard ...................................................................................... 155

Starting the Data Migration Wizard ............................................................................. 155

Scheduling an individual data migration job in the GUI .................................................. 156

Scheduling an individual data migration job in the CLI ................................................... 158

Scheduling data migration jobs in batch mode .............................................................. 161

Starting serial scheduled jobs ............................................................................................. 163

Migrating data to an EVA array configured for iSCSI ............................................................ 164

Creating a DMS iSCSI host entry with HP Command View EVA ....................................... 164

Adding LUNs to the DMS iSCSI host entry with HP Command View EVA ........................... 165

Viewing the status of data migration jobs ............................................................................ 167

Viewing data migration job status in the GUI ................................................................. 167

Viewing data migration jobs status in the CLI ................................................................. 168

Rescanning targets ........................................................................................................... 169

Viewing job details and controlling job actions .................................................................... 170

Viewing job details and controlling job actions in the GUI .............................................. 170

Viewing job details and controlling job actions in the CLI ................................................ 172

Viewing system and data migration job logs ........................................................................ 173

Viewing logs in the GUI .............................................................................................. 174

Viewing logs in the CLI ............................................................................................... 177

Using the Verify Migration Job wizard ................................................................................. 178

Starting the Verify Migration Job wizard ....................................................................... 178

Scheduling verification of job options ........................................................................... 179

Acknowledging a data migration job .................................................................................. 180

Removing an offline array ................................................................................................. 181

Data migration licenses ........................................................................................................... 181

Types of data migration licenses ......................................................................................... 181

Capacity-based licenses ............................................................................................. 181

Array-based licenses .................................................................................................. 181

6

Page 7

Installing a data migration license key ................................................................................. 181

Installing a data migration license key in the GUI ........................................................... 182

Installing a data migration license key in the CLI ............................................................ 183

Applying an array-based license to a specific array .............................................................. 183

Applying an array-based license to a specific array in the GUI ........................................ 184

Applying an array-based license to a specific array in the CLI .......................................... 186

Viewing data migration license usage ................................................................................. 187

Viewing data migration license usage in the GUI ........................................................... 187

Viewing data migration license usage in the CLI ............................................................ 187

Command Line Interface .......................................................................................................... 188

User accounts .................................................................................................................. 188

User sessions ................................................................................................................... 188

Admin session ........................................................................................................... 188

Miguser session ......................................................................................................... 189

Commands ...................................................................................................................... 189

set system ................................................................................................................. 191

set features ............................................................................................................... 192

set fc ........................................................................................................................ 193

set array ................................................................................................................... 194

set VPGroups ............................................................................................................ 195

migration_group add ................................................................................................. 196

migration_group remove ............................................................................................. 197

migration_group edit .................................................................................................. 198

migration add ........................................................................................................... 199

migration rm ............................................................................................................. 201

migration stop ........................................................................................................... 202

migration start ........................................................................................................... 203

migration pause ........................................................................................................ 204

migration resume ....................................................................................................... 205

migration acknowledge .............................................................................................. 206

start_serial_jobs ......................................................................................................... 207

compare_luns add ..................................................................................................... 208

compare_luns rm ....................................................................................................... 210

compare_luns stop ..................................................................................................... 211

compare_luns start ..................................................................................................... 212

compare_luns pause .................................................................................................. 213

compare_luns resume ................................................................................................. 214

compare_luns acknowledge ........................................................................................ 215

show fc .................................................................................................................... 216

show migration .......................................................................................................... 217

show array ............................................................................................................... 218

show luninfo ............................................................................................................. 220

show migration_luninfo ............................................................................................... 221

show migration_params .............................................................................................. 223

show mgmt ............................................................................................................... 224

show memory ........................................................................................................... 225

show properties ......................................................................................................... 226

show migration_usage ................................................................................................ 227

show perf ................................................................................................................. 228

show perf byte .......................................................................................................... 229

show features ............................................................................................................ 230

show migration_logs .................................................................................................. 231

show logs ................................................................................................................. 232

show luns ................................................................................................................. 233

show targets ............................................................................................................. 235

MPX200 Multifunction Router 7

Page 8

show vpgroups .......................................................................................................... 236

target rescan ............................................................................................................. 237

save capture ............................................................................................................. 238

array rm ................................................................................................................... 239

array_licensed_port rm ............................................................................................... 240

readjust_priority ......................................................................................................... 241

show compare_luns ................................................................................................... 242

Performance ........................................................................................................................... 242

Performance factors .......................................................................................................... 242

Maximizing performance .................................................................................................. 243

Optimal configuration and zoning ...................................................................................... 243

Using the HP MSA2012fc Storage Array ................................................................................... 243

MSA2012fc array behavior ............................................................................................... 243

Using array-based licenses for MSA2012fc array ................................................................. 244

Workaround for the use of a single array license for the MSA2012fc ...................................... 244

Restrictions ............................................................................................................................. 245

Reconfiguring LUNs on a storage array ............................................................................... 245

Removing an array after completing data migration jobs ....................................................... 245

Troubleshooting ...................................................................................................................... 245

7 Diagnostics and troubleshooting ...................................................... 249

MPX200 chassis diagnostics .................................................................................................... 249

Input Power LED is extinguished .......................................................................................... 249

System Fault LED is illuminated ........................................................................................... 250

Power-on self-test (POST) diagnostics ................................................................................... 250

Heartbeat LED blink patterns ....................................................................................... 250

Locating the MPX200 blade .............................................................................................. 251

MPX200 log data ............................................................................................................ 252

MPX200 statistics ............................................................................................................. 252

MPX200 ping command ................................................................................................... 252

Troubleshooting using HP Command View EVA .................................................................... 253

Issues and solutions ................................................................................................................. 254

HP Command View EVA does not discover MPX200 ............................................................. 254

Initiator cannot log in to MPX200 iSCSI target ..................................................................... 254

Initiator logs in to MPX200 iSCSI target, but EVA-assigned LUNs do not appear on initiator ....... 254

EVA-presented virtual disk not seen by initiator ..................................................................... 255

Windows initiators may display Reconnecting if NIC MTU changes after connection has logged

in ................................................................................................................................... 256

Communication between HP Command View EVA and MPX200 is down ................................ 256

HP Command View EVA issues and solutions ............................................................................. 256

8 Support and other resources ............................................................ 259

Intended audience .................................................................................................................. 259

Related documentation ............................................................................................................ 259

Contacting HP ........................................................................................................................ 260

HP technical support ......................................................................................................... 260

Subscription service .......................................................................................................... 260

Product feedback ............................................................................................................. 260

Documentation feedback ................................................................................................... 260

Related information ................................................................................................................. 260

HP Websites .................................................................................................................... 260

Typographical conventions ....................................................................................................... 261

Rack stability .......................................................................................................................... 262

Product warranties .................................................................................................................. 262

8

Page 9

A Command reference ....................................................................... 263

Command syntax .................................................................................................................... 263

Command line completion ................................................................................................. 263

Authority requirements ...................................................................................................... 263

Commands ............................................................................................................................ 264

Admin ............................................................................................................................ 264

Beacon ........................................................................................................................... 265

Blade ............................................................................................................................. 265

Clear .............................................................................................................................. 265

Date ............................................................................................................................... 266

Exit ................................................................................................................................ 266

Fciproute ......................................................................................................................... 267

FRU ................................................................................................................................ 269

Help ............................................................................................................................... 269

History ............................................................................................................................ 271

Image ............................................................................................................................. 271

Initiator ........................................................................................................................... 272

Logout ............................................................................................................................ 273

Lunmask .......................................................................................................................... 273

Passwd ........................................................................................................................... 275

Ping ............................................................................................................................... 275

Quit ............................................................................................................................... 276

Reboot ............................................................................................................................ 276

Reset .............................................................................................................................. 277

Save ............................................................................................................................... 277

Set ................................................................................................................................. 278

Set alias .......................................................................................................................... 279

Set CHAP ........................................................................................................................ 280

Set chassis ...................................................................................................................... 280

Set FC ............................................................................................................................ 281

Set features ..................................................................................................................... 281

Set iSCSI ......................................................................................................................... 282

Set iSNS ......................................................................................................................... 282

Set Mgmt ........................................................................................................................ 283

Set NTP .......................................................................................................................... 283

Set properties .................................................................................................................. 284

Set SNMP ....................................................................................................................... 284

Set system ....................................................................................................................... 285

Set VPGroups .................................................................................................................. 286

Show .............................................................................................................................. 286

Show CHAP .................................................................................................................... 288

Show chassis ................................................................................................................... 289

Show FC ......................................................................................................................... 290

Show fciproutes ............................................................................................................... 290

Show features .................................................................................................................. 291

Show initiators ................................................................................................................. 291

Show initiators LUNmask ................................................................................................... 291

Show iSCSI ..................................................................................................................... 292

Show iSNS ...................................................................................................................... 293

Show logs ....................................................................................................................... 293

Show LUNinfo ................................................................................................................. 294

Show LUNs ..................................................................................................................... 294

Show LUNmask ............................................................................................................... 295

Show memory ................................................................................................................. 295

Show mgmt ..................................................................................................................... 296

MPX200 Multifunction Router 9

Page 10

Show NTP ....................................................................................................................... 296

Show perf ....................................................................................................................... 297

Show presented targets ..................................................................................................... 298

Show properties ............................................................................................................... 299

Show SNMP .................................................................................................................... 299

Show stats ....................................................................................................................... 300

Show system .................................................................................................................... 302

Show targets ................................................................................................................... 303

Show VPGroups ............................................................................................................... 303

Shutdown ........................................................................................................................ 304

Target ............................................................................................................................. 304

Traceroute ....................................................................................................................... 305

B Command Line Interface usage ........................................................ 307

Logging on to an MPX200 ....................................................................................................... 307

Understanding the guest account .............................................................................................. 308

Working with MPX200 router configurations .............................................................................. 309

Modifying a configuration ................................................................................................. 309

Saving and restoring router configurations ........................................................................... 309

Restoring router configuration and persistent data ................................................................. 310

C Simple Network Management Protocol setup ..................................... 311

SNMP parameters .................................................................................................................. 311

SNMP trap configuration parameters ......................................................................................... 312

Management Information Base ................................................................................................ 312

Network port table ........................................................................................................... 312

FC port table ................................................................................................................... 314

Initiator object table .......................................................................................................... 316

LUN table ....................................................................................................................... 318

VP group table ................................................................................................................. 320

Sensor table .................................................................................................................... 323

Notifications .......................................................................................................................... 324

System information objects ................................................................................................. 325

Notification objects .......................................................................................................... 326

Agent startup notification ................................................................................................... 326

Agent shutdown notification ............................................................................................... 326

Network port down notification .......................................................................................... 326

FC port down notification .................................................................................................. 327

Target device discovery ..................................................................................................... 327

Target presentation (mapping) ............................................................................................ 327

VP group notification ........................................................................................................ 328

Sensor notification ............................................................................................................ 328

Generic notification .......................................................................................................... 329

D Log messages ............................................................................... 331

E Regulatory compliance and safety .................................................... 355

Regulatory compliance ............................................................................................................ 355

Federal Communications Commission notice for Class A equipment ........................................ 355

Modifications ............................................................................................................ 355

Cables ..................................................................................................................... 355

Regulatory compliance identification numbers ...................................................................... 355

Laser device .................................................................................................................... 355

10

Page 11

Laser safety warning .................................................................................................. 356

Certification and classification information .................................................................... 356

Laser product label .................................................................................................... 356

International notices and statements .......................................................................................... 357

Canadian notice (avis Canadien) ....................................................................................... 357

Class A equipment ..................................................................................................... 357

BSMI notice ..................................................................................................................... 357

Japanese notice ............................................................................................................... 357

Korean notices ................................................................................................................. 358

Safety ................................................................................................................................... 358

Battery replacement notice ................................................................................................ 358

Taiwan battery recycling notice .......................................................................................... 359

Power cords ..................................................................................................................... 359

Japanese power cord statement .......................................................................................... 359

Waste Electrical and Electronic Equipment directive ..................................................................... 359

English notice .................................................................................................................. 359

Dutch notice .................................................................................................................... 360

Czechoslovakian notice ..................................................................................................... 360

Estonian notice ................................................................................................................ 360

Finnish notice ................................................................................................................... 360

French notice ................................................................................................................... 361

German notice ................................................................................................................. 361

Greek notice .................................................................................................................... 361

Hungarian notice ............................................................................................................. 362

Italian notice .................................................................................................................... 362

Latvian notice .................................................................................................................. 362

Lithuanian notice .............................................................................................................. 362

Polish notice .................................................................................................................... 363

Portuguese notice ............................................................................................................. 363

Slovakian notice ............................................................................................................... 363

Slovenian notice ............................................................................................................... 364

Spanish notice ................................................................................................................. 364

Swedish notice ................................................................................................................. 364

F Saving and restoring the MPX200 configuration ................................. 365

Saving the MPX200 configuration ............................................................................................ 365

Saving the configuration using the MPX200 CLI ......................................................................... 365

Restoring the MPX200 configuration .......................................................................................... 366

Restoring the configuration using the MPX200 CLI ................................................................ 366

G Data migration best practices .......................................................... 369

Overview ............................................................................................................................... 369

Terminology .................................................................................................................... 369

Relationship between application data and physical storage .................................................. 369

LUN access to a server ..................................................................................................... 370

General steps for data migration ........................................................................................ 370

Inventory checklists .................................................................................................................. 371

Step 1. List the source and destination storage array ............................................................. 371

Step 2. List the servers impacted by the data migration project ............................................... 371

Step 3. List the applications, mount points, and paths to the physical devices ........................... 371

Step 4. List and create LUN ID mappings ............................................................................. 372

Step 5. List and create Fibre Channel zoning ....................................................................... 373

Performance and downtime ...................................................................................................... 373

Optimizing performance during data migration .................................................................... 373

MPX200 Multifunction Router 11

Page 12

Minimizing downtime ....................................................................................................... 374

Before application downtime ....................................................................................... 374

During application downtime ...................................................................................... 374

Validating integrity of the data .................................................................................... 375

Operating systems dependencies .............................................................................................. 375

Windows operating system ................................................................................................ 375

UNIX operating system (HP_UX, Linux, AIX, Solaris) .............................................................. 375

Checklist examples ................................................................................................................. 376

Step 1. List the source and destination storage array ............................................................. 376

Step 2. List the servers impacted by the data migration project ............................................... 377

Step 3. List the applications, mount points, and paths to the physical device ............................. 377

Step 4. List and create LUN ID mappings ............................................................................. 378

Step 5. List and create Fibre Channel zoning ....................................................................... 378

Assigning LUNs to the MPX200 for data migration ...................................................................... 379

Assigning LUNs from an MSA2012fc array ......................................................................... 379

Assigning LUNs from an MSA1000/1500 array .................................................................. 382

Assigning LUNs from an HDS array .................................................................................... 384

Assigning LUNs from an IBM DS4K/DS5K/LSI array ............................................................. 389

Assigning LUNs from an EVA 4/6/8000 series array ........................................................... 394

Assigning LUNs from an EMC CLARiiON array .................................................................... 396

Index ............................................................................................... 403

12

Page 13

Figures

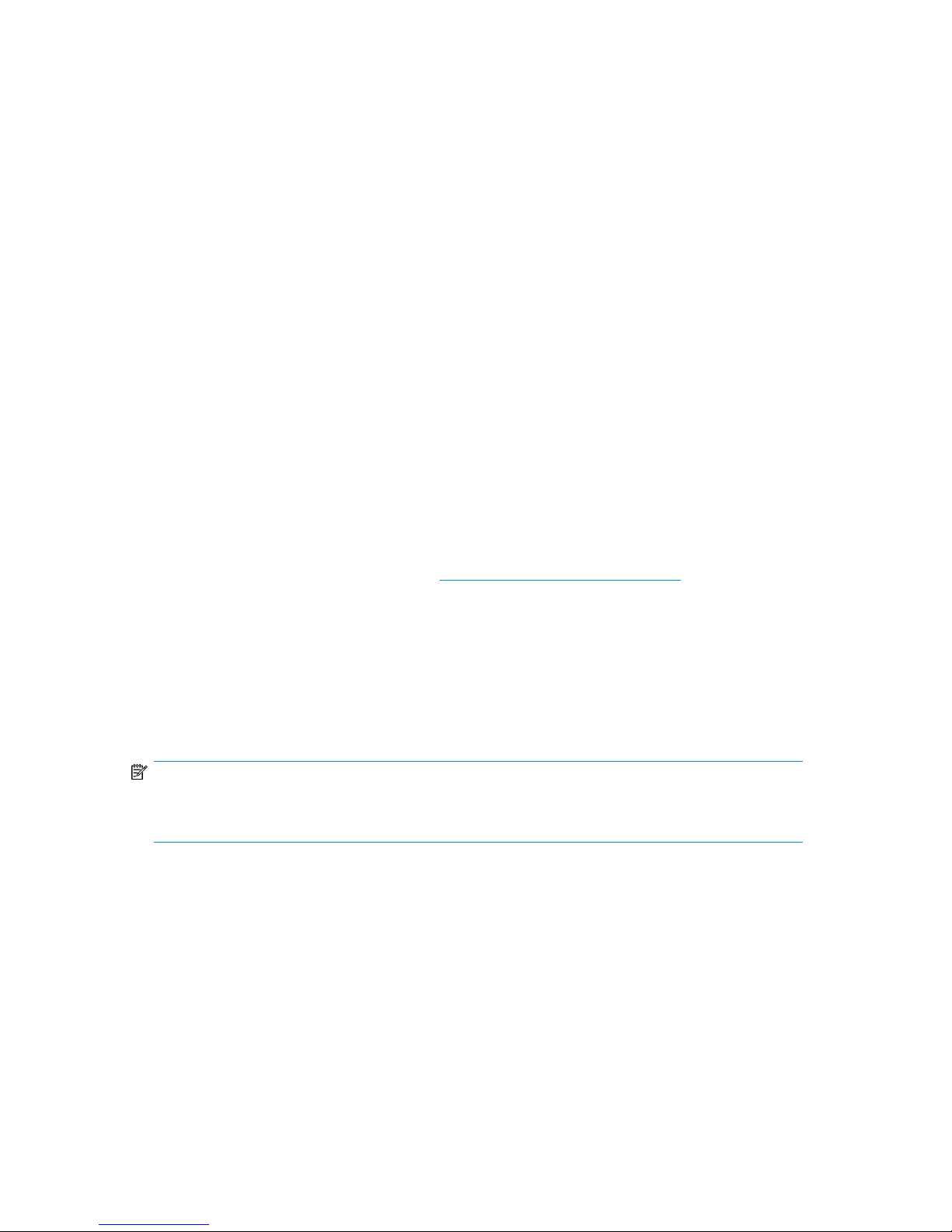

MPX200 single-blade fabric-attached configuration ..................................................... 241

MPX200 dual-blade fabric-attached configuration ....................................................... 242

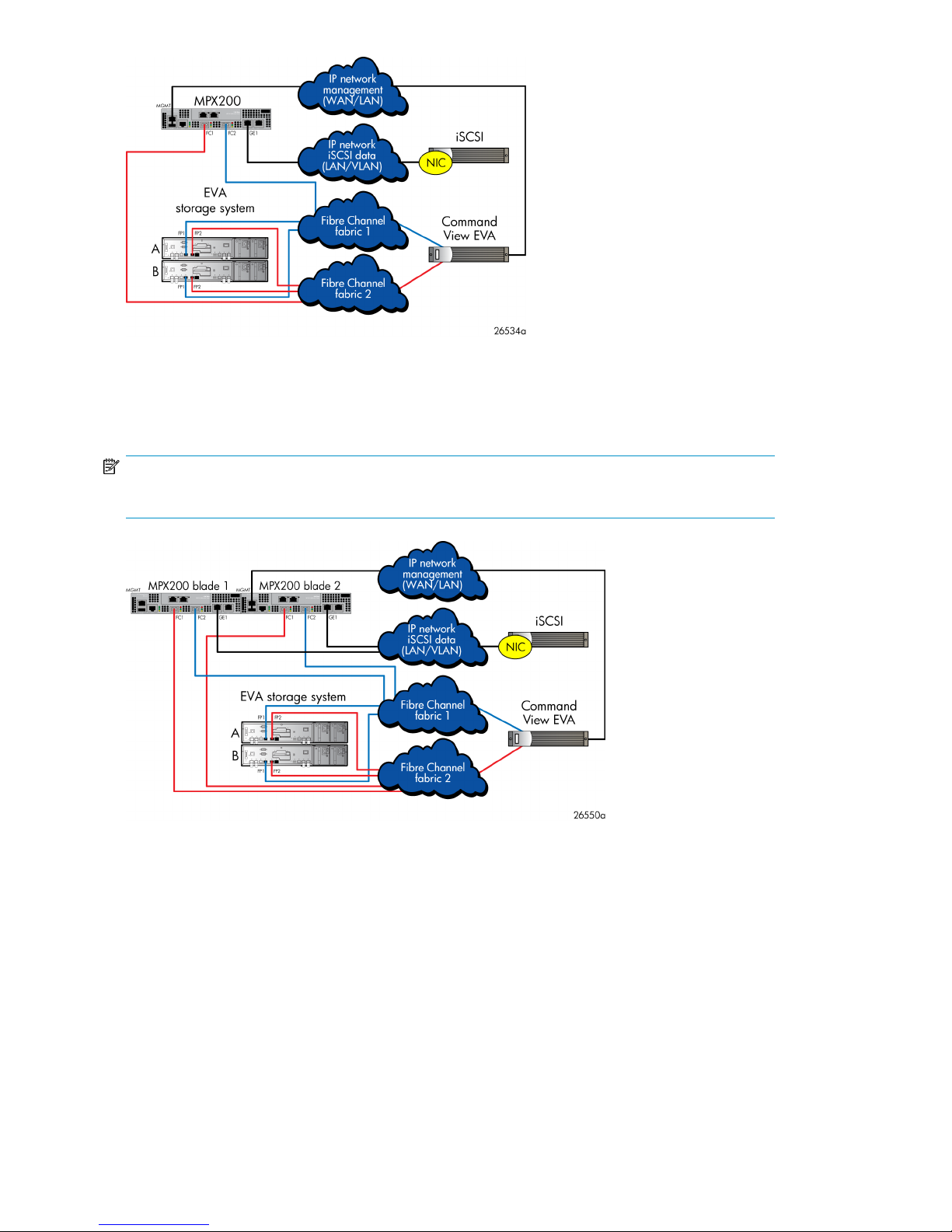

MPX200 single-blade multi-EVA configuration ............................................................. 253

MPX200 dual-blade multi-EVA configuration ............................................................... 254

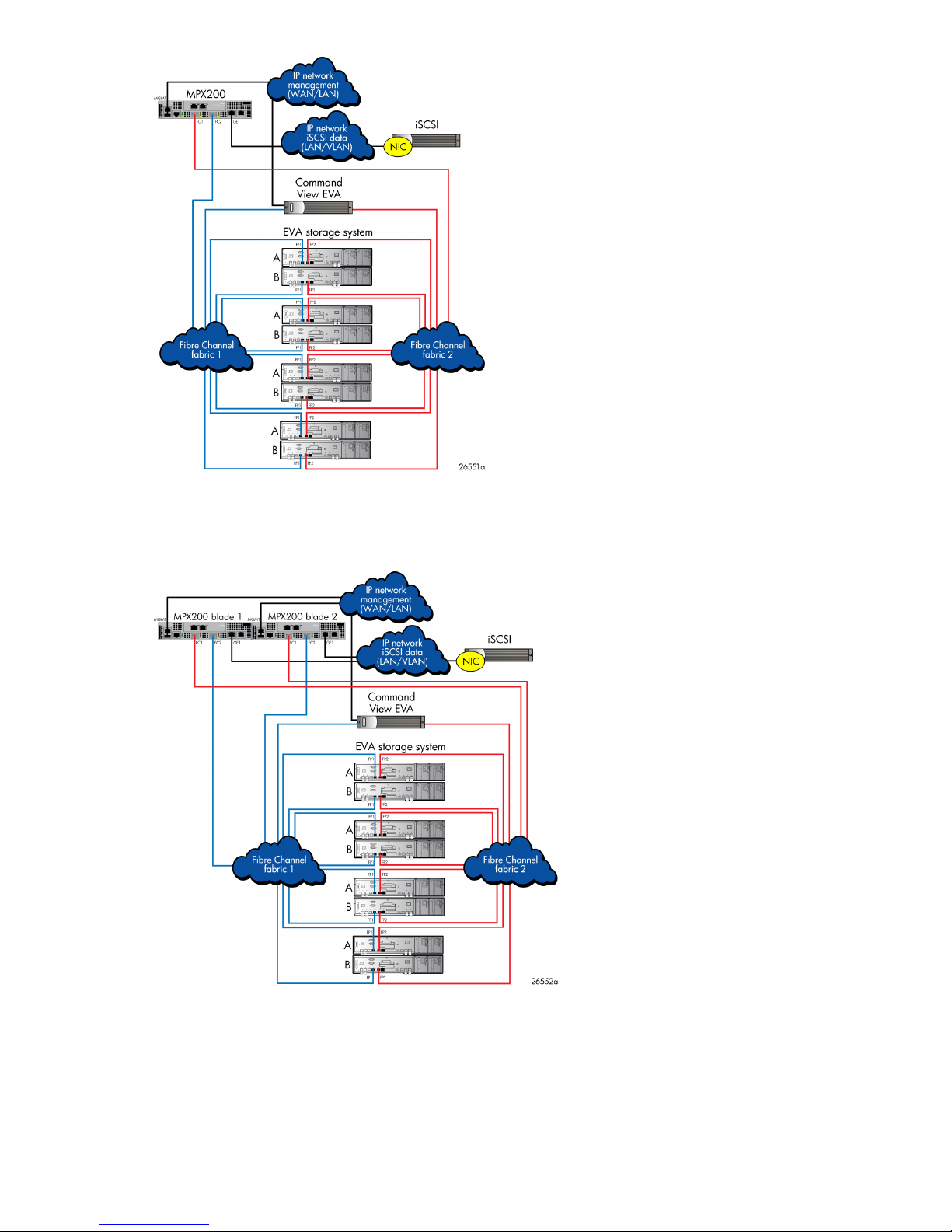

MPX200 dual-blade direct connect to one EVA configuration ........................................ 265

MPX200 single-blade direct connect to one EVA configuration ...................................... 266

MPX200 dual-blade direct connect to two EVA configuration ........................................ 267

MPX200 components .............................................................................................. 318

Chassis rails ........................................................................................................... 329

Rack rails ............................................................................................................... 3210

Inner rack view ....................................................................................................... 3311

Rear view .............................................................................................................. 3412

Power cord ............................................................................................................. 3513

Bezel view ............................................................................................................. 3614

Open zone configuration ......................................................................................... 3815

Fibre Channel zone configuration .............................................................................. 3916

iSCSI devices folder properties .................................................................................. 3917

Host properties ....................................................................................................... 4018

iSCSI controller properties: Mgmt Port tab .................................................................. 4119

iSCSI controller properties: IP Ports tab ....................................................................... 4220

Set iSCSI controller time ........................................................................................... 4321

Code Load page for the iSCSI controller .................................................................... 4422

iSCSI controller shutdown options .............................................................................. 4423

iSCSI controller configuration selection ....................................................................... 4524

Blue LED locating beacon ........................................................................................ 4625

Locate hardware device ........................................................................................... 4626

iSCSI controller options: Remove controller ................................................................. 4727

Installing the Windows Server 2003 kit ..................................................................... 5628

Installation Wizard .................................................................................................. 5629

iSCSI initiator properties: Discovery tab ..................................................................... 5730

Adding a host ........................................................................................................ 5831

Virtual disk properties .............................................................................................. 5932

MPX200 Multifunction Router 13

Page 14

Host details ............................................................................................................ 5933

Targets tab ............................................................................................................. 6034

Select Features page ............................................................................................... 6235

MPIO MPIO Properties page before reboot ................................................................ 6336

MPIO Properties page after reboot ............................................................................ 6337

Software update installation wizard ........................................................................... 6438

Discover targets ...................................................................................................... 6639

Add static IP address ............................................................................................... 6640

Discovered targets list .............................................................................................. 6741

iSNS discovery and verification ................................................................................ 6742

Discovered targets ................................................................................................... 6843

Selecting the newly added target .............................................................................. 6944

Select status ........................................................................................................... 6945

Presented EVA LUNs ................................................................................................ 7046

Configure the iSCSI initiator and targets ..................................................................... 7147

Discovered Targets tab ............................................................................................ 7148

Log in to the target .................................................................................................. 7249

Connected Targets tab ............................................................................................. 7350

Configuration tab .................................................................................................... 7851

Firewall Properties dialog box ................................................................................... 7952

General Properties dialog box .................................................................................. 8053

Add Send Target Server dialog box .......................................................................... 8154

Rescan dialog box .................................................................................................. 8255

iSCSI initiator MPIO properties ................................................................................. 9256

Microsoft iSCSI Initiator Installation page ................................................................... 9457

Connect to Host window .......................................................................................... 9558

Start general configuration wizard ............................................................................. 9559

HBA Port Target Configuration window ...................................................................... 9660

Target Settings tab .................................................................................................. 9661

iSCSI HBA port connections ..................................................................................... 9762

HP MPIO DSM Manager with iSCSI devices ............................................................... 9863

iSCSI Persistent Reservation Setup .............................................................................. 9964

MPX200 basic FCIP configuration ........................................................................... 11365

MPX200 high-availability configuration with fully-redundant long-distance links ............. 11466

FCIP impact on existing iSCSI configurations – single fabric per blade ......................... 11567

FCIP impact on existing iSCSI configurations — dual fabrics per blade ........................ 11668

MPX200 basic FCIP configuration with one or two long-distance links .......................... 11769

14

Page 15

MPX200 FCIP with B-series Integrated Routing .......................................................... 11770

MPX200 high-availability configuration with one or two long-distance links .................. 11871

MPX200 high-availability configuration with fully-redundant long-distance links ............. 11872

MPX200 configuration with remote IP Distance gateway (mpx110) ............................. 11873

MPX200 highly-redundant configuration with one or two long-distance lists .................. 11974

MPX200 highly redundant pairs of gateways with fully-redundant long-distance links ..... 11975

MPX200 simultaneous iSCSI and FCIP with remote dedicated FCIP blade .................... 12076

MPX200 simultaneous iSCSI and FCIP, both local and remote ..................................... 12077

MPX200 iSCSI and FCIP dedicated blades, both local and remote .............................. 12178

HP Continuous Access EVA 3–site configuration with four MPX200 routers ................... 12279

HP Continuous Access EVA 3–site configuration with six MPX200 routers ..................... 12380

HP Continuous Access EVA 3–site configuration with eight MPX200 routers .................. 12481

HP Continuous Access EVA 3–site configuration with six MPX200 router full peer-to-peer

82

connectivity .......................................................................................................... 125

MPX200 ports and LEDs ........................................................................................ 13583

Setting IP addresses .............................................................................................. 13684

FCIP License Add .................................................................................................. 13685

Configuring the FCIP routes using the CLI ................................................................. 13786

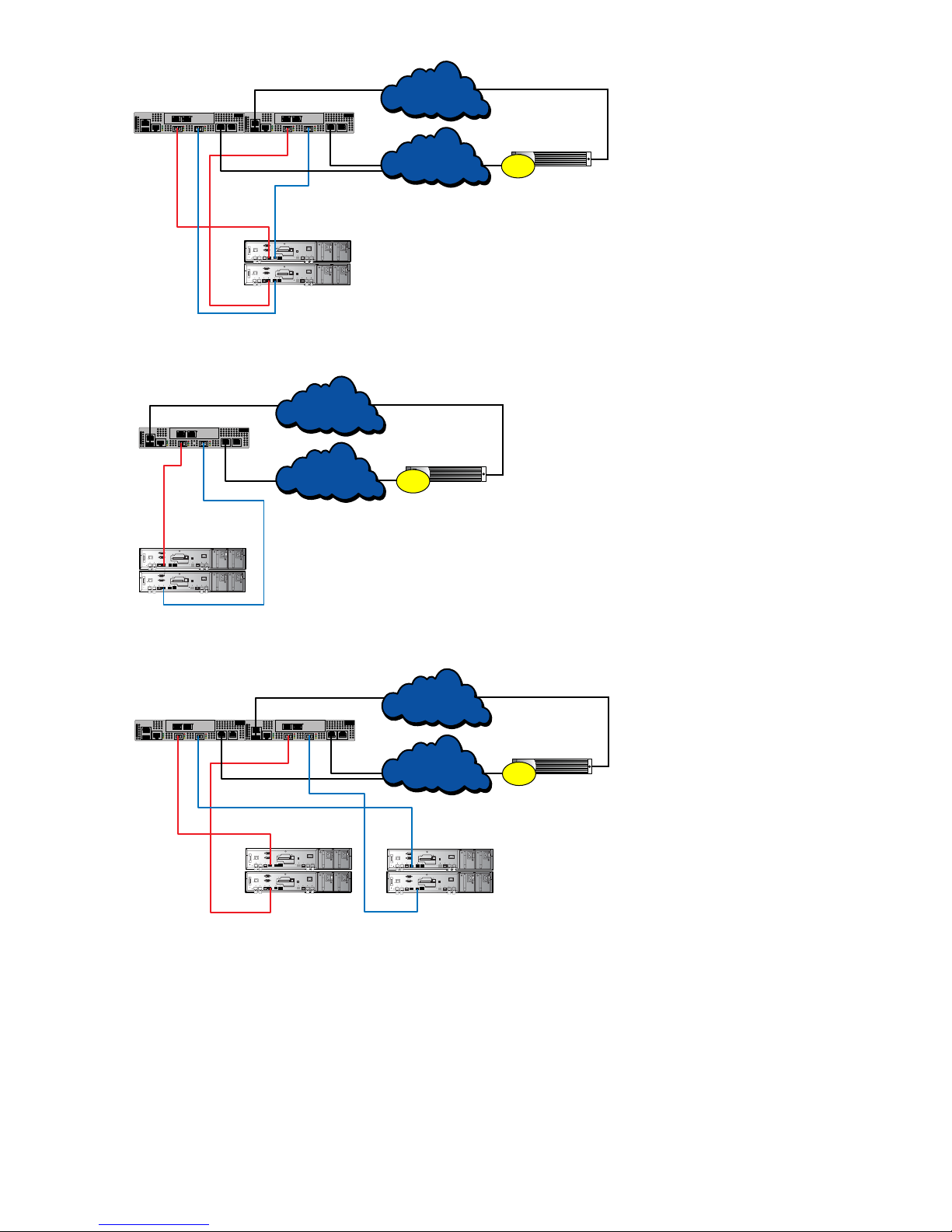

Single and dual blade high-availability setup ........................................................... 14487

Migration between dissimilar vendor SANs .............................................................. 14588

Setting array properties: target type ........................................................................ 15289

Setting array bandwidth ........................................................................................ 15390

Migration Schedule Options: individual job .............................................................. 15691

Create data migration job: individual job ................................................................. 15792

Data migration jobs: individual ............................................................................... 15893

Migration Schedule Options: batch mode ................................................................ 16194

Create Data Migration Job: batch ........................................................................... 16295

Serial Data Migration Jobs Options ......................................................................... 16396

DMS iSCSI host entry in HP Command View EVA ...................................................... 16597

Virtual disk creation .............................................................................................. 16698

DMS virtual disk presentation ................................................................................. 16799

Active Data Migration jobs ..................................................................................... 168100

Viewing migration job details ................................................................................. 171101

mpx Log: System Log ............................................................................................. 174102

mpx Log: Migration Log ......................................................................................... 176103

Verify migration job wizard .................................................................................... 179104

Verifying jobs options ............................................................................................ 180105

MPX200 Multifunction Router 15

Page 16

Features page: License Information .......................................................................... 182106

New License Key dialog box .................................................................................. 182107

Add license dialog box ......................................................................................... 183108

License Array dialog box ....................................................................................... 185109

Information page showing array is licensed .............................................................. 186110

Data Migration Info ............................................................................................... 187111

MPX200 chassis diagnostic LEDs ............................................................................ 249112

Normal blink pattern ............................................................................................. 250113

System error blink pattern ....................................................................................... 250114

Management port IP address conflict blink pattern .................................................... 251115