Page 1

HP StorageWorks

File System Extender Software installation guide for

Linux

Part number: T3648-96011

First edition: October 2006

Page 2

Legal and notice information

© Copyright 2005, 2006 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212,

Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S.

Government under vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP

shall not be liable for technical or editorial errors or omissions contained herein.

Microsoft, Windows, Windows NT, and Windows XP are U.S. registered trademarks of Microsoft Corporation.

File System Extender Software installation guide for Linux

2

Page 3

Contents

Intended audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Related documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Document conventions and symbols . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

HP technical support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Subscription service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

HP web sites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Documentation feedback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1 Introduction and preparation basics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

FSE implementation options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Consolidated implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Distributed implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Mixed implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Preparing file systems for FSE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Reasons for organizing file systems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Organizing the file system layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Estimating the size of file systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Formula for the expected HSM file system size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Formula for the expected size of Fast Recovery Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Formula for the expected File System Catalog size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Formula for the expected Hierarchical Storage Manager Database (HSMDB) size . . . . . . . . . . . . . . . . 16

Sample calculation for the expected sizes of HSM file system, FSC and HSMDB . . . . . . . . . . . . . . . . . 17

Sample calculation for the expected total size of the FRI files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Space requirements of FSE disk buffer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Storage space for FSE debug files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2 Installation overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3 Preparing the operating system environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Preparing the operating system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Required operating system updates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Required third-party packages for SUSE Linux Enterprise Server 9 (SLES 9) . . . . . . . . . . . . . . . . . . . . . 21

Required third-party packages for Red Hat Enterprise Linux 4 (RHEL 4) . . . . . . . . . . . . . . . . . . . . . . . . 22

Verifying third-party packages. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Installing Firebird SuperServer on an FSE server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Disabling ACPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

4 Preparing file systems for FSE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Preparing file systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Preparing Logical Volume Manager (LVM) volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Creating file systems on top of LVM logical volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Mounting file systems for FSE databases and system files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Creating a symbolic link for debug files directory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

5 Installing FSE software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Prerequisites. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Installation overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Installing an FSE release . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Installation procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Monitoring the installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Verifying and repairing the installed FSE software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Determining the build number . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Repairing the FSE software installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

HP StorageWorks File System Extender Software installation guide for Linux 3

Page 4

Preparing the environment for the first startup of the FSE implementation . . . . . . . . . . . . . . . . . . . . . . . . . 39

Modifying the PATH environment variable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Modifying the LD_LIBRARY_PATH environment variable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Modifying the MANPATH environment variable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Preparing the FSE backup configuration file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Configuring the FSE interprocess communication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Starting the FSE implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Starting the FSE processes in a consolidated FSE implementation. . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Starting FSE processes in a distributed or mixed FSE implementation . . . . . . . . . . . . . . . . . . . . . . . . . 44

Restarting FSE processes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Checking the status of a running FSE implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Checking Firebird SuperServer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Checking the omniNames daemon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Checking FSE Processes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Configuring and starting HSM Health Monitor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Configuring HSM Health Monitor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Starting the HSM Health Monitor daemon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Configuring and starting Log Analyzer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Configuring Log Analyzer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Starting the Log Analyzer daemon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Installing the FSE Management Console . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Installing the FSE Management Console server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Installing the FSE Management Console client . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Automating the mounting of HSM file systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Configuring the post-start and pre-stop helper scripts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

The post-start script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

The pre-stop script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

6 Upgrading from previous FSE releases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Upgrade overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Shutting down the FSE implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Upgrading the operating system on Linux hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Upgrading the Linux FSE server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Installing FSE release 3.4 software on the Linux FSE server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Starting up the FSE server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Upgrading the Windows FSE server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Upgrading Linux FSE clients . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Installing FSE release 3.4 software on a Linux FSE client. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Starting up a Linux FSE client . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Upgrading Windows FSE clients . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Configuring and starting HSM Health Monitor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Configuring HSM Health Monitor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Starting the HSM Health Monitor daemon on Linux systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Starting the HSM Health Monitor service on Windows systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Configuring and starting Log Analyzer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Configuring Log Analyzer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Starting the Log Analyzer daemons on Linux systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Starting the Log Analyzer service on Windows systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Upgrading the FSE Management Console . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Upgrading the FSE Management Console on Linux systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Verifying availability of the configured FSE partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

7 Uninstalling FSE software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Uninstalling FSE software. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Uninstalling the FSE Management Console . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Uninstalling basic FSE software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

8 Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

General problems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4

Page 5

Installation problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

A Integrating existing file systems in the FSE implementation . . . . . . . . . . . . . . . . . . . . . . . 71

Integrating existing file systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

B FSE system maintenance releases and hot fixes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

FSE software: releases, hot fixes, and system maintenance releases . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

FSE releases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

FSE hot fixes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

FSE system maintenance releases. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

FSE system maintenance releases and FSE hot fixes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Installing a system maintenance release . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Determining the installed system maintenance release . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Uninstalling a system maintenance release . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Installing a hot fix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Determining the installed hot fix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

Uninstalling a hot fix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

HP StorageWorks File System Extender Software installation guide for Linux 5

Page 6

6

Page 7

About this guide

This guide provides information about:

• Checking detailed software requirements

• Preparing your environment prior to installing the FSE software

• Installing, upgrading, and uninstalling the FSE software on the Linux platform

• Performing mandatory and optional post-installation steps

• Troubleshooting installation, upgrade, and uninstallation problems

Intended audience

This guide is intended for system administrators with knowledge of:

• Linux platform

Related documentation

The following documents provide related information:

• FSE release notes

• FSE installation guide for Windows

• FSE user guide

You can find these documents from the Manuals page of the HP Business Support Center web site:

http://www.hp.com/support/manuals

In the Storage section, click Archiving and active archiving and then select your product.

Document conventions and symbols

Table 1 Document conventions

Convention Element

Medium blue text: Table 1 Cross-reference links and e-mail addresses

Medium blue, underlined text

(http://www.hp.com

Bold text • Keys that are pressed

Italic text Text emphasis

Monospace text • File and directory names

Monospace, italic text • Code variables

)

Web site addresses

• Text typed into a GUI element, such as a box

• GUI elements that are clicked or selected, such as menu and

list items, buttons, tabs, and check boxes

• System output

• Code

• Commands, their arguments, and argument values

• Command variables

Monospace, bold text Emphasized monospace text

HP StorageWorks File System Extender Software installation guide for Linux 7

Page 8

CAUTION: Indicates that failure to follow directions could result in damage to equipment or data.

IMPORTANT: Provides clarifying information or specific instructions.

NOTE: Provides additional information.

TIP: Provides helpful hints and shortcuts.

HP technical support

Telephone numbers for worldwide technical support are listed on the HP support web site:

http://www.hp.com/support/

Collect the following information before calling:

• Technical support registration number (if applicable)

• Product serial numbers

• Product model names and numbers

• Error messages

• Operating system type and revision level

• Detailed questions

.

For continuous quality improvement, calls may be recorded or monitored.

Subscription service

HP recommends that you register your product at the Subscriber's Choice for Business web site:

http://www.hp.com/go/e-updates

After registering, you will receive e-mail notification of product enhancements, new driver versions,

firmware updates, and other product resources.

HP web sites

For additional information, see the following HP web sites:

• http://www.hp.com

• http://www.hp.com/go/storage

• http://www.hp.com/service_locator

• http://www.hp.com/support/manuals

• http://www.hp.com/support/downloads

Documentation feedback

HP welcomes your feedback.

To make comments and suggestions about product documentation, please send a message to

storagedocs.feedback@hp.com. All submissions become the property of HP.

.

8

Page 9

1 Introduction and preparation basics

HP StorageWorks File System Extender (FSE) is a mass storage oriented software product, based on

client-server technology. It provides large storage space by combining disk storage and a tape library with

high-capacity tape media and implementing Hierarchical Storage Management (HSM).

Refer to the FSE user guide for a detailed product description.

This Installation Guide tells you how to prepare the environment and install the FSE software. You then

need to configure FSE resources, such as disk media and tape libraries, HSM file systems, and partitions.

You also need to configure migration policies. These tasks are described in the FSE user guide.

This chapter includes the following topics:

• FSE implementation options, page 9

• Licensing, page 12

• Preparing file systems for FSE, page 12

• Reasons for organizing file systems, page 13

• Organizing the file system layout, page 13

• Estimating the size of file systems, page 14

FSE implementation options

FSE supports both Linux and Windows servers, and Linux and Windows clients. An FSE implementation

can be set up as a:

• Consolidated implementation, where FSE server and client are both installed on a single machine. See

page 9.

• Mixed implementation, a consolidated implementation with additional external FSE clients. See

page 11.

• Distributed implementation, an FSE server system and one or more separate FSE clients. See page 10.

Thus, an FSE implementation can be customized for either heterogeneous or homogenous operating system

environments.

NOTE: Before installing FSE software, consider your current environment so that you can match the FSE

implementation to your needs.

For a description of the specific components shown in the Figure 1 on page 10 and Figure 2 on page 11,

refer to the FSE user guide. Key to components:

fse-hsm Hierarchical Storage Manager

fse-mif Management Interface

fse-pm Partition Manager

fse-rm Resource Manager

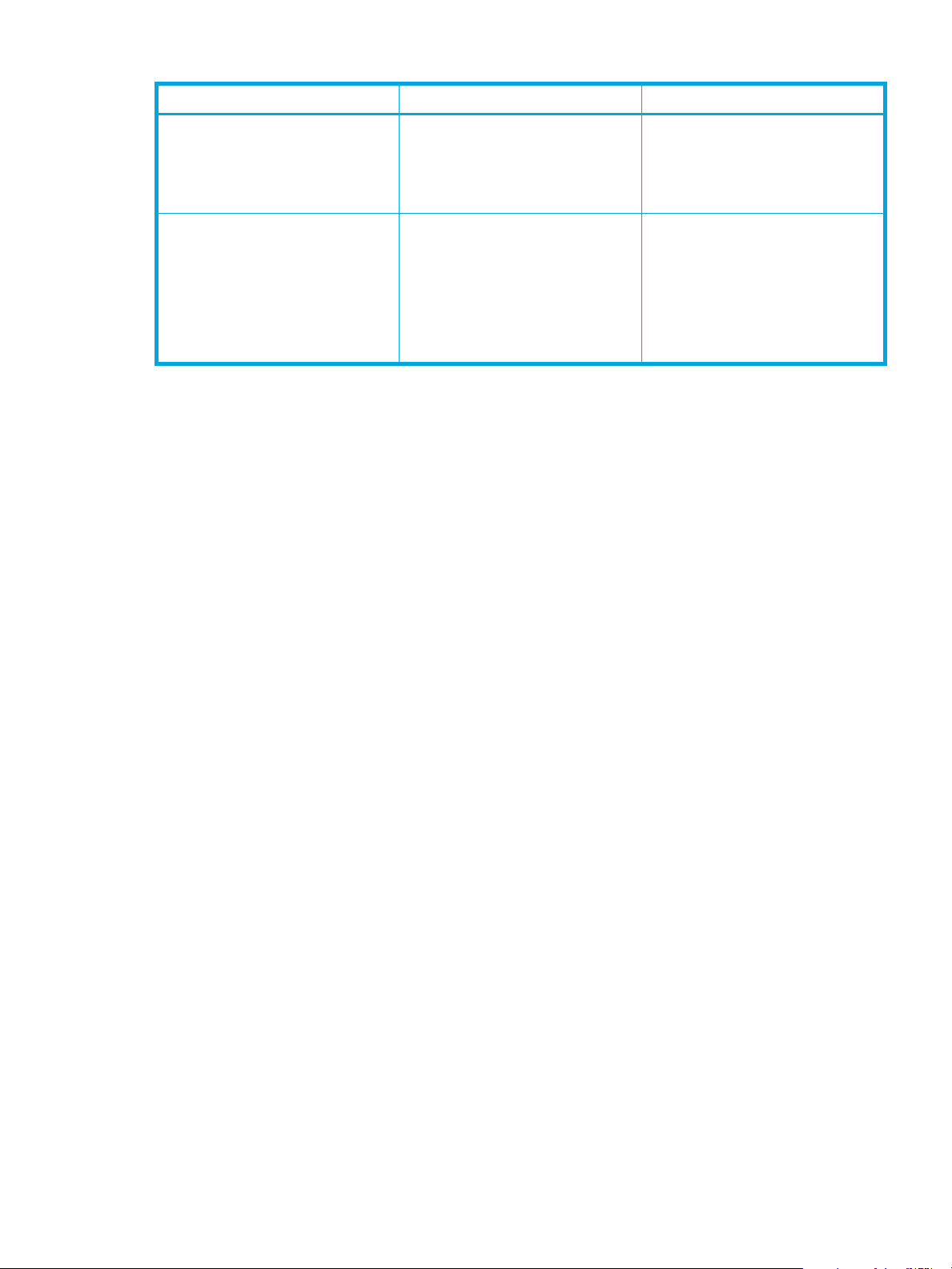

Consolidated implementation

Consolidated implementations integrate FSE server and client functionality in a single machine. It connects

directly to the disk media and/or SCSI tape library with FSE drives and slots; it also hosts an arbitrary

number of HSM file systems used as storage space by FSE users. The machine runs all the processes of a

HP StorageWorks File System Extender Software installation guide for Linux 9

Page 10

working FSE environment. User data from local HSM file systems is recorded on disk media or tape media

in the attached tape library.

Figure 1 Consolidated FSE implementation

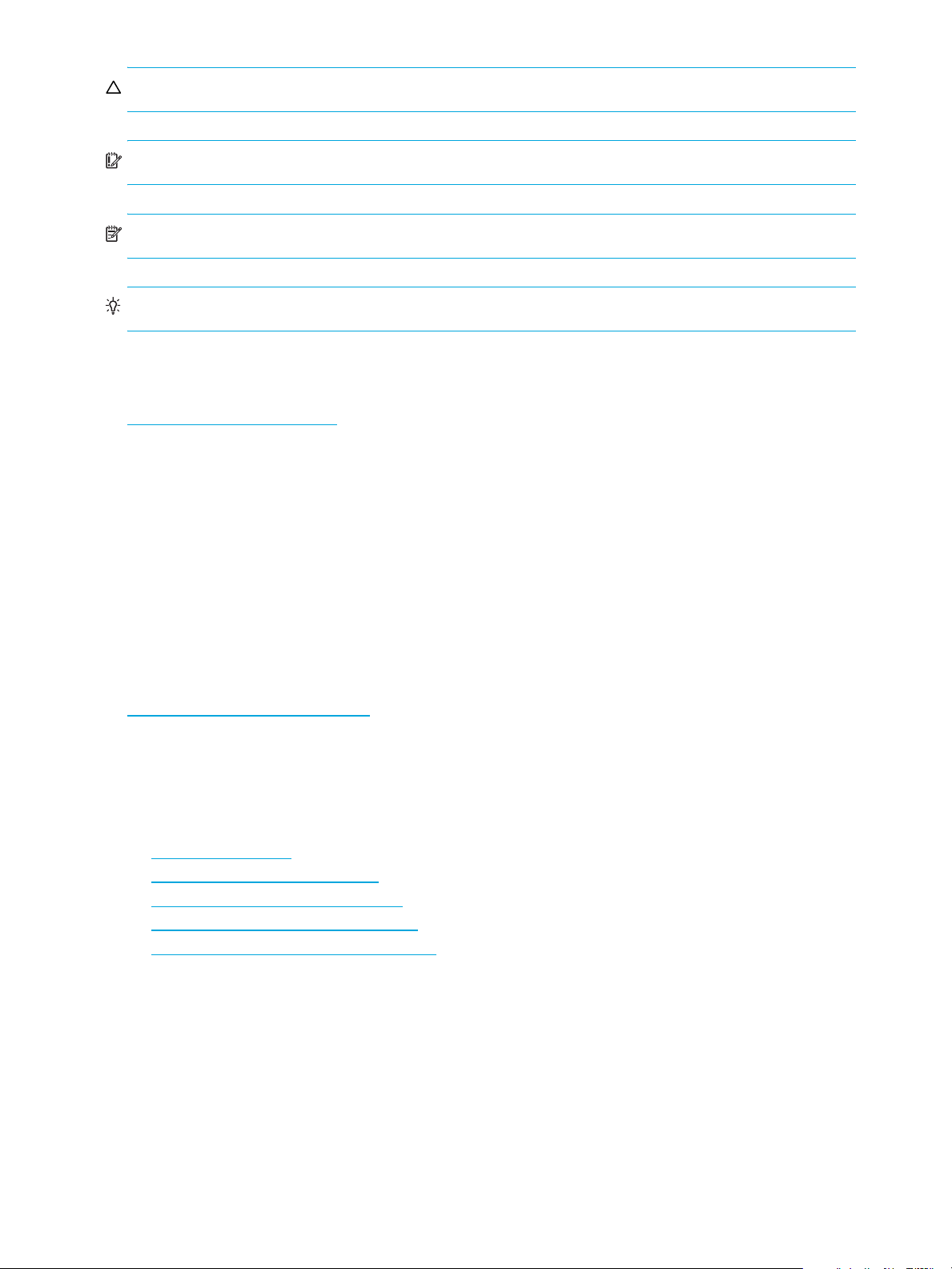

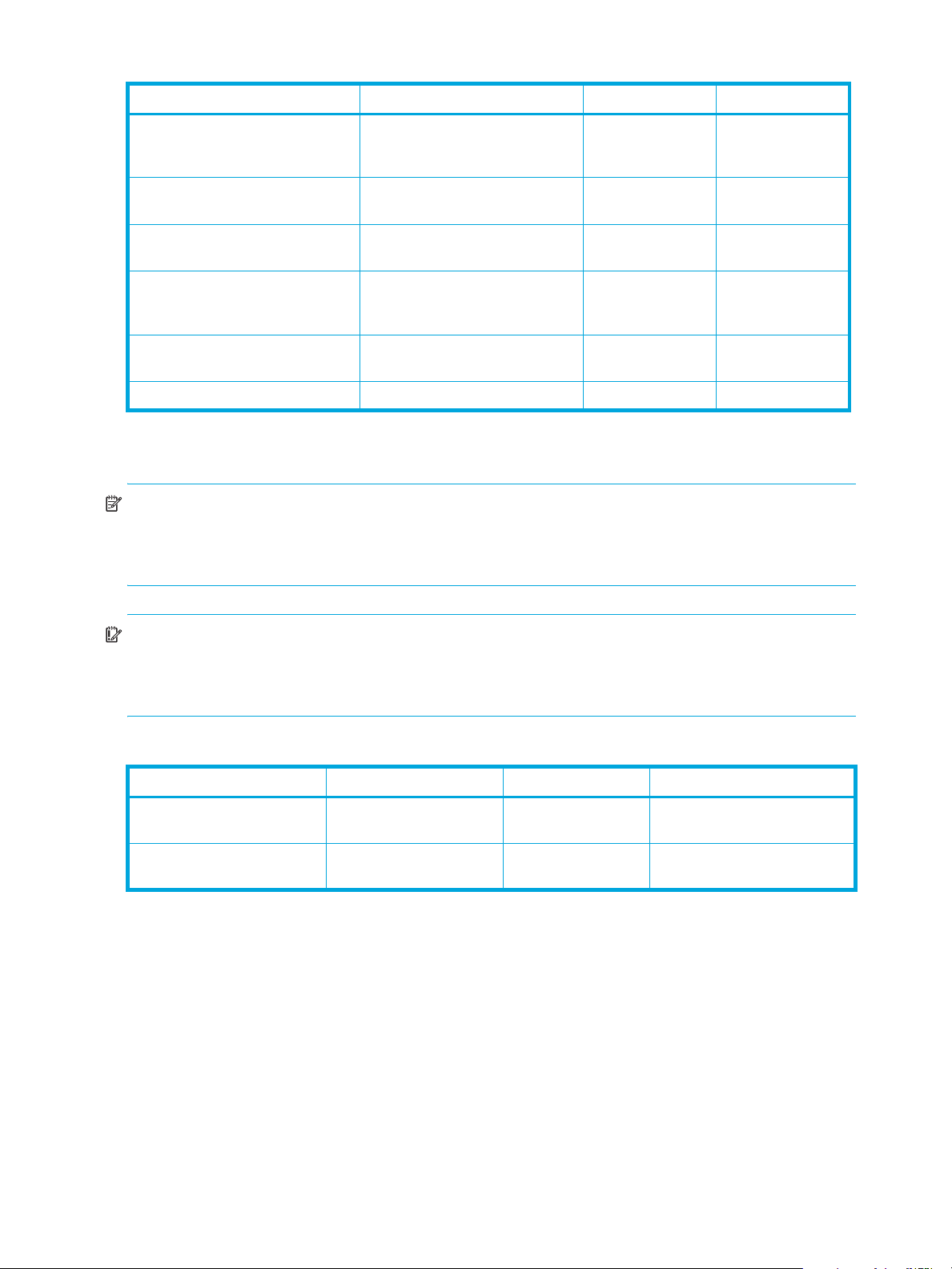

Distributed implementation

A distributed implementation consists of a central FSE server with disk media and/or SCSI tape library

attached and one or more external FSE clients that are connected to the server. External FSE clients can run

on different operating systems.

An FSE server is similar to the consolidated implementation with all major services running on it, but it does

not host any HSM file system; all HSM file systems in such an environment reside on FSE clients - the

machines that only run the essential processes for local HSM file system management and utilize major

10 Introduction and preparation basics

Page 11

services on the FSE server. User data from these remote file systems is transferred to the FSE server and

recorded on the corresponding disk media or tape media in the attached tape library.

Figure 2 Distributed FSE implementation

NOTE: Communication between the components of a distributed FSE implementation is based on the

CORBA technology (omniORB). A reliable bidirectional network connection from each of the external FSE

clients to the FSE server is an essential prerequisite for a reliable operation of a distributed FSE

implementation. Communication between FSE implementation components through a firewall is neither

supported nor tested.

Distributed implementation is sometimes called distributed system with separate server and clients.

IMPORTANT: In a distributed FSE implementation, if the FSE processes on the FSE server are restarted,

you must restart the FSE processes on all external FSE clients to resume the normal FSE operation.

Mixed implementation

Mixed implementations consist of a consolidated FSE system with additional clients connected to it.

External FSE clients, which can run on different operating systems, are physically separated from the

integrated server and client.

External FSE clients connect to the consolidated FSE system through LAN and host additional HSM file

systems. They run only processes that provide functionality for managing these HSM file systems and

communicate with major services running on the consolidated system. User data from HSM file systems on

clients is transferred to the consolidated FSE system and recorded on disk media and/or tape media in the

attached SCSI tape library.

NOTE: See the note about omniORB for distributed implementations above.

HP StorageWorks File System Extender Software installation guide for Linux 11

Page 12

IMPORTANT: In a mixed FSE implementation, if the FSE processes on the consolidated FSE system are

restarted, you must restart the FSE processes on all external FSE clients to resume the normal FSE operation.

Licensing

There are both per machine and capacity-based licenses for HP File System Extender.

• For every machine that runs an FSE client, you need an FSE client license appropriate to the operating

system.

• For every machine that runs the FSE server, you need a base license appropriate to the operating

system. The base license includes a license to migrate 1 TB to secondary storage managed by the FSE

server.

• To migrate more than 1 TB to the secondary storage, you need additional FSE server capacity licenses.

These are available in 1 TB increments. The migrated capacity is the sum of all capacity migrated to

the secondary storage from all associated FSE clients, including all copies where two or more copies of

migrated files are configured, and all versions of modified migrated files.

• To upgrade the FSE client-managed file system to WORM you need a capacity-based license. This is

available in 1 TB increments. The capacity for this WORM license is based on the physical capacity

occupied by the upgraded FSE file system on the production disk.

Preparing file systems for FSE

In order to optimize operation of the FSE implementation and increase its reliability, you should organize

file systems on the host that will become the FSE server, as well as on the FSE client. If you intend to use

disk media, you also need to prepare file systems to hold disk media files.

The following sections explain the importance of preparing file systems for FSE operation and provide

formulas to estimate the required space for FSE components. These explanations and formulas apply

generally when configuring an FSE implementation. The preparation is described in ”Preparing file

systems” on page 27.

The following table summarizes the main parameters to be considered when setting up the environment.

These parameters are discussed later in this chapter.

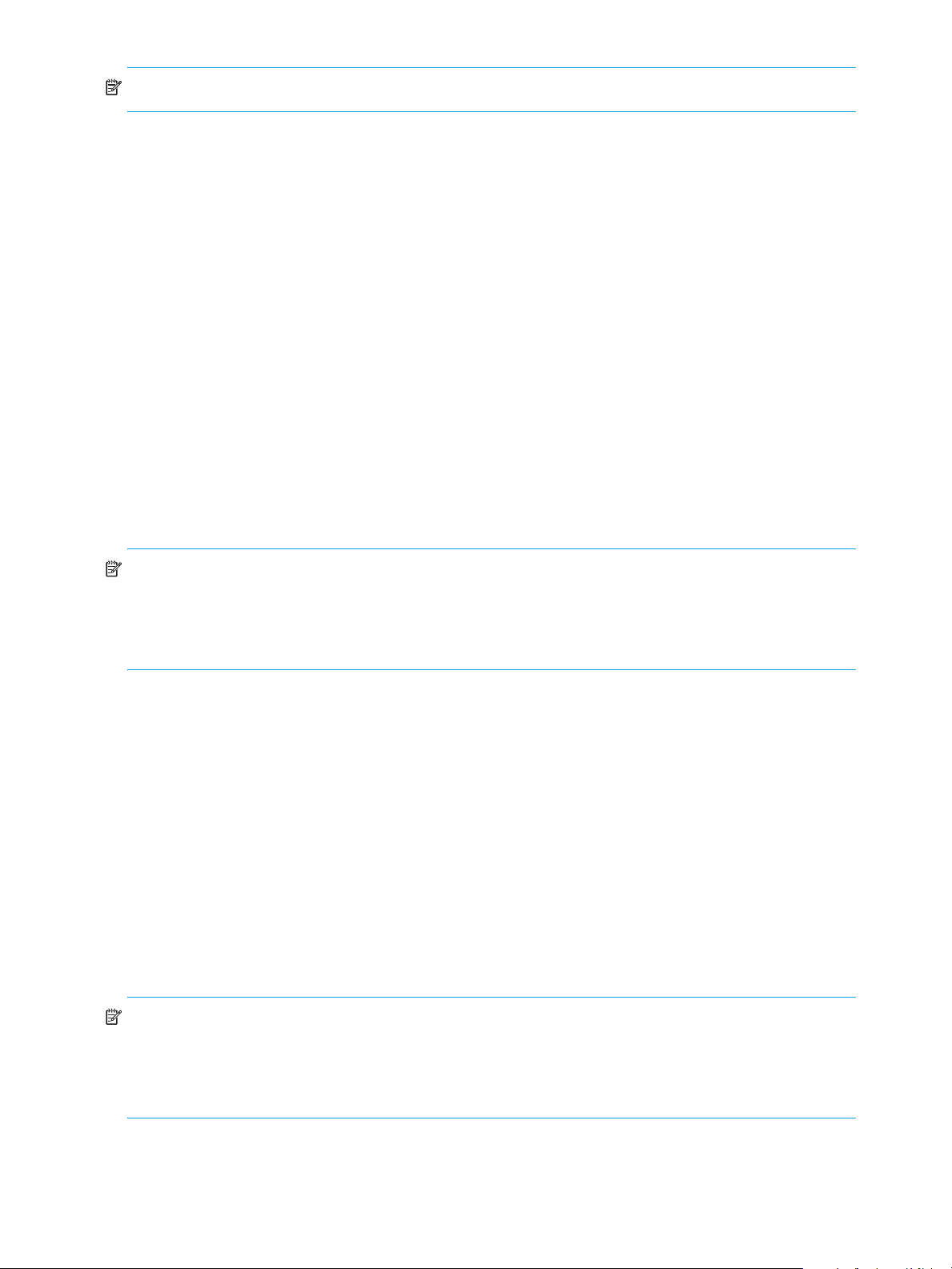

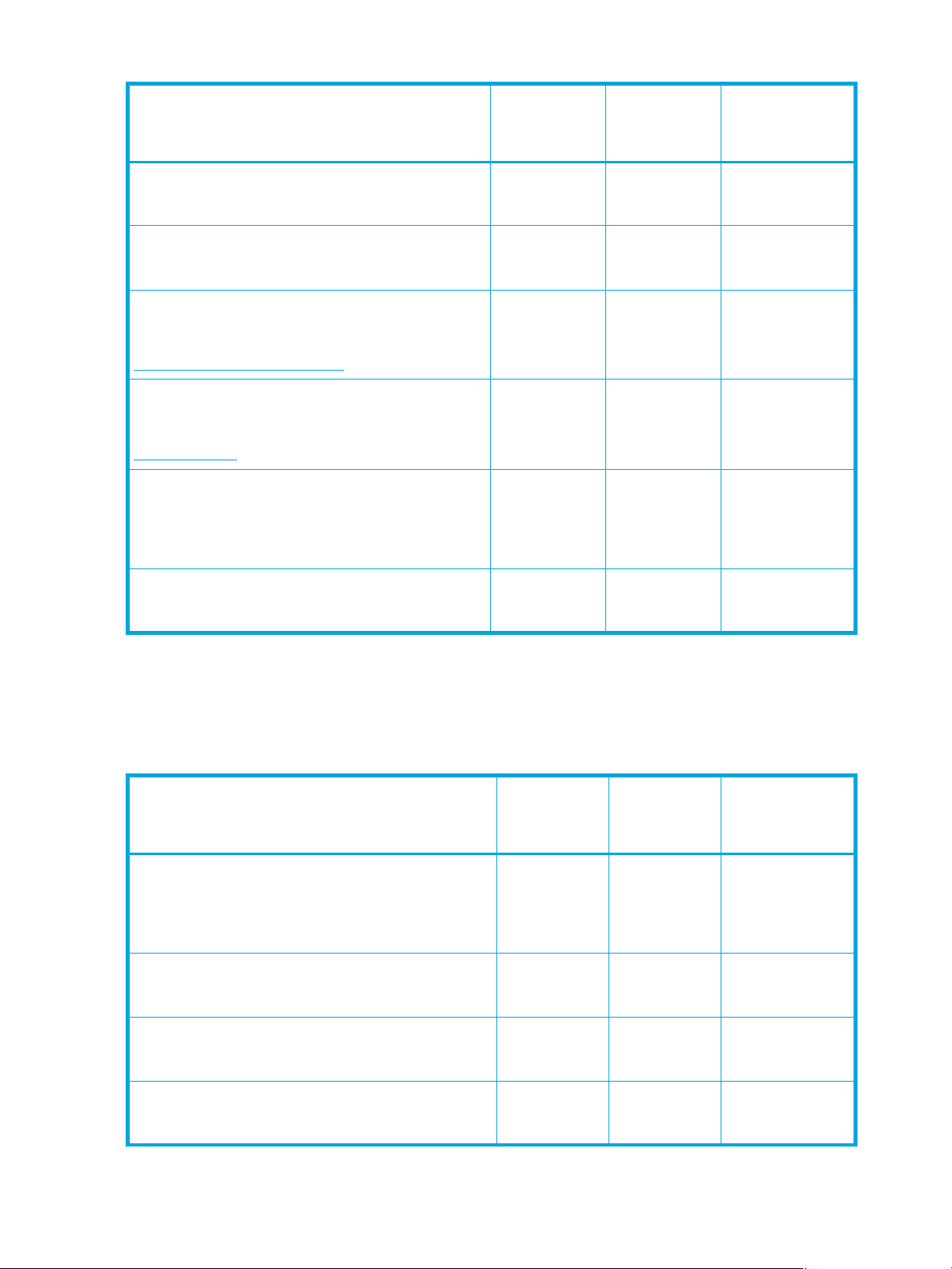

Table 2 Pre-installation size considerations

Parameter Description Reference

HSM file system size Determine the minimum HSM file

system size using such data as

expected number of files and

average file size.

Fast Recovery Information (FRI) size Determine the expected size of FRI. ”

File System Catalog (FSC) size FSC contains location history and

metadata. Determine expected size

of FSC.

HSM database (HSMDB) size Determine expected HSM database

size

”

Formula for the expected HSM

ile system size

f

Formula for the expected size of

Fast R

ecovery Information

page 15

”

Formula for the expected File

Sy

stem Catalog size

”

Formula for the expected

Hie

rarchical Storage Manager

Databas

page 16

” on page 15

” on page 15

e (HSMDB) size

” on

” on

12 Introduction and preparation basics

Page 13

Table 2 Pre-installation size considerations

Parameter Description Reference

Temporary files in FSE disk buffer Total storage space on file systems or

volumes that are assigned to the FSE

disk buffer should be at least 10% of

total storage space on all HSM file

systems in the FSE implementation.

Debug files Debug files are optional but may

grow and fill up the file system.

Dedicate a separate file system to

debug files and use a symbolic link,

or mount another file system to the

debug files directory.

Reasons for organizing file systems

There are several reasons why you need to re-organize file systems on the machine that will host the FSE

software:

• Increase reliability of the core FSE databases.

FSE databases are vital FSE components and need to be secured to allow the FSE implementation to

become as stable as possible. Splitting the file system, which contains FSE databases, into several file

systems provides increased security.

• Reserve sufficient disk space for FSE databases, FSE log files, and FSE debug files.

FSE databases, FSE log, and FSE debug files can grow quite large in time. Gradually, some file systems

that hold these files can become full, which may lead to partial or complete data loss. For more

information on calculation of the required disk space, see ”Estimating the size of file systems” on

page 14.

”

Space requirements of FSE disk

” on page 17

er

buff

”

Storage space for FSE debug

” on page 18

files

On the consolidated FSE system or FSE server, HP recommends that you use Logical Volume Manager

(LVM) volumes for storage of file systems that are used for FSE disk media. This will increase flexibility and

robustness of the file systems that store FSE disk media.

Organizing the file system layout

During the FSE installation process, several new directories are created and FSE-related files are copied to

them. Some of the directories in the FSE directory layout are crucial for correct operation of an FSE

implementation. For improved robustness and safety purposes, they must be placed on separate file

systems. This prevents problems on one directory influencing data on any of the others. In case of the FSE

disk buffer, this may also improve the overall performance of the FSE system.

The directories and the required characteristics of the mounted file systems are listed in Table 3 and

Table 4, according to their location in the FSE implementation.

HP StorageWorks File System Extender Software installation guide for Linux 13

Page 14

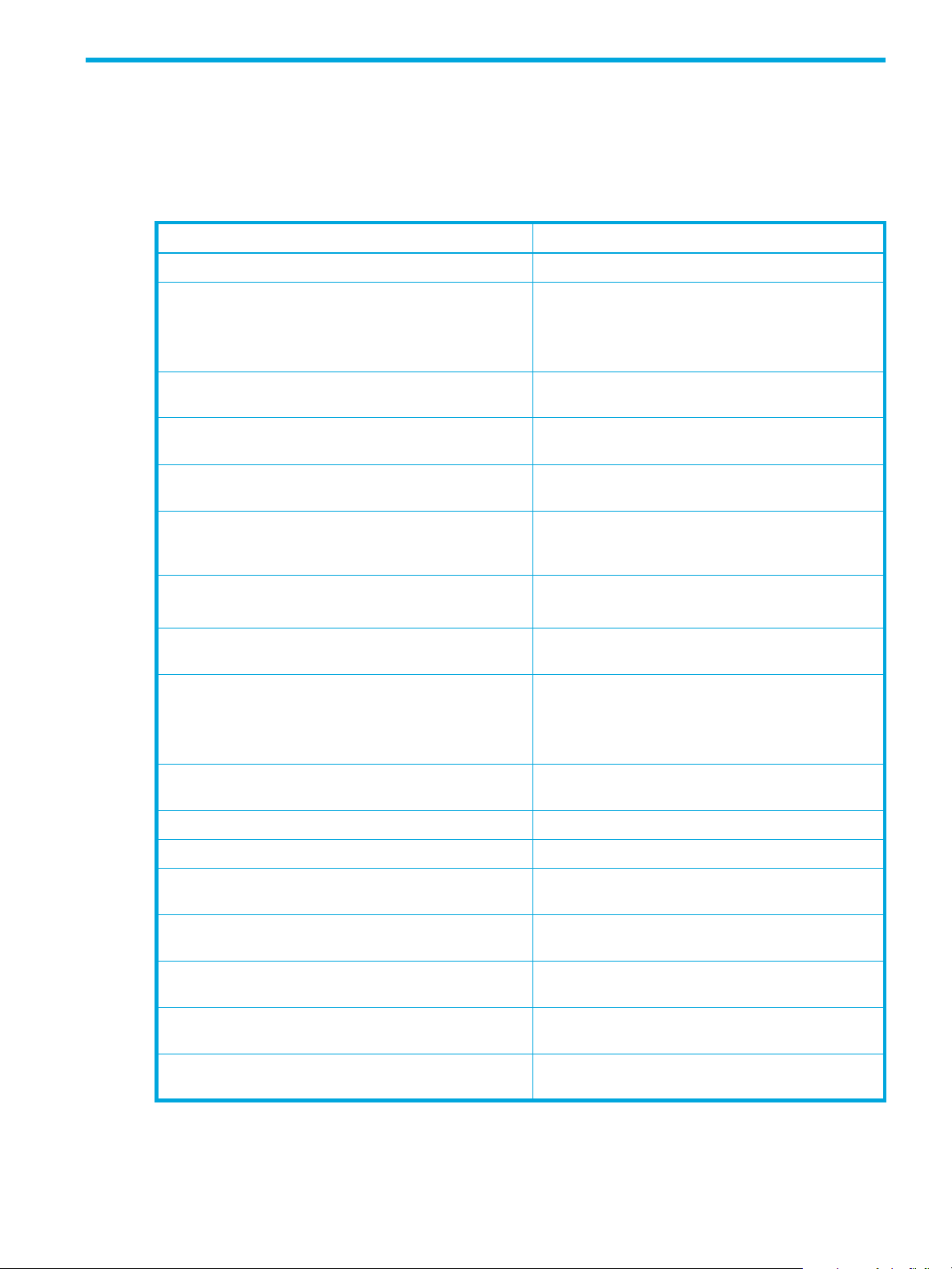

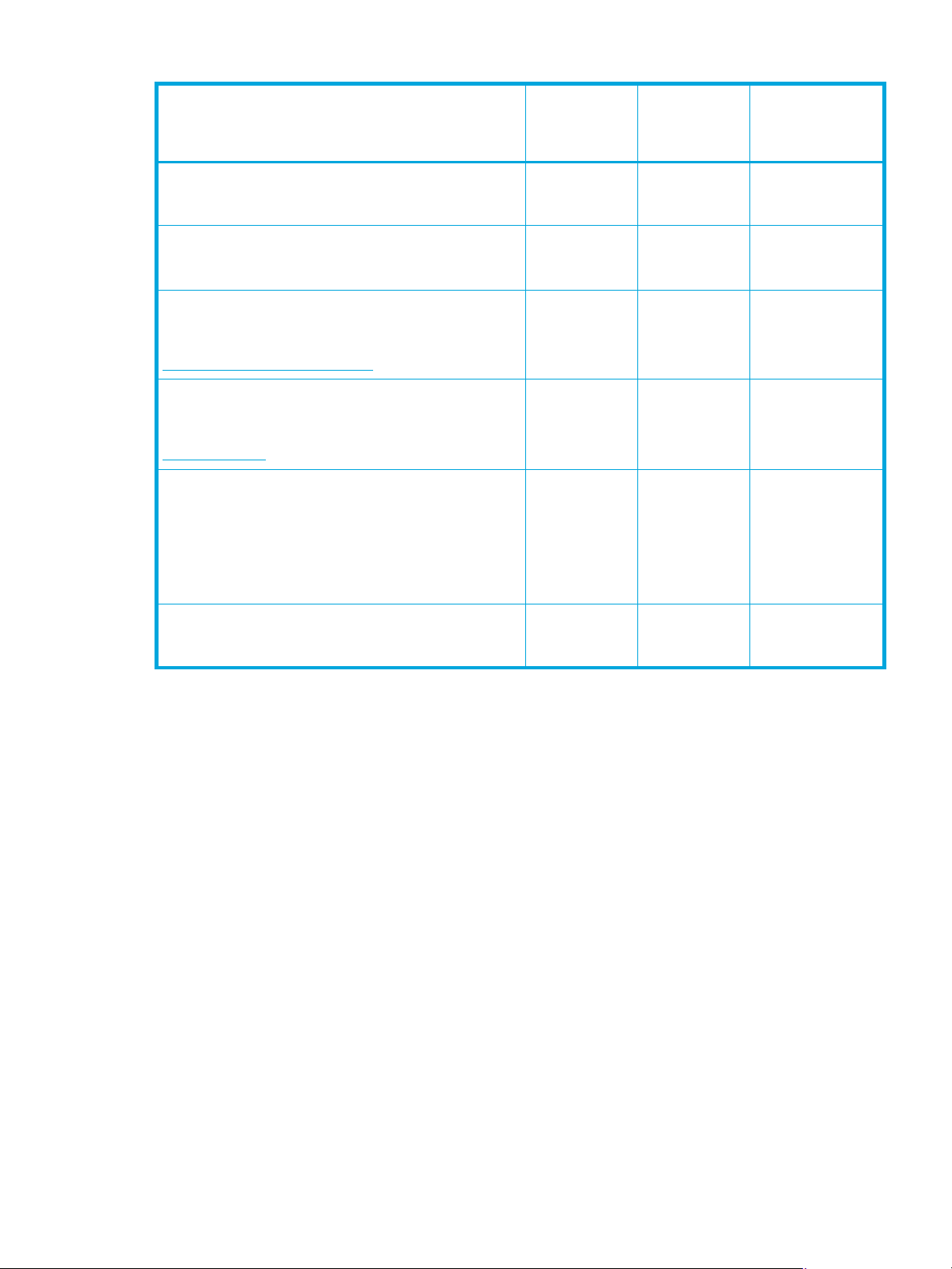

Table 3 FSE server directory layout

Directory Contents File system type LVM volume

/var/opt/fse/ Configuration Database, Resource

Management Database, other

FSE system files

/var/opt/fse/part/ File System Catalogs Ext3 required for the

/var/opt/fse/fri/ Fast Recovery Information (FRI) Ext3 required for the

/var/opt/fse/diskbuf/FS1

/var/opt/fse/diskbuf/FS2

...

/var/opt/fse/log/ FSE log files, FSE debug files any required for the

/var/opt/fse/dm/Barcode/ FSE disk media any recommended

1. Mount points of file systems assigned to the FSE disk buffer, where /var/opt/fse/diskbuf/ is the root

directory of the FSE disk buffer.

1

Temporary files of the FSE disk

buffer

Ext3 required for the

FSE backup

FSE backup

FSE backup

any not required

FSE backup

NOTE: You can assign additional file systems or volumes to the FSE disk buffer. These file systems must be

mounted on subdirectories one level below the root directory of the FSE disk buffer. For details, see the FSE

user guide, chapter ”Monitoring and maintaining FSE”, section ”Extending storage space of FSE disk

buffer”.

IMPORTANT: To achieve a sufficient stability of the FSE disk media, a separate file system must be

dedicated for each disk medium, and it must be mounted to the corresponding subdirectory of the FSE disk

media directory. Thus, the FSE disk media directory itself does not need to be located on a separate file

system.

Table 4 FSE client directory layout

Directory Contents File system type LVM volume

/var/opt/fse/part/ Hierarchical Storage

Management Databases

/var/opt/fse/log/ FSE log files, FSE debug

files

Estimating the size of file systems

Each of the previously mentioned file systems holds large databases and/or system files. Therefore, you

need to calculate the space requirement for all of them before they are created.

The sizes of the HSM file system, Fast Recovery Information (FRI) files, File System Catalog (FSC), and

Hierarchical Storage Manager Database (HSMDB) files are all related to several parameters. Among these

parameters are the number of files on an HSM file system and their average size.

By default, 32 MB of journal space is created on Ext3 file systems. This should be sufficient for the file

systems of the following directories:

Ext3 required

any required, if the client is part of

the consolidated FSE system

• /var/opt/fse

• /var/opt/fse/part

• /var/opt/fse/diskbuf

14 Introduction and preparation basics

Page 15

IMPORTANT: FSE includes the utility HSM Health Monitor, which helps preventing file systems for FSE

databases and system files and HSM file systems from running out of free space. For details, see the FSE

user guide, chapter ”Monitoring and maintaining FSE”, section ”Low storage space detection”.

Formula for the expected HSM file system size

Use this simplified formula to calculate the minimum HSM file system size:

125 afs nf× pon×()bks 2× nf×()+[]×

minHSMFSsize

------------- ------------- ------------- ------------ ------------- ------------- ------------- --------=

100

The parameters have the following meaning:

minHSMFSsize ..... the minimum required HSM file-system size in bytes.

afs ..... the average file size in bytes.

nf ..... the expected number of files on an HSM file system.

pon ..... the percentage of online files (%).

bks ..... the file-system block size in bytes.

Formula for the expected size of Fast Recovery Information

Fast Recovery Information (FRI) consists of a set of files, each corresponding to a single open data volume

on a configured FSE medium, which grows in size as the percentage of used space on the volume

increases. FRI files reach maximum size when the corresponding data volume becomes full. The FRI files

are then copied to appropriate locations on the FSE medium and removed from disk.

Use this formula to calculate the expected maximum size of FRI files on disk:

maxFRIsize

nv sv lf 350+()nm tbks⁄×[]××

---------- ------------- ------------- ------------ ------------- ------------- ------=

sf nm tbks⁄×[]

where the meaning of the parameters is:

maxFRIsize ..... the estimated maximum size of FRI files on disk in bytes.

nv ..... the total number of open FSE medium volumes

1

sv ..... the size of an FSE medium volume on tape in bytes.

lf ..... the average file name length of files being migrated in bytes.

nm ..... the average number of files migrated together in the same migration job.

2

tbks ..... the block size on an FSE medium

in bytes.

sf ..... the average size of files being migrated in bytes.

[...] ..... square brackets indicate that the value inside is rounded up to an integer.

1. Normally, the number of configured FSE media pools containing media with migrated files.

2. Assuming all FSE media pools are configured with the same block size (block size is uniform on all FSE media).

Formula for the expected File System Catalog size

The File System Catalog (FSC) is a database that consists of the Data Location Catalog (DLC) and the

Name Space Catalog (NSC). The DLC records the full history of file locations on FSE media. The NSC

contains metadata of files on an HSM file system.

in the FSE implementation.

Factors used for FSC size estimation:

• Approximate usage 180 bytes per file for FSC (DLC + NSC) for typical file generation with two copies

and file name size of 16 characters using standard file attributes (Linux). You need to add the size of

additional attributes on Windows - access control lists (ACLs), extended attributes (EAs) and alternate

data streams (ADSs).

• Additional 36 bytes for media volume index for each file copy is required when you run FSC

consistency check. This will be used on the first run of consistency check.

HP StorageWorks File System Extender Software installation guide for Linux 15

Page 16

NOTE: HP recommends that you add another 50% as a reserve when calculating the maximum FSC size.

The following examples present space usage for typical configurations.

Example 1: three copies, one generation:

First generation takes (189 for FSC) + (36 x 3 for volume index) = 297 bytes

Each additional generation takes 47 + (36 x 3) = 155 bytes

Total size = ((297 + add. attr. size) x max. number of files) + (155 x number of add. generations)

Example 2: two copies, one generation:

First generation takes (180 for FSC) + (36 x 2 for volume index) = 252 bytes

Each additional generation takes 38 + (36 x 2) = 110 bytes

Total size = ((252 + add. attr. size) x max. number of files) + (110 x number of add. generations)

Example 3: one copy, one generation:

First generation takes (162 for FSC) + (36 for volume index) = 198 bytes

Each additional generation takes 20 + 36 = 56 bytes

Total size = ((198 + add. attr. size) x max. number of files) + (56 x number of add. generations)

NOTE: A well defined FSE backup policy with regular backups prevents the excessive growth of the

transaction log files of the File System Catalogs (FSCs). The transaction log files are committed into the

main databases during the FSE backup process.

For details, see the FSE user guide, chapter ”Backup, restore, and recovery”, section ”Backup”.

Formula for the expected Hierarchical Storage Manager Database (HSMDB) size

This is the formula for calculating the maximum Hierarchical Storage Management Database (HSMDB)

size:

maxHSMDBSize nf 12+()nf pdi× afnl 30+()×[]nf pon× afnl 40+()×[]++=

where the meaning of the parameters is:

maxHSMDBsize ..... the maximum HSM database size in bytes.

nf ..... the expected number of files on an HSM file system.

pdi ..... the percentage of directories (%).

afnl ..... the average length of file names in bytes.

pon ..... the percentage of online files (%).

NOTE: A well defined FSE backup policy with regular backups prevents the excessive growth of the

transaction log files of the Hierarchical Storage Management Databases (HSMDBs). The transaction log

files are committed into the main databases during the FSE backup process.

For details, see the FSE user guide, chapter ”Backup, restore, and recovery”, section ”Backup”.

16 Introduction and preparation basics

Page 17

Sample calculation for the expected sizes of HSM file system, FSC and HSMDB

The following is an example of a calculation of space required on an HSM file system and on the file

systems holding File System Catalog (FSC), and HSM Database (HSMDB), and Fast Recovery Information

(FRI) files.

Sample input for calculations:

• HSM file system can store 10 million entities (files and directories)

• average size of files being migrated is 100 KB

• 20% of the files are online

(online means they occupy space on the local HSM file system)

• 20% of all entities on the HSM file system are directories

• the average file name length of files being migrated is 10 characters

• files have only one generation

• average number of copies per file generation amounts to two

HSM file system size calculation:

minHSMFSsize

FSC size calculation:

First generation takes (162 for FSC) + (36 for volume index) = 198 bytes

There are no additional generations

125 afs nf× pon×()bks 2× nf×()+[]×

------------- ------------- ------------- ------------ ------------- ------------- ------------- --------=

100

Total FSC size = ((198 + add. attr. size) x 10000000) + (56 x 0)

HSMDB size calculation:

maxH SMDBSi ze 10000000 12×()10000000 20× 10 30+()×[]10000000 20× 10 40+()×[]++=

Sample results:

• minimum HSM file system size: 216-306 GB, depending on the block size (1 KB, 2 KB, 4 KB)

• maximum File System Catalog size: 453 MB

• maximum HSM Database size: 287 MB

The File System Catalog and the HSMDB together require 740 MB. That is approximately 0.3% of the size

of minimum required HSM file system size for this input.

Sample calculation for the expected total size of the FRI files

The following is an example of a calculation of space required on the file system holding the Fast Recovery

Information (FRI) files.

Sample input for calculation:

• total number of open FSE medium volumes in the FSE implementation is 8

• size of an FSE medium volume on tape is 5135 MB

• average size of files being migrated is 100 KB

• average file name length of files being migrated is 10 characters

• average number of files migrated together in the same migration job is 50

• block size on tape medium is 128 KB

Sample result:

• estimated maximum size of FRI files on disk: 1027 MB

Space requirements of FSE disk buffer

To determine the approximate storage space required by FSE disk buffer, you should consider the following

points:

HP StorageWorks File System Extender Software installation guide for Linux 17

Page 18

• Total storage space on file systems or volumes that are assigned to FSE disk buffer should be at least

10% of total storage space on all HSM files systems in the FSE implementation.

• Each file system or volume assigned to FSE disk buffer should be at least twice as large as the largest

file that will be put under FSE control.

Additionally, if you plan to perform a duplication of FSE media, the following prerequisite must be fulfilled:

• At least one of the file systems or volumes assigned to FSE disk buffer should be at least twice as large

as the total storage space on the largest FSE medium.

For example, to enable duplication of a 100 GB medium, each file system or volume assigned to the

FSE disk buffer should have at least 200 GB of storage space.

Storage space for FSE debug files

The /var/opt/fse/log/debug directory holds optional FSE debug files. These files contain large

amount of data and can grow very fast. In order to prevent them filling up the /var/opt/fse file system,

you need to make the directory /var/opt/fse/log/debug a symbolic link to a directory outside the

file system for /var/opt/fse. For example, you can make the symbolic link point to one of the following

directories:

• /var/log/FSEDEBUG

• /tmp/FSEDEBUG

The directory /tmp can be used only if it provides large storage space and is not part of the root file

system, that is: if a separate file system is mounted to /tmp.

Creating symbolic links is done after you create and mount the required file systems.

NOTE: If there is enough disk space that is not yet partitioned, you can also make a new partition for the

debugs, create an Ext3 file system on it, and mount it on /var/opt/fse/log/debug.

You need to add a line to the /etc/fstab file, for example

/dev/mynewdebugpart /var/opt/fse/log/debug ext3 defaults 1 2

18 Introduction and preparation basics

Page 19

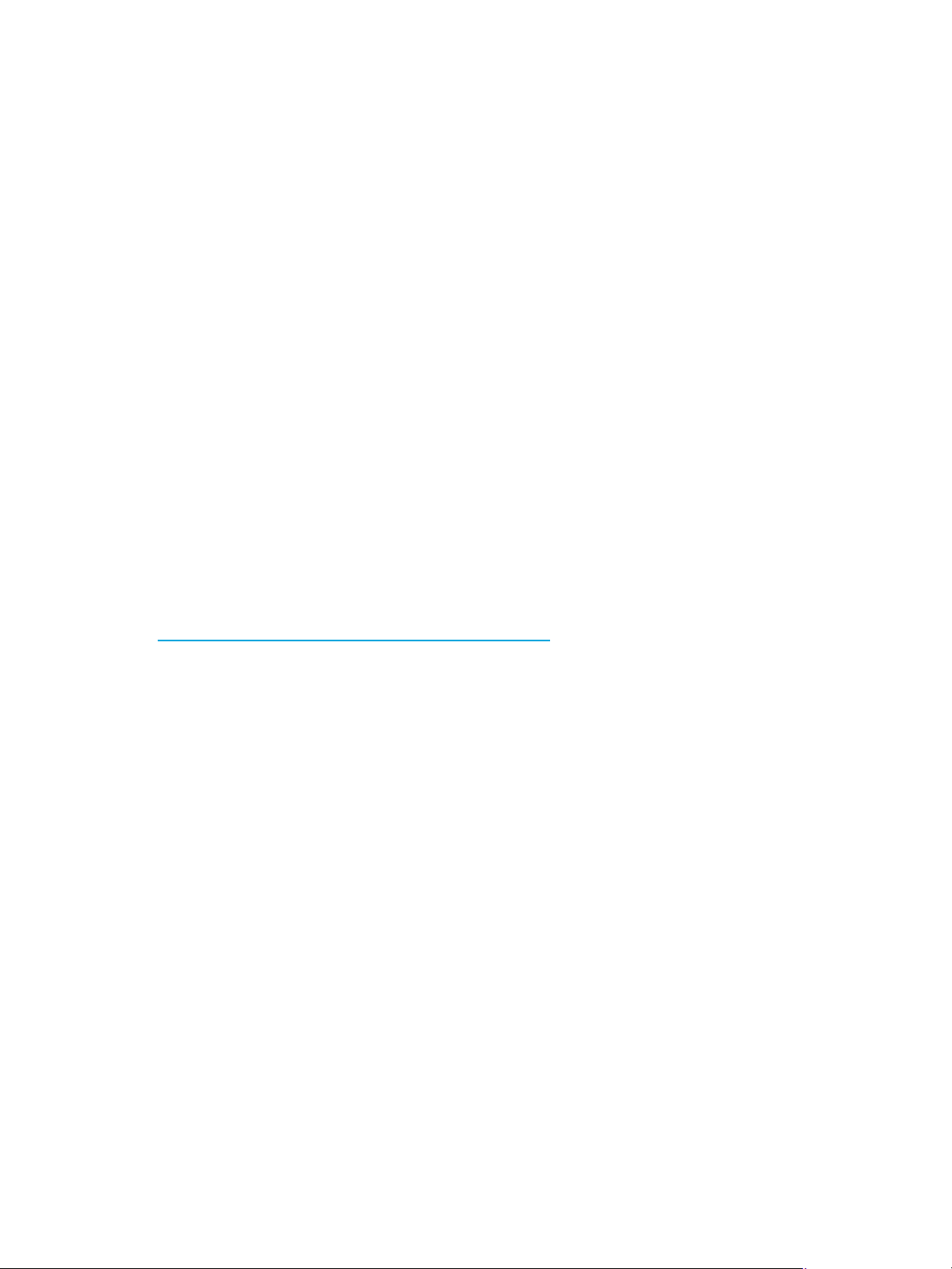

2 Installation overview

This chapter provides an installation overview, which summarizes the steps necessary to prepare the system

and to install the FSE software. Where appropriate, you are pointed to more detailed steps within this

document.

Action Comments & where to find details

1. Install the required operating system update. ”Required operating system updates” on page 21.

2. Install all necessary third-party packages. ”

3. Prepare logical volumes: ”

a. Prepare partitions. ”

b. Create logical volume groups. ”

c. Create logical volumes. ”

d. Create file system on each logical volume.

(command: mkfs.ext3

e. Create the mount points. ”

f. Update the /etc/fstab file with required

information.

)

Required third-party packages for SUSE Linux

E

nterprise Server 9 (SLES 9)

Required third-party packages for Red Hat

”

nterprise Linux 4 (RHEL 4)

E

Preparing Logical Volume Manager (LVM)

lumes

vo

vo

v

vo

l

”Creating file systems on top of LVM logical

vo

s

”

s

This is to mount the files systems (FSE databases and

system files) automatically at system startup time.

” on page 27.

Step 2: Define and initialize LVM physical

lumes

” on page 27.

Step 3: Create and initialize LVM logical

olume groups

Step 4: Create and initialize LVM logical

lumes

ogical volumes for HSM file systems

lumes

Mounting file systems for FSE databases and

ystem files

Mounting file systems for FSE databases and

ystem files

” on page 28.

” on page 28, ”Step 5: Create LVM

” on page 30.

” on page 31.

” on page 31.

” on page 21.

” on page 22.

” on page 29.

g. Mount the file systems for FSE databases and

system files on previously created directories.

4. Install the FSE software. ”

5. Start FSE. ”

6. Check the status of Firebird SuperServer and the FSE

processes.

7. Configure and start HSM Health Monitor. ”

8. Optionally, configure and start Log Analyzer. ”

9. Optionally, install the FSE Management Console. ”

10. Configure resources (libraries, drives, media pools,

partitions, media).

HP StorageWorks File System Extender Software installation guide for Linux 19

”

Mounting file systems for FSE databases and

ystem files

s

Installing an FSE release” on page 35.

Starting the FSE implementation” on page 43.

”

Checking the status of a running FSE

im

plementation

Configuring and starting HSM Health Monitor”

on page 48.

Configuring and starting Log Analyzer” on

page 48.

Installing the FSE Management Console” on

page 49.

FSE user guide, chapter ”

” on page 31.

” on page 46.

Configuring FSE”.

Page 20

Action Comments & where to find details

11. Mount HSM file systems:

a. Create directories.

b. Update the /etc/fstab file with required

information.

Automating the mounting of HSM file systems”

”

on page 49.

To mount HSM file systems, add entries for these file

systems to the local file system table in the file

/etc/fstab.

NOTE: This step is similar to steps 3e and 3f where

you mounted the FSE databases and system files. In this

step, you are mounting HSM file systems.

20 Installation overview

Page 21

3 Preparing the operating system environment

This chapter describes the necessary changes that need to be made to the operating system environment

on the computer that will host a consolidated FSE system (integrated server and client) or part of a

distributed FSE implementation (separate server and separate client). It also lists the required third-party

packages that must be installed prior to installing the FSE software.

Preparing the operating system

NOTE: You must be logged on to the system as root in order to prepare the operating system environment.

Required operating system updates

SUSE Linux Enterprise Server

You need to upgrade all SUSE Linux Enterprise Server 9 systems that will become FSE system components

with SLES 9 Service Pack 3 (SLES 9, SP 3, kernel: 2.6.5-7.244-default, 2.6.5-7.244-smp or

2.6.5-7.244-bigsmp) in order to enable installation of the FSE software.

For more information on specifics related to the supported kernel variants, see the FSE release notes.

Red Hat Enterprise Linux

Red Hat Enterprise Linux AS/ES 4 Update 3 (RHEL AS/ES 4, Update 3, kernel: 2.6.9-34.EL,

2.6.9-34.ELhugemem or 2.6.9-34.ELsmp) must be installed on all FSE system components running

on RHEL AS/ES 4.

For more information on specifics related to the supported kernel variants, see the FSE release notes.

Required third-party packages for SUSE Linux Enterprise Server 9 (SLES 9)

Table 5 lists the required package versions for a SUSE Linux Enterprise Server 9 (SLES 9) operating system

and the components of FSE that require each package. Most packages are already included in the

operating system distribution or service pack. Unless stated otherwise, later versions are also acceptable.

Table 5 Packages and their relation to FSE components on SUSE Linux Enterprise server

Package

Package name in the rpm -qa output (SLES)

Package file name (SLES)

Extended Attributes utilities

attr-2.4.16-1.2

attr-2.4.16-1.2.i586.rpm

libattr-2.4.16-1.2

libattr-2.4.16-1.2.i586.rpm

Logical Volume Manager package

lvm2-2.01.14-3.6

lvm2-2.01.14-3.6.rpm

E2fsprogs tools

e2fsprogs-1.38-4.9

e2fsprogs-1.38-4.9.i586.rpm

FSE client FSE server Consolidated

FSE system

libgcc C library

libgcc-3.3.3-43.41

libgcc-3.3.3-43.41.i586.rpm

libstdc++ C++ library

libstdc++-3.3.3-43.41

libstdc++-3.3.3-43.41.i586.rpm

HP StorageWorks File System Extender Software installation guide for Linux 21

Page 22

Table 5 Packages and their relation to FSE components on SUSE Linux Enterprise server

Package

Package name in the rpm -qa output (SLES)

FSE client FSE server Consolidated

Package file name (SLES)

glibc C library

glibc-2.3.3-98.61

glibc-2.3.3-98.61.i586.rpm

glibc locale C library

glibc-locale-2.3.3-98.61

glibc-locale-2.3.3-98.61.i586.rpm

Firebird SuperServer

FirebirdSS-1.0.3.972-0.64IO

FirebirdSS-1.0.3.972-0.64IO.i386.rpm

sourceforge.net/projects/firebird/

Python interpreter

python-2.3.3-88.6

python-2.3.3-88.6.i586.rpm

www.python.org

customized Samba packages with offline file support

samba-3.0.20b-3.4.HSM.2

samba-3.0.20b-3.4.HSM.2.i586.rpm

samba-client-3.0.20b-3.4.HSM.2

samba-client-3.0.20b-3.4.HSM.2.i586.rpm

FSE system

tar archiving package

tar-1.13.25-325.3

tar-1.13.25-325.3.i586.rpm

Required third-party packages for Red Hat Enterprise Linux 4 (RHEL 4)

Table 6 lists the required package versions for a Red Hat Enterprise Linux 4 (RHEL 4) operating system and

the components of FSE that require each package. Most packages are already included in the operating

system distribution or service pack. Unless stated otherwise, later versions are also acceptable.

Table 6 Packages and their relation to FSE components on Red Hat Enterprise Linux

Package

Package name in the rpm -qa output (RHEL)

Package file name (RHEL)

Extended Attributes utilities

attr-2.4.16-3

attr-2.4.16-3.i386.rpm

libattr-2.4.16-3

libattr-2.4.16-3.i386.rpm

Logical Volume Manager package

lvm2-2.02.01-1.3.RHEL4

lvm2-2.02.01-1.3.RHEL4.i386.rpm

E2fsprogs tools

e2fsprogs-1.35-12.3.EL4

e2fsprogs-1.35-12.3.EL4.i386.rpm

FSE client FSE server Consolidated

FSE system

libgcc C library

libgcc-3.4.5-2

libgcc-3.4.5-2.i386.rpm

22 Preparing the operating system environment

Page 23

Table 6 Packages and their relation to FSE components on Red Hat Enterprise Linux

Package

Package name in the rpm -qa output (RHEL)

FSE client FSE server Consolidated

Package file name (RHEL)

libstdc++ C++ library

libstdc++-3.4.5-2

libstdc++-3.4.5-2.i386.rpm

glibc C library

glibc-2.3.4-2.19

glibc-2.3.4-2.19.i386.rpm

Firebird SuperServer

FirebirdSS-1.0.3.972-0.64IO

FirebirdSS-1.0.3.972-0.64IO.i386.rpm

sourceforge.net/projects/firebird/

Python interpreter

python-2.3.4-14.1

python-2.3.4-14.1.i386.rpm

www.python.org

customized Samba packages with offline file support

samba-3.0.10-1.4E.6.HSM.2

samba-3.0.10-1.4E.6.HSM.2.i386.rpm

samba-common-3.0.10-1.4E.6.HSM.2

samba-common-3.0.10-1.4E.6.HSM.2.i386.rpm

samba-client-3.0.10-1.4E.6.HSM.2

samba-client-3.0.10-1.4E.6.HSM.2.i386.rpm

FSE system

tar archiving package

tar-1.14-8.RHEL4

tar-1.14-8.RHEL4.i386.rpm

Verifying third-party packages

To check whether the required package versions are installed, use rpm -q followed by the package name

without the version and suffix:

# rpm -q PackageName

If the package has been installed, the command responds with:

PackageName-PackageVersion

Otherwise, the response is:

package PackageName is not installed

Example

To check if libgcc-3.2.2-54.i586.rpm has been installed, enter rpm -q libgcc at the command

prompt. Note that later versions are also acceptable.

Installing Firebird SuperServer on an FSE server

Consolidated FSE systems and FSE servers require the third-party software Firebird SuperServer, used for

implementation of the Resource Management Database.

To install the RPM package (FirebirdSS-1.0.3.972-0.64IO.i386.rpm) to the appropriate

locations, use the RPM installation tool:

1. Install the FirebirdSS package using the command:

# rpm --install FirebirdSS-1.0.3.972-0.64IO.i386.rpm

HP StorageWorks File System Extender Software installation guide for Linux 23

Page 24

2. In the /etc directory, create a plain text file gds_hosts.equiv containing the following two lines:

+

+localhost

3. If you are installing FirebirdSS to a SUSE Linux Enterprise Server system, once the Firebird SuperServer

is installed, open the file /etc/sysconfig/firebird with a text editor, search for the

START_FIREBIRD variable, and set its value to "yes". If the line does not exist, add it as follows:

# Start the Firebird RDBMS ?

#

START_FIREBIRD="yes"

If the file /etc/sysconfig/firebird does not exist, create it and add the above contents to it.

Disabling ACPI

Some kernels can have incomplete implementation of support for the Advanced Configuration and Power

Interface (ACPI). Enabled kernel support for ACPI causes problems on the symmetric multiprocessing (SMP)

machines (the machines with multiple processors), and on machines with SCSI disk controllers. This means

that you need to disable the kernel support for ACPI before booting the SMP variant of a Linux kernel on a

SMP machine or an arbitrary kernel variant on a machine with SCSI disk controller. ACPI has to be

disabled on all supported distributions. The following additional boot-loader parameter disables ACPI:

acpi=off

However, with some configurations, this single parameter does not give the desired effect. In such cases, a

different set of boot-loader parameters must be specified to disable ACPI. Instead of the acpi=off string,

you must provide the following options:

acpi=oldboot pci=noacpi apm=power-off

See http://portal.suse.com/sdb/en/2002/10/81_acpi.html

control the ACPI code.

Depending on the boot loader you are using on the system, you need to modify the appropriate

boot-loader configuration file to disable ACPI.

Disabling ACPI with GRUB boot loader

To disable ACPI, you need to edit the GRUB configuration file /boot/grub/menu.lst and add the

syntax acpi=off to it.

The following is an example for supplying the required booting parameter to a kernel image that resides in

the directory /boot/bzImage on the system’s first hard drive:

title Linux

root (hd0,0)

kernel /boot/bzImage acpi=off

Disabling ACPI with LILO boot loader

To disable ACPI, you need to edit the LILO configuration file and add the syntax append = "acpi=off"

to it. The LILO configuration file is usually /etc/lilo.conf.

for information on kernel parameters to

This is an example for supplying the required booting parameter to a kernel image that resides in the

directory /boot/bzImage:

image = /boot/bzImage

label = Linux

read-only

append = "acpi=off"

24 Preparing the operating system environment

Page 25

After you add this option to the LILO configuration file, run lilo to ensure that at the next boot, ACPI will

be disabled.

HP StorageWorks File System Extender Software installation guide for Linux 25

Page 26

26 Preparing the operating system environment

Page 27

4 Preparing file systems for FSE

In order to optimize the FSE implementation and increase its reliability, it may be necessary to re-organize

the file systems on the host that will be dedicated to the FSE server as well as on the FSE client. When using

disk media, you need to prepare file systems to hold disk media files.

Preparing file systems

The following sections describe the steps you need to perform on the operating systems to manually define

the necessary Logical Volume Manager (LVM) volumes, create file systems on top of them, and mount the

file systems created for FSE databases and system files.

Preparing Logical Volume Manager (LVM) volumes

Most of the file systems that are used by the FSE implementation, HSM file systems and file systems for FSE

databases and system files, should be located on Logical Volume Manager (LVM) volumes. This is required

by the FSE backup and restore functionality, in order to enable file system snapshot creation. For details on

which file systems must be located on the LVM volumes, see chapter ”Introduction and preparation basics”,

section ”Organizing the file system layout” on page 13.

For detailed instructions on LVM usage, see LVM manuals, LVM man pages, and web site

http://tldp.org/HOWTO/LVM-HOWTO

CAUTION: Use the LVM command set with caution, as certain commands can destroy existing file

systems!

.

Preparation overview

You need to perform the following steps to prepare LVM volumes:

1. Get a list of disks and disk partitions that exist on the system.

2. Define and initialize LVM physical volumes. LVM commands for managing LVM physical volumes begin

with letters pv (physical volume) and are located in the directory /sbin.

3. Once physical volumes are configured, you have to create and initialize the LVM logical volume

groups; these use the space on physical volumes. Commands for managing LVM logical volume groups

begin with letters vg (volume group) and are located in the directory /sbin.

4. Create and initialize LVM logical volumes for the file systems you are going to use inside the FSE

implementation. Commands for managing LVM logical volumes begin with letters lv (logical volume)

and are located in the directory /sbin.

For detailed instructions on LVM use, see the LVM man pages and the web site

http://tldp.org/HOWTO/LVM-HOWTO/

.

Step 1: Get a list of available disks and disk partitions

Before you define and initialize physical LVM volumes, you need to know the existing disk and disk

partition configuration.

Invoke the following command to get a list of disks and disk partitions that exist on the system:

# fdisk -l

Step 2: Define and initialize LVM physical volumes

LVM physical volumes can be either whole disks or disk partitions. HP recommends to use disk partitions

and not whole disks as they can be mistakenly considered as free disks.

CAUTION: Any data on these disks or partitions will be lost as you initialize an LVM volume. Make sure

you specify the correct device or partition.

HP StorageWorks File System Extender Software installation guide for Linux 27

Page 28

NOTE: Commands for managing LVM physical volumes begin with the letters pv (physical volume) and

are located in the /sbin directory.

In the example below, the first partition of the first SCSI disk and the first partition on the second SCSI disk

are initialized as LVM physical volumes and are dedicated to the LVM volumes. Use values according to

your actual disk configuration:

# pvcreate /dev/cciss/c0d_p1

# pvcreate /dev/cciss/c0d_p2

Step 3: Create and initialize LVM logical volume groups

LVM logical volume groups are a layer on top of the LVM physical volumes. One LVM logical volume group

can occupy one or more LVM physical volumes.

NOTE: Commands for managing LVM logical volume groups begin with the letters vg (volume group)

and are located in the /sbin directory.

CAUTION: HP recommends to separate the FSE databases and system files from the user data on the

HSM file systems by putting them on two separate LVM volume groups, as shown in the following

examples. This helps increasing data safety.

In the following example, the newly created LVM physical volume /dev/cciss/c0d_p1 is assigned to

the LVM volume group vg_fse, and the LVM physical volume /dev/cciss/c0d_p2 is assigned to the

LVM volume group vg_fsesfs. The volume group vg_fse will store FSE databases and system files, and

the volume group vg_fsefs will store the HSM file systems with user files and directories.

When creating the LVM volume groups, use names and values according to your preferences and your

actual LVM physical volume configuration.

To create the volume groups using the default physical extent size, invoke the following commands:

# vgcreate vg_fse /dev/cciss/c0d_p1

# vgcreate vg_fsefs /dev/cciss/c0d_p2

NOTE: If you intend to create LVM logical volumes larger than 256 GB, you must use the option -s

(--physicalextentsize) with vgcreate, and specify a physical extent larger than 4 MB.

For example: a physical extent of 4 MB enables LVM to address up to 256 GB and a physical extent of

32 MB allows addressing 2 TB of disk space. Note that the recommended physical extent size for the FSE

file system and disk media (if under LVM) volume groups is 32 MB.

For details on using the vgcreate command, see the vgcreate man page (man vgcreate).

To create the volume groups using the physical extent size of 32 MB, invoke the following commands:

# vgcreate -s 32M vg_fse /dev/cciss/c0d_p1

# vgcreate -s 32M vg_fsefs /dev/cciss/c0d_p2

Step 4: Create and initialize LVM logical volumes

LVM logical volumes are virtual partitions and can be mounted like ordinary partitions once file systems

are created on them.

28 Preparing file systems for FSE

Page 29

NOTE: Commands for managing LVM logical volumes begin with the letters lv (logical volume) and are

located in the /sbin directory.

In the following example, LVM logical volumes are created on the LVM volume group vg_fse for the

important directories that FSE uses, namely:

• /var/opt/fse/

• /var/opt/fse/part/

• /var/opt/fse/fri/

• /var/opt/fse/log/

• /var/opt/fse/diskbuf/FileSystemMountPoint

Optional additional file systems assigned to FSE disk buffer will use mount points conforming to the

following scheme, where NewFileSystemMountPoint is a unique subdirectory name:

• /var/opt/fse/diskbuf/NewFileSystemMountPoint

You should use logical volume names according to your preferences and sizes that correspond to your

actual LVM volume group configuration:

# lvcreate -L 6G -n fsevar vg_fse

# lvcreate -L 6G -n fsepart vg_fse

# lvcreate -L 6G -n fsefri vg_fse

# lvcreate -L 6G -n fselog vg_fse

# lvcreate -L 20G -n fsediskbuf vg_fse

In case of additional file systems assigned to the FSE disk buffer, you should use the following command for

creation of the LVM logical volume for each of them:

# lvcreate -L 20G -n fsediskbufNumber vg_fse

NOTE: You need to leave some free space for optional LVM snapshot volumes on the LVM volume group,

which are created during backup of the FSE implementation. The size of the reserved space should be

approximately 15-20 % of the whole LVM volume group size, as recommended by the LVM developers.

The exact value depends on the actual load of the LVM volumes: it should be increased if frequent changes

to the HSM file systems are expected during the FSE backup or if the FSE backup process is expected to

last longer.

The /var/opt/fse/log/debug directory is not as critical as others, and can be placed on an ordinary

file system. For more details on configuration, see chapter ”Introduction and preparation basics”, section

”Estimating the size of file systems”, subsection ”Storage space for FSE debug files”.

Step 5: Create LVM logical volumes for HSM file systems

To create the LVM logical volume for a single HSM file system that will actually contain user files, use the

lvcreate command. Use a logical volume name according to your preferences, and a size that

corresponds to your actual LVM volume group configuration:

# lvcreate -L 400G -n fsefs_01 vg_fsefs

Repeat the procedure to create the LVM logical volumes for each additional HSM file system you are going

to use.

HP StorageWorks File System Extender Software installation guide for Linux 29

Page 30

Creating file systems on top of LVM logical volumes

After the LVM logical volumes have been successfully initialized, you need to create file systems on top of

them using the command mkfs.ext3.

HP recommends that you use the mkfs.ext3 option -b 4096 for specifying a block size of 4096 bytes.

TIP: If you want to check the properties your file system will have without actually creating it, you can run

the command mkfs.ext3 with the switch -n.

An example output of checking the example_fs file system values is as follows:

# mkfs.ext3 -n -b 4096 /dev/vg_fsefs/example_fs

mkfs.ext3 1.28 (14-Mar-2002)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

456064 inodes, 911680 blocks

45584 blocks (5.00%) reserved for the super user

First data block=0

28 block groups

32768 blocks per group, 32768 fragments per group

16288 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736

NOTE: The number of inodes in the mkfs.ext3 output corresponds to the expected maximum number of

files on the file system. If this number is not satisfactory, you can explicitly tell mkfs.ext3 to reserve a

certain number of inodes with the -N option.

Note that the upper limit for the number of inodes is affected by two factors: the file system size and the file

system block size. On a file system with size Fs and block size Bs, the maximum size of inodes ln is

determined by the equation In = Fs / Bs. If you specify a number bigger than ln, the mkfs.ext3

command creates ln inodes.

For example, to create one million inodes you must specify the -N 1000000 option. In case of a block

size of 4096 bytes, the file system size must be equal to or greater than 3.8 GB (4 096 000 bytes) for this

number of inodes to be actually created:

# mkfs.ext3 -b 4096 -N 1000000 /dev/vg_fsefs/example_fs

Proceed as follows and first create the file systems for the FSE databases and system files, and after that

create HSM file systems.

Step 1: Creating file systems for FSE databases and system files

Use the following command sequence to create the file systems for the FSE databases and system files (for

our example):

# mkfs.ext3 -b 4096 /dev/vg_fse/fsevar

# mkfs.ext3 -b 4096 /dev/vg_fse/fsepart

30 Preparing file systems for FSE

Page 31

# mkfs.ext3 -b 4096 /dev/vg_fse/fsefri

# mkfs.ext3 -b 4096 /dev/vg_fse/fselog

# mkfs.ext3 -b 4096 /dev/vg_fse/fsediskbuf

If you will assign additional file systems to the FSE disk buffer, run the following command for each of them:

# mkfs.ext3 -b 4096 /dev/vg_fse/fsediskbufNumber

Each command reports the properties of the newly created file system.

NOTE: To improve the performance of the FSE disk buffer, you can use Ext2 file systems for its storage

space. Ext2 file system are non-journaled and therefore faster than Ext3. In this case, in the above

command sequence, use the command mkfs.ext2 instead of mkfs.ext3.

Step 2: Creating HSM file systems

To create HSM file systems, proceed as follows:

1. Use the following command to create an HSM file system on top of the LVM logical volume fsefs_01:

# mkfs.ext3 -b 4096 -N 10000000 /dev/vg_fsefs/fsefs_01

In this example, the newly created HSM file system can store a maximum of 10 000 000 files, as the

same number of inodes are reserved on it. Consider the limitation on the number of inodes that can be

created on a file system with specific total size and specific block size.

You should use the number of inodes according to your HSM file system requirements, and the LVM

logical volume name according to your actual LVM volume configuration.

2. Create HSM file systems on all other LVM logical volumes that will be used for FSE partitions. Use

values according to your requirements and the particular purpose of HSM file systems in the FSE

implementation.

NOTE: The number of inodes on the file system cannot be changed once the file system has been put into

use.

The next section provides instructions on how to mount file systems for FSE databases and system files.

Mounting file systems for FSE databases and system files

The last step of the preparation procedure is to mount the file systems for FSE databases and system files.

Note that the file systems for the FSE partitions, that is HSM file systems, can only be mounted after the FSE

daemons have been successfully started.

To mount the necessary file systems, do the following:

1. Create the first of the three important FSE directories (and its parent directories):

# mkdir -p /var/opt/fse

2. Invoke the following command to retrieve the name of the device file. Use the symbolic link from the

step 1 of section ”Creating file systems on top of LVM logical volumes”:

# ls -la /dev/vg_fse/fsevar

The command generates an output similar to the following:

lrwxrwxrwx 1 root root 25 Aug 17 09:12 /dev/vg_fse/fsevar ->

/dev/mapper/vg_fse-fsevar

HP StorageWorks File System Extender Software installation guide for Linux 31

Page 32

3. To ensure that the file system is automatically mounted at system startup, add the appropriate entry to

the file system table in the file /etc/fstab. When adding the entry, note that the device name in the

first column corresponds to the device file that you retrieved in the previous step:

/dev/mapper/vg_fse-fsevar /var/opt/fse ext3 defaults 1 2

4. Manually mount the corresponding file system for the directory /var/opt/fse. You must use the

device (logical volume) name from your actual LVM configuration:

# mount /dev/mapper/vg_fse-fsevar

5. Create the four remaining directories:

# mkdir /var/opt/fse/part

# mkdir /var/opt/fse/fri

# mkdir /var/opt/fse/log

# mkdir -p /var/opt/fse/diskbuf/FileSystemMountPoint

FileSystemMountPoint should be a unique subdirectory name.

6. Invoke the following commands to retrieve the names of the device files. Use the symbolic links from the

step 1 of section ”Creating file systems on top of LVM logical volumes”:

# ls -la /dev/vg_fse/fsepart

lrwxrwxrwx 1 root root 25 Aug 17 09:12 /dev/vg_fse/fsepart ->

/dev/mapper/vg_fse-fsepart

# ls -la /dev/vg_fse/fsefri

lrwxrwxrwx 1 root root 25 Aug 17 09:12 /dev/vg_fse/fsefri ->

/dev/mapper/vg_fse-fsefri

# ls -la /dev/vg_fse/fselog

lrwxrwxrwx 1 root root 25 Aug 17 09:12 /dev/vg_fse/fselog ->

/dev/mapper/vg_fse-fselog

# ls -la /dev/vg_fse/fsediskbuf

lrwxrwxrwx 1 root root 25 Aug 17 09:12 /dev/vg_fse/fsediskbuf ->

/dev/mapper/vg_fse-fsediskbuf

7. Add the appropriate entries to the file system table in the file /etc/fstab. When adding the entries,

note that the device names in the first column correspond to the device files that you retrieved in the

previous step:

/dev/mapper/vg_fse-fsepart /var/opt/fse/part ext3 data=journal 1 2

/dev/mapper/vg_fse-fsefri /var/opt/fse/fri ext3 defaults 1 2

/dev/mapper/vg_fse-fselog /var/opt/fse/log ext3 defaults 1 2

/dev/mapper/vg_fse-fsediskbuf /var/opt/fse/diskbuf/FileSystemMountPoint \

ext3 defaults 1 2

NOTE: The keyword data=journal must be specified in the fourth column in /etc/fstab for

correctly mounting the file system on the /var/opt/fse/part directory. This improves file system

stability and performance.

Note that all parameter entries must be entered in the proposed order.

NOTE: If you created an Ext2 file system for the FSE disk buffer, modify the fstab entry in the

above example accordingly.

32 Preparing file systems for FSE

Page 33

8. Manually mount the corresponding file systems for these four directories. You must use the device

(logical volume) names from your actual LVM configuration:

# mount /dev/mapper/vg_fse-fsepart

# mount /dev/mapper/vg_fse-fsefri

# mount /dev/mapper/vg_fse-fselog

# mount /dev/mapper/vg_fse-fsediskbuf

9. In case of FSE disk buffer configuration with additional assigned file systems, follow the substeps for

each additional file system:

a. Create the file system mount point:

# mkdir /var/opt/fse/diskbuf/NewFileSystemMountPoint

NewFileSystemMountPoint should be a unique subdirectory name.

b. Invoke the following command to retrieve the name of the device file. Use the symbolic link from the

step 1 of section ”Creating file systems on top of LVM logical volumes”:

# ls -la /dev/vg_fse/fsediskbufNumber

lrwxrwxrwx 1 root root 25 Aug 17 09:12 /dev/vg_fse/fsediskbufNumber ->

/dev/mapper/vg_fse-fsediskbufNumber

c. Add the appropriate entry to the file system table in the file /etc/fstab. When adding the entry,

note that the device name in the first column corresponds to the device file that you retrieved in the

previous substep:

/dev/mapper/vg_fse-fsediskbufNumber \

/var/opt/fse/diskbuf/NewFileSystemMountPoint \

ext3 defaults 1 2

NOTE: If you created an Ext2 file system, modify the above fstab entry accordingly.

d. Manually mount the corresponding file system. You must use the device (logical volume) name from

your actual LVM configuration:

# mount /dev/mapper/vg_fse-fsediskbufNumber

10.Use the following command to check that all file systems have been mounted successfully, for example:

# cat /etc/mtab

/dev/hda2 / ext3 rw 0 0

proc /proc proc rw 0 0

devpts /dev/pts devpts rw,mode=0620,gid=5 0 0

/dev/hda1 /boot ext3 rw 0 0

/dev/hda5 /usr ext3 rw 0 0

/dev/hda6 /var ext3 rw 0 0

/dev/vg_fse/fsevar /var/opt/fse ext3 rw 0 2