Page 1

HP Scalable Visualization Array Version 2.1

User's Guide

HP Part Number: A-SVAUG-4A

Published: March 2007

Page 2

© Copyright 2006, 2007 Hewlett-Packard Development Company, L.P.

Confidential computersoftware. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standardcommercial license.The informationcontained hereinis subject to change without notice. The only warranties forHP products

and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as

constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein. UNIX is a registered

trademark of The Open Group.

FLEXlm is a trademark of Macrovision Corporation.

InfiniBand is a registered trademark and service mark of the InfiniBand Trade Association.

Linux is a U.S. registered trademark of Linus Torvalds.

Myrinet and Myricom are registered trademarks of Myricom, Inc.

NVIDIA, NVIDIA Quadro are registered trademarks or trademarks of NVIDIA Corporation in the United States and/or other countries.

Red Hat is a registered trademark of Red Hat, Inc.

UNIX is a registered trademark of The Open Group.

Page 3

Table of Contents

About This Document.......................................................................................................11

1 Intended Audience.............................................................................................................................11

2 Document Organization.....................................................................................................................11

3 Typographic Conventions..................................................................................................................11

4 Related Information...........................................................................................................................12

5 Publishing History.............................................................................................................................12

6 HP Encourages Your Comments........................................................................................................12

1 Introduction...................................................................................................................13

1.1 Where SVA Fits in the High Performance Computing Environment.............................................13

1.2 SVA Clusters....................................................................................................................................14

1.3 Displays...........................................................................................................................................15

1.4 SVA Functional Attributes...............................................................................................................15

1.4.1 Scalability................................................................................................................................15

1.4.2 Flexibility.................................................................................................................................16

1.5 Application Support........................................................................................................................16

1.5.1 OpenGL Applications.............................................................................................................17

1.5.2 Scenegraph Applications.........................................................................................................17

2 SVA Architecture...........................................................................................................19

2.1 SVA as a Cluster..............................................................................................................................19

2.1.1 Background on Linux Clusters................................................................................................19

2.2 Architectural Design.......................................................................................................................19

2.2.1 Components of the HP Cluster Platform................................................................................20

2.2.2 Main Visualization Cluster Tasks............................................................................................20

2.2.3 Components of an SVA...........................................................................................................21

2.2.4 Configuration Flexibility.........................................................................................................21

2.3 SVA Operation.................................................................................................................................22

2.3.1 Cluster Data Flow....................................................................................................................22

2.3.2 File Access...............................................................................................................................22

3 SVA Hardware and Software.....................................................................................25

3.1 Hardware Component Summary....................................................................................................25

3.2 Bounded Configuration...................................................................................................................26

3.3 Modular Packaging Configuration.................................................................................................27

3.4 Network Configurations.................................................................................................................27

3.4.1 System Interconnect (SI)..........................................................................................................27

3.4.2 Administrative Network Connections....................................................................................27

3.5 Display Devices...............................................................................................................................28

3.6 SVA Software Summary..................................................................................................................28

3.6.1 Linux Operating System..........................................................................................................29

3.6.2 HP XC Clustering Software.....................................................................................................29

3.6.3 Additional System Software....................................................................................................30

4 Quick Start....................................................................................................................33

4.1 Typical Uses of SVA.........................................................................................................................33

4.2 Configuring Displays for Use with SVA.........................................................................................33

4.3 Run a Test Application....................................................................................................................34

Table of Contents 3

Page 4

4.3.1 Set Up Control for an Application..........................................................................................34

4.3.2 Launch an Application in Interactive Mode...........................................................................35

4.3.3 Launch an Application in Batch Mode....................................................................................36

4.3.4 Run an Application Using HP RGS.........................................................................................36

5 Setting Up and Running a Visualization Session......................................................39

5.1 Configuration Data Files.................................................................................................................39

5.2 Running an Application Using Scripts............................................................................................40

5.2.1 Selecting a Template or Script.................................................................................................40

5.2.2 Modifying a Script Template...................................................................................................41

5.2.3 Using a Script to Launch an Application................................................................................42

5.3 Running an Interactive Session.......................................................................................................42

5.4 Use Head or Remote-Capable Nodes in a Job.................................................................................43

5.4.1 Head Node..............................................................................................................................43

5.4.2 Remote-Capable Node............................................................................................................43

5.5 Using Nodes as a Different Type.....................................................................................................44

5.6 Running a Stereo Application.........................................................................................................44

5.7 G-Sync Framelock Support.............................................................................................................45

5.7.1 Use the Framelock Script Option............................................................................................45

5.7.2 Use the Framelock Script Function.........................................................................................46

5.7.3 Use the Framelock Utility........................................................................................................46

6 Application Examples..................................................................................................47

6.1 Running an Existing Application on a Single SVA Workstation....................................................47

6.1.1 Assumptions and Goal............................................................................................................47

6.1.2 HP Remote Graphics Software and Use..................................................................................48

6.1.2.1 Location for Application Execution and Control............................................................48

6.1.2.2 Data Access......................................................................................................................49

6.1.2.3 Use of Display Surfaces...................................................................................................49

6.1.2.4 Launch Script...................................................................................................................50

6.1.2.4.1 Non-Interactive Example........................................................................................50

6.1.2.4.2 Interactive Mode Example......................................................................................51

6.1.3 VirtualGL and TurboVNC Applications and Use...................................................................52

6.1.3.1 Assumptions....................................................................................................................52

6.1.3.2 Interactive Mode Example..............................................................................................53

6.1.3.3 Collaborative Viewing.....................................................................................................54

6.1.3.4 Encrypt the Connection with SSH..................................................................................54

6.1.3.4.1 Steps for Windows Desktops..................................................................................54

6.1.3.4.2 Steps for Linux Desktops........................................................................................55

6.2 Running Render and Display Applications Using ParaView.........................................................55

6.2.1 Assumptions and Goal............................................................................................................55

6.2.2 ParaView Overview.................................................................................................................56

6.2.3 Location for Application Execution and Control....................................................................56

6.2.4 Data Access..............................................................................................................................58

6.2.5 Use of Display Surfaces...........................................................................................................58

6.2.6 Launch Script Template ..........................................................................................................59

6.3 Running a Workstation Application Using a Multi-Tile Display...................................................59

6.3.1 Assumptions and Goal............................................................................................................59

6.3.2 Chromium Overview and Usage Notes..................................................................................59

6.3.3 Distributed Multi-Head X (DMX)...........................................................................................60

6.3.4 Location for Application Execution and Control....................................................................60

6.3.5 Data Access..............................................................................................................................61

6.3.6 Using Display Surfaces............................................................................................................62

4 Table of Contents

Page 5

6.3.7 Launch Script...........................................................................................................................62

Glossary............................................................................................................................65

Index.................................................................................................................................67

Table of Contents 5

Page 6

6

Page 7

List of Figures

1-1 System View of a Computing Environment with Integrated SVA...............................................13

1-2 Standalone SVA Data Flow............................................................................................................14

1-3 Software Support for Application Development and Use............................................................16

2-1 SVA Data Flow Overview..............................................................................................................22

3-1 Sample SVA Bounded Configuration............................................................................................27

3-2 Software Hierarchy in the SVA......................................................................................................29

6-1 Using a Single SVA Node from Local Desktop.............................................................................49

6-2 ParaView Flow of Control on the SVA..........................................................................................57

6-3 Processes Running with Chromium-DMX Script.........................................................................61

7

Page 8

8

Page 9

List of Tables

3-1 Operating System and Driver Components..................................................................................29

3-2 HP XC System Components Relevant to SVA Operation.............................................................30

3-3 HP SVA System Software..............................................................................................................30

3-4 Third Party System Software.........................................................................................................31

3-5 Application Development Tools....................................................................................................31

6-1 Comparison Summary of Application Scenarios..........................................................................47

9

Page 10

10

Page 11

About This Document

The SVA User's Guide introduces the components of the HP Scalable Visualization Array (SVA).

The SVA product has hardware and software components that together make up the HP high

performance visualization cluster. This document provides a high level understanding of SVA

components.

The main purpose of the SVA is to give HP customers a platform on which to develop and run

graphics applications that require high performance combined with large data throughput on

single or multi-tile displays.

1 Intended Audience

The SVA User's Guide is intended for all users of the SVA. This includes visualization application

developers, visualization application users, system managers, and technical managers who need

a high level understanding of SVA.

2 Document Organization

This manual is organized into the following sections:

Chapter 1 Overview of SVA and where it fits in the HP Cluster Platform environment. It

also describes attributes of the SVA.

Chapter 2 Overview of SVA architecture, hardware, and software that make up the system.

Chapter 3 Additional detail on the hardware and software that make up the SVA.

Chapter 4 Summarizes how to get SVA sample applications running.

Chapter 5 Description of how to run a visualization application on the SVA.

Chapter 6 Description of common application examples as well as how to set them up on

the SVA.

3 Typographic Conventions

This document uses the following typographical conventions:

%, $, or #

audit(5) A manpage. The manpage name is audit, and it is located in

\ (backslash) Indicates the continuation of a command, where the line is too

Command

Computer output

Ctrl+x A key sequence. A sequence such as Ctrl+x indicates that you

ENVIRONMENT VARIABLE The name of an environment variable, for example, PATH.

[ERROR NAME]

Key The name of a keyboard key. Return and Enter both refer to the

Term The defined use of an important word or phrase.

User input

Variable

A percent sign represents the C shell system prompt. A dollar

sign represents the system prompt for the Bourne, Korn, and

POSIX shells. A number sign represents the superuser prompt.

Section 5.

long for the current page width.

A command name or qualified command phrase.

Text displayed by the computer.

must hold down the key labeled Ctrl while you press another

key or mouse button.

The name of an error, usually returned in the errno variable.

same key.

Commands and other text that you type.

The name of a placeholder in a command, function, or other

syntax display that you replace with an actual value.

1 Intended Audience 11

Page 12

[] The contents are optional in syntax. If the contents are a list

{} The contents are required in syntax. If the contents are a list

... The preceding element can be repeated an arbitrary number of

Indicates the continuation of a code example.

| Separates items in a list of choices.

WARNING A warning calls attention to important information that if not

CAUTION A caution calls attention to important information that if not

IMPORTANT This alert provides essential information to explain a concept or

NOTE A note contains additional information to emphasize or

4 Related Information

Related documentation is available via links from the home page for the SVA Documentation

Library on the HP XC Documentation CD. It also includes links to third party documentation

available on the Web that is relevant to users of SVA.

separated by a pipe ( | ), you must choose one of the items.

separated by a pipe ( | ), you must choose one of the items.

times.

understood or followed will result in personal injury or

nonrecoverable system problems.

understood or followed will result in data loss, data corruption,

or damage to hardware or software.

to complete a task

supplement important points of the main text.

5 Publishing History

The document printing date and part number indicate the document’s current edition. The

printing date will change when a new edition is printed. Minor changes may be made at reprint

without changing the printing date. The document part number will change when extensive

changes are made. Document updates may be issued between editions to correct errors or

document product changes. To ensure that you receive the updated or new editions, subscribe

to the appropriate product support service. See your HP sales representative for details. You can

find the latest version of this document on line at:

http://www.docs.hp.com.

Manufacturing Part

Number

A-SVAUG-4A

Systems

Software Version 3.2

6 HP Encourages Your Comments

HP encourages your comments concerning this document. We are committed to providing

documentation that meets your needs. Send any errors found, suggestions for improvement, or

compliments to:

feedback@fc.hp.com

Include the document title, manufacturing part number, and any comment, error found, or

suggestion for improvement you have concerning this document.

Publication DateEdition NumberSupported VersionsSupported Operating

March, 20071Version 2.1HP XC System

12 About This Document

Page 13

1 Introduction

Cluster System Interconnect

Compute Compute Compute Compute Compute

Visualization Visualization Visualization

HP SFS

Remote

PC

Display Surface

This chapter gives an overview of the HP Scalable Visualization Array (SVA). It describes how

the SVA works within the context of overall HP cluster solutions. It also discusses attributes of

the SVA that make it a powerful tool for running data intensive graphics applications.

The SVA is a scalable visualization solution that brings the power of parallel computing to bear

on many demanding visualization challenges.

The SVA leverages the advances made across the industry in workstation class systems, graphics

technology, processors, and networks by integrating the latest generations of these components

into its clustering architecture. This base of scalable hardware underlies powerful Linux clustering

software from HP. It is further enhanced by a set of utilities and support software developed by

HP and its partners to facilitate the use of the system by new and existing user applications.

1.1 Where SVA Fits in the High Performance Computing Environment

The SVA is an HP Cluster Platform system. It can be a specialized, standalone system consisting

entirely of visualization nodes, or it can be integrated into a larger HP Cluster Platform system

and share a single System Interconnect with the compute nodes and a storage system. Either

way, the SVA can integrate seamlessly into the complete computational, storage, and display

environment of customers as shown in Figure 1-1.

Figure 1-1 System View of a Computing Environment with Integrated SVA

High-speed networks make feasible the transfer of large amounts of data among the following:

• Individual users at their desktops, or logged into a cluster.

• The compute nodes, the visualization nodes, and local and remote display devices.

• Servers that are part of data storage farms.

A typical usage model for the type of system shown in Figure 1-1 has the following characteristics:

• A compute intensive application, for example, an automobile crash test simulation, runs on

the supercomputing compute nodes of the cluster.

• The large dataset generated on the compute nodes can be stored in the storage servers for

later retrieval, or directed in realtime for rendering on the SVA portion of the overall system.

• One or more users can log into the SVA concurrently, which allocates resources efficiently

to meet the rendering and display requirements of each user application.

• Users’ visualization applications use parallel programming techniques and visualization

middleware software to distribute their graphical rendering across the SVA nodes, each of

which in turn renders a portion of the output for the final image. Image data can be

apportioned by a master application to a set of visualization nodes for rendering.

• Each portion of the final image rendered by a visualization node is sent to a tile of a single

or multi-tile display. The complete image is available for display locally. The complete image

1.1 Where SVA Fits in the High Performance Computing Environment 13

Page 14

is also available for display remotely, but limited to single or two-tile output from a single

OpenGL

Graphics

User Application

Master Node

user interface

transfer simulation data

and drawing commands

display nodes

System Interconnect

Card

OpenGL

Graphics

Card

OpenGL

Graphics

Card

OpenGL

Graphics

Card

multi-tile display

render nodes

OpenGL

Graphics

Card

OpenGL

Graphics

Card

OpenGL

Graphics

Card

graphics card.

The SVA serves as a key unit in an integrated computing environment that displays the results

of generated data in locations where scientists and engineers can most effectively carry out

analyses individually or collaboratively.

1.2 SVA Clusters

This section gives a high-level description of a standalone SVA, that is, an HP Cluster Platform

system built to include visualization nodes. The SVA can also provide a visualization solution

that is fully integrated into an existing HP Cluster Platform system with compute and storage

components, as shown in Figure 1-1.

The SVA image-based approach works with a variety of visualization techniques, including

isosurface extraction and volume visualization. Such a graphics architecture combines the high

performance of clustersof rendering machines with the interactivity made possible by the speed,

scalability, and low latency of the cluster network.

HP SVA offers a graphics visualization solution that can be used by a variety of applications that

run on distributed computing systems; in this case, a cluster of Linux workstations. Figure 1-2

illustrates the makeup of a standalone SVA.

Figure 1-2 Standalone SVA Data Flow

14 Introduction

Key points of Figure 1-2 are the following:

• Industry standard workstations and servers with standard OpenGL 3D graphics cardsserve

as visualization nodes (render and display), and run clustering software and Linux. Use of

industry standard graphics cards lets the system take advantage of new generations of cards

as they become available.

• Depending on the design of the application, an application “master” can run the application

and the user interface for the application on a specified node.

• Display nodes transfer their rendered output to the display devices and can synchronize

multi-tile displays. A range of displays are supported at locations local and remote to the

SVA. A series of render nodes can also contribute composited images to the display nodes,

depending on the visualization application. The HP Parallel Compositing Library that ships

with SVA can help application developers accomplish parallel rendering. See the SVA Parallel

Compositing Reference Guide.

• The System Interconnect (SI) supports data transfer among visualization nodes. High-speed,

low-latency networks such as InfiniBand and Myrinet can be used for the SI to speed the

transfer of image data and drawing commands to the visualization nodes.

Each portion of an image is rendered on its visualization node as determined by the application

and the visualization middleware being used. For example, you can use Chromium or a

scenegraph application in conjunction with Distributed MultiHead X (DMX). The final images

are transmitted by the graphics cards in the display nodes to the display devices.

Page 15

Final images can also be transmitted to a remote workstation display over a network external to

the cluster. This lets users interact with applications running on the cluster from their offices.

Optionally, you can use HP Remote Graphics Software (RGS) or VirtualGL to accomplish this

more easily. See Chapter 6 for more information on both these packages.

Figure 1-2 also shows a master application node communicating with the other visualization

nodes over the SI. The SI carries file I/O and application communications; for example, MPI

traffic. The user interface for a visualization application can run on a master application node

and communicate with the visualization nodes over the SI, sending control information such as

changes in point of view, data, or OpenGL commands.

1.3 Displays

Display devices are not necessarily provided as part of the SVA. For example, your site can use

projector display systems or immersive displays provided by third party vendors.

Displays fall into a number of categories, including immersive CAVE displays, single monitors,

multiheaded monitors, large wall displays, multiheaded desktops, flat panels, and projector

displays used in theaters. SVA hardware and software deliver images to digital or analog standard

interfaces. The SVA depends on the graphics cards to drive the image output. This means the

wide range of display devices that the graphics cards support are available for use.

See Section 3.5 for more information.

1.4 SVA Functional Attributes

The key to SVA scalability and flexibility is its combination of cluster technology with high-speed

graphics cards and networks to transfer data. The SVA enables scaling up the number of nodes

working on a problem in parallel to handle larger dataset sizes, to increase frame rates, and to

display at higher image resolutions.

1.4.1 Scalability

There are a number of ways that applications can be designed and implemented to take advantage

of an SVA for effective scaling:

• Performance scaling: Render image data on separate nodes in the SVA. In effect, the work

is divided up among nodes working in parallel. Larger datasets can be accommodated by

more render nodes. The system design can scale from four to forty visualization nodes. This

count does not include the required head node.

The parallel attributes of the rendering pipeline removes a key performance bottleneck of

a conventional hardware accelerated graphics architecture, which feeds data sequentially

to a centralized pipeline.

In addition, the choice of a network that transmits data among the visualization nodes with

adequately low latency and high speed maintains interactive frame rates for delivery to the

display devices.

• Resolution scaling: Parallel rendering, combined with the parallel display of multiple tiles

makes such scaling possible. You can display high-resolution data and use large display

surfaces, including immersive displays and display walls.

In general, adding nodes to a dataset of fixed size provides good scaling up of the frame rate,

although speed-up is not linear because of the inevitable overhead due to portions of an

application's code that cannot be made parallel. However, a strength of SVA as a cluster

visualization platform is that scalability is nearly linear when the dataset size and node count

are both increased. For example, doubling the node count from four to eight makes it possible

to double the distributed dataset size with virtually no loss of frame rate. To achieve such gains

in frame rate, an application must be a true parallel application to efficiently distribute data and

to load balance across cluster nodes.

1.3 Displays 15

Page 16

1.4.2 Flexibility

Visualization

Libraries

(optional)

Applications

X Servers

HP XC Linux

Allocate

Launch

Initialize

Cleanup

SVA

Software

Utilities

OpenGL

Cluster Nodes and Displays

One of the most powerful attributes of the SVA is its flexibility, which makes it possible to apply

the SVA effectively to a wide range of technical problems. This flexibility derives from the

architectural characteristics of the SVA.

When the architectural characteristics of the SVA are integrated with an HP high performance

compute cluster (see Figure 1-1), you can select an optimal number of application or compute

nodes and match them with an appropriate number of render and display nodes. Visual

applications with high computation requirements can be distributed over the compute nodes

and the visualization nodes; thus the render nodes can double as compute nodes.

This flexibility is critical because visualization applications often need to perform intensive

computations to compute isosurfaces, streamlines, or particle traces. You can select application

nodes based on factors such as model size, and match them to the visualization nodes your

application needs to yield the desired performance and resolution.

1.5 Application Support

This section introduces software support for application developers. Chapter 3 contains more

information on the software tools available for application developers.

HP recognizes that a key capability of the SVA is to make it possible for serial applications to

run without extensive recoding. To that end, HP works with both commercial ISVs and the open

source community to ensure solutions are available for the SVA.

Figure 1-3 illustrates the layers of software support and their hierarchical interrelationships that

are part of the SVA. These include:

• Cluster management software (HP XC) and visualization resource management software

(SVA Software Utilities).

• Visualization toolkits and libraries.

• User and third-party visualization applications.

Figure 1-3 also shows the tasks carried out by the SVA Software Utilities (part of the Visualization

System Software (VSS)). These tasks — allocate, launch, initialize, cleanup — are aligned alongside

the software layers they impact.

Figure 1-3 Software Support for Application Development and Use

Visualization and graphics toolkits are provided by third party vendors and the open source

community. ISV applications and applications written by end users can run on the SVA, taking

16 Introduction

Page 17

full advantage of the various toolkits and libraries. The SVA uses standards such as OpenGL,

Linux, InfiniBand, and Gigabit Ethernet for portability and interoperability.

The HP Parallel Compositing Library that ships with SVA can help application developers

accomplish parallel rendering. See the SVA Parallel Compositing Reference Guide.

To achieve maximum performance scaling when running on the SVA, an application must be

parallel and distributed. There are two main pathways to this state: applications made parallel

by design and serial applications made parallel automatically through middleware libraries or

toolkits; for example, Chromium or other middleware.

1.5.1 OpenGL Applications

If your application is already parallel and distributed, you can use OpenGL directly.

Most visualization applications support OpenGL directly or through graphics toolkits.

Autoparallel toolkits such as Chromium, enable standard OpenGL applications to run on an

SVA with increased resolution, although without the performance advantages of a true parallel

application.

1.5.2 Scenegraph Applications

The SVA lets you take advantage of scenegraph applications available through scenegraph

middleware libraries and toolkits. The result is that the application is available on the SVA and

can take advantage of its parallel scalability features.

1.5 Application Support 17

Page 18

18

Page 19

2 SVA Architecture

This chapter gives a detailed look at the architecture of the HP Scalable Visualization Array

(SVA). It compares the SVA to other clusters and describes the flow of data within the cluster.

2.1 SVA as a Cluster

It is important to understand the cluster characteristics of the SVA. These characteristics have

implications for how SVA functions. They also affect how applications take advantage of cluster

features to achieve graphical performance and display goals.

2.1.1 Background on Linux Clusters

In the taxonomy of parallel computers, the SVA is most similar to a Beowulf class Linux cluster.

Beowulf clustershave many servers of the same type that communicate on high speed connections

such as channel bonded Ethernet. In this way, the cluster provides high performance for

applications capable of using parallel processing. This type of cluster can provide exceptional

computational performance.

A Beowulf cluster falls somewhere between the class of systems known as Massively Parallel

Processors (MPP) and a network of workstations (NOW). Examples of MPP systems include the

nCube, CM5, Convex SPP, Cray T3D, and Cray T3E. Beowulf clusters benefit from developments

in both these classes of architecture.

MPPs are typically larger and have a lower latency interconnect than a Beowulf cluster. However,

programmers on MPPs must take into account locality, load balancing, granularity, and

communication overheads to obtain the best performance. Even on shared memory machines,

many programmers develop programs that use message passing. Programs that do not require

fine-grain computation and communication can usually be ported and run effectively on a Linux

cluster.

Programming a NOW is usually an attempt to harvest unused cycles on an already-installed

base of workstations in a lab or on a campus. Programming in this environment requires

algorithms that are extremely tolerant of load balancing problems and large communication

latency. Any program that runs on a NOW runs at least as well on a cluster.

A Beowulf cluster is distinguished from a NOW by several subtle but significant characteristics.

These characteristics are shared by the SVA.

• Nodes in the cluster are dedicated to the cluster. This helps ease load balancing problems

because the performance of individual nodes is not subject to external factors.

• Because the System Interconnect (SI) is isolated from the external network, the network load

is determined only by the applications being run on the cluster. This eases problems

associated with unpredictable latency in NOWs.

• All nodes in the cluster are within the administrative jurisdiction of the cluster. For example,

the SI for the cluster is less visible to the outside world. Often, the only authentication needed

between processors is for system integrity. On a NOW, network security is an issue.

2.2 Architectural Design

The SVA derives its most powerful attributes from its architectural design, which consists of a

cluster of visualization nodes, high-speed interconnects, and advanced graphics cards.

SVA runs parallel visualization applications efficiently. The SVA also is an integral part of the

HP Cluster Platform and storage (HP Scalable File Share) solutions. To accomplish this, the SVA

architecture extends the HP Cluster Platform architecture with the addition of visualization

nodes, which you can use as specialized compute nodes. Further, an SVA can be made up entirely

of visualization nodes, or it can share an interconnect with compute nodes and a storage system.

2.1 SVA as a Cluster 19

Page 20

Thus, the SVA provides the HP Cluster Platform with a visualization component for those

applications that require visualization in addition to computation.

The following sections describe the components that make up an HP Cluster Platform, followed

by those tasks and components that are unique to an SVA.

2.2.1 Components of the HP Cluster Platform

Because the SVA is an extension of the HP Cluster Platform, you can begin by understanding its

base components without any visualization nodes. The following are the key architectural

components of an HP Cluster Platform system without visualization nodes:

Compute Nodes and

Administrative/Service Nodes

System Interconnect (SI) A high-bandwidth, low-latency network which connects

Administrative Network An Administrative Network connects all nodes in the

Linux The nodes of the cluster run a derivative of 64-bit Red Hat®

The compute cluster consists of compute nodes and

administrative or service nodes. Parallel applications are

allocated exclusive use of the compute nodes on which

they run. The other nodes provide administration, software

installation, remote login, file I/O, external network access,

and so on. These nodes are shared by multiple jobs, and

are not allocated to individual jobs. One such node is

designated as the head node, which is used for

administration and connects to the external network.

all nodes. This supports communication among the

compute nodes (for example, MPI and sockets) and file

I/O between compute nodes and a shared file system.

cluster. In an HP XC compute cluster, this consists of two

branches, the Administrative Network and the Console

Network. This private local Ethernet network runs TCP/IP.

The Administrative Network is Gigabit Ethernet (GigE);

the Console Network is 10/100 BaseT. (Because

visualization nodes do not support console functions,

visualization nodes are not connected to a console branch.)

Enterprise Linux Advanced Server.

Note:

All nodes must attach to two networks using different ports, one for the SI and one for the

Administrative Network.

2.2.2 Main Visualization Cluster Tasks

The SVA has a number of tasks that are unique to a visualization-capable cluster. It accomplishes

these tasks using a set of unique node types that differ in their hardware configurations, and so

are capable of different functional tasks. The main tasks are as follows:

Render images. A node must have a graphics card to render images. A

Display images. The final output of a visualization application is a complete

20 SVA Architecture

visualization job uses multiple nodes to render image data

in parallel. A render node typically communicates over

the SI with other render and display nodes to composite

and display images.

displayed image that is the result of the parallel rendering

that takes place during an application job. To make this

possible, a display node must contain a graphics card

connected toa display device. The display can show images

integrated with the application user interface, or full screen

Page 21

Remote images. The SVA also supports the transmission of a complete

Integrate an application user

interface.

2.2.3 Components of an SVA

The main tasks described in Section 2.2.2 are supported by two types of visualization nodes,

which differ in their configuration and in the tasks they carry out. The two nodes types can carry

out multiple tasks. These node types are unique to the SVA configuration and extend HP compute

clusters to support visualization functions. See Chapter 3 for detailed information on the hardware

configurations of these node types.

Display Nodes Display nodes carry out the display task. Typically, a displaynode contains

one or two graphics cards, each connected to its display device(s). The

output of each graphics card port (two ports per card) on a display node

can be sent to a display device. Final output can be a single tile or a partial

image in the form of a single tile, which is part of an aggregate multi-tile

display.

The SVA supports up to eight display nodes in a Display Surface. The

display nodes in your cluster can drive one or two display devices in the

case of the xw8200, xw8400, DL140 G3, and DL145 G3 nodes, and one to

four display devices in the case of xw9300 and xw9400 nodes. See the SVA

System Administration Guide for more information on setting up display

nodes, displays, and Display Surfaces.

images. The output can be a complete display or one tile

of an aggregate display.

image to a system external to the cluster over an external

network for remote viewing; for example, to an office

workstation outside the lab. A node with a port connected

to the external network is recommended. Alternatively,

you can connect to the external network by routing through

another cluster node with such a port.

An application user interface (UI) usually runs on a cluster

node. The UI typically controls the parts of the distributed

application running on other nodes. A node that provides

users with access to the UI can have an attached keyboard,

mouse, and monitor for user interaction. Alternatively, the

node can export the application UI to an external node

using the X protocol or using the HP Remote Graphics

Software (RGS) or VirtualGL. If you use RGS or VirtualGL,

a port connected to the external network is recommended.

Render Nodes Render nodes render images, as do display nodes. However, render nodes

are not connected directly to display devices. Typically, render nodes are

used by visualization applications that composite images. Render nodes

render a part of the final image. These sub-images are combined with

sub-images from other nodes. The composited image data is transferred

to another render node, or to a display node to be routed to a display device.

Render nodes are industry standard workstations or servers with standard

OpenGL 3D graphics cards.

Both types of nodes can perform UI and remote graphics functions. When nodes are allocated

to a job, the job typically requires specific display nodes that correspond to the display devices

intended for use. Typically, there is no requirement for specific render nodes.

2.2.4 Configuration Flexibility

The SVA supports several different configurations and uses. These include:

2.2 Architectural Design 21

Page 22

• Multiple displays with different resolutions.

OpenGL

Graphics

User Application

Master Node

user interface

transfer simulation data

and drawing commands

display nodes

System Interconnect

Card

OpenGL

Graphics

Card

OpenGL

Graphics

Card

OpenGL

Graphics

Card

multi-tile display

render nodes

OpenGL

Graphics

Card

OpenGL

Graphics

Card

OpenGL

Graphics

Card

• Use of a variable number of display and render nodes to solve the computational and

rendering requirements of an application.

• Bounded configuration designed for a single user.

• Larger, modular, expandable systems designed for one or more concurrent users.

See Chapter 3 for more information on the physical configurations of the SVA.

2.3 SVA Operation

This section describes a common way data flows through an SVA.

2.3.1 Cluster Data Flow

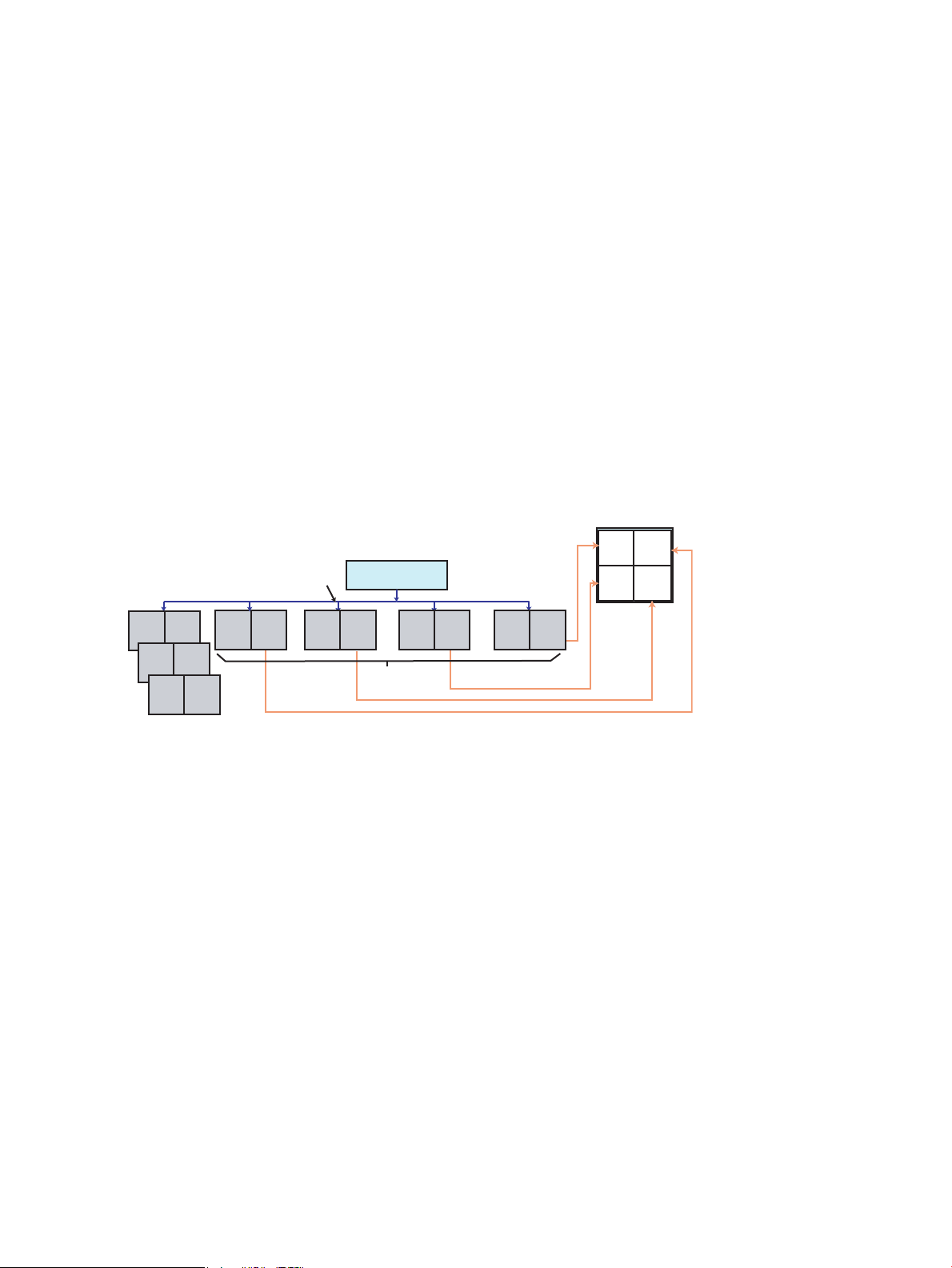

Figure 2-1 shows a high-level view of the basic components of an SVA.

Figure 2-1 SVA Data Flow Overview

A common usage scenario includes a master application node that runs the controlling logic of

an application, processes the 3D data, and updates the virtual display or scene in the case of

scenegraph applications. The master node typically does no rendering. Because it transmits data

changes to other visualization nodes, it must be able to communicate with these nodes using the

cluster SI. The SI is the fastest network available to the SVA and is the best choice for internode

communication when performance is important.

A different scenario does not use a master application node. Instead, an application relies on

Distributed Multi-Head X (DMX) to distribute the display output to multiple nodes and displays.

It does this by controlling the back-end X Servers running on each of the display nodes. Partial

images routed to individual display devices are assembled and displayed as a single virtual

image.

Other scenarios arise depending on the application and the capabilities of visualization

middleware running on the SVA. For example, several render nodes can carry out rendering

and compositing tasks. The render nodes can rely on middleware software to handle the

compositing of any partial images. The image data then flows to a display node before being

sent to a display device or remote node, for example; a desktop display outside the SVA.

See Chapter 6 for other usage scenarios.

2.3.2 File Access

Visualization applications typically read data from files in response to user input. For example,

after starting an application, you specify a data file to open and load. Without exiting the

application, you can select additional data files to open and load, replacing or adding to the data

already loaded. Much visualized data is static rather than time-varying. When visualizing

time-varying data, the application must read and cache multiple time steps. The application may

22 SVA Architecture

not be able to visualize the data as it is being read. Each time step may need to be analyzed and

Page 23

features extracted based on application settings. The application then caches the results of the

analysis or rendering to display an animation of the time steps.

Although parallel visualization is a relatively new approach, some file access patterns that

applications use include the following:

• Master portion of the application reads data from files and distributes data to visualization

nodes using the SI.

• Visualization nodes all read data from the same files.

• Visualization nodes all read data from different files.

• Master writes data; for example, to save an animation sequence.

Dataset sizes can range from less than 1GB to more than 100GB. Some examples include seismic

datasets that are 1GB to 128GB, and medical datasets that are 1GB to 50GB.

Applications access files using HP Scalable File Share (SFS) or NFS. When visualization nodes

are integrated into a cluster with HP SFS, they access this file system using the SI. When HP SFS

is in a separate cluster and not accessible by the SI, access is with GigE.

See the SVA System Administration Guide for more information.

2.3 SVA Operation 23

Page 24

24

Page 25

3 SVA Hardware and Software

This chapter provides information on the hardware and software that make up the SVA. It is a

useful reference for anyone involved in managing the SVA. It is also useful for anyone who

wants to understand the hardware that makes up the SVA and the software that is installed on

it.

The SVA combines commodity hardware components with software that include the following:

• A cluster of Intel EM64T or AMD-64 Opteron HP workstations and servers as visualization

nodes.

• A range of graphics cards that varies by workstation or server: NVIDIA® Quadro® FX 1500

(DL140 G3, DL145 G3), NVIDIA Quadro FX 3450 (xw8200, xw9300), NVIDIA Quadro FX

3500 (xw8400,xw9400, DL140 G3, DL145 G3), NVIDIA Quadro FX 4500 with optional G-sync

or hardware SLI (xw8200, xw8400, xw9300, xw9400), or NVIDIA Quadro FX 5500 with

optional G-sync or hardware SLI (xw8400, xw9400).

• InfiniBand, Gigabit Ethernet (GigE), or Myrinet system interconnects.

• Third-party software tools and libraries.

• Custom and enhanced software tools.

3.1 Hardware Component Summary

You can use the SVA with a variety of applications that run on distributed computing systems;

in this case, a cluster of Linux workstations. The SVA is a specialized version of the HP Cluster

Platform systems.

There are two SVA physical configurations:

Bounded Configuration Contains only visualization nodes and is limited in size to

four to seventeen workstations plus a workstation or server

head node. This configuration is based on racked

component building blocks, namely the Utility

Visualization Block (UVB) and the Visualization Building

Block (VBB).

The bounded configuration serves as a standalone

visualization cluster and is not integrated with compute

nodes. The bounded configuration meets the need for

relatively small, personal use clusters consisting of only

four workstations. When expanded to seventeen nodes,

such a cluster can be a visualization-specific, multi-user

system capable of driving a large display wall. Although

designed as a standalone cluster, it can be connected to a

larger HP XC cluster using external GigE connections. This

level of inter-cluster integration supports communication

with a compute cluster and data retrieval from a file share,

such as an HP Scalable File Share (SFS).

Modular Packaging Configuration This configuration has two or more racks as needed to

contain from four to ninety-five workstations or servers,

along with a server head node. All servers and

workstations must have the same CPU type (EM64T or

Opteron). This configuration is based on HP Cluster

Platform building blocks, namely the Visualization

Building Block (VBB) and the Utility Building Block (UBB).

It can be exclusively visualization nodes or be combined

with compute nodes as part of an integrated HP Cluster

Platform system. When integrated into a larger Cluster

3.1 Hardware Component Summary 25

Page 26

The two SVA physical configurations are built using one or more of three types of cluster building

blocks. Each building block uses a single rack.

Utility Visualization Block (UVB) Base utility unit of a Bounded Configuration. It contains

Utility Building Block (UBB) Base utility unit of a Modular Packaging Configuration. It

Visualization Building Block (VBB) Rack of visualization nodes that can be added to either

3.2 Bounded Configuration

A Bounded Configuration is built from the UVB and VBB rack systems. It has the following

components as summarized in Chapter 2:

• Render and Display nodes.

Workstations: xw8200, xw8400, xw9300, xw9400.

Platform system, the visualization nodes can use a high

speed system interconnect to load data from an HP SFS.

network switches, PDU, workstations (xw8200, xw8400,

xw9300, or xw9400), head node (xw8200, xw8400,xw9300,

xw9400, DL380 G4, or DL385 G4), and an optional KVM.

contains network switches, PDU, head node (DL380 G4,

or DL385 G4), and an optional KVM.

base unit to create a Bounded Configuration or a Modular

Packaging Configuration. It contains PDU, workstation or

server nodes, and an optional KVM.

• Head node.

Workstations: xw8200, xw8400, xw9300, xw9400, or servers: DL380 G4 and DL385 G4.

• Optional KVM.

• System Interconnect and Administrative Network (found in HP Cluster Platform systems,

and thus not unique to the SVA).

The head node is a typical node type found in HP Cluster Platform systems.

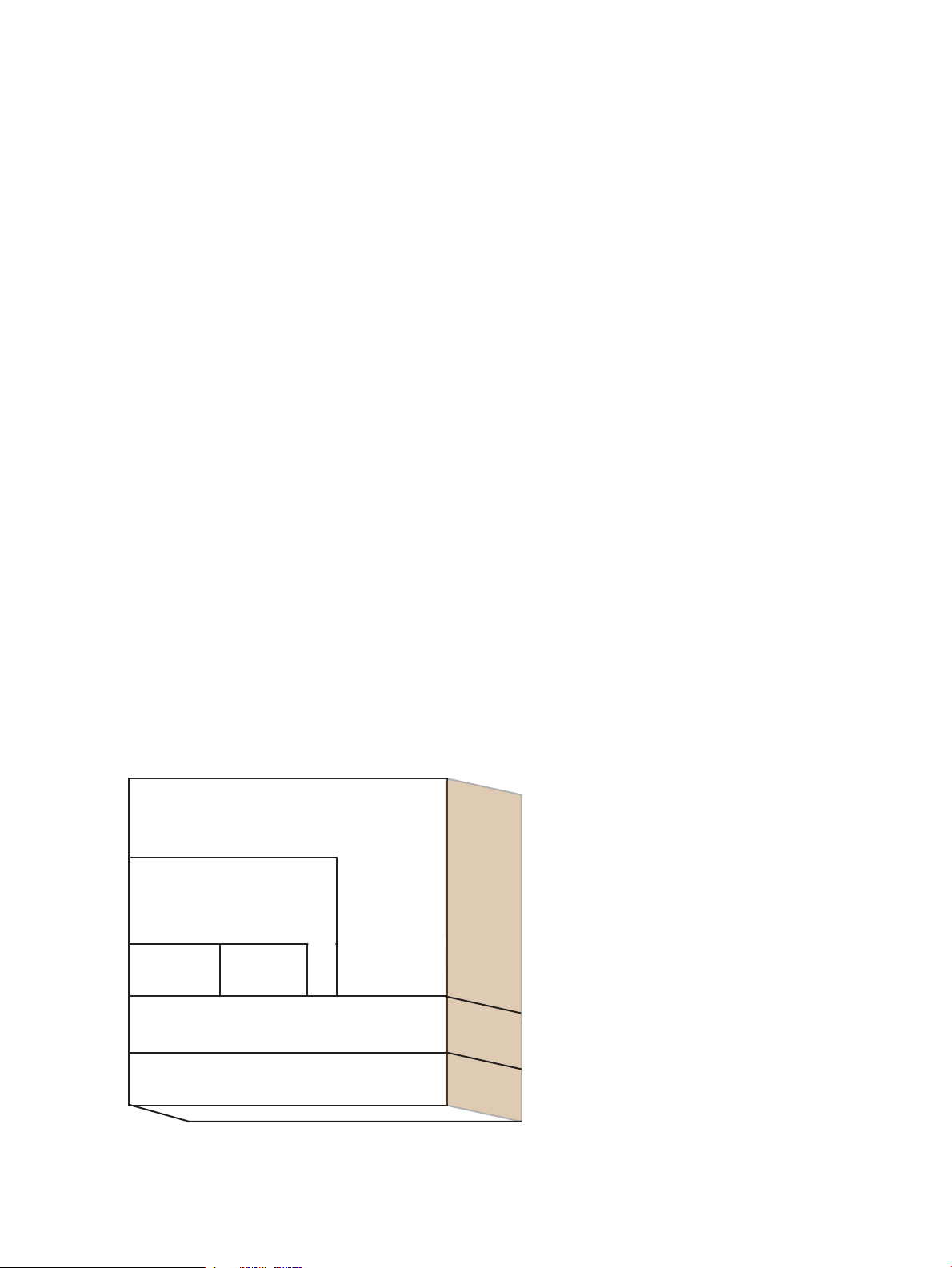

Figure 3-1 illustrates a sample Bounded Configuration. The UVB contains the network switches,

PDU, five visualization nodes, and the head node. The visualization nodes support a 2x2 multi-tile

display. Additional VBBs can be added to this configuration, with up to eight workstations in

each rack.

26 SVA Hardware and Software

Page 27

Figure 3-1 Sample SVA Bounded Configuration

GigE

External Node

Display

Devices

Base Rack (UVB)

3.3 Modular Packaging Configuration

A Modular Packaging Configuration is built from the UBB and VBB rack systems. It has the

following components as summarized in Chapter 2:

• Render and Display nodes.

Workstations: xw8200, xw8400, xw9300, xw9400, or servers: DL140 G3 or DL145 G3 .

• Head node.

DL380 G4 or a DL385 G4 server.

• Optional KVM.

• System Interconnect and Administrative Network (found in HP Cluster Platform systems,

and thus not unique to the SVA).

The head node is a typical node type found in HP Cluster Platform systems. The server head

node must have the same architecture as any workstations in the cluster.

3.4 Network Configurations

This section describes the different networks used in the SVA.

3.4.1 System Interconnect (SI)

3.4.2 Administrative Network Connections

The SI for visualization nodes can be GigE, InfiniBand, or Myrinet. When the visualization nodes

are integrated with compute nodes, the choice of SI is usually determined by the requirements

of the compute nodes.

A GigE interconnect serves as the Administrative Network to control the operation of the cluster

(for example, boot, shutdown, restart) and to control the running parts of a distributed application

(for example, launching and stopping processes).

The SVA adds visualization capability to an HP XC cluster; therefore, the Administrative Network

is implemented with the logical configuration defined by the HP XC and Cluster Platform

architectures. However, the physical implementation differs.

3.3 Modular Packaging Configuration 27

Page 28

The management switches are collected together in one rack. SVA nodes connect to branch

switches in the Administrative Network. SVA nodes do not connect to the console branch.

Nodes connect to the switches according to the Cluster Platform Administrative Network

connections for HP XC. Display and render node types are typically grouped together.

3.5 Display Devices

SVA supports a wide range of displays and configurations, including single displays, tiled

displays in walls, and immersive CAVE environments using projector systems. SVA relies on

the display capabilities of the graphics cards in the display nodes. This means that the SVA lets

you use the wide range of display devices that are supported by the graphics card.

Depending on the demands of the display devices, you can use digital or analog output. The

aggregate resolution of these displays can range from 10s to 100s of megapixels.

The SVA supports up to eight display nodes1in a Display Surface. The display nodes in your

cluster can drive one or two display devices in the case of the xw8200, xw8400, DL140 G3, and

DL145 G3 nodes, and one to four display devices in the case of xw9300 or xw9400 nodes. (Note

that the xw8400 can be used to drive four display devices when ordered and configured on an

exception basis.) This means that you can drive a maximum of 32 display devices using eight

xw9300 or xw9400 nodes.

Theoretically, SVA technology can scale to arbitrarily large displays. Realistically, the bandwidth

of the network delivering subparts of the image to various nodes, and the resolution of display

devices are limiting factors. You can create large displays by arranging a grid of smaller displays.

See the SVA System Administration Guide for more information on setting up display nodes and

devices.

3.6 SVA Software Summary

The SVA combines third party software tools and libraries with custom and enhanced software

tools and libraries. SVA software must be installed and run on each visualization node as well

as the head node of a valid cluster configuration, such as an HP Cluster Platform 3000 or HP

Cluster Platform 4000, properly configured for HP XC System Software with the SVA option.

This section describes the following software categories:

• Linux Operating System.

• HP XC clustering software.

• Additional system software.

Figure 3-2 illustrates the various software categories and their hierarchical interrelationships, as

well as the tasks carried out by the utilities provided by HP Visualization System Software (VSS).

These tasks — allocate, launch, initialize, cleanup — are aligned alongside the software categories

they impact.

1. More display nodes may be supported on an exceptional basis.

28 SVA Hardware and Software

Page 29

Figure 3-2 Software Hierarchy in the SVA

Visualization

Libraries

(optional)

Applications

X Servers

HP XC Linux

Allocate

Launch

Initialize

Cleanup

SVA

Software

Utilities

OpenGL

Cluster Nodes and Displays

3.6.1 Linux Operating System

The SVA software is layered on top of HP XC System Software Version 3.2, a clustering Linux

distribution compatible with Red Hat Enterprise Linux Advanced Server V4.0 Update 3. The

kernel version is V2.6.9–x.

See Section 3.6.2.

The windowing system used by Red Hat is the X.Org windowing system.

Table 3-1 summarizes the main operating system components as well as the low-level drivers.

Table 3-1 Operating System and Driver Components

Base Operating System

Red Hat Enterprise Linux Advanced Server

V4.0 Update 3.

www.redhat.com

http://docs.hp.com/en/highperfcomp.html

X.Org Windowing System.

X.Org Foundation: www.x.org

3.6.2 HP XC Clustering Software

Low-Level Drivers

Linux driver for the supported NVIDIA

Quadro FX graphics cards.

The SVA runs HP XC System Software Version 3.2 clustering software. The SVA uses HP XC for

the following key system management tasks:

• Installing and reinstalling a uniform set of software on visualization nodes.

• Booting and shutting down the cluster.

• Managing user accounts and user directories across the cluster.

NotesComponent

HP XC Linux is compatible with this version of Red Hat Enterprise

Linux; however, it is built by HP and does not contain all the

components distributed by Red Hat.

Clustering software.HP XC System Software Version 3.2.

Official Windowing System of the X.Org Foundation that is also

included as part of the standard Red Hat distribution.

Device driver for the graphics cardsprovided by NVIDIA Corporation.

Qualified by HP WGBU group.

InfiniBand, GigE, and Myrinet are supported.Driver for selected high-speed interconnect.

3.6 SVA Software Summary 29

Page 30

• Naming each of the nodes in the cluster and determining which nodes are up and running.

• Serializing application use of the cluster.

For more information on HP XC, consult the HP XC documentation set at the following Web

site:

http://docs.hp.com/en/highperfcomp.html

Table 3-2 summarizes the software components that are provided by the HP XC operating system

that relate to the SVA.

Table 3-2 HP XC System Components Relevant to SVA Operation

NotesComponent

Simple Linux Utility for Resource

Management (SLURM)

Platform Load Sharing Facility for High

Performance Computing (LSF HPC)

3.6.3 Additional System Software

The SVA Software Kit provides software focused on making the job of developing and running

visualization applications easier. This section summarizes additional system software of interest

related to the SVA, as well as where to get information on packages or applications. The main

categories of additional system software include:

• Main software components provided by HP (Table 3-3).

• Main software components provided by third parties (Table 3-4).

• Application development tools available on the SVA (Table 3-5).

A resource manager for Linux clusters. Used to set up visualization

sessions and launch visualization jobs. Preferred allocation utility of

HP XC.

Layered on top of SLURM to provide high-level scheduling services

for the HP XC system software user. LSF can be used in parallel with

SVA job launching techniques that rely on SLURM.

System file replication used to install cluster nodes.SystemImager

A parallel distributed shell that replaces rsh and ssh.pdsh

Message Passing Interface.HP-MPI

High performance file system (optional).HP Scalable File Share (HP SFS)

License management.FlexLM®

Table 3-3 HP SVA System Software

Visualization System Software (VSS)

See the SVA Visualization System Software Reference

Guide in the SVA Documentation Library.

HP Parallel Compositing Reference Guide

See the SVA Parallel Compositing Library Reference

Guide in the SVA Documentation Library.

HP Remote Graphics Software

http://www.hp.com/workstations/software/remote/

30 SVA Hardware and Software

DescriptionSoftware

Collection of various categories, including:

• Data Access Functions that permit an application to access

and use the Configuration Data Files for job allocation and

launch.

• Command syntax for job launch scripts commands.

Shipped with SVA, the HP Parallel Compositing Library helps

application developers easily create parallel rendering

applications.

Transmits2D and3D images across standard computer networks

to remote users.

(optional purchase)

Page 31

Table 3-4 Third Party System Software

http://www.opengl.org/

DescriptionSoftware

Primary interface programmers use to create images.OpenGL

OpenGL Utility library (GLU)

freeglut

http://freeglut.sourceforge.net/

OpenMotif

Distributed MultiHead X (DMX)

http://dmx.sourceforge.net/

Chromium

http://chromium.sourceforge.net/

Table 3-5 Application Development Tools

DescriptionSoftware

Included as part of the HP XC distribution.Default GNU C and C++ compilers and

run-time

Contains routines that build on the lower level OpenGL library

to perform such tasks as setting up matrices for specific viewing

orientations and projections, performing polygon tessellation,

and rendering surfaces.

This library masks a number of the operating system specific

calls for creating windows and managing input devices.

Royalty-free version of Motif®, the industry standard graphical

user interface. It provides users with the industry's most widely

used environment for standardizing application presentation on

a wide range of platforms.

An open source application that provides a proxy X Server that

distributes its display over multiple X Servers.

X Server available as part of the Red Hat distribution.X Server (X.Org)

A system for interactive rendering on clusters of graphics

workstations.

gdb GNU debugger

Perl, Tcl/tk, Python

Included as part of the HP XC distribution.

Scripting tools available as part of the HP XC distribution.

64 bit Linux applications are supported on SVA systems; 34 bit applications may run if their

providers have validated them to run on 64 bit Linux systems. You may find that you need to

install additional libraries. You may also find that certain libraries, for example, MPI, don't work

for particular hardware configurations.

3.6 SVA Software Summary 31

Page 32

32

Page 33

4 Quick Start

This chapter lets you quickly try some of the sample applications on the SVA Kit. Details on

using scripts are provided in other chapters of this HP SVA User's Guide and other documents

in the HP SVA Documentation Library.

4.1 Typical Uses of SVA

SVA has three primary usage scenarios as described in detail in Chapter 6.

• A workstation application that is launched remotely to use only a single node in the SVA.

• An application that uses render and display capabilities of the SVA (for example, ParaView).

• A workstation application that uses Chromium software and DMX to display on multiple

tiles using the SVA.

4.2 Configuring Displays for Use with SVA

There are several steps to getting the cluster display devices working, particularly in the case of

complex display systems, including stereo systems. These steps are typically done by the system

manager for the cluster.

• Physically set up display nodes and display devices.

Plan how you want the display devices arranged, and how you want to use your display

nodes to drive your display devices. It also involves cabling the display nodes to the display

devices.

• Configure Display Nodes and Display Surfaces.

This task involves using two SVA tools:

— Node Configuration Tool: Use this tool to define the tile orientation for individual

display nodes.

When the cluster is built by HP Manufacturing, display nodes are configured with a

default of having a single display device per display node. If a cluster uses single display

nodes to drive multiple display devices (a one to several relationship), you need to use

the Node Configuration Tool. The output of a single display node is defined as a display

block. The Node Configuration Tool is used when a display node drives more than one

tile, that is, uses one or two graphics cards and/or multiple ports on a card. A tile is

assumed to be the image output from a single port of a graphics card.

The tool also lets you change the role of a node, for example, from render to display

and vice versa.

— Display Surface Configuration Tool: Use this tool to assemble the output of one or more

display nodes in a particular spatial orientation. This orientation is needed to define

Display Surfaces.

One or more display blocks are assembled into a Display Surface using the Display

Surface Configuration Tool.

4.1 Typical Uses of SVA 33

Page 34

TIP: See the HP SVA System Administration Guide for detailed information on how to define

Display Surfaces, including a recommended incremental series of steps for configuring SVA

for your displays.

• Verify and possibly modify supported resolutions, display modelines, and refresh rates.

This step is likely to be required for stereo displays and more exotic mono displays. Typical

desktop display devices (monitors and flat panels) are supported by default by SVA.

This topic is covered in detail in the HP SVA System Administration Guide. Note the following:

— You should not edit the standard X Configuration File (xorg.conf) on individual

visualization nodes. SVA creates its own set of SVA X Configuration Files during

installation. Only these are used by SVA when you launch a job.

— The SVA X Configuration Files support most desktop-style displays (flat panels and

monitors) without any changes. More exotic display devices (for example, projector

systems, extremely high-resolution devices, or stereo displays) require changes to the

settings in the SVA X Configuration Files.

— In the case of such exotic displays, you will need information from the display vendor,

namely, resolution, refresh rate, and modeline settings. Consult your display vendor

documentation.

— Use the display information to edit the settings in the SVA Monitor Properties Files.

After any edits, you need to regenerate the SVA X Configuration Files on all the nodes.

This process of editing and regenerating is detailed in the HP SVA System Administration

Guide.

4.3 Run a Test Application

Once you reach the point that the hardware is installed properly and the Display Surfaces are

defined, you can run a sample application to get a feel for how the cluster works. There are

several tasks that help familiarize you with the cluster:

• Set up how you want to control an application.

• Use an SVA launch script with a sample application. We recommend the

sva_chromium_dmx.sh script with the city application in interactive and then batch

mode.

• Run an application remotely using HP Remote Graphics Software (HP RGS). (HP RGS is an

optional purchase that may not be available on your cluster.) VirtualGL is an alternative

package to HP RGS for remote viewing.

See Chapter 5 for more detail on launch scripts. See Chapter 6 for more information on how to

use HP RGS and VirtualGL and their accompanying scripts.

4.3.1 Set Up Control for an Application

You have several ways to control an application (that is, provide mouse and keyboard input):

• Use a keyboard, mouse, and monitor plugged directly into the node running the application.

Note that the node that runs the application is determined by the SVA_EXECUTION_HOST

as described in Section 4.3.3.

• Use a console other than one connected directly to the node running the application. For

example, this could be the console connected to the head node. Use a KVM to switch to the

node running the application.

• Use HP Remote Graphics Software (HP RGS) or VirtualGL to control the application from

a node remote from the cluster.

34 Quick Start

Page 35

• In the case of third-party applications, for example, ParaView and EnSight, there is a separate

user interface whose location is determined by setting the DISPLAY environment variable

before you run the application.

• In the specific case of the SVA sva_chromium_dmx.sh script only: Use the -i option or

set the DISPLAY environment variable to specify the node from which you want to provide

input.

KVM/RKM Use:

If you are using a KVM or RKM to control your application, it does not necessarily display the

image as shown on a large multi-tile display. This is because the KVM/RKM cannot support such

a high resolution. The keyboard and mouse continue to work and you should be able to see the

cursor move on the large display as you use the mouse. Options for viewing and controlling a

large display are described in Section 4.3.2.

TIP: You may choose to use the --local option with the sva_paraview.sh script. The main

advantage of using this option is to have the application GUI visible on your current machine,

for example, the head node. See Section 5.4 for details on how to do this.

4.3.2 Launch an Application in Interactive Mode

Interactive mode lets you launch and terminate the application without re-allocating cluster

nodes to the job.

Before you launch an application in interactive mode, for example, with the Chromium-DMX

script, you need to specify the node from which you intend to provide input to the application

and on which you want to display the DMX Console Window. This window lets you view and

interact with multiple tiles on your console display. You do this by defining the DISPLAY

environment variable, for example:

% export DISPLAY node:0.0

Alternatively, you can specify the input node on the command line. Use the -i

input-x-display flag to the sva_chromium_dmx.sh script to force DMX to display the

DMX Console Window on the input-node (whatever you specify). Then use the DMX Console

Window to control the large display.

Use a command similar to the following to launch an interactive session:

% sva_chromium_dmx.sh -I -d YOUR_DISPLAY_SURFACE -i YOUR_INPUT_NODE:0.0

In the specific case of OpenGL applications, you use the Chromium/DMX script again from the

terminal window to run it. For example:

% sva_chromium_dmx.sh "city"

Note the following:

• You can launch the application from any node; the head node is a good location because

you can do other things from here as well as control the application. However, the Display

Surface definition determines the node on which the application runs by means of the

SVA_EXECUTION_HOST Configuration Data tag. Any image that you see is from the

SVA_EXECUTION_HOST node — not necessarily the console node. See the Section 6.3.4 and