Page 1

HP StorageWorks

P9000 Configuration Guide

P9500 Disk Array

Abstract

This guide provides requirements and procedures for connecting a P9000 disk array to a host system, and for configuring the

disk array for use with a specific operating system. This document is intended for system administrators, HP representatives,

and authorized service providers who are involved in installing, configuring, and operating P9000 disk arrays.

HP Part Number: AV400-96380

Published: May 2011

Edition: Second

Page 2

© Copyright 2010, 2011 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Acknowledgments

Microsoft® and Windows® are U.S. registered trademarks of Microsoft Corporation.

UNIX is a registered trademark of The Open Group.

Oracle is a registered US trademark of Oracle Corporation, Redwood City, California.

Page 3

Contents

1 Overview................................................................................................10

What's in this guide................................................................................................................10

Audience...............................................................................................................................10

Features and requirements.......................................................................................................10

Fibre Channel interface...........................................................................................................11

Device emulation types............................................................................................................12

Failover.................................................................................................................................12

SNMP configuration................................................................................................................13

RAID Manager command devices.............................................................................................13

2 HP-UX.....................................................................................................14

Installation roadmap...............................................................................................................14

Installing and configuring the disk array....................................................................................14

Defining the paths..............................................................................................................15

Setting the host mode and host group mode for the disk array ports.........................................15

Setting the system option modes..........................................................................................15

Configuring the Fibre Channel ports.....................................................................................16

Installing and configuring the host.............................................................................................16

Loading the operating system and software...........................................................................16

Installing and configuring the FCAs .....................................................................................16

Clustering and fabric zoning...............................................................................................16

Fabric zoning and LUN security for multiple operating systems.................................................17

Connecting the disk array........................................................................................................17

Verifying FCA installation....................................................................................................17

Verifying device recognition................................................................................................18

Configuring disk array devices.................................................................................................19

Verifying the device files and drivers.....................................................................................20

Creating the device files.....................................................................................................20

Creating the physical volumes.............................................................................................22

Creating new volume groups...............................................................................................22

Creating logical volumes....................................................................................................24

Creating the file systems.....................................................................................................26

Setting the I/O timeout parameter........................................................................................26

Creating the mount directories.............................................................................................27

Mounting and verifying the file systems.................................................................................27

Setting and verifying the auto-mount parameters....................................................................28

3 Windows................................................................................................30

Installation roadmap...............................................................................................................30

Installing and configuring the disk array....................................................................................30

Defining the paths..............................................................................................................30

Setting the host mode and host group mode for the disk array ports.........................................31

Setting the system option modes..........................................................................................32

Configuring the Fibre Channel ports.....................................................................................32

Installing and configuring the host.............................................................................................32

Loading the operating system and software...........................................................................32

Installing and configuring the FCAs .....................................................................................32

Fabric zoning and LUN security...........................................................................................33

Connecting the disk array........................................................................................................33

Contents 3

Page 4

Verifying the host recognizes array devices............................................................................34

Configuring disk devices..........................................................................................................34

Writing signatures..............................................................................................................34

Creating and formatting disk partitions.................................................................................35

Verifying file system operations ...........................................................................................35

4 Novell NetWare......................................................................................36

Installation roadmap...............................................................................................................36

Installing and configuring the disk array....................................................................................36

Defining the paths..............................................................................................................36

Setting the host mode and host group mode for the disk array ports.........................................37

Configuring the Fibre Channel ports.....................................................................................37

Installing and configuring the host.............................................................................................37

Loading the operating system and software...........................................................................37

Installing and configuring the FCAs .....................................................................................37

Configuring NetWare client................................................................................................37

Configuring NetWare ConsoleOne......................................................................................38

Clustering and fabric zoning...............................................................................................38

Fabric zoning and LUN security for multiple operating systems.................................................39

Connecting the disk array........................................................................................................39

Verifying new device recognition.........................................................................................39

Configuring disk devices..........................................................................................................40

Creating the disk partitions.................................................................................................40

Assigning the new devices to volumes...................................................................................42

Mounting the new volumes..................................................................................................43

Verifying client operations...................................................................................................43

Middleware configuration........................................................................................................44

Host failover......................................................................................................................44

Multipath failover .........................................................................................................44

Helpful Multipath commands.....................................................................................45

Configuring NetWare 6.x servers for Cluster Services........................................................46

Installing Cluster Services...........................................................................................46

Creating logical volumes...........................................................................................47

5 NonStop.................................................................................................48

Installation roadmap...............................................................................................................48

Installing and configuring the disk array....................................................................................48

Defining the paths..............................................................................................................48

Setting the host mode and host group mode for the disk array ports.........................................49

Setting system option modes................................................................................................49

Configuring the Fibre Channel ports.....................................................................................50

Installing and configuring the host.............................................................................................50

Loading the operating system and software...........................................................................50

Installing and configuring the FCSAs ...................................................................................50

Fabric zoning and LUN security for multiple operating systems.................................................50

Connecting the disk array........................................................................................................51

Verifying disk array device recognition.................................................................................51

Configuring disk devices..........................................................................................................51

6 OpenVMS...............................................................................................52

Installation roadmap...............................................................................................................52

Installing and configuring the disk array....................................................................................52

Defining the paths..............................................................................................................53

4 Contents

Page 5

Setting the host mode for the disk array ports........................................................................54

Setting the UUID................................................................................................................54

Setting the system option modes..........................................................................................55

Configuring the Fibre Channel ports.....................................................................................55

Installing and configuring the host.............................................................................................55

Loading the operating system and software...........................................................................56

Installing and configuring the FCAs .....................................................................................56

Clustering and fabric zoning...............................................................................................56

Fabric zoning and LUN security for multiple operating systems.................................................57

Configuring FC switches..........................................................................................................57

Connecting the disk array........................................................................................................57

Verifying disk array device recognition.................................................................................57

Configuring disk array devices.................................................................................................58

Initializing and labeling the devices.....................................................................................58

Mounting the devices.........................................................................................................58

Verifying file system operation.............................................................................................59

7 VMware..................................................................................................61

Installation roadmap...............................................................................................................61

Installing and configuring the disk array....................................................................................61

Defining the paths..............................................................................................................61

Setting the host mode and host group mode for the disk array ports.........................................62

Setting the system option modes..........................................................................................62

Configuring the Fibre Channel ports.....................................................................................62

Installing and configuring the host.............................................................................................62

Loading the operating system and software...........................................................................62

Installing and configuring the FCAs .....................................................................................62

Clustering and fabric zoning...............................................................................................63

Fabric zoning and LUN security for multiple operating systems.................................................63

Configuring VMware ESX Server..........................................................................................64

Connecting the disk array........................................................................................................64

Setting up virtual machines (VMs) and guest operating systems.....................................................65

Setting the SCSI disk timeout value for Windows VMs.............................................................65

Sharing LUNs....................................................................................................................65

Selecting the SCSI emulation driver......................................................................................67

8 Linux.......................................................................................................69

Installation roadmap...............................................................................................................69

Installing and configuring the disk array....................................................................................69

Defining the paths..............................................................................................................69

Setting the host mode and host group mode for the disk array ports.........................................70

Configuring the Fibre Channel ports.....................................................................................70

Setting the system option modes..........................................................................................70

Installing and configuring the host.............................................................................................71

Installing and configuring the FCAs .....................................................................................71

Loading the operating system and software...........................................................................71

Clustering and fabric zoning...............................................................................................71

Fabric zoning and LUN security for multiple operating systems.................................................71

Connecting the disk array........................................................................................................72

Restarting the Linux server...................................................................................................72

Verifying new device recognition.........................................................................................72

Configuring disk array devices.................................................................................................73

Partitioning the devices.......................................................................................................73

Contents 5

Page 6

Creating the file systems.....................................................................................................74

Creating file systems with ext2........................................................................................74

Creating the mount directories.............................................................................................74

Creating the mount table....................................................................................................74

Verifying file system operation.............................................................................................75

9 Solaris....................................................................................................76

Installation roadmap...............................................................................................................76

Installing and configuring the disk array....................................................................................76

Defining the paths..............................................................................................................76

Setting the host mode and host group mode for the disk array ports.........................................77

Setting the system option modes..........................................................................................78

Configuring the Fibre Channel ports.....................................................................................78

Installing and configuring the host.............................................................................................78

Loading the operating system and software...........................................................................78

Installing and configuring the FCAs......................................................................................79

WWN.........................................................................................................................79

Setting the disk and device parameters............................................................................79

Configuring FCAs with the Oracle SAN driver stack...........................................................80

Configuring Emulex FCAs with the lpfc driver....................................................................81

Configuring QLogic FCAs with the qla2300 driver.............................................................82

Verifying the FCA configuration...........................................................................................82

Clustering and fabric zoning...............................................................................................83

Fabric Zoning and LUN security for multiple operating systems.................................................83

Connecting the disk array........................................................................................................83

Adding the new device paths to the system............................................................................84

Verifying host recognition of disk array devices .....................................................................84

Configuring disk array devices.................................................................................................84

Labeling and partitioning the devices...................................................................................85

Creating the file systems.....................................................................................................85

Creating the mount directories.............................................................................................86

Configuring for use with Veritas Volume Manager 4.x and later....................................................86

10 IBM AIX.................................................................................................87

Installation roadmap...............................................................................................................87

Installing and configuring the disk array....................................................................................87

Defining the paths..............................................................................................................87

Setting the host mode and host group mode for the disk array ports.........................................88

Setting the system option modes..........................................................................................89

Configuring the Fibre Channel ports.....................................................................................89

Installing and configuring the host.............................................................................................89

Loading the operating system and software...........................................................................89

Installing and configuring the FCAs .....................................................................................89

Clustering and fabric zoning...............................................................................................89

Fabric zoning and LUN security for multiple operating systems.................................................90

Connecting the disk array........................................................................................................90

Verifying host recognition of disk array devices......................................................................90

Configuring disk array devices.................................................................................................91

Changing the device parameters.........................................................................................91

Assigning the new devices to volume groups.........................................................................93

Creating the journaled file systems.......................................................................................95

Mounting and verifying the file systems.................................................................................97

6 Contents

Page 7

11 Citrix XenServer Enterprise........................................................................99

Installation roadmap...............................................................................................................99

Installing and configuring the disk array....................................................................................99

Defining the paths..............................................................................................................99

Setting the host mode and host group mode for the disk array ports.......................................100

Configuring the Fibre Channel ports...................................................................................100

Setting the system option modes........................................................................................100

Installing and configuring the host...........................................................................................100

Installing and configuring the FCAs ...................................................................................101

Loading the operating system and software.........................................................................101

Clustering and fabric zoning.............................................................................................101

Fabric zoning and LUN security for multiple operating systems...............................................101

Connecting the disk array......................................................................................................102

Restarting the Linux server.................................................................................................102

Verifying new device recognition.......................................................................................102

Configuring disk array devices...............................................................................................103

Configuring multipathing..................................................................................................103

Creating a Storage Repository...........................................................................................106

Adding a Virtual Disk to a domU.......................................................................................108

Adding a dynamic LUN....................................................................................................110

12 Troubleshooting....................................................................................111

Error conditions....................................................................................................................111

13 Support and other resources...................................................................113

Contacting HP......................................................................................................................113

Subscription service..........................................................................................................113

Documentation feedback..................................................................................................113

Related information...............................................................................................................113

Conventions for storage capacity values..................................................................................113

A Path worksheet.......................................................................................115

Worksheet...........................................................................................................................115

B Path worksheet (NonStop)........................................................................116

Worksheet...........................................................................................................................116

C Disk array supported emulations..............................................................117

HP-UX.................................................................................................................................117

Supported emulations.......................................................................................................117

Emulation specifications....................................................................................................117

LUSE device parameters....................................................................................................119

SCSI TID map for Fibre Channel adapters...........................................................................121

Windows............................................................................................................................122

Supported emulations.......................................................................................................122

Emulation specifications....................................................................................................122

Novell NetWare...................................................................................................................125

Supported emulations.......................................................................................................125

Emulation specifications....................................................................................................125

NonStop.............................................................................................................................128

Supported emulations.......................................................................................................128

Contents 7

Page 8

Emulation specifications....................................................................................................128

OpenVMS...........................................................................................................................129

Supported emulations.......................................................................................................129

Emulation specifications....................................................................................................129

VMware..............................................................................................................................132

Supported emulations.......................................................................................................132

Emulation specifications....................................................................................................132

Linux...................................................................................................................................135

Supported emulations.......................................................................................................135

Emulation specifications....................................................................................................135

Solaris................................................................................................................................138

Supported emulations.......................................................................................................138

Emulation specifications....................................................................................................138

IBM AIX..............................................................................................................................141

Supported emulations.......................................................................................................141

Emulation specifications....................................................................................................141

Disk parameters by emulation type.....................................................................................143

Byte information table.......................................................................................................149

Physical partition size table...............................................................................................151

D Using Veritas Cluster Server to prevent data corruption................................153

Using VCS I/O fencing.........................................................................................................153

E Reference information for the HP System Administration Manager (SAM)........156

Configuring the devices using SAM.........................................................................................156

Setting the maximum number of volume groups using SAM........................................................157

F HP Clustered Gateway deployments..........................................................158

Windows............................................................................................................................158

HBA configuration............................................................................................................158

MPIO software................................................................................................................158

Array configuration..........................................................................................................158

LUN presentation........................................................................................................158

Membership partitions.................................................................................................158

Snapshots..................................................................................................................158

Dynamic volume and file system creation............................................................................158

Linux...................................................................................................................................159

HBA configuration............................................................................................................159

MPIO software................................................................................................................159

Array configuration..........................................................................................................159

LUN presentation........................................................................................................159

Membership partitions.................................................................................................159

Snapshots..................................................................................................................160

Dynamic volume and file system creation............................................................................160

Glossary..................................................................................................161

Index.......................................................................................................163

8 Contents

Page 9

Contents

Contents 9

Page 10

1 Overview

What's in this guide

This guide includes information on installing and configuring P9000 disk arrays. The following

operating systems are covered:

• HP-UX

• Windows

• Novell Netware

• NonStop

• OpenVMS

• VMware

• Linux

• Solaris

• IBM AIX

For additional information on connecting disk arrays to a host system and configuring for a

mainframe, see the HP StorageWorks P9000 Mainframe Host Attachment and Operations Guide.

Audience

This document is intended for system administrators, HP representatives, and authorized service

providers who are involved in installing, configuring, and operating the HP P9000 storage systems.

Features and requirements

The disk array provides following features:

• Storage capacity:

Maximum FC PortsMaximum CapacityMaximum DrivesModel

1601.2 PB2048P9500

• Server support: Check with your HP representative for the servers and Fibre Channel adapters

supported by your disk arrays.

NOTE: See the following list for specific OS server support:

◦ OpenVMS server support: PCI-based AlphaServers; PCI-based Integrity (IA64) Servers.

◦ VMware server support: VMware-supported processor.

◦ Windows server support: Windows PC server with the latest HP supported patches.

• Operating system support: For supported disk array microcode and OS versions, see the HP

SPOCK website: http://www.hp.com/storage/spock.

For all operating systems, before installing the disk array, ensure the environment conforms to the

following requirements:

• Fibre Channel Adapters (FCAs): Install FCAs, all utilities, and drivers. For installation details,

see the adapter documentation.

• HP StorageWorks P9000 Remote Web Console or HP StorageWorks P9000 Command View

Advanced Edition Suite Software for configuring disk array ports and paths.

10 Overview

Page 11

• HP StorageWorks P9000 Array Manager Software

• Check with your HP representative for other P9000 software available for your system.

NOTE:

• Linux, NonStop, and Novell NetWare: Make sure you have superuser (root) access.

• OpenVMS firmware version: Alpha System firmware version 5.6 or later for Fibre Channel

support. Integrity servers have no minimum firmware version requirement.

• HP does not support using Command View Advanced Edition Suite Software from a Guest

OS.

In addition, for Solaris, ensure the following requirements are aslo met before installing the disk

array:

• Volume Manager: Solaris Volume Manager or Veritas Volume Manager.

• Oracle SAN software: For Solaris 8/9 (if not using Emulex, QLogic, or JNI drivers), latest SAN

Foundation Software with current patches. For Solaris 10 (if not using Emulex, or QLogic

drivers), latest SAN (Leadville driver stack) with current patches.

Oracle StorEdge Traffic Manager/Oracle VM Storage Multipathing requires that you configure

/kernel/drv/scsi_vhci.conf.

For Solaris 8/9 SAN information, see Oracle StorEdge SAN Foundation Software & Installation

Guide and Oracle StorEdge Traffic Manager Software Installation and Configuration Guide

at www.oracle.com.

For Solaris 10 and later SAN information, see Solaris Fibre Channel and Storage Multipathing

Administration Guide at www.oracle.com.

Fibre Channel interface

The P9000 family of disk arrays supports the following Fibre Channel elements:

• Connection speeds of 2 Gbps, 4 Gbps, and 8 Gbps.

• Short-wave non-OFC (open fibre control) optical interface

• Multimode optical cables with SC or LC connectors

• Public or private arbitrated loop (FC-AL) or direct fabric attach

• Fibre Channel switches

Even though the interface is Fibre Channel, this guide uses the term “SCSI disk” because disk array

devices are defined to the host as SCSI disks.

Fibre Channel elements specific to NonStop:

• Connection speeds of 1 Gbps, 2 Gbps, and 4 Gbps

• Short-wave non-OFC (open fiber control) optical interface

• Multimode optical cables with LC connectors

• Direct connect (PriNL) or fabric switch connect (N-port or DFA)

• Fibre Channel switches

Fibre Channel interface 11

Page 12

Device emulation types

The P9000 family of disk arrays supports these device emulation types:

• OPEN-x devices: OPEN-x logical units represent disk devices. Except for OPEN-V, these devices

are based on fixed sizes. OPEN-V is a user-defined size based on a CVS device. Supported

emulations include OPEN-3, OPEN-8, OPEN-9, OPEN-E, OPEN-L, and OPEN-V devices.

• LUSE devices (OPEN-x*n): Logical Unit Size Expansion (LUSE) devices combine 2 to 36 OPEN-x

devices to create expanded LDEVs larger than standard OPEN-x disk devices. For example,

an OPEN-x LUSE volume created from ten OPEN-x volumes is designated as OPEN-x*10.

• CVS devices (OPEN-x CVS): Volume Size Configuration (VSC) defines custom volumes (CVS)

that are smaller than normal fixed-sized logical disk devices (volumes). (OPEN-V is a CVS-based

custom disk size that you determine. OPEN-L does not support CVS.) Although OPEN-V is a

CVS-based device, the product name in the SCSI inquiry string is OPEN-V opposed to the

fixed size OPEN-[389E] devices that appear as OPEN-x-CVS.

• LUSE (expanded) CVS devices (OPEN-x*n CVS): LUSE CVS combines CVS devices to create

an expanded device. This is done by first creating CVS custom-sized devices and then using

LUSE to combine from 2 to 36 CVS devices. For example, if three OPEN-9 CVS volumes are

combined to create an expanded device, this device is designated as OPEN-9*3-CVS. OPEN-V

devices are designated as OPEN-V*n (without CVS).

• FX Devices (3390-3A/B/C, OPEN-x FXoto): The Data Exchange feature allows you to share

data across mainframe, UNIX, and PC server platforms using special multi-platform volumes.

The VLL feature can be applied to DE devices for maximum flexibility in volume size.

(FX Devices—Not applicable to NonStop, Novell Netware, OpenVMS, and VMware)

NOTE: When the P9500 is connected to external storage devices, HP recommends using OPEN-V

as the emulation the array makes visible to the host. This allows configuration of external storage

LDEVs without losing data. Using any other emulation might cause data loss in the external storage

LUNs. For new deployments, use OPEN-V, because some features (such as features available with

HP StorageWorks P9000 Snapshot Software or HP StorageWorks P9000 Continuous Access

Journal Software) are only supported with OPEN-V.

For detailed information, see “Emulation specifications (HP-UX)” (page 117).

Failover

Depending on the operating system used, the disk arrays support many standard software products

that provide host, application, or I/O path failover, and management.

HP-UX

• HP Multi-Computer/Serviceguard (MC/Serviceguard) software for application failover

• Alternate link for I/O path failover (included in HP-UX)

• Logical volume management (included in HP-UX)

OpenVMS

The P9000 family of disk arrays is supported with OpenVMS's resident Multipath software, which

provides I/O path failover.

Solaris

• The Veritas Cluster Server, Solaris Cluster, and Fujitsu Siemens Computers PRIMECLUSTER

host failover products are supported for the Solaris operating system. See the documentation

for these products and Oracle technical support for installation and configuration information.

12 Overview

Page 13

Your HP representative might need to set specific disk array system modes for these products.

Check with your HP representative for the current versions supported.

• For I/O path failover, different products are available from Oracle, Veritas, and HP. Oracle

supplies software called STMS for Solaris 8/9 and Storage Multipathing for Solaris 10. Veritas

offers VxVM, which includes DMP. HP supplies HDLM. All these products provide multipath

configuration management, FCAs I/O load balancing, and automatic failover support, however

their level of configuration possibilities and FCAs support differs.

• For instructions on STMS, Storage Multipathing, or VxVM, see the manufacturers' manuals.

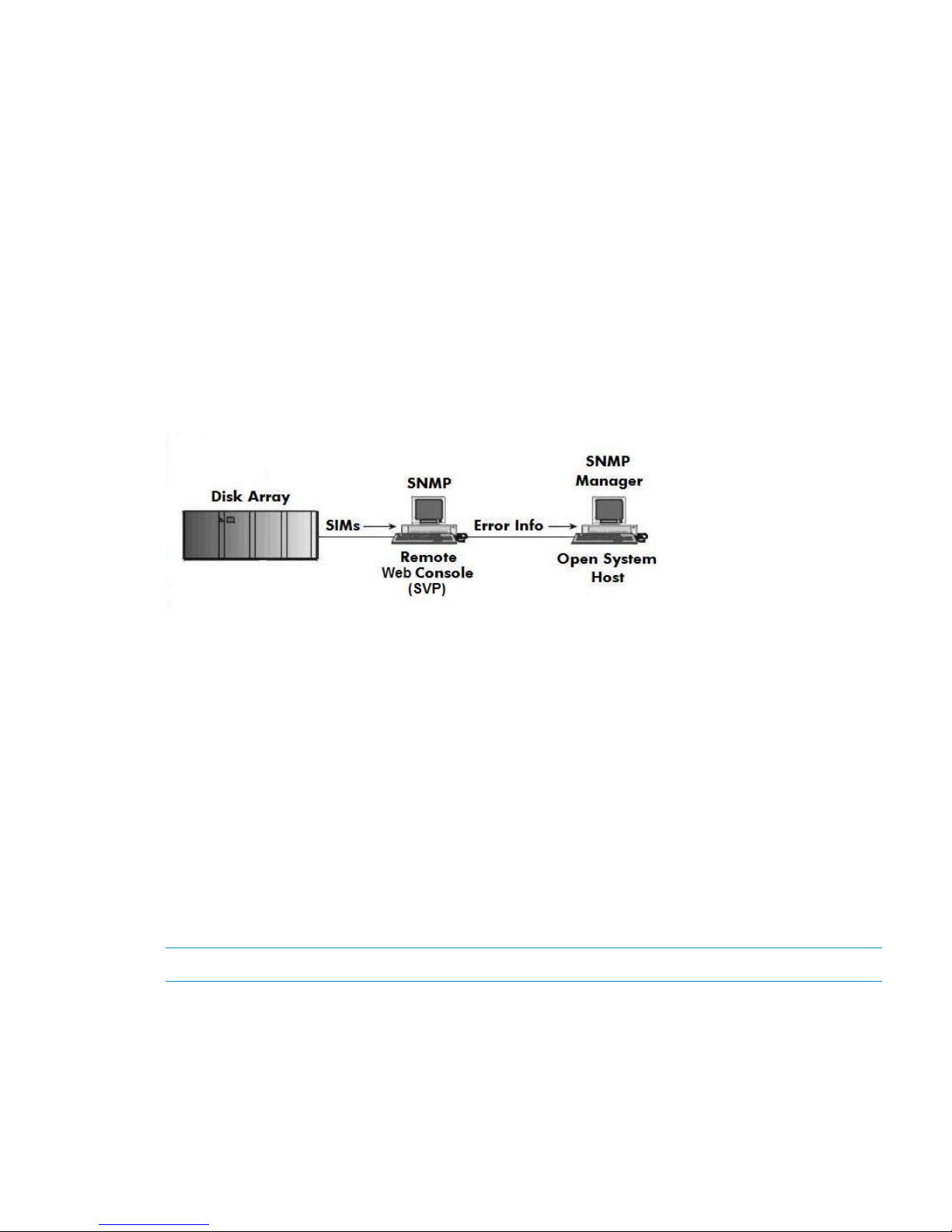

SNMP configuration

The P9000 family of disk arrays supports standard SNMP for remotely managing arrays. The

SNMP agent on the SVP performs error-reporting operations requested by the SNMP manager.

SNMP properties are usually set from the SVP but they can also be set remotely using Remote Web

Console or Command View Advanced Edition Suite Software. For specific procedures, see the

applicable user guide.

Figure 1 SNMP configuration

RAID Manager command devices

The following applies to OpenVMS, HP-UX, IBM AIX, Linux, Solaris, VMware, and Windows.

• HP StorageWorks P9000 RAID Manager Software manages HP StorageWorks P9000 Business

Copy Software or HP StorageWorks P9000 Continuous Access Synchronous Software

operations from a host server. To use RAID Manager, you must designate at least one LDEV

as a command device. This can be done with Remote Web Console or Command View

Advanced Edition Suite Software. For information about how to designate a command device,

see the applicable user guide.

• Creating scripts to configure all devices at once could save you considerable time.

The following applies to OpenVMS.

When creating a command device, HP recommends creating a LUN 0 device of 35 megabytes

(the smallest allowed). This allows you to use host-based RAID management tools available from

HP, and will allow HP support to perform some additional diagnostics.

NOTE: Storage assigned to the LUN 0 device is not accessible to OpenVMS.

SNMP configuration 13

Page 14

2 HP-UX

You and the HP service representative each play a role in installation. The HP service representative

is responsible for installing the disk array and formatting the disk devices. You are responsible for

configuring the host server for the new devices with assistance from the HP service representative.

Installation roadmap

Perform these actions to install and configure the disk array:

1. “Installing and configuring the disk array” (page 14)

• “Defining the paths” (page 15)

• “Setting the host mode and host group mode for the disk array ports” (page 15)

• “Setting the system option modes” (page 15)

• “Configuring the Fibre Channel ports” (page 16)

2. “Installing and configuring the host” (page 16)

• “Loading the operating system and software” (page 16)

• “Installing and configuring the FCAs ” (page 16)

• “Clustering and fabric zoning” (page 16)

• “Fabric zoning and LUN security for multiple operating systems” (page 17)

3. “Connecting the disk array” (page 17)

• “Verifying FCA installation” (page 17)

• “Verifying device recognition” (page 18)

4. “Configuring disk array devices” (page 19)

• “Verifying the device files and drivers” (page 20)

• “Creating the device files” (page 20)

• “Creating the physical volumes” (page 22)

• “Creating new volume groups” (page 22)

• “Creating logical volumes” (page 24)

• “Creating the file systems” (page 26)

• “Setting the I/O timeout parameter” (page 26)

• “Creating the mount directories” (page 27)

• “Mounting and verifying the file systems” (page 27)

• “Setting and verifying the auto-mount parameters” (page 28)

Installing and configuring the disk array

The HP service representative performs these tasks:

• Assembling hardware and installing software

• Loading the microcode updates

• Installing and formatting devices

After these tasks are finished, use Remote Web Console, Command View Advanced Edition, or

Array Manager to complete the remaining disk array configuration tasks. If you do not have these

programs, your HP service representative can perform these tasks for you.

14 HP-UX

Page 15

Defining the paths

Use Command View Advanced Edition or Remote Web Console to define paths between hosts

and volumes (LUNs) in the disk array.

This process is also called “LUN mapping.” In the Remote Web Console, LUN mapping includes:

• Configuring ports

• Enabling LUN security on the ports

• Creating host groups

• Assigning Fibre Channel adapter WWNs to host groups

• Mapping volumes (LDEVs) to host groups (by assigning LUNs)

In Command View Advanced Edition, LUN mapping includes:

• Configuring ports

• Creating storage groups

• Mapping volumes and WWN/host access permissions to the storage groups

For details see the HP StorageWorks P9000 Provisioning for Open Systems User Guide. Note the

LUNs and their ports, WWNs, nicknames, and LDEVs for later use in verifying host and device

configuration.

Setting the host mode and host group mode for the disk array ports

After the disk array is installed, you must set the host mode for each host group that is configured

on a disk array port to match the host OS. Set the host mode using LUN Manager in Remote Web

Console or Command View Advanced Edition. If these are not available, the HP service

representative can set the host mode using the SVP. The host mode setting for HP-UX is 08.

CAUTION: The correct host mode must be set for all new installations (newly connected ports)

to HP-UX hosts. Do not select a mode other than 08 for HP-UX. Changing a host mode after the

host has been connected is disruptive and requires the server to be rebooted.

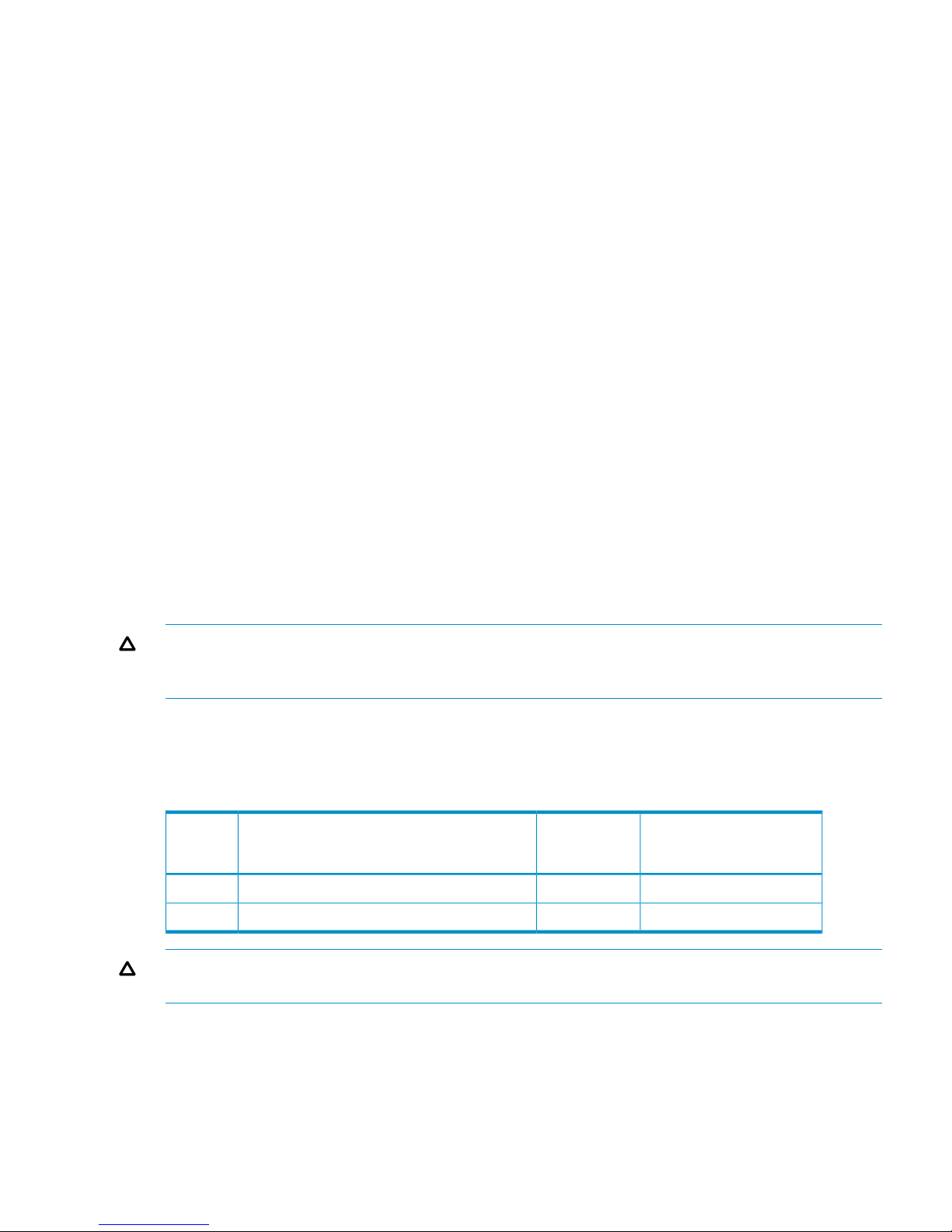

When a new host group is added, additional host group modes (options) can be configured. The

storage administrator must verify if an additional host group mode is required for the host group.

The following host group modes (options) are available for HP-UX:

Table 1 Host group modes (options) HP-UX

CommentsDefaultFunction

Host

Group

Mode

Previously MODE280InactiveDeletion of Ghost LUN12

HP-UX 11.31 onlyInactiveTask retry ID enable33

CAUTION: Changing host group modes for ports where servers are already installed and

configured is disruptive and requires the server to be rebooted.

Setting the system option modes

The HP service representative sets system option modes based on the operating system and software

configuration of the host. In some situations, the system option modes shown in Table 2

(page 50) enable storage system behaviors that are more compatible with the requirements of a

NonStop system than the default modes. Ask your service representative if these modes apply in

your situation.

Installing and configuring the disk array 15

Page 16

Table 2 System option modes (NonStop)

Minimum microcode versionSystemOption Mode

P9500

Available from initial release142

Available from initial release454

N/A685

1

N/A724

HP also recommends setting host group mode 13 with P9000 storage systems that are connected

to HP NonStop systems.

Configuring the Fibre Channel ports

Configure the disk array Fibre Channel ports by using Command View Advanced Edition or Remote

Web Console. Select the settings for each port based on your SAN topology. Use switch zoning

if you connect different types of hosts to the array through the same switch.

Installing and configuring the host

This section explains how to install and configure Fibre Channel adapters (FCAs) that connect the

host to the disk array.

Loading the operating system and software

Follow the manufacturer's instructions to load the operating system and software onto the host.

Load all OS patches and configuration utilities supported by HP and the FCA manufacturer.

Installing and configuring the FCAs

Install and configure the Fibre Channel adapters using the FCA manufacturer's instructions.

Clustering and fabric zoning

If you plan to use clustering, install and configure the clustering software on the servers.

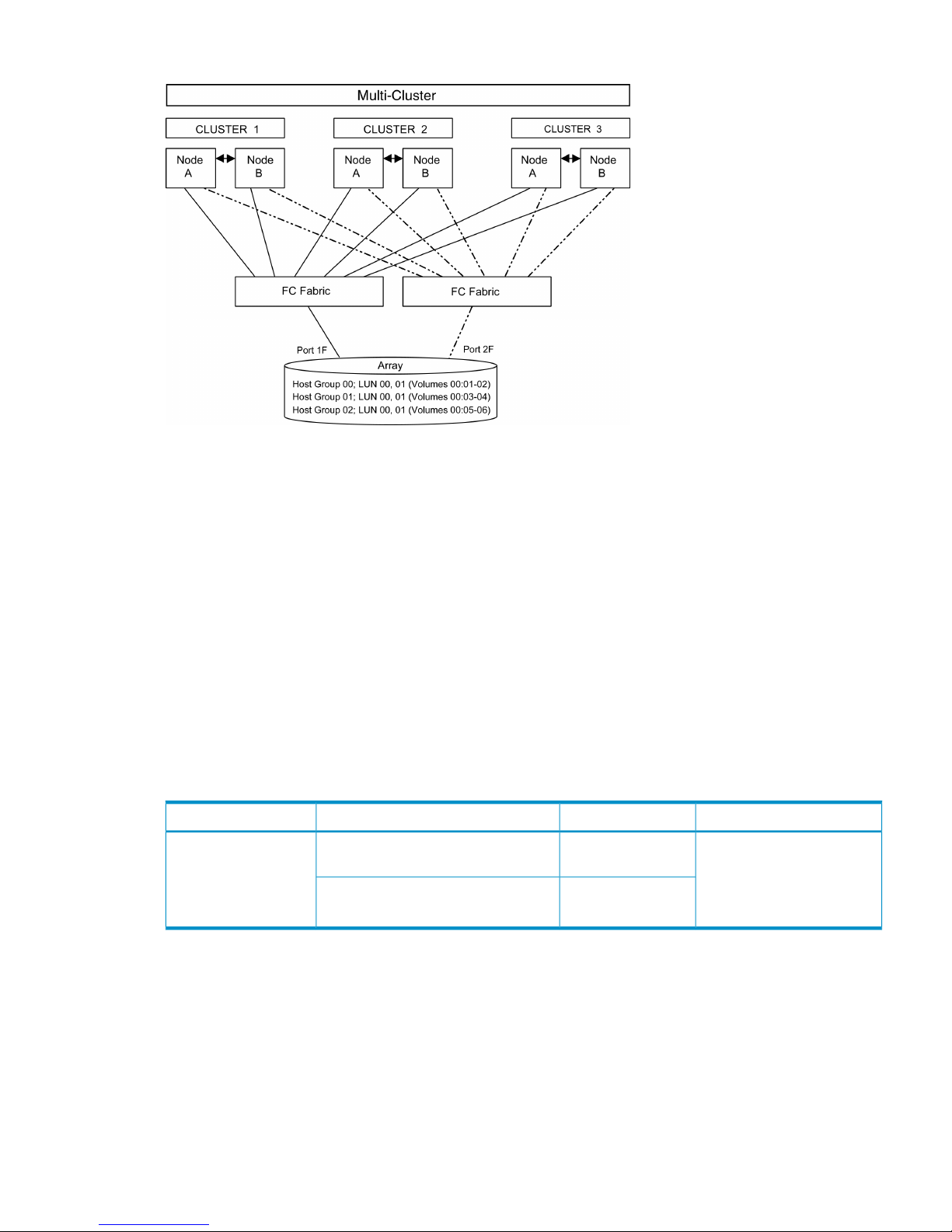

Clustering is the organization of multiple servers into groups. Within a cluster, each server is a

node. Multiple clusters compose a multi-cluster environment. The following example shows a

multi-cluster environment with three clusters, each containing two nodes. The nodes share access

to the disk array.

16 HP-UX

Page 17

Figure 2 Multi-cluster environment (HP-UX)

Within the SAN, the clusters can be homogeneous (all the same operating system) or heterogeneous

(mixed operating systems). How you configure LUN security and fabric zoning depends on the

operating system mix and the SAN configuration.

Fabric zoning and LUN security for multiple operating systems

You can connect multiple clusters with multiple operating systems to the same switch and fabric

using appropriate zoning and LUN security as follows:

• Storage port zones can overlap if more than one operating system needs to share an array

port.

• Heterogeneous operating systems can share an array port if you set the appropriate host

group and mode. All others must connect to a dedicated array port.

• Use LUN Manager for LUN isolation when multiple hosts connect through a shared array port.

LUN Manager provides LUN security by allowing you to restrict which LUNs each host can

access.

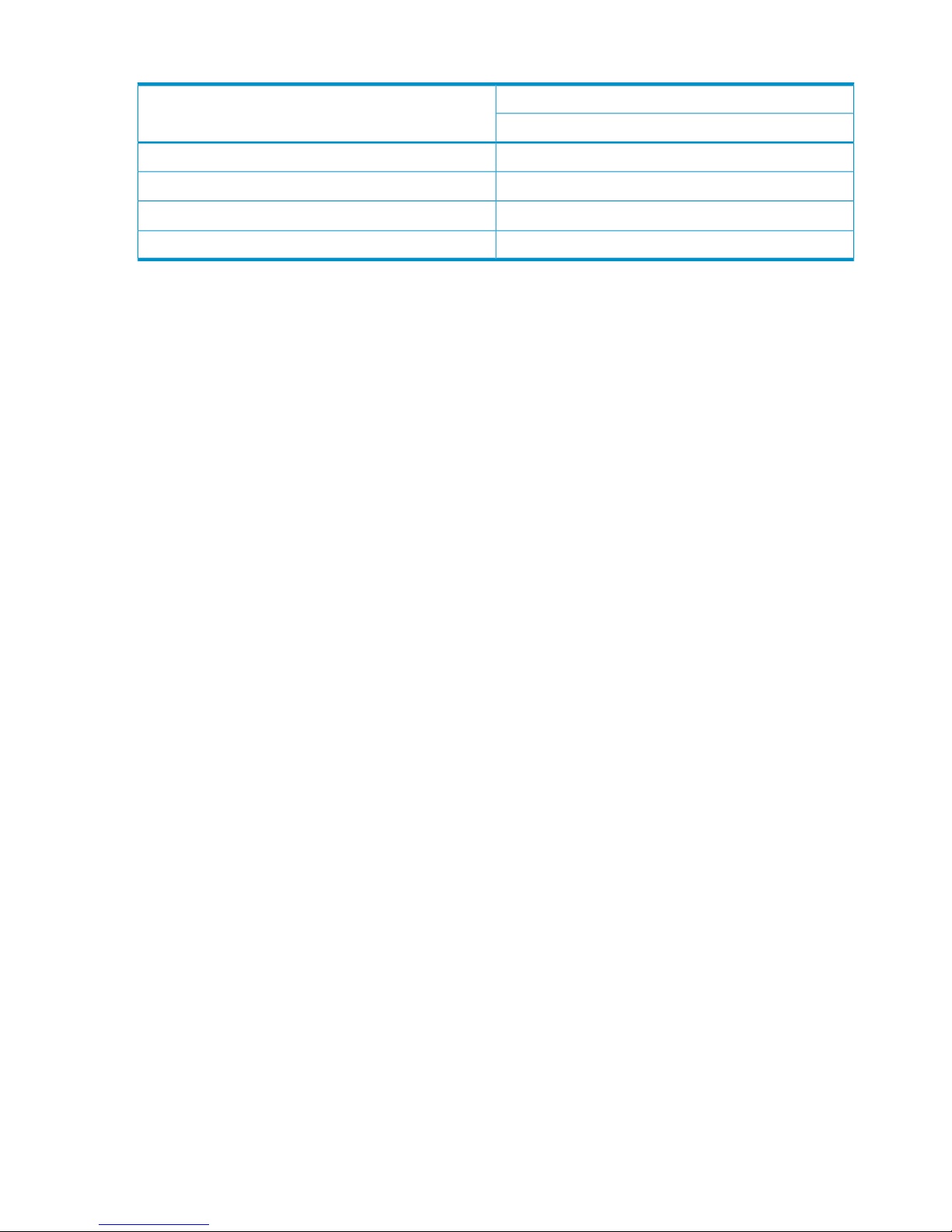

Table 3 Fabric zoning and LUN security settings

LUN SecurityFabric ZoningOS MixEnvironment

Must be used when multiple

hosts or cluster nodes connect

through a shared port

Not requiredhomogeneous (a single OS type present

in the SAN)

Standalone SAN

(non-clustered)

Clustered SAN

Multi-Cluster SAN

Requiredheterogeneous (more than one OS type

present in the SAN)

Connecting the disk array

The HP service representative performs the following steps to connect the disk array to the host:

1. Verifying operational status of the disk array channel adapters, LDEVs, and paths.

2. Connecting the Fibre Channel cables between the disk array and the fabric switch or host.

3. Verifying the ready status of the disk array and peripherals.

Verifying FCA installation

After configuring the ports on the disk array, verify that the FCAs are installed properly.

Connecting the disk array 17

Page 18

Use the ioscan –f command, and verify that the rows shown in the example are displayed. If

these rows are not displayed, check the host adapter installation (hardware and driver installation)

or the host configuration.

Example

# ioscan –f

Class I H/W Path Driver S/W State H/W Type Description

...

fc 0 8/12 fcT1 CLAIMED INTERFACE HP Fibre ...

lan 1 8/12.5 fcT1_cntl CLAIMED INTERFACE HP Fibre ...

fcp 0 8/12.8 fcp CLAIMED INTERFACE FCP Proto...

ext bus 2 8/12.8.0.255.0 fcpdev CLAIMED INTERFACE FCP Devic...

Verifying device recognition

Verify that the HP-UX system recognizes the new devices on the disk array.

If the SCSI paths were defined after the system is powered on, you must halt and restart the system

to allow the system to recognize the new devices.

To verify device recognition:

1. Log in to the system as root.

2. Display the device data to verify that the system recognizes the newly installed devices on the

disk array. Use the ioscan –fn command to display the device data.

On a system with a large LUN configuration, HP-UX cannot build device files on all LUNs.

Enter insf –e to build all missing device files.

Example

# ioscan -fn

Class I H/W Path Driver S/W State H/W Type...

bc 6 14 ccio CLAIMED BUS_NEXUS...

fc 1 14/12 fcT1 CLAIMED INTERFACE...

lan 2 14/12.5 fcT1_cntl CLAIMED INTERFACE...

fcp 1 14/12.8 fcp CLAIMED INTERFACE...

ext_bus 6 14/12.8.0.0.0 fcpmux CLAIMED INTERFACE...

disk 4 14/12.8.0.0.0.0.0 sdisk CLAIMED DEVCE...

disk 5 14/12.8.0.0.0.0.1 sdisk CLAIMED DEVICE...

ext_bus 7 14/12.8.0.255.0 fcpdev CLAIMED INTERFACE...

target 10 14/12.8.0.255.0.0 tgt CLAIMED DEVICE...

ctl 5 14/12.8.0.255.0.0.0 sctl CLAIMED DEVICE...

In the example:

• HP OPEN-9 device: SCSI bus number = 14/12, bus instance = 6, SCSI target ID = 0,

LUN = 0.

• HP OPEN-9*2 device: SCSI bus number = 14/12, bus instance = 6, SCSI target ID =

0, LUN = 1.

• If UNKNOWN is displayed for a disk, the HP 9000 system might be configured properly.

See the HP documentation or contact HP customer support for assistance with the HP

9000 system or the HP-UX operating system.

3. Enter the device data for each disk array device in a table. See “Path worksheet” (page 115).

4. Construct the device file name for each device, using the device information, and enter the

file names in your table. Use the following formula to construct the device file name:

cxtydz

where:

• x = SCSI bus instance number

• y = SCSI target ID

18 HP-UX

Page 19

• z = LUN

• c stands for controller

• t stands for target ID

• d stands for device

The numbers x, y, and z are hexadecimal.

Table 4 Device file name example (HP-UX)

File nameLUNSCSI TIDHardware pathSCSI bus instance

number

c6t0d00614/12.6.000

c6t0d12614/12.6.100

5. Verify that the SCSI TIDs correspond to the assigned port address for all connected ports (see

mapping tables in SCSI TID map for Fibre Channel adapters (HP-UX), for values). If so, the

logical devices are recognized properly.

If the logical devices are not recognized properly:

• Check the AL-PA for each port using the LUN Manager software.

• If the same port address is set for multiple ports on the same loop (AL with HUB), all port

addresses except one changed to another value, and the relationship between AL-PA and

TID does not correspond to the mapping given in SCSI TID map for Fibre Channel adapters

(HP-UX), set a different address for each port, reboot the server, and then verify new

device recognition again.

• If unused device information remains, the TID-to-AL-PA mapping will not correspond to

the mapping given in SCSI TID map for Fibre Channel adapters (HP-UX). Renew the device

information, and then verify new device recognition again.

Configuring disk array devices

Disk arrays are configured using the same procedure for configuring any new disk on the host.

This includes the following procedures:

1. “Verifying the device files and drivers” (page 20)

2. “Creating the device files” (page 20)

3. “Creating the physical volumes” (page 22)

4. “Creating new volume groups” (page 22)

5. “Creating logical volumes” (page 24)

6. “Creating the file systems” (page 26)

7. “Setting the I/O timeout parameter” (page 26)

8. “Creating the mount directories” (page 27)

9. “Mounting and verifying the file systems” (page 27)

10. “Setting and verifying the auto-mount parameters” (page 28)

The HP-UX system uses the Logical Volume Manager (LVM) to manage the OPEN-x devices on the

disk array. The instructions in this section do not explicitly cover all LVM configuration issues. For

further information on LVM configuration, see the HP-UX user documentation.

HP System Administrator Manager (SAM) can be used instead of UNIX commands to configure

SCSI disk devices. See Reference information for the HP System Administrator Manager SAM for

further information. The newer releases of HP-UX have deprecated the SAM tool and replaced it

with the System Management Homepage (SMH) tool.

Configuring disk array devices 19

Page 20

Verifying the device files and drivers

The device files for new devices are usually created automatically during HP-UX startup. Each

device must have a block-type device file in the /dev/dsk directory and a character-type device

file in the /dev/rdsk directory.

However, some HP-compatible systems do not create the device files automatically. If verification

shows that the device files were not created, follow the instructions in “Creating the device files”

(page 20).

The following procedure verifies both types of device files:

1. Display the block-type device files in the /dev/dsk directory using the ls –l command with

the output piped to more. Verify there is one block-type device file for each disk array device.

Example

# ls –l /dev/dsk | more

Total 0

brw-r - - - - - 1 bin sys 28 0x006000 Dec 6 15:08 c6t0d0

brw-r - - - - - 1 bin sys 280 0x06100 Dec 6 15:08 c6t0d1

2. Verify that the block-type device file name for each device is correct.

3. Display the character-type device files in the /dev/rdsk directory using the ls –l command

with the output piped to more. Verify that there is one character-type device file for each disk

array device.

Example

# ls –l /dev/rdsk | more

Total 0

crw-r - - - - - 1 bin sys 177 0x006000 Dec 6 15:08 c6t0d0

crw-r - - - - - 1 bin sys 177 0x006100 Dec 6 15:08 c6t0d1

4. Use the device data table you created to verify that the character-type device file name for

each device is correct.

This task can also be accomplished with the lssf command.

5. After verifying the block-type and character-type device files, verify the HP-UX driver for the

disk array using the ioscan –fn command.

Example

# ioscan -fn

Class I H/W Path Driver S/W State H/W Type Desc

-----------------------------------------------------------bc 0 root CLAIMED BUS_NEXUS...

bc 1 8 bc CLAIMED BUS_NEXUS...

fc 0 8/12 fcT1 CLAIMED INTERFACE...

fcp 0 8/12.8 fcp CLAIMED INTERFACE...

ext_bus 2 8/12.8.0.255.0 fcpdev CLAIMED INTERFACE...

disk 3 8/12.8.8.255.0.6.0 sdisk CLAIMED DEVICE...

/dev/dsk/c2t6d0 /dev/rdsk/c2t6d0

disk 4 8/12.8.8.255.0.6.1 sdisk CLAIMED DEVICE...

/dev/dsk/c2t6d1 /dev/rdsk/c2t6d1

disk 5 8/12.8.8.255.0.8.0 sdisk CLAIMED DEVICE...

/dev/dsk/c2t8d0 /dev/rdsk/c2t8d0

Creating the device files

If the device files were not created automatically when the system was restarted, use the insf

–e command in the /dev directory to create the device files. After this command is executed,

20 HP-UX

Page 21

repeat the procedures in “Verifying device recognition” (page 18) to verify new device recognition

and the device files and driver.

Example

# insf -e

insf: Installing special files for mux2 instance 0 address 8/0/0

: : : :

: : : :

#

Failure of the insf –e command indicates a SAN problem.

If the device files for the new disk array devices cannot be created automatically, you must create

the device files manually using the mknodcommand as follows:

1. Retrieve the device information you recorded earlier.

2. Construct the device file name for each device, using the device information, and enter the

file names in your table. Use the following formula to construct the device file name:

cxtydz

where:

• x = SCSI bus instance number

• y = SCSI target ID

• z = LUN

• c stands for controller

• t stands for target ID

• d stands for device

The numbers x, y, and z are hexadecimal.

3. Construct the minor number for each device, using the device information, and enter the file

names in your table. Use the following formula to construct the minor number:

0xxxyz00 where

xx = SCSI bus instance number, y = SCSI target ID, and z = LUN.

4. Display the driver information for the system using the lsdev command.

Example

# lsdev

Character Block Driver Class

: : : :

188 31 sdisk disk

#

5. Enter the major numbers for the device drivers into the table. You should now have all required

device and driver information in the table.

Configuring disk array devices 21

Page 22

6. Create the device files for all disk array devices (SCSI disk and multiplatform devices) using

the mknodcommand. Create the block-type device files in the /dev/dsk directory and the

character-type device files in the /dev/rdsk directory.

Example

# cd /dev/dsk Go to /dev/dsk directory.

# mknod /dev/dsk/c2t6d0 b 31 0x026000 Create block-type file.

File name, b=block-type,

31=major #, 0x026000= minor #

# cd /dev/rdsk Go to /dev/rdsk directory.

# mknod /dev/rdsk/c2t6d0 c 188 0x026000 Create character-type file.

File name, c=character-type,

177=major #, 0x026000=minor #

:

#

The character-type device file is required for volumes used as raw devices (for example,

3390-3A/B/C). The block-type device file is not required for volumes used as raw devices.

If you need to delete a device file, use the rm –i command.

Table 5 Device information example (HP-UX)

Major

no.

block

files

Major

no.

char.

files

Minor no.Dev fileLUNTIDDev typeDriverHW pathDiskInstBus

311880x026000c2t6d006OPEN-9sdisk8/12.8.8.255.0.6.03028/12

311880x026100c2t6d116OPEN-9sdisk8/12.8.8.255.0.6.14028/12

311880x028000c2t8d0083390-3Bsdisk8/12.8.8.255.0.8.05028/12

Creating the physical volumes

A physical volume must be created for each new SCSI disk device.

To create the physical volumes:

1. Use the pvcreate command to create the physical volumes with the character-type device

file as the argument. Specify the /dev/rdsk directory.

Example

# pvcreate /dev/rdsk/c6t0d0

Physical volume "/dev/rdsk/c6t0d0" has been successfully created.

:

# pvcreate /dev/rdsk/c6t0d1

Physical volume "/dev/rdsk/c6t0d1" has been successfully created.

Do not use the –f option with the pvcreate command. This option creates a new physical

volume forcibly and overwrites the existing volume. If you accidentally enter the character-type

device file for an existing volume, you will lose the data on that volume.

2. Repeat step 1 for each OPEN-x device on the disk array.

Creating new volume groups

You must create new volume groups for the new physical volumes. If desired, you can also add

any of the volumes on the disk array to existing volume groups using the vgextend command.

22 HP-UX

Page 23

The physical volumes that make up one volume group can be located either in the same disk array

or in other disk arrays.

To allow more volume groups to be created, use SAM to modify the HP-UX system kernel

configuration. See Reference information for the HP System Administrator Manager SAM for details.

The newer releases of HP-UX have deprecated the SAM tool and replaced it with the System

Management Homepage (SMH) tool.

To create volume groups:

1. Use the vgdisplay command to display the existing volume groups.

2. Choose a unique name for the new volume group (for example: vg06).

3. Create the directory for the new volume group.

Example

# mkdir /dev/vg06

4. Use the ls –l command (with the output piped to grep to display only the files containing

“group”) to display the minor numbers for the existing group files.

Example

# ls –1 /dev/vg* | grep group

crw-rw-rw 1 root root 64 0x0000000 Nov7 08:13 group

:

5. Choose a unique minor number for the new group file in sequential order (for example, when

existing volume groups are vg00-vg05 and the next group name is vg06, use minor number

06 for the vg06 group file).

The minor numbers are hexadecimal (for example, the 10th minor number is 0x0a0000).

6. Use mknod to create the group file for the new directory. Specify the volume group name,

major number, and minor number. The major number for all group files is 64.

Example

In this example: group name = vg06, major number of group file = 64, minor number of

existing group file = 06 (which must be unique for each volume group), and c = character.

# mknod /dev/vg06/group c 64 0x060000

:

7. Create the volume group.

To allocate more than one physical volume to the new volume group, add the other physical

volumes, separated by a space.

Example

# vgcreate /dev/vg06 /dev/dsk/c6t0d0

Volume group "/dev/vg06" has been successfully created.

Volume group configuration for /dev/vg06 has been saved in

/etc/1vmconf/vg06.conf.

For Logical Unit Size Expansion (LUSE) volumes that contain more than 17 OPEN-8/9 LDEVs

or more than 7043 MB (OPEN-8/9*n-CVS), use the –s and –e physical extent (PE) parameters

of the vgcreate command. See LUSE device parameters.

If you need to delete a volume group, use the vgremove command (for example, vgremove

/dev/vgnn). If the vgremove command does not work because the volume group is not

active, use the vgexport command (for example, vgexport /dev/vgnn).

8. Use the vgdisplay command to verify that the new directory was created.

Configuring disk array devices 23

Page 24

9. Use vgdisplay –v to verify that the volume group was created correctly. The –v option

displays the detailed volume group information.

Example

# vgdisplay –v /dev/vg06

- - - Volume groups - - VG Name /dev/vg06

VG Write Access read/write

VG Status available

Max LV 255

Cur LV 0

Open LV 0

Max PV 16

Cur PV 1

Act PV 1

Max PE per PV 1016

VGDA 2

PE Size (Mbytes) 4

Total PE 586

Alloc PE 0

Free PE 586

Total PVG 0

- - Physical Volumes - - PV Name /dev/dsk/c6t0d0

PV Status available

Total PE 586

Free PE 586

Creating logical volumes

Use these commands for logical volume configuration:

• lvremove

Deletes a logical volume. Any file system attached to the logical volume must be unmounted

before executing the lvremove command.

Example

lvremove /dev/vgnn/lvolx

• lvextend

Increases the size of an existing logical volume.

Example

lvextend –L size /dev/vgnn/lvolx

• lvreduce

Decreases the size of an existing logical volume. Any file system attached to the logical volume

must be unmounted before executing the lvreduce command.

Example

lvreduce –L size /dev/vgnn/lvolx

CAUTION: Data within the file system can be lost after execution of lvreduce.

Create logical volumes after you create volume groups. A logical volume must be created for each

new SCSI disk device.

24 HP-UX

Page 25

To create logical volumes:

1. Use the lvcreate –L command to create a logical volume.

Specify the volume size (in megabytes) and the volume group for the new logical volume.

HP-UX assigns the logical volume numbers automatically (lvol1, lvol2, lvol3). Use the following

capacity values for the size parameter:

OPEN-K = 1740

OPEN-3 = 2344

OPEN-8 = 7004

OPEN-9 = 7004

OPEN-E = 13888

OPEN-L = 34756

OPEN-V = 61432

To calculate S1 for CVS, LUSE, and CVS LUSE volumes, first use the vgdisplay command

to display the physical extent size (PE Size) and usable number of physical extents (Free PE)

for the volume. Calculate the maximum size value (in MB) as follows:

S1 = (PE Size) × (Free PE)

Logical volumes can span multiple physical volumes. Use the diskinfo command for extended

LUNs.

2. Create an OPEN-3 logical volume the size of the physical volume, using 2344 for the size

parameter. An OPEN-9 volume uses 7040 for the size parameter to create a logical volume

the size of the physical volume.

Example

# lvcreate –L 2344 /dev/vg06

Logical volume "/dev/vg06/lvol1" has been successfully created with

character device "/dev/vg06/rlvol1".

Logical volume "/dev/vg06/lvol1" has been successfully extended.

Volume Group configuration for /dev/vg06 has been saved in

/etc/1vmconf/vg06.conf.

3. Use the lvdisplay command to verify that the logical volume was created correctly.

Example

# lvdisplay /dev/vg06/lvol1

- - - Logical volume - - LV Name /dev/vg06/lvol1

VG Name /dev/vg06

LV Permission read/write

LV Status available/syncd

Mirror copies 0

Consistency Recovery MWC

Schedule parallel

LV Size (Mbytes) 2344

Current LE 586

Allocated PE 586

Stripes 0

Stripe Size (Kbytes) 0

Bad block on

Allocation strict

4. Repeat steps 1–3 for each logical volume to be created.

You can create only one logical volume at a time. However, you can verify multiple logical

volumes at a time.

Configuring disk array devices 25

Page 26

Creating the file systems

Create the file system for each new logical volume on the disk array. The default file system types

are:

• HP-UX OS version 10.20 = hfs or vxfs, depending on entry in the /etc/defaults/fs

file.

• HP-UX OS version 11.0 = vxfs

• HP-UX OS version 11.i = vxfs

To create file systems:

1. Use the newfs command to create the file system using the logical volume as the argument.

Example 1

# newfs /dev/vg06/rlvol1

newfs: /etc/default/fs determine the file system type

mkfs (hfs): Warning -272 sectors in the last cylinder are not

allocated.

mkfs (hfs): /dev/vg06/rlvol1 - 2400256 sectors in 3847 cylinders

of 16 tracks,

2547.9MB in 241 cyl groups (16 c/g, 10.22Mb/g, 1600 ig/g)

Super block backups (for fsck -b) at:

16, 10040, 20064, 30038, 40112, 50136, 60160, 70184, 80208, 90232, ...

2396176

Example 2

# newfs /dev/vg06/rlvol1 create file system

newfs: / etc/default/fs determine the file system type

mkfs (hfs): ...

:

7188496, 7198520, 7208544

#

Example 3

# newfs -F vxfs /dev/vg06/rlvol1 Specify file system type

:

# newfs -F hfs /dev/vg06/rlvol2

2. Repeat step 1 for each logical volume on the disk array.

Setting the I/O timeout parameter

Set the I/O timeout value for each disk device to 60 seconds.

1. Verify the current I/O timeout value using the pvdisplay command:

Example

# pvdisplay /dev/dsk/c0t6d0

- - - Physical volumes - - PV Name /dev/dsk/c0t6d0

VG Name /dev/vg06

PV Status available

Allocatable yes

VGDA 2

Cur LV 1

PE Size (Mbytes) 4

Total PE 586

Free PE 0

Allocated PE 586 [OPEN-9]

Stale PE 0

IO Timeout (Seconds) default [I/O timeout value]

2. If the I/O timeout value is not 60, change the value to 60 using the pvchange -t command:

26 HP-UX

Page 27

Example

# pvchange -t 60 /dev/dsk/c0t6d0

Physical volume "/dev/dsk/c0t6d0" has been successfully changed.

Volume Group configuration for /dev/vg06 has been saved in

/etc/lvmconf/vg06.conf.

3. Verify that the new I/O timeout value is 60 seconds using the pvdisplay command:

Example

# pvdisplay /dev/dsk/c0t6d0

--- Physical volumes --PV Name /dev/dsk/c0t6d0

VG Name /dev/vg06

PV Status available

:

Stale PE 0

IO Timeout (Seconds) 60 [New I/O timeout value]

4. Repeat steps 1–3 for each new disk connected to the system.

Creating the mount directories