Page 1

QuickSpecs

Overview

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 1

Page 2

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

QuickSpecs

and 64-way

and 64-way

and 64-wayand 64-way

Overview

At A Glance

At A Glance

At A GlanceAt A Glance

The latest release of Superdome, HP Integrity Superdome supports the new and improved sx1000 chip set. HP Integrity Superdome supports Itanium 2 1.5GHz processors in mid 2003, and the next generation PA RISC processor, PA 8800 and the mx2 processor module based on two Itanium 2 processors in early

2004.

Throughout the rest of this document, the term HP Integrity Superdome with Itanium 2 1.5-GHz processors will be referred to as simply "Superdome".

Superdome with Itanium 2 1.5-GHz processors showcases HP's commitment to delivering a 64-way Itanium server and superior investment protection. It is

the dawn of a new era in high end computing with the emergence of commodity based hardware.

Superdome supports a multi OS environment. The multi OS environment offered by Superdome is listed below.

NOTE:

NOTE:

NOTE: NOTE:

referred to as Linux.

NOTE:

NOTE:

NOTE: NOTE:

NOTE:

NOTE:

NOTE: NOTE:

http://esp.mayfield.hp.com:2000/nav24/ppos/servers/gen/PriceAvailConfig/59815790/cgch2/cgch2sub8

Superdome supports both Red Hat Enterprise Linux AS 3 and Debian Linux. Throughout the rest of this document, the two flavors will be collectively

For information on upgrades from existing Superdome systems to HP Integrity Superdome systems, please refer to the "Upgrade" section.

This information can also be found in ESP at:

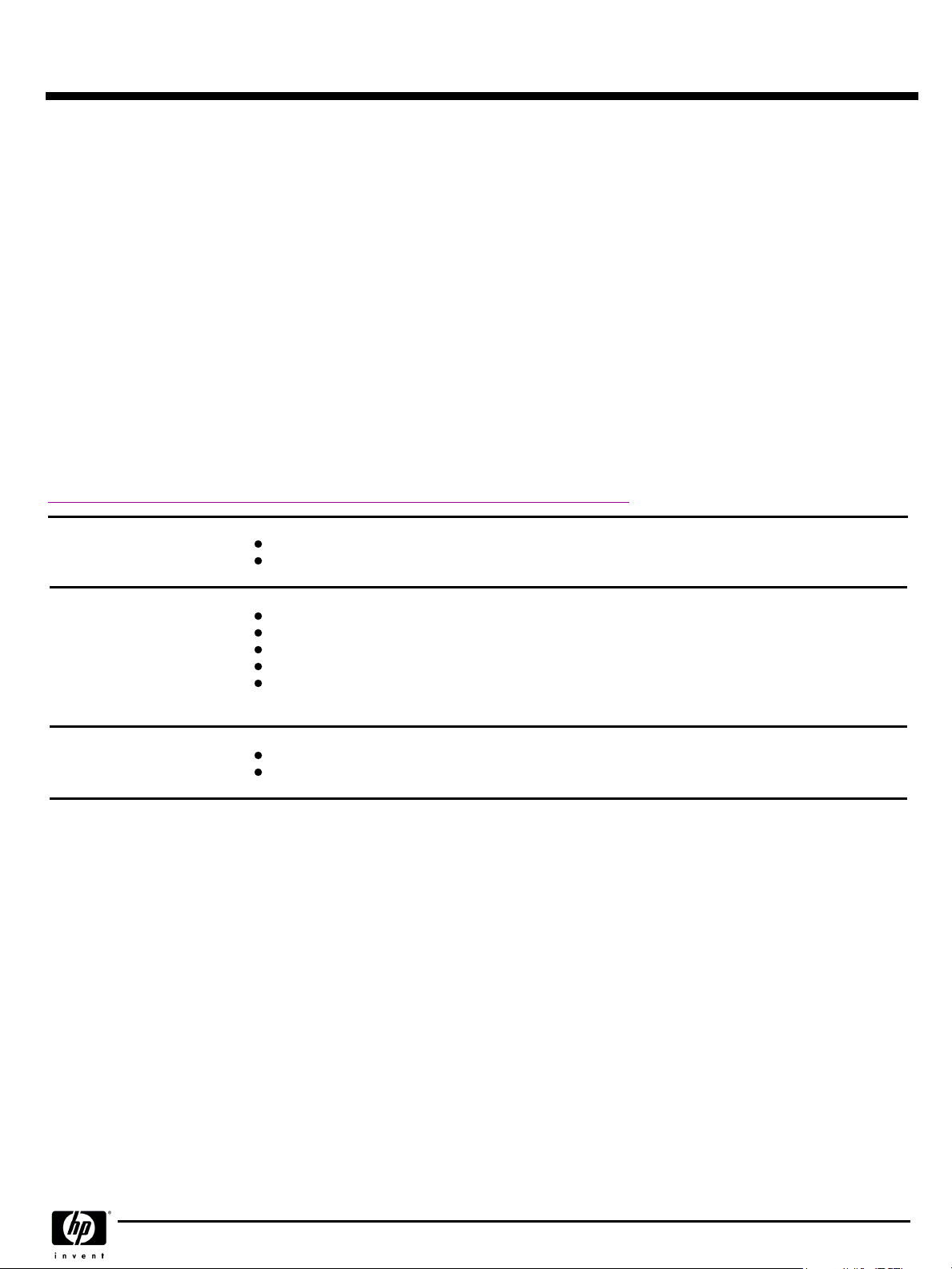

HP-UX 11i version 2

HP-UX 11i version 2

HP-UX 11i version 2HP-UX 11i version 2

Windows Server 2003

Windows Server 2003

Windows Server 2003Windows Server 2003

Datacenter Edition for

Datacenter Edition for

Datacenter Edition forDatacenter Edition for

Itanium 2

Itanium 2

Itanium 2Itanium 2

Linux

Linux

LinuxLinux

Improved performance over PA 8700

Investment protection through upgrades from existing Superdomes to next generation Itanium 2 processors

Extension of industry standard computing with Windows further into the enterprise data center

Increased performance and scalability over 32-bit implementations

Lower cost of ownership versus proprietary solutions

Ideal for scale up database opportunities such as SQL Server 2000 (64-bit)

Ideal for database consolidation opportunities such as consolidation of legacy 32-bit versions of SQL Server 2000

to SQL Server 2000 (64-bit)

Extension of industry standard computing with Linux further into the enterprise data center

Lower cost of ownership versus proprietary solutions

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 2

Page 3

QuickSpecs

Overview

Superdome Service

Superdome Service

Superdome ServiceSuperdome Service

Solutions

Solutions

SolutionsSolutions

Superdome continues to provide the same positive Total Customer Experience via industry-leading HP Services, as with

existing Superdome servers. The HP Services component of Superdome is as follows:

HP customers have consistently achieved higher levels of satisfaction when key components of their IT infrastructures

are implemented using the

maximum availability by examining customers' specific needs at each of five distinct phases (plan, design,

integrate, install, and manage) and then designing their Superdome solution around those needs. HP offers three

pre configured service solutions for Superdome that provides customers with a choice of lifecycle services to address

their own individual business requirements.

HP's Mission Critical Partnership:

HP's Mission Critical Partnership:

HP's Mission Critical Partnership:HP's Mission Critical Partnership:

agreement with Hewlett Packard to achieve the level of service that you need to meet your business requirements.

This level of service can help you reduce the business risk of a complex IT infrastructure, by helping you align IT

service delivery to your business objectives, enable a high rate of business change, and continuously improve service

levels. HP will work with you proactively to eliminate downtime, and improve IT management processes. S

Service Solution Enhancements:

Service Solution Enhancements:

Service Solution Enhancements:Service Solution Enhancements:

Solution in order to address your specific business needs. Services focused across multi operating systems as well as

other platforms such as storage and networks can be combined to compliment your total solution.

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

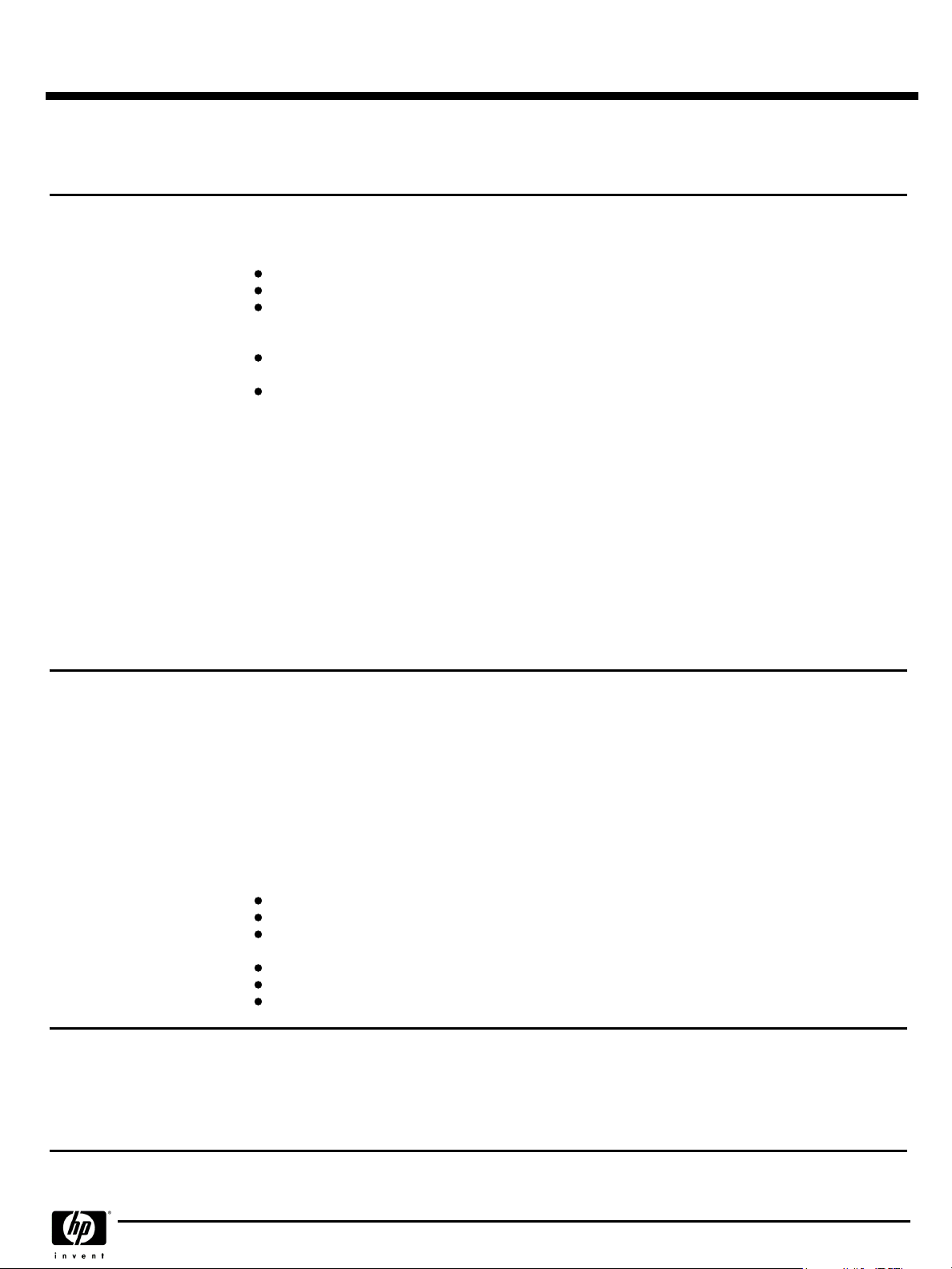

Solution Life Cycle

Solution Life Cycle

Solution Life CycleSolution Life Cycle

Foundation Service Solution:

Foundation Service Solution:

Foundation Service Solution:Foundation Service Solution:

lays the groundwork for long term system reliability by combining pre installation preparation and

integration services, hands on training and reactive support. This solution includes HP Support Plus 24 to

provide an integrated set of 24x7 hardware and software services as well as software updates for selected HP

and third party products.

Proactive Service Solution:

Proactive Service Solution:

Proactive Service Solution:Proactive Service Solution:

management phase of the Solution Life Cycle with HP Proactive 24 to complement your internal IT

resources with proactive assistance and reactive support. Proactive Service Solution helps reduce design

problems, speed time to production, and lay the groundwork for long term system reliability by combining

pre installation preparation and integration services with hands on staff training and transition assistance.

With HP Proactive 24 included in your solution, you optimize the effectiveness of your IT environment with

access to an HP certified team of experts that can help you identify potential areas of improvement in key IT

processes and implement necessary changes to increase availability.

Critical Service Solution:

Critical Service Solution:

Critical Service Solution:Critical Service Solution:

reactive support services to ensure maximum IT availability and performance for companies that can't

tolerate downtime without serious business impact. Critical Service Solution encompasses the full spectrum

of deliverables across the Solution Lifecycle and is enhanced by HP Critical Service as the core of the

management phase. This total solution provides maximum system availability and reduces design

problems, speeds time to production, and lays the groundwork for long term system reliability by combining

pre installation preparation and integration services, hands on training, transition assistance, remote

monitoring, and mission critical support. As part of HP Critical Service, you get the services of a team of HP

certified experts that will assist with the transition process, teach your staff how to optimize system

performance, and monitor your system closely so potential problems are identified before they can affect

availability.

This solution builds on the Foundation Service Solution by enhancing the

Mission Critical environments are maintained by combining proactive and

This service offering provides customers the opportunity to create a custom

HP's full portfolio of services is available to enhance your Superdome Service

. The Solution Life Cycle focuses on rapid productivity and

This solution reduces design problems, speeds time to production, and

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 3

Page 4

QuickSpecs

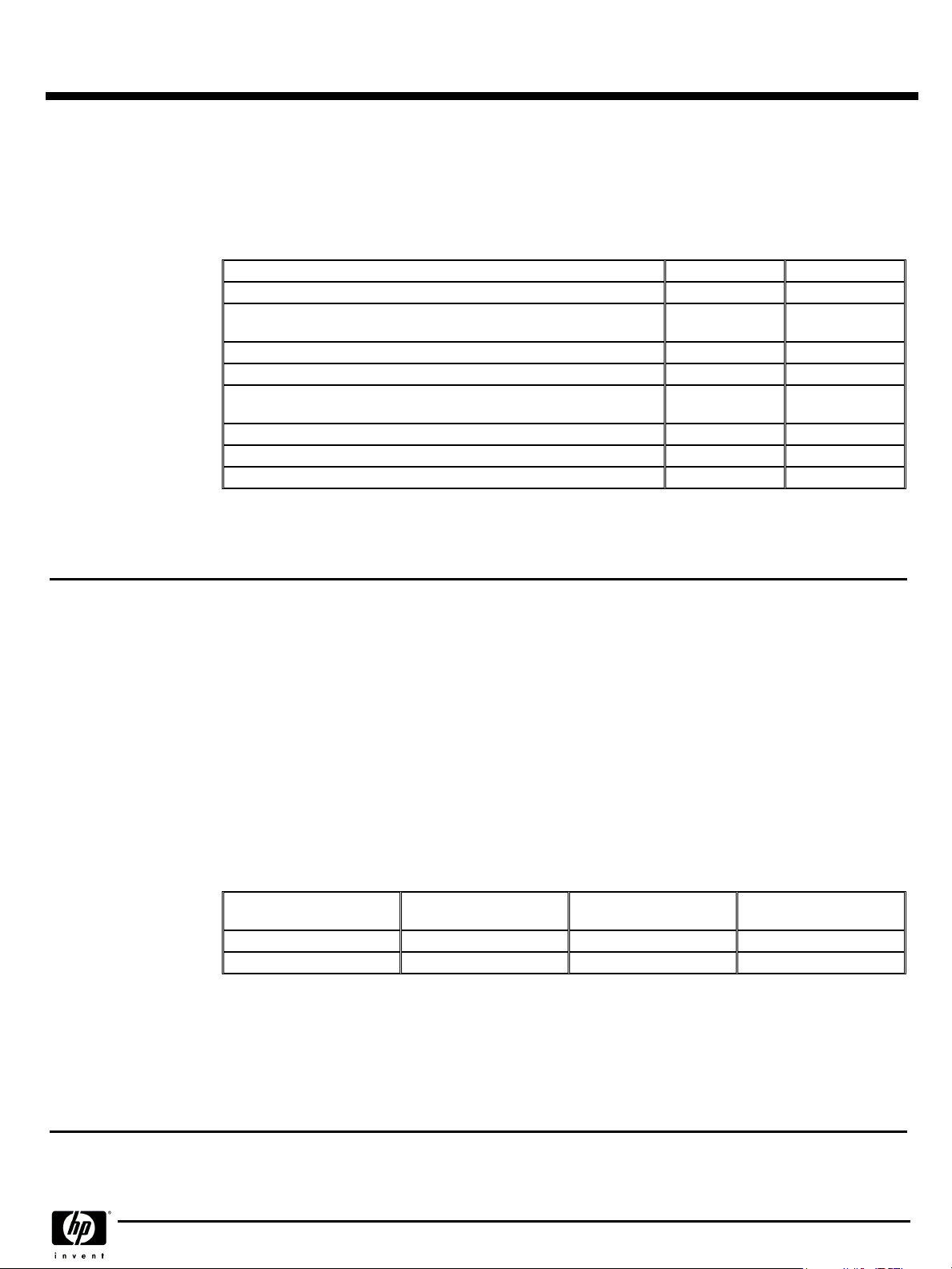

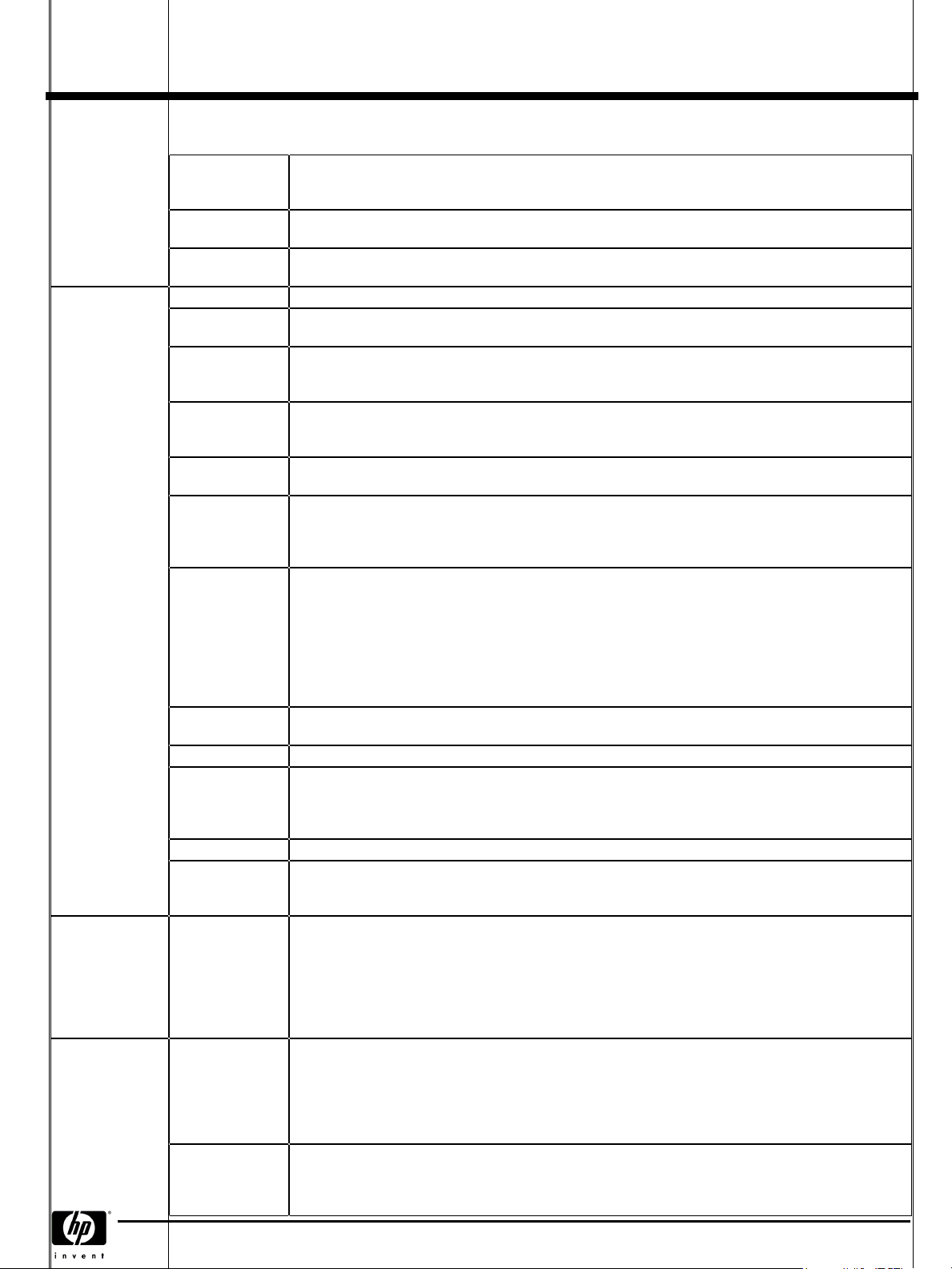

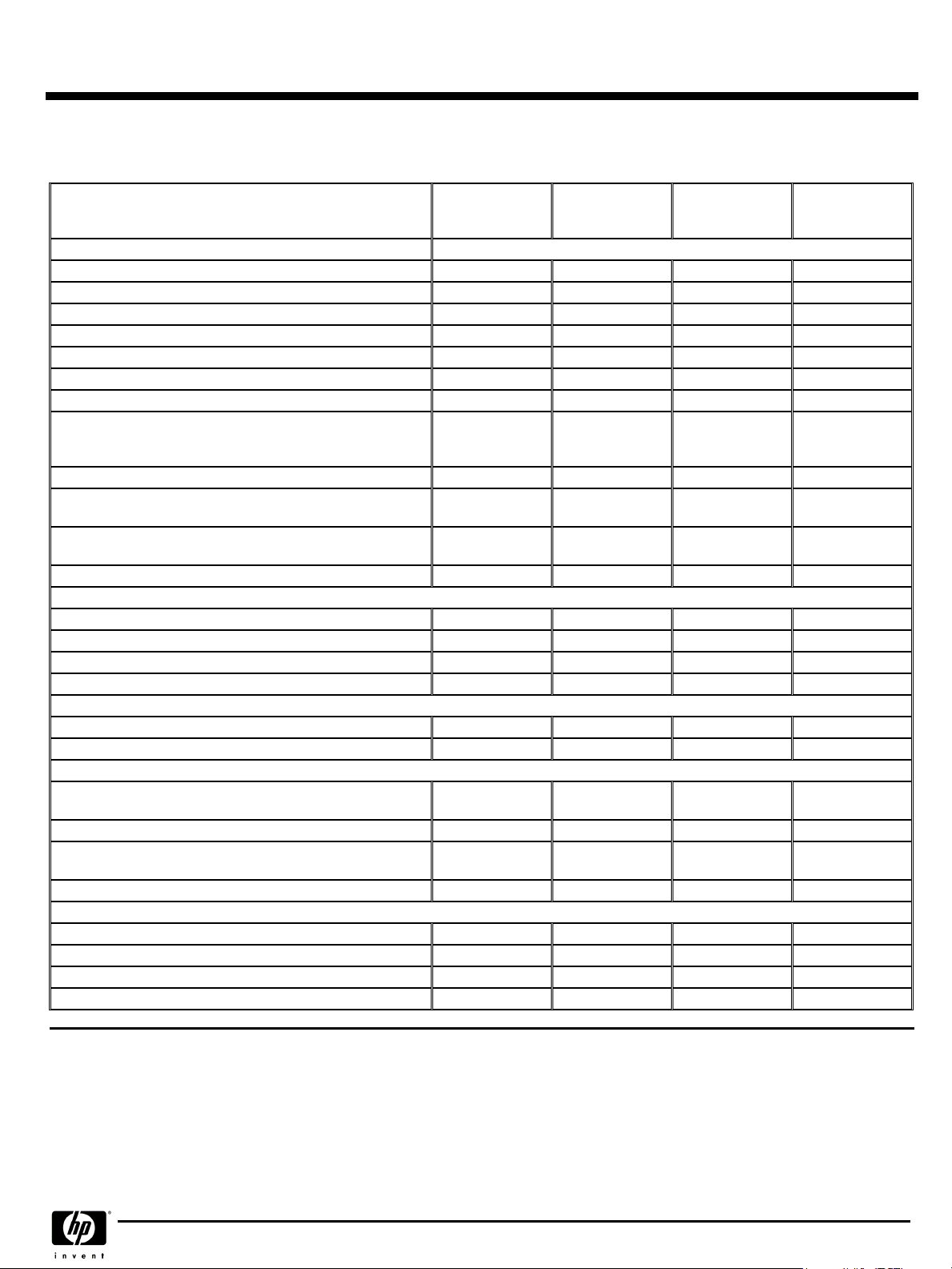

Standard Features

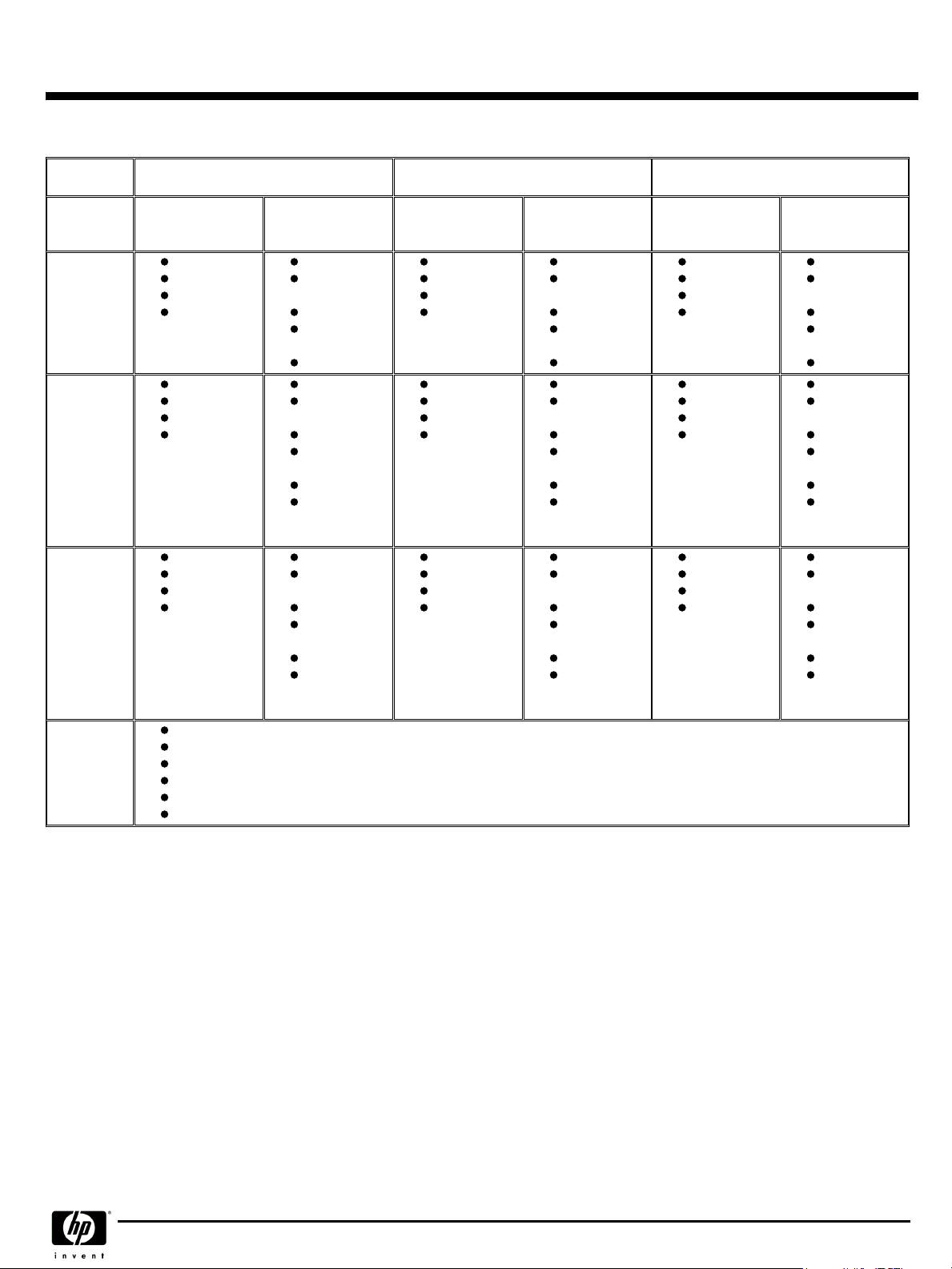

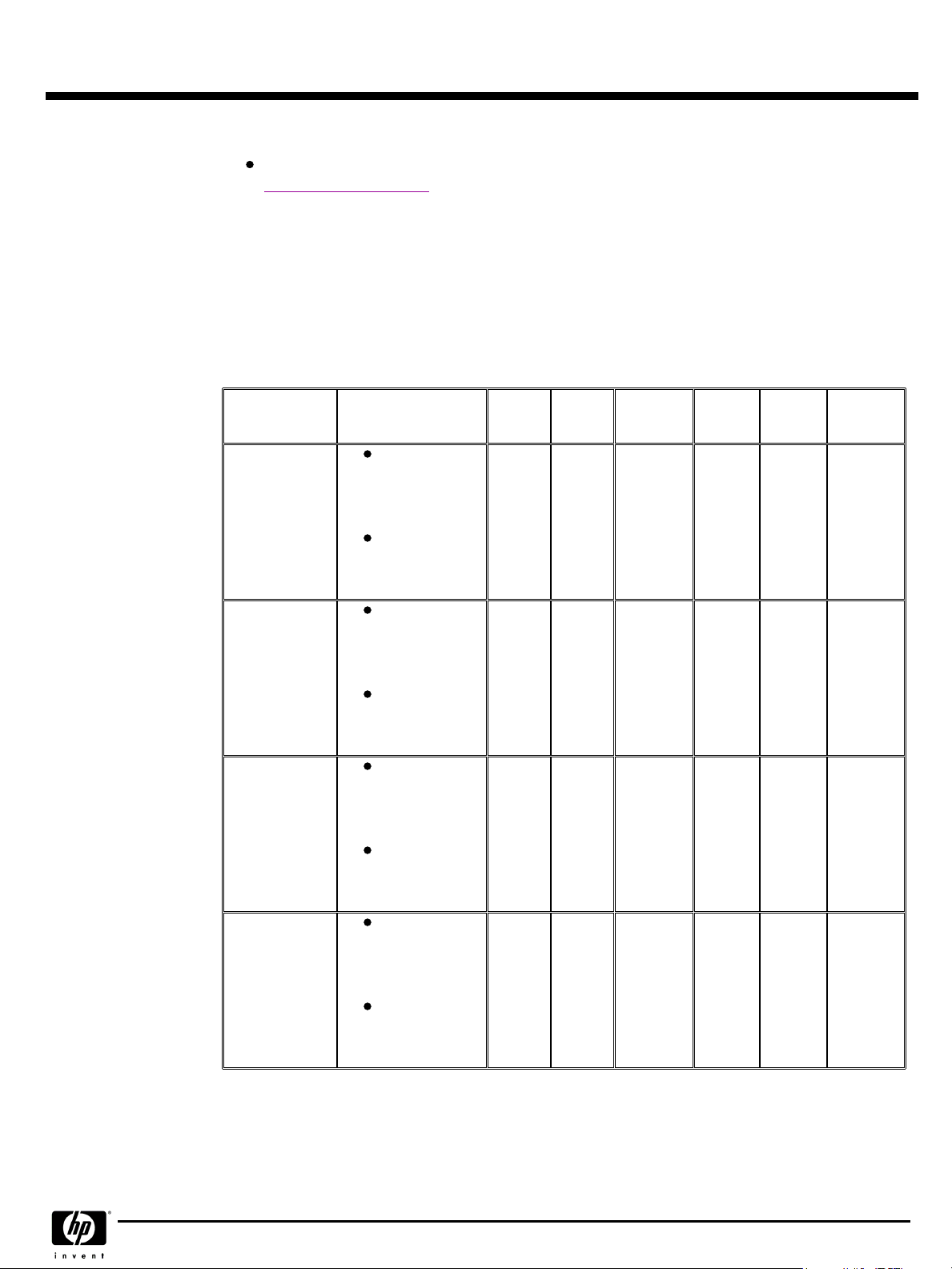

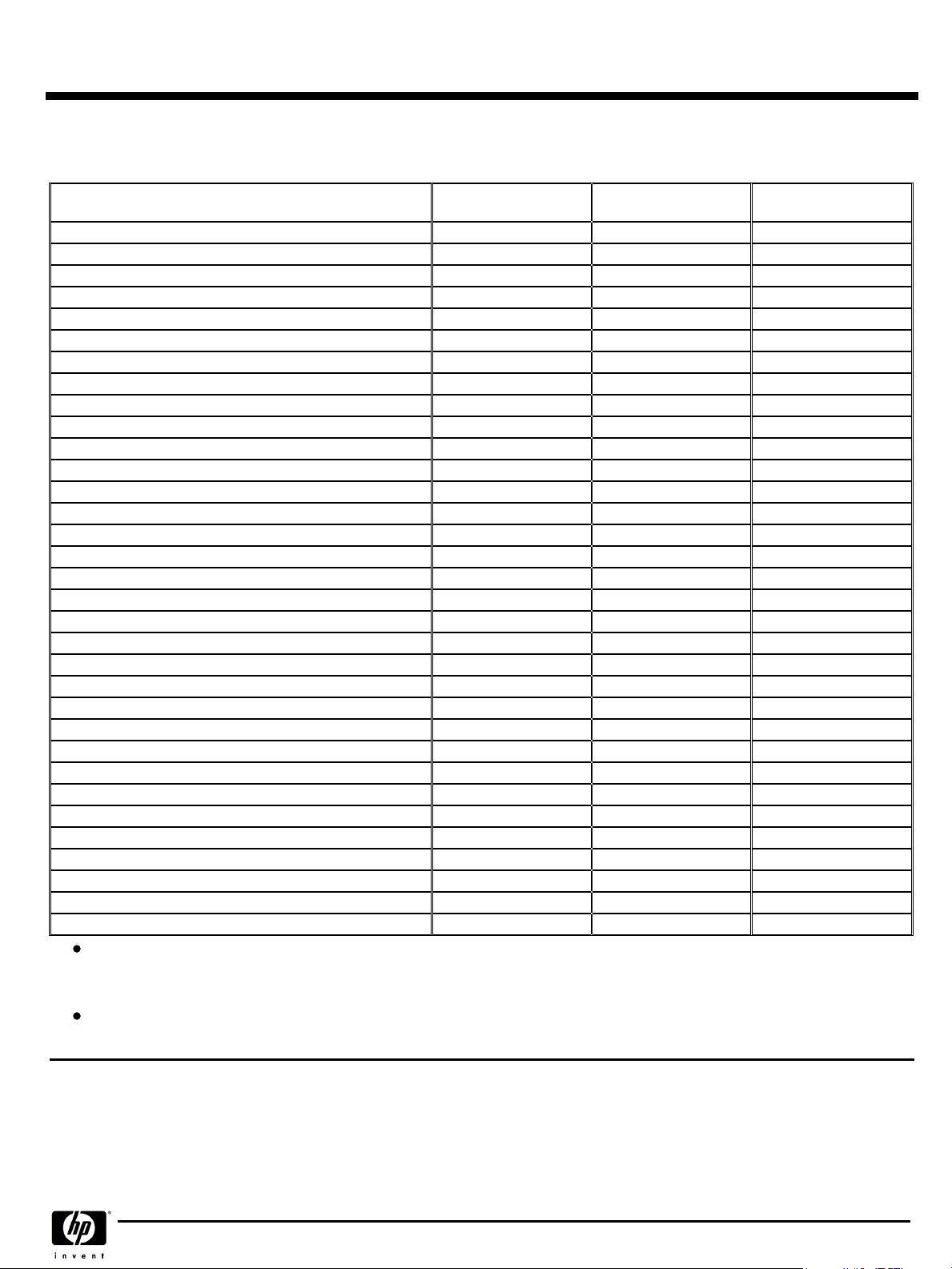

System

System

SystemSystem

Superdome

Superdome

SuperdomeSuperdome

16-way

16-way

16-way16-way

Superdome

Superdome

SuperdomeSuperdome

32-way

32-way

32-way32-way

Superdome

Superdome

SuperdomeSuperdome

64-way

64-way

64-way64-way

Standard

Standard

StandardStandard

Hardware

Hardware

HardwareHardware

Features

Features

FeaturesFeatures

Minimum

Minimum

MinimumMinimum

HP UX 11i version 2

HP UX 11i version 2

HP UX 11i version 2HP UX 11i version 2

Maximum

Maximum

MaximumMaximum

(in one partition)

(in one partition)

(in one partition)(in one partition)

2 CPUs

2 GB Memory

1 Cell Board

1 PCI X

Chassis

2 CPUs

2 GB Memory

1 Cell Board

1 PCI X

Chassis

6 CPUs

6 GB memory

3 Cell Boards

1 PCI X

Chassis

Redundant Power supplies

Redundant Fans

Factory integration of memory and I/O cards

Installation Guide, Operator's Guide and Architecture Manual

HP site planning and installation

One year warranty with same business day on site service response

16 CPUs

128 GB

Memory

4 Cell Boards

4 PCI X

Chassis

4 npars max

32 CPUs

256 GB

Memory

8 Cell Boards

8 PCI X

Chassis

8 npars max

IOX required if

more than 4

npars.

64 CPUs

512 GB

Memory

16 Cell Boards

16 PCI X

Chassis

16 npars max

IOX required if

more than 8

npars.

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

Windows Server 2003 Datacenter

Windows Server 2003 Datacenter

Windows Server 2003 DatacenterWindows Server 2003 Datacenter

edition

edition

editionedition

Minimum

Minimum

MinimumMinimum

2 CPUs

2 GB Memory

1 Cell Board

1 PCI X

Chassis

2 CPUs

2 GB Memory

1 Cell Board

1 PCI X

Chassis

6 CPUs

6 GB memory

3 Cell Boards

1 PCI X

Chassis

Maximum

Maximum

MaximumMaximum

(in one

(in one

(in one(in one

partition)

partition)

partition)partition)

16 CPUs

128 GB

Memory

4 Cell Boards

4 PCI X

Chassis

4 npars max

32 CPUs

256 GB

Memory

8 Cell Boards

8 PCI X

Chassis

8 npars max

IOX required if

more than 4

npars.

64 CPUs

512 GB

Memory

16 Cell Boards

16 PCI X

Chassis

16 npars max

IOX required if

more than 8

npars.

Minimum

Minimum

MinimumMinimum

2 CPUs

2 GB Memory

1 Cell Board

1 PCI X

Chassis

2 CPUs

2 GB Memory

1 Cell Board

1 PCI X

Chassis

6 CPUs

6 GB memory

3 Cell Boards

1 PCI X

Chassis

and 64-way

and 64-way

and 64-wayand 64-way

Linux

Linux

LinuxLinux

Maximum

Maximum

MaximumMaximum

(in one partition)

(in one partition)

(in one partition)(in one partition)

8 CPUs

64 GB

Memory

2 Cell Boards

1 PCI X

Chassis

4 npars max

8 CPUs

64 GB

Memory

2 Cell Boards

1 PCI X

Chassis

8 npars max

IOX required if

more than 4

npars

8 CPUs

64 GB

Memory

2 Cell Boards

1 PCI X

Chassis

16 npars max

IOX required if

more than 8

npars.

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 4

Page 5

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

QuickSpecs

Configuration

There are three basic building blocks in the Superdome system architecture: the cell, the crossbar backplane and the PCI X based I/O subsystem.

Cabinets

Cabinets

CabinetsCabinets

Starting with the sx1000 chip set, Superdome servers will be released with the Graphite color. A Superdome system will

consist of up to four different types of cabinet assemblies:

One Superdome left cabinet.

No more than one Superdome right cabinet (only Superdome 64-way system)

The Superdome cabinets contain all of the processors, memory and core devices of the system. They will also house

most (usually all) of the system's PCI X cards. Systems may include both left and right cabinet assemblies

containing, a left or right backplane respectively.

One or more HP Rack System/E cabinets. These 19-inch rack cabinets are used to hold the system peripheral

devices such as disk drives.

Optionally, one or more I/O expansion cabinets (Rack System/E). An I/O expansion cabinet is required when a

customer requires more PCI X cards than can be accommodated in their Superdome cabinets.

Superdome cabinets will be serviced from the front and rear of the cabinet only. This will enable customers to arrange the

cabinets of their Superdome system in the traditional row fashion found in most computer rooms. The width of the cabinet

will accommodate moving it through common doorways in the U.S. and Europe. The intake air to the main (cell) card

cage will be filtered. This filter will be removable for cleaning/replacement while the system is fully operational.

and 64-way

and 64-way

and 64-wayand 64-way

Cells (CPUs and Memory)

Cells (CPUs and Memory)

Cells (CPUs and Memory)Cells (CPUs and Memory)

A status display will be located on the outside of the front and rear doors of each cabinet. The customer and field engineers

can therefore determine basic status of each cabinet without opening any cabinet doors.

Superdome 16-way and Superdome 32-way systems are available in single cabinets. Superdome 64 way systems are

available in dual cabinets.

Each cabinet may contain a specific number of cell boards (consisting of CPUs and memory) and I/O. See the following

sections for configuration rules pertaining to each cabinet.

A cell, or cell board, is the basic building block of a Superdome system. It is a symmetric multi processor (SMP), containing

up to 4 processor modules and up to 16 GB of main memory using 512 MB DIMMs or up to 32 GB of main memory using

1 GB DIMMs. It is also possible to mix 512 MB and 1 GB DIMMs on the same cell board. A connection to a 12 slot PCI X

card cage is optional for each cell.

The Superdome cell boards shipped from the factory are offered with 2 processors or 4 processors. These cell boards are

different from those that were used in the previous releases of Superdome.

The cell boards can contain a minimum of 2 (for 2-way cell boards) and 4 (for 4-way cell boards) active processors.

The Superdome cell board contains:

Itanium 2 1.5-GHz CPUs (up to 4 processor modules)

Cell controller ASIC (application specific integrated circuit)

Main memory DIMMs (up to 32 DIMMs per board in 4 DIMM increments, using 512 MB or 1 GB DIMMs - or some

combination of both.)

Voltage Regulator Modules (VRM)

Data buses

Optional link to 12 PCI X I/O slots

Crossbar Backplane

Crossbar Backplane

Crossbar BackplaneCrossbar Backplane

Each crossbar backplane contains two sets of two crossbar chips that provide a non blocking connection between eight

cells and the other backplane. Each backplane cabinet can support up to eight cells or 32 processors (in a Superdome 32way in a single cabinet). A backplane supporting four cells or 16 processors would result in a Superdome 16-way. Two

backplanes can be linked together with flex cables to produce a cabinet that can support up to 16 cells or 64 processors

(Superdome 64-way in dual cabinets).

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 5

Page 6

QuickSpecs

Configuration

I/O Subsystem

I/O Subsystem

I/O SubsystemI/O Subsystem

Core I/O

Core I/O

Core I/OCore I/O

Each I/O chassis provides twelve PCI X slots; eight standard and four high bandwidth PCI X slots. There are two I/O chassis

in an I/O Chassis Enclosure (ICE). Each I/O chassis connects to one cell board and the number of I/O chassis supported is

dependent on the number of cells present in the system. If a PCI card is inserted into a PCI X slot, the card cannot take

advantage of the faster slot.

Each Superdome cabinet supports a maximum of four I/O chassis. The optional I/O expansion cabinet can support up to

six I/O chassis.

A 4 cell Superdome (16-way) supports up to four I/O chassis for a maximum of 48 PCI X slots.

An 8 cell Superdome supports up to eight I/O chassis for a maximum of 96 PCI X slots. Four of these I/O chassis will reside

in an I/O expansion cabinet.

A 16-cell Superdome supports up to sixteen I/O chassis for a maximum of 192 PCI X slots. Eight of these I/O chassis will

reside in two I/O expansion cabinets (either six chassis in one I/O expansion cabinet and two chassis in the other, or four

chassis in each).

The core I/O in Superdome provides the base set of I/O functions required by every Superdome partition. Each partition

must have at least one core I/O card in order to boot. Multiple core I/O cards may be present within a partition (one core

I/O card is supported per I/O backplane); however, only one may be active at a time. Core I/O will utilize the standard

long card PCI X form factor but will add a second card cage connection to the I/O backplane for additional non PCI X

signals (USB and utilities). This secondary connector will not impede the ability to support standard PCI X cards in the core

slot when a core I/O card is not installed.

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

HP-UX Core I/O

HP-UX Core I/O

HP-UX Core I/OHP-UX Core I/O

(A6865A)

(A6865A)

(A6865A)(A6865A)

Any I/O chassis can support a Core I/O card that is required for each independent partition. A system configured with 16

cells, each with its own I/O chassis and core I/O card could support up to 16 independent partitions. Note that cells can

be configured without I/O chassis attached, but I/O chassis cannot be configured in the system unless attached to a cell.

The core I/O card's primary functions are:

Partitions (console support) including USB and RS 232 connections

10/100Base T LAN (general purpose)

Other common functions, such as Ultra/Ultra2 SCSI, Fibre Channel, and Gigabit Ethernet, are not included on the core I/O

card. These functions are, of course, supported as normal PCI X add in cards.

The unified 100Base T Core LAN driver code searches to verify whether there is a cable connection on an RJ 45 port or on an

AUI port. If no cable connection is found on the RJ 45 port, there is a busy wait pause of 150 ms when checking for an AUI

connection. By installing the loopback connector (description below) in the RJ 45 port, the driver would think an RJ 45 cable

was connected and would not continue to search for an AUI connection, hence eliminate the 150 ms busy wait state:

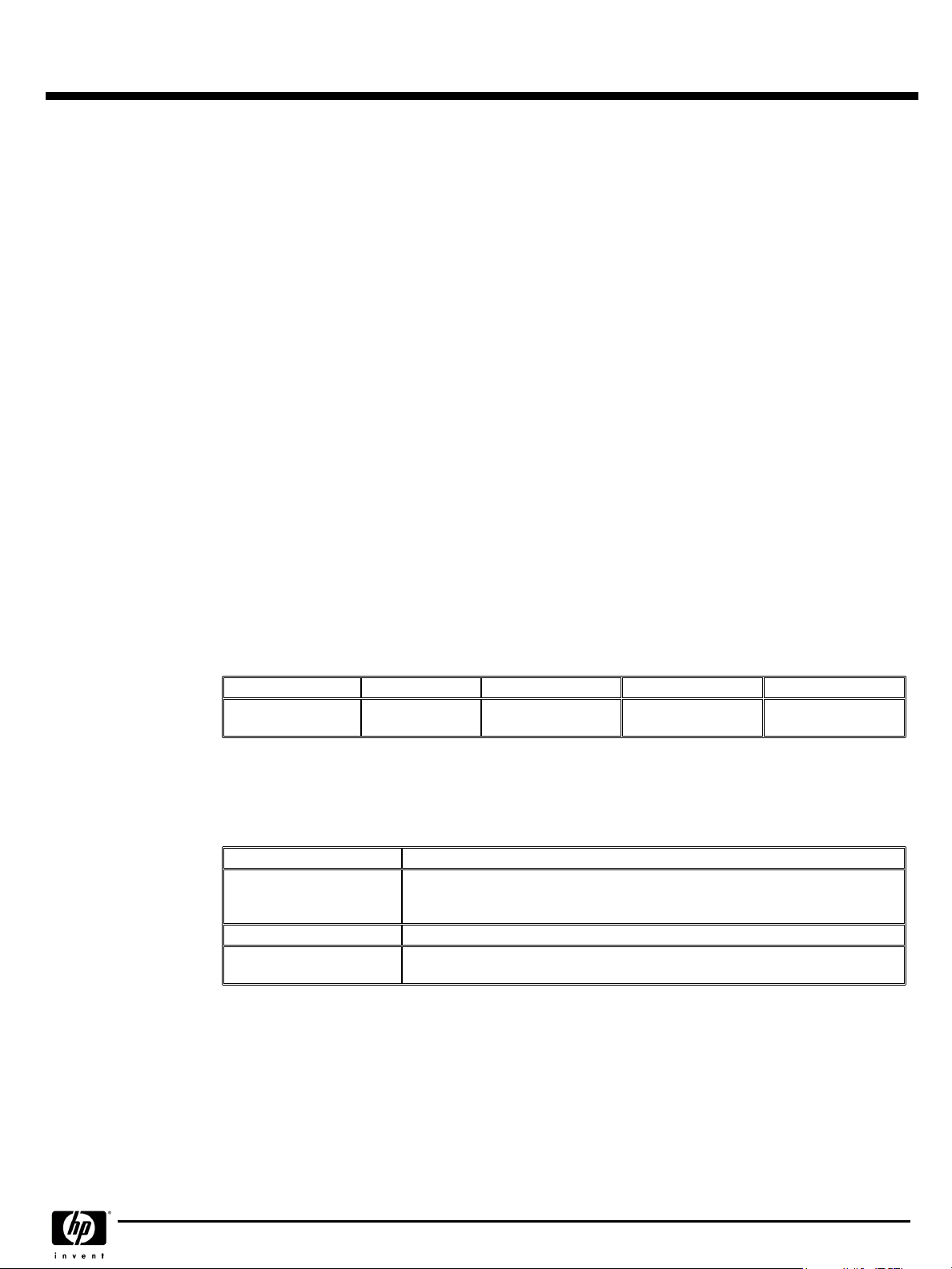

Product/

Product/

Product/Product/

Option Number

Option Number

Option NumberOption Number

A7108A

0D1

Description

Description

DescriptionDescription

RJ 45 Loopback Connector

Factory integration RJ 45 Loopback Connector

Windows Core I/O

Windows Core I/O

Windows Core I/OWindows Core I/O

(A6865A and A7061A and

(A6865A and A7061A and

(A6865A and A7061A and(A6865A and A7061A and

optional VGA/USB

optional VGA/USB

optional VGA/USBoptional VGA/USB

A6869A)

A6869A)

A6869A)A6869A)

For Windows Server 2003, two core I/O cards are required: the Superdome core I/O card (A6865A) and a 1000Base T LAN

card (A7061A). The use of Graphics/USB card (A6869A) is optional and not required.

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 6

Page 7

QuickSpecs

Configuration

I/O Expansion Cabinet

I/O Expansion Cabinet

I/O Expansion CabinetI/O Expansion Cabinet

Field Racking

Field Racking

Field RackingField Racking

I/O Chassis Enclosure

I/O Chassis Enclosure

I/O Chassis EnclosureI/O Chassis Enclosure

(ICE)

(ICE)

(ICE)(ICE)

The I/O expansion functionality is physically partitioned into four rack mounted chassis-the I/O expansion utilities chassis

(XUC), the I/O expansion rear display module (RDM), the I/O expansion power chassis (XPC) and the I/O chassis enclosure

(ICE). Each ICE supports up to two 12-slot PCI-X chassis.

The only field rackable I/O expansion components are the ICE and the 12-slot I/O chassis. Either component would be

field installed when the customer has ordered additional I/O capability for a previously installed I/O expansion cabinet.

No I/O expansion cabinet components will be delivered to be field installed in a customer's existing rack other than a

previously installed I/O expansion cabinet. The I/O expansion components were not designed to be installed in racks other

than Rack System E. In other words, they are not designed for Rosebowl I, pre merger Compaq, Rittal, or other third party

racks.

The I/O expansion cabinet is based on a modified HP Rack System E and all expansion components mount in the rack.

Each component is designed to install independently in the rack. The Rack System E cabinet has been modified to allow

I/O interface cables to route between the ICE and cell boards in the Superdome cabinet. I/O expansion components are

not designed for installation behind a rack front door. The components are designed for use with the standard Rack System

E perforated rear door.

The I/O chassis enclosure (ICE) provides expanded I/O capability for Superdome. Each ICE supports up to 24 PCI-X slots

by using two 12-slot Superdome I/O chassis. The I/O chassis installation in the ICE puts the PCI-X cards in a horizontal

position. An ICE supports one or two 12-slot I/O chassis. The I/O chassis enclosure (ICE) is designed to mount in a Rack

System E rack and consumes 9U of vertical rack space.

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

Cabinet Height and

Cabinet Height and

Cabinet Height andCabinet Height and

Configuration Limitations

Configuration Limitations

Configuration LimitationsConfiguration Limitations

Peripheral Support

Peripheral Support

Peripheral SupportPeripheral Support

Server Support

Server Support

Server SupportServer Support

Standalone I/O Expansion

Standalone I/O Expansion

Standalone I/O ExpansionStandalone I/O Expansion

Cabinet

Cabinet

CabinetCabinet

To provide online addition/replacement/deletion access to PCI or PCI-X cards and hot swap access for I/O fans, all I/O

chassis are mounted on a sliding shelf inside the ICE.

Four (N+1) I/O fans mounted in the rear of the ICE provide cooling for the chassis. Air is pulled through the front as well

as the I/O chassis lid (on the side of the ICE) and exhausted out the rear. The I/O fan assembly is hot swappable. An LED

on each I/O fan assembly indicates that the fan is operating.

Although the individual I/O expansion cabinet components are designed for installation in any Rack System E cabinet,

rack size limitations have been agreed upon. IOX Cabinets will ship in either the 1.6 meter (33U) or 1.96 meter (41U)

cabinet. In order to allay service access concerns, the factory will not install IOX components higher than 1.6 meters from

the floor. Open space in an IOX cabinet will be available for peripheral installation.

All peripherals qualified for use with Superdome and/or for use in a Rack System E are supported in the I/O expansion

cabinet as long as there is available space. Peripherals not connected to or associated with the Superdome system to which

the I/O expansion cabinet is attached may be installed in the I/O expansion cabinet.

No servers except those required for Superdome system management such as Superdome Support Management Station or

ISEE may be installed in an I/O expansion.

Peripherals installed in the I/O expansion cabinet cannot be powered by the XPC. Provisions for peripheral AC power must

be provided by a PDU or other means.

If an I/O expansion cabinet is ordered alone, its field installation can be ordered via option 750 in the ordering guide

(option 950 for Platinum Channel partners).

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 7

Page 8

QuickSpecs

Configuration

DVD Solution

DVD Solution

DVD SolutionDVD Solution

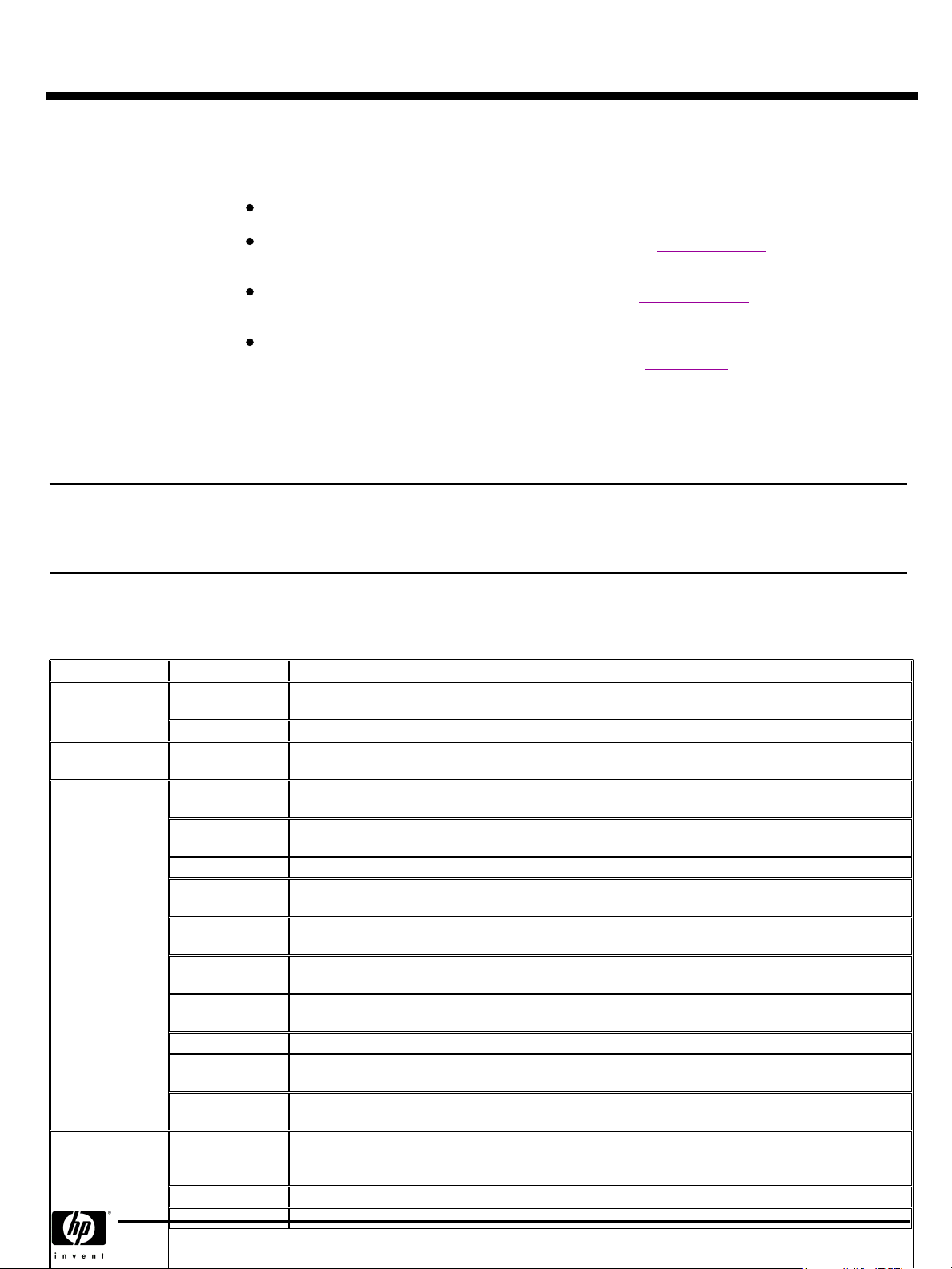

The DVD solution for Superdome requires the following components. These components are recommended per partition,

although it is acceptable to have only one DVD solution and connect it to one partition at a time. External racks A4901A

and A4902A must also be ordered with the DVD solution.

Superdome DVD Solutions

Superdome DVD Solutions

Superdome DVD SolutionsSuperdome DVD Solutions

Description

Description

DescriptionDescription

PCI Ultra160 SCSI Adapter or PCI-X Dual channel Ultra160 SCSI Adapter

PCI Ultra160 SCSI Adapter or PCI-X Dual channel Ultra 160 SCSI Adapter

(Windows Server 2003)

Surestore Tape Array 5300

DVD (recommend one per partition)

DDS-4 (opt.)/DAT40 (DDS-5/DAT 72 is also supported. Product number is

Q1524A)

Jumper SCSI Cable for DDS-4 (optional)

SCSI Cable

SCSI Terminator

0.5-meter HD HDTS68 is required if DDS-4 is used.

1.

5-meter multi-mode VH-HD68TS available now (C2365B #0D1) and can be used in place of the 10- meter cable on

2.

solutions that will be physically compact

10-meter multi-mode VH-HD68TS available now (C2363B, #0D1)

3.

2,3

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

Part Number

Part Number

Part NumberPart Number

A6828A or A6829A

A7059A or A7060A

C7508AZ

C7499A

C7497A

1

C2978B

C2363B

C2364A

2

Option Number

Option Number

Option NumberOption Number

0D1

0D1

0D1

0D1

0D1

0D1

0D1

Partitions

Partitions

PartitionsPartitions

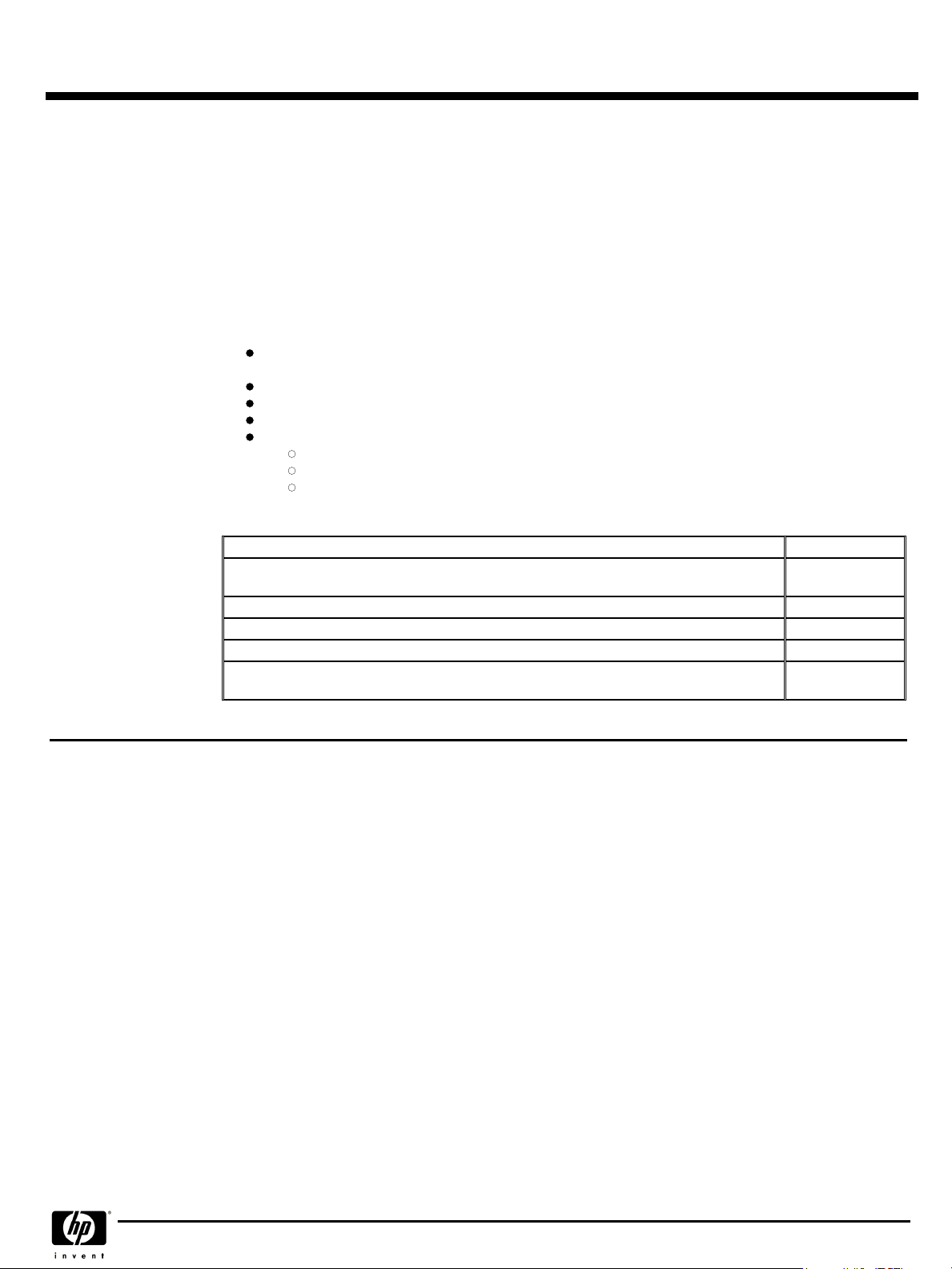

Superdome can be configured with hardware partitions, (npars). Given that HP-UX 11i version 2 does not support virtual

partitions (vpars), Superdome systems running HP-UX 11i version 2 do not support vpars.

A hardware partition (npar) consists of one or more cells that communicate coherently over a high bandwidth, low latency

crossbar fabric. Individual processors on a single-cell board cannot be separately partitioned. Hardware partitions are

logically isolated from each other such that transactions in one partition are not visible to the other hardware partitions

within the same complex.

Each npar runs its own independent operating system. Different npars may be executing the same or different revisions of an

operating system, or they may be executing different operating systems altogether. Superdome supports HP-UX 11i version 2

(at first release), Windows Server 2003 (at first release + 2 to 4 months) and Linux (first release + 6 months) operating

systems.

Each npar has its own independent CPUs, memory and I/O resources consisting of the resources of the cells that make up

the partition. Resources (cell boards and/or I/O chassis) may be removed from one npar and added to another without

having to physically manipulate the hardware, but rather by using commands that are part of the System Management

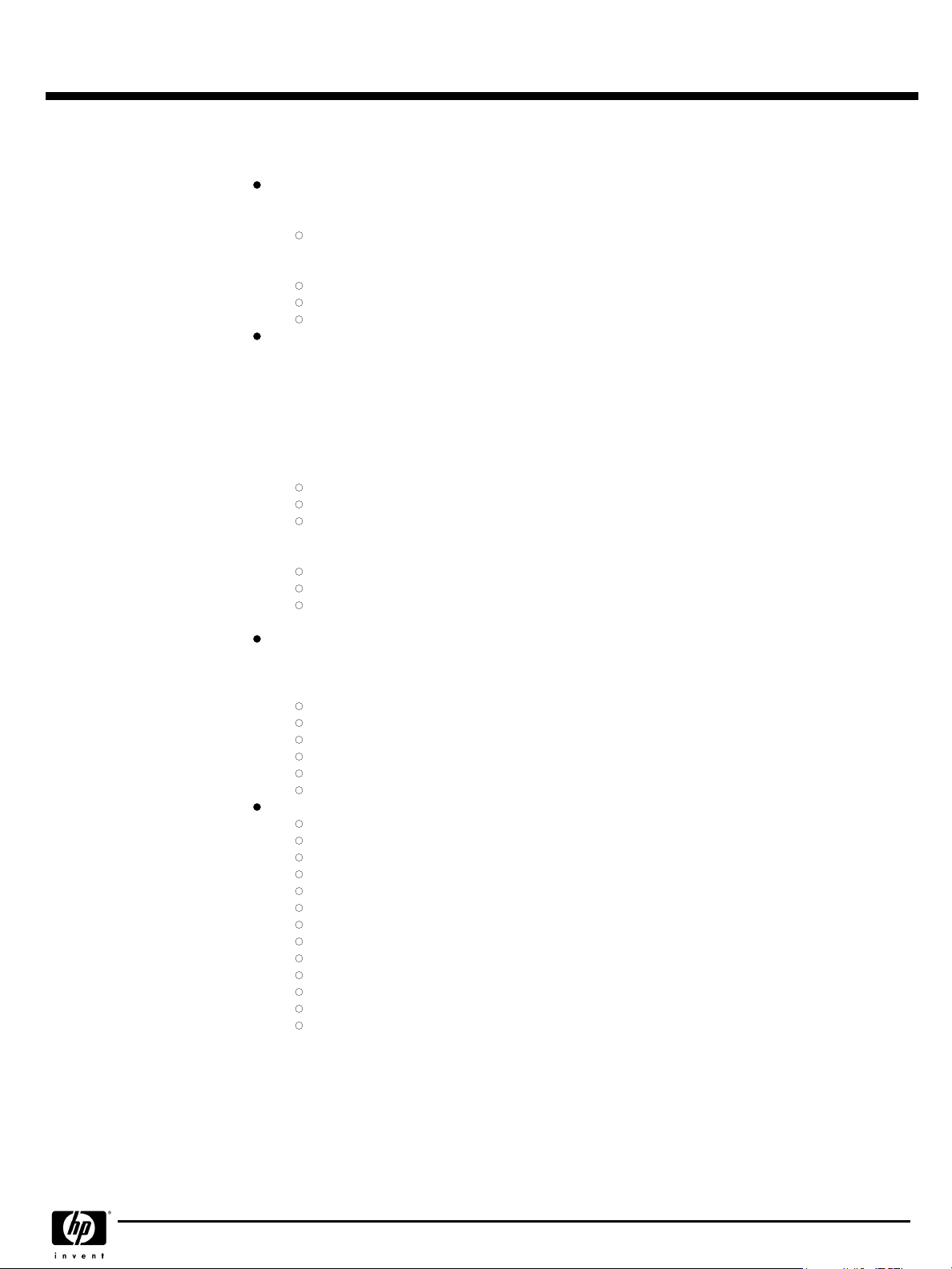

interface. The table below shows the maximum size of npars per operating system:

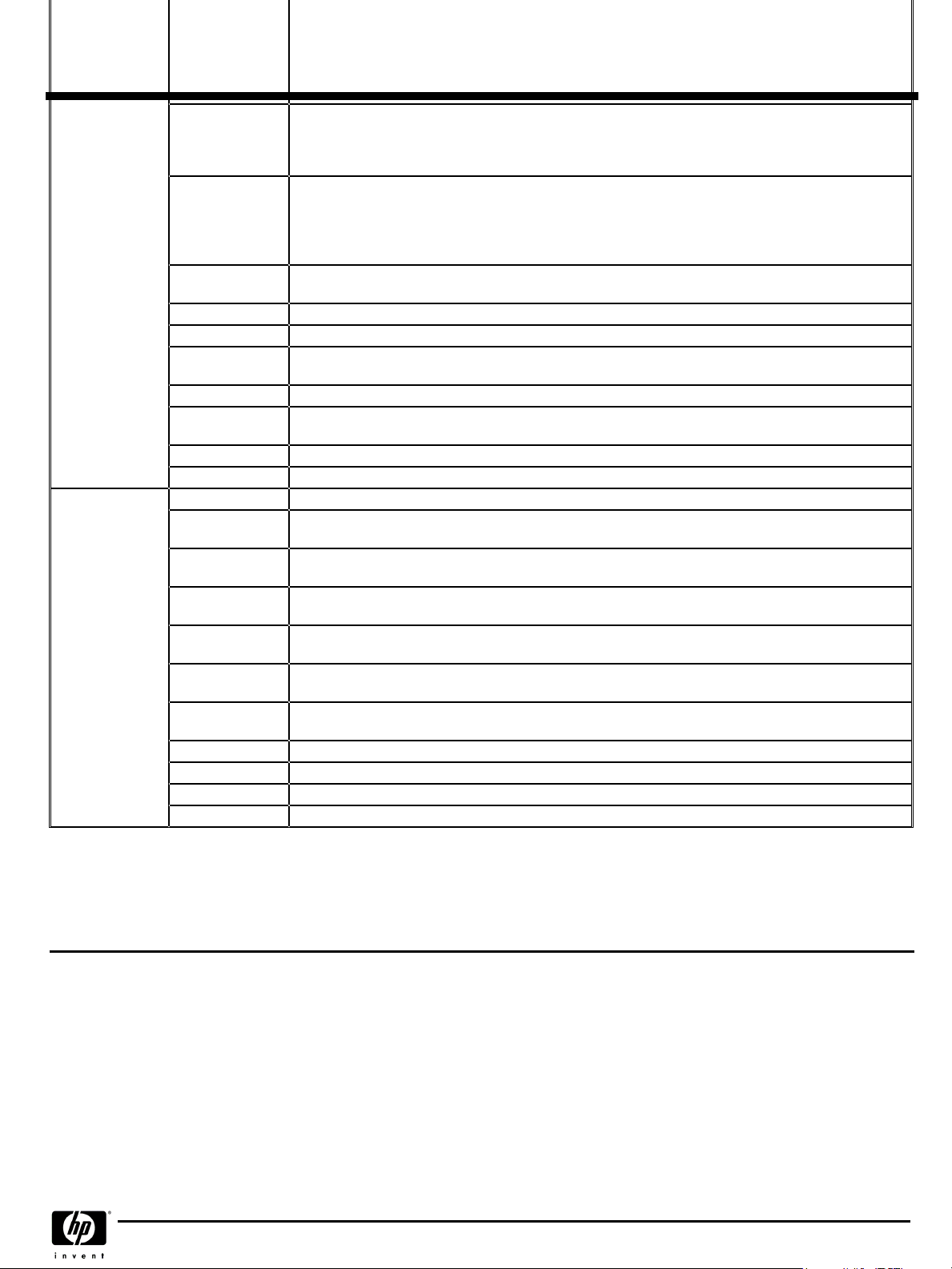

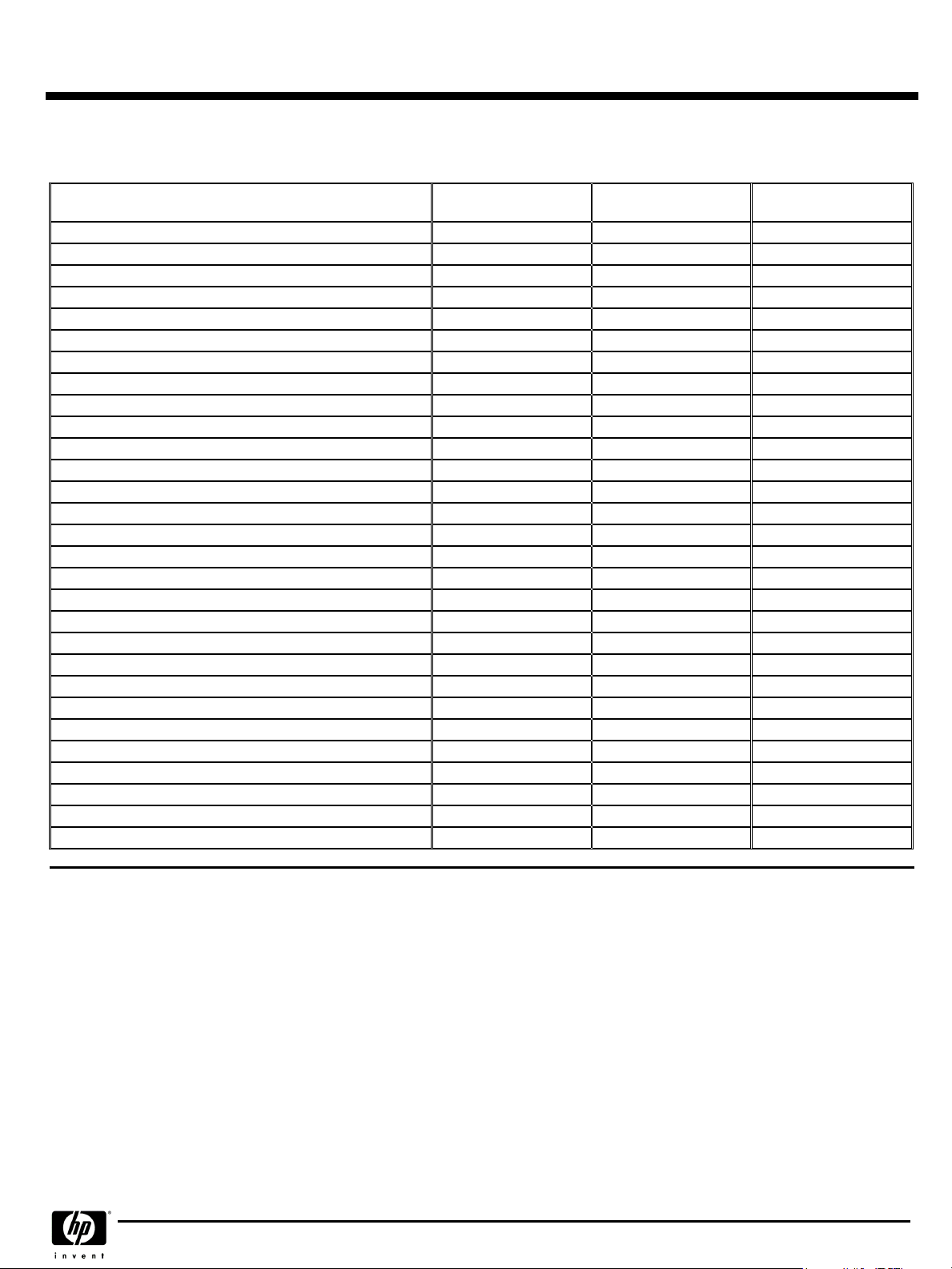

Maximum size of npar

Maximum number of npars

For information on type of I/O cards for networking and mass storage for each operating environment, please refer to the

Technical Specifications section.

Superdome supports static partitions. Static partitions imply that any npar configuration change requires a reboot of the

npar. In a future HP-UX and Windows release, dynamic npars will be supported. Dynamic npars imply that npar

configuration changes do not require a reboot of the npar. Using the related capabilities of dynamic reconfiguration (i.e. online addition, on-line removal), new resources may be added to an npar and failed modules may be removed and replaced

while the npar continues in operation. Adding new npars to Superdome system does not require a reboot of the system.

HP UX 11i Version 2

HP UX 11i Version 2

HP UX 11i Version 2HP UX 11i Version 2

64 CPUs, 512 GB RAM 64 CPUs, 512 GB RAM

16 16 16

For licensing information for each operating system, please refer to the Ordering Guide.

Windows Server 2003

Windows Server 2003

Windows Server 2003Windows Server 2003

Red Hat Enterprise Linux

Red Hat Enterprise Linux

Red Hat Enterprise LinuxRed Hat Enterprise Linux

AS 3 or Debian Linux

AS 3 or Debian Linux

AS 3 or Debian LinuxAS 3 or Debian Linux

8 CPUs, 64 GB RAM

Single System

Single System

Single SystemSingle System

Reliability/Availability

Reliability/Availability

Reliability/AvailabilityReliability/Availability

Features

Features

FeaturesFeatures

Superdome high availability offering is as follows:

NOTE:

NOTE:

NOTE: NOTE:

release. Online addition/replacement of individual CPUs and memory DIMMs will never be supported.)

Online addition/replacement for cell boards is not currently supported and will be available in a future HP-UX

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 8

Page 9

QuickSpecs

Configuration

CPU

CPU

CPUCPU

of CPU errors). If a CPU is exhibiting excessive cache errors, HP-UX 11i version 2 will ONLINE activate to take its

place. Furthermore, the CPU cache will automatically be repaired on reboot, eliminating the need for a service call.

Memory

Memory

MemoryMemory

each ECC word. Therefore, the only way to get a multiple bit memory error from SDRAMs is if more than one

SDRAM failed at the same time (rare event). The system is also resilient to any cosmic ray or alpha particle strike

because these failure modes can only affect multiple bits in a single SDRAM. If a location in memory is "bad", the

physical page is de allocated dynamically and is replaced with a new page without any OS or application

interruption. In addition, a combination of hardware and software scrubbing is used for memory. The software

scrubber reads/writes all memory locations periodically. However, it does not have access to "locked down" pages.

Therefore, a hardware memory scrubber is provided for full coverage. Finally data is protected by providing

address/control parity protection.

I/O

I/O

I/OI/O

thus preventing I/O cards from creating faults on other I/O paths. I/O cards in hardware partitions (npars) are fully

isolated from I/O cards in other hard partitions. It is not possible for an I/O failure to propagate across hard

partitions. It is possible to dynamically repair and add I/O cards to an existing running partition.

Crossbar and Cabinet Infrastructure

Crossbar and Cabinet Infrastructure

Crossbar and Cabinet InfrastructureCrossbar and Cabinet Infrastructure

HA Cluster-In-A-Box" Configuration

HA Cluster-In-A-Box" Configuration

HA Cluster-In-A-Box" ConfigurationHA Cluster-In-A-Box" Configuration

between hardware partitions (npars) on a single Superdome system. All providers of mission critical solutions agree

that failover between clustered systems provides the safest availability-no single points of failures (SPOFs) and no

ability to propagate failures between systems. However, HP supports the configuration of HA cluster software in a

single system to allow the highest possible availability for those users that need the benefits of a non-clustered

solution, such as scalability and manageability. Superdome with this configuration will provide the greatest single

system availability configurable. Since no single system solution in the industry provides protection against a SPOF,

users that still need this kind of safety and HP's highest availability should use HA cluster software in a multiple

system HA configuration. Multiple HA software clusters can be configured within a single Superdome system (i.e.,

two 4 -ode clusters configured within a 32-way Superdome system).

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

: The features below nearly eliminate the down time associated with CPU cache errors (which are the majority

Dynamic processor resilience w/ iCOD enhancement.

NOTE:

NOTE:

NOTE: NOTE:

Linux in the partition.

CPU cache ECC protection and automatic de allocation

CPU bus parity protection

Redundant DC conversion

Memory DRAM fault tolerance, i.e. recovery of a single SDRAM failure

DIMM address / control parity protection

Dynamic memory resilience, i.e. page de allocation of bad memory pages during operation.

NOTE:

NOTE:

NOTE: NOTE:

partition.

Hardware and software memory scrubbing

Redundant DC conversion

Cell COD.

NOTE:

NOTE:

NOTE: NOTE:

: Partitions configured with dual path I/O can be configured to have no shared components between them,

Full single wire error detection and correction on I/O links

I/O cards fully isolated from each other

HW for the Prevention of silent corruption of data going to I/O

On line addition/replacement (OLAR) for individual I/O cards, some external peripherals, SUB/HUB.

Parity protected I/O paths

Dual path I/O

Recovery of a single crossbar wire failure

Localization of crossbar failures to the partitions using the link

Automatic de-allocation of bad crossbar link upon boot

Redundant and hotswap DC converters for the crossbar backplane

ASIC full burn-in and "high quality" production process

Full "test to failure" and accelerated life testing on all critical assemblies

Strong emphasis on quality for multiple-nPartition single points of failure (SPOFs)

System resilience to Management Processor (MP)

Isolation of nPartition failure

Protection of nPartitions against spurious interrupts or memory corruption

Hot swap redundant fans (main and I/O) and power supplies (main and backplane power bricks)

Dual power source

Phone-Home capability

Dynamic processor resilience and iCOD are not supported when running Windows Server 2003 or

: The memory subsystem design is such that a single SDRAM chip does not contribute more than 1 bit to

Dynamic memory resilience is not supported when running Windows Server 2003 or Linux in the

Cell COD is not supported when Windows Server 2003 or Linux is running in the partition.

:

: The "HA Cluster-In-A- Box" allows for failover of users' applications

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 9

Page 10

QuickSpecs

Configuration

Multi-system High

Multi-system High

Multi-system HighMulti-system High

Availability

Availability

AvailabilityAvailability

Geographically Dispersed

Geographically Dispersed

Geographically DispersedGeographically Dispersed

Cluster Configurations

Cluster Configurations

Cluster ConfigurationsCluster Configurations

HP-UX 11i v2:

HP-UX 11i v2:

HP-UX 11i v2:HP-UX 11i v2:

Any Superdome partition that is protected by Serviceguard or Serviceguard Extension for RAC can be configured in a cluster

with:

Another Superdome with Itanium 2 processors

One or more standalone non Superdome systems with Itanium 2 processors

Another partition within the same single cabinet Superdome (refer to "HA Cluster in a Box" above for specific

requirements)

Separate partitions within the same Superdome system can be configured as part of different Serviceguard clusters.

HP-UX 11i v2:

HP-UX 11i v2:

HP-UX 11i v2:HP-UX 11i v2:

The following Geographically Dispersed Cluster solutions fully support cluster configurations using Superdome systems. The

existing configuration requirements for non Superdome systems also apply to configurations that include Superdome

systems. An additional recommendation, when possible, is to configure the nodes of cluster in each datacenter within

multiple cabinets to allow for local failover in the case of a single cabinet failure. Local failover is always preferred over a

remote failover to the other datacenter. The importance of this recommendation increases as the geographic distance

between datacenters increases.

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

HP-UX: Serviceguard and Serviceguard Extension for RAC

Windows Server 2003: Microsoft Cluster Service (MSCS) - limited configurations supported

Linux: Serviceguard for Linu

and 64-way

and 64-way

and 64-wayand 64-way

Windows Server 2003

Windows Server 2003

Windows Server 2003Windows Server 2003

Linux

Linux

LinuxLinux

Supportability Features

Supportability Features

Supportability FeaturesSupportability Features

Console Access

Console Access

Console AccessConsole Access

(Management Processor

(Management Processor

(Management Processor(Management Processor

[MP])

[MP])

[MP])[MP])

Extended Campus Clusters (using Serviceguard with MirrorDisk/UX)

MetroCluster with Continuous Access XP

MetroCluster with EMC SRDF

ContinentalClusters

From an HA perspective, it is always better to have the nodes of an HA cluster spread across as many system cabinets

(Superdome and non Superdome systems) as possible. This approach maximizes redundancy to further reduce the chance of

a failure causing down time.

Any Superdome partition that is protected by Microsoft Cluster Service for Windows Server 2003, Datacenter Edition can be

configured in a cluster of up to 8 nodes with:

Another Superdome complex

Another partition within the same single cabinet Superdome with an identical hardware configuration

Furthermore, geographically dispersed clusters are supported utilizing a single quorum resource (Cluster Extension XP for

Windows). Specific Superdome Windows Server 2003 cluster configurations will be announced later in 2003.

Support of Serviceguard on Linux and Cluster Extension on Linux should be available in late 2003.

Superdome now supports the Console and Support Management Station in one device.

The optimal configuration of console device(s) depends on a number of factors, including the customer's data center

layout, console security needs, customer engineer access needs, and the degree with which an operator must interact with

server or peripheral hardware and a partition (i.e. changing disks, tapes). This section provides a few guidelines. However

the configuration that makes best sense should be designed as part of site preparation, after consulting with the customer's

system administration staff and the field engineering staff.

Customer data centers exhibit a wide range of configurations in terms of the preferred physical location of the console

device. (The term "console device" refers to the physical screen/keyboard/mouse that administrators and field engineers use

to access and control the server.) The Superdome server enables many different configurations by its flexible configuration of

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 10

Page 11

QuickSpecs

Configuration

access to the MP, and by its support for multiple geographically distributed console devices.

Three common data center styles are:

The secure site where both the system and its console are physically secured in a small area.

The "glass room" configuration where all the systems' consoles are clustered in a location physically near the

machine room.

The geographically dispersed site, where operators administer systems from consoles in remote offices.

These can each drive different solutions to the console access requirement.

The considerations listed below apply to the design of provision of console access to the server. These must be considered

during site preparation.

The Superdome server can be operated from a VT100 or an hpterm compatible terminal emulator. However some

programs (including some of those used by field engineers) have a more friendly user interface when operated from

an hpterm.

LAN console device users connect to the MP (and thence to the console) using terminal emulators that establish

telnet connections to the MP. The console device(s) can be anywhere on the network connected to either port of the

MP.

Telnet data is sent between the client console device and the MP "in the clear", i.e. unencrypted. This may be a

concern for some customers, and may dictate special LAN configurations.

If an HP-UX workstation is used as a console device, an hpterm window running telnet is the recommended way to

connect to the MP. If a PC is used as a console device, Reflection1 configured for hpterm emulation and telnet

connection is the recommended way to connect to the MP.

The MP currently supports a maximum of 16 telnet-connected users at any one time.

It is desirable, and sometimes essential for rapid time to repair to provide a reliable way to get console access that is

physically close to the server, so that someone working on the server hardware can get immediate access to the

results of their actions. There are a few options to achieve this:

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

Place a console device close to the server.

Ask the field engineer to carry in a laptop, or to walk to the operations center.

and 64-way

and 64-way

and 64-wayand 64-way

Use a system that is already in close proximity of the server such as the Instant Support Enterprise Edition (ISEE) or the System

Management Station as a console device close to the system.

The system administrator is likely to want to run X applications or a browser using the same client that they access

the MP and partition consoles with. This is because the partition configuration tool, parmgr, has a graphical

interface. The system administrator's console device(s) should have X window or browser capability, and should be

connected to the system LAN of one or more partitions.

Functional capabilities

Functional capabilities

Functional capabilitiesFunctional capabilities

Local console physical connection (RS-232)

Display of system status on the console (Front panel display messages)

Console mirroring between LAN and RS-232 ports

System hard and soft (TOC or INIT) reset capability from the console.

Password secured access to the console functionality

Support of generic terminals (i.e. VT100 compatible).

Power supply control and monitoring from the console. It will be possible to get power supply status and to switch

power on/off from the console.

Console over the LAN. This means that a PC or HP workstation can become the system console if properly

connected on the customer LAN. This feature becomes especially important because of the remote power

management capability. The LAN will be implemented on a separate port, distinct from the system LAN, and

provide TCP/IP and Telnet access.

There is one MP per Superdome cabinet, thus there are two (2) for Superdome 64-way. But one, and only one, can

be active at a time. There is no redundancy or failover feature.

:

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 11

Page 12

QuickSpecs

Configuration

Windows

Windows

WindowsWindows

TFor Windows Server 2003 customers desiring full visibility to the Superdome Windows partition, an IP console solution is

available to view the partition while the OS is rebooting (in addition to normal Windows desktop). Windows Terminal

Services (standard in Windows Server 2003) can provide remote access, but does not display VGA during reboot. For

customers who mandate VGA access during reboot, the IP console switch (262586-B21), used in conjunction with a

VGA/USB card in the partition (A6869A) is the solution.

In order to have full graphical console access when running Windows Server 2003 on Superdome, the 3×1×16 IP Console

Switch (product number 262586-B21) is required.

The features of this switch are as follows:

Provides keyboard, video and mouse (KVM) connections to 16 direct attached Windows partitions (or servers) expandable to 128.

Allows access to partitions (or servers) from a remote centralized console.

1 for local KVM

3 concurrent remote users (secure SSL data transfer across network)

Single screen switch management with the IP Console Viewer Software:

Authentication

Administration

Client Software

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

Support Management

Support Management

Support ManagementSupport Management

Station

Station

StationStation

If the full graphical console access is needed, the following must be ordered.

Component

Component

ComponentComponent

3×1×16 IP console switch (100 240V)-1 switch per 16 OS instances (n<=16), each connected to

VGA card

8 to 1 console expander-Order expander if there are more than 16 OS instances

USB interface adapters-Order one per OS instance

CAT5 cable-Order one per OS instance

AB243A 1U KVM-For local KVM - provides a full 15" digital display, keyboard, mouse and console

switch in only 1U

The purpose of the Support Management Station (SMS) is to provide Customer Engineers with an industry-leading set of

support tools, and thereby enable faster troubleshooting and more precise problem root cause analysis. It also enables

remote support by factory experts who consult with and back up the HP Customer Engineer. The SMS complements the

proactive role of HP's Instant Support Enterprise Edition (ISEE) (which is offered to Mission Critical customers), by focusing

on reactive diagnosis, for both mission critical and non mission critical Superdome customers.

The user of the SMS is the HP Customer Engineer and HP Factory Support Engineer. The Superdome customer benefits from

their use of the SMS by receiving faster return to normal operation of their Superdome server, and improved accuracy of fault

diagnosis, resulting in fewer callbacks. HP can offer better service through reduced installation time.

Only one SMS is required per customer site (or data center) connected to each platform via Ethernet LAN. Physically, it

would be beneficial to have the SMS close to the associated platforms because the customer engineer will run the scan tools

and would need to be near platform to replace failing hardware. The physical connection from the platform is an Ethernet

connection and thus, the absolute maximum distance is not limited by physical constraints.

Product Number

Product Number

Product NumberProduct Number

262586-B21

262589-B21

336057-001

C7542A

221546-B21

The SMS supports a single LAN interface that is connected to the Superdome and to the customer's management LAN.

When connected in this manner, SMS operations can be performed remotely.

Physical Connection:

Physical Connection:

Physical Connection:Physical Connection:

The SMS will contain one physical Ethernet connection, namely a 10/100Base-T connection. Note that the connection on

Superdome (MP) is also 10/100Base-T, as is the LAN connection on the core I/O card installed in each hardware partition.

For connecting more than one Superdome server to the SMS, a LAN hub is required for the RJ-45 connection. A point to

point connection is only required for one Superdome server to one SMS.

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 12

Page 13

QuickSpecs

Configuration

Functional Capabilities:

Functional Capabilities:

Functional Capabilities:Functional Capabilities:

Allows local access to SMS by CE.

Provides integrated console access, providing hpterm emulation over telnet and web browser, connecting over LAN

or serial to a Superdome system

Provides remote access over a LAN or dialup connection:

Provides seamless integration with data center level management.

Provides partition logon capability, providing hpterm emulation over telnet, X-windows, and Windows Terminal

Services capabilities.

Provides following diagnostics tools:

Supports updating platform and system firmware.

Always on event and console logging for Superdome systems, which captures and stores very long event and console

histories, and allows HP specialists to analyze the first occurrence of a problem.

Allows more than one LAN connected response center engineer to look at SMS logs simultaneously.

Can be disconnected from the Superdome systems and not disrupt their operation.

Provides ability to connect a new Superdome system to the SMS and be recognized by scan software.

Scans one Superdome system while other Superdome systems are connected (and not disrupt the operational

systems).

Supports multiple, heterogeneous Superdome platforms.

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

ftp server with capability to ftp the firmware files and logs

dialup modem access support (e.g. PC Anywhere or VNC)

Runs HP's proven highly effective JTAG scan diagnostic tools, which offer rapid fault resolution to the

failing wire.

Superdome HPMC and MCA analyzer

Console log storage and viewing

Event log storage and viewing

Partition and memory adviser flash applications

System Management

System Management

System ManagementSystem Management

Features

Features

FeaturesFeatures

Minimum Hardware Requirements:

Minimum Hardware Requirements:

Minimum Hardware Requirements:Minimum Hardware Requirements:

The SMS should meet the following

ProLiant ML350 G3 running Windows 2000 Server SP3 including:

Modem

DVD R/W

Keyboard/monitor/mouse

512-MB memory

Options:

Factory racked or field racked

Rack mount or desk mount keyboard/monitor/mouse/platform (bundled CPL line items)

NOTE:

NOTE:

NOTE: NOTE:

Software Requirements:

Software Requirements:

Software Requirements:Software Requirements:

The SMS will run Windows 2000 SP3 as the default operating system. The SMS will follow the Windows OS roadmap and

support later versions of this operating system as needed.

NOTE:

NOTE:

NOTE: NOTE:

HP-UX

HP-UX

HP-UXHP-UX

The CE Tool is used by the CE to service the system and is not part of the purchased system.

HP-UX Servicecontrol Manager

HP-UX Servicecontrol Manager

HP-UX Servicecontrol ManagerHP-UX Servicecontrol Manager

address the configuration, fault, and workload management requirements of an adaptive infrastructure.

Servicecontrol Manager

Servicecontrol Manager

Servicecontrol ManagerServicecontrol Manager

integrates with many other HP-UX specific system management tools, including the following, which are available

on Itanium 2 based servers:

Ignite-UX

Ignite-UX

Ignite-UXIgnite-UX

many servers. It provides the means for creating and reusing standard system configurations, enables replication of

systems, permits post installation customizations, and is capable of both interactive and unattended operating

modes.

Software Distributor (SD)

Software Distributor (SD)

Software Distributor (SD)Software Distributor (SD)

systems and layered software applications. Delivered as part of HP-UX, SD can help you manage your HP-UX

operating system, patches, and application software on HP Itanium2 based servers.

System Administration Manager (SAM)

System Administration Manager (SAM)

System Administration Manager (SAM)System Administration Manager (SAM)

-Ignite-UX addresses the need for HP-UX system administrators to perform fast deployment for one or

minimum

minimum

minimumminimum

The rack-mount option of the SMS will not be available for ordering until July 1, 2003.

maintains both effective and efficient management of computing resources. It

is the HP-UX administration tool set used to deliver and maintain HP-UX operating

hardware requirements:

is the central point of administration for management applications that

is used to manage accounts for users and groups, perform auditing

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 13

Page 14

QuickSpecs

Configuration

and security, and handle disk and file system management and peripheral device management. Servicecontrol

Manager enables these tasks to be distributed to multiple systems and delegated using role based security.

HP-UX Kernel Configuration

HP-UX Kernel Configuration

HP-UX Kernel ConfigurationHP-UX Kernel Configuration

allows users to tune both dynamic and static kernel parameters quickly and easily from a Web based GUI to

optimize system performance. This tool also sets kernel parameter alarms that notify you when system usage levels

exceed thresholds.

Partition Manager

Partition Manager

Partition ManagerPartition Manager

created, the systems running on those partitions can be managed consistently with all the other tools integrated into

Servicecontrol Manager. Key features include:

Security Patch Check

Security Patch Check

Security Patch CheckSecurity Patch Check

security vulnerabilities and warns administrators about recalled patches still present on the system.

System Inventory Manager

System Inventory Manager

System Inventory ManagerSystem Inventory Manager

manage inventory and configuration information for HP-UX based servers. It provides an easy to use, Web based

interface, superior performance, and comprehensive reporting capabilities

Event Monitoring Service (EMS) keeps the administrator of multiple systems aware of system operation throughout

the cluster, and notifies the administrator of potential hardware or software problems before they occur. HP

Servicecontrol Manager can launch the EMS interface and configure EMS monitors for any node or node group that

belongs to the cluster, resulting in increased reliability and reduced downtime.

Process Resource Manager (PRM)

Process Resource Manager (PRM)

Process Resource Manager (PRM)Process Resource Manager (PRM)

manage the allocation of CPU, memory resources, and disk bandwidth. It allows administrators to run multiple

mission critical applications on a single system, improve response time for critical users and applications, allocate

resources on shared servers based on departmental budget contributions, provide applications with total resource

isolation, and dynamically change configuration at any time-even under load. (fee based)

HP-UX Workload Manager (WLM)

HP-UX Workload Manager (WLM)

HP-UX Workload Manager (WLM)HP-UX Workload Manager (WLM)

Manager provides automatic CPU resource allocation and application performance management based on

prioritized service level objectives (SLOs). In addition, WLM allows administrators to set real memory and disk

bandwidth entitlements (guaranteed minimums) to fixed levels in the configuration. The use of workload groups

and SLOs improves response time for critical users, allows system consolidation, and helps manage user

expectations for performance. (Fee based)

HP's Management Processor

HP's Management Processor

HP's Management ProcessorHP's Management Processor

the unlikely event that none of the nPartitions are booted, the Management Processor can be accessed to power

cycle the server, view event logs and status logs, enable console redirection, and more. The Management Processor

is embedded into the server and does not take a PCI slot. And, because secure access to the Management Processor

is available through SSL encryption, customers can be confident that its powerful capabilities will be available only

to authorized administrators. New features that will be available include:

OpenView Operations Agent

OpenView Operations Agent

OpenView Operations AgentOpenView Operations Agent

OpenView Performance Agent

OpenView Performance Agent

OpenView Performance AgentOpenView Performance Agent

OpenView GlancePlus

OpenView GlancePlus

OpenView GlancePlusOpenView GlancePlus

problems (fee based)

OpenView Data Protector (Omniback II)

OpenView Data Protector (Omniback II)

OpenView Data Protector (Omniback II)OpenView Data Protector (Omniback II)

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

-for self optimizing kernel changes. The new HP-UX Kernel Configuration tool

creates and manages nPartitions-hard partitions for high end servers. Once the partitions are

Easy-to-use, familiar graphical user interface.

Runs locally on a partition, or remotely. The Partition Manager application can be run remotely on any

system running HP-UX 11i Version 2 and eventually select Windows releases and remotely manage a

complex either by 1) communicating with a booted OS on an nPartition in the target complex via WBEM, or

2) communicating with the service processor in the target complex via IPMI over LAN. The latter is especially

significant because a complex can be managed with NONE of the nPartitions booted.

Full support for creating, modifying, and deleting hardware partitions.

Automatic detection of configuration and hardware problems.

Ability to view and print hardware inventory and status.

Big picture views that allow system administrators to graphically view the resources in a server and the

partitions that the resources are assigned to.

Complete interface for the addition and replacement of PCI devices.

Comprehensive online help system.

determines how current a systems security patches are, recommends patches for continuing

is for change and asset management. It allows you to easily collect, store and

controls the resources that processes use during peak system load. PRM can

A key differentiator in the HP-UX family of management tools, Workload

enables remote server management over the Web regardless of the system state. In

Support for Web Console that provides secure text mode access to the management processor

Reporting of error events from system firmware.

Ability to trigger the task of PCI OL* from the management processor.

Ability to scan a cell board while the system is running. (only available for partitionable systems)

Implementation of management processor commands for security across partitions so that partitions do not

modify system configuration (only available for partitionable systems).

-collects and correlates OS and application events (fee based)

-determines OS and application performance trends (fee based)

-shows real time OS and application availability and performance data to diagnose

-backs up and recovers data (fee based)

In addition, the Network Node Manager (NNM) management station will run on HP-UX Itanium 2 based servers. NNM

automatically discovers, draws (maps), and monitors networks and the systems connected to them.

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 14

Page 15

QuickSpecs

Configuration

All other OpenView management tools, such as OpenView Operations, Service Desk, and Service Reporter, will be able to

collect and process information from the agents running on Itanium 2-based servers running HP-UX.

Windows Server 2003, Datacenter Edition

Windows Server 2003, Datacenter Edition

Windows Server 2003, Datacenter EditionWindows Server 2003, Datacenter Edition

The HP Essentials Foundation Pack for Windows

The HP Essentials Foundation Pack for Windows

The HP Essentials Foundation Pack for WindowsThe HP Essentials Foundation Pack for Windows

Itanium2 servers running Windows. Included in the Pack is the Smart Setup DVD which contains all the latest tested

and compatible HP Windows drivers, HP firmware, HP Windows utilities, and HP management agents that assist in

the server deployment process by preparing the server for installation of standard Windows operating system and in

the on going management of the server. Please note that this is available for HP service personnel but not provided

to end customers.

Partition Manager

Partition Manager

Partition ManagerPartition Manager

partitions are created, the Windows Server 2003 resources running on those partitions can be managed consistently

with the Windows System Resource Manager and Insight Manager through the System Management Homepage (see

below). Key features include full support for creating, modifying, and deleting hardware partitions.

NOTE:

NOTE:

NOTE: NOTE:

(A9801A or A9802A) or an HP-UX 11i Version 2 partition or separate device (i.e. Itanium2-based workstation or

server running HP-UX 11i Version 2) in order to configure Windows partitions. Refer to HP-UX section above for key

features of Partition Manager.

Insight Manager 7

Insight Manager 7

Insight Manager 7Insight Manager 7

delivers pre failure alerting for servers ensuring potential server failures are detected before they result in unplanned

system downtime. Insight Manager 7 also provides inventory reporting capabilities that dramatically reduce the time

and effort required to track server assets and helps systems administrators make educated decisions about which

system may required hardware upgrades or replacement. And Insight Manager 7 is an effective tool for managing

your HP desktops and notebooks as well as non HP devices instrumented to SNMP or DMI.

System Management Homepage

System Management Homepage

System Management HomepageSystem Management Homepage

user interface. All system faults and major subsystem status are now reported within the initial System Management

Homepage view. In addition, the new tab-based interface and menu structure provide one click access to server log.

The System Management Homepage is accessible either directly through a browser (with the partition's IP address) or

through a management application such as Insight Manager 7 or an enterprise management application.

HP's Management Processor

HP's Management Processor

HP's Management ProcessorHP's Management Processor

the unlikely event that the operating system is not running, the Management Processor can be accessed to power

cycle the server, view event logs and status logs, enable console redirection, and more. The Management Processor

is embedded into the server and does not take a PCI slot. And, because secure access to the Management Processor

is available through SSL encryption, customers can be confident that its powerful capabilities will be available only

to authorized administrators. New features on the management processor include:

OpenView Management Tools

OpenView Management Tools

OpenView Management ToolsOpenView Management Tools

collect and process information from the SNMP agents and WMI running on Windows Itanium 2 based servers. In

the future, OpenView agents will be able to directly collect and correlate event, storage, and performance data from

Windows Itanium 2 based servers, thus enhancing the information OpenView management tools will process and

present.

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

is a complete toolset to install, configure, and manage

creates and manages nPartitions-hard partitions for high end servers. Once the hard

At first release, Partition Manager will require a PC SMS running Partition Manager Command Line

maximizes system uptime and provides powerful monitoring and control. Insight Manager 7

displays critical management information through a simple, task oriented

enables remote server management over the Web regardless of the system state. In

Support for Web Console that provides secure text mode access to the management processor

Reporting of error events from system firmware.

Ability to trigger the task of PCI OL* from the management processor.

Ability to scan a cell board while the system is running.

Implementation of management processor commands for security across partitions so that partitions do not

modify system configuration.

, such as OpenView Operations and Network Node Manager, will be able to

Linux

Linux

LinuxLinux

Insight Manager 7

Insight Manager 7

Insight Manager 7Insight Manager 7

also provides inventory reporting capabilities that dramatically reduce the time and effort required to track server

assets and helps systems administrators make educated decisions about which system may required hardware

upgrades or replacement. And Insight Manager 7 is an effective tool for managing your HP desktops and notebooks

as well as non HP devices instrumented to SNMP or DMI.

The HP Enablement Kit for Linux

The HP Enablement Kit for Linux

The HP Enablement Kit for LinuxThe HP Enablement Kit for Linux

System Imager, an open source operating system deployment tool. System Imager is a golden image based tool and

can be used for initial deployment as well as updates.

Partition Manager

Partition Manager

Partition ManagerPartition Manager

created, the systems running on those partitions can be managed consistently with all the other tools integrated into

Servicecontrol Manager.

NOTE:

NOTE:

NOTE: NOTE:

At first release, Partition Manager will require an HP-UX 11i Version 2 partition or separate device (i.e.

DA - 11717 Worldwide — Version 1 — June 30, 2003

maximizes system uptime and provides powerful monitoring and control. Insight Manager 7

facilitates setup and configuration of the operating system. This kit includes

creates and manages nPartitions-hard partitions for high-end servers. Once the partitions are

Page 15

Page 16

QuickSpecs

Configuration

Itanium2 based workstation or server running HP-UX 11i Version 2) in order to configure Linux partitions. Refer to

HP-UX section above for key features of Partition Manager.

HP's Management Processor

HP's Management Processor

HP's Management ProcessorHP's Management Processor

the unlikely event that the operating system is not running, the Management Processor can be accessed to power

cycle the server, view event logs and status logs, enable console redirection, and more. The Management Processor

is embedded into the server and does not take a PCI slot. And, because secure access to the Management Processor

is available through SSL encryption, customers can be confident that its powerful capabilities will be available only

to authorized administrators.

General Site Preparation

General Site Preparation

General Site PreparationGeneral Site Preparation

Rules

Rules

RulesRules

AC Power Requirements

AC Power Requirements

AC Power RequirementsAC Power Requirements

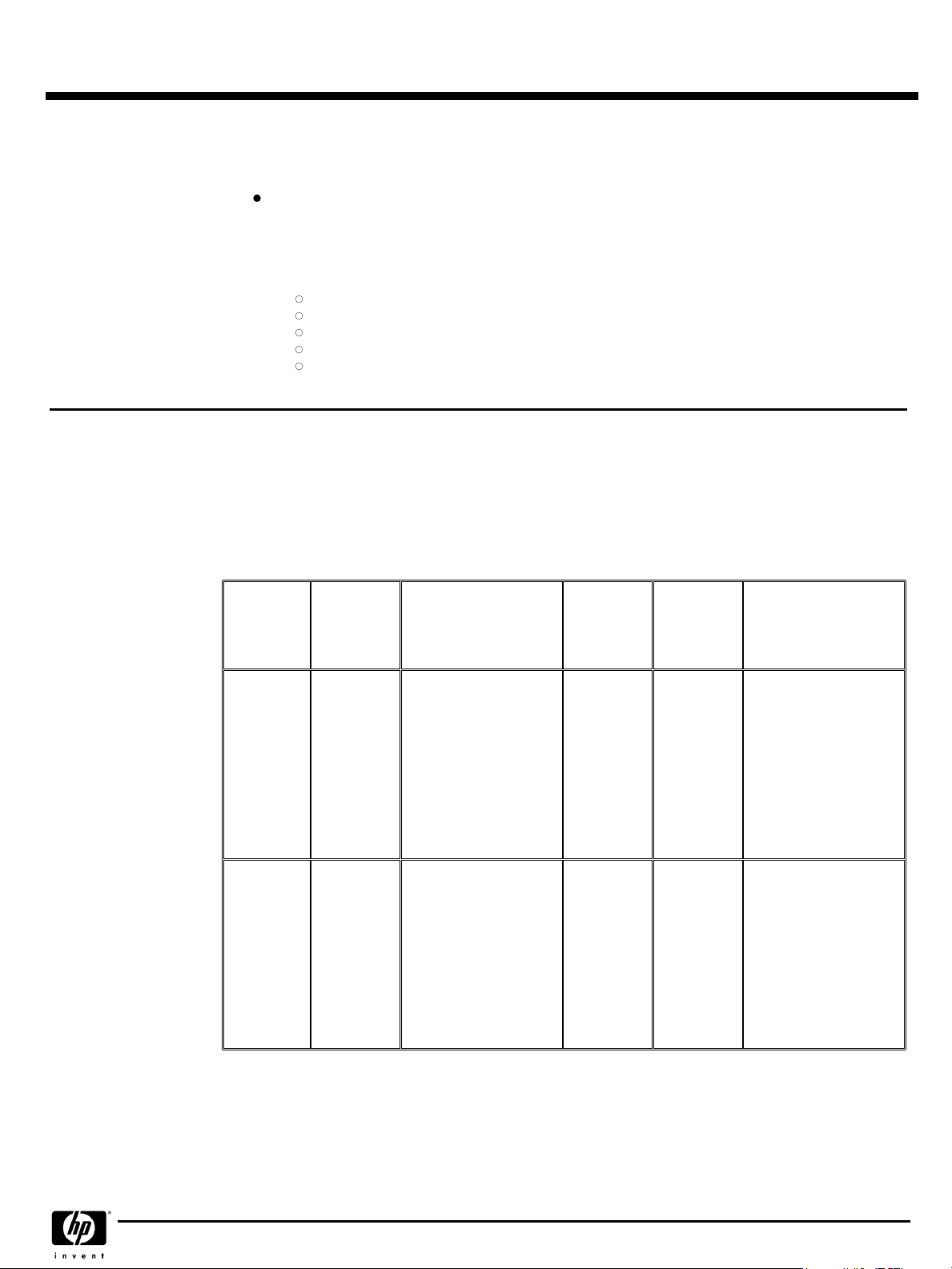

The modular, N+1 power shelf assembly is called the Front End Power Subsystem (FEPS). The redundancy of the FEPS is

achieved with 6 internal Bulk Power Supplies (BPS), any five of which can support the load and performance requirements.

Input Options

Input Options

Input OptionsInput Options

Reference the Site Preparation Guide for detailed power configuration options.

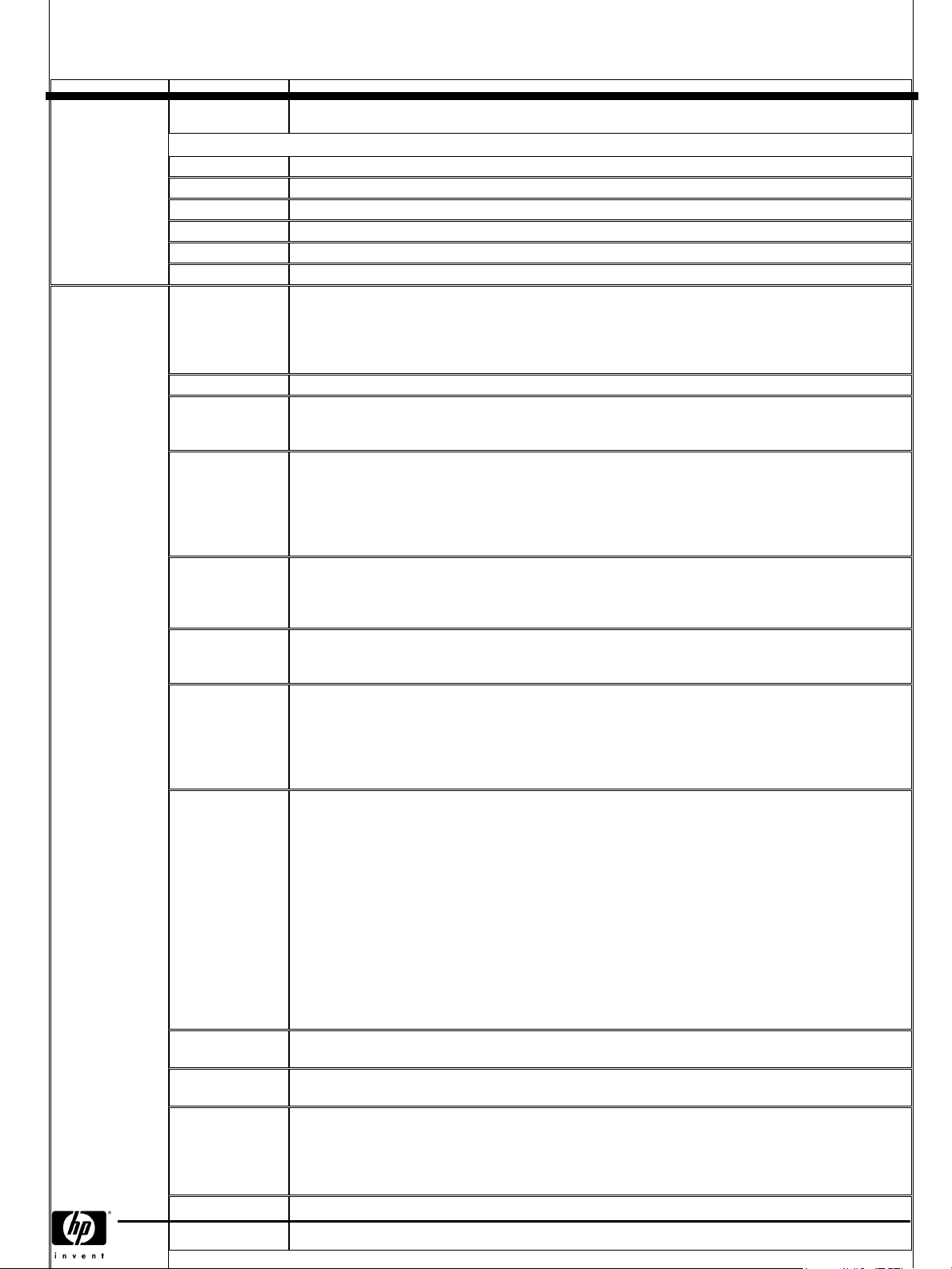

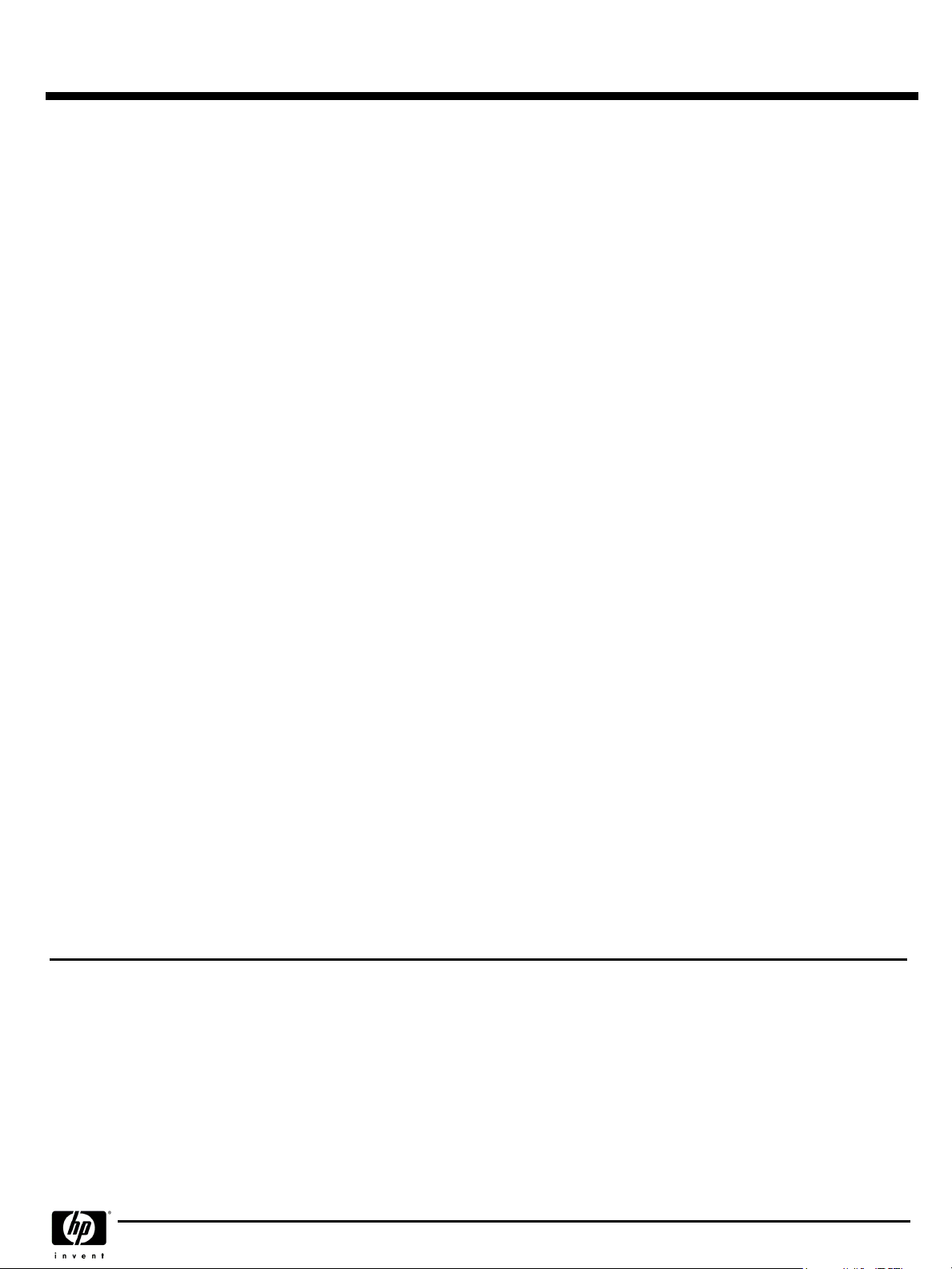

Input Power Options

Input Power Options

Input Power OptionsInput Power Options

PDCA

PDCA

PDCAPDCA

Product

Product

ProductProduct

Number

Number

NumberNumber

A5800A

Option 006

A5800A

Option 007

a. A dedicated branch is required for each PDCA installed.

b. In the U.S.A, site power is 60 Amps; in Europe site power is 63 Amps.

c. Refer to the Option 006 and 007 Specifics Table for detailed specifics related to this option.

d. In the U.S.A. site power is 30 Amps; in Europe site power is 32 Amps.

Option 006 and 007 Specifics

Option 006 and 007 Specifics

Option 006 and 007 SpecificsOption 006 and 007 Specifics

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

enables remote server management over the Web regardless of the system state. In

Support for Web Console that provides secure text mode access to the management processor

Reporting of error events from system firmware.

Ability to trigger the task of PCI OL* from the management processor.

Ability to scan a cell board while the system is running. (only available for partitionable systems)

Implementation of management processor commands for security across partitions so that partitions do not

modify system configuration. (only available for partitionable systems)

Source Type

Source Type

Source TypeSource Type

3-phase

3-phase

Source Voltage

Source Voltage

Source VoltageSource Voltage

(nominal)

(nominal)

(nominal)(nominal)

Voltage range 200- 240

VAC, phase-to- phase,

50/60 Hz

Voltage range 200- 240

VAC, phase-to- neutral,

50/60 Hz

a

PDCA

PDCA

PDCAPDCA

Required

Required

RequiredRequired

4-wire

5-wire

Input

Input

InputInput

Current

Current

CurrentCurrent

Per Phase

Per Phase

Per PhasePer Phase

200-240

200-240

200-240200-240

VAC

VAC

VACVAC

44 A Maximum

per phase

24 A Maximum

per phase

Power Required

Power Required

Power RequiredPower Required

2.5 meter UL power cord

and OL approved plug

provided. The customer must

provide the mating in line

connector or purchase

quantity one A6440A opt

401 to receive a mating in

line connector. An

electrician must hardwire the

in- line connector to

60 A/63 A site power.

2.5 meter <HAR> power

cord and VDE approved

plug provided. The customer

must provide the mating in

line connector or purchase

quantity 1 A6440A opt 501

to receive a mating in line

connector. An electrician

must hardwire the in-line

connector to

30 A/32 A site power.

a,b,c

a,b,d

DA - 11717 Worldwide — Version 1 — June 30, 2003

Page 16

Page 17

QuickSpecs

Configuration

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,

HP Integrity Superdome Servers: 16-way, 32-way,HP Integrity Superdome Servers: 16-way, 32-way,

and 64-way

and 64-way

and 64-wayand 64-way

PDCA Product

PDCA Product

PDCA ProductPDCA Product

Number

Number

NumberNumber

A5800A Option 006

A5800A Option 007

a. In line connector is available from HP by purchasing A6440A, Option 401.

b. Panel mount receptacles must be purchased by the customer from a local Mennekes supplier.

c. In line connector is available from HP by purchasing A6440A, Option 501.

NOTE:

NOTE:

NOTE: NOTE:

with all local codes.

Input Requirements

Input Requirements

Input RequirementsInput Requirements

Reference the Site Preparation Guide for detailed power configuration requirements.

Cooling Requirements

Cooling Requirements

Cooling RequirementsCooling Requirements

A qualified electrician must wire the PDCA in line connector to site power using copper wire and in compliance

Requirements

Requirements

RequirementsRequirements

Nominal Input Voltage (VAC rms)

Input Voltage Range (VAC rms)

Frequency Range (Hz)

Number of Phases

Maximum Input Current (A rms), 3Phase 5-wire

Maximum Input Current (A rms), 3Phase 4-wire

Maximum Inrush Current (A peak)

Circuit Breaker Rating (A),

3-Phase 5-wire

Circuit Breaker Rating (A),

3-Phase 4-wire

Power Factor Correction

Ground Leakage Current (mA)

The cooling system in Superdome was designed to maintain reliable operation of the system in the specified

environment. In addition, the system is designed to provide redundant cooling (i.e. N+1 fans and blowers) that

allows all of the cooling components to be "hot swapped."

Superdome was designed to operate in all data center environments with any traditional room cooling scheme (i.e.

raised floor environments) but in some cases where data centers have previously installed high power density systems,

Attached Power

Attached Power

Attached PowerAttached Power

Cord

Cord

CordCord

OLFLEX 190 (PN

600804), fourconductor, 6-AWG