Page 1

HP IBRIX X9000 Network Storage System Installation Guide

Abstract

This document describes how to install the X9000 File Serving Software. It is intended for HP Services personnel who configure

X9000 series Network Storage systems at customer sites. For upgrade information, see the administration guide for your system.

For the latest X9000 guides, browse to http://www.hp.com/support/manuals. In the storage section, select NAS Systems and

then select HP X9000 Network Storage Systems from the IBRIX Storage Systems section.

HP Part Number: TA768-96058

Published: June 2012

Edition: 9

Page 2

© Copyright 2009, 2012 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Acknowledgements

Microsoft, Windows, Windows XP, and Windows NT are U.S. registered trademarks of Microsoft Corporation.

UNIX® is a registered trademark of The Open Group.

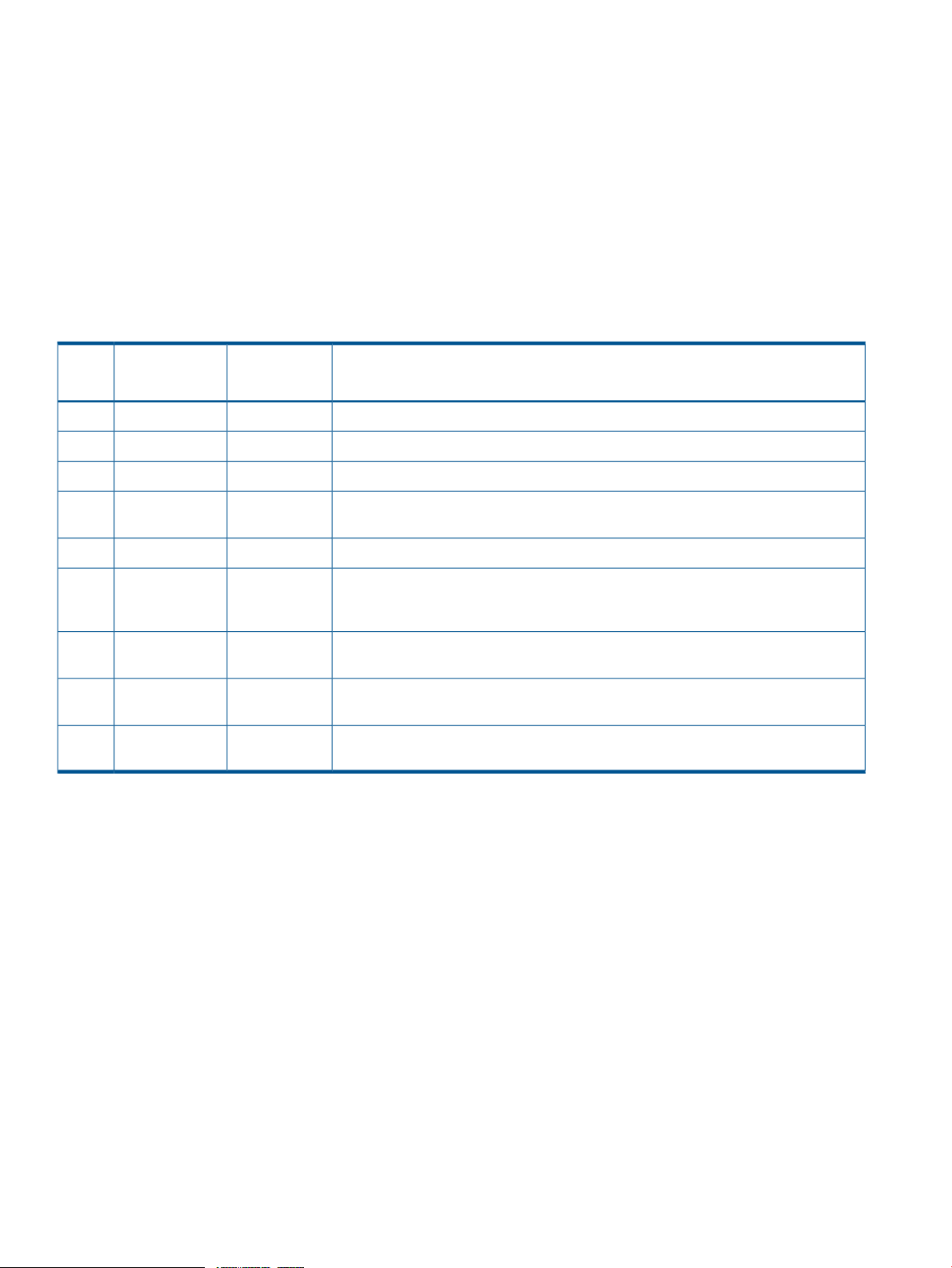

Revision History

DateEdition

DescriptionSoftware

Version

Initial release of HP StorageWorks X9000 File Serving Software5.3.1November 20091

Updated license and quotas information5.3.2 or laterDecember 20092

Major revision of installation and configuration information5.4 or laterApril 20103

5.4 or laterMay 20104

Added X9720 on-site commissioning information; revise system restore information;

removed installation blueprints chapter

Updated for the X9000 Software 5.5 release5.5 or laterDecember 20105

5.5 or laterMarch 20116

Added network best practices for X9720 systems, updated the network best practices

for X9300 and X9320 systems, and updated the configuration procedure for the

management console and file serving nodes

5.6 or laterJune 20117

Updated network best practices, added installation and configuration information for

X9720 systems

6.0 or laterSeptember 20118

Updated installation and configuration information, replaced Support Ticket with Ibrix

Collect, added information about ibrixinit

6.1 or laterJune 20129

Added new installation procedures and wizards for X9300, X9320, and X9730

systems.

Page 3

Contents

1 Installing X9300 and X9320 systems.............................................................6

Network information.................................................................................................................6

Installation checklist..................................................................................................................6

Installing the latest IBRIX X9000 software release..........................................................................7

Starting the installation and configuration....................................................................................8

Completing the installation in text mode — unified network..........................................................11

Installing additional servers without the template....................................................................14

Installing additional servers using the template.......................................................................17

Completing the installation in text mode — separate cluster and user networks...............................19

Installing additional servers.................................................................................................23

2 Configuring the cluster with the Getting Started Wizard (X9300/X9320

systems).....................................................................................................31

Running the wizard.................................................................................................................31

Troubleshooting the Getting Started Wizard...............................................................................36

Cluster Settings page..........................................................................................................36

DNS/FTP page.................................................................................................................37

File Servers page...............................................................................................................38

Create a Default File System page........................................................................................42

3 Installing X9730 systems............................................................................44

X9730 network layouts............................................................................................................44

IP address requirements...........................................................................................................45

Installation checklist................................................................................................................46

Configuring OA1 IP addresses for Onboard Administrator...........................................................46

Installing the latest IBRIX X9000 software release........................................................................48

Starting the installation and configuring the chassis.....................................................................49

Creating the cluster on blade 1................................................................................................59

Installing additional X9730 blades............................................................................................63

Firmware updates...................................................................................................................72

Troubleshooting .....................................................................................................................74

4 Post-installation tasks.................................................................................77

Updating license keys.............................................................................................................77

Configuring and Enabling High Availability...............................................................................77

X9730 systems..................................................................................................................77

X9300/X9320 systems.......................................................................................................77

Using the management console GUI.........................................................................................77

Changing the GUI user password........................................................................................77

X9000 software manpages......................................................................................................78

Configuring data collection with Ibrix Collect..............................................................................78

Configuring HP Insight Remote Support.....................................................................................78

Creating file systems...............................................................................................................78

Configuring NFS exports (optional)...........................................................................................78

NFS client implementation tuning.........................................................................................78

Configuring CIFS shares (optional)............................................................................................79

Configuring other X9000 software features................................................................................79

5 Configuring virtual interfaces for client access..............................................80

Network and VIF guidelines.....................................................................................................80

Creating a bonded VIF............................................................................................................80

Configuring standby backup nodes...........................................................................................80

Configuring NIC failover.........................................................................................................81

Contents 3

Page 4

Configuring automated failover................................................................................................81

Example configuration.............................................................................................................81

Specifying VIFs in the client configuration...................................................................................82

Configuring link state monitoring for iSCSI network interfaces.......................................................82

6 Adding Linux and Windows X9000 clients..................................................83

Linux X9000 client..................................................................................................................83

Prerequisites for installing the Linux X9000 client....................................................................83

Installation procedure.........................................................................................................83

Registering Linux X9000 clients............................................................................................84

Registering multicluster clients..............................................................................................84

Preferring a network interface for a Linux X9000 client............................................................84

Preferring a network interface for a hostgroup........................................................................84

Removing an X9000 client from the cluster............................................................................85

Windows X9000 client...........................................................................................................85

System requirements...........................................................................................................85

Installing the Windows X9000 client....................................................................................85

Windows X9000 client setup...............................................................................................86

Setting up Windows Services for UNIX.................................................................................86

Configuring automatic user mapping....................................................................................86

Configuring static user mapping..........................................................................................87

Configuring groups and users on the Active Directory server...............................................87

Configuring Active Directory settings on the management console.......................................88

Registering Windows X9000 clients and starting services........................................................88

Importing UIDs/GIDs to the Active Directory server................................................................90

Using the Windows X9000 client GUI..................................................................................90

Preferring a user network interface for a Windows client.........................................................91

Enabling file system access.................................................................................................91

Managing Access Control Lists............................................................................................91

Uninstalling X9000 clients.......................................................................................................94

Uninstalling Linux X9000 clients...........................................................................................94

Uninstalling Windows X9000 clients....................................................................................95

7 Completing the X9730 Performance Module installation................................96

Prerequisites...........................................................................................................................96

Installing the latest IBRIX X9000 software release........................................................................96

Installing the first expansion blade............................................................................................97

Installing the second expansion blade.....................................................................................102

Using the new storage...........................................................................................................106

8 Expanding an X9720 or X9320 10GbE cluster by an X9730 module............109

Prerequisites.........................................................................................................................109

Installing the latest IBRIX X9000 software release......................................................................109

Installing the first expansion blade..........................................................................................110

Installing the second expansion blade.....................................................................................123

Using the new storage...........................................................................................................129

9 Expanding an X9320 cluster with an X9320 starter kit................................132

Installing the latest IBRIX X9000 software release......................................................................132

Installing the first expansion server..........................................................................................132

Completing the installation with the eWizard............................................................................135

Completing the installation in text mode..................................................................................136

10 Using ibrixinit.......................................................................................141

11 Setting up InfiniBand couplets.................................................................143

Downloading and installing the InfiniBand software..................................................................144

Installing the driver...............................................................................................................144

4 Contents

Page 5

Troubleshooting the InfiniBand network....................................................................................145

Enabling client access...........................................................................................................146

Setting up Voltaire InfiniBand ................................................................................................146

12 Support and other resources...................................................................148

Contacting HP......................................................................................................................148

Related information...............................................................................................................148

HP websites.........................................................................................................................148

13 Documentation feedback.......................................................................149

Glossary..................................................................................................150

Contents 5

Page 6

1 Installing X9300 and X9320 systems

The system is configured at the factory as follows:

• X9000 File Serving Software 6.1 is installed on the servers but is not configured

• For X9320 systems, LUNs are created and preformatted on the MSA storage system

You will need the following information when you perform the installation:

• One IP address per server to assign to the network bond

• One IP address per server to assign to the iLO interface

• One virtual IP address (VIF) to assign to the entire cluster for management use

• The IP addresses of your DNS servers

• The IP addresses of your NTP servers

If you are performing the installation on an existing cluster, ensure that the same version of the

X9000 software is installed on all nodes.

Network information

In previous releases, X9300/X9320 systems were set up with two networks:

• 1GbE models used the LOMs for the cluster and management networks and used the PCI card

as the user network.

• 10GbE models placed the cluster and user networks on the 10GbE cards and used the LOMs

as a management network.

By default, the 6.1 release uses a unified network that incorporates cluster and user operations.

There is only one IP address per server by default, and the IP address exists on the public network.

The unified network creates bond0 on specific interfaces. For 10GigE systems, bond0 uses the

eth4/eth5 interfaces. For Quad GigE systems, bond0 uses interfaces eth4–eth7.

If necessary, you can create separate user and cluster networks, with bond0 as the cluster network

and bond1 as the user network. The initial installation configures bond0 on the default interfaces.

Later, you can customize bond0, selecting the correct interfaces for your network, and can then

define bond1. This procedure is described in detail later in this chapter.

See the HP IBRIX X9000 Network Storage System Network Best Practices Guide for a detailed

description of the unified network on X9300/X9320 systems, including the physical layout of the

network.

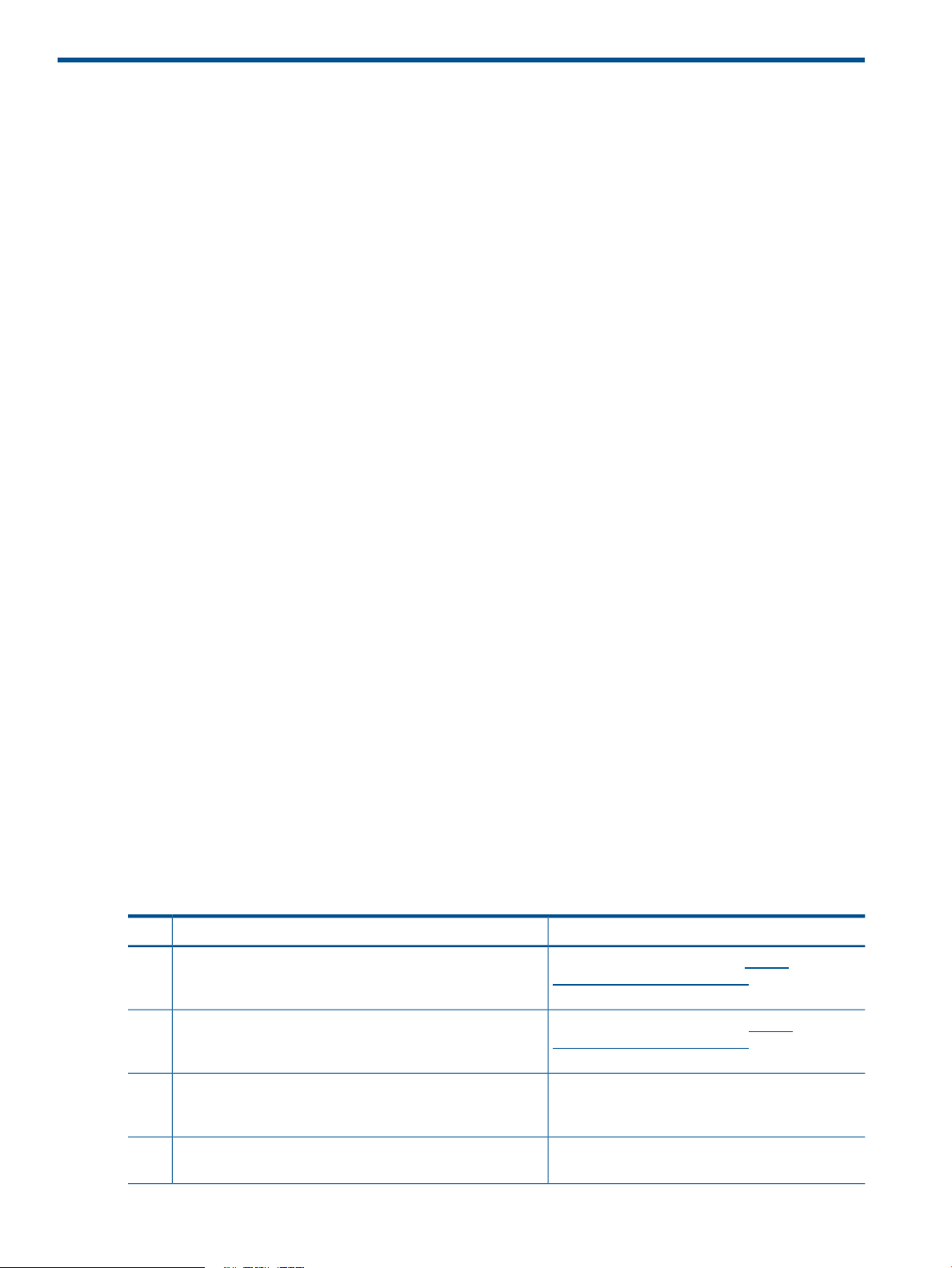

Installation checklist

For X9320 systems, set up the HP storage array1.

Set up iLO on the ProLiant servers2.

More informationTaskStep

The array documentation is on http://

www.hp.com/support/manuals under storage >

Disk Storage Systems

The server documentation is on http://

www.hp.com/support/manuals under servers >

ProLiant ml/dl and tc series servers

3.

for the storage arrays connected to the gateways has been

configured and is running on each file serving node

Install the latest IBRIX X9000 software release on each server4.

6 Installing X9300 and X9320 systems

See the documentation for the multipath solutionFor X9300 systems, ensure that the correct multipath solution

“Installing the latest IBRIX X9000 software release”

(page 7)

Page 7

More informationTaskStep

Perform the installation5.

Set up IBRIX virtual IP addresses for client access6.

• Update license keys if not done already

• Configure server standby pairs for High Availability

• Configure the Ibrix Collect feature

• Configure HP Insight Remote Support

• Create file systems if not already configured

Optionally, also configure the following features:

• NFS, CIFS, HTTP/HTTPS, FTP/FTPS shares

• Remote replication

• Data retention and validation

• Antivirus support

• Software snapshots

• Block snapshots

• Data tiering

• NDMP Backup Protocol Support

“Starting the installation and configuration”

(page 8)

“Configuring virtual interfaces for client access”

(page 80)

“Post-installation tasks” (page 77)Perform post-installation tasks:7.

Configure X9000 clients for Linux or Windows (optional)8.

“Adding Linux and Windows X9000 clients”

(page 83)

Installing the latest IBRIX X9000 software release

Obtain the latest 6.1 release from the IBRIX X9000 software dropbox. Download the Quick Restore

ISO image and then use either a DVD or a USB key to install the image.

Use a DVD

1. Burn the ISO image to a DVD.

2. Insert the Quick Restore DVD into the server's DVD-ROM drive.

3. Restart the server to boot from the DVD-ROM.

4. When the HP Network Storage System screen appears, enter qr to install the software.

Repeat steps 2–4 on each server.

Use a USB key

1. Copy the ISO to a Linux system.

2. Insert a USB key into the Linux system.

3. Execute cat /proc/partitions to find the USB device partition, which is displayed as

dev/sdX. For example:

cat /proc/partitions

major minor #blocks name

8 128 15633408 sdi

4. Execute the following dd command to make USB the QR installer:

dd if=<ISO file name with path> of=/dev/sdi oflag=direct bs=1M

For example:

dd if=X9000-QRDVD-6.2.96-1.x86_64.iso of=/dev/sdi oflag=direct bs=1M

4491+0 records in

Installing the latest IBRIX X9000 software release 7

Page 8

4491+0 records out

4709154816 bytes (4.7 GB) copied, 957.784 seconds, 4.9 MB/s

5. Insert the USB key into the server to be installed.

6. Restart the server to boot from the USB key. (Press F11 and use option 3 ).

7. When the “HP Network Storage System” screen appears, enter qr to install the software.

Repeat steps 5–8 on each server and then go to the next section, “Starting the installation and

configuration.”

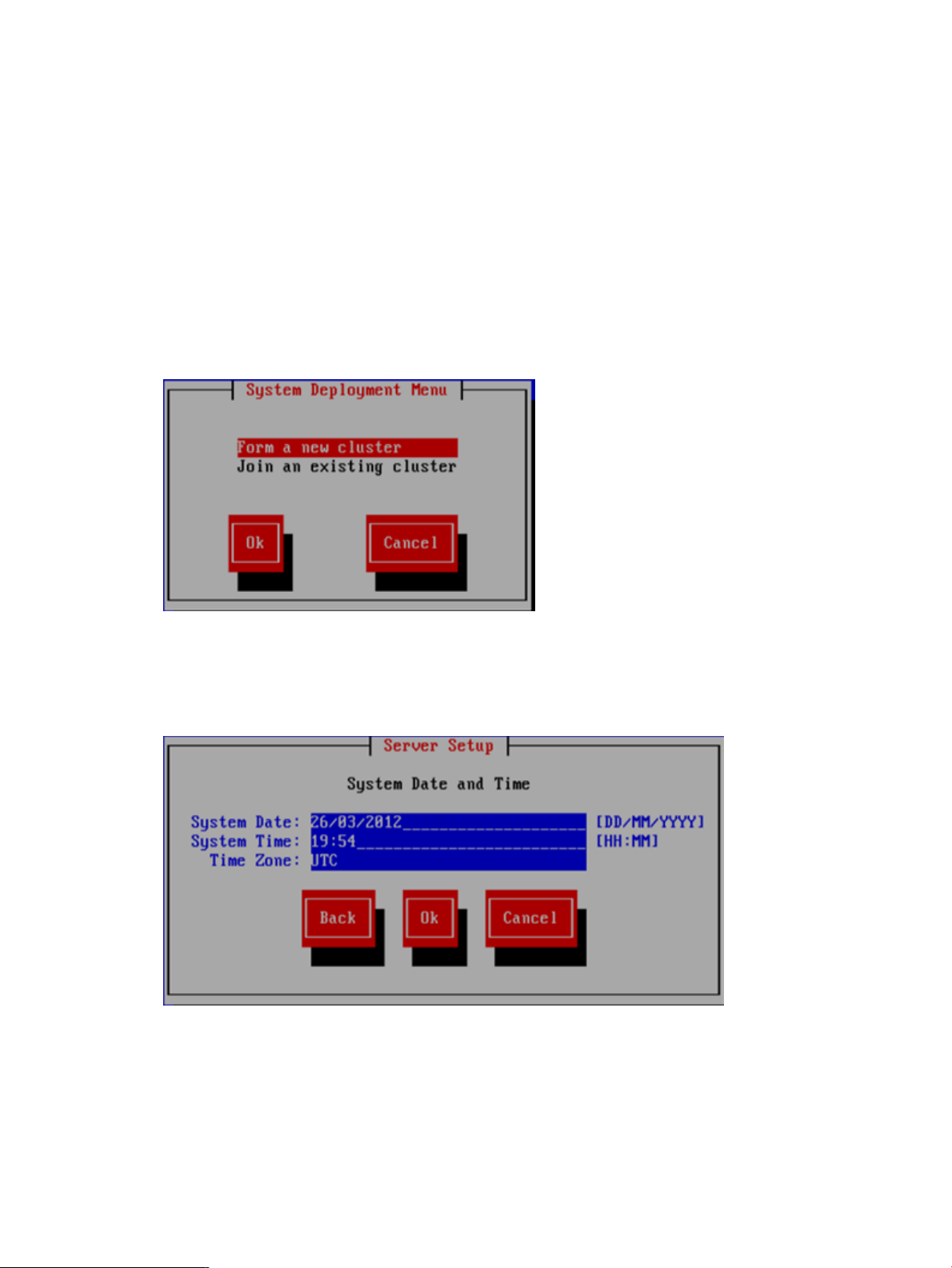

Starting the installation and configuration

Complete the following steps:

1. Boot the servers that will be in the cluster.

2. The setup wizard checks the network for an existing cluster. If a cluster is found, you will be

asked if you want to join an existing cluster or create a new cluster.

If there is not an existing cluster or you chose to create a new cluster, the setup process asks

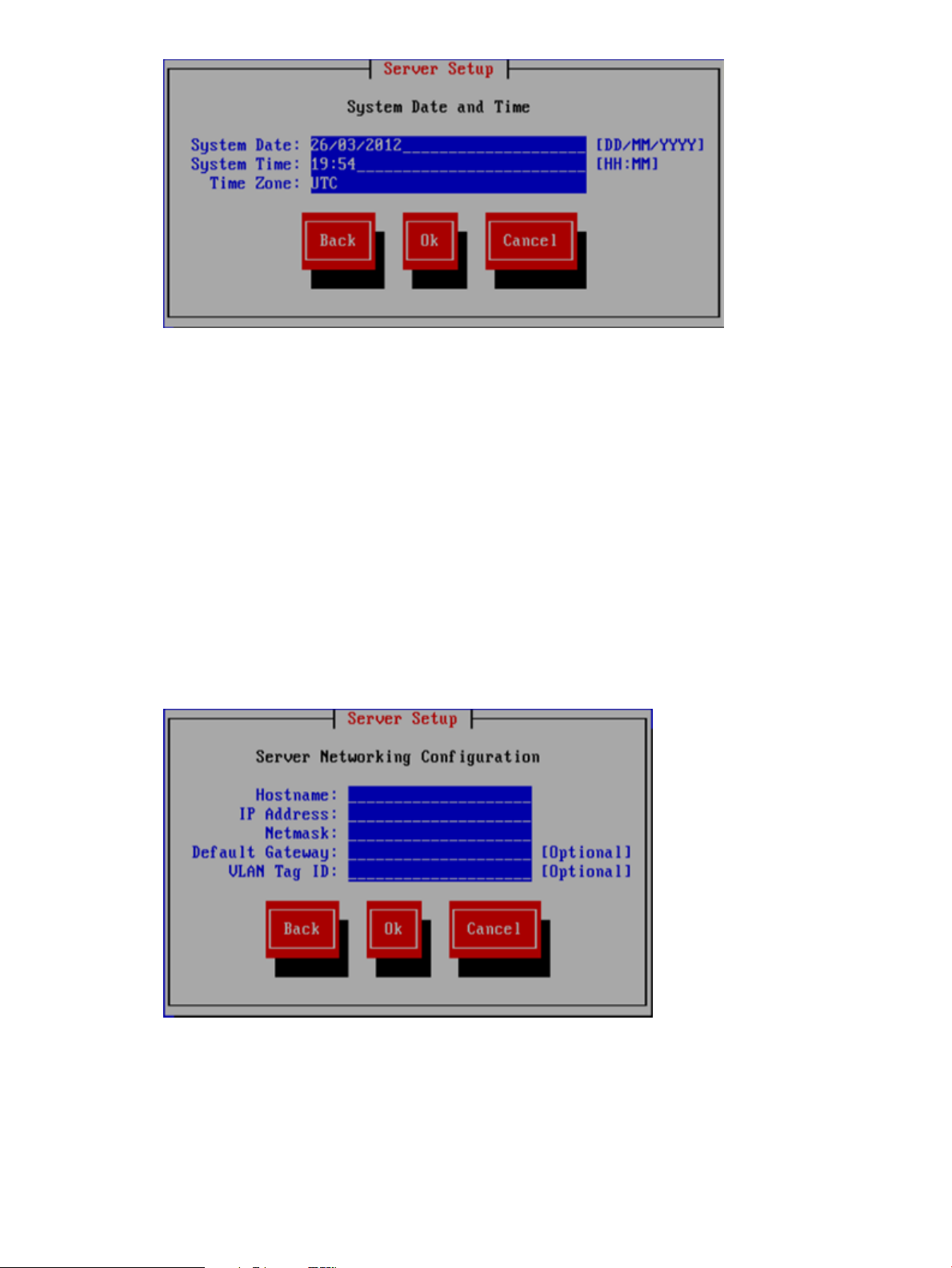

for information about the server you are using. On the System Date and Time dialog box,

enter the system date (day/month/year) and time (in 24-hour format). Tab to the Time Zone

field and press Enter to display a list of time zones. Select your time zone from the list.

3. The Server Networking Configuration dialog box defines the server on bond0. Note the

following:

• The hostname can include alphanumeric characters and the hyphen (–) special character.

It is a best practice to use only lowercase characters in hostnames; IBRIX issues can occur

with uppercase characters. Do not use an underscore (_) in the hostname.

• The IP address is the address of the server on bond0.

8 Installing X9300 and X9320 systems

Page 9

• The default gateway provides a route between networks. If your default gateway is on a

different subnet than bond0, skip this field.

Later in this procedure, you can select either Web UI or ASCII text mode to complete the

installation. A gateway address is required to use the Web UI.

• VLAN capabilities provide hardware support for running multiple logical networks over

the same physical networking hardware. IBRIX supports the ability to associate a VLAN

tag with a FSN interface. For more information, see the HP IBRIX X9000 Network Storage

System Network Best Practices Guide.

NOTE: If you see a message reporting that link-down networking issues were detected, select

Proceed.

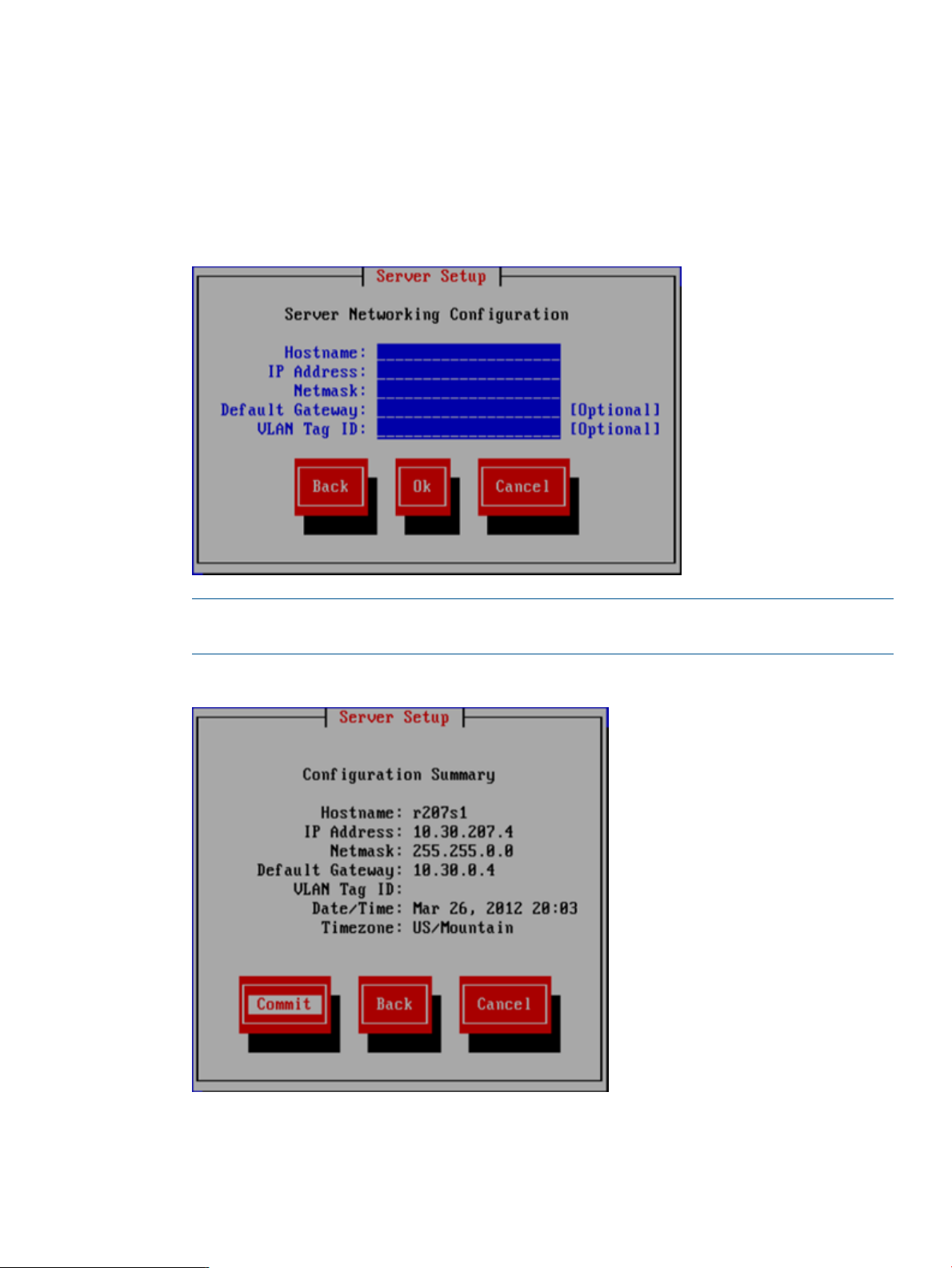

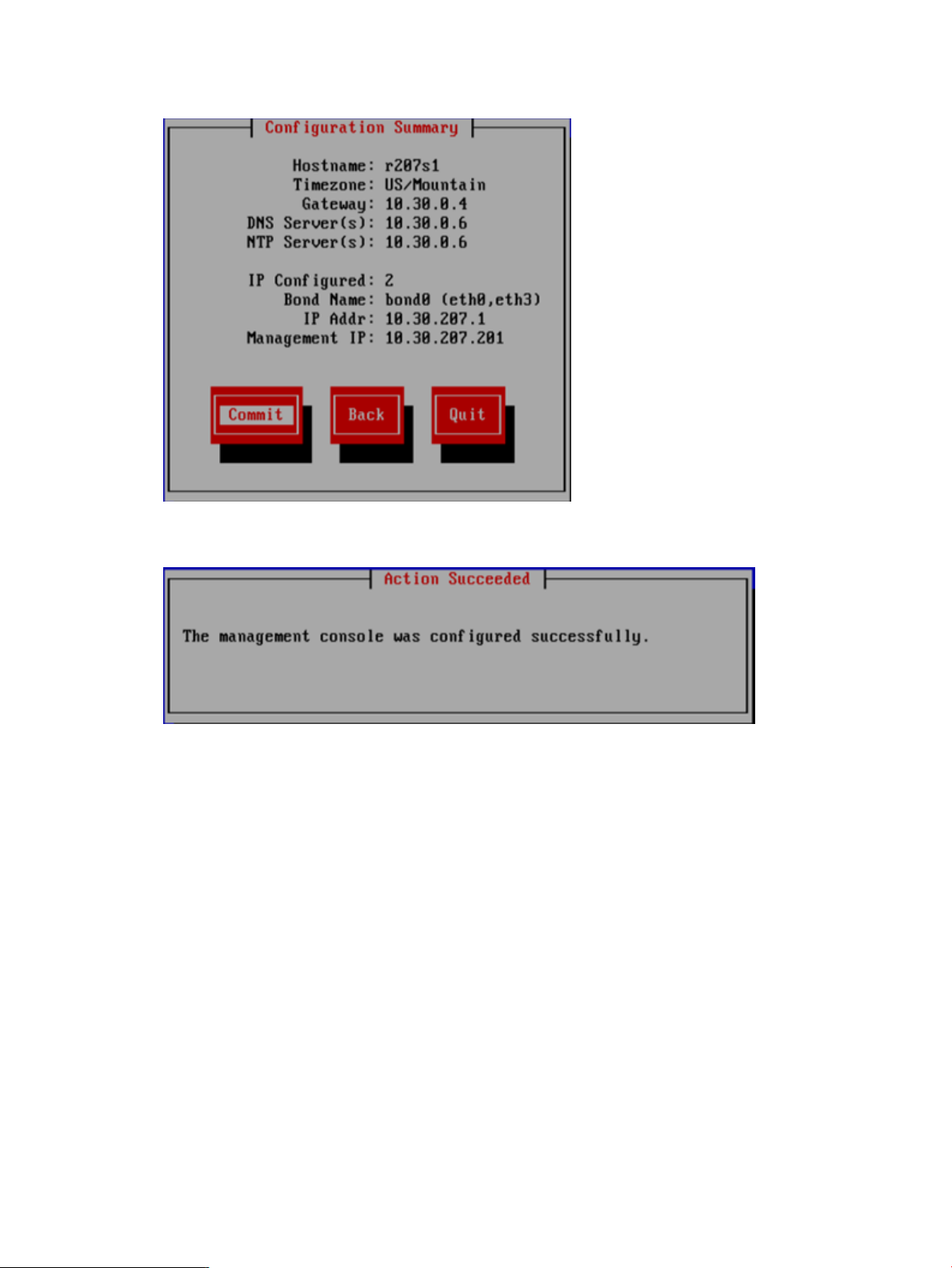

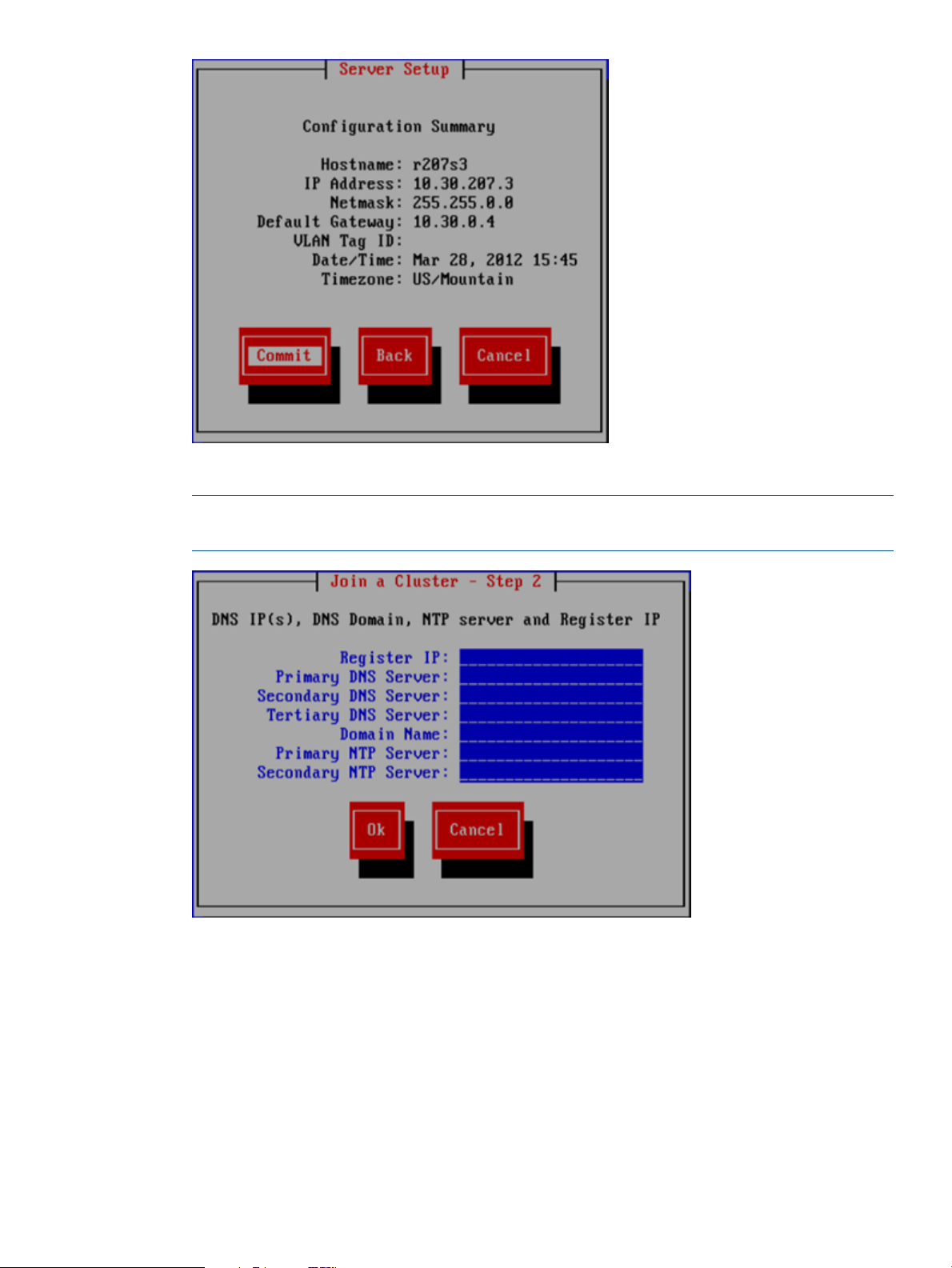

4. The Configuration Summary lists your configuration. Select Commit to continue the installation.

The setup wizard now configures the server according to the information you entered.

Starting the installation and configuration 9

Page 10

5. Select a method to complete the installation:

• Web UI over the network from a remote system. The URL for the IBRIX Getting Started

Wizard is displayed on the console when you select this option.

• A local Web UI session on this console. Selecting this option launches the IBRIX Getting

Started Wizard from a web browser on this console.

• ASCII text mode. This is a continuation of the menus you have been using.

IMPORTANT: To configure separate cluster and user networks, you must use ASCII text mode.

IMPORTANT: To use web UI, your cluster network must meet the following conditions:

• Network bond0 was configured without errors.

• Broadcast is enabled. The web UI relies on broadcast traffic.

• The cluster has a single bond0 unified network for user/cluster traffic. (Using an external

management network for ILO traffic is okay.) The web UI assumes that a bond0 unified

network should be used throughout the cluster.

• You entered a gateway IP address on the Server Setup screen.

If your cluster does not meet these conditions, select text mode to complete the installation.

If you would like to proceed with the Getting Started Wizard from some other laptop or desktop

connected to the network, select Install in graphical mode remotely. To proceed with the Getting

Started Wizard on this console, select Install in graphical mode at this console. If you are using

web UI, see “Configuring the cluster with the Getting Started Wizard (X9300/X9320 systems)”

(page 31).

If you are using text mode, go to the section corresponding to your network type.

• Unified network. Configure the bond0 interface and the active management console (Fusion

Manager) on the server and, optionally, create a template for installing the remaining servers.

• Separate cluster and user networks. Customize the bond0 cluster interface if needed, define

the bond1 user interface, and configure the active management console (Fusion Manager)

on the server. See “Completing the installation in text mode — separate cluster and user

networks” (page 19).

10 Installing X9300 and X9320 systems

Page 11

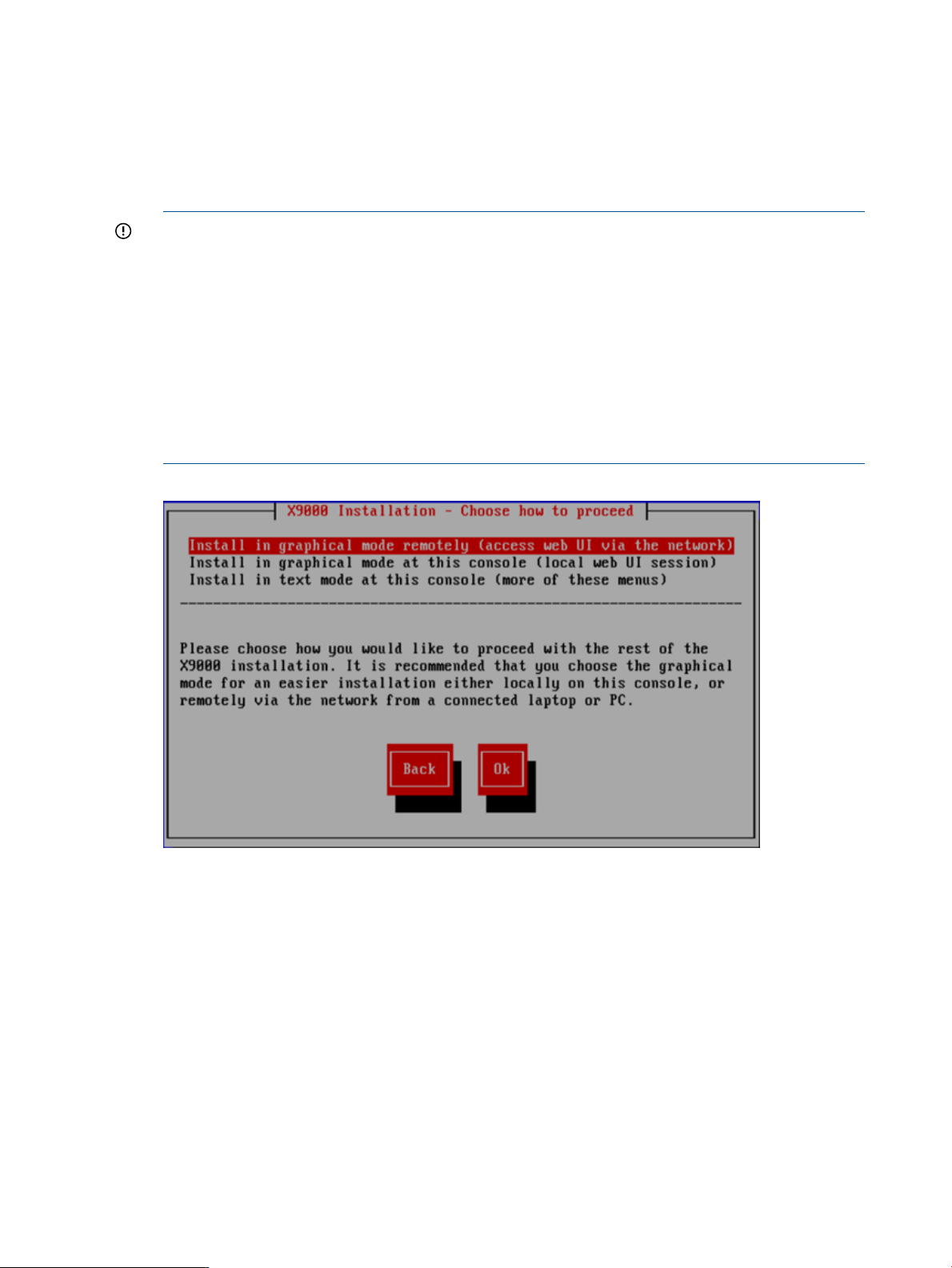

Completing the installation in text mode — unified network

To configure the first server, complete the following steps:

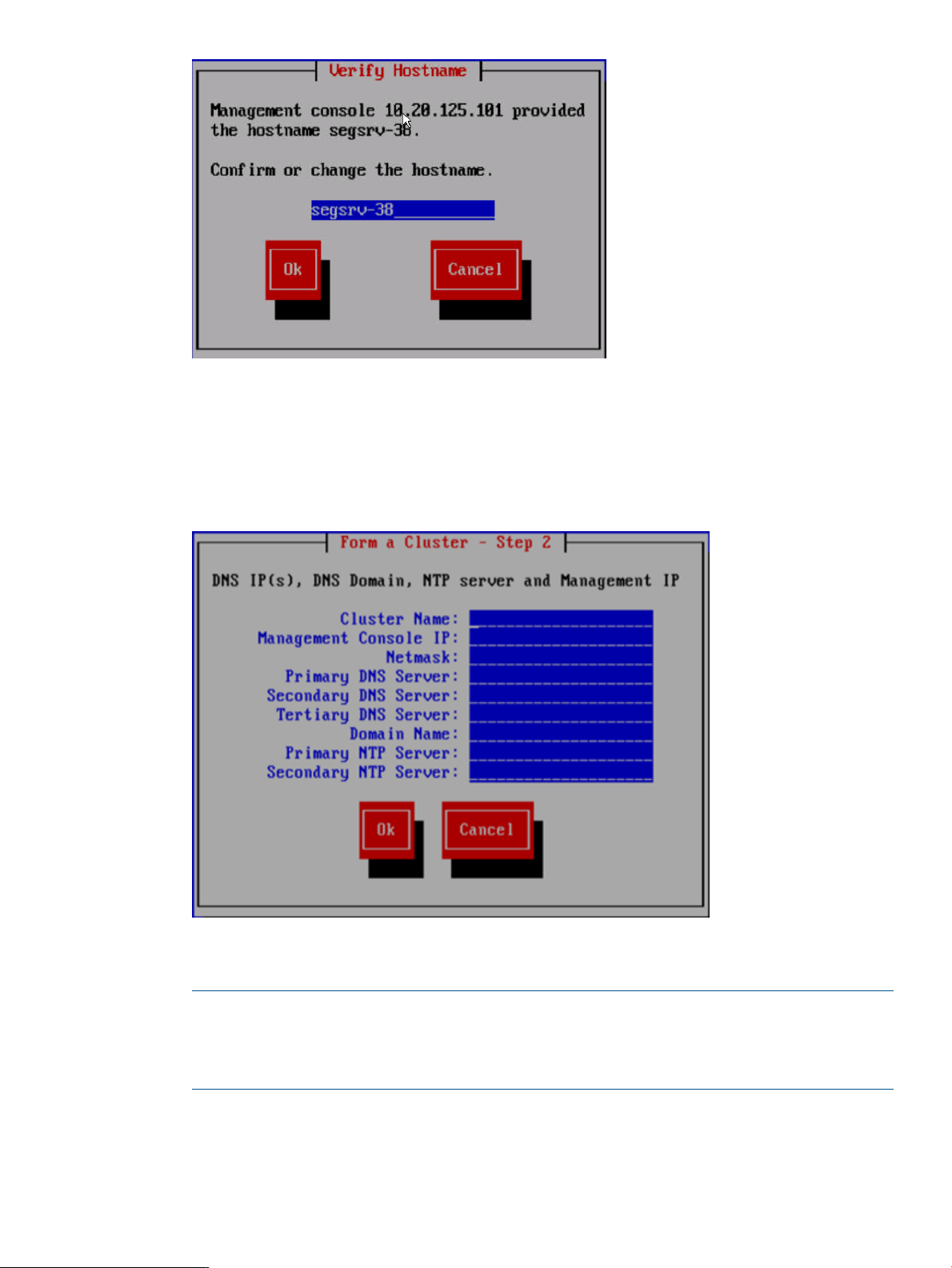

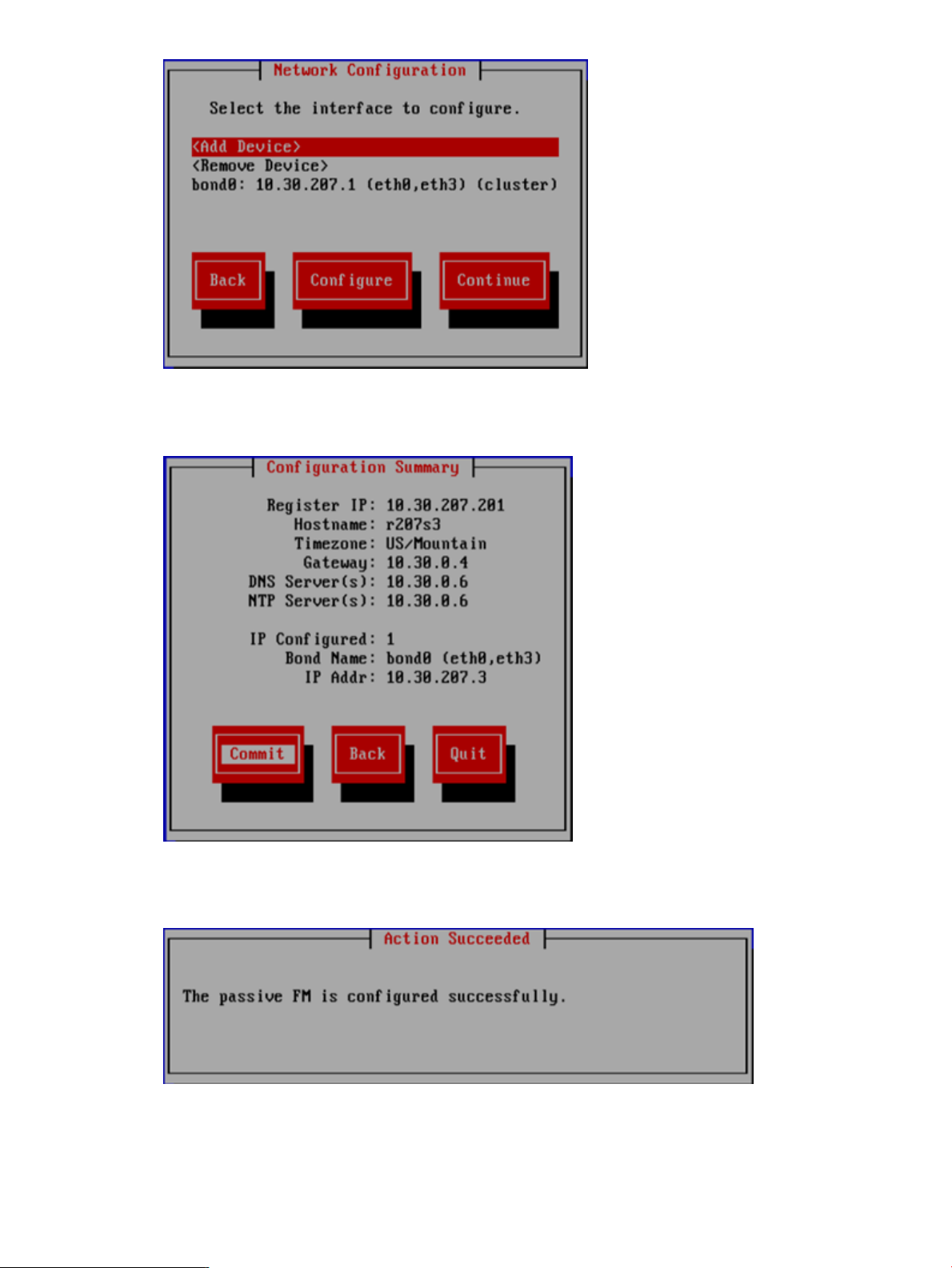

1. On the Form a Cluster — Step 2 dialog box, enter a name for the cluster and specify the IP

address and netmask for the Management Console IP (also called the Cluster Management

IP). This a virtual IP address (VIF) assigned to the entire cluster for management use. Think of

it as the “IP address of the cluster.” You should connect to this VIF in future GUI management

sessions. The VIF remains highly available.

Also enter the IP addresses and domain for your DNS servers, and the IP addresses for your

NTP servers.

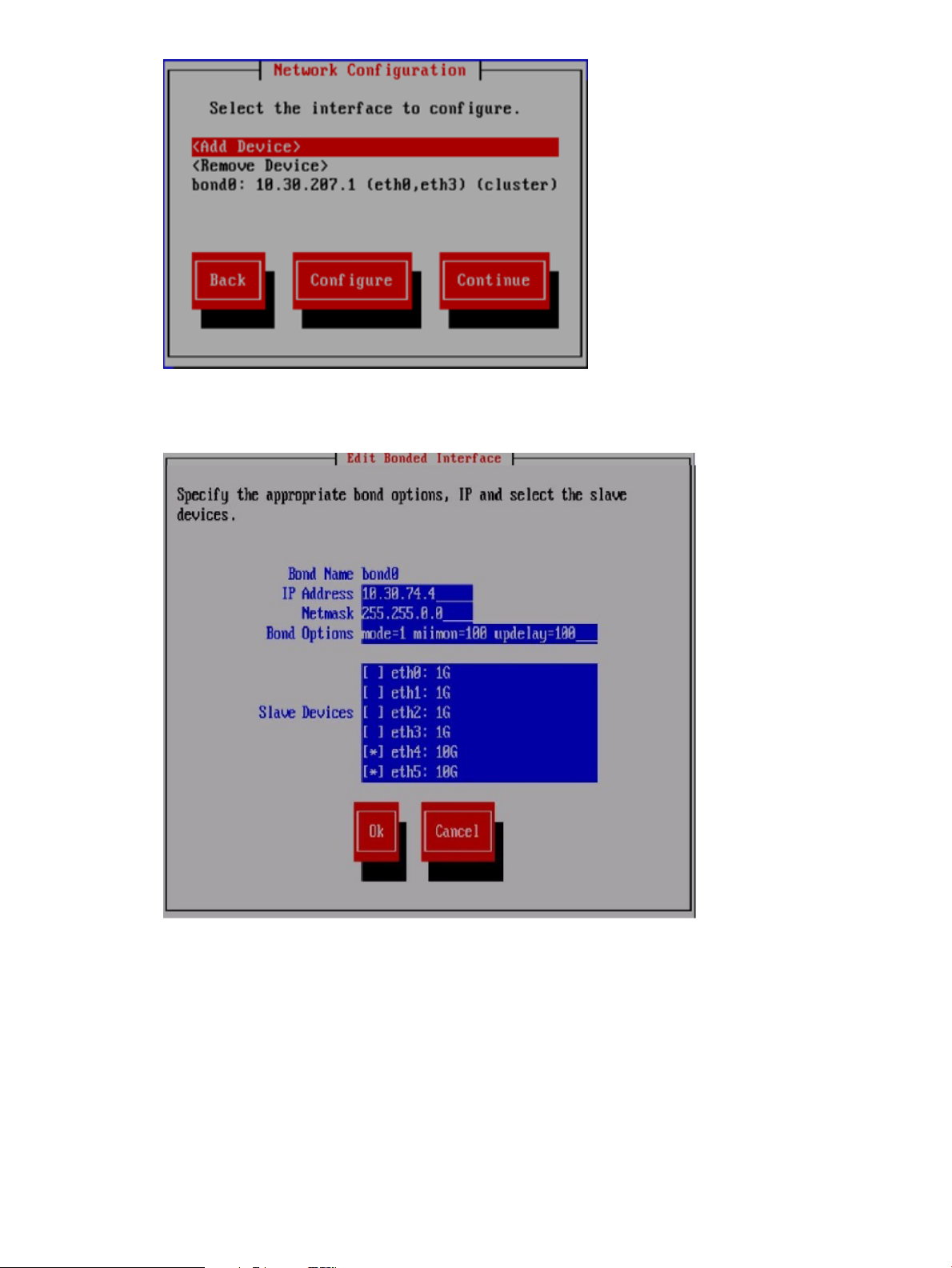

2. The Network Configuration dialog box lists the Ethernet devices included in bond0. The bond0

configuration is complete; select Continue and go to the next step.

NOTE: If required, the configuration of bond0 can be modified at this step by selecting

bond0 in the list of devices and then selecting Configure. This should be necessary only if you

have a non-standard configuration and the slave devices chosen by the installer for bond0

are not correct for your environment.

Completing the installation in text mode — unified network 11

Page 12

3. The Configuration Summary lists the configuration you have specified. Select Commit to

continue.

4. The wizard now configures the active management console (Fusion Manager) on the server.

12 Installing X9300 and X9320 systems

Page 13

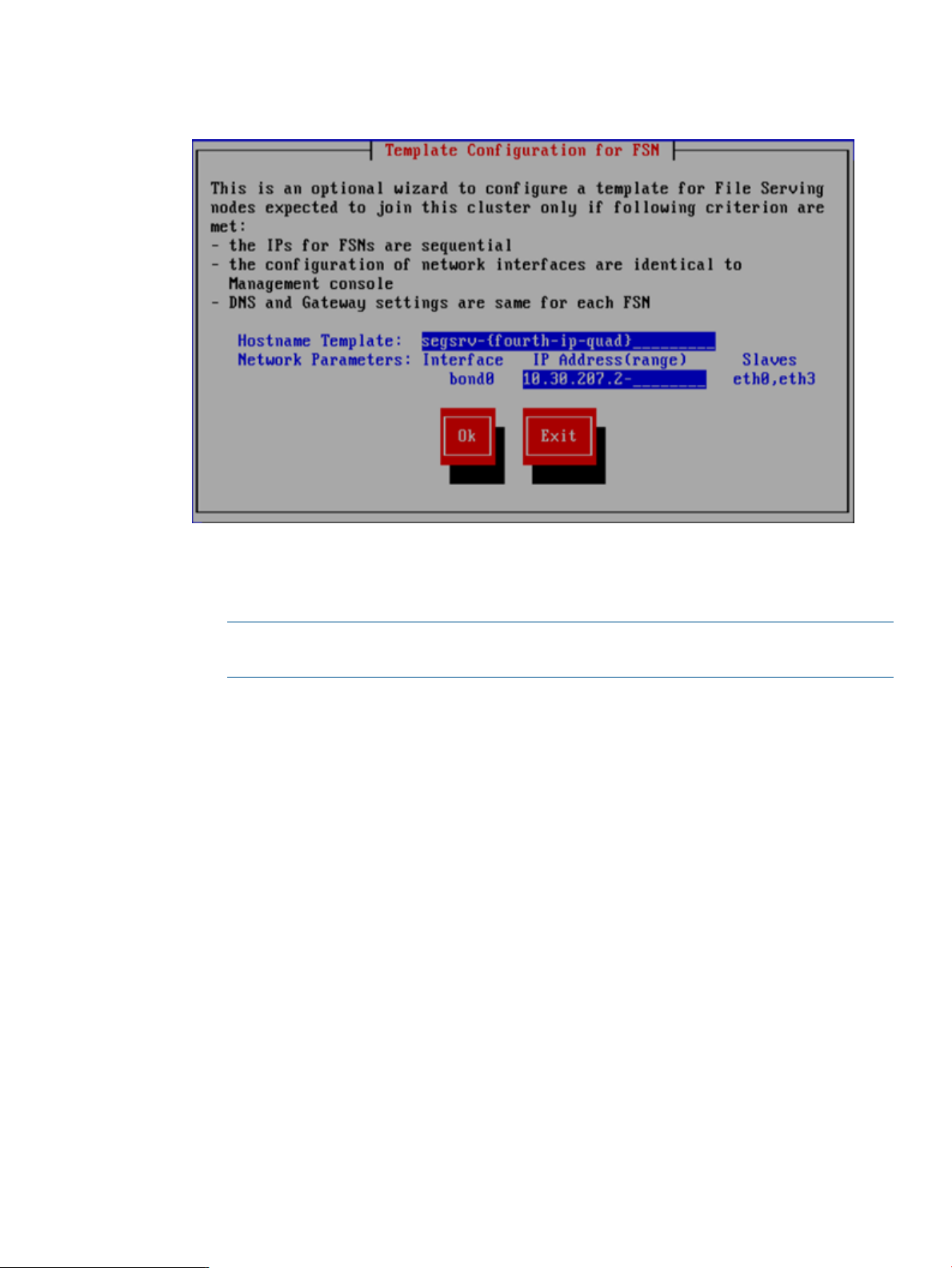

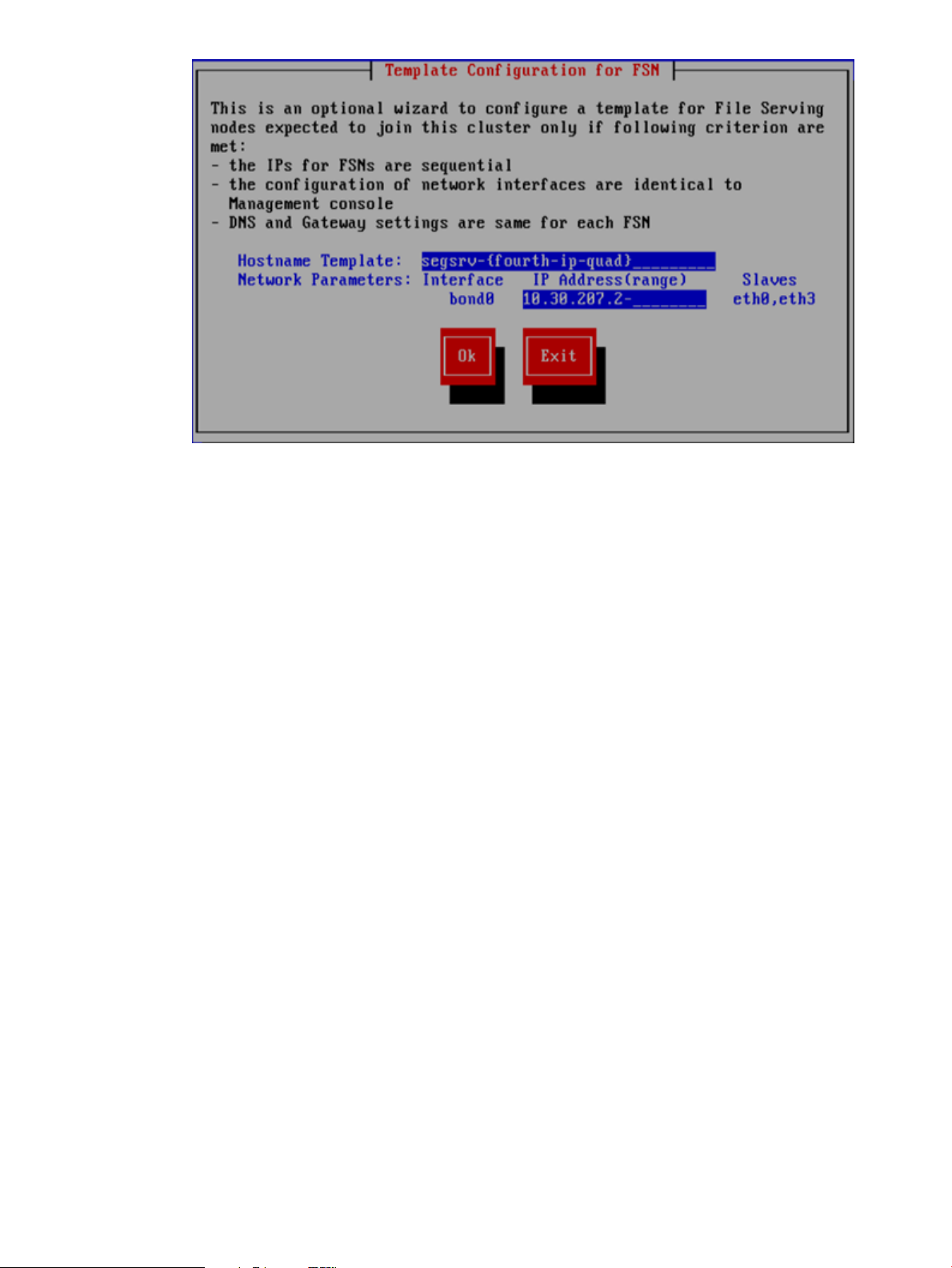

5. Optionally, create a template to configure the remaining nodes. To use the template, all nodes

must have identical network configurations. If you are using separate cluster/user networks,

or the node configurations will not be identical, select Exit.

In the hostname templates, the parameters enclosed in braces ({...}) expand in the following

manner:

• number num: The number of file serving nodes in the cluster.

NOTE: When using the number format, allow each file serving node to register before

logging in to the next system.

• fourth-ip-quad ip4: the fourth section of an IP address (dotted quad format)

• third-ip-quad ip3: the third section of an IP address (dotted quad format)

• second-ip-quad ip2: the second section of an IP address (dotted quad format)

• first-ip-quad ip1: the first section of an IP address (dotted quad format)

• address ip: the IP address with dots replaced by dashes

• reverse-address rip: The IP addresses, reversed by quads, with dots replaced by dashes

• uuid: A Universally Unique Identifier

For example:

template: ib74s{fourth-ip-quad}

ip hostname

192.168.74.3 ib74s3

template: ib74s{first-ip-quad}

ip hostname

192.168.74.3 ib74s192

template: Noname-{address}

ip hostname

192.168.74.3 Noname-192-168-74-3

template: Noname-{reverse-address}

ip hostname

192.168.74.3 Noname-3-74-168-192

Completing the installation in text mode — unified network 13

Page 14

A configuration script now performs some tuning and imports the LUNs into the X9000 software.

When the script is complete, you can install the remaining servers.

If you did not create a template, go to the next section.

If you created a template, go to “Installing additional servers using the template” (page 17).

Installing additional servers without the template

Complete the following steps:

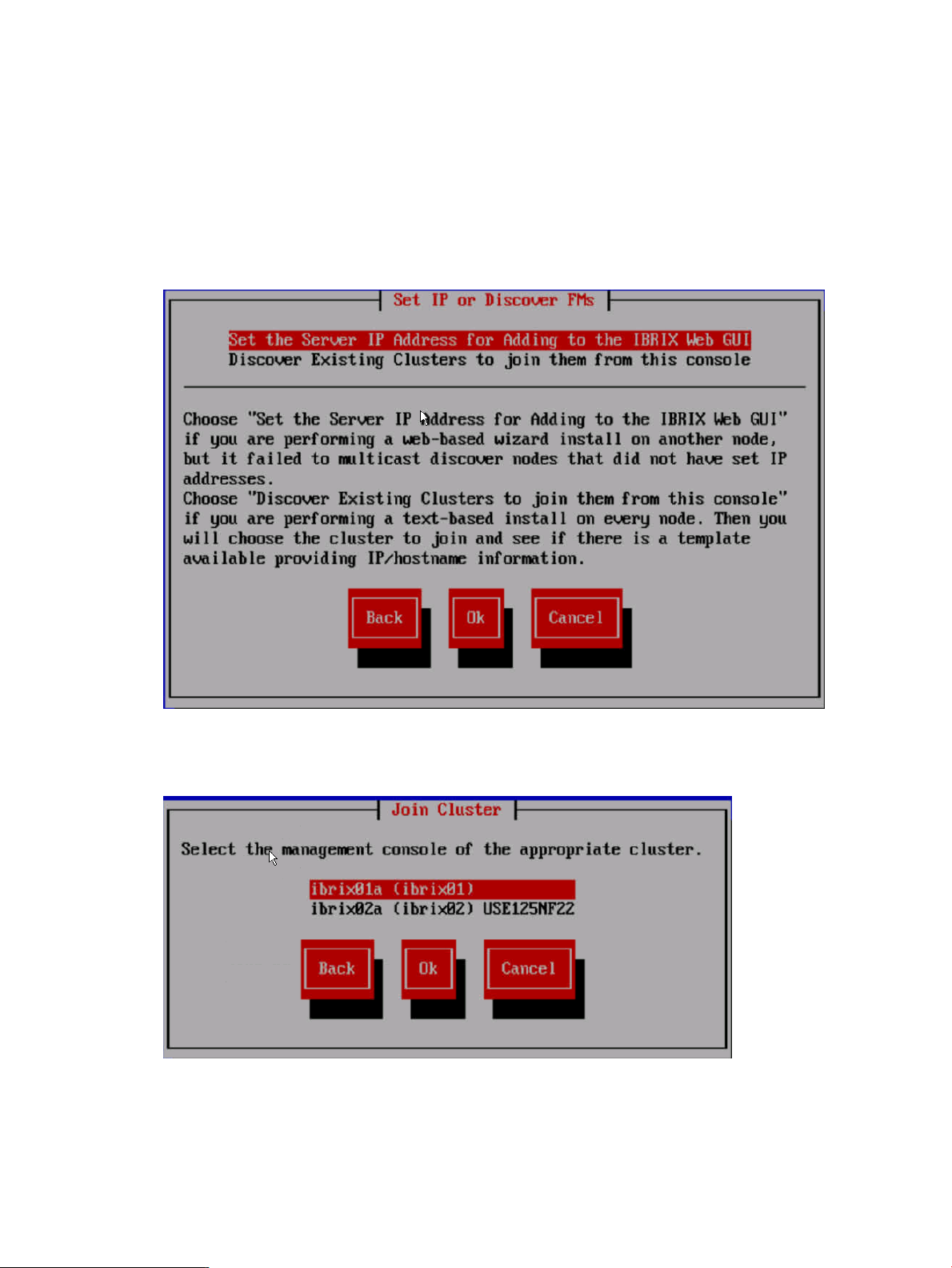

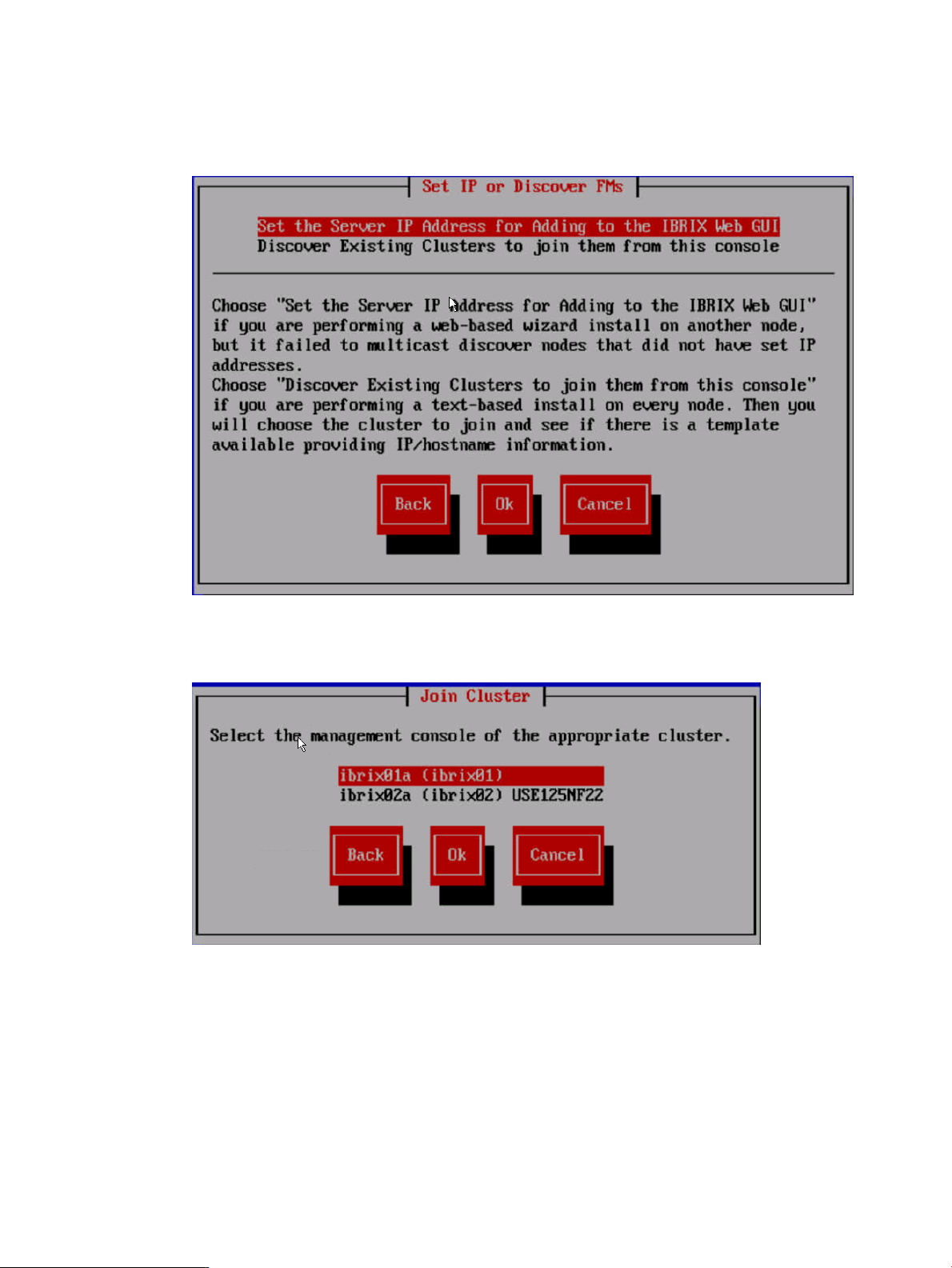

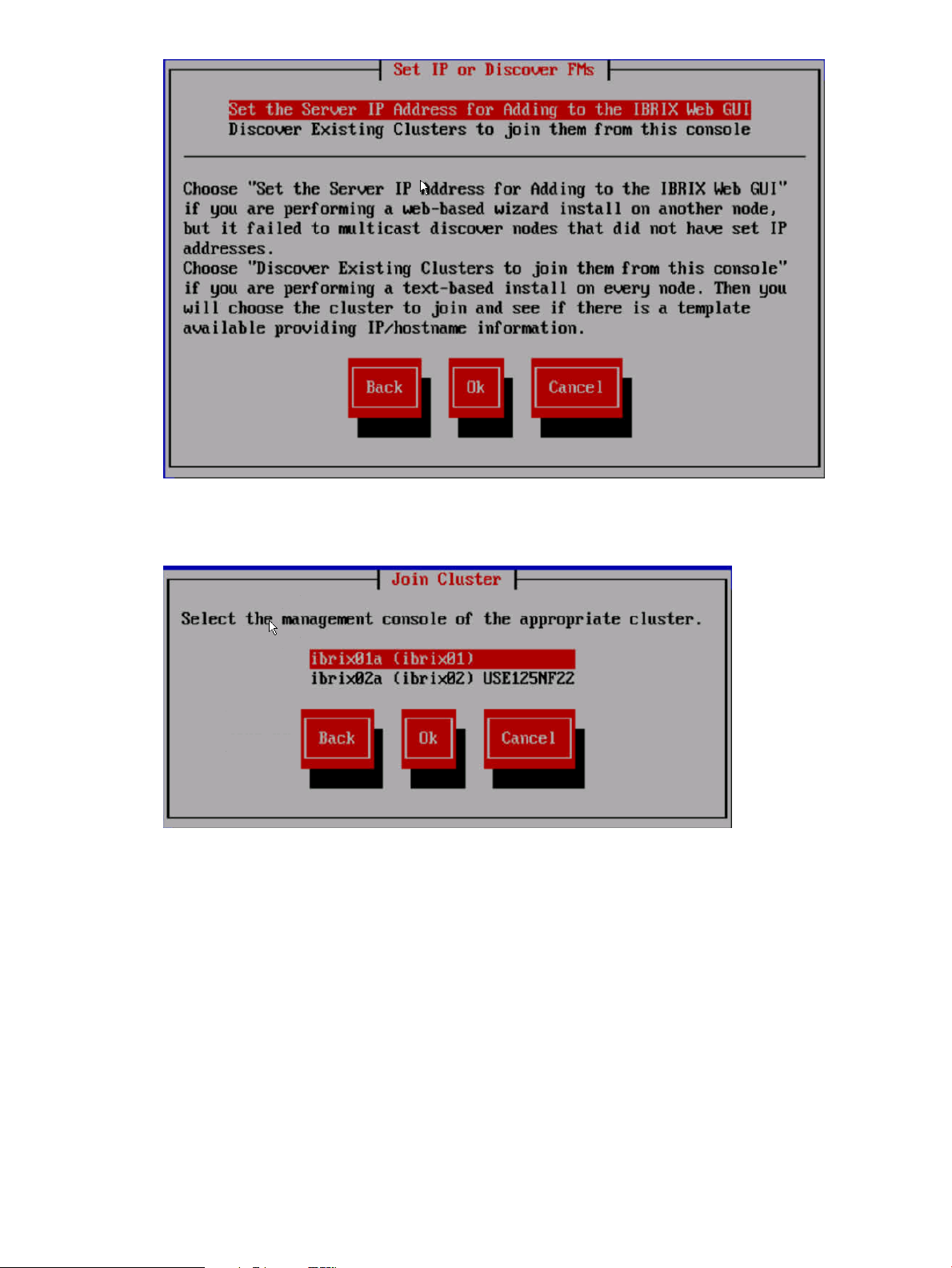

1. Using a console connection, log into the server you are installing. The Set IP or Discover FMs

dialog box appears. Select Discover Existing Clusters to join them from this console.

2. The installation wizard scans the network for existing clusters and lists the clusters on the Join

Cluster dialog box. Select the appropriate cluster.

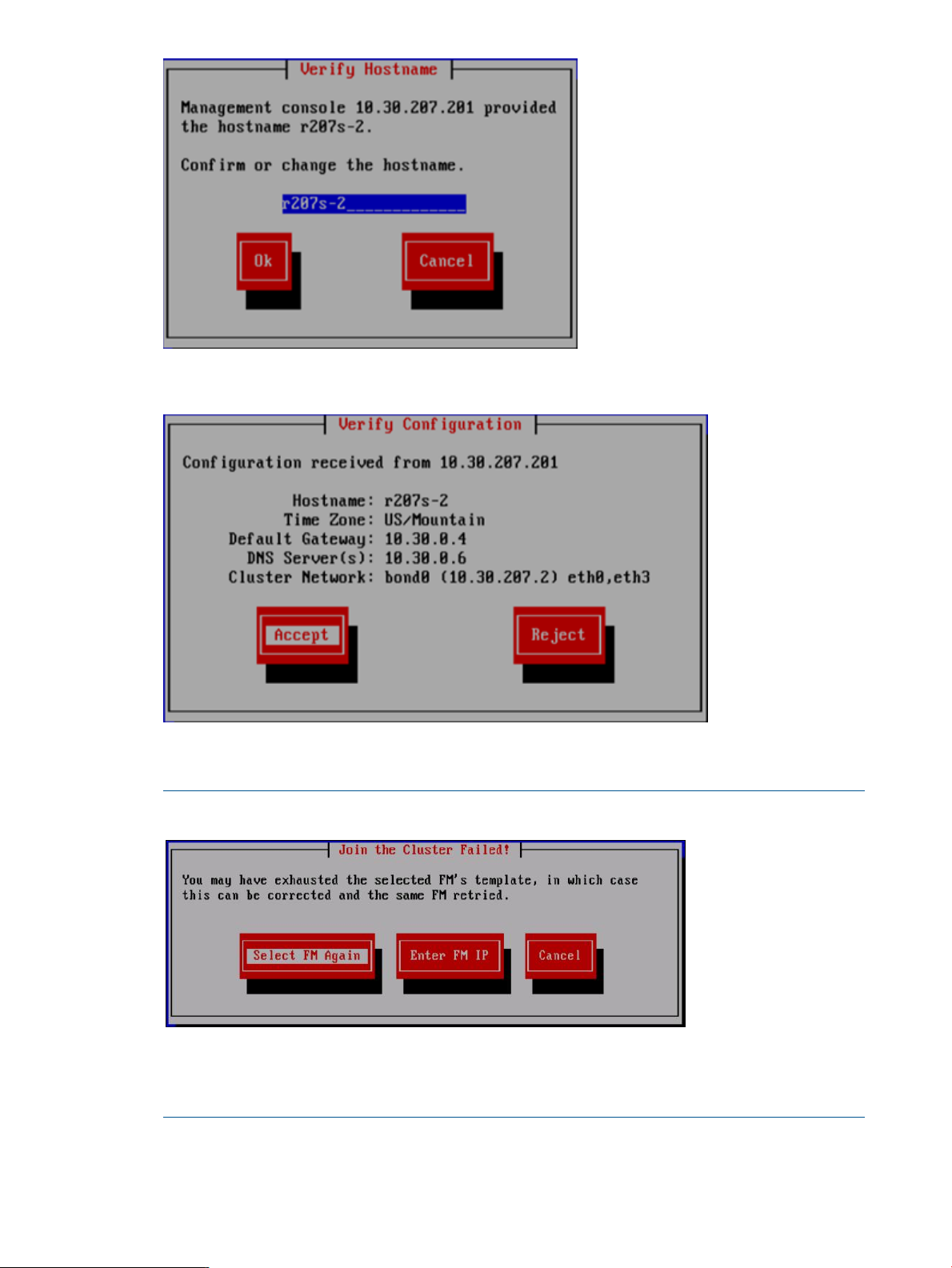

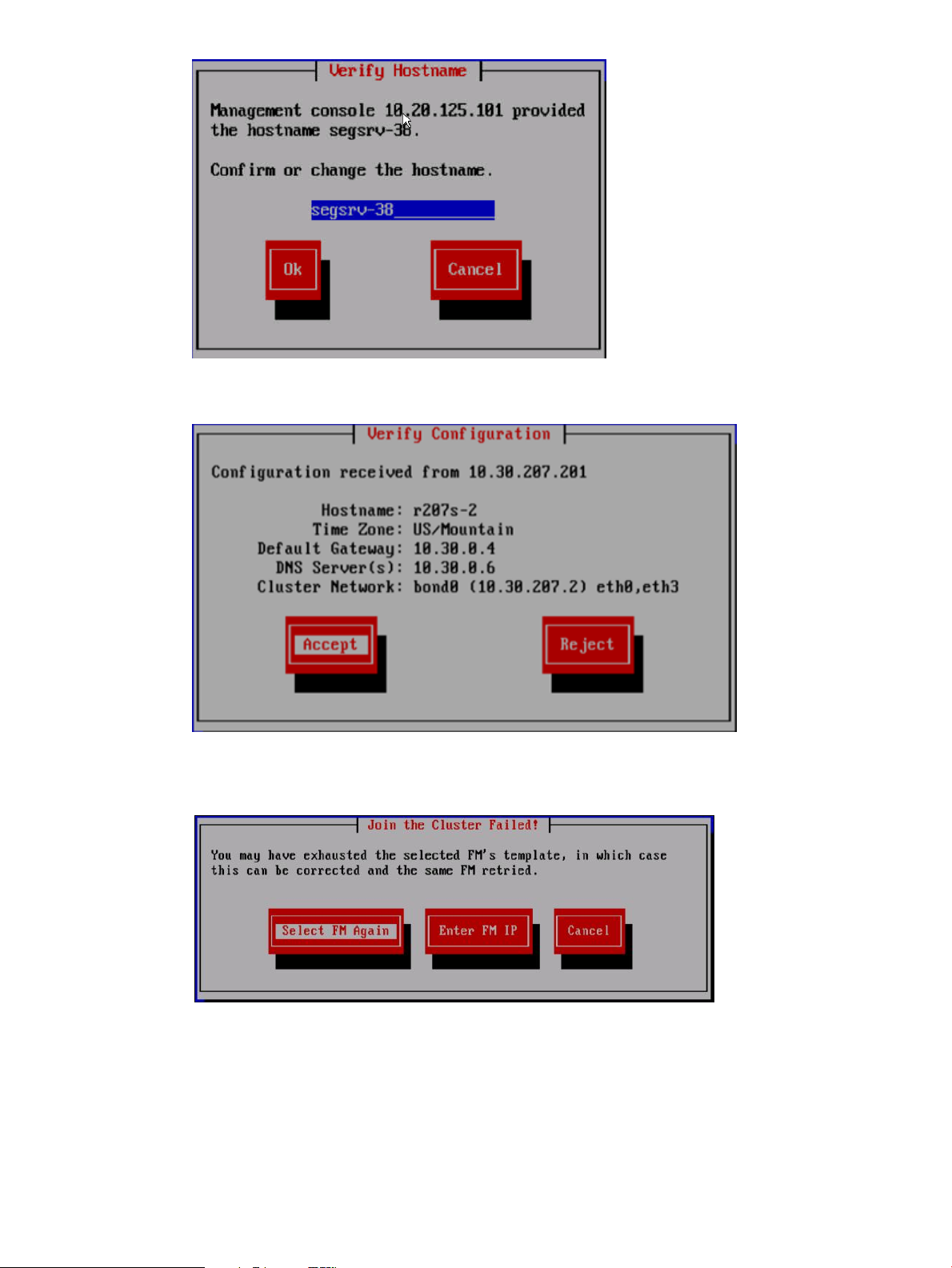

3. The Verify Hostname dialog box lists a hostname generated from the management console.

Accept or change this hostname.

14 Installing X9300 and X9320 systems

Page 15

4. The Verify Configuration dialog box shows the configuration for the server. Select Commit to

continue.

5. On the Form a Cluster — Step 2 dialog box, enter the cluster name and specify the IP address

and netmask for the Management Console IP (also called the Cluster Management IP). Also

enter the IP addresses and domain for your DNS servers, and the IP addresses for your NTP

servers.

6. The Network Configuration dialog box lists the Ethernet devices included in bond0. The bond0

configuration is complete; select Continue and go to the next step.

NOTE: If required, the configuration of bond0 can be modified at this step by selecting

bond0 in the list of devices and then selecting Configure. This should be necessary only if you

have a non-standard configuration and the slave devices chosen by the installer for bond0

are not correct for your environment.

Completing the installation in text mode — unified network 15

Page 16

7. The Configuration Summary dialog box lists the configuration you specified. Select Commit

to apply the configuration.

8. The wizard registers a passive Fusion Manager on the server, and then configures and starts

it.

The installation is complete.

16 Installing X9300 and X9320 systems

Page 17

Installing additional servers using the template

Complete the following steps:

1. Using a console connection, log into the server you are installing. The Set IP or Discover FMs

dialog box appears. Select Discover Existing Clusters to join them from this console.

2. The installation wizard scans the network for existing clusters and lists the clusters on the Join

Cluster dialog box. Select the appropriate Fusion Manager management console.

3. The server you are installing is assigned the appropriate name from the template, plus the last

octet IP for a hostname. If necessary, you can change the hostname on the Verify Hostname

dialog box.

Completing the installation in text mode — unified network 17

Page 18

4. The Verify Configuration dialog box shows the configuration for the server.

If the configuration is correct, select Accept and go to step 4.

NOTE: To change the configuration, select Reject, and the following screen appears.

Choose Select FM Again to reselect the Fusion Manager and use the template again. To

configure the server manually, select Enter FM IP and use the procedure corresponding to your

network type.

18 Installing X9300 and X9320 systems

Page 19

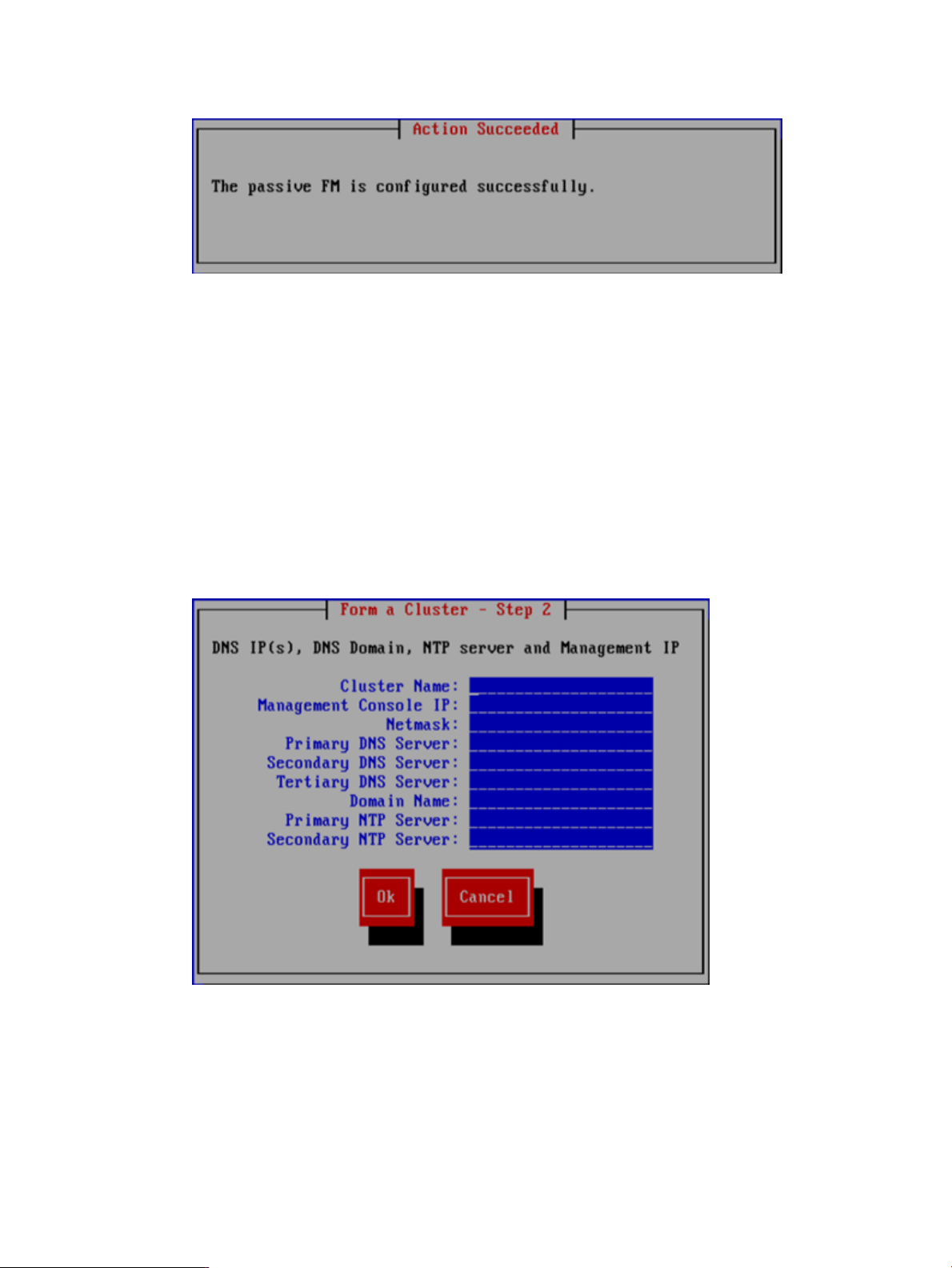

5. The wizard registers a passive Fusion Manager on the server, and then configures and starts

it.

The installation is complete.

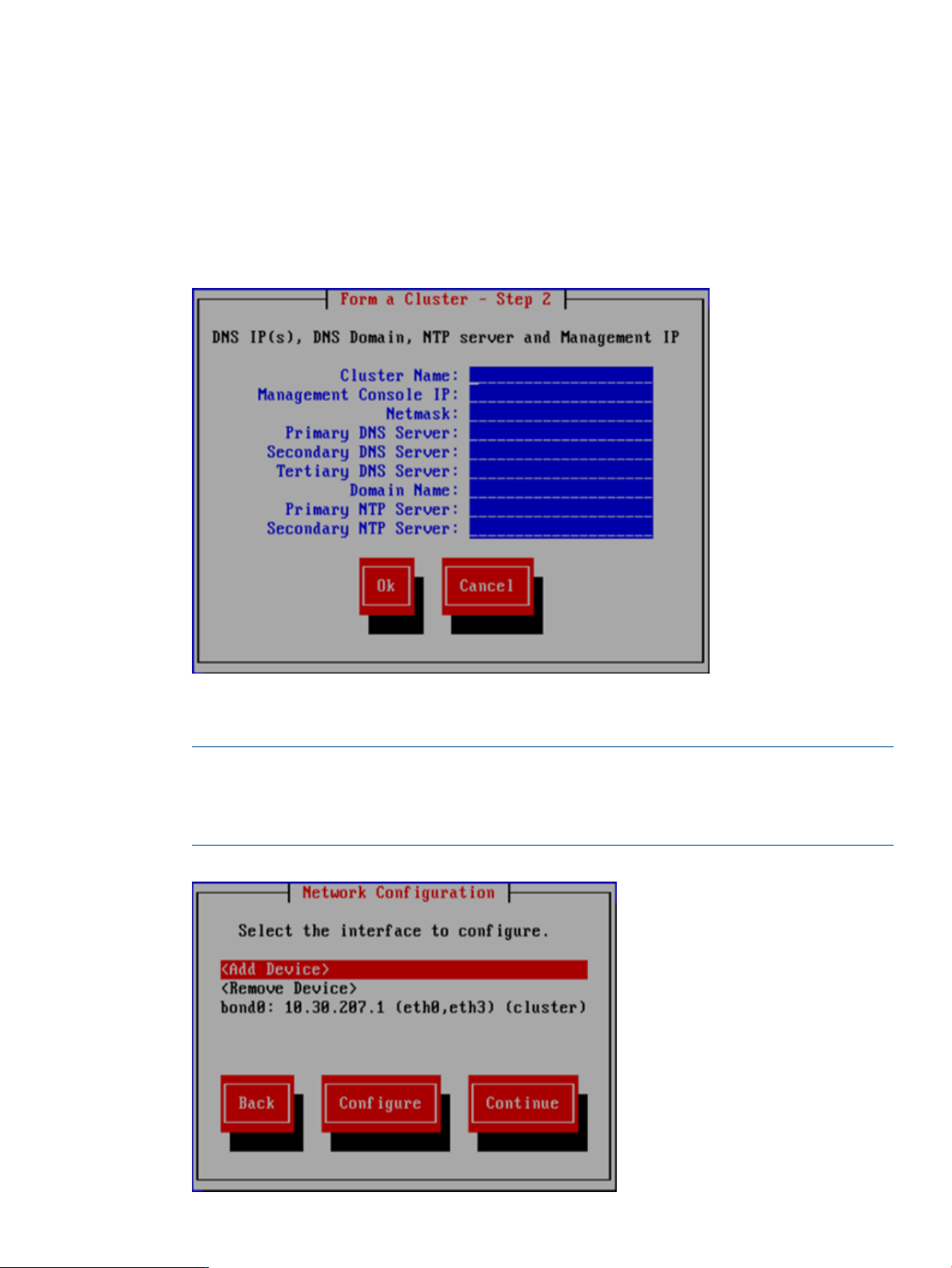

Completing the installation in text mode — separate cluster and user networks

To configure the first server, complete the following steps:

1. On the Form a Cluster — Step 2 dialog box, enter a name for the cluster and specify the IP

address and netmask for the Management Console IP (also called the Cluster Management

IP). This a virtual IP address (VIF) assigned to the entire cluster for management use. Think of

it as the “IP address of the cluster.” You should connect to this VIF in future GUI management

sessions. The VIF remains highly available.

Also enter the IP addresses and domain for your DNS servers, and the IP addresses for your

NTP servers.

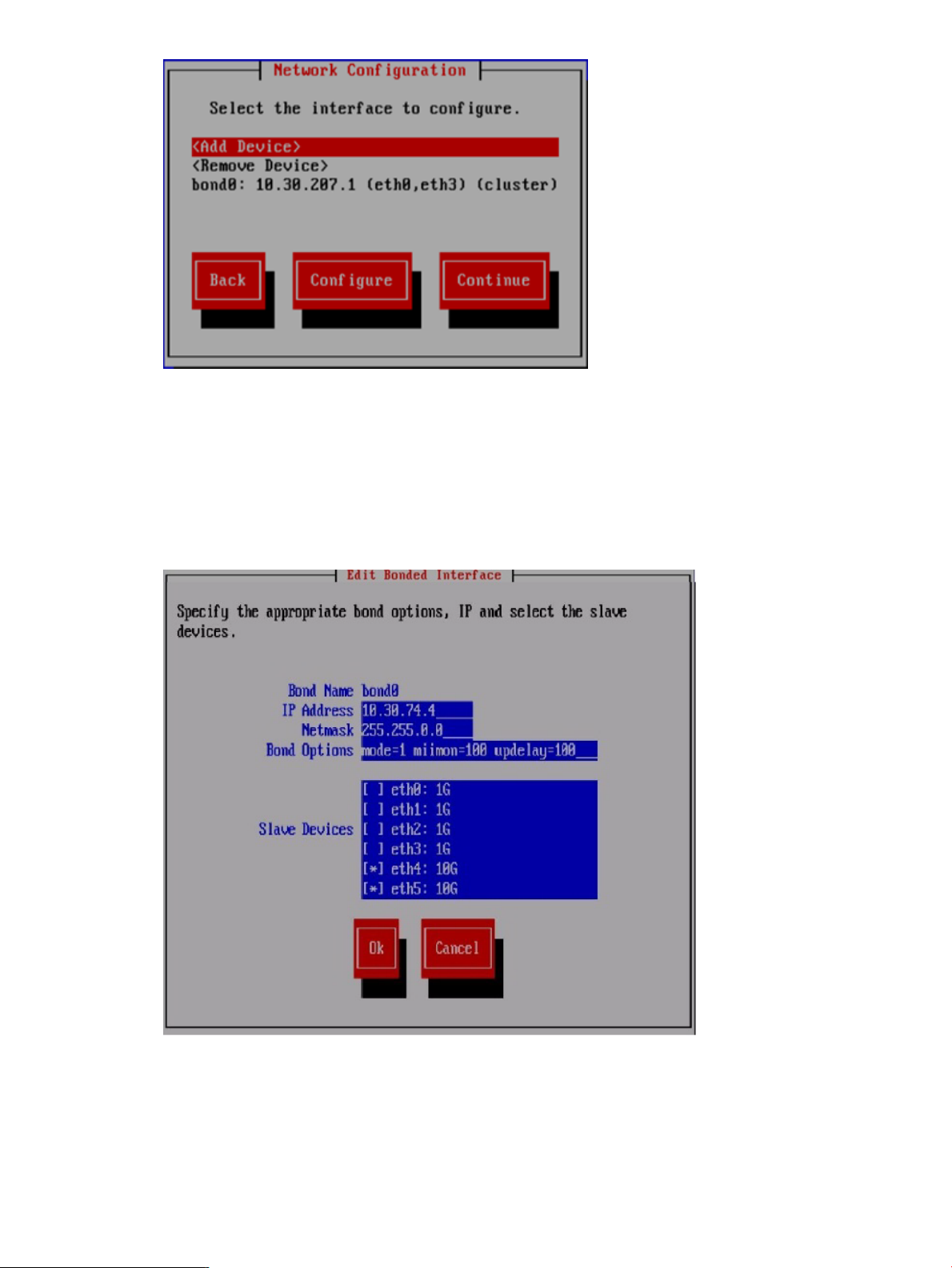

2. The Network Configuration dialog box lists the Ethernet devices included in bond0. If the

devices are correct, go to step 3. If you have a non-standard configuration and the slave

devices chosen by the installer for bond0 are not correct for your environment, select bond0

and then select Configure to customize the interface.

Completing the installation in text mode — separate cluster and user networks 19

Page 20

On the Edit Bonded Interface dialog box, enter the IP address and netmask and specify any

bond options. The slaves are selected by the system based on the following preference:

• 10G dual port PCI add-on card

• 1G quad port PCI add-on card

• Embedded server network ports

Change the slave devices as necessary for your configuration.

When you select Ok, the Configuration Summary dialog box appears. Select Back and return

to the Network Configuration dialog box.

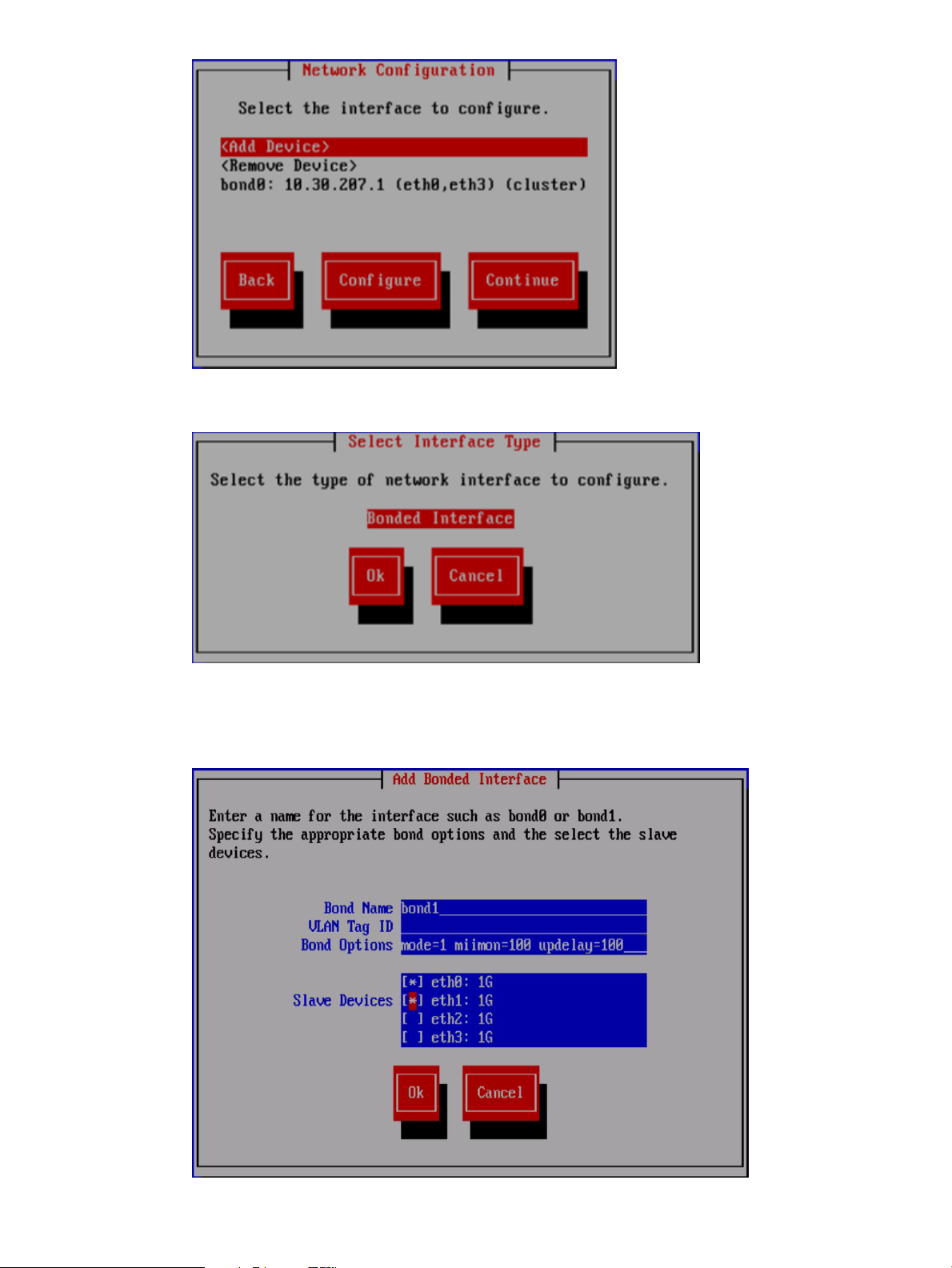

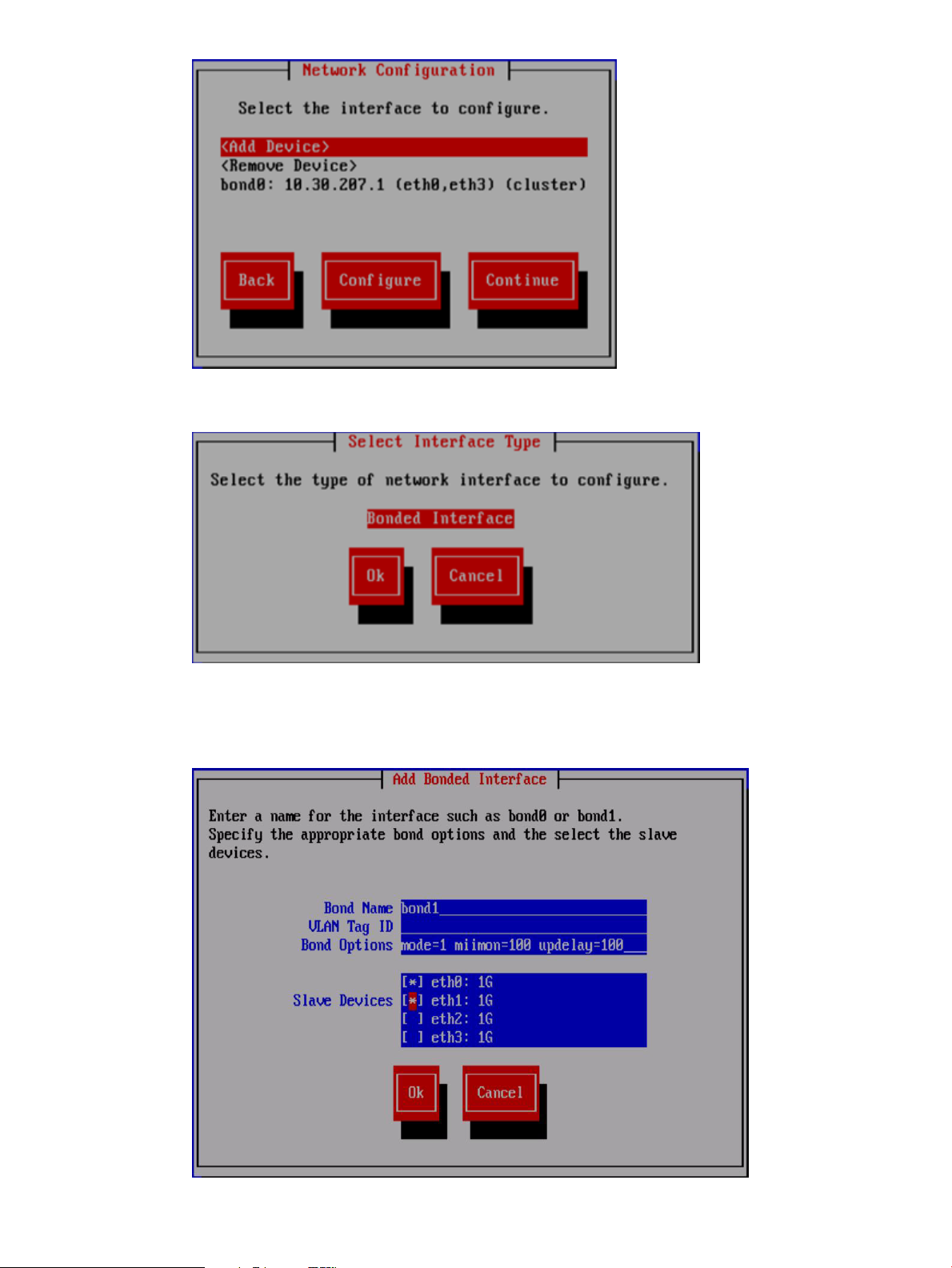

3. Set up bond1. On the Network Configuration dialog box, select <Add Device>.

20 Installing X9300 and X9320 systems

Page 21

On the Select Interface Type dialog box, select Ok to create a bonded interface.

On the Add Bonded Interface dialog box, enter bond1 as the name for the interface. Also

specify the appropriate options and slave devices. Use mode 6 bonding for a 1GbE network,

and mode 1 bonding for a 10GbE network.

Completing the installation in text mode — separate cluster and user networks 21

Page 22

When you select Ok, the Configure Network dialog box reappears. Select bond1, and then

select Configure. The Edit Bonded Interface dialog box is displayed. Enter the IP address and

netmask for bond1 and select Ok.

4. The Configuration Summary lists the configuration you have specified. Select Commit to

continue.

5. The wizard now configures the active management console (Fusion Manager) on the server.

6. The template does not apply when configuring separate cluster and user networks. Select Exit.

22 Installing X9300 and X9320 systems

Page 23

A configuration script now performs some tuning and imports the LUNs into the X9000 software.

7. If the bond0/cluster network is not routed and the bond1/user network is routed, complete

the following steps to define the default gateway for bond1:

• Set the default gateway in /etc/sysconfig/network.

• Add a default route for bond1:

ibrix_nic -r -n IFNAME -h HOSTNAME -A -R ROUTE

For example:

# ibrix_nic -r -n bond1 -h ibrix02a -A -R 10.10.125.1

8. If you want to be able to access the FM through the bond1/user network, create a management

VIF for bond1:

ibrix_fm -c <VIF IP address> -d <VIF device> -n <VIF netmask> -V

<VIF type>

For example:

# ibrix_fm -c 10.10.125.101 -d bond1:1 -n 255.255.255.0 -v user

The installation is complete on the first server.

Installing additional servers

Complete the following steps:

1. Using a console connection, log into the server you are installing.

2. The Set IP or Discover FMs dialog box appears. Select Discover Existing Clusters to join them

from this console.

Completing the installation in text mode — separate cluster and user networks 23

Page 24

3. The installation wizard scans the network for existing clusters and lists the clusters on the Join

Cluster dialog box. Select the appropriate cluster.

4. The Verify Hostname dialog box lists a hostname generated from the management console.

Accept or change this hostname.

24 Installing X9300 and X9320 systems

Page 25

5. The Verify Configuration dialog box shows the configuration for the server.

Because you need to change the configuration, select Reject, and the following screen appears.

Select Enter FM IP.

6. On the System Date and Time dialog box, enter the system date (day/month/year) and time

(24-hour format). Tab to the Time Zone field and press Enter to display a list of time zones.

Select your time zone from the list.

Completing the installation in text mode — separate cluster and user networks 25

Page 26

7. The Server Networking Configuration dialog box defines the server on bond0. Note the

following:

• The hostname can include alphanumeric characters and the hyphen (–) special character.

It is a best practice to use only lowercase characters in hostnames; uppercase characters

can cause issues with IBRIX software. Do not use an underscore (_) in the hostname.

• The IP address is the address of the server on bond0.

• The default gateway provides a route between networks. If your default gateway is on a

different subnet than bond0, skip this field.

Later in this procedure, you can select either Web UI or ASCII text mode to complete the

installation. A gateway address is required to use the Web UI.

• VLAN capabilities provide hardware support for running multiple logical networks over

the same physical networking hardware. IBRIX supports the ability to associate a VLAN

tag with a FSN interface. For more information, see the HP IBRIX X9000 Network Storage

System Network Best Practices Guide.

8. The Configuration Summary lists your configuration. Select Commit to continue the installation.

26 Installing X9300 and X9320 systems

Page 27

9. On the Join a Cluster – Step 2 dialog box, enter the requested information.

NOTE: Register IP is the Fusion Manager (management console) IP, not the IP you are

registering for this server.

10. The Network Configuration dialog box lists the Ethernet devices in bond0. If the devices are

correct, go to the next step. If the devices are not correct, select bond0 and then select Configure

to customize the interface.

Completing the installation in text mode — separate cluster and user networks 27

Page 28

On the Edit Bonded Interface dialog box, enter the IP address and netmask, specify any bond

options, and change the slave devices as necessary for your configuration.

When you select Ok, the Configuration Summary dialog box appears. Select Back and return

to the Network Configuration dialog box.

11. Configure bond1. Select <Add Device> from the Network Configuration dialog box.

28 Installing X9300 and X9320 systems

Page 29

On the Select Interface Type dialog box, select Ok to create a bonded interface.

On the Add Bonded Interface dialog box, enter bond1 as the name for the interface. Also

specify the appropriate options and slave devices. Use mode 6 bonding for a 1GbE network,

and mode 1 bonding for a 10GbE network.

Completing the installation in text mode — separate cluster and user networks 29

Page 30

When you select Ok, the Configure Network dialog box reappears. Select bond1, and then

select Configure. The Edit Bonded Interface dialog box is displayed. Enter the IP address and

netmask for bond1, specify any bond options, and change the slave devices if necessary.

12. The Configuration Summary dialog box lists the configuration you specified. Select Commit

to apply the configuration.

13. The wizard registers a passive Fusion Manager on the server, and then configures and starts

it.

14. If necessary, complete the bond1 configuration on the server:

• If the bond0/cluster network is not routed and the bond1/user network is routed, set the

default gateway in /etc/sysconfig/network.

• On the active FM, set the default route for bond1:

ibrix_nic -r -n <bond> -h <hostname> -A -R <route>

For example:

ibrix_nic -r -n bond1 -h ibrix02a -A -R 10.10.125.1

The installation is complete.

30 Installing X9300 and X9320 systems

Page 31

2 Configuring the cluster with the Getting Started Wizard

(X9300/X9320 systems)

The Getting Started Wizard configures the cluster in a few steps. Be sure to have the necessary IP

addresses available.

NOTE: This wizard can be used only for X9300 and X9320 systems.

Running the wizard

The Cluster Settings page asks for information to identify your cluster. Enter a name for the cluster

and specify the Cluster Management IP address.

Running the wizard 31

Page 32

To update your license keys, click Update. Typically, you will need a license key for each server.

Download your licenses from the HP website and place them in a location that can be accessed

by this server. Use Browse to locate a license key and then click Add. Repeat this step for each

license key.

Enter the DNS server addresses and search domain for your cluster. Also enter your NTP server

addresses.

32 Configuring the cluster with the Getting Started Wizard (X9300/X9320 systems)

Page 33

The wizard attempts to access the addresses that you specify. If it cannot reach an address, a

message such as Primary DNS Server Unreachable will be displayed on the screen.

The File Servers page lists all servers the wizard found on your network. If the list includes servers

that do not belong in the cluster, select those servers and click Remove. If a server is not defined,

select the server and click Configure.

Running the wizard 33

Page 34

To configure a server, select the server on the File Servers page and click Configure. Enter the host

name and IP address of the server. If the wizard can locate the subnet masks and iLO IP address,

it will fill in those values.

If your cluster should include servers that were not listed on the File Servers page, configure an IP

address manually on the servers, using their local console setup screens. Then click Add on the

File Servers page to add the servers to the cluster. You can enter a single IP address on the Add

Servers dialog box, or you can enter a range of addresses and the wizard will locate all servers

with IP addresses in the range.

HP recommends that you create a single file system using all of the available storage. You can

create this file system on the Create a Default File System page. You can also create NFS and CIFS

shares on the file system.

34 Configuring the cluster with the Getting Started Wizard (X9300/X9320 systems)

Page 35

f you create a CIFS share, you will need to configure the file serving nodes for CIFS and configure

authentication. You might also want to set certain CIFS parameters such as user permissions. Other

configuration options are also available for NFS shares. See the HP IBRIX X9000 Network Storage

System File System User Guide for more information.

The Summary lists any warnings or other items noted during the post-installation checks. Click the

items to determine whether further action is needed.

Running the wizard 35

Page 36

The wizard saves an installation log at /usr/local/ibrix/log/installtime.log on the

server where you ran the wizard. Click View Log to display the log.

When you click Finish to exit the wizard, the Fusion Manager is restarted. When the service is

running again, you can log into the GUI.

Troubleshooting the Getting Started Wizard

If you are unable to resolve an issue, contact HP support for assistance.

Cluster Settings page

The cluster name cannot be set

Probable cause: The command failed because of a timeout or other condition.

Troubleshooting steps: There may be network inconsistencies or an outage. Typically, a network

outage lasts only a few minutes. Check the network settings and retry the operation. You can also

use the following command to set the cluster name:

ibrix_fm_tune -S -o clusterName=<clusterName>, fusionPrimaryAddress=<Vif

add>

The cluster name and IP address cannot be retrieved

Probable cause: The command timed out.

Troubleshooting steps: There may be network inconsistencies or an outage. Usually a network

outage lasts only a few minutes. Relaunch the wizard and try the operation again.

The VIF for the primary file server cannot be registered

Probable cause:

• The specified IP address is already assigned to another server.

• The Fusion Manager registration failed for the primary file server.

• The segment server registration failed for the primary file server.

36 Configuring the cluster with the Getting Started Wizard (X9300/X9320 systems)

Page 37

Troubleshooting steps:

1. Relaunch the wizard, go to the Cluster Settings page, and click Next to retry the operation.

If the operation fails again, run ifconfig bond0:0. If the output is empty, use the following

command to set the VIF on the server:

ibrix_fm -c <VIF IP address> -d bond0:0 -n <VIF Netmask> -v cluster

2. Check the status of the Fusion Manager registration on the primary server:

ibrix_fm –i

If the output is FusionServer: fusion manager name not set! (active, quorum

is not configured) and the output of ibrix_fm –l is empty, use the following command

to perform the Fusion Manager registration:

ibrix_fm -R hostname -I ipaddress

3. Run the ibrix_server –l command. If there is no output, use the following command to

register the segment server:

/usr/local/ibrix/bin/register_server -p -c bond0 -n <hostname> -a

none -H /usr/local/ibrix <VIF IP address>

4. Restart the server:

/usr/local/ibrix/bin/init/ibrix_server start

If the registration continues to fail, use the text-based installation method to set the VIF. If that fails,

QR the system.

DNS/FTP page

DNS and /NTP cannot be set

Probable cause:

• The specified DNS/NTP IP addresses are not valid.

• The specified domain name is not valid.

• A network outage or connectivity situation occurred.

Troubleshooting steps:

• Determine whether the specified IP addresses are valid and can be pinged. Run the following

command:

dig +short @ <DNS server IP>

If the output is empty or the connection times out, contact the administrator for the DNS/NTP

server. Then relaunch the wizard to configure the DNS/NTP settings.

• If the DNS/NTP IP addresses are valid, the wizard will configure them. However, if you want

to set DNS and NTP manually, use the following Linux command to set the NTP server settings:

ntpdate –u <NTP server IP> )

To set the DNS server, edit the /etc/resolv.conf file, where:

◦ search <domainname.com>: The search list is normally determined from the local

domain name. By default, it contains only the local domain name.

◦ nameserver <Name-server-IP-address>: Point to your name server or the ISP

name server. You can list up to three name servers.

For example:

search mydomain.com

nl

nameserver 201.52.1.10

nl

Troubleshooting the Getting Started Wizard 37

Page 38

nameserver 202.52.1.11

If the operation still fails, there may be network inconsistencies or an outage. Typically, a network

outage lasts only a few minutes. Try the operation later. If the operation continues to fail, contact

your network administrator.

DNS/NTP information cannot be retrieved

Probable cause: The command timed out.

Troubleshooting steps: The network is experiencing inconsistencies or an outage. Usually a network

outage last only a few minutes. Relaunch the wizard and start the operation again.

File Servers page

No servers are detected

Probable cause:

• The discovery service is not running on the servers.

• The X9000 version on the servers does not match the version on the primary (Active ) server.

• The servers belong to another cluster.

• Multicast is not enabled on the servers.

• The network is behaving inconsistently, causing a timeout.

Troubleshooting steps:

• Check the system type. Run the following command on the servers you expected to discover:

/opt/hp/platform/bin/hpsp_srvid

If the system type is X9720, the servers will not be displayed. The installation does not support

X9720 systems.

• Check the status of the discovery service on all expected servers. (If the expected servers do

not have an IP address, use the ILO to check the status.)

service ibrix_discovery status

If the discovery service is stopped, restart the service:

service ibrix_discovery start

Then relaunch the wizard and go to the File Servers page.

NOTE: If the discovery does not start, files may be missing or deleted. QR the system.

• Check the X9000 software version on the servers. (If the expected servers do not have an IP

address, use the ILO to check the version.)

ibrix_version –l

If the major and minor version numbers on the expected servers do not match the version on

the active server, update the X9000 software on the expected servers. Then relaunch the

wizard, go to the File Servers page, and check for the servers.

• Determine whether multicast broadcasting is enabled on the active server:

ibrix_discovery -d

If the command does not discover any nodes, multicast broadcasting may be disabled. If so,

enable multicast on the server, relaunch the wizard, go to the File Servers page, and check

for the servers. You can also use the text-based installation method to form the cluster.

• Check the network connection. The discovery period for servers is approximately one minute.

If the active server does not receive a response within that time, the servers will not be

38 Configuring the cluster with the Getting Started Wizard (X9300/X9320 systems)

Page 39

displayed. This situation could be caused by network inconsistencies or an outage. Usually a

network outage lasts only a few minutes. Try relaunching the wizard and revisiting this page.

If the operation continues to fail, use the text-based installation method to form the cluster.

Server registration fails with “Error: Registering server” or “Error: Passive FM”

Probable cause:

• The operation failed when checking and configuring network details for a passive server.

• The hostname is not valid and cannot be reached.

• The operation failed when registering the server with the active Fusion Manager.

• The operation failed when setting passive mode on the server.

• The operation failed when setting nofailover mode on the server.

• The operation failed when restarting the Fusion Manager.

• The operation failed because of a timeout or other condition.

Troubleshooting steps:

1. Try to ping the server. If the server cannot be reached, the network settings on that server may

have failed. This can be due to temporary connectivity conditions. Try using the iLO to assign

network details such as the IP address and hostname and then launch the wizard again. If

connectivity is restored, the server will be discovered as already configured, and it can be

used to form the cluster.

2. Check the status of the Fusion Manager registration on the primary server:

ibrix_fm –i

If the output is FusionServer: fusion manager name not set! (active, quorum

is not configured) and the output of ibrix_fm –l is empty, use the following command

to perform the Fusion Manager registration:

ibrix_fm -R hostname -I ipaddress

3. Run the ibrix_server –l command. If there is no output, use the following command to

register the segment server:

/usr/local/ibrix/bin/register_server -p -c bond0 -n <hostname> -a

none -H /usr/local/ibrix <VIF IP address>

4. Restart the server:

/usr/local/ibrix/bin/init/ibrix_server start

Alternatively, you can use the text-based installation method to perform the registration, but you

will need to enable the HA configuration after the registration is complete.

If the registration continues to fail, there may be a network inconsistency or outage. A network

outage usually lasts only a few minutes. If the operation continues to fail, contact your network

administrator.

An HA pair cannot be configured

Probable cause:

• Network configuration failed on the server.

• The command timed out.

Troubleshooting steps:

• Try to ping the server. If you cannot reach the server, the network settings on the server may

have failed. This can be due to temporary connectivity conditions. Use the iLO to assign

Troubleshooting the Getting Started Wizard 39

Page 40

network details such as the IP address and hostname, relaunch the wizard, and try to set the

HA pair again.

If the operation still fails, use the following command to set the HA pair:

ibrix_server -b -h SERVERNAME1,SERVERNAME2

• Network inconsistencies or an outage could cause the wizard to fail. Usually a network outage

lasts only a few minutes. Relaunch the wizard, go to the File Servers page, and click Next to

try to set HA pair again.

If the operation continues to fail, QR the system and retry the operation.

An HA backup server cannot be configured

Probable cause:

• No storage was detected.

• A backup candidate server was not listed.

Troubleshooting steps:

• If the backup candidate server is not listed on the screen, restart the listener on that server

using service ibrix_discovery restart, relaunch the wizard, and go to the File

Servers page.

• Run the ibrix_version –l command and check the X9000 software version on the backup

server. If the version does not match the version on the active server, update the X9000

software and QR the system again.

• If the backup candidate server is listed but the Back-up not found error occurs, possibly

storage was not detected on either or both of the servers, or the storage was detected but it

did not match with the expected HA pair. If so, run the appropriate command on both servers:

X9730 systems: ccu show controllers all

X 9300/X9320 systems: multipath -ll

If the output matches on both servers, restart the discovery service:

service ibrix_discovery restart

Then relaunch the wizard and go to the File Servers page.

If the output does not match, the two servers are not considered to be an HA pair. Check the

storage settings and mapping to the controllers/servers.

If the HA pair detection fails after taking these actions, use the text-based installation method to

form the cluster.

A power source cannot be added

Probable cause:

• The iLO IP address is not reachable.

• The command timed out.

Troubleshooting steps:

• Use the following command to determine whether the iLO can be accessed:

ping <iLO address>

If the iLO cannot be reached, use the route command to check the gateway used for the

server. Both the server and iLO should be on the same network. After verifying this, relaunch

the wizard and go to the File Servers page. Click Next to add the power source again.

If the operation still fails, use the following command to set the power source:

40 Configuring the cluster with the Getting Started Wizard (X9300/X9320 systems)

Page 41

ibrix_powersrc -a -t ilo -h HOSTNAME -I IPADDR [-u USERNAME -p

PASSWORD] [-s]

• If the network is experiencing inconsistencies or an outage, the wizard can fail to add the

power source. Typically, a network outage lasts only a few minutes. Relaunch the wizard, go

to the File Servers page, and click Next to try to add the power source again.

If the operation continues to fail, QR the system again and retry the operation.

Bond creation fails with “Error: Fetching bond details”

Probable cause:

• The bond0 device is not present on the server.

• The operation to get the bond details failed.

Troubleshooting steps:

• Use the ifconfig bond0 command to check for bond0. If the bond is not present, restart

the discovery service:

service ibrix_discovery restart

• If restarting the discovery service fails, run the /opt/hp/platform/bin/hpsp_srvid

script and verify that the system type is not X9720. The installation does not support X9720

systems.

• The discovery service does not create the bond if network configurations already exist on

eth0. If network settings exist on the eth0 port, remove the network configuration and then

restart the discovery service.

• Check the value of the LISTENER_BONDED_INTERFACE entry in the /usr/local/ibrix/

bin/listener.conf file. If it is not bond0, edit the file, setting the value for

LISTENER_BONDED_INTERFACE to bond0, and then restart the discovery service:

service ibrix_discovery restart

• If the previous step fails to create the bond0 interface, either use the text-based installation

method to perform the network settings or create the bond interface on the server using the

following command:

/usr/local/ibrix/autocfg/bin/create_bonds -d 0 <Bond Mode> bond0

<eth port(s)>

Restart the discovery application. To determine values for the bond mode and Ethernet ports,

contact HP Support or your network administrator, or follow these steps:

◦ On X9730 systems, create the bond on internal NIC ports.

◦ On X9300/X9320 systems, create the bond on external NIC ports. To detect the external

NIC ports connected to the server, run the following command to list all available NIC

ports:

ip add| grep eth

Run the following command to list only internal NIC ports:

dmidecode | grep "MAC address”

The external NIC ports appear on the ipadd output but not on the dmidecode output.

Troubleshooting the Getting Started Wizard 41

Page 42

Use ethtool <eth port> to detect the speed information. The port value for X9300/X9320

systems is detected as described in the previous step. For X9730 systems, the ports are internal.

◦ If the speed of the NIC ports selected for bond creation is 10GB, use any two NIC ports

in bond mode 1.

◦ If the speed of the NIC ports selected for bond creation is 1GB, use four NIC ports in

bond mode 6.

• If these steps do not resolve the condition, use the text-based installation method to form the

cluster.

Create a Default File System page

The default file system cannot be created

Probable cause:

• The file system mount operation failed.

• The specified volume group name already exists.

• The storage is not preformatted properly.

Troubleshooting steps:

• Determine if the file system was actually created. Run the ibrix_fs –l command. If the file

system exists but is not mounted, use the GUI or the following command to mount the file

system:

ibrix_mount -f FSNAME [-o { RW | RO } ] [-O MOUNTOPTIONS] [-h

HOSTLIST] -m MOUNTPOINT

• The storage might be unavailable. To determine the storage exposed to the server, run the

following command to discover devices:

ibrix_pv –a

List the discovered physical devices:

ibrix_pv –l

If the output of ibrix_pv –l is not empty, relaunch the wizard, go to the Create a Default

File System page, and create the file system. If the output is empty, no storage is available to

create the file system. Add storage to the server and run the previous commands again to

make the storage available for the file system.

• The storage exposed to the servers might be in an inconsistent state. Preformat the storage

before trying to create the default file system. Complete the following steps on any server:

1. On the GUI, unmount the file system created in a previously formed cluster.

2. Delete all shares and the file system.

3. Use putty or ssh to connect to each node you need to QR and run following commands:

a. Reinitialize the physical volume so that it can be used by LVM. A new physical volume

is not created.

pvcreate -ff device path

For example:

pvcreate -ff /dev/sda

b. Preformat the device:

/usr/local/ibrix/bin/preformat_segment -d device path

For example:

/usr/local/ibrix/bin/preformat_segment -d /dev/sda

42 Configuring the cluster with the Getting Started Wizard (X9300/X9320 systems)

Page 43

QR the preformatted systems.

An NFS/CIFS share cannot be created

Probable cause:

• The server on which the file system is mounted is not available for share creation.

• The storage configuration is incorrect.

Troubleshooting steps:

Run the ibrix_fs –l command to determine whether the file system was actually created. If the

file system exists but is unmounted, use the GUI or the following command to mount the file system:

ibrix_mount -f FSNAME [-o { RW | RO } ] [-O MOUNTOPTIONS] [-h HOSTLIST]

-m MOUNTPOINT

After mounting the file system on the servers, use the GUI share creation wizard or a CLI command

to create the export or share.

Create a NFS export:

ibrix_exportfs -f FSNAME -h HOSTLIST -p

CLIENT1:PATHNAME1,CLIENT2:PATHNAME2, [ -o "OPTIONS"] [-b]

Create a CIFS share:

ibrix_cifs -a -f FSNAME -s SHARENAME -p SHAREPATH [-D SHAREDESCRIPTION]

[-S SETTINGLIST] [-h HOSTLIST]

Check the output of the appropriate command to verify that the export or share was created:

• ibrix_exportfs –l (For NFS export)

• ibrix_cifs -i (For CIFS shares)

If the NFS or CIFS share still cannot be created, preformat the storage. See “The default file system

cannot be created” (page 42).

Troubleshooting the Getting Started Wizard 43

Page 44

3 Installing X9730 systems

The system is configured at the factory as follows:

• X9000 File Serving Software 6.1 is installed on the servers.

• LUNs are created and preformatted on the X9730 CX storage system.

• Depending on the system size, the X9730 system is partially or totally preracked and cabled.

You will need the following information when you perform the installation:

• IP addresses as specified in “IP address requirements” (page 45).

• The administrator passwords for OA and VC. The default passwords are on the labels affixed

to the back of the chassis.

If you are performing the installation on an existing cluster, ensure that the same version of the

X9000 software is installed on all nodes.

X9730 network layouts

The X9730 supports four networking layouts. The default is to use a single, unified network for all

cluster and user traffic. The other layouts provide a dedicated management network, a user/cluster

network, and, optionally, additional user networks. To use a network layout other than the default,

you need to specify the number of networks needed when you run the X9730 Setup Wizard.

NOTE: Use layout 2 when adding an X9730 system to an existing 2-network IBRIX cluster.

See the HP IBRIX X9000 Network Storage System Network Best Practices Guide for detailed

information about the network layouts.

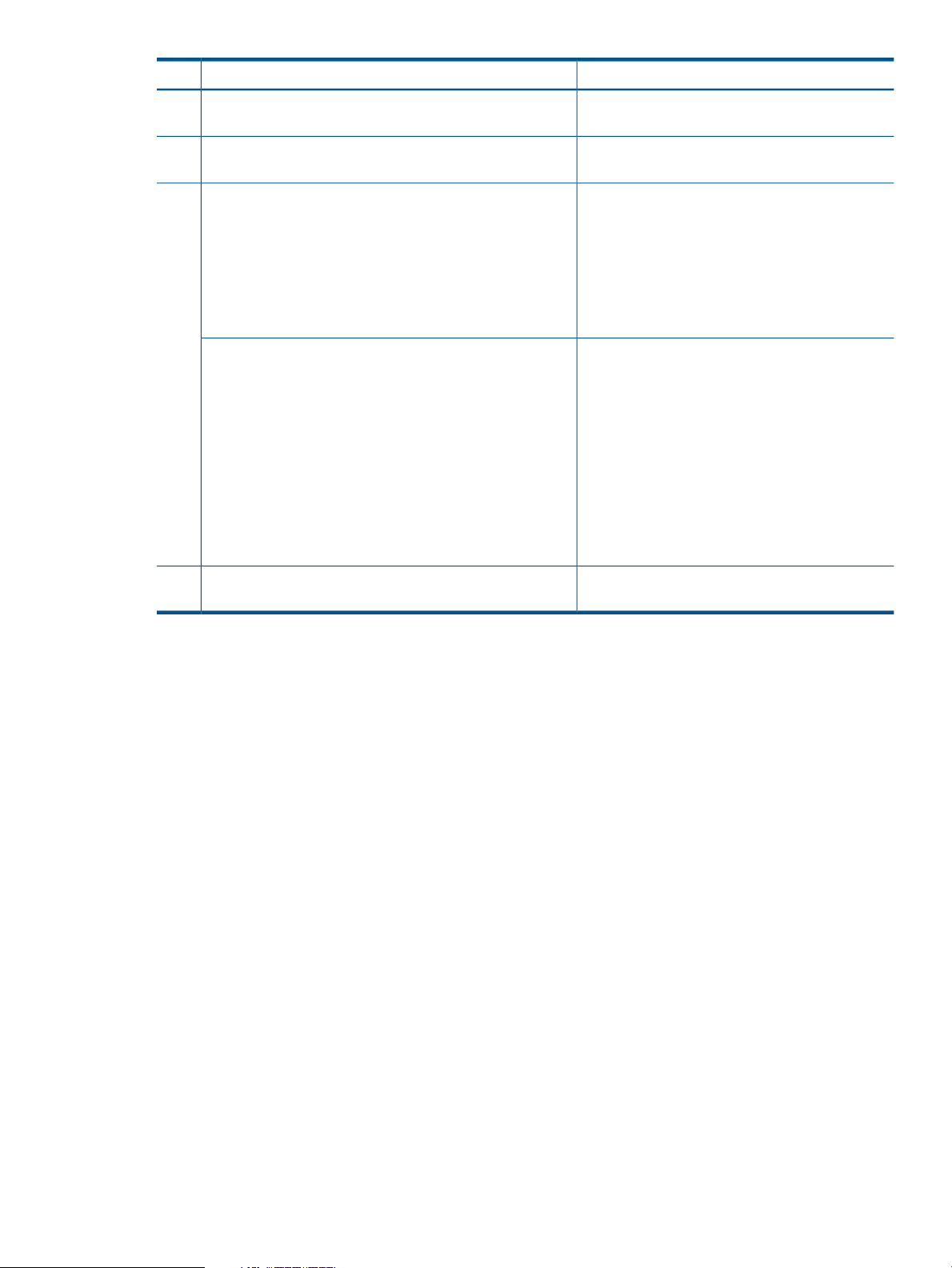

Table 1 Supported X9730 network layouts

DescriptionLayout

Number of

networks

Network

name

devices

Bond speedEthernet

10GBeth0, eth3bond01Unified network (default)1

1GBeth0, eth3bond02Dedicated management network2

9GBeth1, eth2bond1User/cluster network

1GBeth0, eth3bond03Dedicated management network3

4.5GBeth1, eth2bond1User/cluster network

4.5GBeth4, eth5bond2User2 network

1GBeth0, eth3bond04Dedicated management network4

3.5GBeth1, eth2bond1User/cluster network

3.5GBeth4, eth5bond2User2

2GBeth6, eth7bond3User3

44 Installing X9730 systems

Page 45

IMPORTANT: In previous releases, the X9720 system shipped with access to the management,

user and cluster networks through a single FSN bond. The field setup moved the user and cluster

network access to a separate bond once the connection between a FSN and the customer network

was established.

X9730 systems default to a unified network configuration that places the chassis management

components on one subnet and the user/cluster components on a separate subnet. File serving

nodes are configured to access the user, cluster, and management networks through a single bond

attached to the user/cluster subnet. File serving node access to the components on the management

subnet requires a route in the customer network that connects the management and user/cluster

subnets.

IMPORTANT: The default unified network layout requires the customer to configure a router to

provide a level-3 route that connects the user/cluster subnet and the management subnet. The setup

process will not complete if the level-3 route is not in place for system installation. During the setup

process, the installer software makes sure that it can ICMP ping the OA to verify the route between

the user/cluster and management subnets. If it is unsuccessful, the installer software prompts the

user to correct the problem before proceeding.

IP address requirements

You will need the following IP addresses when you perform the installation for the unified network.

The HP IBRIX X9000 Network Storage System Network Best Practices Guide describes the

components in more detail, and also lists IP address requirements for the other supported network

layouts.

Table 2 IP addresses for unified network layout

Maximum number of

addressesMinimum number of addressesSubnetComponent

user/clusterCluster management VIF

cluster for management use)

2 ( virtual IP address for failover)user/clusterUser VIF

user/clusterFile serving nodes

network bond)

31 for minimum configuration

11 (one virtual IP address to assign to the entire

Variable. At least 1

per node (16 included

in total)

162 (one IP address per server to assign to the

1616managementiLO

88managementInterconnect bays

22 (OA primary and secondary)managementOA module

59 for maximum

configuration

You also need to provide the IP addresses of the DNS servers, NTP servers, and, optionally, the

default gateway.

HP recommends that you use sequential addresses for the blades, iLOs, and Interconnect.

IP address requirements 45

Page 46

Installation checklist

More informationTaskStep

Configure active and standby IP addresses for Onboard

1.

Administrator

Install the latest IBRIX X9000 software release2.

Perform the installation3.

Set up virtual IP addresses for client access4.

• Update license keys if needed

• Enable High Availability

• Configure Ibrix Collect

• Configure HP Insight Remote Support

• Create file systems if not already configured

Optionally, also configure the following features:

• NFS, CIFS, HTTP/HTTPS, FTP/FTPS shares

• Remote replication

• Data retention and validation

• Antivirus support

• Software snapshots

• Data tiering

• NDMP Backup Protocol Support

“Configuring OA1 IP addresses for Onboard

Administrator” (page 46)

“Installing the latest IBRIX X9000 software release”

(page 48)

“Starting the installation and configuring the

chassis” (page 49)

“Configuring virtual interfaces for client access”

(page 80)

“Post-installation tasks” (page 77)Perform post-installation tasks:5.

Configure X9000 clients for Linux or Windows (optional)6.

“Adding Linux and Windows X9000 clients”

(page 83)

Configuring OA1 IP addresses for Onboard Administrator

Complete the following steps:

1. From the Main Menu of the Insight Display, navigate to Enclosure Settings and press OK.

2. On the Enclosure Settings screen, select Active OA and press OK.

46 Installing X9730 systems

Page 47

3. On the Network Settings: OA1 Active screen, select Network Properties and press OK.

4. On the Change OA Network Properties screen, set Enclosure IP to Enable and press OK to

Accept.

5. On the Network Settings:OA1 Active screen, navigate to Active IPv4 and press OK.

6. On the Change:OA1 Network Mode screen, change DHCP to Static and press OK to Accept.

Configuring OA1 IP addresses for Onboard Administrator 47

Page 48

7. On the Change: OA1 IP Address screen, set the IP address, subnet mask, and gateway

(optional) and Accept the changes.

8. On the Network Settings: OA1 Active screen, select Accept All and press OK.

9. On the Enclosure Settings screen, select Standby OA or OA2 and press OK.

10. On the Network Settings:OA2 screen, navigate to Active IPv4 and press OK.

11. Set the IP address, subnet mask, and gateway (optional) and Accept the changes.

12. Back on the Network Settings:OA2 screen, navigate to Accept All and press OK.

The Main Menu reappears and the procedure is complete.

Installing the latest IBRIX X9000 software release

Obtain the latest 6.1 release from the IBRIX X9000 software dropbox. Download the Quick Restore

ISO image and transfer it to a DVD or USB key.

Use a DVD

1. Burn the ISO image to a DVD.

2. Insert the Quick Restore DVD into a USB DVD drive cabled to the Onboard Administrator or

to the Dongle connecting the drive to the front of the blade.

IMPORTANT: Use an external USB drive that has external power; do not rely on the USB

bus for power to drive the device.

3. Restart the server to boot from the DVD-ROM.

4. When the HP Network Storage System screen appears, enter qr to install the software.

Repeat steps 2–4 on each server.

Use a USB key

1. Copy the ISO to a Linux system.

2. Insert a USB key into the Linux system.

3. Execute cat /proc/partitions to find the USB device partition, which is displayed as

dev/sdX. For example:

48 Installing X9730 systems

Page 49

cat /proc/partitions

major minor #blocks name

8 128 15633408 sdi

4. Execute the following dd command to make USB the QR installer:

dd if=<ISO file name with path> of=/dev/sdi oflag=direct bs=1M

For example:

dd if=X9000-QRDVD-6.2.96-1.x86_64.iso of=/dev/sdi oflag=direct bs=1M

4491+0 records in

4491+0 records out

4709154816 bytes (4.7 GB) copied, 957.784 seconds, 4.9 MB/s

5. Insert the USB key into the server to be installed.

6. Restart the server to boot from the USB key. (Press F11 and use option 3 ).

7. When the “HP Network Storage System” screen appears, enter qr to install the software.

Repeat steps 5–8 on each server.

Starting the installation and configuring the chassis

To start the installation, complete the following steps:

1. Boot the blades in the cluster.

2. Log into blade1. The X9730 Setup dialog box is displayed.

3. The setup wizard verifies the firmware on the system and notifies you if a firmware update is

needed. See “Firmware updates” (page 72) for more information.

Starting the installation and configuring the chassis 49

Page 50

IMPORTANT: HP recommends that you update the firmware before continuing with the

installation. X9730 systems have been tested with specific firmware recipes. Continuing the

installation without upgrading to a supported firmware recipe can result in a defective system.

4. The setup wizard checks the network for an existing cluster. If a cluster is found, you will be

asked if you want to join an existing cluster or create a new cluster.

If there is not an existing cluster or you chose to a new cluster, the setup wizard asks for

information about the blade you are using. On the System Date and Time dialog box, enter

the system date (day/month/year) and time (24-hour format). Tab to the Time Zone field and

press Enter to display a list of time zone. Then select your time zone from the list.

50 Installing X9730 systems

Page 51

5. The Server Networking Configuration dialog box defines the server on bond0. Note the

following:

• The hostname can include alphanumeric characters and the hyphen (–) special character.

It is a best practice to use only lowercase characters in hostnames; uppercase characters

can cause issues with IBRIX software. Do not use an underscore (_) in the hostname.

• The IP address is the address of the server on bond0.

• The default gateway provides a route between networks. If your default gateway is on a

different subnet than bond0, skip this field.

Later in this procedure, you can select either Web UI or ASCII text mode to complete the

installation. A gateway address is required to use the Web UI.

• VLAN capabilities provide hardware support for running multiple logical networks over

the same physical networking hardware. IBRIX supports the ability to associate a VLAN

tag with a FSN interface. For more information, see the HP IBRIX X9000 Network Storage

System Network Best Practices Guide.

6. The Configuration Summary lists your configuration. Select Commit to continue the installation.

Starting the installation and configuring the chassis 51

Page 52

The wizard now sets up the blade based on the information you entered.

7. The setup wizard next configures the chassis on the X9730 system. See the HP IBRIX X9000

Network Storage System Network Best Practices Guide for detailed information about the

chassis components. (This step will fail if the OA IP address has not been set up or if the blade

cannot communicate with the OA.) The Active Virtual Connect is by default the Virtual Connect

in interconnect bay 1. If the system is at factory defaults, the administrator passwords for OA

and Virtual Connect are on the labels affixed to the back of the chassis. If the passwords have

been reset, enter the new passwords.

IMPORTANT: If you are using the default unified network layout, select OK on the Confirming

Onboard Administrator and Virtual Connect Settings dialog box and go to step 8. To use a

different network layout, press F2.

When you press F2, the following screen appears.

When you answer Yes to configure a dedicated management network, the following screen

appears. Specify the number of networks for your layout on the Get Total Networks dialog

box.

52 Installing X9730 systems

Page 53

8. The wizard now validates the information you have entered. It performs the following tests:

• Pings the active OA.

• Verifies the OA password.

• Verifies that that OA at that IP address is the active OA.

If any of these tests fail, verify the configuration of the OA and VC modules as described on

the failure report displayed on the GUI. If you need to re-enter the IP address for the OA, select

Back to return to the Confirming Onboard Administrator and Virtual Connect Settings dialog

box, where you can make your changes.

NOTE: If the initialization of the credential manager fails, the GUI displays a message asking

you to initialize the credential manager manually. See “Credential Manager initialization

failed” (page 76) for more information.

9. The wizard now verifies the OA firmware.

10. Set the iLO IP addresses. On the Get iLO IP Addresses dialog box, select the method you want

to use to set up iLO IP addresses. Use the space bar to select/deselect the check boxes.

To configure the iLO IP addresses in sequence, enter the first iLO IP address on the Set iLO IP