Page 1

Distributed Systems Administration Utilities User's Guide

HP Part Number: T2786-90327

Published: March 2009

Edition: 1.5

Page 2

© Copyright 2009 Hewlett-Packard Development Company, L.P.

Legal Notices

Confidential computersoftware. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor’s standard commercial license.

The informationcontained hereinis subject to change without notice. Theonly warranties for HP products and servicesare setforth inthe express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP

shall not be liable for technical or editorial errors or omissions contained herein.

HP-UX Release 10.20 and later and HP-UX Release 11.00 and later (in both 32-bit and 64-bit configurations) on all HP 9000 computers are Open

Group UNIX 95 branded products.

UNIX is a registered trademark of The Open Group.

Java is a U.S. trademark of Sun Microsystems, Inc.

Intel and Itanium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

Microsoft and Windows are U.S. registered trademarks of Microsoft Corporation.

Page 3

Table of Contents

About this Document..........................................................................................................7

Intended Audience.................................................................................................................................7

Typographic Conventions......................................................................................................................7

Related Information................................................................................................................................7

Publishing History..................................................................................................................................7

Product Support......................................................................................................................................8

HP Encourages Your Comments............................................................................................................8

1 Introduction......................................................................................................................9

1.1 Distributed Systems Administration Utilities Commands.............................................................10

1.2 Open Source Components...............................................................................................................11

1.3 Distributed Systems Administration Utilities Manual Pages.........................................................12

2 Configuration Synchronization....................................................................................13

2.1 cfengine Overview...........................................................................................................................13

2.1.1 cfengine Daemons and Commands........................................................................................14

2.2 cfengine Master Server Deployment Models..................................................................................15

2.3 Configuring cfengine.......................................................................................................................16

2.3.1 Using the Configuration Synchronization Wizard.................................................................16

2.3.1.1 Using the Wizard to Configure a Standalone Synchronization Server...........................17

2.3.1.2 Using the Wizard to Configure a Serviceguard Cluster Synchronization Server..........19

2.3.1.3 Cluster Configuration Notes for cfengine.......................................................................23

2.3.1.4 Serviceguard Automation Features................................................................................23

2.3.1.5 Using the Wizard to Configure a Synchronization Client..............................................25

2.3.2 Manual Configuration ............................................................................................................26

2.3.2.1 Manually Configuring a Standalone Synchronization Server........................................27

2.3.2.2 Manually Configuring a Serviceguard Cluster Synchronization Server........................29

2.3.2.3 Configuring a Synchronization Managed Client............................................................35

2.3.2.4 Choosing a Synchronization Invocation Method...........................................................36

2.4 Security Notes..................................................................................................................................36

2.4.1 Key Exchange..........................................................................................................................37

2.4.2 csync Network Port Usage......................................................................................................37

2.4.3 Encryption...............................................................................................................................37

2.4.4 Checksum Alerts.....................................................................................................................38

2.5 Disabling Use of cfengine................................................................................................................38

2.6 Logging Options..............................................................................................................................38

2.7 cfengine Troubleshooting................................................................................................................39

3 Consolidated Logging.................................................................................................41

3.1 Introduction to syslog.....................................................................................................................41

3.1.1 syslog Message Format...........................................................................................................41

3.1.2 Message Filtering....................................................................................................................42

3.2 Log Consolidation Overview..........................................................................................................42

3.2.1 Improved Log Consolidation .................................................................................................42

3.2.2 syslog Co-existence.................................................................................................................43

3.3 Log Consolidation Configuration...................................................................................................45

3.3.1 Using the Log Consolidation Wizard......................................................................................46

3.3.1.1 Configuring a Log Consolidation Standalone Server with clog_wizard........................46

Table of Contents 3

Page 4

3.3.1.2 Configuring a Serviceguard Cluster as a Log Consolidation Server with

clog_wizard................................................................................................................................49

3.3.1.3 Cluster Configuration Notes for clog..............................................................................52

3.3.1.4 Serviceguard Automation Features................................................................................52

3.3.1.5 Minimizing Message Loss During Failover....................................................................53

3.3.1.6 Configuring a Log Forwarding Client Using clog_wizard.............................................54

3.3.2 Manually Configuring Log Consolidation..............................................................................56

3.3.2.1 Manually Configuring a Standalone Log Consolidation Server.....................................56

3.3.2.2 Manually Configuring a Serviceguard Cluster as a Log Consolidation Server.............59

3.3.2.2.1 Creating the clog Package.......................................................................................63

3.3.2.2.2 Testing and Starting the clog Package.....................................................................65

3.3.2.2.3 Using VxVM Instead of LVM..................................................................................66

3.3.2.3 Manually Configuring Log Forwarding Clients.............................................................66

3.3.2.3.1 Manually Configuring a Standalone Log Forwarding Client.................................66

3.3.2.3.2 Manually Configuring a Serviceguard Cluster as a Log Forwarding Client..........68

3.3.2.3.3 Forwarding ASCII Log Data...................................................................................72

3.3.2.4 Consolidating Package Logs on the Log Consolidation Server......................................74

3.4 Disabling Log Consolidation...........................................................................................................75

3.4.1 Disabling a Standalone Log Consolidation System................................................................75

3.4.2 Disabling a Serviceguard Cluster Log Consolidation System................................................76

3.4.3 Disabling a Standalone Log Forwarding Client......................................................................76

3.4.4 Disabling a Serviceguard Cluster Log Forwarding Client......................................................77

3.5 Securing Consolidated Logs............................................................................................................78

3.5.1 Log File Protections.................................................................................................................78

3.5.2 ssh Port Forwarding................................................................................................................78

3.5.2.1 ssh Port Forwarding to a Serviceguard Cluster Log Consolidator.................................78

3.5.3 clog Network Port Usage........................................................................................................79

3.5.4 Using Bastille to Harden the System.......................................................................................79

3.6 Viewing System and Consolidated Logs.........................................................................................80

3.6.1 Starting System Management Homepage...............................................................................80

3.6.2 Using the System and Consolidated Log Viewer....................................................................80

4 Command Fanout.........................................................................................................83

4.1 Parallel Distributed Shell.................................................................................................................83

4.2 pdsh Utility Wrappers.....................................................................................................................84

4.3 Security Configuration....................................................................................................................85

4.3.1 Remote Shell Security Setup....................................................................................................85

4.3.2 ssh Security Setup....................................................................................................................85

4.3.3 Security Notes.........................................................................................................................85

4.4 Command Fanout Troubleshooting................................................................................................86

A HP-Supported Open Source pdsh Options...............................................................87

Index.................................................................................................................................89

4 Table of Contents

Page 5

List of Figures

2-1 cfengine Overview.........................................................................................................................15

3-1 syslog-ng Log-Forwarding Configuration....................................................................................44

3-2 syslog-ng Log Consolidator Configuration...................................................................................45

4-1 pdsh Architecture .........................................................................................................................84

5

Page 6

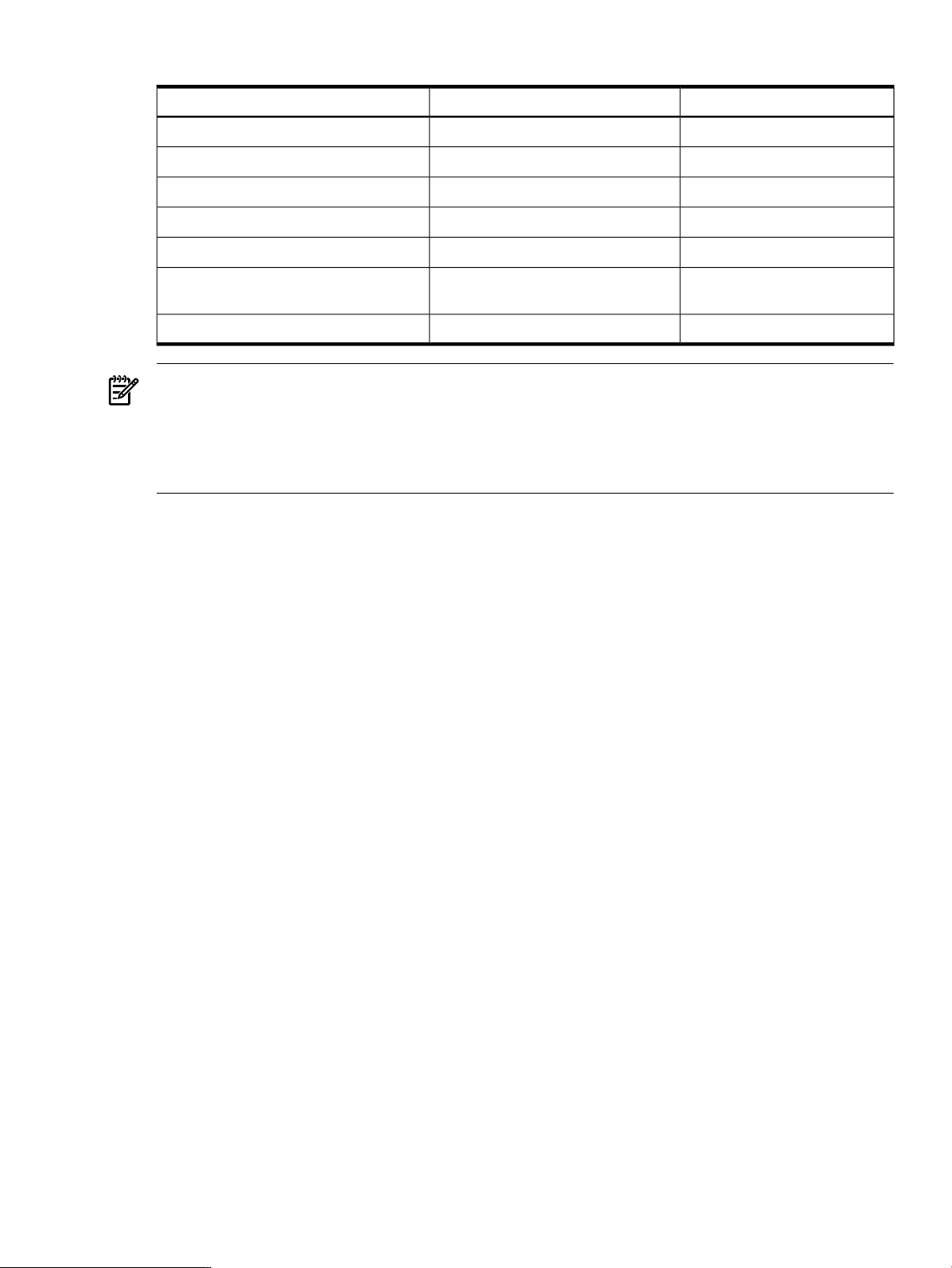

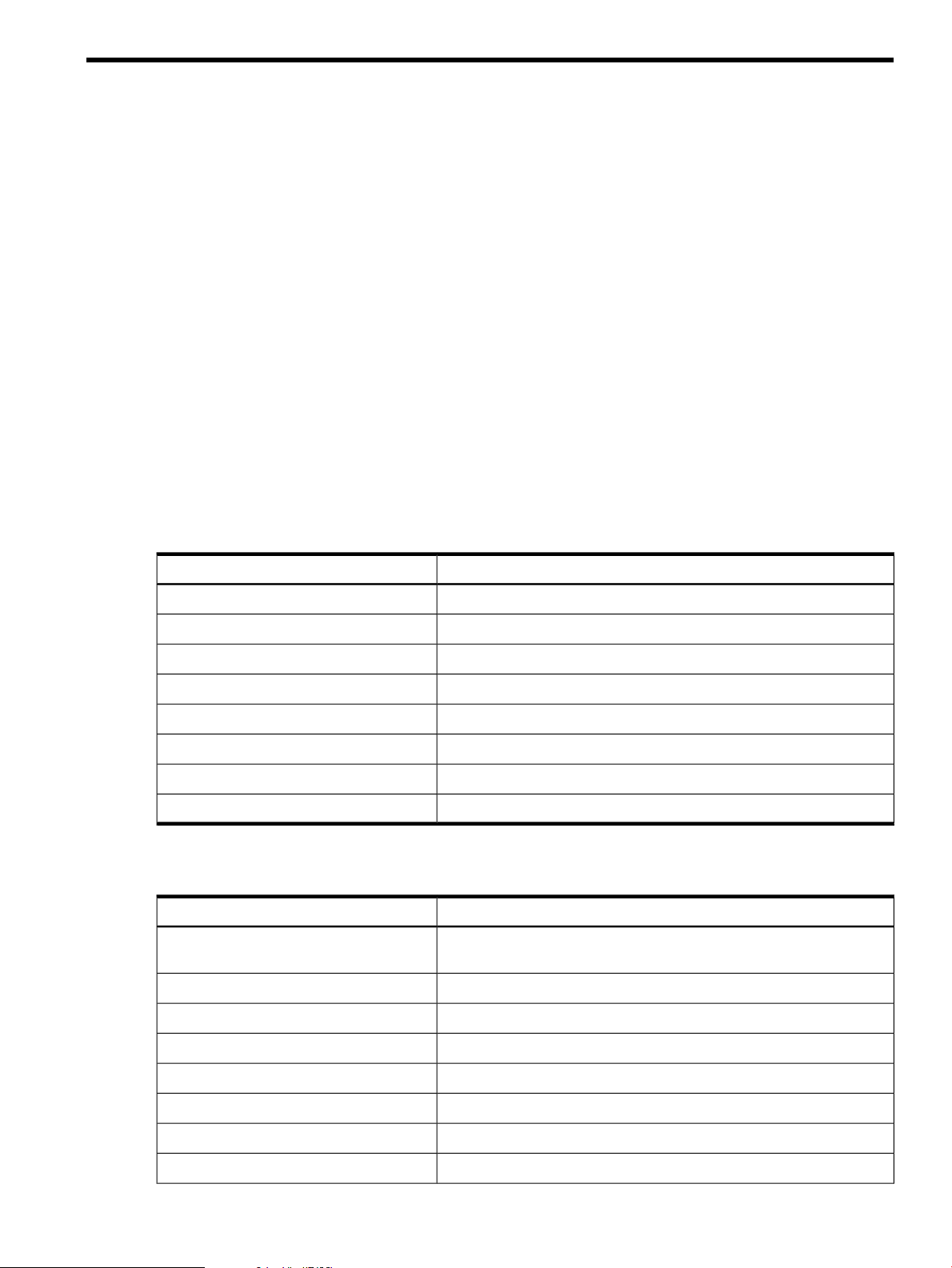

List of Tables

1 Conventions.....................................................................................................................................7

2 DSAU Publishing History...............................................................................................................7

1-1 Configuration Synchronization Command...................................................................................10

1-2 Consolidated Logging Commands...............................................................................................10

1-3 Command Fanout Commands......................................................................................................10

1-4 Utility Setup Command................................................................................................................11

1-5 Open Source cfengine Commands................................................................................................11

1-6 Open Source pdsh Commands......................................................................................................11

1-7 Open Source syslog-ng Command................................................................................................12

1-8 DSAU Manual Page Sections.........................................................................................................12

2-1 Configuration Data for csync_wizard...........................................................................................17

3-1 syslog Priority Levels....................................................................................................................41

3-2 syslog Facilities Messages.............................................................................................................41

3-3 Configuration Data for clog_wizard.............................................................................................46

4-1 ssh Command Messages..............................................................................................................86

4-2 rsh Command Messages..............................................................................................................86

4-3 Target Node Error Messages.........................................................................................................86

6 List of Tables

Page 7

About this Document

Distributed SystemsAdministration Utilities provide tools to simplify the management of groups

of systemsand of Serviceguard clusters. This document provides informationon each component

tool in separate chapters.

Intended Audience

This document is written for system administrators to assist them in learning about Distributed

Systems Administration Utilities and how to use them.

Typographic Conventions

Table 1 Conventions

Title of a book or other document.Book Title

command

user input

computer output

Enter

value

find(1)

Related Information

The following documents will also be useful in extending your knowledge of Distributed Systems

Administration Utilities (DSAU).

• DSAU manpages

• DSAU GUI online help

• DSAU Release Notes

Command name or qualified command phrase.

Commands and other text that you type.

Text displayed by the computer.

The name of a keyboard key. Note that Return and Enter both refer to the same

key. A sequence such as Ctrl+A indicates that you must hold down the key

labeled Ctrl while pressing the A key.

The name of an environment variable, for example PATH or errno.variable

A value that you replace in a command or function, or information in a display

that represents several possible values.

Manual page (manpage). In this example, “find” is the manpage name and “1”

is the manpage section.

Publishing History

Table 2 DSAU Publishing History

Publication DateDocument EditionDSAU VersionManufacturing Part Number

March 20091.52.4T2786-90327

September 20081.42.2T2786-90291

March 20081.32.0T2786-90265

September 20071.21.5T2786-90137

February 20071.11.2/1.3T2786-90090

September 20061.01.2/1.3T2786-90003

Intended Audience 7

Page 8

For specific versions of HP-UX , Serviceguard, and open source components, see the Distributed

Systems Administration Utilities V2.4 Release Notes for HP-UX 11i v3 March 2009 available on the

HP Technical Documentation web site at http://www.docs.hp.com.

Product Support

For product support, contact your HP Support Representative, your HP Services Representative,

or your authorized HP reseller. For more information about support services, see the HP Support

web site at http://www.hp.com/go/support.

HP Encourages Your Comments

HP encourages your comments concerning this document. We are truly committed to providing

documentation that meets your needs. Please submit comments to:

http://docs.hp.com/assistance/feedback.html

Please include the following information:

• Document title (Distributed Systems Administration Utilities User’s Guide )

• Manufacturing part number (T2786-90327)

• Any comment, error found, or suggestion for improvement you have concerning this

document.

8

Page 9

1 Introduction

The Distributed Systems Administration Utilities provide several tools for simplifying the

management of groups of systems and of Serviceguard clusters.

There are three utilities:

• Configuration Synchronization: - with this utility, based on the open source tool cfengine

or “configuration engine,” the administrator can centrally define management actions to be

applied to a set of managed systems. cfengine is a client/server based tool. The central

configuration master system hosts the configuration description file that defines the

management actions to be performed on each managed client. The configuration master

also hosts the “golden image” files, which are master copies of files that are distributed to

the clients. The administrator can use cfengine to perform tasks such as:

— Ensure that client systems are using a correct set of configuration files

— Disable inappropriately configured files on the client

— Check file permissions, ownership, and track checksum changes

— Perform edits to files

— Execute arbitrary shell commands on each client

— Check for processes, signal processes

A Configuration Synchronization Wizard is available to help the administrator quickly

configure cfengine for managing a set of distributed systems or configuring it as a highly

available service in a Serviceguard cluster. This wizard is described in Chapter 2:

“Configuration Synchronization” (page 13). For additional information, see the cfengine

and csync_wizard manpages.

• Consolidated Logging: standard UNIX syslogd offers UDP-based log forwarding to a

central log consolidator today. The DSAU utilities provide the open source tool syslog-ng

or “syslog next-generation.” syslog-ng offers additional features that make it a powerful

tool for log forwarding, log centralization and log consolidation.

The Configuration Synchronization Wizard helps to configure syslog-ng on a log

consolidation server and log forwarding clients. Centralized log consolidation offers the

following benefits:

— Easier log file analysis

A centralized log provides a single location for the administrator to perform log file

analysis. It offers a single view of events that impact multiple systems.

The DSAU utilities are specifically designed to optimize this method for managing a

Serviceguard cluster. Member syslogs and package logs can be centralized for simpler

log file access and analysis. DSAU utilities also allow the cluster to offer a highly available

consolidated logging service.

— Increased security

A security breach might compromise the local logs but not the centralized copy.

— Simplified archiving of logs

It is usually simpler to archive a set of centralized logs rather than per-system logs.

This wizard is described in Chapter 3: “Consolidated Logging” (page 41). For additional

information, refer to the clog_wizard and syslog-ng manpages.

• Command fanout is based on the open source tool Parallel Distributed Shell (pdsh). pdsh

enables the administrator to execute shell commands in parallel across a set of systems. It

can use remsh or ssh as the network transports. The csshsetup tool is provided to simplify

the distribution of ssh keys. The companion utility Parallel Distributed Copy (pdcp) enables

9

Page 10

file and directory copies to be performed in parallel to a set of remote systems. The dshbak

filter allows the output from multiple systems to be formatted and consolidated for better

on-screen presentation.

The cexec, ccp, ckill, cps, and cuptime tools are wrappers around the pdsh and

pdcp commands optimized for use in a Serviceguard cluster. They default to executing

commands cluster-wide. These wrappers do the following:

— cexec - Like pdsh but with additional reporting and retry features

— ccp - Copies files cluster-wide

— ckill - Kills the named process cluster-wide or on the specified systems

— cps - Issues a ps command cluster-wide or on the specified systems

— cuptime - Runs the uptime command cluster-wide

These commands can also be used outside a cluster, but like pdsh and pdcp, the user must

specify a list of target hosts. The cexec command operates like pdsh and adds reporting

capabilities. Saved reports can be used to reissue previous commands and target only those

systems where the command originally failed, originally succeeded, or both. Command

fanout is more fully described in Chapter 4: “Command Fanout” (page 83).

IMPORTANT: On HP-UX 11i v3 Integrity systems, pdsh requires an additional software,

LibcExt, to make use of the functions that are not shipped with the standard Library Routines,

libc in HP-UX 11i v3. LibcExt contains setegid() and seteuid() POSIX APIs, which the

pdsh tool requires to function properly.

LibcExt forms part of the Portability Package (Product # PortPkg) depot. You can download

Portability Package from the HP Software Depot web site at www.software.hp.com

The next section describes the commands provided with each DSAU component.

1.1 Distributed Systems Administration Utilities Commands

Table 1-1 Configuration Synchronization Command

When to Use itWhat it DoesCommand

csync_wizard

Table 1-2 Consolidated Logging Commands

clog

clog_wizard

clients.

Table 1-3 Command Fanout Commands

ccp

Copies files to multiple hosts in parallel.

In a Serviceguard cluster, copies files

cluster-wide.

When setting up the configuration master.Helps set up the cfengine environment.

When to Use itWhat it DoesCommand

To examine log files.Displays log files.

When setting up log consolidation.Helps set up log consolidation servers and

When to Use itWhat it DoesCommand

To perform on-demand synchronization of

files across a set of systems or a

Serviceguard cluster.

cexec

ckill

10 Introduction

Issues commands to multiple hosts in

parallel. In a Serviceguard cluster, issues

command cluster-wide.

Distributes a kill command to multiple

hosts in parallel. In a Serviceguard cluster,

issues command cluster-wide by default.

Toexecute anon-interactive shellcommand

across a set of systems or cluster. To

consolidate identical output, pipe the

output to dshbak -c.

To send a signal to a named process across

multiple systems or a cluster.

Page 11

Table 1-3 Command Fanout Commands (continued)

When to Use itWhat it DoesCommand

cps

cuptime

cwall

Distributes aps(1) command tomultiple

hosts in parallel. In a Serviceguard cluster,

issues command cluster-wide by default.

Reports uptime(1) information for

multiple systems. In a Serviceguard

cluster, issues command cluster-wide by

default.

Displays a wall(1M) broadcast message

on multiple hosts. In a Serviceguard

cluster, issues command cluster-wide by

default.

Table 1-4 Utility Setup Command

csshsetup

For the current user, performs a secure

shell (ssh) public key distribution to

multiple systems.

Tocollect processinformation from groups

of systems simultaneously.

To check uptime, users, and load averages.

To broadcast a message to all logged-in

users across a group of systems.

When to Use itWhat it DoesCommand

To greatly simplify ssh key distribution.

pdsh and the command fanout

(cexec-related) commands all rely on a

proper ssh key distribution. The

csync_wizard requires ssh access to

managed clients. For example, in a

Serviceguard cluster, this allows ssh access

from any member to any other member, so

pdsh and cexec can be used from any

cluster member.

1.2 Open Source Components

The open source components and their commands are described in the following table. These

open source components used by DSAU are based on the high level cfengine language. For

additional information on cfengine, see the cfengine manpage; for the individual commands,

see theirrespective manpages and open source documentation at /opt/dsau/doc. For supported

open source options, refer Appendix A (page 87) HP-Supported Open Source pdsh Options.

Table 1-5 Open Source cfengine Commands

What it DoesCommand

cfagent

cfexecd

cfkey

cfservd

Table 1-6 Open Source pdsh Commands

System configuration agent that performs the configuration actions defined in a

configuration policy file.

A scheduling and report service. This is an optional component.

Security key generation tool. cfkey is run once on every host to create a

public/private key pair.

Tool to activate a remote cfagent.cfrun

A file server and remote activation service.

What it DoesCommand

dshbak

pdcp

pdsh

Formats outputfrom pdshcommands; consolidatesidentical output from multiple

hosts.

Tool to make file and directory copies in parallel to a set of remote systems.

Tool to execute shell commands in parallel across a set of systems.

1.2 Open Source Components 11

Page 12

Table 1-7 Open Source syslog-ng Command

What it DoesCommand

syslog-ng

Tool that performs consolidated logging.

1.3 Distributed Systems Administration Utilities Manual Pages

In addition to the open source manual pages (man pages) for DSAU’s open source components,

DSAU also provides the following manual pages:

Table 1-8 DSAU Manual Page Sections

SectionManpage

ccp

cexec

ckill

clog

clog_wizard

cps

csshsetup

csync_wizard

cuptime

1

1

1

1m

1

1

1m

1

cwall

1m

12 Introduction

Page 13

2 Configuration Synchronization

Managing the configuration and configuration drift of a set of distributed systems is a constant

challenge for systemadministrators. There are a variety of tools availableto help manage various

aspects of multi-system configuration management. For example, for account management,

standard solutions include the Network Information System (NIS) and Lightweight Directory

Access Protocol (LDAP). For file level synchronization, tools like rdist (see the rdist(1) manpage)

and rsync are available. HP Systems Insight Manager helps to discover, monitor and manage

groups of systems.

A new tool in this toolkit is Configuration Engine (cfengine). cfengine is a popular open source

tool for configuration synchronization. It allows policy-based or goal-based configuration

management that allows the administrator to define the management actions to be applied to

groups of systems so those systems reach a desired state.

cfengine is a client/server based tool. A central configuration master system or policy server hosts

a configuration policy file which defines the management actions to be performed on each

managed client. The configuration master also hosts the“golden image” files, or reference copies

of files that should be distributed to the clients. The administrator can use cfengine to perform

tasks such as:

• Ensure that client systems are using a correct set of configuration files by copying over

reference files or directories.

• Disable inappropriately configured files on the client.

• Check file permissions, ownership, and track checksum changes.

• Edit files.

• Execute specified shell commands on each client.

• Check for processes or signal processes.

• Check for specific filesystem mounts.

A Configuration Synchronization Wizard (csync_wizard) is available to help the administrator

quickly configure cfengine for managing a set of distributed systems or configuring it as a highly

available service in a Serviceguard cluster.

2.1 cfengine Overview

The administrator starts by defining a central system or Serviceguard cluster to act as the master

configuration server or policy server. The Configuration Synchronization Wizard (csync_wizard)

is a user-friendly front-end to the initial configuration process. This central system will house

the master policy files (for example, cfagent.conf) which define the desired configuration

policies, and also reference copies or master copies of files that should be distributed to the

managed clients.

Each managed client copies down the master copies of the policy files from the central

configuration server and evaluates its current state versus the desired state defined by the policy

file. Any differences cause configurations rules to run in order to resynchronize the client. The

administrator can initiate synchronization operations on the managed clients in two ways, using

either a push or a pull operation.

• Using the cfrun command (see the cfrun(1) manpage for more information) from the master

configuration server, the administratorcan push changes. cfrun reads the file cfrun.hosts

to determine the list of managed clients. It then invokes the cfagent command on each

managed client to perform a synchronization run. Thus, push operations are really requests

to the managed clients to perform an immediate pull.

• Pull operations are performed using cron or cfengine’s own cron-like cfexecd daemon.

Either technique invokes the cfagent command at fixed intervals in order to perform

client-initiated configuration synchronization. The administrator defines what interval is

2.1 cfengine Overview 13

Page 14

appropriate for each group of managed clients. For example, every five minutes, once an

hour, or once a day. The administrator can also invoke cfagent directly for on-demand

synchronization runs.

2.1.1 cfengine Daemons and Commands

cfengine employs several daemons and commands to perform configuration synchronization

operations. The following list describes the primary cfengine components.

• cfagent -- the cfagent command is cfengine’s workhorse. It runs on each managed client,

and bootstraps itself using the file update.conf, which describes the set of files to transfer

from the master server to the local managed client. The files transferred include the main

policy file, cfagent.conf, and any related policy files. In the DSAU implementation,

cfagent.conf imports the file cf.main which has examples of many cfengine features.

After the configuration files are transferred, cfagent evaluates the configuration instructions

in these files. If the client system’s current configuration deviates from the desired

configuration, cfagent executes the defined actions to return the client to the proper state.

• cfservd -- cfservd daemon has two roles:

— cfservd runs on the master configuration server and is the clearinghouse for file

transfer requests from the managed clients. cfagent on the managed clients contacts

the master server’s cfservd and requests copies of the master policy files and copies

of any reference files that are needed as part of the defined configuration synchronization

operations. The master cfservd is responsible for authenticating remote clients using

a public/private key exchange mechanism and optionally encrypting the files that are

transferred to the managed clients.

— cfservd can optionally run on each managed client in order to process cfrun requests.

cfrun allows the administrator to push changes to the managed clients instead of waiting

for the clients to synchronize using some client-defined time interval. The cfrun

command must be initiated from the master configuration server. It contacts each

managed client listed in the cfrun.hostsfiles and connects to the managed client’s

cfservd asking it to invoke cfagent to perform the synchronization work.

cfservd is configured using cfservd.conf and started using /sbin/init.d/

cfservd.

• cfexecd -- cfexecd is a scheduling and reporting tool. If the administrator uses cron to

perform synchronization runs at fixed intervals, cfexecd is the command placed in the

crontab file to wrap the invocation of cfagent. It stores the output of the cfagent run

in the outputs directory (see cfagent.conf for details) and optionally sends email.

cfexecd has it’s own cron-like features based on cfengine’s time classes. The administrator

can choose to run cfexecd in daemon mode and use it to invoke cfagent at defined

intervals instead of cron. The default is to invoke cfagent every hour. HP recommends

adding an entry for cfexecd in the crontab file for the initial configuration.

• cfrun -- the cfrun command contacts the managed clients asking each to perform an

immediate synchronization run. Specifically, it connects to the optional cfservd on each

managed client which in turn launches cfagent.

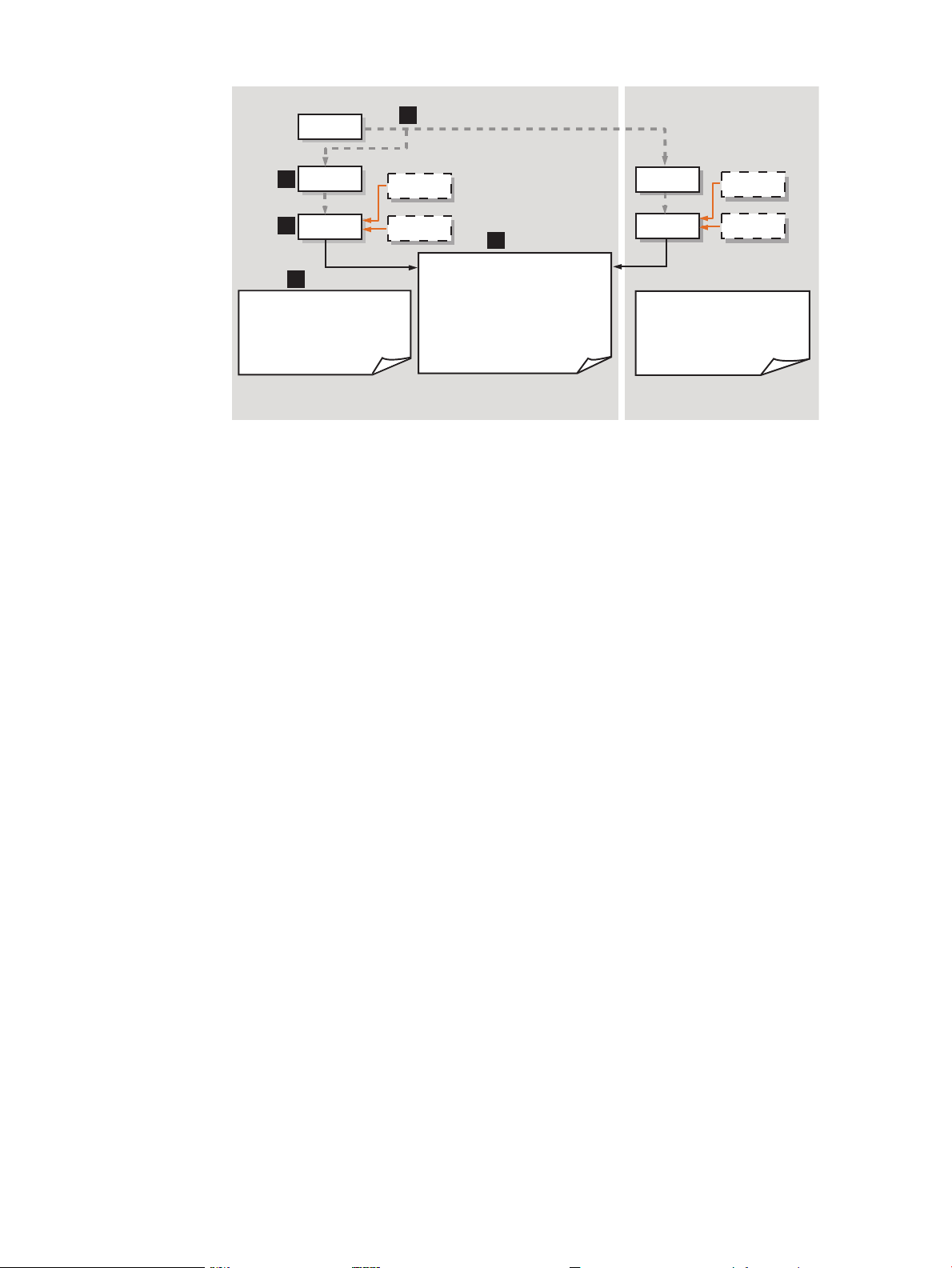

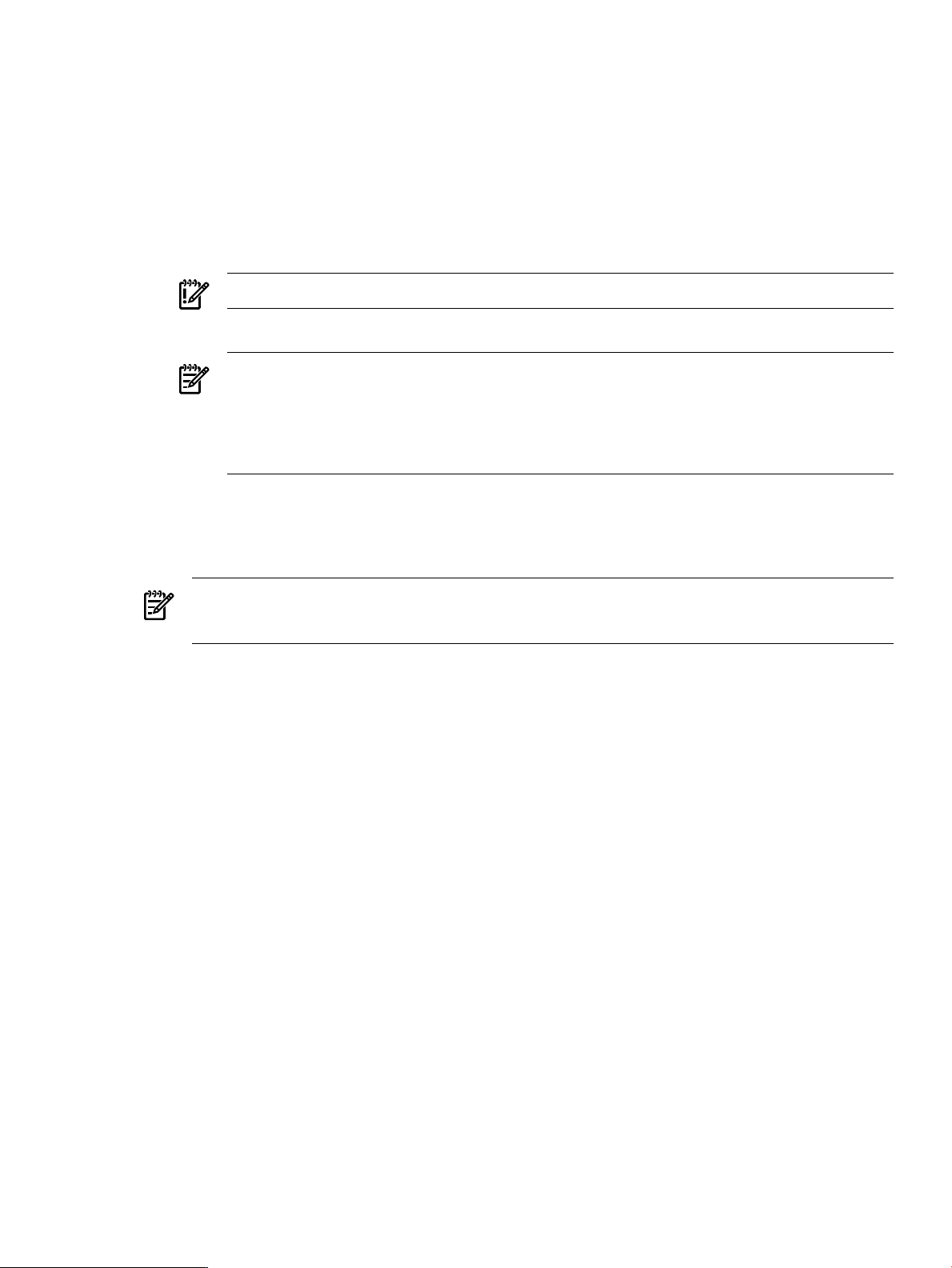

Figure 2-1: “cfengine Overview” illustrates the relationship of the cfengine commands and

daemons, and shows an example of the administrator using cfrun. The dashed lines in the

diagram indicate calling sequences (for example, A calls B). Solid lines indicate that data is being

read from configuration files.

14 Configuration Synchronization

Page 15

Figure 2-1 cfengine Overview

1

3

4

5

2

cfexecd

cron

+ /var/opt/dsau/cfengine/inputs

-update.conf

-cfagent.conf

-cfservd.conf

-cfrun.hosts

+ /var/opt/dsau/cfengine/inputs

-update.conf

-cfagent.conf

-cfservd.conf

-cfrun.hosts

cfservd

cfservd

cfagent

cfrun

Master Server

Client

cfagent

cfexecd

cfron

Master Policy Files:

+/dir/cfengine_master/master_files/

-<reference files>

+/dir/cfengine_master/inputs/

-update.conf

-cfagent.conf

-cfservd.conf

-cfrun.hosts

1. The administrator is logged into the master configuration synchronization server and makes

a change to be propagated out to the managed clients, using the cfrun command. cfrun

checks the file cfrun.hosts for the list of managed clients. Note that the master server

can be a client of itself. In this diagram, there are two clients, the master server and a remote

client.

2. cfrun contacts cfservd on each managed client, which in turn invokes cfagent.

3. cfagent first checks the master server for an updated copy of theupdate.conf file and

transfers it to the client if needed.

4. If a standalone system is the master server, by default the master copy of update.conf is

located in /var/opt/dsau/cfengine_master/inputs/. The master copies of other

configuration files such as cfagent.conf, cfservd.conf, cf.main, and cfrun.hosts

are also located here. If the master server is a Serviceguard cluster, the master configuration

files are located in the mount point associated with the package. For example, if this mount

point is named csync, the path would be /csync/dsau/cfengine_master/inputs.

5. When copying the configuration files to the local system, cfagent places them in /var/

opt/dsau/cfengine/inputs for both standalone systems and clusters. cfagent first

evaluates the contents of update.conf in order to update any changed cfengine binaries

(if any) and gets the latest version of the policy files (cfagent.conf and related files).

2.2 cfengine Master Server Deployment Models

The cfengine master server can be a standalone HP-UX system servicing groups of distributed

clients. The clients can themselves be standalone systems or members of a Serviceguard cluster.

If you are already using a Systems Insight Manager central management server, this can be an

ideal system to use as a cfengine master server. Master servers can also act as clients and the

configuration synchronization tasks can be performed on these systems as well as the remote

clients.

If you are managing Serviceguard clusters, cfengine can be deployed strictly for intra-cluster use

to synchronize the members of a single cluster. In this configuration, cfservd is configured as

a package for high availability but the only cfengine clients are the cluster members themselves.

The package’s DNS name/IP address is the name for the cfengine master server.

In addition to providing configuration synchronization as an intra-cluster service, another

Serviceguard configuration has the cluster providing the highly available configuration

cfagent then evaluates cfagent.conf to determine if the client is in the desired state. If

there are deltas, cfagent performs the defined actions to correct the client’s configuration.

2.2 cfengine Master Server Deployment Models 15

Page 16

synchronization service to groups of remote client systems. Those clients can be standalone

systems or Serviceguard clusters. The cluster providing the cfengine service can be a client of

itself and also take advantage of cfengine’s configuration synchronization features. A possible

but somewhat unusual configuration is to have a fixed member of a Serviceguard cluster act as

the master server but no package is configured so cfservd will not be highly available. This

configuration is valid but not recommended.

2.3 Configuring cfengine

The following sections provide detailed instructions for setting up a configuration synchronization

master server and its clients. The quickest way to get started is to use the Configuration

Synchronization Wizard (csync_wizard), described in the next section. Completely manual

configurations are also described.

Configuring Synchronization Master (csync master) Server in Cross-Subnet Cluster

Environments

In a cluster environment, if all the nodes are within the same subnet, then you can configure a

server within that cluster environment as the csync master server.

However, in a cross-subnet cluster environment, the csync master server must be an external

system, preferably a quorum server, outside the cross-subnet cluster. A cross-subnet cluster can

be configured only as client, with an external system acting as the cfengine master. After you

configure an external system as the csync master, you can configure the cross-subnet cluster

nodes as cfengine managed clients.

2.3.1 Using the Configuration Synchronization Wizard

The csync_wizard (see csync_wizard(1)) automates the task of setting a configuration

synchronization master server and its managed clients. It supports setting up a standalone system

or a Serviceguard cluster as the master server. The wizard configures all managed clients to run

a cfservd so that cfrun (see cfrun(8)) can be used on the master server.

When you run the csync_wizard on a cross-subnet cluster, the system displays a message

indicating that the csync master must be an external system outside the cross-subnet cluster.

Depending on whether the csync master is already configured, one of the following is possible:

• If the csync master is already configured outside the cross-subnet cluster, the system displays

the hostname or the IP address of the csync master.

• If the csync master is not configured outside the cross-subnet cluster, then you must configure

an external system, preferably a quorum server, as the csync master server and later proceed

to run the csync_wizard on the cross-subnet cluster.

With the csync_wizard, you perform the following tasks:

• Set up a master server

• Add a client

• Remove a client

• Manage keys for cfengine clients

• Display the current configuration

See the appropriate sections below for details. Table 2-1 lists the information you will need to

gather before running the csync_wizard to set up the master server.

When running the wizard on a Serviceguard cluster, the default is to set up cfengine as a highly

available service (Serviceguard package). The administrator must provision the storage

environment for the package and the required package IP address and DNS name registration.

The wizard supports LVM storage configurations. Non-LVM configurations must be completed

manually. The package information that the wizard requires is given in Table 2-1.

16 Configuration Synchronization

Page 17

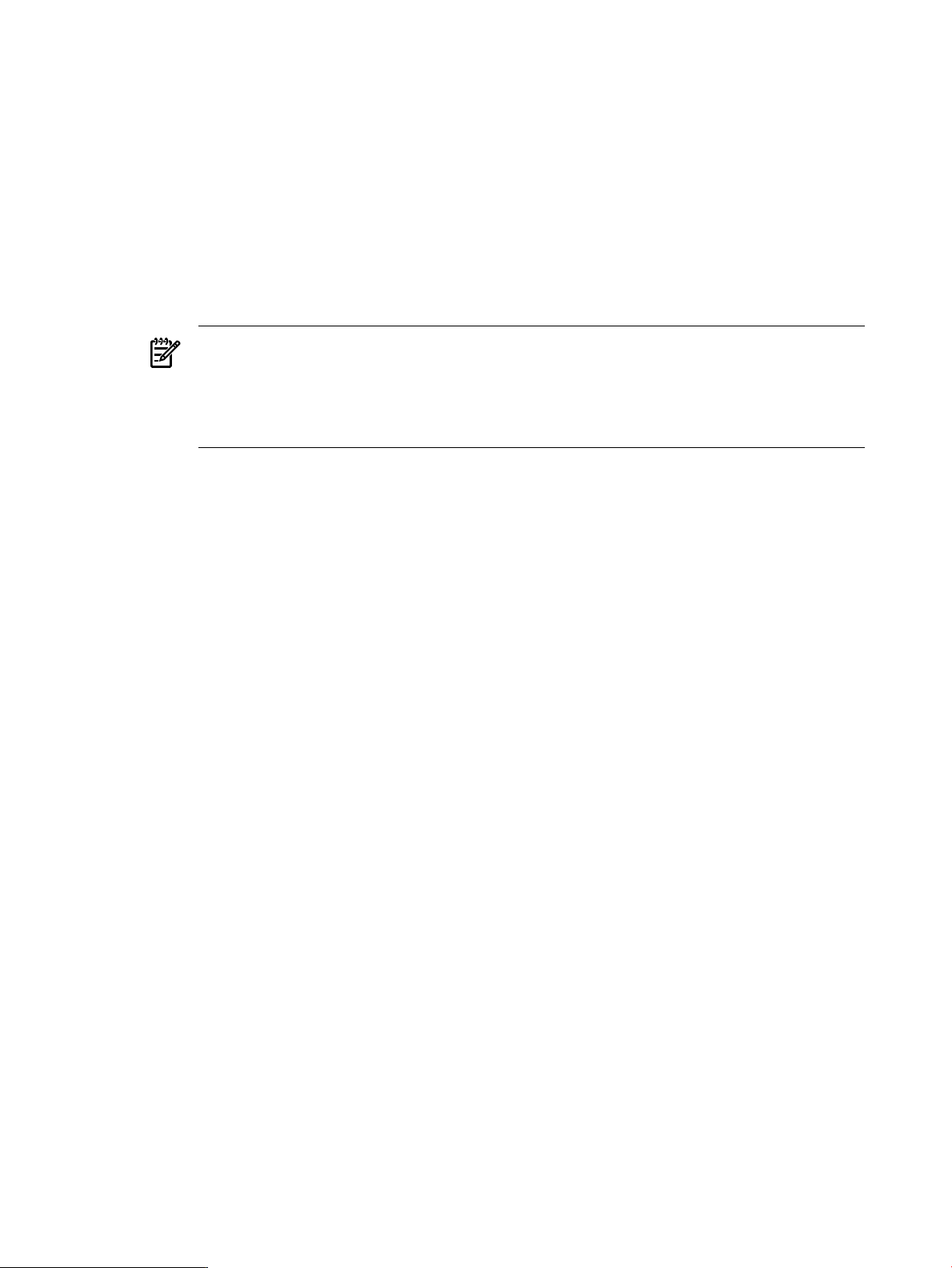

Table 2-1 Configuration Data for csync_wizard

Your ValueExampleConfiguration Data

LVM volume group

Logical volume

Filesystem mount point

Mount options

Filesystem type

Package IP Address (a registered DNS

name)

Package subnet

/dev/vgcsync

/dev/vcgsync/lvol1

/csync

-o rw, largefiles

vxfs

192.10.25.12

192.10.25.0

NOTE: If you have used the wizard previously to configure a cfengine master server and rerun

it to reconfigure the master server, stop the currently running configuration first. For example,

use the following command to stop the running cfservd: /sbin/init.d/cfservd stop

If you have cfagent in cron or are using cfexed, disable them so they do not run while the

wizard reconfigures the system.

Configuring a single node in a Serviceguard cluster as a master server is not a highly available

configuration and is not recommended. The default configuration to create for a cluster is to

create a package for cfengine’s cfservd. (To rerun the wizard in a Serviceguard cluster and

change your configuration from one that is highly available to one that is not, halt the existing

csync package (use cmhaltpkg) and delete it before running the wizard.)

2.3.1.1 Using the Wizard to Configure a Standalone Synchronization Server

To configure a synchronization server for a standalone system, run the csync_wizard(1) on the

standalone system you wish to configure as the master synchronization server:

# /opt/dsau/sbin/csync_wizard

The wizard displays the following introductory screen:

Querying the system local_hostname for current status, one moment...

This Configuration Synchronization Wizard (csync_wizard) helps you set

up the Configuration Engine (cfengine) environment. Cfengine is a powerful

tool for performing policy-based management for groups of systems and

cluster environments.

csync_wizard is a client/server based utility. With csync_wizard, the

user can configure a standalone system or Serviceguard cluster as the

cfengine “master”. The master contains the configuration description and

configuration files that will be used by all the clients. Clients copy the

configuration description from the master and apply it to themselves.

The configuration description supports a rich set of management actions

such as copying configuration files from the master to the client,

performing edits to files, checking file ownerships, permissions, and

checksums, executing shell commands, checking for processes, etc.

For a detailed description of the cfengine management actions,

please refer to the cfengine man page.

The csync wizard helps you set up this system as a cfengine master,

add or remove cfengine-managed clients, and perform the required

security setup.

Press “Enter” to continue...

2.3 Configuring cfengine 17

Page 18

Press Enter to continue; choose item 1 from the menu below to configure a master server:

Configuration Synchronization Wizard Menu

=========================================

(1) Set up a cfengine master server

(2) Add a client

(3) Remove a client

(4) Manage keys for cfengine clients

(5) Display current configuration

(9) Exit

Enter choice: 1

The cfengine master server is being configured on:

local_hostname

The wizard then asks if you would like to additionally configure managed clients immediately

after configuring the server. For this example, answer no. Managed clients will be added later.

You can optionally specify additional remote clients to manage at this

time. If you are running in an HA environment, you do not need to specify the

cluster members.

Would you like to manage clients? [N]: n

The wizard proceeds to configure the system as a master server:

******* WARNING!!!! ********

To protect against possible corruption of sensitive configuration files,

control-c has been disabled for the remainder of this configuration.

Configuration of the cfengine master server is starting.

Configuration files have been saved at

/var/opt/dsau/cfengine/backups

cfengine keys are being created...

cfengine keys have been created, now distributing....

Verifying that the master has an entry in the /etc/hosts file

on each client...

Starting cfengine on the master server and any managed clients. This may

take a few minutes....

When the configuration is completed, the wizard displays the following summary screens which

direct the administrator to the main policyfile, /var/opt/dsau/cfengine_master/inputs/

cf.main, and the recorded answer file for this run of the wizard.

The Configuration Synchronization Wizard has completed the

configuration of cfengine:

- The master configuration description template is here:

</csync/dsau/cfengine_master/inputs/cf.main>

This default template has examples of typical configuration

synchronization actions performed in a cluster. For example,

18 Configuration Synchronization

Page 19

synchronizing critical files such as /etc/hosts, package

scripts, etc.

All the actions in the template are disabled by default

(commented out). Uncomment the lines corresponding to the desired

synchronization actions for the cluster. See the cfengine

reference documentation for a description of additional cfengine

features: /opt/dsau/doc/cfengine/

Press “Enter” to continue...

The cfengine environment consists of:

Master server (policy host):

local_hostname

Managed clients:

Note that when configuring a master server but not adding any managed clients during the

server configuration, the members entry (list of managed clients), is empty, as shown in the

above example.

A file containing the answers for this run of the Configuration

Synchronization Wizard is stored here...

/var/opt/dsau/cfengine/tmpdir/csync_wizard_input.txt

This configuration can be reestablished by issuing the following command:

/opt/dsau/sbin/csync_wizard \

-f /var/opt/dsau/cfengine/tmpdir/csync_wizard_input.txt

2.3.1.2 Using the Wizard to Configure a Serviceguard Cluster Synchronization Server

To configure a synchronization server for a Serviceguard cluster, there are two configuration

choices:

• Create a Serviceguard package for the configuration service to ensure high availability.

• Configure a single member of the cluster as if it is a standalone system. The configuration

synchronization service will not be highly available, and this configuration will also not

work properly with the Serviceguard automation features discussed in “Serviceguard

Automation Features” (page 23) and is not recommended.

This section focuses on using the wizard to configure a highly available configuration

synchronization service.

NOTE: Before starting the wizard, all the current cluster members should be up and running

in the cluster. Make sure that this cluster’s MAX_CONFIGURED_PACKAGES value can

accommodate the additional package. For more information on this setting, refer to the Managing

Serviceguard manual that is part of the Serviceguard documentation set.

Start by running the Configuration Synchronization Wizard, csync_wizard(1) by issuing the

following command:

# /opt/dsau/sbin/csync_wizard

Querying the system local_hostname for current status, one moment...

This Configuration Synchronization Wizard (csync_wizard) helps you set

up the Configuration Engine (cfengine) environment. Cfengine is a powerful

tool used to perform policy-based management for groups of systems and

cluster environments.

csync_wizard is a client/server based utility. With csync_wizard, the

user can configure a standalone system or Serviceguard cluster as the

2.3 Configuring cfengine 19

Page 20

cfengine “master”. The master contains the configuration description and

configuration files that will be used by all the clients. Clients copy the

configuration description from the master and apply it to themselves.

The configuration description supports a rich set of management actions

such as copying configuration files from the master to the client,

performing edits to files, checking file ownerships, permissions, and

checksums, executing shell commands, checking for processes, etc.

For a detailed description of the cfengine management actions,

please refer to the cfengine man page.

The csync_wizard helps you set up this system as a cfengine master,

add or remove cfengine-managed clients, and perform the required

security setup.

Press “Enter” to continue...

Press Enter to continue and choose item 1 from the menu below to configure a master server:

Configuration Synchronization Wizard Menu

=========================================

(1) Set up a cfengine master server

(2) Add a client

(3) Remove a client

(4) Manage keys for cfengine clients

(5) Display current configuration

(9) Exit

Enter choice: 1

After you choose 1 and press Enter, the wizard displays the following text:

This system is a member of a Serviceguard cluster. The cfengine

configuration will be defined as a package for high availability

unless you answer no to the question below. If you answer no, for the

purposes of cfengine control, this machine will be treated as a single

machine without failover capability for cfengine.

If you accept the default answer of ‘HA’ to the question below,

cfengine will be configured as a highly available Serviceguard package.

This ensures that your cfengine master server is available as long

as one of the cluster members that can run the package is also available.

You will need a free IP address for this package and you must

configure storage for the package before proceeding. For details

on creating highly available file systems, please refer to the

Managing Serviceguard manual.

Will this master server be Highly Available (HA) [Y]:

The wizard names the package name “csync” for configuration synchronization. This specific

package name is required. The LVM storage configuration and network configuration for the

package must be set up before continuing or before running the wizard. All the cluster members

should also be up and available. For details, refer to the Managing Serviceguard manual, under

“Building an HA Cluster Configuration”, “Creating a Storage Infrastructure with LVM.”

20 Configuration Synchronization

Page 21

NOTE: The wizard only supports creating packages based on LVM volume groups. When

using CFS or VxVM, manual configuration is required. See the section on “Manually Configuring

a Serviceguard Cluster Synchronization Server” (page 29) for details on manually configuring

the csync package.

The wizard prompts for the following, all of which should have already been configured:

• LVM volume group name (for example, /dev/vgcsync)

• Logical volume in the volume group; must be the full path name of the logical volume (for

example, /dev/vgcsync/lvol1)

• The filesystem mount point (for example, /csync)

• The filesystem mount options (for example, -o rw,largefiles). The mount options are

used verbatim in the Service package control script’s FS_MOUNT_OPT[0] field. Note that

the mount options must agree with the filesystem you created on the logical volume. For

example, if the filesystem was created with largefiles support, the largefiles mount option

should be specified.

• The filesystem type (for example, vxfs)

• The package IP address. This should also be a registered DNS name so the configuration

synchronization is easy to configure on client systems.

• The package subnet. (Use netstat -i to determine the correct subnet.)

Once the storage infrastructure is configured and the IP address obtained, press return to access

the default answer of ‘yes’ and proceed with creating the package. The wizard now prompts for

the package information:

Configuring the csync Serviceguard package for a

highly available cfengine master.

The cfengine master server is being configured as a

HA Serviceguard Package on this cluster.

Please provide the following information for the package:

Enter the Volume group [/dev/vgcsync]:

Enter the Logical Volume [/dev/vgcsync/lvol1]:

Enter the Filesystem (Mount Point) [/csync]:

Enter the Mount Options [-o rw, largefiles]:

Enter the Filesystem Type [vxfs]:

Enter the IP address [192.10.25.12]:

Enter the Subnet [192.10.25.0]:

The wizard now asks if you would like to manage additional remote clients, that is, systems

outside the cluster. The wizard automatically configures the current members of the cluster. This

is why all members must be up and accessible when running the wizard. In the example shown

below, the administrator chose ‘no’ so only cluster members are configured as clients initially.

Additional remote clients can easily be added later using the wizard. When adding members to

the cluster, it is not necessary to run the wizard to specify the new members as new client systems.

They are automatically configured to participate in the current cfengine configuration. Refer to

“Serviceguard Automation Features” (page 23) for details.

You can optionally specify additional remote clients to manage at this

time. If you are running in an HA environment, you do not need to

specify the cluster members.

2.3 Configuring cfengine 21

Page 22

Would you like to manage clients? [N]:

The wizard now has all the data it needs to configure the cluster and proceeds to do so:

******* WARNING!!!! ********

To protect against possible corruption of sensitive configuration files,

control-c has been disabled for the remainder of this configuration.

Configuring the “csync” Serviceguard package.

Applying the “csync” Serviceguard package configuration file.

This will take a moment.

Starting the “csync” Serviceguard package. This will take a few moments...

The “csync” Serviceguard package has been started on local_hostname.

Configuration of the cfengine master server is starting.

Configuration files have been saved at:

/var/opt/dsau/cfengine/backups

cfengine keys are being created...

cfengine keys have been created, now distributing....

Verifying that the master has an entry in the /etc/hosts file

on each client...

Starting cfengine on the master server and any managed clients.

This may take a few minutes....

When the configuration is done, the wizard displays the following summary screens which direct

the administrator to the main policy file,

/mount_point/cfengine_master/inputs/cf.main, and the recorded answer file for this

run of the wizard. The policy file is located on the newly configured filesystem associated with

the package. In our example, the administrator chose to mount the filesystem for the package as

/csync.

If the administrator had previously configured cfengine, before overwriting any existing

configuration files, the wizard creates backups in the directory:

/var/opt/dsau/cfengine/backups

The top level files in this directory are the most recent backup files. Any configurations before

that are saved in timestamped subdirectories named v_timestamp.

The Configuration Synchronization Wizard has completed the

configuration of cfengine:

- The master configuration description template is here:

</csync/dsau/cfengine_master/inputs/cf.main>

This default template has examples of typical configuration

synchronization actions performed in a cluster. For example,

synchronizing critical files such as /etc/hosts, package

scripts, etc.

All the actions in the template are disabled by default

(commented out).

Uncomment the lines corresponding to the desired

synchronization actions for this cluster. See the cfengine

reference documentation for a description of additional cfengine

features: /opt/dsau/doc/cfengine/

22 Configuration Synchronization

Page 23

Press “Enter” to continue...

The cfengine environment consists of:

Master server (policy host): package_hostname

Master clients:

cluster_member_1, cluster_member_2, ...

A file containing the answers for this run of the Configuration

Synchronization Wizard is stored here:

/var/opt/dsau/cfengine/tmpdir/csync_wizard_input.txt

This configuration can be reestablished by issuing the following command:

/opt/dsau/sbin/csync_wizard \

-f /var/opt/dsau/cfengine/tmpdir/csync_wizard_input.txt

2.3.1.3 Cluster Configuration Notes for cfengine

This section describes the details of a high availability configuration of cfengine in a Serviceguard

cluster. For more information on the role of the various cfengine daemons and commands, refer

to “cfengine Daemons and Commands” (page 14). The Serviceguard package ensures that

cfengine's cfservd daemon remains highly available. The cfengine configuration files

update.conf and cfagent.conf define the master configuration synchronization server to

be the registered DNS name for the relocatable IP address of the package. When managed clients

run cfagent (see cfagent(8)), cfagent connects to cfservd on the package’s adoptive node.

Thus the cluster members themselves are all managed clients. The member hosting the package

additionally acts as the master server for the policy files.

When booting the cluster, each member will start a client cfservd. This is the cfservd that

responds to cfrun requests. When the package starts on a member, that cfservd now has

access to the filesystem of the package and becomes the master cfservd that serves the policy

files to all managed clients. This cfservd is monitored by the package. If cfservd fails, the

package will attempt to restart on another member. That member’s cfservd will then become

the master cfservd.

Halting the package does not stop the cfservd daemon on the adoptive member since the

expectation is that the daemon is present to respond to future cfrun requests. Also, unlike some

other high availability services, if the csync package is down or unavailable, remote clients are

not adversely impacted. The clients continue to run with their currently defined configurations.

The administrator would need to make sure the package is up and running in order to distribute

any new configuration instructions to the managed clients.

The wizard automates cfengine key distribution to all cluster members. For a detailed description

of key distribution steps performed, refer to “Security Notes” (page 36).

2.3.1.4 Serviceguard Automation Features

The Distributed Systems Administration Utilities require Serviceguard 11.17 or later. With

Serviceguard 11.17 or later, when members are added to or deleted from the cluster, the

configuration synchronization tools automatically take the appropriate configuration actions.

Specifically:

• When adding a member to the cluster, the new member is automatically configured to

participate in configuration synchronization. The following configuration actions occur

automatically on the added member:

1. /etc/rc.config.d/cfservd is changed to set CSYNC_CONFIGURED to 1.

2. The appropriate cfengine public/private keys are created for the new member and

placed in the member's /var/opt/dsau/cfengine/ppkeys directory. The new keys

2.3 Configuring cfengine 23

Page 24

for this member are also distributed to the /var/opt/dsau/cfengine/ppkeys

directories on the other cluster members.

3. The new member’s /var/opt/dsau/cfengine/inputs directory is populated.

4. cfservd is started on the new member.

5. The package files are copied to /etc/cmcluster/csync/ on the new member.

6. A cfagent synchronization run is performed on the master to populate the master’s

/var/opt/dsau/cfengine/inputs directory.

7. A cfagent synchronization run is performed on the newly added member.

If there are any errors when performing these automated actions, messages are posted to

syslog on the master server. Use cmviewcl -p csync to determine which member is

currently the master server. Alternatively, if the cluster is using consolidated logging, check

for messages in the consolidated syslog.

• When deleting a member from a cluster, the public key of the deleted member is deleted

from the /var/opt/dsau/cfengine/ppkeys directory cluster-wide.

• The administrator can define cfengine groups or classes that enumerate all the members of

a particular Serviceguard cluster. These class definitions are not updated automatically and

the administrator must manually update the cfagent.conf and related files for cluster

membership changes.

24 Configuration Synchronization

Page 25

NOTE: When adding members to a cluster, consider the following:

• When adding a member to a cluster that is configured as a highly available master server,

the csyncpackage must be running when the member is added.The add member processing

task copies the configuration data from the package’s mounted filesystem to the new

member’s /var/opt/dsau/cfengine directories. If the package is not running, the

filesystem will not be accessible and the new member will not be properly configured. In

that case, the administrator can manually configure the new member as follows:

1. Make sure that the csync package is running. If not, start it.

2. Log in to the member running the package.

3. Execute the following command exactly as shown:

/opt/dsau/bin/csync_dispatcher MEMBER_ADDED: member_hostname

For example, if the new member’s unqualified hostname is newhost, use the following

command:

/opt/dsau/bin/csync_dispatcher MEMBER_ADDED: newhost

• When adding a member to the cluster that is configured as a highly available master server,

the cfengine security key of the new member is distributed cluster-wide. This enables the

new member to operate as an adoptive node. If the csync package fails over to the new

member, the new member will correctly handle cfagent requests from all managed clients.

However, a cfrun executed from the new member will fail when contacting the managed

clients. For cfrun to work properly, each managed client must have a copy of each cluster

member’s key. (This is unlike cfagent on the managed client which needs only the key

that corresponds to the IP address of the csync package.)

For the new member to issue cfrun requests, its key must be manually created on each

managed client. There are two ways to distribute the key:

— Use the csync_wizard “Manage keys for cfengine clients” function, which regenerates

keys for all systems. All managed clients must be reachable for the regeneration to

complete.

— Copy existing member keys to the new member. This approach takes advantage of the

fact that the new member’s key is identical to the keys for the other cluster members.

On the managed client, any of the existing cluster member’s keys can be copied to the

proper name for the newly added member.

For example,

# cd /var/opt/dsau/cfengine/ppkeys

# cp root-existing_member_IP_address.pub \

root-new_member_IP_address.pub

2.3.1.5 Using the Wizard to Configure a Synchronization Client

You can use the Configuration Synchronization Wizard to add managed clients to an existing

cfengine configuration. Run the wizard on the master server, not the client system. When a

Serviceguard cluster is the master server, run the wizard on the adoptive node for the csync

package. When a Serviceguard cluster is configured as a highly available master server, adding

new members to the cluster does not require using the wizard to configure those new members.

They will be configured automatically. For more information, see “Serviceguard Automation

Features” (page 23).

If the client is not a cluster member, to distribute cfengine keys securely, the client must be

configured fornon-interactive ssh access by theroot account of the master server. The csshsetup

tool (see csshsetup(1)) makes it easy to configure ssh access to a remote system. The csshsetup

tool is used in the examples below.

2.3 Configuring cfengine 25

Page 26

A remote Serviceguard cluster can be configured as a managed client. However, each member

must be configured individually. Repeat the configuration tasks described below for each member

of the cluster.

Start by logging in as root on the master server and configure ssh access to the remote system:

# csshsetup hostname_of_managed_client

csshsetup first tests ssh access to the remote system. If it is not configured, ssh prompts for

the managed client’s password.

Run the Configuration Synchronization Wizard and choose option 2 to add a new client:

Configuration Synchronization Wizard Menu

=========================================

(1) Set up a cfengine master server

(2) Add a client

(3) Remove a client

(4) Manage keys for cfengine clients

(5) Display current configuration

(9) Exit

Enter choice: 2

When prompted, enter the name of the client to add:

This option will configure additional clients to the cfengine domain.

Enter the name of the client to add: new_client

The wizard then proceeds to configure the client and report on its progress:

Verifying that the master has an entry in the /etc/hosts file on each client...

cfengine keys are being created...

cfengine keys have been created, now distributing....

The client new_client has been added to the cfengine domain

The wizard configures each new client to run cfservd so it can respond to cfrun requests and

adds the client to the master’s cfrun.hosts file.

2.3.2 Manual Configuration

The following sections describe the steps required to configure cfengine master configuration

synchronization servers or managed clients manually. Note that it is typically easier to start by

using the csync_wizard (see csync_wizard(1m)) and then modifying the resulting configuration

instead of starting from scratch. This is especially true in a Serviceguard cluster where the wizard

helps set up the package and takes care of propagating the correct configuration files to all

members of the cluster.

When performing manual configurations, it is possible to create configurations that cannot

subsequently be managed by the csync_wizard. Here are two examples:

• The wizard requires that all managed clients havessh configured so that cfengine’s security

keys can be initially distributed or subsequently changed.

• The wizard places all managed clients in the cfrun.hosts file. This list of managed clients

is used to identify systems for operations such as regenerating cfengine’s keys on all machines.

cfrun.hosts is an optional cfengine configuration file used by the cfrun command.

Manual configurations need not use this file but the wizard requires it.

26 Configuration Synchronization

Page 27

NOTE: You can use csshsetup to configure a trust relationship between the master server

and the managed clients. This will allow you to use command fanout commands such as cexec

and ccp (see cexec(1) and ccp(1)). Using these commands can simplify the configuration steps

described below when files need to be distributed to managed clients.

2.3.2.1 Manually Configuring a Standalone Synchronization Server

Perform the following one-time steps to configure a standalone system as a cfengine master

server:

1. Start by creating the master copies of the cfengine configuration files. These files are placed

in a well known directory and are distributed to each managed client. The default directory

is /var/opt/dsau/cfengine_master/inputs, referenced in the default templates.

Start by creating the directory:

# mkdir -p /var/opt/dsau/cfengine_master/inputs

2. Copy the default template files to the following directories:

# cd /var/opt/dsau/cfengine_master/inputs

# cp /opt/dsau/share/cfengine/templates/cf.main.template cf.main

# cp /opt/dsau/share/cfengine/templates/update.conf.template update.conf

# cp /opt/dsau/share/cfengine/templates/cfagent.conf.template cfagent.conf

# cp /opt/dsau/share/cfengine/templates/cfrun.hosts.template cfrun.hosts

# cp /opt/dsau/share/cfengine/templates/cfservd.conf.template cfservd.conf

3. Next, edit update.conf. This file has a format similar to cfengine’s main configuration file

cfagent.conf. It is used to transfer and update cfengine binaries and any updated

configuration definitions files (for example, cfagent.conf) to the managed clients. It is

critical to keep this file very simple and avoid errors. Errors in this file will require manually

copying a new version to each managed client.

The file contains tokens in the form <%token-name%> that are replaced by the

csync_wizard with the administrator’s answers to questions. Replace the tokens as follows:

a. Replace the <%POLICYHOST_NAME%> token with the fully qualified domain name of

the master server. Note that it is critical that this be a fully qualified domain name. This

file is copied to and evaluated on the managed clients. If a managed client is in a different

DNS domain from the master server, the client will be unable to communicate with the

master server if the hostname is not fully qualified.

b. Note that the cfengine domain variable is set as follows:

domain = ( ExecResult(/bin/sh -c ${dblquote}nslookup ‘hostname‘|

awk ${quote}/Name:/ {print $2}${quote} | cut -d . -f

2-${dblquote}) )

The domain variable is used by cfagent’s “resolve” action. The ExecResult command

above assumes that the client’s /etc/resolve.conf and /etc/nsswitch.conf are already

appropriately configured. The command expects to get a fully qualified hostname when

using nslookup of the client’s own hostname. If this assumption is not appropriate for

your environment, other techniques for setting the domain are possible. For example,

the client’s domain could be determined based on the client’s IP address or subnet, as

follows:

classes:

# host in these ip address ranges

xyz_domain = ( IPRange(10.0.0.1-15) )

abc_domain = ( IPRange(192.0.0.1-254) )

control:

xyz_domain::

domain = ( “xyz.example.com” )

abc_domain::

domain = ( “abc.example.com”)

2.3 Configuring cfengine 27

Page 28

Use the cfagent -p (or --parse-only) flag to verify the syntax of update.conf.

4. Distribute the master update.conf to each managed client. This step is described in

“Configuring a Synchronization Managed Client” (page 35).

5. Create the master server’s security keys. cfengine uses a public/private key exchange to

authenticate remote clients. A public/private key pair is generated on the master server and

all managed clients. The public key for each managed client is copied to the master server

and from the master server to the managed clients. It is important to exchange keys securely

using a tool like secure copy, (see scp(1)) or using tape or CD-ROM. Start by generating the

keys for the master server:

# /opt/dsau/sbin/cfkey

# /var/opt/dsau/cfengine/ppkeys

This creates the files localhost.pub and localhost.priv.

Copy the public key to root-master_server_IP_address.pub. For example, assuming

this system’s IP address is 10.0.0.5, use this command:

# cp localhost.pub root-10.0.0.5.pub

See “Configuring a Synchronization Managed Client” (page 35) for details on copying the

client keys to this master server.

6. On the master server, configure the cfservd daemon to start at system startup. Edit /etc/

rc.config.d/cfservd and change the line CSYNC_CONFIGURED=0 to

CSYNC_CONFIGURED=1. Optionally, if you want to be able to push changes out to the

managed clients using cfrun, replicate this change on all of the managed clients.

7. cfrun requires that the managed clients be listed in the file cfrun.hosts. In the default

configuration, this file is located in /var/opt/dsau/cfengine_master/inputs. Edit

it and add the hostnames of each managed client, one per line. It is simplest to make sure

that all the host names are fully qualified. Whenusing fully qualified hostnames, the "domain

= " line is not required and can be deleted. If using unqualified hostnames, find the line

"domain = " variables and replace the token with the DNS domain of the master system.

This restricts all of the unqualified clients to be members of that single domain.

8. The file /var/opt/dsau/cfengine_master/inputs/cfagent.conf is the master

policy file. The default cfagent.conf includes the default cf.main template file with

examples of common synchronization actions for both standalone systems and Serviceguard

clusters. cf.main contains the POLICY HOST_NAME and “domain = “ variables. Perform

the same edits described in Step 3 above.

Note that this default cf.main file performs no management actions. All the action lines

are commented out. This is a starting point for creating a custom set of cfengine policies and

actions for your managed clients. The cfengine reference manual that documents the syntax

and allthe management actions defined in this file is located in /opt/dsau/doc/cfengine.