Page 1

HPE Integrity Superdome X Service Guide for Users

Abstract

This guide describes the HPE Integrity Superdome X and provides user service information.

Part Number: 794235-009

Published: November 2018

Edition: Ninth

Page 2

Contents

HPE Integrity Superdome X overview................................................... 8

System specifications.......................................................................... 23

Complex components................................................................................................................... 8

Power subsystem..........................................................................................................................8

Powering off the compute enclosure..................................................................................8

Manageability subsystem..............................................................................................................9

Server blades................................................................................................................................9

I/O subsystem............................................................................................................................... 9

Compute enclosure overview......................................................................................................10

Server blade overview................................................................................................................ 20

Dimensions and weights............................................................................................................. 23

Rack specifications..................................................................................................................... 24

Internal and external site door requirements.............................................................................. 24

Electrical specifications...............................................................................................................25

Environmental specifications...................................................................................................... 28

Temperature and humidity specifications......................................................................... 28

Cooling requirements....................................................................................................... 29

Air quality specifications...................................................................................................29

Acoustic noise specifications........................................................................................... 29

Sample site inspection checklist for site preparation.................................................................. 30

Updating firmware.................................................................................34

Prerequisites............................................................................................................................... 34

Installing the latest complex firmware using SUM...................................................................... 34

Manually updating the complex firmware....................................................................................34

Download firmware bundle...............................................................................................35

Update the complex firmware.......................................................................................... 35

I/O firmware and drivers...................................................................................................36

SMH and WBEM providers.............................................................................................. 36

Drivers and firmware for other devices.............................................................................36

Superdome X operating systems........................................................ 37

OSs supported............................................................................................................................ 37

Using Microsoft Windows Server................................................................................................ 38

Using VMware.............................................................................................................................38

Using Red Hat Linux................................................................................................................... 38

Using SuSE Linux....................................................................................................................... 38

Partitioning............................................................................................ 39

Partition Identification..................................................................................................................39

Partition Number......................................................................................................................... 39

Partition Name............................................................................................................................ 39

Partition Power Operations......................................................................................................... 39

PARSTATUS............................................................................................................................... 40

2

Page 3

UUID for nPartitions.................................................................................................................... 40

nPartition states.......................................................................................................................... 40

nPartition runstate.......................................................................................................................41

nPartition and resource health status......................................................................................... 42

Troubleshooting.................................................................................... 44

General troubleshooting methodology........................................................................................ 44

LED status information.....................................................................................................44

OA access........................................................................................................................44

OA CLI..............................................................................................................................44

Gathering power related information................................................................................45

Gathering cooling related information.............................................................................. 47

Gathering failure information............................................................................................49

Recommended troubleshooting methodology............................................................................ 50

Developer log collection................................................................................................... 51

Troubleshooting tables................................................................................................................52

Troubleshooting tools..................................................................................................................57

LEDs and components.....................................................................................................57

OA GUI.............................................................................................................................65

Health Repository viewer............................................................................................................ 65

Indictment Records.......................................................................................................... 65

Acquitting indictments...................................................................................................... 66

Viewing the list of indicted components........................................................................... 67

Viewing deconfigured components.................................................................................. 67

Viewing indictment acquittals........................................................................................... 68

Viewing recent service history..........................................................................................68

Physical Location installation and health history..............................................................68

Subcomponent isolation and deconfiguration displays.................................................... 70

Using event logs......................................................................................................................... 75

Live viewer....................................................................................................................... 75

SEL and FPL viewers.......................................................................................................77

Core Analysis Engine.......................................................................................................80

OA.................................................................................................................................... 82

Troubleshooting processors........................................................................................................84

Troubleshooting memory............................................................................................................ 85

Troubleshooting cards and drivers..............................................................................................87

Troubleshooting compute enclosure events............................................................................... 87

Troubleshooting firmware............................................................................................................88

Identifying and troubleshooting firmware issues.............................................................. 88

Verifying and installing the latest firmware version.......................................................... 89

System firmware...............................................................................................................89

FRU replacement firmware update procedures............................................................... 90

I/O firmware......................................................................................................................92

Interconnect module firmware..........................................................................................93

Troubleshooting partitions...........................................................................................................94

Troubleshooting the network.......................................................................................................94

Troubleshooting fabric issues..................................................................................................... 96

Troubleshooting clock-related issues..........................................................................................97

Troubleshooting MCAs................................................................................................................97

Troubleshooting the blade interface (system console)................................................................98

Websites.............................................................................................. 100

3

Page 4

Support and other resources.............................................................101

Accessing Hewlett Packard Enterprise Support....................................................................... 101

Accessing updates....................................................................................................................101

Customer self repair..................................................................................................................102

Remote support........................................................................................................................ 102

Warranty information.................................................................................................................102

Regulatory information..............................................................................................................103

Documentation feedback.......................................................................................................... 103

Utilities................................................................................................. 104

UEFI..........................................................................................................................................104

UEFI Shell and POSSE commands..........................................................................................104

Boot Maintenance Manager......................................................................................................108

Onboard Administrator.............................................................................................................. 110

Connecting to the OA with a local PC............................................... 111

Connecting a PC to the OA service port....................................................................................111

Connecting a PC to the OA serial port...................................................................................... 112

Modifying the serial connection baud rate.................................................................................113

Insight Display.....................................................................................114

Insight Display overview............................................................................................................114

Navigating the Insight Display...................................................................................................114

Health Summary screen.................................................................................................116

Enclosure Settings screen..............................................................................................117

Enclosure Info screen.....................................................................................................117

Blade and Port Info screen.............................................................................................118

Turn Enclosure UID On/Off screen.................................................................................119

View User Note screen...................................................................................................120

Chat Mode screen..........................................................................................................120

Insight Display errors................................................................................................................ 121

Power errors...................................................................................................................121

Cooling errors.................................................................................................................122

Location errors............................................................................................................... 122

Configuration errors....................................................................................................... 122

Device failure errors....................................................................................................... 122

Warranty and regulatory information................................................124

Warranty information.................................................................................................................124

Regulatory information..............................................................................................................124

Belarus Kazakhstan Russia marking............................................................................. 124

Turkey RoHS material content declaration.....................................................................125

Ukraine RoHS material content declaration................................................................... 125

Standard terms, abbreviations, and acronyms................................ 126

4

Page 5

©

Copyright 2014, 2017 Hewlett Packard Enterprise Development LP

Notices

The information contained herein is subject to change without notice. The only warranties for Hewlett

Packard Enterprise products and services are set forth in the express warranty statements accompanying

such products and services. Nothing herein should be construed as constituting an additional warranty.

Hewlett Packard Enterprise shall not be liable for technical or editorial errors or omissions contained

herein.

Confidential computer software. Valid license from Hewlett Packard Enterprise required for possession,

use, or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer

Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government

under vendor's standard commercial license.

Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard

Enterprise has no control over and is not responsible for information outside the Hewlett Packard

Enterprise website.

Acknowledgments

Intel®, Itanium®, Pentium®, Xeon®, Intel Inside®, and the Intel Inside logo are trademarks of Intel

Corporation in the U.S. and other countries.

Microsoft® and Windows® are either registered trademarks or trademarks of Microsoft Corporation in the

United States and/or other countries.

Adobe® and Acrobat® are trademarks of Adobe Systems Incorporated.

Java® and Oracle® are registered trademarks of Oracle and/or its affiliates.

UNIX® is a registered trademark of The Open Group.

Revision History

HPE Part

Number

794235–001 First December 2014

794235–002 Second March 2015

Edition Publication Date Changes

Table Continued

Page 6

HPE Part

Number

Edition Publication Date Changes

794235–003 Third September 2015

794235–004 Fourth January 2016

• Added BL920s Gen9 blade support

• Added SLES 11 SP4 and SLES 12 OS

support

• Added RHEL 6.6, RHEL 6.7, and RHEL

7.1 OS support

• Added Windows 2012 R2 OS support

(Gen8)

• Added ESXi OS support (Gen8)

• Moved firmware update information from

installation chapter to dedicated chapter.

Refer to firmware matrix and release

notes for correct information.

• Removed detailed SLES boot/shutdown

information and add reference to Linux

and Windows white papers.

• Minor text changes and clarifications

throughout

794235–005 Fifth July 2016

• Added details for safely powering off an

enclosure

• Added BL920s Gen9+ blade support

• Added FlexFabric 20 Gb 2P 650FLB and

650M adapter support

• Added note about scrolling the Insight

Display

• Added instructions to save EFI variables

to disk

• Added sections on troubleshooting the

OA battery

• Updated illustrations for new HPE

standards.

• Updated Insight Display screens.

• Added troubleshooting scenario where

PXE fails to find the boot file.

• Updated references to the new XFM2

crossbar modules.

Table Continued

Page 7

HPE Part

Number

Edition Publication Date Changes

794235–006 Sixth September 2016

794235–007 Seventh November 2016

794235–008 Eighth April 2017

• Updated access to OS white papers for

firmware updates

• Updated Insight Display screenshots

• Included component ID for both XFM and

XFM2 modules

• Added notes that both XFM and XFM2

modules are referred to as XFM in this

document and not to mix module types in

the same system

• Updated OS support list

• Added links to current OS and spare

parts information

• Added vSphere 6.0U3 and RHEL 6.9 in

OSs supported

• Added XFM2 firmware version in FRU

replacement firmware update

procedures

794235-009 Ninth November 2018 Updated Health LED in LEDs and

components

Page 8

HPE Integrity Superdome X overview

HPE Integrity Superdome X is a blade-based, high-end server platform supporting the x86 processor

family which incorporates a modular design and uses the sx3000 crossbar fabric to interconnect

resources. The system also includes remote system management functionality through the HPE Onboard

Administrator (OA), which helps monitor and manage complex resources.

Integrity Superdome X supports the SuSE Linux Enterprise Server, Red Hat Enterprise Linux, and

Microsoft Windows OSs, as well as VMware ESXi. For the latest list of supported OSs, see the HPE

Integrity Superdome X Operating System Reference at

(Servers > Integrity Servers > Integrity Superdome X) or Firmware Matrix for HPE Integrity

Superdome X servers.

Complex components

Integrity Superdome X consists of a single compute enclosure containing one to eight BL920s Gen8 or

Gen9 blades. It also includes interconnect modules, manageability modules, fans, power supplies, and an

integrated LCD Insight Display. The Insight Display can be used for basic enclosure maintenance and

displays the overall enclosure health. The compute enclosure supports four XFMs that provide the

crossbar fabric which carries data between blades.

NOTE: HPE Integrity Superdome X systems may contain XFM or XFM2 crossbar modules. Unless

specifically stated otherwise, this document refers to all crossbar modules as XFMs, but the information

will generally apply to either XFM or XMF2 modules.

http://www.hpe.com/info/enterprise/docs

More information

Integrity Superdome X QuickSpecs

Power subsystem

The Integrity Superdome X compute enclosure supports two power input modules, using either single

phase or 3-phase power cords. Connecting two AC sources to each power input module provides 2N

redundancy for AC input and DC output of the power supplies.

There are 12 power supplies per Integrity Superdome X compute enclosure. Six power supplies are

installed in the upper section of the enclosure, and six power supplies are installed in the lower section of

the enclosure.

More information

Integrity Superdome X QuickSpecs

Powering off the compute enclosure

IMPORTANT: To power off the enclosure, disconnect the power cables from the lower power

supplies first, and then disconnect the power cables from the upper power supplies.

To service any internal compute enclosure component, complete the following steps in order:

Procedure

1. Power off the partition.

2. Power off all XFMs.

8 HPE Integrity Superdome X overview

Page 9

3. Disconnect the power cables from the lower power supplies.

4. Disconnect the power cables from the upper power supplies.

Manageability subsystem

The Integrity Superdome X is managed by two OAs that monitor both individual components and complex

health. This information can be accessed in the following ways:

• A GUI using a remote terminal

• A CLI using a remote or local terminal

NOTE: Only one OA is required for operation. The second OA provides redundancy and automatic

failover capabilities.

Two GPSMs in the Integrity Superdome X enclosure manage CAMNET distribution to all server blades

and XFMs in the complex and provide the redundant global clock source for the complex. Fans and

power supplies in the upper section of the enclosure are monitored and controlled by the OA through the

GPSMs.

More information

Integrity Superdome X QuickSpecs

Server blades

Each BL920s server blade contains two x86 processors and up to 48 DIMMs.

Server blades and partitions

Integrity Superdome X supports multiple nPartitions of 2, 4, 6, 8, 12, or 16 sockets (1, 2, 3, 4, 6, or 8

blades). Each nPartition must include blades of the same type but the system can include nPartitions with

different blade types.

More information

Integrity Superdome X QuickSpecs

I/O subsystem

Integrity Superdome X provides I/O through mezzanine cards and FlexLOMs on individual server blades.

Each BL920s blade has two FLB slots and three Mezzanine slots.

FLB slots can contain any of these cards:

• HPE FlexFabric 10 Gb 2–port 534FLB Adapter (BL920s Gen8)

• HPE Ethernet 10 Gb 2–port 560FLB

• HPE FlexFabric 20 Gb 2P 630FLB (BL920s Gen9)

• HPE FlexFabric 20 Gb 2P 650FLB (BL920s Gen9)

Mezzanine slots can contain any of these cards:

• HPE FlexFabric 10 Gb 2–port 534M Adapter (BL920s Gen8)

• HPE Ethernet 10 Gb 2–port 560M

HPE Integrity Superdome X overview 9

Page 10

• HPE FlexFabric 20 Gb 2P 630M (BL920s Gen9)

• HPE FlexFabric 20 Gb 2P 650M (BL920s Gen9)

• HPE QMH2672 16 Gb 2P FC HBA

• Infiniband HPE IB FDR 2P 545M (BL920s Gen9)

Not all types of cards are supported on Gen8 and Gen9 blades. For a complete list of supported I/O cards

and firmware requirements, see the Firmware Matrix for HPE Integrity Superdome X servers at http://

www.hpe.com/info/superdomeX-firmware-matrix.

Fibre channel and LAN connectivity are supported by the interconnect modules in the rear of the compute

enclosure. For more information, see

More information

• Interconnect bay numbering

• Integrity Superdome X QuickSpecs

• Firmware Matrix for HPE Integrity Superdome X servers

• Connecting a PC to the OA service port

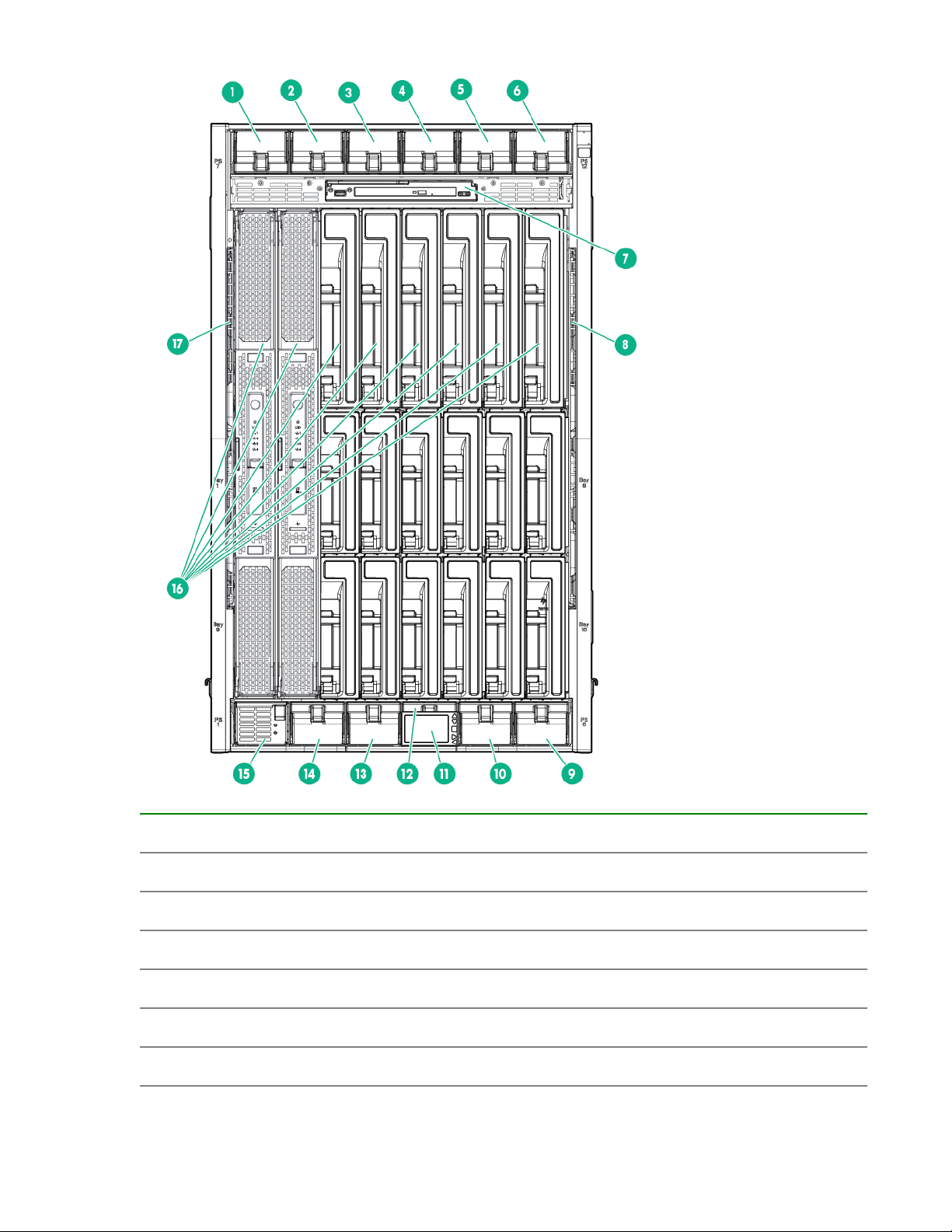

Compute enclosure overview

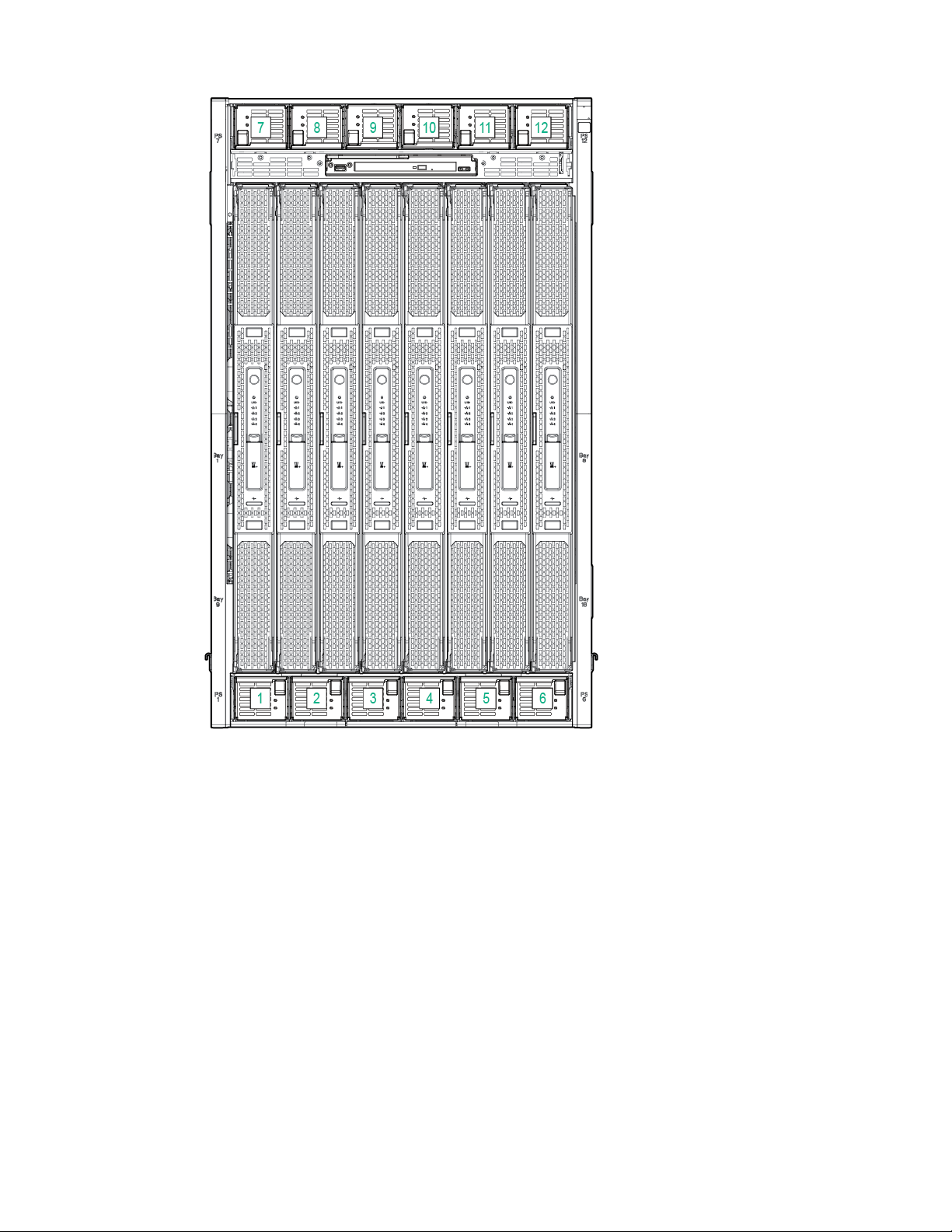

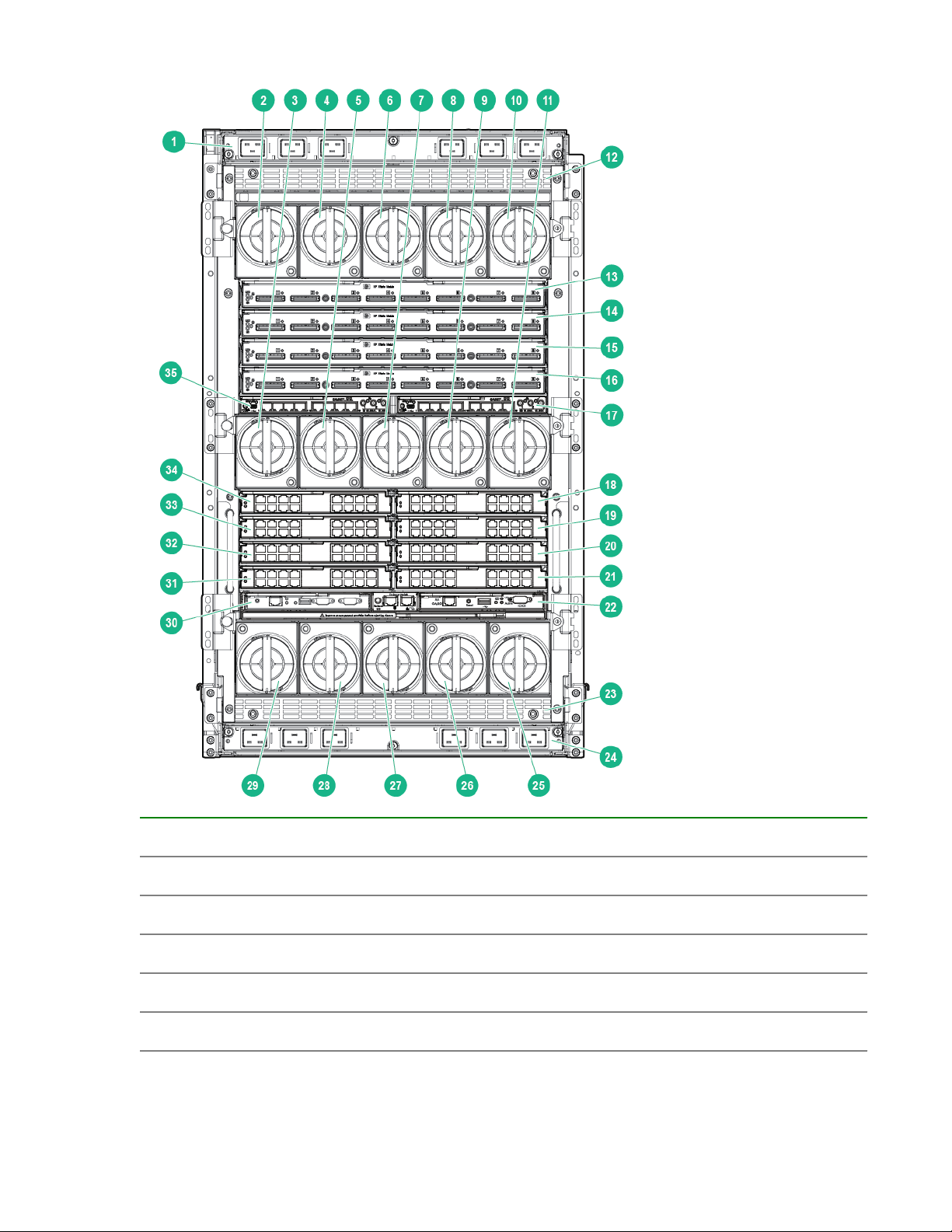

Compute enclosure front components

NOTE: Images might not represent supported configurations.

10 HPE Integrity Superdome X overview

Page 11

Item Description

1 Power supply bay 7

2 Power supply bay 8

3 Power supply bay 9

4 Power supply bay 10

5 Power supply bay 11

6 Power supply bay 12

HPE Integrity Superdome X overview 11

Table Continued

Page 12

Item Description

7 DVD module

8 Air intake slot (Do not block)

9 Power supply bay 6

10 Power supply bay 5

11 Insight Display

12 Power supply bay 4

13 Power supply bay 3

14 Power supply bay 2

15 Power supply bay 1

16 Blade slots

17 Air intake slot (Do not block)

12 HPE Integrity Superdome X overview

Page 13

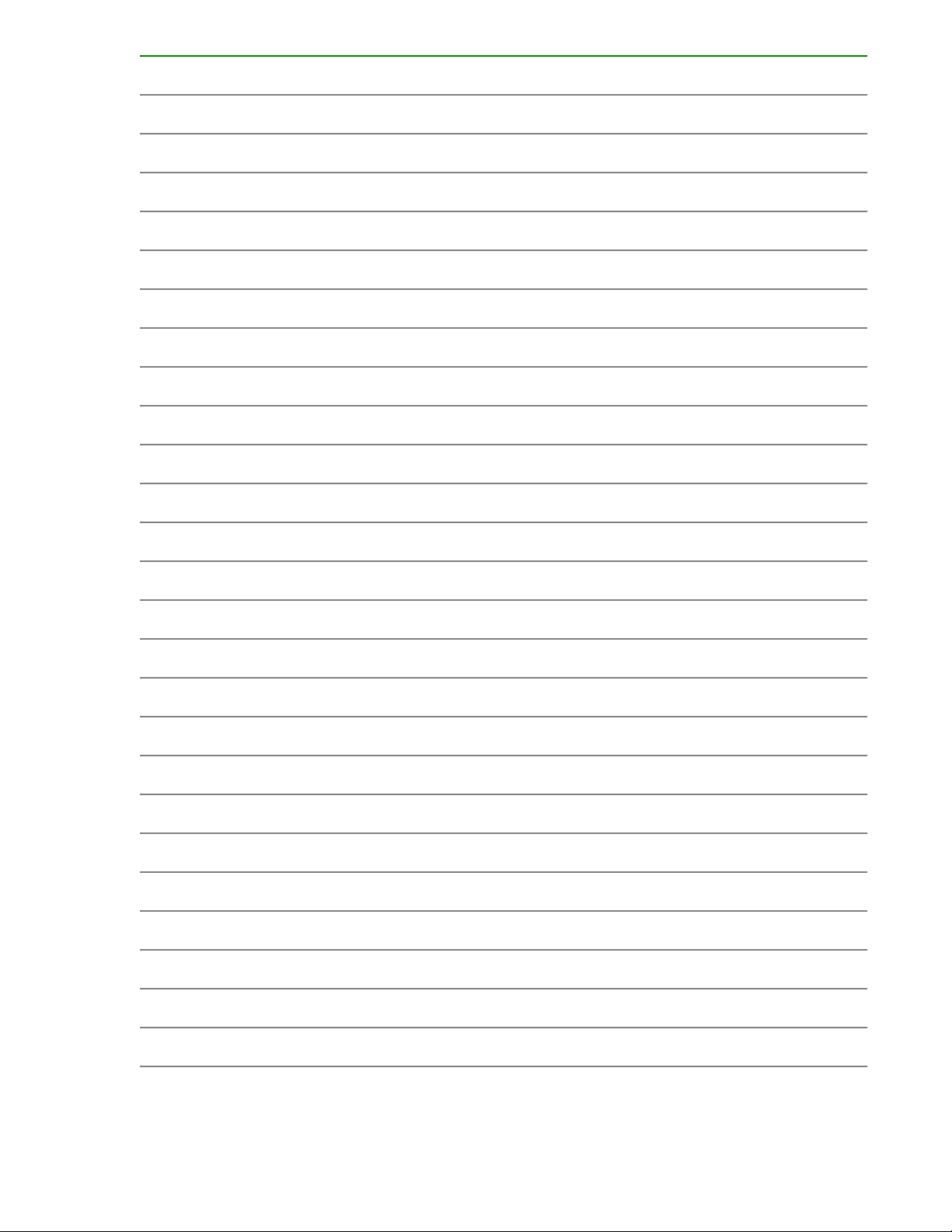

Power supply bay numbering

HPE Integrity Superdome X overview 13

Page 14

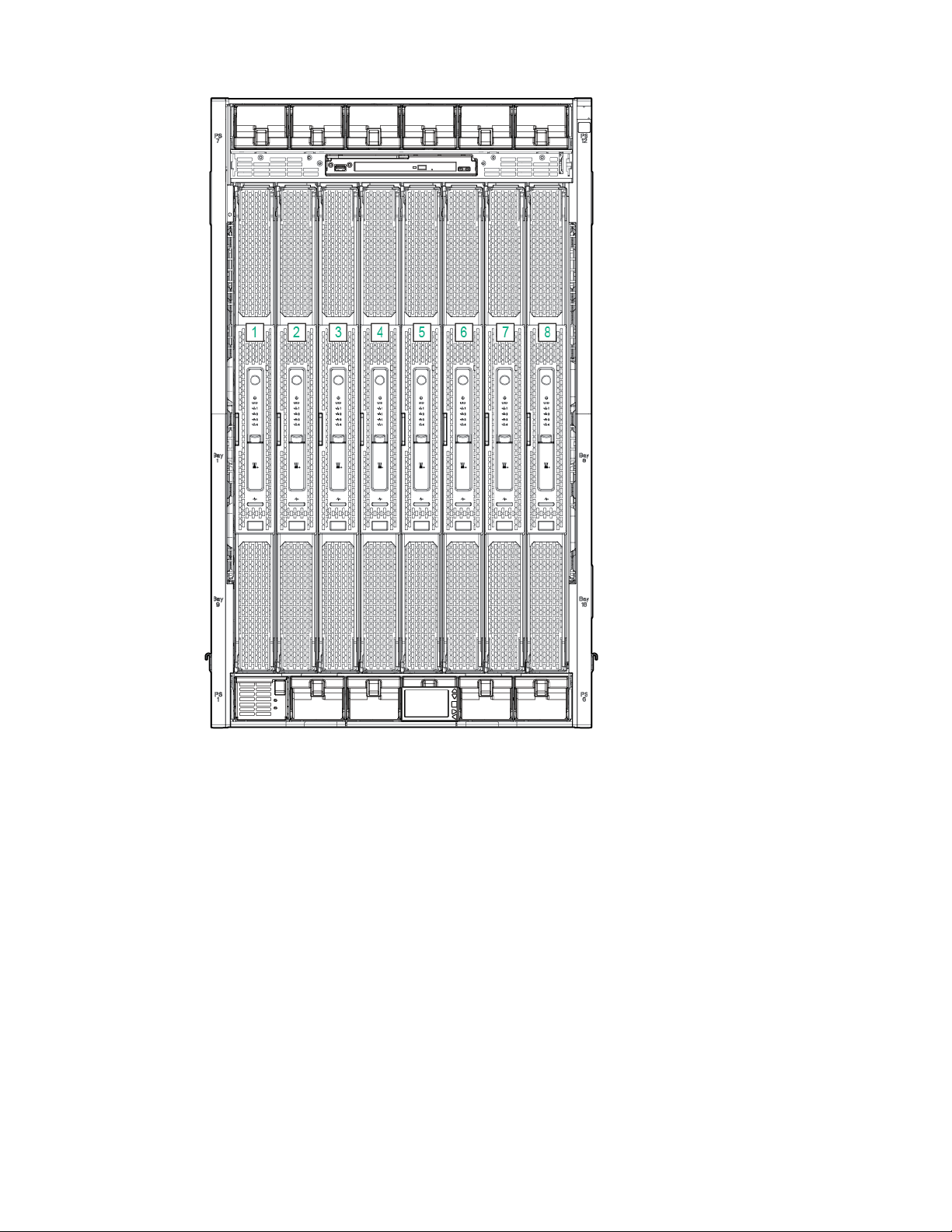

Server blade slot numbering

14 HPE Integrity Superdome X overview

Page 15

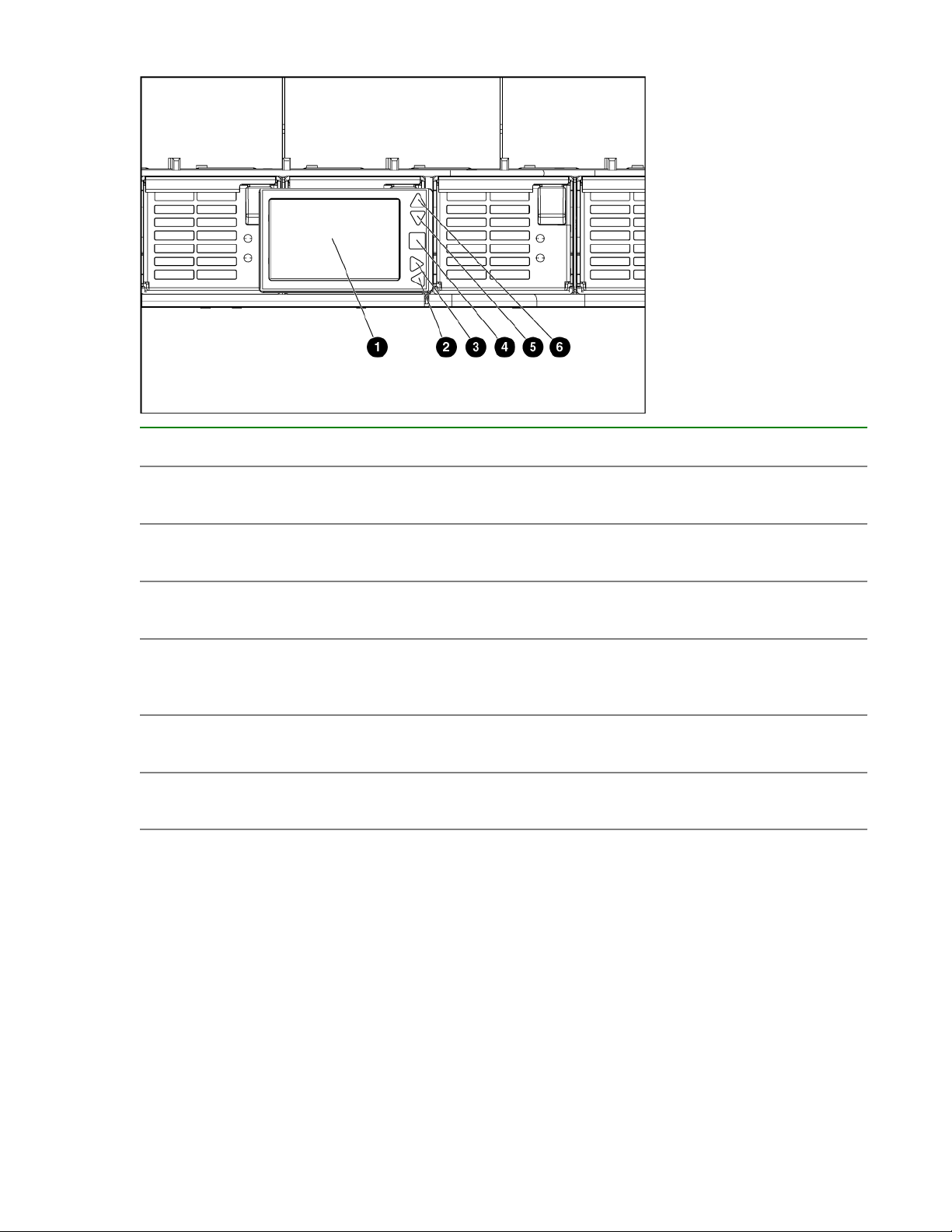

Insight Display components

Item Description Function

1 Insight Display screen Displays Main Menu error

messages and instructions

2 Left arrow button Moves the menu or navigation

bar selection left one position

3 Right arrow button Moves the menu or navigation

bar selection right one position

4 OK button Accepts the highlighted selection

and navigates to the selected

menu

5 Down arrow button Moves the menu selection down

one position

6 Up arrow button Moves up the menu selection one

position

HPE Integrity Superdome X overview 15

Page 16

Compute enclosure rear components

Item Description

1 AC power connectors (upper)

2 Fan bay 1

3 Fan bay 6

4 Fan bay 2

5 Fan bay 7

16 HPE Integrity Superdome X overview

Table Continued

Page 17

Item Description

6 Fan bay 3

7 Fan bay 8

8 Fan bay 4

9 Fan bay 9

10 Fan bay 5

11 Fan bay 10

12 Power supply exhaust vent (Do not block)

13 XFM bay 1

14 XFM bay 2

15 XFM bay 3

16 XFM bay 4

17 GPSM bay 2

18 Interconnect bay 2

19 Interconnect bay 4

20 Interconnect bay 6

21 Interconnect bay 8

22 OA bay 2

23 Power supply exhaust vent (Do not block)

24 AC power connectors (lower)

25 Fan bay 15

26 Fan bay 14

27 Fan bay 13

28 Fan bay 12

29 Fan bay 11

30 OA bay 1

Table Continued

HPE Integrity Superdome X overview 17

Page 18

Item Description

31 Interconnect bay 7

32 Interconnect bay 5

33 Interconnect bay 3

34 Interconnect bay 1

35 GPSM bay 1

Fan bay numbering

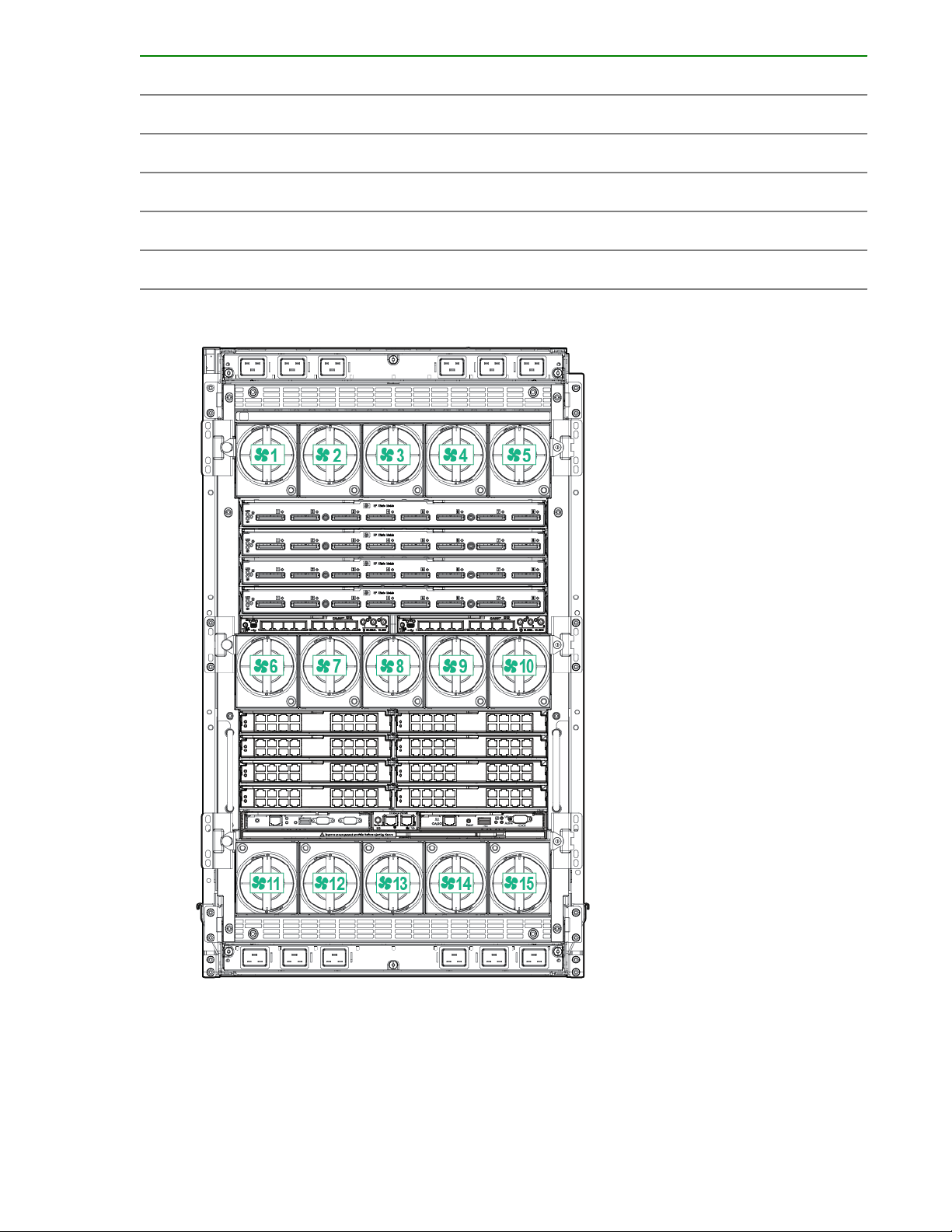

Interconnect bay numbering

Each Integrity Superdome X enclosure requires interconnect modules to provide network access for data

transfer. Interconnect modules reside in bays located in the rear of the enclosure. Review blade slot

numbering to determine which external network connections on the interconnect modules are active.

18 HPE Integrity Superdome X overview

Page 19

To support server blade LAN and Fibre Channel I/O connections, an appropriate type of interconnect

module is installed according to bay location.

Server blade port Compute enclosure

interconnect bay

FlexLOM 1 port 1 1

FlexLOM 1 port 2 2

FlexLOM 2 port 1 1

FlexLOM 2 port 2 2

Mezzanine 1 port 1 3

Interconnect bay label

Table Continued

HPE Integrity Superdome X overview 19

Page 20

Server blade port Compute enclosure

interconnect bay

Mezzanine 1 port 2 4

Mezzanine 1 port 3 3

Mezzanine 1 port 4 4

Mezzanine 2 port 1 5

Mezzanine 2 port 2 6

Mezzanine 2 port 3 7

Mezzanine 2 port 4 8

Mezzanine 3 port 1 7

Mezzanine 3 port 2 8

Mezzanine 3 port 3 5

Interconnect bay label

Mezzanine 3 port 4 6

NOTE: For information on the location of LEDs and ports on individual interconnect modules, see the

documentation that ships with the interconnect module.

More information

Integrity Superdome X QuickSpecs

Server blade overview

Product Processors DIMM slots Supported

BL920s Gen8

BL920s Gen9

2 48 16 GB and 32

DIMM size

GB (Gen8)

16 GB, 32 GB,

and 64 GB

(Gen9)

PCIe I/O

Mezzanine

card capacity

3 2

PCI I/O

FlexLOM card

capacity

20 HPE Integrity Superdome X overview

Page 21

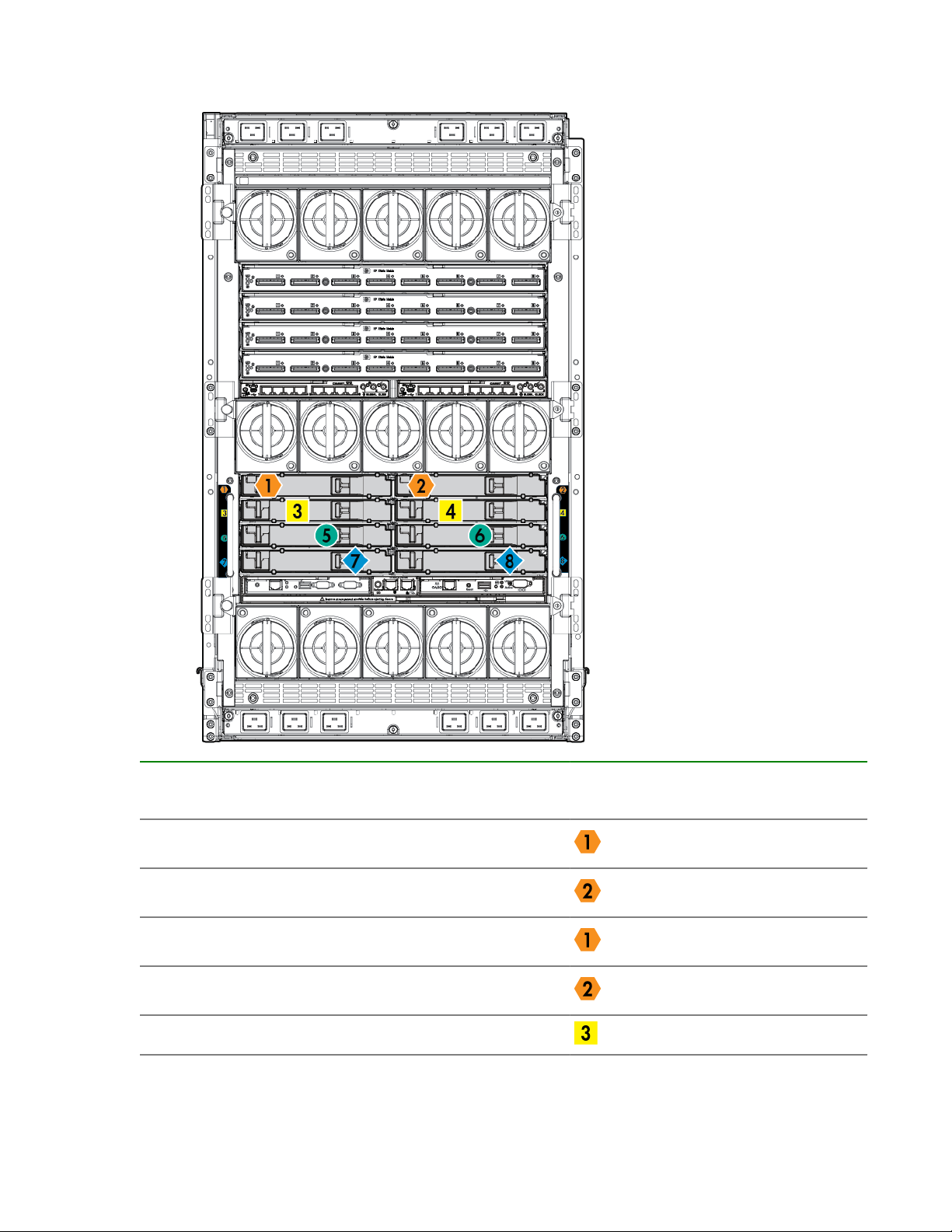

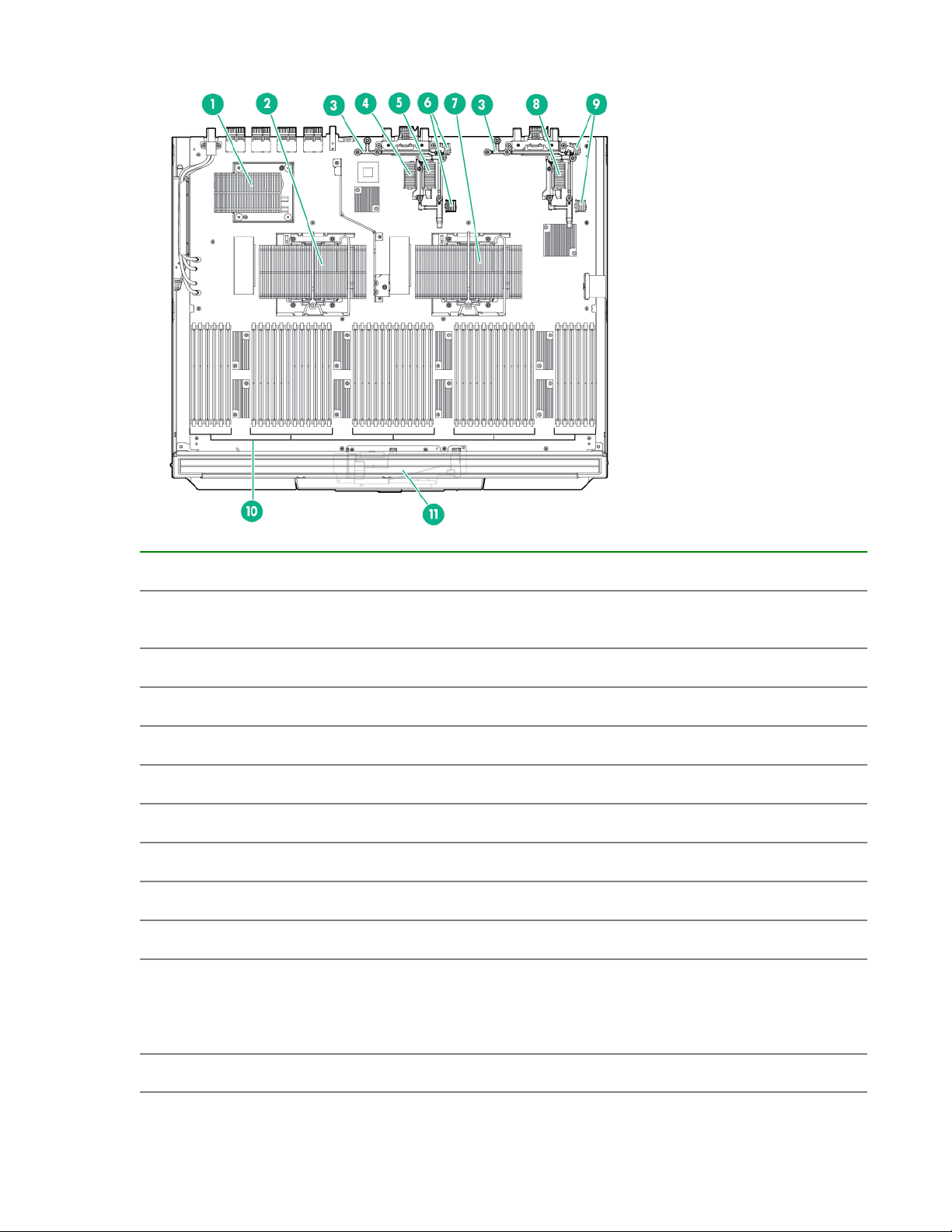

Server blade components

Item Description

1 sx3000 crossbar fabric ASIC (referred to as XNC

by the Health Repository and in event logs)

2 CPU 1

3 Mezzanine bracket

4 Mezzanine connector 1 Type A

5 Mezzanine connector 2 Type A/B

6 FlexLOM slot 2

7 CPU 0

8 Mezzanine connector 3 Type A/B

9 FlexLOM slot 1

10 DDR3 DIMM slots (48) — BL920s Gen8

DDR4 DIMM slots (48) — BL920s Gen9

LR DIMM slots (48) — BL920s Gen9

11 SUV board

HPE Integrity Superdome X overview 21

Page 22

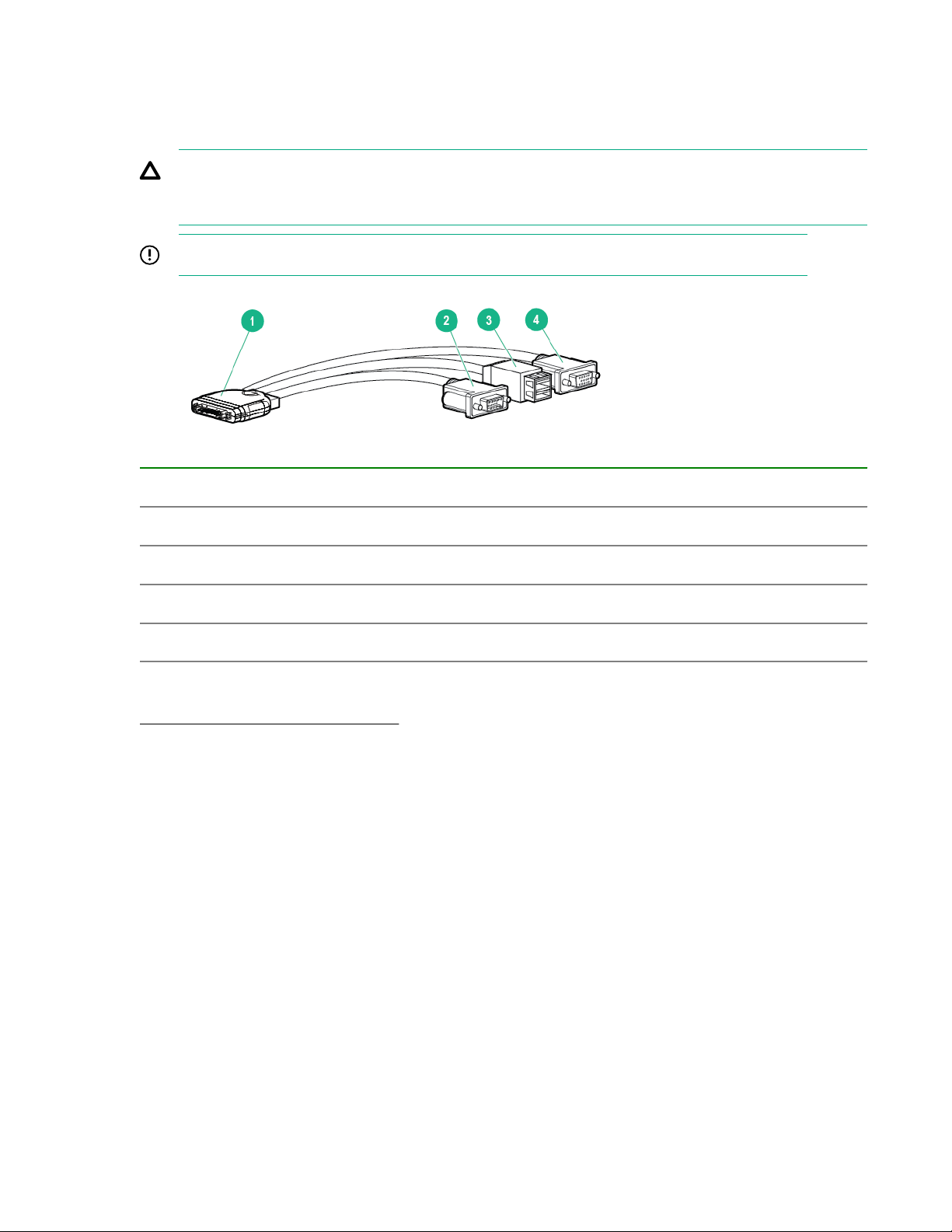

SUV cable and ports

The SUV port on the front of the server blade is used with an SUV cable to connect the blade to external

devices (serial terminal or monitor) or USB devices. The SUV port is located behind a door that stays

closed when an SUV cable is not installed.

CAUTION: The SUV cable is not designed to be used as a permanent connection; therefore be

careful when walking near the server blade. Hitting or bumping the cable might cause the port on

the server blade to break and damage the blade.

IMPORTANT: The SUV port does not provide console access and the serial port is unused.

Item Description

1 Server blade connector

2 Serial

3 USB ports (2)

4 Video

More information

Integrity Superdome X QuickSpecs

22 HPE Integrity Superdome X overview

Page 23

System specifications

Dimensions and weights

Component dimensions

Table 1: Component dimensions

Component Width Depth Height

Compute enclosure 44.7 cm

17.6 in

Server blade 5.13 cm

2.02 in

Component weights

82.8 cm

32.6 in

52.25 cm

20.60 in

79.8 cm

31.4 in

62.18 cm

24.48 in

Table 2: Compute enclosure weights

Component Weight Max. quantity per enclosure

Compute enclosure chassis 64.9 kg

143.0 lb

I/O chassis 22.1 kg

48.7 lb

Midplane Brick 18.8 kg

41.5 lb

1

1

1

OA tray 3.6 kg

8.0 lb

Active Cool Fan 0.9 kg

2.7 lb

Power supply module 2.3 kg

5.0 lb

Enclosure DVD module 2.1 kg

4.7 lb

OA module 0.8 kg

1.8 lb

1

15

12

1

2

Table Continued

System specifications 23

Page 24

Component Weight Max. quantity per enclosure

GPSM 1.2 kg

XFM 3.3 kg

I/O interconnect module 1.3 kg

Server blade 12-16 kg

More information

Generic Site Preparation Guide

Rack specifications

Table 3: Rack specifications

Rack Total

cabinet area

with packing

materials

2

2.6 lb

4

7.3 lb

8

2.9 lb

8

26-35 lb

U height Width Depth Dynamic

load

(gross)

Static load

(H x D x W)

HPE 642

1075 mm

Intelligent

Rack

HPE 642

1200 mm

Shock

Intelligent

Rack

More information

Generic Site Preparation Guide

246.80 x

129.20 x

90 cm

(85.35 x

50.87 x

35.43 in)

218.00 x

147.00 x

90 cm

(85.82 x

57.87 x

35.43 in)

42U 597.8 mm

(23.54 in)

42U 597.8 mm

(23.54 in)

1,085.63 mm

(42.74 in)

1,300.2 mm

(51.19 in)

Internal and external site door requirements

Internal site doorways must obey the following height requirements:

1,134 kg

(2,500 lb)

1,460.11 kg

(3,219 lb)

1,360.8 kg

(3,000 lb)

1,360.78 kg

(3,000 lb)

24 System specifications

Page 25

• For the 642 1075 mm rack — no less than 200.19 cm (78.816 in)

• For the 642 1200 mm rack — no less than 200.66 cm (79.00 in)

To account for the lifted height of the pallet, external doorways must obey the following height

requirements:

• For the 642 1075 mm rack — no less than 216.80 cm (85.35 in)

• For the 642 1200 mm rack — no less than 215.00 cm (84.65 in)

More information

Generic Site Preparation Guide

Electrical specifications

Table 4: Enclosure power options

Source type Source voltage

(nominal)

3–phase 200 VAC to 240

VAC line-to-line

(phase-tophase), 3phase

50/60 Hz

3–phase 220 VAC to 240

VAC line-toneutral 3-phase

50/60 Hz

Single-phase 200 VAC to 240

VAC

50/60 Hz

Plug or

connector type

NEMA L15-30p,

3-Pole, 4-wire,

3 m (10 ft)

power cord

IEC 309, 4pole, 5-wire,

Red, 3 m (10 ft)

power cord

IEC 320

C19-C20

Table 5: Single-phase power cords

Circuit type Power

receptacle

required

30 A 3-phase L15-30R. 3-

pole, 4-wire

16 A IEC 309, 4-

pole, 5-wire, red

16/20 A Singlephase

IEC 320

C19

Number of

power cords

required (per

enclosure)

4

4

12

Part number Description Where used

8120-6895 Stripped end, 240 V International - other

8120-6897 Male IEC309, 240 V International

8121-0070 Male GB-1002, 240 V China

8120-6903 Male NEMA L6-20, 240 V North America/Japan

System specifications 25

Page 26

Table 6: Enclosure single-phase HPE 2400 W power supply specifications

Specification Value

Power cord IEC-320 C19-C20

Output 2450 W per power supply

Input requirements

Rated input voltage 200–240 VAC

Rated input frequency 50-60 Hz

Rated input current per power supply (maximum) 13.8 A at 200 VAC

13.3 A at 208 VAC

12.6 A at 220 VAC

Maximum inrush current 100 A for 10 ms

Ground leakage current 3.5 mA

Power factor correction 0.98

Table 7: Enclosure 3-phase 2400 W power supply specifications (North America/

Japan)

Specification Value

Power cords (4) NEMA L15-30p

3.0 m (10 ft)

Max input current per line cord 24.0 A at 200 VAC

23.1 A at 208 VAC

Output 2450 W per power supply

Input requirements

Rated input voltage 200–240 VAC line-to-line 3-phase

Rated input frequency 50–60 Hz

Maximum inrush current 100 A for 10 ms

Ground leakage current 3.5 mA

Power factor correction 0.98

26 System specifications

Page 27

Table 8: Enclosure 3-phase 2400 W power supply specifications (International)

Specification Value

Power cords (4) IEC-309 220–240 VAC, 5-pin, 16 A

3.0 m (10 ft)

Max input current per line cord 12.1 A at 220 VAC

11.1 A at 240 VAC

Output 2450 W per power supply

Input requirements

Rated input voltage 200–240 VAC line-to-neutral 3-phase

Rated input frequency 50-60 Hz

Maximum inrush current 100 A for 10 ms

Ground leakage current 3.5 mA

Power factor correction 0.98

Table 9: Enclosure power requirements

Power required (50–60 Hz) Watts VA

User expected maximum power 9065 9250

Table 10: Enclosure PDU power options

Source/Circuit

type

3–phase 60 A 200–240 VAC line-

3–phase 32 A 220–240 VAC line-

Source voltage

(nominal)

to-line (phase-tophase), 3–phase

50/60 Hz

to-neutral 3–phase

50/60 Hz

Plug or connector

type

IEC 309 60 A 3Pole, 4 wire, Blue,

3.6 m (11.8 ft)

power cord

IEC 309 32 A 4Pole, 5 wire, Red,

3.6 m (11.8 ft)

power cord

Power receptacle

required

IEC 309 60 A 3Pole, 4 wire, Blue

IEC 309 32 A 4Pole, 5 wire, Red

Number of power

cords required

(per enclosure

leaving the rack)

2

2

Table Continued

System specifications 27

Page 28

Source/Circuit

type

Source voltage

(nominal)

Plug or connector

type

Power receptacle

required

Number of power

cords required

(per enclosure

leaving the rack)

Single-phase 63 A 200–240 VAC

50/60 Hz

IEC 309 63 A

Single Phase Blue,

3.6 m (11.8 ft)

power cord

Single-phase 30 A 200–240 VAC

50/60 Hz

NEMA L6-30P

Single Phase,

3.6 m (11.8 ft)

power cord

More information

Generic Site Preparation Guide

Environmental specifications

Temperature and humidity specifications

The following table contains the allowed and recommended temperature and humidity limits for both

operating and nonoperating Integrity Superdome X systems.

Specification Value

Temperature range

IEC 309 63 A

Single Phase, Blue

NEMA L6-30R

Single Phase

4

6

Allowable Operating Range

Recommended Operating Range

2

2

+5° C to +40° C (41° F to 104° F)

+18° C to +27° C (64° F to 81° F)

Nonoperating (powered off) +5° C to +45° C (41° F to 113° F)

Nonoperating (storage) -40° C to +80° C (-40° F to 176° F)

Humidity Range (noncondensing)

Allowable Operating Range

Recommended Operating Range

2

2

-12° C DP and 8% RH to +24° C DP and 85% RH

+5.5° C DP to +15° C DP and 65% RH

Nonoperating (powered off) 8% RH to 90% RH and 29° C DP

Nonoperating (storage) 8% RH to 90% RH and 32° C DP

1

The Recommended Operating Range is recommended for continuous operation. Operating within the Allowable

Operating Range is supported but might result in a decrease in system performance.

More information

Generic Site Preparation Guide

28 System specifications

Page 29

Cooling requirements

Integrity Superdome X is a rack-mounted system that cools by drawing air in the front and exhausting it

out the rear. General ASHRAE best practices must be followed when installing the system in a data

center.

• Hot/cold aisle layout

• Appropriate blanking panels in any unused space in the rack.

• No gaps exist between adjacent racks, which ensures minimal air recirculation.

• An adequate hot-air return path to the computer room air conditioners (CRAC) or computer room air

handlers (CRAH), which minimizes the flow of hot air over any rack.

Integrity Superdome X utilizes variable speed fans to realize the most efficient use of air. The volume of

air required varies with the temperature of the air supplied to the inlet.

IMPORTANT: The optimal equipment orientation is a parallel layout to the air flow supply and

return. Supply air will flow down cold aisles which are parallel to equipment rows, and return air to

CRAC through parallel air flow. Perpendicular air flow causes too much room mixing, places higher

electrical loads on the room, and can lead to unexpected equipment problems.

More information

Generic Site Preparation Guide

Air quality specifications

Chemical contaminant levels in customer environments for Hewlett Packard Enterprise hardware

products must not exceed G1 (mild) levels of Group A chemicals at any time. These contaminant levels

are described in the current version of ISA–71.04 Environmental Conditions for Process Measurement

and Control Systems: Airborne Contaminants.

More information

• Generic Site Preparation Guide

• ISA–71.04 Environmental Conditions for Process Measurement and Control Systems: Airborne

Contaminants

Acoustic noise specifications

The acoustic noise specifications are 8.6 bel (86 dB) (sound power level).

IMPORTANT: Hewlett Packard Enterprise recommends that anyone in the immediate vicinity of the

product for extended periods of time wear hearing protection or use other means to reduce noise

exposure.

This level of noise is appropriate for dedicated computer room environments, not office environments.

Understand the acoustic noise specifications relative to operator positions within the computer room when

adding Integrity Superdome X systems to computer rooms with existing noise sources.

More information

Generic Site Preparation Guide

System specifications 29

Page 30

Sample site inspection checklist for site preparation

See Customer and Hewlett Packard Enterprise Information and Site inspection checklist. You can

use these tables to measure your progress.

Table 11: Customer and Hewlett Packard Enterprise Information

Customer Information

Name: Phone number:

Street address: City or Town:

State or province: Country

Zip or postal code:

Primary customer contact: Phone number:

Secondary customer contact: Phone number:

Traffic coordinator: Phone number:

Hewlett Packard Enterprise information

Sales representative: Order number:

Representative making survey: Date:

Scheduled delivery date:

Table 12: Site inspection checklist

Check either Yes or No. If No, include comment or date.

Computer Room

Number Area or condition Yes No Comment or

Date

1. Do you have a completed floor plan?

2. Is adequate space available for maintenance needs?

Front 91.4 cm (36 inches) minimum and rear 91.4 cm

(36 inches) minimum are recommended clearances.

3. Is access to the site or computer room restricted?

4. Is the computer room structurally complete? Expected

date of completion?

30 System specifications

Table Continued

Page 31

Check either Yes or No. If No, include comment or date.

Computer Room

Number Area or condition Yes No Comment or

Date

5. Is a raised floor installed and in good condition?

What is the floor to ceiling height? [228 cm (7.5 ft)

minimum]

6. Is the raised floor adequate for equipment loading?

7. Are channels or cutouts available for cable routing?

8. Is a network line available?

9. Is a telephone line available?

10. Are customer-supplied peripheral cables and LAN cables

available and of the proper type?

11. Are floor tiles in good condition and properly braced?

12. Is floor tile underside shiny or painted?

If painted, judge the need for particulate test.

Power and Lighting

13. Are lighting levels adequate for maintenance?

14. Are AC outlets available for servicing needs (for example,

laptop usage)?

15. Does the input voltage correspond to equipment

specifications?

15a. Is dual source power used? If so, identify types and

evaluate grounding.

16. Does the input frequency correspond to equipment

specifications?

17. Are lightning arrestors installed inside the building?

18. Is power conditioning equipment installed?

19. Is a dedicated branch circuit available for equipment?

20. Is the dedicated branch circuit less than 22.86 m (75 ft)?

21. Are the input circuit breakers adequate for equipment

loads?

Safety

22. Is an emergency power shutoff switch available?

23. Is a telephone available for emergency purposes?

24. Does the computer room have a fire protection system?

Table Continued

System specifications 31

Page 32

Check either Yes or No. If No, include comment or date.

Computer Room

Number Area or condition Yes No Comment or

Date

25. Does the computer room have anti-static flooring

installed?

26. Do any equipment servicing hazards exist (loose ground

wires, poor lighting, and so on)?

Cooling

27. Can cooling be maintained between 5° C (41° F) and

40° C (104° F) up to 1,525 m (5,000 ft)? Derate 1° C/

305 m (1.8° F/1,000 ft) above 1,525 m (5,000 ft) and up to

3,048 m (10,000 ft).

28. Can temperature changes be held to 5° C (9° F) per hour

with tape media? Can temperature changes be held to

20° C (36° F) per hour without tape media?

The following are examples of different types of

temperature changes.

• Unidirectional changes

— Storage operating temperature changes in excess of

20° C (36° F) is not within tolerance. Allow one hour

per 20° C (36° F) to acclimate.

• Multidirectional spurious changes

— Operating temperatures that increase 10° C (18° F)

and then decrease 10° C (18° F). This temperature

change Is within tolerance as a 20° C (36° F) change

per hour.

• Repetitive changes

— Every 15 minutes, there is a repetitive, consistent

5° C (9° F) up and down change. This repetitive

temperature change is a 40° C (72° F) change per hour

and not within tolerance.

Also note that rapid changes to temperature over a short

period are more damaging than gradual changes over

time.

29. Can humidity level be maintained at 40% to 55% at 35° C

(95 ° F) noncondensing?

30. Are air-conditioning filters installed and clean?

Storage

31. Are cabinets available for tape and disc media?

32. Is shelving available for documentation?

32 System specifications

Table Continued

Page 33

Check either Yes or No. If No, include comment or date.

Computer Room

Number Area or condition Yes No Comment or

Date

Training

33. Are personnel enrolled in the System Administrator

Course?

34. Is on-site training required?

More information

Generic Site Preparation Guide

System specifications 33

Page 34

Updating firmware

Hewlett Packard Enterprise recommends that all firmware on all devices in your system be updated to the

latest version after hardware installation is complete. Hewlett Packard Enterprise also encourages you to

check back often for any updates that might have been posted.

There are two methods for updating the complex firmware; using SUM or manually.

Prerequisites

Before updating firmware, Hewlett Packard Enterprise strongly recommends implementing these security

best practices:

• Isolate the management network by keeping it separate from the production network and not putting it

on the open internet without additional access authentication.

• Patch and maintain LDAP and web servers.

• Run latest virus and malware scanners in your network environment.

Installing the latest complex firmware using SUM

The SUM utility enables you to deploy firmware components from either an easy-to-use interface or a

command line. It has an integrated hardware discovery engine that discovers the installed hardware and

the current versions of firmware in use on target servers. SUM contains logic to install updates in the

correct order and ensure that all dependencies are met before deployment of a firmware update. It also

contains logic to prevent version-based dependencies from destroying an installation and ensures that

updates are handled in a manner that reduces any downtime required for the update process. SUM does

not require an agent for remote installations.

SUM is included in the downloadable firmware bundles.

For more information about SUM, see the Smart Update Manager User Guide (http://www.hpe.com/

info/sum-docs).

NOTE: You can also update firmware manually. There are different firmware bundles for each method.

See the detailed instructions provided in the release notes for the firmware bundle for more information

about manually updating firmware. Also see Manually updating the complex firmware on page 34.

Manually updating the complex firmware

To update the complex firmware manually, you will:

Procedure

1. Download the firmware bundle.

2. Update the complex and nPartition firmware.

3. Update I/O firmware and SMH and WBEM providers.

4. Be sure to use only the recommended I/O firmware to avoid incompatibility with other system

firmware.

5. Check for driver and firmware updates for other devices.

34 Updating firmware

Page 35

To use SUM to update the complex firmware, see Installing the latest complex firmware using SUM on

page 34.

Download firmware bundle

Hewlett Packard Enterprise recommends running only approved firmware versions. For the latest

approved firmware versions, see the Firmware Matrix for HPE Integrity Superdome X servers at http://

www.hpe.com/info/superdomeX-firmware-matrix. Follow the instructions provided in the bundle

Release Notes.

For special OS requirements, see the Superdome X firmware bundle Release Notes and these OS white

papers:

• Running Linux on HPE Integrity Superdome X white paper at http://www.hpe.com/support/

superdomeXlinux-whitepaper

• Running Microsoft Windows Server on HPE Integrity Superdome X white paper at http://

www.hpe.com/support/superdomeXwindows-whitepaper

• Running VMware vSphere on HPE Integrity Superdome X white paper at http://www.hpe.com/

support/superdomeXvmware-whitepaper

Update the complex firmware

To manually update the complex firmware:

Procedure

1. Refer to the Firmware Matrix for HPE Integrity Superdome X servers document at http://

www.hpe.com/info/superdomeX-firmware-matrix.

2. Select the complex firmware version for your OS to download and extract the latest HPE Integrity

Superdome X firmware bundle. Follow the instructions provided in the bundle Release Notes.

3. Copy the bundle to a media accessible from the OA.

4. Connect a PC to OA over Telnet or SSH and login to the CLI. For more information, see Connecting a

PC to the OA service port.

5. At the CLI prompt, use the connect blade <blade#> command to connect to each blade, and

then use the exit command to return to the OA prompt.

For example:

OA> connect blade 1

</>hpiLO-> exit

IMPORTANT: This will ensure that there is communication between OA and all blades. The

firmware update will fail if communication from OA to any blade is not working.

6. Use the Health Repository to discover currently indicted and deconfigured components.

Launch the Health Repository viewer with the SHOW HR command on the Monarch OA. List indicted

and deconfigured components with the SHOW INDICT and SHOW DECONFIG commands.

Address all indicted and deconfigured components before proceeding. Replace a deconfigured blade

or OA before starting the firmware update.

Updating firmware 35

Page 36

7. To start the firmware update, use the UPDATE FIRMWARE command; for example update firmware

<uri> all , where <uri> is the path to the firmware bundle. The "all" option must be used to update

complex AND partition firmware.

The Firmware update process can take up to 1 hour to complete. During this process, you might notice

no progress for long periods of time and connection to OA will be lost when OA reboots between

updates.

NOTE: For more information about using the UPDATE FIRMWARE command, see the HPE Integrity

Superdome X and Superdome 2 Onboard Administrator Command Line Interface User Guide.

8. After OA is rebooted, reconnect to OA and login to confirm successful updates. Run the UPDATE

SHOW FIRMWARE command to display the complex bundle version and the firmware versions installed.

Example:

Configured complex firmware bundle version: 7.6.0

Firmware on all devices matches the complex configured bundle version

NOTE: The bundle contains firmware for the complex and npartition. The bundle does not contain I/O

card drivers or firmware.

9. Verify that all partitions are ready for use with the parstatus -P command.

I/O firmware and drivers

It is important that you install the recommended I/O adapter firmware and drivers for the appropriate

complex firmware bundle. For information about supported firmware and drivers for supported I/O cards,

see Firmware Matrix for HPE Integrity Superdome X servers at http://www.hpe.com/info/superdomeX-

firmware-matrix. Use the information provided in this document to download the correct firmware bundle

and drivers.

IMPORTANT: Installing incorrect or unsupported firmware can cause unpredictable behavior. The

latest IO device firmware versions might not be supported for your system. Be sure to use only the

firmware versions that are qualified and recommended for your system. Do not use the SPP as a

source of device firmware for Superdome X systems.

SMH and WBEM providers

Hewlett Packard Enterprise recommends that you install the latest versions of the SMH and WBEM

providers for your OS.

NOTE: You must install the SMH package before the WBEM providers or in the same session.

Use the information provided in the Firmware Matrix for HPE Integrity Superdome X servers

document to download the correct WBEM providers.

Reboot is not required for SMH and WBEM providers changes to take effect.

Drivers and firmware for other devices

Interconnect modules also contain firmware which can be updated.

Before installing any firmware or drivers, be sure to see the Firmware Matrix for HPE Integrity Superdome

X servers at http://www.hpe.com/info/superdomeX-firmware-matrix. Use only the specified firmware

and drivers. Use the information provided in this document to download the correct versions. Also see the

Linux and Windows white papers for additional updates that might be needed.

36 Updating firmware

Page 37

Superdome X operating systems

This is the current OS support information for Superdome X systems.

OSs supported

Integrity Superdome X supports these operating systems:

• Microsoft Windows Server

◦ 2012 R2 (BL920s, all versions)

◦ 2016 (BL920s, all versions)

• VMware

◦ vSphere 5.5 U2 (BL920s Gen8 up to 8 sockets)

◦ vSphere 5.5 U3 (BL920s Gen8 and Gen9 v3 up to 8 sockets)

◦ vSphere 6.0 (BL920s Gen8 up to 8 sockets)

◦ vSphere 6.0 U1 (BL920s Gen8 up to 16 sockets and Gen9 v3 up to 8 sockets)

◦ vSphere 6.0 U2 (BL920s Gen8 up to 16 sockets and Gen9 v3 & v4 up to 8 sockets)

◦ vSphere 6.0 U3 (BL920s Gen8 up to 16 sockets and Gen9 v3 & v4 up to 8 sockets)

• Red Hat Linux

◦ RHEL 6.5 (BL920s Gen8)

◦ RHEL 6.6 (BL920s Gen8 and Gen9 v3)

◦ RHEL 6.7 (BL920s, all versions)

◦ RHEL 6.8 (BL920s, all versions)

◦ RHEL 6.9 (BL920s, all versions)

◦ RHEL 7.0 (BL920s Gen8)

◦ RHEL 7.1 (BL920s Gen8 and Gen9 v3)

◦ RHEL 7.2 (BL920s, all versions)

◦ RHEL 7.3 (BL920s, all versions)

• SuSE Linux

◦ SLES 11 SP3 (BL920s Gen8 and Gen9 v3)

◦ SLES 11 SP3 for SAP (BL920s Gen8 and Gen9 v3)

◦ SLES 11 SP4 (BL920s, all versions)

◦ SLES 12 (BL920s Gen8 and Gen9 v3)

◦ SLES 12 SP1 (BL920s, all versions)

◦ SLES 12 SP2 (BL920s, all versions)

Superdome X operating systems 37

Page 38

Support for some OSs requires a minimum firmware version. For the minimum required firmware

versions, see the Firmware Matrix for HPE Integrity Superdome X servers at http://www.hpe.com/info/

superdomeX-firmware-matrix.

For the latest list of supported OSs, see the HPE Integrity Superdome X Operating System Reference at

http://www.hpe.com/info/enterprise/docs (Servers > Integrity Servers > Integrity Superdome X) or

the Firmware Matrix for HPE Integrity Superdome X servers at http://www.hpe.com/info/superdomeX-

firmware-matrix.

Using Microsoft Windows Server

For detailed information about using the Windows OS on Integrity Superdome X systems, see the

Running Microsoft Windows Server on HPE Integrity Superdome X white paper at http://www.hpe.com/

support/superdomeXwindows-whitepaper.

Using VMware

For detailed information about using VMware on Integrity Superdome X systems, see the Running

VMware vSphere on HPE Integrity Superdome X white paper at

superdomeXvmware-whitepaper.

Using Red Hat Linux

For detailed information about using RHEL on Integrity Superdome X systems, see the Running Linux on

HPE Integrity Superdome X white paper at http://www.hpe.com/support/superdomeXlinux-

whitepaper.

http://www.hpe.com/support/

Using SuSE Linux

For detailed information about using SLES on Integrity Superdome X systems, see the Running Linux on

HPE Integrity Superdome X white paper at http://www.hpe.com/support/superdomeXlinux-

whitepaper.

38 Superdome X operating systems

Page 39

Partitioning

This chapter provides information on partition identification and operations.

Partition Identification

Every partition has two identifiers: a partition number (the primary identifier from an internal perspective)

and a partition name (a more meaningful handle for administrators).

Partition Number

• A numeric value that is well suited for programmatic use and required by the hardware for configuring

routing, firewalls, etc. related to nPartitions.

• Once a partition has been created, its partition number cannot be changed. In effect, a different

partition number implies a different partition.

• Only one instance of an nPartition with a given partition number can exist within a complex.

• The range of partition numbers for nPartitions is 1 – 255.

Partition Name

• A partition name is a string value which directly conveys meaning.

• The name of a partition can be changed; this includes after the partition has been created and even if

a partition is active (such is the nature of an alias).

• A partition name should at least have one of the following non-numeric characters:

◦ a-z

◦ A-Z

◦ - (dash)

◦ _ (underscore)

◦ . (period)

Any other non-numeric character is not allowed in a partition name.

• nPartition names are unique within a complex.

Partition Power Operations

To activate an inactive nPartition, use the poweron partition command on the OA CLI.

To make an active partition inactive, use the poweroff partition command on the OA CLI.

To reboot an active nPartition, use the reboot partition command on the OA CLI.

To do a TOC on the nPartition and obtain a core dump, use the toc partition command from the OA

CLI.

To list all the nPartitions and their boot states and runstates (active or inactive states), use the

parstatus -P command on the OA CLI.

Partitioning 39

Page 40

For more information on the usage of these commands, see “Partition commands” in the HPE Integrity

Superdome X and Superdome 2 Onboard Administrator Command Line Interface User Guide.

PARSTATUS

The status of a partition and its assigned resources can be obtained by exercising various options

available with the OA CLI command parstatus. For more information on the parstatus command,

see “Partition commands” in the HPE Integrity Superdome X and Superdome 2 Onboard Administrator

Command Line Interface User Guide.

UUID for nPartitions

The partition firmware subsystem will generate an unique nPar UUID when a user creates an nPartition.

The UUID will be communicated to system firmware, which places the UUID on the SMBIOS for the OS

and the management applications to pick up and use this as “Universally Unique Identifiers” of the

partition. The UUID would also be available for the manageability and the deployment tools and

applications through established SOAP interfaces that can query UUID. Customers can view the UUID of

the nPartition by issuing parstatus –p <npar_id> -V under the field “Partition UUID”.

nPartition states

The nPartition state indicates whether the nPartition has booted and represents the power state of

nPartition. The nPartitions will have one of the following states:

• Active nPartition

• Inactive nPartition

• Unknown

Active nPartition

An nPartition is active when a poweron operation is initiated on the nPartition and the firmware boot

process is started.

Inactive nPartition

An nPartition is considered inactive when it is not powered on. An nPartition is in inactive state after it has

been created or shut down.

Unknown nPartition

An nPartition might report a partition state of “Unknown” and a runstate of “DETACHED” after an OA

restart. This state is possible when the firmware is not able to identify the correct nPartition state due to

internal firmware errors at OA startup. The state is persistent and can only be cleared by force powering

off the nPartition from the OA. A partition in this state will not accept any partition operation for the

nPartition, except parstatus and force poweroff. Any active OS instances continue to run unhindered

even when the nPartition is in an Unknown state.

If any attempts are made to issue partition administration operations, the following error occurs:

Error: Partition state unavailable due to firmware errors. All OS instances

running in this partition will continue unimpacted.

40 Partitioning

Page 41

NOTE: To clear this partition state:

1. Shut down all OS instances in the nPartition.

2. Force power off the nPartition from the OA.

3. Power on the nPartition from the OA.

This is an example of parstatus output for a partition in the DETACHED state:

parstatus -P

[Partition]

Par State/RunState Status* # of # of ILM/(GB)** Partition Name

=== =============== ======= ==== ==== ============= ==============

1 Unknown/DETACHED OK 8 0 0.0/8192.0 nPar0001

* D-Degraded

** Actual allocated for Active and User requested for Inactive partitions

To list all the nPartitions and their boot states and runstates (active or inactive states), use the

parstatus -P command on the OA CLI.

parstatus -P

[Partition]

Par State/RunState Status* # of # of ILM/(GB)** Partition Name

=== =============== ======= ==== ==== ============= ==============

1 Inactive/DOWN OK 4 0 0.0/4096.0 nPar0001

2 Active/EFI OK 4 0 0.0/4096.0 nPar0002

* D-Degraded

** Actual allocated for Active and User requested for Inactive partitions

nPartition runstate

The partition runstates displayed by the status commands show the actual state of the partition varying

from a firmware boot state to a state where an OS has successfully booted in a partition. The following

table lists the runstates for an nPartition.

State Description

DOWN The partition is inactive and powered off.

ACTIVATING A boot operation has been initiated for this partition.

FWBOOT The boot process is in the firmware boot phase for this partition and the

EFI The partition is at the EFI shell.

OSBOOT The boot process has started booting the OS in this partition.

UP The OS in this partition is booted and running.

SHUT A shutdown/reboot/reset operation has been initiated on this partition.

partition has transitioned into the active status.

1

Table Continued

Partitioning 41

Page 42

State Description

DEACTIVATING The partition is being deactivated (powered down) as part of a shutdown

or reboot operation.

RESETTING A partition reset is in progress.

MCA A machine check (MCA) has occurred in the partition and is being

processed.

DETACHED The status is not known. This might reflect an error condition or a

transitional state while partition states are being discovered.

1

OS WBEM drivers must be installed to see this runstate.

nPartition and resource health status

The nPartition and resource status reveals the current health of the hardware. The nPartition resources

can have one of the following usage status:

Resource Usage Description

Empty The slot has no resource.

Inactive Resource is inactive.

Unintegrated Firmware is in the process of discovering or integrating the resource. It cannot

be used for partition operations.

Active The resource is active in the partition.

The partition resources might display one of the following health status:

Resource

health

OK Okay/healthy Resource is present and usable.

D Deconfigured Resource has been deconfigured.

I Indicted Resource has been indicted.

PD Parent Deconfigured A parent resource has been deconfigured. An example is the

PI Parent Indicted Similar to PD except the parent resource has been indicted.

Meaning Comment

status of a memory DIMM which is healthy when the blade in

which it is located is deconfigured. The DIMM status is then PD.

I D Indicted and

PI PD Parent Indicted and

42 Partitioning

A resource has been indicted and deconfigured

Deconfigured

A parent resource has been indicted and deconfigured.

Parent Deconfigured

Page 43

The health of an nPartition depends on the health of its own resources. If there are unhealthy resources,

the health of the partition is marked as Degraded. If all the resources in the partition are healthy, the

health of the partition is reported as OK.

Partitioning 43

Page 44

Troubleshooting

Symptom

The purpose of this chapter is to provide a preferred methodology (strategies and procedures) and tools

for troubleshooting complex error and fault conditions.

This section is not intended to be a comprehensive guide to all of the tools that can be used for

troubleshooting the system. See the HPE Integrity Superdome X and Superdome 2 Onboard

Administrator User Guide and the HPE Integrity Superdome X and Superdome 2 Onboard Administrator

Command Line Interface User Guide for additional information on troubleshooting using the OA.

General troubleshooting methodology

The system provides the following sources of information for troubleshooting:

• LED status information

• Insight Display

• OA CLI, Health Repository (HR) and Core Analysis Engine (CAE)

• OA GUI

NOTE:

Examples in this section might reflect other systems and not the currently supported configuration of the

Integrity Superdome X system.

LED status information

The LEDs provide initial status and health information. LED information should be verified by the other

sources of status information.

See LEDs and components on page 57 for more information.

TIP:

The OA CLI is the most efficient way to verify the information provided from LEDs.

OA access

You can access the OA by entering the 169.254.1.x address using either a Telnet session or a SSH

connection. This can be done by connecting a laptop to the service port on the OA tray using a standard

LAN cable using Telnet or by using a system which has access to the OA-management LAN (customer

LAN connected to the OA RJ45–port). See Connecting a PC to the OA service port for more

information about connecting to the OA service port.

IMPORTANT: The OA service (Link Up) port is not to be confused with the serial port. The OA serial

port is only used for initial system setup. Once the network is configured, the OA should be always

be accessed using Telnet or SSH connection to the Service port.

OA CLI

The central point of communication for gaining system status is the active OA.

44 Troubleshooting

Page 45

Hewlett Packard Enterprise recommends checking the system status information using show complex

status before continuing with troubleshooting:

sd-oa1> show complex status

Status: OK

Enclosure ID: OK

Enclosure: OK

Robust Store: OK

CAMNET: OK

Product ID: OK

Xfabric: OK

Diagnostic Status:

Thermal Danger OK

Cooling OK

Device Failure OK

Device Degraded OK

Firmware Mismatch OK

If no issues are seen in the command output, then more troubleshooting information is required.

Gathering power related information

Gather the power information for all of the system components.

Compute enclosure

Use the show enclosure status and show enclosure powersupply all commands.

sd-oa1> show enclosure status

Enclosure 1:

Status: OK

Enclosure ID: OK

Unit Identification LED: Off

Diagnostic Status:

Internal Data OK

Thermal Danger OK

Cooling OK

Device Failure OK

Device Degraded OK

Redundancy OK

Indicted OK

Onboard Administrator:

Status: OK

Standby Onboard Administrator:

Status: OK

Power Subsystem:

Status: OK

Power Mode: Not Redundant

Power Capacity: 14400 Watts DC

Power Available: 2270 Watts DC

Present Power: 6024 Watts AC

Cooling Subsystem:

Status: OK

Fans Good/Wanted/Needed: 15/15/15

Fan 1: 10760 RPM (60%)

Fan 2: 10758 RPM (60%)

Fan 3: 10760 RPM (60%)

Fan 4: 10760 RPM (60%)

Troubleshooting 45

Page 46

Fan 5: 10759 RPM (60%)

Fan 6: 8600 RPM (48%)

Fan 7: 8600 RPM (48%)

Fan 8: 8600 RPM (48%)

Fan 9: 8599 RPM (48%)

Fan 10: 8599 RPM (48%)

Fan 11: 8602 RPM (48%)

Fan 12: 8601 RPM (48%)

Fan 13: 8600 RPM (48%)

Fan 14: 8597 RPM (48%)

Fan 15: 8600 RPM (48%)

sd-oa1> show enclosure powersupply all

Power Supply #1 Information:

Status: OK

AC Input Status: OK

Capacity: 2450 Watts

Current Power Output: 918 Watts

Serial Number: 5BGXF0AHL4B0S6

Product Name: HPE 2400W 80 PLUS PLATINUM

Part Number: 588603-B21

Spare Part Number: 588733-001

Product Ver: 07

Diagnostic Status:

Internal Data OK

Device Failure OK

Power Cord OK

Indicted OK

Similar information will be displayed for all other power supplies.

Collecting power status information for components at the compute enclosure

Use the show xfm status all, show blade status all, and show interconnect status

all commands to gather information on compute enclosure component power if in use:

NOTE: OA displays XFM2 information as SXFM.

NOTE: Similar information should be displayed for XFMs 1 through 3.

sd-oa1> show xfm status all

Bay 4 SXFM Status:

Health: OK

Power: On

Unit Identification LED: Off

Diagnostic Status:

Internal Data OK

Management Processor OK

Thermal Warning OK

Thermal Danger OK

Power OK <<<<

Firmware Mismatch OK

Indicted OK

Link 1: Dormant

Link 2: Dormant

Link 3: Dormant

Link 4: Dormant

sd-oa1> show blade status all

46 Troubleshooting

Page 47

Blade #1 Status:

Power: On

Current Wattage used: 1325 Watts

Health: OK

Unit Identification LED: Off

Diagnostic Status:

Internal Data OK

Management Processor OK

Thermal Warning OK

Thermal Danger OK

I/O Configuration OK

Power OK <<<

Cooling OK

Device Failure OK

Device Degraded OK

Device Info OK

Firmware Mismatch OK

PDHC OK

Indicted OK

sd-oa1> show interconnect status all

Interconnect Module #1 Status:

Status: OK

Thermal: OK

CPU Fault: OK

Health LED: OK

UID: Off

Powered: On

Diagnostic Status:

Internal Data OK

Management Processor OK

Thermal Warning OK

Thermal Danger OK

I/O Configuration OK

Power OK <<<

Device Failure OK

Device Degraded OK

Gathering cooling related information

Use the following commands to gather all complex cooling information:

• show enclosure fan all

sd-oa1> show enclosure fan all

Fan #1 Information:

Status: OK

Speed: 60 percent of Maximum speed

Maximum speed: 18000 RPM

Minimum speed: 10 RPM

Power consumed: 32 Watts

Product Name: Active Cool 200 Fan

Part Number: 412140-B21

Spare Part Number: 413996-001

Version: 2.9

Diagnostic Status:

Internal Data OK

Location OK

Device Failure OK

Device Degraded OK

Troubleshooting 47

Page 48

Missing Device OK

Indicted OK

• show blade status all

sd-oa1> show blade status all

Blade #1 Status:

Power: On

Current Wattage used: 1100 Watts

Health: OK

Unit Identification LED: Off

Virtual Fan: 36%

Diagnostic Status:

Internal Data OK

Management Processor OK

Thermal Warning OK

Thermal Danger OK

I/O Configuration OK

Power OK

Cooling OK

Location OK

Device Failure OK

Device Degraded OK

iLO Network OK

Device Info OK

Firmware Mismatch OK

Mezzanine Card OK

Deconfigured OK

PDHC OK

Indicted OK

• show xfm status all

sd-oa1> show xfm status all

Bay 4 SXFM Status:

Health: OK

Power: On

Unit Identification LED: Off

Diagnostic Status:

Internal Data OK

Management Processor OK

Thermal Warning OK <<<

Thermal Danger OK <<<

Power OK

Firmware Mismatch OK

Indicted OK

Link 1: Dormant

Link 2: Dormant

Link 3: Dormant

Link 4: Dormant

• show interconnect status all

Interconnect Module #1 Status:

Status: OK

Thermal: OK

CPU Fault: OK

Health LED: OK

UID: Off

Powered: On

Diagnostic Status:

Internal Data OK

Management Processor OK

Thermal Warning OK <<<<

48 Troubleshooting

Page 49

Thermal Danger OK <<<<

I/O Configuration OK

Power OK

Device Failure OK

Device Degraded OK

Gathering failure information

To obtain information about failures recorded by the system, use the following commands:

• Show cae –L

sd-oa1> show cae -L

Sl.No Severity EventId EventCategory PartitionId

EventTime Summary

###########################################################################

#####

71 Critical 3040 System Coo... N/A Fri May 18 06:26:34

2012 SXFM air intake

or exhaust temperature...

70 Critical 3040 System Coo... N/A Fri May 18 04:56:22

2012 SXFM air intake

or exhaust temperature...