HP Data Protector

D

ata Protector Cluster Cell Manager Configuration and

Integration on RHCS

Technical white paper

Table of contents

Abstract ............................................................................................................................................................................................................ 2

Introduction .................................................................................................................................................................................................... 2

Integrating a cluster-aware Cell Manager on RHCS...................................................................................................................... 2

Configuring the shared DISK and GFS volume ................................................................................................................................ 3

Installing Data Protector Cell Manager on each node ............................................................................................................ 4

Creating the Data Protector cluster resources ................................................................................................................................ 6

Checking the order of appearance of Data Protector resources ............................................................................................. 9

Testing the start/stop order of Data Protector resources ........................................................................................................ 10

Deploying the Data Protector Cluster Cell Manager to live operation ............................................................................... 11

For more information ............................................................................................................................................................................... 13

Call to action ................................................................................................................................................................................................ 13

Abstract

This document describes the steps needed to install and integrate HP Data Protector Cluster Cell

Manager on the Red Hat Cluster Suite (RHCS)

.

Introduction

This document describes the installation and integration of Cluster-aware Cell Manager on a Linux

Cluster. The Red Hat Cluster Suite (RHCS) is considered as an example.

Cluster-aware application: An application that calls cluster APIs to determine the context under

which it is running, such as the virtual server name, and that can fail over between nodes for high

availability.

Cluster-aware Cell Manager: A cluster-aware application that supports the cluster Application

Programming Interface (API). Each cluster-aware application declares its own critical resources. In

the case of a Data Protector Cell Manager (CM), the critical resources are volume groups, application

services, and virtual server

In order to configure the Cluster-aware Cell Manager, the following resources are mandatory:

• Shared Disk group, configured with either a gfs or gfs2 File System

• Virtual server, which can be pinged using host name, which will act as the Cell Manager

• Data Protector cluster services

.

This white paper describes the following:

• How to integrate the cluster-aware Cell Manager in cluster environment

• How to setup a data protector cluster service in failover mode on the Red Hat Cluster Suite

Integrating a cluster-aware Cell Manager on RHCS

Prerequisites

• Two servers with RHEL OS installed and RHCS (HA) configured on them

• Two NIC cards on each machine: one configured with private IP, to check the heart beat of nodes,

and the other with a public IP address, for external communication

For example: Public/Private IP: Node1: 10.10.1.82/192.168.0.10

Node2: 10.10.1.81/192.168.0.11

• Two disks of 4 GB capacity, shared between the two System/Nodes either using FC SAN or iSCSI

SAN

• OS repository

• Virtual Server configured on the cluster. For example: server: dpi00180 with IP address: 10.10.1.9

• Basic understanding of RHCS, HA, and Data Protector Cell Manager

• Deciding which systems are going to be the Primary Cell Manager and the Secondary Cell

Manager. Both of them must be configured as cluster members.

• For example: Primary Node: dpi00182, Secondary Node: dpi00181

• Valid HP Data Protector Cluster Cell Manager license

2

Configuring the shared DISK and GFS volume

1. To set up the gfs/gfs2 volume, start all the gfs/gfs2 services on each machine. Before this, ensure

that GFS/GFS2 and RHCS components are installed on them. If not, install the components:

GFS components: gfs-util, k-mod-gfs, Distributed Lock Manager (DLM)

RHCS components: OpenAIS, CCS, fenced, CMAN and CLVMD (Clustered LVM)

$yum install gfs-util kmod-gfs or $yum install gfs2-util

2. Start gfs/gfs2, cman, and clvm services, and then enable cluster on all the nodes by using the

following command:

$service gfs start (or $service gfs2 start)

$service cman start

$service rgmanager start

$service clvmd start

$lvmconf --enable-cluster

3. To create a shared volume in HA mode, execute the following commands on any one node:

$pvcreate /dev/sd[bcde]1

Physical volume "/dev/sdb1" successfully created

$vgcreate DP_Grp /dev/sd[bcde]1

Volume group "DP_Grp " successfully created

$lvcreate -n DP_Vol -L 3G disk1

Logical volume "DP_Vol" created

4. Mount the created GFS Volume on the Master Node (any one node). The output of the

command is as follows:

$gfs_mkfs -p lock_dlm -t home:mygfs -j 2 /dev/DP_Grp/DP_Vol

This will destroy any data on /dev/DP_Grp/DP_Vol.

It appears to contain a gfs filesystem.

Are you sure you want to proceed? [y/n] y

Device: /dev/DP_Grp/DP_Vol

Blocksize: 4096

Filesystem Size: 616384

Journals: 2

Resource Groups: 10

Locking Protocol: lock_dlm

Lock Table: home:mygfs

Syncing...

All Done

5. Check whether the gfs and cluster volume managers are running. Create the mount point folder,

namely FileShare, on all the nodes.

$ chkconfig gfs on

$ chkconfig clvmd on

Mount GFS in the cluster member by editing the /etc/fstab file with the device and mount

point information.

$ vi /etc/fstab

3

/dev/DP_Grp/DP_Vol /FileShare gfs defaults 0 0

$mount /FileShare

$ mount –l

/dev/mapper/DP_Grp-DP_Vol on /FileShare type gfs

(rw,hostdata=jid=0:id=262145:first=1) [home:mygfs]

Installing Data Protector Cell Manager on each node

Installing and integrating on the primary node

Note: Data Protector v6.11 is used to install Data Protector’s Cell Manager on all the nodes. In

general, the procedure will work with later versions of Data Protector as well

1. Copy Data Protector rpm’s/ depots and the omnisetup.sh script to the /tmp folder of the

primary node, and run the omnisetup.sh script to install Data Protector on the primary node

$./omnisetup_243D.sh -source ‘pwd’ -CM -IS -build 243D

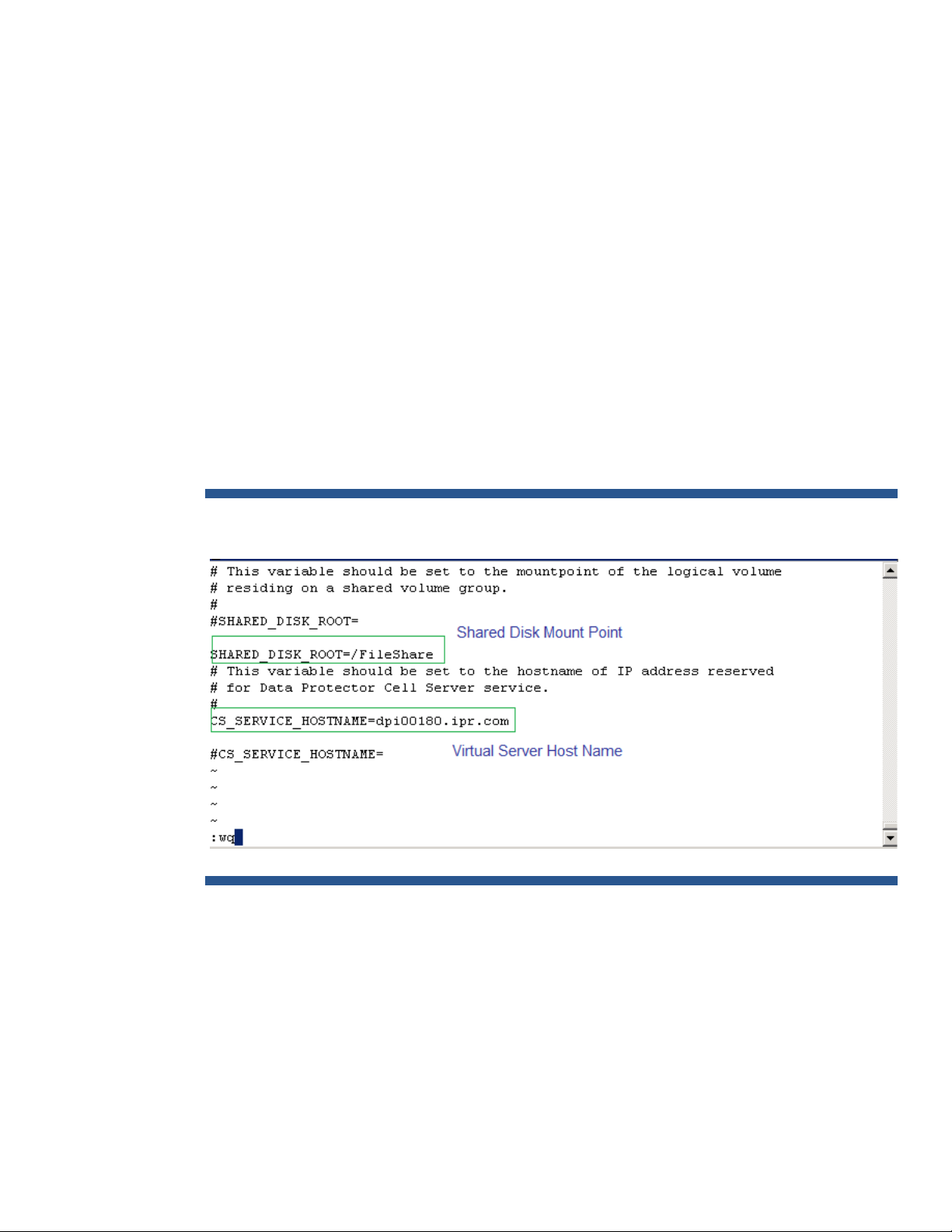

2. After installing Data Protector on the primary node, edit the file

/etc/opt/omni/server/sg/sg.conf as follows:

Figure 1: The file sg.conf, updated with mount point and hostname on the primary node

.

.

4

Note:

– FileShare is the mount point of the shared disk. It is created on all the nodes

.

– Dpi00180.ipr.com is the virtual server with a proper DNS entry. It acts as the Cell Manager

3. Check that the shared disk is mounted on the primary node by executing the df command as

follows:

$df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup00-LogVol00 19425972 3236244 15187028 18% /

/dev/sda1 101086 12613 83254 14% /boot

.

Loading...

Loading...