Hp COMPAQ PROLIANT 6400R Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Page 1

Parallel Database Cluster Model

PDC/O5000 for Oracle8i and

Windows 2000

Administrator Guide

Second Edition (June 2001)

Part Number 225081-002

Compaq Computer Corporation

Page 2

Notice

© 2001 Compaq Computer Corporation

Compaq, the Compaq logo, Compaq Insight Manager, SmartStart, ROMPaq, ProLiant, and

StorageWorks Registered in U.S. Patent and Trademark Office. ActiveAnswers is a trademark of

Compaq Information Technologies Group, L.P. in the United States and other countries.

Microsoft, Windows, and Windows NT are trademarks of Microsoft Corporation in the United States

and other countries.

All other product names mentioned herein may be trademarks of their respective companies.

Compaq shall not be liable for technical or editorial errors or omissions contained herein. The

information in this document is provided “as is” without warranty of any kind and is subject to change

without notice. The warranties for Compaq products are set forth in the express limited warranty

statements accompanying such products. Nothing herein should be construed as constituting an

additional warranty.

Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000

Second Edition (June 2001)

Part Number 225081-002

Page 3

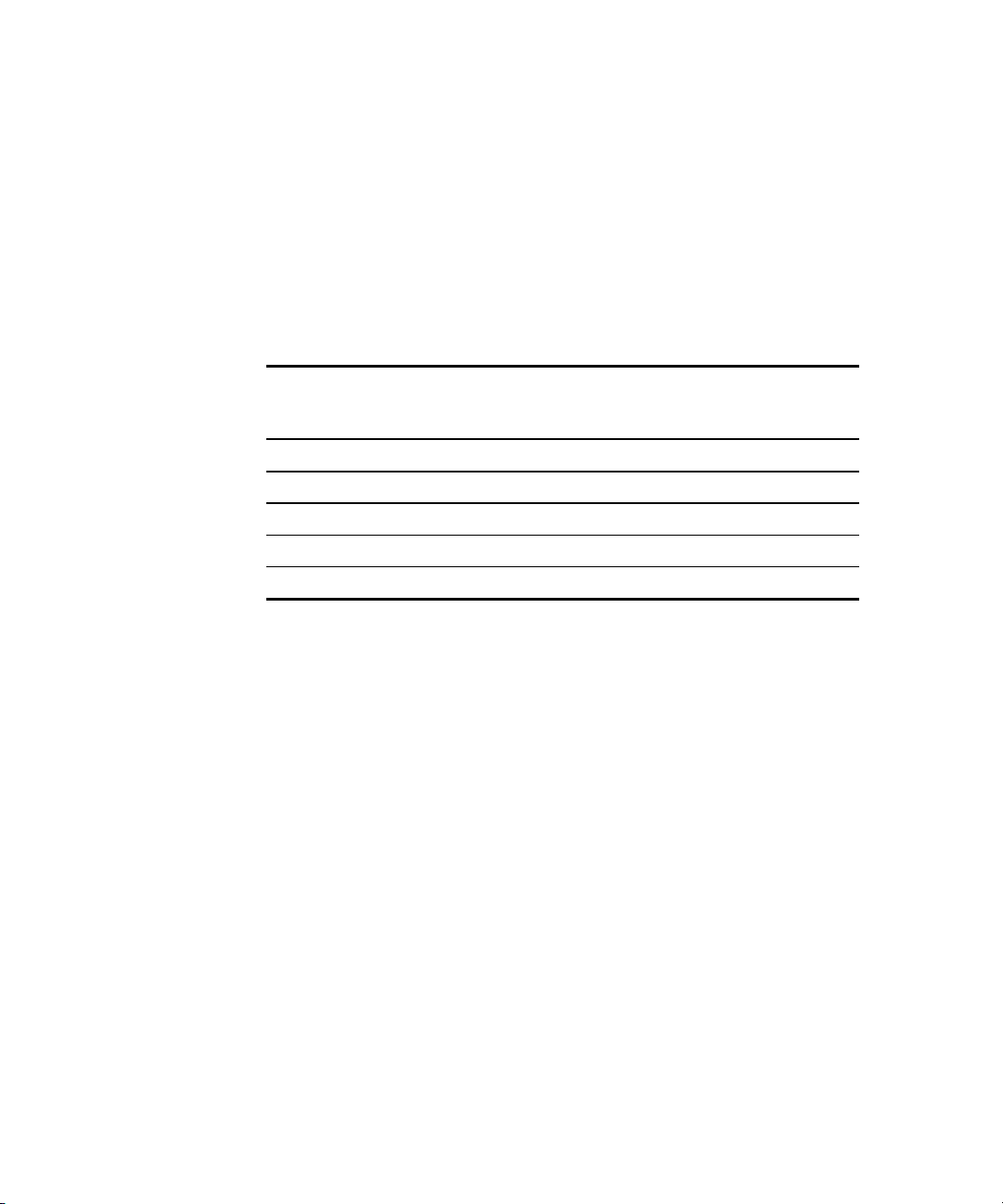

Contents

About This Guide

Purpose .................................................................................................................... xiii

Audience.................................................................................................................. xiii

Scope ........................................................................................................................xiv

Referenced Manuals ..................................................................................................xv

Supplemental Documents .........................................................................................xvi

Text Conventions......................................................................................................xvi

Symbols in Text.......................................................................................................xvii

Symbols on Equipment............................................................................................xvii

Rack Stability ........................................................................................................ xviii

Getting Help .......................................................................................................... xviii

Compaq Technical Support ...............................................................................xix

Compaq Website................................................................................................xix

Compaq Authorized Reseller..............................................................................xx

Chapter 1

Clustering Overview

Clusters Defined ...................................................................................................... 1-2

Availability .............................................................................................................. 1-4

Scalability ................................................................................................................ 1-4

Compaq Parallel Database Cluster Overview.......................................................... 1-5

Chapter 2

Cluster Architecture

Compaq ProLiant Servers........................................................................................ 2-2

High-Availability Features of ProLiant Servers ............................................... 2-3

Shared Storage Components.................................................................................... 2-3

MA8000/EMA12000 Storage Subsystem ........................................................ 2-4

HSG80 Array Controller .................................................................................. 2-4

Fibre Channel SAN Switch .............................................................................. 2-5

Page 4

iv Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Storage Hub ...................................................................................................... 2-6

KGPSA-BC and KGPSA-CB Host Adapter..................................................... 2-6

Gigabit Interface Converter-Shortwave ............................................................ 2-7

Fibre Channel Cables........................................................................................ 2-7

Configuring and Cabling the MA8000/EMA12000 Storage Subsystem

Components ............................................................................................................. 2-7

Configuring LUNS for Storagesets................................................................... 2-7

SCSI Cabling Examples.................................................................................... 2-8

UltraSCSI Cables.............................................................................................. 2-9

Using I/O Modules in the Controller Enclosure ...............................................2-9

Connecting EMUs Between MA8000/EMA12000 Storage Subsystems........ 2-12

I/O Path Configurations for Redundant Fibre Channel Fabrics ............................. 2-13

Overview of Fibre Channel Fabric SAN Topology ........................................ 2-13

Redundant Fibre Channel Fabrics................................................................... 2-13

Multiple Redundant Fibre Channel Fabrics.................................................... 2-15

Maximum Distances Between Nodes and Shared Storage Subsystem

Components .................................................................................................... 2-16

I/O Data Paths in a Redundant Fibre Channel Fabric ..................................... 2-17

I/O Path Definitions for Redundant Fibre Channel Fabrics............................ 2-20

I/O Path Configuration Examples for Redundant Fibre Channel Fabrics....... 2-21

Summary of I/O Path Failure and Failover Scenarios for Redundant Fibre

Channel Fabrics ..............................................................................................2-25

I/O Path Configurations for Redundant Fibre Channel Arbitrated Loops.............. 2-26

Overview of FC-AL SAN Topology............................................................... 2-26

Redundant Fibre Channel Arbitrated Loops ................................................... 2-26

Multiple Redundant Fibre Channel Arbitrated Loops..................................... 2-28

Maximum Distances Between Nodes and Shared Storage Subsystem

Components .................................................................................................... 2-30

I/O Data Paths in a Redundant FC-AL ...........................................................2-32

I/O Path Definitions for Redundant FC-ALs .................................................. 2-34

I/O Path Configuration Examples for Redundant FC-ALs............................. 2-35

Summary of I/O Path Failure and Failover Scenarios for Redundant

FC-ALs ........................................................................................................... 2-38

Cluster Interconnect Requirements........................................................................ 2-39

Ethernet Cluster Interconnect..........................................................................2-39

Local Area Network........................................................................................ 2-42

Chapter 3

Cluster Software Components

Overview of the Cluster Software............................................................................ 3-1

Microsoft Windows 2000 Advanced Server ............................................................ 3-2

Compaq Software..................................................................................................... 3-2

Compaq SmartStart and Support Software ....................................................... 3-2

Compaq System Configuration Utility ............................................................. 3-3

Compaq Insight Manager.................................................................................. 3-3

Page 5

Compaq Insight Manager XE ........................................................................... 3-3

Compaq Options ROMPaq............................................................................... 3-4

Compaq StorageWorks Command Console ..................................................... 3-4

Compaq StorageWorks Secure Path for Windows 2000 .................................. 3-4

Compaq Operating System Dependent Modules.............................................. 3-5

Oracle Software ....................................................................................................... 3-5

Oracle8i Server Enterprise Edition................................................................... 3-6

Oracle8i Server................................................................................................. 3-6

Oracle8i Parallel Server Option........................................................................ 3-6

Oracle8i Enterprise Manager............................................................................ 3-7

Oracle8i Certification ....................................................................................... 3-7

Application Failover and Reconnection Software ................................................... 3-7

Chapter 4

Cluster Planning

Site Planning............................................................................................................ 4-2

Capacity Planning for Cluster Hardware ................................................................. 4-2

Compaq ProLiant Servers................................................................................. 4-2

Planning Shared Storage Components for Redundant Fibre Channel

Fabrics .............................................................................................................. 4-3

Planning Shared Storage Components for Redundant Fibre Channel

Arbitrated Loops............................................................................................... 4-4

Planning Cluster Interconnect and Client LAN Components........................... 4-6

Planning Cluster Configurations for Redundant Fibre Channel Fabrics.................. 4-7

Planning Dual Redundancy Configurations ..................................................... 4-7

Planning Quad Redundancy Configurations..................................................... 4-9

Planning Cluster Configurations for Redundant Fibre Channel Arbitrated

Loops ..................................................................................................................... 4-11

Planning Dual Redundancy Configurations ................................................... 4-11

Planning Quad Redundancy Configurations................................................... 4-13

RAID Planning for the MA8000/EMA12000 Storage Subsystem ........................ 4-15

Supported RAID Levels ................................................................................. 4-16

Raw Data Storage and Database Size............................................................. 4-17

Selecting the Appropriate RAID Level .......................................................... 4-18

Planning the Grouping of Physical Disk Storage Space ........................................ 4-19

Disk Drive Planning .............................................................................................. 4-20

Nonshared Disk Drives................................................................................... 4-20

Shared Disk Drives......................................................................................... 4-20

Network Planning .................................................................................................. 4-21

Windows 2000 Advanced Server Host Files for an Ethernet Cluster

Interconnect .................................................................................................... 4-21

Client LAN ..................................................................................................... 4-22

Contents v

Page 6

vi Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Chapter 5

Installation and Configuration

Reference Materials for Installation......................................................................... 5-1

Installation Overview............................................................................................... 5-2

Installing the Hardware............................................................................................ 5-3

Setting Up the Nodes ........................................................................................ 5-3

Installing the KGPSA-BC and KGPSA-CB Host Adapters.............................. 5-4

Installing GBIC-SW Modules for the Host Adapters ....................................... 5-4

Cabling the Host Adapters to Fibre Channel SAN Switches or Storage

Hubs.................................................................................................................. 5-5

Installing the Cluster Interconnect Adapters..................................................... 5-6

Installing the Client LAN Adapters .................................................................. 5-6

Installing GBIC-SW Modules for the Array Controllers .................................. 5-6

Installing Hardware Into an MA8000/EMA12000 Storage Subsystem.......... 5-10

Cabling the Controller Enclosure to Disk Enclosures..................................... 5-11

Cabling EMUs to Each Other ......................................................................... 5-13

Cabling Array Controllers to Fibre Channel SAN Switches........................... 5-14

Cabling Array Controllers to Storage Hubs.................................................... 5-15

Installing Operating System Software.................................................................... 5-16

Guidelines for Clusters ...................................................................................5-17

Automated Installation Using SmartStart ....................................................... 5-17

Setting up and Configuring an MA8000/EMA12000 Storage Subsystem............. 5-21

Designating a Server as a Maintenance Terminal........................................... 5-21

Powering On the MA8000/EMA12000 Storage Subsystem........................... 5-21

Installing the StorageWorks Command Console (SWCC) Client................... 5-22

Configuring a Storage Subsystem for Secure Path Operation ........................ 5-22

Verifying Array Controller Properties ............................................................ 5-27

Configuring a Storageset................................................................................. 5-29

Installing Secure Path Software for Windows 2000 .............................................. 5-32

Overview of Secure Path Software Installation .............................................. 5-32

Description of the Secure Path Software ........................................................ 5-33

Installing the Host Adapter Drivers ................................................................ 5-33

Installing the Fibre Channel Software Setup (FCSS) Utility .......................... 5-34

Installing the Secure Path Drivers, Secure Path Agent, and Secure Path

Manager .......................................................................................................... 5-35

Specifying the Preferred_Path for Storage Units ............................................ 5-36

Powering Up All Other Fibre Channel SAN Switches or Storage Hubs ........ 5-38

Creating Partitions ..........................................................................................5-38

Installing Compaq OSDs ....................................................................................... 5-39

Verifying Cluster Communications ................................................................ 5-40

Mounting Remote Drives and Verifying Administrator Privileges ................ 5-41

Installing the Ethernet OSDs .......................................................................... 5-42

Installing Oracle Software ..................................................................................... 5-52

Configuring Oracle8i Software .............................................................................. 5-53

Installing Object Link Manager ............................................................................. 5-53

Additional Notes on Configuring Oracle Software......................................... 5-54

Page 7

Verifying the Hardware and Software Installation ................................................ 5-55

Cluster Communications ................................................................................ 5-55

Access to Shared Storage from All Nodes...................................................... 5-55

OSDs .............................................................................................................. 5-55

Other Verification Tasks ................................................................................ 5-56

Power Distribution and Power Sequencing Guidelines ......................................... 5-56

Overview ........................................................................................................ 5-56

Server Power Distribution .............................................................................. 5-57

Storage Subsystem Power Distribution .......................................................... 5-57

Power Sequencing .......................................................................................... 5-58

Chapter 6

Cluster Management

Cluster Management Concepts ................................................................................ 6-2

Powering Off a Node Without Interrupting Cluster Services........................... 6-2

Managing a Cluster in a Degraded Condition................................................... 6-2

Managing Network Clients Connected to a Cluster ......................................... 6-3

Cluster Events................................................................................................... 6-3

Management Applications ....................................................................................... 6-4

Monitoring Server and Network Hardware ...................................................... 6-4

Monitoring Storage Subsystem Hardware........................................................ 6-5

Managing Shared Storage................................................................................. 6-5

Monitoring the Database .................................................................................. 6-7

Remotely Managing a Cluster .......................................................................... 6-7

Software Maintenance ............................................................................................. 6-8

Deinstalling the OSDs ...................................................................................... 6-8

Upgrading Oracle8i Server ............................................................................. 6-11

Upgrading the OSDs....................................................................................... 6-11

Deinstalling a Partial OSD Installation........................................................... 6-13

Managing Changes to Shared Storage Components.............................................. 6-14

Adding New Storagesets to Increase Shared Storage Capacity...................... 6-14

Replacing a Failed Drive in a Storage Subsystem.......................................... 6-15

Replacing a Host Adapter............................................................................... 6-16

Adding a Shared Storage Subsystem.............................................................. 6-19

Replacing a Cluster Node ...................................................................................... 6-19

Removing the Node........................................................................................ 6-20

Adding the Replacement Node....................................................................... 6-20

Adding a Cluster Node .......................................................................................... 6-24

Preparing the New Node................................................................................. 6-25

Preparing the Existing Cluster Nodes............................................................. 6-27

Installing the Cluster Software ....................................................................... 6-27

Monitoring Cluster Performance ........................................................................... 6-29

Tools Overview .............................................................................................. 6-29

Using Secure Path Manager............................................................................ 6-30

Uninstalling Secure Path ................................................................................ 6-33

Contents vii

Page 8

viii Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Chapter 7

Troubleshooting

Basic Troubleshooting Tips ..................................................................................... 7-2

Power ................................................................................................................ 7-2

Physical Connections........................................................................................ 7-2

Access to Cluster Components .........................................................................7-3

Software Revisions ........................................................................................... 7-3

Firmware Revisions .......................................................................................... 7-4

Troubleshooting Oracle8i and OSD Installation Problems and Error Messages ..... 7-5

Potential Difficulties Installing the OSDs with the Oracle Universal

Installer ............................................................................................................. 7-5

Unable to Start OracleCMService..................................................................... 7-6

Unable to Start OracleNMService .................................................................... 7-6

Unable to Start the Database............................................................................. 7-7

Initialization of the Dynamic Link Library NM.DLL Failed............................ 7-7

Troubleshooting Node-to-Node Connectivity Problems.......................................... 7-7

Nodes Are Unable to Communicate with Each Other ...................................... 7-7

Unable to Ping the Cluster Interconnect or the Client LAN ............................. 7-8

Troubleshooting Client-to-Cluster Connectivity Problems...................................... 7-9

A Network Client Cannot Communicate With the Cluster............................... 7-9

Troubleshooting Shared Storage Subsystem Problems.......................................... 7-10

Verifying Host Adapter Device Driver Installation........................................ 7-10

Verifying KGPSA-BC Device Driver Initialization ....................................... 7-10

Verifying Connectivity to a Redundant Fibre Channel Fabric........................ 7-12

Verifying Connectivity to a Redundant Fibre Channel Arbitrated Loop........ 7-13

A Cluster Node Cannot Connect to the Shared Drives ................................... 7-15

Disk Management Shows Storagesets With the Same Label (Dual Image).... 7-15

Device or Devices Were Not Found by KGPSA-BC Device Driver.............. 7-15

Devices on One I/O Connection Path Cannot Be Seen by the Cluster

Nodes .............................................................................................................. 7-16

Troubleshooting Secure Path ................................................................................. 7-18

Secure Path Guidelines for Windows 2000 Advanced Server........................ 7-18

Secure Path Manager Shows Reversed Locations for Top and Bottom

Array Controllers ............................................................................................ 7-20

Secure Path Manager Cannot Start With Hosts That Use Hyphenated Host

Names ............................................................................................................. 7-20

Secure Path Manager Is Delayed In Reporting Path Failure Information....... 7-20

The Addition of New LUNs Causes an Error................................................. 7-21

A Configuration of More Than 64 LUNs Prevents the Secure Path Agent

From Starting .................................................................................................. 7-21

Appendix A

Diagnosing and Resolving Shared Disk Problems

Introduction............................................................................................................. A-1

Run Object Link Manager on All Nodes ................................................................ A-3

Page 9

Restart All Affected Nodes in the Cluster ...............................................................A-4

Rerun and Validate Object Link Manager On All Affected Nodes .........................A-4

Run and Validate Secure Path Manager On All Nodes ...........................................A-5

Run Disk Management On All Nodes .....................................................................A-5

Run and Validate the StorageWorks Command Console From All Storage

Subsystems ..............................................................................................................A-6

Perform Cluster Software and Firmware Checks ....................................................A-6

Perform Cluster Hardware Checks ..........................................................................A-7

Contact Your Compaq Support Representative.......................................................A-8

Glossary

Index

Contents ix

Page 10

x Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

List of Figures

Figure 1-1. Example of a two-node PDC/O5000 cluster ........................................ 1-3

Figure 2-1. SCSI bus numbers for I/O modules in the controller enclosure ......... 2-10

Figure 2-2. UltraSCSI cabling between a controller enclosure and three

disk enclosures ................................................................................................. 2-11

Figure 2-3. UltraSCSI cabling between a controller enclosure and six disk

enclosures......................................................................................................... 2-12

Figure 2-4. Two-node PDC/O5000 with a four-fabric redundant Fibre

Channel Fabric ................................................................................................. 2-14

Figure 2-5. Two-node PDC/O5000 with two redundant Fibre Channel

Fabrics.............................................................................................................. 2-16

Figure 2-6. Maximum distances between PDC/O5000 cluster nodes and

shared storage subsystem components in a redundant Fibre Channel

Fabric................................................................................................................ 2-17

Figure 2-7. Host adapter-to-Fibre Channel SAN Switch I/O data paths ............... 2-18

Figure 2-8. Fibre Channel SAN Switch-to-array controller I/O data paths........... 2-19

Figure 2-9. Dual redundancy configuration for a redundant Fibre Channel

Fabric................................................................................................................ 2-22

Figure 2-10. Quad redundancy configuration for a redundant Fibre Channel

Fabric................................................................................................................ 2-24

Figure 2-11. Two-node PDC/O5000 with a four-loop redundant Fibre

Channel Arbitrated Loop.................................................................................. 2-27

Figure 2-12. Two-node PDC/O5000 with two redundant Fibre Channel

Arbitrated Loops .............................................................................................. 2-29

Figure 2-13. Maximum distances between PDC/O5000 cluster nodes and

shared storage subsystem components in a redundant FC-AL......................... 2-31

Figure 2-14. Host adapter-to-Storage Hub I/O data paths..................................... 2-32

Figure 2-15. Storage Hub-to-array controller I/O data paths ................................ 2-33

Figure 2-16. Dual redundancy configuration for a redundant FC-AL .................. 2-36

Figure 2-17. Quad redundancy configuration for a redundant FC-AL.................. 2-37

Figure 2-18. Redundant Ethernet cluster interconnect for a two-node

PDC/O5000 cluster .......................................................................................... 2-41

Figure 4-1. Dual redundancy configuration for a redundant Fibre Channel

Fabric.................................................................................................................. 4-8

Figure 4-2. Quad redundancy configuration for a redundant Fibre Channel

Fabric................................................................................................................ 4-10

Figure 4-3. Dual redundancy configuration for a redundant FC-AL .................... 4-12

Figure 4-4. Quad redundancy configuration for a redundant FC-AL.................... 4-14

Figure 4-5 MA8000/EMA12000 Storage Subsystem disk grouping for a

PDC/O5000 cluster .......................................................................................... 4-19

Figure 5-1. Connecting host adapters to Fibre Channel SAN Switches or

Storage Hubs ...................................................................................................... 5-5

Figure 5-2. Redundant Ethernet cluster interconnect for a two-node

PDC/O5000 cluster ............................................................................................ 5-8

Figure 5-3. SCSI bus numbers for I/O modules in the controller enclosure ......... 5-11

Page 11

Figure 5-4. UltraSCSI cabling between a controller enclosure and three

disk enslosures ................................................................................................. 5-12

Figure 5-5. UltraSCSI cabling between a controller enclosure and six disk

enclosures......................................................................................................... 5-13

Figure 5-6. Dual redundancy configuration for a redundant Fibre Channel

Fabric ............................................................................................................... 5-15

Figure 5-7. Dual redundancy configuration for a redundant FC-AL .................... 5-16

Figure 5-8. Server power distribution in a three-node cluster............................... 5-57

Figure A-1. Tasks for diagnosing and resolving shared storage problems .............A-2

List of Tables

Table 2-1 High-Availability Components of ProLiant Servers ............................... 2-3

Table 2-2 SCSI bus address ID assignments for the MA8000/EMA12000

Storage Subsystem ............................................................................................. 2-9

Table 2-3 I/O Path Failure and Failover Scenarios for Redundant Fibre

Channel Fabrics ............................................................................................... 2-25

Table 2-4 I/O Path Failure and Failover Scenarios for Redundant FC-ALs.......... 2-38

Table 5-1 Controller Properties ............................................................................. 5-28

Contents xi

Page 12

Purpose

Audience

About This Guide

This administrator guide provides information about the planning, installation,

configuration, implementation, management, and troubleshooting of the

Compaq Parallel Database Cluster Model PDC/O5000 on Oracle8i software

running on the Microsoft Windows 2000 Advanced Server operating system.

The expected audience of this guide consists primarily of MIS professionals

whose jobs include designing, installing, configuring, and maintaining

Compaq Parallel Database Clusters.

The audience of this guide must have a working knowledge of Microsoft

Windows 2000 Advanced Server and Oracle databases or have the assistance

of a database administrator.

This guide contains information for network administrators, database

administrators, installation technicians, systems integrators, and other

technical personnel in the enterprise environment for the purpose of cluster

planning, installation, implementation, and maintenance.

IMPORTANT: This guide contains installation, configuration, and maintenance

information that can be valuable for a variety of users. If you are installing the PDC/O5000

but will not be administering the cluster on a daily basis, please make this guide available

to the person or persons who will be responsible for the clustered servers after you have

completed the installation.

Page 13

xiv Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Scope

This guide offers significant background information about clusters as well as

basic concepts associated with designing clusters. It also contains detailed

product descriptions and installation steps.

This administrator guide is designed to assist you in the following objectives:

■ Understanding basic concepts of clustering technology

■ Recognizing and using the high-availability features of the PDC/O5000

■ Planning and designing a PDC/O5000 cluster configuration to meet your

business needs

■ Installing and configuring PDC/O5000 hardware and software

■ Managing the PDC/O5000

■ Troubleshooting the PDC/O5000

The following summarizes the contents of this guide:

■ Chapter 1, “Clustering Overview,” provides an introduction to

clustering technology features and benefits.

■ Chapter 2, “Cluster Architecture,” describes the hardware components

of the PDC/O5000 and provides detailed I/O path configuration

information.

■ Chapter 3, “Cluster Software Components,” describes software

components used with the PDC/O5000.

■ Chapter 4, “Cluster Planning,” outlines an approach to planning and

designing cluster configurations that meet your business needs.

■ Chapter 5, “Installation and Configuration,” outlines the steps you will

take to install and configure the PDC/O5000 hardware and software.

■ Chapter 6, “Cluster Management,” includes techniques for managing

and maintaining the PDC/O5000.

■ Chapter 7, “Troubleshooting,” contains troubleshooting information for

the PDC/O5000.

■ Appendix A, “Diagnosing and Resolving Shared Disk Problems,”

describes procedures to diagnose and resolve shared disk problems.

■ Glossary contains definitions of terms used in this guide.

Page 14

Some clustering topics are mentioned, but not detailed, in this guide. For

example, this guide does not describe how to install and configure Oracle8i on

a cluster. For information about these topics, see the referenced and

supplemental documents listed in subsequent sections.

Referenced Manuals

For additional information, refer to documentation related to the specific

hardware and software components of the Compaq Parallel Database Cluster.

These related manuals include, but are not limited to:

■ Documentation related to the ProLiant servers you are clustering (for

example, guides, posters, and performance and tuning guides)

■ Compaq StorageWorks documentation provided with the

MA8000/EMA12000 Storage Subsystem, HSG80 Array Controller,

Fibre Channel SAN Switches, Storage Hubs, and the KGPSA-BC or

KGPSA-CB Host Adapter

■ Microsoft Windows 2000 Advanced Server documentation

G Microsoft Windows 2000 Advanced Server Administrator’s Guide

■ Oracle8i documentation, including:

About This Guide xv

G Oracle8i Parallel Server Setup and Configuration Guide

G Oracle8i Parallel Server Concepts

G Oracle8i Parallel Server Administration, Deployment, and

Performance

G Oracle Enterprise Manager Administrator’s Guide

G Oracle Enterprise Manager Configuration Guide

G Oracle Enterprise Manager Concepts Guide

Page 15

xvi Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Supplemental Documents

The following technical documents contain important supplemental

information for the Compaq Parallel Database Cluster Model PDC/O5000:

■ Compaq Parallel Database Cluster Model PDC/O5000 Certification

Matrix for Windows 2000, at

www.compaq.com/solutions/enterprise/ha-pdc.html

■ Configuring Compaq RAID Technology for Database Servers, at

www.compaq.com/highavailability

■ Various technical white papers on Oracle and cluster sizing, which are

available from Compaq ActiveAnswers website, at

www.compaq.com/activeanswers

Text Conventions

This document uses the following conventions to distinguish elements of text:

Keys Keys appear in boldface. A plus sign (+) between

two keys indicates that they should be pressed

simultaneously.

USER INPUT User input appears in a different typeface and in

uppercase.

FILENAMES File names appear in uppercase italics.

Menu Options,

Command Names,

Dialog Box Names

COMMANDS,

DIRECTORY NAMES,

and DRIVE NAMES

Type When you are instructed to type information, type

Enter When you are instructed to enter information, type

These elements appear in initial capital letters, and

may be bolded for emphasis.

These elements appear in uppercase.

the information without pressing the Enter key.

the information and then press the Enter key.

Page 16

Symbols in Text

These symbols may be found in the text of this guide. They have the following

meanings:

WARNING: Text set off in this manner indicates that failure to follow directions

in the warning could result in bodily harm or loss of life.

CAUTION: Text set off in this manner indicates that failure to follow directions

could result in damage to equipment or loss of information.

IMPORTANT: Text set off in this manner presents clarifying information or specific

instructions.

NOTE: Text set off in this manner presents commentary, sidelights, or interesting points

of information.

Symbols on Equipment

About This Guide xvii

These icons may be located on equipment in areas where hazardous conditions

may exist.

Any surface or area of the equipment marked with these symbols

indicates the presence of electrical shock hazards. Enclosed area

contains no operator serviceable parts.

WARNING: To reduce the risk of injury from electrical shock hazards,

do not open this enclosure.

Any RJ-45 receptacle marked with these symbols indicates a Network

Interface Connection.

WARNING: To reduce the risk of electrical shock, fire, or damage to

the equipment, do not plug telephone or telecommunications

connectors into this receptacle.

Page 17

xviii Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Any surface or area of the equipment marked with these symbols

indicates the presence of a hot surface or hot component. If this

surface is contacted, the potential for injury exists.

WARNING: To reduce the risk of injury from a hot component, allow

the surface to cool before touching.

Power Supplies or Systems marked with these symbols

indicate the equipment is supplied by multiple sources of

power.

WARNING: To reduce the risk of injury from electrical shock,

remove all power cords to completely disconnect power from

the system.

Rack Stability

WARNING: To reduce the risk of personal injury or damage to the equipment,

be sure that:

Getting Help

If you have a problem and have exhausted the information in this guide, you

can get further information and other help in the following locations.

■ The leveling jacks are extended to the floor.

■ The full weight of the rack rests on the leveling jacks.

■ The stabilizing feet are attached to the rack if it is a single rack

installations.

■ The racks are coupled together in multiple rack installations.

■ Only one component is extended at a time. A rack may become unstable if

more than one component is extended for any reason.

Page 18

Compaq Technical Support

You are entitled to free hardware technical telephone support for your product

for as long you own the product. A technical support specialist will help you

diagnose the problem or guide you to the next step in the warranty process.

About This Guide xix

In North America, call the Compaq Technical Phone Support Center at

1-800-OK-COMPAQ

Outside North America, call the nearest Compaq Technical Support Phone

Center. Telephone numbers for world wide Technical Support Centers are

listed on the Compaq website. Access the Compaq website by logging on to

the Internet at

www.compaq.com

Be sure to have the following information available before you call Compaq:

■ Technical support registration number (if applicable)

■ Product serial number(s)

■ Product model name(s) and numbers(s)

■ Applicable error messages

■ Add-on boards or hardware

■ Third-party hardware or software

■ Operating system type and revision level

■ Detailed, specific questions

Compaq Website

1

. This service is available 24 hours a day, 7 days a week.

The Compaq website has information on this product as well as the latest

drivers and Flash ROM images. You can access the Compaq website by

logging on to the Internet at

www.compaq.com

1

For continuous quality improvement, calls may be recorded or monitored.

Page 19

xx Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Compaq Authorized Reseller

For the name of your nearest Compaq Authorized Reseller:

■ In the United States, call 1-800-345-1518.

■ In Canada, call 1-800-263-5868.

■ Elsewhere, see the Compaq website for locations and telephone

numbers.

Page 20

Chapter 1

Clustering Overview

For many years, companies have depended on clustered computer systems to

fulfill two key requirements: to ensure users can access and process

information that is critical to the ongoing operation of their business, and to

increase the performance and throughput of their computer systems at minimal

cost. These requirements are known as availability and scalability,

respectively.

Historically, these requirements have been fulfilled with clustered systems

built on proprietary technology. Over the years, open systems have

progressively and aggressively moved proprietary technologies into

industry-standard products. Clustering is no exception. Its primary features,

availability and scalability, have been moving into client/server products for

the last few years.

The absorption of clustering technologies into open systems products is

creating less expensive, non-proprietary solutions that deliver levels of

function commonly found in traditional clusters. While some uses of the

proprietary solutions will always exist, such as those controlling stock

exchange trading floors and aerospace mission controls, many critical

applications can reach the desired levels of availability and scalability with

non-proprietary client/server-based clustering.

These clustering solutions use industry-standard hardware and software,

thereby providing key clustering features at a lower price than proprietary

clustering systems. Before examining the features and benefits of the Compaq

Parallel Database Cluster Model PDC/O5000 (referred to here as the

PDC/O5000), it is helpful to understand the concepts and terminology of

clustered systems.

Page 21

1-2 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Clusters Defined

A cluster is an integration of software and hardware products that enables a set

of loosely coupled servers and shared storage subsystem components to

present a single system image to clients and to operate as a single system. As a

cluster, the group of servers and shared storage subsystem components offers a

level of availability and scalability far exceeding that obtained if each cluster

node operated as a stand-alone server.

The PDC/O5000 uses the Oracle8i Parallel Server software, which is a parallel

database that can distribute its workload among the cluster nodes. Refer to

Chapter 3, “Cluster Software Components” to determine the specific releases

your cluster kit supports.

Figure 1-1 shows an example of a PDC/O5000 that includes two nodes

(Compaq ProLiant™ servers), two sets of storage subsystems, two Compaq

StorageWorks

TM

Fibre Channel SAN Switches or Compaq StorageWorks Fibre

Channel Storage Hubs, a redundant cluster interconnect, and a client local area

network (LAN).

Page 22

Client LAN

Switch/Hub Cluster Interconnect

Clustering Overview 1-3

Host Adapters (2)

Fibre Channel SAN

Switch/Storage Hub #1

A

B

P1 P2

P1 P2

Storage Subsystem #1 Storage Subsystem #2

A

B

Host Adapters (2)

Node 2Node 1

Fibre Channel SAN

Switch/Storage Hub #2

P1 P2

P1 P2

Figure 1-1. Example of a two-node PDC/O5000 cluster

The PDC/O5000 can support redundant Fibre Channel Fabric Storage Area

Network (SAN) and redundant Fibre Channel Arbitrated Loop (FC-AL) SAN

topologies. In the example shown in Figure 1-1, the clustered nodes are

connected to the database on the shared storage subsystems through a

redundant Fibre Channel Fabric or redundant FC-AL. Clients access the

database through the client LAN, and the cluster nodes communicate across an

Ethernet cluster interconnect.

Page 23

1-4 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Availability

When computer systems experience outages, the amount of time the system is

unavailable is referred to as downtime. Downtime has several primary causes:

hardware faults, software faults, planned service, operator error, and

environmental factors. Minimizing downtime is a primary goal of a cluster.

Simply defined, availability is the measure of how well a computer system can

continuously deliver services to clients.

Availability is a system-wide endeavor. The hardware, operating system, and

applications must be designed for availability. Clustering requires stability in

these components, then couples them in such a way that failure of one item

does not render the system unusable. By using redundant components and

mechanisms that detect and recover from faults, clusters can greatly increase

the availability of applications critical to business operations.

Scalability

Simply defined, scalability is a computer system characteristic that enables

improved performance or throughput when supplementary hardware resources

are added. Scalable systems allow increased throughput by adding components

to an existing system without the expense of adding an entire new system.

In a stand-alone server configuration, scalable systems allow increased

throughput by adding processors or more memory. In a cluster configuration,

this result is usually obtained by adding cluster nodes.

Not only must the hardware benefit from additional components, but also

software must be constructed in such a way as to take advantage of the

additional processing power. Oracle8i Parallel Server distributes the workload

among the cluster nodes. As more nodes are added to the cluster, cluster-aware

applications can use the parallel features of Oracle8i Parallel Server to

distribute workload among more servers, thereby obtaining greater throughput.

Page 24

Compaq Parallel Database

Cluster Overview

As traditional clustering technology has moved into the open systems of

client/server computing, Compaq has provided innovative, customer-focused

solutions. The PDC/O5000 moves client/server computing one step closer to

the capabilities found in expensive, proprietary cluster solutions, at a fraction

of the cost.

The PDC/O5000 combines the popular Microsoft Windows 2000 Advanced

Server operating system and the industry-leading Oracle8i Parallel Server with

award-winning Compaq ProLiant servers and shared storage subsystems.

Clustering Overview 1-5

Page 25

Chapter 2

Cluster Architecture

The Compaq Parallel Database Cluster Model PDC/O5000 (referred to here as

the PDC/O5000) is an integration of a number of different hardware and

software products. This chapter discusses how these products play a role in

bringing a complete clustering solution to your computing environment.

The hardware products include:

■ Compaq ProLiant servers

■ Shared storage components

G Compaq StorageWorks Modular Array 8000 Fibre Channel Storage

Subsystems or the Compaq StorageWorks Enterprise Modular Array

12000 Fibre Channel Storage Subsystems (MA8000/EMA12000

Storage Subsystems)

G Compaq StorageWorks HSG80 Array Controllers (HSG80 Array

Controllers)

G Compaq StorageWorks Fibre Channel SAN Switches (Fibre Channel

SAN Switches) for redundant Fibre Channel Fabrics

G Compaq StorageWorks Storage Hubs (Storage Hubs) for redundant

Fibre Channel Arbitrated Loops

G KGPSA-BC PCI-to-Optical Fibre Channel Host Adapters

(KGPSA-BC Host Adapters) or KGPSA-CB PCI-to-Optical Fibre

Channel Host Adapters (KGPSA-CB Host Adapters)

Page 26

2-2 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Gigabit Interface Converter-Shortwave (GBIC-SW) modules

G

G Fibre Channel cables

■ Cluster interconnect components

G Ethernet NIC adapters

G Ethernet cables

G Ethernet switches/hubs

The software products include:

■ Microsoft Windows 2000 Advanced Server with Service Pack 1 or later

■ Compaq drivers and utilities

■ Oracle8i Enterprise Edition with the Oracle8i Parallel Server Option

For a description of the software products used with the PDC/O5000, refer to

Chapter 3, “Cluster Software Components.”

Compaq ProLiant Servers

A primary component of any cluster is the server. Each Compaq Parallel

Database Cluster Model PDC/O5000 consists of cluster nodes in which each

node is a Compaq ProLiant server.

With some exceptions, all nodes in a PDC/O5000 must be identical in model.

In addition, all components common to all nodes in a cluster, such as memory,

number of CPUs, and the interconnect adapters, should be identical and

identically configured.

NOTE: Certain restrictions apply to the server models and server configurations that are

supported by the Compaq Parallel Database Cluster. For a current list of PDC-certified

servers and details on supported configurations, refer to the Compaq Parallel Database

Cluster Model PDC/O5000 Certification Matrix for Windows 2000. This document is

available on the Compaq website at

www.compaq.com/solutions/enterprise/ha-pdc.html

Page 27

High-Availability Features of ProLiant Servers

In addition to the increased application and data availability enabled by

clustering, ProLiant servers include many reliability features that provide a

solid foundation for effective clustered server solutions. The PDC/O5000 is

based on ProLiant servers, most of which offer excellent reliability through

redundant power supplies, redundant cooling fans, and Error Checking and

Correcting (ECC) memory. The high-availability features of ProLiant servers

are a critical foundation of Compaq clustering products. Table 2-1 lists the

high-availability features found in many ProLiant servers.

Table 2-1

High-Availability Components of ProLiant Servers

Hot-pluggable hard drives Redundant power supplies

Digital Linear Tape (DLT) Array (optional) ECC-protected processor-memory bus

Uninterruptible power supplies (optional) Redundant processor power modules

ECC memory PCI Hot Plug slots (in some servers)

Offline backup processor Redundant cooling fans

Cluster Architecture 2-3

Shared Storage Components

The PDC/O5000 is based on a cluster architecture known as shared storage

clustering, in which clustered nodes share access to a common set of shared

disk drives. In this discussion, the shared storage includes these components:

■ MA8000/EMA12000 Storage Subsystem

■ HSG80 Array Controllers

■ Fibre Channel SAN Switches for redundant Fibre Channel Fabrics

■ Storage Hubs for redundant Fibre Channel Arbitrated Loops

■ KGPSA-BC or KGPSA-CB Host Adapters

■ Gigabit Interface Converter-Shortwave (GBIC-SW) modules

■ Fibre Channel cables

Page 28

2-4 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

MA8000/EMA12000 Storage Subsystem

The MA8000/EMA12000 Storage Subsystem is the shared storage solution for

the PDC/O5000. Each storage subsystem consists of one controller enclosure

and up to six disk enclosures.

For detailed information about storage subsystem components, refer to the

Compaq StorageWorks documentation provided with the

MA8000/EMA12000 Storage Subsystem.

Controller Enclosure Components

The controller enclosure for the MA8000/EMA12000 Storage Subsystem

houses the two HSG80 Array Controllers, one cache module for each

controller, an environmental monitoring unit (EMU), one or two power

supplies, and three dual-speed fans. In addition, the controller enclosure

houses the six I/O modules that connect the enclosure’s six SCSI buses to up

to six disk enclosures.

Disk Enclosure Components

Each disk enclosure houses up to 12 or 14 form factor hard disk drives,

depending on the number of SCSI buses connected to the enclosure’s I/O

module. A single-bus or dual-bus I/O module in each disk enclosure is

connected by UltraSCSI cable to one single-bus I/O module in the controller

enclosure. Disk enclosures using a single-bus I/O module can contain up to

12 disk drives. Disk enclosures using both connectors on a dual-bus I/O

module can contain up to 14 disk drives (7 per SCSI bus). When you have

more than three disk enclosures in your subsystem, they must all use

single-bus I/O modules, for a maximum of 72 disk drives in each

MA8000/EMA12000 Storage Subsystem.

Each disk enclosure also contains redundant power supplies, an EMU, and two

variable-speed blowers.

HSG80 Array Controller

Two dual-port HSG80 Array Controllers are installed in the controller

enclosure of each MA8000/EMA12000 Storage Subsystem.

From the perspective of the cluster nodes, each HSG80 Array Controller port

is simply another device connected to one of the cluster’s I/O connection paths

between the host adapters and the MA8000/EMA12000 Storage Subsystems.

Consequently, each node sends its I/O requests to the array controllers just as

Page 29

Cluster Architecture 2-5

it would to any SCSI device. An array controller port receives the I/O requests

from a host adapter in a cluster node and directs them to the shared storage

disks to which it has been configured.

Because the array controller processes the I/O requests, the cluster nodes are

not burdened with the I/O processing tasks associated with reading and writing

data to multiple shared storage devices.

Each HSG80 Array Controller port combines all of the logical disk drives that

have been configured to it into a single, high-performance storage unit called a

storageset. RAID technology ensures that every unpartitioned storageset,

whether it uses 12 disks or 14 disks, looks like a single storage unit to the

cluster nodes.

Both ports on each of the two HSG80 Array Controllers in the controller

enclosure are simultaneously active, and access to a specific logical unit

number (LUN) is distributed among and shared by these ports. This provides

redundant access to the same LUNs if one port or array controller fails. If an

HSG80 Array Controller in an MA8000/EMA12000 Storage Subsystem fails,

Secure Path failover software detects the failure and automatically transfers all

I/O activity to the defined backup path.

To further ensure redundancy, connect the two ports on each HSG80 Array

Controller by Fibre Channel cables to different Fibre Channel SAN Switches

or Storage Hubs.

For further information, refer to the Compaq StorageWorks documentation

provided with the array controllers.

Fibre Channel SAN Switch

IMPORTANT: For detailed information about cascading two Fibre Channel SAN Switches,

refer to the latest Compaq StorageWorks documentation. This guide does not document

cascaded configurations for the Fibre Channel SAN Switch.

Fibre Channel SAN Switches are installed between cluster nodes and shared

storage subsystems in clusters to create redundant Fibre Channel Fabrics.

An 8-port Fibre Channel SAN Switch and 16-port Fibre Channel SAN Switch

are supported. From two to four Fibre Channel SAN Switches can be used in

each redundant Fibre Channel Fabric.

Fibre Channel SAN Switches provide full 100 MBps bandwidth on every port.

Adding new devices to Fibre Channel SAN Switch ports increases the

aggregate bandwidth.

Page 30

2-6 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

For further information, refer to these manuals provided with each Fibre

Channel SAN Switch:

■ Compaq StorageWorks Fibre Channel SAN Switch 8 Installation and

Hardware Guide

■ Compaq StorageWorks Fibre Channel SAN Switch 16 Installation and

Hardware Guide

■ Compaq StorageWorks Fibre Channel SAN Switch Management Guide

provided with the Fibre Channel SAN Switch

Storage Hub

Storage Hubs are installed between cluster nodes and shared storage

subsystems in clusters to create redundant Fibre Channel Arbitrated Loops.

Storage Hubs connect the host adapters in cluster nodes with the HSG80 Array

Controllers in MA8000/EMA12000 Storage Subsystems. From two to four

Storage Hubs are used in each redundant FC-AL of a PDC/O5000. Using two

or more Storage Hubs provides fault tolerance and supports the redundant

architecture implemented by the PDC/O5000.

You can use either the Storage Hub 7 (with 7 ports) or the Storage Hub 12

(with 12 ports). Using the Storage Hub 7 may limit the size of the

PDC/O5000. For more detailed information, refer to Chapter 4, “Cluster

Planning,” in this guide.

Refer to the Compaq StorageWorks Fibre Channel Storage Hub 7 Installation

Guide and the Compaq StorageWorks Fibre Channel Storage Hub 12

Installation Guide for further information about the Storage Hubs.

KGPSA-BC and KGPSA-CB Host Adapter

Each redundant Fibre Channel Fabric or redundant FC-AL contains a

dedicated set of KGPSA-BC or KGPSA-CB Host Adapters in every cluster

node. Each host adapter in a node should be connected to a different Fibre

Channel SAN Switch or Storage Hub.

If the cluster contains multiple redundant Fibre Channel Fabrics or multiple

redundant FC-ALs, then host adapters cannot be shared between them. Each

redundant Fibre Channel Fabric or redundant FC-AL must have its own set of

host adapters installed in each cluster node.

Page 31

Compaq StorageWorks Secure Path failover software is installed on every

cluster node to ensure the proper operation of components along each I/O path.

For information about installing Secure Path, see the section entitled,

“Installing Secure Path for Windows 2000” in Chapter 5, “Installation and

Configuration.”

Gigabit Interface Converter-Shortwave

A Gigabit Interface Converter-Shortwave (GBIC-SW) module is installed at

both ends of a Fibre Channel cable. A GBIC-SW module must be installed in

each host adapter, active Fibre Channel SAN Switch or Storage Hub port, and

array controller.

GBIC-SW modules provide 100 MB/second performance. Fibre Channel

cables connected to these modules can be up to 500 meters in length.

Fibre Channel Cables

Shortwave (multi-mode) fibre optic cables are used to connect the server

nodes, Fibre Channel SAN Switches or Storage Hubs, and array controllers in

a PDC/O5000.

Cluster Architecture 2-7

Configuring and Cabling the MA8000/EMA12000 Storage Subsystem Components

Configuring LUNS for Storagesets

If you install the optional Compaq StorageWorks Large LUN utility on your

PDC/O5000 cluster, you can distribute a maximum of 256 LUNs among the

four array controller ports in each storage subsystem.

If you do not install the Large LUN utility, you can distribute a maximum of

16 LUNs among the four array controller ports in each storage subsystem.

This 16-LUN restriction is imposed by the Compaq Secure Path software and

the MA8000/EMA12000 Storage Subsystems.

IMPORTANT: When offsets are used, always assign LUNs 0-99 to port 1 on each HSG80

Array Controller and assign LUNs 100-199 to port 2.

Page 32

2-8 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

If you do not install the optional Large LUN utility in you cluster, there are

two accepted methods for distributing LUNs for storagesets:

■ You can distribute eight LUNs between the same-numbered ports on

both array controllers so that a total of 16 LUNs are assigned across all

four controller ports in each storage subsystem. For example, assign

LUNs 0 through 3 to array controller A port 1, LUNs 4 through 7 to

array controller B port 1, LUNs 100 through 103 to array controller A

port 2, and LUNs 104 through 107 to array controller B port 2. If array

controller A fails in multibus failover mode, Secure Path automatically

gives controller B control over I/O transfers for LUNs 0-3 on port 1 and

LUNs 100-103 on port 2.

■ You can distribute eight LUNs across all four array controller ports. For

example, assign LUNs 0 and 1 to array controller A port 1, LUNS 2 and

3 to array controller A port 2, LUNS 4 and 5 to array controller B port 1,

and LUNs 6 and 7 to array controller B port 2. In this configuration, a

single controller port can access all eight LUNs in the event of a path

failure on the other three ports.

If you do install the optional Large LUN utility in your cluster, then you must

follow these guidelines:

■ Do not assign unit offsets to any connections.

■ Use unit identifiers D0 through D63 for port 1 connections and D100

through D163 for port 2 connections.

■ Distribute the LUNs evenly across all four array controller ports.

SCSI Cabling Examples

SCSI Bus Addressing

The two array controllers in each MA8000/EMA12000 Storage Subsystem

controller enclosure receive I/O data from the cluster nodes, process it, and

distribute this data over single-ended UltraSCSI buses to as many as six disk

enclosures. Each bus has 16 possible SCSI bus identifier IDs (0-15).

The following devices use a SCSI bus address ID and are classified as SCSI

bus “nodes”:

■ Array controllers (A and B)

■ EMUs in the controller enclosure

■ Physical disk drives in the disk enclosures

Page 33

Cluster Architecture 2-9

Every node on a SCSI bus must have a unique SCSI bus ID.

Table 2-2 shows the SCSI bus address ID assignments for the

MA8000/EMA12000 Storage Subsystem.

Table 2-2

SCSI bus address ID assignments for the

MA8000/EMA12000 Storage Subsystem

SCSI Bus ID SCSI Bus Node

0 - 5 Physical drives in disk enclosure

6 Array controller B

7 Array controller A

8, 9 EMUs in the controller enclosure

10 - 15 Physical drives in disk enclosure

Each disk enclosure has two internal SCSI buses, with each bus controlling

half of the total available disk drive slots in the enclosure. The single-bus

I/O module places all physical disk drives in the enclosure on a single bus of

12 devices. The dual-bus I/O module maintains two internal buses with seven

physical disk drive slots on each bus.

For more detailed information about SCSI bus addressing for the

MA8000/EMA12000 Storage Subsystem, refer to the Compaq documentation

provided with the storage subsystem.

UltraSCSI Cables

Compaq recommends using the shortest cable length possible to connect disk

enclosures to the controller enclosure. The maximum supported cable length is

2 meters (6.6 ft). Refer to the documentation provided with your storage

subsystem for further information.

Using I/O Modules in the Controller Enclosure

As Figure 2-1 shows, both array controllers (A and B) connect to six I/O

modules in the controller enclosure. UltraSCSI cables are installed between

these I/O modules in the controller enclosure and one I/O module in each disk

enclosure.

Page 34

2-10 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

SCSI Bus 3

SCSI Bus 1

SCSI Bus 2

Controller Enclosure (rear view)

SCSI Bus 4

A

B

SCSI Bus 5

SCSI Bus 6

Figure 2-1. SCSI bus numbers for I/O modules in the controller enclosure

Using Dual-Bus I/O Modules in the Disk

Enclosures

Figure 2-2 shows UltraSCSI cables installed between a controller enclosure

and three disk enclosures. When three or fewer disk enclosures are present in a

storage subsystem, use one dual-bus I/O module in each disk enclosure to

support up to 14 disk drives in each disk enclosure. Using single-bus I/O

modules limits you to 12 disk drives per disk enclosure.

Page 35

Disk Enclosure

Disk Enclosure

Disk Enclosure

Cluster Architecture 2-11

Dual-Bus I/O Module

I/O Modules (6)

A

B

Controller Enclosure

Figure 2-2. UltraSCSI cabling between a controller enclosure and three disk

enclosures

Using Single-Bus I/O Modules in the Disk

Enclosures

Figure 2-3 shows UltraSCSI cables installed between a controller enclosure

and six disk enclosures. When four or more disk enclosures are present in a

storage subsystem, use one single-bus I/O module in each disk enclosure.

Page 36

2-12 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Single-Bus I/O

Disk Enclosure

Disk Enclosure

Disk Enclosure

Module

A

B

Controller Enclosure

Disk Enclosure

Disk Enclosure

Disk Enclosure

Single-Bus I/O

Module

I/O Modules (6)

Figure 2-3. UltraSCSI cabling between a controller enclosure and six disk

enclosures

Connecting EMUs Between MA8000/EMA12000 Storage Subsystems

For information about connecting the EMUs between enclosures in an

MA8000/EMA12000 Storage Subsystem and between different subsystems,

refer to the Compaq documentation provided with the storage subsystem.

Page 37

I/O Path Configurations for Redundant Fibre Channel Fabrics

Overview of Fibre Channel Fabric SAN Topology

Fibre Channel standards define a multi-layered architecture for moving data

across the storage area network (SAN). This layered architecture can be

implemented using the Fibre Channel Fabric or the Fibre Channel Arbitrated

Loop (FC-AL) topology. The PDC/O5000 supports both topologies.

A redundant Fibre Channel Fabric is two to four Fibre Channel SAN Switches

installed between host adapters in a PDC/O5000’s cluster nodes and the array

controllers in the shared storage subsystems. Fibre Channel SAN Switches

provide full 100 MBps bandwidth per switch port. Whereas the introduction of

new devices to FC-AL Storage Hubs further divides their shared bandwidth,

adding new devices to Fibre Channel SAN Switches increases the aggregate

bandwidth.

Redundant Fibre Channel Fabrics

Cluster Architecture 2-13

A redundant Fibre Channel Fabric consists of the PDC/O5000 cluster

hardware used to connect host adapters to a particular set of shared storage

devices using Fibre Channel SAN Switches. Each redundant Fibre Channel

Fabric consists of the following hardware:

■ From two to four host adapters in each node

■ From two to four Fibre Channel SAN Switches

■ MA8000/EMA12000 Storage Subsystems, each containing two

dual-port HSG80 Array Controllers

■ Fibre Channel cables used to connect the host adapters to the Fibre

Channel SAN Switches and the Fibre Channel SAN Switches to the

array controllers

■ GBIC-SW modules installed in host adapters, Fibre Channel SAN

Switches, and array controllers

IMPORTANT: For detailed information about cascading two Fibre Channel SAN Switches,

refer to the latest Compaq StorageWorks documentation. This guide does not document

cascaded configurations for the Fibre Channel SAN Switch.

Page 38

2-14 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

A redundant Fibre Channel Fabric consists of from two to four individual

fabrics. The number of fabrics present is determined by the number of host

adapters installed in the redundant Fibre Channel Fabric: two host adapters

create two fabrics and four host adapters create four fabrics.

Figure 2-4 shows a two-node PDC/O5000 with a redundant Fibre Channel

Fabric that contains four fabrics, one for each host adapter.

Host Adapters (2) Host Adapters (2)

Node 2Node 1

Fibre Channel

SAN Switch # 1

P1 P2

A

B

P1 P2

Storage Subsystem #1 Storage Subsystem #2

A

B

Fibre Channel

SAN Switch # 2

P1 P2

P1 P2

Figure 2-4. Two-node PDC/O5000 with a four-fabric redundant Fibre

Channel Fabric

Used in conjunction with the I/O path failover capabilities of Compaq Secure

Path software, this redundant Fibre Channel Fabric configuration gives cluster

resources increased availability and fault tolerance.

Page 39

Multiple Redundant Fibre Channel Fabrics

The PDC/O5000 supports the use of multiple redundant Fibre Channel Fabrics

within the same cluster. You would install additional redundant Fibre Channel

Fabrics in a PDC/O5000 to:

■ Increase the amount of shared storage space available to the cluster’s

nodes. Each redundant Fibre Channel Fabric can connect to a limited

number of MA8000/EMA12000 Storage Subsystems. This limit is

imposed by the number of ports available on the Fibre Channel SAN

Switches used. The storage subsystems are available only to the host

adapters connected to that redundant Fibre Channel Fabric.

■ Increase the cluster’s I/O performance.

Adding a second redundant Fibre Channel Fabric to the cluster involves

duplicating the hardware components used in the first redundant Fibre Channel

Fabric.

The maximum number of redundant Fibre Channel Fabrics you can install in a

PDC/O5000 is restricted by the number of host adapters your Compaq servers

support. Refer to the Compaq server documentation for this information.

Figure 2-5 shows a two-node PDC/O5000 with two redundant Fibre Channel

Fabrics. In this example, each redundant Fibre Channel Fabric has its own pair

of host adapters in each node, a pair of Fibre Channel SAN Switches, and two

MA8000/EMA12000 Storage Subsystems. In Figure 2-5, the hardware

components that constitute the second redundant Fibre Channel Fabric are

shaded.

Cluster Architecture 2-15

Page 40

2-16 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Storage Subsystem #1 Storage Subsystem #2

Redundant

Fibre Channel

Fabric #1

A

B

Storage Subsystem #1 Storage Subsystem #2

P1 P2

A

P1 P2

B

Fibre Channel

SAN Switch #1

Host

Adapters (4)

Fibre Channel

SAN Switch #1

P1 P2

P1 P2

P1 P2

A

P1 P2

B

Fibre Channel

SAN Switch #2

Host

Adapters (4)

Node 2Node 1

Fibre Channel

SAN Switch #2

P1 P2

A

P1 P2

B

Redundant

Fibre Channel

Fabric #2

Figure 2-5. Two-node PDC/O5000 with two redundant Fibre Channel Fabrics

Maximum Distances Between Nodes and Shared Storage Subsystem Components

By using standard short-wave Fibre Channel cables with Gigabit Interface

Converter-Shortwave (GBIC-SW) modules, the following maximum distances

apply:

■ Each MA8000/EMA12000 Storage Subsystem’s controller enclosure

can be placed up to 500 meters from the Fibre Channel SAN Switches.

■ Each Fibre Channel SAN Switch can be placed up to 500 meters from

the host adapters in the cluster nodes.

Page 41

Cluster Architecture 2-17

Figure 2-6 illustrates these maximum cable distances for a redundant Fibre

Channel Fabric.

500 meter maximum

Host Adapters (2) Host Adapters (2)

Node 2Node 1

Fibre Channel

SAN Switch # 1

500 meter

maximum

P1 P2

A

P1 P2

B

Storage Subsystem #1 Storage Subsystem #2

P1 P2

A

P1 P2

B

Figure 2-6. Maximum distances between PDC/O5000 cluster nodes and

shared storage subsystem components in a redundant Fibre Channel Fabric

I/O Data Paths in a Redundant Fibre Channel Fabric

Within a redundant Fibre Channel Fabric, an I/O path connection exists

between each host adapter and all four array controller ports in each storage

subsystem.

Fibre Channel

SAN Switch # 2

Page 42

2-18 Compaq Parallel Database Cluster Model PDC/O5000 for Oracle8i and Windows 2000 Administrator Guide

Host Adapter-to-Fibre Channel SAN Switch I/O Data Paths

Figure 2-7 highlights the I/O data paths that run between the host adapters and

the Fibre Channel SAN Switches. Each host adapter has its own I/O data path.

Host Adapters (2)

Fibre Channel

SAN Switch # 1

A

B

Storage Subsystem #1 Storage Subsystem #2

P1 P2

P1 P2

Node 2Node 1

A

B

Host Adapters (2)

Fibre Channel

SAN Switch # 2