Page 1

HP Cluster Test Administration Guide

HP Part Number: 5900-3310

Published: January 2014

Edition: 6

Page 2

© Copyright 2010, 2014 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor’s standard commercial license. The information contained herein is subject to change without notice. The only warranties for HP products

and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as

constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

Acknowledgments

Intel®, Itanium®, Pentium®, Intel Inside®, Intel® Xeon®, and the Intel Inside logo are trademarks or registered trademarks of Intel Corporation or

its subsidiaries in the United States and other countries. Microsoft®, Windows®, Windows® XP, and Windows NT® are U.S. registered trademarks

of Microsoft Corporation. UNIX® is a registered trademark of The Open Group.

Revision history

Publication dateEdition numberManufacturing part number

January 201465900-3310

September 201255900-2457

October 201145900-1970

May 201135070-6699

October 201025900-1262

September 201015900-1039

Page 3

Contents

1 Varieties of Cluster Test................................................................................5

CT Image................................................................................................................................5

CT Image using a network.........................................................................................................5

RPM........................................................................................................................................6

2 The Cluster Test GUI....................................................................................7

Starting Cluster Test...................................................................................................................7

Files generated by Cluster Test....................................................................................................7

Running cluster tests..................................................................................................................7

Configuration settings.........................................................................................................10

Running tests in a batch......................................................................................................11

Using scripts to run tests......................................................................................................12

Test descriptions.................................................................................................................14

Monitoring tests and viewing results..........................................................................................15

The nodes monitoring window.............................................................................................15

The test output window.......................................................................................................16

Performance analysis..........................................................................................................18

Test report.........................................................................................................................19

Checking the InfiniBand fabric..................................................................................................20

The Cluster Test toolbar menus..................................................................................................21

3 The accelerator test GUI............................................................................22

Starting accelerator tests..........................................................................................................22

Files generated by accelerator test............................................................................................22

Running accelerator tests.........................................................................................................23

4 Cluster Test procedure as recommended by HP.............................................26

Additional software.................................................................................................................26

Configuring Cluster Test when using RPM...................................................................................26

Accelerator test procedure.......................................................................................................27

The Cluster Test procedure.......................................................................................................29

5 The performance monitor...........................................................................33

The performance monitor utility................................................................................................33

The xperf utility......................................................................................................................35

6 Cluster Test tools.......................................................................................36

Hardware Inventory................................................................................................................36

Firmware Summary.................................................................................................................36

Server health check.................................................................................................................37

Excluding the head node from tests...........................................................................................38

Disk Scrubber........................................................................................................................39

7 Running tests in parallel.............................................................................41

8 Creating and changing per node files.........................................................42

An example per-node directory.................................................................................................42

An example cloned per-node directory......................................................................................42

9 NFS performance tuning...........................................................................43

10 Troubleshooting......................................................................................44

Detecting new hardware..........................................................................................................44

Troubleshooting Cluster Test.....................................................................................................45

11 Support and other resources.....................................................................46

Scope of this document...........................................................................................................46

Contents 3

Page 4

Intended audience..................................................................................................................46

Contacting HP .......................................................................................................................46

Before you contact HP........................................................................................................46

HP contact information.......................................................................................................46

Documentation feedback....................................................................................................46

New and changed information in this edition ............................................................................47

Related information.................................................................................................................47

Documentation..................................................................................................................47

Websites..........................................................................................................................47

Typographic conventions.........................................................................................................48

Customer self repair................................................................................................................48

A Useful files and directories.........................................................................50

B Utility commands......................................................................................51

analyze.................................................................................................................................51

apply_new_model_updates......................................................................................................51

archive_logs..........................................................................................................................51

checkadm..............................................................................................................................51

checkic..................................................................................................................................51

conrep..................................................................................................................................52

cpu_rate................................................................................................................................52

getMP...................................................................................................................................52

hponcfg................................................................................................................................52

ib_fabric_check......................................................................................................................52

inspect_ib_fabric.pl.................................................................................................................54

ipmitool.................................................................................................................................57

light......................................................................................................................................57

list_messages.........................................................................................................................57

pdsh.....................................................................................................................................58

run_cluster_script.pl.................................................................................................................58

setMP....................................................................................................................................58

C Sample test output....................................................................................59

CrissCross.............................................................................................................................59

Test4.....................................................................................................................................61

Pallas....................................................................................................................................62

Stream..................................................................................................................................65

Disk Test................................................................................................................................67

Linpack.................................................................................................................................68

D Documentation feedback...........................................................................72

Glossary....................................................................................................73

Index.........................................................................................................74

4 Contents

Page 5

1 Varieties of Cluster Test

All the Cluster Test varieties are designed to:

• verify the validity of a cluster configuration.

• test the functionality of the cluster as a whole, with emphasis on testing interconnect, including

the interconnect switch, cabling, and interface cards.

• provide stress testing on the cluster nodes.

Cluster Test detects failures of hardware and network connections to the node level early, so the

failed components can be replaced or corrected before cluster software (HP Insight Cluster

Management Utility (CMU), XC, Scali, Scyld, etc.) installation is started. This makes cluster software

integration much easier and faster, particularly on a complex solution with several hundred nodes.

Cluster Test is available in the following versions:

• Image

• RPM

• Ctlite

The Cluster Test Image and RPM versions have a common GUI and suite of tests. Ctlite is a

lightweight text-based CLI version.

For the requirements of each version of Cluster Test, see the HP Cluster Test Installation Guide

available at http://www.hp.com/go/ct-docs.

CT Image

Cluster Test Image is installed on one or more head nodes by means of a DVD or USB media. This

method destroys any information on the head node's installation hard drive. The Cluster Test Image

compute node installation uses a diskless setup, which does not destroy or alter any information

on the compute nodes.

For Cluster Test Image it is recommended that even when the cluster solution comes with disk drives

in its compute nodes, the diskless test set up should be followed, for both time savings during cluster

test and for simplicity.

The Image version of Cluster Test:

• allows you to configure and test a High-Performance Computing (HPC) cluster without being

• contains CentOS, libraries, software packages, scripts, and CT GUIs.

• provides the ability to test and diagnose HPC cluster hardware that doesn't yet have an OS

• configures compute nodes in a diskless cluster environment – compute nodes are not altered

• requires installation on the head nodes' hard drives.

• may be distributed via DVD ISO image, USB flash drive, or over the network.

• allows compute nodes to boot over Mellanox 10GigE.

a Linux or cluster expert.

installed.

any way.

CT Image using a network

Cluster Test Image can be installed on a cluster using a network installation server. A network

installation server is a separate Linux server required to serve the Cluster Test Image. This server

must have an available NIC to serve the image, and run DHCP, TFTP, and NFS on this NIC. This

method is useful for installing Cluster Test on multiple head nodes.

CT Image 5

Page 6

RPM

Cluster Test RPM is installed on an existing cluster as an additional software package. The current

cluster settings are not modified. Once Cluster Test RPM is installed and set up, the testing process

is the same as Cluster Test Image. The RPM version of Cluster Test:

• is available as an RPM.

• allows someone with average computing skills to set up and test a High-Performance Computing

(HPC) cluster in very little time.

• contains software packages, scripts, and CT GUIs.

• does not include the Linux OS – you test the cluster using your existing OS. The RPM kit includes

binaries for RHEL6 and the equivalent CentOS releases.

• requires the OS, drivers, and other software already installed on each cluster node.

• is a good solution for clusters that are already up and running.

• currently supports only X86–64 systems.

• includes accelerator tests for Nvidia GPUs.

Cluster Test RPM files are available from www.hp.com/go/ct-download.

The Cluster Test RPM file is Clusterx64_rpm-vx.x-XXXX.bin.

6 Varieties of Cluster Test

Page 7

2 The Cluster Test GUI

Starting Cluster Test

The entire suite of cluster tests can be invoked through the Cluster Test interface. Start Cluster Test

with the command

# /opt/clustertest/bin/testnodes.pl

A directory for Cluster Test output messages will be created under /opt/clustertest/logs,

if it does not already exist. Sequencing will be set to avoid conflict with any other testnodes.pl

processes already running. See “Running tests in parallel” (page 41) for important information

regarding running multiple cluster tests.

NOTE: Make sure the X server is running and the DISPLAY environment variable is set

appropriately.

If you want to test accelerators, first validate the performance of the cluster with the procedures in

this chapter, then perform the accelerator tests described in “The accelerator test GUI” (page 22).

NOTE: Accelerator tests are only available with the RPM version of Cluster Test.

Files generated by Cluster Test

As you run the tests, output is collected in log files. You can set the log directory using command

line options. For example, testnodes.pl <logdirectory>.

Upon each new invocation of Cluster Test, a new directory is generated under /opt/

clustertest/logs with the current timestamp (year/month/day/time). The log, error, and

script files are saved in this directory.

NOTE: Remove all files when you are finished testing with Cluster Test.

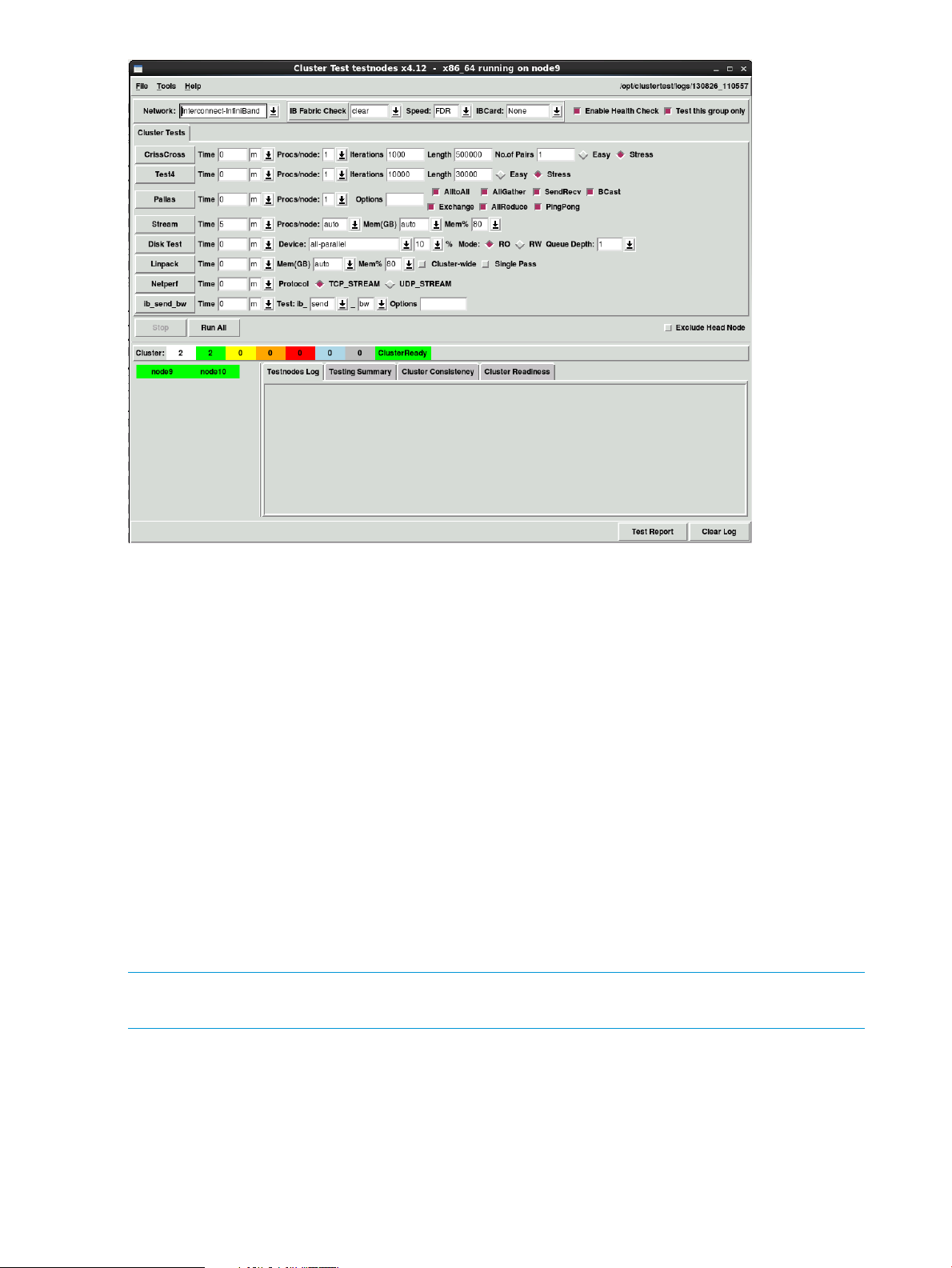

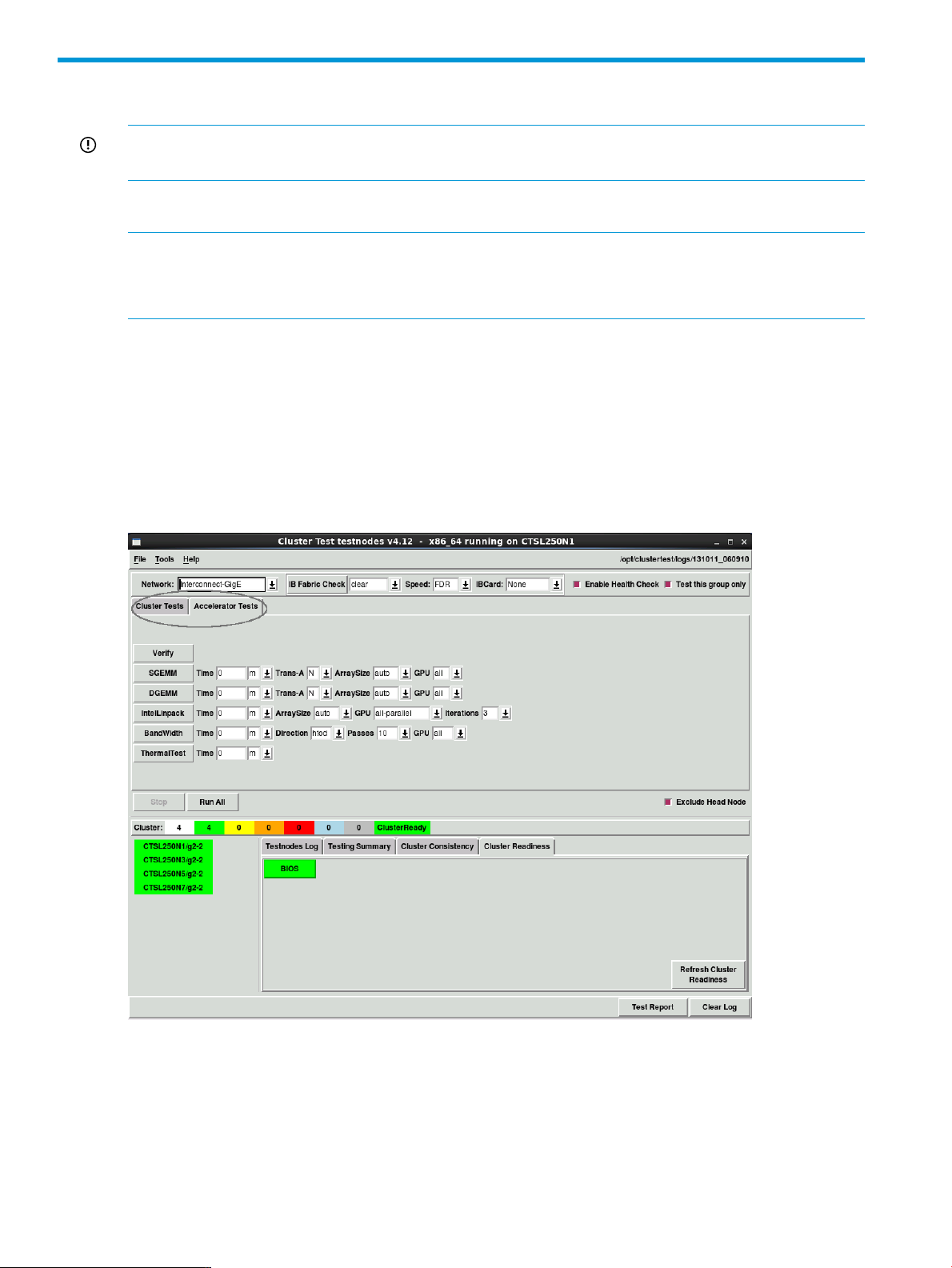

Running cluster tests

Cluster Test includes the following test buttons:

• CrissCross

• Test4

• Pallas

• Stream

• Disk Test

• Linpack

• Netperf

• InfiniBand verbs tests (ib_send_bw)

Tests are described in detail in “Test descriptions” (page 14).

Starting Cluster Test 7

Page 8

To start an individual test, select that test's button. Test parameters are on the same row as the test

button.

For each of the tests, the run time can be specified in minutes (m), hours (h), or days (d). The default

run time of 0 will run the test for one pass. Normally, the default values are good enough and

won't need to be changed. However, in a large cluster solution, these values might need to be

changed to reduce the load on CPUs or shorten the test time.

When a test is running, all test buttons are disabled (in grey color).

After each test, all nodes in the test are checked for disk and memory errors – the test fails if the

error count exceeds the threshold.

The Network: pull-down is at the top of the interface. This is for selecting the cluster interconnect

type: Admin, Interconnect, or Alternate networks. The Admin network can be a GigE or 10GigE

network, the Interconnect and Alternate networks may be GigE, InfiniBand, 10GigE, or None, if

they have not been configured. For example, if you are testing an InfiniBand-based cluster with

one IB connection per node, you will see Admin, and Interconnect-InfiniBand as options in the

pull-down. If you are testing a dual-rail IB cluster, you will see Admin, Interconnect-InfiniBand,

Alternate-InfiniBand, and Combined-InfiniBand. In this case, Interconnect-InfiniBand will test the

first rail, Alternate-InfiniBand will test the second rail, and Combined-InfiniBand will use both rails

for testing.

NOTE: Only MPI applications can use both rails for testing; the Ibverbs tests (ib_send_bw,

ib_read_bw, etc.) and Netperf will only work on one rail at a time.

The Stop button halts the current test. When no test is running, this button is disabled.

The Test this group only check box allows tests to be run on either a group of nodes or on the

whole cluster. If this box is checked, the tests will run on the group of nodes that includes the head

node and compute nodes under its control. If this box is unchecked, the tests run on the whole

cluster. When there is only one head node in the cluster solution, Test this group only has no effect.

8 The Cluster Test GUI

Page 9

The Clear Log button clears the Cluster Test interface output window. The Test Report button allows

users to generate a summary pass/fail report from all test runs. The test report can be found in the

current test directory under /opt/clustertest/logs.

Running cluster tests 9

Page 10

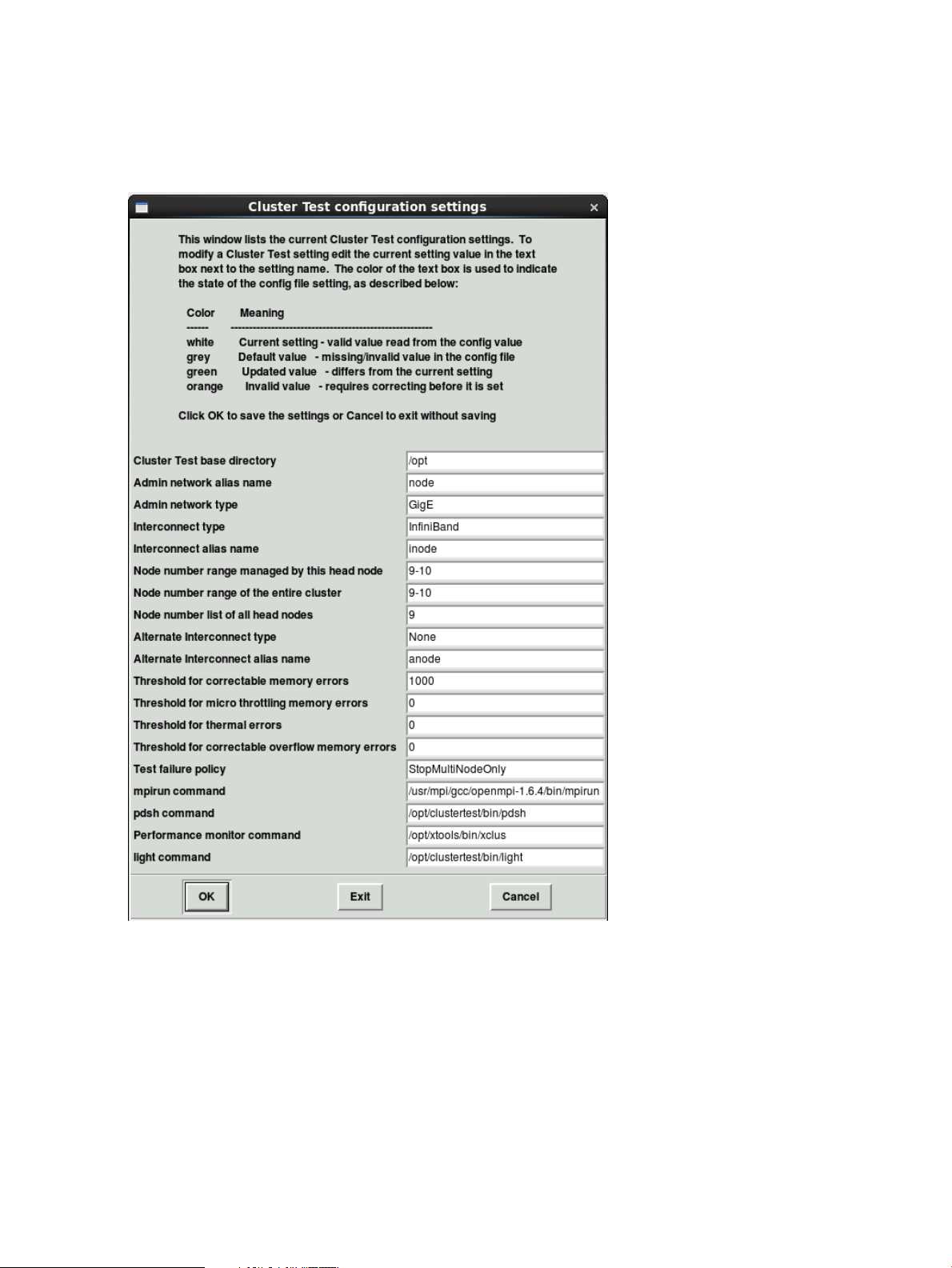

Configuration settings

Cluster Test provides an interface to modify the Cluster Test configuration settings. The window

displays the current configuration settings and allows you to modify any setting. You can access

the Cluster Test configuration settings dialog box from the Cluster Test toolbar: File→Config File

Settings .

This window displays the current admin and interconnect node name settings along with the range

of nodes being tested. Simply select a field and modify it to make any changes. The color of the

field will change to indicate if the updated setting is valid (green) or invalid (orange). The “Node

number range managed by this head node” setting is the range of nodes being tested when Test

this group only is selected on the Cluster Test interface. The “Node number range of the entire

cluster” setting is the range of nodes being tested when the Test this group only button is not

selected.

Both Open MPI and HP MPI may be used for the tests, provided they are installed. By default,

Open MPI is used. To use Open MPI, select File→Config File Settings... and edit the “mpirun

command” field to /usr/mpi/gcc/openmpi-<version>/bin/mpirun. Look in the /usr/

mpi/gcc file on your system to find what version number to use. You can change back to HP MPI

by changing the “mpirun command” field to /opt/hpmpi/bin/mpirun.

10 The Cluster Test GUI

Page 11

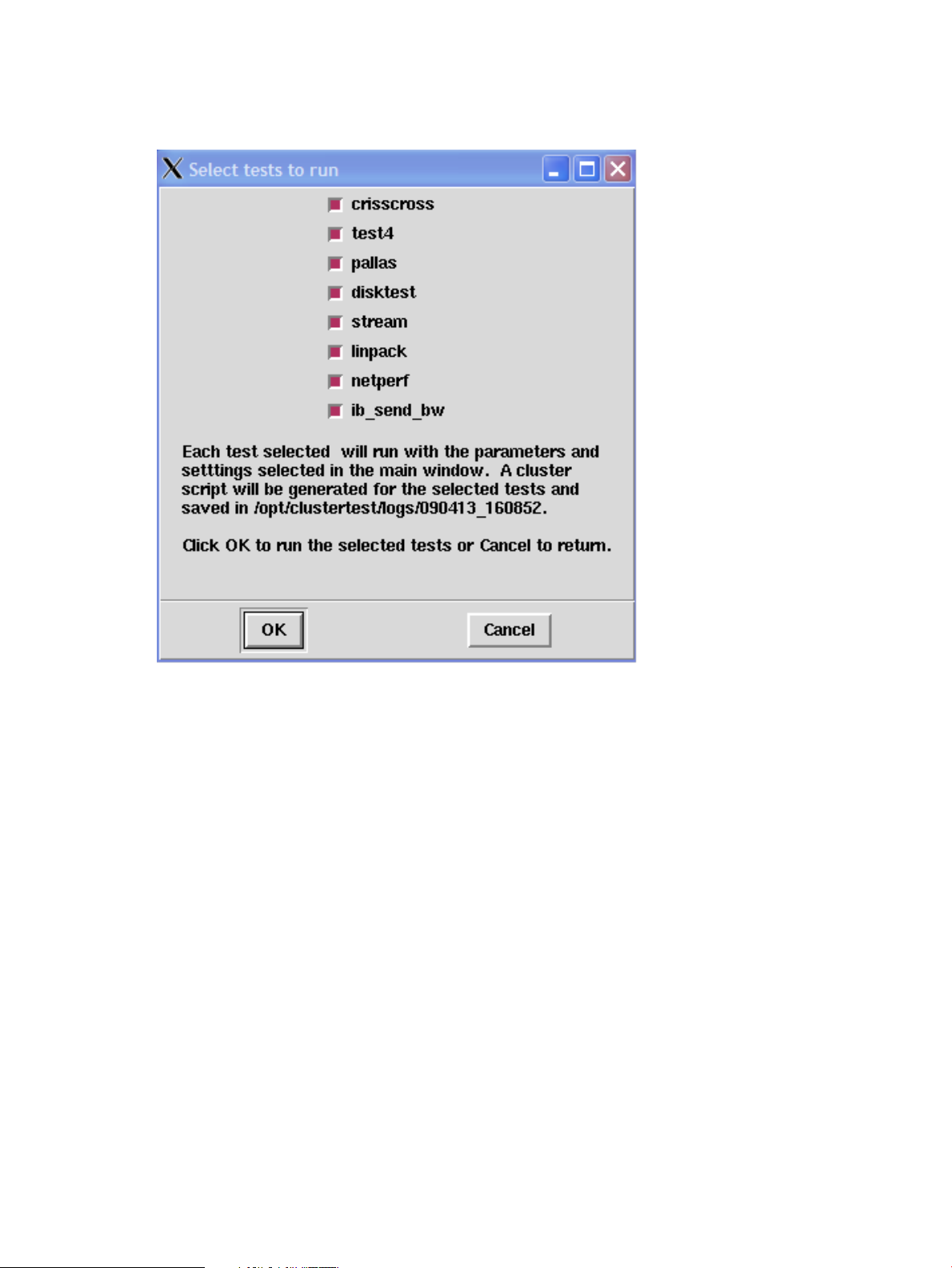

Running tests in a batch

The Run All button runs a selection of tests serially. Choose the tests for the batch from the Run All

dialog box.

Individual tests can be selected by checking or un-checking the boxes.

The order test are run in may be changed by editing the Run Order field. This number expresses

the ascending order in which tests will be run. To change the order, edit this number to a lower

or higher value. Duplicate numbers are allowed. For example, if you select only the tests CrissCross,

Test4, and Pallas, and change the CrissCross Run Order to 3, the run order may be Test4, CrissCross,

and Pallas, or it may be Test4, Pallas, and CrissCross.

Selected tests are run serially in the specified order in a loop for an iteration, a duration, or

continuously. Based on the selected Run Options, the test run will loop:

• iteration – for the number of times specified in this field. The default is 1 iteration.

• duration – for the duration in hours and minutes starting when the dialog box OK button is

clicked. The test run stops when the time is expired. Specify the duration in hours and minutes

using the form HH:MM in 24-hour-clock format. Days can be expressed in 24 hour units. For

example, 3 days is 72 hours.

• continuous – continuously until the Stop button is clicked in the main window.

The dialog box lists the output file names for all the tests. Each selected test will run and a cluster

script will be saved in the current directory. When one of the tests is running, the Run All button is

disabled.

The ib_send_bw test is disabled if InfiniBand is not selected.

Running cluster tests 11

Page 12

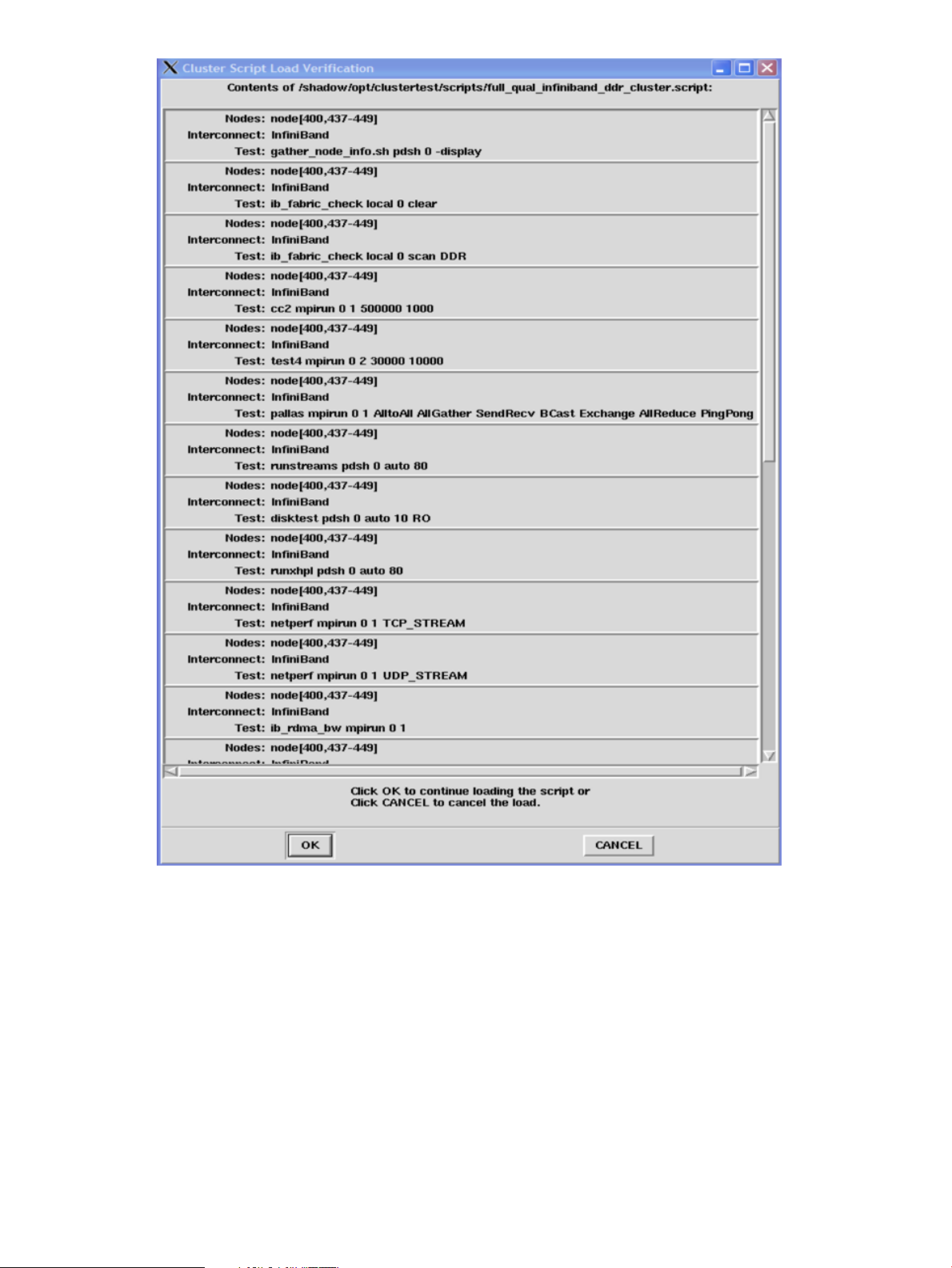

Using scripts to run tests

The Load Cluster Script window (File Load Script) allows you to repeat a previous test. Each time

a test is run, the test parameters selected in the GUI are saved to a script file. This script file is then

added to a cluster script file that also includes the selected interconnect and the list of active nodes

at the time of the test’s invocation. A single cluster script can contain one or more test scripts, as

is the case when Run All is used. When a cluster test script is loaded and run, neither a new test

script nor a new cluster script is created. By default, the scripts are written to and listed from the

current logs directory.

After a script is selected, a confirmation window is displayed listing the tests that will be run by

that cluster script. You have the option to continue (OK) or cancel (CANCEL) loading the selected

cluster script.

12 The Cluster Test GUI

Page 13

The run_cluster_script command basically does the same thing as File Load Script, except

you can use run_cluster_script to run tests on independent nodes in the same cluster in

parallel. See “Running tests in parallel” (page 41) for important information regarding running

multiple cluster tests.

Running cluster tests 13

Page 14

Test descriptions

CrissCross

In the CrissCross test, nodes take turns sending data packets to every other process. This test is

used to determine the maximum point-to-point bandwidth for the interconnect network. CrissCross

can be run in Easy or Stress mode. The Easy mode provides a basic connectivity test with small

packets. In this mode the actual transfer rates will vary significantly from node to node because of

the small packet size. The default parameters are 1 process per node and 300 bytes for an Easy

test. Once the Easy test passes, the Stress option should be run. A much larger packet, depending

on the interconnect type, is required for a network Stress test. The Stress test is expected to return

true bandwidth values, which are generally greater than 3000 MBs for a QDR IB network.

Test4

In this test, the nodes send small packets to all other nodes at the same time. This test basically

floods the network with data, and in the process also stresses the CPUs. The default number of

CPUs per node is the number of processors (cores) the node has. It is recommended that this test

should not be run in a large group of nodes, because the time it takes to run the test grows

exponentially with the number of nodes. The time setting for Test4 limits the runtime so a setting of

3 hours takes close to 3 hours regardless of how many iterations were specified. Test4 can be run

in Easy or Stress modes. In easy mode, the test uses smaller data packets and is intended to verify

basic connectivity of the selected network. Stress mode is a much longer test that more fully verifies

network performance.

Pallas

This is the industry standard test suite formerly known as Pallas, now known as IMB (Intel MPI

Benchmark). Only a few of the original tests are available: AllGather, SendRecv, Bcast, Exchange,

AllReduce, AlltoAll, and PingPong. You may select individual tests with their check boxes. The

number of processors (cores) can be selected. Most of the current platforms have up to 4 cores,

maximum.

Stream

This test is a standard memory benchmark. Depending on the hardware configuration, the memory

size selected for this test should be a little less than the actual memory size on the node. Otherwise,

the test will take a very long time to complete. The default setting, auto, will select an amount of

memory based on the available memory on each node. You can control the percentage of memory

used with the Mem% pull-down. You can also explicitly select a different amount of memory using

the Mem(GB) pull-down. You can also select the number of CPUs used in this test.

Disk test

This test takes the parameters:

• Disk device

• Percentage of the disk to be tested

• Queue Depth

For Queue Depth:, the default value of 4 will produce bandwidth measurements that are more

stable than using a queue depth of 0. If auto is entered for Device, then the first block device will

be automatically selected. If all or all-parallel is entered for Device, then all block devices on

each node will be tested in parallel. If all-sequential is entered for the device, then all block devices

on each node will be tested on at a time. On the head node (the node serving NFS to the group)

the test will run a read-only (RO) test. On all other nodes, the test can be run read-only or read-write

(RW).

14 The Cluster Test GUI

Page 15

Linpack

Linpack is an industry standard cluster performance test used for submitting numbers to the Top

500 Supercomputers list. The test is implemented to run separately on each node using all CPU

cores and about 80% or less of memory. Two parameters need to be specified: Mem(GB) and

Mem(%). Mem(GB) is the total memory in gigabytes on each node, and Mem(%) is the percentage

of total memory used in the test. By default, this test will not give cluster-wide performance, but it

exercises CPU and memory at the same time, and it provides another set of numbers you can use

to compare the performance among the cluster nodes. The amount of memory required is set to

auto by default – this will run each node at the specified percentage of the memory available. For

example, using auto and 80%, a 2 GB node will use 80% of 2 GB, while a 16 GB node will use

80% of 16 GB. The amount of memory used on any head node is adjusted to allow for increased

memory consumption on head nodes. The default Linpack test run performs three iterations of the

test using the same input data for each run. To limit the number of iterations to one, select the Single

Pass option.

Netperf

Netperf is a benchmark used to measure network performance (for example, GigE and 10GigE).

It currently provides tests for unidirectional throughput. You must select TCP (TCP_STREAM) or UDP

(UDP_STREAM) protocol. The first half of the selected nodes receives messages from the second

half. For example, in a 10 node cluster, node 5 sends to node 0; node 6 sends to node 1; node

7 sends to node 2; and so on. An even number of nodes must be selected to run this test.

InfiniBand verbs tests

This is a collection of InfiniBand verbs bandwidth/latency performance tests provided with OFED.

Verbs-layer performance is usually higher than what is reported by the MPI-level tests, such as

Pallas. The InfiniBand verbs test (shown in the GUI as ib_send_bw) is selected by choosing the

test name from the first two spin boxes. These selections will update the test button used to run the

test. Test options can be specified in the Options box. Please refer to the documentation for each

individual InfiniBand verbs test for the available options. Some of the useful options include –a to

include all message sizes, and –b for bi-directional test. The node settings are identical to the

Netperf test above. These tests are only available with the InfiniBand interconnect on non-QLogic

IB hardware.

Deviation test

This test measures the consistency of bandwidth and latency between nodes using MPI over IB.

The node settings are identical to the Netperf test above. This test is only available with the InfiniBand

interconnect on QLogic IB hardware.

Monitoring tests and viewing results

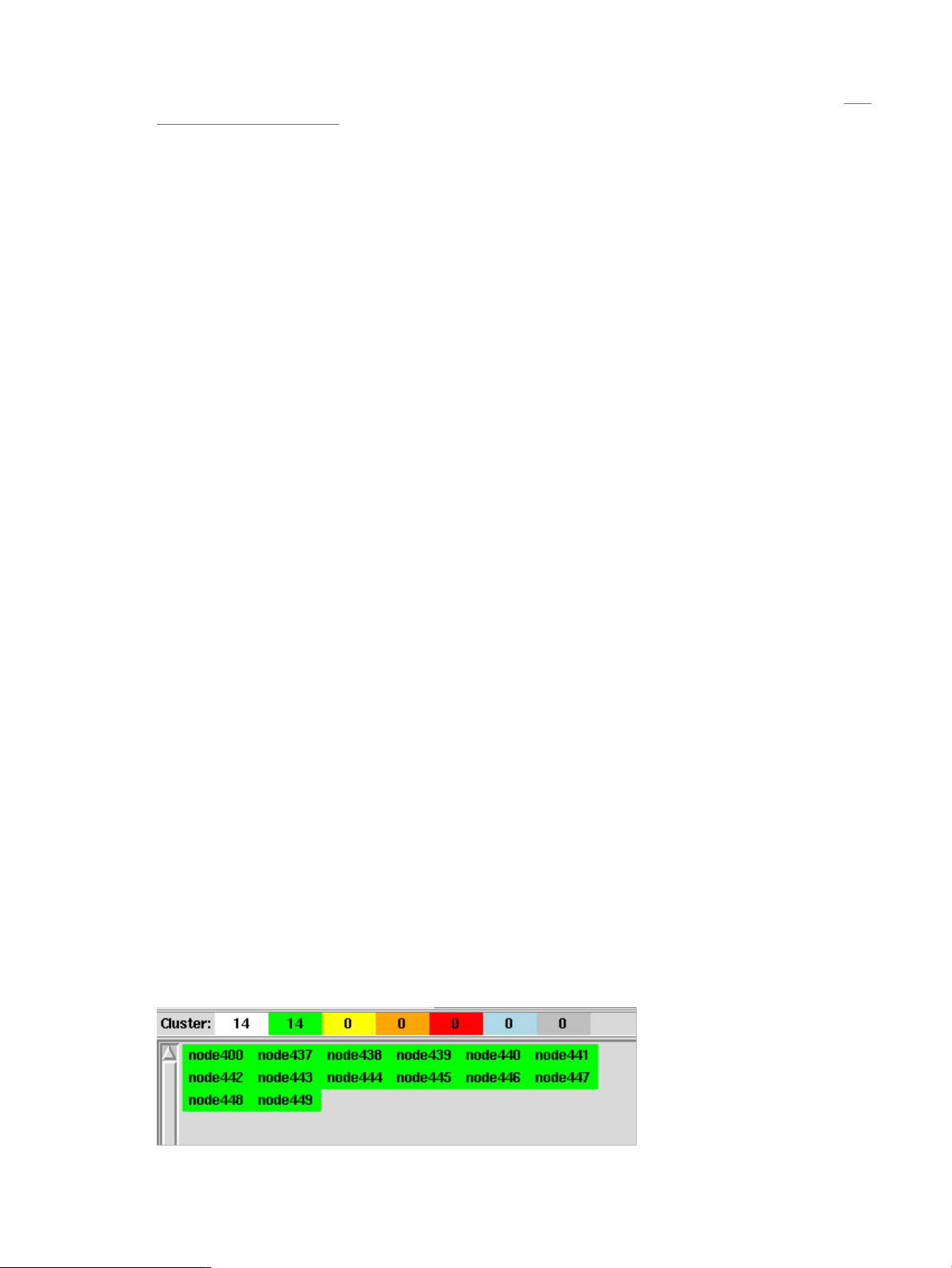

The nodes monitoring window

The bottom left window on the Cluster Test interface is the nodes monitoring window. It can be

resized to give more space to the test output window.

This window indicates whether a node is active (online) or inactive (offline).

Monitoring tests and viewing results 15

Page 16

An overall cluster node status bar, Cluster:, indicates the total number of nodes in each state as

listed below:

• White: currently configured for testing

• Green: active admin and interconnect networks

• Yellow: only the admin network is active

• Orange: only the interconnect network is active

• Red: no active networks

• Blue: status update is pending

• Gray: excluded from this testing

A node can be disabled (removed from the test) or enabled (added to the test) by clicking on the

node name. If a node is enabled, clicking on its node name disables it. In reverse, if a node is

disabled, clicking on its node name enables it. When a node is disabled, its node name is shown

in gray color. Right-clicking on any active node name and holding it will pop up a menu as follows:

• UID – turns on/off the UID of the node

• Terminal – opens a terminal to the node

• Reboot – reboots the node from the OS

• Power Off – powers off the node from the OS

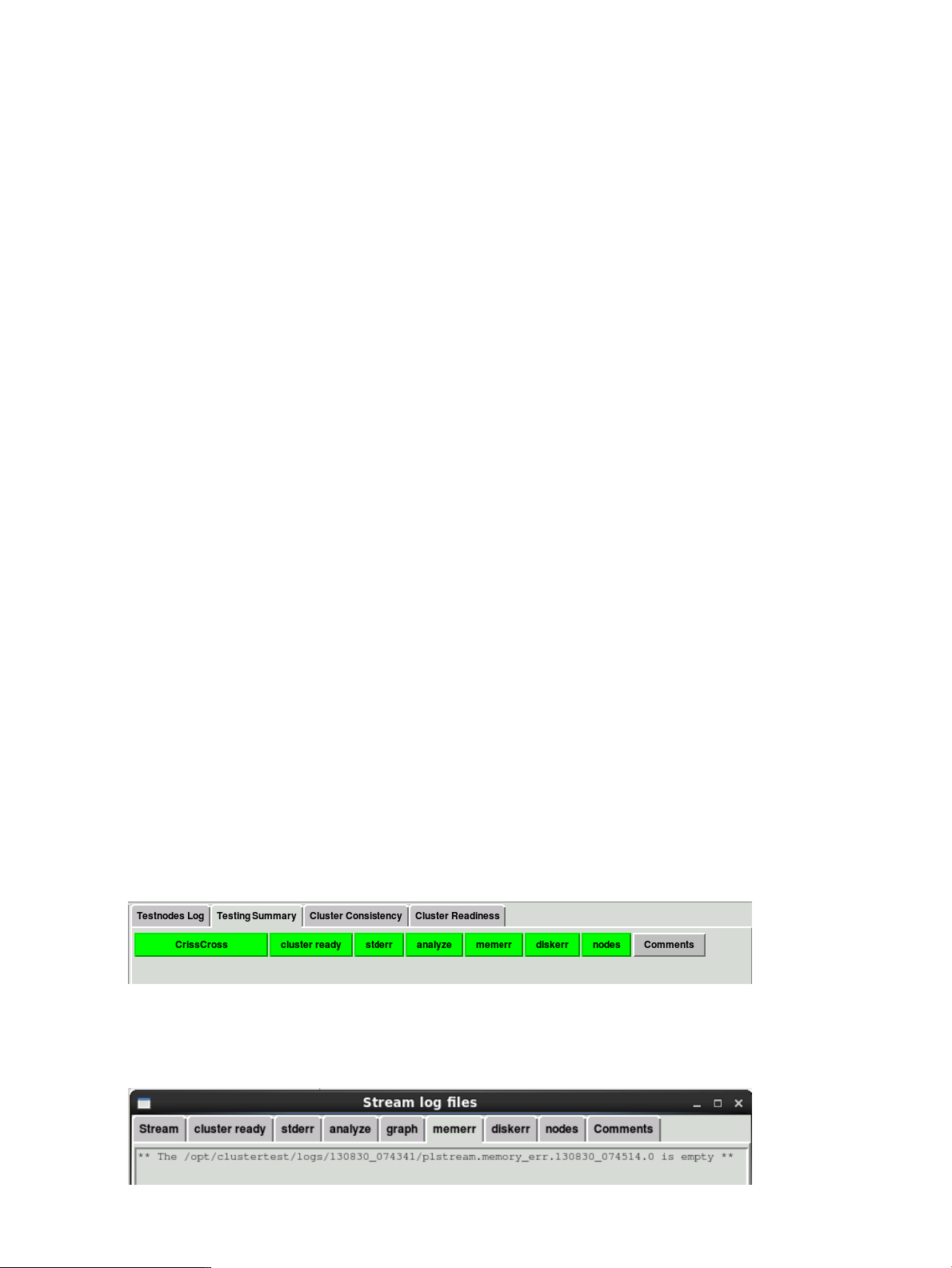

The test output window

The bottom right window on the Cluster Test interface is the test output window. It has four tabs:

• Testnodes Log – All tests display results in this window while they run. You can save the contents

of this window by clicking Save Log, then entering a file name.

• Testing Summary – Use this to track test progress. Progress is indicated by color: yellow

indicates the test/check is in progress; green indicates the test/check is complete. The newest

run is at the top.

• Cluster Consistency – This tab collects information from all the nodes in the cluster into categories

like Model, ILO/MP, BIOS, Processors, Memory, Network, Storage, Location, and so on. The

categories are displayed with a pass (green) or fail (red) status. Pass or fail is determined by

matching the data fields for each node in each category. Nodes are sorted by matching fields.

Clicking on one of the categories displays its details in a pop-up dialog box.

• Cluster Readiness – This tab verifies that all enabled nodes are up and BIOS hyper-threading

is disabled on all the nodes. A Cluster Readiness failure status in any aspect is used to mark

CT tests as failed if cluster ready status is not green.

You can customize the displayed output for a particular test by selecting the test name (CrissCross

in this example), cluster ready, stderr, analyze, memerr, diskerr, nodes, or Comments. An example

of memerr output is shown below.

16 The Cluster Test GUI

Page 17

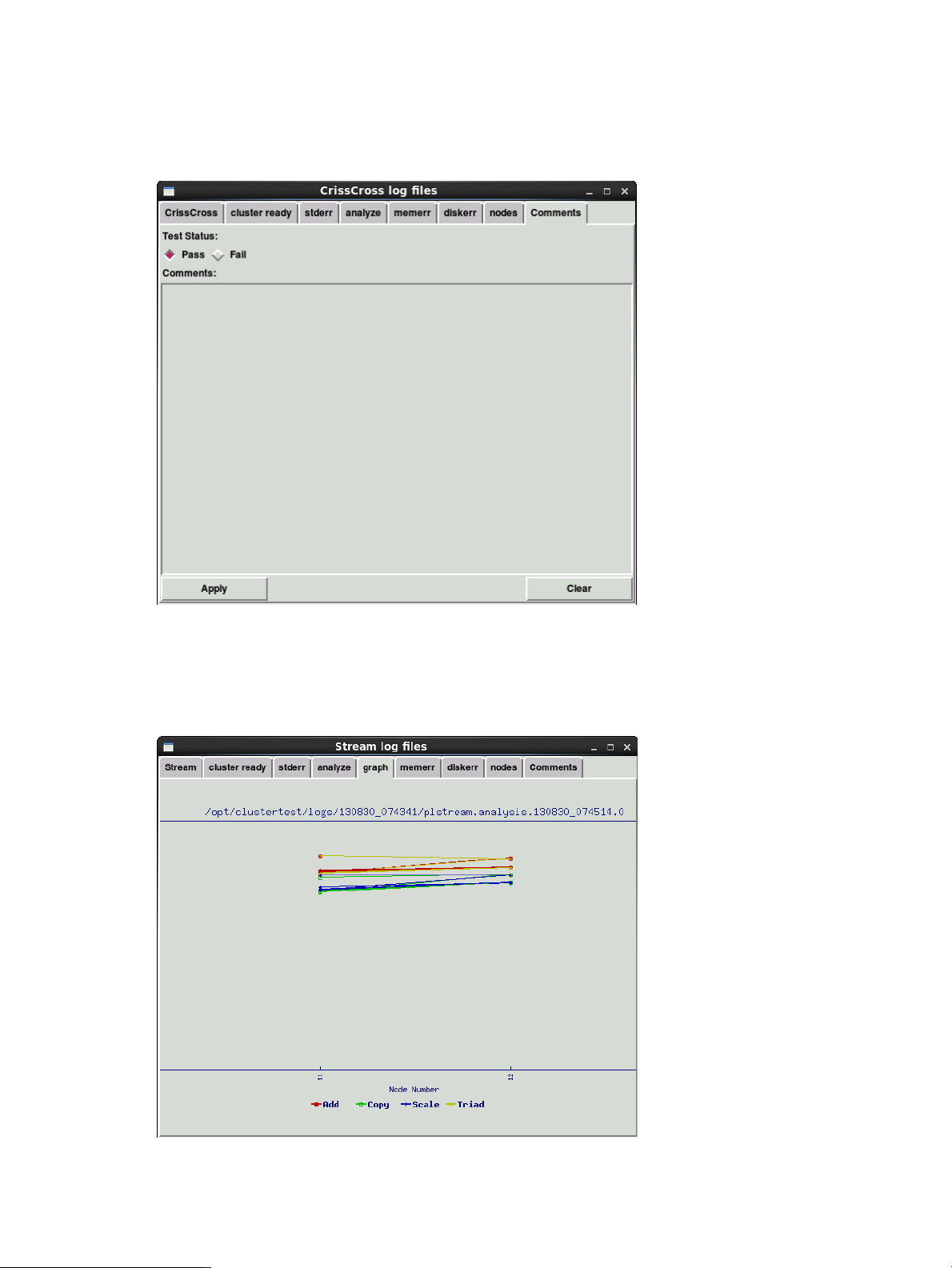

The Comments button allows you to override the test status (Pass or Fail) and enter a comment

describing why that status better reflects the actual results. When a test status is overridden, the

Comments button on the Testing Summary display changes from grey (no comments or override)

to whatever test status the user chose, as does the test name button. The check buttons (stderr,

analyze, memerr, and diskerr) will continue to reflect their original status.

Select Apply to set the selected status. Select Clear to restore the original test status, thus eliminating

the override.

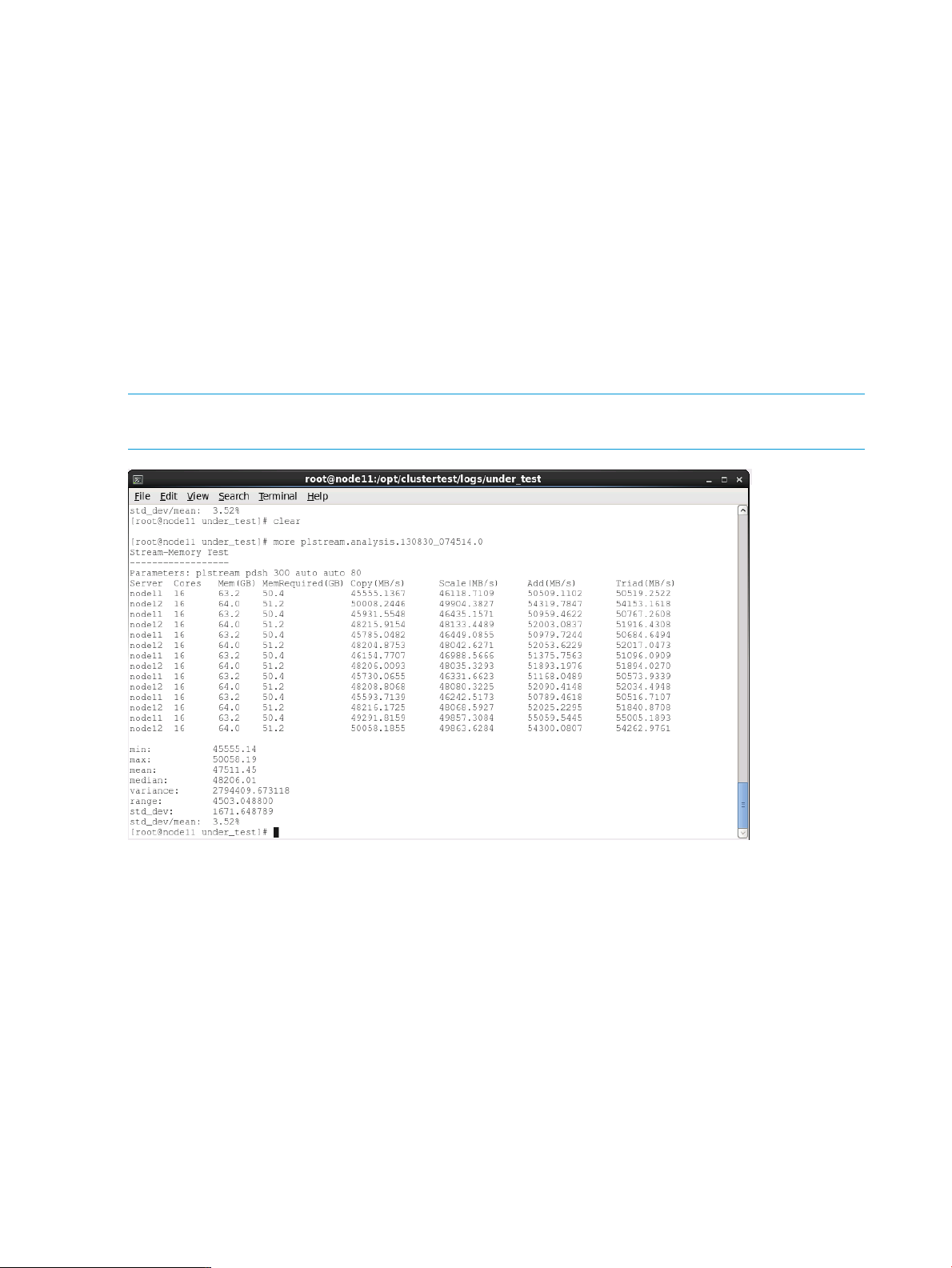

If you run a Stream test, Disk Test, or Linpack test, a graph tab is also available. An example graph

is shown below.

Monitoring tests and viewing results 17

Page 18

Performance analysis

After a test has completed, the analyze tool (see “Utility commands” (page 51)) runs on the test

output. The analyze tool compares the performance of all tested nodes to the mean value, and

identifies the nodes outside the acceptable range. The performance of each node is also compared

to the standard deviation. The full analysis is saved to a analysis log file (for example

090406_070956_runstreams.analysis) in the current logs directory. Currently, only

CrissCross, Stream, Linpack, Disk Test, Netperf, InfiniBand Verbs Test, and Pallas Test have

performance analyses.

If the performance of some nodes is less than the mean value by three standard deviations, these

nodes are marked as outside the acceptable range. The nodes outside the acceptable range are

included in the analysis summary. In cases where a large standard deviation is detected, a warning

is issued – you should review the analysis file in order to detect nodes with lower performance.

For Stream, Disk Test, and Linpack, a graphical view of the analyze results is generated. It can

be viewed by selecting the graph tab from the Testing Summary window as described in “The test

output window” (page 16).

NOTE: For the analysis to be meaningful, it’s recommended you run it on a minimum of

approximately 20 homogeneous nodes.

Although multiple values may be parsed from the log file during analysis, only the following values

are used for reporting the statistical information and test run performance:

• CrissCross: interconnect bandwidth

• Stream: memory bandwidth; only Copy bandwidth is used for summary analysis

• Linpack: floating-point performance (in gigaFLOPS)

• Disk Test: disk bandwidth (read or write)

• Netperf: network bandwidth (TCP or UDP)

• InfiniBand verbs tests: InfiniBand bandwidth (average and peak) and latency (typical, best

and worst)

• Pallas: interconnect bandwidth and time taken (minimum, maximum and average)

The analyze tool can also be used from the command line. See “Utility commands” (page 51)

for details.

18 The Cluster Test GUI

Page 19

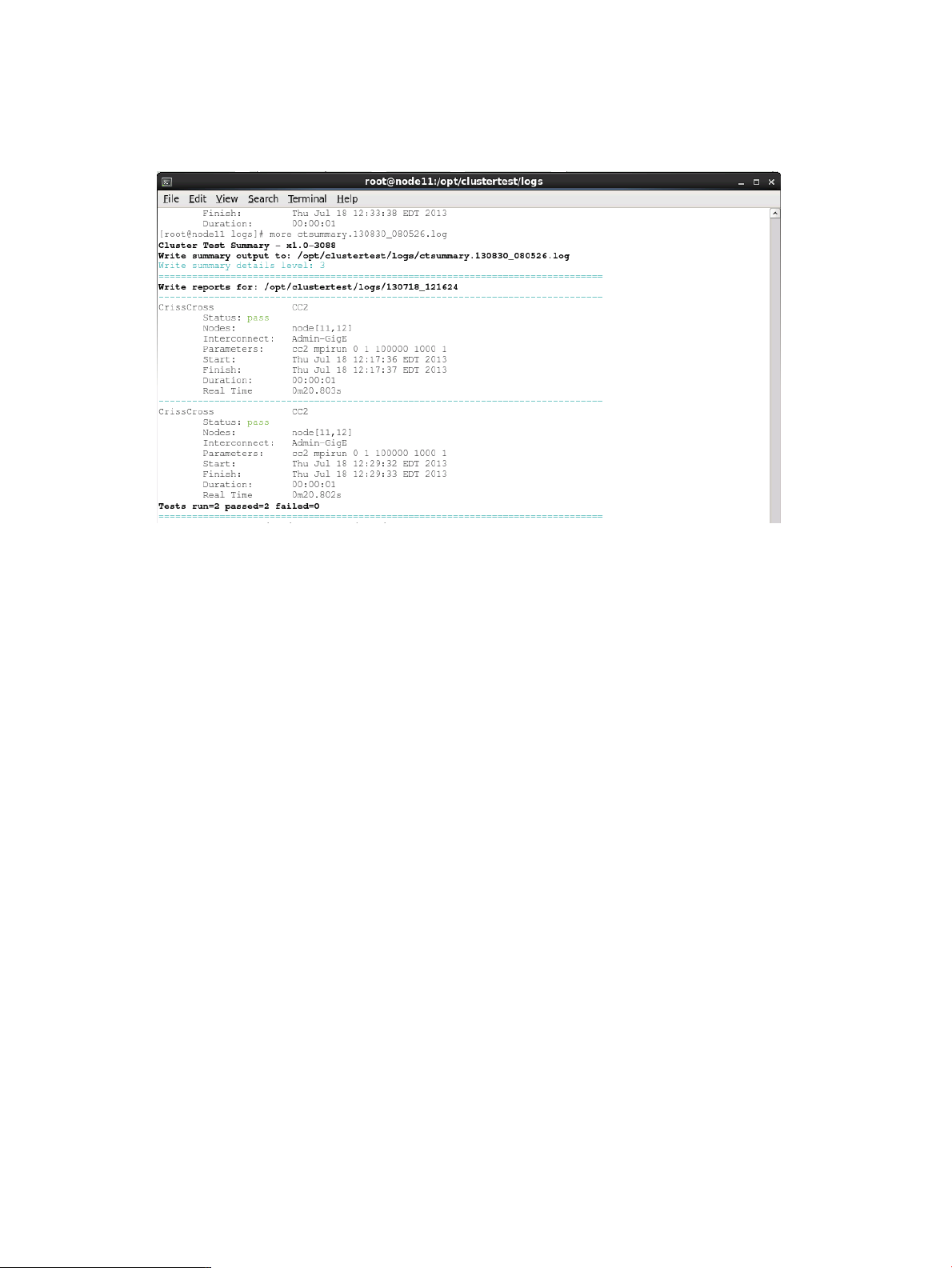

Test report

The Test Report button generates a summary pass/fail report from all test runs. The test report can

be found in the current test directory under /opt/clustertest/logs. An example of a test

report is shown below.

Monitoring tests and viewing results 19

Page 20

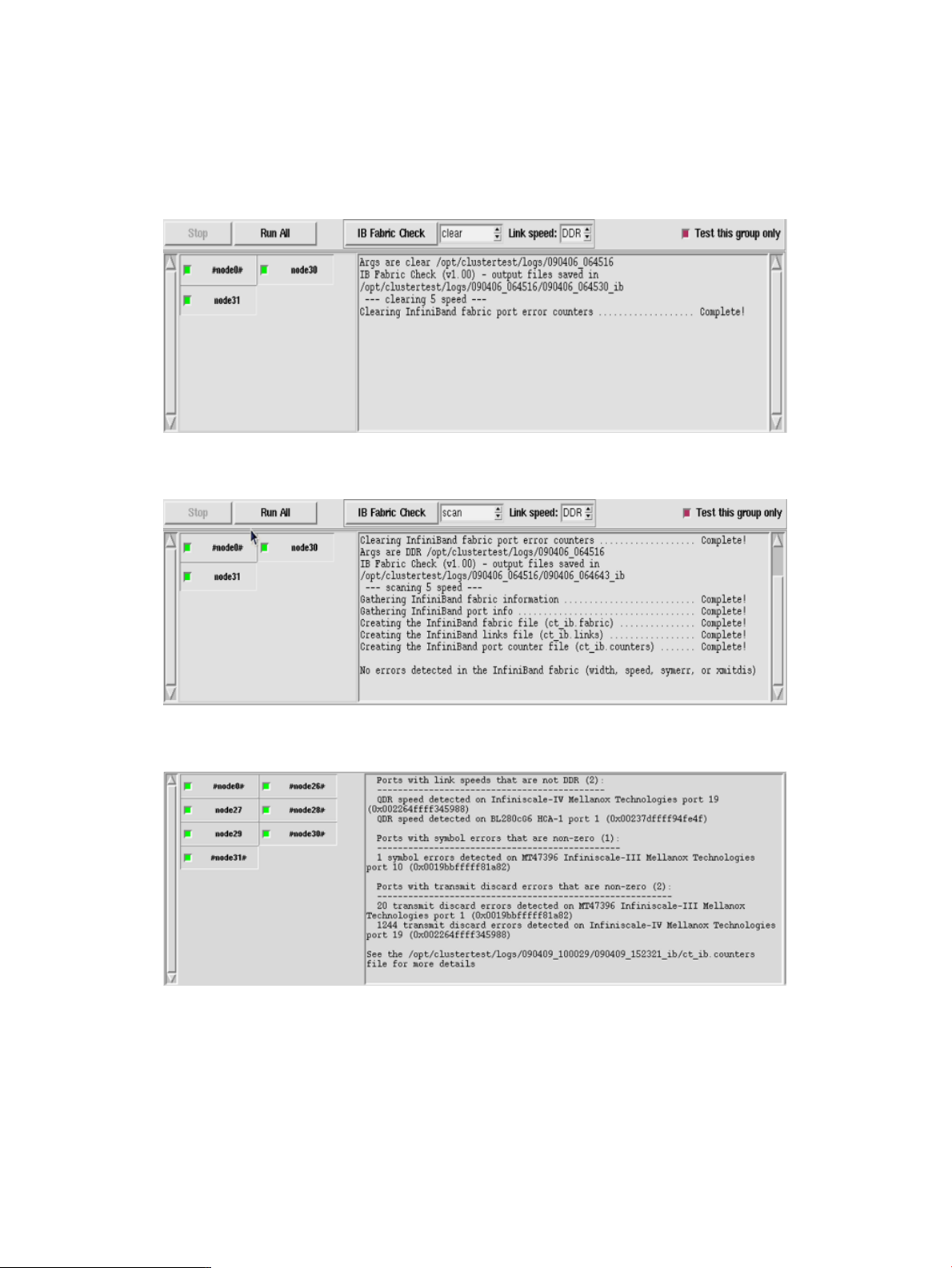

Checking the InfiniBand fabric

The IB Fabric Check allows you to clear and scan the InfiniBand fabric for errors. The

ib_fabric_check utility uses scripts provided with the OFED drivers to gather information about

the InfiniBand fabric and clear the port counters. See “Utility commands” (page 51) for more

details, including sample log files. The recommend usage is as follows:

1. Select the appropriate link speed and clear the fabric.

2. Run the initial scan of the fabric.

3. Run the tests and then scan the fabric again to check for InfiniBand fabric errors.

20 The Cluster Test GUI

Page 21

The Cluster Test toolbar menus

The menus File, Tools, and Help are at the top of the Cluster Test interface.

Table 1 Cluster Test toolbar menus

This function has been deprecated.DebugFile

For Cluster Test RPM only. See “Configuration settings” (page 10).Config File Settings

Load Script

Performance MonitorTools

Hardware Inventory

Firmware Summary

Select a cluster script to run. See “Using scripts to run tests” (page

12).

Close the Cluster Test interface – tests continue running.Exit

Launches the Performance Monitor. See the HP Cluster Test Procedures

Manual for more information.

Allow all nodes in the group or cluster to be tested.Enable All

No nodes in the group or cluster will be tested.Disable All

Selects all active nodes for testing.Select Active

Selects all active nodes with good interconnect for testing.Select Active IC

Turns UID on for all nodes in the group or cluster.All UID ON

Turns UID off for all nodes in the group or cluster.All UID OFF

View and save a hardware inventory. For more information, see

“Hardware Inventory” (page 36).

View and save a firmware inventory. For more information, see

“Firmware Summary” (page 36).

Invoke the Forge data gathering script.Forge Cluster Inventory

Invoke the Intel Xeon Phi status display. Requires Xeon Phi.MIC Status Panel

Launches the Firmware Update utility.Firmware Update

Server Health

Disk Scrubber

Set date/time for all selected nodes to be the same as headnode time.Sync Node Time

Interface to report any Cluster Test issue to the support team.Report Problem

Opens window to display available server health data. For more

information, see “Server health check” (page 37).

Erases the disk contents of all compute nodes' hard disks by running

simple dd commands. For more information, see “Disk Scrubber”

(page 39).

Displays author information.InfoHelp

The Cluster Test toolbar menus 21

Page 22

3 The accelerator test GUI

IMPORTANT: Accelerator tests are only available with the RPM version of Cluster Test. The Image

version does not include accelerator tests.

Starting accelerator tests

NOTE: Make sure the X server is running and the DISPLAY environment variable is set

appropriately.

NOTE: Cluster Test will recognize and test nodes with one to eight GPU cards.

Start accelerator tests with the command

# /opt/clustertest/bin/testnodes.pl -gpu

or

# testnodes.pl -gpu

The Cluster Test testnodes window has the following tabs:

• Cluster Tests – contains all the Cluster Tests described in “The Cluster Test GUI” (page 7).

• Accelerator Tests – contains the accelerator tests described in “Running accelerator tests”

(page 23).

Files generated by accelerator test

As you run the tests, output is collected in log files. You can set the log directory using command

line options. For example, testnodes.pl <logdirectory>.

Upon each new invocation of accelerator test, a new directory is generated under /opt/

clustertest/logs with the current timestamp (year/month/day/time). The log, error, and

script files are saved in this directory.

22 The accelerator test GUI

Page 23

NOTE: Remove all files when you are finished testing with accelerator test.

Running accelerator tests

GPU detection

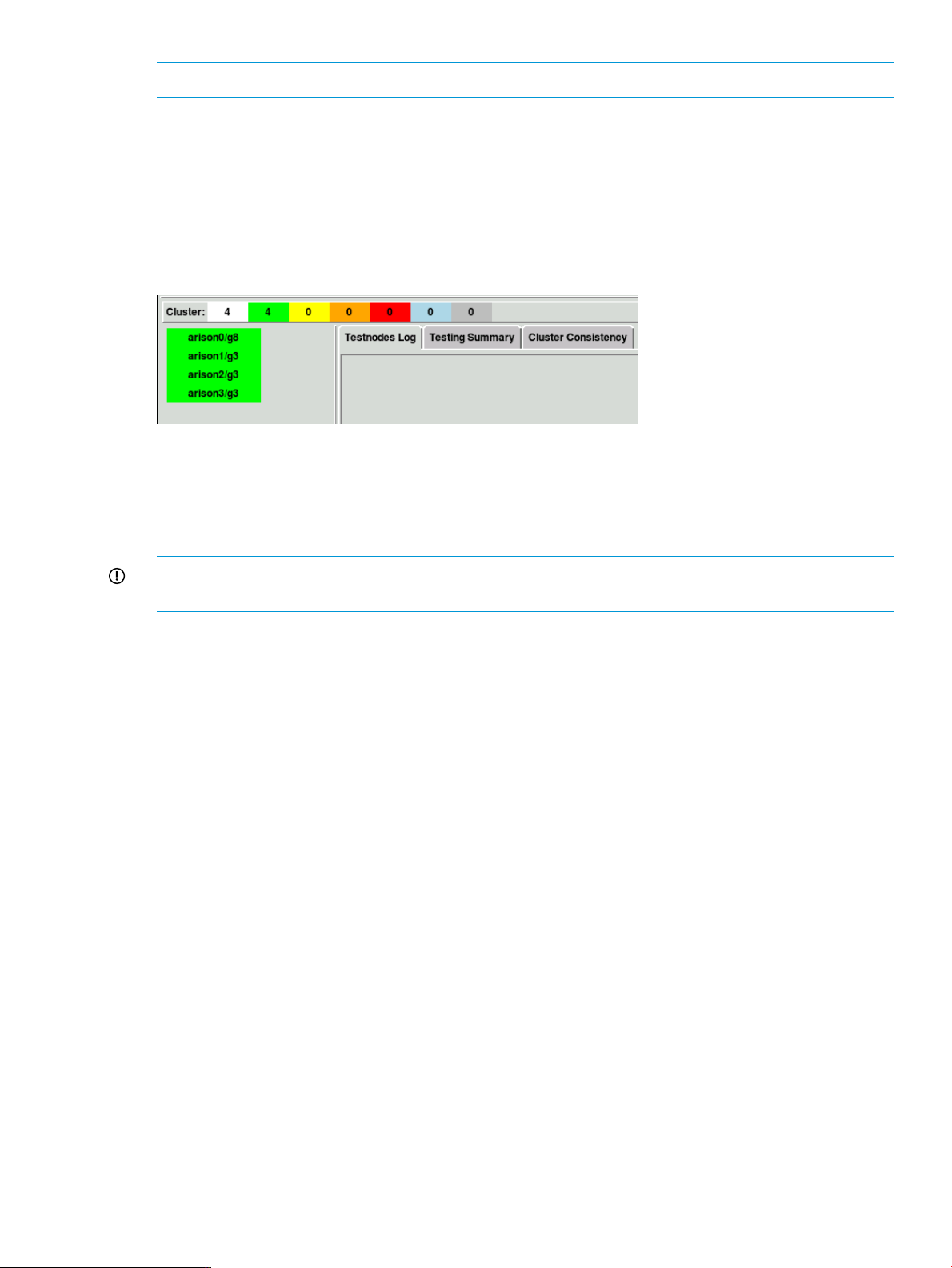

When you start testnodes.pl -gpu, a test is launched to check all nodes for the presence of

accelerator cards (GPUs). If any GPUs are detected and they are responsive to communication,

the node will be marked by adding /g<number of nodes> to the node name in the nodes

window. In the example below, each node has three detected and responsive GPUs.

You should compare the number of GPUs indicated in the nodes monitoring window to the actual

number of GPUs for each node. Any discrepancies indicate a problem with GPUs on that node.

It might be helpful to run the Verify test, described below, to get more information about problem

nodes. Additional information on the nodes monitoring window is available at “The nodes

monitoring window” (page 15).

IMPORTANT: For all the accelerator tests, only nodes with detected GPUs should be selected.

Deselect any nodes that do not have GPUs.

Verify

The Verify test is similar to the GPU detection run on testnodes.pl -gnu startup. Each selected

node is tested for the presence of GPUs using lspci and is then queried. The test report shows

the accelerators detected for each node and whether communication with the GPU was successful.

If a GPU is installed on a node but not detected, reseat the GPU and repeat the test. An example

test report is shown below.

---------------n21

---------------** The lspci command shows that there are 3 GPGPUs installed on node

** All 3 GPGPUs appear to be functional on this node

GPU Model Video BIOS Link Speed Width Bus ID

0 Tesla S2050 70.00.2f.00.03 5GT/s, x16, 06.00.0

1 Tesla S2050 70.00.2f.00.03 5GT/s, x16, 14.00.0

2 Tesla S2050 70.00.2f.00.03 5GT/s, x16, 11.00.0

To use the Verify test report:

• Make sure all GPUs are listed for each node.

• Verify the Model numbers.

• Verify the Video BIOS.

• The Link Speed can be reported as either 2.5, 5, or UNKNOWN. A report of 5 or UNKNOWN

indicates the GPU is running at Gen2 speed and is acceptable. A value of 2.5 might indicate

the GPU is not properly configured. However this test is timing sensitive, so it is recommended

you retest any nodes reporting 2.5. If the test consistently reports 2.5, the GPU should be

re-seated and the test repeated. If all the GPUs report 2.5, there might be a BIOS setting

error.

Running accelerator tests 23

Page 24

• A Width of x16 is expected for Gen2 GPUs.

• The Bus ID can be used to identify the physical location of each GPU.

SGEMM: Single Precision General Matrix Multiply Test

• The Trans-A setting determines whether the matrix A is to be transposed. The default is N.

• ArraySize sets the size of the array to be used. The default is Auto, which means the test

will automatically compute the array size. Test results are very sensitive to array size.

• GPU sets which GPU to test. The default is all.

Expected results for Nvidia GPUs: All nodes should report 520 – 550 GFlop/s.

Expected results for AMD GPUs: All nodes should report about 430-440 Gflop/s.

DGEMM: Double Precision General Matrix Multiply Test

• The Trans-A setting determines whether the matrix A is to be transposed. The default is N.

• ArraySize sets the size of the array to be used. The default is Auto, which means the test

will automatically compute the array size. Test results are very sensitive to array size.

• GPU sets which GPU to test. The default is all.

Expected results for Nvidia GPUs: All nodes should report 200 – 250 GFlop/s.

Expected results for AMD GPUs: All nodes should report about 200 Gflop/s.

BandWidth: GPU Bandwidth Test

• Direction sets the direction of the transfers. Available options are htod (host-to-device)

and dtoh (device-to-host). The default is htod.

• TransferSize is the number of bytes in a transfer block. The default is 32 GB.

• Iterations is the number of times to repeat the test. The default is 10.

• GPU sets which GPU to test. The default is all.

Expected results for Nvidia GPUs: All GPUs should report 5650-5750 MBs. Values of half the

expected range might indicate the GPU is running at Gen1 speed instead of Gen2 speed. This

might be caused by a BIOS setting or might indicate a GPU hardware issue.

Expected results for AMD GPUs: All GPUs should report about 3000-3300 MB/s.

Memory Test

NOTE: For Nvidia GPUs only.

This test writes and then reads a pattern to memory and tests for errors. GPU sets which GPU to

test. The default is all. All GPUs tested should report zero errors.

Thermal Test

NOTE: For Nvidia GPUs only.

This test reports GPU temperatures for five minutes while a benchmark runs in the background. The

GPU temperature should remain below 81° C. GPU temperatures are obtained using the IPMI

ipmitool command. It is necessary for IPMI to be installed and enabled for this test to run.

NOTE: The Thermal Test does not report meaningful results for accelerators installed in

Workstations (WS490).

24 The accelerator test GUI

Page 25

Nvidia Linpack: CUDA Accelerated Linpack Benchmark

NOTE: For Nvidia GPUs only.

This test is implemented to run separately on each node using all CPU cores and all GPUs with

about 80% or less of memory. Two parameters must be specified: Mem (GB) and Mem (%). Mem

(GB) is the total memory in Giga Bytes on each node and Mem (%) is the percentage of total

memory used in the test. This test does not give the cluster wide performance but it exercises CPU,

GPU, and memory at the same time and it provides another set of numbers used to compare the

relative performance among the cluster nodes. The amount of memory required is set to auto by

default. This runs each node at the specified percentage of the memory available. For example,

using auto and 80% a 2GB node uses 80% of 2GB while a 16GB node uses 80% of 16GB. The

amount of memory used on any management node is adjusted to allow for increased memory

consumption on management nodes.

Running accelerator tests 25

Page 26

4 Cluster Test procedure as recommended by HP

A good cluster solution must pass five mandatory cluster tests: CrissCross, Test4, Stream, Linpack,

and Disk Test. The Pallas test is another interconnect test and is recommended as a supplement to

CrissCross and Test4.

If you have accelerators (GPUs) in your cluster, run the accelerator tests first as described in

“Accelerator test procedure” (page 27).

If you replace any node in the cluster, it is suggested you run all mandatory tests again.

Cluster Test uses the following directory structure.

Table 2 Directory structure for Cluster Test

/opt/clustertest/bin/

CAUTION: Make sure no one else is running tests on the nodes you will be testing. Running

simultaneous tests on a single node will most likely cause all tests to fail.

Additional software

Some of the features of Cluster Test rely on additional software packages that might not be installed

on your cluster. These features, while not vital to running Cluster Test, can assist in troubleshooting

problems with the cluster.

One such feature is the ability to turn on or off the UID light on a server. In order to make this

feature available, either the ipmitool or hponcfg software (depending on the node model)

must be installed and set up on each node of the cluster. In general, ipmitool is used for nodes

with LO100 and hponcfg is used with nodes with iLO and iLO 2. See “Utility commands”

(page 51) for more information.

Configuring Cluster Test when using RPM

When running the RPM version of Cluster Test for the first time, you will need to provide some

configuration settings. A warning message will appear when you launch Cluster Test, as shown

below.

location of tests, including the testnodes.pl Cluster Test

user interface

location of test output/opt/clustertest/logs/

location of install/uninstall scripts and sources/opt/clustertest/setup/

The configuration settings screen, shown below, will be displayed after accepting the warning

message. Verify the default settings and update any incorrect settings. At a minimum, the two node

number range fields should be updated to reflect the nodes in your cluster.

26 Cluster Test procedure as recommended by HP

Page 27

Accelerator test procedure

This is the Cluster Test procedure recommended by HP when your cluster contains nodes with

accelerator cards. You should run these accelerator tests and the tests described in “The Cluster

Test procedure” (page 29).

Each of these tests use default values. You may change parameters as desired, but be careful the

current system limits are not exceeded.

1. Make sure the X server is running and the DISPLAY environment variable is set appropriately.

2. Make sure no one else is running tests on the nodes you will be testing.

3. Run /opt/clustertest/bin/testnodes.pl -gpu on the first head node.

Accelerator test procedure 27

Page 28

As soon as you start testnodes.pl -gpu a test is launched to check all nodes for the

presence of accelerator cards (GPUs). If any GPUs are detected and they are responsive to

communication, the node will be marked by adding /g<number of nodes> to the node

name in the nodes window. In the example below, each node has three detected and

responsive GPUs.

4. Compare the number of GPUs indicated in the nodes monitoring window to the actual number

of GPUs for each node. Any discrepancies indicate a problem with GPUs on that node.

5. Deselect any nodes that do not have GPUs.

6. Select Verify and use the generated report for the following checklist.

• Make sure all GPUs are listed for each node.

• Verify the Model numbers.

• Verify the Video BIOS.

• The Link Speed can be reported as either 2.5, 5, or UNKNOWN. A report of 5 or

UNKNOWN indicates the GPU is running at Gen2 speed and is acceptable. A value of 2.5

might indicate the GPU is not properly configured. However this test is timing sensitive,

so it is recommended you retest any nodes reporting 2.5. If the test consistently reports

2.5, the GPU should be re-seated and the test repeated. If all the GPUs report 2.5, there

might be a BIOS setting error.

28 Cluster Test procedure as recommended by HP

Page 29

• A Width of x16 is expected for Gen2 GPUs.

• The Bus ID can be used to identify the physical location of each GPU.

7. Select SGEMM to start the Single Precision General Matrix Multiply Test. All nodes should

report a rate of 520 – 545 Gflop/s.

8. Select DGEMM to start the Double Precision General Matrix Multiply Test. All nodes should

report a rate of 200 – 220 Gflop/s.

9. Select BandWidth to start that test. All GPUs should report 5650-5750 MBs. Values of half

the expected range might indicate the GPU is running at Gen1 speed instead of Gen2 speed.

This might be caused by a BIOS setting or might indicate a GPU hardware issue.

10. Run the Memory Test.

11. Run the Thermal Test. All GPU temperatures should remain below 81 °C.

The Cluster Test procedure

This procedure outlines the Cluster Test procedure recommended by HP.

If your cluster has accelerator cards (GPUs), first run the accelerator tests as described in “Accelerator

test procedure” (page 27).

The test parameters for each of these tests are default values. You may change parameters as

desired, but be careful the current system limits are not exceeded.

See “Sample test output” (page 59) for example output of these tests.

Refer to “Troubleshooting” (page 44) if any of the following tests fail.

This procedure assumes a diskless cluster of 77 nodes has been set up successfully.

NOTE: All commands and utilities in this procedure reside in the /opt/clustertest/bin

directory. Cluster Test does not modify the PATH environment variable on the head node, therefore

any calls to commands and utilities referenced in this section must be preceded with the path to

the command.

1. Make sure the X server is running and the DISPLAY environment variable is set appropriately.

2. Make sure no one else is running tests on the nodes you will be testing.

3. Run testnodes.pl on the first head node. This will launch the Cluster Test interface.

A log directory will be created under /opt/clustertest/logs with a timestamp

(year/month/day/time). The log, error, and script files are saved to this directory.

You can override the default test logging directory with the command testnodes.pl

<my_log_dir>. This will create a directory my_log_dir in /opt/clustertest/logs

and set the sequence to avoid any conflict with other testnodes.pl jobs already running.

The Cluster Test procedure 29

Page 30

4. Deselect Test this group only so you will be running tests on the entire cluster.

5. Run the checkadm script to ping all the nodes on the admin network.

# checkadm

Fix any errors before continuing. See the “Troubleshooting” (page 44) section for information.

6. If the cluster solution has an interconnect network other than the admin network (such as GigE

or InfiniBand), run the checkic script to ping all nodes on the interconnection network. To

run the script, open a terminal window and enter the following command.

# checkic

Fix any errors before continuing. See the “Troubleshooting” (page 44) section for information.

TIP: If all nodes are shown as green on the Node Status display (lower left corner of the

Cluster Test GUI), you will get no errors from checkadm or checkic.

7. Run CrissCross Easy on the admin and interconnect networks to check the networks'

functionality. The output of the CrissCross Easy test might indicate a slow network; that's okay

and is to be expected.

The CrissCross test takes two to three minutes on a cluster of 350 nodes.

a. Select the admin network by choosing Admin from the Network: pull-down menu on the

Cluster Test interface. Then, select Easy for the CrissCross test and enter the test parameters

(it's suggested you use the default values). Select CrissCross to run the test on the admin

network.

b. Select a GigE or InfiniBand network by choosing from the Network: pull-down menu on

the Cluster Test interface. For this example, we choose InfiniBand. Select CrissCross to

run the test on the interconnect (InfiniBand) network.

c. Select Clear Log to clear the output window.

8. If the CrissCross Easy test completes successfully, run CrissCross Stress on the interconnect

network. The CrissCross Stress test takes approximately 30 minutes on a cluster of 350 nodes

with InfiniBand interconnect.

30 Cluster Test procedure as recommended by HP

Page 31

a. Select Stress for the CrissCross test on the Cluster Test interface. Leave the Procs/node

value unchanged. Unless you have a cluster of several hundred nodes, leave the default

values for Length and Iterations. If you do have a cluster of several hundred nodes, you

might need to change these parameters to smaller values, something like Length: 50000,

Iterations: 1000, to shorten the test time.

NOTE: In general, CrissCross runtime goes up as the square of the number of nodes.

For example, for a fixed message length and number of iterations, if it takes 5 minutes

on 10 nodes, it will take 20 minutes on 20 nodes, and 500 minutes on 100 nodes.

CrissCross will stop when it hits the specified time limit, but will not have tested all node

pairs. You can control the number of node pairs tested simultaneously, which will make

CrissCross run faster at the cost of sometimes having more variation in performance due

to congestion.

b. Select CrissCross to start the test.

c. When the CrissCross test finishes, select Save Log to save your test result to a file, then

select Clear Log to clear the output window.

d. If this test fails, see “Troubleshooting” (page 44) for more information. Repair or replace

any failed components before continuing with other tests.

9. Run Test4 on the interconnect network, first on Easy and then on Stress.

a. Select Easy, then Test4 to start the test. You don’t need to change the test parameters.

b. Wait for Test4 Easy to finish. Test4 Easy takes less than 15 minutes on a cluster of 350

nodes.

c. Select Stress. You don’t need to change the value in the Length box; the default value is

appropriate. Run Test4 Stress for at least four hours by specifying an appropriate time

limit. The test will stop when the time limit is reached. If necessary, the test will repeat

until the time limit is reached.

d. Select Test4 to start the test.

e. While Test4 is running, use the Performance Monitor, accessed from Tools→Performance

Monitor on the Cluster Test toolbar, to verify that all nodes are being exercised. See “The

performance monitor” (page 33)for more information.

f. Select Save Log to save your test results to a file, then select Clear Log to clear the output

window.

10. At this point, the cluster should have undergone the CrissCross and Test4 tests – the cluster

network connections are fully functional. The next step is to test memory with the Stream test.

Usually, the default settings (auto/80%) for Stream are sufficient. If you want to choose an

explicit memory size, select the memory size that is a little smaller than the actual memory

size on the nodes. In this example, each server has 4 GB of memory, so we select 3 GB.

Select Stream to start the test. This test takes approximately 30 minutes on a cluster of 350

nodes.

11. The next test focuses on CPU testing – Linpack. The Linpack test requires two parameters:

Mem(GB) and Mem%.

Linpack can either run separate instances on each node, or a single instance covering the

whole cluster. To run one instance of Linpack covering the entire cluster, select Cluster-wide.

Linpack runtime varies depending on how much memory is available on each node, and the

number and speed of the CPUs.

The Cluster Test procedure 31

Page 32

a. The auto Mem(GB) setting selects a memory size for each node that will accommodate

the amount of memory available on that node. You may select an explicit size by clicking

on the arrow adjacent to the Mem(GB) box. If you have more than one memory

configuration in the cluster, select the one with lowest memory. For example, if one node

in the cluster is configured with 8 GB and one with 16 GB, you should select 8 in the

Mem(GB) box. When using the Cluster-wide option with Linpack, the Mem(GB) box will

still be the amount of memory on each node – the values will be collected together to

calculate how large the matrix should be for the whole cluster.

b. Next, select the percentage of total memory for the Linpack test by clicking on the arrow

adjacent to the Mem% box. The default value is 80%. A Mem% value over 80% is likely

to cause swapping or running out of memory.

c. Select Linpack to start the test.

d. Linpack running on individual nodes takes approximately one hour. The output of Linpack

test is saved to files named HPL-node<x> where x is a node number. In this example,

output is saved to files HPL-node0 – HPL-node76.

12. The final required test is Disk Test.

a. Enter the disk device (for example, /dev/sda) in the Device box. If nodes in the cluster

have only one disk installed, you can select auto and let Disk Test figure out the device.

You don’t need to change the % (percentage) parameter – the default value (10) is good.

Do not run the test with more than 10% of the disk size because it will take a very long

time to complete.

Time limits can be specified to control the amount of time that Disk Test runs.

When run with all, all-parallel, or all-sequential, Disk Test avoids testing physical disks

and the logical volumes containing them.

b. Select Disk Test to begin the test.

NOTE: There is a known issue with Disk Test that causes Cluster Test to always indicate

errors in Disk Test’s standard error output. As long as the Disk Test .err file in the logs

directory only lists the node names of the systems tested with Disk Test, this error can be

ignored.

c. If you want to save your test results to a file, select Save Log. To clear the output window,

select Clear Log.

13. Remember to run a Hardware Inventory (Tools→Hardware Inventory from the Cluster Test

toolbar) to check and save the cluster hardware configuration. See “Hardware Inventory”

(page 36) for more information.

14. When all the testing is complete, uninstall Cluster Test and remove all associated log, error,

and script files. Refer to the HP Cluster Test Installation Guide, available at http://www.hp.com/

go/ct-docs, for the appropriate uninstall instructions for Cluster Test.

32 Cluster Test procedure as recommended by HP

Page 33

5 The performance monitor

The performance monitor utility

The Performance Monitor (or the xcxclus utility) is a graphic utility that monitors nodes in a cluster

simultaneously. The Performance Monitor displays an array of icons. Each icon represents a node

in the cluster and provides the following information:

• Ethernet activity

• Core utilization

• Memory utilization

• Interconnect I/O activity

• Disk I/O activity

The Performance Monitor can be invoked from the Tools menu at the top of the Cluster Test interface.

The data are color-coded with respect to the percent of utilization. For example, red indicates 90%

to 100% utilization; purple represents 0% to 10% utilization. The color legend is displayed at the

bottom of the window. The description of various boxes is provided by positioning a mouse over

the box.

Except for the values in the arrows, numbers are in MB; the icons show the node utilization statistics

as a percentage of total resource utilization.

Positioning the mouse pointer over a node icon opens a popup that describes the purpose of that

portion of the icon. This information is also presented in the status bar at the bottom of the window.

The performance monitor utility 33

Page 34

• The node designator, ct465g1n1, is in the upper left corner.

• The center portion displays core usage data for each CPU core in the node. As many as four

CPU cores can be displayed. This examples shows four cores utilized at 100%.

• The right portion of the icon displays memory statistics. This example shows 82% memory

utilization.

• The leftmost arrows at the bottom of the icon represent Ethernet connections. This example

shows two Ethernet connections. As many as four Ethernet connections may be displayed.

• The single rightmost arrow represents I/O activity.

Clicking on an icon launches the xcxperf utility. See “The xperf utility” (page 35) for more

information.

The toolbar menu options are described below.

Table 3 The Performance Monitor toolbar menu

Terminates the Performance MonitorExitFile

Specify the utilization data in terms of cumulative or incremental utilizationUtilizationOptions

Opens a dialog box for setting the refresh rateRefresh...

Displays the core utilization in terms of user or system statistics, or bothCPU

Displays the system's total memory or memory used by the applicationSystem Memory

Names

Displays network utilization or bandwidthNetwork

Hide the color key at the bottom of the displayKeyView

Hide the values in the node iconsValues

View the full node name instead of the node number for each nodeFull Node

Scale the Performance Monitor window to 50%, 75%, 100%, and 125%Zoom

Suspends the display until the Hold button is releasedHold

34 The performance monitor

Page 35

The xperf utility

The xperf utility is a dynamic graph that displays performance on a number of measurements for

a single node. It is started by clicking on a node icon on the Performance Monitor display.

Statistics for each measurement are shown real-time in a color-coded histogram. To see the meaning

of the color-coding for an item, click on the toolbar for that measurement. For example, to see the

color key for an Ethernet connection histogram, select Ethernet from the xperf toolbar.

The xperf utility 35

Page 36

6 Cluster Test tools

The tools in this section are run from the Cluster Test interface. Be sure to check for additional tools

that run from the command line. Utility commands are listed in “Utility commands” (page 51).

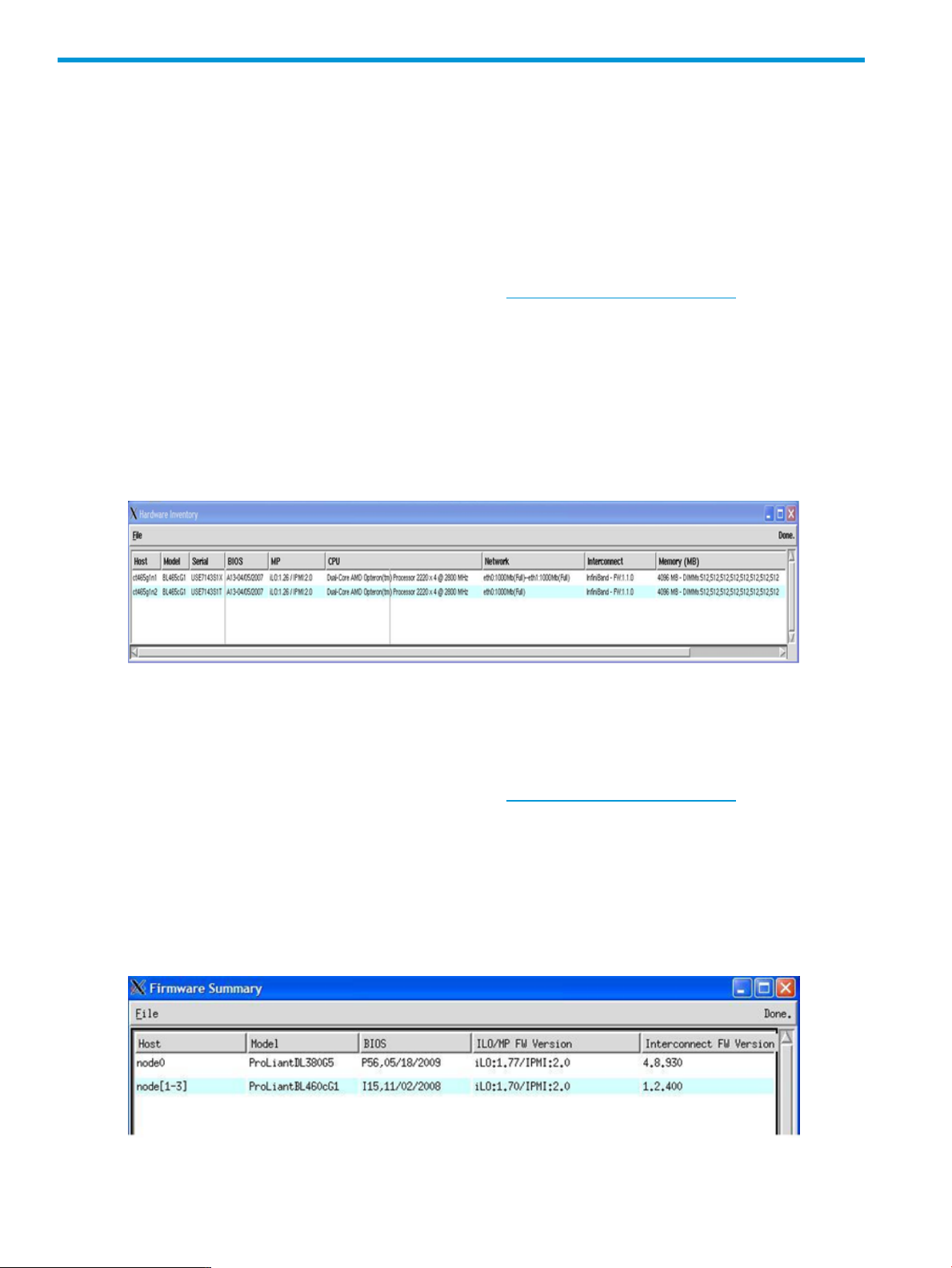

Hardware Inventory

For the Hardware Inventory tool to work correctly, you must have the HP Lights-Out Online

Configuration Utility, hponcfg, or the ipmitool installed, depending on the node model. In

general, if your nodes are in the list of supported models for hponcfg, then you will need that

tool, otherwise you will need ipmitool. See the HP SmartStart Scripting Toolkit page and look

for the HP SmartStart Scripting Toolkit Linux and Windows Editions Support Matrix for a list of

supported models for hponcfg.

To get a hardware inventory, select Tools→Hardware Inventory from the Cluster Test Interface

toolbar. This tool performs a hardware inventory on all active nodes in the cluster. Reported

hardware includes server models, serial numbers, BIOS versions, BMC firmware versions, number

of CPU’s, NIC’s status, and memory in MBytes.

The Hardware Inventory report can be saved to a file by selecting File→Save from the Hardware

Inventory toolbar.

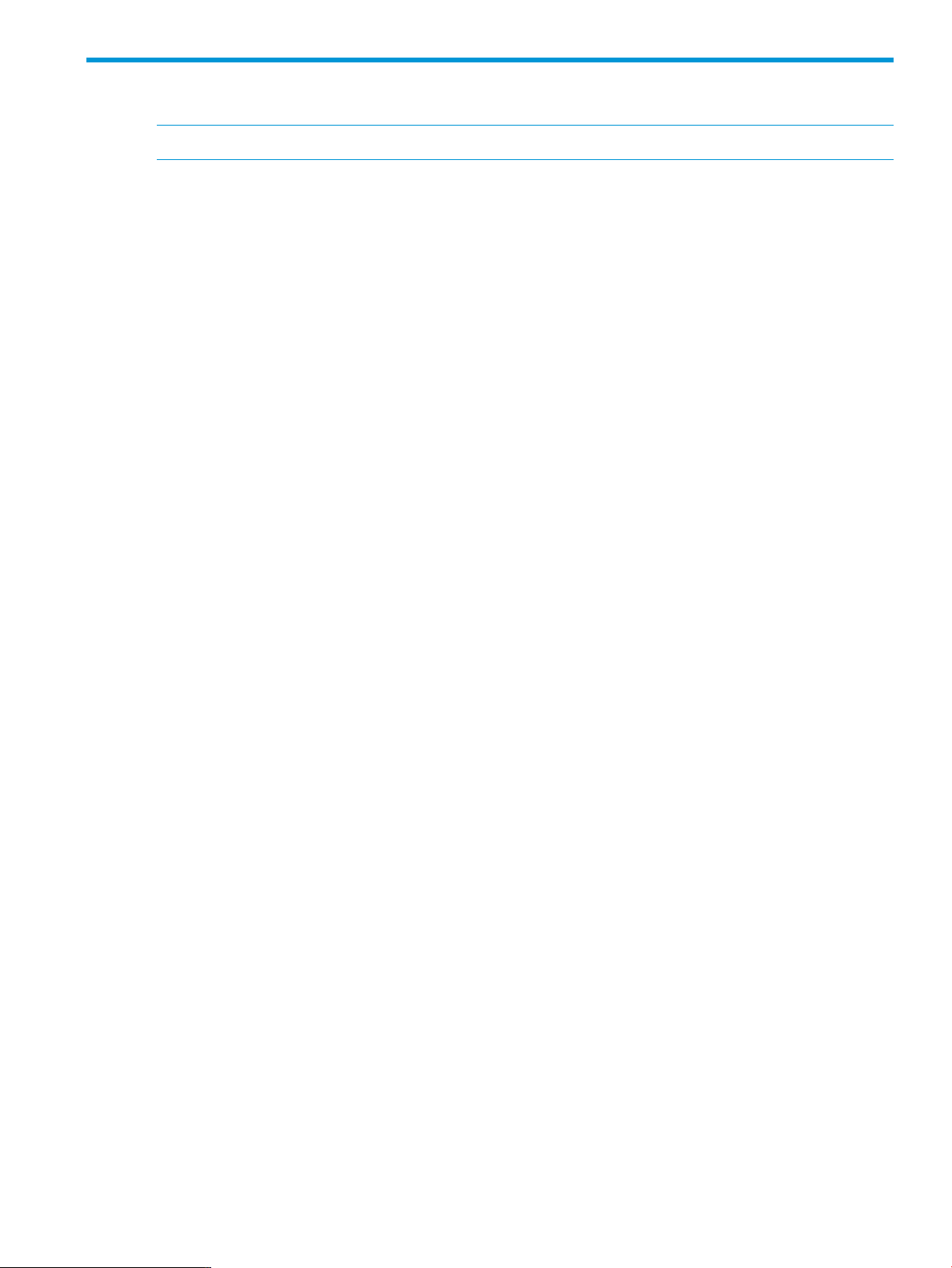

Firmware Summary

For the Firmware Summary tool to work correctly, you must have the HP Lights-Out Online

Configuration Utility, hponcfg, or the ipmitool installed, depending on the node model. In

general, if your nodes are in the list of supported models for hponcfg, then you will need that

tool, otherwise you will need ipmitool. See the HP SmartStart Scripting Toolkit page and look

for the HP SmartStart Scripting Toolkit Linux and Windows Editions Support Matrix for a list of

supported models for hponcfg.

To get a firmware summary, select Tools→Firmware Summary from the Cluster Test toolbar. This

tool summarizes the firmware versions on all nodes in the cluster. Each row in the Firmware Summary

window represents a group of nodes of the same model, BIOS firmware version, iLO/MP firmware

version, and Interconnect HCA firmware version; the window is sorted based on node type

(hardware model) so it’s easier to check for correct firmware versions.

36 Cluster Test tools

Page 37

Server health check

The server health check tool reports the overall health status of the nodes. It generates Temperature,

Fan, and Power reports based on values retrieved from the management interface (LO100i or

iLO2) of the server. This tool provides reports for every active node in the cluster. The health data

is polled every five minutes on the head node if the Enable Health Check option on the Cluster Test

interface is selected.

Once you select Enable Health Check, Cluster Test starts polling the health data for all servers,

including the head node. Results are written to the following location:

/opt/clustertest/logs/server-health/<node-name>.

You may also see the health check results via the Cluster Test toolbar at Tools→Server Health.

From the Server Health Status window, hold the left mouse button down over a node item to display

a menu with the items Temperature, Fan, and Power. From this menu, select the report you'd like

to view for that node.

Below is an example Temperature report. The data in the report are historic, beginning from the

time Enable Health Check is selected on the Cluster Test interface.

Server health check 37

Page 38

Below is an example Power report. Check a node's Present Power, Average Power, Maximum

Power, and Minimum Power.

Zeros in power readings indicate that the firmware is not responding to the Power Monitoring

module at that time stamp.

IMPORTANT: Power Monitoring is not supported on all Cluster Test server models. For more

information, contact HP support.

Excluding the head node from tests

To exempt the head nodes in the current cluster configuration from tests, select Exclude Head Node

on the Cluster Test interface.

38 Cluster Test tools

Page 39

Disk Scrubber

The Disk Scrubber tool erases the disk contents of all the compute nodes' hard disks by running

simple dd commands.

CAUTION: Make sure you back up the contents of your compute nodes, both local and attached

disks, before using Disk Scrubber.

To start Disk Scrubber, select Tools→Disk Scrubber from the Cluster Test interface toolbar.

Disk Scrubber 39

Page 40

40 Cluster Test tools

Page 41

7 Running tests in parallel

It is possible to run tests on independent groups of nodes in the same cluster in parallel. This is

done from the command line, using the run_cluster_script command (see “Utility commands”

(page 51)). The run_cluster_script command does basically what the FileLoad Script menu

item does in the Cluster Test GUI – loads and runs a cluster script describing one or more tests.

(See “Using scripts to run tests” (page 12).)

You may run as many simultaneous cluster scripts as you like, provided they don’t use the same

nodes. For example, in a 256-node cluster of HP ProLiant BL280c G6 Server Blades, you could

run 16 separate cluster scripts, each on the nodes in one enclosure. No attempt is made to check

that the nodes in question are actually unused.

CAUTION: Running tests in parallel is an advanced use of Cluster Test. The responsibility of

making sure a node is not subjected to multiple, simultaneous tests is yours. Make sure you assign

specific nodes to testers. Running simultaneous tests on a single node will most likely cause all tests

to fail.

By default, files associated with tests run from a single run_cluster_script command go into

a separate time-stamped directory under /opt/clustertest/logs, the same as a single

invocation of testnodes.pl. If you would like to have all your separate run_cluster_script

files go into the same directory, you can use the –logdir option to control which directory the

test files go into – perhaps the same directory being used by the current .pl. File names are

assigned sequence numbers, so several run_cluster_script commands can share a single

directory without conflict.

Scripts used with run_cluster_script.pl should adhere to one of the following forms:

• <full path to test script><full path to machines file><interconnect>

• <full path to machines file><interconnect><full path to test

script><[optional test script parameters]>

• <full path to machines file><interconnect><test name><[optional test

parameters]>

This last form is for a test script with the machines file and interconnect at the beginning of

the line.

41

Page 42

8 Creating and changing per node files

NOTE: This section applies only to the image version of Cluster Test.

In Cluster Test Image v3.2 and later, per-node files for the compute nodes (used for /tmp, /etc/

sysconfig/network-scripts, and a few other things) are implemented differently than in

previous versions. Instead of seeing per-node files on the head node under /shadow with suffixes

like $$IP=192.168.1.2$$, they will appear as symbolic links to something under

/node-specific/thisnode . The Cluster Test set up process does all of this for you

automatically, but if you need to create or change per-node files yourself, this chapter describes

how to do it.

There are two ways per-node files and directories can be represented, depending on what is

needed. A simple per-node file is visible on the head node as a symbolic link somewhere under

/shadow to the same location under /node-specific/thisnode. The actual per-node file or

directory is located in /node-specific/<ip-addr>/.

A cloned per-node directory is where individual nodes are expected to have unique files present,

but share some files. In this case, the original directory under /shadow is copied to the

corresponding location under /node-specific/clone and replaced with a symbolic link to

the corresponding directory under /node-specific/thisnode. The real per-node directory

located under /node-specific/<ip-addr>/ is filled with symbolic links to the corresponding

files, now located under /node-specific/clone. Any files in the directory that must be added,

changed, or removed for a particular node are handled by removing the symbolic link under

/node-specific/<ip-addr> and creating or changing the file as necessary.

An example per-node directory

The per-node directory, /shadow/tmp, is visible on the head node as a symbolic link to

/node-specific/thisnode/tmp. Each compute node has its own copy. The

/node-specific/thisnode/tmp directory doesn’t exist on the head node.

The actual per-node /tmp directory appears on the head node in

/node-specific/<ip-addr>/tmp and is NFS-mounted appropriately so it appears as

/node-specific/thisnode/tmp on the compute nodes. So, on the head node, /tmp for

node2 (IP address 10.0.1.2) is located in /node-specific/10.0.1.2/tmp, and /tmp for

node10 (IP address 10.0.1.10) is located in /node-specific/10.0.1.10/tmp.

An example cloned per-node directory

The /etc/sysconfig/network-scripts directory is a typical cloned per-node directory. It

contains configuration scripts for setting up the network on a node, with files named ifcfg-eth0,

ifcfg-eth1, and so forth, corresponding to Ethernet NICs. Different node models have different

numbers of NICs, and of course different nodes will have different IP addresses assigned to the

NIC of the same name.

There are some additional scripts associated with starting and stopping the network that are the

same across all nodes. In Cluster Test, this scripts directory is a cloned per-node directory (after

running clsetup.pl). The /shadow/etc/sysconfig/network-scripts directory has been

copied to /node-specific/clone/etc/sysconfig/network-scripts. The /shadow/

etc/sysconfig/network-scripts directory is then replaced with a symbolic link to

/node-specific/thisnode/etc/sysconfig/network-scripts. Each node has a

/node-specific/<ip-addr>/etc/sysconfig/network-scripts directory created,

initially populated with symbolic links to the contents of /node-specific/clone/etc/

sysconfig/network-scripts. The clsetup.pl command replaces entries such as