ClusterPack

Index of Tutorial Sections

Index

Administrators Guide

|

Administrators Guide

1.0 ClusterPack Install QuickStart

1.1 ClusterPack General Overview

1.2 Comprehensive Install Instructions

1.3 Installation and Configuration of Optional Components

1.4 Software Upgrades and Reinstalls

1.5 Golden Image Tasks

1.6 System Maintenance Tasks

1.7 System Monitoring Tasks

1.8 Workload Management Tasks

1.9 System Troubleshooting Tasks

Users Guide

2.1 Job Management Tasks

2.2 File Transfer Tasks

2.3 Miscellaneous Tasks

Users Guide

|

Tool Overview

|

Related Documents

|

Dictionary

|

Tool Overview

3.1 Cluster Management Utility Zone Overview

3.2 Service ControlManager (SCM) Overview

3.3 System Inventory Manager Overview

3.4 Application ReStart (AppRS) Overview

3.5 Cluster Management Utility (CMU) Overview

3.6 NAT/IPFilter Overview

3.7 Platform Computing Clusterware Pro V5.1 Overview

3.8 Management Processor (MP) Card Interface Overview

3.9 HP Systems Insight Manager (HPSIM) Overview

Related Documents

4.1 Related Documents

Dictionary of Cluster Terms

Back to Top

Index

Administrators Guide

|

Users Guide

|

Tool Overview

|

Related Documents

|

Copyright 1994-2004 hewlett-packard company

Dictionary

|

ClusterPack Install QuickStart

ClusterPack Install QuickStart

ClusterPack

Index

1.0.1 How Can I Get My HP-UX Cluster Running?

Step Q1 Fill Out the ClusterPack Installation Worksheet

Step Q2 Install Prerequisites

Step Q3 Allocate File System Space

Step Q4 Obtain a License File

Step Q5 Prepare Hardware Access

Step Q6 Power Up the Management Server

Step Q7 Configure the ProCurve Switch

Step Q8 Copy the License Files to the Management Server

Step Q9 Install ClusterPack on the Management Server

Step Q10 Run manager_config on the Management Server

Step Q11 Run mp_register on the Management Server

Step Q12 Power up the Compute Nodes

Step Q13 Run compute_config on the Management Server

Step Q14 Run finalize_config on the Management Server

Administrators Guide

|

Users Guide

|

Tool Overview

|

Related Documents

|

1.0.1 How Can I Get My HP-UX Cluster Running?

Dictionary

|

If you have installed ClusterPack before, follow the instructions in this section as a quick

reminder. You can refer to the detailed instructions for any given step via the links provided.

If you have not installed ClusterPack before, use the Comprehensive Install Instructions

section instead. This QuickStart Guide does NOT cover the use of Golden Images. If you

wish to use Golden Images, use the Comprehensive Install Instructions section instead.

Note:

If you do the installation steps out of order or omit steps, your installation

will leave your systems in an unknown and non-deterministic state.

Back to Top

Step Q1 Fill Out the ClusterPack Installation Worksheet

N

Print out this form and fill out all information for each node in your cluster.

Installation Worksheet (pdf)

Note:

You will not be able to complete the following steps if you have not

collected all of this information.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 1 Fill Out the ClusterPack Installation Worksheet

Back to Top

Step Q2 Install Prerequisites

Install the following software on the Management Server.

z

HP-UX 11i Ignite-UX

z

HP-UX 11i V2.0 TCOE

Install the following software on each Compute Node.

z

HP-UX 11i Ignite-UX

z

HP-UX 11i V2.0 TCOE

Allow the default choices to install.

ClusterPack requires a homogeneous operating system environment. That is, all Compute

odes and the Management Server must have the same release of HP-UX installed as well

as the same operating environment.

The Management Server requires at least one LAN connection. The manager must be able

to contact all the compute nodes using a "management network" that will ve configured by

Clusterpack. In addition, the management server must be able to connect to all the MP cards

on the compute nodes. No network connections need to be configured before installing

Clusterpack, the console interface can be used for all installation and configuration steps.

The Compute Nodes must have Management Processor (MP) cards.

ClusterPack depends on certain open source software which is normally installed as a part

of the operating environment. The minimum release versions required are:

z

MySQL Version 3.23.58 or higher

z

Perl Version 5.8 or higher

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 2 Install Prerequisites

Back to Top

Step Q3 Allocate File System Space

Allocate file system space on the Management Server. Minimum requirements are listed

below.

z

/var - 4GB

z

/opt - 4GB

z

/share - 500MB (Clusterware edition only)

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 3 Allocate File System Space

Back to Top

Step Q4 Obtain a License File

z

Get the Host ID number of the Management Server.

z

Contact Hewlett-Packard Licensing Services to redeem your license

certificates.

z

If you purchased the ClusterPack Base Edition, redeem the Base Edition

license certificate.

z

If you purchased the ClusterPack ClusterWare Edition, redeem the Base

Edition certificate and the ClusterWare edition certificate

Note:

It may take up to 24 hours to receive the license file. Plan accordingly.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 4 Obtain a License File

Back to Top

Step Q5 Prepare Hardware Access

Get a serial console cable long enough to reach all the Compute Nodes from the

Management Server.

Note:

If you are installing ClusterPack on Compute Nodes for the first time, DO

NOT power up the systems, ClusterPack will do that for you automatically.

If you do accidentally power the compute nodes, DO NOT answer the HPUX boot questions.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 5 Prepare Hardware Access

Back to Top

Step Q6 Power Up the Management Server

Perform a normal first boot for the Management Server.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 6 Power Up the Management Server

Back to Top

Step Q7 Configure the ProCurve Switch

z

Select an IP address from the same IP subnet that will be used for the

Compute Nodes.

z

Connect a console to the switch

z

Log onto the switch through the console

z

Type 'set-up'

z

Select IP Config and select the "manual" option

z

Select the IP address field and enter the IP address to be used for the

switch

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 7 Configure the ProCurve Switch

Back to Top

Step Q8 Copy the License Files to the Management Server

Put the files in any convenient directory on the Management Server (e.g. /tmp).

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 8 Copy the License Files to the Management Server

Back to Top

Step Q9 Install ClusterPack on the Management Server

z

Mount and register the ClusterPack DVD as a software depot.

z

Install the ClusterPack Manager software (CPACK-MGR) using

swinstall.

z

Leave the DVD in the DVD drive for the next step.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 9 Install ClusterPack on the Management Server

Back to Top

Step Q10 Run manager_config on the Management Server

Provide the following information to the manager_config program:

z

The path to the license file(s),

z

The DNS domain and optional NIS domain for the cluster,

z

The host name of the manager and the name of the cluster,

z

The management LAN interface on the Management Server,

z

The IP address(es) of the Compute Node(s),

z

Whether to mount a home directory,

z

Whether to configure SCM / SysInvMgr or HP SIM software ,

z

The LSF admin password. (Clusterware edition only)

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 10 Run manager_config on the Management Server

Back to Top

Step Q11 Run mp_register on the Management Server

Provide the following information to the mp_register program about each Management

Processor card that is connected to a Compute Node:

z

IP address,

z

netmask,

z

gateway IP address.

The information can be provided to mp_register in a file. See the mp_register(1) man page

for the file format.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 11 Run mp_register on the Management Server

Back to Top

Step Q12 Power up the Compute Nodes

Use the clbootnodes program to power up all Compute Nodes that have a connected

Management Processor that you specified in the previous step. The clbootnodes program

will provide the following information to the Compute Nodes:

z

Language to use,

z

Host name,

z

Time and time zone settings,

z

Network configuration,

z

Root password.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 12 Power up the Compute Nodes

Back to Top

Step Q13 Run compute_config on the Management Server

The compute_config program will register the nodes with various programs.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 13 Run compute_config on the Management Server

Back to Top

Step Q14 Run finalize_config on the Management Server

This program completes the installation and configuration process, verifies the Cluster

Management Software, and validates the installation. If it reports diagnostic error messages,

repeat the installation process, performing all steps in the order specified.

For more information, see the Comprehensive Instructions for this step.

References:

z

Step 14 Set up HyperFabric (optional)

Back to Top

Index

Administrators Guide

|

Users Guide

|

Tool Overview

|

Related Documents

|

Copyright 1994-2004 hewlett-packard company

Dictionary

|

ClusterPack General Overview

p

ClusterPack General Overview

ClusterPack

Index

Administrators Guide

|

Users Guide

|

Tool Overview

|

Related Documents

|

Dictionary

|

1.1.1 ClusterPack Overview

1.1.2 Who should use the material in this tutorial?

1.1.3 What is the best order to review the material in the tutorial?

1.1.4 Operating System and Operating Environment Requirements

1.1.5 System Requirements

1.1.1 ClusterPack Overview

Compute Cluster

A cluster is a set of independent computers combined into a unified system through system

software and networking technologies. Compute clusters are important options for a

growing number of technical and commercial customers. The primary driver for clustering

of compute workstations and servers is that it provides a low cost alternative to

supercomputers. It also has the following key benefits:

z

horizontally scalable by adding more nodes

z

vertically scalable by using larger SMP nodes

z

fault-isolation - failure of a single Compute Node will not shutdown the

entire cluster system

z

asymmetry: mix and match of different nodes in a cluster

z

configuration flexibility: nodes, interconnect

z

re-deployable nodes

A compute cluster consists of Compute Nodes that incorporate multiple processors in a

symmetric multiprocessor configuration. These nodes are connected through interconnection

network(s) and are supervised within a single administrative domain. A compute cluster

usually resides in a single machine room and is managed as a single computer system. The

interconnection network employs standard local area network (LAN) and/or high

erformance interconnect technologies based on the application requirements. A compute

cluster has to support both time-to-solution jobs and throughput jobs. HP high performance

technical computing cluster is available on HP Integrity servers with interconnection options

of Gigabit Ethernet or Infiniband. The common components of a cluster are:

p

p

p

z

Head Node - provides user access to the cluster. In smaller clusters, the

Head Node may also serve as a Management Server.

z

Management Server - server that provides single point of management for

all system components in the cluster

z

Management LAN/switch - usually an Ethernet network used to monitor

and control all the major system components. May also handle traffic to

the file server.

z

Interconnect switch - provides high speed connectivity between Compute

Nodes. Used for message passing and remote memory access capabilities

for parallel applications.

z

Storage - includes both local disk space on each Compute Node and

optionally an independent storage unit accessible to the Compute Nodes

(See NAS below).

z

Cluster management software - ClusterPack for system administrators

and end-users.

z

Management Processor (MP) - controls the system console, reset and

power management functions of a server.

z

Console LAN - network for remote management of consoles (ex. using

the Management Processor LAN).

z

Network Attached Storage (NAS) - attach directly to Ethernet networks,

providing easy installation, low maintenance, and high uptime.

Compute Nodes

Compute Nodes in a cluster are normal compute servers that provide system computing

resource and storage capability. A ClusterPack cluster is built with HP Integrity servers: 2way or 4-way server platforms based on Intel Itanium 2-based processors and HP's zx1

chipset technologies.

The HP Integrity rx2600 server, powered by Intel Itanium 2-based processors, is the

industry's first dual-processor Itanium 2-based server. The rx2600 dramatically improves

rice/performance for technical applications and gives customers a cost-effective yet

owerful entry into a technology with tremendous growth potential. Its 2U rack density is

ideal for clustering solutions.

HP's extensive Itanium 2-based systems experience and co-developer insights have resulted

in premium performance through the development of the HP Scalable Processor Chipset

zx1. Invented by HP, the HP zx1 chipset fully unleashes the power of Intel Itanium 2-based

rocessors by lowering memory latencies and increasing memory and I/O subsystem

scalability. With the HP zx1 chipset, HP Integrity servers and Itanium 2-based servers

achieve even better performance and memory expandability.

Interconnection Networks

An ClusterPack cluster is built with the industry standard Gigabit Ethernet. This

interconnect network is capable of transferring data packets between Compute Nodes for

both file serving and inter-node communication for applications. When applications benefit

from lower latency, HP's Infiniband can be configured into the cluster to provide lower

latency and higher bandwidth. A cluster LAN is also configured to separate the system

management traffic from application message passing and file serving traffics.

Management Software and Head Node

The ability to manage and use a cluster as easily as a single compute system is critical to the

success of any cluster solution. To facilitate ease of use for both system administrators and

end-users, HP has created a software package called ClusterPack. The ClusterPack cluster

can be managed and used just as easily as a single server platform.

The ClusterPack is an integrated solution that offers the following key features:

Installation and configuration

z

automated cluster setup

z

network services setup (NFS, NTP, NIS, Ignite-UX)

z

remote power-on

z

network configuration

System administration

z

single point of administration

z

role-based management

z

software and patch management

z

user account maintenance

z

resource control

z

cluster health monitoring

z

cluster troubleshooting

z

cluster tuning

z

golden image creation and distribution

z

cluster reconfiguration

z

cluster system hardware and software inventory management

z

cluster server nodes consistency checking

Distributed resource management

z

cluster resource scheduling

z

policy based queues and multiple queue management

z

job submission, monitor, and control

z

user specified job priority

z

Application ReStart file migration

The ClusterPack integrates HP's enterprise system management solution, HP System Insight

Manager (HP SIM), and Platform Computing's Clusterware. HP SIM has been widely used

in large-scale data centers for managing hundreds of HP systems through a single point of

control. Its multi-system management capabilities, such as group operations and role-based

management, enable customers to achieve optimal IT resource efficiency. Platform

Computing's Clusterware is based on the industry distributed resource management solution,

Load Sharing Facility (LSF), and is specially designed for cluster management and ease-ofuse for both system administrators and end-users. ClusterPack is available on HP-UX 11i

Version 2.0. The ClusterPack has a server component that runs on a Management Server,

N

j

and client agents that run on the managed Integrity compute servers.

NAS 8000

AS 8000 High Availability Cluster was designed to significantly reduce downtime and

maximize the availability of storage by providing heterogeneous file-sharing and fileserving functionality across a wide variety of application areas, including content delivery

and distribution, consolidated storage management, technical computing, and Web serving.

It allows capacity without server downtime so additions can be made without losing access

to valuable data. The HP NAS 8000 series provides interoperability with other applications.

It also provides storage flexibility.

The critical need for more accessible data, and more reliable storage, is met with a NAS

solution.

Back to Top

1.1.2 Who should use the material in this tutorial?

This tutorial is divided into four main sections:

z

Administrators Guide

z

Users Guide

z

Tool Overview

z

Related Documents

z

Data Dictionary

The Administrators Guide is intended for the system administrators who will be responsible

for the initial setup and continuing operation of the cluster. The Administrators section of

the tutorial covers a range of topics including: installation and setup of the ClusterPack

software on the cluster, creating and managing golden images, system maintenance tasks,

adding users to the cluster, adding third party software to the cluster, system monitoring

tasks, and basic troubleshooting of the ClusterPack software.

The Users Guide is intended for users who will be using the ClusterPack software to submit

obs to the cluster. The Users section of the tutorial covers a range of topics including: basic

user account setup and access, the layout of the cluster, submitting jobs to the cluster,

monitoring jobs currently running on the cluster, collecting data in a central location, and

general troubleshooting of the ClusterPack software.

The Tool Overview is a general overview of each major software component of the

ClusterPack solution. For each tool, a basic functional overview is presented. This section

includes information that is useful for both system administrators and end users.

The Related Documents gives the location of additional information for components of

ClusterPack and HP Integrity clusters.

The Data Dictionary contains definitions for common terms that are used through the

j

tutorial.

Back to Top

1.1.3 What is the best order to review the material in the tutorial?

System Administrators

Initial installation and configuration of the cluster requires a complete understanding of the

steps involved and the information required. Before installing a new cluster, the system

administrator should read and understand all of the steps involved before beginning the

actual installation. It is assumed that anyone setting up a cluster is familiar with Unix system

administration, HP-UX OS installation, the Management Processor interface and network

setup and configuration. Before installing a cluster for the first time, read and understand the

following material:

z

Section 1.2 Initial Installation and Set-Up

z

Section 1.3 Installation and Configuration of Optional Components

z

Section 1.4 Software Upgrades and Reinstalls

z

Section 1.5 Golden Image Tasks

It is helpful prior to installation to review and be familiar with several additional sections of

the tutorial. This material does not need to be completely reviewed, but should be read and

available during the initial testing of the new cluster:

z

Section 1.6 System Maintenance Tasks

z

Section 1.7 System Monitoring Tasks

z

Section 1.8 Workload Management Tasks

z

Section 1.9 System Troubleshooting Tasks

z

Tool Overview

Users

Before beginning to use the cluster for daily tasks, it is helpful to review several sections in

order to be familiar with the tools that are provided on the cluster to submit and monitor

obs. This material should be kept readily available for reference.

z

Section 2.1 Job Management Tasks

z

Section 2.2 File Transfer Tasks

z

Section 2.3 Miscellaneous Tasks

z

Tool Overview

Is the Online Tutorial available in a single printable file?

The Online Tutorial is available in a single file. From the Master Index of Sections, there is

a link to the printable version at the bottom of the page.

References:

z

Printable Version

Back to Top

1.1.4 Operating System and Operating Environment Requirements

The key components of the HP Integrity Server Technical Cluster are:

z

Management Server: HP Integrity server with HP-UX 11i Version 2.0

TCOE

z

Compute Nodes: HP Integrity servers with HP-UX 11i Version 2.0

TCOE

z

Cluster Management Software: ClusterPack V2.4

The following prerequisites are assumed:

z

HP-UX 11i V2.0 TCOE installed on the Management Server

z

HP-UX 11i V2.0 TCOE installed on each Compute Node

The following software components must be installed for all features of ClusterPack V2.4 to

function effectively:

z

HP-UX 11i Ignite-UX on the Management Server

z

HP-UX 11i Ignite-UX on each Compute Node

Back to Top

1.1.5 System Requirements

In order to install the ClusterPack solution, the Management Server must have access to a

DVD drive.

The Management Server hard drive must be partitioned to allow sufficient disc space for the

following directories:

z

/var - 4 GB

z

/opt - 4 GB

z

/share - 500 MB

Back to Top

Index

Administrators Guide

|

Users Guide

|

Tool Overview

|

Related Documents

|

Copyright 1994-2004 hewlett-packard company

Dictionary

|

Comprehensive Install Instructions

ClusterPack

Comprehensive Install Instructions

Index

1.2.1 Comprehensive Installation Overview

Step 1 Fill Out the ClusterPack Installation Worksheet

Step 2 Install Prerequisites

Step 3 Allocate File System Space

Step 4 Obtain a License File

Step 5 Prepare Hardware Access

Step 6 Power Up the Management Server

Step 7 Configure the ProCurve Switch

Step 8 Copy the License Files to the Management Server

Step 9 Install ClusterPack on the Management Server

Step 10 Run manager_config on the Management Server

Step 11 Run mp_register on the Management Server

Step 12 Power up the Compute Nodes

Step 13 Run compute_config on the Management Server

Step 14 Set up HyperFabric (optional)

Step 15 Set up InfiniBand (optional)

Step 16 Run finalize_config on the Management Server

Step 17 Create a Golden Image of a Compute Node from the Management Server

Step 18 Add nodes to the cluster that will receive the Golden Image

Step 19 Distribute the Golden Image to the remaining Compute Nodes

Step 20 Install and Configure the remaining Compute Nodes

Step 21 Verify the final cluster configuration

Administrators Guide

|

Users Guide

|

Tool Overview

|

Related Documents

|

Dictionary

|

1.2.1 Comprehensive Installation Overview

ClusterPack uses a two-stage process for setting up an HP-UX Cluster:

z

Create a base configuration with a Management Server and one Compute Node

{

Prepare for installation.

{

Install and configure the Management Server.

{

Install and configure the initial Compute Node and its Management

Processor.

g

w

u

X

b

Y

{

Verify the Management Server and the initial Compute Node.

z

Configure the remaining Compute Nodes with a Golden Image.

{

Create a Golden Image.

{

Add nodes to the configuration that will receive the Golden Image.

{

Distribute the Golden Image to remaining nodes.

{

Install and configure the Compute Nodes that received the Golden

Image.

{

Verify the final cluster configuration.

These processes are further broken down into a number of discrete steps. Each step contains the followin

sections:

z

Background

z

Overview

z

Details

The background section explains why this step is necessary and what will be done for you. The overvie

section tells you what this step entails in general terms. The details section gives the exact commands yo

must enter.

Note:

The steps in this section have to be followed in the specified order to ensure that

everything works correctly. Please read all of the following steps BEFORE beginning the

installation process.

Back to Top

Step 1 Fill Out the ClusterPack Installation Worksheet

Background

ClusterPack simplifies the creation and administration of a cluster of HP Integrity Servers running HP-U

y automating the collection, recording, and distribution of information about the systems in a network.

the system administrator, must still make decisions about how to identify and secure those network

components. You can record all of your decisions on a form that you can keep nearby as you execute the

steps of the installation process.

Overview

Print out this form and fill out all information for each node in your cluster.

Installation Worksheet (pdf)

Note:

v

a

s

p

You will not be able to complete the following steps if you have not collected all of this

information.

Details

At various points during the configuration you will be queried for the following information:

z

DNS Domain name [ex. domain.com]

z

NIS Domain name [ex. hpcluster]

z

Network Connectivity:

{

Information on which network cards in each Compute Node connect to

the Management Server

{

Information on which network card in the Management Server

connects to the Compute Nodes.

z

MySQL root password (You will be asked to set it).

z

HP SIM Administrator password (You will be asked to set it).

z

LSF Administrator password (You will be asked to set it).

Back to Top

Step 2 Install Prerequisites

Background

ClusterPack works on HP Integrity Servers running HP-UX. In order to install ClusterPack, you must ha

the Technical Computing Operating Environment (TCOE) version of HP-UX installed. You must also h

the Ignite-UX software, which is used for installation. Installing Ignite-UX on the Compute Nodes make

ossible to create and distribute "golden images" from the Compute Nodes.

ClusterPack requires a homogeneous operating system environment. That is, all Compute Nodes and the

Management Server must have the same release of HP-UX installed as well as the same operating

environment.

Overview

Install the following software on the Management Server.

z

HP-UX 11i Ignite-UX

z

HP-UX 11i V2.0 TCOE

Install the following software on each Compute Node.

z

n

r

p

N

HP-UX 11i Ignite-UX

z

HP-UX 11i V2.0 TCOE

ClusterPack depends on certain open source software which is normally installed as a part of the operati

environment. The minimum release versions required are:

z

MySQL Version 3.23.58 or higher

z

Perl Version 5.8 or higher

The Management Server requires a minimum of two LAN connections. One connection must be configu

rior to installing ClusterPack.

The Compute Nodes must have Management Processor (MP) cards.

Details

Install these items when you do a fresh install of HP-UX. on the Management Server and the Compute

odes. Or, you can install Ignite-UX after rebooting by the following method.

z

Using the HP-UX 11i V2.0 TCOE DVD, mount and register the DVD as a software

depot.

z

Install the Ignite-UX software on the Management Server using swinstall.

On the Management Server:

% /usr/sbin/swinstall -s <source_machine>:/mnt/dvdrom IgniteUX

Note:

Allow the default choices to install.

Back to Top

Step 3 Allocate File System Space

Background

ClusterPack installs software in the /opt and /share file systems. It stores data in the /var file system. You

must allocate sufficient space in these file systems if it is to operate correctly.

Overview

Allocate file system space on the Management Server. Minimum requirements are listed below.

z

/opt - 4GB

z

/var - 4GB

z

/share - 500MB (Clusterware edition only)

Details

Allocate space for these file systems when you do a fresh install of HP-UX on the Management Server.

To resize /opt

1. Go to single user mode.

# /usr/sbin/shutdown -r now

%

2. Interrupt auto boot.

3. Select the EFI shell.

4. Select the appropriate file system. (Should be fs0: but may be fs1:)

% Shell> fs0:

5. Boot HP-UX.

fs0:\>hpux

%

6. Interrupt auto boot.

7. Boot to single user mode.

HPUX> boot vmunix -is

%

8. Determine the lvol of /opt.

% cat /etc/fstab

9. Look for the lvol that corresponds to /opt.

10. Extend the file system. (Use lvol from Step 2.)

% # lvextend -L 4096 /dev/vg00/lvol4

(May not be lvol4.)

# umount /dev/vg00/lvol4

%

(This should fail.)

% # extendfs /dev/vg00/lvol4

% # mount /dev/vg00/lvol4

11. Repeat 8 through 10 for /var.

Back to Top

Step 4 Obtain a License File

(

d

o

D

Background

For ClusterPack Base Edition, please refer to the Base Edition License certificate for instructions on

redeeming your license.

For ClusterPack Clusterware Edition, you will need to redeem BOTH the Base Edition license certificate

AND the Clusterware Edition license certificate. You will need TWO license files in order to run

manager_config.

As part of the normal installation and configuration process, you will be asked to provide the license key

ClusterPack will install the license files in the correct location(s), and all licensing services will be starte

Overview

z

Get the Host ID number of the Management Server.

z

Contact Hewlett-Packard Licensing Services to redeem your license certificates.

z

If you purchased the ClusterPack Base Edition, redeem the Base Edition license

certificate.

z

If you purchased the ClusterPack Clusterware Edition, redeem the Base Edition certificate

and the Clusterware edition certificate

Note:

It may take up to 24 hours to receive license file. Plan accordingly.

Details

You will need to contact HP licensing to redeem your license certificates. You can call, E-mail, or fax y

request to Hewlett-Packard Software Licensing Services. Refer to your Software License Certificate for

contact information. Prior to installing ClusterPack V2.4, you can request a key by providing the Host I

number of the Management Server. The Host ID can be found using the 'uname' command:

% /bin/uname -i

The number returned by this command must be proceeded by a # when making your request. For exampl

'uname -i' returns 2005771344, provide the number as #2005771344 in your key request.

Back to Top

Step 5 Prepare Hardware Access

Background

p

v

s

b

r

This document does not cover hardware details. It is necessary, however, to make certain hardware

reparations in order to run the software.

Overview

Get a serial console cable long enough to reach all the Compute Nodes from the Management Server.

Details

To allow the Management Server to aid in configuring the Management Processors, it is necessary to ha

serial console cable to connect the serial port on the Management Server to the console port on the

Management Processor to be configured. Be sure that the serial cable is long enough to reach all of the

Compute Nodes. It is also possible to configure the Management Processors manually by connecting a

console to each card.

Note:

If you are installing ClusterPack on Compute Nodes for the first time, DO NOT power up

the systems, ClusterPack will do that for you automatically. If you do accidentally power

the compute nodes, DO NOT answer the HP-UX boot questions.

Back to Top

Step 6 Power Up the Management Server

Background

This is the first step in actually configuring your system.

Overview

Perform a normal boot process for the Management Server.

Note:

If you are using DHCP for IP assignment, DO NOT boot the Compute Nodes at this time.

Details

If you use DHCP to assign the host names and IP addresses to the Compute Nodes, the DHCP server mu

e running on the Management Server before the Compute Nodes are booted. The manager_config prog

will set up the DHCP server.

% /opt/clusterpack/bin/manager_config

e

Back to Top

Step 7 Configure the ProCurve Switch

Background

The ProCurve Switch is used for the management network of the cluster.

Overview

The IP address for the ProCurve Switch should be selected from the same IP subnet that will be used for

Compute Nodes.

Details

z

Select an IP address from the same IP subnet that will be used for the Compute Nodes.

z

Connect a console to the switch

z

Log onto the switch through the console

z

Type 'set-up'

z

Select IP Config and select the "manual" option

z

Select the IP address field and enter the IP address to be used for the switch

Back to Top

Step 8 Copy the License Files to the Management Server

Background

Copy the license files onto the Management Server. The license files can be placed in any convenient

directory that is accessable to the Management Server. During the invocation of the manager_config tool

you will be asked to provide a path to the license files. As part of manager_config the license files will b

installed into the correct locations on the machine, and all licensing services will be started.

Overview

Put the files in any convenient directory on the Management Server.

Details

/usr/bin/ftp your_host

%

% > cd your_home

% > lcd /tmp

% > get cpack.lic

> bye

%

Back to Top

Step 9 Install ClusterPack on the Management Server

Background

The ClusterPack software is delivered on a DVD.

Overview

z

Mount and register the ClusterPack DVD as a software depot.

z

Install the ClusterPack Manager software (CPACK-MGR) using swinstall.

z

Leave the DVD in the DVD drive for the next step.

Details

How to mount a DVD on a remote system to a local directory

On the system with the DVD drive (i.e. remote system):

1. Mount the DVD.

% mount /dev/dsk/xxx /mnt/dvdrom

2. Edit the /etc/exports file. DVDs must be mounted read only ('ro'), and if required, can give

root permission to other machines mounting the filesystem

("root=<machine_foo:machine_bar:machine_baz>"). Add a line to /etc/exports:

% /mnt/dvdrom -ro,root=<local_system>

3. Export the file system, using all the directives found in /etc/exports

% exportfs -a

4. Check to be sure that the line you added is actually exported.

exportfs

%

On the local machine:

5. Mount the DVD to an existing directory.

% /etc/mount <remote_system>:/mnt/dvdrom /mnt/dvdrom

Note:

e

e

You cannot be in the /mnt/dvdrom directory when you try to mount. You

will get a file busy error.

When you are finished, on the local machine:

6. Unmount the DVD file system.

/etc/umount /mnt/dvdrom

%

On the remote system:

7. Unexport the DVD file system.

% exportfs -u -i /mnt/dvdrom

8. Unmount the DVD

/etc/umount /mnt/dvdrom

%

How to enable a DVD as a software depot

During the installation process, two DVDs will be required. Generic instructions for making a DVD

accessible as a software depot for installation onto the Management Server are provided here. Please ref

the steps that follow for the specific DVDs that are required.

The steps to mount a DVD for use as a software depot are:

z

Insert DVD into the drive

z

Mount the DVD drive locally on that system

z

Register the depot on the DVD using swreg

z

Check the contents of the DVD using swlist

These commands can only be executed as the super user (i.e. root).

A DVD drive installed in the Management Server can be used for software installations. If the Managem

Server does not include a DVD drive, use one of these two methods:

1. Connect a portable DVD drive to the Management Server

2. Use a HP-UX system with a DVD drive, which is network accessible from the

Management Server, as a source for installation.

For example, to mount the device /dev/dvdrom to the directory /mnt/dvdrom, execute the following

commands on the "source machine" with the DVD drive

/sbin/mount -r /dev/dsk/xxx /mnt/dvdrom

%

% /usr/sbin/swreg -l depot /mnt/dvdrom

% /usr/sbin/swlist @ /mnt/dvdrom

z

Using the ClusterPack DVD, mount and register the DVD as a software depot.

z

Install the ClusterPack Manager software (CPACK-MGR) on the Management Server

using swinstall.

On the Management Server:

% /usr/sbin/swinstall -s <source_machine>:/mnt/dvdrom CPACKMGR

z

The ClusterPack DVD will be referenced again in the installation process. Please leave it

in the DVD drive until the "Invoke /opt/clusterpack/bin/manager_config on Management

Server" step has completed.

Back to Top

Step 10 Run manager_config on the Management Server

Background

This program is the main installation and configuration driver. It should be executed on the Management

Server.

Some of the steps are:

z

Install the appropriate license files (i.e. ONE Base Edition license file or TWO

Clusterware Edition license files), and start the licensing services

z

Assign DNS domain name and NIS domain name based on inputs provided

z

Select and configure the cluster LAN interface on the Management Server that interfaces

with the Compute Nodes

z

Specify how many Compute Nodes are in the cluster and the starting IP address of the

first Compute Node. This information is used to assign names and IP addresses when

Compute Nodes are brought up. The first 5 characters of the Management Server's

hostname are used for a base for the Compute Nodes. For example, if the starting IP

address is 10.1.1.1, and there are 16 Compute Nodes, and the name of the Management

Server is hpnode, then the first Compute Node will be called hpnod001 with address

10.1.1.1, the next hpnod002 with address 10.1.1.2, and so on. If the tool is invoked with

the -f option, the input file will be the source for this information.

z

Set up the Management Server as NTP server, NIS server, NFS server, Ignite-UX server,

and Web server.

z

Install all of the dependent software components from the ClusterPack DVD:

{

This step looks for the source of the CPACK-MGR install and queries

for an alternate source, if the source is not found. A local depot is

setup. All of the agent components are copied. Other dependent

software pieces in the Management Server are validated and installed.

z

Modify configuration files on the Management Server to enable auto-startup of the

Cluster Management Software components after reboots.

z

Configure Cluster Management Software tools. The Management Server components of

HP System Management Tools (HP Systems Insight Manager) is also configured if

selected.

z

Print a PASS diagnostic message if all of the configuration steps are successful.

Overview

Provide the following information to the manager_config program:

z

The path to the license file(s),

z

Whether to store passwords,

z

The DNS domain and NIS domain for the cluster,

z

The host name of the manager and the name of the cluster,

z

The cluster LAN interface on the Management Server,

z

The count and starting IP address of the Compute Nodes,

z

Whether to mount a home directory,

z

The SCM admin password if SCM is configured.

z

The LSF admin password. (Clusterware edition only)

Details

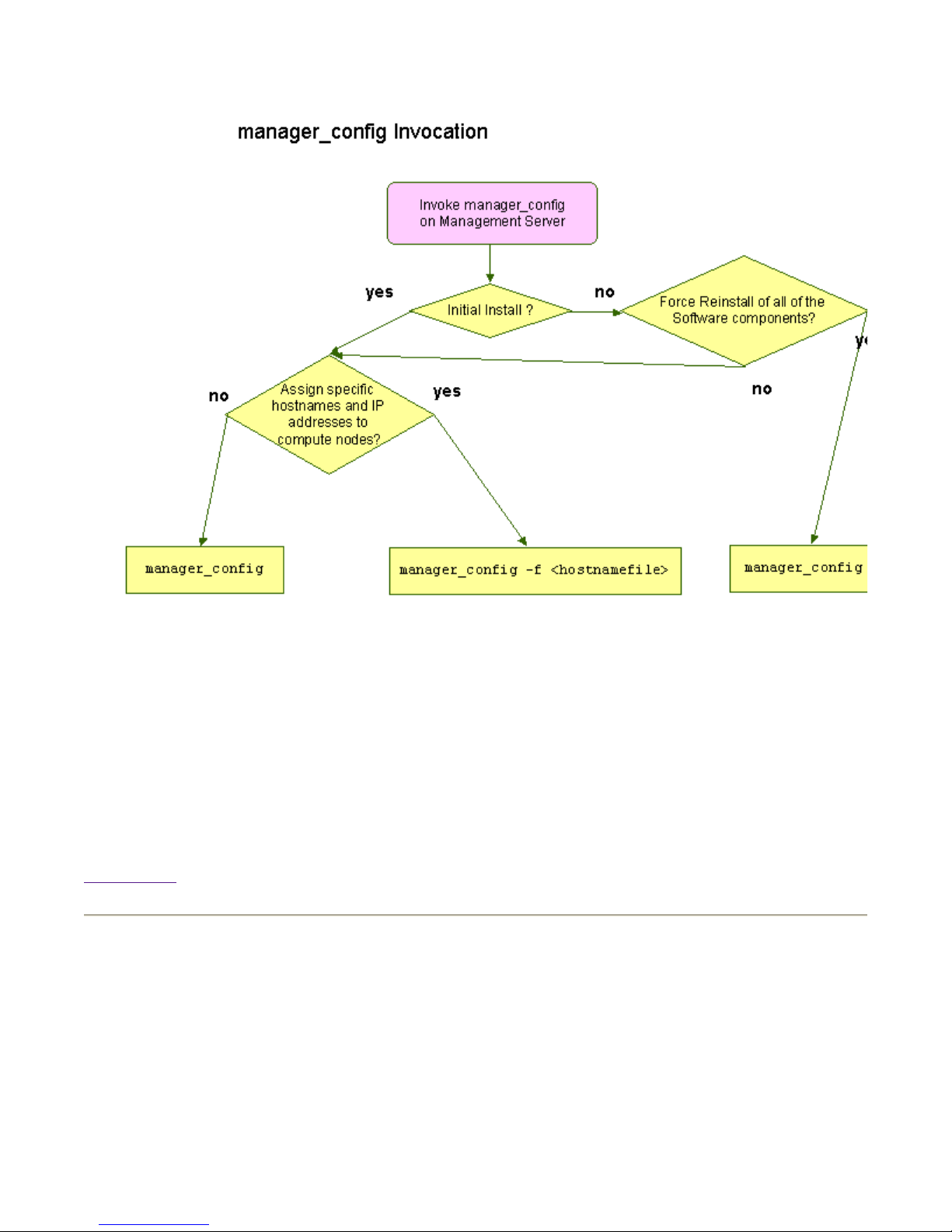

This tool can be invoked in two ways, based on your specific requirements:

z

If you want manager_config to drive the allocation of hostnames and IP addresses of the

Compute Nodes in the cluster (based on some basic queries),

invoke /opt/clusterpack/bin/manager_config with no arguments:

% /opt/clusterpack/bin/manager_config

z

If you want manager_config to assign specific hostnames and IP addresses to the

Compute Nodes in the cluster, supply an input file in the same format as /etc/hosts, and

invoke the tool as follows:

/opt/clusterpack/bin/manager_config -f input_file

%

After this step, the ClusterPack DVD is no longer required during installation. On the source machine,

unmount the DVD drive and remove the DVD.

/sbin/umount /mnt/dvdrom

%

manager_config Invocation

a

manager_config is an interactive tool that configures the Management Server based on some simple quer

(most of the queries have default values assigned, and you just need to press RETURN to assign those

default values).

Back to Top

Step 11 Run mp_register on the Management Server

Background

A Management Processor (MP) allows you to remotely monitor and control the state of a Compute Node

configuring and registering the MP cards for each Compute Node, clbootnodes can be used to automatic

answer the first boot questions for each Compute Node.

Loading...

Loading...