Page 1

DIGITAL

HSG8 0 Array Controll er ACS Version 8 .2

EK-HSG80-UG. B01

Digital Equipment Corporation

Maynard, Mass achusetts

User’s Guide

Page 2

July 1998

While Digi tal Equipment Corporation believes the inf ormation included in this manual i s correct as of the date of

publicat ion , it is sub je ct to c ha nge wi thout no tice . DI GITAL m akes no r epres ent atio ns tha t the inter co nne ction of its

product s in the manner described in this document will not infringe existing or future patent rig hts, nor do the

descri ptions contained in this document imply the grant ing of licenses to make, use, or sell equipment or software

in acc ordance with the description. No responsibility is assumed for the use or reliability of firmware on equipment

not suppl ied by DIGITAL or its affil iated compani es. Possession, use, or copying of the software or firmw are

descri bed in this documenta tion is authorized only purs uant to a valid w ritten lic ense from DIGITAL, an authorized

sublicensor , or the identified licensor.

Commercia l Comp uter S oft wa re , Com puter Sof tware Doc ument at ion an d Techni ca l Da ta for Comm erci al It ems ar e

licensed to the U.S. Gover n m en t w it h D IGITAL’s stand a rd co m me rcial lice ns e and, when applicable, the rights in

DFAR 252.227 701 5, “Te chnical Data—Comm ercial Items. ”

© Digital Equipment Corporation, 1998.

Printed in U.S.A.

All rights reserved.

DIGITAL, DIGITAL UNIX, DECconnect, HSZ, StorageWorks, VMS, OpenVMS, and the DIGITAL logo are

tradema rks of Digital Equipm ent Corporatio n.

UNIX is a regist ered tradem ark in the U nited States and other countries exclusively through X/Open Company Ltd.

Windows NT is a trademar k of the Micr osoft Corpor ation. Sun is a regis tered trademark of Sun Microsystems , Inc.

Hewlett-Packard and HP–UX are registered trademarks of the Hewlett-Packard Company. IBM and AIX are

registered tradem arks of International Busine ss Machines Corporation. All other trademarks and registered

trade marks are th e pr operty of th ei r respect ive own er s.

This equipment has been tested and fo und to compl y with the limits for a Class A digital device, pursuant to Part 15

of the FCC Rules. These limits are designed to provide reasonable protection against harmful interference when the

equipment is operated in a commercial environment. This eq uipment generates, uses and can radiate radio frequency energy and, i f not installed and used in accordance with the manuals, may cause harmful interference to

radio communications. Operation of t his equipment in a resid en tial area is likely to cause harmful interference in

which case the user will be required to correct the int erference at his own expe nse. Restrictions apply to the use of

the local-connection port on this series of controllers; failure to observe these restr ictions may result in harmful

interference. Always disconne ct this port as soon as possible after completing the setup operation. Any changes or

modifications made to this equipment may void the user's authority t o operate the equipment.

Warning!

This is a Class A product. In a dom estic environment this product may cause radio interference in which case the

user may be required to take adequate measures.

Achtung!

Dieses i st ein Gerät der Funkstörgrenzwertklasse A. In Wohnbereichen können bei Betrieb dieses Gerätes Rundfunkstörungen auftreten, in welchen Fällen der Benutz er für entsprechende Gegen ma ßnahmen verantwortlich ist.

Avertissement!

Cet appareil est un appareil de Classe A. D ans un environnement ré sidentiel cet appareil peut provoquer des brouillages ra dioélectriques. Dans ce cas, il peut être de mandé à l’ utilisateur de prendre les mesures appropriées .

Page 3

Preface

Precautions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xviii

Electrostatic Discharge Precautions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xviii

Component Precaution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix

Maintenance Port Precautions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix

Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xx

T ypographical Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xx

Special Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi

Required Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xxii

Related Publications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxiii

Revision History. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxiv

Chapter 1 General Descri ption

The HSG80 Array Controller Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–2

Summary of HSG80 Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–4

The HSG80 Array Controller. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–7

Operator Control Panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–13

Maintenance Port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–14

Utilities and Exercisers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–14

Cache Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–18

Caching Techniques . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–20

Fault-Tolerance for Write-Back Caching . . . . . . . . . . . . . . . . . . . . . . . . . . .1–21

External Cache Battery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–28

Charging Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–29

iii

Chapter 2 Configuring an HSG80 Array Controller

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–2

Configuration Rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–2

Configuring an HSG80 Array Controller. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–3

Setting the PVA Module ID Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–6

Establishing a Local Connection to the Controller . . . . . . . . . . . . . . . . . . . . . . . .2–7

Selecting a Failover Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–10

Using Transparent Failover. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–10

Using Multiple-Bus Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–11

Enabling Mirrored Write-Back Cache. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–12

Page 4

iv

Selecting a Cache Mode. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–12

Fault-Tolerance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–12

Backing up Power with a UPS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–13

Connecting the Subsystem to the Host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–14

Connecting a Dua l-Redundant Configuration to the Host. . . . . . . . . . . . . . 2–16

Chapter 3 Creating Storagesets

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–2

Planning and Configuring Storagesets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–4

Creating a Storageset and Device Profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–5

Determining Storage Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–7

Choosing a Storageset Type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–8

Using Stripesets to Increase I/O Performance . . . . . . . . . . . . . . . . . . . . . . . . 3–8

Using Mirrorsets to Ensure Av ailability. . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–12

Using RAIDsets to Increase Performance and Availability. . . . . . . . . . . . . 3–15

Using Striped Mirrorsets for Highe s t Perfo rmance and Availability. . . . . . 3–17

Cloning Data for Backup. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–19

Backing Up Your Subsystem Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–23

Saving Subsystem Configur ation Information to a Single Disk . . . . . . . . . 3–23

Saving Subsystem Configur ation Information to Multiple Disks . . . . . . . . 3–23

Saving Subsystem Configur ation Information to a Storagese t . . . . . . . . . . 3–24

Controller and Port Worldwide Names (Node IDs) . . . . . . . . . . . . . . . . . . . . . . 3–26

Restoring Worldwide Names (Node IDs) . . . . . . . . . . . . . . . . . . . . . . . . . . 3–26

Unit World Wide Names (LUN IDs) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–27

Assigning Unit Numbers for Host Access to Storagesets . . . . . . . . . . . . . . . . . . 3–28

Assigning Unit Offsets. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–29

Assigning Access Paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–30

Creating a Storageset Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–32

Device PTL Addressing Convention within the Controller. . . . . . . . . . . . . 3–33

Planning Partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–37

Defining a Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–37

Guidelines for Partitioning Storagesets and Disk Drives. . . . . . . . . . . . . . . 3–38

Choosi n g Sw i tches for Sto r a ge s e ts an d Dev ic es. . . . . . . . . . . . . . . . . . . . . . . . . 3–39

Enabling Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–39

Changing Switches. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–39

RAIDset Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–40

Page 5

Replacement Policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–40

Reconstruction Policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–40

Membership . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–41

Mirrorset Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–42

Replacement Policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–42

Copy Speed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–42

Read Source . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–43

Device Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–44

Transportability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–44

Device Transfer Rate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–46

Initialize Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–47

Chunk Size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–47

Save Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–50

Destroy/Nodestroy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–52

Unit Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–54

Configuring Storagesets with CLI Commands . . . . . . . . . . . . . . . . . . . . . . . . . .3–55

Adding Disk Drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–55

Configuring a Stripeset. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–55

Configuring a Mirrorset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–56

Configuring a RAIDset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–57

Configuring a Striped Mirrorset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–59

Configuring a Single-Disk Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–60

Partitioning a Storageset or Disk Drive . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–61

Adding a Disk Drive to the Spareset. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–63

Removing a Disk Drive from the Spareset . . . . . . . . . . . . . . . . . . . . . . . . . .3–64

Enabling Autospare. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–65

Deleting a Storageset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–65

Changing Switches for a Storageset or Device. . . . . . . . . . . . . . . . . . . . . . .3–66

Configuring with the Command Console LUN . . . . . . . . . . . . . . . . . . . . . . . . . .3–68

Enabling and Disabling the CCL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–68

Finding the CCL Location . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–69

Multiple-Port and Multiple-Host Use. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–69

Tro ubleshooting with the CCL. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–70

Adding Storage Units: Where Is the CCL? . . . . . . . . . . . . . . . . . . . . . . . . .3–70

Moving Storagesets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–71

v

Page 6

vi

Chapter 4 Troubleshooting

Maintenance Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–1

Tro ubleshooting Checklist . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–2

Troubleshooting Table. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–4

Significant Event Reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–14

Events that cause controller termination . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–14

Events that do not cause controller operation to terminate . . . . . . . . . . . . . 4–15

Fault Management Utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–17

Displaying Failure Entries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–17

Translating Event Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–18

Controlling the Display of Significant Events and Failures. . . . . . . . . . . . . 4–20

Using VTDPY to Check for Communication Problems . . . . . . . . . . . . . . . . . . . 4–23

Checking Controller-to-Host Communications . . . . . . . . . . . . . . . . . . . . 4–24

Checking Controller-to-Device Communications . . . . . . . . . . . . . . . . . . . . 4–24

Checking Unit Status and I/O Activity . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–29

Checking Fibre Channel Link Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–31

Checking for Disk-Drive Problems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–36

Finding a Disk Drive in the Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–36

T esting the Read Capability of a Disk Drive . . . . . . . . . . . . . . . . . . . . . . . . 4–36

T esting the Read and Write Capabilities of a Disk Drive . . . . . . . . . . . . . . 4–37

DILX Error Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–40

Running the Controller’s Diagnostic Test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–41

Chapter 5 Replacement Procedures

Replacing Modules in a Single Controller Configuration. . . . . . . . . . . . . . . . . . . 5–2

Replacing the Controller and Cache Module in a Single Controller

Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–2

Replacing the Controller in a Single Controller Configuration. . . . . . . . . . . 5–3

Replacing the Cache Module in a Single Controller Configuration . . . . . . . 5–6

Replacing Modul es in a Dual-Redundant Contr oller Configuration. . . . . . . . . . . 5–8

Replacing a Con troller and Cache Module in a Dual-Redundant Controlle r

Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–9

Replacing a Con troller in a Dual-Redundant Controller Configuration . . . 5–15

Replacing a Cache Module in a Dual-Redundant Controller

Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–21

Page 7

vii

Replacing the External Cache Battery St o rage

Building Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–27

Replacing the External Cache Batte r y St orage Building Block

With Cabinet Powered On . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–28

Replacing the External Cache Batte r y St orage Building Block

With Cabinet Powered Off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–29

Replacing the GLM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–32

Replacing a PVA Module. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–34

Replacing the PVA in the Master Enclosure (ID 0) . . . . . . . . . . . . . . . . . . .5–34

Replacing the PVA in the First Expans ion (ID 2) or Second

Expansion Enclosure (ID 3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–36

Replacing an I/O Module. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–39

Replacing DIMMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–42

Removing DIMMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–43

Installing DIMMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–44

Replacing a Fibre Cable or Hub. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–45

Replacing a PCMCIA Card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–46

Replacing a Failed Storageset Member . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–47

Removing a Failed RAIDset or Mirrorset Member . . . . . . . . . . . . . . . . . . .5–47

Installing the New Member . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–47

Shutting Down the Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–48

Disabling and Enabling the External Cache Batteries . . . . . . . . . . . . . . . . .5–48

Restarting the Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5–50

Chapter 6 Upgrading the Su bsy stem

Upgrading Controller Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6–2

Installing a New Program Card. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6–2

Downloading New Software. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6–3

Using CLCP to Install, Delete, and List Software Patches. . . . . . . . . . . . . . .6–6

Upgrading Firmware on a Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6–11

HSUTIL Messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6–14

Upgrading to a Dual-Redundant Controller Configuration . . . . . . . . . . . . . . . . .6–16

Installi ng a Ne w Controller, Cache Module, and ECB. . . . . . . . . . . . . . . . .6–16

Upgrading Cache Memory. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6–20

Page 8

viii

Appendix A System Profiles

Device Profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .A–2

Storageset Profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .A–3

Enclosure Template . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .A–4

Appendix B CLI Commands

CLI Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–2

Using the CLI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–2

Command Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–2

Getting Help. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–3

Entering CLI Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–3

Changing the CLI Prompt . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–4

Command Syntax. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–5

ADD CONNECTIONS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–7

ADD DISK . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–11

ADD MIRRORSET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–15

ADD RAIDSET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–19

ADD SPARESET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–23

ADD STRIPESET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–25

ADD UNIT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–27

CLEAR_ERRORS CLI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–35

CLEAR_ERRORS controller INVALID_CACHE . . . . . . . . . . . . . . . . . . . . . .B–37

CLEAR_ERRORS device-name UNKNOWN . . . . . . . . . . . . . . . . . . . . . . . . .B–39

CLEAR_ERRO RS u ni t-num be r LOST_DATA . . . . . . . . . . . . . . . . . . . . . . . . .B–41

CLEAR_ERRORS unit-number UNWRITEABLE_DATA . . . . . . . . . . . . . . . .B–43

CONFIGURATION RESET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–45

CONFIGURATION RESTORE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–47

CONFIGURATION SAVE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–49

CREATE_PARTITION . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–51

DELETE connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–55

DELETE container-name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–57

DELETE FAILEDSET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–59

DELETE SPARESET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–61

DELETE unit-number . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–63

DESTROY_PARTITION . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–65

DIRECTORY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–67

Page 9

HELP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–69

INITIALIZE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–71

LOCATE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–77

MIRROR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–79

POWEROFF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–83

REDUCE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–85

RENAME . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–89

RESTART controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–91

RETRY_ERRORS UNWRITEABLE_DATA . . . . . . . . . . . . . . . . . . . . . . . . . B–93

RUN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–95

SELFTEST controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–99

SET connection-name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–101

SET controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–103

SET device-name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–111

SET EMU . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–113

SET FAILEDSET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–117

SET FAILOVER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–119

SET mirrorset-name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–121

SET MULTIBUS_FAILOVER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–127

SET NOF AILOVER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–129

SET NOMULTIBUS_FAILOVER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–131

SET RAIDset-name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–133

SET unit-number . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–137

SHOW . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–143

SHUTDOWN controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–149

UNMIRROR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B–151

ix

Appendix C LED Codes

Operator Control Panel LED Codes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C–2

Solid OCP Patterns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C–3

Flashing OCP Patterns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C–8

Appendix D Event Reporting: Templates and Codes

Passthrough Device Reset Event Sense Data Response. . . . . . . . . . . . . . . . . . . . D–2

Last Failure Event Sense Data Response . . . . . . . . . . . . . . . . . . . . . . . . . . . D–3

Multiple-Bus Failover Event Sense Data Response . . . . . . . . . . . . . . . . . . . D–5

Page 10

x

Failover Event Sense Data Respons e. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–6

Nonvolatile Parameter Memory Component Event Sense

Data Response . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–8

Backup Batte r y F ailure Event Sense Data Response. . . . . . . . . . . . . . . . . . .D–9

Subsystem Buil t-In Self Tes t Failure Event Sense Data Response . . . . . . .D–10

Memory System Failure Event Sense Data Response . . . . . . . . . . . . . . . . .D–12

Device Serv ices Non-Transfer Error Event Sense Data Response . . . . . . .D–13

Disk Trans f er Error Event Sense Data Response. . . . . . . . . . . . . . . . . . . . .D–14

Instance Codes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–16

Instance Code Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–16

Instance Codes and FMU. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–16

Last Failure Codes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–36

Last Failure Code Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–36

Last Failure Codes and FMU . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–36

Recommended Repair Action Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–77

Component Identifier Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–82

Event Threshold Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–84

ASC/ASCQ Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–85

Appendix E Controller Specifications

Physical and Electrical Specifications for the Controller . . . . . . . . . . . . . . . . . . . E–2

Environmental Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . E–3

Glossary

Index

Page 11

Figures

xi

The HSG80 Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–3

A Host and Its Storage Subsystem. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–7

HSG80 Array Controller–Fibre Channel Copper Cabling. . . . . . . . . . . . . . . . . . .1–8

Optional Maintenance Port Cable for a Terminal Connection. . . . . . . . . . . . . . .1–10

HSG80 Array Controller–Fibre Channel Optical Cabling. . . . . . . . . . . . . . . . . .1–11

Location of Cont rollers and Cache Modules . . . . . . . . . . . . . . . . . . . . . . . . . . .1–13

HSG80 Controller Operator Control Panel (OCP). . . . . . . . . . . . . . . . . . . . . . . .1–14

Cache Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–19

ECB for Dual-Redundant Configurat ions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–28

SCSI Target ID Numbers on the Controller Device Bus and PVA Settings

in an Extended Subsytem. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–6

Terminal to Local- Connection Port Connection . . . . . . . . . . . . . . . . . . . . . . . . . .2–7

“This Controller” and “Other Controller” . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–9

Cabling for Sing le Configuration with Fibre Channel Copper Support . . . . . . .2–14

Cabling for Sing le Configuration with Fibre Channel Optical S upport . . . . . . .2–15

Cabling for Dual-Re dundant Configu ration with Two Hubs using

Fibre Channel Copper Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–17

Cabling for Dual-Re dundant Configu ration with Two Hubs using

Fibre Channel Optical Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–18

Cabling for Dual-Re dundant Configu ration with One Hub using

Fibre Channel Copper Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–20

Cabling for Dual-Re dundant Configu ration with One Hub using

Fibre Channel Optical Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–21

Units Created from Storagesets, Partitions, and Drives . . . . . . . . . . . . . . . . . . . . .3–3

A Typical Storageset Profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–6

Striping Lets Several Disk Drives Participate in Each I/O Request. . . . . . . . . . . .3–9

Distribute Members across Ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–11

Mirrorsets Maintain Two Copies of the Same Data. . . . . . . . . . . . . . . . . . . . . . .3–12

First Mirrorset Members on Different Buses . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–13

Page 12

xii

Parity Ensures Availability; Striping Provides Good Performance. . . . . . . . . . . 3–15

Striping and Mirroring in the Same Storageset. . . . . . . . . . . . . . . . . . . . . . . . . . 3–17

CLONE Steps for Duplicating Unit Members . . . . . . . . . . . . . . . . . . . . . . . . . . 3–20

Controller Port ID and Unit Numbers in Transparent Failover Mode . . . . . . . . 3–28

Controller Port ID Numbers and Unit Numbers in Mulitple Bus Failover

Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–29

LUN Presentation Using Unit Offset on a Per-Host Basis . . . . . . . . . . . . . . . . . 3–30

Storageset Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–32

PTL Naming Convention . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–34

PTL Addressing in a Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–35

Locating Devices using PTLs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–36

Partitioning a Single-Disk Unit. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–37

Chunk Size Larger than the Request Size. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–48

Chunk Size Smaller than the Request Size. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–49

Moving a Storageset from one Subsystem to Another . . . . . . . . . . . . . . . . . . . . 3–71

Troubleshooting: Host Canno t Acc es s Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–12

Xfer Rate Region of the Default Display . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–24

Regions on the Device Display . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–25

Unit Status on the Cache Display . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–29

Fibre Channel Host Status Display . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–32

Single Controller Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–2

Dual-Redundant Controller Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–8

Single-Battery ECB SSB Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–27

Dual-battery ECB SBB Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–27

Location of GLMs in Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–32

I/O Module Locations in a BA370 Enclosure. . . . . . . . . . . . . . . . . . . . . . . . . . . 5–39

Cache-Module Memory Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–42

Installing a DIMM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–44

Battery Disable Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–49

Location of Write-Protection Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–4

Upgrading Device Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–11

Pass-through Device Reset Event Sense Data Response Format . . . . . . . . . . . . .D–2

T emplate 01 - Last Failure Event Sense Data Response Format. . . . . . . . . . . . . .D–4

Template 04 - Mu lt ip l e -Bus Failove r Eve n t Se n se Da ta Respon se Format . . . . .D–5

Page 13

xiii

Template 05 - Failover Event Sense Data Response Format . . . . . . . . . . . . . . . . D–7

Template 11 - Nonvolatile Parameter Memory Component Event Sense Data

Response Form at . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D–8

Template 12 - Backup Battery Failure Event Sense Data Response Format. . . . D–9

Template 13 - Subsystem Built-In Self Test Failure Event Sense Data

Response Form at . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D–11

Template 14 - Memory System Failure Event Sense Data Response Format . . D–12

Temp l at e 41 - Devi ce Servic es N o n- Tra ns f er Er ro r Event Sense Da ta

Response Form at . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D–13

Template 51 - Disk Transfer Error Event Sense Data Response Format. . . . . . D–15

Structure of an Instance Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D–16

Instance Code Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D–16

Structure of a Last Failure Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D–36

Last Failure Code Format. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D–36

Page 14

Page 15

Tables

xv

Key to Figure 1–1 The HSG80 Subsystem . . . . . . . . . . . . . . . . . . .1–3

Controller Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–4

Key to Figure 1–3 HSG80 Array Controller–Fibre Channel

Copper Cabling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–9

Key to Figure 1–4: Opti onal Maintenance Port Cable for a

T erminal Connection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–10

Key to Figure 1–4 HSG80 Array Controller–Fibre Channel

Optical cabling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1–11

Cache Module Memory Con f igurations. . . . . . . . . . . . . . . . . . . . . .1–18

Cache Policies and Cache Module Status . . . . . . . . . . . . . . . . . . . .1–22

Cache Policies Resulting and ECB Status . . . . . . . . . . . . . . . . . . . .1–24

ECB Capacity Based on Memory Size. . . . . . . . . . . . . . . . . . . . . . .1–29

Key to Figure 2–4 Cabling for S ingle Configur ation (copper). . . . .2–14

Key to Figure 2–5 Cabling for S ingle Configuration (optical). . . . .2–15

Key to Figure 2–6 Cabling for Dual-Redundant Conf iguration

with Two Hubs (copper) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–17

Key to Figure 2–7 Cabling for Dual-Redundant Conf iguration

with Two Hubs (optical) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–18

Key to Figure 2–8 Cabling for Dual-Redundant Conf iguration

with One Hub (copper) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–20

Key to Figure 2–9 Cabling for Dual-Redundant Conf iguration

with One Hub (optical) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2–21

Controller Limitations for RAIDsets . . . . . . . . . . . . . . . . . . . . . . . . .3–3

A Comparison of Different Kinds of Storagesets. . . . . . . . . . . . . . . .3–8

Maximum Chunk Sizes for a RAIDs et . . . . . . . . . . . . . . . . . . . . . .3–50

Unit Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3–54

Troubleshooting Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4–4

Event-Code Types. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4–19

FMU SET Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4–20

Page 16

xvi

VTDPY Key Sequences and Commands. . . . . . . . . . . . . . . . . . . . . 4–23

Device Map Columns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–25

Device Status Columns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–26

Device-Port Status Columns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–28

Unit Status Columns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–29

Fibre Channel Hos t Status Display- Known Hosts (Connections) . 4–32

Fibre Channel Host Status Display- Port Status . . . . . . . . . . . . . . . 4–33

Fibre Channel Hos t St atus Display- Link Error Counte r s . . . . . . . 4–33

Tachyon First Digit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–35

Tachyon Second Digi t . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–35

DILX Control Sequences. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–37

Data Patterns for Phase 1: Write Test . . . . . . . . . . . . . . . . . . . . . . . 4–38

DILX Error Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–40

Cache Module Memory Con f igurations. . . . . . . . . . . . . . . . . . . . . . 5–42

HSUTIL Messages and Inquiries . . . . . . . . . . . . . . . . . . . . . . . . . . 6–14

Cache Module Memory Con f igurations. . . . . . . . . . . . . . . . . . . . . . 6–20

Recall and Edit Command Keys . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–4

ADD UNIT Switches for Storagesets . . . . . . . . . . . . . . . . . . . . . . .B–28

POWEROFF Switch Settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–84

SET controller Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–104

EMU Set Point Temperatures. . . . . . . . . . . . . . . . . . . . . . . . . . . . .B–114

SET unit-number Switc hes for Exi sting Containers . . . . . . . . . . .B–138

Solid OCP Patterns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .C–3

Flashing OCP Patterns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .C–8

Instance Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–18

Controller Restart Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–37

Last Failure Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–38

Recommended Repair Action Codes . . . . . . . . . . . . . . . . . . . . . . .D–77

Component Identifier Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–82

Event Notification/Recovery Threshold Classifications . . . . . . . . .D–84

ASC and ASCQ Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .D–85

Controller Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . E–2

StorageWorks Environmental Specifications . . . . . . . . . . . . . . . . . . E–3

Page 17

Preface

xvii

This book describes the features of the HSG80 array controller and configuration

procedures for the controller and storagesets running Array Controller Softw are

(ACS) Version 8.2G.

This book does not con tain information about the operating envi ronments to which

the controller may be connected, nor does it co ntain detailed informa tion about

subsystem enclosures or their components. See the documentation that

accompanied these peripherals for information about them.

Page 18

xviii

Precautions

Follow these precau tions when ca rr y i ng o ut th e pro cedures in th i s

book.

Electrostatic Discharge Precautions

Static electricity collects on all nonconducting material, such as paper,

cloth, an d plastic. An ele ctrostatic dis charge (ESD) can easily damage a

controller or other subsystem component even though you may not see

or feel the discharge. Follow these precautions whenever you’re

servicing a subsystem or one of its components:

n

Always use an ESD wrist stra p when s ervicing the controlle r or

other components in the subsystem. Make sure that the strap

contacts bare skin, fits snugly, and that its grounding lead is

attached to a bus that is a verified earth ground.

n

Before touching any circuit board or component, always touch a

verif iable earth ground to disc harge any static electricity that may

be present in you r clothing.

n

Alwa y s keep circuit boards an d component s away from

nonconducting material.

n

Always keep cl othing away from circuit boards and components.

n

Always use antis tatic bags and grounding mats for storing circuit

boards or component s duri ng replacement procedures.

n

Always keep the ESD cover over the program card when the card is

in the controller. If you remove the card, put it in its ori ginal

carrying case. Never touch the conta cts or twist or bend the card

while you’re handling it.

n

Never touch the connector pins of a cable when it is attached to a

component or host.

Page 19

Component Precaution

System components referenced in this manual comply to regulatory

standards docu mented herein. Use of other components in their place

may violate country standards, negate regulatory compliance, or

invalidate the warra nty on your product.

Maintenance Port Precautions

The maintenance port gen era tes, uses, and radiates radio-frequency

energy through cables that are connected to it. This energy may

interfere wit h radio and television reception. Do not leave a cable

connected to this port when you’re not communicating with the

controller.

xix

Page 20

xx

Conventions

This book uses th e following typogr aphical conventio ns and special

notices to help you find what you’re looking for.

Typographical Conventions

Convention Meaning

ALLCAPS BOLD

ALLCAPS Command discussed within text, for exam ple:

Monospaced Screen display.

Sans seri f it a lic Command variable or numeric value that you

italic Reference to other books or publications, for

.

.

.

“this controller” The controller serving your current CLI session

“other controller” The controller in a dual-redundant pair that’s

Command syntax that mu st be ent ered exactly as

shown, for example:

SET FAILOVER COPY=OTHER_CONTROLLER

“Use the SHOW SPARESET command t o show

the contents of the spareset.”

supply, for example: SHO W RAIDset-name or

SET THIS_CONTROLLER ID=(n,n,n,n,)

example: “See the HSG80 Array Controller ACS

V8.2 Release Notes for details.”

Indicates that a portion of an example or figure

has been omitted.

through a local or remote terminal.

connected to the cont roller serving your current

CLI session.

Page 21

Special Notices

xxi

This book doesn’t contain detailed desc riptions of standard safety

procedures. However, it does contain warni ngs for procedures that

could cause personal injury and cautions for procedures that could

damage the controller or its related components . L ook for these

symbols when you’re carrying out the procedures in this book:

Warning A warning indicates th e presence of a hazard that can cause

personal injury if you do not observe the precautions in the text.

Caution A caution indicates the presence of a hazard that might

damage hardware, corrupt software, or cause a loss of data.

Tip A tip provides alternative methods or procedures that may not be

immediately obvious. A tip may also aler t cus tomers that the

controller’s behavior being discussed is different from prior software or

hardware versions.

Note A note provides additional information that’s related to the

completio n of an instruction or procedure.

Page 22

xxii

Required Tools

You wil l ne ed th e f ollowing tools to se r v ic e th e contr ol l er, cache

module, external cache battery (ECB), the Power Verification and

Addressing (PVA) module, the Gigabit Link Module (GLM), and the

I/O module:

n

A flathead screwdri v er for looseni ng and tighte ning the I/O module

retaining s crews.

n

A small phillips screwdriver for loosening and tigh tening the GLM

access do o r s cr ews .

n

An anti s tatic wrist strap .

n

An antistatic mat on which to place modules during servicing.

n

A Storage Building Block (SBB) Extractor for remo ving

StorageWorks building blocks. This tool is not required, but it will

enable you to perform more efficiently.

Page 23

Related Publications

The fo ll ow in g table list s some of the do cuments th a t ar e related to th e

use of the controller, cache module, and external cache battery.

xxiii

Document Title Part Number

Fibre Ch annel Ar b it r at ed Lo op H u b

(DS-DHGGA-CA) User’s Guide

KGPSA PCI-to-Fibre Channel Host Adapter EK–KGPSA–UG

DIGITAL StorageW orks Ultra SCSI RAID

Enclosure (BA370-S eries) User’s Guide

The RAIDBOOK—A Source for RAID

Technology

DIGITAL StorageWorks HSG80 Array

Controller ACS V8.2 Release Notes

EK–DHGGA–UG

EK–BA370–UG

RAID Advisory

Board

AA–RDY8A–TE

Page 24

xxiv

Revision History

This is a rev ised document. Prev ious documents include:

EK-HSG80-UG .A01 ACS Version 8.0 January 1998

Page 25

CHAPTER 1

General Description

This chapter i llustrates and describes in general terms your subsystem and its major

components: the HSG80 array controller, its cache module, and its external cache

battery. See the Fibre Channel Arbitrated Loop Hub User’s Guide and the KGPSA

PCI-to-Fibre Channel Hos t Adapter User Guide for information about the fibre

channel arbitrated loop hub and adapter that connect the subsystem to your host.

1–1

Page 26

1–2 HSG80 User’s Gui de

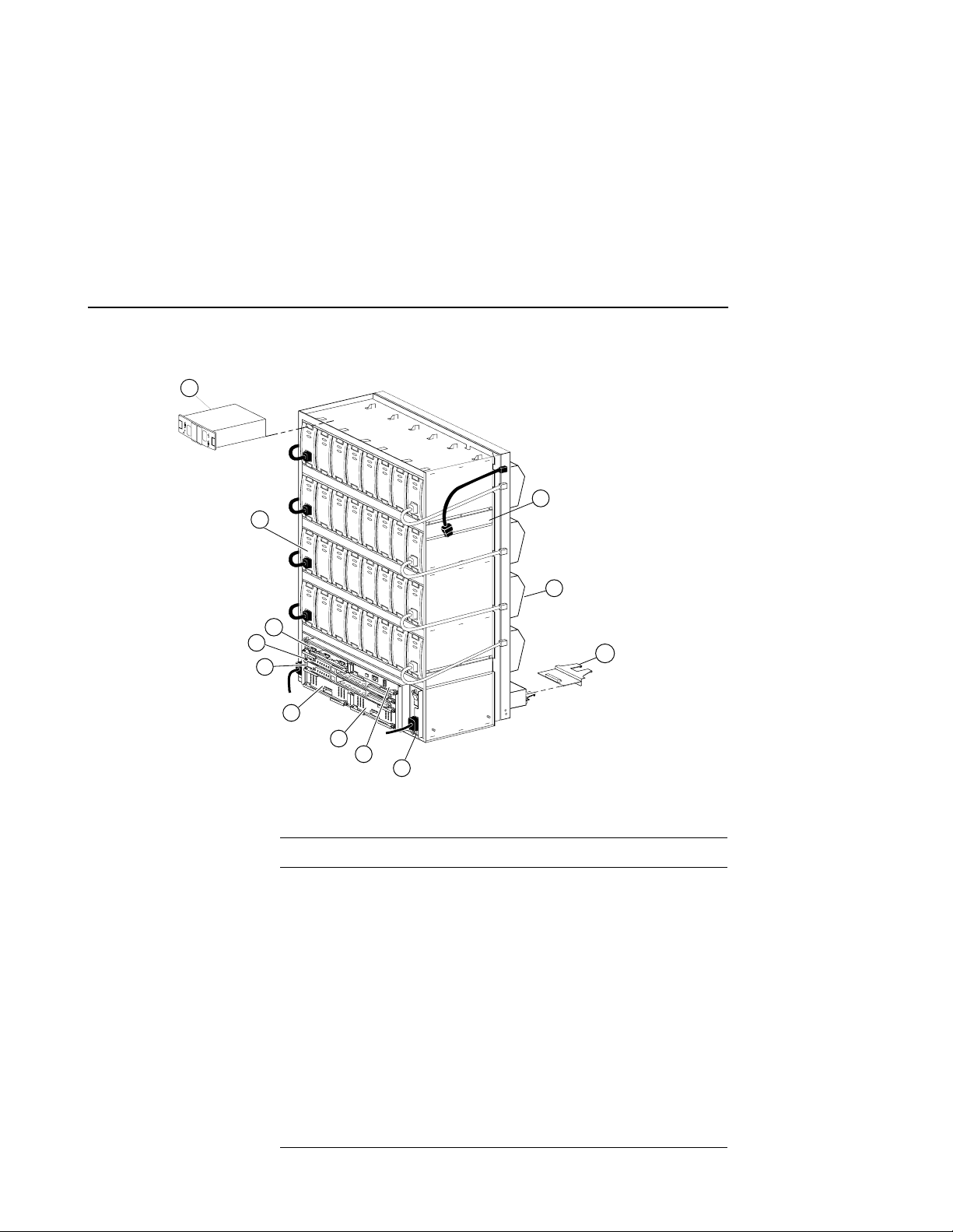

The HSG80 Array Controller Subsystem

Take a few moments to familiarize yourself with the major components

of the HSG80 Array Controlle r subsys tem. Figure 1–1 shows the

components of a typical installation which includes:

n

One BA370 rack-mountable pedestal enclosure.

n

Two controllers, each supported by their own cache module.

n

T wo ex ternal cache bat ter ies (ECBs ) in one Stora ge Build ing Bl ock

(SBB), which provide backup power to the cache modules during a

primary powe r failure.

n

One environmental monitoring unit (EMU) that monitors the

subsystem’s environment, and alerts the controller of equipment

failures that could cause an abnormal environment.

n

One power verification and addressing (PVA) module that provides

a unique address to each enclosure in an ex tended subsystem.

n

Six I/O modules that in tegrate the SSB shelf with either an 8-bit

single-ended, 16-bit single-ende d, or 16-bit differ ential SCSI bus.

n

Two cache modules, which support non volatile memory and

dynamic cache policies to protect the availability of its unwritten

(write-back) data.

Page 27

Figure 1–1 The HSG80 Subsystem

12

11

4x

10

9

8

7

General Descrip ti on 1–3

1

2

3

6x

6

5

4

CXO6453A

Table 1–1 Key to Figure 1–1 The HSG80 Subsystem

Item Description Part No.

1 BA370 rack-mountable enclosure DS–BA370–AA

2 Cooling fan DS–BA35X–MK

3 I/O module 70–32876–01

4 AC input module DS–BA35X–HE

5 PVA module DS–BA35X–EC

6 Cache module B 70–33256–01

7 Cache module A 70–33256–01

8 HSG80 controller B 70–33259–01

9 HSG80 controller A 70–33259–01

10 EMU 70–32866–01

Page 28

1–4 HSG80 User’s Gui de

Table 1–1 Key t o Fig u re 1–1 The HSG80 Subsystem (Continued)

Item Descripti on Part No.

11 180-watt power supply DS–BA35X–HH

12 ECB, single

Summary of HSG80 Features

Ta ble 1–2 summarize s the featu r es of the controller.

Table 1–2 Controller Features

Controller Failover

Topology

Supported Operating Systems

Host protocol

Host bus interconnect

ECB, dual

Feature Supported

n

Transparen t Failov er

n

Multiple Bus Failover

n

FC–AL

n

8 nodes per loop; maxi mum

4 initiators per loop

n

single and dual host

adapter(s)

n

2 controller subsystems;

maximum 4 controllers (2

dual-redundant

configurations)

n

128 LUNs in Transparent

and Multiple Bus F ailover

Mode

n

WINNT/Intel

n

WINNT/Alpha

n

FC–AL

n

Copper

Optical : MultiMode 50

Micron

(Do not mix media types)

DS–HS35X–BC

DS–HS35X–BD

n

Gigabit link module (GLM)

Page 29

General Descrip ti on 1–5

Table 1–2 Controller Features (Continued)

Feature Supported

n

Device protocol

Device bus interconnect

SCSI–2

n

Limited SCSI–3

n

Ultra/Fast Wide Sin g leended

n

Number of SCSI device ports

Number of SCSI device tar gets per

6

n

12

port

n

Maximum number of SCSI

72

devices

n

Disk Drives

4 and 9 GB Ultra &

Fast Wide

n

18 GB Ultra

n

4 GB 10K Ultra

n

RAID levels supported

0

n

1

Cache C ap acity

Caching Feature s

Maximum number of RAID-5 and

RAID-1 storagesets

Maximum number of RAID-5

storagesets

n

0+1

n

3/5

n

64 MB and 128 MB

(32 MB DIMMs only)

n

256 MB and 512 MB

(128 MB DIMMs only)

n

Mirrored Cache

n

Sequential Read Ahead

n

Graceful Power Down Policy

n

UPS support with “auto

cache flus h ”

n

30

n

20

Page 30

1–6 HSG80 User’s Gui de

Table 1–2 Controller Features (Continued)

Feature Supported

n

Maximum number of RAID-5,

45

RAID-1, and RAID-0 storagesets

n

Maximum number of partiti ons

8

per storageset or individual disk

n

Maximum number of units

presented to each host

Maximum number of devices per

16 (8 on each of 2 ports) This

is a driver li m i t ation.

n

48

unit

n

Serial interconnect speed

Maximum device, storageset, or

1 GB/second

n

512 GB LUN capacity

unit size

n

Configuration Save

Transfer configuration from

HSZ70 subsyste m to HSG80

controller

n

Transfer configuration from

ACS V 8.0 to ACS V 8.2

n

General Features

Host Modes/Access

Privileges

n

Persistent Reserve s

n

Program card updates

n

Device warm swap

n

Utiliti es to test disks

Page 31

The HSG80 Array Controller

Your controller is the intelli gent bridge between your host and the

devices in your subsystem. As Figure 1–2 illustrates, it bridges the gap

between the host and its storage subsystem.

Figure 1–2 A Host and Its Storage Subsystem

Host

General Descrip ti on 1–7

Storage

subsystem

Hub

Controller

CXO6233B

The controller is an integral part of an y st orage subsystem because it

provide s a host with high-performance and high-avail ability access to

storage devices.

The contro ller provides the ability to comb ine several ordinary disk

drives into a sin g le , h ig h - pe r f o rm an c e en ti t y ca ll ed a s to rag eset.

Storagesets are implementations of RAID technology, which ensures

that every unpartitioned storageset, whether it uses tw o disk drives or

ten, looks li ke a single storage unit to the host. See Chapter 3,

“Creating Storagesets,” for more information about storagesets and

how to configure them.

From the host’s perspective, the controller is simply another device

connected to one of its I/O buses. Consequent ly, the host sends its I/O

requests to the controller just as it would to any Fibre Channel devi ce.

From the subsyst em’s perspecti ve, the controller receives the I/O

requests and directs them to the devices in the subsystem. Because the

Page 32

1–8 HSG80 User’s Gui de

controller proc es ses the I/O requests, the host isn’t burdened by the

processing that’s typically associated with rea ding and writing data to

multiple storage devices.

For the most r ecent list of support ed devices and oper ating systems, see

the product-specific release note s that accompanied your controller’s

software. To determine which specific parts you nee d for your

config uration, see “Connecting the Subsystem to the Host,” page 2–14.

Figure 1–3 and Figure 1–4 detail the HSG80 Array Controller and its

fibre channel components. Figure 1–5 highlights th e variant par ts for an

optical configuration.

Figure 1–3 HSG80 Array Controller Fibre Channel Copper Cabling

2

1

3x

1 2 3 4 5 6

11

2x

12

10

3

2x

4

5

6

7

2x

8

9

To terminal

CXO6467A

Page 33

General Descrip ti on 1–9

Table 1–3 Key t o Fig u re 1–3 HSG80 Array Controller–Fibre

Channel Copper Cabling

Item Description Part No.

1 Backplane connectors —

2 Access Door 70–33287–01

3GLM 30–49226–01

4 Program-card slot —

5 Program-card ejection button —

6 Program card BG–R8Q3B–BA

7 ESD/PCMCIA card cov er 74–52628–01

8 5-meter Fibre Channel copper cable

10-meter Fibre Channel copper cable

9 Maintenance Port Cable

n

See Figure 1–4 for information

17–04718–06

17–04718–07

17–04074–02

on an optional maintenance port

cable and its parts

10 Maintenance Port —

11 Operator control panel (OCP ) —

12 Lever for removing, installing, and

—

retaining cont roller module.

Page 34

1–10 HSG80 User’s Guide

Figure 1–4 Optional Maintenance Port Cable for a Terminal

Connection

1

2

3

4

5

CXO6485A

Table 1–4Key to Figure 1–4: Optional Maintenance Port Cable for

a Terminal Connection

Item Description Part Numb er

1 BC16E-xx Cable Assembly 17–04074–01

2 Ferrite Bead 16–25105–14

3 RJ-11 Adapter 12–43346–01

4 RJ-11 Extension Cable 17–03511–01

5 PC Serial Port Adapter, 9 pin

12–45238–01

D-sub to 25 pin SKT D-sub for a PC

PC Serial Port Adapter, 9 pin D-sub to

12–45238–02

25 pin D-sub for Sun operating system

PC Serial Port Adapter, 9 pin D-sub to

12–45238–03

25 pin D-sub, mod for an HP800

operating system

Page 35

Figure 1–5 HSG80 Array Controller–Fibre Channel Optical Cabling

1

2x

1 2 3 4 5 6

Table 1–5 Key t o Fig u re 1–4 HSG80 Array Controller–Fibre

Channel Optical cabling

Item Descri ption Part No .

1GLM 30–50124–01

General Descrip ti on 1–11

2x

2

CXO6494A

2 2 M Fibre Channel opti cal cable

5 M Fibre Channel optical cable

10 M Fibre Channel optical cable

20 M Fibre Channel optical cable

30 M Fibre Channel optical cable

50 M Fibre Channel optical cable

17–04820–03

17–04820–05

17–04820–06

17–04820–07

17–04820–08

17–04820–09

Caution If the Fibre Channel optical cable is not properly connec ted to

the controller, controlle r f ailure may result. In addition, if the cable is

not regularly maintained, its perf orm ance and lifespan will be affected.

Before proceeding, it is important to administer the precationa ry

measures detailed in the following secti ons.

Fibre Channel Optical Cable Precautions

Prior to connecting the Fibre Channel cable to the controller , look for

the white stripe on each side of the coupling. After the cabl e is seated

into th e co ntroller, be su re that the white strip es are hidden . Also, whe n

connecting the Fibre Channel cable to the controller, listen for a

Page 36

1–12 HSG80 User’s Guide

1. Open the prep cleaner using the lever on the side of the cable cartridge.

2. Rotate the end f ace of the ferrule 180 degrees.

3. Slide the ferrule end face along the opening to one si de of the coupling.

4. Insert a lint-free polyester swab to dust out the cavity.

5. Remove the lint- fr ee pol yset er s w ab, a nd re turn the f err ule t o it s or igina l

6. Repeat 180 de gree rotation in the opposite direction.

7. Slide the ferrule end face along the opening to the oppos ite side.

8. Insert the lint-free polyester swab to dust out the cavity.

distinctive “snap” sound. This will indicate that the cable is properly

insert ed into the controller.

Fibre Channel Optical Cable Cleaning Instructions

It is essential to maintain clean cables to ensure optimum performance

and lifespan of the cable. Figure 1–6 illustrates the proper clean ing

procedures , as outlined in the follo w ing steps:

position.

9. Return to step 5, and re peat remaining procedures until all areas of the

cartr i dge are cleaned.

Note Be sure to clean both cartridges of the fibre ch annel coupling.

Figure 1–6 Fibre Channel Optical Cleaning Procedures

Small diameter

lint-free polyester

swab

Ferrule

CXO6503A

Page 37

General Descrip ti on 1–13

The HSG80 Array Controller components that you will use most ofte n,

such as the maintenance port and the OCP, are conveniently located on

the cont r oller’s front panel. The host port and program-card slot are

also located on the front panel, ma king it easy to update the controller’s

software or to connect the controller to a different host.

Each controller is supported by its own cache module. Figure 1–4

shows which cache module supports which controller in a dualredundant configuration in a BA370 rack-mountable enclosure.

Figure 1–7 Location of Controllers and Cache Modules

Tip D IGITAL recommends that you use the slots for controller A and

cache module A for single configurations. Slot A responds to SCSI target

ID number 7 on device buses; slot B responds to SCSI target ID number

6 on the device buses.

Operator Control Pane l

The operator control panel (OCP) contains a reset button and six port

LED but to ns, as sh own in Fi gu r e 1–5. The reset button flashes about

once per second to indi cate that the controll er is operating normally.

The port button LEDs correspond to the controller’s device ports and

remain off during normal operation. If an error occurs, the reset b utton

and LEDs will illuminate in a solid or flashing pat tern to help you

diagnose the problem. See Appendix C, “LED Codes,” for further

explanation on these codes.

EMU

Controller A

Controller B

Cache module A Cache module B

PVA

CXO6283A

Page 38

1–14 HSG80 User’s Guide

Figure 1–8 HSG80 Controller Operator Control Panel (OCP)

Maintenance Port

Reset button/

LED

123456

Port button/

LED

CXO6216A

To identify the exact lo cation of the OCP, refer to Figu r e 1 –3.

Under normal circumstances, you will not need to remove the

controller from its enclosure. For thi s reason, the components that you

will use most often are conveniently located on the front pan el. For

example, the maintenance port provides a convenient way to connect a

PC or terminal to your controller so that you can interact with it.

After you configure your controller, you should per iodically check its

control panel. If an error occ urs, one or more of the LEDs on the

control panel will flash in a pattern that will help you to diagnose the

problem. See Chapter 4, “Troubleshooting,” for details about

troubleshooting your controller.

You can access the controller through a PC or a local terminal via the

maintenance port, or through a remote terminal—sometimes called a

virtual terminal or host console—via the host. D I G ITAL recomm en d s

that you use a PC or a local term inal to carry out the troubles hooting

and servicing proc edures in this manual. See “Establishing a Local

Connection to the Controller,” page 2–7, for more information on

connecting the controller with a mainte nance port cable.

Utilities and Exercisers

The controller ’s software includes the following utilities and e xercisers

to assist in tro ubleshooting and maintaining the controller and the other

modules that support its operation:

Page 39

General Descrip ti on 1–15

Fault Management Utility

The Fault Managem ent Utility (FMU) provides a limited interface to

the cont r oller’s fault-management system. As a troubleshooting tool,

you can use FMU to display last-failure and memory-system-failure

entries, translate many of the code values contained in event messages,

and set the dis p lay charact eristics of significant events and failures.

See “Fault Manageme nt Utility,” page 4–17, for more information

about using thi s utility.

Virtual Terminal Disp l ay

Use the virtual terminal display (VTDPY) utility to troubleshoot

communicati on between the controller and its host, communicati on

between the controller and the device s in the subsystem, and the state

and I/O activity of the logical units, devices, and device ports in the

subsystem.

See “Using VTDPY to Check for Communication Problems,” page

4–23, for more information about using this utility.

Disk Inline Exerciser

Use the disk inline exer ciser (DILX) to invest igate the data-transfer

capabilities of disk drives. DILX tests and verifies operation of the

contr o ller and the S C SI–2 disk drives attached to it. DILX generate s

intense read and write loads to th e d i sk drive while monitoring the

drive’s performance and status. See “Checking for Disk-Dr ive

Problems,” page 4–37, for more information about this exerciser.

Configuration Utility

Use the configuration (CONFIG) utility to ad d one or mor e storage

device s to the subsystem. This utili ty checks the device ports for new

disk drives then adds them to the controller’s c onfiguration and

automatically names them. See “Adding Several Disk Drives at a

Time,” pa g e 3–55, for more information about using the CONFIG

utility.

Page 40

1–16 HSG80 User’s Guide

HSUTIL

Use HSUTIL to upgrade the firmware on disk drives in the subsystem

and to format disk drives. See “Upgrading Firmware on a Device,”

page 6–11, for more information about this utility.

Code Load and Code Patch Utility

Use Code Load/Code P atch (CLCP) utility to upgrade the controller

software and the EMU software. You can also use it to patch the

controller software. When you install a new controller, you must have

the correct software version and patch number. See “Upgrading

Controller Software,” page 6–2, for more information about using this

utility.

Note Only DIGITAL field service personnel are authorized to upload

EMU microcode updat es . Contact the Customer Service Center (CSC)

for directions in obtaining the appropriate EMU micro code and

installa tion guide.

Clone Utility

Use the Clone utility to duplicate th e data on any unpartitioned singledisk unit, stripeset, or mirrorset. Bac k up the cloned data while the

actual storageset remains online. See “Cloning Data for Backup,” page

3–19, for more information about using the Clone utility.

Field Replacement Utility

Use the field replacement utility (FRUTIL) to replace a failed controller

(in a dual-redundant configuration) without shutting do wn the

subsystem.You can also use this menu-driven utility to replace cache

modules and ext ernal cache batteri es . See Chapter 5, “Replacement

Procedures,” for a more detailed explanation of how to use FRUTIL.

Page 41

General Descrip ti on 1–17

Change Volume Serial Number Utility

Only DIGITIAL authorized service personnel may use this utility.

The Change Volume Serial Number (CHVSN) utility generates a new

volume serial number (call ed VSN) for the spec ified device and writes it

on the media. It is a way to eliminate duplicate volume serial numbers

and to rename duplic ates with different volume serial numbers .

Device Statistics Utilit y

The Device Statistics (DST AT) utility allows you to log I/O activity o n

a controlle r over an e xtended period of time. Later, you can anal yze that

log to determi ne where the bottlenecks are a nd h ow to tune the

controller for optim um perform ance.

Page 42

1–18 HSG80 User’s Guide

Cache Module

Each controlle r requires a companion cache module as shown in Figure

1–9. Figure 1–7 on page 1–13 shows the loca tion of a controller’s

companion cache module. The cache module, whic h can contain up to

512 MB of memory, increases the subsystem’s I/O performance by

providi ng r ea d, read-ahead, write-th rough, and write-back caching.

The size of the memory cont ained in the cache module depends on the

configuration of the DIMMs, with the supported combinations shown

in Table 1–6. For placement of the DIMMs, see “Replacing DIMMs,”

page 5–42.

Table 1–6 Cache Module Memory Configurations

DIMMs Quantity Memory

32 MB 2 64 MB

32 MB 4 128 MB

128 MB 2 256 MB

128 MB 4 512 MB

Page 43

General Descrip ti on 1–19

Figure 1–9 Cac he Module

5

4

3

2x

Item Description Part No.

1 Cache-memory power LED button —

1

~

2

CXO6161A

2 ECB Y cable for the BA370

17–04479–03

Enclosure

ECB Y cable for the Data Center

17–04479–04

Cabinet

3 Retaining lever —

4 Backplane co n nector —

5 64 MB cache upgrade

256 MB cache upgrade

DS-HSDIM-AB

DS-MSDIM-AC

Page 44

1–20 HSG80 User’s Guide

Caching Techniques

The cache module sup ports the following caching techniques to

increase the subsystem’s read and write performance:

n

Read cach i ng

n

Read-ahead caching

n

Write-through ca ch ing

n

Write-back caching

Read Caching

When the controller receives a read req uest from the host, it reads the

data from the disk drives, delivers it to the hos t, and stores the data in

its cache module. This process is called read caching.

Read caching can decrease the subsystem’s response time to many of

the host’s read requests. If the host requests some or all of the cached

data, the controller satisfi es the request from its cache module rather

than from the disk drives. By default, read caching is enabled for all

storage units.

See SET unit number MAXIMUM_CACHED_TRANSFER in

Appendix B, “CLI Commands,” for more details.

Read-Ahead Caching

Read-ahead caching begins when the co ntroller has already processed a

read request, and it receives a sequential read reques t from the host. If

the controller does not find the data in the cache memory, it reads the

data from the disks and sends it to the cache memory.

The controller then anticipates subse quent read requests and begins to

prefetch the next blocks of data from the disks as it sends the requested

read data to the host. This is a parallel action. The controller notifies the

host of the read completion, and subsequent sequential read requests

are satisfied f rom the cach e memory. By default, read- ahead caching is

enabled for all disk units.

Page 45

General Descrip ti on 1–21

Write-Through Caching

When the contr oller receives a write request from the host, it stores the

data in its cache module, writes the data to the d isk drive s, then notifies

the hos t when th e w r it e o peration is co m p l ete. This pr o c ess is calle d

write-thr ough ca ching because the data actually passes through—and is

stored in—the cache memory on its way to the disk drives.

If you enable read caching for a storage unit, write-through caching is

automatically enabled. Likewise, if you disable rea d caching, writethrough caching is automatically disabled.

Write-Back Caching

This caching te chnique improves the subsystem’s response time to

write r eq u est s by a ll owin g th e co n tr o l le r to de cl a re th e w r it e op e r at io n

“complete” as soon as the data rea ches its cache memory. The

controller performs the slower operation of writing the data to the dis k

drives at a la ter time.

By default, write-back caching is enabled for all units. In either case,

the controller will not provide write- back caching to a unit unless the

cache memory is non-volatile, as described in the next section.

Fault-Tol erance for Write-Back Caching

The cache module supports nonvol atile memory and dynamic cache

policies to protect the availability of its unwritten (write-back) data:

Nonvolatil e Memory

The controller can provide write-back caching for any storage unit as

long as the controller’s cac h e memory is nonvolatile . I n other words, to

enable write-back caching, you must provide a backup power source to

the cac he mo du l e to pr e s er ve th e unw r i tt en cache data in th e even t of a

power failure. If the cache memory were volatil e—that is, if it didn’t

have a backup power supply—the unwritte n ca che data would be lost

during a power fa ilure.

By default, the controller expe cts to use an ECB as the backup power

source for its cache module. See “External Cache Battery,” page 1–28,

for more information about the ECB. However, if your subsystem is

backed up by a UPS (uninterruptible power supply), you can tell the

controller to use the UPS as the backup power source with the SET

Page 46

1–22 HSG80 User’s Guide

THIS CONTROLLER CACHE_UP S command. See Appendix B, “CLI

Commands,” for instructions on using this command.

Cache Policies Resulting from Cache Module Failures

If the co ntroller detects a fu l l o r p ar t ial failu re of it s c ac he modul e o r

ECB, it automatically reacts to preserve the unwritten data in its cache

module. Depending upon the severity of the failure, the controller

chooses an interim caching technique (als o called the cache policy)

which it uses until you repair or replace the cache mo dule or ECB.

Table 1-7 sho w s the cache policies result ing from a full or partial

failure of cache module A in a dual-redundant controller configur ation.

The consequences shown in this table are the same for cache module B.

Table 1-7 Cache Policies and Cache Module Status

Cache Module Status Cache Policy