Page 1

HP EVA 4000/6000/8000 and EVA 4100/6100/8100 User Guide

Abstract

This document is intended for customers who operate and manage the EVA 4000/6000/8000 and EVA 4100/6100/8100

storage systems. These models are sometimes referred to as EVA4x00, EVA6x00, and EVA8x00 or as EVAx000 and x100.

IMPORTANT: With the release of the P6300/P6500 EVA, the EVA family name has been rebranded to HP P6000 EVA. The

names for all existing EVA array models will not change. The rebranding also affects related EVA software. The following

product names have been rebranded:

• HP P6000 Command View (formerly HP StorageWorks Command View EVA)

• HP P6000 Business Copy (formerly HP StorageWorks Business Copy EVA)

• HP P6000 Continuous Access (formerly HP StorageWorks Continuous Access EVA)

• HP P6000 Performance Data Collector (formerly EVAPerf)

HP Part Number: 5697-1119

Published: January 2012

Edition: 12

Page 2

© Copyright 2005, 2012 Hewlett-Packard Development Company, L.P.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Warranty

To obtain a copy of the warranty for this product, see the warranty information website:

http://www.hp.com/go/storagewarranty

Acknowledgements

Microsoft® and Windows® are U.S. registered trademarks of Microsoft Corporation.

UNIX® is a registered trademark of The Open Group.

Page 3

Contents

1 Enterprise Virtual Array startup...................................................................11

EVA8000/8100 storage system connections..............................................................................11

EVA6000/6100 storage system connections...............................................................................12

EVA4000/4100 storage system connections..............................................................................12

Direct connect........................................................................................................................13

iSCSI connection configurations................................................................................................14

Fabric connect iSCSI..........................................................................................................14

Direct connect iSCSI...........................................................................................................14

Procedures for getting started...................................................................................................15

Gathering information........................................................................................................15

Host information...........................................................................................................15

Setting up a controller pair using the OCP............................................................................15

Entering the WWN.......................................................................................................16

Entering the WWN checksum.........................................................................................17

Entering the storage system password..............................................................................17

Installing HP P6000 Command View....................................................................................17

Installing optional EVA software licenses...............................................................................18

2 Enterprise Virtual Array hardware components.............................................19

Physical layout of the storage system.........................................................................................19

Fibre Channel drive enclosures.................................................................................................20

Enclosure layout.................................................................................................................20

I/O modules.....................................................................................................................21

I/O module status indicators..........................................................................................22

Fiber Optic Fibre Channel cables.........................................................................................23

Copper Fibre Channel cables..............................................................................................23

Fibre Channel disk drives....................................................................................................23

Disk drive status indicators..............................................................................................24

Disk drive status displays................................................................................................24

Disk drive blank............................................................................................................25

Power supplies and blowers................................................................................................25

Power supplies..............................................................................................................25

Blowers........................................................................................................................26

Drive enclosure EMU..........................................................................................................26

Controls and displays....................................................................................................27

EMU functions..............................................................................................................27

EMU monitoring functions..............................................................................................28

EMU displays...............................................................................................................28

EMU indicator displays..................................................................................................29

Using the alphanumeric display......................................................................................29

Alphanumeric display description...............................................................................29

Display groups.........................................................................................................29

EMU pushbutton status indicators....................................................................................30

Audible alarm operations ..............................................................................................30

Audible alarm patterns..............................................................................................30

Controlling the audible alarm.....................................................................................31

Enabling the audible alarm............................................................................................31

Muting or unmuting the audible alarm.............................................................................31

Disabling the audible alarm...........................................................................................32

Enclosure number feature...............................................................................................32

En description..........................................................................................................32

Enclosure address bus...............................................................................................33

Contents 3

Page 4

Enclosure address bus connections..............................................................................34

Error Condition Reporting...............................................................................................34

Error condition categories..........................................................................................35

Error queue.............................................................................................................35

Error condition report format......................................................................................36

Navigating the error condition display........................................................................36

Reporting group feature.................................................................................................37

Reporting group numbers..........................................................................................37

Fibre Channel loop switches.....................................................................................................38

30-10022-01 loop switch.....................................................................................................38

Power-on self test (POST).................................................................................................39

30-10010-02 loop switch....................................................................................................39

Power-on self test (POST).................................................................................................40

Reading the switch status indicators.................................................................................40

Problem isolation..........................................................................................................41

HSV controllers.......................................................................................................................41

High availability features....................................................................................................43

Operator control panel.......................................................................................................43

Status indicators............................................................................................................44

Navigation buttons........................................................................................................45

Alphanumeric display....................................................................................................45

Displaying the OCP menu tree........................................................................................45

Displaying system information.........................................................................................47

Displaying versions system information.............................................................................47

Shutting down the system...............................................................................................47

Shutting the controller down...........................................................................................48

Restarting the system......................................................................................................48

Uninitializing the system.................................................................................................48

Password options..........................................................................................................49

Changing a password...................................................................................................49

Clearing a password.....................................................................................................49

Power supplies...................................................................................................................50

Blowers............................................................................................................................50

Cache battery...................................................................................................................51

HSV controller cabling........................................................................................................51

Racks....................................................................................................................................52

Rack configurations............................................................................................................52

Power distribution...............................................................................................................52

PDUs...........................................................................................................................54

PDU 1.....................................................................................................................54

PDU 2.....................................................................................................................54

PDMs..........................................................................................................................54

Rack AC power distribution............................................................................................55

Rack System/E power distribution components..................................................................56

Rack AC power distribution........................................................................................56

Moving and stabilizing a rack.............................................................................................56

3 Enterprise Virtual Array operation...............................................................59

Best practices.........................................................................................................................59

Operating tips and information................................................................................................59

Reserving adequate free space............................................................................................59

Using FATA disk drives........................................................................................................59

Changing the host port topology..........................................................................................59

Host port connection limit on B-series 3200 and 3800 switches...............................................59

Enabling Boot from SAN for Windows direct connect.............................................................60

4 Contents

Page 5

Windows 2003 MSCS cluster installation..............................................................................60

Connecting to C-series switches...........................................................................................60

HP Insight Remote Support software.....................................................................................60

Failback preference setting for HSV controllers............................................................................62

Changing virtual disk failover/failback setting.......................................................................64

Storage system shutdown and startup........................................................................................64

Shutting down the storage system.........................................................................................64

Starting the storage system..................................................................................................65

Saving storage system configuration data...................................................................................65

Adding disk drives to the storage system....................................................................................67

Creating disk groups..........................................................................................................68

Adding a disk drive...........................................................................................................69

Removing the drive blank...............................................................................................69

Changing the Device Addition Policy...............................................................................69

Installing the disk drive...................................................................................................69

Checking status indicators..............................................................................................70

Adding the disk to a disk group......................................................................................71

Handling fiber optic cables......................................................................................................71

4 Configuring application servers..................................................................72

Overview..............................................................................................................................72

Clustering..............................................................................................................................72

Multipathing..........................................................................................................................72

Installing Fibre Channel adapters..............................................................................................72

Testing connections to the EVA.................................................................................................73

Adding hosts..........................................................................................................................73

Creating and presenting virtual disks.........................................................................................73

Verifying virtual disk access from the host...................................................................................74

Configuring virtual disks from the host.......................................................................................74

HP-UX...................................................................................................................................74

Scanning the bus...............................................................................................................74

Creating volume groups on a virtual disk using vgcreate.........................................................75

IBM AIX................................................................................................................................75

Accessing IBM AIX utilities..................................................................................................75

Adding hosts.....................................................................................................................76

Creating and presenting virtual disks....................................................................................76

Verifying virtual disks from the host.......................................................................................76

Linux.....................................................................................................................................77

Driver failover mode...........................................................................................................77

Installing a Qlogic driver....................................................................................................77

Upgrading Linux components..............................................................................................78

Upgrading qla2x00 RPMs..............................................................................................78

Detecting third-party storage...........................................................................................78

Compiling the driver for multiple kernels...........................................................................79

Uninstalling the Linux components........................................................................................79

Using the source RPM.........................................................................................................79

Verifying virtual disks from the host.......................................................................................80

OpenVMS.............................................................................................................................80

Updating the AlphaServer console code, Integrity Server console code, and Fibre Channel FCA

firmware...........................................................................................................................80

Verifying the Fibre Channel adapter software installation........................................................80

Console LUN ID and OS unit ID...........................................................................................80

Adding OpenVMS hosts.....................................................................................................81

Scanning the bus...............................................................................................................81

Configuring virtual disks from the OpenVMS host...................................................................82

Contents 5

Page 6

Setting preferred paths.......................................................................................................83

Oracle Solaris........................................................................................................................83

Loading the operating system and software...........................................................................83

Configuring FCAs with the Oracle SAN driver stack...............................................................83

Configuring Emulex FCAs with the lpfc driver....................................................................84

Configuring QLogic FCAs with the qla2300 driver.............................................................85

Fabric setup and zoning.....................................................................................................87

Oracle StorEdge Traffic Manager (MPxIO)/Sun Storage Multipathing.......................................87

Configuring with Veritas Volume Manager............................................................................87

Configuring virtual disks from the host...................................................................................89

Verifying virtual disks from the host..................................................................................90

Labeling and partitioning the devices...............................................................................91

VMware................................................................................................................................92

Installing or upgrading VMware .........................................................................................92

Configuring the EVA with VMware host servers......................................................................92

Configuring an ESX server ..................................................................................................93

Loading the FCA NVRAM..............................................................................................93

Setting the multipathing policy........................................................................................93

Specifying DiskMaxLUN.................................................................................................94

Verifying connectivity.....................................................................................................94

Verifying virtual disks from the host.......................................................................................95

5 Customer replaceable units........................................................................96

Customer self repair (CSR).......................................................................................................96

Parts only warranty service..................................................................................................96

Best practices for replacing hardware components......................................................................96

Component replacement videos...........................................................................................96

Verifying component failure.................................................................................................96

Procuring the spare part......................................................................................................96

Replaceable parts.........................................................................................................97

Replacing the failed component...........................................................................................99

Returning the defective part...............................................................................................100

6 Support and other resources....................................................................101

Contacting HP......................................................................................................................101

Subscription service..............................................................................................................101

Documentation feedback.......................................................................................................101

Related information...............................................................................................................101

Documents......................................................................................................................101

Websites........................................................................................................................101

Document conventions and symbols........................................................................................102

Rack stability........................................................................................................................102

Customer self repair..............................................................................................................103

A Regulatory notices and specifications........................................................104

Regulatory notices................................................................................................................104

Federal Communications Commission (FCC) notice...............................................................104

FCC Class A certification.............................................................................................104

Class A equipment......................................................................................................104

Class B equipment......................................................................................................104

Declaration of conformity for products marked with the FCC logo, United States only...........105

Modifications.............................................................................................................105

Cables.......................................................................................................................105

Laser device....................................................................................................................105

Laser safety warnings..................................................................................................105

Compliance with CDRH regulations...............................................................................105

6 Contents

Page 7

Certification and classification information..........................................................................106

Canadien notice (avis Canadien).......................................................................................106

Class A equipment......................................................................................................106

Class B equipment......................................................................................................106

European union notice......................................................................................................106

Notice for France.............................................................................................................106

WEEE Recycling Notices...................................................................................................106

English notice.............................................................................................................106

Dutch notice...............................................................................................................107

Czechoslovakian notice...............................................................................................107

Estonian notice...........................................................................................................107

Finnish notice.............................................................................................................107

French notice..............................................................................................................108

German notice............................................................................................................108

Greek notice..............................................................................................................108

Hungarian notice .......................................................................................................109

Italian notice..............................................................................................................109

Latvian notice.............................................................................................................109

Lithuanian notice.........................................................................................................109

Polish notice...............................................................................................................109

Portuguese notice........................................................................................................110

Slovakian notice.........................................................................................................110

Slovenian notice.........................................................................................................110

Spanish notice............................................................................................................110

Swedish notice............................................................................................................111

Germany noise declaration...............................................................................................111

Japanese notice...............................................................................................................111

Harmonics conformance (Japan)...................................................................................111

Taiwanese notice.............................................................................................................111

Japanese power cord notice..............................................................................................111

Country-specific certifications.............................................................................................112

Storage system specifications..................................................................................................112

Physical specifications......................................................................................................112

Environmental specifications..............................................................................................112

Power specifications.........................................................................................................113

B EMU-generated condition reports..............................................................117

Condition report format.........................................................................................................117

Correcting errors..................................................................................................................117

Drive conditions...............................................................................................................118

0.1.en.01 CRITICAL condition—Drive configuration or drive link rate...................................118

0.1.en.02 INFORMATION condition—Drive missing.........................................................119

0.1.en.03 INFORMATION condition—Drive software lock active........................................119

0.1.en.04 CRITICAL condition—Loop a drive link rate incorrect..........................................119

0.1.en.05 CRITICAL condition—Loop b drive link rate incorrect..........................................120

Power supply conditions....................................................................................................120

0.2.en.01 NONCRITICAL Condition—Power supply AC input missing................................120

0.2.en.02 UNRECOVERABLE condition—Power supply missing ........................................121

0.2.en.03 CRITICAL condition—Power supply load unbalanced .......................................121

Blower conditions............................................................................................................121

0.3.en.01 NONCRITICAL condition—Blower speed.........................................................122

0.3.en.02 CRITICAL condition—Blower speed.................................................................122

0.3.en.03 UNRECOVERABLE condition—Blower failure ..................................................122

0.3.en.04 UNRECOVERABLE condition—Blower internal..................................................122

0.3.en.05 NONCRITICAL condition—Blower missing......................................................122

Contents 7

Page 8

0.3.en.06 UNRECOVERABLE condition—No blowers installed .........................................123

Temperature conditions.....................................................................................................123

0.4.en.01 NONCRITICAL condition—High temperature...................................................123

0.4.en.02 CRITICAL condition—High temperature...........................................................124

0.4.en.03 NONCRITICAL condition—Low temperature....................................................124

0.4.en.04 CRITICAL condition—Low temperature............................................................124

0.4.en.05 UNRECOVERABLE condition—High temperature .............................................124

EMU conditions...............................................................................................................125

Resetting the EMU.......................................................................................................125

07.01.01 CRITICAL condition—EMU internal clock...........................................................125

07.01.02 UNRECOVERABLE condition—EMU interrupted ................................................125

0.7.01.03 UNRECOVERABLE Condition—Power supply shutdown .....................................126

0.7.01.04 INFORMATION condition—EMU internal data.................................................126

0.7.01.05 UNRECOVERABLE condition—Backplane NVRAM ...........................................126

0.7.01.10 NONCRITICAL condition—NVRAM invalid read data .......................................126

0.7.01.11 NONCRITICAL condition—EMU NVRAM write failure .......................................126

0.7.01.12 NONCRITICAL condition—EMU cannot read NVRAM data ...............................127

0.7.01.13 UNRECOVERABLE condition—EMU load failure ...............................................127

0.7.01.14 NONCRITICAL condition—EMU enclosure address ...........................................127

0.7.01.15 UNRECOVERABLE condition—EMU hardware failure ........................................127

0.7.01.16 INFORMATION condition—EMU internal ESI data corrupted ..............................127

0.7.01.17 UNRECOVERABLE condition—Power shutdown failure........................................128

0.7.01.18 UNRECOVERABLE condition—EMU hardware failure.........................................128

0.7.01.19 UNRECOVERABLE condition—EMU ESI driver failure.........................................128

Transceiver conditions.......................................................................................................128

0.F.en.01 CRITICAL condition—Transceiver incompatibility ...............................................129

0.F.en.02 CRITICAL condition—Transceiver data signal lost ..............................................129

0.F.en.03 CRITICAL condition—Transceiver fibre channel drive enclosure bus fault...............129

0.F.en.04 CRITICAL condition—Transceiver removed........................................................129

0.F.en.05 CRITICAL condition—Invalid fibre channel character..........................................130

CAN bus communication port conditions............................................................................130

Resetting the EMU.......................................................................................................130

1.1.03.01 NONCRITICAL condition—Communication error...............................................130

1.1.03.02 INFORMATION condition—Recovery completed...............................................130

1.1.03.03 INFORMATION condition—Overrun recovery...................................................131

Voltage sensor and current sensor conditions.......................................................................131

1.2.en.01 NONCRITICAL condition—High voltage .........................................................131

1.2.en.02 CRITICAL condition—High voltage .................................................................131

1.2.en.03 NONCRITICAL condition—Low voltage ..........................................................131

1.2.en.04 CRITICAL condition—Low voltage ..................................................................132

1.3.en.01 NONCRITICAL condition—High current ..........................................................132

1.3.en.02 CRITICAL condition—High current ..................................................................132

Backplane conditions.......................................................................................................132

8.2.01.10 NONCRITICAL condition—Backplane NVRAM read .........................................132

8.2.01.11 NONCRITICAL condition—Backplane NVRAM write failure ...............................132

8.2.01.12 NONCRITICAL condition—Backplane NVRAM read failure ...............................132

8.2.01.13 NONCRITICAL condition—Backplane WWN is blank.......................................132

I/O Module conditions.....................................................................................................133

8.7.en.01 CRITICAL condition—I/O module unsupported ................................................133

8.7.en.02 CRITICAL condition—I/O module communication ............................................133

8.7.en.10 NONCRITICAL condition—I/O module NVRAM read ......................................133

8.7.en.11 NONCRITICAL condition—I/O module NVRAM write........................................133

8.7.en.12 NONCRITICAL condition—I/O Module NVRAM read failure .............................134

8.7.en.13 NONCRITICAL condition—I/O module removed...............................................134

Host conditions................................................................................................................134

8 Contents

Page 9

C Controller fault management....................................................................135

Using HP P6000 Command View ..........................................................................................135

GUI termination event display................................................................................................135

GUI event display............................................................................................................135

Fault management displays...............................................................................................136

Displaying Last Fault Information...................................................................................136

Displaying Detailed Information....................................................................................136

Interpreting fault management information......................................................................137

D Non-standard rack specifications..............................................................138

Rack specifications................................................................................................................138

Internal component envelope.............................................................................................138

EIA310-D standards..........................................................................................................138

EVA cabinet measures and tolerances.................................................................................138

Weights, dimensions and component CG measurements.......................................................138

Airflow and Recirculation..................................................................................................139

Component Airflow Requirements..................................................................................139

Rack Airflow Requirements...........................................................................................139

Configuration Standards...................................................................................................139

Environmental and operating specifications..............................................................................139

Power requirements..........................................................................................................140

UPS Selection.............................................................................................................141

Environmental specifications..............................................................................................143

Shock and vibration specifications......................................................................................144

E Single Path Implementation......................................................................145

High-level solution overview...................................................................................................145

Benefits at a glance..............................................................................................................145

Installation requirements........................................................................................................146

Recommended mitigations.....................................................................................................146

Supported configurations.......................................................................................................146

General configuration components.....................................................................................146

Connecting a single path HBA server to a switch in a fabric zone..........................................146

HP-UX configuration.........................................................................................................148

Requirements..............................................................................................................148

HBA configuration.......................................................................................................148

Risks..........................................................................................................................148

Limitations..................................................................................................................148

Windows Server (32-bit) configuration................................................................................149

Requirements..............................................................................................................149

HBA configuration.......................................................................................................149

Risks..........................................................................................................................149

Limitations..................................................................................................................149

Windows Server (64-bit) configuration................................................................................150

Requirements..............................................................................................................150

HBA configuration.......................................................................................................150

Risks..........................................................................................................................150

Limitations..................................................................................................................150

Oracle Solaris configuration..............................................................................................151

Requirements..............................................................................................................151

HBA configuration.......................................................................................................151

Risks..........................................................................................................................151

Limitations..................................................................................................................152

Tru64 UNIX configuration.................................................................................................152

Requirements..............................................................................................................152

HBA configuration.......................................................................................................152

Contents 9

Page 10

Risks..........................................................................................................................152

OpenVMS configuration...................................................................................................153

Requirements..............................................................................................................153

HBA configuration.......................................................................................................153

Risks..........................................................................................................................153

Limitations..................................................................................................................154

Linux (32-bit) configuration................................................................................................154

Requirements..............................................................................................................154

HBA configuration.......................................................................................................154

Risks..........................................................................................................................154

Limitations..................................................................................................................155

Linux (64-bit) configuration................................................................................................155

Requirements..............................................................................................................155

HBA configuration.......................................................................................................155

Risks..........................................................................................................................155

Limitations..................................................................................................................156

IBM AIX configuration......................................................................................................156

Requirements..............................................................................................................156

HBA configuration.......................................................................................................156

Risks..........................................................................................................................157

Limitations..................................................................................................................157

VMware configuration......................................................................................................157

Requirements..............................................................................................................157

HBA configuration.......................................................................................................157

Risks..........................................................................................................................158

Limitations..................................................................................................................158

Failure scenarios...................................................................................................................158

HP-UX.............................................................................................................................158

Windows Server..............................................................................................................159

Oracle Solaris.................................................................................................................159

OpenVMS and Tru64 UNIX..............................................................................................160

Linux..............................................................................................................................160

IBM AIX..........................................................................................................................161

VMware.........................................................................................................................161

Glossary..................................................................................................163

Index.......................................................................................................175

10 Contents

Page 11

1 Enterprise Virtual Array startup

This chapter describes the procedures to install and configure the Enterprise Virtual Array. When

these procedures are complete, you can begin using your storage system.

NOTE: Installation of the Enterprise Virtual Array should be done only by an HP authorized

service representative. The information in this chapter provides an overview of the steps involved

in the installation and configuration of the storage system.

This chapter consists of:

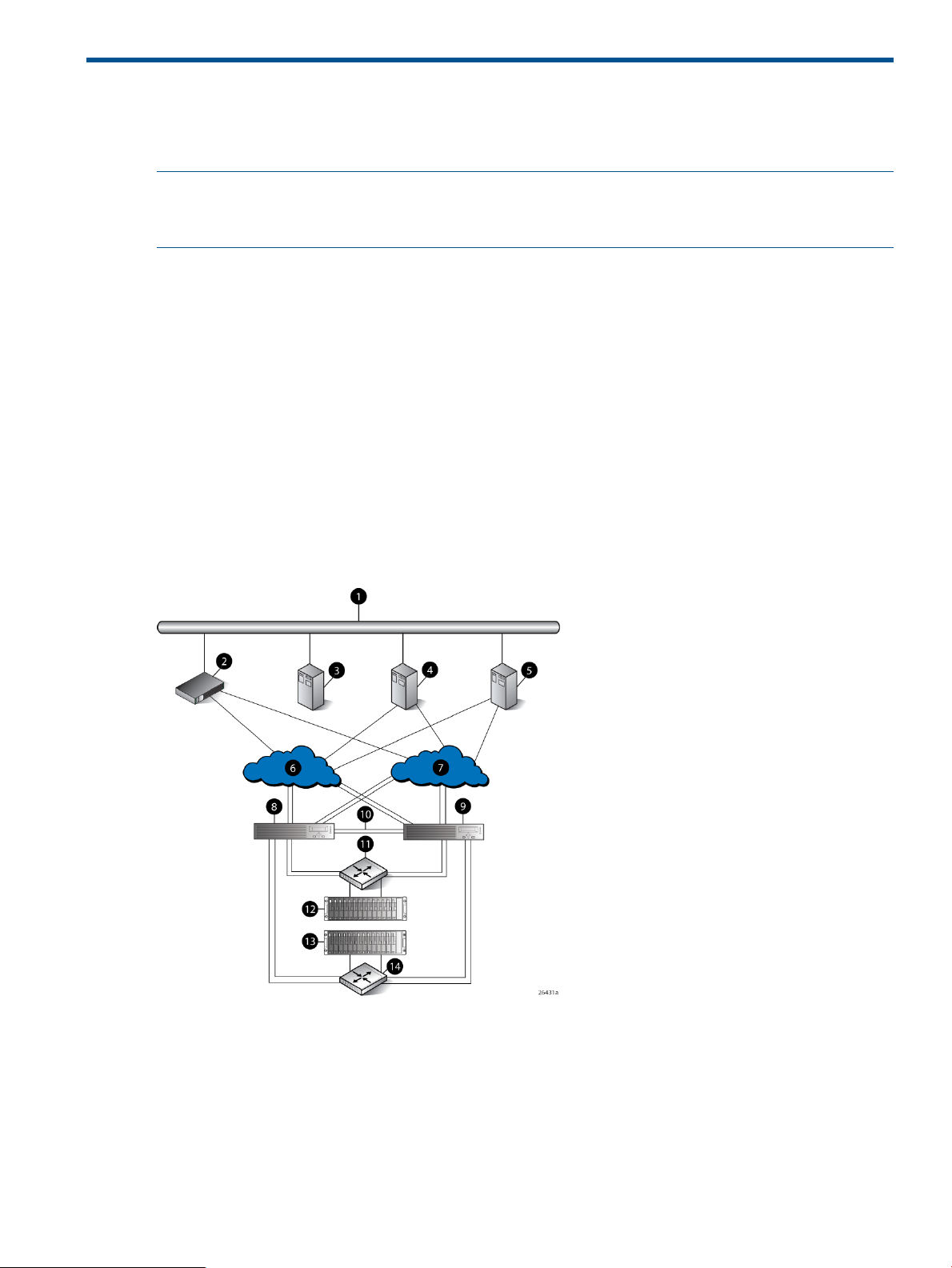

EVA8000/8100 storage system connections

Figure 1 (page 11) shows how the storage system is connected to other components of the storage

solution.

• The HSV210-A and HSV210-B controllers connect via four host ports (FP1, FP2, FP3, and FP4)

to the Fibre Channel fabrics. The hosts that will access the storage system are connected to

the same fabrics.

• The HP P6000 Command View management server also connects to the fabric.

• The controllers connect through two loop pairs to the drive enclosures. Each loop pair consists

of two independent loops, each capable of managing all the disks should one loop fail. Four

FC loop switches are used to connect the controllers to the disk enclosures.

Figure 1 EVA8000/8100 configuration

8 Controller A1 Network interconnection

9 Controller B2 Management server

10 Cache mirror ports3 Non-host

11 FC loop switch4 Host X

12 Drive enclosure 15 Host Z

13 Drive enclosure 26 Fabric 1

14 FC loop switch7 Fabric 2

EVA8000/8100 storage system connections 11

Page 12

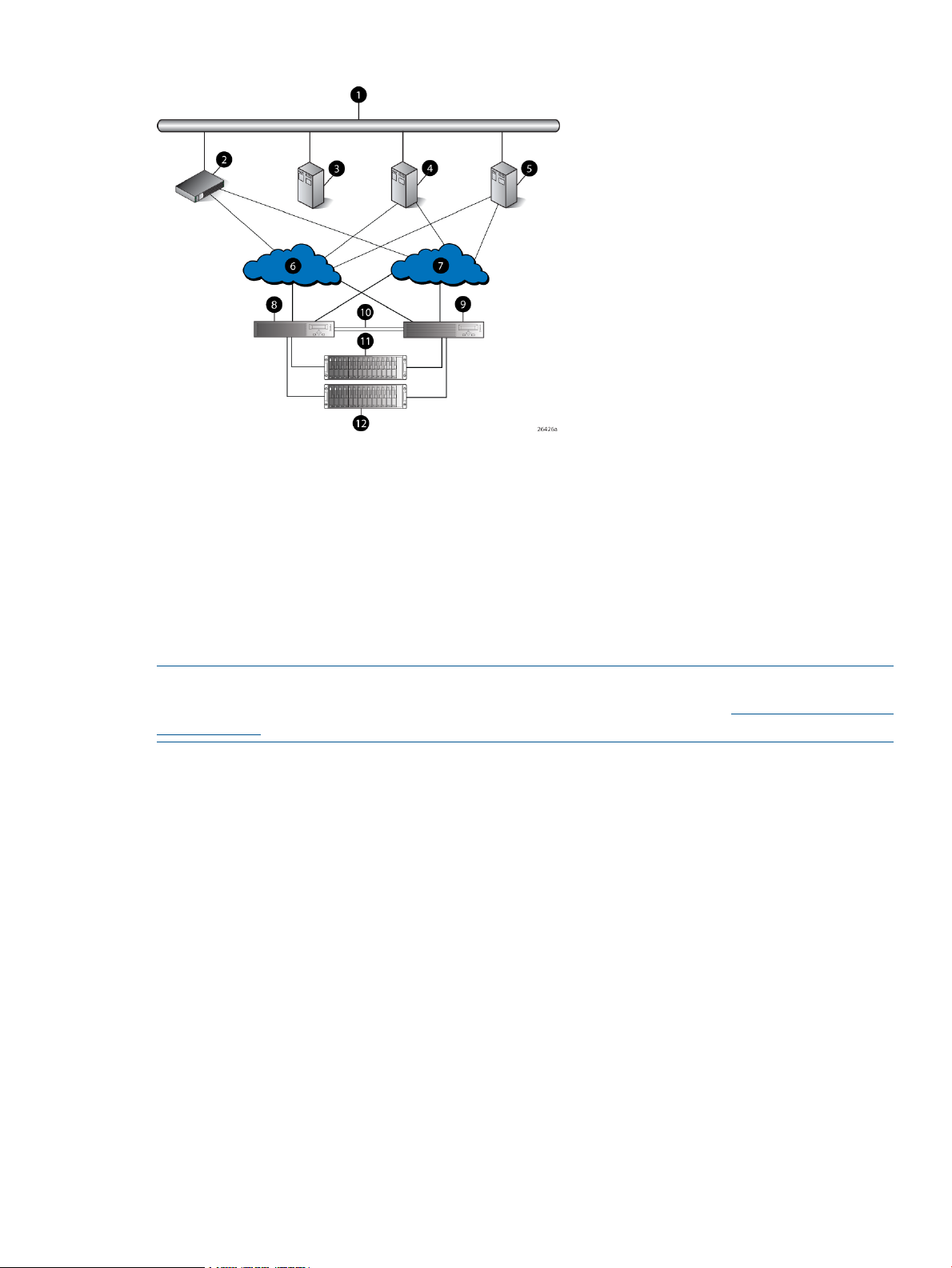

EVA6000/6100 storage system connections

Figure 2 (page 12) shows a typical EVA6000/6100 SAN topology:

• The HSV200-A and HSV200-B controllers connect via two host ports (FP1 and FP2) to the

Fibre Channel fabrics. The hosts that will access the storage system are connected to the same

fabrics.

• The HP Command View EVA management server also connects to both fabrics.

• The controllers connect through one loop pair to the drive enclosures. The loop pair consists

of two independent loops, each capable of managing all the disks should one loop fail. Two

FC loop switches are used to connect the controllers to the disk enclosures.

Figure 2 EVA6000/6100 configuration

8 Controller A1 Network interconnection

9 Controller B2 Management server

10 Cache mirror ports3 Non-host

11 FC loop switch4 Host X

12 Drive enclosure 15 Host Z

13 Drive enclosure 26 Fabric 1

7 Fabric 2

EVA4000/4100 storage system connections

Figure 3 (page 13) shows a typical EVA 4000/4100 SAN topology:

• The HSV200-A and HSV200-B controllers connect via two host ports (FP1 and FP2) to the

Fibre Channel fabrics. The hosts that will access the storage system are connected to the same

fabrics.

• The HP P6000 Command View management server also connects to both fabrics.

• The controllers connect through one loop pair to the drive enclosures. The loop pair consists

of two independent loops, each capable of managing all the disks should one loop fail. The

controllers connect directly to the disk enclosures.

12 Enterprise Virtual Array startup

Page 13

Figure 3 EVA4000/4100 configuration

7 Fabric 21 Network interconnection

8 Controller A2 Management server

9 Controller B3 Non-host

10 Cache mirror ports4 Host X

11 Drive enclosure 15 Host Z

12 Drive enclosure 26 Fabric 1

Direct connect

NOTE: Direct connect is currently supported on Microsoft Windows only. For more information

on direct connect, go the Single Point of Connectivity Knowledge (SPOCK) at: http://www.hp.com/

storage spock.

Direct connect provides a lower cost solution for smaller configurations. When using direct connect,

the storage system controllers are connected directly to the host(s), not to SAN Fibre Channel

switches. Make sure the following requirements are met when configuring your environment for

direct connect:

• A management server running HP P6000 Command View must be connected to one port on

each EVA controller. The management host must use dual HBAs for redundancy.

• To provide redundancy, it is recommended that dual HBAs be used for each additional host

connected to the storage system. Using this configuration, up to four hosts (including the

management host) can be connected to an EVA8x00, and up to two hosts can be connected

to an EVA6x00 or EVA4x00.

• The Host Port Configuration must be set to Direct Connect using the OCP.

• HP P6000 Continuous Access cannot be used with direct connect configurations.

• The HSV controller firmware cannot differentiate between an empty host port and a failed

host port in a direct connect configuration. As a result, the Connection state dialog box on

the Controller Properties window displays Connection failed for an empty host port. To fix this

problem, insert an optical loop-back connector into the empty host port; the Connection state

will display Connected. For more information about optical loop-back connectors, contact

your HP-authorized service provider.

Direct connect 13

Page 14

iSCSI connection configurations

The EVA4x00/6x00/8x00 support iSCSI attach configurations using the HP MPX100. Both fabric

connect and direct connect are supported for iSCSI configurations. For complete information on

iSCSI configurations, go to the following website:

http://h18006.www1.hp.com/products/storageworks/evaiscsiconnect/index.html

NOTE: An iSCSI connection configuration supports mixed direct connect and fabric connect.

Fabric connect iSCSI

Fabric connect provides an iSCSI solution for EVA Fibre Channel configurations that want to

continue to use all EVA ports on FC or if the EVA is also used for HP P6000 Continuous Access.

Make sure the following requirements are met when configuring your MPX100 environment for

fabric connect:

• A maximum of two MPX100s per storage system are supported

• Each storage system port can connect to a maximum of two MPX100 FC ports.

• Each MPX100 FC port can connect to a maximum of one storage system port.

• In a single MPX100 configuration, if both MPX100 FC ports are used, each port must be

connected to one storage system controller.

• In a dual MPX100 configuration, at least one FC port from each MPX100 must be connected

to one storage system controller.

• The Host Port Configuration must be set to Fabric Connect using the OCP.

• HP P6000 Continuous Access is supported on the same storage system connected in MPX100

fabric connect configurations.

Direct connect iSCSI

Direct connect provides a lower cost solution for configurations that want to dedicate controller

ports to iSCSI I/O. When using direct connect, the storage system controllers are connected directly

to the MPX100(s), not to SAN Fibre Channel switches.

Make sure the following requirements are met when configuring your MPX100 environment for

direct connect:

• A maximum two MPX100s per storage system are supported.

• In a single MPX100 configuration, if both MPX100 FC ports are used each port must be

connected to one storage system controller.

• In a dual MPX100 configuration, at least one FC port from each MPX100 must be connected

to one storage system controller.

• The Host Port Configuration must be set to Direct Connect using the OCP.

• HP P6000 Continuous Access cannot be used with direct connect configurations.

• EVAs cannot be directly connected to each other to create an HP P6000 Continuous Access

configuration. However, hosts can be directly connected to the EVA in an HP P6000 Continuous

Access configuration. At least one port from each array in an HP P6000 Continuous Access

configuration must be connected to a Fabric connection for remote array connectivity.

14 Enterprise Virtual Array startup

Page 15

Procedures for getting started

documentation.

hardware configuration information.

View.

storage system software documentation for each host's

operating system.

Gathering information

The following items should be available when installing and configuring an Enterprise Virtual Array.

They provide information necessary to set up the storage system successfully.

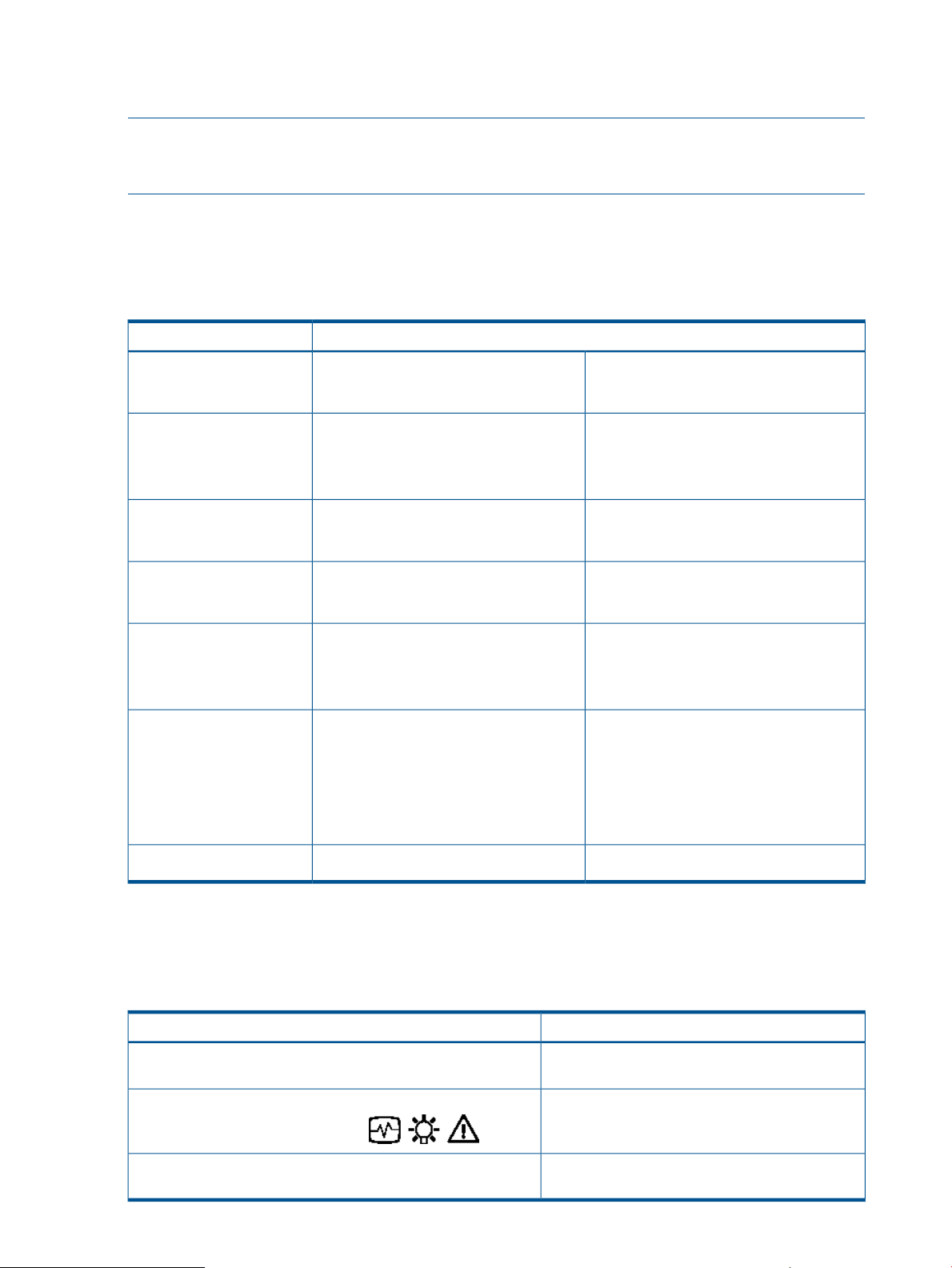

ResponsibilityStep

Customer1. Gather information and identify all related storage

Customer2. Contact an authorized service representative for

HP Service Engineer3. Enter the World Wide Name (WWN) into the OCP.

HP Service Engineer4. Configure HP P6000 Command View.

Customer5. Prepare the hosts.

HP Service Engineer6. Configure the system through HP P6000 Command

HP Service Engineer7. Make virtual disks available to their hosts. See the

• HP 4x00/6x00/8x00 Enterprise Virtual Array World Wide Name label, which is shipped

with the system

• HP EVA 4000/6000/8000 and EVA 4100/6100/8100 Read Me First

• HP EVA 4000/6000/8000 and EVA 4100/6100/8100 Release Notes (XCS 6.250)

• The latest HP P6000 Command View software (Check the HP P6000 Enterprise Virtual Array

Compatibility Reference for controller software and HP P6000 Command View compatibility.)

Locate these items and keep them handy. You will need them for the procedures in this manual.

Host information

Make a list of information for each host computer that will be accessing the storage system. You

will need the following information for each host:

• The LAN name of the host

• A list of World Wide Names of the FC adapters, also called host bus adapters, through which

the host will connect to the fabric that provides access to the storage system, or to the storage

system directly if using direct connect.

• Operating system type

• Available LUN numbers

Setting up a controller pair using the OCP

NOTE: This procedure should be performed by an HP authorized service representative.

Two pieces of data must be entered during initial setup using the controller OCP:

• World Wide Name (WWN) — Required to complete setup. This procedure should be

performed by an HP authorized service representative.

• Storage system password — Optional. A password provides security allowing only specific

instances of HP P6000 Command View to access the storage system.

Procedures for getting started 15

Page 16

The OCP on either controller can be used to input the WWN and password data. For more

information about the OCP, see “Operator control panel” (page 43).

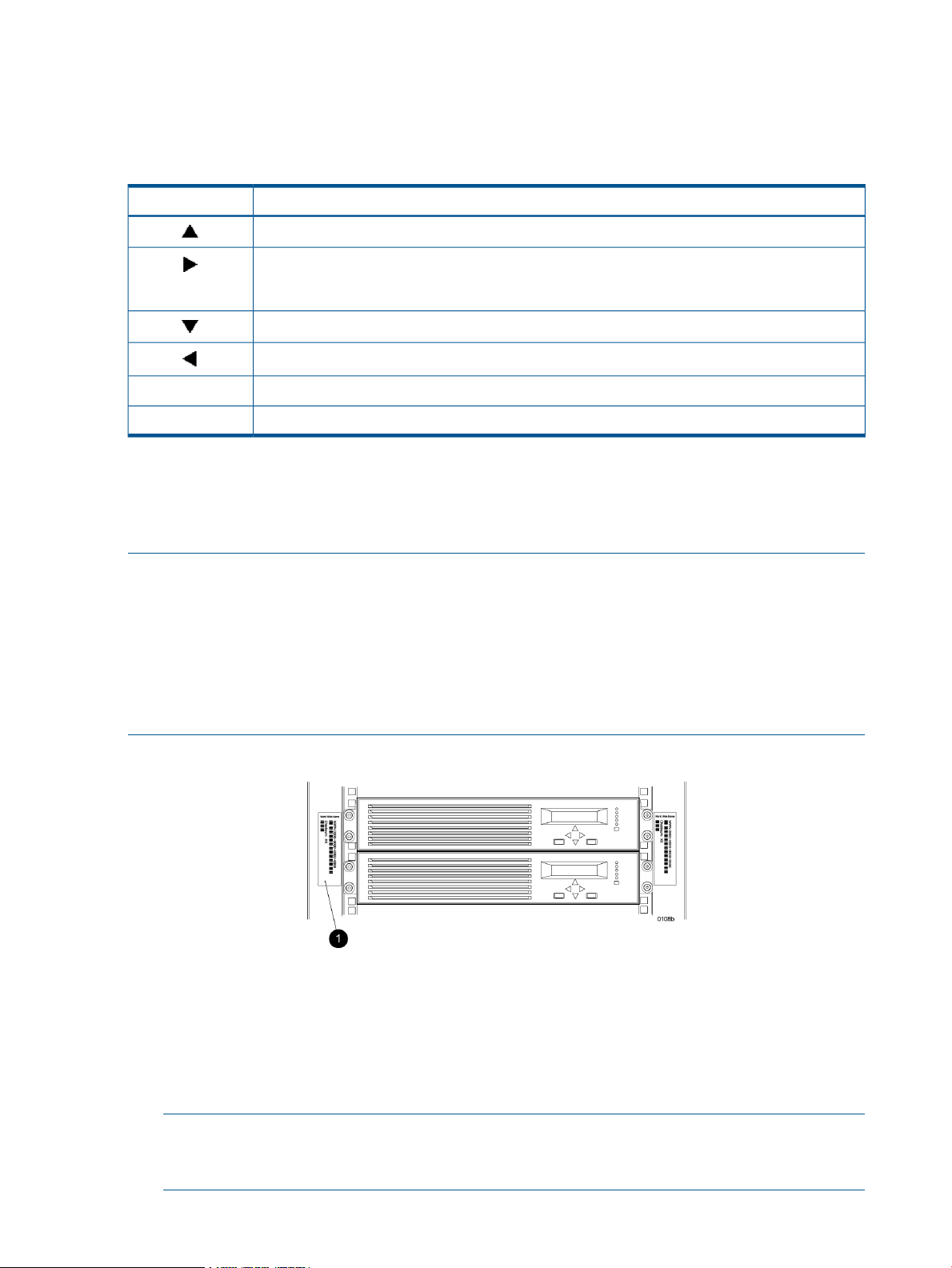

Table 1 (page 16) lists the push-button functions when entering the WWN, WWN checksum, and

password data.

Table 1 Push button functions

Entering the WWN

Fibre Channel protocol requires that each controller pair have a unique WWN. This 16-character

alphanumeric name identifies the controller pair on the storage system. Two WWN labels attached

to the rack identify the storage system WWN and checksum. See Figure 4 (page 16).

FunctionButton

Selects a character by scrolling up through the character list one character at a time.

Moves forward one character. If you accept an incorrect character, you can move through all 16

characters, one character at a time, until you display the incorrect character. You can then change

the character.

Selects a character by scrolling down through the character list one character at a time.

Moves backward one character.

Returns to the default display.ESC

Accepts all the characters entered.ENTER

NOTE:

• The WWN is unique to a controller pair and cannot be used for any other controller pair or

device anywhere on the network.

• This is the only WWN applicable to any controller installed in a specific physical location,

even a replacement controller.

• Once a WWN is assigned to a controller, you cannot change the WWN while the controller

is part of the same storage system.

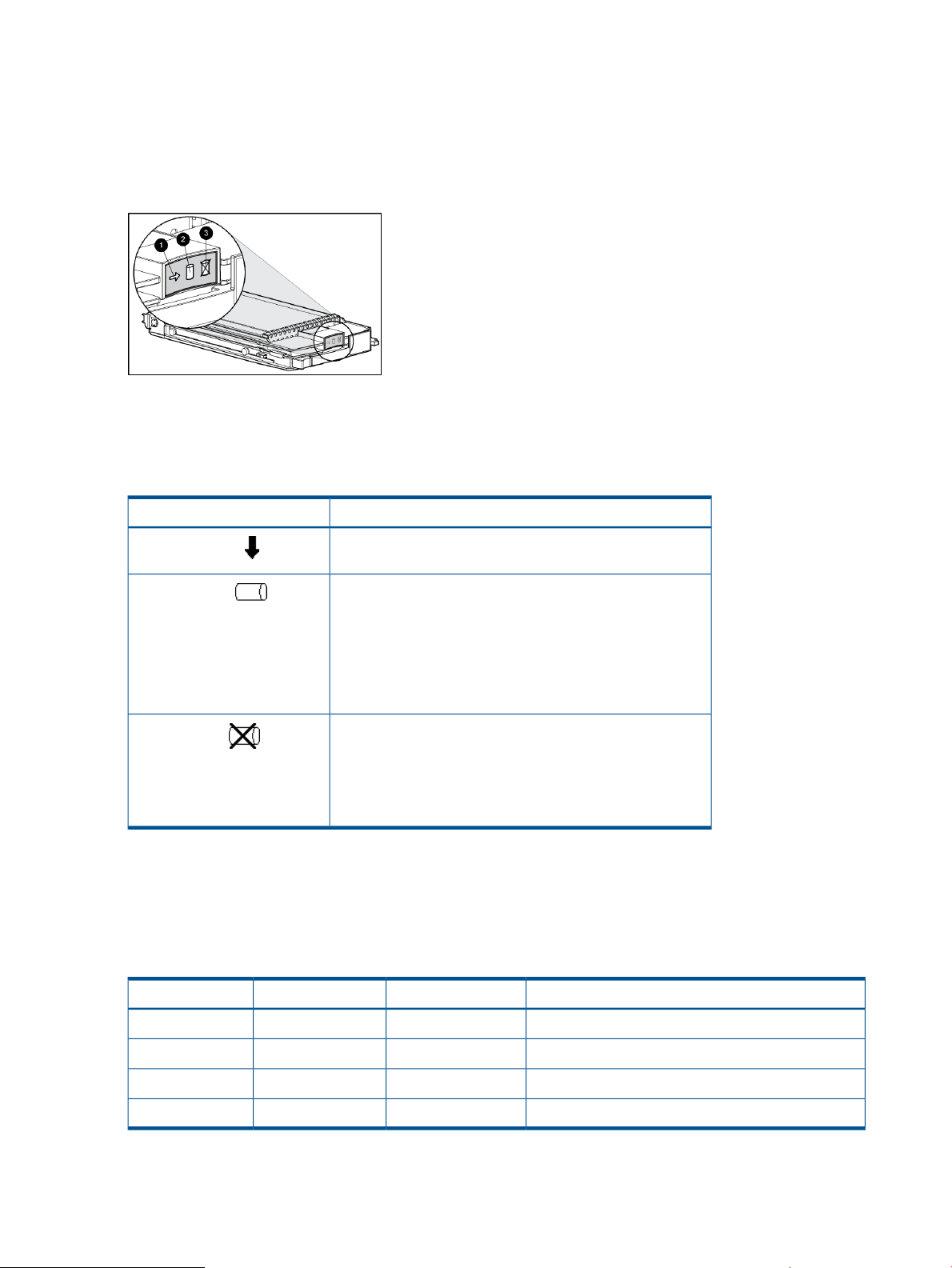

Figure 4 Location of the World Wide Name labels

1. World Wide Name labels

Complete the following procedure to assign the WWN to each pair of controllers.

1. Turn the power switches on both controllers off.

2. Apply power to the rack.

3. Turn the power switch on both controllers on.

NOTE: Notifications of the startup test steps that have been executed are displayed while

the controller is booting. It may take up to two minutes for the steps to display. The default

WWN entry display has a 0 in each of the 16 positions.

16 Enterprise Virtual Array startup

Page 17

4. Press or until the first character of the WWN is displayed. Press to accept this character

and select the next.

5. Repeat Step 4 to enter the remaining characters.

6. Press Enter to accept the WWN and select the checksum entry mode.

Entering the WWN checksum

The second part of the WWN entry procedure is to enter the two-character checksum, as follows.

1. Verify that the initial WWN checksum displays 0 in both positions.

2. Press or until the first checksum character is displayed. Press to accept this character

and select the second character.

3. Press or until the second character is displayed. Press Enter to accept the checksum and

exit.

4. Verify that the default display is automatically selected. This indicates that the checksum is

valid.

NOTE: If you enter an incorrect WWN or checksum, the system will reject the data and you must

repeat the procedure.

Entering the storage system password

The storage system password feature enables you to restrict management access to the storage

system. The password must meet the following requirements:

• 8 to 16 characters in length

• Can include upper or lower case letters

• Can include numbers 0 - 9

• Can include the following characters: ! “ # $ % & ‘ ( ) * + , - . / : ; < = > ? @ [ ] ^ _ ` {

| }

• Cannot include the following characters: space ~ \

NOTE: You must be running HP Command View EVA 6.0 or later to use passwords of more than

eight characters. HP Command View EVA 8.0.1 is required with XCS 6.200. If you set a password

longer than eight characters, you will no longer be able to manage the storage system with an

earlier version of HP P6000 Command View. In this case, it will be necessary to clear the long

password and reenter a password of no more than eight characters.

Complete the following procedure to enter the password:

1. Select a unique password of 8 to 16 characters.

2. With the default menu displayed, press three times to display System Password.

3. Press to display Change Password?

4. Press Enter for yes.

The default password, AAAAAAAA~~~~~~~~, is displayed.

5. Press or to select the desired character.

6. Press to accept this character and select the next character.

7. Repeat the process to enter the remaining password characters.

8. Press Enter to enter the password and return to the default display.

Installing HP P6000 Command View

HP P6000 Command View is installed on a management server. Installation may be skipped if

the latest version of HP P6000 Command View is running. Verify the latest version at the HP website:

http://h18006.www1.hp.com/storage/software.html.

Procedures for getting started 17

Page 18

See the HP P6000 Command View Installation Guide for information on installing the software.

Installing optional EVA software licenses

If you purchased optional EVA software, it will be necessary to install the license. Optional software

available for the Enterprise Virtual Array includes HP Business Copy EVA and HP P6000 Continuous

Access. Installation instructions are included with the license.

18 Enterprise Virtual Array startup

Page 19

2 Enterprise Virtual Array hardware components

The Enterprise Virtual Array includes the following hardware components:

• Fibre Channel drive enclosure — Contains disk drives, power supplies, blowers, I/O modules,

and an Environmental Monitoring Unit (EMU).

• Fibre Channel loop switches — Provides twelve-port central interconnect for Fibre Channel

drive enclosure FC Arbitrated Loops. The loop switches are required for EVA6000/6100 and

EVA8000/8100 configurations with more than four disk enclosures.

• HSV controller — Manages all aspects of storage system operation, including communications

between host systems and other devices. A pair of HSV controllers is included in the Enterprise

Virtual Array.

• Rack — A variety of free-standing racks are available.

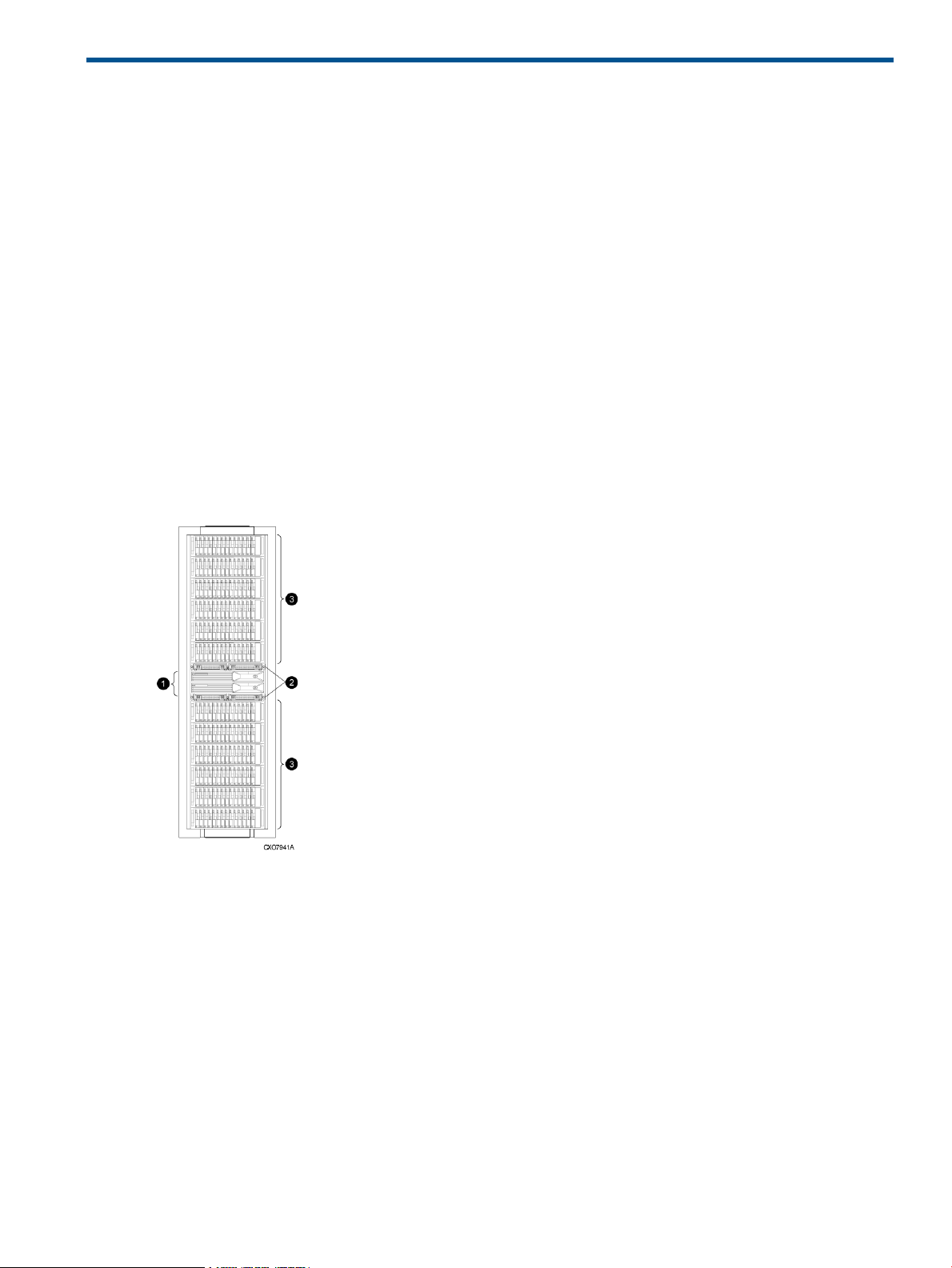

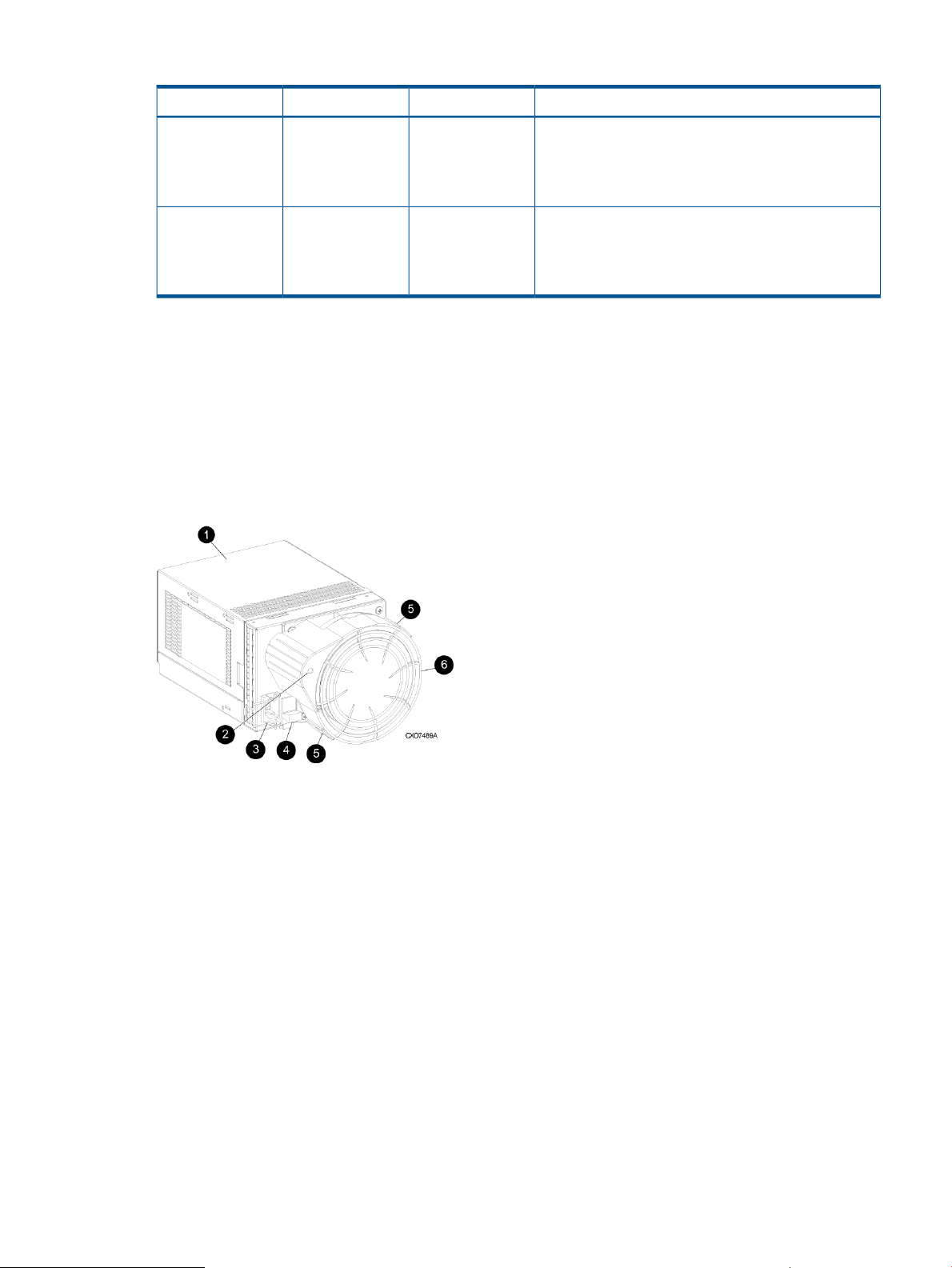

Physical layout of the storage system

The basic physical components are shown in Figure 5 (page 19). The disk drives are installed in

the disk enclosures, which connect to Fibre Channel loop switches, except on the EVA4000/4100

which does not use switches. The controller pair also connects to the loop switches.

Figure 5 Storage system hardware components

1. controllers

2. loop switches

3. disk enclosures

The EVA8000/8100, EVA6000/6100, and EVA4000/4100 are available as follows:

• EVA8000/8100 — available in multiple configurations ranging from the single-rack 2C2D

configuration to the multi-rack 2C18D. The EVA8000 includes two HSV210-A controllers and

four Fibre Channel loop switches. The EVA8100 includes two HSV210-B controllers and four

Fibre Channel loop switches.

• EVA6000/6100 — available in configurations ranging from the 2C4D configuration to the

2C8D configuration. The EVA6000 includes two HSV200-A controllers and two Fibre Channel

Physical layout of the storage system 19

Page 20

loop switches. The EVA6100 includes two HSV200-B controllers with two Fibre Channel loop

switches.

• EVA4000/4100 — available in configurations ranging from the 2C1D configuration to the

2C4D configuration without loop switches. The EVA4000 includes two HSV200-A controllers.

The EVA4100 includes two HSV200-B controllers. Multiple EVA4000/4100s can be installed

in a single rack.

See the HP 4x00/6x00/8x00 Enterprise Virtual Array Hardware Configuration Guide for more

information about configurations. See “Related information” (page 101) for links to this document.

Fibre Channel drive enclosures

The drive enclosure contains the disk drives used for data storage. A storage system includes

multiple drive enclosures. The major components of the enclosure are:

• 3U enclosure

• Dual redundant, active-to-active 2 Gbps FC loops

• 2.125-Gbps, dual loop, 14-drive enclosure

• Dual 2 Gbps FC I/O modules (A and B loops)

• Copper Fibre Channel cables

• Fibre Channel disk drives and drive blanks

• Dual redundant power supplies

• Dual redundant blowers

• Environmental Monitoring Unit (EMU)

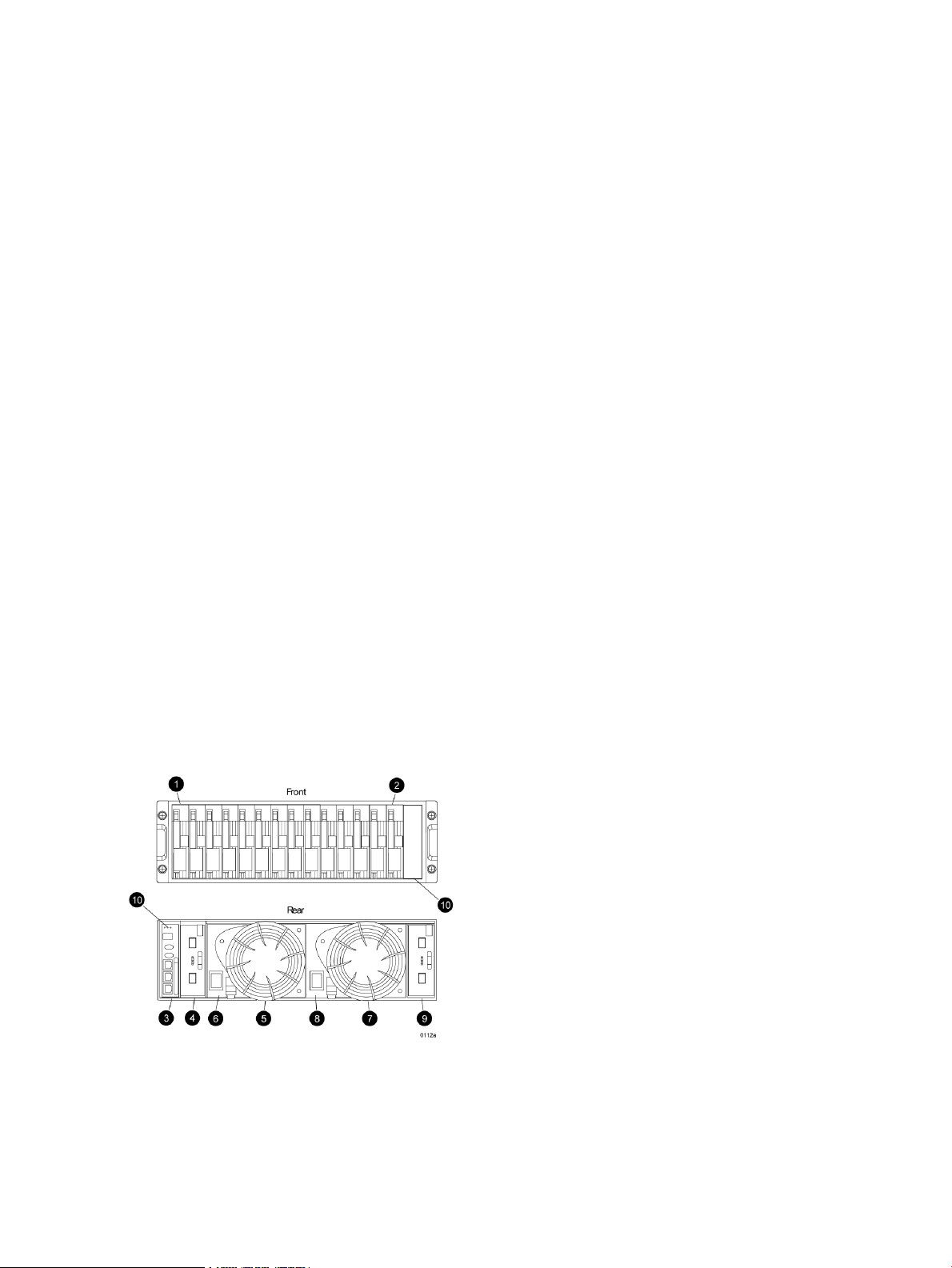

Enclosure layout

The disk drives mount in bays in the front of the enclosure. The bays are numbered sequentially

from left to right. A drive is referred to by its bay number. Enclosure status indicators are located

in the lower-right, front corner. Figure 6 (page 20) shows the front and rear views of the FC drive

enclosure.

Figure 6 FC drive enclosure—front and rear views

20 Enterprise Virtual Array hardware components

2. Drive bay 141. Drive bay 1

4. I/O module B3. EMU

6. Power supply 15. Blower 1

Page 21

9. I/O module A

I/O modules

Two I/O modules provide the interface between the drive enclosure and the host controllers. See

Figure 7 (page 21). They route data to and from the disk drives using Loop A and Loop B, the

dual-loop configuration. For redundancy, only dual-controller, dual-loop operation is supported.

Each controller is connected to both I/O modules in the drive enclosure.

Figure 7 I/O module

8. Power supply 27. Blower 2

10. Status indicators (EMU, enclosure power, enclosure

fault)

1. Status indicators (Upper port, Power, and Lower port)

2. Upper port

3. Lower port

The I/O modules are functionally identical, but are not interchangeable. Module A can only be

installed at the right end of the enclosure, and module B can only be installed at the left end of the

enclosure. See Figure 6 (page 20).

Each I/O module has two ports that can both transmit and receive data for bidirectional operation.

Activating a port requires connecting a FC cable to the port. The port function depends upon the

loop. See Figure 8 (page 21).

Figure 8 Input and output ports

2. Loop A upper port1. Loop A lower port

4. Loop B upper port3. Loop B lower port

Fibre Channel drive enclosures 21

Page 22

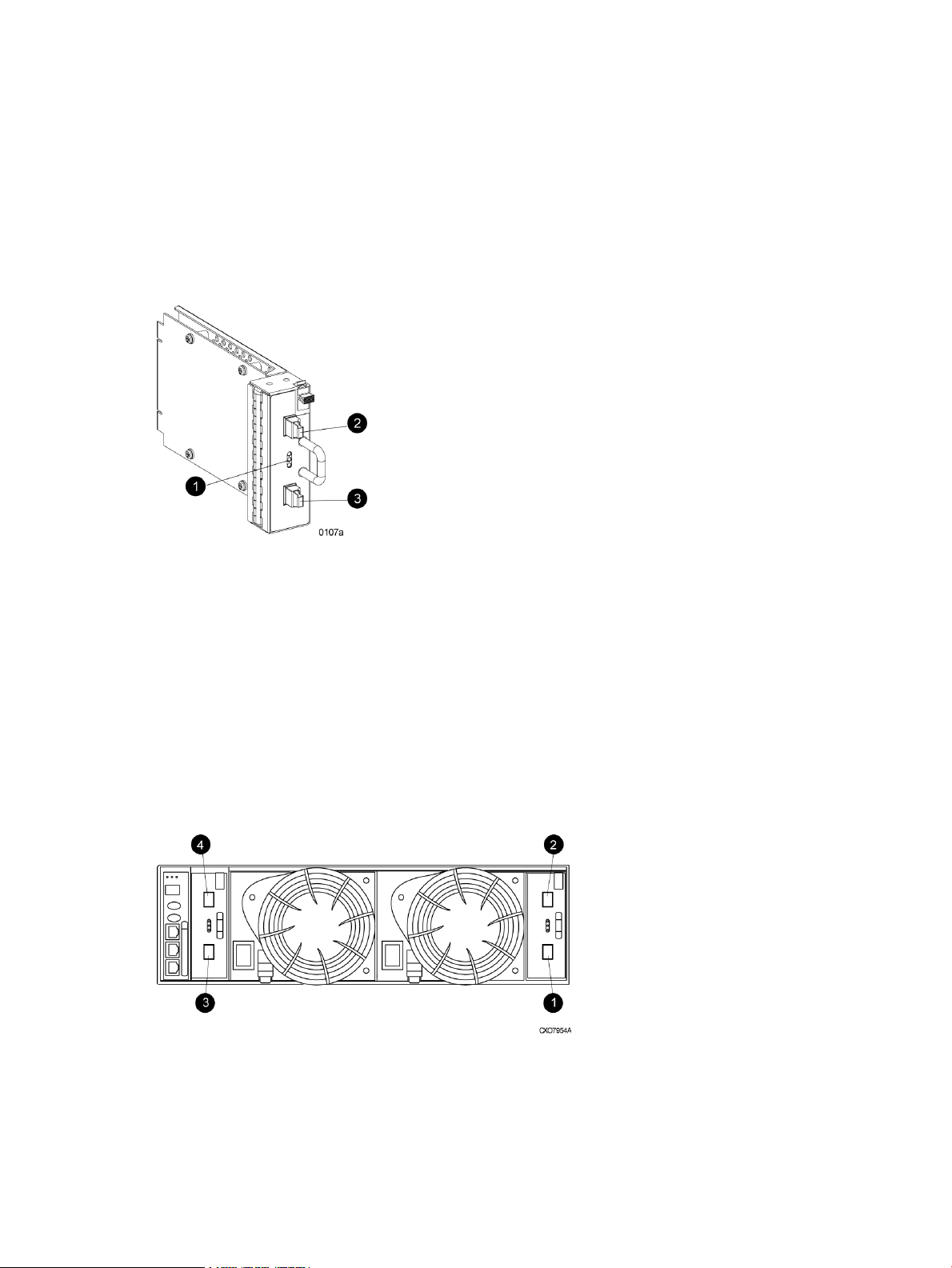

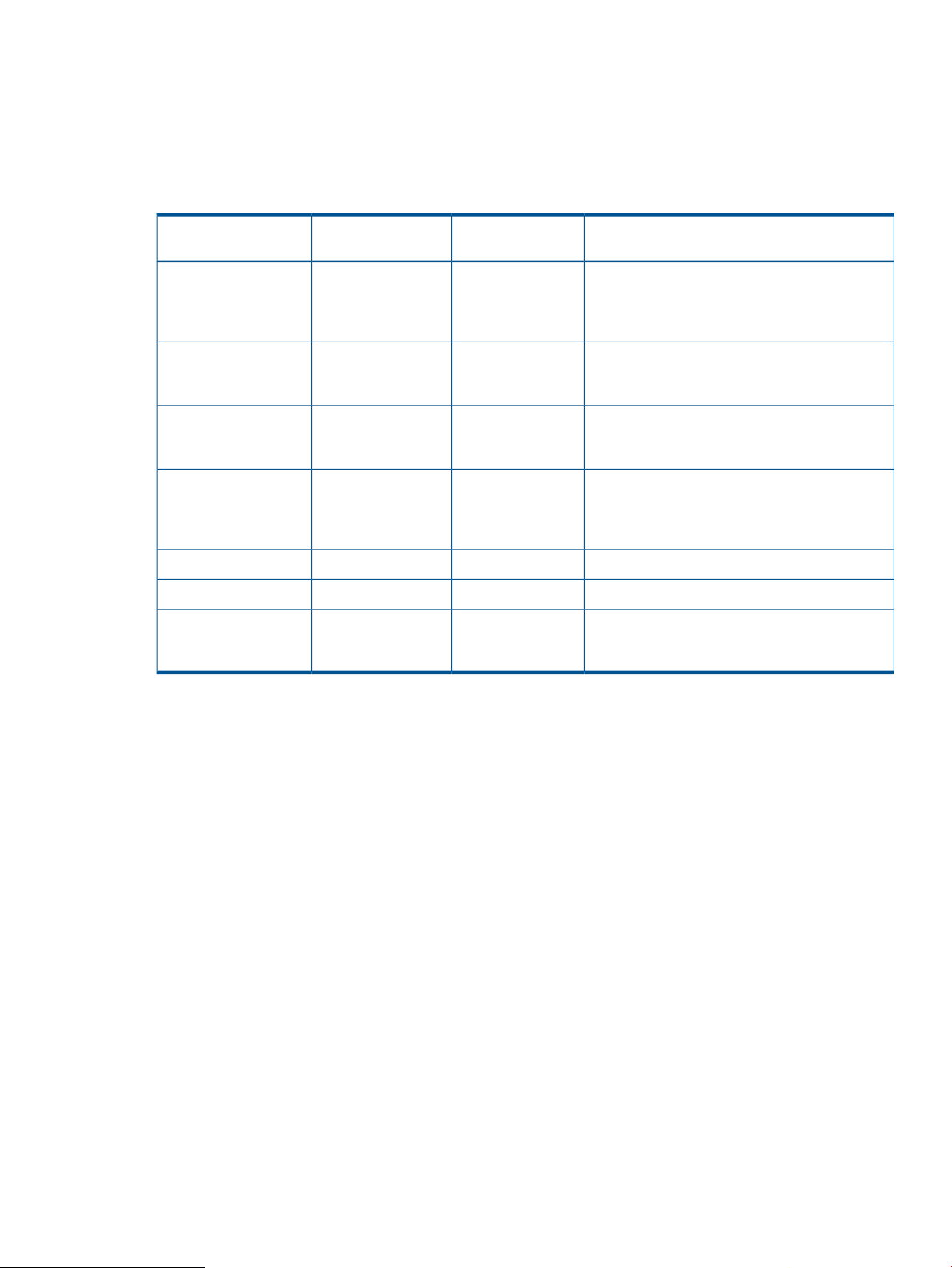

I/O module status indicators

There are three status indicators on the I/O module. See Figure 7 (page 21). The status indicator

states for an operational I/O module are shown in Table 2 (page 22). Table 3 (page 22) shows

the status indicator states for a non-operational I/O module.

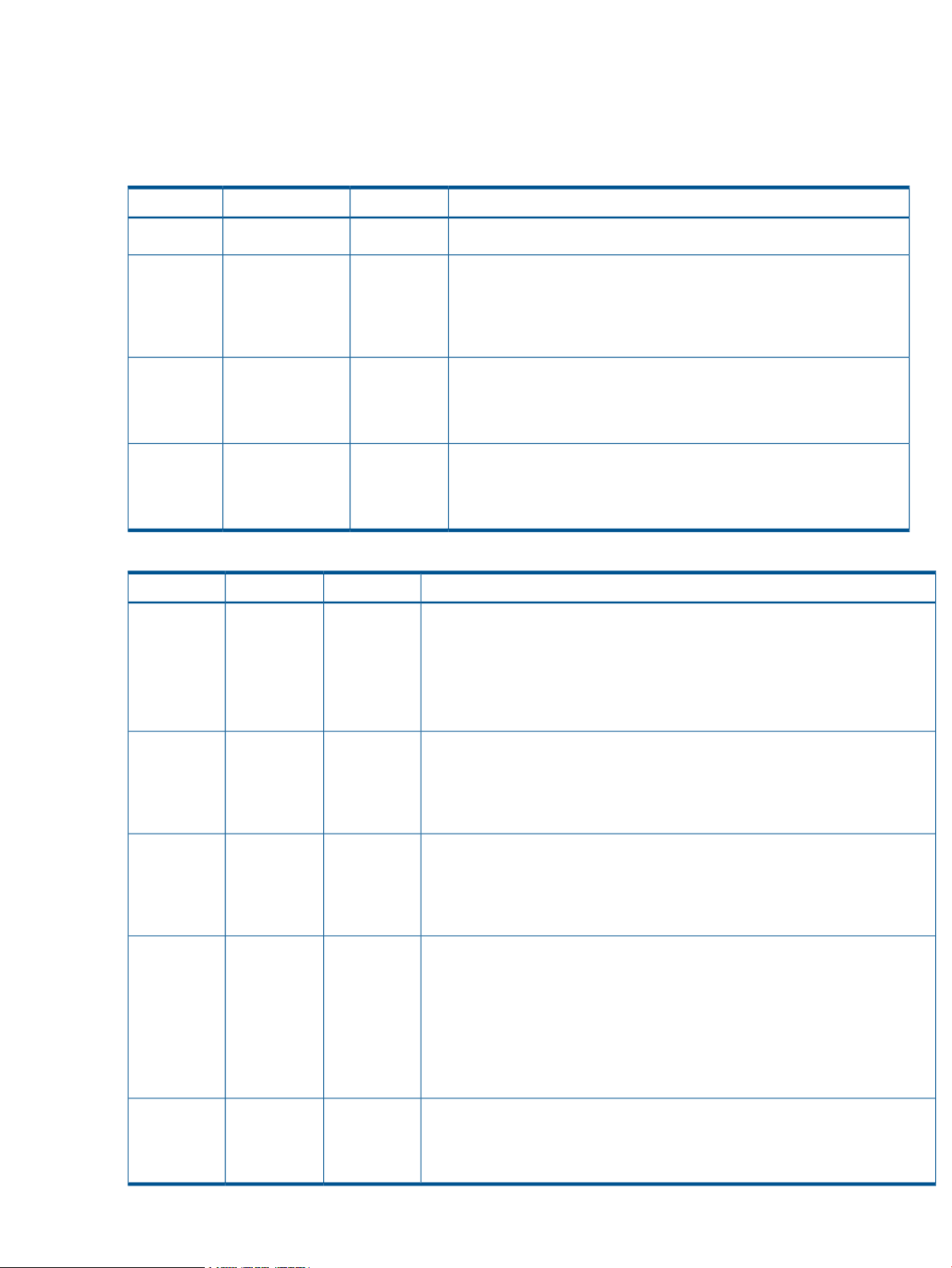

Table 2 Operational I/O module status indicators

DescriptionsLowerPowerUpper

OffOnOff

OnFlashing, then OnOn

OnOnOn

FlashingFlashingFlashing

• I/O Module is operational.

• Top port—Fibre Channel drive enclosure signal detected.

• Power—Flashes for about 90 seconds after initial power application,

then remains constant.

• Bottom port—Fibre Channel drive enclosure signal detected.

• Top port—Fibre Channel drive enclosure signal detected.

• Power—Present.

• Bottom port—Fibre Channel drive enclosure signal detected.

• When the locate function is active, all three indicators flash

simultaneously. The Locate function overrides all other indicator

functions. Therefore, an error could be detected while the Locate

function is active and not be indicated until the Locate action terminates.

Table 3 Non-operational I/O module status indicators

OffOnOn

• Top port—Fibre Channel drive enclosure signal detected.

• Power—Present.

• Bottom port—No Fibre Channel drive enclosure signal detected. Check

transceiver and fiber cable connections.

NOTE: This status applies to configurations with and without FC loop switches.

DescriptionsLowerPowerUpper

OnOnOff

OnOnFlashing

FlashingOnOn

OffOffOff

• Top port—No Fibre Channel drive enclosure signal detected. Check transceiver

and fiber cable connections.

• Power—Present.

• Bottom port—Fibre Channel drive enclosure signal detected .

• Top port—EMU detected possible transceiver problem. Check transceiver and

fiber cable connections.

• Power—Present.

• Bottom port—Fibre Channel drive enclosure signal detected .

• Top port—Fibre Channel drive enclosure signal detected.

• Power—Present.

• Bottom port—EMU detected possible transceiver problem. Check transceiver

and fiber cable connections.

NOTE: The EMU will not flash the lower indicator on its own. It will flash only

in response to a locate command. You can flash each of the lights independently

during a locate action.

• No I/O module power.

• I/O module is nonoperational.

• Check power supplies. If power supplies are operational, replace I/O module.

22 Enterprise Virtual Array hardware components

Page 23

Fiber Optic Fibre Channel cables

The Enterprise Virtual Array uses orange, 50-µm, multi-mode, fiber optic cables for connection to

the SAN. The fiber optic cable assembly consists of two 2-m fiber optic strands and small form-factor

connectors on each end. See Figure 9 (page 23).

To ensure optimum operation, the fiber optic cable components require protection from

contamination and mechanical hazards. Failure to provide this protection can cause degraded

operation. Observe the following precautions when using fiber optic cables.

• To avoid breaking the fiber within the cable:

Do not kink the cable◦

◦ Do not use a cable bend-radius of less than 30 mm (1.18 in)

• To avoid deforming, or possibly breaking the fiber within the cable, do not place heavy objects

on the cable.

• To avoid contaminating the optical connectors:

Do not touch the connectors◦

◦ Never leave the connectors exposed to the air

◦ Install a dust cover on each transceiver and fiber cable connector when they are

disconnected

If an open connector is exposed to dust, or if there is any doubt about the cleanliness of the

connector, clean the connector as described in “Handling fiber optic cables” (page 71).

Figure 9 Fiber Optic Fibre Channel cable