Page 1

Dell FluidFS V3 NAS Solutions For PowerVault

NX3500, NX3600, And NX3610

Administrator's Guide

Page 2

Notes, Cautions, and Warnings

NOTE: A NOTE indicates important information that helps you make better use of your computer.

CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you

how to avoid the problem.

WARNING: A WARNING indicates a potential for property damage, personal injury, or death.

Copyright © 2014 Dell Inc. All rights reserved. This product is protected by U.S. and international copyright and

intellectual property laws. Dell™ and the Dell logo are trademarks of Dell Inc. in the United States and/or other

jurisdictions. All other marks and names mentioned herein may be trademarks of their respective companies.

2014 - 01

Rev. A02

Page 3

Contents

1 Introduction.............................................................................................................. 11

How PowerVault FluidFS NAS Works ................................................................................................. 11

FluidFS Terminology............................................................................................................................ 11

Key Features Of PowerVault FluidFS Systems.................................................................................... 12

Overview Of PowerVault FluidFS Systems..........................................................................................13

Internal Cache............................................................................................................................... 14

Internal Backup Power Supply...................................................................................................... 14

Internal Storage............................................................................................................................. 14

PowerVault FluidFS Architecture........................................................................................................ 14

Client/LAN Network.......................................................................................................................15

MD System..................................................................................................................................... 16

SAN Network..................................................................................................................................16

Internal Network............................................................................................................................16

Data Caching And Redundancy..........................................................................................................16

File Metadata Protection..................................................................................................................... 16

High Availability And Load Balancing..................................................................................................17

Failure Scenarios............................................................................................................................ 17

Ports Used by the FluidFS System.......................................................................................................18

Required Ports............................................................................................................................... 18

Feature-Specific Ports...................................................................................................................18

Other Information You May Need...................................................................................................... 19

2 Upgrading to FluidFS Version 3...........................................................................21

Supported Upgrade Paths................................................................................................................... 21

FluidFS V2 and FluidFS V3 Feature and Configuration Comparison................................................. 21

Performing Pre-Upgrade Tasks..........................................................................................................24

Upgrading from FluidFS Version 2.0 to 3.0 .......................................................................................25

3 FluidFS Manager User Interface Overview.......................................................29

FluidFS Manager Layout......................................................................................................................29

Navigating Views.................................................................................................................................29

Working With Panes, Menus, And Dialogs......................................................................................... 30

Showing And Hiding Panes...........................................................................................................30

Opening A Pane Menu..................................................................................................................30

Opening A Table Element Menu.................................................................................................. 30

Changing Settings Within A Dialog............................................................................................... 31

Accessing NAS Volume SubTopics.....................................................................................................32

Working With The Event Log.............................................................................................................. 32

Page 4

Viewing The Event Log..................................................................................................................32

Viewing Event Details....................................................................................................................33

Sorting The Event Log...................................................................................................................33

Searching the Event Log............................................................................................................... 33

4 FluidFS 3.0 System Management........................................................................35

Connecting to the FluidFS Cluster .................................................................................................... 35

Connecting to the FluidFS Cluster Using the FluidFS Manager Web Client............................... 35

Connecting to the FluidFS Cluster CLI Using a VGA Console.....................................................35

Connecting to the FluidFS Cluster CLI through SSH Using a Password.....................................36

Connecting to the FluidFS Cluster CLI through SSH without Using a Password....................... 36

Managing Secured Management....................................................................................................... 36

Adding a Secured Management Subnet ...................................................................................... 37

Changing the Netmask for the Secured Management Subnet .................................................. 38

Changing the VLAN ID for the Secured Management Subnet ...................................................38

Changing the VIP for the Secured Management Subnet............................................................ 38

Changing the NAS Controller IP Addresses for the Secured Management Subnet...................39

Deleting the Secured Management Subnet ................................................................................39

Enabling Secured Management ...................................................................................................39

Disabling Secured Management ..................................................................................................40

Managing the FluidFS Cluster Name .................................................................................................40

Viewing the FluidFS Cluster Name............................................................................................... 40

Renaming the FluidFS Cluster...................................................................................................... 40

Managing Licensing ............................................................................................................................41

Viewing License Information ........................................................................................................41

Accepting the End-User License Agreement .............................................................................. 41

Managing the System Time ................................................................................................................41

Viewing the Time Zone................................................................................................................. 41

Setting the Time Zone...................................................................................................................42

Viewing the Time ..........................................................................................................................42

Setting the Time Manually............................................................................................................ 42

Viewing the NTP Servers ..............................................................................................................42

Add or Remove NTP Servers ........................................................................................................43

Enabling NTP ................................................................................................................................ 43

Disabling NTP................................................................................................................................ 43

Managing the FTP Server ...................................................................................................................44

Accessing the FTP Server .............................................................................................................44

Enabling or Disabling the FTP Server........................................................................................... 44

Managing SNMP .................................................................................................................................44

Obtaining SNMP MIBs and Traps .................................................................................................45

Changing the SNMP Read-Only Community ............................................................................. 45

Changing the SNMP Trap System Location or Contact ............................................................. 45

Page 5

Adding or Removing SNMP Trap Recipients ...............................................................................45

Enabling or Disabling SNMP Traps .............................................................................................. 46

Managing the Health Scan Throttling Mode .....................................................................................46

Viewing the Health Scan Throttling Mode .................................................................................. 46

Changing the Health Scan Throttling Mode ............................................................................... 47

Managing the Operation Mode.......................................................................................................... 47

Viewing the Operation Mode .......................................................................................................47

Changing the Operation Mode ....................................................................................................47

Managing Client Connections ...........................................................................................................48

Displaying the Distribution of Clients between NAS Controllers ...............................................48

Viewing Clients Assigned to a NAS Controller ............................................................................48

Assigning a Client to a NAS Controller ........................................................................................48

Unassigning a Client from a NAS Controller ...............................................................................48

Manually Migrating Clients to another NAS Controller .............................................................. 49

Failing Back Clients to Their Assigned NAS Controller ...............................................................49

Rebalancing Client Connections across NAS Controllers ..........................................................49

Shutting Down and Restarting NAS Controllers ...............................................................................50

Shutting Down the FluidFS Cluster ..............................................................................................50

Starting Up the FluidFS Cluster ....................................................................................................50

Rebooting a NAS Controller ........................................................................................................ 50

Managing NAS Appliance and NAS Controller .................................................................................. 51

Enabling or Disabling NAS Appliance and Controller Blinking.................................................... 51

5 FluidFS 3.0 Networking ........................................................................................53

Managing the Default Gateway ......................................................................................................... 53

Viewing the Default Gateway .......................................................................................................53

Changing the Default Gateway.....................................................................................................53

Managing DNS Servers and Suffixes...................................................................................................53

Viewing DNS Servers and Suffixes................................................................................................ 54

Adding DNS Servers and Suffixes..................................................................................................54

Removing DNS Servers and Suffixes............................................................................................ 54

Managing Static Routes...................................................................................................................... 55

Viewing the Static Routes............................................................................................................. 55

Adding a Static Route....................................................................................................................55

Changing the Target Subnet for a Static Route........................................................................... 56

Changing the Gateway for a Static Route....................................................................................56

Deleting a Static Route..................................................................................................................56

Managing the Internal Network..........................................................................................................57

Viewing the Internal Network IP Address..................................................................................... 57

Changing the Internal Network IP Address.................................................................................. 57

Managing the Client Networks........................................................................................................... 57

Viewing the Client Networks........................................................................................................ 58

Page 6

Creating a Client Network............................................................................................................ 58

Changing the Netmask for a Client Network...............................................................................58

Changing the VLAN Tag for a Client Network............................................................................. 59

Changing the Client VIPs for a Client Network............................................................................59

Changing the NAS Controller IP Addresses for a Client Network...............................................59

Deleting a Client Network............................................................................................................ 60

Viewing the Client Network MTU.................................................................................................60

Changing the Client Network MTU..............................................................................................60

Viewing the Client Network Bonding Mode................................................................................ 60

Changing the Client Network Bonding Mode..............................................................................61

Managing SAN Fabrics.........................................................................................................................61

Managing SAN Fabrics/Subnets....................................................................................................62

Viewing the SAN Network Configuration.....................................................................................62

Adding an iSCSI Fabric.................................................................................................................. 62

Modifying an iSCSI Fabric’s Configuration................................................................................... 62

Deleting an iSCSI Fabric................................................................................................................63

Modifying iSCSI Portals................................................................................................................. 63

Viewing Storage Identifiers........................................................................................................... 64

6 FluidFS 3.0 Account Management And Authentication .............................. 65

Account Management and Authentication........................................................................................65

Default Administrative Accounts........................................................................................................ 65

Administrative Account.................................................................................................................66

Support Account........................................................................................................................... 66

Enabling or Disabling the Support Account.................................................................................66

Changing the Support Account Password...................................................................................67

Using the Escalation Account.......................................................................................................67

CLI Account...................................................................................................................................68

Default Local User and Local Group Accounts................................................................................. 68

Managing Administrator Accounts.....................................................................................................68

Viewing Administrators................................................................................................................. 69

Adding an Administrator............................................................................................................... 69

Assigning NAS Volumes to a Volume Administrator....................................................................70

Changing an Administrator’s Permission Level............................................................................70

Changing an Administrator’s Email Address................................................................................ 70

Changing a Local Administrator Password...................................................................................71

Deleting an Administrator..............................................................................................................71

Managing Local Users..........................................................................................................................71

Adding a Local User....................................................................................................................... 71

Changing a Local User’s Group.................................................................................................... 72

Enabling or Disabling a Local User............................................................................................... 72

Changing a Local User Password................................................................................................. 73

Page 7

Deleting a Local User.................................................................................................................... 73

Managing Password Age and Expiration............................................................................................ 73

Changing the Maximum Password Age........................................................................................73

Enabling or Disabling Password Expiration.................................................................................. 74

Managing Local Groups......................................................................................................................74

Viewing Local Groups................................................................................................................... 74

Adding a Local Group....................................................................................................................74

Changing the Users Assigned to a Local Group...........................................................................75

Deleting a Local Group................................................................................................................. 76

Managing Active Directory..................................................................................................................76

Enabling Active Directory Authentication.....................................................................................77

Modifying Active Directory Authentication Settings.................................................................... 78

Disabling Active Directory Authentication................................................................................... 78

Managing LDAP................................................................................................................................... 78

Enabling LDAP Authentication......................................................................................................78

Changing the LDAP Base DN........................................................................................................79

Adding or Removing LDAP Servers.............................................................................................. 80

Enabling or Disabling LDAP on Active Directory Extended Schema.......................................... 80

Enabling or Disabling Authentication for the LDAP Connection................................................80

Enabling or Disabling TLS Encryption for the LDAP Connection................................................81

Disabling LDAP Authentication..................................................................................................... 81

Managing NIS.......................................................................................................................................81

Enabling NIS Authentication.........................................................................................................82

Changing the NIS Domain Name................................................................................................. 82

Changing the Order of Preference for NIS Servers..................................................................... 82

Disabling NIS Authentication........................................................................................................ 83

Managing User Mappings between Windows and UNIX/Linux Users.............................................. 83

User Mapping Policies...................................................................................................................83

User Mapping Policy and NAS Volume Security Style................................................................. 83

Managing the User Mapping Policy..............................................................................................84

Managing User Mapping Rules..................................................................................................... 84

7 FluidFS 3.0 NAS Volumes, Shares, and Exports............................................... 87

Managing the NAS Pool...................................................................................................................... 87

Discovering New or Expanded LUNs............................................................................................87

Viewing Internal Storage Reservations......................................................................................... 87

Viewing the Size of the NAS Pool................................................................................................. 87

Expanding the Size of the NAS Pool.............................................................................................88

Enabling or Disabling the NAS Pool Used Space Alert ............................................................... 88

Enabling or Disabling the NAS Pool Unused Space Alert ...........................................................88

Managing NAS Volumes .................................................................................................................... 89

File Security Styles.........................................................................................................................89

Page 8

Thin and Thick Provisioning for NAS Volumes............................................................................ 90

Choosing a Strategy for NAS Volume Creation...........................................................................90

Example NAS Volume Creation Scenarios................................................................................... 91

NAS Volumes Storage Space Terminology ................................................................................. 92

Configuring NAS Volumes ........................................................................................................... 92

Cloning a NAS Volume..................................................................................................................96

NAS Volume Clone Defaults.........................................................................................................96

NAS Volume Clone Restrictions................................................................................................... 97

Managing NAS Volume Clones.....................................................................................................97

Managing CIFS Shares........................................................................................................................ 98

Configuring CIFS Shares............................................................................................................... 98

Viewing and Disconnecting CIFS Connections.........................................................................100

Using CIFS Home Shares ............................................................................................................101

Changing the Owner of a CIFS Share ........................................................................................102

Managing ACLs or SLPs on a CIFS Share....................................................................................103

Accessing a CIFS Share Using Windows.................................................................................... 104

Accessing a CIFS Share Using UNIX/Linux................................................................................. 105

Managing NFS Exports......................................................................................................................105

Configuring NFS Exports.............................................................................................................105

Setting Permissions for an NFS Export.......................................................................................109

Accessing an NFS Export ........................................................................................................... 109

Managing Quota Rules .....................................................................................................................110

Viewing Quota Rules for a NAS Volume.....................................................................................110

Setting the Default Quota per User ........................................................................................... 110

Setting the Default Quota per Group..........................................................................................111

Adding a Quota Rule for a Specific User ....................................................................................111

Adding a Quota Rule for Each User in a Specific Group............................................................112

Adding a Quota Rule for an Entire Group ..................................................................................112

Changing the Soft Quota or Hard Quota for a User or Group..................................................113

Enabling or Disabling the Soft Quota or Hard Quota for a User or Group .............................. 113

Deleting a User or Group Quota Rule.........................................................................................114

Managing Data Reduction.................................................................................................................114

Enabling Data Reduction at the System Level............................................................................ 115

Enabling Data Reduction on a NAS Volume ..............................................................................115

Changing the Data Reduction Type for a NAS Volume............................................................. 116

Changing the Candidates for Data Reduction for a NAS Volume.............................................116

Disabling Data Reduction on a NAS Volume.............................................................................. 117

8 FluidFS 3.0 Data Protection................................................................................119

Managing the Anti-Virus Service.......................................................................................................119

Excluding Files and Directory Paths from Scans........................................................................120

Supported Anti-Virus Applications............................................................................................. 120

Page 9

Configuring Anti-Virus Scanning................................................................................................120

Viewing Anti-Virus Events .......................................................................................................... 123

Managing Snapshots......................................................................................................................... 123

Creating On-Demand Snapshots............................................................................................... 124

Managing Scheduled Snapshots ................................................................................................124

Modifying and Deleting Snapshots.............................................................................................126

Restoring Data from a Snapshot.................................................................................................127

Managing NDMP............................................................................................................................... 129

Supported DMAs......................................................................................................................... 130

Configuring NDMP .....................................................................................................................130

Specifying NAS Volumes Using the DMA................................................................................... 132

Viewing NDMP Jobs and Events.................................................................................................132

Managing Replication........................................................................................................................133

How Replication Works...............................................................................................................134

Target NAS Volumes................................................................................................................... 136

Managing Replication Partnerships............................................................................................ 136

Replicating NAS Volumes............................................................................................................138

Recovering an Individual NAS Volume....................................................................................... 141

Restoring the NAS Volume Configuration................................................................................. 142

Restoring Local Users .................................................................................................................144

Restoring Local Groups...............................................................................................................145

Using Replication for Disaster Recovery.................................................................................... 146

9 FluidFS 3.0 Monitoring........................................................................................ 153

Viewing the Status of Hardware Components.................................................................................153

Viewing the Status of the Interfaces ..........................................................................................153

Viewing the Status of the Disks...................................................................................................153

Viewing the Status of the Power Supplies..................................................................................154

Viewing the Status of a Backup Power Supply...........................................................................154

Viewing the Status of the Fans....................................................................................................154

Viewing the Status of FluidFS Cluster Services................................................................................ 154

Viewing the Status of Background Processes..................................................................................156

Viewing FluidFS Cluster NAS Pool Trends........................................................................................156

Viewing Storage Usage..................................................................................................................... 156

Viewing FluidFS NAS Pool Storage Usage.................................................................................. 156

Viewing Volume Storage Usage..................................................................................................156

Viewing FluidFS Traffic Statistics.......................................................................................................156

Viewing NAS Controller Load Balancing Statistics...........................................................................157

10 FluidFS 3.0 Maintenance...................................................................................159

Adding and Deleting NAS Appliances in a FluidFS Cluster ............................................................. 159

Adding NAS Appliances to the FluidFS Cluster...........................................................................159

Page 10

Deleting a NAS Appliance from the FluidFS Cluster...................................................................161

Detaching, Attaching, and Replacing a NAS Controller...................................................................161

Detaching a NAS Controller........................................................................................................ 161

Attaching a NAS Controller ........................................................................................................162

Replacing a NAS Controller........................................................................................................ 162

Managing Service Packs....................................................................................................................163

Viewing the Upgrade History .....................................................................................................163

Managing Firmware Updates............................................................................................................164

Reinstalling FluidFS from the Internal Storage Device.................................................................... 164

11 Troubleshooting..................................................................................................167

Viewing the Event Log.......................................................................................................................167

Running Diagnostics......................................................................................................................... 167

Running FluidFS Diagnostics on a FluidFS Cluster .................................................................... 167

Launching the iBMC Virtual KVM......................................................................................................168

Troubleshooting Common Issues....................................................................................................169

Troubleshooting Active Directory Issues................................................................................... 169

Troubleshooting Backup Issues..................................................................................................170

Troubleshooting CIFS Issues....................................................................................................... 171

Troubleshooting NFS Issues........................................................................................................175

Troubleshooting NAS File Access And Permissions Issues........................................................179

Troubleshooting Networking Issues...........................................................................................181

Troubleshooting Replication Issues........................................................................................... 182

Troubleshooting System Issues.................................................................................................. 185

12 Getting Help.........................................................................................................189

Contacting Dell................................................................................................................................. 189

Locating Your System Service Tag................................................................................................... 189

Documentation Feedback................................................................................................................ 189

Page 11

1

Introduction

The Dell Fluid File System (FluidFS) network attached storage (NAS) solution is a highly-available file

storage solution. The solution aggregates multiple NAS controllers into one system and presents them to

UNIX, Linux, and Microsoft Windows clients as one virtual file server.

How PowerVault FluidFS NAS Works

PowerVault FluidFS NAS leverages the PowerVault FluidFS appliances and the Dell PowerVault MD

storage to provide scale‐out file storage to Microsoft Windows, UNIX, and Linux clients. The FluidFS

system supports SMB (CIFS) and NFS clients installed on dedicated servers or on virtual systems deploying

VMware virtualization.

The MD storage systems manage the “NAS pool” of storage capacity. The FluidFS system administrator

can create NAS volumes in the NAS pool, and CIFS shares and/or NFS exports to serve NAS clients

working on different platforms.

To the clients, the FluidFS system appears as a single file server, hosting multiple CIFS shares and NFS

exports, with a single IP address and namespace. Clients connect to the FluidFS system using their

respective operating systems’ NAS protocols:

• UNIX and Linux users access files through the NFS protocol

• Windows users access files through the SMB(CIFS) protocol

The FluidFS system serves data to all clients concurrently, with no performance degradation.

FluidFS Terminology

The following table defines terminology related to FluidFS scale‐out NAS.

11

Page 12

Term Description

Fluid File System (FluidFS) A special purpose, Dell proprietary operating system providing

enterprise class, high‐performance, scalable NAS services using

Dell PowerVault, EqualLogic or Dell Compellent SAN storage

systems.

FluidFS Controller (NAS controller) Dell hardware device capable of running the FluidFS firmware.

FluidFS Appliance (NAS appliance) Enclosure containing two NAS controllers. The controllers in an

appliance are called peers, are hot‐swappable and operate in

active‐active mode.

Backup Power Supply (BPS) A backup power supply that keeps a FluidFS controller running

in the event of power failure and allows it to dump its cache to

a nonvolatile storage device.

FluidFS system (cluster) Multiple NAS controllers appropriately connected and

configured to form a single functional unit.

PowerVault FluidFS Manager WebUI Management user interface used for managing

PowerVault FluidFS systems.

NAS reserve (pool) The SAN storage system LUNs (and their aggregate size)

allocated and provisioned to a FluidFS system.

NAS volume File system (single-rooted directory/folder and file hierarchy),

defined using FluidFS management functions over a portion of

the NAS reserve.

Client Network (Client LAN) The network through which clients access CIFS shares or NFS

exports and also through which the PowerVault FluidFS

Manager is accessed.

Client VIP Virtual IP address(es) that clients use to access CIFS shares and

NFS exports hosted by the FluidFS system.

CIFS share A directory in a NAS volume that is shared on the Client

Network using the SMB (CIFS) protocol.

NFS export A directory in a NAS volume that is shared on the Client

Network using the Network File System (NFS) protocol.

Network Data Management Protocol

(NDMP)

Replication partnership A relation between two FluidFS systems enabling them to

Snapshot A time-specific view of a NAS volume data.

Protocol used for NDMP backup and restore.

replicate NAS volumes between themselves.

Key Features Of PowerVault FluidFS Systems

The following table summarizes key features of PowerVault FluidFS scale‐out NAS.

Feature Description

Shared back‐end infrastructure The MD system SAN and NX36X0 scale‐out NAS leverage the

same virtualized disk pool.

Unified block and file Unified block (SAN) and file(NAS) storage.

High performance NAS Support for a single namespace spanning up to two NAS

appliances (four NAS controllers).

Capacity scaling Ability to scale a single namespace up to 1024 TB capacity.

12

Page 13

Feature Description

Connectivity options 1GbE and 10GbE, copper and optical options for connectivity to

the client network.

Highly available and active‐active

design

Automatic load balancing Automatic balancing of client connections across network ports

Multi‐protocol support Support for CIFS/SMB (on Windows) and NFS (on UNIX and Linux)

Client authentication Control access to files using local and remote client

Quota rules Support for controlling client space usage.

File security style Choice of file security mode for a NAS volume (UNIX or NTFS).

Cache mirroring The write cache is mirrored between NAS controllers, which

Journaling mode In the event of a NAS controller failure, the cache in the remaining

NAS volume thin clones Clone NAS volumes without the need to physically copy the data

Deduplication Policy‐driven post‐process deduplication technology that

Compression LZPS (Level Zero Processing System) compression algorithm that

Metadata protection Metadata is constantly check-summed and stored in multiple

Replication NAS-volume level, snapshot‐based, asynchronous replication to

Snapshots Redirect‐on‐write, user‐accessible snapshots

NDMP backups Snapshot‐based, asynchronous backup (remote NDMP) over

Anti‐virus scanning CIFS anti‐virus scanning by deploying certified third‐party ICAP‐

Monitoring Built‐in performance monitoring and capacity planning.

Redundant, hot‐swappable NAS controllers in each NAS appliance.

Both NAS controllers in a NAS appliance process I/O. BPS allows

maintaining data integrity in the event of a power failure by

keeping a NAS controller online long enough to write the cache

to the internal storage device.

and NAS controllers, as well as back‐end I/O across MD array

LUNs.

protocols with ability to share user data across both protocols.

authentication, including LDAP, Active Directory, and NIS.

ensures a high performance response to client requests and

maintains data integrity in the event of a NAS controller failure.

NAS controller is written to storage and the NAS controller

continues to write directly to storage, which protects against data

loss.

set.

eliminates redundant data at rest.

intelligently shrinks data at rest.

locations for data consistency and protection.

enable disaster recovery.

Ethernet to certified third‐party backup solutions.

enabled anti‐virus solutions.

Overview Of PowerVault FluidFS Systems

PowerVault FluidFS system consists of one or two PowerVault NX36x0 appliances connected and

configured to utilize a PowerVault MD storage array and provide NAS services. PowerVault FluidFS

systems can start with one NX36x0 appliance, and expand with another (identical) appliance as required.

13

Page 14

NOTE: To identify the physical hardware displayed in PowerVault FluidFS Manager, match the

Service Tag shown in FluidFS Manager with the Service Tag printed on a sticker on the front right

side of the NAS appliance.

All NAS appliances in a FluidFS system must use the same controllers — mixing of 1 GbE and 10 GbE

appliances or controllers is not supported. The following appliances are supported:

• NX3500 (legacy) — 1 Gb Ethernet client connectivity with 1GB iSCSI back‐end connectivity to the MD

system(s)

• NX3600 — 1 Gb Ethernet client connectivity with 1GB iSCSI back‐end connectivity to the MD

system(s)

• NX3610 — 10 Gb Ethernet client connectivity with 10GB Ethernet iSCSI back‐end connectivity to the

MD system(s)

NAS appliance numbers start at 1 and NAS controller numbers start at 0. So, NAS Appliance 1 contains

NAS Controllers 0 and 1 and FluidFS Appliance 2 contains NAS Controllers 2 and 3.

Internal Cache

Each NAS controller has an internal cache that provides fast reads and reliable writes.

Internal Backup Power Supply

Each NAS controller is equipped with an internal Backup Power Supply (BPS) that protects data during a

power failure. The BPS units provide continuous power to the NAS controllers for a minimum of 5

minutes and have sufficient battery power to allow the NAS controllers to write all data from the cache to

non‐volatile internal storage before they shut down.

The NAS controllers regularly monitor the BPS battery status for the minimum level of power required for

normal operation. To ensure that the BPS battery status is accurate, the NAS controllers routinely

undergo battery calibration cycles. During a battery calibration cycle, the BPS goes through charge and

discharge cycles; therefore, battery error events during this process are expected. A battery calibration

cycle takes up to seven days to complete. If a NAS controller starts a battery calibration cycle, and the

peer NAS controller BPS has failed, the NAS controllers enter journaling mode, which might impact

performance. Therefore, Dell recommends repairing a failed BPS as soon as possible.

Internal Storage

Each NAS controller has an internal storage device that is used only for the FluidFS images and as a cache

storage offload location in the event of a power failure. The internal hard drive does not provide the NAS

storage capacity.

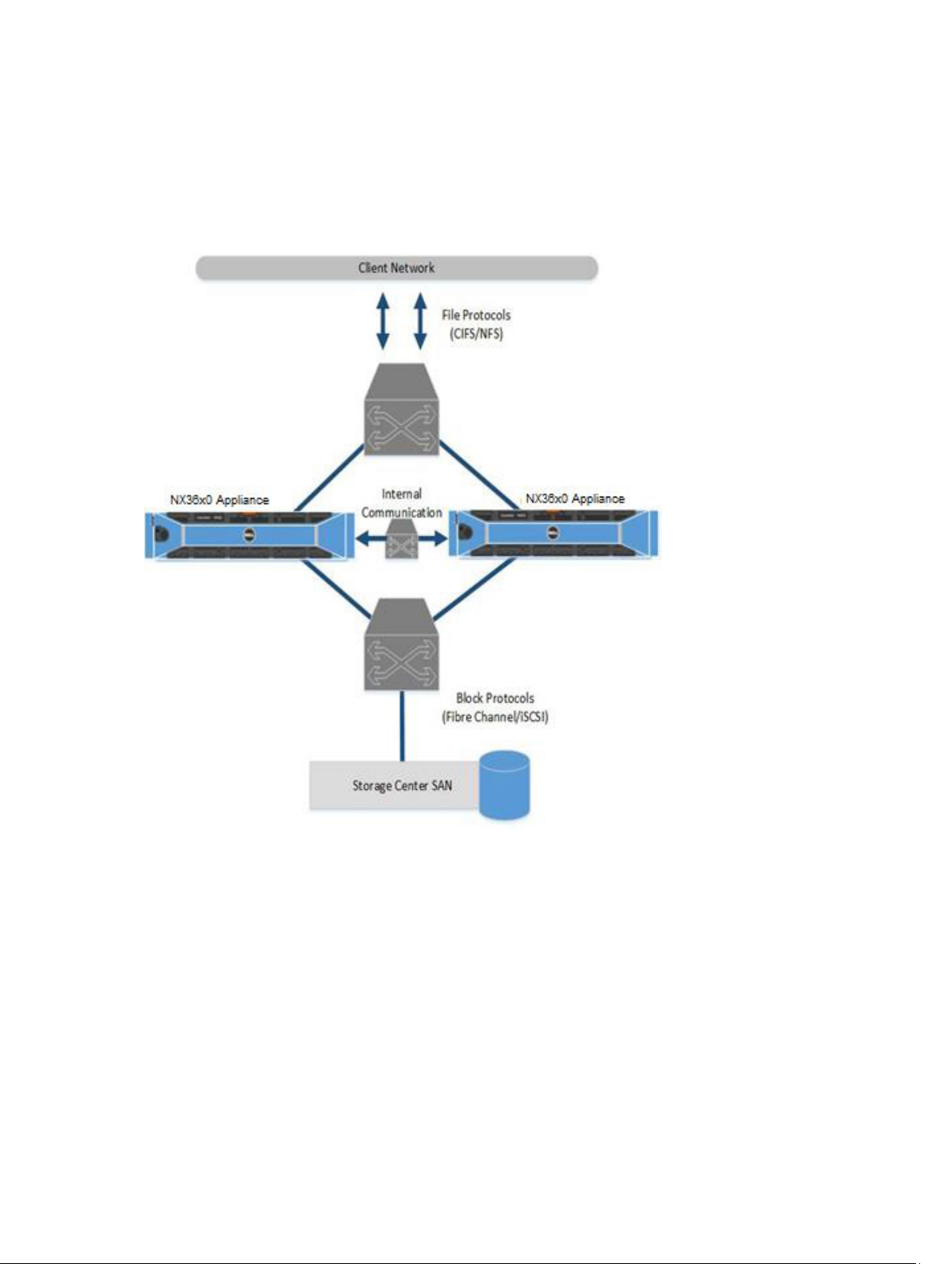

PowerVault FluidFS Architecture

PowerVault FluidFS scale‐out NAS consists of:

• Hardware:

– FluidFS appliance(s)

– MD system

14

Page 15

• NAS appliance network interface connections:

– Client/LAN network

– SAN network

– Internal network

The following figure shows an overview of the PowerVault FluidFS architecture:

Figure 1. PowerVault FluidFS Architecture Overview

Client/LAN Network

The client/LAN network is used for client access to the CIFS shares and NFS exports. It is also used by the

storage administrator to manage the FluidFS system. The FluidFS system is assigned one or more virtual

IP addresses (client VIPs) that allow clients to access the FluidFS system as a single entity. The client VIP

also enables load balancing between NAS controllers, and ensures failover in the event of a NAS

controller failure.

If client access to the FluidFS system is not through a router (in other words, the network has a “flat”

topology), define one client VIP. Otherwise, define a client VIP for each client interface port per NAS

controller. If you deploy FluidFS in an LACP environment, please contact Dell Support to get more

information about the optimal number of VIPs for your system.

15

Page 16

MD System

The PowerVault MD array provides the storage capacity for NAS; the NX36x0 cannot be used as a stand‐

alone NAS appliance. The MD array eliminates the need for separate storage capacity for block and file

storage.

SAN Network

The NX36x0 shares a back‐end infrastructure with the MD array. The SAN network connects the NX36x0

to the MD system and carries the block level traffic. The NX36x0 communicates with the MD system

using the iSCSI protocol.

Internal Network

The internal network is used for communication between NAS controllers. Each of the NAS controllers in

the FluidFS system must have access to all other NAS controllers in the FluidFS system to achieve the

following goals:

• Provide connectivity for FluidFS system creation

• Act as a heartbeat mechanism to maintain high availability

• Enable internal data transfer between NAS controller

• Enable cache mirroring between NAS controllers

• Enable balanced client distribution between NAS controllers

Data Caching And Redundancy

New or modified file blocks are first written to a local cache, and then immediately mirrored to the peer

NAS controller (mirroring mode). Data caching provides high performance, while cache mirroring

between peer NAS controllers ensures data redundancy. Cache data is ultimately (and asynchronously)

transferred to permanent storage using optimized data‐placement schemes.

When cache mirroring is not possible, such as during a single NAS controller failure or when the BPS

battery status is low, NAS controllers write directly to storage (journaling mode).

File Metadata Protection

File metadata includes information such as name, owner, permissions, date created, date modified, and a

soft link to the file’s storage location.

The FluidFS system has several built‐in measures to store and protect file metadata:

• Metadata is managed through a separate caching scheme and replicated on two separate volumes.

• Metadata is check-summed to protect file and directory structure.

• All metadata updates are journaled to storage to avoid potential corruption or data loss in the event of

a power failure.

• There is a background process that continuously checks and fixes incorrect checksums.

16

Page 17

High Availability And Load Balancing

To optimize availability and performance, client connections are load balanced across the available NAS

controllers. Both NAS controllers in a NAS appliance operate simultaneously. If one NAS controller fails,

clients are automatically failed over to the remaining controllers. When failover occurs, some CIFS clients

reconnect automatically, while in other cases, a CIFS application might fail, and the user must restart it.

NFS clients experience a temporary pause during failover, but client network traffic resumes

automatically.

Failure Scenarios

The FluidFS system can tolerate a NAS controller failure without impact to data availability and without

data loss. If one NAS controller becomes unavailable (for example, because the NAS controller failed, is

turned off, or is disconnected from the network), the NAS appliance status is degraded. Although the

FluidFS system is still operational and data is available to clients, the administrator cannot perform most

configuration modifications and performance might decrease because data is no longer cached.

The impact to data availability and data integrity following a multiple NAS controller failure depends on

the circumstances of the failure scenario. Dell recommends detaching a failed NAS controller as soon as

possible, so that it can be safely taken offline for service. Data access remains intact as long as one of the

NAS controllers in each NAS appliance in a FluidFS system is functional.

The following table summarizes the impact to data availability and data integrity of various failure

scenarios.

Scenario System Status Data Integrity Comments

Single NAS controller

failure

Available, degraded Unaffected

• Peer NAS controller enters

journaling mode

• Failed NAS controller can be

replaced while keeping the

file system online

Sequential dual‐NAS

controller failure in

single NAS appliance

system

Simultaneous dual‐ NAS

controller failure in

single NAS appliance

system

Sequential dual‐NAS

controller failure in

multiple NAS appliance

system, same NAS

appliance

Simultaneous dual‐NAS

controller failure in

multiple NAS appliance

Unavailable Unaffected Sequential failure assumes that

there is enough time between

NAS controller failures to write all

data from the cache to disk (MD

system or non‐volatile internal

storage)

Unavailable Lose data in cache Data that has not been written to

disk is lost

Unavailable Unaffected Sequential failure assumes that

there is enough time between

NAS controller failures to write all

data from the cache to disk (MD

system or non‐volatile internal

storage)

Unavailable Lose data in cache Data that has not been written to

disk is lost

17

Page 18

Scenario System Status Data Integrity Comments

system, same NAS

appliance

Dual‐NAS controller

failure in multiple NAS

appliance system,

separate NAS appliances

Available, degraded Unaffected

• Peer NAS controller enters

journaling mode

• Failed NAS controller can be

replaced while keeping the

file system online

Ports Used by the FluidFS System

The FluidFS system uses the ports listed in the following table. You might need to adjust your firewall

settings to allow the traffic on these ports. Some ports might not be used, depending on which features

are enabled.

Required Ports

The following table summarizes ports that are required for all FluidFS systems.

Port Protocol Service Name

22 TCP

53 TCP

80 TCP

111 TCP and UDP

427 TCP and UDP

443 TCP

445 TCP and UDP

2049–2049+(domain number ‐ 1)TCP and UDP

SSH

DNS

Internal system use

portmap

SLP

Internal system use

CIFS/SMB

NFS

4000–4000+(domain number ‐ 1)TCP and UDP

4050–4050+(domain number ‐ 1)TCP and UDP

5001–5001+(domain number ‐ 1) TCP and UDP

5051–5051+(domain number ‐ 1) TCP and UDP

44421 TCP

44430–44439 TCP

statd

NLM (lock manager)

mount

quota

FTP

FTP (Passive)

Feature-Specific Ports

The following table summarizes ports that are required, depending on enabled features.

18

Page 19

Port Protocol Service Name

88 TCP and UDP Kerberos

123 UDP NTP

135 TCP AD ‐ RPC

138 UDP NetBIOS

139 TCP NetBIOS

161 UDP SNMP Agent

162 TCP SNMP trap

389 TCP and UDP LDAP

464 TCP and UDP Kerberos v5

543 TCP Kerberos login

544 TCP Kerberos remote shell

636 TCP LDAP over TLS/SSL

711 UDP NIS

714 TCP NIS

749 TCP and UDP Kerberos administration

1344 TCP Anti-virus ‐ ICAP

3268 TCP LDAP global catalog

3269 TCP LDAP global catalog over TLS/SSL

8004 TCP ScanEngine server WebUI (AV host)

9445 TCP Replication trust setup

10000 TCP NDMP

10550‐10551, 10560‐10568 TCP Replication

Other Information You May Need

WARNING: See the safety and regulatory information that shipped with your system. Warranty

information may be included within this document or as a separate document.

• The Getting Started Guide provides an overview of setting up your system and technical

specifications.

• The Owner's Manual provides information about solution features and describes how to troubleshoot

the system and install or replace system components.

• The rack documentation included with your rack solution describes how to install your system into a

rack, if required.

• The System Placemat provides information on how to set up the hardware and install the software on

your NAS solution.

• Any media that ships with your system that provides documentation and tools for configuring and

managing your system, including those pertaining to the operating system, system management

software, system updates, and system components that you purchased with your system.

• For the full name of an abbreviation or acronym used in this document, see the Glossary at dell.com/

support/manuals.

19

Page 20

NOTE: Always check for updates on dell.com/support/manuals and read the updates first because

they often supersede information in other documents.

20

Page 21

2

Upgrading to FluidFS Version 3

Supported Upgrade Paths

To upgrade to FluidFS version 3.0, the FluidFS cluster must be at FluidFS version 2.0.7630 or later. If the

FluidFS cluster is at a pre‐2.0.7630 version, upgrade to version 2.0.7680 prior to upgrading to version 3.0.

The following table summarizes the supported upgrade paths.

Version 2.0 Release Upgrades to Version 3.0.x Supported?

2.0.7680 Yes

2.0.7630 Yes

2.0.7170 No

2.0.6940 No

2.0.6730 No

2.0.6110 No

FluidFS V2 and FluidFS V3 Feature and Configuration Comparison

This section summarizes functionality differences between FluidFS version 2.0 and 3.0. Review the

functionality comparison before upgrading FluidFS to version 3.0.

• Note: The Version 3.0 column in the table below indicates changes that must be made in some cases,

in order to accommodate version 3.0 configuration options.

Feature Version 2.0 Version 3.0

Management interface The NAS ManagerUser Interface has been

updated.

Management

connections

Default management

account

The FluidFS cluster is

managed using a dedicated

Management VIP.

The default administrator

account is named admin.

Version 3.0 does not use a Management VIP—

the FluidFS cluster can be managed using any

client VIP.

During the upgrade, the Management VIP from

version 2.0 is converted to a client VIP.

The default administrator account is named

Administrator.

During the upgrade, the admin account from

version 2.0 is deleted and the CIFS

Administrator account becomes the version

3.0 Administrator account. During the upgrade,

you will be prompted to reset the CIFS

Administrator password if you have not reset it

within the last 24 hours. Make sure to

21

Page 22

Feature Version 2.0 Version 3.0

remember this password because it is required

to manage the FluidFS cluster in version 3.0.

User‐defined

management accounts

Command Line

Interface (CLI) access

and commands

Dell Technical Support

Services remote

troubleshooting

account

FluidFS cluster name

and NetBIOS name

Data reduction

overhead

Anti‐virus scanning You can specify which file

Supported NFS

protocol versions

Supported SMB

protocol versions

Only local administrator

accounts can be created.

Administrator accounts log

into the CLI directly.

The remote troubleshooting

account is named fse (field

service engineer).

The FluidFS cluster name and

NetBIOS name do not have

to match. The NetBIOS name

can begin with a digit.

Version 2.0 does not include

a data

reduction feature.

types to scan.

Version 2.0 supports NFS

protocol version 3.

Version 2.0 supports SMB

protocol version 1.0.

You can create local administrator accounts or

create administrator accounts for remote users

(members of Active Directory, LDAP or NIS

repositories).

During the upgrade, any user‐defined

administrator accounts from version 2.0 are

deleted.

Workaround: Use one of the following

options:

Convert the administrator accounts to local

users before upgrading and convert them back

to administrator accounts after upgrading.

Re‐create administrator accounts after the

upgrade.

Version 3.0 introduces a cli account that must

be used in conjunction with an administrator

account to log into the CLI.

In addition, the command set is significantly

different in version 3.0.

The remote troubleshooting account is named

support. During the upgrade, the fse account

from version 2.0 is deleted. After the upgrade,

the support account is disabled by default.

The FluidFS cluster name is used as the

NetBIOS name.

Before upgrading, the FluidFS cluster name and

NetBIOS name must be changed to match.

Also, the FluidFS cluster name cannot be

longer than 15 characters and cannot begin

with a digit.

Version 3.0 introduces a data reduction

feature. If data reduction is enabled, the system

deducts an additional 100GB per NAS

appliance from the NAS pool for data reduction

processing. This is in addition to the amount of

space that the system deducts from the NAS

pool for internal use.

You cannot specify which files types to scan—

all files smaller than the specified file size

threshold are scanned.

You can specify whether to allow or deny

access to files larger than the file size

threshold.

As in version 2.0, you can specify file types and

directories to exclude from anti‐virus scanning.

Version 3.0 supports NFS protocol version 3

and 4.

Version 3.0 supports SMB protocol version 1.0,

2.0, and 2.1.

22

Page 23

Feature Version 2.0 Version 3.0

CIFS home shares Clients can access CIFS

home shares in two ways:

\\<client_VIP_or_name>

\<path_prefix>\<username>

\\<client_VIP_or_name>

\homes

Both access methods point

to the same folder.

Local user names A period can be used as the

last

character of a local user

name.

Local users and local

groups UID/GID range

Guest account

mapping policy

NDMP client port The NDMP client port must

Replication ports TCP ports 10560–10568 and

Snapshot schedules Snapshot schedules can be

Internal subnet The internal (interconnect)

A unique UID (user ID) or GID

(group ID) can be configured

for

local users and local groups.

By default, unmapped users

are

mapped to the guest

account, which allows a

guest account to access a file

if the CIFS share allows guest

access.

be in

the range 1–65536.

26 are used for replication.

disabled.

subnet

can be changed from a Class

C

subnet during or after

deployment.

Version 3.0 does not include the “homes”

access method. After the upgrade, the “homes”

share will not be present, and clients will need

to use the “username” access method instead.

If you have a policy that mounts the \

\<client_VIP_or_name>\homes share when

client systems start, you must change the

policy to mount the \\<client_VIP_or_name>

\<path_prefix>\<username> share.

A period cannot be used as the last character

of a local user name.

Before upgrading, delete local user names that

have a period as the last character and re‐

create the accounts with a different name.

The UID/GID range for local users and local

groups is 1001 to 100,000. There is no way to

configure or determine the UID/GID of local

users and local groups. This information is

internal to the FluidFS cluster.

During the upgrade, any existing local users

and local

groups from version 2.0 with a UID/GID that is

outside the version 3.0 UID/GID range will

remain unchanged. Local users and local

groups created after the upgrade will use the

version 3.0 UID/GID range.

Unmapped users cannot access any CIFS

share, regardless of whether the CIFS share

allows guest access.

Guest access is enabled automatically after the

upgrade only if there are guest users already

defined for any CIFS shares in version 2.0.

The NDMP client port must be in the range

10000–10100.

Before upgrading, the NDMP client port must

be changed to be in the range 10000–10100.

You must also make the reciprocal change on

the DMA servers.

TCP ports 10550–10551 and 10560–10568 are

used for replication.

Snapshot schedules cannot be disabled.

During the upgrade, disabled snapshot

schedules from version 2.0 are deleted.

The internal subnet must be a Class C subnet.

Before upgrading, the internal subnet must be

changed to a Class C subnet, otherwise the

service pack installation will fail with the

following message:

“Please allocate a new C-class subnet for

FluidFS Internal Network, run the following

23

Page 24

Feature Version 2.0 Version 3.0

command, and then repeat the upgrade:

system

networking subnets add NEWINTER Primary

255.255.255.0 -PrivateIPs x.y.z.1,x.y.z.2

(where x.y.z.* is the new subnet)”.

Note: If you receive this message while

attempting to

upgrade, obtain a Class C subnet that is not

used in your network, run the command to set

the internal subnet (for example: system

networking subnets add NEWINTER Primary

255.255.255.0 –PrivateIPs 172.41.64.1,

172.41.64.2), and retry the service pack

installation.

Port for management

and remote KVM

1GbE to 10GbE client

connectivity upgrade

Only subnet‐level isolation of

management traffic is

supported.

Version 2.0 does not support

upgrading an appliance from

1GbE client connectivity to

10GbE client connectivity.

The following features are available:

Physical isolation of management traffic

Remote KVM that allows you to view and

manage the NAS controller console remotely

over a network

These features are implemented using the

Ethernet port located on the lower right side of

the back panel of a NAS controller.

Version 3.0 introduces support for upgrading

an appliance from 1GbE client connectivity to

10GbE client connectivity. Upgrades must be

performed by a Dell installer or certified

business partner.

Performing Pre-Upgrade Tasks

Complete the following tasks before upgrading.

• The FluidFS cluster must be at FluidFS version 2.0.7630 or later before upgrading to FluidFS version

3.0.

• When upgrading to V3 “admin” user will not be available anymore, and local “Administrator” must be

used instead. Make sure to know its password, Administrator password must be changed up to 24

Hours before the upgrade. To change Administrator password Login to CLI ( Using SSH ) and run the

following command :system authentication local-accounts users change-password Administrator

CAUTION: The password you set will be required to manage the FluidFS cluster after

upgrading to version 3.0. Make sure that you remember this password or record it in a secure

location.

• Change the FluidFS cluster name to match the NetBIOS name, if needed. Also, ensure that the FluidFS

cluster name is no longer than 15 characters and does not begin with a digit.

• Change the internal subnet to a Class C subnet, if needed.

• Convert user‐defined administrator accounts to local users, if needed.

• Change policies that mount the \\<client_VIP_or_name>\homes share to mount the \

\<client_VIP_or_name>\<path_prefix>\<username> share, if needed.

24

Page 25

• Delete local user names that have a period as the last character and re‐create the accounts with a

different name, if needed.

• Change the NDMP client port to be in the range 10000–10100, if needed. You must also make the

reciprocal change on the DMA servers.

• Stop all NDMP backup sessions, if needed. If an NDMP backup session is in progress during the

upgrade, the temporary NDMP snapshot is left in place.

• Open additional ports on your firewall to allow replication between replication partners, if needed.

• Remove parentheses characters from the Comment field for CIFS shares and NFS exports.

• Ensure the NAS volumes do not have the following names (these names are reserved for internal

FluidFS cluster functions):

– .

– ..

– .snapshots

– acl_stream

– cifs

– int_mnt

– unified

– Any name starting with locker_

• Ensure that at least one of the defined DNS servers is accessible using ping and dig (DNS lookup

utility).

• Ensure that the Active Directory domain controller is accessible using ping and that the FluidFS cluster

system time is in sync with the Active Directory time.

• Ensure that the NAS controllers are running, attached, and accessible using ping, SSH, and rsync.

• Although the minimum requirement to upgrade is that at least one NAS controller in each NAS

appliance must be running, Dell recommends ensuring that all NAS controllers are running before

upgrading.

• Ensure that the FluidFS cluster system status shows running.

Upgrading from FluidFS Version 2.0 to 3.0

Use the following procedure to upgrade a Dell PowerVault NX3500/NX3600/NX3610 FluidFS cluster from

FluidFS version 2.0 to 3.0.

25

Page 26

NOTE:

• Perform pre‐upgrade tasks.

• Installing a service pack causes the NAS controllers to reboot during the installation process.

This might cause interruptions in CIFS and NFS client connections. Therefore, Dell recommends

scheduling a maintenance window to perform service pack installations.

• Contact Dell Technical Support Services to obtain the latest FluidFS version 3.0 service pack. Do

not modify the service pack filename.

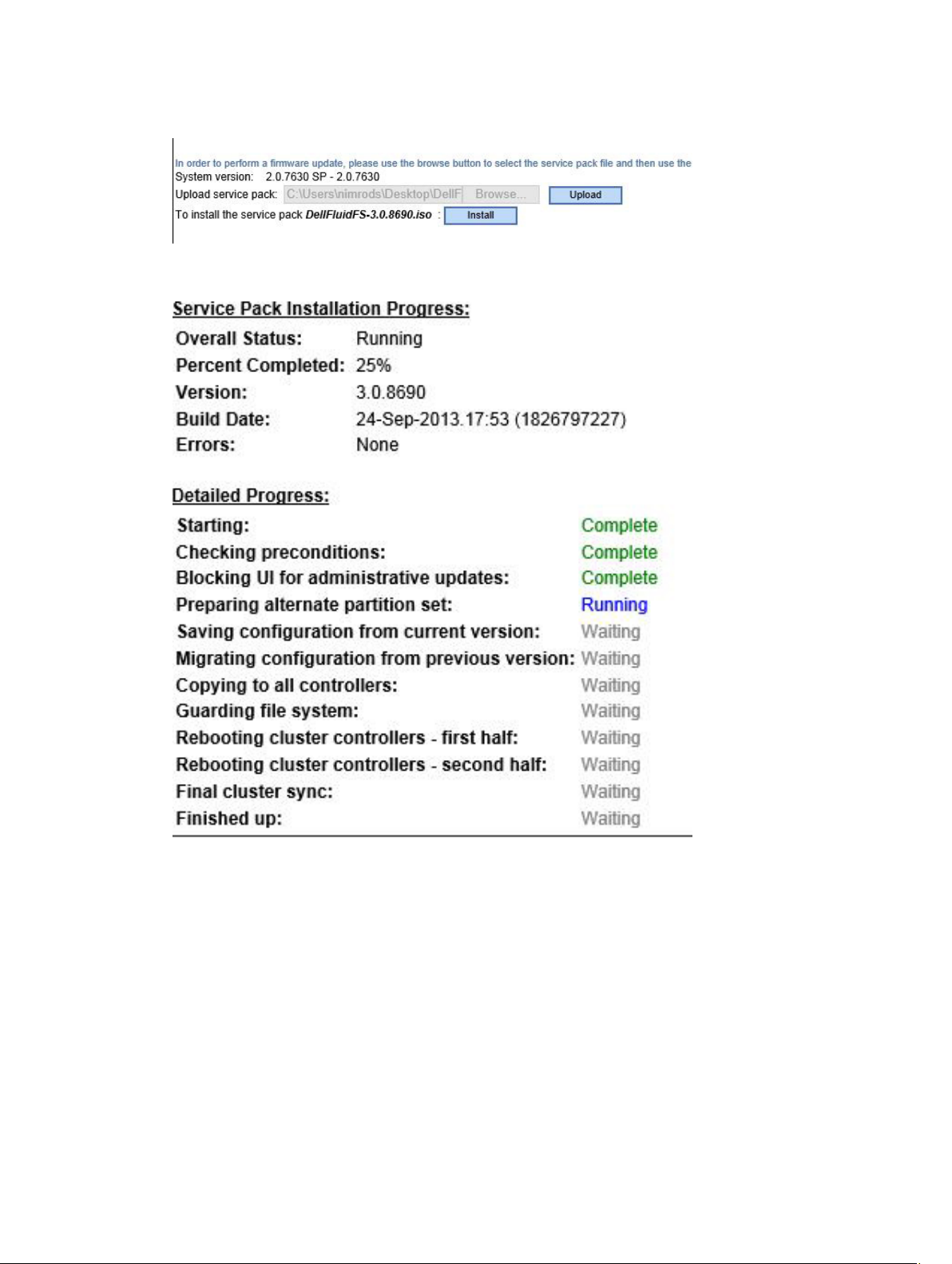

1. Login to the FluidFS v2 Manager application using a browser and go to Cluster Management →

Cluster Management → Maintenance → Service Packs.

2. Browse to the ISO location and click Upload.

The system starts uploading the service pack file.

26

Page 27

3. When the file is uploaded, click Install.

The upgrade process starts and may take an hour or more. The upgrade’s progress is displayed as

follows:

4. During the upgrade, you will be notified that a node has been rebooted. After receiving this message,

wait 15 minutes more so that the reboot of both nodes and the Final Sync are completed.

5. Login again with the Administrator user (the Admin user is no longer available). The new FluidFS

version 3 Manager UI is displayed.

6. Make sure the system is fully operational and all components are in Optimal status, before you start

working with it.

27

Page 28

28

Page 29

3

FluidFS Manager User Interface Overview

FluidFS Manager Layout

The following image and legend describe the layout of the FluidFS Manager.

Figure 2. FluidFS Manager Web User Interface Layout

FluidFS Manager Sections

❶ Left-hand tabs, used to select a view topic.

❷ Upper tabs, used to select a view subtopic.

❸ Main view area, containing one or more panes. Each pane refers to a different FluidFS

element or configuration setting, which can be viewed/modified/deleted.

❹ The event log, which shows a sortable table of event messages.

❺ The dashboard, which displays various system statistics, statuses and services at a glance.

Navigating Views

A specific FluidFS Manager view is displayed when you select a topic, by clicking the topic tab on the left,

and select a subtopic, by clicking a subtopic tab on top.

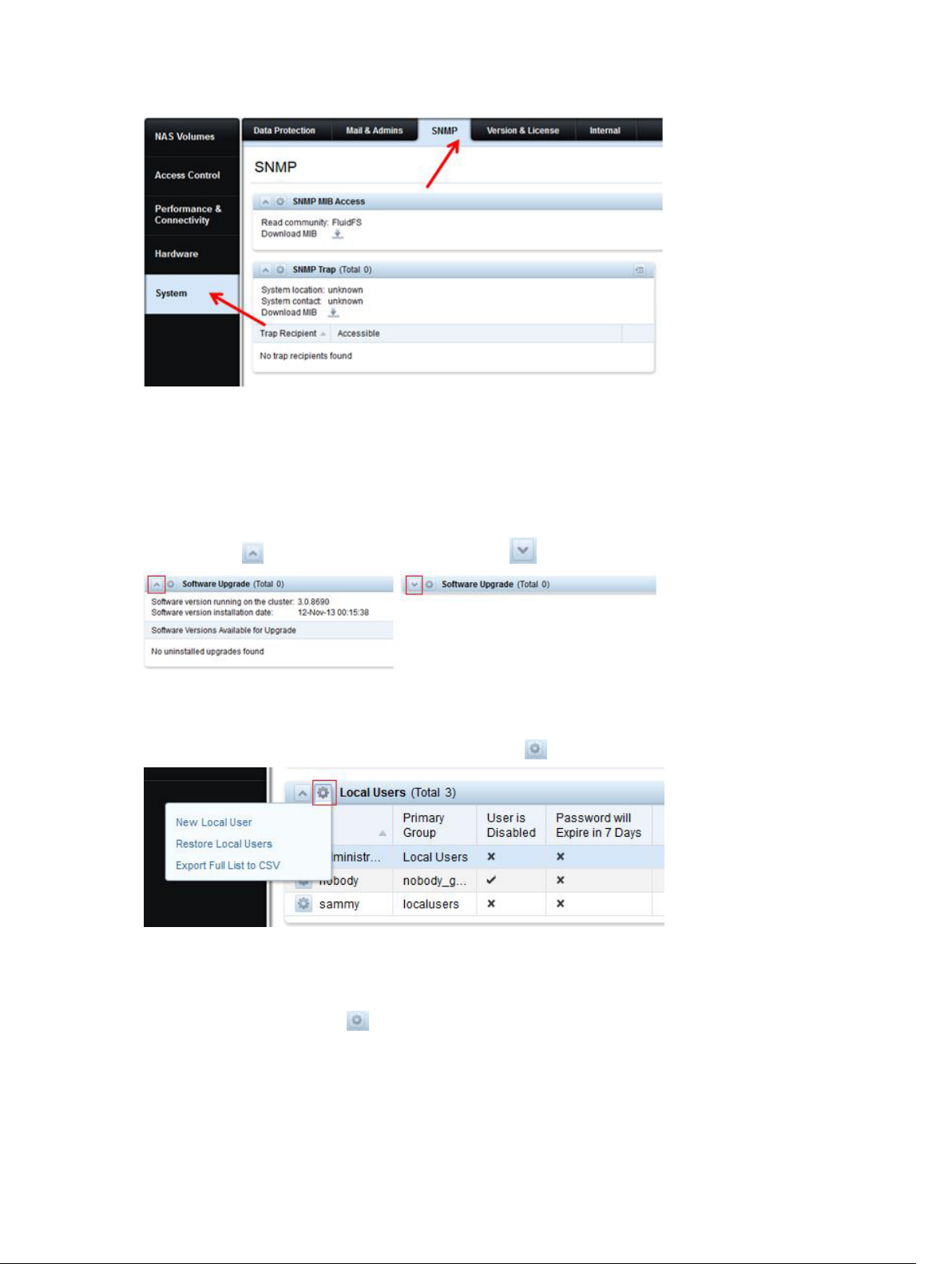

For example, to display the System\SNMP view, click the System tab on the left and the SNMP tab on

top.

The FluidFS elements and settings related to the view you selected are displayed in the main view area.

29

Page 30

Figure 3. Navigating Views in FluidFS Manager

Working With Panes, Menus, And Dialogs

Showing And Hiding Panes

Panes within the main view area display FluidFS elements and settings. A pane’s contents may be hidden

by clicking the button, and displayed by clicking the button.

Opening A Pane Menu

To modify a setting or add an element to a pane, click the button and select the desired menu option.

Opening A Table Element Menu

Some panes display a table of elements, each of which may be edited independently. To modify or delete

an element in a table, click the button in the row of the element you want to change, then select the