Page 1

Dell DL4300 Appliance

User's Guide

Page 2

Notes, cautions, and warnings

NOTE: A NOTE indicates important information that helps you make better use of your computer.

CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you

how to avoid the problem.

WARNING: A WARNING indicates a potential for property damage, personal injury, or death.

Copyright © 2016 Dell Inc. All rights reserved. This product is protected by U.S. and international copyright and

intellectual property laws. Dell™ and the Dell logo are trademarks of Dell Inc. in the United States and/or other

jurisdictions. All other marks and names mentioned herein may be trademarks of their respective companies.

2015 - 12

Rev. A01

Page 3

Contents

1 Introduction to Dell DL4300 Appliance......................................................... 10

Core technologies...............................................................................................................................10

Live Recovery................................................................................................................................. 11

Verified Recovery........................................................................................................................... 11

Universal Recovery.........................................................................................................................11

True Global Deduplication.............................................................................................................11

True Scale architecture........................................................................................................................11

Deployment architecture.................................................................................................................... 12

Smart Agent................................................................................................................................... 14

DL4300 Core................................................................................................................................. 14

Snapshot process.......................................................................................................................... 14

Replication of disaster recovery site or service provider............................................................. 15

Recovery.........................................................................................................................................15

Product features ................................................................................................................................. 15

Repository...................................................................................................................................... 16

True Global Deduplication ........................................................................................................... 16

Encryption...................................................................................................................................... 17

Replication..................................................................................................................................... 18

Recovery-as-a-Service (RaaS).......................................................................................................19

Retention and archiving................................................................................................................ 19

Virtualization and cloud................................................................................................................ 20

Alerts and event management......................................................................................................20

License portal................................................................................................................................ 20

Web console..................................................................................................................................20

Service management APIs.............................................................................................................21

2 Working with the DL4300 Core.......................................................................22

Accessing the DL4300 Core Console................................................................................................22

Updating trusted sites in Internet Explorer...................................................................................22

Configuring browsers to remotely access the Core Console.....................................................22

Roadmap for configuring the Core ...................................................................................................23

Managing licenses ..............................................................................................................................24

Changing a license key ................................................................................................................ 24

Contacting the license portal server ........................................................................................... 24

Changing the AppAssure language manually.................................................................................... 25

Changing the OS language during installation.................................................................................. 25

Managing Core settings .....................................................................................................................26

Changing the Core display name ................................................................................................26

3

Page 4

Adjusting the nightly job time ......................................................................................................26

Modifying the transfer queue settings .........................................................................................26

Adjusting the client time-out settings ......................................................................................... 27

Configuring deduplication cache settings .................................................................................. 27

Modifying engine settings ............................................................................................................28

Modifying database connection settings .................................................................................... 29

About repositories ..............................................................................................................................29

Roadmap for managing a repository ................................................................................................ 30

Creating a repository ................................................................................................................... 30

Viewing repository details.............................................................................................................33

Modifying repository settings .......................................................................................................33

Expanding an existing repository..................................................................................................34

Adding a storage location to an existing repository ................................................................... 34

Checking a repository ..................................................................................................................36

Deleting a repository ....................................................................................................................36

Remounting volumes....................................................................................................................36

Recovering a repository................................................................................................................ 37

Managing security ..............................................................................................................................38

Adding an encryption key ............................................................................................................ 38

Editing an encryption key ............................................................................................................ 39

Changing an encryption key passphrase .................................................................................... 39

Importing an encryption key ....................................................................................................... 39

Exporting an encryption key ........................................................................................................40

Removing an encryption key .......................................................................................................40

Managing cloud accounts .................................................................................................................40

Adding a cloud account................................................................................................................40

Editing a cloud account................................................................................................................42

Configuring cloud account settings.............................................................................................42

Understanding replication ................................................................................................................. 43

About protecting workstations and servers ................................................................................ 43

About replication ..........................................................................................................................43

About seeding .............................................................................................................................. 44

About failover and failback .......................................................................................................... 45

About replication and encrypted recovery points ...................................................................... 45

About retention policies for replication ...................................................................................... 46

Performance considerations for replicated data transfer .......................................................... 46

Roadmap for performing replication .................................................................................................47

Replicating to a self-managed core............................................................................................. 47

Replicating to a core managed by a third party........................................................................... 51

Monitoring replication ..................................................................................................................53

Managing replication settings ......................................................................................................55

Removing replication ......................................................................................................................... 55

4

Page 5

Removing a protected machine from replication on the source Core...................................... 55

Removing a protected machine on the target Core................................................................... 56

Removing a target Core from replication.................................................................................... 56

Removing a source Core from replication...................................................................................56

Recovering replicated data .......................................................................................................... 56

Roadmap for failover and failback .....................................................................................................57

Setting up an environment for failover ........................................................................................57

Performing failover on the target Core ....................................................................................... 57

Performing failback ...................................................................................................................... 58

Managing events ................................................................................................................................ 59

Configuring notification groups .................................................................................................. 59

Configuring an email server and email notification template .................................................... 61

Configuring repetition reduction .................................................................................................62

Configuring event retention ........................................................................................................ 62

Managing recovery .............................................................................................................................62

About system information ................................................................................................................. 63

Viewing system information ........................................................................................................ 63

Downloading installers ...................................................................................................................... 63

About the agent installer ....................................................................................................................63

Downloading and installing the agent installer ...........................................................................63

About the local mount utility .............................................................................................................64

Downloading and installing the local mount utility ....................................................................64

Adding a core to the local mount utility ......................................................................................65

Mounting a recovery point by using the local mount utility ...................................................... 66

Dismounting a recovery point by using the local mount utility .................................................66

About the local mount utility tray menu ..................................................................................... 67

Using Core and agent options......................................................................................................67

Managing retention policies ..............................................................................................................68

Archiving to a cloud............................................................................................................................68

About archiving ..................................................................................................................................68

Creating an archive ...................................................................................................................... 68

Setting a scheduled archive .........................................................................................................69

Pausing or resuming scheduled archive ..................................................................................... 70

Editing a scheduled archive ..........................................................................................................71

Checking an archive .....................................................................................................................72

Importing an archive .................................................................................................................... 72

Managing SQL attachability ............................................................................................................... 73

Configuring SQL attachability settings ........................................................................................ 73

Configuring nightly SQL attachability checks and log truncation ............................................. 74

Managing exchange database mountability checks and log truncation ......................................... 74

Configuring exchange database mountability and log truncation ............................................ 74

Forcing a mountability check .......................................................................................................75

5

Page 6

Forcing checksum checks ............................................................................................................75

Forcing log truncation ..................................................................................................................75

Recovery point status indicators ..................................................................................................76

3 Managing Your Appliance.................................................................................78

Monitoring the status of the Appliance..............................................................................................78

Provisioning storage............................................................................................................................78

Provisioning selected storage.......................................................................................................79

Deleting space allocation for a virtual disk........................................................................................80

Resolving failed tasks..........................................................................................................................80

Upgrading your Appliance..................................................................................................................80

Repairing your Appliance.................................................................................................................... 81

4 Protecting workstations and servers..............................................................82

About protecting workstations and servers ......................................................................................82

Configuring machine settings ........................................................................................................... 82

Viewing and modifying configuration settings ........................................................................... 82

Viewing system information for a machine ................................................................................ 83

Configuring notification groups for system events .................................................................... 83

Editing notification groups for system events .............................................................................85

Customizing retention policy settings .........................................................................................87

Viewing license information ........................................................................................................ 89

Modifying protection schedules ..................................................................................................89

Modifying transfer settings .......................................................................................................... 90

Restarting a service ...................................................................................................................... 92

Viewing machine logs ..................................................................................................................93

Protecting a machine .........................................................................................................................93

Deploying the agent software when protecting an agent.......................................................... 95

Creating custom schedules for volumes .................................................................................... 96

Modifying exchange server settings ............................................................................................96

Modifying SQL server settings ......................................................................................................97

Deploying an agent (push install) ...................................................................................................... 97

Replicating a new agent .................................................................................................................... 98

Managing machines ...........................................................................................................................99

Removing a machine ................................................................................................................... 99

Replicating agent data on a machine ..........................................................................................99

Setting replication priority for an agent .................................................................................... 100

Canceling operations on a machine ......................................................................................... 100

Viewing machine status and other details .................................................................................101

Managing multiple machines .......................................................................................................... 102

Deploying to multiple machines ................................................................................................102

Monitoring the deployment of multiple machines ...................................................................106

6

Page 7

Protecting multiple machines ....................................................................................................106

Monitoring the protection of multiple machines ..................................................................... 108

Managing snapshots and recovery points ...................................................................................... 108

Viewing recovery points ............................................................................................................ 109

Viewing a specific recovery point...............................................................................................109

Mounting a recovery point for a Windows machine .................................................................110

Dismounting select recovery points............................................................................................111

Dismounting all recovery points..................................................................................................111

Mounting a recovery point volume on a Linux machine ...........................................................111

Removing recovery points ..........................................................................................................112

Deleting an orphaned recovery point chain...............................................................................112

Forcing a snapshot ......................................................................................................................113

Pausing and resuming protection ..............................................................................................113

Restoring data ...................................................................................................................................114

Backup..........................................................................................................................................114

About exporting protected data from Windows machines to virtual machines.......................115

Exporting backup information from your Microsoft Windows machine to a virtual

machine .......................................................................................................................................117

Exporting Windows data using ESXi export ............................................................................... 117

Exporting Windows data using VMware workstation export ....................................................119

Exporting Windows data using Hyper-V export ........................................................................ 121

Exporting Microsoft Windows data using Oracle VirtualBox export ........................................124

Virtual Machine Management.....................................................................................................126

Performing a rollback .................................................................................................................130

Performing a rollback for a Linux machine by using the command line.................................. 131

About bare metal restore for Windows machines .......................................................................... 132

Prerequisites for performing a bare metal restore for a Windows machine ............................132

Roadmap for performing a bare metal restore for a Windows machine .......................................133

Creating a bootable CD ISO image............................................................................................ 133

Loading a boot CD...................................................................................................................... 135

Launching a restore from the Core ...........................................................................................136

Mapping volumes ....................................................................................................................... 136

Viewing the recovery progress ...................................................................................................137

Starting the restored target server ............................................................................................. 137

Repairing startup problems.........................................................................................................137

Performing a bare metal restore for a Linux machine ....................................................................138

Installing the screen utility.......................................................................................................... 139

Creating bootable partitions on a Linux machine......................................................................139

Viewing events and alerts ................................................................................................................ 140

5 Protecting server clusters............................................................................... 141

About server cluster protection ....................................................................................................... 141

7

Page 8

Supported applications and cluster types ..................................................................................141

Protecting a cluster ..........................................................................................................................142

Protecting nodes in a cluster ...........................................................................................................143

Process of modifying cluster node settings ....................................................................................144

Roadmap for configuring cluster settings .......................................................................................144

Modifying cluster settings .......................................................................................................... 145

Configuring cluster event notifications .....................................................................................145

Modifying the cluster retention policy ...................................................................................... 146

Modifying cluster protection schedules .................................................................................... 147

Modifying cluster transfer settings .............................................................................................147

Converting a protected cluster node to an agent ..........................................................................148

Viewing server cluster information ................................................................................................. 148

Viewing cluster system information ..........................................................................................148

Viewing summary information .................................................................................................. 149

Working with cluster recovery points ............................................................................................. 149

Managing snapshots for a cluster ....................................................................................................149

Forcing a snapshot for a cluster ................................................................................................ 150

Pausing and resuming cluster snapshots ..................................................................................150

Dismounting local recovery points ................................................................................................. 150

Performing a rollback for clusters and cluster nodes ..................................................................... 151

Performing a rollback for CCR (Exchange) and DAG clusters .................................................. 151

Performing a rollback for SCC (Exchange, SQL) clusters...........................................................151

Replicating cluster data .................................................................................................................... 151

Removing a cluster from protection ................................................................................................151

Removing cluster nodes from protection .......................................................................................152

Removing all nodes in a cluster from protection ......................................................................152

Viewing a cluster or node report .....................................................................................................153

6 Reporting........................................................................................................... 154

About reports ....................................................................................................................................154

About the reports toolbar ................................................................................................................ 154

About compliance reports ...............................................................................................................154

About errors reports .........................................................................................................................155

About the Core Summary Report ....................................................................................................155

Repositories summary ................................................................................................................155

Agents summary .........................................................................................................................156

Generating a report for a Core or agent ......................................................................................... 156

About the Central Management Console Core reports ................................................................. 157

Generating a report from the Central Management Console ........................................................157

7 Completing a full recovery of the DL4300 Appliance............................... 158

Creating a RAID 1 partition for the operating system......................................................................158

8

Page 9

Installing the operating system.........................................................................................................159

Running the recovery and update utility..........................................................................................159

8 Changing the host name manually............................................................... 161

Stopping the Core service.................................................................................................................161

Deleting server certificates................................................................................................................161

Deleting Core server and registry keys.............................................................................................161

Launching the Core with the new host name................................................................................. 162

Changing the display name ............................................................................................................. 162

Updating trusted sites in Internet Explorer...................................................................................... 162

9 Appendix A— scripting.................................................................................... 163

About powershell scripting ..............................................................................................................163

Powershell scripting prerequisites .............................................................................................163

Testing scripts .............................................................................................................................163

Input parameters ..............................................................................................................................164

VolumeNameCollection (namespace Replay.Common.Contracts.Metadata.Storage) ..........168

Pretransferscript.ps1 ...................................................................................................................169

Posttransferscript.ps1 .................................................................................................................169

Preexportscript.ps1 .....................................................................................................................170

Postexportscript.ps1 ....................................................................................................................171

Prenightlyjobscript.ps1 ................................................................................................................171

Postnightlyjobscript.ps1...............................................................................................................173

Sample scripts ...................................................................................................................................175

10 Getting help.....................................................................................................176

Finding documentation and software updates................................................................................176

Contacting Dell..................................................................................................................................176

9

Page 10

1

Introduction to Dell DL4300 Appliance

This chapter provides an introduction and overview of DL4300. It describes the features, functionality,

and architecture, and consists of the following topics:

• Core technologies

• True Scale architecture

• Deployment architecture

• Product features

Your appliance sets a new standard for unified data protection by combining backup, replication, and

recovery in a single solution that is engineered to be the fastest and most reliable backup for protecting

virtual machines (VM), physical machines, and cloud environments.

Your appliance is capable of handling up to petabytes of data with built-in global deduplication,

compression, encryption, and replication to any private or public cloud infrastructure. Server applications

and data can be recovered in minutes for data retention (DR) and compliance.

Your appliance supports multi-hypervisor environments on VMware vSphere and Microsoft Hyper-V

private and public clouds.

Your appliance combines the following technologies:

• Live Recovery

• Verified Recovery

• Universal Recovery

• True Global Deduplication

These technologies are engineered with secure integration for cloud disaster recovery and deliver fast

and reliable recovery. With its scalable object store, your appliance is uniquely capable of handling up to

petabytes of data very rapidly with built-in global deduplication, compression, encryption, and replication

to any private or public cloud infrastructure.

AppAssure addresses the complexity and inefficiency of legacy tools through its core technology and

support of multi-hypervisor environments including those running on VMware vSphere and Microsoft

Hyper-V, which comprise both private and public clouds. AppAssure offers these technological advances

while dramatically reducing IT management and storage costs.

Core technologies

Details about the core technologies of AppAssure are described in the following topics.

10

Page 11

Live Recovery

Live Recovery is instant recovery technology for VMs or servers. It gives you near-continuous access to

data volumes on virtual or physical servers. You can recover an entire volume with near-zero RTO and an

RPO of minutes.

The backup and replication technology records concurrent snapshots of multiple VMs or servers,

providing near instantaneous data and system protection. You can resume the use of the server directly

from the backup file without waiting for a full restore to production storage. Users remain productive and

IT departments reduce recovery windows to meet today's increasingly stringent Recovery Time Objective

(RTO) and Recovery Point Objective (RPO) service-level agreements.

Verified Recovery

Verified Recovery enables you to perform automated recovery testing and verification of backups. It

includes, but is not limited to, file systems:- Microsoft Exchange 2007, 2010, and 2013, and different

versions of Microsoft SQL Server 2005, 2008, 2008 R2, 2012 and 2014. Verified Recovery provides

recoverability of applications and backups in virtual and physical environments. It features a

comprehensive integrity checking algorithm based on 256-bit SHA keys that check the correctness of

each disk block in the backup during archiving, replication, and data seeding operations. This ensures that

data corruption is identified early and prevents corrupted data blocks from being maintained or

transferred during the backup process.

Universal Recovery

Universal Recovery technology gives you unlimited machine restoration flexibility. You can restore your

backups from physical systems to virtual machines, virtual machines to virtual machines, virtual machines

to physical systems, or physical systems to physical systems, and carry out bare metal restores to

dissimilar hardware. For example, P2V, V2V, V2P, P2P, P2C, V2C, C2P, and C2V.

Universal Recovery technology also accelerates cross-platform moves among virtual machines. For

example, moving from VMware to Hyper-V or Hyper-V to VMware. It builds in application-level, itemlevel, and object-level recovery (individual files, folders, e-mail, calendar items, databases, and

applications). With AppAssure, you can recover or export physical to cloud, or virtual to cloud.

True Global Deduplication

Your appliance provides true global deduplication that reduces your physical disk drive capacity

requirements by offering space reduction ratios exceeding 50:1, while still meeting the data storage

requirements. AppAssure True Scale inline block-level compression and deduplication with line speed

performance, along with built-in integrity checking, prevents data corruption from affecting the quality of

the backup and archiving processes.

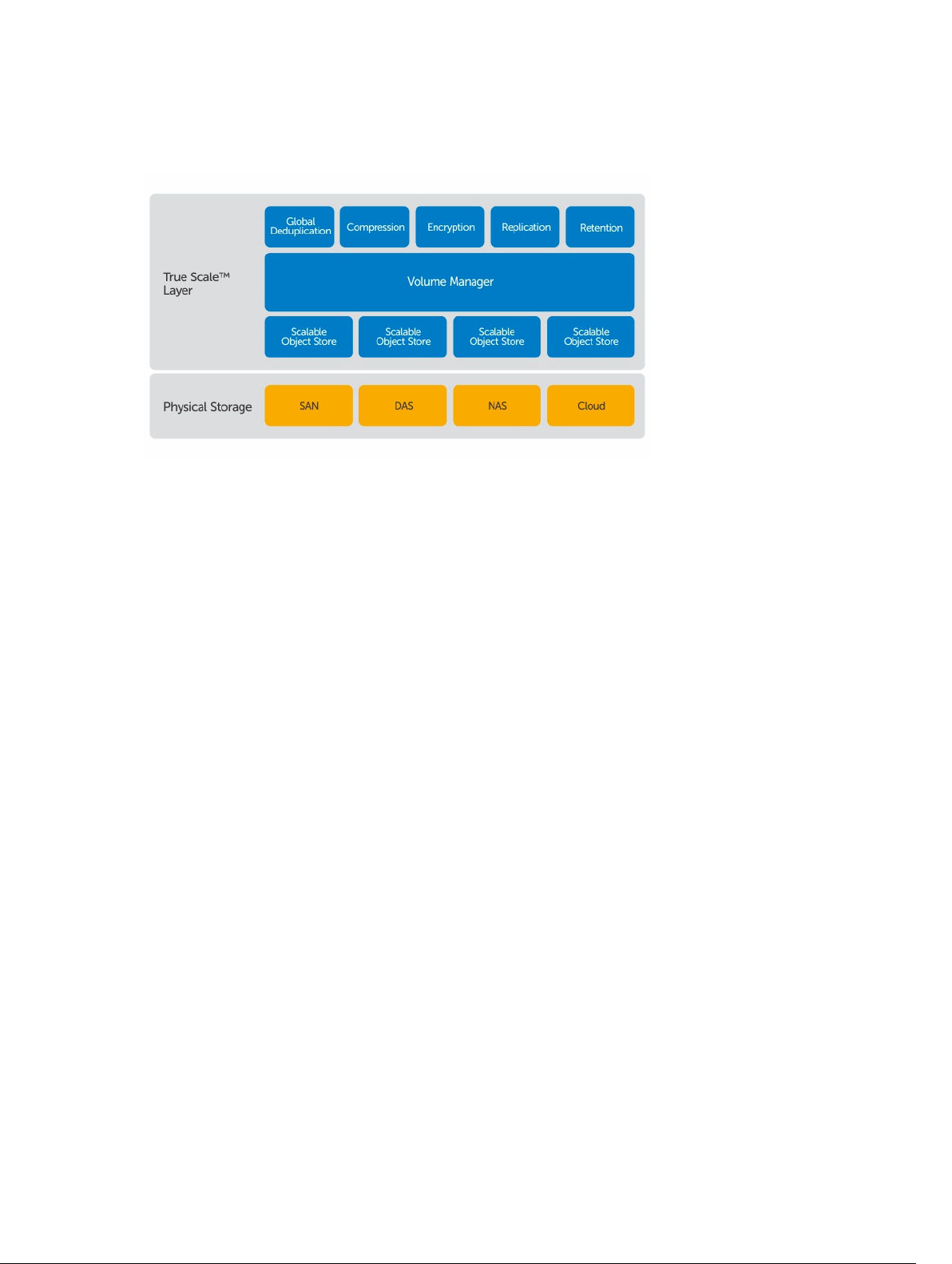

True Scale architecture

Your appliance is built on AppAssure True Scale architecture. It leverages dynamic, multi-core pipeline

architecture that is optimized to consistently deliver solid performance for your enterprise environments.

True Scale is designed from the ground up to linearly scale and efficiently store and manage big data, and

deliver RTOs and RPOs of minutes without compromising performance. It comprises of a purpose-built

11

Page 12

object and a volume manager with integrated global deduplication, compression, encryption, replication,

and retention. The following diagram describes the AppAssure True Scale architecture.

Figure 1. AppAssure True Scale architecture

The AppAssure Volume Manager and Scalable Object Store serve as the foundation of the AppAssure

True Scale architecture. The scalable object store stores block-level snapshots that are captured from

virtual and physical servers. The volume manager manages the numerous object stores by providing a

common repository or just-in-time storage for only what is needed. The Object Store concurrently

supports everything with asynchronous I/O that delivers high throughput with minimal latency and

maximizes system utilization. The repository resides on different storage technologies such as Storage

Area Network (SAN), Direct Attached Storage (DAS), or Network Attached Storage (NAS).

The role of the AppAssure Volume Manager is similar to the role of the volume manager in an operating

system. It takes various storage devices which can be of different sizes and types and combines them into

logical volumes, using striped or sequential allocation policies. The object store saves, retrieves,

maintains, and then replicates objects that are derived from application-aware snapshots. The volume

manager delivers scalable I/O performance in tandem with global data deduplication, encryption, and

retention management.

Deployment architecture

Your appliance is a scalable backup and recovery product that is flexibly deployed within the enterprise or

as a service delivered by a managed service provider. The type of deployment depends on the size and

requirements of the customer. Preparing to deploy your appliance involves planning the network storage

topology, core hardware and disaster recovery infrastructure, and security.

The deployment architecture consists of local and remote components. The remote components may be

optional for those environments that do not require leveraging a disaster recovery site or a managed

service provider for off-site recovery. A basic local deployment consists of a backup server called the

Core and one or more protected machines. The off-site component is enabled using replication that

provides full recovery capabilities in the DR site. The Core uses base images and incremental snapshots to

compile recovery points of protected machines.

Additionally, your appliance is application-aware because it can detect the presence of Microsoft

Exchange and SQL and their respective databases and log files, and then automatically group these

12

Page 13

volumes with dependency for comprehensive protection and effective recovery. This ensures that you

never have incomplete backups when you are performing recoveries. Backups are performed by using

application-aware block-level snapshots. Your appliance can also perform log truncation of the

protected Microsoft Exchange and SQL servers.

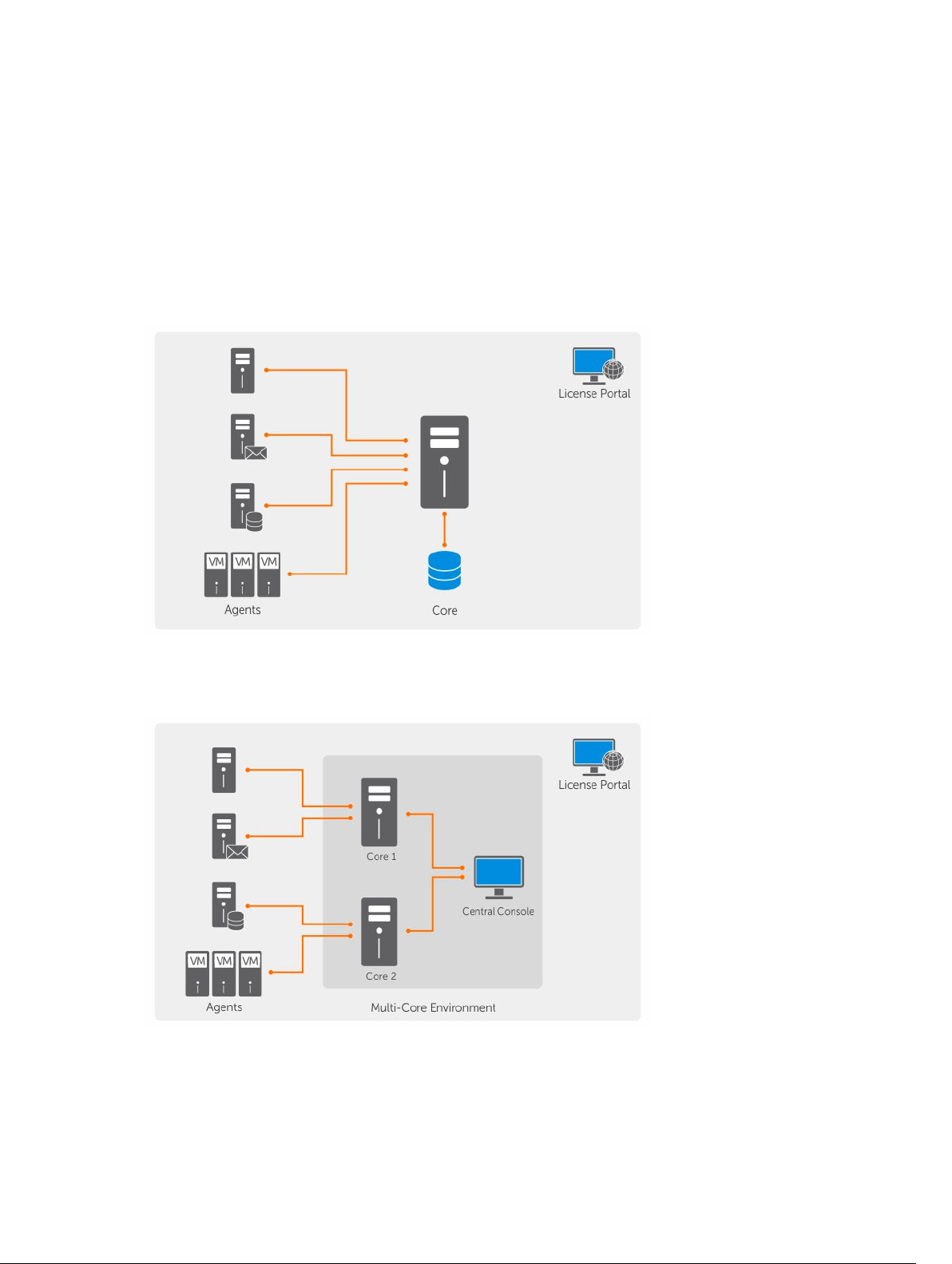

The following diagram depicts a simple deployment. In this diagram, AppAsure agent software is installed

on machines such as a file server, email server, database server, or virtual machines and connect to and

are protected by a single Core, which also consists of the central repository. The License Portal manages

license subscriptions, groups and users for the protected machines and cores in your environment. The

License Portal allows users to log in, activate accounts, download software, and deploy protected

machines and cores per your license for your environment.

Figure 2. Basic deployment architecture

You can also deploy multiple Cores as shown in the following diagram. A central console manages

multiple cores.

Figure 3. Multi—Core deployment architecture

13

Page 14

Smart Agent

Smart Agent tracks the changed blocks on the disk volume and then snaps an image of the changed

blocks at a predefined interval of protection. The incremental forever block-level snapshots approach

prevents repeated copying of the same data from the protected machine to the Core. The Smart Agent is

installed on the machines that is protected by the Core.

The Smart Agent is application-aware and is dormant when not in use, with near zero (0) percent CPU

utilization and less than 20 MB of memory overhead. When the Smart Agent is active, it uses up to 2 to 4

percent processor utilization and less than 150 MB memory, which includes transferring the snapshots to

the Core.

The Smart Agent is application-aware and it detects the type of application that is installed and also the

location of the data. It automatically groups data volumes with dependency, such as databases, and then

logs them together for effective protection and rapid recovery. After the AppAssure Agent software is

configured, it uses smart technology to keep track of changed blocks on the protected disk volumes.

When the snapshot is ready, it is rapidly transferred to the Core using intelligent multi-threaded, socketbased connections. To preserve CPU bandwidth and memory on the protected machines, the smart

agent does not encrypt or deduplicate the data at the source and protected machines are paired with a

Core for protection.

DL4300 Core

The Core is the central component of the deployment architecture. The Core stores and manages all of

the machine backups and provides core services for backup, recovery, and retention; replication, archival,

and management. The Core is a self-contained network-addressable computer that runs a 64-bit version

of Microsoft Windows operating system. Your appliance performs target-based inline compression,

encryption, and deduplication of the data received from the protected machine. The Core then stores the

snapshot backups in repositories such as, Storage Area Network (SAN) or Direct Attached Storage (DAS).

The repository can also reside on internal storage within the Core. The Core is managed by accessing the

following URL from a Web browser: https://CORENAME:8006/apprecovery/admin. Internally, all core

services are accessible through REST APIs. The Core services can be accessed from within the core or

directly over the Internet from any application that can send an HTTP/HTTPS request and receive an

HTTP/HTTPS response. All API operations are performed over SSL and mutually authenticated using X.

509 v3 certificates.

Cores are paired with other cores for replication.

Snapshot process

A snapshot is when a base image is transferred from a protected machine to the Core. This is the only

time a full copy of the machine is transported across the network under normal operation, followed by

incremental snapshots. AppAssure Agent software for Windows uses Microsoft Volume Shadow copy

Service (VSS) to freeze and quiesce application data to disk to capture a file-system-consistent and an

application-consistent backup. When a snapshot is created, the VSS, and the writer on the target server

prevent content from being written to the disk. When the writing of content to disk is halted, all disk I/O

operations are queued and resume only after the snapshot is complete, while the operations already in

flight are completed and all open files are closed. The process of creating a shadow copy does not

significantly impact the performance of the production system.

14

Page 15

AppAssure uses Microsoft VSS because it has built-in support for all Windows internal technologies such

as NTFS, Registry, Active Directory, to flush data to disk before the snapshot. Additionally, other enterprise

applications, such as Microsoft Exchange and SQL, use VSS Writer plug-ins to get notified when a

snapshot is being prepared and when they have to flush their used database pages to disk to bring the

database to a consistent transactional state. It is important to note that VSS is used to quiesce system and

application data to disk; it is not used to create the snapshot. The captured data is immediately

transferred and stored on the Core. Using VSS for backup does not render the application server in

backup mode for an extended period of time because the time taken to create the snapshot is seconds

and not hours. Another benefit of using VSS for backups is that it lets the AppAsssure Agent software to

take a snapshot of large quantities of data at one time because the snapshot works at the volume level.

Replication of disaster recovery site or service provider

The replication process requires a paired source-target relationship between two cores. The source core

copies the recovery points of the protected machines and then asynchronously and continuously

transmits them to a target core at a remote disaster recovery site. The off-site location can be a

company-owned data center (self-managed core) or a third-party managed service provider’s (MSP’s)

location, or cloud environment. When replicating to a MSP, you can use built-in workflows that let you

request connections and receive automatic feedback notifications. For the initial transfer of data, you can

perform data seeding using external media, which is useful for large sets of data or sites with slow links.

In the case of a severe outage, your appliance supports failover and failback in replicated environments.

In case of a comprehensive outage, the target core in the secondary site can recover instances from

replicated protected machines and immediately commence protection on the failed-over machines.

After the primary site is restored, the replicated core can fail-back data from the recovered instances back

to protected machines at the primary site.

Recovery

Recovery can be performed in the local site or the replicated remote site. After the deployment is in

steady state with local protection and optional replication, the Core allows you to perform recovery using

Verified Recovery, Universal Recovery, or Live Recovery.

Product features

You can manage protection and recovery of critical data using the following features and functionality:

• Repository

• True Global Deduplication (Features)

• Encryption

• Replication

• Recovery-as-a-Service (RaaS)

• Retention and archiving

• Virtualization And Cloud

• Alerts and Event Management

• License portal

• Web console

• Service Management APIs

15

Page 16

Repository

The repository uses Deduplication Volume Manager (DVM) to implement a volume manager that provides

support for multiple volumes, each of which could reside on different storage technologies such as

Storage Area Network (SAN), Direct Attached Storage (DAS), Network Attached Storage (NAS), or cloud

storage. Each volume consists of a scalable object store with deduplication. The scalable object store

behaves as a records-based file system, where the unit of storage allocation is a fixed-sized data block

called a record. This architecture allows you to configure block-sized support for compression and

deduplication. Rollup operations are reduced to metadata operations from disk intensive operations

because the rollup no longer moves data but only moves the records.

The DVM can combine a set of object stores into a volume and they can be expanded by creating

additional file systems. The object store files are pre-allocated and can be added on demand as storage

requirements change. It is possible to create up to 255 independent repositories on a single Core and to

further increase the size of a repository by adding new file extents. An extended repository may contain

up to 4,096 extents that span across different storage technologies. The maximum size of a repository is

32 exabytes. Multiple repositories can exist on a single core.

True Global Deduplication

True global deduplication is an effective method of reducing backup storage needs by eliminating

redundant or duplicate data. Deduplication is effective because only one unique instance of the data

across multiple backups is stored in the repository. The redundant data is stored, but not physically; it is

simply replaced with a pointer to the one unique data instance in the repository.

Conventional backup applications have been performing repetitive full backups every week, but your

appliance performs incremental block-level backups of the machine. The incremental-forever approach

in tandem with data deduplication helps to drastically reduce the total quantity of data committed to the

disk.

The typical disk layout of a server consists of the operating system, application, and data. In most

environments, the administrators often use a common flavor of the server and desktop operating system

across multiple systems for effective deployment and management. When backup is performed at the

block level across multiple machines at the same time, it provides a more granular view of what is in the

backup and what is not, irrespective of the source. This data includes the operating system, the

applications, and the application data across the environment.

16

Page 17

Figure 4. Diagram of deduplication

Your appliance performs target-based inline data deduplication, where the snapshot data is transmitted

to the Core before it is deduplicated. Inline data deduplication simply means the data is deduplicated

before it is committed to disk. This is different from at-source or post-process deduplication, where the

data is deduplicated at the source before it is transmitted to the target for storage, and in post-process

the data is sent raw to the target where it is analyzed and deduplicated after the data has been committed

to disk. At-source deduplication consumes precious system resources on the machine whereas the postprocess data deduplication approach needs all the requisite data on disk (a greater initial capacity

overhead) before commencing the deduplication process. On the other hand, inline data deduplication

does not require additional disk capacity and CPU cycles on the source or on the Core for the

deduplication process. Lastly, conventional backup applications perform repetitive full backups every

week, while your appliance performs incremental block-level backups of the machines forever. This

incremental- forever approach in tandem with data deduplication helps to drastically reduce the total

quantity of data committed to the disk with a reduction ratio of as much as 50:1.

Encryption

Your appliance provides integrated encryption to protect backups and data-at-rest from unauthorized

access and use, ensuring data privacy. Only a user with the encryption key can access and decrypt the

data. There is no limit to the number of encryption keys that can be created and stored on a system. DVM

uses AES 256-bit encryption in the Cipher Block Chaining (CBC) mode with 256-bit keys. Encryption is

performed inline on snapshot data, at line speeds without impacting performance. This is because DVM

implementation is multi-threaded and uses hardware acceleration specific to the processor on which it is

deployed.

Encryption is multi-tenant ready. Deduplication has been specifically limited to records that have been

encrypted with the same key; two identical records that have been encrypted with different keys will not

be deduplicated against each other. This design ensures that deduplication cannot be used to leak data

between different encryption domains. This is a benefit for managed service providers, as replicated

backups for multiple tenants (customers) can be stored on a single core without any tenant being able to

see or access other tenant’s data. Each active tenant encryption key creates an encryption domain within

the repository where only the owner of the keys can see, access, or use the data. In a multi-tenant

scenario, data is partitioned and deduplicated within the encryption domains.

17

Page 18

In replication scenarios, your appliance uses SSL 3.0 to secure the connections between the two cores in

a replication topology to prevent eavesdropping and tampering.

Replication

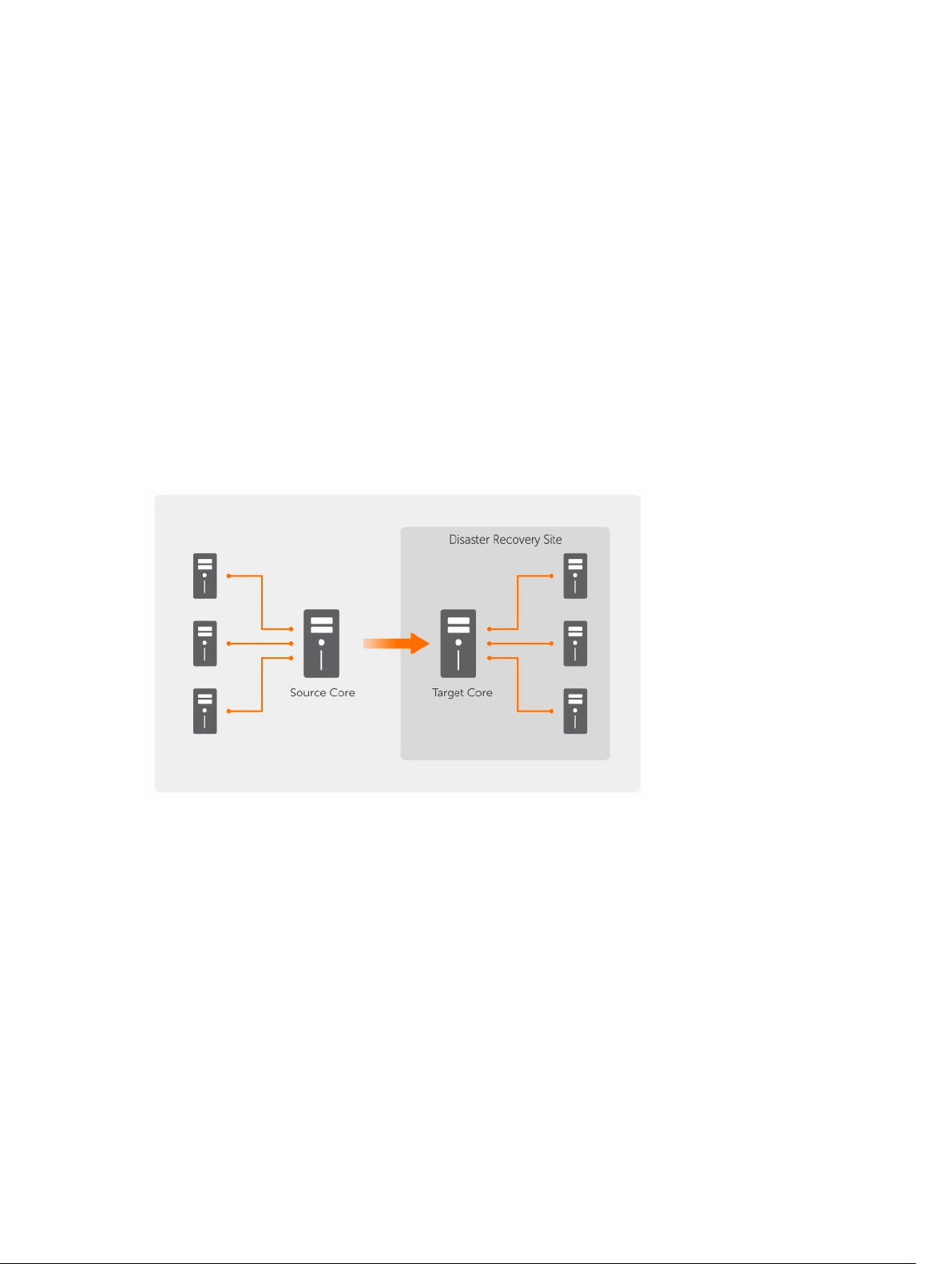

Replication is the process of copying recovery points from an AppAssure core and transmitting them to

another AppAssure core in a separate location for the purpose of disaster recovery. The process requires

a paired source-target relationship between two or more cores.

The source core copies the recovery points of selected protected machines, and then asynchronously

and continually transmits the incremental snapshot data to the target core at a remote disaster recovery

site. You can configure outbound replication to a company-owned data center or remote disaster

recovery site (that is, a self-managed target core). Or, you can configure outbound replication to a thirdparty managed service provider (MSP) or the cloud that hosts off-site backup and disaster recovery

services. When replicating to a third-party target core, you can use built-in work flows that let you

request connections and receive automatic feedback notifications.

Replication is managed on a per-protected-machine basis. Any machine (or all machines) protected or

replicated on a source core can be configured to replicate to a target core.

Figure 5. Basic replication architecture

Replication is self-optimizing with a unique Read-Match-Write (RMW) algorithm that is tightly coupled

with deduplication. With RMW replication, the source and target replication service matches keys before

transferring data and then replicates only the compressed, encrypted, deduplicated data across the WAN,

resulting in a 10x reduction in bandwidth requirements.

Replication begins with seeding. Seeding is the initial transfer of deduplicated base images and

incremental snapshots of the protected machines. The data can add up to hundreds or thousands of

gigabytes. Initial replication can be seeded to the target core using external media. This is useful for large

sets of data or sites with slow links. The data in the seeding archive is compressed, encrypted and

deduplicated. If the total size of the archive is larger than the space available on the external media, the

archive can span across multiple devices. During the seeding process, the incremental recovery points

18

Page 19

replicate to the target site. After data has been transferred to the target core, the newly replicated

incremental recovery points automatically synchronize.

Recovery-as-a-Service (RaaS)

Managed service providers (MSPs) can fully leverage the appliance as a platform for delivering recovery as

a service (RaaS). RaaS facilitates complete recovery-in-the-cloud by replicating customers' physical and

virtual servers along with their data to the service provider's cloud as virtual machines to support recovery

testing or actual recovery operations. Customers wanting to perform recovery-in-the-cloud can

configure replication on their protected machines on the local cores to an AppAssure service provider. In

the event of a disaster, the MSPs can instantly spin-up virtual machines for the customer.

MSPs can deploy multi-tenant AppAssure RaaS infrastructure that can host multiple and discrete

organizations or business units (the tenants) that ordinarily do not share security or data on a single server

or a group of servers. The data of each tenant is isolated and secure from other tenants and the service

provider.

Retention and archiving

In your appliance, backup and retention policies are flexible and, therefore, easily configurable. The ability

to tailor retention polices to the needs of an organization not only helps to meet compliance

requirements, but does so without compromising on RTO.

Retention policies enforce the periods of time in which backups are stored on short-term (fast and

expensive) media. Sometimes certain business and technical requirements mandate extended retention

of these backups, but use of fast storage is cost prohibitive. Therefore, this requirement creates a need

for long-term (slow and cheap) storage. Businesses often use long-term storage for archiving both

compliance and non-compliance data. The archive feature supports extended retentions for compliance

and non-compliance data, it can also be used for seeding replication data to a target core.

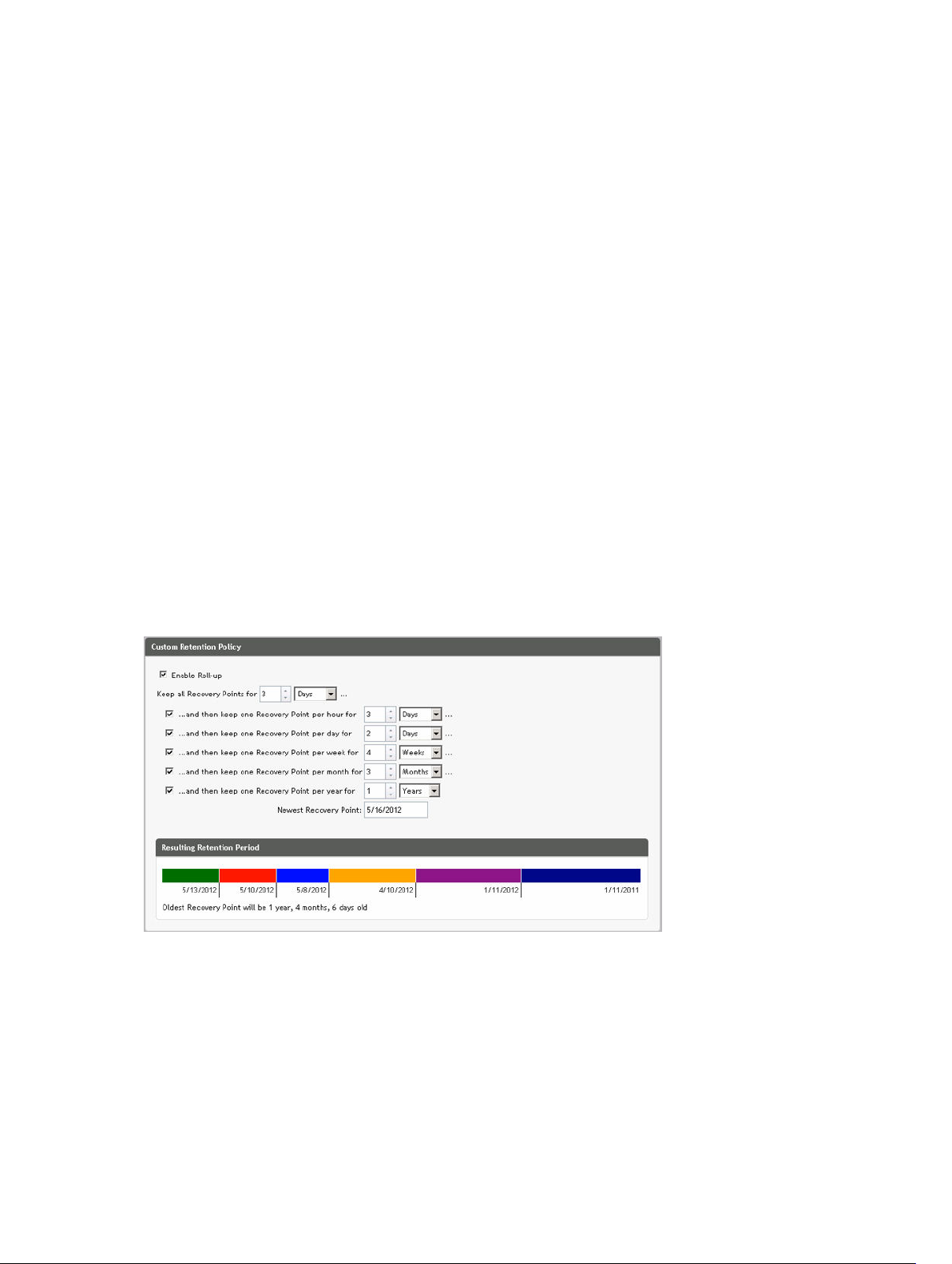

Figure 6. Custom retention policy

In your appliance, retention policies can be customized to specify the length of time a backup recovery

point is maintained. As the age of the recovery points approaches the end of their retention period, the

recovery points age out and are removed from the retention pool. Typically, this process becomes

inefficient and eventually fails as the amount of data and the period of retention start grows rapidly. Your

19

Page 20

appliance solves the big data problem by managing the retention of large amounts of data with complex

retention policies and performing rollup operations for aging data using efficient metadata operations.

Backups can be performed with an interval of a few minutes. As these backups age over days, months,

and years, retention policies manage the aging and deletion of old backups. A simple waterfall method

defines the aging process. The levels within the waterfall are defined in minutes, hours, days, weeks,

months, and years. The retention policy is enforced by the nightly rollup process.

For long-term archiving, your appliance provides the ability to create an archive of the source or target

core on any removable media. The archive is internally optimized and all data in the archive is

compressed, encrypted, and deduplicated. If the total size of the archive is larger than the space available

on the removable media, the archive spans across multiple devices based on the available space on the

media. The archive also can be locked with a passphrase. Recovery from an archive does not require a

new core; any core can ingest the archive and recover data if the administrator has the passphrase and

the encryption keys.

Virtualization and cloud

The Core is cloud-ready, which allows you to leverage the compute capacity of the cloud for recovery.

Your appliance can export any protected or replicated machine to a virtual machine, such as licensed

versions of VMware or Hyper-V. You can perform a one-time virtual export, or you can establish a virtual

standby VM by establishing a continuous virtual export. With continuous exports, the virtual machine is

incrementally updated after every snapshot. The incremental updates are very fast and provide standby

clones that are ready to be powered up with a click of a button. The supported virtual machine export

types are VMware Workstation/Server on a folder; direct export to a vSphere/VMware ESX(i) host; export

to Oracle VirtualBox; and export to Microsoft Hyper-V Server on Windows Server 2008 (x64), 2008 R2,

2012 (x64), and 2012 R2 (including support for Hyper-V generation 2 VMs)

Additionally, you can now archive your repository data to the cloud using Microsoft Azure, Amazon S3,

Rackspace Cloud Block Storage, or other OpenStack-based cloud services.

Alerts and event management

In addition to HTTP REST API, your appliance also includes an extensive set of features for event logging

and notification using e-mail, Syslog, or Windows Event Log. email notifications can be used to alert users

or groups of the health or status of different events in response to an alert. The Syslog and Windows

Event Log methods are used for centralized logging to a repository in multi-operating system

environment. In Windows-only environments, only the Windows Event Log is used.

License portal

The License Portal provides easy-to-use tools for managing license entitlements. You can download,

activate, view, and manage license keys and create a company profile to track your license assets.

Additionally, the portal enables service providers and re-sellers to track and manage their customer

licenses.

Web console

Your appliance features a new web-based central console that manages distributed cores from one

central location. MSPs and enterprise customers with multiple distributed cores can deploy the central

console to get a unified view for central management. The central console provides the ability to

20

Page 21

organize the managed cores in hierarchical organizational units. These organizational units can represent

business units, locations, or customers for MSPs with role-based access. The central console can also run

reports across managed cores.

Service management APIs

Your appliance comes bundled with a service management API and provides programmatic access to all

of the functionality available through the Central Management Console. The service management API is a

REST API. All the API operations are performed over SSL and are mutually authenticated using X.509 v3

certificates. The management service can be accessed from within the environment or directly over the

Internet from any application that can send and receive an HTTPS request and response. This approach

facilitates easy integration with any web application such as relationship management methodology

(RMM) tools or billing systems. Also included is an SDK client for PowerShell scripting.

21

Page 22

2

Working with the DL4300 Core

Accessing the DL4300 Core Console

To access the Core Console:

1. Update trusted sites in your browser. See Updating Trusted Sites In Internet Explorer.

2. Configure your browsers to remotely access the Core Console. See Configuring Browsers To

Remotely Access The Core Console.

3. Perform one of the following to access the Core Console:

• Log on locally to your DL4300 core server, and then double-click the Core Console icon.

• Type one of the following URLs in your web browser:

– https://<yourCoreServerName>:8006/apprecovery/admin/core

– https://<yourCoreServerIPaddress>:8006/apprecovery/admin/core

Updating trusted sites in Internet Explorer

To update trusted sites in Microsoft Internet Explorer:

1. Open Internet Explorer.

2. If the File, Edit View, and other menus are not displayed, press <F10>.

3. Click the Tools menu, and select Internet Options.

4. In the Internet Options window, click the Security tab.

5. Click Trusted Sites and then click Sites.

6. In Add this website to the zone, enter https://[Display Name], using the new name you provided for

the Display Name.

7. Click Add.

8. In Add this website to the zone, enter about:blank.

9. Click Add.

10. Click Close and then OK.

Configuring browsers to remotely access the Core Console

To access the Core Console from a remote machine, you need to modify your browser settings.

NOTE: To modify the browser settings, log in to the system as an administrator.

NOTE: Google Chrome uses Microsoft Internet Explorer settings, change Chrome browser settings

using Internet Explorer.

22

Page 23

NOTE: Ensure that the Internet Explorer Enhanced Security Configuration is turned on when you

access the Core Web Console either locally or remotely. To turn on the Internet Explorer

Enhanced Security Configuration:

1. Open Server Manager.

2. Select Local Server IE Enhanced Security Configuration displayed on the right. Ensure that it is

On.

Configuring browser settings in Internet Explorer and Chrome

To modify browser settings in Internet Explorer and Chrome:

1. Open Internet Explorer.

2. From the Tools menu, select Internet Options, Security tab.

3. Click Trusted Sites and then click Sites.

4. Deselect the option Require server verification (https:) for all sites in the zone, and then add

http://<hostname or IP Address of the Appliance server hosting the AppAssure Core> to Trusted

Sites.

5. Click Close, select Trusted Sites, and then click Custom Level.

6. Scroll to Miscellaneous → Display Mixed Content and select Enable.

7. Scroll to the bottom of the screen to User Authentication → Logon, and then select Automatic

logon with current user name and password.

8. Click OK, and then select the Advanced tab.

9. Scroll to Multimedia and select Play animations in webpages.

10. Scroll to Security, check Enable Integrated Windows Authentication, and then click OK.

Configuring Mozilla Firefox browser settings

NOTE: To modify Mozilla Firefox browser settings in the latest versions of Firefox, disable

protection. Right-click the Site Identify button (located to the left of the URL), go to Options and

click on

To modify Mozilla Firefox browser settings:

1. In the Firefox address bar, type about:config, and then click I’ll be careful, I promise if prompted.

2. Search for the term ntlm.

The search should return at least three results.

3. Double-click network.automatic-ntlm-auth.trusted-uris and enter the following setting as

appropriate for your machine:

• For local machines, enter the host name.

• For remote machines, enter the host name or IP address separated by a comma of the appliance

4. Restart Firefox.

Disable protection for now.

system hosting the AppAssure Core; for example, IPAddress, host name.

Roadmap for configuring the Core

Configuration includes tasks such as creating and configuring the repository for storing backup

snapshots, defining encryption keys for securing protected data, and setting up alerts and notifications.

After you complete the configuration of the Core, you can then protect agents and perform recovery.

23

Page 24

Configuring the Core involves understanding certain concepts and performing the following initial

operations:

• Create a repository

• Configure encryption keys

• Configure event notification

• Configure retention policy

• Configure SQL attachability

NOTE: If you are using this Appliance, it is recommended that you use the Appliance tab to

configure the Core. For more information about configuring the Core after initial installation, see

the Dell DL4300 Appliance Deployment Guide at dell.com/support/home.

Managing licenses

You can manage licenses directly from the Core Console. From the console, you can change the license

key and contact the license server. You can also access the License Portal from the Licensing page in the

Core console.

The Licensing page includes the following information:

• License type

• License status

• License constraints

• Number of machines protected

• Status of last response from the licensing server

• Time of last contact with the licensing server

• Next scheduled attempt of contact with the licensing server

Changing a license key

To change a license key:

1. Navigate to the Core Console.

2. Select Configuration → Licensing.

The Licensing page appears.

3. From the License Details section, click Change License.

The Change License dialog box appears.

4. In the Change License dialog box, enter the new license key and then click Continue.

Contacting the license portal server

The Core Console frequently contacts the portal server to remain current with any changes made in the

license portal. Typically, communication with the portal server occurs automatically at designated

intervals; however, you can initiate communication on demand.

To contact the portal server:

1. Navigate to the Core Console.

2. Click Configuration → Licensing.

3. From the License Server option, click Contact Now.

24

Page 25

Changing the AppAssure language manually

AppAssure allows you to change the language that you had selected while running AppAssure Appliance

Configuration Wizard to any of the supported languages.

To change the AppAssure language to the desired language:

1. Launch the registry Editor using regdit command.

2. Navigate to HKEY_LOCAL_MACHINE → SOFTWARE → AppRecovery → Core → Localization.

3. Open Lcid.

4. Select decimal.

5. Enter the required language value in the Value data box, the supported language values are:

a. English: 1033

b. Brazilian Portuguese: 1046

c. Spanish: 1034

d. French: 1036

e. German: 1031

f. Simplified Chinese: 2052

g. Japanese: 1041

h. Korean: 1042

6. Right-click and restart the services in the given order:

a. Windows Management Instrumentation

b. SRM Web Service

c. AppAssure Core

7. Clear the browser cache.

8. Close the browser and restart the core console from the desktop icon.

Changing the OS language during installation

On a running Windows installation, you can use the control panel to select language packs and configure

additional international settings.

To change OS language:

NOTE: It is recommended that the OS language and the AppAssure language be set to the same

language. otherwise, some messages may be displayed in mixed languages.

NOTE: It is recommended to change the OS language before changing the AppAssure language.

1. On the Start page, type language, and make sure that the search scope is set to Settings.

2. In the Results panel, select Language.

3. In the Change your language preferences pane, select Add a language.

4. Browse or search for the language that you want to install.

For example, select Catalan, and then select Add. Catalan is now added as one of your languages.

5. In the Change your language preferences pane, select Options next to the language that you added.

6. If a language pack is available for your language, select Download and install language pack.

7. When the language pack is installed, the language is displayed as available to use for the Windows

display language.

25

Page 26

8. To make this language your display language, move it to the top of your language list.

9. Log out and log in again to Windows for the change to take effect.

Managing Core settings

The Core settings are used to define various settings for configuration and performance. Most settings

are configured for optimal use, but you can change the following settings as necessary:

• General

• Nightly Jobs

• Transfer Queue

• Client Timeout Settings

• Deduplication Cache Configuration

• Database Connection Settings

Changing the Core display name

NOTE: It is recommended that you select a permanent display name during the initial configuration

of your Appliance. If you change it later, you must perform several steps manually to ensure that the

new host name takes effect and the appliance functions properly. For more information, see