Page 1

Avaya Media Processing Server Series System

Reference Manual

(Software Release 2.1)

Avaya Business Communications Manager

Release 6.0

Document Status: Standard

Document Number: P0602477

Document Version: 3.1.12

Date: June 2010

Page 2

© 2010 Avaya Inc.

All Rights Reserved.

Notices

While reasonable efforts have been made to ensure that the information in this document is complete and accurate at the time of printing,

Avaya assumes no liability for any errors. Avaya reserves the right to make changes and corrections to the information in this document

without the obligation to notify any person or organization of such changes.

Documentation disclaimer

Avaya shall not be responsible for any modifications, additions, or deletions to the original published version of this documentation

unless such modifications, additions, or deletions were performed by Avaya. End User agree to indemnify and hold harmless Avaya,

Avaya’s agents, servants and employees against all claims, lawsuits, demands and judgments arising out of, or in connection with,

subsequent modifications, additions or deletions to this documentation, to the extent made by End User.

Link disclaimer

Avaya is not responsible for the contents or reliability of any linked Web sites referenced within this site or documentation(s) provided by

Avaya. Avaya is not responsible for the accuracy of any information, statement or content provided on these sites and does not

necessarily endorse the products, services, or information described or offered within them. Avaya does not guarantee that these links will

work all the time and has no control over the availability of the linked pages.

Warranty

Avaya provides a limited warranty on this product. Refer to your sales agreement to establish the terms of the limited warranty. In

addition, Avaya’s standard warranty language, as well as information regarding support for this product, while under warranty, is

available to Avaya customers and other parties through the Avaya Support Web site: http://www.avaya.com/support

Please note that if you acquired the product from an authorized reseller, the warranty is provided to you by said reseller and not by Avaya.

Licenses

THE SOFTWARE LICENSE TERMS AVAILABLE ON THE AVAYA WEBSITE, HTTP://SUPPORT.AVAYA.COM/LICENSEINFO/

ARE APPLICABLE TO ANYONE WHO DOWNLOADS, USES AND/OR INSTALLS AVAYA SOFTWARE, PURCHASED FROM

AVAYA INC., ANY AVAYA AFFILIATE, OR AN AUTHORIZED AVAYA RESELLER (AS APPLICABLE) UNDER A

COMMERCIAL AGREEMENT WITH AVAYA OR AN AUTHORIZED AVAYA RESELLER. UNLESS OTHERWISE AGREED TO

BY AVAYA IN WRITING, AVAYA DOES NOT EXTEND THIS LICENSE IF THE SOFTWARE WAS OBTAINED FROM ANYONE

OTHER THAN AVAYA, AN AVAYA AFFILIATE OR AN AVAYA AUTHORIZED RESELLER, AND AVAYA RESERVES THE

RIGHT TO T AKE LEGAL ACTION AGAINST YOU AND ANYONE ELSE USING OR SELLING THE SOFTWARE WITHOUT A

LICENSE. BY INSTALLING, DOWNLOADING OR USING THE SOFTWARE, OR AUTHORIZING OTHERS TO DO SO, YOU,

ON BEHALF OF YOURSELF AND THE ENTITY FOR WHOM YOU ARE INSTALLING, DOWNLOADING OR USING THE

SOFTWARE (HEREINAFTER REFERRED TO INTERCHANGEABLY AS "YOU" AND "END USER"), AGREE TO THESE

TERMS AND CONDITIONS AND CREATE A BINDING CONTRACT BETWEEN YOU AND AVAYA INC. OR THE

APPLICABLE AVAYA AFFILIATE ("AVAYA").

Copyright

Except where expressly stated otherwise, no use should be made of the Documentation(s) and Pr oduct( s) p rovided by Avaya. All content

in this documentation(s) and the product(s) pr ov id ed by Avaya including the selection, arrangement and design of the content is owned

either by Avaya or its licensors and is protected b y copyright and other intellectual property laws including the sui generis rights relating

to the protection of databases. You may not modify, copy, reproduce, republish, upload, post, transmit or distribute in any way any

content, in whole or in part, including any code and software. Unauthorized reproduction, transmission, dissemination, storage, and or

use without the express written consent of Avaya can be a criminal, as well as a civil offense under the applicable law.

Third Party Components

Certain software programs or portions thereof included in the Product may contain software distributed under third party agreements

("Third Party Components"), which may contain terms that expand or limit rights to use certain portions of the Product ("Third Party

Terms" ). Information regarding distributed Linux OS source code (for those Products that have distributed the Linux OS source code),

and identifying the copyright holders of the Third Party Components and the Third Party Terms that apply to them is available on the

Avaya Support Web site: http://support.avaya.com/Copyright.

Trademarks

The trademarks, logos and service marks ("Marks") displayed in this site, the documentation(s) and product(s) pr ovided by Avaya are the

registered or unregistered Marks of Avaya, its affiliates, or other third parties. Users are not permitted to use such Marks without prior

written consent from A vaya or such third party which may own the Mark. Nothing contained in this site, the documentation(s) and

product(s) should be construed as granting, by implication, estoppel, or otherwise, any license or right in and to the Marks without the

express written permission of Avaya or the applicable third party. Avaya is a registered trademark of Avaya Inc. All non-Avaya

trademarks are the property of their respective owners.

Downloading documents

For the most current versions of documentation, see the Avaya Support. Web site: http://www.avaya.c om/support

Contact Avaya Support

Avaya provides a telephone number for you to use to report problems or to ask questions about your product. The support telephone

number is 1-800-242-2121 in the United States. For additional support telephone numbers, see the Avaya Web site: http://

www.avaya.com/support

Page 3

Table of Contents

Table of Contents

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Scope . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Intended Audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

How to Use This Manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Organization of This Manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Conventions Used in This Manual . . . . . . . . . . . . . . . . . . . . . . . . 13

Solaris and Windows 2000 Conventions . . . . . . . . . . . . . . . . . . . 15

Trademark Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Avaya MPS Architectural Overview . . . . . . . . . . . . . . . . . . 17

Overview of the Avaya Media Processing Server (MPS) System 18

System Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Hardware Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Front Control Panel (FCP) . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Variable Resource Chassis (VRC) . . . . . . . . . . . . . . . . . . . . 22

Power Supplies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

VRC Rear Panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Drive Bays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Application Processor . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Network Interface Controller (NIC) or Hub-NIC . . . . . . 27

Telephony Media Server (TMS). . . . . . . . . . . . . . . . . . . . . . 28

Phone Line Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Multiple DSP Module (MDM) . . . . . . . . . . . . . . . . . . . . 31

System LAN Interface . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Field Programmable Gate Arrays (FPGA) and the Boot

ROM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

TelCo Connector Panel (TCCP) . . . . . . . . . . . . . . . . . . . . . 33

Software Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Software Environment . . . . . . . . . . . . . . . . . . . . . . . . . . 35

ASE Processes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

ASE/VOS Integration Layer . . . . . . . . . . . . . . . . . . . . . . 39

VOS Processes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

System Utilities and Software . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

alarm . . . . . . . . . . . . . . . . 51

dlog . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

dlt . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

PeriProducer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

PeriReporter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

PeriStudio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

PeriView . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

PeriWeb . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

vsh . . . . . . . . . . . . . . . . . 60

# P0602477 Ver: 3.1.11 Page 3

Page 4

Avaya Media Processing Server Series System Reference Manual

Base System Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Base System Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

System Startup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Solaris Startup/Shutdown . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Windows Startup/Shutdown . . . . . . . . . . . . . . . . . . . . . . . . . 69

SRP (Startup and Recovery Process) . . . . . . . . . . . . . . . . . . 70

Manually Starting and Stopping SRP . . . . . . . . . . . . . . . 70

VPS Topology Database Server (VTDB) . . . . . . . . . . . . 71

Restart of Abnormally Terminated Programs . . . . . . . . . 72

Communication with VOS Processes . . . . . . . . . . . . . . . 72

SRP Configuration Command Line Arguments . . . . . . . 74

VSH Shell Commands . . . . . . . . . . . . . . . . . . . . . . . . . . 75

SRP Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Call Control Manager (CCM/CCMA) . . . . . . . . . . . . . . . . . 82

Startup Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

The hosts File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

User Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

The .xhtrahostsrc File. . . . . . . . . . . . . . . . . . . . . . . . . 86

The MPSHOME Directory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

The MPSHOME/common Directory . . . . . . . . . . . . . . . . . . . . . . 88

The MPSHOME/common/etc Directory . . . . . . . . . . . . . . 88

The srp.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

The vpshosts File . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

The compgroups File . . . . . . . . . . . . . . . . . . . . . . . . . 95

The gen.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

The global_users.cfg File . . . . . . . . . . . . . . . . . . 98

The alarmd.cfg and alarmf.cfg Files . . . . . . . . . 99

The pmgr.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

The periview.cfg File . . . . . . . . . . . . . . . . . . . . . . 102

The MPSHOME/common/etc/tms Directory . . . . . . . . 103

The sys.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . 103

The tms.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

Protocol Configuration Files . . . . . . . . . . . . . . . . . . . . . . . 123

The $MPSHOME/packages Directory . . . . . . . . . . . . . . 125

%MPSHOME%\PERIase - /opt/vps/PERIase. . 127

The /etc/ase.conf file . . . . . . . . . . . . . . . . . . . . . 127

The /etc/services File . . . . . . . . . . . . . . . . . . . . . 129

%MPSHOME%\PERIbrdge - /opt/vps/PERIbrdge 132

%MPSHOME%\PERIdist - /opt/vps/PERIdist. 133

%MPSHOME%\PERIglobl - /opt/vps/PERIglobl 133

%MPSHOME%\PERIview - /opt/vps/PERIview. 134

%MPSHOME%\PERIplic - /opt/vps/PERIplic. 134

%MPSHOME%\PERItms -

The /cfg/atm_triplets.cfg File . . . . . . . . . . . 135

The /cfg/ps_triplets.cfg File . . . . . . . . . . . . 136

/opt/vps/PERItms. . 134

Page 4 # P0602477 Ver: 3.1.11

Page 5

Table of Contents

The /cfg/tms_triplets.cfg File . . . . . . . . . . . 136

%MPSHOME%\PERImps - /opt/vps/PERImps . 137

The MPSHOME/tmscommN Directory. . . . . . . . . . . . . . . . 138

MPS 500 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

MPS 1000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

The MPSHOME/mpsN Directory . . . . . . . . . . . . . . . . . . . . 139

The MPSHOME/mpsN/apps Directory . . . . . . . . . . . 140

The MPSHOME/mpsN/etc Directory . . . . . . . . . . . . . 142

VMM Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . 144

The vmm.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

The vmm-mmf.cfg File . . . . . . . . . . . . . . . . . . . . . . . 146

ASE Configuration Files. . . . . . . . . . . . . . . . . . . . . . . . . . . 148

The ase.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

The aseLines.cfg File . . . . . . . . . . . . . . . . . . . . . . 149

CCM Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . . 151

The ccm_phoneline.cfg File . . . . . . . . . . . . . . . . 151

The ccm_admin.cfg File . . . . . . . . . . . . . . . . . . . . . 155

TCAD Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . 157

The tcad-tms.cfg File . . . . . . . . . . . . . . . . . . . . . . 157

The tcad.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

TRIP Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . . 159

The trip.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

TMS Watchdog Functions . . . . . . . . . . . . . . . . . . . . . . . . . 160

Common Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

Multi-Media Format Files (MMFs) . . . . . . . . . . . . . . . . . . . . . . 164

How to Create an MMF File. . . . . . . . . . . . . . . . . . . . . . . . 164

Vocabulary MMF Files vs. CMR MMF Files . . . . . . . . . . 165

Activating MMF Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

Delimited and Partial Loading . . . . . . . . . . . . . . . . . . . 168

Audio Playback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

Custom Loading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Using Hash Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

System MMF Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

Application-Specific MMF Files . . . . . . . . . . . . . . . . . 174

Default Vocabulary and Record MMF Files . . . . . . . . 175

Diagnostics and Reports . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

Synchronizing MMF Files Across Nodes. . . . . . . . . . . . . . 177

ZAP and MMF files on the MPS . . . . . . . . . . . . . . . . . 177

MMF Abbreviated Content (MAC) File . . . . . . . . . . . . 178

Basic Implementation (Low Volume/Traffic) . . . . . . . 178

Advanced Implementation (High Volume/Traffic) . . . 181

Updating a Specific Element . . . . . . . . . . . . . . . . . . . . 185

Exception Processing . . . . . . . . . . . . . . . . . . . . . . . . . . 187

Log Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

# P0602477 Ver: 3.1.11 Page 5

Page 6

Avaya Media Processing Server Series System Reference Manual

Synchronization (ZAP) Command Summary . . . . . . . . 191

Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

Call Simulator Facility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

VEMUL Script Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Script Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

Configuration Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . 196

Script Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

Statements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Primitives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

Phone Line Behavior During Simulation . . . . . . . . . . . . . . 199

Call Simulator Conditions and Usage. . . . . . . . . . . . . . . . . 199

Command Line Interface . . . . . . . . . . . . . . . . . . . . . . . . . . 200

Example Call Simulation Script Files. . . . . . . . . . . . . . . . . 202

Alarm Filtering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

Filtering Precepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

Command Line Interface . . . . . . . . . . . . . . . . . . . . . . . . . . 205

alarmf Command Line Options . . . . . . . . . . . . . . . . 206

Notation Functionality . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

Logical Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Action Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Filtering Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

Interapplication/Host Service Daemon Data Exchange . . . . . . . 215

VMST (VMS) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

Starting Under SRP . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

PeriPro Interaction . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Arguments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Examples: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 218

VTCPD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220

Single Connection to Host . . . . . . . . . . . . . . . . . . . . . . 221

Multiple Connections to Multiple Hosts . . . . . . . . . . . . 221

One Connection Per Line . . . . . . . . . . . . . . . . . . . . . . . 222

Multiple VTCPD Daemons . . . . . . . . . . . . . . . . . . . . . 222

Host Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

Attaching to VMST . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

Message Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

Message Identification (ID) . . . . . . . . . . . . . . . . . . . . . 231

Connection Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Application-Host Interaction Configuration Options . . 234

Queuing Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Monitoring Host Connections . . . . . . . . . . . . . . . . . . . . 238

Backup LAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

VFTPD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

Specifying a Port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

Automatic Startup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

Automatic FTP Logins . . . . . . . . . . . . . . . . . . . . . . . . . 241

Identifying the Configured Host Computers . . . . . . . . 242

Page 6 # P0602477 Ver: 3.1.11

Page 7

Table of Contents

Configuration Procedures and Considerations . . . . . . . . . 243

Making Changes to an Existing System . . . . . . . . . . . . . . . . . . 244

Adding Spans . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

Modifying the Span Resource Set . . . . . . . . . . . . . . . . . . . 244

Changing Pool/Class Names . . . . . . . . . . . . . . . . . . . . . . . 245

Renumbering a Component . . . . . . . . . . . . . . . . . . . . . . . . 245

Renaming a Solaris MPS Node . . . . . . . . . . . . . . . . . . . . . 246

Renaming a Windows MPS Node . . . . . . . . . . . . . . . . . . . 247

Introducing a New Node. . . . . . . . . . . . . . . . . . . . . . . . . . . 248

Enabling Statistics Collection. . . . . . . . . . . . . . . . . . . . . . . 249

Debug Terminal Connection . . . . . . . . . . . . . . . . . . . . . . . 250

Connection Using a Dumb Terminal or PC . . . . . . . . . 250

Connection from the System Console . . . . . . . . . . . . . 250

Verifying/Modifying Boot ROM Settings . . . . . . . . . . . . . . . . 252

DCC Boot ROM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 252

TMS Boot ROM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

NIC Boot ROM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

Resetting the NIC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

TMS Computer Telephony (CT) Bus Clocking . . . . . . . . . . . . 265

N+1 Redundancy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 267

Sample MPS 1000 N+1 Redundancy System Configuration 267

TRIP Failback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

Directory Layout on a Secondary (Backup) Node . . . . . . . 269

Least Cost Routing Daemon . . . . . . . . . . . . . . . . . . . . . . . . 271

Redundancy Configuration Daemon (RCD). . . . . . . . . . . . 271

The Failover/Failback Process . . . . . . . . . . . . . . . . . . . . . . 273

Installation and Configuration . . . . . . . . . . . . . . . . . . . . . . 274

Create the Secondary Node . . . . . . . . . . . . . . . . . . . . . . 274

TMSCOMM Component Configuration . . . . . . . . . . . 274

Edit the vpshosts File . . . . . . . . . . . . . . . . . . . . . . . . . . 275

Edit the tms.cfg File . . . . . . . . . . . . . . . . . . . . . . . . . . . 276

Edit TRIP and RCD Configuration Files . . . . . . . . . . . 276

Edit the gen.cfg file . . . . . . . . . . . . . . . . . . . . . . . . . . . . 276

PMGR configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . 277

Media Directories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 278

First Startup After Configuration . . . . . . . . . . . . . . . . . . . . 280

Verifying N+1 Functionality . . . . . . . . . . . . . . . . . . . . . . . 283

Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283

Failback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

Speech Server Resources in N+1 Redundancy. . . . . . . . . . 285

Pool Manager (PMGR) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 288

Terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 288

Resource Object . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 288

Allocation/Deallocation . . . . . . . . . . . . . . . . . . . . . . . . 288

Pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 289

# P0602477 Ver: 3.1.11 Page 7

Page 8

Avaya Media Processing Server Series System Reference Manual

Resource Identifier/String . . . . . . . . . . . . . . . . . . . . . . . 289

Scheme . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 289

Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 289

Port Service States . . . . . . . . . . . . . . . . . . . . . . . . . . . . 291

Network Failure Detection (Pinging) . . . . . . . . . . . . . . 291

Database Conversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Platform Conversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Starting a Reader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Starting a Writer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Database Format Conversion . . . . . . . . . . . . . . . . . . . . . . . 293

Reader/Writer Synchronization . . . . . . . . . . . . . . . . . . . . . 293

File Size Limitations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 293

Call Monitoring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Listening to Calls . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Security . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 297

Antivirus Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

Secure Shell . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 299

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

Page 8 # P0602477 Ver: 3.1.11

Page 9

Preface

Page 10

Avaya Media Processing Server Series System Reference Manual

Scope

The Avaya Media Processing Server Series System Reference Manual details the

procedures and parameters for configuring the Avaya Media Processing Server (MPS)

Series system for online operation in a variety of telephony environments. In addition,

this manual provides configuration parameters and basic file information for elements

common to all MPS within the network. Note, however, that though there are two

basic products available in the MPS system - a single rack-mounted version known as

the Avaya MPS Series and a cabinet enclosed network configuration which relies on

the MPS 500 - this manual deals almost exclusively with the latter.

In addition to this document, the Avaya Media Processing Server Series System

Operator’s Guide may be particularly helpful. They provide a road map through the

major functions in the daily operation and monitoring of the MPS system. For a list of

other user manuals, see the Reference Material link in PeriDoc.

Intended Audience

This manual is intended for the persons who will be configuring the MPS for a

specific site and/or maintaining it from a particular perspective. The reader should be

familiar with telecommunications and computer equipment, their functions, and

associated terminology. In addition, the reader must be familiar with the

characteristics of the specific installation site, including site-specific power systems,

computer systems, peripheral components, and telephone networks.

Some of the material covered here involves the configuration of basic and critical

MPS parameters. Small inaccuracies in the configuration of these parameters can

impede system performance. Individuals without highly specialized knowledge in this

area should not attempt to change the defaults.

This guide assumes that the user has completed an on-site system familiarization

training program conducted as part of the initial system installation. Basic knowledge

of the Solaris and/or Windows 2000 operating system(s) is also assumed.

Page 10 # P0602477 Ver: 3.1.11

Page 11

How to Use This Manual

This manual uses many standard terms relating to computer system and software

application functions. However, it contains some terminology that can only be

explained in the context of the MPS system. Refer to the Glossary of Avaya Media

Processing Server Series Terminology for definitions of product specific terms.

It is not essential that this document be read cover-to-cover, as the entire contents is

not universally applicable to all MPS environments. It is essential, however, that there

is a clear understanding of exactly what information pertains to your environment and

that you can identify, locate, and apply the information documented in this manual.

Later, you can use the Table of Contents to locate topics of interest for reference and

review.

If you are reading this document online, use the hypertext links to quickly locate

related topics. Click once with your mouse while positioned with your cursor over the

hypertext link. Click on any point in a Table of Contents entry to move to that topic.

Click on the page number of any Index entry to access that topic page. Use the

hyperlinks at the top and bottom of each HTML “page” to help you navigate the

documentation. Pass your cursor over the Avaya Globemark to display the title,

software release, publication number, document release, and release date for the

HTML manual you are using.

Preface

For additional related information, use the Reference Material link in PeriDoc. To

familiarize yourself with various specialized textual references within the manual, see

Conventions Used in This Manual on page 13.

Periphonics is now part of Avaya. The name Periphonics, and variations thereof,

appear in this manual only where it is referred to in a product. (For example, a

PeriProducer application, the PERImps package, the perirev command, etc.)

# P0602477 Ver: 3.1.11 Page 11

Page 12

Avaya Media Processing Server Series System Reference Manual

Organization of This Manual

This document is designed to identify the procedures and configuration parameters

required for successful MPS operations. It provides an overview of the MPS system

and proceeds to document both basic and common system parameters. The following

passages provide an overview of the information contained in each area of this

manual.

Chapter 1 - Avaya Media Processing Server Series Architectural Overview

Provides a description of the MPS system and an overview of its hardware

and software. Diagrams and describes the MPS structure, its software

processes, and identifies other system utilities.

Chapter 2 - Base System Configuration

Describes and diagrams the system directory structure and startup and

shutdown, delineates the Startup and Recovery Process (SRP), and details

MPSHOME and all required configuration files.

Chapter 3 - Common Configuration

Documents the facilities available on all (common) MPS platforms. Details

MultiMedia Format (MMF) file creation and utilization. Also covers call

simulation, alarm filtering, and exchange of data between applications, hosts,

and MPS.

Chapter 4 - Configuration Procedures and Considerations

Contains common procedures and comprehensive considerations for

modifying existing systems and adding features.

Appendix A - Process and Utility Command Summary

Lists commands for some of the processes and utilities most commonly

interacted with in the MPS system. Provides brief definitions for each and

links to more detailed information.

Appendix B - Avaya MPS Specifications

Contains physical, electrical, environmental, and interface specifications for

the MPS.

Page 12 # P0602477 Ver: 3.1.11

Page 13

Conventions Used in This Manual

This manual uses different fonts and symbols to differentiate between document

elements and types of information. These conventions are summarized in the

following table.

Conventions Used in This Manual Sheet 1 of 2

Notation Description

Preface

Normal text

important term

system

command

command,

condition

and alarm

file name /

directory

on-screen field

<KEY NAME>

Book Reference

Normal text font is used for most of the document.

The Italics font is used to introduce new terms, to highlight

meaningful words or phrases, or to distinguish specific terms from

nearby text.

This font indicates a system command and/or its arguments. Such

keywords are to be entered exactly as shown (i.e., users are not to

fill in their own values).

Command, Condition and Alarm references appear on the screen

in magenta text and reference the Command Reference Manual,

the PeriProducer User’s Guide, or the Alarm Reference Manual,

respectively. Refer to these documents for detailed information

Commands, Conditions, and Alarms.

about

This font is used for highlighting the names of disk directories, files,

and extensions for file names. It is also used to show displays on

text-based screens (e.g., to show the contents of a file.)

This font is used for field labels, on-screen menu buttons, and

action buttons.

A term that appears within angled brackets denotes a terminal

keyboard key, a telephone keypad button, or a system mouse

button.

This font indicates the names of other publications referenced

within the document.

cross reference

!

A cross reference appears on the screen in blue text. Click on the

cross reference to access the referenced location. A cross

reference that refers to a section name accesses the first page of

that section.

The Note icon identifies notes, important facts, and other keys to

understanding.

The Caution icon identifies procedures or events that require

special attention. The icon indicates a warning that serious

problems may arise if the stated instructions are improperly

followed.

# P0602477 Ver: 3.1.11 Page 13

Page 14

Avaya Media Processing Server Series System Reference Manual

Conventions Used in This Manual Sheet 2 of 2

Notation Description

The flying Window icon identifies procedures or events that apply

to the Windows 2000 operating system only.

The Solaris icon identifies procedures or events that apply to the

Solaris operating system only.

1. Windows 2000 and the flying Window logo are either trademarks or registered

trademarks of the Microsoft Corporation.

2. Solaris is a trademark or registered trademark of Sun Microsystems, Inc. in the

United States and other countries.

2

1

Page 14 # P0602477 Ver: 3.1.11

Page 15

Solaris and Windows 2000 Conventions

This manual depicts examples (command line syntax, configuration files, and screen

shots) in Solaris format. In certain instances Windows 2000 specific commands,

procedures, or screen shots are shown where required. The following table lists

examples of general operating system conventions to keep in mind when using this

manual with either the Solaris or NT operating system.

Solaris Windows 2000

Environment $MPSHOME %MPSHOME%

Paths $MPSHOME/common/etc %MPSHOME%\common\etc

Command <command> & start /b <command>

Trademark Conventions

The following trademark information is presented here and applies throughout for

third party products discussed within this manual. Trademarking information is not

repeated hereafter.

Preface

Solaris is a trademark or registered trademark of Sun Microsystems, Inc. in the United

States and other countries.

Microsoft, Windows, Windows 2000, Internet Explorer, and the Flying Windows logo

are either trademarks or registered trademarks of Microsoft Corporation.

Netscape® and Netscape Navigator® are registered trademarks of Netscape

Communications Corporation in the United States and other countries. Netscape's

logos and Netscape product and service names are also trademarks of Netscape

Communications Corporation, which may be registered in other countries.

# P0602477 Ver: 3.1.11 Page 15

Page 16

Avaya Media Processing Server Series System Reference Manual

This page has been intentionally left blank.

Page 16 # P0602477 Ver: 3.1.11

Page 17

Avaya MPS Architec-

tural Overview

This chapter covers:

1. Overview of the Avaya

Media Processing Server

Series System

2. System Architecture

3. System Utilities and

Software

Page 18

Avaya Media Processing Server Series System Reference Manual

Overview of the Avaya Media Processing Server System

The Avaya Media Processing Server (MPS) Series products comprise hardware and

software to create a call and web-based processing environment. These systems

integrate the call processing environment with speech, telephony, data

communications, and transaction processing functions. The platform is based on the

Avaya Telephony Media Server (TMS) which provides high phone port densities and

increased user flexibility and extensibility. The basic TMS assembly provides

resources for telephony media management including switching/bridging, digital

signal processing, voice and data memory, and network interfaces. A variety of

interactive voice processing applications are accommodated, from simple information

delivery services to complex multimedia (voice/fax/data/web) call processing

implementations with local databases, multiple services, and transaction processing

functions.

The MPS system supports a wide selection of telephony and host computer

connectivity interfaces for easy integration into an existing dataprocessing/communications environment. It also includes a set of easy to use objectoriented Graphical User Interface (GUI) tools. These tools are used for:

• application and vocabulary development

• system configuration, control, and monitoring

• collection and reporting of statistical data

• access to on-line documentation and its concurrent implementations

The application development environment provides a totally graphical environment

for the entire application life cycle, and also allows typically phone-line applications

to be ported over to Internet-based Web usage. The PeriProducer GUI is the suggested

tool of choice for application development. The PeriWeb package allows these phone

line applications to be run as interactive World Wide Web apps.

The MPS systems employ industry standards and distributed processing in an open

architecture, allowing plug-in integration of future technological developments. In

addition, networking elements of the MPS support multiple LAN/WAN interfaces,

providing an environment ready for distributed computing.

This chapter of the Avaya Media Processing Server Series System Reference Manual

presents an overall view of the MPS hardware and software, describes the software

processes responsible for operations, and provides a series of diagrams that illustrate

both hardware and software relationships.

Base System Configuration on page 64, documents the process of getting the MPS

system up and running, identifies the individual configuration files, details some of

the newer processes, and describes the directory structure of the operating

environment and predefined environment variables.

Page 18 # P0602477 Ver: 3.1.11

Page 19

System Architecture

The MPS family is designed with a flexible hardware and software architecture that is

highly scalable. System models range from small (48 ports) to large networked

configurations of tens of thousands of ports. The same basic hardware and software

components are used for all configurations. Individual systems usually vary only in

application/transaction processor performance, capacity for additional ports (TMS’),

and optional feature software/hardware (for example, Call Progress Detection, Speech

Recognition, or Caller Message Recording).

Architecture of the MPS is based on a Sun Microsystems SPARC system processor

running the Solaris operating system or an Intel processor running Windows 2000.

The system processor is connected to one or more Telephony Media Servers (TMS).

The TMS is a flexible platform that provides switching, bridging, programmable

resources, memory, and network interfaces to execute a comprehensive set of

telephony and media functions.

Each MPS system consists of a Solaris or Windows host node running OS and MPS

software, and one or more TMS’ responsible for the bulk of the actual telephony

processing. One TMS is required for each MPS defined on the node. A multiple node

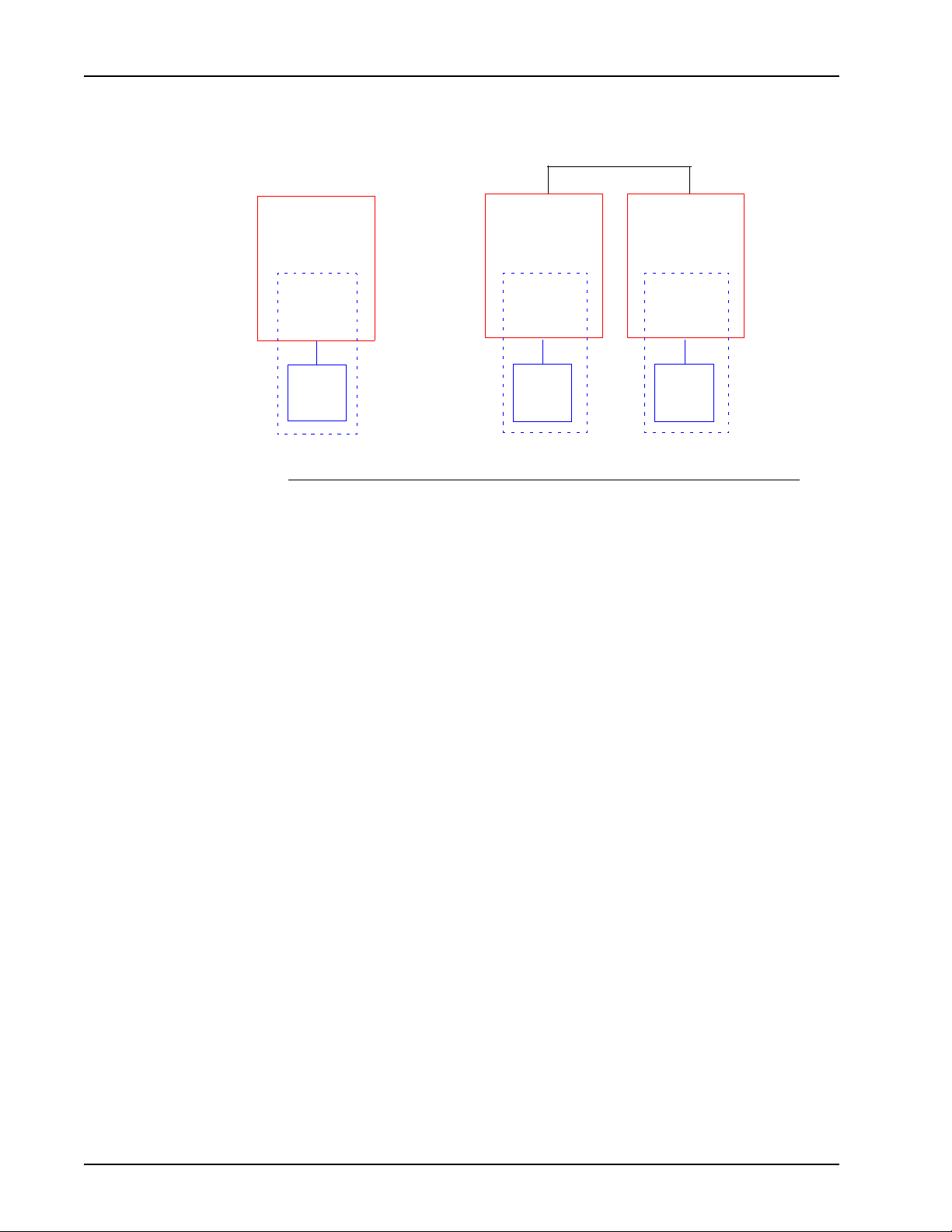

configuration is referred to as the MPS Network. The following diagrams illustrate the

two basic products available in the MPS system: a single rack-mounted version,

known as the MPS100, which is available on the Windows platform only, and a

cabinet enclosed networked configuration which relies on the MPS1000 and is

available on both the Windows and Solaris platforms. Typically, the MPS100 contains

only 2 spans (though it may contain up to 8) and only 1 Digital Communications

Controller (DCC) card, and does not support bridging outside the TMS. Conversely,

the MPS1000 is the high-capacity model, with 4 TMS’ per chassis and up to 4 chassis

per cabinet. It can support up to ten thousand ports with the ability to bridge between

any two regardless of the chassis the ports are in with respect to each other. This

manual deals almost exclusively with the MPS1000.

Avaya MPS Architectural Overview

The flexibility inherent in the product line allows the MPS networks to incorporate

numerous different designs. For additional information and configurations, see the

Avaya Media Processing Server Series 1000 Transition Guide. For information on

using the MPS, see the Avaya System Operator’s Guide.

Though the Avaya Media Processing Server Series 1000 Transition Guide is typically

used by those migrating from a previous version of our transaction processing

systems, it also contains information of interest to those new to the product line. Such

information should be used in that context only.

# P0602477 Ver: 3.1.11 Page 19

Page 20

Avaya Media Processing Server Series System Reference Manual

MPS100

Windows

MPS

ASE

VOS

TMS

Single Media Processing Server 100 and Basic Media Processing Server 1000 Network

MPS

MPS1000 Network

Node A

MPS 1

ASE

VOS

TMS

MPS

Node B

MPS 2

ASE

VOS

TMS

Page 20 # P0602477 Ver: 3.1.11

Page 21

Hardware Overview

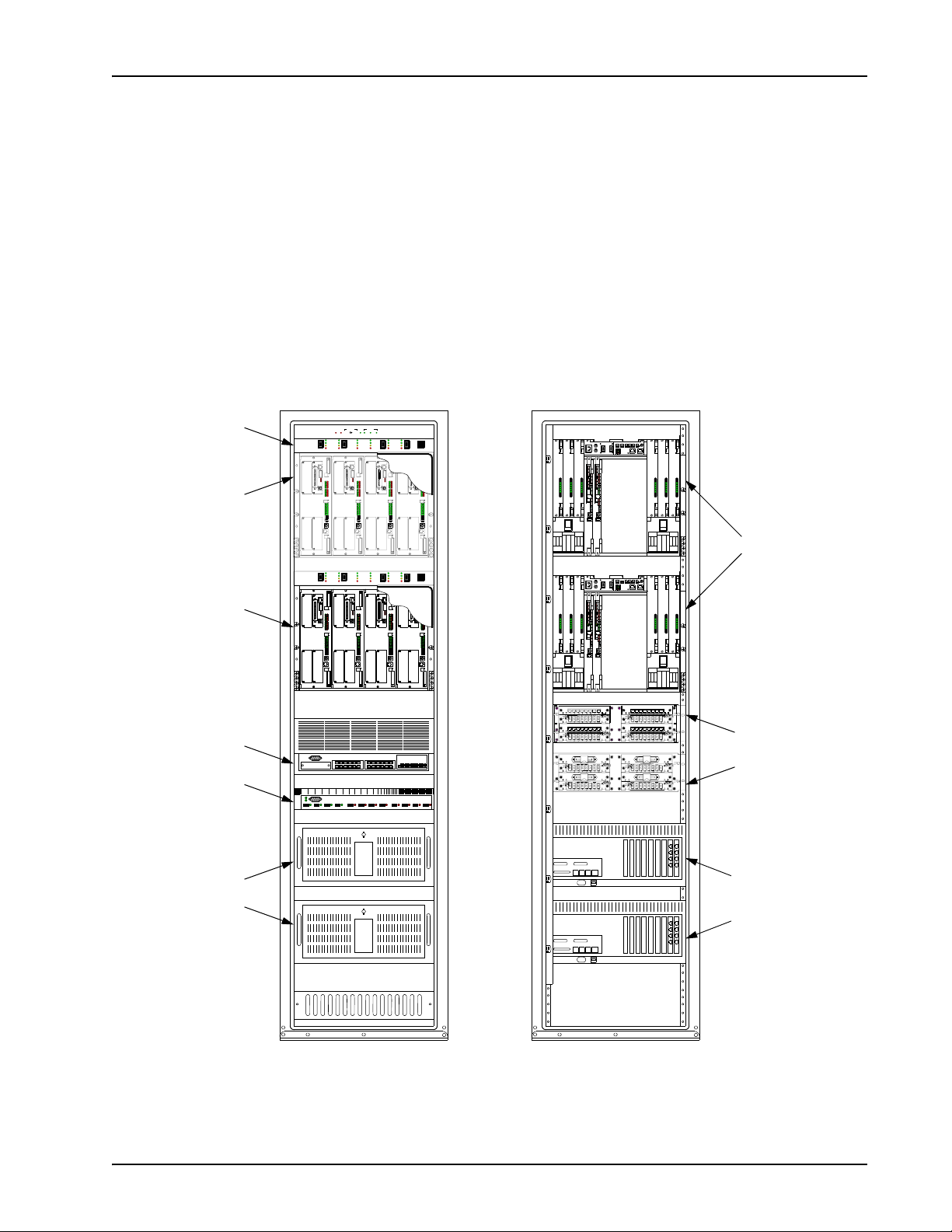

Typical system hardware includes a SPARC (Solaris) or Intel (Windows)

application/transaction processor and related computer components (such as hard

drive and RAM) and TMS hardware, including storage for speech and data files, a

telephone interface card, network interface cards, power supplies, and various voice

processing modules. The major hardware components that make up the MPS1000 are

shown in the following illustration (MPS100 information is contained in a separate

manual). Each of these is further dissected and discussed in the paragraphs that

follow. See the Avaya Media Processing Server Series System Operator’s Guide

regarding details on system monitoring and control and specific analysis of panel

switches and LEDs.

Avaya MPS Architectural Overview

Front Control

Panel (FCP)

Var iable

Resource

Chassis (VRCs),

populated with

Telephony Media

Server (TMS)

assemblies

Network

(Ethernet) Switch

Asynchronous

Transfer Mode

(ATM) Fiber

Optic Switch

Front View Rear View

OVER

FAULTS/

FAN SPEED

FAN SPEED

SYSTEM

TEMP

MAN HS

OK

MANUAL

AUTO

HIGH

TEMP

SPEED

CNTRL

LOWMEDHIGH

POWER ON

POWER ON

POWER ON

TEST

TEST

NORMAL

ON

OFF

SLOT

SLOT 3

SLOT 1

TEST

ON

OFF

SLOT

SLOT 3

SLOT 1

NORMAL

ON

MINOR ALARM

MINOR ALARM

OFF

MAJOR ALARM

MAJOR ALARM

2

1

5

L

L

C

O

C

O

M

P

M

P

SL1

SL1

2

2

S

L

S

L

EXT

EXT

COL

COL

M

P

M

P

3

3

S

L

S

L

SL4

SL4

HUB BHUB A

HUB BHUB A

T

T

E

X

E

X

SLOT 4

SLOT 4

SLOT 3

0

1

0

1

2

3

2

3

4

5

4

5

6

7

6

7

8

9

8

9

11

11

10

10

12

13

12

13

14

15

14

15

SLOT 2

SLOT 1

SLOT 2

AUDIOCONSOLE

AUDIOCONSOLE

POWER ON

POWER ON

TEST

NORMAL

NORMAL

ON

MINOR ALARM

MINOR ALARM

OFF

MAJOR ALARM

MAJOR ALARM

2

1

5

L

L

C

O

C

O

M

P

M

P

SL1

SL1

2

2

S

L

S

L

EXT

EXT

COL

COL

M

P

M

P

3

3

S

L

S

L

SL4

SL4

HUB BHUB A

HUB BHUB A

T

T

E

X

E

X

SLOT 4

SLOT 4

SLOT 3

0

1

0

1

2

3

2

3

4

5

4

5

6

7

6

7

8

9

8

9

11

11

10

10

12

13

12

13

14

15

14

15

SLOT 2

SLOT 1

SLOT 2

AUDIOCONSOLE

AUDIOCONSOLE

TEST

TEST

NORMAL

ON

ON

MINOR ALARM

OFF

OFF

MAJOR ALARM

6

6

RESET

3

4

L

L

C

O

C

O

M

P

M

P

SL1

SL1

2

2

S

L

S

L

EXT

EXT

COL

COL

M

P

M

P

3

3

S

L

S

L

SL4

SL4

HUB BHUB A

HUB BHUB A

T

T

E

X

E

X

SLOT 4

SLOT 3

SLOT 4

SLOT 3

0

1

0

1

2

3

2

3

4

5

4

5

6

7

6

7

8

9

8

9

11

11

10

10

12

13

12

13

14

15

14

SLOT 1

TEST

ON

OFF

SLOT 3

SLOT 1

15

SLOT 2

SLOT 1

SLOT 2

AUDIOCONSOLE

AUDIOCONSOLE

POWER ON

TEST

NORMAL

ON

MINOR ALARM

OFF

MAJOR ALARM

RESET

4

3

L

L

C

O

C

O

M

P

M

P

SL1

SL1

2

2

S

L

S

L

EXT

EXT

COL

COL

M

P

M

P

3

3

S

L

S

L

SL4

SL4

HUB BHUB A

HUB BHUB A

T

T

E

X

E

X

SLOT 4

SLOT 4

SLOT 3

0

1

0

1

2

3

2

3

4

5

4

5

6

7

6

7

8

9

8

9

11

11

10

10

12

13

12

13

14

15

14

15

SLOT 2

SLOT 1

SLOT 2

AUDIOCONSOLE

AUDIOCONSOLE

+3.3V

+3.3V

+3.3V

+5V

+5V

+5V

+12V

+12V

+12V

-12V

-12V

-12V

MIS-

MIS-

MIS-

MATCH

MATCH

MATCH

+3.3V

+3.3V

+3.3V

+5V

+5V

+5V

+12V

+12V

+12V

-12V

-12V

-12V

MIS-

MIS-

MIS-

MATCH

MATCH

MATCH

0

CHASSIS ID

0

CHASSIS ID

EXT CLK A

EXT CLK B

EXT CLK A

EXT CLK B

MC1 IN

MC1 OUT

ALARM

EXTERNAL SENSORS

MAJ M IN

CSL

NCCNOABCD

NCCNO

TEST

NIC

ENET-A

S5S6

ON

PWR ON

OFF

NORMAL

MIN ALARM

6

SLOT

5

MAJ ALARM

ENET-B

+3.3V

+3.3V

+3.3V

+5V

+5V

+5V

+12V

+12V

+12V

-12V

-12V

-12V

MIS-

MIS-

MIS-

MATCH

MATCH

MATCH

Rear of VRCs

MC1 IN

MC1 OUT

ALARM

EXTERNAL SENSORS

MAJ M IN

CSL

NCCNOABCD

NCCNO

TEST

NIC

ENET-A

S5S6

ON

PWR ON

OFF

NORMAL

MIN ALARM

6

SLOT

5

MAJ ALARM

ENET-B

+3.3V

+3.3V

+3.3V

+5V

+5V

+5V

+12V

+12V

+12V

-12V

-12V

-12V

MIS-

MIS-

MIS-

MATCH

MATCH

MATCH

TelCo Connector

Panels (TCCP)

Application

Processors

Rear of

Application

Processors

# P0602477 Ver: 3.1.11 Page 21

Page 22

Avaya Media Processing Server Series System Reference Manual

For detailed information on the physical, electrical, environmental, and interface

specifications of the Avaya Media Processing Server (MPS) Series, please refer the

MPS Specifications chapter in the Avaya MPS Hardware Installation and

Maintenance manual.

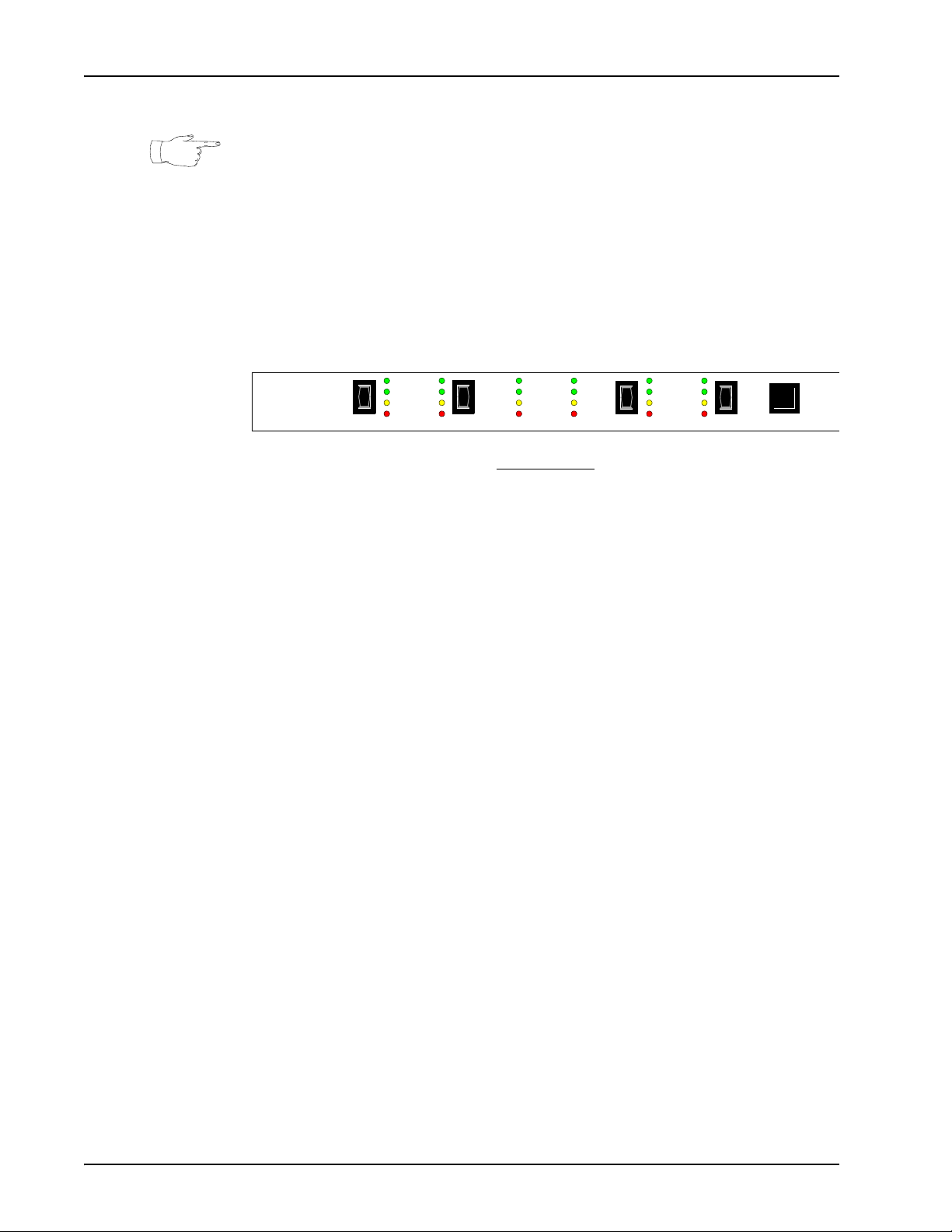

Front Control Panel (FCP)

One FCP is present for each VRC in the system. The FCP provides separate power

controls and status indicators for each TMS (by chassis slot).

SLOT

TEST

OFF

POWER ON

1

NORMAL

MINOR ALARM

MAJOR ALARM

ON

TEST

ON

OFF

2

POWER ON

NORMAL

MINOR ALARM

MAJOR ALARM

5

MINOR ALARM

MAJOR ALARM

3

POWER ON

NORMAL

TEST

ON

OFF

4

RESET

TEST

ON

OFF

6

FCP Front View

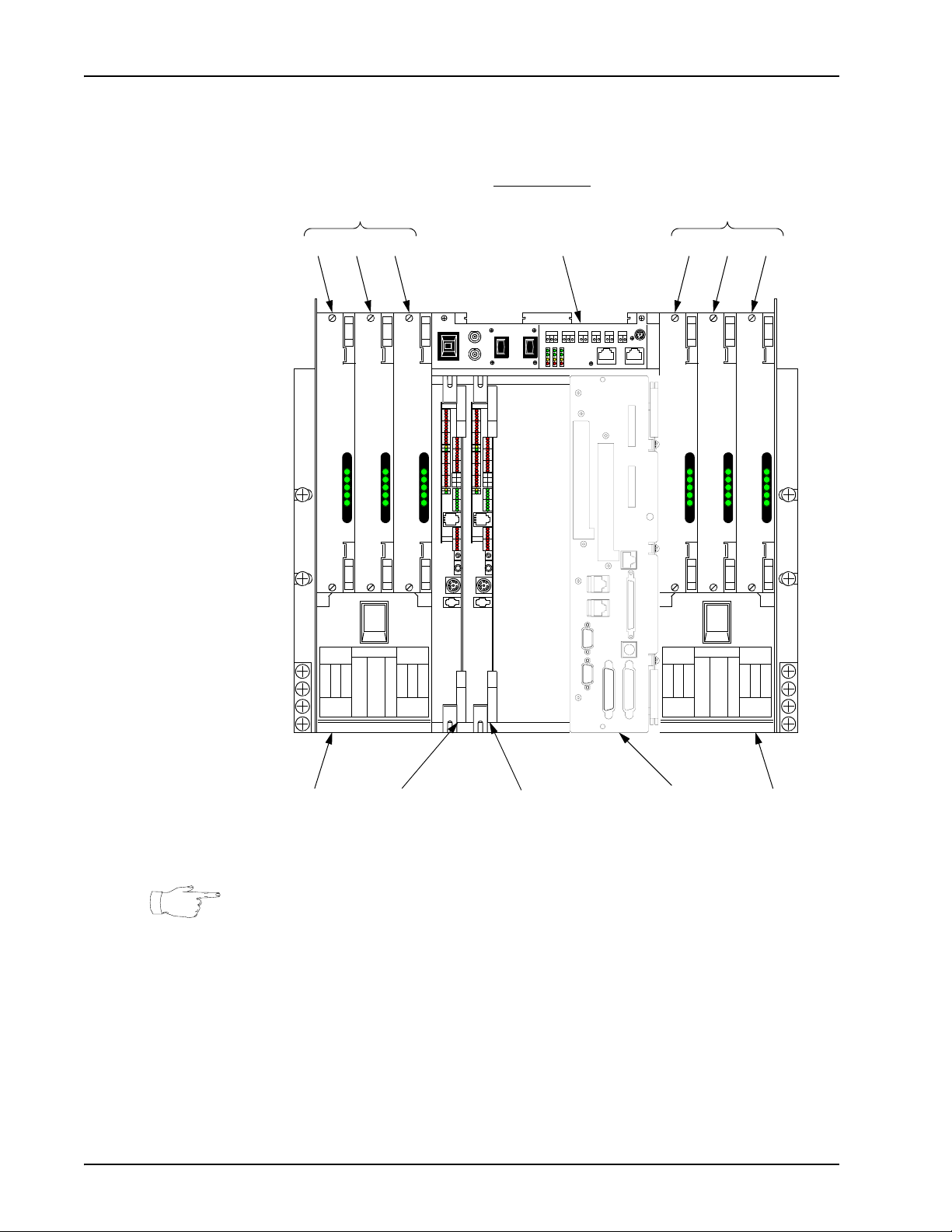

Variable Resource Chassis (VRC)

The VRC is a versatile chassis assembly that is used in several Avaya product lines.

The VRC has four front and two rear plug-in slots, and contains:

• Up to four TMS assemblies

• One or two application processor board(s) (rear; not present if rack mounted

application processor(s) are used)

• Two Network Interface Controllers (NICs) or one Hub-NIC

• Up to six power supplies, one for each populated slot

• Two available drive bays

Page 22 # P0602477 Ver: 3.1.11

Page 23

VRC Front View (Populated with Four TMS’)

Slot 1 Slot 2 Slot 3 Slot 4

Avaya MPS Architectural Overview

L

C

O

M

P

1

S

L

2

S

L

T

E

X

L

C

O

M

P

3

S

L

4

S

L

HUB B HUB A

T

E

X

SLOT 4

SLOT 3

SLOT 1

0

2

4

6

8

10

12

14

SLOT 2

SLOT 3

1

3

5

7

9

11

13

15

SLOT 1

SLOT 2

AUDIO CONS OLE

L

C

O

M

P

1

S

L

2

S

L

T

E

X

L

C

O

M

P

3

S

L

4

S

L

HUB B HUB A

T

E

X

SLOT 4

0

2

4

6

8

10

12

14

SLOT 3

1

3

5

7

9

11

13

15

SLOT 1

AUDIO CONS OLE

L

C

O

M

P

1

S

L

2

S

L

T

E

X

L

C

O

M

P

3

S

L

4

S

L

HUB B HUB A

T

E

X

SLOT 4

0

2

4

6

8

10

12

14

SLOT 2

SLOT 3

1

3

5

7

9

11

13

15

SLOT 1

AUDIO CONS OLE

L

C

O

M

P

1

S

L

2

S

L

T

E

X

L

C

O

M

P

3

S

L

4

S

L

HUB B HUB A

T

E

X

SLOT 4

0

1

2

3

4

5

6

7

8

9

11

10

13

12

14

15

SLOT 2

AUDIO CONS OLE

The VRC backplane is located midway between the front and rear of the chassis. The

backplane contains connectors for the modules that plug into each slot, front and back.

The backplane provides connections for:

• Inter-module signals

• Power from the power supplies to the module slots

• A Time Delay Multiplexing (TDM) bus for PCM (voice/audio)

communications between the TMS assemblies

• Clocking signals for the TDM bus

# P0602477 Ver: 3.1.11 Page 23

Page 24

Avaya Media Processing Server Series System Reference Manual

VRC Rear View

VRC Rear View

Power Supplies for slots

435 621

CHASSIS ID

+3.3V

+3.3V

MATCH

+3.3V

+5V

+5V

+12V

+12V

-12V

-12V

MIS-

MIS-

MATCH

MATCH

+5V

+12V

-12V

MIS-

VRC Rear Panel

EXT CLK A

0

EXT CLK B

MC1 IN

TEST

ON

OFF

6

SLOT

5

Alternate

Application

Processor

Location

(Slot 5)

MC1 OUT

ALARM

MAJ MIN

NC C NO

NIC

S5 S6

NCCNO

PWR ON

NORMAL

MIN ALARM

MAJ ALARM

EXTERNAL SENSORS

A

BCD

ENET-B

Power Supplies for slots

CSL

ENET-A

+3.3V

+3.3V

+5V

+5V

+12V

+12V

-12V

-12V

MIS-

MIS-

MATCH

MATCH

MATCH

+3.3V

+5V

+12V

-12V

MIS-

Hub-NIC

OR...

Drive Bay Drive BayNIC

(Primary)

(Logical Slot 7)

NIC

(Secondary)

(Logical Slot 8)

Application

Processor (Slot 6)

(If rack-mounted AP

is not used)

In multiple chassis and cabinet systems, some VRCs do not contain all the assemblies

listed above.

Power Supplies

Each slot in the VRC has a separate power supply dedicated to it. The power supplies

are identical and can be installed in any of the six locations for a slot that requires

power. The slot that each power supply is associated with is indicated on the decals on

the drive bay doors. There is no dedicated power supply for the NIC slot.

Page 24 # P0602477 Ver: 3.1.11

Page 25

+3.3V

+5V

+12V

-12V

MIS-

MATCH

Avaya MPS Architectural Overview

# P0602477 Ver: 3.1.11 Page 25

Page 26

Avaya Media Processing Server Series System Reference Manual

VRC Rear Panel

The rear panel of the VRC contains indicators, switches, and connectors for

maintenance, configuration, and connection to other system components. The power

switches for slots 5 and 6 are also located here, as well as the chassis ID wheel.

0

CHASSIS ID

EXT CLK A

EXT CLK B

MC1 IN

TEST

ON

OFF

6

SLOT

5

MC1 OUT

ALARM

MAJ MIN

NC C NO

NC C NO

NIC

S5 S6

PWR ON

NORMAL

MIN ALARM

MAJ ALARM

EXTERNAL SENSORS

A

BCD

ENET-B

CSL

ENET-A

Drive Bays

These bays contain the slots for and physical location of the system hard drives when

VRC-mounted application processors are used. Generally one drive is present per

processor, but additional drives may be added if system performance requires them.

Application Processor

In VRC-mounted configurations, the application processor is a “stripped down”

version of a Solaris or Windows computer: it contains the CPU, memory, and printed

circuit boards needed for both standard OS functions as well as basic MPS1000

transaction processing. One application processor is present per VRC in slot 6, but if

the VRC is populated with multiple TMS’ (which may in turn contain more than one

phone line interface card) and large numbers of spans, system performance may be

degraded and require the addition of another processor.

In typical rack-mounted configurations, there is one application processor per VRC,

and they are mounted at the bottom of the cabinet. This application processor is

similar in makeup to a typical Solaris or Windows computer. In either form, an

additional application processor may be added where instances of dual redundancy is

desired.

Page 26 # P0602477 Ver: 3.1.11

Page 27

Avaya MPS Architectural Overview

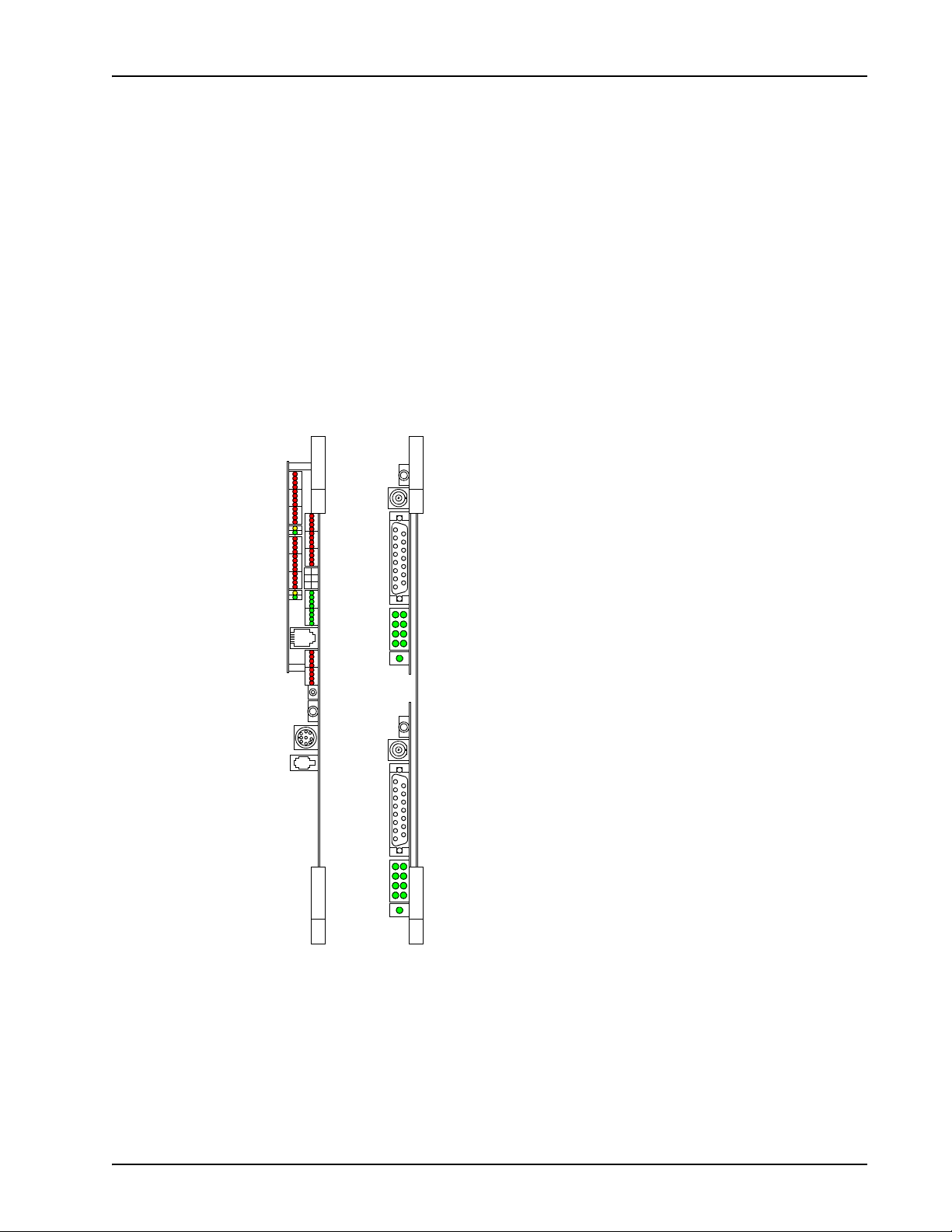

Network Interface Controller (NIC) or Hub-NIC

Each VRC in the system contains either two NICs (primary and secondary) or a single

Hub-NIC. The Hub-NIC plugs into the NIC slot in back of the VRC, and contains two

network hubs for the chassis Ethernet. It is generally used only in single chassis

systems. In multiple chassis systems, two NICs are used. In this case a midplane board

is installed over the backplane connector of the NIC slot, effectively splitting the slot

and providing separate connectors for each NIC. The two connectors on the midplane

board are logically assigned to slot 7 (primary) and slot 8 (secondary) for addressing.

The NICs have additional functionality such as system monitor capabilities, watchdog

timer, and alarm drivers, and can interface from the intra-chassis Pulse Code

Modulation (PCM) highways to a fiber optic Asynchronous Transfer Mode (ATM)

switching fabric. The NICs receive power from any installed power supply that is on.

NIC Hub-NIC

# P0602477 Ver: 3.1.11 Page 27

Page 28

Avaya Media Processing Server Series System Reference Manual

Telephony Media Server (TMS)

The TMS is the core functional module of the Avaya Media Processing Server (MPS)

Series system. It provides a versatile platform architecture for a broad range of

telephony functions with potential for future enhancement. The basic TMS assembly

consists of a motherboard and mounting plate containing front panel connectors and

indicators.

TMS Assembly Front View

01234567891011121314

SLOT 3

SLOT 4

HUB B HUB A

E

S

S

MPC

X

T

E

L

L

O

X

4

3

L

T

S

S

MPC

L

L

O

2

1

L

AUDIO CONSOLE

SLOT 1

SLOT 2

15

The TMS motherboard provides most essential functions for telephony and telephony

media management, including network and backplane bus interfaces, local memory,

digital signal processors, tone generators, local oscillators, and Phase-Lock Loop

(PLL) for Computer Telephony (CT) bus synchronization with other TMS’ and the

chassis. The motherboard contains a riser board that allows up to four additional

modules to be plugged in. The TMS motherboard also contains six Digital Signal

Processors (DSPs) which can be configured for communications protocols and to

provide resources.

Phone Line Interface

A TMS contains at least one phone line interface card, which can be a single Digital

Communications Controller (DCC) (see page 29) or up to three Analog Line Interface

(ALI) (see page 30) (a second DCC will be present if Voice over Internet Protocol

[VoIP] is installed). Though digital and analog line interfaces cannot be combined in

the same TMS, multiple TMS systems can contain any combination of digital and

analog lines in the VRC. Any line can be either incoming or outgoing, and all ports are

nonblocking (i.e., any port can be bridged to any other port). The TMS can also be

populated with a Multiple DSP Module (MDM) (see page 31), in one or more of the

remaining open slots. Although the motherboard has local digital signal processors,

the MDM provides additional resources for systems that require them.

Page 28 # P0602477 Ver: 3.1.11

Page 29

Avaya MPS Architectural Overview

A single TMS can support up to eight digital T1 (24 channels/span for a total of 192

lines) or E1 (30 channels/span for a total of 240 lines) spans by using an individual

DCC to connect to the Public Switched Telephone Network (PSTN). If some of the

lines are used exclusively for IVR resources, one or more spans may be dedicated.

Spans dedicated as such are connected directly in clear channel protocol. Supported

digital protocols include in-band T1/E1 and out-of-band SS7 and ISDN.

In addition a TMS can support up to 72 analog lines by using three ALI boards (24

lines per ALI). The standard analog interface supports common two-wire loop-start

circuits.

Information on configuration and application of phone line protocols and interfaces

can be found in the Ava ya Media Processing Server Series Telephony Reference

Manual.

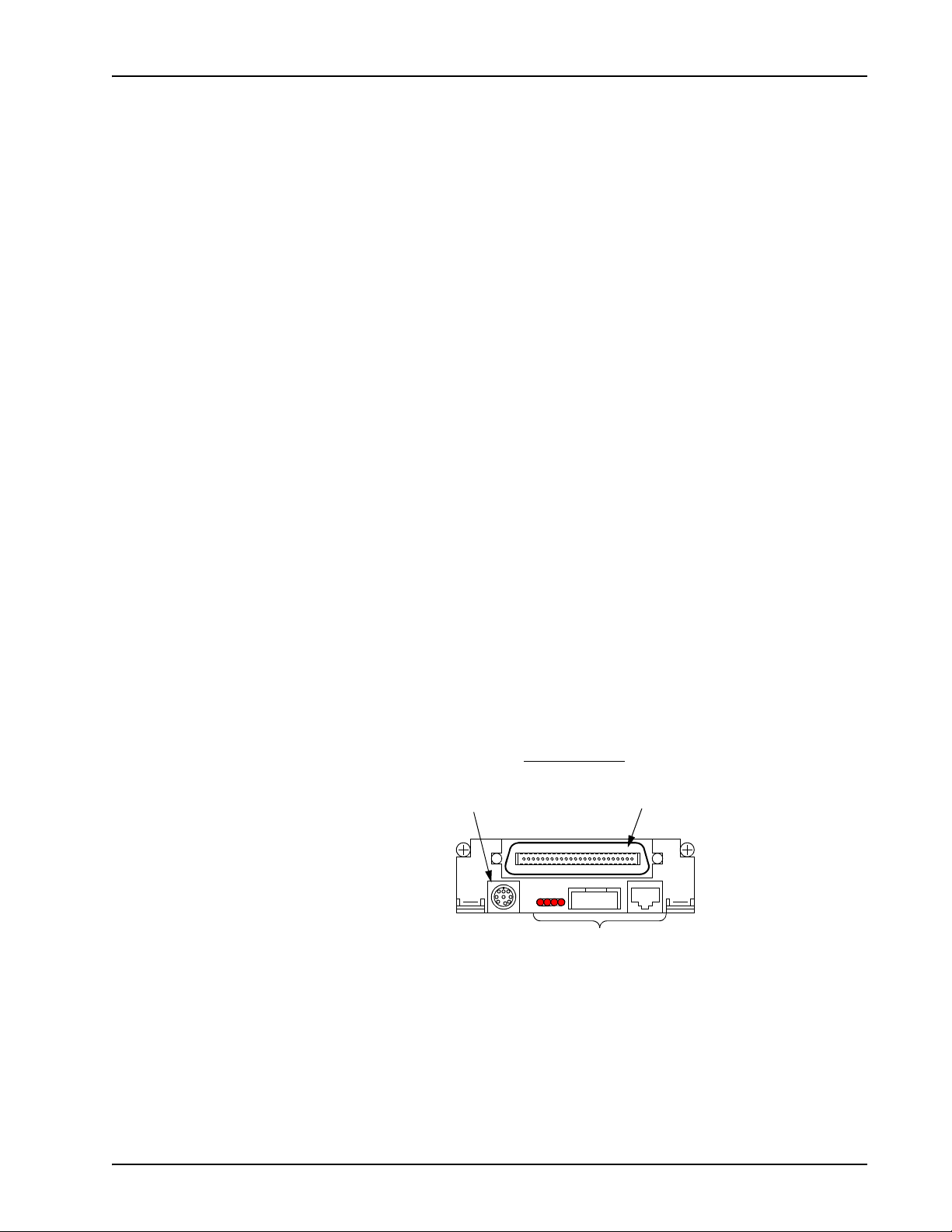

Digital Communications Controller (DCC)

The DCC provides the digital phone line interfaces for the system. It can be plugged

into any of the four slots of the TMS. The DCC is dedicated for either a T1 or E1

system, and connects to the PSTN via an RJ48M connector (up to eight spans).

The DCC is also capable of interfacing with a telephony network using VoIP. A

DCC-VoIP has no telephony connector on the front panel. Only one DCC is typically

installed in the TMS, unless the system is also using VoIP, in which case the

DCC-VoIP will also be installed. The DCC cannot be combined with an ALI in the

same TMS.

A serial console connector is provided for diagnostic purposes and for verifying and

configuring the boot ROM (see Verifying/Modifying Boot ROM Settings on page 252

for details). Other connectors and indicators are provided on the DCC front panel but

are reserved for future enhancement.

DCC Front View

Console

Connector

(Reserved for future enhancement)

RJ48M

Connector

# P0602477 Ver: 3.1.11 Page 29

Page 30

Avaya Media Processing Server Series System Reference Manual

Analog Line Interface (ALI)

The ALI provides a phone line interface to the system for up to 24 analog phone lines.

It connects to the PSTN via an RJ21X connector on the front panel. The standard

analog interface supports common two-wire loop-start circuits. There are no other

connectors or indicators on the front of the ALI.

Up to four ALIs can be installed in a TMS, although three is typical since one of the

four TMS slots is usually occupied by an MDM. ALIs cannot be combined with a

DCC in the same TMS.

ALI Front View

RJ21X

Connector

Page 30 # P0602477 Ver: 3.1.11

Page 31

Avaya MPS Architectural Overview

Multiple DSP Module (MDM)

A resource must be available on the system for an application to use it. If the resident

DSPs are fully allocated to resources or protocols, capacity for more resources can be

added by installing a Multiple DSP Module (MDM) in an open TMS slot and loading

the image definitions for the resources required. These resources are in addition to the

MPS resource itself. Examples of TMS supported resources are:

• Player (ply) - Vocabularies or audio data can be played from local memory

on the TMS motherboard.

• DTMF Receiver (dtmf) and Call Progress Detection (cpd) - Phone line

events such as touch-tone entry, hook-flash, dial tone, busy signals, etc. can

be detected.

• Tone Generator (tgen) - In lieu of playing tones as vocabularies, DTMF

and other tones can be generated.

• R1 Transmit (r1tx), R1 Receive (r1rx), and R2 (r2) - Tone generators

and detectors to support R1 and R2 protocols.

The MDM contains 12 DSPs for configuration of additional resources. There are no

indicators or connectors on the front panel of the MDM. The only visible indication

that an MDM is installed in a TMS slot (versus a blank), is the presence of bend tabs

near the center of the front bracket that secure it to the MDM circuit board.

MDM Front View

Configuration of resources and protocols is covered in Base System Configuration on

page 64.

# P0602477 Ver: 3.1.11 Page 31

Page 32

Avaya Media Processing Server Series System Reference Manual

System LAN Interface

The TMS interfaces with the system Local Area Network (LAN) via Ethernets using

TCP/IP. The chassis Ethernet is connected via the VRC backplane to separate hubs on

the chassis NIC or Hub-NIC (see VRC Rear View on page 24). If there is a failure on

the master Ethernet (controlled by the first NIC), the secondary NIC takes control of

all Ethernet A, system clocking, and ATM functions. The switchover is virtually

instantaneous and the inherent error correction of TCP/IP prevents loss of data.

The redundant Ethernet is only for backup of the primary Ethernet. Ethernet A

is the ONLY Ethernet supported between the chassis and the Application

Processor. There is no support for dual redundant Ethernet.

Field Programmable Gate Arrays (FPGA) and the Boot ROM

The TMS and the modules that plug into it (i.e., DCC, MDM, and ALI) contain

FPGAs. An FPGA is a generic microchip that has no inherent functionality. It

contains arrays of generic logic elements (e.g., gates) that are software configurable.

The software that configures the FPGA is called an image, and the image typically

commands the FPGA to assume the functionality of a designed logic circuit. A

hardware architecture based on FPGAs is very powerful and flexible because:

• A greater degree of complex logic functionality can be achieved in a

relatively smaller board space with fewer circuit components than if dedicated

circuit components and hard board wiring were used. This also provides

greater circuit reliability.

• Functionality can be enhanced without hardware redesign or even removal

and replacement. Upgrades can be done in the field by loading a new image

definition.

FPGAs are dynamic devices in that they do not retain their image definition when

power is removed. The image definition for each device is loaded from an image

definition file (*.idf) during the system boot sequence. The TMS contains a boot

ROM that statically stores the names of the .idf files for the devices contained on its

motherboard and the modules that are plugged in.

Whenever a new system is installed, has components added or replaced, or the system

is upgraded, the boot ROM should be verified and, if necessary, modified by Certified

Avaya Support Personnel. Details concerning boot ROM verification can be found at

Verifying/Modifying Boot ROM Settings on page 252.

Page 32 # P0602477 Ver: 3.1.11

Page 33

Avaya MPS Architectural Overview

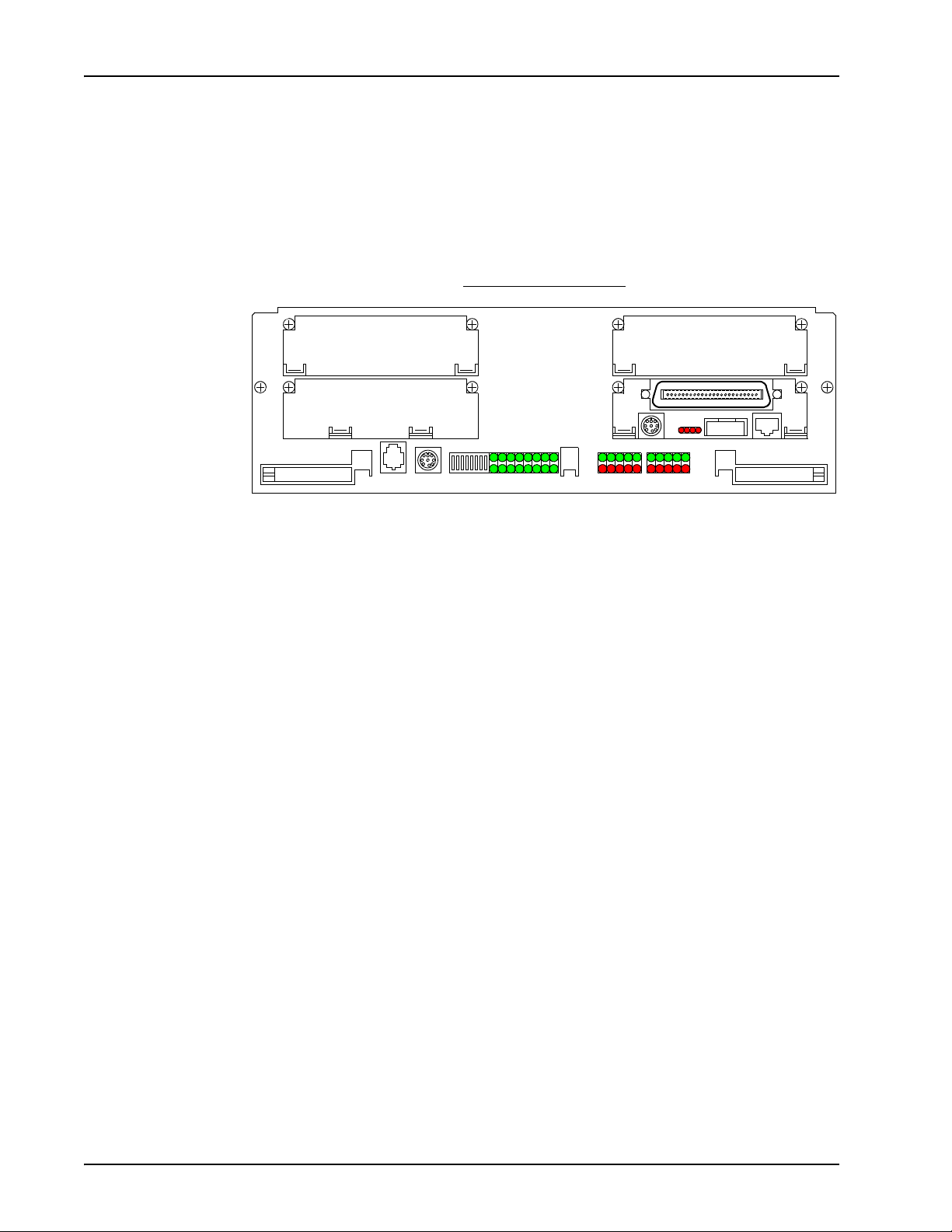

TelCo Connector Panel (TCCP)

The TCCP provides a built-in platform for connecting to the Public Switched

Telephone Network (PSTN) and for conveniently breaking out and looping-back

spans for monitoring or off-line testing. One TCCP can support up to four TMSs and

can be configured with RJ48M or RJ48C connectors for each TMS.

TCCP with RJ48M Interfaces

TCCP with RJ48C Interfaces

TCCP Rear View

J2 (Connects

to TMS 2)

J4 (Connects

to TMS 4)

J3 (Connects

to TMS 1)

J1 (Connects

to TMS 3)

The TCCP is connected to each TMS from the corresponding connector on the TCCP

back panel by a direct feed RJ48M cable. In TCCP equipped systems, PSTN

connections are made at the TCCP using the RJ48M or RJ48C connectors on the front

of the panel. A pair of bantam jacks (SND and RCV) is provided for each span

connected to the TCCP. The bantam jacks are resistor isolated and can be used for

monitoring only. The bantam jacks cannot be used to create span loop-back

connections. Loop-back connections for testing purposes can be made between TMSs

or spans using special crossover cables. For details, see the Avaya Media Processing

Server Series 1000 Transition Guide.

# P0602477 Ver: 3.1.11 Page 33

Page 34

Avaya Media Processing Server Series System Reference Manual

Software Overview

The following illustration shows the functional arrangement of the ASE and VOS

processes for MPS release 1.x. Though many of the processes are similar to those of

release 5.x, there are several new and revised processes, all of which are described in

the paragraphs that follow.

ASE

Processes

PPro

App.

VENGINE

ASE/VOS Integration Layer

VOS Process Group

(1 per TMS)

SRP

CFG

PPro

App.

VENGINE

VMST VSUPD

VAM P

COMMGR

protocol

CCMA

CCM

host

PPro

App.

VENGINE

Common Processes

(1 each per node)

CONFIGD

VSTAT

CONOUT

ALARMD

To h os t

VSH

To system

console

To alarm

viewer

MMF

TCAD

VSH

PMGR

TMS Common

Processes

(1 per node)

CONSOLED

VSH

To TRIPs of

VMM

TRIP NCD

other VOS

process groups

(if present)

VMem

TMS

LRM,

ADSM,

SIM

Master Slave

NIC Pairs

Page 34 # P0602477 Ver: 3.1.11

Page 35

Avaya MPS Architectural Overview

Software Environment

The MPS software components are categorized into two process groups: VOS (Voice

Operating Software) and ASE (Application Services Environment).

The VOS software process group comprises the main system software required to run

the MPS system. The ASE software process group contains the software required to

develop and execute applications.

VOS and ASE software processes have been designed to operate in an open systems

Solaris or Windows environment. All speech, telephony, and communications

functions run under Solaris or Windows, and require no additional device drivers.

VOS uses the standard Solaris or Windows file system for managing all speech/fax

data. A set of GUI tools provides for application development and system

management.

Some VOS and ASE software processes are common to all MPS components defined

on a specific host node; these are located in the GEN subcomponent of the common

component on that node (and defined in the file

$MPSHOME/common/etc/gen.cfg). Other VOS processes are unique to each

defined MPS component, and are part of the VOS subcomponent of the MPS

component (and defined in $MPSHOME/mpsN/etc/vos.cfg). The NCD process,

on the other hand, is part of the VOS subcomponent of the tmscomm component

(and defined in $MPSHOME/tmscommN/etc/vos.cfg). This TMS-specific

process requires one instance per node; other common processes also require that only

a single instance of the process execute on a node. Processes that are unique to each

component require an instance of each process be executed for each MPS component

defined on the node. When uncommented in their respective gen.cfg or

vos.cfg files, these processes are started by the Startup and Recovery Process

(SRP). (For a more comprehensive discussion about SRP, see SRP (Startup and

Recovery Process) on page 70.)

Individual applications are executed by means of a separate instance of the ASE

process VENGINE for each instance of the application’s execution. There are three

major types of applications:

• Call processing applications are assigned to physical phone lines. A separate

instance of both the application and VENGINE process is required for each

physical phone line to which the application is assigned.

• Administrative applications perform system maintenance functions and

support the call processing applications. They are not assigned with physical

phone lines. However, they also require a separate instance of VENGINE for

each instance of the application.

Applications can communicate with each other by means of shared memory or

message passing.

# P0602477 Ver: 3.1.11 Page 35

Page 36

Avaya Media Processing Server Series System Reference Manual

ASE Processes

The Application Services Environment (ASE) process group is comprised of software

required to develop and execute applications. ASE processes include:

Process Description

VENGINE The application execution process. One VENGINE process is

required for each MPS application (call processing, web based,

and administrative).

VMST VENGINE Message Server - Extended. Manages MPS

messages related to VENGINE applications. This process also

can be used to bridge messages in a multi-MPS environment.

VSUPD Collects application-specific statistics (as opposed to system

statistics).

• VMST, and VSUPD are node-specific processes and require only one

occurrence of the process for each host node regardless of the number of

components defined on the node.

• VENGINE is an application-specific process. One occurrence of VENGINE

must execute for each application assigned to an MPS line.

VENGINE

VENGINE is the application-specific ASE software process. It is responsible for the

execution of each occurrence of an application that is assigned to an MPS. One

VENGINE process is required to execute for each occurrence of a call processing,

web based, or administrative application. Administrative applications are not

associated with physical phone lines and perform system maintenance operations and

support call processing applications.

Additionally, VENGINE is used to execute all or part of an application while it is

under development. It can run all or part of the application so that the logic paths and

application functions may be tested.

VENGINE is located in $MPSHOME/PERIase/bin on Solaris systems and

%MPSHOME%\bin on Windows systems, and can be initiated from the command

line or by starting an application with the PeriView Assign/(Re)Start Lines tool (see

the PeriView Reference Manual for more information on the latter). Applications that

require ASE processes are located in the $MPSHOME/mpsN/apps directory. For

additional information about these applications, see The MPSHOME/mpsN/apps

Directory on page 140. VENGINE makes connections to both these applications and

VMST. For additional information on VENGINE, see the PeriProducer User’s

Guide.

VMST

VMST (VENGINE Message Server - Extended) is an ASE software process that

Page 36 # P0602477 Ver: 3.1.11

Page 37

Avaya MPS Architectural Overview

performs message server functions for VENGINE. It funnels VOS messages that have

been translated by VAMP to VENGINE processes and service daemons. VMST

interprets and supports all pre-existing VMS options, allowing scripts incorporating

them to continue functioning under the present release without any modifications.

The advent of the TMS brings about an increase in the number of lines supportable on

a single platform, as well as an increase in potential message traffic. In order to handle

the increase in addressable lines, this modified version of VMS was created

(previously, VMS addressing was limited to a one-to-one correspondence of VMS to

CPS/VPS). Though VMST can still act on behalf of a single MPS, VMST can also

address the new paradigm by supporting many real or virtual MPS’ in a single process

(the VMST process assumes the functions of one or more VMS’ running on the same

node). In addition, VMST: