Page 1

USER’S

GUIDE

MegaRAID

®

Configuration Software

March 2006

Version 2.0

®

DB15-000269-01

Page 2

This document contains proprietary information of LSI Logic Corporation. The

information contained herein is not to be used by or disclosed to third parties

without the express written permission of an officer of LSI Logic Corporation.

LSI Logic products are not intended for use in life-support appliances, devices,

or systems. Use of any LSI Logic product in such applications without written

consent of the appropriate LSI Logic officer is prohibited.

2

Purchase of I

Associated Companies, conveys a license under the Philips I

use these components in an I

2

C standard Specification as defined by Philips.

the I

C components of LSI Logic Corporation, or one of its sublicensed

2

C system, provided that the system conforms to

2

C Patent Rights to

Document DB15-000269-01, Second Edition (March 2006)

This document describes LSI Logic Corporation’s MegaRAID software tools and

utilities. This document will remain the official reference source for all

revisions/releases of these products until rescinded by an update.

LSI Logic Corporation reserves the right to make changes to any products herein

at any time without notice. LSI Logic does not assume any responsibility or

liability arising out of the application or use of any product described herein,

except as expressly agreed to in writing by LSI Logic; nor does the purchase or

use of a product from LSI Logic convey a license under any patent rights,

copyrights, trademark rights, or any other of the intellectual property rights of

LSI Logic or third parties.

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

TRADEMARK ACKNOWLEDGMENT

LSI Logic, the LSI Logic logo design, FlexRAID, MegaRAID, MegaRAID

Configuration Utility, MegaRAID Manager, and Power Console Plus are

trademarks or registered trademarks of LSI Logic Corporation. Intel and Pentium

are registered trademarks of Intel Corporation. Linux is a registered trademark of

Linus Torvalds. Microsoft, MS-DOS, Windows, and Windows NT are registered

trademarks of Microsoft Corporation. NetWare,Novell, and SUSE are trademarks

or registered trademarks of Novell, Inc. PCI-X is a registered trademark of PCI

SIG. Red Hat is a registered trademark of Red Hat Software, Inc. All other brand

and product names may be trademarks of their respective companies.

CD

To receive product literature, visit us at http://www.lsilogic.com.

For a current list of our distributors, sales offices, and design resource

centers, view our web page located at

http://www.lsilogic.com/contacts/index.html

ii

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 3

Preface

Audience

This book is the primary reference and user’s guide for the MegaRAID

software tools and utilities. These include the MegaRAID BIOS

Configuration Utility (CU), WebBIOS CU, MegaRAID Manager™, and

Power Console Plus™, which enable configuration and management of

RAID systems using the MegaRAID controllers.

This document assumes that you have familiarity with storage systems,

and are knowledgeable about PCI, SCSI, and Serial ATA interfaces. It

also assumes that you are familiar with computer systems and know how

to use the keyboard, mouse, clipboard functions, toolbars, and drop

down menus.

The people who benefit from this book are:

• Users who want to configure, monitor, or manage RAID systems that

use MegaRAID controllers

• Engineers and managers who are evaluating MegaRAID controllers

for use in a system

• Engineers who are designing MegaRAID controllers into a system

®

Organization

This document has the following chapters and appendix:

• Chapter 1, Overview, introduces the MegaRAID software tools and

utilities, and provides operating system information.

• Chapter 2, Introduction to RAID, describes the components,

functions and benefits of RAID, along with RAID levels, configuration

strategies, and configuration planning.

MegaRAID Configuration Software User’s Guide iii

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 4

• Chapter 3, BIOS Configuration Utility and MegaRAID Manager,

describes the MegaRAID BIOS CU.

• Chapter 4, WebBIOS Configuration Utility, describes the

WebBIOS CU.

• Chapter 5, Start the Power Console Plus Utility, describes the

Power Console Plus tool.

• Chapter 6, Virtual Sizing and Online Capacity Expansion,

describes the FlexRAID Virtual Sizing feature and capacity

expansion under Linux.

• Appendix A, MegaRAID Service Monitor, describes the messages

used by the MegaRAID Service Monitor.

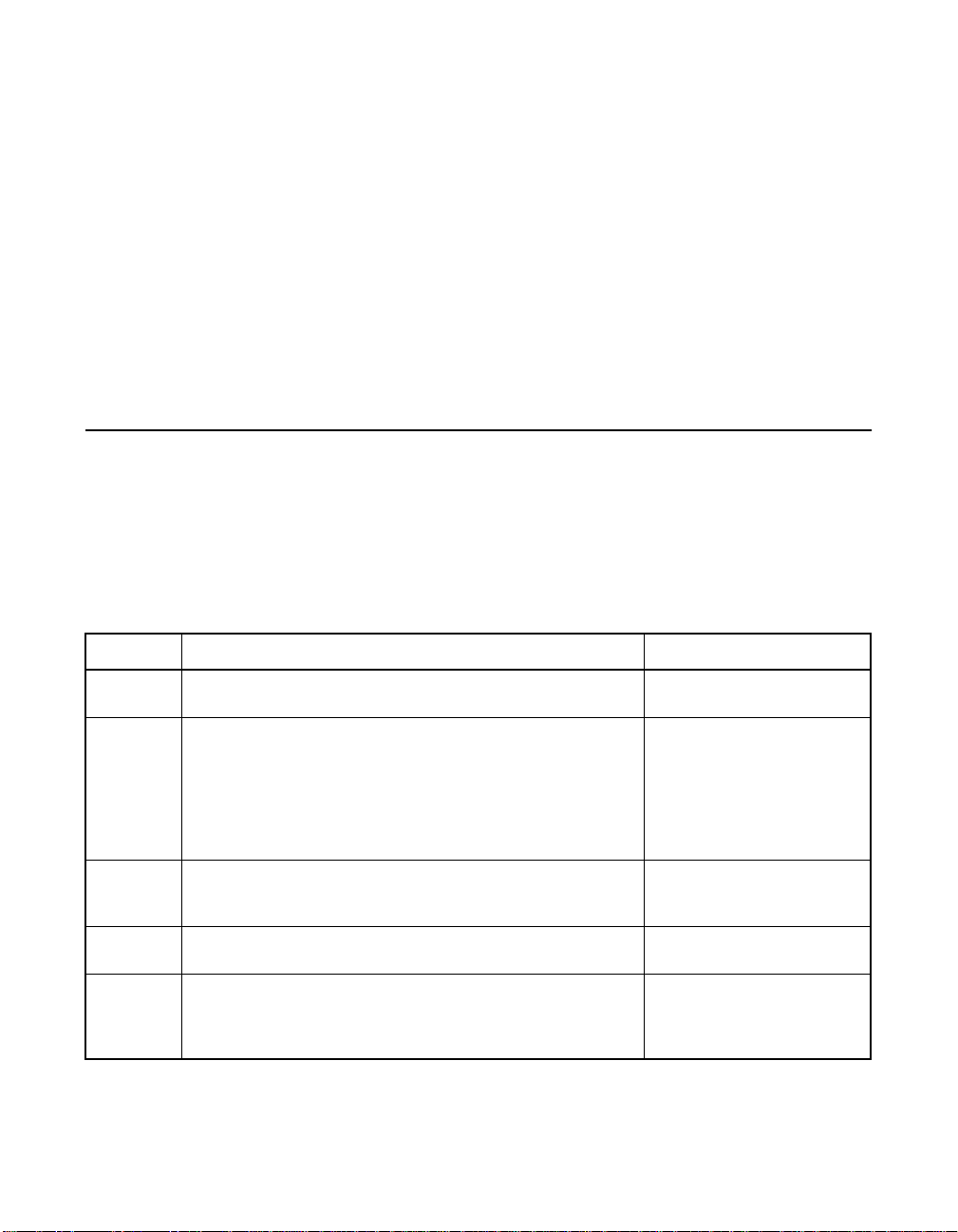

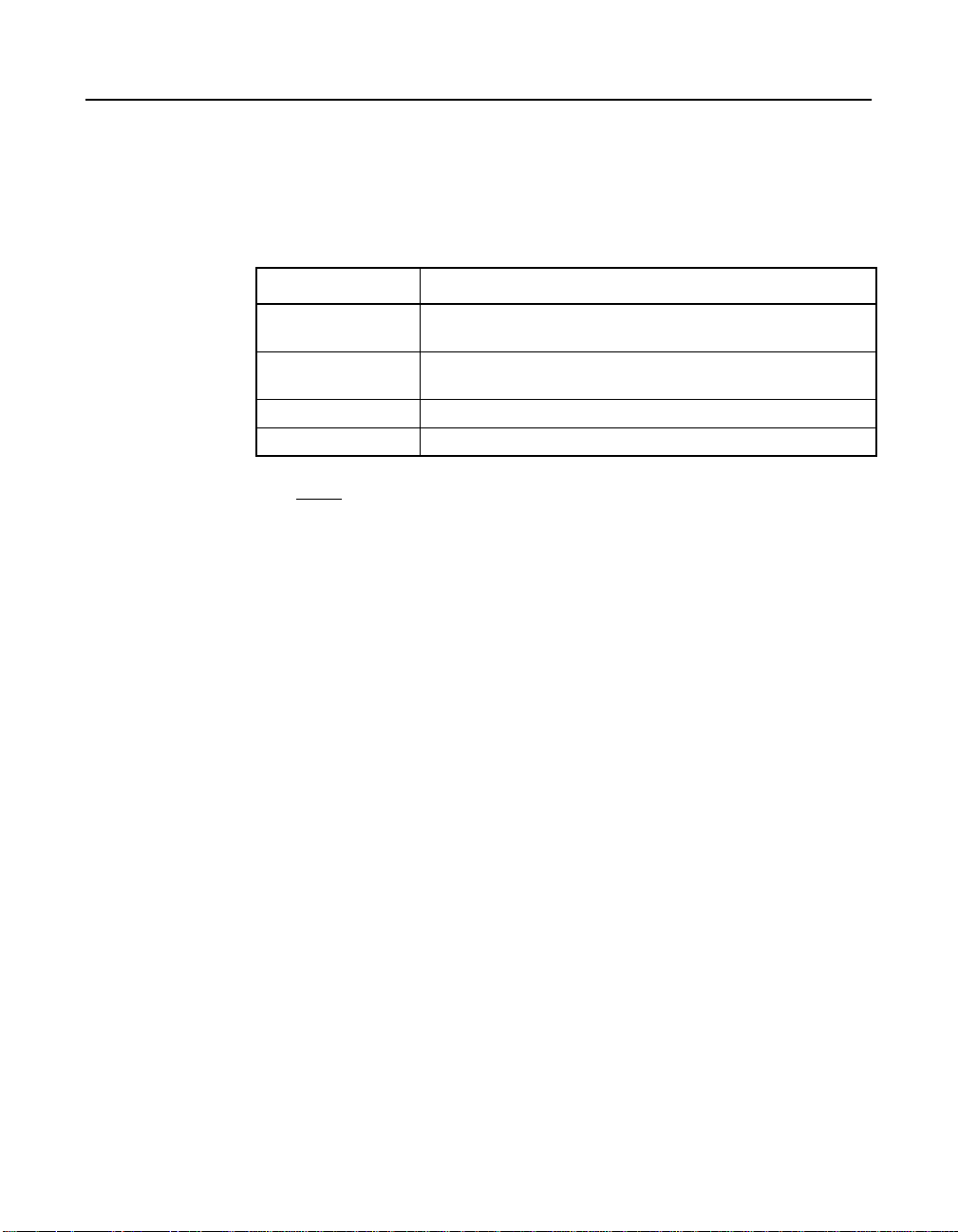

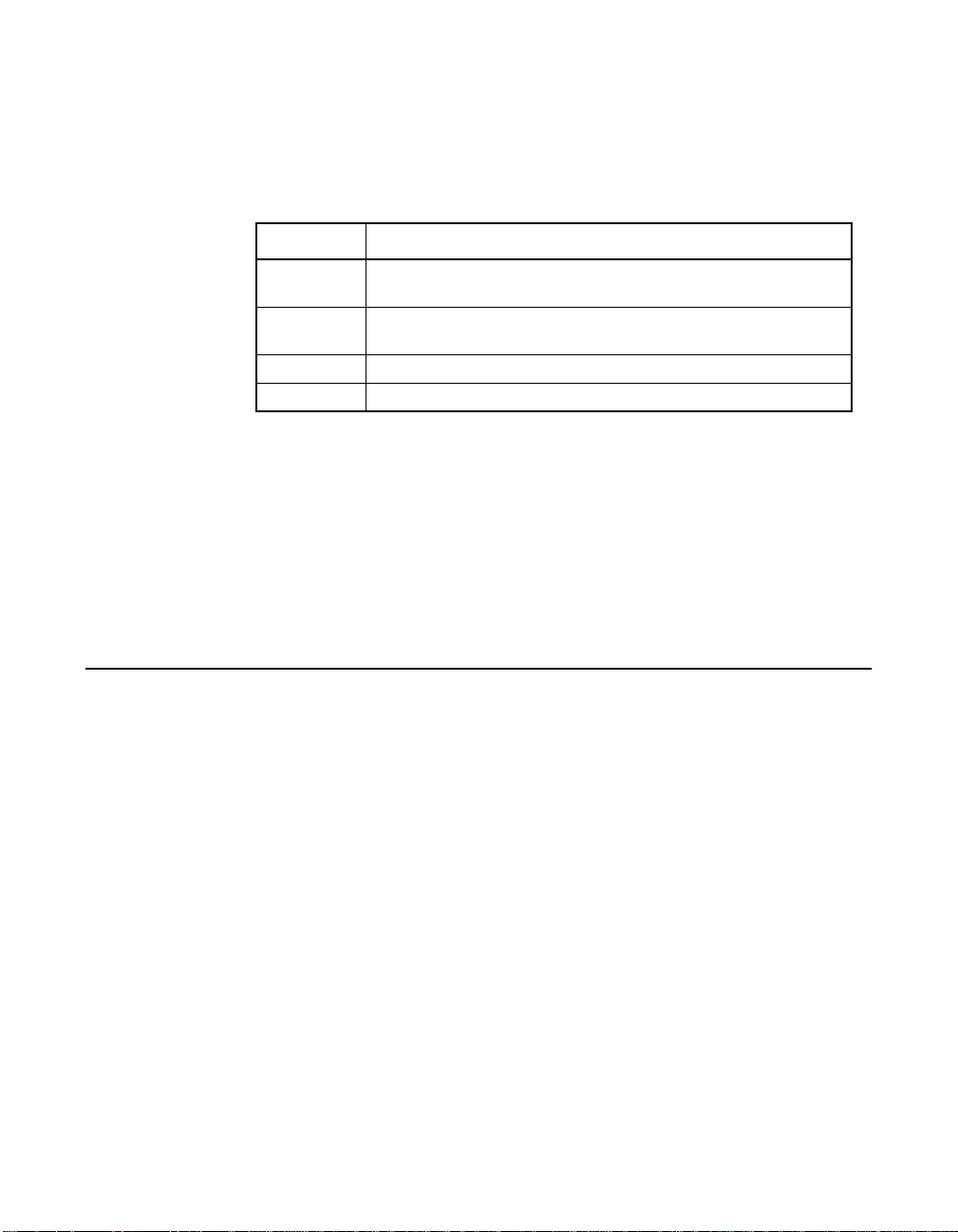

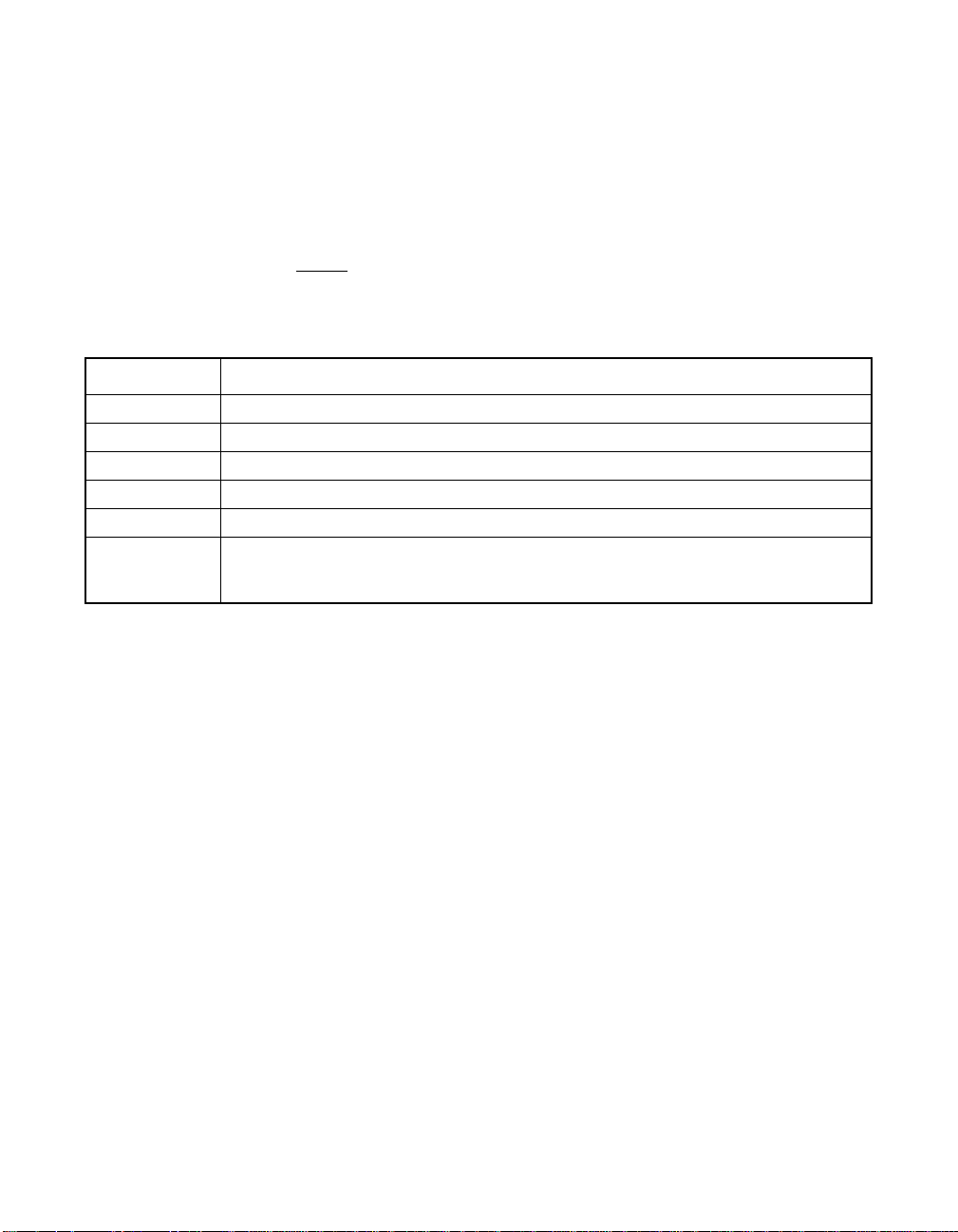

MegaRAID System Installation Sequences and Document Organization

The following table outlines the installation, configuration, and

management sequences for a MegaRAID Serial ATA system. Each

sequence consists of a series of steps and operations that the reference

manual explains. LSI Logic recommends performing the sequences in

the order listed when you install and configure your Serial ATA system.

Sequence Task Reference Manual

1 Understand RAID system theory and operation. MegaRAID Configuration

2 Install the MegaRAID Serial ATA (SATA) or SCSI storage

3 Configure the physical arrays and logical devices using

4 Install the MegaRAID device drivers. MegaRAID Device Driver

5 Manage, monitor, and reconfigure the RAID array using

iv Preface

adapter and the related hardware.

either the MegaRAID Configuration Utility

WebBIOS CU.

either the MegaRAID Manager tool or the Power Console

Plus tool. Each tool runs under an operating system and

can manage the RAID array while the system is operating.

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

™

(CU) or the

Software User’s Guide

MegaRAID 320 Storage

Adapters User’s Guide,

MegaRAID SATA150 PCI to

Serial ATA Storage Adapters

User’sGuide, and MegaRAID

SATA 300 Storage Adapters

User’s Guide

MegaRAID Configuration

Software User’s Guide

Installation User’s Guide

MegaRAID Configuration

Software User’s Guide

Page 5

Related Publications

MegaRAID SA TA150 PCI to Serial ATA Storage Adapters User’s Guide

Document Number: DB15-000272-04

This document explains how to install your MegaRAID SATA 150 storage

adapter in the host system. It provides the electrical and physical

specifications, jumper definitions, and connector locations for the

storage adapter.

MegaRAID SATA 300 Storage Adapters User’s Guide

Document Number: DB15-000311-02

This document explains how to install your MegaRAID SATA 300 storage

adapter in the host system. It provides the electrical and physical

specifications, jumper definitions, and connector locations for the

storage adapter.

MegaRAID 320 Storage Adapters User’s Guide

Document Number: DB15-000260-06

This document explains how to install your MegaRAID 320 storage

adapter in the host system. It provides the electrical and physical

specifications, jumper definitions, and connector locations for the

storage adapter.

MegaRAID Device Driver Installation User’s Guide

Document Number: DB11-000018-02

This document explains how to install the MegaRAID device driver for

your operating system. The information in this document is independent

of the back-end bus and applies to both MegaRAID SCSI storage

adapters and Serial ATA storage adapters.

Preface v

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 6

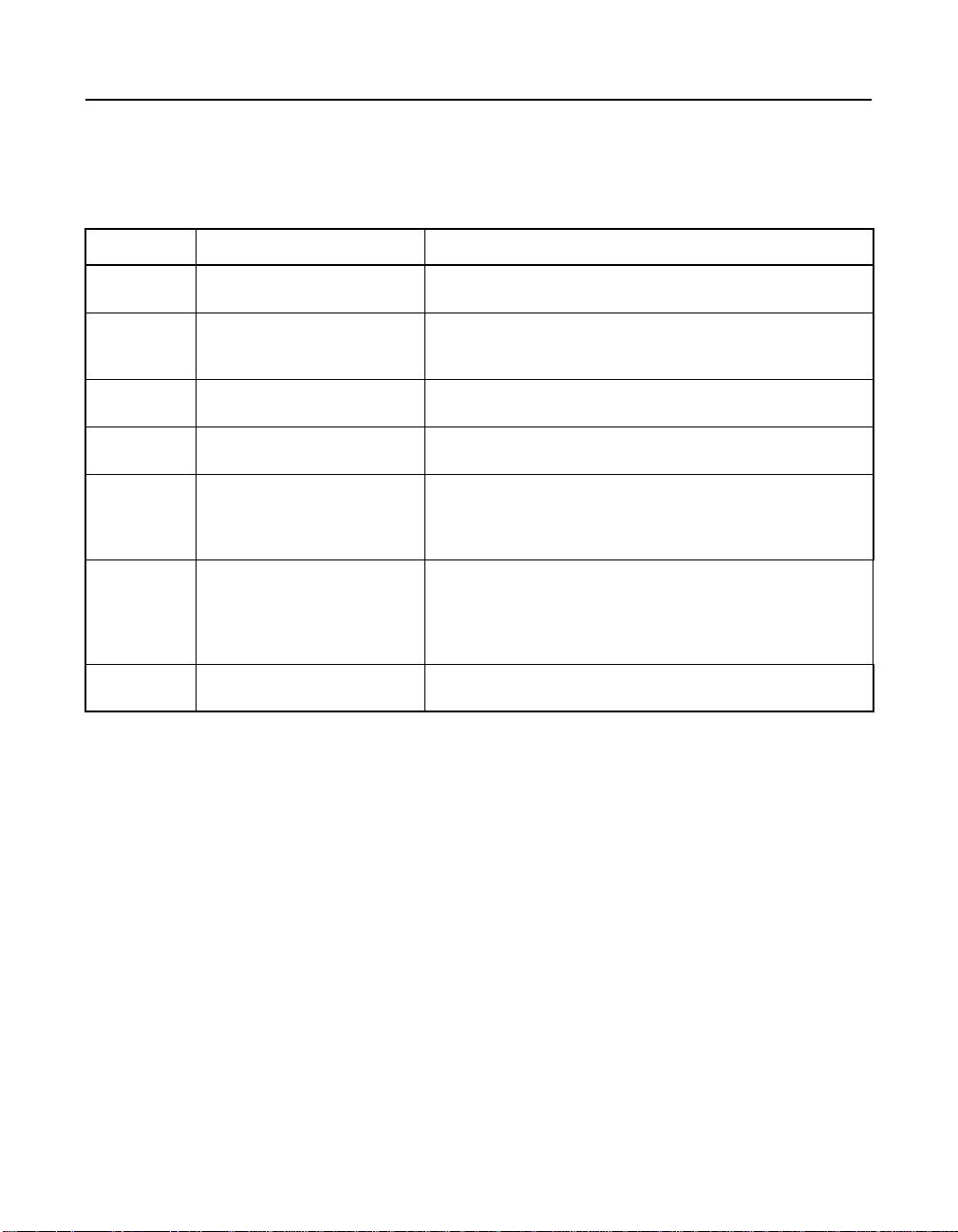

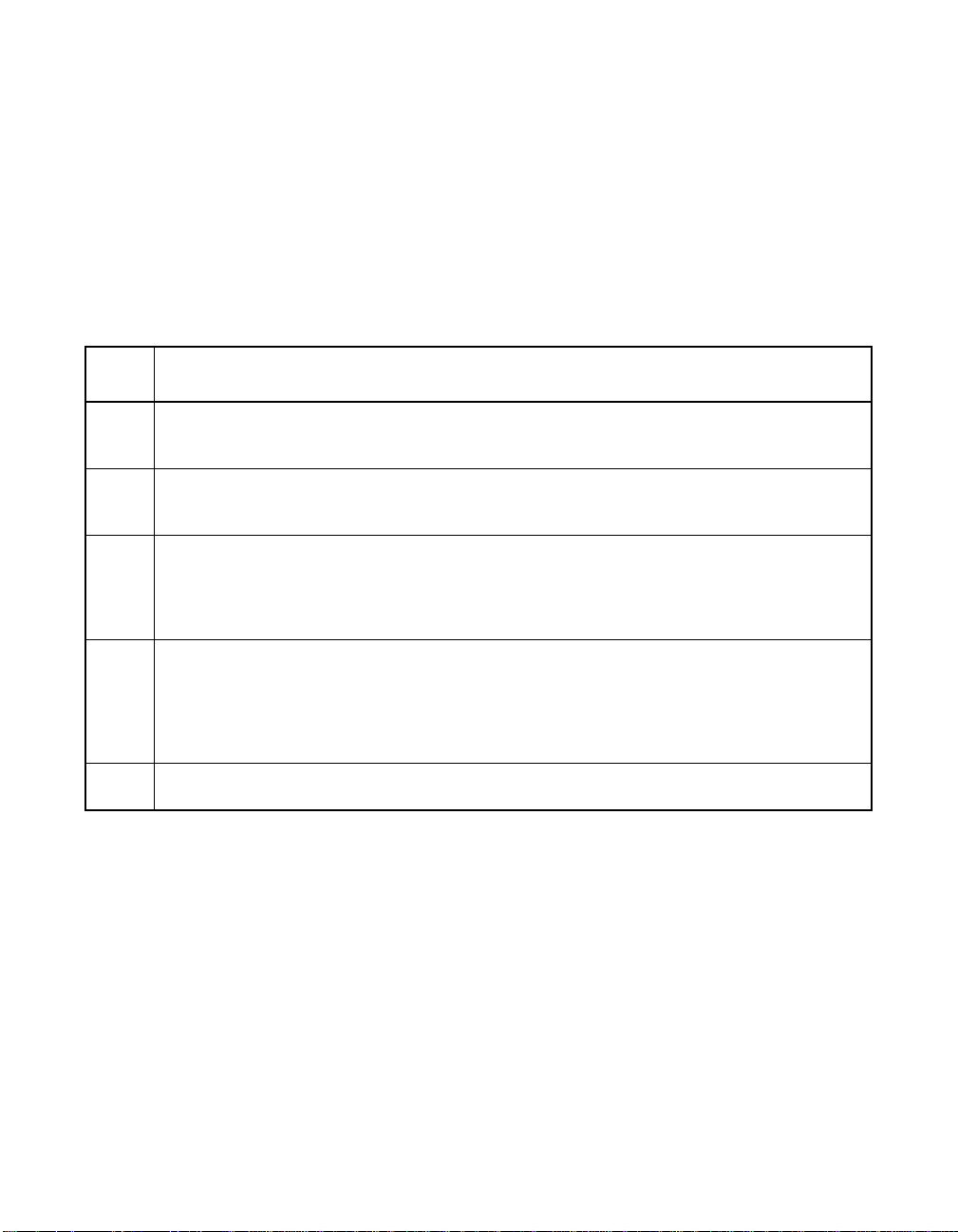

Conventions

Throughout the manual, the following conventions are used to describe

user interaction with the product.

Notation Meaning and Use Examples

→ Used to indicate a series of

all caps,

plus sign

courier

typeface

bold typeface fd1sp In a command line, keywords are shown in bold, nonitalic

italics module In command lines and names, italics indicate user

Initial Capital letters

brackets [version] You may, but need not, select one item enclosed within

selections in a GUI.

Key presses are in all caps,

with a plus sign (+) between

key presses in a sequence

.nwk file Names of commands, directories, file names, and

Undo

Edit

Apply

Start → Programs

ENTER, ALT+CTRL+DEL, TAB

on-screen text are shown in courier typeface.

typeface. Enter them exactly as shown.

variables. Italicized text must be replaced with appropriate

user-specified items. Enter items of the type called for,

using lowercase.

Names of menu commands, options, check buttons, text

buttons, options buttons, text boxes, list boxes, etc., are

shown in text with Initial Capital lettering to avoid

misreading. These elements may appear on your screen

in all lowercase.

brackets. Do not enter the brackets.

vi Preface

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 7

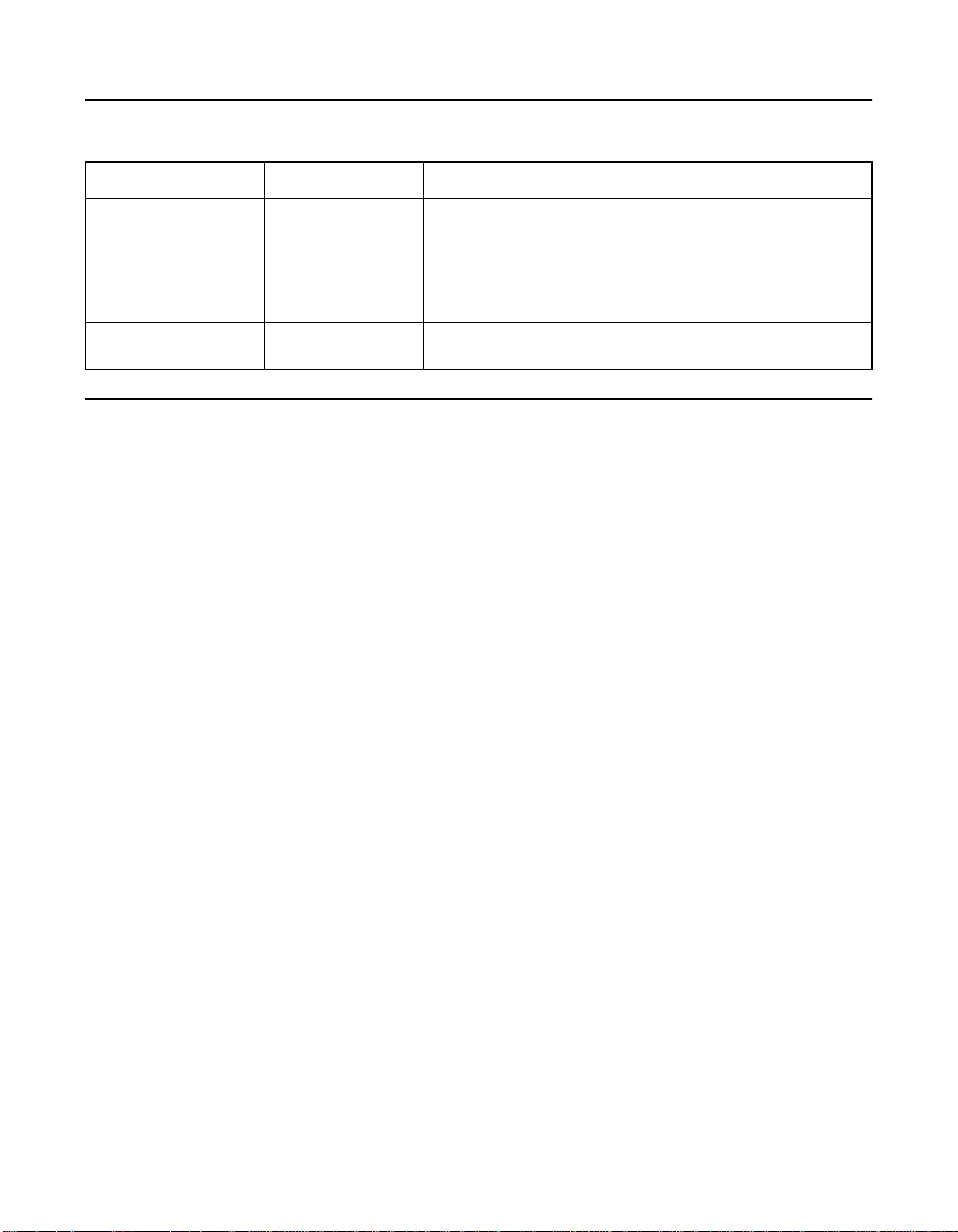

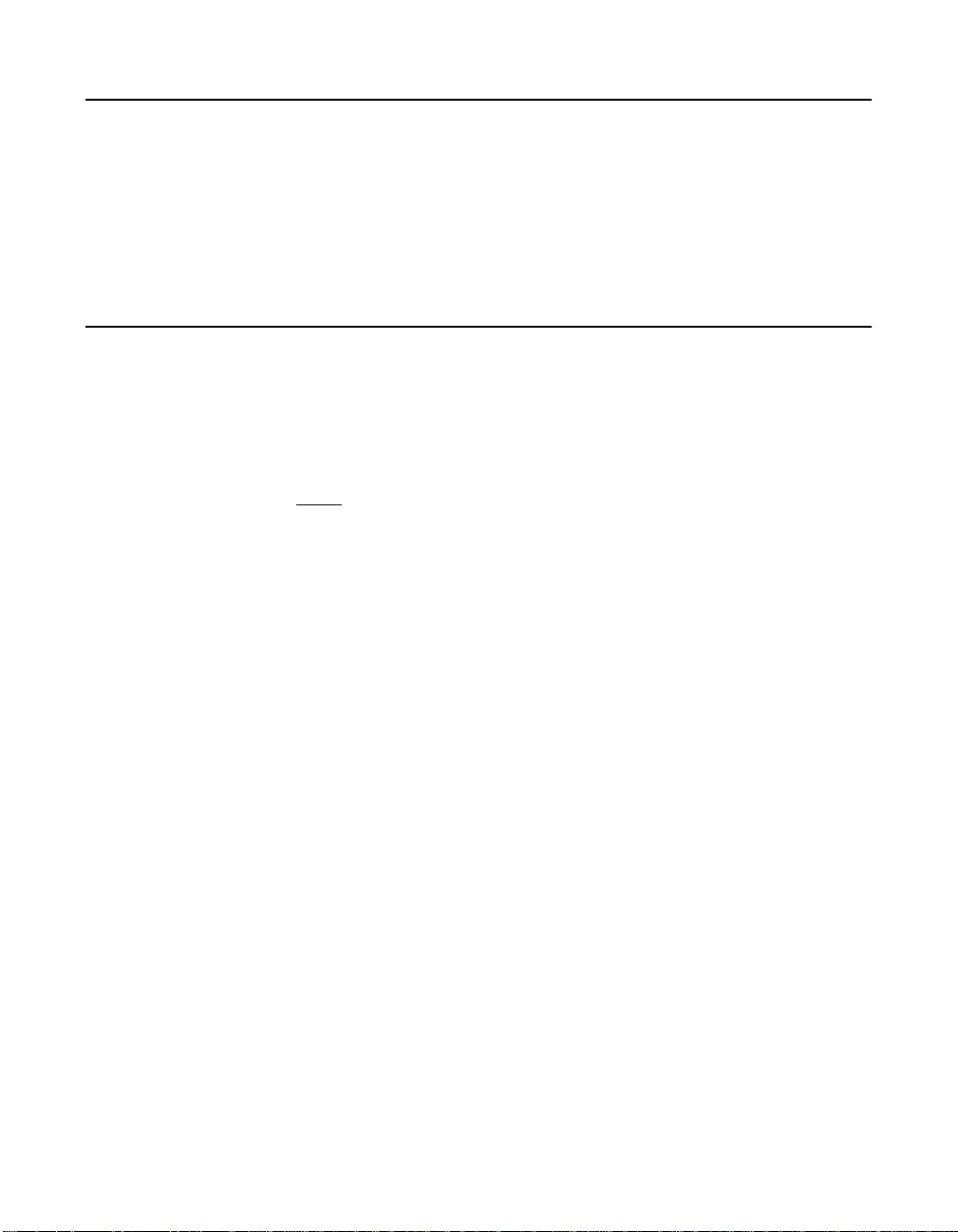

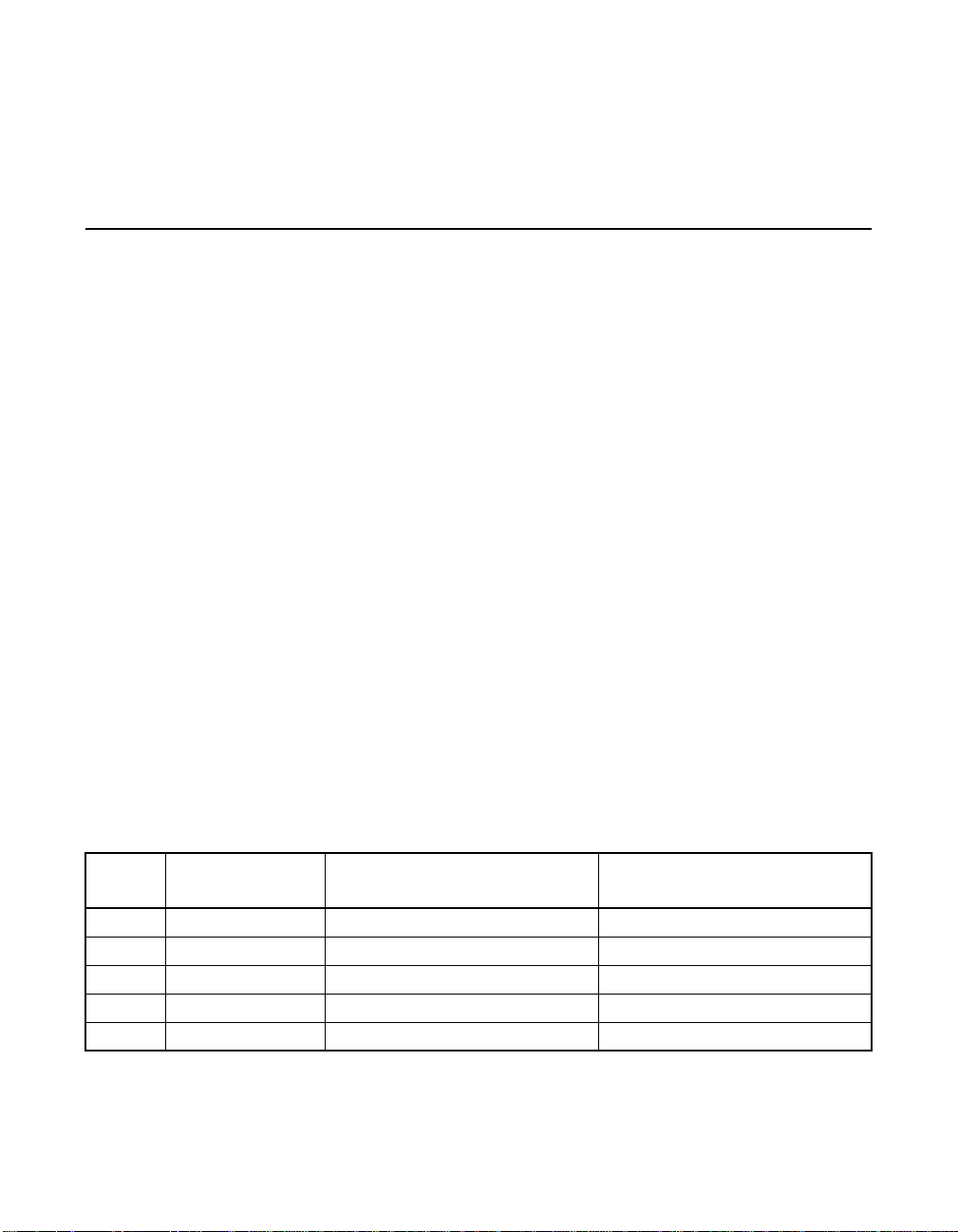

Revision History

Document Number Version/Date Description

DB15-000269-01 Version 2.0

February 2006

DB15-000269-00 Version 1.0

February 2003

Technical Support

LSI provides technical support only for LSI products purchased directly

from LSI or from an LSI-authorized reseller.

If you purchased the MegaRAID controller from LSI or from a certified

LSI reseller, call LSI technical support at support@lsil.com,

1-800-633-4545 #3, or 1-678-728-1250. Please be prepared to specify

the 10-digit number preceded by the letter “E”.

In Europe, you can contact LSI Technical Support at

eurosupport@lsil.com or +44.1344.413.441 (English).

If the MegaRAID controller was installed as part of a system

manufactured by a company other than LSI, or if you purchased an LSI

product from an unauthorized reseller, call the technical support

department of the computer manufacturer or the unauthorized reseller.

LSI does not provide direct technical support in these cases.

Added the introduction to RAID chapter. Revised

supported operating systems, spanning, and menu

options, and added port multiplier information in the BIOS

Configuration Utility. Revised information about virtual

sizing. Revised the WebBIOS Adapter Properties Menu

and the Configuration Wizard procedure.

Initial release of document.

To download drivers or documentation, go to the LSI web site at:

http://www.lsilogic.com/downloads/selectDownload.do.

Preface vii

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 8

viii Preface

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 9

Contents

Chapter 1 Overview

1.1 MegaRAID Tool Description 1-1

1.1.1 MegaRAID BIOS Configuration Utility (Ctrl+M) 1-2

1.1.2 MegaRAID Manager Configuration Utility 1-2

1.1.3 WebBIOS Configuration Utility (CTRL+H) 1-2

1.1.4 Power Console Plus Configuration Utility 1-2

1.2 Operating System Support 1-3

Chapter 2 Introduction to RAID

2.1 RAID Description 2-1

2.2 RAID Benefits 2-2

2.3 RAID Functions 2-2

2.4 RAID Components and Features 2-3

2.4.1 Physical Array 2-3

2.4.2 Logical Drive 2-3

2.4.3 RAID Array 2-3

2.4.4 Fault Tolerance 2-3

2.4.5 Consistency Check 2-4

2.4.6 Background Initialization 2-4

2.4.7 Patrol Read 2-5

2.4.8 Disk Striping 2-6

2.4.9 Disk Mirroring 2-7

2.4.10 Parity 2-7

2.4.11 Disk Spanning 2-8

2.4.12 Hot Spares 2-9

2.4.13 Disk Rebuilds 2-11

2.4.14 SCSI Physical Drive States 2-12

2.4.15 Logical Drive States 2-13

2.4.16 Enclosure Management 2-13

MegaRAID Configuration Software User’s Guide ix

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 10

2.5 RAID Levels 2-13

2.5.1 Summary of RAID Levels 2-13

2.5.2 Selecting a RAID Level 2-14

2.6 RAID Configuration Strategies 2-19

2.6.1 Maximizing Fault Tolerance 2-19

2.6.2 Maximizing Performance 2-21

2.6.3 Maximizing Storage Capacity 2-22

2.7 RAID Availability 2-23

2.7.1 Spare Drives 2-23

2.7.2 Rebuilding 2-23

2.8 RAID Configuration Planning 2-24

2.8.1 Number of Physical Disk Drives 2-24

2.8.2 Array Purpose 2-25

Chapter 3 BIOS Configuration Utility and MegaRAID Manager

3.1 Quick Configuration Steps for the BIOS

Configuration Utility 3-2

3.2 Quick Configuration Steps for MegaRAID Manager 3-3

3.3 Configuration Utility Menu 3-4

3.3.1 Configure Menu 3-5

3.3.2 Initialize Option 3-6

3.3.3 Objects Menu 3-6

3.3.4 Clear Option 3-14

3.3.5 Rebuild Option 3-14

3.3.6 Check Consistency Option 3-15

3.3.7 Reconstruct Option 3-15

3.3.8 Select Adapter Menu 3-15

3.4 Detailed Configuration Instructions 3-16

3.4.1 Starting the MegaRAID Configuration Utility 3-16

3.4.2 Resolving a Configuration Mismatch 3-16

3.4.3 Starting MegaRAID Manager 3-17

3.4.4 Configuring Arrays and Logical Drives 3-18

3.4.5 Selecting a Configuration Method 3-18

3.4.6 Designating Drives as Hot Spares 3-18

3.4.7 Creating Physical Arrays and Logical Drives 3-19

3.4.8 Initializing Logical Drives 3-26

x Contents

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 11

3.5 Deleting Logical Drives 3-27

3.6 Performing Drive Roaming 3-27

3.7 Performing Drive Migration 3-29

3.8 Rebuilding Failed Disks 3-30

3.8.1 Rebuild Types 3-30

3.8.2 Manual Rebuild – Rebuilding an Individual Drive 3-31

3.8.3 Manual Rebuild – Rebuilding in Batch Mode 3-31

3.9 FlexRAID Virtual Sizing 3-32

3.10 Checking Data Consistency 3-32

3.11 Reconstructing Logical Drives 3-33

3.12 Replacing a Failed Controller Containing Data

in the TBBU 3-34

3.13 Using a Preloaded System Drive 3-35

3.14 Exiting MegaRAID Configuration Utility 3-36

Chapter 4 WebBIOS Configuration Utility

4.1 General Description 4-1

4.2 Quick Configuration Steps 4-2

4.3 Starting the WebBIOS Configuration Utility

on the Host Computer 4-2

4.4 Screen and Option Descriptions 4-4

4.4.1 WebBIOS Toolbar Options 4-4

4.4.2 Main Screen 4-5

4.4.3 Adapter Properties Screen 4-6

4.4.4 Scan Devices Option 4-9

4.4.5 SCSI Channel Properties 4-9

4.4.6 Logical Drive Screen 4-9

4.4.7 Physical Drive Screen 4-12

4.4.8 Configuration Mismatch Screen 4-13

4.4.9 Configuration Wizard Option 4-14

4.4.10 Adapter Selection Option 4-14

4.4.11 Physical View/Logical View Option 4-14

4.4.12 Exit 4-14

4.5 Configuring RAID Arrays and Logical Drives 4-14

Contents xi

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 12

Chapter 5 Start the Power Console Plus Utility

5.1 Quick Configuration Steps 5-1

5.2 Power Console Plus Overview 5-2

5.2.1 Power Console Plus Components 5-2

5.2.2 Features 5-3

5.2.3 Client System Requirements 5-4

5.2.4 MegaService Monitor 5-4

5.3 Installing the Power Console Plus Utility 5-5

5.3.1 Windows Installations 5-5

5.3.2 Deregistering and Reregistering under

the Power Console Plus Utility 5-11

5.4 Power Console Plus Interface Description 5-11

5.4.1 Power Console Plus Main Window Description 5-12

5.4.2 Power Console Plus Menus 5-14

5.4.3 Physical Drive Menu 5-16

5.4.4 Logical Drive Menu 5-17

5.4.5 Progress Menu 5-18

5.5 Configuring Arrays and Logical Drives 5-19

5.5.1 Starting the Power Console Plus Utility 5-19

5.5.2 Choosing an Adapter 5-20

5.5.3 Running the Configuration Wizard 5-20

5.5.4 Defining Logical Drives 5-22

5.5.5 Saving the Configuration 5-24

5.5.6 Initializing Logical Drives 5-25

5.5.7 Checking Rebuild Rate 5-25

5.5.8 Exiting the Power Console Plus Utility 5-25

5.6 Reclaiming Hot Spare Disks 5-25

5.7 Reconfiguring Existing Arrays 5-26

5.7.1 Adding a Physical Drive to an Existing Array 5-26

5.7.2 Removing a Physical Drive from an Array 5-26

5.8 Add Capacity Steps 5-27

Chapter 6 Virtual Sizing and Online Capacity Expansion

6.1 FlexRAID Virtual Sizing 6-1

6.2 Capacity Expansion under the Linux Operating System 6-2

xii Contents

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 13

Appendix A MegaRAID Service Monitor

A.1 Power Console Plus Internal Messages A-1

A.2 MegaRAID Service Monitor Event Types A-3

A.3 Event Message IDs A-7

Index

Customer Feedback

Contents xiii

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 14

xiv Contents

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 15

Figures

2.1 Disk Striping (RAID 0) Example 2-6

2.2 Disk Mirroring (RAID 1) Example 2-7

2.3 Distributed Parity (RAID 5) Example 2-8

2.4 Disk Spanning (RAID 10) Example 2-9

2.5 RAID 10 Logical Drive 2-17

2.6 RAID 50 Logical Drive 2-19

3.1 MegaRAID Configuration Utility Menu Tree 3-4

3.2 Port Multiplier Option 3-9

3.3 Port and Drive Information 3-9

4.1 WebBIOS Adapter Selection Screen 4-3

4.2 WebBIOS Main Screen 4-5

4.3 WebBIOS Adapter Properties Screen 4-6

4.4 WebBIOS Logical Drive Screen 4-10

4.5 WebBIOS Physical Drive Screen 4-12

4.6 WebBIOS Configuration Mismatch Screen 4-13

4.7 WebBIOS Configuration Wizard Screen 4-15

4.8 WebBIOS Logical Drive Definition Screen 4-16

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

xv

Page 16

xvi

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 17

Tables

1.1 MegaRAID Tool Operating System Support 1-3

2.1 Types of Parity 2-8

2.2 Disk Spanning for RAID 10 and RAID 50 2-9

2.3 SCSI Physical Drive States 2-12

2.4 Logical Drive States 2-13

2.5 RAID 0 Overview 2-15

2.6 RAID 1 Overview 2-15

2.7 RAID 5 Overview 2-16

2.8 RAID 10 Overview 2-17

2.9 RAID 50 Overview 2-18

2.10 RAID Levels and Fault Tolerance 2-20

2.11 RAID Levels and Performance 2-21

2.12 RAID Levels and Capacity 2-22

2.13 Physical Drives Required for Each RAID Level 2-24

2.14 Factors to Consider for Array Configuration 2-25

3.1 Configuration Utility Configure Menu 3-5

3.2 Configuration Utility Objects Menu 3-6

3.3 Configuration Utility Adapter Submenu 3-7

3.4 Configuration Utility Logical Drive Submenu 3-10

3.5 Configuration Utility View/Update Parameters Submenu 3-11

3.6 Configuration Utility Physical Drive Submenu 3-13

3.7 Configuration Utility Channel Submenu 3-13

3.8 Configuration Utility Battery Backup Submenu 3-14

3.9 Configuration Hot Keys 3-16

3.10 Logical Drive Parameters and Descriptions 3-21

3.11 Spanning Mode Options 3-25

3.12 Rebuild Types 3-30

4.1 WebBIOS Toolbar Icon Descriptions 4-4

4.2 WebBIOS Adapter Properties Menu Options 4-7

5.1 Power Console Plus Screen Elements 5-12

5.2 Power Console Plus Toolbar Icons 5-13

5.3 Power Console Plus Configuration Menu 5-14

5.4 Power Console Plus Adapter Properties Menu 5-15

5.5 Power Console Plus Physical Drive Menu 5-16

5.6 Power Console Plus Change Status Submenu 5-16

5.7 Power Console Plus Logical Drive Menu 5-17

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

xvii

Page 18

5.8 Power Console Plus Change Configuration Submenu 5-17

5.9 Power Console Plus Read Policy Menu 5-18

5.10 Power Console Plus Write Policy Menu 5-18

5.11 Drive State Description 5-19

5.12 Custom Configuration Wizard Options 5-21

A.1 Log Messages A-2

A.2 General Events Types and Log Messages A-3

A.3 Logical Drive Status Messages A-4

A.4 Physical Drive Status and Error Messages A-4

A.5 Messages for SAF-TE Compliant Boxes A-5

A.6 Battery Status Messages A-6

A.7 General Event Message IDs A-7

A.8 Test-Related Event Message IDs A-7

xviii

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 19

Chapter 1

Overview

This book describes the following software tools and utilities that enable

configuration and management of RAID systems using the MegaRAID

controllers:

• MegaRAID BIOS Configuration Utility (CU)

• WebBIOS CU

• MegaRAID Manager

• Power Console Plus

This chapter provides an overview of the MegaRAID software tools and

explains the intended use of each tool. It consists of the following sections:

• Section 1.1, “MegaRAID Tool Description”

• Section 1.2, “Operating System Support”

1.1 MegaRAID Tool Description

MegaRAID products provide a powerful set of software products for

configuring and managing Redundant Array of Independent Disks (RAID)

systems. The following subsections describe each software product.

Subsequent chapters provide detailed information concerning

each product.

You can use any of the listed utilities to configure your RAID system. Or,

you can configure your RAID system with one utility and update it later

with a different utility. All MegaRAID tools provide a full set of RAID array

configuration and monitoring features.

MegaRAID Configuration Software User’s Guide 1-1

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 20

1.1.1 MegaRAID BIOS Configuration Utility (Ctrl+M)

The MegaRAID BIOS Configuration Utility (CU) provides full-featured,

character-based configuration and management of RAID arrays. The

MegaRAID CU resides in the BIOS and is independent of the operating

system. For information about the BIOS CU, refer to Chapter 3, “BIOS

Configuration Utility and MegaRAID Manager.”

1.1.2 MegaRAID Manager Configuration Utility

The MegaRAID Manager utility provides full-featured configuration and

management of RAID arrays. The MegaRAID Manager utility enables

configuration and management of RAID systems while the operating

system is running. The MegaRAID Manager utility provides the same

feature set as the MegaRAID CU. For information about MegaRAID

Manager, refer to Chapter 3, “BIOS Configuration Utility and

MegaRAID Manager.”

1.1.3 WebBIOS Configuration Utility (CTRL+H)

The WebBIOS CU tool provides full-featured, html-based configuration

and management of RAID arrays. WebBIOS resides in the BIOS and is

independent of the operating system. The WebBIOS CU provides the

same feature set as the MegaRAID CU. In addition, it allows you to add

drives and migrate between RAID levels. For information about the

WebBIOS CU, refer to Chapter 4, “WebBIOS Configuration Utility.”

1.1.4 Power Console Plus Configuration Utility

The Power Console Plus utility provides on-the-fly RAID migration,

creating almost limitless adaptability and expansion of any logical drive

while the system remains operational. For information about the

Power Console Plus utility, refer to Chapter 5, “Start the Power Console

Plus Utility.”

The Power Console Plus utility is an object-oriented GUI utility that

configures and monitors RAID systems locally or over a network. The

Power Console Plus manager runs on the Microsoft Windows NT,

Windows 2000, Windows XP, and Windows Server 2003 operating

systems. With the Power Console Plus manager, you can perform the

same tasks as with the MegaRAID Manager.

1-2 Overview

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 21

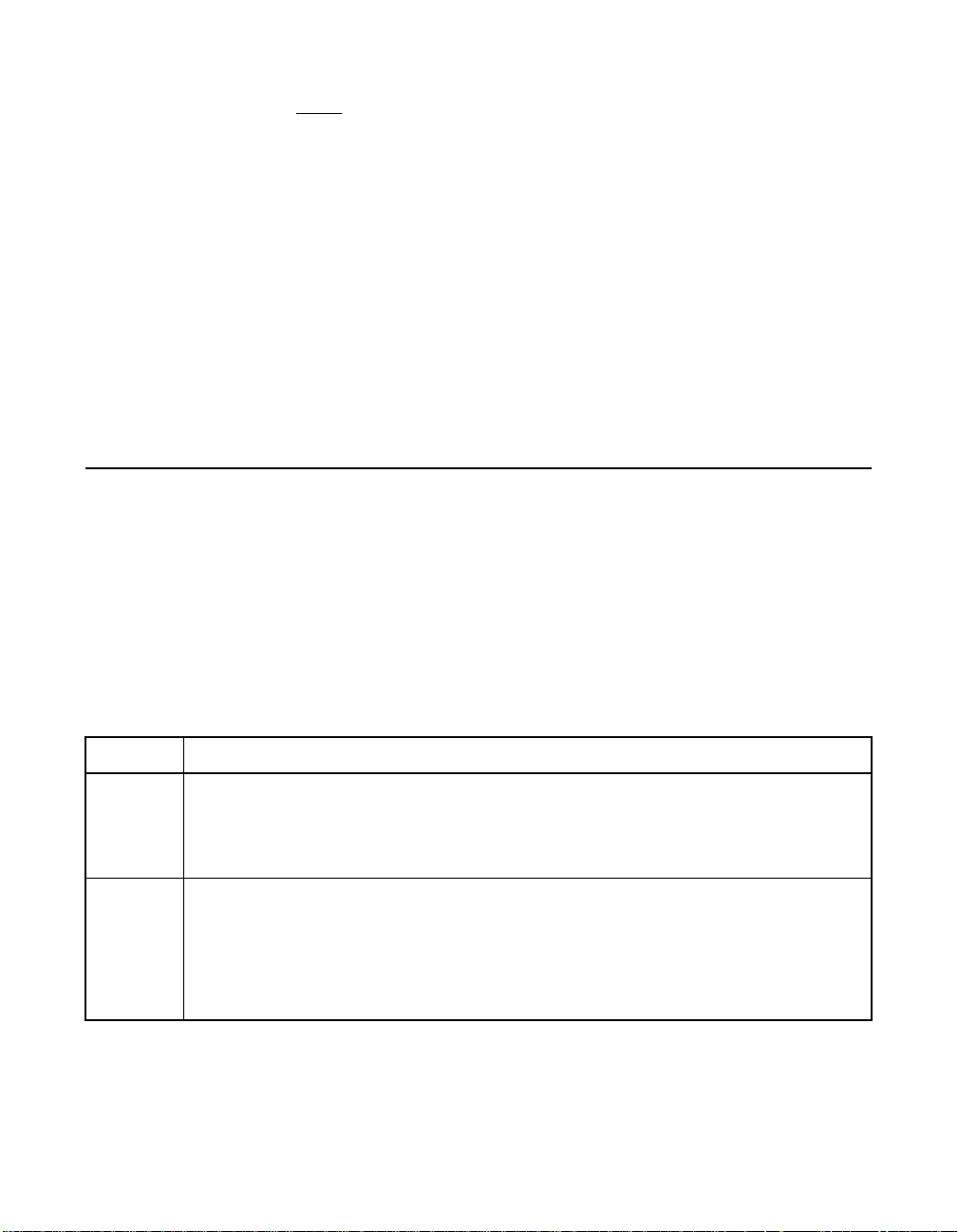

1.2 Operating System Support

Table 1.1 lists the operating system support for each of the

MegaRAID tools.

Table 1.1 MegaRAID Tool Operating System Support

MegaRAID Tool Supported Operating Systems

BIOS CU Operating system (OS) support is not required. The CU

MegaRAID Manager MS-DOS, Novell NetWare, Red Hat Linux, SUSE Linux

WebBIOS CU OS support is not required. The CU runs from the BIOS.

Power Console Plus Windows 2000, Windows XP, Windows Server 2003.

runs from the BIOS.

Enterprise Server (SLES).

Note: For information about drivers forthe operating systems, refer

to the MegaRAID Device Driver Installation User’s Guide.

Operating System Support 1-3

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 22

1-4 Overview

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 23

Chapter 2

Introduction to RAID

This chapter describes RAID features and the advantages that RAID

systems offer in terms of fault tolerance, improved I/O performance, and

data storage reliability. In addition, it discusses RAID configuration

strategies and planning.

This chapter consists of the following sections:

• Section 2.1, “RAID Description”

• Section 2.2, “RAID Benefits”

• Section 2.3, “RAID Functions”

• Section 2.4, “RAID Components and Features”

• Section 2.5, “RAID Levels”

• Section 2.6, “RAID Configuration Strategies”

• Section 2.7, “RAID Availability”

• Section 2.8, “RAID Configuration Planning”

2.1 RAID Description

RAID is an array of multiple independent hard disk drives that provides

high performance and fault tolerance. The RAID array appears to the

host computer as a single storage unit or as multiple logical units. Data

throughput improves because several disks can be accessed

simultaneously. RAID systems also improve data storage availability and

fault tolerance. Data loss caused by a hard drive failure can be recovered

by rebuilding missing data from the remaining data or parity drives.

MegaRAID Configuration Software User’s Guide 2-1

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 24

2.2 RAID Benefits

RAID systems improve data storage reliability and fault tolerance

compared to single-drive storage systems. Data loss resulting from a

hard drive failure can be prevented by reconstructing missing data from

the remaining hard drives. RAID has gained popularity because it

improves I/O performance and increases storage subsystem reliability.

2.3 RAID Functions

Logical drives, also known as virtual disks, are arrays or spanned arrays

that are available to the operating system. The storage space in a logical

drive is spread across all the physical drives in the array.

Note: The maximum logical drive size for all supported RAID

Your SCSI hard drives must be organized into logical drives in an array

and must be able to support the RAID level that you select. Following are

some common RAID functions:

• Creating hot spare drives.

levels (0, 1, 5, 10, and 50) is 2 Tbytes. You can create

multiple logical drives on the same physical disks.

• Configuring physical arrays and logical drives.

• Initializing one or more logical drives.

• Accessing controllers, logical drives, and physical drives individually.

• Rebuilding failed hard drives.

• Verifying that the redundancy data in logical drives using RAID level

1, 5, 10, or 50 is correct.

• Reconstructing logical drives after changing RAID levels or adding a

hard drive to an array.

• Selecting a host controller to work on.

2-2 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 25

2.4 RAID Components and Features

RAID levels describe a system for ensuring the availability and

redundancy of data stored on large disk subsystems. See Section 2.5,

“RAID Levels,” page 2-13 for detailed information about RAID levels.

2.4.1 Physical Array

A physical array is a group of physical disk drives. The physical disk

drives are managed in partitions known as logical drives.

2.4.2 Logical Drive

A logical drive is a partition in a physical array of disks that is made up

of contiguous data segments on the physical disks. A logical drive can

consist of an entire physical array, more than one entire physical array, a

part of an array, parts of more than one array, or a combination of any

two of these conditions.

Note: The maximum logical drive size for all supported RAID

levels (0, 1, 5, 10, and 50) is 2 Tbytes. You can create

multiple logical drives within the same physical array.

2.4.3 RAID Array

A RAID array is one or more logical drives controlled by the RAID

controller.

2.4.4 Fault Tolerance

Fault tolerance is the capability of the subsystem to undergo a single

failure without compromising data integrity, and processing capability.

The RAID controller provides this support through redundant arrays in

RAID levels 1, 5, 10 and 50. The system can still work properly even with

a single disk failure in an array, through performance can be degraded

to some extent.

Note: RAID level 0 is not fault-tolerant. If a drive in a RAID 0 array

RAID Components and Features 2-3

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

fails, the whole logical drive (all physical drives associated

with the logical drive) fails.

Page 26

Fault tolerance is often associated with system availability because it

allows the system to be available during the failures. However, this

means it is also important for the system to be available during repair.

A hot spare is an unused physical disk that, in case of a disk failure in a

redundant RAID array, can be used to rebuild the data and re-establish

redundancy. After the hot spare is automatically moved into the RAID

array, the data is automatically rebuilt on the hot spare drive. The RAID

array continues to handle requests while the rebuild occurs.

Auto-rebuild allows a failed drive to be replaced and the data

automatically rebuilt by hot-swapping the drive in the same drive bay. The

RAID array continues to handle requests while the rebuild occurs.

2.4.5 Consistency Check

The Consistency Check operation verifies correctness of the data in

logical drives that use RAID levels 1, 5, 10, and 50. (RAID 0 does not

provide data redundancy.) For example, in a system with parity, checking

consistency means computing the data on one drive and comparing the

results to the contents of the parity drive.

Note: LSI recommends that you perform a consistency check at

least once a month.

2.4.6 Background Initialization

Background initialization is a consistency check that is forced when you

create a logical drive. The difference between a background initialization

and a consistency check is that a background initialization is forced on

new logical drives. This is an automatic operation that starts 5 minutes

after you create the drive.

Background initialization is a check for media errors on physical drives.

It ensures that striped data segments are the same on all physical drives

in an array. The background initialization rate is controlled by the rebuild

rate set using the BIOS Configuration Utility. The default, and

recommended, rate is 30%. Before you change the rebuild rate, you must

stop the background initialization or the rate change does not affect the

background initialization rate. After you stop background initialization and

change the rebuild rate, the rate change takes effect when you restart

background initialization.

2-4 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 27

2.4.7 Patrol Read

Patrol read involves the review of your system for possible hard drive

errors that could lead to drive failure, then action to correct errors. The

goal is to protect data integrity by detecting physical drive failure before

the failure can damage data. The corrective actions depend on the array

configuration and type of errors.

Patrol read starts only when the controller is idle for a defined period of

time and no other background tasks are active, though it can continue to

run during heavy I/O processes.

You can use the BIOS Configuration Utility to select the patrol read options,

which you can use to set automatic or manual operation, or disable patrol

read. Perform the following steps to select a patrol read option:

Step 1. Select Objects → Adapter from the Management Menu.

Step 2. Select Patrol Read Options from the Adapter menu.

Step 3. The following options display:

The Adapter menu displays.

Patrol Read Mode

Patrol Read Status

Patrol Read Control

Step 4. Select Patrol Read Mode to display the patrol read options:

Manual – In manual mode, you must initiate the patrol read.

Auto – In auto mode, the firmware initiates the patrol read on a

scheduled basis.

Manual Halt – Use manual halt to stop the automatic operation,

then switch to manual mode.

Disable – Use this option to disable the patrol read.

Step 5. If you use Manual mode, perform the following steps to initiate

a patrol read:

a. Select Patrol Read Control and press ENTER.

b. Select Start and press ENTER.

Note. Pause/Resume is not a valid operation when Patrol Read

is set to Manual mode.

RAID Components and Features 2-5

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 28

Step 6. Select Patrol Read Status to display the number of iterations

2.4.8 Disk Striping

Disk striping allows you to write data across multiple physical disks

instead of just one physical disk. Disk striping involves partitioning each

drive storage space into stripes that can vary in size from 8 Kbytes to

128 Kbytes. These stripes are interleaved in a repeated sequential

manner. The combined storage space is composed of stripes from each

drive. It is recommended that you keep stripe sizes the same across

RAID arrays.

For example, in a four-disk system using only disk striping (used in RAID

level 0), segment 1 is written to disk 1, segment 2 is written to disk 2,

and so on. Disk striping enhances performance because multiple drives

are accessed simultaneously, but disk striping does not provide data

redundancy.

Figure 2.1 shows an example of disk striping.

completed, the current state of the patrol read (active or stopped),

and the schedule for the next execution of patrol read.

Note: Do not install an operating system on a logical drive with

less than a 16 Kbyte stripe size.

Figure 2.1 Disk Striping (RAID 0) Example

Segment 1

Segment 5

Segment 9

2.4.8.1 Stripe Width

Stripe width is the number of disks involved in an array where striping is

implemented. For example, a four-disk array with disk striping has a

stripe width of four.

2-6 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Segment 2

Segment 6

Segment 10

Segment 3

Segment 7

Segment 11

Segment 4

Segment 8

Segment 12

Page 29

2.4.8.2 Stripe Size

The stripe size is the length of the interleaved data segments that the

RAID controller writes across multiple drives.

2.4.9 Disk Mirroring

With disk mirroring (used in RAID 1), data written to one disk is

simultaneously written to another disk. If one disk fails, the contents of the

other disk can be used to run the system and reconstruct the failed disk.

The primary advantage of disk mirroring is that it provides 100% data

redundancy. Because the disk contents are completely written to a second

disk, it does not matter whether one of the disks fails. Both disks contain

the same data at all times. Either drive can act as the operational drive.

Disk mirroring provides 100% redundancy, but is expensive because

each drive in the system must be duplicated. Figure 2.2 shows an

example of disk mirroring.

Figure 2.2 Disk Mirroring (RAID 1) Example

2.4.10 Parity

Segment 1

Segment 2

Segment 3

Segment 4 Segment 4 Duplicated

Segment 1 Duplicated

Segment 2 Duplicated

Segment 3 Duplicated

Parity generates a set of redundancy data from two or more parent data

sets. The redundancy data can reconstruct one of the parent data sets.

Parity data does not fully duplicate the parent data sets. In RAID, this

method is applied to entire drives or stripes across all disk drives in an

array. The types of parity are described in Table 2.1.

RAID Components and Features 2-7

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 30

Table 2.1 Types of Parity

Parity Type Description

Dedicated The parity of the data on two or more disk drives is stored on

Distributed The parity data is distributed across more than one drive in the

an additional disk.

system.

If a single disk drive fails, it can be rebuilt from the parity and the data

on the remaining drives. RAID level 5 combines distributed parity with

disk striping, as shown in Figure 2.3. Parity provides redundancy for one

drive failure without duplicating the contents of entire disk drives, but

parity generation can slow the write process.

Figure 2.3 Distributed Parity (RAID 5) Example

Segment 1

Segment 7

Parity (9–12)

Note: Parity is distributed across multiple drives in the array.

Segment 2

Segment 8

Segment 3

Segment 9

Parity (5–8)

Segment 4

Segment 10

2.4.11 Disk Spanning

Segment 5

Segment 11

Parity (1–4)

Segment 6

Segment 12

Disk spanning allows multiple physical drives to function like one big

drive. Disk spanning overcomes lack of disk space and simplifies storage

management by combining existing resources or adding relatively

inexpensive resources. For example, four 20 Gbyte drives can be

combined to appear to the operating system as a single 80 Gbyte drive.

Spanning alone does not provide reliability or performance

enhancements. Spanned logical drives must have the same stripe size

and must be contiguous. In Figure 2.4, RAID 1 arrays are turned into a

RAID 10 array.

Important: Make sure that the spans are in different backplanes, so

that if one span fails, you do not lose the whole array.

2-8 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 31

Figure 2.4 Disk Spanning (RAID 10) Example

60 Gbytes/s 60 Gbytes/s

Can Be Accessed as

One 120 Gbyte/s Drive

60 Gbytes/s 60 Gbytes/s

Can Be Accessed as

One 120 Gbyte/s Drive

Note: Spanning two contiguous RAID 0 logical drives does not

produce a new RAID level or add fault tolerance. It

increases the size of the logical volume and improves

performance by doubling the number of spindles.

Disk Spanning for RAID 10 or RAID 50. Table 2.2 describes how to

configure RAID 10 and RAID 50 by spanning. The logical drives must

have the same stripe size, and the maximum number of spans is eight.

The full drive size is used when you span logical drives; you cannot

specify a smaller drive size.

Table 2.2 Disk Spanning for RAID 10 and RAID 50

Level Description

10 Configure RAID 10 by spanning two contiguous RAID 1 logical drives.

50 Configure RAID 50 by spanning two contiguous RAID 5 logical drives.

The RAID 1 logical drives must have the same stripe size.

The RAID 5 logical drives must have the same stripe size.

2.4.12 Hot Spares

A hot spare is an extra, unused disk drive that is part of the disk

subsystem. It is usually in standby mode, ready for service if a drive fails.

Hot spares permit you to replace failed drives without system shutdown

or user intervention. MegaRAID 320 controllers can implement automatic

and transparent rebuilds of failed drives using hot spare drives, providing

a high degree of fault tolerance and zero downtime.

RAID Components and Features 2-9

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Note. When running RAID 0 and RAID 5 logical drives on the

same set of physical drives (a sliced configuration), a

rebuild to a hot spare does not occur after a drive failure

until the RAID 0 logical drive is deleted.

Page 32

The RAID management software allows you to specify physical drives as

hot spares. When a hot spare is needed, the RAID controller assigns the

hot spare that has a capacity closest to and at least as great as that of

the failed drive to replace the failed drive. The failed drive is removed

from the logical drive and marked ready awaiting removal after the

rebuild to a hot spare begins. See Table 2.13 for detailed information

about the minimum and maximum number of physical drives supported

by each RAID level for each RAID controller. You can make hot spares

of the physical drives that are not in a RAID logical drive.

Note: If a rebuild to a hot spare fails for any reason, the hot spare

There are two types of hot spares:

• Global Hot Spare

• Dedicated Hot Spare

2.4.12.1 Global Hot Spare

A global hot spare can replace any failed drive in a redundant array as

long as its capacity is equal to or larger than the coerced capacity of the

failed drive. A global hot spare defined on any channel should be

available to replace a failed drive on both channels.

drive is marked as failed. If the source drive fails, both the

source drive and the hot spare drive are marked as failed.

2.4.12.2 Dedicated Hot Spare

A dedicated hot spare can replace a failed drive only in a selected array.

One or more drives can be designated as member of a spare drive pool;

the most suitable drive from the pool is selected for failover. A dedicated

hot spare is used before one from the global hot spare pool.

Hot spare drives can be located on any RAID channel. Standby hot

spares (not being used in RAID array) are polled every 60 seconds at a

minimum, and their status is made available in the array management

software. RAID controllers can rebuild with a disk that is in a system, but

not initially set to be a hot spare.

Observe the following parameters when using hot spares:

2-10 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 33

• Hot spares are used only in arrays with data redundancy, for

example, RAID levels 1, 5, 10, and 50.

• A hot spare connected to a specific RAID controller can rebuild a

drive that is connected to the same controller only.

• You must assign the hot spare to one or more drives through the

controller BIOS or use array management software to place it in the

hot spare pool.

• A hot spare must have free space equal to or greater than the drive

it would replace. For example, to replace an 18 Gbyte drive, the hot

spare must be 18 Gbytes or larger.

2.4.13 Disk Rebuilds

When a physical drive in a RAID array fails, you can rebuild the drive by

recreating the data that was stored on the drive before it failed. The RAID

controller uses hot spares to rebuild failed drives automatically and

transparently, at user-defined rebuild rates. If a hot spare is available, the

rebuild can start automatically when a drive fails. If a hot spare is not

available, the failed drive must be replaced with a new drive so the data

on the failed drive can be rebuilt. Rebuilding can be done only in arrays

with data redundancy, which includes RAID 1, 5, 10, and 50.

The failed physical drive is removed from the logical drive and marked

ready awaiting removal after the rebuild to a hot spare begins. If the

system goes down during a rebuild, the RAID controller automatically

restarts the rebuild after the system reboots.

Note: When the rebuild to a hot spare begins, the failed drive is

often removed from the logical drive before management

applications detect the failed drive. When this occurs, the

events logs show the drive rebuilding to the hot spare

without showing the failed drive. The formerly failed drive is

marked as ready after a rebuild begins to a hot spare.

Note: If a rebuild to a hot spare fails for any reason, the hot spare

drive is marked as failed. If the source drive fails, both the

source drive and the hot spare drive are marked as failed.

An automatic drive rebuild does not start if you replace a drive during an

online capacity expansion or RAID level migration. The rebuild must be

started manually after the expansion or migration procedure is complete.

RAID Components and Features 2-11

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 34

2.4.13.1 Rebuild Rate

The rebuild rate is the percentage of the compute cycles dedicated to

rebuilding failed drives. A rebuild rate of 100 percent means the system

gives priority to rebuilding the failed drives.

The rebuild rate can be configured between 0 and 100 percent. At

0 percent, the rebuild is done only if the system is not doing anything

else. At 100 percent, the rebuild has a higher priority than any other

system activity. LSI recommends not using 0 or 100 percent. The default

rebuild rate is 30 percent.

2.4.13.2 Hot Swap

A hot swap is the manual replacement of a defective physical disk unit

while the computer is still running. When a new drive has been installed,

a rebuild occurs automatically if:

• The newly inserted drive is the same size as or larger than the

• The drive is placed in the same drive bay as the failed drive it

The RAID controller can be configured to detect the new disks and

rebuild the contents of the disk drive automatically.

failed drive

is replacing

2.4.14 SCSI Physical Drive States

The SCSI Physical drive states are described in Table 2.3.

Table 2.3 SCSI Physical Drive States

State Description

Online The physical drive is working normally and is a part of a

Ready The physical drive is functioning normally but is not part of a

Hot Spare The physical drive is powered up and ready for use as a spare in

Fail A fault has occurred in the physical drive, placing it out of service.

Rebuild The physical drive is being rebuilt with data from a failed drive.

2-12 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

configured logical drive.

configured logical drive and is not designated as a hot spare.

case an online drive fails.

Page 35

2.4.15 Logical Drive States

The logical drive states are described in Table 2.4.

Table 2.4 Logical Drive States

State Description

Optimal The logical drive operating condition is good. All configured

Degraded The logical drive operating condition is not optimal. One of the

Failed The logical drive has failed.

Offline The logical drive is not available to the RAID controller.

physical drives are online.

configured physical drives has failed or is offline.

2.4.16 Enclosure Management

Enclosure management is the intelligent monitoring of the disk

subsystem by software and/or hardware. The disk subsystem can be part

of the host computer or can reside in an external disk enclosure.

Enclosure management helps you stay informed of events in the disk

subsystem, such as a drive or power supply failure. Enclosure

management increases the fault tolerance of the disk subsystem.

2.5 RAID Levels

The RAID controller supports RAID levels 0, 1, 5, 10, and 50. The

supported RAID levels are summarized in Section 2.5.1, “Summary of

RAID Levels.” In addition, it supports independent drives (configured as

RAID 0.) The following subsections describe the RAID levels in detail.

2.5.1 Summary of RAID Levels

RAID 0 uses striping to provide high data throughput, especially for large

files in an environment that does not require fault tolerance.

RAID 1 uses mirroring so that data written to one disk drive is

simultaneously written to another disk drive. This is good for small

databases or other applications that require small capacity, but complete

data redundancy.

RAID Levels 2-13

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 36

RAID 5 uses disk striping and parity data across all drives

(distributed parity) to provide high data throughput, especially for small

random access.

RAID 10, a combination of RAID 0 and RAID 1, consists of striped data

across mirrored spans. It provides high data throughput and complete

data redundancy, but uses a larger number of spans.

RAID 50, a combination of RAID 0 and RAID 5, uses distributed parity

and disk striping and works best with data that requires high reliability,

high request rates, high data transfers, and medium-to-large capacity.

LSI does not recommend having RAID 0 and RAID 5 logical drives in the

same physical array. If a drive in the physical array has to be rebuilt, the

RAID 0 logical drive causes a failure during the rebuild.

2.5.2 Selecting a RAID Level

To ensure the best performance, you should select the optimal RAID

level when you create a system drive. The optimal RAID level for your

disk array depends on a number of factors:

• Number of physical drives in the disk array

• Capacity of the physical drives in the array

• Need for data redundancy

• Disk performance requirements

2.5.2.1 RAID 0

RAID 0 provides disk striping across all drives in the RAID array. RAID 0

does not provide any data redundancy, but does offer the best

performance of any RAID level. RAID 0 breaks up data into smaller

blocks and then writes a block to each drive in the array. The size of each

block is determined by the stripe size parameter, set during the creation

of the RAID set. RAID 0 offers high bandwidth.

Note: RAID level 0 is not fault-tolerant. If a drive in a RAID 0 array

fails, the whole logical drive (all physical drives associated

with the logical drive) fails.

2-14 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 37

By breaking up a large file into smaller blocks, the RAID controller can

use several drives to read or write the file faster. RAID 0 involves no

parity calculations to complicate the write operation. This makes RAID 0

ideal for applications that require high bandwidth but do not require fault

tolerance. RAID 0 also denotes an independent or single drive.

Table 2.5 provides an overview of RAID 0.

Table 2.5 RAID 0 Overview

Feature Description

2.5.2.2 RAID 1

Uses Provides high data throughput, especially for large files. Any

environment that does not require fault tolerance.

Strong Points Provides increased data throughput for large files. No

capacity loss penalty for parity.

Weak Points Does not provide fault tolerance or high bandwidth. All data

lost if any drive fails.

Drives 1 to (14 drives x the number of channels).

In RAID 1, the RAID controller duplicates all data from one drive to a

second drive. RAID 1 provides complete data redundancy, but at the cost

of doubling the required data storage capacity.

Table 2.6 provides an overview of RAID 1.

Table 2.6 RAID 1 Overview

Feature Description

Uses Appropriate for small databases or any other environment

that requires fault tolerance but small capacity.

Strong Points Provides complete data redundancy. RAID 1 is ideal for any

application that requires fault tolerance and minimal capacity.

Weak Points Requires twice as many disk drives. Performance is impaired

during drive rebuilds.

Drives 2

RAID Levels 2-15

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 38

2.5.2.3 RAID 5

RAID 5 includes disk striping at the block level and parity. In RAID 5, the

parity information is written to several drives. RAID 5 is best suited for

networks that perform a lot of small input/output (I/O) transactions

simultaneously.

RAID 5 addresses the bottleneck issue for random I/O operations.

Because each drive contains both data and parity, numerous writes can

take place concurrently. In addition, robust caching algorithms and

hardware-based, exclusive-or assist make RAID 5 performance

exceptional in many different environments.

Table 2.7 provides an overview of RAID 5.

Table 2.7 RAID 5 Overview

Feature Description

2.5.2.4 RAID 10

Uses Provides high data throughput, especially for large files. Use

RAID 5 for transaction processing applications because

each drive can read and write independently. If a drive fails,

the RAID controller uses the parity drive to recreate all

missing information. Use also for office automation and

online customer service that requires fault tolerance. Use for

any application that has high read request rates but low write

request rates.

Strong Points Provides data redundancy, high read rates, and good

performance in most environments. Provides data

redundancy with lowest loss of capacity.

Weak Points Not well-suited to tasks requiring numerous writes. Suffers

more impact if no cache is used (clustering). Disk drive

performance is reduced if a drive is being rebuilt.

Environments with few processes do not perform as well

because the RAID overhead is not offset by the performance

gains in handling simultaneous processes.

Drives 3 to (14 drives x the number of channels).

RAID 10 is a combination of RAID 0 and RAID 1. RAID 10 consists of

striped data across mirrored spans. RAID 10 breaks up data into smaller

blocks, then mirrors the blocks of data to each RAID 1 set. Each RAID 1

set then duplicates its data to its other drive. The size of each block is

determined by the stripe size parameter, which is set during the creation

of the RAID set. Up to 8 spans can be supported by RAID 10.

2-16 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 39

Table 2.8 provides an overview of RAID 10.

Table 2.8 RAID 10 Overview

Feature Description

Uses Appropriate when used with data storage that needs 100%

redundancy of mirrored arrays and that also needs the

enhanced I/O performance of RAID 0 (striped arrays.) RAID

10 works well for medium-sized databases or any

environment that requires a higher degree of fault tolerance

and moderate to medium capacity.

Strong Points Provides both high data transfer rates and complete data

redundancy.

Weak Points Requires twice as many drives as all other RAID levels

except RAID 1.

Drives 2n, where n is greater than 1.

In Figure 2.5, logical drive 0 is created by distributing data across four

arrays (arrays 0 through 3). Spanning is used because one logical drive

is defined across more than one array. Logical drives defined across

multiple RAID 1 level arrays are referred to as RAID 10 logical drives. To

increase performance, data is striped across arrays, which enables

access to multiple arrays simultaneously.

Using RAID level 10, rather than a simple RAID set, up to 8 spans can

be supported, and up to 8 drive failures can be tolerated, though less

than total disk drive capacity is available. Though multiple drive failures

can be tolerated, only one drive failure can be tolerated in each RAID 1

level array.

Figure 2.5 RAID 10 Logical Drive

RAID 10

Segment 1 Segment 1

Segment 5

...

Duplicate

Segment 5

Duplicate

RAID 1 RAID 1 RAID 1 RAID 1

RAID Levels 2-17

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Segment 2 Segment 3

Segment 6

... ... ...

Segment 2

Duplicate

Segment 6

Duplicate

Segment 3 Segment 4

Segment 7

RAID 0

Duplicate

Segment 7

Duplicate

Segment 8

Segment 4

Duplicate

Segment 8

Duplicate

Page 40

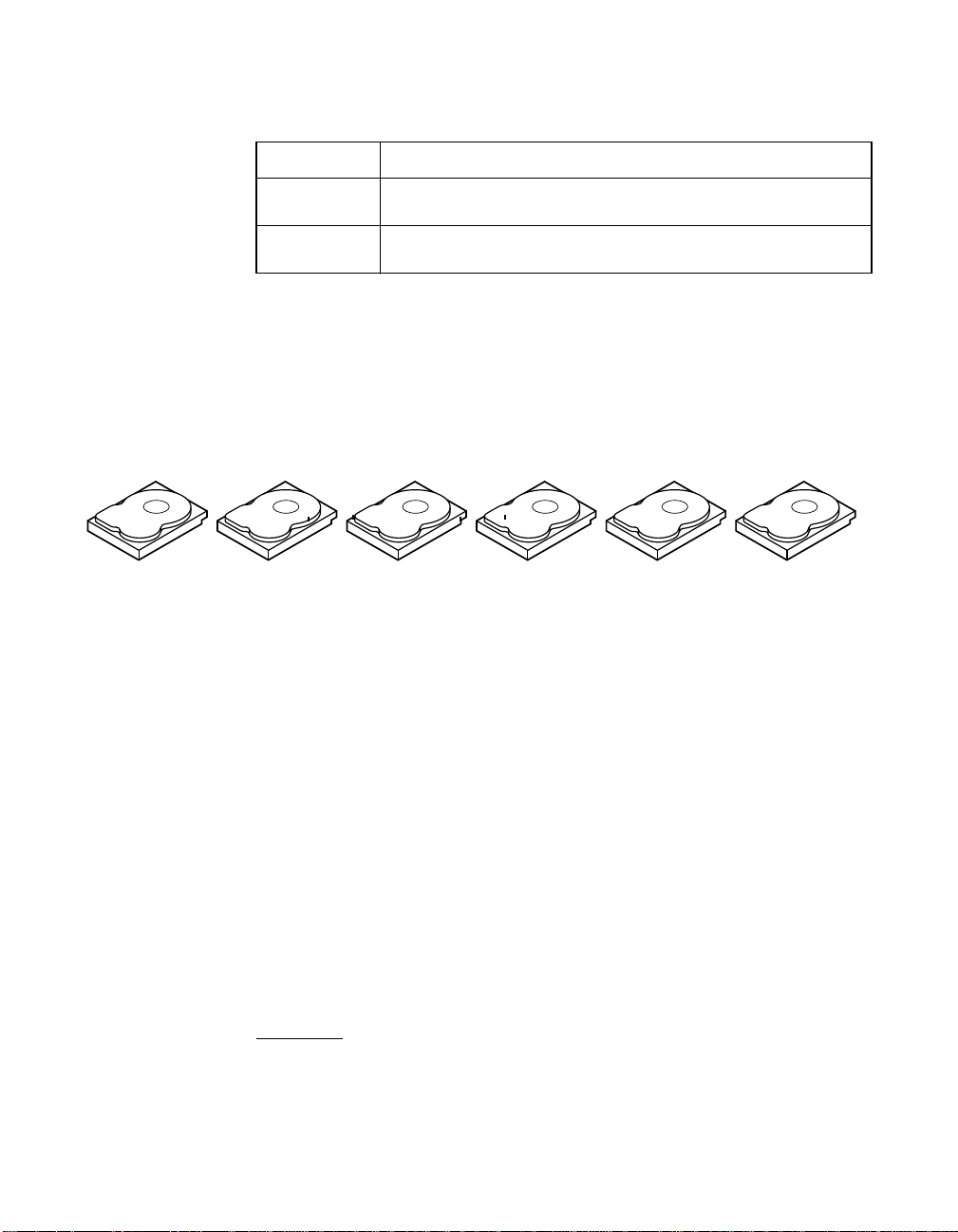

2.5.2.5 RAID 50

RAID 50 provides the features of both RAID 0 and RAID 5. RAID 50

includes both distributed parity and disk striping across multiple arrays.

RAID 50 is best implemented on two RAID 5 disk arrays with data striped

across both disk arrays.

RAID 50 breaks up data into smaller blocks, then stripes the blocks of

data to each RAID 5 disk set. RAID 5 breaks up data into smaller blocks,

calculates parity by performing an exclusive-or on the blocks, then writes

the blocks of data and parity to each drive in the array. The size of each

block is determined by the stripe size parameter, which is set during the

creation of the RAID set.

RAID 50 can support up to 8 spans and tolerate up to 8 drive failures,

though less than total disk drive capacity is available. Though multiple

drive failures can be tolerated, only one drive failure can be tolerated in

each RAID 1 level array.

Table 2.9 provides an overview of RAID 50.

Table 2.9 RAID 50 Overview

Feature Description

Uses Appropriate when used with data that requires high

Strong Points Provides high data throughput, data redundancy and very

Weak Points Requires 2 to 8 times as many parity drives as RAID 5.

Drives 6 to (14 drives x the number of channels)

Figure 2.6 provides an example of a RAID 50 level logical drive.

2-18 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

reliability, high request rates, high data transfer, and medium

to large capacity.

good performance.

Page 41

Figure 2.6 RAID 50 Logical Drive

RAID 50

Segment 1

Segment 6

(Segment 9, 10)

Segment 2

(Segment 5, 6)

Segment 9

RAID 5 RAID 5

(Segment 1, 2)

Segment 5

Segment 10

(Segment 11, 12)

RAID 0

2.6 RAID Configuration Strategies

The most important factors in RAID array configuration are:

• Logical drive availability (fault tolerance)

• Logical drive performance

• Logical drive capacity

You cannot configure a logical drive that optimizes all three factors, but

it is easy to choose a logical drive configuration that maximizes one

factor at the expense of another factor. For example, RAID 1 (mirroring)

provides excellent fault tolerance, but requires a redundant drive. The

following subsections describe how to use the RAID levels to maximize

logical drive availability (fault tolerance), logical drive performance, and

logical drive capacity.

Segment 3

Segment 8

Segment 4

(Segment 7, 8)

Segment 11

(Segment 3, 4)

Segment 7

Segment 12

2.6.1 Maximizing Fault Tolerance

Fault tolerance is achieved through the ability to perform automatic and

transparent rebuilds using hot spare drives and hot swaps. A hot spare

drive is an available, unused online drive that the RAID controller

instantly plugs into the system when an active drive fails. After the hot

spare is automatically moved into the RAID array, the failed drive is

automatically rebuilt on the spare drive. The RAID array continues to

handle requests while the rebuild occurs.

RAID Configuration Strategies 2-19

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 42

A hot swap is the manual substitution of a replacement unit in a disk

subsystem for a defective one, where the substitution can be performed

while the subsystem is running hot swap drives. Auto-Rebuild in the

BIOS Configuration Utility allows a failed drive to be replaced and

automatically rebuilt by hot-swapping the drive in the same drive bay. The

RAID array continues to handle requests while the rebuild occurs,

providing a high degree of fault tolerance and zero downtime. Table 2.10

describes the fault tolerance features of each RAID level.

Table 2.10 RAID Levels and Fault Tolerance

RAID

Level Fault Tolerance

0 Does not provide fault tolerance. All data lost if any drive fails. Disk striping writes data across

1 Provides complete data redundancy. If one disk drive fails, the contents of the other disk drive

5 Combines distributed parity with disk striping. Parity provides redundancy for one drive failure

10 Provides complete data redundancy using striping across spanned RAID 1 arrays. RAID 10

50 Provides data redundancy using distributed parity across spanned RAID 5 arrays. RAID 50

multiple disk drives instead of just one disk drive. It involves partitioning each drive storage

space into stripes that can vary in size. RAID 0 is ideal for applications that require high

bandwidth but do not require fault tolerance.

can run the system and reconstruct the failed drive. The primary advantage of disk mirroring is

that it provides 100% data redundancy.Since the contents of the disk drive are completely written

to a second drive, no data is lost if one of the drives fails. Both drives contain the same data at

all times. RAID 1 is ideal for any application that requires fault tolerance and minimal capacity.

without duplicating the contents of entire disk drives. If a drive fails, the RAID controller uses

the parity data to rebuild all missing information. In RAID 5, this method is applied to entire

drives or stripes across all disk drives in an array. Using distributed parity, RAID 5 offers fault

tolerance with limited overhead.

works well for any environment that requires the 100 percent redundancy offered by mirrored

arrays. RAID 10 can sustain a drive failure in each mirrored array and maintain drive integrity.

includes both parity and disk striping across multiple drives. If a drive fails, the RAID controller

uses the parity data to recreate all missing information. RAID 50 can sustain one drive failure

per RAID 5 array and still maintain data integrity.

2-20 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 43

2.6.2 Maximizing Performance

A RAID disk subsystem improves I/O performance. The RAID array

appears to the host computer as a single storage unit or as multiple

logical units. I/O is faster because drives can be accessed simultaneously.

Table 2.11 describes the performance for each RAID level.

Table 2.11 RAID Levels and Performance

RAID

Level Performance

0 RAID 0 (disk striping) offers the best performance of any RAID level. RAID 0 breaks up data

1 With RAID 1 (disk mirroring), each drive in the system must be duplicated, which requires

5 RAID 5 (distributed parity) provides high data throughput, especially for large files. Use this

10 RAID 10 (disk spanning) works best for data storage that needs the enhanced I/O

50 RAID 50 (disk spanning) works best when used with data that requires high reliability, high

into smaller blocks, then writes a block to each drive in the array. Disk striping writes data

across multiple disk drives instead of just one disk drive. It involves partitioning each drive

storage space into stripes that can vary in size from 8 Kbytes to 128 Kbytes. These stripes

are interleaved in a repeated sequential manner. Disk striping enhances performance

because multiple drives are accessed simultaneously.

more time and resources than striping. Performance is impaired during drive rebuilds.

RAID level for any application that requires high read request rates, but low write request

rates, such as transaction processing applications, because each drive can read and write

independently. Since each drive contains both data and parity, numerous writes can take

place concurrently. In addition, robust caching algorithms and hardware based exclusive-or

assist make RAID 5 performance exceptional in many different environments.

Parity generation can slow the write process, making write performance significantly lower for

RAID 5 than for RAID 0 or RAID 1. Disk drive performance is reduced if a drive is being

rebuilt. Clustering can also reduce drive performance. Environments with few processes do

not perform as well because the RAID overhead is not offset by the performance gains in

handling simultaneous processes.

performance of RAID 0 (striped arrays), which provides high data transfer rates. Spanning

increases the size of the logical volume and improves performance by doubling the number

of spindles. The system performance improves as the number of spans increases.

(The maximum number of spans is eight.) As the storage space in the spans is filled, the

system stripes data over fewer and fewer spans and RAID performance degrades to that of

a RAID 1 or RAID 5 array.

request rates, and high data transfer. It provides high data throughput, data redundancy, and

very good performance. Spanning increases the size of the logical volume and improves

performance by doubling the number of spindles. The system performance improves as the

number of spans increases. (The maximum number of spans is eight.) As the storage space

in the spans is filled, the system stripes data over fewer and fewer spans and RAID

performance degrades to that of a RAID 1 or RAID 5 array.

RAID Configuration Strategies 2-21

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 44

2.6.3 Maximizing Storage Capacity

Storage capacity is an important factor when selecting a RAID level.

There are several variables to consider. Mirrored data and parity data

require more storage space than striping alone (RAID 0). Parity

generation uses algorithms to create redundancy and requires less

space than mirroring. Table 2.12 explains the effects of the RAID levels

on storage capacity.

Table 2.12 RAID Levels and Capacity

RAID

Level Capacity

0 RAID 0 (disk striping) involves partitioning each drive storage space into stripes that can vary

1 With RAID 1 (disk mirroring), data written to one disk drive is simultaneously written to

5 RAID 5 (distributed parity) provides redundancy for one drive failure without duplicating the

10 RAID 10 (disk spanning) requires twice as many drives as all other RAID levels except RAID

50 RAID 50 (disk spanning) requires two to four times as many parity drives as RAID 5. This

in size. The combined storage space is composed of stripes from each drive.RAID 0 provides

maximum storage capacity for a given set of physical disks.

another disk drive, which doubles the required data storage capacity. This is expensive

because each drive in the system must be duplicated.

contents of entire disk drives. RAID 5 breaks up data into smaller blocks, calculates parity by

performing an exclusive-or on the blocks, then writes the blocks of data and parity to each

drive in the array. The size of each block is determined by the stripe size parameter, which

is set during the creation of the RAID set.

1. RAID 10 works well for medium-sized databases or any environment that requires a higher

degree of fault tolerance and moderate to medium capacity. Disk spanning allows multiple

disk drives to function like one big drive. Spanning overcomes lack of disk space and

simplifies storage management by combining existing resources or adding relatively

inexpensive resources.

RAID level works best when used with data that requires medium to large capacity.

2-22 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 45

2.7 RAID Availability

Data availability without downtime is essential for many types of data

processing and storage systems. Businesses want to avoid the financial

costs and customer frustration associated with downed servers. RAID

helps you maintain data availability and avoid downtime for the servers

that provide that data. RAID offers several features, such as spare drives

and rebuilds, that you can use to fix any hard drive problems, while

keeping the server(s) running and data available. The following

subsections describe these features.

2.7.1 Spare Drives

You can use spare drives to replace failed or defective drives in an array.

A replacement drive must be at least as large as the drive it replaces.

Spare drives include hot swaps, hot spares, and cold swaps.

A hot swap is the manual substitution of a replacement unit in a disk

subsystem for a defective one, where the substitution can be performed

while the subsystem is running (performing its normal functions). The

backplane and enclosure must support hot swap in order for the

functionality to work.

Hot spare drives are physical drives that power up along with the RAID

drives and operate in a standby state. If a hard drive used in a RAID

logical drive fails, a hot spare automatically takes its place and the data

on the failed drive is rebuilt on the hot spare. Hot spares can be used for

RAID levels 1, 5, 10, and 50.

A cold swap requires that you power down the system before replacing

a defective hard drive in a disk subsystem.

2.7.2 Rebuilding

If a hard drive fails in an array that is configured as a RAID 1, 5, 10, or

50 logical drive, you can recover the lost data by rebuilding the drive. If

you have configured hot spares, the RAID controller automatically tries

RAID Availability 2-23

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Note: If a rebuild to a hot spare fails for any reason, the hot spare

drive is marked as failed. If the source drive fails, both the

source drive and the hot spare drive are marked as failed.

Page 46

to use them to rebuild failed disks. Manual rebuild is necessary if no hot

spares with enough capacity to rebuild the failed drives are available.You

must insert a drive with enough storage into the subsystem before

rebuilding the failed drive.

2.8 RAID Configuration Planning

Factors to consider when planning a configuration are the number of

hard disk drives the RAID controller can support, the purpose of the

array, and the availability of spare drives.

Each type of data stored in the disk subsystem has a different frequency

of read and write activity. If you know the data access requirements, you

can more successfully determine a strategy for optimizing the disk

subsystem capacity, availability, and performance.

Servers that support video on demand typically read the data often, but

write data infrequently. Both the read and write operations tend to be

long. Data stored on a general-purpose file server involves relatively

short read and write operations with relatively small files.

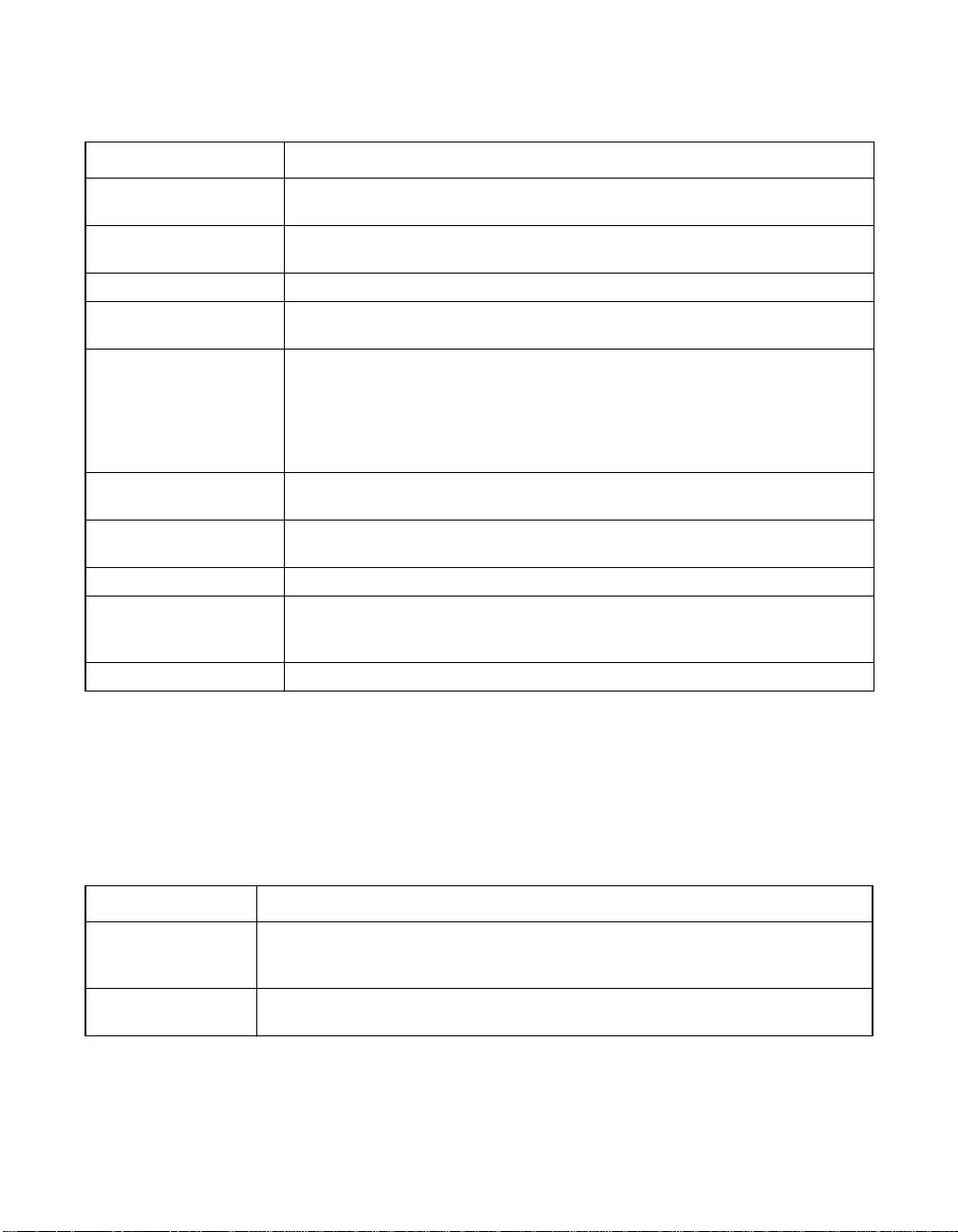

2.8.1 Number of Physical Disk Drives

Your configuration planning depends in part on the number of physical

disk drives that you want to use in a RAID array. The number of drives

in an array determines the RAID levels that can be supported. Only one

RAID level can be assigned to each logical drive. Table 2.13 shows the

minimum and maximum number of drives required for each RAID level.

Table 2.13 Physical Drives Required for Each RAID Level

RAID

Level

0 1 14 28

1 2 2 2

5 3 14 28

10 4 14 28

50 6 14 28

2-24 Introduction to RAID

Minimum # of

Physical Drives

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Maximum # of Physical Drives

for Single-Channel Controller

Maximum # of Physical Drives

for Dual-Channel Controller

Page 47

2.8.2 Array Purpose

Important factors to consider when creating RAID arrays include

availability, performance, and capacity. Define the major purpose of the

disk array by answering questions related to these factors, such as the

following,which are followed by suggested RAID levels for each situation:

• Does this disk array increase the system storage capacity for

general-purpose file and print servers? Use RAID 5, 10, or 50.

• Does this disk array support any software system that must be

available 24 hours per day? Use RAID 1, 5, 10, or 50.

• Does the information stored in this disk array contain large audio or

video files that must be available on demand? Use RAID 0.

• Does this disk array contain data from an imaging system? Use

RAID 0 or 10.

Fill out Table 2.14 to help you plan the array configuration. Rank the

requirements for your array, such as storage space and data redundancy,

in order of importance, then review the suggested RAID levels.

Table 2.14 Factors to Consider for Array Configuration

Requirement Rank Suggested RAID Level(s)

Storage space RAID 0, RAID 5

Data redundancy RAID 5, RAID 10, RAID 50

Hard drive performance and throughput RAID 0, RAID 10

Hot spares (extra hard drives required) RAID 1, RAID 5, RAID 10, RAID 50

RAID Configuration Planning 2-25

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 48

2-26 Introduction to RAID

Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 49

Chapter 3

BIOS Configuration

Utility and

MegaRAID Manager

The MegaRAID BIOS Configuration Utility (CU) configures disk arrays

and logical drives. Because the CU resides in the BIOS, it is independent

of the operating system.

MegaRAID Manager is a character-based, non-GUI utility that changes

policies, and parameters, and monitors RAID systems.

MegaRAID Manager runs under the DOS, Red Hat Linux, and NetWare

operating systems.

The BIOS Configuration Utility and MegaRAID Manager use the same

command structure to configure controllers and disks. The following

sections describe the steps to start either utility and detailed instructions

to perform configuration steps using either utility.

Note: MegaRAID Manager screens differ slightly from the BIOS

Configuration Utility screens, but the utilities have

similar functions.

Use the configuration utilities to do the following:

• Select a configuration method for physical arrays and logical disks

• Create physical arrays

• Define logical drives

• Initialize logical drives

• Access controllers, logical drives, and physical arrays to display their

properties

• Create hot spare drives

• Verify that the redundancy data in logical drives using RAID level

1, 5, 10, or 50 is correct

• Rebuild failed drives

MegaRAID Configuration Software User’s Guide 3-1

Version 2.0 Copyright © 2003–2006 by LSI Logic Corporation. All rights reserved.

Page 50

• Reconstruct logical drives after changing RAID levels or adding a

hard drive to an array

• Select a MegaRAID host adapter

This chapter consists of the following sections:

• Section 3.1, “Quick Configuration Steps for the BIOS

Configuration Utility”

• Section 3.2, “Quick Configuration Steps for MegaRAID Manager”

• Section 3.3, “Configuration Utility Menu”