Page 1

3ware® SATA+SAS

RAID Controller Card

CLI Guide

Command Line Interface

Version 10.0—Supports the 9750 and 9000 Series

(9750, 9690SA, and 9650SE)

PN: 45413-00, Rev. A

November 2009

Page 2

Document Description

Document 45413-00, Rev. A. November 2009.

This document will remain the official reference source for all revisions and

releases of this product until rescinded by an update.

Disclaimer

It is the policy of LSI Corporation to improve products as new technology,

components, software, and firmware become available. LSI reserves the right

to make changes to any products herein at any time without notice. All

features, functions, and operations described herein may not be marketed by

LSI in all parts of the world. In some instances, photographs and figures are of

equipment prototypes. Therefore, before using this document, consult your

LSI representative for information that is applicable and current. LSI DOES

NOT ASSUME ANY RESPONSIBILITY OR LIABILITY FOR THE USE

OF ANY PRODUCTS DESCRIBED HEREIN EXCEPT AS EXPRESSLY

AGREED TO IN WRITING BY LSI.

LSI products are not intended for use in life-support appliances, devices, or

systems. Use of any LSI product in such applications without written consent

of the appropriate LSI officer is prohibited.

License Restriction

The purchase or use of an LSI Corporation product does not convey a license

under any patent, copyright, trademark, or other intellectual property right of

LSI or third parties.

Copyright Notice

© 2009 LSI Corporation. All rights reserved.

Trademark Acknowledgments

LSI, the LSI logo design, 3ware®, 3DM®, StorSwitch®, and T winS tor® are all

registered trademarks of LSI Corporation. StorSave, and StreamFusion are

®

trademarks of LSI. Linux

United States, other countries, or both. SUSE

Novell, Inc. Windows

the United States and other countries. Firefox

the Mozilla Foundation. Safari

registered in the U.S. and other countries. PCI Express

trademark of PCI-SIG

trademarks of their respective companies.

is a registered trademark of Linus Torvalds in the

®

is a registered trademark of

®

is a registered trademark of Microsoft Corporation in

®

is a registered trademark of

®

is a registered trademark of Apple Inc.,

®

is a registered

®

. All other brand and product names may be

Page 3

Table of Contents

About this CLI Guide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Chapter 1. Introduction to the 3ware Command Line Interface. . . . . . . . . . . . . . . . . .1

Features of the CLI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Supported Operating Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Installing the 3ware CLI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Installing the 3ware CLI on Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Installing the 3ware CLI on Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Working with 3ware CLI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

Using the command interface interactively . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

Using a single command with output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Using an input file to execute a script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Outputting the CLI to a Text File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Understanding RAID Levels and Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

RAID Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Available RAID Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Determining What RAID Level to Use . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Using Drive Capacity Efficiently . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Support for Over 2 Terabytes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Chapter 2. CLI Syntax Reference . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .18

Common Tasks Mapped to CLI Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Syntax Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Shell Object Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

focus Object . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

commit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

flush . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

rescan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

show alarms [reverse] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

show events [reverse] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

show AENs [reverse] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

show diag . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

show rebuild . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

show selftest . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

show ver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

show verify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

update fw=filename_with_path [force] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Controller Object Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

/cx show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

/cx show attribute [attribute ...] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

/cx show achip . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

/cx show allunitstatus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

/cx show autocarve . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

/cx show autorebuild . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

/cx show bios . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

www.lsi.com/channel/products iii

Page 4

/cx show carvesize . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

/cx show ctlbus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

/cx show driver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

/cx show dpmstat [type=inst|ra|ext] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

/cx show drivestatus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

/cx show firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

/cx show memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

/cx show model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

/cx show monitor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

cx show numdrives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

/cx show numports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

/cx show numunits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

/cx show ondegrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

/cx show pcb . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

/cx show pchip . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

/cx show serial . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

/cx show spinup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

/cx show stagger . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

/cx show unitstatus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

/cx show all . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

/cx show alarms [reverse] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

/cx show events [reverse] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

/cx show AENs [reverse] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

/cx show diag . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

/cx show phy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

/cx show rebuild . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

/cx show rebuildmode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

/cx show rebuildrate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

/cx show selftest . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

/cx show verify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

/cx show verifymode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

/cx show verifyrate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

/cx add type=<RaidT ype> disk

[group=<3|4|5|6|7|8|9|10|11|12/13|14|15|16>] [nocache|nowrcache] [nordcache|

rdcachebasic] [autoverify] [noqpolicy] [ignoreECC] [name=string]

[storsave=<protect|balance|perform>] [rapidrecovery=all|rebuild|disable]

[v0=n|vol=a:b:c:d] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

/cx rescan [noscan] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

/cx commit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

/cx flush . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

/cx update fw=filename_with_path [force] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

/cx add rebuild=ddd:hh:duration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

/cx add verify=ddd:hh:duration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

/cx add selftest=ddd:hh . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

/cx del rebuild=slot_id . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

/cx del verify=slot_id . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

/cx del selftest=slot_id . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

/cx set dpmstat=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

/cx set rebuild=enable|disable|1..5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

/cx set rebuildmode=<adaptive|lowlatency> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

/cx set rebuildrate=<1...5> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

/cx set verify=enable|disable|1..5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

/cx set verify=advanced|basic|1..5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

/cx set verify=basic [pref=ddd:hh] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

/cx set verifymode=<adaptive|lowlatency> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

=<p:-p> [stripe=size] [noscan]

iv 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 5

/cx set verifyrate=<1..5> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

/cx set selftest=enable|disable [task=UDMA|SMART] . . . . . . . . . . . . . . . . . . . . . . 74

/cx set ondegrade=cacheoff|follow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

/cx set spinup=nn . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

/cx set stagger=nn . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

/cx set autocarve=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

/cx set carvesize=[1024..32768] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

/cx set autorebuild=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

/cx set autodetect=on|off disk=<p:-p>|all . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

/cx start mediascan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

/cx stop mediascan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Unit Object Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

/cx/ux show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

/cx/ux show attribute [attribute ...] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

/cx/ux show autoverify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

/cx/ux show cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

/cx/ux show wrcache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

/cx/ux show rdcache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

/cx/ux show identify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

/cx/ux show ignoreECC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

/cx/ux show initializestatus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

/cx/ux show name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

/cx/ux show qpolicy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

/cx/ux show rapidrecovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

/cx/ux show rebuildstatus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

/cx/ux show serial . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

/cx/ux show status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

/cx/ux show storsave . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

/cx/ux show verifystatus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

/cx/ux show volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

/cx/ux show all . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

/cx/ux remove [noscan] [quiet] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

/cx/ux del [noscan] [quiet] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

/cx/ux start rebuild disk=<p:-p...> [ignoreECC] . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

/cx/ux start verify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

/cx/ux pause rebuild . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

/cx/ux resume rebuild . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

/cx/ux stop verify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

/cx/ux flush . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

/cx/ux set autoverify=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

/cx/ux set cache=on|off [quiet] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

/cx/ux set wrcache=on|off [quiet] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

/cx/ux set rdcache=basic|intelligent|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

/cx/ux set identify=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

/cx/ux set ignoreECC=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

/cx/ux set name=string . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

/cx/ux set qpolicy=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

/cx/ux set rapidrecovery all|rebuild|disable [quiet] . . . . . . . . . . . . . . . . . . . . . . . . . 95

/cx/ux set storsave=protect|balance|perform [quiet] . . . . . . . . . . . . . . . . . . . . . . . 95

/cx/ux migrate type=RaidType [disk=p:-p]

[group=3|4|5|6|7|8|9|10|11|12|13|14|15|16] [stripe=size] [noscan] [nocache]

[autoverify] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Port Object Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

/cx/px show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

/cx/px show attribute [attribute ...] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

www.lsi.com/channel/products v

Page 6

/cx/px show capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

/cx/px show driveinfo . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

/cx/px show firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

/cx/px show identify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

/cx/px show lspeed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

/cx/px show model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

/cx/px show ncq . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

/cx/px show serial . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

/cx/px show smart . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

/cx/px show status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

/cx/px show all . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

/cx/px show dpmstat type=inst|ra|lct|histdata|ext . . . . . . . . . . . . . . . . . . . . . . . . 110

/cx/px remove [noscan] [quiet] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

/cx/px set identify=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

/cx/px set dpmstat=clear [type=ra|lct|ext] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Phy Object Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

/cx/phyx show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

/cx/phyx set link=auto|1.5|3.0|6.0 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

BBU Object Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

/cx/bbu show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

/cx/bbu show attribute [attribute ...] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

/cx/bbu show batinst . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

/bbu show bootloader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

/cx

/cx/bbu show cap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

/cx/bbu show fw . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

/cx/bbu show lasttest . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

/cx/bbu show pcb . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

/cx/bbu show serial . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

/cx/bbu show status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

/cx/bbu show temp . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

/cx/bbu show tempstat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

/cx/bbu show tempval . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

/cx/bbu show volt . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

/cx/bbu show all . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

/cx/bbu test [quiet] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

/cx/bbu enable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

/cx/bbu disable [quiet] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Enclosure Object and Element Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

/cx/ex show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

/cx/ex show attribute [attribute ...] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

/cx/ex show controllers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

/cx/ex/ show diag=helptext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

/cx/ex show slots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

/cx/ex show fans . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

/cx/ex show temp . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

/cx/ex show pwrs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

/cx/ex show alarms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

/cx/ex show all . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

/slotx show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

/cx/ex

/cx/ex/slotx show identify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

/cx/ex/slotx set identify=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

/cx/ex/fanx show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

/cx/ex/fanx show identify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

/cx/ex/fanx set identify=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

/cx/ex/fanx set speed=<0..7> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

vi 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 7

/cx/ex/pwrsx show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

/cx/ex/pwrsx show identify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

/cx/ex/pwrsx set identify=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

/cx/ex/tempx show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

/cx/ex/tempx show identify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

/cx/ex/tempx set identify=on|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

/cx/ex/almx show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

/cx/ex/almx set alarm=mute|unmute|off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

Help Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Help with specific commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Help with attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

help . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

help show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

help flush . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

help rescan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

help update . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

help commit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

help focus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

help /cx . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

help /cx/phyx . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

help /cx/ux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

help /cx/px . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

help /cx/bbu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

help /cx/ex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

help /cx/ex/slotx . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

help /cx/ex/fanx . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

help /cx

help /cx/ex/pwrsx . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

help /cx/ex/almx . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Command Logging . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Location of the Log File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Disabling and enabling command logging . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Return Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

/ex/tempx . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

www.lsi.com/channel/products vii

Page 8

About this CLI Guide

3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0 provides

instructions for configuring and maintaining your 3ware

3ware’s command line interface (CLI).

This guide assumes that you have already installed your 3ware RAID

controller in your system. If you have not yet done so, see the installation

guide that came with your 3ware RAID controller for instructions. This guide

is available in PDF format on your 3ware CD, or can be downloaded from the

®

LSI

website at http://www.lsi.com/channel/ChannelDownloads.

®

controller using

Table 1: Sections in this CLI Guide

Chapter Description

1. Introduction to 3ware

Command Line Interface

2. CLI Syntax Reference Describes individual commands using the

There are often multiple ways to accomplish the same configuration and

maintenance tasks for your 3ware controller. While this manual includes

instructions for performing tasks using the command line interface, you can

also use the following applications:

Installation, features, concepts

primary syntax

• 3ware BIOS Manager

• 3DM

For details, see the user guide or the 3ware HTML Bookshelf.

viii www.lsi.com/channel/products

®

2 (3ware Disk Manager)

Page 9

Introduction to the 3ware Command Line Interface

The 3ware SATA+SAS Controller Card Command Line Interface (CLI)

manages multiple 9750, 9690SA, and 9650SE 3ware RAID controllers.

Note: Older 3ware RAID controllers also share the vast majority of CLI commands.

Wherever possible, commands are labeled to indicate when they are supported for

only a subset of controllers.

For example, commands that apply only to 3ware 9000 series controllers are

labeled as such and are not supported for 3ware 7000/8000 controllers.

Within the 9000 series, some commands apply to only to models 9750, 9690SA and

9650SE, some apply to 9690SA, 9650SE, 9590SE, and 9550SX(U), but not to

9500S, and are so labeled. A few commands apply only to models 9500S, and are

labeled as such.

If a command is labeled as applying to the SX controller, it is available for both

9550SX and 9550SXU.

1

You may need to install particular firmware and drivers for some features to take

effect. See the Release Notes for details.

Important!

For all of the functions of the 3ware CLI to work properly, you must have the proper

CLI, firmware, and driver versions installed. For the latest versions and upgrade

instructions, check http://www.lsi.com/channel/ChannelDownloads.

This chapter includes the following sections:

• “Features of the CLI” on page 2

• “Installing the 3ware CLI” on page 2

• “Working with 3ware CLI” on page 5

• “Understanding RAID Levels and Concepts” on page 8

www.lsi.com/channel/products 1

Page 10

Chapter 1. Introduction to the 3ware Com m a nd Line Int er fa ce

Features of the CLI

3ware CLI is a command line interface for managing 3ware RAID

Controllers. It provides controller, logical unit, drive, enclosure, and BBU

(Battery Backup Unit) management. It can be used in both interactive and

batch mode, providing higher level API (application programming interface)

functionalities.

You can use the CLI to view unit status and version information and perform

maintenance functions such as adding or removing drives. 3ware CLI also

includes advanced features for creating and dele ting RAID units online.

For a summary of what you can do using the CLI, see “Common Tasks

Mapped to CLI Commands” on page 18.

Supported Operating Systems

The 10.0 version of the 3ware CLI is supported under the following op erating

systems:

•Windows®. Windows 7, Vista, Windows Server 2008, and Windows

Server 2003 SP2 (32-bit and 64-bit versions of each).

• Linux®. Redhat Enterprise, openSUSE Linux, SUSE

Server, and other versions of Linux, using the open source Linux 2.6

kernel driver sources

Additional operating systems will be supported in later releases. For specific

operating system versions that are supported in a given release, see the

Release Notes available at http://www.lsi.com/channel/ChannelDownloads,

or the file file versions.txt, available on the 3ware CD.

Installing the 3ware CLI

This section section includes information on installing the 3ware CLI under

various operating systems.

Installing the 3ware CLI on Windows

3ware CLI can be installed or run directly from the 3ware software CD, or the

latest version can be downloaded from the LSI web site,

http://www.lsi.com/channel/ChannelDownloads. Online manual pages are

also available in nroff and html formats. These are located in

cli/tw_cli.8.html

or tw_cli.8.nroff.

®

Linux Enterprise

/packages/

2 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 11

Installing the 3ware CLI

To install 3ware CLI on Windows

Do one of the following:

•

Run the installer from the 3ware CD. Start the 3ware CD and at the

3ware menu, click

Step through the pages of the installation wizard and make sure that

Command Line Interface (tw_cli) is selected.

•

Copy the file from the 3ware CD. Copy the file tw_cli.exe to the

directory from which you want to run the program.

Install Software.

CLI is located on the 3ware CD in the directory

Note: CLI comes in both 32-bit and 64-bit versions. If you are

copying the file directly, be sure to copy the correct version for the

version of the operating system you are using.

\packages\cli\windows

Permissions Required to Run CLI

To run CLI, you can be logged onto Windows with one of the following sets

of permissions:

• Administrator

• User with administrator rights

• Domain administrator

• Domain user with Domain Admin or Administrator membership

Without the correct privileges, CLI will prompt and then exit when the

application is executed.

If you are uncertain whether you have the correct permissions, contact your

network administrator.

To start CLI, do one of the following:

• Start the 3ware CD and at the 3ware menu, click

Run CLI.

• Or, open a console window, change to the directory where tw_cli is

located, and at the command prompt, enter

tw_cli

• OR, double-click the CLI icon in a folder.

The CLI prompt is displayed in a DOS console window.

www.lsi.com/channel/products 3

Page 12

Chapter 1. Introduction to the 3ware Com m a nd Line Int er fa ce

Installing the 3ware CLI on Linux

3ware CLI can be installed or run directly from the 3ware software CD, or the

latest version can be downloaded from the LSI web site,

http://www.lsi.com/channel/ChannelDownloads.

To install 3ware CLI on Linux

Do one of the following:

•

Copy the file. Copy the file tw_cli to the directory from which you want

to run the program.

CLI is located on the 3ware CD in the following directory:

/packages/cli/linux

Online manual pages are also available in nroff and html formats. These

are located in

/packages/cli/tw_cli.8.html or tw_cli.8.nroff.

You will need to be root or have root privileges to install the CLI to

and to run the CLI.

/usr/sbin

Notes:

The installation location needs to be in the environment path for root to

execute the CLI without using complete paths (i.e., if installed to /usr/sbin/, you

can type tw_cli on the command line, otherwise you will have to type the

complete path:

/home/user/tw_cli

The 3ware CLI comes in both 32-bit and 64-bit versions. If you are copying the

file directly, be sure to copy the correct version for the version of the operating

system you are using.

• Use the setup command from a command line

• Navigate to one of the following directories on the 3ware CD

/packages/installers/tools/linux/x86

/packages/installers/tools/linux/x86_64

• Type (depending upon which directory you are in)

./setupLinux_x86.bin -console

or

./setupLinux_x64.bin -console

• Press Enter to begin installation.

4 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 13

• Use a GUI. If you are using a graphical user interface, insert and mount

the 3ware CD.

Under Linux, at the 3ware menu, click Install Software. If the 3ware

menu does not appear automatically, open a command window and type

./autorun from the CD directory.

The installer application will start in graphical mode.

Step through the pages of the installation wizard. On the 3ware Disk

Management Tools screen, make sure that Command Line Interface

(tw_cli) is selected. The default directory location used by 3ware is

/opt/3ware/CLI/tw_cli.

Working with 3ware CLI

You can work with the 3ware CLI in different ways:

• Interactively, entering commands at the main prompt

Working with 3ware CLI

• As a series of single commands

• By creating a script—an input file with multiple commands

The next few topics shows examples of these different methods.

• “Using the command interface interactively” on page 5

• “Using a single command with output” on page 6

• “Using an input file to execute a script” on page 6

• “Outputting the CLI to a Text File” on page 7

Examples shown in the CLI Syntax Reference chapter reflect the interactive

method.

Using the command interface interactively

You can use 3ware CLI interactively, entering commands at the main prompt

and observing the results on the screen.

To use the CLI interactively

1 If necessary, change to the directory that contains CLI.

2 Enter the following command:

tw_cli

(Under Linux, if the directory containing the CLI is not in your path, you

may need to type ./tw_cli )

www.lsi.com/channel/products 5

Page 14

Chapter 1. Introduction to the 3ware Com m a nd Line Int er fa ce

The main prompt is displayed, indicating that the program is awaiting a

command.

//localhost>

3 At the CLI prompt, you can enter commands to show or act on 3ware

controllers, units, and drives.

For example,

//localhost> show

displays all controllers in the system and shows summary information

about them, like this:

Ctl Model Ports Drives Units NotOpt RRate VRate BBU

-----------------------------------------------------------c0 9750-4I 4 12 2 0 1 1 c1 9650SE-4 4 4 1 0 3 5 TESTING

c2 7500-12 12 8 3 1 2 - -

Using a single command with output

You can use 3ware CLI with line arguments, processing a single command at

a time. To do so, simply enter the command and the arguments.

Single commands can be useful when you want to perform a task such as

redirecting the output of the command to a file. It also allows you to use the

command line history to eliminate some typing.

Syntax

tw_cli <command_line_arguments>

Example

tw_cli /c0 show diag > /tmp/3w_diag.out

Using an input file to execute a script

You can operate 3ware CLI scripts by executing a file. The file is a text file

containing a list of CLI commands which you have entered in advance. Each

command must be on a separate line.

Syntax

tw_cli -f <filename>

Where <filename> is the name of the text file you want to execute.

Example

tw_cli -f clicommand.txt

6 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 15

Working with 3ware CLI

This example executes the file clicommand.txt, and runs the CLI commands

included in that file.

Scripting examples

Following is a scripting example for a 4-port controller using a text file called

config_unit.txt, containing three commands. This example sets up a 4-port

controller with two units, each with 2 drives mirrored. It then prints the

configurations for verification. The commands included in the script file are:

/c0 add type=raid1 disk=0-1

/c0 add type=raid1 disk=2-3

/c0 show

Following is a scripting example for a 12-port controller using a text file

called config_unit.txt, containing three commands. This example sets up a 12port controller with two units: one with the first 2 drives mirrored, and another

with the remaining drives in a RAID 5 array. It then prints the configurations

for verification. The commands included in the script file are:

/c0 add type=raid1 disk=0-1

/c0 add type=raid5 disk=2-11

/c0 show

To run either of the scripts, enter:

tw_cli -f config_unit.txt

Outputting the CLI to a Text File

You can have the output of the 3ware CLI, including errors, sent to a text file

by adding 2>&1 to the end of the line. This could be useful, for example, if

you want to email the output to LSI Technical Support.

Examples

tw_cli /c2/p0 show >> controller2port0info.txt 2>&1

or

tw_cli /c0 show diag >> Logfile.txt 2>&1

Conventions

The following conventions are used through this guide:

• In text,

• In descriptions and explanations of commands, a bold font indicates the

name of commands and parameters, for example, /c0/p0 show all.

monospace font is used for code and for things you type.

• In commands, an italic font indicates items that are variable, but that you

must specify, such as a controller ID, or a unit ID, for example,

attribute, and /cx/px show all

show

www.lsi.com/channel/products 7

/c0/p0

Page 16

Chapter 1. Introduction to the 3ware Com m a nd Line Int er fa ce

• In commands, brackets around an item indicates that it is optional.

• In commands, ellipses (...) indicate that more than one parameter at a time

can be included, for example, /c0/p0 show attribute [attribute ...], or that

there is a range between two values from which you can pick a value, for

example, /cx set carvesize=[1024...2048].

• In commands, a vertical bar (|) indicates an 'or' situation where the user

has a choice between more than one attribute, but only one can be

specified.

Example: In the command to rescan all ports and reconstitute all units, the

syntax appears as /cx rescan [noscan]. The brackets [ ] indicate that you may

omit the noscan parameter, so that the operation will be reported to the

operating system.

Understanding RAID Levels and Concepts

3ware RAID controllers use RAID (Redundant Array of Independent Disks)

to increase your storage system’s performance and provide fault tolerance

(protection against data loss).

This section organizes information about RAID concepts and configuration

levels into the following topics:

• “RAID Concepts” on page 8

• “Available RAID Configurations” on page 9

• “Determining What RAID Level to Use” on page 15

RAID Concepts

The following concepts are important to understand when working with a

RAID controller:

•

•

Arrays and Units. In the storage industry, the term “array” is used to

describe two or more disk drives that appear to the operating system as a

single unit. When working with a 3ware RAID controller, “unit” is the

term used to refer to an array of disks that is configured and managed

through the 3ware software. Single-disk units can also be configured in

the 3ware software.

Mirroring. Mirrored arrays (RAID 1) write data to paired drives

simultaneously . If one drive fails, the data is preserved on the paired

drive. Mirroring provides data protection through redundancy. In

addition, mirroring using a 3ware RAID controller provides improved

performance because 3ware’s TwinStor® technology reads from both

drives simultaneously.

8 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 17

Understanding RAID Levels and Concepts

• Striping. Striping across disks allows data to be written and accessed on

more than one drive, at the same time. Striping combines each drive’s

capacity into one large volume. Striped disk arrays (RAID 0) achieve

highest transfer rates and performance at the expense of fault tolerance.

•

Distributed Parity . Parity works in combination with striping on RAID 5,

RAID 6, and RAID 50. Parity information is written to each of the striped

drives, in rotation. Should a failure occur, the data on the failed drive can

be reconstructed from the data on the other drives.

Hot Swap. The process of exchanging a drive without having to shut

•

down the system. This is useful when you need to exchange a defective

drive in a redundant unit.

Array Roaming. The process of removing a unit from a controller and

•

putting it back later, either on the same controller, or a different one, and

having it recognized as a unit. The disks may be attached to different ports

than they were originally attached to, without harm to the data.

Available RAID Configurations

RAID is a method of combining several hard drives into one unit. It can offer

fault tolerance and higher throughput levels than a single hard drive or group

of independent hard drives. LSI's 3ware controllers support RAID 0, 1, 5, 6,

10, 50, and Single Disk. The information below provides a more in-depth

explanation of the different RAID levels.

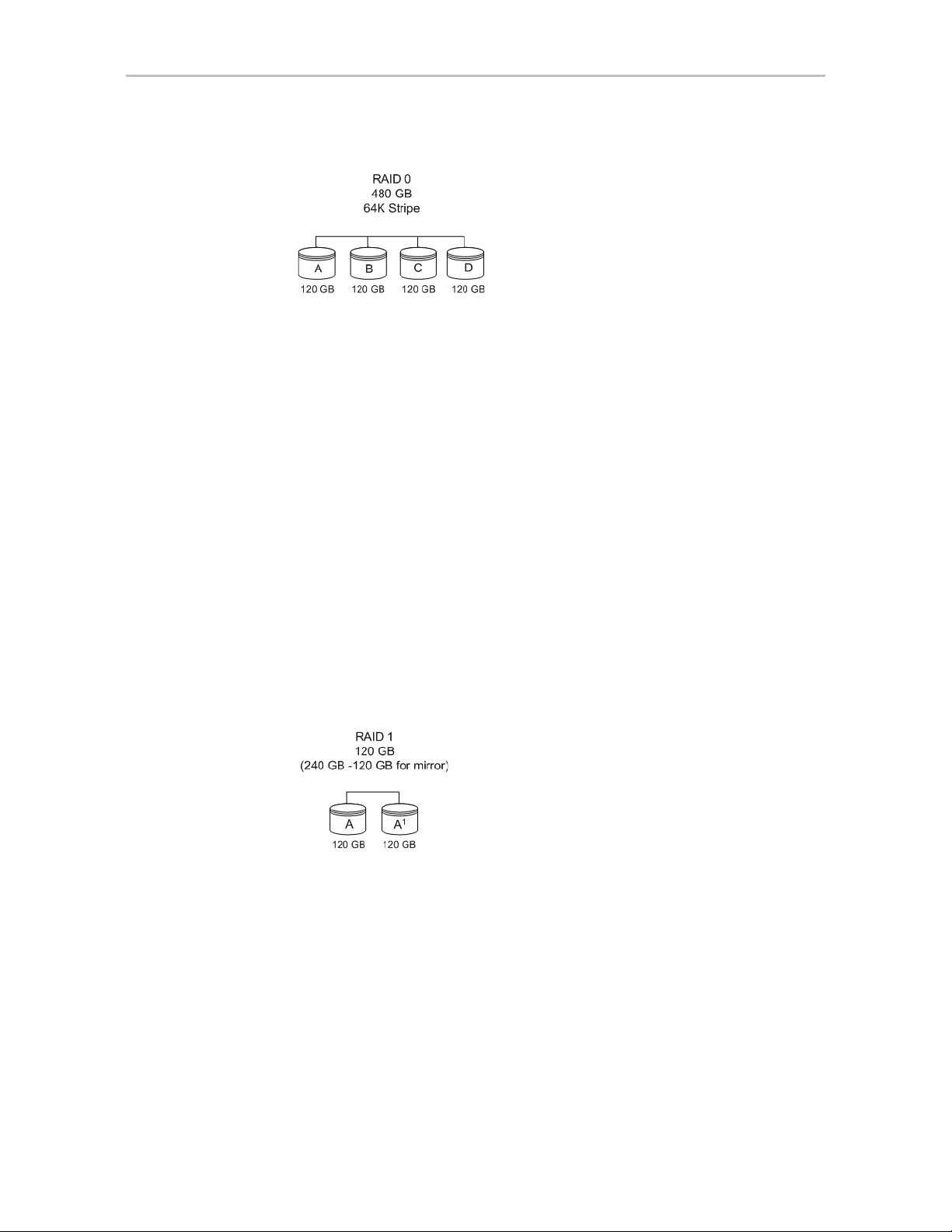

RAID 0

RAID 0 provides improved performance, but no fault tolerance. Since the

data is striped across more than one disk, RAID 0 disk arrays achieve high

transfer rates because they can read and write data on more than one drive

simultaneously. The stripe size is configurable during unit creation. RAID 0

requires a minimum of two drives.

When drives are configured in a striped disk array (see Figure 1), large files

are distributed across the multiple disks using RAID 0 techniques.

Striped disk arrays give exceptional performance, particularly for data

intensive applications such as video editing, computer-aided design and

geographical information systems.

RAID 0 arrays are not fault tolerant. The loss of any drive results in the loss of

all the data in that array, and can even cause a system hang, depending on

your operating system. RAID 0 arrays are not recommended for high

availability systems unless additional precautions are taken to prevent system

hangs and data loss.

www.lsi.com/channel/products 9

Page 18

Chapter 1. Introduction to the 3ware Com m a nd Line Int er fa ce

Figure 1. RAID 0 Configuration Example

RAID 1

RAID 1 provides fault tolerance and a speed advantage over non-RAID disks.

RAID 1 is also known as a mirrored array. Mirroring is done on pairs of

drives. Mirrored disk arrays write the same data to two different drives using

RAID 1 algorithms (see Figure 2). This gives your system fault tolerance by

preserving the data on one drive if the other drive fails. Fault tolerance is a

basic requirement for critical systems like web and database servers.

3ware uses a patented technology, TwinStor

performance during sequential read operations. With TwinStor technology,

read performance is twice the speed of a single drive during sequential read

operation.

The adaptive algorithms in TwinStor technology boost performance by

distinguishing between random and sequential read requests. For the

sequential requests generated when accessing large files, both drives are used,

with the heads simultaneously reading alternating sections of the file. For the

smaller random transactions, the data is read from a single optimal drive head.

Figure 2. RAID 1 Configuration Example

®

, on RAID 1 arrays for improved

RAID 5

RAID 5 provides performance, fault tolerance, high capacity, and storage

efficiency. It requires a minimum of three drives and combines striping data

with parity (exclusive OR) to restore data in case of a drive failure.

Performance and efficiency increase as the number of drives in a unit

increases.

Parity information is distributed across all of the drives in a unit rather than

being concentrated on a single disk (see Figure 3). This avoids throughput

loss due to contention for the parity drive.

10 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 19

Understanding RAID Levels and Concepts

RAID 5 is able to tolerate 1 drive failure in the unit.

Figure 3. RAID 5 Configuration Example

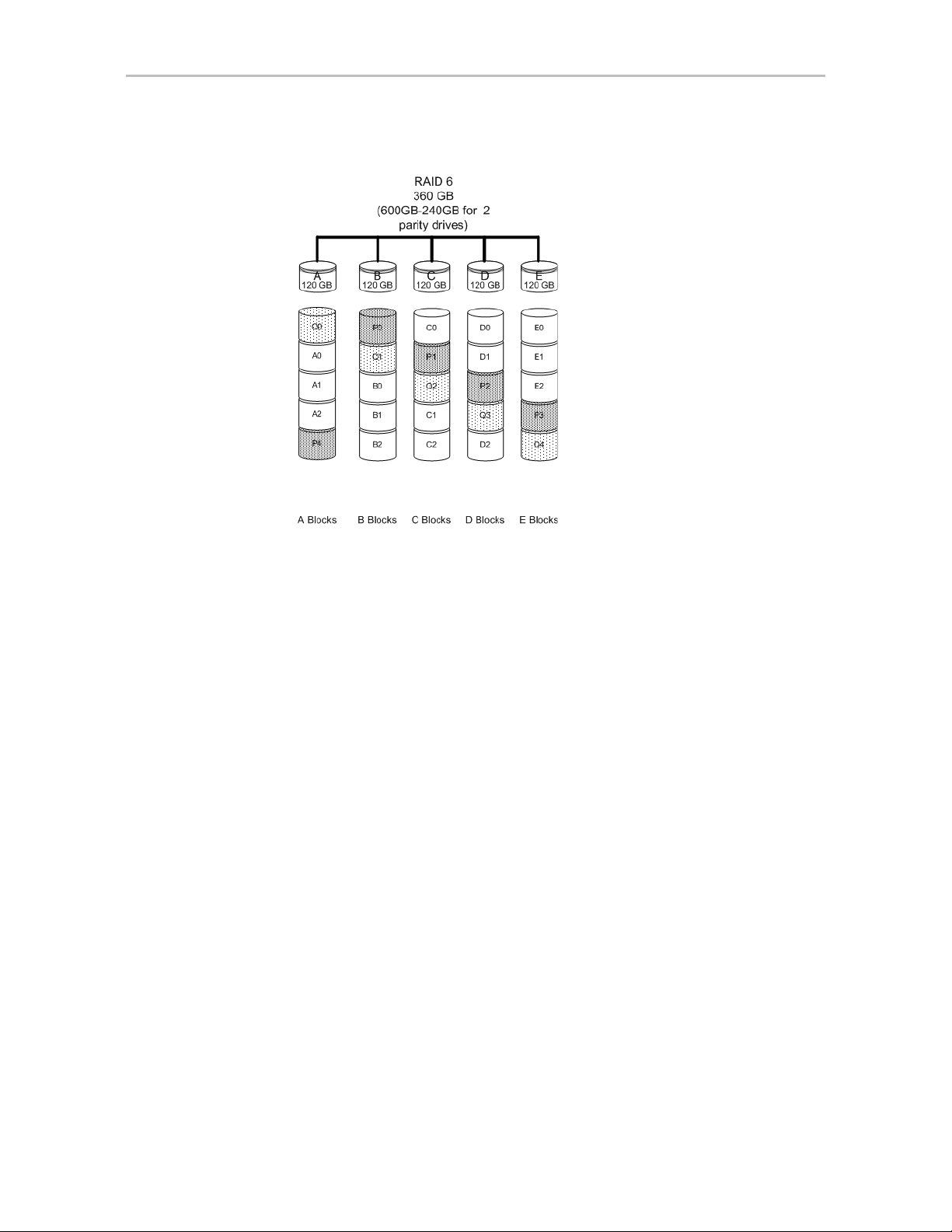

RAID 6

RAID 6 provides greater redundancy and fault tolerance than RAID 5. It is

similar to RAID 5, but has two blocks of parity information (P+Q) distributed

across all the drives of a unit, instead of the single block of RAID 5.

Due to the two parities, a RAID 6 unit can tolerate two hard drives failing

simultaneously. This also means that a RAID 6 unit may be in two different

states at the same time. For example, one sub-unit can be degraded, while

another may be rebuilding, or one sub-unit may be initializing, while another

is verifying.

The 3ware implementation of RAID 6 requires a minimum of five drives.

Performance and storage efficiency also increase as the number of drives

increase.

www.lsi.com/channel/products 11

Page 20

Chapter 1. Introduction to the 3ware Com m a nd Line Int er fa ce

Figure 4. RAID 6 Configuration Example

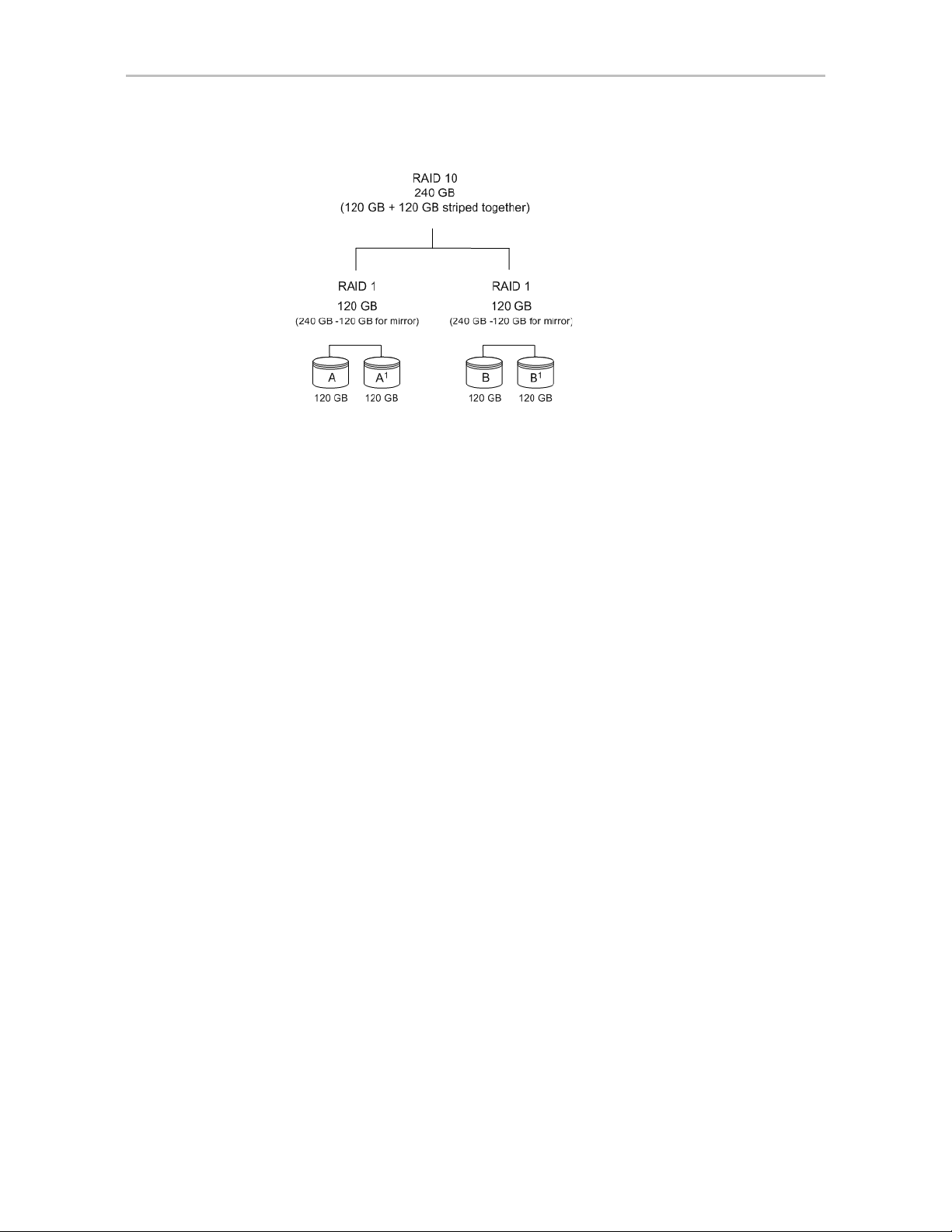

RAID 10

RAID 10 is a combination of striped and mirrored arrays for fault tolerance

and high performance.

When drives are configured as a striped mirrored array, the disks are

configured using both RAID 0 and RAID 1 techniques, thus the name RAID

10 (see Figure 5). A minimum of four drives are required to use this

technique. The first two drives are mirrored as a fault tolerant array using

RAID 1. The third and fourth drives are mirrored as a second fault tolerant

array using RAID 1. The two mirrored arrays are then grouped as a striped

RAID 0 array using a two tier structure. Higher data transfer rates are

achieved by leveraging TwinStor and striping the arrays.

In addition, RAID 10 arrays offer a higher degree of fault tolerance than

RAID 1 and RAID 5, since the array can sustain multiple drive failures

without data loss. For example, in a twelve-drive RAID 10 array, up to six

drives can fail (half of each mirrored pair) and the array will continue to

function. Please note that if both halves of a mirrored pair in the RAID 10

array fail, then all of the data will be lost.

12 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 21

Understanding RAID Levels and Concepts

Figure 5. RAID 10 Configuration Example

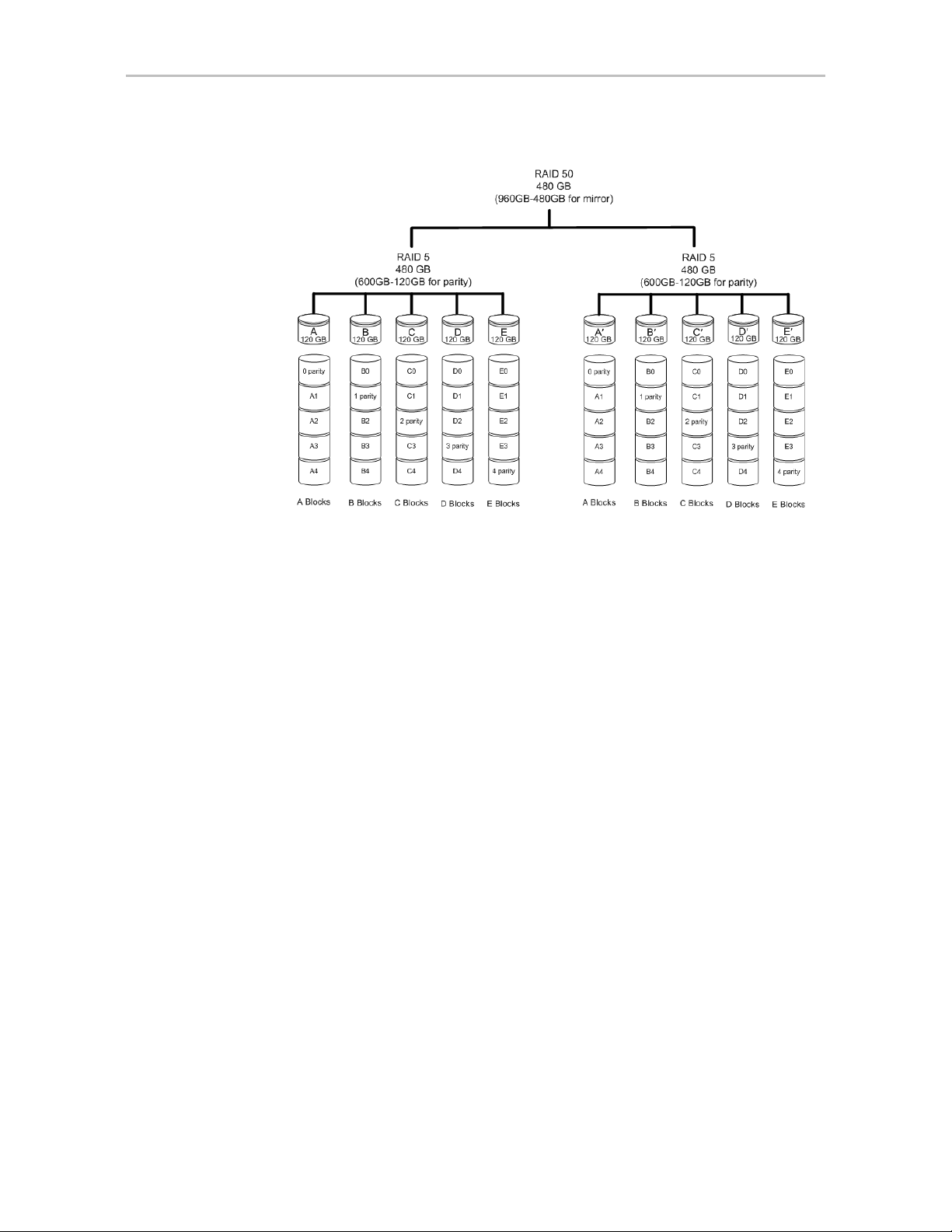

RAID 50

RAID 50 is a combination of RAID 5 with RAID 0. This array type provides

fault tolerance and high performance. RAID 50 requires a minimum of six

drives.

Several combinations are available with RAID 50. For example, on a 12-port

controller, you can hav e a grouping of 3, 4, or 6 drives. A grouping of 3 means

that the RAID 5 arrays used have 3 disks each; four of these 3-drive RAID 5

arrays are striped together to form the 12-drive RAID 50 array. On a 16-port

controller, you can have a grouping of 4 or 8 drives.

No more than four RAID 5 subunits are allowed in a RAID 50 unit. For

example, a 24-drive RAID 50 unit may have groups of 12, 8, or 6 drives, but

not groups of 4 or 3.

In addition, RAID 50 arrays offer a higher degree of fault tolerance than

RAID 1 and RAID 5, since the array can sustain multiple drive failures

without data loss. For example, in a twelve-drive RAID 50 array, up to one

drive in each RAID 5 set can fail and the array will continue to function.

Please note that if two or more drives in a RAID 5 set fail, then all of the data

will be lost.

www.lsi.com/channel/products 13

Page 22

Chapter 1. Introduction to the 3ware Com m a nd Line Int er fa ce

Figure 6. RAID 50 Configuration Example

Single Disk

A single drive can be configured as a unit through 3ware software. (3BM,

3DM 2, or CLI). Like disks in other RAID configurations, single disks

contain 3ware Disk Control Block (DCB) information and are seen by the OS

as available units.

Single drives are not fault tolerant and therefore not recommended for high

availability systems unless additional precautions are taken to prevent system

hangs and data loss.

JBOD

A JBOD (acronym for “Just a Bunch of Disks”) is an unconfigured disk

attached to your 3ware RAID controller. Creation of JBOD configuration is

not supported in the 3ware 9750 series. New single disk units must be created

as “Single Disk.”

JBOD units are not fault tolerant and therefore not recommended for high

availability systems unless additional precautions are taken to prevent system

hangs and data loss.

14 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 23

Understanding RAID Levels and Concepts

Hot Spare

A hot spare is a single drive, available online, so that a redundant unit can be

automatically rebuilt in case of drive failure.

Determining What RAID Level to Use

Your choice of which type of RAID unit (array) to create will depend on your

needs. You may wish to maximize speed of access, total amount of storage, or

redundant protection of data. Each type of RAID unit offers a different blend

of these characteristics.

The following table provides a brief summary of RAID type characteristics.

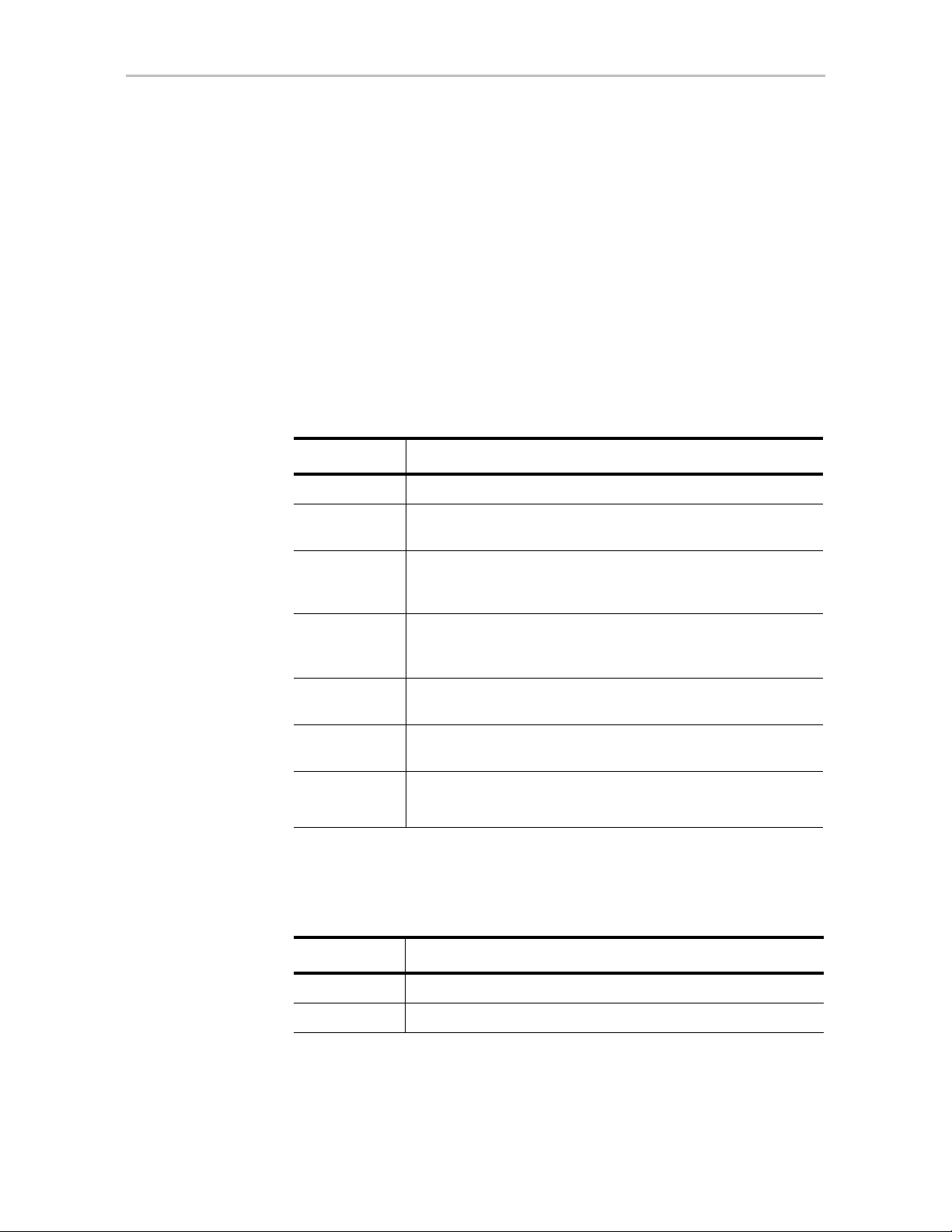

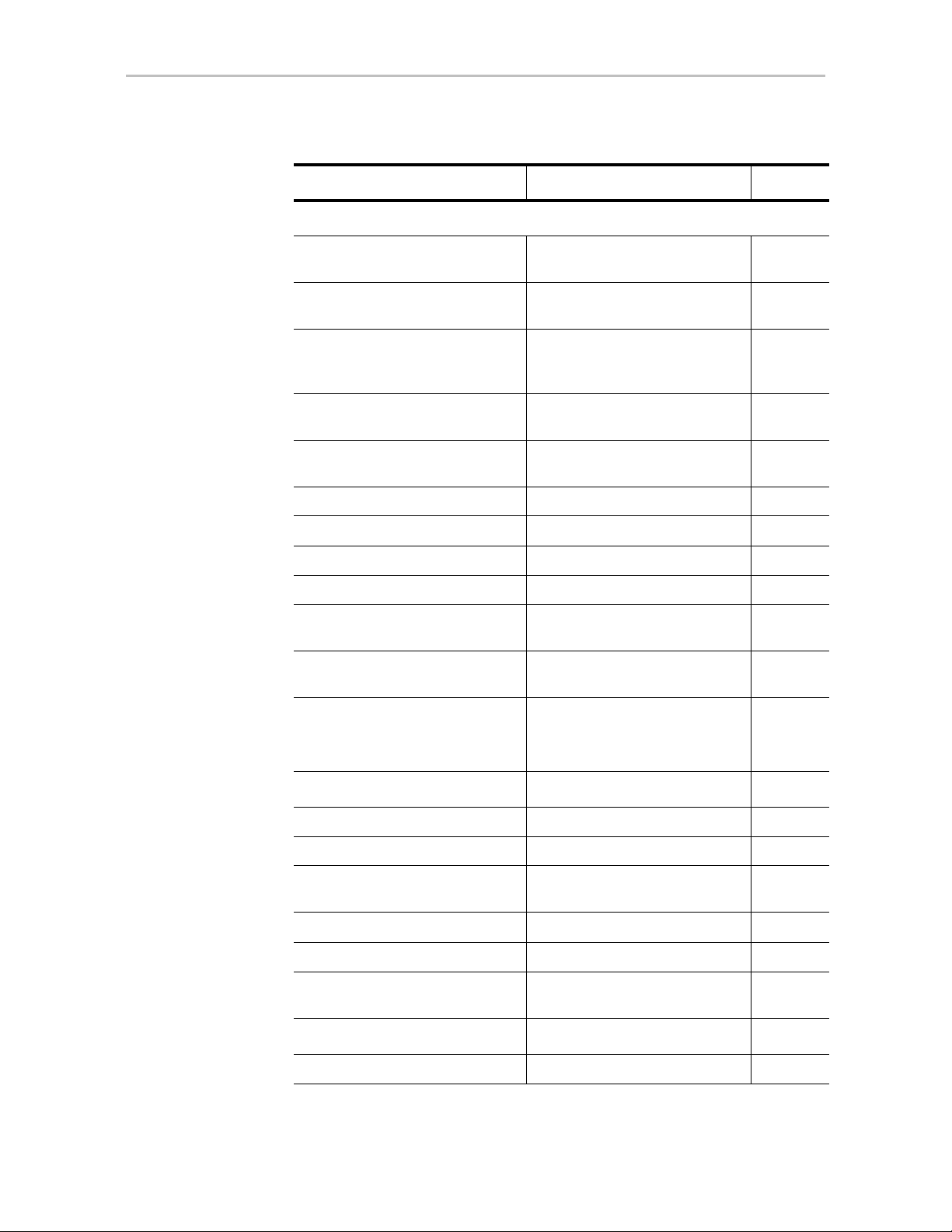

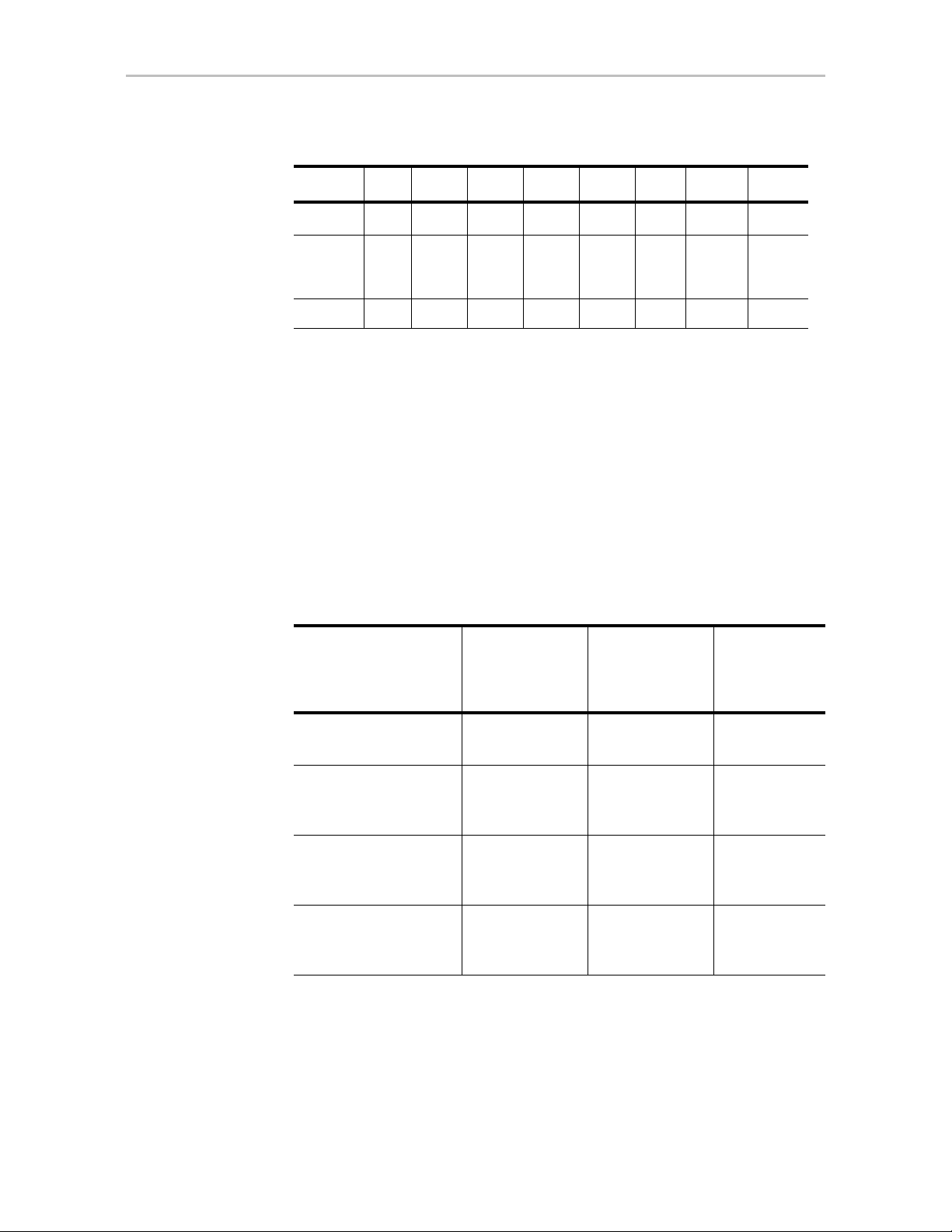

Table 2: RAID Configuration Types

RAID Type Description

RAID 0 Provides performance, but no fault tolerance.

RAID 1 Provides fault tolerance and a read speed advantage over non-

RAID disks.

RAID 5 This type of unit provides performance, fault tolerance, and high

storage efficiency. RAID 5 units can tolerate one drive failing

RAID 6 Provides very high fault tolerance with the ability to protect

RAID 10 A combination of striped and mirrored units for fault tolerance

RAID 50 A combination of RAID 5 and RAID 0. It provides high fault

Single Disk Not a RAID type, but supported as a configuration.

before losing data.

against two consecutive drive failures. Performance and

efficiency increase with higher numbers of drives.

and high performance.

tolerance and performance.

Provides for maximum disk capacity with no redundancy.

You can create one or more units, depending on the number of drives you

have installed.

Table 3: Possible Configurations Based on Number of Drives

# Drives Possible RAID Configurations

1 Single disk

2 RAID 0 or RAID 1

www.lsi.com/channel/products 15

Page 24

Chapter 1. Introduction to the 3ware Com m a nd Line Int er fa ce

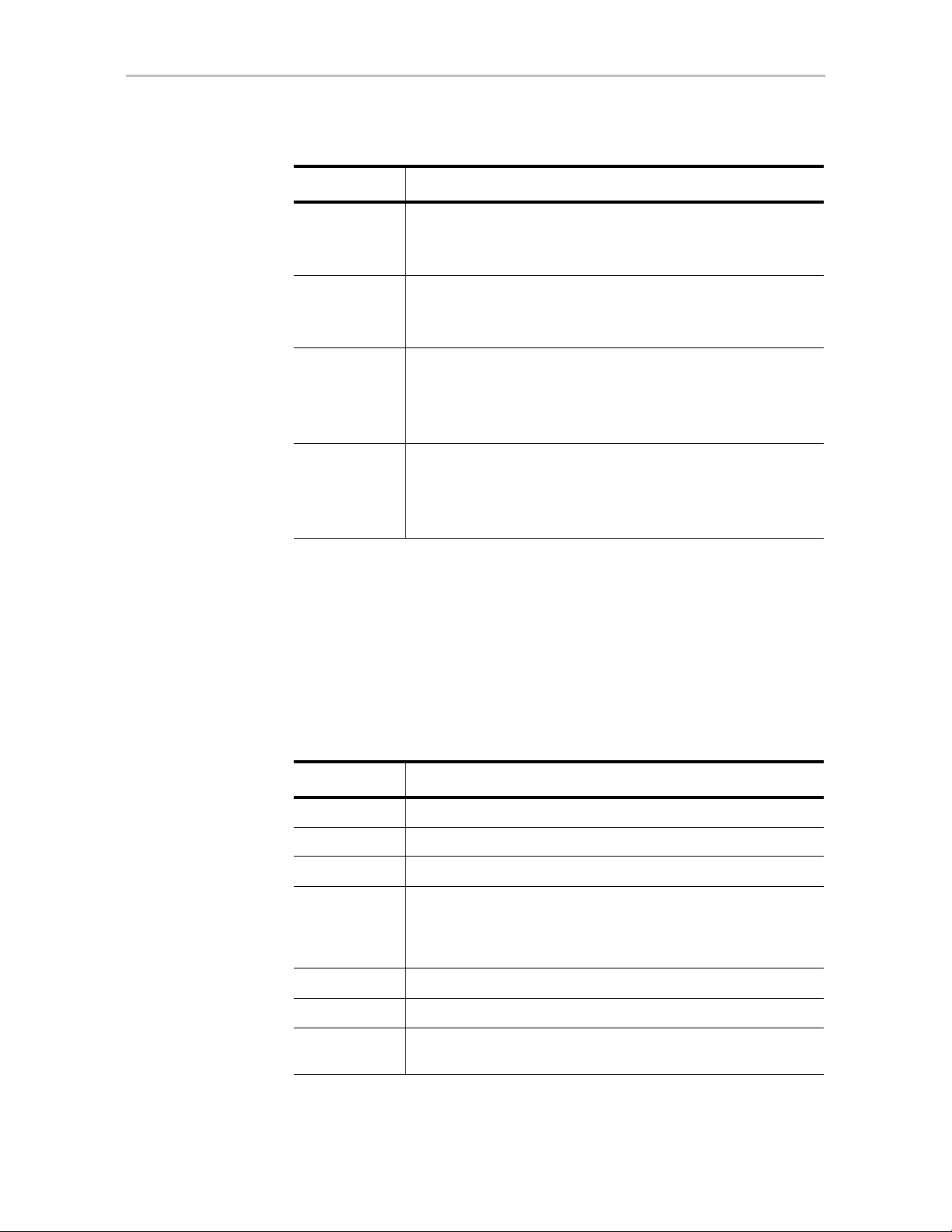

Table 3: Possible Configurations Based on Number of Drives

# Drives Possible RAID Configurations

3RAID 0

RAID 1 with hot spare

RAID 5

4 RAID 5 with hot spare

RAID 10

Combination of RAID 0, RAID 1, single disk

5RAID 6

RAID 5 with hot spare

RAID 10 with hot spare

Combination of RAID 0, RAID 1, hot spare, single disk

6 or more RAID 6

RAID 6 with hot spare

RAID 50

Combination of RAID 0, 1, 5, 6,10, hot spare, single disk

Using Drive Capacity Efficiently

To make the most efficient use of drive capacity, it is advisable to use drives

of the same capacity in a unit. This is because the capacity of each drive is

limited to the capacity of the smallest drive in the unit.

The total unit capacity is defined as follows:

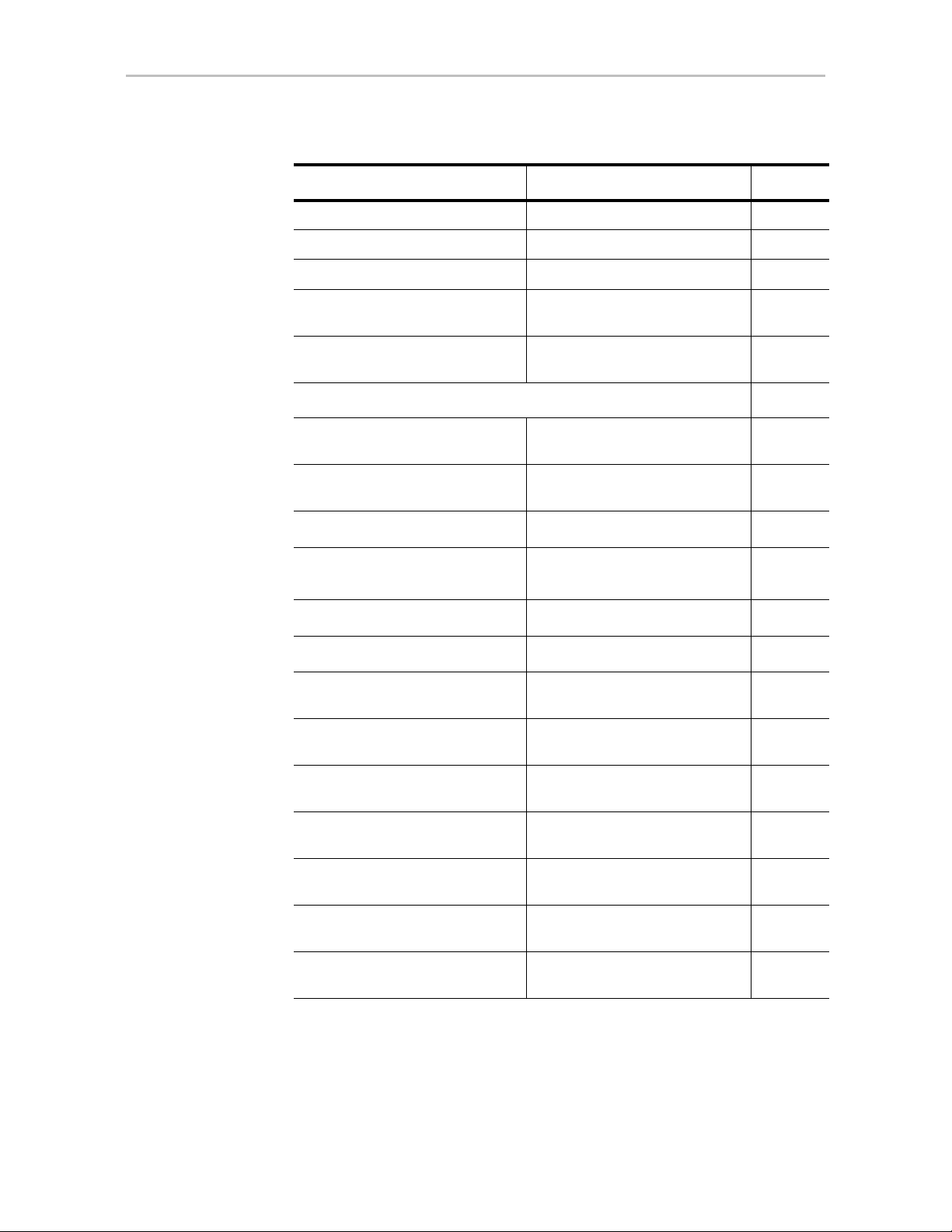

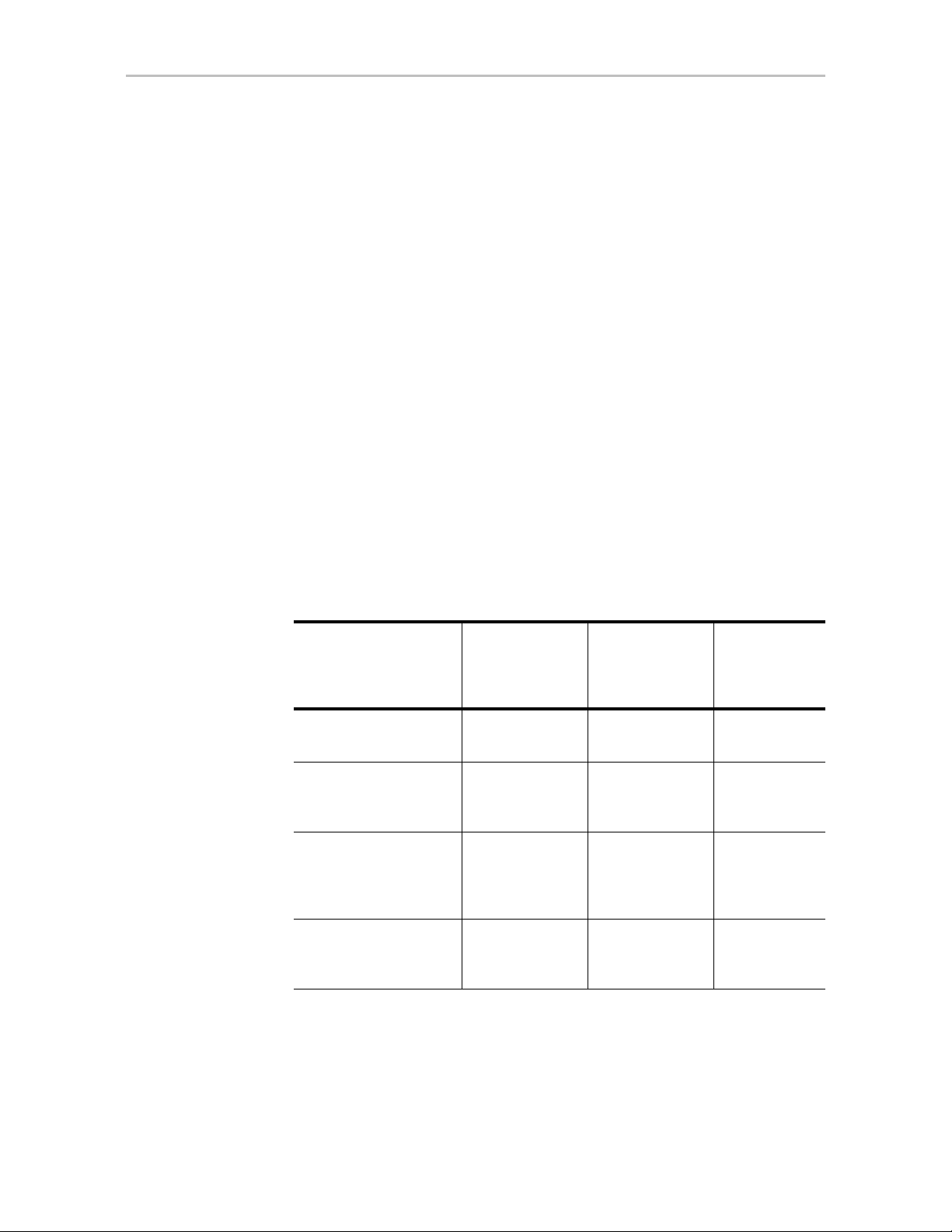

Table 4: Drive Capacity

RAID Level Capacity

Single Disk Capacity of the drive

RAID 0 (number of drives) X (capacity of the smallest drive)

RAID 1 Capacity of the smallest drive

RAID 5 (number of drives - 1) X (capacity of the smallest drive)

Storage efficiency increases with the number of disks:

storage efficiency = (number of drives -1)/(number of drives)

RAID 6 (number of drives - 2) x (capacity of the smallest drive)

RAID 10 (number of drives / 2) X (capacity of smallest drive)

RAID 50 (number of drives - number of groups of drives) X (cap acity of the

smallest drive)

16 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 25

Through drive coercion, the capacity used for each drive is rounded down so

that drives from differing manufacturers are more likely to be able to be used

as spares for each other. The capacity used for each drive is rounded down to

the nearest GB for drives under 45 GB (45,000,000,000 bytes), and rounded

down to the nearest 5 GB for drives over 45 GB. For example, a 44.3 GB

drive will be rounded down to 44 GB, and a 123 GB drive will be rounded

down to 120 GB.

Note: All drives in a unit must be of the same type, either SAS or SATA.

Support for Over 2 Terabytes

Legacy operating systems such as Windows 2000, Windows XP (32-bit),

Windows 2003 (32-bit and 64-bit without SP1), and Linux 2.4, do not

recognize unit capacity in excess of 2 TB.

Understanding RAID Levels and Concepts

If the combined capacity of the drives to be connected to a unit exceeds 2

Terabytes (TB), you can enable auto-carving when you configure your units.

Auto-carving divides the available unit capacity into multiple chunks of 2 TB

or smaller that can be addressed by the operating systems as separate

volumes. The carve size is adjustable from 1024 GB to 2048 GB (default)

prior to unit creation.

If a unit over 2 TB was created prior to enabling the auto-carve option, its

capacity visible to the operating system will still be 2 TB; no additional

capacity will be registered. To change this, the unit has to be recreated.

You may also want to refer to Knowledge Base article # 13431, at

https://selfservice.lsi.com/service/main.jsp. (Use Advanced search and enter

the KB # as a keyword.)

www.lsi.com/channel/products 17

Page 26

2

CLI Syntax Reference

This chapter provides detailed information about using the command syntax

for the 3ware CLI.

Throughout this chapter the examples reflect the interactive method of

executing 3ware CLI.

Note: The output of some commands varies somewhat for different types of

controllers, and may vary if you have an enclosure attached. For most commands

where this is the case, examples are provi d ed to show the differences.

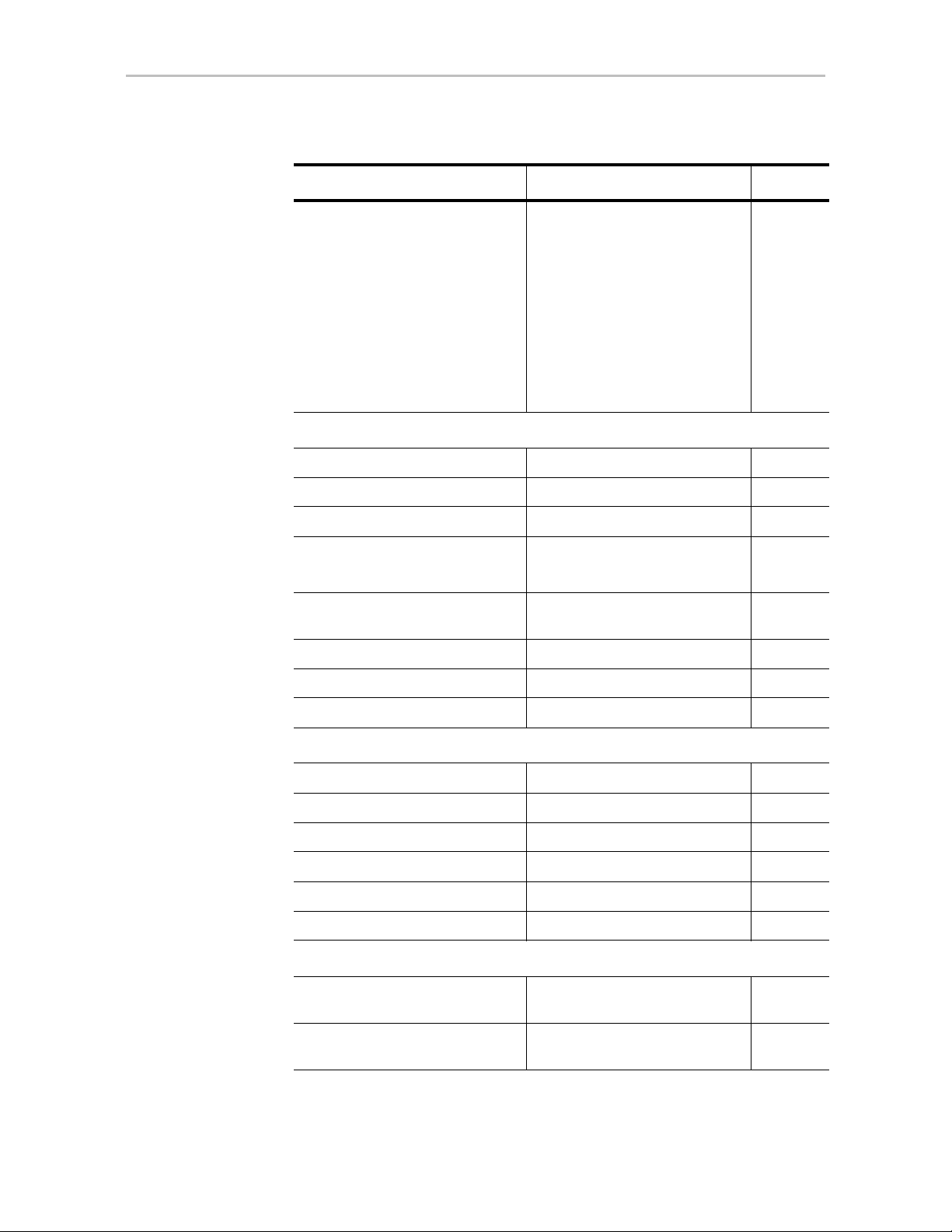

Common Tasks Mapped to CLI Commands

The table below lists many of the tasks people use to manage their RAID

controllers and units, and lists the primary CLI command associated with

those tasks.

Table 5: Common Tasks Mapped to CLI Commands

T ask CLI Command Page

Controller Configuration Tasks

View information about a controller /cx show 33

View controller policies and other

details

View drive performance statistics /cx show dpmstat

/cx show [attribute] [attribute] 35

[type=inst|ra|ext]

38

18 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 27

Common Tasks Mapped to CLI Commands

Table 5: Common Tasks Mapped to CLI Commands

T ask CLI Command Page

Set policies for a controller

• Modify staggered spinup

• Disable write cache on unit

degrade

• Enable/disable autocarving

• Enable/disable autorebuild

• Set the autocarve volume size

• Enable/disable drive

performance monitoring

statistics (dpmstat)

Unit Configuration Tasks

View information about a uni t /cx/ux show 80

Create a new unit /cx add 56

/cx set stagger and /cx set spinup

/cx set ondegrade

/cx set autocarve

/cx set autorebuild

/cx set carvesize

/cx set dpmstat

75

75

75

76

75

68

Create a hot spare /cx add 56

Enable/disable unit write cache /cx/ux set cache

/cx/ux set wrcache

Enable Basic or Intelligent read

cache, or disable both.

Set the queue policy /cx/ux set qpolicy 94

Set the rapid RAID recovery policy /cx/ux set rapidrecovery 95

Set the storsave profile /cx/ux set storsave 95

Unit Configuration Changes

Change RAID level /cx/ux migrate 97

Change stripe size /cx/ux migrate 97

Expand unit capacity /cx/ux migrate 97

Delete a unit /cx/ux del 87

Remove a unit (export) /cx/ux remove 87

Name a unit /cx/ux set name 94

Controller Maintenance Tasks

/cx/ux set rdcache 92

92

Update controller with new

firmware

Add a time slot to a rebuild

schedule

/cx update 63

/cx add rebuild 64

www.lsi.com/channel/products 19

Page 28

Chapter 2. CLI Syntax Reference

Table 5: Common Tasks Mapped to CLI Commands

T ask CLI Command Page

Controller Maintenance Tasks (continued)

Add a time slot to a verify

schedule

Add a time slot to a selftest

schedule

Enable/disable the initialize/

rebuild/migrate schedule and set

the task rate

Enable/disable the verify schedule

and set the task rate

Set the verify schedule to

advanced or basic

Set the rebuild/migrate task rate /cx set rebuildrate 70

Set the rebuild/migrate task mode /cx set rebuildmode 70

Set the verify task rate /cx set verifyrate 74

Set the verify task mode /cx set verifymode 73

Set the basic verify start time and

day

Enable/disable the selftest

schedule

/cx add verify 65

/cx add selftest 67

/cx set rebuild 69

/cx set verify 71

/cx set

verify=advanced|basic|1..5

/cx set verify=basic [pref=ddd:hh] 72

/cx set selftest 74

72

View controller alarms /cx show alarms

/cx show events

/cx show AENs

Unit Maintenance Tasks

Start a rebuild /cx/ux start rebuild 88

Start a verify /cx/ux start verify 88

Pause/resume rebuild /cx/ux pause rebuild and /cx/ux

resume rebuild

Stop verify /cx/ux stop verify 90

Enable/disable autoverify /cx/ux set autoverify 90

Identify all drives that make up a

unit by blinking associated LEDs

Port Tasks

Locate drive by blinking an LED /cx/px set identify 113

/cx/ux set identify 64

46

90

20 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 29

Common Tasks Mapped to CLI Commands

Table 5: Common Tasks Mapped to CLI Commands

T ask CLI Command Page

Check if LED is set to on or off /cx/px show identify 106

View information for specific dri v e /cx/px show 104

View the status of specific drive /cx/px show status 107

Show statistics for the drive on a

particular port

Clear statistics counters for a

particular drive

PHY Tasks

View details about link speed for a

specified phy

Set the link speed for a specified

phy

BBU Tasks

Check on charge and condition of

battery

Start a test of the battery

Enclosure Tasks

View information about an

enclosure and its components

Locate a drive slot in an enclosure

by blinking an LED

/cx/px show dpmstat

type=inst|ra|lct|histdata|ext

/cx/px set dpmstat=clear

[type=ra|lct|ext]

/cx/phyx show 115

/cx/phyx set link=auto|1.5|3.0|6.0 115

/cx/bbu/ show status 1 18

/cx/bbu test [quiet] 120

/cx/ex show 123

/cx/ex/slotx set identify 128

110

114

Locate a fan in an enclosure by

blinking an LED

Set the speed for a fan in an

enclosure

Locate a power supply in an

enclosure by blinking an LED

Locate a temperature sensor in an

enclosure by blinking an LED

Turn off or mute an audible alarm

in an enclosure

/cx/ex/fanx set identify 129

/cx/ex/fanx set speed 129

/cx/ex/pwrsx set identify 131

/cx/ex/tempx set identify 132

/cx/ex/almx set alarm 132

www.lsi.com/channel/products 21

Page 30

Chapter 2. CLI Syntax Reference

Terminology

3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0 uses the

following terminology:

Logical Units. Usually shortened to “units.” These are block devices

presented to the operating system. A logical unit can be a one-tier, two-tier , or

three-tier arrangement. Spare and Single logical units are examples of one-tier

units. RAID 1 and RAID 5 are examples of two-tier units and as such will

have sub-units. RAID 10 and RAID 50 are examples of three-tier units and as

such will have sub-sub-units.

Port. 3ware controller models up to the 9650SE series have one or many ports

(typically 4, 8, 12, 16, or 24). Each port can be attached to a single disk drive.

On a controller such as the 9650SE with a multilane serial port connector, one

connector supports four ports. On 9750 and 9690SA series controllers,

connections are made with phys and vports (virtual port).

Phy. Phys are transceivers that transmit and receive the serial data stream that

flows between the controller and the drives. 3ware 9750 and 9690SA

controllers have 8 phys. These “controller phys” are associated with virtual

ports (vports) by 3ware software to establish up to 128 potential connections

with SAS or SATA hard drives. Each controller phy can be connected directly

to a single drive, or can be connected through an expander to additional

drives.

VPort. Connections from 3ware 9750 and 9690SA controllers to SAS or

SATA drives are referred to as virt ual po rts , or VPorts. A VPort indicates the

ID of a drive, whether it is directly connected to the controller, or cascaded

through one or more expanders. The VPort, in essence, is a handle in the

software to uniquely identify a drive. The VPort ID or port ID allows a drive

to be consistently identified, used in a RAID unit, and managed. For dualported drives, although there are two connections to a drive the drive is still

identified with one VPort handle.

Note: For practical purposes, port and VPort are used interchangeab ly in this

document in reference to a drive (or disk). Therefore, unless otherwise specified,

the mention of port implies VPort as well. For example, when “port” is used to

indicate a drive, it is implied that for the applicable controller series, the reference

also applies to VPort.

For additional information about 3ware controller concepts and terminology,

see the user guide PDF for your 3ware RAID controller or the user guide

portions of the 3ware HTML Bookshelf.

22 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 31

Syntax Overview

The command syntax uses the general form:

Object Command Attributes

Objects are shell commands, controllers, units, ports (drives), BBUs (battery

backup units), and enclosures.

Commands can either select (show , get, present, read) attributes or alter (add,

change, set, write) attributes.

Attributes are either Boolean Attributes or Name-Value Attributes.

• The value of a boolean attribute is deduced by presence or lack of—that

is, the attribute is either specified, or not. For example, the command

show alarms by default lists controller alarms with the oldest alarm first.

If you include the attribute reverse, as in the command show alarms

reverse, alarms are listed in reverse order, with the most recent alarm

first.

• The value of name-value attributes are expressed in the format

attribute=value.

Syntax Overview

Example: When adding (creating) a unit to the controller with the following

command string,

/c1 add type=raid1 disk=0-1

c1

is the object, add is the command, type (for type of array) is an attribute

raid1 as the value of the attribute, and disk is another attribute with

with

0-1 as the value (ports 0 through 1).

Information about commands is organized by the object on which the

commands act:

Shell Object Commands. Shell object commands set the focus or provide

information (such as alarms, diagnostics, rebuild schedules, and so forth)

about all controllers in the system. For details, see “Shell Object Commands”

on page 24.

Controller Object Commands. Controller object commands provide

information and perform actions related to a specific controller. For example,

you use controller object commands for such tasks as seeing alarms specific

to a controller, creating schedules during which background tasks are run, and

setting policies for the controller. You also use the controller object command

/cx add type to create RAID arrays. For details, see “Controller Object

Commands” on page 31.

Unit Object Commands. Unit object commands provide information and

perform actions related to a specific unit on a specific controller. For example,

you use unit object commands for such tasks as seeing the rebuild, verify, or

initialize status of a unit, starting, stopping, and resuming verifies, starting

and stopping rebuilds, and setting policies for the unit. You also use the

www.lsi.com/channel/products 23

Page 32

Chapter 2. CLI Syntax Reference

controller object command /cx/ux migrate to change the configuration of a

RAID array. For details, see “Unit Object Commands” on page 79.

Phy Object Commands. Phy object commands provide information and

perform actions related to a specific phy on a 9750 or 9690SA controller.

Port Object Commands. Port object commands provide information and

perform actions related to a drive on a specific port or vport. You can use port

object commands for such tasks as seeing the status, model, or serial number

of the drive. For details, see “Port Object Commands” on page 104.

BBU Object Commands. BBU object commands provide information and

perform actions related to a Battery Backup Unit on a specific controller. For

details, see “BBU Object Commands” on page 116.

Enclosure Object Commands. Enclosure object commands provide

information and perform actions related to a particular enclosure. For

example, you can use enclosure object commands to see information about an

enclosure and its elements (slots, fan, and temperature sensor elements).

Help Commands. Help commands allow you to display help information for

all commands and attributes. For details, see “Help Commands” on page 133.

Shell Object Commands

Shell object commands are either applicable to all the controllers in the

system (such as show, rescan, flush, commit), or redirect the focused object.

Syntax

focus object

commit

flush

rescan

show [attribute [modifier]]

alarms [reverse]

diag

rebuild

selftest

ver

verify

update fw=filename_with_path [force]

24 3ware SATA+SAS RAID Controller Card CLI Guide, Version 10.0

Page 33

Shell Object Commands

focus

Object

The focus command is active in interactive mode only and is provided to

reduce typing.

The focus command will set the specified object in focus and change the

prompt to reflect this. This allows you to enter a command that applies to the

focus, instead of having to type the entire object name each time.

For example, where normally you might type:

/c0/u0 show

If you set the focus to /c0/u0, the prompt changes to reflect that, and you

only have to type

in a file system and requesting a listing of the current directory.

object

can have the following forms:

/cx/ux specifies the fully qualified URI (Universal Resource Identifier) of an

object on controller

.. specifies one level up (the parent object).

/ specifies the root

./object specifies the next level of the object.

/c0/bbu specifies a relative path with respect to the current focused

hostname.

show. The concept is similar to being in a particular location

cx, unit ux.

commit

Example:

//localhost> focus /c0/u0

//localhost/c0/u0>

//localhost/c0/u0> focus..

//localhost/c0>

//localhost> focus u0

//localhost/c0/u0>

//localhost/c0> focus /

//localhost>

The focus command is available by default. You can disable focus by setting

TW_CLI_INPUT_STYLE to old. (See “Return Code” on page 141.)

This command sends a commit command to all 3ware controllers in the

system. For more information, see “/cx commit” on page 63.

www.lsi.com/channel/products 25

Page 34

Chapter 2. CLI Syntax Reference

flush

This command sends a flush command to all 3ware controllers in the system.

For more information, see “/cx flush” on page 63.

rescan

This command sends a rescan command to all 3ware controllers in the system.

For more information, see “/cx rescan [noscan]” on page 62.

show

This command shows a general summary of all detected controllers and

enclosures.

The output of this command will vary, depending upon your cont roller model

and whether there is an enclosure with an expander attached.

Note that the device drivers for the appropriate operating system should be

loaded for the list to show all controllers. The intention is to provide a global

view of the environment.

Example for controller without an enclosure and expander: