Page 1

SGI® InfiniteStorage 15000 RAID User’s Guide

007-5510-002

Page 2

COPYRIGHT

© 2008 SGI. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein.

No permission is granted to copy, distribute, or create derivative works from the contents of this electronic

documentation in any manner, in whole or in part, without the prior written permission of SGI.

LIMITED RIGHTS LEGEND

The software described in this document is “commerci al computer software” provided with restricted rights (except

as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive

sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and

conventions. This document is provided with limited rights as defined in 52.227-14.

The electronic (software) version of this document was developed at private expense; if acquired under an agreement

with the USA government or any contractor thereto, it is acquired as “commercial computer software” subject to the

provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired fo r

Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto.

Contractor/manufacturer is SGI, 1140 E. Arques Avenue, Sunnyvale, CA 94085.

TRADEMARKS AND A TTRIBUTIONS

SGI and the SGI logoare registered trademarks of SGI in the United States and/or other countries worldwide.

Windows is a registered trademark of Microsoft Corporation in the United States and/or other countries.

All other trademarks mentioned herein are the property of their respective owners.

Page 3

Contents

1 Introduction ..................................................................................................................................... 1

1.1 Controller Features ....................................... .. ... .......................................................................... 1

1.2 The Controller Hardware ............................................................................................................. 2

1.2.1 Power Supply and Fan Modules ........................................................................................ 4

1.2.2 I/O Connectors and Status LED Indicators ........................................................................ 5

1.2.3 Uninterruptible Power Supply (UPS) .................................................................................. 9

2 Controller Installation ................................................................................................................... 11

2.1 Setting Up the Controller ........................... ............................................. .. ... .............................. 11

2.2 Unpacking the System .............................................................................................................. 12

2.2.1 Rack-Mounting the Controller Chassis ............................................................................. 12

2.2.2 Connecting the Controller in Dual Mode .......................................................................... 12

2.2.3 Connecting the Controller ................................................................................................. 13

2.2.4 Selecting SAS- ID for Your Drives .................................................................................... 13

2.2.5 Laying Out your Storage Drives ....................................................................................... 13

2.2.6 Connecting the RS-232 Terminal ..................................................................................... 14

2.2.7 Powering On the Controller .............................................................................................. 15

2.3 Configuring the Controller ......................................................................................................... 16

2.3.1 Planning Your Setup and Configuration ........................................................................... 16

2.3.2 Configuration Interface ..................................................................................................... 17

2.3.3 Login as Administrator ...................................................................................................... 17

2.3.4 Setting System Time & Date ............................................................................................ 17

2.3.5 Setting Tier Mapping Mode .............................................................................................. 18

2.3.6 Checking Tier Status and Configuration ........................................................................... 19

2.3.7 Cache Coherency and Labeling in Dual Mode ................................................................. 20

2.3.8 Configuring the Storage Arrays ........................................................................................ 21

2.3.9 Setting Security Levels ..................................................................................................... 24

3 Controller Management ................................................................................................................ 29

3.1 Managing the Controller ............................................................................................................ 29

3.1.1 Management Interface ..................................................................................................... 2

3.1.2 Available Commands ....................................................................................................... 30

3.1.3 Administrator and User Logins ......................................................................................... 30

3.2 Configuration Management ....................................................................................................... 32

3.2.1 Configure and Monitor Status of Host Ports ..................................................................... 32

3.2.2 Configure and Monitor Status of Storage Assets ............................................................. 34

3.2.3 Tier Mapping for Enclosures ............................................................................................ 42

3.2.4 System Network Configuration ......................................................................................... 43

3.2.5 Restarting the Controller ..................................................................................................45

3.2.6 Setting the System’s Date and Time ................................................................................ 46

3.2.7 Saving the Controller’s Configuration . .............................................................................. 47

3.2.8 Restoring the System’s Default Configuration .................................................................. 47

3.2.9 LUN Management ............................................................................................................ 48

3.2.10 Automatic Drive Rebuild ...................................................................................................50

3.2.11 SMART Command .............................. .. ............................................. .............................. 51

3.2.12 Couplet Controller Configuration (Cache/Non-Cache Coherent) ..................................... 53

3.3 Performance Management ........................................................................................................ 55

3.3.1 Optimizing I/O Request Patterns ...................................................................................... 55

3.3.2 Audio/Visual Settings of the System ................................................................................ 58

3.3.3 Locking LUN in Cache ...................................................................................................... 59

3.3.4 Resources Allocation ........................................................................................................ 66

9

007-5510-002 i

Page 4

3.4 Security Administration ............................................................................................................. 72

3.4.1 Monitoring User Logins .................................................................................................... 73

3.4.2 Zoning (Anonymous Access) ........................................................................................... 73

3.4.3 User Authentication .......................................................................................................... 74

3.5 Firmware Update Management ................................................................................................. 75

3.5.1 Displaying Current Firmware Version .............................................................................. 75

3.5.2 Firmware Update Procedure ............................................................................................ 75

3.6 Remote Login Management .................................... ... ... ............................................. ... ............76

3.6.1 When a Telnet Session is Active ..................................................................................... 77

3.7 System Logs .............. ... ............................................. ... ... ......................................................... 79

3.7.1 Message Log ................................................................................................................... 79

3.7.2 System and Drive Enclosure Faults ................................................................................. 79

3.7.3 Displaying System Uptime ............................................................................................... 80

3.7.4 Saving a Comment to the Log ......................................................................................... 80

3.8 Other Utilities ............................................................................................................................. 81

3.8.1 APC UPS SNMP Trap Monitor ........................................................................................ 81

3.8.2 API Server Connections ................................................................................................... 81

3.8.3 Changing Baud Rate for the CLI Interface ....................................................... ............... 81

3.8.4 CLI/Telnet Session Control Settings ................................................................................ 82

3.8.5 Disk Diagnostics .............................................................................................................. 82

3.8.6 Disk Reassignment and Miscellaneous Disk Commands ................................................ 83

3.8.7 SPARE Commands ......................................................................................................... 83

4 Controller Remote Management and Troubleshooting ............................................................. 85

4.1 Remote Management of the Controller ................................................................... .................. 85

4.1.1 Network Connection .........................................................................................................85

4.1.2 Network Interface Set Up ................................................... ............................................. . 85

4.1.3 Login Names and Passwords .......................................................................................... 87

4.1.4 SNMP Set Up on Host Computer .................................................................................... 88

4.2 Troubleshooting the Controller .................................................................................................. 89

4.2.1 Component Failure Recovery ............................................... ........................................... 89

4.2.2 Recovering from Drive Failures ....................................................................................... 90

4.2.3 Component Failure on Enclosures ................................................................................... 94

5 Drive Enclosure System ....................... ... ............................................ ... ...............................

....... 95

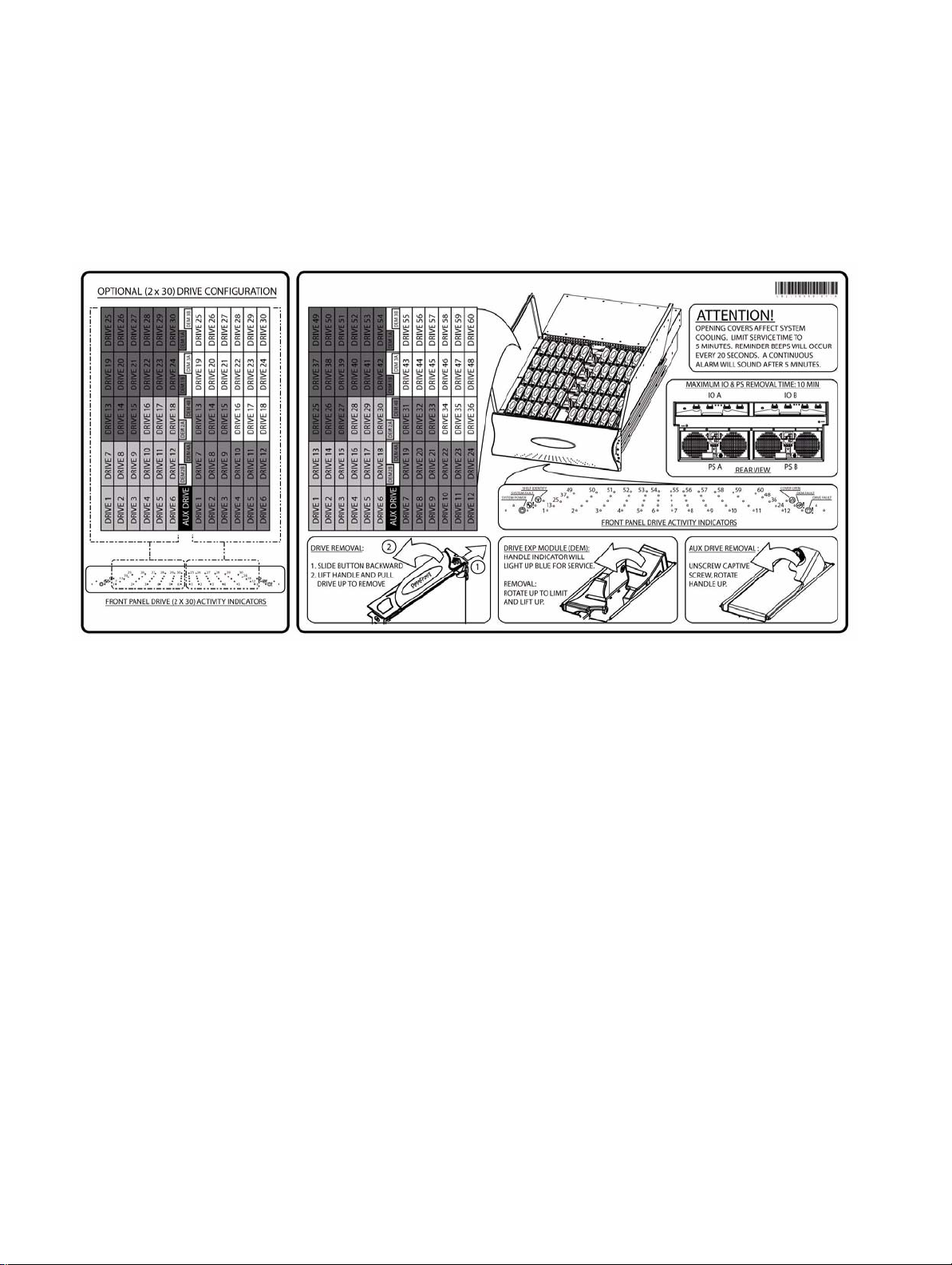

5.1 The SGI InfiniteStorage 15000 Drive Enclosure ....................................................................... 95

5.2 Enclosure Core Product ............................................................................................................ 96

5.2.1 Enclosure Chassis ........................................................................................................... 97

5.3 The Plug-in Modules ................................................................................................................. 97

5.3.1 Power Cooling Module (PCM) ......................................................................................... 97

5.3.2 Input/Output (I/O) Module ................................................................................................ 98

5.3.3 Drive Carrier Module and Status Indicator ........................................................ ............. 100

5.3.4 DEM Card ...................................................................................................................... 100

5.4 Indicators ................................................................................................................................. 101

5.4.1 Front Panel Drive Activity Indicators .............................................................................. 101

5.4.2 Internal Indicators .............................................................. ... .. ....................................... 104

5.4.3 Rear of Enclosure Activity Indicators ............................................................................. 104

5.5 Visible and Audible Alarms ..................................................................................................... 105

5.6 Drive Enclosure Technical Specification ................................................................................. 105

5.6.1 Dimensions .................................................................................................................... 105

5.6.2 Weight ............................................................................................................................ 106

ii 007-5510-002

Page 5

Contents

5.6.3 AC INPUT PCM .................. ............................................. .. ............................................. 106

5.6.4 DC INPUT PCM .............. ............................................. ............................................. ... .. 106

5.6.5 DC OUTPUT PCM ............. ... .. ....................................................................................... 107

5.6.6 PCM Safety and EMC Compliance ................................................................................ 107

5.6.7 Power Cord .................................................................................................................... 107

5.7 Environment ............................................................................................................................ 107

6 Drive Enclosure Installation ....................................................................................................... 109

6.1 Introduction .............................................................................................................................. 109

6.2 Planning Your Installation ........................................................................................................ 109

6.2.1 Enclosure Bay Numbering Convention .......................................................................... 110

6.3 Enclosure Installation Procedures ........................................................................................... 112

6.4 I/O Module Configurations ...................................................................................................... 112

6.4.1 Controller Options .............. ... ......................................................................................... 112

6.5 SAS DEM ................................................................................................................................ 113

6.6 SATA Interposer Features ....................................................................................................... 113

6.7 Drive Enclosure Device Addressing ........................................................................................ 113

6.8 Grounding Checks ................................................................................................................... 113

7 Drive Enclosure Operation ......................................................................................................... 115

7.1 Before You Begin .................................................... ... .. ............................................. ... ........... 115

7.2 Power On / Power Down ...................................... ............................................. ... ... ................ 115

7.2.1 PCM LEDs ...................................................................................................................... 115

7.2.2 I/O Panel LEDs ............................................................................................................... 116

8 Drive Enclosure Troubleshooting ............................................................................................. 117

8.1 Overview ................................................................................................................................. 117

8.2 Initial Start-up Problems .......................................................................................................... 117

8.2.1 Faulty Cords ................................................................................................................... 117

8.2.2 Alarm Sounds On Power Up ........................................ ... ............................................... 117

8.2.3 Green “Signal Good” LED on I/O Module Not Lit ........................................................... 117

8.2.4 Computer Doesn’t Recognize the Drive Enclosure Subsystem .................................... 117

8.3 LEDs ........................................................................................................................................ 118

8.3.1 HDD (Hard Disk Drive) ................................................................................................... 118

8.3.2 PCM (Power Cooling Module) ........................................................................................ 118

8.3.3 DEM (Drive Expander Module) ...................................................................................... 118

8.3.4 I/O Module ...................................................................................................................... 119

8.3.5 Front Panel Drive Activity Indicators .............................................................................. 120

8.4 Audible Alarm .......................................................................................................................... 121

8.4.1 Top Cover Open .......................................................................................... ... ................ 121

8.4.2 SES Command ............................................................................................................... 121

8.5 Troubleshooting ....................................................................................................................... 121

8.5.1 Thermal Control ......................................................... ............................................. ... ..... 121

8.5.2 Thermal Alarm ....................................... ... ............................................. ......................... 123

8.5.3 Thermal Shutdown ................................... ... ............................................. ... ................... 1

23

8.6 Dealing with Hardware Faults ................................................................................................. 123

8.7 Continuous Operation During Replacement ............................................................................ 124

8.8 Replacing a Module ........................................ ... ...................................................................... 124

8.8.1 Power Cooling Modules ................................................................................................. 124

8.8.2 I/O Module ...................................................................................................................... 126

8.8.3 Replacing the Drive Carrier Module ............................................................................... 126

007-5510-002 iii

Page 6

8.9 Replacing the DEM ................................................................................................................. 127

Appendix A. Controller Technical Specifications. . . . . . . . . . . . . . . . . . . . . . . 129

Appendix B. Drive Addressing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Appendix C. Cabling Controllers and Drive Enclosures . . . . . . . . . . . . . . . . . 135

iv 007-5510-002

Page 7

Preface

Preface

What is in this guide

This user guide gives you step-by-step instructions on how to install, configure, and connect the

SGI InfiniteStorage 15000 system to your host computer system, as well as to use and maintain the

system.

Who should use this guide

This user guide assumes that you have a working knowledge of the Serial Attached SCSI (SAS) protocol

environments into which you are installing this system.

International St andards

The SGI InfiniteStorage 15000 system complies with the requirements of the following agencies and

standards:

•CE

•UL

•cUL

Potential for Radio Frequency Interference

USA Federal Communications Commission (FCC)

Note This equipment has been tested and found to comply with the limits for a class A digital device, pursuant

to Part 15 of the FCC rules. These limits are designed to provide reasonable protection against harmful

interference when the equipment is operated in a commercial environment. This equipment generates,

uses and can radiate radio frequency energy and, if not installed and used in accordance with the

instruction manual, may cause harmful interference to radio communications. Operation of this

equipment in a residential area is likely to cause harmful interference in which case the user will be

required to correct the interference at his own expense.

Properly shielded and grounded cables and connectors must be used in order to meet FCC emission

limits. The supplier is not responsible for any radio or television interference caused by using other than

recommended cables and connectors or by unauthorized changes or modifications to this equipment.

Unauthorized changes or modifications could void the user’s authority to operate the equipment.

This device complies with Part 15 of the FCC Rules. Operation is subject to the following two

conditions: (1) this device may not cause harmful interference, and (2) this device must accept any

interference received, including interference that may cause undesired operation.

007-5510-002 v

Page 8

Preface

European Regulations

This equipment complies with European Regulations EN 55022 Class A: Limits and Methods of

Measurement of Radio Disturbance Characteristics of Information Technology Equipment and

EN50082-1: Generic Immunity.

Qualified Personnel

Qualified personnel are defined as follows:

• Service Person: A person having appropriate technical training and experience necessary to be

aware of hazards to which that person may be exposed in performing a task and of measures to

minimize the risks to that person or other persons.

• User/Operator: Any person other than a Service Person.

Safe Handling

• Remove drives to minimize weight.

• Do not try to lift the enclosure by yourself.

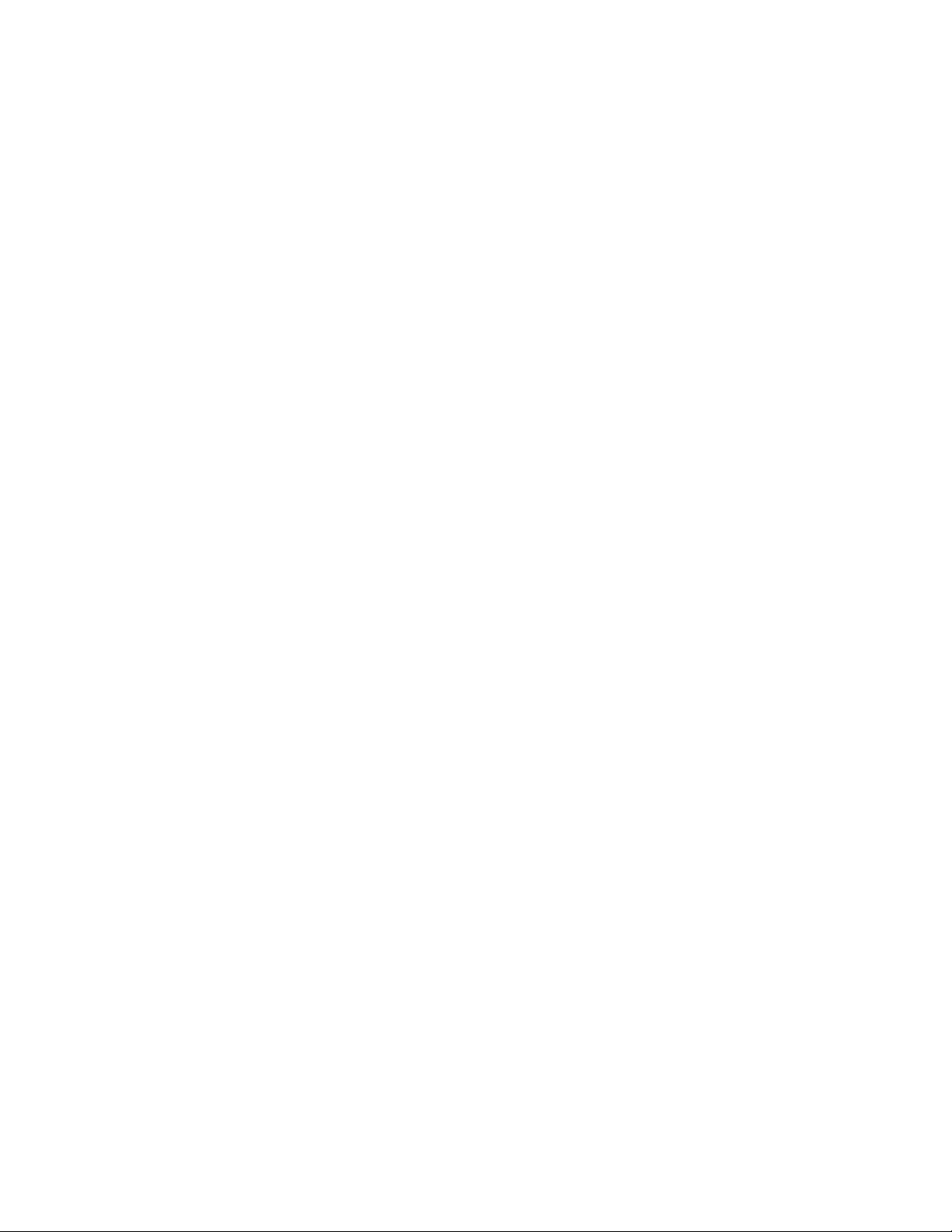

Weight Handling Label: Lifting and Tipping

Pinch Hazard Label: Keep Hands Clear

vi 007-5510-002

Page 9

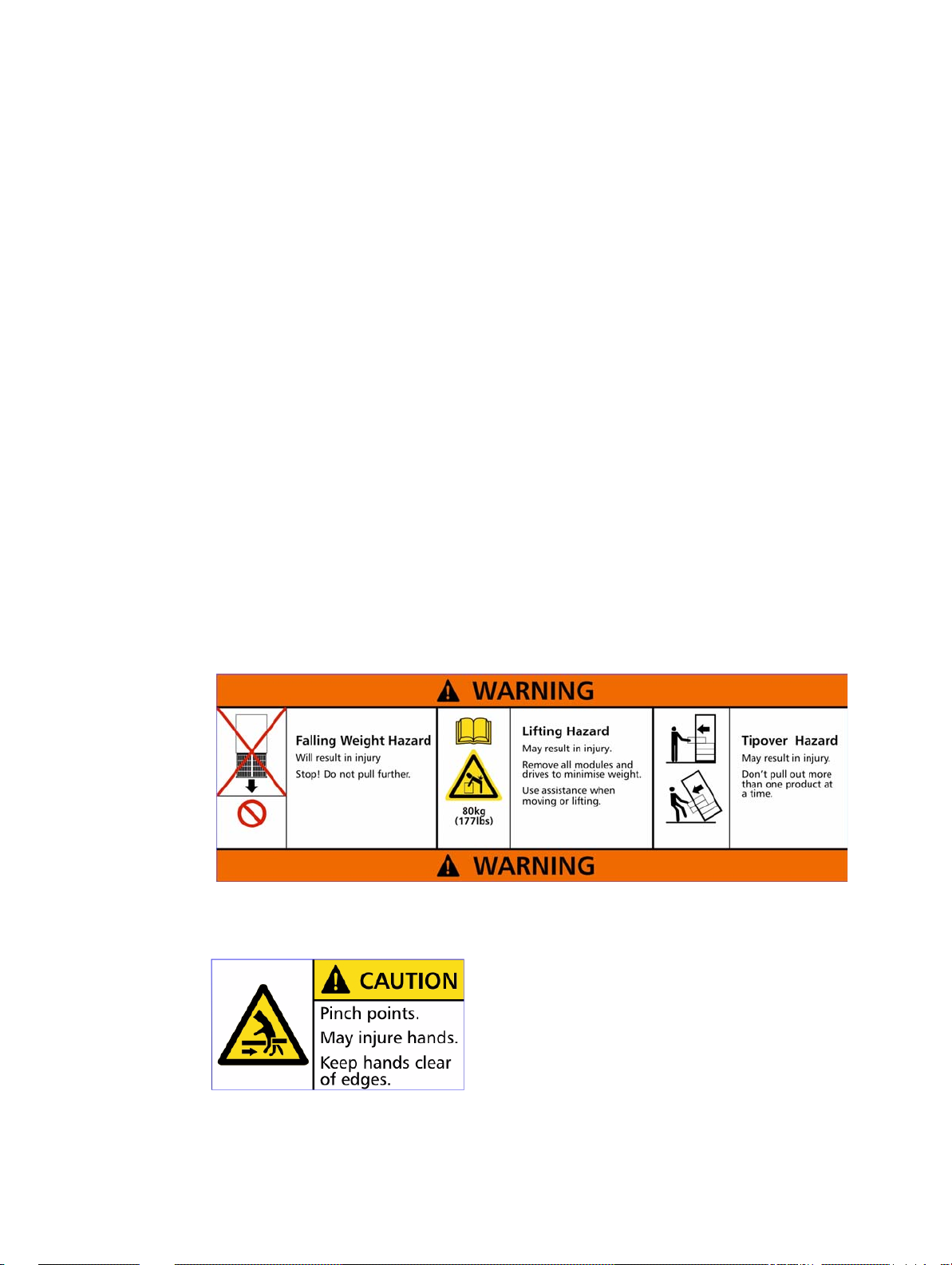

Chassis Warning Label: Weight Hazard

• Do not lift the drive enclosure by the handles on the power cooling module (PCM); they are not

designed to support the weight of the populated enclosure.

Safety

Preface

Important SGI InfiniteStorage 15000 drive enclosures must be always installed in SGI InfiniteStorage 15000

racks. SGI does not authorize or support the use of these drive enclosures in any standalone

benchtop or enclosure-on-enclosure stacking configuration.

If this equipment is used in a manner not specified by the manufacturer, the protection provided

by the equipment may be impaired.

Warning The SGI InfiniteStorage 15000 MUST be grounded before applying power.

Unplug the unit if you think that it has become damaged in any way and before you move it.

Caution Plug-in modules are part of the fire enclosure and must only be removed when a replacement can be

immediately added. The system must not be run without all units in place. Operate the system with the

enclosure top cover closed.

• In order to comply with applicable safety, emission and thermal requirements no covers should be

removed.

• The drive enclosure unit must only be operated from a power supply input voltage range of 200 V

AC to 240 V AC.

• The plug on the power supply cord is used as the main disconnect device. Ensure that the socket

outlets are located near the equipment and are easily accessible.

Warning To ensure protection against electric shock caused by HIGH LEAKAGE CURRENT (TOUCH

CURRENT), the SGI InfiniteStorage 15000 must be connected to at least two separate and

independent sources. This is to ensure a reliable earth connection.

• The equipment is intended to operate with two (2) working PCMs. Before removal/replacement of

any module disconnect all supply power for complete isolation.

• A faulty PCM must be replaced with a fully operational module within 24 hours.

007-5510-002 vii

Page 10

Preface

Power Cooling Module (PCM) Caution Label: Do not operate with modules missing

Warning To ensure your system has warning of a power failure please discon nect the power from the

power supply, by either the switch (where present) or by physically removing the power source,

prior to removing the PCM from the enclosure/shelf.

• Do not remove a faulty PCM unless you have a replacement unit of the correct type ready for

insertion.

PCM Warning Label: Power Hazards

• The power connection must always be disconnected prior to removal of the PCM from the

enclosure.

• A safe electrical earth connection must be provided to the power cord.

• Provide a suitable power source with electrical overload protection to meet the requirements laid

down in the technical specification.

Warning Do not remove covers from the PCM. Danger of electric shock inside. Return the PCM to your

supplier for repair.

PCM Safety Label: Electric Shock Hazard Inside

viii 007-5510-002

Page 11

Preface

Warning Operation of the Enclosure with ANY modules missing will disrupt the airflow and the drives will

not receive sufficient cooling. It is ESSENTIAL that all apertures are filled before operating the

unit.

• Drive Carrier Module Caution Label: Drive spin down time 30 seconds

Recycling of Waste Electrical and Electronic Equipment (WEEE)

At the end of the product’s life, all scrap/ waste electrical and electronic equipment should be recycled

in accordance with National regulations applicable to the handling of hazardous/ toxic electrical and

electronic waste materials.

Please contact your supplier for a copy of the Recycling Procedures applicable to your product.

Important Observe all applicable safety precautions, e.g. weight restrictions, handling batteries and lasers

etc., detailed in the preceding paragraphs when dis m an tling and disposing of this equipment

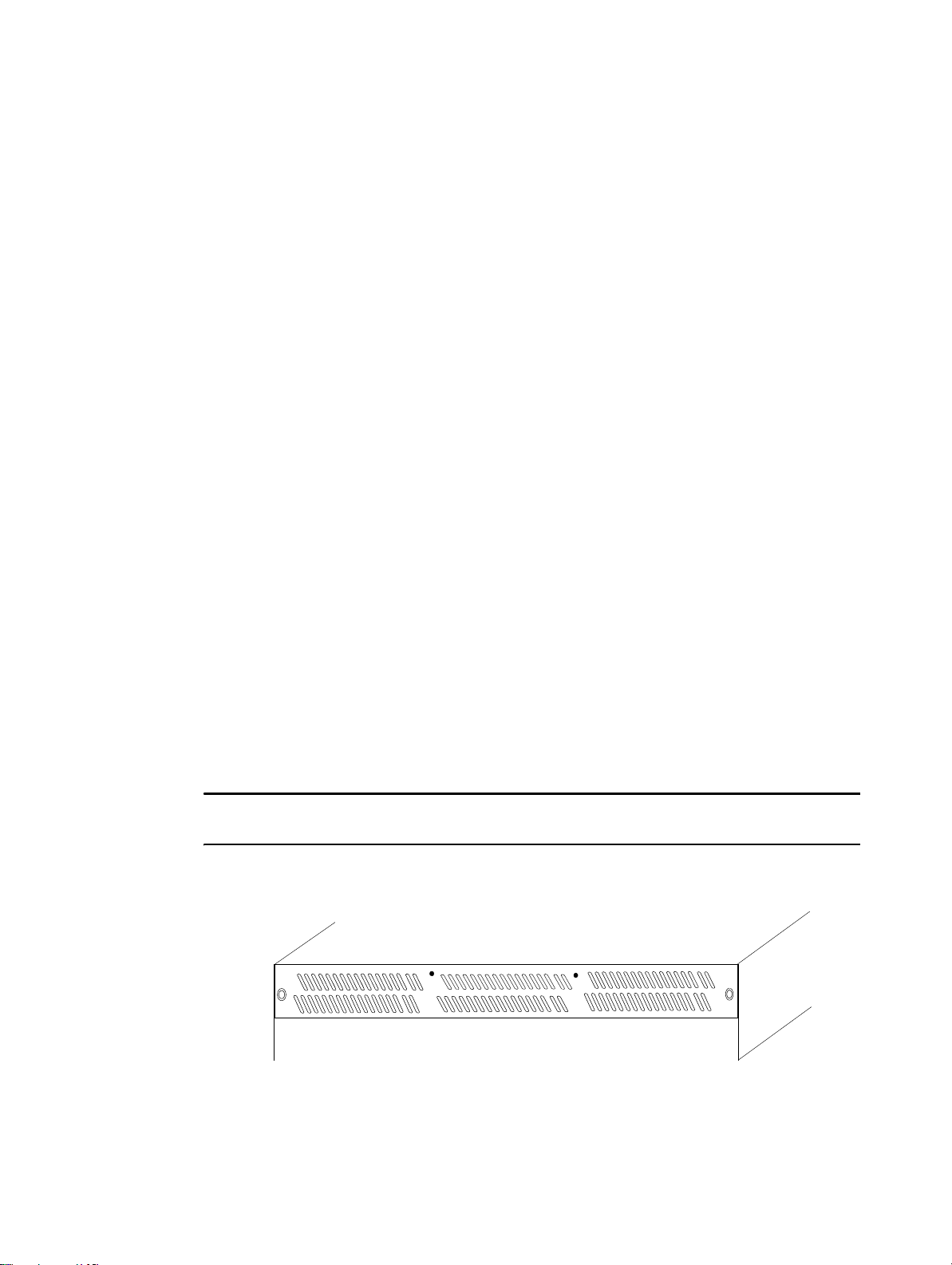

Rack System Precautions

Important SGI InfiniteStorage 15000 drive enclosures should only be installed in SGI InfiniteStorage 15000

racks. Mounting and installing these drive enclosures in any other rack is not authorized or

supported by SGI.

The SGI InfiniteStorage 15000 drive enclosures are pre-installed in the rack before shipment. If the drive

enclosures must be re-installed and mounted, the following safety requirements must be considered

when the unit is mounted in a rack.

• The rack stabilizing (anti-tip) plates should be installed and secured to prevent the rack from tipping

or being pushed over during installation or normal use.

• When loading a rack with the units, fill the rack from the bottom up and empty from the top down.

• Always remove all modules and drives, to minimize weight, before loading the chassis into a rack.

Warning It is recommended that you do not slide more than one enclosure out of the rack at a time, to avoid

danger of the rack tipping over.

• When mounting in a rack, ensure that the enclosure is pushed fully back into the rack.

007-5510-002 ix

Page 12

Preface

• The electrical distribution system must provide a reliable earth ground for each unit and the rack.

• Each power supply in each unit has an earth leakage current of 1.5mA. The design of the electrical

distribution system must take into consideration the total earth leakage current from all the power

supplies in all the units.

Cover Label

ESD Precautions

Caution It is recommended that you fit and check a suitable anti-static wrist or ankle strap and observe all

conventional ESD precautions when handling plug-in modules and components. Avoid contact with

backplane components and module connectors, etc.

Data Security

• Power down your host computer and all attached peripheral devices before beginning installation.

• Each enclosure contains up to 60 removable disk drive modules. Disk units are fragile. Handle them

with care, and keep them away from strong magnetic fields.

• All the supplied plug-in modules and blanking plates must be in place for the air to flow correctly

around the enclosure and also to complete the internal circuitry.

x 007-5510-002

Page 13

Preface

• If the subsystem is used with modules or blanking plates missing for more than a few minutes, the

enclosure can overheat, causing power failure and data loss. Such use may also invalidate the

warranty.

• If you remove any drive module, you may lose data.

– If you remove a drive module, replace it immediately. If it is faulty, replace it with a drive

module of the same type and capacity

• Ensure that all disk drives are removed from the enclosure before attempting to move the rack

installation.

• Do not abandon your backup routines. No system is completely foolproof.

.

007-5510-002 xi

Page 14

Page 15

Introduction

Chapter 1

Introduction

The SGI InfiniteStorage 15000 controller is an intelligent storage infrastructure device designed and

optimized for the high bandwidth and capacity requirements of IT departments, rich media, and high

performance workgroup applications.

The controller plugs seamlessly into existing SAN environments, protecting and upgrading investments

made in legacy storage and networking products to substantially improve their performance, availability,

and manageability.

The controller’s design is based on an advanced pipelined, parallel processing architecture, caching,

RAID, and system and file management technologies. These technologies have been integrated into a

single plug-and-play device—the SGI InfiniteStorage 15000—providing simple, centralized, and secure

data and SMNP management.

The SGI InfiniteStorage 15000 is designed specifically to support high bandwidth, rich content, and

shared access to and backup of large banks of data. It enables a multi-vendor environment comprised of

standalone and clustered servers, workstations and PCs to access and back-up data stored in centralized

or distributed storage devices in an easy, cost-effective, and reliable manner.

Each controller orchestrates a coherent flow of data throughout the storage area network (SAN) from

users to storage, managing data at speeds of up to 3000 MB/second (or 3 GB/second). This is

accomplished through virtualized host and storage connections, a DMA-speed shared data access space,

and advanced network-optimized RAID data protection and security—all acting in harmony with

sophisticated SAS storage management intelligence embedded within the controller.

The controller can be “coupled” to form data access redundancy while maintaining fully pipelined,

parallel bandwidth to the same disk storage. This modular architecture ensures high data availability and

uptime along with application performance. With its PowerLUN technology, the system provides full

bandwidth to all host ports simultaneously and without host striping.

1.1 Controller Features

The SGI InfiniteStorage 15000 controller incorporates the following features:

• Simplifies Deployment of Complex SANs

The controller provides SAN administration with the management tools required for large number

of clients.

• Infiniband or Fibre Channel (FC-8) Connectivity Throughput

The controller provides up to four (4) individual double data rate four-lane Infiniband or FC-8 host

port connections, including simultaneous access to the same data through multiple ports. Each IB

host port supports point-to-point and switched fab ric operation.

• Highly Scalable Performance and Capacity

The RAID engine provides both fault-tolerance and capacity scalability. Performance remains the

same, even in degraded mode. Internal data striping provides generic load balancing across drives.

The RAID engine can support from 10 drives minimum to 1200 drives maximum. Formatable

capacity is drive capacity.

007-5510-002 1

Page 16

• Comprehensive, Centralized Management Capability

The controller provides a wide range of management capabilities: Configuration Management,

Performance Management, Logical Unit Number (LUN) Management, Security Administration,

and Firmware Update Management.

• Management Options via RS-232 and Ethernet (Telnet)

A RS-232 port and Ethernet port are included to provide local and remote management capabilities.

SNMP is also supported.

• Data Security with Dual-Level Protection

Non-host based data security is maintained with scalable security features including restricted

management access, dual-level protection, and authentication against authorized listing (up to 256

direct host logins per host port are supported). No security software is required on the host

computers.

• Storage Virtualization and Pooling

Storage pooling enables different types of storage to be aggregated into a single logical storage

resource from which virtual volumes can be served up to multi-vendor host computers. Up to 1024

LUNs are supported.

• SES (SCSI Enclosure Services) Support for Enclosure Monitoring

Status information on the condition of enclosure, disk drives, power supplies, and cooling systems

are obtained via the SES interface.

• Absolute Data Integrity and Availability

Automatic drive failure recovery procedures are transparent to users.

• Hot-Swapable and Redundant Components

The controller utilizes redundant, hot-swappable power supplies and a hot swappable fan module

that contains redundant cooling fans.

1.2 The Controller Hardware

The basic controller (See Figure 1–1.) includes:

• A chassis enclosure (with a minimum of 2.56GB cache memory)

• 10 SAS connectors that connect the controller to the drive enclosures

• Connector(s) for host Infiniband or Fibre Channel (FC-8) connection(s)

• Serial connectors for maintenance/diagnostics

• Ethernet RJ-45 connector

2 007-5510-002

Page 17

Front (behind cover panel)

Rear

Introduction

A

AB

B

AC

FAIL

C

CD

D

E

EF

F

DISK CHANNELS

G

GH

H

P

PS

S

TEST

CTRL

TEMP

FAN

STATUS

STATUS

STATUS

SYSTEM

DISK

STATUS

STATUSDCSTATUS

HOST 1/2

HOST 1

CLI

STATUS

ACT

1

2

1/2

HOST 2

HOST 3/4

HOST 3

CLI

STATUS

ACT

3

4

HOST 4

TEST

PLACE PIN HERE

ACT

TELNET

LINK

ACT

LINK

LINK

CLI

COM

LINK

MUTEACFAIL

ACT

ALARM

SILENCE

Figure 1–1 SGI InfiniteStorage 15000 IB - Front and Rear Views

The controller is a high-performance controller designed to be rack-mounted in standard 19" racks. Each

controller is 3.5" in height, requiring 2U of rack space. The system uses 10 independent SAS drive

channels to manage data distribution and storage for up to 120 disk drives per channel (which can be

limited by drive enclosure type).

007-5510-002 3

Page 18

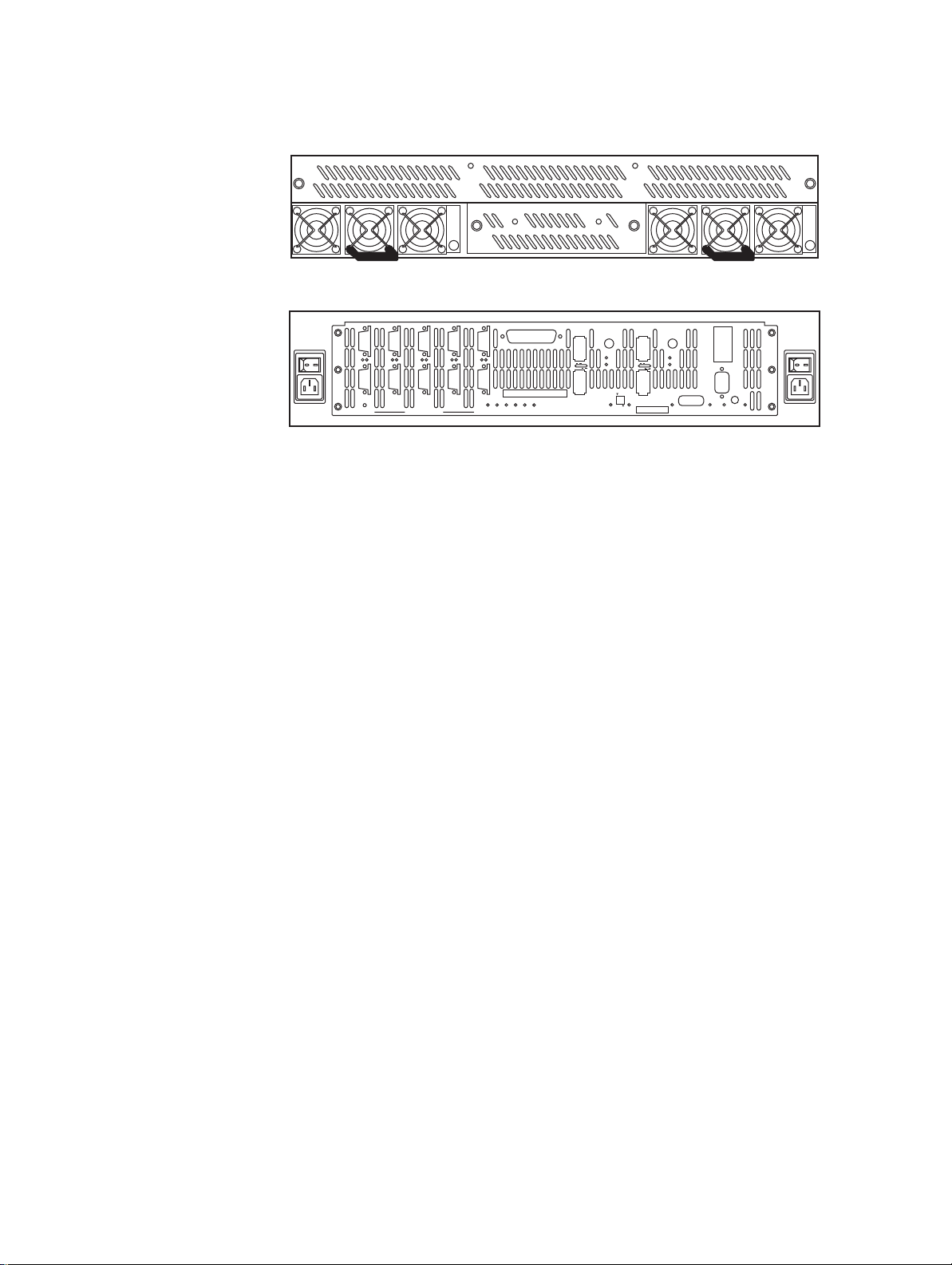

1.2.1 Power Supply and Fan Modules

Each controller is equipped with two (2) power supply modules and one (1) fan module. The PSU (power

supply unit) voltage operating ranges are nominally 110V to 230V AC, and are autoranging.

The two Power Supply modules provide redundant power . If one module fails, the other will maintain the

power supply and cooling while you replace the faulty module. The faulty module will still be providing

proper air flow for the system so do not remove it until a new module is available for replacement.

The two power supply modules are installed in the lower left and right slots at the front of the unit, behind

the cover panel

The fan module (Figure 1–1) is installed in the front top slot, behind the cover panel, and held in place

by two thumbscrews.

The two LEDs mounted on the front of the power supply module (located on the right and left of the

power supply handle) indicate the status of the PSU:

• Both LEDs will be lit green when the supply is active and the output is within operating limits with

no faults.

(Figure 1–1). Each PSU module is held in place by one thumbscrew.

• The left LED indicates the status of the AC input. The LED is lit green as long as the AC input is

present.

• The right LED indicates the status of the DC output of the power supply. The LED is lit green when

the supply is enabled and the outputs are withing specification. The LED will be off when AC input

is not present, the outputs are disabled (after a SHUTDOWN command), or the outputs are not within

specification. A cooling fan failure will not turn this LED off unless the failure results in a thermal

shutdown of the supply.

The AC switch for each supply is located on the rear of the controller unit.

The fan module contains multiple fans for cooling the controller. It is the primary source of cooling and

must be installed at all times during operation (except when it is being replaced due to a faulty fan).

NOTE :

For more information on fan status, see the description of the Status LEDs on rear panel in

the next section.

Figure 1–2 Fan Module (front panel)

4 007-5510-002

Page 19

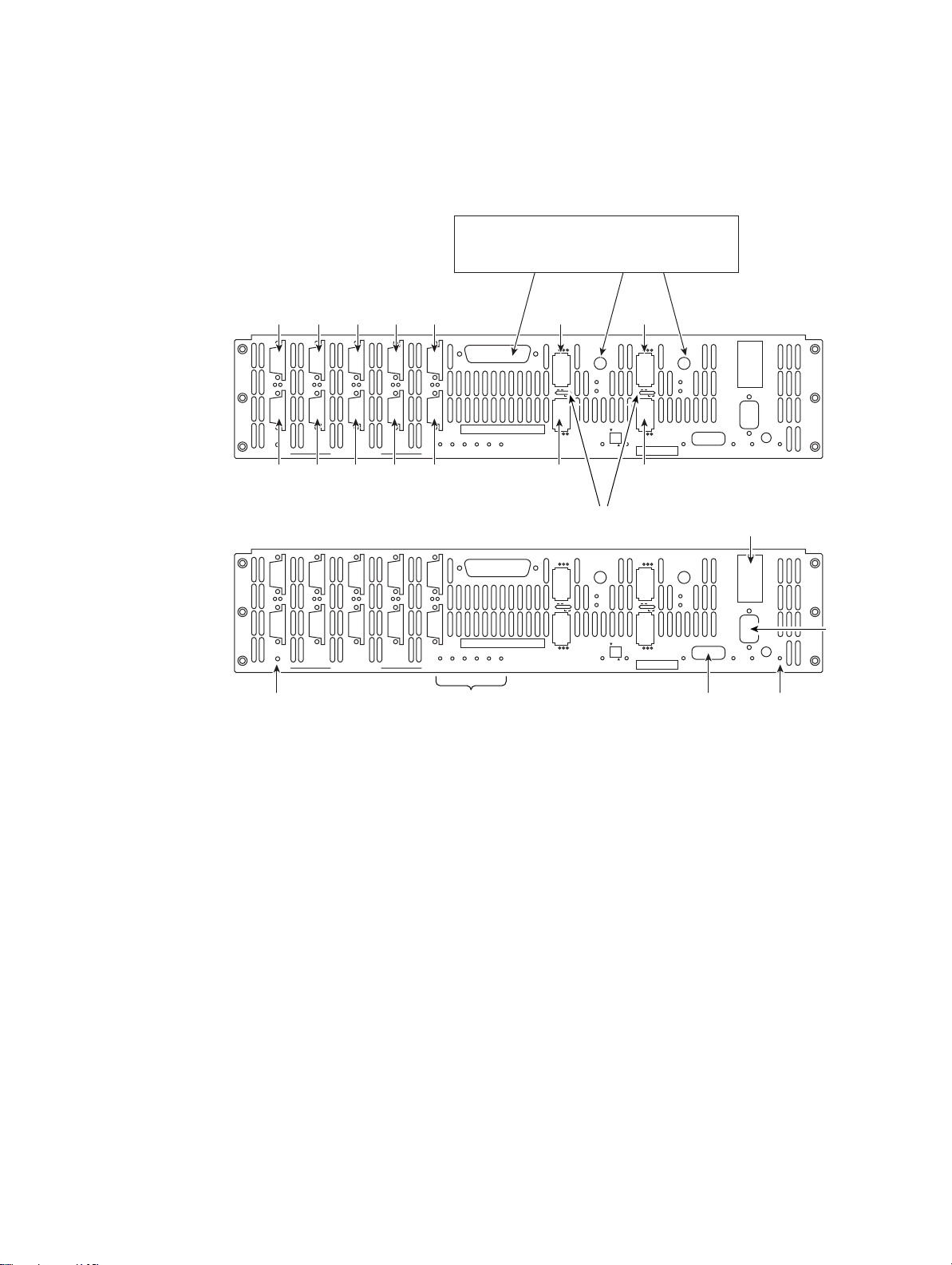

1.2.2 I/O Connectors and Status LED Indicators

2

e

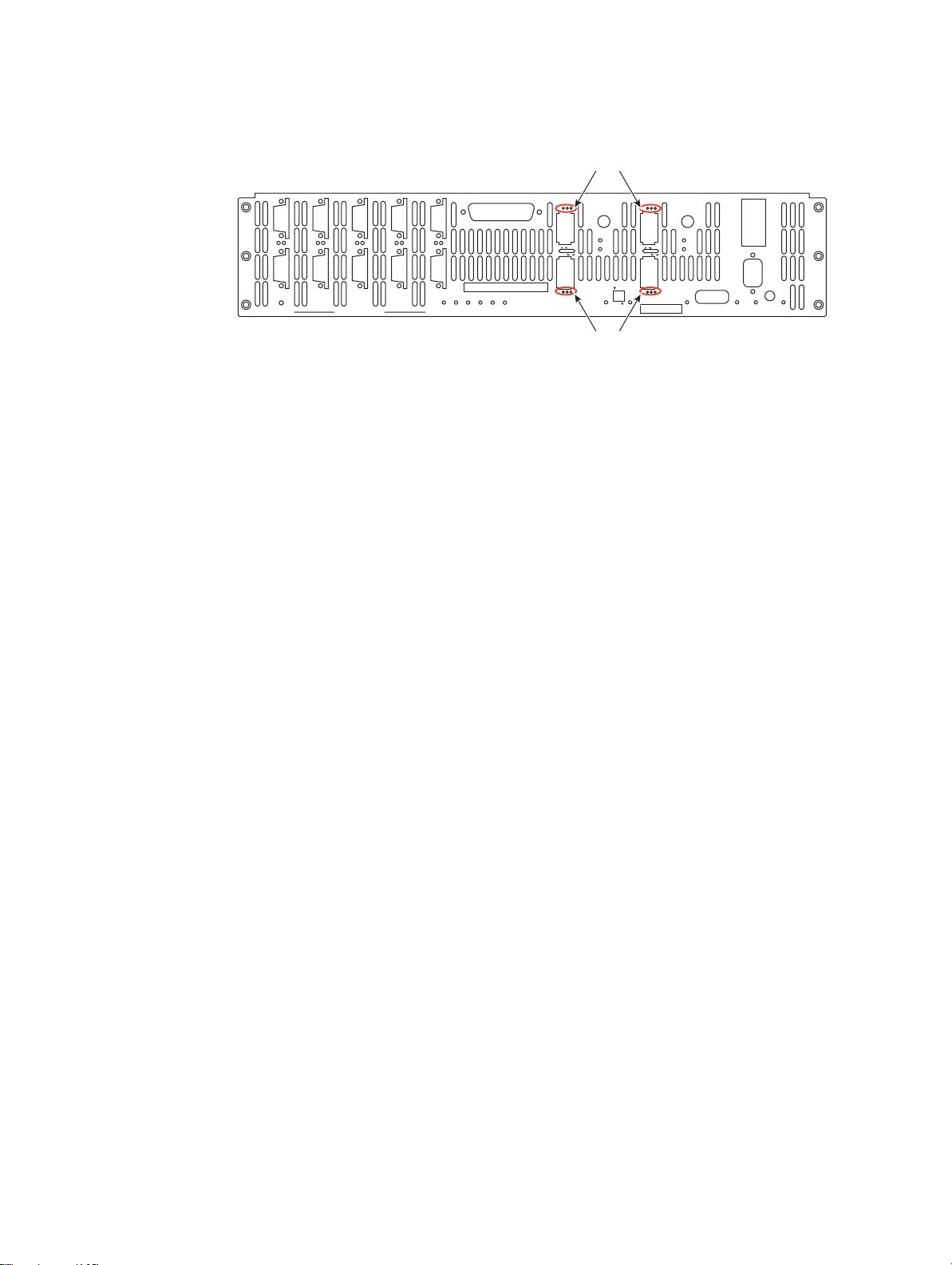

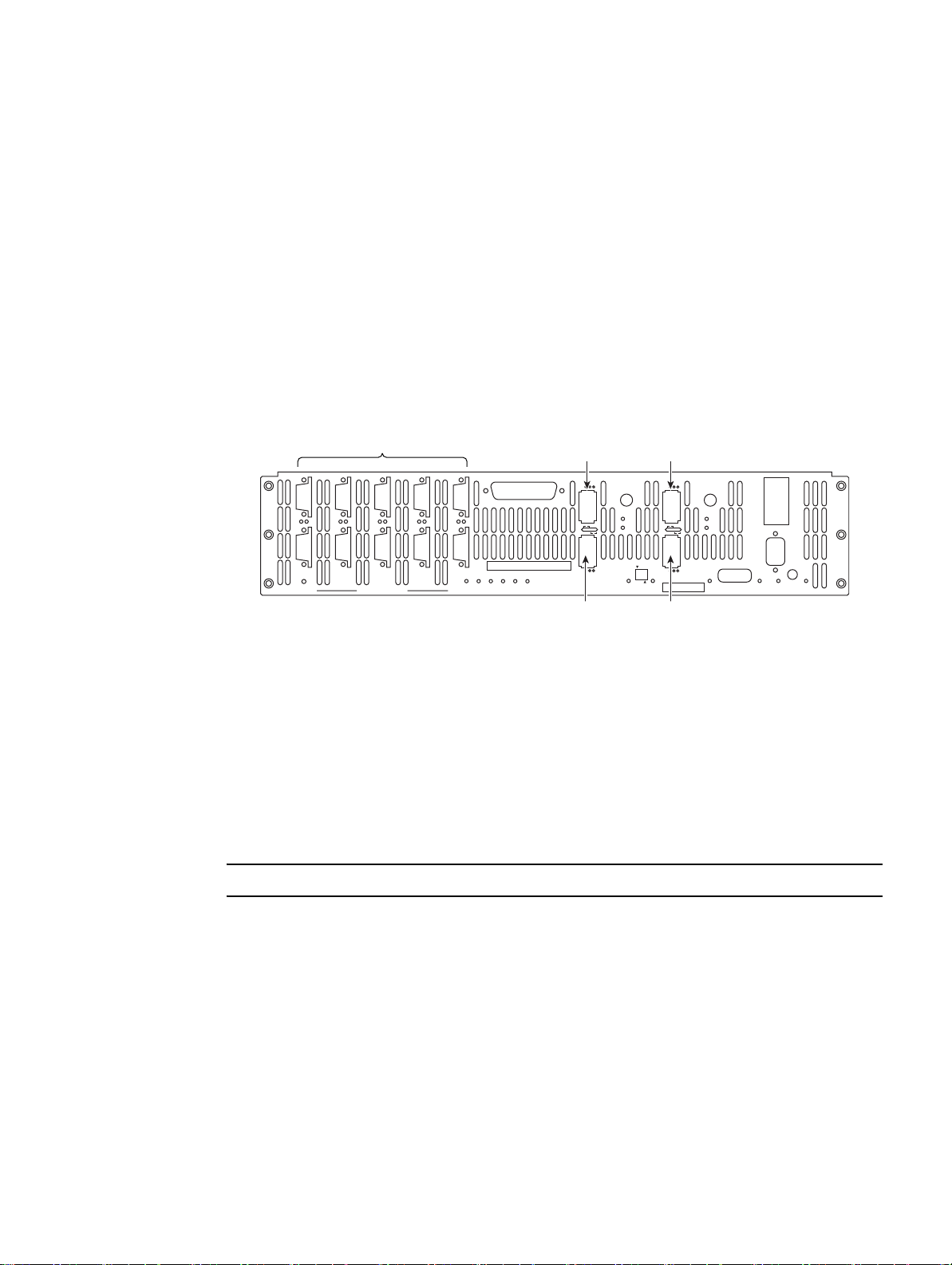

Figure 1–3 shows the ports at the back of the controller 4 Infiniband (IB) unit.

These ports are for the test of the RAID

engine by the manufacturer or other authorized

personnel only.

Introduction

A

A

AB

B

AC

FAIL

B

C

CD

D

E

EF

F

DISK CHANNELS

G

GH

H

PGEC

P

PS

S

SYSTEM

STATUS

SHFD

CTRL

STATUS

TEMP

STATUS

DISK

STATUSDCSTATUS

TEST

FAN

STATUS

HOST 1

HOST 2

HOST 1/2

STATUS

1

2

IB LEDs

CTRL

STATUS

TEMP

STATUS

DISK

STATUSDCSTATUS

TEST

FAN

STATUS

HOST 1

HOST 2

HOST 1/2

STATUS

1

2

A

AB

B

AC

FAIL

C

CD

D

E

EF

F

DISK CHANNELS

G

GH

H

P

PS

S

SYSTEM

STATUS

Figure 1–3 I/O Ports on the Rear Panel of the Controller

Host 3Host 1

HOST 3

3

HOST 4

4

PLACE PIN HERE

HOST 3/4

CLI

STATUS

ACT

COM

CLI

ACT

1/2

TEST

TELNET

ACT

LINK

ACT

LINK

LINK

CLI

LINK

ACT

AC

FAIL

MUTE

ALARM

SILENCE

Host 4Host 2

Ethernet

HOST 3

3

HOST 4

4

PLACE PIN HERE

HOST 3/4

CLI

STATUS

ACT

COM

CLI

ACT

1/2

TEST

TELNET

ACT

LINK

ACT

LINK

LINK

CLI

LINK

ACT

AC

FAIL

MUTE

ALARM

SILENCE

RS-23

interfac

AC fail LEDAC fail LED COMStatus LEDs

007-5510-002 5

Page 20

Host port LEDs

CTRL

STATUS

TEMP

STATUS

DISK

STATUSDCSTATUS

TEST

FAN

STATUS

HOST 1

HOST 2

HOST 1/2

STATUS

1

2

HOST 3

3

HOST 4

PLACE PIN HERE

HOST 3/4

CLI

STATUS

ACT

4

COM

CLI

ACT

1/2

TEST

TELNET

ACT

LINK

ACT

LINK

LINK

CLI

AC

LINK

FAIL

ACT

MUTE

ALARM

SILENCE

A

AB

B

AC

FAIL

C

CD

D

E

EF

F

DISK CHANNELS

G

GH

H

P

PS

S

SYSTEM

STATUS

Host port LEDs

Figure 1–4 Host Port LEDs

The four HOST ports are used for IB or FC-8 host connections. You can connect your host servers IB

HCA port(s) or FC HCA port(s)directly to these ports. Additionally, you can connect these ports to your

IB or FC switches and hubs.

The

IB LEDs (the Infiniband LEDs) located between the host ports, when solid green, indicate that

there is physical connectivity with the host; when steady amber, they indicate that the subnet manager

is communicating with the host.

On FC-8 models, the

FC LEDs are located next to each FC host port. There are 3 LEDs for each host

port, which indicate if the connection is running at 8 GB (left LED), 4 GB (middle LED), or 2 GB (right

LED). The respective LED will be a solid green to show that there is a physical connection. If the

respective LED is flashing, this indicates data transfer . If the connector is taken from the host port, all 3

LEDs for that port will flash.

The

DISK CHANNEL ports ( jackscrew style connectors) are for disk connections. The ten ports are

labeled by data channels (ABCDEFGHPS). Flashing LEDs indicate activity.

The

RS-232 connector provides local system monitoring and configuration capabilities and uses a

standard DB-9 null modem female-to-male cable.

The

TELNET port provides remote monitoring and configuration capabilities. The ACT (Activity)

LED flashes green when there is Ethernet activity . It is unlit when there is no Ethernet link. The

LINK

LED turns green when the link speed is 1000MB/s, amber when the link speed is 100MB/s, and is unlit

when the link speed is 10 MB/s.

The

LINK port is used to connect single controller units in order to form a couplet via a cross-over

Ethernet cable. The

unlit when there is no Ethernet link. The

ACT (Activity) LED flashes green when there is Ethernet activity. The LED is

LINK LED turns green when the link speed is 1000MB/s,

amber when the link speed is 100 MB/s, and is unlit when the link speed is 10 MB/s.

The

COM port is an RS-232 Interface that uses an RJ-45 cable and connects controller units. The COM

port has two(2) LEDs associated with it:

The

Controller ID Selection Switch (labeled as 1/2) allows the user to configure the units

HDD ACT (Activity) and LINK ACT.

as Unit 1 or Unit 2. Each unit has an activity LED. It is green for the selected unit. The switch is

comprised of two DIP switches. The first DIP switch (indicated by the 1/2 label ) is used to select the unit

configuration. Flip the switch up for Unit 1---down for Unit 2. When two controller controllers are

paired together to form a couplet, one controller must be configured as unit 1 and its partner must be

configured as unit 2.

6 007-5510-002

Page 21

Introduction

There are two AC Fail LEDs. Each LED is connected to its power supply independent of the other

supply. The LEDs are green to indicate that the AC input to the supply is present. The LEDs turn red

if the AC input to the supply is not present. If this occurs, check the LEDs on the front side of the unit.

If you lose AC power from one supply cord, the LED for that supply outlet will turn red.

Figure 1–5 shows the following status LEDs: System, Controller, Disk, Temperature, DC, and Fan.

CTRL

STATUS

SYSTEM

STATUS

Figure 1–5 LED Status Indicators - Rear Panel of the Controller

The SYSTEM STATUS LED is solid green when the entire storage system is operating normally.

The

CTRL (CONTROLLER) STATUS LED is green when the controller is operating normally

and turns red when the controller unit is failed.

The

DISK ST A TUS LED is green when a disk enclosure is operating normally and turns amber when

there is a problem.

The

TEMP ST ATUS LED is green when the temperature sensors (6 total) indicate that the system is

operating normally, amber when one (1) temp sensor indicates an over-temperature condition, and red

when two (2) or more sensors indicate an over-temperature condition.

The

DC STA TUS LED is green when indicating normal operating status. It turns amber if there is a

non-critical power Supply DC fault (that is, a power supply is not installed or is not indicating “Power

Good”). It turns red if an on-board supply fails or if there is a critical supply fault. If this occurs, check

the LEDs on the front side of the unit.

DISK

STATUS

TEMP

STATUS

DC

STATUS

FAN

STATUS

The

FAN STATUS LED is solid green when fans are operating normally. A flashing green LED

indicates system monitoring activity such that the monitoring is being updated. The LED flashes amber

if one of the fans in the module fails. If 2 or more fans fail, the LED flashes a solid red and the system

will begin the shut down process at 5 seconds, for a total of 30 seconds to complete shutdown.

007-5510-002 7

Page 22

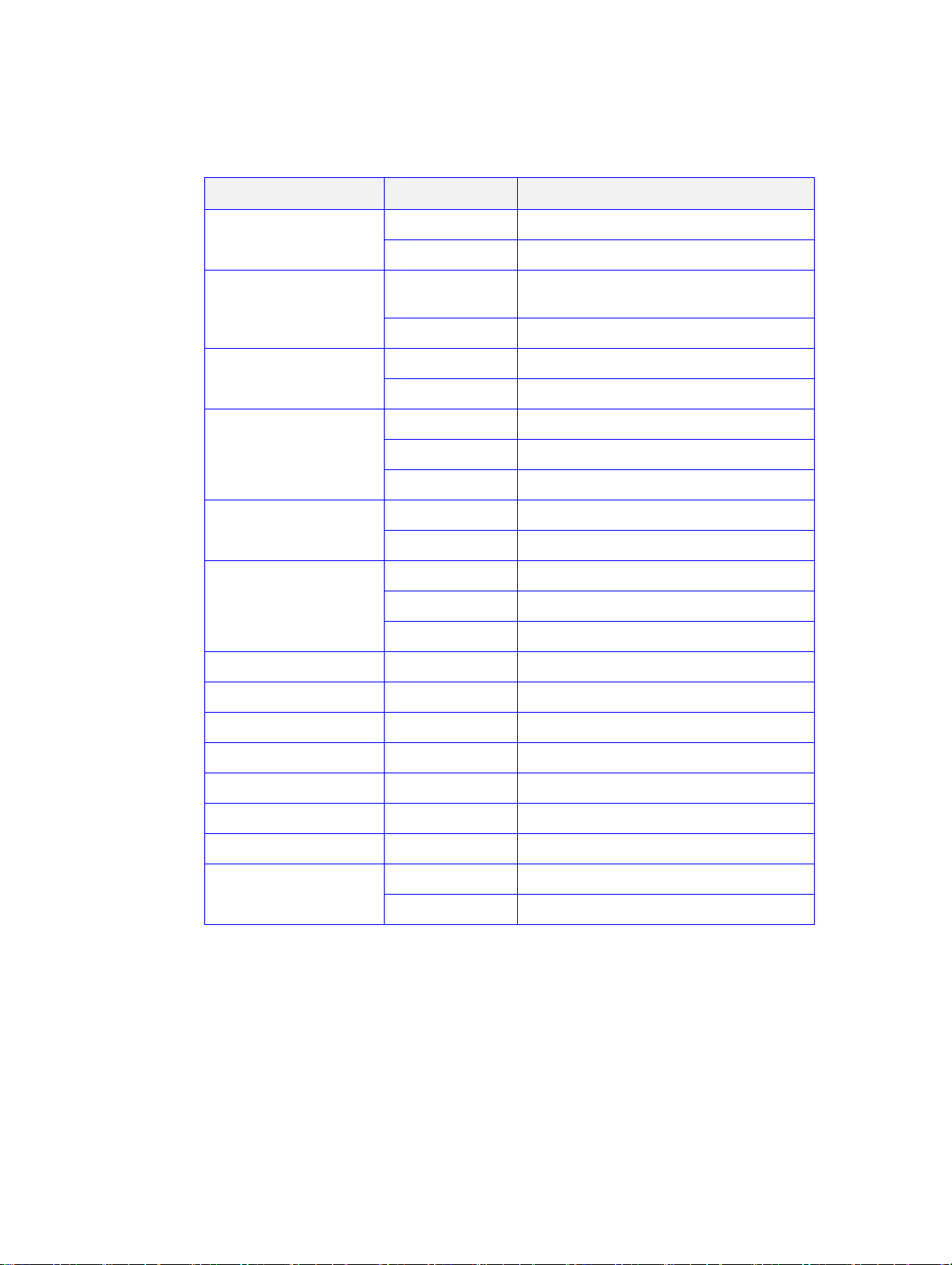

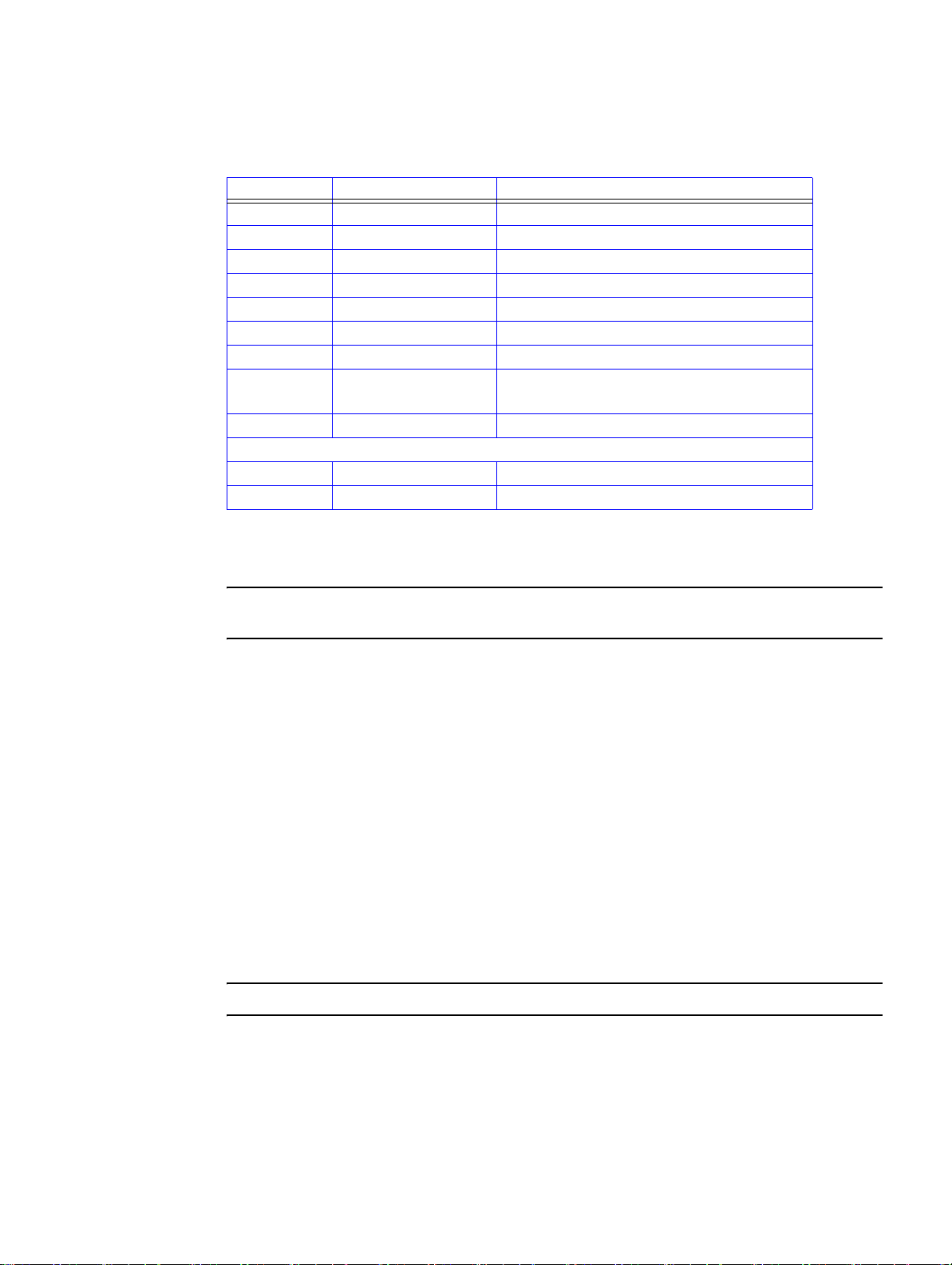

Table 1–1 LED Indicators

IB Solid Green (Infiniband) Physical Connectivity with host

DISK ports Flashing Green Activity. There is an LED for each of the ten

Telnet ACT Flashing Green Activi ty

Telnet LINK (Speed) Solid Green Link Speed=1000 mb/s

Link ACT Flashing Green Activity

Status Indicator Led Activity Explanation

Solid Amber Subnet Manager communicating with host

ports/channels (ABCDEFGPS)

Unlit

Unlit No activity

Solid Amber Link Speed=100 mb/s

Unlit Link Speed= 0 mb/s

Unlit No activity

Link LINK (Speed) Solid Green Link Speed=1000 mb/s

Solid Amber Link Speed=100 mb/s

Unlit Link Speed=10 mb/s

Com Port HDD ACT Open

Com Port LINK ACT Open

Host 1/2 CLI STATUS Open

Host 1/2 CLI STATUS Open

Host 3/4 CLI ACT Open

Host 3/4 CLI ACT Open

System Solid Green System is operating normally

Ctrl Solid Green System is operating normally

Solid Amber System is shutting down

8 007-5510-002

Page 23

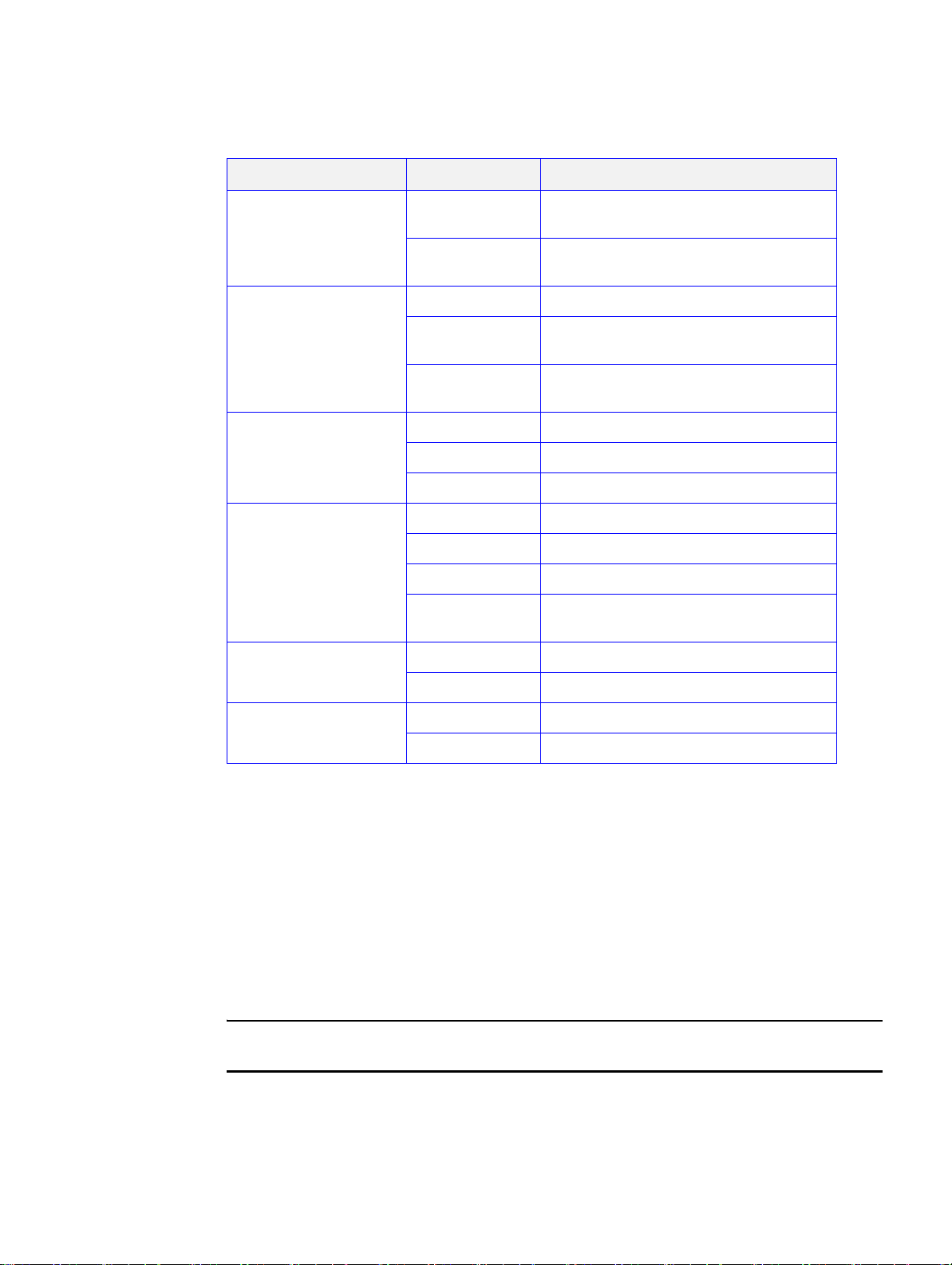

Table 1–1 LED Indicators

Status Indicator Led Activity Explanation

Disk Solid Green All related disk enclosures are operating

Temp S tatus Solid Green All temp sensors operating normally

DC Solid Green Operating normally

Fan Status Solid Green Operating normally

Introduction

normally

Solid Amber There is a problem with 1 or more of the disk

enclosures

Solid Amber At least 1 temp sensor has reported over-

temperature conditions

Solid Red 2 or more temp sensors has reported over-

temperature condition

Solid Amber Non-critical power supply fault

Solid Red Critical power supply fault

Flashing Green System monitoring activity

Flashing Amber 1 fan has failed and needs to be replaced

Solid Red 2 or more fans have failed or are undetected and

the system will shutdown in 30 seconds

AC Fail Solid Green Operating normally

Solid Red Power input to supply not present. AC failure

FC (FC-8 only) Solid Physical connection has been made.

Flashing Data is being transferred.

1.2.3 Uninterruptible Power Supply (UPS)

Using an Uninterruptible Power Supply (UPS) with the controller is highly recommended. The UPS can

guarantee power to the system in the event of a power failure for a short time, which will allow the system

to power down properly.

SGI offers two types of UPS: basic and redundant. The basic UPS is rack-mountable. It can maintain

power to a five (5) enclosure system for seven (7) minutes while the system safely shuts down during a

power failure. The redundant UPS contains power cells that provide a redundant UPS solution.

NOTE :

The UPS should be installed by a licensed electrician. Contact SGI to obtain circuit and

power requirements.

007-5510-002 9

Page 24

Page 25

Controller Installation

Chapter 2

Controller Inst allation

These steps provide an overview of the controller installation process. The steps are explained in detail

in the following sections of this chapter.

1. Unpack the controller system.

2. If it is necessary to install the controller in the 19-inch cabinet(s), contact your service provider.

NOTE :

3. Set up and connect the drive enclosures to the controller.

4. Connect the controller to your Infiniband (IB) or Fibre Channel (FC) switch and host computer(s).

5. Connect your RS-232 terminal to the controller.

6. Power up the system.

7. Configure the storage array (create and format LUNs - Logical Units) via RS-232 interface, Telnet,

8. Define and provide access rights for the clients in your SAN environment. Shared LUNs need to be

9. Initialize the system LUNs for use with your server/client systems. Partition disk space and create

Most controller configurations arrive at sites pre-mounted in a 42U or 45U rack supplied by

SGI.

or GUI.

managed by SAN management software. Individual dedicated LUNs appear to the client as local

storage and do not require management software.

file systems as needed.

2.1 Setting Up the Controller

This section details the installation of the hardware components of the controller system.

The SGI InfiniteStorge 15000 must be removed from the shipping pallet using a

!

Warning

NOTE :

007-5510-002 11

minimum of 4 people. The racked unit may not be tipped more than 10 degrees,

either from a level surface or rolling down an incline (such as a ramp).

Follow the safety guidelines for rack installation given in the “Preface”.

Page 26

Controller Installation

2.2 Unpacking the System

Before you unpack your controller, inspect the shipping container(s) for damage. If you detect damage,

report it to your carrier immediately. Retain all boxes and packing materials in case you need to store or

ship the system in the future.

While removing the components from their boxes/containers, inspect the controller chassis and all

components for signs of damage. If you detect any problems, contact SGI immediately.

Your controller ships with the following:

• controller chassis

• two (2) power cords

• RS-232 and Ethernet cables for monitoring and configuration

• cover panel and rack-mounting hardware

!

Warning

Wear an ESD wrist strap or otherwise ground yourself when ha ndling controller

modules and components. Electrostatic discharge can damage the circuit boards.

2.2.1 Rack-Mounting the Controller Chassis

For instructions on mounting the controller in the rack, contact your service provider.

2.2.2 Connecting the Controller in Dual Mode

For dual mode configuration only:

1. Connect the LINK ports on the two controller units using the supplied cable.

2. Connect the COM ports on the two units using the supplied cable.

12 007-5510-002

Page 27

2.2.3 Connecting the Controller

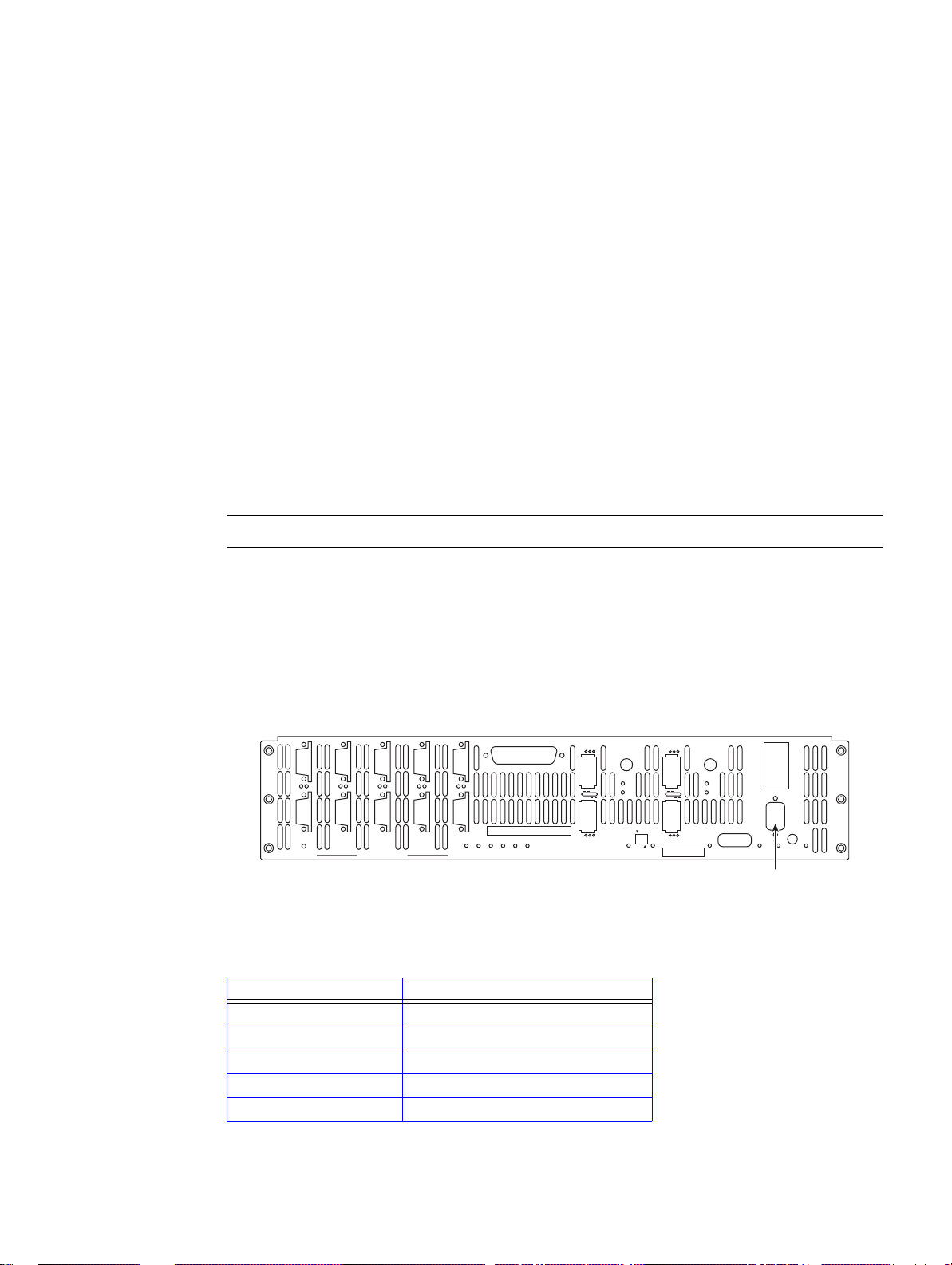

To set up the disk enclosures and connect them to the controller, do the following.

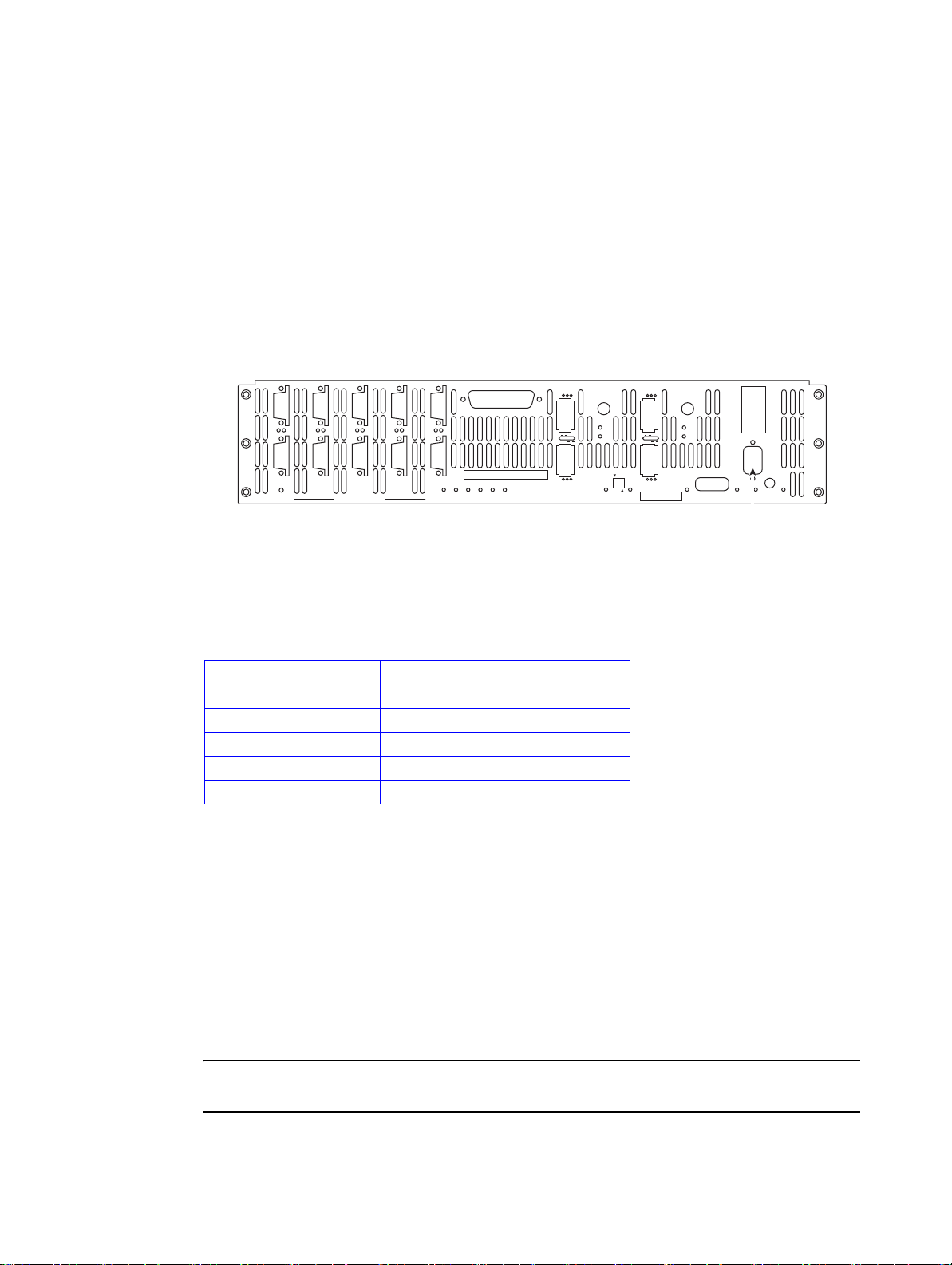

1. There are 10 disk channels on the controller. They correspond with disk ports. The disk ports are

labeled as follows (Figure 2–1

DISK A = Channel A DISK B = Ch annel B

DISK C = Channel C DISK D = Channel D

DISK E = Channel E DISK F = Channel F

DISK G = Channel G DISK H = Channel H

DISK P = Channel P (parity)

DISK S = Channel S (spare)

Using the 10 copper SAS cables provided, connect these disk ports to your ten disk channels.

):

Controller Installation

Disk ports

CTRL

STATUS

STATUSDCSTATUS

TEST

FAN

TEMP

STATUS

STATUS

DISK

A

AB

B

AC

FAIL

C

CD

D

E

EF

F

DISK CHANNELS

G

GH

H

P

PS

S

SYSTEM

STATUS

Figure 2–1 I/O Connectors

Each controller supports up to four host connections. You may connect more than four client

2.

systems to the controller using switches and you can restrict user access to the LUNs (as described

in Section 2.3 "Configuring the Controller" of this guide).

The Host ports are numbered 1 through 4 as shown in Figure 2–1. Connect your host system(s) or

switches to these ports. For FC-8 models, ensure that the latches on the transceivers are engaged.

2.2.4 Selecting SAS- ID for Your Drives

NOTE :

The controller uses a select ID of 1.

HOST 1

HOST 2

Host port 3Host port 1

HOST 1/2

1

2

HOST 3

3

HOST 4

4

PLACE PIN HERE

HOST 3/4

STATUS

ACT

CLI

COM

CLI

STATUS

ACT

1/2

TEST

TELNET

ACT

LINK

ACT

LINK

LINK

CLI

LINK

AC

ACT

FAIL

MUTE

ALARM

SILENCE

Host port 4Host port 2

2.2.5 Laying Out your Storage Drives

Tiers, or RAID groups, are the basic building blocks of the controller. A tier can be catalogued as 8+1

or 8+2. In 8+1 mode, a tier contains 10 drives—eight (8) data drives (Channels A through H), one parity

drive (Channel P), and one optional spare drive (Channel S). In 8+2 mode, a tier contains 10 drives—

eight (8) data drives (Channels A through H) and two parity drives (Channel P and S).

The controller can manage up to 120 tiers.

007-5510-002 13

Page 28

Controller Installation

CLI (RS-232 Interface)

2.2.6 Connecting the RS-232 Terminal

Configuration of disks in the enclosures must be in sets of complete tiers (Channels A through P).

Allocating one spare drive per tier gives you the best data protection but this is not required. The spare

drives on the controller are global hot spares.

For first time set-up, you will need access to an RS-232 terminal or terminal emulator (such as Wi ndows

hyperterminal). Then you may set up the remote management functions and configure/monitor the

controller remotely via Telnet.

1. Connect your terminal to the CLI port at the back of the controller using a standard DB-9 female-

to-male null modem cable (Figure 2–2).

A

AB

B

AC

FAIL

C

CD

D

E

EF

F

DISK CHANNELS

G

GH

H

Figure 2–2 Controller CLI Port

2. Open the terminal window .

3. Use the following settings for your serial port:

Setting Value

Bits per second: 115,200

Data bits: 8

Parity: None

Stop bits: 1

Flow Control: None

2.2.6.1 Basic Key Operations

P

PS

S

SYSTEM

STATUS

CTRL

STATUS

TEMP

STATUS

DISK

STATUSDCSTATUS

TEST

FAN

STATUS

HOST 1

HOST 2

HOST 1/2

STATUS

1

2

HOST 3

3

HOST 4

PLACE PIN HERE

HOST 3/4

CLI

STATUS

ACT

4

COM

CLI

ACT

1/2

TEST

TELNET

ACT

LINK

ACT

LINK

LINK

CLI

AC

LINK

FAIL

ACT

MUTE

ALARM

SILENCE

The command line editing and history features support ANSI and VT-100 terminal modes. The

command history buffer can hold up to 64 commands. The full command line editing and history only

work on main CLI and telnet sessions when entering new commands. Basic Key Assignments are listed

in Table 2–1 on page 15.

Simple, not full command, line editing only is supported when the:

• CLI prompts the user for more information.

• alternate CLI prompt is active. (The alternate CLI is used on the RS-232 connection during an active

telnet session.)

NOTE :

Not all telnet programs support all the keys listed in Table 2–1 "Basic Key Assignments".

The Backspace key in the terminal program should be setup to send ‘Ctrl-H’.

14 007-5510-002

Page 29

Table 2–1 Basic Key Assignments

Key Escape Sequence Description

Backspace Ctrl-H, 0x08 deletes preceding character

Del Del, 0x7F or Esc [3~ deletes current character

Up Arrow Esc [A retrieves previous command in the history buffer

Down Arrow Esc [B retrieves next command in the history buffer

Right Arrow Esc [C moves cursor to the right by one character

Left Arrow Esc [D moves cursor to the left by one character

Home Esc [H or Esc [1~ moves cursor to the start of the line.

End Esc [K or

Esc [4~

Ins Esc [2~ toggles character insert mode, on and off

NOTE: Insert mode is ON by default and resets to ON for each new command.

PgUp Esc [5~ retrieves oldest command in the history buffer

PgDn Esc [6~ retrieves latest command in the history buffer

moves cursor to the end of the line

Controller Installation

2.2.7 Powering On the Controller

NOTE :

1. Verify that the power switches on the two (2) power supply module at the back of each controller

are off.

2. Connect the two AC connectors, using the power cords provided at the back to the AC power

source for each controller unit. For maximum redundancy, connect the two power connectors to

two different AC power circuits for each unit.

3. Check that all your drive enclosures are powered up.

4. Check that the drives are spun up and ready.

5. Turn on the power supplies on the controller unit(s). The controller will undergo a series of system

diagnostics and the bootup sequence is displayed on your terminal.

6. Wait until the bootup sequence is complete and the controller system prompt is displayed.

NOTE :

Systems that have dual controllers (couplets) should have the controllers powered on

simultaneously ensure correct system configuration.

Do not interrupt the boot sequence without guidance from SGI support personnel.

Yo u may now configure the system as described in Section 2.3 "Configuring the Controller".

007-5510-002 15

Page 30

Controller Installation

2.3 Configuring the Controller

This section provides information on configuring your controller.

NOTE :

The configuration examples provided here represent only a general guideline. These

examples should not be used directly to configure your particular controller.

The CLI (command line interface) commands used in these examples are fully

documented in sections 3.1 through 3.8—though exact commands may change depending

on your firmware version. T o access the most up-to-date commands, use the CLI’s online

HELP feature.

2.3.1 Planning Your Setup and Configuration

Before proceeding with your controller configuration, determine the requirements for your SAN

environment, including the types of I/O access (random or sequential), the number of storage arrays

(LUNs) and their sizes, and user access rights.

The controller uses either an 8+2 or an 8+1+1 parity scheme. It is a unique implementation that combines

the virtues of RAID 3, RAID 0, and RAID 6 (Figure 2–3

per 8+1 parity group; two parity drives are dedicated in the case of an 8+2 parity group or RAID 6. A

parity group is also known as a Tier.

This RAID implementation exhibits RAID 3 characteristics such as tremendous large block-transfer—

READ and WRITE—capability with NO performance degradation in crippled mode. This capability

also extends to RAID 6, delivering data protection against a double disk drive failure in the same tier with

no loss of performance.

). Like RAID 3, a dedicated parity drive is used

Tier Configuration

Striping

across tiers

when a LUN

is created

across

multiple tiers

Capacity

----------->

------------------------------------------------------------

Parity Protection within same tier

(Mbytes)

---------------->

Space Available

(Mbytes)Tier Disk Status Lun List

2718202800121

ABCDEFGHPS

ABCDEFGHPS2718202800122

ABCDEFGHPS2718202800123

0

0

0

Figure 2–3 Striping Across Tiers - RAID

However, Like RAID 5, this RAID implementation does not lock drive spindles and does allow the disks

to re-order commands to minimize seek latency, and the RAID 0-like functionality allows multiple tiers

to be striped, providing “PowerLUNs” that can span hundreds of disk drives. These PowerLUNs support

very high throughput and have a greatly enhanced ability to handle small I/O (particularly as disk

spindles are added) and many streams of real-time content.

LUNs can be created on just a part of a tier, a full tier, across a fraction of multiple tiers, or across multiple

full tiers. A minimum configuration for tiers of drives require either 9 drives in an 8+1 configuration or

10 drives in an 8+2 configuration. When configured in 8+1+1 mode, the tenth data segment is reserved

for global hot spare drives. When configured in 8+2 mode, spares may reside on each data segment and

are global only to that data segment.

16 007-5510-002

Page 31

Controller Installation

The controller supports various disk drive enclosures that can be used to populate the 10

<ABCDEFGHPS> disk channels in both SAS 1x and SAS 2x modes. Each chassis has a limit to the tiers

that can be created and supported. Refer to the specific disk enclosure user guides for further information.

You can create up to 1024 LUNs in a controller . LUNs can be shared or dedicated to individual users,

according to your security level setup, with Read or Read/Write privileges granted per user. Users only

have access to their own and “allowed-to-share” LUNs. Shared LUNs need to be managed by SAN

management software. Individual dedicated LUNs appear to users as local storage and do not require

external management software.

NOTE :

In dual mode, LUNs are “owned” by the controller unit on which they are created. Hosts only

see the LUNs on the controller to which they are connected, unless cache coherency is enabled.

For random I/O applications, use as many tiers as possible and create one or more LUNs. For

applications that employ sequential I/O, use individual or small grouping of tiers. If you need guidance

in determining your requirements, contact SGI support.

2.3.2 Configuration Interface

You can use the Command Line Interface (CLI) to configure the controller system. This user guide

provides information for setup using the CLI.

2.3.3 Login as Administrator

The default Administrator account name is admin and its default password is password. (See

Section 3.1.3 "Administrator and User Logins" for information on how to change the user and

administrator passwords.) Only users with administrator rights are allowed to change the configuration.

To login:

1. At the login prompt, type:

login admin

<Enter>

2. At the password prompt, type:

password

<Enter>

2.3.4 Setting System Time & Date

The system time and date for the controller are factory-configured for the U.S. Pacific Standard Time

(PST) zone. If you are located in a different time zone, you need to change the system date and time so

that the time stamps for all events are correct. In dual mode, changes should always be made on Unit 1.

New settings are automatically applied to both units.

T o set the system date, at the prompt, type:

date mm dd yyyy

where mm represents the two digit value for month, dd represents the two digit value for day , and yyyy

represents the four digit value for year.

007-5510-002 17

<Enter>

Page 32

Controller Installation

For example, to change the system date to March 1, 2009, enter:

date 3 1 2009

<Enter>

To set the system time, at the prompt, type:

time hh:mm:ss

<Enter>

where hh represents the two digit value for hour (00 to 24), mm is the two digit value for minutes, and

ss represents the two digit value for seconds

For example, to change the system time to 2:15:32 p.m., enter:

time 14:15:32

<Enter>

NOTE :

The system records time using the military method, which records hours from 00 to 24, not

in a.m. and p.m. increments of 1 to 12.

2.3.5 Setting Tier Mapping Mode

When the controller system is first configured, it is necessary to select a tier mapping mode for the

attached enclosures.

The controller currently supports SAS drive enclosures.

To display the current mapping mode, type:

Figure 2–4 Tier Changemap Screen

T o change the mapping mode (Figure 2–4):

tier map

<Enter>

.

1. Enter:

tier changemap

<Enter>

2. Enter the appropriate mapping mode.

•For 10 and 20 box solutions, choose SAS_2X.

•For 5 box solutions, choose SAS_1X.

3. For the changes to take effect, enter:

18 007-5510-002

restart

<Enter>

.

Page 33

2.3.6 Checking Tier St atus and Configuration

Use the tier command to display your current tier status. Figure 2–5 illustrates the status of a system

containing 80 drives on 8 tiers with both parity modes of 8+1 and 8+2 tiers. The plus sign (+) adjacent

to the tier number indicates that the tier is in 8+2 mode.

Controller Installation

15000 [1]: tier

Capacity

(Mbytes)

Owner

-----------------------------------------------------------------

Automatic disk rebuilding is Enabled

System rebuild extend: 32 Mbytes

System rebuild delay: 60

System Capacity 2240096 Mbytes, 2240096 Mbytes available.

1

1

1

1

1

Tier Status

Space Available

(Mbytes)Tier Disk Status Lun List

280012280012 1 +

ABCDEFGHPS

ABCD FGHPS2800122800122

ABCDEFGHPS2800122800123

ABCDEFGHPS2800122800124

AB.DEFGHPS2800122800125

ABCDEFGHPS280012280012 6 + 1

ABCDEFG?PS2800122800127 1

ABCDEFGHPS280012280012 8 + 1

Figure 2–5 Tier Status Screen

Each letter under the “Disk Status” column represents a healthy drive at that channel (as s hown in Figure

2–5). Verify that all drives can be seen by the controller.

“Unhealthy” drives appear as follows:

• A blank space indicates that the drive is not present (or detected) at that location.

• A period (.) denotes that the disk was failed by the system.

• A question mark (?) indicates that the disk has failed the diagnostics tests or is not configured

correctly.

• The character “r” indicates that the disk at that location is being replaced by a spare drive.

After entering the tier command, perform the following steps if necessary:

1. If a drive is not displayed at all (that is, it is “missing”), check to ensure that the drive is properly

seated and in good condition. To search for the drive, enter:

disk scan

<Enter>

2. If the same channel is missing on all tiers, check the cable connections for that channel.

3. If “automatic disk rebuilding” is not enabled, enable it by entering:

tier autorebuild=on

<Enter>

4. To display the detailed disk configuration information for all of the tiers (Figure 2–6) enter:

tier config

<Enter>

007-5510-002 19

Page 34

Controller Installation

2.3.6.1 Heading Definitions

Figure 2–6 Current Tier Configuration

• Total LUNs. LUNs that currently reside on the tier.

• Healthy Disk. The “health” of the spare disk currently being used (if any is being used) to replace

a disk on the listed tier. The health indication for the spare channel that is physically on the listed

tier is found under SP H.

• F indicates the failed disk (if any) on the tier.

• R indicates the replaced disk (if any) on the tier.

• Sp H indicates if the spare disk that is physically on the tier is healthy.

• Sp A indicates if the spare disk that is physically on the tier is available for use as a replacement.

• Spare Owner indicates the current owner of the physical spare, where ownership is assigned when

the spare is used as a replacement. “RES-#” will appear under the Spare Owner heading while a

replacement operation is underway to indicate that unit “#” currently has the spare reserved.

• Spare Used on indicates the tier (if any) where this physical spare is being used as a replacement.

• Repl Spare from indicates the tier (if any) whose spare disk is being used as a replacement for this

tier.

NOTE :

Tiers are 8+1 mode by default.

2.3.7 Cache Coherency and Labeling in Dual Mode

Use the DUAL command to check the status of the units that are healthy and verify that the “Dual”

(COM2) and “Ethernet” (LINK) communication paths between the two controller units are established

(Figure 2–7).

20 007-5510-002

Page 35

Controller Installation

Figure 2–7 Dual Controller Configuration

If you require multi-pathing to the LUNs, enable cache coherency . If you do not require multi-pathing,

disable cache coherency.

To enable/disable the cache coherency function, enter the following (ON enables, OFF disables):

dual coherency=on|off

<Enter>

You may change the label assigned to each unit. This allows you to uniquely identify each unit in the

system. Each unit can have a label of up to 31 characters long.

1. To change the label, enter:

dual label

<Enter>

2. Select which unit you want to re-label (see Figure 2–8).

3. When prompted, type in the new label for the selected unit. The new name is displayed.

15000 [1]: dual label

Enter the number of the Unit you wish to rename.

LABEL=1 for Unit 1, Test System[1]

LABEL=2 for Unit 2, Test System[2]

Unit: 1

Enter a new label for Unit 1, or DEFAULT to return to the default label.

Up to 31 characters are permitted.

Current Unit name: Test System[1]

New Unit name: System[1]

15000 [1]:

Figure 2–8 Labeling a Controller Unit

2.3.8 Configuring the Storage Arrays

When you have determined your array configuration, you need to create and format the LUNs. You have

the option of creating a 32-bit or a 64-bit address LUN.

In the example below, 2 LUNs (32-bit addressing) are created:

• LUN 0 on Tiers 1 to 8 with capacity of 8192MB each.

• LUN 1 on Tiers 1 and 2 with capacity of 8192MB each.

NOTE :

007-5510-002 21

You may press e at any time to exit and cancel the command completely.

Page 36

Controller Installation

NOTE :

In dual mode, LUNs are “owned” by the controller unit where they are created. Hosts only

see the LUNs on the unit to which that they are connected, unless cache coherency is enabled.

1. To display the current cache settings, type:

cache

<Enter>

2. Select a cache segment size for your array. For example, to set the segment size to 128KBytes,

type:

cache size=128

<Enter>

This setting can also be adjusted on-the-fly for specific application tuning: see section 3.2.12

"Couplet Controller Configuration (Cache/Non-Cache Coherent)". The default setting is 1024.

3. Type:

lun

<Enter>

.

The Logical Unit Status chart should be empty, as no LUN is present on the array.

4. To create a new LUN, type:

where

X is the LUN number. Valid LUN numbers are 0..1023.

If only

lun add is entered, you are prompted to enter a LUN number.

lun add=x

<Enter>

5. Yo u will be prompted to enter the parameter values for the LUN. In this example:

- Enter a label for the LUN (you can include up to 12 characters). The label may be changed later

using the LUN LABEL command.

- Enter the capacity (in Mbytes) for a single LUN in the LUN group:

- Enter the number of tiers to use:

8

<Enter>

8192

<Enter>

- Select the tier(s) by entering the Tier number. Enter each one on a new line. Tiers are numbered

from 1 through 125.

1

2

3

4

5

6

7

8

- Enter the block size in Bytes: 512

NOTE :

512 is the recommended block size. A larger block size may give better performance.

<Enter>

<Enter>

<Enter>

<Enter>

<Enter>

<Enter>

<Enter>

<Enter>

<Enter>

However, verify that your OS and file system can support a larger block size before changing the block

size from its default value.

This message will display: Operation successful: LUN 0 added to the system

6. When you are asked to format the LUN, type:

22 007-5510-002

y

<Enter>

Page 37

Controller Installation

After you have initiated LUN format, the message Starting Format of LUN is displayed.

You can monitor the format progress by entering the command

LUN (see Figure 2–9).

Upon completion, this message: Finished Format of LUN 0 displays.

15000 [1]: lun

LUN Owner Tier ListCapacity

Label

-----------------------------------------------------------------------------1 1 2 3 4 5 6 7 8

System Capacity 2240096 Mbytes, 2207328 Mbytes available.

Logical Unit Status

Status

(Mbytes)

8192Format 14%0

Block

Size

512

Tiers

8

Figure 2–9 Logical Unit Status - Formatting

7. Enter the command LUN to check the status of the LUN, which should be “Ready” (see Figure 2–

10

).

15000 [1]: lun

LUN Owner Tier ListCapacity

Label

-----------------------------------------------------------------------------1 1 2 3 4 5 6 7 8

System Capacity 2240096 Mbytes, 2207328 Mbytes available.

Logical Unit Status

Status

(Mbytes)

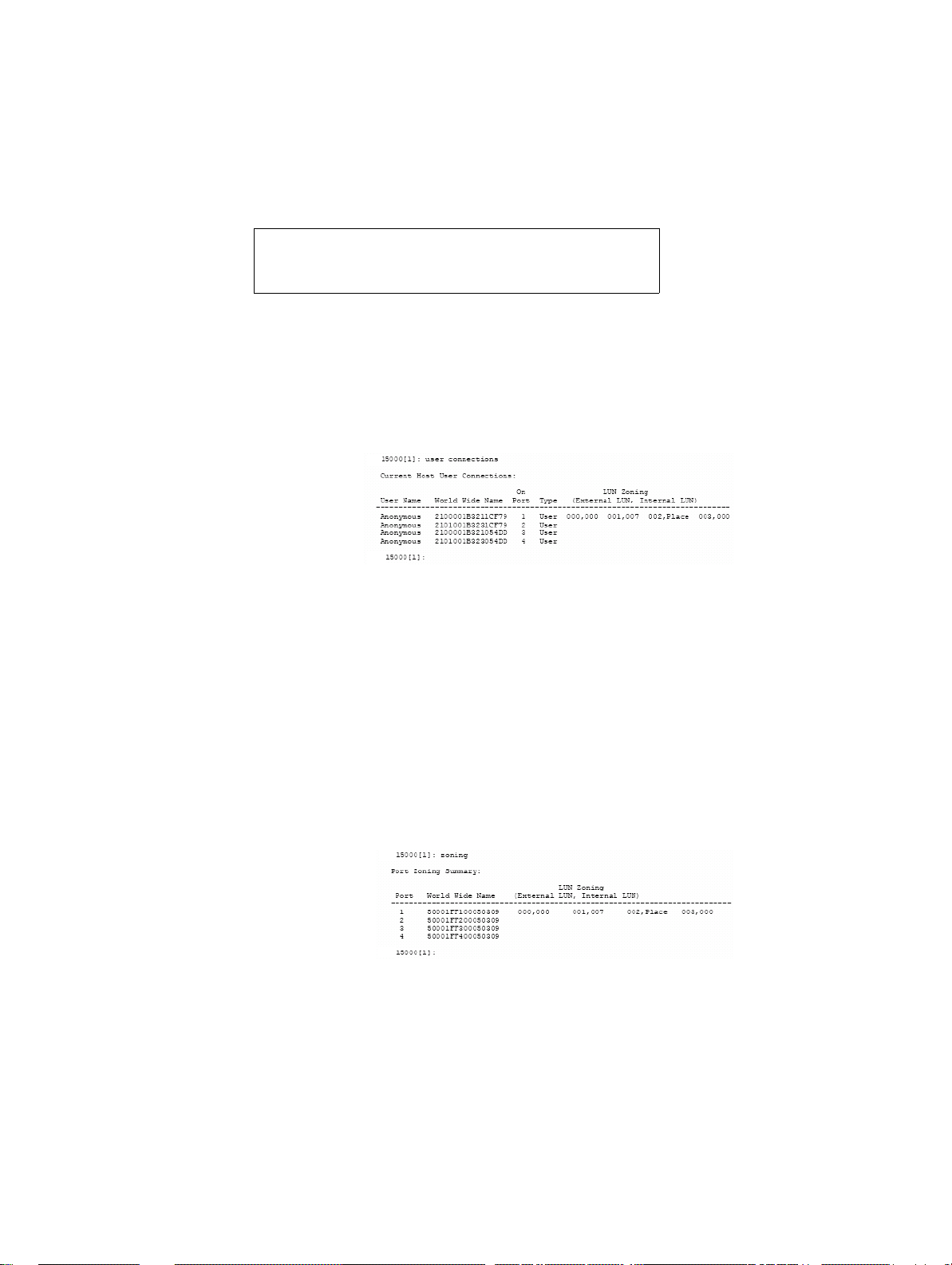

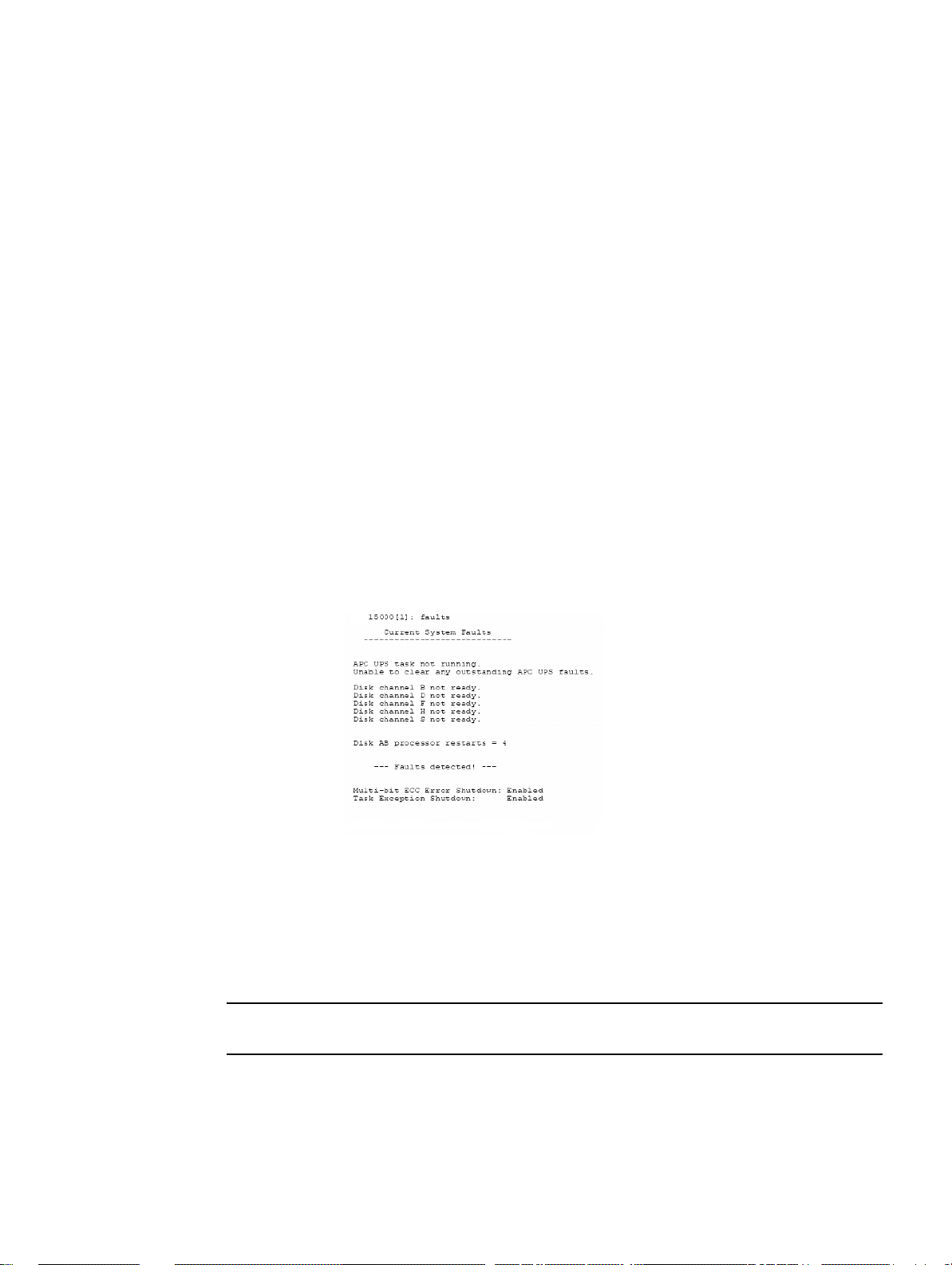

8192Ready0