Page 1

W3.5

Kernel (VDK) User’s Guide

for 16-Bit Processors

Analog Devices, Inc.

Digital Signal Processor Division

One Technology Way

Norwood, Mass. 02062-9106

Revision 1.0, October 2003

Part Number

82-000035-03

a

Page 2

Copyright Information

© 2003 Analog Devices, Inc., ALL RIGHTS RESERVED. This document may not be reproduced in any form without prior, express written

consent from Analog Devices, Inc.

Printed in the USA.

Disclaimer

Analog Devices, Inc. reserves the right to change this product without

prior notice. Information furnished by Analog Devices is believed to be

accurate and reliable. However, no responsibility is assumed by Analog

Devices for its use; nor for any infringement of patents or other rights of

third parties which may result from its use. No license is granted by implication or otherwise under the patent rights of Analog Devices, Inc.

Trademark and Service Mark Notice

The Analog Devices logo, VisualDSP, the VisualDSP logo,

CROSS-CORE, the CROSSCORE logo, and EZ-KIT Lite are registered

trademarks of Analog Devices, Inc.

VisualDSP++ and the VisualDSP++ logo are trademarks of Analog

Devices, Inc.

Trademarks and registered trademarks are the property of their respective

owners.

Page 3

CONTENTS

PREFACE

Purpose of this Manual ................................................................. xvii

Intended Audience ....................................................................... xviii

Manual Contents ......................................................................... xviii

What’s New in this Manual ............................................................ xix

Technical or Customer Support ....................................................... xx

Supported Processors ....................................................................... xx

Product Information ...................................................................... xxi

MyAnalog.com ......................................................................... xxi

DSP Product Information ......................................................... xxi

Related Documents ................................................................. xxii

Online Documentation ......................................................... xxiii

From VisualDSP++ ........................................................... xxiii

From Windows .................................................................. xxiv

From the Web .................................................................... xxiv

Printed Manuals ..................................................................... xxiv

VisualDSP++ Documentation Set ........................................ xxv

Hardware Manuals .............................................................. xxv

Data Sheets ......................................................................... xxv

VisualDSP++ 3.5 Kernel (VDK) User’s Guide iii

for 16-bit Processors

Page 4

CONTENTS

Contacting DSP Publications ................................................. xxvi

Notation Conventions ................................................................. xxvi

INTRODUCTION TO VDK

Motivation ................................................................................... 1-1

Rapid Application Development .............................................. 1-2

Debugged Control Structures .................................................. 1-2

Code Reuse ............................................................................. 1-3

Hardware Abstraction ............................................................. 1-3

Partitioning an Application ........................................................... 1-4

Scheduling ................................................................................... 1-5

Priorities ................................................................................. 1-5

Preemption ............................................................................. 1-7

Protected Regions ......................................................................... 1-7

Disabling Scheduling .............................................................. 1-7

Disabling Interrupts ................................................................ 1-8

Thread and Hardware Interaction ................................................. 1-8

Thread Domain with Software Scheduling ............................... 1-9

Interrupt Domain with Hardware Scheduling ........................ 1-10

Device Drivers ...................................................................... 1-10

CONFIGURATION AND DEBUGGING OF VDK

PROJECTS

Configuring VDK Projects ............................................................ 2-1

Linker Description File ........................................................... 2-2

iv VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 5

CONTENTS

Thread Safe Libraries ............................................................... 2-2

Header Files for the VDK API ................................................. 2-2

Debugging VDK Projects .............................................................. 2-3

Instrumented Build Information .............................................. 2-3

VDK State History Window .................................................... 2-3

Target Load Graph Window .................................................... 2-4

VDK Status Window ............................................................... 2-4

General Tips ........................................................................... 2-5

Kernel Panic ............................................................................ 2-5

USING VDK

Threads ........................................................................................ 3-1

Thread Types .......................................................................... 3-2

Thread Parameters .............................................................. 3-2

Stack Size ........................................................................ 3-2

Priority ........................................................................... 3-3

Required Thread Functionality ............................................ 3-3

Run Function ................................................................. 3-3

Error Function ................................................................ 3-4

Create Function .............................................................. 3-4

Init Function/Constructor ............................................... 3-5

Destructor ...................................................................... 3-5

Writing Threads in Different Languages .............................. 3-6

C++ Threads ................................................................... 3-6

C and Assembly Threads ................................................. 3-7

VisualDSP++ 3.5 Kernel (VDK) User’s Guide v

for 16-bit Processors

Page 6

CONTENTS

Global Variables ................................................................. 3-8

Error Handling Facilities ..................................................... 3-8

Scheduling ................................................................................... 3-9

Ready Queue .......................................................................... 3-9

Scheduling Methodologies ..................................................... 3-10

Cooperative Scheduling .................................................... 3-10

Round-robin Scheduling ................................................... 3-11

Preemptive Scheduling ...................................................... 3-11

Disabling Scheduling ............................................................ 3-12

Entering the Scheduler From API Calls .................................. 3-13

Entering the Scheduler From Interrupts ................................. 3-13

Idle Thread ........................................................................... 3-14

Signals ........................................................................................ 3-15

Semaphores ........................................................................... 3-16

Behavior of Semaphores .................................................... 3-16

Thread’s Interaction With Semaphores .............................. 3-17

Pending on a Semaphore ............................................... 3-17

Posting a Semaphore ..................................................... 3-18

Periodic Semaphores ..................................................... 3-21

Messages ............................................................................... 3-21

Behavior of Messages ........................................................ 3-22

Thread’s Interaction With Messages .................................. 3-23

Pending on a Message ................................................... 3-23

Posting a Message ......................................................... 3-24

vi VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 7

CONTENTS

Multiprocessor Messaging ...................................................... 3-26

Routing Threads (RThreads) ............................................. 3-27

Data Transfer (Payload Marshalling) .................................. 3-33

Device Drivers for Messaging ............................................ 3-36

Routing Topology ............................................................. 3-37

Events and Event Bits ............................................................ 3-38

Behavior of Events ............................................................ 3-38

Global State of Event Bits .............................................. 3-39

Event Calculation .......................................................... 3-39

Effect of Unscheduled Regions on Event Calculation ..... 3-41

Thread’s Interaction With Events ...................................... 3-41

Pending on an Event ..................................................... 3-42

Setting or Clearing of Event Bits .................................... 3-42

Loading New Event Data into an Event ......................... 3-44

Device Flags .......................................................................... 3-45

Behavior of Device Flags ................................................... 3-45

Thread’s Interaction With Device Flags ............................. 3-45

Interrupt Service Routines ........................................................... 3-46

Enabling and Disabling Interrupts ......................................... 3-46

Interrupt Architecture ............................................................ 3-47

Vector Table ...................................................................... 3-47

Global Data ...................................................................... 3-48

Communication with the Thread Domain ......................... 3-49

Timer ISR ............................................................................. 3-50

VisualDSP++ 3.5 Kernel (VDK) User’s Guide vii

for 16-bit Processors

Page 8

CONTENTS

Reschedule ISR ..................................................................... 3-50

I/O Interface .............................................................................. 3-51

I/O Templates ....................................................................... 3-51

Device Drivers ...................................................................... 3-51

Execution ......................................................................... 3-52

Parallel Scheduling Domains ............................................. 3-53

Using Device Drivers ........................................................ 3-55

Dispatch Function ........................................................ 3-56

Device Flags ..................................................................... 3-64

Pending on a Device Flag .............................................. 3-65

Posting a Device Flag .................................................... 3-66

General Notes .................................................................. 3-67

Variables ....................................................................... 3-67

Critical/Unscheduled Regions ....................................... 3-67

Memory Pools ............................................................................ 3-68

Memory Pool Functionality ................................................... 3-68

Multiple Heaps ........................................................................... 3-69

Thread Local Storage .................................................................. 3-70

VDK DATA TYPES

Data Type Summary ..................................................................... 4-1

Bitfield ......................................................................................... 4-4

DeviceDescriptor .......................................................................... 4-5

DeviceFlagID ............................................................................... 4-6

DeviceInfoBlock ........................................................................... 4-7

viii VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 9

CONTENTS

DispatchID ................................................................................... 4-8

DispatchUnion ............................................................................. 4-9

DSP_Family ............................................................................... 4-11

DSP_Product .............................................................................. 4-12

EventBitID ................................................................................. 4-14

EventID ...................................................................................... 4-15

EventData ................................................................................... 4-16

HeapID ...................................................................................... 4-17

HistoryEnum .............................................................................. 4-18

IMASKStruct .............................................................................. 4-20

IOID .......................................................................................... 4-21

IOTemplateID ............................................................................ 4-22

MarshallingCode ......................................................................... 4-23

MarshallingEntry ........................................................................ 4-25

MessageDetails ............................................................................ 4-26

MessageID .................................................................................. 4-27

MsgChannel ............................................................................... 4-28

MsgWireFormat .......................................................................... 4-30

PanicCode .................................................................................. 4-32

PayloadDetails ............................................................................ 4-33

PFMarshaller .............................................................................. 4-34

PoolID ....................................................................................... 4-36

Priority ....................................................................................... 4-37

RoutingDirection ........................................................................ 4-38

VisualDSP++ 3.5 Kernel (VDK) User’s Guide ix

for 16-bit Processors

Page 10

CONTENTS

SemaphoreID ............................................................................. 4-39

SystemError ............................................................................... 4-40

ThreadCreationBlock ................................................................. 4-44

ThreadID ................................................................................... 4-46

ThreadStatus .............................................................................. 4-47

ThreadType ................................................................................ 4-49

Ticks .......................................................................................... 4-50

VersionStruct .............................................................................. 4-51

VDK API REFERENCE

Calling Library Functions ............................................................. 5-2

Linking Library Functions ............................................................ 5-2

Working With VDK Library Header ............................................. 5-3

Passing Function Parameters ......................................................... 5-3

Library Naming Conventions ........................................................ 5-3

API Summary ............................................................................... 5-5

API Functions ............................................................................ 5-10

AllocateThreadSlot() ............................................................. 5-11

AllocateThreadSlotEx() ......................................................... 5-13

ClearEventBit() ..................................................................... 5-15

ClearInterruptMaskBits() ...................................................... 5-17

ClearThreadError() ............................................................... 5-18

CloseDevice() ....................................................................... 5-19

CreateDeviceFlag() ................................................................ 5-21

CreateMessage() .................................................................... 5-22

x VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 11

CONTENTS

CreatePool() .......................................................................... 5-24

CreatePoolEx() ...................................................................... 5-26

CreateSemaphore() ................................................................ 5-28

CreateThread() ...................................................................... 5-30

CreateThreadEx() .................................................................. 5-32

DestroyDeviceFlag() .............................................................. 5-34

DestroyMessage() .................................................................. 5-35

DestroyMessageAndFreePayload() .......................................... 5-37

DestroyPool() ........................................................................ 5-39

DestroySemaphore() .............................................................. 5-41

DestroyThread() .................................................................... 5-43

DeviceIOCtl() ....................................................................... 5-45

DispatchThreadError() .......................................................... 5-47

ForwardMessage() .................................................................. 5-49

FreeBlock() ............................................................................ 5-52

FreeDestroyedThreads() ......................................................... 5-54

FreeMessagePayload () ........................................................... 5-55

FreeThreadSlot() ................................................................... 5-57

GetClockFrequency() ............................................................ 5-59

GetEventBitValue() ............................................................... 5-60

GetEventData() ..................................................................... 5-61

GetEventValue() .................................................................... 5-62

GetHeapIndex() .................................................................... 5-63

GetInterruptMask() ............................................................... 5-65

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xi

for 16-bit Processors

Page 12

CONTENTS

GetLastThreadError() ........................................................... 5-66

GetLastThreadErrorValue() ................................................... 5-67

GetMessageDetails () ............................................................ 5-68

GetMessagePayload() ............................................................ 5-70

GetMessageReceiveInfo() ...................................................... 5-72

GetNumAllocatedBlocks() ..................................................... 5-74

GetNumFreeBlocks() ............................................................ 5-75

GetPriority() ......................................................................... 5-76

GetSemaphoreValue() ........................................................... 5-77

GetThreadHandle() .............................................................. 5-78

GetThreadID() ..................................................................... 5-79

GetThreadSlotValue() ........................................................... 5-80

GetThreadStackUsage() ......................................................... 5-81

GetThreadStatus() ................................................................ 5-83

GetThreadType() .................................................................. 5-84

GetTickPeriod() .................................................................... 5-85

GetUptime() ......................................................................... 5-86

GetVersion() ......................................................................... 5-87

InstallMessageControlSemaphore () ....................................... 5-88

InstrumentStack() ................................................................. 5-90

LoadEvent() .......................................................................... 5-92

LocateAndFreeBlock() ........................................................... 5-94

LogHistoryEvent() ................................................................ 5-95

MakePeriodic() ..................................................................... 5-96

xii VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 13

CONTENTS

MallocBlock() ....................................................................... 5-98

MessageAvailable() ............................................................... 5-100

OpenDevice() ...................................................................... 5-102

PendDeviceFlag() ................................................................ 5-104

PendEvent() ........................................................................ 5-106

PendMessage() ..................................................................... 5-108

PendSemaphore() ................................................................ 5-111

PopCriticalRegion() ............................................................. 5-113

PopNestedCriticalRegions() ................................................. 5-115

PopNestedUnscheduledRegions() ......................................... 5-117

PopUnscheduledRegion() ..................................................... 5-118

PostDeviceFlag() .................................................................. 5-120

PostMessage() ...................................................................... 5-121

PostSemaphore() .................................................................. 5-124

PushCriticalRegion() ........................................................... 5-126

PushUnscheduledRegion() ................................................... 5-127

RemovePeriodic() ................................................................ 5-128

ResetPriority() ..................................................................... 5-130

SetClockFrequency() ........................................................... 5-132

SetEventBit() ....................................................................... 5-133

SetInterruptMaskBits() ........................................................ 5-135

SetMessagePayload() ............................................................ 5-136

SetPriority() ........................................................................ 5-138

SetThreadError() ................................................................. 5-140

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xiii

for 16-bit Processors

Page 14

CONTENTS

SetThreadSlotValue() .......................................................... 5-141

SetTickPeriod() ................................................................... 5-142

Sleep() ................................................................................ 5-143

SyncRead() ......................................................................... 5-145

SyncWrite() ........................................................................ 5-147

Yield() ................................................................................ 5-149

Assembly Macros ...................................................................... 5-151

VDK_ISR_ACTIVATE_DEVICE_() .................................. 5-152

VDK_ISR_CLEAR_EVENTBIT_() .................................... 5-153

VDK_ISR_LOG_HISTORY_EVENT_() ............................ 5-154

VDK_ISR_POST_SEMAPHORE_() .................................. 5-155

VDK_ISR_SET_EVENTBIT_() ......................................... 5-156

PROCESSOR-SPECIFIC NOTES

VDK for Blackfin Processors

(AD6532, ADSP-BF531, ADSP-BF532, ADSP-BF533, ADSP-BF535,

and ADSP-BF561) ..................................................................... A-1

User and Supervisor Modes ..................................................... A-1

Thread, Kernel, and Interrupt Execution Levels ....................... A-2

Critical and Unscheduled Regions ........................................... A-3

Exceptions .............................................................................. A-3

ISR APIs ................................................................................. A-3

Interrupts ............................................................................... A-4

Timer ..................................................................................... A-5

ADSP-BF531, ADSP-BF532 and ADSP-BF533 Processor Memory A-5

xiv VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 15

CONTENTS

ADSP-BF535 and AD6532 Processor Memory ........................ A-6

ADSP-BF561 Processor Memory ............................................ A-6

Interrupt Nesting ................................................................... A-7

Thread Stack Usage by Interrupts ........................................... A-7

Interrupt Latency ................................................................... A-8

Multiprocessor Messaging ....................................................... A-8

VDK for ADSP-219x DSPs

(ADSP-2191, ADSP-2192-12, ADSP-2195, and ADSP-2196) ... A-9

Thread, Kernel, and Interrupt Execution Levels ....................... A-9

Critical and Unscheduled Regions ......................................... A-10

Interrupts on ADSP-2192 DSPs ........................................... A-10

Interrupts on ADSP-2191 DSPs ........................................... A-11

Timer ................................................................................... A-11

Memory ............................................................................... A-12

Interrupt Nesting ................................................................. A-12

Interrupt Latency ................................................................. A-13

Multiprocessor Messaging ..................................................... A-13

MIGRATING DEVICE DRIVERS

Step 1: Saving Existing Sources ..................................................... B-1

Step 2: Revising Properties ........................................................... B-2

Step 3: Revising Sources ............................................................... B-3

Step 4: Creating Boot Objects ...................................................... B-4

INDEX

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xv

for 16-bit Processors

Page 16

CONTENTS

xvi VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 17

PREFACE

Thank you for purchasing Analog Devices (ADI) development software

for digital signal processor (DSP) applications.

Purpose of this Manual

The VisualDSP++ Kernel (VDK) User's Guide contains information about

VisualDSP++™ Kernel, a Real Time Operating System kernel integrated

with the rest of the VisualDSP++ 3.5 development tools. The VDK incorporates state-of-art scheduling and resource allocation techniques tailored

specially for the memory and timing constraints of DSP programming.

The kernel facilitates development of fast performance structured applications using frameworks of template files.

The kernel is specially designed for effective operations on Analog Devices

DSP architectures: ADSP-219x, ADSP-BF53x Blackfin®, ADSP-21xxx

SHARC®, and ADSP-TSxxx TigerSHARC® processors.

The majority of the information in this manual is generic. Information

applicable to only a particular target processor, or to a particular processor

family, is provided in Appendix A, “Processor-Specific Notes”.

This manual is designed so that you can quickly learn about the kernel

internal structure and operation.

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xvii

for 16-bit Processors

Page 18

Intended Audience

Intended Audience

The primary audience for this manual is programmers who are familiar

with Analog Devices DSPs. This manual assumes the audience has a working knowledge of the appropriate processor architecture and instruction

set. Programmers who are unfamiliar with Analog Devices DSPs can use

this manual but should supplement it with other texts, such as Hardware

Reference and Instruction Set Reference manuals, that describe your target

architecture.

Manual Contents

The manual consists of:

• Chapter 1, “Introduction to VDK”

Concentrates on concepts, motivation, and general architectural principles of the VDK software.

• Chapter 2, “Configuration and Debugging of VDK Projects”

Describes the Integrated Development and Debugging

Environment (IDDE) support for configuring and debugging a VDK enabled project. For specific procedures on

how to create, modify, and manage the kernel’s components, refer to the VisualDSP++ online Help.

• Chapter 3, “Using VDK”

Describes the kernel’s internal structure and components.

• Chapter 4, “VDK Data Types”

Describes built-in data types supported in the current

release of the VDK.

xviii VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 19

• Chapter 5, “VDK API Reference”

Describes library functions and macros included in the current release of the VDK.

• Appendix A, “Processor-Specific Notes”

Provides processor-specific information for Blackfin and

ADSP-219x processor architectures.

• Appendix B, “Migrating Device Drivers”

Describes how to convert the device driver components created with VisualDSP++ 2.0 for use in projects built with

VisualDSP++ 3.5.

What’s New in this Manual

Preface

This first revision of the VisualDSP++ 3.5 Kernel (VDK) User’s Guide documents VDK support for the new Blackfin processor ADSP-BF561 in

addition to the existing processors in the Blackfin and ADSP-219x families that were supported in previous releases. This manual documents

VDK functionality that is new for VisualDSP++ 3.5, including the following: multiprocessor messaging, kernel panic, the option not to throw

errors on timeout, runtime access/setting of timing parameters, and

increased configurability (choice of timer interrupt and use of multiple

heaps to specify allocations for VDK components).

The Blackfin processors are embedded processors that sport a Media

Instruction Set Computing (MISC) architecture. This architecture is the

natural merging of RISC, media functions, and digital signal processing

characteristics towards delivering signal processing performance in a

microprocessor-like environment.

The manual documents VisualDSP++ Kernel version 3.5.00.

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xix

for 16-bit Processors

Page 20

Technical or Customer Support

Technical or Customer Support

You can reach DSP Tools Support in the following ways.

• Visit the DSP Development Tools website at

www.analog.com/technology/dsp/developmentTools/index.html

• Email questions to

dsptools.support@analog.com

• Phone questions to 1-800-ANALOGD

• Contact your ADI local sales office or authorized distributor

• Send questions by mail to

Analog Devices, Inc.

DSP Division

One Technology Way

P.O. Box 9106

Norwood, MA 02062-9106

USA

Supported Processors

VisualDSP++ 3.5 Kernel currently supports the following Analog Devices

DSPs.

• AD6532, ADSP-BF531, ADSP-BF532, ADSP-BF533,

ADSP-BF535, and ADSP-BF561

• ADSP-2191, ADSP-2192-12, ADSP-2195, and ADSP-2196

xx VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 21

Preface

Product Information

You can obtain product information from the Analog Devices website,

from the product CD-ROM, or from the printed publications (manuals).

Analog Devices is online at www.analog.com. Our website provides information about a broad range of products—analog integrated circuits,

amplifiers, converters, and digital signal processors.

MyAnalog.com

MyAnalog.com is a free feature of the Analog Devices website that allows

customization of a webpage to display only the latest information on

products you are interested in. You can also choose to receive weekly email

notification containing updates to the webpages that meet your interests.

MyAnalog.com provides access to books, application notes, data sheets,

code examples, and more.

Registration:

Visit www.myanalog.com to sign up. Click Register to use MyAnalog.com.

Registration takes about five minutes and serves as means for you to select

the information you want to receive.

If you are already a registered user, just log on. Your user name is your

email address.

DSP Product Information

For information on digital signal processors, visit our website at

www.analog.com/dsp, which provides access to technical publications, data

sheets, application notes, product overviews, and product announcements.

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xxi

for 16-bit Processors

Page 22

Product Information

You may also obtain additional information about Analog Devices and its

products in any of the following ways.

• Email questions or requests for information to

dsp.support@analog.com

• Fax questions or requests for information to 1-781-461-3010

(North America) or +49 (0) 89 76903-157 (Europe)

• Access the Digital Signal Processing Division’s FTP website at

ftp.analog.com or ftp 137.71.23.21 or ftp://ftp.analog.com

Related Documents

For information on product related development software, see the following publications for the appropriate processor family.

VisualDSP++ 3.5 Getting Started Guide

VisualDSP++ 3.5 User’s Guide

VisualDSP++ 3.5 C/C++ Compiler and Library Manual

VisualDSP++ 3.5 Assembler and Preprocessor Manual

VisualDSP++ 3.5 Linker and Utilities Manual

VisualDSP++ 3.5 Kernel (VDK) User’s Guide

Quick Installation Reference Card

For hardware information, refer to your DSP Hardware Reference,

Programming Reference, and data sheet.

All documentation is available online. Most documentation is available in

printed form.

xxii VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 23

Online Documentation

Online documentation comprises Microsoft HTML Help (.CHM), Adobe

Portable Documentation Format (.PDF), and HTML (.HTM and .HTML)

files. A description of each file type is as follows.

File Description

.CHM VisualDSP++ online Help system files and VisualDSP++ manuals are provided in

Microsoft HTML Help format. Installing VisualDSP++ automatically copies these

files to the

tools manual set. Invoke Help from the VisualDSP++ Help menu or via the

Windows Start button.

.PDF Manuals and data sheets in Portable Documentation Format are located in the

installation CD’s

reader, such as Adobe Acrobat Reader (4.0 or higher). Running setup.exe on the

installation CD provides easy access to these documents. You can also copy .PDF

files from the installation CD onto another disk.

VisualDSP\Help folder. Online Help is ideal for searching the entire

Docs folder. Viewing and printing a .PDF file requires a PDF

Preface

.HTM

or

.HTML

Dinkum Abridged C++ library and FlexLM network license manager software

documentation is located on the installation CD in the Docs\Reference folder.

Viewing or printing these files requires a browser, such as Internet Explorer 4.0 (or

higher). You can copy these files from the installation CD onto another disk.

Access the online documentation from the VisualDSP++ environment,

Windows Explorer, or Analog Devices website.

From VisualDSP++

VisualDSP++ provides access to online Help. It does not provide access to

.PDF files or the supplemental reference documentation (Dinkum

Abridged C++ library and FlexLM network licence). Access Help by:

• Choosing Contents, Search, or Index from the VisualDSP++ Help

menu

• Invoking context-sensitive Help on a user interface item

(toolbar button, menu command, or window)

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xxiii

for 16-bit Processors

Page 24

Product Information

From Windows

In addition to shortcuts you may construct, Windows provides many ways

to open VisualDSP++ online Help or the supplementary documentation.

Help system files (

.CHM) are located in the VisualDSP 3.5 16-Bit\Help

folder. Manuals and data sheets in PDF format are located in the Docs

folder of the installation CD. The installation CD also contains the Dinkum Abridged C++ library and FlexLM network license manager software

documentation in the \Reference folder.

Using Windows Explorer

• Double-click any file that is part of the VisualDSP++ documentation set.

• Double-click vdsp-help.chm, the master Help system, to access all

the other .CHM files.

From the Web

To download the tools manuals, point your browser at

www.analog.com/technology/dsp/developmentTools/gen_purpose.html.

Select a DSP family and book title. Download archive (.ZIP) files, one for

each manual. Use any archive management software, such as WinZip, to

decompress downloaded files.

Printed Manuals

For general questions regarding literature ordering, call the Literature

Center at 1-800-ANALOGD (1-800-262-5643) and follow the prompts.

xxiv VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 25

Preface

VisualDSP++ Documentation Set

Printed copies of VisualDSP++ manuals may be purchased through Analog Devices Customer Service at 1-781-329-4700; ask for a Customer

Service representative. The manuals can be purchased only as a kit. For

additional information, call 1-603-883-2430.

If you do not have an account with Analog Devices, you will be referred to

Analog Devices distributors. To get information on our distributors, log

onto

www.analog.com/salesdir/continent.asp.

Hardware Manuals

Printed copies of hardware reference and instruction set reference manuals

can be ordered through the Literature Center or downloaded from the

Analog Devices website. The phone number is 1-800-ANALOGD

(1-800-262-5643). The manuals can be ordered by a title or by product

number located on the back cover of each manual.

Data Sheets

All data sheets can be downloaded from the Analog Devices website. As a

general rule, printed copies of data sheets with a letter suffix (L, M, N, S)

can be obtained from the Literature Center at 1-800-ANALOGD

(1-800-262-5643) or downloaded from the website. Data sheets without

the suffix can be downloaded from the website only—no hard copies are

available. You can ask for the data sheet by part name or by product

number.

If you want to have a data sheet faxed to you, the phone number for that

service is 1-800-446-6212. Follow the prompts and a list of data sheet

code numbers will be faxed to you. Call the Literature Center first to find

out if requested data sheets are available.

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xxv

for 16-bit Processors

Page 26

Notation Conventions

Contacting DSP Publications

Please send your comments and recommendations on how to improve our

manuals and online Help. You can contact us by:

• Emailing dsp.techpubs@analog.com

• Filling in and returning the attached Reader’s Comments Card

found in our manuals

Notation Conventions

The following table identifies and describes text conventions used in this

manual.

L

Example Description

Close command

(File menu) or OK

{this | that} Alternative required items in syntax descriptions appear within curly

[this | that] Optional items in syntax descriptions appear within brackets and sepa-

[this,…] Optional item lists in syntax descriptions appear within brackets

.SECTION Commands, directives, keywords, code examples, and feature names

filename Non-keyword placeholders appear in text with italic style format.

appear throughout this document.

Tex t in bold style indicates the location of an item within the

VisualDSP++ environment’s menu system and user interface items.

brackets separated by vertical bars; read the example as this or that.

rated by vertical bars; read the example as an optional

delimited by commas and terminated with an ellipsis; read the example

as an optional comma-separated list of

are in text with

letter gothic font.

this or that.

this.

Additional conventions, which apply only to specific chapters, may

xxvi VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 27

Example Description

A note providing information of special interest or identifying a

related topic. In the online version of this book, the word Note appears

instead of this symbol.

A caution providing information about critical design or programming

issues that influence operation of a product. In the online version of

this book, the word Caution appears instead of this symbol.

Preface

VisualDSP++ 3.5 Kernel (VDK) User’s Guide xxvii

for 16-bit Processors

Page 28

Notation Conventions

xxviii VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 29

1 INTRODUCTION TO VDK

This chapter concentrates on concepts, motivation, and general architectural principles of the operating system kernel. It also provides

information on how to partition a VDK application into independent,

reusable functional units that are easy to maintain and debug.

The following sections provide information about the operating system

kernel concepts.

• “Motivation” on page 1-1

• “Partitioning an Application” on page 1-4

• “Scheduling” on page 1-5

• “Protected Regions” on page 1-7

• “Thread and Hardware Interaction” on page 1-8

Motivation

All applications require control code as support for the algorithms that are

often thought of as the “real” program. The algorithms require data to be

moved to and/or from peripherals, and many algorithms consist of more

than one functional block. For some systems, this control code may be as

simple as a “superloop” blindly processing data that arrives at a constant

rate. However, as processors become more powerful, considerably more

sophisticated control may be needed to realize the processor’s potential, to

allow the DSP to absorb the required functionality of previously sup-

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 1-1

for 16-bit Processors

Page 30

Motivation

ported chips, and to allow a single DSP to do the work of many. The

following sections provide an overview of some of the benefits of using a

kernel on a DSP.

Rapid Application Development

The tight integration between the VisualDSP++ environment and the

VDK allows rapid development of applications compared to creating all of

the control code required by hand. The use of automatic code generation

and file templates, as well as a standard programming interface to device

drivers, allows you to concentrate on the algorithms and the desired control flow rather than on the implementation details. VDK supports the use

of C, C++, and assembly language. You are encouraged to develop code

that is highly readable and maintainable, yet to retain the option of hand

optimizing if necessary.

Debugged Control Structures

Debugging a traditional DSP application can be laborious because development tools (compiler, assembler, and linker among others) are not

aware of the architecture of the target application and the flow of control

that results. Debugging complex applications is much easier when instantaneous snapshots of the system state and statistical run-time data are

clearly presented by the tools. To help offset the difficulties in debugging

software, VisualDSP++ includes three versions of the VDK libraries containing full instrumentation (including error checking), only error

checking, and neither instrumentation nor error checking.

In the instrumented mode, the kernel maintains statistical information

and logging of all significant events into a history buffer. When the execution is paused, the debugger can traverse this buffer and present a

graphical trace of the program’s execution including context switches,

pending and posting of signals, changes in a thread’s status, and more.

Statistics are presented for each thread in a tabular view and show the total

1-2 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 31

Introduction to VDK

amount of time the thread has executed, the number of times it has been

run, the signal it is currently blocked on, and other data. For more information, see “Debugging VDK Projects” on page 2-3 and the online Help.

Code Reuse

Many programmers begin a new project by writing the infrastructure portions that transfers data to, from, and between algorithms. This necessary

control logic usually is created from scratch by each design team and infrequently reused on subsequent projects. The VDK provides much of this

functionality in a standard, portable and reusable library. Furthermore,

the kernel and its tight integration with the VisualDSP++ environment are

designed to promote good coding practice and organization by partitioning large applications into maintainable and comprehensible blocks. By

isolating the functionality of subsystems, the kernel helps to prevent the

morass all too commonly found in systems programming.

The kernel is designed specifically to take advantage of commonality in

user applications and to encourage code reuse. Each thread of execution is

created from a user defined template, either at boot time or dynamically

by another thread. Multiple threads can be created from the same template, but the state associated with each created instance of the thread

remains unique. Each thread template represents a complete encapsulation

of an algorithm that is unaware of other threads in the system unless it has

a direct dependency.

Hardware Abstraction

In addition to a structured model for algorithms, the VDK provides a

hardware abstraction layer. Presented programming interfaces allow you

to write most of the application in a platform independent, high level language (C or C++). The VDK API is identical for all Analog Devices

processors, allowing code to be easily ported to a different DSP core.

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 1-3

for 16-bit Processors

Page 32

Partitioning an Application

When porting an application to a new platform, programmers must

address the two areas necessarily specific to a particular processor: interrupt service routines and device drivers. The VDK architecture identifies a

crisp boundary around these subsystems and supports the traditionally difficult development with a clear programming framework and code

generation. Both interrupts and device drivers are declared with a graphical user interface in the IDDE, which generates well commented code that

can be compiled without further effort.

Partitioning an Application

A VDK thread is an encapsulation of an algorithm and its associated data.

When beginning a new project, use this notion of a thread to leverage the

kernel architecture and to reduce the complexity of your system. Since

many algorithms may be thought of as being composed of “subalgorithm”

building blocks, an application can be partitioned into smaller functional

units that can be individually coded and tested. These building blocks

then become reusable components in more robust and scalable systems.

You define the behavior of VDK threads by creating thread types. Types

are templates that define the behavior and data associated with all threads

of that type. Like data types in C or C++, thread types are not used

directly until an instance of the type is created. Many threads of the same

thread type can be created, but for each thread type, only one copy of the

code is linked into the executable code. Each thread has its own private set

of variables defined for the thread type, its own stack, and its own C

run-time context.

When partitioning an application into threads, identify portions of your

design in which a similar algorithm is applied to multiple sets of data.

These are, in general, good candidates for thread types. When data is

present in the system in sequential blocks, only one instance of the thread

type is required. If the same operation is performed on separate sets of

1-4 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 33

Introduction to VDK

data simultaneously, multiple threads of the same type can coexist and be

scheduled for prioritized execution (based on when the results are

needed).

Scheduling

The VDK is a preemptive multitasking kernel. Each thread begins execu-

tion at its entry point. Then, it either runs to completion or performs its

primary function repeatedly in an infinite loop. It is the role of the scheduler to preempt execution of a thread and to resume its execution when

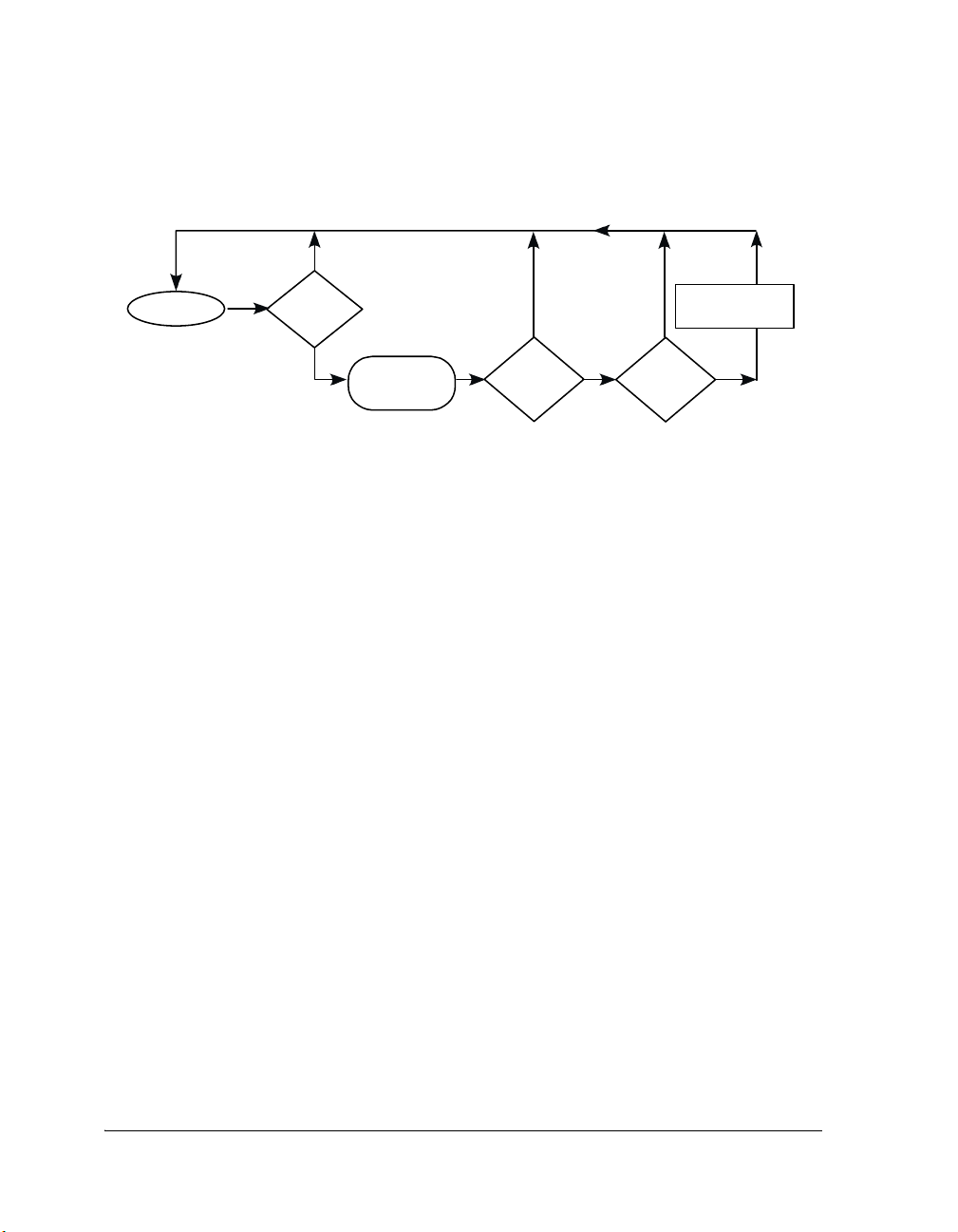

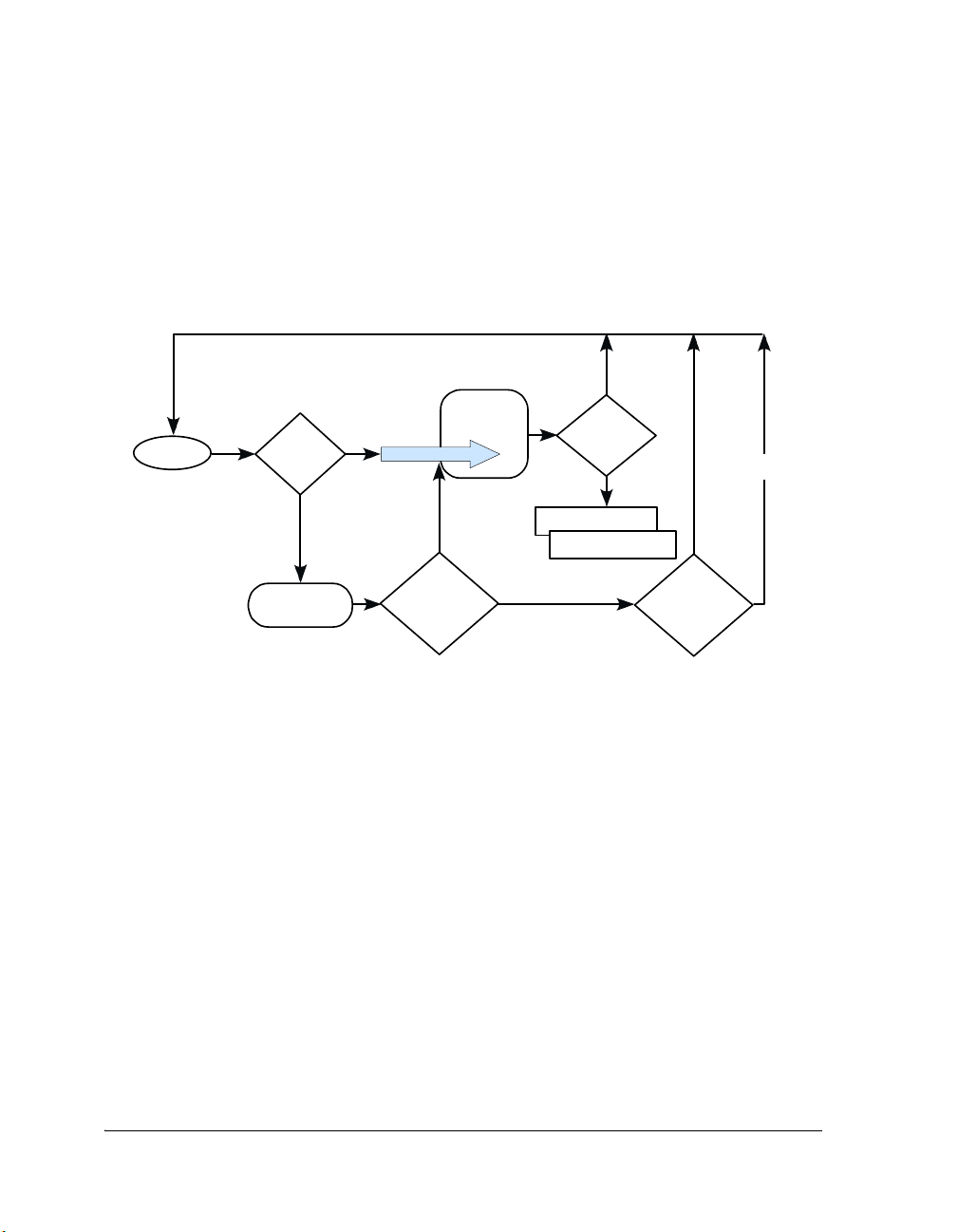

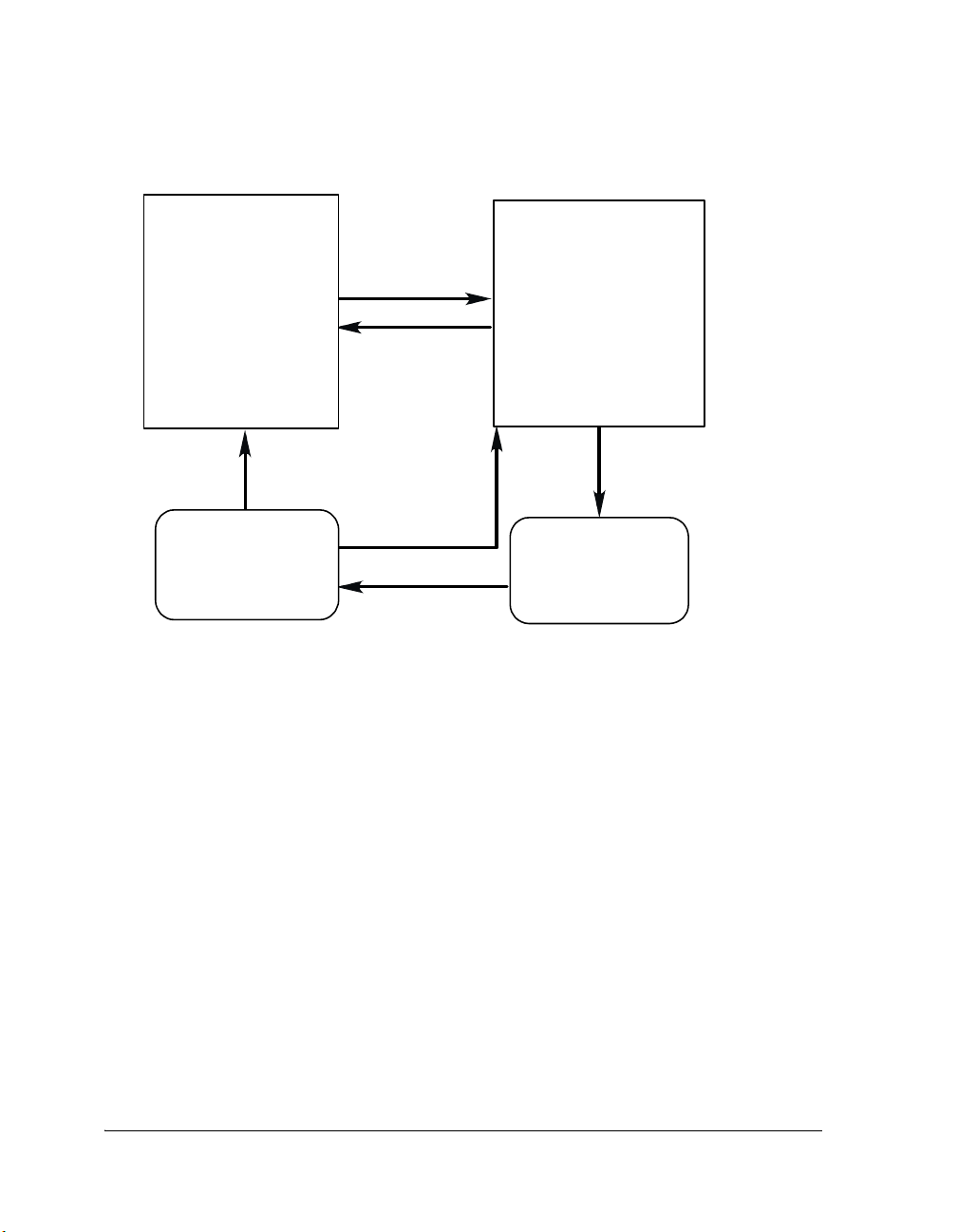

appropriate. Each thread is given a priority to assist the scheduler in determining precedence of threads (see Figure 1-1 on page 1-6).

The scheduler gives processor time to the thread with the highest priority

that is in the ready state (see Figure 3-2 on page 3-14). A thread is in the

ready state when it is not waiting for any system resources it has requested.

A reference to each ready thread is stored in a structure that is internal to

the kernel and known as the ready queue. For more information, see

“Scheduling” on page 3-9.

Priorities

Each thread is assigned a dynamically modifiable priority based on the

default for its thread type declared in VisualDSP++ environment’s Project

window. An application is limited to either fourteen or thirty priority levels, depending on the processor’s architecture. However, the number of

threads at each priority is limited, in practice, only by system memory.

Priority level one is the highest priority, and priority fourteen (or thirty) is

the lowest. The system maintains an idle thread that is set to a priority

lower than that of the lowest user thread.

Assigning priorities is one of the most difficult tasks of designing a real

time preemptive system. Although there has been research in the area of

rigorous algorithms for assigning priorities based on deadlines (e.g., rate

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 1-5

for 16-bit Processors

Page 34

Scheduling

Request or

free

resources

Executing

All ISRs serviced &

no scheduling state

has changed

Interrupt

Done

ISR

Push/pop

nested

interrupts

All ISRs serviced &

scheduling state

has changed

Highest priority

thre ad is

thre a d las t

executed

Context Switch

Highest p riority

thread has

changed

Resources Reallocation

& Priorities Assessment

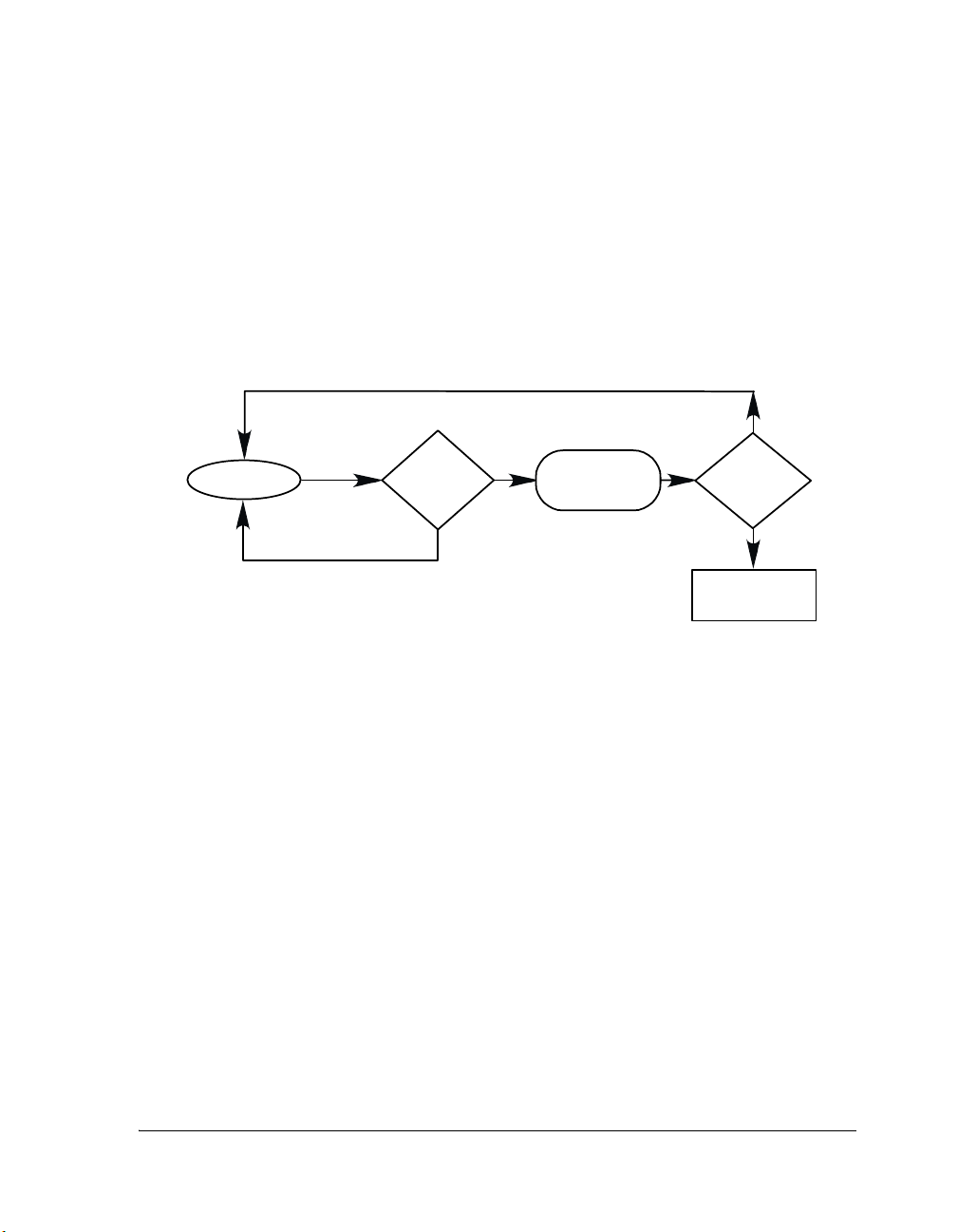

Figure 1-1. VDK State Diagram

monotonic scheduling), most systems are designed by considering the

interrupts and signals that are triggering the execution, while balancing

the deadlines imposed by the system’s input and output streams. For more

information, see “Thread Parameters” on page 3-2.

1-6 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 35

Introduction to VDK

Preemption

A running thread continues execution unless it requests a system resource

using a kernel API. When a thread requests a signal (semaphore, event,

device flag, or message) and the signal is available, the thread resumes execution. If the signal is not available, the thread is removed from the ready

queue—the thread is blocked (see Figure 3-2 on page 3-14). The kernel

does not perform a context switch as long as the running thread maintains

the highest priority in the ready queue, even if the thread frees a resource

and enables other threads to move to the ready queue at the same or lower

priority. A thread can also be interrupted. When an interrupt occurs, the

kernel yields to the hardware interrupt controller. When the ISR completes, the highest priority thread resumes execution. For more

information, see “Preemptive Scheduling” on page 3-11.

Protected Regions

Frequently, system resources must be accessed atomically. The kernel provides two levels of protection for code that needs to execute sequentially—

unscheduled regions and critical regions.

Critical and unscheduled regions can be intertwined. You can enter critical regions from within unscheduled regions, or enter unscheduled regions

from within critical regions. For example, if you are in an unscheduled

region and call a function that pushes and pops a critical region, the system is still in an unscheduled region when the function returns.

Disabling Scheduling

The VDK scheduler can be disabled by entering an unscheduled region.

The ability to disable scheduling is necessary when you need to free multiple system resources without being switched out, or access global variables

that are modified by other threads without preventing interrupts from

being serviced. While in an unscheduled region, interrupts are still

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 1-7

for 16-bit Processors

Page 36

Thread and Hardware Interaction

enabled and ISRs execute. However, the kernel does not perform a thread

context switch even if a higher priority thread becomes ready. Unscheduled regions are implemented using a stack style interface. This enables

you to begin and end an unscheduled region within a function without

concern for whether or not the calling code is already in an unscheduled

region.

Disabling Interrupts

On occasions, disabling the scheduler does not provide enough protection

to keep a block of thread code reentrant. A critical region disables both

scheduling and interrupts. Critical regions are necessary when a thread is

modifying global variables that may also be modified by an Interrupt Service Routine. Similar to unscheduled regions, critical regions are

implemented as a stack. Developers can enter and exit critical regions in a

function without being concerned about the critical region state of the

calling code. Care should be taken to keep critical regions as short as possible as they may increase interrupt latency.

Thread and Hardware Interaction

Threads should have minimal knowledge of hardware; rather, they should

use device drivers for hardware control. A thread can control and interact

with a device in a portable and hardware abstracted manner through a

standard set of APIs.

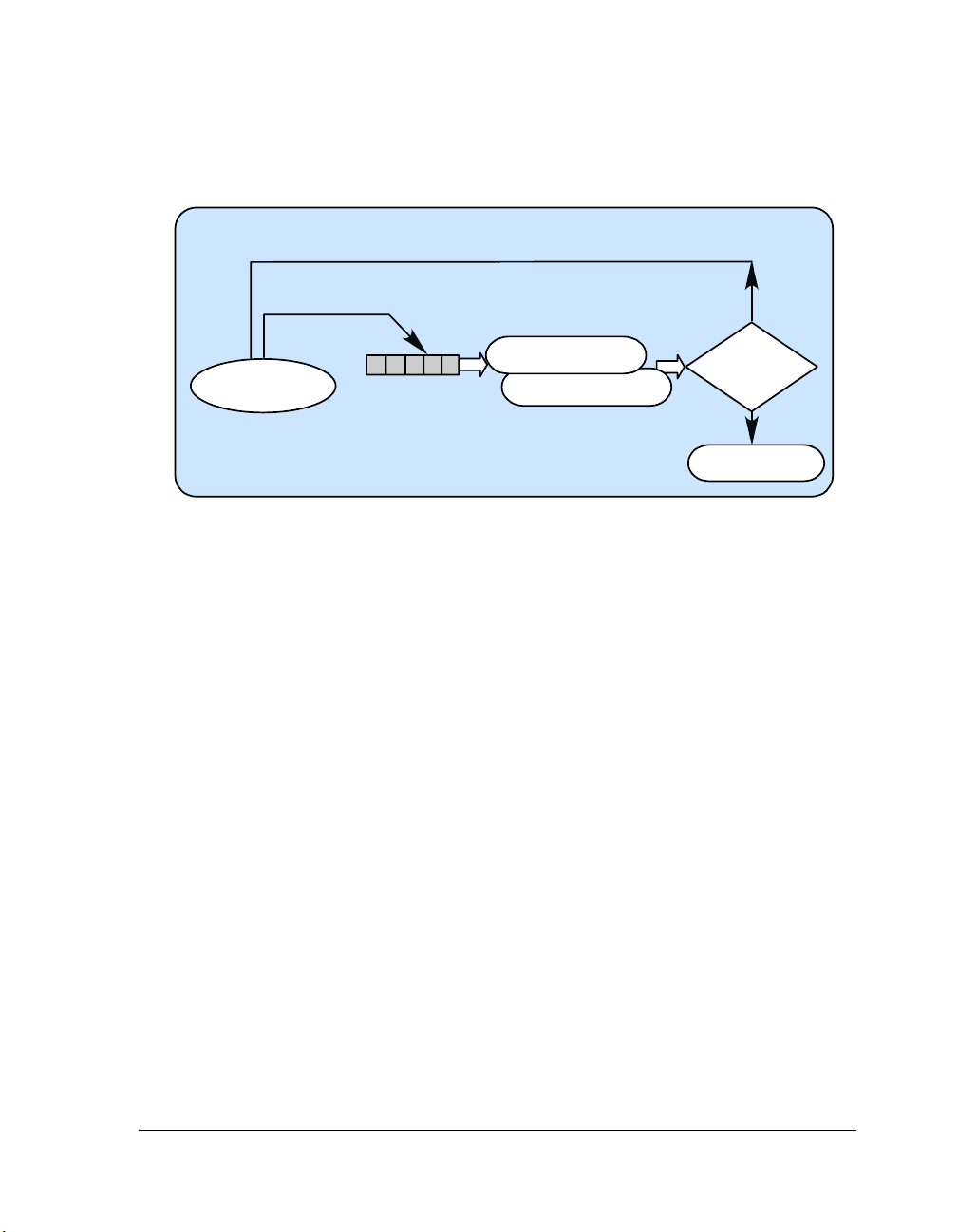

The VDK Interrupt Service Routine framework encourages you to remove

specific knowledge of hardware from the algorithms encapsulated in

threads (see Figure 1-2). Interrupts relay information to threads through

signals to device drivers or directly to threads. Using signals to connect

hardware to the algorithms allows the kernel to schedule threads based on

asynchronous events.

1-8 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 37

Introduction to VDK

Kernel

• init()

Function at Boot Time

Communication Manager

MyDevice::DispatchFunction()

Dispatc h ID:

Interrupt Service Routine

•

VDK_ISR_ACTIVATE_DEVICE_()

Application Algorithm

Device Driver

•kIO_Init

•kIO_Activate

• kIO_Ope n

•kIO_Close

• kIO_SyncRead

• kIO_SyncWrite

•kIO_IOCtl

Macr o

• OpenDevice()

APIs:

• CloseDevice( )

• SyncRead()

•

•

SyncWrite()

DeviceIO Ctl()

(Thread)

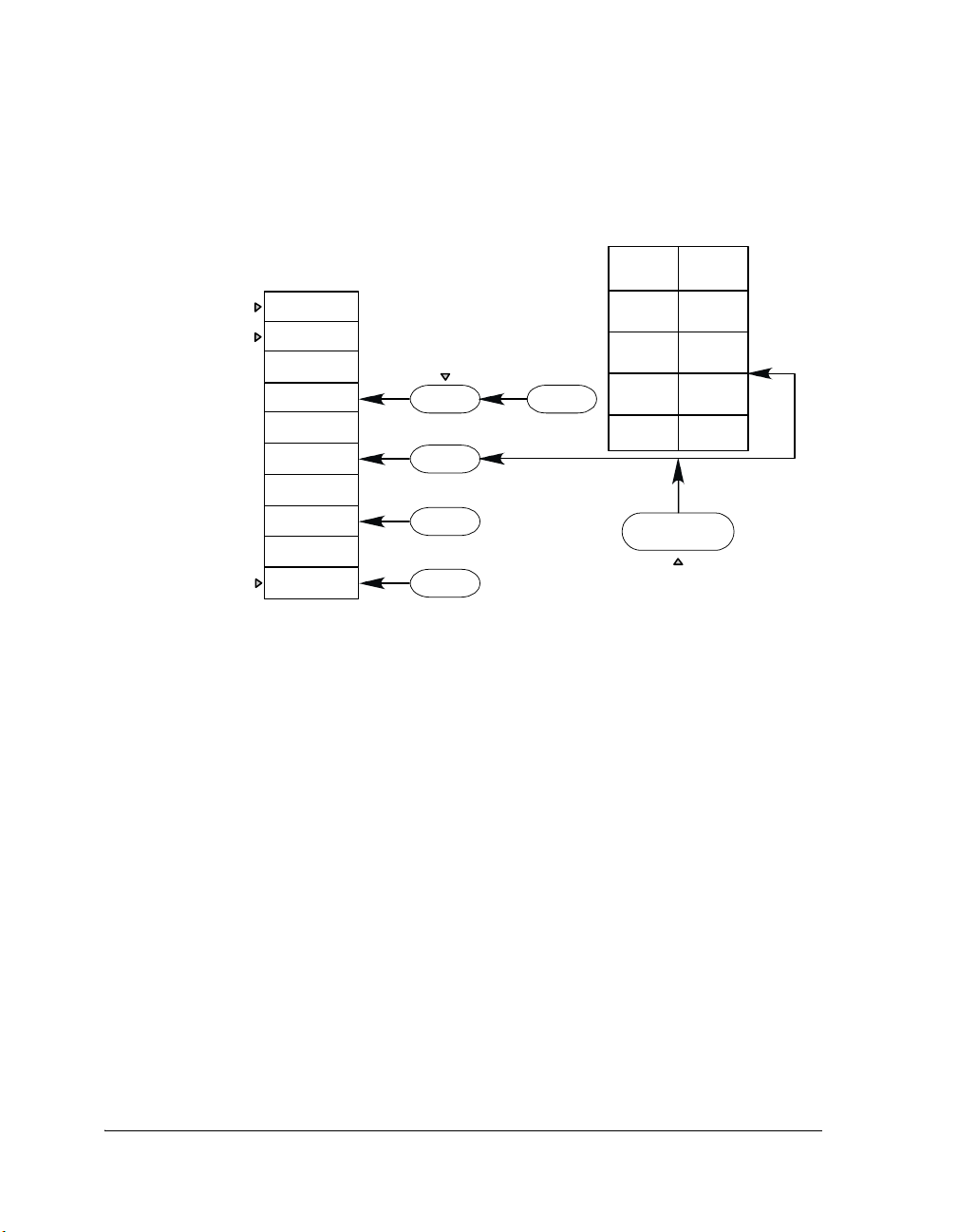

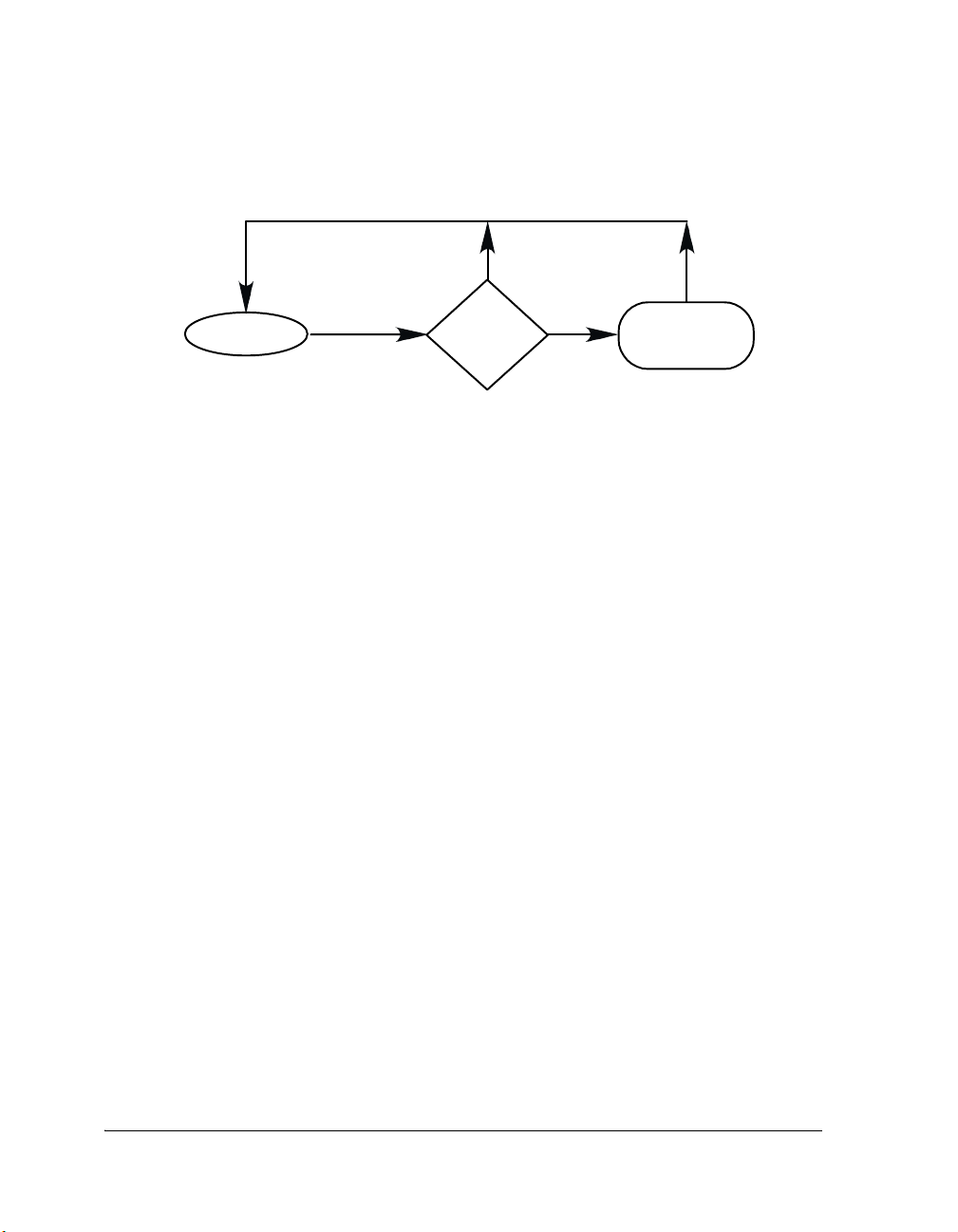

Figure 1-2. Device Drivers Entry Points

The VDK run-time environment can be thought of as a bridge between

two domains, the thread domain and the interrupt domain. The interrupt

domain services the hardware with minimal knowledge of the algorithms,

and the thread domain is abstracted from the details of the hardware.

Device drivers and signals bridge the two domains. For more information,

see “Threads” on page 3-1.

Thread Domain with Software Scheduling

The thread domain runs under a C/C++ run-time model. The prioritized

execution is maintained by a software scheduler with full context switching. Threads should have little or no direct knowledge of the hardware;

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 1-9

for 16-bit Processors

Page 38

Thread and Hardware Interaction

rather, threads should request resources and then wait for them to become

available. Threads are granted processor time based on their priority and

requested resources. Threads should minimize time spent in critical and

unscheduled regions to avoid short-circuiting the scheduler and interrupt

controller.

Interrupt Domain with Hardware Scheduling

The interrupt domain runs outside the C/C++ run-time model. The prioritized execution is maintained by the hardware interrupt controller. ISRs

should be as small as possible. They should only do as much work as is

necessary to acknowledge asynchronous external events and to allow

peripherals to continue operations in parallel with the processor. ISRs

should only signal that more processing can occur and leave the processing

to threads. For more information, see “Interrupt Service Routines” on

page 3-46.

Device Drivers

Interrupt Service Routines can communicate with threads directly using

signals. Alternatively, an interVisualDSP++ 3.5 Kernel (VDK) User’s

Guidecomplex device-specific functionality that is abstracted from the

algorithm. A device driver is a single function with multiple entry conditions and domains of execution. For more information, see “Device

Drivers” on page 3-51.

1-10 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 39

2 CONFIGURATION AND

DEBUGGING OF VDK

PROJECTS

This chapter contains information about the VisualDSP++ Integrated

Development and Debugging Environment (IDDE) support for VDK

enabled projects. You can access VDK components and services through

the set of menus, commands, and windows in the IDDE.

If you are new to VisualDSP++ application development software, we recommend that you start with the VisualDSP++ 3.5 Getting Started Guide

for your target processor family.

The IDDE support for the VDK can be broken into two areas:

• “Configuring VDK Projects” on page 2-1

• “Debugging VDK Projects” on page 2-3

Configuring VDK Projects

VisualDSP++ is extended to manage all of the VDK components. You

start developing a VDK based application by creating a set of source files.

The IDDE automatically generates a source code framework for each user

requested kernel object. Use the interface to supply the required information for these objects.

For specific procedures on how to set up VDK system parameters or how

to create, modify, or delete a VDK component, refer to the VisualDSP++

online Help. Following the online procedures ensures your VDK projects

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 2-1

for 16-bit Processors

Page 40

Configuring VDK Projects

build consistently and accurately with minimal project management. The

process reduces development time and allows you to concentrate on algorithm development.

Linker Description File

When a new project makes use of the kernel, a reference to a VDK-specific

default Linker Description File (

will be copied to your project directory to allow you to modify it to suit

your individual hardware configurations.

.LDF) is added to the project. This file

Thread Safe Libraries

Just as user threads must be reentrant, special “thread safe” versions of the

standard C and C++ libraries are included for use with the VDK. The

default .LDF included in VDK projects links with these libraries. If you

modify your Linker Description File, ensure that the file links with the

thread safe libraries. Your project’s LDF resides in the Linker Files folder

and is accessible via the Project tab of the Project window in

VisualDSP++.

Header Files for the VDK API

When a VDK project is created in the development environment, one of

the automatically generated files in the project directory is

header file contains enumerations for every user defined object in the

development environment and all VDK API declarations. Your source

files must include vdk.h to access any kernel services.

2-2 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

vdk.h. This

for 16-bit Processors

Page 41

Configuration and Debugging of VDK Projects

Debugging VDK Projects

Debugging embedded software is a difficult task. To help offset the initial

difficulties present in debugging VDK enabled projects, the kernel offers

special instrumented builds.

Instrumented Build Information

When building a VDK project, you have an option to include instrumentation in your executable by choosing Full Instrumentation as the

instrumentation level in the Kernel tab of the Project window. An instrumented build differs from a release or non-instrumented build because the

build includes extra code for thread statistic logging. In addition, an

instrumented build creates a circular buffer of important system events.

The extra logging introduces slight overhead in thread switches and certain API calls but helps you to trace system activities.

VDK State History Window

The VDK logs user defined events and certain system state changes in a

circular buffer. An event is logged in the history buffer with a call to

LogHistoryEvent(). The call to LogHistoryEvent() logs four data values:

the ThreadID of the calling thread, the tick when the call happened, the

enumeration, and a value that is specific to the enumeration. Enumerations less than zero are reserved for use by the VDK. For more

information about the history enumeration type, see HistoryEnum

on page 4-18.

Using the history log, the IDDE displays a graph of running threads and

system state changes in the State History window. Note that the data displayed in this window is only updated at halt. The State History window,

the Thread Status and Thread Event legends are described in detail in the

online Help.

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 2-3

for 16-bit Processors

Page 42

Debugging VDK Projects

Target Load Graph Window

Instrumented VDK builds allow you to analyze the average load of the

processor over a period of time. The calculated load is displayed in the

Target Load graph window. Although the calculation is not exact, the

graph helps you to estimate the utilization level of the processor. Note

that the information is updated at halt.

The Target Load graph shows the percent of time the target spent in the

idle thread. A load of 0% means the VDK spent all of its time in the idle

thread. A load of 100% means the target did not spend any time in the

idle thread. Load data is processed using a moving window average. The

load percentage is calculated for every clock tick, and all the ticks are averaged. The following formula is used to calculate the percentage of

utilization for every clock tick.

Load = 1 – (# of times idle thread ran this tick) / (# of threads

run this tick)

For more information about the Target Load graph, refer to the online

Help.

VDK Status Window

Besides history and processor load information, an instrumented build

collects statistics for relevant VDK components, such as when a thread

was created, last run, the number of times run, etc. This data is displayed

in the Status window and is updated at halt.

For more information about the VDK Status window, refer to the online

Help.

2-4 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 43

Configuration and Debugging of VDK Projects

General Tips

Even with the data collection features built into the VDK, debugging

thread code is a difficult task. Due to the fact that multiple threads in a

system are interacting asynchronously with device drivers, interrupts, and

the idle thread, it can become difficult to track down the source of an

error.

Unfortunately, one of the oldest and easiest debugging methods—inserting breakpoints—can have uncommon side effects in VDK projects. Since

multiple threads (either multiple instantiations of the same thread type or

different threads of different thread types) can execute the same function

with completely different contexts, the utilization of non-thread-aware

breakpoints is diminished. One possible workaround involves inserting

some ‘thread-specific’ breakpoints:

if (VDK_GetThreadID() == <thread_with_bug>)

{

<some statement>; /* Insert breakpoint */

}

Kernel Panic

When VDK detects an error that cannot be handled by dispatching a

thread error, it calls an internal function called KernelPanic(). By default,

this function loops forever so that users can determine that a problem has

occurred and to provide information to facilitate debugging.

Panic()

intended location.

disables interrupts on entry to ensure that execution loops in the

KernelPanic() can be overridden by users in order to

handle these types of errors differently, for example resetting the

hardware.

To allow users to determine the cause of the panic VDK sets up the following variables.

VDK::PanicCode VDK::g_KernelPanicCode

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 2-5

for 16-bit Processors

Kernel-

Page 44

Debugging VDK Projects

VDK::SystemError VDK::g_KernelPanicError

int VDK::g_KernelPanicValue

int VDK::g_KernelPanicPC

• g_KernelPanicCode indicates the reason why VDK needed to raise

a Kernel Panic. For more information on the possible values of this

variable see “PanicCode” on page 4-32.

• g_KernelPanicError indicates in more detail the cause of the error.

For example, if g_KernelPanicCode indicates a boot error,

g_KernelPanicError specifies if the boot problem is in a sema-

phore, device flag, and so on. For more information, see

“SystemError” on page 4-40.

• g_KernelPanicValue is a value whose meaning is determined by the

error enumeration. For example, if the problem is creating the boot

thread with ID 4, g_KernelPanicValue is 4.

• g_KernelPanicPC provides the address that produced the Kernel

Panic.

2-6 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 45

3 USING VDK

This chapter describes how the VDK implements the general concepts

described in Chapter 2, “Introduction to VDK”. For information about

the kernel library, see Chapter 5, “VDK API Reference”.

The following sections provide information about the operating system

kernel components and operations.

• “Threads” on page 3-1

• “Scheduling” on page 3-9

• “Signals” on page 3-15

• “Interrupt Service Routines” on page 3-46

• “I/O Interface” on page 3-51

• “Memory Pools” on page 3-68

• “Multiple Heaps” on page 3-69

• “Thread Local Storage” on page 3-70

Threads

When designing an application, you partition it into threads, where each

thread is responsible for a piece of the work. Each thread operates independently of the others. A thread performs its duty as if it has its own

processor but can communicate with other threads.

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 3-1

for 16-bit Processors

Page 46

Threads

Thread Types

You do not directly define threads; instead, you define thread types. A

thread is an instance of a thread type and is similar to any other user

defined type.

You can create multiple instantiations of the same thread type. Each

instantiation of the thread type has its own stack, state, priority, and other

local variables. In order to distinguish between different instances of the

same thread type an 'initializer' value can be passed to a boot thread (see

online Help for further information). Each thread is individually identified by its ThreadID, a handle that can be used to reference that thread in

kernel API calls. A thread can gain access to its ThreadID by calling

GetThreadID(). A ThreadID is valid for the life of the thread—once a

thread is destroyed, the ThreadID becomes invalid.

Old ThreadIDs are eventually reused, but there is significant time

between a thread’s destruction and the ThreadID reuse: other threads

have to recognize that the original thread is destroyed.

Thread Parameters

When a thread is created, the system allocates space in the heap to store a

data structure that holds the thread-specific parameters. The data structure contains internal information required by the kernel and the thread

type specifications provided by the user.

Stack Size

Each thread has its own stack. The full C/C++ run-time model, as specified in the appropriate VisualDSP++ 3.5 C/C++ Compiler and Library

Manual, is maintained on a per thread basis. It is your responsibility to

assure that each thread has enough room on its stack for all function calls’

return addresses and passed parameters appropriate to the particular

3-2 VisualDSP++ 3.5 Kernel (VDK) User’s Guide

for 16-bit Processors

Page 47

Using VDK

run-time model, user code structure, use of libraries, etc. Stack overflows

do not generate an exception, so an undersized stack has the potential to

cause difficulties when reproducing bugs in your system.

Priority

Each thread type specifies a default priority. Threads may change their

own (or another thread’s) priority dynamically using the SetPriority() or

ResetPriority() functions. Priorities are predefined by the kernel as an enu-

meration of type Priority with a value of

kPriority1 being the highest

priority (or the first to be scheduled) in the system. The priority enumeration is set up such that kPriority1 > kPriority2 > …. The number of

priorities is limited to the processor’s word size minus two.

Required Thread Functionality

Each thread type requires five particular functions to be declared and

implemented. Default null implementations of all five functions are provided in the templates generated by the VisualDSP++ development

environment. The thread’s run function is the entry point for the thread.

For many thread types, the thread’s run and error functions are the only

ones in the template you need to modify. The other functions allocate and

free up system resources at appropriate times during the creation and

destruction of a thread.

Run Function

The run function—called Run() in C++ and RunFunction() in C/assembly

implemented threads—is the entry point for a fully constructed thread;

Run() is roughly equivalent to main() in a C program. When a thread’s

run function returns, the thread is moved to the queue of threads waiting

to free their resources. If the run function never returns, the thread

remains running until destroyed.

VisualDSP++ 3.5 Kernel (VDK) User’s Guide 3-3

for 16-bit Processors

Page 48

Threads

Error Function

The thread’s error function is called by the kernel when an error occurs in

an API call made by the thread. The error function passes a description of

the error in the form of an enumeration (see SystemError on page 4-40 for

more details). It also can pass an additional piece of information whose

exact definition depends on the error enumeration. A thread’s default

error-handling behavior makes VDK go into Kernel Panic. See “Error

Handling Facilities” on page 3-8 for more information about error han-

dling in the VDK.

Create Function

The create function is similar to the C++ constructor. The function provides an abstraction used by the kernel APIs CreateThread() and

CreateThreadEx() to enable dynamic thread creation. The create function

is the first function called in the process of constructing a thread; it is also

responsible for calling the thread’s init function/constructor. Similar to

the constructor, the create function executes in the context of the thread

that is spawning a new thread by calling CreateThread() or CreateTh-

readEx(). The thread being constructed does not have a run-time context

fully established until after these functions complete.

A create function calls the constructor for the thread and ensures that all

of the allocations that the thread type required have taken place correctly.

If any of the allocations failed, the create function deletes the partially created thread instantiation and returns a null pointer. If the thread has been

constructed successfully, the create function returns the pointer to the

thread. A create function should not call DispatchThreadError() because