Software Optimization

Guide

for

AMD64 Processors

25112Publication # 3.06Revision:

September 2005Issue Date:

© 2001 – 2005 Advanced Micro Devices, Inc. All rights reserved.

The contents of this document are provided in connection with Advanced Micro Devices,

Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the

accuracy or completeness of the contents of this publication and reserves the right to make

changes to specifications and product descriptions at any time without notice. No license,

whether express, implied, arising by estoppel or otherwise, to any intellectual property

rights is granted by this publication. Except as set forth in AMD’s Standard Terms and

Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or

implied warranty, relating to its products including, but not limited to, the implied warranty of merchantability, fitness for a particular purpose, or infringement of any intellectual property right.

AMD’s products are not designed, intended, authorized or warranted for use as components in systems intended for surgical implant into the body, or in other applications

intended to support or sustain life, or in any other application in which the failure of

AMD’s product could create a situation where personal injury, death, or severe property or

environmental damage may occur. AMD reserves the right to discontinue or make changes

to its products at any time without notice.

Trademarks

AMD, the AMD Arrow logo, AMD Athlon, AMD Opteron, and combinations thereof, 3DNow! and AMD-8151 are trademarks of

Advanced Micro Devices, Inc.

HyperTransport is a licensed trademark of the HyperTransport Technology Consortium.

Microsoft is a registered trademark of Microsoft Corporation.

MMX is a trademark of Intel Corporation.

Other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Contents

Revision History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xv

Chapter 1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1

1.1 Intended Audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1

1.2 Getting Started Quickly . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1

1.3 Using This Guide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2

1.4 Important New Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4

1.5 Key Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6

Chapter 2 C and C++ Source-Level Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7

2.1 Declarations of Floating-Point Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9

2.2 Using Arrays and Pointers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10

2.3 Unrolling Small Loops . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .13

2.4 Expression Order in Compound Branch Conditions . . . . . . . . . . . . . . . . . . . . . . . . .14

2.5 Long Logical Expressions in If Statements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .16

2.6 Arrange Boolean Operands for Quick Expression Evaluation . . . . . . . . . . . . . . . . . .17

2.7 Dynamic Memory Allocation Consideration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .19

2.8 Unnecessary Store-to-Load Dependencies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .20

2.9 Matching Store and Load Size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .22

2.10 SWITCH and Noncontiguous Case Expressions . . . . . . . . . . . . . . . . . . . . . . . . . . . .25

2.11 Arranging Cases by Probability of Occurrence . . . . . . . . . . . . . . . . . . . . . . . . . . . . .28

2.12 Use of Function Prototypes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .29

2.13 Use of const Type Qualifier . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .30

2.14 Generic Loop Hoisting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .31

2.15 Local Static Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .34

2.16 Explicit Parallelism in Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .35

2.17 Extracting Common Subexpressions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .37

2.18 Sorting and Padding C and C++ Structures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .39

2.19 Sorting Local Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .41

Contents iii

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.20 Replacing Integer Division with Multiplication . . . . . . . . . . . . . . . . . . . . . . . . . . . . .43

2.21 Frequently Dereferenced Pointer Arguments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .44

2.22 Array Indices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .46

2.23 32-Bit Integral Data Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .47

2.24 Sign of Integer Operands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .48

2.25 Accelerating Floating-Point Division and Square Root . . . . . . . . . . . . . . . . . . . . . . .50

2.26 Fast Floating-Point-to-Integer Conversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .52

2.27 Speeding Up Branches Based on Comparisons Between Floats . . . . . . . . . . . . . . . .54

2.28 Improving Performance in Linux Libraries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .57

Chapter 3 General 64-Bit Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .59

3.1 64-Bit Registers and Integer Arithmetic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .60

3.2 64-Bit Arithmetic and Large-Integer Multiplication . . . . . . . . . . . . . . . . . . . . . . . . .62

3.3 128-Bit Media Instructions and Floating-Point Operations . . . . . . . . . . . . . . . . . . . .67

3.4 32-Bit Legacy GPRs and Small Unsigned Integers . . . . . . . . . . . . . . . . . . . . . . . . . .68

Chapter 4 Instruction-Decoding Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .71

4.1 DirectPath Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .72

4.2 Load-Execute Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .73

4.2.1 Load-Execute Integer Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .73

4.2.2 Load-Execute Floating-Point Instructions with Floating-Point Operands . . .74

4.2.3 Load-Execute Floating-Point Instructions with Integer Operands . . . . . . . . .74

4.3 Branch Targets in Program Hot Spots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .76

4.4 32/64-Bit vs. 16-Bit Forms of the LEA Instruction . . . . . . . . . . . . . . . . . . . . . . . . . .77

4.5 Take Advantage of x86 and AMD64 Complex Addressing Modes . . . . . . . . . . . . . .78

4.6 Short Instruction Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .80

4.7 Partial-Register Reads and Writes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .81

4.8 Using LEAVE for Function Epilogues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .83

4.9 Alternatives to SHLD Instruction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .85

4.10 8-Bit Sign-Extended Immediate Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .87

4.11 8-Bit Sign-Extended Displacements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .88

4.12 Code Padding with Operand-Size Override and NOP . . . . . . . . . . . . . . . . . . . . . . . .89

iv Contents

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Chapter 5 Cache and Memory Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .91

5.1 Memory-Size Mismatches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .92

5.2 Natural Alignment of Data Objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .95

5.3 Cache-Coherent Nonuniform Memory Access (ccNUMA) . . . . . . . . . . . . . . . . . . . .96

5.4 Multiprocessor Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .99

5.5 Store-to-Load Forwarding Restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .100

5.6 Prefetch Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .104

5.7 Streaming-Store/Non-Temporal Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .112

5.8 Write-combining . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .113

5.9 L1 Data Cache Bank Conflicts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .114

5.10 Placing Code and Data in the Same 64-Byte Cache Line . . . . . . . . . . . . . . . . . . . . .116

5.11 Sorting and Padding C and C++ Structures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .117

5.12 Sorting Local Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .119

5.13 Memory Copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .120

5.14 Stack Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .122

5.15 Cache Issues when Writing Instruction Bytes to Memory . . . . . . . . . . . . . . . . . . . .123

5.16 Interleave Loads and Stores . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .124

Chapter 6 Branch Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .125

6.1 Density of Branches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .126

6.2 Two-Byte Near-Return RET Instruction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .128

6.3 Branches That Depend on Random Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .130

6.4 Pairing CALL and RETURN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .132

6.5 Recursive Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .133

6.6 Nonzero Code-Segment Base Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .135

6.7 Replacing Branches with Computation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .136

6.8 The LOOP Instruction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .141

6.9 Far Control-Transfer Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .142

Chapter 7 Scheduling Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .143

7.1 Instruction Scheduling by Latency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .144

7.2 Loop Unrolling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .145

Contents v

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

7.3 Inline Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .149

7.4 Address-Generation Interlocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .151

7.5 MOVZX and MOVSX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .153

7.6 Pointer Arithmetic in Loops . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .154

7.7 Pushing Memory Data Directly onto the Stack . . . . . . . . . . . . . . . . . . . . . . . . . . . .157

Chapter 8 Integer Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .159

8.1 Replacing Division with Multiplication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .160

8.2 Alternative Code for Multiplying by a Constant . . . . . . . . . . . . . . . . . . . . . . . . . . .164

8.3 Repeated String Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .167

8.4 Using XOR to Clear Integer Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .169

8.5 Efficient 64-Bit Integer Arithmetic in 32-Bit Mode . . . . . . . . . . . . . . . . . . . . . . . . .170

8.6 Efficient Implementation of Population-Count Function in 32-Bit Mode . . . . . . . .179

8.7 Efficient Binary-to-ASCII Decimal Conversion . . . . . . . . . . . . . . . . . . . . . . . . . . .181

8.8 Derivation of Algorithm, Multiplier, and Shift Factor for Integer

Division by Constants . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .186

8.9 Optimizing Integer Division . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .192

Chapter 9 Optimizing with SIMD Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .193

9.1 Ensure All Packed Floating-Point Data are Aligned . . . . . . . . . . . . . . . . . . . . . . . . .195

9.2 Improving Scalar SSE and SSE2 Floating-Point Performance with MOVLPD and

MOVLPS When Loading Data from Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . .196

9.3 Use MOVLPx/MOVHPx Instructions for Unaligned Data Access . . . . . . . . . . . . .198

9.4 Use MOVAPD and MOVAPS Instead of MOVUPD and MOVUPS . . . . . . . . . . .199

9.5 Structuring Code with Prefetch Instructions to Hide Memory Latency . . . . . . . . . .200

9.6 Avoid Moving Data Directly Between

General-Purpose and MMX™ Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .206

9.7 Use MMX™ Instructions to Construct Fast Block-Copy

Routines in 32-Bit Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .207

9.8 Passing Data between MMX™ and 3DNow!™ Instructions . . . . . . . . . . . . . . . . . .208

9.9 Storing Floating-Point Data in MMX™ Registers . . . . . . . . . . . . . . . . . . . . . . . . . .209

9.10 EMMS and FEMMS Usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .210

vi Contents

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

9.11 Using SIMD Instructions for Fast Square Roots and Fast

Reciprocal Square Roots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .211

9.12 Use XOR Operations to Negate Operands of SSE, SSE2, and

3DNow!™ Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .215

9.13 Clearing MMX™ and XMM Registers with XOR Instructions . . . . . . . . . . . . . . . .216

9.14 Finding the Floating-Point Absolute Value of Operands of SSE, SSE2, and

3DNow!™ Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .217

9.15 Accumulating Single-Precision Floating-Point Numbers Using SSE, SSE2,

and 3DNow!™ Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .218

9.16 Complex-Number Arithmetic Using SSE, SSE2, and 3DNow!™ Instructions . . . .221

9.17 Optimized 4 × 4 Matrix Multiplication on 4 × 1 Column Vector Routines . . . . . . .230

Chapter 10 x87 Floating-Point Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .237

10.1 Using Multiplication Rather Than Division . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .238

10.2 Achieving Two Floating-Point Operations per Clock Cycle . . . . . . . . . . . . . . . . . .239

10.3 Floating-Point Compare Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .244

10.4 Using the FXCH Instruction Rather Than FST/FLD Pairs . . . . . . . . . . . . . . . . . . . .245

10.5 Floating-Point Subexpression Elimination . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .246

10.6 Accumulating Precision-Sensitive Quantities in x87 Registers . . . . . . . . . . . . . . . .247

10.7 Avoiding Extended-Precision Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .248

Appendix A Microarchitecture for AMD Athlon™ 64 and AMD Opteron™ Processors . .249

A.1 Key Microarchitecture Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .250

A.2 Microarchitecture for AMD Athlon™ 64 and AMD Opteron™ Processors . . . . . .251

A.3 Superscalar Processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .251

A.4 Processor Block Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .251

A.5 L1 Instruction Cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .252

A.6 Branch-Prediction Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .253

A.7 Fetch-Decode Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .254

A.8 Instruction Control Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .254

A.9 Translation-Lookaside Buffer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .254

A.10 L1 Data Cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .255

A.11 Integer Scheduler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .256

Contents vii

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

A.12 Integer Execution Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .256

A.13 Floating-Point Scheduler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .257

A.14 Floating-Point Execution Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .258

A.15 Load-Store Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .258

A.16 L2 Cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .259

A.17 Write-combining . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .260

A.18 Buses for AMD Athlon™ 64 and AMD Opteron™ Processor . . . . . . . . . . . . . . . .260

A.19 Integrated Memory Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .260

A.20 HyperTransport™ Technology Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .260

Appendix B Implementation of Write-Combining . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .263

B.1 Write-Combining Definitions and Abbreviations . . . . . . . . . . . . . . . . . . . . . . . . . . .263

B.2 Programming Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .264

B.3 Write-combining Operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .264

B.4 Sending Write-Buffer Data to the System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .266

B.5 Write-Combining Optimization on Revision D and E

AMD Athlon™ 64 and AMD Opteron™ Processors . . . . . . . . . . . . . . . . . . . . . . . .266

Appendix C Instruction Latencies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .269

C.1 Understanding Instruction Entries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .270

C.2 Integer Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .273

C.3 MMX™ Technology Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .303

C.4 x87 Floating-Point Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .307

C.5 3DNow!™ Technology Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .314

C.6 3DNow!™ Technology Extensions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .316

C.7 SSE Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .317

C.8 SSE2 Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .326

C.9 SSE3 Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .342

Appendix D AGP Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .345

D.1 Fast-Write Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .345

D.2 Fast-Write Optimizations for Graphics-Engine Programming . . . . . . . . . . . . . . . . .346

D.3 Fast-Write Optimizations for Video-Memory Copies . . . . . . . . . . . . . . . . . . . . . . .349

viii Contents

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

D.4 Memory Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .351

D.5 Memory Optimizations for Graphics-Engine Programming

Using the DMA Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .352

D.6 Optimizations for Texture-Map Copies to AGP Memory . . . . . . . . . . . . . . . . . . . .353

D.7 Optimizations for Vertex-Geometry Copies to AGP Memory . . . . . . . . . . . . . . . . .353

Appendix E SSE and SSE2 Optimizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .355

E.1 Half-Register Operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .356

E.2 Zeroing Out an XMM Register . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .357

E.3 Reuse of Dead Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .359

E.4 Moving Data Between XMM Registers and GPRs . . . . . . . . . . . . . . . . . . . . . . . . .360

E.5 Saving and Restoring Registers of Unknown Format . . . . . . . . . . . . . . . . . . . . . . .361

E.6 SSE and SSE2 Copy Loops . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .362

E.7 Explicit Load Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .363

E.8 Data Conversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .364

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .367

Contents ix

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

x Contents

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Tables

Table 1. Instructions, Macro-ops and Micro-ops ............................................................................5

Table 2. Optimizations by Rank......................................................................................................6

Table 3. Comparisons against Zero...............................................................................................55

Table 4. Comparisons against Positive Constant ..........................................................................55

Table 5. Comparisons among Two Floats.....................................................................................55

Table 6. Latency of Repeated String Instructions .......................................................................167

Table 7. L1 Instruction Cache Specifications by Processor........................................................253

Table 8. L1 Instruction TLB Specifications................................................................................255

Table 9. L1 Data TLB Specifications..........................................................................................255

Table 10. L2 TLB Specifications by Processor.............................................................................255

Table 11. L1 Data Cache Specifications by Processor..................................................................256

Table 12. Write-Combining Completion Events...........................................................................265

Table 13. Integer Instructions........................................................................................................273

Table 14. MMX™ Technology Instructions.................................................................................303

Table 15. x87 Floating-Point Instructions.....................................................................................307

Table 16. 3DNow!™ Technology Instructions.............................................................................314

Table 17. 3DNow!™ Technology Extensions ..............................................................................316

Table 18. SSE Instructions............................................................................................................317

Table 19. SSE2 Instructions..........................................................................................................326

Table 20. SSE3 Instructions..........................................................................................................342

Table 21. Clearing XMM Registers ..............................................................................................357

Table 22. Converting Scalar Values .............................................................................................364

Table 23. Converting Vector Values.............................................................................................365

Table 24. Converting Directly from Memory ...............................................................................365

Tables xi

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

xii Tables

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Figures

Figure 1. Simple SMP Block Diagram...........................................................................................97

Figure 2. Dual-Core AMD Opteron™ Processor Configuration ...................................................97

Figure 3. Memory-Limited Code .................................................................................................109

Figure 4. Processor-Limited Code ...............................................................................................109

Figure 5. AMD Athlon™ 64 and AMD Opteron™ Processors Block Diagram .........................252

Figure 6. Integer Execution Pipeline............................................................................................256

Figure 7. Floating-Point Unit .......................................................................................................258

Figure 8. Load-Store Unit ............................................................................................................259

Figure 9. AGP 8x Fast-Write Transaction ...................................................................................346

Figure 10. Cacheable-Memory Command Structure .....................................................................347

Figure 11. Northbridge Command Flow ........................................................................................352

Figures xiii

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

xiv Figures

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

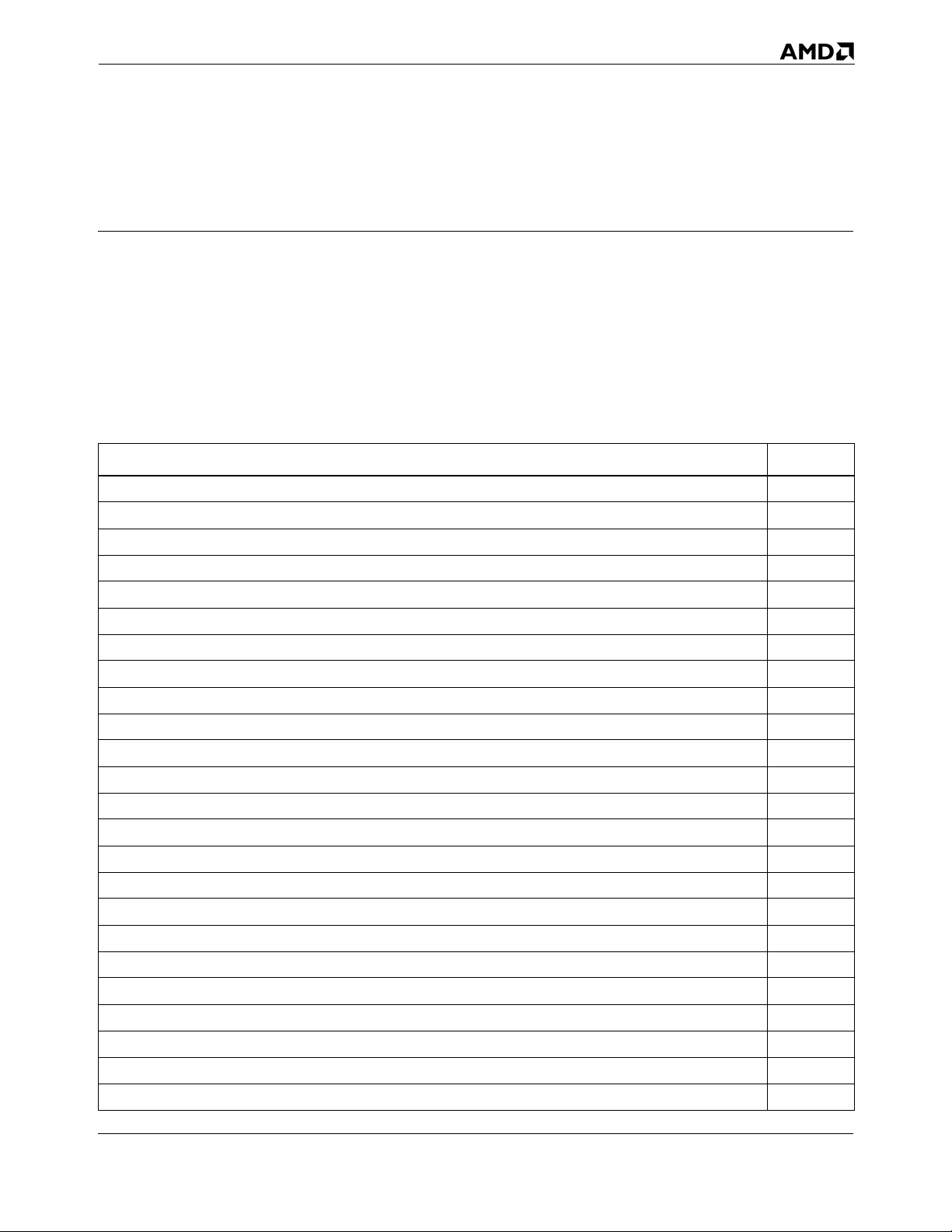

Revision History

Date Rev. Description

August 2005 3.06 Updated latency tables in Appendix C. Added section 8.9 on optimizing integer

division. Clarified the use of non-temporal PREFETCHNTA instruction in section

5.6. Added explanatory information to section 5.3 on ccNUMA. Added section 4.5

on AMD64 complx addressing modes. Added new section 5.13 on memory copies.

October 2004 3.05 Updated information on write-combining optimizations in Appendix B,

Implementation of Write-Combining; Added latency information for SSE3

instructions.

March 2004 3.04 Incorporated a section on ccNUMA in Chapter 5. Added sections on moving

unaligned versus unaligned data. Added to PREFETCHNTA information in Chapter

5. Fixed many minor typos.

September 2003 3.03 Made several minor typographical and formatting corrections.

July 2003 3.02 Added index references. Corrected information pertaining to L1 and L2 data and

instruction caches. Corrected information on alignment in Chapter 5, “Cache and

Memory Optimizations”. Amended latency information in Appendix C.

April 2003 3.01 Clarified section 2.22 'Array Indices'. Corrected factual errors and removed

misleading examples from Cache and Memory chapter..

April 2003 3.00 Initial public release.

Revision History xv

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

xvi Revision History

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Chapter 1 Introduction

This guide provides optimization information and recommendations for the AMD Athlon™ 64 and

AMD Opteron™ processors. These optimizations are designed to yield software code that is fast,

compact, and efficient. Toward this end, the optimizations in each of the following chapters are listed

in order of importance.

This chapter covers the following topics:

Topic Page

Intended Audience 1

Getting Started Quickly 1

Using This Guide 2

Important New Terms 4

Key Optimizations 6

1.1 Intended Audience

This book is intended for compiler and assembler designers, as well as C, C++, and assemblylanguage programmers writing performance-sensitive code sequences. This guide assumes that you

are familiar with the AMD64 instruction set and the AMD64 architecture (registers and programming

modes). For complete information on the AMD64 architecture and instruction set, see the

multivolume AMD64 Architecture Programmer’s Manual available from AMD.com. Documentation

volumes and their order numbers are provided below.

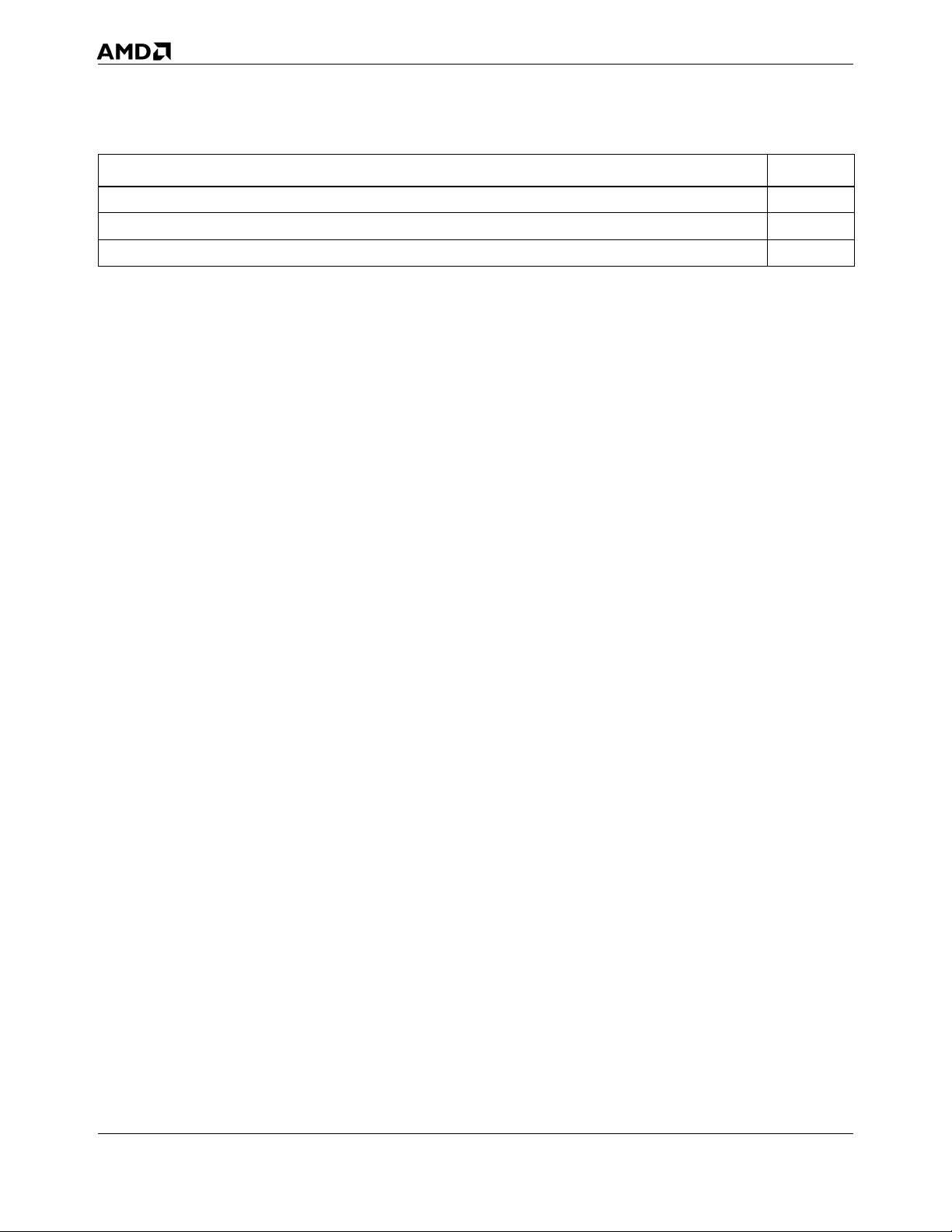

Title Order no.

Volume 1 , Application Programming 24592

Volume 2 , System Programming 24593

Volume 3 , General-Purpose and System Instructions 24594

Volume 4 , 128-Bit Media Instructions 26568

Volume 5 , 64-Bit Media and x87 Floating-Point Instructions 26569

1.2 Getting Started Quickly

More experienced readers may skip to “Key Optimizations” on page 6, which identifies the most

important optimizations.

Chapter 1 Introduction 1

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

1.3 Using This Guide

This chapter explains how to get the most benefit from this guide. It defines important new terms you

will need to understand before reading the rest of this guide and lists the most important optimizations

by rank.

Chapter 2 describes techniques that you can use to optimize your C and C++ source code. The

“Application” section for each optimization indicates whether the optimization applies to 32-bit

software, 64-bit software, or both.

Chapter 3 presents general assembly-language optimizations that improve the performance of

software designed to run in 64-bit mode. All optimizations in this chapter apply only to 64-bit

software.

The remaining chapters describe assembly-language optimizations. The “Application” section under

each optimization indicates whether the optimization applies to 32-bit software, 64-bit software, or

both.

Chapter 4 Instruction-Decoding Optimizations

Chapter 5 Cache and Memory Optimizations

Chapter 6 Branch Optimizations

Chapter 7 Scheduling Optimizations

Chapter 8 Integer Optimizations

Chapter 9 Optimizing with SIMD Instructions

Chapter 10 x87 Floating-Point Optimizations

Appendix A discusses the internal design, or microarchitecture, of the processor and provides

specifications on the translation-lookaside buffers. It also provides information on other functional

units that are not part of the main processor but are integrated on the chip.

Appendix B describes the memory write-combining feature of the processor.

Appendix C provides a complete listing of all AMD64 instructions. It shows each instruction’s

encoding, decode type, execution latency, and—where applicable—the pipe used in the floating-point

unit.

Appendix D discusses optimizations that improve the throughput of AGP transfers.

Appendix E describes coding practices that improve performance when using SSE and SSE2

instructions.

2 Introduction Chapter 1

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Special Information

Special information in this guide looks like this:

❖ This symbol appears next to the most important, or key, optimizations.

Numbering Systems

The following suffixes identify different numbering systems:

This suffix Identifies a

b Binary number. For example, the binary equivalent of the number 5 is written 101b.

d Decimal number. Decimal numbers are followed by this suffix only when the possibility of

confusion exists. In general, decimal numbers are shown without a suffix.

h Hexadecimal number. For example, the hexadecimal equivalent of the number 60 is

written 3Ch.

Typographic Notation

This guide uses the following typographic notations for certain types of information:

This type of text Identifies

italic Placeholders that represent information you must provide. Italicized text is also used

for the titles of publications and for emphasis.

monowidth Program statements and function names.

Providing Feedback

If you have suggestions for improving this guide, we would like to hear from you. Please send your

comments to the following e-mail address:

code.optimization@amd.com

Chapter 1 Introduction 3

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

1.4 Important New Terms

This section defines several important terms and concepts used in this guide.

Primitive Operations

AMD Athlon 64 and AMD Opteron processors perform four types of primitive operations:

• Integer (arithmetic or logic)

• Floating-point (arithmetic)

• Load

• Store

Internal Instruction Formats

The AMD64 instruction set is complex; instructions have variable-length encodings and many

perform multiple primitive operations. AMD Athlon 64 and AMD Opteron processors do not execute

these complex instructions directly, but, instead, decode them internally into simpler fixed-length

instructions called macro-ops. Processor schedulers subsequently break down macro-ops into

sequences of even simpler instructions called micro-ops, each of which specifies a single primitive

operation.

A macro-op is a fixed-length instruction that:

• Expresses, at most, one integer or floating-point operation and one load and/or store operation.

• Is the primary unit of work managed (that is, dispatched and retired) by the processor.

A micro-op is a fixed-length instruction that:

• Expresses one and only one of the primitive operations that the processor can perform (for

example, a load).

• Is executed by the processor’s execution units.

4 Introduction Chapter 1

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

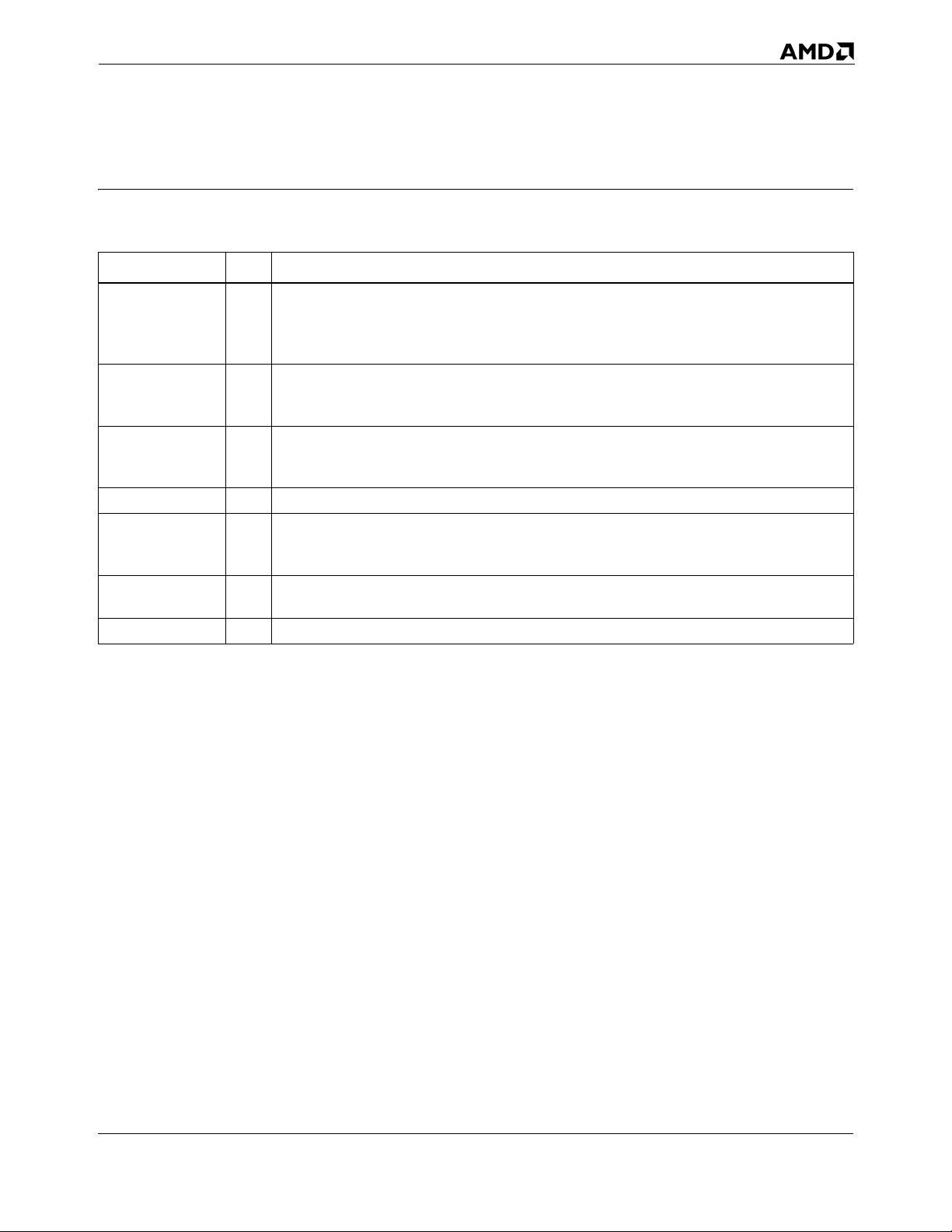

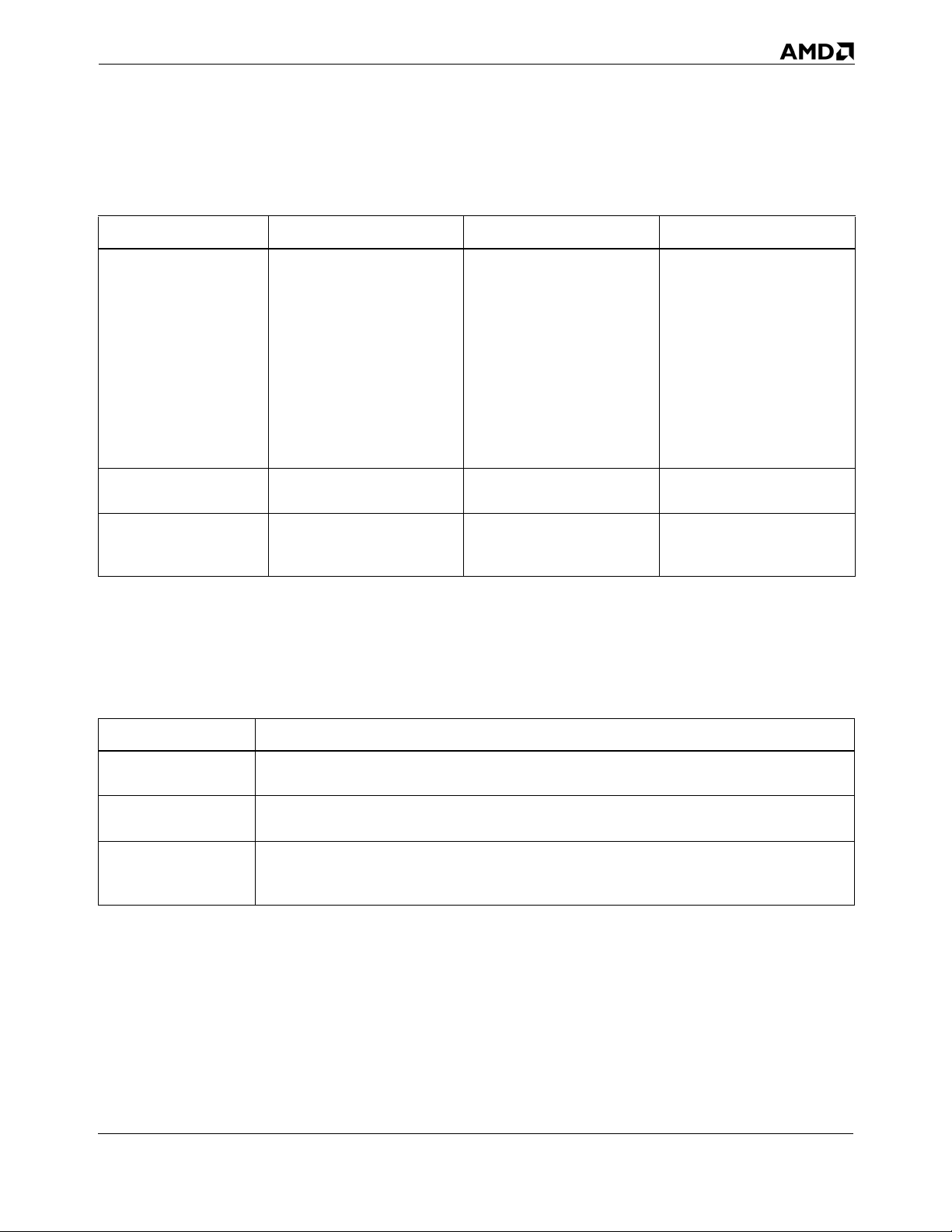

Table 1 summarizes the differences between AMD64 instructions, macro-ops, and micro-ops.

Table 1. Instructions, Macro-ops and Micro-ops

Comparing AMD64 instructions Macro-ops Micro-ops

Complexity Complex

A single instruction may

specify one or more of

each of the following

operations:

• Integer or floating-point

operation

• Load

•Store

Encoded length Variable (instructions are

different lengths)

Regularized

instruction fields

No (field locations and

definitions vary among

instructions)

Average

A single macro-op may

specify—at most—one

integer or floating-point

operation and one of the

following operations:

• Load

•Store

• Load and store to the

same address

Fixed (all macro-ops are

the same length)

Yes (field locations and

definitions are the same

for all macro-ops)

Simple

A single micro-op

specifies only one of the

following primitive

operations:

• Integer or floating-point

• Load

•Store

Fixed (all micro-ops are

the same length)

Yes (field locations and

definitions are the same

for all micro-ops)

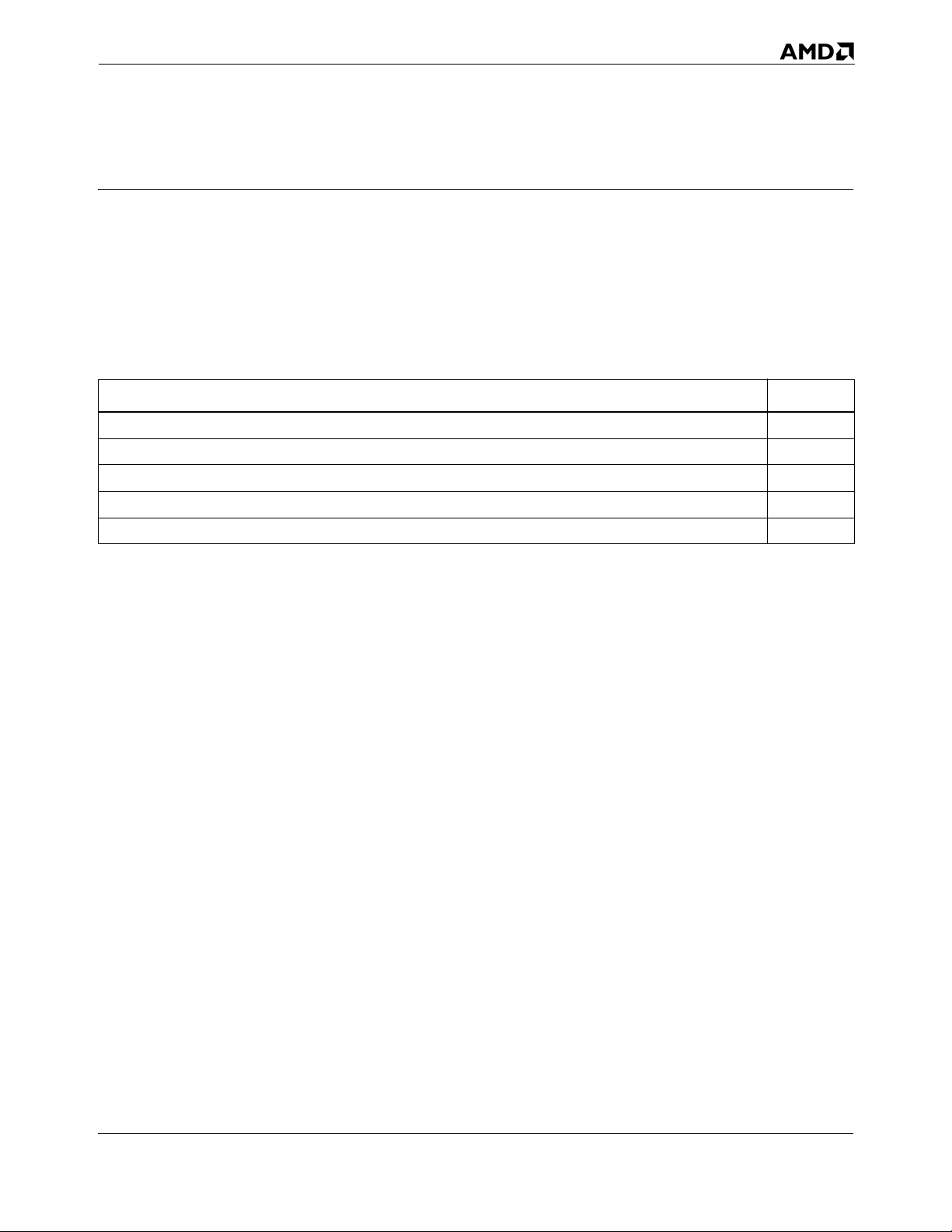

Types of Instructions

Instructions are classified according to how they are decoded by the processor. There are three types

of instructions:

Instruction Type Description

DirectPath Single A relatively common instruction that the processor decodes directly into one macro-op

in hardware.

DirectPath Double A relatively common instruction that the processor decodes directly into two macro-

ops in hardware.

VectorPath A sophisticated or less common instruction that the processor decodes into one or

more (usually three or more) macro-ops using the on-chip microcode-engine ROM

(MROM).

Chapter 1 Introduction 5

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

1.5 Key Optimizations

While all of the optimizations in this guide help improve software performance, some of them have

more impact than others. Optimizations that offer the most improvement are called key optimizations.

Guideline

Concentrate your efforts on implementing key optimizations before moving on to other optimizations,

and incorporate higher-ranking key optimizations first.

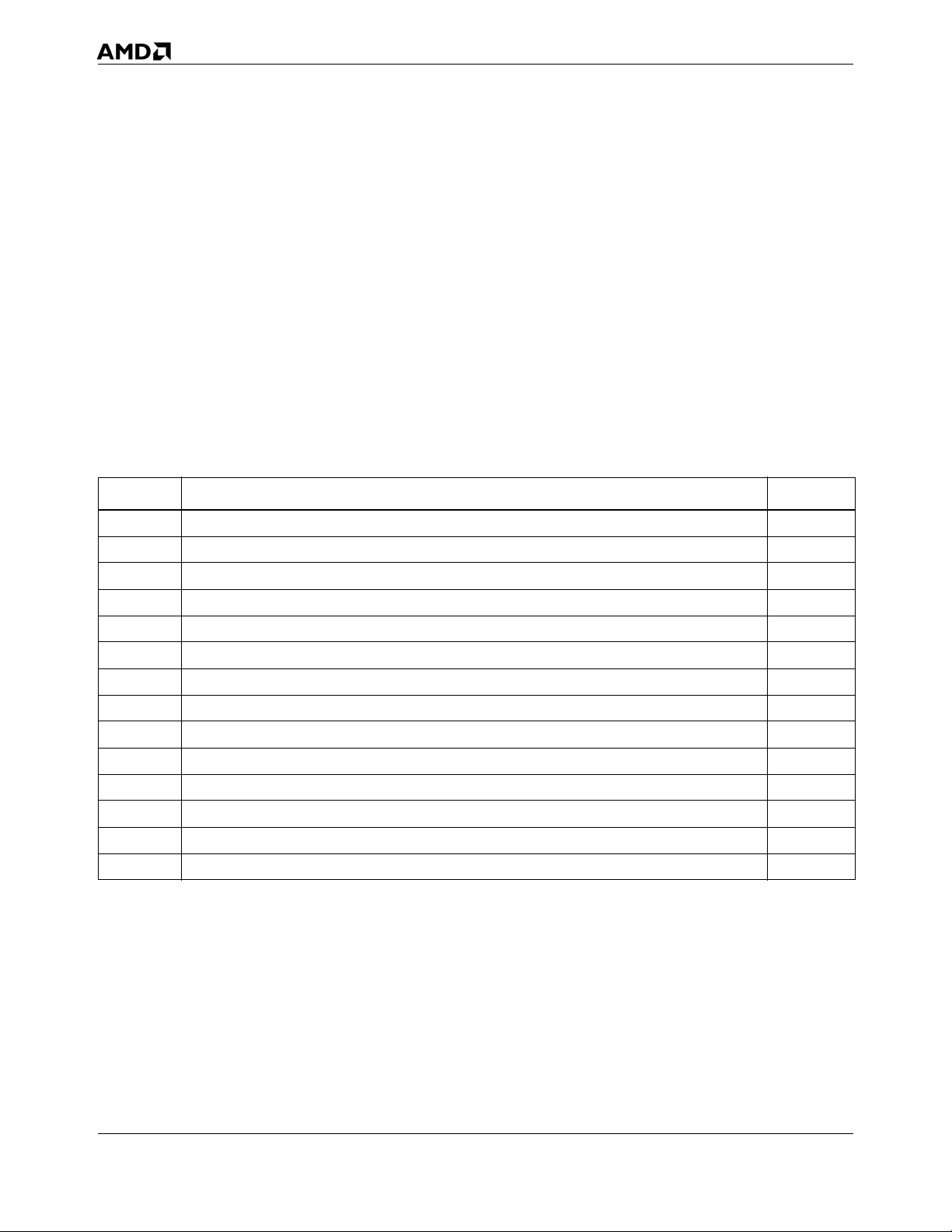

Key Optimizations by Rank

Table 1 lists the key optimizations by rank.

Table 2. Optimizations by Rank

Rank Optimization Page

1 Memory-Size Mismatches 92

2 Natural Alignment of Data Objects 95

3 Memory Copy 120

4 Density of Branches 126

5 Prefetch Instructions 104

6 Two-Byte Near-Return RET Instruction 128

7 DirectPath Instructions 72

8 Load-Execute Integer Instructions 73

9 Load-Execute Floating-Point Instructions with Floating-Point Operands 74

10 Load-Execute Floating-Point Instructions with Integer Operands 74

11 Write-combining 113

12 Branches That Depend on Random Data 130

13 Half-Register Operations 356

14 Placing Code and Data in the Same 64-Byte Cache Line 116

6 Introduction Chapter 1

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Chapter 2 C and C++ Source-Level

Optimizations

Although C and C++ compilers generally produce very compact object code, many performance

improvements are possible by careful source code optimization. Most such optimizations result from

taking advantage of the underlying mechanisms used by C and C++ compilers to translate source

code into sequences of AMD64 instructions. This chapter includes guidelines for writing C and C++

source code that result in the most efficiently optimized AMD64 code.

This chapter covers the following topics:

Topic Page

Declarations of Floating-Point Values 9

Using Arrays and Pointers 10

Unrolling Small Loops 13

Expression Order in Compound Branch Conditions 14

Long Logical Expressions in If Statements 16

Arrange Boolean Operands for Quick Expression Evaluation 17

Dynamic Memory Allocation Consideration 19

Unnecessary Store-to-Load Dependencies 20

Matching Store and Load Size 22

SWITCH and Noncontiguous Case Expressions 25

Arranging Cases by Probability of Occurrence 28

Use of Function Prototypes 29

Use of const Type Qualifier 30

Generic Loop Hoisting 31

Local Static Functions 34

Explicit Parallelism in Code 35

Extracting Common Subexpressions 37

Sorting and Padding C and C++ Structures 39

Sorting Local Variables 41

Replacing Integer Division with Multiplication 43

Frequently Dereferenced Pointer Arguments 44

Array Indices 46

32-Bit Integral Data Types 47

Sign of Integer Operands 48

Chapter 2 C and C++ Source-Level Optimizations 7

Software Optimization Guide for AMD64 Processors

Topic Page

Accelerating Floating-Point Division and Square Root 50

Fast Floating-Point-to-Integer Conversion 52

Speeding Up Branches Based on Comparisons Between Floats 54

25112 Rev. 3.06 September 2005

8 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

2.1 Declarations of Floating-Point Values

Optimization

When working with single precision (float) values:

• Use the f or F suffix (for example, 3.14f) to specify a constant value of type float.

• Use function prototypes for all functions that accept arguments of type float.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

C and C++ compilers treat floating-point constants and arguments as double precision (double)

unless you specify otherwise. However, single precision floating-point values occupy half the

memory space as double precision values and can often provide the precision necessary for a given

computational problem.

Chapter 2 C and C++ Source-Level Optimizations 9

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.2 Using Arrays and Pointers

Optimization

Use array notation instead of pointer notation when working with arrays.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

C allows the use of either the array operator ([]) or pointers to access the elements of an array.

However, the use of pointers in C makes work difficult for optimizers in C compilers. Without

detailed and aggressive pointer analysis, the compiler has to assume that writes through a pointer can

write to any location in memory, including storage allocated to other variables. (For example, *p and

*q can refer to the same memory location, while x[0] and x[2] cannot.) Using pointers causes

aliasing, where the same block of memory is accessible in more than one way. Using array notation

makes the task of the optimizer easier by reducing possible aliasing.

10 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Example

Avoid code, such as the following, which uses pointer notation:

typedef struct {

float x, y, z, w;

} VERTEX;

typedef struct {

float m[4][4];

} MATRIX;

void XForm(float *res, const float *v, const float *m, int numverts) {

float dp;

int i;

const VERTEX* vv = (VERTEX *)v;

for (i = 0; i < numverts; i++) {

dp = vv->x * *m++;

dp += vv->y * *m++;

dp += vv->z * *m++;

dp += vv->w * *m++;

*res++ = dp; // Write transformed x.

dp = vv->x * *m++;

dp += vv->y * *m++;

dp += vv->z * *m++;

dp += vv->w * *m++;

*res++ = dp; // Write transformed y.

dp = vv->x * *m++;

dp += vv->y * *m++;

dp += vv->z * *m++;

dp += vv->w * *m++;

*res++ = dp; // Write transformed z.

dp = vv->x * *m++;

dp += vv->y * *m++;

dp += vv->z * *m++;

dp += vv->w * *m++;

*res++ = dp; // Write transformed w.

++vv; // Next input vertex

m -= 16; // Reset to start of transform matrix.

}

}

Chapter 2 C and C++ Source-Level Optimizations 11

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

Instead, use the equivalent array notation:

typedef struct {

float x, y, z, w;

} VERTEX;

typedef struct {

float m[4][4];

} MATRIX;

void XForm(float *res, const float *v, const float *m, int numverts) {

int i;

const VERTEX* vv = (VERTEX *)v;

const MATRIX* mm = (MATRIX *)m;

VERTEX* rr = (VERTEX *)res;

for (i = 0; i < numverts; i++) {

rr->x = vv->x * mm->m[0][0] + vv->y * mm->m[0][1] +

vv->z * mm->m[0][2] + vv->w * mm->m[0][3];

rr->y = vv->x * mm->m[1][0] + vv->y * mm->m[1][1] +

vv->z * mm->m[1][2] + vv->w * mm->m[1][3];

rr->z = vv->x * mm->m[2][0] + vv->y * mm->m[2][1] +

vv->z * mm->m[2][2] + vv->w * mm->m[2][3];

rr->w = vv->x * mm->m[3][0] + vv->y * mm->m[3][1] +

vv->z * mm->m[3][2] + vv->w * mm->m[3][3];

++rr; // Increment the results pointer.

++vv; // Increment the input vertex pointer.

}

}

Additional Considerations

Source-code transformations interact with a compiler’s code generator, making it difficult to control

the generated machine code from the source level. It is even possible that source-code transformations

aimed at improving performance may conflict with compiler optimizations. Depending on the

compiler and the specific source code, it is possible for pointer-style code to compile into machine

code that is faster than that generated from equivalent array-style code. Compare the performance of

your code after implementing a source-code transformation with the performance of the original code

to be sure that there is an improvement.

12 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

2.3 Unrolling Small Loops

Optimization

Completely unroll loops that have a small fixed loop count and a small loop body.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

Many compilers do not aggressively unroll loops. Manually unrolling loops can benefit performance,

especially if the loop body is small, which makes the loop overhead significant.

Example

Avoid a small loop like this:

// 3D-transform: Multiply vector V by 4x4 transform matrix M.

for (i = 0; i < 4; i++) {

r[i] = 0;

for (j = 0; j < 4; j++) {

r[i] += m[j][i] * v[j];

}

}

Instead, replace it with its completely unrolled equivalent, as shown here:

r[0] = m[0][0] * v[0] + m[1][0] * v[1] + m[2][0] * v[2] + m[3][0] * v[3];

r[1] = m[0][1] * v[0] + m[1][1] * v[1] + m[2][1] * v[2] + m[3][1] * v[3];

r[2] = m[0][2] * v[0] + m[1][2] * v[1] + m[2][2] * v[2] + m[3][2] * v[3];

r[3] = m[0][3] * v[0] + m[1][3] * v[1] + m[2][3] * v[2] + m[3][3] * v[3];

Related Information

For information on loop unrolling at the assembly-language level, see “Loop Unrolling” on page 145.

Chapter 2 C and C++ Source-Level Optimizations 13

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.4 Expression Order in Compound Branch Conditions

Optimization

In the most active areas of a program, order the expressions in compound branch conditions to take

advantage of short circuiting of compound conditional expressions.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

Branch conditions in C programs often consist of compound conditions consisting of multiple

boolean expressions joined by the logical AND (&&) and logical OR (||) operators. C compilers

guarantee short-circuit evaluation of these operators. In a compound logical OR expression, the first

operand to evaluate to true terminates the evaluation, and subsequent operands are not evaluated at all.

Similarly, in a logical AND expression, the first operand to evaluate to false terminates the evaluation.

Because of this short-circuit evaluation, it is not always possible to swap the operands of logical OR

and logical AND. This is especially true when the evaluation of one of the operands causes a side

effect. However, in most cases the order of operands in such expressions is irrelevant.

When used to control conditional branches, expressions involving logical OR and logical AND are

translated into a series of conditional branches. The ordering of the conditional branches is a function

of the ordering of the expressions in the compound condition and can have a significant impact on

performance. It is impossible to give an easy, closed-form formula on how to order the conditions.

Overall performance is a function of a variety of the following factors:

• Probability of a branch misprediction for each of the branches generated

• Additional latency incurred due to a branch misprediction

• Cost of evaluating the conditions controlling each of the branches generated

• Amount of parallelism that can be extracted in evaluating the branch conditions

• Data stream consumed by an application (mostly due to the dependence of misprediction

probabilities on the nature of the incoming data in data-dependent branches)

It is recommended to experiment with the ordering of expressions in compound branch conditions in

the most active areas of a program (so-called “hot spots,” where most of the execution time is spent).

14 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Such hot spots can be found through the use of profiling by feeding a typical data stream to the

program while doing the experiments.

Chapter 2 C and C++ Source-Level Optimizations 15

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.5 Long Logical Expressions in If Statements

Optimization

In if statements, avoid long logical expressions that can generate dense conditional branches that

violate the guideline described in “Density of Branches” on page 126.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

Listing 1. Preferred for Data that Falls Mostly Within the Range

if (a <= max && a >= min && b <= max && b >= min)

If most of the data falls within the range, the branches will not be taken, so the above code is

preferred. Otherwise, the following code is preferred.

Listing 2. Preferred for Data that Does Not Fall Mostly Within the Range

if (a > max || a < min || b > max || b < min)

16 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

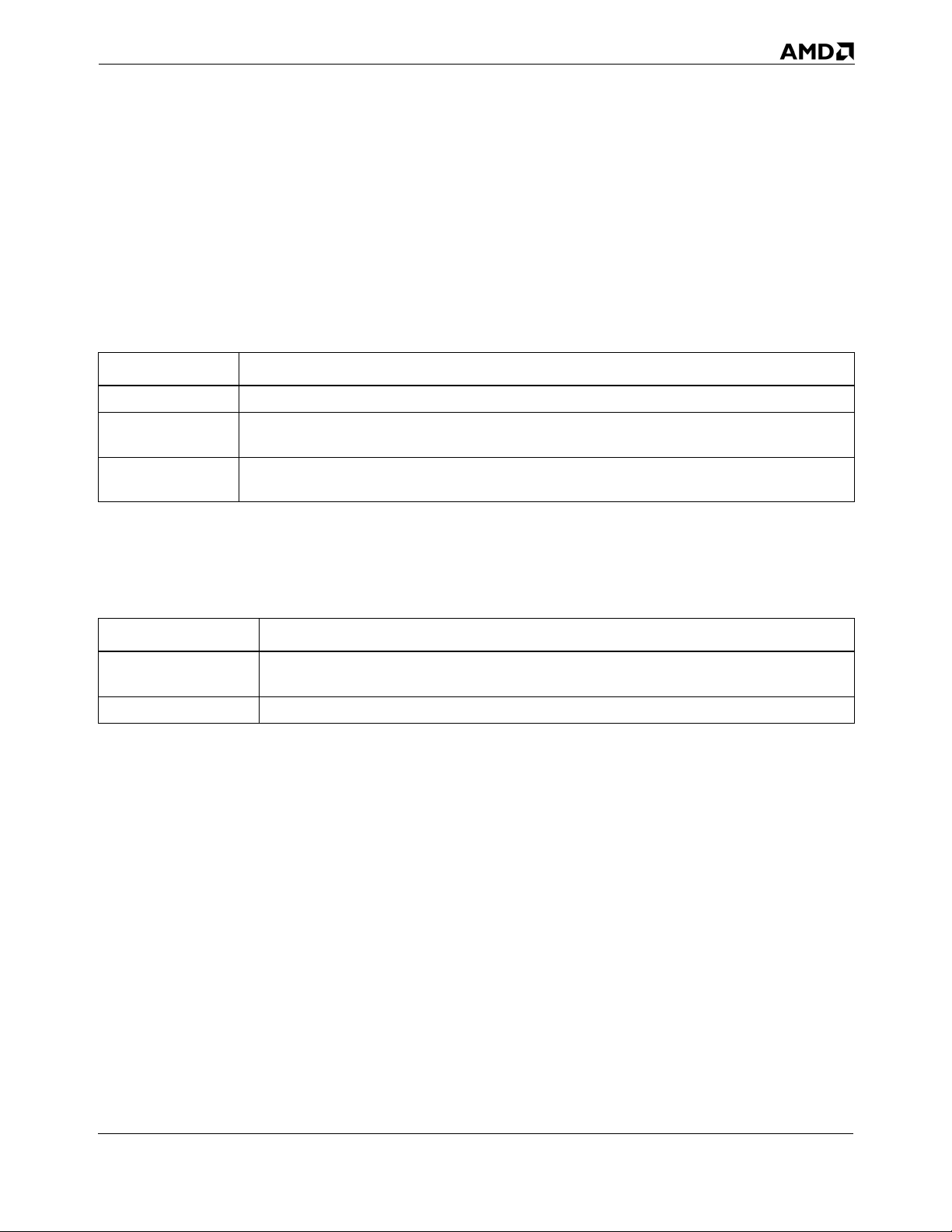

2.6 Arrange Boolean Operands for Quick Expression Evaluation

Optimization

In expressions that use the logical AND (&&) or logical OR (||) operator, arrange the operands for

quick evaluation of the expression:

If the expression uses this

operator

&& (logical AND) False

|| (logical OR) True

Then arrange the operands from left to right in decreasing

probablity of being

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

C and C++ compilers guarantee short-circuit evaluation of the boolean operators && and ||. In an

expression that uses &&, the first operand to evaluate to false terminates the evaluation; subsequent

operands are not evaluated. In an expression that uses ||, the first operand to evaluate to true terminates

the evaluation.

When used to control program flow, expressions involving && and || are translated into a series of

conditional branches. This optimization minimizes the total number of conditions evaluated and

branches executed.

Example 1

In the following code, the operands of && are not arranged for quick expression evaluation because the

first operand is not the condition case most likely to be false (it is far less likely for an animal name to

begin with a ‘y’ than for it to have fewer than four characters):

char animalname[30];

char *p;

p = animalname;

if ((strlen(p) > 4) && (*p == 'y')) { ... }

Chapter 2 C and C++ Source-Level Optimizations 17

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

Because the odds that the animal name begins with a ‘y’ are comparatively low, it is better to put that

operand first:

if ((*p == 'y') && (strlen(p) > 4)) { ... }

Example 2

In the following code (assuming a uniform random distribution of i), the operands of || are not

arranged for quick expression evaluation because the first operand is not the condition most likely to

be true:

unsigned int i;

if ((i < 4) || (i & 1)) { ... }

Because it is more likely for the least-significant bit of i to be 1, it is better to put that operand first:

if ((i & 1) || (i < 4)) { ... }

18 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

2.7 Dynamic Memory Allocation Consideration

Dynamic memory allocation—accomplished through the use of the malloc library function in C—

should always return a pointer that is suitably aligned for the largest base type (quadword alignment).

Where this aligned pointer cannot be guaranteed, use the technique shown in the following code to

make the pointer quadword aligned, if needed. This code assumes that it is possible to cast the pointer

to a long.

double *p;

double *np;

p = (double *)malloc(sizeof(double) * number_of_doubles + 7L);

np = (double *)((((long)(p)) + 7L) & (-8L));

Then use np instead of p to access the data. The pointer p is still needed in order to deallocate the

storage.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Chapter 2 C and C++ Source-Level Optimizations 19

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.8 Unnecessary Store-to-Load Dependencies

A store-to-load dependency exists when data is stored to memory, only to be read back shortly

thereafter. For details, see “Store-to-Load Forwarding Restrictions” on page 100. The

AMD Athlon™ 64 and AMD Opteron™ processors contain hardware to accelerate such store-to-load

dependencies, allowing the load to obtain the store data before it has been written to memory.

However, it is still faster to avoid such dependencies altogether and keep the data in an internal

register.

Avoiding store-to-load dependencies is especially important if they are part of a long dependency

chain, as may occur in a recurrence computation. If the dependency occurs while operating on arrays,

many compilers are unable to optimize the code in a way that avoids the store-to-load dependency. In

some instances the language definition may prohibit the compiler from using code transformations

that would remove the store-to-load dependency. Therefore, it is recommended that the programmer

remove the dependency manually, for example, by introducing a temporary variable that can be kept

in a register, as in the following example. This can result in a significant performance increase.

Listing 3. Avoid

double x[VECLEN], y[VECLEN], z[VECLEN];

unsigned int k;

for (k = 1; k < VECLEN; k++) {

x[k] = x[k-1] + y[k];

}

for (k = 1; k < VECLEN; k++) {

x[k] = z[k] * (y[k] - x[k-1]);

}

Listing 4. Preferred

double x[VECLEN], y[VECLEN], z[VECLEN];

unsigned int k;

double t;

t = x[0];

for (k = 1; k < VECLEN; k++) {

t = t + y[k];

x[k] = t;

}

t = x[0];

for (k = 1; k < VECLEN; k++) {

t = z[k] * (y[k] - t);

x[k] = t;

}

20 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Software Optimization Guide for AMD64 Processors

Chapter 2 C and C++ Source-Level Optimizations 21

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.9 Matching Store and Load Size

Optimization

Align memory accesses and match addresses and sizes of stores and dependent loads.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

The AMD Athlon 64 and AMD Opteron processors contain a load-store buffer to speed up the

forwarding of store data to dependent loads. However, this store-to-load forwarding (STLF) inside the

load-store buffer occurs, in general, only when the addresses and sizes of the store and the dependent

load match, and when both memory accesses are aligned. For details, see “Store-to-Load Forwarding

Restrictions” on page 100.

It is impossible to control load and store activity at the source level so as to avoid all cases that violate

restrictions placed on store-to-load-forwarding. In some instances it is possible to spot such cases in

the source code. Size mismatches can easily occur when different-size data items are joined in a

union. Address mismatches could be the result of pointer manipulation.

The following examples show a situation involving a union of different-size data items. The examples

show a user-defined unsigned 16.16 fixed-point type and two operations defined on this type.

Function

portion of a fixed-point number. Listing 5 shows an inappropriate implementation of

which, when used on the result of

fixed_add adds two fixed-point numbers, and function fixed_int extracts the integer

fixed_int,

fixed_add, causes misalignment, address mismatch, or size

mismatch between memory operands, such that no store-to-load forwarding in the load-store buffer

takes place. Listing 6 shows how to properly implement fixed_int in order to allow store-to-load

forwarding in the load-store buffer.

Examples

Listing 5. Avoid

typedef union {

unsigned int whole;

struct {

unsigned short frac; /* Lower 16 bits are fraction. */

unsigned short intg; /* Upper 16 bits are integer. */

} parts;

} FIXED_U_16_16;

22 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

__inline FIXED_U_16_16 fixed_add(FIXED_U_16_16 x, FIXED_U_16_16 y) {

FIXED_U_16_16 z;

z.whole = x.whole + y.whole;

return (z);

}

__inline unsigned int fixed_int(FIXED_U_16_16 x) {

return((unsigned int)(x.parts.intg));

}

...

FIXED_U_16_16 y, z;

unsigned int q;

...

label1:

y = fixed_add (y, z);

q = fixed_int (y);

label2:

...

Software Optimization Guide for AMD64 Processors

The object code generated for the source code between label1 and label2 typically follows one of

these two variants:

; Variant 1

mov edx, DWORD PTR [z]

mov eax, DWORD PTR [y] ; -+

add eax, edx ; |

mov DWORD PTR [y], eax ; |

mov EAX, DWORD PTR [y+2] ; <+ Address mismatch--no forwarding in LSU

and EAX, 0FFFFh

mov DWORD PTR [q], eax

; Variant 2

mov edx, DWORD PTR [z]

mov eax, DWORD PTR [y] ; -+

add eax, edx ; |

mov DWORD PTR [y], eax ; |

movzx eax, WORD PTR [y+2] ; <+ Size and address mismatch--no forwarding in LSU

mov DWORD PTR [q], eax

Listing 6. Preferred

typedef union {

unsigned int whole;

struct {

unsigned short frac; /* Lower 16 bits are fraction. */

unsigned short intg; /* Upper 16 bits are integer. */

} parts;

} FIXED_U_16_16;

__inline FIXED_U_16_16 fixed_add(FIXED_U_16_16 x, FIXED_U_16_16 y) {

Chapter 2 C and C++ Source-Level Optimizations 23

Software Optimization Guide for AMD64 Processors

FIXED_U_16_16 z;

z.whole = x.whole + y.whole;

return(z);

}

__inline unsigned int fixed_int(FIXED_U_16_16 x) {

return (x.whole >> 16);

}

...

FIXED_U_16_16 y, z;

unsigned int q;

...

label1:

y = fixed_add (y, z);

q = fixed_int (y);

label2:

...

25112 Rev. 3.06 September 2005

The object code generated for the source code between label1 and label2 typically looks like this:

mov edx, DWORD PTR [z]

mov eax, DWORD PTR [y]

add eax, edx

mov DWORD PTR [y], eax ; -+

mov eax, DWORD PTR [y] ; <+ Aligned (size/address match)--forwarding in LSU

shr eax, 16

mov DWORD PTR [q], eax

24 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

2.10 SWITCH and Noncontiguous Case Expressions

Optimization

Use if-else statements in place of switch statements that have noncontiguous case expressions.

(Case expressions are the individual expressions to which the single switch expression is compared.)

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

If the case expressions are contiguous or nearly contiguous integer values, most compilers translate

the switch statement as a jump table instead of a comparison chain. Jump tables generally improve

performance because:

• They reduce the number of branches to a single procedure call.

• The size of the control-flow code is the same no matter how many cases there are.

• The amount of control-flow code that the processor must execute is the same for all values of the

switch expression.

However, if the case expressions are noncontiguous values, most compilers translate the switch

statement as a comparison chain. Comparison chains are undesirable because:

• They use dense sequences of conditional branches, which interfere with the processor’s ability to

successfully perform branch prediction.

• The size of the control-flow code increases with the number of cases.

• The amount of control-flow code that the processor must execute varies with the value of the

switch expression.

Chapter 2 C and C++ Source-Level Optimizations 25

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

Example 1

A switch statement like this one, whose case expressions are contiguous integer values, usually

provides good performance:

switch (grade)

{

case ‘A’:

...

break;

case ‘B’:

...

break;

case ‘C’:

...

break;

case ‘D’:

...

break;

case ‘F’:

...

break;

}

Example 2

Because the case expressions in the following switch statement are not contiguous values, the

compiler will likely translate the code into a comparison chain instead of a jump table:

switch (a)

{

case 8:

// Sequence for a==8

break;

case 16:

// Sequence for a==16

break;

...

default:

// Default sequence

break;

}

26 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

To avoid a comparison chain and its undesirable effects on branch prediction, replace the switch

statement with a series of if-else statements, as follows:

if (a==8) {

// Sequence for a==8

}

else if (a==16) {

// Sequence for a==16

}

...

else {

// Default sequence

}

Related Information

For information on preventing branch-prediction interference at the assembly-language level, see

“Density of Branches” on page 126.

Chapter 2 C and C++ Source-Level Optimizations 27

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.11 Arranging Cases by Probability of Occurrence

Optimization

Arrange switch statement cases by probability of occurrence, from most probable to least probable.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

Arranging switch statement cases by probability of occurrence improves performance when the

switch statement is translated as a comparison chain; this arrangement has no negative impact when

the statement is translated as a jump table.

Example

Avoid switch statements such as the following, in which the cases are not arranged by probability of

occurrence:

int days_in_month, short_months, normal_months, long_months;

switch (days_in_month) {

case 28:

case 29: short_months++; break;

case 30: normal_months++; break;

case 31: long_months++; break;

default: printf("Month has fewer than 28 or more than 31 days.\n");

}

Instead, arrange the cases to test for frequently occurring values first:

switch (days_in_month) {

case 31: long_months++; break;

case 30: normal_months++; break;

case 28:

case 29: short_months++; break;

default: printf("Month has fewer than 28 or more than 31 days.\n");

}

28 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

2.12 Use of Function Prototypes

Optimization

In general, use prototypes for all functions.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

Prototypes can convey additional information to the compiler that might enable more aggressive

optimizations.

Chapter 2 C and C++ Source-Level Optimizations 29

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.13 Use of const Type Qualifier

Optimization

For objects whose values will not be changed, use the const type qualifier.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

Using the const type qualifier makes code more robust and may enable the compiler to generate

higher-performance code. For example, under the C standard, a compiler is not required to allocate

storage for an object that is declared const, if its address is never used.

30 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

2.14 Generic Loop Hoisting

Optimization

To improve the performance of inner loops, reduce redundant constant calculations (that is, loopinvariant calculations). This idea can also be extended to invariant control structures.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale and Examples

The following example demonstrates the use of an invarient condition in an if statement in a for

loop. The second listing shows the preferred optimization.

Listing 7. (Avoid)

for (i...) {

if (CONSTANT0) {

DoWork0(i); // Does not affect CONSTANT0.

}

else {

DoWork1(i); // Does not affect CONSTANT0.

}

}

Listing 8. (Preferred Optimzation)

if (CONSTANT0) {

for (i...) {

DoWork0(i);

}

}

else {

for (i...) {

DoWork1(i);

}

}

The preferred optimization in Listing 8 tightens the inner loops by avoiding repetitious evaluation of a

known

if control structure. Although the branch would be easily predicted, the extra instructions and

decode limitations imposed by branching (which are usually advantageous) are saved.

Chapter 2 C and C++ Source-Level Optimizations 31

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

To generalize the example in Listing 8 further for multiple-constant control code, more work may be

needed to create the proper outer loop. Enumeration of the constant cases reduces this to a simple

switch statement.

Listing 9.

for (i...) {

if (CONSTANT0) {

DoWork0(i); // Does not affect CONSTANT0 or CONSTANT1.

}

else {

DoWork1(i); // Does not affect CONSTANT0 or CONSTANT1.

}

if (CONSTANT1) {

DoWork2(i); // Does not affect CONSTANT0 or CONSTANT1.

}

else {

DoWork3(i); // Does not affect CONSTANT0 or CONSTANT1.

}

}

32 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

Transform the loop in Listing 9 (by using the switch statement) into:

#define combine(c1, c2) (((c1) << 1) + (c2))

switch (combine(CONSTANT0 != 0, CONSTANT1 != 0)) {

case combine(0, 0):

for(i...) {

DoWork0(i);

DoWork2(i);

}

break;

case combine(1, 0):

for(i...) {

DoWork1(i);

DoWork2(i);

}

break;

case combine(0, 1):

for(i...) {

DoWork0(i);

DoWork3(i);

}

break;

case combine( 1, 1 ):

for(i...) {

DoWork1(i);

DoWork3(i);

}

break;

default:

break;

}

Some introductory code is necessary to generate all the combinations for the switch constant and the

total amount of code has doubled. However, the inner loops are now free of

cases where the

to greater parallelism than possible in the presence of intervening

DoWorkn functions are inlined, the successive functions have greater overlap, leading

if statements.

if statements. In ideal

The same idea can be applied to constant switch statements or to combinations of switch statements

and

if statements inside of for loops. The method used to combine the input constants becomes

more complicated but benefits performance.

However, the number of inner loops can also substantially increase. If the number of inner loops is

prohibitively high, then only the most common cases must be dealt with directly, and the remaining

cases can fall back to the old code in the default clause of the

switch statement. This situation is

typical of run-time generated code. While the performance of run-time generated code can be

improved by means similar to those presented here, it is much harder to maintain and developers must

do their own code-generation optimizations without the help of an available compiler.

Chapter 2 C and C++ Source-Level Optimizations 33

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

2.15 Local Static Functions

Optimization

Declare as static functions that are not used outside the file where they are defined.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

Declaring a function as static forces internal linkage. Functions that are not declared as static

default to external linkage, which may inhibit certain optimizations—for example, aggressive

inlining—with some compilers.

34 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

2.16 Explicit Parallelism in Code

Optimization

Where possible, break long dependency chains into several independent dependency chains that can

then be executed in parallel, exploiting the execution units in each pipeline.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale and Examples

This is especially important to break long dependency chains into smaller executing units in floatingpoint code, whether it is mapped to x87, SSE, or SSE2 instructions, because of the longer latency of

floating-point operations. Because most languages (including ANSI C) guarantee that floating-point

expressions are not reordered, compilers cannot usually perform such optimizations unless they offer

a switch to allow noncompliant reordering of floating-point expressions according to algebraic rules.

Reordered code that is algebraically identical to the original code does not necessarily produce

identical computational results due to the lack of associativity of floating-point operations. There are

well-known numerical considerations in applying these optimizations (consult a book on numerical

analysis). In some cases, these optimizations may lead to unexpected results. In the vast majority of

cases, the final result differs only in the least-significant bits.

Listing 10. Avoid

double a[100], sum;

int i;

sum = 0.0f;

for (i = 0; i < 100; i++) {

sum += a[i];

}

Chapter 2 C and C++ Source-Level Optimizations 35

Software Optimization Guide for AMD64 Processors

25112 Rev. 3.06 September 2005

Listing 11. Preferred

double a[100], sum1, sum2, sum3, sum4, sum;

int i;

sum1 = 0.0;

sum2 = 0.0;

sum3 = 0.0;

sum4 = 0.0;

for (i = 0; i < 100; i + 4) {

sum1 += a[i];

sum2 += a[i+1];

sum3 += a[i+2];

sum4 += a[i+3];

}

sum = (sum4 + sum3) + (sum1 + sum2);

Notice that the four-way unrolling is chosen to exploit the four-stage fully pipelined floating-point

adder. Each stage of the floating-point adder is occupied on every clock cycle, ensuring maximum

sustained utilization.

36 C and C++ Source-Level Optimizations Chapter 2

25112 Rev. 3.06 September 2005

Software Optimization Guide for AMD64 Processors

2.17 Extracting Common Subexpressions

Optimization

Manually extract common subexpressions where C compilers may be unable to extract them from

floating-point expressions due to the guarantee against reordering of such expressions in the ANSI

standard.

Application

This optimization applies to:

• 32-bit software

• 64-bit software

Rationale

Specifically, the compiler cannot rearrange the computation according to algebraic equivalencies

before extracting common subexpressions. Rearranging the expression may give different

computational results due to the lack of associativity of floating-point operations, but the results

usually differ in only the least-significant bits.

Examples

Listing 12. Avoid

double a, b, c, d, e, f;

e = b * c / d;

f = b / d * a;

Listing 13. Preferred

double a, b, c, d, e, f, t;

t = b / d;

e = c * t;

f = a * t;

Listing 14. Avoid

double a, b, c, e, f;