Page 1

Acronis Cyber

Infrastructure 3.0

Installation Guide

November 20, 2019

Page 2

Copyright Statement

Copyright ©Acronis International GmbH, 2002-2019. All rights reserved.

”Acronis” and ”Acronis Secure Zone” are registered trademarks of Acronis International GmbH.

”Acronis Compute with Confidence”, ”Acronis Startup Recovery Manager”, ”Acronis Instant Restore”, and the Acronis logo are trademarks of Acronis

International GmbH.

Linux is a registered trademark of Linus Torvalds.

VMware and VMware Ready are trademarks and/or registered trademarks of VMware, Inc. in the United States and/or other jurisdictions.

Windows and MS-DOS are registered trademarks of Microsoft Corporation.

All other trademarks and copyrights referred to are the property of their respective owners.

Distribution of substantively modified versions of this document is prohibited without the explicit permission of the copyright holder.

Distribution of this work or derivative work in any standard (paper) book form for commercial purposes is prohibited unless prior permission is

obtained from the copyright holder.

DOCUMENTATION IS PROVIDED ”AS IS” AND ALL EXPRESS OR IMPLIED CONDITIONS, REPRESENTATIONS AND WARRANTIES, INCLUDING ANY IMPLIED

WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE OR NON-INFRINGEMENT, ARE DISCLAIMED, EXCEPT TO THE EXTENT THAT

SUCH DISCLAIMERS ARE HELD TO BE LEGALLY INVALID.

Third party code may be provided with the Software and/or Service. The license terms for such third-parties are detailed in the license.txt file located in

the root installation directory. You can always find the latest up-to-date list of the third party code and the associated license terms used with the

Software and/or Service at http://kb.acronis.com/content/7696.

Acronis patented technologies

Technologies, used in this product, are covered and protected by one or more U.S. Patent Numbers: 7,047,380; 7,246,211; 7,275,139; 7,281,104;

7,318,135; 7,353,355; 7,366,859; 7,383,327; 7,475,282; 7,603,533; 7,636,824; 7,650,473; 7,721,138; 7,779,221; 7,831,789; 7,836,053; 7,886,120; 7,895,403;

7,934,064; 7,937,612; 7,941,510; 7,949,635; 7,953,948; 7,979,690; 8,005,797; 8,051,044; 8,069,320; 8,073,815; 8,074,035; 8,074,276; 8,145,607; 8,180,984;

8,225,133; 8,261,035; 8,296,264; 8,312,259; 8,347,137; 8,484,427; 8,645,748; 8,732,121; 8,850,060; 8,856,927; 8,996,830; 9,213,697; 9,400,886; 9,424,678;

9,436,558; 9,471,441; 9,501,234; and patent pending applications.

Page 3

Contents

1. Deployment Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

2. Planning Infrastructure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

2.1 Storage Architecture Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

2.1.1 Storage Role . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

2.1.2 Metadata Role . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

2.1.3 Supplementary Roles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

2.2 Compute Architecture Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

2.3 Planning Node Hardware Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.3.1 Hardware Limits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.3.2 Hardware Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.3.2.1 Requirements for Management Node with Storage and Compute . . . . . . . 5

2.3.2.2 Requirements for Storage and Compute . . . . . . . . . . . . . . . . . . . . . . 6

2.3.2.3 Hardware Requirements for Backup Gateway . . . . . . . . . . . . . . . . . . . 7

2.3.3 Hardware Recommendations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.3.3.1 Storage Cluster Composition Recommendations . . . . . . . . . . . . . . . . . 9

2.3.3.2 General Hardware Recommendations . . . . . . . . . . . . . . . . . . . . . . . 10

2.3.3.3 Storage Hardware Recommendations . . . . . . . . . . . . . . . . . . . . . . . 10

2.3.3.4 Network Hardware Recommendations . . . . . . . . . . . . . . . . . . . . . . . 12

2.3.4 Hardware and Software Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.3.5 Minimum Storage Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.3.6 Recommended Storage Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.3.6.1 HDD Only . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.3.6.2 HDD + System SSD (No Cache) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.3.6.3 HDD + SSD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.3.6.4 SSD Only . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.3.6.5 HDD + SSD (No Cache), 2 Tiers . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

i

Page 4

2.3.6.6 HDD + SSD, 3 Tiers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.3.7 Raw Disk Space Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.3.8 Checking Disk Data Flushing Capabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.4 Planning Virtual Machine Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.4.1 Running on VMware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.5 Planning Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.5.1 General Network Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.5.2 Network Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.5.3 Per-Node Network Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.5.4 Network Recommendations for Clients . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.6 Understanding Data Redundancy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.6.1 Redundancy by Replication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.6.2 Redundancy by Erasure Coding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.6.3 No Redundancy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.7 Understanding Failure Domains . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.8 Understanding Storage Tiers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.9 Understanding Cluster Rebuilding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

3. Installing Using GUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.1 Obtaining Distribution Image . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.2 Preparing for Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.2.1 Preparing for Installation from USB Storage Drives . . . . . . . . . . . . . . . . . . . . . . 35

3.3 Starting Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

3.4 Step 1: Accepting the User Agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3.5 Step 2: Configuring the Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3.5.1 Creating Bonded Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

3.5.2 Creating VLAN Adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.6 Step 3: Choosing the Time Zone . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.7 Step 4: Configuring the Storage Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.7.1 Deploying the Primary Node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.7.2 Deploying Secondary Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.8 Step 5: Selecting the System Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.9 Step 6: Setting the Root Password . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.10 Finishing Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4. Installing Using PXE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

ii

Page 5

4.1 Preparing Environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.1.1 Installing PXE Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.1.2 Configuring TFTP Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.1.3 Setting Up DHCP Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.1.4 Setting Up HTTP Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.2 Installing Over the Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.3 Creating Kickstart File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.3.1 Kickstart Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.3.2 Kickstart Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.3.2.1 Installing Packages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

4.3.2.2 Installing Admin Panel and Storage . . . . . . . . . . . . . . . . . . . . . . . . . 52

4.3.2.3 Installing Storage Component Only . . . . . . . . . . . . . . . . . . . . . . . . . 53

4.3.3 Kickstart File Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

4.3.3.1 Creating the System Partition on Software RAID1 . . . . . . . . . . . . . . . . . 55

4.4 Using Kickstart File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

5. Additional Installation Modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

5.1 Installing via VNC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

6. Troubleshooting Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.1 Installing in Basic Graphics Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.2 Booting into Rescue Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

iii

Page 6

CHAPTER 1

Deployment Overview

To deploy Acronis Cyber Infrastructure for evaluation purposes or in production, you will need to do the

following:

1. Plan the infrastructure.

2. Install and configure Acronis Cyber Infrastructure on required servers.

3. Create the storage cluster.

4. Create a compute cluster and/or set up data export services.

1

Page 7

CHAPTER 2

Planning Infrastructure

To plan the infrastructure, you will need to decide on the hardware configuration of each server, plan

networks, choose a redundancy method and mode, and decide which data will be kept on which storage tier.

Information in this chapter is meant to help you complete all of these tasks.

2.1 Storage Architecture Overview

The fundamental component of Acronis Cyber Infrastructure is a storage cluster: a group of physical servers

interconnected by network. Each server in a cluster is assigned one or more roles and typically runs services

that correspond to these roles:

• storage role: chunk service or CS

• metadata role: metadata service or MDS

• supplementary roles:

•

SSD cache,

•

system

Any server in the cluster can be assigned a combination of storage, metadata, and network roles. For

example, a single server can be an S3 access point, an iSCSI access point, and a storage node at once.

Each cluster also requires that a web-based admin panel be installed on one (and only one) of the nodes. The

panel enables administrators to manage the cluster.

2

Page 8

Chapter 2. Planning Infrastructure

2.1.1 Storage Role

Storage nodes run chunk services, store all data in the form of fixed-size chunks, and provide access to these

chunks. All data chunks are replicated and the replicas are kept on different storage nodes to achieve high

availability of data. If one of the storage nodes fails, the remaining healthy storage nodes continue providing

the data chunks that were stored on the failed node.

The storage role can only be assigned to a server with disks of certain capacity.

2.1.2 Metadata Role

Metadata nodes run metadata services, store cluster metadata, and control how user files are split into

chunks and where these chunks are located. Metadata nodes also ensure that chunks have the required

amount of replicas. Finally, they log all important events that happen in the cluster.

To provide system reliability, Acronis Cyber Infrastructure uses the Paxos consensus algorithm. It guarantees

fault-tolerance if the majority of nodes running metadata services are healthy.

To ensure high availability of metadata in a production environment, metadata services must be run on at

least three cluster nodes. In this case, if one metadata service fails, the remaining two will still be controlling

the cluster. However, it is recommended to have at least five metadata services to ensure that the cluster can

survive simultaneous failure of two nodes without data loss.

2.1.3 Supplementary Roles

SSD cache

Boosts chunk read/write performance by creating write caches on selected solid-state drives (SSDs). It

is also recommended to use such SSDs for metadata, see Metadata Role (page 3). The use of write

journals may more than double the write speed in the cluster.

System

One disk per node that is reserved for the operating system and unavailable for data storage.

3

Page 9

Chapter 2. Planning Infrastructure

Networking

service

Compute

service

Image

service

Storage

service

Identity

service

Provides VM network

connectivity

Stores VM images

Provides storage volumes to VMs

Admin panel

QEMU/KVM

Storage

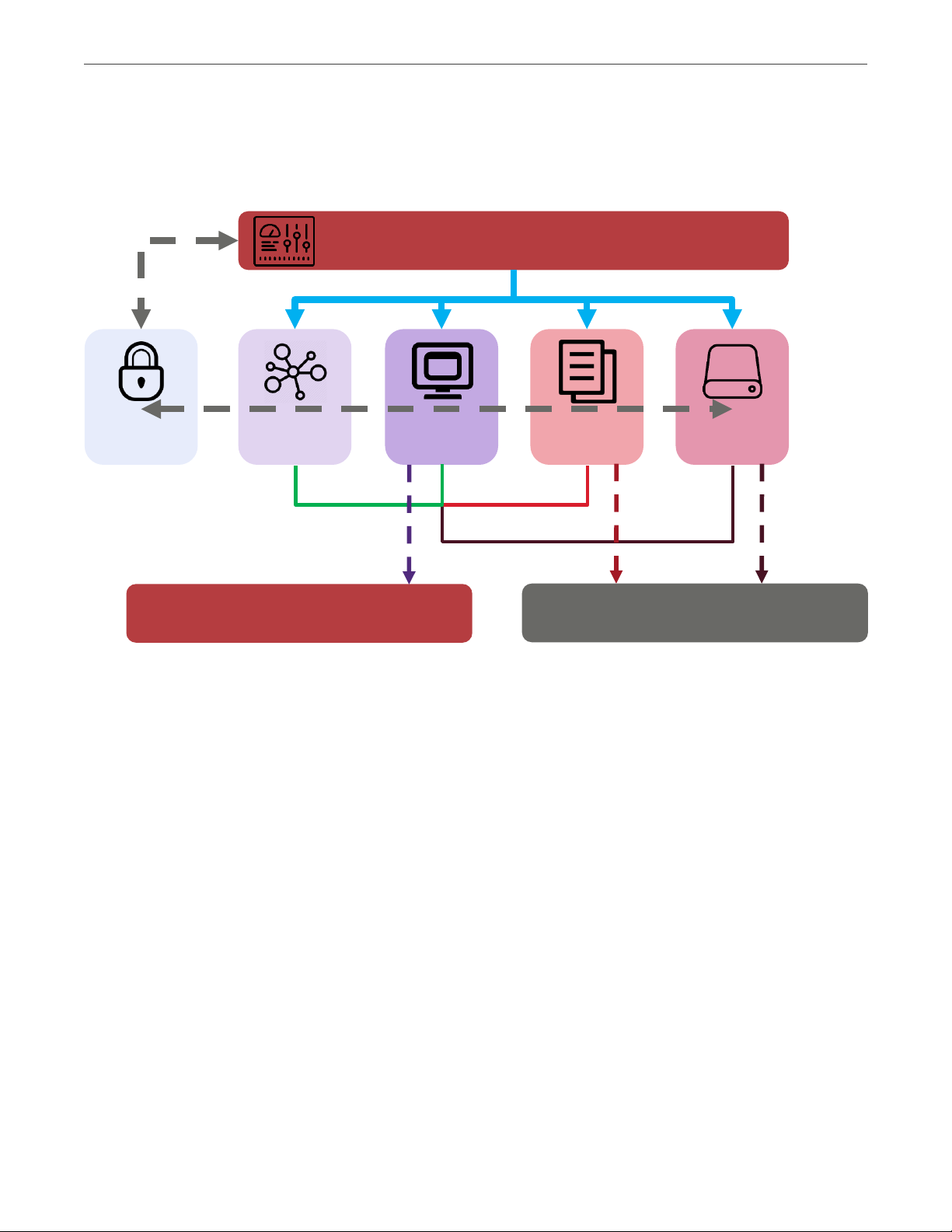

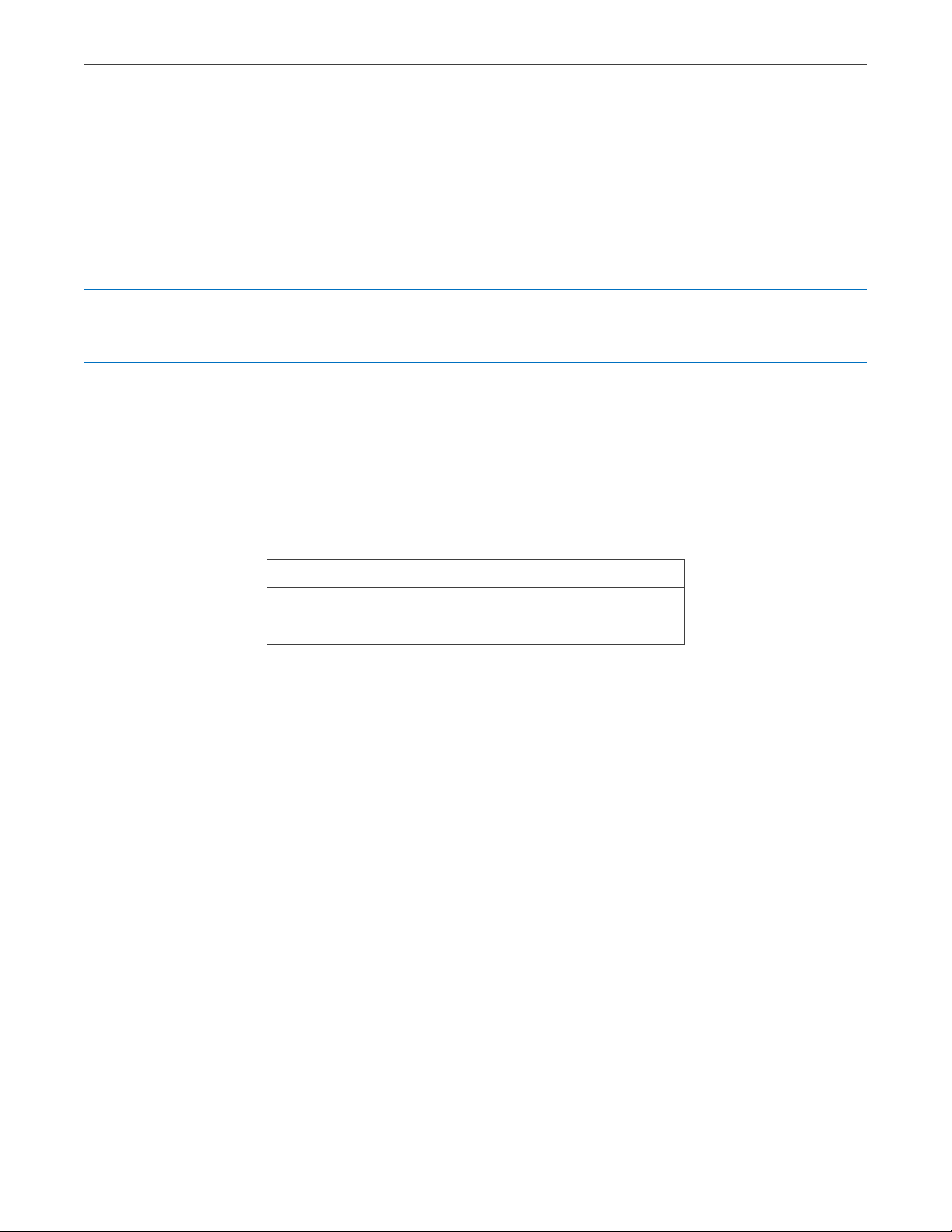

2.2 Compute Architecture Overview

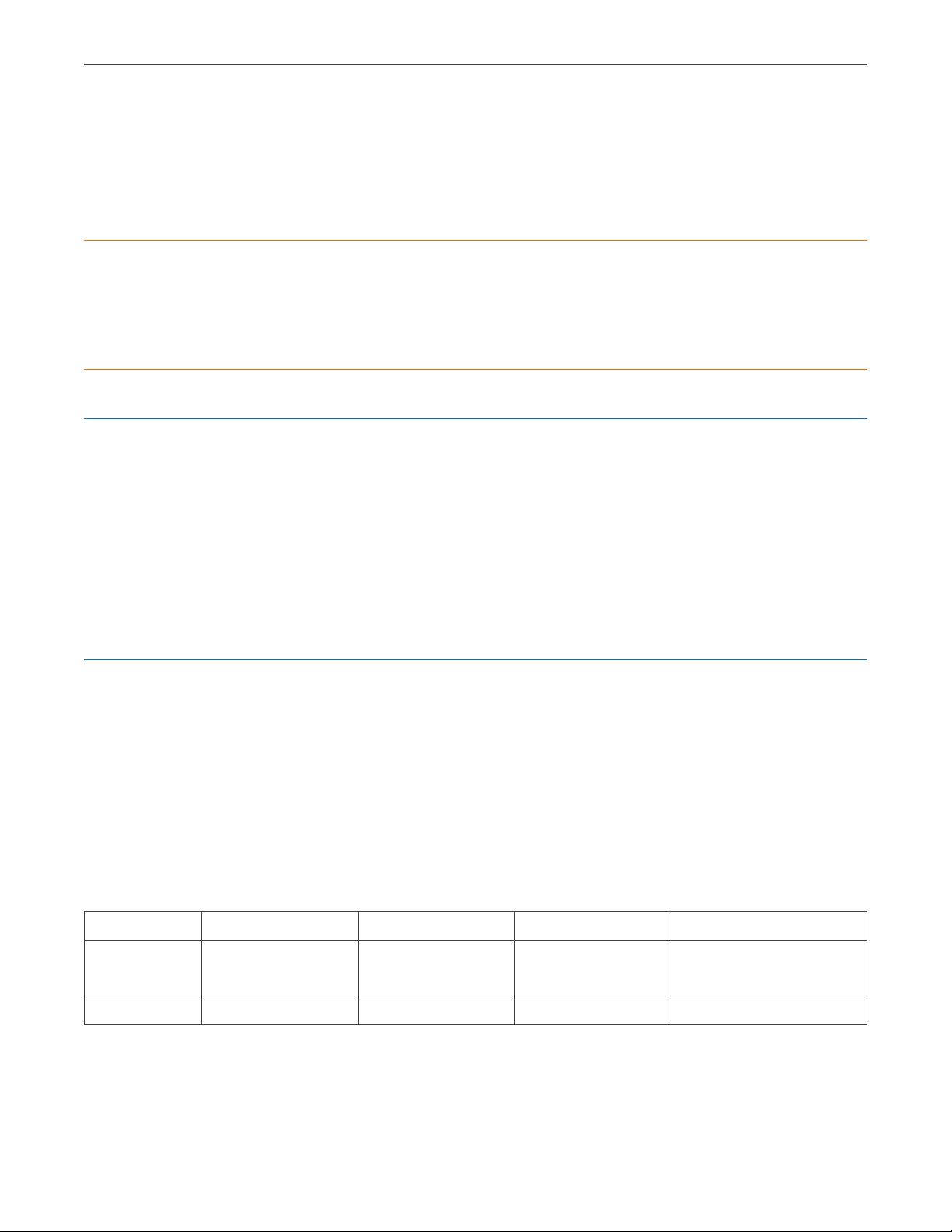

The following diagram shows the major compute components of Acronis Cyber Infrastructure.

• The storage service provides virtual disks to virtual machines. This service relies on the base storage

cluster for data redundancy.

• The image service enables users to upload, store, and use images of supported guest operating

systems and virtual disks. This service relies on the base storage cluster for data redundancy.

• The identity service provides authentication and authorization capabilities for Acronis Cyber

Infrastructure.

• The compute service enables users to create, run, and manage virtual machines. This service relies on

the custom QEMU/KVM hypervisor.

• The networking service provides private and public network capabilities for virtual machines.

• The admin panel service provides a convenient web user interface for managing the entire

infrastructure.

4

Page 10

Chapter 2. Planning Infrastructure

2.3 Planning Node Hardware Configurations

Acronis Cyber Infrastructure works on top of commodity hardware, so you can create a cluster from regular

servers, disks, and network cards. Still, to achieve the optimal performance, a number of requirements must

be met and a number of recommendations should be followed.

Note: If you are unsure of what hardware to choose, consult your sales representative. You can also use the

online hardware calculator.

2.3.1 Hardware Limits

The following table lists the current hardware limits for Acronis Cyber Infrastructure servers:

Table 2.3.1.1: Server hardware limits

Hardware Theoretical Certified

RAM 64 TB 1 TB

CPU 5120 logical CPUs 384 logical CPUs

A logical CPU is a core (thread) in a multicore (multithreading) processor.

2.3.2 Hardware Requirements

Deployed Acronis Cyber Infrastructure consists of a single management node and a number of storage and

compute nodes. The following subsections list requirements to node hardware depending on usage scenario.

2.3.2.1 Requirements for Management Node with Storage and Compute

The following table lists the minimal and recommended hardware requirements for a management node

that also runs the storage and compute services.

If you plan to enable high availability for the management node (recommended), all the servers that you will

add to the HA cluster must meet the requirements listed in this table.

5

Page 11

Chapter 2. Planning Infrastructure

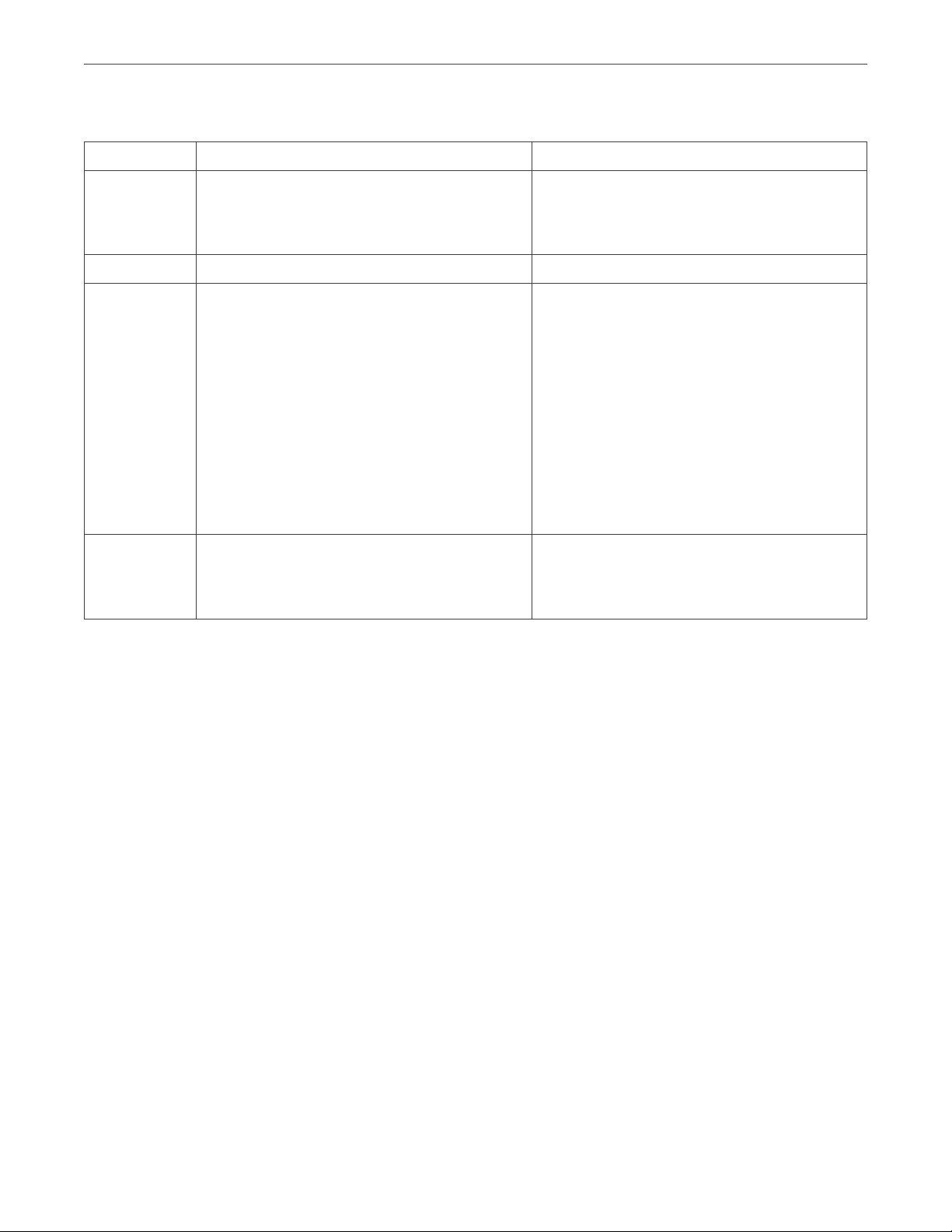

Table 2.3.2.1.1: Hardware for management node with storage and compute

Type Minimal Recommended

CPU 64-bit x86 Intel processors with

“unrestricted guest” and VT-x with Extended

Page Tables (EPT) enabled in BIOS

16 logical CPUs in total*

RAM 32 GB** 64+ GB**

Storage 1 disk: system + metadata, 100+ GB SATA

HDD

1 disk: storage, SATA HDD, size as required

Network 1 GbE for storage traffic

1 GbE (VLAN tagged) for other traffic

64-bit x86 Intel processors with

“unrestricted guest” and VT-x with Extended

Page Tables (EPT) enabled in BIOS

32+ logical CPUs in total*

2+ disks: system + metadata + cache, 100+

GB recommended enterprise-grade SSDs in

a RAID1 volume, with power loss protection

and 75 MB/s sequential write performance

per serviced HDD (e.g., 750+ MB/s total for

a 10-disk node)

4+ disks: HDD or SSD, 1 DWPD endurance

minimum, 10 DWPD recommended

2 x 10 GbE (bonded) for storage traffic

2 x 1 GbE or 2 x 10 GbE (bonded, VLAN

tagged) for other traffic

* A logical CPU is a core (thread) in a multicore (multithreading) processor.

** Each chunk server (CS), e.g., storage disk, requires 1 GB of RAM (0.5 GB anonymous memory + 0.5 GB

page cache). The total page cache limit is 12 GB. In addition, each metadata server (MDS) requires 0.2 GB of

RAM + 0.1 GB per 100TB of physical storage space.

2.3.2.2 Requirements for Storage and Compute

The following table lists the minimal and recommended hardware requirements for a node that runs the

storage and compute services.

6

Page 12

Chapter 2. Planning Infrastructure

Table 2.3.2.2.1: Hardware for storage and compute

Type Minimal Recommended

CPU 64-bit x86 processor(s) with Intel VT

hardware virtualization extensions enabled

8 logical CPUs*

RAM 8 GB** 64+ GB**

Storage 1 disk: system, 100 GB SATA HDD

1 disk: metadata, 100 GB SATA HDD (only

on the first three nodes in the cluster)

1 disk: storage, SATA HDD, size as required

Network 1 GbE for storage traffic

1 GbE (VLAN tagged) for other traffic

64-bit x86 processor(s) with Intel VT

hardware virtualization extensions enabled

32+ logical CPUs*

2+ disks: system, 100+ GB SATA HDDs in a

RAID1 volume

1+ disk: metadata + cache, 100+ GB

enterprise-grade SSD with power loss

protection and 75 MB/s sequential write

performance per serviced HDD (e.g., 750+

MB/s total for a 10-disk node)

4+ disks: HDD or SSD, 1 DWPD endurance

minimum, 10 DWPD recommended

2 x 10 GbE (bonded) for storage traffic

2 x 1 GbE or 2 x 10 GbE (bonded) for other

traffic

* A logical CPU is a core (thread) in a multicore (multithreading) processor.

** Each chunk server (CS), e.g., storage disk, requires 1 GB of RAM (0.5 GB anonymous memory + 0.5 GB

page cache). The total page cache limit is 12 GB. In addition, each metadata server (MDS) requires 0.2 GB of

RAM + 0.1 GB per 100TB of physical storage space.

2.3.2.3 Hardware Requirements for Backup Gateway

The following table lists the minimal and recommended hardware requirements for a management node

that also runs the storage and ABGW services.

7

Page 13

Chapter 2. Planning Infrastructure

Table 2.3.2.3.1: Hardware for Backup Gateway

Type Minimal Recommended

CPU 64-bit x86 processor(s) with AMD-V or Intel

VT hardware virtualization extensions

enabled

4 logical CPUs*

RAM 4 GB** 16+ GB**

Storage 1 disk: system + metadata, 120 GB SATA

HDD

1 disk: storage, SATA HDD, size as required

Network 1 GbE 2 x 10 GbE (bonded)

* A logical CPU is a core (thread) in a multicore (multithreading) processor.

64-bit x86 processor(s) with AMD-V or Intel

VT hardware virtualization extensions

enabled

4+ logical CPUs*, at least one CPU per 8

HDDs

1 disk: system + metadata + cache, 120+ GB

recommended enterprise-grade SSD with

power loss protection and 75 MB/s

sequential write performance per serviced

HDD

1 disk: storage, SATA HDD, 1 DWPD

endurance minimum, 10 DWPD

recommended, size as required

** Each chunk server (CS), e.g., storage disk, requires 1 GB of RAM (0.5 GB anonymous memory + 0.5 GB

page cache). The total page cache limit is 12 GB. In addition, each metadata server (MDS) requires 0.2 GB of

RAM + 0.1 GB per 100TB of physical storage space.

2.3.3 Hardware Recommendations

In general, Acronis Cyber Infrastructure works on the same hardware that is recommended for Red Hat

Enterprise Linux 7: servers, components.

The following recommendations further explain the benefits added by specific hardware in the hardware

requirements table. Use them to configure your cluster in an optimal way.

8

Page 14

Chapter 2. Planning Infrastructure

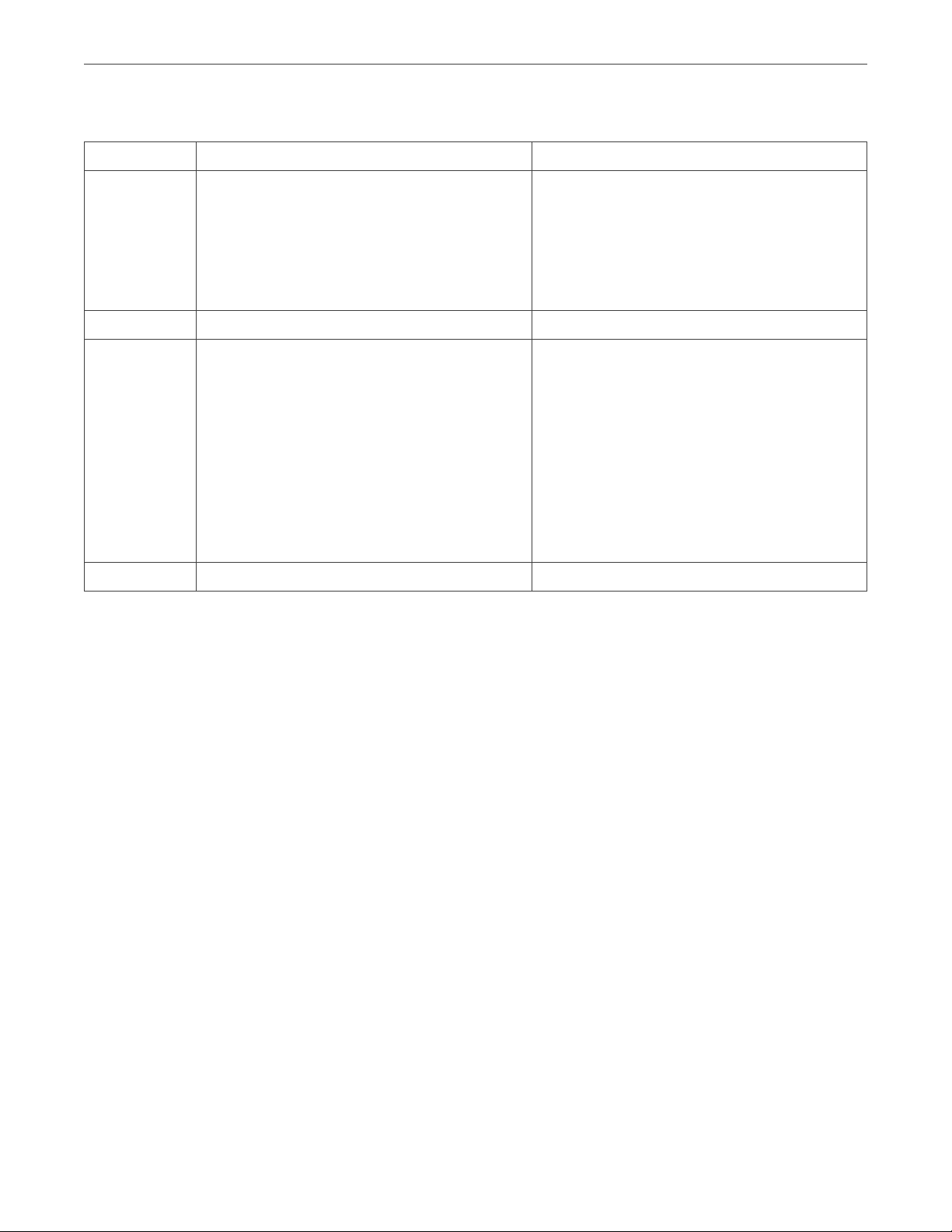

2.3.3.1 Storage Cluster Composition Recommendations

Designing an efficient storage cluster means finding a compromise between performance and cost that suits

your purposes. When planning, keep in mind that a cluster with many nodes and few disks per node offers

higher performance while a cluster with the minimal number of nodes (3) and a lot of disks per node is

cheaper. See the following table for more details.

Table 2.3.3.1.1: Cluster composition recommendations

Design considerations Minimum nodes (3), many disks per

node

Optimization Lower cost. Higher performance.

Free disk space to

reserve

Redundancy Fewer erasure coding choices. More erasure coding choices.

Cluster balance and

rebuilding performance

Network capacity More network bandwidth required to

Favorable data type Cold data (e.g., backups). Hot data (e.g., virtual environments).

Sample server

configuration

More space to reserve for cluster

rebuilding as fewer healthy nodes will

have to store the data from a failed

node.

Worse balance and slower rebuilding. Better balance and faster rebuilding.

maintain cluster performance during

rebuilding.

Supermicro SSG-6047R-E1R36L (Intel

Xeon E5-2620 v1/v2 CPU, 32GB RAM,

36 x 12TB HDDs, a 500GB system

Many nodes, few disks per node

(all-flash configuration)

Less space to reserve for cluster

rebuilding as more healthy nodes will

have to store the data from a failed

node.

Less network bandwidth required to

maintain cluster performance during

rebuilding.

Supermicro SYS-2028TP-HC0R-SIOM

(4 x Intel E5-2620 v4 CPUs, 4 x 16GB

RAM, 24 x 1.9TB Samsung PM1643

disk).

Take note of the following:

1. These considerations only apply if failure domain is host.

2. The speed of rebuilding in the replication mode does not depend on the number of nodes in the cluster.

3. Acronis Cyber Infrastructure supports hundreds of disks per node. If you plan to use more than 36

disks per node, contact sales engineers who will help you design a more efficient cluster.

SSDs).

9

Page 15

Chapter 2. Planning Infrastructure

2.3.3.2 General Hardware Recommendations

• At least five nodes are required for a production environment. This is to ensure that the cluster can

survive failure of two nodes without data loss.

• One of the strongest features of Acronis Cyber Infrastructure is scalability. The bigger the cluster, the

better Acronis Cyber Infrastructure performs. It is recommended to create production clusters from at

least ten nodes for improved resilience, performance, and fault tolerance in production scenarios.

• Even though a cluster can be created on top of varied hardware, using nodes with similar hardware in

each node will yield better cluster performance, capacity, and overall balance.

• Any cluster infrastructure must be tested extensively before it is deployed to production. Such common

points of failure as SSD drives and network adapter bonds must always be thoroughly verified.

• It is not recommended for production to run Acronis Cyber Infrastructure on top of SAN/NAS hardware

that has its own redundancy mechanisms. Doing so may negatively affect performance and data

availability.

• To achieve best performance, keep at least 20% of cluster capacity free.

• During disaster recovery, Acronis Cyber Infrastructure may need additional disk space for replication.

Make sure to reserve at least as much space as any single storage node has.

• It is recommended to have the same CPU models on each node to avoid VM live migration issues. For

more details, see the Administrator’s Command Line Guide.

• If you plan to use Backup Gateway to store backups in the cloud, make sure the local storage cluster

has plenty of logical space for staging (keeping backups locally before sending them to the cloud). For

example, if you perform backups daily, provide enough space for at least 1.5 days’ worth of backups.

For more details, see the Administrator’s Guide.

2.3.3.3 Storage Hardware Recommendations

• It is possible to use disks of different size in the same cluster. However, keep in mind that, given the

same IOPS, smaller disks will offer higher performance per terabyte of data compared to bigger disks. It

is recommended to group disks with the same IOPS per terabyte in the same tier.

• Using the recommended SSD models may help you avoid loss of data. Not all SSD drives can withstand

enterprise workloads and may break down in the first months of operation, resulting in TCO spikes.

10

Page 16

Chapter 2. Planning Infrastructure

•

SSD memory cells can withstand a limited number of rewrites. An SSD drive should be viewed as a

consumable that you will need to replace after a certain time. Consumer-grade SSD drives can

withstand a very low number of rewrites (so low, in fact, that these numbers are not shown in their

technical specifications). SSD drives intended for storage clusters must offer at least 1 DWPD

endurance (10 DWPD is recommended). The higher the endurance, the less often SSDs will need to

be replaced, improving TCO.

•

Many consumer-grade SSD drives can ignore disk flushes and falsely report to operating systems

that data was written while it, in fact, was not. Examples of such drives include OCZ Vertex 3, Intel

520, Intel X25-E, and Intel X-25-M G2. These drives are known to be unsafe in terms of data

commits, they should not be used with databases, and they may easily corrupt the file system in

case of a power failure. For these reasons, use enterprise-grade SSD drives that obey the flush

rules (for more information, see

http://www.postgresql.org/docs/current/static/wal-reliability.html). Enterprise-grade SSD drives

that operate correctly usually have the power loss protection property in their technical

specification. Some of the market names for this technology are Enhanced Power Loss Data

Protection (Intel), Cache Power Protection (Samsung), Power-Failure Support (Kingston), Complete

Power Fail Protection (OCZ).

•

It is highly recommended to check the data flushing capabilities of your disks as explained in

Checking Disk Data Flushing Capabilities (page 19).

•

Consumer-grade SSD drives usually have unstable performance and are not suited to withstand

sustainable enterprise workloads. For this reason, pay attention to sustainable load tests when

choosing SSDs. We recommend the following enterprise-grade SSD drives which are the best in

terms of performance, endurance, and investments: Intel S3710, Intel P3700, Huawei ES3000 V2,

Samsung SM1635, and Sandisk Lightning.

•

Performance of SSD disks may depend on their size. Lower-capacity drives (100 to 400 GB) may

perform much slower (sometimes up to ten times slower) than higher-capacity ones (1.9 to 3.8 TB).

Consult drive performance and endurance specifications before purchasing hardware.

• Using NVMe or SAS SSDs for write caching improves random I/O performance and is highly

recommended for all workloads with heavy random access (e.g., iSCSI volumes). In turn, SATA disks are

best suited for SSD-only configurations but not write caching.

• Using shingled magnetic recording (SMR) HDDs is strongly not recommended, even for backup

scenarios. Such disks have unpredictable latency that may lead to unexpected temporary service

outages and sudden performance degradations.

11

Page 17

Chapter 2. Planning Infrastructure

• Running metadata services on SSDs improves cluster performance. To also minimize CAPEX, the same

SSDs can be used for write caching.

• If capacity is the main goal and you need to store non-frequently accessed data, choose SATA disks over

SAS ones. If performance is the main goal, choose NVMe or SAS disks over SATA ones.

• The more disks per node the lower the CAPEX. As an example, a cluster created from ten nodes with

two disks in each will be less expensive than a cluster created from twenty nodes with one disk in each.

• Using SATA HDDs with one SSD for caching is more cost effective than using only SAS HDDs without

such an SSD.

• Create hardware or software RAID1 volumes for system disks using RAID or HBA controllers,

respectively, to ensure its high performance and availability.

• Use HBA controllers as they are less expensive and easier to manage than RAID controllers.

• Disable all RAID controller caches for SSD drives. Modern SSDs have good performance that can be

reduced by a RAID controller’s write and read cache. It is recommend to disable caching for SSD drives

and leave it enabled only for HDD drives.

• If you use RAID controllers, do not create RAID volumes from HDDs intended for storage. Each storage

HDD needs to be recognized by Acronis Cyber Infrastructure as a separate device.

• If you use RAID controllers with caching, equip them with backup battery units (BBUs) to protect against

cache loss during power outages.

• Disk block size (e.g., 512b or 4K) is not important and has no effect on performance.

2.3.3.4 Network Hardware Recommendations

• Use separate networks (and, ideally albeit optionally, separate network adapters) for internal and public

traffic. Doing so will prevent public traffic from affecting cluster I/O performance and also prevent

possible denial-of-service attacks from the outside.

• Network latency dramatically reduces cluster performance. Use quality network equipment with low

latency links. Do not use consumer-grade network switches.

• Do not use desktop network adapters like Intel EXPI9301CTBLK or Realtek 8129 as they are not designed

for heavy load and may not support full-duplex links. Also use non-blocking Ethernet switches.

• To avoid intrusions, Acronis Cyber Infrastructure should be on a dedicated internal network

inaccessible from outside.

12

Page 18

Chapter 2. Planning Infrastructure

• Use one 1 Gbit/s link per each two HDDs on the node (rounded up). For one or two HDDs on a node,

two bonded network interfaces are still recommended for high network availability. The reason for this

recommendation is that 1 Gbit/s Ethernet networks can deliver 110-120 MB/s of throughput, which is

close to sequential I/O performance of a single disk. Since several disks on a server can deliver higher

throughput than a single 1 Gbit/s Ethernet link, networking may become a bottleneck.

• For maximum sequential I/O performance, use one 1Gbit/s link per each hard drive, or one 10Gbit/s

link per node. Even though I/O operations are most often random in real-life scenarios, sequential I/O is

important in backup scenarios.

• For maximum overall performance, use one 10 Gbit/s link per node (or two bonded for high network

availability).

• It is not recommended to configure 1 Gbit/s network adapters to use non-default MTUs (e.g., 9000-byte

jumbo frames). Such settings require additional configuration of switches and often lead to human

error. 10+ Gbit/s network adapters, on the other hand, need to be configured to use jumbo frames to

achieve full performance.

• The currently supported Fibre Channel host bus adapters (HBAs) are QLogic QLE2562-CK and QLogic

ISP2532.

• It is recommended to use Mellanox ConnectX-4 and ConnectX-5 InfiniBand adapters. Mellanox

ConnectX-2 and ConnectX-3 cards are not supported.

2.3.4 Hardware and Software Limitations

Hardware limitations:

• Each management node must have at least two disks (one system+metadata, one storage).

• Each compute or storage node must have at least three disks (one system, one metadata, one storage).

• Three servers are required to test all the features of the product.

• Each server must have at least 4GB of RAM and two logical cores.

• The system disk must have at least 100 GBs of space.

• Admin panel requires a Full HD monitor to be displayed correctly.

• The maximum supported physical partition size is 254 TiB.

Software limitations:

13

Page 19

Chapter 2. Planning Infrastructure

• The maintenance mode is not supported. Use SSH to shut down or reboot a node.

• One node can be a part of only one cluster.

• Only one S3 cluster can be created on top of a storage cluster.

• Only predefined redundancy modes are available in the admin panel.

• Thin provisioning is always enabled for all data and cannot be configured otherwise.

• Admin panel has been tested to work at resolutions 1280x720 and higher in the following web

browsers: latest Firefox, Chrome, Safari.

For network limitations, see Network Limitations (page 22).

2.3.5 Minimum Storage Configuration

The minimum configuration described in the table will let you evaluate the features of the storage cluster. It

is not meant for production.

Table 2.3.5.1: Minimum cluster configuration

Node # 1st disk role 2nd disk role 3rd+ disk roles Access points

1 System Metadata Storage iSCSI, S3 private, S3 public,

NFS, ABGW

2 System Metadata Storage iSCSI, S3 private, S3 public,

NFS, ABGW

3 System Metadata Storage iSCSI, S3 private, S3 public,

NFS, ABGW

3 nodes

in total

Note: SSD disks can be assigned System, Metadata, and Cache roles at the same time, freeing up more

disks for the storage role.

3 MDSs in total 3+ CSs in total Access point services run on

three nodes in total.

Even though three nodes are recommended even for the minimal configuration, you can start evaluating

Acronis Cyber Infrastructure with just one node and add more nodes later. At the very least, a storage cluster

must have one metadata service and one chunk service running. A single-node installation will let you

14

Page 20

Chapter 2. Planning Infrastructure

evaluate services such as iSCSI, ABGW, etc. However, such a configuration will have two key limitations:

1. Just one MDS will be a single point of failure. If it fails, the entire cluster will stop working.

2. Just one CS will be able to store just one chunk replica. If it fails, the data will be lost.

Important: If you deploy Acronis Cyber Infrastructure on a single node, you must take care of making its

storage persistent and redundant to avoid data loss. If the node is physical, it must have multiple disks so

you can replicate the data among them. If the node is a virtual machine, make sure that this VM is made

highly available by the solution it runs on.

Note: Backup Gateway works with the local object storage in the staging mode. It means that the data to be

replicated, migrated, or uploaded to a public cloud is first stored locally and only then sent to the destination.

It is vital that the local object storage is persistent and redundant so the local data does not get lost. There are

multiple ways to ensure the persistence and redundancy of the local storage. You can deploy your Backup

Gateway on multiple nodes and select a good redundancy mode. If your gateway is deployed on a single

node in Acronis Cyber Infrastructure, you can make its storage redundant by replicating it among multiple

local disks. If your entire Acronis Cyber Infrastructure installation is deployed in a single virtual machine with

the sole purpose of creating a gateway, make sure this VM is made highly available by the solution it runs on.

2.3.6 Recommended Storage Configuration

It is recommended to have at least five metadata services to ensure that the cluster can survive simultaneous

failure of two nodes without data loss. The following configuration will help you create clusters for

production environments:

Table 2.3.6.1: Recommended cluster configuration

Node # 1st disk role 2nd disk role 3rd+ disk roles Access points

Nodes 1 to 5 System SSD; metadata,

Storage iSCSI, S3 private, S3

cache

Nodes 6+ System SSD; cache Storage iSCSI, S3 private, ABGW

public, ABGW

Continued on next page

15

Page 21

Chapter 2. Planning Infrastructure

Table 2.3.6.1 – continued from previous page

Node # 1st disk role 2nd disk role 3rd+ disk roles Access points

5+ nodes in

total

A production-ready cluster can be created from just five nodes with recommended hardware. However, it is

recommended to enter production with at least ten nodes if you are aiming to achieve significant

performance advantages over direct-attached storage (DAS) or improved recovery times.

Following are a number of more specific configuration examples that can be used in production. Each

configuration can be extended by adding chunk servers and nodes.

5 MDSs in total 5+ CSs in total All nodes run required

access points.

2.3.6.1 HDD Only

This basic configuration requires a dedicated disk for each metadata server.

Table 2.3.6.1.1: HDD only configuration

Nodes 1-5 (base) Nodes 6+ (extension)

Disk # Disk type Disk roles Disk # Disk type Disk roles

1 HDD System 1 HDD System

2 HDD MDS 2 HDD CS

3 HDD CS 3 HDD CS

… … … … … …

N HDD CS N HDD CS

2.3.6.2 HDD + System SSD (No Cache)

This configuration is good for creating capacity-oriented clusters.

Table 2.3.6.2.1: HDD + system SSD (no cache) configuration

Nodes 1-5 (base) Nodes 6+ (extension)

Disk # Disk type Disk roles Disk # Disk type Disk roles

1 SSD System, MDS 1 SSD System

2 HDD CS 2 HDD CS

Continued on next page

16

Page 22

Table 2.3.6.2.1 – continued from previous page

Nodes 1-5 (base) Nodes 6+ (extension)

Disk # Disk type Disk roles Disk # Disk type Disk roles

3 HDD CS 3 HDD CS

… … … … … …

N HDD CS N HDD CS

2.3.6.3 HDD + SSD

This configuration is good for creating performance-oriented clusters.

Table 2.3.6.3.1: HDD + SSD configuration

Nodes 1-5 (base) Nodes 6+ (extension)

Disk # Disk type Disk roles Disk # Disk type Disk roles

Chapter 2. Planning Infrastructure

1 HDD System 1 HDD System

2 SSD MDS, cache 2 SSD Cache

3 HDD CS 3 HDD CS

… … … … … …

N HDD CS N HDD CS

2.3.6.4 SSD Only

This configuration does not require SSDs for cache.

When choosing hardware for this configuration, have in mind the following:

• Each Acronis Cyber Infrastructure client will be able to obtain up to about 40K sustainable IOPS (read +

write) from the cluster.

• If you use the erasure coding redundancy scheme, each erasure coding file, e.g., a single VM HDD disk,

will get up to 2K sustainable IOPS. That is, a user working inside a VM will have up to 2K sustainable IOPS

per virtual HDD at their disposal. Multiple VMs on a node can utilize more IOPS, up to the client’s limit.

• In this configuration, network latency defines more than half of overall performance, so make sure that

the network latency is minimal. One recommendation is to have one 10Gbps switch between any two

nodes in the cluster.

17

Page 23

Chapter 2. Planning Infrastructure

Table 2.3.6.4.1: SSD only configuration

Nodes 1-5 (base) Nodes 6+ (extension)

Disk # Disk type Disk roles Disk # Disk type Disk roles

1 SSD System, MDS 1 SSD System

2 SSD CS 2 SSD CS

3 SSD CS 3 SSD CS

… … … … … …

N SSD CS N SSD CS

2.3.6.5 HDD + SSD (No Cache), 2 Tiers

In this configuration example, tier 1 is for HDDs without cache and tier 2 is for SSDs. Tier 1 can store cold

data (e.g., backups), tier 2 can store hot data (e.g., high-performance virtual machines).

Table 2.3.6.5.1: HDD + SSD (no cache) 2-tier configuration

Nodes 1-5 (base) Nodes 6+ (extension)

Disk # Disk type Disk roles Tier Disk # Disk type Disk roles Tier

1 SSD System,

MDS

2 SSD CS 2 2 SSD CS 2

3 HDD CS 1 3 HDD CS 1

… … … … … … … …

N HDD/SSD CS 1/2 N HDD/SSD CS 1/2

1 SSD System

2.3.6.6 HDD + SSD, 3 Tiers

In this configuration example, tier 1 is for HDDs without cache, tier 2 is for HDDs with cache, and tier 3 is for

SSDs. Tier 1 can store cold data (e.g., backups), tier 2 can store regular virtual machines, and tier 3 can store

high-performance virtual machines.

18

Page 24

Chapter 2. Planning Infrastructure

Table 2.3.6.6.1: HDD + SSD 3-tier configuration

Nodes 1-5 (base) Nodes 6+ (extension)

Disk # Disk type Disk roles Tier Disk # Disk type Disk roles Tier

1 HDD/SSD System 1 HDD/SSD System

2 SSD MDS, T2

cache

3 HDD CS 1 3 HDD CS 1

4 HDD CS 2 4 HDD CS 2

5 SSD CS 3 5 SSD CS 3

… … … … … … … …

N HDD/SSD CS 1/2/3 N HDD/SSD CS 1/2/3

2 SSD T2 cache

2.3.7 Raw Disk Space Considerations

When planning the infrastructure, keep in mind the following to avoid confusion:

• The capacity of HDD and SSD is measured and specified with decimal, not binary prefixes, so “TB” in

disk specifications usually means “terabyte”. The operating system, however, displays drive capacity

using binary prefixes meaning that “TB” is “tebibyte” which is a noticeably larger number. As a result,

disks may show capacity smaller than the one marketed by the vendor. For example, a disk with 6TB in

specifications may be shown to have 5.45 TB of actual disk space in Acronis Cyber Infrastructure.

• 5% of disk space is reserved for emergency needs.

Therefore, if you add a 6TB disk to a cluster, the available physical space should increase by about 5.2 TB.

2.3.8 Checking Disk Data Flushing Capabilities

It is highly recommended to make sure that all storage devices you plan to include in your cluster can flush

data from cache to disk if power goes out unexpectedly. Thus you will find devices that may lose data in a

power failure.

Acronis Cyber Infrastructure ships with the vstorage-hwflush-check tool that checks how a storage device

flushes data to disk in emergencies. The tool is implemented as a client/server utility:

• The client continuously writes blocks of data to the storage device. When a data block is written, the

client increases a special counter and sends it to the server that keeps it.

19

Page 25

Chapter 2. Planning Infrastructure

• The server keeps track of counters incoming from the client and always knows the next counter

number. If the server receives a counter smaller than the one it has (e.g., because the power has failed

and the storage device has not flushed the cached data to disk), the server reports an error.

To check that a storage device can successfully flush data to disk when power fails, follow the procedure

below:

1. Install the tool from the vstorage-ctl package available in the official repository. For example:

# wget http://repo.virtuozzo.com/hci/releases/3.0/x86_64/os/Packages/v/\

vstorage-ctl-7.9.198-1.vl7.x86_64.rpm

# yum install vstorage-ctl-7.9.198-1.vl7.x86_64.rpm

Do this on all the nodes involved in tests.

2. On one node, run the server:

# vstorage-hwflush-check -l

3. On a different node that hosts the storage device you want to test, run the client, for example:

# vstorage-hwflush-check -s vstorage1.example.com -d /vstorage/stor1-ssd/test -t 50

where

• vstorage1.example.com is the host name of the server.

• /vstorage/stor1-ssd/test is the directory to use for data flushing tests. During execution, the

client creates a file in this directory and writes data blocks to it.

• 50 is the number of threads for the client to write data to disk. Each thread has its own file and

counter. You can increase the number of threads (max. 200) to test your system in more stressful

conditions. You can also specify other options when running the client. For more information on

available options, see the vstorage-hwflush-check man page.

4. Wait for at least 10-15 seconds, cut power from the client node (either press the Power button or pull

the power cord out) and then power it on again.

5. Restart the client:

# vstorage-hwflush-check -s vstorage1.example.com -d /vstorlage/stor1-ssd/test -t 50

Once launched, the client will read all previously written data, determine the version of data on the disk, and

restart the test from the last valid counter. It then will send this valid counter to the server and the server will

compare it to the latest counter it has. You may see output like:

20

Page 26

Chapter 2. Planning Infrastructure

id<N>:<counter_on_disk> -> <counter_on_server>

which means one of the following:

• If the counter on the disk is lower than the counter on the server, the storage device has failed to flush

the data to the disk. Avoid using this storage device in production, especially for CS or journals, as you

risk losing data.

• If the counter on the disk is higher than the counter on the server, the storage device has flushed the

data to the disk but the client has failed to report it to the server. The network may be too slow or the

storage device may be too fast for the set number of load threads so consider increasing it. This

storage device can be used in production.

• If both counters are equal, the storage device has flushed the data to the disk and the client has

reported it to the server. This storage device can be used in production.

To be on the safe side, repeat the procedure several times. Once you have checked your first storage device,

continue with all the remaining devices you plan to use in the cluster. You need to test all devices you plan to

use in the cluster: SSD disks used for CS journaling, disks used for MDS journals and chunk servers.

2.4 Planning Virtual Machine Configurations

Even though Acronis Cyber Infrastructure performs best on bare metal, it can also run inside virtual

machines. However, in this case only storage services will be available and you will not be able to create the

compute cluster.

2.4.1 Running on VMware

To be able to run the storage services on VMware, make sure the following requirements are met:

• Minimum ESXi version: ESXi 6.7.0 (build 8169922)

• VM version: 14

21

Page 27

Chapter 2. Planning Infrastructure

Management +

Compute +

Storage

10

GB 10GB

10

GB 10GB

Bond Bond

Management +

Compute +

Storage

10

GB 10GB

10

GB 10GB

Bond Bond

Management +

Compute +

Storage

10

GB 10GB

10

GB 10GB

Bond Bond

Compute +

Storage

10

GB 10GB

10

GB 10GB

Bond Bond

Compute +

Storage

10

GB 10GB

10

GB 10GB

Bond Bond

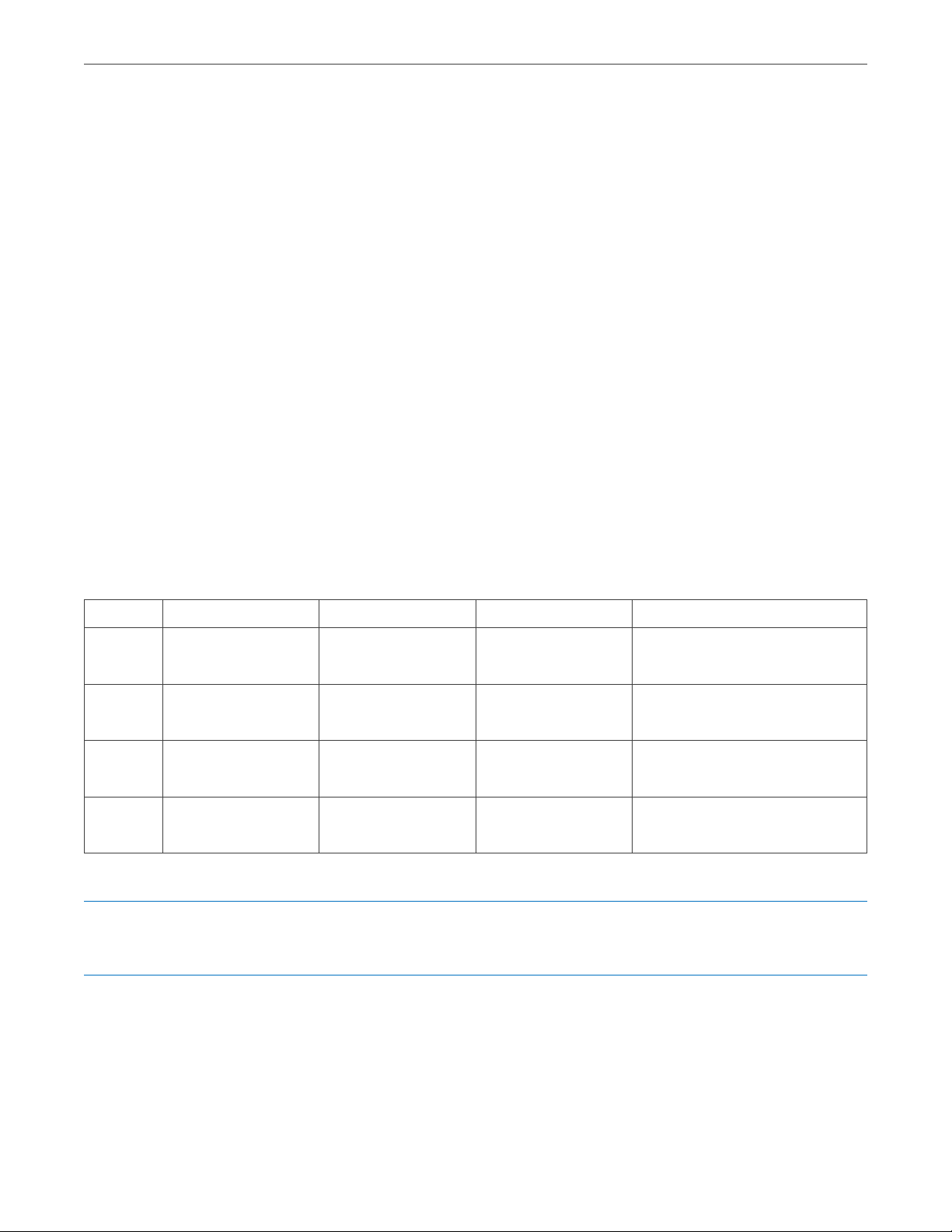

10.0.0.0/24 – Storage internal (traffic types: "Storage", "Internal management", "OSTOR private", "ABGW private")

192.168.0.0/24 - Overlay networking (traffic type "VM private ")

172.16.0.0/24 - Management and API (traffic types: "Admin panel", "Compute API", "SSH", "SNMP")

10.64.0.0/24 - External networking (traffic types: "VM public", "S3 public", "ABGW public", "iSCSI", "NFS")

2.5 Planning Network

The recommended network configuration for Acronis Cyber Infrastructure is as follows:

• One bonded connection for internal storage traffic;

• One bonded connection for service traffic divided into these VLANs:

•

Overlay networking (VM private networks)

•

Management and API (admin panel, SSH, SNMP, compute API)

•

External networking (VM public networks, public export of iSCSI, NFS, S3, and ABGW data)

2.5.1 General Network Requirements

• Internal storage traffic must be separated from other traffic types.

2.5.2 Network Limitations

• Nodes are added to clusters by their IP addresses, not FQDNs. Changing the IP address of a node in the

cluster will remove that node from the cluster. If you plan to use DHCP in a cluster, make sure that IP

addresses are bound to the MAC addresses of nodes’ network interfaces.

22

Page 28

Chapter 2. Planning Infrastructure

• Each node must have Internet access so updates can be installed.

• The MTU value is set to 1500 by default. See Step 2: Configuring the Network (page 36) for information on

setting an optimal MTU value.

• Network time synchronization (NTP) is required for correct statistics. It is enabled by default using the

chronyd service. If you want to use ntpdate or ntpd, stop and disable chronyd first.

• The Internal management traffic type is assigned automatically during installation and cannot be

changed in the admin panel later.

• Even though the management node can be accessed from a web browser by the hostname, you still

need to specify its IP address, not the hostname, during installation.

2.5.3 Per-Node Network Requirements

Network requirements for each cluster node depend on the services that will run on this node:

• Each node in the cluster must have access to the internal network and have the port 8888 open to listen

for incoming connections from the internal network.

• All network interfaces on a node must be connected to different subnets. A network interface can be a

VLAN-tagged logical interface, an untagged bond, or an Ethernet link.

• Each storage and metadata node must have at least one network interface for the internal network

traffic. The IP addresses assigned to this interface must be either static or, if DHCP is used, mapped to

the adapter’s MAC address. The figure below shows a sample network configuration for a storage and

metadata node.

23

Page 29

Chapter 2. Planning Infrastructure

• The management node must have a network interface for internal network traffic and a network

interface for the public network traffic (e.g., to the datacenter or a public network) so the admin panel

can be accessed via a web browser.

The management node must have the port 8888 open by default. This will allow access to the admin

panel from the public network as well as access to the cluster node from the internal network.

The figure below shows a sample network configuration for a storage and management node.

• A node that runs one or more storage access point services must have a network interface for the

internal network traffic and a network interface for the public network traffic.

The figure below shows a sample network configuration for a node with an iSCSI access point. iSCSI

access points use the TCP port 3260 for incoming connections from the public network.

24

Page 30

Chapter 2. Planning Infrastructure

The next figure shows a sample network configuration for a node with an S3 storage access point. S3

access points use ports 443 (HTTPS) and 80 (HTTP) to listen for incoming connections from the public

network.

In the scenario pictured above, the internal network is used for both the storage and S3 cluster traffic.

The next figure shows a sample network configuration for a node with a Backup Gateway storage

25

Page 31

Chapter 2. Planning Infrastructure

access point. Backup Gateway access points use port 44445 for incoming connections from both

internal and public networks and ports 443 and 8443 for outgoing connections to the public network.

• A node that runs compute services must have a network interface for the internal network traffic and a

network interface for the public network traffic.

2.5.4 Network Recommendations for Clients

The following table lists the maximum network performance a client can get with the specified network

interface. The recommendation for clients is to use 10Gbps network hardware between any two cluster

nodes and minimize network latencies, especially if SSD disks are used.

Table 2.5.4.1: Maximum client network performance

Storage network

interface

1 Gbps 100 MB/s 100 MB/s 70 MB/s

2 x 1 Gbps ~175 MB/s 100 MB/s ~130 MB/s

3 x 1 Gbps ~250 MB/s 100 MB/s ~180 MB/s

10 Gbps 1 GB/s 1 GB/s 700 MB/s

Node max. I/O VM max. I/O

(replication)

VM max. I/O

(erasure coding)

2 x 10 Gbps 1.75 GB/s 1 GB/s 1.3 GB/s

26

Page 32

Chapter 2. Planning Infrastructure

2.6 Understanding Data Redundancy

Acronis Cyber Infrastructure protects every piece of data by making it redundant. It means that copies of

each piece of data are stored across different storage nodes to ensure that the data is available even if some

of the storage nodes are inaccessible.

Acronis Cyber Infrastructure automatically maintains a required number of copies within the cluster and

ensures that all the copies are up-to-date. If a storage node becomes inaccessible, copies from it are

replaced by new ones that are distributed among healthy storage nodes. If a storage node becomes

accessible again after downtime, out-of-date copies on it are updated.

The redundancy is achieved by one of two methods: replication or erasure coding (explained in more detail

in the next section). The chosen method affects the size of one piece of data and the number of its copies

that will be maintained in the cluster. In general, replication offers better performance while erasure coding

leaves more storage space available for data (see table).

Acronis Cyber Infrastructure supports a number of modes for each redundancy method. The following table

illustrates data overhead of various redundancy modes. The first three lines are replication and the rest are

erasure coding.

Table 2.6.1: Redundancy mode comparison

Redundancy

mode

1 replica (no

redundancy)

2 replicas 2 1 100 200GB

3 replicas 3 2 200 300GB

Encoding 1+0 (no

redundancy)

Encoding 1+2 3 2 200 300GB

Encoding 3+2 5 2 67 167GB

Encoding 5+2 7 2 40 140GB

Min. number of

nodes required

1 0 0 100GB

1 0 0 100GB

How many nodes

can fail without

data loss

Storage

overhead, %

Raw space

needed to store

100GB of data

Encoding 7+2 9 2 29 129GB

Encoding 17+3 20 3 18 118GB

27

Page 33

Chapter 2. Planning Infrastructure

51 2 3 4

Storage nodes

Data stream

Note: The 1+0 and 1+2 encoding modes are meant for small clusters that have insufficient nodes for other

erasure coding modes but will grow in the future. As redundancy type cannot be changed once chosen (from

replication to erasure coding or vice versa), this mode allows one to choose erasure coding even if their

cluster is smaller than recommended. Once the cluster has grown, more beneficial redundancy modes can

be chosen.

You choose a data redundancy mode when configuring storage services and creating storage volumes for

virtual machines. No matter what redundancy mode you choose, it is highly recommended to be protected

against a simultaneous failure of two nodes as that happens often in real-life scenarios.

All redundancy modes allow write operations when one storage node is inaccessible. If two storage nodes

are inaccessible, write operations may be frozen until the cluster heals itself.

2.6.1 Redundancy by Replication

With replication, Acronis Cyber Infrastructure breaks the incoming data stream into 256MB chunks. Each

chunk is replicated and replicas are stored on different storage nodes, so that each node has only one replica

of a given chunk.

The following diagram illustrates the 2 replicas redundancy mode.

Replication in Acronis Cyber Infrastructure is similar to the RAID rebuild process but has two key differences:

28

Page 34

Chapter 2. Planning Infrastructure

• Replication in Acronis Cyber Infrastructure is much faster than that of a typical online RAID 1/5/10

rebuild. The reason is that Acronis Cyber Infrastructure replicates chunks in parallel, to multiple storage

nodes.

• The more storage nodes are in a cluster, the faster the cluster will recover from a disk or node failure.

High replication performance minimizes the periods of reduced redundancy for the cluster. Replication

performance is affected by:

• The number of available storage nodes. As replication runs in parallel, the more available replication

sources and destinations there are, the faster it is.

• Performance of storage node disks.

• Network performance. All replicas are transferred between storage nodes over network. For example,

1 Gbps throughput can be a bottleneck (see Per-Node Network Requirements (page 23)).

• Distribution of data in the cluster. Some storage nodes may have much more data to replicate than

others and may become overloaded during replication.

• I/O activity in the cluster during replication.

2.6.2 Redundancy by Erasure Coding

With erasure coding, Acronis Cyber Infrastructure breaks the incoming data stream into fragments of certain

size, then splits each fragment into a certain number (M) of 1-megabyte pieces and creates a certain number

(N) of parity pieces for redundancy. All pieces are distributed among M+N storage nodes, that is, one piece

per node. On storage nodes, pieces are stored in regular chunks of 256MB but such chunks are not replicated

as redundancy is already achieved. The cluster can survive failure of any N storage nodes without data loss.

The values of M and N are indicated in the names of erasure coding redundancy modes. For example, in the

5+2 mode, the incoming data is broken into 5MB fragments, each fragment is split into five 1MB pieces and

two more 1MB parity pieces are added for redundancy. In addition, if N is 2, the data is encoded using the

RAID6 scheme, and if N is greater than 2, erasure codes are used.

The diagram below illustrates the 5+2 mode.

29

Page 35

Chapter 2. Planning Infrastructure

51 2 3 4 6 7

Data stream

Fragment

M chunks N parity chunks

M + N chunks

Storage nodes

2.6.3 No Redundancy

Warning: Danger of data loss!

Without redundancy, singular chunks are stored on storage nodes, one per node. If the node fails, the data

may be lost. Having no redundancy is highly not recommended no matter the scenario, unless you only want

to evaluate Acronis Cyber Infrastructure on a single server.

2.7 Understanding Failure Domains

A failure domain is a set of services which can fail in a correlated manner. To provide high availability of data,

Acronis Cyber Infrastructure spreads data replicas evenly across failure domains, according to a replica

placement policy.

30

Page 36

Chapter 2. Planning Infrastructure

The following policies are available:

• Host as a failure domain (default). If a single host running multiple CS services fails (e.g., due to a power

outage or network disconnect), all CS services on it become unavailable at once. To protect against data

loss under this policy, Acronis Cyber Infrastructure never places more than one data replica per host.

This policy is highly recommended for clusters of three nodes and more.

• Disk, the smallest possible failure domain. Under this policy, Acronis Cyber Infrastructure never places

more than one data replica per disk or CS. While protecting against disk failure, this option may still

result in data loss if data replicas happen to be on different disks of the same host and it fails. This

policy can be used with small clusters of up to three nodes (down to a single node).

2.8 Understanding Storage Tiers

In Acronis Cyber Infrastructure terminology, tiers are disk groups that allow you to organize storage

workloads based on your criteria. For example, you can use tiers to separate workloads produced by

different tenants. Or you can have a tier of fast SSDs for service or virtual environment workloads and a tier

of high-capacity HDDs for backup storage.

When assigning disks to tiers (which you can do at any time), have in mind that faster storage drives should

be assigned to higher tiers. For example, you can use tier 0 for backups and other cold data (CS without SSD

cache), tier 1 for virtual environments—a lot of cold data but fast random writes (CS with SSD cache), tier 2

for hot data (CS on SSD), caches, specific disks, and such.

This recommendation is related to how Acronis Cyber Infrastructure works with storage space. If a storage

tier runs out of free space, Acronis Cyber Infrastructure will attempt to temporarily use the space of the

lower tiers down to the lowest. If the lowest tier also becomes full, Acronis Cyber Infrastructure will attempt

to use a higher one. If you add more storage to the original tier later, the data, temporarily stored elsewhere,

will be moved to the tier where it should have been stored originally. For example, if you try to write data to

the tier 2 and it is full, Acronis Cyber Infrastructure will attempt to write that data to tier 1, then to tier 0. If

you add more storage to tier 2 later, the aforementioned data, now stored on the tier 1 or 0, will be moved

back to the tier 2 where it was meant to be stored originally.

Inter-tier data allocation as well as the transfer of data to the original tier occurs in the background. You can

disable such migration and keep tiers strict as described in the Administrator’s Command Line Guide.

Note: With the exception of out-of-space situations, automatic migration of data between tiers is not

31

Page 37

Chapter 2. Planning Infrastructure

supported.

2.9 Understanding Cluster Rebuilding

The storage cluster is self-healing. If a node or disk fails, a cluster will automatically try to restore the lost

data, i.e. rebuild itself.

The rebuild process involves the following steps. Every CS sends a heartbeat message to an MDS every 5

seconds. If a heartbeat is not sent, the CS is considered inactive and the MDS informs all cluster components

that they stop requesting operations on its data. If no heartbeats are received from a CS for 15 minutes, the

MDS considers that CS offline and starts cluster rebuilding (if the prerequisites below are met). In the

process, the MDS finds CSs that do not have pieces (replicas) of the lost data and restores the data—one

piece (replica) at a time—as follows:

• If replication is used, the existing replicas of a degraded chunk are locked (to make sure all replicas

remain identical) and one is copied to the new CS. If at this time a client needs to read some data that

has not been rebuilt yet, it reads any remaining replica of that data.

• If erasure coding is used, the new CS requests almost all the remaining data pieces to rebuild the

missing ones. If at this time a client needs to read some data that has not been rebuilt yet, that data is

rebuilt out of turn and then read.

Self-healing requires more network traffic and CPU resources if replication is used. On the other hand,

rebuilding with erasure coding is slower.

For a cluster to be able to rebuild itself, it must have at least:

1. As many healthy nodes as required by the redundancy mode

2. Enough free space to accommodate as much data as any one node can store

The first prerequisite can be explained on the following example. In a cluster that works in the 5+2 erasure

coding mode and has seven nodes (i.e. the minimum), each piece of user data is distributed to 5+2 nodes for

redundancy, i.e. each node is used. If one or two nodes fail, the user data will not be lost, but the cluster will

become degraded and will not be able to rebuild itself until at least seven nodes are healthy again (that is,

until you add the missing nodes). For comparison, in a cluster that works in the 5+2 erasure coding mode

and has ten nodes, each piece of user data is distributed to the random 5+2 nodes out of ten to even out the

load on CSs. If up to three nodes fail, such a cluster will still have enough nodes to rebuild itself.

32

Page 38

Chapter 2. Planning Infrastructure

The second prerequisite can be explained on the following example. In a cluster that has ten 10 TB nodes, at

least 1 TB on each node should be kept free, so if a node fails, its 9 TB of data can be rebuilt on the remaining

nine nodes. If, however, a cluster has ten 10 TB nodes and one 20 TB node, each smaller node should have at

least 2 TB free in case the largest node fails (while the largest node should have 1 TB free).

Two recommendations that help smooth out rebuilding overhead:

• To simplify rebuilding, keep uniform disk counts and capacity sizes on all nodes.

• Rebuilding places additional load on the network and increases the latency of read and write

operations. The more network bandwidth the cluster has, the faster rebuilding will be completed and

bandwidth freed up.

33

Page 39

CHAPTER 3

Installing Using GUI

After planning out the infrastructure, proceed to install the product on each server included in the plan.

Important: Time needs to be synchronized via NTP on all nodes in the same cluster. Make sure that the

nodes can access the NTP server.

3.1 Obtaining Distribution Image

To obtain the distribution ISO image, visit the product page and submit a request for the trial version.

3.2 Preparing for Installation

Acronis Cyber Infrastructure can be installed from

• IPMI virtual drives

• PXE servers (in this case, time synchronization via NTP is enabled by default)

• USB drives

34

Page 40

Chapter 3. Installing Using GUI

3.2.1 Preparing for Installation from USB Storage Drives

To install Acronis Cyber Infrastructure from a USB storage drive, you will need a 4 GB or higher-capacity USB

drive and the Acronis Cyber Infrastructure distribution ISO image.

Make a bootable USB drive by transferring the distribution image to it with dd.

Important: Be careful to specify the correct drive to transfer the image to.

For example, on Linux:

# dd if=storage-image.iso of=/dev/sdb

And on Windows (with dd for Windows):

C:\>dd if=storage-image.iso of=\\?\Device\Harddisk1\Partition0

3.3 Starting Installation

The installation program requires a minimum screen resolution of 800x600. With 800x600, however, you

may experience issues with the user interface. For example, some elements can be inaccessible. The

recommended screen resolution is at least 1024x768.

To start the installation, do the following:

1. Configure the server to boot from the chosen media.

2. Boot the server and wait for the welcome screen.

3. On the welcome screen, do one of the following:

• If you want to set installation options manually, choose Install Acronis Cyber Infrastructure.

• If you want to install Acronis Cyber Infrastructure in the unattended mode, press E to edit the

menu entry, append kickstart file location to the linux line, and press Ctrl+X. For example:

linux /images/pxeboot/vmlinuz inst.stage2=hd:LABEL=<ISO_image> quiet ip=dhcp \

logo.nologo=1 inst.ks=<URL>

For instructions on how to create and use a kickstart file, see Creating Kickstart File (page 50) and

Using Kickstart File (page 56), respectively.

35

Page 41

Chapter 3. Installing Using GUI

If you choose Install Acronis Cyber Infrastructure, you will be asked to complete these steps:

1. Read and accept the user agreement.

2. Set up the network.

3. Choose a time zone. The date and time will be configured via NTP.

4. Choose what storage cluster node you are installing: first or second/other. You can also choose to skip

this step so you can add the node to the storage cluster manually later.

5. Choose the destination disk to install Acronis Cyber Infrastructure on.

6. Create the root password and start installation.

These steps are described in detail in the following sections.

3.4 Step 1: Accepting the User Agreement

On this step, please carefully read the End-User License Agreement. Accept it by ticking the I accept the

End-User License Agreement checkbox and click Next.

3.5 Step 2: Configuring the Network

Acronis Cyber Infrastructure requires one network interface per server for management. You will need to

specify a network interface to which to assign the network with the Internal management traffic type. After

installation, you will not be able to remove this traffic type from the preconfigured network in the admin

panel.

On the Network and hostname screen, you need to have at least one network card configured. Usually the

network is configured automatically (via DHCP). If manual configuration is required, select a network card,

click Configure…, and specify the necessary parameters.

In particular, consider setting a better MTU value. As mentioned in Network Limitations (page 22), MTU is set

to 1500 by default, while 9000 is recommended. If you are integrating Acronis Cyber Infrastructure into an

existing network, adjust the MTU value to that of the network. If you are deploying Acronis Cyber

Infrastructure from scratch alongside a new network, set the MTU value to the recommended 9000.

36

Page 42

Chapter 3. Installing Using GUI

Important: The MTU value must be the same across the entire network.

You will need to configure the same MTU value on:

• Each router and switch on the network (consult your network equipment manuals)

• Each node’s network card as well as each bond or VLAN

It is also recommended to create two bonded connections as described in Planning Network (page 22) and

create three VLAN interfaces on one of the bonds. One of the VLAN interfaces must be created in the

installer and assigned to the admin panel network so that you can access the admin panel after the

installation. The remaining VLAN interfaces can be more conveniently created and assigned to networks in

the admin panel as described in the Administrator’s Guide.

In addition, you need to provide a unique hostname, either a fully qualified domain name

(<hostname>.<domainname>) or a short name (<hostname>), in the Host name field.

Having set up the network, click Next.

3.5.1 Creating Bonded Connections

Bonded connections offer increased throughput beyond the capabilities of a single network card as well as

improved redundancy.

You can create network bonds on the Network and hostname screen as described below.

1. To add a new bonded connection, click the plus button in the bottom, select Bond from the drop-down

list, and click Add.

2. In the Editing Bond connection… window, set the following parameters for an Ethernet bonding

interface:

1. Mode to XOR

37

Page 43

2. Link Monitoring to MII (recommended)

3. Monitoring frequency, Link up delay, and Link down delay to 300

Chapter 3. Installing Using GUI

Note: It is also recommended to manually set xmit_hash_policy to layer3+4 after the installation.

3. In the Bonded connections section on the Bond tab, click Add.

4. In the Editing bond slave… window, select a network interface to bond from the Device drop-down list.

38

Page 44

Chapter 3. Installing Using GUI

5. Configure MTU if required and click Save.

6. Repeat steps 3 to 5 for each network interface you need to add to the bonded connection.

7. Configure IPv4 settings if required and click Save.

The connection will appear in the list on the Network and hostname screen.

3.5.2 Creating VLAN Adapters

While installing Acronis Cyber Infrastructure, you can also create virtual local area network (VLAN) adapters

on the basis of physical adapters or bonded connections on the Network and hostname screen as

described below.

1. To add a new VLAN adapter, click the plus button in the bottom, select VLAN from the drop-down list,

and click Add.

2. In the Editing VLAN connection… window:

1. From the Parent interface drop-down list, select a physical adapter or bonded connection that

the VLAN adapter will be based on.

2. Specify a VLAN adapter identifier in the VLAN ID field. The value must be in the 1-4094 range.

39

Page 45

Chapter 3. Installing Using GUI

3. Configure IPv4 settings if required and click

The VLAN adapter will appear in the list on the Network and hostname screen.

Save

.

3.6 Step 3: Choosing the Time Zone

On this step, select your time zone. The date and time will be set via NTP. You will need an Internet

connection for synchronization to complete.

3.7 Step 4: Configuring the Storage Cluster

On this step, you will need to specify what type of node you are installing:

• Choose No, add it to an existing cluster if this is a secondary node that you are adding to an existing

storage cluster. Such nodes will run services related to data storage and will be added to the

40

Page 46

Chapter 3. Installing Using GUI

infrastructure during installation.

• Choose Yes, create a new cluster if you are just starting to set up Acronis Cyber Infrastructure and

want to create a new storage cluster. This primary node, also called the management node, will host

cluster management services and the admin panel. It will also serve as a storage node. Only one

primary node is required.

• Choose Skip cluster configuration if you want to register the deployed node in the admin panel

manually later on (see “Re-Adding Nodes to the Unassigned List” in the Administrator’s Guide).

Click Next to proceed to the next substep that depends on your choice.

3.7.1 Deploying the Primary Node

If you chose to deploy the primary node, do the following:

1. In the Internal management network drop-down list, select a network interface for internal

management and configuration purposes.