Page 1

vRealize Operations Manager vApp

Deployment and Configuration Guide

vRealize Operations Manager 6.3

This document supports the version of each product listed and

supports all subsequent versions until the document is

replaced by a new edition. To check for more recent editions of

this document, see http://www.vmware.com/support/pubs.

EN-002057-00

Page 2

vRealize Operations Manager vApp Deployment and Configuration Guide

You can find the most up-to-date technical documentation on the VMware Web site at:

hp://www.vmware.com/support/

The VMware Web site also provides the latest product updates.

If you have comments about this documentation, submit your feedback to:

docfeedback@vmware.com

Copyright © 2016 VMware, Inc. All rights reserved. Copyright and trademark information.

VMware, Inc.

3401 Hillview Ave.

Palo Alto, CA 94304

www.vmware.com

2 VMware, Inc.

Page 3

Contents

About vApp Deployment and Conguration 5

Preparing for vRealize Operations Manager Installation 7

1

About vRealize Operations Manager Virtual Appliance Installation 8

Complexity of Your Environment 9

vRealize Operations Manager Cluster Nodes 11

General vRealize Operations Manager Cluster Node Requirements 12

vRealize Operations Manager Cluster Node Networking Requirements 13

vRealize Operations Manager Cluster Node Best Practices 13

Using IPv6 with vRealize Operations Manager 14

Sizing the vRealize Operations Manager Cluster 15

Add Data Disk Space to a vRealize Operations Manager vApp Node 16

Custom vRealize Operations Manager Certicates 16

Custom vRealize Operations Manager Certicate Requirements 16

Sample Contents of Custom vRealize Operations Manager Certicates 17

Verifying a Custom vRealize Operations Manager Certicate 19

Create a Node by Deploying an OVF 19

Creating the vRealize Operations Manager Master Node 23

2

About the vRealize Operations Manager Master Node 23

Run the Setup Wizard to Create the Master Node 23

Scaling vRealize Operations Manager Out by Adding a Data Node 25

3

About vRealize Operations Manager Data Nodes 25

Run the Setup Wizard to Add a Data Node 25

VMware, Inc.

Adding High Availability to vRealize Operations Manager 27

4

About vRealize Operations Manager High Availability 27

Run the Setup Wizard to Add a Master Replica Node 28

Gathering More Data by Adding a vRealize Operations Manager Remote

5

Collector Node 31

About vRealize Operations Manager Remote Collector Nodes 31

Run the Setup Wizard to Create a Remote Collector Node 31

Continuing With a New vRealize Operations Manager Installation 33

6

About New vRealize Operations Manager Installations 33

Log In and Continue with a New Installation 33

3

Page 4

vRealize Operations Manager vApp Deployment and Configuration Guide

Connecting vRealize Operations Manager to Data Sources 35

7

VMware vSphere Solution in vRealize Operations Manager 35

Add a vCenter Adapter Instance in vRealize Operations Manager 37

Congure User Access for Actions 38

Endpoint Operations Management Solution in vRealize Operations Manager 39

Endpoint Operations Management Agent Installation and Deployment 39

Roles and Privileges in vRealize Operations Manager 74

Registering Agents on Clusters 75

Manually Create Operating System Objects 75

Managing Objects with Missing Conguration Parameters 76

Mapping Virtual Machines to Operating Systems 77

Endpoint Operations Management Agent Upgrade for vRealize Operations Manager 6.3 77

Installing Optional Solutions in vRealize Operations Manager 78

Managing Solution Credentials 78

Managing Collector Groups 79

Migrate a vCenter Operations Manager Deployment into this Version 79

vRealize Operations Manager Post-Installation Considerations 81

8

About Logging In to vRealize Operations Manager 81

Secure the vRealize Operations Manager Console 82

Log in to a Remote vRealize Operations Manager Console Session 82

The Customer Experience Improvement Program 83

Join or Leave the Customer Experience Improvement Program for

vRealize Operations Manager 83

Updating Your Software 85

9

Obtain the Software Update PAK File 85

Create a Snapshot as Part of an Update 86

Install a Software Update 86

Index 89

4 VMware, Inc.

Page 5

About vApp Deployment and Configuration

The vRealize Operations Manager vApp Deployment and Conguration Guide provides information about

deploying the VMware® vRealize Operations Manager virtual appliance, including how to create and

congure the vRealize Operations Manager cluster.

The vRealize Operations Manager installation process consists of deploying the

vRealize Operations Manager virtual appliance once for each cluster node, and accessing the product to

nish seing up the application.

Intended Audience

This information is intended for anyone who wants to install and congure vRealize Operations Manager by

using a virtual appliance deployment. The information is wrien for experienced virtual machine

administrators who are familiar with enterprise management applications and datacenter operations.

VMware Technical Publications Glossary

VMware Technical Publications provides a glossary of terms that might be unfamiliar to you. For denitions

of terms as they are used in VMware technical documentation, go to

hp://www.vmware.com/support/pubs.

VMware, Inc.

5

Page 6

vRealize Operations Manager vApp Deployment and Configuration Guide

6 VMware, Inc.

Page 7

Preparing for

vRealize Operations Manager

Installation 1

You prepare for vRealize Operations Manager installation by evaluating your environment and deploying

enough vRealize Operations Manager cluster nodes to support how you want to use the product.

This chapter includes the following topics:

“About vRealize Operations Manager Virtual Appliance Installation,” on page 8

n

“Complexity of Your Environment,” on page 9

n

“vRealize Operations Manager Cluster Nodes,” on page 11

n

“Using IPv6 with vRealize Operations Manager,” on page 14

n

“Sizing the vRealize Operations Manager Cluster,” on page 15

n

“Custom vRealize Operations Manager Certicates,” on page 16

n

“Create a Node by Deploying an OVF,” on page 19

n

VMware, Inc.

7

Page 8

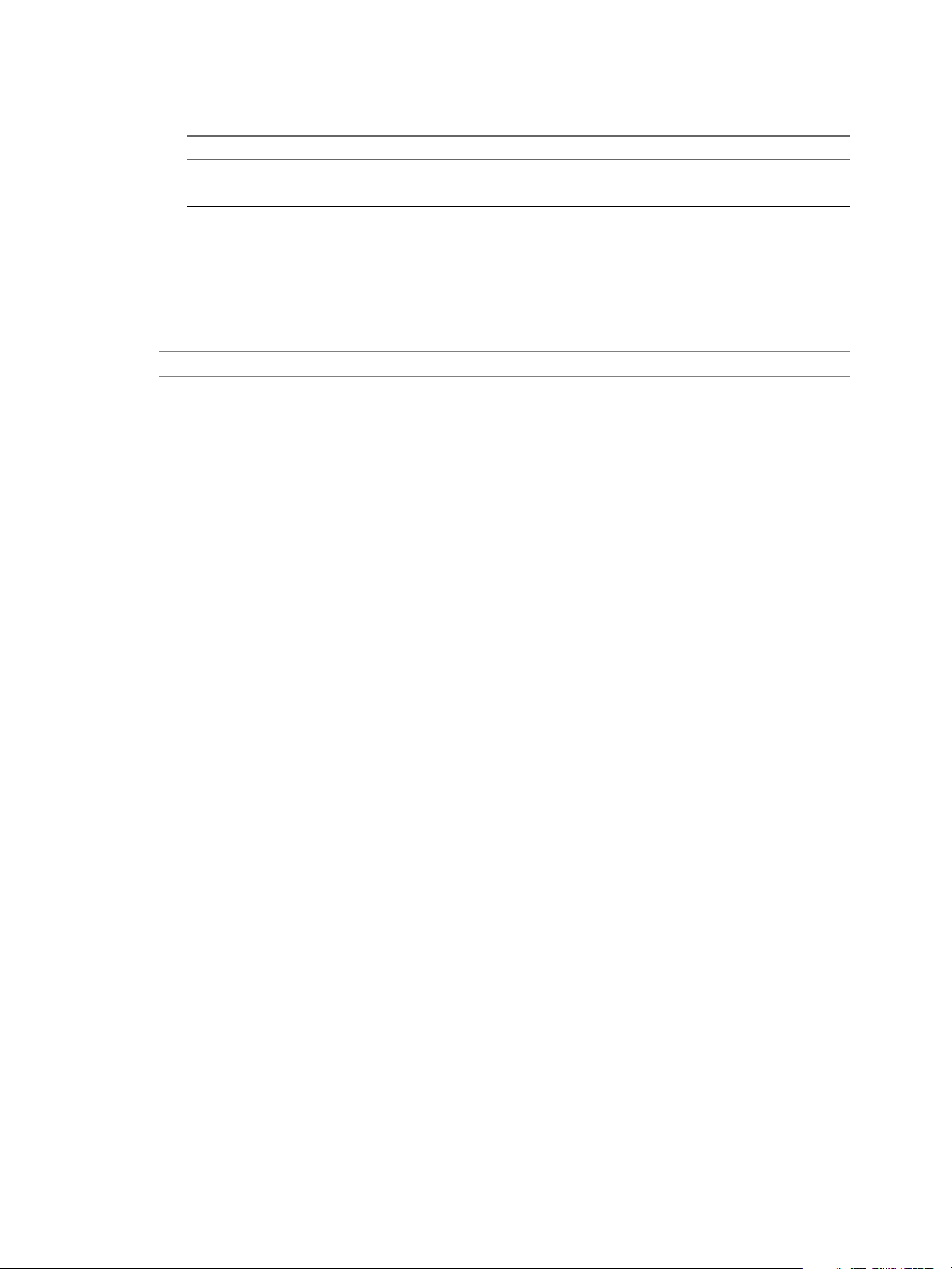

Start

Deploy OVF to create master node.

(optional) Deploy OVFs for master replica, data,

or remote collector nodes

Run master node setup

(optional)

Enable master

replica

(optional)

Run data nodes

setup

(optional)

Run remote collector

nodes setup

First-time login to the product

Monitor your environment

Select, license,

and upload

Customer Experience Improvement Program

(optional) Add more solutions

Configure solutions

Configure monitoring policies

Licensing

vRealize Operations Manager vApp Deployment and Configuration Guide

About vRealize Operations Manager Virtual Appliance Installation

The vRealize Operations Manager virtual appliance installation process consists of deploying the

vRealize Operations Manager OVF once for each cluster node, accessing the product to set up cluster nodes

according to their role, and logging in to congure the installation.

Figure 1‑1. vRealize Operations Manager Installation

8 VMware, Inc.

Page 9

Complexity of Your Environment

When you deploy vRealize Operations Manager, the number and nature of the objects that you want to

monitor might be complex enough to recommend a Professional Services engagement.

Complexity Levels

Every enterprise is dierent in terms of the systems that are present and the level of experience of

deployment personnel. The following table presents a color-coded guide to help you determine where you

are on the complexity scale.

Green

n

Your installation only includes conditions that most users can understand and work with, without

assistance. Continue your deployment.

Yellow

n

Your installation includes conditions that might justify help with your deployment, depending on your

level of experience. Consult your account representative before proceeding, and discuss using

Professional Services.

Red

n

Chapter 1 Preparing for vRealize Operations Manager Installation

Your installation includes conditions that strongly recommend a Professional Services engagement.

Consult your account representative before proceeding, and discuss using Professional Services.

Note that these color-coded levels are not rm rules. Your product experience, which increases as you work

with vRealize Operations Manager and in partnership with Professional Services, must be taken into

account when deploying vRealize Operations Manager.

Table 1‑1. Effect of Deployment Conditions on Complexity

Current or New Deployment

Complexity Level

Green You run only one

Green Your deployment includes a

Yellow You run multiple instances of

Condition Additional Notes

Lone instances are usually easy to

vRealize Operations Manager

deployment.

management pack that is listed as

Green according to the compatibility

guide on the VMware Solutions

Exchange Web site.

vRealize Operations Manager.

create in

vRealize Operations Manager.

The compatibility guide indicates

whether the supported management

pack for vRealize Operations Manager

is a compatible 5.x one or a new one

designed for this release. In some

cases, both might work but produce

dierent results. Regardless, users

might need help in adjusting their

conguration so that associated data,

dashboards, alerts, and so on appear as

expected.

Note that the terms solution,

management pack, adapter, and plug-in

are used somewhat interchangeably.

Multiple instances are typically used to

address scaling or operator use

paerns.

VMware, Inc. 9

Page 10

vRealize Operations Manager vApp Deployment and Configuration Guide

Table 1‑1. Effect of Deployment Conditions on Complexity (Continued)

Current or New Deployment

Complexity Level

Yellow Your deployment includes a

Yellow You are deploying

Yellow You are deploying a multiple-node

Yellow Your new

Yellow Your vRealize Operations Manager

Yellow You want help in understanding the

Red You run multiple instances of

Red Your deployment includes a

Red You are deploying multiple

Condition Additional Notes

management pack that is listed as

Yellow according to the compatibility

guide on the VMware Solutions

Exchange Web site.

vRealize Operations Manager remote

collector nodes.

vRealize Operations Manager cluster.

vRealize Operations Manager

instance will include a Linux or

Windows based deployment.

instance will use high availability

(HA).

new or changed features in

vRealize Operations Manager and

how to use them in your

environment.

vRealize Operations Manager, where

at least one includes virtual desktop

infrastructure (VDI).

management pack that is listed as

Red according to the compatibility

guide on the VMware Solutions

Exchange Web site.

vRealize Operations Manager

clusters.

The compatibility guide indicates

whether the supported management

pack for vRealize Operations Manager

is a compatible 5.x one or a new one

designed for this release. In some

cases, both might work but produce

dierent results. Regardless, users

might need help in adjusting their

conguration so that associated data,

dashboards, alerts, and so on appear as

expected.

Remote collector nodes gather data but

leave the storage and processing of the

data to the analytics cluster.

Multiple nodes are typically used for

scaling out the monitoring capability

of vRealize Operations Manager.

Linux and Windows deployments are

not as common as vApp deployments

and often need special consideration.

High availability and its node failover

capability is a unique multiple-node

feature that you might want additional

help in understanding.

vRealize Operations Manager is

dierent than vCenter Operations

Manager in areas such as policies,

alerts, compliance, custom reporting,

or badges. In addition,

vRealize Operations Manager uses one

consolidated interface.

Multiple instances are typically used to

address scaling, operator use paerns,

or because separate VDI (V4V

monitoring) and non-VDI instances are

needed.

The compatibility guide indicates

whether the supported management

pack for vRealize Operations Manager

is a compatible 5.x one or a new one

designed for this release. In some

cases, both might work but produce

dierent results. Regardless, users

might need help in adjusting their

conguration so that associated data,

dashboards, alerts, and so on appear as

expected.

Multiple clusters are typically used to

isolate business operations or

functions.

10 VMware, Inc.

Page 11

Chapter 1 Preparing for vRealize Operations Manager Installation

Table 1‑1. Effect of Deployment Conditions on Complexity (Continued)

Current or New Deployment

Complexity Level

Red Your current

Red Professional Services customized

Condition Additional Notes

vRealize Operations Manager

deployment required a Professional

Services engagement to install it.

your vRealize Operations Manager

deployment. Examples of

customization include special

integrations, scripting, nonstandard

congurations, multiple level

alerting, or custom reporting.

vRealize Operations Manager Cluster Nodes

All vRealize Operations Manager clusters consist of a master node, an optional replica node for high

availability, optional data nodes, and optional remote collector nodes.

If your environment was complex

enough to justify a Professional

Services engagement in the previous

version, it is possible that the same

conditions still apply and might

warrant a similar engagement for this

version.

If your environment was complex

enough to justify a Professional

Services engagement in the previous

version, it is possible that the same

conditions still apply and might

warrant a similar engagement for this

version.

When you install vRealize Operations Manager, you use a vRealize Operations Manager vApp deployment,

Linux installer, or Windows installer to create role-less nodes. After the nodes are created and have their

names and IP addresses, you use an administration interface to congure them according to their role.

You can create role-less nodes all at once or as needed. A common as-needed practice might be to add nodes

to scale out vRealize Operations Manager to monitor an environment as the environment grows larger.

The following node types make up the vRealize Operations Manager analytics cluster:

Master Node

The initial, required node in vRealize Operations Manager. All other nodes

are managed by the master node.

In a single-node installation, the master node manages itself, has adapters

installed on it, and performs all data collection and analysis.

Data Node

In larger deployments, additional data nodes have adapters installed and

perform collection and analysis.

Larger deployments usually include adapters only on the data nodes so that

master and replica node resources can be dedicated to cluster management.

Replica Node

To use vRealize Operations Manager high availability (HA), the cluster

requires that you convert a data node into a replica of the master node.

The following node type is a member of the vRealize Operations Manager cluster but not part of the

analytics cluster:

Remote Collector Node

Distributed deployments might require a remote collector node that can

navigate rewalls, interface with a remote data source, reduce bandwidth

across data centers, or reduce the load on the vRealize Operations Manager

analytics cluster. Remote collectors only gather objects for the inventory,

without storing data or performing analysis. In addition, remote collector

nodes may be installed on a dierent operating system than the rest of the

cluster.

VMware, Inc. 11

Page 12

vRealize Operations Manager vApp Deployment and Configuration Guide

General vRealize Operations Manager Cluster Node Requirements

When you create the cluster nodes that make up vRealize Operations Manager, you have general

requirements that you must meet.

General Requirements

vRealize Operations Manager Version. All nodes must run the same vRealize Operations Manager

n

version.

For example, do not add a version 6.1 data node to a cluster of vRealize Operations Manager 6.2 nodes.

Analytics Cluster Deployment Type. In the analytics cluster, all nodes must be the same kind of

n

deployment: vApp, Linux, or Windows.

Do not mix vApp, Linux, and Windows nodes in the same analytics cluster.

Remote Collector Deployment Type. A remote collector node does not need to be the same deployment

n

type as the analytics cluster nodes.

When you add a remote collector of a dierent deployment type, the following combinations are

supported:

vApp analytics cluster and Windows remote collector

n

Linux analytics cluster and Windows remote collector

n

Analytics Cluster Node Sizing. In the analytics cluster, CPU, memory, and disk size must be identical

n

for all nodes.

Master, replica, and data nodes must be uniform in sizing.

Remote Collector Node Sizing. Remote collector nodes may be of dierent sizes from each other or

n

from the uniform analytics cluster node size.

Geographical Proximity. You may place analytics cluster nodes in dierent vSphere clusters, but the

n

nodes must reside in the same geographical location.

Dierent geographical locations are not supported.

Virtual Machine Maintenance. When any node is a virtual machine, you may only update the virtual

n

machine software by directly updating the vRealize Operations Manager software.

For example, going outside of vRealize Operations Manager to access vSphere to update VMware Tools

is not supported.

Redundancy and Isolation. If you expect to enable HA, place analytics cluster nodes on separate hosts.

n

See “About vRealize Operations Manager High Availability,” on page 27.

Requirements for Solutions

Be aware that solutions might have requirements beyond those for vRealize Operations Manager itself. For

example, vRealize Operations Manager for Horizon View has specic sizing guidelines for its remote

collectors.

See your solution documentation, and verify any additional requirements before installing solutions. Note

that the terms solution, management pack, adapter, and plug-in are used somewhat interchangeably.

12 VMware, Inc.

Page 13

Chapter 1 Preparing for vRealize Operations Manager Installation

vRealize Operations Manager Cluster Node Networking Requirements

When you create the cluster nodes that make up vRealize Operations Manager, the associated setup within

your network environment is critical to inter-node communication and proper operation.

Networking Requirements

I vRealize Operations Manager analytics cluster nodes need frequent communication with one

another. In general, your underlying vSphere architecture might create conditions where some vSphere

actions aect that communication. Examples include, but are not limited to, vMotions, storage vMotions,

HA events, and DRS events.

The master and replica nodes must be addressed by static IP address, or fully qualied domain name

n

(FQDN) with a static IP address.

Data and remote collector nodes may use dynamic host control protocol (DHCP).

You must be able to successfully reverse-DNS all nodes, including remote collectors, to their FQDN,

n

currently the node hostname.

Nodes deployed by OVF have their hostnames set to the retrieved FQDN by default.

All nodes, including remote collectors, must be bidirectionally routable by IP address or FQDN.

n

Analytics cluster nodes must not be separated by network address translation (NAT), load balancer,

n

rewall, or a proxy that inhibits bidirectional communication by IP address or FQDN.

Analytics cluster nodes must not have the same hostname.

n

Place analytics cluster nodes within the same data center and connect them to the same local area

n

network (LAN).

Place analytics cluster nodes on same Layer 2 network and IP subnet.

n

A stretched Layer 2 or routed Layer 3 network is not supported.

Do not span the Layer 2 network across sites, which might create network partitions or network

n

performance issues.

One-way latency between analytics cluster nodes must be 5 ms or lower.

n

Network bandwidth between analytics cluster nodes must be 1 gbps or higher.

n

Do not distribute analytics cluster nodes over a wide area network (WAN).

n

To collect data from a WAN, a remote or separate data center, or a dierent geographic location, use

remote collectors.

Remote collectors are supported through a routed network but not through NAT.

n

vRealize Operations Manager Cluster Node Best Practices

When you create the cluster nodes that make up vRealize Operations Manager, additional best practices

improve performance and reliability in vRealize Operations Manager.

Best Practices

Deploy vRealize Operations Manager analytics cluster nodes in the same vSphere cluster.

n

VMware, Inc. 13

Page 14

vRealize Operations Manager vApp Deployment and Configuration Guide

If you deploy analytics cluster nodes in a highly consolidated vSphere cluster, you might need resource

n

reservations for optimal performance.

Determine whether the virtual to physical CPU ratio is aecting performance by reviewing CPU ready

time and co-stop.

Deploy analytics cluster nodes on the same type of storage tier.

n

To continue to meet analytics cluster node size and performance requirements, apply storage DRS anti-

n

anity rules so that nodes are on separate datastores.

To prevent unintentional migration of nodes, set storage DRS to manual.

n

To ensure balanced performance from analytics cluster nodes, use ESXi hosts with the same processor

n

frequencies. Mixed frequencies and physical core counts might aect analytics cluster performance.

To avoid a performance decrease, vRealize Operations Manager analytics cluster nodes need

n

guaranteed resources when running at scale. The vRealize Operations Manager Knowledge Base

includes sizing spreadsheets that calculate resources based on the number of objects and metrics that

you expect to monitor, use of HA, and so on. When sizing, it is beer to over-allocate than underallocate resources.

See Knowledge Base article 2093783.

Because nodes might change roles, avoid machine names such as Master, Data, Replica, and so on.

n

Examples of changed roles might include making a data node into a replica for HA, or having a replica

take over the master node role.

The NUMA placement is removed in the vRealize Operations Manager 6.3 and later. Procedures related

n

to NUMA seings from the OVA le follow:

Table 1‑2. NUMA Setting

Action Description

Set the vRealize Operations Manager cluster status to

oine

Remove the NUMA seing 1 From the Conguration Parameters, remove the

1 Shut down the vRealize Operations Manager cluster.

2 Right-click the cluster and click Edit >

Options > Advanced General.

3 Click Parameters. In the vSphere

Client, repeat these steps for each VM.

seing numa.vcpu.preferHT and click OK.

2 Click OK.

3 Repeat these steps for all the VMs in the vRealize

Operations cluster.

4 Power on the cluster.

N To ensure the availability of adequate resources and continued product performance, monitor

vRealize Operations performance by checking its CPU usage, CPU ready and CPU contention time.

Using IPv6 with vRealize Operations Manager

vRealize Operations Manager supports Internet Protocol version 6 (IPv6), the network addressing

convention that will eventually replace IPv4. Use of IPv6 with vRealize Operations Manager requires that

certain limitations be observed.

Using IPv6

All vRealize Operations Manager cluster nodes, including remote collectors, must have IPv6 addresses.

n

Do not mix IPv6 and IPv4.

14 VMware, Inc.

Page 15

Chapter 1 Preparing for vRealize Operations Manager Installation

All vRealize Operations Manager cluster nodes, including remote collectors, must be vApp or Linux

n

based. vRealize Operations Manager for Windows does not support IPv6.

Use global IPv6 addresses only. Link-local addresses are not supported.

n

If any nodes use DHCP, your DHCP server must be congured to support IPv6.

n

DHCP is only supported on data nodes and remote collectors. Master nodes and replica nodes still

n

require xed addresses, which is true for IPv4 as well.

Your DNS server must be congured to support IPv6.

n

When adding nodes to the cluster, remember to enter the IPv6 address of the master node.

n

When registering a VMware vCenter® instance within vRealize Operations Manager, place square

n

brackets around the IPv6 address of your VMware vCenter Server® system if vCenter is also using IPv6.

For example: [2015:0db8:85a3:0042:1000:8a2e:0360:7334]

Note that, even when vRealize Operations Manager is using IPv6, vCenter Server may still have an IPv4

address. In that case, vRealize Operations Manager does not need the square brackets.

You cannot register an Endpoint Operations Management agent in an environment that supports both

n

IPv4 and IPv6. In the event that you aempt to do so, the following error appears:

Connection failed. Server may be down (or wrong IP/port were used). Waiting for 10 seconds

before retrying.

Sizing the vRealize Operations Manager Cluster

The resources needed for vRealize Operations Manager depend on how large of an environment you expect

to monitor and analyze, how many metrics you plan to collect, and how long you need to store the data.

It is dicult to broadly predict the CPU, memory, and disk requirements that will meet the needs of a

particular environment. There are many variables, such as the number and type of objects collected, which

includes the number and type of adapters installed, the presence of HA, the duration of data retention, and

the quantity of specic data points of interest, such as symptoms, changes, and so on.

VMware expects vRealize Operations Manager sizing information to evolve, and maintains Knowledge Base

articles so that sizing calculations can be adjusted to adapt to usage data and changes in versions of

vRealize Operations Manager.

Knowledge Base article 2093783

The Knowledge Base articles include overall maximums, plus spreadsheet calculators in which you enter the

number of objects and metrics that you expect to monitor. To obtain the numbers, some users take the

following high-level approach, which uses vRealize Operations Manager itself.

1 Review this guide to understand how to deploy and congure a vRealize Operations Manager node.

2 Deploy a temporary vRealize Operations Manager node.

3 Congure one or more adapters, and allow the temporary node to collect overnight.

4 Access the Cluster Management page on the temporary node.

5 Using the Adapter Instances list in the lower portion of the display as a reference, enter object and

metric totals of the dierent adapter types into the appropriate sizing spreadsheet from Knowledge

Base article 2093783.

6 Deploy the vRealize Operations Manager cluster based on the spreadsheet sizing recommendation. You

can build the cluster by adding resources and data nodes to the temporary node or by starting over.

If you have a large number of adapters, you might need to reset and repeat the process on the temporary

node until you have all the totals you need. The temporary node will not have enough capacity to

simultaneously run every connection from a large enterprise.

VMware, Inc. 15

Page 16

vRealize Operations Manager vApp Deployment and Configuration Guide

Another approach to sizing is through self monitoring. Deploy the cluster based on your best estimate, but

create an alert for when capacity falls below a threshold, one that allows enough time to add nodes or disk

to the cluster. You also have the option to create an email notication when thresholds are passed.

During internal testing, a single-node vApp deployment of vRealize Operations Manager that monitored

8,000 virtual machines ran out of disk storage within one week.

Add Data Disk Space to a vRealize Operations Manager vApp Node

You add to the data disk of vRealize Operations Manager vApp nodes when space for storing the collected

data runs low.

Prerequisites

Note the disk size of the analytics cluster nodes. When adding disk, you must maintain uniform size

n

across analytics cluster nodes.

Use the vRealize Operations Manager administration interface to take the node oine.

n

Verify that you are connected to a vCenter Server system with a vSphere client, and log in to the

n

vSphere client.

Procedure

1 Shut down the virtual machine for the node.

2 Edit the hardware seings of the virtual machine, and do one of the following:

Increase the size of Hard Disk 2.

n

You cannot increase the size when the virtual machine has snapshots.

Add another disk.

n

3 Power on the virtual machine for the node.

During the power-on process, the virtual machine expands the vRealize Operations Manager data partition.

Custom vRealize Operations Manager Certificates

By default, vRealize Operations Manager includes its own authentication certicates. The default certicates

cause the browser to display a warning when you connect to the vRealize Operations Manager user

interface.

Your site security policies might require that you use another certicate, or you might want to avoid the

warnings caused by the default certicates. In either case, vRealize Operations Manager supports the use of

your own custom certicate. You can upload your custom certicate during initial master node

conguration or later.

Custom vRealize Operations Manager Certificate Requirements

A certicate used with vRealize Operations Manager must conform to certain requirements. Using a custom

certicate is optional and does not aect vRealize Operations Manager features.

Requirements for Custom Certificates

Custom vRealize Operations Manager certicates must meet the following requirements.

The certicate le must include the terminal (leaf) server certicate, a private key, and all issuing

n

certicates if the certicate is signed by a chain of other certicates.

In the le, the leaf certicate must be rst in the order of certicates. After the leaf certicate, the order

n

does not maer.

16 VMware, Inc.

Page 17

Chapter 1 Preparing for vRealize Operations Manager Installation

In the le, all certicates and the private key must be in PEM format. vRealize Operations Manager

n

does not support certicates in PFX, PKCS12, PKCS7, or other formats.

In the le, all certicates and the private key must be PEM-encoded. vRealize Operations Manager does

n

not support DER-encoded certicates or private keys.

PEM-encoding is base-64 ASCII and contains legible BEGIN and END markers, while DER is a binary

format. Also, le extension might not match encoding. For example, a generic .cer extension might be

used with PEM or DER. To verify encoding format, examine a certicate le using a text editor.

The le extension must be .pem.

n

The private key must be generated by the RSA or DSA algorithm.

n

The private key must not be encrypted by a pass phrase if you use the master node conguration

n

wizard or the administration interface to upload the certicate.

The REST API in this vRealize Operations Manager release supports private keys that are encrypted by

n

a pass phrase. Contact VMware Technical Support for details.

The vRealize Operations Manager Web server on all nodes will have the same certicate le, so it must

n

be valid for all nodes. One way to make the certicate valid for multiple addresses is with multiple

Subject Alternative Name (SAN) entries.

SHA1 certicates creates browser compatibility issues. Therefore, ensure that all certicates that are

n

created and being uploaded to vRealize Operations Manager are signed using SHA2 or newer.

Sample Contents of Custom vRealize Operations Manager Certificates

For troubleshooting purposes, you can open a custom certicate le in a text editor and inspect its contents.

PEM Format Certificate Files

A typical PEM format certicate le resembles the following sample.

-----BEGIN CERTIFICATE-----

MIIF1DCCBLygAwIBAgIKFYXYUwAAAAAAGTANBgkqhkiG9w0BAQ0FADBhMRMwEQYK

CZImiZPyLGQBGRYDY29tMRUwEwYKCZImiZPyLGQBGRYFdm13Y3MxGDAWBgoJkiaJ

<snip>

vKStQJNr7z2+pTy92M6FgJz3y+daL+9ddbaMNp9fVXjHBoDLGGaLOvyD+KJ8+xba

aGJfGf9ELXM=

-----END CERTIFICATE-----

-----BEGIN RSA PRIVATE KEY-----

MIIEowIBAAKCAQEA4l5ffX694riI1RmdRLJwL6sOWa+Wf70HRoLtx21kZzbXbUQN

mQhTRiidJ3Ro2gRbj/btSsI+OMUzotz5VRT/yeyoTC5l2uJEapld45RroUDHQwWJ

<snip>

DAN9hQus3832xMkAuVP/jt76dHDYyviyIYbmxzMalX7LZy1MCQVg4hCH0vLsHtLh

M1rOAsz62Eht/iB61AsVCCiN3gLrX7MKsYdxZcRVruGXSIh33ynA

-----END RSA PRIVATE KEY-----

-----BEGIN CERTIFICATE-----

MIIDnTCCAoWgAwIBAgIQY+j29InmdYNCs2cK1H4kPzANBgkqhkiG9w0BAQ0FADBh

MRMwEQYKCZImiZPyLGQBGRYDY29tMRUwEwYKCZImiZPyLGQBGRYFdm13Y3MxGDAW

<snip>

ukzUuqX7wEhc+QgJWgl41mWZBZ09gfsA9XuXBL0k17IpVHpEgwwrjQz8X68m4I99

dD5Pflf/nLRJvR9jwXl62yk=

-----END CERTIFICATE-----

Private Keys

Private keys can appear in dierent formats but are enclosed with clear BEGIN and END markers.

VMware, Inc. 17

Page 18

vRealize Operations Manager vApp Deployment and Configuration Guide

Valid PEM sections begin with one of the following markers.

-----BEGIN RSA PRIVATE KEY-----

-----BEGIN PRIVATE KEY-----

Encrypted private keys begin with the following marker.

-----BEGIN ENCRYPTED PRIVATE KEY-----

Bag Attributes

Microsoft certicate tools sometimes add Bag Aributes sections to certicate les.

vRealize Operations Manager safely ignores content outside of BEGIN and END markers, including Bag

Aributes sections.

Bag Attributes

Microsoft Local Key set: <No Values>

localKeyID: 01 00 00 00

Microsoft CSP Name: Microsoft RSA SChannel Cryptographic Provider

friendlyName: le-WebServer-8dea65d4-c331-40f4-aa0b-205c3c323f62

Key Attributes

X509v3 Key Usage: 10

-----BEGIN PRIVATE KEY-----

MIICdwIBADANBgkqhkiG9w0BAQEFAASCAmEwggJdAgEAAoGBAKHqyfc+qcQK4yxJ

om3PuB8dYZm34Qlt81GAAnBPYe3B4Q/0ba6PV8GtWG2svIpcl/eflwGHgTU3zJxR

gkKh7I3K5tGESn81ipyKTkPbYebh+aBMqPKrNNUEKlr0M9sa3WSc0o3350tCc1ew

5ZkNYZ4BRUVYWm0HogeGhOthRn2fAgMBAAECgYABhPmGN3FSZKPDG6HJlARvTlBH

KAGVnBGHd0MOmMAbghFBnBKXa8LwD1dgGBng1oOakEXTftkIjdB+uwkU5P4aRrO7

vGujUtRyRCU/4fjLBDuxQL/KpQfruAQaof9uWUwh5W9fEeW3g26fzVL8AFZnbXS0

7Z0AL1H3LNcLd5rpOQJBANnI7vFu06bFxVF+kq6ZOJFMx7x3K4VGxgg+PfFEBEPS

UJ2LuDH5/Rc63BaxFzM/q3B3Jhehvgw61mMyxU7QSSUCQQC+VDuW3XEWJjSiU6KD

gEGpCyJ5SBePbLSukljpGidKkDNlkLgbWVytCVkTAmuoAz33kMWfqIiNcqQbUgVV

UnpzAkB7d0CPO0deSsy8kMdTmKXLKf4qSF0x55epYK/5MZhBYuA1ENrR6mmjW8ke

TDNc6IGm9sVvrFBz2n9kKYpWThrJAkEAk5R69DtW0cbkLy5MqEzOHQauP36gDi1L

WMXPvUfzSYTQ5aM2rrY2/1FtSSkqUwfYh9sw8eDbqVpIV4rc6dDfcwJBALiiDPT0

tz86wySJNeOiUkQm36iXVF8AckPKT9TrbC3Ho7nC8OzL7gEllETa4Zc86Z3wpcGF

BHhEDMHaihyuVgI=

-----END PRIVATE KEY-----

Bag Attributes

localKeyID: 01 00 00 00

1.3.6.1.4.1.311.17.3.92: 00 04 00 00

1.3.6.1.4.1.311.17.3.20: 7F 95 38 07 CB 0C 99 DD 41 23 26 15 8B E8

D8 4B 0A C8 7D 93

friendlyName: cos-oc-vcops

1.3.6.1.4.1.311.17.3.71: 43 00 4F 00 53 00 2D 00 4F 00 43 00 2D 00

56 00 43 00 4D 00 35 00 37 00 31 00 2E 00 76 00 6D 00 77 00 61 00

72 00 65 00 2E 00 63 00 6F 00 6D 00 00 00

1.3.6.1.4.1.311.17.3.87: 00 00 00 00 00 00 00 00 02 00 00 00 20 00

00 00 02 00 00 00 6C 00 64 00 61 00 70 00 3A 00 00 00 7B 00 41 00

45 00 35 00 44 00 44 00 33 00 44 00 30 00 2D 00 36 00 45 00 37 00

30 00 2D 00 34 00 42 00 44 00 42 00 2D 00 39 00 43 00 34 00 31 00

2D 00 31 00 43 00 34 00 41 00 38 00 44 00 43 00 42 00 30 00 38 00

42 00 46 00 7D 00 00 00 70 00 61 00 2D 00 61 00 64 00 63 00 33 00

2E 00 76 00 6D 00 77 00 61 00 72 00 65 00 2E 00 63 00 6F 00 6D 00

5C 00 56 00 4D 00 77 00 61 00 72 00 65 00 20 00 43 00 41 00 00 00

31 00 32 00 33 00 33 00 30 00 00 00

subject=/CN=cos-oc-vcops.eng.vmware.com

18 VMware, Inc.

Page 19

Chapter 1 Preparing for vRealize Operations Manager Installation

issuer=/DC=com/DC=vmware/CN=VMware CA

-----BEGIN CERTIFICATE-----

MIIFWTCCBEGgAwIBAgIKSJGT5gACAAAwKjANBgkqhkiG9w0BAQUFADBBMRMwEQYK

CZImiZPyLGQBGRYDY29tMRYwFAYKCZImiZPyLGQBGRYGdm13YXJlMRIwEAYDVQQD

EwlWTXdhcmUgQ0EwHhcNMTQwMjA1MTg1OTM2WhcNMTYwMjA1MTg1OTM2WjAmMSQw

Verifying a Custom vRealize Operations Manager Certificate

When you upload a custom certicate le, the vRealize Operations Manager interface displays summary

information for all certicates in the le.

For a valid custom certicate le, you should be able to match issuer to subject, issuer to subject, back to a

self-signed certicate where the issuer and subject are the same.

In the following example, OU=MBU,O=VMware\, Inc.,CN=vc-ops-slice-32 is issued by OU=MBU,O=VMware\,

Inc.,CN=vc-ops-intermediate-32, which is issued by OU=MBU,O=VMware\, Inc.,CN=vc-ops-clusterca_33717ac0-ad81-4a15-ac4e-e1806f0d3f84, which is issued by itself.

Thumbprint: 80:C4:84:B9:11:5B:9F:70:9F:54:99:9E:71:46:69:D3:67:31:2B:9C

Issuer Distinguished Name: OU=MBU,O=VMware\, Inc.,CN=vc-ops-intermediate-32

Subject Distinguished Name: OU=MBU,O=VMware\, Inc.,CN=vc-ops-slice-32

Subject Alternate Name:

PublicKey Algorithm: RSA

Valid From: 2015-05-07T16:25:24.000Z

Valid To: 2020-05-06T16:25:24.000Z

Thumbprint: 72:FE:95:F2:90:7C:86:24:D9:4E:12:EC:FB:10:38:7A:DA:EC:00:3A

Issuer Distinguished Name: OU=MBU,O=VMware\, Inc.,CN=vc-ops-cluster-ca_33717ac0-ad81-4a15-ac4e-

e1806f0d3f84

Subject Distinguished Name: OU=MBU,O=VMware\, Inc.,CN=vc-ops-intermediate-32

Subject Alternate Name: localhost,127.0.0.1

PublicKey Algorithm: RSA

Valid From: 2015-05-07T16:25:19.000Z

Valid To: 2020-05-06T16:25:19.000Z

Thumbprint: FA:AD:FD:91:AD:E4:F1:00:EC:4A:D4:73:81:DB:B2:D1:20:35:DB:F2

Issuer Distinguished Name: OU=MBU,O=VMware\, Inc.,CN=vc-ops-cluster-ca_33717ac0-ad81-4a15-ac4e-

e1806f0d3f84

Subject Distinguished Name: OU=MBU,O=VMware\, Inc.,CN=vc-ops-cluster-ca_33717ac0-ad81-4a15-ac4e-

e1806f0d3f84

Subject Alternate Name: localhost,127.0.0.1

PublicKey Algorithm: RSA

Valid From: 2015-05-07T16:24:45.000Z

Valid To: 2020-05-06T16:24:45.000Z

Create a Node by Deploying an OVF

vRealize Operations Manager consists of one or more nodes, in a cluster. To create nodes, you use the

vSphere client to download and deploy the vRealize Operations Manager virtual machine, once for each

cluster node.

Prerequisites

Verify that you have permissions to deploy OVF templates to the inventory.

n

If the ESXi host is part of a cluster, enable DRS in the cluster. If an ESXi host belongs to a non-DRS

n

cluster, all resource pool functions are disabled.

VMware, Inc. 19

Page 20

vRealize Operations Manager vApp Deployment and Configuration Guide

If this node is to be the master node, reserve a static IP address for the virtual machine, and know the

n

associated domain name server, default gateway, and network mask values.

Plan to keep the IP address because it is dicult to change the address after installation.

If this node is to be a data node that will become the HA replica node, reserve a static IP address for the

n

virtual machine, and know the associated domain name server, default gateway, and network mask

values.

Plan to keep the IP address because it is dicult to change the address after installation.

In addition, familiarize yourself with HA node placement as described in “About vRealize Operations

Manager High Availability,” on page 27.

Preplan your domain and machine naming so that the deployed virtual machine name will begin and

n

end with alphabet (a–z) or digit (0–9) characters, and will only contain alphabet, digit, or hyphen (-)

characters. The underscore character (_) must not appear in the host name or anywhere in the fully

qualied domain name (FQDN).

Plan to keep the name because it is dicult to change the name after installation.

For more information, review the host name specications from the Internet Engineering Task Force.

See www.ietf.org.

Preplan node placement and networking to meet the requirements described in “General vRealize

n

Operations Manager Cluster Node Requirements,” on page 12 and “vRealize Operations Manager

Cluster Node Networking Requirements,” on page 13.

If you expect the vRealize Operations Manager cluster to use IPv6 addresses, review the IPv6

n

limitations described in “Using IPv6 with vRealize Operations Manager,” on page 14.

Download the vRealize Operations Manager .ova le to a location that is accessible to the vSphere

n

client.

If you download the virtual machine and the le extension is .tar, change the le extension to .ova.

n

Verify that you are connected to a vCenter Server system with a vSphere client, and log in to the

n

vSphere client.

Do not deploy vRealize Operations Manager from an ESXi host. Deploy only from vCenter Server.

Procedure

1 Select the vSphere Deploy OVF Template option.

2 Enter the path to the vRealize Operations Manager .ova le.

3 Follow the prompts until you are asked to enter a name for the node.

4 Enter a node name. Examples might include Ops1, Ops2 or Ops-A, Ops-B.

Do not include nonstandard characters such as underscores (_) in node names.

Use a dierent name for each vRealize Operations Manager node.

5 Follow the prompts until you are asked to select a conguration size.

6 Select the size conguration that you need. Your selection does not aect disk size.

Default disk space is allocated regardless of which size you select. If you need additional space to

accommodate the expected data, add more disk after deploying the vApp.

20 VMware, Inc.

Page 21

Chapter 1 Preparing for vRealize Operations Manager Installation

7 Follow the prompts until you are asked to select the disk format.

Option Description

Thick Provision Lazy Zeroed

Thick Provision Eager Zeroed

Thin Provision

Creates a virtual disk in a default thick format.

Creates a type of thick virtual disk that supports clustering features such

as Fault Tolerance. Thick provisioned eager-zeroed format can improve

performance depending on the underlying storage subsystem.

Select the thick provisioned eager-zero option when possible.

Creates a disk in thin format. Use this format to save storage space.

Snapshots can negatively aect the performance of a virtual machine and typically result in a 25–30

percent degradation for the vRealize Operations Manager workload. Do not use snapshots.

8 Click Next.

9 From the drop-down menu, select a Destination Network, for example, Network 1 = TEST, and click

Next.

10 In Properties, under Application, Timezone Seing, leave the default of UTC or select a time zone.

The preferred approach is to standardize on UTC. Alternatively, congure all nodes to the same time

zone.

11 (Optional) Select the option for IPv6.

12 Under Networking Properties, leave the entries blank for DHCP, or ll in the default gateway, domain

name server, static IP address, and network mask values.

The master node and replica node require a static IP. A data node or remote collector node may use

DHCP or static IP.

13 Click Next.

14 Review the seings and click Finish.

15 If you are creating a multiple-node vRealize Operations Manager cluster, repeat Step 1 through Step 14

to deploy each node.

What to do next

Use a Web browser client to congure a newly added node as the vRealize Operations Manager master

node, a data node, a high availability master replica node, or a remote collector node. The master node is

required rst.

C For security, do not access vRealize Operations Manager from untrusted or unpatched clients, or

from clients using browser extensions.

VMware, Inc. 21

Page 22

vRealize Operations Manager vApp Deployment and Configuration Guide

22 VMware, Inc.

Page 23

Creating the

vRealize Operations Manager Master

Node 2

All vRealize Operations Manager installations require a master node.

This chapter includes the following topics:

“About the vRealize Operations Manager Master Node,” on page 23

n

“Run the Setup Wizard to Create the Master Node,” on page 23

n

About the vRealize Operations Manager Master Node

The master node is the required, initial node in your vRealize Operations Manager cluster.

In single-node clusters, administration and data are on the same master node. A multiple-node cluster

includes one master node and one or more data nodes. In addition, there might be remote collector nodes,

and there might be one replica node used for high availability.

The master node performs administration for the cluster and must be online before you congure any new

nodes. In addition, the master node must be online before other nodes are brought online. If the master node

and replica node go oine together, bring them back online separately. Bring the master node completely

online rst, and then bring the replica node online. For example, if the entire cluster were oine for any

reason, you would bring the master node online rst.

Creating the Master Node (hp://link.brightcove.com/services/player/bcpid2296383276001?

bctid=ref:video_vrops_create_master_node)

Run the Setup Wizard to Create the Master Node

All vRealize Operations Manager installations require a master node. With a single node cluster,

administration and data functions are on the same master node. A multiple-node

vRealize Operations Manager cluster contains one master node and one or more nodes for handling

additional data.

Prerequisites

Create a node by deploying the vRealize Operations Manager vApp.

n

After it is deployed, note the fully qualied domain name (FQDN) or IP address of the node.

n

If you plan to use a custom authentication certicate, verify that your certicate le meets the

n

requirements for vRealize Operations Manager. See “Custom vRealize Operations Manager

Certicates,” on page 16.

VMware, Inc.

23

Page 24

vRealize Operations Manager vApp Deployment and Configuration Guide

Procedure

1 Navigate to the name or IP address of the node that will be the master node of

vRealize Operations Manager.

The setup wizard appears, and you do not need to log in to vRealize Operations Manager.

2 Click New Installation.

3 Click Next.

4 Enter and conrm a password for the admin user account, and click Next.

Passwords require a minimum of 8 characters, one uppercase leer, one lowercase leer, one digit, and

one special character.

The user account name is admin by default and cannot be changed.

5 Select whether to use the certicate included with vRealize Operations Manager or to install one of your

own.

a To use your own certicate, click Browse, locate the certicate le, and click Open to load the le in

the Certicate Information text box.

b Review the information detected from your certicate to verify that it meets the requirements for

vRealize Operations Manager.

6 Click Next.

7 Enter a name for the master node.

For example: Ops-Master

8 Enter the URL or IP address for the Network Time Protocol (NTP) server with which the cluster will

synchronize.

For example: time.nist.gov

9 Click Add.

Leave the NTP blank to have vRealize Operations Manager manage its own synchronization by having

all nodes synchronize with the master node and replica node.

10 Click Next, and click Finish.

The administration interface appears, and it takes a moment for vRealize Operations Manager to nish

adding the master node.

What to do next

After creating the master node, you have the following options.

Create and add data nodes to the unstarted cluster.

n

Create and add remote collector nodes to the unstarted cluster.

n

Click Start vRealize Operations Manager to start the single-node cluster, and log in to nish

n

conguring the product.

The cluster might take from 10 to 30 minutes to start, depending on the size of your cluster and nodes.

Do not make changes or perform any actions on cluster nodes while the cluster is starting.

24 VMware, Inc.

Page 25

Scaling vRealize Operations Manager

Out by Adding a Data Node 3

You can deploy and congure additional nodes so that vRealize Operations Manager can support larger

environments.

This chapter includes the following topics:

“About vRealize Operations Manager Data Nodes,” on page 25

n

“Run the Setup Wizard to Add a Data Node,” on page 25

n

About vRealize Operations Manager Data Nodes

Data nodes are the additional cluster nodes that allow you to scale out vRealize Operations Manager to

monitor larger environments.

A data node always shares the load of performing vRealize Operations Manager analysis and might also

have a solution adapter installed to perform collection and data storage from the environment. You must

have a master node before you add data nodes.

You can dynamically scale out vRealize Operations Manager by adding data nodes without stopping the

vRealize Operations Manager cluster. When you scale out the cluster by 25% or more, you should restart the

cluster to allow vRealize Operations Manager to update its storage size, and you might notice a decrease in

performance until you restart. A maintenance interval provides a good opportunity to restart the

vRealize Operations Manager cluster.

In addition, the product administration options include an option to re-balance the cluster, which can be

done without restarting. Rebalancing adjusts the vRealize Operations Manager workload across the cluster

nodes.

N Do not shut down online cluster nodes externally or by using any means other than the

vRealize Operations Manager interface. Shut down a node externally only after taking it oine in the

vRealize Operations Manager interface.

Creating a Data Node (hp://link.brightcove.com/services/player/bcpid2296383276001?

bctid=ref:video_vrops_create_data_node)

Run the Setup Wizard to Add a Data Node

Larger environments with multiple-node vRealize Operations Manager clusters contain one master node

and one or more data nodes for additional data collection, storage, processing, and analysis.

Prerequisites

Create nodes by deploying the vRealize Operations Manager vApp.

n

VMware, Inc.

25

Page 26

vRealize Operations Manager vApp Deployment and Configuration Guide

Create and congure the master node.

n

Note the fully qualied domain name (FQDN) or IP address of the master node.

n

Procedure

1 In a Web browser, navigate to the name or IP address of the node that will become the data node.

The setup wizard appears, and you do not need to log in to vRealize Operations Manager.

2 Click Expand an Existing Installation.

3 Click Next.

4 Enter a name for the node (for example, Data-1).

5 From the Node Type drop-down, select Data.

6 Enter the FQDN or IP address of the master node and click Validate.

7 Select Accept this and click Next.

If necessary, locate the certicate on the master node and verify the thumbprint.

8 Verify the vRealize Operations Manager administrator username of admin.

9 Enter the vRealize Operations Manager administrator password.

Alternatively, instead of a password, type a pass-phrase that you were given by your

vRealize Operations Manager administrator.

10 Click Next, and click Finish.

The administration interface appears, and it takes a moment for vRealize Operations Manager to nish

adding the data node.

What to do next

After creating a data node, you have the following options.

New, unstarted clusters:

n

Create and add more data nodes.

n

Create and add remote collector nodes.

n

Create a high availability master replica node.

n

Click Start vRealize Operations Manager to start the cluster, and log in to nish conguring the

n

product.

The cluster might take from 10 to 30 minutes to start, depending on the size of your cluster and

nodes. Do not make changes or perform any actions on cluster nodes while the cluster is starting.

Established, running clusters:

n

Create and add more data nodes.

n

Create and add remote collector nodes.

n

Create a high availability master replica node, which requires a cluster restart.

n

26 VMware, Inc.

Page 27

Adding High Availability to

vRealize Operations Manager 4

You can dedicate one vRealize Operations Manager cluster node to serve as a replica node for the

vRealize Operations Manager master node.

This chapter includes the following topics:

“About vRealize Operations Manager High Availability,” on page 27

n

“Run the Setup Wizard to Add a Master Replica Node,” on page 28

n

About vRealize Operations Manager High Availability

vRealize Operations Manager supports high availability (HA). HA creates a replica for the

vRealize Operations Manager master node and protects the analytics cluster against the loss of a node.

With HA, data stored on the master node is always 100% backed up on the replica node. To enable HA, you

must have at least one data node deployed, in addition to the master node.

HA is not a disaster recovery mechanism. HA protects the analytics cluster against the loss of only one

n

node, and because only one loss is supported, you cannot stretch nodes across vSphere clusters in an

aempt to isolate nodes or build failure zones.

When HA is enabled, the replica can take over all functions that the master provides, were the master to

n

fail for any reason. If the master fails, failover to the replica is automatic and requires only two to three

minutes of vRealize Operations Manager downtime to resume operations and restart data collection.

When a master node problem causes failover, the replica node becomes the master node, and the cluster

runs in degraded mode. To get out of degraded mode, take one of the following steps.

Return to HA mode by correcting the problem with the master node, which allows

n

vRealize Operations Manager to congure the node as the new replica node.

Return to HA mode by converting a data node into a new replica node and then removing the old,

n

failed master node. Removed master nodes cannot be repaired and re-added to

vRealize Operations Manager.

Change to non-HA operation by disabling HA and then removing the old, failed master node.

n

Removed master nodes cannot be repaired and re-added to vRealize Operations Manager.

In the administration interface, after an HA replica node takes over and becomes the new master node,

n

you cannot remove the previous, oine master node from the cluster. In addition, the previous node

continues to be listed as a master node. To refresh the display and enable removal of the node, refresh

the browser.

VMware, Inc.

27

Page 28

vRealize Operations Manager vApp Deployment and Configuration Guide

When HA is enabled, the cluster can survive the loss of one data node without losing any data.

n

However, HA protects against the loss of only one node at a time, of any kind, so simultaneously losing

data and master/replica nodes, or two or more data nodes, is not supported. Instead,

vRealize Operations Manager HA provides additional application level data protection to ensure

application level availability.

When HA is enabled, it lowers vRealize Operations Manager capacity and processing by half, because

n

HA creates a redundant copy of data throughout the cluster, as well as the replica backup of the master

node. Consider your potential use of HA when planning the number and size of your

vRealize Operations Manager cluster nodes. See “Sizing the vRealize Operations Manager Cluster,” on

page 15.

When HA is enabled, deploy analytics cluster nodes on separate hosts for redundancy and isolation.

n

One option is to use anti-anity rules that keep nodes on specic hosts in the vSphere cluster.

If you cannot keep the nodes separate, you should not enable HA. A host fault would cause the loss of

more than one node, which is not supported, and all of vRealize Operations Manager would become

unavailable.

The opposite is also true. Without HA, you could keep nodes on the same host, and it would not make a

dierence. Without HA, the loss of even one node would make all of vRealize Operations Manager

unavailable.

When you power o the data node and change the network seings of the VM, this aects the IP

n

address of the data node. After this point, the HA cluster is no longer accessible and all the nodes have

a status of "Waiting for analytics". Verify that you have used a static IP address.

Creating a Replica Node for High Availability

(hp://link.brightcove.com/services/player/bcpid2296383276001?

bctid=ref:video_vrops_create_replica_node_ha)

Run the Setup Wizard to Add a Master Replica Node

You can convert a vRealize Operations Manager data node to a replica of the master node, which adds high

availability (HA) for vRealize Operations Manager.

N If the cluster is running, enabling HA restarts the cluster.

If you convert a data node that is already in use for data collection and analysis, adapters and data

connections that were provided through that data node fail over to other data nodes.

You may add HA to the vRealize Operations Manager cluster at installation time or after

vRealize Operations Manager is up and running. Adding HA at installation is less intrusive because the

cluster has not yet started.

Prerequisites

Create nodes by deploying the vRealize Operations Manager vApp.

n

Create and congure the master node.

n

Create and congure a data node with a static IP address.

n

Note the fully qualied domain name (FQDN) or IP address of the master node.

n

Procedure

1 In a Web browser, navigate to the master node administration interface.

https://master-node-name-or-ip-address/admin

2 Enter the vRealize Operations Manager administrator username of admin.

28 VMware, Inc.

Page 29

Chapter 4 Adding High Availability to vRealize Operations Manager

3 Enter the vRealize Operations Manager administrator password and click Log In.

4 Under High Availability, click Enable.

5 Select a data node to serve as the replica for the master node.

6 Select the Enable High Availability for this cluster option, and click OK.

If the cluster was online, the administration interface displays progress as vRealize Operations Manager

congures, synchronizes, and rebalances the cluster for HA.

7 If the master node and replica node go oine, and the master remains oine for any reason while the

replica goes online, the replica node does not take over the master role, take the entire cluster oine,

including data nodes and log in to the replica node command line console as a root.

8 Open $ALIVE_BASE/persistence/persistence.properties in a text editor.

9 Locate and set the following properties:

db.role=MASTER

db.driver=/data/vcops/xdb/vcops.bootstrap

10 Save and close persistence.properties.

11 In the administration interface, bring the replica node online, and verify that it becomes the master

node and bring the remaining cluster nodes online.

What to do next

After creating a master replica node, you have the following options.

New, unstarted clusters:

n

Create and add data nodes.

n

Create and add remote collector nodes.

n

Click Start vRealize Operations Manager to start the cluster, and log in to nish conguring the

n

product.

The cluster might take from 10 to 30 minutes to start, depending on the size of your cluster and

nodes. Do not make changes or perform any actions on cluster nodes while the cluster is starting.

Established, running clusters:

n

Create and add data nodes.

n

Create and add remote collector nodes.

n

VMware, Inc. 29

Page 30

vRealize Operations Manager vApp Deployment and Configuration Guide

30 VMware, Inc.

Page 31

Gathering More Data by Adding a

vRealize Operations Manager Remote

Collector Node 5

You deploy and congure remote collector nodes so that vRealize Operations Manager can add to its

inventory of objects to monitor without increasing the processing load on vRealize Operations Manager

analytics.

This chapter includes the following topics:

“About vRealize Operations Manager Remote Collector Nodes,” on page 31

n

“Run the Setup Wizard to Create a Remote Collector Node,” on page 31

n

About vRealize Operations Manager Remote Collector Nodes

A remote collector node is an additional cluster node that allows vRealize Operations Manager to gather

more objects into its inventory for monitoring. Unlike data nodes, remote collector nodes only include the

collector role of vRealize Operations Manager, without storing data or processing any analytics functions.

A remote collector node is usually deployed to navigate rewalls, reduce bandwidth across data centers,

connect to remote data sources, or reduce the load on the vRealize Operations Manager analytics cluster.

Remote collectors do not buer data while the network is experiencing a problem. If the connection between

remote collector and analytics cluster is lost, the remote collector does not store data points that occur

during that time. In turn, and after the connection is restored, vRealize Operations Manager does not

retroactively incorporate associated events from that time into any monitoring or analysis.

You must have at least a master node before adding remote collector nodes.

Run the Setup Wizard to Create a Remote Collector Node

In distributed vRealize Operations Manager environments, remote collector nodes increase the inventory of

objects that you can monitor without increasing the load on vRealize Operations Manager in terms of data

storage, processing, or analysis.

Prerequisites

Create nodes by deploying the vRealize Operations Manager vApp.

n

During vApp deployment, select a remote collector size option.

Create and congure the master node.

n

Note the fully qualied domain name (FQDN) or IP address of the master node.

n

VMware, Inc.

31

Page 32

vRealize Operations Manager vApp Deployment and Configuration Guide

Procedure

1 In a Web browser, navigate to the name or IP address of the deployed OVF that will become the remote

collector node.

The setup wizard appears, and you do not need to log in to vRealize Operations Manager.

2 Click Expand an Existing Installation.

3 Click Next.

4 Enter a name for the node, for example, Remote-1.

5 From the Node Type drop-down menu, select Remote Collector.

6 Enter the FQDN or IP address of the master node and click Validate.

7 Select Accept this and click Next.

If necessary, locate the certicate on the master node and verify the thumbprint.

8 Verify the vRealize Operations Manager administrator username of admin.

9 Enter the vRealize Operations Manager administrator password.

Alternatively, instead of a password, type a passphrase that you were given by the

vRealize Operations Manager administrator.

10 Click Next, and click Finish.

The administration interface appears, and it takes several minutes for vRealize Operations Manager to

nish adding the remote collector node.

What to do next

After creating a remote collector node, you have the following options.

New, unstarted clusters:

n

Create and add data nodes.

n

Create and add more remote collector nodes.

n

Create a high availability master replica node.

n

Click Start vRealize Operations Manager to start the cluster, and log in to nish conguring the

n

product.

The cluster might take from 10 to 30 minutes to start, depending on the size of your cluster and

nodes. Do not make changes or perform any actions on cluster nodes while the cluster is starting.

Established, running clusters:

n

Create and add data nodes.

n

Create and add more remote collector nodes.

n

Create a high availability master replica node, which requires a cluster restart.

n

32 VMware, Inc.

Page 33

Continuing With a New

vRealize Operations Manager

Installation 6

After you deploy the vRealize Operations Manager nodes and complete the initial setup, you continue with

installation by logging in for the rst time and conguring a few seings.

This chapter includes the following topics:

“About New vRealize Operations Manager Installations,” on page 33

n

“Log In and Continue with a New Installation,” on page 33

n

About New vRealize Operations Manager Installations

A new vRealize Operations Manager installation requires that you deploy and congure nodes. Then, you

add solutions for the kinds of objects to monitor and manage.

After you add solutions, you congure them in the product and add monitoring policies that gather the kind

of data that you want.

Logging In for the First Time (hp://link.brightcove.com/services/player/bcpid2296383276001?

bctid=ref:video_vrops_rst_time_login)

Log In and Continue with a New Installation

To nish a new vRealize Operations Manager installation, you log in and complete a one-time process to

license the product and congure solutions for the kinds of objects that you want to monitor.

Prerequisites

Create the new cluster of vRealize Operations Manager nodes.

n

Verify that the cluster has enough capacity to monitor your environment. See “Sizing the vRealize

n

Operations Manager Cluster,” on page 15.

Procedure

1 In a Web browser, navigate to the IP address or fully qualied domain name of the master node.

2 Enter the username admin and the password that you dened when you congured the master node,

and click Login.

Because this is the rst time you are logging in, the administration interface appears.

3 To start the cluster, click Start vRealize Operations Manager.

4 Click Yes.

The cluster might take from 10 to 30 minutes to start, depending on your environment. Do not make

changes or perform any actions on cluster nodes while the cluster is starting.

VMware, Inc.

33

Page 34

vRealize Operations Manager vApp Deployment and Configuration Guide

5 When the cluster nishes starting and the product login page appears, enter the admin username and

password again, and click Login.

A one-time licensing wizard appears.

6 Click Next.

7 Read and accept the End User License Agreement, and click Next.

8 Enter your product key, or select the option to run vRealize Operations Manager in evaluation mode.

Your level of product license determines what solutions you may install to monitor and manage objects.

Standard. vCenter only

n

Advanced. vCenter plus other infrastructure solutions

n

Enterprise. All solutions

n

vRealize Operations Manager does not license managed objects in the same way that vSphere does, so

there is no object count when you license the product.

N When you transition to the Standard edition, you no longer have the Advanced and Enterprise

features. After the transition, delete any content that you created in the other versions to ensure that

you comply with EULA and verify the license key which supports the Advanced and Enterprise

features.

9 If you entered a product key, click Validate License Key.

10 Click Next.

11 Select whether or not to return usage statistics to VMware, and click Next.

12 Click Finish.

The one-time wizard nishes, and the vRealize Operations Manager interface appears.

What to do next

Use the vRealize Operations Manager interface to congure the solutions that are included with the

n

product.

Use the vRealize Operations Manager interface to add more solutions.

n

Use the vRealize Operations Manager interface to add monitoring policies.

n

34 VMware, Inc.

Page 35

Connecting

vRealize Operations Manager to Data

Sources 7

Congure solutions in vRealize Operations Manager to connect to and analyze data from external data

sources in your environment. Once connected, you use vRealize Operations Manager to monitor and

manage objects in your environment.

A solution might be only a connection to a data source, or it might include predened dashboards, widgets,

alerts, and views.

vRealize Operations Manager includes the VMware vSphere and Endpoint Operations Management

solutions. These solutions are installed when you install vRealize Operations Manager.

Other solutions can be added to vRealize Operations Manager as management packs, such as the VMware

Management Pack for NSX for vSphere. To download VMware management packs and other third-party

solutions, visit the VMware Solution Exchange.

This chapter includes the following topics:

“VMware vSphere Solution in vRealize Operations Manager,” on page 35

n

“Endpoint Operations Management Solution in vRealize Operations Manager,” on page 39

n

“Installing Optional Solutions in vRealize Operations Manager,” on page 78

n

“Migrate a vCenter Operations Manager Deployment into this Version,” on page 79

n

VMware vSphere Solution in vRealize Operations Manager

The VMware vSphere solution connects vRealize Operations Manager to vCenter Server instances. You

collect data and metrics from those instances, monitor them, and run actions in them.

vRealize Operations Manager evaluates the data in your environment, identifying trends in object behavior,

calculating possible problems and future capacity for objects in your system based on those trends, and

alerting you when an object exhibits dened symptoms. The vSphere solution includes actions that you can

run on the vCenter Server from vRealize Operations Manager to manage those objects as you respond to

problems and alerts. Actions are run from toolbars in vRealize Operations Manager.

VMware, Inc.

35

Page 36

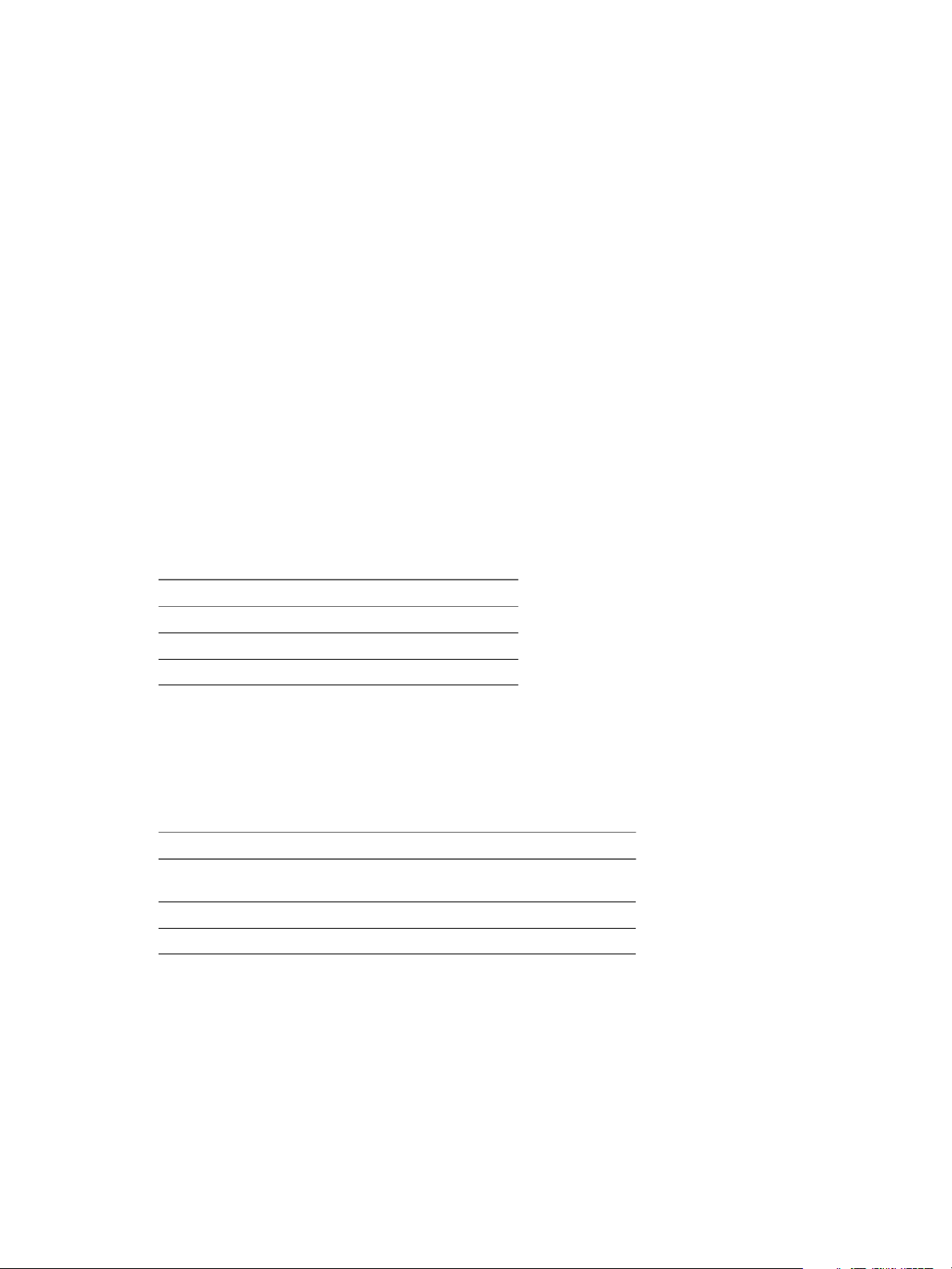

Configure and manage

vCenter adapter instances in

one central workplace

Configure user access so that

users can run actions on objects

in vCenter Server from vRealize

Operations Manager

Enable/disable actions

Update the default monitoring policy

Register/unregister vCenter instances

Add vCenter adapter instances

Configure the vSphere Solution to

connect vRealize Operations Manager

to one or more vCenter instances

To begin, access

Administration > Solutions

Create roles with permissions to determine

who can access actions

Create user groups, and assign them

action-specific roles and access to adapter

instances

vRealize Operations Manager vApp Deployment and Configuration Guide

Configuring the vSphere Solution

The vSphere solution is provided with vRealize Operations Manager. It includes the vCenter Server adapter.

To congure the vSphere solution, you congure one or more vCenter Server adapter instances, and

congure user access so that users can run actions.

How Adapter Credentials Work

The vCenter Server credentials that you use to connect vRealize Operations Manager to a vCenter Server

instance, determines what objects vRealize Operations Manager monitors. Understand how these adapter

credentials and user privileges interact to ensure that you congure adapters and users correctly, and to

avoid some of the following issues.

If you congure the adapter to connect to a vCenter Server instance with credentials that have

n

permission to access only one of your three hosts, every user who logs in to

vRealize Operations Manager sees only the one host, even when an individual user has privileges on all

three of the hosts in the vCenter Server.

If the provided credentials have limited access to objects in the vCenter Server, even

n

vRealize Operations Manager administrative users can run actions only on the objects for which the

vCenter Server credentials have permission.

If the provided credentials have access to all the objects in the vCenter Server, any

n

vRealize Operations Manager user who runs actions is using this account.

Controlling User Access to Actions

You control user access for local users based on how you congure user privileges in

vRealize Operations Manager. If users log in using their vCenter Server account, then the way their account

is congured in vCenter Server determines their privileges.

36 VMware, Inc.

Page 37

Chapter 7 Connecting vRealize Operations Manager to Data Sources

For example, you might have a vCenter Server user with a read-only role in vCenter Server. If you give this

user the vRealize Operations Manager Power User role in vCenter Server rather than a more restrictive role,

the user can run actions on objects because the adapter is congured with credentials that has privileges to

change objects. To avoid this type of unexpected result, congure local vRealize Operations Manager users

and vCenter Server users with the privileges you want them to have in your environment.

Add a vCenter Adapter Instance in vRealize Operations Manager

To manage your vCenter Server instances in vRealize Operations Manager, you must congure an adapter

instance for each vCenter Server instance. The adapter requires the credentials that are used for

communication with the target vCenter Server.

C Any adapter credentials you add are shared with other adapter administrators and