Artisan Technology Group is your source for quality

new and certied-used/pre-owned equipment

• FAST SHIPPING AND

DELIVERY

• TENS OF THOUSANDS OF

IN-STOCK ITEMS

• EQUIPMENT DEMOS

• HUNDREDS OF

MANUFACTURERS

SUPPORTED

• LEASING/MONTHLY

RENTALS

• ITAR CERTIFIED

SECURE ASSET SOLUTIONS

SERVICE CENTER REPAIRS

Experienced engineers and technicians on staff

at our full-service, in-house repair center

Instra

Remotely inspect equipment before purchasing with

our interactive website at www.instraview.com

Contact us: (888) 88-SOURCE | sales@artisantg.com | www.artisantg.com

SM

REMOTE INSPECTION

View

WE BUY USED EQUIPMENT

Sell your excess, underutilized, and idle used equipment

We also offer credit for buy-backs and trade-ins

www.artisantg.com/WeBuyEquipment

LOOKING FOR MORE INFORMATION?

Visit us on the web at www.artisantg.com for more

information on price quotations, drivers, technical

specications, manuals, and documentation

UltraSPARC User’s Manual

UltraSP ARC-I

UltraSP ARC-II

July 1997

Sun Microelectronics

901 San Antonio Road

Palo Alto, CA 94303

Part No: 802-7220-02

This July 1997 -02 Revision is only available on-

line. The only changes made were to support

hypertext links in the pdf file.

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Copyright © 1997 Sun Microsystems, Inc. All Rights Reserved.

THE INFORMATION CONTAINED IN THIS DOCUMENT IS PROVIDED “AS

IS” WITHOUT ANY EXPRESS REPRESENTATIONS OR WARRANTIES. IN

ADDITION, SUN MICROSYSTEMS, INC. DISCLAIMS ALL IMPLIED

REPRESENTATIONS AND WARRANTIES, INCLUDING ANY WARRANTY OF

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, OR NONINFRINGEMENT OF THIRD PARTY INTELLECTUAL PROPERTY RIGHTS.

This document contains proprietary information of Sun Microsystems, Inc. or

under license from third parties. No part of this document may be reproduced in

any form or by any means or transferred to any third party without the prior

written consent of Sun Microsystems, Inc.

Sun, Sun Microsystems, and the Sun logo are trademarks or registered

trademarks of Sun Microsystems, Inc. in the United States and other countries.

All SPARC trademarks are used under license and are trademarks or registered

trademarks of SPARC International, Inc. in the United States and other countries.

Products bearing SPARC trademarks are based upon an architecture developed

by Sun Microsystems, Inc.

The information contained in this document is not designed or intended for use

in on-line control of aircraft, air traffic, aircraft navigation or aircraft

communications; or in the design, construction, operation or maintenance of any

nuclear facility. Sun disclaims any express or implied warranty of fitness for such

uses.

Printed in the United States of America.

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Contents

Preface ..................................................................................................................................... 9

Overview ...................................................................................................................... 9

A Brief History of SPARC.......................................................................................... 9

How to Use This Book................................................................................................ 10

Section I — Introducing UltraSPARC

1. UltraSPARC Basics................................................................................................................ 3

1.1 Overview ...................................................................................................................... 3

1.2 Design Philosophy ...................................................................................................... 3

1.3 Component Overview ................................................................................................ 5

1.4 UltraSPARC Subsystem.............................................................................................. 10

2. Processor Pipeline................................................................................................................. 11

2.1 Introductions................................................................................................................11

2.2 Pipeline Stages............................................................................................................. 12

3. Cache Organization .............................................................................................................. 17

3.1 Introduction.................................................................................................................. 17

4. Overview of the MMU......................................................................................................... 21

4.1 Introduction.................................................................................................................. 21

4.2 Virtual Address Translation ...................................................................................... 21

Section II — Going Deeper

5. Cache and Memory Interactions ........................................................................................ 27

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

5.2 Cache Flushing............................................................................................................. 27

5.3 Memory Accesses and Cacheability ......................................................................... 29

5.4 Load Buffer................................................................................................................... 39

5.5 Store Buffer................................................................................................................... 40

6.1 Introduction.................................................................................................................. 41

6.2 Translation Table Entry (TTE) ................................................................................... 41

6.3 Translation Storage Buffer (TSB)............................................................................... 44

6.4 MMU-Related Faults and Traps................................................................................ 47

6.5 MMU Operation Summary........................................................................................ 50

6.6 ASI Value, Context, and Endianness Selection for Translation............................ 52

6.7 MMU Behavior During Reset, MMU Disable, and RED_state............................. 54

6.8 Compliance with the SPARC-V9 Annex F............................................................... 55

6.9 MMU Internal Registers and ASI Operations ......................................................... 55

6.10 MMU Bypass Mode..................................................................................................... 68

6.11 TLB Hardware.............................................................................................................. 69

7.1 Introduction.................................................................................................................. 73

7.2 Overview of UltraSPARC External Interfaces......................................................... 73

7.3 Interaction Between E-Cache and UDB.................................................................... 76

7.4 SYSADDR Bus Arbitration Protocol......................................................................... 84

7.5 UltraSPARC Interconnect Transaction Overview .................................................. 92

7.6 Cache Coherence Protocol.......................................................................................... 94

7.7 Cache Coherent Transactions .................................................................................... 102

7.8 Non-Cached Data Transactions................................................................................. 109

7.9 S_RTO/S_ERR ............................................................................................................. 111

7.10 S_REQ............................................................................................................................ 111

7.11 Writeback Issues.......................................................................................................... 112

7.12 Interrupts (P_INT_REQ)............................................................................................. 116

7.13 P_REPLY and S_REPLY.............................................................................................. 117

7.14 Multiple Outstanding Transactions.......................................................................... 126

7.15 Transaction Set Summary........................................................................................... 129

7.16 Transaction Sequences................................................................................................ 131

7.17 Interconnect Packet Formats...................................................................................... 138

7.18 WriteInvalidate............................................................................................................ 143

8.1 Overview....................................................................................................................... 145

8.2 Physical Address Space .............................................................................................. 145

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Contents

8.4 Ancillary State Registers............................................................................................. 156

8.5 Other UltraSPARC Registers ..................................................................................... 158

8.6 Supported Traps.......................................................................................................... 158

9. Interrupt Handling ............................................................................................................... 161

9.1 Interrupt Vectors ......................................................................................................... 161

9.2 Interrupt Global Registers.......................................................................................... 163

9.3 Interrupt ASI Registers............................................................................................... 163

9.4 Software Interrupt (SOFTINT) Register................................................................... 166

10. Reset and RED_state............................................................................................................. 169

10.1 Overview ...................................................................................................................... 169

10.2 RED_state Trap Vector ............................................................................................... 171

10.3 Machine State after Reset and in RED_state............................................................ 171

11. Error Handling....................................................................................................................... 175

11.1 Overview ...................................................................................................................... 175

11.2 Memory Errors............................................................................................................. 178

11.3 Memory Error Registers............................................................................................. 179

11.4 UltraSPARC Data Buffer (UDB) Control Register.................................................. 185

11.5 Overwrite Policy.......................................................................................................... 185

Section III — UltraSPARC and SPARC-V9

12. Instruction Set Summary..................................................................................................... 189

13. UltraSPARC Extended Instructions................................................................................... 195

13.1 Introduction.................................................................................................................. 195

13.2 SHUTDOWN ............................................................................................................... 195

13.3 Graphics Data Formats............................................................................................... 196

13.4 Graphics Status Register (GSR)................................................................................. 197

13.5 Graphics Instructions.................................................................................................. 198

13.6 Memory Access Instructions...................................................................................... 225

14. Implementation Dependencies.......................................................................................... 235

14.1 SPARC-V9 General Information ............................................................................... 235

14.2 SPARC-V9 Integer Operations.................................................................................. 240

14.3 SPARC-V9 Floating-Point Operations...................................................................... 242

14.4 SPARC-V9 Memory-Related Operations................................................................. 247

14.5 Non-SPARC-V9 Extensions ....................................................................................... 249

15. SPARC-V9 Memory Models ............................................................................................... 255

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

15.2 Supported Memory Models....................................................................................... 256

Section IV — Producing Optimized Code

16.1 Hardware / Software Synergy.................................................................................. 261

16.2 Instruction Stream Issues ........................................................................................... 261

16.3 Data Stream Issues....................................................................................................... 272

17.1 Introduction.................................................................................................................. 281

17.2 General Grouping Rules............................................................................................. 282

17.3 Instruction Availability............................................................................................... 283

17.4 Single Group Instructions .......................................................................................... 283

17.5 Integer Execution Unit (IEU) Instructions ............................................................... 284

17.6 Control Transfer Instructions..................................................................................... 287

17.7 Load / Store Instructions ........................................................................................... 290

17.8 Floating-Point and Graphic Instructions.................................................................. 295

Appendixes

A.1 Overview....................................................................................................................... 303

A.2 Diagnostics Control and Accesses............................................................................. 303

A.3 Dispatch Control Register.......................................................................................... 303

A.4 Floating-Point Control................................................................................................ 304

A.5 Watchpoint Support.................................................................................................... 304

A.6 LSU_Control_Register................................................................................................ 306

A.7 I-Cache Diagnostic Accesses...................................................................................... 309

A.8 D-Cache Diagnostic Accesses.................................................................................... 314

A.9 E-Cache Diagnostics Accesses................................................................................... 315

B.1 Overview....................................................................................................................... 319

B.2 Performance Control and Counters.......................................................................... 319

B.3 PCR/PIC Accesses....................................................................................................... 321

B.4 Performance Instrumentation Counter Events ....................................................... 321

C.1 Overview....................................................................................................................... 327

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Contents

C.3 Power-Up...................................................................................................................... 328

D. IEEE 1149.1 Scan Interface................................................................................................... 329

D.1 Introduction.................................................................................................................. 329

D.2 Interface........................................................................................................................ 329

D.3 Test Access Port (TAP) Controller............................................................................ 330

D.4 Instruction Register..................................................................................................... 333

D.5 Instructions................................................................................................................... 333

D.6 Public Test Data Registers.......................................................................................... 335

E. Pin and Signal Descriptions ............................................................................................... 337

E.1 Introduction.................................................................................................................. 337

E.2 Pin Descriptions........................................................................................................... 337

E.3 Signal Descriptions...................................................................................................... 341

F. ASI Names.............................................................................................................................. 345

F.1 Introduction.................................................................................................................. 345

G. Differences Between UltraSPARC Models...................................................................... 351

G.1 Introduction.................................................................................................................. 351

G.2 Summary....................................................................................................................... 351

G.3 References to Model-Specific Information............................................................... 352

Back Matter

Glossary .................................................................................................................................. 357

Bibliography .......................................................................................................................... 363

General References...................................................................................................... 363

Sun Microelectronics (SME) Publications................................................................ 364

How to Contact SME................................................................................................... 365

On Line Resources....................................................................................................... 365

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

Sun Microelectronics

viii

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Preface

Overview

Welcome to the UltraSPARC User’s Manual. This book contains information about

the architecture and programming of UltraSPARC, Sun Microsystems’ family of

SPARC-V9-compliant processors. It describes the UltraSPARC-I and

UltraSPARC-II processor implementasions.

This book contains information on:

• The UltraSPARC system architecture

• The components that make up an UltraSPARC processor

• Memory and low-level system management, including detailed information

• Extensions to and implementation-dependencies of the SPARC-V9 architecture

• Techniques for managing the pipeline and for producing optimized code

needed by operating system programmers

A Brief History of SPARC

SPARC stands for Scalable Processor ARChitecture, which was first announced in

1987. Unlike more traditional processor architectures, SPARC is an open standard, freely available through license from SPARC International, Inc. Any company that obtains a license can manufacture and sell a SPARC-compliant processor.

By the early 1990s SPARC processors we available from over a dozen different

vendors, and over 8,000 SPARC-compliant applications had been certified.

Sun Microelectronics

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

9

In 1994, SPARC International, Inc. published The SPARC Architecture Manual, Version 9, which defined a powerful 64-bit enhancement to the SPARC architecture.

SPARC-V9 provided support for:

• 64-bit virtual addresses and 64-bit integer data

• Fault tolerance

• Fast trap handling and context switching

• Big- and little-endian byte orders

UltraSPARC is the first family of SPARC-V9-compliant processors available from

Sun Microsystems, Inc.

This book is a companion to The SPARC Architecture Manual, Version 9, which is

available from many technical bookstores or directly from its copyright holder:

SPARC International, Inc.

535 Middlefield Road, Suite 210

Menlo Park, CA 94025

(415) 321-8692

The SPARC Architecture Manual, Version 9 provides a complete description of the

SPARC-V9 architecture. Since SPARC-V9 is an open architecture, many of the implementation decisions have been left to the manufacturers of SPARC-compliant

processors. These “implementation dependencies” are introduced in The SPARC

Architecture Manual, Version 9; they are numbered throughout the body of the text,

and are cross referenced in Appendix C that book.

This book, the UltraSPARC User’s Manual, describes the UltraSPARC-I and

UltraSPARC-II implementations of the SPARC-V9 architecture. It provides specific information about UltraSPARC processors, including how each SPARC-V9 implementation dependency was resolved. (See Chapter 14, “Implementation

Dependencies,” for specific information.) This manual also describes extensions

to SPARC-V9 that are available (currently) only on UltraSPARC processors.

A great deal of background information and a number of architectural concepts

are not contained in this book. You will find cross references to The SPARC Archi-

tecture Manual, Version 9 located throughout this book. You should have a copy of

that book at hand whenever you are working with the UltraSPARC User’s Manual.

For detailed information about the electrical and mechanical characteristics of the

processor, including pin and pad assignments, consult the UltraSPARC-I Data

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Preface

Textual Conventions

This book uses the same textual conventions as The SPARC Architecture Manual,

Version 9. They are summarized here for convenience.

Fonts are used as follows:

• Italic font is used for register names, instruction fields, and read-only register

fields.

• Typewriter font is used for literals and software examples.

• Bold font is used for emphasis.

• UPPER CASE items are acronyms, instruction names, or writable register

fields.

•

Italic sans serif

font is used for exception and trap names.

• Underbar characters (_) join words in register, register field, exception, and

trap names. Such words can be split across lines at the underbar without an

intervening hyphen.

The following notational conventions are used:

• Square brackets ‘[ ]’ indicate a numbered register in a register file.

• Angle brackets ‘< >’ indicate a bit number or colon-separated range of bit

numbers within a field.

• Curly braces ‘{ }’ are used to indicate textual substitution.

• The symbol designates concatenation of bit vectors. A comma ‘,’ on the left

side of an assignment separates quantities that are concatenated for the

purpose of assignment.

Contents

This manual has the following organization.

Section I, “Introducing UltraSPARC,”presents an overview of the UltraSPARC ar-

chitecture. Section I contains the following chapters:

• Chapter 1, “UltraSPARC Basics,” describes the architecture in general terms

and introduces its components.

• Chapter 2, “Processor Pipeline,” describes UltraSPARC’s 9-stage pipeline.

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

• Chapter 4, “Overview of the MMU, “ describes the UltraSPARC MMU, its

architecture, how it performs virtual address translation, and how it is

programmed.

Section II, “Going Deeper,” presents detailed information about UltraSPARC architecture and programming. Section II contains the following chapters:

• Chapter 5, “Cache and Memory Interactions,” describes cache coherency and

cache flushing.

• Chapter 6, “MMU Internal Architecture,” describes in detail the internal

architecture of the MMU and how to program it.

• Chapter 7, “UltraSPARC External Interfaces,” describes in detail the external

transactions that UltraSPARC performs, including interactions with the caches

and the SYSADDR bus, and interrupts.

• Chapter 8, “Address Spaces, ASIs, ASRs, and Traps,” describes the address

spaces that UltraSPARC supports, and how it handles traps.

• Chapter 9, “Interrupt Handling,” describes how UltraSPARC processes

interrupts.

• Chapter 10, “Reset and RED_state,” describes how UltraSPARC handles the

various SPARC-V9 reset conditions, and how it implements RED_state.

• Chapter 11, “Error Handling,” discusses how UltraSPARC handles system

errors and describes the available error status registers.

Section III, “UltraSPARC and SPARC-V9,” describes UltraSPARC as an implementation of the SPARC-V9 architecture. Section III contains the following chapters:

• Chapter 12, “Instruction Set Summary,” lists all supported instructions,

including both SPARC-V9 core instructions and UltraSPARC extended

instructions.

• Chapter 13, “UltraSPARC Extended Instructions,” contains detailed

documentation of the extended instructions that UltraSPARC has added to the

SPARC-V9 instruction set.

• Chapter 14, “Implementation Dependencies,” discusses how UltraSPARC has

resolved each of the implementation-dependencies defined by the SPARC-V9

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Preface

• Chapter 15, “SPARC-V9 Memory Models,” describes the supported memory

models (which are documented fully in The SPARC Architecture Manual,

Version 9). Low-level programmers and operating system implementors

should study this chapter to understand how their code will interact with the

UltraSPARC cache and memory systems.

Section IV, “Producing Optimized Code,” contains detailed information for assembly language programmers and compiler developers. Section IV contains the

following chapters:

• Chapter 16, “Code Generation Guidelines,” contains detailed information

about generating optimum UltraSPARC code.

• Chapter 17, “Grouping Rules and Stalls,”describes instruction

interdependencies and optimal instruction ordering.

Appendixes contain low-level technical material or information not needed for a

general understanding of the architecture. The manual contains the following appendixes:

• Appendix A, “Debug and Diagnostics Support,” describes diagnostics

registers and capabilities.

• Appendix B, “Performance Instrumentation,” describes built-in capabilities to

measure UltraSPARC performance.

• Appendix C, “Power Management,” describes UltraSPARC’s Energy Star

compliant power-down mode.

• Appendix D, “IEEE 1149.1 Scan Interface,” contains information about the

scan interface for UltraSPARC.

• Appendix E, “Pin and Signal Descriptions,” contains general information

about the pins and signals of the UltraSPARC and its components.

• Appendix F, “ASI Names,” contains an alphabetical listing of the names and

suggested macro syntax for all supported ASIs.

A Glossary, Bibliography, and Index complete the book.

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

Sun Microelectronics

14

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Section I — IntroducingUltraSP ARC

1. UltraSPARC Basics ............................................................................. 3

2. Processor Pipeline ............................................................................... 11

3. Cache Organization ............................................................................ 17

4. Overview of the MMU ........................................................................ 21

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

Sun Microelectronics

2

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSP ARC Basics 1

1.1 Overview

UltraSPARC is a high-performance, highly integrated superscalar processor implementing the 64-bit SPARC-V9 RISC architecture. UltraSPARC is capable of sus-

taining the execution of up to four instructions per cycle, even in the presence of

conditional branches and cache misses. This is due mainly to the asynchronous

aspect of the units feeding instructions and data to the rest of the pipeline. Instructions predicted to be executed are issued in program order to multiple functional units, execute in parallel and, for added parallelism, can complete out-oforder. In order to further increase the number of instructions executed per cycle

(IPC), instructions from two basic blocks (that is, instructions before and after a

conditional branch) can be issued in the same group.

UltraSPARC is a full implementation of the 64-bit SPARC-V9 architecture. It supports a 44-bit virtual address space and a 41-bit physical address space. The core

instruction set has been extended to include graphics instructions that provide

the most common operations related to two-dimensional image processing, twoand three-dimensional graphics and image compression algorithms, and parallel

operations on pixel data with 8- and 16-bit components. Support for high bandwidth bcopy is also provided through block load and block store instructions.

1.2 Design Philosophy

The execution time of an application is the product of three factors: the number of

instructions generated by the compiler, the average number of cycles required per

instruction, and the cycle time of the processor. The architecture and implementation of UltraSPARC, coupled with new compiler techniques, makes it possible to

reduce each component while not deteriorating the other two.

Sun Microelectronics

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

3

1. UltraSPARC Basics

The number of instructions for a given task depends on the instruction set and on

compiler optimizations (dead code elimination, constant propagation, profiling

for code motion, and so on). Since it is based on the SPARC-V9 architecture,

UltraSPARC offers features that can help reduce the total instruction count:

• 64-bit integer processing

• Additional floating-point registers (beyond the number offered in SPARC-V8),

which can be used to eliminate floating-point loads and stores

• Enhanced trap model with alternate global registers

The average number of cycles per instruction (CPI) depends on the architecture

of the processor and on the ability of the compiler to take advantage of the hardware features offered. The UltraSPARC execution units (ALUs, LD/ST, branch,

two floating-point, and two graphics) allow the CPI to be as low as 0.25 (four instructions per cycle). To support this high execution bandwidth, sophisticated

hardware is provided to supply:

1. Up to four instructions per cycle, even in the presence of conditional

branches

2. Data at a rate of 16 bytes-per-cycle from the external cache to the data

cache, or 8 bytes-per-cycle into the register files.

To reduce instruction dependency stalls, UltraSPARC has short latency operations and provides direct bypassing between units or within the same unit. The

impact of cache misses, usually a large contributor to the CPI, is reduced significantly through the use of de-coupled units (prefetch unit, load buffer, and store

buffer), which operate asynchronously with the rest of the pipeline.

Other features such as a fully pipelined interface to the external cache (E-Cache)

and support for speculative loads, coupled with sophisticated compiler techniques such as software pipelining and cross-block scheduling also reduce the

CPI significantly.

A balanced architecture must be able to provide a low CPI without affecting the

cycle time. Several of UltraSPARC’s architectural features, coupled with an aggressive implementation and state-of-the-art technology, have made it possible to

achieve a short cycle time (see Table 1-1). The pipeline is organized so that large

scalarity (four), short latencies, and multiple bypasses do not affect the cycle time

significantly.

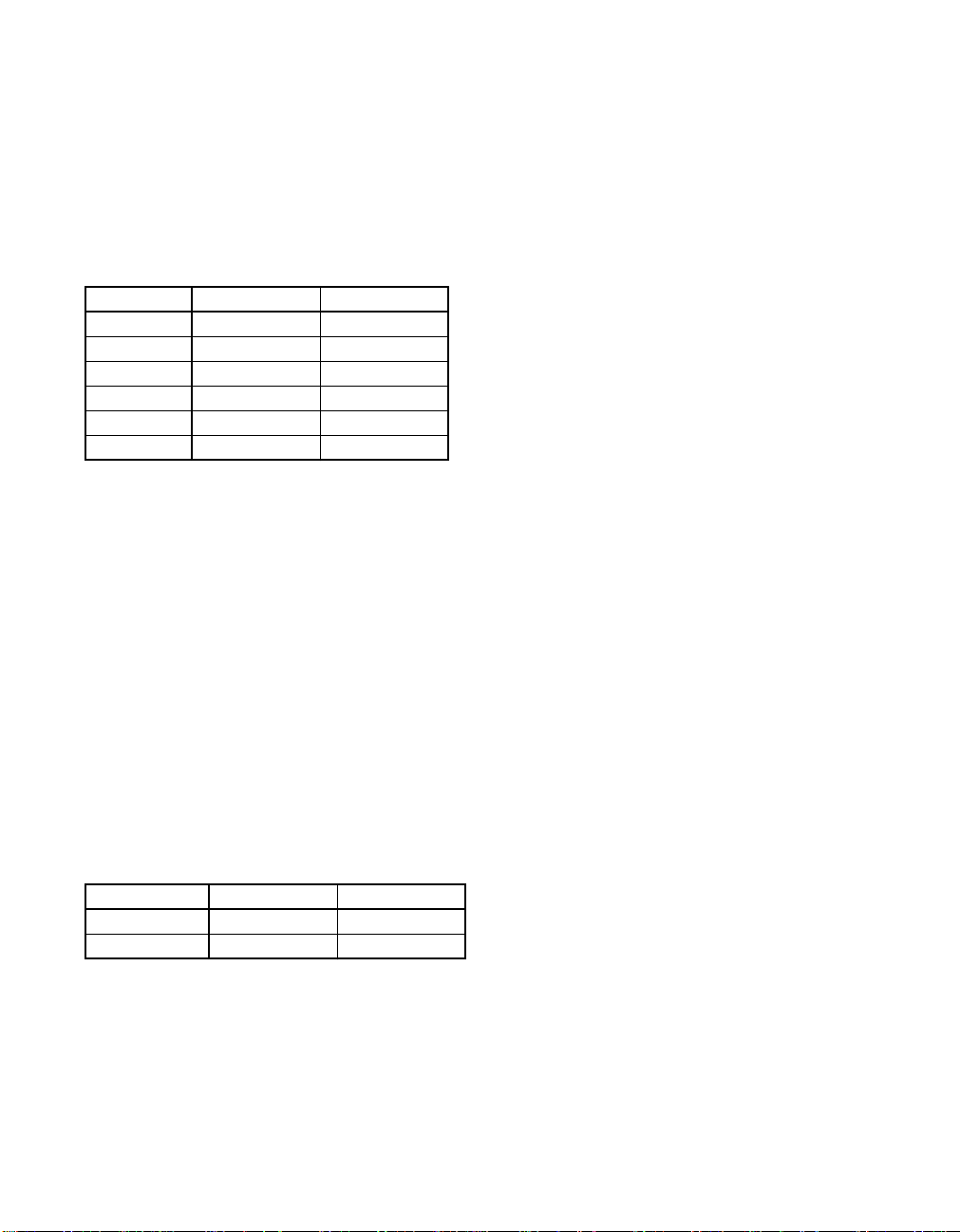

Table 1-1 Implementation Technologies and Cycle Times

UltraSPARC-I UltraSPARC-II

Technology 0.5 µ CMOS 0.35 µCMOS

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

1. UltraSPARC Basics

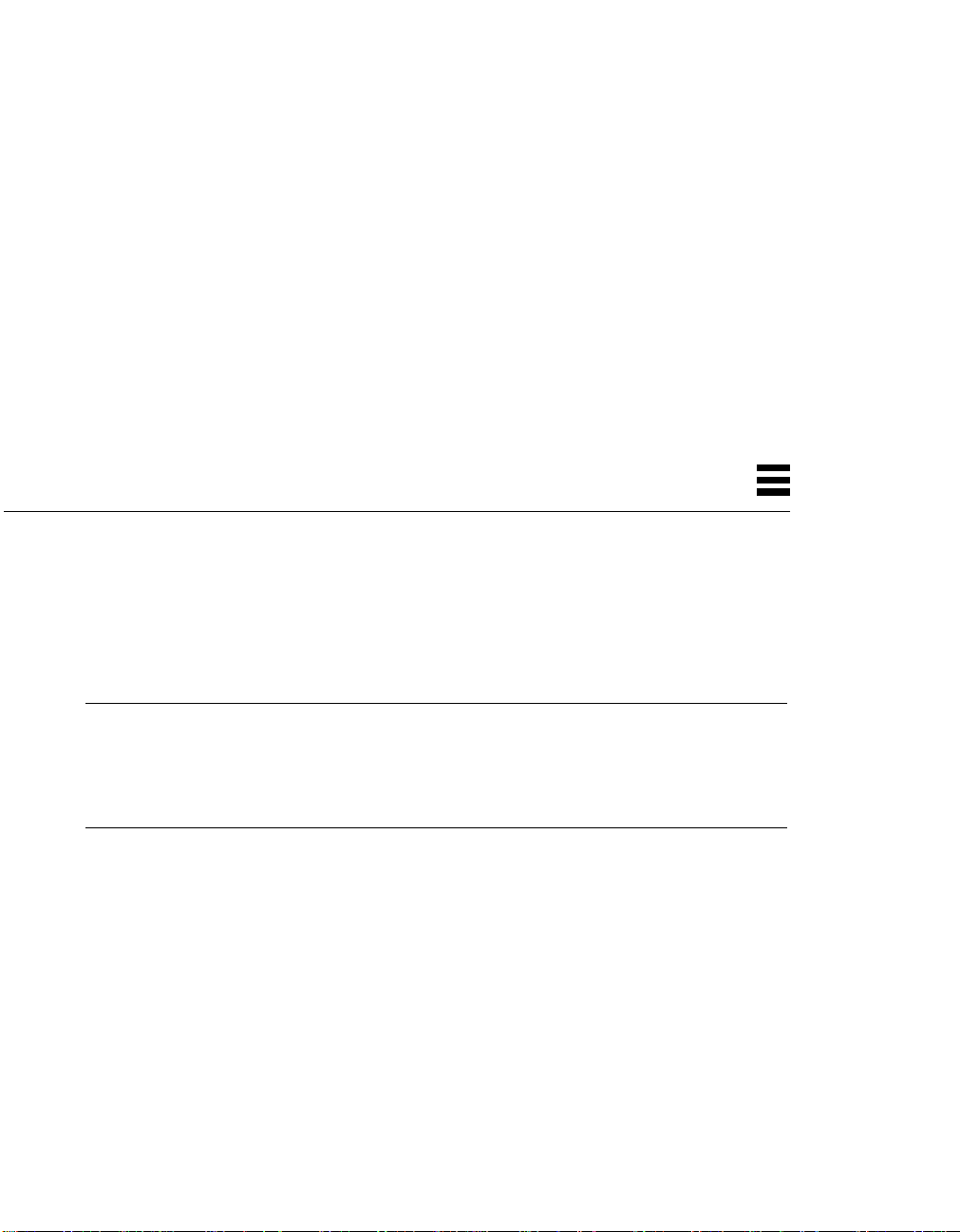

1.3 Component Overview

Figure 1-1 shows a block diagram of the UltraSPARC processor.

Figure 1-1 UltraSPARC Block Diagram

The block diagram illustrates the following components:

• Prefetch and Dispatch Unit (PDU), including logic for branch prediction

• 16Kb Instruction Cache (I-Cache)

• Memory Management Unit (MMU), containing a 64-entry Instruction

Translation Lookaside Buffer (iTLB) and a 64-entry Data Translation

Ext.

Cache

RAM

Prefetch and Dispatch Unit (PDU)

Integer Execution Unit (IEU)

Floating Point Unit (FPU)

Graphics Unit (GRU)

Instruction Cache and Buffer

Grouping Logic Integer Reg and Annex

FP

Reg

FP Multiply

FP Add

FP Divide

Load / Store Unit (LSU)

Data Load Store

External Cache Unit (ECU)

Memory Management Unit (MMU)

Memory Interface Unit (MIU)

System Interconnect

Cache Buffer Buffer

iTLB dTLB

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

• Integer Execution Unit (IEU) with two Arithmetic and Logic Units (ALUs)

• Load/Store Unit (LSU) with a separate address generation adder

• Load buffer and store buffer, decoupling data accesses from the pipeline

• A 16Kb Data Cache (D-Cache)

• Floating-Point Unit (FPU) with independent add, multiply, and divide/square

root sub-units

• Graphics Unit (GRU) with two independent execution pipelines

• External Cache Unit (ECU), controlling accesses to the External Cache

(E-Cache)

• Memory Interface Unit (MIU), controlling accesses to main memory and I/O

space

1.3.1 Prefetch and Dispatch Unit (PDU)

The prefetch and dispatch unit fetches instructions before they are actually needed in the pipeline, so the execution units do not starve for instructions. Instructions can be prefetched from all levels of the memory hierarchy; that is, from the

instruction cache, the external cache, and main memory. In order to prefetch

across conditional branches, a dynamic branch prediction scheme is implemented

in hardware. The outcome of a branch is based on a two-bit history of the branch.

A “next field” associated with every four instructions in the instruction cache

(I-Cache) points to the next I-Cache line to be fetched. The use of the next field

makes it possible to follow taken branches and to provide nearly the same instruction bandwidth achieved while running sequential code. Prefetched instructions are stored in the Instruction Buffer until they are sent to the rest of the

pipeline; up to 12 instructions can be buffered.

1.3.2 Instruction Cache (I-Cache)

The instruction cache is a 16 Kbyte two-way set associative cache with 32 byte

blocks. The cache is physically indexed and contains physical tags. The set is predicted as part of the “next field;” thus, only the index bits of an address (13 bits,

which matches the minimum page size) are needed to address the cache. The

I-Cache returns up to 4 instructions from an 8-instruction-wide cache line.

Sun Microelectronics

6

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

1. UltraSPARC Basics

1.3.3 Integer Execution Unit (IEU)

The IEU contains the following components:

• Two ALUs

• A multi-cycle integer multiplier

• A multi-cycle integer divider

• Eight register windows

• Four sets of global registers (normal, alternate, MMU, and interrupt globals)

• The trap registers (See Table 1-2 for supported trap levels)

1.3.4 Floating-Point Unit (FPU)

The FPU is partitioned into separate execution units, which allows the

UltraSPARC processor to issue and execute two floating-point instructions per

cycle. Source and result data are stored in the 32-entry register file, where each

entry can contain a 32-bit value or a 64-bit value. Most instructions are fully pipelined, (with a throughput of one per cycle), have a latency of three, and are not

affected by the precision of the operands (same latency for single- or double-precision). The divide and square root instructions are not pipelined and take 12/22

cycles (single/double) to execute but they do not stall the processor. Other instructions, following the divide/square root can be issued, executed, and retired

to the register file before the divide/square root finishes. A precise exception

model is maintained by synchronizing the floating-point pipe with the integer

pipe and by predicting traps for long latency operations. See Section 7.3.1, “Precise Traps,” in The SPARC Architecture Manual, Version 9.

1.3.5 Graphics Unit (GRU)

UltraSPARC introduces a comprehensive set of graphics instructions that provide

fast hardware support for two-dimensional and three-dimensional image and

video processing, image compression, audio processing, etc. 16-bit and 32-bit partitioned add, boolean, and compare are provided. 8-bit and 16-bit partitioned

multiplies are supported. Single cycle pixel distance, data alignment, packing,

Table 1-2 Supported Trap Levels

UltraSPARC-I UltraSPARC-II

MAXTL 44

Trap Levels 55

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

The MMU provides mapping between a 44-bit virtual address and a 41-bit physical address. This is accomplished through a 64-entry iTLB for instructions and a

64-entry dTLB for data; both TLBs are fully associative. UltraSPARC provides

hardware support for a software-based TLB miss strategy. A separate set of global registers is available to process MMU traps. Page sizes of 8Kb (13-bit offset),

64Kb (16-bit offset), 512Kb (19-bit offset), and 4Mb (22-bit offset) are supported.

The LSU is responsible for generating the virtual address of all loads and stores

(including atomics and ASI loads), for accessing the D-Cache, for decoupling

load misses from the pipeline through the Load Buffer, and for decoupling stores

through the Store Buffer. One load or one store can be issued per cycle.

The D-Cache is a write-through, non-allocating, 16Kb direct-mapped cache with

two 16-byte sub-blocks per line. It is virtually indexed and physically tagged

(VIPT). The tag array is dual ported, so tag updates due to line fills do not collide

with tag reads for incoming loads. Snoops to the D-Cache use the second tag

port, so they do not delay incoming loads.

The main role of the ECU is to handle I-Cache and D-Cache misses efficiently.

The ECU can handle one access per cycle to the External Cache (E-Cache). Accesses to the E-Cache are pipelined, which effectively makes the E-Cache part of

the instruction pipeline. Programs with large data sets can keep data in the

E-Cache and can schedule instructions with load latencies based on E-Cache latency. Floating-point code can use this feature to effectively hide D-Cache misses.

Table 1-5 on page 10 shows the E-Cache sizes that each UltraSPARC model supports. Regardless of model, however, the E-Cache line size is always 64 bytes.

UltraSPARC uses a MOESI (Modified, Own, Exclusive, Shared, Invalid) protocol

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

1. UltraSPARC Basics

The ECU provides overlap processing during load and store misses. For instance,

stores that hit the E-Cache can proceed while a load miss is being processed. The

ECU can process reads and writes indiscriminately, without a costly turn-around

penalty (only 2 cycles). Finally, the ECU handles snoops.

Block loads and block stores, which load/store a 64-byte line of data from memory to the floating-point register file, are also processed efficiently by the ECU,

providing high transfer bandwidth without polluting the E-Cache.

1.3.9.1 E-Cache SRAM Modes

Different UltraSPARC models support various E-Cache SRAM configurations using one or more SRAM “modes.” Table 1-5 shows the modes that each

UltraSPARC model supports. The modes are described below.

1–1–1 (Pipelined) Mode:

The E-Cache SRAMS have a cycle time equal to the processor cycle time. The

name “1–1–1” indicates that it takes one processor clock to send the address, one

to access the SRAM array, and one to return the E-Cache data. 1–1–1 mode has a

3 cycle pin-to-pin latency and provides the best possible E-Cache throughput.

2–2 (Register-Latched) Mode:

The E-Cache SRAMS have a cycle time equal to one-half the processor cycle time.

The name “2–2” indicates that it takes two processor clocks to send the address

and two clocks to access and return the E-Cache data. 2–2 mode has a 4 cycle pin-

Table 1-3 Supported E-Cache Sizes

E-Cache Size UltraSPARC-I UltraSPARC-II

512 Kb ✓✓

1 Mb ✓✓

2 Mb ✓✓

4 Mb ✓✓

8 Mb ✓

16 Mb ✓

Table 1-4 Supported E-Cache SRAM Modes

SRAM Mode UltraSPARC-I UltraSPARC-II

1–1–1 ✓✓

2–2 ✓

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

The MIU handles all transactions to the system controller; for example, external

cache misses, interrupts, snoops, writebacks, and so on. The MIU communicates

with the system at some model-dependent fraction of the UltraSPARC frequency.

Table 1-5 shows the possible ratios between the processor and system clock frequencies for each UltraSPARC model.

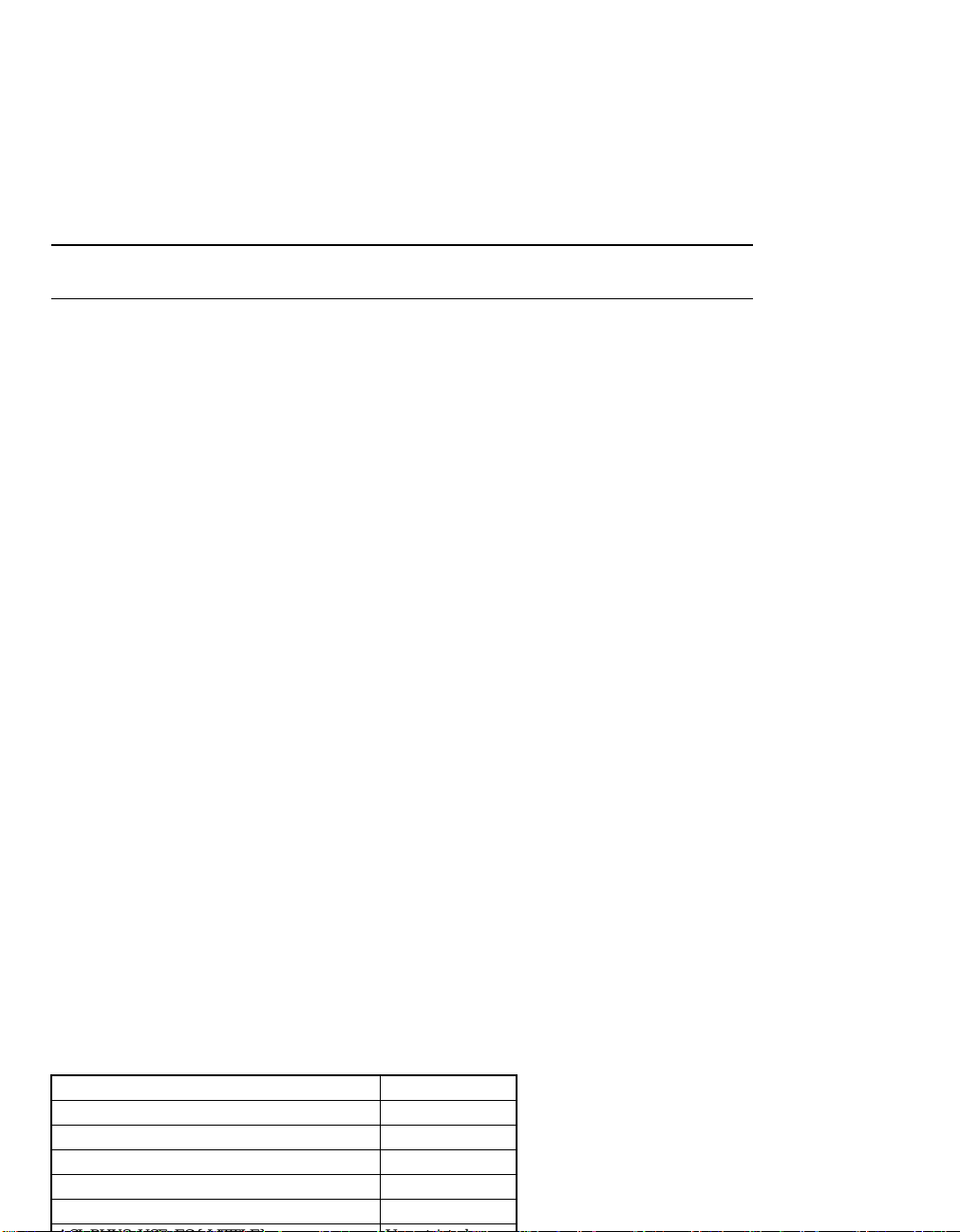

Figure 1-2 shows a complete UltraSPARC subsystem, which consists of the

UltraSPARC processor, synchronous SRAM components for the E-Cache tags and

data, and two UltraSPARC Data Buffer (UDB) chips. The UDBs isolate the

E-Cache from the system, provide data buffers for incoming and outgoing system

transactions, and provide ECC generation and checking.

Table 1-5 Model-Dependent Processor : System Clock Frequency Ratios

Frequency Ratio UltraSPARC-I UltraSPARC-II

2 : 1 ✓✓

3 : 1 ✓✓

4 : 1 ✓

E-Cache Data SRAM

UDB

E-Cache Tag SRAM

System

Data Bus

System

Address Bus

E-Cache Data

Tag Data

Tag Address

Data Address

UltraSPARC

Processor

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Processor Pipeline 2

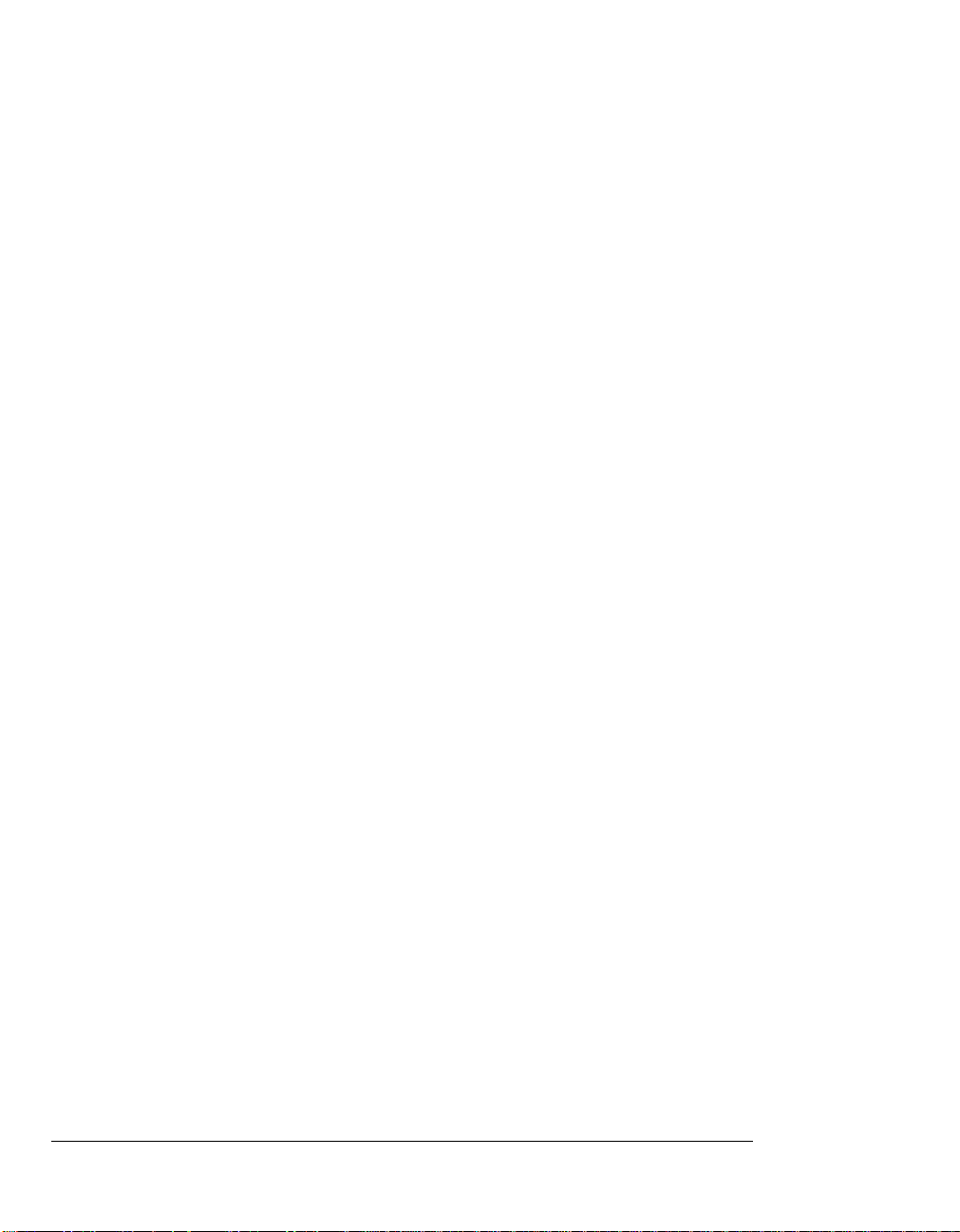

2.1 Introductions

UltraSPARC contains a 9-stage pipeline. Most instructions go through the pipeline in exactly 9 stages. The instructions are considered terminated after they go

through the last stage (W), after which changes to the processor state are irreversible. Figure 2-1 shows a simplified diagram of the integer and floating-point pipeline stages.

Figure 2-1 UltraSPARC Pipeline Stages (Simplified)

Three additional stages are added to the integer pipeline to make it symmetrical

with the floating-point pipeline. This simplifies pipeline synchronization and exception handling. It also eliminates the need to implement a floating-point queue.

Floating-point instructions with a latency greater than three (divide, square root,

and inverse square root) behave differently than other instructions; the pipe is

“extended” when the instruction reaches stage N1. See Chapter 16, “Code Generation Guidelines” for more information. Memory operations are allowed to proceed asynchronously with the pipeline in order to support latencies longer than

Fetch Decode Group Execute Cache N

1

N

2

N

3

Write

Integer Pipeline

Register X

1

X

2

X

3

Floating-Point &

Graphics Pipeline

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

This section describes each pipeline stage in detail. Figure 2-2 illustrates the pipeline stages.

Figure 2-2 UltraSPARC Pipeline Stages (Detail)

X

1

IU Register File

EC

N

1

N

2

G

D-Cache

TLB

FP add

FP RF 32 x 64

IST_data

Icc

FPST_data

Annex

FPU

IEU

G ALU

FP mul

G mul

GRU

address bus

data bus

instruction bus

LSU

Tag

Tag Check

Hit

align

VA

PA

N

3

W

(Results in Annex)

ECU

LDQ/STQ

D-Cache

Data

R

X

2

X

3

SB

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

2.2.1 Stage 1: Fetch (F) Stage

Prior to their execution, instructions are fetched from the Instruction Cache

(I-Cache) and placed in the Instruction Buffer, where eventually they will be selected to be executed. Accessing the I-Cache is done during the F Stage. Up to

four instructions are fetched along with branch prediction information, the predicted target address of a branch, and the predicted set of the target. The high

bandwidth provided by the I-Cache (4 instructions/cycle) allows UltraSPARC to

prefetch instructions ahead of time based on the current instruction flow and on

branch prediction. Providing a fetch bandwidth greater than or equal to the maximum execution bandwidth assures that, for well behaved code, the processor

does not starve for instructions. Exceptions to this rule occur when branches are

hard to predict, when branches are very close to each other, or when the I-Cache

miss rate is high.

2.2.2 Stage 2: Decode (D) Stage

After being fetched, instructions are pre-decoded and then sent to the Instruction

Buffer. The pre-decoded bits generated during this stage accompany the instructions during their stay in the Instruction Buffer. Upon reaching the next stage

(where the grouping logic lives) these bits speed up the parallel decoding of up

to 4 instructions.

2. Processor Pipeline

While it is being filled, the Instruction Buffer also presents up to 4 instructions to

the next stage. A pair of pointers manage the Instruction Buffer, ensuring that as

many instructions as possible are presented in order to the next stage.

2.2.3 Stage 3: Grouping (G) Stage

The G Stage logic’s main task is to group and dispatch a maximum of four valid

instructions in one cycle. It receives a maximum of four valid instructions from

the Prefetch and Dispatch Unit (PDU), it controls the Integer Core Register File

(ICRF), and it routes valid data to each integer functional unit. The G Stage sends

up to two floating-point or graphics instructions out of the four candidates to the

Floating-Point and Graphics Unit (FGU). The G Stage logic is responsible for

comparing register addresses for integer data bypassing and for handling pipeline stalls due to interlocks.

Sun Microelectronics

13

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

2.2.4 Stage 4: Execution (E) Stage

Data from the integer register file is processed by the two integer ALUs during

this cycle (if the instruction group includes ALU operations). Results are computed and are available for other instructions (through bypasses) in the very next cycle. The virtual address of a memory operation is also calculated during the E

Stage, in parallel with ALU computation.

FLOATING-POINT AND GRAPHICS UNIT: The Register (R) Stage of the FGU. The

floating-point register file is accessed during this cycle. The instructions are also

further decoded and the FGU control unit selects the proper bypasses for the current instructions.

2.2.5 Stage 5: Cache Access (C) Stage

The virtual address of memory operations calculated in the E Stage is sent to the

tag RAM to determine if the access (load or store type) is a hit or a miss in the

D-Cache. In parallel the virtual address is sent to the data MMU to be translated

into a physical address. On a load when there are no other outstanding loads, the

data array is accessed so that the data can be forwarded to dependent instructions in the pipeline as soon as possible.

ALU operations executed in the E Stage generate condition codes in the C Stage.

The condition codes are sent to the PDU, which checks whether a conditional

branch in the group was correctly predicted. If the branch was mispredicted, earlier instructions in the pipe are flushed and the correct instructions are fetched.

The results of ALU operations are not modified after the E Stage; the data merely

propagates down the pipeline (through the annex register file), where it is available for bypassing for subsequent operations.

FLOATING-POINT AND GRAPHICS UNIT: The X1 Stage of the FGU. Floating-point and

graphics instructions start their execution during this stage. Instructions of latency one also finish their execution phase during the X1Stage.

2.2.6 Stage 6: N1 Stage

A data cache miss/hit or a TLB miss/hit is determined during the N1 Stage. If a

load misses the D-Cache, it enters the Load Buffer. The access will arbitrate for

the E-Cache if there are no older unissued loads. If a TLB miss is detected, a trap

will be taken and the address translation is obtained through a software routine.

Sun Microelectronics

14

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

The physical address of a store is sent to the Store Buffer during this stage. To

avoid pipeline stalls when store data is not immediately available, the store address and data parts are decoupled and sent to the Store Buffer separately.

FLOATING-POINT AND GRAPHICS UNIT: The X2stage of the FGU. Execution continues for most operations.

2.2.7 Stage 7: N2 Stage

Most floating-point instructions finish their execution during this stage. After N2,

data can be bypassed to other stages or forwarded to the data portion of the Store

Buffer. All loads that have entered the Load Buffer in N1 continue their progress

through the buffer; they will reappear in the pipeline only when the data comes

back. Normal dependency checking is performed on all loads, including those in

the load buffer.

FLOATING-POINT AND GRAPHICS UNIT: The X3stage of the FGU.

2.2.8 Stage 8: N3 Stage

UltraSPARC resolves traps at this stage.

2. Processor Pipeline

2.2.9 Stage 9: Write (W) Stage

All results are written to the register files (integer and floating-point) during this

stage. All actions performed during this stage are irreversible. After this stage, instructions are considered terminated.

Sun Microelectronics

15

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

Sun Microelectronics

16

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Cache Organization 3

3.1 Introduction

3.1.1 Level-1 Caches

UltraSPARC’s Level-1 D-Cache is virtually indexed, physically tagged (VIPT).

Virtual addresses are used to index into the D-Cache tag and data arrays while

accessing the D-MMU (that is, the dTLB). The resulting tag is compared against

the translated physical address to determine D-Cache hits.

A side-effect inherent in a virtual-indexed cache is address aliasing; this issue is

addressed in Section 5.2.1, “Address Aliasing Flushing,” on page 28.

UltraSPARC’s Level-1 I-Cache is physically indexed, physically tagged (PIPT).

The lowest 13 bits of instruction addresses are used to index into the I-Cache tag

and data arrays while accessing the I-MMU (that is, the iTLB). The resulting tag

is compared against the translated physical address to determine I-Cache hits.

3.1.1.1 Instruction Cache (I-Cache)

The I-Cache is a 16 Kb pseudo-two-way set-associative cache with 32-byte blocks.

The set is predicted based on the next fetch address; thus, only the index bits of

an address are necessary to address the cache (that is, the lowest 13 bits, which

matches the minimum page size of 8Kb). Instruction fetches bypass the instruction cache under the following conditions:

• When the I-Cache enable or I-MMU enable bits in the LSU_Control_Register

are clear (see Section A.6, “LSU_Control_Register,” on page 306)

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

• When the I-MMU maps the fetch as noncacheable.

The instruction cache snoops stores from other processors or DMA transfers, but

it is not updated by stores in the same processor, except for block commit stores

(see Section 13.6.4, “Block Load and Store Instructions,” on page 230). The

FLUSH instruction can be used to maintain coherency. Block commit stores update the I-Cache but do not flush instructions that have already been prefetched

into the pipeline. A FLUSH, DONE, or RETRY instruction can be used to flush

the pipeline. For block copies that must maintain I-Cache coherency, it is more efficient to use block commit stores in the loop, followed by a single FLUSH instruction to flush the pipeline.

Note: The size of each I-Cache set is the same as the page size in UltraSPARC-I

and UltraSPARC-II; thus, the virtual index bits equal the physical index bits.

The D-Cache is a write-through, nonallocating-on-write-miss 16-Kb direct

mapped cache with two 16-byte sub-blocks per line. Data accesses bypass the

data cache when the D-Cache enable bit in the LSU_Control_Register is clear (see

Section A.6, “LSU_Control_Register,” on page 306). Load misses will not allocate

in the D-Cache if the D-MMU enable bit in the LSU_Control_Register is clear or

the access is mapped by the D-MMU as virtual noncacheable.

Note: A noncacheable access may access data in the D-Cache from an earlier

cacheable access to the same physical block, unless the D-Cache is disabled.

Software must flush the D-Cache when changing a physical page from cacheable

to noncacheable (see Section 5.2, “Cache Flushing”).

UltraSPARC’s level-2 (external) cache (the E-Cache) is physically indexed, physically tagged (PIPT). This cache has no references to virtual address and context

information. The operating system needs no knowledge of such caches after initialization, except for stable storage management and error handling.

Memory accesses must be cacheable in the E-Cache to allow use of UltraSPARC’s

ECC checking. As a result, there is no E-Cache enable bit in the

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

3. Cache Organization

Instruction fetches bypass the E-Cache when:

• The I-MMU is disabled, or

• The processor is in RED_state, or

• The access is mapped by the I-MMU as physically noncacheable

Data accesses bypass the E-Cache when:

• The D-MMU enable bit (DM) in the LSU_Control_Register is clear, or

• The access is mapped by the D-MMU as nonphysical cacheable (unless

ASI_PHYS_USE_EC is used).

The system must provide a noncacheable, ECC-less scratch memory for use of the

booting code until the MMUs are enabled.

The E-Cache is a unified, write-back, allocating, direct-mapped cache. The

E-Cache always includes the contents of the I-Cache and D-Cache. The E-Cache

size is model dependent (see Table 1-5 on page 10); its line size is 64 bytes.

Block loads and block stores, which load or store a 64-byte line of data from

memory to the floating-point register file, do not allocate into the E-Cache, in order to avoid pollution.

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

Sun Microelectronics

20

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Overview of the MMU 4

4.1 Introduction

This chapter describes the UltraSPARC Memory Management Unit as it is seen by

the operating system software. The UltraSPARC MMU conforms to the requirements set forth in The SPARC Architecture Manual, Version 9.

Note: The UltraSPARC MMU does not conform to the SPARC-V8 Reference

MMU Specification. In particular, the UltraSPARC MMU supports a 44-bit virtual

address space, software TLB miss processing only (no hardware page table walk),

simplified protection encoding, and multiple page sizes. All of these differ from

features required of SPARC-V8 Reference MMUs.

4.2 Virtual Addr ess T ranslation

The UltraSPARC MMU supports four page sizes: 8 Kb, 64 Kb, 512 Kb, and 4 Mb.

It supports a 44-bit virtual address space, with 41 bits of physical address. During

each processor cycle the UltraSPARC MMU provides one instruction and one

data virtual-to-physical address translation. In each translation, the virtual page

number is replaced by a physical page number, which is concatenated with the

page offset to form the full physical address, as illustrated in Figure 4-1 on page

22. (This figure shows the full 64-bit virtual address, even though UltraSPARC

supports only 44 bits of VA.)

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Figure 4-1 Virtual-to-physical Address Translation for all Page Sizes

UltraSPARC implements a 44-bit virtual address space in two equal halves at the

extreme lower and upper portions of the full 64-bit virtual address space. Virtual

addresses between 0000 0800 0000 000016 and FFFF F7FF FFFF FFFF16, inclusive,

are termed “out of range” for UltraSPARC and are illegal. (In other words, virtual

address bits VA<63:43> must be either all zeros or all ones.) Figure 4-2 on page 23

illustrates the UltraSPARC virtual address space.

0

0

12

1213

13

63

40

8K-byte Virtual Page Number

8K-byte Physical Page Number

Page Offset

Page Offset

0

0

15

1516

16

63

40

64K-byte Virtual Page Number

64K-byte Physical Page Number

Page Offset

Page Offset

0

0

18

18

19

1963

40

512K-byte Virtual Page Number

512K-byte PPN

Page Offset

Page Offset

VA

PA

PA

PA

VA

VA

8 Kb

64 Kb

512 Kb

0

0

21

21

22

22

63

40

4M-byte Virtual Page Number

4M-byte PPN

Page Offset

Page Offset

PA

VA

4 Mb

MMU

MMU

MMU

MMU

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

4. Overview of the MMU

Figure 4-2 UltraSPARC’s 44-bit Virtual Address Space, with Hole (Same as Figure 14-2)

Note: Throughout this document, when virtual address fields are specified as

64-bit quantities, they are assumed to be sign-extended based on VA<43>.

The operating system maintains translation information in a data structure called

the Software Translation Table. The I- and D-MMU each contain a hardware

Translation Lookaside Buffer (iTLB and dTLB); these act as independent caches of

the Software Translation Table, providing one-cycle translation for the more frequently accessed virtual pages.

Figure 4-3 on page 24 shows a general software view of the UltraSPARC MMU.

The TLBs, which are part of the MMU hardware, are small and fast. The Software

Translation Table, which is kept in memory, is likely to be large and complex. The

Translation Storage Buffer (TSB), which acts like a direct-mapped cache, is the interface between the two. The TSB can be shared by all processes running on a

processor, or it can be process specific. The hardware does not require any particular scheme.

The term “TLB hit” means that the desired translation is present in the MMU’s

on-chip TLB. The term “TLB miss” means that the desired translation is not

present in the MMU’s on-chip TLB. On a TLB miss the MMU immediately traps

to software for TLB miss processing. The TLB miss handler has the option of filling the TLB by any means available, but it is likely to take advantage of the TLB

miss support features provided by the MMU, since the TLB miss handler is time

critical code. Hardware support is described in Section 6.3.1, “Hardware Support

FFFF FFFF FFFF FFFF

FFFF F800 0000 0000

0000 0000 0000 0000

0000 07FF FFFF FFFF

Out of Range VA

(VA “Hole”)

FFFF F7FF FFFF FFFF

0000 0800 0000 0000

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

Translation

Look-aside

Buffers

MMU Memory O/S Data Structure

Figure 4-3 Software View of the UltraSPARC MMU

Translation

Storage

Buffer

Software

Translation

Table

Aliasing between pages of different size (when multiple VAs map to the same

PA) may take place, as with the SPARC-V8 Reference MMU. The reverse case,

when multiple mappings from one VA/context to multiple PAs produce a multiple TLB match, is not detected in hardware; it produces undefined results.

Note: The hardware ensures the physical reliability of the TLB on multiple

matches.

Sun Microelectronics

24

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Section II — Going Deeper

5. Cache and Memory Interactions ...................................................... 27

6. MMU Internal Architecture ............................................................... 41

7. UltraSPARC External Interfaces ....................................................... 73

8. Address Spaces, ASIs, ASRs, and Traps .......................................... 145

9. Interrupt Handling ............................................................................. 161

10. Reset and RED_state .......................................................................... 169

11. Error Handling .................................................................................... 175

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

UltraSPARC User’s Manual

Sun Microelectronics

26

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Cache and Memory Interactions 5

5.1 Introduction

This chapter describes various interactions between the caches and memory, and

the management processes that an operating system must perform to maintain

data integrity in these cases. In particular, it discusses:

• When and how to invalidate one or more cache entries

• The differences between cacheable and non-cacheable accesses

• The ordering and synchronization of memory accesses

• Accesses to addresses that cause side effects (I/O accesses)

• Non-faulting loads

• Instruction prefetching

• Load and store buffers

This chapter only address coherence in a uniprocessor environment. For more information about coherence in multi-processor environments, see Chapter 15,

“SPARC-V9 Memory Models.”

5.2 Cache Flushing

Data in the level-1 (read-only or write-through) caches can be flushed by invalidating the entry in the cache. Modified data in the level-2 (writeback) cache must

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Cache flushing is required in the following cases:

I-Cache:

Flush is needed before executing code that is modified by a local store instruction

other than block commit store, see Section 3.1.1.1, “Instruction Cache (I-Cache).”

This is done with the FLUSH instruction or using ASI accesses. See Section A.7,

“I-Cache Diagnostic Accesses,” on page 309. When ASI accesses are used, software must ensure that the flush is done on the same processor as the stores that

modified the code space.

D-Cache:

Flush is needed when a physical page is changed from (virtually) cacheable to

(virtually) noncacheable, or when an illegal address alias is created (see Section

5.2.1, “Address Aliasing Flushing,” on page 28). This is done with a displacement

flush (see Section 5.2.3, “Displacement Flushing,” on page 29) or using ASI

accesses. See Section A.8, “D-Cache Diagnostic Accesses,” on page 314.

E-Cache:

Flush is needed for stable storage. Examples of stable storage include batterybacked memory and transaction logs. This is done with either a displacement

flush (see Section 5.2.3, “Displacement Flushing,” on page 29) or a store with

ASI_BLK_COMMIT_{PRIMARY,SECONDARY}. Flushing the E-Cache will flush

the corresponding blocks from the I- and D-Caches, because UltraSPARC maintains inclusion between the external and internal caches. See Section 5.2.2, “Committing Block Store Flushing,” on page 29.

A side-effect inherent in a virtual-indexed cache is illegal address aliasing. Aliasing

occurs when multiple virtual addresses map to the same physical address. Since

UltraSPARC’s D-Cache is indexed with the virtual address bits and is larger than

the minimum page size, it is possible for the different aliased virtual addresses to

end up in different cache blocks. Such aliases are illegal because updates to one

cache block will not be reflected in aliased cache blocks.

Normally, software avoids illegal aliasing by forcing aliases to have the same address bits (virtual color) up to an alias boundary. For UltraSPARC, the minimum

alias boundary is 16Kb; this size may increase in future designs. When the alias

boundary is violated, software must flush the D-Cache if the page was virtual

cacheable. In this case, only one mapping of the physical page can be allowed in

the D-MMU at a time. Alternatively, software can turn off virtual caching of illegally aliased pages. This allows multiple mappings of the alias to be in the

D-MMU and avoids flushing the D-Cache each time a different mapping is refer-

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

5. Cache and Memory Interactions

Note: A change in virtual color when allocating a free page does not require a

D-Cache flush, because the D-Cache is write-through.

5.2.2 Committing Block Store Flushing

In UltraSPARC, stable storage must be implemented by software cache flush.

Data that is present and modified in the E-Cache must be written back to the stable storage.

UltraSPARC implements two ASIs (ASI_BLK_COMMIT_{PRIMARY,SECONDARY}) to perform these writebacks efficiently when software can ensure exclusive

write access to the block being flushed. Using these ASIs, software can write back

data from the floating-point registers to memory and invalidate the entry in the

cache. The data in the floating-point registers must first be loaded by a block load

instruction. A MEMBAR #Sync instruction is needed to ensure that the flush is

complete. See also Section 13.6.4, “Block Load and Store Instructions,” on page

230.

5.2.3 Displacement Flushing

Cache flushing also can be accomplished by a displacement flush. This is done by

reading a range of read-only addresses that map to the corresponding cache line

being flushed, forcing out modified entries in the local cache. Care must be taken

to ensure that the range of read-only addresses is mapped in the MMU before

starting a displacement flush, otherwise the TLB miss handler may put new data

into the caches.

Note: Diagnostic ASI accesses to the E-Cache can be used to invalidate a line,

but they are generally not an alternative to displacement flushing. Modified data

in the E-Cache will not be written back to memory using these ASI accesses. See

Section A.9, “E-Cache Diagnostics Accesses,” on page 315.

5.3 Memory Accesses and Cacheability

Note: Atomic load-store instructions are treated as both a load and a store; they

can be performed only in cacheable address spaces.

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Two types of memory operations are supported in UltraSPARC: cacheable and

noncacheable accesses, as indicated by the page translation. Cacheable accesses

are inside the coherence domain; noncacheable accesses are outside the coherence

domain.

SPARC-V9 does not specify memory ordering between cacheable and noncacheable accesses. In TSO mode, UltraSPARC maintains TSO ordering, regardless of

the cacheability of the accesses. For SPARC-V9 compatibility while in PSO or

RMO mode, a MEMBAR #Lookaside should be used between a store and a subsequent load to the same noncacheable address. See Section 8, “Memory Models,”

in The SPARC Architecture Manual, Version 9 for more information about the

SPARC-V9 memory models.

Note: On UltraSPARC, a MEMBAR #Lookaside executes more efficiently than

a MEMBAR #StoreLoad.

Accesses that fall within the coherence domain are called cacheable accesses.

They are implemented in UltraSPARC with the following properties:

• Data resides in real memory locations.

• They observe supported cache coherence protocol(s).

• The unit of coherence is 64 bytes.

Accesses that are outside the coherence domain are called noncacheable accesses.

Some of these memory (-mapped) locations may have side-effects when accessed.

They are implemented in UltraSPARC with the following properties:

• Data may or may not reside in real memory locations.

• Accesses may result in program-visible side-effects; for example, memory-

mapped I/O control registers in a UART may change state when read.

• They may not observe supported cache coherence protocol(s).

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

5. Cache and Memory Interactions

Noncacheable accesses with the E-bit set (that is, those having side-effects) are all

strongly ordered with respect to other noncacheable accesses with the E-bit set. In

addition, store buffer compression is disabled for these accesses. Speculative

loads with the E-bit set cause a

data_access_exception

trap (with SFSR.FT=2, spec-

ulative load to page marked with E-bit).

Note: The side-effect attribute does not imply noncacheability.

5.3.1.3 Global V isibility and Memory Ordering

A memory access is considered globally visible when it has been acknowledged

by the system. In order to ensure the correct ordering between the cacheable and

noncacheable domains, explicit memory synchronization is needed in the form of

MEMBARs or atomic instructions. Code Example 5-1 illustrates the issues involved in mixing cacheable and noncacheable accesses.

Code Example 5-1 Memory Ordering and MEMBAR Examples

Assume that all accesses go to non-side-effect memory locations.

Process A:

While (1)

{

Store D1:data produced

1 MEMBAR #StoreStore (needed in PSO, RMO)

Store F1:set flag

While F1 is set (spin on flag)

Load F1

2 MEMBAR #LoadLoad | #LoadStore (needed in RMO)

Load D2

}

Process B:

While (1)

{

While F1 is cleared (spin on flag)

Load F1

2 MEMBAR #LoadLoad | #LoadStore (needed in RMO)

Load D1

Store D2

1 MEMBAR #StoreStore (needed in PSO, RMO)

Store F1:clear flag

Artisan Technology Group - Quality Instrumentation ... Guaranteed | (888) 88-SOURCE | www.artisantg.com

Note: A MEMBAR #MemIssue or MEMBAR #Sync is needed if ordering of

cacheable accesses following noncacheable accesses must be maintained in PSO

or RMO.

Due to load and store buffers implemented in UltraSPARC, the above example

may not work in PSO and RMO modes without the MEMBARs shown in the program segment.

In TSO mode, loads and stores (except block stores) cannot pass earlier loads, and

stores cannot pass earlier stores; therefore, no MEMBAR is needed.

In PSO mode, loads are completed in program order, but stores are allowed to

pass earlier stores; therefore, only the MEMBAR at #1 is needed between updating data and the flag.

In RMO mode, there is no implicit ordering between memory accesses; therefore,

the MEMBARs at both #1 and #2 are needed.

The MEMBAR (STBAR in SPARC-V8) and FLUSH instructions are provide for explicit control of memory ordering in program execution. MEMBAR has several

variations; their implementations in UltraSPARC are described below. See Section

A.31, “Memory Barrier,” Section 8.4.3, “The MEMBAR Instruction,” and Section J,