Page 1

Solaris 10 Container Guide

- Functionality status up to Solaris 10 10/09 and OpenSolaris 2009.06 -

Detlef Drewanz, Ulrich Gräf, et al.

Sun Microsystems GmbH

Effective: 30/11/2009

Functionalities

Use Cases

Best Practices

Cookbooks

Page 2

Version 3.1-en Solaris 10 Container Guide - 3.1 Effective: 30/11/2009

Table of contents

Disclaimer....................................................................................................................................................VI

Revision control............................................................................................................................................VI

1. Introduction................................................................................................................................................1

2. Functionality..............................................................................................................................................2

2.1. Solaris Containers and Solaris Zones................................................................................................2

2.1.1. Overview................................................................................................................................2

2.1.2. Zones and software installation..............................................................................................4

2.1.3. Zones and security.................................................................................................................4

2.1.4. Zones and privileges..............................................................................................................4

2.1.5. Zones and resource management..........................................................................................5

2.1.5.1. CPU resources...............................................................................................................................................5

2.1.5.2. Memory resource management......................................................................................................................6

2.1.5.3. Network resource management (IPQoS = IP Quality of Service)...................................................................6

2.1.6. User interfaces for zones.......................................................................................................6

2.1.7. Zones and high availability.....................................................................................................7

2.1.8. Branded zones (Linux and Solaris 8/Solaris 9 compatibility)..................................................7

2.1.9. Solaris container cluster (aka "zone cluster") ........................................................................8

2.2. Virtualization technologies compared.................................................................................................9

2.2.1. Domains/physical partitions..................................................................................................10

2.2.2. Logical partitions..................................................................................................................11

2.2.3. Containers (Solaris zones) in an OS....................................................................................12

2.2.4. Consolidation in one computer.............................................................................................13

2.2.5. Summary of virtualization technologies................................................................................14

3. Use Cases...............................................................................................................................................16

3.1. Grid computing with isolation............................................................................................................16

3.2. Small web servers............................................................................................................................17

3.3. Multi-network consolidation..............................................................................................................18

3.4. Multi-network monitoring..................................................................................................................19

3.5. Multi-network backup........................................................................................................................20

3.6. Consolidation development/test/integration/production....................................................................21

3.7. Consolidation of test systems...........................................................................................................22

3.8. Training systems..............................................................................................................................23

3.9. Server consolidation.........................................................................................................................24

3.10. Confidentiality of data and processes.............................................................................................25

3.11. Test systems for developers..........................................................................................................26

3.12. Solaris 8 and Solaris 9 containers for development.......................................................................27

3.13. Solaris 8 and Solaris 9 containers as revision systems..................................................................28

3.14. Hosting for several companies on one computer...........................................................................29

3.15. SAP portals in Solaris containers...................................................................................................30

3.16. Upgrade- and Patch-management in a virtual environment...........................................................31

3.17. "Flying zones" – Service-oriented Solaris server infrastructure......................................................32

3.18. Solaris Container Cluster (aka "zone cluster")................................................................................33

4. Best Practices.........................................................................................................................................34

4.1. Concepts..........................................................................................................................................34

4.1.1. Sparse-root zones................................................................................................................34

4.1.2. Whole-root zones.................................................................................................................34

4.1.3. Comparison between sparse-root zones and whole-root zones...........................................35

4.1.4. Software in zones.................................................................................................................35

4.1.5. Software installations in Solaris and zones..........................................................................36

II

Page 3

Version 3.1-en Solaris 10 Container Guide - 3.1 Effective: 30/11/2009

4.1.5.1. Software installation by the global zone – usage in all zones.......................................................................36

4.1.5.2. Software installation by the global zone – usage in a local zone...................................................................36

4.1.5.3. Software installation by the global zone – usage in the global zone..............................................................37

4.1.5.4. Installation by the local zone – usage in the local zone.................................................................................37

4.1.6. Storage concepts.................................................................................................................38

4.1.6.1. Storage for the root file system of the local zones........................................................................................38

4.1.6.2. Data storage.................................................................................................................................................38

4.1.6.3. Program/application storage.........................................................................................................................38

4.1.6.4. Root disk layout............................................................................................................................................39

4.1.6.5. ZFS within a zone.........................................................................................................................................39

4.1.6.6. Options for using ZFS in local zones............................................................................................................40

4.1.6.7. NFS and local zones.....................................................................................................................................40

4.1.6.8. Volume manager in local zones....................................................................................................................40

4.1.7. Network concepts.................................................................................................................41

4.1.7.1. Introduction into networks and zones...........................................................................................................41

4.1.7.2. Network address management for zones.....................................................................................................41

4.1.7.3. Shared IP instance and routing between zones............................................................................................41

4.1.7.4. Exclusive IP instance....................................................................................................................................42

4.1.7.5. Firewalls between zones (IP filter)................................................................................................................42

4.1.7.6. Zones and limitations in the network.............................................................................................................43

4.1.8. Additional devices in zones..................................................................................................44

4.1.8.1. Configuration of devices...............................................................................................................................44

4.1.8.2. Static configuration of devices......................................................................................................................44

4.1.8.3. Dynamic configuration of devices.................................................................................................................44

4.1.9. Separate name services in zones........................................................................................45

4.1.9.1. hosts database.............................................................................................................................................45

4.1.9.2. User database (passwd, shadow, user_attr)................................................................................................45

4.1.9.3. Services........................................................................................................................................................45

4.1.9.4. Projects.........................................................................................................................................................45

4.2. Paradigms........................................................................................................................................46

4.2.1. Delegation of admin privileges to the application department..............................................46

4.2.2. Applications in local zones only............................................................................................46

4.2.3. One application per zone......................................................................................................47

4.2.4. Clustered containers............................................................................................................47

4.2.5. Solaris Container Cluster......................................................................................................49

4.3. Configuration and administration......................................................................................................50

4.3.1. Manual configuration of zones with zonecfg.........................................................................50

4.3.2. Manual installation of zones with zoneadm..........................................................................50

4.3.3. Manual uninstallation of zones with zoneadm......................................................................50

4.3.4. Manual removal of a configured zone with zonecfg..............................................................50

4.3.5. Duplication of an installed zone............................................................................................50

4.3.6. Standardized creation of zones............................................................................................50

4.3.7. Automatic configuration of zones by script...........................................................................51

4.3.8. Automated provisioning of services......................................................................................51

4.3.9. Installation and administration of a branded zone................................................................51

4.4. Lifecycle management......................................................................................................................52

4.4.1. Patching a system with local zones......................................................................................52

4.4.2. Patching with live upgrade....................................................................................................52

4.4.3. Patching with upgrade server...............................................................................................53

4.4.4. Patching with zoneadm attach -u.........................................................................................53

4.4.5. Moving zones between architectures (sun4u/sun4v)............................................................53

4.4.6. Re-installation and service provisioning instead of patching................................................54

4.4.7. Backup and recovery of zones.............................................................................................54

4.4.8. Backup of zones with ZFS...................................................................................................55

4.4.9. Migration of a zone to another system.................................................................................55

4.4.10. Moving a zone within a system...........................................................................................55

III

Page 4

Version 3.1-en Solaris 10 Container Guide - 3.1 Effective: 30/11/2009

4.5. Management and monitoring............................................................................................................55

4.5.1. Using boot arguments in zones............................................................................................55

4.5.2. Consolidating log information of zones.................................................................................56

4.5.3. Monitoring zone workload.....................................................................................................56

4.5.4. Extended accounting with zones..........................................................................................56

4.5.5. Auditing operations in the zone............................................................................................56

4.5.6. DTrace of processes within a zone......................................................................................57

4.6. Resource management....................................................................................................................58

4.6.1. Types of resource management...........................................................................................58

4.6.2. CPU resources.....................................................................................................................58

4.6.2.1. Capping of CPU time for a zone...................................................................................................................58

4.6.2.2. General resource pools................................................................................................................................58

4.6.2.3. Fair share scheduler (FSS)...........................................................................................................................59

4.6.2.4. Fair share scheduler in a zone......................................................................................................................59

4.6.2.5. Dynamic resource pools...............................................................................................................................59

4.6.2.6. Lightweight processes (LWP).......................................................................................................................59

4.6.3. Limiting memory resources..................................................................................................60

4.6.3.1. Assessing memory requirements for global and local zones........................................................................60

4.6.3.2. Limiting virtual memory.................................................................................................................................60

4.6.3.3. Limiting a zone's physical memory requirement............................................................................................60

4.6.3.4. Limiting locked memory................................................................................................................................61

4.6.4. Network limitation (IPQoS)...................................................................................................61

4.6.5. IPC limits (Semaphore, shared memory, message queues)................................................61

4.6.6. Privileges and resource management..................................................................................61

4.7. Solaris container navigator...............................................................................................................62

5. Cookbooks...............................................................................................................................................65

5.1. Installation and configuration............................................................................................................65

5.1.1. Configuration files.................................................................................................................65

5.1.2. Special commands for zones...............................................................................................66

5.1.3. Root disk layout....................................................................................................................68

5.1.4. Configuring a sparse root zone: required Actions.................................................................69

5.1.5. Configuring a whole root zone: required Actions..................................................................70

5.1.6. Zone installation...................................................................................................................71

5.1.7. Zone initialization with sysidcfg............................................................................................71

5.1.8. Uninstalling a zone...............................................................................................................72

5.1.9. Configuration and installation of a Linux branded zone with CentOS...................................72

5.1.10. Configuration and installation of a Solaris 8/Solaris 9 container.........................................73

5.1.11. Optional settings.................................................................................................................73

5.1.11.1. Starting zones automatically.......................................................................................................................73

5.1.11.2. Changing the set of privileges of a zone.....................................................................................................73

5.1.12. Storage within a zone.........................................................................................................74

5.1.12.1. Using a device in a local zone....................................................................................................................74

5.1.12.2. The global zone supplies a file system per lofs to the local zone................................................................74

5.1.12.3. The global zone mounts a file system when the local zone is booted.........................................................75

5.1.12.4. The local zone mounts a UFS file system from a device............................................................................75

5.1.12.5. User level NFS server in a local zone.........................................................................................................76

5.1.12.6. Using a DVD drive in the local zone............................................................................................................76

5.1.12.7. Dynamic configuration of devices...............................................................................................................76

5.1.12.8. Several zones share a file system..............................................................................................................78

5.1.12.9. ZFS in a zone.............................................................................................................................................78

5.1.12.10. User attributes for ZFS within a zone........................................................................................................78

5.1.13. Configuring a zone by command file or template...............................................................79

5.1.14. Automatic quick installation of zones..................................................................................79

5.1.15. Accelerated automatic creation of zones on a ZFS file system..........................................80

5.1.16. Zones hardening.................................................................................................................80

IV

Page 5

Version 3.1-en Solaris 10 Container Guide - 3.1 Effective: 30/11/2009

5.2. Network............................................................................................................................................81

5.2.1. Change network configuration for shared IP instances........................................................81

5.2.2. Set default router for shared IP instance..............................................................................81

5.2.3. Network interfaces for exclusive IP instances......................................................................81

5.2.4. Change network configuration from shared IP instance to exclusive IP instance................82

5.2.5. IP filter between shared IP zones on a system....................................................................82

5.2.6. IP filter between exclusive IP zones on a system................................................................83

5.2.7. Zones, networks and routing................................................................................................83

5.2.7.1. Global and local zone with shared network...................................................................................................83

5.2.7.2. Zones in separate network segments using the shared IP instance.............................................................84

5.2.7.3. Zones in separate network segments using exclusive IP instances.............................................................85

5.2.7.4. Zones in separate networks using the shared IP instance............................................................................86

5.2.7.5. Zones in separate networks using exclusive IP instances............................................................................87

5.2.7.6. Zones connected to independent customer networks using the shared IP instance.....................................88

5.2.7.7. Zones connected to independent customer networks using exclusive IP instances.....................................90

5.2.7.8. Connection of zones via external routers using the shared IP instance........................................................91

5.2.7.9. Connection of zones through an external load balancing router using exclusive IP instances......................93

5.3. Lifecycle management......................................................................................................................95

5.3.1. Booting a zone.....................................................................................................................95

5.3.2. Boot arguments in zones......................................................................................................95

5.3.3. Software installation per mount............................................................................................96

5.3.4. Software installation with provisioning system.....................................................................97

5.3.5. Zone migration among systems...........................................................................................97

5.3.6. Zone migration within a system............................................................................................98

5.3.7. Duplicating zones with zoneadm clone.................................................................................99

5.3.8. Duplicating zones with zoneadm detach/attach and zfs clone...........................................101

5.3.9. Moving a zone between a sun4u and a sun4v system.......................................................102

5.3.10. Shutting down a zone.......................................................................................................104

5.3.11. Using live upgrade to patch a system with local zones....................................................104

5.4. Management and monitoring..........................................................................................................106

5.4.1. DTrace in a local zone........................................................................................................106

5.4.2. Zone accounting.................................................................................................................106

5.4.3. Zone audit..........................................................................................................................106

5.5. Resource management..................................................................................................................107

5.5.1. Limiting the /tmp-size within a zone....................................................................................107

5.5.2. Limiting the CPU usage of a zone (CPU capping)..............................................................107

5.5.3. Resource pools with processor sets...................................................................................107

5.5.4. Fair share scheduler...........................................................................................................108

5.5.5. Static CPU resource management between zones............................................................108

5.5.6. Dynamic CPU resource management between zones.......................................................108

5.5.7. Static CPU resource management in a zone.....................................................................108

5.5.8. Dynamic CPU resource management in a zone.................................................................108

5.5.9. Dynamic resource pools for zones.....................................................................................109

5.5.10. Limiting the physical main memory consumption of a project..........................................110

5.5.11. Implementing memory resource management for zones.................................................110

Supplement...............................................................................................................................................112

A. Solaris Container in OpenSolaris........................................................................................................112

A.1. OpenSolaris – general.................................................................................................................112

A.1. ipkg-Branded zones.....................................................................................................................112

A.1. Cookbook: Configuring an ipkg zone...........................................................................................113

A.2. Cookbook: Installing an ipkg zone................................................................................................113

B. References..........................................................................................................................................114

V

Page 6

Version 3.1-en Solaris 10 Container Guide - 3.1 Disclaimer Effective: 30/11/2009

Disclaimer

Sun Microsystems GmbH does not offer any guarantee regarding the completeness and accuracy of the

information and examples contained in this document.

Revision control

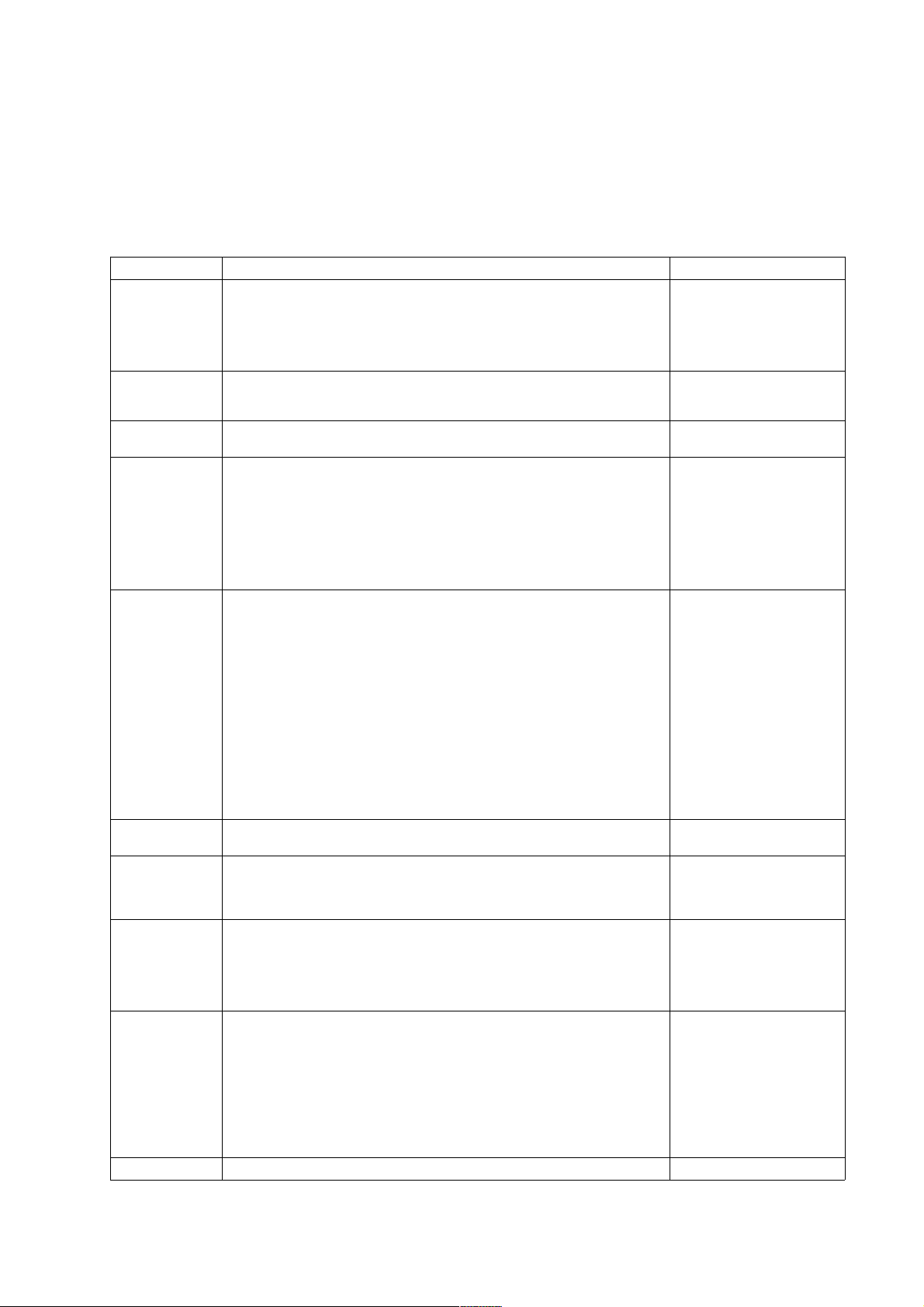

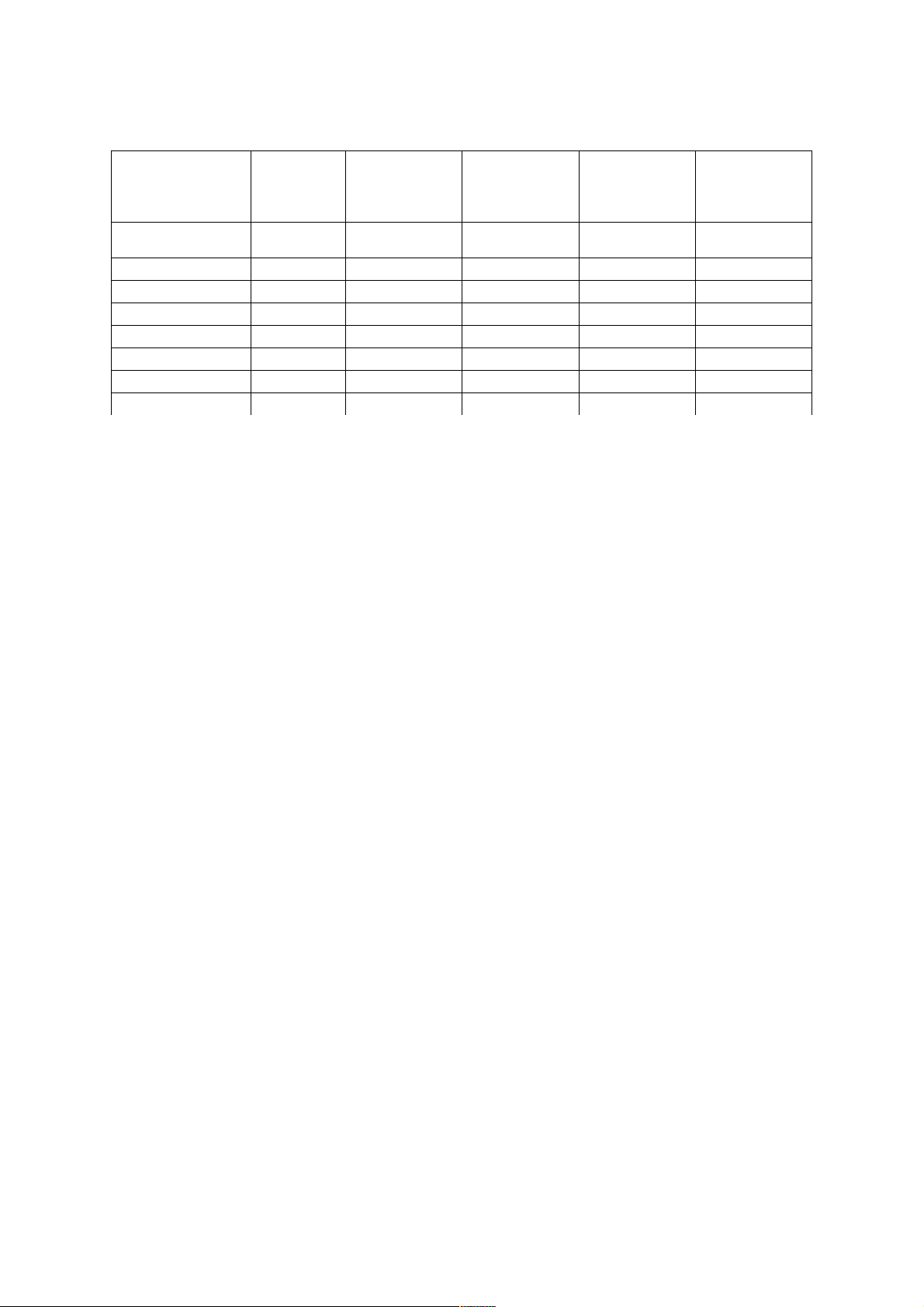

Version Contents Who

3.1 30/11/2009

Adjustment with content of „Solaris Container Leitfaden 3.1“

Table of Content with HTML for better navigating through the document

Correction „Patching of systems with local zones”

Correction „Patching with zoneadm attach -u“

Correction Solaris Container Navigator

3.0-en 27/11/2009

Review and corrections after translation

Name doc to „Solaris 10 Container Guide - 3.0“

3.0-en Draft 1 27/07/2009

Original English translation received

3.0 19/06/2009

General corrections

Additions in the General part, Resource Management

Additions in patch management of zones

Formatting: URLs and hyperlinks for better legibility

Formatting: Numbering repaired

Detlef Drewanz

Ulrich Gräf

Detlef Drewanz

Ulrich Gräf

Detlef Drewanz,

Uwe Furchheim,

Ulrich Gräf,

Franz Haberhauer,

Joachim Knoke,

Hartmut Streppel,

Thomas Wagner,

Insertion: Possibilities for using ZFS in local zones

3.0 Draft 1 10/06/2009

More hyperlinks in the document

General corrections

Insertion: Solaris Container Navigator

Incorporation: Functionalities Solaris 10 5/08 + 10/08 + 5/09

Insertion: Firewall between zones

Revision: Zones and ZFS

Revision: Storage concepts

Revision: Network concepts

Insertion: Dynamic configuration of devices in zones

Revision: Resource management

Addition: Patching zones

Revision: Zones and high availability

Insertion: Solaris Container Cluster

Insertion: Solaris Container in OpenSolaris

Insertion: Upgrade and patch management in a virtual operating environment

2.1 13/02/2008

Incorporation of corrections (tagged vlanID) Detlef Drewanz, Ulrich Gräf

2.0 21/01/2008

Incorporation of comments on 2.0-Draftv27

Insertion: Consolidation of log information

Insertion: Cookbooks for resource management

2.0-Draftv27 11/01/2008

Incorporation of comments on 2.0-Draftv22

Incorporation Live Upgrade and zones

Insertion of several hyperlinks

Accelerated automatic installation of zones on a ZFS file system

2.0-Draftv22 20/12/2007

Incorporation Resource Management

Incorporation of Solaris 10 08/07

Incorporation of Solaris 10 11/06

Incorporation of comments

Complete revision of the manual

Heiko Stein

Dirk Augustin,

Detlef Drewanz,

Ulrich Gräf,

Hartmut Streppel

Detlef Drewanz, Ulrich Gräf

Detlef Drewanz, Ulrich Gräf

Bernd Finger, Ulrich Gräf

Detlef Drewanz, Ulrich Gräf

Incorporation "SAP Portals in Containers”

Incorporation "Flying Zones”

Revision "Zones and High Availability”

1.3 07/11/2006

Dirk Augustin

Oliver Schlicker

Detlef Ulherr, Thorsten Früauf

VI

Page 7

Version 3.1-en Solaris 10 Container Guide - 3.1 Disclaimer Effective: 30/11/2009

Version Contents Who

Drawings 1 - 6 as an image Detlef Drewanz

1.2 06/11/2006

1.1

1.0 24/10/2006

General chapter virtualization

Additional network examples

27/10/2006

Revision control table reorganized

(the latest at the top)

References amended

Hardening of zones amended

Feedback incorporated, corrections

Use cases and network

Detlef Drewanz, Ulrich Gräf

Detlef Drewanz

Detlef Drewanz

Zones added in the cluster

Complete revision of various chapters

Draft 2.2 1st network example, corrections 31/07/2006 Ulrich Gräf

Draft 2.0 2nd draft published – 28/07/2006 Detlef Drewanz, Ulrich Gräf

Draft 1.0 1st draft (internal) – 28/06/2006 Detlef Drewanz, Ulrich Gräf

Thorsten Früauf/Detlef Ulherr

Ulrich Gräf

VII

Page 8

Version 3.1-en Solaris 10 Container Guide - 3.1 1. Introduction Effective: 30/11/2009

1. Introduction

[dd/ug] This guide is about Solaris Containers, how they work and how to use them. Although the

original guide was developed in german [25], starting with version 3.1 we begin to deliver a version in

english.

By making Solaris 10 available on 31st January 2005, an operating system with groundbreaking

innovations has been provided by Sun Microsystems. Among these innovations are Solaris

Containers that can be used - among other things - to consolidate and virtualize OS environments, to

isolate applications, and for resource management. Solaris Containers can contribute considerably to

the advanced reconfiguration of IT processes and environments, as well as to cost savings in IT

operations.

Using these new possibilities requires know-how, decision guidance and examples which we have

summarized in this guide. It is directed at decision makers, data center managers, IT groups and

system administrators. The document is subdivided into the following chapters: Introduction,

Functionality, Use Cases, Best Practices, Cookbooks, and a list of references.

A brief introduction is followed by the functionality part, which contains a description of today's typical

data center requirements in terms of virtualization and consolidation, as well as a description and

comparison of Solaris Container technology. This is followed by a discussion of the fields of

application for Solaris Containers in a variety of use cases. Their conceptual implementation is

demonstrated by means of Best Practices. In the chapter on Cookbooks, the commands used to

implement Best Practices are demonstrated using concrete examples. All cookbooks were tested and

verified by the authors themselves. The supplement discusses the specifics of Solaris Containers in

OpenSolaris.

The document itself is designed to be a reference document. Although it is possible to read the

manual from beginning to end, this is not mandatory. The manager of a data center gets an overview

of the Solaris Container technology or have a look at the use cases. An IT architect goes over the

Best Practices in order to build solutions. Meanwhile, a system administrator tests the commands

listed in the cookbooks in order to gain experience. That is why the document offers something for

everyone and in addition provides references to look into other areas.

Many thanks to all who have contributed to this document through comments, examples and

additions. Special thanks goes to the colleagues (in alphabetical order): Dirk Augustin[da], Bernd

Finger[bf], Constantin Gonzalez, Uwe Furchheim, Thorsten Früauf[tf], Franz Haberhauer, Claudia

Hildebrandt, Kristan Klett, Joachim Knoke, Matthias Pfützner, Roland Rambau, Oliver Schlicker[os],

Franz Stadler, Heiko Stein[hes], Hartmut Streppel[hs], Detlef Ulherr[du], Thomas Wagner and Holger

Weihe.

Please do not hesitate to contact the authors with feedback and suggestions.

Berlin and Langen, November 2009

Detlef Drewanz (Detlef.Drewanz@sun.com), Ulrich Gräf (Ulrich.Graef@sun.com)

1

Page 9

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

2. Functionality

2.1. Solaris Containers and Solaris Zones

2.1.1. Overview

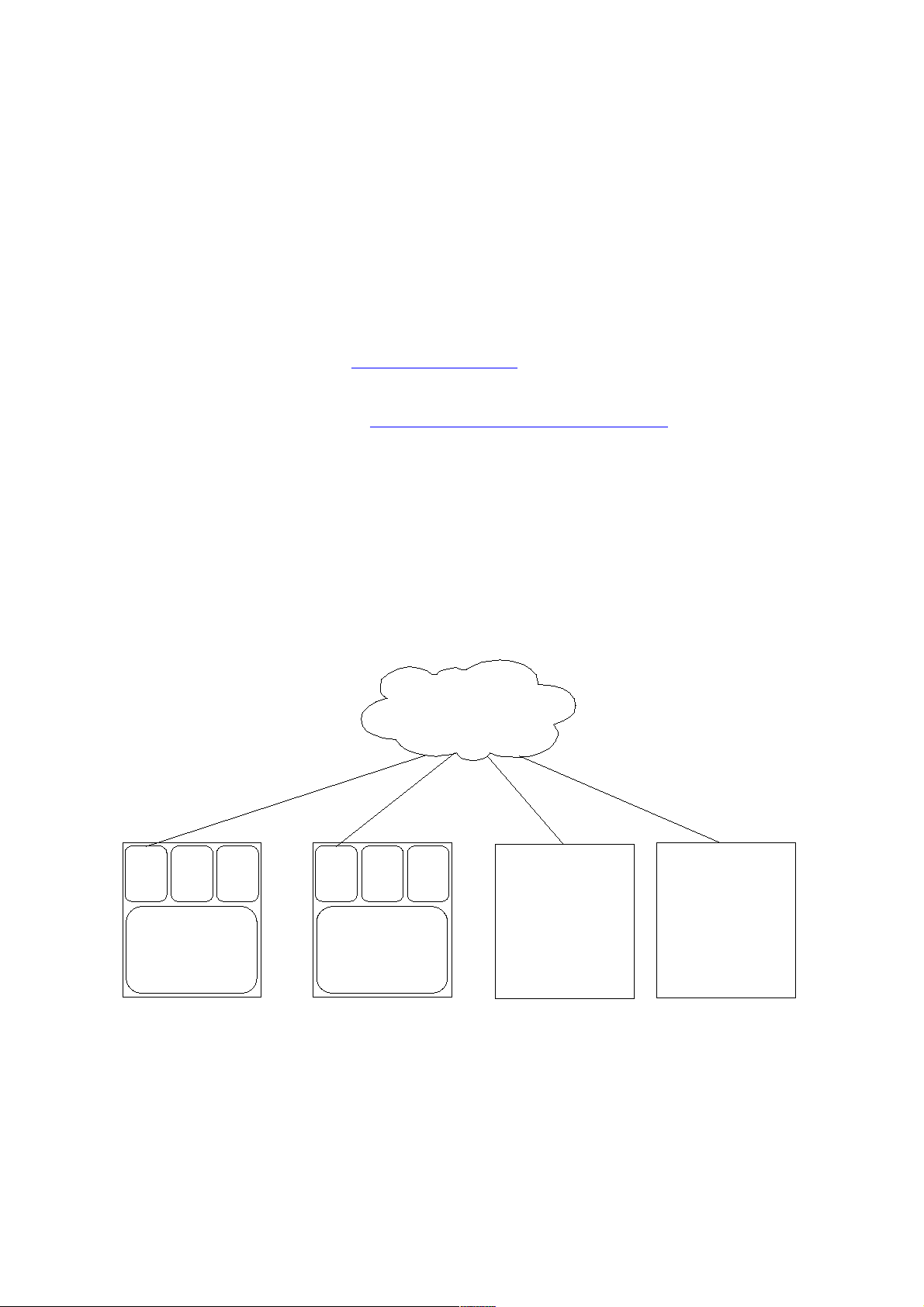

[ug] Solaris Zones is the term for a virtualized execution environment – a virtualization at the operating

system level (in contrast to HW virtualization).

Solaris Containers are Solaris Zones with Resource Management. The term is frequently used

(in this document as well) as a synonym for Solaris Zones.

Resource Management has already been introduced with Solaris 9 and allows the definition of CPU,

main memory and network resources.

Solaris Zones represent a virtualization at the interface between the operating system and the

application.

• There is a global zone which is essentially the same as a Solaris operating system was in earlier

versions

• In addition, local zones, also called nonglobal zones, can be defined as virtual execution

environments.

• All local zones use the kernel of the global zone and are thus part of a single physical operating

system installation – unlike HW virtualization, where several operating systems are started on

virtualized hardware instances.

• All shared objects (programs, libraries, the kernel) are loaded only once; therefore, unlike for

HW virtualization, additional consumption of main memory is very low.

• The file system of a local zone is separated from the global zone. It uses a subdirectory of the

global zone's filesystem for a root directory (as in chroot environments).

• A zone can have one or several network addresses and network interfaces of its own.

• Physical devices are not visible in local zones (standard) but can optionally be configured.

• Local zones have their own OS settings, e.g. for name service.

• Local zones are separated from each other and from the global zone with respect to processes,

that is, a local zone cannot see the processes of a different zone.

• The separation extends also to the shared memory segments and logical or physical network

interfaces.

• Access to another local zone on the same computer is therefore possible through the network

only.

• The global zone, however, can see all processes in the local zones for the purpose of control

and monitoring (accounting).

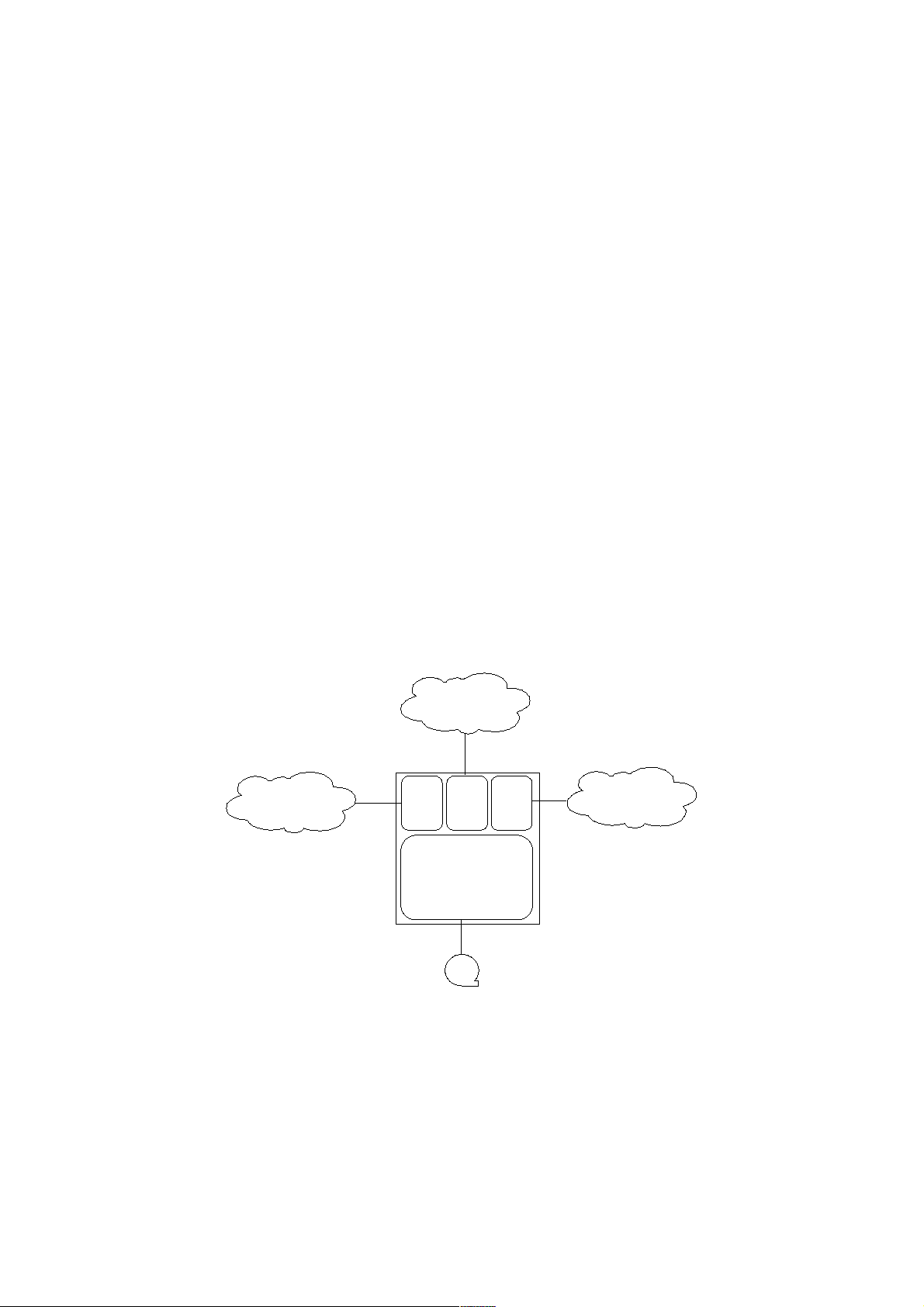

Local

zone

Global zone

Figure 1: [dd] Schematic representation of zones

Local

zone

App

Local

zone

OS

Server

2

Page 10

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

Thus, a local zone is a Solaris environment that is separated from other zones and can be used

independently. At the same time, many hardware and operating system resources are shared with

other local zones, which causes little additional runtime expenditure.

Local zones execute the same Solaris version as the global zone. Alternatively, virtual execution

environments for older Solaris versions (SPARC: Solaris 8 and 9) or other operating systems (x86:

Linux) can also be installed in so-called Branded Zones. In this case, the original environment is then

executed on the Solaris 10 kernel; differences in the systemcalls are emulated.

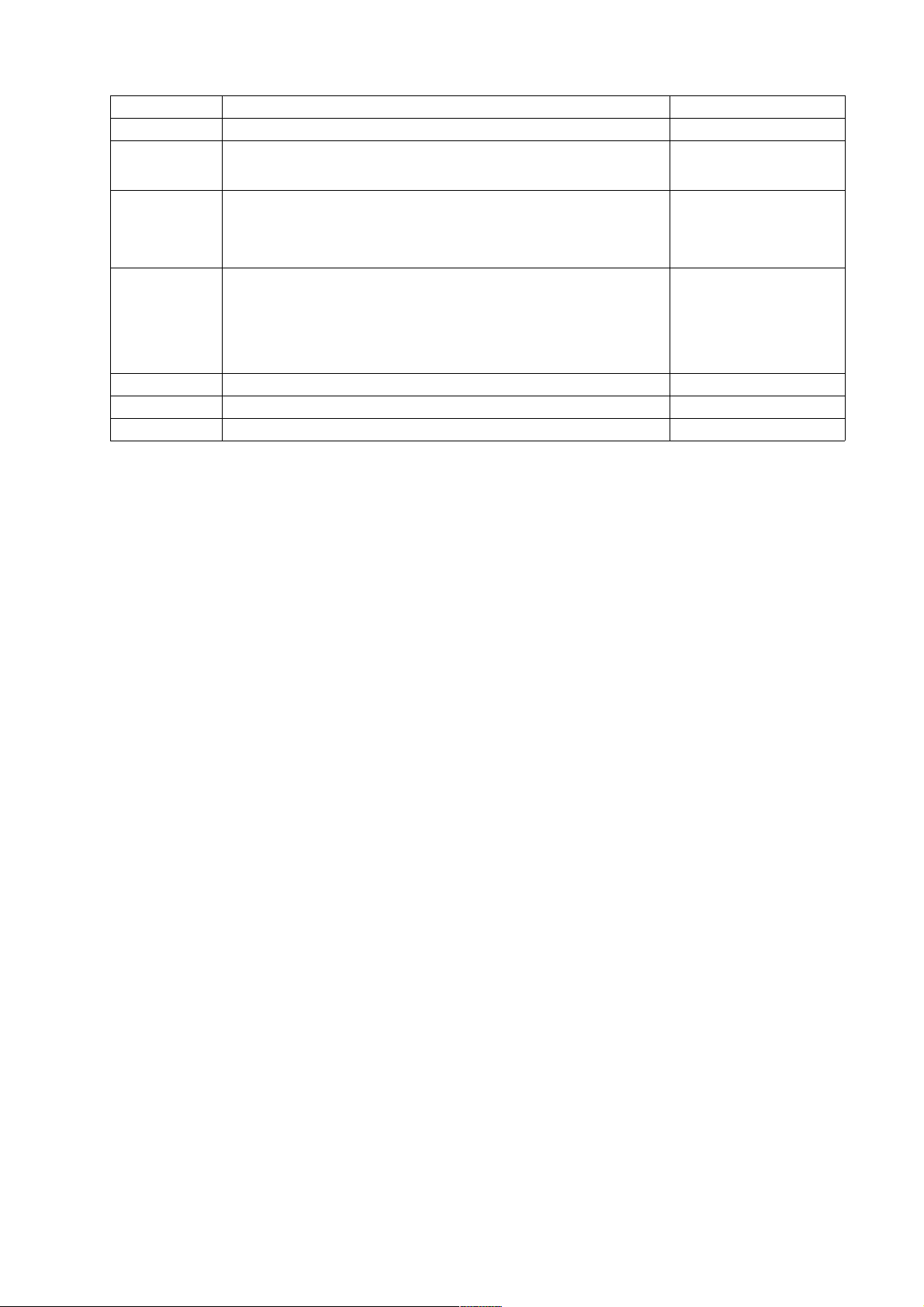

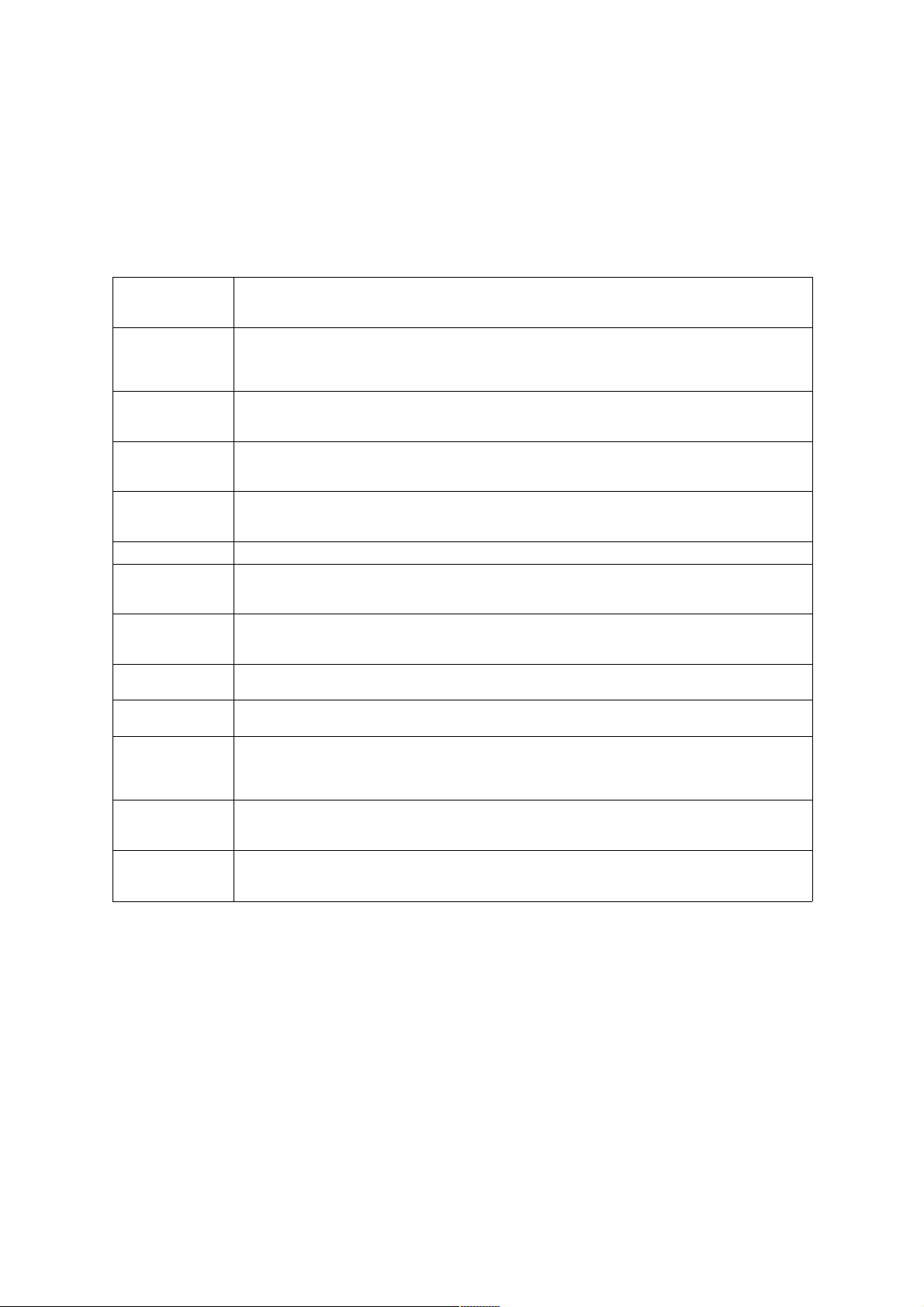

Additional details are summarized in the following table:

Shared kernel: The kernel is shared by the global zone and the local zones. The resources needed by the OS are

Shared objects: In Unix, all objects such as programs, files and shared libraries are loaded only once as a shared

File system: The visible portion of the file system of the local zone can be limited to one subtree or several subtrees of

Patches: For packages (Solaris packages) installed as copies in the local zone, patches can be installed

Network: Zones have their own IP addresses on one or more virtual or physical interfaces. Network

Process: Each local zone can see its own processes only. The global zone sees all processes of the local zones.

Separation: Access to the resources of the global zone or other local zones, unless explicitly configured as such

Assigned devices: No physical devices are contained in the standard configuration of a local zone. It is, however, possible to

Shared disk space: In addition, further parts of the file tree (file systems or directories) can be assigned from the global zone

Physical devices: Physical devices are administered from the global zone. Local zones do not have any access to the

Root delegation: A local zone has an individual root account (zone administrator). Therefore, the administration of

Naming

environment:

System settings: Settings in /etc/system apply to the kernel used by all zones. However, the most important settings of

Table 1: [ug] Characteristics of Solaris 10 Zones

needed only once. Costs for a local zone are therefore low, as measured by main memory, CPU

consumption and disk space.

memory segment which improves overall performance. For Solaris 10, this also includes zones; that is,

no matter how frequently e.g. a program or a shared library is used in zones: in the main memory, it will

occupy space only once. (other than in virtual machines.)

the global zone. The files in the local zone can be configured on the basis of directories shared with the

global zone or as copies.

separately as well. The patch level regarding non-Application patches should be the same, because all

zones share the same kernel.

communication between zones takes place, if possible, via the shared network layers or when using

exclusive IP-instances via external network connections.

(devices, memory), is prevented. Any software errors that may occur are limited to their respective local

zone by means of error isolation.

assign devices (e.g. disks, volumes, DVD drives, etc.) to one or more local zones.

Special drivers can be used this way as well.

to one or more local zones.

assignment of these devices.

applications and services in a local zone can be delegated completely to other persons – including the

root portion. Operating safety in the global zone or in other local zones is not affected by this. The global

zone root has general access to all local zones.

Local zones have an independent naming environment with host names, network services, users, roles

and process environments. The name service of one zone can be configured from local files, and another

zone from the same computer can use e.g. LDAP or NIS.

earlier Solaris versions (shared memory, semaphores and message queues) can be modified from

Solaris 10 onwards by the Solaris resource manager for each zone independently.

3

Page 11

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

2.1.2. Zones and software installation

[dd] The respective requirements on local zones determine the manner in which software is installed

in zones.

There are two ways of supplying software in zones:

1. Software is usually supplied in pkg format. If this software is installed in the global zone with

pk g a d d, it will be automatically available to all other local zones as well. This considerably

simplifies the installation and maintenance of software since – even if many zones are

installed – software maintenance can be performed centrally from the global zone.

2. Software can be installed exclusively for a local or for the global zone in order to e.g. be able

to make software changes in one zone independent of other zones. This can be achieved by

installation using special pk g ad d options or by special types of software installations.

In any case the Solaris kernel and the drivers are shared by all zones but can be directly installed and

modified in the global zone only.

2.1.3. Zones and security

[dd] By providing separate root directories for each zone, separate stipulations regarding security

settings can be made by the local name service environments in the zones (RBAC – Role Based

Access Control, passwd database). Furthermore, a separate passwd database with its own user

accounts is provided in each zone. This makes it possible to build separate user environments for

each zone as well as introducing separate administrator accounts for each zone.

Solaris 10 5/08, like earlier Solaris versions, is certified according to Common Criteria EAL4+. This

certification was performed by the Canadian CCS. The Canadian CCS is a member of the group of

certification authorities of Western states of which the Federal Office for Information Security (BSI,

Bundesamt für Sicherheit in der Informationstechnik) is also a member. This certification is also

recognized by BSI. A constituent component of the certification is protection against break-ins,

separation and – new in Solaris 10 – zone differentiation. Details on this are available at:

http://www.sun.com/software/security/securitycert/

Solaris Trusted Extensions allow customers who are subject to specific laws or data protection

requirements to use labeling features that have thus far only been contained in highly specialized

operating systems and appliances. To implement labeled security, so-called compartments are used.

For Solaris Trusted Extensions, these compartments are put into practice by Solaris zones.

2.1.4. Zones and privileges

[dd] Local zones have fewer process privileges than the global zone whereby some commands

cannot be executed within a local zone. For standard configurations of zones, this access is permitted

only in the global zone. The restrictions include, among other things:

• Configuration of swap space and processor sets

• Modifications to the process scheduler and the shared memory

• Setting up device files

• Downloading and uploading kernel modules

• For shared IP authorities:

− Access to the physical network interface

− Setting up IP addresses

Since Solaris 10 11/06, local zones can have additional process privileges assigned to them when

zones are being configured that allow extended possibilities for local zones (but not all).

Potential combinations and usable privileges in zones are shown here:

http://docs.sun.com/app/docs/doc/817-1592/6mhahuotq?a=view

4

Page 12

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

2.1.5. Zones and resource management

[ug] In Solaris 9, resource management was introduced on the basis of projects, tasks and resource

pools. In Solaris 10, resource management can be applied to zones as well. The following resources

can be managed:

• CPU resources (processor sets, CPU capping and fair share scheduler)

• Memory use (real memory, virtual memory, shared segments)

• Monitoring network traffic (IPQoS = IP Quality of Service)

• Zone-specific settings for shared memory, semaphore, swap

(System V IPC Resource Controls)

2.1.5.1. CPU resources

[ug] Three stages of resource managements can be used for zones:

• Partitioning of CPUs in processor sets that can be assigned to resource pools.

Resource pools are then assigned to local zones, thus defining the usable CPU quantity.

• Using the fair share scheduler (FSS) in a resource pool that is used by one or more local zones.

This allows fine granular allocation of CPU resources to zones in a defined ratio as soon as

zones compete for CPU time. This is the case if system capacity is at 100%. Thus, the FSS

ensures the response time for zones, if configured accordingly.

• Using the FSS in a local zone. This allows fine granular allocation of CPU resources to projects

(groups of processes) in a defined ratio if projects compete for CPU. This takes place, when the

capacity of the CPU time available for this zone is at 100%. Thus, the FSS ensures the process

response time.

Processor sets in a resource pool

Just like a project, a local zone can have a resource pool assigned to it where all zone processes

proceed (zo n e c fg : s et p o o l =). CPUs can be assigned to a resource pool. Zone processes

will then run only on the CPUs assigned to the resource pool. Several zones (or even projects) can

also be assigned to a resource pool which will then share the CPU resources of the resource pool.

The most frequent case is to create a separate resource pool with CPUs per zone. To simplify

matters, the number of CPUs for a zone can then be configured in the zone configuration

(zo ne c f g : a dd de di ca t e d -c pu ). When starting up a zone, a temporary resource pool is

then generated automatically that contains the configured number of CPUs. When the zone is shut

down, the resource pools and CPUs are released again (since Solaris 10 8/07).

Fair share scheduler in a resource pool

In the event that several zones run together in a resource pool, the fair share scheduler (FSS) allows

the allocation of CPU resources within a resource pool to be managed. To this end, each zone or

each project can have a share assigned to it. The settings for zones and projects in a resource pool

are used to manage the CPU resources in the event that the local zones or projects compete for

CPU time:

• If the workload of the processor set is less than 100%, no management is done since free CPU

capacity is still available.

• If the workload is at 100%, the fair share scheduler is activated and modifies the priority of the

participating processes such that the assigned CPU capacity of a zone or a project corresponds

to the defined share.

• The defined share is calculated from the share value of an active zone/project) divided by the

sum of the shares of all active zones/projects.

The allocation can be changed dynamically while running.

CPU resource management within a zone

In a local zone it is furthermore possible to define projects and resource pools and to apply CPU

resources via FSS to projects running in the zone (see previous paragraph).

CPU capping

The maximum CPU usage of zones can be set (c p u - ca ps ). This setting is an absolute limit with

regard to the CPU capacity used and can be adjusted to 1/100 CPU exactly (starting with Solaris 10

5/08). With this configuration option, the allocation can be adjusted much more finely than with

processor sets (1/100 CPU instead of 1 CPU).

Furthermore, CPU capping offers another control option if several zones run within one resource pool

(with or without FSS) in order to limit all users to the capacity that will be available later on.

5

Page 13

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

2.1.5.2. Memory resource management

[ug] In Solaris 10 (in an update of Solaris 9 as well), main memory consumption can be limited at the

level of zones, projects and processes. This is implemented with the so-called resource capping

daemon (rc ap d ).

A limit for physical memory consumption is defined for the respective objects. If consumption of one

of the projects exceeds the defined limit, the rc a pd causes little-used main memory pages of

processes to be paged out. The sum of the space consumption of the processes is used as a

measurement parameter.

In this manner, the defined main memory requirement is complied with. The capacity of processes in

the corresponding object drops as they may need to swap pages back in again if necessary if the

memory areas are used again. Continuous swapping of main memory pages is an indication that the

available main memory is too low or that the settings are too tight or the application currently needs

more than the negotiated amount of memory.

For simplification, starting with Solaris 10 8/07, memory limits can be set for each zone. The amount

of physical memory used (physical), the virtual memory (swap) and locked segments (main

memory pages that cannot be swapped, shared memory segments) can be limited. The settings for

virtual and locked memory are hard limits, that is, once the corresponding value has been reached, a

request of an application for more memory is denied. The limit for physical memory, however, is

monitored by the rcapd, which successively swaps main memory pages if the limit is exceeded.

2.1.5.3. Network resource management (IPQoS = IP Quality of Service)

[ug] In Solaris 10 (also Solaris 9) it is possible to classify network traffic and to manage the data rate

of the classes. One example is giving preference to a web server's network traffic over network

backup. Service to the customer should not suffer while network backup is running.

Configuration is done using rules in a file that is activated with the command i pq os co n f. The rules

consist of a part that allows the user to classify network traffic, and actions to manage the data

rate/burst rate. Classification can take place among other things according to some or all of the

following parameters:

• Address of sender or recipient

• Port number

• Data traffic type (UDP, TCP)

• Userid of the local process

• Project of the local process (/ e t c/ pr oj e ct )

• IP traffic TOS field (change priority in an ongoing connection)

2.1.6. User interfaces for zones

[dd] A variety of tools are available for working with zones and containers. Solaris itself provides a

series of command line interface (CLI) tools such as z on ea d m , z on ec f g and z lo gi n that allow

you to undertake the configuration and installation of zones on the command line or in scripts.

The Solaris Container Manager is available as a graphical user interface (GUI)

(http://www.sun.com/software/products/container_mgr/). This is a separate product operated together

with the Sun Management Center (SunMC). The Container Manager allows simple and rapid

creation, reconfiguration or migration of zones through its user interface, and the effective use of

resource management.

With its zone module, Webmin supplies a browser user interface (BUI) for the installation and

management of zones. The module can be downloaded from http://www.webmin.com/standard.html

as z o ne s. w b m .g z.

6

Page 14

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

2.1.7. Zones and high availability

[tf/du/hs] In the presence of all RAS capabilities, a zone has only the availability of a computer and it

decreases with the number of components of the machine (MTBF).

If this availability is not sufficient, so-called failover zones can be implemented using the HA Solaris

Container Agent, allowing zones to be panned among cluster nodes (from Sun Cluster 3.1 08/05).

This increases the availability of the total system considerably. In addition, a container here becomes

a flexible container. That is to say, it is completely irrelevant which of the computers participating in

the cluster the container is running on. Relocating the container can be done manually by

administrative actions or automatically in the event of a computer malfunction.

Alternatively, it is also possible using the HA Solaris Container Agent to start and to stop local zones

including their services. That is, identical zones exist on several computers with identical services that

have no(!) shared storage. The failover of a zone in such a configuration is not possible, because the

zone rootpath cannot be moved between the zones. Instead one of the zones can be stopped and an

identical zone can then be started on another system.

Container clusters (since Sun Cluster 3.2 1/09) offer a third option. A Sun Cluster, where virtual

clusters can be configured, is installed in the global zone. The virtual clusters consist of virtual

computer nodes that are local zones. Administration can be transferred to the zone operators. (see

2.1.9 Solaris container cluster (aka "zone cluster") ).

2.1.8. Branded zones (Linux and Solaris 8/Solaris 9 compatibility)

[dd/ug] Branded zones allow you to run an OS environment which is different from the one that is

installed in the global zone (BrandZ, since Solaris 10 8/07)

Branded zones extend zone configuration as follows:

• A brand is a zone attribute.

• Each brand contains mechanisms for the installation of a branded zone.

• Each brand can use its own pre-/post-boot procedures.

At runtime, system calls are intercepted and either forwarded to Solaris or emulated. Emulation is

done by modules in libraries contained in the brand. This design has the advantage that additional

brands can easily be introduced without changing the Solaris kernel.

The following brands are currently available:

• na t i v e: Brand for zones with the Solaris 10 version from the global zone

• lx : Solaris Container for Linux Applications (abbreviated: SCLA)

With this, unmodified 32-bit Linux programs can be used under Solaris on x86 systems. To do

so, a 32-bit Linux distribution that can use a Linux 2.4 kernel must be installed in the zone. The

required Linux programs can then be used and/or installed in the zone.

The Linux distribution and license are themselves not contained in Solaris and must be installed

in each zone (new installations or copies of an existing installation). At least the following Linux

distributions work:

− CentOS 3.5 – 3.8, RedHat Linux 3.5 – 3.8 (certified)

− Debian (unsupported, for instructions see blog by Nils Nieuwejaar

http://blogs.sun.com/nilsn/entry/installing_a_debian_zone_with)

• so l a r is 8/ So l a r is 9: Solaris 8 or Solaris 9 container

This allows you to operate a Solaris 8 or Solaris 9 environment in a zone (SPARC only). Such

containers can be made highly available with the HA Solaris Container Agent. Such types of

zones cannot be used as virtual nodes in a virtual cluster.

• so l a r is 10 : Solaris 10 branded zones for OpenSolaris are in the planning stage

(see http://opensolaris.org/os/project/s10brand/)

7

Page 15

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

2.1.9. Solaris container cluster (aka "zone cluster")

[hs] In autumn 2008, within the scope of the Open HA Cluster Project, zone clusters were

announced. The latter has also been available since Sun Cluster 3.2 1/09 in a commercial product as

Solaris Container Cluster. A Solaris Container Cluster is the further development of the Solaris zone

technology up to a virtual cluster, also called "zone cluster”. The installation and configuration

of a container cluster is described in the Sun Cluster Installation Guide

[http://docs.sun.com/app/docs/doc/820-4677/ggzen?a=view].

The Open HA Cluster provides a complete, virtual cluster environment. Zones as virtualized Solaris

authorities are used as elements. The administrator of such an environment can see and notice

almost no difference to the global cluster that is virtualized here.

Two principal reasons have advanced the development of virtual cluster technology:

• the desire to round off container technology

• the customer requirement to be able to run Oracle RAC (Real Application Cluster) within a

container environment.

Solaris containers, operated by Sun Cluster, offer an excellent possibility to operate applications

safely and with high availability in Solaris containers. Two options, Flying Containers and Flying

Services, are available for implementation.

A clean separation in system administration allows container administration to be delegated to the

application operators who install, configure and operate their application within a container. It was

indeed unsatisfactory for an application operator to have administrator privileges in his containers but,

on the other hand, to be limited in handling the cluster resources belonging to his zone.

In the cluster implementation prior to Sun Cluster 3.2 1/09, the cluster consisted mainly of the

components installed, configured and also running in the global zone. Only a very small number of

cluster components were actually active within a container, and even this allowed very limited

administrative intervention only.

Now, virtual clusters make the zone administrator feel that he has almost complete control of his

cluster. Restrictions apply to services that continue to exist only once in the cluster, such as e.g.

quorum devices or even heartbeats.

Oracle RAC users could not understand that this product could not simply be installed and operated

within a container. One has to know, however, that Oracle CRS, the so-called Oracle Clusterware –

an operating system independent cluster layer – requires rights that the original safety concept of

Solaris containers did not delegate to a non-global zone. Since Solaris 10 5/08 it is, however, possible

to administer network interfaces even within a zone such that Oracle CRS can be operated there.

The goal of providing a cluster environment that does not require any adjustments of applications

whatsoever has been achieved. Even Oracle RAC can be installed and configured just like in a

normal Solaris instance.

Certification of Oracle RAC in a Solaris Container Cluster is currently (June 2009) not yet finalized.

However, Sun Microsystems offers support for such an architecture.

It is also possible to install Oracle RAC with CRS in a Solaris container without a zone cluster but it

will not yet be certified. The disadvantage of such a configuration consists in the fact that solely

exclusive-IP configurations can be used, which unnecessarily increases the number of required

network interfaces (if not using VLAN interfaces).

8

Page 16

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

2.2. Virtualization technologies compared

[ug] Conventional data center technologies include

• Applications on separate computers

This also includes multi-tier architectures with firewall, load balancing, web and application

servers and databases.

• Applications on a network of computers

This includes distributed applications and job systems.

• Many applications on a large computer

The separation of applications on computers simplifies the installation of the applications but

increases administrative costs since the operating systems must be installed several times and

maintained separately. Furthermore, computers are usually underutilized (< 30%).

Distributed applications are applications running simultaneously on several computers that

communicate (MPP computers, MPI software, etc.) via a network (TCP/IP, Infiniband, Myrinet, etc.).

For job systems, the computation is broken up into self-contained sub-jobs with dependencies and is

carried out on several computers by a job scheduler who also undertakes data transport (grid

system).

Both alternatives require high network capacity and appropriate applications and make sense only in

areas where the applications are already adapted to this type of computing. Modifying of an

application is a major step and is rarely performed for today's standard applications. But this

technology can become more interesting in the future with new applications.

The manner in which mainframes and larger Unix systems are operated today is to run many

applications in one computer. The advantages are that the systems have a better workload (several

applications) and a lower number of operating system installations to be serviced. It is therefore

exactly this variant which is of interest for consolidation in the data center.

The challenges consist in creating an environment for the applications where the latter can run

independently (separation) while still sharing resources with each other to save costs. Particularly

interesting areas are:

• Separation. How far separated are the environments of the applications?

• Application. How does the application fit into the environment?

• Effects on software maintenance

• Effects on hardware maintenance

• Delegation: Can administrative tasks be delegated to the environment?

• Scaling of the environment?

• Overhead of the virtualization technology?

• Can different OS versions be used in the environments?

A variety of virtualization techniques were developed for this purpose and are presented below.

For comparison, see also: http://en.wikipedia.org/wiki/Virtualization

9

Page 17

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

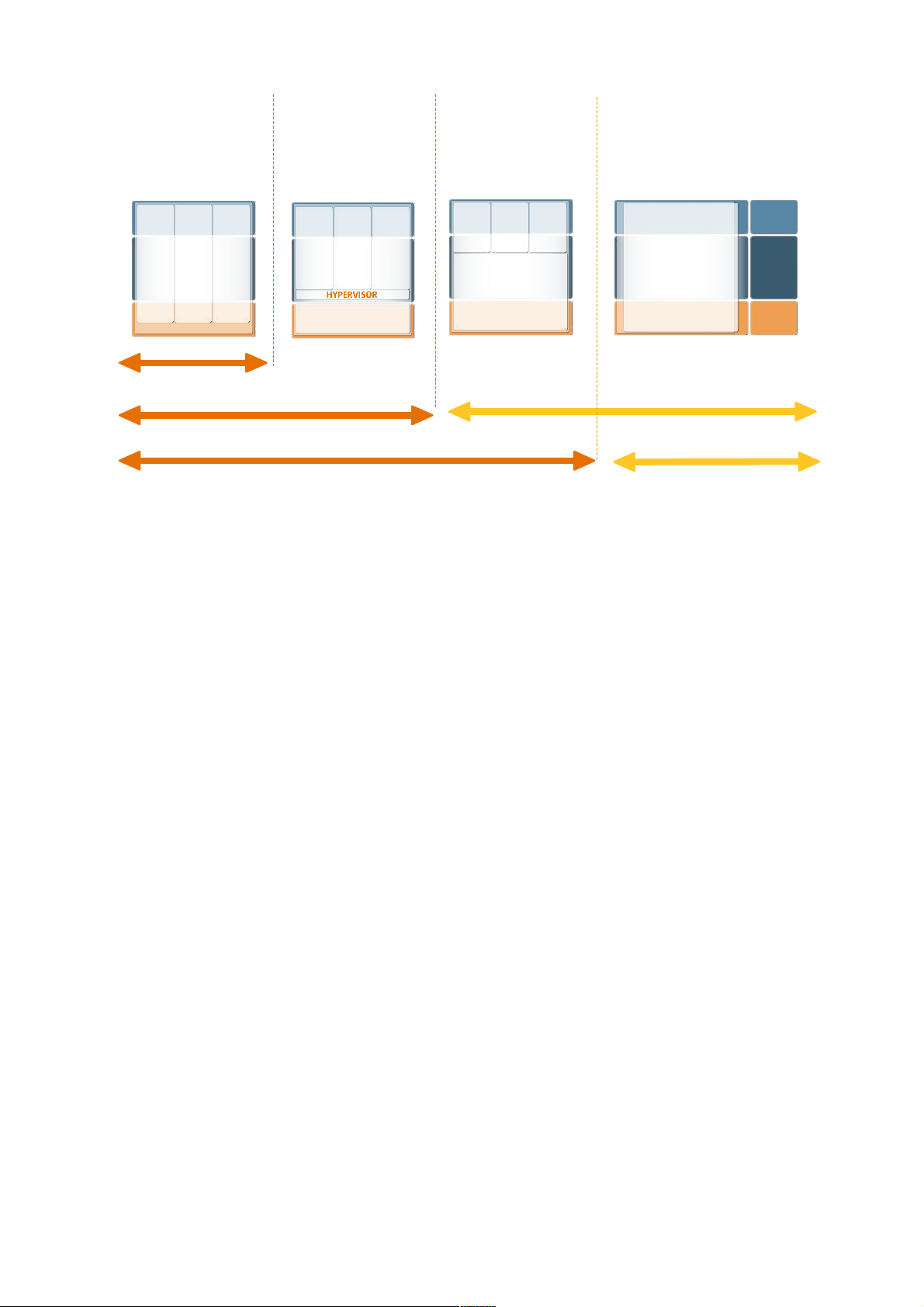

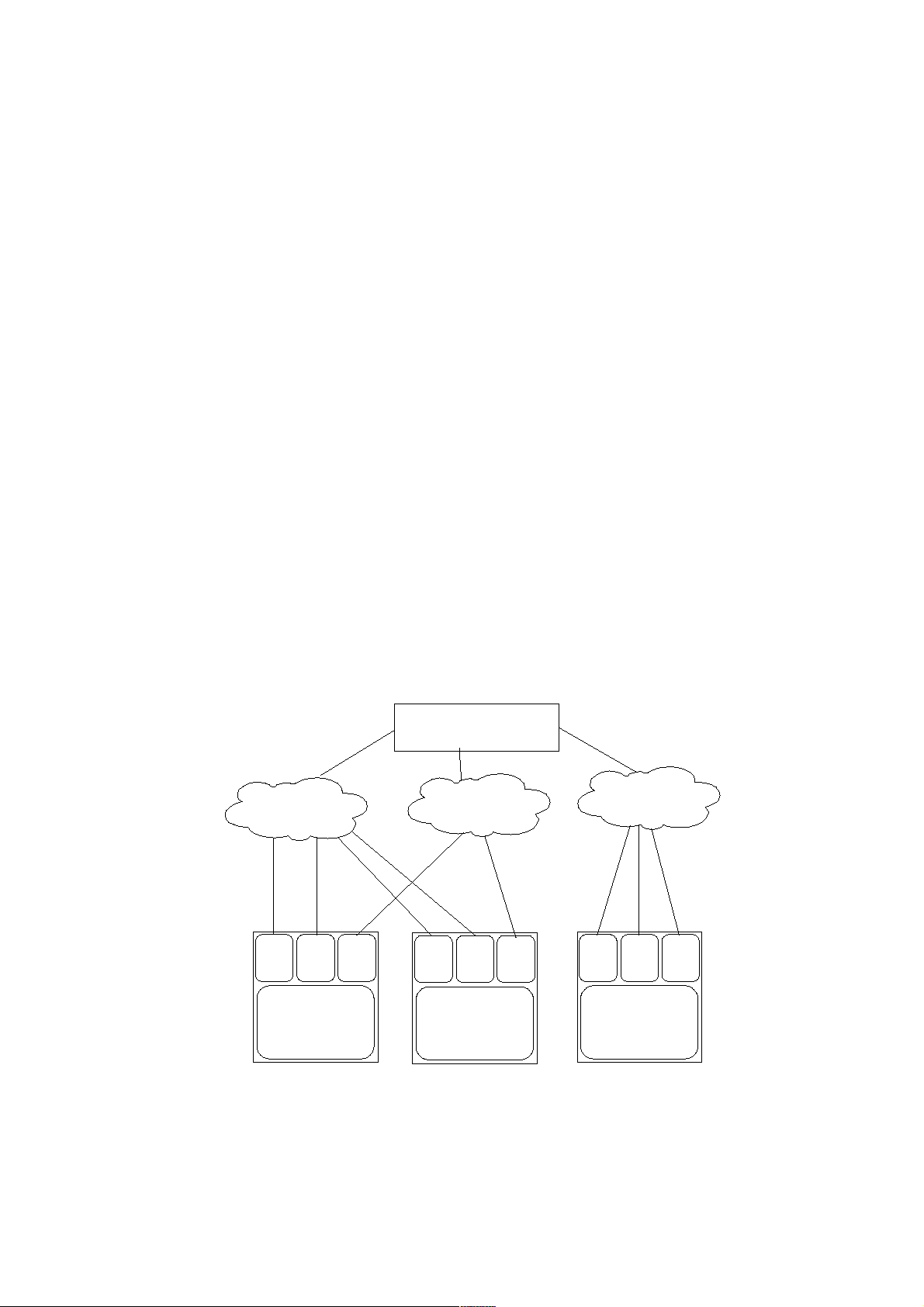

2.2.1. Domains/physical partitions

[ug] A computer can be partitioned by configuration into sub-computers (domain, partition). Domains

are almost completely physically separated since electrical connections are turned off. Shared parts

are either very failsafe (cabinet) or redundantly structured (service processor, power supplies).

Advantages:

• Separation: Applications are well separated from each other; mutual influence via the OS or

failed shared hardware is not possible.

• Application: All applications are executable as long as they are executable in the basic operating

system.

• Scalability: The capacity of a virtualization instance (here: a domain) can be changed for some

implementations while running (dynamic reconfiguration) by relocating hardware resources

between domains.

• HW maintenance: If one component fails and the domain is constructed appropriately, the

application can still run. Dynamic reconfiguration allows repairs to be performed while running

(in redundant setups). A cluster must be set up only to intercept total failures (power supply,

building on fire, data center failure, software errors).

• OS versions: The partitions are able to run different operating systems/versions.

Disadvantages:

• OS maintenance: Each machine has to be administered separately. OS installation, patches

and the implementation of in-house standards must be done separately for each machine.

• Delegation: The department responsible for the application/service requires root privileges, or

must communicate with computer operations regarding modifications. All aspects of the

operating system can be administered in the physical partition. This can affect security and can

become costly/time-consuming.

• Overhead: Each machine has a separate operating system overhead.

Sun offers domains in the high-end servers SunFire E20K, E25K, the mid-range servers SunFire

E2900, E4900, E6900 and the Sun SPARC Enterprise M4000, M5000, M8000 and M9000.

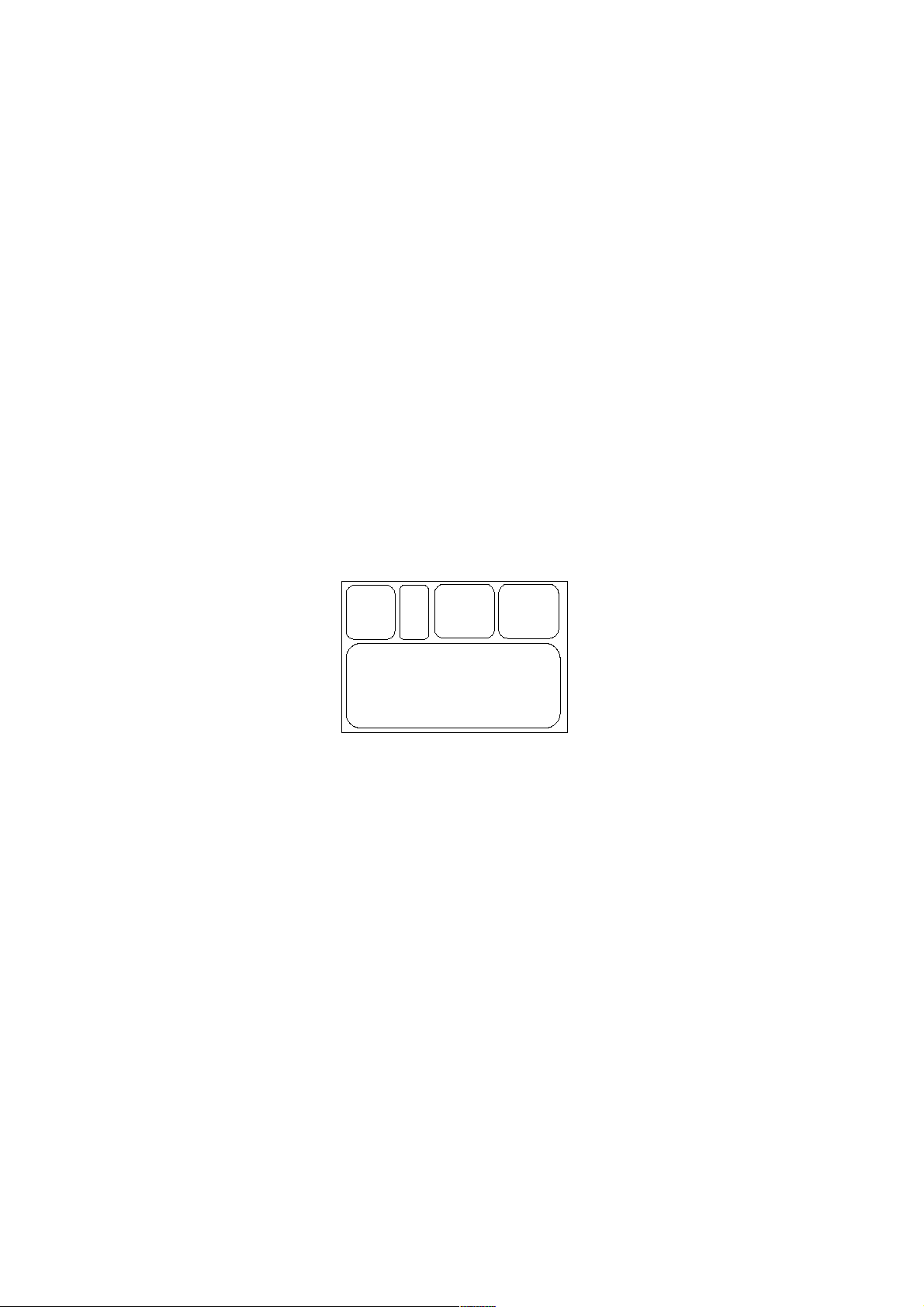

App 3App 1 App 2

App

OS

Server

Figure 2: [dd] Domains/Physical domains

This virtualization technology is provided by several manufacturers (Sun Dynamic System Domains,

Fujitsu-Siemens Partitions, HP nPars). HW support and (a little) OS support are required.

10

Page 18

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

2.2.2. Logical partitions

[ug] A minimal operating system called the hypervisor, that virtualizes the interface between the

hardware and the OS of a computer, runs on the computer's hardware. A separate operating system

(guest operating system) can be installed on the arising so-called virtual machines.

In some implementations, the hypervisor runs as a normal application program; this involves

increased overhead.

Virtual devices are usually created from real devices by emulation; real and virtual devices are

assigned to the logical partitions by configuration.

Advantages:

• Application: All applications of the guest operating system are executable.

• Scalability: The capacity of a logical partition can be modified in some cases while running,

when the OS and the hypervisor support this.

• Separation: Applications are separated from each other; direct mutual influence via the OS is

not possible.

• OS versions: The partitions are able to run different operating systems/versions.

Disadvantages:

• HW maintenance: If a shared component fails, many or all logical partitions may be affected. An

attempt is made, however, to recognize symptoms of future failure by preventive analysis, in

order to segregate errors in advance.

• Separation: The applications can influence each other via shared hardware. One example for

this is the virtual network since the hypervisor has to emulate a switch. Virtual disks which are

located together on a real disk are “pulling away” the disk head from each other are another

example of this behavior. To prevent this from happening, real network interfaces or dedicated

disks can be used which, however, increases the cost for using logical partitions.

• OS maintenance: Each partition has to be administered separately. OS installation, patches and

the implementation of in-house standards must be done separately for each partition.

• Delegation: If the department responsible for the application/service requires root privileges, or

must communicate with computer operations regarding modifications. All aspects of the

operating system can be administered in the logical partition. This can affect security and can

become costly/time-consuming.

• Overhead: Each logical partition has its own operating system overhead; in particular the main

memory requirements of the individual systems are maintained.

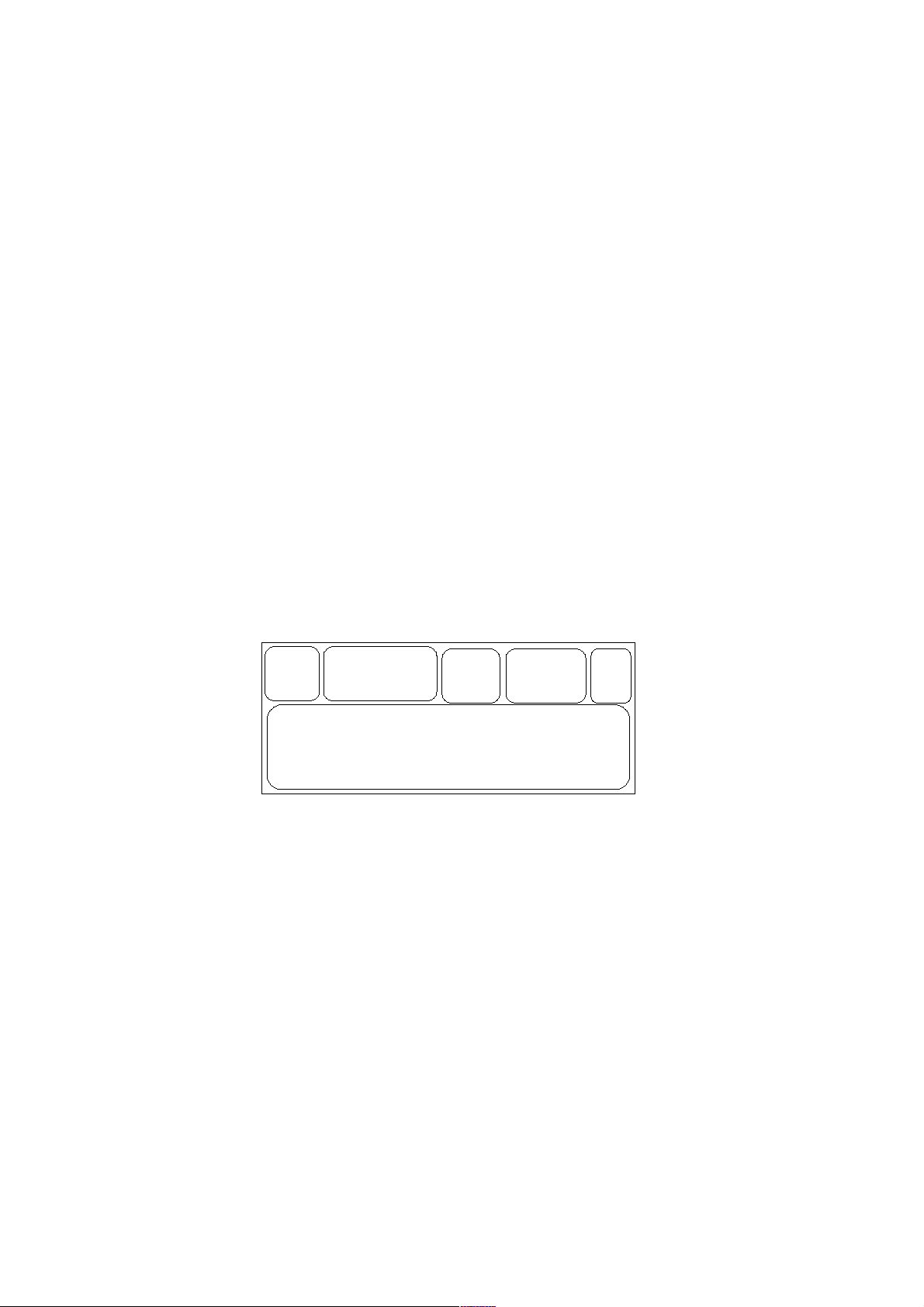

App 3App 2App 1

App

OS

Server

Figure 3: [dd] Logical partitions

Logical partitioning systems include the IBM VM operating system, IBM LPARs on z/OS and AIX, HP

vPars, as well as VMware and XEN. Sun offers Logical Domains (SPARC: since Solaris 10 11/06) as

well as Sun xVM VirtualBox (x86 and x64 architectures).

The Sun xVM server is in collaboration with the XEN community for x64 architectures in development.

The virtualization component (xVM Hypervisor) can already be used since OpenSolaris 2009.06.

11

Page 19

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

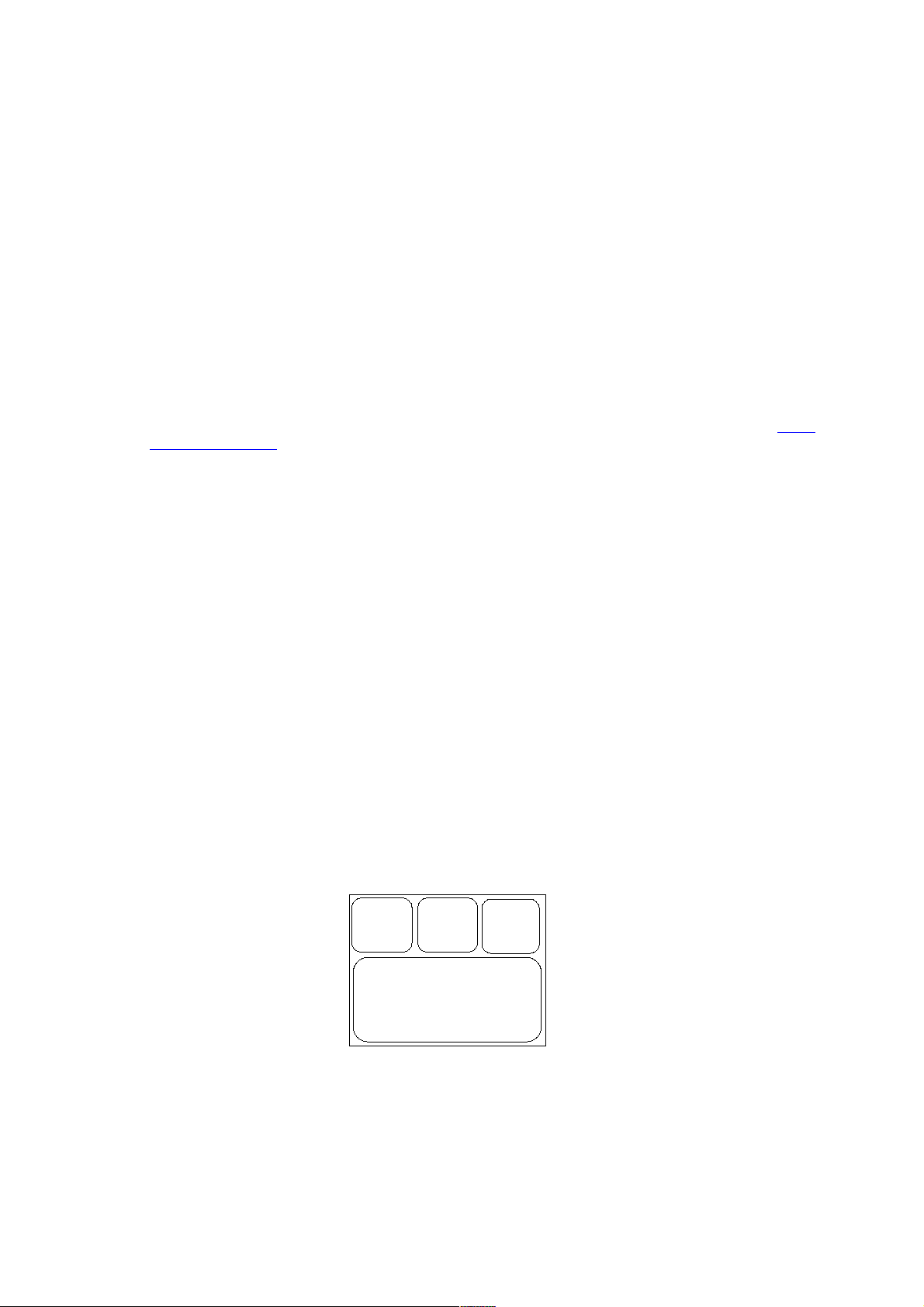

2.2.3. Containers (Solaris zones) in an OS

[ug] In an operating system installation, execution environments for applications and services are

created that are independent of each other. The kernel becomes multitenant enabled: it exists only

once but appears in each zone as though it was assigned exclusively.

Separation is implemented by restricting access to resources, such as e.g. the visibility of processes

(modified procfs), the usability of the devices (modified devfs) and the visibility of the file tree (as with

chroot).

Advantages:

• Application: All applications are executable unless they use their own drivers or other system-

oriented features. Separate drivers can, however, be used via installations in the global zone.

• Scalability: Container capacity can be configured (through resource management, processor

sets and CPU caps).

• Separation: Applications are separated from each other; direct mutual influence via the OS is

not possible.

• OS maintenance: OS installation, patches and implementation of in-house standards must take

place in a central location (in the global zone) only.

• Delegation: The department responsible for the application/ service requires root privileges for

part of the administration. Here, it can obtain the root privileges within the zone without being in

a position to affect other local zones or the global zone. The right to allocate resources is

reserved to the global zone only.

• Overhead: All local zone processes are merely normal application processes from the point of

view of the global zone. The OS overhead (memory management, scheduling, kernel) and

memory requirements for shared objects (files, programs, libraries) are created only once. Each

zone has only a small additional number of system processes. For that reason, it is possible to

have hundreds of zones on a single-processor system.

Disadvantages:

• HW maintenance: If a shared component fails, many or all zones may be affected. Solaris 10

recognizes symptoms of a future failure through FMA (Fault Management Architecture) and can

deactivate the affected components (CPU, memory, bus systems) while running, or instead use

alternative components that are available. Through the use of cluster software (Sun Cluster),

the availability of the application in the zone can be improved (Solaris Container Cluster/ Solaris

Container Agent).

• Separation: The applications can influence each other through shared hardware. That influence

can be minimized in Solaris with resource management and network bandwidth management.

• OS versions: Different operating systems/versions are possible with branded zones only. Here,

a virtual process environment for another operating system is created in one zone but the kernel

of the global zone is used by the branded zones as well.

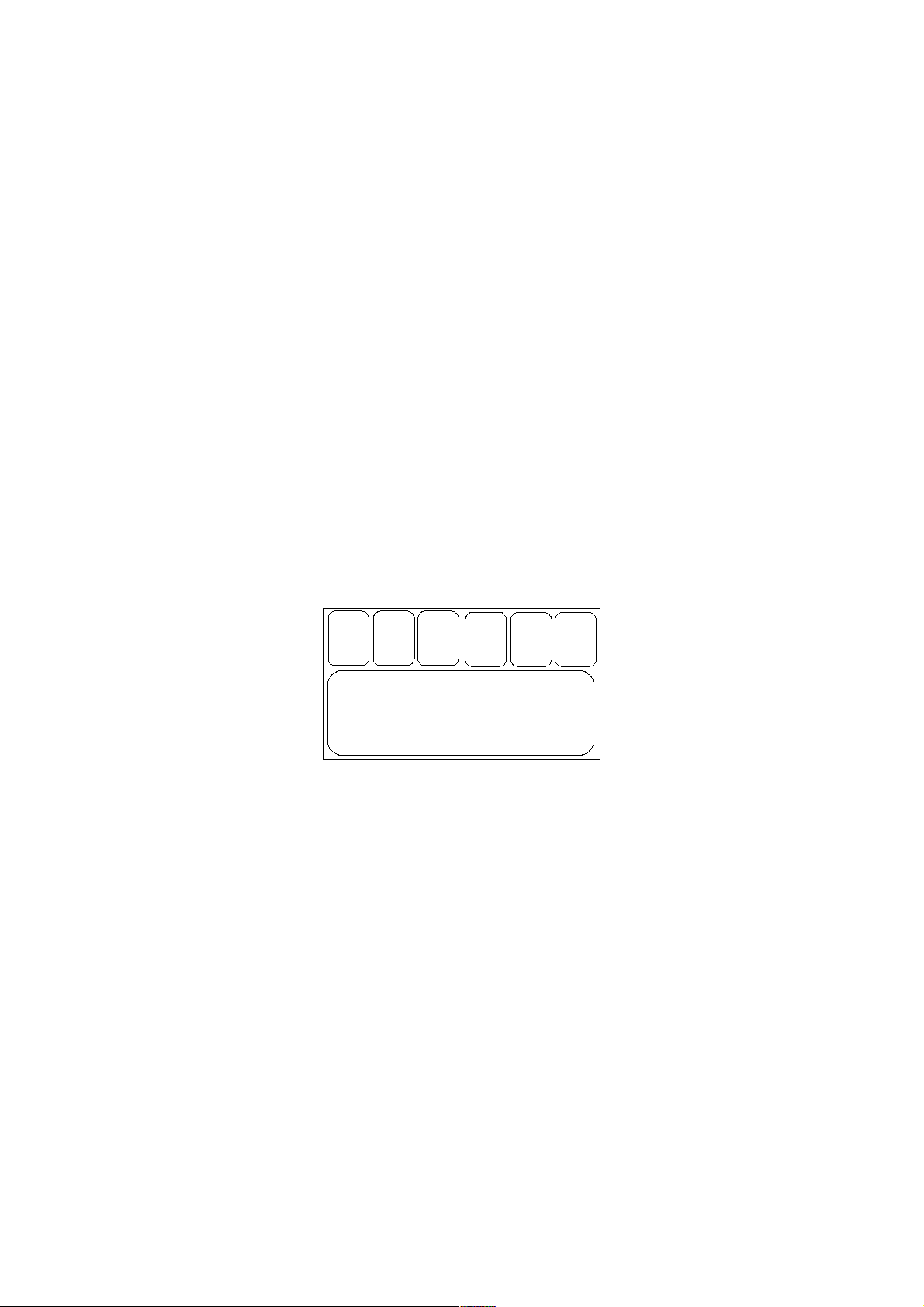

App 1 App 2 App 3

App

BrandZ

OS

Server

Figure 4: [dd] Container (Solaris zones) in an OS

Implementations in the BSD operating system are Jails, in Solaris: zones, and in Linux the vserver

project. HW requirements are not necessary.

12

Page 20

Version 3.1-en Solaris 10 Container Guide - 3.1 2. Functionality Effective: 30/11/2009

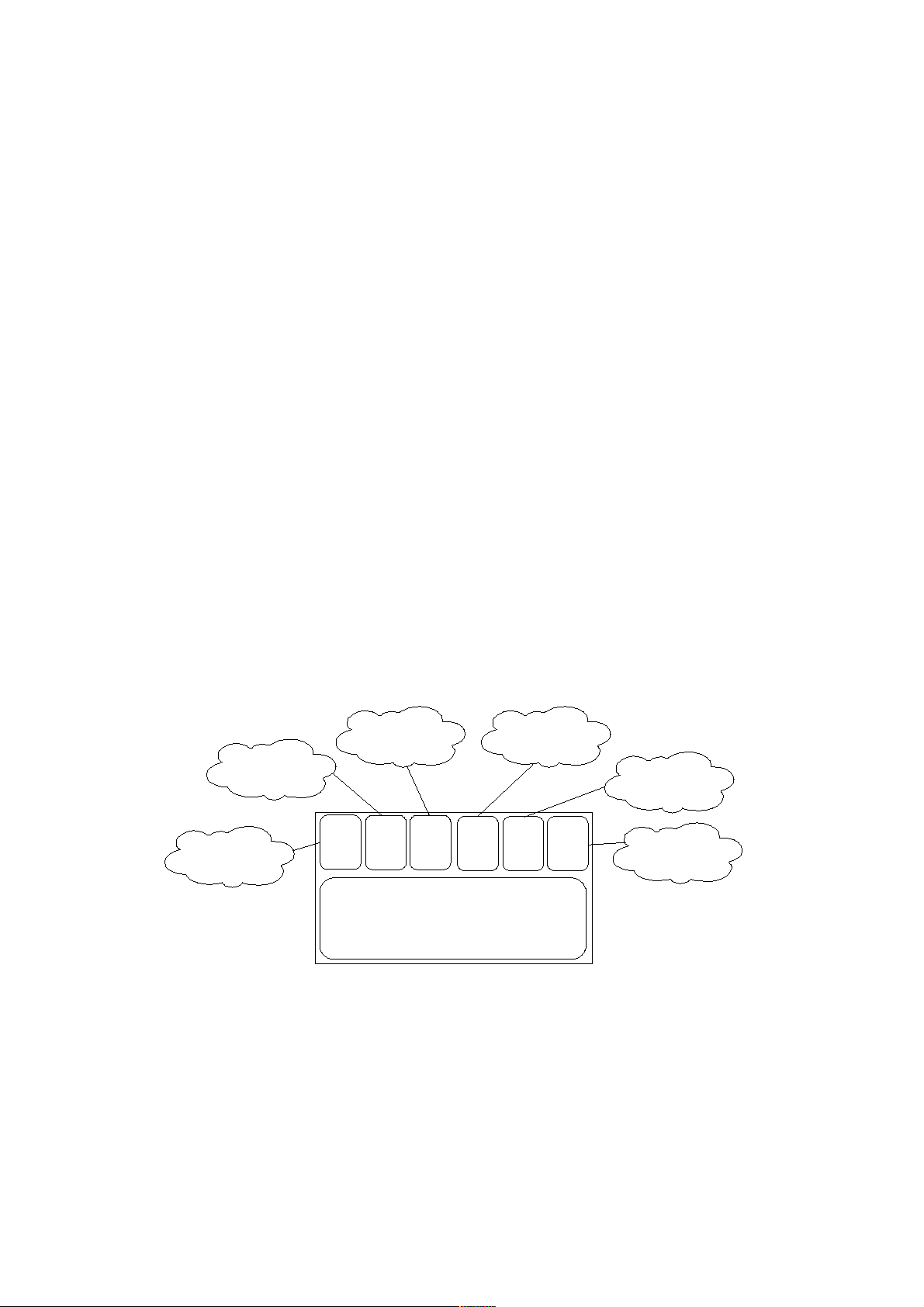

2.2.4. Consolidation in one computer

[ug] The applications are installed on a computer and used under different userid. This is the type of

consolidation feasible with modern operating systems.

Advantages:

• Application: All applications are executable as long as they are executable in the basic operating

system and do not use their own OS drivers. However, there are restrictions if different versions

of the application with a defined install directory are required, or if two instances require the

same userid (e.g. Oracle instances), or if the configuration files are located in the same

locations in the file system.

• Scalability: The capacity of an application can be modified online.

• OS maintenance: OS installation, patches and implementation of in-house standards must take

place for one OS only. That is to say, many applications can be run with the administrative effort

for one machine only.

• Overhead: Overhead is low since only the application processes must run for each application.

Disadvantages:

• HW maintenance: If a shared component fails, many or all applications may be affected.

• OS maintenance: Administration becomes complicated as soon as applications are based on

different versions of a software (e.g. versions for Oracle, Weblogic, Java, etc.). Such a system

becomes difficult to control without accurate documentation and change management. Any error

in the documentation that is not noticed immediately can have fatal consequences in an

upgrade (HW or OS) later on.

• Separation: The applications can influence each other through shared hardware and the OS. In

Solaris, the influence can be reduced by using resource management and network bandwidth

management.