Page 1

Sun Java SystemWeb Server 7.0 Update 1 Performance Tuning, Sizing, and Scaling Guide

Sun Microsystems,Inc.

4150 Network Circle

Santa Clara, CA 95054

U.S.A.

Part No: 820–1067

Page 2

Copyright 2007 SunMicrosystems, Inc. 4150 Network Circle, SantaClara, CA 95054 U.S.A. Allrights reserved.

Sun Microsystems, Inc. hasintellectual property rights relating to technology embodied in the product that is describedin this document. Inparticular, and without

limitation, these intellectual property rights may include oneor more U.S. patentsor pending patent applications in the U.S. and in other countries.

U.S. Government Rights – Commercial software. Governmentusers are subject to the Sun Microsystems, Inc. standardlicense agreement and applicable provisions

of the FAR and its supplements.

This distribution may include materials developed by thirdparties.

Parts of the productmay be derived from Berkeley BSD systems, licensedfrom the University of California. UNIX is a registered trademark in the U.S. and other

countries, exclusively licensed through X/Open Company, Ltd.

Sun, Sun Microsystems, the Sun logo, the Solarislogo, the Java Coee Cuplogo, docs.sun.com, JavaServer Pages, JSP, JVM, JDBC, Java HotSpot, Java, and Solaris are

trademarks or registered trademarks of Sun Microsystems, Inc.in the U.S. and other countries. All SPARC trademarks are used underlicense and are trademarksor

registered trademarks of SPARC International,Inc. in the U.S. and other countries. Products bearingSPARC trademarks are based upon an architecture developed

by Sun Microsystems, Inc.Netscape is a trademark orregistered trademark of Netscape Communications Corporation in the United Statesand other countries.

The OPEN LOOK andSun

of Xerox in researching and developing the conceptof visual or graphicaluser interfaces for the computer industry. Sun holds a non-exclusive license from Xeroxto

the Xerox Graphical UserInterface, which license also covers Sun's licensees who implement OPEN LOOK GUIs and otherwise comply with Sun's writtenlicense

agreements.

Products covered by andinformation contained in thispublication are controlled byU.S. Export Control lawsand may be subject to the export or import laws in

other countries. Nuclear, missile, chemical or biological weapons or nuclear maritime end usesor end users, whetherdirect or indirect, are strictly prohibited. Export

or reexport to countries subject to U.S. embargoor to entities identiedon U.S. export exclusion lists, including, but not limited to, the denied personsand specially

designated nationals lists is strictly prohibited.

DOCUMENTATION IS PROVIDED “AS IS”AND ALL EXPRESS OR IMPLIED CONDITIONS, REPRESENTATIONSAND WARRANTIES, INCLUDINGANY

IMPLIED WARRANTY OF MERCHANTABILITY, FITNESS FORA PARTICULARPURPOSE OR NON-INFRINGEMENT, ARE DISCLAIMED, EXCEPT TO

THE EXTENT THAT SUCH DISCLAIMERS AREHELD TO BE LEGALLY INVALID.

TM

Graphical User Interface wasdeveloped by Sun Microsystems, Inc.for its users andlicensees. Sun acknowledges the pioneering eorts

Copyright 2007 SunMicrosystems, Inc. 4150 Network Circle, SantaClara, CA 95054 U.S.A. Tousdroits réservés.

Sun Microsystems, Inc. détientles droits de propriété intellectuelle relatifs à la technologie incorporée dans le produitqui est décrit dansce document. En particulier,

et ce sans limitation, ces droits de propriétéintellectuelle peuvent inclure unou plusieurs brevets américains ou des applications de brevet en attente aux Etats-Unis

et dans d'autres pays.

Cette distribution peutcomprendre des composants développés par des tierces personnes.

Certaines composants dece produit peuvent être dérivées du logiciel Berkeley BSD, licenciés par l'Université de Californie. UNIX est une marque déposée aux

Etats-Unis et dans d'autrespays; elle est licenciée exclusivement par X/Open Company, Ltd.

Sun, Sun Microsystems, le logo Sun, le logoSolaris, le logo Java Coee Cup,docs.sun.com, JavaServer Pages, JSP, JVM, JDBC,Java HotSpot, Java et Solaris sontdes

marques de fabrique ou des marques déposées deSun Microsystems, Inc. aux Etats-Uniset dans d'autres pays. Toutes les marques SPARC sont utilisées sous licence

et sont des marques de fabrique ou desmarques déposées de SPARC International,Inc. auxEtats-Unis et dans d'autres pays. Les produits portant les marques SPARC

sont basés sur une architecture développée par SunMicrosystems, Inc. Netscape est unemarque de Netscape Communications Corporation aux Etats-Unis et dans

d'autres pays.

L'interface d'utilisation graphique OPENLOOK et Sun aété développée par Sun Microsystems, Inc. pour ses utilisateurset licenciés. Sun reconnaît les eorts de

pionniers de Xerox pour la recherche et ledéveloppement du concept desinterfaces d'utilisation visuelle ou graphique pour l'industrie de l'informatique. Sun détient

une licence non exclusive de Xerox sur l'interfaced'utilisation graphique Xerox, cettelicence couvrant également les licenciés de Sun qui mettent en place l'interface

d'utilisation graphique OPEN LOOKet qui, en outre,se conforment aux licences écrites de Sun.

Les produits qui font l'objet de cette publication et les informations qu'ilcontient sont régis parla legislation américaine en matière de contrôle des exportations et

peuvent être soumis au droit d'autres pays dansle domaine des exportationset importations. Les utilisationsnales, ou utilisateurs naux, pour des armes nucléaires,

des missiles, des armes chimiques ou biologiques oupour le nucléaire maritime,directement ou indirectement, sont strictement interdites. Les exportations ou

réexportations vers des pays sous embargo des Etats-Unis,ou vers des entités gurant sur les listes d'exclusiond'exportation américaines, y compris,mais de manière

non exclusive, la liste de personnes qui fontobjet d'un ordre dene pas participer, d'une façon directe ou indirecte, aux exportations des produits oudes services qui

sont régis par la legislation américaine en matièrede contrôle des exportationset la liste de ressortissants spéciquement designés, sont rigoureusement interdites.

LA DOCUMENTATION EST FOURNIE "ENL'ETAT" ET TOUTES AUTRESCONDITIONS, DECLARATIONS ET GARANTIESEXPRESSES OU TACITES

SONT FORMELLEMENT EXCLUES, DANS LA MESURE AUTORISEEPAR LA LOI APPLICABLE, Y COMPRIS NOTAMMENT TOUTE GARANTIE

IMPLICITE RELATIVE A LA QUALITEMARCHANDE,A L'APTITUDE A UNE UTILISATION PARTICULIEREOU A L'ABSENCE DE CONTREFACON.

071005@18741

Page 3

Contents

Preface ...................................................................................................................................................11

1 Performance and MonitoringOverview ..........................................................................................19

Performance Issues .............................................................................................................................. 19

Conguration ...................................................................................................................................... 20

Virtual Servers ..................................................................................................................................... 20

Server Farms ......................................................................................................................................... 21

64–Bit Servers ...................................................................................................................................... 21

SSL Performance .................................................................................................................................. 21

Monitoring Server Performance ........................................................................................................ 22

About Statistics ............................................................................................................................. 23

Monitoring Current Activity Using the Admin Console ........................................................ 25

▼ To Monitor Statistics from the Admin Console ............................................................... 25

Monitoring Current Activity Using the CLI ............................................................................. 26

▼ To Monitor Statistics from the CLI .................................................................................... 26

Monitoring Current Activity Using stats.xml .......................................................................... 29

▼ To Enable the stats-xml URI from the Admin Console ................................................... 29

▼ To Enable the stats-xml URI from the CLI ........................................................................ 30

▼ To Limit the stats-xml Statistics Displayed in the URI .................................................... 30

▼ To View stats-xml Output from the CLI ............................................................................ 31

Monitoring Current Activity Using perfdump ........................................................................ 31

▼ To Enable the perfdump URI from the Admin Console ................................................. 31

▼ To Enable the perfdump URI from the CLI ...................................................................... 32

▼ To View the perfdump Data from the CLI ........................................................................ 33

Monitoring Current Activity Using the Java ES Monitoring Console ................................... 37

3

Page 4

Contents

2 TuningSun Java System Web Server ................................................................................................ 39

General Tuning Tips ........................................................................................................................... 39

Understanding Threads, Processes, and Connections ................................................................... 40

Connection-Handling Overview ............................................................................................... 40

Custom Thread Pools .................................................................................................................. 42

The Native Thread Pool ............................................................................................................... 43

Process Modes .............................................................................................................................. 44

Mapping Web Server 6.1 Tuning Parameters to Web Server 7.0 ................................................... 46

Using Monitoring Data to Tune Your Server .................................................................................. 48

Connection Queue Information ................................................................................................ 49

HTTP Listener (Listen Socket) Information ............................................................................ 51

Keep-Alive Information .............................................................................................................. 53

Session Creation (Thread) Information .................................................................................... 57

File Cache Information (Static Content) .................................................................................. 59

Thread Pool Information ............................................................................................................ 65

DNS Cache Information .............................................................................................................68

Java Virtual Machine (JVM) Information ................................................................................ 70

Web Application Information .................................................................................................... 71

▼ To Access Web Application Statistics From the Admin Console ................................... 71

JDBC Resource Information ...................................................................................................... 72

Tuning the ACL User Cache .............................................................................................................. 77

Tuning Java Web Application Performance .................................................................................... 78

Using Precompiled JSPs .............................................................................................................. 78

Using Servlet/JSP Caching .......................................................................................................... 79

Conguring the Java Security Manager ..................................................................................... 79

Conguring Class Reloading ...................................................................................................... 79

AvoidingDirectories in the Classpath ....................................................................................... 80

Conguring the Web Application’s Session Settings ............................................................... 80

Tuning CGI Stub Processes (UNIX/Linux) ..................................................................................... 81

Using nd-pathinfo-forward ............................................................................................................. 82

Using nostat ......................................................................................................................................... 82

Using Busy Functions ......................................................................................................................... 83

3 Common Performance Problems ......................................................................................................85

check-acl Server Application Functions ........................................................................................... 85

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •4

Page 5

Contents

Low-Memory Situations ..................................................................................................................... 86

Too FewThreads ................................................................................................................................. 86

Cache Not Utilized .............................................................................................................................. 87

Keep-Alive Connections Flushed ...................................................................................................... 87

Log File Modes ..................................................................................................................................... 88

4 Platform-Specic Issues andTips .....................................................................................................89

Solaris Platform-Specic Issues ......................................................................................................... 89

Files Open in a Single Process (File Descriptor Limits) ........................................................... 89

Failure to Connect to HTTP Server ........................................................................................... 90

Connection Refused Errors ........................................................................................................ 91

Tuning TCP Buering ................................................................................................................. 91

Using the Solaris Network Cache and Accelerator (SNCA) ................................................... 91

▼ To Enable SNCA to Work With Web Server .................................................................... 92

Solaris File System Tuning ................................................................................................................. 93

High File System Page-In Rate .................................................................................................... 93

Reduce File System Housekeeping ............................................................................................. 93

Long Service Times on Busy Disks or Volumes ....................................................................... 93

Solaris Platform-Specic Performance Monitoring ........................................................................ 94

Short-Term System Monitoring ................................................................................................ 94

Long-Term System Monitoring ................................................................................................. 95

“Intelligent” Monitoring ............................................................................................................. 95

Solaris 10 Platform-Specic Tuning Information ........................................................................... 95

Tuning Solaris for Performance Benchmarking .............................................................................. 96

Tuning UltraSPARC T1–Based Systems for Performance Benchmarking .................................. 97

Tuning Operating System and TCP Settings ............................................................................ 97

Disk Conguration ...................................................................................................................... 98

Network Conguration ............................................................................................................... 98

Web Server Start Options ...........................................................................................................99

5 Sizing and ScalingYour Server ........................................................................................................101

64-Bit Server ....................................................................................................................................... 101

Processors ........................................................................................................................................... 101

Memory .............................................................................................................................................. 102

Drive Space ......................................................................................................................................... 102

5

Page 6

Contents

Networking ........................................................................................................................................ 102

6 Scalability Studies .............................................................................................................................103

Study Goals ......................................................................................................................................... 103

Study Conclusion .............................................................................................................................. 104

Hardware ............................................................................................................................................ 104

Software .............................................................................................................................................. 105

Conguration and Tuning ............................................................................................................... 105

Network Conguration ............................................................................................................. 106

Web Server Tuning .................................................................................................................... 107

Performance Tests and Results ........................................................................................................ 108

Static Content Test ..................................................................................................................... 108

Dynamic Content Test: Servlet ................................................................................................ 110

Dynamic Content Test: C CGI ................................................................................................. 111

Dynamic Content Test: Perl CGI ............................................................................................. 113

Dynamic Content Test: NSAPI ................................................................................................ 114

PHP Scalability Tests ................................................................................................................. 115

SSL Performance Test: Static Content ..................................................................................... 118

SSL Performance Test: Perl CGI ............................................................................................... 119

SSL Performance Test: C CGI ................................................................................................... 120

SSL Performance Test: NSAPI .................................................................................................. 121

E-Commerce Web Application Test ....................................................................................... 122

Index ................................................................................................................................................... 127

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •6

Page 7

Tables

TABLE 1–1 Methods of MonitoringPerformance ..................................................................... 22

TABLE 2–1 Parameter Mapping toserver.xml ........................................................................ 46

TABLE 2–2 Connection Queue Statistics .................................................................................... 50

TABLE 2–3 Keep-AliveStatistics .................................................................................................. 53

TABLE 2–4 File Cache Statistics ................................................................................................... 60

TABLE 2–5 Thread Pools Statistics .............................................................................................. 66

TABLE 2–6 DNS Cache Statistics ................................................................................................. 69

TABLE 2–7 Java Virtual Machine (JVM) Statistics .................................................................... 70

TABLE 2–8 Web Application Statistics ....................................................................................... 72

TABLE 2–9 JDBC Resource Statistics .......................................................................................... 73

TABLE 4–1 Tuning Solarisfor Performance Benchmarking .................................................... 96

TABLE 4–2 Tuning 64–bitSystems forPerformance Benchmarking .....................................97

TABLE 6–1 Web Server Tuning Settings ................................................................................... 107

TABLE 6–2 SSL SessionCache TuningSettings ....................................................................... 107

TABLE 6–3 File Cache Conguration ....................................................................................... 109

TABLE 6–4 Static Content Scalability ........................................................................................109

TABLE 6–5 JVM Tuning Settings ............................................................................................... 110

TABLE 6–6 Dynamic Content Test: Servlet Scalability ...........................................................111

TABLE 6–7 CGI Tuning Settings ...............................................................................................112

TABLE 6–8 Dynamic Content Test: C CGI Scalability ............................................................112

TABLE 6–9 CGI Tuning Settings ...............................................................................................113

TABLE 6–10 Dynamic Content Test: PerlCGI Scalability ........................................................ 113

TABLE 6–11 Dynamic Content Test: NSAPI Scalability ...........................................................114

TABLE 6–12 Tuning Settings for FastCGI Plug-inTest ............................................................116

TABLE 6–13 PHP Scalability with Fast CGI ................................................................................ 116

TABLE 6–14 NSAPI Plug-in Conguration forPHP ................................................................ 117

TABLE 6–15 PHP Scalability with NSAPI ................................................................................... 118

TABLE 6–16 SSL Performance Test: Static Content Scalability ................................................ 119

7

Page 8

Tables

TABLE 6–17 SSL Performance Test: Perl CGIScalability ......................................................... 120

TABLE 6–18 SSL Performance Test: C CGI Scalability .............................................................121

TABLE 6–19 SSL Performance Test: NSAPI Scalability ............................................................122

TABLE 6–20 PerformanceTest Pass Criteria .............................................................................. 124

TABLE 6–21 E-Commerce Web ApplicationScalability .......................................................... 125

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •8

Page 9

Figures

FIGURE 2–1 Web Server Connection Handling .......................................................................... 40

9

Page 10

10

Page 11

Preface

This guide discusses adjustments you can make that may improve the performance of Sun Java

System Web Server (henceforth known as Web Server). The guide provides tuning, scaling, and

sizing tips and suggestions; possible solutions to common performance problems; and data

from scalability studies. It also addresses miscellaneous conguration and platform-specic

issues.

Who Should UseThis Book

This guide is intended for advanced administrators only. Be sure to read this guide and other

relevant server documentation before making any changes. Be very careful when tuning your

server, and always back up your conguration les before making any changes.

BeforeYou Read This Book

Web Server can be installed as a stand-alone product or as a component of Sun Java

Enterprise System (Java ES), a software infrastructure that supports enterprise applications

distributed across a network or Internet environment. If you are installing Web Server as a

component of Java ES, you should be familiar with the system documentation at

http://docs.sun.com/coll/1286.3.

WebServer Documentation Set

The Web Server documentation set describes how to install and administer the Web Server.

You can access the Web Server documentation at http://docs.sun.com/coll/1653.1. For an

introduction to Web Server , refer to the books in the order in which they are listed in the

following table.

TM

11

Page 12

Preface

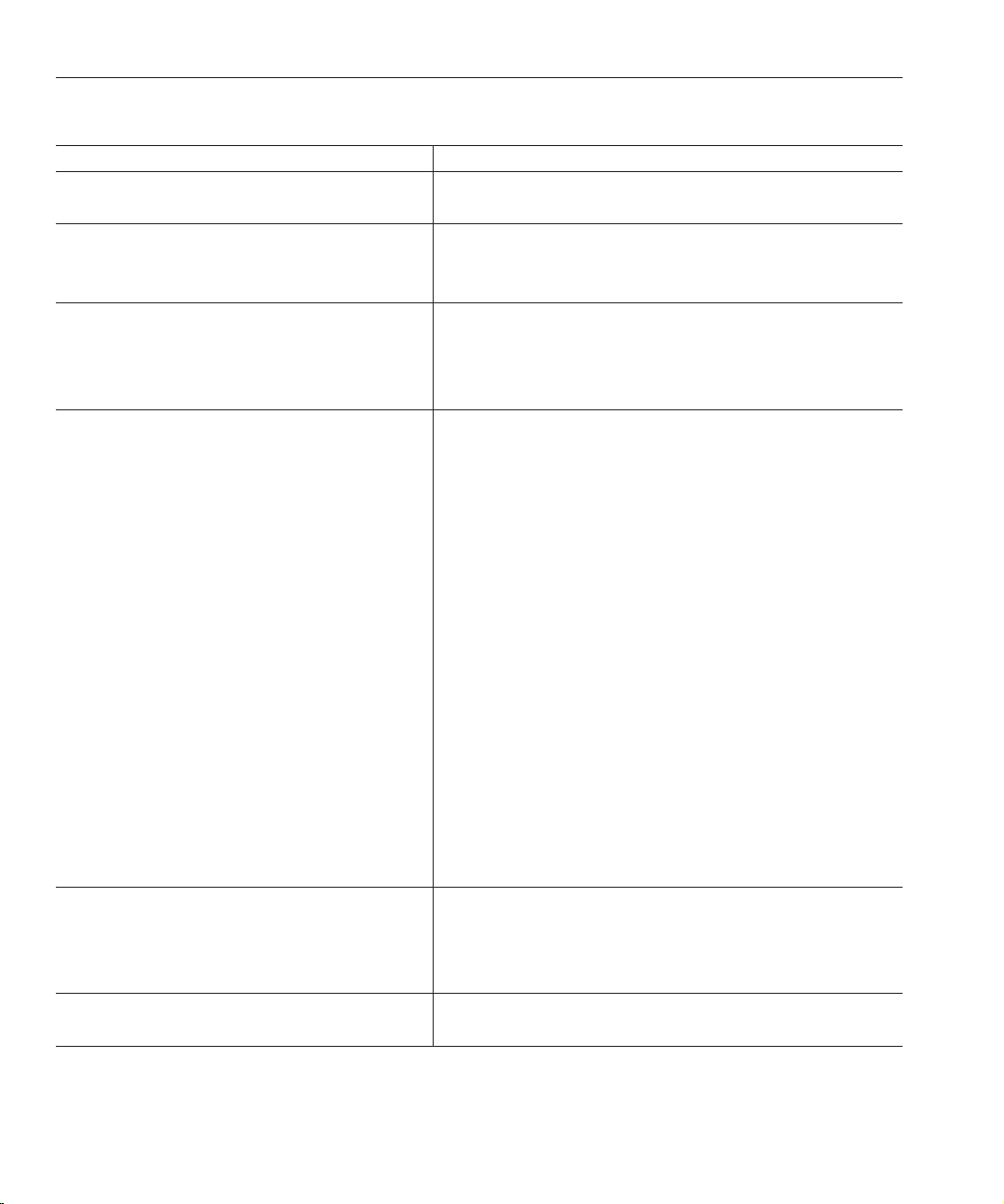

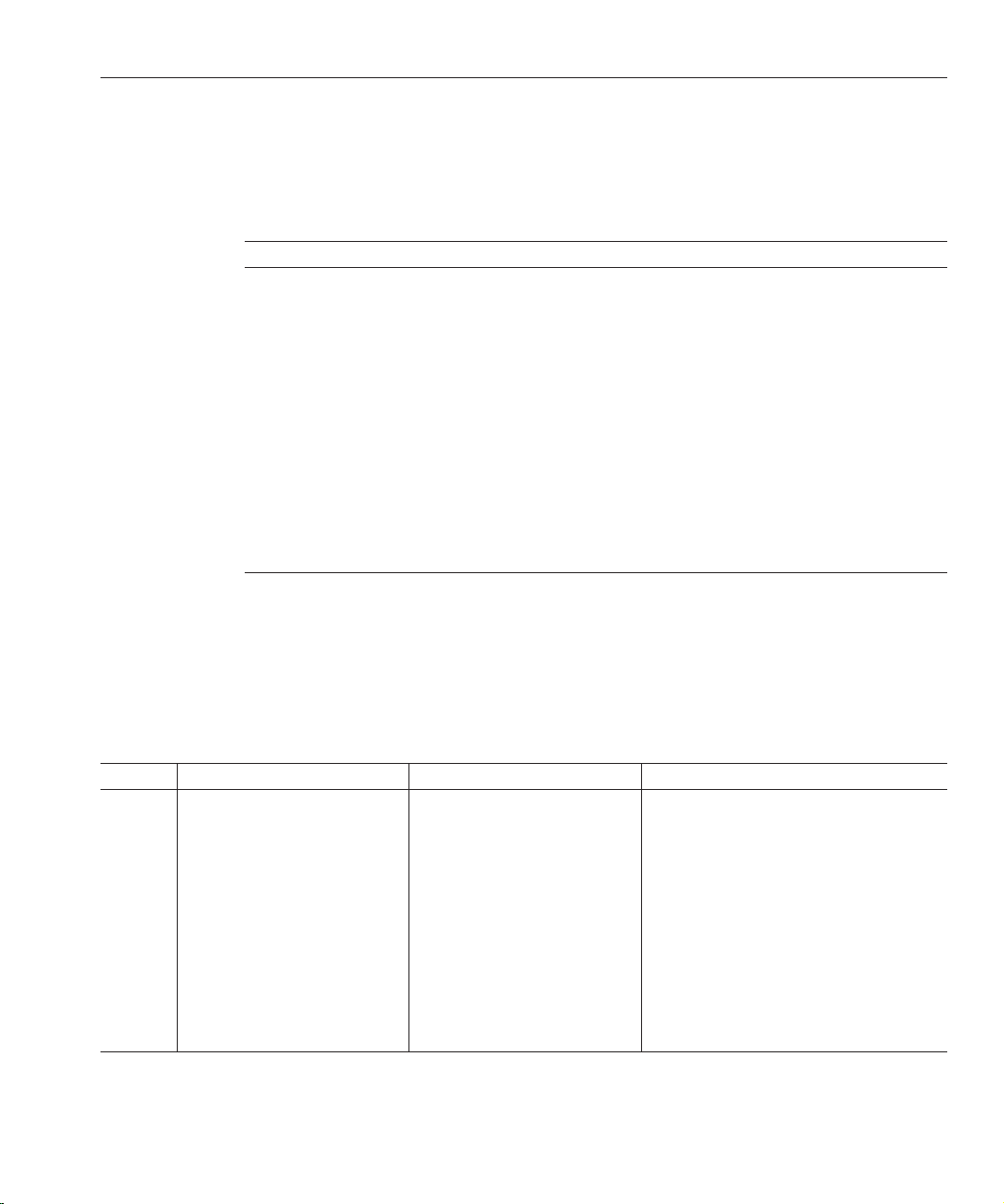

TABLE P–1 Books inthe Web Server Documentation Set

DocumentationTitle Contents

Sun Java System Web Server 7.0 Update 1 Documentation

Center

Sun Java System Web Server 7.0 Update 1 Release Notes

Sun Java System Web Server 7.0 Update 1 Installation and

Migration Guide

Sun Java System Web Server 7.0 Update 1 Administrator’s

Guide

Web Server documentation topics organized by tasks and subject

■

Late-breaking informationabout the software and documentation

■

Supported platforms and patch requirements for installing Web

Server

Performing installation and migration tasks:

■

Installing Web Server and its various components,

■

Migrating data from Sun ONE Web Server 6.0 or 6.1 to Sun Java

System Web Server 7.0

Performing the following administration tasks:

■

Using the Administration GUI and command-line interface

■

Conguring serverpreferences

■

Using server instances

■

Monitoring and logging server activity

■

Using certicates and public key cryptography to secure the server

■

Conguring accesscontrol to secure the server

■

Using Java Platform Enterprise Edition (Java EE) security features

■

Deploying applications

■

Managing virtual servers

■

Dening server workload and sizing the system to meet performance

needs

■

Searching the contents and attributes of server documents, and

creating a text search interface

■

Conguring theserver for content compression

■

Conguring theserver for web publishing and content authoring

using WebDAV

Sun Java System Web Server 7.0 Update 1 Developer’s

Guide

Sun Java System Web Server 7.0 Update 1 NSAPI

Developer’s Guide

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •12

Using programming technologies and APIs to dothe following:

■

Extend and modify Sun Java System Web Server

■

Dynamically generatecontent in response to client requests and

modify the content of the server

Creating custom Netscape Server Application Programmer’s Interface

(NSAPI) plug-ins

Page 13

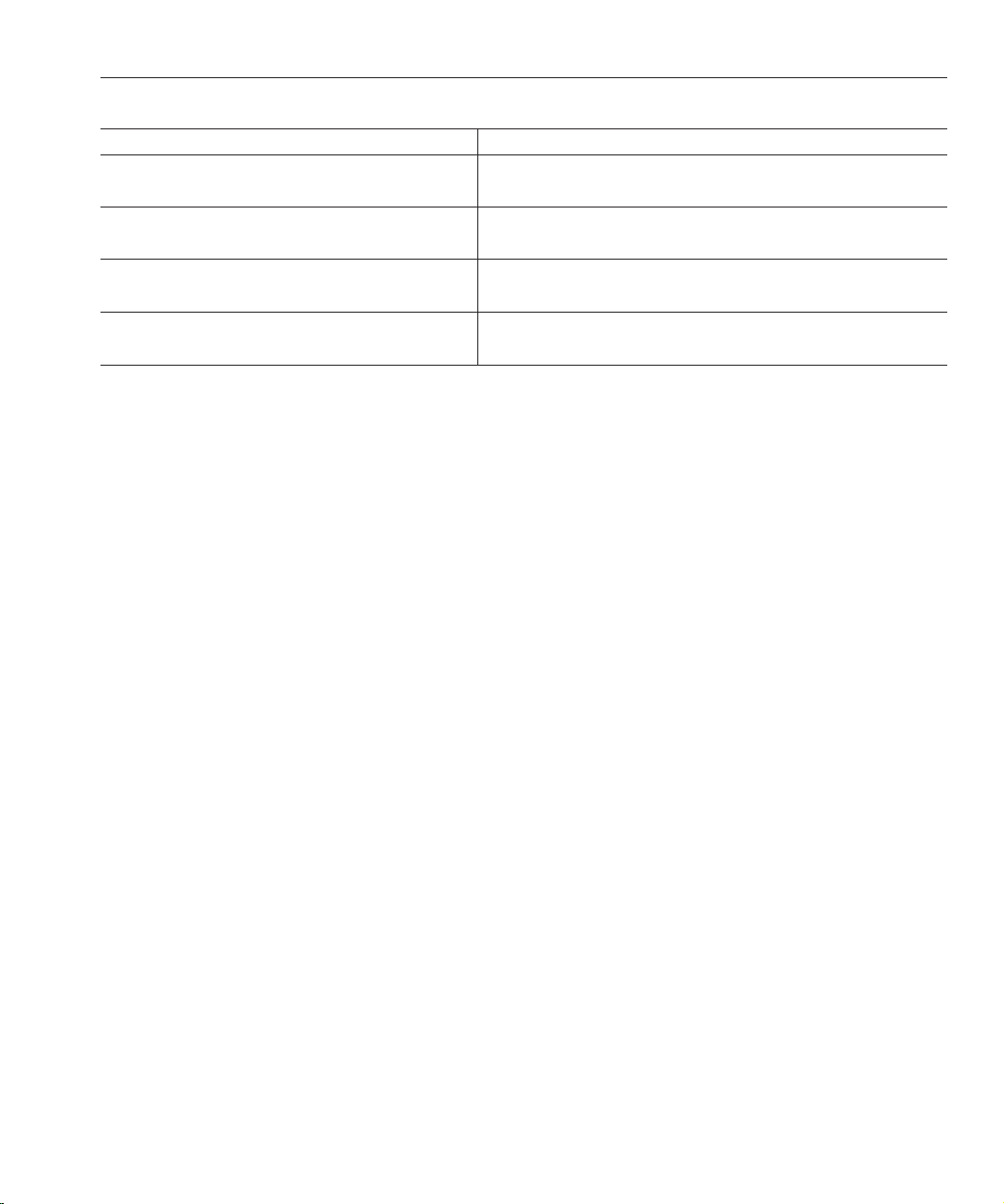

TABLE P–1 Books inthe Web Server Documentation Set (Continued)

DocumentationTitle Contents

Preface

Sun Java System Web Server 7.0 Update 1 Developer’s

Guide to Java Web Applications

Sun Java System Web Server 7.0 Update 1 Administrator’s

Conguration File Reference

Sun Java System Web Server 7.0 Update 1 Performance

Tuning, Sizing, and Scaling Guide

Sun Java System Web Server 7.0 Update 1 Troubleshooting

Guide

Implementing Java Servlets and JavaServer PagesTM(JSPTM) technology in

Sun Java System Web Server

Editing conguration les

Tuning Sun Java System Web Server to optimize performance

Troubleshooting Web Server

Related Books

The URL for all documentation about Sun Java Enterprise System (Java ES) and its components

is

http://docs.sun.com/coll/1286.3.

Default Paths and File Names

The following table describes the default paths and le names that are used in this book.

13

Page 14

Preface

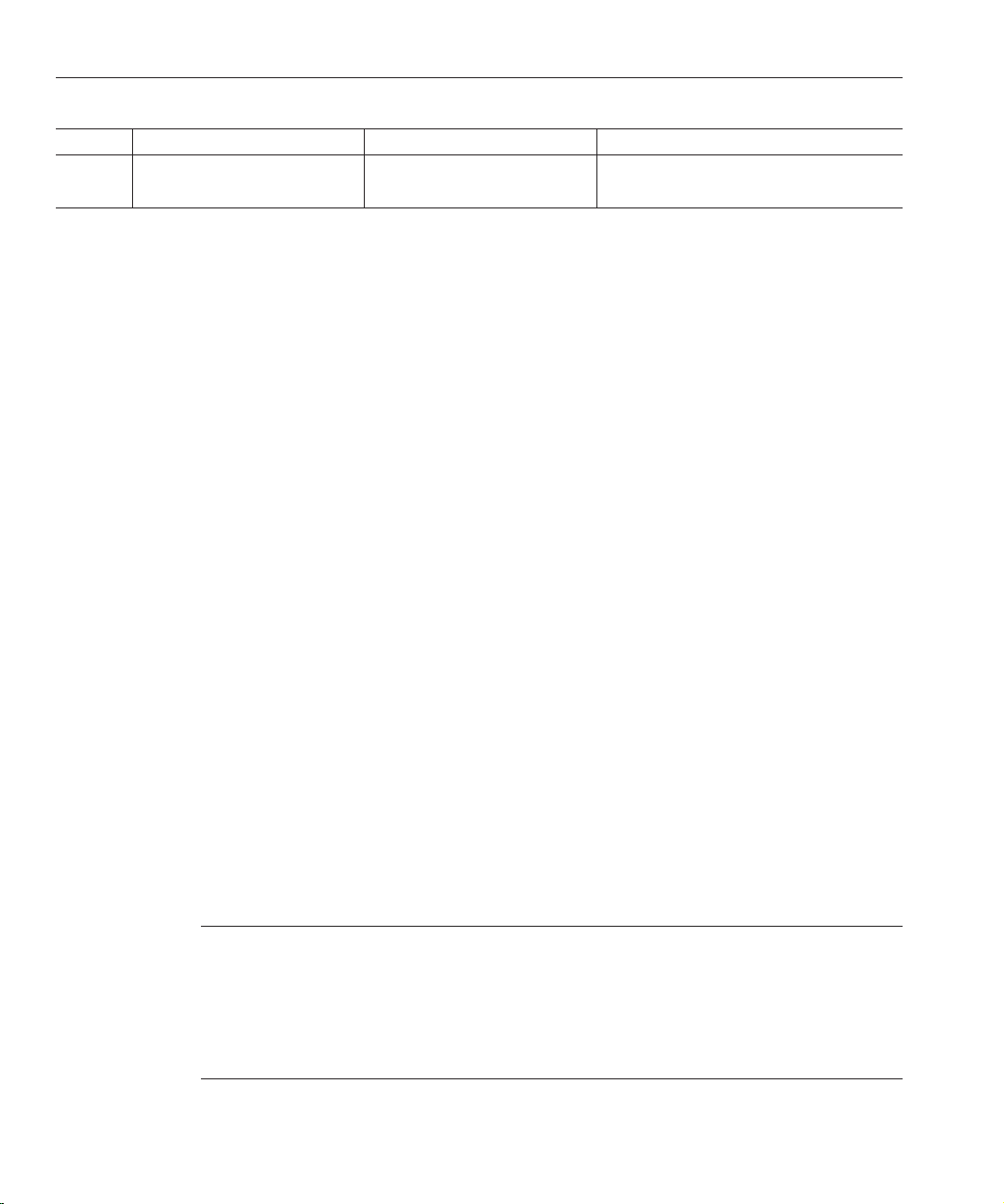

TABLE P–2 Default Paths andFile Names

Placeholder Description Default Value

install-dir Represents the base installation directory for Web

Server

instance-dir Directory that contains the instance-specic

subdirectories.

Sun Java Enterprise System (JavaES) installations on the

TM

Solaris

platform:

/opt/SUNWwbsvr7

Java ES installations on the Linux andHP-UX platform:

/opt/sun/webserver/

Java ES installations on the Windows platform:

system-drive:\Program Files\Sun\JavaES5\WebServer7

Other Solaris, Linux, and HP-UXinstallations, non-root

user:

home-directory/sun/webserver7

Other Solaris, Linux, and HP-UXinstallations, root user:

/sun/webserver7

Windows, all installations:

system-drive:\Program Files\Sun\WebServer7

For Java ESinstallations, the default location for instances

on Solaris:

/var/opt/SUNWwbsvr7

For Java ESinstallations, the default location for instances

on Linux and HP-UX:

/var/opt/sun/webserver7

For Java ESinstallations, the default location for instance

on Windows:

system-drive:\Program Files\Sun\JavaES5\WebServer7

For stand-alone installations, the default location for

instance on Solaris, Linux, and HP-UX:install-dir

For stand-alone installations, the default location for

instance on Windows:

system-drive:\Program Files\sun\WebServer7

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •14

Page 15

Typographic Conventions

The following table describes the typographic changes that are used in this book.

TABLE P–3 TypographicConventions

Typeface Meaning Example

Preface

AaBbCc123 The names of commands, les, and

directories, and onscreen computer

output

AaBbCc123 What you type, contrasted with onscreen

computer output

AaBbCc123 A placeholder to be replaced with a real

name or value

AaBbCc123 Book titles,new terms, and terms to be

emphasized (note that some emphasized

items appear bold online)

Edit your .login le.

Use ls -a to list all les.

machine_name% you have mail.

machine_name% su

Password:

The command to remove a le is rm lename.

Read Chapter 6 in the User's Guide.

A cache is a copy that is stored locally.

Do not save the le.

Symbol Conventions

The following table explains symbols that might be used in this book.

TABLE P–4 SymbolConventions

Symbol Description Example Meaning

[] Contains optionalarguments and

command options.

ls [-l] The -l option is not required.

{|} Contains aset of choices for a

required command option.

${ } Indicates a variable reference. ${com.sun.javaRoot} References the value of the com.sun.javaRoot

- Joins simultaneous multiple

keystrokes.

+ Joins consecutive multiple

keystrokes.

-d {y|n} The -d option requires that you use either the y

argument or the n argument.

variable.

Control-A Press the Control key while you press the A

key.

Ctrl+A+N Press the Control key,release it, and then press

the subsequent keys.

15

Page 16

Preface

TABLE P–4 SymbolConventions (Continued)

Symbol Description Example Meaning

→ Indicates menu item selection in a

graphical user interface.

File → New → Templates From the File menu, choose New. Fromthe

Documentation, Support, and Training

The Sun web site provides information about the following additional resources:

■

Documentation (http://www.sun.com/documentation/)

■

Support (http://www.sun.com/support/)

■

Training (http://www.sun.com/training/)

Searching Sun Product Documentation

Besides searching Sun product documentation from the docs.sun.com web site, you can use a

search engine by typing the following syntax in the search eld:

search-term site:docs.sun.com

For example, to search for “Web Server,” type the following:

Web Server site:docs.sun.com

New submenu, choose Templates.

To include other Sun web sites in your search (for example, java.sun.com, www.sun.com, and

developers.sun.com), use “sun.com” in place of “docs.sun.com” in the search eld.

Third-PartyWeb Site References

Third-party URLs are referenced in this document and provide additional, related information.

Note – Sun is notresponsible for the availability of third-party web sitesmentioned in this

document. Sun does not endorse and is not responsible or liable for any content, advertising,

products, or other materials that are available on or through such sites or resources. Sun will not

be responsible or liable for any actual or alleged damage or loss caused or alleged to be caused by

or in connection with use of or reliance on any such content, goods, or services that are available

on or through such sites or resources.

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •16

Page 17

Sun Welcomes Your Comments

Sun is interested in improving its documentation and welcomes your comments and

suggestions. To share your comments, go to http://docs.sun.com and click Send Comments.

In the online form, provide the full document title and part number. The part number is a

7-digit or 9-digit number that can be found on the book's title page or in the document's URL.

For example, the part number of this book is 819-2635.

Preface

17

Page 18

18

Page 19

CHAPTER 1

1

Performance and Monitoring Overview

Sun Java System Web Server (henceforth known as Web Server) is designed to meet the needs

of the most demanding, high-trac sites in the world. It can serve both static and dynamically

generated content. Web Server can also run in SSL mode, enabling the secure transfer of

information.

This guide helps you to dene your server workload and size a system to meet your

performance needs. Your environment is unique, however, so the impacts of the suggestions

provided here also depend on your specic environment. Ultimately you must rely on your own

judgement and observations to select the adjustments that are best for you.

This chapter provides a general discussion of server performance considerations, and more

specic information about monitoring server performance.

This chapter includes the following topics:

■

“Performance Issues” on page 19

■

“Conguration” on page 20

■

“Virtual Servers” on page 20

■

“Server Farms”on page 21

■

“64–Bit Servers” on page 21

■

“SSL Performance” on page 21

■

“Monitoring Server Performance” on page 22

Performance Issues

The rst step toward sizing your server is to determine your requirements. Performance means

dierent things to users than to webmasters. Users want fast response times (typically less than

100 milliseconds), high availability (no “connection refused” messages), and as much interface

control as possible. Webmasters and system administrators, on the other hand, want to see high

connection rates, high data throughput, and uptime approaching 100%. In addition, for virtual

19

Page 20

Conguration

servers the goal might be to provide a targeted level of performance at dierent price points.

You need to dene what performance means for your particular situation.

Here are some areas to consider:

■

The number of peak concurrent users

■

Security requirements

Encrypting your Web Server’s data streams with SSL makes an enormous dierence to your

site’s credibility for electronic commerce and other security conscious applications, but it

can also seriously impact your CPU load. For more information, see

page 21

■

The size of the document tree

■

Dynamic or static content

The content you serve aects your server’s performance. A Web Server delivering mostly

static HTML can run much faster than a server that must execute CGIs for every query.

Conguration

Certain tuning parameters are set at the conguration level, so that every server instance that is

based on the conguration has the same tuning information. In addition, some monitoring

information is available at the conguration level, so you can monitor the performance of all

instances based on the conguration. However, the bulk of the monitoring information is

available at the individual server instance, or virtual server level. If you are using a single Web

Server instance per conguration (your server is not part of a server farm), the

conguration-level statistics show the information for the single server instance based on that

conguration.

“SSL Performance” on

.

Virtual Servers

Virtual servers add another layer to the performance improvement process. Certain settings are

tunable for the conguration, while others are based on an individual virtual server.

You can also use the quality of service (QoS) features to set resource utilization constraints for

an individual virtual server. For example, you can use QoS features to limit the amount of

bandwidth and the number of connections allowed for a virtual server. You can set these

performance limits, track them, and optionally enforce them.

For more information about using the quality of service features, see Sun Java System Web

Server 7.0 Update 1 Administrator’s Guide.

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •20

Page 21

Server Farms

The clustering features of Web Server allow you to easily deploy to a server farm. Because all

servers in a server farm share identical congurations, tuning is not done on a server-by-server

basis.

64–Bit Servers

The performance for the 64–bit Web Server is not necessarily better than the performance for

the 32–bit Web Server, but the 64–bit server scales better. Because the 32–bit Web Server

process is conned to 4 GB of address space, it can run out of address space attempting to

support simultaneous sessions beyond a certain limit. Even if the host machine has available

memory and CPU resources, the 32–bit Web Server might not be able to take advantage of it

because of the address space limit. The 64–bit Web Server can run more applications and

servlets than the 32-bit server. Also, the 64–bit Web Server can cache several GBs of static

content, while the 32-bit Web Server is conned to 4 GB of address space.

In general, the tuning for the 64–bit Web Server is similar to the tuning for the 32–bit Web

Server. The dierences are mostly tuned at the operating system level. Tuning specics are

discussed in

page 97

SSL Performance

“Tuning UltraSPARC T1–Based Systems for Performance Benchmarking” on

.

SSL Performance

SSL always has a signicant impact on throughput, so for best performance minimize your use

of SSL, or consider using a multi-CPU server to handle it.

For SSL, the Web Server uses the NSS library. However, there are other options available for

SSL:

■

If you are using the Solaris 10 operating system, kernel SSL (KSSL) is available. It does not

contain all the algorithms available, as does NSS, but it often provides better performance.

■

A cryptographic card hardware accelerator for SSL can also improve performance.

■

If you are using the 64–bit Web Server on Solaris, you can use the cryptographic accelerator

of the UltraSPARC T1 processor.

Chapter 1 • Performanceand Monitoring Overview 21

Page 22

Monitoring Server Performance

Monitoring Server Performance

Making the adjustments described in this guide without measuring their eects doesn’t make

sense. If you don’t measure the system’s behavior before and after making a change, you won’t

know whether the change was a good idea, a bad idea, or merely irrelevant. You can monitor the

performance of Web Server in several dierent ways.

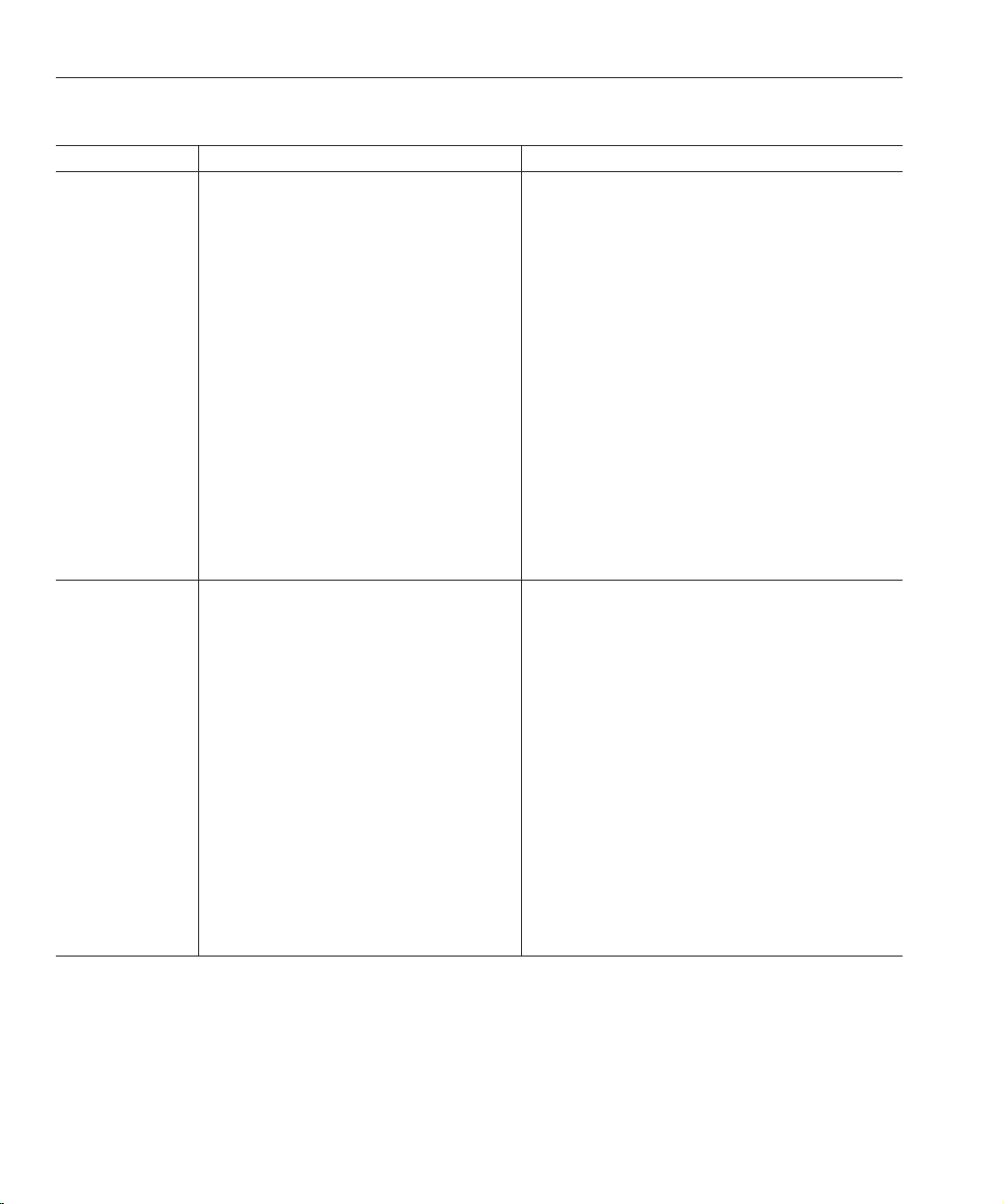

TABLE 1–1 Methods of Monitoring Performance

Monitoring Method How to Enable How to Access Advantages and Requirements

Statistics through the

Admin Console

Statistics through

individual wadm

commands

XML-formatted statistics

(stats-xml) through a

browser

XML-formatted statistics

(stats-xml) through the

command-line interface

perfdump through a

browser

perfdump through the

command-line interface

Enabled by default In the Admin Console, for a

conguration, click the

Monitor tab

Enabled by default Through wadm commands:

get-config-stats

get-virtual-server-stats

get-webapp-stats

get-servlet-stats

Enable through

Admin Console or

through editing a

conguration le

Enabled by default Through the wadm command

Enable through

Admin Console or

through editing a

conguration le

Enabled by default Through wadm command

Through a URI Administration Server

get-stats-xml

Through a URI Administration Server

get-perfdump

Accessible when session

threads are hanging.

Administration Server

must be running.

Accessible when session

threads are hanging.

Administration Server

must be running.

need not be running.

Accessible when session

threads are hanging.

Administration Server

must be running.

need not be running.

Accessible when session

threads are hanging.

Administration Server

must be running.

Java ES Monitoring Enabled by default Through the Java ES

Monitoring Console

Only for Java ES

installations.

Administration Server

must be running.

Monitoring the server does have some impact on computing resources. In general, using

perfdump through the URI is the least costly, followed by using stats-xml through a URI.

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •22

Page 23

Monitoring Server Performance

Because using the Administration Server takes computing resources, the command-line

interface and the Admin Console are the most costly monitoring methods.

For more information on these monitoring methods, see the following sections:

■

“About Statistics” on page 23

■

“Monitoring Current Activity Using the Admin Console” on page 25

■

“Monitoring Current Activity Using the CLI” on page 26

■

“Monitoring Current Activity Using stats.xml” on page 29

■

“Monitoring Current Activity Using perfdump” on page 31

■

“Monitoring Current Activity Using the Java ES Monitoring Console” on page 37

About Statistics

You can monitor many performance statistics through the Admin Console user interface,

through the command-line interface, through the stats-xml URI, and through perfdump.For

all these monitoring methods, the server uses statistics it collects. Noneof these monitoring

methods will work if statistics are not collected.

The statistics give you information at the conguration level, the server instance level, or the

virtual server level. The statistics are broken up into functional areas.

For the conguration, statistics are available in the following areas:

■

Requests

■

Errors

■

Response Time

For the server instance, statistics are available in the following areas:

■

Requests

■

Errors

■

Response Time

■

General

■

Java Virtual Machine (JVMTM)

■

Connection Queue

■

Keep Alive

■

DNS

■

File Cache

■

Thread Pools

■

Session Replication

■

Session Threads, including Proling data (available if proling is enabled)

Chapter 1 • Performanceand Monitoring Overview 23

Page 24

Monitoring Server Performance

■

For the virtual server, statistics are available in the following areas:

■

■

■

■

■

Some statistics default to zero if Quality of Service (QoS) is not enabled, for example, the count

of open connections, the maximum open connections, the rate of bytes transmitted, and the

maximum byte transmission rate.

Enabling Statistics

Statistics are activated by default on Web Server. However, if you have disabled them, you need

to enable them again to monitor your server for performance. To enable statistics, use Admin

Console or the wadm command-line utility (CLI).

Java DataBase Connectivity (JDBCTM) (available if a JDBC resource is created and the

connection pool is accessed)

General

Response

Web Applications

Proling Data (available if proling is enabled)

Servlet and Servlet Response Cache (available if the Servlet cache is enabled in

sun.web.xml)

Note – Collecting statistics causesa slight hit to performance.

▼

To Enable Statistics from the Admin Console

From the AdminConsole Common Tasks page, select the conguration.

1

2

Click Edit Conguration.

3

Click the General tab.

4

Click the Monitoring Settings sub tab.

5

On the Monitoring Settings page,under GeneralSettings, select the Statistics Enabled

checkbox.

6

Congure the interval and proling.

■

The Interval is the period in seconds between statistics updates. A higher setting (less

frequent) improves performance. The minimum value is .001 seconds; the default value is 5

seconds.

■

Proling is activated by default. Deactivating it results in slightly less monitoring overhead.

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •24

Page 25

Monitoring Server Performance

Restart theserver.

7

▼

To Enable Statistics from the CLI

Enter the followingCLI commandto enable statistics collection:

1

./wadm set-stats-prop --user=admin_user –password-file=password-le

--config=mycong enabled=true

To disable statistics, set enabled to false.

2

To setthe interval and enableproling, use the set-stats-prop interval and profiling

properties. For moreinformation, see the help forset-stats-prop.

3

Restart theserver.

Monitoring Current Activity Using the Admin Console

Frequently-used statistics are available through the Admin Console, viewed as general statistics,

instance statistics, and virtual server statistics.

▼

To Monitor Statistics from the Admin Console

In the Admin Console, from the Common Tasks page, select the Monitoringtab.

1

Select theconguration.

2

The conguration statistics are displayed.

From the drop-down list, select a View interval.

3

The statistics displayed in your browser are automatically updated at this interval.

Select thetype ofstatistics to display.

4

The initial list of statistics types includes General Statistics, Instance Statistics, and Virtual

Server Statistics.

If you choose Instance Statistics, click the name of the instance to monitor. Detailed statistics

are then displayed, including information on processes and session replications.

If you choose Virtual Server Statistics, click the name of the virtual server to monitor. Statistics

for the virtual server are displayed, including response statistics and web application statistics.

This information is not provided through perfdump.

Chapter 1 • Performanceand Monitoring Overview 25

Page 26

Monitoring Server Performance

Monitoring Current Activity Using the CLI

You can also view statistics information using the wadm commands get-config-stats,

get-virtual-server-stats, get-webapp-stats and get-servlet-stats. Note that the

examples below do not contain all possible command options. For the complete syntax, see the

help for the command.

▼

To Monitor Statistics from the CLI

To getstatistics for a conguration deployed on a singlenode, enter:

1

./wadm get-config-stats --user=admin-user --password-file=admin-password-le

--config=cong-name --node=node-name

Using the node option in this syntax restricts the output to a single node. To get the statistics at

the conguration level, use the command without the node option.

The following shows an example of the output for a single node:

timeStarted=1168035653

secondsRunning=1404

countRequests=690546

rpsLast1MinAvg=4491.7666

rpsLast5MinAvg=1844.6061

rpsLast15MinAvg=637.37305

countErrors=0

epsLast1MinAvg=0.0

epsLast5MinAvg=0.0

epsLast15MinAvg=0.0

maxResponseTime=0.30789953

rtLast1MinAvg=5.3970284

rtLast5MinAvg=5.208407

rtLast15MinAvg=35.56042

countBytesReceived=96800935

countBytesTransmitted=689929574

countChildDied=0

countVirtualServers=2

instanceName=https-test

process.1.countThreadPools=2

process.1.jdbcPoolCount=1

process.1.countThreads=64

process.1.fractionSystemMemoryUsage=2887.0

process.1.countConnectionQueues=1

process.1.sizeResident=0

process.1.countIdleThreads=32

process.1.mode=1

process.1.sizeVirtual=0

process.1.countConfigurations=1

process.1.pid=15874

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •26

Page 27

process.1.timeStarted=Jan 5, 2007 2:20:53 PM

process.1.DNSCache.countCacheHits=687804

process.1.DNSCache.countAsyncNameLookup=0

process.1.DNSCache.countAsyncLookupsInProgress=0

process.1.DNSCache.flagAsyncEnabled=false

process.1.DNSCache.countAsyncAddrLookups=0

process.1.DNSCache.flagCacheEnabled=true

process.1.DNSCache.countCacheMisses=75

process.1.JDBCPool.1.countQueued=32

process.1.JDBCPool.1.countFreeConnections=0

process.1.JDBCPool.1.peakConnections=32

process.1.JDBCPool.1.millisecondsPeakWait=72

process.1.JDBCPool.1.countWaitQueueTimeouts=288

process.1.JDBCPool.1.peakQueued=64

process.1.JDBCPool.1.maxConnections=32

process.1.JDBCPool.1.currentConnections=32

process.1.JDBCPool.1.millisecondsAverageQueued=1.0

process.1.JDBCPool.1.countTotalFailedValidationConnections=0

process.1.JDBCPool.1.countLeasedConnections=32

process.1.JDBCPool.1.countTotalLeasedConnections=414

process.1.JDBCPool.1.countConnectionIdleTimeouts=1

process.1.JDBCPool.1.name=jdbc/jdbc-simple_1

process.1.connectionQueue.1.countQueued15MinuteAverage=4.3203125

process.1.connectionQueue.1.countQueued=0

process.1.connectionQueue.1.countQueued1MinuteAverage=0.046875

process.1.connectionQueue.1.countTotalQueued=79171

process.1.connectionQueue.1.countQueued5MinuteAverage=4.03125

process.1.connectionQueue.1.countOverflows=0

process.1.connectionQueue.1.maxQueued=1288

process.1.connectionQueue.1.ticksTotalQueued=724956383

process.1.connectionQueue.1.countTotalConnections=863

process.1.connectionQueue.1.peakQueued=64

process.1.connectionQueue.1.name=cq1

process.1.fileCache.countContentMisses=7

process.1.fileCache.maxMmapCacheSize=0

process.1.fileCache.sizeHeapCache=27520

process.1.fileCache.countMisses=22

process.1.fileCache.countContentHits=620662

process.1.fileCache.maxEntries=1024

process.1.fileCache.flagEnabled=true

process.1.fileCache.secondsMaxAge=30

process.1.fileCache.sizeMmapCache=0

process.1.fileCache.countInfoHits=1862013

process.1.fileCache.maxHeapCacheSize=10747924

process.1.fileCache.countOpenEntries=0

process.1.fileCache.countHits=2482682

process.1.fileCache.maxOpenEntries=1024

process.1.fileCache.countEntries=12

Monitoring Server Performance

Chapter 1 • Performanceand Monitoring Overview 27

Page 28

Monitoring Server Performance

process.1.fileCache.countInfoMisses=19

process.1.jvm.countGarbageCollections=96

process.1.jvm.sizeHeap=67762048

process.1.jvm.countThreads=79

process.1.jvm.countClassesUnloaded=0

process.1.jvm.vMVendor=Sun Microsystems Inc.

process.1.jvm.countTotalClassesLoaded=3170

process.1.jvm.vMName=Java HotSpot(TM) Server VM

process.1.jvm.countTotalThreadsStarted=81

process.1.jvm.countClassesLoaded=3170

process.1.jvm.peakThreads=79

process.1.jvm.millisecondsGarbageCollection=1981

process.1.jvm.vMVersion=1.5.0_09-b03

process.1.keepalive.countConnections=32

process.1.keepalive.maxConnections=200

process.1.keepalive.countFlushes=0

process.1.keepalive.countRefusals=0

process.1.keepalive.countTimeouts=6

process.1.keepalive.countHits=686943

process.1.keepalive.secondsTimeout=30

process.1.threadPool.1.countQueued=0

process.1.threadPool.1.countThreadsIdle=1

process.1.threadPool.1.threadPoolId=NativePool

process.1.threadPool.1.maxThreads=128

process.1.threadPool.1.countThreads=1

process.1.threadPool.1.maxQueued=0

process.1.threadPool.1.peakQueued=0

process.1.threadPool.1.name=NativePool

process.1.threadPool.2.countQueued=0

process.1.threadPool.2.countThreadsIdle=1

process.1.threadPool.2.threadPoolId=my-custom-pool

process.1.threadPool.2.maxThreads=128

process.1.threadPool.2.countThreads=1

process.1.threadPool.2.maxQueued=0

process.1.threadPool.2.peakQueued=0

process.1.threadPool.2.name=my-custom-pool

To getstatistics for a virtual server,enter:

2

./wadm get-virtual-server-stats --user=admin-user

--password-file=admin-password-le --config=cong-name --vs=virtual-server-name

Because the node option is not used, this syntax gives the aggregate statistics for the virtual

server across all the nodes where the conguration has been deployed. Using the node option

restricts the output to a single node.

To getstatistics for a deployed web application, enter:

3

./wadm get-webapp-stats --user=admin-user --password-file=admin-password-le

--config=cong-name --node=node-name --vs=virtual-server-name --uri=URI

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •28

Page 29

Monitoring Server Performance

The syntax gets the statistics for a given web application deployed on the given virtual server of

the given instance. To get the aggregated web application statistics for a given conguration

across all the nodes where the conguration has been deployed, use the command without the

node option.

The following example shows the output for the URI hello:

countActiveSessions=1

countExpiredSessions=0

countJsps=1

countRejectedSessions=0

countReloadedJsps=1

countSessions=1

peakActiveSessions=1

secondsSessionAliveAverage=0

secondsSessionAliveMax=0

uri=/hello

vsName=myvs.sun.com

Monitoring Current Activity Using stats.xml

You can also display statistics using stats-xml, which displays statistics in XML format. The

output of stats-xml is in XML so that various tools can easily parse the statistics. You can view

the stats-xml output through a URI, which you have to enable, or you can view the stats-xml

output through the CLI, which is enabled by default.

▼

To Enable the stats-xml URIfrom the Admin Console

If you enable the stats-xml URI, you can access statistics for your server in XML format

through a browser. Note that when you use the stats-xml URI, you can access statistics even

when the Administration Server is not running. Also, with the stats-xml URI activated, users

can see the statistics information for your server, unless you take precautions to deny access.

1

On the CommonTaskspage, select the conguration fromthe pull-down menu on the left.

2

Select thevirtual server from thepull-down menuon the right, then click Edit Virtual Server.

3

On the Server Settings tab, click theMonitoring Settings sub tab.

4

Select theXML Report enabled checkbox.

5

Provide a URI,for example, /stats-xml.

6

Click Save.

7

Deploy the conguration.

Chapter 1 • Performanceand Monitoring Overview 29

Page 30

Monitoring Server Performance

Access the stats-xml URI, forexample:

8

http://yourhost:port/stats-xml

The statistics are displayed in XML format.

▼

To Enable the stats-xml URIfrom the CLI

Use the followingcommand to enable stats-xml:

1

./wadm enable-stats-xml --user=admin-user --password-file=admin-password-le

[--uri-prefix=prex]--config=cong-name --vs= virtual-server-name

Use the uri-prefix option to set the stats-xml URI.

2

Deploy the conguration using the wadm deploy-config command.

3

Access the stats-xml URI, forexample:

http://yourhost:port/stats-xml

The statistics are displayed in XML format.

▼

To Limit the stats-xml StatisticsDisplayed in theURI

You can modify the stats-xml URI to limit the data it provides.

Modify the stats-xml URI to limit theinformation by setting elements to0 or1. An element set

●

to 0 is not displayedon the stats-xml output. Forexample:

http://yourhost:port/stats-xml?thread=0&process=0

This syntax limits the stats-xml output so that thread and process statistics are not included.

By default all statistics are enabled (set to 1).

Most of the statistics are available at the server level, but some are available at the process level.

Use the following syntax elements to limit stats-xml:

■

cache-bucket

■

connection-queue

■

connection-queue-bucket (process-level)

■

cpu-info

■

dns-bucket

■

jdbc-resource-bucket

■

keepalive-bucket

■

process

■

profile

■

profile-bucket (process-level)

■

request-bucket

■

servlet-bucket

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •30

Page 31

Monitoring Server Performance

■

session-replication

■

thread

■

thread-pool

■

thread-pool-bucket (process-level)

■

virtual-server

■

web-app-bucket

▼

To View stats-xml Output from the CLI

In addition to a URI, you can also access stats-xml output through the command-line

interface. It is enabled by default. Unlike viewing stats-xml output through the URI, the

Administration Server must be running to view stats-xml output at the command-line.

However, if request processing threads are hanging in your server (for example, because they

are busy), and you cannot use the URI, you can still access stats-xml output through the CLI.

To viewthe stats-xml output through the command-line interface,enter:

●

./wadm get-stats-xml --user=admin-user --password-file=admin-password-le

--config=cong-name --node=node-name

Monitoring Current Activity Using perfdump

perfdump is a Server Application Function (SAF) built into Web Server that collects various

pieces of performance data from the Web Server internal statistics and displays them in ASCII

text. The perfdump output does not display all the statistics available through the

command-line statistics or the AdminConsole, but it can still be a useful tool. For example, you

can still use perfdump even if the AdministrationServer is not running. You can view the

perfdump output through the CLI, which is enabled by default, or you can view the perfdump

output through a URI, which you have to enable. If you enable the URI, you must control access

to the perfdump URI, otherwise it can be visible to users.

With perfdump, the statistics are unied. Rather than monitoring a single process, statistics are

multiplied by the number of processes, which gives you an accurate view of the server as a

whole.

For information on tuning the information displayed by perfdump, see

Data to Tune Your Server” on page 48

▼

To Enable the perfdump URI fromthe Admin Console

.

You can enable perfdump URI for a virtual server through the Admin Console.

Chapter 1 • Performanceand Monitoring Overview 31

“Using Monitoring

Page 32

Monitoring Server Performance

Note – The statistics displayed by perfdump are for the server as a whole. If you enable perfdump

on one virtual server, it displays statistics for the whole server, not an individual virtual server.

From Common Tasks, select a conguration.

1

Select thevirtual server and clickEdit Virtual Server.

2

Click the Monitoring Settings tab.

3

Select thePlain Text Report Enabled checkbox.

4

Provide a URIfor accessing the report, forexample /.perf .

5

Click Save.

6

Deploy the conguration.

7

To access perfdump,access the URI on the virtual server.

8

For example: http://localhost:80/.perf

You can request the perfdump statistics and specify how frequently (in seconds) the browser

should automatically refresh. The following example sets the refresh to every 5 seconds:

http://yourhost/.perf?refresh=5

▼

To Enable the perfdump URI fromthe CLI

Use the followingcommand to enable stats-xml:

1

./wadm enable-perfdump --user=admin-user --password-file=admin-password-le

[--uri=uri]--config=cong-name--vs=virtual-server-name

Use the uri option to set the pefdump URI.

Deploy the conguration using the wadm deploy-config command.

2

To access perfdump, access the URI on the virtual server.

3

For example: http://localhost:80/.perf

You can request the perfdump statistics and specify how frequently (in seconds) the browser

should automatically refresh. The following example sets the refresh to every 5 seconds:

http://yourhost/.perf?refresh=5

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •32

Page 33

▼

To View the perfdump Data from the CLI

In addition to a URI, you can also access perfdump output through the command-line interface.

It is enabled by default. Unlike viewing perfdump output through the URI, the Administration

Server must be running to view perfdump output at the command-line. However, if request

processing threads are hanging in your server (for example, because they are busy), and you

cannot use the URI, you can still access perfdump output through the CLI.

To viewthe perfdump output through the command-line interface,enter:

●

./wadm get-perfdump --user=admin-user --password-file=admin-password-le

--config=cong-name --node=node-name

The output appears in your command window.

Sample perfdump Output

The following is sample perfdump output:

webservd pid: 29133

Sun Java System Web Server 7.0 B07/13/2006 17:09 (SunOS DOMESTIC)

Server started Fri Jul 14 14:34:15 2006

Process 29133 started Fri Jul 14 14:34:17 2006

Monitoring Server Performance

ConnectionQueue:

----------------------------------------Current/Peak/Limit Queue Length 2/237/1352

Total Connections Queued 67364017

Average Queue Length (1, 5, 15 minutes) 4.52, 4.73, 4.85

Average Queueing Delay 13.63 milliseconds

ListenSocket ls1:

-----------------------Address https://0.0.0.0:2014

Acceptor Threads 1

Default Virtual Server https-test

KeepAliveInfo:

-------------------KeepAliveCount 198/200

KeepAliveHits 0

KeepAliveFlushes 0

KeepAliveRefusals 56844280

KeepAliveTimeouts 365589

KeepAliveTimeout 10 seconds

SessionCreationInfo:

Chapter 1 • Performanceand Monitoring Overview 33

Page 34

Monitoring Server Performance

-----------------------Active Sessions 128

Keep-Alive Sessions 0

Total Sessions Created 128/128

Server cache disabled

Native pools:

---------------------------NativePool:

Idle/Peak/Limit 1/1/128

Work Queue Length/Peak/Limit 0/0/0

TestPool:

Idle/Peak/Limit 5/5/10

Work Queue Length/Peak/Limit 0/0/15

DNSCacheInfo:

-----------------enabled yes

CacheEntries 4/1024

HitRatio 62854802/62862912 ( 99.99%)

Async DNS disabled

Performance Counters:

-----------------------------------------------Average Total Percent

Total number of requests: 62647125

Request processing time: 0.0343 2147687.2500

default-bucket (Default bucket)

Number of Requests: 62647125 (100.00%)

Number of Invocations: 3374170785 (100.00%)

Latency: 0.0008 47998.2500 ( 2.23%)

Function Processing Time: 0.0335 2099689.0000 ( 97.77%)

Total Response Time: 0.0343 2147687.2500 (100.00%)

Sessions:

-----------------------------------------------------------------------------------------------------------

Process Status Client Age VS Method URI Function

29133 response 192.6.7.7 115 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

29133 response 192.6.7.7 8 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

29133 response 192.6.7.7 4 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

29133 response 10.5.8.19 4 https-test GET /perf service-dump

29133 response 192.6.7.7 3 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

29133 response 192.6.7.7 3 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •34

Page 35

Monitoring Server Performance

29133 response 192.6.7.7 2 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

29133 response 192.6.7.7 2 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

29133 response 192.6.7.7 2 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

29133 response 192.6.7.7 2 https-test GET /qa_webapp/CheckNetwork.class service-j2ee

29133 request 192.6.7.7 0

29133 request 192.6.7.7 0

29133 request 192.6.7.7 0

29133 request 192.6.7.7 0

29133 request 192.6.7.7 0

29133 response 192.6.7.7 0 https-test GET /file1.shtml shtml_send

29133 request 192.6.7.7 0

29133 request 192.6.7.7 0

29133 response 192.6.7.7 0 https-test GET /find-pathinfo-forward/pathinfo.pl/p/info send-cgi

29133 request 192.6.7.7 0

29133 updating 192.6.7.7

29133 updating 192.6.7.7

29133 updating 192.6.7.7

29133 updating 192.6.7.7

.

.

.

Using Performance Buckets

Performance buckets allow you to dene buckets and link them to various server functions.

Every time one of these functions is invoked, the server collects statistical data and adds it to the

bucket. Forexample, send-cgi and service-j2ee are functions used to serve the CGI and Java

servlet requests respectively. You can either dene two buckets to maintain separate counters

for CGI and servlet requests, or create one bucket that counts requests for both types of

dynamic content. The cost of collecting this information is minimal, and the impact on the

server performance is usually negligible. This information can later be accessed using perfdump.

The following information is stored in a bucket:

■

Name of the bucket. This name associates the bucket with a function.

■

Description. A description of the functions with which the bucket is associated.

■

Number of requests for this function. The total number of requests that caused this

function to be called.

■

Number of times the function was invoked. This number might not coincide with the

number of requests for the function, because some functions might be executed more than

once for a single request.

■

Function latency or the dispatch time. The time taken by the server to invoke the function.

■

Function time. The time spent in the function itself.

The default-bucket is predened by the server. It records statistics for the functions not

associated with any user-dened bucket.

Chapter 1 • Performanceand Monitoring Overview 35

Page 36

Monitoring Server Performance

Conguration

You must specify all conguration information for performance buckets in the magnus.conf

and obj.conf les. Only the default-bucket is automatically enabled.

First, you must enable performance statistics collection and perfdump.

The following examples show how to dene new buckets in magnus.conf:

Init fn="define-perf-bucket" name="acl-bucket" description="ACL bucket"

Init fn="define-perf-bucket" name="file-bucket" description="Non-cached responses"

Init fn="define-perf-bucket" name="cgi-bucket" description="CGI Stats"

The examples above create three buckets: acl-bucket, file-bucket, and cgi-bucket.To

associate these buckets with functions, add bucket=bucket-name to the obj.conf function for

which to measure performance.

Example

PathCheck fn="check-acl" acl="default" bucket="acl-bucket"

...

Service method="(GET|HEAD|POST)" type="*~magnus-internal/*"

fn="send-file" bucket="file-bucket"

...

<Object name="cgi">

ObjectType fn="force-type" type="magnus-internal/cgi"

Service fn="send-cgi" bucket="cgi-bucket"

</Object>

For more information, see “The bucket Parameter” in Sun Java System Web Server 7.0 Update 1

Administrator’s Conguration File Reference.

Performance Report

The server statistics in buckets can be accessed using perfdump. The performance buckets

information is located in the last section of the report returned by perfdump.

The report contains the following information:

■

Average, Total, and Percent columns give data for each requested statistic.

■

Request Processing Time is the total time required by the server to process all requests it

has received so far.

■

Number of Requests is the total number of requests for the function.

Sun Java System Web Server 7.0Update 1 Performance Tuning,Sizing, and Scaling Guide •36

Page 37

Monitoring Server Performance

■

Number of Invocations is the total number of times that the function was invoked. This

diers from the number of requests in that a function could be called multiple times while

processing one request. The percentage column for this row is calculated in reference to the

total number of invocations for all of the buckets.

■

Latency is the time in seconds that Web Server takes to prepare for calling the function.

■

Function Processing Time is the time in seconds that Web Server spent inside the function.

The percentage of Function Processing Time and Total Response Time is calculated with

reference to the total Request Processing Time.

■

Total Response Time is the sum in seconds of Function Processing Time and Latency.

The following is an example of the performance bucket information available through

perfdump:

Performance Counters:

-----------------------------------------------Average Total Percent

Total number of requests: 62647125

Request processing time: 0.0343 2147687.2500

default-bucket (Default bucket)

Number of Requests: 62647125 (100.00%)

Number of Invocations: 3374170785 (100.00%)

Latency: 0.0008 47998.2500 ( 2.23%)

Function Processing Time: 0.0335 2099689.0000 ( 97.77%)

Total Response Time: 0.0343 2147687.2500 (100.00%)

Monitoring Current Activity Usingthe Java ES Monitoring Console

The statistics available through the Web Server AdminConsole and the command-line

interface are also available through the Java ES Monitoring Console. Though the information is

the same, it is presented in a dierent format, using the Common Monitoring Data Model

(CMM). Though this guide covers monitoring using tools available in the Web Server, you

could also monitor your server using the Java ES monitoring tools. For more information on

using the Java ES monitoring tools, see Sun Java EnterpriseSystem 5 Monitoring Guide. Use the

same settings to tune the server, regardless of the what monitoring method you are using.

Chapter 1 • Performanceand Monitoring Overview 37

Page 38

38

Page 39

CHAPTER 2

2

Tuning Sun Java System Web Server

This chapter describes specic adjustments you can make that might improve Sun Java System

Web Server performance. It provides an overview of Web Server's connection-handling process

so that you can better understand the tuning settings. The chapter includes the following topics:

■

“General Tuning Tips” on page 39

■

“Understanding Threads, Processes, and Connections” on page 40

■

“Mapping Web Server 6.1 Tuning Parameters to Web Server 7.0” on page 46

■

“Using Monitoring Data to Tune Your Server” on page 48

■

“Tuning the ACL User Cache” on page 77

■

“Tuning Java Web Application Performance” on page 78

■

“Tuning CGI Stub Processes (UNIX/Linux)” on page 81

■

“Using nd-pathinfo-forward” on page 82

■

“Using nostat” on page 82

■

“Using Busy Functions” on page 83

Note – Be very careful when tuning your server. Always back up your conguration les before

making any changes.

GeneralTuning Tips

As you tune your server, it is important to remember that your specic environment is unique.

The impacts of the suggestions provided in this guide will vary, depending on your specic