Page 1

REFERENCE MANUAL

Ranger E/D

MultiScan 3D camera with Gigabit Ethernet (E)

3D camera with Gigabit Ethernet (D)

Page 2

Please read the complete manual before attempting to operate your Ranger.

WARNING

a

Turn off power before connecting

Never connect any signals while the Ranger unit is powered.

Never connect a powered Ranger E/D Power-I/O terminal or powered I/O signals to a

Ranger.

Do not open the Ranger

The Ranger unit should not be opened. The Ranger contains no user serviceable parts

inside.

Safety hints if used with laser equipment

Ranger is often supposed to be used in combination with laser products.

The user is responsible to comply with all laser safety requirements according to the laser

safety standards IEC 60825 – 1 and 21 CFR 1040.10/11 (CDRH) respectively.

Please read the chapter Laser Safety in Appendix B carefully.

Turn off the laser power before maintenance

If the Ranger is used with a laser (accessory), the power to the laser must be turned off

before any maintenance is performed. Failure to turn this power off when maintaining the

unit may result in hazardous radiation exposure.

ISM Radio Frequency Classification - EN55011 - Group1, Class A

Class A equipment is intended for use in an industrial environment. There may be potential

difficulties in ensuring electromagnetic compatibility in other environments, due to conducted as well as radiated disturbances.

Explanations:

Group1 – ISM equipment (ISM = Industrial, Scientific and Medical)

Group 1 contains all ISM equipment in which there is intentionally generated and/or used

conductively coupled radio-frequency energy which is necessary for the internal functioning of the equipment itself.

Class A equipment is equipment suitable for use in all establishments other than domestic

and those directly connected to a low voltage power supply network which supplies buildings used for domestic purposes.

Class A equipment shall meet class A limits.

Note: Although class A limits have been derived for industrial and commercial establishments, administrations may allow, with whatever additional measures are necessary, the

installation and use of class A ISM equipment in a domestic establishment or in an establishment connected directly to domestic electricity power supplies.

Please read and follow ALL Warning statements throughout this manual.

Windows and Visual Studio are registered trademarks of Microsoft Corporation.

All other mentioned trademarks or registered trademarks are the trademarks or registered trademarks of their

respective owner.

SICK uses standard IP technology for its products, e.g. IO Link, industrial PCs. The focus here is on providing

availability of products and services. SICK always assumes that the integrity and confidentiality of data and rights

involved in the use of the above-mentioned products are ensured by customers themselves. In all cases, the

appropriate security measures, e.g. network separation, firewalls, antivirus protection, patch management, etc.,

are always implemented by customers themselves, according to the situation.

© SICK AG 2011-06-17

All rights reserved

Subject to change without prior notice.

Page 3

Reference Manual

Contents

Ranger E/D

Contents

1 Introduction ...................................................................................................................................6

2 Overview......................................................................................................................................... 8

2.1 Measuring with the Ranger..................................................................................................... 8

2.2 Mounting the Ranger............................................................................................................... 9

2.3 Configuring the Ranger..........................................................................................................10

2.3.1 Ranger Studio ..........................................................................................................10

2.3.2 Measurement Methods ...........................................................................................11

2.4 Developing Applications........................................................................................................17

2.5 Triggering ...............................................................................................................................18

3 Mounting Rangers and Lightings...............................................................................................19

3.1 Range (3D) Measurement..................................................................................................... 20

3.1.1 Occlusion..................................................................................................................21

3.1.2 Height Range and Resolution..................................................................................21

3.1.3 Main Geometries......................................................................................................22

3.2 Intensity and Scatter Measurements ...................................................................................23

3.3 MultiScan ............................................................................................................................... 23

3.4 Color Measurements ............................................................................................................. 24

3.5 Light sources for Color and Gray Measurements ................................................................25

3.5.1 Incandescent lamps.................................................................................................25

3.5.2 Halogen lamps .........................................................................................................25

3.5.3 Fluorescent tubes ....................................................................................................26

3.5.4 White LEDs ............................................................................................................... 26

3.5.5 Colored LEDs............................................................................................................ 27

4 Ranger Studio..............................................................................................................................28

4.1 Ranger Studio Main Window.................................................................................................28

4.1.1 Visualization Tabs .................................................................................................... 29

4.2 Zoom Windows ......................................................................................................................31

4.3 Parameter Editor ...................................................................................................................32

4.3.1 Flash retrieve and store of parameters .................................................................. 33

4.4 Using Ranger Studio..............................................................................................................33

4.4.1 Connect and Get an Image......................................................................................33

4.4.2 Adjust Exposure Time ..............................................................................................35

4.4.3 Set Region-of-Interest .............................................................................................. 35

4.4.4 Collection of 3D Data............................................................................................... 36

4.4.5 Getting a Complete Object in One Image................................................................37

4.4.6 White Balancing the Color Data ..............................................................................37

4.4.7 Visualizing Color Images.......................................................................................... 39

4.4.8 Save Visualization Windows....................................................................................39

4.4.9 Save and Load Measurement Data ........................................................................ 40

5 Configuring Ranger E and D .......................................................................................................41

5.1 Selecting Configurations and Components.......................................................................... 41

5.2 Setting Region-of-Interest......................................................................................................42

5.3 Different Triggering Concepts ...............................................................................................43

5.4 Enable Triggering...................................................................................................................43

5.5 Pulse Triggering Using an Encoder ....................................................................................... 45

5.5.1 Triggering Scans.......................................................................................................45

5.5.2 Embedding Mark Data............................................................................................. 46

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 3

Page 4

Reference Manual

Contents

Ranger E/D

5.6 Setting Exposure Time...........................................................................................................47

5.7 Range Measurement Settings ..............................................................................................49

5.8 Details on 3D Profiling Algorithms........................................................................................ 50

5.9 Color Data Acquisition...........................................................................................................54

5.9.1 White Balancing ....................................................................................................... 54

5.9.2 Color Channel Registration......................................................................................55

5.10 Calibration.............................................................................................................................. 56

5.10.1 Calibrated Data ........................................................................................................ 57

5.10.2 Rectified Images ...................................................................................................... 57

5.10.3 Physical Setup..........................................................................................................58

5.10.4 Calibration and 3D Cameras................................................................................... 59

6 Ranger D Parameters .................................................................................................................61

6.1 System settings .....................................................................................................................61

6.2 Ethernet Settings................................................................................................................... 61

6.3 Image Configuration .............................................................................................................. 62

6.4 Measurement Configuration ................................................................................................. 63

7 Ranger E Parameters..................................................................................................................67

7.1 System settings .....................................................................................................................67

7.2 Ethernet Settings................................................................................................................... 67

7.3 Image Configuration .............................................................................................................. 68

7.4 Measurement Configuration ................................................................................................. 70

7.5 Measurement Components ..................................................................................................72

7.5.1 Horizontal Threshold (HorThr) ................................................................................. 72

7.5.2 Horizontal Max (HorMax).........................................................................................76

7.5.3 Horizontal Max and Threshold (HorMaxThr) ........................................................... 77

7.5.4 High-resolution 3D (Hi3D)........................................................................................79

7.5.5 High-resolution 3D (Hi3D COG) ............................................................................... 80

7.5.6 Gray ..........................................................................................................................83

7.5.7 HiRes Gray................................................................................................................ 84

7.5.8 Scatter ......................................................................................................................85

7.5.9 Color and HiRes color ..............................................................................................86

8 iCon API .......................................................................................................................................89

8.1 Connecting to an Ethernet Camera ......................................................................................90

8.2 Retrieving Measurement Data.............................................................................................. 92

8.2.1 IconBuffers, Scans, Profiles and Data Format ....................................................... 92

8.2.2 Accessing the Measurement Data..........................................................................93

8.2.3 Polling and Call-back ...............................................................................................95

8.2.4 Handling Buffers ...................................................................................................... 95

8.2.5 Mark Data.................................................................................................................96

8.3 Changing Camera Configuration........................................................................................... 97

8.3.1 Using Parameter Files..............................................................................................97

8.3.2 Setting Single Parameter Values.............................................................................97

8.4 Error Handling........................................................................................................................ 98

8.5 Calibration and Post Processing of Buffers..........................................................................99

8.5.1 Filter Classes............................................................................................................ 99

8.5.2 Extraction Filter ......................................................................................................100

8.5.3 Calibration Filter.....................................................................................................100

8.5.4 Rectification Filter..................................................................................................101

8.5.5 Color Registration filter..........................................................................................101

8.5.6 Color Generation Filter...........................................................................................103

4 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 5

Reference Manual

Contents

Ranger E/D

9 Hardware Description .............................................................................................................. 105

9.1 Sensor ..................................................................................................................................105

9.1.1 Light Sensitivity ......................................................................................................105

9.1.2 Color Filter Layout ..................................................................................................106

9.1.3 High-resolution Rows .............................................................................................107

9.1.4 Color response .......................................................................................................107

9.1.5 Standard and high-resolution color differences...................................................109

9.2 Electrical Connections.........................................................................................................110

9.3 Technical Data.....................................................................................................................112

9.4 Dimensional Drawing ..........................................................................................................114

Appendix............................................................................................................................................ 115

A Ranger E and D Models.......................................................................................................115

B Laser Safety.........................................................................................................................116

C Recommended Network Card Settings ..............................................................................117

D Recommended Switches.....................................................................................................118

E iCon Device Configuration...................................................................................................118

F Connecting Encoders...........................................................................................................119

G Ranger E/D Power-I/O terminal..........................................................................................121

H Laser Safety Key Box (ICT-B)...............................................................................................123

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 5

Page 6

Chapter 1 Reference Manual

Ranger E/D

Introduction

1 Introduction

The Ranger is a high-speed 3D camera intended to be the vision component in a machine

vision system. The Ranger makes measurements on the objects that passes in front of the

camera, and sends the measurement results to a PC for further processing. The measurements can be started and stopped from the PC, and triggered by encoders and photoelectric switches in the vision system.

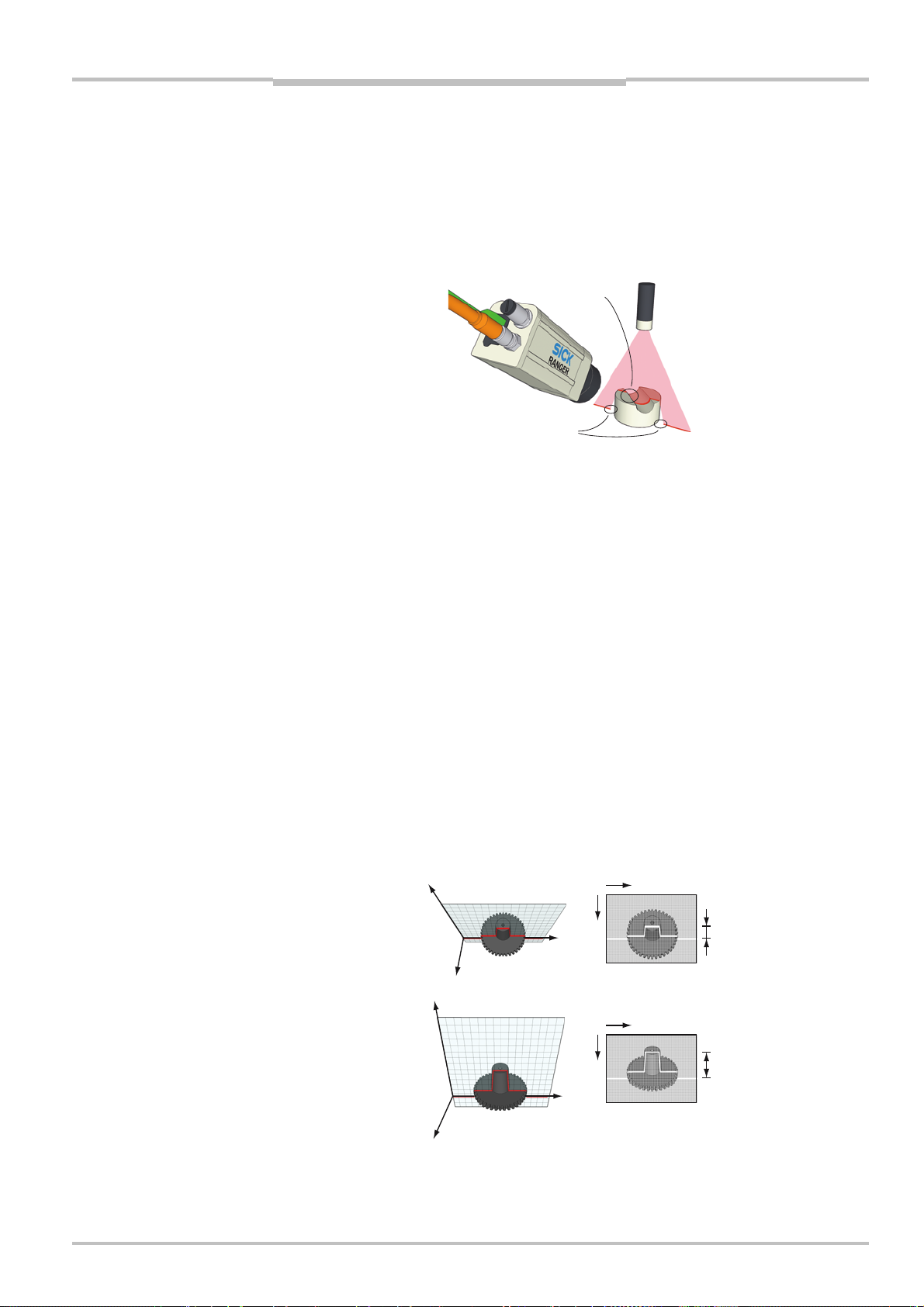

Figure 1.1 – The Ranger as the vision component in a machine vision system.

The main function of the Ranger is to measure 3D shape of objects. Depending on model

and configuration, the Ranger can measure up to 35 000 profiles per second.

In addition to measure 3D – or range – the Ranger can also measure color, intensity and

scatter:

Range measures the 3D shape of the object by the use of laser triangulation. This can

be used for example for generating 3D images of the object, for size rejection

or volume measurement, or for finding shape defects.

Intensity measures the amount of light that is reflected by the object. This can for

example be used for identifying text on objects or detecting defects on the objects’ surface.

Color measures the red, green, and blue wavelength content of the light that is

reflected by the object. This can be used to verify the color of objects or to get

increased contrast for more robust defect detection.

Scatter measures how the incoming light is distributed beneath the object’s surface.

This is for example useful for finding the fiber direction in wood or detecting

delamination defects.

6 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 7

Reference Manual Chapter 1

w

r

Ranger E/D

Introduction

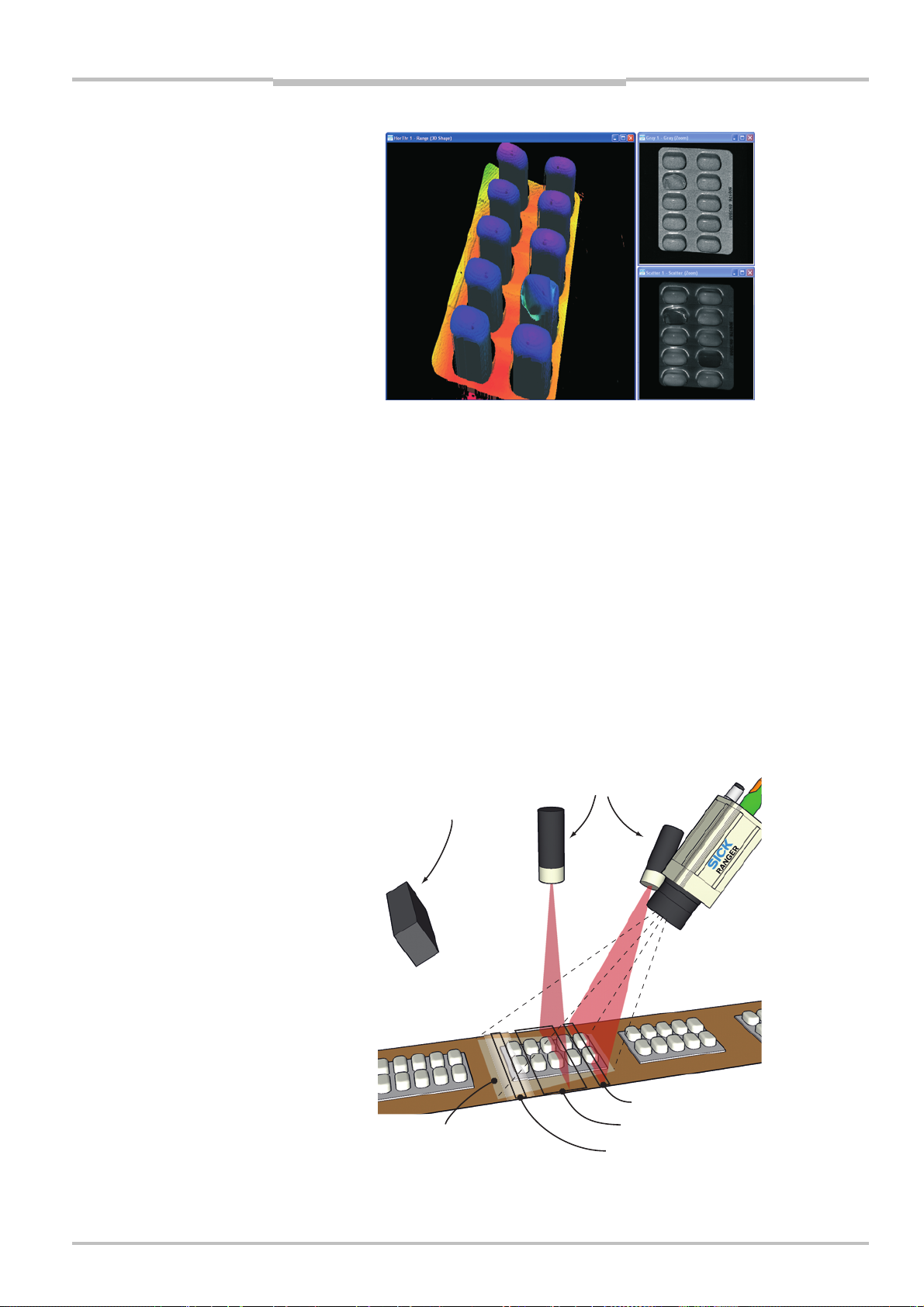

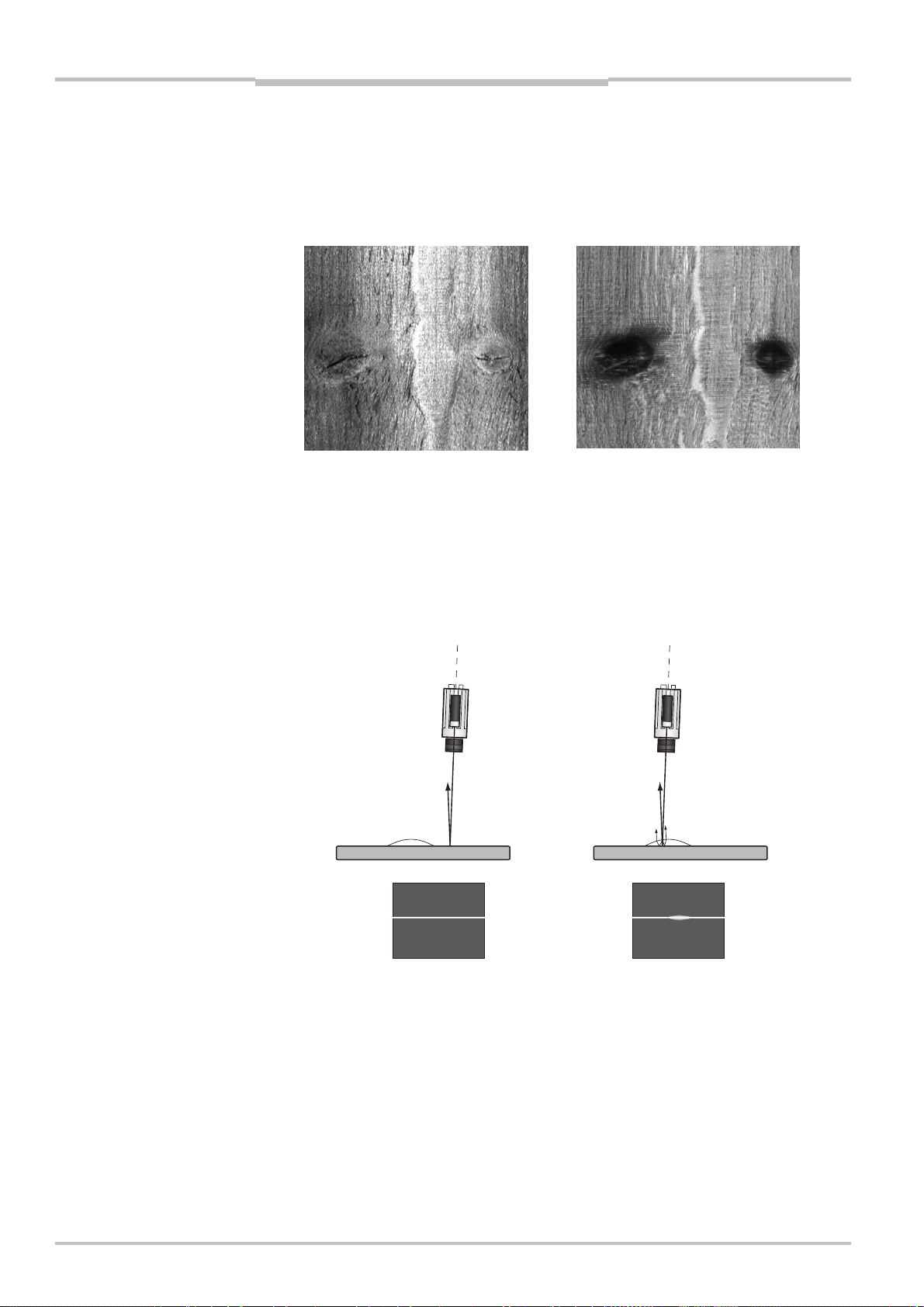

Figure 1.2 – 3D (left), intensity (top right), and scatter (bottom right) images of a blister

pack with one damaged blister and two empty blisters.

There are four different models of the Ranger available:

Ranger C Connects to the PC via CameraLink.

Ranger E Connects to the PC through a Gigabit Ethernet network.

ColorRanger E Combines the function of a Ranger E camera and a three-color line scan

camera.

Ranger D A low-cost, mid-performance version of the Ranger E, suitable for meas-

uring 3D only in applications without high-speed requirements. The

Ranger D can measure up to 1000 profiles per second.

The Ranger C, E and ColorRanger E models are MultiScan cameras, which mean that they

can make several types of measurements on the object in parallel. This is achieved by

applying different measurement methods to different parts of the sensor.

By selecting appropriate illuminations for the different areas of the measurement scene,

the Ranger can be used for measuring several features of the objects at the very same

time.

Lasers

White light

Scatte

Field-of-vie

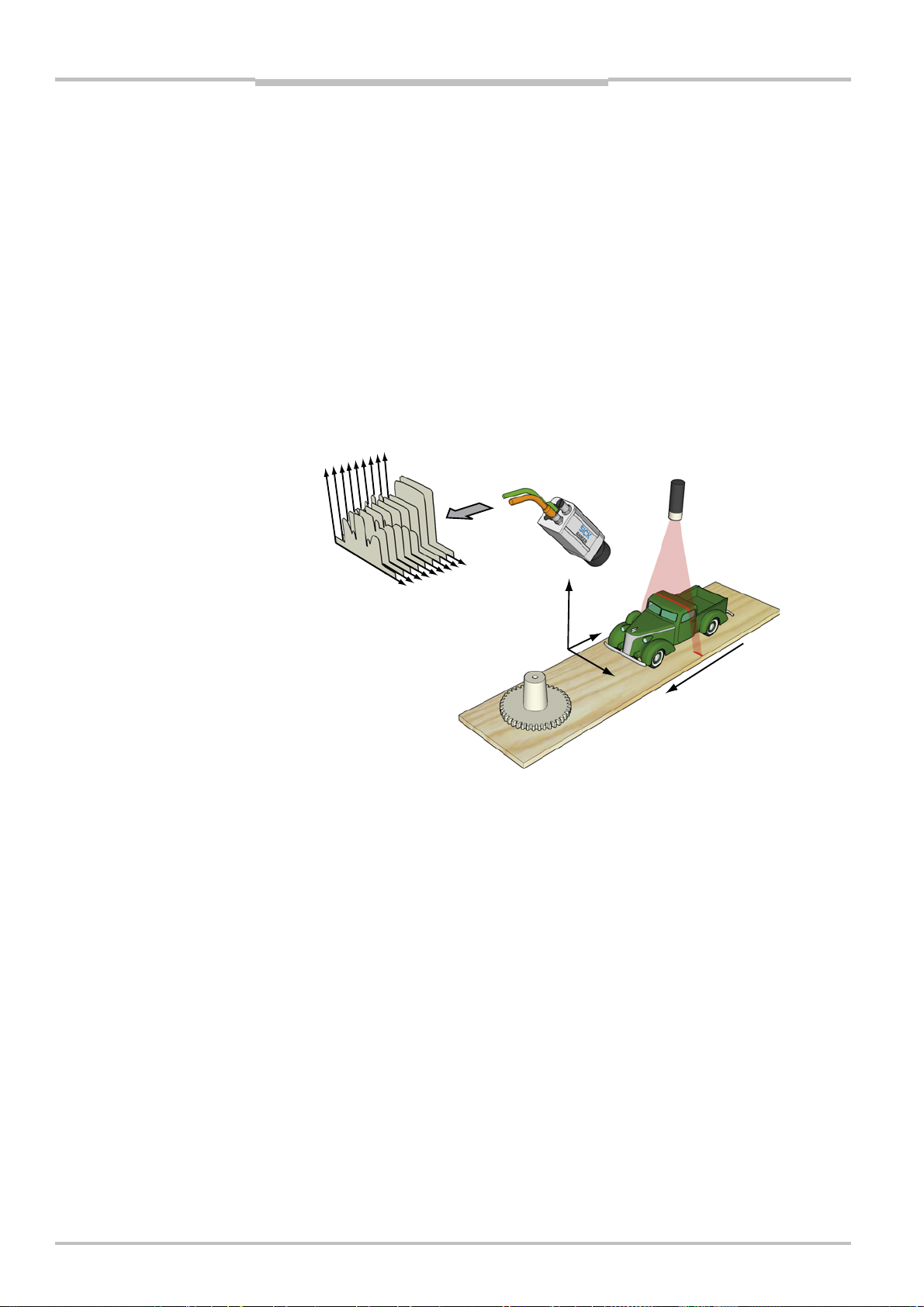

Figure 1.3 – Measuring several properties of the objects at once with MultiScan, using

multiple light sources.

3D measurement

Grayscale

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 7

Page 8

Chapter 2 Reference Manual

y

Ranger E/D

Overview

2 Overview

2.1 Measuring with the Ranger

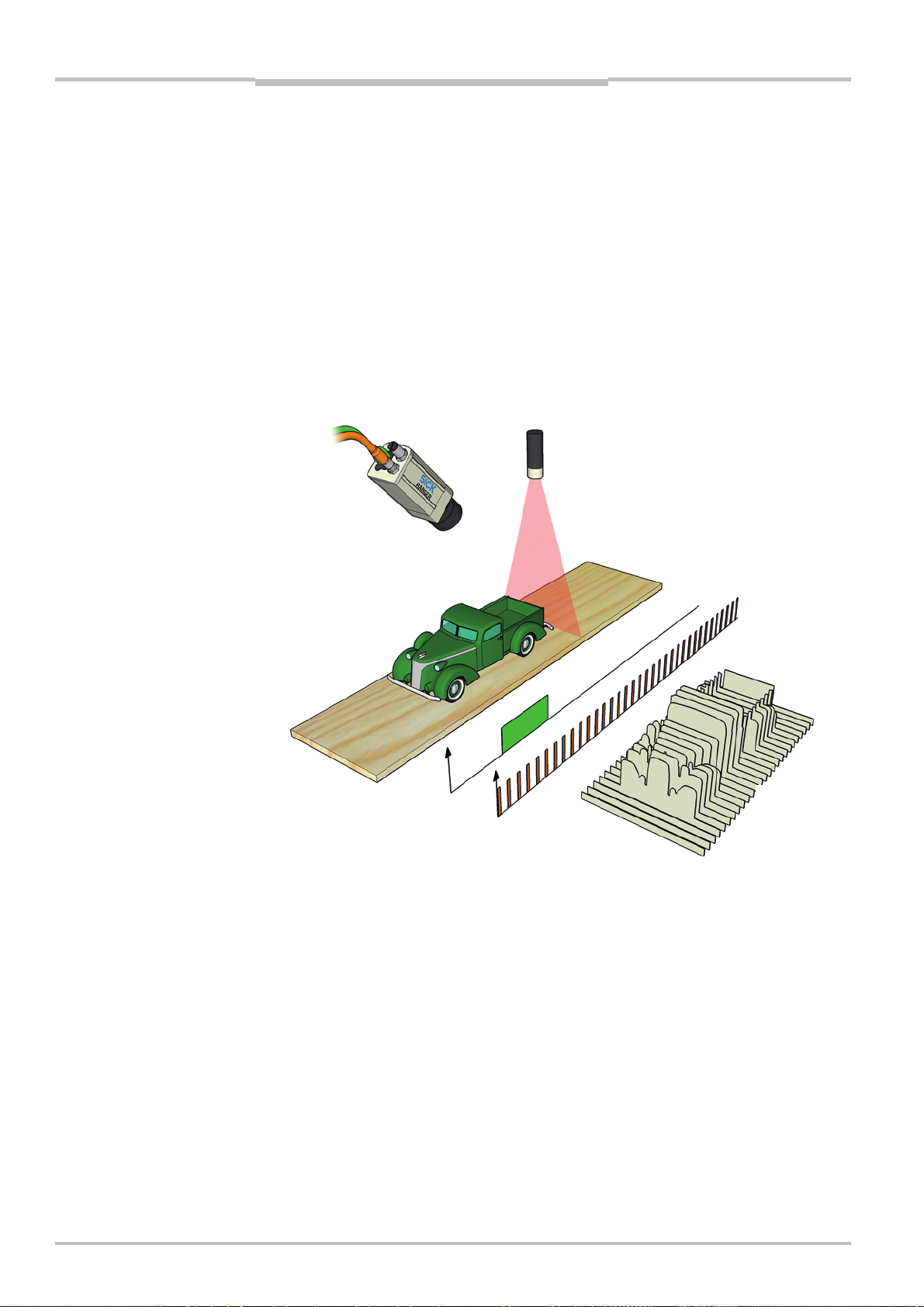

Each time the Ranger makes a measurement, it measures along a cross-section of the

object in front of it. The result of a measurement is a profile, containing one value for each

measured point along the cross-section – for example the height of the object along its

width.

For the Ranger to measure an entire object, the object (or the Ranger and illumination)

must be moved so that the Ranger can make a series of measurements along the object.

The result of such a measurement is a collection of profiles, where each profile contains

the measurement of a cross-section at a certain location along the transportation direction.

z

Profiles

Figure 2.1 – Measuring the range of a cross-section of an object.

For some types of measurements, the Ranger will produce more than one profile when

measuring one cross-section. For example, certain types of range measurements will

result in one range profile and one intensity profile, where the intensity profile contains the

reflected intensity at each measured point.

In addition, the Ranger C, Ranger E and ColorRanger E models – being MultiScan cameras

–can also make parallel measurements on the object. This could for example be used for

measuring surface properties of the objects at the same time as the shape. If the Ranger

is configured for MulitScan measurements, the Ranger may produce a number of profiles

each time it makes one measurement – including multiple profiles from one cross-section

of the object, as well as profiles from parallel cross-sections.

In this manual, the term scan is used for the collection of measurements made by the

Ranger at one point in time.

Note that the range measurement values from the Ranger are not calibrated by default –

that is:

Range values (z coordinates) are given as row – or pixel – locations on the sensor.

The location of a point along the cross-section (x coordinate) is given as a number

representing the column on the sensor in which the point was measured.

The location of a point along the transport direction (y coordinate) is represented by for

example the sequence number of the measurement, or the encoder value for when the

scan was made.

(range)

x

(width)

Transportation

8 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 9

Reference Manual Chapter 2

Ranger E/D

Overview

To get calibrated measurements – for example coordinates and heights in millimeters –

you need to transform the sensor coordinates (row, column, profile id) into world coordinates (x, y, z). This transformation depends on a number of factors, for example the distance between the Ranger and the object, the angle between the Ranger and the laser,

and properties of the lens. You can do the transformation yourself, or you can use the

3D Camera Coordinator – a tool that performs the transformation from sensor coordinates

(row, column) to world coordinates (x, z). The world coordinate in the movement direction

(y) is obtained by the use of an encoder. For more information about the Coordinator tool,

see the 3D Camera Coordinator Reference Manual.

In a machine vision system, the Ranger acts as a data streamer. It is connected to a PC

through either a CameraLink connection (Ranger C) or a Gigabit Ethernet network (Ranger D & E). The Ranger sends the profiles to the computer, and the computer runs a

custom application that retrieves the profiles and processes the measurement data in

them. This application can for example analyze the data to find defects in the objects and

control a lever that pushes faulty objects to the side.

Before the Ranger can be used in a machine vision system, the following needs to be

done:

Find the right way to mount the Ranger and light sources.

Configure the Ranger to make the proper measurements.

Write the application that retrieves and processes the profiles sent from the Ranger.

The application is developed in for example Microsoft Visual Studio, using the APIs that

are installed with the Ranger development software.

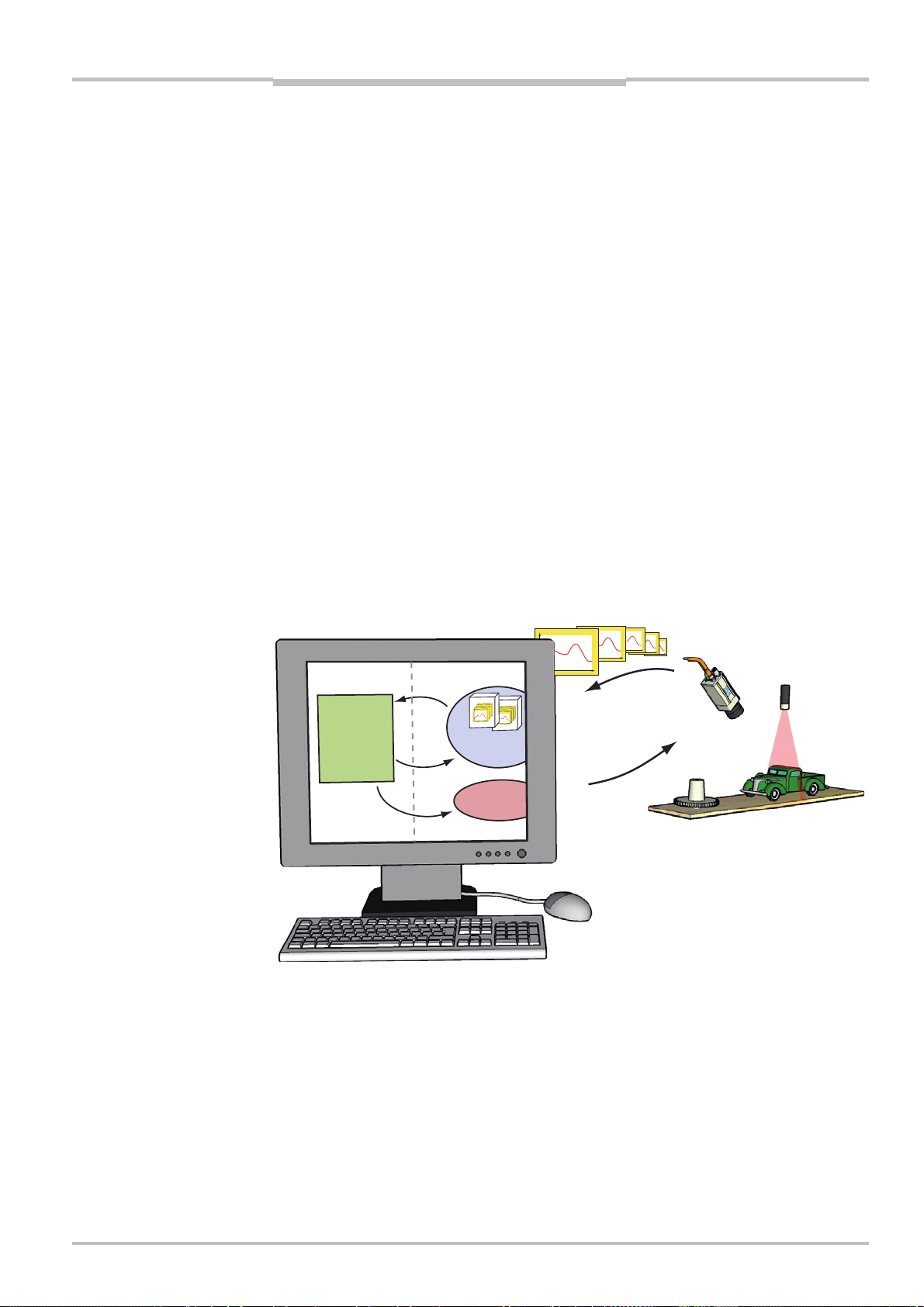

Figure 2.2 – Profiles are sent from the Ranger to a PC, where they are analyzed by a

custom application.

2.2 Mounting the Ranger

Selecting the right way of illuminating the objects to measure, and finding the right way in

which to mount the Ranger and lightings are usually critical factors for building a vision

system that is efficient and robust.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 9

Page 10

Chapter 2 Reference Manual

Ranger E/D

Overview

The Ranger must be able to capture images with good quality of the objects in order to

make proper measurements. Good quality in vision applications usually means that there

is a high contrast between the features that are interesting and those that are not, and

that the exposure of the images does not vary too much over time.

A basic recommendation is therefore to always eliminate ambient light – for example by

using a cover – and instead use illumination specifically selected for the measurements to

be made.

The geometries of the set-up – that is the placement of the Ranger, the lightings and the

objects in relation to each other – are also important for the quality of the measurement

result. The angles between the Ranger and the lights will affect the type and amount of

light that is measured, and the resolution in range measurements.

Chapter 3 'Mounting Rangers and Lightings' contains an introduction to factors to consider when mounting the Ranger and lightings.

2.3 Configuring the Ranger

Before the Ranger can be used in a machine vision application, the Ranger has to be

configured to make the proper measurements, and to deliver the profiles with sufficient

quality and speed. This is usually done by setting up the camera in a production-like

environment and evaluating different ways of mounting, measurement methods and

parameter settings until the result is satisfactory.

2.3.1 Ranger Studio

The Ranger Studio application – which is a part of the Ranger development software – can

be used for evaluating different set-ups of the camera, and for visualizing the measurements. With Ranger Studio, you can change the settings for the camera and instantly see

how the changes affect the measurement result.

Once the Ranger has been set up to deliver measurement data that meets the requirements, the settings can be saved in a parameter file from the Ranger Studio. This parameter file is later used when connecting to the Ranger from the machine vision application.

Figure 2.3 – Configuring the Ranger with Ranger Studio.

10 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 11

Reference Manual Chapter 2

Ranger E/D

Overview

2.3.2 Measurement Methods

One part of configuring the Ranger is selecting which measurement method to use for

measuring. The Ranger has a number of built-in measurement methods – or components

– to choose from.

Which component to use is of course depending on what to measure – range, intensity,

color, or scatter – but also on the following factors:

Required speed and resolution of the measurements

Characteristics of the objects to measure

Conditions in the environment

The MultiScan feature of the Ranger C, Ranger E and ColorRanger E models means that

different components can be applied on different areas of the sensor. These components

will then be measuring simultaneously.

For each component there are a number of settings – parameters – that can be used for

fine-tuning the quality and performance of the measurements. These parameters specify

for example exposure time and which part of the sensor to use (Region-of-interest, ROI).

Range Components

The Range components are used for making 3D measurement of objects.

The Ranger uses laser triangulation when measuring range, which means that the object is

illuminated with a laser line from one direction, and the Ranger is viewing the object from

another direction. The laser line shows up as a cross-section of the object on Ranger’s

sensor, and the Ranger determines the height of each point of the cross-section by locating the vertical location of the laser line.

The Ranger and the laser line should be oriented so that the laser line is parallel to the

rows on the Ranger’s sensor. The Ranger E and D have a laser line indicator on the back

plate, indicating in which direction it expects the laser line to be oriented.

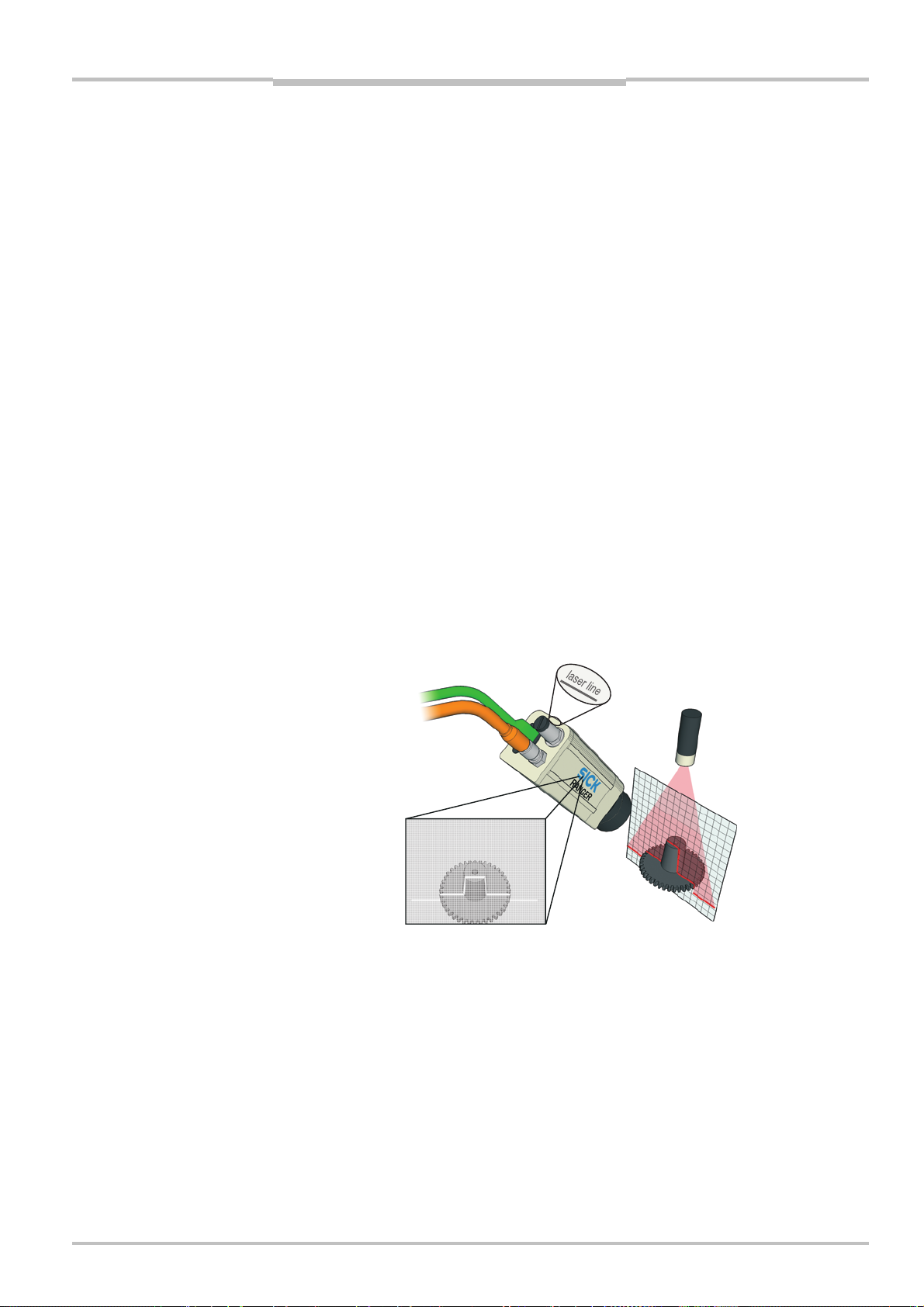

Sensor image

FIgure 2.4 – Laser triangulation.

Laser line indicator

(Ranger E and D only)

Laser line

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 11

Page 12

Chapter 2 Reference Manual

S

x

x

Ranger E/D

Overview

The Ranger E and ColorRanger E models have five different components for measuring

range, the Ranger C has three components, and the Ranger D has one component. They

differ in which method is used for locating the laser line:

Range component Model

E C D

Horizontal threshold X X Fast method, using one or two intensity

thresholds.

Horizontal max X X Uses the maximum intensity.

Horizontal max and

threshold

High-resolution 3D

(Hi3D)

High-resolution 3D

(Hi3D COG)

For each measured point, the Ranger returns a range value that represents the number of

rows – or vertical pixels – from the bottom or top of the ROI to where it detected the laser

line.

X Uses one intensity threshold.

X X Measures with higher resolution, using an

algorithm similar to calculating the centerof-gravity of the intensity.

The algorithm used by the Hi3D component

differs between Ranger E and Ranger D, as

does the format of the output.

X X Measures with higher resolution, using a

true center-of-gravity algorithm.

Rows

Columns

Rows

Columns

ensor image

Threshold

Rows

Projected

laser line

Columns

Ma

Intensity

Threshold Ma

Figure 2.5 – Different methods for determining the range by analyzing the light intensity

in each column of the sensor image:

Threshold determines the range by locating intensities above a certain level,

while Max locates the maximum intensity in each column.

12 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Intensity

Page 13

Reference Manual Chapter 2

Overview

Ranger E/D

If the Ranger was unable to locate the laser line for a point – for example due to insufficient exposure, that the laser line was hidden from view, or that the laser line appeared

outside of the ROI – the Ranger will return the value 0. This is usually referred to as miss-

ing data.

In addition to the range values, the Horizontal max, Horizontal threshold and max, and

Hi3D for Ranger E/C and ColorRanger E also deliver intensity values for the measured

points along the laser line. The intensity values are the maximum intensity in each column

of the sensor, which – in the normal case – is the intensity of the reflected laser line.

(1)

The resolution in the measurements depends on which component that is used. For

example the Horizontal max and threshold method returns the location of the laser line

1

with ½ pixel resolution, while the Hi3D method has a resolution of

/16th of a pixel.

Note that the Ranger delivers the measured range values as integer values, which represent the number of “sub-pixels” from the bottom or top of the ROI. For example, if the

Ranger is configured to measure with ½ pixel resolution, a measured range of 14,5 pixels

is delivered from the Ranger as the integer value 29.

Besides the measurement method, the resolution in the measurements depends on how

the Ranger and the laser are mounted, as well as the distance to the object. For more

information on how the resolution is affected by how the Ranger is mounted, see chapter

3 'Mounting Rangers and Lightings'.

The performance of the Ranger – that is, the maximum number of profiles it can deliver

each second – depends on the chosen measurement method, but also on the height of

the ROI in which to search for the profile. The more rows in the ROI, the longer it takes to

search.

Therefore, one way of increasing the performance of the Ranger is to use a smaller ROI.

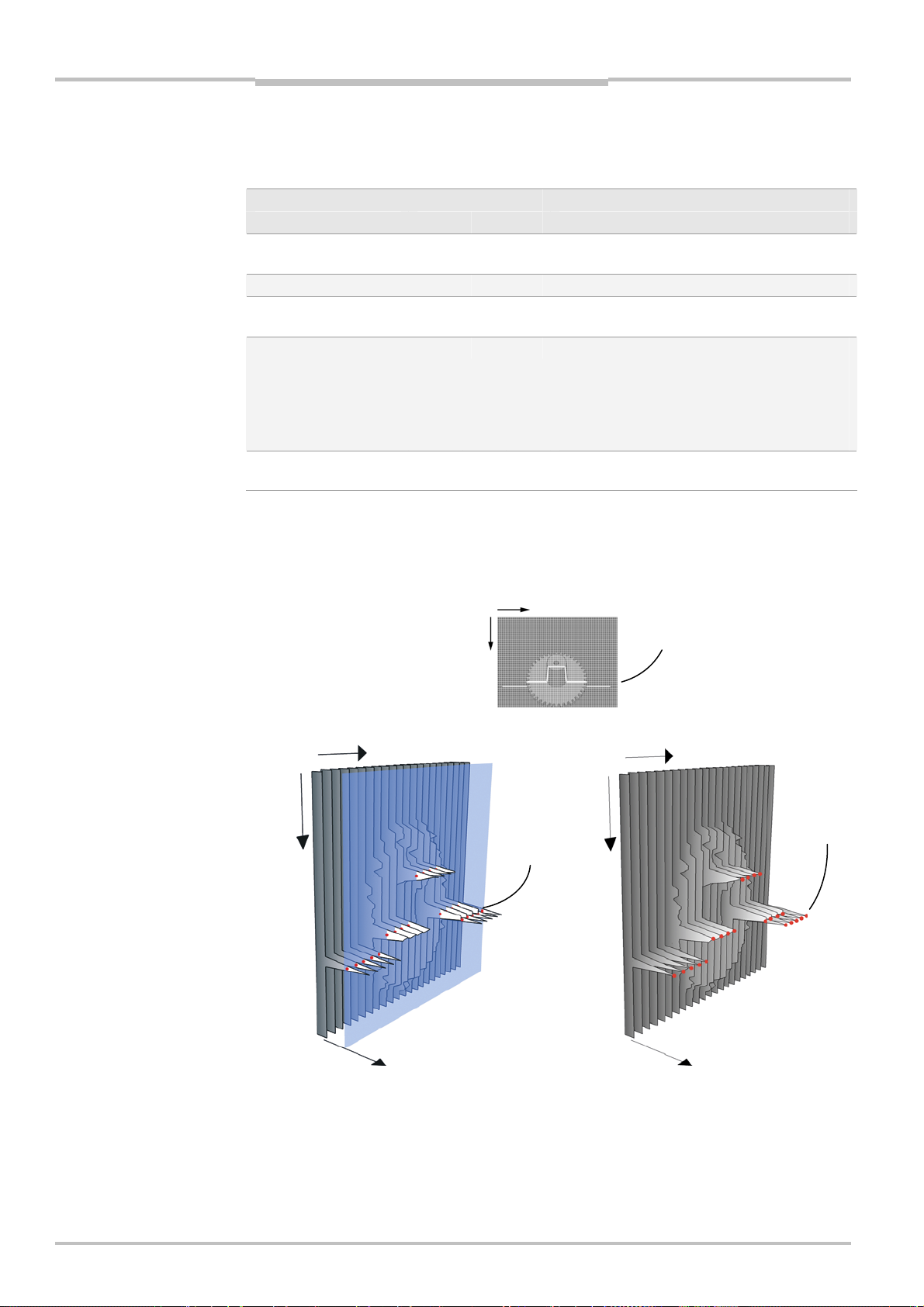

Figure 2.6 – A ROI with few rows will be faster to analyze than a ROI with many rows.

Note that the maximum usable profile rate can be limited by the characteristics of the

object’s surface and conditions in the environment.

(1)

The intensity value from Ranger C’s Hi3D component is the accumulated intensity in

each column, which in the normal case still can be used as a measurement of the intensity

of the reflected laser line.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 13

Page 14

Chapter 2 Reference Manual

Ranger E/D

Overview

Intensity Components

The Intensity components are used for measuring light reflected from the object. They can

be used for example for measuring gloss, inspecting the structure of the object surface, or

inspecting print properties. They can also be used for measuring how objects respond to

light of different wavelengths, by using for example colored or IR lightings.

There are two different intensity components:

Gray Measures reflected light along one or several rows on the sensor.

HiRes Gray Available in Ranger models C55 and E55. Uses a special row on the

sensor that contains twice as many pixels as the rest of the sensor

(3072 pixels versus 1536 pixels). The profiles delivered by the HiRes

Gray component therefore have twice the resolution compared with the

ordinary Gray component.

Figure 2.7 – Grayscale (left) and gloss (right) images of a CD. Both the text and the crack

are present in both images, but the text is easier to detect in the left image

while the crack is easier to detect in the right.

Some of the range components also deliver intensity measurements. The difference

between using these components and using the Gray or HiRes Gray component is that the

Gray and HiRes Gray components measure the intensity on the same rows for every column on the sensor, whereas the range components measure the intensity along the

triangulation laser line, which may be located on different sensor rows for each column.

14 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 15

Reference Manual Chapter 2

Ranger E/D

Overview

Color Components

The Color components are used for measuring the red, green and blue wavelength content

of the light reflected from the object. They can be used for inspecting color properties, for

example to detect discolorations or to sort colored objects.

The Color components are only available on the ColorRanger models, which are equipped

with a sensor where some of the rows are coated with a red, green, or blue filter. The filter

layout is described in 9 “Hardware Description”.

There are two different color components:

Color Measures reflected light along three color filtered rows on the sensor.

HiRes Color Available in the ColorRanger E55. Uses special rows on the sensor that

contains twice as many pixels as the rest of the sensor (3072 pixels

versus 1536 pixels). The profiles delivered by the HiRes Color component therefore have twice the resolution compared with the ordinary

Color component.

Figure 2.8 – Grayscale (left) and color (right) images of candy. The color image makes it

possible to differentiate between the colors, for example for counting or sorting.

The Color components make measurements in three different regions on the sensor

simultaneously. The data is delivered as separate color channels – one channel for each

sensor area. The color channels can then be merged into high quality color images on the

PC by using the APIs in the Ranger development software.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 15

Page 16

Chapter 2 Reference Manual

Ranger E/D

Overview

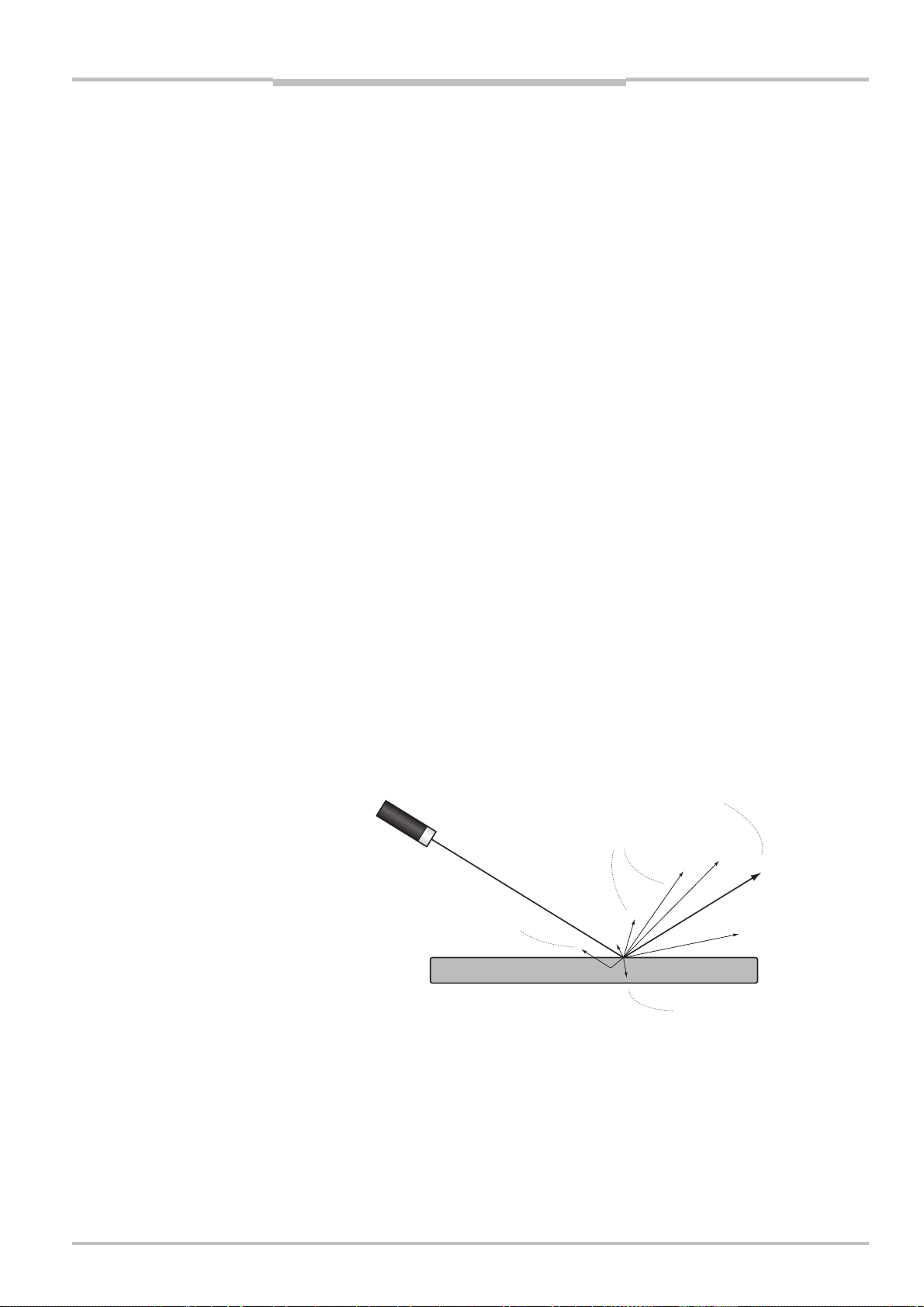

Scatter Component

The Scatter component is used for measuring how the light is distributed just below the

surface of the object. This can be used for emphasizing properties that can be hard to

detect in ordinary grayscale images, and is useful for example for detecting knots in wood,

finding delamination defects, or detecting what is just below a semi-transparent surface.

Figure 2.9 – Grayscale (left) and scatter (right) images of wood. The two knots are easy to

detect in the scatter image.

The scatter component measures the intensity along two rows on the sensor, and the

result is two intensity profiles – one that should be the center of the laser line (direct), and

one row a number of rows away from the first row (scatter).

The scatter profile can be used as it is as a measurement on the distribution of the light,

but the result will usually be better if the scatter profile is normalized with the direct intensity profile.

Ranger

Laser

Bubble

Figure 2.10 –Using scatter to detect delamination defects. Where there are no defects,

very little light is reflected below the surface, resulting in a sharp reflex and

low scatter response. Where there is a defect, the light is scattered in the

gap between the layers, resulting in a wider reflection and thus high scatter

response.

16 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 17

Reference Manual Chapter 2

Overview

Ranger E/D

2.4 Developing Applications

Once the Ranger has been configured to deliver the measurement data of the right type

and quality, you need to write an application that takes care of and uses the data. This

application is developed in for example Visual Studio, using one of the APIs that are delivered with the Ranger.

There are two APIs included with the development software for Ranger: iCon C++ for use

with C++ in Visual Studio 2005/2008/2010, and iCon C for use with C. Both APIs contain

the same functions but differ in the syntax.

The APIs handle all of the communication with the Ranger, and contain functions for:

Starting and stopping the Ranger

Retrieving profiles from the Ranger

Changing Ranger configuration

Most of these functions are encapsulated in two classes:

Camera Used for controlling the Ranger.

FrameGrabber Collects the measurement data from the Ranger.

Your application establishes contact with the Ranger camera by creating a Camera object.

It then creates a FrameGrabber object to set up the PC for collecting the measurement

data sent from the Ranger. When your application needs measurement data, it retrieves it

from the FrameGrabber object.

(2)

Application

iCon API

Profiles

Buffers

Frame

Grabber

Control

Request

Camera

Control

Figure 2.11 – All communication with the Ranger is handled by the API.

When the Ranger is measuring, it will send a profile to the PC as soon as it has finished

measuring a cross-section. The FrameGrabber object collects the profiles and puts them in

buffers – buffers that your application then retrieves from the FrameGrabber. Your application can specify the number of profiles in each buffer, and it is possible to set it to 1 in

order to receive one profile at a time. However, this will also add overhead to the application and put extra load on the CPU.

(2)

For Ranger C, this requires that the Ranger is connected to a frame grabber board that

is supported by the Ranger APIs. If a different frame grabber is used, the measurement

data is retrieved using the APIs for that frame grabber.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 17

Page 18

Chapter 2 Reference Manual

Ranger E/D

Overview

2.5 Triggering

There are two different ways in which external signals can be used for triggering the Ranger to make measurements:

Enable Triggers the Ranger to start making a series of scans. When the

Enable signal goes high, the Ranger will start measuring a specified

number of scans. If the signal is low after that, the Ranger will pause

and wait for the Enable signal to go high again; otherwise it will continue making another series of scans.

The Enable signal could for example come from a photoelectric switch

located along the conveyor belt. It is also useful for synchronizing two

or more Rangers.

Pulse triggering Triggers the Ranger to make one scan. This signal could for example

come from an encoder on the conveyor belt. The Ranger C can also be

triggered by the CC1 signal on the CameraLink interface.

Enable

Pulse

triggering

Figure 2.12 – Triggering the Ranger with Enable and Pulse triggering signals.

If pulse triggering is not used, the Ranger will measure in free-running mode – that is,

make measurements with a regular time interval determined by the Ranger’s cycle time.

The actual distance on the object between two profiles is then determined by the speed of

the object – that is, how far the object has moved during that time.

When measuring the true shape of an object, you should always use an encoder with the

Ranger. With the signals from the encoder as pulse triggering signals, it is guaranteed that

the distance that the object has moved between two profiles is well known.

You can find the actual distance between two profiles even if the Ranger is measuring in

free-running mode, as long as you have an encoder connected to the Ranger. The encoder

information can then be embedded with the profiles sent to the PC as mark data. Your

application can then use this information to calculate the distance between the profiles.

Profiles

Number of scans

(Scan height)

18 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 19

Reference Manual Chapter 3

A

Ranger E/D

Mounting Rangers and Lightings

3 Mounting Rangers and Lightings

Choosing the right way of mounting the Ranger and illuminating the objects to be measured is often crucial for the result of the measurement. Which method to use depends on

a number of factors, for example:

What is going to be measured (range, gloss, grayscale, scatter, etc.)

Characteristics of the surface of the objects (glossy, matte, transparent)

Variations in the shape of the objects (flat or varying height)

Requirements on resolution in the measurement results

Measuring with the Ranger means measuring light that is reflected by objects, and from

these measurement draw conclusions of certain properties of the objects.

For a machine vision application to be efficient and robust, it is therefore important to

measure the right type of light.

Reflections

An illuminated object reflects the light in different directions. On glossy surfaces, all light is

reflected with the same angle as the incoming light, measured from the normal of the

surface. This is called the specular or direct reflection.

Matte surfaces reflect the light in many different directions. Light reflected in any other

direction than the specular reflection is called diffuse reflection.

Light that is not reflected is absorbed by or transmitted through the object. Objects absorb

light with different wavelengths differently. This can for instance be used for measuring

color or IR properties of object.

The amount of light that is absorbed usually decreases as the incoming light becomes

parallel with the surface. For certain angles, almost all light will be reflected regardless of

wavelength. This phenomenon is used when measuring gloss, which can be used for

example for detecting surface scratches (see the example on page 26).

On some materials, the light may also penetrate the surface and travel into the object, and

then emerges out of the object again some distance away from where it entered. If such a

surface is illuminated for example with a laser, it appears as if the object “glows” around

the laser spot. This phenomenon is used when measuring scatter. The amount and direction of the scattered light depends on the material of the object.

Specular reflection

Diffuse reflections

Scattered light

bsorbed light Transmitted light

Figure 3.1 – Direct and diffuse reflections on opaque and semi-transparent objects.

The Ranger measures one cross-section of the object at a time. The most useful illumination for this type of measurements is usually a line light, such as a line-projecting laser or a

bar light.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 19

Page 20

Chapter 3 Reference Manual

Ranger E/D

Mounting Rangers and Lightings

3.1 Range (3D) Measurement

The Ranger measures range by using triangulation, which means that the object is illuminated with a line light from one direction, and the Ranger is measuring the object from

another direction. The most common light source used when measuring range is a line

projecting laser.

The Ranger analyzes the sensor images to locate the laser line in them. The higher up the

laser line is found for a point along the x axis (the width of the object), the higher up is that

point on the object.

z

(range)

y

(transport)

x

(width)

Figure 3.2 – Coordinate system when measuring range.

When measuring range, there are two angles that are interesting:

The angle at which the Ranger is mounted

The angle of the incoming light (incidence)

Both angles are measured from the normal of the transport direction. The angle of the

Ranger is measured to the optical axis of the Ranger – that is, the axis through the center

of the lens.

Optical axis

Incidence angle

Figure 3.3 – Angles and optical axis.

The following is important to get correct measurement results:

The laser line is aligned properly with the sensor rows in the Ranger.

The lens is focused so that the images contain a sharp laser line.

The laser is focused so that there is a sharp line on the objects, and that the laser line

covers a few rows on the sensor.

20 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 21

Reference Manual Chapter 3

S

r

Ranger E/D

Mounting Rangers and Lightings

3.1.1 Occlusion

Occlusion occurs when there is no laser line for the Ranger to detect in the sensor image.

Occlusion will result in missing data for the affected points in the measurement result.

There are two types of occlusion:

Camera occlusion When the laser line is hidden from the camera by the object.

Laser occlusion When the laser cannot properly illuminate parts of the object.

Camera occlusion

Laser occlusion

Figure 3.4 – Different types of occlusion.

Adjusting the angles of the Ranger and the laser can reduce the effects of occlusion.

If adjusting the angle is not suitable or sufficient, occlusion can be avoided by using

multiple lasers illuminating the objects from different angles (laser occlusion) or by using

multiple cameras viewing the objects from different angels (camera occlusion).

3.1.2 Height Range and Resolution

The height range of the measurement is the ratio between the highest and the lowest

point that can be measured within a ROI. A large height range means that objects that vary

much in height can be measured.

The resolution is the smallest height variation that can be measured. High resolution

means that small variations can be measured. But a high resolution also means that the

height range will be smaller, compared with using a lower resolution in the same ROI.

In general, the height range and the resolution depend on the angle between the laser and

the Ranger. If the angle is very small, the location of the laser line will not vary much in the

sensor images even if the object varies a lot in height. This results in a large height range,

but low resolution.

On the other hand if the angle is large, even a small variation in height would be enough to

move the laser line some pixels up or down in the sensor image. This results in high resolution, but small height range.

mall angle

Large angle

View from the Range

Figure 3.5 – The resolution in the measured range is higher if the angle between the laser

and the Ranger is large.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 21

Sensor image

Measured range

in pixels

Measured range

in pixels

Page 22

Chapter 3 Reference Manual

r

Ranger E/D

Mounting Rangers and Lightings

3.1.3 Main Geometries

There are four main principles for mounting the camera and the laser:

Ordinary The Ranger is mounted right above the object – perpendicular to the

direction of movement – and the laser is illuminating the object from

the side.

This geometry gives the highest resolution when measuring range, but

also results in miss-register – that is, a high range value in a profile

corresponds to a different y coordinate than a low range value.

Reversed ordinary As the Ordinary setup, but the placement of the laser and the Ranger

has been switched so that the lighting is placed above the object.

When measuring range, the reversed ordinary geometry does not

result in miss-register, but gives slightly lower resolution than the ordinary geometry.

Specular The Ranger and the lighting are mounted on opposite sides of the

normal.

Specular geometries are useful for measuring dark or matte objects,

since it is requires less light than the other geometries.

Look-away The Ranger and the lighting are mounted on the same side of the

normal.

This geometry can be useful for avoiding unwanted reflexes but re-

quires more light than the other methods and gives lower resolution.

β

Ordinary Reversed ordinary

α

Specula

Figure 3.6 – Main geometries for mounting the Ranger and laser.

β

Look-away

α

α

β

22 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 23

Reference Manual Chapter 3

Ranger E/D

Mounting Rangers and Lightings

As a rule of thumb, the height resolution increases with the angle between the Ranger and

the laser, but the resolution is also depending on the angle between the Ranger and the

height direction (z axis).

The following formulas can be used for approximating the resolution for the different

geometries, in for example mm/pixel:

Geometry Approximate range resolution

Ordinary ∆Z ≈ ∆X / tan(β)

Reversed ordinary ∆Z ≈ ∆X / sin(α)

Specular ∆Z ≈ ∆X · cos(β) / sin(α+ β)

If α = β: ∆Z ≈ ∆X / 2 · sin(α)

Look-away ∆Z ≈ ∆X · cos(β) / sin( |α–β|)

where:

∆Z = Height resolution (mm/pixel)

∆X = Width resolution (mm/pixel)

α = Angle between Ranger and vertical axis (see figure 3.6)

β = Angle between laser and vertical axis (see figure 3.6)

Note that these approximations give the resolution for whole pixels. If the measurement is

made with sub-pixel resolution, the resolution in the measurement is the approximated

resolution divided by the sub-pixel factor. For example, if the measurement is made with

the Hi3D component that has a resolution of 1/16

∆Z/16.

th

pixel, the approximate resolution is

3.2 Intensity and Scatter Measurements

For other types of measurements than range, a general recommendation is to align the

light with the Ranger’s optical axis (as in figure 1.7 on page 26), or mount the lighting so

that the light intersects the optical axis at the lens’ entrance pupil. By doing so, the light

will always be registered by the same rows on the sensor, regardless of the height of the

object, and triangulation effects can be avoided.

An exception is when gloss is going to be measured, since this type of measurement

requires a specular geometry and usually a large angle. However, the triangulation effect is

heavy if the objects vary in height. Therefore it is difficult – if not impossible – to measure

gloss on objects that has large height variations.

3.3 MultiScan

When measuring with MultiScan, it is important to separate the light sources, so that the

light used for illuminating one part of the sensor does not disturb the measurements made

on other parts of the sensor.

If separating the light sources is difficult, the measurements may be improved by only

measuring light with specific wavelengths, using filters and colored (or IR) lightings.

For example, an IR band pass filter can be mounted so that it covers a part of the sensor,

and an IR laser can be used for illuminating the object in that part. This way, range can be

measured in the IR filtered part of the sensor, and at the same time intensity can be

measured in the non-filtered area using white light, without disturbing the range measurements.

For certain Ranger models, a built-in IR filter is available as an option. The IR filter is

mounted so that rows with low row numbers are unaffected by the filter (0–10 for Ranger,

0–16 for ColorRanger), and rows 100–511 are filtered. Please refer to “Ranger E and D

Models” on page 113 for a list of available models.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 23

Page 24

Chapter 3 Reference Manual

w

Ranger E/D

Mounting Rangers and Lightings

Optical axis

IR laser

scatter

Ranger with

IR option

Entrance pupil

of lens

IR laser

3D

White light

source

Field-of-vie

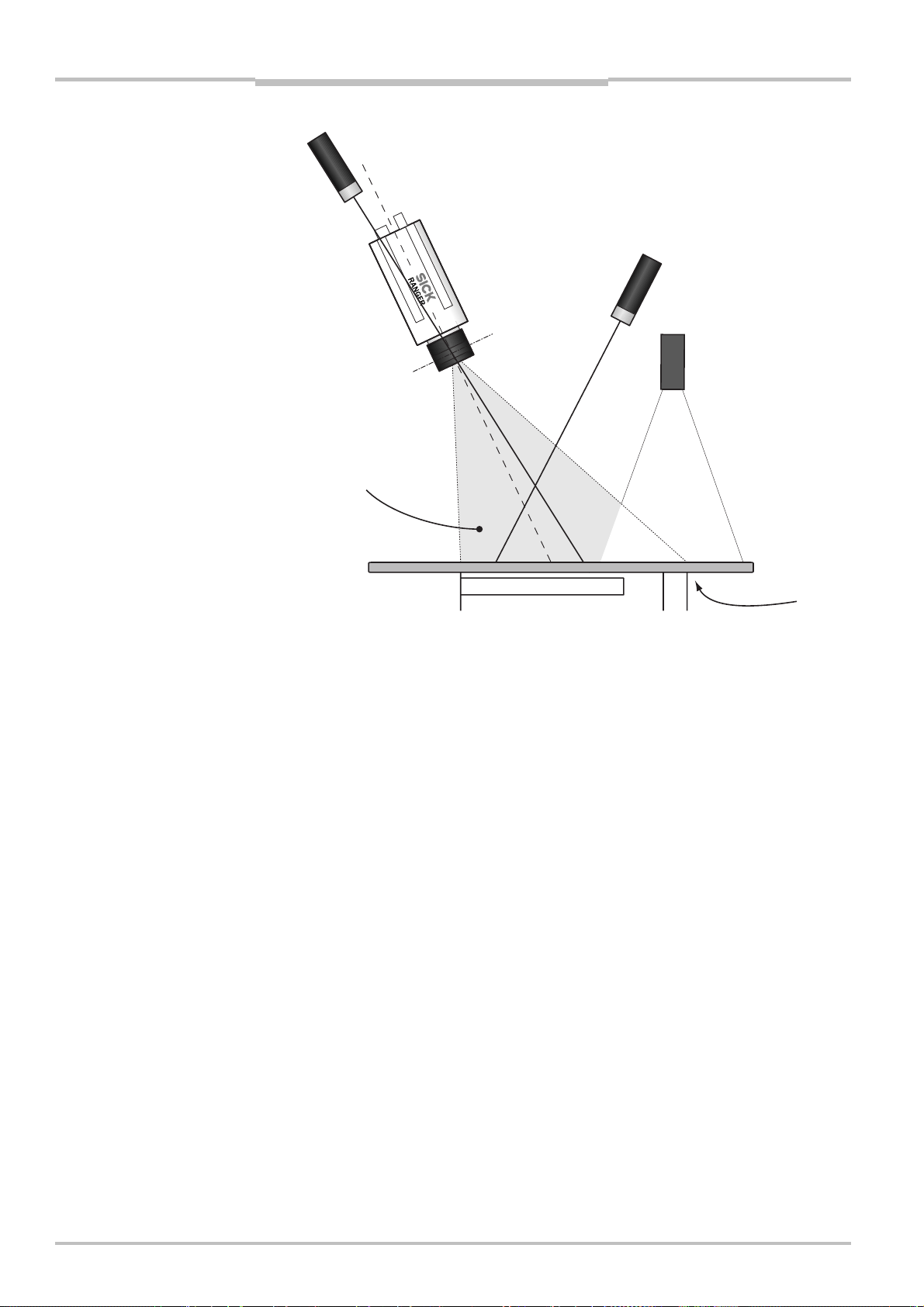

Figure 3.7 – Example of MultiScan set-up using one white light source, one IR laser for

scatter measurement and one IR laser for 3D measurement, and a Ranger

with the IR filter option. Note that the scatter laser is mounted so that the

light beam intersects the optical axis at the lens’ entrance pupil.

IR filtered rows

0 Row: 511

512

High-resolution

row

3.4 Color Measurements

The setup for color data acquisition can follow the general guidelines for Multiscan setup,

with the following additions:

Geometry It is recommended to use the ordinary geometry with the camera

mounted more or less vertically above the object, since this makes the

light source alignment easier.

Note that it is typically good to tilt the setup a little bit off the true vertical

alignment to avoid specular reflections.

Illumination The white light source for color acquisition needs to cover all color rows

on the sensor – that is, at least around 10 rows. It must also be ensured

that the illumination covers the color region for the entire height range in

the FOV.

When using the high-resolution grayscale row together with the standard

color rows, the illumination line must cover approximately 50 rows.

Alignment Since color image acquisition with ColorRanger requires that data from

different channels are registered together, it is important that the camera

is well aligned with the object’ direction of movement. If this is not the

case the color channel registration must also compensate for a sideway

shift, which is currently not supported by the iCon API.

24 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 25

Reference Manual Chapter 3

x

y

Ranger E/D

Mounting Rangers and Lightings

Camera

Transportation

Camera

Figure 3.8 – Correct alignment between camera and object motion. Camera’s y-direction

should be parallel with the direction of transportation.

3.5 Light sources for Color and Gray Measurements

Different light sources have different spectral properties – that is, different composition of

wavelengths. This section lists some typical light sources, some of which are commonly

used for line-scan gray and color imaging applications.

A measure often used for spectral content of a light source is color temperature. A high

color temperature (4-6000) indicates a “cold” bluish light and a low color temperature (2-

3000) a “warm” yellow-reddish light. Color temperature is measured in the Kelvin scale

(K).

3.5.1 Incandescent lamps

Incandescent lamps are not often used in line-scan machine vision applications. This is

since they commonly use low frequency AC drive current, which causes oscillations in the

light.

They are warm with a typical color temperature of ~2700 K.

3.5.2 Halogen lamps

Halogen lamps are common in machine vision applications, and are often coupled to a

fiber-optic extension so that shapes such as a line or ring can be generated. In the optical

path a filter can be placed to alter the color temperature of the lamp.

Halogen lamps typically have a color temperature of ~3000 K, which means that the

illumination has a fairly red appearance.

In a ColorRanger application using halogen illumination it is expected that the blue and

green balance needs to be adjusted to be much larger than the red channel due to the

strong red content. To shift the color temperature of the lamp it is also possible to insert

additional filters in the light source. Filters for photography called cooling color temperature filters in the series 80A/B are recommended for this.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 25

Page 26

Chapter 3 Reference Manual

Ranger E/D

Mounting Rangers and Lightings

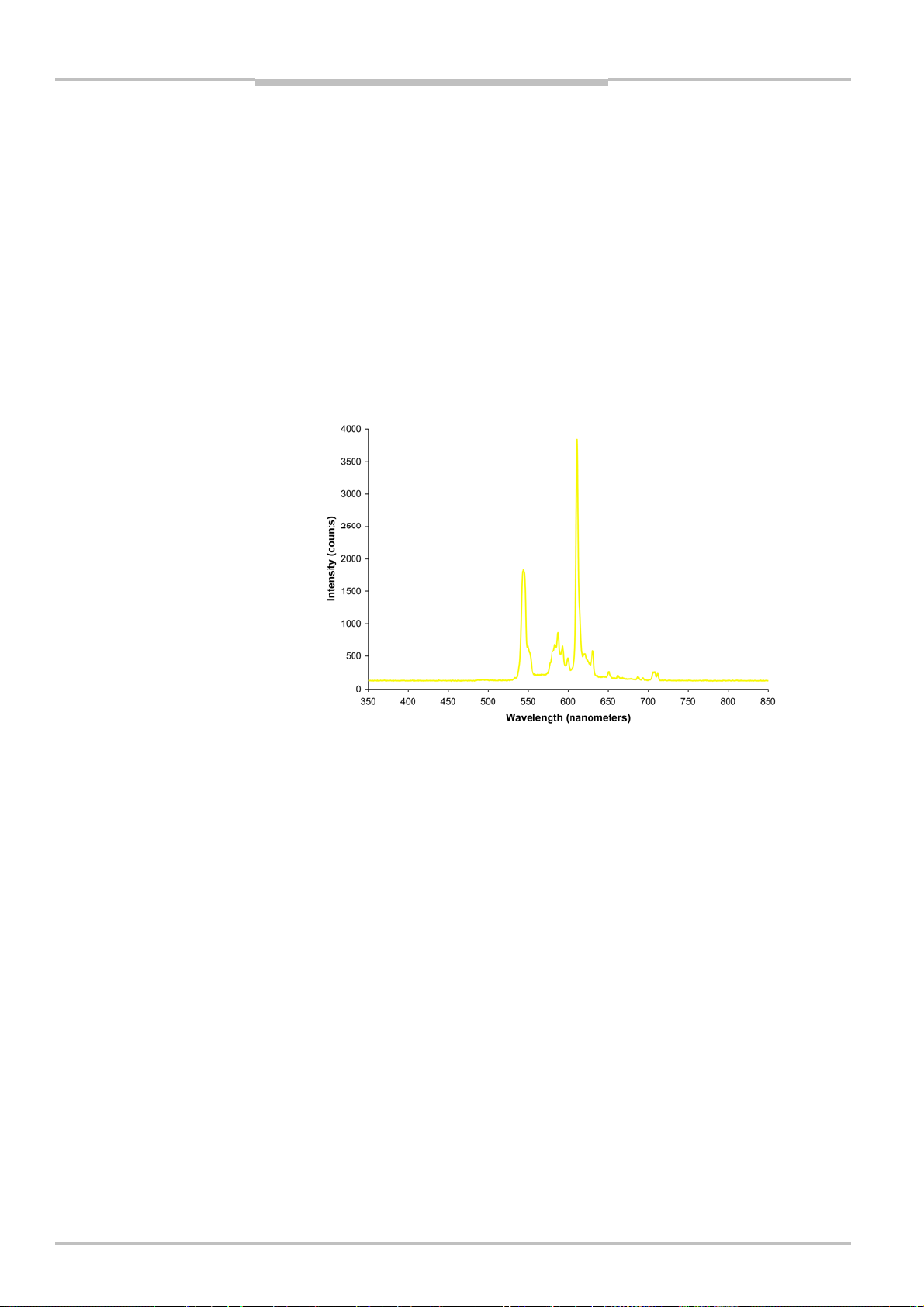

3.5.3 Fluorescent tubes

A fluorescent tube illumination has a very uneven spectral distribution, as shown in the

figure below.

Furthermore, there are many different versions with different color temperature and

therefore color balance. Warm white fluorescent tubes typically have color temperatures at

~2700 Kelvin, neutral white 3000 K or 3500 K, cool white 4100 and daylight white in the

range of 5000 K - 6500 K.

In line-scan machine vision applications it is important that the drive frequency of the

fluorescent tube is higher than the scan rate of the camera to avoid flicker in the images.

Fluorescent tubes are light efficient and have low IR content, but they are difficult to focus

to a narrow line. If using IR lasers and the IR pass filter option the white illumination may

cover the same region as the lasers without interference, reducing the focusing problem.

Figure 3.9 – Illustration of spectrum from “yellow” fluorescent tube illumination. [Picture

from Wikipedia.]

3.5.4 White LEDs

LEDs are commonly used in machine vision since they can be focused to different shapes

and give high light power.

White LEDs have a strong blue peak from the main LED and then a wider spectrum from

the phosphorescence giving the white appearance.

This type of illumination is expected to require approximately 60-70% balance on the blue

and green channels compared with the red.

26 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 27

Reference Manual Chapter 3

g

Mounting Rangers and Lightings

Ranger E/D

Response

[ADu per pixel / (µJ/cm

3000

2500

2000

1500

1000

500

0

300 400 500 600 700 800 900 1000 1100

2

)]

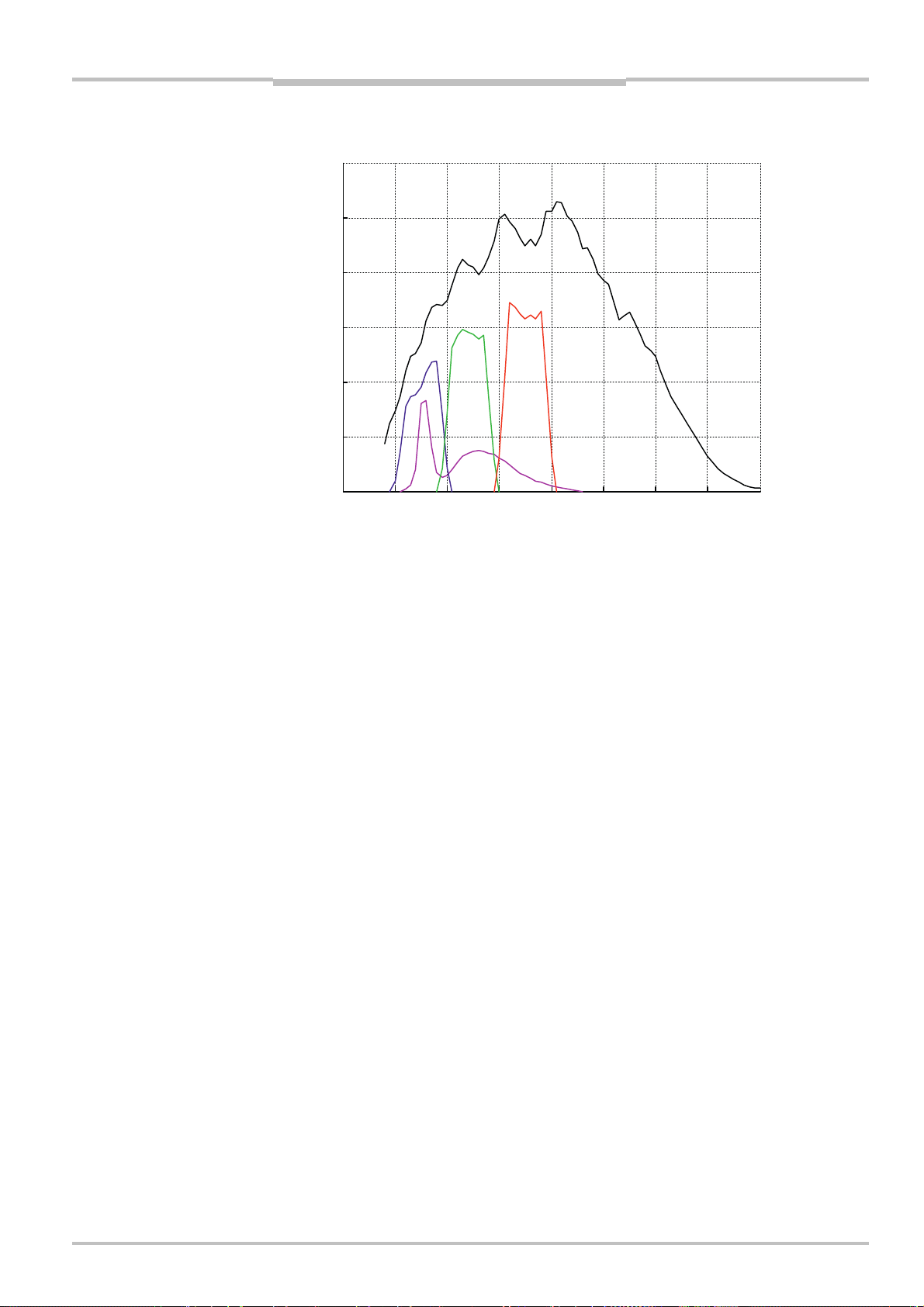

M12 Color Response

ht wavelength [nm]

Li

Figure 3.10 –The spectrum of a white LED plotted in magenta. It has a peak in the blue

range that fits well with the blue filter on the sensor, and has a fairly low

amount of red in the spectrum.

3.5.5 Colored LEDs

An LED illumination can also be made from individual red, green and blue LEDs. In this

case the spectrum of each LED must fall within the respective filter bands. In this case the

balance depends on the individual power of the LEDs.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 27

Page 28

Chapter 4 Reference Manual

r

w

r

w

Ranger E/D

Ranger Studio

4 Ranger Studio

Ranger Studio application is a tool for evaluating data and different set-ups of the camera.

With Ranger Studio, you can change the settings for the camera and instantly see how the

changes affect the measurement result from the Ranger.

Once the Ranger has been set up to deliver measurement data that meets the requirements, the settings can be saved in a parameter file.

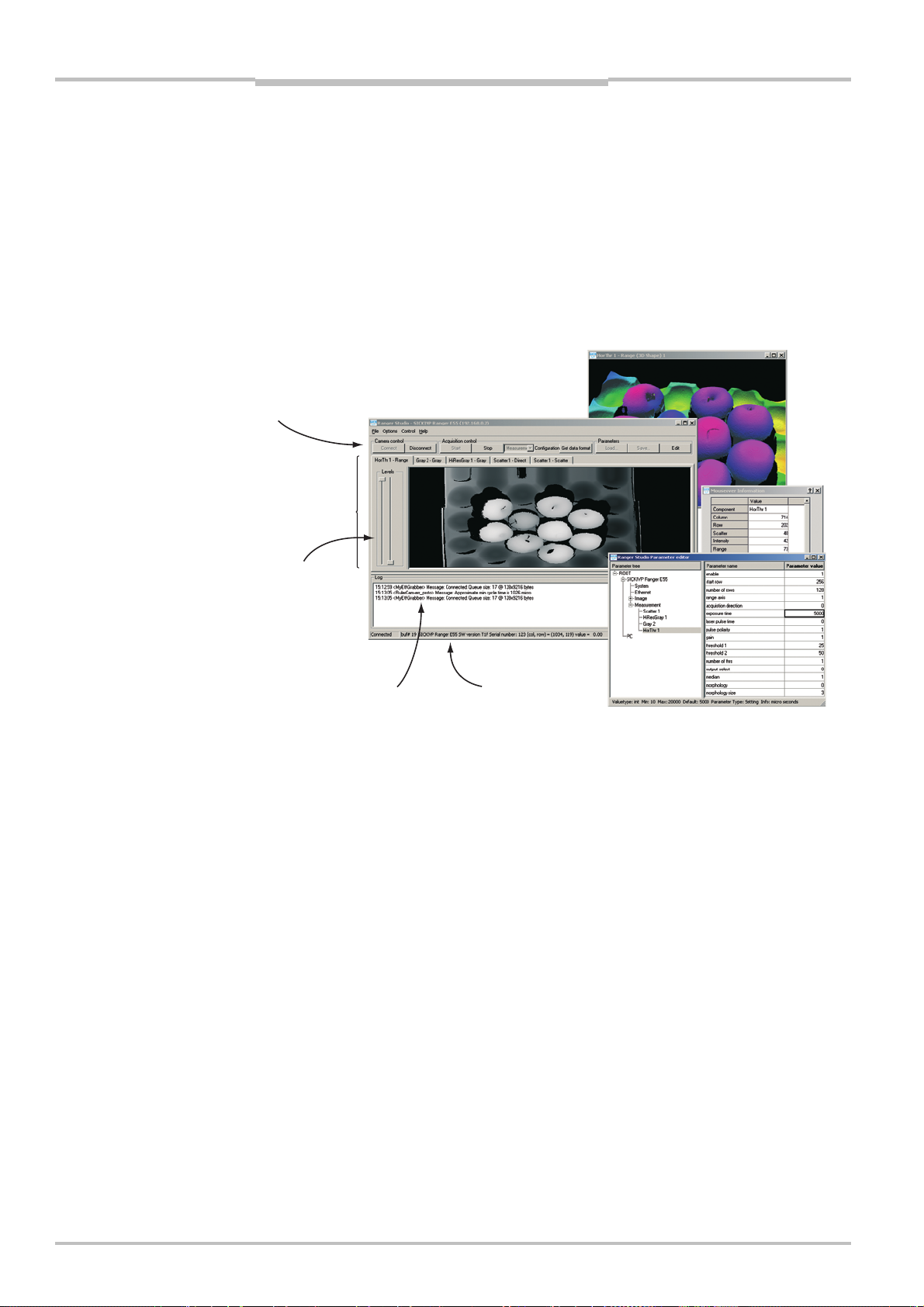

Ranger Studio consists of a Main Window, Zoom Windows, Mouseover Information and a

Parameter Editor.

Zoom Windo

Control

bar

Visualization

tab

Main window

Mouseove

Information

windo

Levels

Log

Figure 4.1 – Ranger Studio windows

Status bar

Parameter edito

4.1 Ranger Studio Main Window

The main window is the core of the application. It consists of a menu bar, a control bar

with buttons, tabs with visualizations of the measurement data and levels sliders, a log

area, and a status bar.

Menu bar – menus with access to visualization windows and options.

Control bar – contains the functions for controlling the Ranger.

Visualization tabs – used for visualizing the measurements made by the camera.

Levels – used for adjusting which measurement values are visualized in the Visualiza-

tion tab.

Log – shows error and status messages.

Status bar – shows information such as the number of scans that Ranger Studio has

received from a Ranger, and the coordinates and value for a pixel under the mouse

pointer.

Mouseover Information window – can be used for showing detailed information of the

data under the mouse pointer in a visualization tab. The window is enabled and disabled in the menu View

The buttons in the control bar are grouped into three categories:

Camera control – contains buttons to connect and disconnect the camera.

Acquisition control – to start and stop the scanning loop and to change between meas-

uring in Image or Measurement mode.

Image mode is used for set-up purposes.

Measurement mode is used for collection measurement data.

Mouseover information.

28 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 29

Reference Manual Chapter 4

Ranger E/D

Ranger Studio

Parameters – to handle parameter files and to start the parameter editor.

All these tools are also available in the menus.

4.1.1 Visualization Tabs

The visualization tabs are used for visualizing the result from the camera. The main window has one tab for each type of measurement made by the Ranger with the current

configuration. The visualization can be disabled and enabled by selecting Options

ize. This can be useful when streaming to file, see 4.4.9 “Save and Load Measurement

Data”.

The number of tabs is automatically updated as components are activated or deactivated

in the configuration.

Image Mode

In Image mode (when the image configuration is active), there is one visualization tab

showing a grayscale 2D image. This view can be useful for example when adjusting the

exposure time, or deciding the region of interest.

Visual-

Figure 4.2 – Visualization tab with grayscale 2D image.

The displayed image is the sensor image from the Ranger, which represents what is in the

Ranger’s field of view.

For the ColorRanger E, available high-resolution rows (depending on model) can also be

displayed in the image. The high-resolution rows are shown at the top of the image, above

the standard rows. The display of the high-resolution rows is adopted to maintain the

aspects of the sensor in the following ways:

For ColorRanger E55, only every other column of the high-resolution rows is shown. The

high-resolution rows have twice as many columns as standard rows, but Ranger Studio

displays every other column to keep the width of the image the same.

The high-resolution rows are taller than normal sensor rows (gray 3 times, color 4

times), thereby covering a larger cross-section on the object in front of the camera.

Each high-resolution row is therefore displayed in the image on 3 and 4 lines (pixels)

respectively.

The black lines in the image correspond to the area between the high-resolution rows,

and between the high-resolution rows and the standard sensor.

Color high-resolution rows have a larger sensitivity, why the image from these rows will

appear brighter than other rows.

The high-resolution rows are displayed in image mode by enabling the Show hires parameter in the image component. Note that when displaying the high-resolution rows, the row

number shown in the status bar and Info window does not match the number of the

sensor row.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 29

Page 30

Chapter 4 Reference Manual

i

S

Ranger Studio

Ranger E/D

Image in Ranger Studio

Hi-Res gray row

H

-Res color rows

ensor layout

512

514

518

522

528

Area between rows

Standard sensor

rows

Figure 4.3 – An image showing the high resolution part and the 32 first sensor rows on

the standard sensor region. Note the black areas, brighter color highresolution part, and the reduced vertical resolution of the high-resolution

rows.

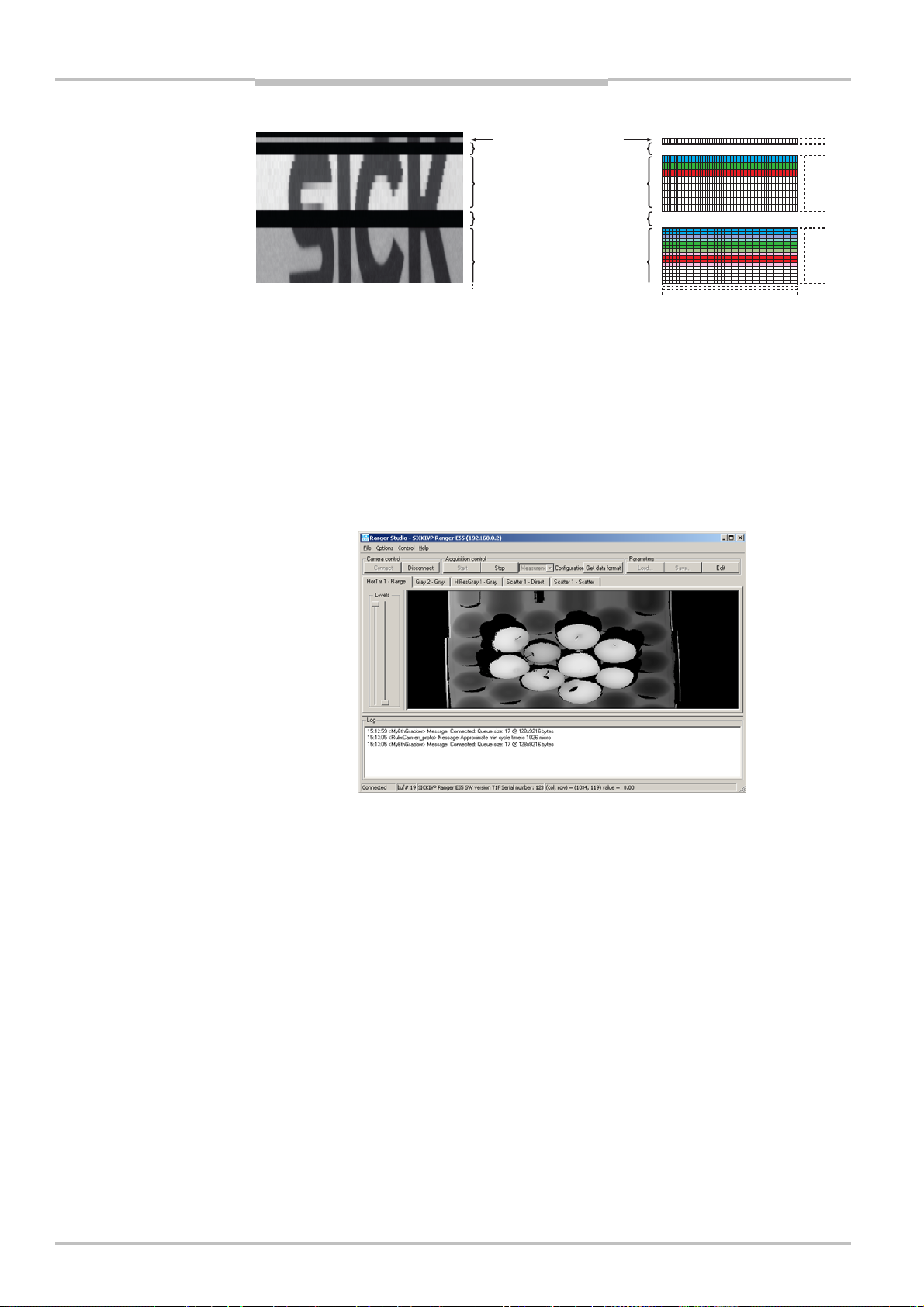

Measurement Mode

When the Ranger is running in Measurement mode, the main window contains visualization tabs for each active component in the configuration. If a component produces more

than one type of profiles, there is one tab for each type of profile. Each tab shows an

image made from the corresponding profiles sent from the Ranger.

0

Figure 4.4 – Main window with tabs for range, scatter and intensity images.

The visualization tabs always shows the range measurement data as an 8-bit grayscale

image. This means that the original range measurement values are translated to 255

grayscale values, where 1 (black) corresponds to the lowest range value and 255 (white)

corresponds to the highest value. The value 0 means missing data.

To display the actual measured value for a point in the visualized image, place the pointer

over the point in the image. The value, together with the coordinates for the point, will be

displayed in the status bar and in the Info window, if open.

When measuring color, the color information for each acquired color is displayed as grayscale images in one tab each, and one tab with a compound color image. To get a proper

compound color image, you have to set up the registration parameters. See “Visualizing

Color Images” on page 39 for more information.

30 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 31

Reference Manual Chapter 4

Ranger E/D

Ranger Studio

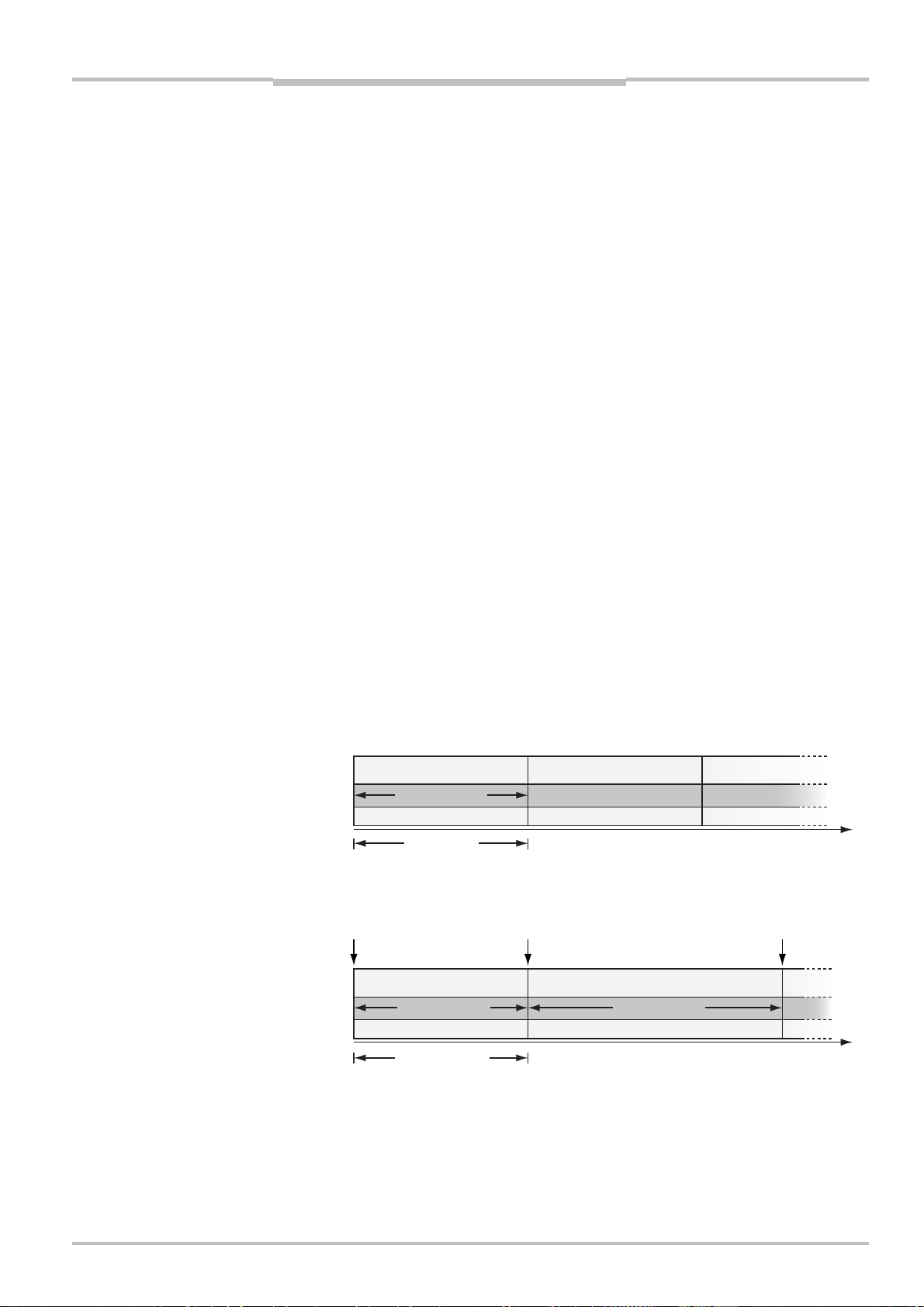

The Levels sliders to the left in each tab can be used for visualizing only a certain range of

measurement values. The right slider sets the highest value to visualize, and the left slider

the lowest value. Measurement values within the range will be translated to the 255

available grayscale values, and the values outside the range will be displayed as black (1)

or white (255).

This can be useful for example when studying small range variations on a part of an object

that varies a lot in height. By adjusting the levels, the variations can be easier to view in

the visualization image, while parts higher or lower than the selected range are ignored.

Full range of values is

visualized

Figure 4.5 – The original measurement values in a range profile (left), and corresponding

row in the image displayed in the visualization window (right), using different

Levels settings.

Only selected range of

values is visualized

4.2 Zoom Windows

The zoom function is available for any visualization tab. Open a zoom window by rightclicking in the image and choosing the type of window:

8-bit gray-scale zoom window

3D zoom window

Profile zoom window

Figure 4.6 – Range visualization tab with Profile and 3D zoom windows.

When choosing a zoom window from this menu a rectangle or a line is shown at the upper

left corner of the active visualization tab, and a new zoom window is opened. Several zoom

windows can be shown simultaneously.

8-bit grayscale Green rectangle. The region is displayed as a grayscale or color 2D

image.

3D zoom Yellow rectangle. The data is displayed as a 3D surface, where varia-

tion in height is also indicated by different colors. The lowest value is

shown as black color.

©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved 31

Page 32

Chapter 4 Reference Manual

r

Ranger E/D

Ranger Studio

Before generating the 3D surface, the data is filtered by a small

median filter to reduce noise peaks. The 3D zoom window can be of

use even if the data is intensity data.

Profile zoom Blue line. The data is displayed as a profile, where range, intensity or

scatter is displayed as the y-coordinate. For color data, three profiles

are displayed – one profile for red, green and blue respectively.

The contents of the zoom image are updated as the line or rectangle is moved and resized

in the active visualization window:

A rectangle is resized and moved by pressing the left mouse button pointing the cursor

on the frame or in the middle of the rectangle respectively.

A line is moved by pressing the left mouse button while pointing the cursor on the line.

A line cannot be resized – it will always go across the entire image in the visualization

tab.

When viewing the 3D zoom, you can change the perspective and coloring of the 3D surface:

Hold down the left mouse button and move it to change the viewing direction, so that

the object can be viewed from various angles.

Hold down the right mouse button and move it up or down in the image to remap the

coloring of the surface. This can be used to emphasize different parts of the current

data.

When viewing the Profile zoom, you can zoom in on a part of the profile, by pressing the

left mouse button and dragging a rectangle over the area to zoom in on. By clicking with

the right mouse button in the Profile zoom window, the entire profile will be displayed in

the zoom window again.

4.3 Parameter Editor

The Parameter Editor button in Ranger Studio main window opens the Parameter Editor

window, which retrieves the current parameters from the system and allows you to modify

parameters in the camera.

The Parameter Editor consists of three areas: Parameter tree, Parameter list and a Status

bar.

Parameter tree

Parameter list

Status ba

Figure 4.7 – The Parameter editor window.

The parameter tree shows a hierarchical structure of the system configuration. When

selecting an item in the parameter tree all available parameters for that item are shown in

the Parameter list on the right.

32 ©SICK AG • Advanced Industrial Sensors • www.sick.com • All rights reserved

Page 33

Reference Manual Chapter 4

Ranger E/D

Ranger Studio

The parameter list is a table containing the parameter names and parameter values.

When selecting a parameter name, or its value, information about parameter type, range

etc is displayed in the status bar at the bottom. The value of a parameter can be changed

in the parameter value column.

The status bar at the bottom of the parameter editor displays additional information about

the selected parameter.

Value The value type of the parameter, for example int for integer.

Min The lower limit of the parameter.

Max The upper limit of the parameter.

Default The default value of the parameter.

Parameter type If the parameter is of type Argument, Setting or Property

Argument The camera needs to be stopped before changing

this parameter.

Setting This parameter can be changed at any time.

Property Read only parameter that cannot be changed.

Info Additional information about typical valid values, units, use, etc.

When you are satisfied with the parameter settings in the camera, use the button Save

parameters in Ranger Studio main window to save it as a parameter file.

For detailed information about parameters, see chapters 6 “Ranger D Parameters” or

7 “Ranger E Parameters”.

4.3.1 Flash retrieve and store of parameters

Normally the parameters are reset to the factory default configuration when restarting the

camera. If you want to permanently save a parameter file in the camera flash memory the

following menu items can be used (in the Options->Flash parameters menu):

Store to flash Stores the currently active parameters in camera flash. Before using

the command upload the desired parameter file to the camera using

Parameters

Retrieve from flash Retrieves the parameters stored in camera flash to the active

parameters.

Auto retrieve on boot Enable the option to make the camera automatically perform the

command Retrieve from flash each time the camera boots.

Load….

4.4 Using Ranger Studio

This section introduces the basics in Ranger Studio and describes how to:

Get an Image from the Ranger

Adjust the Exposure time

Set the Region-of-Interest (ROI)

Collect 3D data