Page 1

Ranger3

3D vision

O P E R A T I N G I N S T R U C T I O N S

Page 2

Described product

2011/65/EU

Ranger3

Manufacturer

SICK AG

Erwin-Sick-Str. 1

79183 Waldkirch

Germany

Legal information

This work is protected by copyright. Any rights derived from the copyright shall be

reserved for SICK AG. Reproduction of this document or parts of this document is only

permissible within the limits of the legal determination of Copyright Law. Any modifica‐

tion, abridgment or translation of this document is prohibited without the express writ‐

ten permission of SICK AG.

The trademarks stated in this document are the property of their respective owner.

© SICK AG. All rights reserved.

Original document

This document is an original document of SICK AG.

2

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 3

Contents

CONTENTS

1 About this document........................................................................ 7

1.1 Information on the operating instructions.............................................. 7

1.2 Explanation of symbols............................................................................ 7

2 Safety information............................................................................ 8

2.1 Correct use................................................................................................ 8

2.2 Improper use............................................................................................. 8

2.3 Limitation of liability................................................................................. 8

2.4 Modifications and conversions................................................................ 8

2.5 Requirements for skilled persons and operating personnel.................. 9

2.6 Operational safety and particular hazards.............................................. 9

2.7 Laser safety............................................................................................... 10

3 Product description........................................................................... 11

3.1 Introduction............................................................................................... 11

3.2 Measuring with a 3D camera................................................................... 11

3.3 Hardware description............................................................................... 12

3.3.1 Sensor...................................................................................... 12

3.4 Standards.................................................................................................. 13

3.4.1 GenICam™............................................................................... 13

3.4.2 GigE Vision®............................................................................ 13

4 Transport and storage....................................................................... 14

4.1 Transport................................................................................................... 14

4.2 Unpacking.................................................................................................. 14

4.3 Transport inspection................................................................................. 14

4.4 Storage...................................................................................................... 14

5 Mounting............................................................................................. 16

5.1 Mounting instructions............................................................................... 16

5.2 Required parts.......................................................................................... 16

5.3 Mounting the camera............................................................................... 16

5.3.1 Mounting an optical filter or a Scheimpflug adapter............. 17

6 Electrical installation........................................................................ 18

6.1 Wiring notes.............................................................................................. 18

6.2 Security...................................................................................................... 18

6.3 Connecting the camera............................................................................ 19

6.4 Electrical connections.............................................................................. 19

7 Configuration..................................................................................... 23

7.1 Software installation................................................................................. 23

7.1.1 System recommendations...................................................... 23

7.1.2 Network preparations.............................................................. 23

7.1.3 Installing PC software.............................................................. 23

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

3

Page 4

CONTENTS

7.2 Concepts................................................................................................... 23

7.2.1 Selectors.................................................................................. 23

7.3 Configuring Ranger3................................................................................. 24

7.4 Regions...................................................................................................... 24

7.4.1 Sensor regions......................................................................... 25

7.4.2 Extraction regions.................................................................... 25

7.4.3 Device scan type...................................................................... 26

7.4.4 Maximum buffer size............................................................... 27

7.5 Exposure time and measurement speed................................................ 27

7.6 Laser strobe output signals..................................................................... 27

7.7 3D profiling................................................................................................ 28

7.7.1 Laser impact position on the sensor...................................... 28

7.7.2 Measurement method............................................................. 29

7.7.3 Detection threshold................................................................. 29

7.8 3D data formats........................................................................................ 29

7.9 Reflectance data....................................................................................... 30

7.10 High dynamic range (HDR) imaging......................................................... 30

7.11 Triggering................................................................................................... 31

7.11.1 3D triggering concepts............................................................ 31

7.11.2 Triggering modes..................................................................... 32

7.11.3 Triggering using an encoder.................................................... 33

7.11.4 Frame triggering....................................................................... 34

7.12 Chunk data................................................................................................ 35

7.13 Features.................................................................................................... 35

7.13.1 Device control.......................................................................... 36

7.13.2 Image format control............................................................... 38

7.13.3 Scan 3D control....................................................................... 39

7.13.4 Acquisition control................................................................... 40

7.13.5 Digital I/O control..................................................................... 41

7.13.6 Timer control............................................................................ 41

7.13.7 Encoder control........................................................................ 42

7.13.8 Event control............................................................................ 42

7.13.9 File access control................................................................... 42

7.13.10 Chunk data control.................................................................. 43

7.13.11 Test control............................................................................... 43

7.13.12 Transport layer control............................................................. 44

7.13.13 Firmware update...................................................................... 45

8 Operation............................................................................................ 46

8.1 Description of the graphical user interface............................................ 46

8.1.1 Menus....................................................................................... 46

8.1.2 Parameter editor...................................................................... 47

8.1.3 Workflow steps......................................................................... 48

8.1.4 Image handling controls.......................................................... 50

8.1.5 Image view options.................................................................. 51

8.1.6 Log and statistics tabs............................................................ 52

4

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 5

CONTENTS

8.1.7 General information................................................................. 52

8.2 Using the interface................................................................................... 52

8.2.1 Connecting and getting a 2D image....................................... 52

8.2.2 Adjusting focus......................................................................... 54

8.2.3 Recording images.................................................................... 54

8.2.4 Loading and saving parameter files....................................... 55

8.2.5 Editing parameters.................................................................. 55

8.2.6 Collecting 3D data................................................................... 60

8.2.7 View modes.............................................................................. 61

8.2.8 Color range............................................................................... 62

8.2.9 Data presentation.................................................................... 63

8.2.10 Height map scaling.................................................................. 64

8.2.11 Light control............................................................................. 64

8.2.12 Loading and saving image buffers......................................... 65

8.2.13 Handling log messages........................................................... 66

8.2.14 Updating firmware................................................................... 66

9 Maintenance...................................................................................... 67

9.1 Maintenance plan..................................................................................... 67

9.2 Cleaning..................................................................................................... 67

10 Troubleshooting................................................................................. 68

10.1 Over triggering........................................................................................... 68

10.2 Encoder line trigger setup tips................................................................. 68

10.3 Network card settings.............................................................................. 68

10.4 Rescue mode............................................................................................ 68

10.5 Repairs...................................................................................................... 69

10.6 Returns...................................................................................................... 69

11 Decommissioning............................................................................. 70

11.1 Disposal..................................................................................................... 70

12 Technical data.................................................................................... 71

12.1 Product data.............................................................................................. 71

12.2 Features.................................................................................................... 71

12.3 Performance............................................................................................. 71

12.3.1 Light sensitivity........................................................................ 71

12.3.2 Maximum line rate................................................................... 72

12.4 Interfaces.................................................................................................. 72

12.5 Ambient data............................................................................................. 73

12.6 Mechanics and electronics...................................................................... 73

12.7 Dimensional drawings.............................................................................. 74

13 Accessories........................................................................................ 75

14 Glossary.............................................................................................. 76

14.1 Terms and abbreviations.......................................................................... 77

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

5

Page 6

CONTENTS

15 Annex.................................................................................................. 78

15.1 Range (3D) measurement........................................................................ 78

15.1.1 Occlusion.................................................................................. 79

15.1.2 Width resolution and resolution in the motion direction....... 80

15.1.3 Height-range and height resolution........................................ 80

15.1.4 Main geometries...................................................................... 80

15.1.5 Sensor coordinate system....................................................... 82

15.2 Recommended network card settings.................................................... 83

15.2.1 Connecting multiple cameras................................................. 84

15.3 Connecting encoders................................................................................ 84

15.4 EU declaration of conformity / Certificates............................................. 86

15.5 Scheimpflug adapters.............................................................................. 86

6

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 7

1 About this document

1.1 Information on the operating instructions

These operating instructions provide important information on how to use devices from

SICK AG.

Prerequisites for safe work are:

Compliance with all safety notes and handling instructions supplied.

•

Compliance with local work safety regulations and general safety regulations for

•

device applications

The operating instructions are intended to be used by qualified personnel and electrical

specialists.

NOTE

Read these operating instructions carefully before starting any work on the device, in

order to familiarize yourself with the device and its functions.

The instructions constitute an integral part of the product and are to be stored in the

immediate vicinity of the device so they remain accessible to staff at all times. Should

the device be passed on to a third party, these operating instructions should be handed

over with it.

ABOUT THIS DOCUMENT 1

These operating instructions do not provide information on operating the machine or

system in which the device is integrated. For information about this, refer to the operat‐

ing instructions of the specific machine.

1.2 Explanation of symbols

Warnings and important information in this document are labeled with symbols. The

warnings are introduced by signal words that indicate the extent of the danger. These

warnings must be observed at all times and care must be taken to avoid accidents, per‐

sonal injury, and material damage.

DANGER

… indicates a situation of imminent danger, which will lead to a fatality or serious

injuries if not prevented.

WARNING

… indicates a potentially dangerous situation, which may lead to a fatality or serious

injuries if not prevented.

CAUTION

… indicates a potentially dangerous situation, which may lead to minor/slight injuries if

not prevented.

NOTICE

… indicates a potentially harmful situation, which may lead to material damage if not

prevented.

NOTE

… highlights useful tips and recommendations as well as information for efficient and

trouble-free operation.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

7

Page 8

2 SAFETY INFORMATION

2 Safety information

2.1 Correct use

Streaming cameras are the vision image acquisition component in a machine vision

system. They make measurements on the objects that pass in front of the camera, and

send the measurement results to an external processing unit for further processing.

Comply with the data on the type label.

Misuse

Different or additional use is considered to be improper use. SICK AG shall not be held

liable for personal injury and damage to property resulting from this.

2.2 Improper use

Any use outside of the stated areas, in particular use outside of the technical specifica‐

tions and the requirements for intended use, will be deemed to be incorrect use.

The device does not constitute a safety component in accordance with the respec‐

•

tive applicable safety standards for machines.

The device must not be used in explosion-hazardous areas, in corrosive environ‐

•

ments or under extreme environmental conditions.

Any use of accessories not specifically approved by SICK AG is at your own risk.

•

WARNING

Danger due to improper use!

Any improper use can result in dangerous situations.

Therefore, observe the following information:

■

Device should be used only in accordance with its intended use.

■

All information in these operating instructions must be strictly observed.

2.3 Limitation of liability

Applicable standards and regulations, the latest state of technological development,

and our many years of knowledge and experience have all been taken into account

when assembling the data and information contained in these operating instructions.

The manufacturer accepts no liability for damage caused by:

■

Failing to observe the operating instructions

■

Incorrect use

■

Use by untrained personnel

■

Unauthorized conversions

■

Technical modifications

■

Use of unauthorized spare parts, consumables, and accessories

With special variants, where optional extras have been ordered, or owing to the latest

technical changes, the actual scope of delivery may vary from the features and illustra‐

tions shown here.

2.4 Modifications and conversions

NOTICE

Modifications and conversions to the device may result in unforeseeable dangers.

8

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 9

Interrupting or modifying the device or SICK software will invalidate any warranty claims

against SICK AG. This applies in particular to opening the housing, even as part of

mounting and electrical installation.

2.5 Requirements for skilled persons and operating personnel

WARNING

Risk of injury due to insufficient training.

Improper handling of the device may result in considerable personal injury and material

damage.

■

All work must only ever be carried out by the stipulated persons.

The operating instructions state the following qualification requirements for the various

areas of work:

■

Instructed personnel have been briefed by the operator about the tasks assigned

to them and about potential dangers arising from improper action.

■

Skilled personnel have the specialist training, skills, and experience, as well as

knowledge of the relevant regulations, to be able to perform tasks delegated to

them and to detect and avoid any potential dangers independently.

■

Electricians have the specialist training, skills, and experience, as well as knowl‐

edge of the relevant standards and provisions to be able to carry out work on elec‐

trical systems and to detect and avoid any potential dangers independently. In Ger‐

many, electricians must meet the specifications of the BGV A3 Work Safety Regu‐

lations (e.g. Master Electrician). Other relevant regulations applicable in other

countries must be observed.

SAFETY INFORMATION 2

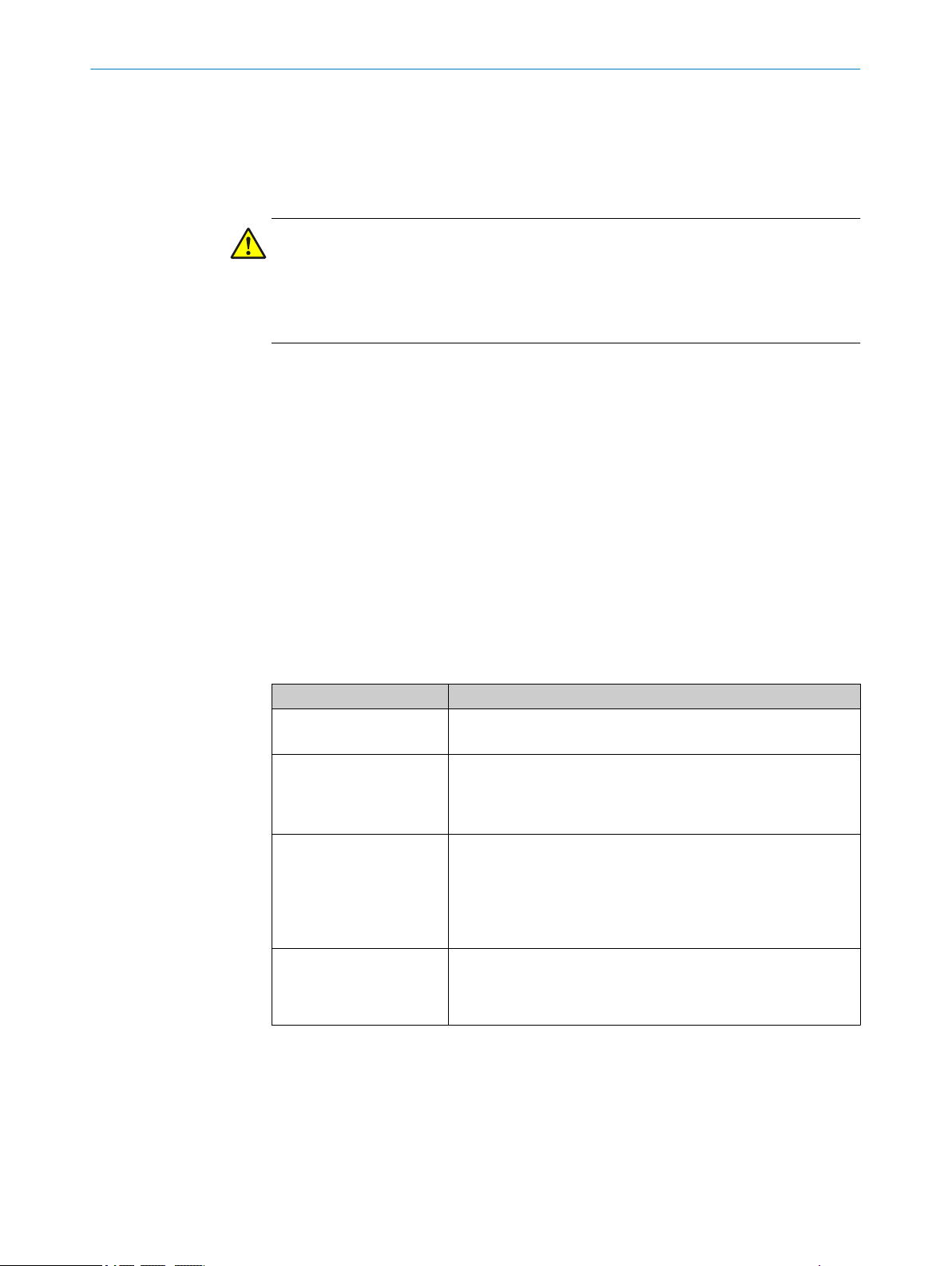

The following qualifications are required for various activities:

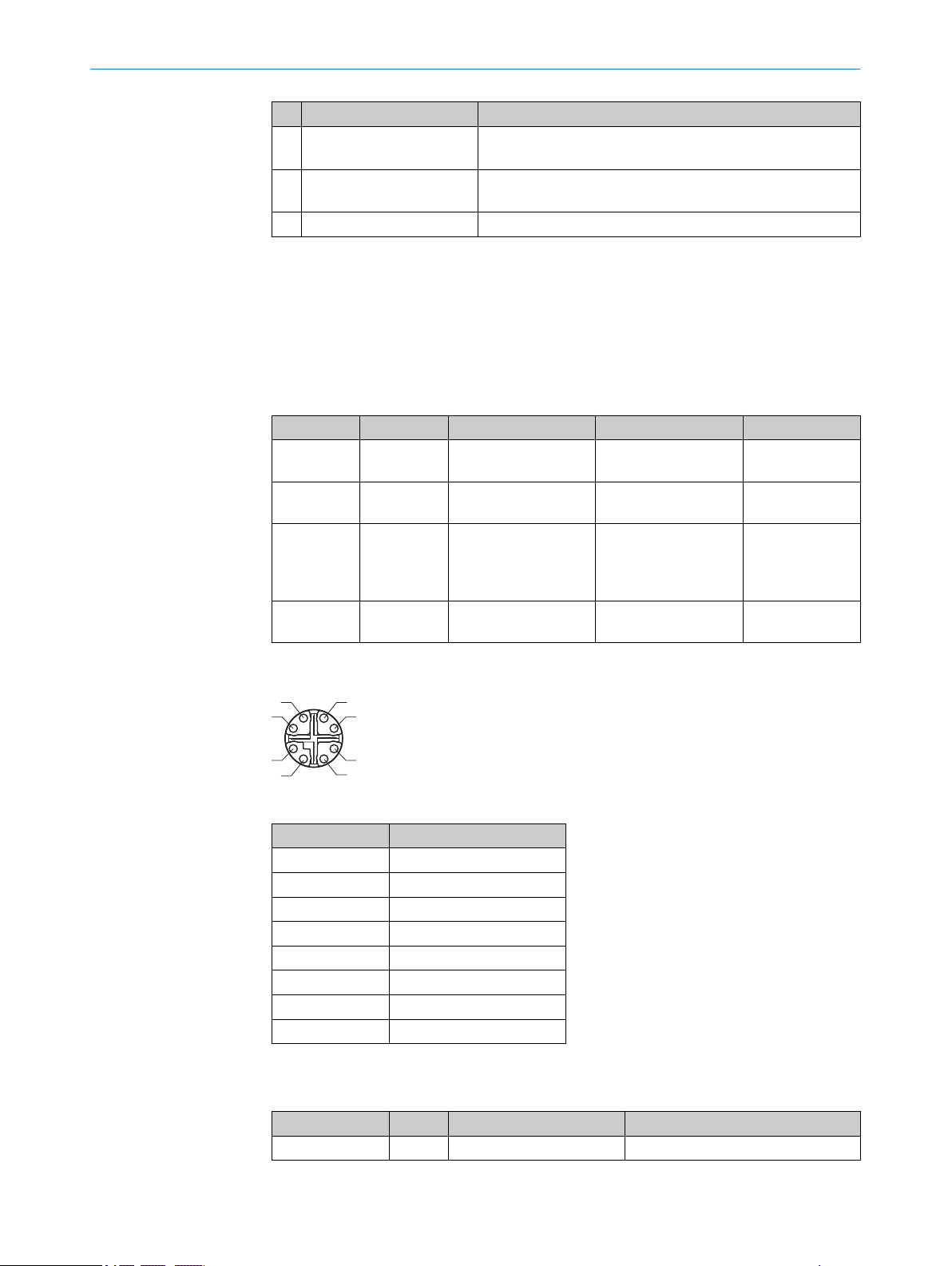

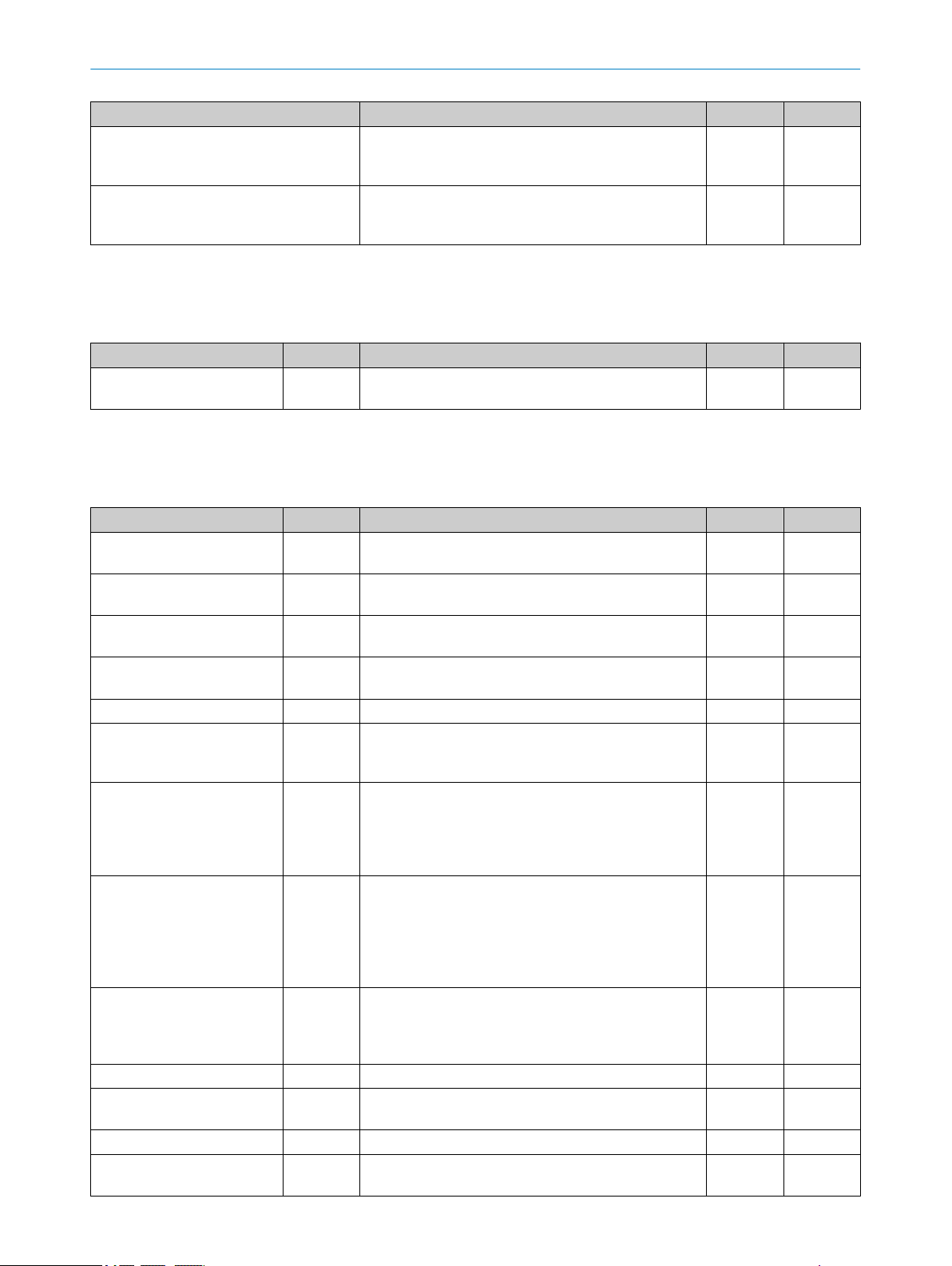

Table 1: Activities and technical requirements

Activities Qualification

Mounting, maintenance

Electrical installation,

device replacement

Basic practical technical training

■

Knowledge of the current safety regulations in the workplace

■

Practical electrical training

■

Knowledge of current electrical safety regulations

■

Knowledge of the operation and control of the devices in their

■

particular application

Commissioning, configura‐

tion

Basic knowledge of the WindowsTM operating system in use

■

Basic knowledge of the design and setup of the described con‐

■

nections and interfaces

Basic knowledge of data transmission

■

Knowledge of the programming of image-processing systems

■

and network components

Operation of the device for

the particular application

Knowledge of the operation and control of the devices in their

■

particular application

Knowledge of the software and hardware environment for the

■

particular application

2.6 Operational safety and particular hazards

Please observe the safety notes and the warnings listed here and in other chapters of

these operating instructions to reduce the possibility of risks to health and avoid dan‐

gerous situations.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

9

Page 10

2 SAFETY INFORMATION

The product is fitted with LEDs of the risk group 0. The accessible radiation from these

LEDs does not pose a danger to the eyes or skin.

WARNING

Electrical voltage!

Electrical voltage can cause severe injury or death.

■

■

■

■

■

WARNING

Dangerous equipotential bonding currents!

Improper grounding can lead to dangerous equipotential bonding currents, which may

in turn lead to dangerous voltages on metallic surfaces, such as the housing. Electrical

voltage can cause severe injury or death.

■

■

■

Work on electrical systems must only be performed by qualified electricians.

The power supply must be disconnected when attaching and detaching electrical

connections.

The product must only be connected to a voltage supply as set out in the require‐

ments in the operating instructions.

National and regional regulations must be complied with.

Safety requirements relating to work on electrical systems must be complied with.

Work on electrical systems must only be performed by qualified electricians.

Follow the notes in the operating instructions.

Install the grounding for the product and the system in accordance with national

and regional regulations.

2.7 Laser safety

Whenever a laser module is used in combination with a 3D camera, the camera is con‐

sidered to be a part of a laser system. This system has to incorporate additional safety

features, depending on the applicable laser class. Refer to the safety instructions of the

manufacturer of the used laser module.

WARNING

When a SICK device is used in combination with a laser, all requirements for laser prod‐

ucts and laser systems according to the laser safety standards EN/IEC 60825–1 and

21 CFR 1040.10/11 must be fulfilled.

10

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 11

3 Product description

3.1 Introduction

Ranger3 is a high-speed 3D camera intended to be the vision component in a machine

vision system. Ranger3 makes measurements on the objects that pass in front of the

camera, and sends the measurement results to a PC for further processing. The mea‐

surements can be started and stopped from the PC, and triggered by encoders and

photoelectric switches in the vision system.

The main function of Ranger3 is to measure 3D shape of objects by the use of laser

line triangulation. This can be used for example for generating 3D images of the object,

for size rejection or volume measurement, or for finding shape defects. In the image

below, the colors represent depth.

PRODUCT DESCRIPTION 3

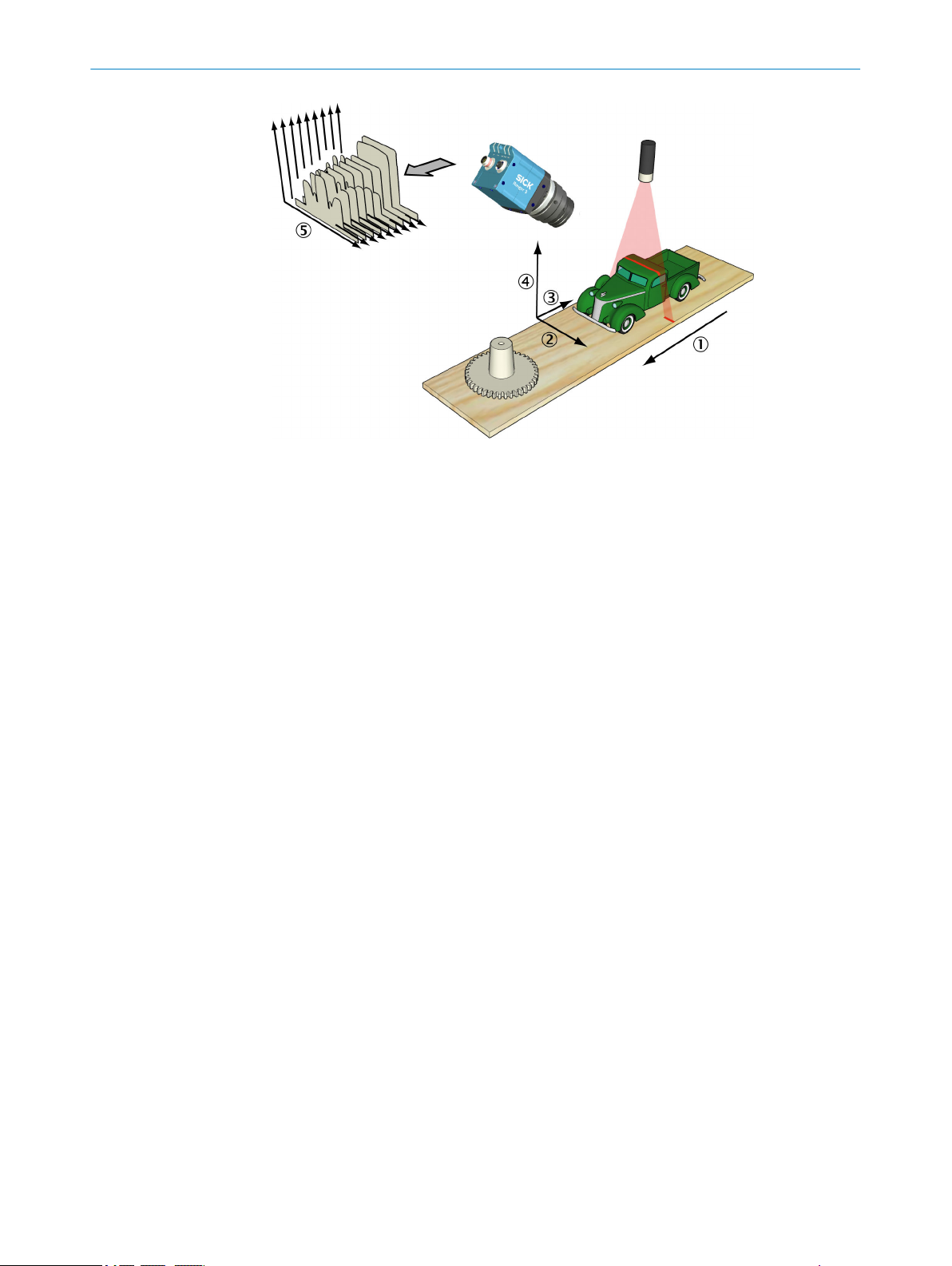

Figure 1: Example of 3D image

3.2 Measuring with a 3D camera

Each time the 3D camera makes a measurement, it measures along a cross-section of

the object in front of it. The result of a measurement is a profile, containing one value

for each measured point along the cross-section – for example the height of the object

along its width.

For the camera to measure an entire object, the object (or the camera and lighting)

must be moved so that the camera can make a series of measurements along the

object. The result of such a measurement is a collection of profiles, where each profile

contains the measurement of a cross-section at a certain location along the transporta‐

tion direction.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

11

Page 12

3 PRODUCT DESCRIPTION

Figure 2: Measuring the range of a cross-section of an object

1

2

3

4

5

Transportation direction

X (width)

Y (negative transport direction)

Z (range)

Profiles

3.3

Hardware description

3.3.1 Sensor

By default, the range measurement values from the camera are not calibrated – that is:

X and Z (range) coordinates are represented by column and row positions on the

•

sensor, instead of real world positions and distances.

Y coordinates are represented for example by the sequence number of the mea‐

•

surement, or by the encoder value for when the profile was captured.

In a machine vision system, the Ranger3 camera acts as a data streamer. It is con‐

nected to a PC through a Gigabit Ethernet network. The camera sends the profiles to

the computer, and the computer runs a custom application that retrieves the profiles

and processes the measurement data in them.

Before the camera can be used in a machine vision system, the following needs to be

done:

Find the right way to mount the camera and lighting.

•

Configure (and optionally calibrate) the camera to make the proper measure‐

•

ments.

Write the application that retrieves and processes the profiles sent from the cam‐

•

era.

For more information about 3D measurements, see "Range (3D) measurement",

page 78.

12

The Ranger3 camera is based on a unique SICK CMOS sensor which has a 2D pixel

matrix, row-parallel AD-converters, and a processor architecture that enables image

processing directly on the sensor. The technology is called ROCC, which means Rapid

On-Chip Calculation. For technical details, see "Technical data", page 71.

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 13

3.4 Standards

3.4.1 GenICam™

3.4.2 GigE Vision®

PRODUCT DESCRIPTION 3

Ranger3 complies with the GenICam™ and the GigE Vision® standards.

GenICam™ is a standard that provides a generic programming interface for different kinds of cameras and devices. The standard is owned by EMVA (European Machine Vision Association) and consists of multiple modules. Ranger3 complies with the follow‐ ing modules:

GenApi Application programming interface (API) for configuring the cam‐

era.

Standard Feature Naming

Convention (SFNC)

GenTL Transport layer interface for grabbing images.

GenTL SFNC Standardized names and types for transport layer interface.

For further information, see www.emva.org/standards-technology/genicam/.

GigE Vision® is a camera interface standard that is based on the Gigabit Ethernet com‐

munication protocol. The GigE Vision® standard is owned by AIA (Automated Imaging

Association).

Standardized names and types for common device features.

For further information, see https://www.visiononline.org/vision-standards.cfm.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

13

Page 14

4 TRANSPORT AND STORAGE

4 Transport and storage

4.1 Transport

For your own safety, please read and observe the following notes:

NOTICE

Damage to the product due to improper transport.

■

The device must be packaged for transport with protection against shock and

damp.

■

Recommendation: Use the original packaging as it provides the best protection.

■

Transport should be performed by trained specialist staff only.

■

The utmost care and attention is required at all times during unloading and trans‐

portation on company premises.

■

Note the symbols on the packaging.

■

Do not remove packaging until immediately before you start mounting.

4.2

Unpacking

■

Before unpacking, it may be necessary to equalize the temperature to protect the

device from condensation.

■

Handle the device with care and protect it from mechanical damage.

■

Remove the protective caps on the electrical connections immediately before con‐

necting the connecting cable to prevent dirt and water from entering.

4.3 Transport inspection

Immediately upon receipt in Goods-in, check the delivery for completeness and for any

damage that may have occurred in transit. In the case of transit damage that is visible

externally, proceed as follows:

■

Do not accept the delivery or only do so conditionally.

■

Note the scope of damage on the transport documents or on the transport com‐

pany's delivery note.

■

File a complaint.

NOTE

Complaints regarding defects should be filed as soon as these are detected. Damage

claims are only valid before the applicable complaint deadlines.

4.4 Storage

14

Store the device under the following conditions:

■

Recommendation: Use the original packaging.

■

Electrical connections are provided with protective caps and plugs (as they are on

delivery).

■

Do not store outdoors.

■

Store in a dry area that is protected from dust.

■

So that any residual damp can evaporate, do not package in airtight containers.

■

Do not expose to any aggressive substances.

■

Protect from sunlight.

■

Avoid mechanical shocks.

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 15

TRANSPORT AND STORAGE 4

■

Storage temperature: see "Technical data", page 71.

■

For storage periods of longer than 3 months, check the general condition of all

components and packaging on a regular basis.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

15

Page 16

1

MOUNTING

5

5 Mounting

5.1 Mounting instructions

Observe the technical data.

•

To prevent condensation, avoid exposing the device to rapid changes in tempera‐

•

ture.

The mounting site has to be designed for the weight of the device.

•

It should be mounted so that it is exposed to as little shock and vibration as possi‐

•

ble. Optional mounting accessories are available, see "Accessories", page 75.

Protect the device from moisture, contamination, and damage.

•

A sufficient level of cooling using ambient air/convection and/or heat dissipation

•

through mechanical mounting must be ensured. Observe the permitted operating

temperature, see "Technical data", page 71.

5.2 Required parts

You need the following parts to get started with Ranger3:

Ranger3 camera.

•

PC with a network interface card (NIC) that supports Gigabit Ethernet. For informa‐

•

tion about requirements, see "Recommended network card settings", page 83.

Ethernet cable for Gigabit Ethernet, with M12 connector for the camera.

•

Power supply.

•

Line-projecting laser.

•

5.3

Mounting the camera

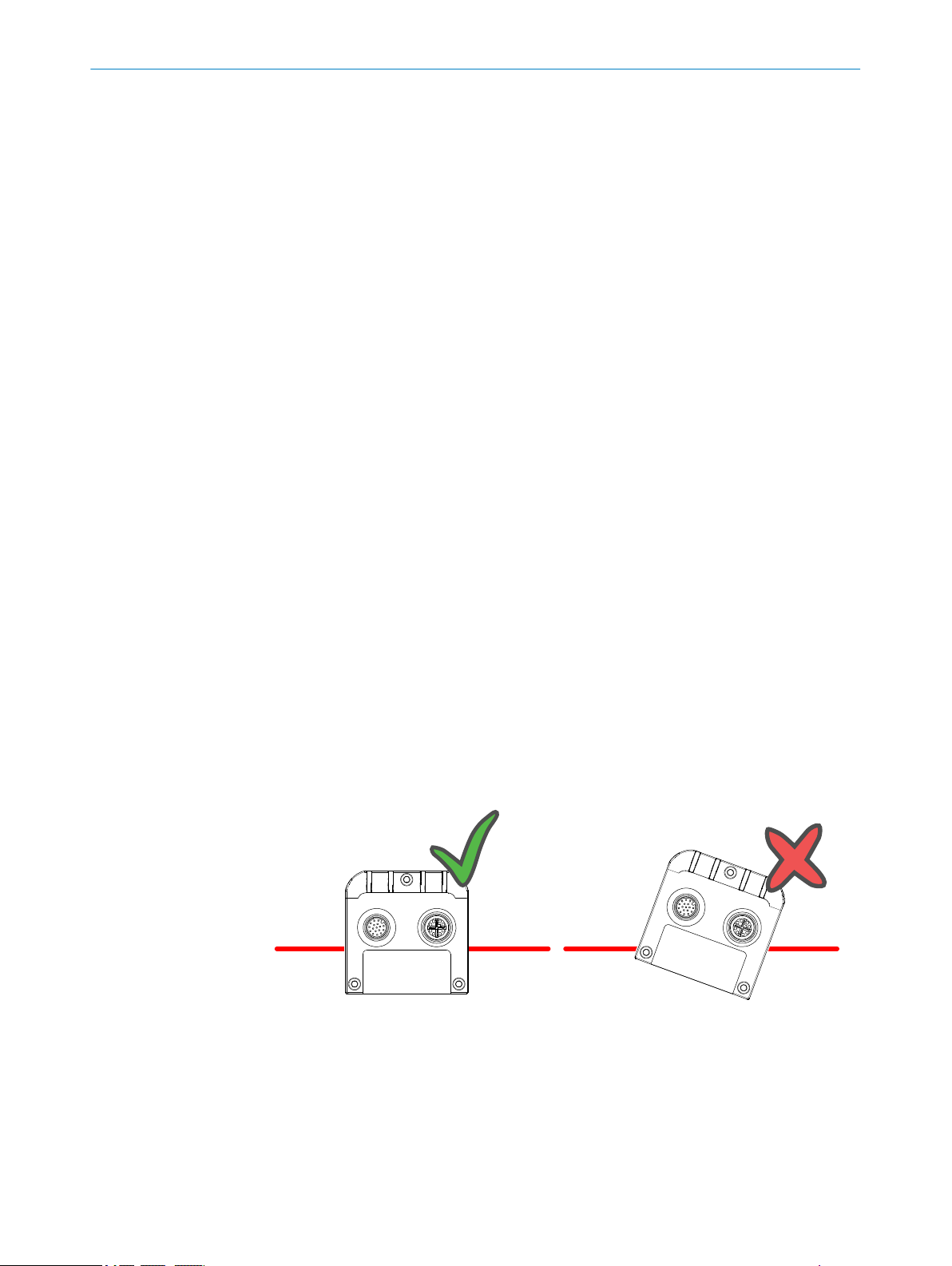

When measuring range, the camera is used together with a line-projecting laser that

illuminates the cross-section of the object to be measured. The camera and the laser

are mounted so that the laser illuminates the object from one direction, and the cam‐

era views the object from another direction.

The laser line must be orthogonal to the movement direction of the object. Also mount

the camera so that the camera does not tilt sideways compared to the laser line, see

the figure below. This makes the laser line appear along the rows of the sensor in the

camera.

Figure 3: Correct (left) and incorrect (right) mounting of the camera

Laser line

1

16

For best result it is important to shield out direct sunlight and other disturbing light from

the field of view. It is recommended to use an optical band-pass filter to reduce ambi‐

ent light.

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 17

It is also important to select a lens that is suitable for the field-of-view in which the cam‐

era should measure. Select a high-quality 1" C-mount lens that gives sharp images and

low distortion, as this can be essential for achieving a successful vision application.

If needed, you can mount a protective cover that makes the camera compliant with

IP65 and IP67. For available brackets, filters, lenses, and protective cover, see "Acces‐

sories", page 75.

Exactly how to mount the camera and the laser depends on a whole number of factors.

For more information, see "Range (3D) measurement", page 78.

5.3.1 Mounting an optical filter or a Scheimpflug adapter

On delivery, there is a dummy filter in the camera to protect the sensor. When you

mount an optical filter or a Scheimpflug adapter, you remove the dummy filter so that

the sensor is unprotected. Make sure to be in a dust-free environment and pay special

attention to cleanliness.

Mounting an optical filter

1. Use the provided tool and remove the dummy filter.

2. Mount the optical filter.

NOTICE

Do not remove the dummy filter without mounting another filter.

MOUNTING 5

Using the camera without a filter can damage the sensor.

•

The distance from the lens to the image sensor is adapted to the thickness of

•

the optical filter. Without a filter, the focusing of the lens may not work prop‐

erly.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

17

Page 18

6 ELECTRICAL INSTALLATION

6 Electrical installation

6.1 Wiring notes

NOTE

Preassembled cables can be found online at:

www.sick.com/Ranger3

•

NOTICE

Faults due to incorrect wiring.

Incorrect wiring may result in operational faults.

■

Follow the wiring notes precisely.

We recommend using shielded cables.

Connect the connecting cables in a de-energized state. Switch on the supply voltage

only after complete installation/connection of all connecting cables to the device and

control system.

6.2 Security

WARNING

Risk of injury and damage caused by electrical current!

As a result of equipotential bonding currents between the device and other grounded

devices in the system, faulty grounding of the device can give rise to the following dan‐

gers and faults:

■

Dangerous voltages are applied to the metal housings.

■

Devices will behave incorrectly or be destroyed.

■

Cable shielding will be damaged by overheating and cause cable fires.

Remedial measures

■

Only skilled electricians should be permitted to carry out work on the electrical sys‐

tem.

■

If the cable insulation is damaged, disconnect the voltage supply immediately and

have the damage repaired.

■

Ensure that the ground potential is the same at all grounding points.

■

Where local conditions do not meet the requirements for a safe earthing method,

take appropriate measures (e.g., ensuring low-impedance and current-carrying

equipotential bonding).

Only skilled electricians with appropriate training and qualifications are permitted to

perform electrical installation. Observe the following safety measures:

18

Standard safety requirements must be met when working in electrical systems.

•

Only connect and disconnect electrical connections when there is no power to the

•

system. Otherwise, the devices may be damaged.

Use only shielded cables. The shield has to be terminated at both ends of the

•

cable.

Ensure that loose cable ends are isolated.

•

Connect unused pins to GND.

•

Wire cross sections of the supply cable from the customer's power system should

•

be designed and protected in accordance with the applicable standards.

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 19

Make sure that the Power-I/O cable is protected by a separate slow-blow fuse with

•

a maximum rating of 2.0 A. This fuse must be located at the start of the supply

circuit.

The 24 V power supply must meet the requirements of SELV+LPS relating to "UL/

•

EN60950-1:2014-08", or ES1 according to "EN/UL62368", or "CAN/CSA-C22.2

No 223-M91(R2008)-Power supplies with Extra-Low-Voltage class 2 outputs", or

"UL1310 (6th Edition)-standard for class 2 power units".

All circuits connected to the device must be designed as ES1 circuits (according to

•

EN/UL62368) or as SELV (Safety Extra Low Voltage) circuits (according to EN/

UL60950).

6.3 Connecting the camera

NOTICE

Never connect any signals while the camera is powered.

•

Never connect a powered Power-I/O terminal or powered I/O signals to a camera.

•

NOTICE

Never connect a powered encoder interface unit to a camera.

•

Never connect signal levels that exceed the input specification to the encoder

•

inputs.

ELECTRICAL INSTALLATION 6

Failure to follow these rules can damage the camera.

NOTE

The function of the camera is not tested and guaranteed for Power I/O cables longer

than 10 meters.

NOTE

Use only shielded cables. The shield has to be terminated at both ends of the cable.

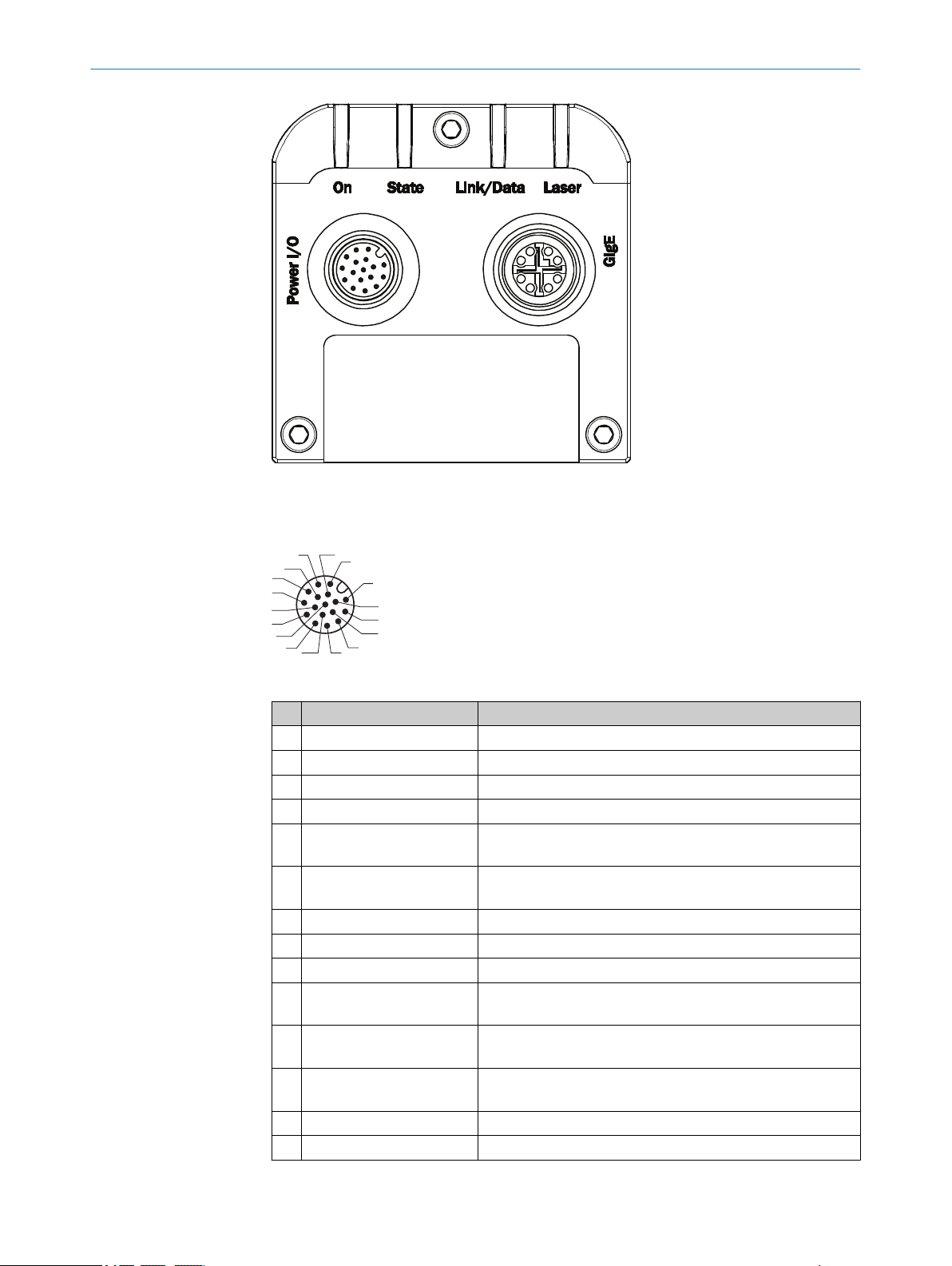

There are two connectors on the back of the camera: Gigabit Ethernet (GigE) and Power

I/O (see figure 4, page 20).

To prepare the camera for operation, do as follows:

1. Remove the protection caps that cover the connections for Gigabit Ethernet (GigE)

and Power I/O.

2. Connect the Ethernet cable to the GigE connector on the camera. Connect the

other end of the Ethernet cable to the Network Interface Card (NIC) in the PC.

3. Connect the connecting module to the Power I/O connector on the camera.

4. Connect the unpowered power supply to the connecting module.

5. Connect the laser to its power supply.

6. Switch on the power to the system.

For more information on how to connect I/O signals to the camera, see the following

sections:

Electrical connections

•

Connecting encoders

•

6.4

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

Electrical connections

There are two connectors and four LEDs on the back plate of Ranger3.

19

Page 20

3

1

7

2

6

5

4

8

13

14

17

15

9

10

12

16

11

6 ELECTRICAL INSTALLATION

Figure 4: Back plate of the Ranger3 device

Power I/O connector

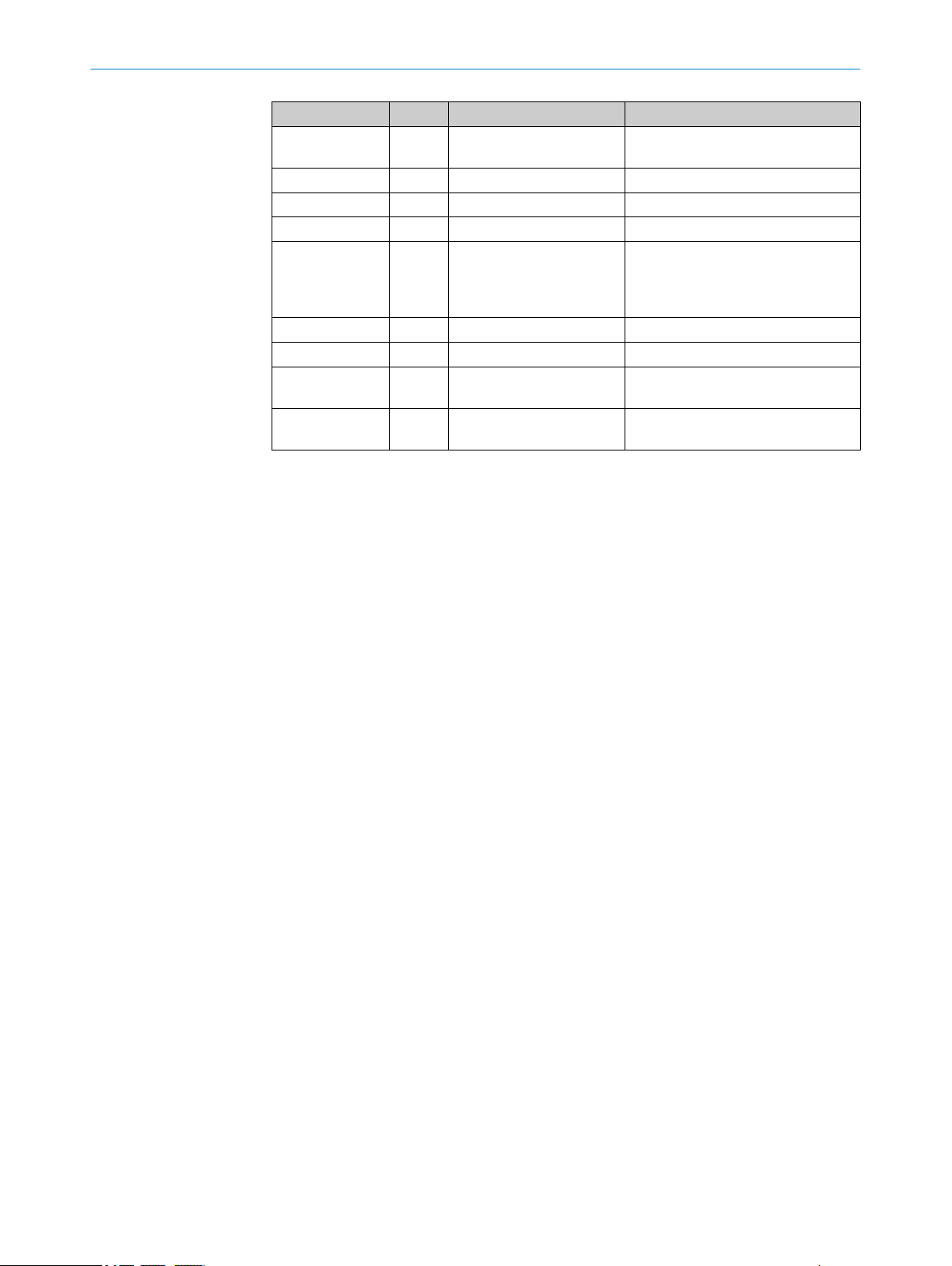

Table 2: Power I/O connector, 17 pin

Pin Signal Description

1 GND Power/signal ground

2 POWER SUPPLY Power supply DC 24 V +/-20%

3 - Not connected

4 - Not connected

5 ENC IN A+ Encoder Input A+

Default: RS422 TTL

6 ENC IN A- Encoder Input A-

Default: RS422 TTL

7 - Reserved

8 - Reserved

9 - Not connected

10 FRAME TRIGGER IN 24 V Frame trigger input or configurable 24 V Input/Output

Default: Frame trigger input

11 ENC IN B+ Encoder Input B+

Default: RS422 TTL

12 ENC IN B- Encoder Input B-

Default: RS422 TTL

13 LASER STROBE OUT1

20

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

14 LASER STROBE OUT2

1

5 V trigger output for Laser or Strobe

1

5 V trigger output for Laser or Strobe

Subject to change without notice

Page 21

1

7

2

6

54

3

8

ELECTRICAL INSTALLATION 6

Pin Signal Description

15 LINE TRIGGER IN

16 I/O 3

17 I/O 4

1

1

1

Not connected for article number 1083672

Notes

Make sure, that at all times, the voltage at the I/O pins is lower or equal to the

•

voltage at the supply pins. If not, you risk to power on the camera through the I/O

pins although it is turned off (V supply = 0 V), which is strictly forbidden.

When using a single-channel encoder, connect it to Encoder Input A+/A- (pin 5

•

and 6)

Table 3: Signal levels for Power I/O connector

Signal Pins Low High Remark

24 V inputs 10, 15, 16,170... 9 V 12.5... V_Supply Pulldown: 22.5

TTL 5, 6, 11, 12 0... +0.8 V +2 V... V_Supply RC-termination,

24 V outputs 16, 17 Output type:

5 V outputs 13, 14 Output type:

1

24 V Line trigger input or configurable 24 V Input/Output

Default: Line trigger input

Encoder reset input or configurable 24 V Input/Output

Default: Encoder reset

Configurable 24 V Input/Output

kΩ

112 Ω / 340 pF

Push-pull.

Max output cur‐

rent: 100 mA

Push-pull.

GigE connector

Table 4: GigE connector, 8 pin

Pin Signal

1 GETH L1+

2 GETH L1-

3 GETH L2+

4 GETH L2-

5 GETH L4+

6 GETH L4-

7 GETH L3-

8 GETH L3+

LED definitions

Table 5: LED definitions

Indicator LED Color Function

On

Green Power ON

O

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

21

Page 22

6 ELECTRICAL INSTALLATION

Indicator LED Color Function

State

Link/Data Off No Ethernet connection

Laser

O= illuminated, Ö= flashing

Yellow Booting (slow flashing) or firmware

Ö

upgrade (fast flashing)

Yellow Idle (or acquiring single frames)

O

Green Continuous acquisition

O

Red Thermal warning (risk of overheating)

Ö

Red The device is in rescue mode, due to

O

software problems or overheating.

For more information, see "Rescue

mode", page 68.

Green Connection established, 1 gigabit/s

O

Green Ethernet frames are being transmit‐

Ö

ted or received

Green Laser output active (Not imple‐

O

mented)

22

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 23

7 Configuration

7.1 Software installation

7.1.1 System recommendations

The PC requirements for the vision system will depend on your application, but as a

general guideline the following is recommended for minimal operation:

Windows 7 or Windows 10, 64 bit.

•

Gigabit Ethernet adapter that supports Jumbo Frames and is dedicated for camera

•

communication, see "Recommended network card settings", page 83.

7.1.2 Network preparations

Due to the large amount of data that the camera delivers per second, it is required to

connect it to the PC using a dedicated Gigabit Ethernet network, without other interfer‐

ing traffic. If the PC must be connected to other equipment, for example network print‐

ers, the PC should be equipped with (at least) two network interface cards (NIC).

Multiple cameras can be connected using a NIC with multiple ports, or multiple NICs. To

connect multiple cameras to a single NIC limits the maximum speed of the cameras.

For best performance, connect each camera to a separate NIC.

CONFIGURATION 7

For recommended network settings, see "Recommended network card settings",

page 83.

7.1.3 Installing PC software

The latest version of the Ranger3 software deployment kit (SDK) can be downloaded

from the SICK Support Portal, supportportal.sick.com.

1. Log in to the SICK Support Portal.

2. Navigate to the Ranger3 product page.

3. Under Releases, click the link corresponding to the latest version of the Ranger3

SDK.

4. Download the SDK zip file.

5. Unzip the SDK and follow the instructions in the README.txt file.

The SDK contains the Ranger3 Studio software application, which is used for the con‐

figuration and operation procedures described in this manual. To start the application,

open the Ranger3 Studio sub-folder and click the Ranger3 Studio.exe file.

7.2 Concepts

The GenICam™ standard uses "feature" as a common word for parameters, com‐

mands, and selectors.

7.2.1 Selectors

In a GenICam™ device, such as Ranger3, selectors are used to access parameters that

are organized in arrays. That is, the selector acts as the index for the affected parame‐

ters. Changing the selector does not change any parameter. A parameter indexed by a

selector is notated ParameterA[SelectorX].

Example: The parameter Width[RegionSelector] sets the width of a region. The value of

RegionSelector decides which region that is manipulated. This means that Width[Region1]

is the width of the region named Region1.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

23

Page 24

7 CONFIGURATION

7.3 Configuring Ranger3

Before the camera can be used in a machine vision system, it has to be configured.

This is usually done by setting up the camera in a production-like environment and eval‐

uate different parameter settings until the result is satisfactory, see "Editing parame‐

ters", page 55.

The following can be specified when configuring the Ranger3:

Regions Where on the sensor to measure and dimensions of the

Exposure time For how long to expose the sensor.

Triggering settings When to make a measurement.

Component-specific settings How to process the measurement result before sending

All this is specified by setting parameters in Ranger3. The parameters, as well as the

selectors and commands, are organized in hierarchical groups. Each group belongs to

one of the following categories1):

DeviceControl Contains the features related to the control and informa‐

ImageFormatControl Contains the features related to the format of the

AcquisitionControl Contains the features related to image acquisition,

DigitalIOControl Contains the digital input and output control features.

TimerControl Contains the Timer control features.

EncoderControl Contains the features related to the usage of quadrature

EventControl Contains the features related to the generation of Event

FileAccessControl Contains the File Access control features.

Scan3dControl Contains the features related to the control of the 3D

ChunkDataControl Contains the features related to the Chunk Data Control.

TestControl Contains the features related to the control of the test

TransportLayerControl Contains the features related to the Transport Layer Con‐

3D output frame.

it to the PC.

tion of the device.

acquired and transmitted images.

including trigger control.

encoders.

notifications by the device.

scan features.

features.

trol (Gigabit Ethernet).

7.4 Regions

There are two types of regions:

It is possible to define multiple regions for both 2D and 3D. In different device versions

different number of sensor and extraction regions are possible. Typically, you use at

least one sensor region for imaging and one sensor and extraction region pair for 3D

profiling purposes. You use the selector RegionSelector to select the region you want to

view and manipulate parameters from, see "Selectors", page 23.

1)

According to GenICam™ SFNC version 2.4.

24

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Sensor regions

RegionSelector = Region0, Region1

Extraction regions

RegionSelector = Scan3dExtraction1

Defines the sensor image dimensions and

readout conditions, see "Sensor regions",

page 25.

Defines the processing and formatting condi‐

tions of the generated 3D linescan output

data, see "Extraction regions", page 25.

Subject to change without notice

Page 25

7.4.1 Sensor regions

OffsetY

OffsetX

Width

Height

Y

X

(0, 0)

Width[Region0]

Height[Region0]

Height[Region0]

Width[Region0]

CONFIGURATION 7

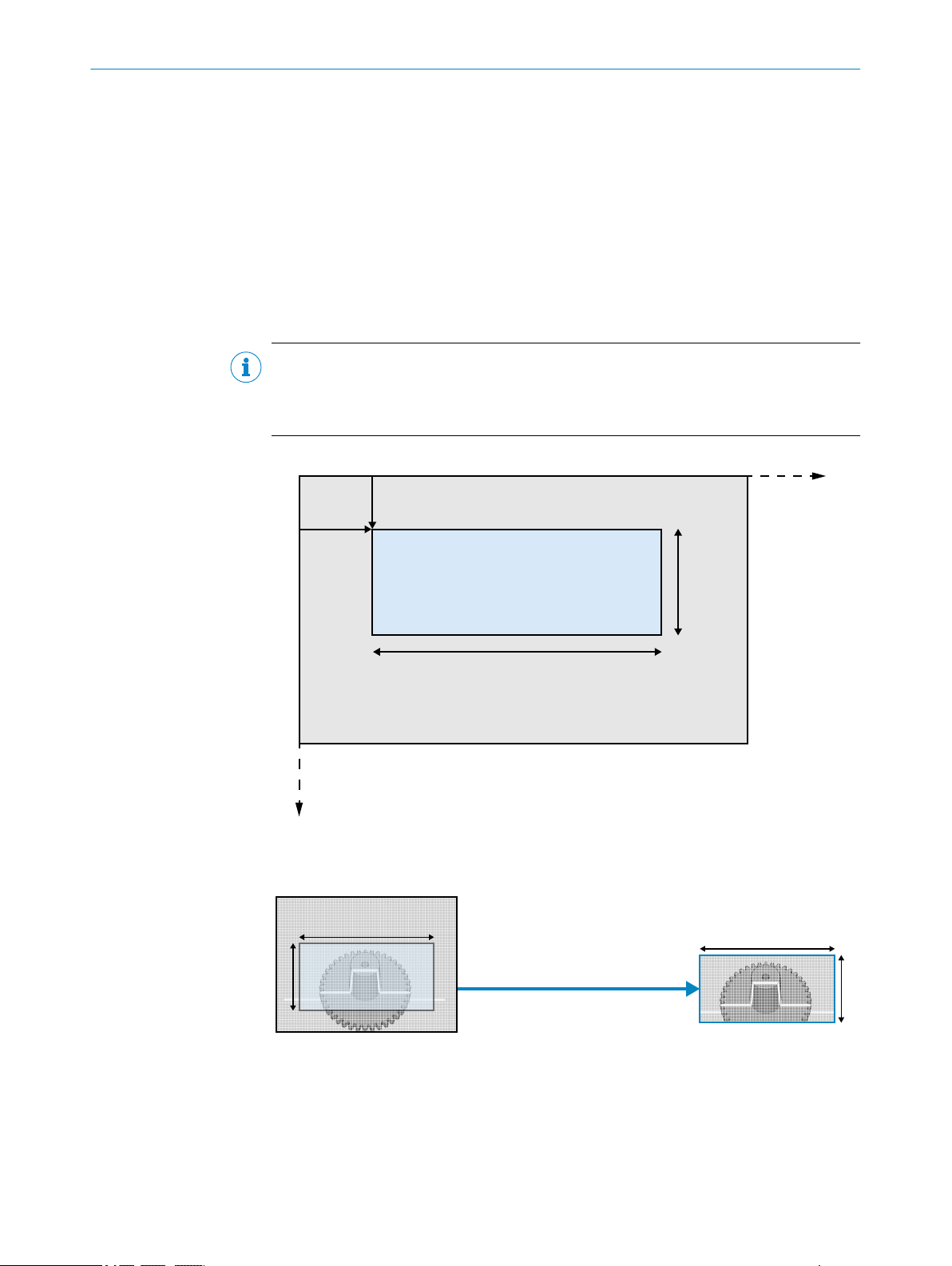

Data from the sensor is used as standard 2D image output and as input to the calcula‐

tion of the 3D data. The sensor region that is used when viewing the 2D intensity image

(Region0) and the sensor region used as input to the processing module (Region1) are

defined independently of each other.

The sensor region defines which area of the sensor to use. Using a smaller region on

the sensor enables measurements at a higher rate. The region is specified by the para‐

meters OffsetX, OffsetY, Width, and Height as shown in the figure below. The resulting

image generated by the device will have Width times Height pixels. OffsetX and OffsetY are

given with respect to the upper left corner of the image area. This corner has the coordi‐

nates (0,0) in the imager (x, y) coordinate system. All measures are given in pixels.

NOTE

In GenICamTM devices, such as Ranger3, imager coordinates are defined as (x,y). In

more general terms, image sensor coordinates are usually defined as (u,v), see "Sensor

coordinate system", page 82.

Figure 5: Image area and 2D region

The 2D intensity image is based on the sensor region Region0, see the figure below.

Figure 6: 2D image

7.4.2 Extraction regions

The extraction region (Scan3dExtraction1) uses input from a sensor region (Region1).

Together with a processing module, the extraction region defines the processing and

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

formatting conditions of the generated 3D linescan output data.

25

Page 26

3D extraction

processing

module

1

Height[Scan3dExtraction1]

Width[Scan3dExtraction1]

Height[Region1]

Width[Region1]

7 CONFIGURATION

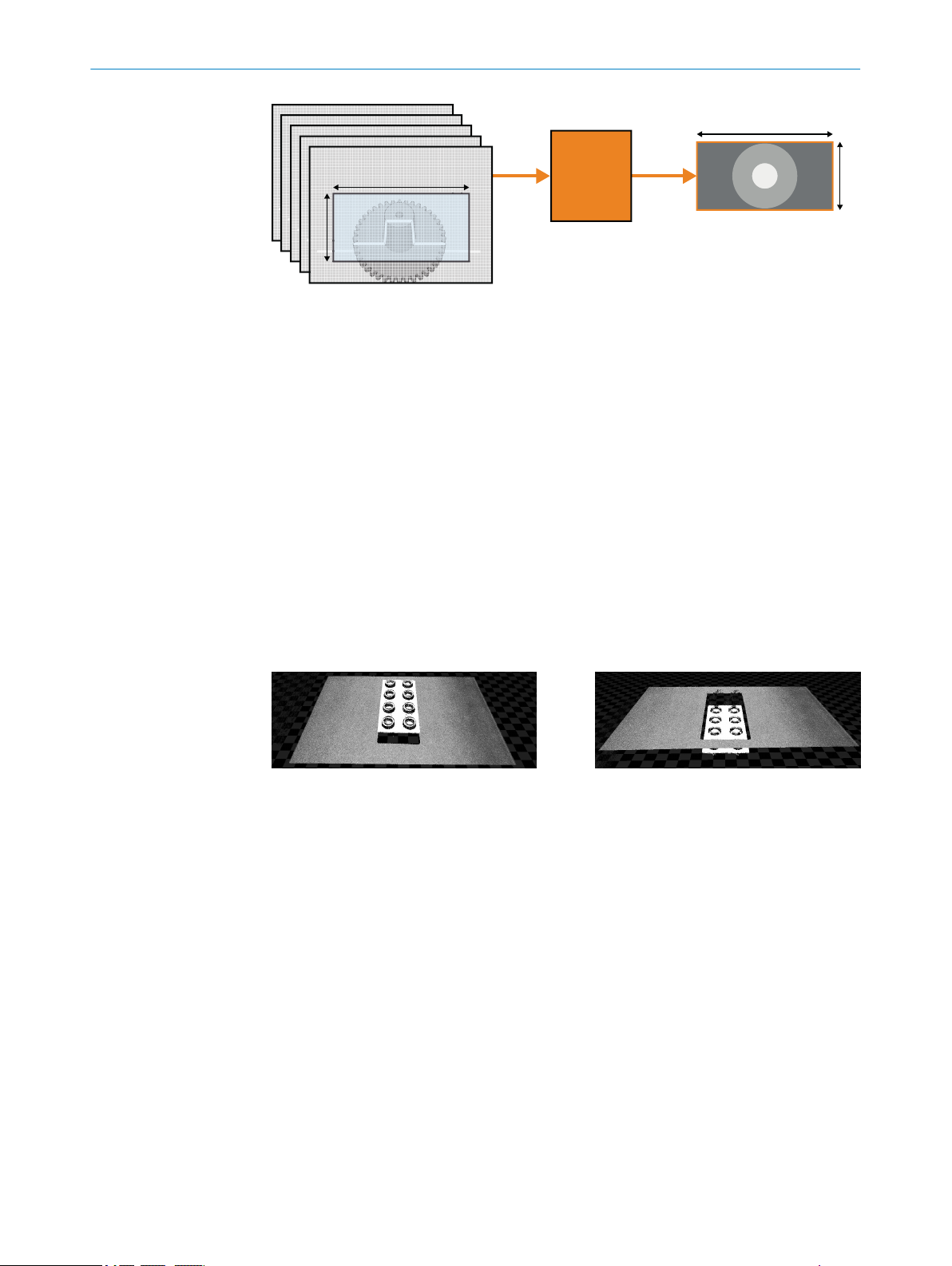

Figure 7: 3D image

3D extraction processing module

1

In Linescan 3D mode, the Height and Width parameters of the region Scan3dExtraction1

define the dimensions of the 3D extraction output frame. Typically, the Width parameter

of an extraction region is locked to the same value as the Width of the source region on

the sensor.

To get a 3D image, several 2D images are required. Each 2D image corresponds to one

profile, see "Measuring with a 3D camera", page 11. The 2D sensor images are trans‐

formed into lines in the 3D extraction output frame. Each line in the frame corresponds

to one 2D image. This means that the Height value of the frame tells how many 2D

images that are used to generate the resulting 3D image. The 3D image has Width times

Height pixels.

The RangeAxis parameter defines how the lines in the 3D extraction output frame are

visualized. The parameter is set to Reversed by default, which means that high values in

the range data correspond to low values on the imager Y axis. If the parameter is set to

Standard, high range values correspond to high values on the imager Y axis and the visu‐

alized 3D object appears upside down. See figure 8 and figure 9 for examples.

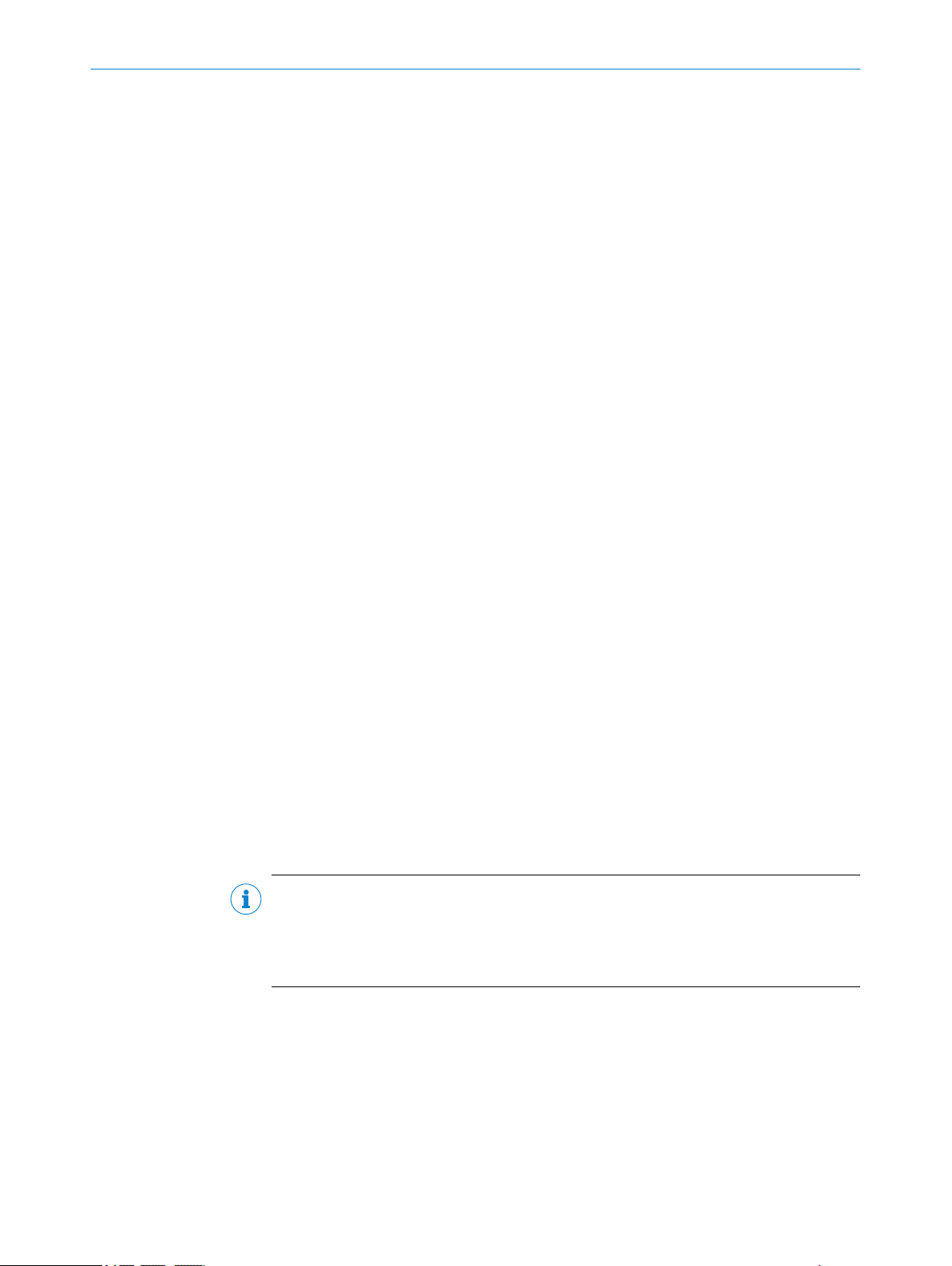

Figure 8: RangeAxis set to Reversed

7.4.3 Device scan type

The camera can be configured to output either the raw data from the image sensor or

the 3D profile data. In the user interface, you select Image to see the raw sensor data as

a 2D image or Data collection to get the 3D profile data, see "Workflow steps", page 48.

The camera uses the parameter DeviceScanType to control if 2D images or 3D profiles

are acquired. This parameter is set when you select Image or Data collection. Setting the

DeviceScanType automatically sets the relevant features for the correct mode, as

described below.

Image

Figure 9: RangeAxis set to Standard

26

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

DeviceScanType is set to Areascan.

The following settings are done automatically:

RegionMode[Region0] = On

RegionMode[Region1] = Off

RegionMode[Scan3dExtraction] = Off

Subject to change without notice

Page 27

Data collection

DeviceScanType is set to Linescan3d.

The following settings are done automatically:

RegionMode[Region0] = Off

RegionMode[Region1] = On

RegionMode[Scan3dExtraction] = On

7.4.4 Maximum buffer size

The maximum size for an image buffer to be sent from Ranger3 to the host PC is

around 40 MB, and the maximum supported buffer height is 16383 profiles. The limit

is due to the limited GigE Vision retransmission buffer memory in the device.

The maximum buffer height (Height[Scan3dExtraction1]) depends on the data format, the

region width (Width[Region1]) and the number of enabled components (e.g. reflectance,

see "Reflectance data", page 30). For example: With default settings, the maximum

Height is about 11000 profiles. When reflectance is enabled, the maximum Height

decreases to about 7000 profiles.

If the buffer size is maximized, the camera may block user actions that further increase

the size. Examles of such actions are:

CONFIGURATION 7

Enabling another component, such as reflectance

•

Increasing the bit-depth of a pixel format

•

Increasing the region width

•

The user must decrease the buffer size to make the blocked actions available again.

This is done by changing the data format, decreasing the region width or decreasing the

number of enabled components.

7.5 Exposure time and measurement speed

Once the height of the sensor region is set, there are two other parameters that affect

the line rate of the camera:

AcquisitionLineRate The rate at which the lines in a frame are captured (Hz). Only

applicable when the camera is in free-running mode.

ExposureTime The time (μs) during which the sensor region is exposed.

The exposure time and the line rate are inter-dependent. The maximum exposure time

cannot be longer than the time between two profiles, minus about three microseconds

that are needed for readout and reset.

NOTE

The maximum exposure time and the maximum line rate are stored as floating point

values and rounding-off effects may make it impossible to set the exact value returned

by the GUI. The maximum deviation is 0.01 μs for the exposure time and 0.01 Hz for

the line rate.

7.6 Laser strobe output signals

The camera has two laser strobe output signals, LASER STROBE OUT1 and LASER STROBE

OUT2, see "Electrical connections", page 19. These signals are individually controlled

and can be used to control two separate lasers. You can use the signal to turn the laser

on only when it is needed, for example when two separate cameras and lasers are used

at the same time.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

27

Page 28

TimerDuration

LineStart

LaserStrobeOut

SensorTrigger

t2

ExposureTime

t1

t0

Intensity 1

ROI start 4

ROI end 3

Sensor row 2

7 CONFIGURATION

In the figure below, the laser is turned on slightly before the sensor exposure time

starts. The reason for this is to allow turn-on time. When the exposure is finished, the

laser is turned off.

The LineStart trigger signal from the encoder is used as a reference. The TimerDelay para‐

meter sets the delay time (t1-t0) from the LineStart signal (t0) until the laser is turned

on. The TimerDuration parameter defines for how long the laser is on. When TriggerSelector

is set to ExposureStart, the TriggerDelay parameter sets the delay time (t2-t0) from the

LineStart signal until the exposure starts.

The maximum time (in seconds) between two LineStart signals is 1/(maximum Acquisi‐

tionLineRate). The higher the line rate, the shorter the cycle time.

Figure 10: Laser is on only during exposure

7.7

3D profiling

7.7.1 Laser impact position on the sensor

The basic function of the 3D measurements is to compute the impact position of the

laser line for all columns of the selected region of interest (ROI). The light intensity dis‐

tribution from the laser line along a sensor column across the laser line can be

described as in the figure below.

Figure 11: The impact position of the laser in one column

Intensity

1

Sensor row

2

ROI end

3

28

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 29

ROI start

Intensity 1

ROI start 4 ROI end 3

Sensor row 2

DetectionThreshold

6

6

5

4

The laser line will produce a distinct light peak distributed over a number of pixels along

the sensor column. The center of this peak will be defined as the impact position of the

laser line on that sensor column, which is the range value.

7.7.2 Measurement method

The default algorithm in Ranger3 is called Hi3D. It measures the impact position using

a high-resolution peak fitting algorithm based on the pixels in a window around the

extracted intensity peak position. The size of the window is set by the WAMSize parame‐

ter.

This method measures range with a resolution of 1/16th pixel.

7.7.3 Detection threshold

The parameter DetectionThreshold defines the minimum reflectance signal that can be

detected as a peak position. Ideally, this parameter is set to a value that is higher than

the amplitude of the noise, but still low enough to detect the laser signal, see the figure

below. If DetectionThreshold is too low, noise will be registered as laser peaks. This will

result in bad image quality. If DetectionThreshold is too high, not all laser peaks will be

registered. This will result in an image where some parts are missing.

CONFIGURATION 7

Figure 12: Analog signal with noise

Intensity

1

Sensor row

2

ROI end

3

ROI start

4

Detected peak

5

Not detected peaks

6

7.8 3D data formats

The 3D data is delivered with 4 subpixel bits resolution. The data can be represented

with 12 or 16 bits. 12 bits allows a higher scan rate given the limitations of the Gigabit

Ethernet link. For 12 bits a maximum region height of 256 rows can be represented.

Any data outside of this is clipped/limited to the maximum value.

The pixel value 0 is dedicated to represent missing data, i.e. that no valid peak was

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

found. This means that the lowest value possible is 0.0625 (1/16th).

The coordinate system of the data is independent of the region position. That is, the

value 0.0625 always represents a position 1/16th of a pixel from the start of the

region. If a buffer is saved to file, the offsetY information is stored in the XML descrip‐

tion so that the actual position on the sensor can be calculated.

29

Page 30

Light intensity 2

Pixel value 1

5

6

4

Maximum

pixel value

3

7 CONFIGURATION

According to the GenICam™ and GigE Vision® standards, 3D data should be repre‐

sented using coordinate pixel formats. For Ranger3, these formats are Coord3d_C12p

and Coord3d_16. To allow a receiver that is not compatible with those new formats, the

binary compatible grayscale formats mono_12p and mono_16 can be used.

7.9 Reflectance data

The reflectance values along the laser line can be collected in parallel to the 3D data.

The reflectance values are saved as an 8-bit grayscale image, with one value corre‐

sponding to each point in the range dataset.

For information about how to enable reflectance measurements, see "Enabling

reflectance measurements", page 58.

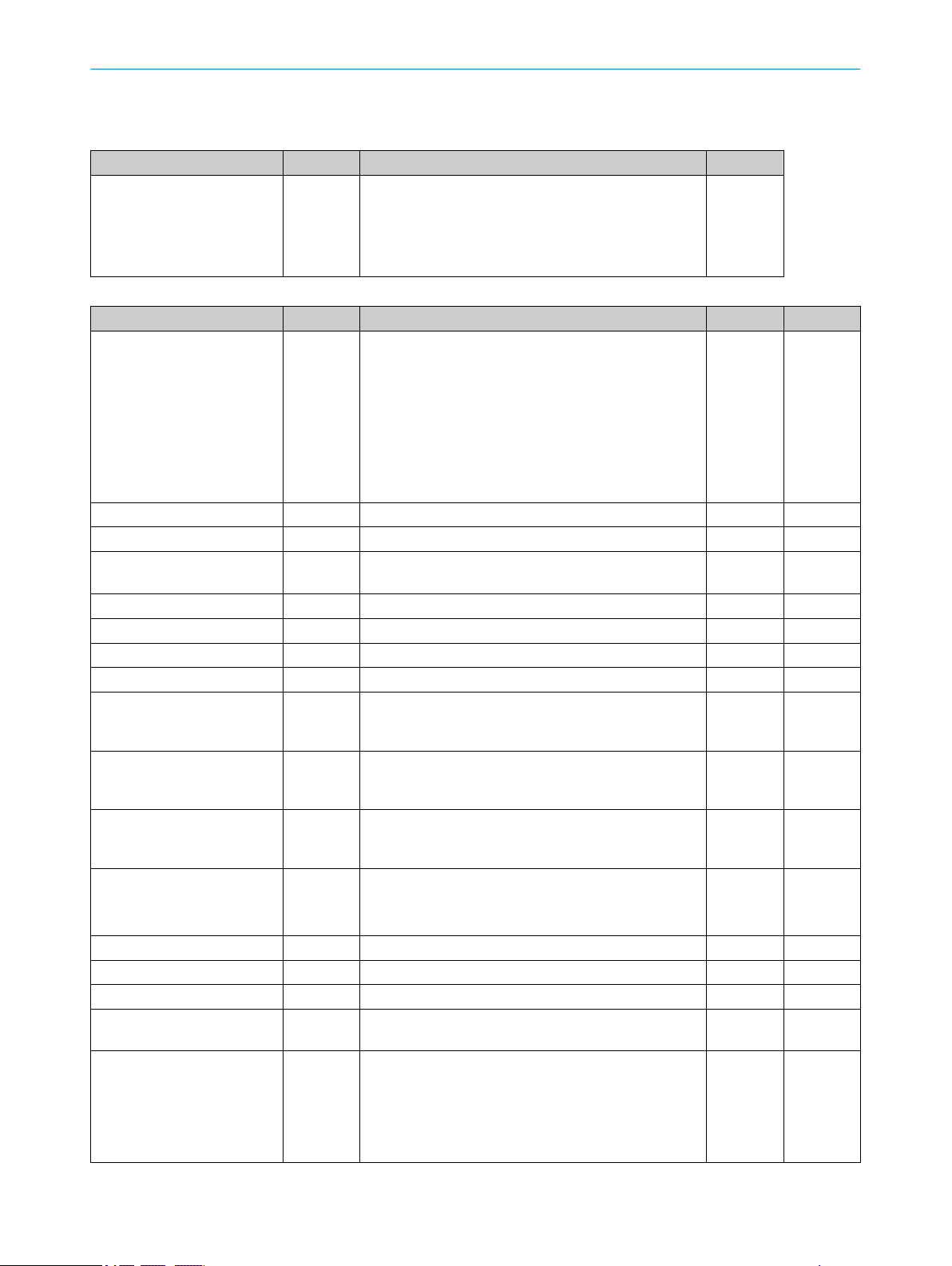

7.10 High dynamic range (HDR) imaging

Ranger3 supports high dynamic range (HDR) imaging, which increases the sensor's

ability to adequately reproduce both bright and dark areas in a scene. HDR is suitable

for improving the localization of the laser line when acquiring images containing both

dark and bright materials, such as bright objects towards a dark background or dark

objects with bright prints.

Figure 13: Acquisition of

profiles

Figure 14: Resulting profile,

linear (non-HDR) mode

Figure 15: Resulting profile,

HDR mode

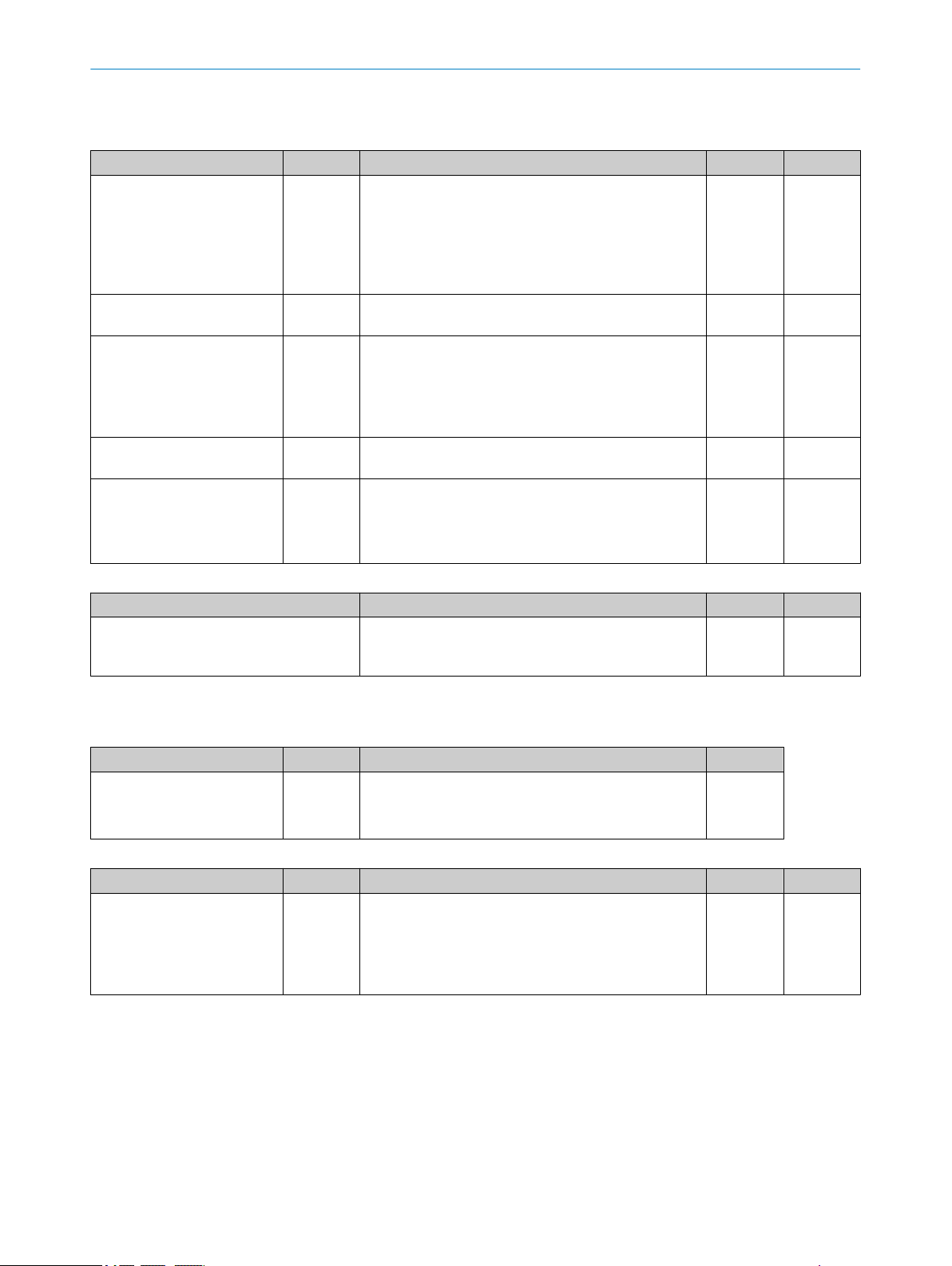

Ranger3 uses an HDR principle called multiple-slope with one knee-point, which means

that the normal linear relationship between the received light and the resulting pixel

value (reflectance) is broken into two linear segments. The result is a compressed lightto-pixel value characteristic for high light intensities, according to figure 16.

Figure 16: HDR multiple-slope principle

Pixel value

1

Light intensity

2

Maximum pixel value

3

Linear (non-HDR) mode

4

HDR mode

5

Knee-point

6

30

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 31

0

0 500 1000 1500 2000 2500 3000 3500

Light intensity 2

Pixel value 1

50

100

150

200

250

3

4

5 6

CONFIGURATION 7

The knee-point position and the slope after the knee-point are controllable by the Multi‐

SlopeMode parameter in the camera. There are three pre-defined parameter settings

(soft, medium and aggressive) which correspond to different amounts of compression.

These settings result in a dynamic range increase of approximately 2, 6 and 15, respec‐

tively. See figure 17.

Figure 17: HDR settings for Ranger3

Pixel value

1

Light intensity

2

Linear (non-HDR) mode

3

Soft pre-set

4

Medium pre-set

5

Aggressive pre-set

6

In HDR mode, the sensor readout must be finished before a new exposure can start.

The minimum cycle time is the sum of the exposure time and the readout time. In linear

mode, the sensor readout and a new exposure can be done in parallel.

For example: If the readout time is 33 μs and the exposure time is 30 μ, the total cycle

time is 33 μs for linear mode and 63 μs for HDR.

For information about how to enable HDR imaging for Ranger3, see "Enabling HDR

imaging", page 59.

7.11

Triggering

Triggering is used to control the initiation and rate of data acquisition. Different trigger‐

ing concepts and modes are presented below.

See "Setting triggering parameters", page 60 for information about how to activate

triggering and set the parameters in the user interface.

7.11.1 3D triggering concepts

Different application types require different triggering concepts. Below is a table of the

most common triggering situations.

Continuous flow No photoelectric switch is used.

Profiles are sent continuously to the PC, typically

arranged in frames of at least a few hundred pro‐

files.

Examples: Crushed stone, grain, sawdust.

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

31

Page 32

7 CONFIGURATION

7.11.2 Triggering modes

There are different ways to trigger the camera to acquire frames and profiles. You can

use an external signal to trigger each frame or each single profile. The camera can also

be configured to acquire frames or profiles with regular time intervals, without an exter‐

nal trigger signal.

Continuous flow

of discrete

objects

Shorter objects

of equal length

Longer objects of

variable size

No photoelectric switch is used.

Profiles are sent continuously to the PC, typically

arranged in frames of at least a few hundred lines.

The resulting image buffers in the PC can be ana‐

lyzed in a rolling buffer fashion, ensuring that all

objects are analyzed completely.

Example: Cookies.

A photoelectric switch is used.

One image per object.

Examples: Bottles, automotive parts, mobile

phones.

A photoelectric switch is used.

Acquire profiles as long as the object remains in

front of the camera.

Several sub-images can be stitched together in the

PC.

Examples: Logs, fish, postal packages.

Note that when the camera acquires 2D images, a frame is the same thing as a com‐

plete 2D image. When the camera acquires 3D images, each frame is a set of profiles.

This means that the acquisition of profiles and the Line triggering concept are only

applicable for 3D images, while the 2D image triggering concept is only relevant for 2D

images.

Frame triggering The camera will acquire frames based on an external input signal,

for example from a photoelectric switch.

The acquisition of profiles can either be free-running or triggered

by an input signal, as described in the sections Free-running and

Line triggering below.

Line triggering The camera will acquire each profile based on an external input

signal. There are two possibilities:

Connect an external input signal to the line trigger input of the

•

device.

Use an encoder for line triggering. In that case, pulses are

•

received on the encoder inputs. The distance between two

profiles is determined by the number of pulses received.

Triggering each profile from an encoder will keep the object pro‐

portions if the object motion, tracked by the encoder, changes.

Four-phase encoders also allow tracking different motion pat‐

terns, see "Triggering using an encoder", page 33. The motion

pattern is defined by the EncoderOutputMode parameter.

When you use the line trigger input, each pulse on the line trigger

input triggers one profile. Profiles are triggered when the object is

moving either backward or forward. The EncoderOutputMode para‐

meter is not used.

32

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 33

Free-running The camera will acquire 2D images (in Areascan mode) or profiles

1 3

2

2D image triggering The camera will acquire 2D images based on external pulses on

7.11.3 Triggering using an encoder

When you use an encoder for triggering, the camera counts the number of pulses

received on the encoder inputs using an internal counter. When the specified number

of pulses have been received, a profile or a 2D image is triggered and the camera

resets the triggering condition counter.

CONFIGURATION 7

(in Linescan3D mode) with a regular time interval. In Areascan

mode the time interval is controlled by the AcquisitionFrameRate

parameter and in Linescan3D mode by the AcquisitionLineRate

parameter.

When the acquisition of profiles is free-running, the distance

between two profiles varies if the speed of the object is not con‐

stant. This may distort the image. To avoid distortion, you can use

an encoder and record the counter value for each profile. This

information makes it possible to calculate a correct image.

the encoder inputs.

The use of a four-phase encoder allows tracking of different

motion patterns. The principles for motion tracking during 2D

image triggering are the same as for line triggering, see "Trigger‐

ing using an encoder", page 33.

Four-phase (dual-channel) encoder

The default definition of a pulse is a full four-phase cycle on the encoder inputs. This

gives a pulse counter that is robust to jitter and noise on the inputs.

A four-phase encoder can handle movements in both directions (forward and back‐

ward). The camera can be configured to react to the pulses in different ways, resulting

in different ways to trigger profiles. The different line triggering modes are illustrated

below.

Mode Parameter Description

Position EncoderOut‐

putMode =

PositionUp

or Position‐

Down

Direction EncoderOut‐

putMode =

DirectionUp

or Direction‐

Down

Motion EncoderOut‐

putMode =

Motion

Table 6: Triggering modes

The encoder triggers a profile for each object position.

If the object has moved backward, no profiles are triggered until

the object has moved (at least) an equal distance forward.

PositionUp: "Forward" is defined as the positive direction.

PositionDown: "Forward" is defined as the negative direction.

The encoder triggers profiles when the object is moving forward.

If the object has moved backward, new profiles will be triggered as

soon as the object moves forward again.

DirectionUp: "Forward" is defined as the positive direction.

DirectionDown: "Forward" is defined as the negative direction.

The encoder triggers profiles when the object is moving either

backward or forward.

Position

Direction

8020774/14IM/2019-07 | SICK O P ER A TI N G I NS T RU C TI O NS | Ranger3

Subject to change without notice

33

Page 34

0 V

24 V

Height Height Height

Frame

trigger

signal

1

Profile acquisition

(level sensitive) 2

3

4

5

7 CONFIGURATION

Motion

Single-channel encoder

A single-channel encoder uses only one encoder channel. The input from the encoder

to the camera is a differential signal, and a profile is triggered each time the signal

goes high.

When a single-channel encoder is used, the camera cannot differentiate between for‐

ward and backward movement. The single-channel encoder mode (EncoderMode = Sin‐

gleChannel) is therefore only visible and selecteable when the EncoderOutputMode (see

table 6) is set to Motion.

7.11.4 Frame triggering

The Frame trigger input is used to trigger the camera to start to acquire profiles when

the object passes a photoelectric switch. If the same photoelectric switch is connected

to several cameras then synchronization at the microsecond level can be achieved.

When using the Frame trigger input, the Height[Scan3dExtraction1] parameter specifies

the number of profiles that the camera acquires after the Frame trigger signal goes

high. After the specified number of profiles, the camera will either idle or continue to

acquire another series of profiles, depending on the state of the Frame trigger signal

and the settings of the acquisition control parameters. See the table and the figure

below.

1 Moving forward 2 Moving backward 3 Moving forward again

Parameter settings

TriggerSelector = FrameStart

TriggerMode[TriggerSelector] = Off

TriggerSelector = FrameStart

TriggerMode[TriggerSelector] = On

The camera acquires profiles continuously.

•

The acquisition of a new series of profiles (that is, captur‐

•

ing of a new frame) starts each time the Frame trigger

input has a high level as input.

Multiple Frame trigger signals are ignored until all profiles

•

(as specified by Height[Scan3dExtraction1]) have been

acquired.

Note that for the very first profile the camera requires not

•

only a high level but also a rising edge.

The system adapts the buffer height for the frame grabber and the camera to the

Height[Scan3dExtraction1] parameter to keep the image synchronized and receive a full

image after the image capture is completed. To avoid unnecessary CPU load, set the

Height[Scan3dExtraction1] parameter to no less than 100 pixels.

34

Figure 18: Timing diagram for Frame trigger signal

Frame trigger signal

1

Profile acquisition (level sensitive)

2

O PE R AT I NG IN S TR U CT I ON S | Ranger3 8020774/14IM/2019-07 | SICK

Subject to change without notice

Page 35

7.12 Chunk data

CONFIGURATION 7

Frame trigger signal rising edge. Acquisition of profiles starts.

3

Frame trigger signal rising edge. Ignored.

4

Acquisition complete. Interpretation of Frame trigger signal.

5

Chunk data are tagged blocks of data, that are sent together with the image data. In

Ranger3, chunk data are used to add encoder data to the image data. Each buffer con‐

tains, in addition to the image data, a chunk of meta data. The following meta data are

available for each buffer: