Accelerating Microsoft SQL Server Beyond

Large Server Memory

The QLogic 10000 Series Adapter Provides

Greater Benets Than DRAM Caching

The QLogic FabricCache™ 10000 Series Fibre Channel

Adapter addresses the shortcomings of server-based DRAM

caching and accelerates the performance of SQL databases

White Paper

to new levels.

EXECUTIVE SUMMARY

With the volume of data worldwide expected to grow by 50 percent

each month, new challenges are being created for companies that

are determined to get the highest performance from their database

resources while increasing efciencies wherever possible. With

dropping DRAM prices, a tempting solution may be to use large

amounts of server DRAM as cache for Microsoft® SQL Server®

database acceleration. While this method can provide positive results,

the approach is not without burdens and limitations. Among them,

DRAM cache is “captive” to the individual server it is housed in.

Thus, it is not sharable with clustered server groups. DRAM cache is

cost-prohibitive for larger-sized databases when specialized servers

or ultra-high density DRAM modules must be employed. Combined,

these drawbacks prescribe that the best use for DRAM caching is for

smaller databases in single-node servers where database sizes and

high-availability requirements are not expected to evolve signicantly.

QLogic® FabricCache™ 10000 Series Adapter delivers an optimized

solution to address enterprise application acceleration challenges

head on. It transparently combines enterprise server I/O connectivity

with industry-standard, ash-based storage to enable sharable,

server-based caching. This shared caching architecture provides

dramatic and scalable performance improvements for today’s

virtualized and distributed workloads. The enterprise workloads span

the data center and run complex, business-critical applications like

online transaction processing (OLTP) and online analytical processing

(OLAP) on Microsoft SQL Server databases. The QLogic 10000

Series Adapter is a distinctive approach to server-based caching and

addresses the problems of using large amounts of server DRAM for

caching SQL databases.

KEY FINDINGS

Acceleration of Microsoft SQL Server databases is driven by the

performance capability of the storage solution. While server-based

Dynamic Random Access Memory (DRAM) caching can accelerate SQL

performance, it has several limitations. The QLogic FabricCache 10000

Series Fibre Channel Adapter addresses the shortcomings of server-based

DRAM caching and accelerates the performance of SQL databases to new

levels. By integrating Fibre Channel storage network connectivity, sharable

ash caching, and full hardware ofoad, the QLogic FabricCache 10000

Series Adapter makes caching I/O data from SQL entirely transparent to

the host, improves scalability, and reduces complexity.

INTRODUCTION

Storage I/O limitations are the primary performance bottleneck for

enterprise database applications like Microsoft SQL Server. Recent

advancements in multi-core CPU technology have only served to widen

the gap between processors and storage performance. SQL database

administrators have long been searching for ways to accelerate and scale

performance as the size of the database and the number of users grows

larger. Caching has evolved to be the most effective method to accelerate

SQL performance, and there are several ways to implement caching.

This paper compares two of the methods: server DRAM caching and

sharable, server-based, ash-based caching as supported by the QLogic

FabricCache 10000 Series Adapter. The information provided includes

guidance on when it is appropriate to use DRAM caching and when it is

advantageous to use the FabricCache Adapter to accelerate Microsoft SQL

Server application performance.

SN0430970-00 Rev. A 02/14 1

Accelerating Microsoft SQL Server Beyond

Large Server Memory

DRAM CACHING

Like most database applications, SQL was designed at a time when

server main memory (DRAM) was very expensive. DRAM pricing has since

dropped, especially over the last few years. With tremendous advances

in multi-core server computing and an ever-widening gap between CPU

and storage I/O capabilities, a case could be made that the time is now for

caching databases with server DRAM. But while the low latency benets

derived from DRAM caching can lead to desirable database performance

improvements, the approach has its limitations. Some of the drawbacks to

DRAM caching include the following:

White Paper

• Using DRAM for cache is an all-or-nothing proposition. A DRAM cache is

owned and managed by the application, and every transaction is going

to compete for cache resources under the processing domain of the

server CPU. If the CPU is spending signicant cycles running caching

algorithms, it may have a negative effect on other CPU tasks. With

today’s multi-core processors, the impact may be minimal. However,

with older CPUs, this may affect the performance of other server

applications or services, or it may change the server’s ability to scale

virtual machines (VMs).

• DRAM cache is “captive” to the individual server it is housed in. Thus,

it is not sharable with clustered servers. While a DRAM cache can

be very effective at improving the performance of individual servers,

providing storage acceleration across clustered server environments or

virtualized infrastructures that use multiple physical servers is outside a

DRAM cache’s capability. This limits the performance benets of DRAM

caching to a relatively small set of single-server SQL situations.

• DRAM cache becomes cost-prohibitive for larger-sized databases

when specialized servers or ultra-high density DRAM modules must be

employed. Tier-1 server companies typically offer servers with a base

conguration of 12 DRAM DIMM sockets and charge a premium for 24

socket servers. DRAM modules show an increasing “price per gigabyte”

(GB) as bit density increases.

• Best practice recommendations from Microsoft call for local “swap and

crash” recovery dump les equal to 2.5 × the physical DRAM capacity.

The swap le and the dump le become so large that it creates an

enormous amount of disk fragmentation. It can take additional free

space and many hours of defragmentation to repair the damage done

by these massive les. However, maintenance windows are already

tight. In addition, more DRAM leads to larger swap and dump les,

which lead to longer maintenance cycles. The swap and dump les also

create additional virus scanning overhead, incurring more contention

and latency.

• Most enterprise SQL servers will also need access to networked storage

to service requests for data not held within the DRAM cache, known as

a “cache miss”. To handle a cache miss, an additional Fibre Channel

Adapter or similar storage network Host Bus Adapter will be required in

the server and it will have to be managed separately.

CACHING BENEFITS WITHOUT LIMITATIONS

The QLogic FabricCache 10000 Series Adapter is a new approach to

server-based caching. It is designed to address the drawbacks of DRAMbased and other types of server-based caching. Rather than creating a

discrete captive cache for each server, the QLogic FabricCache 10000

Series Adapter integrates Host Bus Adapter functionality as part of the

ash-based cache functionality. The QLogic 10000 Series Adapter features

a caching implementation that uses the existing SAN infrastructure to

create a shared cache resource distributed over multiple physical servers,

as identied in the graphic below, “Shared Cache within Application

Clusters.” This capability eliminates the limitations of single-server

caching and enables the performance benets of ash-based acceleration

for Microsoft SQL Server environments.

Owned

Cached LUNs

1

2

Application

Cluster

Nodes

3

4

SN0430970-00 Rev. A 02/14 2

Data01

SAN LUNs

Data02

FC SAN

Data03

SAN LUNs

Data04

SAN LUNs

Shared Cache within Application Clusters

Shared LUNs

Data01

Data02

Data03

Data04

Accelerating Microsoft SQL Server Beyond

Large Server Memory

White Paper

QLogic FabricCache 10000 Series Adapter incorporates adapter-based,

intelligent I/O optimization engines that provide integrated storage

network connectivity, ash-based storage capacity, and the embedded

processing required to make all ash management and caching

tasks entirely transparent to the host. All “heavy lifting” is performed

transparently onboard the caching Host Bus Adapter by the embedded

The QLogic 10000 Series Adapter delivers something that no other ashbased caching solution has today: the ability to provide clustered caching

for SAN adapters and then share the adapters’ caches between servers.

Clustering FabricCache Adapters creates a logical group that cooperates to

maintain cache coherence, delivering a single point of management, high

availability, and optimal allocation of cache resources.

multi-core processor. By delivering full hardware ofoad, the FabricCache

Adapter provides signicant performance benets, including conserving

server memory and processor cycles for applications and services and

maximizing the number of VMs in virtualized environments. The only

host-resident software required for operation is a standard host operating

system (OS) device driver. In fact, the device appears to the host as a

standard QLogic Fibre Channel Host Bus Adapter and uses a common Host

Bus Adapter driver and protocol stack that is the same as the one used

by the traditional QLogic Fibre Channel Adapters that already make up the

existing SAN infrastructure.

FabricCache Adapters communicate with each other using the Fibre

Channel infrastructure. This capability allows the cluster of adapters

to share and manage caches across multiple server nodes. After

administrators dene caches for specic LUNs on the SAN, each

FabricCache Adapter can identify which adapter is the owner of each

cache. Because all FabricCache Adapters recognize the cache owner for

each specic LUN, I/Os are redirected to the cache owner. This distributed

cache model enables a single copy of cache data, which ensures coherent

cache operation, maximizes the use of ash resources, simplies the

architecture, and increases scalability.

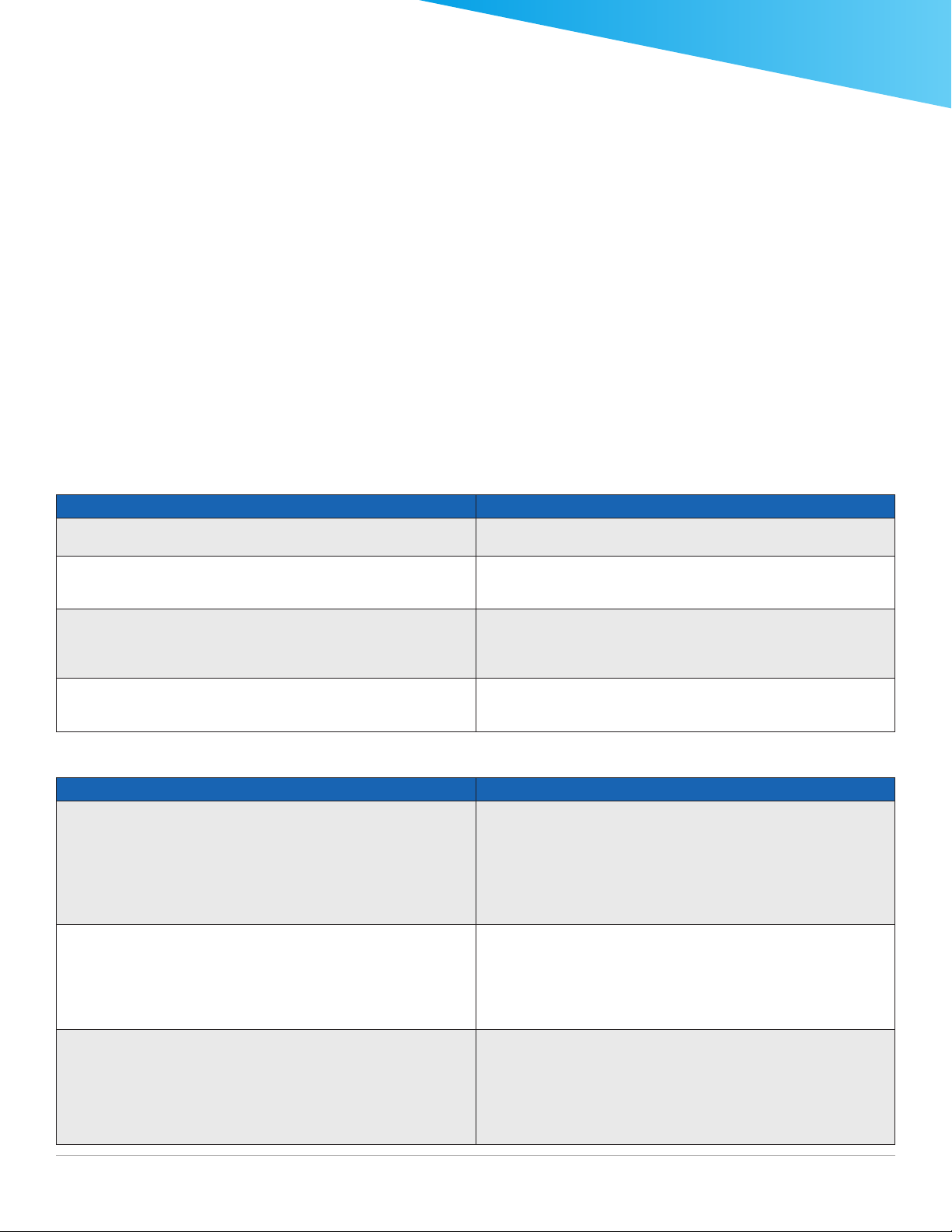

COMPARING DRAM CACHING LIMITATIONS TO THE QLOGIC FABRICCACHE 10000 SERIES ADAPTER

DRAM Caching QLogic FabricCache 10000 Series Adapter

DRAM cache is “captive” to the individual server; it is not sharable.

DRAM cache is cost-prohibitive for larger-sized databases.

DRAM cache best practice recommendations from Microsoft place

additional burdens on a server in the form of processor resource

consumption as well as larger and more fragmented storage capacity.

Servers utilizing DRAM caching for SQL will need an additional Fibre

Channel or similar Host Bus Adapter for networked storage access.

The QLogic FabricCache 10000 Series Adapter allows for the sharing of

cache resources between clustered servers and virtualized environments.

The QLogic FabricCache 10000 Series Adapter is unaffected by server and

DRAM premiums and is the most cost-effective way to scale out database

size and performance.

The QLogic FabricCache 10000 Series Adapter manages all caching with

full hardware ofoad, preserving server processing and memory resources

for applications and services as well as maximizing the number of VMs in

virtualized environments.

The QLogic FabricCache 10000 Series Adapter combines ash cache

with the industry’s most popular Fibre Channel Host Bus Adapter, and it is

managed as a single solution.

SELECTING DRAM CACHING VERSUS FABRICCACHE TO ACCELERATE MICROSOFT SQL SERVER

DRAM Caching QLogic FabricCache 10000 Series Adapter

Analysis 1

Example 1

A single-server SQL database with a current size of 150GB. The size of

the database is expected to grow about 33 percent each year. The existing

server node has 32GB of DRAM with an ability to expand to 128GB using its

12 DRAM sockets.

Example 2

A single-server SQL database with a current size of 600GB. The size of

the database is expected to grow about 40 percent each year. The existing

server node has 96GB of DRAM with an ability to expand to 768GB using its

24 DRAM sockets.

Example 3

A single-server SQL database with a current size of 500GB. The size of

the database is expected to grow about 70 percent each year. The existing

server node has 128GB of DRAM with an ability to expand to 384GB using

its 12 DRAM sockets.

SN0430970-00 Rev. A 02/14 3

A good rule of thumb is to cache up to 30 percent of a database for the

most cost-effective and optimal performance acceleration. Caching a

higher percentage tends to provide diminishing returns. With an ability to

scale to 128GB of DRAM and a 30 percent cache ratio, a database with a

size of up to 427GB can be accelerated efciently. This example is a good

candidate for DRAM caching accommodating three years of growth.

Analysis 2

While this higher-end server has plenty of DRAM expansion capabilities,

buying hundreds of gigabytes of DRAM from the server vendor is

cost-prohibitive. The QLogic FabricCache 10000 Series Adapter will be

the more cost-effective choice now and provide scalable performance

acceleration for several years of database growth.

Analysis 3

Mapping out database sizes, year one will be 850GB, and year two will

be 1,445GB. Using the 30 percent cache ratio tells us that this server’s

maximum 384GB will be sufcient for year one, but by year two it will fall

short of the need. Clearly, choosing DRAM caching in this example would

be short sited. The QLogic FabricCache 10000 Series Adapter is certainly

the better choice longer term.

Accelerating Microsoft SQL Server Beyond

Large Server Memory

White Paper

The tables on page 3 compare limitations of DRAM caching to the QLogic

FabricCache 10000 Series Adapter and provide three examples that

illustrate when DRAM caching or the QLogic FabricCache 10000 Series

Adapter is the appropriate choice to accelerate Microsoft SQL Server.

Note: Example 3 (in the table on the previous page) points out the need for

further analysis. In general, for existing server platforms with limited DRAM

capacity expansion, DRAM caching can be a good choice for smaller-sized

databases where the growth rate is low to moderate. Higher growth rates

or larger database sizes will require more DRAM for effective performance

acceleration. Higher amounts of DRAM will require more costly server

platforms and perhaps a need for higher density DRAM modules. These

modules come with a greater price per GB, and should be purchased from

the server vendor to maintain warranty and support. Adding DRAM to

existing servers near-term, then replacing these servers with higher-end

versions that can support higher DRAM capacities, is one way to keep

pace with growing database sizes, but it is much more expensive and

resource intensive in the long run than using the FabricCache Adapter from

the start. In the end, the decision is between the cost of the QLogic 10000

Series Adapter versus the cost of DRAM, the Fibre Channel Adapter, and

any server replacement.

SUMMARY

The use of DRAM caching for accelerating the performance of a Microsoft

SQL Server database can be an effective tool under the following

conditions:

• Non-clustered or non-virtualized single server congurations

• Databases sizes under and not expected to exceed 600GB

• Enough DRAM to cache 30 percent of the database size

• The aggregate cost of any server, DRAM, and SAN Host Bus Adapter

acquisitions is less than the cost of FabricCache Adapter

The QLogic FabricCache 10000 Series Adapter provides cost-effective

acceleration of Microsoft SQL Server databases under the following

conditions:

• Single-server congurations with database sizes above or expected to

grow beyond 600GB

• Clustered or virtualized multi-server congurations with any size

database

DISCLAIMER

Reasonable efforts have been made to ensure the validity and accuracy of these performance tests. QLogic Corporation is not liable for any error in this

published white paper or the results thereof. Variation in results may be a result of change in conguration or in the environment. QLogic specically

disclaims any warranty, expressed or implied, relating to the test results and their accuracy, analysis, completeness or quality.

Follow us:

Corporate Headquarters QLogic Corporation 26650 Aliso Viejo Parkway Aliso Viejo, CA 92656 949-389-6000

International Offices UK | Ireland | Germany | France | India | Japan | China | Hong Kong | Singapore | Taiwan

www.qlogic.com

© 2014 QLogic Corporation. Specications are subject to change without notice. All rights reserved worldwide. QLogic, the QLogic logo, and FabricCache are trademarks or registered trademarks of QLogic Corporation. Microsoft and SQL Server are registered trademarks of Microsoft

Corporation. All other brand and product names are trademarks or registered trademarks of their respective owners. Information supplied by QLogic Corporation is believed to be accurate and reliable. QLogic Corporation assumes no responsibility for any errors in this document. QLogic

Corporation reserves the right, without notice, to make changes in product design or specications.

SN0430970-00 Rev. A 02/14 4

Share:

Loading...

Loading...