WHITE PAPER

Accelerating Microsoft Exchange

Servers with I/O Caching

QLogic FabricCache Caching Technology Designed for

High-Performance Microsoft Exchange Servers

Key Findings

The QLogic® FabricCache™ 10000 Series 8Gb Fibre Channel Adapter enhances transaction and latency performance for Microsoft®

Exchange Servers by optimizing random read-intensive workloads that are inherent in Exchange and e-mail applications. The QLogic

10000 Series technology is implemented as a PCIe®-based intelligent I/O device that provides integrated Fibre Channel storage network

connectivity, flash memory caching, and embedded processing. The 10000 Series technology makes caching I/O data from Exchange

entirely transparent to the host.

QLogic’s caching technology in the QLogic 10000 Series Fibre Channel Adapter enhances Microsoft Exchange with:

• Increased transactional IOPS by up to 400 percent

• Decreased Exchange I/O read latency by up to 80 percent

• Overall improved performance in the Exchange environment

Executive Summary

WHITE PAPER

Increased demands on the Microsoft Exchange Server make it critical

to optimize its I/O performance in the SAN. The QLogic 10000 Series

8Gb Fibre Channel Adapter meets that need for high performance. By

implementing a unique I/O caching architecture with Fibre Channel

connectivity, the 10000 Series Adapter significantly increases the

performance of transactional I/O (IOPS) and I/O read latency for

large Exchange data volumes. This combined approach of enterprise

server I/O with server-based I/O caching using flash memory delivers

dramatic and smoothly scalable application performance.

E-mail has grown to be one of the most important communication and

collaboration applications for worldwide businesses. According to a

report from The Radicati Group1, Microsoft Exchange Server will have

1 The Radicati Group, Inc. study, Microsoft Exchange Server and Outlook Market Analysis 2010–2014

Server-Side Storage Acceleration

Storage I/O is the primary performance bottleneck for data-intensive

applications such as Microsoft Exchange Server. Processor and memory

performance have grown in-step with Moore’s Law—that is, getting faster

and smaller—while storage performance has lagged far behind. Over the

past two decades, delivering performance from data storage has become

the bane of the applications administrators, and recent challenges are

poised to severely constrain the capabilities of the infrastructure in the face

of increasing virtualization, consolidation, and user loads.

a total installed base that is expected to reach 470 million by 2014,

with an average annual growth rate of 12 percent. This expanding

penetration of Exchange makes it imperative that the user remains

unaffected by increased server loads. Exchange IOPS and latency

relate directly to the usability of Exchange and user e-mail systems, as

well as the number of mailboxes that can be hosted on a server.

To help IT decision-makers make the best, most-informed adapter

choice, QLogic has assessed performance benchmarks that show the

I/O performance and scalability advantages of the QLogic 10000 Series

8Gb Fibre Channel Adapter. This white paper examines the advantages

provided by QLogic’s technology in the 10000 Series Adapter, and

gives guidance for making informed decisions when considering the

value of flash memory to enhance server performance.

limitations of solutions that require separate, server-based storage

management software and OS filter drivers.

For optimal Microsoft Exchange performance, maximized transactional I/O

speed and minimized I/O latency indicate a peak-performing Exchange and

e-mail system. By optimizing these performance values, the platform can

absorb additional users, thereby reducing server, management, and overall

infrastructure costs. The key benefits identified in this paper are the QLogic

10000 Series Adapter improvements of Exchange Server performance that

reduce overall costs.

A new class of server-side storage acceleration is the latest innovation in

the market addressing this performance disparity. The idea is simple: fast,

reliable, solid-state flash memory connected to the server brings faster

Exchange data access to the server’s CPU. Flash memory is highly available

in the market and promises to perform much faster than any rotational disk

under typical small, highly random, I/O enterprise workloads.

The QLogic 10000 Series Adapter from QLogic provides I/O acceleration

across the storage area network for Exchange servers. The 10000 Series

Adapter is a PCIe-based I/O device that provides the integrated storage

network (Fibre Channel) connectivity, I/O caching technology, integrated

flash memory, and embedded processing that are required to make

management and caching tasks transparent to the host server. The QLogic

patent-pending FabricCache solution delivers the application performance

acceleration benefits of transparent, server-based cache, without the

SSG-WP13001D SN0430934-00 Rev. D 05/13 2

Exchange Server Benchmark Using Microsoft Jetstress

Microsoft provides the Jetstress 2010 tool for simulating Microsoft

Exchange Server 2010 database I/O load and for validating hardware

deployments against design targets. In this paper, Jetstress was used to

simulate Exchange 2010 against both non-cached and cached I/O data

implementations with the QLogic 10000 8Gb Fibre Channel Adapter.

Test Setup

The test setup was configured with an Intel® Xeon® server connected to

an HP® Enterprise Virtual Array (EVA) storage through the QLogic 10000

Adapter and QLogic 5800V/5802V Fibre Channel Switch. Jetstress runs

were executed with cache levels varying in size from zero to 100 percent of

the actual Exchange database size.

WHITE PAPER

Figure 1 shows the basic setup for the Exchange test.

Figure 1. Exchange Test Setup

Test Hardware and Software

Table 1 lists the hardware and Table 2 lists the software used for Exchange

Server testing.

Table 1. Hardware Used for Exchange Jetstress Testing

Hardware Description

Host Server Dual 6-core Intel Xeon CPU

E5-2640 at 2.50GHz, 24 logical

processors, 32GB RAM

Host Bus Adapter QLogic FabricCache 10000 Series

8Gb Fibre Channel Adapter

Quantity of Host Bus Adapters

per Host

Fabric Switch

Storage Array HP EVA 6300

Drive Speed 10K

Quantity of Drives on Array 24

LUN Size

RAID Type RAID 5

1

5800V/5802V 8Gbps Fibre

QLogic

Channel Switch

100GB × 7

Table 2. Software Used for Exchange Jetstress Testing

Software Description

Operating System Windows Server 2008 R2

Exchange Performance

Validation Tool

Exchange 2010 System

Simulation ESE.dll File

Quantity of Mailboxes 1,000

Mailbox Size 200MB

Database Size 200GB

Exchange Jetstress 2010

64-bit version 14.01.0225.017

14.01.0218.012 (Microsoft

Exchange Server 2010 SP1)

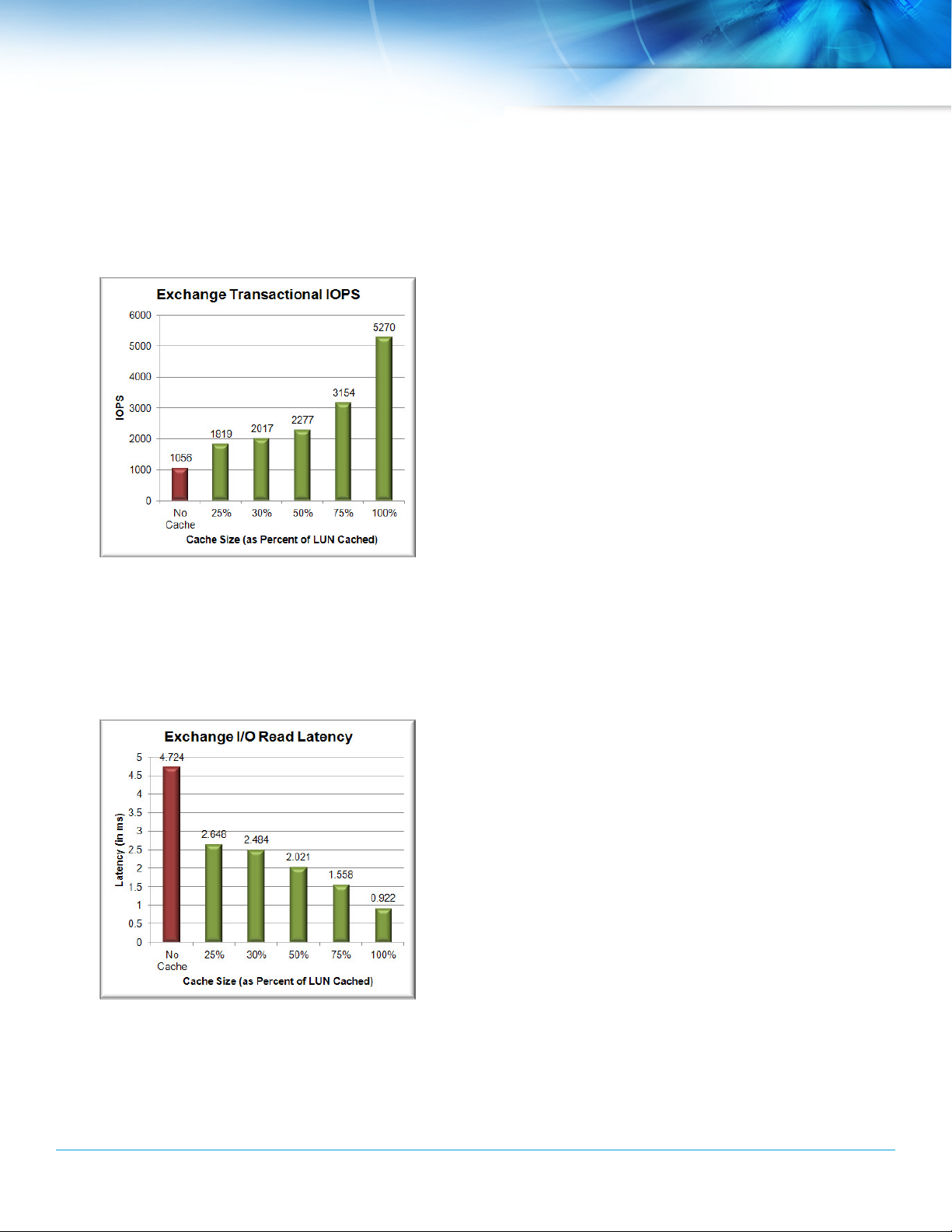

Exchange Performance Results Using Jetstress

The tests demonstrate that, at various levels of LUN caching in the 10000

Series Adapter, caching delivers as much as a 400 percent increase in

the number of IOPS and an 80 percent reduction in I/O latency. These

performance improvements are directly related to caching data closer to

the Exchange Server processor, which eliminates Fibre Channel transit time

over the SAN. Table 3 shows these results.

Table 3. Jetstress Data for Increasing Cache Sizes in the 10000 Series Adapter

Cache Size

Criteria

Exchange I/O

Read Latency

(ms)

Exchange

Transactions

(IOPS)

Latency

Reduction

IOPS Increase 0% 72% 91% 116% 199% 399%

No

Cache

4.724 2.648 2.484 2.021 1.558 0.922

1056 1819 2017 2277 3154 5270

0% (44%) (47%) (57%) (67%) (80%)

25% 30% 50% 75% 100%

SSG-WP13001D SN0430934-00 Rev. D 05/13 3

WHITE PAPER

Figure 2 shows the total Exchange transactional IOPS as measured by the

Jetstress test. With no cache enabled in the QLogic 10000 Series Adapter,

Jetstress measured 1056 transactional IOPS. In this case, all I/O traffic

makes the round trip from the server to the SAN storage array. At

100 percent cache, the cache size is equal to the LUN size in this test. The

measured IOPS of 5,270 has increased by nearly 400 percent.

Figure 2: Exchange Transactional IOPS Measured by Jetstress

at Varying Cache Levels

Figure 3 shows the Exchange I/O read latency as measured by Jetstress.

With no cache enabled in the QLogic 10000, Jetstress measured the

latency at 4.724ms. As with transactional IOPS, all I/O traffic makes the

round trip from server to the SAN storage array. At 100 percent cache, the

latency fell to 0.922ms, an 80 percent reduction in this test.

Summary and Conclusion

QLogic continues to be the industry leader in delivering high-performance

I/O solutions to data center customers. The IOPS and latency of the QLogic

10000 Series 8Gb Fibre Channel Adapter is best-in-class and provides

an unprecedented high level of performance, superior scalability, and

enhanced reliability that exceeds the requirements for next-generation

Exchange environments. The unique caching solution of the 10000 Series

Adapter has the increased performance needed to meet the escalating

requirements of Microsoft Exchange Server. The results of the benchmark

tests demonstrate the I/O performance and scalability advantages of the

QLogic 10000 Series Adapter to Exchange Server performance, which

results in overall reduced total cost of ownership (TCO).

Because Microsoft Exchange is one of the most prevalent, business-critical

applications in enterprises, administrators go to great lengths to ensure

optimal performance. Administrators consider scalability and performance

as major factors when they work to improve Exchange performance. The

QLogic 10000 Series Adapter provides the technology to improve the

scalability and performance superiority of Exchange, which makes it the

right choice for Exchange administrators.

Exchange environments are carefully designed to cater to varying and

unpredictable loads. As heavier demands occur in the data centers, the

SAN must meet minimum business requirements for higher performance.

QLogic 10000 Series Adapter supports the required performance

scalability—400 percent increased IOPS and 80 percent reduced latency—

which makes a positive impact on an organization’s bottom line. The

QLogic FabricCache 10000 Series 8Gb Fibre Channel Adapter with built-in

I/O caching is designed to meet the ever-increasing needs of Microsoft

Exchange platforms.

Figure 3: Exchange I/O Read Latency Measured by Jetstress

SSG-WP13001D SN0430934-00 Rev. D 05/13 4

at Varying Cache Levels

WHITE PAPER

Disclaimer

Reasonable efforts have been made to ensure the validity and accuracy of these performance tests. QLogic Corporation is not liable for any error

in this published white paper or the results thereof. Variation in results may be a result of change in configuration or in the environment. QLogic

specifically disclaims any warranty, expressed or implied, relating to the test results and their accuracy, analysis, completeness or quality.

Follow us:

Corporate Headquarters QLogic Corporation 26650 Aliso Viejo Parkway Aliso Viejo, CA 92656 949-389-6000

www.qlogic.com

© 2013 QLogic Corporation. Specifications are subject to change without notice. All rights reserved worldwide. QLogic, the QLogic logo, and FabricCache are trademarks or registered trademarks of QLogic Corporation. HP is a registered trademark of Hewlett-Packard Company. Intel is

a registered trademark of Intel Corporation. Microsoft is a registered trademark of Microsoft Corporation. Xeon is a registered trademark of Intel Corporation. All other brand and product names are trademarks or registered trademarks of their respective owners. Information supplied by

QLogic Corporation is believed to be accurate and reliable. QLogic Corporation assumes no responsibility for any errors in this brochure. QLogic Corporation reserves the right, without notice, to make changes in product design or specifications.

SSG-WP13001D SN0430934-00 Rev. D 05/13 5

International Offices UK | Ireland | Germany | France | India | Japan | China | Hong Kong | Singapore | Taiwan

Share:

Loading...

Loading...