Page 1

User Guide

DGX-2 SYSTEM

DU-09130-001_v03 | November 2018

Page 2

TABLE OF CONTENTS

Introduction to the NVIDIA DGX-2 System .............................. 5

About this Document ................................................................................. 6

Hardware Overview .................................................................................. 6

Network Ports ....................................................................................... 11

Recommended Ports to Use for External Storage .............................................. 12

DGX OS Software ................................................................................... 13

Additional Documentation ......................................................................... 13

Customer Support .................................................................................. 14

Connecting to the DGX-2 Console ......................................... 15

Direct Connection ................................................................................... 16

Remote Connection through the BMC ........................................................... 17

SSH Connection ..................................................................................... 19

Setting Up the DGX-2 System ............................................... 20

Quick Start Instructions ...................................................... 24

Registration .......................................................................................... 24

Installation and Configuration..................................................................... 25

Obtaining an NVIDIA GPU Cloud Account ....................................................... 25

Getting Your NGC API Key and Selecting Container Tags for the Verification Examples . 25

Verifying Basic Functionality....................................................................... 26

Network Configuration ........................................................ 28

BMC Security ........................................................................................ 28

Configuring Network Proxies ...................................................................... 28

Configuring Docker IP Addresses ................................................................. 29

Opening Ports ....................................................................................... 31

Connectivity Requirements ........................................................................ 31

Configuring Static IP Address for the BMC ...................................................... 32

Configuring Static IP Addresses for the Network Ports ........................................ 36

Switching Between InfiniBand and Ethernet .................................................... 38

Configuring Storage – NFS Mount and Cache .......................... 44

Restoring the DGX-2 Software Image .................................... 46

Obtaining the DGX-2 Software ISO Image and Checksum File .............................. 47

Re-Imaging the System Remotely ................................................................ 47

Creating a Bootable Installation Medium ........................................................ 48

Re-Imaging the System From a USB Flash Drive............................................... 51

Retaining the RAID Partition While Installing the OS .......................................... 52

Updating the DGX OS Software ............................................. 54

Connectivity Requirements For Software Updates ............................................. 54

Update Instructions ................................................................................ 55

Updating Firmware ............................................................. 56

General Firmware Update Guidelines ............................................................ 56

Obtaining the Firmware Update Container ...................................................... 57

DGX-2 System User Guide ii

Page 3

Querying the Firmware Manifest ................................................................. 57

Querying the Currently Installed Firmware Versions .......................................... 57

Updating the Firmware ............................................................................. 58

Additional Options .................................................................................. 60

Command Summary ................................................................................ 61

Removing the Container ........................................................................... 61

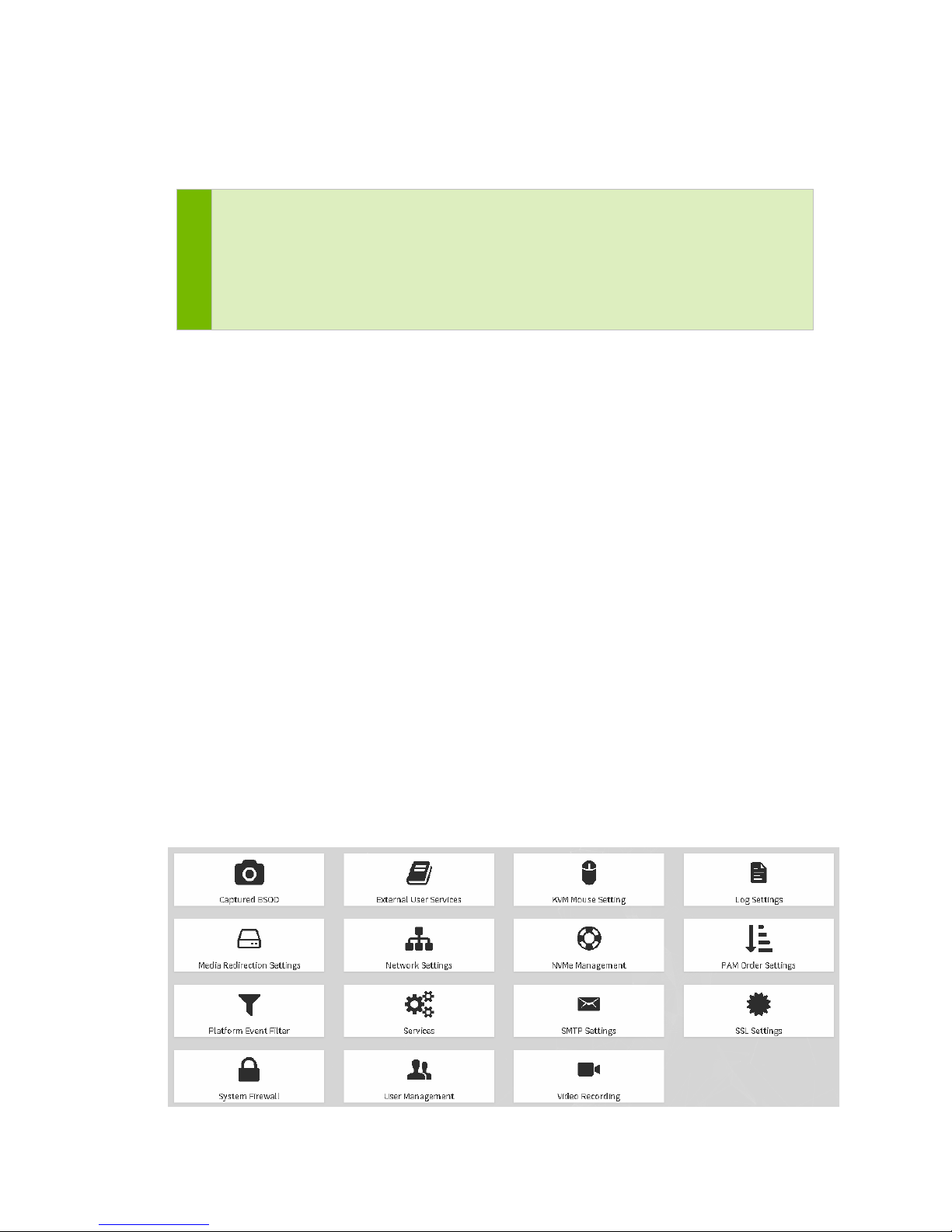

Using the BMC ................................................................ 62

Connecting to the BMC .......................................................................... 62

Overview of BMC Controls ....................................................................... 63

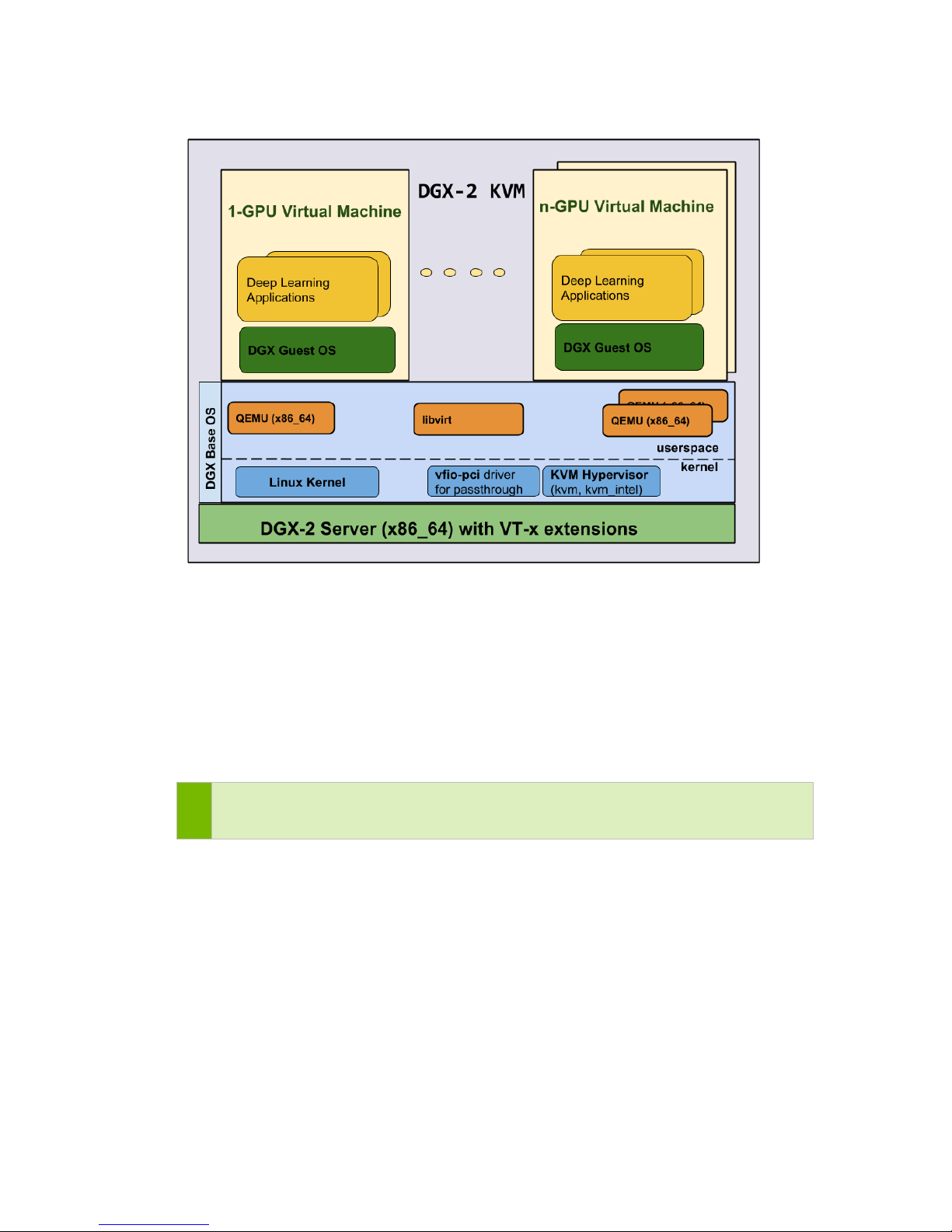

Using DGX-2 System in KVM Mode ...................................... 66

Overview ........................................................................................... 66

Preliminary Setup - Converting the DGX-2 System to a DGX-2 KVM Host ............... 69

Launching a Guest GPU VM Instance .......................................................... 70

Stopping, Restarting, and Deleting a Guest GPU VM ........................................ 72

Connecting to Your Guest GPU VM ............................................................. 74

Managing Images ................................................................................. 76

Using the Guest OS Drives and Data Drives .................................................. 77

Updating the Software ........................................................................... 80

Supplemental Information ....................................................................... 82

Appendix A. Installing Software on Air-gapped DGX-2 Systems ................ 86

A.1. Installing NVIDIA DGX-2 Software ............................................................. 86

A.2. Re-Imaging the System .......................................................................... 87

A.3. Creating a Local Mirror of the NVIDIA and Canonical Repositories ........................ 87

A.4. Installing Docker Containers .................................................................... 88

Appendix B. Safety ............................................................................ 89

B.1. Safety Information ............................................................................... 89

B.2. Safety Warnings and Cautions .................................................................. 90

B.3.

B.4. Site

B.5.

B.6. Electrical Precautions ............................................................................. 92

B.7. System Access Warnings ........................................................................ 93

B.8. Rack Mount Warnings ............................................................................ 94

B.9. Electrostatic Discharge (ESD) .................................................................. 95

B.10. Other

Intended Application

Selection

Equipment Handling Practices

..................................................................................... 91

Hazards

Uses ..................................................................... 91

................................................................................. 95

................................................................ 91

Appendix C. Compliance ..................................................................... 97

C.1. United States ...................................................................................... 97

C.2. United States / Canada .......................................................................... 98

C.3. Canada ............................................................................................. 98

C.4. CE ................................................................................................... 98

C.5. Japan ............................................................................................... 99

C.6. Australia and New Zealand ..................................................................... 101

C.7. China .............................................................................................. 102

C.8. Israel .............................................................................................. 103

C.9. Russia/Kazakhstan/Belarus ..................................................................... 103

DGX-2 System User Guide iii

Page 4

C.10. Vietnam ........................................................................................ 103

C.11. South Korea ................................................................................... 104

C.12. Taiwan ......................................................................................... 107

DGX-2 System User Guide iv

Page 5

INTRODUCTION TO THE NVIDIA DGX-2

SYSTEM

The NVIDIA® DGX-2™ System is the world’s first two-petaFLOPS system that engages

16 fully interconnected GPUs for accelerated deep learning performance. The DGX-2

System is powered by NVIDIA® DGX™ software stack and an architecture designed for

Deep Learning, and High-Performance Computing and analytics.

DGX-2 System User Guide

5

Page 6

Introduction to the NVIDIA DGX-2 System

ID

Component

Qty

Description

ABOUT THIS DOCUMENT

This document is for users and administrators of the DGX-2 System. It is organized as

follows:

Chapters 1-4: Overview of the DGX-2 System, including basic first-time setup and

operation

Chapters 5-6: Network and storage configuration instructions.

Chapters 7-9: Software and firmware update instructions

Chapters 9: How to use the BMC

Chapter 10: How to configure and use the DGX-2 System as a Kernel Virtual Machine

host

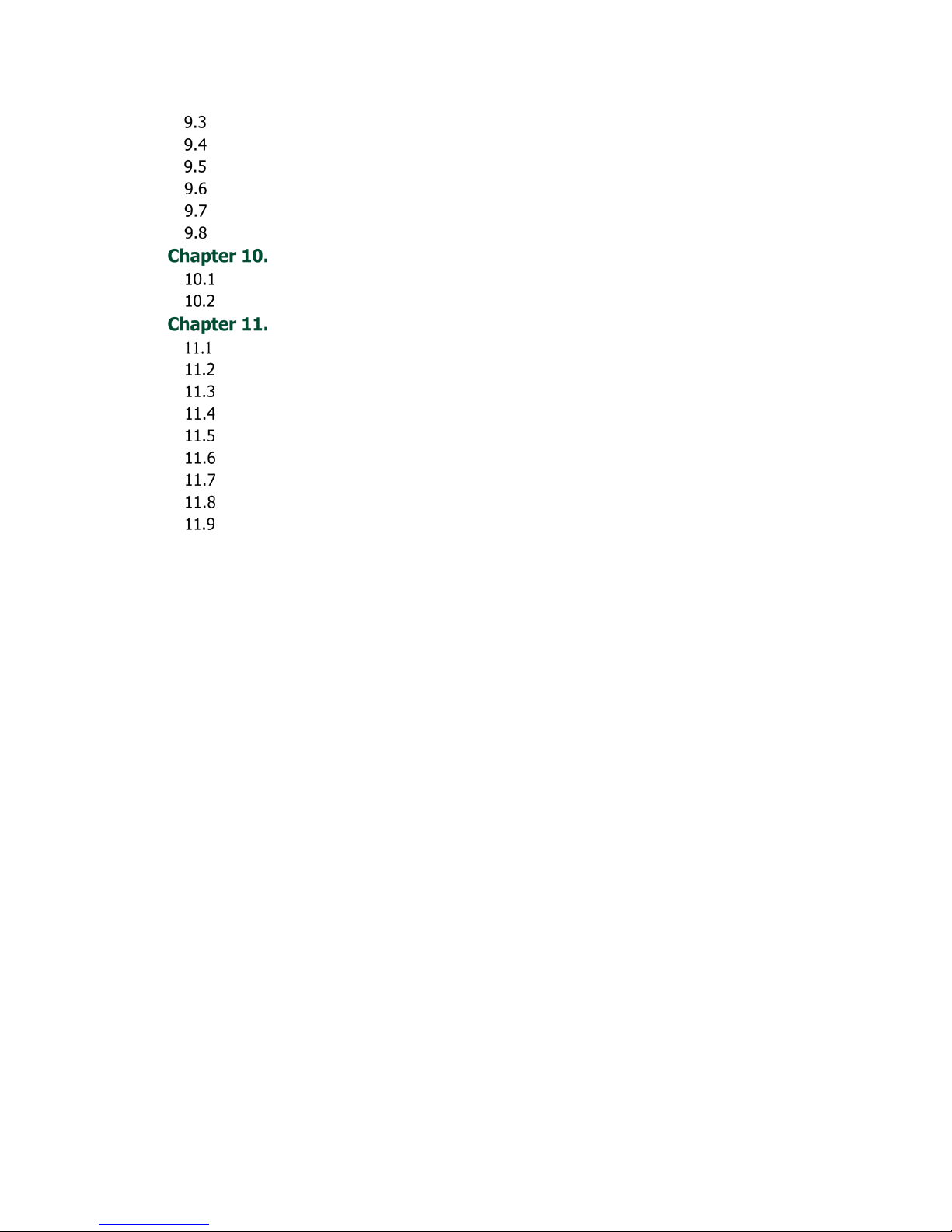

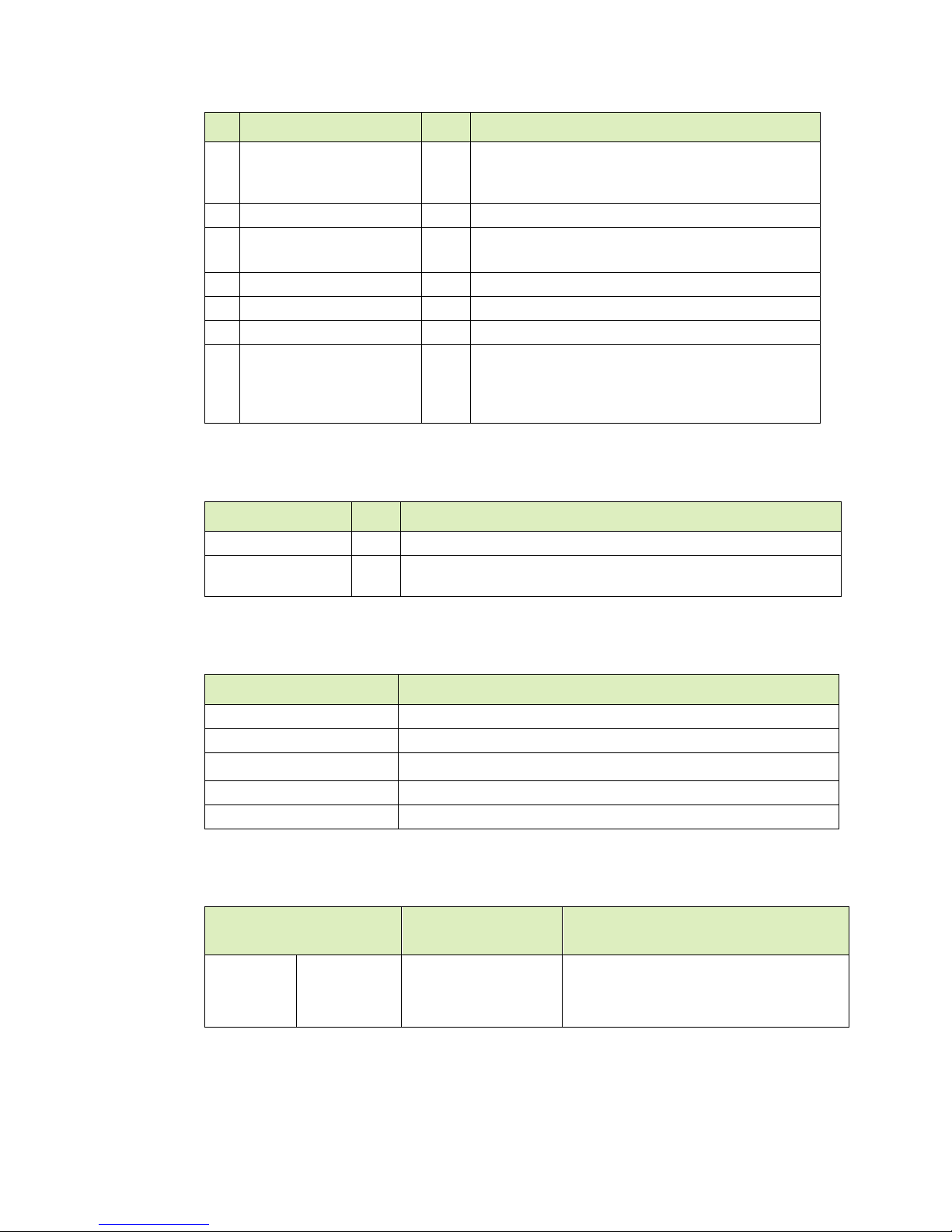

HARDWARE OVERVIEW

1.2.1 Major Components

The following diagram shows the major components of the DGX-2 System.

1 GPU 16 NVIDIA® Tesla V100

- 2 petaFLOPS

- 512GB total GPU memory

- 81920 NVIDIA CUDA® Cores

- 10240 NVIDIA Tensor Cores

2 GPU Boards 2 Each board consists of

DGX-2 System User Guide

6

Page 7

Introduction to the NVIDIA DGX-2 System

8x NVIDIA® Tesla V100

Feature

Description

Each Power Supply

ID Component Qty Description

6x NVSwitches

512GB total HBM2 memory

3 NVSwitch 12 2.4 TB/s bi-section bandwidth

4 Network (cluster) 8 EDR Infiniband or 100 GbE

(1600 Gb/s total bi-directional bandwidth)

5 CPU 2 Dual Intel Xeon Platinum 8168, 2.7 GHz, 24-cores

6 System Memory 1.5 TB

7 Storage (RAID 0) (Cache) 8 3.84TB each (30TB total) NVMe SSDs

8 Network (storage) 2 High speed Ethernet 10/25/40/100 GbE

Can be expanded with the purchase and

installation of a second dual-port network

adapter.

1.2.2 Other Components not in Exploded View

Component Qty Description

Power Supply 6 3000 W each

Storage (RAID 1)

(OS)

2 960GB NVMe SSDs

1.2.3 Mechanical Specifications

Form Factor 10U Rackmount

Height 17.32” (440 mm)

Width 19" (482.6 mm)

Depth 31.3" (795 mm)

Gross Weight 360 lbs (163.29 kg)

1.2.4 Power Specifications

Input Specification for

200-240

volts AC

10 kW max. 3000 W @ 200-240 V,

16 A, 50-60 Hz

Comments

The DGX-2 System contains six loadbalancing power supplies.

DGX-2 System User Guide

7

Page 8

Introduction to the NVIDIA DGX-2 System

Feature

Description

1.2.5 Environmental Specifications

Operating Temperature

Relative Humidity

Airflow

Heat Output 34122 BTU/hr

5

◦ C to 35 ◦ C (41 ◦ F to 95 ◦ F)

20% to 85% noncondensing

1000 CFM @ 35

◦

C

1.2.6 Front Panel Connections and Controls

ID Qty Description

1 4 Upper GPU tray fans

2 4 Lower GPU tray fans

3

4 2 Motherboard tray fans

5 1 Front console board:

6 1

DGX-2 System User Guide

8

(default)

Solid State Drives.

Additional SSDs available for purchase to expand to 16.

USB 3.0 (2x)

VGA (1x)

Power and ID buttons:

Top: Power button and LED

Press to turn the DGX-2 System on or off.

8

Page 9

Introduction to the NVIDIA DGX-2 System

Green steady: Power is On

ID Qty Description

Red steady: Power is Off

Red blinking: Status warning/error

Bottom: ID button

Press to cause an LED on the back of the unit to flash as an

identifier during servicing.

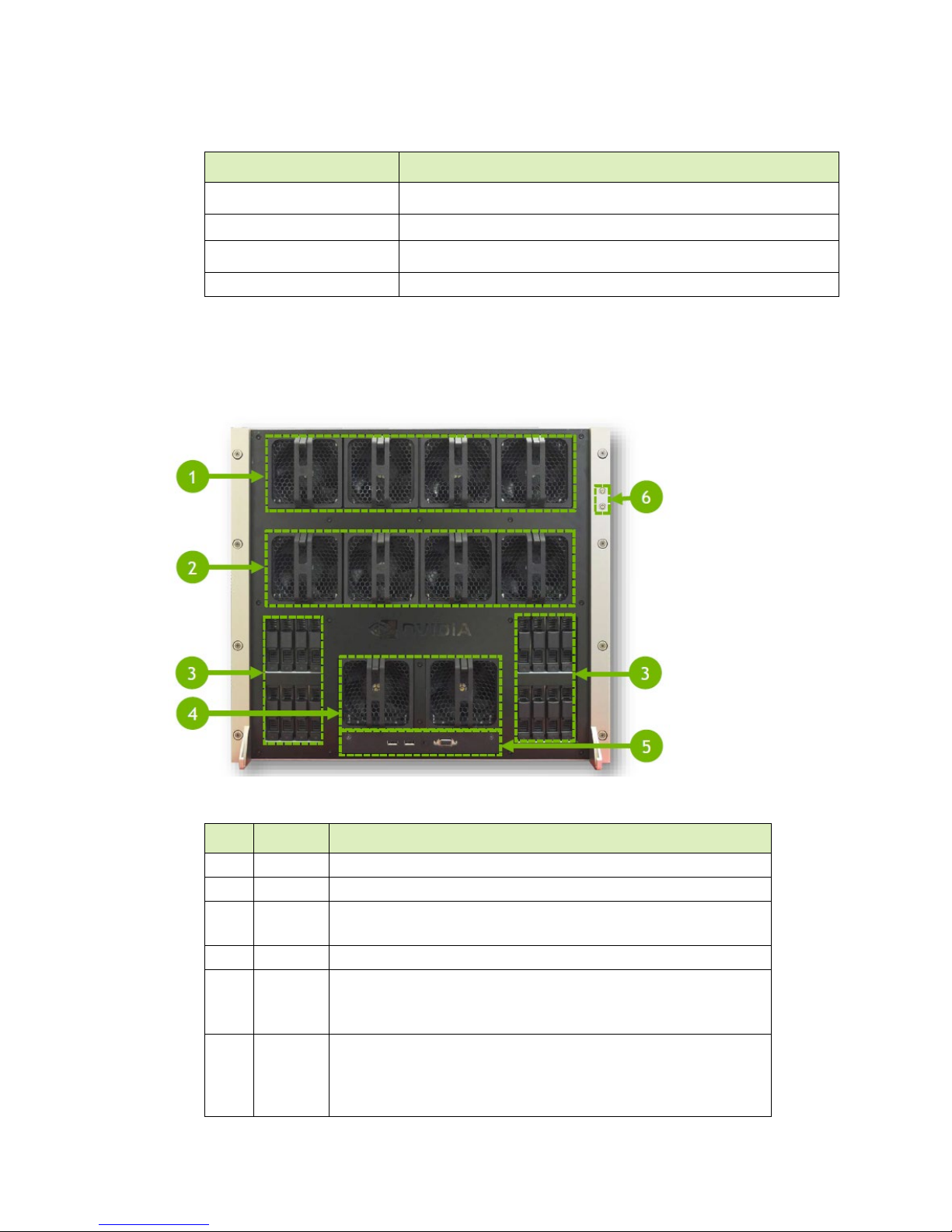

1.2.7 Rear Panel Connections and Controls

With EMI Shield Installed

ID Qty Description

1 1 EMI shield

2 6 Power supplies and connectors

3 1 I/O tray

4 1 Motherboard tray

5 2 Handles to pull power supply carrier

DGX-2 System User Guide

9

Page 10

With EMI Shield Removed

ID

Qty

Description

ID

Qty

Description

enp134s0f1

1 2 NVIDIA NVLink™ plane card

Introduction to the NVIDIA DGX-2 System

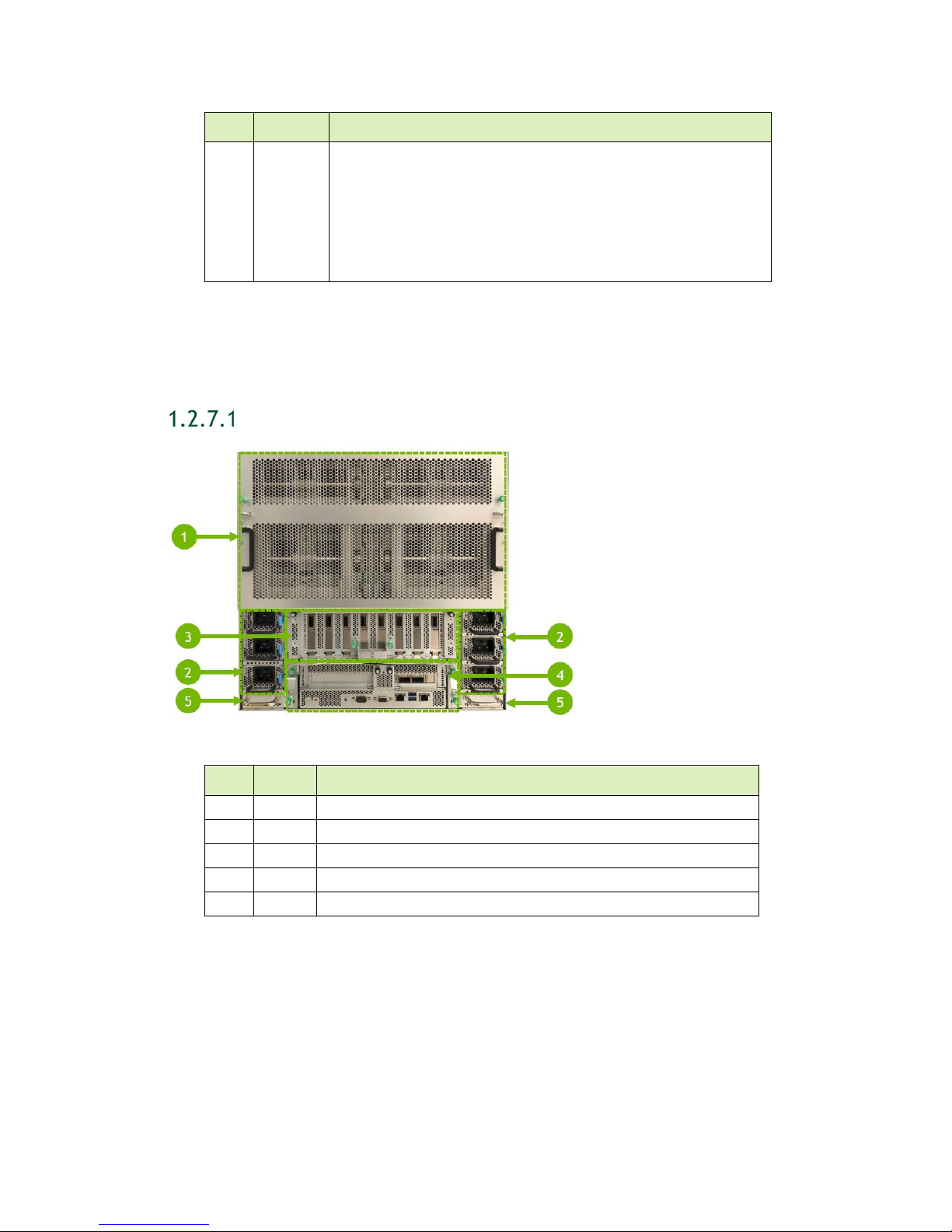

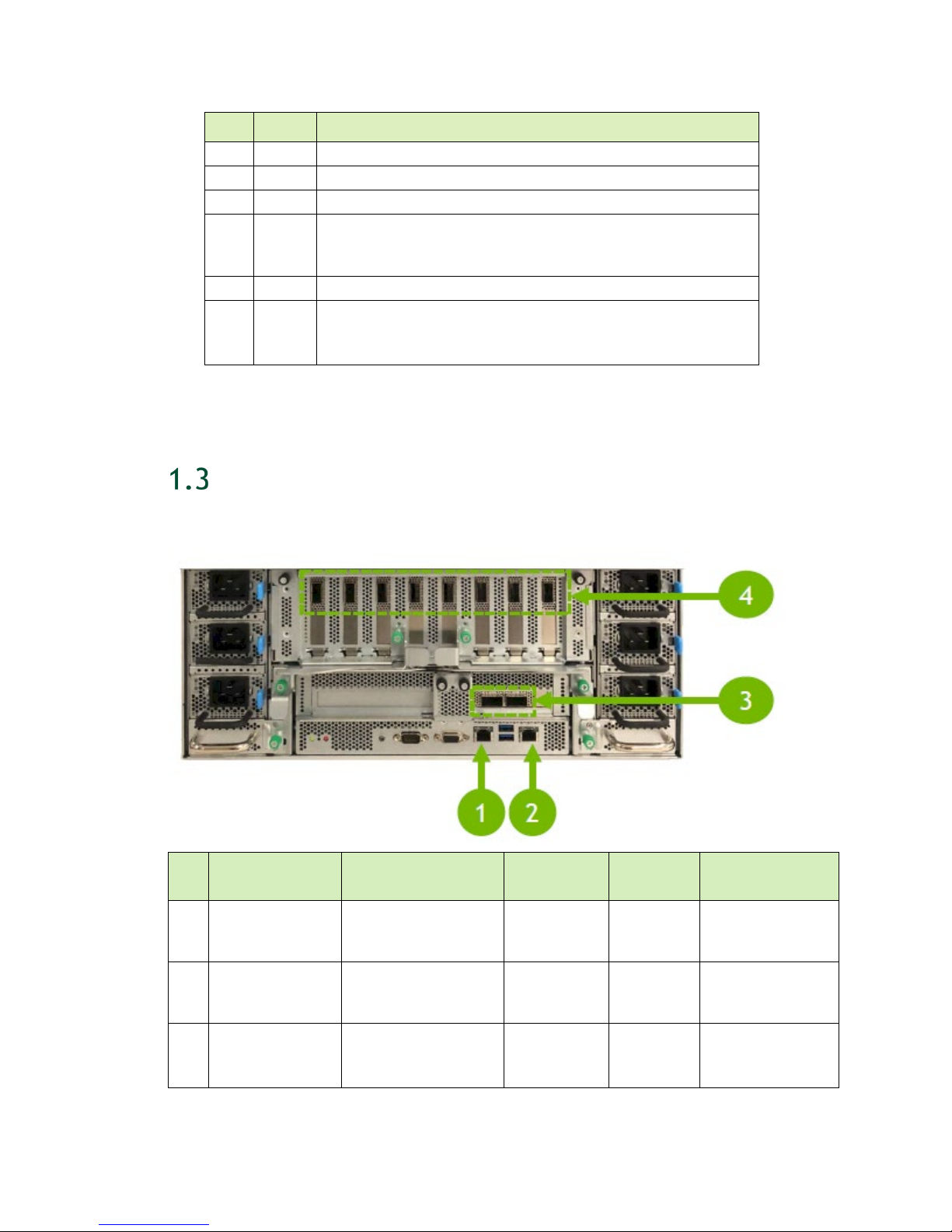

1.2.8 Motherboard Tray Ports and Controls

1 1 (Optional) High profile PCI card slot (for network storage)

2 2 (Default) QSFP28 network ports (for network storage)

Left side port designation: enp134s0f0

Right side port designation:

3 1 RJ45 network port (for in-band management)

4 2 USB 3.0 ports

DGX-2 System User Guide

10

Page 11

ID Qty Description

Ports

5 1 IPMI port (for out-of-band management (BMC))

6 1 VGA port

7 1 Serial port (DB-9)

8 1 System ID LED

Blinks blue when ID button is pressed from the front of the unit

as an aid in identifying the unit needing servicing

9 1 BMC reset button

10 1 Power and BMC heartbeat LED

On/Off – BMC is not ready

Blinking – BMC is ready

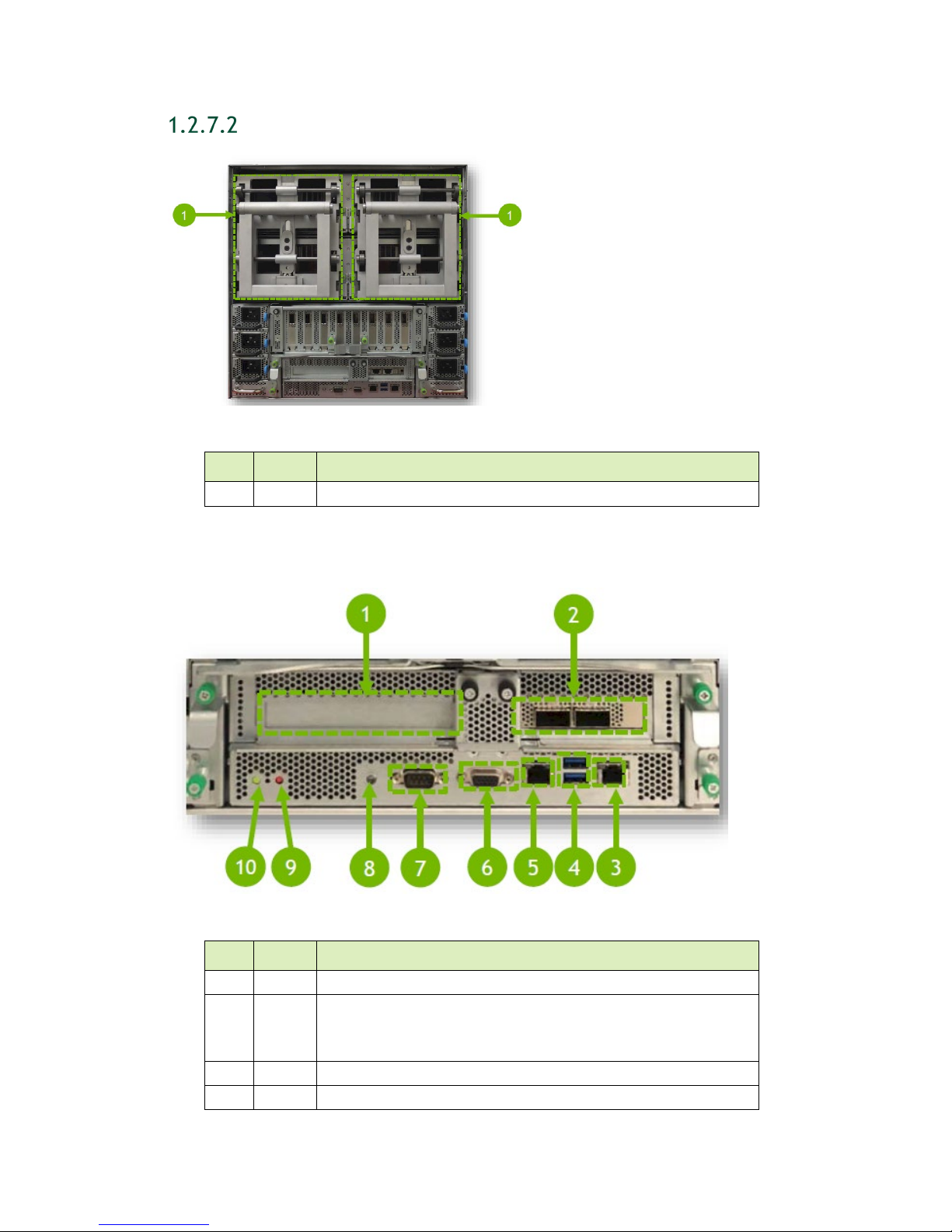

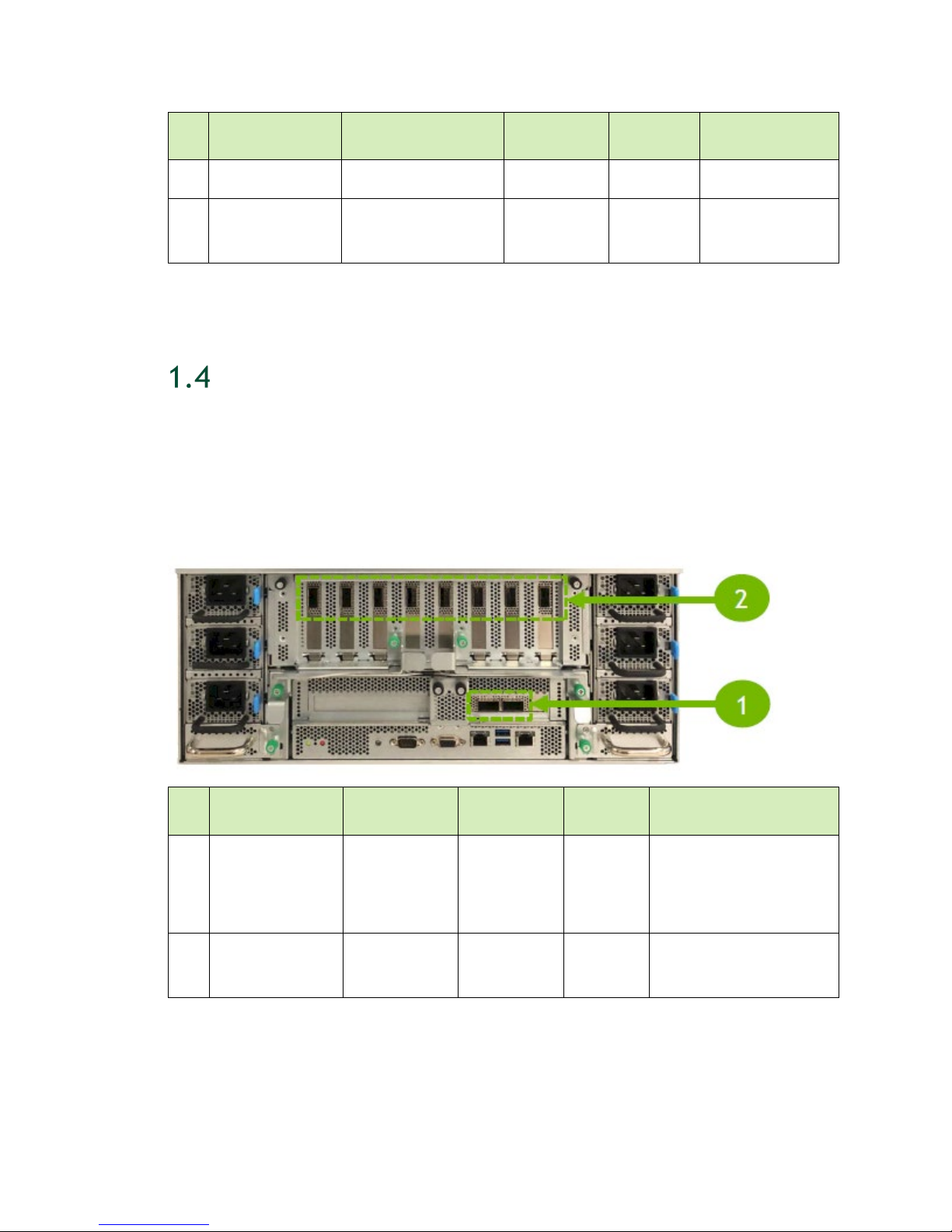

NETWORK PORTS

Introduction to the NVIDIA DGX-2 System

The following figure highlights the available network ports and their purpose.

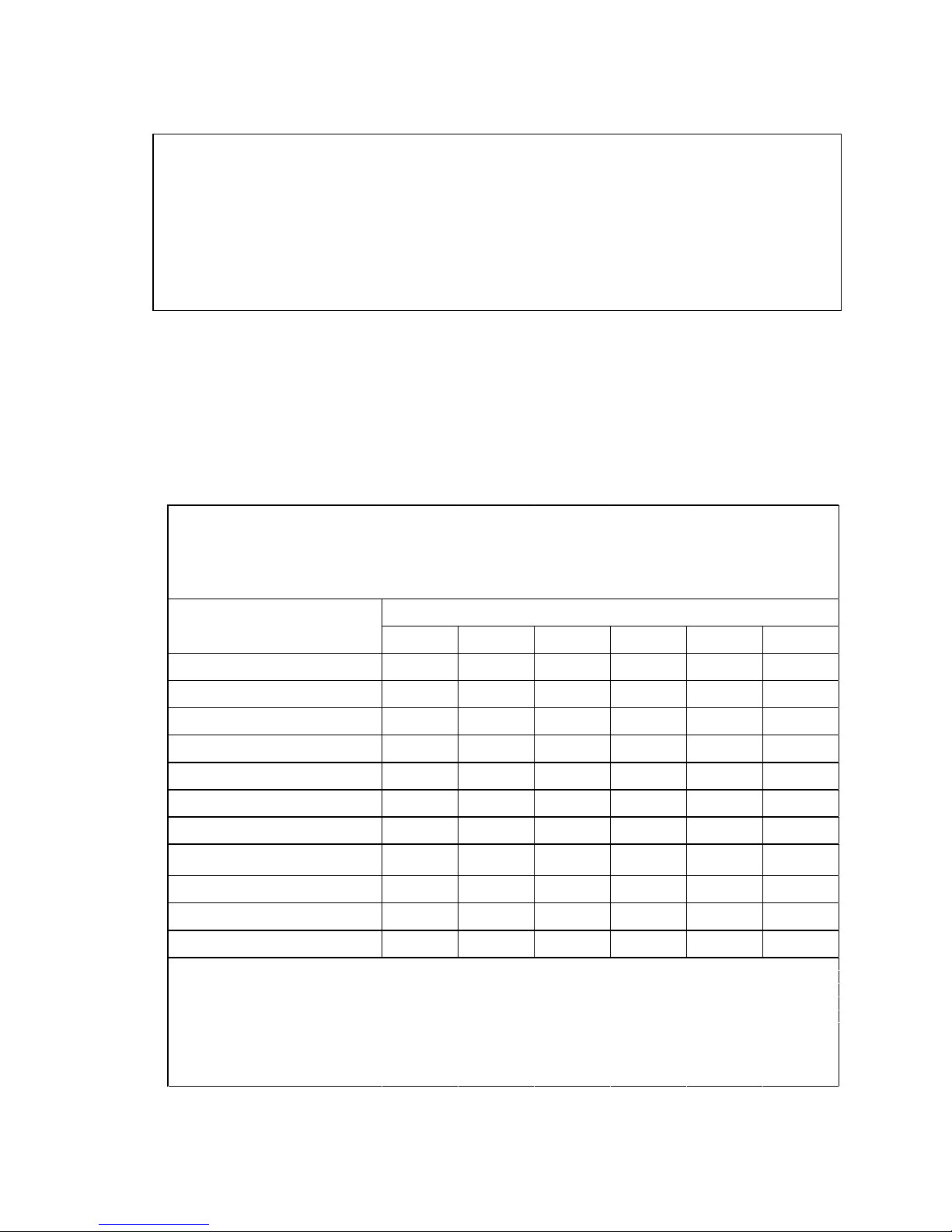

ID Connectivity Uses

BMC (remote

1

management and

monitoring)

Motherboard

2

RJ45

3 ConnectX-5 (LP)

Ethernet mode

Out-of-band

management

In-band

management,

administration

Storage (NFS)

System

communication

Number of

1

1

2

(Left):

enp134s0f0

Port Type Cable Type

100/1000

RJ45

RJ45

QSFP28

Ethernet

Cat5E/6 Ethernet

100/1000

Ethernet

Cat5E/6 Ethernet

100 GbE (QSFP28)

10/25/40 GbE

DGX-2 System User Guide

11

Page 12

Introduction to the NVIDIA DGX-2 System

Ports

(Right):

enp134s0f1

(QSFP28 to SFP28

Ports

Type

enp134s0f1

ID Connectivity Uses

4 ConnectX-5

InfiniBand mode

Ethernet mode

Clustering

Storage

Number of

8

Port Type Cable Type

QSFP28

or SFP+)

InfiniBand EDR

100

Ethernet 100GbE

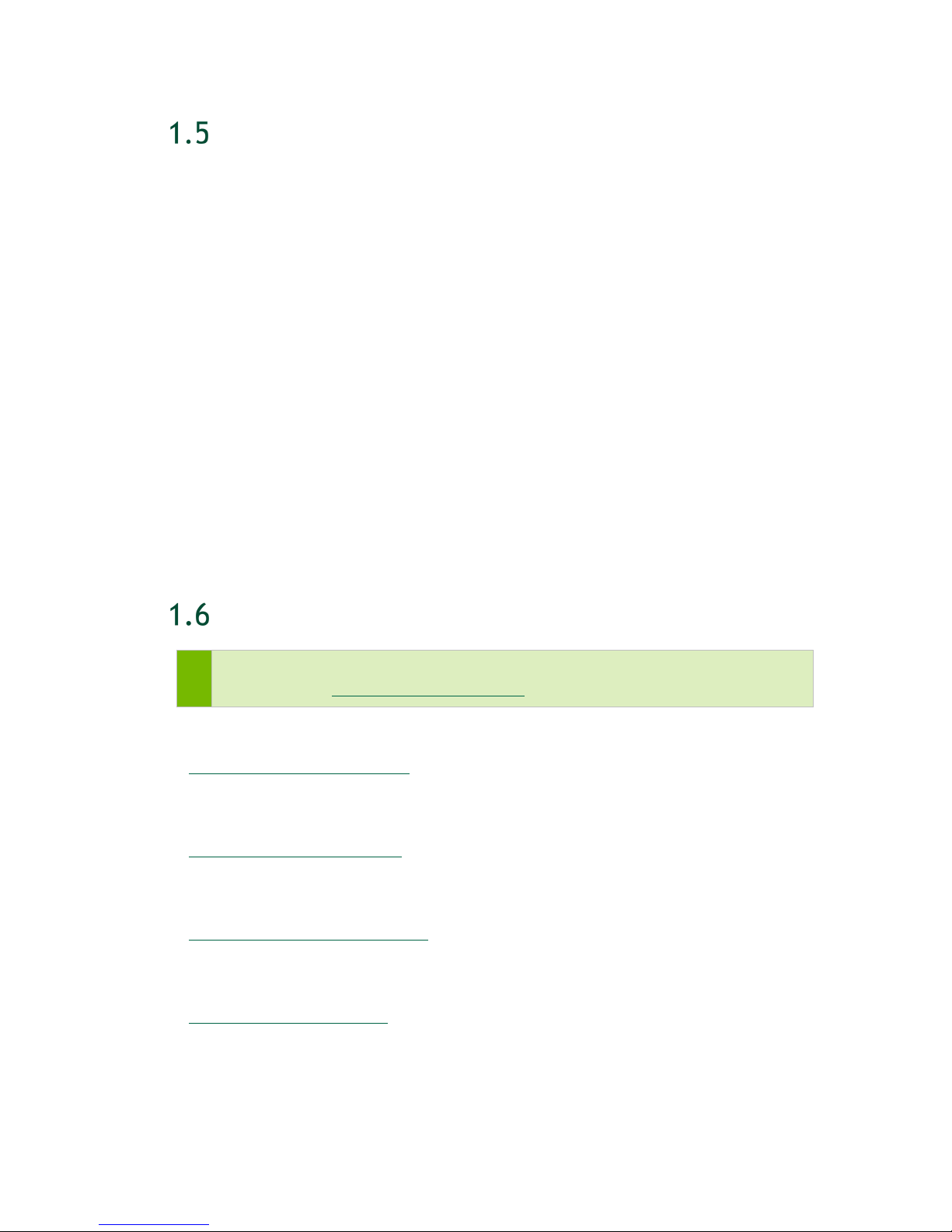

RECOMMENDED PORTS TO USE FOR EXTERNAL

STORAGE

For clarity, the following figure reiterates the recommended ports to use for external

storage. In most configurations, the storage ports (ID 1 below) should be used for

connecting to high-speed NAS storage, while the cluster ports (ID 2 below) should be

used for communication between nodes.

ID Connectivity Uses Number of

1 ConnectX-5 (LP) Storage (NFS) 2

2 ConnectX-5

InfiniBand mode

Ethernet mode

DGX-2 System User Guide

Port

(Left):

enp134s0f0

(Right):

Cluster

12

8

QSFP28 1/10/25/40/100 GbE

QSFP28

Cable Type

EDR InfiniBand or 100

GbE

Page 13

Introduction to the NVIDIA DGX-2 System

DGX OS SOFTWARE

The DGX-2 System comes installed with a base OS incorporating

An Ubuntu server distribution with supporting packages

The NVIDIA driver

Docker CE

NVIDIA Container Runtime for Docker

The following health monitoring software

● NVIDIA System Management (NVSM)

Provides active health monitoring and system alerts for NVIDIA DGX nodes in a

data center. It also provides simple commands for checking the health of the

DGX-2 SYSTEM from the command line.

● Data Center GPU Management (DCGM)

This software enables node-wide administration of GPUs, and can be used for

cluster and data-center level management.

ADDITIONAL DOCUMENTATION

Note: Some of the documentation listed below are not available at the time of

publication. See https://docs.nvidia.com/dgx/ for the latest status.

DGX-2 System Service Manual

Instructions for servicing the DGX-2 System, including how to replace select

components.

DGX OS Server Release Notes

Provides software component versions as well as a list of changes and known issues

in the installed OS software.

NGC Container Registry for DGX

How to access the NGC container registry for using containerized deep learning

GPU-accelerated applications on your DGX-2 System.

NVSM Software User Guide

Contains instructions for using the NVIDIA System Management software.

DCGM Software User Guide

Contains instructions for using the Data Center GPU Management software.

DGX-2 System User Guide

13

Page 14

Introduction to the NVIDIA DGX-2 System

CUSTOMER SUPPORT

Contact NVIDIA Enterprise Support for assistance in reporting, troubleshooting, or

diagnosing problems with your

Support for assistance in installing or moving the DGX-2 System. You can contact

NVIDIA Enterprise Support in the following ways.

1.7.1 NVIDIA Enterprise Support Portal

The best way to file an incident is to log on to the NVIDIA Enterprise Support portal.

1.7.2 NVIDIA Enterprise Support Email

You can also send an email to enterprisesupport@nvidia.com.

DGX-2 System. Also contact NVIDIA Enterprise

1.7.3 NVIDIA Enterprise Support - Local Time Zone

Phone Numbers

Visit the NVIDIA Enterprise Support page.

DGX-2 System User Guide

14

Page 15

CONNECTING TO THE DGX-2 CONSOLE

Connect to the DGX-2 console using either a direct connection, a remote connection

through the BMC, or through an SSH connection.

CAUTION: Connect directly to the DGX-2 console if the DGX-2 System is connected

to a 172.17.xx.xx subnet.

DGX OS Server software installs Docker CE which uses the 172.17.xx.xx subnet by

default for Docker containers. If the DGX-2 System is on the same subnet, you will not

be able to establish a network connection to the DGX-2 System.

Refer to the section Configuring Docker IP Addresses for instructions on how to change

the default Docker network settings.

DGX-2 System User Guide

15

Page 16

Connecting to the DGX-2 Console

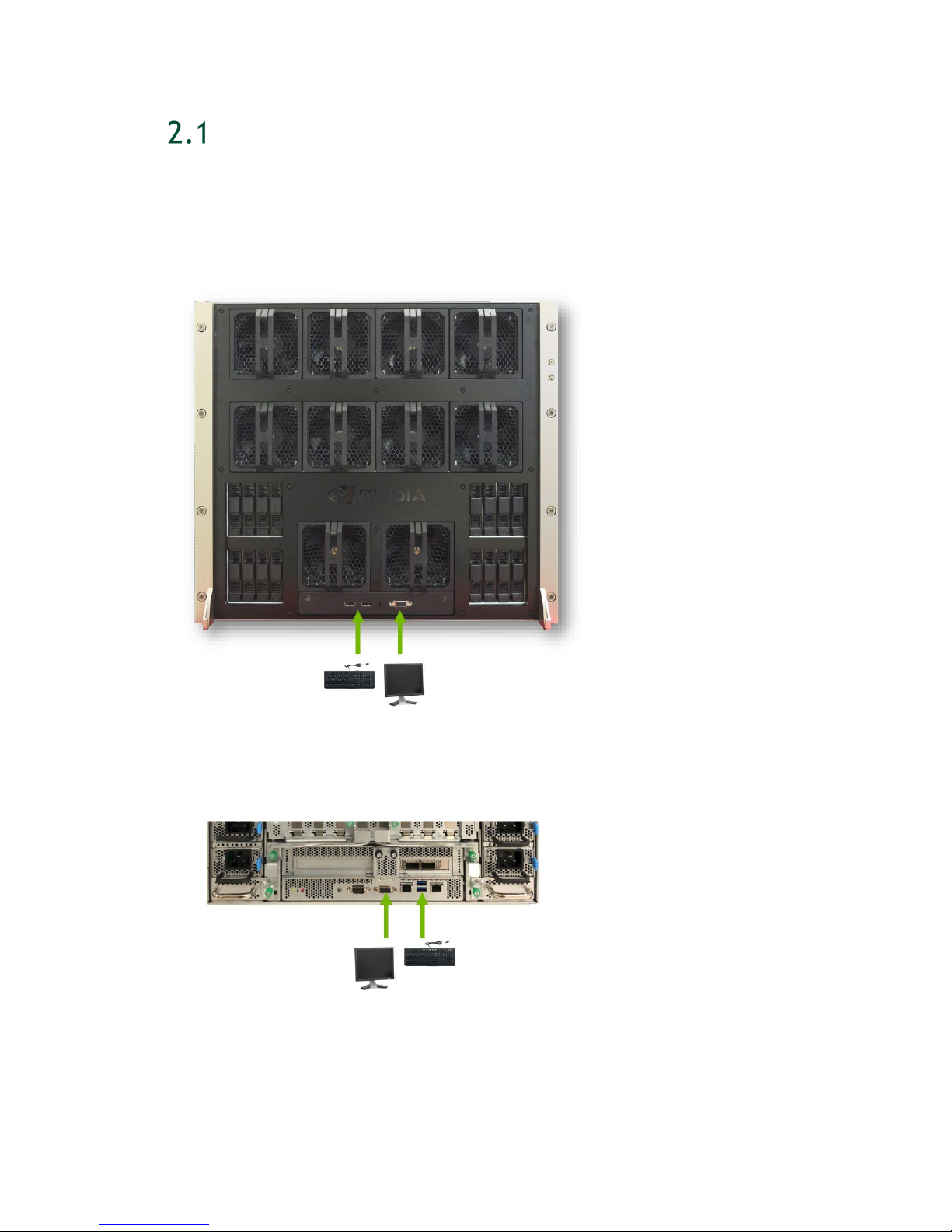

DIRECT CONNECTION

At either the front or the back of the DGX-2 System, connect a display to the VGA

connector, and a keyboard to any of the USB ports.

DGX-2 Server Front

DGX-2 Server Back

DGX-2 System User Guide

16

Page 17

Connecting to the DGX-2 Console

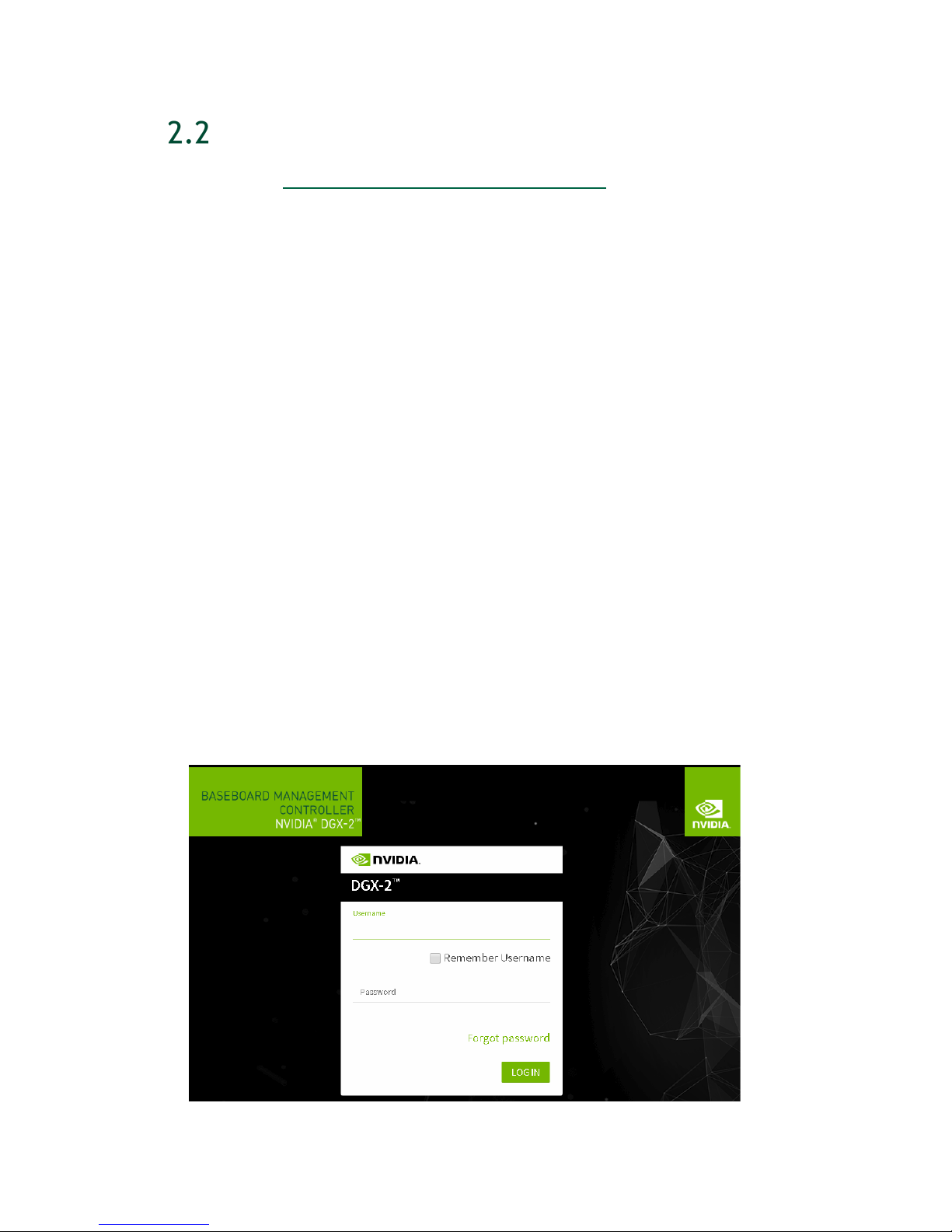

REMOTE CONNECTION THROUGH THE BMC

See the section Configuring Static IP Address for the BMC if you need to configure a

static IP address for the BMC.

This method requires that you have the BMC login credentials. These credentials

depend on the following conditions:

Prior to first time boot: The default credentials are

Username: admin

Password: admin

After first boot setup: The administrative user username that was set up during the

initial boot is used for both the BMC username and BMC password.

Username: <administrator-username>

Password: <administrator-username>

After first boot setup with changed password: The BMC password can be changed

from “<system-username>”, in which case the credentials are

Username: <administrator-username>

Password: <new-bmc-password>

1. Make sure you have connected the BMC port on the DGX-2 System to your LAN.

2. Open a browser within your LAN and go to:

https://<ipmi-ip-address>/

Make sure popups are allowed for the BMC address.

3. Log in.

DGX-2 System User Guide

17

Page 18

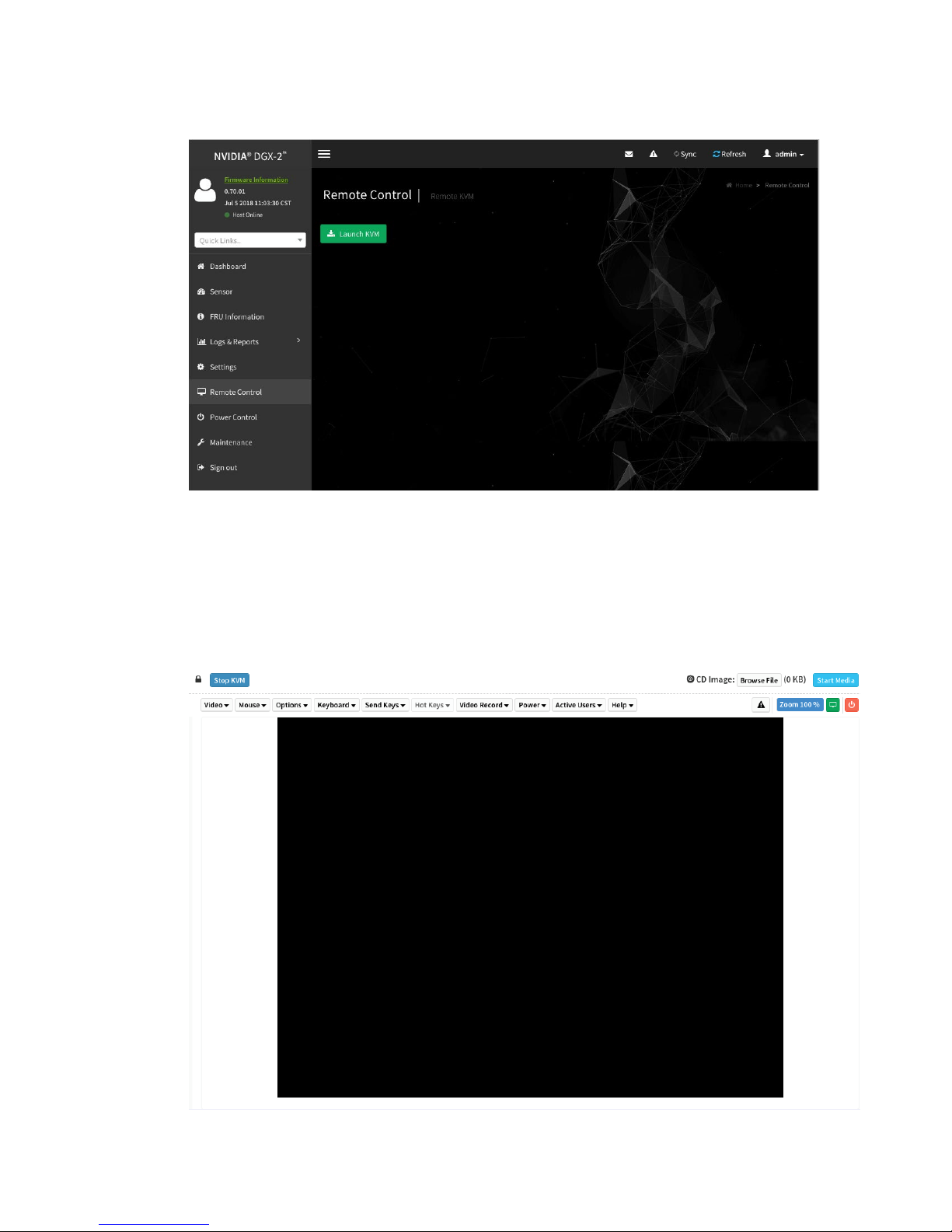

4. From the left-side navigation menu, click Remote Control.

Connecting to the DGX-2 Console

The Remote Control page allows you to open a virtual Keyboard/Video/Mouse

(KVM) on the DGX-2 System, as if you were using a physical monitor and keyboard

connected to the front of the system.

5. Click Launch KVM.

The DGX-2 console appears in your browser.

DGX-2 System User Guide

18

Page 19

Connecting to the DGX-2 Console

SSH CONNECTION

You can also establish an SSH connection to the DGX-2 System through the network

port. See the section Network Ports

Configuring Static IP Addresses for the Network Ports if you need to configure a static

IP address.

to identify the port to use, and the section

DGX-2 System User Guide

19

Page 20

SETTING UP THE DGX-2 SYSTEM

While NVIDIA service personnel will install the DGX-2 System at the site and perform

the first boot setup, the first boot setup instructions are provided here for reference and

to support any re-imaging of the server.

These instructions describe the setup process that occurs the first time the DGX-2 System

is powered on after delivery or after the server is re-imaged.

Be prepared to accept all End User License Agreements (EULAs) and to set up your

username and password.

1. Connect to the DGX-2 console as explained in Connecting to the DGX-2 Console.

2. Power on the DGX-2 System.

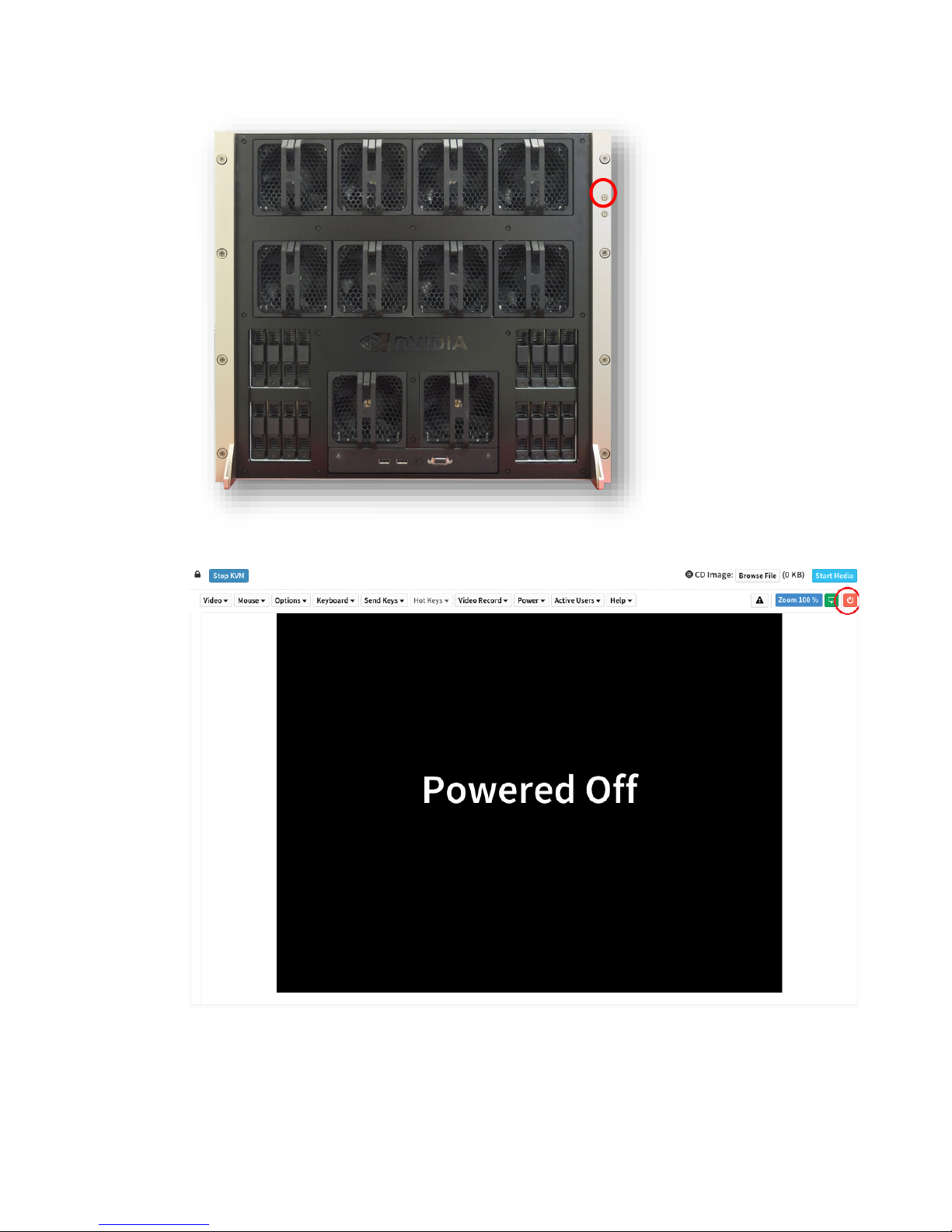

● Using the physical power button

DGX-2 System User Guide

20

Page 21

Setting Up the DGX-2 System

● Using the Remote BMC

The system will take a few minutes to boot.

You are presented with end user license agreements (EULAs) for the NVIDIA

software.

DGX-2 System User Guide

21

Page 22

Setting Up the DGX-2 System

!

3. Accept all EULAs to proceed with the installation.

The system boots and you are prompted to configure the DGX-2 software.

4. Perform the steps to configure the DGX-2 software.

● Select your language and location.

● Create a user account with your name, username, and password.

You will need these credentials to log in to the DGX-2 System as well as to log in to

the BMC remotely. When logging in to the BMC, enter your username for both the

User ID as well as the password. Be sure to create a unique BMC password at the

first opportunity.

CAUTION: Once you create your login credentials, the default admin/admin login will

no longer work.

Note: The BMC software will not accept "sysadmin" for a user name. If you create this

user name for the system log in, "sysadmin" will not be available for logging in to the

BMC.

● Choose a primary network interface for the DGX-2 System; for example, enp6s0.

This should typically be the interface that you will use for subsequent system

configuration or in-band management.

Note: After you select the primary network interface, the system attempts to configure

the interface for DHCP and then asks you to enter a hostname for the system. If DHCP

is not available, you will have the option to configure the network manually. If you

need to configure a static IP address on a network interface connected to a DHCP

network, select Cancel at the Network configuration – Please enter the

hostname for the system screen. The system will then present a screen with the

option to configure the network manually.

● Choose a host name for the DGX-2 System.

After completing the setup process, the DGX-2 System reboots automatically and

then presents the login prompt.

5. Update the software to ensure you are running the latest version.

Updating the software ensures your DGX-2 System contains important updates,

including security updates. The Ubuntu Security Notice site (https://usn.ubuntu.com/

lists known Common Vulnerabilities and Exposures (CVEs), including those that can be

resolved by updating the DGX OS software.

)

a) Run the package manager.

DGX-2 System User Guide

22

Page 23

$ sudo apt update

b) Upgrade to the latest version.

$ sudo apt full-upgrade

Note: RAID 1 Rebuild in Progress - When the system is booted after restoring the

image, software RAID begins the process of rebuilding the RAID 1 array - creating a

mirror of (or resynchronizing) the drive containing the software. System performance

may be affected during the RAID 1 rebuild process, which can take an hour to

complete.

During this time, the command “nvsm show health” will report a warning that the RAID

volume is resyncing.

You can check the status of the RAID 1 rebuild process using “sudo mdadm -D

/dev/md0”.

Setting Up the DGX-2 System

DGX-2 System User Guide

23

Page 24

QUICK START INSTRUCTIONS

This chapter provides basic requirements and instructions for using the DGX-2 System,

including how to perform a preliminary health check and how to prepare for running

containers. Be sure to visit the DGX documentation website at

https://docs.nvidia.com/dgx/

for additional product documentation.

REGISTRATION

Be sure to register your DGX-2 System with NVIDIA as soon as you receive your

purchase confirmation e-mail. Registration enables your hardware warranty and allows

you to set up an NVIDIA GPU Cloud for DGX account.

To register your DGX-2 System, you will need information provided in your purchase

confirmation e-mail. If you do not have the information, send an e-mail to NVIDIA

Enterprise Support at enterprisesupport@nvidia.com.

1. From a browser, go to the NVIDIA DGX Product Registration page

(https://www.nvidia.com/object/dgx-product-registration

2. Enter all required information and then click SUBMIT to complete the registration

process and receive all warranty entitlements and DGX-2 support services

entitlements.

).

DGX-2 System User Guide

24

Page 25

Quick Start Instructions

INSTALLATION AND CONFIGURATION

Your DGX-2 System will be installed by NVIDIA service personnel or an authorized

installation partner.

Before installation, make sure you have completed the Site Survey and have given all

relevant site information to your Installation Partner.

OBTAINING AN NVIDIA GPU CLOUD ACCOUNT

NVIDIA GPU Cloud (NGC) provides simple access to GPU-optimized software tools for

deep learning and high-performance computing (HPC) that take full advantage of

NVIDIA GPUs. An NGC account grants you access to these tools as well as the ability to

set up a private registry to manage your customized tools.

Work with NVIDIA Enterprise Support to set up an NGC enterprise account if you are

the organization administrator for your DGX-2 purchase. See the NGC Container

Registry for DGX User Guide (

guide/) for detailed instructions on getting an NGC enterprise account.

https://docs.nvidia.com/dgx/ngc-registry-for-dgx-user-

GETTING YOUR NGC API KEY AND SELECTING

CONTAINER TAGS FOR THE VERIFICATION

EXAMPLES

Before using the DGX-2 System to run containers from the NGC container registry, you

must visit the NGC web site to obtain your NGC API Key and to determine which

containers are available to run.

4.4.1 Getting Your NGC API Key

Your NGC API Key authenticates your access to the NGC container registry with its

NVIDIA tuned, tested, certified, and maintained containers for the top deep learning

frameworks.

You only need to generate an API Key once. Should you lose your API Key, you can

generate a new one from the NGC website. When you generate a new API Key, the old

one is invalidated.

Perform the following instructions from any system with internet access and a browser.

DGX-2 System User Guide

25

Page 26

Quick Start Instructions

1. Log in to the NGC website (https://ngc.nvidia.com).

2. Click Get API Key from the Registry page.

3. Click Generate API Key from the Configuration->API Key page.

4. Click Confirm at the Generate a New API Key dialog.

Your NGC API Key is displayed at the bottom of the Configuration->API Key page

with examples of how to use it.

NGC does not save your key, so store it in a secure place. You can copy your API Key

to the clipboard by clicking the copy icon to the right of the API key.

4.4.2 Selecting CUDA Container Tags for Verification

Examples

While you are logged in to the web site, select a CUDA container tag to use for the

verification procedure in the next section.

1. Select Registry from the left side menu.

2. Select a CUDA container tag.

c) Click the cuda repository (under the nvidia registry space).

d) In the Tag section, scroll down to find the latest ‘-runtime’ version. For example,

‘10.0-runtime’.

Note this tag as you will need to specify it when running the CUDA container in the

next section.

VERIFYING BASIC FUNCTIONALITY

This section walks you through the steps of performing a health check on the DGX-2

System, and verifying the Docker and NVIDIA driver installation.

1. Establish an SSH connection to the DGX-2 System.

2. Run a basic system check.

sudo nvsm show health

Verify that the output summary shows that all checks are Healthy and that the overall

system status is Healthy.

3. Verify that Docker is installed by viewing the installed Docker version.

sudo docker --version

This should return the version as “Docker version 18.03-ce”, where the actual

version may differ depending on the specific release of the DGX OS Server software.

DGX-2 System User Guide

26

Page 27

Quick Start Instructions

4. Verify connection to the NVIDIA repository and that the NVIDIA Driver is installed.

sudo docker container run --runtime=nvidia --rm

nvcr.io/nvidia/cuda:<cuda-tag-obtained-from-previous-

section> nvidia-smi

Docker pulls the nvidia/cuda container image layer by layer, then runs nvidia-smi.

When completed, the output should show the NVIDIA Driver version and a

description of each installed GPU.

See the NVIDIA Containers and Deep Learning Frameworks User Guide at

https://docs.nvidia.com/deeplearning/dgx/user-guide/index.html

for further

instructions, including an example of logging into the NGC container registry and

launching a deep learning container.

DGX-2 System User Guide

27

Page 28

NETWORK CONFIGURATION

This chapter describes key network considerations and instructions for the DGX-2

System.

BMC SECURITY

NVIDIA recommends that customers follow best security practices for BMC

management (IPMI port). These include, but are not limited to, such measures as:

Restricting the DGX-2 IPMI port to an isolated, dedicated, management network

Using a separate, firewalled subnet

Configuring a separate VLAN for BMC traffic if a dedicated network is not available

CONFIGURING NETWORK PROXIES

If your network requires use of a proxy server, you will need to set up configuration

files to ensure the DGX-2 System communicates through the proxy.

5.2.1 For the OS and Most Applications

Edit the file /etc/environment and add the following proxy addresses to the file,

below the PATH line.

http_proxy="http://<username>:<password>@<host>:<port>/"

ftp_proxy="ftp://<username>:<password>@<host>:<port>/";

DGX-2 System User Guide

28

Page 29

Network Configuration

https_proxy="https://<username>:<password>@<host>:<port>/";

no_proxy="localhost,127.0.0.1,localaddress,.localdomain.com"

HTTP_PROXY="http://<username>:<password>@<host>:<port>/"

FTP_PROXY="ftp://<username>:<password>@<host>:<port>/";

HTTPS_PROXY="https://<username>:<password>@<host>:<port>/";

NO_PROXY="localhost,127.0.0.1,localaddress,.localdomain.com"

Where username and password are optional.

Example:

http_proxy="http://myproxy.server.com:8080/"

ftp_proxy="ftp://myproxy.server.com:8080/";

https_proxy="https://myproxy.server.com:8080/";

5.2.2 For apt

Edit (or create) a proxy config file /etc/apt/apt.conf.d/myproxy and include the

following lines

Acquire::http::proxy "http://<username>:<password>@<host>:<port>/";

Acquire::ftp::proxy "ftp://<username>:<password>@<host>:<port>/";

Acquire::https::proxy "https://<username>:<password>@<host>:<port>/";

Where username and password are optional.

Example:

Acquire::http::proxy "http://myproxy.server.com:8080/";

Acquire::ftp::proxy "ftp://myproxy.server.com:8080>/";

Acquire::https::proxy "https://myproxy.server.com:8080/";

5.2.3 For Docker

To ensure that Docker can access the NGC container registry through a proxy, Docker

uses environment variables. For best practice recommendations on configuring proxy

environment variables for Docker,

see https://docs.docker.com/engine/admin/systemd/#http-proxy.

CONFIGURING DOCKER IP ADDRESSES

To ensure that the DGX-2 System can access the network interfaces for Docker

containers, Docker should be configured to use a subnet distinct from other network

resources used by the DGX-2 System.

DGX-2 System User Guide

29

Page 30

Network Configuration

By default, Docker uses the 172.17.0.0/16 subnet. Consult your network administrator

to find out which IP addresses are used by your network.

If your network does not

conflict with the default Docker IP address range, then no changes are needed and

you can skip this section.

However, if your network uses the addresses within this range for the DGX-2 System,

you should change the default Docker network addresses.

You can change the default Docker network addresses by either modifying

/etc/docker/daemon.json file or modifying the /etc/systemd/

the

system/docker.service.d/docker-override.conf

file. These instructions provide

an example of modifying the/etc/systemd/system/docker.service.d/docker-

override.conf to override the default Docker network addresses.

1. Open the docker-override.conf file for editing.

$ sudo vi /etc/systemd/system/docker.service.d/docker-override.conf

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd -H fd:// -s overlay2

LimitMEMLOCK=infinity

LimitSTACK=67108864

2. Make the changes indicated in bold below, setting the correct bridge IP address and

IP address ranges for your network. Consult your IT administrator for the correct

addresses.

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd -H fd:// -s overlay2 --bip=192.168.127.1/24

--fixed-cidr=192.168.127.128/25

LimitMEMLOCK=infinity

LimitSTACK=67108864

Save and close the /etc/systemd/system/docker.service.d/dockeroverride.conf file when done.

3. Reload the systemctl daemon.

$ sudo systemctl daemon-reload

4. Restart Docker.

$ sudo systemctl restart docker

DGX-2 System User Guide

30

Page 31

Network Configuration

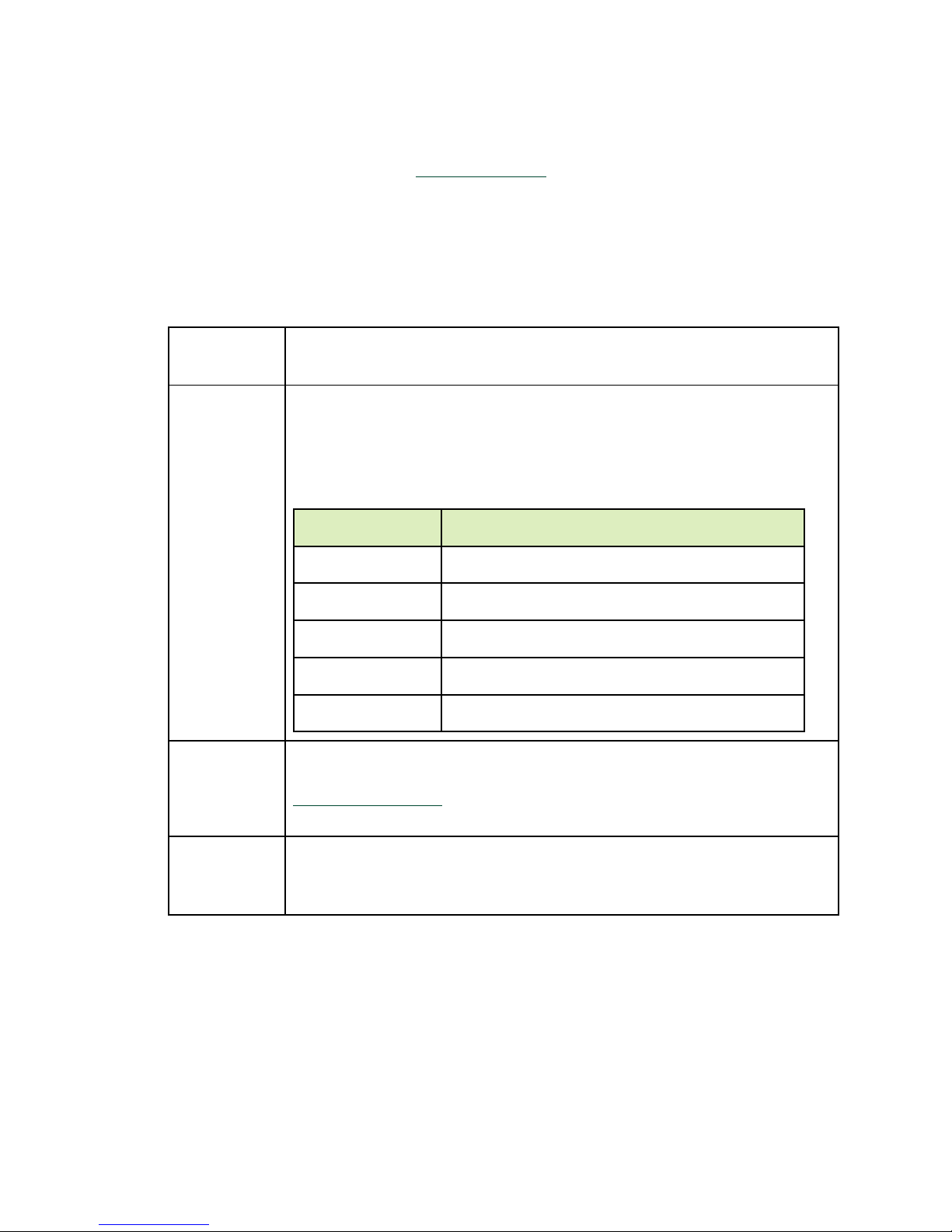

Port (Protocol)

Direction

Use

OPENING PORTS

Make sure that the ports listed in the following table are open and available on

your firewall to the DGX-2 System:

22 (TCP) Inbound SSH

53 (UDP) Outbound DNS

80 (TCP) Outbound HTTP, package updates

443 (TCP) Outbound

443 (TCP) Inbound

For internet (HTTP/HTTPS) connection to

NVIDIA GPU Cloud

If port 443 is proxied through a corporate

firewall, then WebSocket protocol traffic

must be supported

For BMC web services, remote console

services, and cd-media service.

If port 443 is proxied through a corporate

firewall, then WebSocket protocol traffic

must be supported

CONNECTIVITY REQUIREMENTS

To run NVIDIA NGC containers from the NGC container registry, your network must

be able to access the following URLs:

http://archive.ubuntu.com/ubuntu/

http://security.ubuntu.com/ubuntu/

http://international.download.nvidia.com/dgx/repos/

https://apt.dockerproject.org/repo/

https://download.docker.com/linux/ubuntu/

https://nvcr.io/

To verify connection to nvcr.io, run

$ wget https://nvcr.io/v2

You should see connecting verification followed by a 401 error.

--2018-08-01 19:42:58-- https://nvcr.io/v2

Resolving nvcr.io (nvcr.io)... 52.8.131.152, 52.9.8.8

Connecting to nvcr.io (nvcr.io)|52.8.131.152|:443... connected.

HTTP request sent, awaiting response... 401 Unauthorized

DGX-2 System User Guide

31

Page 32

Network Configuration

CONFIGURING STATIC IP ADDRESS FOR THE

BMC

This section explains how to set a static IP address for the BMC. You will need to do this

if your network does not support DHCP.

Use one of the methods described in the following sections:

Configuring a BMC Static IP Address Using ipmitool

Configuring a BMC Static IP Address Using the System BIOS

Configuring a BMC Static IP Address Using the BMC Dashboard

5.6.1 Configuring a BMC Static IP Address Using

ipmitool

This section describes how to set a static IP address for the BMC from the Ubuntu

command line.

Note: If you cannot access the DGX-2 System remotely, then connect a display

(1440x900 or lower resolution) and keyboard directly to the DGX-2 System.

To view the current settings, enter the following command.

$ sudo ipmitool lan print 1

To set a static IP address for the BMC, do the following.

1. Set the IP address source to static.

$ sudo ipmitool lan set 1 ipsrc static

2. Set the appropriate address information.

● To set the IP address (“Station IP address” in the BIOS settings), enter the

following and replace the italicized text with your information.

$ sudo ipmitool lan set 1 ipaddr 10.31.241.190

● To set the subnet mask, enter the following and replace the italicized text with

your information.

$ sudo ipmitool lan set 1 netmask 255.255.255.0

DGX-2 System User Guide

32

Page 33

Network Configuration

● To set the default gateway IP (“Router IP address” in the BIOS settings), enter the

following and replace the italicized text with your information.

$ sudo ipmitool lan set 1 defgw ipaddr 10.31.241.1

5.6.2 Configuring a BMC Static IP Address Using the

System BIOS

This section describes how to set a static IP address for the BMC when you cannot access

the DGX-2 System remotely. This process involves setting the BMC IP address during

system boot.

1. Connect a keyboard and display (1440 x 900 maximum resolution) to the DGX-2

System, then turn on the DGX-2 System.

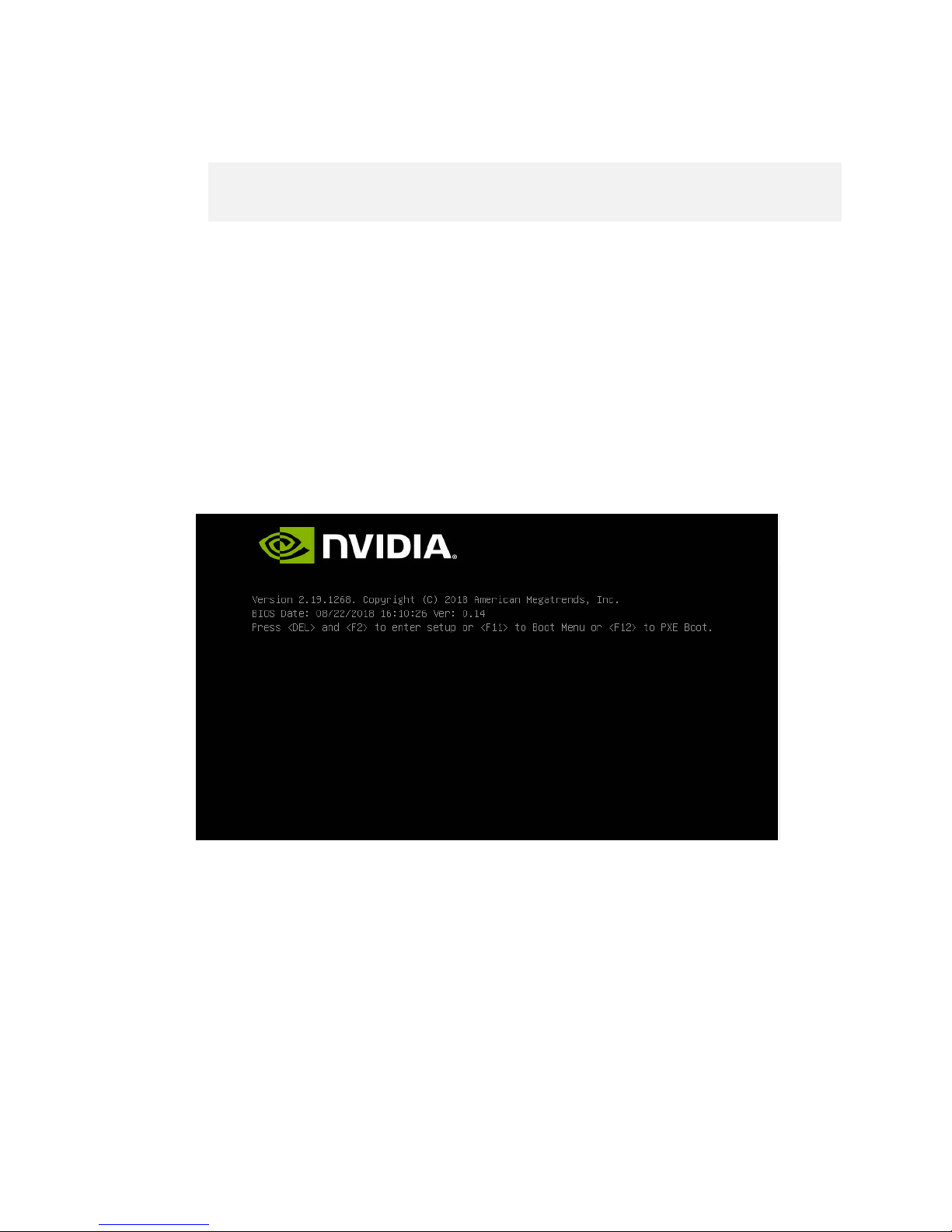

2. When you see the SBIOS version screen, press Del or F2 to enter the BIOS Setup

Utility screen.

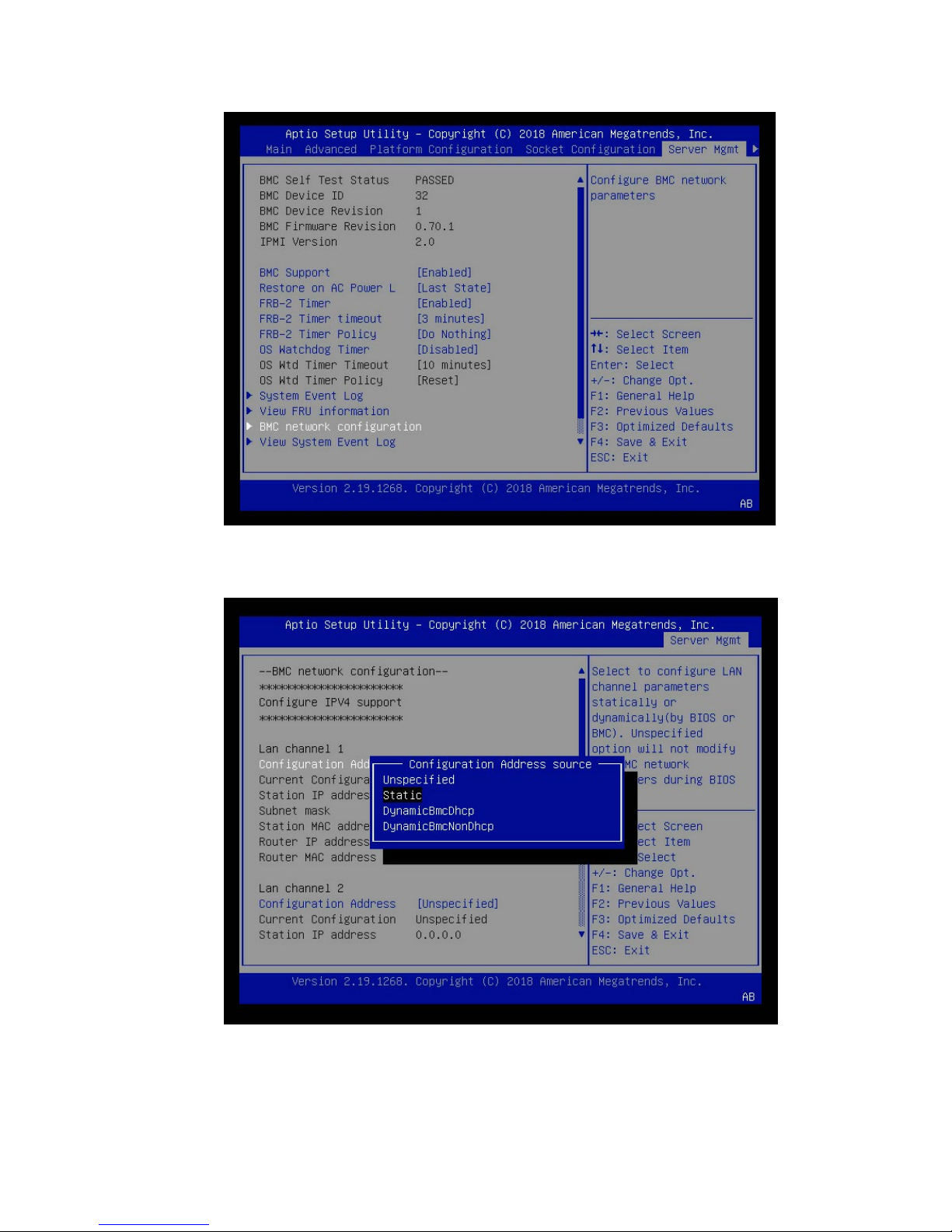

3. At the BIOS Setup Utility screen, navigate to the Server Mgmt tab on the top menu,

then scroll to BMC network configuration and press Enter.

DGX-2 System User Guide

33

Page 34

Network Configuration

4. Scroll to Configuration Address Source and press Enter, then at the Configuration

Address source pop-up, select Static and then press Enter.

5. Set the addresses for the Station IP address, Subnet mask, and Router IP address as

needed by performing the following for each:

DGX-2 System User Guide

34

Page 35

Network Configuration

a) Scroll to the specific item and press Enter.

b) Enter the appropriate information at the pop-up, then press Enter.

6. When finished making all your changes, press F10 to save & exit

You can now access the BMC over the network.

5.6.3 Configuring a BMC Static IP Address Using the

BMC Dashboard

These instructions describe IPv4 addressing, but IPv6 addressing to the BMC can be

configured if needed through the corresponding IPv6 fields.

1. Log into the BMC, then click Settings->Network Settings->Network IP Settings.

2. Clear the Enable IPv4 DHCP check box, then enter the appropriate values for

the IPv4 Address, IPv4 Subnet, and IPv4 Gateway fields

.

DGX-2 System User Guide

35

Page 36

Network Configuration

3. Click Save when done.

CONFIGURING STATIC IP ADDRESSES FOR THE

NETWORK PORTS

During the initial boot setup process for the DGX-2 System, you had an opportunity to

configure static IP addresses for a single network interface. If you did not set this up at

that time, you can configure the static IP addresses from the Ubuntu command line

using the following instructions.

Note: If you cannot access the DGX-2 System remotely, then connect a display

(1440x900 or lower resolution) and keyboard directly to the DGX-2 System.

1. Determine the port designation that you want to configure, based on the physical

ethernet port that you have connected to your network.

DGX-2 System User Guide

36

Page 37

Ethernet Port Position

<port-designation>

enp134s0f0

enp134s0f1

enp6s0

Network Configuration

1

2

3

2. Edit the network configuration yaml file.

$ sudo vi /etc/netplan/01-netcfg.yaml

network:

version: 2

renderer: networkd

ethernets:

<port-designation>:

dhcp4: no

dhcp6: no

addresses: 10.10.10.2/24

gateway4: 10.10.10.1

nameservers:

search: [<mydomain>, <other-domain>]

addresses: [10.10.10.1, 1.1.1.1]

Consult your network administrator for the appropriate information for the items in

bold, such as network, gateway, and nameserver addresses, and use the port

designations that you determined in step 1.

3. When finished with your edits, press ESC to switch to command mode, then save

the file to the disk and exit the editor.

4. Apply the changes.

$ sudo netplan apply

DGX-2 System User Guide

37

Page 38

Network Configuration

Note: If you are not returned to the command line prompt after a minute, then reboot

the system.

For additional information, see

https://help.ubuntu.com/lts/serverguide/network-

configuration.html.en.

SWITCHING BETWEEN INFINIBAND AND

ETHERNET

The NVIDIA DGX-2 System is equipped with eight QSFP28 network ports on the I/O

board, typically used for cluster communications. By default these are configured as

InfiniBand ports, but you have the option to convert these to Ethernet ports.

For these changes to work properly, the configured port must connect to a networking

switch that matches the port configuration. In other words, if the port configuration is

set to InfiniBand, then the external switch should be an InfiniBand switch with the

corresponding InfiniBand cables. Likewise, if the port configuration is set to Ethernet,

then the switch should also be Ethernet.

5.8.1 Starting the Mellanox Software Tools

1. Start the mst driver.

$ sudo mst start

2. To verify that the Mellanox Software Tools (MST) services are running, enter the

following.

$ sudo mst status

● The following output indicates the services are not running.

MST modules:

------------

MST PCI module is not loaded

MST PCI configuration module is not loaded

● The following output indicates the services are running.

MST modules:

------------

DGX-2 System User Guide

38

Page 39

Network Configuration

MST PCI module is not loaded

MST PCI configuration module loaded

MST devices:

------------

/dev/mst/mt4119_pciconf0 - PCI configuration cycles access.

domain:bus:dev.fn=0000:35:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

/dev/mst/mt4119_pciconf1 - PCI configuration cycles access.

domain:bus:dev.fn=0000:3a:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

/dev/mst/mt4119_pciconf2 - PCI configuration cycles access.

domain:bus:dev.fn=0000:58:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

/dev/mst/mt4119_pciconf3 - PCI configuration cycles access.

domain:bus:dev.fn=0000:5d:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

/dev/mst/mt4119_pciconf4 - PCI configuration cycles access.

domain:bus:dev.fn=0000:b8:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

/dev/mst/mt4119_pciconf5 - PCI configuration cycles access.

domain:bus:dev.fn=0000:bd:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

/dev/mst/mt4119_pciconf6 - PCI configuration cycles access.

domain:bus:dev.fn=0000:e1:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

/dev/mst/mt4119_pciconf7 - PCI configuration cycles access.

domain:bus:dev.fn=0000:e6:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

/dev/mst/mt4119_pciconf0 - PCI configuration cycles access.

domain:bus:dev.fn=0000:86:00.0 addr.reg=88 data.reg=92

Chip revision is: 00

$

DGX-2 System User Guide

39

Page 40

Network Configuration

5.8.2 Determining the Current Port Configuration

To determine the current port configuration, enter the following:

$ sudo mlxconfig query | egrep -e Device\|LINK_TYPE

Device #1:

Device type: ConnectX5

Device: 0000:bd:00.0

LINK_TYPE_P1 IB(1)

Device #2:

Device type: ConnectX5

Device: 0000:b8:00.0

LINK_TYPE_P1 IB(1)

Device #3:

Device type: ConnectX5

Device: 0000:3a:00.0

LINK_TYPE_P1 IB(1)

Device #4:

Device type: ConnectX5

Device: 0000:e1:00.0

LINK_TYPE_P1 IB(1)

Device #5:

Device type: ConnectX5

Device: 0000:35:00.0

LINK_TYPE_P1 IB(1)

Device #6:

Device type: ConnectX5

Device: 0000:5d:00.0

LINK_TYPE_P1 IB(1)

Device #7:

Device type: ConnectX5

Device: 0000:e6:00.0

LINK_TYPE_P1 IB(1)

Device #8:

Device type: ConnectX5

Device: 0000:58:00.0

LINK_TYPE_P1 IB(1)

Device #9:

Device type: ConnectX5

Device: 0000:86:00.0

LINK_TYPE_P1 ETH(2)

LINK_TYPE_P2 ETH(2)

This output shows the first eight cards are configured for InfiniBand and correspond to

the network cluster ports. The last card has two ports which correspond to the two

network storage ports. These are configured for Ethernet should not be changed.

Map the Device bus numbers from your output to the device name from the mst

status output on your system. For example, this example output shows that the

device name for bus bd is /dev/mst/mt4119_pciconf5. You will need the device

name when changing the configuration.

DGX-2 System User Guide

40

Page 41

Network Configuration

5.8.3 Switching the Port from InfiniBand to

Ethernet

Make sure that you have started the Mellanox Software Tools (MST) services as explain

in the section Starting the Mellanox Software Tools

, and have identified the correct ports

to change.

1. Change the configuration for the network cluster ports to Ethernet by setting

LINK_TYPE_P1=2 for each port.

The following example configures the 8 network cluster ports.

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf0 set LINK_TYPE_P1=2

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf1 set LINK_TYPE_P1=2

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf2 set LINK_TYPE_P1=2

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf3 set LINK_TYPE_P1=2

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf4 set LINK_TYPE_P1=2

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf5 set LINK_TYPE_P1=2

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf6 set LINK_TYPE_P1=2

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf7 set LINK_TYPE_P1=2

2. Reboot the server.

3. Verify the configuration changes have been applied.

$ sudo mlxconfig query | egrep -e Device\|LINK_TYPE

Device #1:

Device type: ConnectX4

Device: 0000:bd:00.0

LINK_TYPE_P1 ETH (1)

Device #2:

Device type: ConnectX4

Device: 0000:b8:00.0

LINK_TYPE_P1 ETH (1)

Device #3:

Device type: ConnectX4

Device: 0000:3a:00.0

LINK_TYPE_P1 ETH (1)

Device #4:

Device type: ConnectX4

Device: 0000:e1:00.0

LINK_TYPE_P1 ETH (1)

Device #5:

Device type: ConnectX4

Device: 0000:35:00.0

LINK_TYPE_P1 ETH (1)

Device #6:

Device type: ConnectX4

Device: 0000:5d:00.0

LINK_TYPE_P1 ETH (1)

Device #7:

Device type: ConnectX4

DGX-2 System User Guide

41

Page 42

Network Configuration

Device: 0000:e6:00.0

LINK_TYPE_P1 ETH (1)

Device #8:

Device type: ConnectX4

Device: 0000:58:00.0

LINK_TYPE_P1 ETH (1)

Device #9:

Device type: ConnectX5

Device: 0000:86:00.0

LINK_TYPE_P1 ETH(2)

LINK_TYPE_P2 ETH(2)

5.8.4 Switching the Port from Ethernet to

InfiniBand

Make sure that you have started the Mellanox Software Tools (MST) as explain in the

section Starting the Mellanox Software Tools

, and have identified the correct ports to

change.

1. Change the configuration for the network cluster ports to InfiniBand by setting

LINK_TYPE_P1=1 for each port.

The following example configures all 8 network cluster ports.

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf0 set LINK_TYPE_P1=1

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf1 set LINK_TYPE_P1=1

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf2 set LINK_TYPE_P1=1

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf3 set LINK_TYPE_P1=1

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf4 set LINK_TYPE_P1=1

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf5 set LINK_TYPE_P1=1

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf6 set LINK_TYPE_P1=1

~$ sudo mlxconfig -y -d /dev/mst/mt4119_pciconf7 set LINK_TYPE_P1=1

2. Verify the configuration changes have been applied.

$ sudo mlxconfig query | egrep -e Device\|LINK_TYPE

Device #1:

Device type: ConnectX4

Device: 0000:bd:00.0

LINK_TYPE_P1 IB(1)

Device #2:

Device type: ConnectX4

Device: 0000:b8:00.0

LINK_TYPE_P1 IB(1)

Device #3:

Device type: ConnectX4

Device: 0000:3a:00.0

LINK_TYPE_P1 IB(1)

Device #4:

Device type: ConnectX4

Device: 0000:e1:00.0

LINK_TYPE_P1 IB(1)

DGX-2 System User Guide

42

Page 43

Device #5:

Device type: ConnectX4

Device: 0000:35:00.0

LINK_TYPE_P1 IB(1)

Device #6:

Device type: ConnectX4

Device: 0000:5d:00.0

LINK_TYPE_P1 IB(1)

Device #7:

Device type: ConnectX4

Device: 0000:e6:00.0

LINK_TYPE_P1 IB(1)

Device #8:

Device type: ConnectX4

Device: 0000:58:00.0

LINK_TYPE_P1 IB(1)

Device #9:

Device type: ConnectX5

Device: 0000:86:00.0

LINK_TYPE_P1 ETH(2)

LINK_TYPE_P2 ETH(2)

Network Configuration

DGX-2 System User Guide

43

Page 44

CONFIGURING STORAGE – NFS MOUNT

AND CACHE

By default, the DGX-2 System includes eight SSDs in a RAID 0 configuration. These

SSDs are intended for application caching, so you must set up your own NFS storage for

long term data storage. The following instructions describe how to mount the NFS onto

the DGX-2 System, and how to cache the NFS using the DGX-2 SSDs for improved

performance.

Make sure that you have an NFS server with one or more exports with data to be

accessed by the DGX-2 System, and that there is network access between the DGX-2

System and the NFS server.

1. Configure an NFS mount for the DGX-2 System.

a) Edit the filesystem tables configuration.

sudo vi /etc/fstab

b) Add a new line for the NFS mount, using the local mount point of /mnt.

<nfs_server>:<export_path> /mnt nfs

rw,noatime,rsize=32768,wsize=32768,nolock,tcp,intr,fsc,nofail 0 0

― /mnt is used here as an example mount point.

― Consult your Network Administrator for the correct values for <nfs_server> and

<export_path>.

― The nfs arguments presented here are a list of recommended values based on

typical use cases. However, "fsc" must always be included as that argument

specifies use of FS-Cache.

c) Save the changes.

2. Verify the NFS server is reachable.

ping <nfs_server>

DGX-2 System User Guide

44

Page 45

Configuring Storage – NFS Mount and Cache

Use the server IP address or the server name provided by your network

administrator.

3. Mount the NFS export.

sudo mount /mnt

/mnt is an example mount point.

4. Verify caching is enabled.

cat /proc/fs/nfsfs/volumes

Look for the text FSC=yes in the output.

The NFS will be mounted and cached on the DGX-2 System automatically upon

subsequent reboot cycles.

DGX-2 System User Guide

45

Page 46

RESTORING THE DGX-2 SOFTWARE IMAGE

If the DGX-2 software image becomes corrupted (or both OS NVMe drives are replaced),

restore the

the image.

DGX-2 software image to its original factory condition from a pristine copy of

The process for restoring the

1. Obtain an ISO file that contains the image from NVIDIA Enterprise Support as

explained in

2. Restore the DGX-2 software image from this file either remotely through the BMC or

Obtaining the DGX-2 Software ISO Image and Checksum File.

DGX-2 software image is as follows:

locally from a bootable USB flash drive.

● If you are restoring the image remotely, follow the instructions in Re-Imaging the

System Remotely.

● If you are restoring the image locally, prepare a bootable USB flash drive and

restore the image from the USB flash drive as explained in the following topics:

― Creating a Bootable Installation Medium

― Re-Imaging the System From a USB Flash Drive

Note: The DGX OS Server software is restored on one of the two NMVe M.2 drives.

When the system is booted after restoring the image, software RAID begins the process

rebuilding the RAID 1 array - creating a mirror of (or resynchronizing) the drive

containing the software. System performance may be affected during the RAID 1

rebuild process, which can take an hour to complete.

DGX-2 System User Guide

46

Page 47

Restoring the DGX-2 Software Image

OBTAINING THE DGX-2 SOFTWARE ISO IMAGE

AND CHECKSUM FILE

To ensure that you restore the latest available version of the DGX-2 software image,

obtain the current ISO image file from NVIDIA Enterprise Support. A checksum file is

provided for the image to enable you to verify the bootable installation medium that you

create from the image file.

1. Log on to the NVIDIA Enterprise Support site.

2. Click the Announcements tab to locate the download links for the DGX-2 software

image.

3. Download the ISO image and its checksum file and save them to your local disk.

The ISO image is also available in an archive file. If you download the archive file, be

sure to extract the ISO image before proceeding.

RE-IMAGING THE SYSTEM REMOTELY

These instructions describe how to re-image the system remotely through the BMC. For

information about how to restore the system locally, see

USB Flash Drive.

Re-Imaging the System from a

Before re-imaging the system remotely, ensure that the correct DGX-2 software image is

saved to your local disk. For more information, see

Image and Checksum File.

1. Log in to the BMC.

2. Click Remote Control and then click Launch KVM.

3. Set up the ISO image as virtual media.

a) From the top bar, click Browse File and then locate the re-image ISO file and

Obtaining the DGX-2 Software ISO

click Open.

b) Click Start Media.

4. Reboot, install the image, and complete the DGX-2 System setup.

a) From the top menu, click Power and then select Hard Reset, then click Perform

Action.

b) Click Yes and then OK at the Power Control dialogs, then wait for the system to

power down and then come back online.

c) At the boot selection screen, select Install DGX Server.

If you are an advanced user who is not using the RAID disks as cache and want

to keep data on the RAID disks, then select

Install DGX Server without formatting

DGX-2 System User Guide

47

Page 48

Restoring the DGX-2 Software Image

RAID. See the section Retaining the RAID Partition While Installing the OS for

more information.

d) Press Enter.

The DGX-2 System will reboot from ISO image and proceed to install the image.

This can take approximately 15 minutes.

Note: The Mellanox InfiniBand driver installation may take up to 10 minutes.

After the installation is completed, the system ejects the virtual CD and then reboots into

the OS.

Refer to Setting Up the DGX-2 System

System for the first time after a fresh installation.

for the steps to take when booting up the DGX-2

CREATING A BOOTABLE INSTALLATION

MEDIUM

After obtaining an ISO file that contains the software image from NVIDIA Enterprise

Support, create a bootable installation medium, such as a USB flash drive or DVD-ROM,

that contains the image.

Note: If you are restoring the software image remotely through the BMC, you do not

need a bootable installation medium and you can omit this task.

If you are creating a bootable USB flash drive, follow the instructions for the platform

that you are using:

● On a text-only Linux distribution, see Creating a Bootable USB Flash Drive by Using

the dd Command.

● On Windows, see Creating a Bootable USB Flash Drive by Using Akeo Rufus.

If you are creating a bootable DVD-ROM, you can use any of the methods described

in

Burning the ISO on to a DVD on the Ubuntu Community Help Wiki.

DGX-2 System User Guide

48

Page 49

Restoring the DGX-2 Software Image

!

7.3.1 Creating a Bootable USB Flash Drive by Using

the dd Command

On a Linux system, you can use the dd command to create a bootable USB flash drive

that contains the DGX-2 software image.

Note: To ensure that the resulting flash drive is bootable, use the dd command

to perform a device bit copy of the image. If you use other commands to

perform a simple file copy of the image, the resulting flash drive may not be

bootable.

Ensure that the following prerequisites are met:

The correct DGX-2 software image is saved to your local disk. For more information,

see Obtaining the DGX-2 Software ISO Image and Checksum File

.

The USB flash drive capacity is at least 4 GB.

1. Plug the USB flash drive into one of the USB ports of your Linux system.

2. Obtain the device name of the USB flash drive by running the fdisk command.

sudo fdisk -l

You can identify the USB flash drive from its size, which is much smaller than the

size of the SSDs in the

3. Create a mount point.

DGX-2 System.

Example:

sudo mkdir /media/usb

4. Mount the USB flash drive.

sudo mount -t vfat /dev/sdb1 /media/usb -o

uid=1000,gid=100,utf8,dmask=027,fmask=137

5. As root, convert and copy the image to the USB flash drive.

sudo dd if=path-to-software-image bs=2048 of=usb-drive-device-name

CAUTION: The dd command erases all data on the device that you specify in the of

option of the command. To avoid losing data, ensure that you specify the correct path

to the USB flash drive.

DGX-2 System User Guide

49

Page 50

Restoring the DGX-2 Software Image

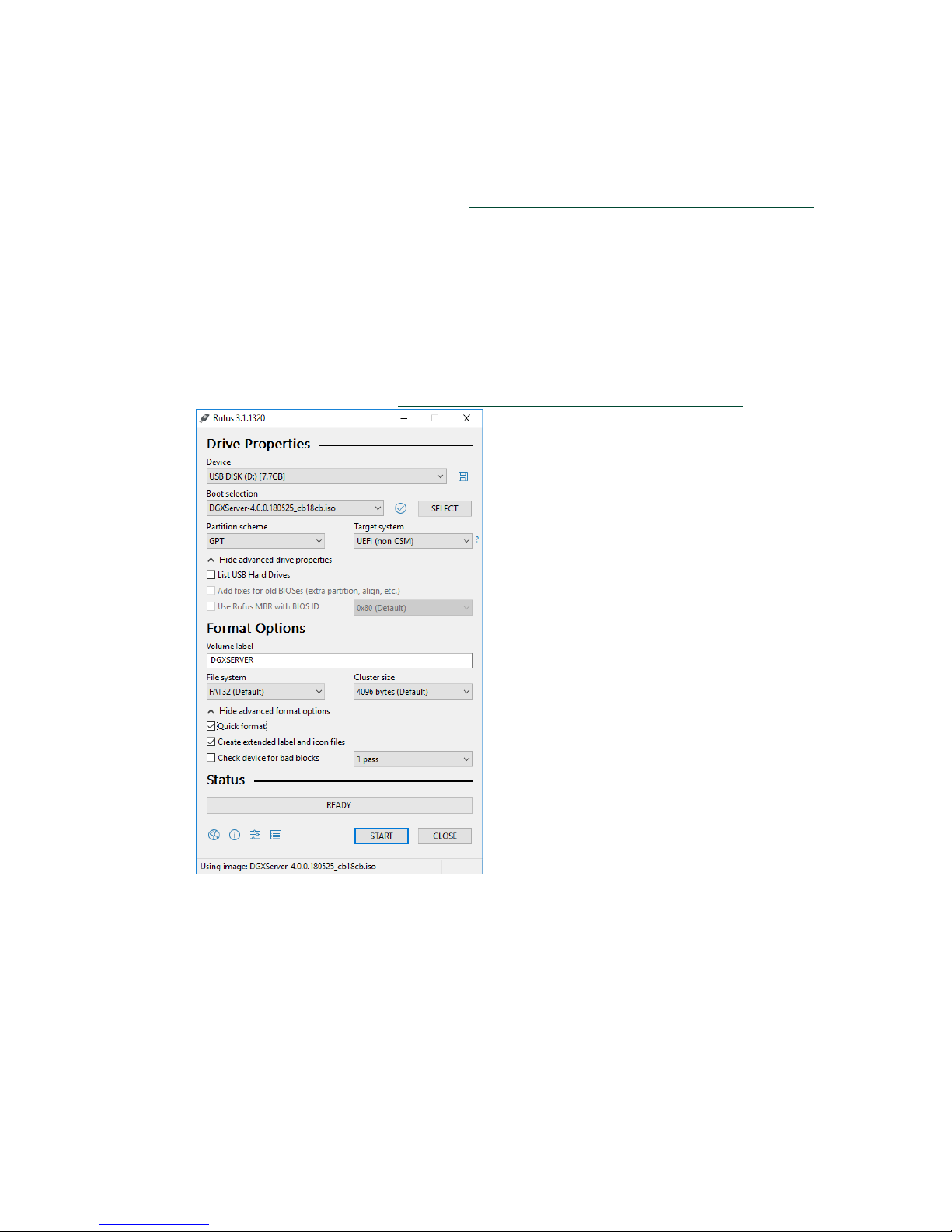

7.3.2 Creating a Bootable USB Flash Drive by Using

Akeo Rufus

On a Windows system, you can use the Akeo Reliable USB Formatting Utility (Rufus) to

create a bootable USB flash drive that contains the DGX-2 software image.

Ensure that the following prerequisites are met:

The correct DGX-2 software image is saved to your local disk. For more information,

see Obtaining the DGX-2 Software ISO Image and Checksum File

The USB flash drive has a capacity of at least 4 GB.

1. Plug the USB flash drive into one of the USB ports of your Windows system.

2. Download and launch the Akeo Reliable USB Formatting Utility (Rufus).

.

3. Under Boot selection, click SELECT and then locate and select the ISO image.

4. Under Partition scheme, select GPT.

5. Under File system, select FAT32.

6. Click Start. Because the image is a hybrid ISO file, you are prompted to select

whether to write the image in ISO Image (file copy) mode or DD Image (disk image)

DGX-2 System User Guide

50

Page 51

mode.

7. Select Write in ISO Image mode and click OK.

Restoring the DGX-2 Software Image

RE-IMAGING THE SYSTEM FROM A USB FLASH

DRIVE

These instructions describe how to re-image the system from a USB flash drive. For

information about how to restore the system remotely, see

Remotely.

Before re-imaging the system from a USB flash drive, ensure that you have a bootable

USB flash drive that contains the current

1. Plug the USB flash drive containing the OS image into the DGX-2 System.

2. Connect a monitor and keyboard directly to the DGX-2 System.

3. Boot the system and press F11 when the NVIDIA logo appears to get to the boot

menu.

4. Select the USB volume name that corresponds to the inserted USB flash drive, and

boot the system from it.

5. When the system boots up, select Install DGX Server on the startup screen.

If you are an advanced user who is not using the RAID disks as cache and want to

keep data on the RAID disks, then select

RAID.

See the section Retaining the RAID Partition While Installing the OS for more

information.

Re-Imaging the System

DGX-2 software image.

Install DGX Server without formatting

6. Press Enter.

The DGX-2 System will reboot and proceed to install the image. This can take more

than 15 minutes.

Note: The Mellanox InfiniBand driver installation may take up to 10 minutes.

DGX-2 System User Guide

51

Page 52

Restoring the DGX-2 Software Image

After the installation is completed, the system then reboots into the OS.

Refer to Setting Up the DGX-2 System

for the steps to take when booting up the DGX-2

System for the first time after a fresh installation.

RETAINING THE RAID PARTITION WHILE

INSTALLING THE OS

The re-imaging process creates a fresh installation of the DGX OS. During the OS

installation or re-image process, you are presented with a boot menu when booting the

installer image. The default selection is

then repartitions all the SSDs, including the OS SSD as well as the RAID SSDs, and the

RAID array is mounted as /raid. This overwrites any data or file systems that may exist

on the OS disk as well as the RAID disks.

Since the RAID array on the DGX-2 System is intended to be used as a cache and not for

long-term data storage, this should not be disruptive. However, if you are an advanced

user and have set up the disks for a non-cache purpose and want to keep the data on

those drives, then select the

boot menu during the boot installation. This option retains data on the RAID disks and

performs the following:

Install DGX Server without formatting RAID option at the

Install DGX Software. The installation process

Installs the cache daemon but leaves it disabled by commenting out the RUN=yes line

in /etc/default/cachefilesd.

Creates a /raid directory, leaves it out of the file system table by commenting out the

entry containing “/raid” in /etc/fstab.

Does not format the RAID disks.

When the installation is completed, you can repeat any configurations steps that you

had performed to use the RAID disks as other than cache disks.

You can always choose to use the RAID disks as cache disks at a later time by

enabling

1. Uncomment the #RUN=yes line in /etc/default/cachefiled.

2. Uncomment the /raid line in etc/fstab.

3. Run the following:

cachefilesd and adding /raid to the file system table as follows:

a) Mount /raid.

sudo mount /raid

b) Start the cache daemon.

DGX-2 System User Guide

52

Page 53

systemctl start cachefilesd

These changes are preserved across system reboots.

Restoring the DGX-2 Software Image

DGX-2 System User Guide

53

Page 54

UPDATING THE DGX OS SOFTWARE

You must register your DGX-2 System in order to receive email notification whenever a

new software update is available.

These instructions explain how to update the DGX-2 software through an internet

connection to the NVIDIA public repository. The process updates a DGX-2 System

image to the latest QA’d versions of the entire DGX-2 software stack, including the

drivers.

CONNECTIVITY REQUIREMENTS FOR

SOFTWARE UPDATES

Before attempting to perform the update, verify that the DGX-2 System network

connection can access the public repositories and that the connection is not blocked by a

firewall or proxy.

Enter the following on the DGX-2 System.

$ wget -O f1-changelogs http://changelogs.ubuntu.com/meta-release-lts

$ wget -O f2-archive

http://archive.ubuntu.com/ubuntu/dists/bionic/Release

$ wget -O f3-usarchive

http://us.archive.ubuntu.com/ubuntu/dists/bionic/Release

$ wget -O f4-security

http://security.ubuntu.com/ubuntu/dists/bionic/Release

$ wget -O f5-download

http://download.docker.com/linux/ubuntu/dists/bionic/Release

$ wget -O f6-international

http://international.download.nvidia.com/dgx/repos/dists/bionic/Release

DGX-2 System User Guide

54

Page 55

Updating the DGX OS Software

!

All the wget commands should be successful and there should be six files in the

directory with non-zero content.

UPDATE INSTRUCTIONS

CAUTION: These instructions update all software for which updates are available from

your configured software sources, including applications that you installed yourself. If

you want to prevent an application from being updated, you can instruct the Ubuntu

package manager to keep the current version. For more information, see Introduction

to Holding Packages on the Ubuntu Community Help Wiki.

Perform the updates using commands on the DGX-2 console.

1. Run the package manager.

$ sudo apt update

2. Check to see which software will get updated.

$ sudo apt full-upgrade -s

To prevent an application from being updated, instruct the Ubuntu package manager

to keep the current version. See Introduction to Holding Packages

3. Upgrade to the latest version.

$ sudo apt full-upgrade

.

Answer any questions that appear.

Most questions require a Yes or No response. If asked to select the grub configuration

to use, select the current one on the system.

Other questions will depend on what other packages were installed before the update

and how those packages interact with the update. Typically, you can accept the

default option when prompted.

4. Reboot the system.

DGX-2 System User Guide

55

Page 56

UPDATING FIRMWARE

This section provides instructions for updating firmware for the NVIDIA® DGX server

BIOS and BMC using a Docker container.

GENERAL FIRMWARE UPDATE GUIDELINES

Before updating the firmware, do the following to prevent corrupting the firmware

due to a system crash or disruption to the update process.

● Ensure the system is healthy

● Stop system activities

Do not terminate the firmware update console while updating the firmware.

Component firmware corruption may occur if the update process is interrupted.

Certain components, such as the system BIOS, require a system reboot for the new

firmware to take effect.

Reboot the system if prompted.

When updating the BMC firmware, system management services are shut down first

to allow the update to occur. Consequently, system management is not available

during the BMC update.

In the event of a firmware update failure, run nvsm dump health and then send

the resulting archive containing the output to NVIDIA Enterprise Support

(https://nvid.nvidia.com/dashboard/

Do not attempt any further firmware updates until the issue is resolved or cleared

by NVIDIA Enterprise Support.

) for failure analysis.

DGX-2 System User Guide

56

Page 57

OBTAINING THE FIRMWARE UPDATE

Updating Firmware

CONTAINER

1. Obtain the container tarball from the NVIDIA Enterprise Support portal and transfer it

to the DGX-2 System.

The container is provided in the tarball <image-name>.tar.gz.

2. From the directory where you copied the tarball file, enter the following command.

$ sudo docker load -i <image-name>.tar.gz

3. To verify that the container image is loaded, enter the following.

$ sudo docker images

Example output after loading nvfw-dgx2_18.09.3.tar.gz.

REPOSITORY TAG IMAGE ID CREATED SIZE

nvfw-dgx2_18.09.3 latest aa681a4ae600 1 hours ago 278MB

QUERYING THE FIRMWARE MANIFEST

The manifest displays a listing of firmware components embedded in the containers that

are qualified by NVIDIA.

To query the firmware manifest, enter the following:

# sudo docker run --rm --privileged -v /:/hostfs <image-name>

show_fw_manifest

QUERYING THE CURRENTLY INSTALLED

FIRMWARE VERSIONS

Display the onboard firmware version level of each component supported by the

container. The output will show which component firmware is up to date, or whether it

needs to be updated to the firmware level listed in the manifest.

To query the version information, enter the following.

DGX-2 System User Guide

57

Page 58

Updating Firmware

# sudo docker run --privileged -v /:/hostfs <image-name> show_version

The output shows the onboard version, the version in the manifest, and whether the

firmware is up-to-date.

UPDATING THE FIRMWARE

You can either update all the down-level firmware components at one time, or update

just one or more components.

9.5.1 Command Syntax

sudo docker run --rm [-e auto=1] --privileged -ti -v /:/hostfs <imagename> update_fw [-f] <target>

Where <target> specifies the hardware to update, and is either

all

to update all firmware components (SBIOS, BMC)

or one or more of the following:

SBIOS

to update the SBIOS

BMC

to update the BMC firmware

Note: Other components may be supported beyond those listed here. Query the

firmware manifest to see all the components supported by the container.

The command will scan the specified firmware components and update any that are

down-level.

See the section Additional Options for an explanation of the [-e auto=1] and [-f]

options.

DGX-2 System User Guide

58

Page 59

Updating Firmware

9.5.2 Updating All Firmware components

The following instructions are an example of attempting to update all the firmware

components using the container nvfw-dgx2_18.09.3. In this example, the SBIOS and

BMC require an update.

1. Enter the following.

$ sudo docker run --rm --privileged -ti -v /:/hostfs nvfwdgx2_18.09.3 update_fw all

The container will scan the components and then prompt for confirmation before

starting the update.

Following components will be updated with new firmware version:

SBIOS

BMC

IMPORTANT: Firmware update is disruptive and may require system

reboot.

Stop system activities before performing the update.

Ok to proceed with firmware update? <Y/N>

2. Press Y to proceed.

The firmware update progress is displayed for each component.

Note: While the progress output shows the current and manifest firmware versions, the

versions may be truncated due to space limitations. You can confirm the updated

version after the update is completed using the show_version option.

When the update completes successfully, the following message is displayed.

Firmware update completed Component: SBIOS, update status: success,

reboot required: yes

Component: BMC, update status: success, new version: 3.20.30

3. If directed by the update message, reboot the system.

9.5.3 Updating Specific Firmware Components

The following is an example of updating the SBIOS firmware using the container nvfw-

dgx2_18.09.3.

1. Enter the following.

$ sudo docker run --rm --privileged -ti -v /:/hostfs nvfwdgx2_18.09.3 update_fw SBIOS

The container will scan the components and then prompt for confirmation before

starting the update.

Following components will be updated with new firmware version:

IMPORTANT: Firmware update is disruptive and may require system

reboot.

Stop system activities before performing the update.

DGX-2 System User Guide

59

Page 60

Updating Firmware

Ok to proceed with firmware update? <Y/N>

2. Press Y to proceed. When the update completes successfully, the following message is

displayed.

Firmware update completed

Component: SBIOS, update status: success, reboot required: yes

You can also update a subset of all the components. For example, to update both the

BMC firmware and the system BIOS, enter the following:

$ sudo docker run --rm --privileged -ti -v /:/hostfs nvfw-dgx2_18.09.3

update_fw BMC SBIOS

9.5.4 Updating Firmware for Individual NVMe or PSU

units

To update firmware for an individual PSU or NMVe unit, use the -s option along with

the component ID, where the PSU component ID is 1 – 6, and the NVMe component ID

is the nvme device name.

Example of updating the firmware for PSU 5:

$ sudo docker run --rm --privileged -ti -v /:/hostfs nvfw-dgx2_18.10.2

update_fw PSU -s 5

Example of updating the firmware for nvme0n1:

$ sudo docker run --rm --privileged -ti -v /:/hostfs nvfw-dgx2_18.10.2

update_fw SSD -s nvme0n1

ADDITIONAL OPTIONS

9.6.1 Forcing the Firmware Update

To update the firmware regardless of whether it is down-level, use the -f option as

follows.

$ sudo docker run --rm --privileged -ti -v /:/hostfs <image-name>

update_fw -f <target>

The container will not check the onboard versions against the manifest.

DGX-2 System User Guide

60

Page 61

Updating Firmware

9.6.2 Updating the Firmware Non-interactively

The standard way to run the container is interactively (-ti option). The container will

prompt you to confirm before initiating the update.

To update the firmware without encountering the prompt, omit the -ti option and

instead use the -e auto=1 and -t options as follows.

$ sudo docker run -e auto=1 --rm --privileged -t -v /:/hostfs <image-

name> update_fw <target>

COMMAND SUMMARY

Show the manifest.

$ sudo docker run --rm --privileged -v /:/hostfs <image-name>

show_fw_manifest

Show version information.

$ sudo docker run --rm --privileged -v /:/hostfs <image-name>

show_version

Check the onboard firmware against the manifest and update any down-level

firmware.

$ sudo docker run --rm --privileged -ti -v /:/hostfs <image-name>

update_fw <target>

Bypass the version check and update the firmware.

$ sudo docker run --rm --privileged -ti -v /:/hostfs <image-name>