Mellanox ConnectX-6 VPI Manual

NVIDIA Mellanox ConnectX-6 InfiniBand/VPI

Adapter Cards User Manual

Exported on Oct/22/2020 07:16 PM

https://docs.mellanox.com/x/NCQuAQ

Table of Contents |

|

Table of Contents ....................................................................................................... |

2 |

Introduction................................................................................................................ |

9 |

Product Overview .................................................................................................................... |

9 |

ConnectX-6 PCIe x8 Card...................................................................................................... |

10 |

ConnectX-6 PCIe x16 Card.................................................................................................... |

11 |

ConnectX-6 Socket Direct™ Cards....................................................................................... |

11 |

ConnectX-6 Dual-slot Socket Direct Cards (2x PCIe x16) .............................................. |

12 |

ConnectX-6 Single-slot Socket Direct Cards (2x PCIe x8 in a row) ............................... |

13 |

Package Contents ................................................................................................................. |

14 |

ConnectX-6 PCIe x8/x16 Adapter Cards.......................................................................... |

14 |

ConnectX-6 Socket Direct Cards (2x PCIe x16)............................................................... |

14 |

Features and Benefits........................................................................................................... |

15 |

Operating Systems/Distributions .................................................................................... |

17 |

Connectivity ...................................................................................................................... |

17 |

Manageability ................................................................................................................... |

17 |

Interfaces ................................................................................................................. |

18 |

InfiniBand Interface............................................................................................................... |

18 |

Ethernet QSFP56 Interfaces ................................................................................................. |

18 |

PCI Express Interface ........................................................................................................... |

18 |

LED Interface......................................................................................................................... |

18 |

Heat Sink Interface................................................................................................................ |

19 |

SMBus Interface.................................................................................................................... |

20 |

Voltage Regulators................................................................................................................ |

20 |

Hardware Installation .............................................................................................. |

21 |

Safety Warnings..................................................................................................................... |

21 |

Installation Procedure Overview........................................................................................... |

21 |

System Requirements........................................................................................................... |

22 |

Hardware Requirements ................................................................................................. |

22 |

Airflow Requirements ........................................................................................................... |

22 |

Software Requirements ................................................................................................... |

23 |

Safety Precautions ................................................................................................................ |

23 |

Pre-Installation Checklist..................................................................................................... |

24 |

2

Bracket Replacement Instructions ...................................................................................... |

24 |

Installation Instructions........................................................................................................ |

25 |

Cables and Modules......................................................................................................... |

25 |

Identifying the Card in Your System ..................................................................................... |

26 |

ConnectX-6 PCIe x8/16 Installation Instructions ................................................................. |

27 |

Installing the Card............................................................................................................ |

27 |

Uninstalling the Card ....................................................................................................... |

29 |

ConnectX-6 Socket Direct (2x PCIe x16) Installation Instructions ...................................... |

30 |

Installing the Card............................................................................................................ |

31 |

Uninstalling the Card ....................................................................................................... |

37 |

Driver Installation .................................................................................................... |

39 |

Linux Driver Installation........................................................................................................ |

39 |

Prerequisites .................................................................................................................... |

39 |

Downloading Mellanox OFED .......................................................................................... |

39 |

Installing Mellanox OFED ................................................................................................ |

41 |

Installation Script ........................................................................................................ |

41 |

Installation Procedure ................................................................................................ |

42 |

Installation Results ..................................................................................................... |

44 |

Installation Logs.......................................................................................................... |

44 |

openibd Script.............................................................................................................. |

44 |

Driver Load Upon System Boot .................................................................................. |

45 |

mlnxofedinstall Return Codes .................................................................................... |

45 |

Uninstalling MLNX_OFED........................................................................................... |

46 |

Installing MLNX_OFED Using YUM ................................................................................. |

46 |

Setting up MLNX_OFED YUM Repository................................................................... |

46 |

Installing MLNX_OFED Using the YUM Tool .............................................................. |

47 |

Uninstalling MLNX_OFED Using the YUM Tool ......................................................... |

48 |

Installing MLNX_OFED Using apt-get Tool..................................................................... |

48 |

Setting up MLNX_OFED apt-get Repository .............................................................. |

48 |

Installing MLNX_OFED Using the apt-get Tool.......................................................... |

48 |

Uninstalling MLNX_OFED Using the apt-get Tool..................................................... |

49 |

Updating Firmware After Installation.............................................................................. |

49 |

Updating the Device Online......................................................................................... |

49 |

Updating the Device Manually .................................................................................... |

50 |

3

Updating the Device Firmware Automatically upon System Boot |

............................ 50 |

UEFI Secure Boot ............................................................................................................. |

51 |

Enrolling Mellanox's x.509 Public Key on Your Systems........................................... |

51 |

Removing Signature from kernel Modules ................................................................ |

51 |

Performance Tuning ........................................................................................................ |

52 |

Windows Driver Installation.................................................................................................. |

52 |

Software Requirements ................................................................................................... |

53 |

Downloading Mellanox WinOF-2 Driver .......................................................................... |

53 |

Attended Installation ................................................................................................... |

54 |

Unattended Installation............................................................................................... |

58 |

Installation Results ..................................................................................................... |

59 |

Uninstalling Mellanox WinOF-2 Driver............................................................................ |

60 |

Attended Uninstallation .............................................................................................. |

60 |

Unattended Uninstallation.......................................................................................... |

60 |

Extracting Files Without Running Installation ................................................................ |

60 |

Firmware Upgrade ........................................................................................................... |

63 |

VMware Driver Installation ................................................................................................... |

63 |

Hardware and Software Requirements........................................................................... |

63 |

Installing Mellanox NATIVE ESXi Driver for VMware vSphere........................................ |

64 |

Removing Earlier Mellanox Drivers................................................................................. |

64 |

Firmware Programming .................................................................................................. |

65 |

Updating Adapter Firmware .................................................................................... |

66 |

Troubleshooting ....................................................................................................... |

67 |

General Troubleshooting...................................................................................................... |

67 |

Linux Troubleshooting .......................................................................................................... |

67 |

Windows Troubleshooting..................................................................................................... |

68 |

Specifications ........................................................................................................... |

69 |

MCX651105A-EDAT Specifications ...................................................................................... |

69 |

MCX653105A-HDAT Specifications....................................................................................... |

70 |

MCX653106A-HDAT Specifications....................................................................................... |

71 |

MCX653105A-ECAT Specifications ....................................................................................... |

73 |

MCX653106A-ECAT Specifications ....................................................................................... |

74 |

MCX654105A-HCAT Specifications....................................................................................... |

75 |

MCX654106A-HCAT Specifications....................................................................................... |

77 |

4

MCX654106A-ECAT Specifications ....................................................................................... |

78 |

MCX653105A-EFAT Specifications........................................................................................ |

80 |

MCX653106A-EFAT Specifications........................................................................................ |

81 |

Adapter Card and Bracket Mechanical Drawings and Dimensions.................................... |

82 |

ConnectX-6 PCIe x16 Adapter Card ................................................................................ |

83 |

ConnectX-6 PCIe x8 Adapter Card .................................................................................. |

83 |

Auxiliary PCIe Connection Card ...................................................................................... |

84 |

Tall Bracket ...................................................................................................................... |

84 |

Short Bracket ................................................................................................................... |

84 |

PCIe Express Pinouts Description for Single-Slot Socket Direct Card.................... |

85 |

Finding the GUID/MAC on the Adapter Card ............................................................ |

86 |

Document Revision History ...................................................................................... |

88 |

5

About This Manual

This User Manual describes NVIDIA® Mellanox® ConnectX®-6 InfiniBand/VPI adapter cards. It provides details as to the interfaces of the board, specifications, required software and firmware for operating the board, and relevant documentation.

Ordering Part Numbers

The table below provides the ordering part numbers (OPN) for the available ConnectX-6 InfiniBand/VPI adapter cards.

OPN |

Marketing Description |

MCX654106A-ECAT |

ConnectX®-6 VPI adapter card, 100Gb/s (HDR100, EDR |

|

InfiniBand and 100GbE), dual-port QSFP56, Socket Direct 2x |

|

PCIe 3.0/4.0 x16, tall bracket |

MCX653105A-EFAT |

ConnectX®-6 VPI adapter card, 100Gb/s (HDR100, EDR IB and |

|

100GbE), single-port QSFP56, PCIe3.0/4.0 Socket Direct 2x8 in |

|

a row, tall bracket |

MCX653106A-EFAT |

ConnectX®-6 VPI adapter card, 100Gb/s (HDR100, EDR |

|

IBand100GbE), dual-port QSFP56, PCIe3.0/4.0 Socket Direct |

|

2x8 in a row, tall bracket |

MCX651105A-EDAT |

ConnectX®-6 VPI adapter card, 100Gb/s (HDR100, EDR IB and |

|

100GbE, single-port QSFP56, PCIe4.0 x8, tall bracket |

MCX653105A-ECAT |

ConnectX®-6 VPI adapter card, 100Gb/s (HDR100, EDR IB and |

|

100GbE), single-port QSFP56, PCIe3.0/4.0 x16, tall bracket |

MCX653106A-ECAT |

ConnectX®-6 VPI adapter card, 100Gb/s (HDR100, EDR IB and |

|

100GbE), dual-port QSFP56, PCIe3.0/4.0 x16, tall bracket |

MCX653105A-HDAT |

ConnectX®-6 VPI adapter card, HDR IB (200Gb/s) and 200GbE, |

|

single-port QSFP56, PCIe3.0/4.0 x16, tall bracket |

MCX653106A-HDAT |

ConnectX®-6 VPI adapter card, HDR IB (200Gb/s) and 200GbE, |

|

dual-port QSFP56, PCIe3.0/4.0 x16, tall bracket |

MCX654105A-HCAT |

ConnectX®-6 VPI adapter card, HDR IB (200Gb/s) and 200GbE, |

|

single-port QSFP56, Socket Direct 2x PCIe3.0/4.0x16, tall |

|

bracket |

MCX654106A-HCAT |

ConnectX®-6 VPI adapter card, HDR IB (200Gb/s) and 200GbE, |

|

dual-port QSFP56, Socket Direct 2x PCIe3.0/4.0x16, tall |

|

bracket |

Intended Audience

This manual is intended for the installer and user of these cards.

The manual assumes basic familiarity with InfiniBand and Ethernet network and architecture specifications.

Technical Support

Customers who purchased Mellanox products directly from Mellanox are invited to contact us through the following methods:

•URL: http://www.mellanox.com > Support

•E-mail: support@mellanox.com

6

• Tel: +1.408.916.0055

Customers who purchased Mellanox M-1 Global Support Services, please see your contract for details regarding Technical Support.

Customers who purchased Mellanox products through a Mellanox approved reseller should first seek assistance through their reseller.

Related Documentation

Mellanox OFED

for Linux User

Manual and

Release Notes

WinOF-2 for

Windows User

Manual and Release Notes

Mellanox VMware for Ethernet User Manual

Mellanox VMware for Ethernet Release Notes

Mellanox

Firmware Utility

(mlxup) User

Manual and

Release Notes

Mellanox

Firmware Tools

(MFT) User

Manual

IEEE Std 802.3

Specification

PCI Express

3.0/4.0

Specifications

User Manual describing OFED features, performance, band diagnostic, tools content and configuration. See Mellanox OFED for Linux Documentation.

User Manual describing WinOF-2 features, performance, Ethernet diagnostic, tools content and configuration. See WinOF-2 for Windows Documentation.

User Manual describing the various components of the Mellanox ConnectX® NATIVE ESXi stack. See http://www.mellanox.com Products > Software > Ethernet Drivers > VMware Driver > User Manual

Release notes for Mellanox ConnectX® NATIVE ESXi stack. See http:// www.mellanox.com Software > Ethernet Drivers > VMware Driver > Release Notes

Mellanox firmware update and query utility used to update the firmware. See http:// www.mellanox.com > Products > Software > Firmware Tools > mlxup Firmware Utility

User Manual describing the set of MFT firmware management tools for a single node. See MFT User Manual.

IEEE Ethernet specification at http://standards.ieee.org

Industry Standard PCI Express Base and Card Electromechanical Specifications at https://pcisig.com/specifications

7

Mellanox LinkX

Interconnect

Solutions

Mellanox LinkX InfiniBand cables and transceivers are designed to maximize the performance of High-Performance Computing networks, requiring high-bandwidth, lowlatency connections between compute nodes and switch nodes. Mellanox offers one of industry’s broadest portfolio of QDR/FDR10 (40Gb/s), FDR (56Gb/s), EDR/HDR100 (100Gb/s) and HDR (200Gb/s) cables, including Direct Attach Copper cables (DACs), copper splitter cables, Active Optical Cables (AOCs) and transceivers in a wide range of lengths from 0.5m to 10km. In addition to meeting IBTA standards, Mellanox tests every product in an end-to-end environment ensuring a Bit Error Rate of less than 1E-15. Read more at https://www.mellanox.com/products/interconnect/infiniband-overview.php

Document Conventions

When discussing memory sizes, MB and MBytes are used in this document to mean size in mega

Bytes. The use of Mb or Mbits (small b) indicates size in mega bits. IB is used in this document to mean InfiniBand. In this document PCIe is used to mean PCI Express.

Revision History

A list of the changes made to this document are provided in Document Revision History.

8

Introduction

Product Overview

This is the user guide for Mellanox technologies VPI adapter cards based on the ConnectX®-6 integrated circuit device. ConnectX-6 connectivity provides the highest performing low latency and most flexible interconnect solution for PCI Express Gen 3.0/4.0 servers used in enterprise datacenters and high-performance computing environments.

ConnectX-6 Virtual Protocol Interconnect® adapter cards provide up to two ports of 200Gb/s for InfiniBand and Ethernet connectivity, sub-600ns latency and 200 million messages per second, enabling the highest performance and most flexible solution for the most demanding HighPerformance Computing (HPC), storage, and datacenter applications.

ConnectX-6 is a groundbreaking addition to the Mellanox ConnectX series of industry-leading adapter cards. In addition to all the existing innovative features of past ConnectX versions, ConnectX-6 offers a number of enhancements that further improve the performance and scalability of datacenter applications.

ConnectX-6 adapter cards are offered in a variety of PCIe configurations, as described in the below table.

Make sure to use a PCIe slot that is capable of supplying the required power and airflow to the ConnectX-6 as stated in Specifications.

Configuration |

OPN |

Marketing Description |

|||

ConnectX-6 PCIe x8 Card |

MCX651105A-EDAT |

ConnectX®-6 VPI adapter card, 100Gb/s |

|||

|

|

|

|

|

(HDR100, EDR IB and 100GbE, single-port |

|

|

|

|

|

QSFP56, PCIe4.0 x8, tall bracket |

|

|

|

|

MCX653105A-HDAT |

ConnectX®-6 VPI adapter card, HDR IB |

ConnectX-6 PCIe x16 Card |

|

(200Gb/s) and 200GbE, single-port QSFP56, |

|||

|

PCIe4.0 x16, tall bracket |

||||

|

|

|

|

|

|

|

|

|

|

MCX653106A-HDAT |

ConnectX®-6 VPI adapter card, HDR IB |

|

|

|

|

|

(200Gb/s) and 200GbE, dual-port QSFP56, |

|

|

|

|

|

PCIe3.0/4.0 x16, tall bracket |

|

|

|

|

MCX653105A-ECAT |

ConnectX®-6 VPI adapter card, 100Gb/s |

|

|

|

|

|

(HDR100, EDR IB and 100GbE), single-port |

|

|

|

|

|

QSFP56, PCIe3.0/4.0 x16, tall bracket |

|

|

|

|

MCX653106A-ECAT |

ConnectX®-6 VPI adapter card, 100Gb/s |

|

|

|

|

|

(HDR100, EDR IB and 100GbE), dual-port |

|

|

|

|

|

QSFP56, PCIe3.0/4.0 x16, tall bracket |

ConnectX-6 Dual-slot Socket Direct |

MCX654105A-HCAT |

ConnectX®-6 VPI adapter card kit, HDR IB |

|||

Cards (2x PCIe x16) |

|

(200Gb/s) and 200GbE, single-port QSFP56, |

|||

|

|

|

|

|

Socket Direct 2x PCIe3.0 x16, tall brackets |

|

|

|

|

MCX654106A-HCAT |

ConnectX®-6 VPI adapter card, HDR IB |

|

|

|

|

|

(200Gb/s) and 200GbE, dual-port QSFP56, |

|

|

|

|

|

Socket Direct 2x PCIe3.0/4.0x16, tall bracket |

9

Configuration |

OPN |

Marketing Description |

|

|

|

MCX654106A-ECAT |

ConnectX®-6 VPI adapter card, 100Gb/s |

|

|

|

(HDR100, EDR InfiniBand and 100GbE), dual- |

|

|

|

port QSFP56, Socket Direct 2x PCIe3.0/4.0 |

|

|

|

x16, tall bracket |

ConnectX-6 Single-slot Socket Direct |

MCX653105A-EFAT |

ConnectX®-6 VPI adapter card, 100Gb/s |

|

Cards (2x PCIe x8 in a row) |

|

(HDR100, EDR IB and 100GbE), single-port |

|

|

|

|

QSFP56, PCIe3.0/4.0 Socket Direct 2x8 in a |

|

|

|

row, tall bracket |

|

|

MCX653106A-EFAT |

ConnectX®-6 VPI adapter card, 100Gb/s |

|

|

|

(HDR100, EDR IBand100GbE), dual-port |

|

|

|

QSFP56, PCIe3.0/4.0 Socket Direct 2x8 in a |

|

|

|

row, tall bracket |

ConnectX-6 PCIe x8 Card

ConnectX-6 with a single PCIe x8 slot can support a bandwidth of up to 100Gb/s in a PCIe Gen 4.0 slot.

Part Number |

MCX651105A-EDAT |

Form Factor/Dimensions |

PCIe Half Height, Half Length / 167.65mm x 68.90mm |

Data Transmission Rate |

Ethernet: 10/25/40/50/100 Gb/s |

|

InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100 |

Network Connector Type |

Single-port QSFP56 |

PCIe x8 through Edge Connector |

PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s |

RoHS |

RoHS Compliant |

Adapter IC Part Number |

MT28908A0-XCCF-HVM |

10

ConnectX-6 PCIe x16 Card

ConnectX-6 with a single PCIe x16 slot can support a bandwidth of up to 100Gb/s in a PCIe Gen 3.0 slot, or up to 200Gb/s in a PCIe Gen 4.0 slot.

Part Number |

MCX653105A- |

MCX653106A- |

MCX653105A- |

MCX653106A- |

|

ECAT |

ECAT |

HDAT |

HDAT |

Form Factor/Dimensions |

PCIe Half Height, Half Length / 167.65mm x 68.90mm |

|

||

Data Transmission Rate |

Ethernet: 10/25/40/50/100 Gb/s |

Ethernet: 10/25/40/50/100/200 Gb/s |

||

|

InfiniBand: SDR, DDR, QDR, FDR, |

InfiniBand: SDR, DDR, QDR, FDR, |

||

|

EDR, HDR100 |

|

EDR, HDR100, HDR |

|

Network Connector Type |

Single-port |

Dual-port |

Single-port |

Dual-port QSFP56 |

|

QSFP56 |

QSFP56 |

QSFP56 |

|

PCIe x16 through Edge Connector |

PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s |

|

||

RoHS |

RoHS Compliant |

|

|

|

Adapter IC Part Number |

MT28908A0-XCCF-HVM |

|

|

|

ConnectX-6 Socket Direct™ Cards

The Socket Direct technology offers improved performance to dual-socket servers by enabling direct access from each CPU in a dual-socket server to the network through its dedicated PCIe interface.

Please note that ConnectX-6 Socket Direct cards do not support Multi-Host functionality (i.e. connectivity to two independent CPUs). For ConnectX-6 Socket Direct card with Multi-Host functionality, please contact Mellanox.

11

ConnectX-6 Socket Direct cards are available in two configurations: Dual-slot Configuration (2x PCIe x16) and Single-slot Configuration (2x PCIe x8).

ConnectX-6 Dual-slot Socket Direct Cards (2x PCIe x16)

In order to obtain 200Gb/s speed, Mellanox offers ConnectX-6 Socket Direct that enable 200Gb/s connectivity also for servers with PCIe Gen 3.0 capability. The adapter’s 32-lane PCIe bus is split into two 16-lane buses, with one bus accessible through a PCIe x16 edge connector and the other bus through an x16 Auxiliary PCIe Connection card. The two cards should be installed into two PCIe x16 slots and connected using two Cabline SA-II Plus harnesses, as shown in the below figure.

Part Number |

MCX654105A- |

MCX654106A- |

MCX654106A-ECAT |

|

HCAT |

HCAT |

|

Form Factor/Dimensions |

Adapter Card: PCIe Half Height, Half Length / 167.65mm x 68.90mm |

||

|

Auxiliary PCIe Connection Card: 5.09 in. x 2.32 in. (129.30mm x 59.00mm) |

||

|

Two 35cm Cabline CA-II Plus harnesses |

||

Data Transmission Rate |

Ethernet: 10/25/40/50/100/200 |

Ethernet: 10/25/40/50/100 Gb/s |

|

|

Gb/s |

|

InfiniBand: SDR, DDR, QDR, FDR, EDR, |

|

InfiniBand: SDR, DDR, QDR, FDR, |

HDR100 |

|

|

EDR, HDR100, HDR |

|

|

Network Connector Type |

Single-port |

Dual-port QSFP56 |

|

|

QSFP56 |

|

|

PCIe x16 through Edge Connector |

PCIe Gen 3.0 / 4.0SERDES@ 8.0GT/s / 16.0GT/s |

||

PCIe x16 through Auxiliary Card |

PCIe Gen 3.0SERDES@ 8.0GT/s |

|

|

RoHS |

RoHS Compliant |

|

|

Adapter IC Part Number |

MT28908A0-XCCF-HVM |

|

|

12

ConnectX-6 Single-slot Socket Direct Cards (2x PCIe x8 in a row)

The PCIe x16 interface comprises two PCIe x8 in a row, such that each of the PCIe x8 lanes can be connected to a dedicated CPU in a dual-socket server. In such a configuration, Socket Direct brings lower latency and lower CPU utilization as the direct connection from each CPU to the network means the interconnect can bypass a QPI (UPI) and the other CPU, optimizing performance and improving latency. CPU utilization is improved as each CPU handles only its own traffic and not traffic from the other CPU.

A system with a custom PCI Express x16 slot that includes special signals is required for installing the card. Please refer to PCIe Express Pinouts Description for Single-Slot Socket Direct Card for pinout definitions.

Part Number |

MCX653105A-EFAT |

MCX653106A-EFAT |

Form Factor/Dimensions |

PCIe Half Height, Half Length / 167.65mm x 68.90mm |

|

Data Transmission Rate |

Ethernet: 10/25/40/50/100 Gb/s |

|

|

InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100 |

|

Network Connector Type |

Single-port QSFP56 |

Dual-port QSFP56 |

PCIe x16 through Edge Connector |

PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s Socket Direct 2x8 in a row |

|

RoHS |

RoHS Compliant |

|

Adapter IC Part Number |

MT28908A0-XCCF-HVM |

|

13

Package Contents

ConnectX-6 PCIe x8/x16 Adapter Cards

Applies to MCX651105A-EDAT, MCX653105A-ECAT, MCX653106A-ECAT, MCX653105A-HDAT, MCX653106A-HDAT, MCX653105A-EFAT, MCX653106A-EFAT.

Category |

Qty |

Item |

Cards |

1 |

ConnectX-6 adapter card |

Accessories |

1 |

Adapter card short bracket |

|

1 |

Adapter card tall bracket (shipped assembled on the |

|

|

card) |

ConnectX-6 Socket Direct Cards (2x PCIe x16)

Applies to MCX654105A-HCAT, MCX654106A-HCAT and MCX654106A-ECAT.

Category |

Qty. |

Item |

Cards |

1 |

ConnectX-6 adapter card |

|

1 |

PCIe Auxiliary Card |

Harnesses |

1 |

35cm Cabline CA-II Plus harness (white) |

135cm Cabline CA-II Plus harness (black)

2Retention Clip for Cablline harness (optional accessory)

1 |

Adapter card short bracket |

Accessories

1Adapter card tall bracket (shipped assembled on the card)

1 |

PCIe Auxiliary card short bracket |

1PCIe Auxiliary card tall bracket (shipped assembled on the card)

14

Features and Benefits

Make sure to use a PCIe slot that is capable of supplying the required power and airflow to the ConnectX-6 cards as stated in Specifications.

PCI Express (PCIe)

Uses the following PCIe interfaces:

•PCIe x8/x16 configurations:

PCIe Gen 3.0 (8GT/s) and Gen 4.0 (16GT/s) through an x8/x16 edge connector.

•2x PCIe x16 configurations:

PCIe Gen 3.0/4.0 SERDES @ 8.0/16.0 GT/s through Edge Connector PCIe Gen 3.0 SERDES @ 8.0GT/s through PCIe Auxiliary Connection Card

200Gb/s |

ConnectX-6 offers the highest throughput VPI adapter, supporting HDR 200b/s InfiniBand and |

Virtual |

200Gb/s Ethernet and enabling any standard networking, clustering, or storage to operate |

Protocol |

seamlessly over any converged network leveraging a consolidated software stack. |

Interconne |

|

ct (VPI) |

|

Adapter |

|

InfiniBand |

ConnectX-6 delivers low latency, high bandwidth, and computing efficiency for performance-driven |

Architectu |

server and storage clustering applications. ConnectX-6 is InfiniBand Architecture Specification |

re |

v1.3 compliant. |

Specificati |

|

on v1.3 |

|

compliant |

|

Up to 200 Mellanox adapters comply with the following IEEE 802.3 standards:

Gigabit 200GbE / 100GbE / 50GbE / 40GbE / 25GbE / 10GbE / 1GbE

Ethernet - IEEE 802.3bj, 802.3bm 100 Gigabit Ethernet

-IEEE 802.3by, Ethernet Consortium25, 50 Gigabit Ethernet, supporting all FEC modes

-IEEE 802.3ba 40 Gigabit Ethernet

-IEEE 802.3by 25 Gigabit Ethernet

-IEEE 802.3ae 10 Gigabit Ethernet

-IEEE 802.3ap based auto-negotiation and KR startup

-IEEE 802.3ad, 802.1AX Link Aggregation

-IEEE 802.1Q, 802.1P VLAN tags and priority

-IEEE 802.1Qau (QCN)

-Congestion Notification

-IEEE 802.1Qaz (ETS)

-IEEE 802.1Qbb (PFC)

-IEEE 802.1Qbg

-IEEE 1588v2

-Jumbo frame support (9.6KB)

InfiniBand |

A standard InfiniBand data rate, where each lane of a 2X port runs a bit rate of 53.125Gb/s with a |

HDR100 |

64b/66b encoding, resulting in an effective bandwidth of 100Gb/s. |

InfiniBand |

A standard InfiniBand data rate, where each lane of a 4X port runs a bit rate of 53.125Gb/s with a |

HDR |

64b/66b encoding, resulting in an effective bandwidth of 200Gb/s. |

Memory |

• SPI Quad - includes 256Mbit SPI Quad Flash device (MX25L25645GXDI-08G device by |

Componen |

Macronix) |

ts |

• FRU EEPROM - Stores the parameters and personality of the card. The EEPROM capacity is |

|

128Kbit. FRU I2C address is (0x50) and is accessible through the PCIe SMBus. |

15

Overlay In order to better scale their networks, datacenter operators often create overlay networks that Networks carry traffic from individual virtual machines over logical tunnels in encapsulated formats such as

NVGRE and VXLAN. While this solves network scalability issues, it hides the TCP packet from the hardware offloading engines, placing higher loads on the host CPU. ConnectX-6 effectively addresses this by providing advanced NVGRE and VXLAN hardware offloading engines that encapsulate and de-capsulate the overlay protocol.

RDMA and ConnectX-6, utilizing IBTA RDMA (Remote Data Memory Access) and RoCE (RDMA over Converged RDMA over Ethernet) technology, delivers low-latency and high-performance over InfiniBand and Ethernet Converged networks. Leveraging datacenter bridging (DCB) capabilities as well as ConnectX-6 advanced Ethernet congestion control hardware mechanisms, RoCE provides efficient low-latency RDMA services (RoCE) over Layer 2 and Layer 3 networks.

Mellanox PeerDirect™ communication provides high efficiency RDMA access by eliminating unnecessary PeerDirect internal data copies between components on the PCIe bus (for example, from GPU to CPU), and

™therefore significantly reduces application run time. ConnectX-6 advanced acceleration technology enables higher cluster efficiency and scalability to tens of thousands of nodes.

CPU |

Adapter functionality enabling reduced CPU overhead allowing more available CPU for |

Offload |

computation tasks. |

|

• Flexible match-action flow tables |

|

• Open VSwitch (OVS) offload using ASAP2(TM) |

|

• Tunneling encapsulation / decapsulation |

Quality of |

Support for port-based Quality of Service enabling various application requirements for latency |

Service |

and SLA. |

(QoS) |

|

Hardware- |

ConnectX-6 provides dedicated adapter resources and guaranteed isolation and protection for |

based I/O |

virtual machines within the server. |

Virtualizati |

|

on |

|

Storage |

A consolidated compute and storage network achieves significant cost-performance advantages |

Accelerati |

over multi-fabric networks. Standard block and file access protocols can leverage: |

on |

• RDMA for high-performance storage access |

|

|

|

• NVMe over Fabric offloads for target machine |

|

• Erasure Coding |

|

• T10-DIF Signature Handover |

SR-IOV |

ConnectX-6 SR-IOV technology provides dedicated adapter resources and guaranteed isolation and |

|

protection for virtual machines (VM) within the server. |

High- |

• Tag Matching and Rendezvous Offloads |

Performan |

• Adaptive Routing on Reliable Transport |

ce |

• Burst Buffer Offloads for Background Checkpointing |

Accelerati |

|

ons |

|

16

Operating Systems/Distributions

ConnectX-6 Socket Direct cards 2x PCIe x16 (OPNs: MCX654105A-HCAT, MCX654106A-HCAT and MCX654106A-ECAT) are not supported in Windows and WinOF-2.

•OpenFabrics Enterprise Distribution (OFED)

•RHEL/CentOS

•Windows

•FreeBSD

•VMware

•OpenFabrics Enterprise Distribution (OFED)

•OpenFabrics Windows Distribution (WinOF-2)

Connectivity

•Interoperable with 1/10/25/40/50/100/200 Gb/s InfiniBand/VPI and Ethernet switches

•Passive copper cable with ESD protection

•Powered connectors for optical and active cable support

Manageability

ConnectX-6 technology maintains support for manageability through a BMC. ConnectX-6 PCIe stand-up adapter can be connected to a BMC using MCTP over SMBus or MCTP over PCIe protocols as if it is a standard Mellanox PCIe stand-up adapter. For configuring the adapter for the specific manageability solution in use by the server, please contact Mellanox Support.

17

Interfaces

InfiniBand Interface

The network ports of the ConnectX®-6 adapter cards are compliant with the InfiniBand Architecture Specification, Release 1.3. InfiniBand traffic is transmitted through the cards' QSFP56 connectors.

Ethernet QSFP56 Interfaces

The adapter card includes special circuits to protect from ESD shocks to the card/server when plugging copper cables.

The network ports of the ConnectX-6 adapter card are compliant with the IEEE 802.3 Ethernet standards listed in Features and Benefits. Ethernet traffic is transmitted through the QSFP56 connectors on the adapter card.

PCI Express Interface

ConnectX®-6 adapter cards support PCI Express Gen 3.0/4.0 (1.1 and 2.0 compatible) through x8/x16 edge connectors. The device can be either a master initiating the PCI Express bus operations, or a slave responding to PCI bus operations.

The following lists PCIe interface features:

•PCIe Gen 3.0 and 4.0 compliant, 2.0 and 1.1 compatible

•2.5, 5.0, 8.0, or 16.0 GT/s link rate x16/x32

•Auto-negotiates to x32, x16, x8, x4, x2, or x1

•Support for MSI/MSI-X mechanisms

LED Interface

The adapter card includes special circuits to protect from ESD shocks to the card/server when plugging copper cables.

There are two I/O LEDs per port:

•LED 1 and 2: Bi-color I/O LED which indicates link status. LED behavior is described below for Ethernet and InfiniBand port configurations.

18

• LED 3 and 4: Reserved for future use.

LED1 and LED2 Link Status Indications (Physical and Logical) - Ethernet Protocole:

LED Color and State |

Description |

Off |

A link has not been established |

Blinking amber |

1 Hz Blinking amber occurs due to running a beacon command |

|

for locating the adapter card |

|

4 Hz blinking amber indicates a problem with the physical link |

Solid green |

Indicates a valid link with no active traffic |

Blinking green |

Indicates a valid logical link with active traffic |

LED1 and LED2 Link Status Indications (Physical and Logical) - InfiniBand Protocole:

LED Color and State |

Description |

Off |

A physical link has not been established |

Solid amber |

Indicates an active physical link |

Blinking amber |

1 Hz Blinking amber occurs due to running a beacon command |

|

for locating the adapter card |

|

4 Hz blinking amber indicates a problem with the physical link |

Solid green |

Indicates a valid logical (data activity) link with no active traffic |

Blinking green |

Indicates a valid logical link with active traffic |

Heat Sink Interface

The heatsink is attached to the ConnectX-6 IC in order to dissipate the heat from the ConnectX-6 IC. It is attached either by using four spring-loaded push pins that insert into four mounting holes, or by screws.

ConnectX-6 IC has a thermal shutdown safety mechanism which automatically shuts down the

ConnectX-6 card in cases of high-temperature event, improper thermal coupling or heatsink removal. For the required airflow (LFM) per OPN, please refer to Specifications.

19

SMBus Interface

ConnectX-6 technology maintains support for manageability through a BMC. ConnectX-6 PCIe stand-up adapter can be connected to a BMC using MCTP over SMBus or MCTP over PCIe protocols as if it is a standard Mellanox PCIe stand-up adapter. For configuring the adapter for the specific manageability solution in use by the server, please contact Mellanox Support.

Voltage Regulators

The voltage regulator power is derived from the PCI Express edge connector 12V supply pins. These voltage supply pins feed on-board regulators that provide the necessary power to the various components on the card.

20

Hardware Installation

Installation and initialization of ConnectX-6 adapter cards require attention to the mechanical attributes, power specification, and precautions for electronic equipment.

Safety Warnings

Safety warnings are provided here in the English language. For safety warnings in other languages, refer to the Adapter Installation Safety Instructions document available on mellanox.com.

Please observe all safety warnings to avoid injury and prevent damage to system components. Note that not all warnings are relevant to all models.

Unable to render include or excerpt-include. Could not retrieve page.

Installation Procedure Overview

The installation procedure of ConnectX-6 adapter cards involves the following steps:

Step |

Procedure |

Direct Link |

|||||||||

1 |

Check the system’s hardware and software |

System Requirements |

|||||||||

|

requirements. |

|

|

|

|

|

|

|

|

|

|

2 |

Pay attention to the airflow consideration within |

Airflow Requirements |

|||||||||

|

the host system |

|

|

|

|

|

|

|

|

|

|

3 |

Follow the safety precautions |

Safety Precautions |

|

||||||||

4 |

Unpack the package |

Unpack the package |

|

||||||||

5 |

Follow the pre-installation checklist |

Pre-Installation Checklist |

|

||||||||

6 |

(Optional) Replace the full-height mounting |

Bracket Replacement Instructions |

|||||||||

|

bracket with the supplied short bracket |

|

|

|

|

|

|

|

|

|

|

7 |

Install the ConnectX-6 PCIe x8/x16 adapter card |

ConnectX-6 PCIe x8/x16 Adapter Cards |

|||||||||

|

in the system |

Installation Instructions |

|

||||||||

|

Install the ConnectX-6 2x PCIe x16 Socket Direct |

ConnectX-6 Socket Direct (2x PCIe x16) |

|||||||||

|

adapter card in the system |

Installation Instructions |

|

||||||||

8 |

Connect cables or modules to the card |

Cables and Modules |

|

||||||||

9 |

Identify ConnectX-6 in the system |

Identifying Your Card |

|

||||||||

21

System Requirements

Hardware Requirements

Unless otherwise specified, Mellanox products are designed to work in an environmentally controlled data center with low levels of gaseous and dust (particulate) contamination.

The operating environment should meet severity level G1 as per ISA 71.04 for gaseous contamination and ISO 14644-1 class 8 for cleanliness level.

For proper operation and performance, please make sure to use a PCIe slot with a corresponding bus width and that can supply sufficient power to your card. Refer to the Specifications section of the manual for more power requirements.

Please make sure to install the ConnectX-6 cards in a PCIe slot that is capable of supplying the required power as stated in Specifications.

ConnectX-6 Configuration |

|

Hardware Requirements |

||

PCIe x8/x16 |

A system with a PCI Express x8/x16 slot is required for |

|||

|

installing the card. |

|||

Socket Direct 2x PCIe x8 in a row (single slot) |

A system with a custom PCI Express x16 slot (four special |

|||

|

pins) is required for installing the card. Please refer to PCIe |

|||

|

|

|

|

|

|

Express Pinouts Description for Single-Slot Socket Direct |

|

||

|

Card for pinout definitions. |

|||

|

|

|

|

|

Socket Direct 2x PCIe x16 (dual slots)

A system with two PCIe x16 slots is required for installing the cards.

Airflow Requirements

ConnectX-6 adapter cards are offered with two airflow patterns: from the heatsink to the network ports, and vice versa, as shown below.

Please refer to the Specifications section for airflow numbers for each specific card model.

Airflow from the heatsink to the network ports:

22

Airflow from the network ports to the heatsink:

All cards in the system should be planned with the same airflow direction.

Software Requirements

•See Operating Systems/Distributions section under the Introduction section.

•Software Stacks - Mellanox OpenFabric software package MLNX_OFED for Linux, WinOF-2 for Windows, and VMware. See the Driver Installation section.

Safety Precautions

The adapter is being installed in a system that operates with voltages that can be lethal. Before opening the case of the system, observe the following precautions to avoid injury and prevent damage to system components.

•Remove any metallic objects from your hands and wrists.

•Make sure to use only insulated tools.

•Verify that the system is powered off and is unplugged.

•It is strongly recommended to use an ESD strap or other antistatic devices.

23

Pre-Installation Checklist

•Unpack the ConnectX-6 Card; Unpack and remove the ConnectX-6 card. Check against the package contents list that all the parts have been sent. Check the parts for visible damage that may have occurred during shipping. Please note that the cards must be placed on an antistatic surface. For package contents please refer to Package Contents.

Please note that if the card is removed hastily from the antistatic bag, the plastic ziplock may harm the EMI fingers on the networking connector. Carefully remove the card from the antistatic bag to avoid damaging the EMI fingers.

•Shut down your system if active; Turn off the power to the system, and disconnect the power cord. Refer to the system documentation for instructions. Before you install the ConnectX-6 card, make sure that the system is disconnected from power.

•(Optional) Check the mounting bracket on the ConnectX-6 or PCIe Auxiliary Connection Card; If required for your system, replace the full-height mounting bracket that is shipped mounted on the card with the supplied low-profile bracket. Refer to Bracket Replacement Instructions.

Bracket Replacement Instructions

The ConnectX-6 card and PCIe Auxiliary Connection card are usually shipped with an assembled highprofile bracket. If this form factor is suitable for your requirements, you can skip the remainder of this section and move to Installation Instructions. If you need to replace the high-profile bracket with the short bracket that is included in the shipping box, please follow the instructions in this section.

Due to risk of damaging the EMI gasket, it is not recommended to replace the bracket more than three times.

To replace the bracket you will need the following parts:

•The new brackets of the proper height

•The 2 screws saved from the removal of the bracket

Removing the Existing Bracket

1.Using a torque driver, remove the two screws holding the bracket in place.

2.Separate the bracket from the ConnectX-6 card.

Be careful not to put stress on the LEDs on the adapter card.

3.Save the two screws.

Installing the New Bracket

1.Place the bracket onto the card until the screw holes line up.

Do not force the bracket onto the adapter card.

2.Screw on the bracket using the screws saved from the bracket removal procedure above.

24

Use a torque driver to apply up to 2 lbs-in torque on the screws.

Installation Instructions

This section provides detailed instructions on how to install your adapter card in a system.

Choose the installation instructions according to the ConnectX-6 configuration you have purchased.

OPNs |

Installation Instructions |

|

MCX651105A-EDAT |

ConnectX-6 (PCIe x8/x16) Adapter Card |

|

MCX653105A-HDAT |

|

|

MCX653106A-HDAT |

|

|

MCX653105A-ECAT |

|

|

MCX653106A-ECAT |

|

|

MCX653105A-EFAT |

|

|

MCX653106A-EFAT |

|

|

MCX654105A-HCAT |

ConnectX-6 Socket Direct (2x PCIe x16) Adapter Card |

|

MCX654106A-HCAT |

|

|

MCX654106A-ECAT |

|

|

Cables and Modules

To obtain the list of supported Mellanox cables for your adapter, please refer to the Cables Reference Table at http://www.mellanox.com/products/interconnect/cables-configurator.php.

Cable Installation

1.All cables can be inserted or removed with the unit powered on.

2.To insert a cable, press the connector into the port receptacle until the connector is firmly seated.

a.Support the weight of the cable before connecting the cable to the adapter card. Do this by using a cable holder or tying the cable to the rack.

b.Determine the correct orientation of the connector to the card before inserting the connector. Do not try and insert the connector upside down. This may damage the adapter card.

c.Insert the connector into the adapter card. Be careful to insert the connector straight into the cage. Do not apply any torque, up or down, to the connector cage in the adapter card.

d.Make sure that the connector locks in place.

When installing cables make sure that the latches engage.

Always install and remove cables by pushing or pulling the cable and connector in a straight line with the card.

3.After inserting a cable into a port, the Green LED indicator will light when the physical connection is established (that is, when the unit is powered on and a cable is plugged into the port with the other end of the connector plugged into a functioning port). See LED

Interface under the Interfaces section.

25

4.After plugging in a cable, lock the connector using the latching mechanism particular to the cable vendor. When data is being transferred the Green LED will blink. See LED Interface under the Interfaces section.

5.Care should be taken as not to impede the air exhaust flow through the ventilation holes. Use cable lengths which allow for routing horizontally around to the side of the chassis before bending upward or downward in the rack.

6.To remove a cable, disengage the locks and slowly pull the connector away from the port receptacle. LED indicator will turn off when the cable is unseated.

Identifying the Card in Your System

On Linux

Get the device location on the PCI bus by running lspci and locating lines with the string “Mellanox Technologies”:

ConnectX-6 Card |

lspci Command Output Example |

|

Configuration |

|

|

Single-port Socket |

|

|

Direct Card (2x PCIe |

[root@mftqa-009 ~]# lspci |grep mellanox -i |

|

x16) |

||

a3:00.0 Infiniband controller: Mellanox Technologies MT28908 Family [ConnectX-6] |

||

|

e3:00.0 Infiniband controller: Mellanox Technologies MT28908 Family [ConnectX-6] |

|

Dual-port Socket |

|

|

Direct Card (2x PCIe |

[root@mftqa-009 ~]# lspci |grep mellanox -i |

|

x16) |

||

05:00.0 Infiniband controller: Mellanox Technologies MT28908A0 Family [ConnectX-6] |

||

|

05:00.1 Infiniband controller: Mellanox Technologies MT28908A0 Family [ConnectX-6] |

|

|

82:00.0 Infiniband controller: Mellanox Technologies MT28908A0 Family [ConnectX-6] |

|

|

82:00.1 Infiniband controller: Mellanox Technologies MT28908A0 Family [ConnectX-6] |

|

|

In the output example above, the first |

|

|

two rows indicate that one card is installed in a PCI slot with PCI Bus address 05 |

|

|

(hexadecimal), PCI Device number 00 and PCI Function number 0 and 1. The other |

|

|

card is installed in a PCI slot with PCI Bus address 82 (hexa-decimal), PCI Device numb |

|

|

er 00 and PCI Function number 0 and 1. |

|

|

Since the two PCIe cards are installed in two PCIe slots, each card |

|

|

gets a unique PCI Bus andDevicenumber.EachofthePCIex16bussesseestwonetwork |

|

|

ports;ineffect,thetwo physical ports of the ConnectX-6 Socket Direct adapter are viewe |

|

|

d as four net devices by thesystem. |

|

Single-port PCIe x8/ |

|

|

x16 Card |

[root@mftqa-009 ~]# lspci |grep mellanox -i |

|

|

||

|

3:00.0 Infiniband controller: Mellanox Technologies MT28908 Family [ConnectX-6] |

|

Dual-port PCIe x16 |

|

|

Card |

[root@mftqa-009 ~]# lspci |grep mellanox -i |

|

|

||

|

86:00.0 Network controller: Mellanox Technologies MT28908A0 Family [ConnectX-6] 86:00.1 |

|

|

Network controller: Mellanox Technologies MT28908A0 Family [ConnectX-6] |

On Windows

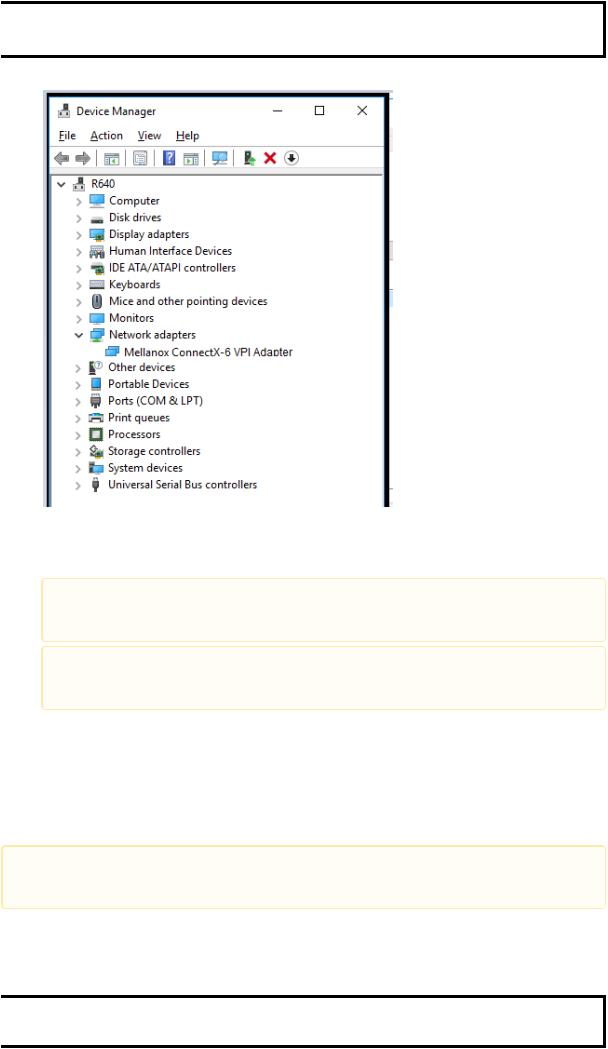

1.Open Device Manager on the server. Click Start => Run, and then enter devmgmt.msc.

2.Expand System Devices and locate your Mellanox ConnectX-6 adapter card.

3.Right click the mouse on your adapter's row and select Properties to display the adapter card properties window.

4.Click the Details tab and select Hardware Ids (Windows 2012/R2/2016) from the Property pulldown menu.

26

PCI Device (Example)

5.In the Value display box, check the fields VEN and DEV (fields are separated by ‘&’). In the display example above, notice the sub-string “PCI\VEN_15B3&DEV_1003”: VEN is equal to 0x15B3 – this is the Vendor ID of Mellanox Technologies; and DEV is equal to 1018 (for ConnectX-6) – this is a valid Mellanox Technologies PCI Device ID.

If the PCI device does not have a Mellanox adapter ID, return to Step 2 to check another device.

The list of Mellanox Technologies PCI Device IDs can be found in the PCI ID repository at http://pci-ids.ucw.cz/read/PC/15b3.

ConnectX-6 PCIe x8/16 Installation Instructions

Installing the Card

Applies to MCX651105A-EDAT, MCX654105A-HCAT, MCX654106A-HCAT and MCX654106AECAT.

27

Loading...

Loading...